├── .github

└── workflows

│ ├── ci.yml

│ ├── regenerate-tutorials.yml

│ └── release.yml

├── CODEOWNERS

├── LICENSE

├── README.md

├── alloy

├── send-logs-to-loki

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ ├── step4.md

│ └── step5.md

├── send-metrics-to-prometheus

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── previous-tutorial-setup.sh

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ └── step4.md

└── structure.json

├── assets

├── ILE.png

├── full-stack-ile.png

├── logo.png

├── loki-ile.png

└── loki-ile.svg

├── docs

├── examples

│ ├── complete-docs-example.md

│ ├── intro-to-logging-fluentd-fluent-bit.md

│ ├── k8s-monitoring-example.md

│ ├── sandbox-transformer-walk-through.md

│ └── using-defaults.md

└── transformer.md

├── full-stack

├── assets

│ ├── grafana_logo.png

│ ├── grot-4.png

│ └── mltp.png

├── intro-to-mltp

│ ├── finished.md

│ ├── index.json

│ ├── intro.md

│ ├── setup.sh

│ └── step1.md

├── structure.json

├── tutorial-enviroment-completed

│ ├── finished.md

│ ├── index.json

│ ├── intro.md

│ ├── setup.sh

│ └── step1.md

└── tutorial-enviroment

│ ├── finished.md

│ ├── index.json

│ ├── intro.md

│ ├── setup.sh

│ └── step1.md

├── grafana

├── alerting-get-started-pt2

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ ├── step4.md

│ ├── step5.md

│ ├── step6.md

│ ├── step7.md

│ └── step8.md

├── alerting-get-started-pt3

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ ├── step4.md

│ ├── step5.md

│ └── step6.md

├── alerting-get-started-pt4

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ ├── step4.md

│ ├── step5.md

│ └── step6.md

├── alerting-get-started-pt5

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ ├── step4.md

│ ├── step5.md

│ ├── step6.md

│ └── step7.md

├── alerting-get-started-pt6

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ ├── step4.md

│ ├── step5.md

│ ├── step6.md

│ └── step7.md

├── alerting-get-started

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ └── step4.md

├── alerting-loki-logs

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ ├── step4.md

│ └── step5.md

├── assets

│ ├── data_sources.png

│ ├── data_sources_list.png

│ ├── grafana_logo.png

│ ├── graph.png

│ ├── grot-4.png

│ ├── query.png

│ ├── save_and_test.png

│ └── search.png

├── fo11y

│ ├── finished.md

│ ├── index.json

│ ├── intro.md

│ ├── setup.sh

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ └── step4.md

├── grafana-basics

│ ├── assets

│ │ ├── prometheus.yml

│ │ └── prometheus_datasource.yml

│ ├── finished.md

│ ├── index.json

│ ├── intro.md

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ └── step4.md

├── grafana-fundamentals

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step10.md

│ ├── step2.md

│ ├── step3.md

│ ├── step4.md

│ ├── step5.md

│ ├── step6.md

│ ├── step7.md

│ ├── step8.md

│ └── step9.md

└── structure.json

├── loki

├── alloy-kafka-logs

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ └── step4.md

├── alloy-otel-logs

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ └── step3.md

├── assets

│ ├── grot-4.png

│ └── loki-logo.png

├── fluentbit-loki-tutorial

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ └── step3.md

├── intro-to-ingest-otel

│ ├── finished.md

│ ├── index.json

│ ├── intro.md

│ ├── setup.sh

│ ├── step1.md

│ ├── step2.md

│ └── step3.md

├── intro-to-ingest

│ ├── finished.md

│ ├── index.json

│ ├── intro.md

│ ├── setup.sh

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ └── step4.md

├── intro-to-logging-fluentd-fluentbit

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ └── step4.md

├── intro-to-logging

│ ├── finished.md

│ ├── index.json

│ ├── intro.md

│ └── step1.md

├── k8s-monitoring-helm

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ ├── step4.md

│ ├── step5.md

│ ├── step6.md

│ ├── step7.md

│ └── step8.md

├── logcli-tutorial

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── setup.sh

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ └── step4.md

├── loki-getting-started-tutorial

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── setup.sh

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ ├── step4.md

│ ├── step5.md

│ ├── step6.md

│ ├── step7.md

│ └── step8.md

├── loki-quickstart

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── setup.sh

│ ├── step1.md

│ └── step2.md

├── otel-collector-getting-started

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ └── step3.md

├── structure-of-logs

│ ├── finished.md

│ ├── index.json

│ ├── intro.md

│ ├── setup.sh

│ └── step1.md

├── structure.json

└── what-is-loki

│ ├── finished.md

│ ├── index.json

│ ├── intro.md

│ ├── setup.sh

│ ├── step1.md

│ └── step2.md

├── mimir

├── play-with-mimir

│ ├── docker-compose-update.sh

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ ├── step4.md

│ └── step5.md

└── structure.json

├── pyroscope

├── ride-share-tutorial

│ ├── docker-compose-update.sh

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ └── step4.md

└── structure.json

├── sandbox-developer

├── sandbox-transformer-walk-through

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ ├── step3.md

│ ├── step4.md

│ └── step5.md

└── structure.json

├── scripts

├── check-out-branch.bash

└── manage-pr.bash

├── tempo

├── quick-start

│ ├── docker-compose-update.sh

│ ├── finish.md

│ ├── index.json

│ ├── intro.md

│ ├── preprocessed.md

│ ├── step1.md

│ ├── step2.md

│ └── step3.md

└── structure.json

├── tools

├── alloy-proxy

│ ├── config.alloy

│ └── dockerfile

├── course-tracker

│ └── config.alloy

└── transformer

│ ├── .gitignore

│ ├── .golangci.yml

│ ├── directives.go

│ ├── extend.go

│ ├── go.mod

│ ├── go.sum

│ ├── goldmark

│ ├── extension

│ │ └── table.go

│ ├── goldmark.go

│ ├── killercoda

│ │ └── killercoda.go

│ └── renderer

│ │ └── markdown

│ │ ├── block.go

│ │ ├── block_test.go

│ │ ├── inline.go

│ │ ├── inline_test.go

│ │ └── markdown.go

│ ├── hack

│ └── generate-directives

│ │ └── main.go

│ ├── killercoda

│ └── index.go

│ ├── main.go

│ ├── preprocess.go

│ ├── preprocess_test.go

│ ├── transform.go

│ └── transform_test.go

└── workshops

├── adventure

├── docker-compose-update.sh

├── finish.md

├── index.json

├── intro.md

├── preprocessed.md

├── step1.md

└── step2.md

├── course-tracker-test

├── finish.md

├── index.json

├── intro.md

├── preprocessed.md

├── setup.sh

├── step1.md

├── step2.md

├── step3.md

├── step4.md

├── step5.md

├── step6.md

├── step7.md

└── step8.md

├── game-of-traces

├── docker-compose-update.sh

├── finish.md

├── index.json

├── intro.md

├── preprocessed.md

├── step1.md

├── step2.md

├── step3.md

└── step4.md

└── structure.json

/.github/workflows/ci.yml:

--------------------------------------------------------------------------------

1 | name: Run Continuous Integration

2 |

3 | permissions: {}

4 |

5 | on:

6 | pull_request:

7 |

8 | jobs:

9 | go-test:

10 | if: github.repository == 'grafana/killercoda'

11 | runs-on: ubuntu-latest

12 | steps:

13 | - uses: actions/checkout@11bd71901bbe5b1630ceea73d27597364c9af683 # v4.2.2

14 | with:

15 | path: killercoda

16 | persist-credentials: false

17 | - uses: actions/setup-go@3041bf56c941b39c61721a86cd11f3bb1338122a # v5.2.0

18 | with:

19 | go-version-file: killercoda/tools/transformer/go.mod

20 | - run: go test ./...

21 | working-directory: killercoda/tools/transformer

22 |

--------------------------------------------------------------------------------

/CODEOWNERS:

--------------------------------------------------------------------------------

1 | # Lines starting with '#' are comments.

2 | # Each line is a file pattern followed by one or more owners.

3 |

4 | # More details are here: https://help.github.com/articles/about-codeowners/

5 |

6 | # The '*' pattern is global owners.

7 |

8 | # Order is important. The last matching pattern has the most precedence.

9 | # The folders are ordered as follows:

10 |

11 | # In each subsection folders are ordered first by depth, then alphabetically.

12 | # This should make it easy to add new rules without breaking existing ones.

13 |

14 | * @Jayclifford345

15 |

16 | /.github/ @jdbaldry

17 | /CODEOWNERS/ @Jayclifford345 @jdbaldry

18 | /scripts/ @jdbaldry

19 | /tools/ @jdbaldry

20 | /transformer.md @jdbaldry

21 |

--------------------------------------------------------------------------------

/alloy/send-logs-to-loki/finish.md:

--------------------------------------------------------------------------------

1 | # Summary

2 |

3 | You have installed and configured Alloy, and sent logs from your local host to your local Grafana stack.

4 |

5 | In the [next tutorial](https://grafana.com/docs/alloy/latest/tutorials/send-metrics-to-prometheus/), you learn more about configuration concepts and metrics.

6 |

--------------------------------------------------------------------------------

/alloy/send-logs-to-loki/index.json:

--------------------------------------------------------------------------------

1 | {

2 | "title": "Use Grafana Alloy to send logs to Loki",

3 | "description": "Learn how to use Grafana Alloy to send logs to Loki",

4 | "details": {

5 | "intro": {

6 | "text": "intro.md"

7 | },

8 | "steps": [

9 | {

10 | "text": "step1.md"

11 | },

12 | {

13 | "text": "step2.md"

14 | },

15 | {

16 | "text": "step3.md"

17 | },

18 | {

19 | "text": "step4.md"

20 | },

21 | {

22 | "text": "step5.md"

23 | }

24 | ],

25 | "finish": {

26 | "text": "finish.md"

27 | }

28 | },

29 | "backend": {

30 | "imageid": "ubuntu"

31 | }

32 | }

33 |

--------------------------------------------------------------------------------

/alloy/send-logs-to-loki/intro.md:

--------------------------------------------------------------------------------

1 | # Use Grafana Alloy to send logs to Loki

2 |

3 | This tutorial shows you how to configure Alloy to collect logs from your local machine, filter non-essential log lines, send them to Loki, and use Grafana to explore the results.

4 |

5 | # Before you begin

6 |

7 | To complete this tutorial:

8 |

9 | - You must have a basic understanding of Alloy and telemetry collection in general.

10 | - You should be familiar with Prometheus, PromQL, Loki, LogQL, and basic Grafana navigation.

11 |

--------------------------------------------------------------------------------

/alloy/send-logs-to-loki/step1.md:

--------------------------------------------------------------------------------

1 | # Install Alloy and start the service

2 |

3 | > This online sandbox environment is based on an Ubuntu image and has Docker pre-installed. To install Alloy in the sandbox, perform the following steps.

4 |

5 | ## Linux

6 |

7 | Install and run Alloy on Linux.

8 |

9 | 1. [Install Alloy](https://grafana.com/docs/alloy/latest/set-up/install/linux/).

10 | 1. To view the Alloy UI within the sandbox, Alloy must run on all interfaces. Run the following command before you start the Alloy service.

11 |

12 | ```bash

13 | sed -i -e 's/CUSTOM_ARGS=""/CUSTOM_ARGS="--server.http.listen-addr=0.0.0.0:12345"/' /etc/default/alloy

14 | ```{{exec}}

15 | 1. [Run Alloy](https://grafana.com/docs/alloy/latest/set-up/run/linux/).

16 |

17 | You can access the Alloy UI at [http://localhost:12345]({{TRAFFIC_HOST1_12345}}).

18 |

--------------------------------------------------------------------------------

/alloy/send-logs-to-loki/step4.md:

--------------------------------------------------------------------------------

1 | # Reload the configuration

2 |

3 | 1. Copy your local `config.alloy`{{copy}} file into the default Alloy configuration file location.

4 |

5 | ```bash

6 | sudo cp config.alloy /etc/alloy/config.alloy

7 | ```{{exec}}

8 | 1. Call the `/-/reload`{{copy}} endpoint to tell Alloy to reload the configuration file without a system service restart.

9 |

10 | ```bash

11 | curl -X POST http://localhost:12345/-/reload

12 | ```{{exec}}

13 |

14 | > This step uses the Alloy UI on `localhost`{{copy}} port `12345`{{copy}}. If you chose to run Alloy in a Docker container, make sure you use the `--server.http.listen-addr=`{{copy}} argument. If you don't use this argument, the [debugging UI](https://grafana.com/docs/alloy/latest/troubleshoot/debug/#alloy-ui) won't be available outside of the Docker container.

15 | 1. Optional: You can do a system service restart Alloy and load the configuration file.

16 |

17 | ```bash

18 | sudo systemctl reload alloy

19 | ```{{exec}}

20 |

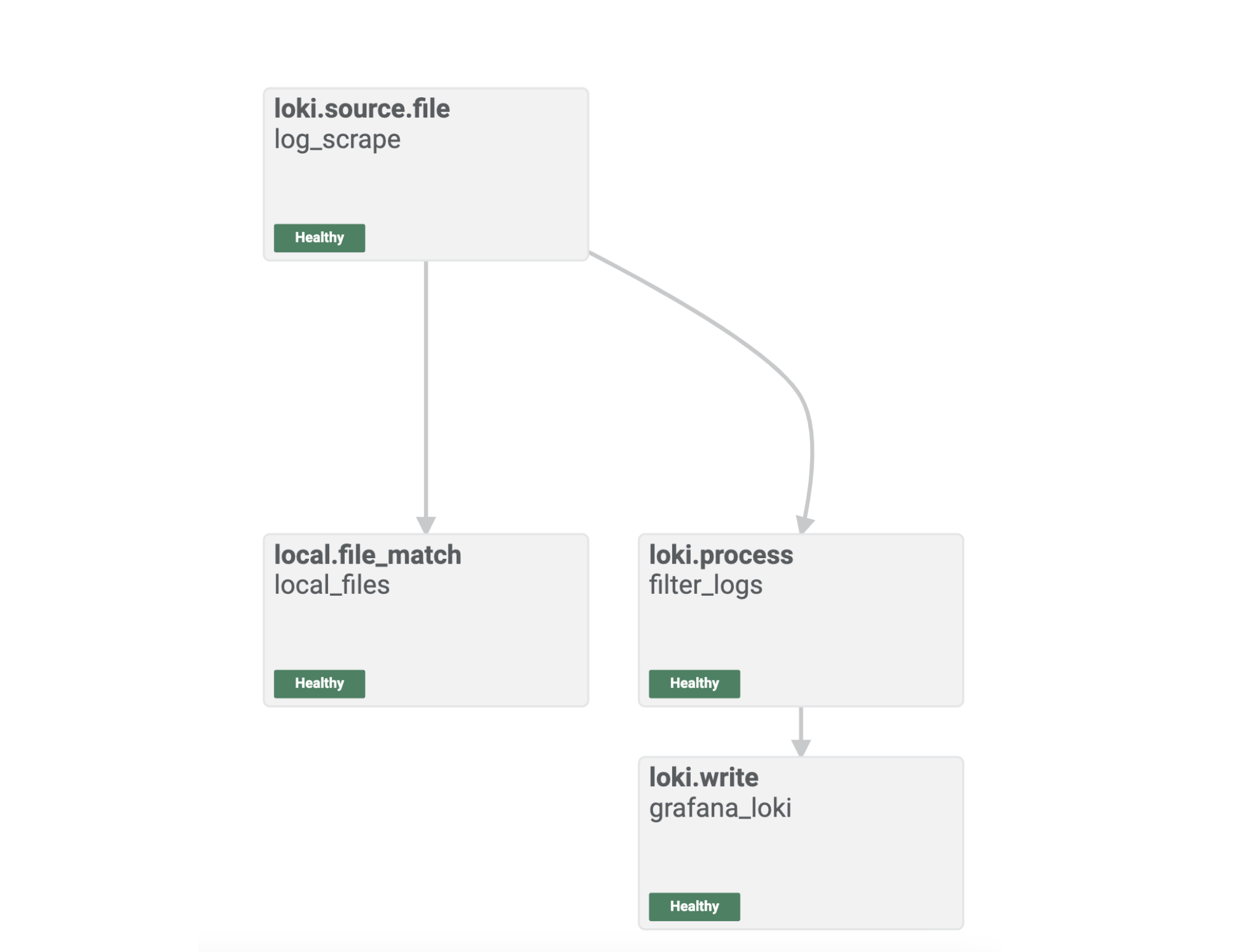

21 | # Inspect your configuration in the Alloy UI

22 |

23 | Open [http://localhost:12345]({{TRAFFIC_HOST1_12345}}) and click the **Graph** tab at the top.

24 | The graph should look similar to the following:

25 |

26 |

27 |

28 | The Alloy UI shows you a visual representation of the pipeline you built with your Alloy component configuration.

29 |

30 | You can see that the components are healthy, and you are ready to explore the logs in Grafana.

31 |

--------------------------------------------------------------------------------

/alloy/send-logs-to-loki/step5.md:

--------------------------------------------------------------------------------

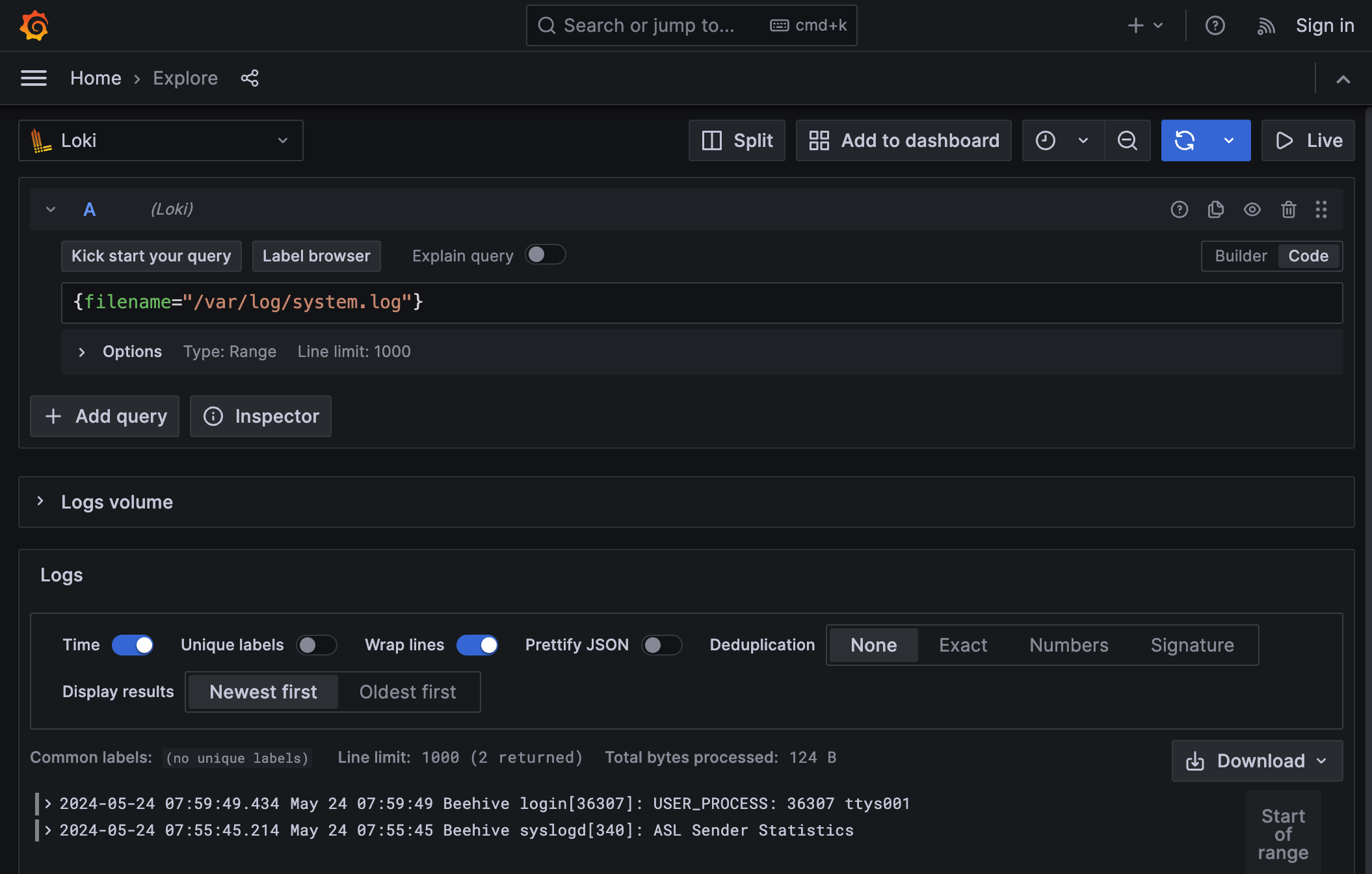

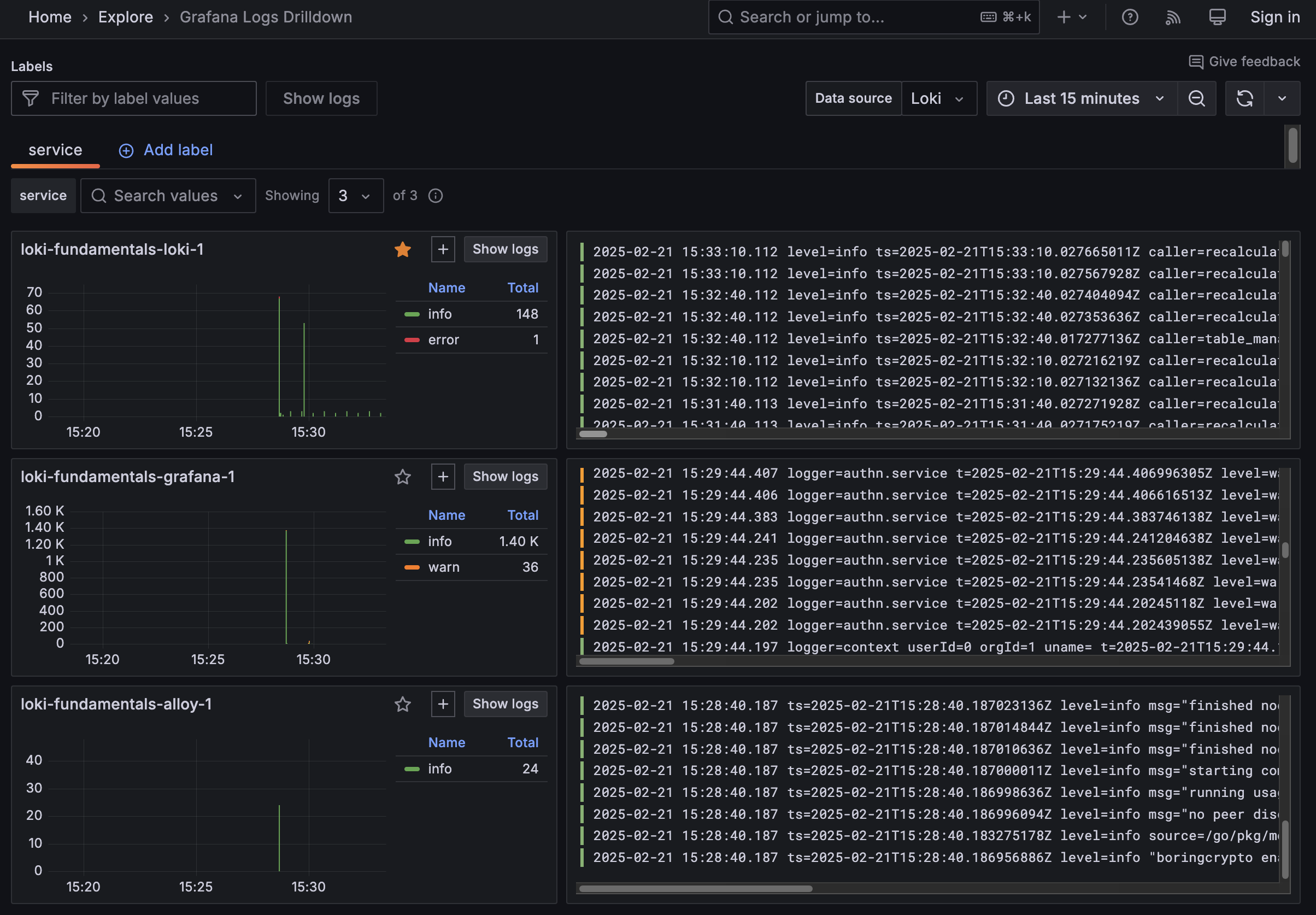

1 | # Log in to Grafana and explore Loki logs

2 |

3 | Open [http://localhost:3000/explore]({{TRAFFIC_HOST1_3000}}/explore) to access **Explore** feature in Grafana.

4 |

5 | Select Loki as the data source and click the **Label Browser** button to select a file that Alloy has sent to Loki.

6 |

7 | Here you can see that logs are flowing through to Loki as expected, and the end-to-end configuration was successful.

8 |

9 |

10 |

--------------------------------------------------------------------------------

/alloy/send-metrics-to-prometheus/finish.md:

--------------------------------------------------------------------------------

1 | # Summary

2 |

3 | You have configured Alloy to collect and process metrics from your local host and send them to your local Grafana stack.

4 |

--------------------------------------------------------------------------------

/alloy/send-metrics-to-prometheus/index.json:

--------------------------------------------------------------------------------

1 | {

2 | "title": "Use Grafana Alloy to send metrics to Prometheus",

3 | "description": "Learn how to send metrics to Prometheus",

4 | "details": {

5 | "intro": {

6 | "text": "intro.md",

7 | "foreground": "previous-tutorial-setup.sh"

8 | },

9 | "steps": [

10 | {

11 | "text": "step1.md"

12 | },

13 | {

14 | "text": "step2.md"

15 | },

16 | {

17 | "text": "step3.md"

18 | },

19 | {

20 | "text": "step4.md"

21 | }

22 | ],

23 | "finish": {

24 | "text": "finish.md"

25 | }

26 | },

27 | "backend": {

28 | "imageid": "ubuntu"

29 | }

30 | }

31 |

--------------------------------------------------------------------------------

/alloy/send-metrics-to-prometheus/intro.md:

--------------------------------------------------------------------------------

1 | # Use Grafana Alloy to send metrics to Prometheus

2 |

3 | In the [previous tutorial](https://grafana.com/docs/alloy/latest/tutorials/send-logs-to-loki/), you learned how to configure Alloy to collect and process logs from your local machine and send them to Loki.

4 |

5 | This tutorial shows you how to configure Alloy to collect and process metrics from your local machine, send them to Prometheus, and use Grafana to explore the results.

6 |

7 | > Since this tutorial builds on the previous one, a setup script is automatically run to ensure you have the necessary prerequisites in place. This should take no longer than 1 minute to complete. You may begin the tutorial when you see this message: `Installation script has now been completed. You may now begin the tutorial.`{{copy}}

8 |

--------------------------------------------------------------------------------

/alloy/send-metrics-to-prometheus/step2.md:

--------------------------------------------------------------------------------

1 | # Reload the configuration

2 |

3 | Copy your local `config.alloy`{{copy}} file into the default Alloy configuration file location.

4 |

5 | ```bash

6 | sudo cp config.alloy /etc/alloy/config.alloy

7 | ```{{exec}}

8 |

9 | Call the `/-/reload`{{copy}} endpoint to tell Alloy to reload the configuration file without a system service restart.

10 |

11 | ```bash

12 | curl -X POST http://localhost:12345/-/reload

13 | ```{{exec}}

14 |

15 | > This step uses the Alloy UI on `localhost`{{copy}} port `12345`{{copy}}. If you chose to run Alloy in a Docker container, make sure you use the `--server.http.listen-addr=`{{copy}} argument. If you don't use this argument, the [debugging UI](https://grafana.com/docs/alloy/latest/troubleshoot/debug/#alloy-ui) won't be available outside of the Docker container.

16 |

17 | Optional: You can do a system service restart Alloy and load the configuration file:

18 |

19 | ```bash

20 | sudo systemctl reload alloy

21 | ```{{exec}}

22 |

--------------------------------------------------------------------------------

/alloy/send-metrics-to-prometheus/step3.md:

--------------------------------------------------------------------------------

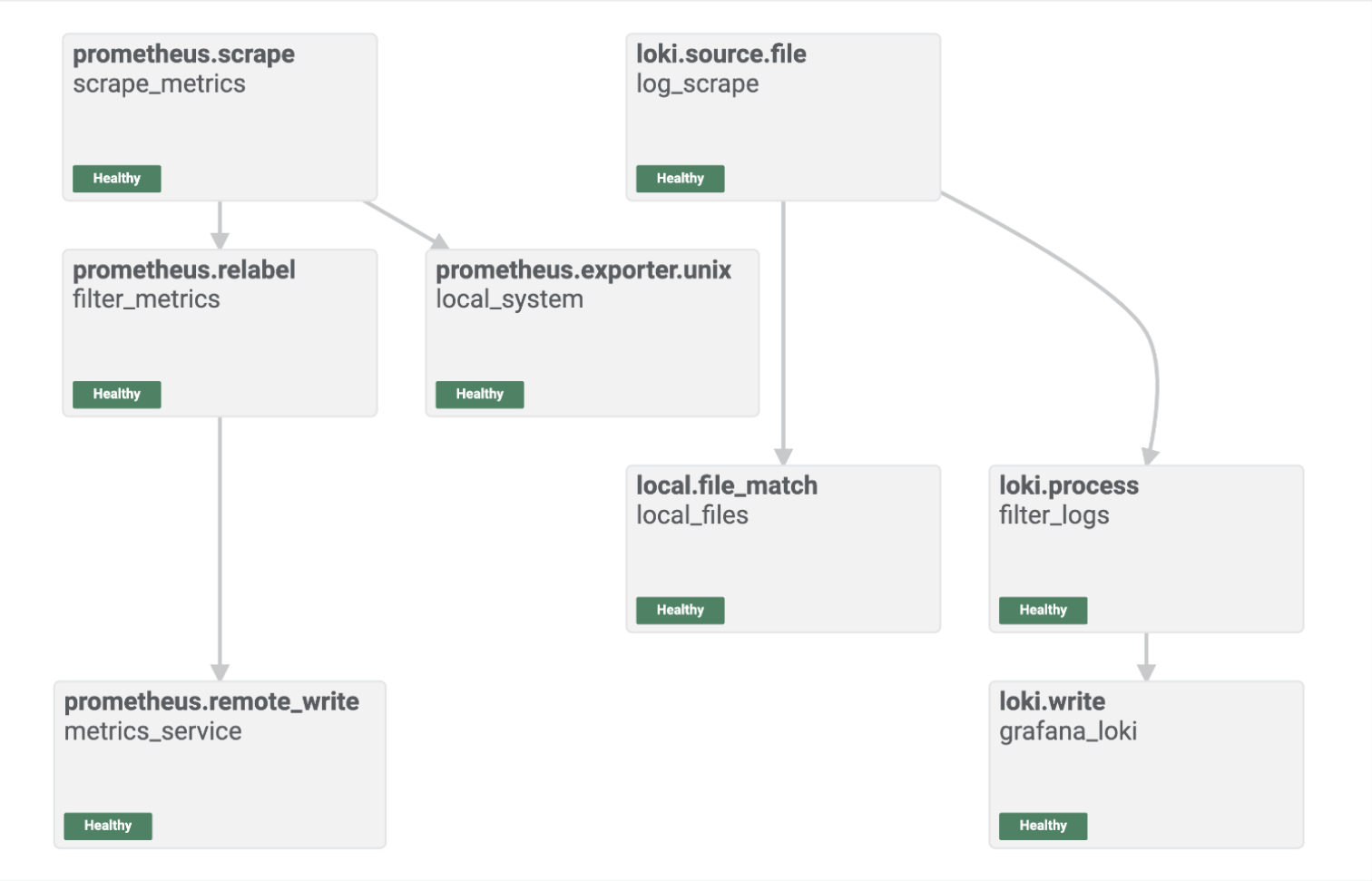

1 | # Inspect your configuration in the Alloy UI

2 |

3 | Open [http://localhost:12345]({{TRAFFIC_HOST1_12345}}) and click the **Graph** tab at the top.

4 | The graph should look similar to the following:

5 |

6 |

7 |

8 | The Alloy UI shows you a visual representation of the pipeline you built with your Alloy component configuration.

9 |

10 | You can see that the components are healthy, and you are ready to explore the metrics in Grafana.

11 |

--------------------------------------------------------------------------------

/alloy/send-metrics-to-prometheus/step4.md:

--------------------------------------------------------------------------------

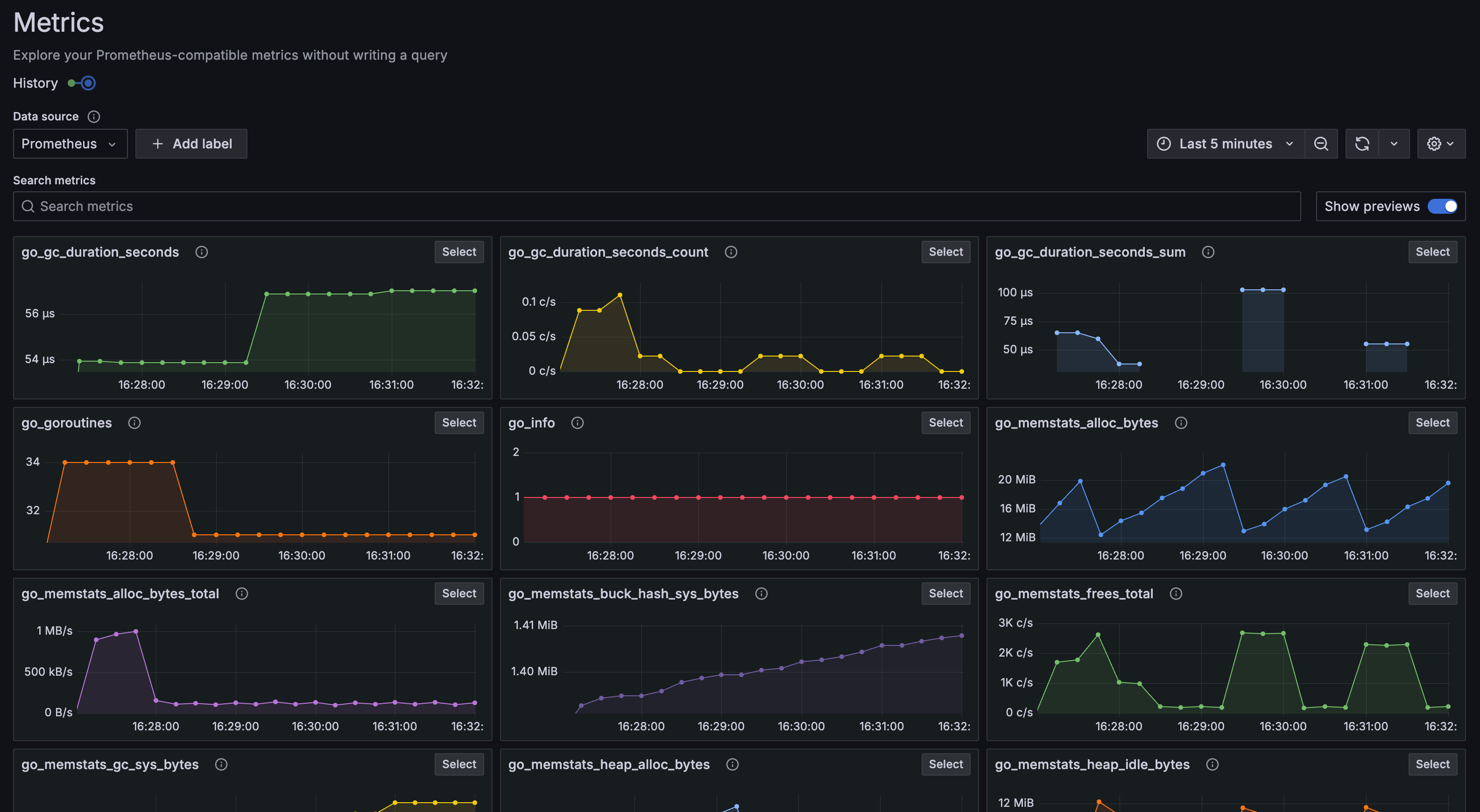

1 | # Log into Grafana and explore metrics in Prometheus

2 |

3 | Open [http://localhost:3000/explore/metrics/]({{TRAFFIC_HOST1_3000}}/explore/metrics/) to access the **Metrics Drilldown** feature in Grafana.

4 |

5 | From here you can visually explore the metrics sent to Prometheus by Alloy.

6 |

7 |

8 |

9 | You can also build PromQL queries manually to explore the data further.

10 |

11 | Open [http://localhost:3000/explore]({{TRAFFIC_HOST1_3000}}/explore) to access the **Explore** feature in Grafana.

12 |

13 | Select Prometheus as the data source and click the **Metrics Browser** button to select the metric, labels, and values for your labels.

14 |

15 | Here you can see that metrics are flowing through to Prometheus as expected, and the end-to-end configuration was successful.

16 |

--------------------------------------------------------------------------------

/alloy/structure.json:

--------------------------------------------------------------------------------

1 | {

2 | "items": [

3 | { "path": "send-logs-to-loki", "title": "Use Grafana Alloy to send logs to Loki"},

4 | { "path": "send-metrics-to-prometheus", "title": "Use Grafana Alloy to send metrics to Prometheus"}

5 | ]

6 | }

--------------------------------------------------------------------------------

/assets/ILE.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/grafana/killercoda/19bfb5e03680c28ae023eec22fe4531ea7467d22/assets/ILE.png

--------------------------------------------------------------------------------

/assets/full-stack-ile.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/grafana/killercoda/19bfb5e03680c28ae023eec22fe4531ea7467d22/assets/full-stack-ile.png

--------------------------------------------------------------------------------

/assets/logo.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/grafana/killercoda/19bfb5e03680c28ae023eec22fe4531ea7467d22/assets/logo.png

--------------------------------------------------------------------------------

/assets/loki-ile.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/grafana/killercoda/19bfb5e03680c28ae023eec22fe4531ea7467d22/assets/loki-ile.png

--------------------------------------------------------------------------------

/full-stack/assets/grafana_logo.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/grafana/killercoda/19bfb5e03680c28ae023eec22fe4531ea7467d22/full-stack/assets/grafana_logo.png

--------------------------------------------------------------------------------

/full-stack/assets/grot-4.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/grafana/killercoda/19bfb5e03680c28ae023eec22fe4531ea7467d22/full-stack/assets/grot-4.png

--------------------------------------------------------------------------------

/full-stack/assets/mltp.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/grafana/killercoda/19bfb5e03680c28ae023eec22fe4531ea7467d22/full-stack/assets/mltp.png

--------------------------------------------------------------------------------

/full-stack/intro-to-mltp/index.json:

--------------------------------------------------------------------------------

1 | {

2 | "title": "Introduction to Metrics, Logs, Traces and Profiles in Grafana",

3 | "description": "This demo provides an introduction to the concepts of Metrics, Logs, Traces and Profiles in Grafana.",

4 | "details": {

5 | "intro": {

6 | "text": "intro.md",

7 | "foreground": "setup.sh"

8 | },

9 | "steps": [

10 | {

11 | "text": "step1.md"

12 | }

13 | ],

14 | "finish": {

15 | "text": "finished.md"

16 | }

17 | }

18 | ,

19 | "backend": {

20 | "imageid": "ubuntu-4GB"

21 | }

22 | }

--------------------------------------------------------------------------------

/full-stack/intro-to-mltp/setup.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 |

3 | echo "RUNNING SETUP SCRIPT"

4 |

5 | # Clone the tutorial environment repository if it doesn't already exist

6 | if [ ! -d "intro-to-mltp" ]; then

7 | git clone https://github.com/grafana/intro-to-mltp.git || { echo "Failed to clone repository"; exit 1; }

8 | fi

9 |

10 | # Enter the directory and switch to the required branch

11 | cd intro-to-mltp && git checkout killercoda || { echo "Moving directory"; exit 1; }

12 |

13 | echo "Building training instance...."

14 | docker-compose -f docker-compose-no-beyla.yml up -d

15 | echo "Catch any failed containers...."

16 | docker-compose -f docker-compose-no-beyla.yml up -d

17 |

18 |

19 | # Update and install required packages

20 | echo "Updating and installing required packages..."

21 | sudo apt-get update && sudo apt-get install -y figlet; clear; echo -e "\e[32m$(figlet -f standard 'Intro to')\e[0m"; echo -e "\e[33m$(figlet -f standard 'MLTP')\e[0m"

--------------------------------------------------------------------------------

/full-stack/intro-to-mltp/step1.md:

--------------------------------------------------------------------------------

1 |

2 | # Your Tutorial Environment

3 |

4 | Your tutorial environment is ready to go. You can find the tutorial environment navigation URLs below.

5 |

6 | **Note:** *You must use the URLs provided within this section as they automatically connect to the localhost of the virtual environment.*

7 |

8 | ## Tutorial Environment Navigation URLs

9 |

10 | Grafana: **[http://localhost:3000]({{TRAFFIC_HOST1_3000}})**

11 |

12 | This is the URL for the Grafana UI. This is where the majority of the tutorial will take place.

13 |

14 |

--------------------------------------------------------------------------------

/full-stack/structure.json:

--------------------------------------------------------------------------------

1 | {

2 | "items": [

3 | { "path": "tutorial-enviroment", "title": "Tutorial Enviroment"},

4 | { "path": "tutorial-enviroment-completed", "title": "Tutorial Enviroment (Completed)"},

5 | { "path": "intro-to-mltp", "title": "Introduction To MLTP"}

6 | ]

7 | }

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment-completed/finished.md:

--------------------------------------------------------------------------------

1 |

2 |  3 |

4 | # Tutorial: Grafana Fundamentals Complete

5 | This is the completed Grafana Fundamentals training environment. In this training environment, you learned the fundamentals of Grafana, a powerful open-source platform for visualizing and analyzing data. Whether you are a developer, system administrator, or data analyst, this course provided you with the knowledge and skills to effectively use Grafana for monitoring and visualization.

6 |

7 | ## What's Next?

8 | Now that you have completed the Grafana Basics course, you can explore more advanced topics such as:

9 | - [Grafana Plugins](https://grafana.com/grafana/plugins)

10 | - [Grafana Dashboards](https://grafana.com/docs/grafana/latest/dashboards)

11 | - [Grafana API](https://grafana.com/docs/grafana/latest/http_api)

12 |

13 |

3 |

4 | # Tutorial: Grafana Fundamentals Complete

5 | This is the completed Grafana Fundamentals training environment. In this training environment, you learned the fundamentals of Grafana, a powerful open-source platform for visualizing and analyzing data. Whether you are a developer, system administrator, or data analyst, this course provided you with the knowledge and skills to effectively use Grafana for monitoring and visualization.

6 |

7 | ## What's Next?

8 | Now that you have completed the Grafana Basics course, you can explore more advanced topics such as:

9 | - [Grafana Plugins](https://grafana.com/grafana/plugins)

10 | - [Grafana Dashboards](https://grafana.com/docs/grafana/latest/dashboards)

11 | - [Grafana API](https://grafana.com/docs/grafana/latest/http_api)

12 |

13 |  14 |

15 | ## Reporting Issues

16 | If you encounter any issues with the environment, please report them to the [GitHub repository](https://github.com/grafana/killercoda)

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment-completed/index.json:

--------------------------------------------------------------------------------

1 | {

2 | "title": "Grafana Fundamentals (Completed)",

3 | "description": "In this tutorial, you’ll learn how to use Grafana to set up a monitoring solution for your application. This training enviroment is completed.",

4 | "details": {

5 | "intro": {

6 | "text": "intro.md",

7 | "foreground": "setup.sh"

8 | },

9 | "steps": [

10 | {

11 | "text": "step1.md"

12 | }

13 | ],

14 | "finish": {

15 | "text": "finished.md"

16 | }

17 | }

18 | ,

19 | "backend": {

20 | "imageid": "ubuntu"

21 | }

22 | }

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment-completed/intro.md:

--------------------------------------------------------------------------------

1 |

2 |

14 |

15 | ## Reporting Issues

16 | If you encounter any issues with the environment, please report them to the [GitHub repository](https://github.com/grafana/killercoda)

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment-completed/index.json:

--------------------------------------------------------------------------------

1 | {

2 | "title": "Grafana Fundamentals (Completed)",

3 | "description": "In this tutorial, you’ll learn how to use Grafana to set up a monitoring solution for your application. This training enviroment is completed.",

4 | "details": {

5 | "intro": {

6 | "text": "intro.md",

7 | "foreground": "setup.sh"

8 | },

9 | "steps": [

10 | {

11 | "text": "step1.md"

12 | }

13 | ],

14 | "finish": {

15 | "text": "finished.md"

16 | }

17 | }

18 | ,

19 | "backend": {

20 | "imageid": "ubuntu"

21 | }

22 | }

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment-completed/intro.md:

--------------------------------------------------------------------------------

1 |

2 |  3 |

4 | # Grafana Fundamentals (Completed)

5 |

6 | Welcome!

7 |

8 | In this training environment, you will learn the fundamentals of Grafana, a powerful open-source platform for visualizing and analyzing data. Whether you are a developer, system administrator, or data analyst, this course will provide you with the knowledge and skills to effectively use Grafana for monitoring and visualization.

9 |

10 | You can find the course content here: [Grafana Fundamentals](https://grafana.com/tutorials/grafana-fundamentals/).

11 |

12 | **Note:** *This is a completed environment, where all the steps have been completed for you. If you would like to complete the steps yourself, please go to the [Grafana Fundamentals](https://killercoda.com/grafana-labs/course/full-stack/tutorial-environment) course.*

13 |

14 | ## Housekeeping

15 |

16 | This environment runs an install script on startup automatically. Your training environment is ready to go once the script has completed and you see the following message:

17 |

18 | ```plaintext

19 | GRAFANA FUNDAMENTALS

20 | ```

21 |

22 | Continue to the next step to find the tutorial environment navigation URLs.

23 |

24 | ## Reporting Issues

25 | If you encounter any issues with the environment, please report them to the [GitHub repository](https://github.com/grafana/killercoda)

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment-completed/setup.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 |

3 | echo "RUNNING SETUP SCRIPT"

4 |

5 | # Clone the tutorial environment repository if it doesn't already exist

6 | if [ ! -d "tutorial-environment" ]; then

7 | git clone https://github.com/grafana/tutorial-environment.git || { echo "Failed to clone repository"; exit 1; }

8 | fi

9 |

10 | # Enter the directory and switch to the required branch

11 | cd tutorial-environment && git checkout killercoda || { echo "Failed to checkout branch"; exit 1; }

12 |

13 | echo "Building training instance...."

14 | docker-compose up -d || { echo "Failed to start docker containers"; exit 1; }

15 |

16 | # Update and install required packages

17 | echo "Updating and installing required packages..."

18 | sudo apt-get update && sudo apt-get install -y figlet; clear; echo -e "\e[32m$(figlet -f standard 'Grafana')\e[0m"; echo -e "\e[33m$(figlet -f standard 'Fundamentals')\e[0m"

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment-completed/step1.md:

--------------------------------------------------------------------------------

1 |

2 | # Your Tutorial Environment

3 |

4 | Your tutorial environment is ready to go. You can find the tutorial environment navigation URLs below.

5 |

6 | **Note:** *You must use the URLs provided within this section as they automatically connect to the localhost of the virtual environment.*

7 |

8 | ## Tutorial Environment Navigation URLs

9 |

10 | Grafana: **[http://localhost:3000]({{TRAFFIC_HOST1_3000}})**

11 |

12 | This is the URL for the Grafana UI. From here, you can access the dashboards and data visualizations created in the tutorial.

13 |

14 | Grafana News: **[http://localhost:8081]({{TRAFFIC_HOST1_8081}})**

15 |

16 | This URL will direct you to the sample application for this tutorial. This simulator lets you post links and vote for the ones you like. This is important for generating traffic and errors to populate our Grafana dashboards.

17 |

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment/finished.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | # Grafana Fundamentals (Completed)

5 |

6 | Welcome!

7 |

8 | In this training environment, you will learn the fundamentals of Grafana, a powerful open-source platform for visualizing and analyzing data. Whether you are a developer, system administrator, or data analyst, this course will provide you with the knowledge and skills to effectively use Grafana for monitoring and visualization.

9 |

10 | You can find the course content here: [Grafana Fundamentals](https://grafana.com/tutorials/grafana-fundamentals/).

11 |

12 | **Note:** *This is a completed environment, where all the steps have been completed for you. If you would like to complete the steps yourself, please go to the [Grafana Fundamentals](https://killercoda.com/grafana-labs/course/full-stack/tutorial-environment) course.*

13 |

14 | ## Housekeeping

15 |

16 | This environment runs an install script on startup automatically. Your training environment is ready to go once the script has completed and you see the following message:

17 |

18 | ```plaintext

19 | GRAFANA FUNDAMENTALS

20 | ```

21 |

22 | Continue to the next step to find the tutorial environment navigation URLs.

23 |

24 | ## Reporting Issues

25 | If you encounter any issues with the environment, please report them to the [GitHub repository](https://github.com/grafana/killercoda)

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment-completed/setup.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 |

3 | echo "RUNNING SETUP SCRIPT"

4 |

5 | # Clone the tutorial environment repository if it doesn't already exist

6 | if [ ! -d "tutorial-environment" ]; then

7 | git clone https://github.com/grafana/tutorial-environment.git || { echo "Failed to clone repository"; exit 1; }

8 | fi

9 |

10 | # Enter the directory and switch to the required branch

11 | cd tutorial-environment && git checkout killercoda || { echo "Failed to checkout branch"; exit 1; }

12 |

13 | echo "Building training instance...."

14 | docker-compose up -d || { echo "Failed to start docker containers"; exit 1; }

15 |

16 | # Update and install required packages

17 | echo "Updating and installing required packages..."

18 | sudo apt-get update && sudo apt-get install -y figlet; clear; echo -e "\e[32m$(figlet -f standard 'Grafana')\e[0m"; echo -e "\e[33m$(figlet -f standard 'Fundamentals')\e[0m"

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment-completed/step1.md:

--------------------------------------------------------------------------------

1 |

2 | # Your Tutorial Environment

3 |

4 | Your tutorial environment is ready to go. You can find the tutorial environment navigation URLs below.

5 |

6 | **Note:** *You must use the URLs provided within this section as they automatically connect to the localhost of the virtual environment.*

7 |

8 | ## Tutorial Environment Navigation URLs

9 |

10 | Grafana: **[http://localhost:3000]({{TRAFFIC_HOST1_3000}})**

11 |

12 | This is the URL for the Grafana UI. From here, you can access the dashboards and data visualizations created in the tutorial.

13 |

14 | Grafana News: **[http://localhost:8081]({{TRAFFIC_HOST1_8081}})**

15 |

16 | This URL will direct you to the sample application for this tutorial. This simulator lets you post links and vote for the ones you like. This is important for generating traffic and errors to populate our Grafana dashboards.

17 |

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment/finished.md:

--------------------------------------------------------------------------------

1 |

2 |  3 |

4 | # Tutorial: Grafana Fundamentals Complete

5 | This is the completed Grafana Fundamentals training environment. In this training environment, you learned the fundamentals of Grafana, a powerful open-source platform for visualizing and analyzing data. Whether you are a developer, system administrator, or data analyst, this course provided you with the knowledge and skills to effectively use Grafana for monitoring and visualization.

6 |

7 | ## What's Next?

8 | Now that you have completed the Grafana Basics course, you can explore more advanced topics such as:

9 | - [Grafana Plugins](https://grafana.com/grafana/plugins)

10 | - [Grafana Dashboards](https://grafana.com/docs/grafana/latest/dashboards)

11 | - [Grafana API](https://grafana.com/docs/grafana/latest/http_api)

12 |

13 |

3 |

4 | # Tutorial: Grafana Fundamentals Complete

5 | This is the completed Grafana Fundamentals training environment. In this training environment, you learned the fundamentals of Grafana, a powerful open-source platform for visualizing and analyzing data. Whether you are a developer, system administrator, or data analyst, this course provided you with the knowledge and skills to effectively use Grafana for monitoring and visualization.

6 |

7 | ## What's Next?

8 | Now that you have completed the Grafana Basics course, you can explore more advanced topics such as:

9 | - [Grafana Plugins](https://grafana.com/grafana/plugins)

10 | - [Grafana Dashboards](https://grafana.com/docs/grafana/latest/dashboards)

11 | - [Grafana API](https://grafana.com/docs/grafana/latest/http_api)

12 |

13 |  14 |

15 | ## Reporting Issues

16 | If you encounter any issues with the environment, please report them to the [GitHub repository](https://github.com/grafana/killercoda)

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment/index.json:

--------------------------------------------------------------------------------

1 | {

2 | "title": "Grafana Fundamentals",

3 | "description": "In this tutorial, you’ll learn how to use Grafana to set up a monitoring solution for your application.",

4 | "details": {

5 | "intro": {

6 | "text": "intro.md",

7 | "foreground": "setup.sh"

8 | },

9 | "steps": [

10 | {

11 | "text": "step1.md"

12 | }

13 | ],

14 | "finish": {

15 | "text": "finished.md"

16 | }

17 | }

18 | ,

19 | "backend": {

20 | "imageid": "ubuntu"

21 | }

22 | }

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment/intro.md:

--------------------------------------------------------------------------------

1 |

2 |

14 |

15 | ## Reporting Issues

16 | If you encounter any issues with the environment, please report them to the [GitHub repository](https://github.com/grafana/killercoda)

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment/index.json:

--------------------------------------------------------------------------------

1 | {

2 | "title": "Grafana Fundamentals",

3 | "description": "In this tutorial, you’ll learn how to use Grafana to set up a monitoring solution for your application.",

4 | "details": {

5 | "intro": {

6 | "text": "intro.md",

7 | "foreground": "setup.sh"

8 | },

9 | "steps": [

10 | {

11 | "text": "step1.md"

12 | }

13 | ],

14 | "finish": {

15 | "text": "finished.md"

16 | }

17 | }

18 | ,

19 | "backend": {

20 | "imageid": "ubuntu"

21 | }

22 | }

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment/intro.md:

--------------------------------------------------------------------------------

1 |

2 |  3 |

4 | # Grafana Fundamentals

5 |

6 | Welcome!

7 |

8 | In this training environment, you will learn the fundamentals of Grafana, a powerful open-source platform for visualizing and analyzing data. Whether you are a developer, system administrator, or data analyst, this course will provide you with the knowledge and skills to effectively use Grafana for monitoring and visualization.

9 |

10 | You can find the course content here: [Grafana Fundamentals](https://grafana.com/tutorials/grafana-fundamentals/).

11 |

12 | **Note:** *In this environment, we provide you with only the initial setup of the tutorial environment. You can then follow the steps in the Grafana Fundamentals to complete the tutorial. If you would like a completed version of the tutorial, please visit the [Grafana Fundamentals (Completed)](https://katacoda.com/full-stack/courses/tutorial-environment-completed) course.*

13 |

14 | ## Housekeeping

15 |

16 | This environment runs an install script on startup automatically. Your training environment is ready to go once the script has completed and you see the following message:

17 |

18 | ```plaintext

19 | GRAFANA FUNDAMENTALS

20 | ```

21 |

22 | Continue to the next step to find the tutorial environment navigation URLs.

23 |

24 | ## Reporting Issues

25 | If you encounter any issues with the environment, please report them to the [GitHub repository](https://github.com/grafana/killercoda)

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment/setup.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 |

3 | echo "RUNNING SETUP SCRIPT"

4 |

5 | # Clone the tutorial environment repository if it doesn't already exist

6 | if [ ! -d "tutorial-environment" ]; then

7 | git clone https://github.com/grafana/tutorial-environment.git || { echo "Failed to clone repository"; exit 1; }

8 | fi

9 |

10 | # Enter the directory and switch to the required branch

11 | cd tutorial-environment || { echo "Failed to checkout branch"; exit 1; }

12 |

13 | echo "Building training instance...."

14 | docker-compose up -d || { echo "Failed to start docker containers"; exit 1; }

15 |

16 | # Update and install required packages

17 | echo "Updating and installing required packages..."

18 | sudo apt-get update && sudo apt-get install -y figlet; clear; echo -e "\e[32m$(figlet -f standard 'Grafana')\e[0m"; echo -e "\e[33m$(figlet -f standard 'Fundamentals')\e[0m"

--------------------------------------------------------------------------------

/full-stack/tutorial-enviroment/step1.md:

--------------------------------------------------------------------------------

1 |

2 | # Your Tutorial Environment

3 |

4 | Your tutorial environment is ready to go. You can find the tutorial environment navigation URLs below.

5 |

6 | **Note:** *You must use the URLs provided within this section as they automatically connect to the localhost of the virtual environment.*

7 |

8 | ## Tutorial Environment Navigation URLs

9 |

10 | Grafana: **[http://localhost:3000]({{TRAFFIC_HOST1_3000}})**

11 |

12 | This is the URL for the Grafana UI. This is where the majority of the tutorial will take place.

13 |

14 | Grafana News: **[http://localhost:8081]({{TRAFFIC_HOST1_8081}})**

15 |

16 | This URL will direct you to the sample application for this tutorial. This simulator lets you post links and vote for the ones you like. This is important for generating traffic and errors to populate our Grafana dashboards.

17 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt2/finish.md:

--------------------------------------------------------------------------------

1 | # Summary

2 |

3 | In this tutorial, you have learned how Grafana Alerting can route individual alert instances using the labels generated by the data-source query and match these labels with notification policies, which in turn routes alert notifications to specific contact points.

4 |

5 | If you run into any problems, you are welcome to post questions in our [Grafana Community forum](https://community.grafana.com/).

6 |

7 | # Learn more in [Grafana Alerting: Group alert notifications](http://www.grafana.com/tutorials/alerting-get-started-pt3/)

8 |

9 | In [Get started with Grafana Alerting: Group alert notifications](http://www.grafana.com/tutorials/alerting-get-started-pt3/) you learn how to group alert notifications effectively.

10 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt2/index.json:

--------------------------------------------------------------------------------

1 | {

2 | "title": "Get started with Grafana Alerting - Multi-dimensional alerts and how to route them",

3 | "description": "Learn to use alert instances and route notifications by labels to contacts.",

4 | "details": {

5 | "intro": {

6 | "text": "intro.md"

7 | },

8 | "steps": [

9 | {

10 | "text": "step1.md"

11 | },

12 | {

13 | "text": "step2.md"

14 | },

15 | {

16 | "text": "step3.md"

17 | },

18 | {

19 | "text": "step4.md"

20 | },

21 | {

22 | "text": "step5.md"

23 | },

24 | {

25 | "text": "step6.md"

26 | },

27 | {

28 | "text": "step7.md"

29 | },

30 | {

31 | "text": "step8.md"

32 | }

33 | ],

34 | "finish": {

35 | "text": "finish.md"

36 | }

37 | },

38 | "backend": {

39 | "imageid": "ubuntu"

40 | }

41 | }

42 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt2/intro.md:

--------------------------------------------------------------------------------

1 | This tutorial is a continuation of the [Grafana Alerting - Create and receive your first alert](http://www.grafana.com/tutorials/alerting-get-started/) tutorial.

2 |

3 | In this guide, we dig into more complex yet equally fundamental elements of Grafana Alerting: **alert instances** and **notification policies**.

4 |

5 | After introducing each component, you will learn how to:

6 |

7 | - Configure an alert rule that returns more than one alert instance

8 | - Create notification policies that route firing alert instances to different contact points

9 | - Use labels to match alert instances and notification policies

10 |

11 | Learning about alert instances and notification policies is useful if you have more than one contact point in your organization, or if your alert rule returns a number of metrics that you want to handle separately by routing each alert instance to a specific contact point. The tutorial will introduce each concept, followed by how to apply both concepts in a real-world scenario.

12 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt2/step1.md:

--------------------------------------------------------------------------------

1 | To demonstrate the observation of data using the Grafana stack, download and run the following files.

2 |

3 | 1. Clone the [tutorial environment repository](https://www.github.com/grafana/tutorial-environment).

4 |

5 | ```

6 | git clone https://github.com/grafana/tutorial-environment.git

7 | ```{{exec}}

8 | 1. Change to the directory where you cloned the repository:

9 |

10 | ```

11 | cd tutorial-environment

12 | ```{{exec}}

13 | 1. Run the Grafana stack:

14 |

15 | ```bash

16 | docker-compose up -d

17 | ```{{exec}}

18 |

19 | The first time you run `docker compose up -d`{{copy}}, Docker downloads all the necessary resources for the tutorial. This might take a few minutes, depending on your internet connection.

20 |

21 | NOTE:

22 |

23 | If you already have Grafana, Loki, or Prometheus running on your system, you might see errors, because the Docker image is trying to use ports that your local installations are already using. If this is the case, stop the services, then run the command again.

24 |

--------------------------------------------------------------------------------

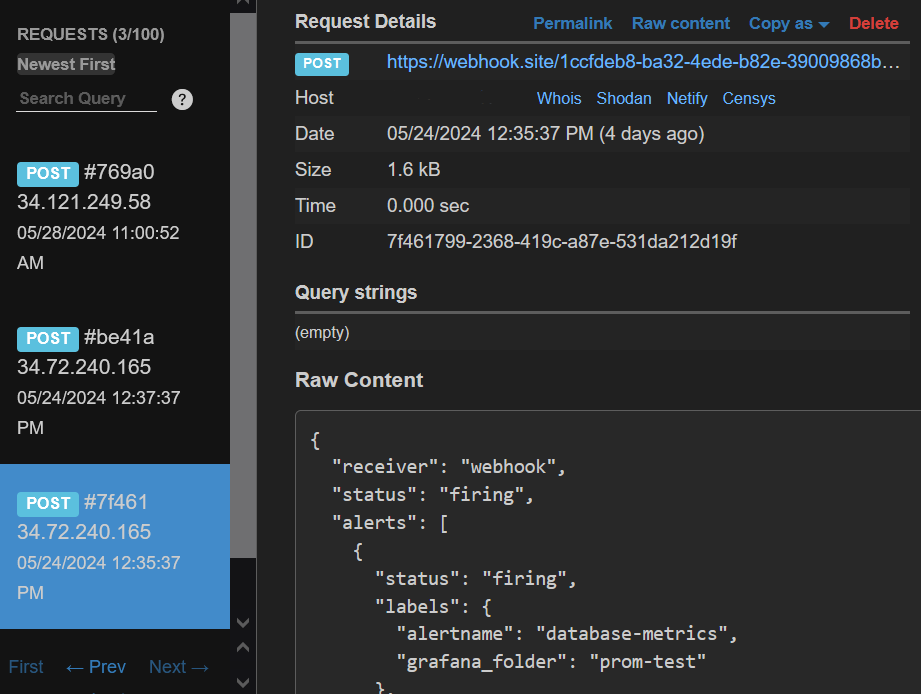

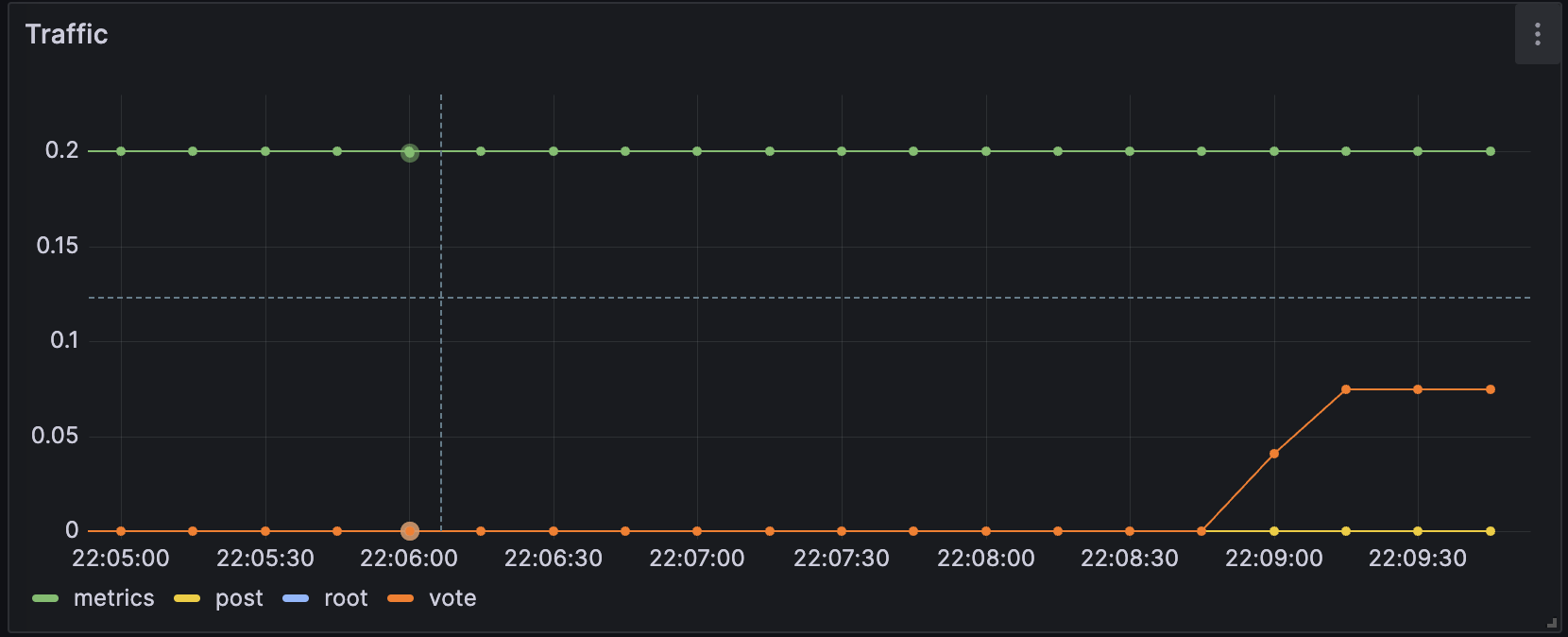

/grafana/alerting-get-started-pt2/step2.md:

--------------------------------------------------------------------------------

1 | # Alert instances

2 |

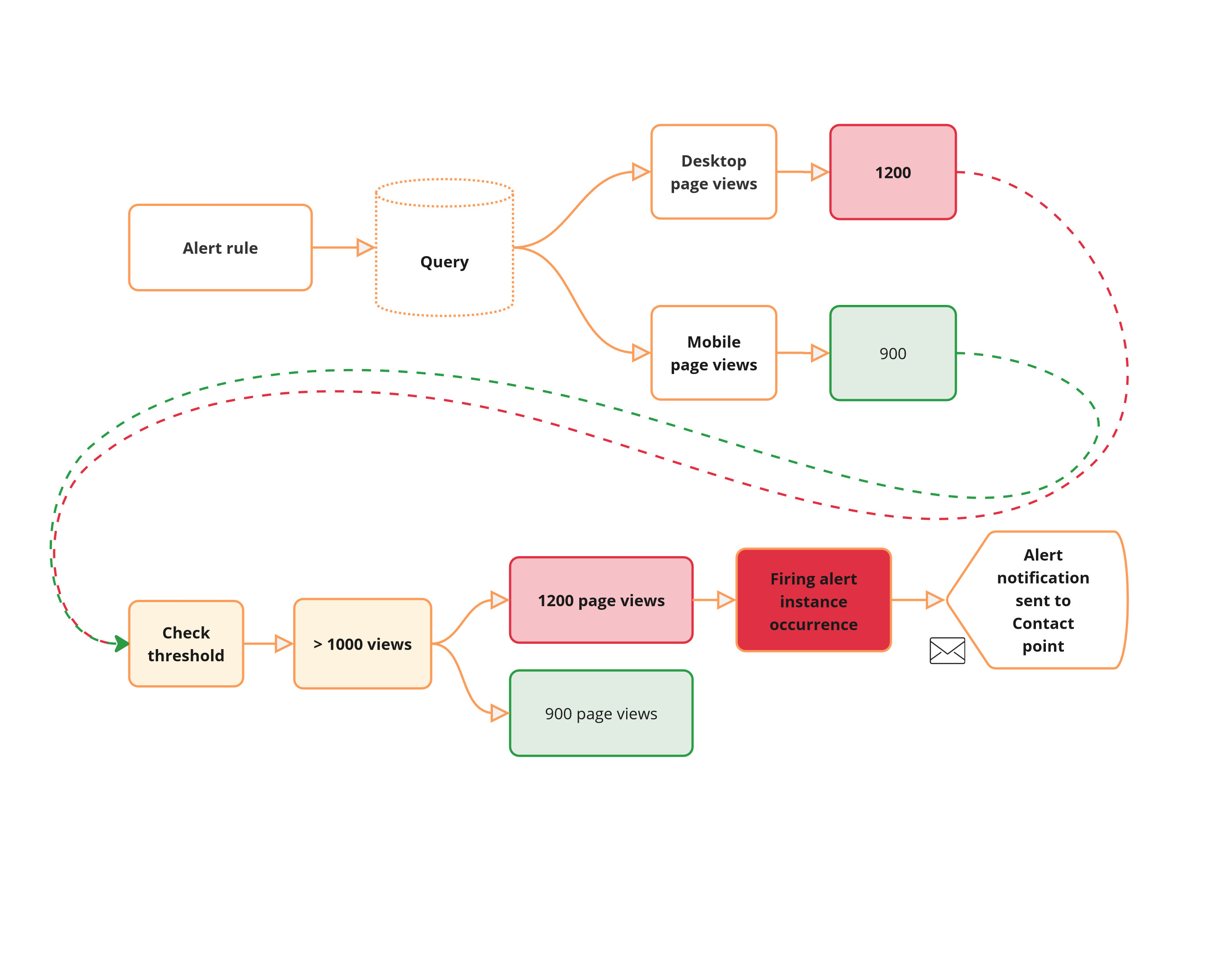

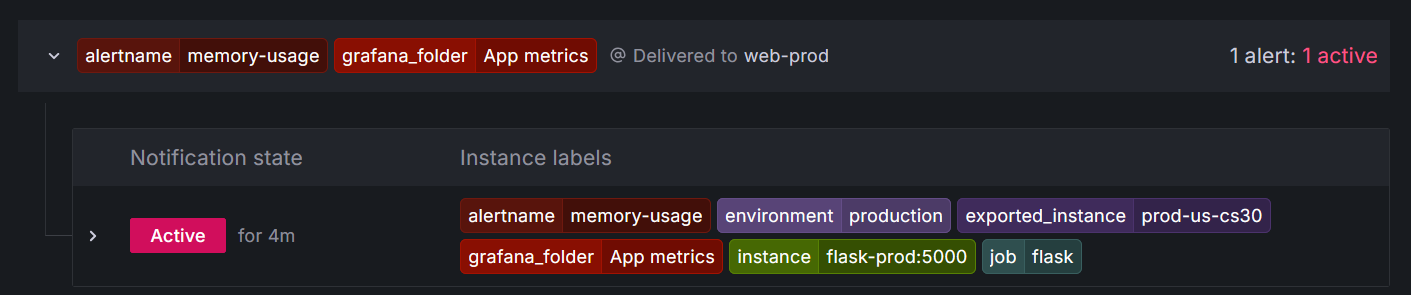

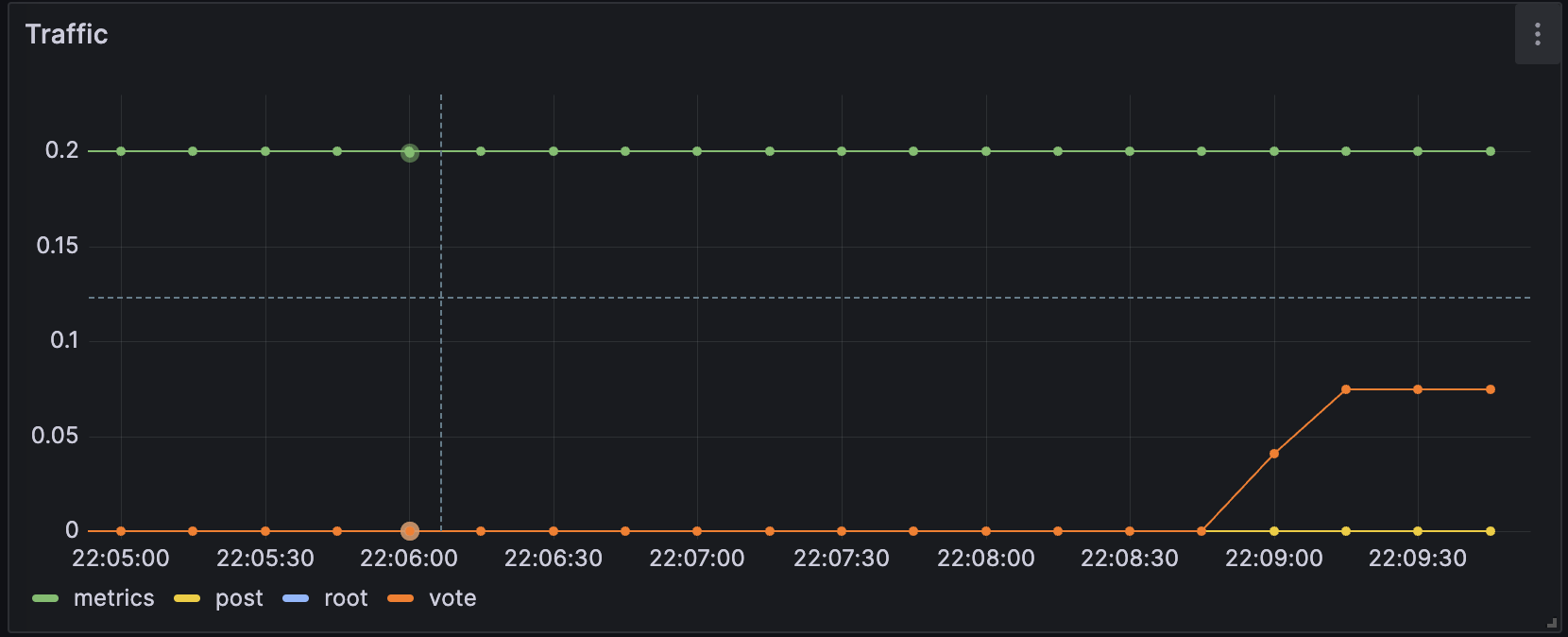

3 | An [alert instance](https://grafana.com/docs/grafana/latest/alerting/fundamentals/#alert-instances) is an event that matches a metric returned by an alert rule query.

4 |

5 | Let's consider a scenario where you're monitoring website traffic using Grafana. You've set up an alert rule to trigger an alert instance if the number of page views exceeds a certain threshold (more than `1000`{{copy}} page views) within a specific time period, say, over the past `5`{{copy}} minutes.

6 |

7 | If the query returns more than one time-series, each time-series represents a different metric or aspect being monitored. In this case, the alert rule is applied individually to each time-series.

8 |

9 |

10 |

11 | In this scenario, each time-series is evaluated independently against the alert rule. It results in the creation of an alert instance for each time-series. The time-series corresponding to the desktop page views meets the threshold and, therefore, results in an alert instance in **Firing** state for which an alert notification is sent. The mobile alert instance state remains **Normal**.

12 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt2/step3.md:

--------------------------------------------------------------------------------

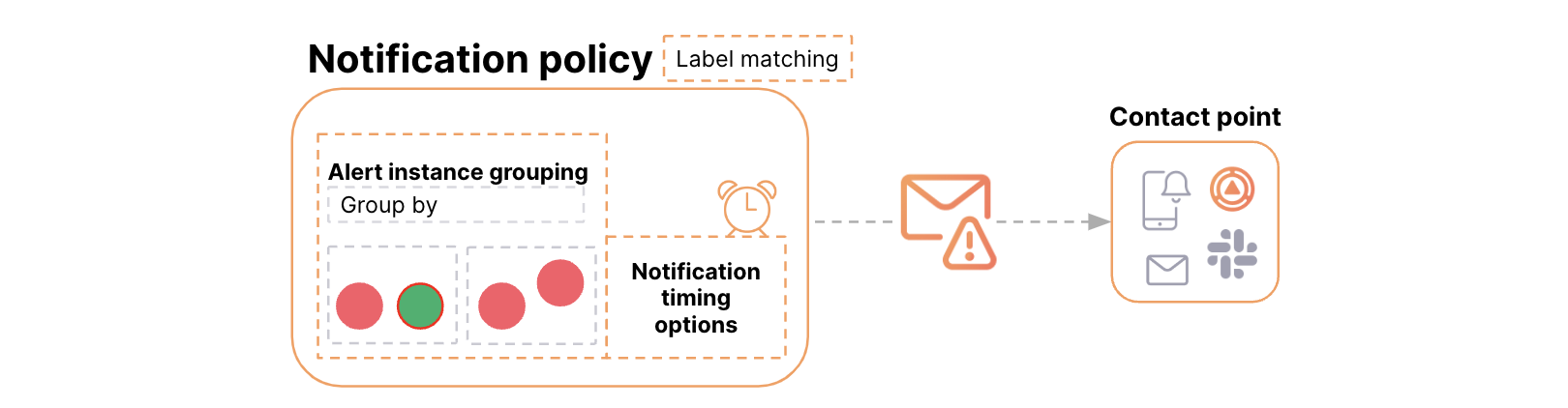

1 | # Notification policies

2 |

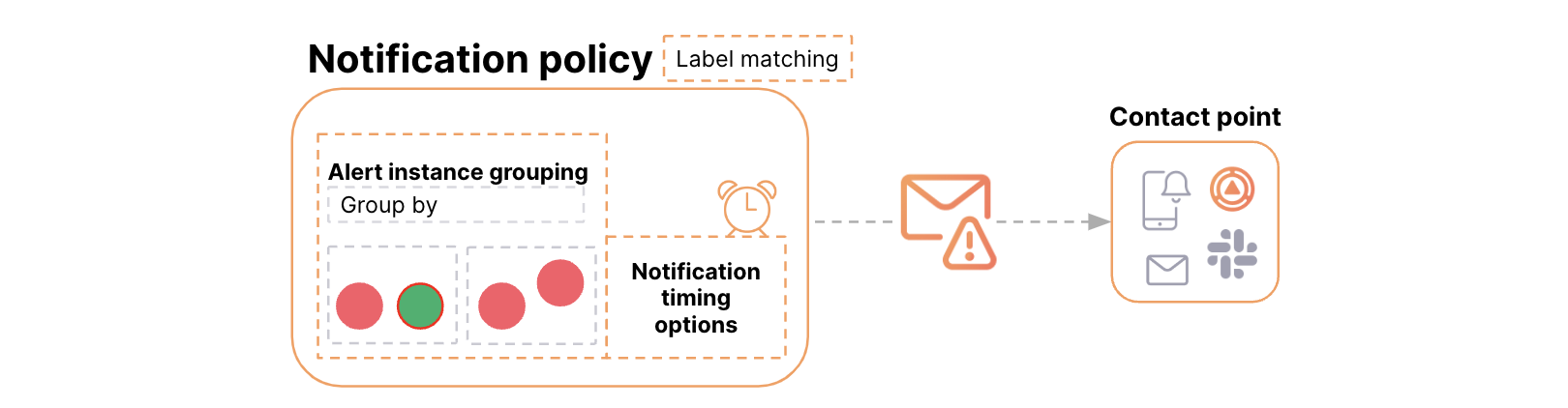

3 | [Notification policies](https://grafana.com/docs/grafana/latest/alerting/fundamentals/notifications/notification-policies/) route alerts to different communication channels, reducing alert noise and providing control over when and how alerts are sent. For example, you might use notification policies to ensure that critical alerts about server downtime are sent immediately to the on-call engineer. Another use case could be routing performance alerts to the development team for review and action.

4 |

5 | Key Characteristics:

6 |

7 | - Route alert notifications by matching alerts and policies with labels

8 | - Manage when to send notifications

9 |

10 |

11 |

12 | In the above diagram, alert instances and notification policies are matched by labels. For instance, the label `team=operations`{{copy}} matches the alert instance “**Pod stuck in CrashLoop**” and “**Disk Usage -80%**” to child policies that send alert notifications to a particular contact point ().

13 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt2/step4.md:

--------------------------------------------------------------------------------

1 | # Create notification policies

2 |

3 | Create a notification policy if you want to handle metrics returned by alert rules separately by routing each alert instance to a specific contact point.

4 |

5 | 1. Visit [http://localhost:3000]({{TRAFFIC_HOST1_3000}}), where Grafana should be running

6 | 1. Navigate to **Alerts & IRM > Alerting > Notification policies**.

7 | 1. In the Default policy, click **+ New child policy**.

8 | 1. In the field **Label** enter `device`{{copy}}, and in the field **Value** enter `desktop`{{copy}}.

9 | 1. From the **Contact point** drop-down, choose **Webhook**.

10 |

11 | If you don’t have any contact points, add a [Contact point](https://grafana.com/tutorials/alerting-get-started/#create-a-contact-point).

12 | 1. Click **Save Policy**.

13 |

14 | This new child policy routes alerts that match the label `device=desktop`{{copy}} to the Webhook contact point.

15 | 1. **Repeat the steps above to create a second child policy** to match another alert instance. For labels use: `device=mobile`{{copy}}. Use the Webhook integration for the contact point. Alternatively, experiment by using a different Webhook endpoint or a [different integration](https://grafana.com/docs/grafana/latest/alerting/configure-notifications/manage-contact-points/#supported-contact-point-integrations).

16 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt2/step5.md:

--------------------------------------------------------------------------------

1 | # Create an alert rule that returns alert instances

2 |

3 | The alert rule that you are about to create is meant to monitor web traffic page views. The objective is to explore what an alert instance is and how to leverage routing individual alert instances by using label matchers and notification policies.

4 |

--------------------------------------------------------------------------------

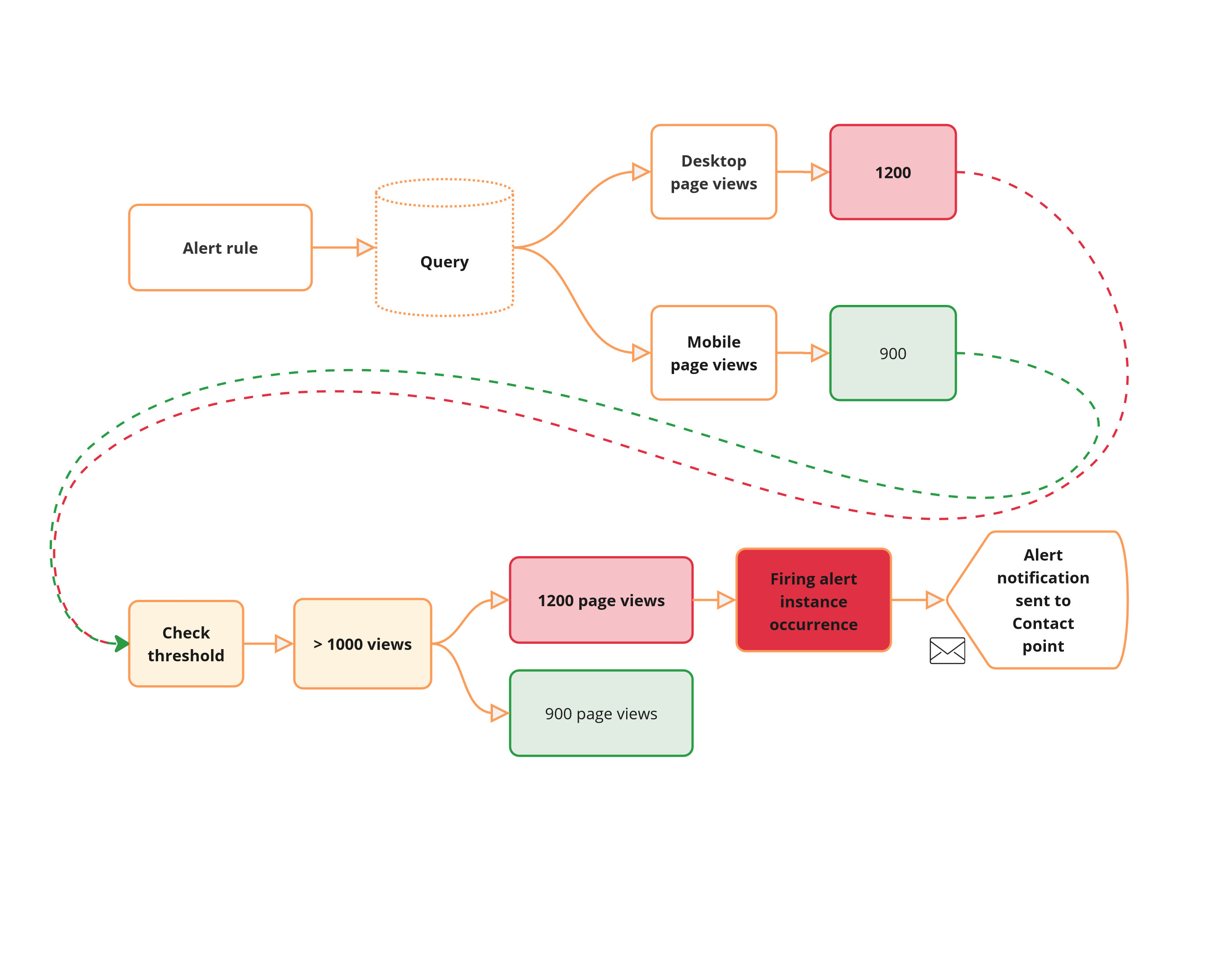

/grafana/alerting-get-started-pt2/step8.md:

--------------------------------------------------------------------------------

1 | # Receive alert notifications

2 |

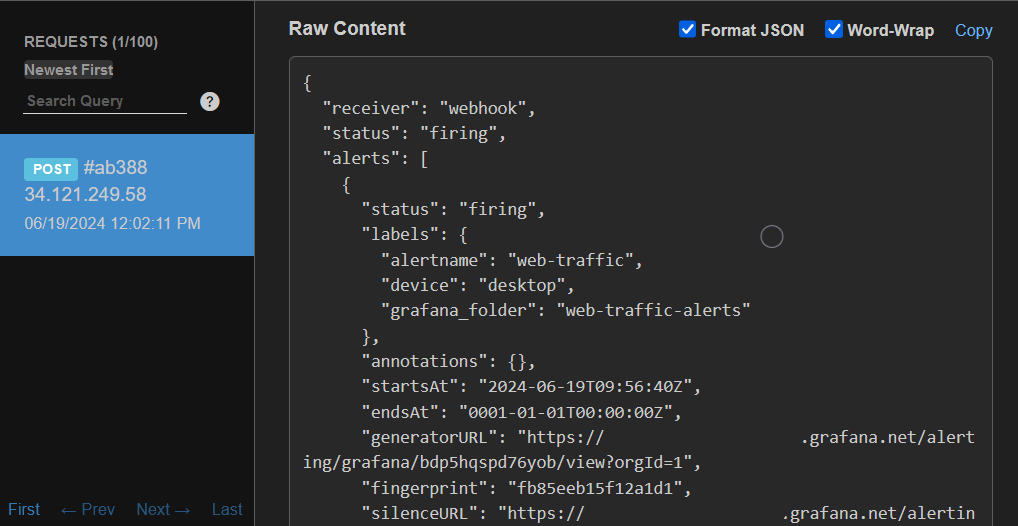

3 | Now that the alert rule has been configured, you should receive alert [notifications](http://grafana.com/docs/grafana/next/alerting/fundamentals/alert-rule-evaluation/state-and-health/#notifications) in the contact point whenever the alert triggers and gets resolved. In our example, each alert instance should be routed separately as we configured labels to match notification policies. Once the evaluation interval has concluded (1m), you should receive an alert notification in the Webhook endpoint.

4 |

5 |

6 |

7 | The alert notification details show that the alert instance corresponding to the website views from desktop devices was correctly routed through the notification policy to the Webhook contact point. The notification also shows that the instance is in **Firing** state, as well as it includes the label `device=desktop`{{copy}}, which makes the routing of the alert instance possible.

8 |

9 | Feel free to change the CSV data in the alert rule to trigger the routing of the alert instance that matches the label `device=mobile`{{copy}}.

10 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt3/finish.md:

--------------------------------------------------------------------------------

1 | # Conclusion

2 |

3 | By configuring **notification policies** and using **labels** (such as _region_), you can group alert notifications based on specific criteria and route them to the appropriate teams. Fine-tuning **timing options**—including group wait, group interval, and repeat interval—further can reduce noise and ensures notifications remain actionable without overwhelming on-call engineers.

4 |

5 | # Learn more in [Grafana Alerting: Template your alert notifications](http://www.grafana.com/tutorials/alerting-get-started-pt4/)

6 |

7 | In [Get started with Grafana Alerting: Template your alert notifications](http://www.grafana.com/tutorials/alerting-get-started-pt4/) you learn how to use templates to create customized and concise notifications.

8 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt3/index.json:

--------------------------------------------------------------------------------

1 | {

2 | "title": "Get started with Grafana Alerting - Group alert notifications",

3 | "description": "Learn how to group alert notifications effectively to reduce noise and streamline communication in Grafana Alerting.",

4 | "details": {

5 | "intro": {

6 | "text": "intro.md"

7 | },

8 | "steps": [

9 | {

10 | "text": "step1.md"

11 | },

12 | {

13 | "text": "step2.md"

14 | },

15 | {

16 | "text": "step3.md"

17 | },

18 | {

19 | "text": "step4.md"

20 | },

21 | {

22 | "text": "step5.md"

23 | },

24 | {

25 | "text": "step6.md"

26 | }

27 | ],

28 | "finish": {

29 | "text": "finish.md"

30 | }

31 | },

32 | "backend": {

33 | "imageid": "ubuntu"

34 | }

35 | }

36 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt3/intro.md:

--------------------------------------------------------------------------------

1 | This tutorial is a continuation of the [Get started with Grafana Alerting - Alert routing](http://www.grafana.com/tutorials/alerting-get-started-pt2/) tutorial.

2 |

3 | Grouping in Grafana Alerting reduces notification noise by combining related alert instances into a single, concise notification. This is useful for on-call engineers, ensuring they focus on resolving incidents instead of sorting through a flood of notifications.

4 |

5 | Grouping is configured using labels in the notification policy. These labels reference those generated by alert instances or configured by the user.

6 |

7 | Notification policies also allow you to define how often notifications are sent for each group of alert instances.

8 |

9 | In this tutorial, you will:

10 |

11 | - Learn how alert rule grouping works.

12 | - Create a notification policy to handle grouping.

13 | - Define alert rules for a real-world scenario.

14 | - Receive and review grouped alert notifications.

15 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt3/step1.md:

--------------------------------------------------------------------------------

1 | To demonstrate the observation of data using the Grafana stack, download and run the following files.

2 |

3 | 1. Clone the [tutorial environment repository](https://www.github.com/grafana/tutorial-environment).

4 |

5 | ```

6 | git clone https://github.com/grafana/tutorial-environment.git

7 | ```{{exec}}

8 | 1. Change to the directory where you cloned the repository:

9 |

10 | ```

11 | cd tutorial-environment

12 | ```{{exec}}

13 | 1. Run the Grafana stack:

14 |

15 | ```bash

16 | docker-compose up -d

17 | ```{{exec}}

18 |

19 | The first time you run `docker compose up -d`{{copy}}, Docker downloads all the necessary resources for the tutorial. This might take a few minutes, depending on your internet connection.

20 |

21 | NOTE:

22 |

23 | If you already have Grafana, Loki, or Prometheus running on your system, you might see errors, because the Docker image is trying to use ports that your local installations are already using. If this is the case, stop the services, then run the command again.

24 |

--------------------------------------------------------------------------------

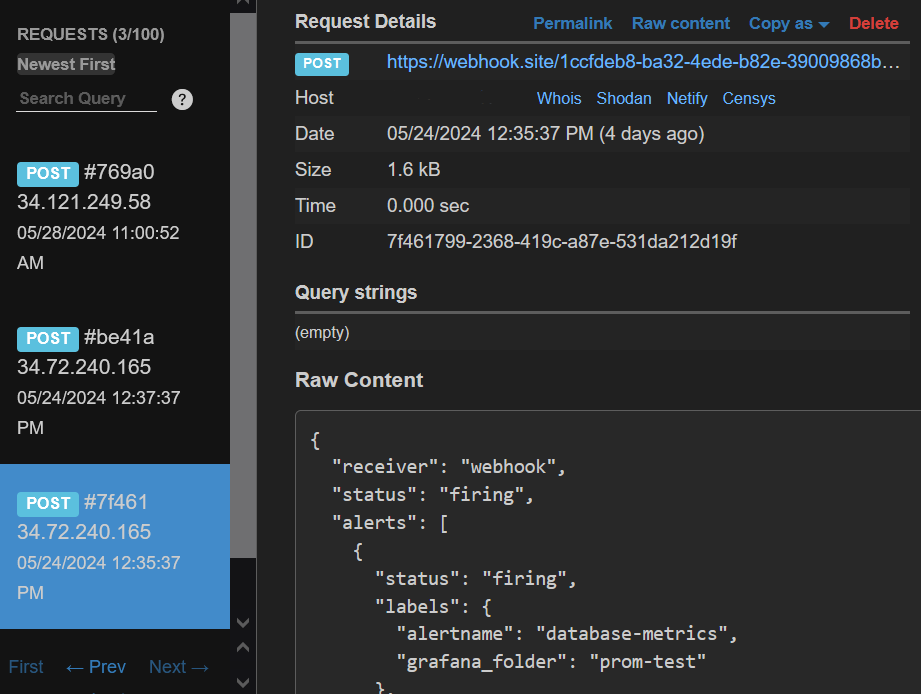

/grafana/alerting-get-started-pt3/step2.md:

--------------------------------------------------------------------------------

1 | # How alert rule grouping works

2 |

3 | Alert notification grouping is configured with **labels** and **timing options**:

4 |

5 | - **Labels** map the alert rule with the notification policy and define the grouping.

6 | - **Timing options** control when and how often notifications are sent.

7 |

8 |

9 |

10 | ## Types of Labels

11 |

12 | **Reserved labels** (default):

13 |

14 | - Automatically generated by Grafana, e.g., `alertname`{{copy}}, `grafana_folder`{{copy}}.

15 | - Example: `alertname="High CPU usage"`{{copy}}.

16 |

17 | **User-configured labels**:

18 |

19 | - Added manually to the alert rule.

20 | - Example: `severity`{{copy}}, `priority`{{copy}}.

21 |

22 | **Query labels**:

23 |

24 | - Returned by the data source query.

25 | - Example: `region`{{copy}}, `service`{{copy}}, `environment`{{copy}}.

26 |

27 | ## Timing Options

28 |

29 | **Group wait**: Time before sending the first notification.

30 | **Group interval**: Time between notifications for a group.

31 | **Repeat interval**: Time before resending notifications for an unchanged group.

32 |

33 | Alerts sharing the **same label values** are grouped together, and timing options determine notification frequency.

34 |

35 | For more details, see:

36 |

37 | - [Grouping Alerts](https://grafana.com/docs/grafana/latest/alerting/fundamentals/notifications/group-alert-notifications/)

38 | - [Alert Labels](https://grafana.com/docs/grafana/latest/alerting/fundamentals/alert-rules/annotation-label/#label-types)

39 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt3/step3.md:

--------------------------------------------------------------------------------

1 | # A real-world example of alert grouping in action

2 |

3 | ## Scenario: monitoring a distributed application

4 |

5 | You’re monitoring metrics like CPU usage, memory utilization, and network latency across multiple regions. Some of these alert rules include labels such as `region: us-west`{{copy}} and `region: us-east`{{copy}}. If multiple alert rules trigger across these regions, they can result in notification floods.

6 |

7 | ## How to manage grouping

8 |

9 | To group alert rule notifications:

10 |

11 | 1. **Define labels**: Use `region`{{copy}}, `metric`{{copy}}, or `instance`{{copy}} labels to categorize alerts.

12 | 1. **Configure Notification policies**:

13 | - Group alerts by the **query label** "region".

14 | - Example:

15 | - Alert notifications for `region: us-west`{{copy}} go to the West Coast team.

16 | - Alert notifications for `region: us-east`{{copy}} go to the East Coast team.

17 | 1. Specify the **timing options** for sending notifications to control their frequency.

18 | - Example:

19 | - **Group interval**: setting determines how often updates for the same alert group are sent. By default, this interval is set to 5 minutes, but you can customize it to be shorter or longer based on your needs.

20 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt4/index.json:

--------------------------------------------------------------------------------

1 | {

2 | "title": "Get started with Grafana Alerting - Template your alert notifications",

3 | "description": "Learn how to use templates to create customized and concise notifications.",

4 | "details": {

5 | "intro": {

6 | "text": "intro.md"

7 | },

8 | "steps": [

9 | {

10 | "text": "step1.md"

11 | },

12 | {

13 | "text": "step2.md"

14 | },

15 | {

16 | "text": "step3.md"

17 | },

18 | {

19 | "text": "step4.md"

20 | },

21 | {

22 | "text": "step5.md"

23 | },

24 | {

25 | "text": "step6.md"

26 | }

27 | ],

28 | "finish": {

29 | "text": "finish.md"

30 | }

31 | },

32 | "backend": {

33 | "imageid": "ubuntu"

34 | }

35 | }

36 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt4/intro.md:

--------------------------------------------------------------------------------

1 | This tutorial is a continuation of the [Get started with Grafana Alerting - Grouping notifications](http://www.grafana.com/tutorials/alerting-get-started-pt3/) tutorial.

2 |

3 | In this tutorial, you will learn:

4 |

5 | - The two types of templates in Grafana Alerting: labels and annotations and notification templates.

6 | - How to configure alert rules with summary and description annotations.

7 | - How to create a notification template that integrates with alert rule annotations.

8 | - How to use a built-in notification template to group and format multiple alert instances.

9 | - How to preview alert notifications by leveraging alert instances in the notification template payload.

10 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt4/step1.md:

--------------------------------------------------------------------------------

1 | To demonstrate the observation of data using the Grafana stack, download and run the following files.

2 |

3 | 1. Clone the [tutorial environment repository](https://www.github.com/grafana/tutorial-environment).

4 |

5 | ```

6 | git clone https://github.com/grafana/tutorial-environment.git

7 | ```{{exec}}

8 | 1. Change to the directory where you cloned the repository:

9 |

10 | ```

11 | cd tutorial-environment

12 | ```{{exec}}

13 | 1. Run the Grafana stack:

14 |

15 | ```bash

16 | docker-compose up -d

17 | ```{{exec}}

18 |

19 | The first time you run `docker compose up -d`{{copy}}, Docker downloads all the necessary resources for the tutorial. This might take a few minutes, depending on your internet connection.

20 |

21 | NOTE:

22 |

23 | If you already have Grafana, Loki, or Prometheus running on your system, you might see errors, because the Docker image is trying to use ports that your local installations are already using. If this is the case, stop the services, then run the command again.

24 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt4/step5.md:

--------------------------------------------------------------------------------

1 | # Apply the template to your contact point

2 |

3 | 1. Apply the template to your contact point.

4 | - Navigate to **Alerts & IRM** > **Alerting** > **Contact points**.

5 | - Edit your contact point.

6 | 1. **Optional** [email] **settings** section:

7 | - Click **Edit Message**.

8 | - Under **Select notification template**, search `custom.firing_and_resolved_alerts`{{copy}}.

9 | - Click **Save**.

10 | 1. Save your contact point.

11 |

--------------------------------------------------------------------------------

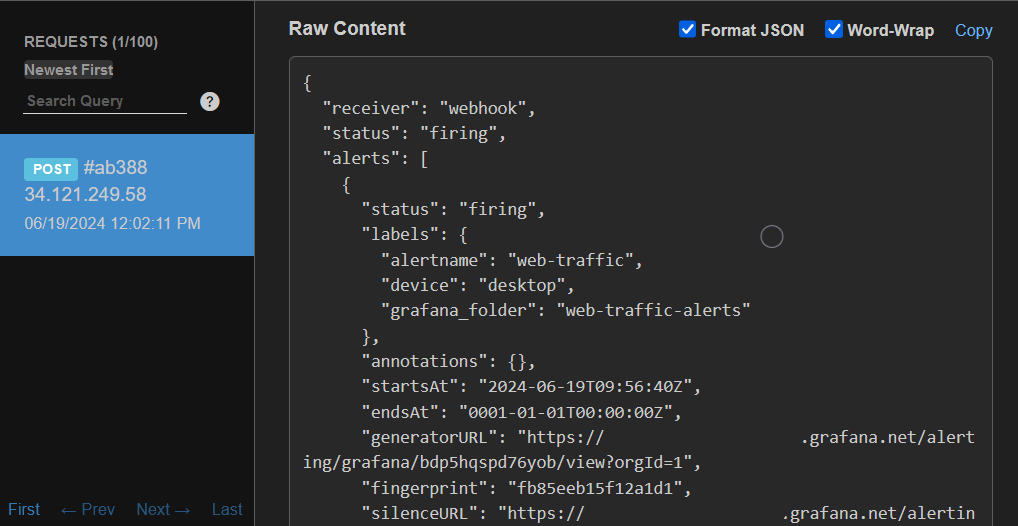

/grafana/alerting-get-started-pt4/step6.md:

--------------------------------------------------------------------------------

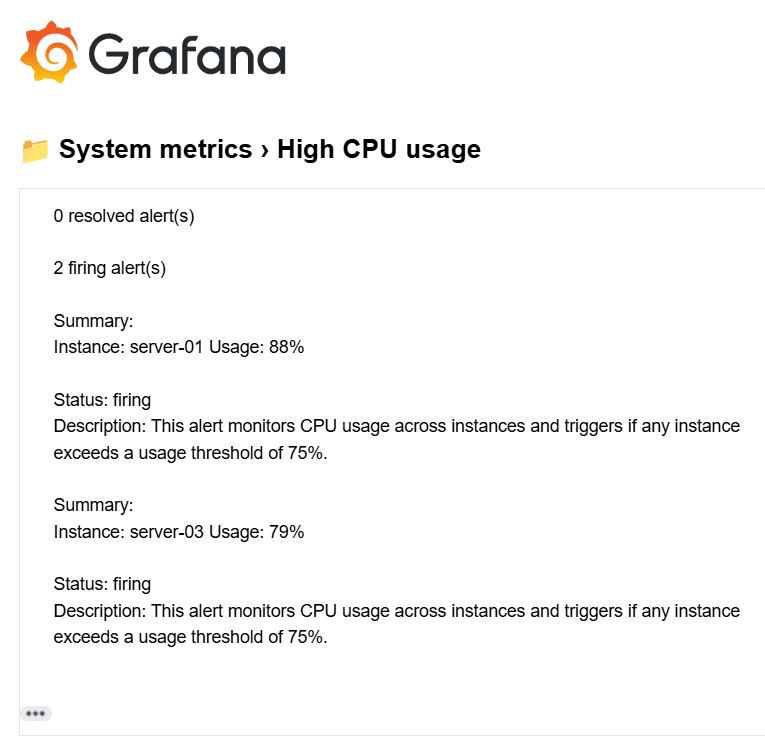

1 | # Receiving notifications

2 |

3 | Now that the template has been applied to the contact point, you should receive notifications in the specified contact point.

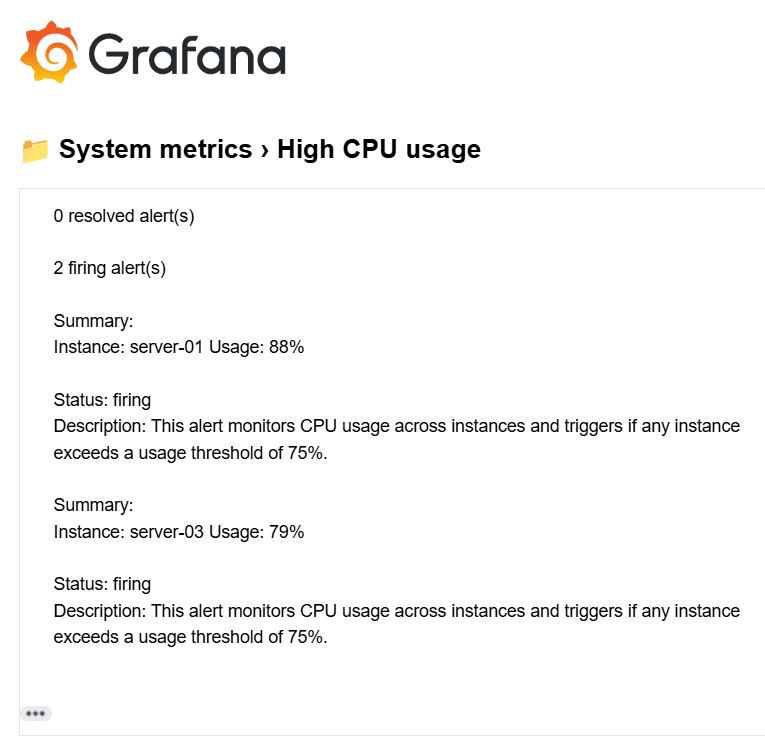

4 |

5 | Note: you might need to pause the alert rule evaluation and resume it to trigger the notification.

6 |

7 |

8 |

9 | In the screen capture, you can see how the notification template groups the alert instances into two sections: **firing alerts** and **resolved alerts**. Each section includes only the key details for each alert, ensuring the message remains concise and focused. Additionally, the summary and description annotations we created earlier are included, providing affected instance and CPU usage.

10 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt5/finish.md:

--------------------------------------------------------------------------------

1 | # Conclusion

2 |

3 | By using notification policies, you can route alerts based on query values, directing them to the appropriate teams.

4 |

5 | # Learn more in [Grafana Alerting - Link alerts to visualizations](http://www.grafana.com/tutorials/alerting-get-started-pt6/)

6 |

7 | In [Grafana Alerting - Link alerts to visualizations](http://www.grafana.com/tutorials/alerting-get-started-pt6/) you will create alerts using Prometheus data and link them to your graphs.

8 |

9 | Explore related topics covered in this tutorial:

10 |

11 | - Understand how alert routing works in [Get started with Grafana Alerting - Alert routing](http://www.grafana.com/tutorials/alerting-get-started-pt2/).

12 | - Learn how templating works in [Get started with Grafana Alerting - Templating](http://www.grafana.com/tutorials/alerting-get-started-pt4/).

13 | - More [examples on templating labels](https://grafana.com/docs/grafana/latest/alerting/alerting-rules/templates/examples/).

14 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt5/index.json:

--------------------------------------------------------------------------------

1 | {

2 | "title": "Get started with Grafana Alerting - Route alerts using dynamic labels",

3 | "description": "Learn how to dynamically route alert notifications.",

4 | "details": {

5 | "intro": {

6 | "text": "intro.md"

7 | },

8 | "steps": [

9 | {

10 | "text": "step1.md"

11 | },

12 | {

13 | "text": "step2.md"

14 | },

15 | {

16 | "text": "step3.md"

17 | },

18 | {

19 | "text": "step4.md"

20 | },

21 | {

22 | "text": "step5.md"

23 | },

24 | {

25 | "text": "step6.md"

26 | }

27 | ],

28 | "finish": {

29 | "text": "finish.md"

30 | }

31 | },

32 | "backend": {

33 | "imageid": "ubuntu"

34 | }

35 | }

36 |

--------------------------------------------------------------------------------

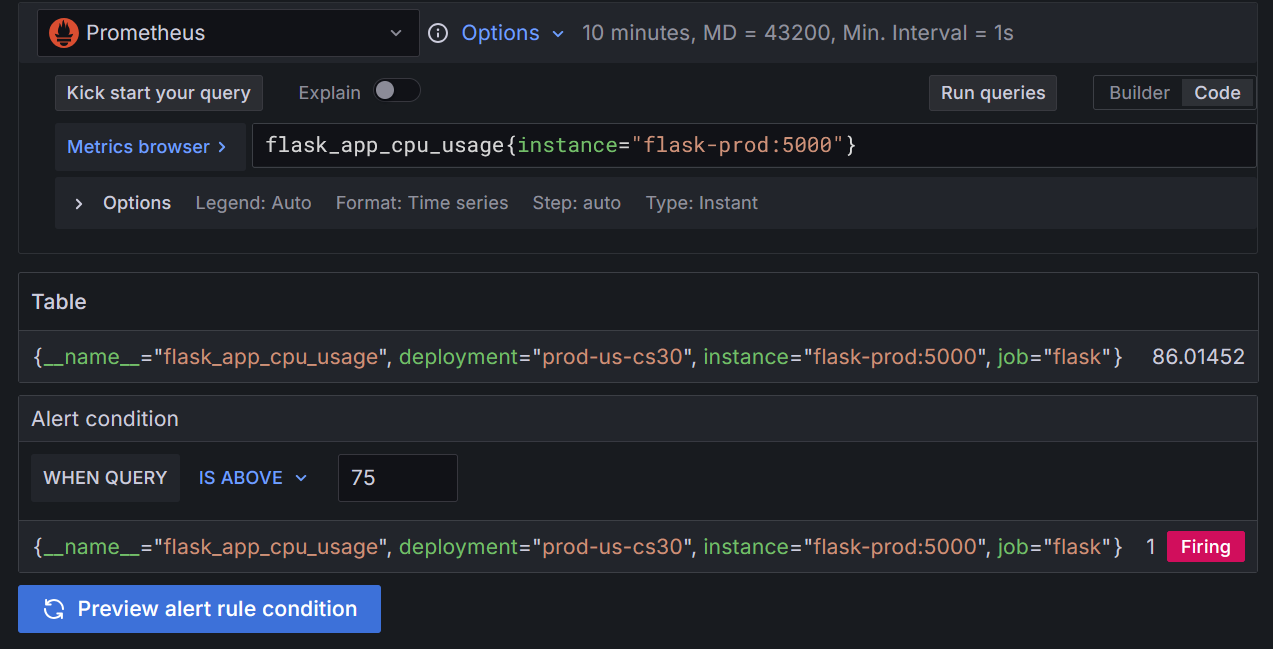

/grafana/alerting-get-started-pt5/intro.md:

--------------------------------------------------------------------------------

1 | The Get started with Grafana Alerting - Dynamic routing tutorial is a continuation of the [Get started with Grafana Alerting - Templating](http://www.grafana.com/tutorials/alerting-get-started-pt4/) tutorial.

2 |

3 | Imagine you are managing a web application or a fleet of servers, tracking critical metrics such as CPU, memory, and disk usage. While monitoring is essential, managing alerts allows your team to act on issues without necessarily feeling overwhelmed by the noise.

4 |

5 | In this tutorial you will learn how to:

6 |

7 | - Leverage notification policies for **dynamic routing based on query values**: Use notification policies to route alerts based on dynamically generated labels, in a way that critical alerts reach the on-call team and less urgent ones go to a general monitoring channel.

8 |

--------------------------------------------------------------------------------

/grafana/alerting-get-started-pt5/step1.md:

--------------------------------------------------------------------------------

1 | # Set up the Grafana stack

2 |

3 | To observe data using the Grafana stack, download and run the following files.

4 |

5 | 1. Clone the [tutorial environment repository](https://github.com/tonypowa/grafana-prometheus-alerting-demo.git).

6 |