├── .gitignore

├── LICENSE.md

├── README.md

├── animate.py

├── assets

├── driving.mp4

├── src.png

└── visual_vox1.png

├── augmentation.py

├── config

└── vox-adv-256.yaml

├── crop-video.py

├── data

├── celeV_cross_id_evaluation.csv

├── utils.py

├── vox256.csv

├── vox_cross_id_animate.csv

├── vox_cross_id_evaluation.csv

├── vox_cross_id_evaluation_best_frame.csv

└── vox_evaluation.csv

├── demo.py

├── demo_multi.py

├── depth

├── __init__.py

├── depth_decoder.py

├── layers.py

├── models

│ └── opt.json

├── pose_cnn.py

├── pose_decoder.py

└── resnet_encoder.py

├── evaluation_dataset.py

├── face-alignment

├── .gitattributes

├── .gitignore

├── Dockerfile

├── LICENSE

├── README.md

├── conda

│ └── meta.yaml

├── docs

│ └── images

│ │ ├── 2dlandmarks.png

│ │ └── face-alignment-adrian.gif

├── examples

│ ├── demo.ipynb

│ └── detect_landmarks_in_image.py

├── face_alignment

│ ├── __init__.py

│ ├── api.py

│ ├── detection

│ │ ├── __init__.py

│ │ ├── blazeface

│ │ │ ├── __init__.py

│ │ │ ├── blazeface_detector.py

│ │ │ ├── detect.py

│ │ │ ├── net_blazeface.py

│ │ │ └── utils.py

│ │ ├── core.py

│ │ ├── dlib

│ │ │ ├── __init__.py

│ │ │ └── dlib_detector.py

│ │ ├── folder

│ │ │ ├── __init__.py

│ │ │ └── folder_detector.py

│ │ └── sfd

│ │ │ ├── __init__.py

│ │ │ ├── bbox.py

│ │ │ ├── detect.py

│ │ │ ├── net_s3fd.py

│ │ │ └── sfd_detector.py

│ └── utils.py

├── requirements.txt

├── setup.cfg

├── setup.py

├── test

│ ├── facealignment_test.py

│ ├── smoke_test.py

│ └── test_utils.py

└── tox.ini

├── frames_dataset.py

├── kill_port.py

├── logger.py

├── modules

├── AdaIN.py

├── dense_motion.py

├── discriminator.py

├── dynamic_conv.py

├── generator.py

├── keypoint_detector.py

├── model.py

├── model_dataparallel.py

└── util.py

├── reconstruction.py

├── requirements.txt

├── run.py

├── run_dataparallel.py

├── sync_batchnorm

├── __init__.py

├── batchnorm.py

├── comm.py

├── replicate.py

└── unittest.py

├── train.py

├── train_dataparallel.py

└── utils.py

/.gitignore:

--------------------------------------------------------------------------------

1 | __pycache__

2 | log

3 | *.pth*

4 | readmesam*

5 | *.jpg

6 | *.mp4

7 | run.sh

8 | source.png

9 | tools

10 | *.pt

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 | ## :book: Depth-Aware Generative Adversarial Network for Talking Head Video Generation (CVPR 2022)

3 |

4 | :fire: If DaGAN is helpful in your photos/projects, please help to :star: it or recommend it to your friends. Thanks:fire:

5 |

6 |

7 | :fire: Seeking for the collaboration and internship opportunities. :fire:

8 |

9 |

10 | > [[Paper](https://arxiv.org/abs/2203.06605)] [[Project Page](https://harlanhong.github.io/publications/dagan.html)] [[Demo](https://huggingface.co/spaces/HarlanHong/DaGAN)] [[Poster Video](https://www.youtube.com/watch?v=nahsJNjWzGo&t=1s)]

11 |

12 |

13 | > [Fa-Ting Hong](https://harlanhong.github.io), [Longhao Zhang](), [Li Shen](), [Dan Xu](https://www.danxurgb.net)

14 | > The Hong Kong University of Science and Technology

15 | > Alibaba Cloud

16 |

17 | ### Cartoon Sample

18 | https://user-images.githubusercontent.com/19970321/162151632-0195292f-30b8-4122-8afd-9b1698f1e4fe.mp4

19 |

20 | ### Human Sample

21 | https://user-images.githubusercontent.com/19970321/162151327-f2930231-42e3-40f2-bfca-a88529599f0f.mp4

22 |

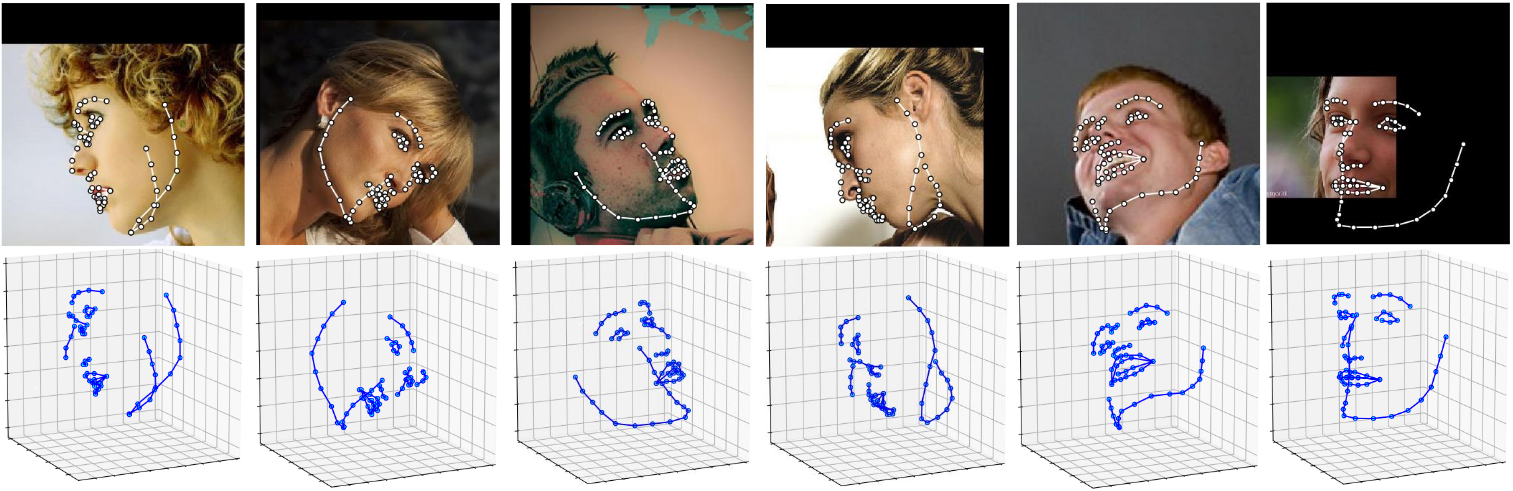

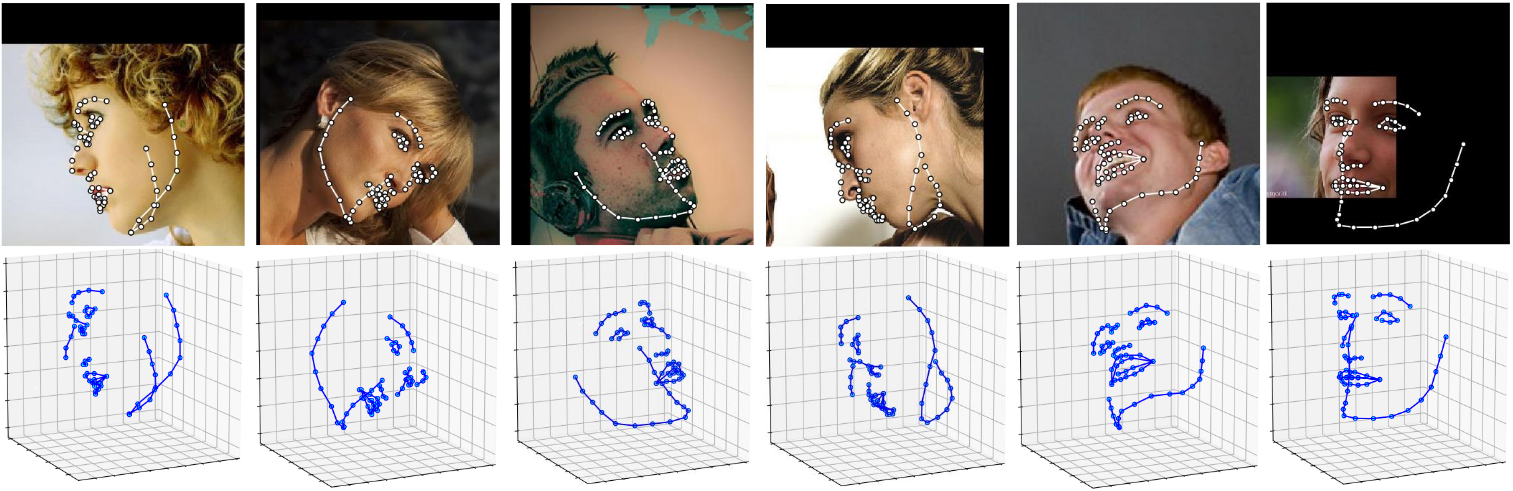

23 | ### Voxceleb1 Dataset

24 |

25 |  26 |

26 |

27 |

28 | :triangular_flag_on_post: **Updates**

29 | - :fire::fire::white_check_mark: July 20 2023: Our new talking head work **[MCNet](https://harlanhong.github.io/publications/mcnet.html) was accpted by ICCV2023. There's no need to train a facial depth network, which makes it more convenient for users to test and fine-tune.

30 | - :fire::fire::white_check_mark: July 26, 2022: The normal dataparallel training scripts were released since some researchers informed me they ran into **DistributedDataParallel** problems. Please try to train your own model using this [command](#dataparallel). Also, we deleted the command line "with torch.autograd.set_detect_anomaly(True)" to boost the training speed.

31 | - :fire::fire::white_check_mark: June 26, 2022: The repo of our face depth network is released, please refer to [Face-Depth-Network](https://github.com/harlanhong/Face-Depth-Network) and feel free to email me if you meet any problem.

32 | - :fire::fire::white_check_mark: June 21, 2022: [Digression] I am looking for research intern/research assistant opportunities in European next year. Please contact me If you think I'm qualified for your position.

33 | - :fire::fire::white_check_mark: May 19, 2022: The depth face model (50 layers) trained on Voxceleb2 is released! (The corresponding checkpoint of DaGAN will release soon). Click the [LINK](https://hkustconnect-my.sharepoint.com/:f:/g/personal/fhongac_connect_ust_hk/EkxzfH7zbGJNr-WVmPU6fcABWAMq_WJoExAl4SttKK6hBQ?e=fbtGlX)

34 |

35 | - :fire::fire::white_check_mark: April 25, 2022: Integrated into Huggingface Spaces 🤗 using Gradio. Try out the web demo: [](https://huggingface.co/spaces/HarlanHong/DaGAN) (GPU version will come soon!)

36 | - :fire::fire::white_check_mark: Add **[SPADE model](https://hkustconnect-my.sharepoint.com/:f:/g/personal/fhongac_connect_ust_hk/EjfeXuzwo3JMn7s0oOPN_q0B81P5Wgu_kbYJAh7uSAKS2w?e=XNZl3K)**, which produces **more natural** results.

37 |

38 |

39 | ## :wrench: Dependencies and Installation

40 |

41 | - Python >= 3.7 (Recommend to use [Anaconda](https://www.anaconda.com/download/#linux) or [Miniconda](https://docs.conda.io/en/latest/miniconda.html))

42 | - [PyTorch >= 1.7](https://pytorch.org/)

43 | - Option: NVIDIA GPU + [CUDA](https://developer.nvidia.com/cuda-downloads)

44 | - Option: Linux

45 |

46 | ### Installation

47 | We now provide a *clean* version of DaGAN, which does not require customized CUDA extensions.

48 |

49 | 1. Clone repo

50 |

51 | ```bash

52 | git clone https://github.com/harlanhong/CVPR2022-DaGAN.git

53 | cd CVPR2022-DaGAN

54 | ```

55 |

56 | 2. Install dependent packages

57 |

58 | ```bash

59 | pip install -r requirements.txt

60 |

61 | ## Install the Face Alignment lib

62 | cd face-alignment

63 | pip install -r requirements.txt

64 | python setup.py install

65 | ```

66 | ## :zap: Quick Inference

67 |

68 | We take the paper version for an example. More models can be found [here](https://hkustconnect-my.sharepoint.com/:f:/g/personal/fhongac_connect_ust_hk/EjfeXuzwo3JMn7s0oOPN_q0B81P5Wgu_kbYJAh7uSAKS2w?e=KaQcPk).

69 |

70 | ### YAML configs

71 | See ```config/vox-adv-256.yaml``` to get description of each parameter.

72 |

73 | ### Pre-trained checkpoint

74 | The pre-trained checkpoint of face depth network and our DaGAN checkpoints can be found under following link: [OneDrive](https://hkustconnect-my.sharepoint.com/:f:/g/personal/fhongac_connect_ust_hk/EjfeXuzwo3JMn7s0oOPN_q0B81P5Wgu_kbYJAh7uSAKS2w?e=KaQcPk).

75 |

76 | **Inference!**

77 | To run a demo, download checkpoint and run the following command:

78 |

79 | ```bash

80 | CUDA_VISIBLE_DEVICES=0 python demo.py --config config/vox-adv-256.yaml --driving_video path/to/driving --source_image path/to/source --checkpoint path/to/checkpoint --relative --adapt_scale --kp_num 15 --generator DepthAwareGenerator

81 | ```

82 | The result will be stored in ```result.mp4```. The driving videos and source images should be cropped before it can be used in our method. To obtain some semi-automatic crop suggestions you can use ```python crop-video.py --inp some_youtube_video.mp4```. It will generate commands for crops using ffmpeg.

83 |

84 |

85 |

86 |

87 | ## :computer: Training

88 |

89 |

90 | ### Datasets

91 |

92 | 1) **VoxCeleb**. Please follow the instruction from https://github.com/AliaksandrSiarohin/video-preprocessing.

93 |

94 | ### Train on VoxCeleb

95 | To train a model on specific dataset run:

96 | ```

97 | CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python -m torch.distributed.launch --master_addr="0.0.0.0" --master_port=12348 run.py --config config/vox-adv-256.yaml --name DaGAN --rgbd --batchsize 12 --kp_num 15 --generator DepthAwareGenerator

98 | ```

99 | Or

100 |

101 | ```

102 | CUDA_VISIBLE_DEVICES=0,1,2,3 python run_dataparallel.py --config config/vox-adv-256.yaml --device_ids 0,1,2,3 --name DaGAN_voxceleb2_depth --rgbd --batchsize 48 --kp_num 15 --generator DepthAwareGenerator

103 | ```

104 |

105 |

106 |

107 |

108 | The code will create a folder in the log directory (each run will create a new name-specific directory).

109 | Checkpoints will be saved to this folder.

110 | To check the loss values during training see ```log.txt```.

111 | By default the batch size is tunned to run on 8 GeForce RTX 3090 gpu (You can obtain the best performance after about 150 epochs). You can change the batch size in the train_params in ```.yaml``` file.

112 |

113 |

114 | Also, you can watch the training loss by running the following command:

115 | ```bash

116 | tensorboard --logdir log/DaGAN/log

117 | ```

118 | When you kill your process for some reasons in the middle of training, a zombie process may occur, you can kill it using our provided tool:

119 | ```bash

120 | python kill_port.py PORT

121 | ```

122 |

123 | ### Training on your own dataset

124 | 1) Resize all the videos to the same size e.g 256x256, the videos can be in '.gif', '.mp4' or folder with images.

125 | We recommend the later, for each video make a separate folder with all the frames in '.png' format. This format is loss-less, and it has better i/o performance.

126 |

127 | 2) Create a folder ```data/dataset_name``` with 2 subfolders ```train``` and ```test```, put training videos in the ```train``` and testing in the ```test```.

128 |

129 | 3) Create a config ```config/dataset_name.yaml```, in dataset_params specify the root dir the ```root_dir: data/dataset_name```. Also adjust the number of epoch in train_params.

130 |

131 |

132 |

133 | ## :scroll: Acknowledgement

134 |

135 | Our DaGAN implementation is inspired by [FOMM](https://github.com/AliaksandrSiarohin/first-order-model). We appreciate the authors of [FOMM](https://github.com/AliaksandrSiarohin/first-order-model) for making their codes available to public.

136 |

137 | ## :scroll: BibTeX

138 |

139 | ```

140 | @inproceedings{hong2022depth,

141 | title={Depth-Aware Generative Adversarial Network for Talking Head Video Generation},

142 | author={Hong, Fa-Ting and Zhang, Longhao and Shen, Li and Xu, Dan},

143 | journal={IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

144 | year={2022}

145 | }

146 |

147 | @article{hong2023dagan,

148 | title={DaGAN++: Depth-Aware Generative Adversarial Network for Talking Head Video Generation},

149 | author={Hong, Fa-Ting and and Shen, Li and Xu, Dan},

150 | journal={IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)},

151 | year={2023}

152 | }

153 | ```

154 |

155 | ### :e-mail: Contact

156 |

157 | If you have any question or collaboration need (research purpose or commercial purpose), please email `fhongac@cse.ust.hk`.

158 |

--------------------------------------------------------------------------------

/animate.py:

--------------------------------------------------------------------------------

1 | import os

2 | from tqdm import tqdm

3 |

4 | import torch

5 | from torch.utils.data import DataLoader

6 |

7 | from frames_dataset import PairedDataset

8 | from logger import Logger, Visualizer

9 | import imageio

10 | from scipy.spatial import ConvexHull

11 | import numpy as np

12 | import depth

13 | from sync_batchnorm import DataParallelWithCallback

14 |

15 |

16 | def normalize_kp(kp_source, kp_driving, kp_driving_initial, adapt_movement_scale=False,

17 | use_relative_movement=False, use_relative_jacobian=False):

18 | if adapt_movement_scale:

19 | source_area = ConvexHull(kp_source['value'][0].data.cpu().numpy()).volume

20 | driving_area = ConvexHull(kp_driving_initial['value'][0].data.cpu().numpy()).volume

21 | adapt_movement_scale = np.sqrt(source_area) / np.sqrt(driving_area)

22 | else:

23 | adapt_movement_scale = 1

24 |

25 | kp_new = {k: v for k, v in kp_driving.items()}

26 |

27 | if use_relative_movement:

28 | kp_value_diff = (kp_driving['value'] - kp_driving_initial['value'])

29 | kp_value_diff *= adapt_movement_scale

30 | kp_new['value'] = kp_value_diff + kp_source['value']

31 |

32 | if use_relative_jacobian:

33 | jacobian_diff = torch.matmul(kp_driving['jacobian'], torch.inverse(kp_driving_initial['jacobian']))

34 | kp_new['jacobian'] = torch.matmul(jacobian_diff, kp_source['jacobian'])

35 | return kp_new

36 |

37 |

38 | def animate(config, generator, kp_detector, checkpoint, log_dir, dataset,opt):

39 | log_dir = os.path.join(log_dir, 'animation')

40 | png_dir = os.path.join(log_dir, 'png')

41 | animate_params = config['animate_params']

42 |

43 | dataset = PairedDataset(initial_dataset=dataset, number_of_pairs=animate_params['num_pairs'])

44 | dataloader = DataLoader(dataset, batch_size=1, shuffle=False, num_workers=1)

45 |

46 | if checkpoint is not None:

47 | Logger.load_cpk(checkpoint, generator=generator, kp_detector=kp_detector)

48 | else:

49 | raise AttributeError("Checkpoint should be specified for mode='animate'.")

50 |

51 | if not os.path.exists(log_dir):

52 | os.makedirs(log_dir)

53 |

54 | if not os.path.exists(png_dir):

55 | os.makedirs(png_dir)

56 |

57 |

58 | depth_encoder = depth.ResnetEncoder(18, False).cuda()

59 | depth_decoder = depth.DepthDecoder(num_ch_enc=depth_encoder.num_ch_enc, scales=range(4)).cuda()

60 | loaded_dict_enc = torch.load('depth/models/weights_19/encoder.pth')

61 | loaded_dict_dec = torch.load('depth/models/weights_19/depth.pth')

62 | filtered_dict_enc = {k: v for k, v in loaded_dict_enc.items() if k in depth_encoder.state_dict()}

63 | depth_encoder.load_state_dict(filtered_dict_enc)

64 | depth_decoder.load_state_dict(loaded_dict_dec)

65 | depth_decoder.eval()

66 | depth_encoder.eval()

67 | generator.eval()

68 | kp_detector.eval()

69 |

70 | for it, x in tqdm(enumerate(dataloader)):

71 | with torch.no_grad():

72 | predictions = []

73 | visualizations = []

74 |

75 | driving_video = x['driving_video'].cuda()

76 | source_frame = x['source_video'][:, :, 0, :, :].cuda()

77 |

78 | outputs = depth_decoder(depth_encoder(source_frame))

79 | depth_source = outputs[("disp", 0)]

80 | outputs = depth_decoder(depth_encoder(driving_video[:, :, 0]))

81 | depth_driving = outputs[("disp", 0)]

82 |

83 | source = torch.cat((source_frame,depth_source),1)

84 | driving = torch.cat((driving_video[:, :, 0],depth_driving),1)

85 |

86 | kp_source = kp_detector(source)

87 | kp_driving_initial = kp_detector(driving)

88 |

89 | for frame_idx in range(driving_video.shape[2]):

90 | driving_frame = driving_video[:, :, frame_idx].cuda()

91 | outputs = depth_decoder(depth_encoder(driving_frame))

92 | depth_map = outputs[("disp", 0)]

93 | driving = torch.cat((driving_frame,depth_map),1)

94 | kp_driving = kp_detector(driving)

95 |

96 | kp_norm = normalize_kp(kp_source=kp_source, kp_driving=kp_driving,

97 | kp_driving_initial=kp_driving_initial, **animate_params['normalization_params'])

98 | out = generator(source_frame, kp_source=kp_source, kp_driving=kp_norm)

99 |

100 | out['kp_driving'] = kp_driving

101 | out['kp_source'] = kp_source

102 | out['kp_norm'] = kp_norm

103 |

104 | del out['sparse_deformed']

105 |

106 | predictions.append(np.transpose(out['prediction'].data.cpu().numpy(), [0, 2, 3, 1])[0])

107 |

108 | visualization = Visualizer(**config['visualizer_params']).visualize(source=source_frame,

109 | driving=driving_frame, out=out)

110 | visualization = visualization

111 | visualizations.append(visualization)

112 |

113 | predictions = np.concatenate(predictions, axis=1)

114 | result_name = "-".join([x['driving_name'][0], x['source_name'][0]])

115 | imageio.imsave(os.path.join(png_dir, result_name + '.png'), (255 * predictions).astype(np.uint8))

116 |

117 | image_name = result_name + animate_params['format']

118 | imageio.mimsave(os.path.join(log_dir, image_name), visualizations)

119 |

120 |

--------------------------------------------------------------------------------

/assets/driving.mp4:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/harlanhong/CVPR2022-DaGAN/052ad359a019492893c098e8717dc993cdf846ed/assets/driving.mp4

--------------------------------------------------------------------------------

/assets/src.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/harlanhong/CVPR2022-DaGAN/052ad359a019492893c098e8717dc993cdf846ed/assets/src.png

--------------------------------------------------------------------------------

/assets/visual_vox1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/harlanhong/CVPR2022-DaGAN/052ad359a019492893c098e8717dc993cdf846ed/assets/visual_vox1.png

--------------------------------------------------------------------------------

/augmentation.py:

--------------------------------------------------------------------------------

1 | """

2 | Code from https://github.com/hassony2/torch_videovision

3 | """

4 |

5 | import numbers

6 |

7 | import random

8 | import numpy as np

9 | import PIL

10 |

11 | from skimage.transform import resize, rotate

12 | # from skimage.util import pad

13 | # import numpy.pad as pad

14 | import torchvision

15 |

16 | import warnings

17 |

18 | from skimage import img_as_ubyte, img_as_float

19 |

20 |

21 | def crop_clip(clip, min_h, min_w, h, w):

22 | if isinstance(clip[0], np.ndarray):

23 | cropped = [img[min_h:min_h + h, min_w:min_w + w, :] for img in clip]

24 |

25 | elif isinstance(clip[0], PIL.Image.Image):

26 | cropped = [

27 | img.crop((min_w, min_h, min_w + w, min_h + h)) for img in clip

28 | ]

29 | else:

30 | raise TypeError('Expected numpy.ndarray or PIL.Image' +

31 | 'but got list of {0}'.format(type(clip[0])))

32 | return cropped

33 |

34 |

35 | def pad_clip(clip, h, w):

36 | im_h, im_w = clip[0].shape[:2]

37 | pad_h = (0, 0) if h < im_h else ((h - im_h) // 2, (h - im_h + 1) // 2)

38 | pad_w = (0, 0) if w < im_w else ((w - im_w) // 2, (w - im_w + 1) // 2)

39 |

40 | return np.pad(clip, ((0, 0), pad_h, pad_w, (0, 0)), mode='edge')

41 |

42 |

43 | def resize_clip(clip, size, interpolation='bilinear'):

44 | if isinstance(clip[0], np.ndarray):

45 | if isinstance(size, numbers.Number):

46 | im_h, im_w, im_c = clip[0].shape

47 | # Min spatial dim already matches minimal size

48 | if (im_w <= im_h and im_w == size) or (im_h <= im_w

49 | and im_h == size):

50 | return clip

51 | new_h, new_w = get_resize_sizes(im_h, im_w, size)

52 | size = (new_w, new_h)

53 | else:

54 | size = size[1], size[0]

55 |

56 | scaled = [

57 | resize(img, size, order=1 if interpolation == 'bilinear' else 0, preserve_range=True,

58 | mode='constant', anti_aliasing=True) for img in clip

59 | ]

60 | elif isinstance(clip[0], PIL.Image.Image):

61 | if isinstance(size, numbers.Number):

62 | im_w, im_h = clip[0].size

63 | # Min spatial dim already matches minimal size

64 | if (im_w <= im_h and im_w == size) or (im_h <= im_w

65 | and im_h == size):

66 | return clip

67 | new_h, new_w = get_resize_sizes(im_h, im_w, size)

68 | size = (new_w, new_h)

69 | else:

70 | size = size[1], size[0]

71 | if interpolation == 'bilinear':

72 | pil_inter = PIL.Image.NEAREST

73 | else:

74 | pil_inter = PIL.Image.BILINEAR

75 | scaled = [img.resize(size, pil_inter) for img in clip]

76 | else:

77 | raise TypeError('Expected numpy.ndarray or PIL.Image' +

78 | 'but got list of {0}'.format(type(clip[0])))

79 | return scaled

80 |

81 |

82 | def get_resize_sizes(im_h, im_w, size):

83 | if im_w < im_h:

84 | ow = size

85 | oh = int(size * im_h / im_w)

86 | else:

87 | oh = size

88 | ow = int(size * im_w / im_h)

89 | return oh, ow

90 |

91 |

92 | class RandomFlip(object):

93 | def __init__(self, time_flip=False, horizontal_flip=False):

94 | self.time_flip = time_flip

95 | self.horizontal_flip = horizontal_flip

96 |

97 | def __call__(self, clip):

98 | if random.random() < 0.5 and self.time_flip:

99 | return clip[::-1]

100 | if random.random() < 0.5 and self.horizontal_flip:

101 | return [np.fliplr(img) for img in clip]

102 |

103 | return clip

104 |

105 |

106 | class RandomResize(object):

107 | """Resizes a list of (H x W x C) numpy.ndarray to the final size

108 | The larger the original image is, the more times it takes to

109 | interpolate

110 | Args:

111 | interpolation (str): Can be one of 'nearest', 'bilinear'

112 | defaults to nearest

113 | size (tuple): (widht, height)

114 | """

115 |

116 | def __init__(self, ratio=(3. / 4., 4. / 3.), interpolation='nearest'):

117 | self.ratio = ratio

118 | self.interpolation = interpolation

119 |

120 | def __call__(self, clip):

121 | scaling_factor = random.uniform(self.ratio[0], self.ratio[1])

122 |

123 | if isinstance(clip[0], np.ndarray):

124 | im_h, im_w, im_c = clip[0].shape

125 | elif isinstance(clip[0], PIL.Image.Image):

126 | im_w, im_h = clip[0].size

127 |

128 | new_w = int(im_w * scaling_factor)

129 | new_h = int(im_h * scaling_factor)

130 | new_size = (new_w, new_h)

131 | resized = resize_clip(

132 | clip, new_size, interpolation=self.interpolation)

133 |

134 | return resized

135 |

136 |

137 | class RandomCrop(object):

138 | """Extract random crop at the same location for a list of videos

139 | Args:

140 | size (sequence or int): Desired output size for the

141 | crop in format (h, w)

142 | """

143 |

144 | def __init__(self, size):

145 | if isinstance(size, numbers.Number):

146 | size = (size, size)

147 |

148 | self.size = size

149 |

150 | def __call__(self, clip):

151 | """

152 | Args:

153 | img (PIL.Image or numpy.ndarray): List of videos to be cropped

154 | in format (h, w, c) in numpy.ndarray

155 | Returns:

156 | PIL.Image or numpy.ndarray: Cropped list of videos

157 | """

158 | h, w = self.size

159 | if isinstance(clip[0], np.ndarray):

160 | im_h, im_w, im_c = clip[0].shape

161 | elif isinstance(clip[0], PIL.Image.Image):

162 | im_w, im_h = clip[0].size

163 | else:

164 | raise TypeError('Expected numpy.ndarray or PIL.Image' +

165 | 'but got list of {0}'.format(type(clip[0])))

166 |

167 | clip = pad_clip(clip, h, w)

168 | im_h, im_w = clip.shape[1:3]

169 | x1 = 0 if h == im_h else random.randint(0, im_w - w)

170 | y1 = 0 if w == im_w else random.randint(0, im_h - h)

171 | cropped = crop_clip(clip, y1, x1, h, w)

172 |

173 | return cropped

174 |

175 |

176 | class RandomRotation(object):

177 | """Rotate entire clip randomly by a random angle within

178 | given bounds

179 | Args:

180 | degrees (sequence or int): Range of degrees to select from

181 | If degrees is a number instead of sequence like (min, max),

182 | the range of degrees, will be (-degrees, +degrees).

183 | """

184 |

185 | def __init__(self, degrees):

186 | if isinstance(degrees, numbers.Number):

187 | if degrees < 0:

188 | raise ValueError('If degrees is a single number,'

189 | 'must be positive')

190 | degrees = (-degrees, degrees)

191 | else:

192 | if len(degrees) != 2:

193 | raise ValueError('If degrees is a sequence,'

194 | 'it must be of len 2.')

195 |

196 | self.degrees = degrees

197 |

198 | def __call__(self, clip):

199 | """

200 | Args:

201 | img (PIL.Image or numpy.ndarray): List of videos to be cropped

202 | in format (h, w, c) in numpy.ndarray

203 | Returns:

204 | PIL.Image or numpy.ndarray: Cropped list of videos

205 | """

206 | angle = random.uniform(self.degrees[0], self.degrees[1])

207 | if isinstance(clip[0], np.ndarray):

208 | rotated = [rotate(image=img, angle=angle, preserve_range=True) for img in clip]

209 | elif isinstance(clip[0], PIL.Image.Image):

210 | rotated = [img.rotate(angle) for img in clip]

211 | else:

212 | raise TypeError('Expected numpy.ndarray or PIL.Image' +

213 | 'but got list of {0}'.format(type(clip[0])))

214 |

215 | return rotated

216 |

217 |

218 | class ColorJitter(object):

219 | """Randomly change the brightness, contrast and saturation and hue of the clip

220 | Args:

221 | brightness (float): How much to jitter brightness. brightness_factor

222 | is chosen uniformly from [max(0, 1 - brightness), 1 + brightness].

223 | contrast (float): How much to jitter contrast. contrast_factor

224 | is chosen uniformly from [max(0, 1 - contrast), 1 + contrast].

225 | saturation (float): How much to jitter saturation. saturation_factor

226 | is chosen uniformly from [max(0, 1 - saturation), 1 + saturation].

227 | hue(float): How much to jitter hue. hue_factor is chosen uniformly from

228 | [-hue, hue]. Should be >=0 and <= 0.5.

229 | """

230 |

231 | def __init__(self, brightness=0, contrast=0, saturation=0, hue=0):

232 | self.brightness = brightness

233 | self.contrast = contrast

234 | self.saturation = saturation

235 | self.hue = hue

236 |

237 | def get_params(self, brightness, contrast, saturation, hue):

238 | if brightness > 0:

239 | brightness_factor = random.uniform(

240 | max(0, 1 - brightness), 1 + brightness)

241 | else:

242 | brightness_factor = None

243 |

244 | if contrast > 0:

245 | contrast_factor = random.uniform(

246 | max(0, 1 - contrast), 1 + contrast)

247 | else:

248 | contrast_factor = None

249 |

250 | if saturation > 0:

251 | saturation_factor = random.uniform(

252 | max(0, 1 - saturation), 1 + saturation)

253 | else:

254 | saturation_factor = None

255 |

256 | if hue > 0:

257 | hue_factor = random.uniform(-hue, hue)

258 | else:

259 | hue_factor = None

260 | return brightness_factor, contrast_factor, saturation_factor, hue_factor

261 |

262 | def __call__(self, clip):

263 | """

264 | Args:

265 | clip (list): list of PIL.Image

266 | Returns:

267 | list PIL.Image : list of transformed PIL.Image

268 | """

269 | if isinstance(clip[0], np.ndarray):

270 | brightness, contrast, saturation, hue = self.get_params(

271 | self.brightness, self.contrast, self.saturation, self.hue)

272 |

273 | # Create img transform function sequence

274 | img_transforms = []

275 | if brightness is not None:

276 | img_transforms.append(lambda img: torchvision.transforms.functional.adjust_brightness(img, brightness))

277 | if saturation is not None:

278 | img_transforms.append(lambda img: torchvision.transforms.functional.adjust_saturation(img, saturation))

279 | if hue is not None:

280 | img_transforms.append(lambda img: torchvision.transforms.functional.adjust_hue(img, hue))

281 | if contrast is not None:

282 | img_transforms.append(lambda img: torchvision.transforms.functional.adjust_contrast(img, contrast))

283 | random.shuffle(img_transforms)

284 | img_transforms = [img_as_ubyte, torchvision.transforms.ToPILImage()] + img_transforms + [np.array,

285 | img_as_float]

286 |

287 | with warnings.catch_warnings():

288 | warnings.simplefilter("ignore")

289 | jittered_clip = []

290 | for img in clip:

291 | jittered_img = img

292 | for func in img_transforms:

293 | jittered_img = func(jittered_img)

294 | jittered_clip.append(jittered_img.astype('float32'))

295 | elif isinstance(clip[0], PIL.Image.Image):

296 | brightness, contrast, saturation, hue = self.get_params(

297 | self.brightness, self.contrast, self.saturation, self.hue)

298 |

299 | # Create img transform function sequence

300 | img_transforms = []

301 | if brightness is not None:

302 | img_transforms.append(lambda img: torchvision.transforms.functional.adjust_brightness(img, brightness))

303 | if saturation is not None:

304 | img_transforms.append(lambda img: torchvision.transforms.functional.adjust_saturation(img, saturation))

305 | if hue is not None:

306 | img_transforms.append(lambda img: torchvision.transforms.functional.adjust_hue(img, hue))

307 | if contrast is not None:

308 | img_transforms.append(lambda img: torchvision.transforms.functional.adjust_contrast(img, contrast))

309 | random.shuffle(img_transforms)

310 |

311 | # Apply to all videos

312 | jittered_clip = []

313 | for img in clip:

314 | for func in img_transforms:

315 | jittered_img = func(img)

316 | jittered_clip.append(jittered_img)

317 |

318 | else:

319 | raise TypeError('Expected numpy.ndarray or PIL.Image' +

320 | 'but got list of {0}'.format(type(clip[0])))

321 | return jittered_clip

322 |

323 |

324 | class AllAugmentationTransform:

325 | def __init__(self, resize_param=None, rotation_param=None, flip_param=None, crop_param=None, jitter_param=None):

326 | self.transforms = []

327 |

328 | if flip_param is not None:

329 | self.transforms.append(RandomFlip(**flip_param))

330 |

331 | if rotation_param is not None:

332 | self.transforms.append(RandomRotation(**rotation_param))

333 |

334 | if resize_param is not None:

335 | self.transforms.append(RandomResize(**resize_param))

336 |

337 | if crop_param is not None:

338 | self.transforms.append(RandomCrop(**crop_param))

339 |

340 | if jitter_param is not None:

341 | self.transforms.append(ColorJitter(**jitter_param))

342 |

343 | def __call__(self, clip):

344 | for t in self.transforms:

345 | clip = t(clip)

346 | return clip

347 |

--------------------------------------------------------------------------------

/config/vox-adv-256.yaml:

--------------------------------------------------------------------------------

1 | dataset_params:

2 | root_dir: /data/fhongac/origDataset/vox1_frames

3 | frame_shape: [256, 256, 3]

4 | id_sampling: True

5 | pairs_list: data/vox256.csv

6 | augmentation_params:

7 | flip_param:

8 | horizontal_flip: True

9 | time_flip: True

10 | jitter_param:

11 | brightness: 0.1

12 | contrast: 0.1

13 | saturation: 0.1

14 | hue: 0.1

15 |

16 |

17 | model_params:

18 | common_params:

19 | num_kp: 10

20 | num_channels: 3

21 | estimate_jacobian: True

22 | kp_detector_params:

23 | temperature: 0.1

24 | block_expansion: 32

25 | max_features: 1024

26 | scale_factor: 0.25

27 | num_blocks: 5

28 | generator_params:

29 | block_expansion: 64

30 | max_features: 512

31 | num_down_blocks: 2

32 | num_bottleneck_blocks: 6

33 | estimate_occlusion_map: True

34 | dense_motion_params:

35 | block_expansion: 64

36 | max_features: 1024

37 | num_blocks: 5

38 | scale_factor: 0.25

39 | discriminator_params:

40 | scales: [1]

41 | block_expansion: 32

42 | max_features: 512

43 | num_blocks: 4

44 | use_kp: True

45 |

46 |

47 | train_params:

48 | num_epochs: 150

49 | num_repeats: 75

50 | epoch_milestones: []

51 | lr_generator: 2.0e-4

52 | lr_discriminator: 2.0e-4

53 | lr_kp_detector: 2.0e-4

54 | batch_size: 4

55 | scales: [1, 0.5, 0.25, 0.125]

56 | checkpoint_freq: 10

57 | transform_params:

58 | sigma_affine: 0.05

59 | sigma_tps: 0.005

60 | points_tps: 5

61 | loss_weights:

62 | generator_gan: 1

63 | discriminator_gan: 1

64 | feature_matching: [10, 10, 10, 10]

65 | perceptual: [10, 10, 10, 10, 10]

66 | equivariance_value: 10

67 | equivariance_jacobian: 10

68 | kp_distance: 10

69 | kp_prior: 0

70 | kp_scale: 0

71 | depth_constraint: 0

72 |

73 | reconstruction_params:

74 | num_videos: 1000

75 | format: '.mp4'

76 |

77 | animate_params:

78 | num_pairs: 50

79 | format: '.mp4'

80 | normalization_params:

81 | adapt_movement_scale: False

82 | use_relative_movement: True

83 | use_relative_jacobian: True

84 |

85 | visualizer_params:

86 | kp_size: 5

87 | draw_border: True

88 | colormap: 'gist_rainbow'

89 |

--------------------------------------------------------------------------------

/crop-video.py:

--------------------------------------------------------------------------------

1 | import face_alignment

2 | import skimage.io

3 | import numpy

4 | from argparse import ArgumentParser

5 | from skimage import img_as_ubyte

6 | from skimage.transform import resize

7 | from tqdm import tqdm

8 | import os

9 | import imageio

10 | import numpy as np

11 | import warnings

12 | warnings.filterwarnings("ignore")

13 |

14 | def extract_bbox(frame, fa):

15 | if max(frame.shape[0], frame.shape[1]) > 640:

16 | scale_factor = max(frame.shape[0], frame.shape[1]) / 640.0

17 | frame = resize(frame, (int(frame.shape[0] / scale_factor), int(frame.shape[1] / scale_factor)))

18 | frame = img_as_ubyte(frame)

19 | else:

20 | scale_factor = 1

21 | frame = frame[..., :3]

22 | bboxes = fa.face_detector.detect_from_image(frame[..., ::-1])

23 | if len(bboxes) == 0:

24 | return []

25 | return np.array(bboxes)[:, :-1] * scale_factor

26 |

27 |

28 |

29 | def bb_intersection_over_union(boxA, boxB):

30 | xA = max(boxA[0], boxB[0])

31 | yA = max(boxA[1], boxB[1])

32 | xB = min(boxA[2], boxB[2])

33 | yB = min(boxA[3], boxB[3])

34 | interArea = max(0, xB - xA + 1) * max(0, yB - yA + 1)

35 | boxAArea = (boxA[2] - boxA[0] + 1) * (boxA[3] - boxA[1] + 1)

36 | boxBArea = (boxB[2] - boxB[0] + 1) * (boxB[3] - boxB[1] + 1)

37 | iou = interArea / float(boxAArea + boxBArea - interArea)

38 | return iou

39 |

40 |

41 | def join(tube_bbox, bbox):

42 | xA = min(tube_bbox[0], bbox[0])

43 | yA = min(tube_bbox[1], bbox[1])

44 | xB = max(tube_bbox[2], bbox[2])

45 | yB = max(tube_bbox[3], bbox[3])

46 | return (xA, yA, xB, yB)

47 |

48 |

49 | def compute_bbox(start, end, fps, tube_bbox, frame_shape, inp, image_shape, increase_area=0.1):

50 | left, top, right, bot = tube_bbox

51 | width = right - left

52 | height = bot - top

53 |

54 | #Computing aspect preserving bbox

55 | width_increase = max(increase_area, ((1 + 2 * increase_area) * height - width) / (2 * width))

56 | height_increase = max(increase_area, ((1 + 2 * increase_area) * width - height) / (2 * height))

57 |

58 | left = int(left - width_increase * width)

59 | top = int(top - height_increase * height)

60 | right = int(right + width_increase * width)

61 | bot = int(bot + height_increase * height)

62 |

63 | top, bot, left, right = max(0, top), min(bot, frame_shape[0]), max(0, left), min(right, frame_shape[1])

64 | h, w = bot - top, right - left

65 |

66 | start = start / fps

67 | end = end / fps

68 | time = end - start

69 |

70 | scale = f'{image_shape[0]}:{image_shape[1]}'

71 |

72 | return f'ffmpeg -i {inp} -ss {start} -t {time} -filter:v "crop={w}:{h}:{left}:{top}, scale={scale}" crop.mp4'

73 |

74 |

75 | def compute_bbox_trajectories(trajectories, fps, frame_shape, args):

76 | commands = []

77 | for i, (bbox, tube_bbox, start, end) in enumerate(trajectories):

78 | if (end - start) > args.min_frames:

79 | command = compute_bbox(start, end, fps, tube_bbox, frame_shape, inp=args.inp, image_shape=args.image_shape, increase_area=args.increase)

80 | commands.append(command)

81 | return commands

82 |

83 |

84 | def process_video(args):

85 | device = 'cpu' if args.cpu else 'cuda'

86 | fa = face_alignment.FaceAlignment(face_alignment.LandmarksType._2D, flip_input=False, device=device)

87 | video = imageio.get_reader(args.inp)

88 |

89 | trajectories = []

90 | previous_frame = None

91 | fps = video.get_meta_data()['fps']

92 | commands = []

93 | try:

94 | for i, frame in tqdm(enumerate(video)):

95 | frame_shape = frame.shape

96 | bboxes = extract_bbox(frame, fa)

97 | ## For each trajectory check the criterion

98 | not_valid_trajectories = []

99 | valid_trajectories = []

100 |

101 | for trajectory in trajectories:

102 | tube_bbox = trajectory[0]

103 | intersection = 0

104 | for bbox in bboxes:

105 | intersection = max(intersection, bb_intersection_over_union(tube_bbox, bbox))

106 | if intersection > args.iou_with_initial:

107 | valid_trajectories.append(trajectory)

108 | else:

109 | not_valid_trajectories.append(trajectory)

110 |

111 | commands += compute_bbox_trajectories(not_valid_trajectories, fps, frame_shape, args)

112 | trajectories = valid_trajectories

113 |

114 | ## Assign bbox to trajectories, create new trajectories

115 | for bbox in bboxes:

116 | intersection = 0

117 | current_trajectory = None

118 | for trajectory in trajectories:

119 | tube_bbox = trajectory[0]

120 | current_intersection = bb_intersection_over_union(tube_bbox, bbox)

121 | if intersection < current_intersection and current_intersection > args.iou_with_initial:

122 | intersection = bb_intersection_over_union(tube_bbox, bbox)

123 | current_trajectory = trajectory

124 |

125 | ## Create new trajectory

126 | if current_trajectory is None:

127 | trajectories.append([bbox, bbox, i, i])

128 | else:

129 | current_trajectory[3] = i

130 | current_trajectory[1] = join(current_trajectory[1], bbox)

131 |

132 |

133 | except IndexError as e:

134 | raise (e)

135 |

136 | commands += compute_bbox_trajectories(trajectories, fps, frame_shape, args)

137 | return commands

138 |

139 |

140 | if __name__ == "__main__":

141 | parser = ArgumentParser()

142 |

143 | parser.add_argument("--image_shape", default=(256, 256), type=lambda x: tuple(map(int, x.split(','))),

144 | help="Image shape")

145 | parser.add_argument("--increase", default=0.1, type=float, help='Increase bbox by this amount')

146 | parser.add_argument("--iou_with_initial", type=float, default=0.25, help="The minimal allowed iou with inital bbox")

147 | parser.add_argument("--inp", required=True, help='Input image or video')

148 | parser.add_argument("--min_frames", type=int, default=150, help='Minimum number of frames')

149 | parser.add_argument("--cpu", dest="cpu", action="store_true", help="cpu mode.")

150 |

151 |

152 | args = parser.parse_args()

153 |

154 | commands = process_video(args)

155 | for command in commands:

156 | print (command)

157 |

158 |

--------------------------------------------------------------------------------

/data/utils.py:

--------------------------------------------------------------------------------

1 | import os

2 | import random

3 | import csv

4 | import pdb

5 | import numpy as np

6 |

7 | def create_csv(path):

8 | videos = os.listdir(path)

9 | source = videos.copy()

10 | driving = videos.copy()

11 | random.shuffle(source)

12 | random.shuffle(driving)

13 | source = np.array(source).reshape(-1,1)

14 | driving = np.array(driving).reshape(-1,1)

15 | zeros = np.zeros((len(source),1))

16 | content = np.concatenate((source,driving,zeros),1)

17 | f = open('vox256.csv','w',encoding='utf-8')

18 | csv_writer = csv.writer(f)

19 | csv_writer.writerow(["source","driving","frame"])

20 | csv_writer.writerows(content)

21 | f.close()

22 |

23 |

24 | if __name__ == '__main__':

25 | create_csv('/data/fhongac/origDataset/vox1/test')

--------------------------------------------------------------------------------

/demo.py:

--------------------------------------------------------------------------------

1 | import matplotlib

2 | matplotlib.use('Agg')

3 | import os, sys

4 | import yaml

5 | from argparse import ArgumentParser

6 | from tqdm import tqdm

7 | import modules.generator as GEN

8 | import imageio

9 | import numpy as np

10 | from skimage.transform import resize

11 | from skimage import img_as_ubyte

12 | import torch

13 | from sync_batchnorm import DataParallelWithCallback

14 | import depth

15 | from modules.keypoint_detector import KPDetector

16 | from animate import normalize_kp

17 | from scipy.spatial import ConvexHull

18 | from collections import OrderedDict

19 | import pdb

20 | import cv2

21 | if sys.version_info[0] < 3:

22 | raise Exception("You must use Python 3 or higher. Recommended version is Python 3.7")

23 |

24 | def load_checkpoints(config_path, checkpoint_path, cpu=False):

25 |

26 | with open(config_path) as f:

27 | config = yaml.load(f)

28 | if opt.kp_num != -1:

29 | config['model_params']['common_params']['num_kp'] = opt.kp_num

30 | generator = getattr(GEN, opt.generator)(**config['model_params']['generator_params'],**config['model_params']['common_params'])

31 | if not cpu:

32 | generator.cuda()

33 | config['model_params']['common_params']['num_channels'] = 4

34 | kp_detector = KPDetector(**config['model_params']['kp_detector_params'],

35 | **config['model_params']['common_params'])

36 | if not cpu:

37 | kp_detector.cuda()

38 | if cpu:

39 | checkpoint = torch.load(checkpoint_path, map_location=torch.device('cpu'))

40 | else:

41 | checkpoint = torch.load(checkpoint_path,map_location="cuda:0")

42 |

43 | ckp_generator = OrderedDict((k.replace('module.',''),v) for k,v in checkpoint['generator'].items())

44 | generator.load_state_dict(ckp_generator)

45 | ckp_kp_detector = OrderedDict((k.replace('module.',''),v) for k,v in checkpoint['kp_detector'].items())

46 | kp_detector.load_state_dict(ckp_kp_detector)

47 |

48 | if not cpu:

49 | generator = DataParallelWithCallback(generator)

50 | kp_detector = DataParallelWithCallback(kp_detector)

51 |

52 | generator.eval()

53 | kp_detector.eval()

54 |

55 | return generator, kp_detector

56 |

57 |

58 | def make_animation(source_image, driving_video, generator, kp_detector, relative=True, adapt_movement_scale=True, cpu=False):

59 | sources = []

60 | drivings = []

61 | with torch.no_grad():

62 | predictions = []

63 | depth_gray = []

64 | source = torch.tensor(source_image[np.newaxis].astype(np.float32)).permute(0, 3, 1, 2)

65 | driving = torch.tensor(np.array(driving_video)[np.newaxis].astype(np.float32)).permute(0, 4, 1, 2, 3)

66 | if not cpu:

67 | source = source.cuda()

68 | driving = driving.cuda()

69 | outputs = depth_decoder(depth_encoder(source))

70 | depth_source = outputs[("disp", 0)]

71 |

72 | outputs = depth_decoder(depth_encoder(driving[:, :, 0]))

73 | depth_driving = outputs[("disp", 0)]

74 | source_kp = torch.cat((source,depth_source),1)

75 | driving_kp = torch.cat((driving[:, :, 0],depth_driving),1)

76 |

77 | kp_source = kp_detector(source_kp)

78 | kp_driving_initial = kp_detector(driving_kp)

79 |

80 | # kp_source = kp_detector(source)

81 | # kp_driving_initial = kp_detector(driving[:, :, 0])

82 |

83 | for frame_idx in tqdm(range(driving.shape[2])):

84 | driving_frame = driving[:, :, frame_idx]

85 |

86 | if not cpu:

87 | driving_frame = driving_frame.cuda()

88 | outputs = depth_decoder(depth_encoder(driving_frame))

89 | depth_map = outputs[("disp", 0)]

90 |

91 | gray_driving = np.transpose(depth_map.data.cpu().numpy(), [0, 2, 3, 1])[0]

92 | gray_driving = 1-gray_driving/np.max(gray_driving)

93 |

94 | frame = torch.cat((driving_frame,depth_map),1)

95 | kp_driving = kp_detector(frame)

96 |

97 | kp_norm = normalize_kp(kp_source=kp_source, kp_driving=kp_driving,

98 | kp_driving_initial=kp_driving_initial, use_relative_movement=relative,

99 | use_relative_jacobian=relative, adapt_movement_scale=adapt_movement_scale)

100 | out = generator(source, kp_source=kp_source, kp_driving=kp_norm,source_depth = depth_source, driving_depth = depth_map)

101 |

102 | drivings.append(np.transpose(driving_frame.data.cpu().numpy(), [0, 2, 3, 1])[0])

103 | sources.append(np.transpose(source.data.cpu().numpy(), [0, 2, 3, 1])[0])

104 | predictions.append(np.transpose(out['prediction'].data.cpu().numpy(), [0, 2, 3, 1])[0])

105 | depth_gray.append(gray_driving)

106 | return sources, drivings, predictions,depth_gray

107 |

108 |

109 | def find_best_frame(source, driving, cpu=False):

110 | import face_alignment

111 |

112 | def normalize_kp(kp):

113 | kp = kp - kp.mean(axis=0, keepdims=True)

114 | area = ConvexHull(kp[:, :2]).volume

115 | area = np.sqrt(area)

116 | kp[:, :2] = kp[:, :2] / area

117 | return kp

118 |

119 | fa = face_alignment.FaceAlignment(face_alignment.LandmarksType._2D, flip_input=True,

120 | device='cpu' if cpu else 'cuda')

121 | kp_source = fa.get_landmarks(255 * source)[0]

122 | kp_source = normalize_kp(kp_source)

123 | norm = float('inf')

124 | frame_num = 0

125 | for i, image in tqdm(enumerate(driving)):

126 | kp_driving = fa.get_landmarks(255 * image)[0]

127 | kp_driving = normalize_kp(kp_driving)

128 | new_norm = (np.abs(kp_source - kp_driving) ** 2).sum()

129 | if new_norm < norm:

130 | norm = new_norm

131 | frame_num = i

132 | return frame_num

133 |

134 | if __name__ == "__main__":

135 | parser = ArgumentParser()

136 | parser.add_argument("--config", required=True, help="path to config")

137 | parser.add_argument("--checkpoint", default='vox-cpk.pth.tar', help="path to checkpoint to restore")

138 |

139 | parser.add_argument("--source_image", default='sup-mat/source.png', help="path to source image")

140 | parser.add_argument("--driving_video", default='sup-mat/source.png', help="path to driving video")

141 | parser.add_argument("--result_video", default='result.mp4', help="path to output")

142 |

143 | parser.add_argument("--relative", dest="relative", action="store_true", help="use relative or absolute keypoint coordinates")

144 | parser.add_argument("--adapt_scale", dest="adapt_scale", action="store_true", help="adapt movement scale based on convex hull of keypoints")

145 | parser.add_argument("--generator", type=str, required=True)

146 | parser.add_argument("--kp_num", type=int, required=True)

147 |

148 |

149 | parser.add_argument("--find_best_frame", dest="find_best_frame", action="store_true",

150 | help="Generate from the frame that is the most alligned with source. (Only for faces, requires face_aligment lib)")

151 |

152 | parser.add_argument("--best_frame", dest="best_frame", type=int, default=None,

153 | help="Set frame to start from.")

154 |

155 | parser.add_argument("--cpu", dest="cpu", action="store_true", help="cpu mode.")

156 |

157 |

158 | parser.set_defaults(relative=False)

159 | parser.set_defaults(adapt_scale=False)

160 |

161 | opt = parser.parse_args()

162 |

163 | depth_encoder = depth.ResnetEncoder(18, False)

164 | depth_decoder = depth.DepthDecoder(num_ch_enc=depth_encoder.num_ch_enc, scales=range(4))

165 | loaded_dict_enc = torch.load('depth/models/weights_19/encoder.pth')

166 | loaded_dict_dec = torch.load('depth/models/weights_19/depth.pth')

167 | filtered_dict_enc = {k: v for k, v in loaded_dict_enc.items() if k in depth_encoder.state_dict()}

168 | depth_encoder.load_state_dict(filtered_dict_enc)

169 | depth_decoder.load_state_dict(loaded_dict_dec)

170 | depth_encoder.eval()

171 | depth_decoder.eval()

172 | if not opt.cpu:

173 | depth_encoder.cuda()

174 | depth_decoder.cuda()

175 |

176 | source_image = imageio.imread(opt.source_image)

177 | reader = imageio.get_reader(opt.driving_video)

178 | fps = reader.get_meta_data()['fps']

179 | driving_video = []

180 | try:

181 | for im in reader:

182 | driving_video.append(im)

183 | except RuntimeError:

184 | pass

185 | reader.close()

186 |

187 | source_image = resize(source_image, (256, 256))[..., :3]

188 | driving_video = [resize(frame, (256, 256))[..., :3] for frame in driving_video]

189 | generator, kp_detector = load_checkpoints(config_path=opt.config, checkpoint_path=opt.checkpoint, cpu=opt.cpu)

190 |

191 | if opt.find_best_frame or opt.best_frame is not None:

192 | i = opt.best_frame if opt.best_frame is not None else find_best_frame(source_image, driving_video, cpu=opt.cpu)

193 | print ("Best frame: " + str(i))

194 | driving_forward = driving_video[i:]

195 | driving_backward = driving_video[:(i+1)][::-1]

196 | sources_forward, drivings_forward, predictions_forward,depth_forward = make_animation(source_image, driving_forward, generator, kp_detector, relative=opt.relative, adapt_movement_scale=opt.adapt_scale, cpu=opt.cpu)

197 | sources_backward, drivings_backward, predictions_backward,depth_backward = make_animation(source_image, driving_backward, generator, kp_detector, relative=opt.relative, adapt_movement_scale=opt.adapt_scale, cpu=opt.cpu)

198 | predictions = predictions_backward[::-1] + predictions_forward[1:]

199 | sources = sources_backward[::-1] + sources_forward[1:]

200 | drivings = drivings_backward[::-1] + drivings_forward[1:]

201 | depth_gray = depth_backward[::-1] + depth_forward[1:]

202 |

203 | else:

204 | # predictions = make_animation(source_image, driving_video, generator, kp_detector, relative=opt.relative, adapt_movement_scale=opt.adapt_scale, cpu=opt.cpu)

205 | sources, drivings, predictions,depth_gray = make_animation(source_image, driving_video, generator, kp_detector, relative=opt.relative, adapt_movement_scale=opt.adapt_scale, cpu=opt.cpu)

206 | imageio.mimsave(opt.result_video, [img_as_ubyte(p) for p in predictions], fps=fps)

207 | # imageio.mimsave(opt.result_video, [np.concatenate((img_as_ubyte(s),img_as_ubyte(d),img_as_ubyte(p)),1) for (s,d,p) in zip(sources, drivings, predictions)], fps=fps)

208 | # imageio.mimsave("gray.mp4", depth_gray, fps=fps)

209 | # merge the gray video

210 | # animation = np.array(imageio.mimread(opt.result_video,memtest=False))

211 | # gray = np.array(imageio.mimread("gray.mp4",memtest=False))

212 |

213 | # src_dst = animation[:,:,:512,:]

214 | # animate = animation[:,:,512:,:]

215 | # merge = np.concatenate((src_dst,gray,animate),2)

216 | # imageio.mimsave(opt.result_video, animate, fps=fps)

217 | #Transfer to gif

218 | # from moviepy.editor import *

219 | # clip = (VideoFileClip(opt.result_video))

220 | # clip.write_gif("{}.gif".format(opt.result_video))

--------------------------------------------------------------------------------

/demo_multi.py:

--------------------------------------------------------------------------------

1 | import matplotlib

2 | matplotlib.use('Agg')

3 | import os, sys

4 | import yaml

5 | from argparse import ArgumentParser

6 | from tqdm import tqdm

7 | import modules.generator as GEN

8 | import imageio

9 | import numpy as np

10 | from skimage.transform import resize

11 | from skimage import img_as_ubyte

12 | import torch

13 | from sync_batchnorm import DataParallelWithCallback

14 | import depth

15 | from modules.keypoint_detector import KPDetector

16 | from animate import normalize_kp

17 | from scipy.spatial import ConvexHull

18 | from collections import OrderedDict

19 | import pdb

20 | import cv2

21 | from glob import glob

22 | if sys.version_info[0] < 3:

23 | raise Exception("You must use Python 3 or higher. Recommended version is Python 3.7")

24 |

25 | def load_checkpoints(config_path, checkpoint_path, cpu=False):

26 |

27 | with open(config_path) as f:

28 | config = yaml.load(f,Loader=yaml.FullLoader)

29 | if opt.kp_num != -1:

30 | config['model_params']['common_params']['num_kp'] = opt.kp_num

31 | generator = getattr(GEN, opt.generator)(**config['model_params']['generator_params'],**config['model_params']['common_params'])

32 | if not cpu:

33 | generator.cuda()

34 | config['model_params']['common_params']['num_channels'] = 4

35 | kp_detector = KPDetector(**config['model_params']['kp_detector_params'],

36 | **config['model_params']['common_params'])

37 | if not cpu:

38 | kp_detector.cuda()

39 | if cpu:

40 | checkpoint = torch.load(checkpoint_path, map_location=torch.device('cpu'))

41 | else:

42 | checkpoint = torch.load(checkpoint_path,map_location="cuda:0")

43 |

44 | ckp_generator = OrderedDict((k.replace('module.',''),v) for k,v in checkpoint['generator'].items())

45 | generator.load_state_dict(ckp_generator)

46 | ckp_kp_detector = OrderedDict((k.replace('module.',''),v) for k,v in checkpoint['kp_detector'].items())

47 | kp_detector.load_state_dict(ckp_kp_detector)

48 |

49 | if not cpu:

50 | generator = DataParallelWithCallback(generator)

51 | kp_detector = DataParallelWithCallback(kp_detector)

52 |

53 | generator.eval()

54 | kp_detector.eval()

55 |

56 | return generator, kp_detector

57 |

58 |

59 | def make_animation(source_image, driving_video, generator, kp_detector, relative=True, adapt_movement_scale=True, cpu=False):

60 | sources = []

61 | drivings = []

62 | with torch.no_grad():

63 | predictions = []

64 | depth_gray = []

65 | source = torch.tensor(source_image[np.newaxis].astype(np.float32)).permute(0, 3, 1, 2)

66 | driving = torch.tensor(np.array(driving_video)[np.newaxis].astype(np.float32)).permute(0, 4, 1, 2, 3)

67 | if not cpu:

68 | source = source.cuda()

69 | driving = driving.cuda()

70 | outputs = depth_decoder(depth_encoder(source))

71 | depth_source = outputs[("disp", 0)]

72 |

73 | outputs = depth_decoder(depth_encoder(driving[:, :, 0]))

74 | depth_driving = outputs[("disp", 0)]

75 | source_kp = torch.cat((source,depth_source),1)

76 | driving_kp = torch.cat((driving[:, :, 0],depth_driving),1)

77 |

78 | kp_source = kp_detector(source_kp)

79 | kp_driving_initial = kp_detector(driving_kp)

80 |

81 | # kp_source = kp_detector(source)

82 | # kp_driving_initial = kp_detector(driving[:, :, 0])

83 |

84 | for frame_idx in tqdm(range(driving.shape[2])):

85 | driving_frame = driving[:, :, frame_idx]

86 |

87 | if not cpu:

88 | driving_frame = driving_frame.cuda()

89 | outputs = depth_decoder(depth_encoder(driving_frame))

90 | depth_map = outputs[("disp", 0)]

91 |

92 | gray_driving = np.transpose(depth_map.data.cpu().numpy(), [0, 2, 3, 1])[0]

93 | gray_driving = 1-gray_driving/np.max(gray_driving)

94 |

95 | frame = torch.cat((driving_frame,depth_map),1)

96 | kp_driving = kp_detector(frame)

97 |

98 | kp_norm = normalize_kp(kp_source=kp_source, kp_driving=kp_driving,

99 | kp_driving_initial=kp_driving_initial, use_relative_movement=relative,

100 | use_relative_jacobian=relative, adapt_movement_scale=adapt_movement_scale)

101 | out = generator(source, kp_source=kp_source, kp_driving=kp_norm,source_depth = depth_source, driving_depth = depth_map)

102 |

103 | drivings.append(np.transpose(driving_frame.data.cpu().numpy(), [0, 2, 3, 1])[0])

104 | sources.append(np.transpose(source.data.cpu().numpy(), [0, 2, 3, 1])[0])

105 | predictions.append(np.transpose(out['prediction'].data.cpu().numpy(), [0, 2, 3, 1])[0])

106 | depth_gray.append(gray_driving)

107 | return sources, drivings, predictions,depth_gray

108 |

109 |

110 | def find_best_frame(source, driving, cpu=False):

111 | import face_alignment

112 |

113 | def normalize_kp(kp):

114 | kp = kp - kp.mean(axis=0, keepdims=True)

115 | area = ConvexHull(kp[:, :2]).volume

116 | area = np.sqrt(area)

117 | kp[:, :2] = kp[:, :2] / area

118 | return kp

119 |

120 | fa = face_alignment.FaceAlignment(face_alignment.LandmarksType._2D, flip_input=True,

121 | device='cpu' if cpu else 'cuda')

122 | kp_source = fa.get_landmarks(255 * source)[0]

123 | kp_source = normalize_kp(kp_source)

124 | norm = float('inf')

125 | frame_num = 0

126 | for i, image in tqdm(enumerate(driving)):

127 | kp_driving = fa.get_landmarks(255 * image)[0]

128 | kp_driving = normalize_kp(kp_driving)

129 | new_norm = (np.abs(kp_source - kp_driving) ** 2).sum()

130 | if new_norm < norm:

131 | norm = new_norm

132 | frame_num = i

133 | return frame_num

134 |

135 | if __name__ == "__main__":

136 | parser = ArgumentParser()

137 | parser.add_argument("--config", required=True, help="path to config")

138 | parser.add_argument("--checkpoint", default='vox-cpk.pth.tar', help="path to checkpoint to restore")

139 |

140 | parser.add_argument("--source_folder", default='sup-mat/source.png', help="path to source image")

141 | parser.add_argument("--driving_video", default='sup-mat/source.png', help="path to driving video")

142 | parser.add_argument("--save_folder", default='result.mp4', help="path to output")

143 |

144 | parser.add_argument("--relative", dest="relative", action="store_true", help="use relative or absolute keypoint coordinates")

145 | parser.add_argument("--adapt_scale", dest="adapt_scale", action="store_true", help="adapt movement scale based on convex hull of keypoints")

146 | parser.add_argument("--generator", type=str, required=True)

147 | parser.add_argument("--kp_num", type=int, required=True)

148 |

149 |

150 | parser.add_argument("--find_best_frame", dest="find_best_frame", action="store_true",

151 | help="Generate from the frame that is the most alligned with source. (Only for faces, requires face_aligment lib)")

152 |

153 | parser.add_argument("--best_frame", dest="best_frame", type=int, default=None,

154 | help="Set frame to start from.")

155 |

156 | parser.add_argument("--cpu", dest="cpu", action="store_true", help="cpu mode.")

157 |

158 |

159 | parser.set_defaults(relative=False)

160 | parser.set_defaults(adapt_scale=False)

161 |

162 | opt = parser.parse_args()

163 |

164 | depth_encoder = depth.ResnetEncoder(18, False)

165 | depth_decoder = depth.DepthDecoder(num_ch_enc=depth_encoder.num_ch_enc, scales=range(4))

166 | loaded_dict_enc = torch.load('depth/models/weights_19/encoder.pth')

167 | loaded_dict_dec = torch.load('depth/models/weights_19/depth.pth')

168 | filtered_dict_enc = {k: v for k, v in loaded_dict_enc.items() if k in depth_encoder.state_dict()}

169 | depth_encoder.load_state_dict(filtered_dict_enc)

170 | depth_decoder.load_state_dict(loaded_dict_dec)

171 | depth_encoder.eval()

172 | depth_decoder.eval()

173 | if not opt.cpu:

174 | depth_encoder.cuda()

175 | depth_decoder.cuda()

176 |

177 | reader = imageio.get_reader(opt.driving_video)

178 | fps = reader.get_meta_data()['fps']

179 | driving_video = []

180 | try:

181 | for im in reader:

182 | driving_video.append(im)

183 | except RuntimeError:

184 | pass

185 | reader.close()

186 |

187 | driving_video = [resize(frame, (256, 256))[..., :3] for frame in driving_video]

188 | generator, kp_detector = load_checkpoints(config_path=opt.config, checkpoint_path=opt.checkpoint, cpu=opt.cpu)

189 | if not os.path.exists(opt.save_folder):

190 | os.makedirs(opt.save_folder)

191 | sources = glob(opt.source_folder+"/*")

192 | for src in tqdm(sources):

193 | source_image = imageio.imread(src)

194 | source_image = resize(source_image, (256, 256))[..., :3]

195 | # predictions = make_animation(source_image, driving_video, generator, kp_detector, relative=opt.relative, adapt_movement_scale=opt.adapt_scale, cpu=opt.cpu)

196 | sources, drivings, predictions,depth_gray = make_animation(source_image, driving_video, generator, kp_detector, relative=opt.relative, adapt_movement_scale=opt.adapt_scale, cpu=opt.cpu)

197 | fn = os.path.basename(src)

198 | imageio.mimsave(os.path.join(opt.save_folder,fn+'.mp4'), [img_as_ubyte(p) for p in predictions], fps=fps)

199 |

--------------------------------------------------------------------------------

/depth/__init__.py:

--------------------------------------------------------------------------------

1 | from .resnet_encoder import ResnetEncoder

2 | from .depth_decoder import DepthDecoder

3 | from .pose_decoder import PoseDecoder

4 | from .pose_cnn import PoseCNN

5 |

6 |

--------------------------------------------------------------------------------

/depth/depth_decoder.py:

--------------------------------------------------------------------------------

1 | # Copyright Niantic 2019. Patent Pending. All rights reserved.

2 | #

3 | # This software is licensed under the terms of the Monodepth2 licence

4 | # which allows for non-commercial use only, the full terms of which are made

5 | # available in the LICENSE file.

6 |

7 | from __future__ import absolute_import, division, print_function

8 |

9 | import numpy as np

10 | import torch

11 | import torch.nn as nn

12 |

13 | from collections import OrderedDict

14 | from depth.layers import *

15 |

16 |

17 | class DepthDecoder(nn.Module):

18 | def __init__(self, num_ch_enc, scales=range(4), num_output_channels=1, use_skips=True):

19 | super(DepthDecoder, self).__init__()

20 |

21 | self.num_output_channels = num_output_channels

22 | self.use_skips = use_skips

23 | self.upsample_mode = 'nearest'

24 | self.scales = scales

25 |

26 | self.num_ch_enc = num_ch_enc

27 | self.num_ch_dec = np.array([16, 32, 64, 128, 256])

28 |

29 | # decoder

30 | self.convs = OrderedDict()

31 | for i in range(4, -1, -1):

32 | # upconv_0

33 | num_ch_in = self.num_ch_enc[-1] if i == 4 else self.num_ch_dec[i + 1]

34 | num_ch_out = self.num_ch_dec[i]

35 | self.convs[("upconv", i, 0)] = ConvBlock(num_ch_in, num_ch_out)

36 |

37 | # upconv_1

38 | num_ch_in = self.num_ch_dec[i]

39 | if self.use_skips and i > 0:

40 | num_ch_in += self.num_ch_enc[i - 1]

41 | num_ch_out = self.num_ch_dec[i]

42 | self.convs[("upconv", i, 1)] = ConvBlock(num_ch_in, num_ch_out)

43 |

44 | for s in self.scales:

45 | self.convs[("dispconv", s)] = Conv3x3(self.num_ch_dec[s], self.num_output_channels)

46 |

47 | self.decoder = nn.ModuleList(list(self.convs.values()))

48 | self.sigmoid = nn.Sigmoid()

49 |

50 | def forward(self, input_features):

51 | self.outputs = {}

52 |

53 | # decoder

54 | x = input_features[-1]

55 | for i in range(4, -1, -1):

56 | x = self.convs[("upconv", i, 0)](x)

57 | x = [upsample(x)]

58 | if self.use_skips and i > 0:

59 | x += [input_features[i - 1]]

60 | x = torch.cat(x, 1)

61 | x = self.convs[("upconv", i, 1)](x)

62 | if i in self.scales:

63 | self.outputs[("disp", i)] = self.sigmoid(self.convs[("dispconv", i)](x))

64 |

65 | return self.outputs

66 |

--------------------------------------------------------------------------------

/depth/layers.py:

--------------------------------------------------------------------------------

1 | # Copyright Niantic 2019. Patent Pending. All rights reserved.

2 | #

3 | # This software is licensed under the terms of the Monodepth2 licence

4 | # which allows for non-commercial use only, the full terms of which are made

5 | # available in the LICENSE file.

6 |

7 | from __future__ import absolute_import, division, print_function

8 |

9 | import numpy as np

10 | import pdb

11 | import torch

12 | import torch.nn as nn

13 | import torch.nn.functional as F

14 |

15 |

16 | def disp_to_depth(disp, min_depth, max_depth):

17 | """Convert network's sigmoid output into depth prediction

18 | The formula for this conversion is given in the 'additional considerations'

19 | section of the paper.

20 | """

21 | min_disp = 1 / max_depth

22 | max_disp = 1 / min_depth

23 | scaled_disp = min_disp + (max_disp - min_disp) * disp

24 | depth = 1 / scaled_disp

25 | return scaled_disp, depth

26 |

27 |

28 | def transformation_from_parameters(axisangle, translation, invert=False):

29 | """Convert the network's (axisangle, translation) output into a 4x4 matrix

30 | """

31 | R = rot_from_axisangle(axisangle)

32 | t = translation.clone()

33 |

34 | if invert:

35 | R = R.transpose(1, 2)

36 | t *= -1

37 |

38 | T = get_translation_matrix(t)

39 |

40 | if invert:

41 | M = torch.matmul(R, T)

42 | else:

43 | M = torch.matmul(T, R)

44 |

45 | return M

46 |

47 |

48 | def get_translation_matrix(translation_vector):

49 | """Convert a translation vector into a 4x4 transformation matrix

50 | """

51 | T = torch.zeros(translation_vector.shape[0], 4, 4).to(device=translation_vector.device)

52 |

53 | t = translation_vector.contiguous().view(-1, 3, 1)

54 |

55 | T[:, 0, 0] = 1

56 | T[:, 1, 1] = 1

57 | T[:, 2, 2] = 1

58 | T[:, 3, 3] = 1

59 | T[:, :3, 3, None] = t

60 |

61 | return T

62 |

63 |

64 | def rot_from_axisangle(vec):

65 | """Convert an axisangle rotation into a 4x4 transformation matrix

66 | (adapted from https://github.com/Wallacoloo/printipi)

67 | Input 'vec' has to be Bx1x3

68 | """

69 | angle = torch.norm(vec, 2, 2, True)

70 | axis = vec / (angle + 1e-7)

71 |

72 | ca = torch.cos(angle)

73 | sa = torch.sin(angle)

74 | C = 1 - ca

75 |

76 | x = axis[..., 0].unsqueeze(1)

77 | y = axis[..., 1].unsqueeze(1)

78 | z = axis[..., 2].unsqueeze(1)

79 |

80 | xs = x * sa

81 | ys = y * sa

82 | zs = z * sa

83 | xC = x * C

84 | yC = y * C

85 | zC = z * C

86 | xyC = x * yC

87 | yzC = y * zC

88 | zxC = z * xC

89 |

90 | rot = torch.zeros((vec.shape[0], 4, 4)).to(device=vec.device)

91 |

92 | rot[:, 0, 0] = torch.squeeze(x * xC + ca)

93 | rot[:, 0, 1] = torch.squeeze(xyC - zs)

94 | rot[:, 0, 2] = torch.squeeze(zxC + ys)

95 | rot[:, 1, 0] = torch.squeeze(xyC + zs)

96 | rot[:, 1, 1] = torch.squeeze(y * yC + ca)

97 | rot[:, 1, 2] = torch.squeeze(yzC - xs)

98 | rot[:, 2, 0] = torch.squeeze(zxC - ys)

99 | rot[:, 2, 1] = torch.squeeze(yzC + xs)

100 | rot[:, 2, 2] = torch.squeeze(z * zC + ca)

101 | rot[:, 3, 3] = 1

102 |

103 | return rot

104 |

105 |

106 | class ConvBlock(nn.Module):

107 | """Layer to perform a convolution followed by ELU

108 | """

109 | def __init__(self, in_channels, out_channels):

110 | super(ConvBlock, self).__init__()

111 |

112 | self.conv = Conv3x3(in_channels, out_channels)

113 | self.nonlin = nn.ELU(inplace=True)

114 |

115 | def forward(self, x):

116 | out = self.conv(x)

117 | out = self.nonlin(out)

118 | return out

119 |

120 |

121 | class Conv3x3(nn.Module):

122 | """Layer to pad and convolve input

123 | """

124 | def __init__(self, in_channels, out_channels, use_refl=True):

125 | super(Conv3x3, self).__init__()

126 |

127 | if use_refl:

128 | self.pad = nn.ReflectionPad2d(1)

129 | else:

130 | self.pad = nn.ZeroPad2d(1)

131 | self.conv = nn.Conv2d(int(in_channels), int(out_channels), 3)

132 |

133 | def forward(self, x):

134 | out = self.pad(x)

135 | out = self.conv(out)

136 | return out

137 |

138 |

139 | class BackprojectDepth(nn.Module):

140 | """Layer to transform a depth image into a point cloud

141 | """

142 | def __init__(self, batch_size, height, width):

143 | super(BackprojectDepth, self).__init__()

144 |

145 | self.batch_size = batch_size

146 | self.height = height

147 | self.width = width

148 |

149 | meshgrid = np.meshgrid(range(self.width), range(self.height), indexing='xy')

150 | self.id_coords = np.stack(meshgrid, axis=0).astype(np.float32)

151 | self.id_coords = nn.Parameter(torch.from_numpy(self.id_coords),

152 | requires_grad=False)

153 |

154 | self.ones = nn.Parameter(torch.ones(self.batch_size, 1, self.height * self.width),

155 | requires_grad=False)

156 |

157 | self.pix_coords = torch.unsqueeze(torch.stack(

158 | [self.id_coords[0].view(-1), self.id_coords[1].view(-1)], 0), 0)

159 | self.pix_coords = self.pix_coords.repeat(batch_size, 1, 1)

160 | self.pix_coords = nn.Parameter(torch.cat([self.pix_coords, self.ones], 1),

161 | requires_grad=False)

162 |

163 | def forward(self, depth, K,scale):

164 | K[:,:2,:] = (K[:,:2,:]/(2 ** scale)).trunc()

165 | b,n,n = K.shape

166 | inv_K = torch.linalg.inv(K)

167 | #inv_K = torch.cholesky_inverse(K)

168 | pad = torch.tensor([0.0,0.0,0.0]).view(1,3,1).expand(b,3,1).cuda()

169 | inv_K = torch.cat([inv_K,pad],-1)

170 | pad = torch.tensor([0.0,0.0,0.0,1.0]).view(1,1,4).expand(b,1,4).cuda()

171 | inv_K = torch.cat([inv_K,pad],1)

172 | cam_points = torch.matmul(inv_K[:, :3, :3], self.pix_coords)

173 | cam_points = depth.view(self.batch_size, 1, -1) * cam_points

174 | cam_points = torch.cat([cam_points, self.ones], 1)

175 |

176 | return cam_points

177 |

178 |

179 | class Project3D(nn.Module):

180 | """Layer which projects 3D points into a camera with intrinsics K and at position T

181 | """

182 | def __init__(self, batch_size, height, width, eps=1e-7):

183 | super(Project3D, self).__init__()

184 |

185 | self.batch_size = batch_size

186 | self.height = height

187 | self.width = width

188 | self.eps = eps

189 |

190 | def forward(self, points, K, T,scale=0):

191 | # K[0, :] *= self.width // (2 ** scale)

192 | # K[1, :] *= self.height // (2 ** scale)

193 | K[:,:2,:] = (K[:,:2,:]/(2 ** scale)).trunc()

194 | b,n,n = K.shape

195 | pad = torch.tensor([0.0,0.0,0.0]).view(1,3,1).expand(b,3,1).cuda()

196 | K = torch.cat([K,pad],-1)

197 | pad = torch.tensor([0.0,0.0,0.0,1.0]).view(1,1,4).expand(b,1,4).cuda()

198 | K = torch.cat([K,pad],1)

199 | P = torch.matmul(K, T)[:, :3, :]

200 |

201 | cam_points = torch.matmul(P, points)

202 |

203 | pix_coords = cam_points[:, :2, :] / (cam_points[:, 2, :].unsqueeze(1) + self.eps)

204 | pix_coords = pix_coords.view(self.batch_size, 2, self.height, self.width)

205 | pix_coords = pix_coords.permute(0, 2, 3, 1)

206 | pix_coords[..., 0] /= self.width - 1

207 | pix_coords[..., 1] /= self.height - 1

208 | pix_coords = (pix_coords - 0.5) * 2

209 | return pix_coords

210 |

211 |

212 | def upsample(x):

213 | """Upsample input tensor by a factor of 2

214 | """

215 | return F.interpolate(x, scale_factor=2, mode="nearest")

216 |

217 |

218 | def get_smooth_loss(disp, img):

219 | """Computes the smoothness loss for a disparity image

220 | The color image is used for edge-aware smoothness

221 | """

222 | grad_disp_x = torch.abs(disp[:, :, :, :-1] - disp[:, :, :, 1:])

223 | grad_disp_y = torch.abs(disp[:, :, :-1, :] - disp[:, :, 1:, :])

224 |

225 | grad_img_x = torch.mean(torch.abs(img[:, :, :, :-1] - img[:, :, :, 1:]), 1, keepdim=True)

226 | grad_img_y = torch.mean(torch.abs(img[:, :, :-1, :] - img[:, :, 1:, :]), 1, keepdim=True)

227 |

228 | grad_disp_x *= torch.exp(-grad_img_x)

229 | grad_disp_y *= torch.exp(-grad_img_y)

230 |

231 | return grad_disp_x.mean() + grad_disp_y.mean()

232 |

233 |

234 | class SSIM(nn.Module):

235 | """Layer to compute the SSIM loss between a pair of images

236 | """

237 | def __init__(self):

238 | super(SSIM, self).__init__()

239 | self.mu_x_pool = nn.AvgPool2d(3, 1)

240 | self.mu_y_pool = nn.AvgPool2d(3, 1)

241 | self.sig_x_pool = nn.AvgPool2d(3, 1)

242 | self.sig_y_pool = nn.AvgPool2d(3, 1)

243 | self.sig_xy_pool = nn.AvgPool2d(3, 1)

244 |

245 | self.refl = nn.ReflectionPad2d(1)

246 |

247 | self.C1 = 0.01 ** 2

248 | self.C2 = 0.03 ** 2

249 |

250 | def forward(self, x, y):

251 | x = self.refl(x)

252 | y = self.refl(y)

253 |

254 | mu_x = self.mu_x_pool(x)

255 | mu_y = self.mu_y_pool(y)

256 |

257 | sigma_x = self.sig_x_pool(x ** 2) - mu_x ** 2

258 | sigma_y = self.sig_y_pool(y ** 2) - mu_y ** 2

259 | sigma_xy = self.sig_xy_pool(x * y) - mu_x * mu_y

260 |