├── .gitignore

├── LICENSE

├── README.md

├── application-deployment

├── README.md

├── consul-connect-with-nomad

│ ├── .gitignore

│ ├── ConsulIntention.png

│ ├── ConsulServices.png

│ ├── NomadUI.png

│ ├── README.md

│ ├── aws

│ │ ├── main.tf

│ │ ├── modules

│ │ │ └── nomadconsul

│ │ │ │ └── nomadconsul.tf

│ │ ├── outputs.tf

│ │ ├── packer

│ │ │ ├── README.md

│ │ │ └── packer.json

│ │ ├── terraform.tfvars.example

│ │ ├── user-data-client.sh

│ │ ├── user-data-server.sh

│ │ ├── variables.tf

│ │ └── vpc.tf

│ └── shared

│ │ ├── config

│ │ ├── consul.json

│ │ ├── consul_client.json

│ │ ├── consul_upstart.conf

│ │ ├── nomad.hcl

│ │ ├── nomad_client.hcl

│ │ └── nomad_upstart.conf

│ │ ├── jobs

│ │ └── catalogue-with-connect.nomad

│ │ └── scripts

│ │ ├── client.sh

│ │ ├── run-proxy.sh

│ │ ├── server.sh

│ │ └── setup.sh

├── fabio

│ ├── README.md

│ └── fabio.nomad

├── go-blue-green

│ ├── README.md

│ └── go-app.nomad

├── go-vault-dynamic-mysql-creds

│ ├── README.md

│ ├── application.nomad

│ ├── golang_vault_setup.sh

│ └── policy-mysql.hcl

├── haproxy

│ ├── README.md

│ └── haproxy.nomad

├── http-echo

│ └── http-echo.nomad

├── jenkins

│ └── jenkins-java.nomad

├── microservices

│ ├── ConsulUI.png

│ ├── LICENSE

│ ├── NomadUI.png

│ ├── README.md

│ ├── SockShopApp.png

│ ├── aws

│ │ ├── delay-vault-aws

│ │ ├── main.tf

│ │ ├── modules

│ │ │ └── nomadconsul

│ │ │ │ └── nomadconsul.tf

│ │ ├── outputs.tf

│ │ ├── packer

│ │ │ └── packer.json

│ │ ├── terraform.tfvars.example

│ │ ├── user-data-client.sh

│ │ ├── user-data-server.sh

│ │ ├── variables.tf

│ │ └── vpc.tf

│ ├── shared

│ │ ├── config

│ │ │ ├── consul.json

│ │ │ ├── consul_client.json

│ │ │ ├── consul_upstart.conf

│ │ │ ├── nomad.hcl

│ │ │ ├── nomad_client.hcl

│ │ │ └── nomad_upstart.conf

│ │ ├── jobs

│ │ │ ├── sockshop.nomad

│ │ │ └── sockshopui.nomad

│ │ └── scripts

│ │ │ ├── client.sh

│ │ │ ├── server.sh

│ │ │ └── setup.sh

│ ├── slides

│ │ └── HashiCorpMicroservicesDemo.pptx

│ └── vault

│ │ ├── aws-policy.json

│ │ ├── nomad-cluster-role.json

│ │ ├── nomad-server-policy.hcl

│ │ ├── setup_vault.sh

│ │ └── sockshop-read.hcl

├── nginx-vault-kv

│ ├── README.md

│ ├── kv_vault_setup.sh

│ ├── nginx-kv-secret.nomad

│ └── test.policy

├── nginx-vault-pki

│ ├── README.md

│ ├── nginx-pki-secret.nomad

│ ├── pki_vault_setup.sh

│ └── policy-superuser.hcl

├── nginx

│ ├── README.md

│ ├── kv_consul_setup.sh

│ └── nginx-consul.nomad

├── redis

│ ├── README.md

│ └── redis.nomad

└── vault

│ └── vault_exec.hcl

├── assets

├── Consul_GUI_redis.png

├── Fabio_GUI_empty.png

├── Fabio_GUI_goapp.png

├── Nginx_Consul.png

├── Nginx_PKI.png

├── NomadLogo.png

├── Nomad_GUI_redis.png

├── Vault_GUI_leases.png

├── Vault_GUI_main.png

├── Vault_GUI_mysql.png

├── go-app-v1.png

└── go-app-v2.png

├── multi-cloud

└── README.md

├── operations

├── README.md

├── multi-job-demo

│ ├── README.md

│ ├── aws

│ │ ├── acls

│ │ │ ├── anonymous.hcl

│ │ │ ├── dev.hcl

│ │ │ └── qa.hcl

│ │ ├── bootstrap_token

│ │ ├── get_bootstrap_token.sh

│ │ ├── main.tf

│ │ ├── modules

│ │ │ ├── network

│ │ │ │ ├── outputs.tf

│ │ │ │ ├── variables.tf

│ │ │ │ └── vpc.tf

│ │ │ └── nomadconsul

│ │ │ │ ├── nomadconsul.tf

│ │ │ │ ├── outputs.tf

│ │ │ │ ├── scripts

│ │ │ │ ├── user-data-client.sh

│ │ │ │ └── user-data-server.sh

│ │ │ │ └── variables.tf

│ │ ├── outputs.tf

│ │ ├── packer

│ │ │ ├── README.md

│ │ │ └── packer.json

│ │ ├── sentinel

│ │ │ ├── allow-docker-and-java-drivers.sentinel

│ │ │ ├── prevent-docker-host-network.sentinel

│ │ │ └── restrict-docker-images.sentinel

│ │ ├── stop_all_jobs.sh

│ │ ├── terraform.tfvars.example

│ │ └── variables.tf

│ └── shared

│ │ ├── config

│ │ ├── consul.json

│ │ ├── consul_client.json

│ │ ├── consul_upstart.conf

│ │ ├── nomad.hcl

│ │ ├── nomad_client.hcl

│ │ └── nomad_upstart.conf

│ │ ├── jobs

│ │ ├── catalogue.nomad

│ │ ├── sleep.nomad

│ │ ├── webserver-test.nomad

│ │ ├── website-dev.nomad

│ │ └── website-qa.nomad

│ │ └── scripts

│ │ ├── client.sh

│ │ ├── server.sh

│ │ └── setup.sh

├── nomad-vault

│ └── README.md

├── provision-nomad

│ ├── README.md

│ ├── best-practices

│ │ └── terraform-aws

│ │ │ ├── README.md

│ │ │ ├── gitignore.tf

│ │ │ ├── main.tf

│ │ │ ├── outputs.tf

│ │ │ ├── terraform.auto.tfvars

│ │ │ └── variables.tf

│ ├── dev

│ │ ├── terraform-aws

│ │ │ ├── README.md

│ │ │ ├── gitignore.tf

│ │ │ ├── main.tf

│ │ │ ├── outputs.tf

│ │ │ ├── terraform.auto.tfvars

│ │ │ └── variables.tf

│ │ └── vagrant-local

│ │ │ ├── README.md

│ │ │ ├── Vagrantfile

│ │ │ └── enterprise-binaries

│ │ │ └── README.md

│ ├── quick-start

│ │ └── terraform-aws

│ │ │ ├── README.md

│ │ │ ├── gitignore.tf

│ │ │ ├── main.tf

│ │ │ ├── outputs.tf

│ │ │ ├── terraform.auto.tfvars

│ │ │ └── variables.tf

│ └── templates

│ │ ├── best-practices-bastion-systemd.sh.tpl

│ │ ├── best-practices-consul-systemd.sh.tpl

│ │ ├── best-practices-nomad-client-systemd.sh.tpl

│ │ ├── best-practices-nomad-server-systemd.sh.tpl

│ │ ├── best-practices-vault-systemd.sh.tpl

│ │ ├── install-base.sh.tpl

│ │ ├── install-consul-systemd.sh.tpl

│ │ ├── install-docker.sh.tpl

│ │ ├── install-java.sh.tpl

│ │ ├── install-nomad-systemd.sh.tpl

│ │ ├── install-vault-systemd.sh.tpl

│ │ ├── quick-start-bastion-systemd.sh.tpl

│ │ ├── quick-start-consul-systemd.sh.tpl

│ │ ├── quick-start-nomad-client-systemd.sh.tpl

│ │ ├── quick-start-nomad-server-systemd.sh.tpl

│ │ └── quick-start-vault-systemd.sh.tpl

└── sentinel

│ ├── README.md

│ ├── jobs

│ ├── batch.nomad

│ ├── docs.nomad

│ ├── example.nomad

│ └── example_two_groups.nomad

│ └── sentinel_policies

│ ├── all_drivers_docker.sentinel

│ ├── allow-docker-and-java-drivers.sentinel

│ ├── bind-namespaces-to-clients.sentinel

│ ├── enforce_multi_dc.sentinel

│ ├── policy_per_namespace.sentinel

│ ├── prevent-docker-host-network.sentinel

│ ├── require-docker-digests.sentinel

│ ├── resource_check.sentinel

│ ├── restrict-docker-images-and-prevent-latest-tag.sentinel

│ ├── restrict_batch_deploy_time.sentinel

│ ├── restrict_docker_images.sentinel

│ └── restrict_namespace_to_dc.sentinel

├── provision

└── vagrant

│ ├── README.md

│ ├── Vagrantfile

│ ├── vault_init_and_unseal.sh

│ └── vault_nomad_integration.sh

└── workload-flexibility

└── README.md

/.gitignore:

--------------------------------------------------------------------------------

1 | # Compiled files

2 | *.tfstate

3 | *.tfstate.backup

4 | *.tfstate.lock.info

5 |

6 | # Directories

7 | .terraform/

8 | .vagrant/

9 |

10 | # Ignored Terraform files

11 | *gitignore*.tf

12 |

13 | .vagrant

14 | nomad

15 | vault

16 | consul

17 | *.pem

18 | .DS_Store

19 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | ----

2 | - Website: https://www.nomadproject.io

3 | - GitHub repository: https://github.com/hashicorp/nomad

4 | - IRC: `#nomad-tool` on Freenode

5 | - Announcement list: [Google Groups](https://groups.google.com/group/hashicorp-announce)

6 | - Discussion list: [Google Groups](https://groups.google.com/group/nomad-tool)

7 | - Resources: https://www.nomadproject.io/resources.html

8 |

9 |  10 |

11 | ----

12 |

13 | # Nomad-Guides

14 | Example usage of HashiCorp Nomad (Work In Progress)

15 |

16 | ## provision

17 | This area will contain instructions to provision Nomad and Consul as a first step to start using these tools.

18 |

19 | These may include use cases installing Nomad in cloud services via Terraform, or within virtual environments using Vagrant, or running Nomad in a local development mode.

20 |

21 | ## application-deployment

22 | This area will contain instructions and gudies for deploying applications on Nomad. This area contains examples and guides for deploying secrets (from Vault) into your Nomad applications.

23 |

24 | ## operations

25 | This area will contain instructions for operating Nomad. This includes topics such as configuring Sentinel policies, namespaces, ACLs etc.

26 |

27 | ## `gitignore.tf` Files

28 |

29 | You may notice some [`gitignore.tf`](operations/provision-consul/best-practices/terraform-aws/gitignore.tf) files in certain directories. `.tf` files that contain the word "gitignore" are ignored by git in the [`.gitignore`](./.gitignore) file.

30 |

31 | If you have local Terraform configuration that you want ignored (like Terraform backend configuration), create a new file in the directory (separate from `gitignore.tf`) that contains the word "gitignore" (e.g. `backend.gitignore.tf`) and it won't be picked up as a change.

32 |

33 | ### Contributing

34 | We welcome contributions and feedback! For guide submissions, please see [the contributions guide](CONTRIBUTING.md)

35 |

--------------------------------------------------------------------------------

/application-deployment/README.md:

--------------------------------------------------------------------------------

1 | # To be implemented

2 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/.gitignore:

--------------------------------------------------------------------------------

1 | *~

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/ConsulIntention.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hashicorp/nomad-guides/cdda5a0ebaaa2c009783c24e98817622d9b7593a/application-deployment/consul-connect-with-nomad/ConsulIntention.png

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/ConsulServices.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hashicorp/nomad-guides/cdda5a0ebaaa2c009783c24e98817622d9b7593a/application-deployment/consul-connect-with-nomad/ConsulServices.png

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/NomadUI.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hashicorp/nomad-guides/cdda5a0ebaaa2c009783c24e98817622d9b7593a/application-deployment/consul-connect-with-nomad/NomadUI.png

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/aws/main.tf:

--------------------------------------------------------------------------------

1 | terraform {

2 | required_version = ">= 0.11.10"

3 | }

4 |

5 | provider "aws" {

6 | region = "${var.region}"

7 | }

8 |

9 | module "nomadconsul" {

10 | source = "./modules/nomadconsul"

11 |

12 | region = "${var.region}"

13 | ami = "${var.ami}"

14 | vpc_id = "${aws_vpc.catalogue.id}"

15 | subnet_id = "${aws_subnet.public-subnet.id}"

16 | server_instance_type = "${var.server_instance_type}"

17 | client_instance_type = "${var.client_instance_type}"

18 | key_name = "${var.key_name}"

19 | server_count = "${var.server_count}"

20 | client_count = "${var.client_count}"

21 | name_tag_prefix = "${var.name_tag_prefix}"

22 | cluster_tag_value = "${var.cluster_tag_value}"

23 | owner = "${var.owner}"

24 | ttl = "${var.ttl}"

25 | }

26 |

27 | #resource "null_resource" "start_catalogue" {

28 | # provisioner "remote-exec" {

29 | # inline = [

30 | # "sleep 180",

31 | # "nomad job run -address=http://${module.nomadconsul.primary_server_private_ips[0]}:4646 /home/ubuntu/catalogue-with-connect.nomad",

32 | # ]

33 |

34 | # connection {

35 | # host = "${module.nomadconsul.primary_server_public_ips[0]}"

36 | # type = "ssh"

37 | # agent = false

38 | # user = "ubuntu"

39 | # private_key = "${var.private_key_data}"

40 | # }

41 | # }

42 | #}

43 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/aws/outputs.tf:

--------------------------------------------------------------------------------

1 | output "IP_Addresses" {

2 | sensitive = true

3 | value = < >(sudo tee /var/log/user-data.log|logger -t user-data -s 2>/dev/console) 2>&1

6 | sudo bash /ops/shared/scripts/client.sh "${region}" "${cluster_tag_value}" "${server_ip}"

7 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/aws/user-data-server.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | set -e

4 |

5 | exec > >(sudo tee /var/log/user-data.log|logger -t user-data -s 2>/dev/console) 2>&1

6 | sudo bash /ops/shared/scripts/server.sh "${server_count}" "${region}" "${cluster_tag_value}"

7 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/aws/variables.tf:

--------------------------------------------------------------------------------

1 | variable "region" {

2 | description = "The AWS region to deploy to."

3 | default = "us-east-1"

4 | }

5 |

6 | variable "ami" {

7 | description = "AMI ID"

8 | default = "ami-01d821506cee7b2c4"

9 | }

10 |

11 | variable "vpc_cidr" {

12 | description = "VPC CIDR"

13 | default = "10.0.0.0/16"

14 | }

15 |

16 | variable "subnet_cidr" {

17 | description = "Subnet CIDR"

18 | default = "10.0.1.0/24"

19 | }

20 |

21 | variable "subnet_az" {

22 | description = "The AZ for the public subnet"

23 | default = "us-east-1a"

24 | }

25 |

26 | variable "server_instance_type" {

27 | description = "The AWS instance type to use for servers."

28 | default = "t2.medium"

29 | }

30 |

31 | variable "client_instance_type" {

32 | description = "The AWS instance type to use for clients."

33 | default = "t2.medium"

34 | }

35 |

36 | variable "key_name" {

37 | description = "name of pre-existing SSH key to be used for provisioner auth"

38 | }

39 |

40 | variable "private_key_data" {

41 | description = "contents of the private key"

42 | }

43 |

44 | variable "server_count" {

45 | description = "The number of servers to provision."

46 | default = "1"

47 | }

48 |

49 | variable "client_count" {

50 | description = "The number of clients to provision."

51 | default = "2"

52 | }

53 |

54 | variable "name_tag_prefix" {

55 | description = "prefixed to Name tag added to EC2 instances and other AWS resources"

56 | default = "nomad-consul"

57 | }

58 |

59 | variable "cluster_tag_value" {

60 | description = "Used by Consul to automatically form a cluster."

61 | default = "nomad-consul-demo"

62 | }

63 |

64 | variable "owner" {

65 | description = "Adds owner tag to EC2 instances"

66 | default = ""

67 | }

68 |

69 | variable "ttl" {

70 | description = "Adds TTL tag to EC2 instances for reaping purposes. Reaping is only done for instances deployed by HashiCorp SEs. In any case, -1 means no reaping."

71 | default = "-1"

72 | }

73 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/aws/vpc.tf:

--------------------------------------------------------------------------------

1 | # Define the VPC.

2 | resource "aws_vpc" "catalogue" {

3 | cidr_block = "${var.vpc_cidr}"

4 | enable_dns_hostnames = true

5 |

6 | tags={

7 | Name = "${var.name_tag_prefix} VPC"

8 | }

9 | }

10 |

11 | # Create an Internet Gateway for the VPC.

12 | resource "aws_internet_gateway" "catalogue" {

13 | vpc_id = "${aws_vpc.catalogue.id}"

14 |

15 | tags={

16 | Name = "${var.name_tag_prefix} IGW"

17 | }

18 | }

19 |

20 | # Create a public subnet.

21 | resource "aws_subnet" "public-subnet" {

22 | vpc_id = "${aws_vpc.catalogue.id}"

23 | cidr_block = "${var.subnet_cidr}"

24 | availability_zone = "${var.subnet_az}"

25 | map_public_ip_on_launch = true

26 | depends_on = ["aws_internet_gateway.catalogue"]

27 |

28 | tags={

29 | Name = "${var.name_tag_prefix} Public Subnet"

30 | }

31 | }

32 |

33 | # Create a route table allowing all addresses access to the IGW.

34 | resource "aws_route_table" "public" {

35 | vpc_id = "${aws_vpc.catalogue.id}"

36 |

37 | route {

38 | cidr_block = "0.0.0.0/0"

39 | gateway_id = "${aws_internet_gateway.catalogue.id}"

40 | }

41 |

42 | tags={

43 | Name = "${var.name_tag_prefix} Public Route Table"

44 | }

45 | }

46 |

47 | # Now associate the route table with the public subnet

48 | # giving all public subnet instances access to the internet.

49 | resource "aws_route_table_association" "public-subnet" {

50 | subnet_id = "${aws_subnet.public-subnet.id}"

51 | route_table_id = "${aws_route_table.public.id}"

52 | }

53 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/shared/config/consul.json:

--------------------------------------------------------------------------------

1 | {

2 | "log_level": "INFO",

3 | "server": true,

4 | "ui": true,

5 | "data_dir": "/opt/consul/data",

6 | "bind_addr": "0.0.0.0",

7 | "client_addr": "0.0.0.0",

8 | "advertise_addr": "IP_ADDRESS",

9 | "recursors": ["10.0.0.2"],

10 | "bootstrap_expect": SERVER_COUNT,

11 | "service": {

12 | "name": "consul"

13 | },

14 | "connect": {

15 | "enabled": true

16 | }

17 | }

18 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/shared/config/consul_client.json:

--------------------------------------------------------------------------------

1 | {

2 | "ui": true,

3 | "log_level": "INFO",

4 | "data_dir": "/opt/consul/data",

5 | "bind_addr": "0.0.0.0",

6 | "client_addr": "0.0.0.0",

7 | "advertise_addr": "IP_ADDRESS",

8 | "recursors": ["10.0.0.2"],

9 | "connect": {

10 | "enabled": true

11 | }

12 | }

13 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/shared/config/consul_upstart.conf:

--------------------------------------------------------------------------------

1 | description "Consul"

2 |

3 | start on runlevel [2345]

4 | stop on runlevel [!2345]

5 |

6 | respawn

7 |

8 | console log

9 |

10 | script

11 | if [ -f "/etc/service/consul" ]; then

12 | . /etc/service/consul

13 | fi

14 |

15 | exec /usr/local/bin/consul agent \

16 | -config-dir="/etc/consul.d" \

17 | -dns-port="53" \

18 | -retry-join "provider=aws tag_key=ConsulAutoJoin tag_value=CLUSTER_TAG_VALUE region=REGION" \

19 | >>/var/log/consul.log 2>&1

20 | end script

21 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/shared/config/nomad.hcl:

--------------------------------------------------------------------------------

1 | data_dir = "/opt/nomad/data"

2 | bind_addr = "IP_ADDRESS"

3 |

4 | # Enable the server

5 | server {

6 | enabled = true

7 | bootstrap_expect = SERVER_COUNT

8 | }

9 |

10 | name = "nomad@IP_ADDRESS"

11 |

12 | consul {

13 | address = "IP_ADDRESS:8500"

14 | }

15 |

16 | telemetry {

17 | publish_allocation_metrics = true

18 | publish_node_metrics = true

19 | }

20 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/shared/config/nomad_client.hcl:

--------------------------------------------------------------------------------

1 | data_dir = "/opt/nomad/data"

2 | bind_addr = "IP_ADDRESS"

3 | name = "nomad@IP_ADDRESS"

4 |

5 | # Enable the client

6 | client {

7 | enabled = true

8 | options = {

9 | driver.java.enable = "1"

10 | docker.cleanup.image = false

11 | }

12 | }

13 |

14 | consul {

15 | address = "IP_ADDRESS:8500"

16 | }

17 |

18 | telemetry {

19 | publish_allocation_metrics = true

20 | publish_node_metrics = true

21 | }

22 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/shared/config/nomad_upstart.conf:

--------------------------------------------------------------------------------

1 | description "Nomad"

2 |

3 | start on runlevel [2345]

4 | stop on runlevel [!2345]

5 |

6 | respawn

7 |

8 | console log

9 |

10 | script

11 | if [ -f "/etc/service/nomad" ]; then

12 | . /etc/service/nomad

13 | fi

14 |

15 | exec /usr/local/bin/nomad agent \

16 | -config="/etc/nomad.d/nomad.hcl" \

17 | >>/var/log/nomad.log 2>&1

18 | end script

19 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/shared/jobs/catalogue-with-connect.nomad:

--------------------------------------------------------------------------------

1 | job "catalogue-with-connect" {

2 | datacenters = ["dc1"]

3 |

4 | constraint {

5 | attribute = "${attr.kernel.name}"

6 | value = "linux"

7 | }

8 |

9 | constraint {

10 | operator = "distinct_hosts"

11 | value = "true"

12 | }

13 |

14 | update {

15 | stagger = "10s"

16 | max_parallel = 1

17 | }

18 |

19 |

20 | # - catalogue - #

21 | group "catalogue" {

22 | count = 1

23 |

24 | restart {

25 | attempts = 10

26 | interval = "5m"

27 | delay = "25s"

28 | mode = "delay"

29 | }

30 |

31 | # - app - #

32 | task "catalogue" {

33 | driver = "docker"

34 |

35 | config {

36 | image = "rberlind/catalogue:latest"

37 | command = "/app"

38 | args = ["-port", "8080", "-DSN", "catalogue_user:default_password@tcp(${NOMAD_ADDR_catalogueproxy_upstream})/socksdb"]

39 | hostname = "catalogue.service.consul"

40 | network_mode = "bridge"

41 | port_map = {

42 | http = 8080

43 | }

44 | }

45 |

46 | service {

47 | name = "catalogue"

48 | tags = ["app", "catalogue"]

49 | port = "http"

50 | }

51 |

52 | resources {

53 | cpu = 100 # 100 Mhz

54 | memory = 128 # 32MB

55 | network {

56 | mbits = 10

57 | port "http" {

58 | static = 8080

59 | }

60 | }

61 | }

62 | } # - end app - #

63 |

64 | # - catalogue connect upstream proxy - #

65 | task "catalogueproxy" {

66 | driver = "exec"

67 |

68 | config {

69 | command = "/usr/local/bin/run-proxy.sh"

70 | args = ["${NOMAD_IP_proxy}", "${NOMAD_TASK_DIR}", "catalogue"]

71 | }

72 |

73 | meta {

74 | proxy_name = "catalogue"

75 | proxy_target = "catalogue-db"

76 | }

77 |

78 | template {

79 | data = </dev/null

7 | }

8 |

9 | trap term SIGINT

10 |

11 | private_ip=$1

12 | local_dir=$2

13 | proxy=$3

14 | echo "main PID is $$"

15 | echo "private_ip is ${private_ip}"

16 | echo "local_dir is ${local_dir}"

17 | echo "proxy is ${proxy}"

18 | curl --request PUT --data @${local_dir}/${proxy}-proxy.json http://localhost:8500/v1/agent/service/register

19 |

20 | /usr/local/bin/consul connect proxy -http-addr http://${private_ip}:8500 -sidecar-for ${proxy} &

21 |

22 | child=$!

23 | wait "$child"

24 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/shared/scripts/server.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | set -e

4 |

5 | CONFIGDIR=/ops/shared/config

6 |

7 | CONSULCONFIGDIR=/etc/consul.d

8 | NOMADCONFIGDIR=/etc/nomad.d

9 | HOME_DIR=ubuntu

10 |

11 | # Wait for network

12 | sleep 15

13 |

14 | IP_ADDRESS=$(curl http://instance-data/latest/meta-data/local-ipv4)

15 | SERVER_COUNT=$1

16 | REGION=$2

17 | CLUSTER_TAG_VALUE=$3

18 |

19 | # Consul

20 | sed -i "s/IP_ADDRESS/$IP_ADDRESS/g" $CONFIGDIR/consul.json

21 | sed -i "s/SERVER_COUNT/$SERVER_COUNT/g" $CONFIGDIR/consul.json

22 | sed -i "s/REGION/$REGION/g" $CONFIGDIR/consul_upstart.conf

23 | sed -i "s/CLUSTER_TAG_VALUE/$CLUSTER_TAG_VALUE/g" $CONFIGDIR/consul_upstart.conf

24 | cp $CONFIGDIR/consul.json $CONSULCONFIGDIR

25 | cp $CONFIGDIR/consul_upstart.conf /etc/init/consul.conf

26 |

27 | service consul start

28 | sleep 10

29 | export CONSUL_HTTP_ADDR=$IP_ADDRESS:8500

30 |

31 | # Nomad

32 | sed -i "s/IP_ADDRESS/$IP_ADDRESS/g" $CONFIGDIR/nomad.hcl

33 | sed -i "s/SERVER_COUNT/$SERVER_COUNT/g" $CONFIGDIR/nomad.hcl

34 | cp $CONFIGDIR/nomad.hcl $NOMADCONFIGDIR

35 | cp $CONFIGDIR/nomad_upstart.conf /etc/init/nomad.conf

36 | export NOMAD_ADDR=http://$IP_ADDRESS:4646

37 |

38 | echo "nameserver $IP_ADDRESS" | tee /etc/resolv.conf.new

39 | cat /etc/resolv.conf | tee --append /etc/resolv.conf.new

40 | mv /etc/resolv.conf.new /etc/resolv.conf

41 |

42 | # Add search service.consul at bottom of /etc/resolv.conf

43 | echo "search service.consul" | tee --append /etc/resolv.conf

44 |

45 | # Set env vars for tool CLIs

46 | echo "export CONSUL_HTTP_ADDR=$IP_ADDRESS:8500" | tee --append /home/$HOME_DIR/.bashrc

47 | echo "export NOMAD_ADDR=http://$IP_ADDRESS:4646" | tee --append /home/$HOME_DIR/.bashrc

48 |

49 | # Start Docker

50 | service docker restart

51 |

52 | # Copy Nomad jobs and scripts to desired locations

53 | cp /ops/shared/jobs/* /home/ubuntu/.

54 | chown -R $HOME_DIR:$HOME_DIR /home/$HOME_DIR/

55 | chmod 666 /home/ubuntu/*

56 |

57 | # Start Nomad

58 | service nomad start

59 | sleep 60

60 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/shared/scripts/setup.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | : ${DEBUG=0}

4 | if [ "0" != "$DEBUG" ]; then

5 | set -x

6 | fi

7 |

8 | set -eu

9 |

10 | cd /ops

11 |

12 | CONFIGDIR=/ops/shared/config

13 |

14 | : ${CONSULVERSION=1.10.1}

15 | CONSULDOWNLOAD=https://releases.hashicorp.com/consul/${CONSULVERSION}/consul_${CONSULVERSION}_linux_amd64.zip

16 | CONSULCONFIGDIR=/etc/consul.d

17 | CONSULDIR=/opt/consul

18 |

19 | : ${NOMADVERSION=1.1.2}

20 | NOMADDOWNLOAD=https://releases.hashicorp.com/nomad/${NOMADVERSION}/nomad_${NOMADVERSION}_linux_amd64.zip

21 | NOMADCONFIGDIR=/etc/nomad.d

22 | NOMADDIR=/opt/nomad

23 |

24 | # Dependencies

25 | sudo apt-get install -y software-properties-common

26 | sudo apt-get update

27 | sudo apt-get install -y unzip tree redis-tools jq

28 | sudo apt-get install -y upstart-sysv

29 | sudo update-initramfs -u

30 |

31 | # Disable the firewall

32 | sudo ufw disable

33 |

34 | # Download Consul

35 | curl -L $CONSULDOWNLOAD > consul.zip

36 |

37 | ## Install Consul

38 | sudo unzip consul.zip -d /usr/local/bin

39 | sudo chmod 0755 /usr/local/bin/consul

40 | sudo chown root:root /usr/local/bin/consul

41 | sudo setcap "cap_net_bind_service=+ep" /usr/local/bin/consul

42 |

43 | ## Configure Consul

44 | sudo mkdir -p $CONSULCONFIGDIR

45 | sudo chmod 755 $CONSULCONFIGDIR

46 | sudo mkdir -p $CONSULDIR

47 | sudo chmod 755 $CONSULDIR

48 |

49 | # Download Nomad

50 | curl -L $NOMADDOWNLOAD > nomad.zip

51 |

52 | ## Install Nomad

53 | sudo unzip nomad.zip -d /usr/local/bin

54 | sudo chmod 0755 /usr/local/bin/nomad

55 | sudo chown root:root /usr/local/bin/nomad

56 |

57 | ## Configure Nomad

58 | sudo mkdir -p $NOMADCONFIGDIR

59 | sudo chmod 755 $NOMADCONFIGDIR

60 | sudo mkdir -p $NOMADDIR

61 | sudo chmod 755 $NOMADDIR

62 |

63 | # Docker

64 | sudo apt-get update

65 | sudo apt-get install -y apt-transport-https ca-certificates curl software-properties-common

66 | curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

67 | sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

68 | sudo apt-get update

69 | sudo apt-get install -y docker-ce=17.09.1~ce-0~ubuntu

70 | sudo usermod -aG docker ubuntu

71 |

--------------------------------------------------------------------------------

/application-deployment/fabio/fabio.nomad:

--------------------------------------------------------------------------------

1 |

2 | job "fabio" {

3 | datacenters = ["dc1"]

4 | type = "system"

5 |

6 | group "fabio" {

7 | count = 1

8 |

9 | task "fabio" {

10 | driver = "raw_exec"

11 |

12 | artifact {

13 | source = "https://github.com/fabiolb/fabio/releases/download/v1.5.4/fabio-1.5.4-go1.9.2-linux_amd64"

14 | }

15 |

16 | config {

17 | command = "fabio-1.5.4-go1.9.2-linux_amd64"

18 | }

19 |

20 | resources {

21 | cpu = 100 # 500 MHz

22 | memory = 128 # 256MB

23 | network {

24 | mbits = 10

25 | port "http" {

26 | static = 9999

27 | }

28 | port "admin" {

29 | static = 9998

30 | }

31 | }

32 | }

33 | }

34 | }

35 | }

--------------------------------------------------------------------------------

/application-deployment/go-blue-green/go-app.nomad:

--------------------------------------------------------------------------------

1 | job "go-app" {

2 | datacenters = ["dc1"]

3 | type = "service"

4 | update {

5 | max_parallel = 1

6 | min_healthy_time = "10s"

7 | healthy_deadline = "3m"

8 | auto_revert = false

9 | canary = 3

10 | }

11 | group "go-app" {

12 | count = 3

13 | restart {

14 | # The number of attempts to run the job within the specified interval.

15 | attempts = 10

16 | interval = "5m"

17 | # The "delay" parameter specifies the duration to wait before restarting

18 | # a task after it has failed.

19 | delay = "25s"

20 | mode = "delay"

21 | }

22 | ephemeral_disk {

23 | size = 300

24 | }

25 | task "go-app" {

26 | # The "driver" parameter specifies the task driver that should be used to

27 | # run the task.

28 | driver = "docker"

29 | config {

30 | # change to go-app-2.0

31 | image = "aklaas2/go-app-1.0"

32 | port_map {

33 | http = 8080

34 | }

35 | }

36 | resources {

37 | cpu = 500 # 500 MHz

38 | memory = 256 # 256MB

39 | network {

40 | mbits = 10

41 | port "http" {

42 | #static=8080

43 | }

44 | }

45 | }

46 | service {

47 | name = "go-app"

48 | tags = [ "urlprefix-/go-app", "go-app" ]

49 | port = "http"

50 | check {

51 | name = "alive"

52 | type = "tcp"

53 | interval = "10s"

54 | timeout = "2s"

55 | }

56 | }

57 | }

58 | }

59 | }

60 |

--------------------------------------------------------------------------------

/application-deployment/go-vault-dynamic-mysql-creds/application.nomad:

--------------------------------------------------------------------------------

1 | job "app" {

2 | datacenters = ["dc1"]

3 | type = "service"

4 |

5 | update {

6 | stagger = "5s"

7 | max_parallel = 1

8 | }

9 |

10 | group "app" {

11 | count = 3

12 |

13 | task "app" {

14 | driver = "exec"

15 | config {

16 | command = "goapp"

17 | }

18 |

19 | env {

20 | VAULT_ADDR = "http://active.vault.service.consul:8200"

21 | APP_DB_HOST = "db.service.consul:3306"

22 | }

23 |

24 | vault {

25 | policies = [ "mysql" ]

26 | }

27 |

28 | artifact {

29 | source = "https://s3.amazonaws.com/ak-bucket-1/goapp"

30 | }

31 |

32 | resources {

33 | cpu = 500

34 | memory = 64

35 | network {

36 | mbits = 1

37 | port "http" {

38 | static = 8080

39 | }

40 | }

41 | }

42 |

43 | service {

44 | name = "app"

45 | tags = ["urlprefix-/app", "go-mysql-app"]

46 | port = "http"

47 | check {

48 | type = "http"

49 | name = "healthz"

50 | interval = "15s"

51 | timeout = "5s"

52 | path = "/healthz"

53 | }

54 | }

55 | }

56 | }

57 | }

58 |

--------------------------------------------------------------------------------

/application-deployment/go-vault-dynamic-mysql-creds/golang_vault_setup.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | consul kv get service/vault/root-token | vault auth -

4 |

5 | POLICY='path "database/creds/readonly" { capabilities = [ "read", "list" ] }'

6 |

7 | echo $POLICY > policy-mysql.hcl

8 |

9 | vault policy-write mysql policy-mysql.hcl

10 |

--------------------------------------------------------------------------------

/application-deployment/go-vault-dynamic-mysql-creds/policy-mysql.hcl:

--------------------------------------------------------------------------------

1 | path "database/creds/readonly" { capabilities = [ "read", "list" ] }

2 |

--------------------------------------------------------------------------------

/application-deployment/haproxy/README.md:

--------------------------------------------------------------------------------

1 | HAPROXY example

2 |

3 | ### TLDR;

4 | ```bash

5 | #Assumes Vagrantfile (lots of port forwarding), fix IP's and ports accordingly

6 |

7 | vagrant@node1:/vagrant/application-deployment/haproxy$ nomad run haproxy.nomad

8 |

9 | vagrant@node1:/vagrant/application-deployment/haproxy$ nomad run /vagrant/application-deployment/go-blue-green/go-app.nomad

10 |

11 | #Golang app (routed via HAPROXY)

12 | http://localhost:9080/

13 |

14 | #Vault GUI:

15 | http://localhost:3200/ui/vault/auth

16 |

17 | #Consul GUI:

18 | http://localhost:3500/ui/#/dc1/services

19 |

20 | #Nomad GUI:

21 | http://localhost:3646/ui/jobs

22 |

23 | ```

24 |

25 |

26 |

27 |

28 |

29 | # GUIDE: TODO

--------------------------------------------------------------------------------

/application-deployment/haproxy/haproxy.nomad:

--------------------------------------------------------------------------------

1 | job "lb" {

2 | region = "global"

3 | datacenters = ["dc1"]

4 | type = "service"

5 | update { stagger = "10s"

6 | max_parallel = 1

7 | }

8 | group "lb" {

9 | count = 3

10 | restart {

11 | interval = "5m"

12 | attempts = 10

13 | delay = "25s"

14 | mode = "delay"

15 | }

16 | task "haproxy" {

17 | driver = "docker"

18 | config {

19 | image = "haproxy"

20 | network_mode = "host"

21 | port_map {

22 | http = 80

23 | }

24 | volumes = [

25 | "custom/haproxy.cfg:/usr/local/etc/haproxy/haproxy.cfg"

26 | ]

27 | }

28 | template {

29 | #source = "haproxy.cfg.tpl"

30 | data = < >(sudo tee /var/log/user-data.log|logger -t user-data -s 2>/dev/console) 2>&1

6 | sudo bash /ops/shared/scripts/client.sh "${region}" "${cluster_tag_value}" "${server_ip}" "${vault_url}"

7 |

--------------------------------------------------------------------------------

/application-deployment/microservices/aws/user-data-server.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | set -e

4 |

5 | exec > >(sudo tee /var/log/user-data.log|logger -t user-data -s 2>/dev/console) 2>&1

6 | sudo bash /ops/shared/scripts/server.sh "${server_count}" "${region}" "${cluster_tag_value}" "${token_for_nomad}" "${vault_url}"

7 |

--------------------------------------------------------------------------------

/application-deployment/microservices/aws/variables.tf:

--------------------------------------------------------------------------------

1 | variable "region" {

2 | description = "The AWS region to deploy to."

3 | default = "us-east-1"

4 | }

5 |

6 | variable "ami" {

7 | description = "AMI ID"

8 | default = "ami-009feb0e09775afc6"

9 | }

10 |

11 | variable "vpc_cidr" {

12 | description = "VPC CIDR"

13 | default = "10.0.0.0/16"

14 | }

15 |

16 | variable "subnet_cidr" {

17 | description = "Subnet CIDR"

18 | default = "10.0.1.0/24"

19 | }

20 |

21 | variable "subnet_az" {

22 | description = "The AZ for the public subnet"

23 | default = "us-east-1a"

24 | }

25 |

26 | variable "server_instance_type" {

27 | description = "The AWS instance type to use for servers."

28 | default = "t2.medium"

29 | }

30 |

31 | variable "client_instance_type" {

32 | description = "The AWS instance type to use for clients."

33 | default = "t2.medium"

34 | }

35 |

36 | variable "key_name" {}

37 |

38 | variable "private_key_data" {

39 | description = "contents of the private key"

40 | }

41 |

42 | variable "server_count" {

43 | description = "The number of servers to provision."

44 | default = "1"

45 | }

46 |

47 | variable "client_count" {

48 | description = "The number of clients to provision."

49 | default = "2"

50 | }

51 |

52 | variable "name_tag_prefix" {

53 | description = "prefixed to Name tag added to EC2 instances and other AWS resources"

54 | default = "nomad-consul"

55 | }

56 |

57 | variable "cluster_tag_value" {

58 | description = "Used by Consul to automatically form a cluster."

59 | default = "nomad-consul-demo"

60 | }

61 |

62 | variable "owner" {

63 | description = "Adds owner tag to EC2 instances"

64 | default = ""

65 | }

66 |

67 | variable "ttl" {

68 | description = "Adds TTL tag to EC2 instances for reaping purposes. Reaping is only done for instances deployed by HashiCorp SEs. In any case, -1 means no reaping."

69 | default = "-1"

70 | }

71 |

72 | variable "token_for_nomad" {

73 | description = "A Vault token for use by Nomad"

74 | }

75 |

76 | variable "vault_url" {

77 | description = "URL of your Vault server including port"

78 | }

79 |

--------------------------------------------------------------------------------

/application-deployment/microservices/aws/vpc.tf:

--------------------------------------------------------------------------------

1 | # Define the VPC.

2 | resource "aws_vpc" "sockshop" {

3 | cidr_block = "${var.vpc_cidr}"

4 | enable_dns_hostnames = true

5 |

6 | tags {

7 | Name = "${var.name_tag_prefix} VPC"

8 | }

9 | }

10 |

11 | # Create an Internet Gateway for the VPC.

12 | resource "aws_internet_gateway" "sockshop" {

13 | vpc_id = "${aws_vpc.sockshop.id}"

14 |

15 | tags {

16 | Name = "${var.name_tag_prefix} IGW"

17 | }

18 | }

19 |

20 | # Create a public subnet.

21 | resource "aws_subnet" "public-subnet" {

22 | vpc_id = "${aws_vpc.sockshop.id}"

23 | cidr_block = "${var.subnet_cidr}"

24 | availability_zone = "${var.subnet_az}"

25 | map_public_ip_on_launch = true

26 | depends_on = ["aws_internet_gateway.sockshop"]

27 |

28 | tags {

29 | Name = "${var.name_tag_prefix} Public Subnet"

30 | }

31 | }

32 |

33 | # Create a route table allowing all addresses access to the IGW.

34 | resource "aws_route_table" "public" {

35 | vpc_id = "${aws_vpc.sockshop.id}"

36 |

37 | route {

38 | cidr_block = "0.0.0.0/0"

39 | gateway_id = "${aws_internet_gateway.sockshop.id}"

40 | }

41 |

42 | tags {

43 | Name = "${var.name_tag_prefix} Public Route Table"

44 | }

45 | }

46 |

47 | # Now associate the route table with the public subnet

48 | # giving all public subnet instances access to the internet.

49 | resource "aws_route_table_association" "public-subnet" {

50 | subnet_id = "${aws_subnet.public-subnet.id}"

51 | route_table_id = "${aws_route_table.public.id}"

52 | }

53 |

--------------------------------------------------------------------------------

/application-deployment/microservices/shared/config/consul.json:

--------------------------------------------------------------------------------

1 | {

2 | "log_level": "INFO",

3 | "server": true,

4 | "ui": true,

5 | "data_dir": "/opt/consul/data",

6 | "bind_addr": "IP_ADDRESS",

7 | "client_addr": "IP_ADDRESS",

8 | "advertise_addr": "IP_ADDRESS",

9 | "bootstrap_expect": SERVER_COUNT,

10 | "service": {

11 | "name": "consul"

12 | }

13 | }

14 |

--------------------------------------------------------------------------------

/application-deployment/microservices/shared/config/consul_client.json:

--------------------------------------------------------------------------------

1 | {

2 | "ui": true,

3 | "log_level": "INFO",

4 | "data_dir": "/opt/consul/data",

5 | "bind_addr": "IP_ADDRESS",

6 | "client_addr": "IP_ADDRESS",

7 | "advertise_addr": "IP_ADDRESS"

8 | }

9 |

--------------------------------------------------------------------------------

/application-deployment/microservices/shared/config/consul_upstart.conf:

--------------------------------------------------------------------------------

1 | description "Consul"

2 |

3 | start on runlevel [2345]

4 | stop on runlevel [!2345]

5 |

6 | respawn

7 |

8 | console log

9 |

10 | script

11 | if [ -f "/etc/service/consul" ]; then

12 | . /etc/service/consul

13 | fi

14 |

15 | exec /usr/local/bin/consul agent \

16 | -config-dir="/etc/consul.d" \

17 | -dns-port="53" \

18 | -recursor="150.10.20.2" \

19 | -retry-join "provider=aws tag_key=ConsulAutoJoin tag_value=CLUSTER_TAG_VALUE region=REGION" \

20 | >>/var/log/consul.log 2>&1

21 | end script

22 |

--------------------------------------------------------------------------------

/application-deployment/microservices/shared/config/nomad.hcl:

--------------------------------------------------------------------------------

1 | data_dir = "/opt/nomad/data"

2 | bind_addr = "IP_ADDRESS"

3 |

4 | # Enable the server

5 | server {

6 | enabled = true

7 | bootstrap_expect = SERVER_COUNT

8 | }

9 |

10 | name = "nomad@IP_ADDRESS"

11 |

12 | consul {

13 | address = "IP_ADDRESS:8500"

14 | }

15 |

16 | vault {

17 | enabled = true

18 | address = "VAULT_URL"

19 | task_token_ttl = "1h"

20 | create_from_role = "nomad-cluster"

21 | token = "TOKEN_FOR_NOMAD"

22 | }

23 |

24 | telemetry {

25 | publish_allocation_metrics = true

26 | publish_node_metrics = true

27 | }

28 |

--------------------------------------------------------------------------------

/application-deployment/microservices/shared/config/nomad_client.hcl:

--------------------------------------------------------------------------------

1 | data_dir = "/opt/nomad/data"

2 | bind_addr = "IP_ADDRESS"

3 | name = "nomad@IP_ADDRESS"

4 |

5 | # Enable the client

6 | client {

7 | enabled = true

8 | options = {

9 | driver.java.enable = "1"

10 | docker.cleanup.image = false

11 | }

12 | }

13 |

14 | consul {

15 | address = "IP_ADDRESS:8500"

16 | }

17 |

18 | vault {

19 | enabled = true

20 | address = "VAULT_URL"

21 | }

22 |

23 | telemetry {

24 | publish_allocation_metrics = true

25 | publish_node_metrics = true

26 | }

27 |

--------------------------------------------------------------------------------

/application-deployment/microservices/shared/config/nomad_upstart.conf:

--------------------------------------------------------------------------------

1 | description "Nomad"

2 |

3 | start on runlevel [2345]

4 | stop on runlevel [!2345]

5 |

6 | respawn

7 |

8 | console log

9 |

10 | script

11 | if [ -f "/etc/service/nomad" ]; then

12 | . /etc/service/nomad

13 | fi

14 |

15 | exec /usr/local/bin/nomad agent \

16 | -config="/etc/nomad.d/nomad.hcl" \

17 | >>/var/log/nomad.log 2>&1

18 | end script

19 |

--------------------------------------------------------------------------------

/application-deployment/microservices/shared/jobs/sockshopui.nomad:

--------------------------------------------------------------------------------

1 | job "sockshopui" {

2 | datacenters = ["dc1"]

3 |

4 | type = "system"

5 |

6 | constraint {

7 | attribute = "${attr.kernel.name}"

8 | value = "linux"

9 | }

10 |

11 | update {

12 | stagger = "10s"

13 | max_parallel = 1

14 | }

15 |

16 | # - frontend #

17 | group "frontend" {

18 |

19 | restart {

20 | attempts = 10

21 | interval = "5m"

22 | delay = "25s"

23 | mode = "delay"

24 | }

25 |

26 | # - frontend app - #

27 | task "front-end" {

28 | driver = "docker"

29 |

30 | config {

31 | image = "weaveworksdemos/front-end:master-ac9ca707"

32 | command = "/usr/local/bin/node"

33 | args = ["server.js", "--domain=service.consul"]

34 | hostname = "front-end.service.consul"

35 | network_mode = "sockshop"

36 | port_map = {

37 | http = 8079

38 | }

39 | }

40 |

41 | service {

42 | name = "front-end"

43 | tags = ["app", "frontend", "front-end"]

44 | port = "http"

45 | }

46 |

47 | resources {

48 | cpu = 100 # 100 Mhz

49 | memory = 128 # 128MB

50 | network {

51 | mbits = 10

52 | port "http" {

53 | static = 80

54 | }

55 | }

56 | }

57 | } # - end frontend app - #

58 | } # - end frontend - #

59 | }

60 |

--------------------------------------------------------------------------------

/application-deployment/microservices/shared/scripts/client.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | set -e

4 |

5 | CONFIGDIR=/ops/shared/config

6 |

7 | CONSULCONFIGDIR=/etc/consul.d

8 | NOMADCONFIGDIR=/etc/nomad.d

9 | HOME_DIR=ubuntu

10 |

11 | # Wait for network

12 | sleep 15

13 |

14 | IP_ADDRESS=$(curl http://instance-data/latest/meta-data/local-ipv4)

15 | DOCKER_BRIDGE_IP_ADDRESS=(`ifconfig docker0 2>/dev/null|awk '/inet addr:/ {print $2}'|sed 's/addr://'`)

16 | REGION=$1

17 | CLUSTER_TAG_VALUE=$2

18 | SERVER_IP=$3

19 | VAULT_URL=$4

20 |

21 | # Install Java

22 | apt-get update

23 | apt install -y default-jre

24 |

25 | # Consul

26 | sed -i "s/IP_ADDRESS/$IP_ADDRESS/g" $CONFIGDIR/consul_client.json

27 | sed -i "s/CLUSTER_TAG_VALUE/$CLUSTER_TAG_VALUE/g" $CONFIGDIR/consul_upstart.conf

28 | sed -i "s/REGION/$REGION/g" $CONFIGDIR/consul_upstart.conf

29 | cp $CONFIGDIR/consul_client.json $CONSULCONFIGDIR/consul.json

30 | cp $CONFIGDIR/consul_upstart.conf /etc/init/consul.conf

31 |

32 | service consul start

33 | sleep 10

34 | export CONSUL_HTTP_ADDR=$IP_ADDRESS:8500

35 |

36 | # Nomad

37 | sed -i "s/IP_ADDRESS/$IP_ADDRESS/g" $CONFIGDIR/nomad_client.hcl

38 | sed -i "s@VAULT_URL@$VAULT_URL@g" $CONFIGDIR/nomad_client.hcl

39 | cp $CONFIGDIR/nomad_client.hcl $NOMADCONFIGDIR/nomad.hcl

40 | cp $CONFIGDIR/nomad_upstart.conf /etc/init/nomad.conf

41 |

42 | service nomad start

43 | sleep 10

44 | export NOMAD_ADDR=http://$IP_ADDRESS:4646

45 |

46 | # Add hostname to /etc/hosts

47 | echo "127.0.0.1 $(hostname)" | tee --append /etc/hosts

48 |

49 | # Add Docker bridge network IP to /etc/resolv.conf (at the top)

50 | #echo "nameserver $DOCKER_BRIDGE_IP_ADDRESS" | tee /etc/resolv.conf.new

51 | echo "nameserver $IP_ADDRESS" | tee /etc/resolv.conf.new

52 | cat /etc/resolv.conf | tee --append /etc/resolv.conf.new

53 | mv /etc/resolv.conf.new /etc/resolv.conf

54 |

55 | # Add search service.consul at bottom of /etc/resolv.conf

56 | echo "search service.consul" | tee --append /etc/resolv.conf

57 |

58 | # Set env vars for tool CLIs

59 | echo "export CONSUL_HTTP_ADDR=$IP_ADDRESS:8500" | tee --append /home/$HOME_DIR/.bashrc

60 | echo "export VAULT_ADDR=$VAULT_URL" | tee --append /home/$HOME_DIR/.bashrc

61 | echo "export NOMAD_ADDR=http://$IP_ADDRESS:4646" | tee --append /home/$HOME_DIR/.bashrc

62 |

63 | # Move daemon.json to /etc/docker

64 | echo "{\"hosts\":[\"tcp://0.0.0.0:2375\",\"unix:///var/run/docker.sock\"],\"cluster-store\":\"consul://$IP_ADDRESS:8500\",\"cluster-advertise\":\"$IP_ADDRESS:2375\",\"dns\":[\"$IP_ADDRESS\"],\"dns-search\":[\"service.consul\"]}" > /home/ubuntu/daemon.json

65 | mkdir -p /etc/docker

66 | mv /home/ubuntu/daemon.json /etc/docker/daemon.json

67 |

68 | # Start Docker

69 | service docker restart

70 |

--------------------------------------------------------------------------------

/application-deployment/microservices/shared/scripts/server.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | set -e

4 |

5 | CONFIGDIR=/ops/shared/config

6 |

7 | CONSULCONFIGDIR=/etc/consul.d

8 | NOMADCONFIGDIR=/etc/nomad.d

9 | HOME_DIR=ubuntu

10 |

11 | # Wait for network

12 | sleep 15

13 |

14 | IP_ADDRESS=$(curl http://instance-data/latest/meta-data/local-ipv4)

15 | DOCKER_BRIDGE_IP_ADDRESS=(`ifconfig docker0 2>/dev/null|awk '/inet addr:/ {print $2}'|sed 's/addr://'`)

16 | SERVER_COUNT=$1

17 | REGION=$2

18 | CLUSTER_TAG_VALUE=$3

19 | TOKEN_FOR_NOMAD=$4

20 | VAULT_URL=$5

21 |

22 | # Consul

23 | sed -i "s/IP_ADDRESS/$IP_ADDRESS/g" $CONFIGDIR/consul.json

24 | sed -i "s/SERVER_COUNT/$SERVER_COUNT/g" $CONFIGDIR/consul.json

25 | sed -i "s/REGION/$REGION/g" $CONFIGDIR/consul_upstart.conf

26 | sed -i "s/CLUSTER_TAG_VALUE/$CLUSTER_TAG_VALUE/g" $CONFIGDIR/consul_upstart.conf

27 | cp $CONFIGDIR/consul.json $CONSULCONFIGDIR

28 | cp $CONFIGDIR/consul_upstart.conf /etc/init/consul.conf

29 |

30 | service consul start

31 | sleep 10

32 | export CONSUL_HTTP_ADDR=$IP_ADDRESS:8500

33 |

34 | # Nomad

35 | sed -i "s/IP_ADDRESS/$IP_ADDRESS/g" $CONFIGDIR/nomad.hcl

36 | sed -i "s/SERVER_COUNT/$SERVER_COUNT/g" $CONFIGDIR/nomad.hcl

37 | sed -i "s@VAULT_URL@$VAULT_URL@g" $CONFIGDIR/nomad.hcl

38 | sed -i "s/TOKEN_FOR_NOMAD/$TOKEN_FOR_NOMAD/g" $CONFIGDIR/nomad.hcl

39 | cp $CONFIGDIR/nomad.hcl $NOMADCONFIGDIR

40 | cp $CONFIGDIR/nomad_upstart.conf /etc/init/nomad.conf

41 | export NOMAD_ADDR=http://$IP_ADDRESS:4646

42 |

43 | # Add hostname to /etc/hosts

44 | echo "127.0.0.1 $(hostname)" | tee --append /etc/hosts

45 |

46 | # Add Docker bridge network IP to /etc/resolv.conf (at the top)

47 | #echo "nameserver $DOCKER_BRIDGE_IP_ADDRESS" | tee /etc/resolv.conf.new

48 | echo "nameserver $IP_ADDRESS" | tee /etc/resolv.conf.new

49 | cat /etc/resolv.conf | tee --append /etc/resolv.conf.new

50 | mv /etc/resolv.conf.new /etc/resolv.conf

51 |

52 | # Add search service.consul at bottom of /etc/resolv.conf

53 | echo "search service.consul" | tee --append /etc/resolv.conf

54 |

55 | # Set env vars for tool CLIs

56 | echo "export CONSUL_HTTP_ADDR=$IP_ADDRESS:8500" | tee --append /home/$HOME_DIR/.bashrc

57 | echo "export VAULT_ADDR=$VAULT_URL" | tee --append /home/$HOME_DIR/.bashrc

58 | echo "export NOMAD_ADDR=http://$IP_ADDRESS:4646" | tee --append /home/$HOME_DIR/.bashrc

59 |

60 | # Move daemon.json to /etc/docker

61 | echo "{\"hosts\":[\"tcp://0.0.0.0:2375\",\"unix:///var/run/docker.sock\"],\"cluster-store\":\"consul://$IP_ADDRESS:8500\",\"cluster-advertise\":\"$IP_ADDRESS:2375\",\"dns\":[\"$IP_ADDRESS\"],\"dns-search\":[\"service.consul\"]}" > /home/ubuntu/daemon.json

62 | mkdir -p /etc/docker

63 | mv /home/ubuntu/daemon.json /etc/docker/daemon.json

64 |

65 | # Start Docker

66 | service docker restart

67 |

68 | # Create Docker Networks

69 | for network in sockshop; do

70 | if [ $(docker network ls | grep $network | wc -l) -eq 0 ]

71 | then

72 | docker network create -d overlay --attachable $network

73 | else

74 | echo docker network $network already created

75 | fi

76 | done

77 |

78 | # Copy Nomad jobs and scripts to desired locations

79 | cp /ops/shared/jobs/*.nomad /home/ubuntu/.

80 | chown -R $HOME_DIR:$HOME_DIR /home/$HOME_DIR/

81 | chmod 666 /home/ubuntu/*

82 |

83 | # Start Nomad

84 | service nomad start

85 | sleep 60

86 |

--------------------------------------------------------------------------------

/application-deployment/microservices/shared/scripts/setup.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | set -e

4 | cd /ops

5 |

6 | CONFIGDIR=/ops/shared/config

7 |

8 | CONSULVERSION=1.3.0

9 | CONSULDOWNLOAD=https://releases.hashicorp.com/consul/${CONSULVERSION}/consul_${CONSULVERSION}_linux_amd64.zip

10 | CONSULCONFIGDIR=/etc/consul.d

11 | CONSULDIR=/opt/consul

12 |

13 | NOMADVERSION=0.8.6

14 | NOMADDOWNLOAD=https://releases.hashicorp.com/nomad/${NOMADVERSION}/nomad_${NOMADVERSION}_linux_amd64.zip

15 | NOMADCONFIGDIR=/etc/nomad.d

16 | NOMADDIR=/opt/nomad

17 |

18 | # Dependencies

19 | sudo apt-get install -y software-properties-common

20 | sudo apt-get update

21 | sudo apt-get install -y unzip tree redis-tools jq

22 | sudo apt-get install -y upstart-sysv

23 | sudo update-initramfs -u

24 |

25 | # Disable the firewall

26 | sudo ufw disable

27 |

28 | # Download Consul

29 | curl -L $CONSULDOWNLOAD > consul.zip

30 |

31 | ## Install Consul

32 | sudo unzip consul.zip -d /usr/local/bin

33 | sudo chmod 0755 /usr/local/bin/consul

34 | sudo chown root:root /usr/local/bin/consul

35 | sudo setcap "cap_net_bind_service=+ep" /usr/local/bin/consul

36 |

37 | ## Configure Consul

38 | sudo mkdir -p $CONSULCONFIGDIR

39 | sudo chmod 755 $CONSULCONFIGDIR

40 | sudo mkdir -p $CONSULDIR

41 | sudo chmod 755 $CONSULDIR

42 |

43 | # Download Nomad

44 | curl -L $NOMADDOWNLOAD > nomad.zip

45 |

46 | ## Install Nomad

47 | sudo unzip nomad.zip -d /usr/local/bin

48 | sudo chmod 0755 /usr/local/bin/nomad

49 | sudo chown root:root /usr/local/bin/nomad

50 |

51 | ## Configure Nomad

52 | sudo mkdir -p $NOMADCONFIGDIR

53 | sudo chmod 755 $NOMADCONFIGDIR

54 | sudo mkdir -p $NOMADDIR

55 | sudo chmod 755 $NOMADDIR

56 |

57 | # Docker

58 | sudo apt-get update

59 | sudo apt-get install -y apt-transport-https ca-certificates curl software-properties-common

60 | curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

61 | sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

62 | sudo apt-get update

63 | sudo apt-get install -y docker-ce=17.09.1~ce-0~ubuntu

64 | sudo usermod -aG docker ubuntu

65 |

--------------------------------------------------------------------------------

/application-deployment/microservices/slides/HashiCorpMicroservicesDemo.pptx:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hashicorp/nomad-guides/cdda5a0ebaaa2c009783c24e98817622d9b7593a/application-deployment/microservices/slides/HashiCorpMicroservicesDemo.pptx

--------------------------------------------------------------------------------

/application-deployment/microservices/vault/aws-policy.json:

--------------------------------------------------------------------------------

1 | {

2 | "Version": "2012-10-17",

3 | "Statement": [

4 | {

5 | "Sid": "Stmt1426528957000",

6 | "Effect": "Allow",

7 | "Action": [

8 | "ec2:*",

9 | "iam:*"

10 | ],

11 | "Resource": [

12 | "*"

13 | ]

14 | }

15 | ]

16 | }

17 |

--------------------------------------------------------------------------------

/application-deployment/microservices/vault/nomad-cluster-role.json:

--------------------------------------------------------------------------------

1 | {

2 | "disallowed_policies": "nomad-server",

3 | "explicit_max_ttl": 0,

4 | "name": "nomad-cluster",

5 | "orphan": false,

6 | "period": 259200,

7 | "renewable": true

8 | }

9 |

--------------------------------------------------------------------------------

/application-deployment/microservices/vault/nomad-server-policy.hcl:

--------------------------------------------------------------------------------

1 | # Allow creating tokens under "nomad-cluster" role. The role name should be

2 | # updated if "nomad-cluster" is not used.

3 | path "auth/token/create/nomad-cluster" {

4 | capabilities = ["update"]

5 | }

6 |

7 | # Allow looking up "nomad-cluster" role. The role name should be updated if

8 | # "nomad-cluster" is not used.

9 | path "auth/token/roles/nomad-cluster" {

10 | capabilities = ["read"]

11 | }

12 |

13 | # Allow looking up the token passed to Nomad to validate the token has the

14 | # proper capabilities. This is provided by the "default" policy.

15 | path "auth/token/lookup-self" {

16 | capabilities = ["read"]

17 | }

18 |

19 | # Allow looking up incoming tokens to validate they have permissions to access

20 | # the tokens they are requesting. This is only required if

21 | # `allow_unauthenticated` is set to false.

22 | path "auth/token/lookup" {

23 | capabilities = ["update"]

24 | }

25 |

26 | # Allow revoking tokens that should no longer exist. This allows revoking

27 | # tokens for dead tasks.

28 | path "auth/token/revoke-accessor" {

29 | capabilities = ["update"]

30 | }

31 |

32 | # Allow checking the capabilities of our own token. This is used to validate the

33 | # token upon startup.

34 | path "sys/capabilities-self" {

35 | capabilities = ["update"]

36 | }

37 |

38 | # Allow our own token to be renewed.

39 | path "auth/token/renew-self" {

40 | capabilities = ["update"]

41 | }

42 |

--------------------------------------------------------------------------------

/application-deployment/microservices/vault/setup_vault.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # Script to setup of Vault for the Nomad/Consul demo

4 | echo "Before running this, you must export your"

5 | echo "AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY keys"

6 | echo "and your VAULT_ADDR and VAULT_TOKEN environment variables."

7 |

8 | # Set up the Vault AWS Secrets Engine

9 | echo "Setting up the AWS Secrets Engine"

10 | echo "Enabling the AWS secrets engine at path aws-tf"

11 | vault secrets enable -path=aws-tf aws

12 | echo "Providing Vault with AWS keys that can create other keys"

13 | vault write aws-tf/config/root access_key=$AWS_ACCESS_KEY_ID secret_key=$AWS_SECRET_ACCESS_KEY

14 | echo "Configuring default and max leases on generated keys"

15 | vault write aws-tf/config/lease lease=1h lease_max=24h

16 | echo "Creating the AWS deploy role and assigning policy to it"

17 | vault write aws-tf/roles/deploy policy=@aws-policy.json

18 |

19 | # Create sockshop-read policy

20 | vault policy write sockshop-read sockshop-read.hcl

21 |

22 | # Write the cataloguedb and userdb passwords to Vault

23 | vault write secret/sockshop/databases/cataloguedb pwd=dioe93kdo931

24 | vault write secret/sockshop/databases/userdb pwd=wo39c5h2sl4r

25 |

26 | # Setup Vault policy/role for Nomad

27 | echo "Setting up Vault policy and role for Nomad"

28 | echo "Writing nomad-server-policy.hcl to Vault"

29 | vault policy write nomad-server nomad-server-policy.hcl

30 | echo "Writing nomad-cluster-role.json to Vault"

31 | vault write auth/token/roles/nomad-cluster @nomad-cluster-role.json

32 |

--------------------------------------------------------------------------------

/application-deployment/microservices/vault/sockshop-read.hcl:

--------------------------------------------------------------------------------

1 | # Read Access to Sock Shop secrets

2 | path "secret/sockshop/*" {

3 | capabilities = ["read"]

4 | }

5 |

--------------------------------------------------------------------------------

/application-deployment/nginx-vault-kv/README.md:

--------------------------------------------------------------------------------

1 | # Nomad-Vault Nginx Key/Value

2 |

3 | ### TLDR;

4 | ```bash

5 | vagrant@node1:/vagrant/vault-examples/nginx/KeyValue$ ./kv_vault_setup.sh

6 | Successfully authenticated! You are now logged in.

7 | token: 25bf4150-94a4-7292-974c-9c3fa4c8ee53

8 | token_duration: 0

9 | token_policies: [root]

10 | Success! Data written to: secret/test

11 | Policy 'test' written.

12 |

13 | vagrant@node1:/vagrant/vault-examples/nginx/KeyValue$ nomad run nginx-kv-secret.nomad

14 |

15 | # in your browser goto (Runs on clients on static port 8080):

16 | http://localhost:8080/nginx-secret/

17 | #Good morning. secret: Live demos rock!!!

18 |

19 | ```

20 |

21 | #Guide: TODO

--------------------------------------------------------------------------------

/application-deployment/nginx-vault-kv/kv_vault_setup.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | consul kv get service/vault/root-token | vault auth -

4 |

5 | vault write secret/test message='Live demos rock!!!'

6 |

7 | cat << EOF > test.policy

8 | path "secret/*" {

9 | capabilities = ["create", "read", "update", "delete", "list"]

10 | }

11 | EOF

12 |

13 | vault policy-write test test.policy

14 |

--------------------------------------------------------------------------------

/application-deployment/nginx-vault-kv/nginx-kv-secret.nomad:

--------------------------------------------------------------------------------

1 | job "nginx" {

2 | datacenters = ["dc1"]

3 | type = "service"

4 |

5 | group "nginx" {

6 | count = 3

7 |

8 | vault {

9 | policies = ["test"]

10 | }

11 |

12 | task "nginx" {

13 | driver = "docker"

14 |

15 | config {

16 | image = "nginx"

17 | port_map {

18 | http = 8080

19 | }

20 | port_map {

21 | https = 443

22 | }

23 | volumes = [

24 | "custom/default.conf:/etc/nginx/conf.d/default.conf"

25 | ]

26 | }

27 |

28 | template {

29 | data = < policy-superuser.hcl

81 |

82 | vault policy-write superuser policy-superuser.hcl

83 | ```

84 |

85 | Execute the script

86 | ```bash

87 | vagrant@node1:/vagrant/vault-examples/nginx/pki$ ./pki_vault_setup.sh

88 | ```

89 |

90 | ## Step 2: Run the Job

91 | ```bash

92 | vagrant@node1:/vagrant/vault-examples/nginx/PKI$ nomad run nginx-pki-secret.nomad

93 | ```

94 |

95 | ## Step 3: Validate Results

96 | The nginx containers should be running on port 443 of your Nomad clients (static port configuration)

97 |

98 | If using the Vagrantfile go to your browswer at:

99 | https://localhost:9443/

100 |

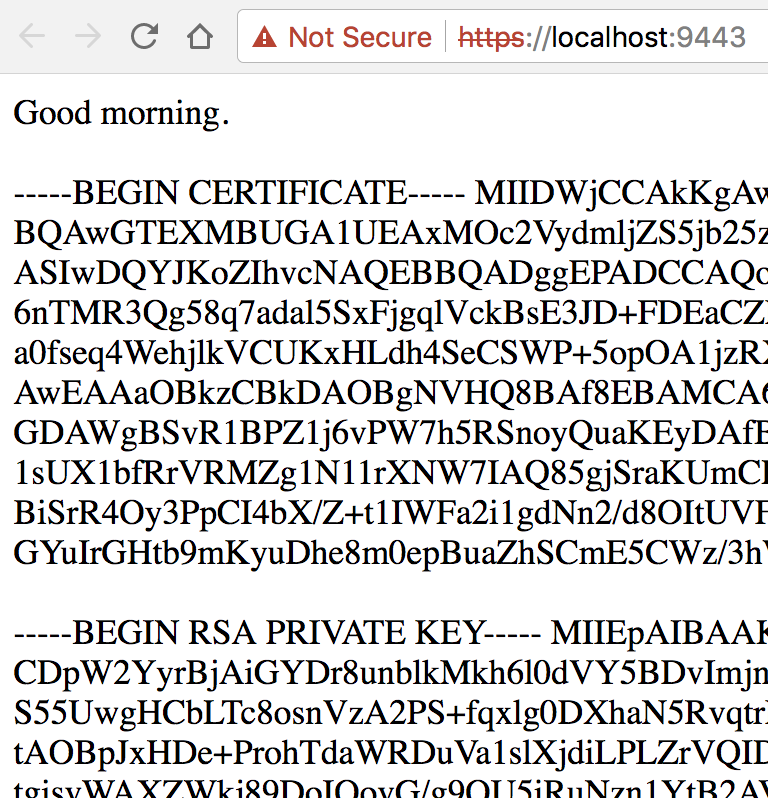

101 | Your browswer should warn you of an untrusted cert. You can use the cert generated from the configuration script (pki_vault_setup.sh) for the root ca in your browser if you would like.

102 |

103 | Once rendered, you should see a webpage showing the dynamic cert and key used by the Nginx task for its SSL config.

104 |

105 |

106 |

107 |

--------------------------------------------------------------------------------

/application-deployment/nginx-vault-pki/nginx-pki-secret.nomad:

--------------------------------------------------------------------------------

1 | job "nginx" {

2 | datacenters = ["dc1"]

3 | type = "service"

4 |

5 | group "nginx" {

6 | count = 3

7 |

8 | vault {

9 | policies = ["superuser"]

10 | }

11 |

12 | task "nginx" {

13 | driver = "docker"

14 |

15 | config {

16 | image = "nginx"

17 | port_map {

18 | http = 80

19 | }

20 | port_map {

21 | https = 443

22 | }

23 | volumes = [

24 | "custom/default.conf:/etc/nginx/conf.d/default.conf",

25 | "secret/cert.key:/etc/nginx/ssl/nginx.key",

26 | ]

27 | }

28 |

29 | template {

30 | data = <

65 |

10 |

11 | ----

12 |

13 | # Nomad-Guides

14 | Example usage of HashiCorp Nomad (Work In Progress)

15 |

16 | ## provision

17 | This area will contain instructions to provision Nomad and Consul as a first step to start using these tools.

18 |

19 | These may include use cases installing Nomad in cloud services via Terraform, or within virtual environments using Vagrant, or running Nomad in a local development mode.

20 |

21 | ## application-deployment

22 | This area will contain instructions and gudies for deploying applications on Nomad. This area contains examples and guides for deploying secrets (from Vault) into your Nomad applications.

23 |

24 | ## operations

25 | This area will contain instructions for operating Nomad. This includes topics such as configuring Sentinel policies, namespaces, ACLs etc.

26 |

27 | ## `gitignore.tf` Files

28 |

29 | You may notice some [`gitignore.tf`](operations/provision-consul/best-practices/terraform-aws/gitignore.tf) files in certain directories. `.tf` files that contain the word "gitignore" are ignored by git in the [`.gitignore`](./.gitignore) file.

30 |

31 | If you have local Terraform configuration that you want ignored (like Terraform backend configuration), create a new file in the directory (separate from `gitignore.tf`) that contains the word "gitignore" (e.g. `backend.gitignore.tf`) and it won't be picked up as a change.

32 |

33 | ### Contributing

34 | We welcome contributions and feedback! For guide submissions, please see [the contributions guide](CONTRIBUTING.md)

35 |

--------------------------------------------------------------------------------

/application-deployment/README.md:

--------------------------------------------------------------------------------

1 | # To be implemented

2 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/.gitignore:

--------------------------------------------------------------------------------

1 | *~

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/ConsulIntention.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hashicorp/nomad-guides/cdda5a0ebaaa2c009783c24e98817622d9b7593a/application-deployment/consul-connect-with-nomad/ConsulIntention.png

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/ConsulServices.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hashicorp/nomad-guides/cdda5a0ebaaa2c009783c24e98817622d9b7593a/application-deployment/consul-connect-with-nomad/ConsulServices.png

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/NomadUI.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hashicorp/nomad-guides/cdda5a0ebaaa2c009783c24e98817622d9b7593a/application-deployment/consul-connect-with-nomad/NomadUI.png

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/aws/main.tf:

--------------------------------------------------------------------------------

1 | terraform {

2 | required_version = ">= 0.11.10"

3 | }

4 |

5 | provider "aws" {

6 | region = "${var.region}"

7 | }

8 |

9 | module "nomadconsul" {

10 | source = "./modules/nomadconsul"

11 |

12 | region = "${var.region}"

13 | ami = "${var.ami}"

14 | vpc_id = "${aws_vpc.catalogue.id}"

15 | subnet_id = "${aws_subnet.public-subnet.id}"

16 | server_instance_type = "${var.server_instance_type}"

17 | client_instance_type = "${var.client_instance_type}"

18 | key_name = "${var.key_name}"

19 | server_count = "${var.server_count}"

20 | client_count = "${var.client_count}"

21 | name_tag_prefix = "${var.name_tag_prefix}"

22 | cluster_tag_value = "${var.cluster_tag_value}"

23 | owner = "${var.owner}"

24 | ttl = "${var.ttl}"

25 | }

26 |

27 | #resource "null_resource" "start_catalogue" {

28 | # provisioner "remote-exec" {

29 | # inline = [

30 | # "sleep 180",

31 | # "nomad job run -address=http://${module.nomadconsul.primary_server_private_ips[0]}:4646 /home/ubuntu/catalogue-with-connect.nomad",

32 | # ]

33 |

34 | # connection {

35 | # host = "${module.nomadconsul.primary_server_public_ips[0]}"

36 | # type = "ssh"

37 | # agent = false

38 | # user = "ubuntu"

39 | # private_key = "${var.private_key_data}"

40 | # }

41 | # }

42 | #}

43 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/aws/outputs.tf:

--------------------------------------------------------------------------------

1 | output "IP_Addresses" {

2 | sensitive = true

3 | value = < >(sudo tee /var/log/user-data.log|logger -t user-data -s 2>/dev/console) 2>&1

6 | sudo bash /ops/shared/scripts/client.sh "${region}" "${cluster_tag_value}" "${server_ip}"

7 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/aws/user-data-server.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | set -e

4 |

5 | exec > >(sudo tee /var/log/user-data.log|logger -t user-data -s 2>/dev/console) 2>&1

6 | sudo bash /ops/shared/scripts/server.sh "${server_count}" "${region}" "${cluster_tag_value}"

7 |

--------------------------------------------------------------------------------

/application-deployment/consul-connect-with-nomad/aws/variables.tf:

--------------------------------------------------------------------------------

1 | variable "region" {

2 | description = "The AWS region to deploy to."

3 | default = "us-east-1"

4 | }

5 |

6 | variable "ami" {

7 | description = "AMI ID"

8 | default = "ami-01d821506cee7b2c4"

9 | }

10 |

11 | variable "vpc_cidr" {

12 | description = "VPC CIDR"

13 | default = "10.0.0.0/16"

14 | }

15 |

16 | variable "subnet_cidr" {

17 | description = "Subnet CIDR"

18 | default = "10.0.1.0/24"

19 | }

20 |

21 | variable "subnet_az" {