├── .clog.toml

├── .envrc

├── .github

├── FUNDING.yml

└── workflows

│ ├── ci.yml

│ └── release.yml

├── .gitignore

├── CHANGELOG.md

├── Cargo.toml

├── IMPLEMENTATION.md

├── LICENSE

├── README.md

├── benches

└── bench.rs

├── bin

└── loom.sh

├── flake.lock

├── flake.nix

├── rust-toolchain.toml

├── src

├── cfg.rs

├── clear.rs

├── implementation.rs

├── iter.rs

├── lib.rs

├── macros.rs

├── page

│ ├── mod.rs

│ ├── slot.rs

│ └── stack.rs

├── pool.rs

├── shard.rs

├── sync.rs

├── tests

│ ├── custom_config.rs

│ ├── loom_pool.rs

│ ├── loom_slab.rs

│ ├── mod.rs

│ └── properties.rs

└── tid.rs

└── tests

└── reserved_bits_leak.rs

/.clog.toml:

--------------------------------------------------------------------------------

1 | [clog]

2 | # A repository link with the trailing '.git' which will be used to generate

3 | # all commit and issue links

4 | repository = "https://github.com/hawkw/sharded-slab"

5 | # A constant release title

6 | # subtitle = "sharded-slab"

7 |

8 | # specify the style of commit links to generate, defaults to "github" if omitted

9 | link-style = "github"

10 |

11 | # The preferred way to set a constant changelog. This file will be read for old changelog

12 | # data, then prepended to for new changelog data. It's the equivilant to setting

13 | # both infile and outfile to the same file.

14 | #

15 | # Do not use with outfile or infile fields!

16 | #

17 | # Defaults to stdout when omitted

18 | changelog = "CHANGELOG.md"

19 |

20 | # This sets the output format. There are two options "json" or "markdown" and

21 | # defaults to "markdown" when omitted

22 | output-format = "markdown"

23 |

24 | # If you use tags, you can set the following if you wish to only pick

25 | # up changes since your latest tag

26 | from-latest-tag = true

27 |

--------------------------------------------------------------------------------

/.envrc:

--------------------------------------------------------------------------------

1 | use flake;

--------------------------------------------------------------------------------

/.github/FUNDING.yml:

--------------------------------------------------------------------------------

1 | # These are supported funding model platforms

2 |

3 | github: [hawkw]

4 | patreon: # Replace with a single Patreon username

5 | open_collective: # Replace with a single Open Collective username

6 | ko_fi: # Replace with a single Ko-fi username

7 | tidelift: # Replace with a single Tidelift platform-name/package-name e.g., npm/babel

8 | community_bridge: # Replace with a single Community Bridge project-name e.g., cloud-foundry

9 | liberapay: # Replace with a single Liberapay username

10 | issuehunt: # Replace with a single IssueHunt username

11 | otechie: # Replace with a single Otechie username

12 | custom: # Replace with up to 4 custom sponsorship URLs e.g., ['link1', 'link2']

13 |

--------------------------------------------------------------------------------

/.github/workflows/ci.yml:

--------------------------------------------------------------------------------

1 | name: CI

2 |

3 | on:

4 | push:

5 | branches: ["main"]

6 | pull_request:

7 |

8 | env:

9 | RUSTFLAGS: -Dwarnings

10 | RUST_BACKTRACE: 1

11 | MSRV: 1.42.0

12 |

13 | jobs:

14 | build:

15 | name: Build (stable, ${{ matrix.target }})

16 | runs-on: ubuntu-latest

17 | strategy:

18 | matrix:

19 | target:

20 | - x86_64-unknown-linux-gnu

21 | - i686-unknown-linux-musl

22 | steps:

23 | - uses: actions/checkout@master

24 | - name: Install toolchain

25 | uses: actions-rs/toolchain@v1

26 | with:

27 | profile: minimal

28 | toolchain: stable

29 | target: ${{ matrix.target }}

30 | override: true

31 | - name: cargo build --target ${{ matrix.target }}

32 | uses: actions-rs/cargo@v1

33 | with:

34 | command: build

35 | args: --all-targets --target ${{ matrix.target }}

36 |

37 | build-msrv:

38 | name: Build (MSRV)

39 | runs-on: ubuntu-latest

40 | steps:

41 | - uses: actions/checkout@master

42 | - name: Install toolchain

43 | uses: actions-rs/toolchain@v1

44 | with:

45 | profile: minimal

46 | toolchain: ${{ env.MSRV }}

47 | override: true

48 | - name: cargo +${{ env.MSRV }} build

49 | uses: actions-rs/cargo@v1

50 | with:

51 | command: build

52 | env:

53 | RUSTFLAGS: "" # remove -Dwarnings

54 |

55 | build-nightly:

56 | name: Build (nightly)

57 | runs-on: ubuntu-latest

58 | steps:

59 | - uses: actions/checkout@master

60 | - name: Install toolchain

61 | uses: actions-rs/toolchain@v1

62 | with:

63 | profile: minimal

64 | toolchain: nightly

65 | override: true

66 | - name: cargo +nightly build

67 | uses: actions-rs/cargo@v1

68 | with:

69 | command: build

70 | env:

71 | RUSTFLAGS: "" # remove -Dwarnings

72 |

73 | test:

74 | name: Tests (stable)

75 | needs: build

76 | runs-on: ubuntu-latest

77 | steps:

78 | - uses: actions/checkout@master

79 | - name: Install toolchain

80 | uses: actions-rs/toolchain@v1

81 | with:

82 | profile: minimal

83 | toolchain: stable

84 | override: true

85 | - name: Run tests

86 | run: cargo test

87 |

88 | test-loom:

89 | name: Loom tests (stable)

90 | needs: build

91 | runs-on: ubuntu-latest

92 | steps:

93 | - uses: actions/checkout@master

94 | - name: Install toolchain

95 | uses: actions-rs/toolchain@v1

96 | with:

97 | profile: minimal

98 | toolchain: stable

99 | override: true

100 | - name: Run Loom tests

101 | run: ./bin/loom.sh

102 |

103 | clippy:

104 | name: Clippy (stable)

105 | runs-on: ubuntu-latest

106 | steps:

107 | - uses: actions/checkout@v2

108 | - name: Install toolchain

109 | uses: actions-rs/toolchain@v1

110 | with:

111 | profile: minimal

112 | toolchain: stable

113 | components: clippy

114 | override: true

115 | - name: cargo clippy --all-targets --all-features

116 | uses: actions-rs/clippy-check@v1

117 | with:

118 | token: ${{ secrets.GITHUB_TOKEN }}

119 | args: --all-targets --all-features

120 |

121 | rustfmt:

122 | name: Rustfmt (stable)

123 | runs-on: ubuntu-latest

124 | steps:

125 | - uses: actions/checkout@v2

126 | - name: Install toolchain

127 | uses: actions-rs/toolchain@v1

128 | with:

129 | profile: minimal

130 | toolchain: stable

131 | components: rustfmt

132 | override: true

133 | - name: Run rustfmt

134 | uses: actions-rs/cargo@v1

135 | with:

136 | command: fmt

137 | args: -- --check

138 |

139 | all-systems-go:

140 | name: "all systems go!"

141 | needs:

142 | - build

143 | - build-msrv

144 | # Note: we explicitly *don't* require the `build-nightly` job to pass,

145 | # since the nightly Rust compiler is unstable. We don't want nightly

146 | # regressions to break our build --- this CI job is intended for

147 | # informational reasons rather than as a gatekeeper for merging PRs.

148 | - test

149 | - test-loom

150 | - clippy

151 | - rustfmt

152 | runs-on: ubuntu-latest

153 | steps:

154 | - run: exit 0

155 |

--------------------------------------------------------------------------------

/.github/workflows/release.yml:

--------------------------------------------------------------------------------

1 | name: Release

2 |

3 | on:

4 | push:

5 | tags:

6 | - v[0-9]+.*

7 |

8 | jobs:

9 | create-release:

10 | runs-on: ubuntu-latest

11 | steps:

12 | - uses: actions/checkout@v2

13 | - uses: taiki-e/create-gh-release-action@v1

14 | with:

15 | # Path to changelog.

16 | changelog: CHANGELOG.md

17 | # Reject releases from commits not contained in branches

18 | # that match the specified pattern (regular expression)

19 | branch: main

20 | env:

21 | # (Required) GitHub token for creating GitHub Releases.

22 | GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | target

2 | Cargo.lock

3 | .direnv

--------------------------------------------------------------------------------

/CHANGELOG.md:

--------------------------------------------------------------------------------

1 |

2 | ### v0.1.7 (2023-10-04)

3 |

4 |

5 | #### Bug Fixes

6 |

7 | * index out of bounds in `get()` and `get_owned()` (#88) ([fdbc930f](https://github.com/hawkw/sharded-slab/commit/fdbc930fb14b0f6f8b77cd6efdad5a1bdf8d3c04))

8 | * **unique_iter:** prevent panics if a slab is empty (#88) ([bd599e0b](https://github.com/hawkw/sharded-slab/commit/bd599e0b2a60a953f25f27ba1fa86682150e05c2), closes [#73](https://github.com/hawkw/sharded-slab/issues/73))

9 |

10 |

11 |

12 |

13 | ## 0.1.6 (2023-09-27)

14 |

15 |

16 | #### Features

17 |

18 | * publicly export `UniqueIter` (#87) ([e4d6482d](https://github.com/hawkw/sharded-slab/commit/e4d6482db05d5767b47eae1b0217faad30f2ebd5), closes [#77](https://github.com/hawkw/sharded-slab/issues/77))

19 |

20 | #### Bug Fixes

21 |

22 | * use a smaller `CustomConfig` for 32-bit tests (#84) ([828ffff9](https://github.com/hawkw/sharded-slab/commit/828ffff9f82cfc41ed66b4743563c4dddc97c1ce), closes [#82](https://github.com/hawkw/sharded-slab/issues/82))

23 |

24 |

25 |

26 |

27 | ## 0.1.5 (2023-08-28)

28 |

29 |

30 | #### Bug Fixes

31 |

32 | * **Slab:** invalid generation in case of custom config (#80) ([ca090279](https://github.com/hawkw/sharded-slab/commit/ca09027944812d024676029a3dde62d27ef22015))

33 |

34 |

35 |

36 |

37 | ### 0.1.4 (2021-10-12)

38 |

39 |

40 | #### Features

41 |

42 | * emit a nicer panic when thread count overflows `MAX_SHARDS` (#64) ([f1ed058a](https://github.com/hawkw/sharded-slab/commit/f1ed058a3ee296eff033fc0fb88f62a8b2f83f10))

43 |

44 |

45 |

46 |

47 | ### 0.1.3 (2021-08-02)

48 |

49 |

50 | #### Bug Fixes

51 |

52 | * set up MSRV in CI (#61) ([dfcc9080](https://github.com/hawkw/sharded-slab/commit/dfcc9080a62d08e359f298a9ffb0f275928b83e4), closes [#60](https://github.com/hawkw/sharded-slab/issues/60))

53 | * **tests:** duplicate `hint` mod defs with loom ([0ce3fd91](https://github.com/hawkw/sharded-slab/commit/0ce3fd91feac8b4edb4f1ece6aebfc4ba4e50026))

54 |

55 |

56 |

57 |

58 | ### 0.1.2 (2021-08-01)

59 |

60 |

61 | #### Bug Fixes

62 |

63 | * make debug assertions drop safe ([26d35a69](https://github.com/hawkw/sharded-slab/commit/26d35a695c9e5d7c62ab07cc5e66a0c6f8b6eade))

64 |

65 | #### Features

66 |

67 | * improve panics on thread ID bit exhaustion ([9ecb8e61](https://github.com/hawkw/sharded-slab/commit/9ecb8e614f107f68b5c6ba770342ae72af1cd07b))

68 |

69 |

70 |

71 |

72 | ## 0.1.1 (2021-1-4)

73 |

74 |

75 | #### Bug Fixes

76 |

77 | * change `loom` to an optional dependency ([9bd442b5](https://github.com/hawkw/sharded-slab/commit/9bd442b57bc56153a67d7325144ebcf303e0fe98))

78 |

79 |

80 | ## 0.1.0 (2020-10-20)

81 |

82 |

83 | #### Bug Fixes

84 |

85 | * fix `remove` and `clear` returning true when the key is stale ([b52d38b2](https://github.com/hawkw/sharded-slab/commit/b52d38b2d2d3edc3a59d3dba6b75095bbd864266))

86 |

87 | #### Breaking Changes

88 |

89 | * **Pool:** change `Pool::create` to return a mutable guard (#48) ([778065ea](https://github.com/hawkw/sharded-slab/commit/778065ead83523e0a9d951fbd19bb37fda3cc280), closes [#41](https://github.com/hawkw/sharded-slab/issues/41), [#16](https://github.com/hawkw/sharded-slab/issues/16))

90 | * **Slab:** rename `Guard` to `Entry` for consistency ([425ad398](https://github.com/hawkw/sharded-slab/commit/425ad39805ee818dc6b332286006bc92c8beab38))

91 |

92 | #### Features

93 |

94 | * add missing `Debug` impls ([71a8883f](https://github.com/hawkw/sharded-slab/commit/71a8883ff4fd861b95e81840cb5dca167657fe36))

95 | * **Pool:**

96 | * add `Pool::create_owned` and `OwnedRefMut` ([f7774ae0](https://github.com/hawkw/sharded-slab/commit/f7774ae0c5be99340f1e7941bde62f7044f4b4d8))

97 | * add `Arc::get_owned` and `OwnedRef` ([3e566d91](https://github.com/hawkw/sharded-slab/commit/3e566d91e1bc8cc4630a8635ad24b321ec047fe7), closes [#29](https://github.com/hawkw/sharded-slab/issues/29))

98 | * change `Pool::create` to return a mutable guard (#48) ([778065ea](https://github.com/hawkw/sharded-slab/commit/778065ead83523e0a9d951fbd19bb37fda3cc280), closes [#41](https://github.com/hawkw/sharded-slab/issues/41), [#16](https://github.com/hawkw/sharded-slab/issues/16))

99 | * **Slab:**

100 | * add `Arc::get_owned` and `OwnedEntry` ([53a970a2](https://github.com/hawkw/sharded-slab/commit/53a970a2298c30c1afd9578268c79ccd44afba05), closes [#29](https://github.com/hawkw/sharded-slab/issues/29))

101 | * rename `Guard` to `Entry` for consistency ([425ad398](https://github.com/hawkw/sharded-slab/commit/425ad39805ee818dc6b332286006bc92c8beab38))

102 | * add `slab`-style `VacantEntry` API ([6776590a](https://github.com/hawkw/sharded-slab/commit/6776590adeda7bf4a117fb233fc09cfa64d77ced), closes [#16](https://github.com/hawkw/sharded-slab/issues/16))

103 |

104 | #### Performance

105 |

106 | * allocate shard metadata lazily (#45) ([e543a06d](https://github.com/hawkw/sharded-slab/commit/e543a06d7474b3ff92df2cdb4a4571032135ff8d))

107 |

108 |

109 |

110 |

111 | ### 0.0.9 (2020-04-03)

112 |

113 |

114 | #### Features

115 |

116 | * **Config:** validate concurrent refs ([9b32af58](9b32af58), closes [#21](21))

117 | * **Pool:**

118 | * add `fmt::Debug` impl for `Pool` ([ffa5c7a0](ffa5c7a0))

119 | * add `Default` impl for `Pool` ([d2399365](d2399365))

120 | * add a sharded object pool for reusing heap allocations (#19) ([89734508](89734508), closes [#2](2), [#15](15))

121 | * **Slab::take:** add exponential backoff when spinning ([6b743a27](6b743a27))

122 |

123 | #### Bug Fixes

124 |

125 | * incorrect wrapping when overflowing maximum ref count ([aea693f3](aea693f3), closes [#22](22))

126 |

127 |

128 |

129 |

130 | ### 0.0.8 (2020-01-31)

131 |

132 |

133 | #### Bug Fixes

134 |

135 | * `remove` not adding slots to free lists ([dfdd7aee](dfdd7aee))

136 |

137 |

138 |

139 |

140 | ### 0.0.7 (2019-12-06)

141 |

142 |

143 | #### Bug Fixes

144 |

145 | * **Config:** compensate for 0 being a valid TID ([b601f5d9](b601f5d9))

146 | * **DefaultConfig:**

147 | * const overflow on 32-bit ([74d42dd1](74d42dd1), closes [#10](10))

148 | * wasted bit patterns on 64-bit ([8cf33f66](8cf33f66))

149 |

150 |

151 |

152 |

153 | ## 0.0.6 (2019-11-08)

154 |

155 |

156 | #### Features

157 |

158 | * **Guard:** expose `key` method #8 ([748bf39b](748bf39b))

159 |

160 |

161 |

162 |

163 | ## 0.0.5 (2019-10-31)

164 |

165 |

166 | #### Performance

167 |

168 | * consolidate per-slot state into one AtomicUsize (#6) ([f1146d33](f1146d33))

169 |

170 | #### Features

171 |

172 | * add Default impl for Slab ([61bb3316](61bb3316))

173 |

174 |

175 |

176 |

177 | ## 0.0.4 (2019-21-30)

178 |

179 |

180 | #### Features

181 |

182 | * prevent items from being removed while concurrently accessed ([872c81d1](872c81d1))

183 | * added `Slab::remove` method that marks an item to be removed when the last thread

184 | accessing it finishes ([872c81d1](872c81d1))

185 |

186 | #### Bug Fixes

187 |

188 | * nicer handling of races in remove ([475d9a06](475d9a06))

189 |

190 | #### Breaking Changes

191 |

192 | * renamed `Slab::remove` to `Slab::take` ([872c81d1](872c81d1))

193 | * `Slab::get` now returns a `Guard` type ([872c81d1](872c81d1))

194 |

195 |

196 |

197 | ## 0.0.3 (2019-07-30)

198 |

199 |

200 | #### Bug Fixes

201 |

202 | * split local/remote to fix false sharing & potential races ([69f95fb0](69f95fb0))

203 | * set next pointer _before_ head ([cc7a0bf1](cc7a0bf1))

204 |

205 | #### Breaking Changes

206 |

207 | * removed potentially racy `Slab::len` and `Slab::capacity` methods ([27af7d6c](27af7d6c))

208 |

209 |

210 | ## 0.0.2 (2019-03-30)

211 |

212 |

213 | #### Bug Fixes

214 |

215 | * fix compilation failure in release mode ([617031da](617031da))

216 |

217 |

218 |

219 | ## 0.0.1 (2019-02-30)

220 |

221 | - Initial release

222 |

--------------------------------------------------------------------------------

/Cargo.toml:

--------------------------------------------------------------------------------

1 | [package]

2 | name = "sharded-slab"

3 | version = "0.1.7"

4 | authors = ["Eliza Weisman "]

5 | edition = "2018"

6 | documentation = "https://docs.rs/sharded-slab/"

7 | homepage = "https://github.com/hawkw/sharded-slab"

8 | repository = "https://github.com/hawkw/sharded-slab"

9 | readme = "README.md"

10 | rust-version = "1.42.0"

11 | license = "MIT"

12 | keywords = ["slab", "allocator", "lock-free", "atomic"]

13 | categories = ["memory-management", "data-structures", "concurrency"]

14 | description = """

15 | A lock-free concurrent slab.

16 | """

17 | exclude = [

18 | "flake.nix",

19 | "flake.lock",

20 | ".envrc",

21 | ".clog.toml",

22 | ".cargo",

23 | ".github",

24 | ".direnv",

25 | "bin",

26 | ]

27 |

28 | [badges]

29 | maintenance = { status = "experimental" }

30 |

31 | [[bench]]

32 | name = "bench"

33 | harness = false

34 |

35 | [dependencies]

36 | lazy_static = "1"

37 |

38 | [dev-dependencies]

39 | proptest = "1"

40 | criterion = "0.3"

41 | slab = "0.4.2"

42 | memory-stats = "1"

43 | indexmap = "1" # newer versions lead to "candidate versions found which didn't match" on 1.42.0

44 |

45 | [target.'cfg(loom)'.dependencies]

46 | loom = { version = "0.5", features = ["checkpoint"], optional = true }

47 |

48 | [target.'cfg(loom)'.dev-dependencies]

49 | loom = { version = "0.5", features = ["checkpoint"] }

50 |

51 | [package.metadata.docs.rs]

52 | all-features = true

53 | rustdoc-args = ["--cfg", "docsrs"]

54 |

55 | [lints.rust]

56 | unexpected_cfgs = { level = "warn", check-cfg = ['cfg(loom)', 'cfg(slab_print)'] }

57 |

--------------------------------------------------------------------------------

/IMPLEMENTATION.md:

--------------------------------------------------------------------------------

1 | Notes on `sharded-slab`'s implementation and design.

2 |

3 | # Design

4 |

5 | The sharded slab's design is strongly inspired by the ideas presented by

6 | Leijen, Zorn, and de Moura in [Mimalloc: Free List Sharding in

7 | Action][mimalloc]. In this report, the authors present a novel design for a

8 | memory allocator based on a concept of _free list sharding_.

9 |

10 | Memory allocators must keep track of what memory regions are not currently

11 | allocated ("free") in order to provide them to future allocation requests.

12 | The term [_free list_][freelist] refers to a technique for performing this

13 | bookkeeping, where each free block stores a pointer to the next free block,

14 | forming a linked list. The memory allocator keeps a pointer to the most

15 | recently freed block, the _head_ of the free list. To allocate more memory,

16 | the allocator pops from the free list by setting the head pointer to the

17 | next free block of the current head block, and returning the previous head.

18 | To deallocate a block, the block is pushed to the free list by setting its

19 | first word to the current head pointer, and the head pointer is set to point

20 | to the deallocated block. Most implementations of slab allocators backed by

21 | arrays or vectors use a similar technique, where pointers are replaced by

22 | indices into the backing array.

23 |

24 | When allocations and deallocations can occur concurrently across threads,

25 | they must synchronize accesses to the free list; either by putting the

26 | entire allocator state inside of a lock, or by using atomic operations to

27 | treat the free list as a lock-free structure (such as a [Treiber stack]). In

28 | both cases, there is a significant performance cost — even when the free

29 | list is lock-free, it is likely that a noticeable amount of time will be

30 | spent in compare-and-swap loops. Ideally, the global synchronzation point

31 | created by the single global free list could be avoided as much as possible.

32 |

33 | The approach presented by Leijen, Zorn, and de Moura is to introduce

34 | sharding and thus increase the granularity of synchronization significantly.

35 | In mimalloc, the heap is _sharded_ so that each thread has its own

36 | thread-local heap. Objects are always allocated from the local heap of the

37 | thread where the allocation is performed. Because allocations are always

38 | done from a thread's local heap, they need not be synchronized.

39 |

40 | However, since objects can move between threads before being deallocated,

41 | _deallocations_ may still occur concurrently. Therefore, Leijen et al.

42 | introduce a concept of _local_ and _global_ free lists. When an object is

43 | deallocated on the same thread it was originally allocated on, it is placed

44 | on the local free list; if it is deallocated on another thread, it goes on

45 | the global free list for the heap of the thread from which it originated. To

46 | allocate, the local free list is used first; if it is empty, the entire

47 | global free list is popped onto the local free list. Since the local free

48 | list is only ever accessed by the thread it belongs to, it does not require

49 | synchronization at all, and because the global free list is popped from

50 | infrequently, the cost of synchronization has a reduced impact. A majority

51 | of allocations can occur without any synchronization at all; and

52 | deallocations only require synchronization when an object has left its

53 | parent thread (a relatively uncommon case).

54 |

55 | [mimalloc]: https://www.microsoft.com/en-us/research/uploads/prod/2019/06/mimalloc-tr-v1.pdf

56 | [freelist]: https://en.wikipedia.org/wiki/Free_list

57 | [Treiber stack]: https://en.wikipedia.org/wiki/Treiber_stack

58 |

59 | # Implementation

60 |

61 | A slab is represented as an array of [`MAX_THREADS`] _shards_. A shard

62 | consists of a vector of one or more _pages_ plus associated metadata.

63 | Finally, a page consists of an array of _slots_, head indices for the local

64 | and remote free lists.

65 |

66 | ```text

67 | ┌─────────────┐

68 | │ shard 1 │

69 | │ │ ┌─────────────┐ ┌────────┐

70 | │ pages───────┼───▶│ page 1 │ │ │

71 | ├─────────────┤ ├─────────────┤ ┌────▶│ next──┼─┐

72 | │ shard 2 │ │ page 2 │ │ ├────────┤ │

73 | ├─────────────┤ │ │ │ │XXXXXXXX│ │

74 | │ shard 3 │ │ local_head──┼──┘ ├────────┤ │

75 | └─────────────┘ │ remote_head─┼──┐ │ │◀┘

76 | ... ├─────────────┤ │ │ next──┼─┐

77 | ┌─────────────┐ │ page 3 │ │ ├────────┤ │

78 | │ shard n │ └─────────────┘ │ │XXXXXXXX│ │

79 | └─────────────┘ ... │ ├────────┤ │

80 | ┌─────────────┐ │ │XXXXXXXX│ │

81 | │ page n │ │ ├────────┤ │

82 | └─────────────┘ │ │ │◀┘

83 | └────▶│ next──┼───▶ ...

84 | ├────────┤

85 | │XXXXXXXX│

86 | └────────┘

87 | ```

88 |

89 |

90 | The size of the first page in a shard is always a power of two, and every

91 | subsequent page added after the first is twice as large as the page that

92 | preceeds it.

93 |

94 | ```text

95 |

96 | pg.

97 | ┌───┐ ┌─┬─┐

98 | │ 0 │───▶ │ │

99 | ├───┤ ├─┼─┼─┬─┐

100 | │ 1 │───▶ │ │ │ │

101 | ├───┤ ├─┼─┼─┼─┼─┬─┬─┬─┐

102 | │ 2 │───▶ │ │ │ │ │ │ │ │

103 | ├───┤ ├─┼─┼─┼─┼─┼─┼─┼─┼─┬─┬─┬─┬─┬─┬─┬─┐

104 | │ 3 │───▶ │ │ │ │ │ │ │ │ │ │ │ │ │ │ │ │

105 | └───┘ └─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┘

106 | ```

107 |

108 | When searching for a free slot, the smallest page is searched first, and if

109 | it is full, the search proceeds to the next page until either a free slot is

110 | found or all available pages have been searched. If all available pages have

111 | been searched and the maximum number of pages has not yet been reached, a

112 | new page is then allocated.

113 |

114 | Since every page is twice as large as the previous page, and all page sizes

115 | are powers of two, we can determine the page index that contains a given

116 | address by shifting the address down by the smallest page size and

117 | looking at how many twos places necessary to represent that number,

118 | telling us what power of two page size it fits inside of. We can

119 | determine the number of twos places by counting the number of leading

120 | zeros (unused twos places) in the number's binary representation, and

121 | subtracting that count from the total number of bits in a word.

122 |

123 | The formula for determining the page number that contains an offset is thus:

124 |

125 | ```rust,ignore

126 | WIDTH - ((offset + INITIAL_PAGE_SIZE) >> INDEX_SHIFT).leading_zeros()

127 | ```

128 |

129 | where `WIDTH` is the number of bits in a `usize`, and `INDEX_SHIFT` is

130 |

131 | ```rust,ignore

132 | INITIAL_PAGE_SIZE.trailing_zeros() + 1;

133 | ```

134 |

135 | [`MAX_THREADS`]: https://docs.rs/sharded-slab/latest/sharded_slab/trait.Config.html#associatedconstant.MAX_THREADS

136 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Copyright (c) 2019 Eliza Weisman

2 |

3 | Permission is hereby granted, free of charge, to any person obtaining a copy

4 | of this software and associated documentation files (the "Software"), to deal

5 | in the Software without restriction, including without limitation the rights

6 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

7 | copies of the Software, and to permit persons to whom the Software is

8 | furnished to do so, subject to the following conditions:

9 |

10 | The above copyright notice and this permission notice shall be included in

11 | all copies or substantial portions of the Software.

12 |

13 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

14 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

15 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

16 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

17 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

18 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

19 | THE SOFTWARE.

20 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # sharded-slab

2 |

3 | A lock-free concurrent slab.

4 |

5 | [![Crates.io][crates-badge]][crates-url]

6 | [![Documentation][docs-badge]][docs-url]

7 | [![CI Status][ci-badge]][ci-url]

8 | [![GitHub License][license-badge]][license]

9 | ![maintenance status][maint-badge]

10 |

11 | [crates-badge]: https://img.shields.io/crates/v/sharded-slab.svg

12 | [crates-url]: https://crates.io/crates/sharded-slab

13 | [docs-badge]: https://docs.rs/sharded-slab/badge.svg

14 | [docs-url]: https://docs.rs/sharded-slab/latest

15 | [ci-badge]: https://github.com/hawkw/sharded-slab/workflows/CI/badge.svg

16 | [ci-url]: https://github.com/hawkw/sharded-slab/actions?workflow=CI

17 | [license-badge]: https://img.shields.io/crates/l/sharded-slab

18 | [license]: LICENSE

19 | [maint-badge]: https://img.shields.io/badge/maintenance-experimental-blue.svg

20 |

21 | Slabs provide pre-allocated storage for many instances of a single data

22 | type. When a large number of values of a single type are required,

23 | this can be more efficient than allocating each item individually. Since the

24 | allocated items are the same size, memory fragmentation is reduced, and

25 | creating and removing new items can be very cheap.

26 |

27 | This crate implements a lock-free concurrent slab, indexed by `usize`s.

28 |

29 | **Note**: This crate is currently experimental. Please feel free to use it in

30 | your projects, but bear in mind that there's still plenty of room for

31 | optimization, and there may still be some lurking bugs.

32 |

33 | ## Usage

34 |

35 | First, add this to your `Cargo.toml`:

36 |

37 | ```toml

38 | sharded-slab = "0.1.7"

39 | ```

40 |

41 | This crate provides two types, [`Slab`] and [`Pool`], which provide slightly

42 | different APIs for using a sharded slab.

43 |

44 | [`Slab`] implements a slab for _storing_ small types, sharing them between

45 | threads, and accessing them by index. New entries are allocated by [inserting]

46 | data, moving it in by value. Similarly, entries may be deallocated by [taking]

47 | from the slab, moving the value out. This API is similar to a `Vec>`,

48 | but allowing lock-free concurrent insertion and removal.

49 |

50 | In contrast, the [`Pool`] type provides an [object pool] style API for

51 | _reusing storage_. Rather than constructing values and moving them into

52 | the pool, as with [`Slab`], [allocating an entry][create] from the pool

53 | takes a closure that's provided with a mutable reference to initialize

54 | the entry in place. When entries are deallocated, they are [cleared] in

55 | place. Types which own a heap allocation can be cleared by dropping any

56 | _data_ they store, but retaining any previously-allocated capacity. This

57 | means that a [`Pool`] may be used to reuse a set of existing heap

58 | allocations, reducing allocator load.

59 |

60 | [`Slab`]: https://docs.rs/sharded-slab/0.1.4/sharded_slab/struct.Slab.html

61 | [inserting]: https://docs.rs/sharded-slab/0.1.4/sharded_slab/struct.Slab.html#method.insert

62 | [taking]: https://docs.rs/sharded-slab/0.1.4/sharded_slab/struct.Slab.html#method.take

63 | [`Pool`]: https://docs.rs/sharded-slab/0.1.4/sharded_slab/struct.Pool.html

64 | [create]: https://docs.rs/sharded-slab/0.1.4/sharded_slab/struct.Pool.html#method.create

65 | [cleared]: https://docs.rs/sharded-slab/0.1.4/sharded_slab/trait.Clear.html

66 | [object pool]: https://en.wikipedia.org/wiki/Object_pool_pattern

67 |

68 | ### Examples

69 |

70 | Inserting an item into the slab, returning an index:

71 |

72 | ```rust

73 | use sharded_slab::Slab;

74 | let slab = Slab::new();

75 |

76 | let key = slab.insert("hello world").unwrap();

77 | assert_eq!(slab.get(key).unwrap(), "hello world");

78 | ```

79 |

80 | To share a slab across threads, it may be wrapped in an `Arc`:

81 |

82 | ```rust

83 | use sharded_slab::Slab;

84 | use std::sync::Arc;

85 | let slab = Arc::new(Slab::new());

86 |

87 | let slab2 = slab.clone();

88 | let thread2 = std::thread::spawn(move || {

89 | let key = slab2.insert("hello from thread two").unwrap();

90 | assert_eq!(slab2.get(key).unwrap(), "hello from thread two");

91 | key

92 | });

93 |

94 | let key1 = slab.insert("hello from thread one").unwrap();

95 | assert_eq!(slab.get(key1).unwrap(), "hello from thread one");

96 |

97 | // Wait for thread 2 to complete.

98 | let key2 = thread2.join().unwrap();

99 |

100 | // The item inserted by thread 2 remains in the slab.

101 | assert_eq!(slab.get(key2).unwrap(), "hello from thread two");

102 | ```

103 |

104 | If items in the slab must be mutated, a `Mutex` or `RwLock` may be used for

105 | each item, providing granular locking of items rather than of the slab:

106 |

107 | ```rust

108 | use sharded_slab::Slab;

109 | use std::sync::{Arc, Mutex};

110 | let slab = Arc::new(Slab::new());

111 |

112 | let key = slab.insert(Mutex::new(String::from("hello world"))).unwrap();

113 |

114 | let slab2 = slab.clone();

115 | let thread2 = std::thread::spawn(move || {

116 | let hello = slab2.get(key).expect("item missing");

117 | let mut hello = hello.lock().expect("mutex poisoned");

118 | *hello = String::from("hello everyone!");

119 | });

120 |

121 | thread2.join().unwrap();

122 |

123 | let hello = slab.get(key).expect("item missing");

124 | let mut hello = hello.lock().expect("mutex poisoned");

125 | assert_eq!(hello.as_str(), "hello everyone!");

126 | ```

127 |

128 | ## Comparison with Similar Crates

129 |

130 | - [`slab`][slab crate]: Carl Lerche's `slab` crate provides a slab implementation with a

131 | similar API, implemented by storing all data in a single vector.

132 |

133 | Unlike `sharded-slab`, inserting and removing elements from the slab requires

134 | mutable access. This means that if the slab is accessed concurrently by

135 | multiple threads, it is necessary for it to be protected by a `Mutex` or

136 | `RwLock`. Items may not be inserted or removed (or accessed, if a `Mutex` is

137 | used) concurrently, even when they are unrelated. In many cases, the lock can

138 | become a significant bottleneck. On the other hand, `sharded-slab` allows

139 | separate indices in the slab to be accessed, inserted, and removed

140 | concurrently without requiring a global lock. Therefore, when the slab is

141 | shared across multiple threads, this crate offers significantly better

142 | performance than `slab`.

143 |

144 | However, the lock free slab introduces some additional constant-factor

145 | overhead. This means that in use-cases where a slab is _not_ shared by

146 | multiple threads and locking is not required, `sharded-slab` will likely

147 | offer slightly worse performance.

148 |

149 | In summary: `sharded-slab` offers significantly improved performance in

150 | concurrent use-cases, while `slab` should be preferred in single-threaded

151 | use-cases.

152 |

153 | [slab crate]: https://crates.io/crates/slab

154 |

155 | ## Safety and Correctness

156 |

157 | Most implementations of lock-free data structures in Rust require some

158 | amount of unsafe code, and this crate is not an exception. In order to catch

159 | potential bugs in this unsafe code, we make use of [`loom`], a

160 | permutation-testing tool for concurrent Rust programs. All `unsafe` blocks

161 | this crate occur in accesses to `loom` `UnsafeCell`s. This means that when

162 | those accesses occur in this crate's tests, `loom` will assert that they are

163 | valid under the C11 memory model across multiple permutations of concurrent

164 | executions of those tests.

165 |

166 | In order to guard against the [ABA problem][aba], this crate makes use of

167 | _generational indices_. Each slot in the slab tracks a generation counter

168 | which is incremented every time a value is inserted into that slot, and the

169 | indices returned by `Slab::insert` include the generation of the slot when

170 | the value was inserted, packed into the high-order bits of the index. This

171 | ensures that if a value is inserted, removed, and a new value is inserted

172 | into the same slot in the slab, the key returned by the first call to

173 | `insert` will not map to the new value.

174 |

175 | Since a fixed number of bits are set aside to use for storing the generation

176 | counter, the counter will wrap around after being incremented a number of

177 | times. To avoid situations where a returned index lives long enough to see the

178 | generation counter wrap around to the same value, it is good to be fairly

179 | generous when configuring the allocation of index bits.

180 |

181 | [`loom`]: https://crates.io/crates/loom

182 | [aba]: https://en.wikipedia.org/wiki/ABA_problem

183 |

184 | ## Performance

185 |

186 | These graphs were produced by [benchmarks] of the sharded slab implementation,

187 | using the [`criterion`] crate.

188 |

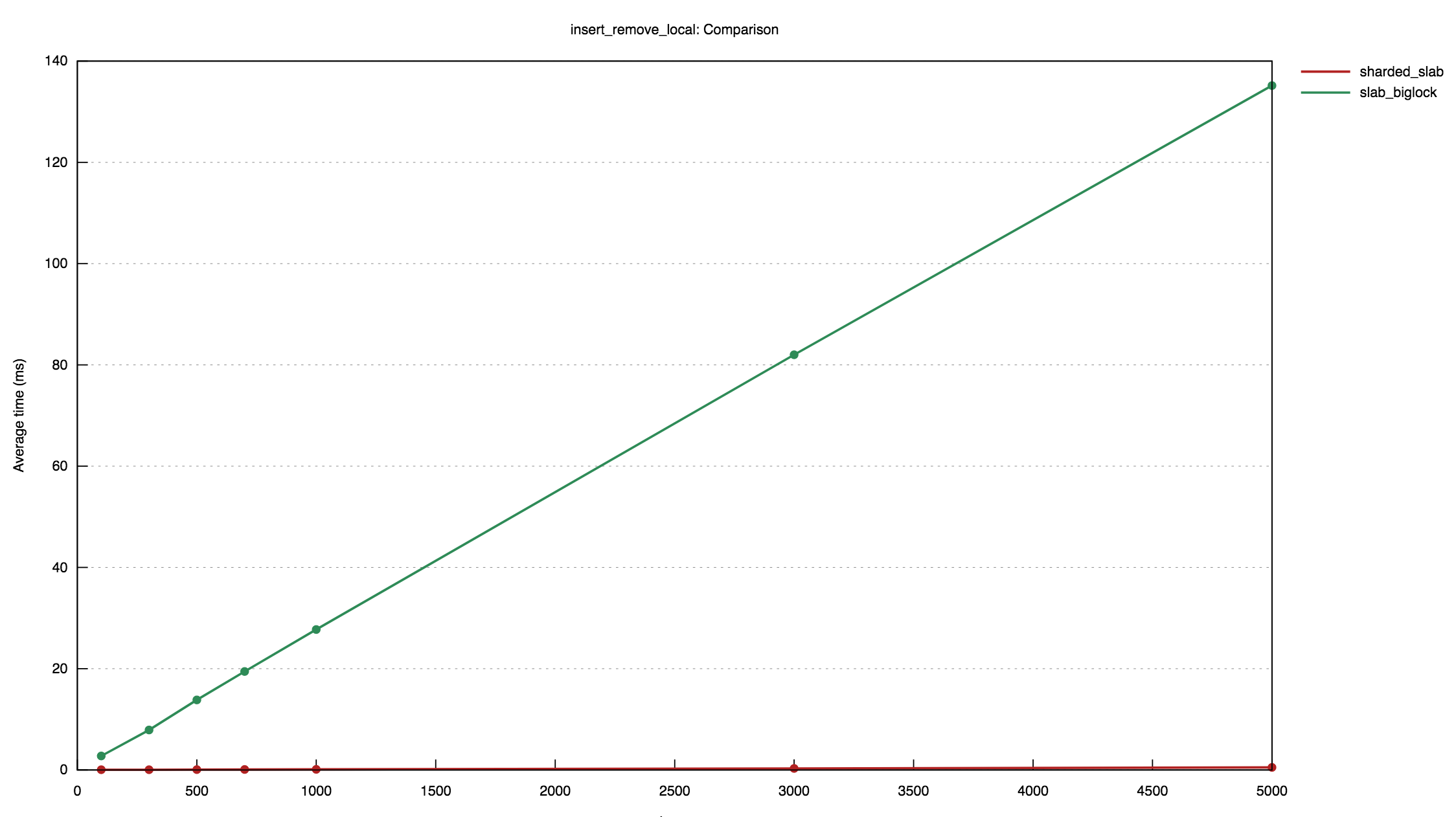

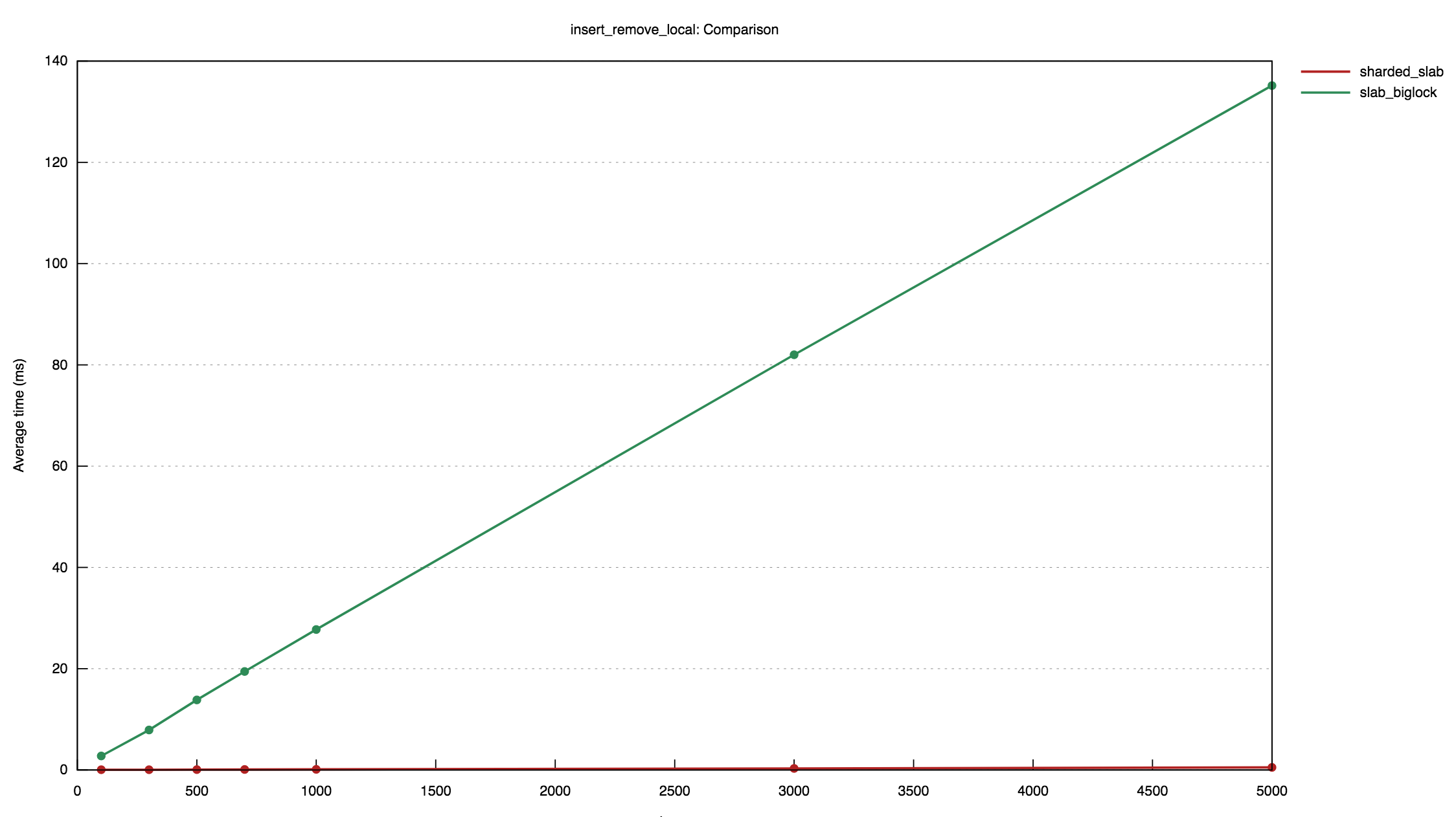

189 | The first shows the results of a benchmark where an increasing number of

190 | items are inserted and then removed into a slab concurrently by five

191 | threads. It compares the performance of the sharded slab implementation

192 | with a `RwLock`:

193 |

194 |  195 |

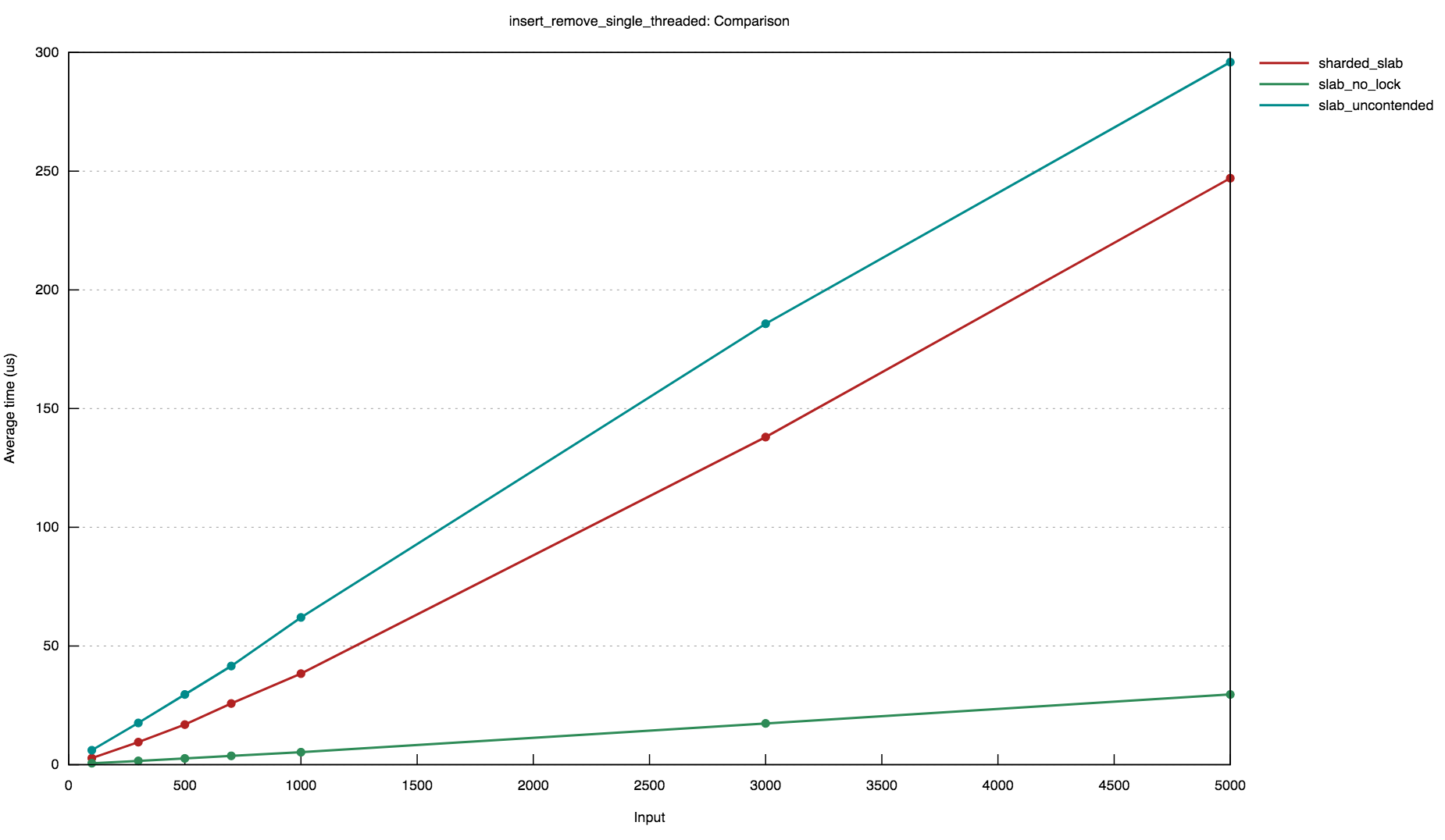

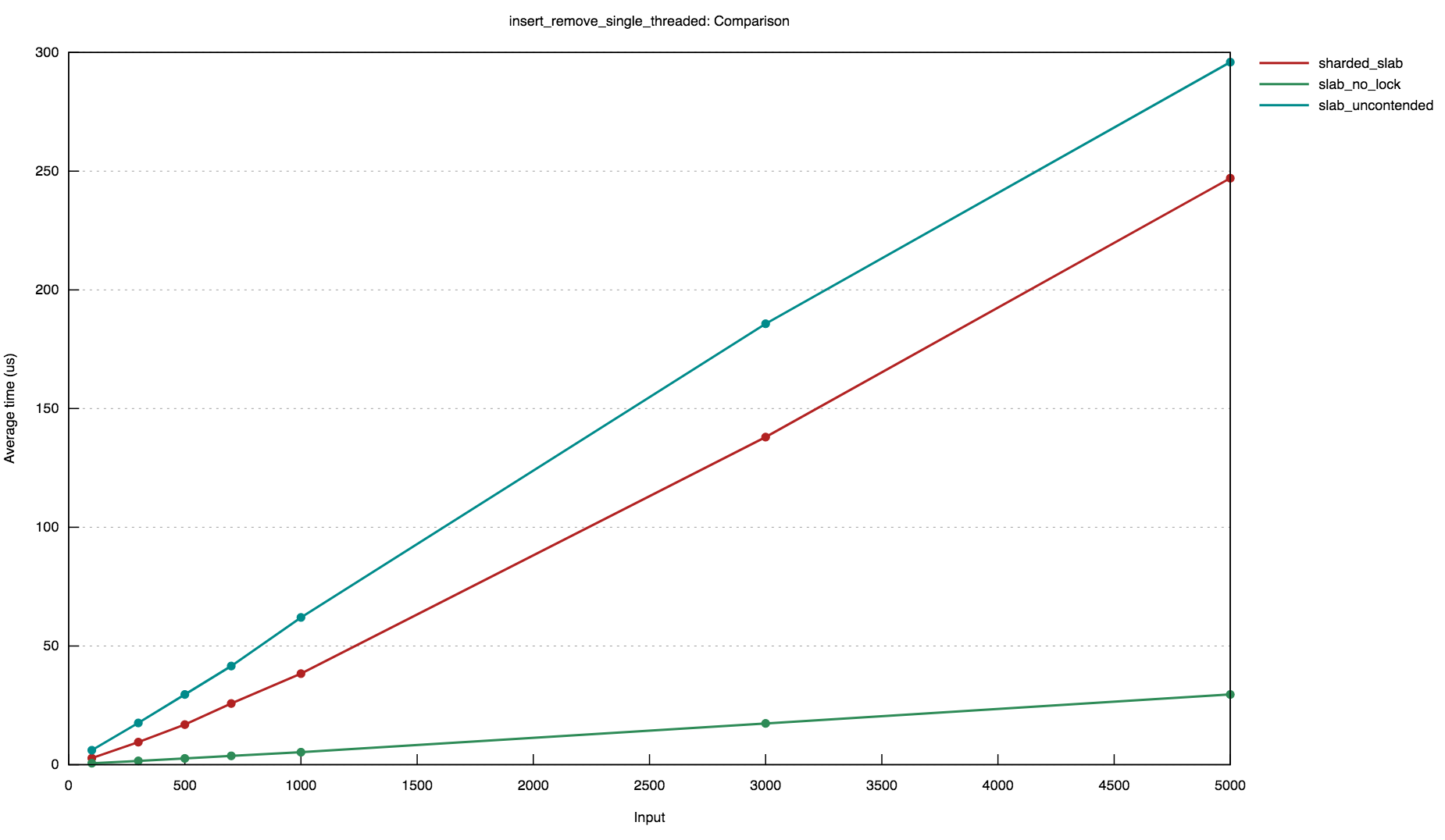

196 | The second graph shows the results of a benchmark where an increasing

197 | number of items are inserted and then removed by a _single_ thread. It

198 | compares the performance of the sharded slab implementation with an

199 | `RwLock` and a `mut slab::Slab`.

200 |

201 |

195 |

196 | The second graph shows the results of a benchmark where an increasing

197 | number of items are inserted and then removed by a _single_ thread. It

198 | compares the performance of the sharded slab implementation with an

199 | `RwLock` and a `mut slab::Slab`.

200 |

201 |  202 |

203 | These benchmarks demonstrate that, while the sharded approach introduces

204 | a small constant-factor overhead, it offers significantly better

205 | performance across concurrent accesses.

206 |

207 | [benchmarks]: https://github.com/hawkw/sharded-slab/blob/master/benches/bench.rs

208 | [`criterion`]: https://crates.io/crates/criterion

209 |

210 | ## License

211 |

212 | This project is licensed under the [MIT license](LICENSE).

213 |

214 | ### Contribution

215 |

216 | Unless you explicitly state otherwise, any contribution intentionally submitted

217 | for inclusion in this project by you, shall be licensed as MIT, without any

218 | additional terms or conditions.

219 |

--------------------------------------------------------------------------------

/benches/bench.rs:

--------------------------------------------------------------------------------

1 | use criterion::{criterion_group, criterion_main, BenchmarkId, Criterion};

2 | use std::{

3 | sync::{Arc, Barrier, RwLock},

4 | thread,

5 | time::{Duration, Instant},

6 | };

7 |

8 | #[derive(Clone)]

9 | struct MultithreadedBench {

10 | start: Arc,

11 | end: Arc,

12 | slab: Arc,

13 | }

14 |

15 | impl MultithreadedBench {

16 | fn new(slab: Arc) -> Self {

17 | Self {

18 | start: Arc::new(Barrier::new(5)),

19 | end: Arc::new(Barrier::new(5)),

20 | slab,

21 | }

22 | }

23 |

24 | fn thread(&self, f: impl FnOnce(&Barrier, &T) + Send + 'static) -> &Self {

25 | let start = self.start.clone();

26 | let end = self.end.clone();

27 | let slab = self.slab.clone();

28 | thread::spawn(move || {

29 | f(&start, &*slab);

30 | end.wait();

31 | });

32 | self

33 | }

34 |

35 | fn run(&self) -> Duration {

36 | self.start.wait();

37 | let t0 = Instant::now();

38 | self.end.wait();

39 | t0.elapsed()

40 | }

41 | }

42 |

43 | const N_INSERTIONS: &[usize] = &[100, 300, 500, 700, 1000, 3000, 5000];

44 |

45 | fn insert_remove_local(c: &mut Criterion) {

46 | // the 10000-insertion benchmark takes the `slab` crate about an hour to

47 | // run; don't run this unless you're prepared for that...

48 | // const N_INSERTIONS: &'static [usize] = &[100, 500, 1000, 5000, 10000];

49 | let mut group = c.benchmark_group("insert_remove_local");

50 | let g = group.measurement_time(Duration::from_secs(15));

51 |

52 | for i in N_INSERTIONS {

53 | g.bench_with_input(BenchmarkId::new("sharded_slab", i), i, |b, &i| {

54 | b.iter_custom(|iters| {

55 | let mut total = Duration::from_secs(0);

56 | for _ in 0..iters {

57 | let bench = MultithreadedBench::new(Arc::new(sharded_slab::Slab::new()));

58 | let elapsed = bench

59 | .thread(move |start, slab| {

60 | start.wait();

61 | let v: Vec<_> = (0..i).map(|i| slab.insert(i).unwrap()).collect();

62 | for i in v {

63 | slab.remove(i);

64 | }

65 | })

66 | .thread(move |start, slab| {

67 | start.wait();

68 | let v: Vec<_> = (0..i).map(|i| slab.insert(i).unwrap()).collect();

69 | for i in v {

70 | slab.remove(i);

71 | }

72 | })

73 | .thread(move |start, slab| {

74 | start.wait();

75 | let v: Vec<_> = (0..i).map(|i| slab.insert(i).unwrap()).collect();

76 | for i in v {

77 | slab.remove(i);

78 | }

79 | })

80 | .thread(move |start, slab| {

81 | start.wait();

82 | let v: Vec<_> = (0..i).map(|i| slab.insert(i).unwrap()).collect();

83 | for i in v {

84 | slab.remove(i);

85 | }

86 | })

87 | .run();

88 | total += elapsed;

89 | }

90 | total

91 | })

92 | });

93 | g.bench_with_input(BenchmarkId::new("slab_biglock", i), i, |b, &i| {

94 | b.iter_custom(|iters| {

95 | let mut total = Duration::from_secs(0);

96 | for _ in 0..iters {

97 | let bench = MultithreadedBench::new(Arc::new(RwLock::new(slab::Slab::new())));

98 | let elapsed = bench

99 | .thread(move |start, slab| {

100 | start.wait();

101 | let v: Vec<_> =

102 | (0..i).map(|i| slab.write().unwrap().insert(i)).collect();

103 | for i in v {

104 | slab.write().unwrap().remove(i);

105 | }

106 | })

107 | .thread(move |start, slab| {

108 | start.wait();

109 | let v: Vec<_> =

110 | (0..i).map(|i| slab.write().unwrap().insert(i)).collect();

111 | for i in v {

112 | slab.write().unwrap().remove(i);

113 | }

114 | })

115 | .thread(move |start, slab| {

116 | start.wait();

117 | let v: Vec<_> =

118 | (0..i).map(|i| slab.write().unwrap().insert(i)).collect();

119 | for i in v {

120 | slab.write().unwrap().remove(i);

121 | }

122 | })

123 | .thread(move |start, slab| {

124 | start.wait();

125 | let v: Vec<_> =

126 | (0..i).map(|i| slab.write().unwrap().insert(i)).collect();

127 | for i in v {

128 | slab.write().unwrap().remove(i);

129 | }

130 | })

131 | .run();

132 | total += elapsed;

133 | }

134 | total

135 | })

136 | });

137 | }

138 | group.finish();

139 | }

140 |

141 | fn insert_remove_single_thread(c: &mut Criterion) {

142 | // the 10000-insertion benchmark takes the `slab` crate about an hour to

143 | // run; don't run this unless you're prepared for that...

144 | // const N_INSERTIONS: &'static [usize] = &[100, 500, 1000, 5000, 10000];

145 | let mut group = c.benchmark_group("insert_remove_single_threaded");

146 |

147 | for i in N_INSERTIONS {

148 | group.bench_with_input(BenchmarkId::new("sharded_slab", i), i, |b, &i| {

149 | let slab = sharded_slab::Slab::new();

150 | b.iter(|| {

151 | let v: Vec<_> = (0..i).map(|i| slab.insert(i).unwrap()).collect();

152 | for i in v {

153 | slab.remove(i);

154 | }

155 | });

156 | });

157 | group.bench_with_input(BenchmarkId::new("slab_no_lock", i), i, |b, &i| {

158 | let mut slab = slab::Slab::new();

159 | b.iter(|| {

160 | let v: Vec<_> = (0..i).map(|i| slab.insert(i)).collect();

161 | for i in v {

162 | slab.remove(i);

163 | }

164 | });

165 | });

166 | group.bench_with_input(BenchmarkId::new("slab_uncontended", i), i, |b, &i| {

167 | let slab = RwLock::new(slab::Slab::new());

168 | b.iter(|| {

169 | let v: Vec<_> = (0..i).map(|i| slab.write().unwrap().insert(i)).collect();

170 | for i in v {

171 | slab.write().unwrap().remove(i);

172 | }

173 | });

174 | });

175 | }

176 | group.finish();

177 | }

178 |

179 | criterion_group!(benches, insert_remove_local, insert_remove_single_thread);

180 | criterion_main!(benches);

181 |

--------------------------------------------------------------------------------

/bin/loom.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 | # Runs Loom tests with defaults for Loom's configuration values.

3 | #

4 | # The tests are compiled in release mode to improve performance, but debug

5 | # assertions are enabled.

6 | #

7 | # Any arguments to this script are passed to the `cargo test` invocation.

8 |

9 | RUSTFLAGS="${RUSTFLAGS} --cfg loom -C debug-assertions=on" \

10 | LOOM_MAX_PREEMPTIONS="${LOOM_MAX_PREEMPTIONS:-2}" \

11 | LOOM_CHECKPOINT_INTERVAL="${LOOM_CHECKPOINT_INTERVAL:-1}" \

12 | LOOM_LOG=1 \

13 | LOOM_LOCATION=1 \

14 | cargo test --release --lib "$@"

15 |

--------------------------------------------------------------------------------

/flake.lock:

--------------------------------------------------------------------------------

1 | {

2 | "nodes": {

3 | "flake-utils": {

4 | "inputs": {

5 | "systems": "systems"

6 | },

7 | "locked": {

8 | "lastModified": 1692799911,

9 | "narHash": "sha256-3eihraek4qL744EvQXsK1Ha6C3CR7nnT8X2qWap4RNk=",

10 | "owner": "numtide",

11 | "repo": "flake-utils",

12 | "rev": "f9e7cf818399d17d347f847525c5a5a8032e4e44",

13 | "type": "github"

14 | },

15 | "original": {

16 | "owner": "numtide",

17 | "repo": "flake-utils",

18 | "type": "github"

19 | }

20 | },

21 | "nixpkgs": {

22 | "locked": {

23 | "lastModified": 1693158576,

24 | "narHash": "sha256-aRTTXkYvhXosGx535iAFUaoFboUrZSYb1Ooih/auGp0=",

25 | "owner": "NixOS",

26 | "repo": "nixpkgs",

27 | "rev": "a999c1cc0c9eb2095729d5aa03e0d8f7ed256780",

28 | "type": "github"

29 | },

30 | "original": {

31 | "owner": "NixOS",

32 | "ref": "nixos-unstable",

33 | "repo": "nixpkgs",

34 | "type": "github"

35 | }

36 | },

37 | "root": {

38 | "inputs": {

39 | "flake-utils": "flake-utils",

40 | "nixpkgs": "nixpkgs",

41 | "rust-overlay": "rust-overlay"

42 | }

43 | },

44 | "rust-overlay": {

45 | "inputs": {

46 | "flake-utils": [

47 | "flake-utils"

48 | ],

49 | "nixpkgs": [

50 | "nixpkgs"

51 | ]

52 | },

53 | "locked": {

54 | "lastModified": 1693188660,

55 | "narHash": "sha256-F8vlVcYoEBRJqV3pN2QNSCI/A2i77ad5R9iiZ4llt1A=",

56 | "owner": "oxalica",

57 | "repo": "rust-overlay",

58 | "rev": "23756b2c5594da5c1ad2f40ae2440b9f8a2165b7",

59 | "type": "github"

60 | },

61 | "original": {

62 | "owner": "oxalica",

63 | "repo": "rust-overlay",

64 | "type": "github"

65 | }

66 | },

67 | "systems": {

68 | "locked": {

69 | "lastModified": 1681028828,

70 | "narHash": "sha256-Vy1rq5AaRuLzOxct8nz4T6wlgyUR7zLU309k9mBC768=",

71 | "owner": "nix-systems",

72 | "repo": "default",

73 | "rev": "da67096a3b9bf56a91d16901293e51ba5b49a27e",

74 | "type": "github"

75 | },

76 | "original": {

77 | "owner": "nix-systems",

78 | "repo": "default",

79 | "type": "github"

80 | }

81 | }

82 | },

83 | "root": "root",

84 | "version": 7

85 | }

86 |

--------------------------------------------------------------------------------

/flake.nix:

--------------------------------------------------------------------------------

1 | # in flake.nix

2 | {

3 | description =

4 | "Flake containing a development shell for the `sharded-slab` crate";

5 |

6 | inputs = {

7 | nixpkgs.url = "github:NixOS/nixpkgs/nixos-unstable";

8 | flake-utils.url = "github:numtide/flake-utils";

9 | rust-overlay = {

10 | url = "github:oxalica/rust-overlay";

11 | inputs = {

12 | nixpkgs.follows = "nixpkgs";

13 | flake-utils.follows = "flake-utils";

14 | };

15 | };

16 | };

17 |

18 | outputs = { self, nixpkgs, flake-utils, rust-overlay }:

19 | flake-utils.lib.eachDefaultSystem (system:

20 | let

21 | overlays = [ (import rust-overlay) ];

22 | pkgs = import nixpkgs { inherit system overlays; };

23 | rustToolchain = pkgs.pkgsBuildHost.rust-bin.stable.latest.default;

24 | nativeBuildInputs = with pkgs; [ rustToolchain pkg-config ];

25 | in with pkgs; {

26 | devShells.default = mkShell { inherit nativeBuildInputs; };

27 | });

28 | }

29 |

--------------------------------------------------------------------------------

/rust-toolchain.toml:

--------------------------------------------------------------------------------

1 | [toolchain]

2 | channel = "stable"

3 | profile = "default"

4 | targets = [

5 | "i686-unknown-linux-musl",

6 | "x86_64-unknown-linux-gnu",

7 | ]

--------------------------------------------------------------------------------

/src/cfg.rs:

--------------------------------------------------------------------------------

1 | use crate::page::{

2 | slot::{Generation, RefCount},

3 | Addr,

4 | };

5 | use crate::Pack;

6 | use std::{fmt, marker::PhantomData};

7 | /// Configuration parameters which can be overridden to tune the behavior of a slab.

8 | pub trait Config: Sized {

9 | /// The maximum number of threads which can access the slab.

10 | ///

11 | /// This value (rounded to a power of two) determines the number of shards

12 | /// in the slab. If a thread is created, accesses the slab, and then terminates,

13 | /// its shard may be reused and thus does not count against the maximum

14 | /// number of threads once the thread has terminated.

15 | const MAX_THREADS: usize = DefaultConfig::MAX_THREADS;

16 | /// The maximum number of pages in each shard in the slab.

17 | ///

18 | /// This value, in combination with `INITIAL_PAGE_SIZE`, determines how many

19 | /// bits of each index are used to represent page addresses.

20 | const MAX_PAGES: usize = DefaultConfig::MAX_PAGES;

21 | /// The size of the first page in each shard.

22 | ///

23 | /// When a page in a shard has been filled with values, a new page

24 | /// will be allocated that is twice as large as the previous page. Thus, the

25 | /// second page will be twice this size, and the third will be four times

26 | /// this size, and so on.

27 | ///

28 | /// Note that page sizes must be powers of two. If this value is not a power

29 | /// of two, it will be rounded to the next power of two.

30 | const INITIAL_PAGE_SIZE: usize = DefaultConfig::INITIAL_PAGE_SIZE;

31 | /// Sets a number of high-order bits in each index which are reserved from

32 | /// user code.

33 | ///

34 | /// Note that these bits are taken from the generation counter; if the page

35 | /// address and thread IDs are configured to use a large number of bits,

36 | /// reserving additional bits will decrease the period of the generation

37 | /// counter. These should thus be used relatively sparingly, to ensure that

38 | /// generation counters are able to effectively prevent the ABA problem.

39 | const RESERVED_BITS: usize = 0;

40 | }

41 |

42 | pub(crate) trait CfgPrivate: Config {

43 | const USED_BITS: usize = Generation::::LEN + Generation::::SHIFT;

44 | const INITIAL_SZ: usize = next_pow2(Self::INITIAL_PAGE_SIZE);

45 | const MAX_SHARDS: usize = next_pow2(Self::MAX_THREADS - 1);

46 | const ADDR_INDEX_SHIFT: usize = Self::INITIAL_SZ.trailing_zeros() as usize + 1;

47 |

48 | fn page_size(n: usize) -> usize {

49 | Self::INITIAL_SZ * 2usize.pow(n as _)

50 | }

51 |

52 | fn debug() -> DebugConfig {

53 | DebugConfig { _cfg: PhantomData }

54 | }

55 |

56 | fn validate() {

57 | assert!(

58 | Self::INITIAL_SZ.is_power_of_two(),

59 | "invalid Config: {:#?}",

60 | Self::debug(),

61 | );

62 | assert!(

63 | Self::INITIAL_SZ <= Addr::::BITS,

64 | "invalid Config: {:#?}",

65 | Self::debug()

66 | );

67 |

68 | assert!(

69 | Generation::::BITS >= 3,

70 | "invalid Config: {:#?}\ngeneration counter should be at least 3 bits!",

71 | Self::debug()

72 | );

73 |

74 | assert!(

75 | Self::USED_BITS <= WIDTH,

76 | "invalid Config: {:#?}\ntotal number of bits per index is too large to fit in a word!",

77 | Self::debug()

78 | );

79 |

80 | assert!(

81 | WIDTH - Self::USED_BITS >= Self::RESERVED_BITS,

82 | "invalid Config: {:#?}\nindices are too large to fit reserved bits!",

83 | Self::debug()

84 | );

85 |

86 | assert!(

87 | RefCount::::MAX > 1,

88 | "invalid config: {:#?}\n maximum concurrent references would be {}",

89 | Self::debug(),

90 | RefCount::::MAX,

91 | );

92 | }

93 |

94 | #[inline(always)]

95 | fn unpack>(packed: usize) -> A {

96 | A::from_packed(packed)

97 | }

98 |

99 | #[inline(always)]

100 | fn unpack_addr(packed: usize) -> Addr {

101 | Self::unpack(packed)

102 | }

103 |

104 | #[inline(always)]

105 | fn unpack_tid(packed: usize) -> crate::Tid {

106 | Self::unpack(packed)

107 | }

108 |

109 | #[inline(always)]

110 | fn unpack_gen(packed: usize) -> Generation {

111 | Self::unpack(packed)

112 | }

113 | }

114 | impl CfgPrivate for C {}

115 |

116 | /// Default slab configuration values.

117 | #[derive(Copy, Clone)]

118 | pub struct DefaultConfig {

119 | _p: (),

120 | }

121 |

122 | pub(crate) struct DebugConfig {

123 | _cfg: PhantomData,

124 | }

125 |

126 | pub(crate) const WIDTH: usize = std::mem::size_of::() * 8;

127 |

128 | pub(crate) const fn next_pow2(n: usize) -> usize {

129 | let pow2 = n.count_ones() == 1;

130 | let zeros = n.leading_zeros();

131 | 1 << (WIDTH - zeros as usize - pow2 as usize)

132 | }

133 |

134 | // === impl DefaultConfig ===

135 |

136 | impl Config for DefaultConfig {

137 | const INITIAL_PAGE_SIZE: usize = 32;

138 |

139 | #[cfg(target_pointer_width = "64")]

140 | const MAX_THREADS: usize = 4096;

141 | #[cfg(target_pointer_width = "32")]

142 | // TODO(eliza): can we find enough bits to give 32-bit platforms more threads?

143 | const MAX_THREADS: usize = 128;

144 |

145 | const MAX_PAGES: usize = WIDTH / 2;

146 | }

147 |

148 | impl fmt::Debug for DefaultConfig {

149 | fn fmt(&self, f: &mut fmt::Formatter<'_>) -> fmt::Result {

150 | Self::debug().fmt(f)

151 | }

152 | }

153 |

154 | impl fmt::Debug for DebugConfig {

155 | fn fmt(&self, f: &mut fmt::Formatter<'_>) -> fmt::Result {

156 | f.debug_struct(std::any::type_name::())

157 | .field("initial_page_size", &C::INITIAL_SZ)

158 | .field("max_shards", &C::MAX_SHARDS)

159 | .field("max_pages", &C::MAX_PAGES)

160 | .field("used_bits", &C::USED_BITS)

161 | .field("reserved_bits", &C::RESERVED_BITS)

162 | .field("pointer_width", &WIDTH)

163 | .field("max_concurrent_references", &RefCount::::MAX)

164 | .finish()

165 | }

166 | }

167 |

168 | #[cfg(test)]

169 | mod tests {

170 | use super::*;

171 | use crate::test_util;

172 | use crate::Slab;

173 |

174 | #[test]

175 | #[cfg_attr(loom, ignore)]

176 | #[should_panic]

177 | fn validates_max_refs() {

178 | struct GiantGenConfig;

179 |

180 | // Configure the slab with a very large number of bits for the generation

181 | // counter. This will only leave 1 bit to use for the slot reference

182 | // counter, which will fail to validate.

183 | impl Config for GiantGenConfig {

184 | const INITIAL_PAGE_SIZE: usize = 1;

185 | const MAX_THREADS: usize = 1;

186 | const MAX_PAGES: usize = 1;

187 | }

188 |

189 | let _slab = Slab::::new_with_config::();

190 | }

191 |

192 | #[test]

193 | #[cfg_attr(loom, ignore)]

194 | fn big() {

195 | let slab = Slab::new();

196 |

197 | for i in 0..10000 {

198 | println!("{:?}", i);

199 | let k = slab.insert(i).expect("insert");

200 | assert_eq!(slab.get(k).expect("get"), i);

201 | }

202 | }

203 |

204 | #[test]

205 | #[cfg_attr(loom, ignore)]

206 | fn custom_page_sz() {

207 | let slab = Slab::new_with_config::();

208 |

209 | for i in 0..4096 {

210 | println!("{}", i);

211 | let k = slab.insert(i).expect("insert");

212 | assert_eq!(slab.get(k).expect("get"), i);

213 | }

214 | }

215 | }

216 |

--------------------------------------------------------------------------------

/src/clear.rs:

--------------------------------------------------------------------------------

1 | use std::{collections, hash, ops::DerefMut, sync};

2 |

3 | /// Trait implemented by types which can be cleared in place, retaining any

4 | /// allocated memory.

5 | ///

6 | /// This is essentially a generalization of methods on standard library

7 | /// collection types, including as [`Vec::clear`], [`String::clear`], and

8 | /// [`HashMap::clear`]. These methods drop all data stored in the collection,

9 | /// but retain the collection's heap allocation for future use. Types such as

10 | /// `BTreeMap`, whose `clear` methods drops allocations, should not

11 | /// implement this trait.

12 | ///

13 | /// When implemented for types which do not own a heap allocation, `Clear`

14 | /// should reset the type in place if possible. If the type has an empty state

15 | /// or stores `Option`s, those values should be reset to the empty state. For

16 | /// "plain old data" types, which hold no pointers to other data and do not have

17 | /// an empty or initial state, it's okay for a `Clear` implementation to be a

18 | /// no-op. In that case, it essentially serves as a marker indicating that the

19 | /// type may be reused to store new data.

20 | ///

21 | /// [`Vec::clear`]: https://doc.rust-lang.org/stable/std/vec/struct.Vec.html#method.clear

22 | /// [`String::clear`]: https://doc.rust-lang.org/stable/std/string/struct.String.html#method.clear

23 | /// [`HashMap::clear`]: https://doc.rust-lang.org/stable/std/collections/struct.HashMap.html#method.clear

24 | pub trait Clear {

25 | /// Clear all data in `self`, retaining the allocated capacithy.

26 | fn clear(&mut self);

27 | }

28 |

29 | impl Clear for Option {

30 | fn clear(&mut self) {

31 | let _ = self.take();

32 | }

33 | }

34 |

35 | impl Clear for Box

36 | where

37 | T: Clear,

38 | {

39 | #[inline]

40 | fn clear(&mut self) {

41 | self.deref_mut().clear()

42 | }

43 | }

44 |

45 | impl Clear for Vec {

46 | #[inline]

47 | fn clear(&mut self) {

48 | Vec::clear(self)

49 | }

50 | }

51 |

52 | impl Clear for collections::HashMap

53 | where

54 | K: hash::Hash + Eq,

55 | S: hash::BuildHasher,

56 | {

57 | #[inline]

58 | fn clear(&mut self) {

59 | collections::HashMap::clear(self)

60 | }

61 | }

62 |

63 | impl Clear for collections::HashSet

64 | where

65 | T: hash::Hash + Eq,

66 | S: hash::BuildHasher,

67 | {

68 | #[inline]

69 | fn clear(&mut self) {

70 | collections::HashSet::clear(self)

71 | }

72 | }

73 |

74 | impl Clear for String {

75 | #[inline]

76 | fn clear(&mut self) {

77 | String::clear(self)

78 | }

79 | }

80 |

81 | impl Clear for sync::Mutex {

82 | #[inline]

83 | fn clear(&mut self) {

84 | self.get_mut().unwrap().clear();

85 | }

86 | }

87 |

88 | impl Clear for sync::RwLock {

89 | #[inline]

90 | fn clear(&mut self) {

91 | self.write().unwrap().clear();

92 | }

93 | }

94 |

95 | #[cfg(all(loom, test))]

96 | impl Clear for crate::sync::alloc::Track {

97 | fn clear(&mut self) {

98 | self.get_mut().clear()

99 | }

100 | }

101 |

--------------------------------------------------------------------------------

/src/implementation.rs:

--------------------------------------------------------------------------------

1 | // This module exists only to provide a separate page for the implementation

2 | // documentation.

3 |

4 | //! Notes on `sharded-slab`'s implementation and design.

5 | //!

6 | //! # Design

7 | //!

8 | //! The sharded slab's design is strongly inspired by the ideas presented by

9 | //! Leijen, Zorn, and de Moura in [Mimalloc: Free List Sharding in

10 | //! Action][mimalloc]. In this report, the authors present a novel design for a

11 | //! memory allocator based on a concept of _free list sharding_.

12 | //!

13 | //! Memory allocators must keep track of what memory regions are not currently

14 | //! allocated ("free") in order to provide them to future allocation requests.

15 | //! The term [_free list_][freelist] refers to a technique for performing this

16 | //! bookkeeping, where each free block stores a pointer to the next free block,

17 | //! forming a linked list. The memory allocator keeps a pointer to the most

18 | //! recently freed block, the _head_ of the free list. To allocate more memory,

19 | //! the allocator pops from the free list by setting the head pointer to the

20 | //! next free block of the current head block, and returning the previous head.

21 | //! To deallocate a block, the block is pushed to the free list by setting its

22 | //! first word to the current head pointer, and the head pointer is set to point

23 | //! to the deallocated block. Most implementations of slab allocators backed by

24 | //! arrays or vectors use a similar technique, where pointers are replaced by

25 | //! indices into the backing array.

26 | //!

27 | //! When allocations and deallocations can occur concurrently across threads,

28 | //! they must synchronize accesses to the free list; either by putting the

29 | //! entire allocator state inside of a lock, or by using atomic operations to

30 | //! treat the free list as a lock-free structure (such as a [Treiber stack]). In

31 | //! both cases, there is a significant performance cost — even when the free

32 | //! list is lock-free, it is likely that a noticeable amount of time will be

33 | //! spent in compare-and-swap loops. Ideally, the global synchronzation point

34 | //! created by the single global free list could be avoided as much as possible.

35 | //!

36 | //! The approach presented by Leijen, Zorn, and de Moura is to introduce

37 | //! sharding and thus increase the granularity of synchronization significantly.

38 | //! In mimalloc, the heap is _sharded_ so that each thread has its own

39 | //! thread-local heap. Objects are always allocated from the local heap of the

40 | //! thread where the allocation is performed. Because allocations are always

41 | //! done from a thread's local heap, they need not be synchronized.

42 | //!

43 | //! However, since objects can move between threads before being deallocated,

44 | //! _deallocations_ may still occur concurrently. Therefore, Leijen et al.

45 | //! introduce a concept of _local_ and _global_ free lists. When an object is

46 | //! deallocated on the same thread it was originally allocated on, it is placed

47 | //! on the local free list; if it is deallocated on another thread, it goes on

48 | //! the global free list for the heap of the thread from which it originated. To

49 | //! allocate, the local free list is used first; if it is empty, the entire

50 | //! global free list is popped onto the local free list. Since the local free

51 | //! list is only ever accessed by the thread it belongs to, it does not require

52 | //! synchronization at all, and because the global free list is popped from

53 | //! infrequently, the cost of synchronization has a reduced impact. A majority

54 | //! of allocations can occur without any synchronization at all; and

55 | //! deallocations only require synchronization when an object has left its

56 | //! parent thread (a relatively uncommon case).

57 | //!

58 | //! [mimalloc]: https://www.microsoft.com/en-us/research/uploads/prod/2019/06/mimalloc-tr-v1.pdf

59 | //! [freelist]: https://en.wikipedia.org/wiki/Free_list

60 | //! [Treiber stack]: https://en.wikipedia.org/wiki/Treiber_stack

61 | //!

62 | //! # Implementation

63 | //!

64 | //! A slab is represented as an array of [`MAX_THREADS`] _shards_. A shard

65 | //! consists of a vector of one or more _pages_ plus associated metadata.

66 | //! Finally, a page consists of an array of _slots_, head indices for the local

67 | //! and remote free lists.

68 | //!

69 | //! ```text

70 | //! ┌─────────────┐

71 | //! │ shard 1 │

72 | //! │ │ ┌─────────────┐ ┌────────┐

73 | //! │ pages───────┼───▶│ page 1 │ │ │

74 | //! ├─────────────┤ ├─────────────┤ ┌────▶│ next──┼─┐

75 | //! │ shard 2 │ │ page 2 │ │ ├────────┤ │

76 | //! ├─────────────┤ │ │ │ │XXXXXXXX│ │

77 | //! │ shard 3 │ │ local_head──┼──┘ ├────────┤ │

78 | //! └─────────────┘ │ remote_head─┼──┐ │ │◀┘

79 | //! ... ├─────────────┤ │ │ next──┼─┐

80 | //! ┌─────────────┐ │ page 3 │ │ ├────────┤ │

81 | //! │ shard n │ └─────────────┘ │ │XXXXXXXX│ │

82 | //! └─────────────┘ ... │ ├────────┤ │

83 | //! ┌─────────────┐ │ │XXXXXXXX│ │

84 | //! │ page n │ │ ├────────┤ │

85 | //! └─────────────┘ │ │ │◀┘

86 | //! └────▶│ next──┼───▶ ...

87 | //! ├────────┤

88 | //! │XXXXXXXX│

89 | //! └────────┘

90 | //! ```

91 | //!

92 | //!

93 | //! The size of the first page in a shard is always a power of two, and every

94 | //! subsequent page added after the first is twice as large as the page that

95 | //! preceeds it.

96 | //!

97 | //! ```text

98 | //!

99 | //! pg.

100 | //! ┌───┐ ┌─┬─┐

101 | //! │ 0 │───▶ │ │

102 | //! ├───┤ ├─┼─┼─┬─┐

103 | //! │ 1 │───▶ │ │ │ │

104 | //! ├───┤ ├─┼─┼─┼─┼─┬─┬─┬─┐

105 | //! │ 2 │───▶ │ │ │ │ │ │ │ │

106 | //! ├───┤ ├─┼─┼─┼─┼─┼─┼─┼─┼─┬─┬─┬─┬─┬─┬─┬─┐

107 | //! │ 3 │───▶ │ │ │ │ │ │ │ │ │ │ │ │ │ │ │ │

108 | //! └───┘ └─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┘

109 | //! ```

110 | //!

111 | //! When searching for a free slot, the smallest page is searched first, and if

112 | //! it is full, the search proceeds to the next page until either a free slot is

113 | //! found or all available pages have been searched. If all available pages have

114 | //! been searched and the maximum number of pages has not yet been reached, a

115 | //! new page is then allocated.

116 | //!

117 | //! Since every page is twice as large as the previous page, and all page sizes

118 | //! are powers of two, we can determine the page index that contains a given

119 | //! address by shifting the address down by the smallest page size and

120 | //! looking at how many twos places necessary to represent that number,

121 | //! telling us what power of two page size it fits inside of. We can

122 | //! determine the number of twos places by counting the number of leading

123 | //! zeros (unused twos places) in the number's binary representation, and

124 | //! subtracting that count from the total number of bits in a word.

125 | //!

126 | //! The formula for determining the page number that contains an offset is thus:

127 | //!

128 | //! ```rust,ignore

129 | //! WIDTH - ((offset + INITIAL_PAGE_SIZE) >> INDEX_SHIFT).leading_zeros()

130 | //! ```

131 | //!

132 | //! where `WIDTH` is the number of bits in a `usize`, and `INDEX_SHIFT` is

133 | //!

134 | //! ```rust,ignore

135 | //! INITIAL_PAGE_SIZE.trailing_zeros() + 1;

136 | //! ```

137 | //!

138 | //! [`MAX_THREADS`]: https://docs.rs/sharded-slab/latest/sharded_slab/trait.Config.html#associatedconstant.MAX_THREADS

139 |

--------------------------------------------------------------------------------

/src/iter.rs:

--------------------------------------------------------------------------------

1 | use std::{iter::FusedIterator, slice};

2 |

3 | use crate::{cfg, page, shard};

4 |

5 | /// An exclusive fused iterator over the items in a [`Slab`](crate::Slab).

6 | #[must_use = "iterators are lazy and do nothing unless consumed"]

7 | #[derive(Debug)]

8 | pub struct UniqueIter<'a, T, C: cfg::Config> {

9 | pub(super) shards: shard::IterMut<'a, Option, C>,

10 | pub(super) pages: slice::Iter<'a, page::Shared, C>>,

11 | pub(super) slots: Option>,

12 | }

13 |

14 | impl<'a, T, C: cfg::Config> Iterator for UniqueIter<'a, T, C> {

15 | type Item = &'a T;

16 |

17 | fn next(&mut self) -> Option {

18 | test_println!("UniqueIter::next");

19 | loop {

20 | test_println!("-> try next slot");

21 | if let Some(item) = self.slots.as_mut().and_then(|slots| slots.next()) {

22 | test_println!("-> found an item!");

23 | return Some(item);

24 | }

25 |

26 | test_println!("-> try next page");

27 | if let Some(page) = self.pages.next() {

28 | test_println!("-> found another page");

29 | self.slots = page.iter();

30 | continue;

31 | }

32 |

33 | test_println!("-> try next shard");

34 | if let Some(shard) = self.shards.next() {

35 | test_println!("-> found another shard");

36 | self.pages = shard.iter();

37 | } else {

38 | test_println!("-> all done!");

39 | return None;

40 | }

41 | }

42 | }

43 | }

44 |

45 | impl FusedIterator for UniqueIter<'_, T, C> {}

46 |

--------------------------------------------------------------------------------

/src/macros.rs:

--------------------------------------------------------------------------------

1 | macro_rules! test_println {

2 | ($($arg:tt)*) => {

3 | if cfg!(test) && cfg!(slab_print) {

4 | if std::thread::panicking() {

5 | // getting the thread ID while panicking doesn't seem to play super nicely with loom's

6 | // mock lazy_static...

7 | println!("[PANIC {:>17}:{:<3}] {}", file!(), line!(), format_args!($($arg)*))

8 | } else {

9 | println!("[{:?} {:>17}:{:<3}] {}", crate::Tid::::current(), file!(), line!(), format_args!($($arg)*))

10 | }

11 | }

12 | }

13 | }

14 |

15 | #[cfg(all(test, loom))]

16 | macro_rules! test_dbg {

17 | ($e:expr) => {

18 | match $e {

19 | e => {

20 | test_println!("{} = {:?}", stringify!($e), &e);

21 | e

22 | }

23 | }

24 | };

25 | }

26 |

27 | macro_rules! panic_in_drop {

28 | ($($arg:tt)*) => {

29 | if !std::thread::panicking() {

30 | panic!($($arg)*)

31 | } else {

32 | let thread = std::thread::current();

33 | eprintln!(

34 | "thread '{thread}' attempted to panic at '{msg}', {file}:{line}:{col}\n\

35 | note: we were already unwinding due to a previous panic.",

36 | thread = thread.name().unwrap_or(""),

37 | msg = format_args!($($arg)*),

38 | file = file!(),

39 | line = line!(),

40 | col = column!(),

41 | );

42 | }

43 | }

44 | }

45 |

46 | macro_rules! debug_assert_eq_in_drop {

47 | ($this:expr, $that:expr) => {

48 | debug_assert_eq_in_drop!(@inner $this, $that, "")

49 | };

50 | ($this:expr, $that:expr, $($arg:tt)+) => {

51 | debug_assert_eq_in_drop!(@inner $this, $that, format_args!(": {}", format_args!($($arg)+)))

52 | };

53 | (@inner $this:expr, $that:expr, $msg:expr) => {

54 | if cfg!(debug_assertions) {

55 | if $this != $that {

56 | panic_in_drop!(

57 | "assertion failed ({} == {})\n left: `{:?}`,\n right: `{:?}`{}",

58 | stringify!($this),

59 | stringify!($that),

60 | $this,

61 | $that,

62 | $msg,

63 | )

64 | }

65 | }

66 | }

67 | }

68 |

--------------------------------------------------------------------------------

/src/page/mod.rs:

--------------------------------------------------------------------------------

1 | use crate::cfg::{self, CfgPrivate};

2 | use crate::clear::Clear;

3 | use crate::sync::UnsafeCell;

4 | use crate::Pack;

5 |

6 | pub(crate) mod slot;

7 | mod stack;

8 | pub(crate) use self::slot::Slot;

9 | use std::{fmt, marker::PhantomData};

10 |

11 | /// A page address encodes the location of a slot within a shard (the page

12 | /// number and offset within that page) as a single linear value.

13 | #[repr(transparent)]

14 | pub(crate) struct Addr {

15 | addr: usize,

16 | _cfg: PhantomData,

17 | }

18 |

19 | impl Addr {

20 | const NULL: usize = Self::BITS + 1;

21 |

22 | pub(crate) fn index(self) -> usize {

23 | // Since every page is twice as large as the previous page, and all page sizes

24 | // are powers of two, we can determine the page index that contains a given

25 | // address by counting leading zeros, which tells us what power of two