├── .gitattributes

├── .github

├── FUNDING.yml

├── pull_request_template.md

└── workflows

│ └── ci.yml

├── .gitignore

├── LICENSE

├── LICENSE-APACHE

├── LICENSE-MIT

├── README.md

├── build.zig

└── src

├── MeasuredAllocator.zig

├── benchmark.zig

├── binaryfusefilter.zig

├── fusefilter.zig

├── main.zig

├── unique.zig

├── util.zig

└── xorfilter.zig

/.gitattributes:

--------------------------------------------------------------------------------

1 | * text=auto eol=lf

2 |

3 |

--------------------------------------------------------------------------------

/.github/FUNDING.yml:

--------------------------------------------------------------------------------

1 | github: emidoots

2 |

--------------------------------------------------------------------------------

/.github/pull_request_template.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | - [ ] By selecting this checkbox, I agree to license my contributions to this project under the license(s) described in the LICENSE file, and I have the right to do so or have received permission to do so by an employer or client I am producing work for whom has this right.

--------------------------------------------------------------------------------

/.github/workflows/ci.yml:

--------------------------------------------------------------------------------

1 | name: CI

2 | on:

3 | - push

4 | - pull_request

5 | jobs:

6 | test:

7 | runs-on: ubuntu-latest

8 | steps:

9 | - name: Checkout

10 | uses: actions/checkout@v2

11 | - name: Setup Zig

12 | run: |

13 | sudo apt install xz-utils

14 | sudo sh -c 'wget -c https://pkg.machengine.org/zig/zig-linux-x86_64-0.14.0-dev.2577+271452d22.tar.xz -O - | tar -xJ --strip-components=1 -C /usr/local/bin'

15 | - name: build

16 | run: zig build

17 | - name: test

18 | run: zig build test

19 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # This file is for zig-specific build artifacts.

2 | # If you have OS-specific or editor-specific files to ignore,

3 | # such as *.swp or .DS_Store, put those in your global

4 | # ~/.gitignore and put this in your ~/.gitconfig:

5 | #

6 | # [core]

7 | # excludesfile = ~/.gitignore

8 | #

9 | # Cheers!

10 | # -andrewrk

11 |

12 | .zig-cache/

13 | zig-out/

14 | /release/

15 | /debug/

16 | /build/

17 | /build-*/

18 | /docgen_tmp/

19 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Copyright 2021, Hexops Contributors (given via the Git commit history).

2 |

3 | Licensed under the Apache License, Version 2.0 (see LICENSE-APACHE or http://www.apache.org/licenses/LICENSE-2.0)

4 | or the MIT license (see LICENSE-MIT or http://opensource.org/licenses/MIT), at

5 | your option. All files in the project without exclusions may not be copied,

6 | modified, or distributed except according to those terms.

7 |

--------------------------------------------------------------------------------

/LICENSE-APACHE:

--------------------------------------------------------------------------------

1 |

2 | Apache License

3 | Version 2.0, January 2004

4 | http://www.apache.org/licenses/

5 |

6 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

7 |

8 | 1. Definitions.

9 |

10 | "License" shall mean the terms and conditions for use, reproduction,

11 | and distribution as defined by Sections 1 through 9 of this document.

12 |

13 | "Licensor" shall mean the copyright owner or entity authorized by

14 | the copyright owner that is granting the License.

15 |

16 | "Legal Entity" shall mean the union of the acting entity and all

17 | other entities that control, are controlled by, or are under common

18 | control with that entity. For the purposes of this definition,

19 | "control" means (i) the power, direct or indirect, to cause the

20 | direction or management of such entity, whether by contract or

21 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

22 | outstanding shares, or (iii) beneficial ownership of such entity.

23 |

24 | "You" (or "Your") shall mean an individual or Legal Entity

25 | exercising permissions granted by this License.

26 |

27 | "Source" form shall mean the preferred form for making modifications,

28 | including but not limited to software source code, documentation

29 | source, and configuration files.

30 |

31 | "Object" form shall mean any form resulting from mechanical

32 | transformation or translation of a Source form, including but

33 | not limited to compiled object code, generated documentation,

34 | and conversions to other media types.

35 |

36 | "Work" shall mean the work of authorship, whether in Source or

37 | Object form, made available under the License, as indicated by a

38 | copyright notice that is included in or attached to the work

39 | (an example is provided in the Appendix below).

40 |

41 | "Derivative Works" shall mean any work, whether in Source or Object

42 | form, that is based on (or derived from) the Work and for which the

43 | editorial revisions, annotations, elaborations, or other modifications

44 | represent, as a whole, an original work of authorship. For the purposes

45 | of this License, Derivative Works shall not include works that remain

46 | separable from, or merely link (or bind by name) to the interfaces of,

47 | the Work and Derivative Works thereof.

48 |

49 | "Contribution" shall mean any work of authorship, including

50 | the original version of the Work and any modifications or additions

51 | to that Work or Derivative Works thereof, that is intentionally

52 | submitted to Licensor for inclusion in the Work by the copyright owner

53 | or by an individual or Legal Entity authorized to submit on behalf of

54 | the copyright owner. For the purposes of this definition, "submitted"

55 | means any form of electronic, verbal, or written communication sent

56 | to the Licensor or its representatives, including but not limited to

57 | communication on electronic mailing lists, source code control systems,

58 | and issue tracking systems that are managed by, or on behalf of, the

59 | Licensor for the purpose of discussing and improving the Work, but

60 | excluding communication that is conspicuously marked or otherwise

61 | designated in writing by the copyright owner as "Not a Contribution."

62 |

63 | "Contributor" shall mean Licensor and any individual or Legal Entity

64 | on behalf of whom a Contribution has been received by Licensor and

65 | subsequently incorporated within the Work.

66 |

67 | 2. Grant of Copyright License. Subject to the terms and conditions of

68 | this License, each Contributor hereby grants to You a perpetual,

69 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

70 | copyright license to reproduce, prepare Derivative Works of,

71 | publicly display, publicly perform, sublicense, and distribute the

72 | Work and such Derivative Works in Source or Object form.

73 |

74 | 3. Grant of Patent License. Subject to the terms and conditions of

75 | this License, each Contributor hereby grants to You a perpetual,

76 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

77 | (except as stated in this section) patent license to make, have made,

78 | use, offer to sell, sell, import, and otherwise transfer the Work,

79 | where such license applies only to those patent claims licensable

80 | by such Contributor that are necessarily infringed by their

81 | Contribution(s) alone or by combination of their Contribution(s)

82 | with the Work to which such Contribution(s) was submitted. If You

83 | institute patent litigation against any entity (including a

84 | cross-claim or counterclaim in a lawsuit) alleging that the Work

85 | or a Contribution incorporated within the Work constitutes direct

86 | or contributory patent infringement, then any patent licenses

87 | granted to You under this License for that Work shall terminate

88 | as of the date such litigation is filed.

89 |

90 | 4. Redistribution. You may reproduce and distribute copies of the

91 | Work or Derivative Works thereof in any medium, with or without

92 | modifications, and in Source or Object form, provided that You

93 | meet the following conditions:

94 |

95 | (a) You must give any other recipients of the Work or

96 | Derivative Works a copy of this License; and

97 |

98 | (b) You must cause any modified files to carry prominent notices

99 | stating that You changed the files; and

100 |

101 | (c) You must retain, in the Source form of any Derivative Works

102 | that You distribute, all copyright, patent, trademark, and

103 | attribution notices from the Source form of the Work,

104 | excluding those notices that do not pertain to any part of

105 | the Derivative Works; and

106 |

107 | (d) If the Work includes a "NOTICE" text file as part of its

108 | distribution, then any Derivative Works that You distribute must

109 | include a readable copy of the attribution notices contained

110 | within such NOTICE file, excluding those notices that do not

111 | pertain to any part of the Derivative Works, in at least one

112 | of the following places: within a NOTICE text file distributed

113 | as part of the Derivative Works; within the Source form or

114 | documentation, if provided along with the Derivative Works; or,

115 | within a display generated by the Derivative Works, if and

116 | wherever such third-party notices normally appear. The contents

117 | of the NOTICE file are for informational purposes only and

118 | do not modify the License. You may add Your own attribution

119 | notices within Derivative Works that You distribute, alongside

120 | or as an addendum to the NOTICE text from the Work, provided

121 | that such additional attribution notices cannot be construed

122 | as modifying the License.

123 |

124 | You may add Your own copyright statement to Your modifications and

125 | may provide additional or different license terms and conditions

126 | for use, reproduction, or distribution of Your modifications, or

127 | for any such Derivative Works as a whole, provided Your use,

128 | reproduction, and distribution of the Work otherwise complies with

129 | the conditions stated in this License.

130 |

131 | 5. Submission of Contributions. Unless You explicitly state otherwise,

132 | any Contribution intentionally submitted for inclusion in the Work

133 | by You to the Licensor shall be under the terms and conditions of

134 | this License, without any additional terms or conditions.

135 | Notwithstanding the above, nothing herein shall supersede or modify

136 | the terms of any separate license agreement you may have executed

137 | with Licensor regarding such Contributions.

138 |

139 | 6. Trademarks. This License does not grant permission to use the trade

140 | names, trademarks, service marks, or product names of the Licensor,

141 | except as required for reasonable and customary use in describing the

142 | origin of the Work and reproducing the content of the NOTICE file.

143 |

144 | 7. Disclaimer of Warranty. Unless required by applicable law or

145 | agreed to in writing, Licensor provides the Work (and each

146 | Contributor provides its Contributions) on an "AS IS" BASIS,

147 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

148 | implied, including, without limitation, any warranties or conditions

149 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

150 | PARTICULAR PURPOSE. You are solely responsible for determining the

151 | appropriateness of using or redistributing the Work and assume any

152 | risks associated with Your exercise of permissions under this License.

153 |

154 | 8. Limitation of Liability. In no event and under no legal theory,

155 | whether in tort (including negligence), contract, or otherwise,

156 | unless required by applicable law (such as deliberate and grossly

157 | negligent acts) or agreed to in writing, shall any Contributor be

158 | liable to You for damages, including any direct, indirect, special,

159 | incidental, or consequential damages of any character arising as a

160 | result of this License or out of the use or inability to use the

161 | Work (including but not limited to damages for loss of goodwill,

162 | work stoppage, computer failure or malfunction, or any and all

163 | other commercial damages or losses), even if such Contributor

164 | has been advised of the possibility of such damages.

165 |

166 | 9. Accepting Warranty or Additional Liability. While redistributing

167 | the Work or Derivative Works thereof, You may choose to offer,

168 | and charge a fee for, acceptance of support, warranty, indemnity,

169 | or other liability obligations and/or rights consistent with this

170 | License. However, in accepting such obligations, You may act only

171 | on Your own behalf and on Your sole responsibility, not on behalf

172 | of any other Contributor, and only if You agree to indemnify,

173 | defend, and hold each Contributor harmless for any liability

174 | incurred by, or claims asserted against, such Contributor by reason

175 | of your accepting any such warranty or additional liability.

176 |

177 | END OF TERMS AND CONDITIONS

178 |

179 | APPENDIX: How to apply the Apache License to your work.

180 |

181 | To apply the Apache License to your work, attach the following

182 | boilerplate notice, with the fields enclosed by brackets "[]"

183 | replaced with your own identifying information. (Don't include

184 | the brackets!) The text should be enclosed in the appropriate

185 | comment syntax for the file format. We also recommend that a

186 | file or class name and description of purpose be included on the

187 | same "printed page" as the copyright notice for easier

188 | identification within third-party archives.

189 |

190 | Copyright [yyyy] [name of copyright owner]

191 |

192 | Licensed under the Apache License, Version 2.0 (the "License");

193 | you may not use this file except in compliance with the License.

194 | You may obtain a copy of the License at

195 |

196 | http://www.apache.org/licenses/LICENSE-2.0

197 |

198 | Unless required by applicable law or agreed to in writing, software

199 | distributed under the License is distributed on an "AS IS" BASIS,

200 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

201 | See the License for the specific language governing permissions and

202 | limitations under the License.

203 |

--------------------------------------------------------------------------------

/LICENSE-MIT:

--------------------------------------------------------------------------------

1 | Copyright (c) 2021 Hexops Contributors (given via the Git commit history).

2 |

3 | Permission is hereby granted, free of charge, to any

4 | person obtaining a copy of this software and associated

5 | documentation files (the "Software"), to deal in the

6 | Software without restriction, including without

7 | limitation the rights to use, copy, modify, merge,

8 | publish, distribute, sublicense, and/or sell copies of

9 | the Software, and to permit persons to whom the Software

10 | is furnished to do so, subject to the following

11 | conditions:

12 |

13 | The above copyright notice and this permission notice

14 | shall be included in all copies or substantial portions

15 | of the Software.

16 |

17 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF

18 | ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED

19 | TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A

20 | PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT

21 | SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY

22 | CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION

23 | OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR

24 | IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER

25 | DEALINGS IN THE SOFTWARE.

26 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # fastfilter: Binary fuse & xor filters for Zig  2 |

3 | [](https://github.com/hexops/fastfilter/actions)

4 |

5 |

2 |

3 | [](https://github.com/hexops/fastfilter/actions)

4 |

5 |  6 |

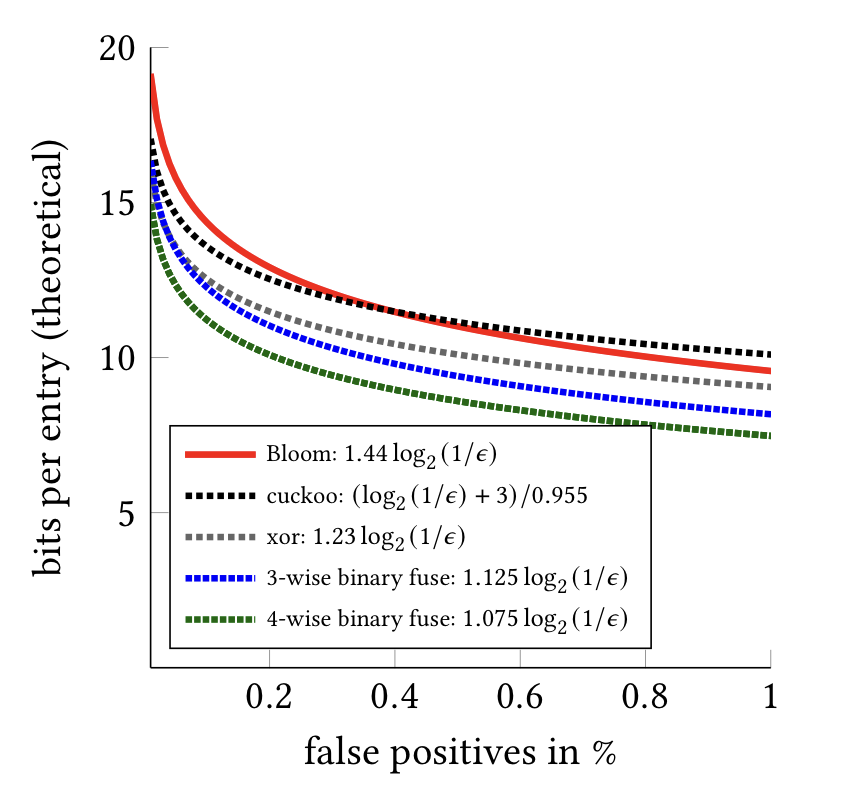

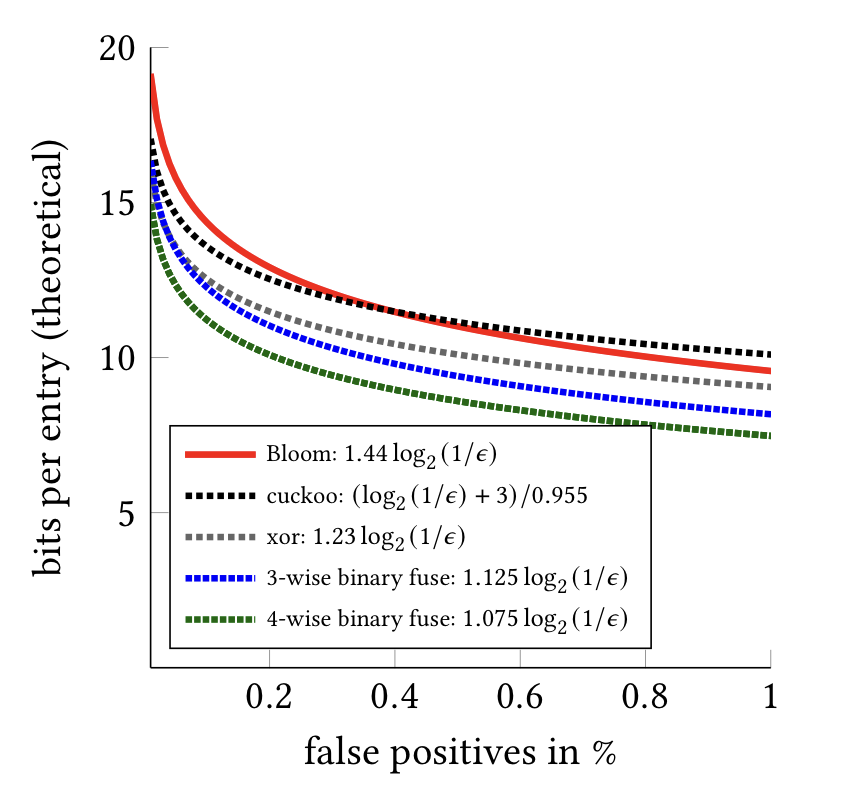

7 | Binary fuse filters & xor filters are probabilistic data structures which allow for quickly checking whether an element is part of a set.

8 |

9 | Both are faster and more concise than Bloom filters, and smaller than Cuckoo filters. Binary fuse filters are a bleeding-edge development and are competitive with Facebook's ribbon filters:

10 |

11 | * Thomas Mueller Graf, Daniel Lemire, Binary Fuse Filters: Fast and Smaller Than Xor Filters (_not yet published_)

12 | * Thomas Mueller Graf, Daniel Lemire, [Xor Filters: Faster and Smaller Than Bloom and Cuckoo Filters](https://arxiv.org/abs/1912.08258), Journal of Experimental Algorithmics 25 (1), 2020. DOI: 10.1145/3376122

13 |

14 | ## Benefits of Zig implementation

15 |

16 | This is a [Zig](https://ziglang.org) implementation, which provides many practical benefits:

17 |

18 | 1. **Iterator-based:** you can populate xor or binary fuse filters using an iterator, without keeping your entire key set in-memory and without it being a contiguous array of keys. This can reduce memory usage when populating filters substantially.

19 | 2. **Distinct allocators:** you can provide separate Zig `std.mem.Allocator` implementations for the filter itself and population, enabling interesting opportunities like mmap-backed population of filters with low physical memory usage.

20 | 3. **Generic implementation:** use `Xor(u8)`, `Xor(u16)`, `BinaryFuse(u8)`, `BinaryFuse(u16)`, or experiment with more exotic variants like `Xor(u4)` thanks to Zig's [bit-width integers](https://ziglang.org/documentation/master/#Runtime-Integer-Values) and generic type system.

21 |

22 | Zig's safety-checking and checked overflows has also enabled us to improve the upstream C/Go implementations where overflow and undefined behavior went unnoticed.[[1]](https://github.com/FastFilter/xor_singleheader/issues/26)

23 |

24 | ## Usage

25 |

26 | Decide if xor or binary fuse filters fit your use case better: [should I use binary fuse filters or xor filters?](#should-i-use-binary-fuse-filters-or-xor-filters)

27 |

28 | Get your keys into `u64` values. If you have strings, structs, etc. then use something like Zig's [`std.hash_map.getAutoHashFn`](https://ziglang.org/documentation/master/std/#std;hash_map.getAutoHashFn) to convert your keys to `u64` first. ("It is not important to have a good hash function, but collisions should be unlikely (~1/2^64).")

29 |

30 | Create a `build.zig.zon` file in your project (replace `$LATEST_COMMIT` with the latest commit hash):

31 |

32 | ```

33 | .{

34 | .name = "mypkg",

35 | .version = "0.1.0",

36 | .dependencies = .{

37 | .fastfilter = .{

38 | .url = "https://github.com/hexops/fastfilter/archive/$LATEST_COMMIT.tar.gz",

39 | },

40 | },

41 | }

42 | ```

43 |

44 | Run `zig build` in your project, and the compiler instruct you to add a `.hash = "..."` field next to `.url`.

45 |

46 | Then use the dependency in your `build.zig`:

47 |

48 | ```zig

49 | pub fn build(b: *std.Build) void {

50 | ...

51 | exe.addModule("fastfilter", b.dependency("fastfilter", .{

52 | .target = target,

53 | .optimize = optimize,

54 | }).module("fastfilter"));

55 | }

56 | ```

57 |

58 | In your `main.zig`, make use of the library:

59 |

60 | ```zig

61 | const std = @import("std");

62 | const testing = std.testing;

63 | const fastfilter = @import("fastfilter");

64 |

65 | test "mytest" {

66 | const allocator = std.heap.page_allocator;

67 |

68 | // Initialize the binary fuse filter with room for 1 million keys.

69 | const size = 1_000_000;

70 | var filter = try fastfilter.BinaryFuse8.init(allocator, size);

71 | defer filter.deinit(allocator);

72 |

73 | // Generate some consecutive keys.

74 | var keys = try allocator.alloc(u64, size);

75 | defer allocator.free(keys);

76 | for (keys, 0..) |key, i| {

77 | _ = key;

78 | keys[i] = i;

79 | }

80 |

81 | // Populate the filter with our keys. You can't update a xor / binary fuse filter after the

82 | // fact, instead you should build a new one.

83 | try filter.populate(allocator, keys[0..]);

84 |

85 | // Now we can quickly test for containment. So fast!

86 | try testing.expect(filter.contain(1) == true);

87 | }

88 | ```

89 |

90 | (you can just add this project as a Git submodule in yours for now, as [Zig's official package manager is still under way](https://github.com/ziglang/zig/issues/943).)

91 |

92 | Binary fuse filters automatically deduplicate any keys during population. If you are using a different filter type (you probably shouldn't be!) then keys must be unique or else filter population will fail. You can use the `fastfilter.AutoUnique(u64)(keys)` helper to deduplicate (in typically O(N) time complexity), see the tests in `src/unique.zig` for usage examples.

93 |

94 | ## Serialization

95 |

96 | To serialize the filters, you only need to encode these struct fields:

97 |

98 | ```zig

99 | pub fn BinaryFuse(comptime T: type) type {

100 | return struct {

101 | ...

102 | seed: u64,

103 | segment_length: u32,

104 | segment_length_mask: u32,

105 | segment_count: u32,

106 | segment_count_length: u32,

107 | fingerprints: []T,

108 | ...

109 | ```

110 |

111 | `T` will be the chosen fingerprint size, e.g. `u8` for `BinaryFuse8` or `Xor8`.

112 |

113 | Look at [`std.io.Writer`](https://sourcegraph.com/github.com/ziglang/zig/-/blob/lib/std/io/writer.zig) and [`std.io.BitWriter`](https://sourcegraph.com/github.com/ziglang/zig/-/blob/lib/std/io/bit_writer.zig) for ideas on actual serialization.

114 |

115 | Similarly, for xor filters you only need these struct fields:

116 |

117 | ```zig

118 | pub fn Xor(comptime T: type) type {

119 | return struct {

120 | seed: u64,

121 | blockLength: u64,

122 | fingerprints: []T,

123 | ...

124 | ```

125 |

126 | ## Should I use binary fuse filters or xor filters?

127 |

128 | If you're not sure, start with `BinaryFuse8` filters. They're fast, and have a false-positive probability rate of 1/256 (or 0.4%).

129 |

130 | There are many tradeoffs, primarily between:

131 |

132 | * Memory usage

133 | * Containment check time

134 | * Population / creation time & memory usage

135 |

136 | See the [benchmarks](#benchmarks) section for a comparison of the tradeoffs between binary fuse filters and xor filters, as well as how larger bit sizes (e.g. `BinaryFuse(u16)`) consume more memory in exchange for a lower false-positive probability rate.

137 |

138 | Note that _fuse filters_ are not to be confused with _binary fuse filters_, the former have issues with construction, often failing unless you have a large number of unique keys. Binary fuse filters do not suffer from this and are generally better than traditional ones in several ways. For this reason, we consider traditional fuse filters deprecated.

139 |

140 | ## Note about extremely large datasets

141 |

142 | This implementation supports key iterators, so you do not need to have all of your keys in-memory, see `BinaryFuse8.populateIter` and `Xor8.populateIter`.

143 |

144 | If you intend to use a xor filter with datasets of 100m+ keys, there is a possible faster implementation _for construction_ found in the C implementation [`xor8_buffered_populate`](https://github.com/FastFilter/xor_singleheader) which is not implemented here.

145 |

146 | ## Changelog

147 |

148 | The API is generally finalized, but we may make some adjustments as Zig changes or we learn of more idiomatic ways to express things. We will release v1.0 once Zig v1.0 is released.

149 |

150 | ### **v0.11.0**

151 |

152 | - fastfilter is now available via the Zig package manager.

153 | - Updated to the latest version of Zig nightly `0.12.0-dev.706+62a0fbdae`

154 |

155 | ### **v0.10.3**

156 |

157 | - Updated to the latest version of Zig `0.12.0-dev.706+62a0fbdae` (`build.zig` `.path` -> `.source` change.)

158 |

159 | ### **v0.10.2**

160 |

161 | - Fixed a few correctness / integer overflow/underflow possibilities where we were inconsistent with the Go/C implementations of binary fuse filters.

162 | - Added debug-mode checks for iterator correctness (wraparound behavior.)

163 |

164 | ### **v0.10.1**

165 |

166 | - Updated to the latest version of Zig `0.12.0-dev.706+62a0fbdae`

167 |

168 | ### **v0.10.0**

169 |

170 | - All types are now unmanaged (allocator must be passed via parameters)

171 | - Renamed `util.sliceIterator` to `fastfilter.SliceIterator`

172 | - `SliceIterator` is now unmanaged / does not store an allocator.

173 | - `SliceIterator` now stores `[]const T` instead of `[]T` internally.

174 | - `BinaryFuseFilter.max_iterations` is now a constant.

175 | - Added `fastfilter.MeasuredAllocator` for measuring allocations.

176 | - Improved usage example.

177 | - Properly free xorfilter/fusefilter fingerprints.

178 | - Updated benchmark to latest Zig version.

179 |

180 | ### **v0.9.3**

181 |

182 | - Fixed potential integer overflow.

183 |

184 | ### **v0.9.2**

185 |

186 | - Handle duplicated keys automatically

187 | - Added a `std.build.Pkg` definition

188 | - Fixed an unlikely bug

189 | - Updated usage instructions

190 | - Updated to Zig v0.10.0-dev.1736

191 |

192 | ### **v0.9.1**

193 |

194 | - Updated to Zig v0.10.0-dev.36

195 |

196 | ### **v0.9.0**

197 |

198 | - Renamed repository github.com/hexops/xorfilter -> github.com/hexops/fastfilter to account for binary fuse filters.

199 | - Implemented bleeding-edge (paper not yet published) "Binary Fuse Filters: Fast and Smaller Than Xor Filters" algorithm by Thomas Mueller Graf, Daniel Lemire

200 | - `BinaryFuse` filters are now recommended by default, are generally better than Xor and Fuse filters.

201 | - Deprecated traditional `Fuse` filters (`BinaryFuse` are much better.)

202 | - Added much improved benchmarking suite with more details on memory consumption during filter population, etc.

203 |

204 | ### **v0.8.0**

205 |

206 | initial release with support for Xor and traditional Fuse filters of varying bit sizes, key iterators, serialization, and a slice de-duplication helper.

207 |

208 | ## Benchmarks

209 |

210 | Benchmarks were ran on both a 2019 Macbook Pro and Windows 10 desktop machine using e.g.:

211 |

212 | ```

213 | zig run -O ReleaseFast src/benchmark.zig -- --xor 8 --num-keys 1000000

214 | ```

215 |

216 |

6 |

7 | Binary fuse filters & xor filters are probabilistic data structures which allow for quickly checking whether an element is part of a set.

8 |

9 | Both are faster and more concise than Bloom filters, and smaller than Cuckoo filters. Binary fuse filters are a bleeding-edge development and are competitive with Facebook's ribbon filters:

10 |

11 | * Thomas Mueller Graf, Daniel Lemire, Binary Fuse Filters: Fast and Smaller Than Xor Filters (_not yet published_)

12 | * Thomas Mueller Graf, Daniel Lemire, [Xor Filters: Faster and Smaller Than Bloom and Cuckoo Filters](https://arxiv.org/abs/1912.08258), Journal of Experimental Algorithmics 25 (1), 2020. DOI: 10.1145/3376122

13 |

14 | ## Benefits of Zig implementation

15 |

16 | This is a [Zig](https://ziglang.org) implementation, which provides many practical benefits:

17 |

18 | 1. **Iterator-based:** you can populate xor or binary fuse filters using an iterator, without keeping your entire key set in-memory and without it being a contiguous array of keys. This can reduce memory usage when populating filters substantially.

19 | 2. **Distinct allocators:** you can provide separate Zig `std.mem.Allocator` implementations for the filter itself and population, enabling interesting opportunities like mmap-backed population of filters with low physical memory usage.

20 | 3. **Generic implementation:** use `Xor(u8)`, `Xor(u16)`, `BinaryFuse(u8)`, `BinaryFuse(u16)`, or experiment with more exotic variants like `Xor(u4)` thanks to Zig's [bit-width integers](https://ziglang.org/documentation/master/#Runtime-Integer-Values) and generic type system.

21 |

22 | Zig's safety-checking and checked overflows has also enabled us to improve the upstream C/Go implementations where overflow and undefined behavior went unnoticed.[[1]](https://github.com/FastFilter/xor_singleheader/issues/26)

23 |

24 | ## Usage

25 |

26 | Decide if xor or binary fuse filters fit your use case better: [should I use binary fuse filters or xor filters?](#should-i-use-binary-fuse-filters-or-xor-filters)

27 |

28 | Get your keys into `u64` values. If you have strings, structs, etc. then use something like Zig's [`std.hash_map.getAutoHashFn`](https://ziglang.org/documentation/master/std/#std;hash_map.getAutoHashFn) to convert your keys to `u64` first. ("It is not important to have a good hash function, but collisions should be unlikely (~1/2^64).")

29 |

30 | Create a `build.zig.zon` file in your project (replace `$LATEST_COMMIT` with the latest commit hash):

31 |

32 | ```

33 | .{

34 | .name = "mypkg",

35 | .version = "0.1.0",

36 | .dependencies = .{

37 | .fastfilter = .{

38 | .url = "https://github.com/hexops/fastfilter/archive/$LATEST_COMMIT.tar.gz",

39 | },

40 | },

41 | }

42 | ```

43 |

44 | Run `zig build` in your project, and the compiler instruct you to add a `.hash = "..."` field next to `.url`.

45 |

46 | Then use the dependency in your `build.zig`:

47 |

48 | ```zig

49 | pub fn build(b: *std.Build) void {

50 | ...

51 | exe.addModule("fastfilter", b.dependency("fastfilter", .{

52 | .target = target,

53 | .optimize = optimize,

54 | }).module("fastfilter"));

55 | }

56 | ```

57 |

58 | In your `main.zig`, make use of the library:

59 |

60 | ```zig

61 | const std = @import("std");

62 | const testing = std.testing;

63 | const fastfilter = @import("fastfilter");

64 |

65 | test "mytest" {

66 | const allocator = std.heap.page_allocator;

67 |

68 | // Initialize the binary fuse filter with room for 1 million keys.

69 | const size = 1_000_000;

70 | var filter = try fastfilter.BinaryFuse8.init(allocator, size);

71 | defer filter.deinit(allocator);

72 |

73 | // Generate some consecutive keys.

74 | var keys = try allocator.alloc(u64, size);

75 | defer allocator.free(keys);

76 | for (keys, 0..) |key, i| {

77 | _ = key;

78 | keys[i] = i;

79 | }

80 |

81 | // Populate the filter with our keys. You can't update a xor / binary fuse filter after the

82 | // fact, instead you should build a new one.

83 | try filter.populate(allocator, keys[0..]);

84 |

85 | // Now we can quickly test for containment. So fast!

86 | try testing.expect(filter.contain(1) == true);

87 | }

88 | ```

89 |

90 | (you can just add this project as a Git submodule in yours for now, as [Zig's official package manager is still under way](https://github.com/ziglang/zig/issues/943).)

91 |

92 | Binary fuse filters automatically deduplicate any keys during population. If you are using a different filter type (you probably shouldn't be!) then keys must be unique or else filter population will fail. You can use the `fastfilter.AutoUnique(u64)(keys)` helper to deduplicate (in typically O(N) time complexity), see the tests in `src/unique.zig` for usage examples.

93 |

94 | ## Serialization

95 |

96 | To serialize the filters, you only need to encode these struct fields:

97 |

98 | ```zig

99 | pub fn BinaryFuse(comptime T: type) type {

100 | return struct {

101 | ...

102 | seed: u64,

103 | segment_length: u32,

104 | segment_length_mask: u32,

105 | segment_count: u32,

106 | segment_count_length: u32,

107 | fingerprints: []T,

108 | ...

109 | ```

110 |

111 | `T` will be the chosen fingerprint size, e.g. `u8` for `BinaryFuse8` or `Xor8`.

112 |

113 | Look at [`std.io.Writer`](https://sourcegraph.com/github.com/ziglang/zig/-/blob/lib/std/io/writer.zig) and [`std.io.BitWriter`](https://sourcegraph.com/github.com/ziglang/zig/-/blob/lib/std/io/bit_writer.zig) for ideas on actual serialization.

114 |

115 | Similarly, for xor filters you only need these struct fields:

116 |

117 | ```zig

118 | pub fn Xor(comptime T: type) type {

119 | return struct {

120 | seed: u64,

121 | blockLength: u64,

122 | fingerprints: []T,

123 | ...

124 | ```

125 |

126 | ## Should I use binary fuse filters or xor filters?

127 |

128 | If you're not sure, start with `BinaryFuse8` filters. They're fast, and have a false-positive probability rate of 1/256 (or 0.4%).

129 |

130 | There are many tradeoffs, primarily between:

131 |

132 | * Memory usage

133 | * Containment check time

134 | * Population / creation time & memory usage

135 |

136 | See the [benchmarks](#benchmarks) section for a comparison of the tradeoffs between binary fuse filters and xor filters, as well as how larger bit sizes (e.g. `BinaryFuse(u16)`) consume more memory in exchange for a lower false-positive probability rate.

137 |

138 | Note that _fuse filters_ are not to be confused with _binary fuse filters_, the former have issues with construction, often failing unless you have a large number of unique keys. Binary fuse filters do not suffer from this and are generally better than traditional ones in several ways. For this reason, we consider traditional fuse filters deprecated.

139 |

140 | ## Note about extremely large datasets

141 |

142 | This implementation supports key iterators, so you do not need to have all of your keys in-memory, see `BinaryFuse8.populateIter` and `Xor8.populateIter`.

143 |

144 | If you intend to use a xor filter with datasets of 100m+ keys, there is a possible faster implementation _for construction_ found in the C implementation [`xor8_buffered_populate`](https://github.com/FastFilter/xor_singleheader) which is not implemented here.

145 |

146 | ## Changelog

147 |

148 | The API is generally finalized, but we may make some adjustments as Zig changes or we learn of more idiomatic ways to express things. We will release v1.0 once Zig v1.0 is released.

149 |

150 | ### **v0.11.0**

151 |

152 | - fastfilter is now available via the Zig package manager.

153 | - Updated to the latest version of Zig nightly `0.12.0-dev.706+62a0fbdae`

154 |

155 | ### **v0.10.3**

156 |

157 | - Updated to the latest version of Zig `0.12.0-dev.706+62a0fbdae` (`build.zig` `.path` -> `.source` change.)

158 |

159 | ### **v0.10.2**

160 |

161 | - Fixed a few correctness / integer overflow/underflow possibilities where we were inconsistent with the Go/C implementations of binary fuse filters.

162 | - Added debug-mode checks for iterator correctness (wraparound behavior.)

163 |

164 | ### **v0.10.1**

165 |

166 | - Updated to the latest version of Zig `0.12.0-dev.706+62a0fbdae`

167 |

168 | ### **v0.10.0**

169 |

170 | - All types are now unmanaged (allocator must be passed via parameters)

171 | - Renamed `util.sliceIterator` to `fastfilter.SliceIterator`

172 | - `SliceIterator` is now unmanaged / does not store an allocator.

173 | - `SliceIterator` now stores `[]const T` instead of `[]T` internally.

174 | - `BinaryFuseFilter.max_iterations` is now a constant.

175 | - Added `fastfilter.MeasuredAllocator` for measuring allocations.

176 | - Improved usage example.

177 | - Properly free xorfilter/fusefilter fingerprints.

178 | - Updated benchmark to latest Zig version.

179 |

180 | ### **v0.9.3**

181 |

182 | - Fixed potential integer overflow.

183 |

184 | ### **v0.9.2**

185 |

186 | - Handle duplicated keys automatically

187 | - Added a `std.build.Pkg` definition

188 | - Fixed an unlikely bug

189 | - Updated usage instructions

190 | - Updated to Zig v0.10.0-dev.1736

191 |

192 | ### **v0.9.1**

193 |

194 | - Updated to Zig v0.10.0-dev.36

195 |

196 | ### **v0.9.0**

197 |

198 | - Renamed repository github.com/hexops/xorfilter -> github.com/hexops/fastfilter to account for binary fuse filters.

199 | - Implemented bleeding-edge (paper not yet published) "Binary Fuse Filters: Fast and Smaller Than Xor Filters" algorithm by Thomas Mueller Graf, Daniel Lemire

200 | - `BinaryFuse` filters are now recommended by default, are generally better than Xor and Fuse filters.

201 | - Deprecated traditional `Fuse` filters (`BinaryFuse` are much better.)

202 | - Added much improved benchmarking suite with more details on memory consumption during filter population, etc.

203 |

204 | ### **v0.8.0**

205 |

206 | initial release with support for Xor and traditional Fuse filters of varying bit sizes, key iterators, serialization, and a slice de-duplication helper.

207 |

208 | ## Benchmarks

209 |

210 | Benchmarks were ran on both a 2019 Macbook Pro and Windows 10 desktop machine using e.g.:

211 |

212 | ```

213 | zig run -O ReleaseFast src/benchmark.zig -- --xor 8 --num-keys 1000000

214 | ```

215 |

216 |

217 | Benchmarks: 2019 Macbook Pro, Intel i9 (1M - 100M keys)

218 |

219 | * CPU: 2.3 GHz 8-Core Intel Core i9

220 | * Memory: 16 GB 2667 MHz DDR4

221 | * Zig version: `0.12.0-dev.706+62a0fbdae`

222 |

223 | | Algorithm | # of keys | populate | contains(k) | false+ prob. | bits per entry | peak populate | filter total |

224 | |--------------|------------|------------|-------------|--------------|----------------|---------------|--------------|

225 | | binaryfuse8 | 1000000 | 37.5ms | 24.0ns | 0.00391115 | 9.04 | 22 MiB | 1 MiB |

226 | | binaryfuse16 | 1000000 | 45.5ms | 24.0ns | 0.00001524 | 18.09 | 24 MiB | 2 MiB |

227 | | binaryfuse32 | 1000000 | 56.0ms | 24.0ns | 0 | 36.18 | 28 MiB | 4 MiB |

228 | | xor2 | 1000000 | 108.0ms | 25.0ns | 0.2500479 | 9.84 | 52 MiB | 1 MiB |

229 | | xor4 | 1000000 | 99.0ms | 25.0ns | 0.06253865 | 9.84 | 52 MiB | 1 MiB |

230 | | xor8 | 1000000 | 103.4ms | 25.0ns | 0.0039055 | 9.84 | 52 MiB | 1 MiB |

231 | | xor16 | 1000000 | 104.7ms | 26.0ns | 0.00001509 | 19.68 | 52 MiB | 2 MiB |

232 | | xor32 | 1000000 | 102.2ms | 25.0ns | 0 | 39.36 | 52 MiB | 4 MiB |

233 | | | | | | | | | |

234 | | binaryfuse8 | 10000000 | 621.2ms | 36.0ns | 0.0039169 | 9.02 | 225 MiB | 10 MiB |

235 | | binaryfuse16 | 10000000 | 666.6ms | 102.0ns | 0.0000147 | 18.04 | 245 MiB | 21 MiB |

236 | | binaryfuse32 | 10000000 | 769.0ms | 135.0ns | 0 | 36.07 | 286 MiB | 43 MiB |

237 | | xor2 | 10000000 | 1.9s | 43.0ns | 0.2500703 | 9.84 | 527 MiB | 11 MiB |

238 | | xor4 | 10000000 | 2.0s | 41.0ns | 0.0626137 | 9.84 | 527 MiB | 11 MiB |

239 | | xor8 | 10000000 | 1.9s | 42.0ns | 0.0039369 | 9.84 | 527 MiB | 11 MiB |

240 | | xor16 | 10000000 | 2.2s | 106.0ns | 0.0000173 | 19.68 | 527 MiB | 23 MiB |

241 | | xor32 | 10000000 | 2.2s | 140.0ns | 0 | 39.36 | 527 MiB | 46 MiB |

242 | | | | | | | | | |

243 | | binaryfuse8 | 100000000 | 7.4s | 145.0ns | 0.003989 | 9.01 | 2 GiB | 107 MiB |

244 | | binaryfuse16 | 100000000 | 8.4s | 169.0ns | 0.000016 | 18.01 | 2 GiB | 214 MiB |

245 | | binaryfuse32 | 100000000 | 10.2s | 173.0ns | 0 | 36.03 | 2 GiB | 429 MiB |

246 | | xor2 | 100000000 | 28.5s | 144.0ns | 0.249843 | 9.84 | 5 GiB | 117 MiB |

247 | | xor4 | 100000000 | 27.4s | 154.0ns | 0.062338 | 9.84 | 5 GiB | 117 MiB |

248 | | xor8 | 100000000 | 28.0s | 153.0ns | 0.004016 | 9.84 | 5 GiB | 117 MiB |

249 | | xor16 | 100000000 | 29.5s | 161.0ns | 0.000012 | 19.68 | 5 GiB | 234 MiB |

250 | | xor32 | 100000000 | 29.4s | 157.0ns | 0 | 39.36 | 5 GiB | 469 MiB |

251 | | | | | | | | | |

252 |

253 | Legend:

254 |

255 | * **contains(k)**: The time taken to check if a key is in the filter

256 | * **false+ prob.**: False positive probability, the probability that a containment check will erroneously return true for a key that has not actually been added to the filter.

257 | * **bits per entry**: The amount of memory in bits the filter uses to store a single entry.

258 | * **peak populate**: Amount of memory consumed during filter population, excluding keys themselves (8 bytes * num_keys.)

259 | * **filter total**: Amount of memory consumed for filter itself in total (bits per entry * entries.)

260 |

261 |

262 |

263 |

264 | Benchmarks: Windows 10, AMD Ryzen 9 3900X (1M - 100M keys)

265 |

266 | * CPU: 3.79Ghz AMD Ryzen 9 3900X

267 | * Memory: 32 GB 2133 MHz DDR4

268 | * Zig version: `0.12.0-dev.706+62a0fbdae`

269 |

270 | | Algorithm | # of keys | populate | contains(k) | false+ prob. | bits per entry | peak populate | filter total |

271 | |--------------|------------|------------|-------------|--------------|----------------|---------------|--------------|

272 | | binaryfuse8 | 1000000 | 44.6ms | 24.0ns | 0.00390796 | 9.04 | 22 MiB | 1 MiB |

273 | | binaryfuse16 | 1000000 | 48.9ms | 25.0ns | 0.00001553 | 18.09 | 24 MiB | 2 MiB |

274 | | binaryfuse32 | 1000000 | 49.9ms | 25.0ns | 0.00000001 | 36.18 | 28 MiB | 4 MiB |

275 | | xor2 | 1000000 | 77.3ms | 25.0ns | 0.25000163 | 9.84 | 52 MiB | 1 MiB |

276 | | xor4 | 1000000 | 80.0ms | 25.0ns | 0.06250427 | 9.84 | 52 MiB | 1 MiB |

277 | | xor8 | 1000000 | 76.0ms | 25.0ns | 0.00391662 | 9.84 | 52 MiB | 1 MiB |

278 | | xor16 | 1000000 | 83.7ms | 26.0ns | 0.00001536 | 19.68 | 52 MiB | 2 MiB |

279 | | xor32 | 1000000 | 79.1ms | 27.0ns | 0 | 39.36 | 52 MiB | 4 MiB |

280 | | fuse8 | 1000000 | 69.4ms | 25.0ns | 0.00390663 | 9.10 | 49 MiB | 1 MiB |

281 | | fuse16 | 1000000 | 71.5ms | 27.0ns | 0.00001516 | 18.20 | 49 MiB | 2 MiB |

282 | | fuse32 | 1000000 | 71.1ms | 27.0ns | 0 | 36.40 | 49 MiB | 4 MiB |

283 | | | | | | | | | |

284 | | binaryfuse8 | 10000000 | 572.3ms | 33.0ns | 0.0038867 | 9.02 | 225 MiB | 10 MiB |

285 | | binaryfuse16 | 10000000 | 610.6ms | 108.0ns | 0.0000127 | 18.04 | 245 MiB | 21 MiB |

286 | | binaryfuse32 | 10000000 | 658.2ms | 144.0ns | 0 | 36.07 | 286 MiB | 43 MiB |

287 | | xor2 | 10000000 | 1.2s | 39.0ns | 0.249876 | 9.84 | 527 MiB | 11 MiB |

288 | | xor4 | 10000000 | 1.2s | 39.0ns | 0.0625026 | 9.84 | 527 MiB | 11 MiB |

289 | | xor8 | 10000000 | 1.2s | 41.0ns | 0.0038881 | 9.84 | 527 MiB | 11 MiB |

290 | | xor16 | 10000000 | 1.3s | 117.0ns | 0.0000134 | 19.68 | 527 MiB | 23 MiB |

291 | | xor32 | 10000000 | 1.3s | 147.0ns | 0 | 39.36 | 527 MiB | 46 MiB |

292 | | fuse8 | 10000000 | 1.1s | 36.0ns | 0.0039089 | 9.10 | 499 MiB | 10 MiB |

293 | | fuse16 | 10000000 | 1.1s | 112.0ns | 0.0000172 | 18.20 | 499 MiB | 21 MiB |

294 | | fuse32 | 10000000 | 1.1s | 145.0ns | 0 | 36.40 | 499 MiB | 43 MiB |

295 | | | | | | | | | |

296 | | binaryfuse8 | 100000000 | 6.9s | 167.0ns | 0.00381 | 9.01 | 2 GiB | 107 MiB |

297 | | binaryfuse16 | 100000000 | 7.2s | 171.0ns | 0.000009 | 18.01 | 2 GiB | 214 MiB |

298 | | binaryfuse32 | 100000000 | 8.5s | 174.0ns | 0 | 36.03 | 2 GiB | 429 MiB |

299 | | xor2 | 100000000 | 16.8s | 166.0ns | 0.249868 | 9.84 | 5 GiB | 117 MiB |

300 | | xor4 | 100000000 | 18.9s | 183.0ns | 0.062417 | 9.84 | 5 GiB | 117 MiB |

301 | | xor8 | 100000000 | 19.1s | 168.0ns | 0.003873 | 9.84 | 5 GiB | 117 MiB |

302 | | xor16 | 100000000 | 16.9s | 171.0ns | 0.000021 | 19.68 | 5 GiB | 234 MiB |

303 | | xor32 | 100000000 | 19.4s | 189.0ns | 0 | 39.36 | 5 GiB | 469 MiB |

304 | | fuse8 | 100000000 | 19.6s | 167.0ns | 0.003797 | 9.10 | 4 GiB | 108 MiB |

305 | | fuse16 | 100000000 | 20.8s | 171.0ns | 0.000015 | 18.20 | 4 GiB | 216 MiB |

306 | | fuse32 | 100000000 | 21.5s | 176.0ns | 0 | 36.40 | 4 GiB | 433 MiB |

307 | | | | | | | | | |

308 |

309 | Legend:

310 |

311 | * **contains(k)**: The time taken to check if a key is in the filter

312 | * **false+ prob.**: False positive probability, the probability that a containment check will erroneously return true for a key that has not actually been added to the filter.

313 | * **bits per entry**: The amount of memory in bits the filter uses to store a single entry.

314 | * **peak populate**: Amount of memory consumed during filter population, excluding keys themselves (8 bytes * num_keys.)

315 | * **filter total**: Amount of memory consumed for filter itself in total (bits per entry * entries.)

316 |

317 |

318 |

319 | ## Related readings

320 |

321 | * Blog post by Daniel Lemire: [Xor Filters: Faster and Smaller Than Bloom Filters](https://lemire.me/blog/2019/12/19/xor-filters-faster-and-smaller-than-bloom-filters)

322 | * Fuse Filters ([arxiv paper](https://arxiv.org/abs/1907.04749)), as described [by @jbapple](https://github.com/FastFilter/xor_singleheader/pull/11#issue-356508475) (note these are not to be confused with _binary fuse filters_.)

323 |

324 | ## Special thanks

325 |

326 | * [**Thomas Mueller Graf**](https://github.com/thomasmueller) and [**Daniel Lemire**](https://github.com/lemire) - _for their excellent research into xor filters, xor+ filters, their C implementation, and more._

327 | * [**Martin Dietzfelbinger**](https://arxiv.org/search/cs?searchtype=author&query=Dietzfelbinger%2C+M) and [**Stefan Walzer**](https://arxiv.org/search/cs?searchtype=author&query=Walzer%2C+S) - _for their excellent research into fuse filters._

328 | * [**Jim Apple**](https://github.com/jbapple) - _for their C implementation[[1]](https://github.com/FastFilter/xor_singleheader/pull/11) of fuse filters_

329 | * [**@Andoryuuta**](https://github.com/Andoryuuta) - _for providing substantial help in debugging several issues in the Zig implementation._

330 |

331 | If it was not for the above people, I ([@emidoots](https://github.com/emidoots)) would not have been able to write this implementation and learn from the excellent [C implementation](https://github.com/FastFilter/xor_singleheader). Please credit the above people if you use this library.

332 |

--------------------------------------------------------------------------------

/build.zig:

--------------------------------------------------------------------------------

1 | const std = @import("std");

2 |

3 | pub fn build(b: *std.Build) void {

4 | const optimize = b.standardOptimizeOption(.{});

5 | const target = b.standardTargetOptions(.{});

6 |

7 | _ = b.addModule("fastfilter", .{

8 | .root_source_file = b.path("src/main.zig"),

9 | });

10 |

11 | const main_tests = b.addTest(.{

12 | .name = "fastfilter-tests",

13 | .root_source_file = b.path("src/main.zig"),

14 | .target = target,

15 | .optimize = optimize,

16 | });

17 | const run_main_tests = b.addRunArtifact(main_tests);

18 |

19 | const test_step = b.step("test", "Run library tests");

20 | test_step.dependOn(&run_main_tests.step);

21 | }

22 |

--------------------------------------------------------------------------------

/src/MeasuredAllocator.zig:

--------------------------------------------------------------------------------

1 | /// This allocator takes an existing allocator, wraps it, and provides measurements about the

2 | /// allocations such as peak memory usage.

3 | const std = @import("std");

4 | const assert = std.debug.assert;

5 | const mem = std.mem;

6 | const Allocator = std.mem.Allocator;

7 |

8 | const MeasuredAllocator = @This();

9 |

10 | parent_allocator: Allocator,

11 | state: State,

12 |

13 | /// Inner state of MeasuredAllocator. Can be stored rather than the entire MeasuredAllocator as a

14 | /// memory-saving optimization.

15 | pub const State = struct {

16 | peak_memory_usage_bytes: usize = 0,

17 | current_memory_usage_bytes: usize = 0,

18 |

19 | pub fn promote(self: State, parent_allocator: Allocator) MeasuredAllocator {

20 | return .{

21 | .parent_allocator = parent_allocator,

22 | .state = self,

23 | };

24 | }

25 | };

26 |

27 | const BufNode = std.SinglyLinkedList([]u8).Node;

28 |

29 | pub fn init(parent_allocator: Allocator) MeasuredAllocator {

30 | return (State{}).promote(parent_allocator);

31 | }

32 |

33 | pub fn allocator(self: *MeasuredAllocator) Allocator {

34 | return .{

35 | .ptr = self,

36 | .vtable = &.{

37 | .alloc = allocFn,

38 | .resize = resizeFn,

39 | .free = freeFn,

40 | },

41 | };

42 | }

43 |

44 | fn allocFn(ptr: *anyopaque, len: usize, ptr_align: u8, ret_addr: usize) ?[*]u8 {

45 | const self = @as(*MeasuredAllocator, @ptrCast(@alignCast(ptr)));

46 | const result = self.parent_allocator.rawAlloc(len, ptr_align, ret_addr);

47 | if (result) |_| {

48 | self.state.current_memory_usage_bytes += len;

49 | if (self.state.current_memory_usage_bytes > self.state.peak_memory_usage_bytes) self.state.peak_memory_usage_bytes = self.state.current_memory_usage_bytes;

50 | }

51 | return result;

52 | }

53 |

54 | fn resizeFn(ptr: *anyopaque, buf: []u8, buf_align: u8, new_len: usize, ret_addr: usize) bool {

55 | const self = @as(*MeasuredAllocator, @ptrCast(@alignCast(ptr)));

56 | if (self.parent_allocator.rawResize(buf, buf_align, new_len, ret_addr)) {

57 | self.state.current_memory_usage_bytes -= buf.len - new_len;

58 | if (self.state.current_memory_usage_bytes > self.state.peak_memory_usage_bytes) self.state.peak_memory_usage_bytes = self.state.current_memory_usage_bytes;

59 | return true;

60 | }

61 | return false;

62 | }

63 |

64 | fn freeFn(ptr: *anyopaque, buf: []u8, buf_align: u8, ret_addr: usize) void {

65 | const self = @as(*MeasuredAllocator, @ptrCast(@alignCast(ptr)));

66 | self.parent_allocator.rawFree(buf, buf_align, ret_addr);

67 | self.state.current_memory_usage_bytes -= buf.len;

68 | }

69 |

--------------------------------------------------------------------------------

/src/benchmark.zig:

--------------------------------------------------------------------------------

1 | // zig run -O ReleaseFast src/benchmark.zig

2 |

3 | const std = @import("std");

4 | const time = std.time;

5 | const Timer = time.Timer;

6 | const xorfilter = @import("main.zig");

7 | const MeasuredAllocator = @import("MeasuredAllocator.zig");

8 |

9 | fn formatTime(writer: anytype, comptime spec: []const u8, start: u64, end: u64, division: usize) !void {

10 | const ns = @as(f64, @floatFromInt((end - start) / division));

11 | if (ns <= time.ns_per_ms) {

12 | try std.fmt.format(writer, spec, .{ ns, "ns " });

13 | return;

14 | }

15 | if (ns <= time.ns_per_s) {

16 | try std.fmt.format(writer, spec, .{ ns / @as(f64, @floatFromInt(time.ns_per_ms)), "ms " });

17 | return;

18 | }

19 | if (ns <= time.ns_per_min) {

20 | try std.fmt.format(writer, spec, .{ ns / @as(f64, @floatFromInt(time.ns_per_s)), "s " });

21 | return;

22 | }

23 | try std.fmt.format(writer, spec, .{ ns / @as(f64, @floatFromInt(time.ns_per_min)), "min" });

24 | return;

25 | }

26 |

27 | fn formatBytes(writer: anytype, comptime spec: []const u8, bytes: u64) !void {

28 | const kib = 1024;

29 | const mib = 1024 * kib;

30 | const gib = 1024 * mib;

31 | if (bytes < kib) {

32 | try std.fmt.format(writer, spec, .{ bytes, "B " });

33 | }

34 | if (bytes < mib) {

35 | try std.fmt.format(writer, spec, .{ bytes / kib, "KiB" });

36 | return;

37 | }

38 | if (bytes < gib) {

39 | try std.fmt.format(writer, spec, .{ bytes / mib, "MiB" });

40 | return;

41 | }

42 | try std.fmt.format(writer, spec, .{ bytes / gib, "GiB" });

43 | return;

44 | }

45 |

46 | fn bench(algorithm: []const u8, Filter: anytype, size: usize, trials: usize) !void {

47 | const allocator = std.heap.page_allocator;

48 |

49 | var filterMA = MeasuredAllocator.init(allocator);

50 | const filterAllocator = filterMA.allocator();

51 |

52 | var buildMA = MeasuredAllocator.init(allocator);

53 | const buildAllocator = buildMA.allocator();

54 |

55 | const stdout = std.io.getStdOut().writer();

56 | var timer = try Timer.start();

57 |

58 | // Initialize filter.

59 | var filter = try Filter.init(filterAllocator, size);

60 | defer filter.deinit(allocator);

61 |

62 | // Generate keys.

63 | var keys = try allocator.alloc(u64, size);

64 | defer allocator.free(keys);

65 | for (keys, 0..) |_, i| {

66 | keys[i] = i;

67 | }

68 |

69 | // Populate filter.

70 | timer.reset();

71 | const populateTimeStart = timer.lap();

72 | try filter.populate(buildAllocator, keys[0..]);

73 | const populateTimeEnd = timer.read();

74 |

75 | // Perform random matches.

76 | var random_matches: u64 = 0;

77 | var i: u64 = 0;

78 | var rng = std.Random.DefaultPrng.init(0);

79 | const random = rng.random();

80 | timer.reset();

81 | const randomMatchesTimeStart = timer.lap();

82 | while (i < trials) : (i += 1) {

83 | const random_key: u64 = random.uintAtMost(u64, std.math.maxInt(u64));

84 | if (filter.contain(random_key)) {

85 | if (random_key >= keys.len) {

86 | random_matches += 1;

87 | }

88 | }

89 | }

90 | const randomMatchesTimeEnd = timer.read();

91 |

92 | const fpp = @as(f64, @floatFromInt(random_matches)) * 1.0 / @as(f64, @floatFromInt(trials));

93 |

94 | const bitsPerEntry = @as(f64, @floatFromInt(filter.sizeInBytes())) * 8.0 / @as(f64, @floatFromInt(size));

95 | const filterBitsPerEntry = @as(f64, @floatFromInt(filterMA.state.peak_memory_usage_bytes)) * 8.0 / @as(f64, @floatFromInt(size));

96 | if (!std.math.approxEqAbs(f64, filterBitsPerEntry, bitsPerEntry, 0.001)) {

97 | @panic("sizeInBytes reporting wrong numbers?");

98 | }

99 |

100 | try stdout.print("| {s: <12} ", .{algorithm});

101 | try stdout.print("| {: <10} ", .{keys.len});

102 | try stdout.print("| ", .{});

103 | try formatTime(stdout, "{d: >7.1}{s}", populateTimeStart, populateTimeEnd, 1);

104 | try stdout.print(" | ", .{});

105 | try formatTime(stdout, "{d: >8.1}{s}", randomMatchesTimeStart, randomMatchesTimeEnd, trials);

106 | try stdout.print(" | {d: >12} ", .{fpp});

107 | try stdout.print("| {d: >14.2} ", .{bitsPerEntry});

108 | try stdout.print("| ", .{});

109 | try formatBytes(stdout, "{: >9} {s}", buildMA.state.peak_memory_usage_bytes);

110 | try formatBytes(stdout, " | {: >8} {s}", filterMA.state.peak_memory_usage_bytes);

111 | try stdout.print(" |\n", .{});

112 | }

113 |

114 | fn usage() void {

115 | std.log.warn(

116 | \\benchmark [options]

117 | \\

118 | \\Options:

119 | \\ --num-trials [int=10000000] number of trials / containment checks to perform

120 | \\ --help

121 | \\

122 | , .{});

123 | }

124 | pub fn main() !void {

125 | var buffer: [1024]u8 = undefined;

126 | var fixed = std.heap.FixedBufferAllocator.init(buffer[0..]);

127 | const args = try std.process.argsAlloc(fixed.allocator());

128 |

129 | var num_trials: usize = 100_000_000;

130 | var i: usize = 1;

131 | while (i < args.len) : (i += 1) {

132 | if (std.mem.eql(u8, args[i], "--num-trials")) {

133 | i += 1;

134 | if (i == args.len) {

135 | usage();

136 | std.process.exit(1);

137 | }

138 | num_trials = try std.fmt.parseUnsigned(usize, args[i], 10);

139 | }

140 | }

141 |

142 | const stdout = std.io.getStdOut().writer();

143 | try stdout.print("| Algorithm | # of keys | populate | contains(k) | false+ prob. | bits per entry | peak populate | filter total |\n", .{});

144 | try stdout.print("|--------------|------------|------------|-------------|--------------|----------------|---------------|--------------|\n", .{});

145 | try bench("binaryfuse8", xorfilter.BinaryFuse(u8), 1_000_000, num_trials);

146 | try bench("binaryfuse16", xorfilter.BinaryFuse(u16), 1_000_000, num_trials);

147 | try bench("binaryfuse32", xorfilter.BinaryFuse(u32), 1_000_000, num_trials);

148 | try bench("xor2", xorfilter.Xor(u2), 1_000_000, num_trials);

149 | try bench("xor4", xorfilter.Xor(u4), 1_000_000, num_trials);

150 | try bench("xor8", xorfilter.Xor(u8), 1_000_000, num_trials);

151 | try bench("xor16", xorfilter.Xor(u16), 1_000_000, num_trials);

152 | try bench("xor32", xorfilter.Xor(u32), 1_000_000, num_trials);

153 | try stdout.print("| | | | | | | | |\n", .{});

154 | try bench("binaryfuse8", xorfilter.BinaryFuse(u8), 10_000_000, num_trials / 10);

155 | try bench("binaryfuse16", xorfilter.BinaryFuse(u16), 10_000_000, num_trials / 10);

156 | try bench("binaryfuse32", xorfilter.BinaryFuse(u32), 10_000_000, num_trials / 10);

157 | try bench("xor2", xorfilter.Xor(u2), 10_000_000, num_trials / 10);

158 | try bench("xor4", xorfilter.Xor(u4), 10_000_000, num_trials / 10);

159 | try bench("xor8", xorfilter.Xor(u8), 10_000_000, num_trials / 10);

160 | try bench("xor16", xorfilter.Xor(u16), 10_000_000, num_trials / 10);

161 | try bench("xor32", xorfilter.Xor(u32), 10_000_000, num_trials / 10);

162 | try stdout.print("| | | | | | | | |\n", .{});

163 | try bench("binaryfuse8", xorfilter.BinaryFuse(u8), 100_000_000, num_trials / 100);

164 | try bench("binaryfuse16", xorfilter.BinaryFuse(u16), 100_000_000, num_trials / 100);

165 | try bench("binaryfuse32", xorfilter.BinaryFuse(u32), 100_000_000, num_trials / 100);

166 | try bench("xor2", xorfilter.Xor(u2), 100_000_000, num_trials / 100);

167 | try bench("xor4", xorfilter.Xor(u4), 100_000_000, num_trials / 100);

168 | try bench("xor8", xorfilter.Xor(u8), 100_000_000, num_trials / 100);

169 | try bench("xor16", xorfilter.Xor(u16), 100_000_000, num_trials / 100);

170 | try bench("xor32", xorfilter.Xor(u32), 100_000_000, num_trials / 100);

171 | try stdout.print("| | | | | | | | |\n", .{});

172 | try stdout.print("\n", .{});

173 | try stdout.print("Legend:\n\n", .{});

174 | try stdout.print("* **contains(k)**: The time taken to check if a key is in the filter\n", .{});

175 | try stdout.print("* **false+ prob.**: False positive probability, the probability that a containment check will erroneously return true for a key that has not actually been added to the filter.\n", .{});

176 | try stdout.print("* **bits per entry**: The amount of memory in bits the filter uses to store a single entry.\n", .{});

177 | try stdout.print("* **peak populate**: Amount of memory consumed during filter population, excluding keys themselves (8 bytes * num_keys.)\n", .{});

178 | try stdout.print("* **filter total**: Amount of memory consumed for filter itself in total (bits per entry * entries.)\n", .{});

179 | }

180 |

--------------------------------------------------------------------------------

/src/binaryfusefilter.zig:

--------------------------------------------------------------------------------

1 | const std = @import("std");

2 | const Allocator = std.mem.Allocator;

3 | const math = std.math;

4 | const testing = std.testing;

5 |

6 | const util = @import("util.zig");

7 | const Error = util.Error;

8 |

9 | const builtin = @import("builtin");

10 | const is_debug = builtin.mode == .Debug;

11 |

12 | /// BinaryFuse8 provides a binary fuse filter with 8-bit fingerprints.

13 | ///

14 | /// See `BinaryFuse` for more details.

15 | pub const BinaryFuse8 = BinaryFuse(u8);

16 |

17 | /// probability of success should always be > 0.5 so 100 iterations is highly unlikely (read:

18 | /// statistically unlikely to happen. If it does, probably we need to increase max_iterations or

19 | /// contact the fastfilter authors.)

20 | const max_iterations: usize = 100;

21 |

22 | /// A binary fuse filter. This is an extension of fuse filters:

23 | ///

24 | /// Dietzfelbinger & Walzer's fuse filters, described in "Dense Peelable Random Uniform Hypergraphs",

25 | /// https://arxiv.org/abs/1907.04749, can accomodate fill factors up to 87.9% full, rather than

26 | /// 1 / 1.23 = 81.3%. In the 8-bit case, this reduces the memory usage from 9.84 bits per entry to

27 | /// 9.1 bits.

28 | ///

29 | /// An issue with traditional fuse filters is that the algorithm requires a large number of unique

30 | /// keys in order for population to succeed, see [FastFilter/xor_singleheader#21](https://github.com/FastFilter/xor_singleheader/issues/21).

31 | /// If you have few (<~125k consecutive) keys, fuse filter creation would fail.

32 | ///

33 | /// By contrast, binary fuse filters, a revision of fuse filters made by Thomas Mueller Graf &

34 | /// Daniel Lemire do not suffer from this issue. See https://github.com/FastFilter/xor_singleheader/issues/21

35 | ///

36 | /// Note: We assume that you have a large set of 64-bit integers and you want a data structure to

37 | /// do membership tests using no more than ~8 or ~16 bits per key. If your initial set is made of

38 | /// strings or other types, you first need to hash them to a 64-bit integer.

39 | pub fn BinaryFuse(comptime T: type) type {

40 | return struct {

41 | seed: u64,

42 | segment_length: u32,

43 | segment_length_mask: u32,

44 | segment_count: u32,

45 | segment_count_length: u32,

46 | fingerprints: []T,

47 |

48 | const Self = @This();

49 |

50 | /// initializes a binary fuse filter with enough capacity for a set containing up to `size`

51 | /// elements.

52 | ///

53 | /// `deinit()` must be called by the caller to free the memory.

54 | pub fn init(allocator: Allocator, size: usize) !Self {

55 | const arity: u32 = 3;

56 | var segment_length = calculateSegmentLength(arity, size);

57 | if (segment_length > 262144) {

58 | segment_length = 262144;

59 | }

60 | const segment_length_mask = segment_length - 1;

61 | const size_factor: f64 = if (size == 0) 4 else calculateSizeFactor(arity, size);

62 | const capacity = if (size <= 1) 0 else @as(u32, @intFromFloat(@round(@as(f64, @floatFromInt(size)) * size_factor)));

63 | const init_segment_count: u32 = (capacity + segment_length - 1) / segment_length -% (arity - 1);

64 | var slice_length = (init_segment_count +% arity - 1) * segment_length;

65 | var segment_count = (slice_length + segment_length - 1) / segment_length;

66 | if (segment_count <= arity - 1) {

67 | segment_count = 1;

68 | } else {

69 | segment_count = segment_count - (arity - 1);

70 | }

71 | slice_length = (segment_count + arity - 1) * segment_length;

72 | const segment_count_length = segment_count * segment_length;

73 |

74 | return Self{

75 | .seed = undefined,

76 | .segment_length = segment_length,

77 | .segment_length_mask = segment_length_mask,

78 | .segment_count = segment_count,

79 | .segment_count_length = segment_count_length,

80 | .fingerprints = try allocator.alloc(T, slice_length),

81 | };

82 | }

83 |

84 | pub inline fn deinit(self: *const Self, allocator: Allocator) void {

85 | allocator.free(self.fingerprints);

86 | }

87 |

88 | /// reports the size in bytes of the filter.

89 | pub inline fn sizeInBytes(self: *const Self) usize {

90 | return self.fingerprints.len * @sizeOf(T) + @sizeOf(Self);

91 | }

92 |

93 | /// populates the filter with the given keys.

94 | ///

95 | /// The function could return an error after too many iterations, but it is statistically

96 | /// unlikely and you probably don't need to worry about it.

97 | ///

98 | /// The provided allocator will be used for creating temporary buffers that do not outlive the

99 | /// function call.

100 | pub fn populate(self: *Self, allocator: Allocator, keys: []u64) Error!void {

101 | var iter = util.SliceIterator(u64).init(keys);

102 | return self.populateIter(allocator, &iter);

103 | }

104 |

105 | /// Identical to populate, except it takes an iterator of keys so you need not store them

106 | /// in-memory.

107 | ///

108 | /// `keys.next()` must return `?u64`, the next key or none if the end of the list has been

109 | /// reached. The iterator must reset after hitting the end of the list, such that the `next()`

110 | /// call leads to the first element again.

111 | ///

112 | /// `keys.len()` must return the `usize` length.

113 | pub fn populateIter(self: *Self, allocator: Allocator, keys: anytype) Error!void {

114 | if (keys.len() == 0) {

115 | return;

116 | }

117 | var rng_counter: u64 = 0x726b2b9d438b9d4d;

118 | self.seed = util.rngSplitMix64(&rng_counter);

119 |

120 | var size = keys.len();

121 | const reverse_order = try allocator.alloc(u64, size + 1);

122 | defer allocator.free(reverse_order);

123 | @memset(reverse_order, 0);

124 |

125 | const capacity = self.fingerprints.len;

126 | const alone = try allocator.alloc(u32, capacity);

127 | defer allocator.free(alone);

128 |

129 | const t2count = try allocator.alloc(T, capacity);

130 | defer allocator.free(t2count);

131 | @memset(t2count, 0);

132 |

133 | const reverse_h = try allocator.alloc(T, size);

134 | defer allocator.free(reverse_h);

135 |

136 | const t2hash = try allocator.alloc(u64, capacity);

137 | defer allocator.free(t2hash);

138 | @memset(t2hash, 0);

139 |

140 | var block_bits: u5 = 1;

141 | while ((@as(u32, 1) << block_bits) < self.segment_count) {

142 | block_bits += 1;

143 | }

144 | const block: u32 = @as(u32, 1) << block_bits;

145 |

146 | const start_pos = try allocator.alloc(u32, @as(usize, 1) << block_bits);

147 | defer allocator.free(start_pos);

148 |

149 | var expect_num_keys: ?usize = null;

150 |

151 | var h012: [5]u32 = undefined;

152 |

153 | reverse_order[size] = 1;

154 | var loop: usize = 0;

155 | while (true) : (loop += 1) {

156 | if (loop + 1 > max_iterations) {

157 | // too many iterations, this is statistically unlikely to happen. If it does,

158 | // probably we need to increase max_iterations or contact the fastfilter authors

159 | return Error.KeysLikelyNotUnique;

160 | }

161 |

162 | var i: u32 = 0;

163 | while (i < block) : (i += 1) {

164 | // important : i * size would overflow as a 32-bit number in some

165 | // cases.

166 | start_pos[i] = @as(u32, @truncate((@as(u64, @intCast(i)) * size) >> block_bits));

167 | }

168 |

169 | const mask_block: u64 = block - 1;

170 | var got_num_keys: usize = 0;

171 | while (keys.next()) |key| {

172 | if (is_debug) got_num_keys += 1;

173 | const sum: u64 = key +% self.seed;

174 | const hash: u64 = util.murmur64(sum);

175 |

176 | const shift_count = @as(usize, 64) - @as(usize, block_bits);

177 | var segment_index: u64 = if (shift_count >= 63) 0 else hash >> @as(u6, @truncate(shift_count));

178 | while (reverse_order[start_pos[segment_index]] != 0) {

179 | segment_index += 1;

180 | segment_index &= mask_block;

181 | }

182 | reverse_order[start_pos[segment_index]] = hash;

183 | start_pos[segment_index] += 1;

184 | }

185 | if (is_debug) {

186 | if (expect_num_keys) |expect| {

187 | if (expect != got_num_keys) @panic("fastfilter: iterator illegal: does not wrap around");

188 | }

189 | expect_num_keys = got_num_keys;

190 | }

191 |

192 | var err = false;

193 | var duplicates: u32 = 0;

194 | i = 0;

195 | while (i < size) : (i += 1) {

196 | const hash = reverse_order[i];

197 | const h0 = self.fuseHash(0, hash);

198 | const h1 = self.fuseHash(1, hash);

199 | const h2 = self.fuseHash(2, hash);

200 | t2count[h0] +%= 4;

201 | t2hash[h0] ^= hash;

202 | t2count[h1] +%= 4;

203 | t2count[h1] ^= 1;

204 | t2hash[h1] ^= hash;

205 | t2count[h2] +%= 4;

206 | t2count[h2] ^= 2;

207 | t2hash[h2] ^= hash;

208 | // If we have duplicated hash values, then it is likely that the next comparison

209 | // is true

210 | if (t2hash[h0] & t2hash[h1] & t2hash[h2] == 0) {

211 | // next we do the actual test

212 | if (((t2hash[h0] == 0) and (t2count[h0] == 8)) or ((t2hash[h1] == 0) and (t2count[h1] == 8)) or ((t2hash[h2] == 0) and (t2count[h2] == 8))) {

213 | duplicates += 1;

214 | t2count[h0] -%= 4;

215 | t2hash[h0] ^= hash;

216 | t2count[h1] -%= 4;

217 | t2count[h1] ^= 1;

218 | t2hash[h1] ^= hash;

219 | t2count[h2] -%= 4;

220 | t2count[h2] ^= 2;

221 | t2hash[h2] ^= hash;

222 | }

223 | }

224 | err = (t2count[h0] < 4) or err;

225 | err = (t2count[h1] < 4) or err;

226 | err = (t2count[h2] < 4) or err;

227 | }

228 | if (err) {

229 | i = 0;

230 | while (i < size) : (i += 1) {

231 | reverse_order[i] = 0;

232 | }

233 | i = 0;

234 | while (i < capacity) : (i += 1) {

235 | t2count[i] = 0;

236 | t2hash[i] = 0;

237 | }

238 | self.seed = util.rngSplitMix64(&rng_counter);

239 | continue;

240 | }

241 |

242 | // End of key addition

243 | var Qsize: u32 = 0;

244 | // Add sets with one key to the queue.

245 | i = 0;

246 | while (i < capacity) : (i += 1) {

247 | alone[Qsize] = i;

248 | Qsize += if ((t2count[i] >> 2) == 1) @as(u32, 1) else @as(u32, 0);

249 | }

250 | var stacksize: u32 = 0;

251 | while (Qsize > 0) {

252 | Qsize -= 1;

253 | const index: u32 = alone[Qsize];

254 | if ((t2count[index] >> 2) == 1) {

255 | const hash = t2hash[index];

256 |

257 | //h012[0] = self.fuseHash(0, hash);

258 | h012[1] = self.fuseHash(1, hash);

259 | h012[2] = self.fuseHash(2, hash);

260 | h012[3] = self.fuseHash(0, hash); // == h012[0];

261 | h012[4] = h012[1];

262 | const found = t2count[index] & 3;

263 | reverse_h[stacksize] = found;

264 | reverse_order[stacksize] = hash;

265 | stacksize += 1;

266 | const other_index1 = h012[found + 1];

267 | alone[Qsize] = other_index1;

268 | Qsize += if ((t2count[other_index1] >> 2) == 2) @as(u32, 1) else @as(u32, 0);

269 |

270 | t2count[other_index1] -= 4;

271 | t2count[other_index1] ^= fuseMod3(T, found + 1);

272 | t2hash[other_index1] ^= hash;

273 |

274 | const other_index2 = h012[found + 2];

275 | alone[Qsize] = other_index2;

276 | Qsize += if ((t2count[other_index2] >> 2) == 2) @as(u32, 1) else @as(u32, 0);

277 | t2count[other_index2] -= 4;

278 | t2count[other_index2] ^= fuseMod3(T, found + 2);

279 | t2hash[other_index2] ^= hash;

280 | }

281 | }

282 | if (stacksize + duplicates == size) {

283 | // success

284 | size = stacksize;

285 | break;

286 | }

287 | @memset(reverse_order[0..size], 0);

288 | @memset(t2count[0..capacity], 0);

289 | @memset(t2hash[0..capacity], 0);

290 | self.seed = util.rngSplitMix64(&rng_counter);

291 | }

292 | if (size == 0) return;

293 |

294 | var i: u32 = @as(u32, @truncate(size - 1));

295 | while (i < size) : (i -%= 1) {

296 | // the hash of the key we insert next

297 | const hash: u64 = reverse_order[i];

298 | const xor2: T = @as(T, @truncate(util.fingerprint(hash)));

299 | const found: T = reverse_h[i];

300 | h012[0] = self.fuseHash(0, hash);

301 | h012[1] = self.fuseHash(1, hash);

302 | h012[2] = self.fuseHash(2, hash);

303 | h012[3] = h012[0];

304 | h012[4] = h012[1];

305 | self.fingerprints[h012[found]] = xor2 ^ self.fingerprints[h012[found + 1]] ^ self.fingerprints[h012[found + 2]];

306 | }

307 | }

308 |

309 | /// reports if the specified key is within the set with false-positive rate.

310 | pub inline fn contain(self: *const Self, key: u64) bool {

311 | const hash = util.mixSplit(key, self.seed);

312 | var f = @as(T, @truncate(util.fingerprint(hash)));

313 | const hashes = self.fuseHashBatch(hash);

314 | f ^= self.fingerprints[hashes.h0] ^ self.fingerprints[hashes.h1] ^ self.fingerprints[hashes.h2];

315 | return f == 0;

316 | }

317 |

318 | inline fn fuseHashBatch(self: *const Self, hash: u64) Hashes {

319 | const hi: u64 = mulhi(hash, self.segment_count_length);

320 | var ans: Hashes = undefined;

321 | ans.h0 = @as(u32, @truncate(hi));

322 | ans.h1 = ans.h0 + self.segment_length;

323 | ans.h2 = ans.h1 + self.segment_length;

324 | ans.h1 ^= @as(u32, @truncate(hash >> 18)) & self.segment_length_mask;

325 | ans.h2 ^= @as(u32, @truncate(hash)) & self.segment_length_mask;

326 | return ans;

327 | }

328 |

329 | inline fn fuseHash(self: *Self, index: usize, hash: u64) u32 {

330 | var h = mulhi(hash, self.segment_count_length);

331 | h +%= index * self.segment_length;

332 | // keep the lower 36 bits

333 | const hh: u64 = hash & ((@as(u64, 1) << 36) - 1);

334 | // index 0: right shift by 36; index 1: right shift by 18; index 2: no shift

335 | //

336 | // NOTE: using u64 here instead of size_it as in upstream C implementation; I think

337 | // that size_t may be incorrect for 32-bit platforms?

338 | const shift_count = (36 - 18 * index);

339 | if (shift_count >= 63) {

340 | h ^= 0 & self.segment_length_mask;

341 | } else {

342 | h ^= (hh >> @as(u6, @truncate(shift_count))) & self.segment_length_mask;

343 | }

344 | return @as(u32, @truncate(h));

345 | }

346 | };

347 | }

348 |

349 | inline fn mulhi(a: u64, b: u64) u64 {