├── .github

├── CODEOWNERS

├── SECURITY.md

└── FUNDING.yml

├── banner.jpg

├── challenge-labs

├── GSP315

│ ├── images

│ │ ├── map.jpg

│ │ ├── trigger.png

│ │ ├── package-json.png

│ │ ├── code_function.png

│ │ └── labs_variable.png

│ └── index.md

├── GSP341

│ ├── images

│ │ └── year.png

│ └── index.md

├── GSP787

│ ├── images

│ │ ├── limit.png

│ │ ├── month.png

│ │ ├── deaths.png

│ │ ├── percentage.png

│ │ ├── looker_date.png

│ │ ├── date variable.png

│ │ └── start_close_date.png

│ └── index.md

├── GSP305

│ ├── images

│ │ ├── bucket.png

│ │ └── kubernetes_cluster.png

│ └── index.md

├── GSP306

│ ├── images

│ │ ├── DB_host.png

│ │ ├── DB_host2.png

│ │ ├── SSH_blog.png

│ │ ├── blog_demo.png

│ │ ├── SQL_instance.png

│ │ ├── vm_instances.png

│ │ └── IP_demo_blog_site.png

│ └── index.md

├── GSP101

│ ├── images

│ │ ├── firewall.png

│ │ ├── vm_create.png

│ │ └── lab_variable.png

│ └── index.md

├── GSP303

│ ├── images

│ │ ├── RDP_login.png

│ │ ├── IIS_install.png

│ │ ├── IIS_install2.png

│ │ ├── RDP_extension.png

│ │ ├── RDP_vm-bastionhost_creds.png

│ │ ├── RDP_vm-securehost_creds.png

│ │ ├── VM_instances_vm-bastionhost.png

│ │ └── VM_instances_vm-securehost.png

│ └── index.md

├── GSP313

│ ├── images

│ │ ├── machine-type.png

│ │ ├── labs_variable.jpg

│ │ ├── labs_variable2.png

│ │ └── zone_variable_task2.jpg

│ └── index.md

├── GSP319

│ ├── images

│ │ ├── fancy store.png

│ │ ├── export variable.png

│ │ ├── kubectl get svc.png

│ │ ├── labs variable.png

│ │ └── kubectl get services.png

│ └── index.md

├── GSP322

│ ├── images

│ │ ├── bastion_ssh.png

│ │ ├── lab_variable.png

│ │ └── vm_instances.png

│ └── index.md

├── GSP342

│ ├── images

│ │ └── lab_variable.png

│ └── index.md

├── GSP345

│ ├── images

│ │ └── Instance ID.png

│ └── index.md

├── GSP304

│ └── index.md

└── GSP301

│ └── index.md

├── README.md

└── LICENSE.md

/.github/CODEOWNERS:

--------------------------------------------------------------------------------

1 | * @hiiruki

2 |

--------------------------------------------------------------------------------

/banner.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/banner.jpg

--------------------------------------------------------------------------------

/challenge-labs/GSP315/images/map.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP315/images/map.jpg

--------------------------------------------------------------------------------

/challenge-labs/GSP341/images/year.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP341/images/year.png

--------------------------------------------------------------------------------

/challenge-labs/GSP787/images/limit.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP787/images/limit.png

--------------------------------------------------------------------------------

/challenge-labs/GSP787/images/month.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP787/images/month.png

--------------------------------------------------------------------------------

/challenge-labs/GSP305/images/bucket.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP305/images/bucket.png

--------------------------------------------------------------------------------

/challenge-labs/GSP306/images/DB_host.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP306/images/DB_host.png

--------------------------------------------------------------------------------

/challenge-labs/GSP315/images/trigger.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP315/images/trigger.png

--------------------------------------------------------------------------------

/challenge-labs/GSP787/images/deaths.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP787/images/deaths.png

--------------------------------------------------------------------------------

/challenge-labs/GSP101/images/firewall.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP101/images/firewall.png

--------------------------------------------------------------------------------

/challenge-labs/GSP101/images/vm_create.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP101/images/vm_create.png

--------------------------------------------------------------------------------

/challenge-labs/GSP303/images/RDP_login.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP303/images/RDP_login.png

--------------------------------------------------------------------------------

/challenge-labs/GSP306/images/DB_host2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP306/images/DB_host2.png

--------------------------------------------------------------------------------

/challenge-labs/GSP306/images/SSH_blog.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP306/images/SSH_blog.png

--------------------------------------------------------------------------------

/challenge-labs/GSP306/images/blog_demo.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP306/images/blog_demo.png

--------------------------------------------------------------------------------

/challenge-labs/GSP787/images/percentage.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP787/images/percentage.png

--------------------------------------------------------------------------------

/challenge-labs/GSP101/images/lab_variable.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP101/images/lab_variable.png

--------------------------------------------------------------------------------

/challenge-labs/GSP303/images/IIS_install.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP303/images/IIS_install.png

--------------------------------------------------------------------------------

/challenge-labs/GSP303/images/IIS_install2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP303/images/IIS_install2.png

--------------------------------------------------------------------------------

/challenge-labs/GSP306/images/SQL_instance.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP306/images/SQL_instance.png

--------------------------------------------------------------------------------

/challenge-labs/GSP306/images/vm_instances.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP306/images/vm_instances.png

--------------------------------------------------------------------------------

/challenge-labs/GSP313/images/machine-type.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP313/images/machine-type.png

--------------------------------------------------------------------------------

/challenge-labs/GSP315/images/package-json.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP315/images/package-json.png

--------------------------------------------------------------------------------

/challenge-labs/GSP319/images/fancy store.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP319/images/fancy store.png

--------------------------------------------------------------------------------

/challenge-labs/GSP322/images/bastion_ssh.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP322/images/bastion_ssh.png

--------------------------------------------------------------------------------

/challenge-labs/GSP322/images/lab_variable.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP322/images/lab_variable.png

--------------------------------------------------------------------------------

/challenge-labs/GSP322/images/vm_instances.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP322/images/vm_instances.png

--------------------------------------------------------------------------------

/challenge-labs/GSP342/images/lab_variable.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP342/images/lab_variable.png

--------------------------------------------------------------------------------

/challenge-labs/GSP345/images/Instance ID.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP345/images/Instance ID.png

--------------------------------------------------------------------------------

/challenge-labs/GSP787/images/looker_date.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP787/images/looker_date.png

--------------------------------------------------------------------------------

/challenge-labs/GSP303/images/RDP_extension.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP303/images/RDP_extension.png

--------------------------------------------------------------------------------

/challenge-labs/GSP313/images/labs_variable.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP313/images/labs_variable.jpg

--------------------------------------------------------------------------------

/challenge-labs/GSP313/images/labs_variable2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP313/images/labs_variable2.png

--------------------------------------------------------------------------------

/challenge-labs/GSP315/images/code_function.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP315/images/code_function.png

--------------------------------------------------------------------------------

/challenge-labs/GSP315/images/labs_variable.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP315/images/labs_variable.png

--------------------------------------------------------------------------------

/challenge-labs/GSP319/images/export variable.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP319/images/export variable.png

--------------------------------------------------------------------------------

/challenge-labs/GSP319/images/kubectl get svc.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP319/images/kubectl get svc.png

--------------------------------------------------------------------------------

/challenge-labs/GSP319/images/labs variable.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP319/images/labs variable.png

--------------------------------------------------------------------------------

/challenge-labs/GSP787/images/date variable.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP787/images/date variable.png

--------------------------------------------------------------------------------

/challenge-labs/GSP306/images/IP_demo_blog_site.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP306/images/IP_demo_blog_site.png

--------------------------------------------------------------------------------

/challenge-labs/GSP787/images/start_close_date.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP787/images/start_close_date.png

--------------------------------------------------------------------------------

/challenge-labs/GSP305/images/kubernetes_cluster.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP305/images/kubernetes_cluster.png

--------------------------------------------------------------------------------

/challenge-labs/GSP313/images/zone_variable_task2.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP313/images/zone_variable_task2.jpg

--------------------------------------------------------------------------------

/challenge-labs/GSP319/images/kubectl get services.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP319/images/kubectl get services.png

--------------------------------------------------------------------------------

/challenge-labs/GSP303/images/RDP_vm-bastionhost_creds.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP303/images/RDP_vm-bastionhost_creds.png

--------------------------------------------------------------------------------

/challenge-labs/GSP303/images/RDP_vm-securehost_creds.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP303/images/RDP_vm-securehost_creds.png

--------------------------------------------------------------------------------

/challenge-labs/GSP303/images/VM_instances_vm-bastionhost.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP303/images/VM_instances_vm-bastionhost.png

--------------------------------------------------------------------------------

/challenge-labs/GSP303/images/VM_instances_vm-securehost.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hiiruki/google-cloudskillsboost/HEAD/challenge-labs/GSP303/images/VM_instances_vm-securehost.png

--------------------------------------------------------------------------------

/.github/SECURITY.md:

--------------------------------------------------------------------------------

1 | # SECURITY POLICY

2 |

3 | .-""-.

4 | / .--. \

5 | / / \ \

6 | | | | |

7 | | |.-""-.|

8 | ///`.::::.`\

9 | ||| ::/ \:: ;

10 | ||; ::\__/:: ;

11 | \\\ '::::' /

12 | `=':-..-'`

13 |

14 | ## Reporting Security Issues

15 |

16 | **Please do not report security vulnerabilities through public GitHub issues.**

17 |

18 | If you discover a security issue in this repository, please submit it through my [email](mailto:hi@hiiruki.dev) address.

19 |

20 | ## Preferred Languages to Report a Vulnerability

21 |

22 | I prefer all communications to be in English (EN).

23 |

--------------------------------------------------------------------------------

/.github/FUNDING.yml:

--------------------------------------------------------------------------------

1 | # These are supported funding model platforms

2 | # https://docs.github.com/en/repositories/managing-your-repositorys-settings-and-features/customizing-your-repository/displaying-a-sponsor-button-in-your-repository

3 |

4 | github: hiiruki

5 | patreon:

6 | open_collective:

7 | ko_fi: hiiruki

8 | tidelift: # Replace with a single Tidelift platform-name/package-name e.g., npm/babel

9 | community_bridge: # Replace with a single Community Bridge project-name e.g., cloud-foundry

10 | liberapay: hiiruki

11 | issuehunt: # Replace with a single IssueHunt username

12 | otechie: # Replace with a single Otechie username

13 | custom: ["https://trakteer.id/hiiruki/tip"]

14 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Google Cloud Skills Boost

2 |

3 |

4 |

5 | This repository contains the solutions for the [Google Cloud Skills Boost](https://www.cloudskillsboost.google/) challenge labs (formerly [Qwiklabs](https://go.qwiklabs.com/)). The challenge labs are a set of labs that test your skills in [Google Cloud](https://cloud.google.com/). The labs are designed to test your skills in Google Cloud and are not designed to be guides. If you are looking for guides, please visit the [Google Cloud Documentation](https://cloud.google.com/docs) or use this guide as a reference.

6 |

7 | ## Challenge Labs

8 |

9 | - [GSP101](./challenge-labs/GSP101/index.md) Google Cloud Essential Skills

10 | - [GSP301](./challenge-labs/GSP301/index.md) Deploy a Compute Instance with a Remote Startup Script

11 | - [GSP303](./challenge-labs/GSP303/index.md) Configure Secure RDP using a Windows Bastion Host

12 | - [GSP304](./challenge-labs/GSP304/index.md) Build and Deploy a Docker Image to a Kubernetes Cluster

13 | - [GSP305](./challenge-labs/GSP305/index.md) Scale Out and Update a Containerized Application on a Kubernetes Cluster

14 | - [GSP306](./challenge-labs/GSP306/index.md) Migrate a MySQL Database to Google Cloud SQL

15 | - [GSP313](./challenge-labs/GSP313/index.md) Create and Manage Cloud Resources

16 | - [GSP315](./challenge-labs/GSP315/index.md) Perform Foundational Infrastructure Tasks in Google Cloud

17 | - [GSP319](./challenge-labs/GSP319/index.md) Build a Website on Google Cloud

18 | - [GSP322](./challenge-labs/GSP322/index.md) Build and Secure Networks in Google Cloud

19 | - [GSP341](./challenge-labs/GSP341/index.md) Create ML Models with BigQuery ML

20 | - [GSP342](./challenge-labs/GSP342/index.md) Ensure Access & Identity in Google Cloud

21 | - [GSP345](./challenge-labs/GSP345/index.md) Automating Infrastructure on Google Cloud with Terraform

22 | - [GSP787](./challenge-labs/GSP787/index.md) Insights from Data with BigQuery

23 |

24 |

25 |

26 | > **Note**: If the lab is labeled **deprecated**, it means the lab has been updated and this solution will not work, but you can still use it to study.

27 |

28 | ## License

29 |

30 | This content is licensed under the terms of the [Attribution-ShareAlike 4.0 International](./LICENSE.md).

31 |

--------------------------------------------------------------------------------

/challenge-labs/GSP304/index.md:

--------------------------------------------------------------------------------

1 | # [GSP304] Build and Deploy a Docker Image to a Kubernetes Cluster

2 |

3 | ### [GSP304](https://www.cloudskillsboost.google/focuses/1738?parent=catalog)

4 |

5 |

6 |

7 | ---

8 |

9 | Time: 1 hour 15 minutes

10 | Difficulty: Intermediate

11 | Price: 5 Credits

12 |

13 | Quest: [Cloud Architecture: Design, Implement, and Manage](https://www.cloudskillsboost.google/quests/124)

14 |

15 | Last updated: May 25, 2023

16 |

17 | ---

18 |

19 | ## Challenge scenario

20 |

21 | Your development team is interested in adopting a containerized microservices approach to application architecture. You need to test a sample application they have provided for you to make sure that that it can be deployed to a Google Kubernetes container. The development group provided a simple Go application called `echo-web` with a Dockerfile and the associated context that allows you to build a Docker image immediately.

22 |

23 | ## Your challenge

24 |

25 | To test the deployment, you need to download the sample application, then build the Docker container image using a tag that allows it to be stored on the Container Registry. Once the image has been built, you'll push it out to the Container Registry before you can deploy it.

26 |

27 | With the image prepared you can then create a Kubernetes cluster, then deploy the sample application to the cluster.

28 |

29 | 1. An application image with a v1 tag has been pushed to the gcr.io repository

30 |

31 | ```bash

32 | mkdir echo-web

33 | cd echo-web

34 | gsutil cp -r gs://$DEVSHELL_PROJECT_ID/echo-web.tar.gz .

35 | tar -xzf echo-web.tar.gz

36 | rm echo-web.tar.gz

37 | cd echo-web

38 | docker build -t echo-app:v1 .

39 | docker tag echo-app:v1 gcr.io/$DEVSHELL_PROJECT_ID/echo-app:v1

40 | docker push gcr.io/$DEVSHELL_PROJECT_ID/echo-app:v1

41 | ```

42 |

43 | 2. A new Kubernetes cluster exists (zone: us-central1-a)

44 |

45 | ```bash

46 | gcloud config set compute/zone us-central1-a

47 |

48 | gcloud container clusters create echo-cluster --num-nodes=2 --machine-type=n1-standard-2

49 | ```

50 |

51 | 3. Check that an application has been deployed to the cluster

52 |

53 | ```bash

54 | kubectl create deployment echo-web --image=gcr.io/$DEVSHELL_PROJECT_ID/echo-app:v1

55 | ```

56 |

57 | 4. Test that a service exists that responds to requests like Echo-app

58 |

59 | ```bash

60 | kubectl expose deployment echo-web --type=LoadBalancer --port 80 --target-port 8000

61 | ```

62 |

63 | ## Congratulations!

64 |

65 |

66 |

67 |

71 |

72 |

73 | [HOME](../../README.md)

74 |

--------------------------------------------------------------------------------

/challenge-labs/GSP101/index.md:

--------------------------------------------------------------------------------

1 | # [GSP101] Google Cloud Essential Skills: Challenge Lab

2 |

3 | ### [GSP101](https://www.cloudskillsboost.google/focuses/1734?parent=catalog)

4 |

5 |

6 |

7 | ---

8 |

9 | Time: 45 minutes

10 | Difficulty: Intermediate

11 | Price: 5 Credits

12 |

13 | Quest: [Cloud Architecture: Design, Implement, and Manage](https://www.cloudskillsboost.google/quests/124)

14 |

15 | Last updated: May 26, 2023

16 |

17 | ---

18 |

19 | ## Challenge scenario

20 |

21 | Your company is ready to launch a brand new product! Because you are entering a totally new space, you have decided to deploy a new website as part of the product launch. The new site is complete, but the person who built the new site left the company before they could deploy it.

22 |

23 | ## Your challenge

24 |

25 | Your challenge is to deploy the site in the public cloud by completing the tasks below. You will use a simple Apache web server as a placeholder for the new site in this exercise. Good luck!

26 |

27 | 1. Create a Compute Engine instance, add necessary firewall rules.

28 |

29 | - In the **Cloud Console**, click the **Navigation menu** > **Compute Engine** > **VM Instances**.

30 | - Click **Create instance**.

31 | - Set the following values, leave all other values at their defaults:

32 |

33 | | Property | Value (type value or select option as specified) |

34 | | --- | --- |

35 | | Name | `INSTANCE_NAME` |

36 | | Zone | `COMPUTE_ZONE` |

37 |

38 |

39 |

40 |

41 |

42 | - Under **Firewall** check **Allow HTTP traffic**.

43 |

44 |

45 |

46 | - Click **Create**.

47 |

48 | 2. Configure Apache2 Web Server in your instance.

49 |

50 | - In the **Cloud Console**, click the **Navigation menu** > **Compute Engine** > **VM Instances**.

51 | - Click on the SSH button next to `INSTANCE_NAME` instance.

52 | - Run the following command:

53 |

54 | ```bash

55 | sudo su -

56 | ```

57 |

58 | then run:

59 |

60 | ```bash

61 | apt-get update

62 | apt-get install apache2 -y

63 |

64 | service --status-all

65 | ```

66 |

67 | 3. Test your server.

68 |

69 | - In the **Cloud Console**, click the **Navigation menu** > **Compute Engine** > **VM Instances**.

70 | - Access the VM using an https address. Check that your URL is http:// EXTERNAL_IP and not https:// EXTERNAL_IP

71 | - Verify **Apache2 Debian Default Page** showed up.

72 |

73 | ## Congratulations!

74 |

75 |

76 |

77 |

80 |

81 |

82 | [HOME](../../README.md)

83 |

--------------------------------------------------------------------------------

/challenge-labs/GSP301/index.md:

--------------------------------------------------------------------------------

1 | # [GSP301] Deploy a Compute Instance with a Remote Startup Script

2 |

3 | ### [GSP301](https://www.cloudskillsboost.google/focuses/1735?parent=catalog)

4 |

5 |

6 |

7 | ---

8 |

9 | Time: 1 hour

10 | Difficulty: Intermediate

11 | Price: 5 Credits

12 |

13 | Quest: [Cloud Architecture: Design, Implement, and Manage](https://www.cloudskillsboost.google/quests/124)

14 |

15 | Last updated: May 22, 2023

16 |

17 | ---

18 |

19 | ## Challenge scenario

20 |

21 | You have been given the responsibility of managing the configuration of your organization's Google Cloud virtual machines. You have decided to make some changes to the framework used for managing the deployment and configuration machines - you want to make it easier to modify the startup scripts used to initialize a number of the compute instances. Instead of storing startup scripts directly in the instances' metadata, you have decided to store the scripts in a Cloud Storage bucket and then configure the virtual machines to point to the relevant script file in the bucket.

22 |

23 | A basic bash script that installs the Apache web server software called `install-web.sh` has been provided for you as a sample startup script. You can download this from the Student Resources links on the left side of the page.

24 |

25 | ## Your challenge

26 |

27 | Configure a Linux Compute Engine instance that installs the Apache web server software using a remote startup script. In order to confirm that a compute instance Apache has successfully installed, the Compute Engine instance must be accessible via HTTP from the internet.

28 |

29 | ## Task 1. Confirm that a Google Cloud Storage bucket exists that contains a file

30 |

31 | Go to cloud shell and run the following command:

32 |

33 | ```bash

34 | gsutil mb gs://$DEVSHELL_PROJECT_ID

35 | gsutil cp gs://sureskills-ql/challenge-labs/ch01-startup-script/install-web.sh gs://$DEVSHELL_PROJECT_ID

36 | ```

37 |

38 | ## Task 2. Confirm that a compute instance has been created that has a remote startup script called install-web.sh configured

39 |

40 | ```bash

41 | gcloud compute instances create example-instance --zone=us-central1-a --tags=http-server --metadata startup-script-url=gs://$DEVSHELL_PROJECT_ID/install-web.sh

42 | ```

43 |

44 | ## Task 3. Confirm that a HTTP access firewall rule exists with tag that applies to that virtual machine

45 |

46 | ```bash

47 | gcloud compute firewall-rules create allow-http --target-tags http-server --source-ranges 0.0.0.0/0 --allow tcp:80

48 | ```

49 |

50 | ## Task 4. Connect to the server ip-address using HTTP and get a non-error response

51 |

52 | After firewall creation (Task 3) just wait and then check the score

53 |

54 | ## Congratulations!

55 |

56 |

57 |

58 |

62 |

63 |

64 | [HOME](../../README.md)

65 |

--------------------------------------------------------------------------------

/challenge-labs/GSP305/index.md:

--------------------------------------------------------------------------------

1 | # [GSP305] Scale Out and Update a Containerized Application on a Kubernetes Cluster

2 |

3 | ### [GSP305](https://www.cloudskillsboost.google/focuses/1739?parent=catalog)

4 |

5 |

6 |

7 | ---

8 |

9 | Time: 1 hour

10 | Difficulty: Intermediate

11 | Price: 5 Credits

12 |

13 | Quest: [Cloud Architecture: Design, Implement, and Manage](https://www.cloudskillsboost.google/quests/124)

14 |

15 | Last updated: May 25, 2023

16 |

17 | ---

18 |

19 | ## Challenge scenario

20 |

21 | You are taking over ownership of a test environment and have been given an updated version of a containerized test application to deploy. Your systems' architecture team has started adopting a containerized microservice architecture. You are responsible for managing the containerized test web applications. You will first deploy the initial version of a test application, called `echo-app` to a Kubernetes cluster called `echo-cluster` in a deployment called `echo-web`.

22 |

23 | Before you get started, open the navigation menu and select **Cloud Storage**. The last steps in the Deployment Manager script used to set up your environment creates a bucket.

24 |

25 | Refresh the Storage browser until you see your bucket. You can move on once your Console resembles the following:

26 |

27 |

28 |

29 | Check to make sure your GKE cluster has been created before continuing. Open the navigation menu and select **Kubernetes Engine** > **Clusters**.

30 |

31 | Continue when you see a green checkmark next to `echo-cluster`:

32 |

33 |

34 |

35 | To deploy your first version of the application, run the following commands in Cloud Shell to get up and running:

36 |

37 | ```bash

38 | gcloud container clusters get-credentials echo-cluster --zone=us-central1-a

39 | ```

40 |

41 | ```bash

42 | kubectl create deployment echo-web --image=gcr.io/qwiklabs-resources/echo-app:v1

43 | ```

44 |

45 | ```bash

46 | kubectl expose deployment echo-web --type=LoadBalancer --port 80 --target-port 8000

47 | ```

48 |

49 | ## Your challenge

50 |

51 | You need to update the running `echo-app` application in the `echo-web` deployment from the v1 to the v2 code you have been provided. You must also scale out the application to 2 instances and confirm that they are all running.

52 |

53 | 1. Check that there is a tagged image in gcr.io for echo-app:v2.

54 |

55 | ```bash

56 | mkdir echo-web

57 | cd echo-web

58 | gsutil cp -r gs://$DEVSHELL_PROJECT_ID/echo-web-v2.tar.gz .

59 | tar -xzf echo-web-v2.tar.gz

60 | rm echo-web-v2.tar.gz

61 | docker build -t echo-app:v2 .

62 | docker tag echo-app:v2 gcr.io/$DEVSHELL_PROJECT_ID/echo-app:v2

63 | docker push gcr.io/$DEVSHELL_PROJECT_ID/echo-app:v2

64 | ```

65 |

66 | 2. Echo-app:v2 is running on the Kubernetes cluster.

67 |

68 | Deploy the first version of the application.

69 |

70 | ```bash

71 | gcloud container clusters get-credentials echo-cluster --zone=us-central1-a

72 | kubectl create deployment echo-web --image=gcr.io/qwiklabs-resources/echo-app:v1

73 | kubectl expose deployment echo-web --type=LoadBalancer --port 80 --target-port 8000

74 | ```

75 |

76 | Edit the `deployment.apps` file.

77 |

78 | ```bash

79 | kubectl edit deploy echo-web

80 | ```

81 |

82 | Start the editor by type `i`. Change `image=...:v1` to `image=...:v2`.

83 |

84 | `image=gcr.io/qwiklabs-resources/echo-app:v2`

85 |

86 | Save the `deployment.apps` file, hit **ESC** then type `:wq` and **Enter**.

87 |

88 | 3. The Kubernetes cluster deployment reports 2 replicas.

89 |

90 | ```bash

91 | kubectl scale deployment echo-web --replicas=2

92 | ```

93 |

94 | 4. The application must respond to web requests with V2.0.0.

95 |

96 | ```bash

97 | kubectl expose deployment echo-web --type=LoadBalancer --port 80 --target-port 8000

98 |

99 | kubectl get svc

100 | ```

101 |

102 | ## Congratulations!

103 |

104 |

105 |

106 |

110 |

111 |

112 | [HOME](../../README.md)

113 |

--------------------------------------------------------------------------------

/challenge-labs/GSP306/index.md:

--------------------------------------------------------------------------------

1 | # [GSP306] Migrate a MySQL Database to Google Cloud SQL

2 |

3 | ### [GSP306](https://www.cloudskillsboost.google/focuses/1740?parent=catalog)

4 |

5 |

6 |

7 | ---

8 |

9 | Time: 1 hour 15 minutes

10 | Difficulty: Advanced

11 | Price: 7 Credits

12 |

13 | Quest: [Cloud Architecture: Design, Implement, and Manage](https://www.cloudskillsboost.google/quests/124)

14 |

15 | Last updated: May 25, 2023

16 |

17 | ---

18 |

19 | ## Challenge scenario

20 |

21 | Your WordPress blog is running on a server that is no longer suitable. As the first part of a complete migration exercise, you are migrating the locally hosted database used by the blog to Cloud SQL.

22 |

23 | The existing WordPress installation is installed in the `/var/www/html/wordpress` directory in the instance called `blog` that is already running in the lab. You can access the blog by opening a web browser and pointing to the external IP address of the blog instance.

24 |

25 | The existing database for the blog is provided by MySQL running on the same server. The existing MySQL database is called `wordpress` and the user called **blogadmin** with password __Password1*__, which provides full access to that database.

26 |

27 | ## Your challenge

28 |

29 | - You need to create a new Cloud SQL instance to host the migrated database

30 | - Once you have created the new database and configured it, you can then create a database dump of the existing database and import it into Cloud SQL.

31 | - When the data has been migrated, you will then reconfigure the blog software to use the migrated database.

32 |

33 | For this lab, the WordPress site configuration file is located here: `/var/www/html/wordpress/wp-config.php.`

34 |

35 | To sum it all up, your challenge is to migrate the database to Cloud SQL and then reconfigure the application so that it no longer relies on the local MySQL database. Good luck!

36 |

37 | 1. Check that there is a Cloud SQL instance.

38 |

39 | Go to cloud shell and run the following command:

40 |

41 | ```bash

42 | export ZONE=us-central1-a

43 |

44 | gcloud sql instances create wordpress --tier=db-n1-standard-1 --activation-policy=ALWAYS --zone $ZONE

45 | ```

46 |

47 | > **Note**: It will take a several times to create the instance.

48 |

49 | Run the following command:

50 |

51 | ```bash

52 | export ADDRESS=[IP_ADDRESS]/32

53 | ```

54 |

55 | Change the `[IP_ADDRESS]` with IP Address from `Demo Blog Site` field

56 |

57 |

58 |

59 | or from the External IP of the `blog` instance in VM Compute Engine.

60 |

61 |

62 |

63 | For example:

64 |

65 | ```bash

66 | export ADDRESS=104.196.226.155/32

67 | ```

68 |

69 | Run the following command:

70 |

71 | ```bash

72 | gcloud sql users set-password --host % root --instance wordpress --password Password1*

73 |

74 | gcloud sql instances patch wordpress --authorized-networks $ADDRESS --quiet

75 | ```

76 |

77 | 2. Check that there is a user database on the Cloud SQL instance.

78 |

79 | - In the **Cloud Console**, click the **Navigation menu** > **Compute Engine** > **VM Instances**.

80 | - Click on the SSH button next to `blog` instance.

81 | - Run the following command:

82 |

83 | ```bash

84 | MYSQLIP=$(gcloud sql instances describe wordpress --format="value(ipAddresses.ipAddress)")

85 |

86 | mysql --host=$MYSQLIP \

87 | --user=root --password

88 | ```

89 |

90 | > **Note**: Enter the password with __Password1*__

91 |

92 | And then run the following command:

93 |

94 | ```sql

95 | CREATE DATABASE wordpress;

96 | CREATE USER 'blogadmin'@'%' IDENTIFIED BY 'Password1*';

97 | GRANT ALL PRIVILEGES ON wordpress.* TO 'blogadmin'@'%';

98 | FLUSH PRIVILEGES;

99 | ```

100 |

101 | - type `exit` to exit the mysql shell.

102 |

103 | 3. Check that the blog instance is authorized to access Cloud SQL.

104 |

105 | In the `blog` SSH instance, run the following command:

106 |

107 | ```bash

108 | sudo mysqldump -u root -p Password1* wordpress > wordpress_backup.sql

109 |

110 | mysql --host=$MYSQLIP --user=root -pPassword1* --verbose wordpress < wordpress_backup.sql

111 |

112 | sudo service apache2 restart

113 | ```

114 |

115 | 4. Check that wp-config.php points to the Cloud SQL instance.

116 | - Run the following command:

117 |

118 | ```bash

119 | cd /var/www/html/wordpress/

120 |

121 | sudo nano wp-config.php

122 | ```

123 |

124 | - Replace `localhost` string on `DB_HOST` with **Public IP address** of SQL Instance that has copied before.

125 |

126 |

127 |

128 | From this:

129 |

130 |

131 |

132 | To this:

133 |

134 |

135 |

136 | - Press **Ctrl + O** and then press **Enter** to save your edited file. Press **Ctrl + X** to exit the nano editor.

137 | - Exit the SSH.

138 |

139 | 5. Check that the blog still responds to requests.

140 |

141 | - In the **Cloud Console**, click the **Navigation menu** > **Compute Engine** > **VM Instances**.

142 | - Click the **External IP** of the `blog` instance.

143 | - Verify that no error.

144 |

145 |

146 |

147 | ## Congratulations!

148 |

149 |

150 |

151 |

155 |

156 |

157 | [HOME](../../README.md)

158 |

--------------------------------------------------------------------------------

/challenge-labs/GSP322/index.md:

--------------------------------------------------------------------------------

1 | # [GSP322] Build and Secure Networks in Google Cloud: Challenge Lab

2 |

3 | ### [GSP322](https://www.cloudskillsboost.google/focuses/12068?parent=catalog)

4 |

5 |

6 |

7 | ---

8 |

9 | Time: 1 hour

10 | Difficulty: Advanced

11 | Price: 7 Credits

12 |

13 | Quest: [Build and Secure Networks in Google Cloud](https://www.cloudskillsboost.google/quests/128)

14 |

15 | Last updated: May 26, 2023

16 |

17 | ---

18 |

19 | ## Setup

20 |

21 | Define the environment variables:

22 |

23 | ```bash

24 | export IAP_NETWORK_TAG=

25 | export INTERNAL_NETWORK_TAG=

26 | export HTTP_NETWORK_TAG=

27 | export ZONE=

28 | ```

29 |

30 | Fill the variables with the values from the lab

31 |

32 | For the zone you can check first. In the console, click the **Navigation menu** > **Compute Engine** > **VM Instance**. In my case I used `us-east1-b`

33 |

34 |

35 |

36 | To list all available zones:

37 |

38 | ```bash

39 | gcloud compute zones list

40 | ```

41 |

42 | Reference: [gcloud compute zones list](https://cloud.google.com/sdk/gcloud/reference/compute/zones/list)

43 |

44 |

45 |

46 | For example in my case:

47 |

48 | ```bash

49 | export IAP_NETWORK_TAG=allow-ssh-iap-ingress-ql-901

50 | export INTERNAL_NETWORK_TAG=allow-ssh-internal-ingress-ql-803

51 | export HTTP_NETWORK_TAG=allow-http-ingress-ql-982

52 | export ZONE=us-east1-b

53 | ```

54 |

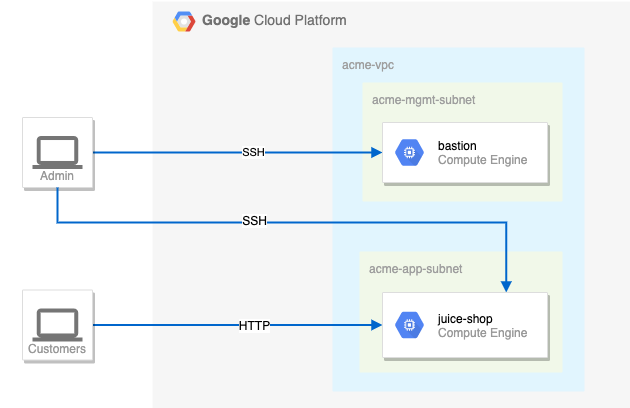

55 | ## Challenge scenario

56 |

57 | You are a security consultant brought in by Jeff, who owns a small local company, to help him with his very successful website (juiceshop). Jeff is new to Google Cloud and had his neighbour's son set up the initial site. The neighbour's son has since had to leave for college, but before leaving, he made sure the site was running.

58 |

59 | You need to help out Jeff and perform appropriate configuration for security. Below is the current situation:

60 |

61 |

62 |

63 | ## Your challenge

64 |

65 | You need to configure this simple environment securely. Your first challenge is to set up appropriate firewall rules and virtual machine tags. You also need to ensure that SSH is only available to the bastion via IAP.

66 |

67 | For the firewall rules, make sure:

68 |

69 | - The bastion host does not have a public IP address.

70 | - You can only SSH to the bastion and only via IAP.

71 | - You can only SSH to juice-shop via the bastion.

72 | - Only HTTP is open to the world for `juice-shop`.

73 |

74 | Tips and tricks:

75 |

76 | - Pay close attention to the network tags and the associated VPC firewall rules.

77 | - Be specific and limit the size of the VPC firewall rule source ranges.

78 | - Overly permissive permissions will not be marked correct.

79 |

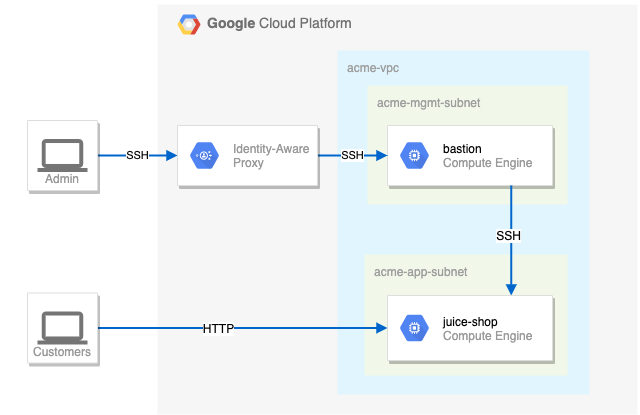

80 |

81 |

82 | Suggested order of actions:

83 |

84 | 1. Check the firewall rules. Remove the overly permissive rules.

85 |

86 | ```bash

87 | gcloud compute firewall-rules delete open-access

88 | ```

89 |

90 | Press `y` and `enter` to confirm.

91 |

92 | 2. Navigate to Compute Engine in the Cloud Console (**Navigation menu** > **Compute Engine** > **VM Instance**) and identify the bastion host. The instance should be stopped. Start the instance.

93 |

94 | ```bash

95 | gcloud compute instances start bastion --zone=$ZONE

96 | ```

97 |

98 | If you getting **_error_** when run this command, you can manually activate bastion in VM Instance.

99 |

100 | 3. The bastion host is the one machine authorized to receive external SSH traffic. Create a firewall rule that allows [SSH (tcp/22) from the IAP service](https://cloud.google.com/iap/docs/using-tcp-forwarding). The firewall rule must be enabled for the bastion host instance using a network tag of `SSH_IAP_NETWORK_TAG`.

101 |

102 | ```bash

103 | gcloud compute firewall-rules create ssh-ingress --allow=tcp:22 --source-ranges 35.235.240.0/20 --target-tags $IAP_NETWORK_TAG --network acme-vpc

104 |

105 | gcloud compute instances add-tags bastion --tags=$IAP_NETWORK_TAG --zone=$ZONE

106 | ```

107 |

108 | 4. The `juice-shop` server serves HTTP traffic. Create a firewall rule that allows traffic on HTTP (tcp/80) to any address. The firewall rule must be enabled for the juice-shop instance using a network tag of `HTTP_NETWORK_TAG`.

109 |

110 | ```bash

111 | gcloud compute firewall-rules create http-ingress --allow=tcp:80 --source-ranges 0.0.0.0/0 --target-tags $HTTP_NETWORK_TAG --network acme-vpc

112 |

113 | gcloud compute instances add-tags juice-shop --tags=$HTTP_NETWORK_TAG --zone=$ZONE

114 | ```

115 |

116 | 5. You need to connect to `juice-shop` from the bastion using SSH. Create a firewall rule that allows traffic on SSH (tcp/22) from `acme-mgmt-subnet` network address. The firewall rule must be enabled for the `juice-shop` instance using a network tag of `SSH_INTERNAL_NETWORK_TAG`.

117 |

118 | ```bash

119 | gcloud compute firewall-rules create internal-ssh-ingress --allow=tcp:22 --source-ranges 192.168.10.0/24 --target-tags $INTERNAL_NETWORK_TAG --network acme-vpc

120 |

121 | gcloud compute instances add-tags juice-shop --tags=$INTERNAL_NETWORK_TAG --zone=$ZONE

122 | ```

123 |

124 | 6. In the Compute Engine instances page, click the SSH button for the **bastion** host.

125 |

126 |

127 |

128 | Once connected, SSH to `juice-shop`.

129 |

130 | ```bash

131 | gcloud compute ssh juice-shop --internal-ip

132 | ```

133 |

134 | When prompted `Do you want to continue (Y/n)?`, press `y` and `enter`.

135 |

136 | Then create a phrase key for the `juice-shop` instance. You can just press `enter` for the empty passphrase.

137 |

138 | When prompted `Did you mean zone [us-east1-b] for instance: [juice-shop] (Y/n)?`, press `y` and `enter`.

139 |

140 |

141 |

142 | ## Congratulations!

143 |

144 |

145 |

146 |

150 |

151 |

152 | [HOME](../../README.md)

153 |

--------------------------------------------------------------------------------

/challenge-labs/GSP313/index.md:

--------------------------------------------------------------------------------

1 | # [GSP313] Create and Manage Cloud Resources: Challenge Lab

2 |

3 | ### [GSP313](https://www.cloudskillsboost.google/focuses/10258?parent=catalog)

4 |

5 |

6 |

7 | ---

8 |

9 | Time: 1 hour

10 | Difficulty: Introductory

11 | Price: 1 Credit

12 |

13 | Quest: [Create and Manage Cloud Resources](https://www.cloudskillsboost.google/quests/120)

14 |

15 | Last updated: May 22, 2023

16 |

17 | ---

18 |

19 | ## Challenge scenario

20 |

21 | You have started a new role as a Junior Cloud Engineer for Jooli, Inc. You are expected to help manage the infrastructure at Jooli. Common tasks include provisioning resources for projects.

22 |

23 | You are expected to have the skills and knowledge for these tasks, so step-by-step guides are not provided.

24 |

25 | Some Jooli, Inc. standards you should follow:

26 |

27 | Create all resources in the default region or zone, unless otherwise directed.

28 |

29 | Naming normally uses the format _team-resource_; for example, an instance could be named **nucleus-webserver1**.

30 |

31 | Allocate cost-effective resource sizes. Projects are monitored, and excessive resource use will result in the containing project's termination (and possibly yours), so plan carefully. This is the guidance the monitoring team is willing to share: unless directed, use **f1-micro** for small Linux VMs, and use **n1-standard-1** for Windows or other applications, such as Kubernetes nodes.

32 |

33 | ## Your challenge

34 |

35 | As soon as you sit down at your desk and open your new laptop, you receive several requests from the Nucleus team. Read through each description, and then create the resources.

36 |

37 | ## Setup

38 |

39 | Export the following environment variables using the values specific to your labs instruction.

40 |

41 | ```bash

42 | export INSTANCE_NAME=

43 | export ZONE=

44 | export REGION=

45 | export PORT=

46 | export FIREWALL_NAME=

47 | ```

48 |

49 |

50 |

51 | You can find the zone in Task 2 description.

52 |

53 |

54 |

55 | Region is just the first part of the zone. For example, if the zone is `us-east1-b`, then the region is `us-east1`.

56 |

57 | Example:

58 |

59 | ```bash

60 | export INSTANCE_NAME=nucleus-jumphost-295

61 | export ZONE=us-central1-b

62 | export REGION=us-central1

63 | export PORT=8080

64 | export FIREWALL_NAME=accept-tcp-rule-633

65 | ```

66 |

67 | ## Task 1. Create a project jumphost instance

68 |

69 | **_Beware with machine-type, maybe have different with me, dont forget to change_**

70 |

71 |

72 | Go to cloud shell and run the following command:

73 |

74 | ```bash

75 | gcloud compute instances create $INSTANCE_NAME \

76 | --network nucleus-vpc \

77 | --zone $ZONE \

78 | --machine-type e2-micro \

79 | --image-family debian-10 \

80 | --image-project debian-cloud

81 | ```

82 |

83 | ## Task 2. Create a Kubernetes service cluster

84 |

85 | Go to cloud shell and run the following command:

86 |

87 | ```bash

88 | gcloud container clusters create nucleus-backend \

89 | --num-nodes 1 \

90 | --network nucleus-vpc \

91 | --zone $ZONE

92 |

93 | gcloud container clusters get-credentials nucleus-backend \

94 | --zone $ZONE

95 | ```

96 |

97 | - Use the Docker container hello-app (`gcr.io/google-samples/hello-app:2.0`) as place holder.

98 |

99 | ```bash

100 | kubectl create deployment hello-server \

101 | --image=gcr.io/google-samples/hello-app:2.0

102 | ```

103 |

104 | - Expose the app on port `APP_PORT_NUMBER`.

105 |

106 | ```bash

107 | kubectl expose deployment hello-server \

108 | --type=LoadBalancer \

109 | --port $PORT

110 | ```

111 |

112 | ## Task 3. Set up an HTTP load balancer

113 |

114 | 1. Create startup-script.

115 |

116 | ```bash

117 | cat << EOF > startup.sh

118 | #! /bin/bash

119 | apt-get update

120 | apt-get install -y nginx

121 | service nginx start

122 | sed -i -- 's/nginx/Google Cloud Platform - '"\$HOSTNAME"'/' /var/www/html/index.nginx-debian.html

123 | EOF

124 | ```

125 |

126 | 2. Create instance template.

127 |

128 | ```bash

129 | gcloud compute instance-templates create web-server-template \

130 | --metadata-from-file startup-script=startup.sh \

131 | --network nucleus-vpc \

132 | --machine-type g1-small \

133 | --region $ZONE

134 | ```

135 |

136 | 3. Create target pool.

137 |

138 | ```bash

139 | gcloud compute target-pools create nginx-pool --region=$REGION

140 | ```

141 |

142 | 4. Create managed instance group.

143 |

144 | ```bash

145 | gcloud compute instance-groups managed create web-server-group \

146 | --base-instance-name web-server \

147 | --size 2 \

148 | --template web-server-template \

149 | --region $REGION

150 | ```

151 |

152 | 5. Create firewall rule named as `FIREWALL_RULE` to allow traffic (80/tcp).

153 |

154 | ```bash

155 | gcloud compute firewall-rules create $FIREWALL_NAME \

156 | --allow tcp:80 \

157 | --network nucleus-vpc

158 | ```

159 |

160 | 6. Create health check.

161 |

162 | ```bash

163 | gcloud compute http-health-checks create http-basic-check

164 | gcloud compute instance-groups managed \

165 | set-named-ports web-server-group \

166 | --named-ports http:80 \

167 | --region $REGION

168 | ```

169 |

170 | 7. Create backend service, and attach the managed instance group with named port (http:80).

171 |

172 | ```bash

173 | gcloud compute backend-services create web-server-backend \

174 | --protocol HTTP \

175 | --http-health-checks http-basic-check \

176 | --global

177 |

178 | gcloud compute backend-services add-backend web-server-backend \

179 | --instance-group web-server-group \

180 | --instance-group-region $REGION \

181 | --global

182 | ```

183 |

184 | 8. Create URL map and target the HTTP proxy to route requests to your URL map.

185 |

186 | ```bash

187 | gcloud compute url-maps create web-server-map \

188 | --default-service web-server-backend

189 |

190 | gcloud compute target-http-proxies create http-lb-proxy \

191 | --url-map web-server-map

192 | ```

193 |

194 | 9. Create forwarding rule.

195 |

196 | ```bash

197 | gcloud compute forwarding-rules create http-content-rule \

198 | --global \

199 | --target-http-proxy http-lb-proxy \

200 | --ports 80

201 |

202 | gcloud compute forwarding-rules create $FIREWALL_NAME \

203 | --global \

204 | --target-http-proxy http-lb-proxy \

205 | --ports 80

206 | gcloud compute forwarding-rules list

207 | ```

208 |

209 | > **Note**: Just wait for the load balancer to finish setting up. It may take a few minutes. If you get an error checkmark, wait a few moments and try again.

210 |

211 | 10. Testing traffic sent to your instances. (**Optional**)

212 |

213 | - In the **Cloud Console**, click the **Navigation menu** > **Network services** > **Load balancing**.

214 | - Click on the load balancer that you just created (`web-server-map`).

215 | - In the **Backend** section, click on the name of the backend and confirm that the VMs are **Healthy**. If they are not healthy, wait a few moments and try reloading the page.

216 | - When the VMs are healthy, test the load balancer using a web browser, going to `http://IP_ADDRESS/`, replacing `IP_ADDRESS` with the load balancer's IP address.

217 |

218 | ## Congratulations!

219 |

220 |

221 |

222 |

226 |

227 |

228 | [HOME](../../README.md)

229 |

--------------------------------------------------------------------------------

/challenge-labs/GSP342/index.md:

--------------------------------------------------------------------------------

1 | # [GSP342] Ensure Access & Identity in Google Cloud: Challenge Lab

2 |

3 | ### [GSP342](https://www.cloudskillsboost.google/focuses/14572?parent=catalog)

4 |

5 |

6 |

7 | ---

8 |

9 | Time: 1 hour 30 minutes

10 | Difficulty: Intermediate

11 | Price: 5 Credits

12 |

13 | Quest: [Ensure Access & Identity in Google Cloud](https://www.cloudskillsboost.google/quests/150)

14 |

15 | Last updated: May 26, 2023

16 |

17 | ---

18 |

19 | ## Challenge scenario

20 |

21 | You have started a new role as a junior member of the security team for the Orca team in Jooli Inc. Your team is responsible for ensuring the security of the Cloud infrastucture and services that the company's applications depend on.

22 |

23 | You are expected to have the skills and knowledge for these tasks, so don't expect step-by-step guides to be provided.

24 |

25 | ## Your challenge

26 |

27 | You have been asked to deploy, configure, and test a new Kubernetes Engine cluster that will be used for application development and pipeline testing by the the Orca development team.

28 |

29 | As per the organisation's security standards you must ensure that the new Kubernetes Engine cluster is built according to the organisation's most recent security standards and thereby must comply with the following:

30 |

31 | - The cluster must be deployed using a dedicated service account configured with the least privileges required.

32 | - The cluster must be deployed as a Kubernetes Engine private cluster, with the public endpoint disabled, and the master authorized network set to include only the ip-address of the Orca group's management jumphost.

33 | - The Kubernetes Engine private cluster must be deployed to the `orca-build-subnet` in the Orca Build VPC.

34 |

35 | From a previous project you know that the minimum permissions required by the service account that is specified for a Kubernetes Engine cluster is covered by these three built in roles:

36 |

37 | - `roles/monitoring.viewer`

38 | - `roles/monitoring.metricWriter`

39 | - `roles/logging.logWriter`

40 |

41 | These roles are specified in the Google Kubernetes Engine (GKE)'s Harden your cluster's security guide in the [Use least privilege Google service accounts](https://cloud.google.com/kubernetes-engine/docs/how-to/hardening-your-cluster#use_least_privilege_sa) section.

42 |

43 | You must bind the above roles to the service account used by the cluster as well as a custom role that you must create in order to provide access to any other services specified by the development team. Initially you have been told that the development team requires that the service account used by the cluster should have the permissions necessary to add and update objects in Google Cloud Storage buckets. To do this you will have to create a new custom IAM role that will provide the following permissions:

44 |

45 | - `storage.buckets.get`

46 | - `storage.objects.get`

47 | - `storage.objects.list`

48 | - `storage.objects.update`

49 | - `storage.objects.create`

50 |

51 | Once you have created the new private cluster you must test that it is correctly configured by connecting to it from the jumphost, `orca-jumphost`, in the management subnet `orca-mgmt-subnet`. As this compute instance is not in the same subnet as the private cluster you must make sure that the master authorized networks for the cluster includes the internal ip-address for the instance, and you must specify the `--internal-ip` flag when retrieving cluster credentials using the `gcloud container clusters get-credentials` command.

52 |

53 | All new cloud objects and services that you create should include the "orca-" prefix.

54 |

55 | Your final task is to validate that the cluster is working correctly by deploying a simple application to the cluster to test that management access to the cluster using the `kubectl` tool is working from the `orca-jumphost` compute instance.

56 |

57 | ## Setup

58 |

59 | Define variables:

60 |

61 | ```bash

62 | export CUSTOM_SECURIY_ROLE=

63 | export SERVICE_ACCOUNT=

64 | export CLUSTER_NAME=

65 | ```

66 |

67 | for example, in my case:

68 |

69 |

70 |

71 | ```bash

72 | export CUSTOM_SECURIY_ROLE=orca_storage_editor_923

73 | export SERVICE_ACCOUNT=orca-private-cluster-278-sa

74 | export CLUSTER_NAME=orca-cluster-995

75 | ```

76 |

77 | ## Task 1. Create a custom security role.

78 |

79 | Set the default zone to `us-east1-b` and create `role-definition.yaml` file.

80 |

81 | ```bash

82 | gcloud config set compute/zone us-east1-b

83 | ```

84 |

85 | Create `role-definition.yaml` file.

86 |

87 | ```bash

88 | cat < role-definition.yaml

89 | title: ""

90 | description: "<DESCRIPTION>"

91 | stage: "ALPHA"

92 | includedPermissions:

93 | - storage.buckets.get

94 | - storage.objects.get

95 | - storage.objects.list

96 | - storage.objects.update

97 | - storage.objects.create

98 | EOF

99 | ```

100 |

101 | Replace `<TITLE>` and `<DESCRIPTION>` with the variables using [sed](https://linux.die.net/man/1/sed) command.

102 |

103 | ```bash

104 | sed -i "s/<TITLE>/$CUSTOM_SECURIY_ROLE/g" role-definition.yaml

105 | sed -i "s/<DESCRIPTION>/Permission/g" role-definition.yaml

106 | ```

107 |

108 | Create a custom security role

109 |

110 | ```bash

111 | gcloud iam roles create $CUSTOM_SECURIY_ROLE --project $DEVSHELL_PROJECT_ID --file role-definition.yaml

112 | ```

113 |

114 | ## Task 2. Create a service account.

115 |

116 | ```bash

117 | gcloud iam service-accounts create $SERVICE_ACCOUNT --display-name "${SERVICE_ACCOUNT} Service Account"

118 | ```

119 |

120 | ## Task 3. Bind a custom security role to a service account.

121 |

122 | ```bash

123 | gcloud projects add-iam-policy-binding $DEVSHELL_PROJECT_ID --member serviceAccount:$SERVICE_ACCOUNT@$DEVSHELL_PROJECT_ID.iam.gserviceaccount.com --role roles/monitoring.viewer

124 |

125 | gcloud projects add-iam-policy-binding $DEVSHELL_PROJECT_ID --member serviceAccount:$SERVICE_ACCOUNT@$DEVSHELL_PROJECT_ID.iam.gserviceaccount.com --role roles/monitoring.metricWriter

126 |

127 | gcloud projects add-iam-policy-binding $DEVSHELL_PROJECT_ID --member serviceAccount:$SERVICE_ACCOUNT@$DEVSHELL_PROJECT_ID.iam.gserviceaccount.com --role roles/logging.logWriter

128 |

129 | gcloud projects add-iam-policy-binding $DEVSHELL_PROJECT_ID --member serviceAccount:$SERVICE_ACCOUNT@$DEVSHELL_PROJECT_ID.iam.gserviceaccount.com --role projects/$DEVSHELL_PROJECT_ID/roles/$CUSTOM_SECURIY_ROLE

130 | ```

131 |

132 | ## Task 4. Create and configure a new Kubernetes Engine private cluster

133 |

134 | ```bash

135 | gcloud config set compute/zone us-east1-b

136 |

137 | gcloud container clusters create $CLUSTER_NAME --num-nodes 1 --master-ipv4-cidr=172.16.0.64/28 --network orca-build-vpc --subnetwork orca-build-subnet --enable-master-authorized-networks --master-authorized-networks 192.168.10.2/32 --enable-ip-alias --enable-private-nodes --enable-private-endpoint --service-account $SERVICE_ACCOUNT@$DEVSHELL_PROJECT_ID.iam.gserviceaccount.com --zone us-east1-b

138 | ```

139 |

140 | ## Task 5. Deploy an application to a private Kubernetes Engine cluster.

141 |

142 | Connect to the `orca-jumphost` compute instance (SSH).

143 |

144 | ```bash

145 | gcloud compute ssh --zone "us-east1-b" "orca-jumphost"

146 | ```

147 |

148 | Define variables:

149 |

150 | ```bash

151 | export CUSTOM_SECURIY_ROLE=

152 | export SERVICE_ACCOUNT=

153 | export CLUSTER_NAME=

154 | ```

155 |

156 | for example, in my case:

157 |

158 |

159 |

160 | ```bash

161 | export CUSTOM_SECURIY_ROLE=orca_storage_editor_923

162 | export SERVICE_ACCOUNT=orca-private-cluster-278-sa

163 | export CLUSTER_NAME=orca-cluster-995

164 | ```

165 |

166 | Install the [gcloud auth plugin for Kubernetes](https://cloud.google.com/blog/products/containers-kubernetes/kubectl-auth-changes-in-gke):

167 |

168 | ```bash

169 | sudo apt-get install google-cloud-sdk-gke-gcloud-auth-plugin

170 | ```

171 |

172 | Create and expose a deployment in Kubernetes:

173 |

174 | ```bash

175 | gcloud container clusters get-credentials $CLUSTER_NAME --zone=us-east1-b --internal-ip

176 |

177 | kubectl create deployment hello-server --image=gcr.io/google-samples/hello-app:1.0

178 |

179 | kubectl expose deployment hello-server --name orca-hello-service --type LoadBalancer --port 80 --target-port 8080

180 | ```

181 |

182 | ## Congratulations!

183 |

184 |

185 |

186 | <div style="display: flex; justify-content: space-between;">

187 | <a style="text-align: left;" href="../GSP341/index.md"><= PREV (GSP341)</a>

188 | <a style="text-align: right;" href="../GSP345/index.md">NEXT (GSP345) =></a>

189 | </div>

190 | <br>

191 |

192 | [HOME](../../README.md)

193 |

--------------------------------------------------------------------------------

/challenge-labs/GSP303/index.md:

--------------------------------------------------------------------------------

1 | # [GSP303] Configure Secure RDP using a Windows Bastion Host

2 |

3 | ### [GSP303](https://www.cloudskillsboost.google/focuses/1737?parent=catalog)

4 |

5 |

6 |

7 | ---

8 |

9 | Time: 1 hour<br>

10 | Difficulty: Intermediate<br>

11 | Price: 5 Credits

12 |

13 | Quest: [Cloud Architecture: Design, Implement, and Manage](https://www.cloudskillsboost.google/quests/124)<br>

14 |

15 | Last updated: Sep 7, 2023

16 |

17 | ---

18 |

19 | ## Challenge scenario

20 |

21 | Your company has decided to deploy new application services in the cloud and your assignment is developing a secure framework for managing the Windows services that will be deployed. You will need to create a new VPC network environment for the secure production Windows servers.

22 |

23 | Production servers must initially be completely isolated from external networks and cannot be directly accessed from, or be able to connect directly to, the internet. In order to configure and manage your first server in this environment, you will also need to deploy a bastion host, or jump box, that can be accessed from the internet using the Microsoft Remote Desktop Protocol (RDP). The bastion host should only be accessible via RDP from the internet, and should only be able to communicate with the other compute instances inside the VPC network using RDP.

24 |

25 | Your company also has a monitoring system running from the default VPC network, so all compute instances must have a second network interface with an internal only connection to the default VPC network.

26 |

27 | ## Your challenge

28 |

29 | Deploy the secure Windows machine that is not configured for external communication inside a new VPC subnet, then deploy the Microsoft Internet Information Server on that secure machine.

30 |

31 | ## Task 1. Create the VPC network

32 |

33 | 1. Create a new VPC network called `securenetwork`

34 |

35 | Go to cloud shell and run the following command:

36 |

37 | ```bash

38 | gcloud compute networks create securenetwork --project=$DEVSHELL_PROJECT_ID --subnet-mode=custom --mtu=1460 --bgp-routing-mode=regional

39 | ```

40 |

41 | 2. Then create a new VPC subnet inside `securenetwork`

42 |

43 | ```bash

44 | gcloud compute networks subnets create secure-subnet --project=$DEVSHELL_PROJECT_ID --range=10.0.0.0/24 --stack-type=IPV4_ONLY --network=securenetwork --region=us-central1

45 | ```

46 |

47 | 3. Once the network and subnet have been configured, configure a firewall rule that allows inbound RDP traffic (`TCP port 3389`) from the internet to the bastion host. This rule should be applied to the appropriate host using network tags.

48 |

49 | ```bash

50 | gcloud compute --project=$DEVSHELL_PROJECT_ID firewall-rules create secuer-firewall --direction=INGRESS --priority=1000 --network=securenetwork --action=ALLOW --rules=tcp:3389 --source-ranges=0.0.0.0/0 --target-tags=rdp

51 | ```

52 |

53 | ## Task 2. Deploy your Windows instances and configure user passwords

54 |

55 | 1. Deploy a Windows 2016 server instance called `vm-securehost` with two network interfaces.

56 | 2. Configure the first network interface with an internal only connection to the new VPC subnet, and the second network interface with an internal only connection to the default VPC network. This is the secure server.

57 |

58 | ```bash

59 | gcloud compute instances create vm-securehost --project=$DEVSHELL_PROJECT_ID --zone=us-central1-a --machine-type=n1-standard-2 --network-interface=stack-type=IPV4_ONLY,subnet=secure-subnet,no-address --network-interface=stack-type=IPV4_ONLY,subnet=default,no-address --metadata=enable-oslogin=true --maintenance-policy=MIGRATE --provisioning-model=STANDARD --tags=rdp --create-disk=auto-delete=yes,boot=yes,device-name=vm-securehost,image=projects/windows-cloud/global/images/windows-server-2016-dc-v20230510,mode=rw,size=150,type=projects/$DEVSHELL_PROJECT_ID/zones/us-central1-a/diskTypes/pd-standard --no-shielded-secure-boot --shielded-vtpm --shielded-integrity-monitoring --labels=goog-ec-src=vm_add-gcloud --reservation-affinity=any

60 | ```

61 |

62 | 3. Install a second Windows 2016 server instance called `vm-bastionhost` with two network interfaces.

63 | 4. Configure the first network interface to connect to the new VPC subnet with an ephemeral public (external NAT) address, and the second network interface with an internal only connection to the default VPC network. This is the jump box or bastion host.

64 |

65 | ```bash

66 | gcloud compute instances create vm-bastionhost --project=$DEVSHELL_PROJECT_ID --zone=us-central1-a --machine-type=n1-standard-2 --network-interface=network-tier=PREMIUM,stack-type=IPV4_ONLY,subnet=secure-subnet --network-interface=network-tier=PREMIUM,stack-type=IPV4_ONLY,subnet=default --metadata=enable-oslogin=true --maintenance-policy=MIGRATE --provisioning-model=STANDARD --tags=rdp --create-disk=auto-delete=yes,boot=yes,device-name=vm-securehost,image=projects/windows-cloud/global/images/windows-server-2016-dc-v20230510,mode=rw,size=150,type=projects/$DEVSHELL_PROJECT_ID/zones/us-central1-a/diskTypes/pd-standard --no-shielded-secure-boot --shielded-vtpm --shielded-integrity-monitoring --labels=goog-ec-src=vm_add-gcloud --reservation-affinity=any

67 | ```

68 |

69 | 5. After your Windows instances have been created, create a user account and reset the Windows passwords in order to connect to each instance.

70 | 6. The following `gcloud` command creates a new user called `app-admin` and resets the password for a host called `vm-bastionhost` and `vm-securehost` located in the `us-central1-a` region:

71 |

72 | ```bash

73 | gcloud compute reset-windows-password vm-bastionhost --user app_admin --zone us-central1-a

74 | ```

75 |

76 |

77 |

78 | > **Note**: Take note of the password that is generated for the user account. You will need this to connect to the bastion host.

79 |

80 | ```bash

81 | gcloud compute reset-windows-password vm-securehost --user app_admin --zone us-central1-a

82 | ```

83 |

84 |

85 |

86 | > **Note**: Take note of the password that is generated for the user account. You will need this to connect to the secure host.

87 |

88 | 7. Alternatively, you can force a password reset from the Compute Engine console. You will have to repeat this for the second host as the login credentials for that instance will be different.

89 |

90 | ## Task 3. Connect to the secure host and configure Internet Information Server

91 |

92 | To connect to the secure host, you have to RDP into the bastion host first, and from there open a second RDP session to connect to the internal private network address of the secure host. A Windows Compute Instance with an external address can be connected to via RDP using the RDP button that appears next to Windows Compute instances in the Compute Instance summary page.

93 |

94 | 1. Connect to the bastion host using the RDP button in the Compute Engine console.

95 |

96 | You can install [Chrome RDP](https://chrome.google.com/webstore/detail/chrome-rdp-for-google-clo/mpbbnannobiobpnfblimoapbephgifkm) extension for Google Cloud Platform

97 |

98 |

99 |

100 | 2. Go to Compute Engine > VM instances, click RDP on `vm-bastionhost`, fill username with app_admin and password with your copied `vm-bastionhost`'s password.

101 |

102 |

103 |

104 |

105 |

106 | When connected to a Windows server, you can launch the Microsoft RDP client using the command `mstsc.exe`, or you can search for `Remote Desktop Manager` from the Start menu. This will allow you to connect from the bastion host to other compute instances on the same VPC even if those instances do not have a direct internet connection themselves.

107 |

108 | 3. Click Search, search for Remote Desktop Connection and run it

109 | 4. Copy and paste the internal ip from `vm-securehost`, click Connect

110 |

111 |

112 |

113 | 5. Fill username with app_admin and password with your copied `vm-securehost`'s password

114 | 6. Click Search, type Powershell, right click and Run as Administrator

115 | 7. Run the following command to install IIS (Internet Information Server) :

116 |

117 | ```powershell

118 | Install-WindowsFeature -name Web-Server -IncludeManagementTools

119 | ```

120 |

121 |

122 |

123 |

124 |

125 | ## Congratulations!

126 |

127 |

128 |

129 | <div style="display: flex; justify-content: space-between;">

130 | <a style="text-align: left;" href="../GSP301/index.md"><= PREV (GSP301)</a>

131 | <a style="text-align: right;" href="../GSP304/index.md">NEXT (GSP304) =></a>

132 | </div>

133 | <br>

134 |

135 | [HOME](../../README.md)

136 |

--------------------------------------------------------------------------------

/challenge-labs/GSP341/index.md:

--------------------------------------------------------------------------------