├── aae

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── aae.py

├── gan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── gan.py

├── acgan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── acgan.py

├── bgan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── bgan.py

├── bigan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── bigan.py

├── ccgan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── ccgan.py

├── cgan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── cgan.py

├── cogan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── cogan.py

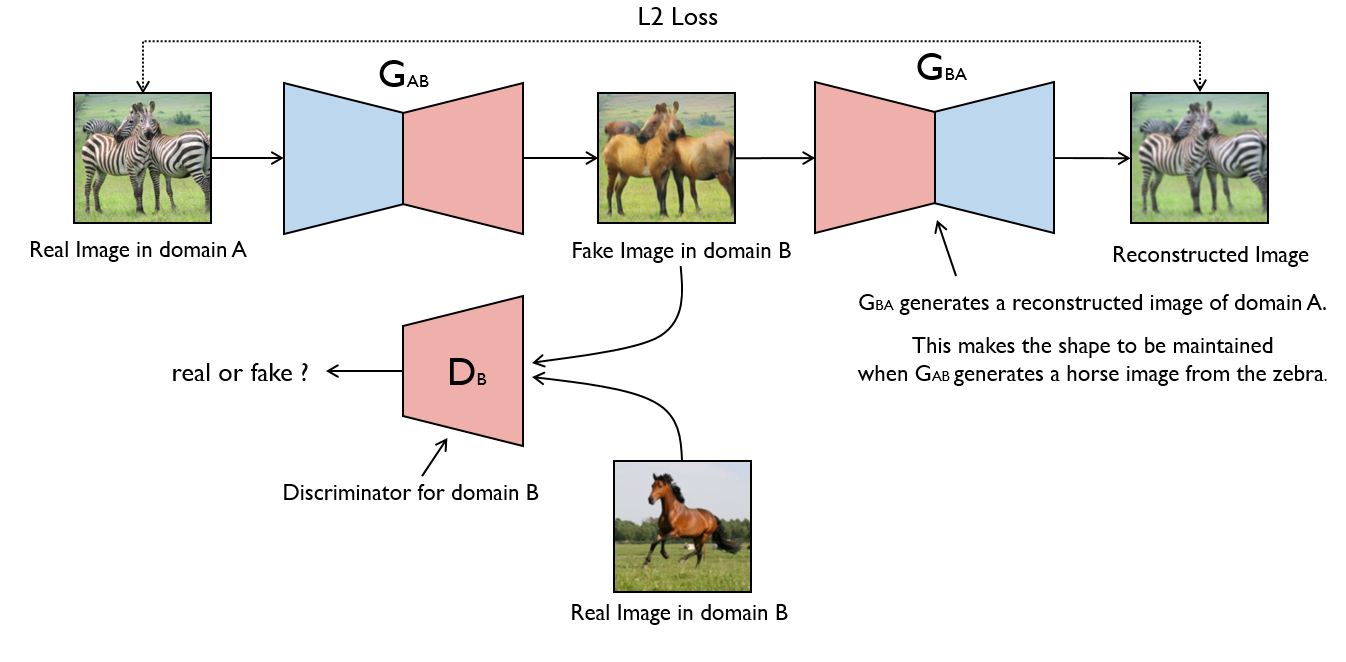

├── cyclegan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

├── download_dataset.sh

├── data_loader.py

└── cyclegan.py

├── dcgan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── dcgan.py

├── discogan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

├── download_dataset.sh

├── data_loader.py

└── discogan.py

├── dualgan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── dualgan.py

├── infogan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── infogan.py

├── lsgan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── lsgan.py

├── pix2pix

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

├── download_dataset.sh

├── data_loader.py

└── pix2pix.py

├── pixelda

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

├── test.py

├── data_loader.py

└── pixelda.py

├── sgan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── sgan.py

├── srgan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

├── data_loader.py

└── srgan.py

├── wgan

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── wgan.py

├── wgan_gp

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── wgan_gp.py

├── context_encoder

├── images

│ └── .gitignore

├── saved_model

│ └── .gitignore

└── context_encoder.py

├── assets

└── keras_gan.png

├── .gitignore

├── requirements.txt

├── LICENSE

└── README.md

/aae/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/gan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/aae/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/acgan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/bgan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/bigan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/ccgan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/cgan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/cogan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/cyclegan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/dcgan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/discogan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/dualgan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/gan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/infogan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/lsgan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/pix2pix/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/pixelda/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/sgan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/srgan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/wgan/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/wgan_gp/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/acgan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/bgan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/bigan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/ccgan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/cgan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/cogan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/cyclegan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/dcgan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/discogan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/dualgan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/infogan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/lsgan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/pix2pix/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/pixelda/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/sgan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/srgan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/wgan/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/wgan_gp/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/context_encoder/images/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/context_encoder/saved_model/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/assets/keras_gan.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/horoscopes/Keras-GAN/master/assets/keras_gan.png

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | */images/*.png

2 | */images/*.jpg

3 | */*.jpg

4 | */*.png

5 | *.json

6 | *.h5

7 | *.hdf5

8 | .DS_Store

9 | */datasets

10 |

11 | __pycache__

12 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | keras

2 | git+https://www.github.com/keras-team/keras-contrib.git

3 | matplotlib

4 | numpy

5 | scipy

6 | pillow

7 | #urllib

8 | #skimage

9 | scikit-image

10 | #gzip

11 | #pickle

12 |

--------------------------------------------------------------------------------

/discogan/download_dataset.sh:

--------------------------------------------------------------------------------

1 | mkdir datasets

2 | FILE=$1

3 | URL=https://people.eecs.berkeley.edu/~tinghuiz/projects/pix2pix/datasets/$FILE.tar.gz

4 | TAR_FILE=./datasets/$FILE.tar.gz

5 | TARGET_DIR=./datasets/$FILE/

6 | wget -N $URL -O $TAR_FILE

7 | mkdir $TARGET_DIR

8 | tar -zxvf $TAR_FILE -C ./datasets/

9 | rm $TAR_FILE

10 |

--------------------------------------------------------------------------------

/pix2pix/download_dataset.sh:

--------------------------------------------------------------------------------

1 | mkdir datasets

2 | FILE=$1

3 | URL=https://people.eecs.berkeley.edu/~tinghuiz/projects/pix2pix/datasets/$FILE.tar.gz

4 | TAR_FILE=./datasets/$FILE.tar.gz

5 | TARGET_DIR=./datasets/$FILE/

6 | wget -N $URL -O $TAR_FILE

7 | mkdir $TARGET_DIR

8 | tar -zxvf $TAR_FILE -C ./datasets/

9 | rm $TAR_FILE

10 |

--------------------------------------------------------------------------------

/cyclegan/download_dataset.sh:

--------------------------------------------------------------------------------

1 | mkdir datasets

2 | FILE=$1

3 |

4 | if [[ $FILE != "ae_photos" && $FILE != "apple2orange" && $FILE != "summer2winter_yosemite" && $FILE != "horse2zebra" && $FILE != "monet2photo" && $FILE != "cezanne2photo" && $FILE != "ukiyoe2photo" && $FILE != "vangogh2photo" && $FILE != "maps" && $FILE != "cityscapes" && $FILE != "facades" && $FILE != "iphone2dslr_flower" && $FILE != "ae_photos" ]]; then

5 | echo "Available datasets are: apple2orange, summer2winter_yosemite, horse2zebra, monet2photo, cezanne2photo, ukiyoe2photo, vangogh2photo, maps, cityscapes, facades, iphone2dslr_flower, ae_photos"

6 | exit 1

7 | fi

8 |

9 | URL=https://people.eecs.berkeley.edu/~taesung_park/CycleGAN/datasets/$FILE.zip

10 | ZIP_FILE=./datasets/$FILE.zip

11 | TARGET_DIR=./datasets/$FILE/

12 | wget -N $URL -O $ZIP_FILE

13 | mkdir $TARGET_DIR

14 | unzip $ZIP_FILE -d ./datasets/

15 | rm $ZIP_FILE

16 |

--------------------------------------------------------------------------------

/pixelda/test.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function, division

2 | import scipy

3 |

4 | import datetime

5 | import matplotlib.pyplot as plt

6 | import sys

7 | from data_loader import DataLoader

8 | import numpy as np

9 | import os

10 |

11 |

12 | # Configure MNIST and MNIST-M data loader

13 | data_loader = DataLoader(img_res=(32, 32))

14 |

15 | mnist, _ = data_loader.load_data(domain="A", batch_size=25)

16 | mnistm, _ = data_loader.load_data(domain="B", batch_size=25)

17 |

18 | r, c = 5, 5

19 |

20 | for img_i, imgs in enumerate([mnist, mnistm]):

21 |

22 | #titles = ['Original', 'Translated']

23 | fig, axs = plt.subplots(r, c)

24 | cnt = 0

25 | for i in range(r):

26 | for j in range(c):

27 | axs[i,j].imshow(imgs[cnt])

28 | #axs[i, j].set_title(titles[i])

29 | axs[i,j].axis('off')

30 | cnt += 1

31 | fig.savefig("%d.png" % (img_i))

32 | plt.close()

33 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2017 Erik Linder-Norén

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/srgan/data_loader.py:

--------------------------------------------------------------------------------

1 | import scipy

2 | from glob import glob

3 | import numpy as np

4 | import matplotlib.pyplot as plt

5 |

6 | class DataLoader():

7 | def __init__(self, dataset_name, img_res=(128, 128)):

8 | self.dataset_name = dataset_name

9 | self.img_res = img_res

10 |

11 | def load_data(self, batch_size=1, is_testing=False):

12 | data_type = "train" if not is_testing else "test"

13 |

14 | path = glob('./datasets/%s/*' % (self.dataset_name))

15 |

16 | batch_images = np.random.choice(path, size=batch_size)

17 |

18 | imgs_hr = []

19 | imgs_lr = []

20 | for img_path in batch_images:

21 | img = self.imread(img_path)

22 |

23 | h, w = self.img_res

24 | low_h, low_w = int(h / 4), int(w / 4)

25 |

26 | img_hr = scipy.misc.imresize(img, self.img_res)

27 | img_lr = scipy.misc.imresize(img, (low_h, low_w))

28 |

29 | # If training => do random flip

30 | if not is_testing and np.random.random() < 0.5:

31 | img_hr = np.fliplr(img_hr)

32 | img_lr = np.fliplr(img_lr)

33 |

34 | imgs_hr.append(img_hr)

35 | imgs_lr.append(img_lr)

36 |

37 | imgs_hr = np.array(imgs_hr) / 127.5 - 1.

38 | imgs_lr = np.array(imgs_lr) / 127.5 - 1.

39 |

40 | return imgs_hr, imgs_lr

41 |

42 |

43 | def imread(self, path):

44 | return scipy.misc.imread(path, mode='RGB').astype(np.float)

45 |

--------------------------------------------------------------------------------

/pix2pix/data_loader.py:

--------------------------------------------------------------------------------

1 | import scipy

2 | from glob import glob

3 | import numpy as np

4 | import matplotlib.pyplot as plt

5 |

6 | class DataLoader():

7 | def __init__(self, dataset_name, img_res=(128, 128)):

8 | self.dataset_name = dataset_name

9 | self.img_res = img_res

10 |

11 | def load_data(self, batch_size=1, is_testing=False):

12 | data_type = "train" if not is_testing else "test"

13 | path = glob('./datasets/%s/%s/*' % (self.dataset_name, data_type))

14 |

15 | batch_images = np.random.choice(path, size=batch_size)

16 |

17 | imgs_A = []

18 | imgs_B = []

19 | for img_path in batch_images:

20 | img = self.imread(img_path)

21 |

22 | h, w, _ = img.shape

23 | _w = int(w/2)

24 | img_A, img_B = img[:, :_w, :], img[:, _w:, :]

25 |

26 | img_A = scipy.misc.imresize(img_A, self.img_res)

27 | img_B = scipy.misc.imresize(img_B, self.img_res)

28 |

29 | # If training => do random flip

30 | if not is_testing and np.random.random() < 0.5:

31 | img_A = np.fliplr(img_A)

32 | img_B = np.fliplr(img_B)

33 |

34 | imgs_A.append(img_A)

35 | imgs_B.append(img_B)

36 |

37 | imgs_A = np.array(imgs_A)/127.5 - 1.

38 | imgs_B = np.array(imgs_B)/127.5 - 1.

39 |

40 | return imgs_A, imgs_B

41 |

42 | def load_batch(self, batch_size=1, is_testing=False):

43 | data_type = "train" if not is_testing else "val"

44 | path = glob('./datasets/%s/%s/*' % (self.dataset_name, data_type))

45 |

46 | self.n_batches = int(len(path) / batch_size)

47 |

48 | for i in range(self.n_batches-1):

49 | batch = path[i*batch_size:(i+1)*batch_size]

50 | imgs_A, imgs_B = [], []

51 | for img in batch:

52 | img = self.imread(img)

53 | h, w, _ = img.shape

54 | half_w = int(w/2)

55 | img_A = img[:, :half_w, :]

56 | img_B = img[:, half_w:, :]

57 |

58 | img_A = scipy.misc.imresize(img_A, self.img_res)

59 | img_B = scipy.misc.imresize(img_B, self.img_res)

60 |

61 | if not is_testing and np.random.random() > 0.5:

62 | img_A = np.fliplr(img_A)

63 | img_B = np.fliplr(img_B)

64 |

65 | imgs_A.append(img_A)

66 | imgs_B.append(img_B)

67 |

68 | imgs_A = np.array(imgs_A)/127.5 - 1.

69 | imgs_B = np.array(imgs_B)/127.5 - 1.

70 |

71 | yield imgs_A, imgs_B

72 |

73 |

74 | def imread(self, path):

75 | return scipy.misc.imread(path, mode='RGB').astype(np.float)

76 |

--------------------------------------------------------------------------------

/discogan/data_loader.py:

--------------------------------------------------------------------------------

1 | import scipy

2 | from glob import glob

3 | import numpy as np

4 |

5 | class DataLoader():

6 | def __init__(self, dataset_name, img_res=(128, 128)):

7 | self.dataset_name = dataset_name

8 | self.img_res = img_res

9 |

10 | def load_data(self, batch_size=1, is_testing=False):

11 | data_type = "train" if not is_testing else "val"

12 | path = glob('./datasets/%s/%s/*' % (self.dataset_name, data_type))

13 |

14 | batch = np.random.choice(path, size=batch_size)

15 |

16 | imgs_A, imgs_B = [], []

17 | for img in batch:

18 | img = self.imread(img)

19 | h, w, _ = img.shape

20 | half_w = int(w/2)

21 | img_A = img[:, :half_w, :]

22 | img_B = img[:, half_w:, :]

23 |

24 | img_A = scipy.misc.imresize(img_A, self.img_res)

25 | img_B = scipy.misc.imresize(img_B, self.img_res)

26 |

27 | if not is_testing and np.random.random() > 0.5:

28 | img_A = np.fliplr(img_A)

29 | img_B = np.fliplr(img_B)

30 |

31 | imgs_A.append(img_A)

32 | imgs_B.append(img_B)

33 |

34 | imgs_A = np.array(imgs_A)/127.5 - 1.

35 | imgs_B = np.array(imgs_B)/127.5 - 1.

36 |

37 | return imgs_A, imgs_B

38 |

39 | def load_batch(self, batch_size=1, is_testing=False):

40 | data_type = "train" if not is_testing else "val"

41 | path = glob('./datasets/%s/%s/*' % (self.dataset_name, data_type))

42 |

43 | self.n_batches = int(len(path) / batch_size)

44 |

45 | for i in range(self.n_batches-1):

46 | batch = path[i*batch_size:(i+1)*batch_size]

47 | imgs_A, imgs_B = [], []

48 | for img in batch:

49 | img = self.imread(img)

50 | h, w, _ = img.shape

51 | half_w = int(w/2)

52 | img_A = img[:, :half_w, :]

53 | img_B = img[:, half_w:, :]

54 |

55 | img_A = scipy.misc.imresize(img_A, self.img_res)

56 | img_B = scipy.misc.imresize(img_B, self.img_res)

57 |

58 | if not is_testing and np.random.random() > 0.5:

59 | img_A = np.fliplr(img_A)

60 | img_B = np.fliplr(img_B)

61 |

62 | imgs_A.append(img_A)

63 | imgs_B.append(img_B)

64 |

65 | imgs_A = np.array(imgs_A)/127.5 - 1.

66 | imgs_B = np.array(imgs_B)/127.5 - 1.

67 |

68 | yield imgs_A, imgs_B

69 |

70 | def load_img(self, path):

71 | img = self.imread(path)

72 | img = scipy.misc.imresize(img, self.img_res)

73 | img = img/127.5 - 1.

74 | return img[np.newaxis, :, :, :]

75 |

76 | def imread(self, path):

77 | return scipy.misc.imread(path, mode='RGB').astype(np.float)

78 |

--------------------------------------------------------------------------------

/cyclegan/data_loader.py:

--------------------------------------------------------------------------------

1 | import scipy

2 | from glob import glob

3 | import numpy as np

4 |

5 | class DataLoader():

6 | def __init__(self, dataset_name, img_res=(128, 128)):

7 | self.dataset_name = dataset_name

8 | self.img_res = img_res

9 |

10 | def load_data(self, domain, batch_size=1, is_testing=False):

11 | data_type = "train%s" % domain if not is_testing else "test%s" % domain

12 | path = glob('./datasets/%s/%s/*' % (self.dataset_name, data_type))

13 |

14 | batch_images = np.random.choice(path, size=batch_size)

15 |

16 | imgs = []

17 | for img_path in batch_images:

18 | img = self.imread(img_path)

19 | if not is_testing:

20 | img = scipy.misc.imresize(img, self.img_res)

21 |

22 | if np.random.random() > 0.5:

23 | img = np.fliplr(img)

24 | else:

25 | img = scipy.misc.imresize(img, self.img_res)

26 | imgs.append(img)

27 |

28 | imgs = np.array(imgs)/127.5 - 1.

29 |

30 | return imgs

31 |

32 | def load_batch(self, batch_size=1, is_testing=False):

33 | data_type = "train" if not is_testing else "val"

34 | path_A = glob('./datasets/%s/%sA/*' % (self.dataset_name, data_type))

35 | path_B = glob('./datasets/%s/%sB/*' % (self.dataset_name, data_type))

36 |

37 | self.n_batches = int(min(len(path_A), len(path_B)) / batch_size)

38 | total_samples = self.n_batches * batch_size

39 |

40 | # Sample n_batches * batch_size from each path list so that model sees all

41 | # samples from both domains

42 | path_A = np.random.choice(path_A, total_samples, replace=False)

43 | path_B = np.random.choice(path_B, total_samples, replace=False)

44 |

45 | for i in range(self.n_batches-1):

46 | batch_A = path_A[i*batch_size:(i+1)*batch_size]

47 | batch_B = path_B[i*batch_size:(i+1)*batch_size]

48 | imgs_A, imgs_B = [], []

49 | for img_A, img_B in zip(batch_A, batch_B):

50 | img_A = self.imread(img_A)

51 | img_B = self.imread(img_B)

52 |

53 | img_A = scipy.misc.imresize(img_A, self.img_res)

54 | img_B = scipy.misc.imresize(img_B, self.img_res)

55 |

56 | if not is_testing and np.random.random() > 0.5:

57 | img_A = np.fliplr(img_A)

58 | img_B = np.fliplr(img_B)

59 |

60 | imgs_A.append(img_A)

61 | imgs_B.append(img_B)

62 |

63 | imgs_A = np.array(imgs_A)/127.5 - 1.

64 | imgs_B = np.array(imgs_B)/127.5 - 1.

65 |

66 | yield imgs_A, imgs_B

67 |

68 | def load_img(self, path):

69 | img = self.imread(path)

70 | img = scipy.misc.imresize(img, self.img_res)

71 | img = img/127.5 - 1.

72 | return img[np.newaxis, :, :, :]

73 |

74 | def imread(self, path):

75 | return scipy.misc.imread(path, mode='RGB').astype(np.float)

76 |

--------------------------------------------------------------------------------

/pixelda/data_loader.py:

--------------------------------------------------------------------------------

1 | import scipy

2 | from glob import glob

3 | import numpy as np

4 | from keras.datasets import mnist

5 | from skimage.transform import resize as imresize

6 | import pickle

7 | import os

8 | import urllib

9 | import gzip

10 |

11 | class DataLoader():

12 | """Loads images from MNIST (domain A) and MNIST-M (domain B)"""

13 | def __init__(self, img_res=(128, 128)):

14 | self.img_res = img_res

15 |

16 | self.mnistm_url = 'https://github.com/VanushVaswani/keras_mnistm/releases/download/1.0/keras_mnistm.pkl.gz'

17 |

18 | self.setup_mnist(img_res)

19 | self.setup_mnistm(img_res)

20 |

21 | def normalize(self, images):

22 | return images.astype(np.float32) / 127.5 - 1.

23 |

24 | def setup_mnist(self, img_res):

25 |

26 | print ("Setting up MNIST...")

27 |

28 | if not os.path.exists('datasets/mnist_x.npy'):

29 | # Load the dataset

30 | (mnist_X, mnist_y), (_, _) = mnist.load_data()

31 |

32 | # Normalize and rescale images

33 | mnist_X = self.normalize(mnist_X)

34 | mnist_X = np.array([imresize(x, img_res) for x in mnist_X])

35 | mnist_X = np.expand_dims(mnist_X, axis=-1)

36 | mnist_X = np.repeat(mnist_X, 3, axis=-1)

37 |

38 | self.mnist_X, self.mnist_y = mnist_X, mnist_y

39 |

40 | # Save formatted images

41 | np.save('datasets/mnist_x.npy', self.mnist_X)

42 | np.save('datasets/mnist_y.npy', self.mnist_y)

43 | else:

44 | self.mnist_X = np.load('datasets/mnist_x.npy')

45 | self.mnist_y = np.load('datasets/mnist_y.npy')

46 |

47 | print ("+ Done.")

48 |

49 | def setup_mnistm(self, img_res):

50 |

51 | print ("Setting up MNIST-M...")

52 |

53 | if not os.path.exists('datasets/mnistm_x.npy'):

54 |

55 | # Download the MNIST-M pkl file

56 | filepath = 'datasets/keras_mnistm.pkl.gz'

57 | if not os.path.exists(filepath.replace('.gz', '')):

58 | print('+ Downloading ' + self.mnistm_url)

59 | data = urllib.request.urlopen(self.mnistm_url)

60 | with open(filepath, 'wb') as f:

61 | f.write(data.read())

62 | with open(filepath.replace('.gz', ''), 'wb') as out_f, \

63 | gzip.GzipFile(filepath) as zip_f:

64 | out_f.write(zip_f.read())

65 | os.unlink(filepath)

66 |

67 | # load MNIST-M images from pkl file

68 | with open('datasets/keras_mnistm.pkl', "rb") as f:

69 | data = pickle.load(f, encoding='bytes')

70 |

71 | # Normalize and rescale images

72 | mnistm_X = np.array(data[b'train'])

73 | mnistm_X = self.normalize(mnistm_X)

74 | mnistm_X = np.array([imresize(x, img_res) for x in mnistm_X])

75 |

76 | self.mnistm_X, self.mnistm_y = mnistm_X, self.mnist_y.copy()

77 |

78 | # Save formatted images

79 | np.save('datasets/mnistm_x.npy', self.mnistm_X)

80 | np.save('datasets/mnistm_y.npy', self.mnistm_y)

81 | else:

82 | self.mnistm_X = np.load('datasets/mnistm_x.npy')

83 | self.mnistm_y = np.load('datasets/mnistm_y.npy')

84 |

85 | print ("+ Done.")

86 |

87 |

88 | def load_data(self, domain, batch_size=1):

89 |

90 | X = self.mnist_X if domain == 'A' else self.mnistm_X

91 | y = self.mnist_y if domain == 'A' else self.mnistm_y

92 |

93 | idx = np.random.choice(list(range(len(X))), size=batch_size)

94 |

95 | return X[idx], y[idx]

96 |

--------------------------------------------------------------------------------

/lsgan/lsgan.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function, division

2 |

3 | from keras.datasets import mnist

4 | from keras.layers import Input, Dense, Reshape, Flatten, Dropout

5 | from keras.layers import BatchNormalization, Activation, ZeroPadding2D

6 | from keras.layers.advanced_activations import LeakyReLU

7 | from keras.layers.convolutional import UpSampling2D, Conv2D

8 | from keras.models import Sequential, Model

9 | from keras.optimizers import Adam

10 |

11 | import matplotlib.pyplot as plt

12 |

13 | import sys

14 |

15 | import numpy as np

16 |

17 | class LSGAN():

18 | def __init__(self):

19 | self.img_rows = 28

20 | self.img_cols = 28

21 | self.channels = 1

22 | self.img_shape = (self.img_rows, self.img_cols, self.channels)

23 | self.latent_dim = 100

24 |

25 | optimizer = Adam(0.0002, 0.5)

26 |

27 | # Build and compile the discriminator

28 | self.discriminator = self.build_discriminator()

29 | self.discriminator.compile(loss='mse',

30 | optimizer=optimizer,

31 | metrics=['accuracy'])

32 |

33 | # Build the generator

34 | self.generator = self.build_generator()

35 |

36 | # The generator takes noise as input and generated imgs

37 | z = Input(shape=(self.latent_dim,))

38 | img = self.generator(z)

39 |

40 | # For the combined model we will only train the generator

41 | self.discriminator.trainable = False

42 |

43 | # The valid takes generated images as input and determines validity

44 | valid = self.discriminator(img)

45 |

46 | # The combined model (stacked generator and discriminator)

47 | # Trains generator to fool discriminator

48 | self.combined = Model(z, valid)

49 | # (!!!) Optimize w.r.t. MSE loss instead of crossentropy

50 | self.combined.compile(loss='mse', optimizer=optimizer)

51 |

52 | def build_generator(self):

53 |

54 | model = Sequential()

55 |

56 | model.add(Dense(256, input_dim=self.latent_dim))

57 | model.add(LeakyReLU(alpha=0.2))

58 | model.add(BatchNormalization(momentum=0.8))

59 | model.add(Dense(512))

60 | model.add(LeakyReLU(alpha=0.2))

61 | model.add(BatchNormalization(momentum=0.8))

62 | model.add(Dense(1024))

63 | model.add(LeakyReLU(alpha=0.2))

64 | model.add(BatchNormalization(momentum=0.8))

65 | model.add(Dense(np.prod(self.img_shape), activation='tanh'))

66 | model.add(Reshape(self.img_shape))

67 |

68 | model.summary()

69 |

70 | noise = Input(shape=(self.latent_dim,))

71 | img = model(noise)

72 |

73 | return Model(noise, img)

74 |

75 | def build_discriminator(self):

76 |

77 | model = Sequential()

78 |

79 | model.add(Flatten(input_shape=self.img_shape))

80 | model.add(Dense(512))

81 | model.add(LeakyReLU(alpha=0.2))

82 | model.add(Dense(256))

83 | model.add(LeakyReLU(alpha=0.2))

84 | # (!!!) No softmax

85 | model.add(Dense(1))

86 | model.summary()

87 |

88 | img = Input(shape=self.img_shape)

89 | validity = model(img)

90 |

91 | return Model(img, validity)

92 |

93 | def train(self, epochs, batch_size=128, sample_interval=50):

94 |

95 | # Load the dataset

96 | (X_train, _), (_, _) = mnist.load_data()

97 |

98 | # Rescale -1 to 1

99 | X_train = (X_train.astype(np.float32) - 127.5) / 127.5

100 | X_train = np.expand_dims(X_train, axis=3)

101 |

102 | # Adversarial ground truths

103 | valid = np.ones((batch_size, 1))

104 | fake = np.zeros((batch_size, 1))

105 |

106 | for epoch in range(epochs):

107 |

108 | # ---------------------

109 | # Train Discriminator

110 | # ---------------------

111 |

112 | # Select a random batch of images

113 | idx = np.random.randint(0, X_train.shape[0], batch_size)

114 | imgs = X_train[idx]

115 |

116 | # Sample noise as generator input

117 | noise = np.random.normal(0, 1, (batch_size, self.latent_dim))

118 |

119 | # Generate a batch of new images

120 | gen_imgs = self.generator.predict(noise)

121 |

122 | # Train the discriminator

123 | d_loss_real = self.discriminator.train_on_batch(imgs, valid)

124 | d_loss_fake = self.discriminator.train_on_batch(gen_imgs, fake)

125 | d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

126 |

127 |

128 | # ---------------------

129 | # Train Generator

130 | # ---------------------

131 |

132 | g_loss = self.combined.train_on_batch(noise, valid)

133 |

134 | # Plot the progress

135 | print ("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100*d_loss[1], g_loss))

136 |

137 | # If at save interval => save generated image samples

138 | if epoch % sample_interval == 0:

139 | self.sample_images(epoch)

140 |

141 | def sample_images(self, epoch):

142 | r, c = 5, 5

143 | noise = np.random.normal(0, 1, (r * c, self.latent_dim))

144 | gen_imgs = self.generator.predict(noise)

145 |

146 | # Rescale images 0 - 1

147 | gen_imgs = 0.5 * gen_imgs + 0.5

148 |

149 | fig, axs = plt.subplots(r, c)

150 | cnt = 0

151 | for i in range(r):

152 | for j in range(c):

153 | axs[i,j].imshow(gen_imgs[cnt, :,:,0], cmap='gray')

154 | axs[i,j].axis('off')

155 | cnt += 1

156 | fig.savefig("images/mnist_%d.png" % epoch)

157 | plt.close()

158 |

159 |

160 | if __name__ == '__main__':

161 | gan = LSGAN()

162 | gan.train(epochs=30000, batch_size=32, sample_interval=200)

163 |

--------------------------------------------------------------------------------

/gan/gan.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function, division

2 |

3 | from keras.datasets import mnist

4 | from keras.layers import Input, Dense, Reshape, Flatten, Dropout

5 | from keras.layers import BatchNormalization, Activation, ZeroPadding2D

6 | from keras.layers.advanced_activations import LeakyReLU

7 | from keras.layers.convolutional import UpSampling2D, Conv2D

8 | from keras.models import Sequential, Model

9 | from keras.optimizers import Adam

10 |

11 | import matplotlib.pyplot as plt

12 |

13 | import sys

14 |

15 | import numpy as np

16 |

17 | class GAN():

18 | def __init__(self):

19 | self.img_rows = 28

20 | self.img_cols = 28

21 | self.channels = 1

22 | self.img_shape = (self.img_rows, self.img_cols, self.channels)

23 | self.latent_dim = 100

24 |

25 | optimizer = Adam(0.0002, 0.5)

26 |

27 | # Build and compile the discriminator

28 | self.discriminator = self.build_discriminator()

29 | self.discriminator.compile(loss='binary_crossentropy',

30 | optimizer=optimizer,

31 | metrics=['accuracy'])

32 |

33 | # Build the generator

34 | self.generator = self.build_generator()

35 |

36 | # The generator takes noise as input and generates imgs

37 | z = Input(shape=(self.latent_dim,))

38 | img = self.generator(z)

39 |

40 | # For the combined model we will only train the generator

41 | self.discriminator.trainable = False

42 |

43 | # The discriminator takes generated images as input and determines validity

44 | validity = self.discriminator(img)

45 |

46 | # The combined model (stacked generator and discriminator)

47 | # Trains the generator to fool the discriminator

48 | self.combined = Model(z, validity)

49 | self.combined.compile(loss='binary_crossentropy', optimizer=optimizer)

50 |

51 |

52 | def build_generator(self):

53 |

54 | model = Sequential()

55 |

56 | model.add(Dense(256, input_dim=self.latent_dim))

57 | model.add(LeakyReLU(alpha=0.2))

58 | model.add(BatchNormalization(momentum=0.8))

59 | model.add(Dense(512))

60 | model.add(LeakyReLU(alpha=0.2))

61 | model.add(BatchNormalization(momentum=0.8))

62 | model.add(Dense(1024))

63 | model.add(LeakyReLU(alpha=0.2))

64 | model.add(BatchNormalization(momentum=0.8))

65 | model.add(Dense(np.prod(self.img_shape), activation='tanh'))

66 | model.add(Reshape(self.img_shape))

67 |

68 | model.summary()

69 |

70 | noise = Input(shape=(self.latent_dim,))

71 | img = model(noise)

72 |

73 | return Model(noise, img)

74 |

75 | def build_discriminator(self):

76 |

77 | model = Sequential()

78 |

79 | model.add(Flatten(input_shape=self.img_shape))

80 | model.add(Dense(512))

81 | model.add(LeakyReLU(alpha=0.2))

82 | model.add(Dense(256))

83 | model.add(LeakyReLU(alpha=0.2))

84 | model.add(Dense(1, activation='sigmoid'))

85 | model.summary()

86 |

87 | img = Input(shape=self.img_shape)

88 | validity = model(img)

89 |

90 | return Model(img, validity)

91 |

92 | def train(self, epochs, batch_size=128, sample_interval=50):

93 |

94 | # Load the dataset

95 | (X_train, _), (_, _) = mnist.load_data()

96 |

97 | # Rescale -1 to 1

98 | X_train = X_train / 127.5 - 1.

99 | X_train = np.expand_dims(X_train, axis=3)

100 |

101 | # Adversarial ground truths

102 | valid = np.ones((batch_size, 1))

103 | fake = np.zeros((batch_size, 1))

104 |

105 | for epoch in range(epochs):

106 |

107 | # ---------------------

108 | # Train Discriminator

109 | # ---------------------

110 |

111 | # Select a random batch of images

112 | idx = np.random.randint(0, X_train.shape[0], batch_size)

113 | imgs = X_train[idx]

114 |

115 | noise = np.random.normal(0, 1, (batch_size, self.latent_dim))

116 |

117 | # Generate a batch of new images

118 | gen_imgs = self.generator.predict(noise)

119 |

120 | # Train the discriminator

121 | d_loss_real = self.discriminator.train_on_batch(imgs, valid)

122 | d_loss_fake = self.discriminator.train_on_batch(gen_imgs, fake)

123 | d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

124 |

125 | # ---------------------

126 | # Train Generator

127 | # ---------------------

128 |

129 | noise = np.random.normal(0, 1, (batch_size, self.latent_dim))

130 |

131 | # Train the generator (to have the discriminator label samples as valid)

132 | g_loss = self.combined.train_on_batch(noise, valid)

133 |

134 | # Plot the progress

135 | print ("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100*d_loss[1], g_loss))

136 |

137 | # If at save interval => save generated image samples

138 | if epoch % sample_interval == 0:

139 | self.sample_images(epoch)

140 |

141 | def sample_images(self, epoch):

142 | r, c = 5, 5

143 | noise = np.random.normal(0, 1, (r * c, self.latent_dim))

144 | gen_imgs = self.generator.predict(noise)

145 |

146 | # Rescale images 0 - 1

147 | gen_imgs = 0.5 * gen_imgs + 0.5

148 |

149 | fig, axs = plt.subplots(r, c)

150 | cnt = 0

151 | for i in range(r):

152 | for j in range(c):

153 | axs[i,j].imshow(gen_imgs[cnt, :,:,0], cmap='gray')

154 | axs[i,j].axis('off')

155 | cnt += 1

156 | fig.savefig("images/%d.png" % epoch)

157 | plt.close()

158 |

159 |

160 | if __name__ == '__main__':

161 | gan = GAN()

162 | gan.train(epochs=30000, batch_size=32, sample_interval=200)

163 |

--------------------------------------------------------------------------------

/bgan/bgan.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function, division

2 |

3 | from keras.datasets import mnist

4 | from keras.layers import Input, Dense, Reshape, Flatten, Dropout

5 | from keras.layers import BatchNormalization, Activation, ZeroPadding2D

6 | from keras.layers.advanced_activations import LeakyReLU

7 | from keras.layers.convolutional import UpSampling2D, Conv2D

8 | from keras.models import Sequential, Model

9 | from keras.optimizers import Adam

10 | import keras.backend as K

11 |

12 | import matplotlib.pyplot as plt

13 |

14 | import sys

15 |

16 | import numpy as np

17 |

18 | class BGAN():

19 | """Reference: https://wiseodd.github.io/techblog/2017/03/07/boundary-seeking-gan/"""

20 | def __init__(self):

21 | self.img_rows = 28

22 | self.img_cols = 28

23 | self.channels = 1

24 | self.img_shape = (self.img_rows, self.img_cols, self.channels)

25 | self.latent_dim = 100

26 |

27 | optimizer = Adam(0.0002, 0.5)

28 |

29 | # Build and compile the discriminator

30 | self.discriminator = self.build_discriminator()

31 | self.discriminator.compile(loss='binary_crossentropy',

32 | optimizer=optimizer,

33 | metrics=['accuracy'])

34 |

35 | # Build the generator

36 | self.generator = self.build_generator()

37 |

38 | # The generator takes noise as input and generated imgs

39 | z = Input(shape=(self.latent_dim,))

40 | img = self.generator(z)

41 |

42 | # For the combined model we will only train the generator

43 | self.discriminator.trainable = False

44 |

45 | # The valid takes generated images as input and determines validity

46 | valid = self.discriminator(img)

47 |

48 | # The combined model (stacked generator and discriminator)

49 | # Trains the generator to fool the discriminator

50 | self.combined = Model(z, valid)

51 | self.combined.compile(loss=self.boundary_loss, optimizer=optimizer)

52 |

53 | def build_generator(self):

54 |

55 | model = Sequential()

56 |

57 | model.add(Dense(256, input_dim=self.latent_dim))

58 | model.add(LeakyReLU(alpha=0.2))

59 | model.add(BatchNormalization(momentum=0.8))

60 | model.add(Dense(512))

61 | model.add(LeakyReLU(alpha=0.2))

62 | model.add(BatchNormalization(momentum=0.8))

63 | model.add(Dense(1024))

64 | model.add(LeakyReLU(alpha=0.2))

65 | model.add(BatchNormalization(momentum=0.8))

66 | model.add(Dense(np.prod(self.img_shape), activation='tanh'))

67 | model.add(Reshape(self.img_shape))

68 |

69 | model.summary()

70 |

71 | noise = Input(shape=(self.latent_dim,))

72 | img = model(noise)

73 |

74 | return Model(noise, img)

75 |

76 | def build_discriminator(self):

77 |

78 | model = Sequential()

79 |

80 | model.add(Flatten(input_shape=self.img_shape))

81 | model.add(Dense(512))

82 | model.add(LeakyReLU(alpha=0.2))

83 | model.add(Dense(256))

84 | model.add(LeakyReLU(alpha=0.2))

85 | model.add(Dense(1, activation='sigmoid'))

86 | model.summary()

87 |

88 | img = Input(shape=self.img_shape)

89 | validity = model(img)

90 |

91 | return Model(img, validity)

92 |

93 | def boundary_loss(self, y_true, y_pred):

94 | """

95 | Boundary seeking loss.

96 | Reference: https://wiseodd.github.io/techblog/2017/03/07/boundary-seeking-gan/

97 | """

98 | return 0.5 * K.mean((K.log(y_pred) - K.log(1 - y_pred))**2)

99 |

100 | def train(self, epochs, batch_size=128, sample_interval=50):

101 |

102 | # Load the dataset

103 | (X_train, _), (_, _) = mnist.load_data()

104 |

105 | # Rescale -1 to 1

106 | X_train = X_train / 127.5 - 1.

107 | X_train = np.expand_dims(X_train, axis=3)

108 |

109 | # Adversarial ground truths

110 | valid = np.ones((batch_size, 1))

111 | fake = np.zeros((batch_size, 1))

112 |

113 | for epoch in range(epochs):

114 |

115 | # ---------------------

116 | # Train Discriminator

117 | # ---------------------

118 |

119 | # Select a random batch of images

120 | idx = np.random.randint(0, X_train.shape[0], batch_size)

121 | imgs = X_train[idx]

122 |

123 | noise = np.random.normal(0, 1, (batch_size, self.latent_dim))

124 |

125 | # Generate a batch of new images

126 | gen_imgs = self.generator.predict(noise)

127 |

128 | # Train the discriminator

129 | d_loss_real = self.discriminator.train_on_batch(imgs, valid)

130 | d_loss_fake = self.discriminator.train_on_batch(gen_imgs, fake)

131 | d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

132 |

133 |

134 | # ---------------------

135 | # Train Generator

136 | # ---------------------

137 |

138 | g_loss = self.combined.train_on_batch(noise, valid)

139 |

140 | # Plot the progress

141 | print ("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100*d_loss[1], g_loss))

142 |

143 | # If at save interval => save generated image samples

144 | if epoch % sample_interval == 0:

145 | self.sample_images(epoch)

146 |

147 | def sample_images(self, epoch):

148 | r, c = 5, 5

149 | noise = np.random.normal(0, 1, (r * c, self.latent_dim))

150 | gen_imgs = self.generator.predict(noise)

151 | # Rescale images 0 - 1

152 | gen_imgs = 0.5 * gen_imgs + 0.5

153 |

154 | fig, axs = plt.subplots(r, c)

155 | cnt = 0

156 | for i in range(r):

157 | for j in range(c):

158 | axs[i,j].imshow(gen_imgs[cnt, :,:,0], cmap='gray')

159 | axs[i,j].axis('off')

160 | cnt += 1

161 | fig.savefig("images/mnist_%d.png" % epoch)

162 | plt.close()

163 |

164 |

165 | if __name__ == '__main__':

166 | bgan = BGAN()

167 | bgan.train(epochs=30000, batch_size=32, sample_interval=200)

168 |

--------------------------------------------------------------------------------

/dcgan/dcgan.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function, division

2 |

3 | from keras.datasets import mnist

4 | from keras.layers import Input, Dense, Reshape, Flatten, Dropout

5 | from keras.layers import BatchNormalization, Activation, ZeroPadding2D

6 | from keras.layers.advanced_activations import LeakyReLU

7 | from keras.layers.convolutional import UpSampling2D, Conv2D

8 | from keras.models import Sequential, Model

9 | from keras.optimizers import Adam

10 |

11 | import matplotlib.pyplot as plt

12 |

13 | import sys

14 |

15 | import numpy as np

16 |

17 | class DCGAN():

18 | def __init__(self):

19 | # Input shape

20 | self.img_rows = 28

21 | self.img_cols = 28

22 | self.channels = 1

23 | self.img_shape = (self.img_rows, self.img_cols, self.channels)

24 | self.latent_dim = 100

25 |

26 | optimizer = Adam(0.0002, 0.5)

27 |

28 | # Build and compile the discriminator

29 | self.discriminator = self.build_discriminator()

30 | self.discriminator.compile(loss='binary_crossentropy',

31 | optimizer=optimizer,

32 | metrics=['accuracy'])

33 |

34 | # Build the generator

35 | self.generator = self.build_generator()

36 |

37 | # The generator takes noise as input and generates imgs

38 | z = Input(shape=(self.latent_dim,))

39 | img = self.generator(z)

40 |

41 | # For the combined model we will only train the generator

42 | self.discriminator.trainable = False

43 |

44 | # The discriminator takes generated images as input and determines validity

45 | valid = self.discriminator(img)

46 |

47 | # The combined model (stacked generator and discriminator)

48 | # Trains the generator to fool the discriminator

49 | self.combined = Model(z, valid)

50 | self.combined.compile(loss='binary_crossentropy', optimizer=optimizer)

51 |

52 | def build_generator(self):

53 |

54 | model = Sequential()

55 |

56 | model.add(Dense(128 * 7 * 7, activation="relu", input_dim=self.latent_dim))

57 | model.add(Reshape((7, 7, 128)))

58 | model.add(UpSampling2D())

59 | model.add(Conv2D(128, kernel_size=3, padding="same"))

60 | model.add(BatchNormalization(momentum=0.8))

61 | model.add(Activation("relu"))

62 | model.add(UpSampling2D())

63 | model.add(Conv2D(64, kernel_size=3, padding="same"))

64 | model.add(BatchNormalization(momentum=0.8))

65 | model.add(Activation("relu"))

66 | model.add(Conv2D(self.channels, kernel_size=3, padding="same"))

67 | model.add(Activation("tanh"))

68 |

69 | model.summary()

70 |

71 | noise = Input(shape=(self.latent_dim,))

72 | img = model(noise)

73 |

74 | return Model(noise, img)

75 |

76 | def build_discriminator(self):

77 |

78 | model = Sequential()

79 |

80 | model.add(Conv2D(32, kernel_size=3, strides=2, input_shape=self.img_shape, padding="same"))

81 | model.add(LeakyReLU(alpha=0.2))

82 | model.add(Dropout(0.25))

83 | model.add(Conv2D(64, kernel_size=3, strides=2, padding="same"))

84 | model.add(ZeroPadding2D(padding=((0,1),(0,1))))

85 | model.add(BatchNormalization(momentum=0.8))

86 | model.add(LeakyReLU(alpha=0.2))

87 | model.add(Dropout(0.25))

88 | model.add(Conv2D(128, kernel_size=3, strides=2, padding="same"))

89 | model.add(BatchNormalization(momentum=0.8))

90 | model.add(LeakyReLU(alpha=0.2))

91 | model.add(Dropout(0.25))

92 | model.add(Conv2D(256, kernel_size=3, strides=1, padding="same"))

93 | model.add(BatchNormalization(momentum=0.8))

94 | model.add(LeakyReLU(alpha=0.2))

95 | model.add(Dropout(0.25))

96 | model.add(Flatten())

97 | model.add(Dense(1, activation='sigmoid'))

98 |

99 | model.summary()

100 |

101 | img = Input(shape=self.img_shape)

102 | validity = model(img)

103 |

104 | return Model(img, validity)

105 |

106 | def train(self, epochs, batch_size=128, save_interval=50):

107 |

108 | # Load the dataset

109 | (X_train, _), (_, _) = mnist.load_data()

110 |

111 | # Rescale -1 to 1

112 | X_train = X_train / 127.5 - 1.

113 | X_train = np.expand_dims(X_train, axis=3)

114 |

115 | # Adversarial ground truths

116 | valid = np.ones((batch_size, 1))

117 | fake = np.zeros((batch_size, 1))

118 |

119 | for epoch in range(epochs):

120 |

121 | # ---------------------

122 | # Train Discriminator

123 | # ---------------------

124 |

125 | # Select a random half of images

126 | idx = np.random.randint(0, X_train.shape[0], batch_size)

127 | imgs = X_train[idx]

128 |

129 | # Sample noise and generate a batch of new images

130 | noise = np.random.normal(0, 1, (batch_size, self.latent_dim))

131 | gen_imgs = self.generator.predict(noise)

132 |

133 | # Train the discriminator (real classified as ones and generated as zeros)

134 | d_loss_real = self.discriminator.train_on_batch(imgs, valid)

135 | d_loss_fake = self.discriminator.train_on_batch(gen_imgs, fake)

136 | d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

137 |

138 | # ---------------------

139 | # Train Generator

140 | # ---------------------

141 |

142 | # Train the generator (wants discriminator to mistake images as real)

143 | g_loss = self.combined.train_on_batch(noise, valid)

144 |

145 | # Plot the progress

146 | print ("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100*d_loss[1], g_loss))

147 |

148 | # If at save interval => save generated image samples

149 | if epoch % save_interval == 0:

150 | self.save_imgs(epoch)

151 |

152 | def save_imgs(self, epoch):

153 | r, c = 5, 5

154 | noise = np.random.normal(0, 1, (r * c, self.latent_dim))

155 | gen_imgs = self.generator.predict(noise)

156 |

157 | # Rescale images 0 - 1

158 | gen_imgs = 0.5 * gen_imgs + 0.5

159 |

160 | fig, axs = plt.subplots(r, c)

161 | cnt = 0

162 | for i in range(r):

163 | for j in range(c):

164 | axs[i,j].imshow(gen_imgs[cnt, :,:,0], cmap='gray')

165 | axs[i,j].axis('off')

166 | cnt += 1

167 | fig.savefig("images/mnist_%d.png" % epoch)

168 | plt.close()

169 |

170 |

171 | if __name__ == '__main__':

172 | dcgan = DCGAN()

173 | dcgan.train(epochs=4000, batch_size=32, save_interval=50)

174 |

--------------------------------------------------------------------------------

/bigan/bigan.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function, division

2 |

3 | from keras.datasets import mnist

4 | from keras.layers import Input, Dense, Reshape, Flatten, Dropout, multiply, GaussianNoise

5 | from keras.layers import BatchNormalization, Activation, Embedding, ZeroPadding2D

6 | from keras.layers import MaxPooling2D, concatenate

7 | from keras.layers.advanced_activations import LeakyReLU

8 | from keras.layers.convolutional import UpSampling2D, Conv2D

9 | from keras.models import Sequential, Model

10 | from keras.optimizers import Adam

11 | from keras import losses

12 | from keras.utils import to_categorical

13 | import keras.backend as K

14 |

15 | import matplotlib.pyplot as plt

16 |

17 | import numpy as np

18 |

19 | class BIGAN():

20 | def __init__(self):

21 | self.img_rows = 28

22 | self.img_cols = 28

23 | self.channels = 1

24 | self.img_shape = (self.img_rows, self.img_cols, self.channels)

25 | self.latent_dim = 100

26 |

27 | optimizer = Adam(0.0002, 0.5)

28 |

29 | # Build and compile the discriminator

30 | self.discriminator = self.build_discriminator()

31 | self.discriminator.compile(loss=['binary_crossentropy'],

32 | optimizer=optimizer,

33 | metrics=['accuracy'])

34 |

35 | # Build the generator

36 | self.generator = self.build_generator()

37 |

38 | # Build the encoder

39 | self.encoder = self.build_encoder()

40 |

41 | # The part of the bigan that trains the discriminator and encoder

42 | self.discriminator.trainable = False

43 |

44 | # Generate image from sampled noise

45 | z = Input(shape=(self.latent_dim, ))

46 | img_ = self.generator(z)

47 |

48 | # Encode image

49 | img = Input(shape=self.img_shape)

50 | z_ = self.encoder(img)

51 |

52 | # Latent -> img is fake, and img -> latent is valid

53 | fake = self.discriminator([z, img_])

54 | valid = self.discriminator([z_, img])

55 |

56 | # Set up and compile the combined model

57 | # Trains generator to fool the discriminator

58 | self.bigan_generator = Model([z, img], [fake, valid])

59 | self.bigan_generator.compile(loss=['binary_crossentropy', 'binary_crossentropy'],

60 | optimizer=optimizer)

61 |

62 |

63 | def build_encoder(self):

64 | model = Sequential()

65 |

66 | model.add(Flatten(input_shape=self.img_shape))

67 | model.add(Dense(512))

68 | model.add(LeakyReLU(alpha=0.2))

69 | model.add(BatchNormalization(momentum=0.8))

70 | model.add(Dense(512))

71 | model.add(LeakyReLU(alpha=0.2))

72 | model.add(BatchNormalization(momentum=0.8))

73 | model.add(Dense(self.latent_dim))

74 |

75 | model.summary()

76 |

77 | img = Input(shape=self.img_shape)

78 | z = model(img)

79 |

80 | return Model(img, z)

81 |

82 | def build_generator(self):

83 | model = Sequential()

84 |

85 | model.add(Dense(512, input_dim=self.latent_dim))

86 | model.add(LeakyReLU(alpha=0.2))

87 | model.add(BatchNormalization(momentum=0.8))

88 | model.add(Dense(512))

89 | model.add(LeakyReLU(alpha=0.2))

90 | model.add(BatchNormalization(momentum=0.8))

91 | model.add(Dense(np.prod(self.img_shape), activation='tanh'))

92 | model.add(Reshape(self.img_shape))

93 |

94 | model.summary()

95 |

96 | z = Input(shape=(self.latent_dim,))

97 | gen_img = model(z)

98 |

99 | return Model(z, gen_img)

100 |

101 | def build_discriminator(self):

102 |

103 | z = Input(shape=(self.latent_dim, ))

104 | img = Input(shape=self.img_shape)

105 | d_in = concatenate([z, Flatten()(img)])

106 |

107 | model = Dense(1024)(d_in)

108 | model = LeakyReLU(alpha=0.2)(model)

109 | model = Dropout(0.5)(model)

110 | model = Dense(1024)(model)

111 | model = LeakyReLU(alpha=0.2)(model)

112 | model = Dropout(0.5)(model)

113 | model = Dense(1024)(model)

114 | model = LeakyReLU(alpha=0.2)(model)

115 | model = Dropout(0.5)(model)

116 | validity = Dense(1, activation="sigmoid")(model)

117 |

118 | return Model([z, img], validity)

119 |

120 | def train(self, epochs, batch_size=128, sample_interval=50):

121 |

122 | # Load the dataset

123 | (X_train, _), (_, _) = mnist.load_data()

124 |

125 | # Rescale -1 to 1

126 | X_train = (X_train.astype(np.float32) - 127.5) / 127.5

127 | X_train = np.expand_dims(X_train, axis=3)

128 |

129 | # Adversarial ground truths

130 | valid = np.ones((batch_size, 1))

131 | fake = np.zeros((batch_size, 1))

132 |

133 | for epoch in range(epochs):

134 |

135 |

136 | # ---------------------

137 | # Train Discriminator

138 | # ---------------------

139 |

140 | # Sample noise and generate img

141 | z = np.random.normal(size=(batch_size, self.latent_dim))

142 | imgs_ = self.generator.predict(z)

143 |

144 | # Select a random batch of images and encode

145 | idx = np.random.randint(0, X_train.shape[0], batch_size)

146 | imgs = X_train[idx]

147 | z_ = self.encoder.predict(imgs)

148 |

149 | # Train the discriminator (img -> z is valid, z -> img is fake)

150 | d_loss_real = self.discriminator.train_on_batch([z_, imgs], valid)

151 | d_loss_fake = self.discriminator.train_on_batch([z, imgs_], fake)

152 | d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

153 |

154 | # ---------------------

155 | # Train Generator

156 | # ---------------------

157 |

158 | # Train the generator (z -> img is valid and img -> z is is invalid)

159 | g_loss = self.bigan_generator.train_on_batch([z, imgs], [valid, fake])

160 |

161 | # Plot the progress

162 | print ("%d [D loss: %f, acc: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100*d_loss[1], g_loss[0]))

163 |

164 | # If at save interval => save generated image samples

165 | if epoch % sample_interval == 0:

166 | self.sample_interval(epoch)

167 |

168 | def sample_interval(self, epoch):

169 | r, c = 5, 5

170 | z = np.random.normal(size=(25, self.latent_dim))

171 | gen_imgs = self.generator.predict(z)

172 |

173 | gen_imgs = 0.5 * gen_imgs + 0.5

174 |

175 | fig, axs = plt.subplots(r, c)

176 | cnt = 0

177 | for i in range(r):

178 | for j in range(c):

179 | axs[i,j].imshow(gen_imgs[cnt, :,:,0], cmap='gray')

180 | axs[i,j].axis('off')

181 | cnt += 1

182 | fig.savefig("images/mnist_%d.png" % epoch)

183 | plt.close()

184 |

185 |

186 | if __name__ == '__main__':

187 | bigan = BIGAN()

188 | bigan.train(epochs=40000, batch_size=32, sample_interval=400)

189 |

--------------------------------------------------------------------------------

/wgan/wgan.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function, division

2 |

3 | from keras.datasets import mnist

4 | from keras.layers import Input, Dense, Reshape, Flatten, Dropout

5 | from keras.layers import BatchNormalization, Activation, ZeroPadding2D

6 | from keras.layers.advanced_activations import LeakyReLU

7 | from keras.layers.convolutional import UpSampling2D, Conv2D

8 | from keras.models import Sequential, Model

9 | from keras.optimizers import RMSprop

10 |

11 | import keras.backend as K

12 |

13 | import matplotlib.pyplot as plt

14 |

15 | import sys

16 |

17 | import numpy as np

18 |

19 | class WGAN():

20 | def __init__(self):

21 | self.img_rows = 28

22 | self.img_cols = 28

23 | self.channels = 1

24 | self.img_shape = (self.img_rows, self.img_cols, self.channels)

25 | self.latent_dim = 100

26 |

27 | # Following parameter and optimizer set as recommended in paper

28 | self.n_critic = 5

29 | self.clip_value = 0.01

30 | optimizer = RMSprop(lr=0.00005)

31 |

32 | # Build and compile the critic

33 | self.critic = self.build_critic()

34 | self.critic.compile(loss=self.wasserstein_loss,

35 | optimizer=optimizer,

36 | metrics=['accuracy'])

37 |

38 | # Build the generator

39 | self.generator = self.build_generator()

40 |

41 | # The generator takes noise as input and generated imgs

42 | z = Input(shape=(self.latent_dim,))

43 | img = self.generator(z)

44 |

45 | # For the combined model we will only train the generator

46 | self.critic.trainable = False

47 |

48 | # The critic takes generated images as input and determines validity

49 | valid = self.critic(img)

50 |

51 | # The combined model (stacked generator and critic)

52 | self.combined = Model(z, valid)

53 | self.combined.compile(loss=self.wasserstein_loss,

54 | optimizer=optimizer,

55 | metrics=['accuracy'])

56 |

57 | def wasserstein_loss(self, y_true, y_pred):

58 | return K.mean(y_true * y_pred)

59 |

60 | def build_generator(self):

61 |

62 | model = Sequential()

63 |

64 | model.add(Dense(128 * 7 * 7, activation="relu", input_dim=self.latent_dim))

65 | model.add(Reshape((7, 7, 128)))

66 | model.add(UpSampling2D())

67 | model.add(Conv2D(128, kernel_size=4, padding="same"))

68 | model.add(BatchNormalization(momentum=0.8))

69 | model.add(Activation("relu"))

70 | model.add(UpSampling2D())

71 | model.add(Conv2D(64, kernel_size=4, padding="same"))

72 | model.add(BatchNormalization(momentum=0.8))

73 | model.add(Activation("relu"))

74 | model.add(Conv2D(self.channels, kernel_size=4, padding="same"))

75 | model.add(Activation("tanh"))

76 |

77 | model.summary()

78 |

79 | noise = Input(shape=(self.latent_dim,))

80 | img = model(noise)

81 |

82 | return Model(noise, img)

83 |

84 | def build_critic(self):

85 |

86 | model = Sequential()

87 |

88 | model.add(Conv2D(16, kernel_size=3, strides=2, input_shape=self.img_shape, padding="same"))

89 | model.add(LeakyReLU(alpha=0.2))

90 | model.add(Dropout(0.25))

91 | model.add(Conv2D(32, kernel_size=3, strides=2, padding="same"))

92 | model.add(ZeroPadding2D(padding=((0,1),(0,1))))

93 | model.add(BatchNormalization(momentum=0.8))

94 | model.add(LeakyReLU(alpha=0.2))

95 | model.add(Dropout(0.25))

96 | model.add(Conv2D(64, kernel_size=3, strides=2, padding="same"))

97 | model.add(BatchNormalization(momentum=0.8))

98 | model.add(LeakyReLU(alpha=0.2))

99 | model.add(Dropout(0.25))

100 | model.add(Conv2D(128, kernel_size=3, strides=1, padding="same"))

101 | model.add(BatchNormalization(momentum=0.8))

102 | model.add(LeakyReLU(alpha=0.2))

103 | model.add(Dropout(0.25))

104 | model.add(Flatten())

105 | model.add(Dense(1))

106 |

107 | model.summary()

108 |

109 | img = Input(shape=self.img_shape)

110 | validity = model(img)

111 |

112 | return Model(img, validity)

113 |

114 | def train(self, epochs, batch_size=128, sample_interval=50):

115 |

116 | # Load the dataset

117 | (X_train, _), (_, _) = mnist.load_data()

118 |

119 | # Rescale -1 to 1

120 | X_train = (X_train.astype(np.float32) - 127.5) / 127.5

121 | X_train = np.expand_dims(X_train, axis=3)

122 |

123 | # Adversarial ground truths

124 | valid = -np.ones((batch_size, 1))

125 | fake = np.ones((batch_size, 1))

126 |

127 | for epoch in range(epochs):

128 |

129 | for _ in range(self.n_critic):

130 |

131 | # ---------------------

132 | # Train Discriminator

133 | # ---------------------

134 |

135 | # Select a random batch of images

136 | idx = np.random.randint(0, X_train.shape[0], batch_size)

137 | imgs = X_train[idx]

138 |

139 | # Sample noise as generator input

140 | noise = np.random.normal(0, 1, (batch_size, self.latent_dim))

141 |

142 | # Generate a batch of new images

143 | gen_imgs = self.generator.predict(noise)

144 |

145 | # Train the critic

146 | d_loss_real = self.critic.train_on_batch(imgs, valid)

147 | d_loss_fake = self.critic.train_on_batch(gen_imgs, fake)

148 | d_loss = 0.5 * np.add(d_loss_fake, d_loss_real)

149 |

150 | # Clip critic weights

151 | for l in self.critic.layers:

152 | weights = l.get_weights()

153 | weights = [np.clip(w, -self.clip_value, self.clip_value) for w in weights]

154 | l.set_weights(weights)

155 |

156 |

157 | # ---------------------

158 | # Train Generator

159 | # ---------------------

160 |

161 | g_loss = self.combined.train_on_batch(noise, valid)

162 |

163 | # Plot the progress

164 | print ("%d [D loss: %f] [G loss: %f]" % (epoch, 1 - d_loss[0], 1 - g_loss[0]))

165 |

166 | # If at save interval => save generated image samples

167 | if epoch % sample_interval == 0:

168 | self.sample_images(epoch)

169 |

170 | def sample_images(self, epoch):

171 | r, c = 5, 5

172 | noise = np.random.normal(0, 1, (r * c, self.latent_dim))

173 | gen_imgs = self.generator.predict(noise)

174 |

175 | # Rescale images 0 - 1

176 | gen_imgs = 0.5 * gen_imgs + 0.5

177 |

178 | fig, axs = plt.subplots(r, c)

179 | cnt = 0

180 | for i in range(r):

181 | for j in range(c):

182 | axs[i,j].imshow(gen_imgs[cnt, :,:,0], cmap='gray')

183 | axs[i,j].axis('off')

184 | cnt += 1

185 | fig.savefig("images/mnist_%d.png" % epoch)

186 | plt.close()

187 |

188 |

189 | if __name__ == '__main__':

190 | wgan = WGAN()

191 | wgan.train(epochs=4000, batch_size=32, sample_interval=50)

192 |

--------------------------------------------------------------------------------

/cgan/cgan.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function, division

2 |

3 | from keras.datasets import mnist

4 | from keras.layers import Input, Dense, Reshape, Flatten, Dropout, multiply

5 | from keras.layers import BatchNormalization, Activation, Embedding, ZeroPadding2D

6 | from keras.layers.advanced_activations import LeakyReLU

7 | from keras.layers.convolutional import UpSampling2D, Conv2D

8 | from keras.models import Sequential, Model

9 | from keras.optimizers import Adam

10 |

11 | import matplotlib.pyplot as plt

12 |

13 | import numpy as np

14 |

15 | class CGAN():

16 | def __init__(self):

17 | # Input shape

18 | self.img_rows = 28

19 | self.img_cols = 28

20 | self.channels = 1

21 | self.img_shape = (self.img_rows, self.img_cols, self.channels)

22 | self.num_classes = 10

23 | self.latent_dim = 100

24 |

25 | optimizer = Adam(0.0002, 0.5)

26 |

27 | # Build and compile the discriminator

28 | self.discriminator = self.build_discriminator()

29 | self.discriminator.compile(loss=['binary_crossentropy'],

30 | optimizer=optimizer,

31 | metrics=['accuracy'])

32 |

33 | # Build the generator

34 | self.generator = self.build_generator()

35 |

36 | # The generator takes noise and the target label as input

37 | # and generates the corresponding digit of that label

38 | noise = Input(shape=(self.latent_dim,))

39 | label = Input(shape=(1,))

40 | img = self.generator([noise, label])

41 |

42 | # For the combined model we will only train the generator

43 | self.discriminator.trainable = False

44 |

45 | # The discriminator takes generated image as input and determines validity

46 | # and the label of that image

47 | valid = self.discriminator([img, label])

48 |

49 | # The combined model (stacked generator and discriminator)

50 | # Trains generator to fool discriminator

51 | self.combined = Model([noise, label], valid)

52 | self.combined.compile(loss=['binary_crossentropy'],

53 | optimizer=optimizer)

54 |

55 | def build_generator(self):

56 |

57 | model = Sequential()

58 |

59 | model.add(Dense(256, input_dim=self.latent_dim))

60 | model.add(LeakyReLU(alpha=0.2))

61 | model.add(BatchNormalization(momentum=0.8))

62 | model.add(Dense(512))

63 | model.add(LeakyReLU(alpha=0.2))

64 | model.add(BatchNormalization(momentum=0.8))

65 | model.add(Dense(1024))

66 | model.add(LeakyReLU(alpha=0.2))

67 | model.add(BatchNormalization(momentum=0.8))

68 | model.add(Dense(np.prod(self.img_shape), activation='tanh'))

69 | model.add(Reshape(self.img_shape))

70 |

71 | model.summary()

72 |

73 | noise = Input(shape=(self.latent_dim,))

74 | label = Input(shape=(1,), dtype='int32')

75 | label_embedding = Flatten()(Embedding(self.num_classes, self.latent_dim)(label))

76 |

77 | model_input = multiply([noise, label_embedding])

78 | img = model(model_input)

79 |

80 | return Model([noise, label], img)

81 |

82 | def build_discriminator(self):

83 |

84 | model = Sequential()

85 |

86 | model.add(Dense(512, input_dim=np.prod(self.img_shape)))

87 | model.add(LeakyReLU(alpha=0.2))

88 | model.add(Dense(512))

89 | model.add(LeakyReLU(alpha=0.2))

90 | model.add(Dropout(0.4))

91 | model.add(Dense(512))

92 | model.add(LeakyReLU(alpha=0.2))

93 | model.add(Dropout(0.4))

94 | model.add(Dense(1, activation='sigmoid'))

95 | model.summary()

96 |

97 | img = Input(shape=self.img_shape)

98 | label = Input(shape=(1,), dtype='int32')

99 |

100 | label_embedding = Flatten()(Embedding(self.num_classes, np.prod(self.img_shape))(label))

101 | flat_img = Flatten()(img)

102 |

103 | model_input = multiply([flat_img, label_embedding])

104 |

105 | validity = model(model_input)

106 |