├── .gitignore

├── LICENSE

├── README.md

├── code

├── .gitkeep

├── draw_detail_2D.py

├── draw_detail_3D.py

├── draw_weight.py

├── gen_noise.py

└── gen_resnet.py

├── data

├── .gitkeep

├── casia-webface

│ └── .gitkeep

├── imdb-face

│ └── .gitkeep

└── ms-celeb-1m

│ └── .gitkeep

├── deploy

├── .gitkeep

├── resnet20_arcface_train.prototxt

├── resnet20_l2softmax_train.prototxt

├── resnet64_l2softmax_train.prototxt

└── solver.prototxt

├── figures

├── detail.png

├── strategy.png

├── webface_dist_2D_noise-0.png

├── webface_dist_2D_noise-20.png

├── webface_dist_2D_noise-40.png

├── webface_dist_2D_noise-60.png

├── webface_dist_3D_noise-0.png

├── webface_dist_3D_noise-20.png

├── webface_dist_3D_noise-40.png

└── webface_dist_3D_noise-60.png

└── layer

├── .gitkeep

├── arcface_layer.cpp

├── arcface_layer.cu

├── arcface_layer.hpp

├── caffe.proto

├── noise_tolerant_fr_layer.cpp

├── noise_tolerant_fr_layer.hpp

├── normL2_layer.cpp

├── normL2_layer.cu

└── normL2_layer.hpp

/.gitignore:

--------------------------------------------------------------------------------

1 | # Prerequisites

2 | *.d

3 |

4 | # Compiled Object files

5 | *.slo

6 | *.lo

7 | *.o

8 | *.obj

9 |

10 | # Precompiled Headers

11 | *.gch

12 | *.pch

13 |

14 | # Compiled Dynamic libraries

15 | *.so

16 | *.dylib

17 | *.dll

18 |

19 | # Fortran module files

20 | *.mod

21 | *.smod

22 |

23 | # Compiled Static libraries

24 | *.lai

25 | *.la

26 | *.a

27 | *.lib

28 |

29 | # Executables

30 | *.exe

31 | *.out

32 | *.app

33 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2019 Harry Wong

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Noise-Tolerant Paradigm for Training Face Recognition CNNs

2 |

3 | Paper link: https://arxiv.org/abs/1903.10357

4 |

5 | Presented at **CVPR 2019**

6 |

7 | This is the code for the paper

8 |

9 |

10 | ### Contents

11 | 1. [Requirements](#Requirements)

12 | 1. [Dataset](#Dataset)

13 | 1. [How-to-use](#How-to-use)

14 | 1. [Diagram](#Diagram)

15 | 1. [Performance](#Performance)

16 | 1. [Contact](#Contact)

17 | 1. [Citation](#Citation)

18 | 1. [License](#License)

19 |

20 | ### Requirements

21 |

22 | 1. [**`Caffe`**](http://caffe.berkeleyvision.org/installation.html)

23 |

24 | ### Dataset

25 |

26 | Training dataset:

27 | 1. [**`CASIA-Webface clean`**](https://github.com/happynear/FaceVerification)

28 | 2. [**`IMDB-Face`**](https://github.com/fwang91/IMDb-Face)

29 | 3. [**`MS-Celeb-1M`**](https://www.microsoft.com/en-us/research/project/ms-celeb-1m-challenge-recognizing-one-million-celebrities-real-world/)

30 |

31 | Testing dataset:

32 | 1. [**`LFW`**](http://vis-www.cs.umass.edu/lfw/)

33 | 2. [**`AgeDB`**](https://ibug.doc.ic.ac.uk/resources/agedb/)

34 | 3. [**`CFP`**](http://www.cfpw.io/)

35 | 4. [**`MegaFace`**](http://megaface.cs.washington.edu/)

36 |

37 | Both the training data and testing data are aligned by the method described in [**`util.py`**](https://github.com/huangyangyu/SeqFace/blob/master/code/util.py)

38 |

39 | ### How-to-use

40 | Firstly, you can train the network in noisy dataset as following steps:

41 |

42 | step 1: add noise_tolerant_fr and relevant layers(at ./layers directory) to caffe project and recompile it.

43 |

44 | step 2: download training dataset to ./data directory, and corrupt training dataset in different noise ratio which you can refer to ./code/gen_noise.py, then generate the lmdb file through caffe tool.

45 |

46 | step 3: configure prototxt file in ./deploy directory.

47 |

48 | step 4: run caffe command to train the network by using noisy dataset.

49 |

50 | After training, you can evaluate the model on testing dataset by using [**`evaluate.py`**](https://github.com/huangyangyu/SeqFace/blob/master/code/LFW/evaluate.py).

51 |

52 | ### Diagram

53 |

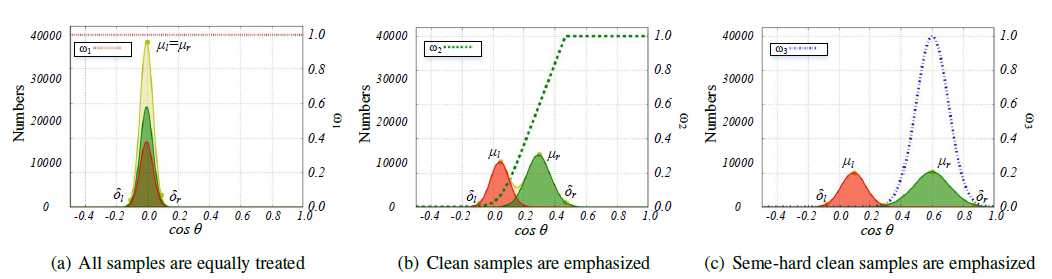

54 | The figure shows three strategies in different purposes. At the beginning of the training process, we focus on all samples; then we focus on easy/clean samples; at last we focus on semi-hard clean samples.

55 |

56 |

57 |

58 |

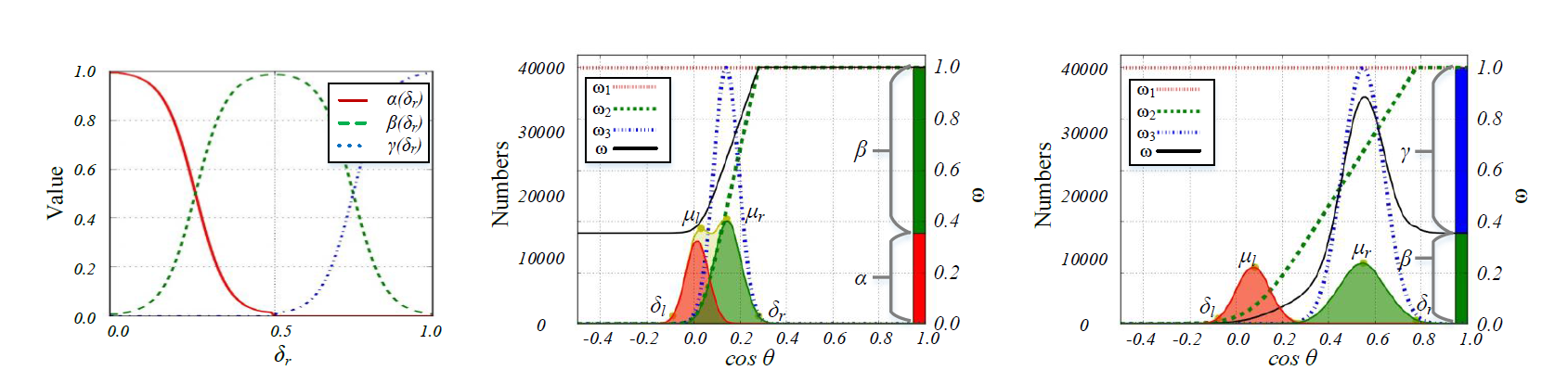

59 | The figure explains the fusion function of three strategies. The left part demonstrates three functions: **α(δr)**, **β(δr)**, and **γ(δr)**. The right part shows two fusion examples. According to the **ω**, we can see that the easy/clean samples are emphasized in the first example(**δr** < 0.5), and the semi-hard clean samples are emphasized in the second example(**δr** > 0.5).

60 | **For more detail, please click here to play [demo video](https://youtu.be/FxRoN_i7FLw).**

61 |

62 |

63 |

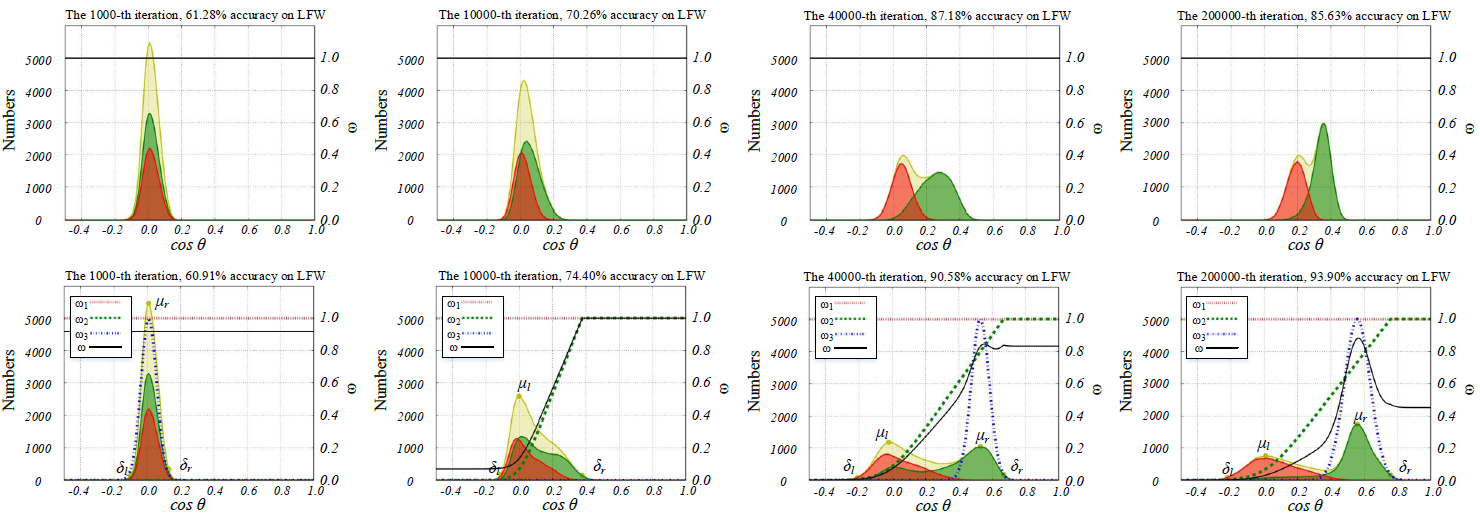

64 | The figure shows the 2D Histall of CNNcommon (up) and CNNm2 (down) under 40% noise rate.

65 |

66 |

67 |

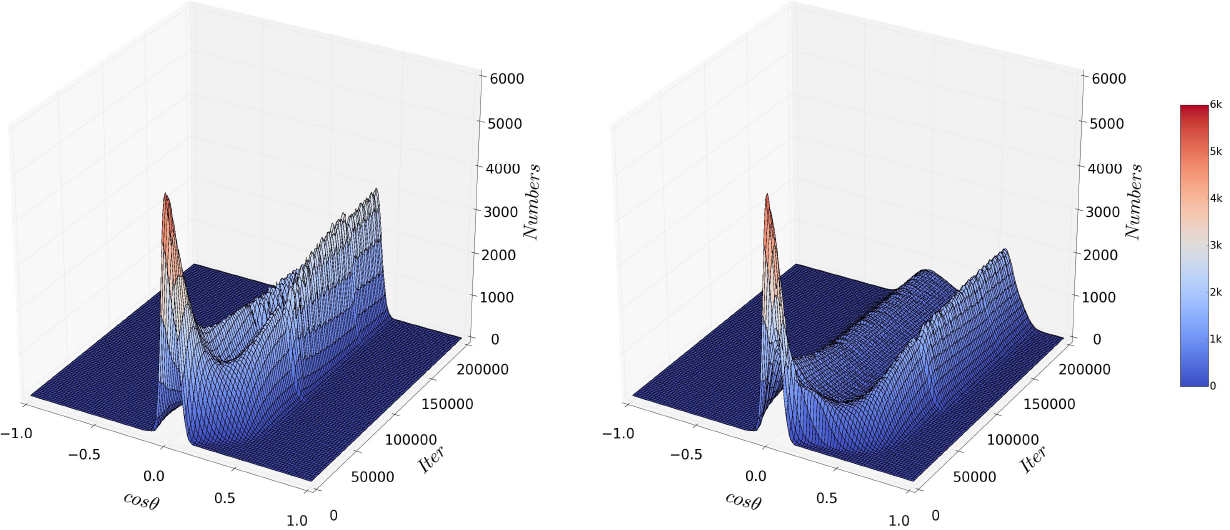

68 | The figure shows the 3D Histall of CNNcommon (left) and CNNm2 (right) under 40% noise rate.

69 |

70 |

71 | ### Performance

72 |

73 | The table shows comparison of accuracies(%) on **LFW**, **ResNet-20** models are used. CNNclean is trained with clean

74 | data WebFace-Clean-Sub by using the traditional method. CNNcommon is trained with noisy dataset WebFace-All by using the traditional method. CNNct is trained

75 | with noisy dataset WebFace-All by using our implemented Co-teaching(with pre-given noise rates). CNNm1 and CNNm2 are all trained with noisy dataset WebFace-All but through the proposed approach, and they respectively use the 1st and 2nd method to compute loss.

76 | Note: The WebFace-Clean-Sub is the clean part of the WebFace-All, the WebFace-All contains noise data with different rate as describe below.

77 |

78 | | Loss | Actual Noise Rate | CNNclean | CNNcommon | CNNct | CNNm1 | CNNm2 | Estimated Noise Rate |

79 | | --------- | ----------------- | ------------------- | -------------------- | ---------------- | ---------------- | ---------------- | -------------------- |

80 | | L2softmax | 0% | 94.65 | 94.65 | - | 95.00 | **96.28** | 2% |

81 | | L2softmax | 20% | 94.18 | 89.05 | 92.12 | 92.95 | **95.26** | 18% |

82 | | L2softmax | 40% | 92.71 | 85.63 | 87.10 | 89.91 | **93.90** | 42% |

83 | | L2softmax | 60% | **91.15** | 76.61 | 83.66 | 86.11 | 87.61 | 56% |

84 | | Arcface | 0% | 97.95 | 97.95 | - | 97.11 | **98.11** | 2% |

85 | | Arcface | 20% | **97.80** | 96.48 | 96.53 | 96.83 | 97.76 | 18% |

86 | | Arcface | 40% | 96.53 | 92.33 | 94.25 | 95.88 | **97.23** | 36% |

87 | | Arcface | 60% | 94.56 | 84.05 | 90.36 | 93.66 | **95.15** | 54% |

88 |

89 |

90 | ### Contact

91 |

92 | [Wei Hu](mailto:huwei@mail.buct.edu.cn)

93 |

94 | [Yangyu Huang](mailto:yangyu.huang.1990@outlook.com)

95 |

96 |

97 | ### Citation

98 |

99 | If you find this work useful in your research, please cite

100 | ```text

101 | @inproceedings{Hu2019NoiseFace,

102 | title = {Noise-Tolerant Paradigm for Training Face Recognition CNNs},

103 | author = {Hu, Wei and Huang, Yangyu and Zhang, Fan and Li, Ruirui},

104 | booktitle = {IEEE Conference on Computer Vision and Pattern Recognition},

105 | month = {June},

106 | year = {2019},

107 | address = {Long Beach, CA}

108 | }

109 | ```

110 |

111 |

112 | ### License

113 |

114 | The project is released under the MIT License

115 |

--------------------------------------------------------------------------------

/code/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huangyangyu/NoiseFace/0b434a2c0eb664ca2af36c3bc619629fb27dcf3f/code/.gitkeep

--------------------------------------------------------------------------------

/code/draw_detail_2D.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | #coding: utf-8

3 |

4 | import os

5 | import sys

6 | import cv2

7 | import math

8 | import json

9 | import numpy as np

10 | import matplotlib.pyplot as plt

11 | plt.switch_backend("agg")

12 |

13 | bins = 201

14 |

15 |

16 | def choose_iter(_iter, interval):

17 | if _iter == 1000:

18 | return True

19 | if _iter == 200000:

20 | return True

21 |

22 | k = _iter / interval

23 | if k % 2 != 0 or (k / 2) % (_iter / 20000 + 1) != 0:

24 | return False

25 | else:

26 | return True

27 |

28 |

29 | def get_pdf(X):

30 | pdf = bins * [0.0]

31 | for x in X:

32 | pdf[int(x*100)] += 1

33 | return pdf

34 |

35 |

36 | def mean_filter(pdf, fr=2):

37 | filter_pdf = bins * [0.0]

38 | for i in xrange(fr, bins-fr):

39 | for j in xrange(i-fr, i+fr+1):

40 | filter_pdf[i] += pdf[j] / (fr+fr+1)

41 | return filter_pdf

42 |

43 |

44 | def load_lfw(prefix):

45 | items = list()

46 | for line in open("./data/%s_lfw.txt" % prefix):

47 | item = line.strip().split()

48 | items.append((int(item[0]), float(item[1]), 100.0 * float(item[2])))

49 | items.append(items[-1])

50 | return items

51 |

52 |

53 | def draw_imgs(prefix, ratio, mode):

54 | lfw = load_lfw(prefix)

55 |

56 | frames = 0

57 | iters = list()

58 | threds = list()

59 | accs = list()

60 | alphas = list()

61 | betas = list()

62 | gammas = list()

63 | for _iter in xrange(1000, 200000+1, 100):

64 | if not choose_iter(_iter, 100):

65 | continue

66 | _iter1, _thred1, _acc1 = lfw[_iter/10000]

67 | _iter2, _thred2, _acc2 = lfw[_iter/10000+1]

68 | _thred = _thred1 + (_thred2 - _thred1) * (_iter - _iter1) / max(1, (_iter2 - _iter1))

69 | _acc = _acc1 + (_acc2 - _acc1) * (_iter - _iter1) / max(1, (_iter2 - _iter1))

70 | iters.append(_iter)

71 | threds.append(_thred)

72 | accs.append(_acc)

73 |

74 | log_file = "./data/%s_%d.txt" % (prefix, _iter)

75 | if not os.path.exists(log_file):

76 | print "miss: %s" % log_file

77 | continue

78 | lines = open(log_file).readlines()

79 |

80 | # X

81 | X = list()

82 | for i in xrange(bins):

83 | X.append(i * 0.01 - 1.0)

84 |

85 | # pdf

86 | pdf = list()

87 | for line in lines[3:bins+3]:

88 | item = line.strip().split()

89 | item = map(lambda x: float(x), item)

90 | pdf.append(item[1])

91 |

92 | # clean pdf

93 | clean_pdf = list()

94 | for line in lines[bins+3+1:bins+bins+3+1]:

95 | item = line.strip().split()

96 | item = map(lambda x: float(x), item)

97 | clean_pdf.append(item[1])

98 |

99 | # noise pdf

100 | noise_pdf = list()

101 | for line in lines[bins+bins+3+1+1:bins+bins+bins+3+1+1]:

102 | item = line.strip().split()

103 | item = map(lambda x: float(x), item)

104 | noise_pdf.append(item[1])

105 |

106 | # pcf

107 | pcf = list()

108 | for line in lines[bins+bins+bins+3+1+1+1:bins+bins+bins+bins+3+1+1+1]:

109 | item = line.strip().split()

110 | item = map(lambda x: float(x), item)

111 | pcf.append(item[1])

112 |

113 | # weight

114 | W = list()

115 | for line in lines[bins+bins+bins+bins+3+1+1+1+1:bins+bins+bins+bins+bins+3+1+1+1+1]:

116 | item = line.strip().split()

117 | item = map(lambda x: float(x), item)

118 | W.append(item[1])

119 |

120 | # mean filtering

121 | filter_pdf = mean_filter(pdf)

122 | filter_clean_pdf = mean_filter(clean_pdf)

123 | filter_noise_pdf = mean_filter(noise_pdf)

124 | # pcf

125 | sum_filter_pdf = sum(filter_pdf)

126 | pcf[0] = filter_pdf[0] / sum_filter_pdf

127 | for i in xrange(1, bins):

128 | pcf[i] = pcf[i-1] + filter_pdf[i] / sum_filter_pdf

129 | # mountain

130 | l_bin_id_ = 0

131 | r_bin_id_ = bins-1

132 | while pcf[l_bin_id_] < 0.005:

133 | l_bin_id_ += 1

134 | while pcf[r_bin_id_] > 0.995:

135 | r_bin_id_ -= 1

136 |

137 | m_bin_id_ = (l_bin_id_ + r_bin_id_) / 2

138 | t_bin_id_ = 0

139 | for i in xrange(bins):

140 | if filter_pdf[t_bin_id_] < filter_pdf[i]:

141 | t_bin_id_ = i

142 | t_bin_ids_ = list()

143 | for i in xrange(max(l_bin_id_, 5), min(r_bin_id_, bins-5)):

144 | if filter_pdf[i] >= filter_pdf[i-1] and filter_pdf[i] >= filter_pdf[i+1] and \

145 | filter_pdf[i] >= filter_pdf[i-2] and filter_pdf[i] >= filter_pdf[i+2] and \

146 | filter_pdf[i] > filter_pdf[i-3] and filter_pdf[i] > filter_pdf[i+3] and \

147 | filter_pdf[i] > filter_pdf[i-4] and filter_pdf[i] > filter_pdf[i+4] and \

148 | filter_pdf[i] > filter_pdf[i-5] and filter_pdf[i] > filter_pdf[i+5]:

149 | t_bin_ids_.append(i)

150 | i += 5

151 | if len(t_bin_ids_) == 0:

152 | t_bin_ids_.append(t_bin_id_)

153 | if t_bin_id_ < m_bin_id_:

154 | lt_bin_id_ = t_bin_id_

155 | rt_bin_id_ = t_bin_ids_[-1] if t_bin_ids_[-1] > m_bin_id_ else None

156 | #rt_bin_id_ = max(t_bin_ids_[-1], m_bin_id_)

157 | #rt_bin_id_ = t_bin_ids_[-1]

158 | else:

159 | rt_bin_id_ = t_bin_id_

160 | lt_bin_id_ = t_bin_ids_[0] if t_bin_ids_[0] < m_bin_id_ else None

161 | #lt_bin_id_ = min(t_bin_ids_[0], m_bin_id_)

162 | #lt_bin_id_ = t_bin_ids_[0]

163 |

164 | # weight1-3

165 | weights1 = list()

166 | weights2 = list()

167 | weights3 = list()

168 | weights = list()

169 | r = 2.576

170 | alpha = np.clip((r_bin_id_ - 100.0) / 100.0, 0.0, 1.0)

171 | #beta = abs(2.0 * alpha - 1.0)

172 | beta = 2.0 - 1.0 / (1.0 + math.exp(5-20*alpha)) - 1.0 / (1.0 + math.exp(20*alpha-15))

173 | if mode == 1:

174 | alphas.append(100.0)

175 | betas.append(0.0)

176 | gammas.append(0.0)

177 | else:

178 | alphas.append(100.0*beta*(alpha<0.5))

179 | betas.append(100.0*(1-beta))

180 | gammas.append(100.0*beta*(alpha>0.5))

181 | for bin_id in xrange(bins):

182 | # w1

183 | weight1 = 1.0

184 | # w2

185 | if lt_bin_id_ is None:

186 | weight2 = 0.0

187 | else:

188 | #weight2 = np.clip(1.0 * (bin_id - lt_bin_id_) / (r_bin_id_ - lt_bin_id_), 0.0, 1.0)# relu

189 | weight2 = np.clip(math.log(1.0 + math.exp(10.0 * (bin_id - lt_bin_id_) / (r_bin_id_ - lt_bin_id_))) / math.log(1.0 + math.exp(10.0)), 0.0, 1.0)# softplus

190 | # w3

191 | if rt_bin_id_ is None:

192 | weight3 = 0.0

193 | else:

194 | x = bin_id

195 | u = rt_bin_id_

196 | #a = ((r_bin_id_ - u) / r) if x > u else ((u - l_bin_id_) / r)# asymmetric

197 | a = (r_bin_id_ - u) / r# symmetric

198 | weight3 = math.exp(-1.0 * (x - u) * (x - u) / (2 * a * a))

199 | # w: merge w1, w2 and w3

200 | weight = alphas[-1]/100.0 * weight1 + betas[-1]/100.0 * weight2 + gammas[-1]/100.0 * weight3

201 |

202 | weights1.append(weight1)

203 | weights2.append(weight2)

204 | weights3.append(weight3)

205 | weights.append(weight)

206 |

207 | asize= 26

208 | ticksize = 24

209 | lfont1 = {

210 | 'family' : 'Times New Roman',

211 | 'weight' : 'normal',

212 | 'size' : 18,

213 | }

214 | lfont2 = {

215 | 'family' : 'Times New Roman',

216 | 'weight' : 'normal',

217 | 'size' : 24,

218 | }

219 | titlesize = 28

220 | glinewidth = 2

221 |

222 | fig = plt.figure(0)

223 | fig.set_size_inches(10, 20)

224 | ax1 = plt.subplot2grid((4,2), (0,0), rowspan=2, colspan=2)

225 | ax2 = plt.subplot2grid((4,2), (2,0), rowspan=1, colspan=2)

226 | ax3 = plt.subplot2grid((4,2), (3,0), rowspan=1, colspan=2)

227 |

228 | ax1.set_title(r"$The\ cos\theta\ distribution\ of\ WebFace-All(64K\ samples),\ $" + "\n" + r"$current\ iteration\ is\ %d$" % _iter, fontsize=titlesize)

229 | ax1.set_xlabel(r"$cos\theta$", fontsize=asize)

230 | ax1.set_ylabel(r"$Numbers$", fontsize=asize)

231 | ax1.tick_params(labelsize=ticksize)

232 | ax1.set_xlim(-0.5, 1.0)

233 | ax1.set_ylim(0.0, 6000.0)

234 | ax1.grid(True, linewidth=glinewidth)

235 |

236 | ax1.plot(X, filter_pdf, "y-", linewidth=2, label="Hist-all")

237 | ax1.plot(X, filter_clean_pdf, "g-", linewidth=2, label="Hist-clean")

238 | ax1.plot(X, filter_noise_pdf, "r-", linewidth=2, label="Hist-noisy")

239 | ax1.fill(X, filter_pdf, "y", alpha=0.25)

240 | ax1.fill(X, filter_clean_pdf, "g", alpha=0.50)

241 | ax1.fill(X, filter_noise_pdf, "r", alpha=0.50)

242 | # points

243 | if mode != 1:

244 | #p_X = [X[l_bin_id_], X[lt_bin_id_], X[rt_bin_id_], X[r_bin_id_]]

245 | #p_Y = [filter_pdf[l_bin_id_], filter_pdf[lt_bin_id_], filter_pdf[rt_bin_id_], filter_pdf[r_bin_id_]]

246 | p_X = [X[l_bin_id_], X[r_bin_id_]]

247 | p_Y = [filter_pdf[l_bin_id_], filter_pdf[r_bin_id_]]

248 | if lt_bin_id_ is not None:

249 | p_X.append(X[lt_bin_id_])

250 | p_Y.append(filter_pdf[lt_bin_id_])

251 | if rt_bin_id_ is not None:

252 | p_X.append(X[rt_bin_id_])

253 | p_Y.append(filter_pdf[rt_bin_id_])

254 | ax1.scatter(p_X, p_Y, color="y", linewidths=5)

255 | ax1.legend(["Hist-all", "Hist-clean", "Hist-noisy"], loc=1, ncol=1, prop=lfont1)

256 |

257 | _ax1 = ax1.twinx()

258 | _ax1.set_ylabel(r"$\omega$", fontsize=asize)

259 | _ax1.tick_params(labelsize=ticksize)

260 | _ax1.set_xlim(-0.5, 1.0)

261 | _ax1.set_ylim(0.0, 1.2)

262 |

263 | #_ax1.bar([1.0], [beta*(alpha<0.5)], color="r", width=0.1, align="center")

264 | #_ax1.bar([1.0], [(1-beta)], bottom=[beta*(alpha<0.5)], color="g", width=0.1, align="center")

265 | #_ax1.bar([1.0], [beta*(alpha>0.5)], bottom=[beta*(alpha<0.5) + (1-beta)], color="b", width=0.1, align="center")

266 |

267 | if mode == 1:

268 | _ax1.plot(X, weights, "k-", linewidth=2, label=r"$\omega$")

269 | _ax1.legend([r"$\omega$"], loc=2, ncol=2, prop=lfont2)

270 | else:

271 | _ax1.plot(X, weights1, "r:", linewidth=5, label=r"$\omega1$")

272 | _ax1.plot(X, weights2, "g--", linewidth=5, label=r"$\omega2$")

273 | _ax1.plot(X, weights3, "b-.", linewidth=5, label=r"$\omega3$")

274 | _ax1.plot(X, weights, "k-", linewidth=2, label=r"$\omega$")

275 | _ax1.legend([r"$\omega1$", r"$\omega2$", r"$\omega3$", r"$\omega$"], loc=2, ncol=2, prop=lfont2)

276 |

277 | ax2.set_title(r"$The\ accuracy\ on\ LFW,\ $" + "\n" + r"$current\ value\ is\ %.2f$%%" % accs[-1], fontsize=titlesize)

278 | ax2.yaxis.grid(True, linewidth=glinewidth)

279 | ax2.set_xlabel(r"$Iter$", fontsize=asize)

280 | ax2.set_ylabel(r"$Acc(\%)$", fontsize=asize)

281 | ax2.tick_params(labelsize=ticksize)

282 | ax2.set_xlim(0, 200000)

283 | ax2.set_ylim(50.0, 100.0)

284 | ax2.plot(iters, accs, "k-", linewidth=3, label="LFW")

285 | ax2.legend(["LFW"], loc=7, ncol=1, prop=lfont1)# center right

286 |

287 | ax3.set_title(r"$The\ Proportion\ of\ \omega1,\ \omega2\ and\ \omega3,\ $" + "\n" + r"$current\ value\ is\ $%.0f%%, %.0f%% and %.0f%%" % (alphas[-1], betas[-1], gammas[-1]), fontsize=titlesize)

288 | ax3.yaxis.grid(True, linewidth=glinewidth)

289 | ax3.set_xlabel(r"$Iter$", fontsize=asize)

290 | ax3.set_ylabel(r"$Proportion(\%)$", fontsize=asize)

291 | ax3.tick_params(labelsize=ticksize)

292 | ax3.set_xlim(0, 200000)

293 | ax3.set_ylim(0.0, 100.0)

294 | ax3.plot(iters, np.array(alphas), "r-", linewidth=1, label=r"$\alpha$")

295 | ax3.plot(iters, np.array(alphas) + np.array(betas), "g-", linewidth=1, label=r"$\beta$")

296 | ax3.plot(iters, np.array(alphas) + np.array(betas) + np.array(gammas), "b-", linewidth=1, label=r"$\gamma$")

297 | ax3.fill_between(iters, 0, np.array(alphas) + np.array(betas) + np.array(gammas), color="b", alpha=1.0)

298 | ax3.fill_between(iters, 0, np.array(alphas) + np.array(betas), color="g", alpha=1.0)

299 | ax3.fill_between(iters, 0, np.array(alphas), color="r", alpha=1.0)

300 | ax3.legend([r"$\alpha$", r"$\beta$", r"$\gamma$"], loc=7, ncol=1, prop=lfont2)# center right

301 |

302 | fig.tight_layout()

303 |

304 | plt.savefig("./figures/%s_2D_dist_%d.jpg" % (prefix, _iter))

305 | plt.close()

306 |

307 | frames += 1

308 | print "frames:", frames

309 | print "processed:", _iter

310 | sys.stdout.flush()

311 |

312 |

313 | def draw_video(prefix1, prefix2, ratio):

314 | draw_imgs(prefix1, ratio, mode=1)

315 | draw_imgs(prefix2, ratio, mode=2)

316 |

317 | fps = 25

318 | size = (2000, 2000)

319 | videowriter = cv2.VideoWriter("./figures/demo_2D_distribution_noise-%d%%.avi" % ratio, cv2.cv.CV_FOURCC(*"MJPG"), fps, size)

320 | for _iter in xrange(1000, 200000+1, 100):

321 | if not choose_iter(_iter, 100):

322 | continue

323 | image_file1 = "./figures/%s_2D_dist_%d.jpg" % (prefix1, _iter)

324 | img1 = cv2.imread(image_file1)

325 | h1, w1, c1 = img1.shape

326 |

327 | image_file2 = "./figures/%s_2D_dist_%d.jpg" % (prefix2, _iter)

328 | img2 = cv2.imread(image_file2)

329 | h2, w2, c2 = img2.shape

330 |

331 | assert h1 == h2 and w1 == w2

332 | img = np.zeros((h1, w1+w2, c1), dtype=img1.dtype)

333 |

334 | img[0:h1, 0:w1, :] = img1

335 | img[0:h1, w1:w1+w2, :] = img2

336 | videowriter.write(img)

337 |

338 |

339 | if __name__ == "__main__":

340 | prefixs = [

341 | (0, "p_casia-webface_noise-flip-outlier-1_1-0_Nsoftmax_exp", "p_casia-webface_noise-flip-outlier-1_1-0_Nsoftmax_FIT_exp"), \

342 | (20, "p_casia-webface_noise-flip-outlier-1_1-20_Nsoftmax_exp", "p_casia-webface_noise-flip-outlier-1_1-20_Nsoftmax_FIT_exp"), \

343 | (40, "p_casia-webface_noise-flip-outlier-1_1-40_Nsoftmax_exp", "p_casia-webface_noise-flip-outlier-1_1-40_Nsoftmax_FIT_exp"), \

344 | (60, "p_casia-webface_noise-flip-outlier-1_1-60_Nsoftmax_exp", "p_casia-webface_noise-flip-outlier-1_1-60_Nsoftmax_FIT_exp")

345 | ]

346 |

347 | for ratio, prefix1, prefix2 in prefixs:

348 | draw_video(prefix1, prefix2, ratio)

349 | print "processing noise-%d" % ratio

350 |

351 |

--------------------------------------------------------------------------------

/code/draw_detail_3D.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | #coding: utf-8

3 |

4 | from mpl_toolkits.mplot3d import Axes3D

5 | import os

6 | import sys

7 | import cv2

8 | import math

9 | import json

10 | import numpy as np

11 | import matplotlib

12 | from matplotlib import ticker

13 | import matplotlib.pyplot as plt

14 | plt.switch_backend("agg")

15 |

16 | bins = 201

17 |

18 |

19 | def choose_iter(_iter, interval):

20 | if _iter == 1000:

21 | return True

22 | if _iter == 200000:

23 | return True

24 |

25 | k = _iter / interval

26 | if k % 1 != 0 or (k / 1) % (_iter / 40000 + 1) != 0:

27 | return False

28 | else:

29 | return True

30 |

31 |

32 | def get_pdf(X):

33 | pdf = bins * [0.0]

34 | for x in X:

35 | pdf[int(x*100)] += 1

36 | return pdf

37 |

38 |

39 | def mean_filter(pdf, fr=2):

40 | filter_pdf = bins * [0.0]

41 | for i in xrange(fr, bins-fr):

42 | for j in xrange(i-fr, i+fr+1):

43 | filter_pdf[i] += pdf[j] / (fr+fr+1)

44 | return filter_pdf

45 |

46 |

47 | def load_lfw(prefix):

48 | items = list()

49 | for line in open("./data/%s_lfw.txt" % prefix):

50 | item = line.strip().split()

51 | items.append((int(item[0]), float(item[1]), 100.0 * float(item[2])))

52 | items.append(items[-1])

53 | return items

54 |

55 |

56 | def draw_imgs(prefix, ratio, mode):

57 | lfw = load_lfw(prefix)

58 |

59 | frames = 0

60 | _X = list()

61 | _Y = list()

62 | _Z = list()

63 | iters = list()

64 | threds = list()

65 | accs = list()

66 | alphas = list()

67 | betas = list()

68 | gammas = list()

69 | for _iter in xrange(1000, 200000+1, 1000):

70 | _iter1, _thred1, _acc1 = lfw[_iter/10000]

71 | _iter2, _thred2, _acc2 = lfw[_iter/10000+1]

72 | _thred = _thred1 + (_thred2 - _thred1) * (_iter - _iter1) / max(1, (_iter2 - _iter1))

73 | _acc = _acc1 + (_acc2 - _acc1) * (_iter - _iter1) / max(1, (_iter2 - _iter1))

74 | iters.append(_iter)

75 | threds.append(_thred)

76 | accs.append(_acc)

77 |

78 | log_file = "./data/%s_%d.txt" % (prefix, _iter)

79 | if not os.path.exists(log_file):

80 | print "miss: %s" % log_file

81 | continue

82 | lines = open(log_file).readlines()

83 |

84 | # pdf

85 | pdf = list()

86 | for line in lines[3:bins+3]:

87 | item = line.strip().split()

88 | item = map(lambda x: float(x), item)

89 | pdf.append(item[1])

90 |

91 | # clean pdf

92 | clean_pdf = list()

93 | for line in lines[bins+3+1:bins+bins+3+1]:

94 | item = line.strip().split()

95 | item = map(lambda x: float(x), item)

96 | clean_pdf.append(item[1])

97 |

98 | # noise pdf

99 | noise_pdf = list()

100 | for line in lines[bins+bins+3+1+1:bins+bins+bins+3+1+1]:

101 | item = line.strip().split()

102 | item = map(lambda x: float(x), item)

103 | noise_pdf.append(item[1])

104 |

105 | # pcf

106 | pcf = list()

107 | for line in lines[bins+bins+bins+3+1+1+1:bins+bins+bins+bins+3+1+1+1]:

108 | item = line.strip().split()

109 | item = map(lambda x: float(x), item)

110 | pcf.append(item[1])

111 |

112 | # weight

113 | W = list()

114 | for line in lines[bins+bins+bins+bins+3+1+1+1+1:bins+bins+bins+bins+bins+3+1+1+1+1]:

115 | item = line.strip().split()

116 | item = map(lambda x: float(x), item)

117 | W.append(item[1])

118 |

119 | X = list()

120 | for i in xrange(bins):

121 | X.append(i * 0.01 - 1.0)

122 | _X.append(X)

123 | _Y.append(bins * [_iter])

124 | _Z.append(mean_filter(pdf))

125 |

126 | if not choose_iter(_iter, 1000):

127 | continue

128 |

129 | titlesize = 44

130 | asize = 44

131 | glinewidth = 2

132 |

133 | fig = plt.figure(0)

134 | fig.set_size_inches(24, 18)

135 | ax = Axes3D(fig)

136 | #ax.set_title(r"$The\ cos\theta\ distribution\ of\ $" + str(ratio) + "%" + r"$\ noisy\ training\ data\ over\ iteration$", fontsize=titlesize)

137 | ax.set_xlabel(r"$cos\theta$", fontsize=asize)

138 | ax.set_ylabel(r"$Iter$", fontsize=asize)

139 | ax.set_zlabel(r"$Numbers$", fontsize=asize)

140 | ax.tick_params(labelsize=32)

141 | ax.set_xlim(-1.0, 1.0)

142 | ax.set_ylim(0, 200000)

143 | ax.set_zlim(0.0, 6000.0)

144 | ax.grid(True, linewidth=glinewidth)

145 | surf = ax.plot_surface(_X, _Y, _Z, rstride=3, cstride=3, cmap=plt.cm.coolwarm, linewidth=0.1, antialiased=False)

146 | surf.set_clim([0, 6000])

147 | cbar = fig.colorbar(surf, shrink=0.5, aspect=10, norm=plt.Normalize(0, 6000))

148 | cbar.set_ticks([0, 1000, 2000, 3000, 4000, 5000, 6000])

149 | cbar.set_ticklabels(["0", "1k", "2k", "3k", "4k", "5k", "6k"])

150 | #cbar.locator = ticker.MaxNLocator(nbins=6)

151 | #cbar.update_ticks()

152 | cbar.ax.tick_params(labelsize=24)

153 |

154 | #print dir(ax)

155 | #_ax = ax.twiny()

156 | #_ax.set_ylim(0.0, 1.0)

157 | #_ax.plot(bins * [-1.0], iters, accs, label="LFW")

158 | #_ax.legend()

159 | #ax.plot(len(iters) * [-1.0], iters, 100.0 * np.array(accs), color="k", label="LFW")

160 | #ax.plot(len(iters) * [-1.0], iters, 60.0 * np.array(accs), color="k", label="LFW")

161 | #ax.legend()

162 |

163 | plt.savefig("./figures/%s_3D_dist_%d.jpg" % (prefix, _iter))

164 | plt.close()

165 |

166 | frames += 1

167 | print "frames:", frames

168 | print "processed:", _iter

169 | sys.stdout.flush()

170 |

171 |

172 | def draw_video(prefix1, prefix2, ratio):

173 | draw_imgs(prefix1, ratio, mode=1)

174 | draw_imgs(prefix2, ratio, mode=2)

175 |

176 | fps = 25

177 | #size = (4800, 1800)

178 | #size = (2400, 900)

179 | size = (2000, 750)

180 | videowriter = cv2.VideoWriter("./figures/demo_3D_distribution_noise-%d%%.avi" % ratio, cv2.cv.CV_FOURCC(*"MJPG"), fps, size)

181 | for _iter in xrange(1000, 200000+1, 1000):

182 | if not choose_iter(_iter, 1000):

183 | continue

184 | image_file1 = "./figures/%s_3D_dist_%d.jpg" % (prefix1, _iter)

185 | img1 = cv2.imread(image_file1)

186 | img1 = cv2.resize(img1, (1000, 750))##

187 | h1, w1, c1 = img1.shape

188 |

189 | image_file2 = "./figures/%s_3D_dist_%d.jpg" % (prefix2, _iter)

190 | img2 = cv2.imread(image_file2)

191 | img2 = cv2.resize(img2, (1000, 750))##

192 | h2, w2, c2 = img2.shape

193 |

194 | assert h1 == h2 and w1 == w2

195 | img = np.zeros((size[1], size[0], 3), dtype=img1.dtype)

196 |

197 | img[0:h1, 0:w1, :] = img1

198 | img[0:h1, w1:w1+w2, :] = img2

199 | videowriter.write(img)

200 |

201 |

202 | if __name__ == "__main__":

203 | prefixs = [

204 | (0, "p_casia-webface_noise-flip-outlier-1_1-0_Nsoftmax_exp", "p_casia-webface_noise-flip-outlier-1_1-0_Nsoftmax_FIT_exp"), \

205 | (20, "p_casia-webface_noise-flip-outlier-1_1-20_Nsoftmax_exp", "p_casia-webface_noise-flip-outlier-1_1-20_Nsoftmax_FIT_exp"), \

206 | (40, "p_casia-webface_noise-flip-outlier-1_1-40_Nsoftmax_exp", "p_casia-webface_noise-flip-outlier-1_1-40_Nsoftmax_FIT_exp"), \

207 | (60, "p_casia-webface_noise-flip-outlier-1_1-60_Nsoftmax_exp", "p_casia-webface_noise-flip-outlier-1_1-60_Nsoftmax_FIT_exp")

208 | ]

209 |

210 | for ratio, prefix1, prefix2 in prefixs:

211 | draw_video(prefix1, prefix2, ratio)

212 | print "processing noise-%d" % ratio

213 |

214 |

--------------------------------------------------------------------------------

/code/draw_weight.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | #coding: utf-8

3 |

4 | import math

5 | import numpy as np

6 | import matplotlib

7 | import matplotlib.pyplot as plt

8 | plt.switch_backend("agg")

9 |

10 | def draw_weight():

11 | X = list()

12 | W1 = list()

13 | W2 = list()

14 | W3 = list()

15 | for i in xrange(-100, 101):

16 | x = 0.01 * i

17 |

18 | """

19 | # method1

20 | alpha = max(0.0, x)

21 | beta = abs(2.0 * alpha - 1.0)

22 | w1 = beta*(alpha<0.5)

23 | w2 = (1-beta)

24 | w3 = beta*(alpha>0.5)

25 | """

26 |

27 | # method2

28 | alpha = max(0.0, x)

29 | m = 20

30 | beta = 2 - 1 / (1 + math.e ** (m*(0.25-alpha))) - 1 / (1 + math.e ** (m*(alpha-0.75)))

31 | w1 = beta*(alpha<0.5)

32 | w2 = (1-beta)

33 | w3 = beta*(alpha>0.5)

34 |

35 | X.append(x)

36 | W1.append(w1)

37 | W2.append(w2)

38 | W3.append(w3)

39 |

40 | #plt.figure("Figure")

41 | #plt.title("Title")

42 | #plt.xlabel("x")

43 | #plt.ylabel("y")

44 | plt.grid(True)

45 | plt.xlim(-1.0, 1.0)

46 | plt.ylim(0.0, 1.0)

47 | #plt.yticks(color="w")

48 | #plt.xticks(color="w")

49 | plt.plot(X, W1, "r-", X, W2, "g--", X, W3, "b-.", linewidth=2)

50 | plt.savefig("../figures/W.jpg")

51 | plt.close()

52 |

53 |

54 | if __name__ == "__main__":

55 | draw_weight()

56 |

--------------------------------------------------------------------------------

/code/gen_noise.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | #coding: utf-8

3 |

4 | import json

5 | import copy

6 | import random

7 | import collections

8 |

9 | #noise_ratio = 0.20

10 | noise_ratio = 0.40

11 | #noise_ratio = 0.60

12 |

13 | flip_outlier_ratio = 0.5

14 |

15 | def flip_noise():

16 | items = list()

17 | for line in open("ori_data.txt"):

18 | item = json.loads(line)

19 | item["ori_id"] = copy.deepcopy(item["id"])

20 | item["clean"] = True

21 | items.append(item)

22 | random.shuffle(items)

23 |

24 | m = int(round(noise_ratio * len(items)))

25 | ids = map(lambda item: item["id"][0], items[:m])

26 | random.shuffle(ids)

27 | for item, id in zip(items[:m], ids):

28 | if item["id"][0] != id:

29 | item["clean"] = False

30 | item["id"][0] = id

31 | for item in items[:m]:

32 | while item["clean"]:

33 | _item = random.choice(items[:m])

34 | if item["id"][0] != _item["ori_id"][0] and \

35 | item["ori_id"][0] != _item["id"][0]:

36 | id = item["id"][0]

37 | item["id"][0] = _item["id"][0]

38 | _item["id"][0] = id

39 | item["clean"] = _item["clean"] = False

40 | random.shuffle(items)

41 | for item in items:

42 | if item["clean"]:

43 | assert item["ori_id"][0] == item["id"][0]

44 | else:

45 | assert item["ori_id"][0] != item["id"][0]

46 |

47 | clean_data_file = open("clean_data.txt", "wb")

48 | flip_noise_data_file = open("flip_noise_data.txt", "wb")

49 | for item in items:

50 | if item["clean"]:

51 | clean_data_file.write(json.dumps(item) + "\n")

52 | else:

53 | flip_noise_data_file.write(json.dumps(item) + "\n")

54 | clean_data_file.close()

55 | flip_noise_data_file.close()

56 |

57 |

58 | def add_outlier_noise():

59 | # clean: (1 - noise_ratio)

60 | items = list()

61 | for line in open("clean_data.txt"):

62 | item = json.loads(line)

63 | items.append(item)

64 |

65 | # flip noise: flip_outlier_ratio * noise_ratio

66 | flip_noise_items = list()

67 | for line in open("flip_noise_data.txt"):

68 | item = json.loads(line)

69 | flip_noise_items.append(item)

70 | flip_num = int(len(flip_noise_items) * flip_outlier_ratio)

71 | assert len(flip_noise_items) >= flip_num

72 | random.shuffle(flip_noise_items)

73 | items.extend(flip_noise_items[:flip_num])

74 | flip_noise_items = flip_noise_items[flip_num:]

75 |

76 | # outlier noise: (1 - flip_outlier_ratio) * noise_ratio

77 | outlier_num = len(flip_noise_items)

78 | outlier_noise_items = list()

79 | for line in open("outlier_noise_data.txt"):

80 | item = json.loads(line)

81 | outlier_noise_items.append(item)

82 | assert len(outlier_noise_items) >= outlier_num

83 | random.shuffle(outlier_noise_items)

84 | for i in xrange(outlier_num):

85 | item = outlier_noise_items[i]

86 | item["id"] = flip_noise_items[i]["id"]

87 | items.append(item)

88 |

89 | # output

90 | random.shuffle(items)

91 | with open("data.txt", "wb") as f:

92 | for item in items:

93 | f.write(json.dumps(item) + "\n")

94 |

95 |

96 | if __name__ == "__main__":

97 | """

98 | input:

99 | orignal dataset: ori_data.txt

100 | megaface 1m dataset: outlier_noise_data.txt

101 | output:

102 | data.txt

103 | """

104 | flip_noise()

105 | add_outlier_noise()

106 |

107 |

--------------------------------------------------------------------------------

/code/gen_resnet.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | #coding: utf-8

3 |

4 |

5 | def data_layer(layer_name, output_name1, output_name2, phase, crop_size=128, scale=0.0078125):

6 | context = '\

7 | layer {\n\

8 | name: "%s"\n\

9 | type: "Data"\n\

10 | top: "%s"\n\

11 | top: "%s"\n\

12 | include {\n\

13 | phase: %s\n\

14 | }\n\

15 | transform_param {\n\

16 | crop_size: %d\n\

17 | scale: %.7f\n\

18 | mean_file: "@model_dir/mean.binaryproto"\n\

19 | mirror: %s\n\

20 | }\n\

21 | data_param {\n\

22 | source: "@data_dir/%s_data_db/"\n\

23 | backend: LMDB\n\

24 | batch_size: %s\n\

25 | }\n\

26 | }\n\

27 | ' % (layer_name, output_name1, output_name2, phase, crop_size, scale, \

28 | "true" if phase == "TRAIN" else "false", \

29 | "train" if phase == "TRAIN" else "validation", \

30 | "@train_batch_size" if phase == "TRAIN" else "@test_batch_size")

31 | return context

32 |

33 |

34 | def conv_layer(layer_name, input_name, output_name, num_output, kernel_size=3, stride=1, pad=1, w_lr_mult=1, w_decay_mult=1, b_lr_mult=2, b_decay_mult=0, initializer="xavier"):

35 | initializer_context = '\

36 | type: "xavier"\n\

37 | ' if initializer == "xavier" else\

38 | '\

39 | type: "gaussian"\n\

40 | std: 0.01\n\

41 | '

42 |

43 | context = '\

44 | layer {\n\

45 | name: "%s"\n\

46 | type: "Convolution"\n\

47 | bottom: "%s"\n\

48 | top: "%s"\n\

49 | param {\n\

50 | lr_mult: %d\n\

51 | decay_mult: %d\n\

52 | }\n\

53 | param {\n\

54 | lr_mult: %d\n\

55 | decay_mult: %d\n\

56 | }\n\

57 | convolution_param {\n\

58 | num_output: %d\n\

59 | kernel_size: %d\n\

60 | stride: %d\n\

61 | pad: %d\n\

62 | weight_filler {\n%s\

63 | }\n\

64 | bias_filler {\n\

65 | type: "constant"\n\

66 | value: 0\n\

67 | }\n\

68 | }\n\

69 | }\n\

70 | ' % (layer_name, input_name, output_name, w_lr_mult, w_decay_mult, b_lr_mult, b_decay_mult, num_output, kernel_size, stride, pad, initializer_context)

71 | return context

72 |

73 |

74 | def relu_layer(layer_name, input_name, output_name):

75 | context = '\

76 | layer {\n\

77 | name: "%s"\n\

78 | type: "PReLU"\n\

79 | bottom: "%s"\n\

80 | top: "%s"\n\

81 | }\n\

82 | ' % (layer_name, input_name, output_name)

83 | return context

84 |

85 |

86 | def eltwise_layer(layer_name, input_name1, input_name2, output_name):

87 | context = '\

88 | layer {\n\

89 | name: "%s"\n\

90 | type: "Eltwise"\n\

91 | bottom: "%s"\n\

92 | bottom: "%s"\n\

93 | top: "%s"\n\

94 | eltwise_param {\n\

95 | operation: SUM\n\

96 | }\n\

97 | }\n\

98 | ' % (layer_name, input_name1, input_name2, output_name)

99 | return context

100 |

101 |

102 | def fc_layer(layer_name, input_name, output_name, num_output, w_lr_mult=1, w_decay_mult=1, b_lr_mult=2, b_decay_mult=0):

103 | context = '\

104 | layer {\n\

105 | name: "%s"\n\

106 | type: "InnerProduct"\n\

107 | bottom: "%s"\n\

108 | top: "%s"\n\

109 | param {\n\

110 | lr_mult: %d\n\

111 | decay_mult: %d\n\

112 | }\n\

113 | param {\n\

114 | lr_mult: %d\n\

115 | decay_mult: %d\n\

116 | }\n\

117 | inner_product_param {\n\

118 | num_output: %d\n\

119 | weight_filler {\n\

120 | type: "xavier"\n\

121 | }\n\

122 | bias_filler {\n\

123 | type: "constant"\n\

124 | value: 0\n\

125 | }\n\

126 | }\n\

127 | }\n\

128 | ' % (layer_name, input_name, output_name, w_lr_mult, w_decay_mult, b_lr_mult, b_decay_mult, num_output)

129 | return context

130 |

131 |

132 | def resnet(file_name="deploy.prototxt", layer_num=100):

133 | cell_num = 4

134 | if layer_num == 20:

135 | block_type = 1

136 | block_num = [1, 2, 4, 1]

137 | #block_num = [2, 2, 2, 2]

138 | elif layer_num == 34:

139 | block_type = 1

140 | block_num = [2, 4, 7, 2]

141 | elif layer_num == 50:

142 | block_type = 1

143 | #block_num = [2, 6, 13, 2]

144 | block_num = [3, 4, 13, 3]

145 | elif layer_num == 64:

146 | block_type = 1

147 | block_num = [3, 8, 16, 3]

148 | elif layer_num == 100:

149 | block_type = 1

150 | #block_num = [3, 12, 30, 3]

151 | block_num = [4, 12, 28, 4]

152 | else:

153 | block_type = 1

154 | # 12

155 | block_num = [1, 1, 1, 1]

156 | num_output = 32

157 |

158 | network = 'name: "resnet_%d"\n' % layer_num

159 | # data layer

160 | layer_name = "data"

161 | output_name1 = layer_name

162 | output_name2 = "label000"

163 | output_name = layer_name

164 | network += data_layer(layer_name, output_name1, output_name2, "TRAIN")

165 | network += data_layer(layer_name, output_name1, output_name2, "TEST")

166 | for i in xrange(cell_num):

167 | num_output *= 2

168 | # ceil

169 | # conv layer

170 | layer_name = "conv%d_%d" % (i+1, 1)

171 | input_name = output_name

172 | output_name = layer_name

173 | network += conv_layer(layer_name, input_name, output_name, num_output, stride=2, b_lr_mult=2, initializer="xavier")

174 | # relu layer

175 | layer_name = "relu%d_%d" % (i+1, 1)

176 | input_name = output_name

177 | output_name = output_name

178 | network += relu_layer(layer_name, input_name, output_name)

179 | # block

180 | for j in xrange(block_num[i]):

181 | # type1

182 | if block_type == 1:

183 | for k in xrange(2):

184 | # conv layer

185 | layer_name = "conv%d_%d" % (i+1, 2*j+k+2)

186 | input_name = output_name

187 | output_name = layer_name

188 | network += conv_layer(layer_name, input_name, output_name, num_output, stride=1, b_lr_mult=0, initializer="gaussian")

189 | # relu layer

190 | layer_name = "relu%d_%d" % (i+1, 2*j+k+2)

191 | input_name = output_name

192 | output_name = output_name

193 | network += relu_layer(layer_name, input_name, output_name)

194 | # type2

195 | elif block_type == 2:

196 | for k in xrange(3):

197 | # conv layer

198 | layer_name = "conv%d_%d" % (i+1, 2*j+k+2)

199 | input_name = output_name

200 | output_name = layer_name

201 | network += conv_layer(layer_name, input_name, output_name, num_output if k==2 else num_output/2, \

202 | kernel_size=(3 if k==1 else 1), \

203 | pad=(1 if k==1 else 0), \

204 | stride=1, b_lr_mult=0, initializer="gaussian")

205 | # relu layer

206 | layer_name = "relu%d_%d" % (i+1, 2*j+k+2)

207 | input_name = output_name

208 | output_name = output_name

209 | network += relu_layer(layer_name, input_name, output_name)

210 | else:

211 | print "error"

212 |

213 | # eltwise_layer

214 | layer_name = "res%d_%d" % (i+1, 2*j+3)

215 | input_name1 = "%s%d_%d" % ("conv" if j == 0 else "res", i+1, 2*j+1)

216 | input_name2 = "conv%d_%d" % (i+1, 2*j+3)

217 | output_name = layer_name

218 | network += eltwise_layer(layer_name, input_name1, input_name2, output_name)

219 | # fc layer

220 | layer_name = "fc%d" % (cell_num+1)

221 | input_name = output_name

222 | output_name = layer_name

223 | network += fc_layer(layer_name, input_name, output_name, num_output)

224 |

225 | # write network to file

226 | open(file_name, "wb").write(network)

227 |

228 |

229 | if __name__ == "__main__":

230 | layer_num = 20

231 | resnet(file_name="resnet_%d.prototxt" % layer_num, layer_num=layer_num)

232 |

233 |

--------------------------------------------------------------------------------

/data/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huangyangyu/NoiseFace/0b434a2c0eb664ca2af36c3bc619629fb27dcf3f/data/.gitkeep

--------------------------------------------------------------------------------

/data/casia-webface/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huangyangyu/NoiseFace/0b434a2c0eb664ca2af36c3bc619629fb27dcf3f/data/casia-webface/.gitkeep

--------------------------------------------------------------------------------

/data/imdb-face/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huangyangyu/NoiseFace/0b434a2c0eb664ca2af36c3bc619629fb27dcf3f/data/imdb-face/.gitkeep

--------------------------------------------------------------------------------

/data/ms-celeb-1m/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huangyangyu/NoiseFace/0b434a2c0eb664ca2af36c3bc619629fb27dcf3f/data/ms-celeb-1m/.gitkeep

--------------------------------------------------------------------------------

/deploy/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huangyangyu/NoiseFace/0b434a2c0eb664ca2af36c3bc619629fb27dcf3f/deploy/.gitkeep

--------------------------------------------------------------------------------

/deploy/resnet20_arcface_train.prototxt:

--------------------------------------------------------------------------------

1 | name: "face_res20net"

2 | layer {

3 | name: "data"

4 | type: "Data"

5 | top: "data"

6 | top: "label000"

7 | include {

8 | phase: TRAIN

9 | }

10 | transform_param {

11 | crop_size: 128

12 | scale: 0.0078125

13 | mean_value: 127.5

14 | mirror: true

15 | }

16 | data_param {

17 | source: "./data/casia-webface/p_noise-flip-outlier-1_1-40/converter_p_casia-webface_noise-flip-outlier-1_1-40/train_data_db/"

18 | backend: LMDB

19 | batch_size: 64

20 | }

21 | }

22 | layer {

23 | name: "data"

24 | type: "Data"

25 | top: "data"

26 | top: "label000"

27 | include {

28 | phase: TEST

29 | }

30 | transform_param {

31 | crop_size: 128

32 | scale: 0.0078125

33 | mean_value: 127.5

34 | mirror: false

35 | }

36 | data_param {

37 | source: "./data/casia-webface/p_noise-flip-outlier-1_1-40/converter_p_casia-webface_noise-flip-outlier-1_1-40/validation_data_db/"

38 | backend: LMDB

39 | batch_size: 8

40 | }

41 | }

42 | layer {

43 | name: "conv1_1"

44 | type: "Convolution"

45 | bottom: "data"

46 | top: "conv1_1"

47 | param {

48 | lr_mult: 1

49 | decay_mult: 1

50 | }

51 | param {

52 | lr_mult: 2

53 | decay_mult: 0

54 | }

55 | convolution_param {

56 | num_output: 64

57 | kernel_size: 3

58 | stride: 2

59 | pad: 1

60 | weight_filler {

61 | type: "xavier"

62 | }

63 | bias_filler {

64 | type: "constant"

65 | value: 0

66 | }

67 | }

68 | }

69 | layer {

70 | name: "relu1_1"

71 | type: "PReLU"

72 | bottom: "conv1_1"

73 | top: "conv1_1"

74 | }

75 | layer {

76 | name: "conv1_2"

77 | type: "Convolution"

78 | bottom: "conv1_1"

79 | top: "conv1_2"

80 | param {

81 | lr_mult: 1

82 | decay_mult: 1

83 | }

84 | param {

85 | lr_mult: 0

86 | decay_mult: 0

87 | }

88 | convolution_param {

89 | num_output: 64

90 | kernel_size: 3

91 | stride: 1

92 | pad: 1

93 | weight_filler {

94 | type: "gaussian"

95 | std: 0.01

96 | }

97 | bias_filler {

98 | type: "constant"

99 | value: 0

100 | }

101 | }

102 | }

103 | layer {

104 | name: "relu1_2"

105 | type: "PReLU"

106 | bottom: "conv1_2"

107 | top: "conv1_2"

108 | }

109 | layer {

110 | name: "conv1_3"

111 | type: "Convolution"

112 | bottom: "conv1_2"

113 | top: "conv1_3"

114 | param {

115 | lr_mult: 1

116 | decay_mult: 1

117 | }

118 | param {

119 | lr_mult: 0

120 | decay_mult: 0

121 | }

122 | convolution_param {

123 | num_output: 64

124 | kernel_size: 3

125 | stride: 1

126 | pad: 1

127 | weight_filler {

128 | type: "gaussian"

129 | std: 0.01

130 | }

131 | bias_filler {

132 | type: "constant"

133 | value: 0

134 | }

135 | }

136 | }

137 | layer {

138 | name: "relu1_3"

139 | type: "PReLU"

140 | bottom: "conv1_3"

141 | top: "conv1_3"

142 | }

143 | layer {

144 | name: "res1_3"

145 | type: "Eltwise"

146 | bottom: "conv1_1"

147 | bottom: "conv1_3"

148 | top: "res1_3"

149 | eltwise_param {

150 | operation: 1

151 | }

152 | }

153 | layer {

154 | name: "conv2_1"

155 | type: "Convolution"

156 | bottom: "res1_3"

157 | top: "conv2_1"

158 | param {

159 | lr_mult: 1

160 | decay_mult: 1

161 | }

162 | param {

163 | lr_mult: 2

164 | decay_mult: 0

165 | }

166 | convolution_param {

167 | num_output: 128

168 | kernel_size: 3

169 | stride: 2

170 | pad: 1

171 | weight_filler {

172 | type: "xavier"

173 | }

174 | bias_filler {

175 | type: "constant"

176 | value: 0

177 | }

178 | }

179 | }

180 | layer {

181 | name: "relu2_1"

182 | type: "PReLU"

183 | bottom: "conv2_1"

184 | top: "conv2_1"

185 | }

186 | layer {

187 | name: "conv2_2"

188 | type: "Convolution"

189 | bottom: "conv2_1"

190 | top: "conv2_2"

191 | param {

192 | lr_mult: 1

193 | decay_mult: 1

194 | }

195 | param {

196 | lr_mult: 0

197 | decay_mult: 0

198 | }

199 | convolution_param {

200 | num_output: 128

201 | kernel_size: 3

202 | stride: 1

203 | pad: 1

204 | weight_filler {

205 | type: "gaussian"

206 | std: 0.01

207 | }

208 | bias_filler {

209 | type: "constant"

210 | value: 0

211 | }

212 | }

213 | }

214 | layer {

215 | name: "relu2_2"

216 | type: "PReLU"

217 | bottom: "conv2_2"

218 | top: "conv2_2"

219 | }

220 | layer {

221 | name: "conv2_3"

222 | type: "Convolution"

223 | bottom: "conv2_2"

224 | top: "conv2_3"

225 | param {

226 | lr_mult: 1

227 | decay_mult: 1

228 | }

229 | param {

230 | lr_mult: 0

231 | decay_mult: 0

232 | }

233 | convolution_param {

234 | num_output: 128

235 | kernel_size: 3

236 | stride: 1

237 | pad: 1

238 | weight_filler {

239 | type: "gaussian"

240 | std: 0.01

241 | }

242 | bias_filler {

243 | type: "constant"

244 | value: 0

245 | }

246 | }

247 | }

248 | layer {

249 | name: "relu2_3"

250 | type: "PReLU"

251 | bottom: "conv2_3"

252 | top: "conv2_3"

253 | }

254 | layer {

255 | name: "res2_3"

256 | type: "Eltwise"

257 | bottom: "conv2_1"

258 | bottom: "conv2_3"

259 | top: "res2_3"

260 | eltwise_param {

261 | operation: 1

262 | }

263 | }

264 | layer {

265 | name: "conv2_4"

266 | type: "Convolution"

267 | bottom: "res2_3"

268 | top: "conv2_4"

269 | param {

270 | lr_mult: 1

271 | decay_mult: 1

272 | }

273 | param {

274 | lr_mult: 0

275 | decay_mult: 0

276 | }

277 | convolution_param {

278 | num_output: 128

279 | kernel_size: 3

280 | stride: 1

281 | pad: 1

282 | weight_filler {

283 | type: "gaussian"

284 | std: 0.01

285 | }

286 | bias_filler {

287 | type: "constant"

288 | value: 0

289 | }

290 | }

291 | }

292 | layer {

293 | name: "relu2_4"

294 | type: "PReLU"

295 | bottom: "conv2_4"

296 | top: "conv2_4"

297 | }

298 | layer {

299 | name: "conv2_5"

300 | type: "Convolution"

301 | bottom: "conv2_4"

302 | top: "conv2_5"

303 | param {

304 | lr_mult: 1

305 | decay_mult: 1

306 | }

307 | param {

308 | lr_mult: 0

309 | decay_mult: 0

310 | }

311 | convolution_param {

312 | num_output: 128

313 | kernel_size: 3

314 | stride: 1

315 | pad: 1

316 | weight_filler {

317 | type: "gaussian"

318 | std: 0.01

319 | }

320 | bias_filler {

321 | type: "constant"

322 | value: 0

323 | }

324 | }

325 | }

326 | layer {

327 | name: "relu2_5"

328 | type: "PReLU"

329 | bottom: "conv2_5"

330 | top: "conv2_5"

331 | }

332 | layer {

333 | name: "res2_5"

334 | type: "Eltwise"

335 | bottom: "res2_3"

336 | bottom: "conv2_5"

337 | top: "res2_5"

338 | eltwise_param {

339 | operation: 1

340 | }

341 | }

342 | layer {

343 | name: "conv3_1"

344 | type: "Convolution"

345 | bottom: "res2_5"

346 | top: "conv3_1"

347 | param {

348 | lr_mult: 1

349 | decay_mult: 1

350 | }

351 | param {

352 | lr_mult: 2

353 | decay_mult: 0

354 | }

355 | convolution_param {

356 | num_output: 256

357 | kernel_size: 3

358 | stride: 2

359 | pad: 1

360 | weight_filler {

361 | type: "xavier"

362 | }

363 | bias_filler {

364 | type: "constant"

365 | value: 0

366 | }

367 | }

368 | }

369 | layer {

370 | name: "relu3_1"

371 | type: "PReLU"

372 | bottom: "conv3_1"

373 | top: "conv3_1"

374 | }

375 | layer {

376 | name: "conv3_2"

377 | type: "Convolution"

378 | bottom: "conv3_1"

379 | top: "conv3_2"

380 | param {

381 | lr_mult: 1

382 | decay_mult: 1

383 | }

384 | param {

385 | lr_mult: 0

386 | decay_mult: 0

387 | }

388 | convolution_param {

389 | num_output: 256

390 | kernel_size: 3

391 | stride: 1

392 | pad: 1

393 | weight_filler {

394 | type: "gaussian"

395 | std: 0.01

396 | }

397 | bias_filler {

398 | type: "constant"

399 | value: 0

400 | }

401 | }

402 | }

403 | layer {

404 | name: "relu3_2"

405 | type: "PReLU"

406 | bottom: "conv3_2"

407 | top: "conv3_2"

408 | }

409 | layer {

410 | name: "conv3_3"

411 | type: "Convolution"

412 | bottom: "conv3_2"

413 | top: "conv3_3"

414 | param {

415 | lr_mult: 1

416 | decay_mult: 1

417 | }

418 | param {

419 | lr_mult: 0

420 | decay_mult: 0

421 | }

422 | convolution_param {

423 | num_output: 256

424 | kernel_size: 3

425 | stride: 1

426 | pad: 1

427 | weight_filler {

428 | type: "gaussian"

429 | std: 0.01

430 | }

431 | bias_filler {

432 | type: "constant"

433 | value: 0

434 | }

435 | }

436 | }

437 | layer {

438 | name: "relu3_3"

439 | type: "PReLU"

440 | bottom: "conv3_3"

441 | top: "conv3_3"

442 | }

443 | layer {

444 | name: "res3_3"

445 | type: "Eltwise"

446 | bottom: "conv3_1"

447 | bottom: "conv3_3"

448 | top: "res3_3"

449 | eltwise_param {

450 | operation: 1

451 | }

452 | }

453 | layer {

454 | name: "conv3_4"

455 | type: "Convolution"

456 | bottom: "res3_3"

457 | top: "conv3_4"

458 | param {

459 | lr_mult: 1

460 | decay_mult: 1

461 | }

462 | param {

463 | lr_mult: 0

464 | decay_mult: 0

465 | }

466 | convolution_param {

467 | num_output: 256

468 | kernel_size: 3

469 | stride: 1

470 | pad: 1

471 | weight_filler {

472 | type: "gaussian"

473 | std: 0.01

474 | }

475 | bias_filler {

476 | type: "constant"

477 | value: 0

478 | }

479 | }

480 | }

481 | layer {

482 | name: "relu3_4"

483 | type: "PReLU"

484 | bottom: "conv3_4"

485 | top: "conv3_4"

486 | }

487 | layer {

488 | name: "conv3_5"

489 | type: "Convolution"

490 | bottom: "conv3_4"

491 | top: "conv3_5"

492 | param {

493 | lr_mult: 1

494 | decay_mult: 1

495 | }

496 | param {

497 | lr_mult: 0

498 | decay_mult: 0

499 | }

500 | convolution_param {

501 | num_output: 256

502 | kernel_size: 3

503 | stride: 1

504 | pad: 1

505 | weight_filler {

506 | type: "gaussian"

507 | std: 0.01

508 | }

509 | bias_filler {

510 | type: "constant"

511 | value: 0

512 | }

513 | }

514 | }

515 | layer {

516 | name: "relu3_5"

517 | type: "PReLU"

518 | bottom: "conv3_5"

519 | top: "conv3_5"

520 | }

521 | layer {

522 | name: "res3_5"

523 | type: "Eltwise"

524 | bottom: "res3_3"

525 | bottom: "conv3_5"

526 | top: "res3_5"

527 | eltwise_param {

528 | operation: 1

529 | }

530 | }

531 | layer {

532 | name: "conv3_6"

533 | type: "Convolution"

534 | bottom: "res3_5"

535 | top: "conv3_6"

536 | param {

537 | lr_mult: 1

538 | decay_mult: 1

539 | }

540 | param {

541 | lr_mult: 0

542 | decay_mult: 0

543 | }

544 | convolution_param {

545 | num_output: 256

546 | kernel_size: 3

547 | stride: 1

548 | pad: 1

549 | weight_filler {

550 | type: "gaussian"

551 | std: 0.01

552 | }

553 | bias_filler {

554 | type: "constant"

555 | value: 0

556 | }

557 | }

558 | }

559 | layer {

560 | name: "relu3_6"

561 | type: "PReLU"

562 | bottom: "conv3_6"

563 | top: "conv3_6"

564 | }

565 | layer {

566 | name: "conv3_7"

567 | type: "Convolution"

568 | bottom: "conv3_6"

569 | top: "conv3_7"

570 | param {

571 | lr_mult: 1

572 | decay_mult: 1

573 | }

574 | param {

575 | lr_mult: 0

576 | decay_mult: 0

577 | }

578 | convolution_param {

579 | num_output: 256

580 | kernel_size: 3

581 | stride: 1

582 | pad: 1

583 | weight_filler {

584 | type: "gaussian"

585 | std: 0.01

586 | }

587 | bias_filler {

588 | type: "constant"

589 | value: 0

590 | }

591 | }

592 | }

593 | layer {

594 | name: "relu3_7"

595 | type: "PReLU"

596 | bottom: "conv3_7"

597 | top: "conv3_7"

598 | }

599 | layer {

600 | name: "res3_7"

601 | type: "Eltwise"

602 | bottom: "res3_5"

603 | bottom: "conv3_7"

604 | top: "res3_7"

605 | eltwise_param {

606 | operation: 1

607 | }

608 | }

609 | layer {

610 | name: "conv3_8"

611 | type: "Convolution"

612 | bottom: "res3_7"

613 | top: "conv3_8"

614 | param {

615 | lr_mult: 1

616 | decay_mult: 1

617 | }

618 | param {

619 | lr_mult: 0

620 | decay_mult: 0

621 | }

622 | convolution_param {

623 | num_output: 256

624 | kernel_size: 3

625 | stride: 1

626 | pad: 1

627 | weight_filler {

628 | type: "gaussian"

629 | std: 0.01

630 | }

631 | bias_filler {

632 | type: "constant"

633 | value: 0

634 | }

635 | }

636 | }

637 | layer {

638 | name: "relu3_8"

639 | type: "PReLU"

640 | bottom: "conv3_8"

641 | top: "conv3_8"

642 | }

643 | layer {

644 | name: "conv3_9"

645 | type: "Convolution"

646 | bottom: "conv3_8"

647 | top: "conv3_9"

648 | param {

649 | lr_mult: 1

650 | decay_mult: 1

651 | }

652 | param {

653 | lr_mult: 0

654 | decay_mult: 0

655 | }

656 | convolution_param {

657 | num_output: 256

658 | kernel_size: 3

659 | stride: 1

660 | pad: 1

661 | weight_filler {

662 | type: "gaussian"

663 | std: 0.01

664 | }

665 | bias_filler {

666 | type: "constant"

667 | value: 0

668 | }

669 | }

670 | }

671 | layer {

672 | name: "relu3_9"

673 | type: "PReLU"

674 | bottom: "conv3_9"

675 | top: "conv3_9"

676 | }

677 | layer {

678 | name: "res3_9"

679 | type: "Eltwise"

680 | bottom: "res3_7"

681 | bottom: "conv3_9"

682 | top: "res3_9"

683 | eltwise_param {

684 | operation: 1

685 | }

686 | }

687 | layer {

688 | name: "conv4_1"

689 | type: "Convolution"

690 | bottom: "res3_9"

691 | top: "conv4_1"

692 | param {

693 | lr_mult: 1

694 | decay_mult: 1

695 | }

696 | param {

697 | lr_mult: 2

698 | decay_mult: 0

699 | }

700 | convolution_param {

701 | num_output: 512

702 | kernel_size: 3

703 | stride: 2

704 | pad: 1

705 | weight_filler {

706 | type: "xavier"

707 | }

708 | bias_filler {

709 | type: "constant"

710 | value: 0

711 | }

712 | }

713 | }

714 | layer {

715 | name: "relu4_1"

716 | type: "PReLU"

717 | bottom: "conv4_1"

718 | top: "conv4_1"

719 | }

720 | layer {

721 | name: "conv4_2"

722 | type: "Convolution"

723 | bottom: "conv4_1"

724 | top: "conv4_2"

725 | param {

726 | lr_mult: 1

727 | decay_mult: 1

728 | }

729 | param {

730 | lr_mult: 0

731 | decay_mult: 0

732 | }

733 | convolution_param {

734 | num_output: 512

735 | kernel_size: 3

736 | stride: 1

737 | pad: 1

738 | weight_filler {

739 | type: "gaussian"

740 | std: 0.01

741 | }

742 | bias_filler {

743 | type: "constant"

744 | value: 0

745 | }

746 | }

747 | }

748 | layer {

749 | name: "relu4_2"

750 | type: "PReLU"

751 | bottom: "conv4_2"

752 | top: "conv4_2"

753 | }

754 | layer {

755 | name: "conv4_3"

756 | type: "Convolution"

757 | bottom: "conv4_2"

758 | top: "conv4_3"

759 | param {

760 | lr_mult: 1

761 | decay_mult: 1

762 | }

763 | param {

764 | lr_mult: 0

765 | decay_mult: 0

766 | }

767 | convolution_param {

768 | num_output: 512

769 | kernel_size: 3

770 | stride: 1

771 | pad: 1

772 | weight_filler {

773 | type: "gaussian"

774 | std: 0.01

775 | }

776 | bias_filler {

777 | type: "constant"

778 | value: 0

779 | }

780 | }

781 | }

782 | layer {

783 | name: "relu4_3"

784 | type: "PReLU"

785 | bottom: "conv4_3"

786 | top: "conv4_3"

787 | }

788 | layer {

789 | name: "res4_3"

790 | type: "Eltwise"

791 | bottom: "conv4_1"

792 | bottom: "conv4_3"

793 | top: "res4_3"

794 | eltwise_param {

795 | operation: 1

796 | }

797 | }

798 | layer {

799 | name: "fc5"

800 | type: "InnerProduct"

801 | bottom: "res4_3"

802 | top: "fc5"

803 | param {

804 | lr_mult: 1

805 | decay_mult: 1

806 | }

807 | param {

808 | lr_mult: 2

809 | decay_mult: 0

810 | }

811 | inner_product_param {

812 | num_output: 512

813 | weight_filler {

814 | type: "xavier"

815 | }

816 | bias_filler {

817 | type: "constant"

818 | value: 0

819 | }

820 | }

821 | }

822 | ####################### innerproduct ###################

823 | layer {

824 | name: "fc5_norm"

825 | type: "NormL2"

826 | bottom: "fc5"

827 | top: "fc5_norm"

828 | }

829 | layer {

830 | name: "fc6"

831 | type: "InnerProduct"

832 | bottom: "fc5_norm"

833 | top: "fc6"

834 | param {

835 | lr_mult: 1

836 | decay_mult: 1

837 | }

838 | inner_product_param {

839 | num_output: 10575

840 | normalize: true

841 | weight_filler {

842 | type: "xavier"

843 | }

844 | bias_term: false

845 | }

846 | }

847 | layer {

848 | name: "fc6_margin"

849 | type: "Arcface"

850 | bottom: "fc6"

851 | bottom: "label000"

852 | top: "fc6_margin"

853 | arcface_param {

854 | m: 0.5

855 | }

856 | }

857 | layer {

858 | name: "fix_cos"

859 | type: "NoiseTolerantFR"

860 | bottom: "fc6_margin"

861 | bottom: "fc6"

862 | bottom: "label000"

863 | top: "fix_cos"

864 | noise_tolerant_fr_param {

865 | shield_forward: false

866 | start_iter: 1

867 | bins: 200

868 | slide_batch_num: 1000

869 |

870 | value_low: -1.0

871 | value_high: 1.0

872 |

873 | #debug: true

874 | #debug_prefix: "p_casia-webface_noise-flip-outlier-1_1-40_Asoftmax_FIT"

875 | debug: false

876 | }

877 | }

878 | layer {

879 | name: "fc6_margin_scale"

880 | type: "Scale"

881 | bottom: "fix_cos"

882 | top: "fc6_margin_scale"

883 | param {

884 | lr_mult: 0

885 | decay_mult: 0

886 | }

887 | scale_param {

888 | filler {

889 | type: "constant"

890 | value: 32.0

891 | }

892 | bias_term: false

893 | }

894 | }

895 | ######################### softmax #######################

896 | layer {

897 | name: "loss/label000"

898 | type: "SoftmaxWithLoss"

899 | bottom: "fc6_margin_scale"

900 | bottom: "label000"

901 | top: "loss/label000"

902 | loss_weight: 1.0

903 | loss_param {

904 | ignore_label: -1

905 | }

906 | }

907 | layer {

908 | name: "accuracy/label000"

909 | type: "Accuracy"

910 | bottom: "fc6_margin_scale"

911 | bottom: "label000"

912 | top: "accuracy/label000"

913 | accuracy_param {

914 | top_k: 1

915 | ignore_label: -1

916 | }

917 | }

918 |

--------------------------------------------------------------------------------

/deploy/resnet20_l2softmax_train.prototxt:

--------------------------------------------------------------------------------

1 | name: "face_res20net"

2 | layer {

3 | name: "data"

4 | type: "Data"

5 | top: "data"

6 | top: "label000"

7 | include {

8 | phase: TRAIN

9 | }

10 | transform_param {

11 | crop_size: 128

12 | scale: 0.0078125

13 | mean_value: 127.5

14 | mirror: true

15 | }

16 | data_param {

17 | source: "./data/casia-webface/p_noise-flip-outlier-1_1-40/converter_p_casia-webface_noise-flip-outlier-1_1-40/train_data_db/"

18 | backend: LMDB

19 | batch_size: 64

20 | }

21 | }

22 | layer {

23 | name: "data"

24 | type: "Data"

25 | top: "data"

26 | top: "label000"

27 | include {

28 | phase: TEST

29 | }

30 | transform_param {

31 | crop_size: 128

32 | scale: 0.0078125

33 | mean_value: 127.5

34 | mirror: false

35 | }

36 | data_param {

37 | source: "./data/casia-webface/p_noise-flip-outlier-1_1-40/converter_p_casia-webface_noise-flip-outlier-1_1-40/validation_data_db/"

38 | backend: LMDB

39 | batch_size: 8

40 | }

41 | }

42 | layer {

43 | name: "conv1_1"

44 | type: "Convolution"

45 | bottom: "data"

46 | top: "conv1_1"

47 | param {

48 | lr_mult: 1

49 | decay_mult: 1

50 | }

51 | param {

52 | lr_mult: 2

53 | decay_mult: 0

54 | }

55 | convolution_param {

56 | num_output: 64

57 | kernel_size: 3

58 | stride: 2

59 | pad: 1

60 | weight_filler {

61 | type: "xavier"

62 | }

63 | bias_filler {

64 | type: "constant"

65 | value: 0

66 | }

67 | }

68 | }

69 | layer {

70 | name: "relu1_1"

71 | type: "PReLU"

72 | bottom: "conv1_1"

73 | top: "conv1_1"

74 | }

75 | layer {

76 | name: "conv1_2"

77 | type: "Convolution"

78 | bottom: "conv1_1"

79 | top: "conv1_2"

80 | param {

81 | lr_mult: 1

82 | decay_mult: 1

83 | }

84 | param {

85 | lr_mult: 0

86 | decay_mult: 0

87 | }

88 | convolution_param {

89 | num_output: 64

90 | kernel_size: 3

91 | stride: 1

92 | pad: 1

93 | weight_filler {

94 | type: "gaussian"

95 | std: 0.01

96 | }

97 | bias_filler {

98 | type: "constant"

99 | value: 0

100 | }

101 | }

102 | }

103 | layer {

104 | name: "relu1_2"

105 | type: "PReLU"

106 | bottom: "conv1_2"

107 | top: "conv1_2"

108 | }

109 | layer {

110 | name: "conv1_3"

111 | type: "Convolution"

112 | bottom: "conv1_2"

113 | top: "conv1_3"

114 | param {

115 | lr_mult: 1

116 | decay_mult: 1

117 | }

118 | param {

119 | lr_mult: 0

120 | decay_mult: 0

121 | }

122 | convolution_param {

123 | num_output: 64

124 | kernel_size: 3

125 | stride: 1

126 | pad: 1

127 | weight_filler {

128 | type: "gaussian"

129 | std: 0.01

130 | }

131 | bias_filler {

132 | type: "constant"

133 | value: 0

134 | }

135 | }

136 | }

137 | layer {

138 | name: "relu1_3"

139 | type: "PReLU"

140 | bottom: "conv1_3"

141 | top: "conv1_3"

142 | }

143 | layer {

144 | name: "res1_3"

145 | type: "Eltwise"

146 | bottom: "conv1_1"

147 | bottom: "conv1_3"

148 | top: "res1_3"

149 | eltwise_param {

150 | operation: 1

151 | }

152 | }

153 | layer {

154 | name: "conv2_1"

155 | type: "Convolution"

156 | bottom: "res1_3"

157 | top: "conv2_1"

158 | param {

159 | lr_mult: 1

160 | decay_mult: 1

161 | }

162 | param {

163 | lr_mult: 2

164 | decay_mult: 0

165 | }

166 | convolution_param {

167 | num_output: 128

168 | kernel_size: 3

169 | stride: 2

170 | pad: 1

171 | weight_filler {

172 | type: "xavier"

173 | }

174 | bias_filler {

175 | type: "constant"

176 | value: 0

177 | }

178 | }

179 | }

180 | layer {

181 | name: "relu2_1"

182 | type: "PReLU"

183 | bottom: "conv2_1"

184 | top: "conv2_1"

185 | }

186 | layer {

187 | name: "conv2_2"

188 | type: "Convolution"

189 | bottom: "conv2_1"

190 | top: "conv2_2"

191 | param {

192 | lr_mult: 1

193 | decay_mult: 1

194 | }

195 | param {

196 | lr_mult: 0

197 | decay_mult: 0

198 | }

199 | convolution_param {

200 | num_output: 128

201 | kernel_size: 3

202 | stride: 1

203 | pad: 1

204 | weight_filler {

205 | type: "gaussian"

206 | std: 0.01

207 | }

208 | bias_filler {

209 | type: "constant"

210 | value: 0

211 | }

212 | }

213 | }

214 | layer {

215 | name: "relu2_2"

216 | type: "PReLU"

217 | bottom: "conv2_2"

218 | top: "conv2_2"

219 | }

220 | layer {

221 | name: "conv2_3"

222 | type: "Convolution"

223 | bottom: "conv2_2"

224 | top: "conv2_3"

225 | param {

226 | lr_mult: 1

227 | decay_mult: 1

228 | }

229 | param {

230 | lr_mult: 0

231 | decay_mult: 0

232 | }

233 | convolution_param {

234 | num_output: 128

235 | kernel_size: 3

236 | stride: 1

237 | pad: 1

238 | weight_filler {

239 | type: "gaussian"

240 | std: 0.01

241 | }

242 | bias_filler {

243 | type: "constant"

244 | value: 0

245 | }

246 | }

247 | }

248 | layer {

249 | name: "relu2_3"

250 | type: "PReLU"

251 | bottom: "conv2_3"

252 | top: "conv2_3"

253 | }

254 | layer {

255 | name: "res2_3"

256 | type: "Eltwise"

257 | bottom: "conv2_1"

258 | bottom: "conv2_3"

259 | top: "res2_3"

260 | eltwise_param {

261 | operation: 1

262 | }

263 | }

264 | layer {

265 | name: "conv2_4"

266 | type: "Convolution"

267 | bottom: "res2_3"

268 | top: "conv2_4"

269 | param {

270 | lr_mult: 1

271 | decay_mult: 1

272 | }

273 | param {

274 | lr_mult: 0

275 | decay_mult: 0

276 | }

277 | convolution_param {

278 | num_output: 128

279 | kernel_size: 3

280 | stride: 1

281 | pad: 1

282 | weight_filler {

283 | type: "gaussian"

284 | std: 0.01

285 | }

286 | bias_filler {

287 | type: "constant"

288 | value: 0

289 | }

290 | }

291 | }

292 | layer {

293 | name: "relu2_4"

294 | type: "PReLU"

295 | bottom: "conv2_4"

296 | top: "conv2_4"

297 | }

298 | layer {

299 | name: "conv2_5"

300 | type: "Convolution"

301 | bottom: "conv2_4"

302 | top: "conv2_5"

303 | param {

304 | lr_mult: 1

305 | decay_mult: 1

306 | }

307 | param {

308 | lr_mult: 0

309 | decay_mult: 0

310 | }

311 | convolution_param {