├── .gitignore

├── README.md

├── computer-vision-study-group

├── Notebooks

│ └── HuggingFace_vision_ecosystem_overview_(June_2022).ipynb

├── README.md

└── Sessions

│ ├── Blip2.md

│ ├── Fiber.md

│ ├── FlexiViT.md

│ ├── HFVisionEcosystem.md

│ ├── HowDoVisionTransformersWork.md

│ ├── MaskedAutoEncoders.md

│ ├── NeuralRadianceFields.md

│ ├── PolarizedSelfAttention.md

│ └── SwinTransformer.md

├── gradio-blocks

└── README.md

├── huggan

├── README.md

├── __init__.py

├── assets

│ ├── cyclegan.png

│ ├── dcgan_mnist.png

│ ├── example_model.png

│ ├── example_space.png

│ ├── huggan_banner.png

│ ├── lightweight_gan_wandb.png

│ ├── metfaces.png

│ ├── pix2pix_maps.png

│ └── wandb.png

├── model_card_template.md

├── pytorch

│ ├── README.md

│ ├── __init__.py

│ ├── cyclegan

│ │ ├── README.md

│ │ ├── __init__.py

│ │ ├── modeling_cyclegan.py

│ │ ├── train.py

│ │ └── utils.py

│ ├── dcgan

│ │ ├── README.md

│ │ ├── __init__.py

│ │ ├── modeling_dcgan.py

│ │ └── train.py

│ ├── huggan_mixin.py

│ ├── lightweight_gan

│ │ ├── README.md

│ │ ├── __init__.py

│ │ ├── cli.py

│ │ ├── diff_augment.py

│ │ └── lightweight_gan.py

│ ├── metrics

│ │ ├── README.md

│ │ ├── __init__.py

│ │ ├── fid_score.py

│ │ └── inception.py

│ └── pix2pix

│ │ ├── README.md

│ │ ├── __init__.py

│ │ ├── modeling_pix2pix.py

│ │ └── train.py

├── tensorflow

│ └── dcgan

│ │ ├── README.md

│ │ ├── __init__.py

│ │ ├── requirements.txt

│ │ └── train.py

└── utils

│ ├── README.md

│ ├── __init__.py

│ ├── hub.py

│ └── push_to_hub_example.py

├── jax-controlnet-sprint

├── README.md

├── dataset_tools

│ ├── coyo_1m_dataset_preprocess.py

│ ├── create_pose_dataset.ipynb

│ └── data.py

└── training_scripts

│ ├── requirements_flax.txt

│ └── train_controlnet_flax.py

├── keras-dreambooth-sprint

├── Dreambooth_on_Hub.ipynb

├── README.md

├── compute-with-lambda.md

└── requirements.txt

├── keras-sprint

├── README.md

├── deeplabv3_plus.ipynb

├── example_image_2.jpeg

├── example_image_3.jpeg

└── mnist_convnet.ipynb

├── open-source-ai-game-jam

└── README.md

├── requirements.txt

├── setup.py

├── sklearn-sprint

└── guidelines.md

└── whisper-fine-tuning-event

├── README.md

├── ds_config.json

├── fine-tune-whisper-non-streaming.ipynb

├── fine-tune-whisper-streaming.ipynb

├── fine_tune_whisper_streaming_colab.ipynb

├── interleave_streaming_datasets.ipynb

├── requirements.txt

├── run_eval_whisper_streaming.py

└── run_speech_recognition_seq2seq_streaming.py

/.gitignore:

--------------------------------------------------------------------------------

1 | # Initially taken from Github's Python gitignore file

2 |

3 | # Byte-compiled / optimized / DLL files

4 | __pycache__/

5 | *.py[cod]

6 | *$py.class

7 |

8 | # C extensions

9 | *.so

10 |

11 | # tests and logs

12 | tests/fixtures/cached_*_text.txt

13 | logs/

14 | lightning_logs/

15 | lang_code_data/

16 |

17 | # Distribution / packaging

18 | .Python

19 | build/

20 | develop-eggs/

21 | dist/

22 | downloads/

23 | eggs/

24 | .eggs/

25 | lib/

26 | lib64/

27 | parts/

28 | sdist/

29 | var/

30 | wheels/

31 | *.egg-info/

32 | .installed.cfg

33 | *.egg

34 | MANIFEST

35 |

36 | # PyInstaller

37 | # Usually these files are written by a python script from a template

38 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

39 | *.manifest

40 | *.spec

41 |

42 | # Installer logs

43 | pip-log.txt

44 | pip-delete-this-directory.txt

45 |

46 | # Unit test / coverage reports

47 | htmlcov/

48 | .tox/

49 | .nox/

50 | .coverage

51 | .coverage.*

52 | .cache

53 | nosetests.xml

54 | coverage.xml

55 | *.cover

56 | .hypothesis/

57 | .pytest_cache/

58 |

59 | # Translations

60 | *.mo

61 | *.pot

62 |

63 | # Django stuff:

64 | *.log

65 | local_settings.py

66 | db.sqlite3

67 |

68 | # Flask stuff:

69 | instance/

70 | .webassets-cache

71 |

72 | # Scrapy stuff:

73 | .scrapy

74 |

75 | # Sphinx documentation

76 | docs/_build/

77 |

78 | # PyBuilder

79 | target/

80 |

81 | # Jupyter Notebook

82 | .ipynb_checkpoints

83 |

84 | # IPython

85 | profile_default/

86 | ipython_config.py

87 |

88 | # pyenv

89 | .python-version

90 |

91 | # celery beat schedule file

92 | celerybeat-schedule

93 |

94 | # SageMath parsed files

95 | *.sage.py

96 |

97 | # Environments

98 | .env

99 | .venv

100 | env/

101 | venv/

102 | ENV/

103 | env.bak/

104 | venv.bak/

105 |

106 | # Spyder project settings

107 | .spyderproject

108 | .spyproject

109 |

110 | # Rope project settings

111 | .ropeproject

112 |

113 | # mkdocs documentation

114 | /site

115 |

116 | # mypy

117 | .mypy_cache/

118 | .dmypy.json

119 | dmypy.json

120 |

121 | # Pyre type checker

122 | .pyre/

123 |

124 | # vscode

125 | .vs

126 | .vscode

127 |

128 | # Pycharm

129 | .idea

130 |

131 | # TF code

132 | tensorflow_code

133 |

134 | # Models

135 | proc_data

136 |

137 | # examples

138 | runs

139 | /runs_old

140 | /wandb

141 | /examples/runs

142 | /examples/**/*.args

143 | /examples/rag/sweep

144 |

145 | # data

146 | /data

147 | serialization_dir

148 |

149 | # emacs

150 | *.*~

151 | debug.env

152 |

153 | # vim

154 | .*.swp

155 |

156 | #ctags

157 | tags

158 |

159 | # pre-commit

160 | .pre-commit*

161 |

162 | # .lock

163 | *.lock

164 |

165 | # DS_Store (MacOS)

166 | .DS_Store

167 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Community Events @ 🤗

2 |

3 | A central repository for all community events organized by 🤗 HuggingFace. Come one, come all!

4 | We're constantly finding ways to democratise the use of ML across modalities and languages. This repo contains information about all past, present and upcoming events.

5 |

6 | ## Hugging Events

7 |

8 | | **Event Name** | **Dates** | **Status** |

9 | |-------------------------------------------------------------------------|-----------------|--------------------------------------------------------------------------------------------------------------|

10 | | [Open Source AI Game Jam 🎮 (First Edition)](/open-source-ai-game-jam) | July 7th - 9th, 2023 | Finished |

11 | | [Whisper Fine Tuning Event](/whisper-fine-tuning-event) | Dec 5th - 19th, 2022 | Finished |

12 | | [Computer Vision Study Group](/computer-vision-study-group) | Ongoing | Monthly |

13 | | [ML for Audio Study Group](https://github.com/Vaibhavs10/ml-with-audio) | Ongoing | Monthly |

14 | | [Gradio Blocks](/gradio-blocks) | May 16th - 31st, 2022 | Finished |

15 | | [HugGAN](/huggan) | Apr 4th - 17th, 2022 | Finished |

16 | | [Keras Sprint](keras-sprint) | June, 2022 | Finished |

17 |

--------------------------------------------------------------------------------

/computer-vision-study-group/README.md:

--------------------------------------------------------------------------------

1 | # Computer Vision Study Group

2 |

3 | This is a collection of all past sessions that have been held as part of the Hugging Face Computer Vision Study Group.

4 |

5 | | |Session Name | Session Link |

6 | |--- |--- | --- |

7 | |❓|How Do Vision Transformers Work? | [Session Sheet](Sessions/HowDoVisionTransformersWork.md) |

8 | |🔅|Polarized Self-Attention | [Session Sheet](Sessions/PolarizedSelfAttention.md)|

9 | |🍄|Swin Transformer | [Session Sheet](Sessions/SwinTransformer.md)|

10 | |🔮|Introduction to Neural Radiance Fields | [Session Sheet](Sessions/NeuralRadianceFields.md)|

11 | |🌐|Hugging Face Vision Ecosystem Overview (June 2022) | [Session Sheet](Sessions/HFVisionEcosystem.md)|

12 | |🪂|Masked Autoencoders Are Scalable Vision Learners | [Session Sheet](Sessions/MaskedAutoEncoders.md)|

13 | |🦊|Fiber: Coarse-to-Fine Vision-Language Pre-Training | [Session Sheet](Sessions/Fiber.md)|

14 | |⚔️ |FlexiViT: One Model for All Patch Sizes| [Session Sheet](Sessions/FlexiViT.md)|

15 | |🤖|BLIP-2: Bootstrapping Language-Image Pre-training| [Session Sheet](Sessions/Blip2.md)|

16 |

--------------------------------------------------------------------------------

/computer-vision-study-group/Sessions/Blip2.md:

--------------------------------------------------------------------------------

1 | # BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models

2 | Session by [johko](https://github.com/johko)

3 |

4 |

5 | ## Recording 📺

6 | [YouTube](https://www.youtube.com/watch?v=k0DAtZCCl1w&pp=ygUdaHVnZ2luZyBmYWNlIHN0dWR5IGdyb3VwIHN3aW4%3D)

7 |

8 |

9 | ## Session Slides 🖥️

10 | [Google Drive](https://docs.google.com/presentation/d/1Y_8Qu0CMlt7jvCd8Jw0c_ILh8LHB0XgnlrvXObe5FYs/edit?usp=sharing)

11 |

12 |

13 | ## Original Paper 📄

14 | [Hugging Face](https://huggingface.co/papers/2301.12597) /

15 | [arxiv](https://arxiv.org/abs/2301.12597)

16 |

17 |

18 | ## GitHub Repo 🧑🏽💻

19 | https://github.com/salesforce/lavis

20 |

21 |

22 | ## Additional Resources 📚

23 | - [BLIP-2 Demo Space](https://huggingface.co/spaces/hysts/BLIP2-with-transformers)

24 | - [BLIP-2 Transformers Example Notebooks](https://github.com/NielsRogge/Transformers-Tutorials/tree/master/BLIP-2) by Niels Rogge

25 | - [BLIP-2 Transformers Docs](https://huggingface.co/docs/transformers/model_doc/blip-2)

26 |

--------------------------------------------------------------------------------

/computer-vision-study-group/Sessions/Fiber.md:

--------------------------------------------------------------------------------

1 | # Fiber: Coarse-to-Fine Vision-Language Pre-Training with Fusion in the Backbone

2 | Session by [johko](https://github.com/johko)

3 |

4 |

5 | ## Recording 📺

6 | [YouTube](https://www.youtube.com/watch?v=m9qhNGuWE2g&t=20s&pp=ygUdaHVnZ2luZyBmYWNlIHN0dWR5IGdyb3VwIHN3aW4%3D)

7 |

8 |

9 | ## Session Slides 🖥️

10 | [Google Drive](https://docs.google.com/presentation/d/1vSu27tE87ZM103_CkgqsW7JeIp2mrmyl/edit?usp=sharing&ouid=107717747412022342990&rtpof=true&sd=true)

11 |

12 |

13 | ## Original Paper 📄

14 | [Hugging Face](https://huggingface.co/papers/2206.07643) /

15 | [arxiv](https://arxiv.org/abs/2206.07643)

16 |

17 |

18 | ## GitHub Repo 🧑🏽💻

19 | https://github.com/microsoft/fiber

20 |

21 |

22 | ## Additional Resources 📚

23 | - [Text to Pokemon](https://huggingface.co/spaces/lambdalabs/text-to-pokemon) HF Space to create your own Pokemon

24 | - [Paper to Pokemon](https://huggingface.co/spaces/hugging-fellows/paper-to-pokemon) derived from the above space - create your own Pokemon from a paper

--------------------------------------------------------------------------------

/computer-vision-study-group/Sessions/FlexiViT.md:

--------------------------------------------------------------------------------

1 | # FlexiViT: One Model for All Patch Sizes

2 | Session by [johko](https://github.com/johko)

3 |

4 |

5 | ## Recording 📺

6 | [YouTube](https://www.youtube.com/watch?v=TlRYBgsl7Q8&t=977s&pp=ygUdaHVnZ2luZyBmYWNlIHN0dWR5IGdyb3VwIHN3aW4%3D)

7 |

8 |

9 | ## Session Slides 🖥️

10 | [Google Drive](https://docs.google.com/presentation/d/1rLAYr160COYQMUN0FDH7D9pP8qe1_QyXGvfbHkutOt8/edit?usp=sharing)

11 |

12 |

13 | ## Original Paper 📄

14 | [Hugging Face](https://huggingface.co/papers/2212.08013) /

15 | [arxiv](https://arxiv.org/abs/2212.08013)

16 |

17 |

18 | ## GitHub Repo 🧑🏽💻

19 | https://github.com/google-research/big_vision

20 |

21 |

22 | ## Additional Resources 📚

23 | - [FlexiViT PR](https://github.com/google-research/big_vision/pull/24)

24 |

--------------------------------------------------------------------------------

/computer-vision-study-group/Sessions/HFVisionEcosystem.md:

--------------------------------------------------------------------------------

1 | # Hugging Face Vision Ecosystem Overview (June 2022)

2 | Session by [Niels Rogge](https://github.com/NielsRogge)

3 |

4 |

5 | ## Recording 📺

6 | [YouTube](https://www.youtube.com/watch?v=oL-xmufhZM8&pp=ygUdaHVnZ2luZyBmYWNlIHN0dWR5IGdyb3VwIHN3aW4%3D)

7 |

8 |

9 | ## Additional Resources 📚

10 | - [Accompanying Notebook](../Notebooks/HuggingFace_vision_ecosystem_overview_(June_2022).ipynb)

--------------------------------------------------------------------------------

/computer-vision-study-group/Sessions/HowDoVisionTransformersWork.md:

--------------------------------------------------------------------------------

1 | # How Do Vision Transformers Work

2 | Session by [johko](https://github.com/johko)

3 |

4 |

5 | ## Session Slides 🖥️

6 | [Google Drive](https://docs.google.com/presentation/d/1PewOHVABkxx0jO9PoJSQi8to_WNlL4HdDp4M9e4L8hs/edit?usp=drivesdks)

7 |

8 |

9 | ## Original Paper 📄

10 | [Hugging Face](https://huggingface.co/papers/2202.06709) /

11 | [arxiv](https://arxiv.org/pdf/2202.06709.pdf)

12 |

13 |

14 | ## GitHub Repo 🧑🏽💻

15 | https://github.com/microsoft/Swin-Transformer

16 |

17 |

18 | ## Additional Resources 📚

19 | Hessian Matrices:

20 |

21 | - https://stackoverflow.com/questions/23297090/how-calculating-hessian-works-for-neural-network-learning

22 | - https://machinelearningmastery.com/a-gentle-introduction-to-hessian-matrices/

23 |

24 | Loss Landscape Visualization:

25 |

26 | - https://mathformachines.com/posts/visualizing-the-loss-landscape/

27 | - https://github.com/tomgoldstein/loss-landscape

--------------------------------------------------------------------------------

/computer-vision-study-group/Sessions/MaskedAutoEncoders.md:

--------------------------------------------------------------------------------

1 | # Masked Autoencoders are Scalable Vision Learners

2 | Session by [johko](https://github.com/johko)

3 |

4 |

5 | ## Recording 📺

6 | [YouTube](https://www.youtube.com/watch?v=AC6flxUFLrg&pp=ygUdaHVnZ2luZyBmYWNlIHN0dWR5IGdyb3VwIHN3aW4%3D)

7 |

8 |

9 | ## Session Slides 🖥️

10 | [Google Drive](https://docs.google.com/presentation/d/10ZZ-Rl1D57VX005a58OmqNeOB6gPnE54/edit?usp=sharing&ouid=107717747412022342990&rtpof=true&sd=true)

11 |

12 |

13 | ## Original Paper 📄

14 | [Hugging Face](https://huggingface.co/papers/2111.06377) /

15 | [arxiv](https://arxiv.org/abs/2111.06377)

16 |

17 |

18 | ## GitHub Repo 🧑🏽💻

19 | https://github.com/facebookresearch/mae

20 |

21 |

22 | ## Additional Resources 📚

23 | - [Transformers Docs ViTMAE](https://huggingface.co/docs/transformers/model_doc/vit_mae)

24 | - [Transformers ViTMAE Demo Notebook](https://github.com/NielsRogge/Transformers-Tutorials/tree/master/ViTMAE) by Niels Rogge

--------------------------------------------------------------------------------

/computer-vision-study-group/Sessions/NeuralRadianceFields.md:

--------------------------------------------------------------------------------

1 | # Introduction to Neural Radiance Fields

2 | Session by [Aritra](https://arig23498.github.io/) and [Ritwik](ritwikraha.github.io)

3 |

4 |

5 | ## Recording 📺

6 | [YouTube](https://www.youtube.com/watch?v=U2XS7SxOy2s)

7 |

8 |

9 | ## Session Slides 🖥️

10 | [Google Drive](https://docs.google.com/presentation/d/e/2PACX-1vTQVnoTJGhRxDscNV1Mg2aYhvXP8cKODpB5Ii72NWoetCGrTLBJWx_UD1oPXHrzPtj7xO8MS_3TQaSH/pub?start=false&loop=false&delayms=3000)

11 |

12 |

13 | ## Original Paper 📄

14 | [Hugging Face](https://huggingface.co/papers/2003.08934) /

15 | [arxiv](https://arxiv.org/abs/2003.08934)

16 |

17 |

18 | ## GitHub Repo 🧑🏽💻

19 | https://github.com/bmild/nerf

20 |

--------------------------------------------------------------------------------

/computer-vision-study-group/Sessions/PolarizedSelfAttention.md:

--------------------------------------------------------------------------------

1 | # Polarized Self-Attention

2 | Session by [Satpal](https://github.com/satpalsr)

3 |

4 | ## Session Slides 🖥️

5 | [GitHub PDF](https://github.com/satpalsr/Talks/blob/main/PSA_discussion.pdf)

6 |

7 |

8 | ## Original Paper 📄

9 | [Hugging Face](https://huggingface.co/papers/2107.00782) /

10 | [arxiv](https://arxiv.org/pdf/2107.00782.pdf)

11 |

12 |

13 | ## GitHub Repo 🧑🏽💻

14 | https://github.com/DeLightCMU/PSA

15 |

--------------------------------------------------------------------------------

/computer-vision-study-group/Sessions/SwinTransformer.md:

--------------------------------------------------------------------------------

1 | # Swin Transformer

2 | Session by [johko](https://github.com/johko)

3 |

4 |

5 | ## Recording 📺

6 | [YouTube](https://www.youtube.com/watch?v=Ngikt-K1Ecc&t=305s&pp=ygUdaHVnZ2luZyBmYWNlIHN0dWR5IGdyb3VwIHN3aW4%3D)

7 |

8 |

9 | ## Session Slides 🖥️

10 | [Google Drive](https://docs.google.com/presentation/d/1RoFIC6vE55RS4WNqSlzNu3ljB6F-_8edtprAFXpGvKs/edit?usp=sharing)

11 |

12 |

13 | ## Original Paper 📄

14 | [Hugging Face](https://huggingface.co/papers/2103.14030) /

15 | [arxiv](https://arxiv.org/pdf/2103.14030.pdf)

16 |

17 |

18 | ## GitHub Repo 🧑🏽💻

19 | https://github.com/xxxnell/how-do-vits-work

20 |

21 |

22 | ## Additional Resources 📚

23 | - [Transformers Docs Swin v1](https://huggingface.co/docs/transformers/model_doc/swin)

24 | - [Transformers Docs Swin v2](https://huggingface.co/docs/transformers/model_doc/swinv2)

25 | - [Transformers Docs Swin Super Resolution](https://huggingface.co/docs/transformers/model_doc/swin2sr)

--------------------------------------------------------------------------------

/gradio-blocks/README.md:

--------------------------------------------------------------------------------

1 | # Welcome to the [Gradio](https://gradio.app/) Blocks Party 🥳

2 |

3 |

4 |

5 |

6 | _**Timeline**: May 17th, 2022 - May 31st, 2022_

7 |

8 | ---

9 |

10 | We are happy to invite you to the Gradio Blocks Party - a community event in which we will create **interactive demos** for state-of-the-art machine learning models. Demos are powerful because they allow anyone — not just ML engineers — to try out models in the browser, give feedback on predictions, identify trustworthy models. The event will take place from **May 17th to 31st**. We will be organizing this event on [Github](https://github.com/huggingface/community-events) and the [Hugging Face discord channel](https://discord.com/invite/feTf9x3ZSB). Prizes will be given at the end of the event, see: [Prizes](#prizes)

11 |

12 |  13 |

14 | ## What is Gradio?

15 |

16 | Gradio is a Python library that allows you to quickly build web-based machine learning demos, data science dashboards, or other kinds of web apps, entirely in Python. These web apps can be launched from wherever you use Python (jupyter notebooks, colab notebooks, Python terminal, etc.) and shared with anyone instantly using Gradio's auto-generated share links. To learn more about Gradio see the Getting Started Guide: https://gradio.app/getting_started/ and the new Course on Huggingface about Gradio: [Gradio Course](https://huggingface.co/course/chapter9/1?fw=pt).

17 |

18 | Gradio can be installed via pip and comes preinstalled in Hugging Face Spaces, the latest version of Gradio can be set in the README in spaces by setting the sdk_version for example `sdk_version: 3.0b8`

19 |

20 | `pip install gradio` to install gradio locally

21 |

22 |

23 | ## What is Blocks?

24 |

25 | `gradio.Blocks` is a low-level API that allows you to have full control over the data flows and layout of your application. You can build very complex, multi-step applications using Blocks. If you have already used `gradio.Interface`, you know that you can easily create fully-fledged machine learning demos with just a few lines of code. The Interface API is very convenient but in some cases may not be sufficiently flexible for your needs. For example, you might want to:

26 |

27 | * Group together related demos as multiple tabs in one web app.

28 | * Change the layout of your demo instead of just having all of the inputs on the left and outputs on the right.

29 | * Have multi-step interfaces, in which the output of one model becomes the input to the next model, or have more flexible data flows in general.

30 | * Change a component's properties (for example, the choices in a Dropdown) or its visibility based on user input.

31 |

32 | To learn more about Blocks, see the [official guide](https://www.gradio.app/introduction_to_blocks/) and the [docs](https://gradio.app/docs/).

33 |

34 | ## What is Hugging Face Spaces?

35 |

36 | Spaces are a simple way to host ML demo apps directly on your profile or your organization’s profile on Hugging Face. This allows you to create your ML portfolio, showcase your projects at conferences or to stakeholders, and work collaboratively with other people in the ML ecosystem. Learn more about Spaces in the [docs](https://huggingface.co/docs/hub/spaces).

37 |

38 | ## How Do Gradio and Hugging Face work together?

39 |

40 | Hugging Face Spaces is a free hosting option for Gradio demos. Spaces comes with 3 SDK options: Gradio, Streamlit and Static HTML demos. Spaces can be public or private and the workflow is similar to github repos. There are over 2000+ Gradio spaces currently on Hugging Face. Learn more about spaces and gradio: https://huggingface.co/docs/hub/spaces

41 |

42 | ## Event Plan

43 |

44 | main components of the event consist of:

45 |

46 | 1. Learning about Gradio and the new Blocks Feature

47 | 2. Building your own Blocks demo using Gradio and Hugging Face Spaces

48 | 3. Submitting your demo on Spaces to the Gradio Blocks Party Organization

49 | 4. Share your blocks demo with a permanent shareable link

50 | 5. Win Prizes

51 |

52 |

53 | ## Example spaces using Blocks

54 |

55 |

13 |

14 | ## What is Gradio?

15 |

16 | Gradio is a Python library that allows you to quickly build web-based machine learning demos, data science dashboards, or other kinds of web apps, entirely in Python. These web apps can be launched from wherever you use Python (jupyter notebooks, colab notebooks, Python terminal, etc.) and shared with anyone instantly using Gradio's auto-generated share links. To learn more about Gradio see the Getting Started Guide: https://gradio.app/getting_started/ and the new Course on Huggingface about Gradio: [Gradio Course](https://huggingface.co/course/chapter9/1?fw=pt).

17 |

18 | Gradio can be installed via pip and comes preinstalled in Hugging Face Spaces, the latest version of Gradio can be set in the README in spaces by setting the sdk_version for example `sdk_version: 3.0b8`

19 |

20 | `pip install gradio` to install gradio locally

21 |

22 |

23 | ## What is Blocks?

24 |

25 | `gradio.Blocks` is a low-level API that allows you to have full control over the data flows and layout of your application. You can build very complex, multi-step applications using Blocks. If you have already used `gradio.Interface`, you know that you can easily create fully-fledged machine learning demos with just a few lines of code. The Interface API is very convenient but in some cases may not be sufficiently flexible for your needs. For example, you might want to:

26 |

27 | * Group together related demos as multiple tabs in one web app.

28 | * Change the layout of your demo instead of just having all of the inputs on the left and outputs on the right.

29 | * Have multi-step interfaces, in which the output of one model becomes the input to the next model, or have more flexible data flows in general.

30 | * Change a component's properties (for example, the choices in a Dropdown) or its visibility based on user input.

31 |

32 | To learn more about Blocks, see the [official guide](https://www.gradio.app/introduction_to_blocks/) and the [docs](https://gradio.app/docs/).

33 |

34 | ## What is Hugging Face Spaces?

35 |

36 | Spaces are a simple way to host ML demo apps directly on your profile or your organization’s profile on Hugging Face. This allows you to create your ML portfolio, showcase your projects at conferences or to stakeholders, and work collaboratively with other people in the ML ecosystem. Learn more about Spaces in the [docs](https://huggingface.co/docs/hub/spaces).

37 |

38 | ## How Do Gradio and Hugging Face work together?

39 |

40 | Hugging Face Spaces is a free hosting option for Gradio demos. Spaces comes with 3 SDK options: Gradio, Streamlit and Static HTML demos. Spaces can be public or private and the workflow is similar to github repos. There are over 2000+ Gradio spaces currently on Hugging Face. Learn more about spaces and gradio: https://huggingface.co/docs/hub/spaces

41 |

42 | ## Event Plan

43 |

44 | main components of the event consist of:

45 |

46 | 1. Learning about Gradio and the new Blocks Feature

47 | 2. Building your own Blocks demo using Gradio and Hugging Face Spaces

48 | 3. Submitting your demo on Spaces to the Gradio Blocks Party Organization

49 | 4. Share your blocks demo with a permanent shareable link

50 | 5. Win Prizes

51 |

52 |

53 | ## Example spaces using Blocks

54 |

55 |  56 |

57 | - [dalle-mini](https://huggingface.co/spaces/dalle-mini/dalle-mini)([Code](https://huggingface.co/spaces/dalle-mini/dalle-mini/blob/main/app/gradio/app.py))

58 | - [mindseye-lite](https://huggingface.co/spaces/multimodalart/mindseye-lite)([Code](https://huggingface.co/spaces/multimodalart/mindseye-lite/blob/main/app.py))

59 | - [ArcaneGAN-blocks](https://huggingface.co/spaces/akhaliq/ArcaneGAN-blocks)([Code](https://huggingface.co/spaces/akhaliq/ArcaneGAN-blocks/blob/main/app.py))

60 | - [gr-blocks](https://huggingface.co/spaces/merve/gr-blocks)([Code](https://huggingface.co/spaces/merve/gr-blocks/blob/main/app.py))

61 | - [tortoisse-tts](https://huggingface.co/spaces/osanseviero/tortoisse-tts)([Code](https://huggingface.co/spaces/osanseviero/tortoisse-tts/blob/main/app.py))

62 | - [CaptchaCracker](https://huggingface.co/spaces/osanseviero/tortoisse-tts)([Code](https://huggingface.co/spaces/akhaliq/CaptchaCracker/blob/main/app.py))

63 |

64 |

65 | ## To participate in the event

66 |

67 | - Join the organization for Blocks event

68 | - [https://huggingface.co/Gradio-Blocks](https://huggingface.co/Gradio-Blocks)

69 | - Join the discord

70 | - [discord](https://discord.com/invite/feTf9x3ZSB)

71 |

72 |

73 | Participants will be building and sharing Gradio demos using the Blocks feature. We will share a list of ideas of spaces that can be created using blocks or participants are free to try out their own ideas. At the end of the event, spaces will be evaluated and prizes will be given.

74 |

75 |

76 | ## Potential ideas for creating spaces:

77 |

78 |

79 | - Trending papers from https://paperswithcode.com/

80 | - Models from huggingface model hub: https://huggingface.co/models

81 | - Models from other model hubs

82 | - Tensorflow Hub: see example Gradio demos at https://huggingface.co/tensorflow

83 | - Pytorch Hub: see example Gradio demos at https://huggingface.co/pytorch

84 | - ONNX model Hub: see example Gradio demos at https://huggingface.co/onnx

85 | - PaddlePaddle Model Hub: see example Gradio demos at https://huggingface.co/PaddlePaddle

86 | - participant ideas, try out your own ideas

87 |

88 |

89 | ## Prizes

90 | - 1st place winner based on likes

91 | - [Hugging Face PRO subscription](https://huggingface.co/pricing) for 1 year

92 | - Embedding your Gradio Blocks demo in the Gradio Blog

93 | - top 10 winners based on likes

94 | - Swag from [Hugging Face merch shop](https://huggingface.myshopify.com/): t-shirts, hoodies, mugs of your choice

95 | - top 25 winners based on likes

96 | - [Hugging Face PRO subscription](https://huggingface.co/pricing) for 1 month

97 | - Blocks event badge on HF for all participants!

98 |

99 | ## Prizes Criteria

100 |

101 | - Staff Picks

102 | - Most liked Spaces

103 | - Community Pick (voting)

104 | - Most Creative Space (voting)

105 | - Most Educational Space (voting)

106 | - CEO's pick (one prize for a particularly impactful demo), picked by @clem

107 | - CTO's pick (one prize for a particularly technically impressive demo), picked by @julien

108 |

109 |

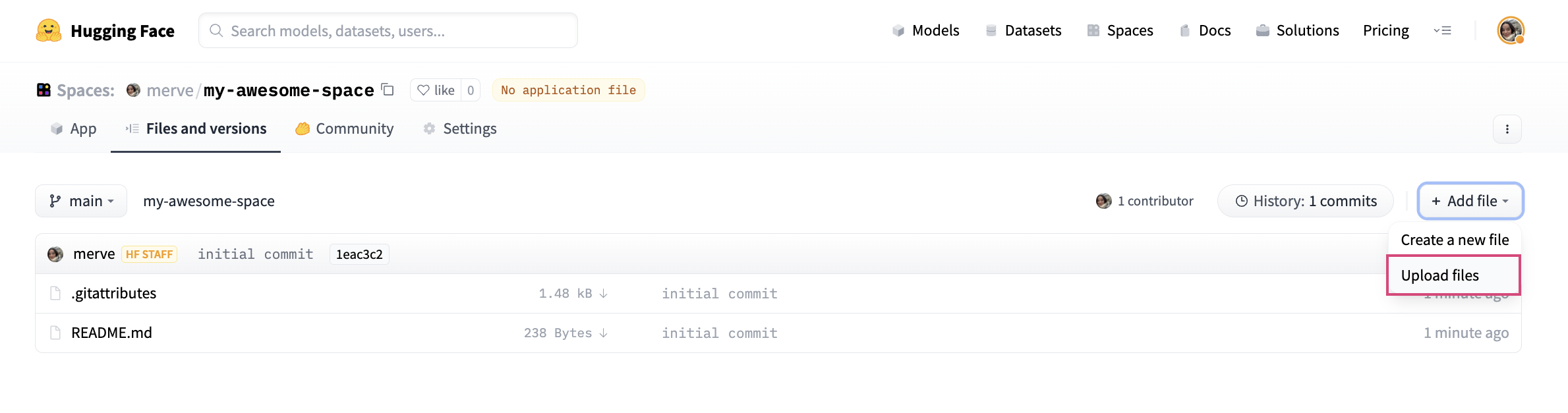

110 | ## Creating a Gradio demo on Hugging Face Spaces

111 |

112 | Once a model has been picked from the choices above or feel free to try your own idea, you can share a model in a Space using Gradio

113 |

114 | Read more about how to add [Gradio spaces](https://huggingface.co/blog/gradio-spaces).

115 |

116 | Steps to add Gradio Spaces to the Gradio Blocks Party org

117 | 1. Create an account on Hugging Face

118 | 2. Join the Gradio Blocks Party Organization by clicking "Join Organization" button in the organization page or using the shared link above

119 | 3. Once your request is approved, add your space using the Gradio SDK and share the link with the community!

120 |

121 | ## LeaderBoard for Most Popular Blocks Event Spaces based on Likes

122 |

123 | - See Leaderboard: https://huggingface.co/spaces/Gradio-Blocks/Leaderboard

124 |

--------------------------------------------------------------------------------

/huggan/__init__.py:

--------------------------------------------------------------------------------

1 | from pathlib import Path

2 |

3 | TEMPLATE_MODEL_CARD_PATH = Path(__file__).parent.absolute() / 'model_card_template.md'

--------------------------------------------------------------------------------

/huggan/assets/cyclegan.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/cyclegan.png

--------------------------------------------------------------------------------

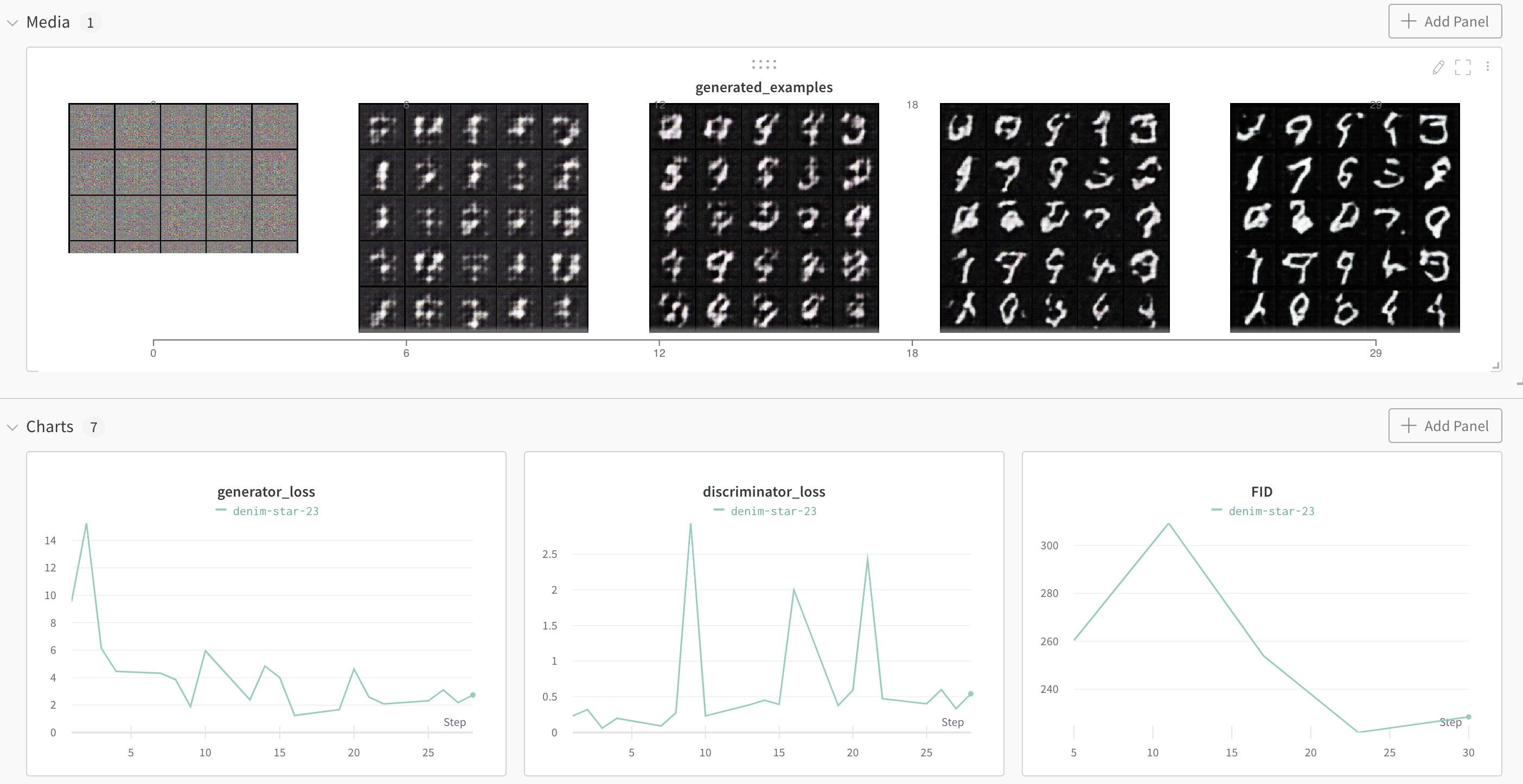

/huggan/assets/dcgan_mnist.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/dcgan_mnist.png

--------------------------------------------------------------------------------

/huggan/assets/example_model.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/example_model.png

--------------------------------------------------------------------------------

/huggan/assets/example_space.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/example_space.png

--------------------------------------------------------------------------------

/huggan/assets/huggan_banner.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/huggan_banner.png

--------------------------------------------------------------------------------

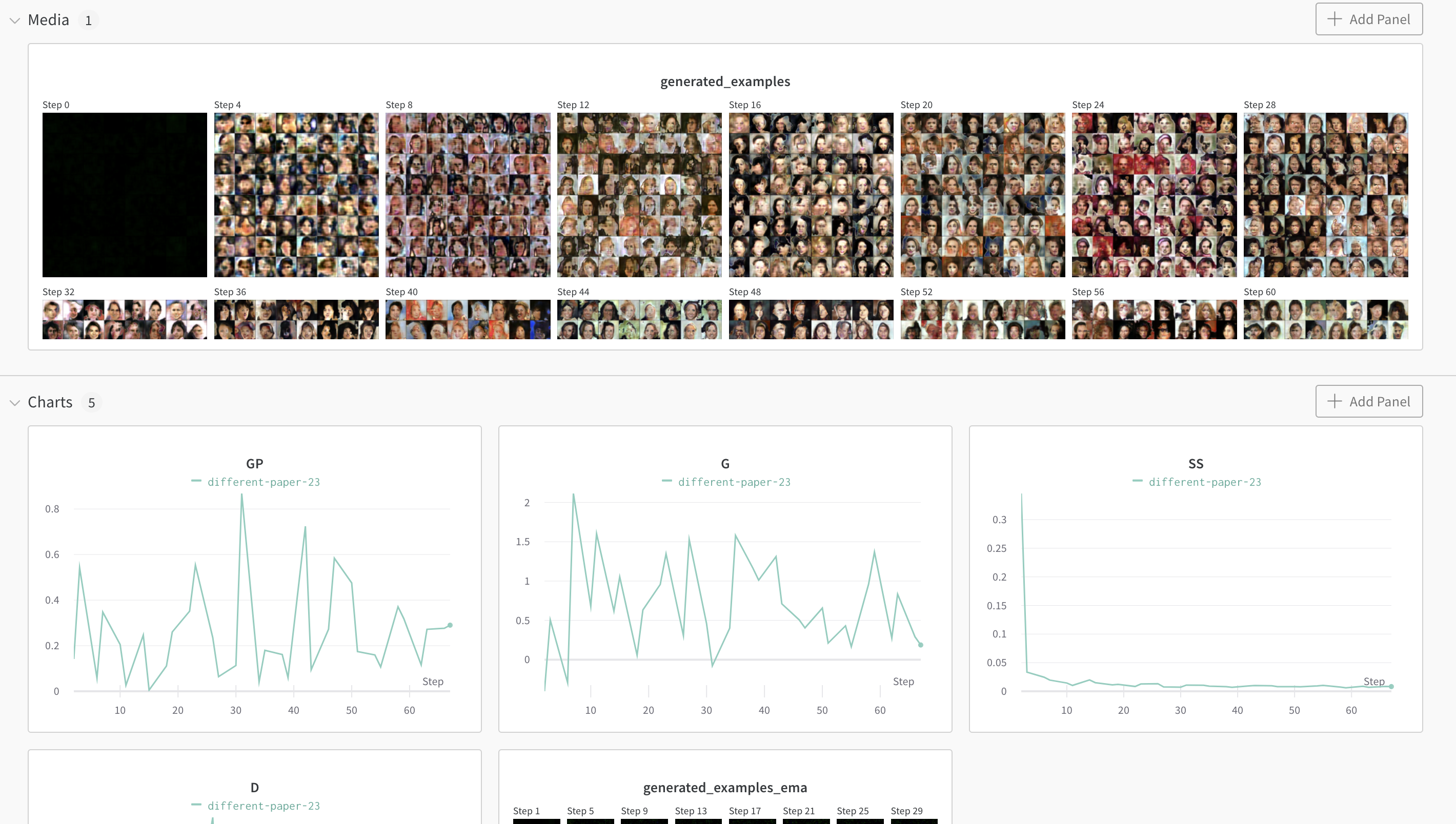

/huggan/assets/lightweight_gan_wandb.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/lightweight_gan_wandb.png

--------------------------------------------------------------------------------

/huggan/assets/metfaces.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/metfaces.png

--------------------------------------------------------------------------------

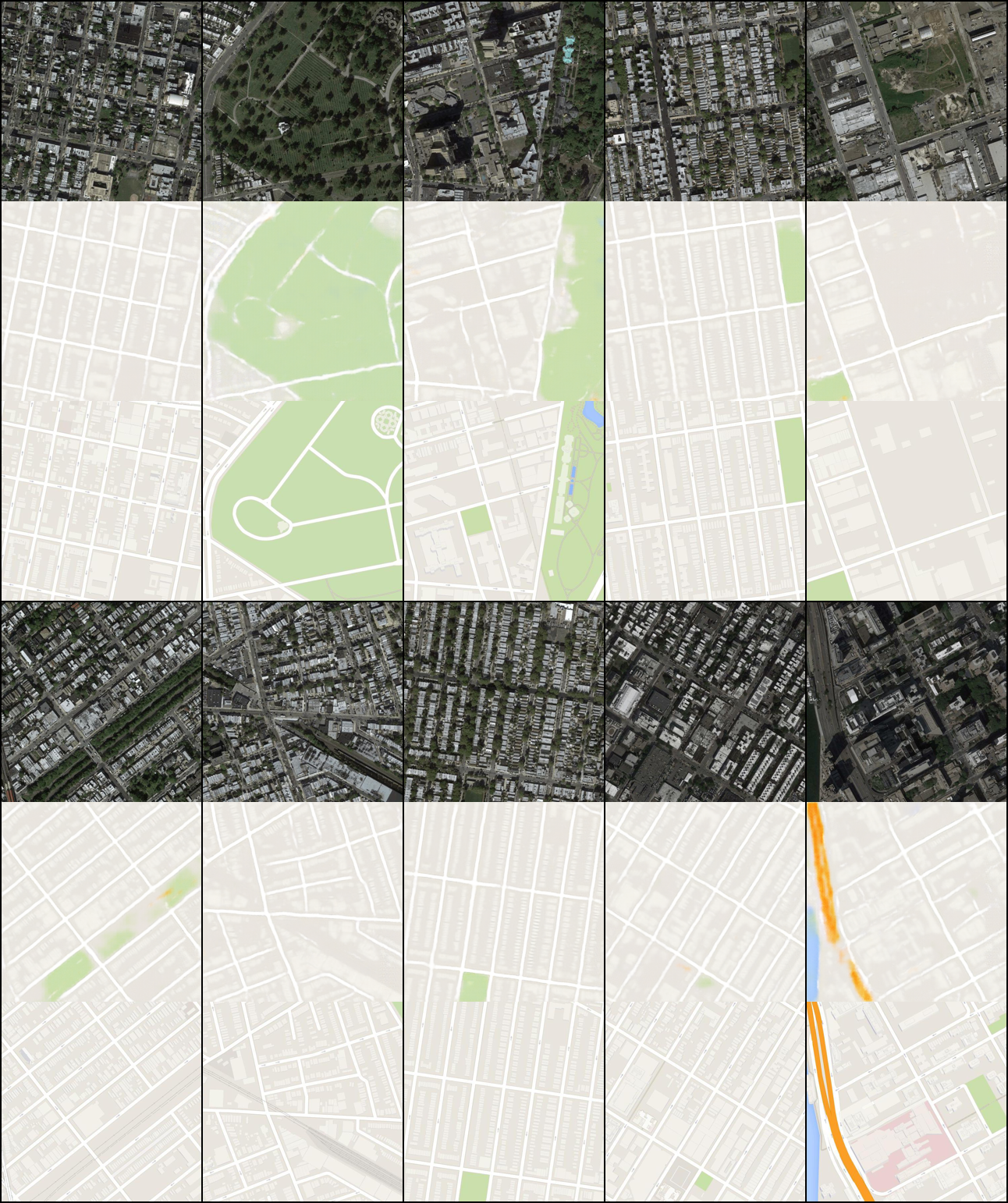

/huggan/assets/pix2pix_maps.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/pix2pix_maps.png

--------------------------------------------------------------------------------

/huggan/assets/wandb.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/wandb.png

--------------------------------------------------------------------------------

/huggan/model_card_template.md:

--------------------------------------------------------------------------------

1 | ---

2 | tags:

3 | - huggan

4 | - gan

5 | # See a list of available tags here:

6 | # https://github.com/huggingface/hub-docs/blob/main/js/src/lib/interfaces/Types.ts#L12

7 | # task: unconditional-image-generation or conditional-image-generation or image-to-image

8 | license: mit

9 | ---

10 |

11 | # MyModelName

12 |

13 | ## Model description

14 |

15 | Describe the model here (what it does, what it's used for, etc.)

16 |

17 | ## Intended uses & limitations

18 |

19 | #### How to use

20 |

21 | ```python

22 | # You can include sample code which will be formatted

23 | ```

24 |

25 | #### Limitations and bias

26 |

27 | Provide examples of latent issues and potential remediations.

28 |

29 | ## Training data

30 |

31 | Describe the data you used to train the model.

32 | If you initialized it with pre-trained weights, add a link to the pre-trained model card or repository with description of the pre-training data.

33 |

34 | ## Training procedure

35 |

36 | Preprocessing, hardware used, hyperparameters...

37 |

38 | ## Eval results

39 |

40 | ## Generated Images

41 |

42 | You can embed local or remote images using ``

43 |

44 | ### BibTeX entry and citation info

45 |

46 | ```bibtex

47 | @inproceedings{...,

48 | year={2020}

49 | }

50 | ```

--------------------------------------------------------------------------------

/huggan/pytorch/README.md:

--------------------------------------------------------------------------------

1 | # Example scripts (PyTorch)

2 |

3 | This directory contains a few example scripts that allow you to train famous GANs on your own data using a bit of 🤗 magic.

4 |

5 | More concretely, these scripts:

6 | - leverage 🤗 [Datasets](https://huggingface.co/docs/datasets/index) to load any image dataset from the hub (including your own, possibly private, dataset)

7 | - leverage 🤗 [Accelerate](https://huggingface.co/docs/accelerate/index) to instantly run the script on (multi-) CPU, (multi-) GPU, TPU environments, supporting fp16 and mixed precision as well as DeepSpeed

8 | - leverage 🤗 [Hub](https://huggingface.co/) to push the model to the hub at the end of training, allowing to easily create a demo for it afterwards

9 |

10 | Currently, it contains the following examples:

11 |

12 | | Name | Paper |

13 | | ----------- | ----------- |

14 | | [DCGAN](dcgan) | [Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks](https://arxiv.org/abs/1511.06434) |

15 | | [pix2pix](pix2pix) | [Image-to-Image Translation with Conditional Adversarial Networks](https://arxiv.org/abs/1611.07004) |

16 | | [CycleGAN](cyclegan) | [Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks](https://arxiv.org/abs/1703.10593)

17 | | [Lightweight GAN](lightweight_gan) | [Towards Faster and Stabilized GAN Training for High-fidelity Few-shot Image Synthesis](https://openreview.net/forum?id=1Fqg133qRaI)

18 |

19 |

20 |

--------------------------------------------------------------------------------

/huggan/pytorch/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/pytorch/__init__.py

--------------------------------------------------------------------------------

/huggan/pytorch/cyclegan/README.md:

--------------------------------------------------------------------------------

1 | # Training CycleGAN on your own data

2 |

3 | This folder contains a script to train [CycleGAN](https://arxiv.org/abs/1703.10593), leveraging the [Hugging Face](https://huggingface.co/) ecosystem for processing data and pushing the model to the Hub.

4 |

5 |

56 |

57 | - [dalle-mini](https://huggingface.co/spaces/dalle-mini/dalle-mini)([Code](https://huggingface.co/spaces/dalle-mini/dalle-mini/blob/main/app/gradio/app.py))

58 | - [mindseye-lite](https://huggingface.co/spaces/multimodalart/mindseye-lite)([Code](https://huggingface.co/spaces/multimodalart/mindseye-lite/blob/main/app.py))

59 | - [ArcaneGAN-blocks](https://huggingface.co/spaces/akhaliq/ArcaneGAN-blocks)([Code](https://huggingface.co/spaces/akhaliq/ArcaneGAN-blocks/blob/main/app.py))

60 | - [gr-blocks](https://huggingface.co/spaces/merve/gr-blocks)([Code](https://huggingface.co/spaces/merve/gr-blocks/blob/main/app.py))

61 | - [tortoisse-tts](https://huggingface.co/spaces/osanseviero/tortoisse-tts)([Code](https://huggingface.co/spaces/osanseviero/tortoisse-tts/blob/main/app.py))

62 | - [CaptchaCracker](https://huggingface.co/spaces/osanseviero/tortoisse-tts)([Code](https://huggingface.co/spaces/akhaliq/CaptchaCracker/blob/main/app.py))

63 |

64 |

65 | ## To participate in the event

66 |

67 | - Join the organization for Blocks event

68 | - [https://huggingface.co/Gradio-Blocks](https://huggingface.co/Gradio-Blocks)

69 | - Join the discord

70 | - [discord](https://discord.com/invite/feTf9x3ZSB)

71 |

72 |

73 | Participants will be building and sharing Gradio demos using the Blocks feature. We will share a list of ideas of spaces that can be created using blocks or participants are free to try out their own ideas. At the end of the event, spaces will be evaluated and prizes will be given.

74 |

75 |

76 | ## Potential ideas for creating spaces:

77 |

78 |

79 | - Trending papers from https://paperswithcode.com/

80 | - Models from huggingface model hub: https://huggingface.co/models

81 | - Models from other model hubs

82 | - Tensorflow Hub: see example Gradio demos at https://huggingface.co/tensorflow

83 | - Pytorch Hub: see example Gradio demos at https://huggingface.co/pytorch

84 | - ONNX model Hub: see example Gradio demos at https://huggingface.co/onnx

85 | - PaddlePaddle Model Hub: see example Gradio demos at https://huggingface.co/PaddlePaddle

86 | - participant ideas, try out your own ideas

87 |

88 |

89 | ## Prizes

90 | - 1st place winner based on likes

91 | - [Hugging Face PRO subscription](https://huggingface.co/pricing) for 1 year

92 | - Embedding your Gradio Blocks demo in the Gradio Blog

93 | - top 10 winners based on likes

94 | - Swag from [Hugging Face merch shop](https://huggingface.myshopify.com/): t-shirts, hoodies, mugs of your choice

95 | - top 25 winners based on likes

96 | - [Hugging Face PRO subscription](https://huggingface.co/pricing) for 1 month

97 | - Blocks event badge on HF for all participants!

98 |

99 | ## Prizes Criteria

100 |

101 | - Staff Picks

102 | - Most liked Spaces

103 | - Community Pick (voting)

104 | - Most Creative Space (voting)

105 | - Most Educational Space (voting)

106 | - CEO's pick (one prize for a particularly impactful demo), picked by @clem

107 | - CTO's pick (one prize for a particularly technically impressive demo), picked by @julien

108 |

109 |

110 | ## Creating a Gradio demo on Hugging Face Spaces

111 |

112 | Once a model has been picked from the choices above or feel free to try your own idea, you can share a model in a Space using Gradio

113 |

114 | Read more about how to add [Gradio spaces](https://huggingface.co/blog/gradio-spaces).

115 |

116 | Steps to add Gradio Spaces to the Gradio Blocks Party org

117 | 1. Create an account on Hugging Face

118 | 2. Join the Gradio Blocks Party Organization by clicking "Join Organization" button in the organization page or using the shared link above

119 | 3. Once your request is approved, add your space using the Gradio SDK and share the link with the community!

120 |

121 | ## LeaderBoard for Most Popular Blocks Event Spaces based on Likes

122 |

123 | - See Leaderboard: https://huggingface.co/spaces/Gradio-Blocks/Leaderboard

124 |

--------------------------------------------------------------------------------

/huggan/__init__.py:

--------------------------------------------------------------------------------

1 | from pathlib import Path

2 |

3 | TEMPLATE_MODEL_CARD_PATH = Path(__file__).parent.absolute() / 'model_card_template.md'

--------------------------------------------------------------------------------

/huggan/assets/cyclegan.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/cyclegan.png

--------------------------------------------------------------------------------

/huggan/assets/dcgan_mnist.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/dcgan_mnist.png

--------------------------------------------------------------------------------

/huggan/assets/example_model.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/example_model.png

--------------------------------------------------------------------------------

/huggan/assets/example_space.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/example_space.png

--------------------------------------------------------------------------------

/huggan/assets/huggan_banner.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/huggan_banner.png

--------------------------------------------------------------------------------

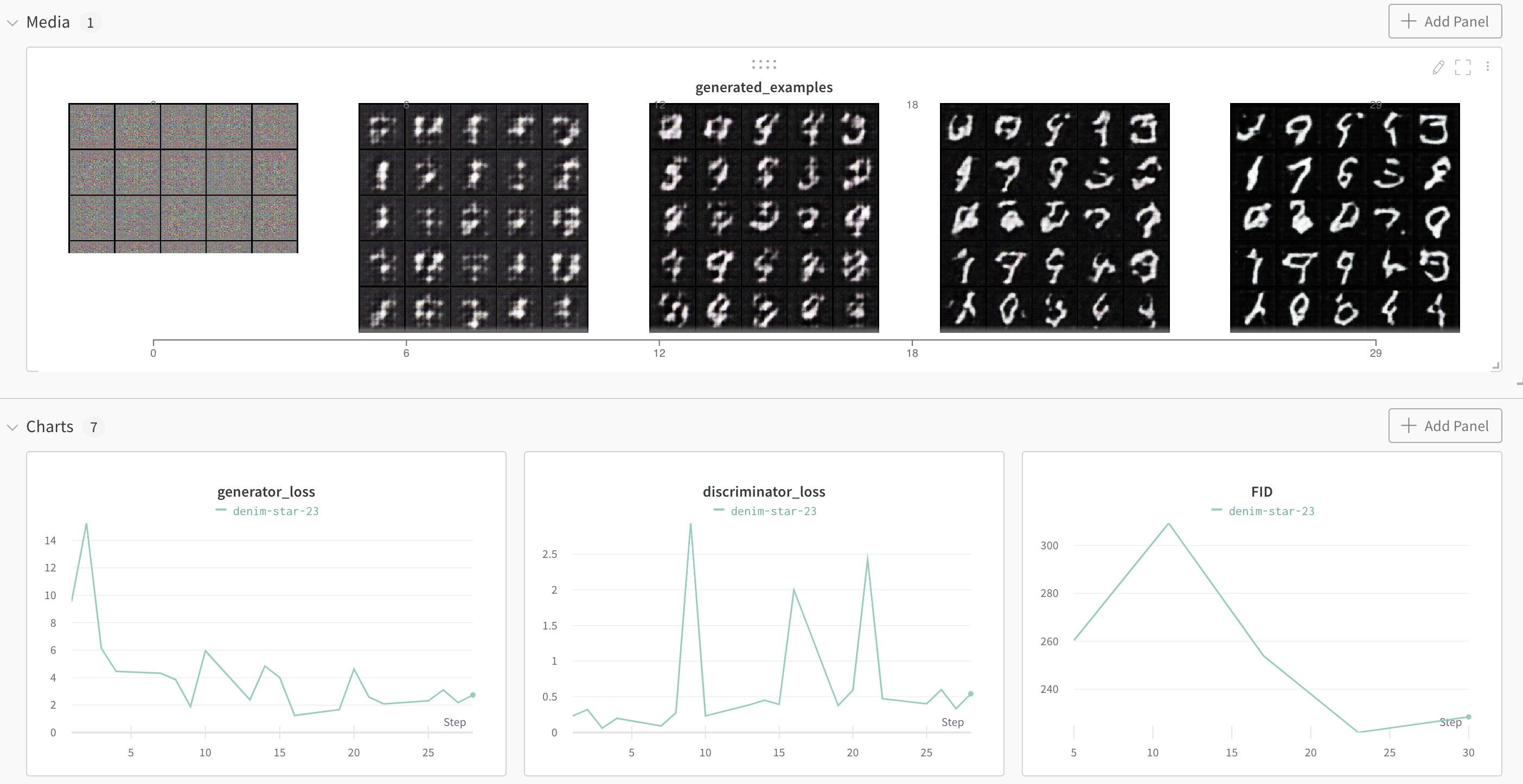

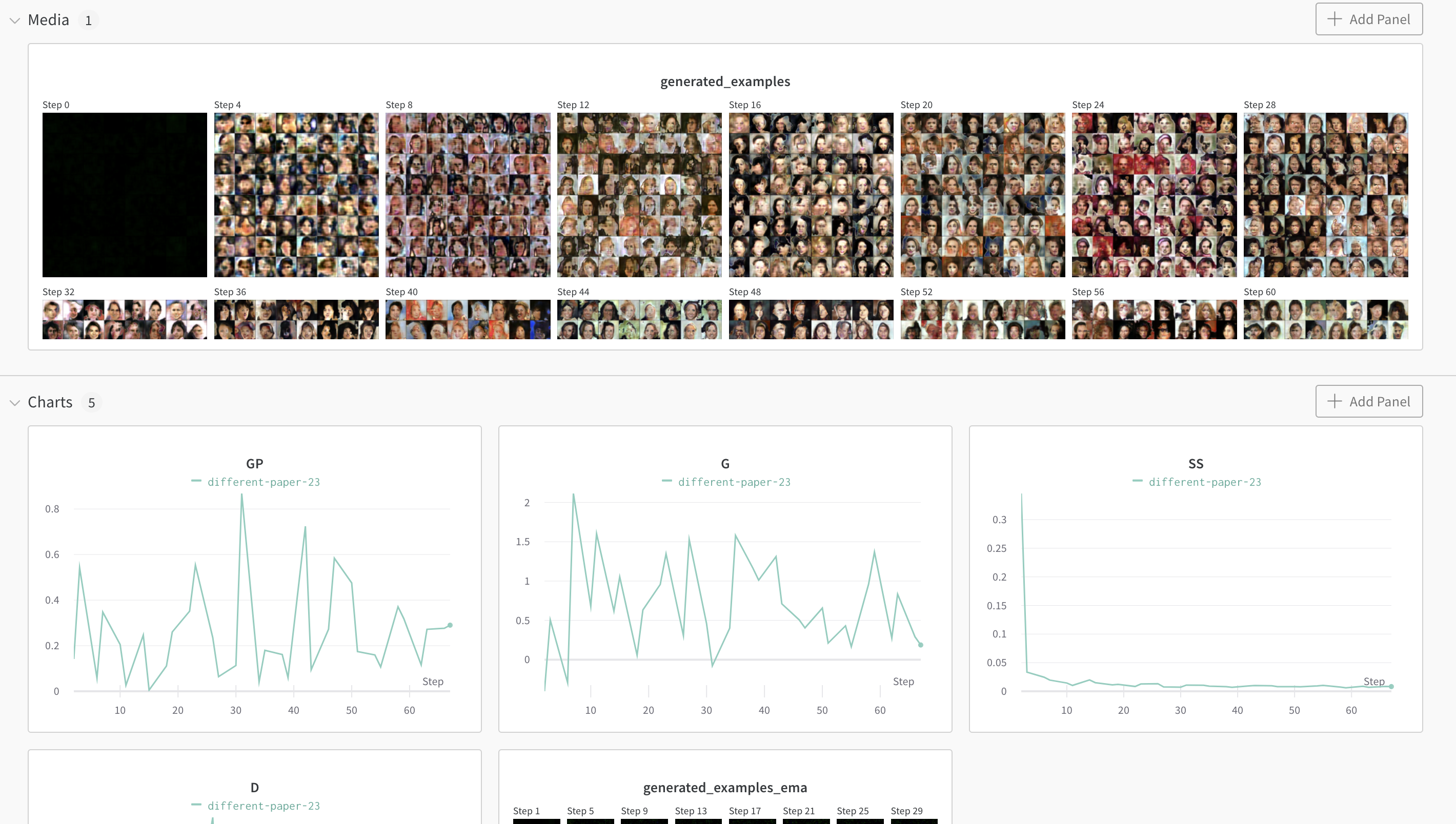

/huggan/assets/lightweight_gan_wandb.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/lightweight_gan_wandb.png

--------------------------------------------------------------------------------

/huggan/assets/metfaces.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/metfaces.png

--------------------------------------------------------------------------------

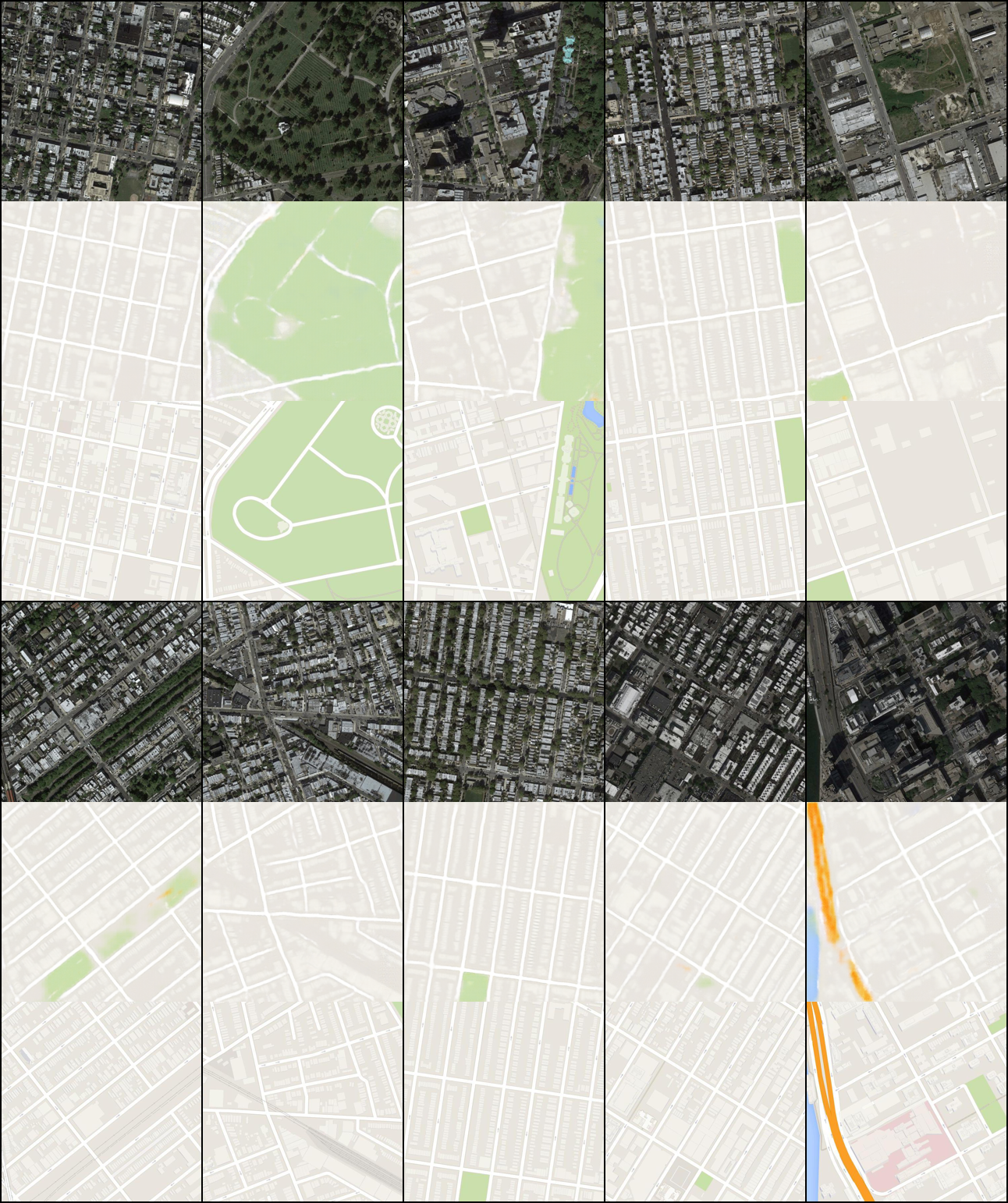

/huggan/assets/pix2pix_maps.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/pix2pix_maps.png

--------------------------------------------------------------------------------

/huggan/assets/wandb.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/assets/wandb.png

--------------------------------------------------------------------------------

/huggan/model_card_template.md:

--------------------------------------------------------------------------------

1 | ---

2 | tags:

3 | - huggan

4 | - gan

5 | # See a list of available tags here:

6 | # https://github.com/huggingface/hub-docs/blob/main/js/src/lib/interfaces/Types.ts#L12

7 | # task: unconditional-image-generation or conditional-image-generation or image-to-image

8 | license: mit

9 | ---

10 |

11 | # MyModelName

12 |

13 | ## Model description

14 |

15 | Describe the model here (what it does, what it's used for, etc.)

16 |

17 | ## Intended uses & limitations

18 |

19 | #### How to use

20 |

21 | ```python

22 | # You can include sample code which will be formatted

23 | ```

24 |

25 | #### Limitations and bias

26 |

27 | Provide examples of latent issues and potential remediations.

28 |

29 | ## Training data

30 |

31 | Describe the data you used to train the model.

32 | If you initialized it with pre-trained weights, add a link to the pre-trained model card or repository with description of the pre-training data.

33 |

34 | ## Training procedure

35 |

36 | Preprocessing, hardware used, hyperparameters...

37 |

38 | ## Eval results

39 |

40 | ## Generated Images

41 |

42 | You can embed local or remote images using ``

43 |

44 | ### BibTeX entry and citation info

45 |

46 | ```bibtex

47 | @inproceedings{...,

48 | year={2020}

49 | }

50 | ```

--------------------------------------------------------------------------------

/huggan/pytorch/README.md:

--------------------------------------------------------------------------------

1 | # Example scripts (PyTorch)

2 |

3 | This directory contains a few example scripts that allow you to train famous GANs on your own data using a bit of 🤗 magic.

4 |

5 | More concretely, these scripts:

6 | - leverage 🤗 [Datasets](https://huggingface.co/docs/datasets/index) to load any image dataset from the hub (including your own, possibly private, dataset)

7 | - leverage 🤗 [Accelerate](https://huggingface.co/docs/accelerate/index) to instantly run the script on (multi-) CPU, (multi-) GPU, TPU environments, supporting fp16 and mixed precision as well as DeepSpeed

8 | - leverage 🤗 [Hub](https://huggingface.co/) to push the model to the hub at the end of training, allowing to easily create a demo for it afterwards

9 |

10 | Currently, it contains the following examples:

11 |

12 | | Name | Paper |

13 | | ----------- | ----------- |

14 | | [DCGAN](dcgan) | [Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks](https://arxiv.org/abs/1511.06434) |

15 | | [pix2pix](pix2pix) | [Image-to-Image Translation with Conditional Adversarial Networks](https://arxiv.org/abs/1611.07004) |

16 | | [CycleGAN](cyclegan) | [Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks](https://arxiv.org/abs/1703.10593)

17 | | [Lightweight GAN](lightweight_gan) | [Towards Faster and Stabilized GAN Training for High-fidelity Few-shot Image Synthesis](https://openreview.net/forum?id=1Fqg133qRaI)

18 |

19 |

20 |

--------------------------------------------------------------------------------

/huggan/pytorch/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/pytorch/__init__.py

--------------------------------------------------------------------------------

/huggan/pytorch/cyclegan/README.md:

--------------------------------------------------------------------------------

1 | # Training CycleGAN on your own data

2 |

3 | This folder contains a script to train [CycleGAN](https://arxiv.org/abs/1703.10593), leveraging the [Hugging Face](https://huggingface.co/) ecosystem for processing data and pushing the model to the Hub.

4 |

5 |

6 |  7 |

7 |

8 |

9 | Example applications of CycleGAN. Taken from [this repo](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix).

10 |

11 | The script leverages 🤗 Datasets for loading and processing data, and 🤗 Accelerate for instantly running on CPU, single, multi-GPUs or TPU, also supporting mixed precision.

12 |

13 | ## Launching the script

14 |

15 | To train the model with the default parameters (200 epochs, 256x256 images, etc.) on [huggan/facades](https://huggingface.co/datasets/huggan/facades) on your environment, first run:

16 |

17 | ```bash

18 | accelerate config

19 | ```

20 |

21 | and answer the questions asked. Next, launch the script as follows:

22 |

23 | ```

24 | accelerate launch train.py

25 | ```

26 |

27 | This will create local "images" and "saved_models" directories, containing generated images and saved checkpoints over the course of the training.

28 |

29 | To train on another dataset available on the hub, simply do:

30 |

31 | ```

32 | accelerate launch train.py --dataset huggan/edges2shoes

33 | ```

34 |

35 | Make sure to pick a dataset which has "imageA" and "imageB" columns defined. One can always tweak the script in case the column names are different.

36 |

37 | ## Training on your own data

38 |

39 | You can of course also train on your own images. For this, one can leverage Datasets' [ImageFolder](https://huggingface.co/docs/datasets/v2.0.0/en/image_process#imagefolder). Make sure to authenticate with the hub first, by running the `huggingface-cli login` command in a terminal, or the following in case you're working in a notebook:

40 |

41 | ```python

42 | from huggingface_hub import notebook_login

43 |

44 | notebook_login()

45 | ```

46 |

47 | Next, run the following in a notebook/script:

48 |

49 | ```python

50 | from datasets import load_dataset

51 |

52 | # first: load dataset

53 | # option 1: from local folder

54 | dataset = load_dataset("imagefolder", data_dir="path_to_folder")

55 | # option 2: from remote URL (e.g. a zip file)

56 | dataset = load_dataset("imagefolder", data_files="URL to .zip file")

57 |

58 | # next: push to the hub (assuming git-LFS is installed)

59 | dataset.push_to_hub("huggan/my-awesome-dataset")

60 | ```

61 |

62 | You can then simply pass the name of the dataset to the script:

63 |

64 | ```

65 | accelerate launch train.py --dataset huggan/my-awesome-dataset

66 | ```

67 |

68 | ## Pushing model to the Hub

69 |

70 | You can push your trained generator to the hub after training by specifying the `push_to_hub` flag.

71 | Then, you can run the script as follows:

72 |

73 | ```

74 | accelerate launch train.py --push_to_hub --model_name cyclegan-horse2zebra

75 | ```

76 |

77 | This is made possible by making the generator inherit from `PyTorchModelHubMixin`available in the `huggingface_hub` library.

78 |

79 | # Citation

80 |

81 | This repo is entirely based on Erik Linder-Norén's [PyTorch-GAN repo](https://github.com/eriklindernoren/PyTorch-GAN), but with added HuggingFace goodies.

82 |

--------------------------------------------------------------------------------

/huggan/pytorch/cyclegan/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/huggingface/community-events/a2d9115007c7e44b4389e005ea5c6163ae5b0470/huggan/pytorch/cyclegan/__init__.py

--------------------------------------------------------------------------------

/huggan/pytorch/cyclegan/modeling_cyclegan.py:

--------------------------------------------------------------------------------

1 | import torch.nn as nn

2 | import torch.nn.functional as F

3 | import torch

4 |

5 | from huggan.pytorch.huggan_mixin import HugGANModelHubMixin

6 |

7 |

8 | ##############################

9 | # RESNET

10 | ##############################

11 |

12 |

13 | class ResidualBlock(nn.Module):

14 | def __init__(self, in_features):

15 | super(ResidualBlock, self).__init__()

16 |

17 | self.block = nn.Sequential(

18 | nn.ReflectionPad2d(1),

19 | nn.Conv2d(in_features, in_features, 3),

20 | nn.InstanceNorm2d(in_features),

21 | nn.ReLU(inplace=True),

22 | nn.ReflectionPad2d(1),

23 | nn.Conv2d(in_features, in_features, 3),

24 | nn.InstanceNorm2d(in_features),

25 | )

26 |

27 | def forward(self, x):

28 | return x + self.block(x)

29 |

30 |

31 | class GeneratorResNet(nn.Module, HugGANModelHubMixin):

32 | def __init__(self, input_shape, num_residual_blocks):

33 | super(GeneratorResNet, self).__init__()

34 |

35 | channels = input_shape[0]

36 |

37 | # Initial convolution block

38 | out_features = 64

39 | model = [

40 | nn.ReflectionPad2d(channels),

41 | nn.Conv2d(channels, out_features, 7),

42 | nn.InstanceNorm2d(out_features),

43 | nn.ReLU(inplace=True),

44 | ]

45 | in_features = out_features

46 |

47 | # Downsampling

48 | for _ in range(2):

49 | out_features *= 2

50 | model += [

51 | nn.Conv2d(in_features, out_features, 3, stride=2, padding=1),

52 | nn.InstanceNorm2d(out_features),

53 | nn.ReLU(inplace=True),

54 | ]

55 | in_features = out_features

56 |

57 | # Residual blocks

58 | for _ in range(num_residual_blocks):

59 | model += [ResidualBlock(out_features)]

60 |

61 | # Upsampling

62 | for _ in range(2):

63 | out_features //= 2

64 | model += [

65 | nn.Upsample(scale_factor=2),

66 | nn.Conv2d(in_features, out_features, 3, stride=1, padding=1),

67 | nn.InstanceNorm2d(out_features),

68 | nn.ReLU(inplace=True),

69 | ]

70 | in_features = out_features

71 |

72 | # Output layer

73 | model += [nn.ReflectionPad2d(channels), nn.Conv2d(out_features, channels, 7), nn.Tanh()]

74 |

75 | self.model = nn.Sequential(*model)

76 |

77 | def forward(self, x):

78 | return self.model(x)

79 |

80 |

81 | ##############################

82 | # Discriminator

83 | ##############################

84 |

85 |

86 | class Discriminator(nn.Module):

87 | def __init__(self, channels):

88 | super(Discriminator, self).__init__()

89 |

90 | def discriminator_block(in_filters, out_filters, normalize=True):

91 | """Returns downsampling layers of each discriminator block"""

92 | layers = [nn.Conv2d(in_filters, out_filters, 4, stride=2, padding=1)]

93 | if normalize:

94 | layers.append(nn.InstanceNorm2d(out_filters))

95 | layers.append(nn.LeakyReLU(0.2, inplace=True))

96 | return layers

97 |

98 | self.model = nn.Sequential(

99 | *discriminator_block(channels, 64, normalize=False),

100 | *discriminator_block(64, 128),

101 | *discriminator_block(128, 256),

102 | *discriminator_block(256, 512),

103 | nn.ZeroPad2d((1, 0, 1, 0)),

104 | nn.Conv2d(512, 1, 4, padding=1)

105 | )

106 |

107 | def forward(self, img):

108 | return self.model(img)

--------------------------------------------------------------------------------

/huggan/pytorch/cyclegan/train.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import os

3 | import numpy as np

4 | import itertools

5 | from pathlib import Path

6 | import datetime

7 | import time

8 | import sys

9 |

10 | from PIL import Image

11 |

12 | from torchvision.transforms import Compose, Resize, ToTensor, Normalize, RandomCrop, RandomHorizontalFlip

13 | from torchvision.utils import save_image, make_grid

14 |

15 | from torch.utils.data import DataLoader

16 |

17 | from modeling_cyclegan import GeneratorResNet, Discriminator

18 |

19 | from utils import ReplayBuffer, LambdaLR

20 |

21 | from datasets import load_dataset

22 |

23 | from accelerate import Accelerator

24 |

25 | import torch.nn as nn

26 | import torch

27 |

28 | def parse_args(args=None):

29 | parser = argparse.ArgumentParser()

30 | parser.add_argument("--epoch", type=int, default=0, help="epoch to start training from")

31 | parser.add_argument("--num_epochs", type=int, default=200, help="number of epochs of training")

32 | parser.add_argument("--dataset_name", type=str, default="huggan/facades", help="name of the dataset")

33 | parser.add_argument("--batch_size", type=int, default=1, help="size of the batches")

34 | parser.add_argument("--lr", type=float, default=0.0002, help="adam: learning rate")

35 | parser.add_argument("--beta1", type=float, default=0.5, help="adam: decay of first order momentum of gradient")

36 | parser.add_argument("--beta2", type=float, default=0.999, help="adam: decay of first order momentum of gradient")

37 | parser.add_argument("--decay_epoch", type=int, default=100, help="epoch from which to start lr decay")

38 | parser.add_argument("--num_workers", type=int, default=8, help="Number of CPU threads to use during batch generation")

39 | parser.add_argument("--image_size", type=int, default=256, help="Size of images for training")

40 | parser.add_argument("--channels", type=int, default=3, help="Number of image channels")

41 | parser.add_argument("--sample_interval", type=int, default=100, help="interval between saving generator outputs")

42 | parser.add_argument("--checkpoint_interval", type=int, default=-1, help="interval between saving model checkpoints")

43 | parser.add_argument("--n_residual_blocks", type=int, default=9, help="number of residual blocks in generator")

44 | parser.add_argument("--lambda_cyc", type=float, default=10.0, help="cycle loss weight")

45 | parser.add_argument("--lambda_id", type=float, default=5.0, help="identity loss weight")

46 | parser.add_argument("--fp16", action="store_true", help="If passed, will use FP16 training.")

47 | parser.add_argument(

48 | "--mixed_precision",

49 | type=str,

50 | default="no",

51 | choices=["no", "fp16", "bf16"],

52 | help="Whether to use mixed precision. Choose"

53 | "between fp16 and bf16 (bfloat16). Bf16 requires PyTorch >= 1.10."

54 | "and an Nvidia Ampere GPU.",

55 | )

56 | parser.add_argument("--cpu", action="store_true", help="If passed, will train on the CPU.")

57 | parser.add_argument(

58 | "--push_to_hub",

59 | action="store_true",

60 | help="Whether to push the model to the HuggingFace hub after training.",

61 | )

62 | parser.add_argument(

63 | "--pytorch_dump_folder_path",

64 | required="--push_to_hub" in sys.argv,

65 | type=Path,

66 | help="Path to save the model. Will be created if it doesn't exist already.",

67 | )

68 | parser.add_argument(

69 | "--model_name",

70 | required="--push_to_hub" in sys.argv,

71 | type=str,

72 | help="Name of the model on the hub.",

73 | )

74 | parser.add_argument(

75 | "--organization_name",

76 | required=False,

77 | default="huggan",

78 | type=str,

79 | help="Organization name to push to, in case args.push_to_hub is specified.",

80 | )

81 | return parser.parse_args(args=args)

82 |

83 |

84 | def weights_init_normal(m):

85 | classname = m.__class__.__name__

86 | if classname.find("Conv") != -1:

87 | torch.nn.init.normal_(m.weight.data, 0.0, 0.02)

88 | if hasattr(m, "bias") and m.bias is not None:

89 | torch.nn.init.constant_(m.bias.data, 0.0)

90 | elif classname.find("BatchNorm2d") != -1:

91 | torch.nn.init.normal_(m.weight.data, 1.0, 0.02)

92 | torch.nn.init.constant_(m.bias.data, 0.0)

93 |

94 |

95 | def training_function(config, args):

96 | accelerator = Accelerator(fp16=args.fp16, cpu=args.cpu, mixed_precision=args.mixed_precision)

97 |

98 | # Create sample and checkpoint directories

99 | os.makedirs("images/%s" % args.dataset_name, exist_ok=True)

100 | os.makedirs("saved_models/%s" % args.dataset_name, exist_ok=True)

101 |

102 | # Losses

103 | criterion_GAN = torch.nn.MSELoss()

104 | criterion_cycle = torch.nn.L1Loss()

105 | criterion_identity = torch.nn.L1Loss()

106 |

107 | input_shape = (args.channels, args.image_size, args.image_size)

108 | # Calculate output shape of image discriminator (PatchGAN)

109 | output_shape = (1, args.image_size // 2 ** 4, args.image_size // 2 ** 4)

110 |

111 | # Initialize generator and discriminator

112 | G_AB = GeneratorResNet(input_shape, args.n_residual_blocks)

113 | G_BA = GeneratorResNet(input_shape, args.n_residual_blocks)

114 | D_A = Discriminator(args.channels)

115 | D_B = Discriminator(args.channels)

116 |

117 | if args.epoch != 0:

118 | # Load pretrained models

119 | G_AB.load_state_dict(torch.load("saved_models/%s/G_AB_%d.pth" % (args.dataset_name, args.epoch)))

120 | G_BA.load_state_dict(torch.load("saved_models/%s/G_BA_%d.pth" % (args.dataset_name, args.epoch)))

121 | D_A.load_state_dict(torch.load("saved_models/%s/D_A_%d.pth" % (args.dataset_name, args.epoch)))

122 | D_B.load_state_dict(torch.load("saved_models/%s/D_B_%d.pth" % (args.dataset_name, args.epoch)))

123 | else:

124 | # Initialize weights

125 | G_AB.apply(weights_init_normal)

126 | G_BA.apply(weights_init_normal)

127 | D_A.apply(weights_init_normal)

128 | D_B.apply(weights_init_normal)

129 |

130 | # Optimizers

131 | optimizer_G = torch.optim.Adam(

132 | itertools.chain(G_AB.parameters(), G_BA.parameters()), lr=args.lr, betas=(args.beta1, args.beta2)

133 | )

134 | optimizer_D_A = torch.optim.Adam(D_A.parameters(), lr=args.lr, betas=(args.beta1, args.beta2))

135 | optimizer_D_B = torch.optim.Adam(D_B.parameters(), lr=args.lr, betas=(args.beta1, args.beta2))

136 |

137 | # Learning rate update schedulers

138 | lr_scheduler_G = torch.optim.lr_scheduler.LambdaLR(

139 | optimizer_G, lr_lambda=LambdaLR(args.num_epochs, args.epoch, args.decay_epoch).step

140 | )

141 | lr_scheduler_D_A = torch.optim.lr_scheduler.LambdaLR(

142 | optimizer_D_A, lr_lambda=LambdaLR(args.num_epochs, args.epoch, args.decay_epoch).step

143 | )

144 | lr_scheduler_D_B = torch.optim.lr_scheduler.LambdaLR(

145 | optimizer_D_B, lr_lambda=LambdaLR(args.num_epochs, args.epoch, args.decay_epoch).step

146 | )

147 |

148 | # Buffers of previously generated samples

149 | fake_A_buffer = ReplayBuffer()

150 | fake_B_buffer = ReplayBuffer()

151 |

152 | # Image transformations

153 | transform = Compose([

154 | Resize(int(args.image_size * 1.12), Image.BICUBIC),

155 | RandomCrop((args.image_size, args.image_size)),

156 | RandomHorizontalFlip(),

157 | ToTensor(),

158 | Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)),

159 | ])

160 |

161 | def transforms(examples):

162 | examples["A"] = [transform(image.convert("RGB")) for image in examples["imageA"]]

163 | examples["B"] = [transform(image.convert("RGB")) for image in examples["imageB"]]

164 |

165 | del examples["imageA"]

166 | del examples["imageB"]

167 |

168 | return examples

169 |

170 | dataset = load_dataset(args.dataset_name)

171 | transformed_dataset = dataset.with_transform(transforms)

172 |

173 | splits = transformed_dataset['train'].train_test_split(test_size=0.1)

174 | train_ds = splits['train']

175 | val_ds = splits['test']

176 |

177 | dataloader = DataLoader(train_ds, shuffle=True, batch_size=args.batch_size, num_workers=args.num_workers)

178 | val_dataloader = DataLoader(val_ds, batch_size=5, shuffle=True, num_workers=1)

179 |

180 | def sample_images(batches_done):

181 | """Saves a generated sample from the test set"""

182 | batch = next(iter(val_dataloader))

183 | G_AB.eval()

184 | G_BA.eval()

185 | real_A = batch["A"]

186 | fake_B = G_AB(real_A)

187 | real_B = batch["B"]

188 | fake_A = G_BA(real_B)

189 | # Arange images along x-axis

190 | real_A = make_grid(real_A, nrow=5, normalize=True)

191 | real_B = make_grid(real_B, nrow=5, normalize=True)

192 | fake_A = make_grid(fake_A, nrow=5, normalize=True)

193 | fake_B = make_grid(fake_B, nrow=5, normalize=True)

194 | # Arange images along y-axis

195 | image_grid = torch.cat((real_A, fake_B, real_B, fake_A), 1)

196 | save_image(image_grid, "images/%s/%s.png" % (args.dataset_name, batches_done), normalize=False)

197 |

198 | G_AB, G_BA, D_A, D_B, optimizer_G, optimizer_D_A, optimizer_D_B, dataloader, val_dataloader = accelerator.prepare(G_AB, G_BA, D_A, D_B, optimizer_G, optimizer_D_A, optimizer_D_B, dataloader, val_dataloader)

199 |

200 | # ----------

201 | # Training

202 | # ----------

203 |

204 | prev_time = time.time()

205 | for epoch in range(args.epoch, args.num_epochs):

206 | for i, batch in enumerate(dataloader):

207 |

208 | # Set model input

209 | real_A = batch["A"]

210 | real_B = batch["B"]

211 |

212 | # Adversarial ground truths

213 | valid = torch.ones((real_A.size(0), *output_shape), device=accelerator.device)

214 | fake = torch.zeros((real_A.size(0), *output_shape), device=accelerator.device)

215 |

216 | # ------------------

217 | # Train Generators

218 | # ------------------

219 |

220 | G_AB.train()

221 | G_BA.train()

222 |

223 | optimizer_G.zero_grad()

224 |

225 | # Identity loss

226 | loss_id_A = criterion_identity(G_BA(real_A), real_A)

227 | loss_id_B = criterion_identity(G_AB(real_B), real_B)

228 |

229 | loss_identity = (loss_id_A + loss_id_B) / 2

230 |

231 | # GAN loss

232 | fake_B = G_AB(real_A)

233 | loss_GAN_AB = criterion_GAN(D_B(fake_B), valid)

234 | fake_A = G_BA(real_B)

235 | loss_GAN_BA = criterion_GAN(D_A(fake_A), valid)

236 |

237 | loss_GAN = (loss_GAN_AB + loss_GAN_BA) / 2

238 |

239 | # Cycle loss

240 | recov_A = G_BA(fake_B)

241 | loss_cycle_A = criterion_cycle(recov_A, real_A)

242 | recov_B = G_AB(fake_A)

243 | loss_cycle_B = criterion_cycle(recov_B, real_B)

244 |

245 | loss_cycle = (loss_cycle_A + loss_cycle_B) / 2

246 |

247 | # Total loss

248 | loss_G = loss_GAN + args.lambda_cyc * loss_cycle + args.lambda_id * loss_identity

249 |

250 | accelerator.backward(loss_G)

251 | optimizer_G.step()

252 |

253 | # -----------------------

254 | # Train Discriminator A

255 | # -----------------------

256 |

257 | optimizer_D_A.zero_grad()

258 |

259 | # Real loss

260 | loss_real = criterion_GAN(D_A(real_A), valid)

261 | # Fake loss (on batch of previously generated samples)

262 | fake_A_ = fake_A_buffer.push_and_pop(fake_A)

263 | loss_fake = criterion_GAN(D_A(fake_A_.detach()), fake)

264 | # Total loss

265 | loss_D_A = (loss_real + loss_fake) / 2

266 |

267 | accelerator.backward(loss_D_A)

268 | optimizer_D_A.step()

269 |

270 | # -----------------------

271 | # Train Discriminator B

272 | # -----------------------

273 |

274 | optimizer_D_B.zero_grad()

275 |

276 | # Real loss

277 | loss_real = criterion_GAN(D_B(real_B), valid)

278 | # Fake loss (on batch of previously generated samples)

279 | fake_B_ = fake_B_buffer.push_and_pop(fake_B)

280 | loss_fake = criterion_GAN(D_B(fake_B_.detach()), fake)

281 | # Total loss

282 | loss_D_B = (loss_real + loss_fake) / 2

283 |

284 | accelerator.backward(loss_D_B)

285 | optimizer_D_B.step()

286 |

287 | loss_D = (loss_D_A + loss_D_B) / 2

288 |

289 | # --------------

290 | # Log Progress

291 | # --------------

292 |

293 | # Determine approximate time left

294 | batches_done = epoch * len(dataloader) + i

295 | batches_left = args.num_epochs * len(dataloader) - batches_done

296 | time_left = datetime.timedelta(seconds=batches_left * (time.time() - prev_time))

297 | prev_time = time.time()

298 |

299 | # Print log

300 | sys.stdout.write(

301 | "\r[Epoch %d/%d] [Batch %d/%d] [D loss: %f] [G loss: %f, adv: %f, cycle: %f, identity: %f] ETA: %s"

302 | % (

303 | epoch,

304 | args.num_epochs,

305 | i,

306 | len(dataloader),

307 | loss_D.item(),

308 | loss_G.item(),

309 | loss_GAN.item(),

310 | loss_cycle.item(),

311 | loss_identity.item(),

312 | time_left,

313 | )

314 | )

315 |

316 | # If at sample interval save image

317 | if batches_done % args.sample_interval == 0:

318 | sample_images(batches_done)

319 |

320 | # Update learning rates

321 | lr_scheduler_G.step()

322 | lr_scheduler_D_A.step()

323 | lr_scheduler_D_B.step()

324 |

325 | if args.checkpoint_interval != -1 and epoch % args.checkpoint_interval == 0:

326 | # Save model checkpoints

327 | torch.save(G_AB.state_dict(), "saved_models/%s/G_AB_%d.pth" % (args.dataset_name, epoch))

328 | torch.save(G_BA.state_dict(), "saved_models/%s/G_BA_%d.pth" % (args.dataset_name, epoch))

329 | torch.save(D_A.state_dict(), "saved_models/%s/D_A_%d.pth" % (args.dataset_name, epoch))

330 | torch.save(D_B.state_dict(), "saved_models/%s/D_B_%d.pth" % (args.dataset_name, epoch))

331 |

332 | # Optionally push to hub

333 | if args.push_to_hub:

334 | save_directory = args.pytorch_dump_folder_path

335 | if not save_directory.exists():

336 | save_directory.mkdir(parents=True)

337 |

338 | G_AB.push_to_hub(

339 | repo_path_or_name=save_directory / args.model_name,

340 | organization=args.organization_name,

341 | )

342 |

343 | def main():

344 | args = parse_args()

345 | print(args)

346 |

347 | # Make directory for saving generated images

348 | os.makedirs("images", exist_ok=True)

349 |

350 | training_function({}, args)

351 |

352 |

353 | if __name__ == "__main__":

354 | main()

--------------------------------------------------------------------------------

/huggan/pytorch/cyclegan/utils.py:

--------------------------------------------------------------------------------

1 | import random

2 | import time

3 | import datetime

4 | import sys

5 |

6 | from torch.autograd import Variable

7 | import torch

8 | import numpy as np

9 |

10 | from torchvision.utils import save_image

11 |

12 |

13 | class ReplayBuffer:

14 | def __init__(self, max_size=50):

15 | assert max_size > 0, "Empty buffer or trying to create a black hole. Be careful."

16 | self.max_size = max_size

17 | self.data = []

18 |

19 | def push_and_pop(self, data):

20 | to_return = []

21 | for element in data.data:

22 | element = torch.unsqueeze(element, 0)

23 | if len(self.data) < self.max_size:

24 | self.data.append(element)

25 | to_return.append(element)

26 | else:

27 | if random.uniform(0, 1) > 0.5:

28 | i = random.randint(0, self.max_size - 1)

29 | to_return.append(self.data[i].clone())

30 | self.data[i] = element

31 | else:

32 | to_return.append(element)

33 | return Variable(torch.cat(to_return))

34 |

35 |

36 | class LambdaLR:

37 | def __init__(self, n_epochs, offset, decay_start_epoch):

38 | assert (n_epochs - decay_start_epoch) > 0, "Decay must start before the training session ends!"

39 | self.n_epochs = n_epochs

40 | self.offset = offset

41 | self.decay_start_epoch = decay_start_epoch

42 |

43 | def step(self, epoch):

44 | return 1.0 - max(0, epoch + self.offset - self.decay_start_epoch) / (self.n_epochs - self.decay_start_epoch)

--------------------------------------------------------------------------------

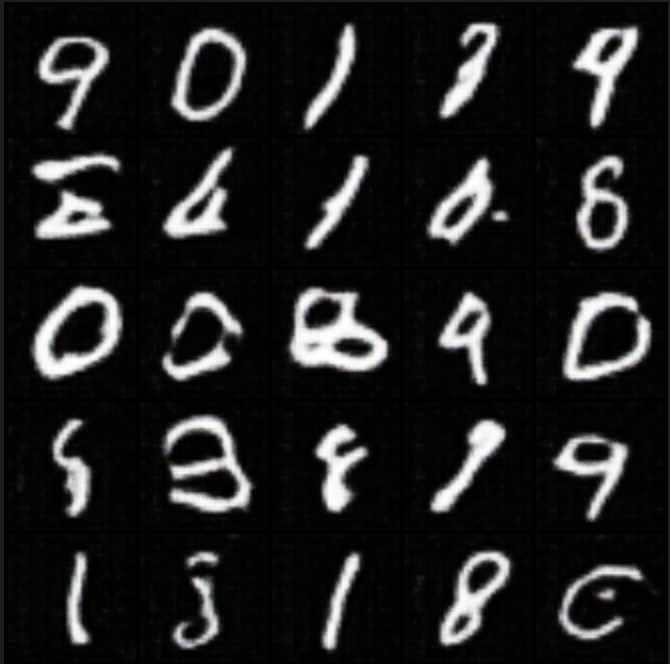

/huggan/pytorch/dcgan/README.md:

--------------------------------------------------------------------------------

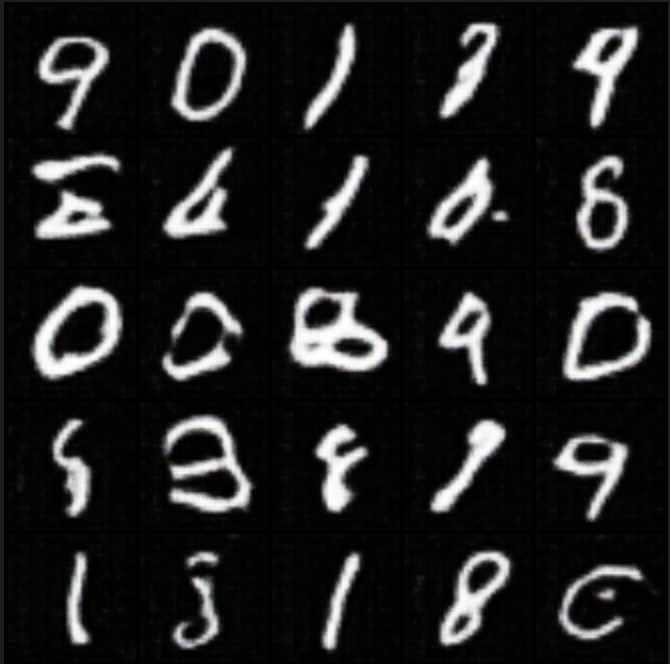

1 | # Train DCGAN on your custom data

2 |

3 | This folder contains a script to train [DCGAN](https://arxiv.org/abs/1511.06434) for unconditional image generation, leveraging the [Hugging Face](https://huggingface.co/) ecosystem for processing your data and pushing the model to the Hub.

4 |

5 | The script leverages 🤗 Datasets for loading and processing data, and 🤗 Accelerate for instantly running on CPU, single, multi-GPUs or TPU, also supporting fp16/mixed precision.

6 |

7 |

8 |  9 |

9 |

10 |

11 |

12 | ## Launching the script

13 |

14 | To train the model with the default parameters (5 epochs, 64x64 images, etc.) on [MNIST](https://huggingface.co/datasets/mnist), first run:

15 |

16 | ```bash

17 | accelerate config

18 | ```

19 |

20 | and answer the questions asked about your environment. Next, launch the script as follows:

21 |

22 | ```bash

23 | accelerate launch train.py

24 | ```

25 |

26 | This will create a local "images" directory, containing generated images over the course of the training.

27 |