├── config.txt

├── logs

└── .gitignore

├── run.sh

├── .gitignore

├── favicon.png

├── world_map.png

├── functions

├── strip.R

├── form.R

├── plot.R

├── crawl.R

└── get.R

├── LICENSE

├── sandbox.R

├── _ui.R

├── README.md

├── _server.R

├── _daemon.R

├── analysis.R

└── style.css

/config.txt:

--------------------------------------------------------------------------------

1 | port = 3030

--------------------------------------------------------------------------------

/logs/.gitignore:

--------------------------------------------------------------------------------

1 | *

2 | !.gitignore

--------------------------------------------------------------------------------

/run.sh:

--------------------------------------------------------------------------------

1 | Rscript --vanilla _daemon.R

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | .DS_Store

2 | maps/

3 | tables/

4 | graphs/

5 | _secret.R

--------------------------------------------------------------------------------

/favicon.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hxrts/spider/HEAD/favicon.png

--------------------------------------------------------------------------------

/world_map.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/hxrts/spider/HEAD/world_map.png

--------------------------------------------------------------------------------

/functions/strip.R:

--------------------------------------------------------------------------------

1 |

2 |

3 | message(' - strip')

4 |

5 |

6 | strip <- function(string) { gsub('^\\s+|\\s+$', '', string) }

7 |

8 |

--------------------------------------------------------------------------------

/functions/form.R:

--------------------------------------------------------------------------------

1 |

2 |

3 | message(' - form')

4 |

5 |

6 | FormArrows <- function(reply) { # format reply as source -> target table

7 |

8 | reply %>%

9 | filter(hierarchy != 'identity') %>%

10 | mutate(source = ifelse(hierarchy == 'child', query, slug)) %>%

11 | mutate(target = ifelse(hierarchy == 'child', slug, query)) %>%

12 | select(source, target) %>%

13 | unique

14 |

15 | }

16 |

17 |

18 | FormObjects <- function(reply) { # format reply as channel metadata table

19 |

20 | reply %>%

21 | select(title, slug, user.name, user.slug, length, type = status)

22 |

23 | }

24 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2016 Sam Hart

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/sandbox.R:

--------------------------------------------------------------------------------

1 |

2 |

3 | Server <- shinyServer(function(input, output, session) {

4 |

5 | # vis function

6 | Net <- function() { visNetwork(

7 | nodes = data.frame(id = 1:3),

8 | edges = data.frame(from = c(1,2), to = c(1,3))

9 | )}

10 |

11 | # output

12 | output$network_proxy <- renderVisNetwork({ Net() })

13 |

14 |

15 | # # observe

16 | # observer <- observe({

17 |

18 | # input$getAll

19 |

20 | # visNetworkProxy("network_proxy") %>%

21 | # visGetEdges()

22 |

23 | # visNetworkProxy("network_proxy") %>%

24 | # visGetNodes()

25 |

26 | # print(input$network_proxy_edges)

27 |

28 | # print(input$network_proxy_nodes)

29 |

30 | # })

31 |

32 |

33 | # # output

34 | # output$edges_data_from_shiny <- renderPrint({

35 | # input$network_proxy_edges

36 | # })

37 |

38 | # output$nodes_data_from_shiny <- renderPrint({

39 | # input$network_proxy_nodes

40 | # })

41 |

42 |

43 | # # cleanup

44 | # session$onSessionEnded(function() {

45 | # observer$suspend()

46 | # })

47 |

48 |

49 | })

50 |

51 |

52 |

53 | UI <- shinyUI(fluidPage(

54 | actionButton("getAll", "fetch"),

55 | visNetworkOutput("network_proxy", height = "400px"),

56 | verbatimTextOutput("edges_data_from_shiny"),

57 | verbatimTextOutput("nodes_data_from_shiny")

58 | ))

59 |

60 |

61 |

62 | Test <- function(){ shinyApp(

63 | UI, Server

64 | )}

65 |

66 |

67 |

68 |

69 | #'test' %>% str_c('https://api.are.na/v2/channels/', ., '/thumb') %>% fromJSON

--------------------------------------------------------------------------------

/_ui.R:

--------------------------------------------------------------------------------

1 |

2 |

3 | message('|-- ui')

4 |

5 |

6 | UI <- function(origin, pop, direction, up.initial, depth, type) {

7 |

8 | fluidPage(

9 |

10 | includeCSS('style.css'),

11 | headerPanel('spider'),

12 | fluidRow(

13 |

14 | tags$head(tags$link(rel = 'shortcut icon', href = 'https://rawgit.com/hxrts/spider/master/favicon.png')),

15 |

16 | column(6, wellPanel(

17 | textInput(

18 | inputId = 'origin',

19 | label = 'Origin channel ID · Are.na/sam-hart/research-tactics',

20 | value = origin,

21 | placeholder = origin))),

22 |

23 | column(6, wellPanel(textInput(

24 | inputId = 'pop',

25 | label = 'Prune channels · IDs comma separated',

26 | value = pop,

27 | placeholder = pop))),

28 |

29 | column(2, wellPanel(selectInput(

30 | inputId = 'direction',

31 | label = 'Direction',

32 | choices = c('down', 'up', 'down & up'),

33 | multiple = FALSE))),

34 |

35 | column(2, wellPanel(selectInput(

36 | inputId = 'up.initial',

37 | label = 'Origin crawl up',

38 | choices = c('yes', 'no'),

39 | multiple = FALSE))),

40 |

41 | column(2, wellPanel(selectInput(

42 | inputId = 'depth',

43 | label = 'Depth', choices = 1:4,

44 | multiple = FALSE))),

45 |

46 | column(2, wellPanel(selectInput(

47 | inputId = 'type',

48 | label = 'Type',

49 | choices = c('all', 'public', 'closed'),

50 | multiple = FALSE))),

51 |

52 | column(2, wellPanel(selectInput(

53 | inputId = 'private',

54 | label = 'Include private',

55 | choices = c('yes', 'no'),

56 | multiple = FALSE))),

57 |

58 | column(1, wellPanel(actionButton('buildGraph', 'Build'))),

59 |

60 | column(1, wellPanel(actionButton('clearGraph', 'Clear'))),

61 |

62 | column(12, visNetworkOutput('network_proxy', width = '100%', height = '1200px'))

63 | )

64 | )

65 | }

66 |

67 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

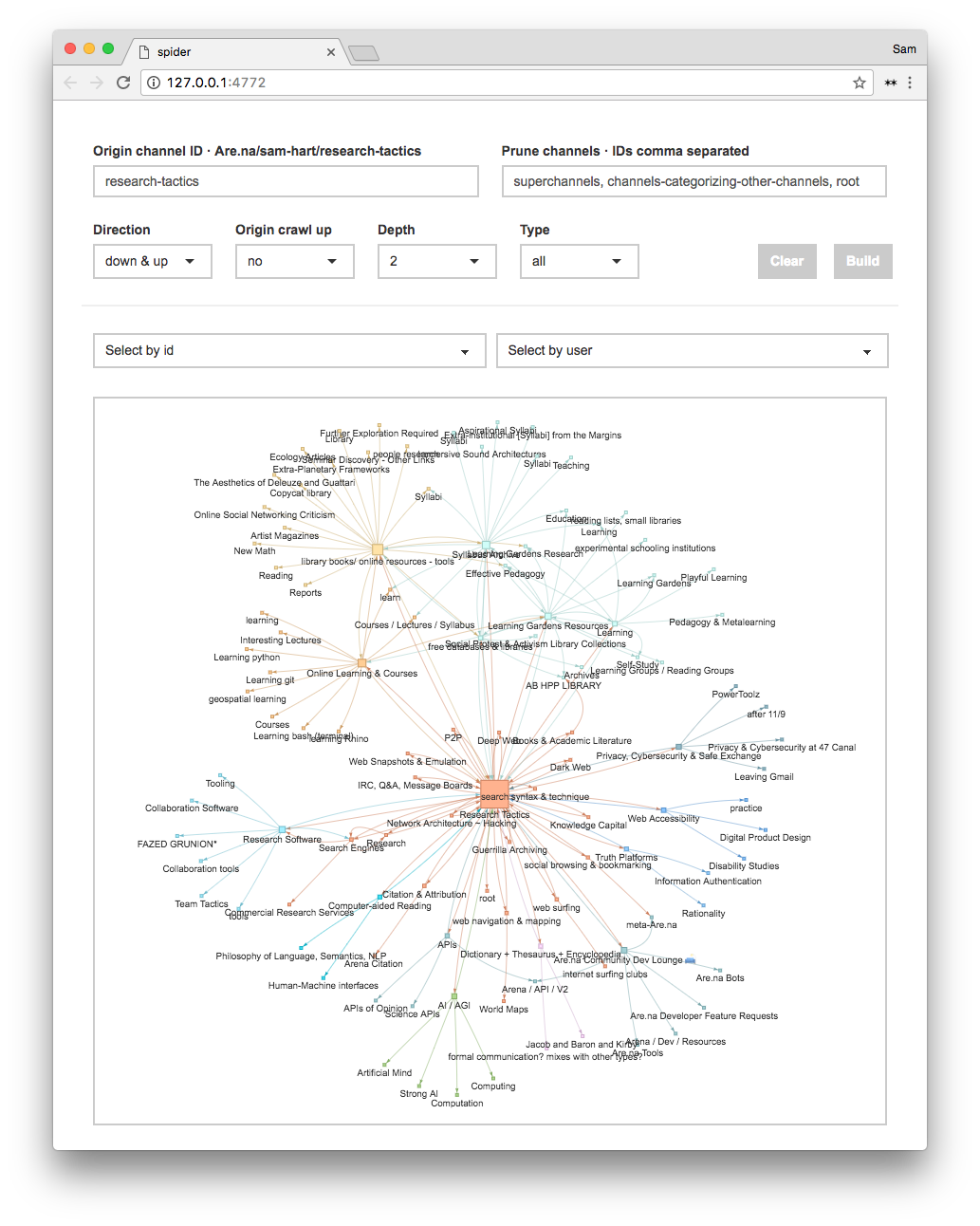

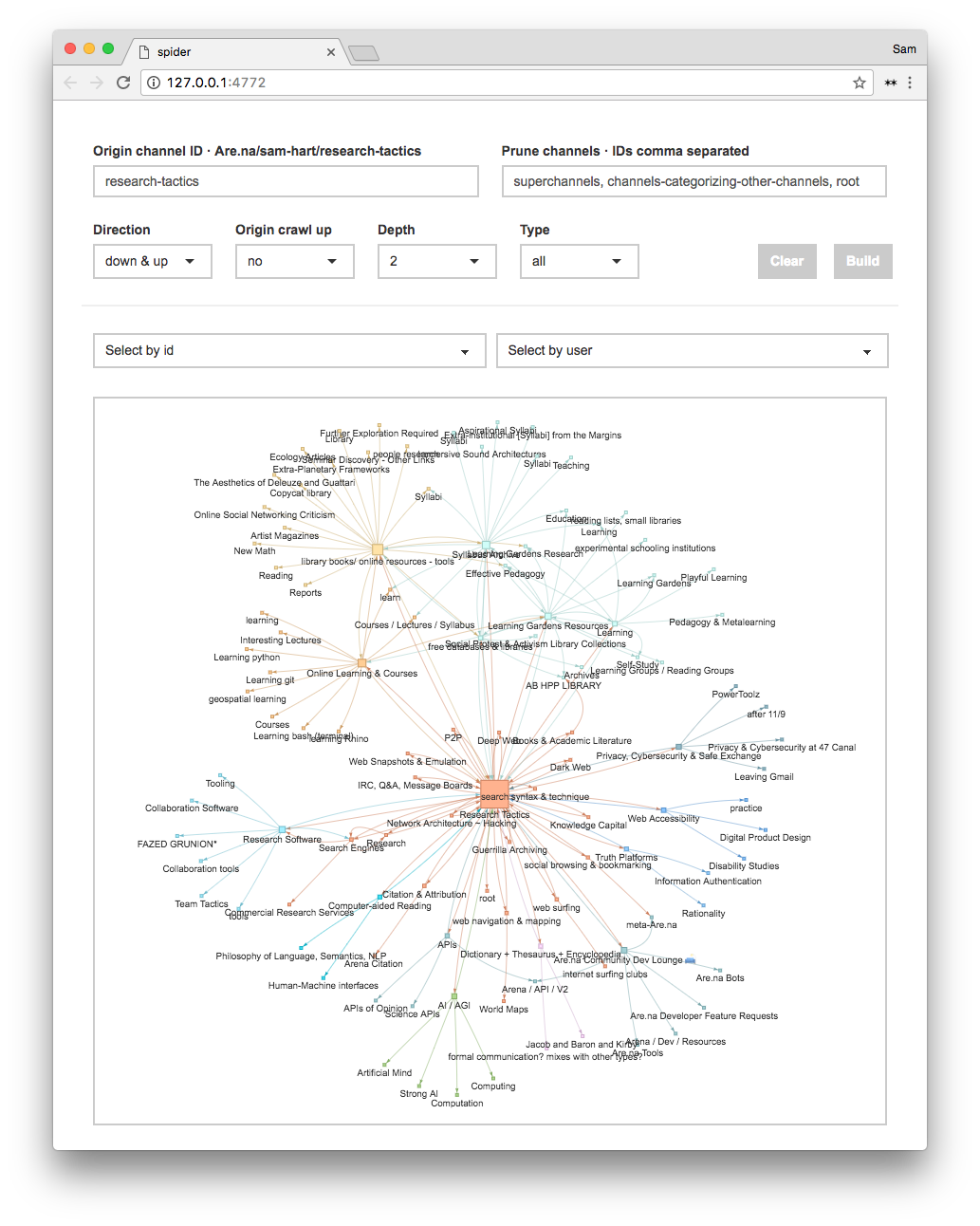

1 | # Are.na Spider

2 | Crawl connected Are.na channels and visualize the resulting network.

3 |

4 |  5 |

6 | ## Setup

7 |

8 | Working prototype, but there are a couple setup steps for first time use:

9 |

10 | - install [XQuartz](https://www.xquartz.org/) if you don't yet have it

11 | - install [R](https://www.r-project.org/)

12 | - clone the repo, move into app directory, set permissions for run script, and start R environment

13 |

14 | ```> git clone git@github.com:hxrts/spider.git && cd spider && chmod +x run.sh && R```

15 |

16 | - from R, install the pacman package manager and import remaining code to install libraries as needed, exit upon completion

17 |

18 | ```> install.packages('pacman') ; source('_daemon.R') ; q(save = 'no')```

19 |

20 | - in the root directory create a file called ```_secret.R```

21 | - register your app on [dev.are.na](https://dev.are.na/oauth/applications/new) and copy your personal access token.

22 | - edit ```_secret.R``` by pasting ```token = 'personal-access-token'``` and adding the token from [dev.are.na](https://dev.are.na)

23 |

24 |

25 | ## Run

26 |

27 | - [optional] specify local port in config.txt file

28 | - from spider directory run startup script ```> sh run.sh```

29 | - point your browser to the address displayed

30 |

31 | ## Usage

32 |

33 | Pick an origin channel and choose crawling parameters as desired. Clear the window after your search or add a new query to the existing graph.

34 |

35 | Note: The scraping is rather slow, especially graphs of high degree, you can watch crawling progrss in the command prompt. Please be patient.

36 |

37 |

38 | ## Stop

39 |

40 | - ```ctrl + c``` halts app and returns command prompt

41 |

42 | ## World Maps

43 |

44 | Add interesting results to the channel [World Maps](https://www.are.na/sam-hart/world-maps) with some information about the parameters used to generate the plot.

45 |

46 | ## Known Bugs

47 |

48 | I've run into issues building graphs with overlapping edges without clearing the previous build, especially working from the initial graph. If the app encounters an error the screen will becomed greyed out, refreshing the window should re-initialize.

49 |

--------------------------------------------------------------------------------

/functions/plot.R:

--------------------------------------------------------------------------------

1 |

2 |

3 | message(' - plot')

4 |

5 |

6 | PlotGraph <- function(objects, arrows, origin) {

7 | visNetwork(

8 | objects %>% unique,

9 | arrows %>% unique,

10 | # main = list(text = 'Are.na / ',

11 | # style = 'display: inline-block;

12 | # font-family: Arial;

13 | # color: #9d9d9d;

14 | # font-weight: bold;

15 | # font-size: 20px;

16 | # text-align: left'),

17 | # submain = list(text = str_c('',

19 | # objects %>% filter(id == origin) %$% label,

20 | # '

5 |

6 | ## Setup

7 |

8 | Working prototype, but there are a couple setup steps for first time use:

9 |

10 | - install [XQuartz](https://www.xquartz.org/) if you don't yet have it

11 | - install [R](https://www.r-project.org/)

12 | - clone the repo, move into app directory, set permissions for run script, and start R environment

13 |

14 | ```> git clone git@github.com:hxrts/spider.git && cd spider && chmod +x run.sh && R```

15 |

16 | - from R, install the pacman package manager and import remaining code to install libraries as needed, exit upon completion

17 |

18 | ```> install.packages('pacman') ; source('_daemon.R') ; q(save = 'no')```

19 |

20 | - in the root directory create a file called ```_secret.R```

21 | - register your app on [dev.are.na](https://dev.are.na/oauth/applications/new) and copy your personal access token.

22 | - edit ```_secret.R``` by pasting ```token = 'personal-access-token'``` and adding the token from [dev.are.na](https://dev.are.na)

23 |

24 |

25 | ## Run

26 |

27 | - [optional] specify local port in config.txt file

28 | - from spider directory run startup script ```> sh run.sh```

29 | - point your browser to the address displayed

30 |

31 | ## Usage

32 |

33 | Pick an origin channel and choose crawling parameters as desired. Clear the window after your search or add a new query to the existing graph.

34 |

35 | Note: The scraping is rather slow, especially graphs of high degree, you can watch crawling progrss in the command prompt. Please be patient.

36 |

37 |

38 | ## Stop

39 |

40 | - ```ctrl + c``` halts app and returns command prompt

41 |

42 | ## World Maps

43 |

44 | Add interesting results to the channel [World Maps](https://www.are.na/sam-hart/world-maps) with some information about the parameters used to generate the plot.

45 |

46 | ## Known Bugs

47 |

48 | I've run into issues building graphs with overlapping edges without clearing the previous build, especially working from the initial graph. If the app encounters an error the screen will becomed greyed out, refreshing the window should re-initialize.

49 |

--------------------------------------------------------------------------------

/functions/plot.R:

--------------------------------------------------------------------------------

1 |

2 |

3 | message(' - plot')

4 |

5 |

6 | PlotGraph <- function(objects, arrows, origin) {

7 | visNetwork(

8 | objects %>% unique,

9 | arrows %>% unique,

10 | # main = list(text = 'Are.na / ',

11 | # style = 'display: inline-block;

12 | # font-family: Arial;

13 | # color: #9d9d9d;

14 | # font-weight: bold;

15 | # font-size: 20px;

16 | # text-align: left'),

17 | # submain = list(text = str_c('',

19 | # objects %>% filter(id == origin) %$% label,

20 | # '

'),

21 | # style = 'margin-left: 0.3em;

22 | # display: inline;

23 | # font-family: Arial;

24 | # font-weight: bold;

25 | # font-size: 20px;

26 | # text-align: left'),

27 | width = '100%',

28 | height = '800px'

29 | ) %>%

30 | visLayout(improvedLayout = TRUE) %>%

31 | # visIgraphLayout(

32 | # #layout = 'layout_nicely',

33 | # layout = 'layout_with_fr',

34 | # physics = FALSE,

35 | # smooth = FALSE,

36 | # type = 'full',

37 | # randomSeed = NULL,

38 | # layoutMatrix = NULL

39 | # ) %>%

40 | visNodes(

41 | font = '20px arial #333',

42 | shape = 'square',

43 | shadow = FALSE,

44 | size = 10,

45 | scaling = list(min = 3, max = 30),

46 | borderWidthSelected = 0,

47 | labelHighlightBold = TRUE

48 | ) %>%

49 | visEdges(

50 | shadow = FALSE,

51 | arrows = list(to = list(enabled = TRUE, scaleFactor = 0.5)),

52 | selectionWidth = 1,

53 | hoverWidth = 1

54 | ) %>%

55 | visOptions(

56 | selectedBy = list(variable = 'user'),

57 | highlightNearest = list(enabled = TRUE,

58 | degree = 1,

59 | hover = TRUE),

60 | nodesIdSelection = list(useLabels = TRUE)

61 | ) %>%

62 | visInteraction(

63 | tooltipStyle =

64 | 'position: fixed;

65 | visibility: hidden;

66 | padding: 8px 12px 1px 12px;

67 | white-space: nowrap;

68 | font-family: Arial;

69 | font-size: 14px;

70 | color: #333;

71 | background-color: white;

72 | webkit-border-radius: 0;

73 | border-radius: 0;

74 | border: solid 2px #cbcbcb',

75 | hover = TRUE)

76 | }

77 |

78 |

--------------------------------------------------------------------------------

/_server.R:

--------------------------------------------------------------------------------

1 |

2 |

3 | message('|-- server')

4 |

5 |

6 | Server <- shiny::shinyServer(function(input, output, session) {

7 |

8 | # initialize variables

9 | old.nodes = old.edges = NULL

10 |

11 | # system time

12 | log.file <- str_c('logs/record_', format(Sys.time(), "%d-%m-%y"), '.txt')

13 |

14 |

15 | # output

16 | output$network_proxy <- renderVisNetwork({

17 |

18 | map <- Crawl(origin, depth, direction, type, private = NULL, up.initial, pop)

19 | PlotGraph(map$objects, map$arrows, map$origin)

20 |

21 | })

22 |

23 |

24 | # observe

25 | build <- observe({

26 |

27 | # listener

28 | input$buildGraph

29 |

30 | isolate(if(input$direction == 'down') {

31 | direction = 1

32 | } else if(isolate(input$direction) == 'down & up') {

33 | direction = 0

34 | } else {

35 | direction = -1

36 | })

37 |

38 | isolate(if(input$private == 'yes' & input$type == 'all') {

39 | private = token

40 | } else {

41 | private = NULL

42 | })

43 |

44 | isolate(if(isolate(input$up.initial) == 'yes') {

45 | up.initial = 1

46 | } else {

47 | up.initial = 0

48 | })

49 |

50 | depth <- isolate(as.numeric(input$depth))

51 |

52 |

53 | isolate(if(input$buildGraph) {

54 |

55 | map <- isolate(Crawl(input$origin, depth, direction, input$type, private, up.initial, input$pop))

56 |

57 | visNetworkProxy('network_proxy') %>%

58 | visUpdateNodes(map$objects %>% unique) %>%

59 | visUpdateEdges(map$arrows %>% unique) %>%

60 | visLayout(improvedLayout = TRUE)

61 |

62 | })

63 |

64 | visNetworkProxy("network_proxy") %>%

65 | visGetEdges()

66 |

67 | visNetworkProxy("network_proxy") %>%

68 | visGetNodes()

69 |

70 | isolate(cat(input$origin, '\n', file = log.file, append = TRUE))

71 |

72 | })

73 |

74 | clear <- observe({

75 |

76 | input$clearGraph

77 |

78 | isolate(if(input$clearGraph) {

79 |

80 | if(!is.null(input$network_proxy_edges) & !is.null(input$network_proxy_nodes)) {

81 |

82 | old.edges <-

83 | input$network_proxy_edges %>%

84 | map(~ { dplyr::as_data_frame(rbind(unlist(.x))) }) %>%

85 | bind_rows

86 |

87 | old.nodes <-

88 | input$network_proxy_nodes %>%

89 | map(~ { dplyr::as_data_frame(rbind(unlist(.x))) }) %>%

90 | bind_rows

91 |

92 | visNetworkProxy('network_proxy') %>%

93 | visRemoveNodes(old.nodes$id) %>%

94 | visRemoveEdges(old.edges$id)

95 |

96 | }

97 |

98 | })

99 |

100 | })

101 |

102 | # cleanup

103 | session$onSessionEnded(function() {

104 | build$suspend()

105 | clear$suspend()

106 | unlink(log.file)

107 | })

108 |

109 | })

110 |

--------------------------------------------------------------------------------

/_daemon.R:

--------------------------------------------------------------------------------

1 |

2 |

3 | SourceDir <- function (path, pattern = '\\.[rR]$', env = NULL, chdir = TRUE)

4 |

5 | message('\n|-- functions')

6 |

7 | source('functions/strip.R')

8 | source('functions/crawl.R')

9 | source('functions/form.R')

10 | source('functions/get.R')

11 | source('functions/plot.R')

12 |

13 | if(file.exists('_secret.R')) { source('_secret.R') }

14 | source('_server.R')

15 | source('_ui.R')

16 |

17 |

18 | #-----------------------

19 | message('|-- libraries')

20 | #-----------------------

21 |

22 |

23 | if(!'shiny' %in% rownames(installed.packages())) {

24 | install.packages(shiny)

25 | }

26 |

27 | pacman::p_load(dplyr, readr, tidyr, stringr, magrittr, purrr, rlist, httr, jsonlite, visNetwork, igraph, shiny)

28 |

29 |

30 | #--------------------

31 | message('|-- daemon')

32 | #--------------------

33 |

34 |

35 | Spider <- function(port = 3030){ shinyApp(

36 |

37 | UI(origin, pop, direction, up.initial, depth, type),

38 | Server,

39 | options = list(port = port)

40 |

41 | )}

42 |

43 |

44 | #------------------------------

45 | message('|-- initialization\n')

46 | #------------------------------

47 |

48 |

49 | # start parameters

50 | origin = 'research-tactics'

51 | direction = 1

52 | depth = 1

53 | type = 'all'

54 | seed = 0

55 | up.initial = 1

56 | pop = 'superchannels, channels-categorizing-other-channels, root, looseleaf, dhr-quick-reference, primer'

57 |

58 | set.seed(seed)

59 |

60 |

61 | # palette

62 | colors <- c(

63 | '#d99aac', '#ffa9c0', '#e2979f', '#ffc6bf', '#ffb18f',

64 | '#d3a088', '#efb17b', '#ffcc95', '#bba98d', '#ffe2a8',

65 | '#fff9d3', '#cfce81', '#a1b279', '#dcf3a7', '#ebffc3',

66 | '#b0d791', '#ccffd5', '#80b88e', '#b7ffd1', '#56bd9c',

67 | '#7deac8', '#74b8a7', '#3ec1ad', '#53d4c1', '#92ffef',

68 | '#affff3', '#6ef4e8', '#d1fef9', '#85f8ff', '#a5d1d6',

69 | '#47e2f6', '#02c0d8', '#a5f0ff', '#6ab6cb', '#4fdcff',

70 | '#94bcc9', '#82deff', '#b2d5e8', '#76b2dc', '#98d2ff',

71 | '#8ac7ff', '#75b1e8', '#b6a2d9', '#e3cdff', '#ffdeff',

72 | '#ca9dc8', '#edabe8', '#f09ccc', '#ffb7de', '#e4bfcb')

73 |

74 |

75 | # read in config

76 | config <- suppressMessages(read_delim('config.txt', delim = '=', col_names = FALSE))

77 |

78 |

79 | if(nrow(config) == 0) {

80 |

81 | message('config file empty, trying default port: 3030')

82 | port <- 3030

83 |

84 | } else {

85 | # parse config file

86 | params <-

87 | config %>%

88 | set_names(c('param', 'value')) %>%

89 | mutate_each(funs(strip))

90 |

91 | # get port value

92 | port <-

93 | params %>%

94 | filter(param == 'port') %>%

95 | slice(1) %$%

96 | value %>%

97 | as.numeric

98 |

99 | message(str_c('using specified port: ', port))

100 | }

101 |

102 | # run spider

103 | Spider(port = port)

104 |

105 |

--------------------------------------------------------------------------------

/functions/crawl.R:

--------------------------------------------------------------------------------

1 |

2 |

3 | message(' - crawl')

4 |

5 |

6 | Crawl <- function(origin, depth = 1, direction = 0, type = 'all', private = NULL, up.initial = TRUE, pop = '') { # main crawling loop

7 |

8 | pool = list(origin)

9 | spent = list()

10 | arrows = list()

11 | objects = list()

12 | exit = 0

13 |

14 | pop %<>% strsplit(',') %>% map(~{ strip(.x) }) %>% unlist %>% list.filter(!. %in% origin)

15 |

16 | if(length(pop) == 0) {

17 | pop = ''

18 | }

19 |

20 | # crawl recursion

21 | for(level in 1:depth) {

22 |

23 | level.msg <- str_c('\n[[ level ', level, ' ]]\n')

24 | message(level.msg)

25 |

26 | if(level == 1) {

27 | if(is.null(private)) {

28 | channel.status <- str_c('https://are.na/', origin[1]) %>% GET %>% http_status %$% category

29 | } else {

30 | channel.status <- str_c('https://api.are.na/v2/channels/', origin[1], '/contents') %>% GET(add_headers(Authorization = str_c('bearer ', private))) %>% http_status %$% category

31 | }

32 | if(channel.status != 'Success' | origin[1] %in% c('', 'explore', 'feed', 'tools', 'about', 'blog', 'pricing', 'import')) {

33 | message(str_c('no channel with the slug "', origin[1], '"'))

34 | pool = list('failure')

35 | depth = 1

36 | type = 'all'

37 | up.initial = FALSE

38 | }

39 | }

40 |

41 | if(level <= length(pool)) {

42 | if(pool[[level]] %>% length > 0) {

43 | if(up.initial == FALSE & depth == 1 & length(origin) == 1) {

44 |

45 | reply <-

46 | pool[[level]] %>%

47 | list.map(GetChannel(., 1, type, private)) %>%

48 | bind_rows %>%

49 | mutate(level = level)

50 |

51 | } else {

52 |

53 | queries <- split(pool[[level]], ceiling(seq_along(pool[[level]])/100))

54 |

55 | reply <- queries %>%

56 | map( ~ {

57 | sub.reply <-

58 | .x %>%

59 | list.map(GetChannel(., direction, type, private)) %>%

60 | bind_rows %>%

61 | mutate(level = level)

62 |

63 | if(level > 2) {

64 | message('\n-- pause --\n') ; Sys.sleep(5)

65 | }

66 |

67 | sub.reply

68 | }) %>%

69 | bind_rows

70 | }

71 |

72 | if(!is.null(pop)) {

73 | reply %<>% filter(!query %in% pop)

74 | }

75 |

76 | arrows[[level]] <- FormArrows(reply)

77 |

78 | objects[[level]] <- FormObjects(reply)

79 |

80 | spent[[level]] <- pool[[level]]

81 |

82 | pool[[level + 1]] <-

83 | arrows[[level]] %>%

84 | unlist %>%

85 | unname %>%

86 | unique %>%

87 | sort %>%

88 | list.filter(! . %in% unlist(spent))

89 | }

90 | }

91 | }

92 |

93 | web <- list(arrows = arrows %>% bind_rows %>% unique,

94 | objects = objects %>% bind_rows %>% unique)

95 |

96 | objects <-

97 | data_frame(id = web$objects$slug %>% unique) %>%

98 | left_join(web$objects, by = c('id' = 'slug')) %>%

99 | rename(label = title) %>%

100 | unique %>%

101 | group_by(id) %>%

102 | arrange(desc(length)) %>%

103 | slice(1) %>%

104 | ungroup %>%

105 | arrange(label)

106 |

107 | arrows <-

108 | web$arrows %>%

109 | mutate(id = str_c(source, '_', target)) %>%

110 | rename(from = source, to = target) %>%

111 | unique

112 |

113 | graph <- graph_from_data_frame(arrows, directed = TRUE, vertices = objects)

114 |

115 | objects$group <- walktrap.community(graph, steps = 6, modularity = TRUE) %>% membership

116 | objects$value <- betweenness(graph, directed = FALSE, normalized = TRUE)

117 |

118 | objects <-

119 | objects %>%

120 | mutate(color = sample(colors, 50)[objects$group]) %>%

121 | rename(user = user.name) %>%

122 | mutate(title = str_c(

123 | 'user: ', user, '

124 | channel: ', label, '

125 | id: ', id,'

126 | type: ', type,'

127 | blocks: ', length,'

128 | betweeness: ', round(value, 2),'

'

129 | ))

130 |

131 | message('\n[[ done ]]')

132 |

133 | list(origin = origin, objects = objects, arrows = arrows, graph = graph)

134 |

135 | }

136 |

137 |

--------------------------------------------------------------------------------

/analysis.R:

--------------------------------------------------------------------------------

1 | GetUser <- function(query, direction, type) { # query Are.na for a user's channel information

2 |

3 | message(query)

4 |

5 | contents = connections = NULL

6 | if(type == 'all') { type <- c('public', 'closed') }

7 |

8 | # contents

9 | if(direction != -1) {

10 |

11 | user.req <-

12 | str_c('https://api.are.na/v2/users/', query, '/channels') %>%

13 | fromJSON

14 |

15 | if(user.req$contents %>% length > 0) {

16 |

17 | user <-

18 | user.req$contents %>%

19 | .[,names(.) %in% c('title', 'slug', 'status', 'class', 'length')] %>%

20 | mutate(user.name = user.req$contents %$% user %$% `username`) %>%

21 | mutate(user.slug = user.req$contents %$% user %$% `slug`) %>%

22 | mutate(query = query, hierarchy = 'content') %>%

23 | filter(class == 'Channel')

24 | }

25 | }

26 |

27 | # return

28 | user %>%

29 | filter(status %in% type)

30 |

31 | }

32 |

33 |

34 |

35 | Graph <- function(web, lay, cluster, file, width = 15, height = 15, label.size, edge.width, node.min, node.max) {

36 |

37 | graph <-

38 | web$arrows %>%

39 | graph.data.frame(directed=TRUE)

40 |

41 | # node sizing

42 | pr <- page.rank(graph)$vector

43 |

44 | # choose layout type

45 | if(lay == 'fr') {

46 | layout <-

47 | layout_with_fr(graph,

48 | start.temp = 20,

49 | niter = 2000)

50 | }

51 |

52 | if(lay == 'drl') {

53 | layout <-

54 | layout_with_drl(graph,

55 | options = list(cooldown.attraction = 0.1, init.damping.mult = 2))

56 | }

57 |

58 | if(lay == 'kk') {

59 | layout <-

60 | layout_with_kk(graph)

61 | }

62 |

63 | if(lay == 'mds') {

64 | layout <-

65 | layout_with_mds(graph)

66 | }

67 |

68 | # graph clustering

69 | if(cluster == 'walktrap') {

70 | mem <-

71 | graph %>%

72 | walktrap.community(steps = 6, modularity = TRUE) %>%

73 | membership

74 | }

75 |

76 | if(cluster == 'betweenness') {

77 | mem <-

78 | graph %>%

79 | edge.betweenness.community(.) %>%

80 | membership

81 | }

82 |

83 | # draw plot

84 | pdf(file = str_c(file, '.pdf'), width = width, height = height)

85 | plot( graph,

86 | layout = layout,

87 | vertex.label.dist = 0.01,

88 | vertex.size = Mapper(pr, c(node.min, node.max)),

89 | vertex.color = colors[mem],

90 | vertex.frame.color = NA,

91 | vertex.label.color = 'black',

92 | vertex.label.family = 'Helvetica',

93 | vertex.label.cex = label.size,

94 | edge.arrow.size = 0.005,

95 | edge.width = edge.width,

96 | edge.color = '#d9d9d9' )

97 | dev.off()

98 |

99 | }

100 |

101 |

102 |

103 | Mapper <- function(x, range=c(0, 1), from.range = NA) {

104 |

105 | if(any(is.na(from.range))) from.range <- range(x, na.rm = TRUE)

106 |

107 | # check if all values are the same

108 | if(!diff(from.range)) {

109 | matrix(mean(range), ncol = ncol(x), nrow = nrow(x), dimnames = dimnames(x))

110 | }

111 |

112 | # map to [0,1]

113 | x <- (x - from.range[1])

114 | x <- x / diff(from.range)

115 |

116 | # handle single values

117 | if(diff(from.range) == 0) x <- 0

118 |

119 | # map from [0,1] to [range]

120 | if (range[1] > range[2]) x <- 1 - x

121 | x <- x*(abs(diff(range))) + min(range)

122 |

123 | x[x < min(range) | x > max(range)] <- NA

124 |

125 | x

126 | }

127 |

128 |

129 |

130 | #---------------

131 | # initialization

132 | #---------------

133 |

134 |

135 | label.size = 2.5

136 | edge.width = 5

137 | node.min = 0.2 # min node plot size

138 | node.max = 10 # max node plot size

139 | lay = 'fr' # layout type: fr | drl | kk | mds

140 | cluster = 'walktrap' # walktrap | betweenness

141 | width = 200

142 | height = 200

143 |

144 | #-------------

145 | # static graph

146 | #-------------

147 |

148 |

149 | #Graph(web, lay, cluster, file = origin, width, height, label.size, edge.width, node.min, node.max)

150 |

151 | #----------------------------

152 | # export csv for graphcommons

153 | #----------------------------

154 |

155 |

156 | # # edges

157 | # edges <-

158 | # web$arrows %>%

159 | # mutate(`From Type` = 'Channel', `Edge` = 'Contains', `To Type` = 'Channel', `Weight` = '') %>%

160 | # select( `From Type`,

161 | # `From Name` = source,

162 | # `Edge`,

163 | # `To Type`,

164 | # `To Name` = target,

165 | # `Weight` ) %>%

166 | # unique %>%

167 | # filter(row_number() > 1600)

168 |

169 |

170 | # # nodes

171 | # nodes <-

172 | # web$objects %>%

173 | # mutate(`Type` = 'Channel', `Description` = '') %>%

174 | # select( `Type`,

175 | # `Name` = slug,

176 | # `Description`,

177 | # `Length` = length,

178 | # `User Name` = user.name ) %>%

179 | # unique %>%

180 | # filter(row_number() > 1600)

181 |

182 |

183 | # # write table to xlsx

184 | # write.xlsx(list('Edges' = edges, 'Nodes' = nodes), file = "graph_commons.xlsx")

185 |

186 |

187 |

188 |

189 |

190 |

191 |

192 |

193 |

--------------------------------------------------------------------------------

/style.css:

--------------------------------------------------------------------------------

1 |

2 | ::selection {

3 | background: none;

4 | }

5 | ::-moz-selection {

6 | background: none;

7 | }

8 |

9 | select::selection {

10 | background: none;

11 | }

12 | select::-moz-selection {

13 | background: none;

14 | }

15 |

16 | select *::selection {

17 | background: none;

18 | }

19 | select *::-moz-selection {

20 | background: none;

21 | }

22 |

23 | * {

24 | border-radius: 0 !important;

25 | outline: none !important;

26 | font-family: Arial;

27 | }

28 |

29 | a:link,

30 | a:visited,

31 | a:hover,

32 | a:active {

33 | color: #4d58ff;

34 | }

35 |

36 | h1 {

37 | display: none;

38 | }

39 |

40 | canvas {

41 | border: solid 2px #cbcbcb;

42 | }

43 |

44 | .container-fluid {

45 | margin: 30px 30px 40px 30px

46 | }

47 |

48 | #maindivnetwork_proxy {

49 | margin: 12px 12px 12px 12px;

50 | }

51 |

52 | .col-sm-1 {

53 | width: 80px;

54 | float: right;

55 | }

56 |

57 |

58 | .col-sm-2 {

59 | width: 150px;

60 | }

61 |

62 |

63 | .col-sm-6 {

64 | width: 50% !important;

65 | }

66 |

67 | @media (max-width: 768px) {

68 | .col-sm-6 {

69 | width: 100% !important;

70 | }

71 | }

72 |

73 |

74 | .dropdown {

75 | width: calc(50% - 20px) !important;

76 | margin-bottom: 20px;

77 | }

78 |

79 | @media (max-width: 768px) {

80 | .dropdown {

81 | width: calc(100% - 20px) !important;

82 | }

83 | }

84 |

85 | .col-sm-12 {

86 | width: 100%;

87 | display: inline-block;

88 | }

89 |

90 |

91 | .col-sm-1,

92 | .col-sm-2,

93 | .col-sm-6,

94 | .col-sm-12 {

95 | padding: 0;

96 | }

97 |

98 | .form-group {

99 | margin-bottom: 0;

100 | }

101 |

102 | .well {

103 | background: none;

104 | border: none;

105 | margin-bottom: 0;

106 | padding: 12px;

107 | box-shadow: none;

108 | }

109 |

110 | .action-button {

111 | color: #ffffff;

112 | font-weight: bold;

113 | margin-top: 1.75em;

114 | padding: 6px 12px 7px 11px;

115 | border: solid 2px #cbcbcb;

116 | box-shadow: none !important;

117 | background: #cbcbcb;

118 | }

119 |

120 | .action-button:hover {

121 | transition: background ease-in-out .05s;

122 | color: #ffffff;

123 | border-color: #919191;

124 | border: solid 2px #919191;

125 | box-shadow: none !important;

126 | background: #919191;

127 | }

128 |

129 | .action-button:focus {

130 | transition: background ease-in-out .05s;

131 | box-shadow: none !important;

132 | }

133 |

134 | .selectize-input {

135 | padding: 6px 12px 7px 11px;

136 | transition: border-color ease-in-out .05s;

137 | border: solid 2px #cbcbcb;

138 | border-color: #cbcbcb;

139 | box-shadow: none !important;

140 | border-radius: 0 !important;

141 |

142 | }

143 |

144 | .selectize-input.focus {

145 | padding: 6px 12px 7px 11px;

146 | transition: border-color ease-in-out .05s;

147 | border: solid 2px #9d9d9d;

148 | border-color: #9d9d9d;

149 | border-radius: 0 !important;

150 | }

151 |

152 | .dropdown {

153 | padding: 6px 12px 7px 11px;

154 | transition: border-color ease-in-out .05s;

155 | /*border: solid 2px #cbcbcb;*/

156 | /*border-color: #cbcbcb;*/

157 | box-shadow: none !important;

158 | border-radius: 0 !important;

159 | }

160 |

161 | .dropdown.focus {

162 | padding: 6px 12px 7px 11px;

163 | transition: border-color ease-in-out .05s;

164 | /*border: solid 2px #9d9d9d;*/

165 | /*border-color: #9d9d9d;*/

166 | border-color: #cbcbcb;

167 | border-radius: 0 !important;

168 | }

169 |

170 |

171 | .form-control {

172 | padding: 8px 12px 9px 11px;

173 | transition: border-color ease-in-out .05s;

174 | border: solid 2px #cbcbcb;

175 | box-shadow: none !important;

176 | height: 1px;

177 | min-height: 34px;

178 |

179 | }

180 |

181 | .form-control:focus {

182 | border: solid 2px #9d9d9d;

183 | box-shadow: none;

184 | }

185 |

186 | .form-control::selection {

187 | background: #d1d4ff;

188 | }

189 |

190 | .form-control::-moz-selection {

191 | background: #d1d4ff;

192 | }

193 |

194 | .tooltip-info::selection {

195 | background: #d1d4ff;

196 | }

197 |

198 | .tooltip-info::-moz-selection {

199 | background: #d1d4ff;

200 | }

201 |

202 | .tooltip-info *::selection {

203 | background: #d1d4ff;

204 | }

205 |

206 | .tooltip-info *::-moz-selection {

207 | background: #d1d4ff;

208 | }

209 |

210 |

211 | .dropdown,

212 | .dropdown:focus {

213 | margin-left: 14px;

214 | height: 33px !important;

215 | padding: 6px 12px 7px 11px !important;

216 | border: 0 !important;

217 | outline: 2px solid #cbcbcb !important;

218 | background-color: white;

219 | outline-offset: 0;

220 |

221 | -webkit-box-sizing: border-box;

222 | -moz-box-sizing: border-box;

223 | box-sizing: border-box;

224 | -webkit-appearance: none;

225 | -moz-appearance: none;

226 | }

227 |

228 | /*.dropdown:active {

229 | margin-left: 14px;

230 | height: 33px !important;

231 | padding: 6px 12px 7px 11px !important;

232 | border: 0 !important;

233 | background-color: white;

234 | outline-offset: 0;

235 |

236 | -webkit-box-sizing: border-box;

237 | -moz-box-sizing: border-box;

238 | box-sizing: border-box;

239 | -webkit-appearance: none;

240 | -moz-appearance: none;

241 | }

242 | */

243 | .dropdown,

244 | .dropdown:active {

245 | background-image:

246 | linear-gradient(45deg, transparent 50%, #333 50%),

247 | linear-gradient(135deg, #333 50%, transparent 50%),

248 | linear-gradient(to right, white, white);

249 | background-position:

250 | calc(100% - 20px) calc(1em + 2px),

251 | calc(100% - 15px) calc(1em + 2px),

252 | 100% 0;

253 | background-size:

254 | 5px 5px,

255 | 5px 5px,

256 | 2.5em 2.5em;

257 | background-repeat: no-repeat;

258 |

259 | }

260 |

261 |

262 | .dropdown:focus {

263 | outline: 2px solid #9d9d9d !important;

264 | }

265 |

266 | #network_proxy {

267 | padding-top: 30px;

268 | border-top: solid 2px #efefef;

269 | margin-top: 0;

270 | }

271 |

272 | #network_proxy hr {

273 | display: none;

274 | }

--------------------------------------------------------------------------------

/functions/get.R:

--------------------------------------------------------------------------------

1 |

2 |

3 | message(' - get')

4 |

5 |

6 | #----------

7 | # functions

8 | #----------

9 |

10 |

11 | GetChannel <- function(query, direction = 0, type = 'all', private = NULL) { # query Are.na for channel information

12 |

13 | message(query)

14 |

15 | identity = contents = connections = NULL

16 |

17 | if(type == 'all') {

18 | type <- c('public', 'closed')

19 | if(!is.null(private)) {

20 | type <- c(type, 'private')

21 | }

22 | }

23 |

24 | if(direction == 0) {

25 | direction = c('identity', 'parent', 'child')

26 | } else if(direction == 1) {

27 | direction = c('identity', 'child')

28 | } else {

29 | direction = c('identity', 'parent')

30 | }

31 |

32 | try({

33 |

34 | if(depth > 3) { Sys.sleep(0.1) }

35 |

36 | # identity

37 | if(!is.null(private)) {

38 | identity.req <-

39 | tryCatch({

40 | str_c('https://api.are.na/v2/channels/', query, '/thumb') %>% GET(add_headers(Authorization = str_c('bearer ', private))) %>% content(type = 'text', encoding = 'UTF-8') %>% fromJSON

41 | }, warning = function(w) {

42 | Sys.sleep(4)

43 | str_c('https://api.are.na/v2/channels/', query, '/thumb') %>% GET(add_headers(Authorization = str_c('bearer ', private))) %>% content(type = 'text', encoding = 'UTF-8') %>% fromJSON

44 | }, error = function(e) {

45 | Sys.sleep(4)

46 | str_c('https://api.are.na/v2/channels/', query, '/thumb') %>% GET(add_headers(Authorization = str_c('bearer ', private))) %>% content(type = 'text', encoding = 'UTF-8') %>% fromJSON

47 | })

48 | } else {

49 | identity.req <-

50 | tryCatch({

51 | str_c('https://api.are.na/v2/channels/', query, '/thumb') %>% fromJSON

52 | }, warning = function(w) {

53 | Sys.sleep(4)

54 | str_c('https://api.are.na/v2/channels/', query, '/thumb') %>% fromJSON

55 | }, error = function(e) {

56 | Sys.sleep(4)

57 | str_c('https://api.are.na/v2/channels/', query, '/thumb') %>% fromJSON

58 | })

59 | }

60 |

61 |

62 | identity <-

63 | data_frame( query = query,

64 | title = identity.req$title,

65 | slug = identity.req$slug,

66 | status = identity.req$status,

67 | user.name = unlist(identity.req$user$username),

68 | user.slug = unlist(identity.req$user$slug),

69 | length = identity.req$length,

70 | hierarchy = 'identity',

71 | class = 'Channel' )

72 |

73 | if(depth > 3) { Sys.sleep(0.3) }

74 |

75 | # contents

76 | contents = data_frame(slug = '')

77 |

78 | if(!is.null(private)) {

79 | contents.req <-

80 | tryCatch({

81 | str_c('https://api.are.na/v2/channels/', query, '/contents') %>% GET(add_headers(Authorization = str_c('bearer ', private))) %>% content(type = 'text', encoding = 'UTF-8') %>% fromJSON

82 | }, warning = function(w) {

83 | Sys.sleep(4)

84 | str_c('https://api.are.na/v2/channels/', query, '/contents') %>% GET(add_headers(Authorization = str_c('bearer ', private))) %>% content(type = 'text', encoding = 'UTF-8') %>% fromJSON

85 | }, error = function(e) {

86 | Sys.sleep(4)

87 | str_c('https://api.are.na/v2/channels/', query, '/contents') %>% GET(add_headers(Authorization = str_c('bearer ', private))) %>% content(type = 'text', encoding = 'UTF-8') %>% fromJSON

88 | })

89 | } else {

90 | contents.req <-

91 | tryCatch({

92 | str_c('https://api.are.na/v2/channels/', query, '/contents') %>% fromJSON

93 | }, warning = function(w) {

94 | Sys.sleep(4)

95 | str_c('https://api.are.na/v2/channels/', query, '/contents') %>% fromJSON

96 | }, error = function(e) {

97 | Sys.sleep(4)

98 | str_c('https://api.are.na/v2/channels/', query, '/contents') %>% fromJSON

99 | })

100 | }

101 |

102 | if(contents.req$contents %>% length > 0) {

103 |

104 | user.name <- contents.req$contents$user$username

105 | user.slug <- contents.req$contents$user$slug

106 |

107 | contents <-

108 | contents.req$contents %>%

109 | .[,names(.) %in% c('title', 'slug', 'status', 'class', 'length')] %>%

110 | mutate(user.name = user.name) %>%

111 | mutate(user.slug = user.slug) %>%

112 | mutate(query = query, hierarchy = 'content') %>%

113 | filter(class == 'Channel')

114 | }

115 |

116 | Sys.sleep(0.3)

117 |

118 | # connections

119 | if(!is.null(private)) {

120 | connections.req <-

121 | tryCatch({

122 | str_c('https://api.are.na/v2/channels/', query, '/connections') %>% GET(add_headers(Authorization = str_c('bearer ', private))) %>% content(type = 'text', encoding = 'UTF-8') %>% fromJSON

123 | }, warning = function(w) {

124 | Sys.sleep(4)

125 | str_c('https://api.are.na/v2/channels/', query, '/connections') %>% GET(add_headers(Authorization = str_c('bearer ', private))) %>% content(type = 'text', encoding = 'UTF-8') %>% fromJSON

126 | }, error = function(e) {

127 | Sys.sleep(4)

128 | str_c('https://api.are.na/v2/channels/', query, '/connections') %>% GET(add_headers(Authorization = str_c('bearer ', private))) %>% content(type = 'text', encoding = 'UTF-8') %>% fromJSON

129 | })

130 | } else {

131 | connections.req <-

132 | tryCatch({

133 | str_c('https://api.are.na/v2/channels/', query, '/connections') %>% fromJSON

134 | }, warning = function(w) {

135 | Sys.sleep(4)

136 | str_c('https://api.are.na/v2/channels/', query, '/connections') %>% fromJSON

137 | }, error = function(e) {

138 | Sys.sleep(4)

139 | str_c('https://api.are.na/v2/channels/', query, '/connections') %>% fromJSON

140 | })

141 | }

142 |

143 |

144 | if(connections.req$channels %>% length > 0) {

145 |

146 | user.name <- connections.req$channels$user$username

147 | user.slug <- connections.req$channels$user$slug

148 |

149 | connections <-

150 | connections.req$channels %>%

151 | .[,names(.) %in% c('title', 'slug', 'status', 'class', 'length')] %>%

152 | mutate(user.name = user.name) %>%

153 | mutate(user.slug = user.slug) %>%

154 | mutate(query = query, hierarchy = 'content') %>%

155 | filter(class == 'Channel')

156 | }

157 |

158 | })

159 |

160 | bind_rows(identity, contents, connections) %>%

161 | filter(status %in% type | hierarchy == 'identity') %>%

162 | mutate(hierarchy = ifelse(hierarchy == 'identity', hierarchy, ifelse(slug %in% contents$slug, 'child', 'parent'))) %>%

163 | filter(hierarchy %in% direction) %>%

164 | unique

165 |

166 | }

167 |

168 |

--------------------------------------------------------------------------------

5 |

6 | ## Setup

7 |

8 | Working prototype, but there are a couple setup steps for first time use:

9 |

10 | - install [XQuartz](https://www.xquartz.org/) if you don't yet have it

11 | - install [R](https://www.r-project.org/)

12 | - clone the repo, move into app directory, set permissions for run script, and start R environment

13 |

14 | ```> git clone git@github.com:hxrts/spider.git && cd spider && chmod +x run.sh && R```

15 |

16 | - from R, install the pacman package manager and import remaining code to install libraries as needed, exit upon completion

17 |

18 | ```> install.packages('pacman') ; source('_daemon.R') ; q(save = 'no')```

19 |

20 | - in the root directory create a file called ```_secret.R```

21 | - register your app on [dev.are.na](https://dev.are.na/oauth/applications/new) and copy your personal access token.

22 | - edit ```_secret.R``` by pasting ```token = 'personal-access-token'``` and adding the token from [dev.are.na](https://dev.are.na)

23 |

24 |

25 | ## Run

26 |

27 | - [optional] specify local port in config.txt file

28 | - from spider directory run startup script ```> sh run.sh```

29 | - point your browser to the address displayed

30 |

31 | ## Usage

32 |

33 | Pick an origin channel and choose crawling parameters as desired. Clear the window after your search or add a new query to the existing graph.

34 |

35 | Note: The scraping is rather slow, especially graphs of high degree, you can watch crawling progrss in the command prompt. Please be patient.

36 |

37 |

38 | ## Stop

39 |

40 | - ```ctrl + c``` halts app and returns command prompt

41 |

42 | ## World Maps

43 |

44 | Add interesting results to the channel [World Maps](https://www.are.na/sam-hart/world-maps) with some information about the parameters used to generate the plot.

45 |

46 | ## Known Bugs

47 |

48 | I've run into issues building graphs with overlapping edges without clearing the previous build, especially working from the initial graph. If the app encounters an error the screen will becomed greyed out, refreshing the window should re-initialize.

49 |

--------------------------------------------------------------------------------

/functions/plot.R:

--------------------------------------------------------------------------------

1 |

2 |

3 | message(' - plot')

4 |

5 |

6 | PlotGraph <- function(objects, arrows, origin) {

7 | visNetwork(

8 | objects %>% unique,

9 | arrows %>% unique,

10 | # main = list(text = 'Are.na / ',

11 | # style = 'display: inline-block;

12 | # font-family: Arial;

13 | # color: #9d9d9d;

14 | # font-weight: bold;

15 | # font-size: 20px;

16 | # text-align: left'),

17 | # submain = list(text = str_c('',

19 | # objects %>% filter(id == origin) %$% label,

20 | # '

5 |

6 | ## Setup

7 |

8 | Working prototype, but there are a couple setup steps for first time use:

9 |

10 | - install [XQuartz](https://www.xquartz.org/) if you don't yet have it

11 | - install [R](https://www.r-project.org/)

12 | - clone the repo, move into app directory, set permissions for run script, and start R environment

13 |

14 | ```> git clone git@github.com:hxrts/spider.git && cd spider && chmod +x run.sh && R```

15 |

16 | - from R, install the pacman package manager and import remaining code to install libraries as needed, exit upon completion

17 |

18 | ```> install.packages('pacman') ; source('_daemon.R') ; q(save = 'no')```

19 |

20 | - in the root directory create a file called ```_secret.R```

21 | - register your app on [dev.are.na](https://dev.are.na/oauth/applications/new) and copy your personal access token.

22 | - edit ```_secret.R``` by pasting ```token = 'personal-access-token'``` and adding the token from [dev.are.na](https://dev.are.na)

23 |

24 |

25 | ## Run

26 |

27 | - [optional] specify local port in config.txt file

28 | - from spider directory run startup script ```> sh run.sh```

29 | - point your browser to the address displayed

30 |

31 | ## Usage

32 |

33 | Pick an origin channel and choose crawling parameters as desired. Clear the window after your search or add a new query to the existing graph.

34 |

35 | Note: The scraping is rather slow, especially graphs of high degree, you can watch crawling progrss in the command prompt. Please be patient.

36 |

37 |

38 | ## Stop

39 |

40 | - ```ctrl + c``` halts app and returns command prompt

41 |

42 | ## World Maps

43 |

44 | Add interesting results to the channel [World Maps](https://www.are.na/sam-hart/world-maps) with some information about the parameters used to generate the plot.

45 |

46 | ## Known Bugs

47 |

48 | I've run into issues building graphs with overlapping edges without clearing the previous build, especially working from the initial graph. If the app encounters an error the screen will becomed greyed out, refreshing the window should re-initialize.

49 |

--------------------------------------------------------------------------------

/functions/plot.R:

--------------------------------------------------------------------------------

1 |

2 |

3 | message(' - plot')

4 |

5 |

6 | PlotGraph <- function(objects, arrows, origin) {

7 | visNetwork(

8 | objects %>% unique,

9 | arrows %>% unique,

10 | # main = list(text = 'Are.na / ',

11 | # style = 'display: inline-block;

12 | # font-family: Arial;

13 | # color: #9d9d9d;

14 | # font-weight: bold;

15 | # font-size: 20px;

16 | # text-align: left'),

17 | # submain = list(text = str_c('',

19 | # objects %>% filter(id == origin) %$% label,

20 | # '