├── _config.yml

├── src

├── segment

│ ├── mod.rs

│ ├── hint.rs

│ └── data.rs

├── util

│ ├── mod.rs

│ ├── misc.rs

│ └── io.rs

├── lib.rs

├── config.rs

├── error.rs

├── bin

│ ├── tinkv-server.rs

│ └── tinkv.rs

├── server.rs

├── store.rs

└── resp.rs

├── .gitignore

├── .travis.yml

├── LICENSE

├── Cargo.toml

├── examples

├── hello.rs

└── basic.rs

├── tests

└── store.rs

├── benches

└── store_benchmark.rs

└── README.md

/_config.yml:

--------------------------------------------------------------------------------

1 | theme: jekyll-theme-architect

--------------------------------------------------------------------------------

/src/segment/mod.rs:

--------------------------------------------------------------------------------

1 | mod data;

2 | mod hint;

3 |

4 | pub(crate) use data::{DataFile, Entry as DataEntry};

5 | pub(crate) use hint::HintFile;

6 |

--------------------------------------------------------------------------------

/src/util/mod.rs:

--------------------------------------------------------------------------------

1 | pub use io::{BufReaderWithOffset, BufWriterWithOffset, ByteLineReader, FileWithBufWriter};

2 | pub use misc::*;

3 |

4 | mod io;

5 | pub mod misc;

6 |

--------------------------------------------------------------------------------

/src/lib.rs:

--------------------------------------------------------------------------------

1 | //! A simple key-value storage.

2 | pub mod config;

3 | mod error;

4 | mod resp;

5 | mod segment;

6 | mod server;

7 | mod store;

8 | pub mod util;

9 |

10 | pub use error::{Result, TinkvError};

11 | pub use server::Server;

12 | pub use store::{OpenOptions, Store};

13 |

--------------------------------------------------------------------------------

/src/config.rs:

--------------------------------------------------------------------------------

1 | pub const REMOVE_TOMESTONE: &[u8] = b"%TINKV_REMOVE_TOMESTOME%";

2 | pub const DATA_FILE_SUFFIX: &str = ".tinkv.data";

3 | pub const HINT_FILE_SUFFIX: &str = ".tinkv.hint";

4 | pub const DEFAULT_MAX_DATA_FILE_SIZE: u64 = 1024 * 1024 * 10; // 10MB

5 | pub const DEFAULT_MAX_KEY_SIZE: u64 = 64;

6 | pub const DEFAULT_MAX_VALUE_SIZE: u64 = 65536;

7 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Generated by Cargo

2 | # will have compiled files and executables

3 | /target/

4 |

5 | # Remove Cargo.lock from gitignore if creating an executable, leave it for libraries

6 | # More information here https://doc.rust-lang.org/cargo/guide/cargo-toml-vs-cargo-lock.html

7 | Cargo.lock

8 |

9 | # These are backup files generated by rustfmt

10 | **/*.rs.bk

11 |

12 | .vscode

13 |

14 | .tinkv

--------------------------------------------------------------------------------

/.travis.yml:

--------------------------------------------------------------------------------

1 | language: rust

2 | cache:

3 | directories:

4 | - target/

5 |

6 | script:

7 | - rustup component add clippy && cargo clippy

8 | - cargo test -- --nocapture

9 |

10 | after_success: |

11 | cargo doc \

12 | && echo '' > target/doc/index.html && \

13 | sudo pip install ghp-import && \

14 | ghp-import -n target/doc && \

15 | git push -qf https://${TOKEN}@github.com/${TRAVIS_REPO_SLUG}.git gh-pages

16 |

17 | notifications:

18 | email: false

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2020 iFaceless

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/src/util/misc.rs:

--------------------------------------------------------------------------------

1 | //! Define some helper functions.

2 | use std::path::Path;

3 | use std::time::{SystemTime, UNIX_EPOCH};

4 |

5 | pub fn current_timestamp() -> u128 {

6 | SystemTime::now()

7 | .duration_since(UNIX_EPOCH)

8 | .expect("time went backwards")

9 | .as_nanos()

10 | }

11 |

12 | pub fn checksum(data: &[u8]) -> u32 {

13 | crc::crc32::checksum_ieee(data)

14 | }

15 |

16 | pub fn parse_file_id(path: &Path) -> Option {

17 | path.file_name()?

18 | .to_str()?

19 | .split('.')

20 | .next()?

21 | .parse::()

22 | .ok()

23 | }

24 |

25 | pub fn to_utf8_string(value: &[u8]) -> String {

26 | String::from_utf8_lossy(value).to_string()

27 | }

28 |

29 | #[cfg(test)]

30 | mod tests {

31 | use super::*;

32 |

33 | #[test]

34 | fn test_parse_file_id() {

35 | let r = parse_file_id(Path::new("path/to/12345.tinkv.data"));

36 | assert_eq!(r, Some(12345 as u64));

37 |

38 | let r = parse_file_id(Path::new("path/to/.tinkv.data"));

39 | assert_eq!(r, None);

40 |

41 | let r = parse_file_id(Path::new("path/to"));

42 | assert_eq!(r, None);

43 | }

44 |

45 | #[test]

46 | fn test_to_utf8_str() {

47 | assert_eq!(to_utf8_string(b"hello, world"), "hello, world".to_owned());

48 | }

49 | }

50 |

--------------------------------------------------------------------------------

/Cargo.toml:

--------------------------------------------------------------------------------

1 | [[example]]

2 | name = 'basic'

3 | path = 'examples/basic.rs'

4 |

5 | [[example]]

6 | name = 'hello'

7 | path = 'examples/hello.rs'

8 |

9 | [[bench]]

10 | name = 'store_benchmark'

11 | harness = false

12 |

13 | [package]

14 | name = 'tinkv'

15 | version = '0.10.0'

16 | authors = ['0xE8551CCB ']

17 | edition = '2018'

18 | description = 'A fast and simple key-value storage engine.'

19 | keywords = [

20 | 'database',

21 | 'key-value',

22 | 'storage',

23 | ]

24 | categories = ['database-implementations']

25 | license = 'MIT'

26 | readme = 'README.md'

27 | homepage = 'https://github.com/iFaceless/tinkv'

28 |

29 | [dependencies]

30 | clap = '2.33.1'

31 | structopt = '0.3.14'

32 | thiserror = '1.0.19'

33 | anyhow = '1.0.31'

34 | crc = '1.8.1'

35 | glob = '0.3.0'

36 | log = '0.4.8'

37 | pretty_env_logger = '0.4.0'

38 | impls = '1.0.3'

39 | bincode = '1.2.1'

40 | clap-verbosity-flag = '0.3.1'

41 | bytefmt = '0.1.7'

42 | lazy_static = '1.4.0'

43 | os_info = '2.0.6'

44 | sys-info = '0.7.0'

45 |

46 | [dependencies.serde]

47 | version = '1.0.111'

48 | features = ['derive']

49 |

50 | [dev-dependencies]

51 | assert_cmd = '0.11.0'

52 | predicates = '1.0.0'

53 | tempfile = '3.1.0'

54 | walkdir = '2.3.1'

55 | criterion = '0.3.2'

56 | sled = "0.32.0"

57 |

58 | [dev-dependencies.rand]

59 | version = '0.7'

60 | features = [

61 | 'std',

62 | 'small_rng',

63 | ]

64 |

--------------------------------------------------------------------------------

/examples/hello.rs:

--------------------------------------------------------------------------------

1 | use pretty_env_logger;

2 | use std::time;

3 | use tinkv::{self};

4 |

5 | fn main() -> tinkv::Result<()> {

6 | pretty_env_logger::init_timed();

7 | let mut store = tinkv::OpenOptions::new()

8 | .max_data_file_size(1024 * 100)

9 | .open("/usr/local/var/tinkv")?;

10 |

11 | let begin = time::Instant::now();

12 |

13 | const TOTAL_KEYS: usize = 1000;

14 | for i in 0..TOTAL_KEYS {

15 | let k = format!("hello_{}", i);

16 | let v = format!("world_{}", i);

17 | store.set(k.as_bytes(), v.as_bytes())?;

18 | store.set(k.as_bytes(), format!("{}_new", v).as_bytes())?;

19 | }

20 |

21 | let duration = time::Instant::now().duration_since(begin);

22 | let speed = (TOTAL_KEYS * 2) as f32 / duration.as_secs_f32();

23 | println!(

24 | "{} keys written in {} secs, {} keys/s",

25 | TOTAL_KEYS * 2,

26 | duration.as_secs_f32(),

27 | speed

28 | );

29 |

30 | let stats = store.stats();

31 | println!("{:?}", stats);

32 |

33 | store.compact()?;

34 |

35 | let mut index = 100;

36 | store.for_each(&mut |k, v| {

37 | index += 1;

38 |

39 | println!(

40 | "key={}, value={}",

41 | String::from_utf8_lossy(&k),

42 | String::from_utf8_lossy(&v)

43 | );

44 |

45 | if index > 5 {

46 | Ok(false)

47 | } else {

48 | Ok(true)

49 | }

50 | })?;

51 |

52 | let v = store.get("hello_1".as_bytes())?.unwrap_or_default();

53 | println!("{}", String::from_utf8_lossy(&v));

54 |

55 | let stats = store.stats();

56 | println!("{:?}", stats);

57 |

58 | Ok(())

59 | }

60 |

--------------------------------------------------------------------------------

/src/error.rs:

--------------------------------------------------------------------------------

1 | use std::io;

2 | use std::num::ParseIntError;

3 | use std::path::PathBuf;

4 | use thiserror::Error;

5 |

6 | /// The result of any operation.

7 | pub type Result = ::std::result::Result;

8 |

9 | /// The kind of error that could be produced during tinkv operation.

10 | #[derive(Error, Debug)]

11 | pub enum TinkvError {

12 | #[error(transparent)]

13 | ParseInt(#[from] ParseIntError),

14 | #[error("parse resp value failed")]

15 | ParseRespValue,

16 | #[error(transparent)]

17 | Io(#[from] io::Error),

18 | #[error(transparent)]

19 | Glob(#[from] glob::GlobError),

20 | #[error(transparent)]

21 | Pattern(#[from] glob::PatternError),

22 | #[error(transparent)]

23 | Codec(#[from] Box),

24 | /// Custom error definitions.

25 | #[error("crc check failed, data entry (key='{}', file_id={}, offset={}) was corrupted", String::from_utf8_lossy(.key), .file_id, .offset)]

26 | DataEntryCorrupted {

27 | file_id: u64,

28 | key: Vec,

29 | offset: u64,

30 | },

31 | #[error("key '{}' not found", String::from_utf8_lossy(.0))]

32 | KeyNotFound(Vec),

33 | #[error("file '{}' is not writeable", .0.display())]

34 | FileNotWriteable(PathBuf),

35 | #[error("key is too large")]

36 | KeyIsTooLarge,

37 | #[error("value is too large")]

38 | ValueIsTooLarge,

39 | #[error("{}", .0)]

40 | Custom(String),

41 | #[error(transparent)]

42 | Other(#[from] anyhow::Error),

43 | #[error("{} {}", .name, .msg)]

44 | RespCommon { name: String, msg: String },

45 | #[error("wrong number of arguments for '{}' command", .0)]

46 | RespWrongNumOfArgs(String),

47 | }

48 |

49 | impl TinkvError {

50 | pub fn new_resp_common(name: &str, msg: &str) -> Self {

51 | Self::RespCommon {

52 | name: name.to_owned(),

53 | msg: msg.to_owned(),

54 | }

55 | }

56 |

57 | pub fn resp_wrong_num_of_args(name: &str) -> Self {

58 | Self::RespWrongNumOfArgs(name.to_owned())

59 | }

60 | }

61 |

--------------------------------------------------------------------------------

/examples/basic.rs:

--------------------------------------------------------------------------------

1 | use pretty_env_logger;

2 | use std::time;

3 | use tinkv::{self, Store};

4 |

5 | fn main() -> tinkv::Result<()> {

6 | pretty_env_logger::init_timed();

7 | let mut store = Store::open(".tinkv")?;

8 |

9 | let begin = time::Instant::now();

10 |

11 | const TOTAL_KEYS: usize = 100000;

12 | for i in 0..TOTAL_KEYS {

13 | let k = format!("key_{}", i);

14 | let v = format!(

15 | "value_{}_{}_hello_world_this_is_a_bad_day",

16 | i,

17 | tinkv::util::current_timestamp()

18 | );

19 | store.set(k.as_bytes(), v.as_bytes())?;

20 | store.set(k.as_bytes(), v.as_bytes())?;

21 | }

22 |

23 | let duration = time::Instant::now().duration_since(begin);

24 | let speed = (TOTAL_KEYS * 2) as f32 / duration.as_secs_f32();

25 | println!(

26 | "{} keys written in {} secs, {} keys/s",

27 | TOTAL_KEYS * 2,

28 | duration.as_secs_f32(),

29 | speed

30 | );

31 |

32 | println!("initial: {:?}", store.stats());

33 |

34 | let v = store.get("key_1".as_bytes())?.unwrap_or_default();

35 | println!("key_1 => {:?}", String::from_utf8_lossy(&v));

36 |

37 | store.set("hello".as_bytes(), "tinkv".as_bytes())?;

38 | println!("after set 1: {:?}", store.stats());

39 |

40 | store.set("hello".as_bytes(), "tinkv 2".as_bytes())?;

41 | println!("after set 2: {:?}", store.stats());

42 |

43 | store.set("hello 2".as_bytes(), "tinkv".as_bytes())?;

44 | println!("after set 3: {:?}", store.stats());

45 |

46 | let value = store.get("hello".as_bytes())?;

47 | assert_eq!(value, Some("tinkv 2".as_bytes().to_vec()));

48 |

49 | store.remove("hello".as_bytes())?;

50 | println!("after remove: {:?}", store.stats());

51 |

52 | let value_not_found = store.get("hello".as_bytes())?;

53 | assert_eq!(value_not_found, None);

54 |

55 | store.compact()?;

56 | println!("after compaction: {:?}", store.stats());

57 |

58 | let v = store.get("key_1".as_bytes())?.unwrap();

59 | println!("key_1 => {:?}", String::from_utf8_lossy(&v));

60 |

61 | Ok(())

62 | }

63 |

--------------------------------------------------------------------------------

/src/bin/tinkv-server.rs:

--------------------------------------------------------------------------------

1 | //! TinKV server is a redis-compatible storage server.

2 | use clap_verbosity_flag::Verbosity;

3 | use std::error::Error;

4 | use std::net::SocketAddr;

5 |

6 | use log::debug;

7 | use structopt::StructOpt;

8 | use tinkv::{config, OpenOptions, Server};

9 |

10 | const DEFAULT_DATASTORE_PATH: &str = "/usr/local/var/tinkv";

11 | const DEFAULT_LISTENING_ADDR: &str = "127.0.0.1:7379";

12 |

13 | #[derive(Debug, StructOpt)]

14 | #[structopt(

15 | rename_all = "kebab-case",

16 | name = "tinkv-server",

17 | version = env!("CARGO_PKG_VERSION"),

18 | author = env!("CARGO_PKG_AUTHORS"),

19 | about = "TiKV is a redis-compatible key/value storage server.",

20 | )]

21 | struct Opt {

22 | #[structopt(flatten)]

23 | verbose: Verbosity,

24 | /// Set listening address.

25 | #[structopt(

26 | short = "a",

27 | long,

28 | value_name = "IP:PORT",

29 | default_value = DEFAULT_LISTENING_ADDR,

30 | parse(try_from_str),

31 | )]

32 | addr: SocketAddr,

33 | /// Set max key size (in bytes).

34 | #[structopt(long, value_name = "KEY-SIZE")]

35 | max_key_size: Option,

36 | /// Set max value size (in bytes).

37 | #[structopt(long, value_name = "VALUE-SIZE")]

38 | max_value_size: Option,

39 | /// Set max file size (in bytes).

40 | #[structopt(long, value_name = "FILE-SIZE")]

41 | max_data_file_size: Option,

42 | /// Sync all pending writes to disk after each writing operation (default to false).

43 | #[structopt(long, value_name = "SYNC")]

44 | sync: bool,

45 | }

46 | fn main() -> Result<(), Box> {

47 | let opt = Opt::from_args();

48 | if let Some(level) = opt.verbose.log_level() {

49 | std::env::set_var("RUST_LOG", format!("{}", level));

50 | }

51 | pretty_env_logger::init_timed();

52 |

53 | debug!("get tinkv server config from command line: {:?}", &opt);

54 | let store = OpenOptions::new()

55 | .max_key_size(

56 | opt.max_key_size

57 | .unwrap_or_else(|| config::DEFAULT_MAX_KEY_SIZE),

58 | )

59 | .max_value_size(

60 | opt.max_value_size

61 | .unwrap_or_else(|| config::DEFAULT_MAX_VALUE_SIZE),

62 | )

63 | .max_data_file_size(

64 | opt.max_data_file_size

65 | .unwrap_or_else(|| config::DEFAULT_MAX_DATA_FILE_SIZE),

66 | )

67 | .sync(opt.sync)

68 | .open(DEFAULT_DATASTORE_PATH)?;

69 |

70 | Server::new(store).run(opt.addr)?;

71 |

72 | Ok(())

73 | }

74 |

--------------------------------------------------------------------------------

/tests/store.rs:

--------------------------------------------------------------------------------

1 | use tempfile::TempDir;

2 | use tinkv::{self, Result, Store};

3 |

4 | #[test]

5 | fn get_stored_value() -> Result<()> {

6 | let tmpdir = TempDir::new().expect("unable to create tmp dir");

7 | let mut store = Store::open(&tmpdir.path())?;

8 |

9 | store.set(b"version", b"1.0")?;

10 | store.set(b"name", b"tinkv")?;

11 |

12 | assert_eq!(store.get(b"version")?, Some(b"1.0".to_vec()));

13 | assert_eq!(store.get(b"name")?, Some(b"tinkv".to_vec()));

14 | assert_eq!(store.len(), 2);

15 |

16 | store.close()?;

17 |

18 | // open again, check persisted data.

19 | let mut store = Store::open(&tmpdir.path())?;

20 | assert_eq!(store.get(b"version")?, Some(b"1.0".to_vec()));

21 | assert_eq!(store.get(b"name")?, Some(b"tinkv".to_vec()));

22 | assert_eq!(store.len(), 2);

23 |

24 | Ok(())

25 | }

26 |

27 | #[test]

28 | fn overwrite_value() -> Result<()> {

29 | let tmpdir = TempDir::new().expect("unable to create tmp dir");

30 | let mut store = Store::open(&tmpdir.path())?;

31 |

32 | store.set(b"version", b"1.0")?;

33 | assert_eq!(store.get(b"version")?, Some(b"1.0".to_vec()));

34 |

35 | store.set(b"version", b"2.0")?;

36 | assert_eq!(store.get(b"version")?, Some(b"2.0".to_vec()));

37 |

38 | store.close()?;

39 |

40 | // open again and check data

41 | let mut store = Store::open(&tmpdir.path())?;

42 | assert_eq!(store.get(b"version")?, Some(b"2.0".to_vec()));

43 |

44 | Ok(())

45 | }

46 |

47 | #[test]

48 | fn get_non_existent_key() -> Result<()> {

49 | let tmpdir = TempDir::new().expect("unable to create tmp dir");

50 | let mut store = Store::open(&tmpdir.path())?;

51 |

52 | store.set(b"version", b"1.0")?;

53 | assert_eq!(store.get(b"version_foo")?, None);

54 | store.close()?;

55 |

56 | let mut store = Store::open(&tmpdir.path())?;

57 | assert_eq!(store.get(b"version_foo")?, None);

58 |

59 | Ok(())

60 | }

61 |

62 | #[test]

63 | fn remove_key() -> Result<()> {

64 | let tmpdir = TempDir::new().expect("unable to create tmp dir");

65 | let mut store = Store::open(&tmpdir.path())?;

66 |

67 | store.set(b"version", b"1.0")?;

68 | assert!(store.remove(b"version").is_ok());

69 | assert_eq!(store.get(b"version")?, None);

70 |

71 | Ok(())

72 | }

73 |

74 | #[test]

75 | fn remove_non_existent_key() -> Result<()> {

76 | let tmpdir = TempDir::new().expect("unable to create tmp dir");

77 | let mut store = Store::open(&tmpdir.path())?;

78 |

79 | assert!(store.remove(b"version").is_err());

80 |

81 | Ok(())

82 | }

83 |

84 | #[test]

85 | fn compaction() -> Result<()> {

86 | let tmpdir = TempDir::new().expect("unable to create tmp dir");

87 | let mut store = Store::open(&tmpdir.path())?;

88 |

89 | for it in 0..100 {

90 | for id in 0..1000 {

91 | let k = format!("key_{}", id);

92 | let v = format!("value_{}", it);

93 | store.set(k.as_bytes(), v.as_bytes())?;

94 | }

95 |

96 | let stats = store.stats();

97 | if stats.total_stale_entries <= 10000 {

98 | continue;

99 | }

100 |

101 | // trigger compaction

102 | store.compact()?;

103 |

104 | let stats = store.stats();

105 | assert_eq!(stats.size_of_stale_entries, 0);

106 | assert_eq!(stats.total_stale_entries, 0);

107 | assert_eq!(stats.total_active_entries, 1000);

108 |

109 | // close and reopen, chack persisted data

110 | store.close()?;

111 |

112 | store = Store::open(&tmpdir.path())?;

113 |

114 | let stats = store.stats();

115 | assert_eq!(stats.size_of_stale_entries, 0);

116 | assert_eq!(stats.total_stale_entries, 0);

117 | assert_eq!(stats.total_active_entries, 1000);

118 |

119 | for id in 0..1000 {

120 | let k = format!("key_{}", id);

121 | assert_eq!(

122 | store.get(k.as_bytes())?,

123 | Some(format!("value_{}", it).as_bytes().to_vec())

124 | );

125 | }

126 | }

127 |

128 | Ok(())

129 | }

130 |

--------------------------------------------------------------------------------

/benches/store_benchmark.rs:

--------------------------------------------------------------------------------

1 | use criterion::{criterion_group, criterion_main, BatchSize, Criterion, ParameterizedBenchmark};

2 | use rand::prelude::*;

3 |

4 | use sled::{Db, Tree};

5 | use std::iter;

6 | use std::path::Path;

7 | use tempfile::TempDir;

8 | use tinkv::{self, Result, Store, TinkvError};

9 |

10 | #[derive(Clone)]

11 | pub struct SledStore(Db);

12 |

13 | impl SledStore {

14 | fn open>(path: P) -> Self {

15 | let tree = sled::open(path).expect("failed to open db");

16 | SledStore(tree)

17 | }

18 |

19 | fn set(&mut self, key: String, value: String) -> Result<()> {

20 | let tree: &Tree = &self.0;

21 | tree.insert(key, value.into_bytes())

22 | .map(|_| ())

23 | .map_err(|e| TinkvError::Custom(format!("{}", e)))?;

24 | tree.flush()

25 | .map_err(|e| TinkvError::Custom(format!("{}", e)))?;

26 | Ok(())

27 | }

28 |

29 | fn get(&mut self, key: String) -> Result> {

30 | let tree: &Tree = &self.0;

31 | Ok(tree

32 | .get(key)

33 | .map_err(|e| TinkvError::Custom(format!("{}", e)))?

34 | .map(|i_vec| AsRef::<[u8]>::as_ref(&i_vec).to_vec())

35 | .map(String::from_utf8)

36 | .transpose()

37 | .map_err(|e| TinkvError::Custom(format!("{}", e)))?)

38 | }

39 | }

40 |

41 | fn set_benchmark(c: &mut Criterion) {

42 | let b = ParameterizedBenchmark::new(

43 | "tinkv-store",

44 | |b, _| {

45 | b.iter_batched(

46 | || {

47 | let tmpdir = TempDir::new().unwrap();

48 | (Store::open(&tmpdir.path()).unwrap(), tmpdir)

49 | },

50 | |(mut store, _tmpdir)| {

51 | for i in 1..(1 << 12) {

52 | store

53 | .set(format!("key_{}", i).as_bytes(), b"value")

54 | .unwrap();

55 | }

56 | },

57 | BatchSize::SmallInput,

58 | )

59 | },

60 | iter::once(()),

61 | )

62 | .with_function("sled_store", |b, _| {

63 | b.iter_batched(

64 | || {

65 | let tmpdir = TempDir::new().unwrap();

66 | (SledStore::open(&tmpdir.path()), tmpdir)

67 | },

68 | |(mut db, _tmpdir)| {

69 | for i in 1..(1 << 12) {

70 | db.set(format!("key_{}", i), "value".to_string()).unwrap();

71 | }

72 | },

73 | BatchSize::SmallInput,

74 | )

75 | });

76 |

77 | c.bench("set_betchmark", b);

78 | }

79 |

80 | fn get_benchmark(c: &mut Criterion) {

81 | let b = ParameterizedBenchmark::new(

82 | "tinkv-store",

83 | |b, i| {

84 | let tempdir = TempDir::new().unwrap();

85 | let mut store = Store::open(&tempdir.path()).unwrap();

86 | for key_i in 1..(1 << i) {

87 | store

88 | .set(format!("key_{}", key_i).as_bytes(), b"value")

89 | .unwrap();

90 | }

91 |

92 | let mut rng = SmallRng::from_seed([0; 16]);

93 | b.iter(|| {

94 | store

95 | .get(format!("key_{}", rng.gen_range(1, 1 << i)).as_bytes())

96 | .unwrap();

97 | })

98 | },

99 | vec![8, 12, 16, 20],

100 | )

101 | .with_function("sled_store", |b, i| {

102 | let tmpdir = TempDir::new().unwrap();

103 | let mut db = SledStore::open(&tmpdir.path());

104 | for key_i in 1..(1 << i) {

105 | db.set(format!("key_{}", key_i), "value".to_owned())

106 | .unwrap();

107 | }

108 |

109 | let mut rng = SmallRng::from_seed([0; 16]);

110 | b.iter(|| {

111 | db.get(format!("key_{}", rng.gen_range(1, 1 << i))).unwrap();

112 | })

113 | });

114 | c.bench("get_benchmark", b);

115 | }

116 |

117 | criterion_group!(benches, set_benchmark, get_benchmark);

118 | criterion_main!(benches);

119 |

--------------------------------------------------------------------------------

/src/segment/hint.rs:

--------------------------------------------------------------------------------

1 | //! Maintain hint files. Each compacted data file

2 | //! should bind with a hint file for faster loading.

3 | use crate::error::Result;

4 | use crate::util::{parse_file_id, FileWithBufWriter};

5 | use log::{error, trace};

6 | use serde::{Deserialize, Serialize};

7 | use std::fmt;

8 | use std::fs::{self, File};

9 | use std::io::prelude::*;

10 | use std::io::{BufReader, SeekFrom};

11 | use std::path::{Path, PathBuf};

12 |

13 | /// Entry in the hint file.

14 | #[derive(Debug, Serialize, Deserialize)]

15 | pub(crate) struct Entry {

16 | pub key: Vec,

17 | pub offset: u64,

18 | pub size: u64,

19 | }

20 |

21 | impl fmt::Display for Entry {

22 | fn fmt(&self, f: &mut fmt::Formatter<'_>) -> fmt::Result {

23 | write!(

24 | f,

25 | "HintEntry(key='{}', offset={}, size={})",

26 | String::from_utf8_lossy(self.key.as_ref()),

27 | self.offset,

28 | self.size,

29 | )

30 | }

31 | }

32 |

33 | /// A hint file persists key value indexes in a related data file.

34 | /// TinKV can rebuild keydir (in-memory index) much faster if hint file

35 | /// exists.

36 | #[derive(Debug)]

37 | pub struct HintFile {

38 | pub path: PathBuf,

39 | pub id: u64,

40 | entries_written: u64,

41 | writeable: bool,

42 | writer: Option,

43 | reader: BufReader,

44 | }

45 |

46 | impl HintFile {

47 | pub(crate) fn new(path: &Path, writeable: bool) -> Result {

48 | // File name must starts with valid file id.

49 | let file_id = parse_file_id(path).expect("file id not found in file path");

50 |

51 | let w = if writeable {

52 | let f = fs::OpenOptions::new()

53 | .create(true)

54 | .write(true)

55 | .append(true)

56 | .open(path)?;

57 |

58 | Some(FileWithBufWriter::from(f)?)

59 | } else {

60 | None

61 | };

62 |

63 | Ok(Self {

64 | path: path.to_path_buf(),

65 | id: file_id,

66 | entries_written: 0,

67 | writeable,

68 | writer: w,

69 | reader: BufReader::new(File::open(path)?),

70 | })

71 | }

72 |

73 | pub(crate) fn write(&mut self, key: &[u8], offset: u64, size: u64) -> Result<()> {

74 | let entry = Entry {

75 | key: key.into(),

76 | offset,

77 | size,

78 | };

79 | trace!("append {} to file {}", &entry, self.path.display());

80 |

81 | let w = &mut self.writer.as_mut().expect("hint file is not writeable");

82 | bincode::serialize_into(w, &entry)?;

83 | self.entries_written += 1;

84 |

85 | self.flush()?;

86 |

87 | Ok(())

88 | }

89 |

90 | /// Sync all pending writes to disk.

91 | pub(crate) fn sync(&mut self) -> Result<()> {

92 | self.flush()?;

93 | if self.writer.is_some() {

94 | self.writer.as_mut().unwrap().sync()?;

95 | }

96 | Ok(())

97 | }

98 |

99 | /// Flush buf writer.

100 | fn flush(&mut self) -> Result<()> {

101 | if self.writeable {

102 | self.writer.as_mut().unwrap().flush()?;

103 | }

104 | Ok(())

105 | }

106 |

107 | pub(crate) fn entry_iter(&mut self) -> EntryIter {

108 | EntryIter::new(self)

109 | }

110 | }

111 |

112 | impl Drop for HintFile {

113 | fn drop(&mut self) {

114 | if let Err(e) = self.sync() {

115 | error!(

116 | "failed to sync hint file: {}, got error: {}",

117 | self.path.display(),

118 | e

119 | );

120 | }

121 |

122 | if self.writeable

123 | && self.entries_written == 0

124 | && fs::remove_file(self.path.as_path()).is_ok()

125 | {

126 | trace!("hint file {} is empty, remove it.", self.path.display());

127 | }

128 | }

129 | }

130 |

131 | pub(crate) struct EntryIter<'a> {

132 | hint_file: &'a mut HintFile,

133 | offset: u64,

134 | }

135 |

136 | impl<'a> EntryIter<'a> {

137 | fn new(hint_file: &'a mut HintFile) -> Self {

138 | EntryIter {

139 | hint_file,

140 | offset: 0,

141 | }

142 | }

143 | }

144 |

145 | impl<'a> Iterator for EntryIter<'a> {

146 | type Item = Entry;

147 |

148 | fn next(&mut self) -> Option {

149 | let reader = &mut self.hint_file.reader;

150 | reader.seek(SeekFrom::Start(self.offset)).unwrap();

151 | let entry: Entry = bincode::deserialize_from(reader).ok()?;

152 | self.offset = self.hint_file.reader.seek(SeekFrom::Current(0)).unwrap();

153 | trace!(

154 | "iter read {} from hint file {}",

155 | &entry,

156 | self.hint_file.path.display()

157 | );

158 | Some(entry)

159 | }

160 | }

161 |

--------------------------------------------------------------------------------

/src/bin/tinkv.rs:

--------------------------------------------------------------------------------

1 | //! TinKV command line app.

2 | use clap_verbosity_flag::Verbosity;

3 | use std::path::PathBuf;

4 | use std::process;

5 | use structopt::{self, StructOpt};

6 | use tinkv::{self, Store};

7 |

8 | #[derive(Debug, StructOpt)]

9 | enum SubCommand {

10 | /// Retrive value of a key, and display the value.

11 | Get { key: String },

12 | /// Store a key value pair into datastore.

13 | Set { key: String, value: String },

14 | #[structopt(name = "del")]

15 | /// Delete a key value pair from datastore.

16 | Delete { key: String },

17 | /// List all keys in datastore.

18 | Keys,

19 | /// Perform a prefix scanning for keys.

20 | Scan { prefix: String },

21 | /// Compact data files in datastore and reclaim disk space.

22 | Compact,

23 | /// Display statistics of the datastore.

24 | Stats,

25 | }

26 |

27 | #[derive(Debug, StructOpt)]

28 | #[structopt(

29 | rename_all = "kebab-case",

30 | name = env!("CARGO_PKG_NAME"),

31 | version = env!("CARGO_PKG_VERSION"),

32 | author = env!("CARGO_PKG_AUTHORS"),

33 | about = env!("CARGO_PKG_DESCRIPTION"),

34 | )]

35 | struct Opt {

36 | #[structopt(flatten)]

37 | verbose: Verbosity,

38 | /// Path to tinkv datastore.

39 | #[structopt(parse(from_os_str))]

40 | path: PathBuf,

41 | #[structopt(subcommand)]

42 | cmd: SubCommand,

43 | }

44 |

45 | fn main() {

46 | let opt = Opt::from_args();

47 | if let Some(level) = opt.verbose.log_level() {

48 | std::env::set_var("RUST_LOG", format!("{}", level));

49 | }

50 |

51 | pretty_env_logger::init_timed();

52 | match dispatch(&opt) {

53 | Ok(_) => {

54 | process::exit(0);

55 | }

56 | Err(e) => {

57 | eprintln!("operation failed: {}", e);

58 | process::exit(1);

59 | }

60 | }

61 | }

62 |

63 | fn dispatch(opt: &Opt) -> tinkv::Result<()> {

64 | let mut store = Store::open(&opt.path)?;

65 |

66 | // dispacth subcommand handler.

67 | match &opt.cmd {

68 | SubCommand::Get { key } => {

69 | handle_get_command(&mut store, key.as_bytes())?;

70 | }

71 | SubCommand::Set { key, value } => {

72 | handle_set_command(&mut store, key.as_bytes(), value.as_bytes())?;

73 | }

74 | SubCommand::Delete { key } => {

75 | handle_delete_command(&mut store, key.as_bytes())?;

76 | }

77 | SubCommand::Compact => {

78 | handle_compact_command(&mut store)?;

79 | }

80 | SubCommand::Keys => {

81 | handle_keys_command(&mut store)?;

82 | }

83 | SubCommand::Scan { prefix } => {

84 | handle_scan_command(&mut store, prefix.as_bytes())?;

85 | }

86 | SubCommand::Stats => {

87 | handle_stats_command(&mut store)?;

88 | }

89 | }

90 | Ok(())

91 | }

92 |

93 | fn handle_set_command(store: &mut Store, key: &[u8], value: &[u8]) -> tinkv::Result<()> {

94 | store.set(key, value)?;

95 | Ok(())

96 | }

97 |

98 | fn handle_get_command(store: &mut Store, key: &[u8]) -> tinkv::Result<()> {

99 | let value = store.get(key)?;

100 | match value {

101 | None => {

102 | println!(

103 | "key '{}' is not found in datastore",

104 | String::from_utf8_lossy(key)

105 | );

106 | }

107 | Some(value) => {

108 | println!("{}", String::from_utf8_lossy(&value));

109 | }

110 | }

111 | Ok(())

112 | }

113 |

114 | fn handle_delete_command(store: &mut Store, key: &[u8]) -> tinkv::Result<()> {

115 | store.remove(key)?;

116 | Ok(())

117 | }

118 |

119 | fn handle_compact_command(store: &mut Store) -> tinkv::Result<()> {

120 | store.compact()?;

121 | Ok(())

122 | }

123 |

124 | fn handle_keys_command(store: &mut Store) -> tinkv::Result<()> {

125 | store.keys().for_each(|key| {

126 | println!("{}", String::from_utf8_lossy(key));

127 | });

128 | Ok(())

129 | }

130 |

131 | fn handle_scan_command(store: &mut Store, prefix: &[u8]) -> tinkv::Result<()> {

132 | // TODO: Optimize it, prefix scanning is too slow if there are too

133 | // many keys in datastore. Consider using other data structure like

134 | // `Trie`.

135 | store.keys().for_each(|key| {

136 | if key.starts_with(prefix) {

137 | println!("{}", String::from_utf8_lossy(key));

138 | }

139 | });

140 | Ok(())

141 | }

142 |

143 | fn handle_stats_command(store: &mut Store) -> tinkv::Result<()> {

144 | let stats = store.stats();

145 | println!(

146 | "size of stale entries = {}

147 | total stale entries = {}

148 | total active entries = {}

149 | total data files = {}

150 | size of all data files = {}",

151 | bytefmt::format(stats.size_of_stale_entries),

152 | stats.total_stale_entries,

153 | stats.total_active_entries,

154 | stats.total_data_files,

155 | bytefmt::format(stats.size_of_all_data_files),

156 | );

157 | Ok(())

158 | }

159 |

--------------------------------------------------------------------------------

/src/util/io.rs:

--------------------------------------------------------------------------------

1 | //! Some io helpers.

2 |

3 | use std::fs;

4 | use std::io::prelude::*;

5 | use std::io::{self, BufReader, BufWriter, SeekFrom};

6 | #[derive(Debug)]

7 | pub struct BufReaderWithOffset {

8 | reader: BufReader,

9 | offset: u64,

10 | }

11 |

12 | impl BufReaderWithOffset {

13 | pub fn new(mut r: R) -> io::Result {

14 | r.seek(SeekFrom::Current(0))?;

15 | Ok(Self {

16 | reader: BufReader::new(r),

17 | offset: 0,

18 | })

19 | }

20 |

21 | pub fn offset(&self) -> u64 {

22 | self.offset

23 | }

24 | }

25 |

26 | impl Read for BufReaderWithOffset {

27 | fn read(&mut self, buf: &mut [u8]) -> io::Result {

28 | let len = self.reader.read(buf)?;

29 | self.offset += len as u64;

30 | Ok(len)

31 | }

32 | }

33 |

34 | impl Seek for BufReaderWithOffset {

35 | fn seek(&mut self, offset: SeekFrom) -> io::Result {

36 | self.offset = self.reader.seek(offset)?;

37 | Ok(self.offset)

38 | }

39 | }

40 |

41 | #[derive(Debug)]

42 | pub struct BufWriterWithOffset {

43 | writer: BufWriter,

44 | offset: u64,

45 | }

46 |

47 | impl BufWriterWithOffset {

48 | pub fn new(mut w: W) -> io::Result {

49 | w.seek(SeekFrom::Current(0))?;

50 | Ok(Self {

51 | writer: BufWriter::new(w),

52 | offset: 0,

53 | })

54 | }

55 |

56 | pub fn offset(&self) -> u64 {

57 | self.offset

58 | }

59 | }

60 |

61 | impl Write for BufWriterWithOffset {

62 | fn write(&mut self, buf: &[u8]) -> io::Result {

63 | let len = self.writer.write(buf)?;

64 | self.offset += len as u64;

65 | Ok(len)

66 | }

67 |

68 | fn flush(&mut self) -> io::Result<()> {

69 | self.writer.flush()

70 | }

71 | }

72 |

73 | impl Seek for BufWriterWithOffset {

74 | fn seek(&mut self, pos: SeekFrom) -> io::Result {

75 | self.offset = self.writer.seek(pos)?;

76 | Ok(self.offset)

77 | }

78 | }

79 |

80 | /// A file wrapper wraps `File` and `BufWriterWithOffset`.

81 | /// We're using `BufWriterWithOffset` here for better writting performance.

82 | /// Also, we need to make sure `file.sync_all()` can be called manually to

83 | /// flush all pending writes to disk.

84 | #[derive(Debug)]

85 | pub struct FileWithBufWriter {

86 | inner: fs::File,

87 | bw: BufWriterWithOffset,

88 | }

89 |

90 | impl FileWithBufWriter {

91 | pub fn from(inner: fs::File) -> io::Result {

92 | let bw = BufWriterWithOffset::new(inner.try_clone()?)?;

93 |

94 | Ok(FileWithBufWriter { inner, bw })

95 | }

96 |

97 | pub fn inner(&self) -> &fs::File {

98 | &self.inner

99 | }

100 |

101 | pub fn inner_mut(&mut self) -> &mut fs::File {

102 | &mut self.inner

103 | }

104 |

105 | pub fn sync(&mut self) -> io::Result<()> {

106 | self.flush()?;

107 | self.inner.sync_all()

108 | }

109 |

110 | pub fn offset(&self) -> u64 {

111 | self.bw.offset()

112 | }

113 | }

114 |

115 | impl Drop for FileWithBufWriter {

116 | fn drop(&mut self) {

117 | // ignore sync errors.

118 | let _r = self.sync();

119 | }

120 | }

121 |

122 | impl Write for FileWithBufWriter {

123 | fn write(&mut self, buf: &[u8]) -> io::Result {

124 | self.bw.write(buf)

125 | }

126 |

127 | fn flush(&mut self) -> io::Result<()> {

128 | self.bw.flush()

129 | }

130 | }

131 |

132 | impl Seek for FileWithBufWriter {

133 | fn seek(&mut self, pos: SeekFrom) -> io::Result {

134 | self.bw.seek(pos)

135 | }

136 | }

137 |

138 | const CARRIAGE_RETURN: &[u8] = b"\r";

139 | const LINE_FEED: &[u8] = b"\n";

140 |

141 | /// Read byte lines from an instance of `BufRead`.

142 | ///

143 | /// Unlike `io::Lines`, it simply return byte lines instead

144 | /// of string lines.

145 | ///

146 | /// Ref: https://github.com/whitfin/bytelines/blob/master/src/lib.rs

147 | #[derive(Debug)]

148 | pub struct ByteLineReader {

149 | reader: B,

150 | buf: Vec,

151 | }

152 |

153 | impl ByteLineReader {

154 | /// Create a new `ByteLineReader` instance from given instance of `BufRead`

155 | pub fn new(reader: B) -> Self {

156 | Self {

157 | reader,

158 | buf: Vec::new(),

159 | }

160 | }

161 |

162 | pub fn next_line(&mut self) -> Option> {

163 | self.buf.clear();

164 | match self.reader.read_until(b'\n', &mut self.buf) {

165 | Ok(0) => None,

166 | Ok(mut n) => {

167 | if self.buf.ends_with(LINE_FEED) {

168 | self.buf.pop();

169 | n -= 1;

170 | if self.buf.ends_with(CARRIAGE_RETURN) {

171 | self.buf.pop();

172 | n -= 1;

173 | }

174 | }

175 | Some(Ok(&self.buf[..n]))

176 | }

177 | Err(e) => Some(Err(e)),

178 | }

179 | }

180 | }

181 |

182 | impl Read for ByteLineReader {

183 | fn read(&mut self, buf: &mut [u8]) -> io::Result {

184 | self.reader.read(buf)

185 | }

186 | }

187 |

188 | impl BufRead for ByteLineReader {

189 | fn fill_buf(&mut self) -> io::Result<&[u8]> {

190 | self.reader.fill_buf()

191 | }

192 |

193 | fn consume(&mut self, amt: usize) {

194 | self.reader.consume(amt);

195 | }

196 | }

197 |

--------------------------------------------------------------------------------

/src/segment/data.rs:

--------------------------------------------------------------------------------

1 | //! Maintain data files.

2 | use crate::error::{Result, TinkvError};

3 | use crate::util::{checksum, parse_file_id, BufReaderWithOffset, FileWithBufWriter};

4 | use serde::{Deserialize, Serialize};

5 |

6 | use log::{error, trace};

7 | use std::fmt;

8 | use std::fs::{self, File};

9 | use std::io::{copy, Read, Seek, SeekFrom, Write};

10 | use std::path::{Path, PathBuf};

11 |

12 | /// Data entry definition.

13 | /// It will be serialized and saved to data file.

14 | #[derive(Serialize, Deserialize, Debug)]

15 | struct InnerEntry {

16 | key: Vec,

17 | value: Vec,

18 | // crc32 checksum

19 | checksum: u32,

20 | }

21 |

22 | impl InnerEntry {

23 | /// New data entry with given key and value.

24 | /// Checksum will be updated internally.

25 | fn new(key: &[u8], value: &[u8]) -> Self {

26 | let mut ent = InnerEntry {

27 | key: key.into(),

28 | value: value.into(),

29 | checksum: 0,

30 | };

31 | ent.checksum = ent.fresh_checksum();

32 | ent

33 | }

34 |

35 | fn fresh_checksum(&self) -> u32 {

36 | checksum(&self.value)

37 | }

38 |

39 | /// Check data entry is corrupted or not.

40 | fn is_valid(&self) -> bool {

41 | self.checksum == self.fresh_checksum()

42 | }

43 | }

44 |

45 | impl fmt::Display for InnerEntry {

46 | fn fmt(&self, f: &mut fmt::Formatter<'_>) -> fmt::Result {

47 | write!(

48 | f,

49 | "DataInnerEntry(key='{}', checksum={})",

50 | String::from_utf8_lossy(self.key.as_ref()),

51 | self.checksum,

52 | )

53 | }

54 | }

55 | /// An entry wrapper with size and offset.

56 | #[derive(Debug)]

57 | pub(crate) struct Entry {

58 | inner: InnerEntry,

59 | // size of inner entry in data file.

60 | pub size: u64,

61 | // position of inner entry in data file.

62 | pub offset: u64,

63 | // related data file id.

64 | pub file_id: u64,

65 | }

66 |

67 | impl Entry {

68 | /// Create a new entry instance with size and offset.

69 | fn new(file_id: u64, inner: InnerEntry, size: u64, offset: u64) -> Self {

70 | Self {

71 | inner,

72 | size,

73 | offset,

74 | file_id,

75 | }

76 | }

77 |

78 | /// Check the inner data entry is corrupted or not.

79 | pub(crate) fn is_valid(&self) -> bool {

80 | self.inner.is_valid()

81 | }

82 |

83 | /// Return key of the inner entry.

84 | pub(crate) fn key(&self) -> &[u8] {

85 | &self.inner.key

86 | }

87 |

88 | /// Return value of the inner entry.

89 | pub(crate) fn value(&self) -> &[u8] {

90 | &self.inner.value

91 | }

92 | }

93 |

94 | impl fmt::Display for Entry {

95 | fn fmt(&self, f: &mut fmt::Formatter<'_>) -> fmt::Result {

96 | write!(

97 | f,

98 | "DataEntry(file_id={}, key='{}', offset={}, size={})",

99 | self.file_id,

100 | String::from_utf8_lossy(self.key().as_ref()),

101 | self.offset,

102 | self.size,

103 | )

104 | }

105 | }

106 |

107 | /// DataFile represents a data file.

108 | #[derive(Debug)]

109 | pub(crate) struct DataFile {

110 | pub path: PathBuf,

111 | /// Data file id (12 digital characters).

112 | pub id: u64,

113 | /// Only one data file can be writeable at any time.

114 | /// Mark current data file can be writeable or not.

115 | writeable: bool,

116 |

117 | /// File handle of data file for writting.

118 | writer: Option,

119 |

120 | /// File handle of current data file for reading.

121 | reader: BufReaderWithOffset,

122 | /// Data file size.

123 | pub size: u64,

124 | }

125 |

126 | impl DataFile {

127 | /// Create a new data file instance.

128 | /// It parses data id from file path, which wraps an optional

129 | /// writer (only for writeable segement file) and reader.

130 | pub(crate) fn new(path: &Path, writeable: bool) -> Result {

131 | // Data name must starts with valid file id.

132 | let file_id = parse_file_id(path).expect("file id not found in file path");

133 |

134 | let w = if writeable {

135 | let f = fs::OpenOptions::new()

136 | .create(true)

137 | .write(true)

138 | .append(true)

139 | .open(path)?;

140 | Some(FileWithBufWriter::from(f)?)

141 | } else {

142 | None

143 | };

144 |

145 | let file = fs::File::open(path)?;

146 | let size = file.metadata()?.len();

147 | let df = DataFile {

148 | path: path.to_path_buf(),

149 | id: file_id,

150 | writeable,

151 | reader: BufReaderWithOffset::new(file)?,

152 | writer: w,

153 | size,

154 | };

155 |

156 | Ok(df)

157 | }

158 |

159 | /// Save key-value pair to segement file.

160 | pub(crate) fn write(&mut self, key: &[u8], value: &[u8]) -> Result {

161 | let inner = InnerEntry::new(key, value);

162 | trace!("append {} to segement file {}", &inner, self.path.display());

163 | // avoid immutable borrowing issue.

164 | let path = self.path.as_path();

165 | let encoded = bincode::serialize(&inner)?;

166 | let w = self

167 | .writer

168 | .as_mut()

169 | .ok_or_else(|| TinkvError::FileNotWriteable(path.to_path_buf()))?;

170 | let offset = w.offset();

171 | w.write_all(&encoded)?;

172 | w.flush()?;

173 |

174 | self.size = offset + encoded.len() as u64;

175 |

176 | let entry = Entry::new(self.id, inner, encoded.len() as u64, offset);

177 | trace!(

178 | "successfully append {} to data file {}",

179 | &entry,

180 | self.path.display()

181 | );

182 |

183 | Ok(entry)

184 | }

185 |

186 | /// Read key value in data file.

187 | pub(crate) fn read(&mut self, offset: u64) -> Result {

188 | trace!(

189 | "read key value with offset {} in data file {}",

190 | offset,

191 | self.path.display()

192 | );

193 | // Note: we have to get a mutable reader here.

194 | let reader = &mut self.reader;

195 | reader.seek(SeekFrom::Start(offset))?;

196 | let inner: InnerEntry = bincode::deserialize_from(reader)?;

197 |

198 | let entry = Entry::new(self.id, inner, self.reader.offset() - offset, offset);

199 | trace!(

200 | "successfully read {} from data log file {}",

201 | &entry,

202 | self.path.display()

203 | );

204 | Ok(entry)

205 | }

206 |

207 | /// Copy `size` bytes from `src` data file.

208 | /// Return offset of the newly written entry

209 | pub(crate) fn copy_bytes_from(

210 | &mut self,

211 | src: &mut DataFile,

212 | offset: u64,

213 | size: u64,

214 | ) -> Result {

215 | let reader = &mut src.reader;

216 | if reader.offset() != offset {

217 | reader.seek(SeekFrom::Start(offset))?;

218 | }

219 |

220 | let mut r = reader.take(size);

221 | let w = self.writer.as_mut().expect("data file is not writeable");

222 | let offset = w.offset();

223 |

224 | let num_bytes = copy(&mut r, w)?;

225 | assert_eq!(num_bytes, size);

226 | self.size += num_bytes;

227 | Ok(offset)

228 | }

229 |

230 | /// Return an entry iterator.

231 | pub(crate) fn entry_iter(&self) -> EntryIter {

232 | // TODO: refactor entry iter.

233 | EntryIter {

234 | path: self.path.clone(),

235 | reader: fs::File::open(self.path.clone()).unwrap(),

236 | file_id: self.id,

237 | }

238 | }

239 |

240 | /// Flush all pending writes to disk.

241 | pub(crate) fn sync(&mut self) -> Result<()> {

242 | self.flush()?;

243 | if self.writer.is_some() {

244 | self.writer.as_mut().unwrap().sync()?;

245 | }

246 | Ok(())

247 | }

248 |

249 | /// Flush buf writer.

250 | fn flush(&mut self) -> Result<()> {

251 | if self.writeable {

252 | self.writer.as_mut().unwrap().flush()?;

253 | }

254 | Ok(())

255 | }

256 | }

257 |

258 | impl Drop for DataFile {

259 | fn drop(&mut self) {

260 | if let Err(e) = self.sync() {

261 | error!(

262 | "failed to sync data file: {}, got error: {}",

263 | self.path.display(),

264 | e

265 | );

266 | }

267 |

268 | // auto clean up if file size is zero.

269 | if self.writeable && self.size == 0 && fs::remove_file(self.path.as_path()).is_ok() {

270 | trace!("data file '{}' is empty, remove it.", self.path.display());

271 | }

272 | }

273 | }

274 |

275 | /// An iterator over a data file, return data entries.

276 | #[derive(Debug)]

277 | pub(crate) struct EntryIter {

278 | path: PathBuf,

279 | reader: fs::File,

280 | file_id: u64,

281 | }

282 |

283 | impl Iterator for EntryIter {

284 | type Item = Entry;

285 |

286 | fn next(&mut self) -> Option {

287 | let offset = self.reader.seek(SeekFrom::Current(0)).unwrap();

288 | let inner: InnerEntry = bincode::deserialize_from(&self.reader).ok()?;

289 | let new_offset = self.reader.seek(SeekFrom::Current(0)).unwrap();

290 |

291 | let entry = Entry::new(self.file_id, inner, new_offset - offset, offset);

292 |

293 | trace!(

294 | "iter read {} from data file {}",

295 | &entry,

296 | self.path.display()

297 | );

298 |

299 | Some(entry)

300 | }

301 | }

302 |

303 | #[cfg(test)]

304 | mod tests {

305 | use super::*;

306 |

307 | #[test]

308 | fn test_new_entry() {

309 | let ent = InnerEntry::new(&b"key".to_vec(), &b"value".to_vec());

310 | assert_eq!(ent.checksum, 494360628);

311 | }

312 |

313 | #[test]

314 | fn test_checksum_valid() {

315 | let ent = InnerEntry::new(&b"key".to_vec(), &b"value".to_vec());

316 | assert_eq!(ent.is_valid(), true);

317 | }

318 |

319 | #[test]

320 | fn test_checksum_invalid() {

321 | let mut ent = InnerEntry::new(&b"key".to_vec(), &b"value".to_vec());

322 | ent.value = b"value_changed".to_vec();

323 | assert_eq!(ent.is_valid(), false);

324 | }

325 | }

326 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | [](https://travis-ci.org/iFaceless/tinkv)

2 | [](https://crates.io/crates/tinkv)

3 | [](#license)

4 |

5 | #

6 |

7 | [TinKV](https://github.com/iFaceless/tinkv) is a simple and fast key-value storage engine written in Rust. Inspired by [basho/bitcask](https://github.com/basho/bitcask), written after attending the [Talent Plan courses](https://github.com/pingcap/talent-plan).

8 |

9 | **Notes**:

10 | - *Do not use it in production.*

11 | - *Operations like set/remove/compact are not thread-safe currently.*

12 |

13 | Happy hacking~

14 |

15 |

16 |

17 |

18 |

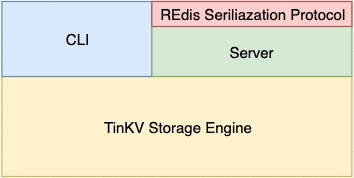

19 | # Features

20 |

21 | - Embeddable (use `tinkv` as a library);

22 | - Builtin CLI (`tinkv`);

23 | - Builtin Redis compatible server;

24 | - Predictable read/write performance.

25 |

26 | # Usage

27 | ## As a library

28 |

29 | ```shell

30 | $ cargo add tinkv

31 | ```

32 |

33 | Full example usage can be found in [examples/basic.rs](./examples/basic.rs).

34 |

35 | ```rust

36 | use tinkv::{self, Store};

37 |

38 | fn main() -> tinkv::Result<()> {

39 | pretty_env_logger::init();

40 | let mut store = Store::open("/path/to/tinkv")?;

41 | store.set("hello".as_bytes(), "tinkv".as_bytes())?;

42 |

43 | let value = store.get("hello".as_bytes())?;

44 | assert_eq!(value, Some("tinkv".as_bytes().to_vec()));

45 |

46 | store.remove("hello".as_bytes())?;

47 |

48 | let value_not_found = store.get("hello".as_bytes())?;

49 | assert_eq!(value_not_found, None);

50 |

51 | Ok(())

52 | }

53 | ```

54 |

55 | ### Open with custom options

56 |

57 | ```rust

58 | use tinkv::{self, Store};

59 |

60 | fn main() -> tinkv::Result<()> {

61 | let mut store = tinkv::OpenOptions::new()

62 | .max_data_file_size(1024 * 1024)

63 | .max_key_size(128)

64 | .max_value_size(128)

65 | .sync(true)

66 | .open(".tinkv")?;

67 | store.set("hello".as_bytes(), "world".as_bytes())?;

68 | Ok(())

69 | }

70 | ```

71 |

72 | ### APIs

73 | Public APIs of tinkv store are very easy to use:

74 | | API | Description |

75 | |--------------------------|---------------------------------------------------------------|

76 | |`Store::open(path)` | Open a new or existing datastore. The directory must be writeable and readable for tinkv store.|`

77 | |`tinkv::OpenOptions()` | Open a new or existing datastore with custom options. |

78 | |`store.get(key)` | Get value by key from datastore.|

79 | |`store.set(key, value)` | Store a key value pair into datastore.|

80 | |`store.remove(key, value)`| Remove a key from datastore.|

81 | |`store.compact()` | Merge data files into a more compact form. drop stale segments to release disk space. Produce hint files after compaction for faster startup.|

82 | |`store.keys()` | Return all the keys in database.|

83 | |`store.len()` | Return total number of keys in database.|

84 | |`store.for_each(f: Fn(key, value) -> Result)` | Iterate all keys in database and call function `f` for each entry.|

85 | |`store.stas()` | Get current statistics of database.|

86 | |`store.sync()` | Force any writes to datastore.|

87 | |`store.close()` | Close datastore, sync all pending writes to disk.|

88 |

89 | ### Run examples

90 |

91 | ```shell

92 | $ RUST_LOG=trace cargo run --example basic

93 | ```

94 |

95 | `RUST_LOG` level can be one of [`trace`, `debug`, `info`, `error`].

96 |

97 |

98 | CLICK HERE | Example output.

99 |

100 | ```shell

101 | $ RUST_LOG=info cargo run --example basic

102 |

103 | 2020-06-18T10:20:03.497Z INFO tinkv::store > open store path: .tinkv

104 | 2020-06-18T10:20:04.853Z INFO tinkv::store > build keydir done, got 100001 keys. current stats: Stats { size_of_stale_entries: 0, total_stale_entries: 0, total_active_entries: 100001, total_data_files: 1, size_of_all_data_files: 10578168 }

105 | 200000 keys written in 9.98773 secs, 20024.57 keys/s

106 | initial: Stats { size_of_stale_entries: 21155900, total_stale_entries: 200000, total_active_entries: 100001, total_data_files: 2, size_of_all_data_files: 31733728 }

107 | key_1 => "value_1_1592475604853568000_hello_world"

108 | after set 1: Stats { size_of_stale_entries: 21155900, total_stale_entries: 200000, total_active_entries: 100002, total_data_files: 2, size_of_all_data_files: 31733774 }

109 | after set 2: Stats { size_of_stale_entries: 21155946, total_stale_entries: 200001, total_active_entries: 100002, total_data_files: 2, size_of_all_data_files: 31733822 }

110 | after set 3: Stats { size_of_stale_entries: 21155994, total_stale_entries: 200002, total_active_entries: 100002, total_data_files: 2, size_of_all_data_files: 31733870 }

111 | after remove: Stats { size_of_stale_entries: 21156107, total_stale_entries: 200003, total_active_entries: 100001, total_data_files: 2, size_of_all_data_files: 31733935 }

112 | 2020-06-18T10:20:14.841Z INFO tinkv::store > compact 2 data files

113 | after compaction: Stats { size_of_stale_entries: 0, total_stale_entries: 0, total_active_entries: 100001, total_data_files: 2, size_of_all_data_files: 10577828 }

114 | key_1 => "value_1_1592475604853568000_hello_world"

115 | ```

116 |

117 |

118 | ## CLI

119 |

120 | Install `tinkv` executable binaries.

121 |

122 | ```shell

123 | $ cargo install tinkv

124 | ```

125 |

126 | ```shell

127 | $ tinkv --help

128 | ...

129 | USAGE:

130 | tinkv [FLAGS]

131 |

132 | FLAGS:

133 | -h, --help Prints help information

134 | -q, --quiet Pass many times for less log output

135 | -V, --version Prints version information

136 | -v, --verbose Pass many times for more log output

137 |

138 | ARGS:

139 | Path to tinkv datastore

140 |

141 | SUBCOMMANDS:

142 | compact Compact data files in datastore and reclaim disk space

143 | del Delete a key value pair from datastore

144 | get Retrive value of a key, and display the value

145 | help Prints this message or the help of the given subcommand(s)

146 | keys List all keys in datastore

147 | scan Perform a prefix scanning for keys

148 | set Store a key value pair into datastore

149 | stats Display statistics of the datastore

150 | ```

151 |

152 | Example usages:

153 | ```shell

154 | $ tinkv /tmp/db set hello world

155 | $ tinkv /tmp/db get hello

156 | world

157 |

158 | # Change verbosity level (info).

159 | $ tinkv /tmp/db -vvv compact

160 | 2020-06-20T10:32:45.582Z INFO tinkv::store > open store path: tmp/db

161 | 2020-06-20T10:32:45.582Z INFO tinkv::store > build keydir from data file /tmp/db/000000000001.tinkv.data

162 | 2020-06-20T10:32:45.583Z INFO tinkv::store > build keydir from data file /tmp/db/000000000002.tinkv.data

163 | 2020-06-20T10:32:45.583Z INFO tinkv::store > build keydir done, got 1 keys. current stats: Stats { size_of_stale_entries:0, total_stale_entries: 0, total_active_entries: 1,total_data_files: 2, size_of_all_data_files: 60 }

164 | 2020-06-20T10:32:45.583Z INFO tinkv::store > there are 3 datafiles need to be compacted

165 | ```

166 |

167 | ## Client & Server

168 |

169 | [`tinkv-server`](./bin/../src/bin/tinkv-server.rs) is a redis-compatible key/value store server. However, not all the redis commmands are supported. The available commands are:

170 |

171 | - `get `

172 | - `mget [...]`

173 | - `set `

174 | - `mset [ ]`

175 | - `del `

176 | - `keys `

177 | - `ping []`

178 | - `exists `

179 | - `info []`

180 | - `command`

181 | - `dbsize`

182 | - `flushdb/flushall`

183 | - `compact`: extended command to trigger a compaction manually.

184 |

185 | Key/value pairs are persisted in log files under directory `/urs/local/var/tinkv`. The default listening address of server is `127.0.0.1:7379`, and you can connect to it with a redis client.

186 |

187 | ### Quick Start

188 |

189 | It's very easy to install `tinkv-server`:

190 |

191 | ```shell

192 | $ cargo install tinkv

193 | ```

194 |

195 | Start server with default config (set log level to `info` mode):

196 |

197 | ```shell

198 | $ tinkv-server -vv

199 | 2020-06-24T13:46:49.341Z INFO tinkv::store > open store path: /usr/local/var/tinkv

200 | 2020-06-24T13:46:49.343Z INFO tinkv::store > build keydir from data file /usr/local/var/tinkv/000000000001.tinkv.data

201 | 2020-06-24T13:46:49.343Z INFO tinkv::store > build keydir from data file /usr/local/var/tinkv/000000000002.tinkv.data

202 | 2020-06-24T13:46:49.343Z INFO tinkv::store > build keydir done, got 0 keys. current stats: Stats { size_of_stale_entries: 0,total_stale_entries: 0, total_active_entries: 0, total_data_files: 2, size_of_all_data_files: 0 }

203 | 2020-06-24T13:46:49.343Z INFO tinkv::server > TinKV server is listening at '127.0.0.1:7379'

204 | ```

205 |

206 | Communicate with `tinkv-server` by using `reids-cli`:

207 |

208 |

209 | CLICK HERE

210 |

211 | ```shell

212 | $ redis-cli -p 7379

213 | 127.0.0.1:7379> ping

214 | PONG

215 | 127.0.0.1:7379> ping "hello, tinkv"

216 | "hello, tinkv"

217 | 127.0.0.1:7379> set name tinkv

218 | OK

219 | 127.0.0.1:7379> exists name

220 | (integer) 1

221 | 127.0.0.1:7379> get name tinkv

222 | (error) ERR wrong number of arguments for 'get' command

223 | 127.0.0.1:7379> get name

224 | "tinkv"

225 | 127.0.0.1:7379> command

226 | 1) "ping"

227 | 2) "get"

228 | 3) "set"

229 | 4) "del"

230 | 5) "dbsize"

231 | 6) "exists"

232 | 7) "compact"

233 | 8) "info"

234 | 9) "command"

235 | ...and more

236 | 127.0.0.1:7379> info

237 | # Server

238 | tinkv_version: 0.9.0

239 | os: Mac OS, 10.15.4, 64-bit

240 |

241 | # Stats

242 | size_of_stale_entries: 143

243 | size_of_stale_entries_human: 143 B

244 | total_stale_entries: 3

245 | total_active_entries: 1109

246 | total_data_files: 5

247 | size_of_all_data_files: 46813

248 | size_of_all_data_files_human: 46.81 KB

249 | 127.0.0.1:7379> notfound

250 | (error) ERR unknown command `notfound`

251 | 127.0.0.1:7379>

252 | ```

253 |

254 |

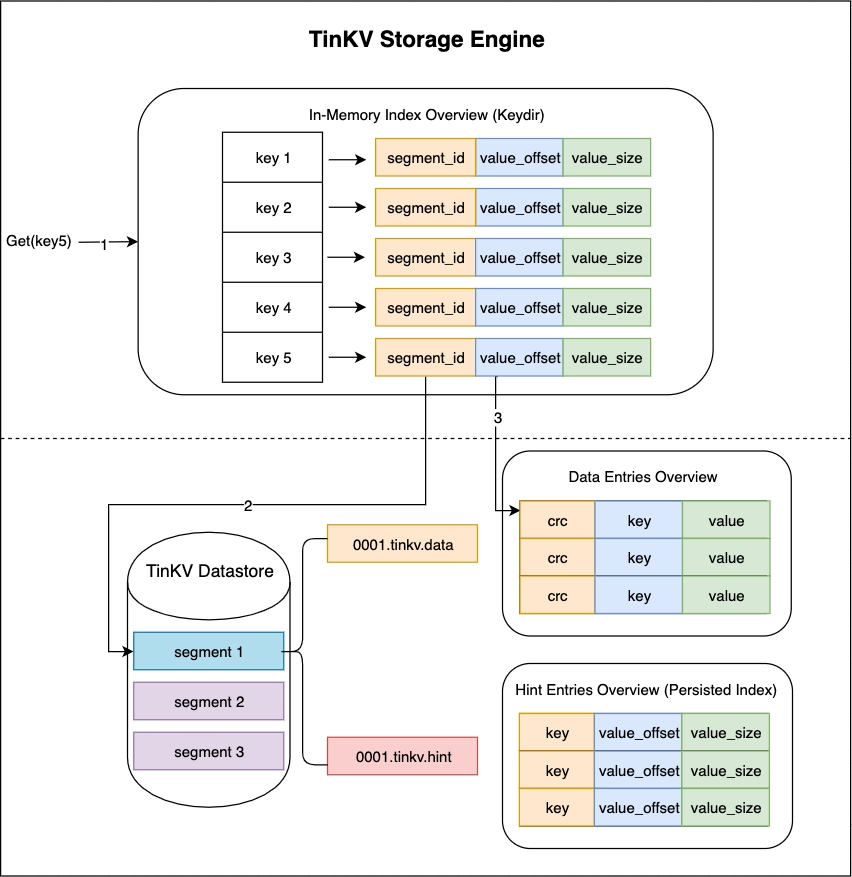

255 | # About Compaction

256 |

257 | Compation process will be triggered if `size_of_stale_entries >= config::COMPACTION_THRESHOLD` after each call of `set/remove`. Compaction steps are very simple and easy to understand:

258 | 1. Freeze current active segment, and switch to another one.

259 | 2. Create a compaction segment file, then iterate all the entries in `keydir` (in-memory hash table), copy related data entries into compaction file and update `keydir`.

260 | 3. Remove all the stale segment files.

261 |

262 | Hint files (for fast startup) of corresponding data files will be generated after each compaction.

263 |

264 | You can call `store.compact()` method to trigger compaction process if nessesary.

265 |

266 | ```rust

267 | use tinkv::{self, Store};

268 |

269 | fn main() -> tinkv::Result<()> {

270 | pretty_env_logger::init();

271 | let mut store = Store::open("/path/to/tinkv")?;

272 | store.compact()?;

273 |

274 | Ok(())

275 | }

276 | ```

277 |

278 | # Structure of Data Directory

279 |

280 | ```shell

281 | .tinkv

282 | ├── 000000000001.tinkv.hint -- related index/hint file, for fast startup

283 | ├── 000000000001.tinkv.data -- immutable data file

284 | └── 000000000002.tinkv.data -- active data file

285 | ```

286 |

287 | # Refs

288 | ## Projects

289 | I'm not familiar with erlang, but I found some implementations in other languages worth learning.

290 |

291 | 1. Go: [prologic/bitcask](https://github.com/prologic/bitcask)

292 | 2. Go: [prologic/bitraft](https://github.com/prologic/bitraft)

293 | 3. Python: [turicas/pybitcask](https://github.com/turicas/pybitcask)

294 | 4. Rust: [dragonquest/bitcask](https://github.com/dragonquest/bitcask)

295 |

296 | Found another simple key-value database based on Bitcask model, please refer [xujiajun/nutsdb](https://github.com/xujiajun/nutsdb).

297 |

298 | ## Articles and more

299 |

300 | - [Implementing a Copyless Redis Protocol in Rust with Parsing Combinators](https://dpbriggs.ca/blog/Implementing-A-Copyless-Redis-Protocol-in-Rust-With-Parsing-Combinators)

301 | - [Expected type parameter, found struct](https://stackoverflow.com/questions/26049939/expected-type-parameter-found-struct)

302 | - [Help understanding how trait bounds workd](https://users.rust-lang.org/t/help-understanding-how-trait-bounds-work/19253/3)

303 | - [Idiomatic way to take ownership of all items in a Vec?](https://users.rust-lang.org/t/idiomatic-way-to-take-ownership-of-all-items-in-a-vec-string/7811/12)

304 | - [Idiomatic callbacks in Rust](https://stackoverflow.com/questions/41081240/idiomatic-callbacks-in-rust)

305 | - [What are reasonable ways to store a callback in a struct?](https://users.rust-lang.org/t/what-are-reasonable-ways-to-store-a-callback-in-a-struct/5810)

306 | - [Things Rust doesn’t let you do](https://medium.com/@GolDDranks/things-rust-doesnt-let-you-do-draft-f596a3c740a5)

307 |

308 | # License

309 |

310 | Licensed under the [MIT license](./LICENSE).

311 |

--------------------------------------------------------------------------------

/src/server.rs:

--------------------------------------------------------------------------------

1 | //! TinKV server is a redis-compatible key value server.

2 |

3 | use crate::error::{Result, TinkvError};

4 |

5 | use crate::store::Store;

6 |

7 | use crate::resp::{deserialize_from_reader, serialize_to_writer, Value};

8 | use lazy_static::lazy_static;

9 | use log::{debug, error, info, trace};

10 |

11 | use crate::util::to_utf8_string;

12 | use std::convert::TryFrom;

13 | use std::fmt;

14 | use std::io::prelude::*;

15 | use std::io::{BufReader, BufWriter};

16 | use std::net::{TcpListener, TcpStream, ToSocketAddrs};

17 |

18 | lazy_static! {

19 | static ref COMMANDS: Vec<&'static str> = vec![

20 | "ping", "get", "mget", "set", "mset", "del", "dbsize", "exists", "keys", "flushdb",

21 | "flushall", "compact", "info", "command",

22 | ];

23 | }

24 |

25 | pub struct Server {

26 | store: Store,

27 | }

28 |

29 | impl Server {

30 | #[allow(dead_code)]

31 | pub fn new(store: Store) -> Self {

32 | Server { store }

33 | }

34 |

35 | pub fn run(&mut self, addr: A) -> Result<()> {

36 | let addr = addr.to_socket_addrs()?.next().unwrap();

37 | info!("TinKV server is listening at '{}'", addr);

38 | let listener = TcpListener::bind(addr)?;

39 | for stream in listener.incoming() {

40 | match stream {

41 | Ok(stream) => {

42 | if let Err(e) = self.serve(stream) {

43 | error!("{}", e);

44 | }

45 | }

46 | Err(e) => error!("{}", e),

47 | }

48 | }

49 | Ok(())

50 | }

51 |

52 | fn serve(&mut self, stream: TcpStream) -> Result<()> {

53 | let peer_addr = stream.peer_addr()?;

54 | debug!("got connection from {}", &peer_addr);

55 | let reader = BufReader::new(&stream);

56 | let writer = BufWriter::new(&stream);

57 | let mut conn = Conn::new(writer);

58 |

59 | for value in deserialize_from_reader(reader) {

60 | self.handle_request(&mut conn, Request::try_from(value?)?)?;

61 | }

62 |

63 | debug!("connection disconnected from {}", &peer_addr);

64 |

65 | Ok(())

66 | }

67 |

68 | fn handle_request(&mut self, conn: &mut Conn, req: Request) -> Result<()> {

69 | trace!("handle {}", &req);

70 | let argv = req.argv();

71 |

72 | macro_rules! send {

73 | () => {

74 | conn.write_value(Value::new_null_bulk_string())?

75 | };

76 | ($value:expr) => {

77 | match $value {

78 | Err(TinkvError::RespCommon { name, msg }) => {

79 | let err = Value::new_error(&name, &msg);

80 | conn.write_value(err)?;

81 | }

82 | Err(TinkvError::RespWrongNumOfArgs(_)) => {

83 | let msg = format!("{}", $value.unwrap_err());

84 | let err = Value::new_error("ERR", &msg);

85 | conn.write_value(err)?;

86 | }

87 | Err(e) => return Err(e),

88 | Ok(v) => conn.write_value(v)?,

89 | }

90 | };

91 | }

92 |

93 | match req.name.as_ref() {

94 | "ping" => send!(self.handle_ping(&argv)),

95 | "get" => send!(self.handle_get(&argv)),

96 | "mget" => send!(self.handle_mget(&argv)),

97 | "set" => send!(self.handle_set(&argv)),

98 | "mset" => send!(self.handle_mset(&argv)),

99 | "del" => send!(self.handle_del(&argv)),

100 | "dbsize" => send!(self.handle_dbsize(&argv)),

101 | "exists" => send!(self.handle_exists(&argv)),

102 | "keys" => send!(self.handle_keys(&argv)),

103 | "flushall" | "flushdb" => send!(self.handle_flush(req.name.as_ref(), &argv)),

104 | "compact" => send!(self.handle_compact(&argv)),

105 | "info" => send!(self.handle_info(&argv)),

106 | "command" => send!(self.handle_command(&argv)),

107 | _ => {

108 | conn.write_value(Value::new_error(

109 | "ERR",

110 | &format!("unknown command `{}`", &req.name),

111 | ))?;

112 | }

113 | }

114 |

115 | conn.flush()?;

116 |

117 | Ok(())

118 | }

119 |

120 | fn handle_ping(&mut self, argv: &[&[u8]]) -> Result {

121 | match argv.len() {

122 | 0 => Ok(Value::new_simple_string("PONG")),

123 | 1 => Ok(Value::new_bulk_string(argv[0].to_vec())),

124 | _ => Err(TinkvError::resp_wrong_num_of_args("ping")),

125 | }

126 | }

127 |

128 | fn handle_get(&mut self, argv: &[&[u8]]) -> Result {

129 | if argv.len() != 1 {

130 | return Err(TinkvError::resp_wrong_num_of_args("get"));

131 | }

132 |

133 | Ok(self

134 | .store

135 | .get(argv[0])?

136 | .map(Value::new_bulk_string)

137 | .unwrap_or_else(Value::new_null_bulk_string))

138 | }

139 |

140 | fn handle_mget(&mut self, argv: &[&[u8]]) -> Result {

141 | if argv.is_empty() {

142 | return Err(TinkvError::resp_wrong_num_of_args("mget"));

143 | }

144 |

145 | let mut values = vec![];

146 | for arg in argv {

147 | let value = self

148 | .store

149 | .get(arg)?

150 | .map(Value::new_bulk_string)

151 | .unwrap_or_else(Value::new_null_bulk_string);

152 | values.push(value);

153 | }

154 |

155 | Ok(Value::new_array(values))

156 | }

157 |

158 | fn handle_set(&mut self, argv: &[&[u8]]) -> Result {

159 | if argv.len() < 2 {

160 | return Err(TinkvError::resp_wrong_num_of_args("set"));

161 | }

162 |

163 | match self.store.set(argv[0], argv[1]) {

164 | Ok(()) => Ok(Value::new_simple_string("OK")),

165 | Err(e) => Err(TinkvError::new_resp_common(

166 | "INTERNALERR",

167 | &format!("{}", e),

168 | )),

169 | }

170 | }

171 |

172 | fn handle_mset(&mut self, argv: &[&[u8]]) -> Result {

173 | if argv.len() % 2 != 0 {

174 | return Err(TinkvError::resp_wrong_num_of_args("mset"));

175 | }

176 |

177 | let mut i = 0;

178 | loop {

179 | if i + 1 >= argv.len() {

180 | break;

181 | }

182 |

183 | if let Err(e) = self.store.set(argv[i], argv[i + 1]) {

184 | return Err(TinkvError::new_resp_common(

185 | "INTERNALERR",

186 | &format!("{}", e),

187 | ));

188 | }

189 |

190 | i += 2;

191 | }

192 |

193 | Ok(Value::new_simple_string("OK"))

194 | }

195 |

196 | fn handle_del(&mut self, argv: &[&[u8]]) -> Result {

197 | if argv.len() != 1 {

198 | return Err(TinkvError::resp_wrong_num_of_args("del"));

199 | }

200 |

201 | match self.store.remove(argv[0]) {

202 | Ok(()) => Ok(Value::new_simple_string("OK")),

203 | Err(e) => Err(TinkvError::new_resp_common(

204 | "INTERNALERR",

205 | &format!("{}", e),

206 | )),

207 | }

208 | }

209 |

210 | fn handle_dbsize(&mut self, argv: &[&[u8]]) -> Result {

211 | if !argv.is_empty() {

212 | return Err(TinkvError::resp_wrong_num_of_args("dbsize"));

213 | }

214 |

215 | Ok(Value::new_integer(self.store.len() as i64))

216 | }

217 |

218 | fn handle_exists(&mut self, argv: &[&[u8]]) -> Result {

219 | if argv.is_empty() {

220 | return Err(TinkvError::resp_wrong_num_of_args("exists"));

221 | }

222 |

223 | let mut exists = 0;

224 | for arg in argv {

225 | if self.store.contains_key(arg) {

226 | exists += 1;

227 | }

228 | }

229 |

230 | Ok(Value::new_integer(exists as i64))

231 | }

232 |

233 | fn handle_keys(&mut self, argv: &[&[u8]]) -> Result {

234 | let pattern = match argv.len() {

235 | 0 => glob::Pattern::new("*"),

236 | 1 => glob::Pattern::new(to_utf8_string(argv[0]).as_ref()),

237 | _ => return Err(TinkvError::resp_wrong_num_of_args("exists")),

238 | };

239 |

240 | let mut keys = vec![];

241 |

242 | let pattern = pattern.map_err(|e| TinkvError::new_resp_common("ERR", &format!("{}", e)))?;

243 | for key in self.store.keys() {

244 | if pattern.matches(to_utf8_string(key).as_ref()) {

245 | keys.push(Value::new_bulk_string(key.to_vec()));

246 | };

247 | }

248 |

249 | Ok(Value::new_array(keys))

250 | }