├── .gitignore

├── .readthedocs.yml

├── LICENSE

├── README.md

├── docs

├── Makefile

├── conf.py

├── environment.yml

├── firelight.rst

├── firelight.utils.rst

├── firelight.visualizers.rst

├── index.rst

├── introduction.rst

├── list_of_visualizers.rst

├── make.bat

└── requirements.txt

├── examples

├── README.rst

├── example_visualization.png

├── understanding

│ ├── README.rst

│ └── specfunction_example.py

└── usage

│ ├── README.rst

│ ├── example_config_0.yml

│ └── realistic_example.py

├── firelight

├── __init__.py

├── config_parsing.py

├── inferno_callback.py

├── utils

│ ├── __init__.py

│ ├── dim_utils.py

│ └── io_utils.py

└── visualizers

│ ├── __init__.py

│ ├── base.py

│ ├── colorization.py

│ ├── container_visualizers.py

│ └── visualizers.py

├── requirements.txt

└── setup.py

/.gitignore:

--------------------------------------------------------------------------------

1 | __pycache__

2 | firelight.egg-info

--------------------------------------------------------------------------------

/.readthedocs.yml:

--------------------------------------------------------------------------------

1 | # .readthedocs.yml

2 | # Read the Docs configuration file

3 | # See https://docs.readthedocs.io/en/stable/config-file/v2.html for details

4 |

5 | # Required

6 | version: 2

7 |

8 | # Build documentation in the docs/ directory with Sphinx

9 | sphinx:

10 | configuration: docs/conf.py

11 |

12 | # Optionally build your docs in additional formats such as PDF and ePub

13 | formats: all

14 |

15 | # conda:

16 | # environment: docs/environment.yml

17 |

18 | # Optionally set the version of Python and requirements required to build your docs

19 | python:

20 | version: 3.7

21 | install:

22 | - requirements: docs/requirements.txt

23 | - method: setuptools

24 | path: .

25 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "[]"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright [yyyy] [name of copyright owner]

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

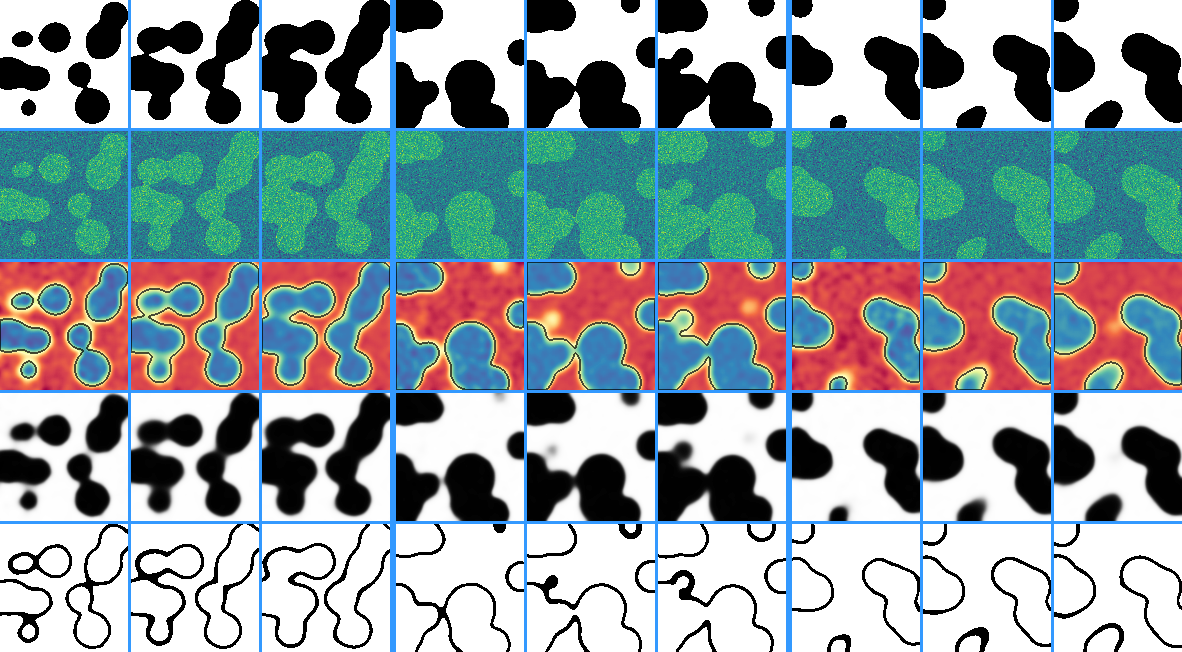

1 | # Firelight

2 |

3 | [](https://firelight.readthedocs.io/en/latest/?badge=latest)

4 | [](https://anaconda.org/conda-forge/firelight)

5 | [](https://badge.fury.io/py/firelight)

6 |

7 | Firelight is a visualization library for pytorch.

8 | Its core object is a **visualizer**, which can be called passing some states (such as `inputs`, `target`,

9 | `prediction`) returning a visualization of the data. What exactly that visualization shows, is specified in a yaml

10 | configuration file.

11 |

12 | Why you will like firelight initially:

13 | - Neat image grids, lining up inputs, targets and predictions,

14 | - Colorful images: Automatic scaling for RGB, matplotlib colormaps for grayscale data, randomly colored label images,

15 | - Many available visualizers.

16 |

17 | Why you will keep using firelight:

18 | - Everything in one config file,

19 | - Easily write your own visualizers,

20 | - Generality in dimensions: All visualizers usable with data of arbitrary dimension.

21 |

22 | ## Installation

23 |

24 | ### From source (to get the most recent version)

25 | On python 3.6+:

26 |

27 | ```bash

28 | # Clone the repository

29 | git clone https://github.com/inferno-pytorch/firelight

30 | cd firelight/

31 | # Install

32 | python setup.py install

33 | ```

34 | ### Using conda

35 |

36 | Firelight is available on conda-forge for python > 3.6 and all operating systems:

37 | ```bash

38 | conda install -c pytorch -c conda-forge firelight

39 | ```

40 |

41 | ### Using pip

42 |

43 | In an environment with [scikit-learn](https://scikit-learn.org/stable/install.html) installed:

44 | ```bash

45 | pip install firelight

46 | ```

47 |

48 | ## Example

49 |

50 | - Run the example `firelight/examples/example_data.py`

51 |

52 | Config file `example_config_0.yml`:

53 |

54 | ```yaml

55 | RowVisualizer: # stack the outputs of child visualizers as rows of an image grid

56 | input_mapping:

57 | global: [B: ':3', D: '0:9:3'] # Show only 3 samples in each batch ('B'), and some slices along depth ('D').

58 | prediction: [C: '0'] # Show only the first channel of the prediction

59 |

60 | pad_value: [0.2, 0.6, 1.0] # RGB color of separating lines

61 | pad_width: {B: 6, H: 0, W: 0, rest: 3} # Padding for batch ('B'), height ('H'), width ('W') and other dimensions.

62 |

63 | visualizers:

64 | # First row: Ground truth

65 | - IdentityVisualizer:

66 | input: 'target' # show the target

67 |

68 | # Second row: Raw input

69 | - IdentityVisualizer:

70 | input: ['input', C: '0'] # Show the first channel ('C') of the input.

71 | cmap: viridis # Name of a matplotlib colormap.

72 |

73 | # Third row: Prediction with segmentation boarders on top.

74 | - OverlayVisualizer:

75 | visualizers:

76 | - CrackedEdgeVisualizer: # Show borders of target segmentation

77 | input: 'target'

78 | width: 2

79 | opacity: 0.7 # Make output only partially opaque.

80 | - IdentityVisualizer: # prediction

81 | input: 'prediction'

82 | cmap: Spectral

83 |

84 | # Fourth row: Foreground probability, calculated by sigmoid on prediction

85 | - IdentityVisualizer:

86 | input_mapping: # the input to the visualizer can also be specified as a dict under the key 'input mapping'.

87 | tensor: ['prediction', pre: 'sigmoid'] # Apply sigmoid function from torch.nn.functional before visualize.

88 | value_range: [0, 1] # Scale such that 0 is white and 1 is black. If not specified, whole range is used.

89 |

90 | # Fifth row: Visualize where norm of prediction is smaller than 2

91 | - ThresholdVisualizer:

92 | input_mapping:

93 | tensor:

94 | NormVisualizer: # Use the output of NormVisualizer as the input to ThresholdVisualizer

95 | input: 'prediction'

96 | colorize: False

97 | threshold: 2

98 | mode: 'smaller'

99 | ```

100 |

101 | Python code:

102 |

103 | ```python

104 | from firelight import get_visualizer

105 | import matplotlib.pyplot as plt

106 |

107 | # Load the visualizer, passing the path to the config file. This happens only once, at the start of training.

108 | visualizer = get_visualizer('./configs/example_config_0.yml')

109 |

110 | # Get an example state dictionary, containing the input, target, prediction

111 | states = get_example_states()

112 |

113 | # Call the visualizer

114 | image_grid = visualizer(**states)

115 |

116 | # Log your image however you want

117 | plt.imsave('visualizations/example_visualization.jpg', image_grid.numpy())

118 | ```

119 |

120 | Resulting visualization:

121 |

122 |

123 |

124 | Many more visualizers are available. Have a look at [visualizers.py](/firelight/visualizers/visualizers.py ) and [container_visualizers.py](/firelight/visualizers/container_visualizers.py) or, for a more condensed list, the imports in [config_parsing.py](/firelight/config_parsing.py).

125 |

126 | ### With Inferno

127 | Firelight can be easily combined with a `TensorboardLogger` from [inferno](https://github.com/inferno-pytorch/inferno).

128 | Simply add an extra line at the start of your config specifying under which tag the visualizations should be logged, and

129 | add a callback to your trainer with `get_visualization_callback` in `firelight/inferno_callback.py`

130 |

131 | Config:

132 | ```yaml

133 | fancy_visualization: # This will be the tag in tensorboard

134 | RowVisualizer:

135 | ...

136 | ```

137 | Python:

138 | ```python

139 | from inferno.trainers.basic import Trainer

140 | from inferno.trainers.callbacks.logging.tensorboard import TensorboardLogger

141 | from firelight.inferno_callback import get_visualization_callback

142 |

143 | # Build trainer and logger

144 | trainer = Trainer(...)

145 | logger = TensorboardLogger(...)

146 | trainer.build_logger(logger, log_directory='path/to/logdir')

147 |

148 | # Register the visualization callback

149 | trainer.register_callback(

150 | get_visualization_callback(

151 | config='path/to/visualization/config'

152 | )

153 | )

154 | ```

155 |

--------------------------------------------------------------------------------

/docs/Makefile:

--------------------------------------------------------------------------------

1 | # Minimal makefile for Sphinx documentation

2 | #

3 |

4 | # You can set these variables from the command line, and also

5 | # from the environment for the first two.

6 | SPHINXOPTS ?=

7 | SPHINXBUILD ?= sphinx-build

8 | SOURCEDIR = .

9 | BUILDDIR = _build

10 |

11 | # Put it first so that "make" without argument is like "make help".

12 | help:

13 | @$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

14 |

15 | clean:

16 | rm -rf $(BUILDDIR)/*

17 | rm -rf auto_examples/

18 |

19 | .PHONY: help Makefile

20 |

21 | # Catch-all target: route all unknown targets to Sphinx using the new

22 | # "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

23 | %: Makefile

24 | @$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

25 |

--------------------------------------------------------------------------------

/docs/conf.py:

--------------------------------------------------------------------------------

1 | # Configuration file for the Sphinx documentation builder.

2 | #

3 | # This file only contains a selection of the most common options. For a full

4 | # list see the documentation:

5 | # https://www.sphinx-doc.org/en/master/usage/configuration.html

6 |

7 | # -- Path setup --------------------------------------------------------------

8 |

9 | # If extensions (or modules to document with autodoc) are in another directory,

10 | # add these directories to sys.path here. If the directory is relative to the

11 | # documentation root, use os.path.abspath to make it absolute, like shown here.

12 | #

13 | import os

14 | import sys

15 | sys.path.insert(0, os.path.abspath('../'))

16 | sys.path.insert(0, os.path.abspath('../firelight/'))

17 |

18 | master_doc = 'index'

19 |

20 | # -- Project information -----------------------------------------------------

21 |

22 | project = 'firelight'

23 | copyright = '2019, Roman Remme'

24 | author = 'Roman Remme'

25 |

26 | # The full version, including alpha/beta/rc tags

27 | release = '0.1.0'

28 |

29 |

30 | # -- General configuration ---------------------------------------------------

31 |

32 | # Add any Sphinx extension module names here, as strings. They can be

33 | # extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

34 | # ones.

35 | extensions = [

36 | 'sphinx.ext.autodoc',

37 | 'sphinx.ext.napoleon',

38 | 'sphinx.ext.intersphinx',

39 | 'sphinx.ext.doctest',

40 | 'sphinx.ext.viewcode',

41 | 'sphinx.ext.graphviz',

42 | 'sphinx.ext.inheritance_diagram',

43 | #'sphinx.ext.autosummary',

44 | 'sphinx_gallery.gen_gallery',

45 | 'sphinx_paramlinks',

46 | 'autodocsumm',

47 | 'sphinx_automodapi.automodapi',

48 | ]

49 |

50 | # autodoc_default_options = {

51 | # 'autosummary': True,

52 | # }

53 |

54 | napoleon_include_init_with_doc = True

55 | napoleon_include_special_with_doc = True

56 | napoleon_use_rtype = False

57 | #autosummary_generate = True

58 |

59 | # interphinx configuration

60 | intersphinx_mapping = {

61 | 'numpy': ('http://docs.scipy.org/doc/numpy/', None),

62 | 'python': ('https://docs.python.org/', None),

63 | 'torch': ('https://pytorch.org/docs/master/', None),

64 | 'sklearn': ('http://scikit-learn.org/stable',

65 | (None, './_intersphinx/sklearn-objects.inv')),

66 | 'inferno': ('http://inferno-pytorch.readthedocs.io/en/latest/', None)

67 | }

68 |

69 | # paths for sphinx gallery

70 | from sphinx_gallery.sorting import ExplicitOrder

71 | sphinx_gallery_conf = {

72 | 'examples_dir': '../examples',

73 | 'gallery_dirs': 'auto_examples',

74 | 'filename_pattern': '/*.py',

75 | 'reference_url': {

76 | # The module you locally document uses None

77 | 'sphinx_gallery': None,

78 | },

79 | 'subsection_order': ExplicitOrder(['../examples/usage',

80 | '../examples/understanding']),

81 | # binder will does not work with readthedocs, see https://github.com/sphinx-gallery/sphinx-gallery/pull/505.

82 | # 'binder': {

83 | # # Required keys

84 | # 'org': 'https://github.com',

85 | # 'repo': 'firelight',

86 | # 'branch': 'docs', # Can be any branch, tag, or commit hash. Use a branch that hosts your docs.

87 | # 'binderhub_url': 'https://mybinder.org', # Any URL of a binderhub deployment. Must be full URL (e.g. https://mybinder.org).

88 | # 'dependencies': 'requirements.txt',

89 | # # Optional keys

90 | # # 'filepath_prefix': 'docs/', # A prefix to prepend to any filepaths in Binder links.

91 | # 'notebooks_dir': 'binder', # Jupyter notebooks for Binder will be copied to this directory (relative to built documentation root).

92 | # 'use_jupyter_lab': False # Whether Binder links should start Jupyter Lab instead of the Jupyter Notebook interface.

93 | # }

94 | }

95 |

96 | doctest_global_setup = """

97 | import torch

98 | from firelight.utils.dim_utils import *

99 | from firelight.config_parsing import *

100 |

101 | from firelight.visualizers.base import *

102 | from firelight.visualizers.visualizers import *

103 | from firelight.visualizers.container_visualizers import *

104 | """

105 |

106 | # Add any paths that contain templates here, relative to this directory.

107 | templates_path = ['_templates']

108 |

109 | # List of patterns, relative to source directory, that match files and

110 | # directories to ignore when looking for source files.

111 | # This pattern also affects html_static_path and html_extra_path.

112 | exclude_patterns = []

113 |

114 |

115 | from unittest.mock import MagicMock

116 |

117 | class Mock(MagicMock):

118 | @classmethod

119 | def __getattr__(cls, name):

120 | return MagicMock()

121 |

122 | MOCK_MODULES = [

123 | 'inferno.trainers.callbacks.base',

124 | 'inferno.trainers.callbacks.logging.tensorboard',

125 | ]

126 | sys.modules.update((mod_name, Mock()) for mod_name in MOCK_MODULES)

127 |

128 | # -- Options for HTML output -------------------------------------------------

129 |

130 | # The theme to use for HTML and HTML Help pages. See the documentation for

131 | # a list of builtin themes.

132 | #

133 | html_theme = 'sphinx_rtd_theme'

134 |

135 | # Add any paths that contain custom static files (such as style sheets) here,

136 | # relative to this directory. They are copied after the builtin static files,

137 | # so a file named "default.css" will overwrite the builtin "default.css".

138 | html_static_path = ['_static']

139 |

--------------------------------------------------------------------------------

/docs/environment.yml:

--------------------------------------------------------------------------------

1 | channels:

2 | - conda-forge

3 | - defaults

4 | dependencies:

5 | - inferno=v0.4.0

6 | - python=3.7.0

7 | - pip:

8 | - sphinx==2.2.0

9 | - sphinx-gallery==0.4.0

10 | - sphinx-rtd-theme==0.4.3

11 |

12 |

--------------------------------------------------------------------------------

/docs/firelight.rst:

--------------------------------------------------------------------------------

1 | firelight package

2 | =================

3 |

4 | .. toctree::

5 | firelight.visualizers

6 | firelight.utils

7 |

8 | firelight.config\_parsing module

9 | --------------------------------

10 |

11 | .. automodule:: firelight.config_parsing

12 | :members:

13 | :undoc-members:

14 | :show-inheritance:

15 |

16 | firelight.inferno\_callback module

17 | ----------------------------------

18 |

19 | .. automodule:: firelight.inferno_callback

20 | :members:

21 | :undoc-members:

22 | :show-inheritance:

23 |

24 | ..

25 | Module contents

26 | ---------------

27 |

28 | .. automodule:: firelight

29 | :members:

30 | :undoc-members:

31 | :show-inheritance:

32 |

--------------------------------------------------------------------------------

/docs/firelight.utils.rst:

--------------------------------------------------------------------------------

1 | firelight.utils package

2 | =======================

3 |

4 | ..

5 | Submodules

6 | ----------

7 |

8 | firelight.utils.dim\_utils module

9 | ---------------------------------

10 |

11 | .. automodule:: firelight.utils.dim_utils

12 | :members:

13 | :undoc-members:

14 | :show-inheritance:

15 |

16 | firelight.utils.io\_utils module

17 | --------------------------------

18 |

19 | .. automodule:: firelight.utils.io_utils

20 | :members:

21 | :undoc-members:

22 | :show-inheritance:

23 |

24 |

25 | ..

26 | Module contents

27 | ---------------

28 |

29 | .. automodule:: firelight.utils

30 | :members:

31 | :undoc-members:

32 | :show-inheritance:

33 |

--------------------------------------------------------------------------------

/docs/firelight.visualizers.rst:

--------------------------------------------------------------------------------

1 | firelight.visualizers package

2 | =============================

3 |

4 | firelight.visualizers.base module

5 | ---------------------------------

6 |

7 | .. automodule:: firelight.visualizers.base

8 | :members:

9 | :undoc-members:

10 | :show-inheritance:

11 |

12 | firelight.visualizers.colorization module

13 | -----------------------------------------

14 |

15 | .. automodule:: firelight.visualizers.colorization

16 | :members:

17 | :undoc-members:

18 | :show-inheritance:

19 |

20 | firelight.visualizers.container\_visualizers module

21 | ---------------------------------------------------

22 |

23 | .. automodule:: firelight.visualizers.container_visualizers

24 | :members:

25 | :undoc-members:

26 | :show-inheritance:

27 |

28 | firelight.visualizers.visualizers module

29 | ----------------------------------------

30 |

31 | .. automodule:: firelight.visualizers.visualizers

32 | :members:

33 | :undoc-members:

34 | :show-inheritance:

35 |

36 | ..

37 | Module contents

38 | ---------------

39 |

40 | .. automodule:: firelight.visualizers

41 | :members:

42 | :undoc-members:

43 | :show-inheritance:

44 |

--------------------------------------------------------------------------------

/docs/index.rst:

--------------------------------------------------------------------------------

1 | .. firelight documentation master file, created by

2 | sphinx-quickstart on Tue Oct 29 13:16:38 2019.

3 | You can adapt this file completely to your liking, but it should at least

4 | contain the root `toctree` directive.

5 |

6 | Welcome to firelight's documentation!

7 | =====================================

8 |

9 | .. toctree::

10 | :maxdepth: 3

11 | :caption: Contents:

12 |

13 | introduction

14 | list_of_visualizers

15 | auto_examples/index

16 | firelight

17 |

18 |

19 | Indices and tables

20 | ==================

21 |

22 | * :ref:`genindex`

23 | * :ref:`modindex`

24 | * :ref:`search`

25 |

--------------------------------------------------------------------------------

/docs/introduction.rst:

--------------------------------------------------------------------------------

1 | Introduction

2 | ============

3 |

4 | Firelight is a package for the visualization of `pytorch `_ tensors as images.

5 | It uses a flexible way of handling tensor shapes, which allows visualization of data

6 | of arbitrary dimensionality (See :mod:`firelight.utils.dim_utils` for details).

7 |

8 | This documentation is work in progress, as is the package itself.

9 |

10 | For now, have a look at the `Examples `_,

11 | check out the currently available `visualizers `_

12 | or read the `docstrings `_.

--------------------------------------------------------------------------------

/docs/list_of_visualizers.rst:

--------------------------------------------------------------------------------

1 | List of Visualizers

2 | -------------------

3 |

4 | .. currentmodule:: firelight.visualizers.base

5 |

6 | The following non-container visualizers are currently available.

7 | They all derive from :class:`BaseVisualizer`.

8 |

9 | ..

10 | inheritance-diagram:: firelight.visualizers.visualizers

11 | :top-classes: firelight.visualizers.base.BaseVisualizer

12 | :parts: 1

13 |

14 | ..

15 | inheritance-diagram:: firelight.visualizers.container_visualizers

16 | :top-classes: firelight.visualizers.base.ContainerVisualizer

17 | :parts: 1

18 |

19 | .. automodsumm:: firelight.visualizers.visualizers

20 | :classes-only:

21 | :skip: PCA, TSNE, BaseVisualizer

22 |

23 | .. currentmodule:: firelight.visualizers.base

24 |

25 | These are the available visualizers combining multiple visualizations.

26 | Their base class is the :class:`ContainerVisualizer`.

27 |

28 | .. automodsumm:: firelight.visualizers.container_visualizers

29 | :classes-only:

30 | :skip: ContainerVisualizer

31 |

--------------------------------------------------------------------------------

/docs/make.bat:

--------------------------------------------------------------------------------

1 | @ECHO OFF

2 |

3 | pushd %~dp0

4 |

5 | REM Command file for Sphinx documentation

6 |

7 | if "%SPHINXBUILD%" == "" (

8 | set SPHINXBUILD=sphinx-build

9 | )

10 | set SOURCEDIR=.

11 | set BUILDDIR=_build

12 |

13 | if "%1" == "" goto help

14 |

15 | %SPHINXBUILD% >NUL 2>NUL

16 | if errorlevel 9009 (

17 | echo.

18 | echo.The 'sphinx-build' command was not found. Make sure you have Sphinx

19 | echo.installed, then set the SPHINXBUILD environment variable to point

20 | echo.to the full path of the 'sphinx-build' executable. Alternatively you

21 | echo.may add the Sphinx directory to PATH.

22 | echo.

23 | echo.If you don't have Sphinx installed, grab it from

24 | echo.http://sphinx-doc.org/

25 | exit /b 1

26 | )

27 |

28 | %SPHINXBUILD% -M %1 %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

29 | goto end

30 |

31 | :help

32 | %SPHINXBUILD% -M help %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

33 |

34 | :end

35 | popd

36 |

--------------------------------------------------------------------------------

/docs/requirements.txt:

--------------------------------------------------------------------------------

1 | sphinx==2.2.0

2 | sphinx-rtd-theme==0.4.3

3 | sphinx-gallery==0.4.0

4 | sphinx-paramlinks==0.3.7

5 | autodocsumm==0.1.11

6 | sphinx-automodapi==0.12

7 |

--------------------------------------------------------------------------------

/examples/README.rst:

--------------------------------------------------------------------------------

1 | Examples

2 | ========

3 |

--------------------------------------------------------------------------------

/examples/example_visualization.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/inferno-pytorch/firelight/796328f93494248e6a4cf238ea36ac4eeb7fc9b8/examples/example_visualization.png

--------------------------------------------------------------------------------

/examples/understanding/README.rst:

--------------------------------------------------------------------------------

1 | Understanding Firelight

2 | -----------------------

3 |

--------------------------------------------------------------------------------

/examples/understanding/specfunction_example.py:

--------------------------------------------------------------------------------

1 | """

2 | SpecFunction Example

3 | ====================

4 |

5 | An example demonstrating the functionality of the :class:`SpecFunction` class.

6 | """

7 |

8 | import torch

9 | import matplotlib.pyplot as plt

10 | from firelight.utils.dim_utils import SpecFunction

11 |

12 | ##############################################################################

13 | # Let us define a function that takes in two arrays and masks one with the

14 | # other:

15 | #

16 |

17 |

18 | class MaskArray(SpecFunction):

19 | def __init__(self, **super_kwargs):

20 | super(MaskArray, self).__init__(

21 | in_specs={'mask': 'B', 'array': 'BC'},

22 | out_spec='BC',

23 | **super_kwargs

24 | )

25 |

26 | def internal(self, mask, array, value=0.0):

27 | # The shapes are

28 | # mask: (B)

29 | # array: (B, C)

30 | # as specified in the init.

31 |

32 | result = array.clone()

33 | result[mask == 0] = value

34 |

35 | # the result has shape (B, C), as specified in the init.

36 | return result

37 |

38 |

39 | ##############################################################################

40 | # We can now apply the function on inputs of arbitrary shape, such as images.

41 | # The reshaping involved gets taken care of automatically:

42 | #

43 |

44 | W, H = 20, 10

45 | inputs = {

46 | 'array': (torch.rand(H, W, 3), 'HWC'),

47 | 'mask': (torch.randn(H, W) > 0, 'HW'),

48 | 'value': 0,

49 | 'out_spec': 'HWC',

50 | }

51 |

52 | maskArrays = MaskArray()

53 | result = maskArrays(**inputs)

54 | print('output shape:', result.shape)

55 |

56 | plt.imshow(result)

57 |

--------------------------------------------------------------------------------

/examples/usage/README.rst:

--------------------------------------------------------------------------------

1 | Using Firelight

2 | ---------------

3 |

--------------------------------------------------------------------------------

/examples/usage/example_config_0.yml:

--------------------------------------------------------------------------------

1 | RowVisualizer: # stack the outputs of child visualizers as rows of an image grid

2 | input_mapping:

3 | global: [B: ':3', D: '0:9:3'] # Show only 3 samples in each batch ('B'), and some slices along depth ('D').

4 | prediction: [C: '0'] # Show only the first channel of the prediction

5 |

6 | pad_value: [0.2, 0.6, 1.0] # RGB color of separating lines

7 | pad_width: {B: 6, H: 0, W: 0, rest: 3} # Padding for batch ('B'), height ('H'), width ('W') and other dimensions.

8 |

9 | visualizers:

10 | # First row: Ground truth

11 | - IdentityVisualizer:

12 | input: 'target' # show the target

13 |

14 | # Second row: Raw input

15 | - IdentityVisualizer:

16 | input: ['input', C: '0'] # Show the first channel ('C') of the input.

17 | cmap: viridis # Name of a matplotlib colormap.

18 |

19 | # Third row: Prediction with segmentation boarders on top.

20 | - OverlayVisualizer:

21 | visualizers:

22 | - CrackedEdgeVisualizer: # Show borders of target segmentation

23 | input_mapping:

24 | segmentation: 'target'

25 | width: 2

26 | opacity: 0.7 # Make output only partially opaque.

27 | - IdentityVisualizer: # prediction

28 | input_mapping:

29 | tensor: 'prediction'

30 | cmap: Spectral

31 |

32 | # Fourth row: Foreground probability, calculated by sigmoid on prediction

33 | - IdentityVisualizer:

34 | input_mapping: # the input to the visualizer can also be specified as a dict under the key 'input mapping'.

35 | tensor: ['prediction', pre: 'sigmoid'] # Apply sigmoid function from torch.nn.functional before visualize.

36 | value_range: [0, 1] # Scale such that 0 is white and 1 is black. If not specified, whole range is used.

37 |

38 | # Fifth row: Visualize where norm of prediction is smaller than 2

39 | - ThresholdVisualizer:

40 | input_mapping:

41 | tensor:

42 | NormVisualizer: # Use the output of NormVisualizer as the input to ThresholdVisualizer

43 | input: 'prediction'

44 | colorize: False

45 | threshold: 2

46 | mode: 'smaller'

--------------------------------------------------------------------------------

/examples/usage/realistic_example.py:

--------------------------------------------------------------------------------

1 | """

2 | Realistic Example

3 | =================

4 |

5 | A close-to-real-world example of how to use firelight.

6 | """

7 |

8 | ##############################################################################

9 | # First of all, let us get some mock data to visualize.

10 | # We generate the following tensors:

11 | #

12 | # - :code:`input` of shape :math:`(B, D, H, W)`, some noisy raw data,

13 | # - :code:`target` of shape :math:`(B, D, H, W)`, the ground truth foreground

14 | # background segmentation,

15 | # - :code:`prediction` of shape :math:`(B, D, H, W)`, the predicted foreground

16 | # probability,

17 | # - :code:`embedding` of shape :math:`(B, D, C, H, W)`, a tensor with an

18 | # additional channel dimension, as for example intermediate activations of a

19 | # neural network.

20 | #

21 |

22 | import numpy as np

23 | import torch

24 | from skimage.data import binary_blobs

25 | from skimage.filters import gaussian

26 |

27 |

28 | def get_example_states():

29 | # generate some toy foreground/background segmentation

30 | batchsize = 5 # we will only visualize 3 of the 5samples

31 | size = 64

32 | target = np.stack([binary_blobs(length=size, n_dim=3, blob_size_fraction=0.25, volume_fraction=0.5, seed=i)

33 | for i in range(batchsize)], axis=0).astype(np.float32)

34 |

35 | # generate toy raw data as noisy target

36 | sigma = 0.5

37 | input = target + np.random.normal(loc=0, scale=sigma, size=target.shape)

38 |

39 | # compute mock prediction as gaussian smoothing of input data

40 | prediction = np.stack([gaussian(sample, sigma=3, truncate=2.0) for sample in input], axis=0)

41 | prediction = 10 * (prediction - 0.5)

42 |

43 | # compute mock embedding (if you need an image with channels for testing)

44 | embedding = np.random.randn(prediction.shape[0], 16, *(prediction.shape[1:]))

45 |

46 | # put input, target, prediction in dictionary, convert to torch.Tensor, add dimensionality labels ('specs')

47 | state_dict = {

48 | 'input': (torch.Tensor(input).float(), 'BDHW'), # Dimensions are B, D, H, W = Batch, Depth, Height, Width

49 | 'target': (torch.Tensor(target).float(), 'BDHW'),

50 | 'prediction': (torch.Tensor(prediction).float(), 'BDHW'),

51 | 'embedding': (torch.Tensor(embedding).float(), 'BCDHW'),

52 | }

53 | return state_dict

54 |

55 |

56 | # Get the example state dictionary, containing the input, target, prediction.

57 | states = get_example_states()

58 |

59 | for name, (tensor, spec) in states.items():

60 | print(f'{name}: shape {tensor.shape}, spec {spec}')

61 |

62 | ##############################################################################

63 | # The best way to construct a complex visualizer to show all the tensors in a

64 | # structured manner is to use a configuration file.

65 | #

66 | # We will use the following one:

67 | #

68 | # .. literalinclude:: ../../../examples/usage/example_config_0.yml

69 | # :language: yaml

70 | #

71 | # Lets load the file and construct the visualizer using :code:`get_visualizer`:

72 |

73 | from firelight import get_visualizer

74 | import matplotlib.pyplot as plt

75 |

76 | # Load the visualizer, passing the path to the config file. This happens only once, at the start of training.

77 | visualizer = get_visualizer('example_config_0.yml')

78 |

79 | ##############################################################################

80 | # Now we can finally apply it on out mock tensors to get the visualization

81 |

82 | # Call the visualizer.

83 | image_grid = visualizer(**states)

84 |

85 | # Log your image however you want.

86 | plt.figure(figsize=(10, 6))

87 | plt.imshow(image_grid.numpy())

88 |

--------------------------------------------------------------------------------

/firelight/__init__.py:

--------------------------------------------------------------------------------

1 | from .config_parsing import get_visualizer

2 |

3 | __version__ = '0.2.1'

4 |

--------------------------------------------------------------------------------

/firelight/config_parsing.py:

--------------------------------------------------------------------------------

1 | from .visualizers.base import BaseVisualizer, ContainerVisualizer

2 | from .utils.io_utils import yaml2dict

3 | from pydoc import locate

4 | import logging

5 | import sys

6 |

7 | # List of available visualizers (without container visualizers)

8 | from .visualizers.visualizers import \

9 | IdentityVisualizer, \

10 | PcaVisualizer, \

11 | MaskedPcaVisualizer, \

12 | TsneVisualizer, \

13 | UmapVisualizer, \

14 | SegmentationVisualizer, \

15 | InputVisualizer, \

16 | TargetVisualizer, \

17 | PredictionVisualizer, \

18 | MSEVisualizer, \

19 | RGBVisualizer, \

20 | MaskVisualizer, \

21 | ThresholdVisualizer, \

22 | ImageVisualizer, \

23 | NormVisualizer, \

24 | DiagonalSplitVisualizer, \

25 | CrackedEdgeVisualizer, \

26 | UpsamplingVisualizer, \

27 | SemanticVisualizer, \

28 | DifferenceVisualizer

29 |

30 | # List of available container visualizers (visualizers acting on outputs of child visualizers)

31 | from .visualizers.container_visualizers import \

32 | ImageGridVisualizer, \

33 | RowVisualizer, \

34 | ColumnVisualizer, \

35 | OverlayVisualizer, \

36 | RiffleVisualizer, \

37 | StackVisualizer, \

38 | AverageVisualizer

39 |

40 |

41 | # set up logging

42 | logging.basicConfig(format='[+][%(asctime)-15s][VISUALIZATION]'

43 | ' %(message)s',

44 | stream=sys.stdout,

45 | level=logging.INFO)

46 | parsing_logger = logging.getLogger(__name__)

47 |

48 |

49 | def get_single_key_value_pair(d):

50 | """

51 | Returns the key and value of a one element dictionary, checking that it actually has only one element

52 |

53 | Parameters

54 | ----------

55 | d : dict

56 |

57 | Returns

58 | -------

59 | tuple

60 |

61 | """

62 | assert isinstance(d, dict), f'{d}'

63 | assert len(d) == 1, f'{d}'

64 | return list(d.keys())[0], list(d.values())[0]

65 |

66 |

67 | def get_visualizer_class(name):

68 | """

69 | Parses the class of a visualizer from a String. If the name is not found in globals(), tries to import it.

70 |

71 | Parameters

72 | ----------

73 | name : str

74 | Name of a visualization class imported above, or dotted path to one (e.g. your custom visualizer in a different

75 | library).

76 |

77 | Returns

78 | -------

79 | type or None

80 |

81 | """

82 | if name in globals(): # visualizer is imported above

83 | return globals().get(name)

84 | else: # dotted path is given

85 | visualizer = locate(name)

86 | assert visualizer is not None, f'Could not find visualizer "{name}".'

87 | assert issubclass(visualizer, BaseVisualizer), f'"{visualizer}" is no visualizer'

88 |

89 |

90 | def get_visualizer(config, indentation=0):

91 | """

92 | Parses a yaml configuration file to construct a visualizer.

93 |

94 | Parameters

95 | ----------

96 | config : str or dict or BaseVisualizer

97 | Either path to yaml configuration file or dictionary (as constructed by loading such a file).

98 | If already visualizer, it is just returned.

99 | indentation : int, optional

100 | How far logging messages arising here should be indented.

101 | Returns

102 | -------

103 | BaseVisualizer

104 |

105 | """

106 | if isinstance(config, BaseVisualizer): # nothing to do here

107 | return config

108 | # parse config to dict (does nothing if already dict)

109 | config = yaml2dict(config)

110 | # name (or dotted path) and kwargs of visualizer have to be specified as key and value of one element dictionary

111 | name, kwargs = get_single_key_value_pair(config)

112 | # get the visualizer class from its name

113 | visualizer = get_visualizer_class(name)

114 | parsing_logger.info(f'Parsing {" "*indentation}{visualizer.__name__}')

115 | if issubclass(visualizer, ContainerVisualizer): # container visualizer: parse sub-visualizers first

116 | child_visualizer_config = kwargs['visualizers']

117 | assert isinstance(child_visualizer_config, (list, dict)), \

118 | f'{child_visualizer_config}, {type(child_visualizer_config)}'

119 | if isinstance(child_visualizer_config, dict): # if dict, convert do list

120 | child_visualizer_config = [{key: value} for key, value in child_visualizer_config.items()]

121 | child_visualizers = []

122 | for c in child_visualizer_config:

123 | v = get_visualizer(c, indentation + 1)

124 | assert isinstance(v, BaseVisualizer), f'Could not parse visualizer: {c}'

125 | child_visualizers.append(v)

126 | kwargs['visualizers'] = child_visualizers

127 |

128 | # TODO: add example with nested visualizers

129 | def parse_if_visualizer(config):

130 | if not (isinstance(config, dict) and len(config) == 1):

131 | return None

132 | # check if the key is the name of a visualizer

133 | try:

134 | get_visualizer_class(iter(config.items()).__next__()[0])

135 | except AssertionError:

136 | return None

137 | # parse the visualizer

138 | return get_visualizer(config, indentation+1)

139 |

140 | # check if any input in 'input_mapping' should be parsed as visualizer

141 | input_mapping = kwargs.get('input_mapping', {})

142 | for map_to, map_from in input_mapping.items():

143 | nested_visualizer = parse_if_visualizer(map_from)

144 | if nested_visualizer is not None:

145 | input_mapping[map_to] = nested_visualizer

146 |

147 | # check if 'input' should be parsed as visualizer

148 | if kwargs.get('input') is not None:

149 | nested_visualizer = parse_if_visualizer(kwargs.get('input'))

150 | if nested_visualizer is not None:

151 | kwargs['input'] = nested_visualizer

152 |

153 | return visualizer(**kwargs)

154 |

--------------------------------------------------------------------------------

/firelight/inferno_callback.py:

--------------------------------------------------------------------------------

1 | from inferno.trainers.callbacks.base import Callback

2 | from inferno.trainers.callbacks.logging.tensorboard import TensorboardLogger

3 | from .utils.io_utils import yaml2dict

4 | from .config_parsing import get_visualizer

5 | import torch

6 | import logging

7 | import sys

8 |

9 | # Set up logger

10 | logging.basicConfig(format='[+][%(asctime)-15s][VISUALIZATION]'

11 | ' %(message)s',

12 | stream=sys.stdout,

13 | level=logging.INFO)

14 | logger = logging.getLogger(__name__)

15 |

16 |

17 | def _remove_alpha(tensor, background_brightness=1):

18 | return torch.ones_like(tensor[..., :3]) * background_brightness * (1-tensor[..., 3:4]) + \

19 | tensor[..., :3] * tensor[..., 3:4]

20 |

21 |

22 | class VisualizationCallback(Callback):

23 | # Autodoc does not pick up VisualizationCallback, since Callback is mocked.

24 |

25 | VISUALIZATION_PHASES = ['training', 'validation']

26 | TRAINER_STATE_PREFIXES = ('training', 'validation')

27 |

28 | def __init__(self, logging_config, log_during='all'):

29 | super(VisualizationCallback, self).__init__()

30 | assert isinstance(logging_config, dict)

31 | self.logging_config = logging_config # dictionary containing the visualizers as values with their names as keys

32 |

33 | # parse phases during which to log the individual visualizers

34 | for i, name in enumerate(logging_config):

35 | phases = logging_config[name].get('log_during', log_during)

36 | if isinstance(phases, str):

37 | if phases == 'all':

38 | phases = self.VISUALIZATION_PHASES

39 | else:

40 | phases = [phases]

41 | assert isinstance(phases, (list, tuple)), f'{phases}, {type(phases)}'

42 | assert all(phase in self.VISUALIZATION_PHASES for phase in phases), \

43 | f'Some phase not recognized: {phases}. Valid phases: {self.VISUALIZATION_PHASES}'

44 | logging_config[name]['log_during'] = phases

45 |

46 | # parameters specifying logging iterations

47 | # self.logged_last = {'train': None, 'val': None}

48 |

49 | def get_trainer_states(self):

50 | current_pre = self.TRAINER_STATE_PREFIXES[0 if self.trainer.model.training else 1]

51 | ignore_pre = self.TRAINER_STATE_PREFIXES[1 if self.trainer.model.training else 0]

52 | result = {}

53 | for key in self.trainer._state:

54 | if key.startswith(ignore_pre):

55 | continue

56 | state = self.trainer.get_state(key)

57 | if key.startswith(current_pre):

58 | key = '_'.join(key.split('_')[1:]) # remove current prefix

59 | if isinstance(state, torch.Tensor):

60 | state = state.cpu().detach().clone().float() # logging is done on the cpu, all tensors are floats

61 | if isinstance(state, (tuple, list)) and all([isinstance(t, torch.Tensor) for t in state]):

62 | state = list(t.cpu().detach().clone().float() for t in state)

63 |

64 | result[key] = state

65 | return result

66 |

67 | def do_logging(self, phase, **_):

68 | assert isinstance(self.trainer.logger, TensorboardLogger)

69 | writer = self.trainer.logger.writer

70 | pre = 'training' if self.trainer.model.training else 'validation'

71 | for name, config in self.logging_config.items():

72 | if phase not in config['log_during']: # skip visualizer if logging not requested for this phase

73 | continue

74 | visualizer = config['visualizer']

75 | logger.info(f'Logging now: {name}')

76 | image = _remove_alpha(visualizer(**self.get_trainer_states())).permute(2, 0, 1) # to [Color, Height, Width]

77 | writer.add_image(tag=pre+'_'+name, img_tensor=image, global_step=self.trainer.iteration_count)

78 | logger.info(f'Logging finished')

79 |

80 | def end_of_training_iteration(self, **_):

81 | last_match_value = self.trainer.logger.log_images_every._last_match_value

82 | log_now = self.trainer.logger.log_images_every.match(

83 | iteration_count=self.trainer.iteration_count,

84 | epoch_count=self.trainer.epoch_count,

85 | persistent=False)

86 | self.trainer.logger.log_images_every._last_match_value = last_match_value

87 | if log_now:

88 | self.do_logging('training')

89 |

90 | def end_of_validation_run(self, **_):

91 | self.do_logging('validation')

92 |

93 |

94 | def get_visualization_callback(config):

95 | """

96 | Gets an :mod:`inferno` callback for logging of firelight visualizations.

97 |

98 | Uses the :class:`inferno.trainers.basic.Trainer` state dictionary as input for the visualizers.

99 |

100 | The logging frequency is taken from the trainer's

101 | :class:`inferno.trainers.callbacks.logging.tensorboard.TensorboardLogger`.

102 |

103 |

104 |

105 | Parameters

106 | ----------

107 | config : str or dict

108 | If :obj:`str`, will be converted to :obj:`dict` using `pyyaml

109 | `_.

110 |

111 | If :obj:`dict`, the keys are the tags under which the visualizations

112 | will be saved in Tensorboard, while the values are the configuration

113 | dictionaries to get the visualizers producing these visualizations,

114 | using :func:`firelight.config_parsing.get_visualizer`.

115 |

116 | Returns

117 | -------

118 | :class:`inferno.trainers.callbacks.base.Callback`

119 |

120 | Examples

121 | --------

122 | The structure of a configuration file could look like this:

123 |

124 | .. code:: yaml

125 |

126 | # visualize model predictions

127 | predictions:

128 | RowVisualizer:

129 | ...

130 |

131 | # visualize something else

132 | fancy_visualization:

133 | RowVisualizer:

134 | ...

135 |

136 | This configuration would produce images that are saved under the tags :code:`predictions` and

137 | :code:`fancy_visualization` in Tensorboard.

138 |

139 | """

140 | config = yaml2dict(config)

141 | logging_config = {}

142 | default_phases = config.pop('log_during', 'all')

143 | for name, kwargs in config.items():

144 | log_during = kwargs.pop('log_during', default_phases)

145 | visualizer = get_visualizer(kwargs)

146 | logging_config[name] = dict(visualizer=visualizer, log_during=log_during)

147 | callback = VisualizationCallback(logging_config)

148 | return callback

149 |

--------------------------------------------------------------------------------

/firelight/utils/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/inferno-pytorch/firelight/796328f93494248e6a4cf238ea36ac4eeb7fc9b8/firelight/utils/__init__.py

--------------------------------------------------------------------------------

/firelight/utils/dim_utils.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import torch

3 | from copy import copy

4 | from collections import OrderedDict

5 |

6 | # in this library, 'spec' always stands for a list of dimension names.

7 | # eg. ['B', 'C', 'H', 'W'] standing for [Batch, Channel, Height, Width]

8 |

9 |

10 | def join_specs(*specs):

11 | """

12 | Returns a list of dimension names which includes each dimension in any of the supplied specs exactly once, ordered

13 | by their occurrence in specs.

14 |

15 | Parameters

16 | ----------

17 | specs : list

18 | List of lists of dimension names to be joined

19 |

20 | Returns

21 | -------

22 | list

23 |

24 | Examples

25 | --------

26 |

27 | >>> join_specs(['B', 'C'], ['B', 'H', 'W'])

28 | ['B', 'C', 'H', 'W']

29 | >>> join_specs(['B', 'C'], ['H', 'B', 'W'])

30 | ['B', 'C', 'H', 'W']

31 |

32 | """

33 | if len(specs) != 2:

34 | return join_specs(specs[0], join_specs(*specs[1:]))

35 | spec1, spec2 = specs

36 | result = copy(spec1)

37 | for d in spec2:

38 | if d not in result:

39 | result.append(d)

40 | return result

41 |

42 |

43 | def extend_dim(tensor, in_spec, out_spec, return_spec=False):

44 | """

45 | Adds extra (length 1) dimensions to the input tensor such that it has all the dimensions present in out_spec.

46 |

47 | Parameters

48 | ----------

49 | tensor : torch.Tensor

50 | in_spec : list

51 | spec of the input tensor

52 | out_spec : list

53 | spec of the output tensor

54 | return_spec : bool, optional

55 | Weather the output should consist of a tuple containing the output tensor and the resulting spec, or only the

56 | former.

57 |

58 | Returns

59 | -------

60 | torch.Tensor or tuple

61 |

62 | Examples

63 | --------

64 |

65 | >>> tensor, out_spec = extend_dim(

66 | ... torch.empty(2, 3),

67 | ... ['A', 'B'], ['A', 'B', 'C', 'D'],

68 | ... return_spec=True

69 | ... )

70 | >>> print(tensor.shape)

71 | torch.Size([2, 3, 1, 1])

72 | >>> print(out_spec)

73 | ['A', 'B', 'C', 'D']

74 |

75 | """

76 | assert all(d in out_spec for d in in_spec)

77 | i = 0

78 | for d in out_spec:

79 | if d not in in_spec:

80 | tensor = tensor.unsqueeze(i)

81 | i += 1

82 | if return_spec:

83 | new_spec = out_spec + [d for d in in_spec if d not in out_spec]

84 | return tensor, new_spec

85 | else:

86 | return tensor

87 |

88 |

89 | def moving_permutation(length, origin, goal):

90 | """

91 | Returns a permutation moving the element at position origin to the position goal (in the format requested by

92 | torch.Tensor.permute)

93 |

94 | Parameters

95 | ----------

96 | length : int

97 | length of the sequence to be permuted

98 | origin : int

99 | position of the element to be moved

100 | goal : int

101 | position the element should end up after the permutation

102 |

103 | Returns

104 | -------

105 | :obj:`list` of :obj:`int`

106 |

107 | Examples

108 | --------

109 |

110 | >>> moving_permutation(length=5, origin=1, goal=3)

111 | [0, 2, 3, 1, 4]

112 | >>> moving_permutation(length=5, origin=3, goal=1)

113 | [0, 3, 1, 2, 4]

114 |

115 | """

116 | result = []

117 | for i in range(length):

118 | if i == goal:

119 | result.append(origin)

120 | elif (i < goal and i < origin) or (i > goal and i > origin):

121 | result.append(i)

122 | elif goal < i <= origin:

123 | result.append(i-1)

124 | elif origin <= i < goal:

125 | result.append(i+1)

126 | else:

127 | assert False

128 | return result

129 |

130 |

131 | def collapse_dim(tensor, to_collapse, collapse_into=None, spec=None, return_spec=False):

132 | """

133 | Reshapes the input tensor, collapsing one dimension into another. This is achieved by

134 |

135 | - first permuting the tensors dimensions such that the dimension to collapse is next to the one to collapse it into,

136 | - reshaping the tensor, making one dimension out of the to affected.

137 |

138 | Parameters

139 | ----------

140 | tensor : torch.Tensor

141 | to_collapse : int or str

142 | Dimension to be collapsed.

143 | collapse_into : int or str, optional

144 | Dimension into which the other will be collapsed.

145 | spec : list, optional

146 | Name of dimensions of input tensor. If not specified, will be taken to be range(len(tensor.shape())).

147 | return_spec : bool, optional

148 | Weather the output should consist of a tuple containing the output tensor and the resulting spec, or only the

149 | former.

150 |

151 | Returns

152 | -------

153 | torch.Tensor or tuple

154 |

155 | Examples

156 | --------

157 |

158 | >>> tensor = torch.Tensor([[1, 2, 3], [10, 20, 30]]).long()

159 | >>> collapse_dim(tensor, to_collapse=1, collapse_into=0)

160 | tensor([ 1, 2, 3, 10, 20, 30])

161 | >>> collapse_dim(tensor, to_collapse=0, collapse_into=1)

162 | tensor([ 1, 10, 2, 20, 3, 30])

163 |

164 | """

165 | spec = list(range(len(tensor.shape))) if spec is None else spec

166 | assert to_collapse in spec, f'{to_collapse}, {spec}'

167 | i_from = spec.index(to_collapse)

168 | if collapse_into is None:

169 | i_delete = i_from

170 | assert tensor.shape[i_delete] == 1, f'{to_collapse}, {tensor.shape[i_delete]}'

171 | tensor = tensor.squeeze(i_delete)

172 | else:

173 | assert collapse_into in spec, f'{collapse_into}, {spec}'

174 | i_to = spec.index(collapse_into)

175 | if i_to != i_from:

176 | i_to = i_to + 1 if i_from > i_to else i_to

177 | tensor = tensor.permute(moving_permutation(len(spec), i_from, i_to))

178 | new_shape = tensor.shape[:i_to-1] + (tensor.shape[i_to-1] * tensor.shape[i_to],) + tensor.shape[i_to+1:]

179 | tensor = tensor.contiguous().view(new_shape)

180 | else:

181 | i_from = -1 # suppress deletion of spec later

182 | if return_spec:

183 | new_spec = [spec[i] for i in range(len(spec)) if i is not i_from]

184 | return tensor, new_spec

185 | else:

186 | return tensor

187 |

188 |

189 | def convert_dim(tensor, in_spec, out_spec=None, collapsing_rules=None, uncollapsing_rules=None,

190 | return_spec=False, return_inverse_kwargs=False):

191 | """

192 | Convert the dimensionality of tensor from in_spec to out_spec.

193 |

194 | Parameters

195 | ----------

196 | tensor : torch.Tensor

197 | in_spec : list

198 | Name of dimensions of the input tensor.

199 | out_spec : list, optional

200 | Name of dimensions that the output tensor will have.

201 | collapsing_rules : :obj:`list` of :obj:`tuple`, optional

202 | List of two element tuples. The first dimension in a tuple will be collapsed into the second (dimensions given

203 | by name).

204 | uncollapsing_rules : :obj:`list` of :obj:`tuple`, optional

205 | List of three element tuples. The first element of each specifies the dimension to 'uncollapse' (=split into

206 | two). The second element specifies the size of the added dimension, and the third its name.

207 | return_spec : bool, optional

208 | Weather the output should consist of a tuple containing the output tensor and the resulting spec, or only the

209 | former.

210 | return_inverse_kwargs : bool, optional

211 | If true, a dictionary containing arguments to reverse the conversion (with this function) are added to the

212 | output tuple.

213 |

214 | Returns

215 | -------

216 | torch.Tensor or tuple

217 |

218 | Examples

219 | --------

220 |

221 | >>> tensor = torch.Tensor([[1, 2, 3], [10, 20, 30]]).long()

222 | >>> convert_dim(tensor, ['A', 'B'], ['B', 'A']) # doctest: +NORMALIZE_WHITESPACE

223 | tensor([[ 1, 10],

224 | [ 2, 20],

225 | [ 3, 30]])

226 | >>> convert_dim(tensor, ['A', 'B'], collapsing_rules=[('A', 'B')]) # doctest: +NORMALIZE_WHITESPACE

227 | tensor([ 1, 10, 2, 20, 3, 30])

228 | >>> convert_dim(tensor, ['A', 'B'], collapsing_rules=[('B', 'A')]) # doctest: +NORMALIZE_WHITESPACE

229 | tensor([ 1, 2, 3, 10, 20, 30])

230 | >>> convert_dim(tensor.flatten(), ['A'], ['A', 'B'], uncollapsing_rules=[('A', 3, 'B')]) # doctest: +NORMALIZE_WHITESPACE

231 | tensor([[ 1, 2, 3],

232 | [10, 20, 30]])

233 |

234 | """

235 | assert len(tensor.shape) == len(in_spec), f'{tensor.shape}, {in_spec}'

236 |

237 | to_collapse = [] if collapsing_rules is None else [rule[0] for rule in collapsing_rules]

238 | collapse_into = [] if collapsing_rules is None else [rule[1] for rule in collapsing_rules]

239 | uncollapsed_dims = []

240 |

241 | temp_spec = copy(in_spec)

242 | # uncollapse as specified

243 | if uncollapsing_rules is not None:

244 | for rule in uncollapsing_rules:

245 | if isinstance(rule, tuple):

246 | rule = {

247 | 'to_uncollapse': rule[0],

248 | 'uncollapsed_length': rule[1],

249 | 'uncollapse_into': rule[2]

250 | }

251 | uncollapsed_dims.append(rule['uncollapse_into'])

252 | tensor, temp_spec = uncollapse_dim(tensor, spec=temp_spec, **rule, return_spec=True)

253 |

254 | # construct out_spec if not given

255 | if out_spec is None:

256 | # print([d for d in in_spec if d not in to_collapse], collapse_into, uncollapsed_dims)

257 | out_spec = join_specs([d for d in in_spec if d not in to_collapse], collapse_into, uncollapsed_dims)

258 |

259 | # bring tensor's spec in same order as out_spec, with dims not present in out_spec at the end

260 | joined_spec = join_specs(out_spec, in_spec)

261 | order = list(np.argsort([joined_spec.index(d) for d in temp_spec]))

262 | tensor = tensor.permute(order)

263 | temp_spec = [temp_spec[i] for i in order]

264 |

265 | # unsqueeze to match out_spec

266 | tensor = extend_dim(tensor, temp_spec, joined_spec)

267 | temp_spec = joined_spec

268 |

269 | # apply dimension collapsing rules

270 | inverse_uncollapsing_rules = [] # needed if inverse is requested

271 | if collapsing_rules is not None:

272 | # if default to collapse into is specified, add appropriate rules at the end

273 | if 'rest' in to_collapse:

274 | ind = to_collapse.index('rest')

275 | collapse_rest_into = collapsing_rules.pop(ind)[1]

276 | for d in temp_spec:

277 | if d not in out_spec:

278 | collapsing_rules.append((d, collapse_rest_into))

279 | # do collapsing

280 | for rule in collapsing_rules:

281 | if rule[0] in temp_spec:

282 | inverse_uncollapsing_rules.append({

283 | 'to_uncollapse': rule[1],

284 | 'uncollapsed_length': tensor.shape[temp_spec.index(rule[0])],

285 | 'uncollapse_into': rule[0]

286 | })

287 | # print(f'{tensor.shape}, {temp_spec}, {out_spec}')

288 | tensor, temp_spec = collapse_dim(tensor, spec=temp_spec, to_collapse=rule[0], collapse_into=rule[1],

289 | return_spec=True)

290 |

291 | # drop trivial dims not in out_spec

292 | for d in reversed(temp_spec):

293 | if d not in out_spec:

294 | tensor, temp_spec = collapse_dim(tensor, to_collapse=d, spec=temp_spec, return_spec=True)

295 |

296 | assert all(d in out_spec for d in temp_spec), \

297 | f'{temp_spec}, {out_spec}: please provide appropriate collapsing rules'

298 | tensor = extend_dim(tensor, temp_spec, out_spec)

299 |

300 | result = [tensor]

301 | if return_spec:

302 | result.append(temp_spec)

303 | if return_inverse_kwargs:

304 | inverse_kwargs = {

305 | 'in_spec': out_spec,

306 | 'out_spec': in_spec,

307 | 'uncollapsing_rules': inverse_uncollapsing_rules[::-1]

308 | }

309 | result.append(inverse_kwargs)

310 | if len(result) == 1:

311 | return result[0]

312 | else:

313 | return result

314 |

315 |

316 | def uncollapse_dim(tensor, to_uncollapse, uncollapsed_length, uncollapse_into=None, spec=None, return_spec=False):

317 | """

318 | Splits a dimension in the input tensor into two, adding a dimension of specified length.

319 |

320 | Parameters

321 | ----------

322 | tensor : torch.Tensor

323 | to_uncollapse : str or int

324 | Dimension to be split.

325 | uncollapsed_length : int

326 | Length of the new dimension.

327 | uncollapse_into : str or int, optional

328 | Name of the new dimension.

329 | spec : list, optional

330 | Names or the dimensions of the input tensor

331 | return_spec : bool, optional

332 | Weather the output should consist of a tuple containing the output tensor and the resulting spec, or only the

333 | former.

334 |

335 | Returns

336 | -------

337 | torch.Tensor or tuple

338 |

339 | Examples

340 | --------

341 |

342 | >>> tensor = torch.Tensor([1, 2, 3, 10, 20, 30]).long()

343 | >>> uncollapse_dim(tensor, 0, 3, 1) # doctest: +NORMALIZE_WHITESPACE

344 | tensor([[ 1, 2, 3],

345 | [10, 20, 30]])

346 | """

347 | # puts the new dimension directly behind the old one

348 | spec = list(range(len(tensor.shape))) if spec is None else spec

349 | assert to_uncollapse in spec, f'{to_uncollapse}, {spec}'

350 | assert uncollapse_into not in spec, f'{uncollapse_into}, {spec}'

351 | assert isinstance(tensor, torch.Tensor), f'unexpected type: {type(tensor)}'

352 | i_from = spec.index(to_uncollapse)

353 | assert tensor.shape[i_from] % uncollapsed_length == 0, f'{tensor.shape[i_from]}, {uncollapsed_length}'

354 | new_shape = tensor.shape[:i_from] + \

355 | (tensor.shape[i_from]//uncollapsed_length, uncollapsed_length) + \

356 | tensor.shape[i_from + 1:]

357 | tensor = tensor.contiguous().view(new_shape)

358 | if return_spec:

359 | assert uncollapse_into is not None

360 | new_spec = copy(spec)

361 | new_spec.insert(i_from + 1, uncollapse_into)

362 | return tensor, new_spec

363 | else:

364 | return tensor

365 |

366 |

367 | def add_dim(tensor, length=1, new_dim=None, spec=None, return_spec=False):

368 | """

369 | Adds a single dimension of specified length (achieved by repeating the tensor) to the input tensor.

370 |

371 | Parameters

372 | ----------

373 | tensor : torch.Tensor

374 | length : int

375 | Length of the new dimension.

376 | new_dim : str, optional

377 | Name of the new dimension

378 | spec : list, optional

379 | Names of dimensions of the input tensor

380 | return_spec : bool, optional

381 | If true, a dictionary containing arguments to reverse the conversion (with this function) are added to the

382 | output tuple.

383 |

384 | Returns

385 | -------

386 | torch.Tensor or tuple

387 |

388 | """

389 | tensor = tensor[None].repeat([length] + [1] * len(tensor.shape))

390 | if return_spec:

391 | return tensor, [new_dim] + spec

392 | else:

393 | return tensor

394 |

395 |

396 | def equalize_specs(tensor_spec_pairs):

397 | """

398 | Manipulates a list of tensors such that their dimension names (including order of dimensions) match up.

399 |

400 | Parameters

401 | ----------

402 | tensor_spec_pairs : :obj:`list` of :obj:`tuple`

403 | List of two element tuples, each consisting of a tensor and a spec (=list of names of dimensions).

404 |

405 | Returns

406 | -------

407 | torch.Tensor

408 |