├── .gitignore

├── .idea

├── .gitignore

├── deployment.xml

├── fuzi.mingcha.iml

├── inspectionProfiles

│ └── profiles_settings.xml

├── misc.xml

├── modules.xml

└── vcs.xml

├── CITATION.cff

├── LICENSE

├── README.md

├── images

├── Background.png

├── Response_with_Case_Retrieval_Case1.png

├── Response_with_Legal_Search_Case1.png

├── Response_with_Legal_Search_Case2.png

├── Syllogistic_Inference_Judgment_Case1.png

├── Syllogistic_Inference_Judgment_Case2.png

├── Syllogistic_Inference_Judgment_Case3.png

├── Syllogistic_Inference_Judgment_Case4.png

├── Syllogistic_Inference_Judgment_Case5.png

├── cli_demo_case1.png

└── cli_demo_case2.png

└── src

├── README.md

├── README_old.md

├── Singularity安装与使用.md

├── cli_demo.py

├── es_task1

└── api.py

├── es_task2

└── api.py

├── pylucene_task1

├── api.py

└── csv_files

│ └── data_task1.csv

├── pylucene_task2

├── api.py

└── csv_files

│ └── data_task2.csv

└── requirements.txt

/.gitignore:

--------------------------------------------------------------------------------

1 | .vscode

2 | __pycache__

3 | .idea

--------------------------------------------------------------------------------

/.idea/.gitignore:

--------------------------------------------------------------------------------

1 | # 默认忽略的文件

2 | /shelf/

3 | /workspace.xml

4 | # 基于编辑器的 HTTP 客户端请求

5 | /httpRequests/

6 | # Datasource local storage ignored files

7 | /dataSources/

8 | /dataSources.local.xml

9 |

--------------------------------------------------------------------------------

/.idea/deployment.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

10 |

11 |

12 |

13 |

14 |

--------------------------------------------------------------------------------

/.idea/fuzi.mingcha.iml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

8 |

--------------------------------------------------------------------------------

/.idea/inspectionProfiles/profiles_settings.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

6 |

--------------------------------------------------------------------------------

/.idea/misc.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

6 |

--------------------------------------------------------------------------------

/.idea/modules.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

7 |

8 |

--------------------------------------------------------------------------------

/.idea/vcs.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

6 |

--------------------------------------------------------------------------------

/CITATION.cff:

--------------------------------------------------------------------------------

1 | cff-version: 1.2.0

2 | message: "If you use this software, please cite it as below."

3 | authors:

4 | - family-names: Wu

5 | given-names: Shiguang

6 | - family-names: Liu

7 | given-names: Zhongkun

8 | - family-names: Zhang

9 | given-names: Zhen

10 | - family-names: Chen

11 | given-names: Zheng

12 | - family-names: Deng

13 | given-names: Wentao

14 | - family-names: Zhang

15 | given-names: Wenhao

16 | - family-names: Yang

17 | given-names: Jiyuan

18 | - family-names: Yao

19 | given-names: Zhitao

20 | - family-names: Lyu

21 | given-names: Yougang

22 | - family-names: Xin

23 | given-names: Xin

24 | - family-names: Gao

25 | given-names: Shen

26 | - family-names: Ren

27 | given-names: Pengjie

28 | - family-names: Ren

29 | given-names: Zhaochun

30 | - family-names: Chen

31 | given-names: Zhumin

32 | title: "fuzi.mingcha"

33 | version: 2023

34 | identifiers:

35 | - type: url

36 | value: https://github.com/irlab-sdu/fuzi.mingcha

37 | date-released: 2023-09

38 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "[]"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright [yyyy] [name of copyright owner]

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # 夫子•明察司法大模型

2 |

3 |

4 | [](https://huggingface.co/SDUIRLab/fuzi-mingcha-v1_0)

5 | [](https://huggingface.co/datasets/SDUIRLab/fuzi-mingcha-v1_0-data)

6 | [](https://huggingface.co/datasets/SDUIRLab/fuzi-mingcha-v1_0-pretrain-data)

7 | [](https://www.modelscope.cn/models/furyton/fuzi-mingcha-v1_0)

8 | [](https://www.modelscope.cn/datasets/furyton/fuzi-mingcha-v1_0-data)

9 | [](https://github.com/irlab-sdu/fuzi.mingcha/blob/main/LICENSE)

10 |

12 |

13 | ## News

14 |

15 | - [2024.10.25] 🔥 更新预训练数据集,详见 [HuggingFace](https://huggingface.co/datasets/SDUIRLab/fuzi-mingcha-v1_0-pretrain-data)。

16 |

17 | - [2024.9.19] 我们已将微调数据上传至 [HuggingFace/SDUIRLab](https://huggingface.co/datasets/SDUIRLab/fuzi-mingcha-v1_0-data) 和 [魔搭社区](https://www.modelscope.cn/datasets/furyton/fuzi-mingcha-v1_0-data),我们提供了 `dataset_info.json`,数据使用方法可以参考 [LLaMA-Factory](https://github.com/hiyouga/LLaMA-Factory/blob/main/README_zh.md#%E6%95%B0%E6%8D%AE%E5%87%86%E5%A4%87)。

18 |

19 | ## 模型简介

20 | 夫子•明察司法大模型是由山东大学、浪潮云、中国政法大学联合研发,以 [ChatGLM](https://github.com/THUDM/ChatGLM-6B) 为大模型底座,基于海量中文无监督司法语料(包括各类判决文书、法律法规等)与有监督司法微调数据(包括法律问答、类案检索)训练的中文司法大模型。该模型支持法条检索、案例分析、三段论推理判决以及司法对话等功能,旨在为用户提供全方位、高精准的法律咨询与解答服务。

21 |

22 | 夫子•明察司法大模型具备如下三大特色:

23 | - **基于法条检索回复** 夫子•明察大模型能够结合相关法条进行回复生成。对于用户的咨询,夫子•明察大模型基于生成式检索范式先初步引用相关法条,再检索外部知识库对所引法条进行校验与确认,最终结合这些法条进行问题分析与回复生成。这保证生成的回复能够基于与问题相关的法律依据,并根据这些依据提供深入的分析和建议,使回复具有高权威性、高可靠性与高可信性。

24 |

25 | - **基于案例检索回复** 夫子•明察大模型能够基于历史相似案例对输入案情进行分析。大模型能够生成与用户提供的案情相似的案情描述及判决结果,通过检索外部数据库得到真实的历史案例,并将这些相似的历史案例的信息用于辅助生成判决。生成的判决参考相关案例的法律依据,从而更加合理。用户可以对照相似案例,从而更好地理解潜在的法律风险。

26 |

27 | - **三段论推理判决** 司法三段论,是把三段论的逻辑推理应用于司法实践的一种思维方式和方法。类比于三段论的结构特征,司法三段论就是法官在司法过程中将法律规范作为大前提,以案件事实为小前提,最终得出判决结果的一种推导方法。针对具体案件,夫子•明察大模型系统能够自动分析案情,识别关键的事实和法律法规,生成一个逻辑严谨的三段论式判决预测。这个功能不仅提供了对案件可能结果的有力洞察,还有助于帮助用户更好地理解案件的法律依据和潜在风险。

28 |

29 | 我们已将夫子•明察的模型权重上传至 [HuggingFace/SDUIRLab](https://huggingface.co/SDUIRLab/fuzi-mingcha-v1_0) 和 [魔搭社区](https://www.modelscope.cn/models/furyton/fuzi-mingcha-v1_0),模型的使用方法见 [#模型部署](#模型部署)。

30 |

31 | ## 公开评测效果

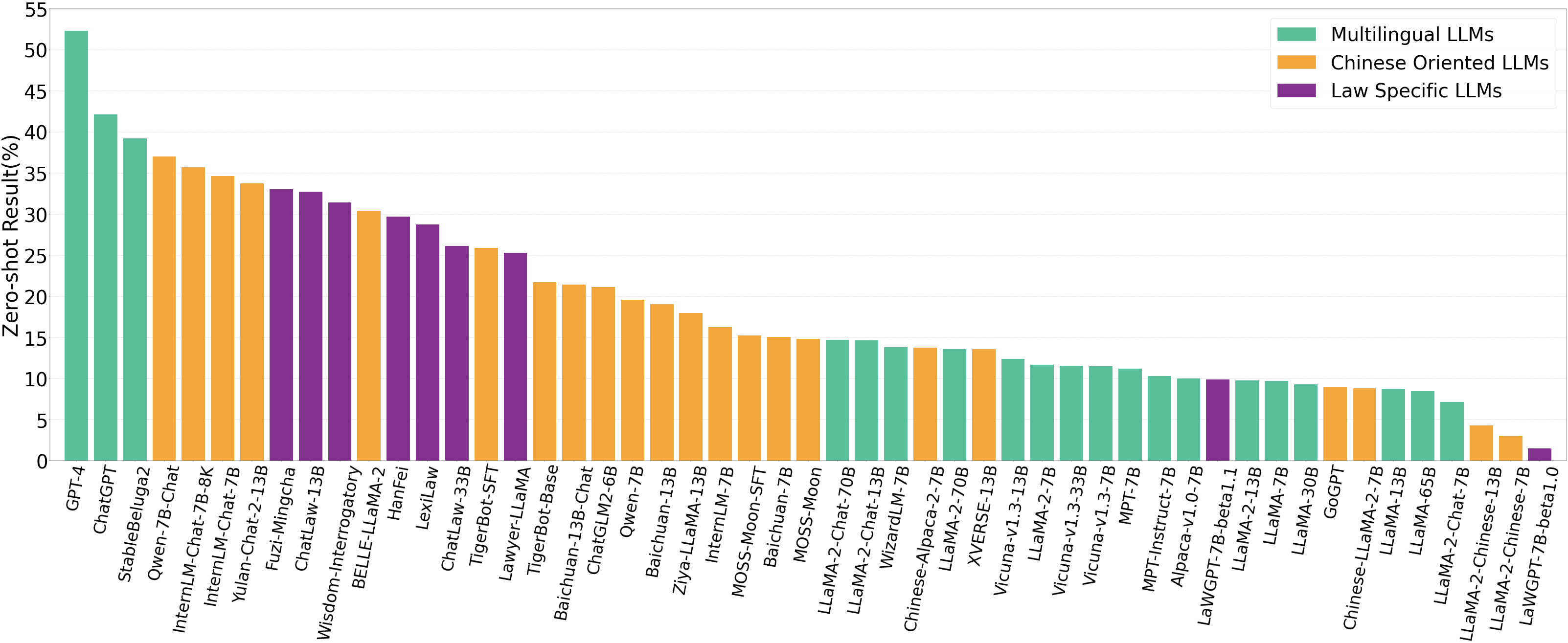

32 | 在 2023 年 9 月份由上海AI实验室联合南京大学推出的大语言模型司法能力评估体系[LawBench](https://github.com/open-compass/LawBench)中 (见下图),我们在法律专精模型 (Law Specific LLMs) 中 Zero-Shot 表现出色,取得了**第一名**,与未经法律专业知识训练的 ChatGLM 相比有了较大提升。

33 |

34 |

35 |

36 | ## 训练数据

37 | 夫子•明察司法大模型的训练数据可分为两大类别:中文无监督司法语料以及有监督司法微调数据。其中不仅涵盖法律法规、司法解释、判决文书等内容,同时还包括各类高质量司法任务数据集,例如法律问答、类案检索和三段论式法律判决。内容丰富、优质海量的训练数据,确保了对司法领域知识进行准确且全面的覆盖,为夫子•明察司法大模型提供坚实的知识基础。

38 |

39 |

40 | 以下为夫子·明察司法大模型增量预训练、指令微调过程中所使用数据的统计信息:

41 |

42 |

43 |

44 |

49 |

50 |

51 |

56 |

57 |

58 |

59 |

60 |

61 | 中文无监督司法语料

62 | 法律法规

63 | LawRefBook

64 | 3.22M

65 |

66 |

67 | 裁判文书

68 | 裁判文书网

69 | 23M

70 |

71 |

72 |

73 | 中文有监督司法微调数据

74 | 法条检索回复数据

75 | 自主构建

76 | 32k

77 |

78 |

79 | Lawyer LLaMA

80 | 11k

81 |

82 |

83 | lawGPT

84 | 35k

85 |

86 |

87 | 案例检索回复数据

88 | 自主构建

89 | 15k

90 |

91 |

92 | CAIL

93 | 10k

94 |

95 |

96 | 三段论推理判决数据

97 | 自主构建

98 | 11k

99 |

100 |

101 | 多轮法律对话数据

102 | Hanfei

103 | 40k

104 |

105 |

106 | 通用领域微调数据

107 | Alpaca指令微调数据

108 | Alpaca

109 | 100K

110 |

111 |

112 | Belle指令微调数据

113 | Belle

114 | 10k

115 |

116 |

117 | OL-CC指令微调数据

118 | 智源

119 | 10K

120 |

121 |

122 |

123 |

124 |

125 | 以下部分自主构建的数据样例:

126 |

127 | - 法条检索回复数据

128 | ```

129 | # 法条询问

130 | {

131 | "instruction": "",

132 | "input": "中华人民共和国义务教育法的第三十条是什么?",

133 | "output": "中华人民共和国义务教育法的第三十条规定如下\n教师应当取得国家规定的教师资格。\n国家建立统一的义务教育教师职务制度。教师职务分为初级职务、中级职务和高级职务。\n"

134 | },

135 | # 法条补全

136 | {

137 | "instruction": "请补充以下法条缺失部分",

138 | "input": "高等教育自学考试暂行条例第十七条规定:\n高等教育自学考试的命题由全国考委统筹安排,分别采取全国统一命题、区域命题、省级命题三种办法",

139 | "output": "逐步建立题库,实现必要的命题标准化。\n试题(包括副题)及参考答案、评分标准启用前属绝密材料。"

140 | },

141 | # 根据案情生成相关法条

142 | {

143 | "instruction": "请根据基本案情,给出适用的法条。",

144 | "input": "基本案情:\n广东省鹤山市人民检察院指控被告人李某甲于2014年7月7日9时许,在鹤山市宅梧镇双和公路宅梧收费站附近路段被民警抓获。民警当场从李某甲身上搜获搜获白色晶体38小包。经鉴定,从李某甲处缴获的白色晶体净重12.9克,检出甲基苯丙胺成分。被告人李某甲到案后如实供述自己的罪行,可以从轻处罚。建议对被告人判处有期徒刑六个月至一年六个月,并处罚金。提请法院依法惩处。上述事实,被告人李某甲在开庭审理过程中亦无异议,并有抓获被告人及破案的经过材料、被告人的供述、证人李X锋的证言、扣押物品清单、鉴定意见、辨认笔录、现场勘某足以认定。",

145 | "output": "适用法条:\n 《中华人民共和国刑法》第三百四十八条 【非法持有毒品罪】,李某甲非法持有甲基苯丙胺十克以上不满五十克,罪名成立,应判处三年以下有期徒刑,并处罚金。"

146 | },

147 | # 根据案情结合相关法条进行分析

148 | {

149 | "instruction": "请根据以下案情进行判决并结合相关法律进行分析",

150 | "input": "基本案情:\n通过A公司经营的旅游App预定境外客房,支付方式为“到店支付”,订单下单后即被从银行卡中扣除房款,后原告未入住。原告认为应当到店后付款,A公司先行违约,要求取消订单。A公司认为其已经在服务条款中就“到店支付”补充说明“部分酒店住宿可能会对您的银行卡预先收取全额预订费用”,不构成违约,拒绝退款。\n邬某将A公司起诉至法院,请求判令退还预扣的房款。\n",

151 | "output": "裁判结果:\n经审理认为,对“到店支付”的通常理解应为用户到酒店办理住宿时才会支付款项,未入住之前不需要支付。即使该条款后补充说明部分酒店会“预先收取全额预订费用”,但对这种例外情形应当进行特别提示和说明,如果只在内容复杂繁多的条款中规定,不足以起到提示的作用,A公司作为预定服务的提供者应当承担责任。\n最终,法院支持邬某退还房款的诉讼请求。\n典型意义:\n字经济、互联网产业飞速发展的大背景下,线上交易中企业基本都采用格式条款的方式与消费者建立契约关系。但是,在格式条款发挥其便捷、高效、积极作用的同时,因其本身具有的单方提供、内容固定的特质所带来的问题和风险,也不容忽视。\n法律明确赋予了格式条款提供者进行提示说明的义务,民法典第四百九十六条规定:“提供格式条款的一方未履行提示或者说明义务,致使对方没有注意或者理解与其有重大利害关系的条款的,对方可以主张该条款不成为合同的内容。\n”提供格式条款的企业应当基于公平、诚信原则,依法、合理制定格式条款的内容,并对于履行方式等与消费者有重大利害关系的条款,向消费者进行特别的提醒和说明,从而维护交易秩序,平衡双方利益,促进行业发展。本案的裁判进一步厘清了网络服务提供者作为提供格式条款一方的责任,引导互联网交易模式更加符合契约自由和契约正义的精神。\n"

152 | }

153 |

154 | ```

155 |

156 | - 案例检索回复数据

157 |

158 | ```

159 | {

160 | "instruction": "请根据以下输入生成相关的案例和判决结果",

161 | "input": "被指控的人在某地某时盗走了三辆电动车。他有精神障碍,但被认定具备完全刑事责任能力。",

162 | "output": "基本案情:\n 河南省驻马店市驿城区人民检察院指控: 1.2016年4月12日13时许,被告人曹某某到驻马店市驿城区金雀路西段开发区高中院内4号楼前,将被害人刘某停放的一辆白色“高仕”牌电动车盗走。经鉴定,被盗电动车价值1064元。 2.2016年4月12日23时许,被告人曹某某到驻马店市驿城区金雀路西段开发区高中院内4号楼前,将被害人付某某停放的一辆白色“爱玛”牌电动车盗走。经鉴定,被盗电动车价值1320元。 3.2016年4月13日12时许,被告人曹某某到驻马店市驿城区金雀路西段开发区高中院内4号楼前,将被害人拜某某停放的一辆红色“高仕”牌电动车盗走。经鉴定,被盗电动车价值1200元。 另查明,案发后,公安机关接被害人报警后,通过查看案发现场监控和走访群众,确定上述三起电动车被盗均系被告人曹某某实施。 还查明,经驻马店安康法医精神病司法鉴定所鉴定,被告人曹某某系酒精所致精神障碍(依赖综合征),具备完全刑事责任能力,有受审能力。 上述事实,被告人曹某某在开庭审理过程中亦无异议,并有被害人陈述、证人证言、鉴定意见、物证照片、视听资料、到案经过、户籍证明等证据证实,足以认定。 \n本院认为,被告人曹某某以非法占有为目的,多次采取秘密手段窃取他人财物,价值3584元,数额较大,其行为已构成盗窃罪。公诉机关对曹某某犯罪指控成立,予以支持。曹某某有劣迹,其当庭自愿认罪,量刑时均可酌予考虑。根据曹某某犯罪的事实、性质、情节以及对社会的危害程度,依照《中华人民共和国刑法》第二百六十四条和第十八条第四款之规定,判决如下:\n 被告人曹某某犯盗窃罪,判处拘役五个月,并处罚金人民币二千元(罚金限判决生效后十日内缴纳)。 (刑期从判决执行之日起计算。判决执行以前先行羁押的,羁押一日折抵刑期一日。即自2016年4月13日起至2016年9月12日止)。 如不服本判决,可在接到判决书的第二日起十日内,通过本院或者直接向驻马店市中级人民法院提出上诉。书面上诉的,应当提交上诉状正本一份,副本二份。"

163 | }

164 | ```

165 |

166 | - 三段论推理判决数据

167 |

168 | ```

169 | {

170 | "instruction": "请根据基本案情,利用三段论的推理方式得到判决结果,判决结果包括:1.罪名;\n2.刑期。",

171 | "input": "基本案情:\n广东省鹤山市人民检察院指控被告人李某甲于2014年7月7日9时许,在鹤山市宅梧镇双和公路宅梧收费站附近路段被民警抓获。民警当场从李某甲身上搜获搜获白色晶体38小包。经鉴定,从李某甲处缴获的白色晶体净重12.9克,检出甲基苯丙胺成分。被告人李某甲到案后如实供述自己的罪行,可以从轻处罚。建议对被告人判处有期徒刑六个月至一年六个月,并处罚金。提请法院依法惩处。上述事实,被告人李某甲在开庭审理过程中亦无异议,并有抓获被告人及破案的经过材料、被告人的供述、证人李X锋的证言、扣押物品清单、鉴定意见、辨认笔录、现场勘某足以认定。",

172 | "output": "犯罪要件:\n犯罪主体:\n 犯罪主体为被告人李某甲,构成要件为完全行为能力人。\n 犯罪客体:\n 犯罪客体为社会公共安全,包括禁毒法规所保护的社会关系。被告人李某甲非法持有、贩卖毒品,严重危害社会公共安全,破坏社会治安秩序。\n 犯罪主观要件:\n 犯罪主观方面为故意,李某甲明知所持有的白色晶体为甲基苯丙胺,仍然故意贩卖毒品。犯罪意图为非法牟利。\n 犯罪客观要件:\n 犯罪时间:2014年7月7日9时许。犯罪地点:广东省鹤山市宅梧镇双和公路宅梧收费站附近路段。犯罪行为:携带白色晶体38小包,净重12.9克,检出甲基苯丙胺成分。犯罪结果:被民警抓获。\n 适用法条:\n 《中华人民共和国刑法》第三百四十八条 【非法持有毒品罪】,李某甲非法持有甲基苯丙胺十克以上不满五十克,罪名成立,应判处三年以下有期徒刑,并处罚金。 \n 判决结果:\n 罪名:非法持有毒品罪刑期:三年以下有期徒刑,并处罚金。 "

173 | },

174 | ```

175 |

176 | ~~注:其中我们自主构建的数据集,如三段论推理判决数据集等,将会在近期以论文的形式公开,感谢您的关注和理解。~~

177 |

178 | 注 1:利用三段论推理来选择和评估当事人的论点是一种常见的做法。三段论中包含大前提、小前提和结论三个部分,应用到法律领域中时,大前提通常是由相关法条构成的法律依据,小前提通常时由犯罪要件构成的案情分析结果,结论通常是由最终适用的法条和判决结果构成。在实践中,三段论是法官广泛使用的法律推理的标准形式,以确保逻辑论点是合理和无可争辩的。

179 |

180 | 注 2:我们自主构建的数据集论文(三段推理判决数据等)已经发表在 EMNLP 2023 [1],详细的数据构建方法及数据集内容请参考[论文代码](https://github.com/dengwentao99/SLJA)。

181 |

182 | [1]. Wentao Deng, Jiahuan Pei, Keyi Kong, Zhe Chen, Furu Wei, Yujun Li, Zhaochun Ren, Zhumin Chen, and Pengjie Ren. 2023. [Syllogistic Reasoning for Legal Judgment Analysis](https://aclanthology.org/2023.emnlp-main.864). In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, pages 13997–14009, Singapore. Association for Computational Linguistics.

183 |

184 | ## 效果展示

185 | 以下为夫子·明察三大特色的效果展示:

187 | 基于法条检索回复

193 |

194 |

195 |

196 | 基于案例检索回复

201 |

202 |

203 |

204 | 三段论推理判决

213 |

214 |

215 |

216 |

219 | 法律咨询

229 |

230 |

231 |

232 | 案例分析

253 |

254 | ## 模型部署

255 |

256 | 模型的部署和使用请参考 [src/cli_demo.py](src)

257 |

258 |

259 | ## 致谢

260 |

261 | 本项目基于或参考如下开源项目,在此对相关项目和开发人员表示感谢:

262 |

263 | - [Alpaca](https://github.com/tatsu-lab/stanford_alpaca)

264 | - [BELLE](https://github.com/LianjiaTech/BELLE)

265 | - [ChatGLM-6B](https://github.com/THUDM/ChatGLM-6B)

266 | - [ChatGLM-Efficient-Tuning](https://github.com/hiyouga/ChatGLM-Efficient-Tuning)

267 | - [Lawyer LLaMA](https://github.com/AndrewZhe/lawyer-llama)

268 | - [LaWGPT](https://github.com/pengxiao-song/LaWGPT)

269 | - [JEC-QA](https://jecqa.thunlp.org/)

270 | - [PKU Opendata](https://opendata.pku.edu.cn/dataset.xhtml?persistentId=doi:10.18170/DVN/OLO4G8)

271 | - [LawRefBook](https://github.com/RanKKI/LawRefBook)

272 | - [CAIL 2018-2021](https://github.com/china-ai-law-challenge)

273 | - [HanFei](https://github.com/siat-nlp/HanFei)

274 | - [BAAI](https://data.baai.ac.cn/details/OL-CC)

275 |

276 |

277 | ## 声明

278 |

279 | 本项目的内容仅供学术研究之用,不得用于商业或其他可能对社会造成危害的用途。

280 | 在涉及第三方代码的使用时,请切实遵守相关的开源协议。

281 | 本项目中大模型提供的法律问答、判决预测等功能仅供参考,不构成法律意见。

282 | 如果您需要法律援助等服务,请寻求专业的法律从业者的帮助。

283 |

284 | ## 协议

285 |

286 | 本仓库的代码依照 [Apache-2.0](LICENSE) 协议开源,我们对 ChatGLM-6B 模型的权重的使用遵循 [Model License](https://github.com/THUDM/ChatGLM-6B/blob/main/MODEL_LICENSE)。

287 |

288 | ## 引用

289 |

290 | 如果本项目有帮助到您的研究,请引用我们:

291 |

292 | ```

293 | @software{sdu_fuzi_mingcha,

294 | title = {{fuzi.mingcha}},

295 | author = {Wu, Shiguang and Liu, Zhongkun and Zhang, Zhen and Chen, Zheng and Deng, Wentao and Zhang, Wenhao and Yang, Jiyuan and Yao, Zhitao and Lyu, Yougang and Xin, Xin and Gao, Shen and Ren, Pengjie and Ren, Zhaochun and Chen, Zhumin},

296 | year = 2023,

297 | journal = {GitHub repository},

298 | publisher = {GitHub},

299 | howpublished = {\url{https://github.com/irlab-sdu/fuzi.mingcha}}

300 | }

301 | ```

302 |

303 | ```

304 | @inproceedings{deng-etal-2023-syllogistic,

305 | title = {Syllogistic Reasoning for Legal Judgment Analysis},

306 | author = {Deng, Wentao and Pei, Jiahuan and Kong, Keyi and Chen, Zhe and Wei, Furu and Li, Yujun and Ren, Zhaochun and Chen, Zhumin and Ren, Pengjie},

307 | year = 2023,

308 | month = dec,

309 | booktitle = {Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing},

310 | publisher = {Association for Computational Linguistics},

311 | address = {Singapore},

312 | pages = {13997--14009},

313 | doi = {10.18653/v1/2023.emnlp-main.864},

314 | url = {https://aclanthology.org/2023.emnlp-main.864},

315 | editor = {Bouamor, Houda and Pino, Juan and Bali, Kalika}

316 | }

317 | ```

318 |

319 |

--------------------------------------------------------------------------------

/images/Background.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/irlab-sdu/fuzi.mingcha/3cecd99f6b50da6624ee9781b26bd15b7b69eb65/images/Background.png

--------------------------------------------------------------------------------

/images/Response_with_Case_Retrieval_Case1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/irlab-sdu/fuzi.mingcha/3cecd99f6b50da6624ee9781b26bd15b7b69eb65/images/Response_with_Case_Retrieval_Case1.png

--------------------------------------------------------------------------------

/images/Response_with_Legal_Search_Case1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/irlab-sdu/fuzi.mingcha/3cecd99f6b50da6624ee9781b26bd15b7b69eb65/images/Response_with_Legal_Search_Case1.png

--------------------------------------------------------------------------------

/images/Response_with_Legal_Search_Case2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/irlab-sdu/fuzi.mingcha/3cecd99f6b50da6624ee9781b26bd15b7b69eb65/images/Response_with_Legal_Search_Case2.png

--------------------------------------------------------------------------------

/images/Syllogistic_Inference_Judgment_Case1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/irlab-sdu/fuzi.mingcha/3cecd99f6b50da6624ee9781b26bd15b7b69eb65/images/Syllogistic_Inference_Judgment_Case1.png

--------------------------------------------------------------------------------

/images/Syllogistic_Inference_Judgment_Case2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/irlab-sdu/fuzi.mingcha/3cecd99f6b50da6624ee9781b26bd15b7b69eb65/images/Syllogistic_Inference_Judgment_Case2.png

--------------------------------------------------------------------------------

/images/Syllogistic_Inference_Judgment_Case3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/irlab-sdu/fuzi.mingcha/3cecd99f6b50da6624ee9781b26bd15b7b69eb65/images/Syllogistic_Inference_Judgment_Case3.png

--------------------------------------------------------------------------------

/images/Syllogistic_Inference_Judgment_Case4.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/irlab-sdu/fuzi.mingcha/3cecd99f6b50da6624ee9781b26bd15b7b69eb65/images/Syllogistic_Inference_Judgment_Case4.png

--------------------------------------------------------------------------------

/images/Syllogistic_Inference_Judgment_Case5.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/irlab-sdu/fuzi.mingcha/3cecd99f6b50da6624ee9781b26bd15b7b69eb65/images/Syllogistic_Inference_Judgment_Case5.png

--------------------------------------------------------------------------------

/images/cli_demo_case1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/irlab-sdu/fuzi.mingcha/3cecd99f6b50da6624ee9781b26bd15b7b69eb65/images/cli_demo_case1.png

--------------------------------------------------------------------------------

/images/cli_demo_case2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/irlab-sdu/fuzi.mingcha/3cecd99f6b50da6624ee9781b26bd15b7b69eb65/images/cli_demo_case2.png

--------------------------------------------------------------------------------

/src/README.md:

--------------------------------------------------------------------------------

1 | ## 依赖库安装

2 |

3 | 1. 本项目的检索部分提供了两种检索方式(您可以选择其中实现)

4 |

5 | a. 使用 [PyLucene](https://lucene.apache.org/pylucene/index.html) 实现,其安装步骤请参考[官方说明](https://lucene.apache.org/pylucene/install.html)。

6 | Pylucene 安装过程较为繁琐,我们提供了 Pylucene 的 [Singularity](https://getsingularity.com/) 环境镜像,您可以参考 [Singularity 安装文档](./Singularity安装与使用.md) 安装 Singularity 并[下载镜像](https://pan.baidu.com/s/1PqUnX7YRNMt9co3RKUwExw)(提取码:jhhl)。然后运行以下代码即可完成部署

7 | ```shell

8 | cd "/src/pylucene_task1" && singularity exec -B "/src/pylucene_task1":/mnt "/xxx/pylucene_singularity.sif" python /mnt/api.py --port "port"

9 | ```

10 | 请将`"/src/pylucene_task1"`换成自己实际的目录,`"/xxx/pylucene_singularity.sif"`是下载得到镜像。

11 |

12 | b. 我们还提供了 [Elasticsearch](https://www.elastic.co/cn/elasticsearch) 的检索部署代码,需要提前安装好 ES

13 |

14 | 2. 夫子•明察的部署与 [ChatGLM](https://github.com/THUDM/ChatGLM-6B/tree/main#%E4%BD%BF%E7%94%A8%E6%96%B9%E5%BC%8F) 相同,在命令行下执行 ` pip install -r requirements.txt` 即可

15 |

16 | ## 检索模块部署

17 |

18 | 我们提供了两种检索系统部署方式 **pylucene** 和 **Elasticsearch**

19 | 1. **pylucene** 的部署方式。

20 |

21 | 如果您安装好 pylucene 的环境,您可以直接运行以下代码进行部署。或者您可以用上面“依赖库安装”中的部署方式。

22 |

23 | ```python

24 | python ./pylucene_task1/api.py --port "端口"

25 | python ./pylucene_task2/api.py --port "端口"

26 | ```

27 |

28 | 2. **ES** 部署方式

29 |

30 | ```python

31 | python ./es_task1/api.py --port "端口"

32 | python ./es_task2/api.py --port "端口"

33 | ```

34 |

35 | 注:

36 |

37 | 如果想更换检索数据集,可以参考我们的检索数据库格式自行进行修改: `./pylucene_task/csv_files/data_task.csv`。

38 | 您也可以简单的修改 `./pylucene_task/csv_files/api.py` 中的代码支持更多的数据类型。

39 | - [task1 的数据库](./pylucene_task1/csv_files/) (法律法规数据集) 来自于 [LawRefBook](https://github.com/RanKKI/LawRefBook)

40 |

41 | - [task2 的数据库](./pylucene_task2/csv_files/) (案例判决数据集) 主要来自于 CAIL,[LeCaRD](https://github.com/myx666/LeCaRD) 以及我们的预训练数据。由于数据库规模较大,我们选取了 1k 条案例作为示例。

42 |

43 | ## 命令行 Demo

44 |

45 |

46 |

47 |

48 | 运行仓库中 [cli_demo.py](cli_demo.py):

49 |

50 | ```python

51 | python cli_demo.py --url_lucene_task1 "法条检索对应部署的 pylucene 地址" --url_lucene_task2 "类案检索对应部署的 pylucene 地址"

52 | ```

53 |

--------------------------------------------------------------------------------

/src/README_old.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ## 依赖库安装

4 |

5 | 1. 本项目的数据库检索部分使用 [PyLucene](https://lucene.apache.org/pylucene/index.html) 实现,其安装步骤请参考[官方说明](https://lucene.apache.org/pylucene/install.html)。

6 | Pylucene 安装过程较为繁琐,我们提供了 Pylucene 的 [Singularity](https://getsingularity.com/) 环境镜像,您可以参考 [Singularity 安装文档](./Singularity安装与使用.md) 安装 Singularity 并[下载镜像](https://pan.baidu.com/s/1PqUnX7YRNMt9co3RKUwExw)(提取码:jhhl)。然后运行以下代码即可完成部署

7 | ```shell

8 | cd "/src/pylucene_task1" && singularity exec -B "/src/pylucene_task1":/mnt "/xxx/pylucene_singularity.sif" python /mnt/api.py --port "port"

9 | ```

10 | 请将`"/src/pylucene_task1"`换成自己实际的目录,`"/xxx/pylucene_singularity.sif"`是下载得到镜像。

11 |

12 | 2. 夫子•明察的部署与 [ChatGLM](https://github.com/THUDM/ChatGLM-6B/tree/main#%E4%BD%BF%E7%94%A8%E6%96%B9%E5%BC%8F) 相同,在命令行下执行 ` pip install -r requirements.txt` 即可

13 |

14 | ## 检索模块部署

15 |

16 | 本项目检索模块使用 **pylucene** 进行构建,您也可以尝试使用其他检索方式,以下是 pylucene 的部署方式。

17 |

18 | 您可以直接运行以下代码进行部署

19 |

20 | ```python

21 | python ./pylucene_task/api.py --port "端口"

22 | ```

23 |

24 | 如果想更换检索数据集,可以参考我们的检索数据库格式自行进行修改: `./pylucene_task/csv_files/data_task.csv`

25 | 。您也可以简单的修改 `./pylucene_task/csv_files/api.py` 中的代码支持更多的数据类型。

26 |

27 | 注:

28 |

29 | - [task1 的数据库](./pylucene_task1/csv_files/) (法律法规数据集) 来自于 [LawRefBook](https://github.com/RanKKI/LawRefBook)

30 |

31 | - [task2 的数据库](./pylucene_task2/csv_files/) (案例判决数据集) 主要来自于 CAIL,[LeCaRD](https://github.com/myx666/LeCaRD) 以及我们的预训练数据。由于数据库规模较大,我们选取了 1k 条案例作为示例。

32 |

33 | ## 命令行 Demo

34 |

35 |

36 |

37 |

38 | 运行仓库中 [cli_demo.py](cli_demo.py):

39 |

40 | ```python

41 | python cli_demo.py --url_lucene_task1 "法条检索对应部署的 pylucene 地址" --url_lucene_task2 "类案检索对应部署的 pylucene 地址"

42 | ```

43 |

--------------------------------------------------------------------------------

/src/Singularity安装与使用.md:

--------------------------------------------------------------------------------

1 | singularity参考文档

2 | [https://sylabs.io/guides/3.8/admin-guide/admin_quickstart.html](https://sylabs.io/guides/3.8/admin-guide/admin_quickstart.html)

3 | [https://sylabs.io/guides/3.8/user-guide/introduction.html](https://sylabs.io/guides/3.8/user-guide/introduction.html)

4 | # 1、singularity安装

5 | 账号:root

6 | ## 1.1 go安装

7 | 由于singularity是用GO写的,所以需要先安装GO(实验室服务器**使用方式二,安装在/data目录下**)

8 |

9 | 1. 方式一 :使用yum安装go

10 | ```shell

11 | sudo yum install -y golang

12 | ```

13 |

14 | 2. **方式二:压缩包安装**

15 | ```shell

16 | wget https://go.dev/dl/go1.18.2.linux-amd64.tar.gz

17 | tar -C /data -xzf go1.18.2.linux-amd64.tar.gz #-C后的路径是将go安装的位置

18 | # 将go的路径添加到环境变量中(添加到~/.bashrc的最后一行)

19 | export PATH=/data/go/bin:$PATH

20 |

21 | source ~/.bashrc

22 | go version

23 | ```

24 | ## 1.2 singularity 安装

25 | **方式一:**(我们使用**方式一,安装在/data目录下**)

26 |

27 | 1. 下载相应的依赖和安装包

28 | ```shell

29 | # yum clean all && yum makecache

30 | sudo yum groupinstall -y 'Development Tools'

31 | sudo yum install -y epel-release

32 | # 注意如果已经根据上述步骤安装了golang,就不要再安装

33 | sudo yum install -y golang libseccomp-devel squashfs-tools cryptsetup

34 |

35 | # yum install -y rpm-build

36 | export VERSION=3.8.0

37 | wget https://github.com/hpcng/singularity/releases/download/v${VERSION}/singularity-${VERSION}.tar.gz

38 |

39 | ```

40 |

41 | 2. 编译singularity

42 | ```shell

43 | #若需要解压到自定义的位置,则在tar后添加 -C your_path

44 | sudo tar -C /home/aizoo-slurm/ -xzf singularity-${VERSION}.tar.gz

45 | cd /data/singularity-${VERSION}

46 | ```

47 | 默认情况下,Singularity将安装在/usr/local 目录层次结构中。--prefix使用该选项指定自定义目录 .

48 | 如果想安装多个版本的 Singularity、或者如果想在安装后轻松删除 Singularity,则--prefix非常有用。

49 | ```shell

50 | sudo ./mconfig --prefix=/data/singularity-3.8.0 && \

51 | sudo make -C ./builddir && \

52 | sudo make -C ./builddir install

53 | #等待一小会儿后,编译完毕

54 | #将装好的singularity添加到PATH中(~/.bashrc)

55 | export PATH=/data/singularity-3.8.0/bin:$PATH

56 |

57 | source ~/.bashrc

58 | singularity --version

59 | ```

60 | 方式二:

61 | 不建议使用以下方式安装。

62 | ```shell

63 | #这种方式会将Singularity安装在/usr/local目录层次结构中,比较分散,可能在后续的配置中出现问题

64 | #删除的时候并不方便,

65 | #使用rpm安装,安装时由于国内网络原因,无法连接GO服务器进行校对,会报超时错误,但是貌似没有影响。

66 | rpmbuild -tb singularity-${VERSION}.tar.gz

67 | rpm -ivh ~/rpmbuild/RPMS/x86_64/singularity-${VERSION}-1.el7.x86_64.rpm

68 | rm -rf ~/rpmbuild singularity-${VERSION}*.tar.gz

69 | singularity version

70 | ```

71 |

72 | # 2、singularity运行

73 | 运行示例:

74 | ```shell

75 | singularity exec -B /data_local:/mnt /image/base-conda2.sif python /data/test_code.py --batch_size=64

76 |

77 | # -B 后面需要接入要用到的路径,以便于singularity mount,默认会mount当前路径

78 | # 这里的/data_local:/mnt 是将本地的/data_local目录挂载到镜像中的/mnt目录(也就是在镜像中对/mnt目录的操作就是对本地/data_local的操作)

79 | # 若要挂载多个目录,则可以使用,连接 /data_localA:/mntA,/data_localB:/mntB

80 | #

81 | # 若我们不使用 : 进行映射,直接在-B后写目录 比如-B /dataA/dataB/dataC 那么在镜像内就会

82 | # 生成(覆盖)一个dataA目录dataA下有个目录是dataB,dataB下有个dataC

83 | # --dataA

84 | # --dataB

85 | # --dataC

86 | # --xxx.json

87 | # --bbb.txt

88 | # 使用/dataA/dataB/dataC/xxx.json 访问

89 | # 注意,只能在dataC目录下进行 读/写 操作,若要写个文件到/dataA/dataB/aaa.txt 会出错

90 |

91 | # /image/base-conda2.sif ---> singularity的镜像文件位置

92 | # /data/test_code.py ---> 需要执行的文件路径(执行文件中的log等输出 可以写到挂载的/data文件中)

93 | # --batch_size=64 ---> 用户自定义的python运行参数

94 | ```

--------------------------------------------------------------------------------

/src/cli_demo.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import json

3 | import os

4 |

5 | import requests

6 | from transformers import AutoTokenizer, AutoModel

7 |

8 | parser = argparse.ArgumentParser()

9 | parser.add_argument("--url_lucene_task1", required=True, help="法条检索对应部署的 pylucene 的地址")

10 | parser.add_argument("--url_lucene_task2", required=True, help="类案检索对应部署的 pylucene 的地址")

11 | args = parser.parse_args()

12 |

13 | print("正在加载模型")

14 |

15 | # SDUIRLab/fuzi-mingcha-v1_0 为参数存放的具体路径

16 |

17 | tokenizer = AutoTokenizer.from_pretrained("SDUIRLab/fuzi-mingcha-v1_0", trust_remote_code=True)

18 | model = AutoModel.from_pretrained("SDUIRLab/fuzi-mingcha-v1_0",

19 | trust_remote_code=True).half().cuda()

20 | model = model.eval()

21 |

22 | print("模型加载完毕")

23 |

24 |

25 | def print_hello():

26 | print(

27 | "欢迎使用 夫子·明察 司法大模型,首先请选择任务:\n键入 1 进入基于法条检索回复任务;\n键入 2 进入基于案例检索回复任务;\n键入 3 进入三段论推理判决任务;\n键入 4 进入司法对话任务;\n键入 "

28 | "stop 终止程序;\n进入任务后键入 home 退出当前任务")

29 |

30 |

31 | def print_hello_task(mod):

32 | task_description = [

33 | "欢迎使用 基于法条检索回复 任务,此任务中模型首先根据用户输入案情,模型生成相关法条;根据生成的相关法条检索真实法条;最后结合真实法条回答用户问题。\n您可以尝试输入以下内容:小李想开办一家个人独资企业,他需要准备哪些信息去进行登记注册?",

34 | "欢迎使用 基于案例检索回复 任务,此任务中模型首先根据用户输入案情,模型生成相关案例;根据生成的相关案例检索真实案例;最后结合真实案例回答用户问题。\n您可以尝试输入以下内容:被告人夏某在2007年至2010年期间,使用招商银行和广发银行的信用卡在北京纸老虎文化交流有限公司等地透支消费和取现。尽管经过银行多次催收,夏某仍欠下两家银行共计人民币26379.85元的本金。2011年3月15日,夏某因此被抓获,并在到案后坦白了自己的行为。目前,涉案的欠款已被还清。请问根据上述事实,该如何判罚夏某?",

35 | "欢迎使用 三段论推理判决 任务,此任务中模型利用三段论的推理方式生成判决结果。\n您可以尝试输入以下内容:被告人陈某伙同王某(已判刑)在邵东县界岭乡峰山村艾窑小学法经营“地下六合彩”,由陈某负责联系上家,王某1负责接单卖码及接受投注,并约定将收受投注10%的提成按三七分成,陈某占三,王某1占七。该地下六合彩利用香港“六合彩”开奖结果作为中奖号码,买1到49中间的一个或几个数字,赔率为1:42。在香港六合彩开奖的当天晚上点三十分前,停止卖号,将当期购买的清单报给姓赵的上家。开奖后从网上下载香港六合彩的中奖号码进行结算赔付,计算当天的中奖数额,将当期卖出的总收入的百分之十留给自己,用总收入的百分之九十减去中奖的钱,剩余的为付给上家的钱。期间,二人共同经营“地下六合彩”40余期,收受吕某、吕永玉、王某2、王某3等人的投注额约25万余元,两人共计非法获利4万余元。被告人陈某于2013年11月18日被抓获,后被取保候审,在取保期间,被告人陈某脱逃。2015年1月21公安机关对其网上追逃。2017年6月21日被告人陈某某自动到公安机关投案。上述事实,被告人陈某在开庭审理过程中亦无异议,并有证人王某1、吕某、吕永玉、王某3等人的证言,扣押决定书,扣押物品清单,文件清单,抓获经过,刑事判决书,户籍证明等证据证实,足以认定。",

36 | "欢迎使用 司法对话 任务,此任务中您可以与模型进行直接对话。"]

37 | print(task_description[mod - 1])

38 |

39 |

40 | def process_lucence_input(input):

41 | nr = ['(', ')', '[', ']', '{', '}', '/', '?', '!', '^', '*', '-', '+']

42 | for char in nr:

43 | input = input.replace(char, f"\\{char}")

44 | return input

45 |

46 |

47 | def chat(prompt, history=None):

48 | if history is None:

49 | history = []

50 | response, history = model.chat(tokenizer, prompt, history=history if history else [], max_length=4096, max_time=100,

51 | top_p=0.7, temperature=0.95)

52 | return response, history

53 |

54 |

55 | prompt1_task1 = "请根据以下问题生成相关法律法规: @用户输入@"

56 | prompt2_task1 = """请根据下面相关法条回答问题

57 | 相关法条:

58 | @检索得到的相关法条@

59 | 问题:

60 | @用户输入@"""

61 | prompt1_task2 = "请根据以下问题生成相关案例: "

62 | prompt2_task2 = """请根据下面相关案例回答问题

63 | 相关案例:

64 | @检索得到的相关案例@

65 | 问题:

66 | @用户输入@"""

67 | prompt_task3 = """请根据基本案情,利用三段论的推理方式得到判决结果,判决结果包括:1.罪名;2.刑期。

68 | 基本案情:@用户输入@"""

69 |

70 |

71 | def main():

72 | history = []

73 | mod = 0

74 | print_hello()

75 | while True:

76 | query = input("\n用户:")

77 | if not query.strip():

78 | print("输入不能为空")

79 | continue

80 | if query.strip() == "stop":

81 | break

82 | if query.strip() == "home":

83 | mod = 0

84 | history = []

85 | print_hello()

86 | continue

87 |

88 | if mod == 0: # 欢迎页

89 | if query in ["1", "2", "3", "4"]:

90 | mod = int(query)

91 | print_hello_task(mod)

92 | else:

93 | print("输入无效")

94 | continue

95 | elif mod == 1: # 基于法条检索回复

96 | # print(f"\n\n用户:{query}")

97 | generate_law, _ = chat(prompt1_task1.replace("@用户输入@", query))

98 | data = {"query": process_lucence_input(generate_law), "top_k": 3}

99 | response_retrieval = requests.post(args.url_lucene_task1, json=data)

100 | response_retrieval = json.loads(response_retrieval.content)

101 | docs = response_retrieval['docs']

102 | retrieval_law = ""

103 | for i, doc in enumerate(docs):

104 | retrieval_law = retrieval_law + f"第{i + 1}条:\n{doc}\n"

105 | response, _ = chat(

106 | prompt2_task1.replace("@检索得到的相关法条@", retrieval_law).replace("@用户输入@", query))

107 | print(f"\n\n夫子·明察·法条检索:\n{response}")

108 | elif mod == 2: # 基于案例检索回复

109 | # print(f"\n\n用户:{query}")

110 | generate_law, _ = chat(prompt1_task2.replace("@用户输入@", query))

111 | data = {"query": process_lucence_input(generate_law), "top_k": 1}

112 | response_retrieval = requests.post(args.url_lucene_task2, json=data)

113 | response_retrieval = json.loads(response_retrieval.content)

114 | docs = response_retrieval['docs']

115 | retrieval_law = ""

116 | max_len = 1000 # 为避免对模型的输入过长,限制检索案例的长度,只保留最后 1000 个 tokens

117 | for i, doc in enumerate(docs):

118 | retrieval_law = retrieval_law + f"第{i + 1}条:\n{doc[int(-max_len / len(docs)):]}\n"

119 | response, _ = chat(

120 | prompt2_task2.replace("@检索得到的相关案例@", retrieval_law).replace("@用户输入@", query))

121 | print(f"\n\n夫子·明察·类案检索:\n{response}")

122 | elif mod == 3: # 三段论推理判决

123 | # print(f"\n\n用户:{query}")

124 | response, _ = chat(prompt_task3.replace("@用户输入@", query))

125 | print(f"\n\n夫子·明察·三段论:\n{response}")

126 | else: # 司法对话

127 | # print(f"\n\n用户:{query}")

128 | response, history = chat(query, history)

129 | print(f"\n\n夫子·明察:\n{response}")

130 |

131 |

132 | if __name__ == "__main__":

133 | main()

134 |

--------------------------------------------------------------------------------

/src/es_task1/api.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | from fastapi import FastAPI, Request

3 | import uvicorn, json, datetime

4 | import sys, os

5 | import csv

6 | from elasticsearch import Elasticsearch

7 | from elasticsearch import helpers

8 | from tqdm import trange

9 |

10 | # --------------接收参数--------------------

11 | import argparse

12 | import random

13 |

14 | parser = argparse.ArgumentParser(description="服务调用方法:python XXX.py --port=xx")

15 | parser.add_argument("--port", default=None, type=int, help="服务端口")

16 | args = parser.parse_args()

17 |

18 |

19 | def get_port():

20 | pscmd = "netstat -ntl |grep -v Active| grep -v Proto|awk '{print $4}'|awk -F: '{print $NF}'"

21 | procs = os.popen(pscmd).read()

22 | procarr = procs.split("\n")

23 | tt = random.randint(15000, 20000)

24 | if tt not in procarr:

25 | return tt

26 | else:

27 | return get_port()

28 |

29 |

30 | # ----------------------------------

31 |

32 | app = FastAPI()

33 |

34 | # Elasticsearch 地址

35 | es_host = ""

36 | # 用户名和密码

37 | username = ""

38 | password = ""

39 | # 创建带有认证信息的 Elasticsearch 客户端

40 | es = Elasticsearch([es_host], http_auth=(username, password), request_timeout=60)

41 | file_name = "src/pylucene_task1/csv_files/data_task1.csv"

42 | index_name = "fuzi_fatiao"

43 | # 删除索引

44 | # response = es.indices.delete(index=index_name, ignore=[400, 404])

45 | # 打印结果

46 | # print(f"Index '{index_name}' deletion response:", response)

47 | # exit()

48 | data = []

49 | with open(file_name, "r", encoding="utf-8") as csvfile:

50 | reader = csv.reader(csvfile)

51 | headers = next(reader)

52 | id = 0

53 | for row in reader:

54 | if len(row) > 0:

55 | content = ""

56 | for i in range(len(headers)):

57 | content += row[i]

58 | data.append(content)

59 |

60 |

61 | def save_to_es(data_from, data_to):

62 | """

63 | 使用生成器批量写入数据到es数据库

64 | :param num:

65 | :return:

66 | """

67 | action = (

68 | {

69 | "_index": index_name,

70 | "_type": "_doc",

71 | "_id": i,

72 | "_source": {"content": data[i]},

73 | }

74 | for i in range(data_from, data_to)

75 | )

76 | helpers.bulk(es, action)

77 |

78 |

79 | def build_index():

80 | CREATE_BODY = {

81 | "settings": {"number_of_replicas": 0}, # 副本的个数

82 | "mappings": {

83 | "properties": {"content": {"type": "text", "analyzer": "ik_smart"}}

84 | },

85 | }

86 | es.indices.create(index=index_name, body=CREATE_BODY)

87 |

88 | data_size_one_time = 1000

89 | for begin_index in trange(0, len(data), data_size_one_time):

90 | save_to_es(begin_index, min(len(data), begin_index + data_size_one_time))

91 |

92 | es.indices.refresh(index=index_name)

93 |

94 |

95 | # 定义Lucene检索器,用输入语句查询Lucene索引

96 | def search_index(query_str):

97 | query_body = {

98 | "query": {

99 | "match": {

100 | "content": query_str

101 | }

102 | },

103 | "size": 10

104 | }

105 | res = es.search(index=index_name, body=query_body, request_timeout=60)

106 | return res

107 |

108 | @app.post("/")

109 | async def create_item(request: Request):

110 | json_post_raw = await request.json()

111 | json_post = json.dumps(json_post_raw)

112 | json_post_list = json.loads(json_post)

113 | query_str = json_post_list.get("query")

114 | top_k = json_post_list.get("top_k")

115 | results = search_index(query_str) # 检索

116 | docs = []

117 | for hit in results["hits"]["hits"]:

118 | docs.append(hit["_source"]["content"])

119 | now = datetime.datetime.now()

120 | time = now.strftime("%Y-%m-%d %H:%M:%S")

121 | answer = {"docs": docs[0 : min(top_k, len(docs))], "status": 200, "time": time}

122 | return answer

123 |

124 |

125 | if __name__ == "__main__":

126 | # build_index()

127 | print("web服务启动")

128 | if args.port:

129 | uvicorn.run(app, host="0.0.0.0", port=args.port)

130 | else:

131 | uvicorn.run(app, host="0.0.0.0", port=get_port())

--------------------------------------------------------------------------------

/src/es_task2/api.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | from fastapi import FastAPI, Request

3 | import uvicorn, json, datetime

4 | import sys, os

5 | import csv

6 | from elasticsearch import Elasticsearch

7 | from elasticsearch import helpers

8 | from tqdm import trange

9 |

10 | # --------------接收参数--------------------

11 | import argparse

12 | import random

13 |

14 | parser = argparse.ArgumentParser(

15 | description='服务调用方法:python XXX.py --port=xx')

16 | parser.add_argument('--port', default=None, type=int, help='服务端口')

17 | args = parser.parse_args()

18 |

19 |

20 | def get_port():

21 | pscmd = "netstat -ntl |grep -v Active| grep -v Proto|awk '{print $4}'|awk -F: '{print $NF}'"

22 | procs = os.popen(pscmd).read()

23 | procarr = procs.split("\n")

24 | tt = random.randint(15000, 20000)

25 | if tt not in procarr:

26 | return tt

27 | else:

28 | return get_port()

29 |

30 |

31 | # ----------------------------------

32 |

33 | app = FastAPI()

34 |

35 | # Elasticsearch 地址

36 | es_host = ""

37 | # 用户名和密码

38 | username = ""

39 | password = ""

40 | # 创建带有认证信息的 Elasticsearch 客户端

41 | es = Elasticsearch([es_host], http_auth=(username, password), request_timeout=60)

42 | file_name = "src/pylucene_task2/csv_files/data_task2.csv"

43 | index_name = "fuzi_anli"

44 | # 删除索引

45 | # response = es.indices.delete(index=index_name, ignore=[400, 404])

46 | # 打印结果

47 | # print(f"Index '{index_name}' deletion response:", response)

48 | # exit()

49 | data = []

50 | with open(file_name, 'r', encoding='utf-8') as csvfile:

51 | reader = csv.reader(csvfile)

52 | headers = next(reader)

53 | id = 0

54 | for row in reader:

55 | if len(row) > 0:

56 | content = ""

57 | for i in range(len(headers)):

58 | content += row[i]

59 | data.append(content)

60 | print(len(data))

61 | # exit()

62 | def save_to_es(data_from, data_to):

63 | """

64 | 使用生成器批量写入数据到es数据库

65 | :param num:

66 | :return:

67 | """

68 | action = (

69 | {

70 | "_index": index_name,

71 | "_type": "_doc",

72 | "_id": i,

73 | "_source": {

74 | "content": data[i]

75 | }

76 | } for i in range(data_from, data_to)

77 | )

78 | helpers.bulk(es, action)

79 |

80 |

81 | def build_index():

82 | CREATE_BODY = {

83 | "settings": {

84 | "number_of_replicas": 0 # 副本的个数

85 | },

86 | "mappings": {

87 | "properties": {

88 | "content": {

89 | "type": "text",

90 | "analyzer": "ik_smart"

91 | }

92 | }

93 | }

94 | }

95 | es.indices.create(index=index_name, body=CREATE_BODY)

96 |

97 | data_size_one_time = 1000

98 | for begin_index in trange(0, len(data), data_size_one_time):

99 | save_to_es(begin_index, min(len(data), begin_index + data_size_one_time))

100 |

101 | es.indices.refresh(index=index_name)

102 |

103 |

104 | # 定义Lucene检索器,用输入语句查询Lucene索引

105 | def search_index(query_str):

106 | query_body = {

107 | "query": {

108 | "match": {

109 | "content": query_str

110 | }

111 | },

112 | "size": 10

113 | }

114 | res = es.search(index=index_name, body=query_body, request_timeout=60)

115 | return res

116 |

117 |

118 | @app.post("/")

119 | async def create_item(request: Request):

120 | json_post_raw = await request.json()

121 | json_post = json.dumps(json_post_raw)

122 | json_post_list = json.loads(json_post)

123 | query_str = json_post_list.get("query")

124 | top_k = json_post_list.get("top_k")

125 | results = search_index(query_str) # 检索

126 | docs = []

127 | for hit in results['hits']['hits']:

128 | docs.append(hit["_source"]["content"])

129 | now = datetime.datetime.now()

130 | time = now.strftime("%Y-%m-%d %H:%M:%S")

131 | answer = {"docs": docs[0:min(top_k, len(docs))], "status": 200, "time": time}

132 | return answer

133 |

134 |

135 | if __name__ == "__main__":

136 | # build_index()

137 | print('web服务启动')

138 | if args.port:

139 | uvicorn.run(app, host='0.0.0.0', port=args.port)

140 | else:

141 | uvicorn.run(app, host='0.0.0.0', port=get_port())

142 |

--------------------------------------------------------------------------------

/src/pylucene_task1/api.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | # --------------接收参数--------------------

3 | import argparse

4 | import csv

5 | import lucene

6 | import os

7 | import random

8 |

9 | import datetime

10 | import json

11 | import uvicorn

12 | from fastapi import FastAPI, Request

13 | from java.nio.file import Paths

14 | from org.apache.lucene.analysis.cn.smart import SmartChineseAnalyzer

15 | from org.apache.lucene.document import Document, Field, StringField, TextField

16 | from org.apache.lucene.index import IndexWriterConfig, IndexWriter, DirectoryReader

17 | from org.apache.lucene.queryparser.classic import QueryParser

18 | from org.apache.lucene.search import IndexSearcher, SortField

19 | from org.apache.lucene.store import FSDirectory

20 |

21 | parser = argparse.ArgumentParser(description="服务调用方法:python XXX.py --port=xx")

22 | parser.add_argument("--port", default=None, type=int, help="服务端口")

23 | args = parser.parse_args()

24 |

25 |

26 | def get_port():

27 | pscmd = "netstat -ntl |grep -v Active| grep -v Proto|awk '{print $4}'|awk -F: '{print $NF}'"

28 | procs = os.popen(pscmd).read()

29 | procarr = procs.split("\n")

30 | tt = random.randint(15000, 20000)

31 | if tt not in procarr:

32 | return tt

33 | else:

34 | return get_port()

35 |

36 |

37 | # ----------------------------------

38 |

39 |

40 | app = FastAPI()

41 |

42 | # 设置CSV文件夹路径和Lucene索引文件夹路径

43 | csv_folder_path = "./csv_files/"

44 | INDEX_DIR = "./lucene_index/"

45 |

46 | # 设定检索top k 条目数

47 | top_k = 3

48 |

49 |

50 | # 定义Lucene索引写入器,将CSV文件内容加入Lucene索引中

51 | def create_index():

52 | if os.path.exists(INDEX_DIR) and len(os.listdir(INDEX_DIR)) > 0:

53 | print("已有索引")

54 | return

55 | analyzer = SmartChineseAnalyzer()

56 | config = IndexWriterConfig(analyzer)

57 |

58 | index_dir = FSDirectory.open(Paths.get(INDEX_DIR))

59 | writer = IndexWriter(index_dir, config)

60 | for filename in os.listdir(csv_folder_path):

61 | if filename.endswith(".csv"):

62 | path = os.path.join(csv_folder_path, filename)

63 | with open(path, "r", encoding="utf-8") as csvfile:

64 | reader = csv.reader(csvfile)

65 | headers = next(reader)

66 | for row in reader:

67 | if len(row) > 0:

68 | content = ""

69 | for i in range(len(headers)):

70 | content += row[i]

71 | doc = Document()

72 | doc.add(Field("filename", filename, StringField.TYPE_STORED))

73 | doc.add(Field("content", content, TextField.TYPE_STORED))

74 | writer.addDocument(doc)

75 | writer.close()

76 |

77 |

78 | # 定义Lucene检索器,用输入语句查询Lucene索引

79 | def search_index(query_str):

80 | index_dir = FSDirectory.open(Paths.get(INDEX_DIR))

81 | reader = DirectoryReader.open(index_dir)

82 |

83 | # 定义检索器和排序器

84 | searcher = IndexSearcher(reader)

85 | sortField = SortField("score", SortField.Type.SCORE, True)

86 |

87 | # 设定QueryParser

88 | query_parser = QueryParser("content", SmartChineseAnalyzer())

89 | # query_parser.setDefaultOperator(QueryParserBase.Operator.AND)

90 | query = query_parser.parse(query_str)

91 |

92 | # 使用TopFieldCollector进行结果的排序和筛选

93 | # top_docs = TopFieldCollector.create(sortField, top_k, len(reader.maxDoc()))

94 | # top_docs = TopFieldCollector.create(sortField, top_k)

95 | # searcher.search(query, top_docs)

96 | # score_docs = top_docs.topDocs().scoreDocs

97 | score_docs = searcher.search(query, 50).scoreDocs

98 |

99 | result = []

100 |

101 | for scoredoc in score_docs:

102 | doc = searcher.doc(scoredoc.doc)

103 | filename = doc.get("filename")

104 | content = doc.get("content")

105 | result.append((scoredoc.score, filename, content))

106 |

107 | # result = list(set(result))

108 | return result

109 |

110 |

111 | @app.post("/")

112 | async def create_item(request: Request):

113 | json_post_raw = await request.json()

114 | json_post = json.dumps(json_post_raw)

115 | json_post_list = json.loads(json_post)

116 | query_str = json_post_list.get("query")

117 | top_k = json_post_list.get("top_k")

118 | results = search_index(query_str) # 检索

119 | docs = []

120 | for result in results:

121 | # print("score:", result[0], "filename:", result[1], "content:", result[2], '\n')

122 | docs.append(result[2])

123 | now = datetime.datetime.now()

124 | time = now.strftime("%Y-%m-%d %H:%M:%S")

125 | answer = {"docs": docs[0 : min(top_k, len(docs))], "status": 200, "time": time}

126 | return answer

127 |

128 |

129 | if __name__ == "__main__":

130 | lucene.initVM(vmargs=["-Djava.awt.headless=true"])

131 | # 在每次测试运行前先创建Lucene索引

132 | create_index()

133 |

134 | print("web服务启动")

135 | if args.port:

136 | uvicorn.run(app, host="0.0.0.0", port=args.port)

137 | else:

138 | uvicorn.run(app, host="0.0.0.0", port=get_port())

139 |

--------------------------------------------------------------------------------

/src/pylucene_task2/api.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | # --------------接收参数--------------------

3 | import argparse

4 | import csv

5 | import lucene

6 | import os

7 | import random

8 |

9 | import datetime

10 | import json

11 | import uvicorn

12 | from fastapi import FastAPI, Request

13 | from java.nio.file import Paths

14 | from org.apache.lucene.analysis.cn.smart import SmartChineseAnalyzer

15 | from org.apache.lucene.document import Document, Field, StringField, TextField

16 | from org.apache.lucene.index import IndexWriterConfig, IndexWriter, DirectoryReader

17 | from org.apache.lucene.queryparser.classic import QueryParser

18 | from org.apache.lucene.search import IndexSearcher, SortField

19 | from org.apache.lucene.store import FSDirectory

20 |

21 | parser = argparse.ArgumentParser(description="服务调用方法:python XXX.py --port=xx")

22 | parser.add_argument("--port", default=None, type=int, help="服务端口")

23 | args = parser.parse_args()

24 |

25 |

26 | def get_port():

27 | pscmd = "netstat -ntl |grep -v Active| grep -v Proto|awk '{print $4}'|awk -F: '{print $NF}'"

28 | procs = os.popen(pscmd).read()

29 | procarr = procs.split("\n")

30 | tt = random.randint(15000, 20000)

31 | if tt not in procarr:

32 | return tt

33 | else:

34 | return get_port()

35 |

36 |

37 | # ----------------------------------

38 |

39 |

40 | app = FastAPI()

41 |

42 | # 设置CSV文件夹路径和Lucene索引文件夹路径

43 | csv_folder_path = "./csv_files/"

44 | INDEX_DIR = "./lucene_index/"

45 |

46 | # 设定检索top k 条目数

47 | top_k = 3

48 |

49 |

50 | # 定义Lucene索引写入器,将CSV文件内容加入Lucene索引中

51 | def create_index():

52 | if os.path.exists(INDEX_DIR) and len(os.listdir(INDEX_DIR)) > 0:

53 | print("已有索引")

54 | return

55 | analyzer = SmartChineseAnalyzer()

56 | config = IndexWriterConfig(analyzer)

57 |

58 | index_dir = FSDirectory.open(Paths.get(INDEX_DIR))

59 | writer = IndexWriter(index_dir, config)

60 | for filename in os.listdir(csv_folder_path):

61 | if filename.endswith(".csv"):

62 | path = os.path.join(csv_folder_path, filename)

63 | with open(path, "r", encoding="utf-8") as csvfile:

64 | reader = csv.reader(csvfile)

65 | headers = next(reader)

66 | for row in reader:

67 | if len(row) > 0:

68 | content = ""

69 | for i in range(len(headers)):

70 | content += row[i]

71 | doc = Document()

72 | doc.add(Field("filename", filename, StringField.TYPE_STORED))

73 | doc.add(Field("content", content, TextField.TYPE_STORED))

74 | writer.addDocument(doc)

75 | writer.close()

76 |

77 |

78 | # 定义Lucene检索器,用输入语句查询Lucene索引

79 | def search_index(query_str):

80 | index_dir = FSDirectory.open(Paths.get(INDEX_DIR))

81 | reader = DirectoryReader.open(index_dir)

82 |

83 | # 定义检索器和排序器

84 | searcher = IndexSearcher(reader)

85 | sortField = SortField("score", SortField.Type.SCORE, True)

86 |

87 | # 设定QueryParser

88 | query_parser = QueryParser("content", SmartChineseAnalyzer())

89 | # query_parser.setDefaultOperator(QueryParserBase.Operator.AND)

90 | query = query_parser.parse(query_str)

91 |

92 | # 使用TopFieldCollector进行结果的排序和筛选

93 | # top_docs = TopFieldCollector.create(sortField, top_k, len(reader.maxDoc()))

94 | # top_docs = TopFieldCollector.create(sortField, top_k)

95 | # searcher.search(query, top_docs)

96 | # score_docs = top_docs.topDocs().scoreDocs

97 | score_docs = searcher.search(query, 50).scoreDocs

98 |

99 | result = []

100 |

101 | for scoredoc in score_docs:

102 | doc = searcher.doc(scoredoc.doc)

103 | filename = doc.get("filename")

104 | content = doc.get("content")

105 | result.append((scoredoc.score, filename, content))

106 |

107 | # result = list(set(result))

108 | return result

109 |

110 |

111 | @app.post("/")

112 | async def create_item(request: Request):

113 | json_post_raw = await request.json()

114 | json_post = json.dumps(json_post_raw)

115 | json_post_list = json.loads(json_post)

116 | query_str = json_post_list.get("query")

117 | top_k = json_post_list.get("top_k")

118 | results = search_index(query_str) # 检索

119 | docs = []

120 | for result in results:

121 | # print("score:", result[0], "filename:", result[1], "content:", result[2], '\n')

122 | docs.append(result[2])

123 | now = datetime.datetime.now()

124 | time = now.strftime("%Y-%m-%d %H:%M:%S")

125 | answer = {"docs": docs[0 : min(top_k, len(docs))], "status": 200, "time": time}

126 | return answer

127 |

128 |

129 | if __name__ == "__main__":

130 | lucene.initVM(vmargs=["-Djava.awt.headless=true"])

131 | # 在每次测试运行前先创建Lucene索引

132 | create_index()

133 |

134 | print("web服务启动")

135 | if args.port:

136 | uvicorn.run(app, host="0.0.0.0", port=args.port)

137 | else:

138 | uvicorn.run(app, host="0.0.0.0", port=get_port())

139 |

--------------------------------------------------------------------------------

/src/requirements.txt:

--------------------------------------------------------------------------------

1 | torch==2.0.0

2 | transformers==4.30.0

3 | sentencepiece==0.1.98

4 | jieba

5 | rouge-chinese

6 | nltk

7 | gradio==3.28.0

8 | uvicorn==0.22.0

9 | pydantic==1.10.11

10 | fastapi==0.95.1

11 | sse-starlette

12 | matplotlib

13 | protobuf

14 | cpm-kernels

15 | elasticsearch

--------------------------------------------------------------------------------