├── .gitignore

├── Notes.md

├── README.md

├── annoy_test.py

├── bert_for_seq_classification.py

├── convert.py

├── data

├── add_faq.json

└── stopwords.txt

├── distills

├── bert_config_L3.json

└── matches.py

├── example.log

├── faq_app_benben.py

├── faq_app_fastapi.py

├── faq_app_flask.py

├── faq_app_whoosh.py

├── faq_index.py

├── faq_test.py

├── faq_whoosh_index.py

├── locust_test.py

├── model_distillation.py

├── requirements.txt

├── sampling.py

├── sentence_transformers_encoder.py

├── sentence_transformers_train.py

├── test.py

├── thread_test.py

├── transformers_encoder.py

├── transformers_trainer.py

└── utils.py

/.gitignore:

--------------------------------------------------------------------------------

1 | .vscode

2 | */.vscode/

3 | *.npy

4 | *.pyc

5 | *.csv

6 | hflqa/

7 | hflqa_extra/

8 | ddqa/

9 | lcqmc/

10 | output/

11 | samples/

12 | distills/outputs/

13 | *.DS_Store

14 | */logs/

15 | logs/

16 | __pycache__/

17 | */__pycache__/

18 | data/

19 | whoosh_index/

20 | *.json

--------------------------------------------------------------------------------

/Notes.md:

--------------------------------------------------------------------------------

1 | 单纯语义检索还算ok,目前存在的问题是特殊关键词无法识别(比如哈工大、哈尔滨工业大学,使用lucene很容易识别,但是BERT语义检索效果较差)

2 |

3 | - 效果优化考虑key-bert

4 | - 性能优化,考虑蒸馏减小特征向量维度(4层384维)

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # FAQ-Semantic-Retrieval

2 |

3 |

4 |

5 | 一种 FAQ 向量语义检索解决方案

6 |

7 | - [x] 基于 [**Sklearn Kmeans**](https://scikit-learn.org/stable/) 聚类的**负采样**

8 |

9 | - [x] 基于 [**Transformers**](https://huggingface.co/transformers/) 的 BertForSiameseNetwork(Bert**双塔模型**)**微调训练**

10 |

11 | - [x] 基于 [**TextBrewer**](https://github.com/airaria/TextBrewer) 的**模型蒸馏**

12 |

13 | - [x] 基于 [**FastAPI**](https://fastapi.tiangolo.com/zh/) 和 [**Locust**](https://locust.io/) 的 **WebAPI 服务**以及**压力测试**

14 |

15 |

16 |

17 | ## 项目介绍

18 |

19 | FAQ 的处理流程一般为:

20 |

21 | - **问题理解**,对用户 query 进行改写以及向量表示

22 | - **召回模块**,在问题集上进行候选问题召回,获得 topk(基于关键字的倒排索引 vs 基于向量的语义召回)

23 | - **排序模块**,对 topk 进行精排序

24 |

25 | 本项目着眼于 **召回模块** 的 **向量检索** 的实现,适用于 **小规模 FAQ 问题集**(候选问题集<10万)的系统快速搭建

26 |

27 |

28 |

29 | ### FAQ 语义检索

30 |

31 | 传统召回模块**基于关键字检索**

32 |

33 | - 计算关键字在问题集中的 [TF-IDF](https://en.wikipedia.org/wiki/Tf%E2%80%93idf) 以及[ BM25](https://en.wikipedia.org/wiki/Okapi_BM25) 得分,并建立**倒排索引表**

34 | - 第三方库 [ElasticSearch](https://whoosh.readthedocs.io/en/latest/index.html),[Lucene](https://lucene.apache.org/pylucene/),[Whoosh](https://whoosh.readthedocs.io/en/latest/index.html)

35 |

36 |

37 |

38 | 随着语义表示模型的增强、预训练模型的发展,基于 BERT 向量的**语义检索**得到广泛应用

39 |

40 | - 对候选问题集合进行向量编码,得到 **corpus 向量矩阵**

41 | - 当用户输入 query 时,同样进行编码得到 **query 向量表示**

42 | - 然后进行语义检索(矩阵操作,KNN,FAISS)

43 |

44 |

45 |

46 | 本项目针对小规模 FAQ 问题集直接计算 query 和 corpus 向量矩阵的**余弦相似度**,从而获得 topk 候选问题

47 | $$

48 | score = \frac{V_{query} \cdot V_{corpus}}{||V_{query}|| \cdot ||V_{corpus}||}

49 | $$

50 | **句向量获取解决方案**

51 |

52 | | Python Lib | Framework | Desc | Example |

53 | | ------------------------------------------------------------ | ---------- | ------------------------------------------------------- | ------------------------------------------------------------ |

54 | | [bert-as-serivce](https://github.com/hanxiao/bert-as-service) | TensorFlow | 高并发服务调用,支持 fine-tune,较难拓展其他模型 | [getting-started](https://github.com/hanxiao/bert-as-service#getting-started) |

55 | | [Sentence-Transformers](https://www.sbert.net/index.html) | PyTorch | 接口简单易用,支持各种模型调用,支持 fine-turn(单GPU) | [using-Sentence-Transformers-model](https://www.sbert.net/docs/quickstart.html#quickstart)

[using-Transformers-model](https://github.com/UKPLab/sentence-transformers/issues/184#issuecomment-607069944) |

56 | | 🤗 [Transformers](https://github.com/huggingface/transformers/) | PyTorch | 自定义程度高,支持各种模型调用,支持 fine-turn(多GPU) | [sentence-embeddings-with-Transformers](https://www.sbert.net/docs/usage/computing_sentence_embeddings.html#sentence-embeddings-with-transformers) |

57 |

58 | > - **Sentence-Transformers** 进行小规模数据的单 GPU fine-tune 实验(尚不支持多 GPU 训练,[Multi-GPU-training #311](https://github.com/UKPLab/sentence-transformers/issues/311#issuecomment-659455875) ;实现了多种 [Ranking loss](https://www.sbert.net/docs/package_reference/losses.html) 可供参考)

59 | > - **Transformers** 进行大规模数据的多 GPU fine-tune 训练(推荐自定义模型使用 [Trainer](https://huggingface.co/transformers/training.html#trainer) 进行训练)

60 | > - 实际使用过程中 **Sentence-Transformers** 和 **Transformers** 模型基本互通互用,前者多了 **Pooling 层(Mean/Max/CLS Pooling)** ,可参考 **Example**

61 | > - :fire: **实际上线推荐直接使用 Transformers 封装**,Sentence-Transformers 在 CPU 服务器上运行存在位置问题。

62 |

63 |

64 |

65 | ### BERT 微调与蒸馏

66 |

67 | 在句向量获取中可以直接使用 [bert-base-chinese](https://huggingface.co/bert-base-chinese) 作为编码器,但在特定领域数据上可能需要进一步 fine-tune 来获取更好的效果

68 |

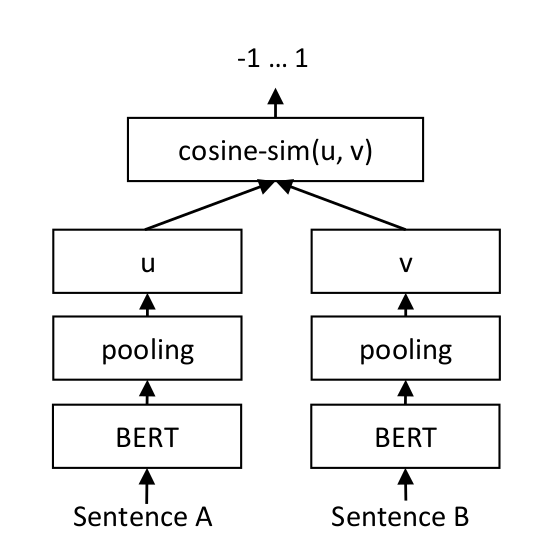

69 | fine-tune 过程主要进行**文本相似度计算**任务,亦**句对分类任务**;此处是为获得更好的句向量,因此使用**双塔模型([SiameseNetwork](https://en.wikipedia.org/wiki/Siamese_neural_network) ,孪生网络)**微调,而非常用的基于表示的模型 [BertForSequenceClassification](https://huggingface.co/transformers/model_doc/bert.html#bertforsequenceclassification)

70 |

71 |

72 |

73 | #### BertForSiameseNetwork

74 |

75 | **BertForSiameseNetwork** 主要步骤如下

76 |

77 | - **Encoding**,使用(同一个) **BERT** 分别对 query 和 candidate 进行编码

78 | - **Pooling**,对最后一层进行**池化操作**获得句子表示(Mean/Max/CLS Pooling)

79 | - **Computing**,计算两个向量的**余弦相似度**(或其他度量函数),计算 loss 进行反向传播

80 |

81 |

82 |

83 | #### 损失函数

84 |

85 | 模型训练使用的损失函数为 **Ranking loss**,不同于CrossEntropy 和 MSE 进行分类和回归任务,**Ranking loss** 目的是预测输入样本对(即上述双塔模型中 $u$ 和 $v$ 之间)之间的相对距离(**度量学习任务**)

86 |

87 | - **Contrastive Loss**

88 |

89 | > 来自 LeCun [Dimensionality Reduction by Learning an Invariant Mapping](http://yann.lecun.com/exdb/publis/pdf/hadsell-chopra-lecun-06.pdf)

90 | >

91 | > [sentence-transformers 源码实现](https://github.com/UKPLab/sentence-transformers/blob/master/sentence_transformers/losses/ContrastiveLoss.py)

92 |

93 | - 公式形式如下,其中 $u, v$ 为 BERT 编码的向量表示,$y$ 为对应的标签(1 表示正样本,0 表示负样本), $\tau$ 为超参数

94 | $$

95 | L(u_i, v_i, y_i) = y_i ||u_i, v_i|| + (1 - y_i) \max(0, \tau - ||u_i, v_i||

96 | $$

97 |

98 | - 公式意义为:对于**正样本**,输出特征向量之间距离要**尽量小**;而对于**负样本**,输出特征向量间距离要**尽量大**;但是若**负样本间距太大**(即容易区分的**简单负样本**,间距大于 $\tau$)**则不处理**,让模型关注更加**难以区分**的样本

99 |

100 |

101 |

102 | - **OnlineContrastive Loss**

103 |

104 | - 属于 **online negative sampling** ,与 **Contrastive Loss** 类似

105 |

106 | - 参考 [sentence-transformers 源码实现](https://github.com/UKPLab/sentence-transformers/blob/master/sentence_transformers/losses/OnlineContrastiveLoss.py) ,在每个 batch 内,选择最难以区分的正例样本和负例样本进行 loss 计算(容易识别的正例和负例样本则忽略)

107 |

108 | - 公式形式如下,如果正样本距离小于 $\tau_1$ 则不处理,如果负样本距离大于 $\tau_0$ 则不处理,实现过程中 $\tau_0, \tau_1$ 可以分别取负/正样本的平均距离值

109 | $$

110 | L(u_i, v_i, y_i)

111 | \begin{cases}

112 | \max (0, ||u_i, v_i|| - \tau_1) ,\ if \ y_i=1 & \\

113 | \max (0, \tau_0 - ||u_i, v_i||),\ if \ y_i =0

114 | \end{cases}

115 | $$

116 |

117 | 本项目使用 **OnlineContrastive Loss** ,更多 Ranking loss 信息可参考博客 [Understanding Ranking Loss, Contrastive Loss, Margin Loss, Triplet Loss, Hinge Loss and all those confusing names](https://gombru.github.io/2019/04/03/ranking_loss/) ,以及 SentenceTransformers 中的 [Loss API](https://www.sbert.net/docs/package_reference/losses.html) 以及 PyTorch 中的 [margin_ranking_loss](https://pytorch.org/docs/master/nn.functional.html#margin-ranking-loss)

118 |

119 |

120 |

121 | #### 数据集

122 |

123 | - **文本相似度数据集**

124 |

125 | - 相关论文比赛发布的数据集可见 [文本相似度数据集](https://github.com/IceFlameWorm/NLP_Datasets) ,大部分为金融等特定领域文本,其中 [LCQMC](http://icrc.hitsz.edu.cn/Article/show/171.html) 提供基于百度知道的约 20w+ 开放域问题数据集,可供模型测试

126 |

127 | | data | total | positive | negative |

128 | | ---------- | ------ | -------- | -------- |

129 | | training | 238766 | 138574 | 100192 |

130 | | validation | 8802 | 4402 | 4400 |

131 | | test | 12500 | 6250 | 6250 |

132 |

133 | - 除此以外,百度[千言项目](https://www.luge.ai/)发布了[文本相似度评测](https://aistudio.baidu.com/aistudio/competition/detail/45),包含 LCQMC/BQ Corpus/PAWS-X 等数据集,可供参考

134 |

135 | - **FAQ数据集**

136 |

137 | - 内部给定的 FAQ 数据集形式如下,包括各种”主题/问题“,每种“主题/问题”可以有多种不同表达形式的问题 `post`,同时对应多种形式的回复 `resp`

138 |

139 | - 检索时只需要将 query 与所有 post 进行相似度计算,从而召回最相似的 post ,然后获取对应的 “主题/问题” 的所有回复 `resp` ,最后随机返回一个回复即可

140 |

141 | ```python

142 | {

143 | "晚安": {

144 | "post": [

145 | "10点了,我要睡觉了",

146 | "唉,该休息了",

147 | ...

148 | ],

149 | "resp": [

150 | "祝你做个好梦",

151 | "祝你有个好梦,晚安!",

152 | ...

153 | ]

154 | },

155 | "感谢": {

156 | "post": [

157 | "多谢了",

158 | "非常感谢",

159 | ...

160 | ],

161 | "resp": [

162 | "助人为乐为快乐之本",

163 | "别客气",

164 | ...

165 | ]

166 | },

167 | ...

168 | }

169 | ```

170 |

171 | - 内部FAQ数据包括两个版本

172 |

173 | - chitchat-faq-small,主要是小规模闲聊FAQ,1500主题问题(topic)、2万多不同形式问题(post)

174 | - entity-faq-large,主要是大规模实体FAQ(涉及业务问题),大约3-5千主题问题(topic)、12万不同形式问题(post)

175 |

176 |

177 |

178 | #### 负采样

179 |

180 | 对于每个 query,需要获得与其相似的 **positve candidate** 以及不相似的 **negtive candidate**,从而构成正样本和负样本作为模型输入,即 **(query, candidate)**

181 |

182 | > ⚠️ 此处为 **offline negtive sampling**,即在训练前采样构造负样本,区别于 **online negtive sampling**,后者在训练中的每个 batch 内进行动态的负采样(可以通过相关损失函数实现,如 **OnlineContrastive Loss**)

183 | >

184 | > 两种方法可以根据任务特性进行选择,**online negtive sampling** 对于数据集有一定的要求,需要确保每个 batch 内的 query 是不相似的,但是效率更高

185 |

186 | 对于 **offline negtive sampling** 主要使用以下两种方式采样:

187 |

188 | - **全局负采样**

189 | - 在整个数据集上进行正态分布采样,很难产生高难度的负样本

190 | - **局部负采样**

191 | - 首先使用少量人工标注数据预训练的 **BERT 模型**对候选问题集合进行**编码**

192 | - 然后使用**无监督聚类** ,如 [Kmeans](https://scikit-learn.org/stable/modules/generated/sklearn.cluster.KMeans.html)

193 | - 最后在每个 query 所在聚类簇中进行采样

194 |

195 |

196 |

197 | 实验中对已有 FAQ 数据集中所有主题的 post 进行 **9:1** 划分得到训练集和测试集,负采样结果对比

198 |

199 | > `chitchat-faq-small` 为需要上线的 FAQ 闲聊数据,`entity-faq-large` 为辅助数据

200 |

201 | | dataset | topics | posts | positive(sampling) | negative(sampling) | total(sampling) |

202 | | ----------------------------- | ------ | ----- | ------------------ | ------------------ | --------------- |

203 | | chitchat-faq-small

train | 1468 | 18267 | 5w+ | 5w+ | 10w+ |

204 | | chitchat-faq-small

test | 768 | 2030 | 2984 | 7148 | 10132 |

205 | | chitchat-faq-small | 1500 | 20297 | - | - | - |

206 | | entity-faq-large | - | 12w+ | 50w+ | 50w+ | 100w+ |

207 |

208 |

209 |

210 | #### 模型蒸馏

211 |

212 | 使用基于 Transformers 的模型蒸馏工具 [TextBrewer](https://github.com/airaria/TextBrewer) ,主要参考 [官方 入门示例](https://github.com/airaria/TextBrewer/tree/master/examples/random_tokens_example) 和[cmrc2018示例](https://github.com/airaria/TextBrewer/tree/master/examples/cmrc2018_example)

213 |

214 |

215 |

216 | ### FAQ Web服务

217 |

218 | #### Web API

219 |

220 | - Web 框架选择

221 | - [Flask](https://flask.palletsprojects.com/) + Gunicorn + gevent + nginx ,进程管理(崩溃自动重启)(uwsgi 同理,gunicorn 更简单)

222 | - :fire: **[FastAPI](https://fastapi.tiangolo.com/)** + uvicorn(崩溃自动重启),最快的Python Web框架(实测的确比 Flask 快几倍)

223 | - cache 缓存机制(保存最近的query对应的topic,命中后直接返回)

224 | - Flask 相关

225 | - [flask-caching](https://github.com/sh4nks/flask-caching) (默认缓存500,超时300秒),使用 set/get 进行数据操作;项目来源于 [pallets/werkzeug](https://github.com/pallets/werkzeug) (werkzeug 版本0.4以后弃用 cache)

226 | - Python 3.2 以上自带(FastAPI 中可使用)

227 | - :fire: [**functools.lru_cache()**](https://docs.python.org/3/library/functools.html#functools.lru_cache) (默认缓存128,lru策略),装饰器,缓存函数输入和输出

228 |

229 |

230 |

231 | #### Locust 压力测试

232 |

233 | 使用 [Locust](https://locust.io/) 编写压力测试脚本

234 |

235 |

236 |

237 | ## 使用说明

238 |

239 | 主要依赖参考 `requirements.txt`

240 |

241 | ```bash

242 | pip install -r requirements.txt

243 | ```

244 |

245 |

246 |

247 | ### 负采样

248 |

249 | ```bash

250 | python sampling.py \

251 | --filename='faq/train_faq.json' \

252 | --model_name_or_path='./model/bert-base-chinese' \

253 | --is_transformers=True \

254 | --hyper_beta=2 \

255 | --num_pos=5 \

256 | --local_num_negs=3 \

257 | --global_num_negs=2 \

258 | --output_dir='./samples'

259 | ```

260 |

261 | **主要参数说明**

262 |

263 | - `--filename` ,**faq 数据集**,按前文所述组织为 `{topic: {post:[], resp:[]}}` 格式

264 | - `--model_name_or_path` ,用于句向量编码的 Transformers **预训练模型**位置(`bert-base-chinese` 或者基于人工标注数据微调后的模型)

265 | - `--hyper_beta` ,**聚类数超参数**,聚类类别为 `n_cluster=num_topics/hyper_beta` ,其中 `num_topics` 为上述数据中的主题数,`hyper_beta` 默认为 2(过小可能无法采样到足够局部负样本)

266 | - `--num_pos` ,**正采样个数**,默认 5(注意正负比例应为 1:1)

267 | - `--local_num_negs` ,**局部负采样个数**,默认 3(该值太大时,可能没有那么多局部负样本,需要适当调低正采样个数,保证正负比例为 1:1)

268 | - `--global_num_negs` ,**全局负采样个数**,默认 2

269 | - `--is_split` ,是否进行训练集拆分,默认 False(建议直接在 faq 数据上进行拆分,然后使用评估语义召回效果)

270 | - `--test_size` ,测试集比例,默认 0.1

271 | - `--output_dir` ,采样结果文件保存位置(`sentence1, sentence2, label` 形式的 csv 文件)

272 |

273 |

274 |

275 | ### BERT 微调

276 |

277 | - 参考[ Sentence-Transformers 的 **raining_OnlineConstrativeLoss.py** ](https://github.com/UKPLab/sentence-transformers/blob/master/examples/training/quora_duplicate_questions/training_OnlineConstrativeLoss.py) 修改,适合单 GPU 小规模样本训练

278 |

279 | - 模型训练

280 |

281 | ```bash

282 | CUDA_VISIBLE_DEVICES=0 python sentence_transformers_train.py \

283 | --do_train \

284 | --model_name_or_path='./model/bert-base-chinese' \

285 | --trainset_path='./lcqmc/LCQMC_train.csv' \

286 | --devset_path='./lcqmc/LCQMC_dev.csv' \

287 | --testset_path='./lcqmc/LCQMC_test.csv' \

288 | --train_batch_size=128 \

289 | --eval_batch_size=128 \

290 | --model_save_path

291 | ```

292 |

293 | - 主要参数说明

294 |

295 | - 模型预测时则使用 `--do_eval`

296 | - 数据集为 `sentence1, sentence2, label` 形式的 csv 文件

297 | - 16G 显存设置 batch size 为 128

298 |

299 |

300 |

301 | - 使用 [Transformers](https://huggingface.co/transformers/) 自定义数据集和 `BertForSiameseNetwork` 模型并使用 Trainer 训练,适合多 GPU 大规模样本训练

302 |

303 | ```bash

304 | CUDA_VISIBLE_DEVICES=0,1,2,3 python transformers_trainer.py \

305 | --do_train=True \

306 | --do_eval=True \

307 | --do_predict=False \

308 | --model_name_or_path='./model/bert-base-chinese' \

309 | --trainset_path='./samples/merge.csv' \

310 | --devset_path='./samples/test.csv' \

311 | --testset_path='./samples/test.csv' \

312 | --output_dir='./output/transformers-merge-bert'

313 | ```

314 |

315 |

316 |

317 | - 使用 [Transformers](https://huggingface.co/transformers/) 的 `BertForSequenceClassification` 进行句对分类对比实验

318 |

319 | ```bash

320 | CUDA_VISIBLE_DEVICES=0 python bert_for_seq_classification.py \

321 | --do_train=True \

322 | --do_eval=True \

323 | --do_predict=False \

324 | --trainset_path='./lcqmc/LCQMC_train.csv' \

325 | --devset_path='./lcqmc/LCQMC_dev.csv' \

326 | --testset_path='./lcqmc/LCQMC_test.csv' \

327 | --output_dir='./output/transformers-bert-for-seq-classify'

328 | ```

329 |

330 |

331 |

332 | ### 模型蒸馏

333 |

334 | 使用 [TextBrewer](https://github.com/airaria/TextBrewer) 以及前文自定义的 `SiameseNetwork` 进行模型蒸馏

335 |

336 | ```bash

337 | CUDA_VISIBLE_DEVICES=0 python model_distillation.py \

338 | --teacher_model='./output/transformers-merge-bert' \

339 | --student_config='./distills/bert_config_L3.json' \

340 | --bert_model='./model/bert-base-chinese' \

341 | --train_file='./samples/train.csv' \

342 | --test_file='./samples/test.csv' \

343 | --output_dir='./distills/outputs/bert-L3'

344 | ```

345 |

346 | 主要参数说明:

347 |

348 | - 此处使用的 `bert_config_L3.json` 作为学生模型参数,更多参数 [student_config](https://github.com/airaria/TextBrewer/tree/master/examples/student_config/bert_base_cased_config) 或者自定义

349 | - 3层应用于特定任务效果不错,但对于句向量获取,至少得蒸馏 6层

350 | - 学生模型可以使用 `bert-base-chinese` 的前几层初始化

351 |

352 |

353 |

354 | ### Web服务

355 |

356 | - 服务启动(`gunicorn` 和 `uvicorn` 均支持多进程启动以及失败重启)

357 | - [Flask](https://flask.palletsprojects.com/)

358 |

359 | ```bash

360 | gunicorn -w 1 -b 127.0.0.1:8888 faq_app_flask:app

361 | ```

362 |

363 | - [FastAPI](https://fastapi.tiangolo.com/) :fire: (推荐)

364 |

365 | ```bash

366 | uvicorn faq_app_fastapi:app --reload --port=8888

367 | ```

368 |

369 |

370 |

371 |

372 | - 压力测试 [Locust](https://docs.locust.io/en/stable/) ,实现脚本参考 `locust_test.py`

373 |

374 |

375 |

376 | ## 结果及分析

377 |

378 | ### 微调结果

379 |

380 | > 对于SiameseNetwork,需要在开发集上确定最佳阈值,然后测试集上使用该阈值进行句对相似度结果评价

381 |

382 | 句对测试集评价结果,此处为 LCQMC 的实验结果

383 |

384 | | model | acc(dev/test) | f1(dev/test) |

385 | | ------------------------------------------------ | ------------- | ------------- |

386 | | BertForSeqClassify

:steam_locomotive: lcqmc | 0.8832/0.8600 | 0.8848/0.8706 |

387 | | SiameseNetwork

:steam_locomotive: lcqmc | 0.8818/0.8705 | 0.8810/0.8701 |

388 |

389 | > 基于表示和基于交互的模型效果差别并不大

390 |

391 |

392 |

393 | ### 语义召回结果

394 |

395 | > 此处为 FAQ 数据集的召回结果评估,将训练集 post 作为 corpus,测试集 post 作为 query 进行相似度计算

396 |

397 | | model | hit@1(chitchat-faq-small) | hit@1(entity-faq-large) |

398 | | ------------------------------------------------------------ | ------------------------- | ----------------------- |

399 | | *lucene bm25 (origin)* | 0.6679 | - |

400 | | bert-base-chinese | 0.7394 | 0.7745 |

401 | | bert-base-chinese

:point_up_2: *6 layers* | 0.7276 | - |

402 | | SiameseNetwork

:steam_locomotive: chit-faq-small | 0.8567 | 0.8500 |

403 | | SiameseNetwork

:steam_locomotive: chitchat-faq-small + entity-faq-large | 0.8980 | **0.9961** |

404 | | :point_up_2: *6 layers* :fire: | **0.9128** | 0.8201 |

405 |

406 | - **chitchat-faq-small**

407 | - 测试集 hit@1 大约 85% 左右

408 | - 错误原因主要是 hflqa 数据问题

409 | - 数据质量问题,部分 topic 意思相同,可以合并

410 | - 一些不常用表达或者表达不完整的句子

411 | - 正常对话的召回率还是不错的

412 | - **chitchat-faq-small + entity-faq-large**

413 | - 2000 chitchat-faq-small 测试集,6层比12层效果好一个点,hit@1 达到 90%

414 | - 10000 entity-faq-large 测试集,12层 hit@1 达到 99%,6层只有 82%

415 | - 底层学到了较为基础的特征,在偏向闲聊的 chitchat-faq-small 上仅使用6层效果超过12层(没有蒸馏必要)

416 | - 高层学到了较为高级的特征,在偏向实体的 entity-faq-large 上12层效果远超于6层

417 | - 另外,entity-faq-large 数量规模远大于 chitchat-faq-small ,因此最后几层分类器偏向于从 entity-faq-large 学到的信息,因此在 chitchat-faq-small 小效果略有下降;同时能够避免 chitchat-faq-small 数据过拟合

418 |

419 |

420 |

421 | ### Web服务压测

422 |

423 | - 运行命令说明

424 |

425 | > 总共 100 个模拟用户,启动时每秒递增 10 个,压力测试持续 3 分钟

426 |

427 | ```bash

428 | locust -f locust_test.py --host=http://127.0.0.1:8889/module --headless -u 100 -r 10 -t 3m

429 | ```

430 |

431 |

432 |

433 | - :hourglass: 配置 **4核8G CPU** (6层小模型占用内存约 700MB)

434 | - 小服务器上 **bert-as-service** 服务非常不稳定(tensorflow各种报错), 效率不如简单封装的 **TransformersEncoder**

435 | - **FastAPI** 框架速度远胜于 **Flask**,的确堪称最快的 Python Web 框架

436 | - **cache** 的使用能够大大提高并发量和响应速度(最大缓存均设置为**500**)

437 | - 最终推荐配置 :fire: **TransformersEncoder + FastAPI + functools.lru_cache**

438 |

439 | | model | Web | Cache | Users | req/s | reqs | fails | Avg | Min | Max | Median | fails/s |

440 | | --------------------------------------------------------- | ----------- | ------------- | ------ | ---------- | ------- | ----- | ----- | ---- | ------- | ------ | ------- |

441 | | *lucene bm25 (origin)* | *flask* | *werkzeug* | *1000* | **271.75** | *48969* | *0* | *91* | *3* | *398* | *79* | 0.00 |

442 | | BertSiameseNet

6 layers

Transformers | flask | flask-caching | 1000 | 24.55 | 4424 | 654 | 28005 | 680 | 161199 | 11000 | 3.63 |

443 | | BertSiameseNet

6 layers

Transformers | **fastapi** | lru_cache | 1000 | **130.87** | 23566 | 1725 | 3884 | 6 | 127347 | 26 | 9.58 |

444 | | *lucene bm25 (origin)* | *flask* | *werkzeug* | *100* | **27.66** | *4973* | *1* | *32* | *6* | *60077* | *10* | 0.01 |

445 | | BertSiameseNet

6 layers

bert-as-service | flask | flask-caching | 100 | 5.49 | 987 | 0 | 13730 | 357 | 17884 | 14000 | 0.00 |

446 | | BertSiameseNet

6 layers

Transformers | flask | flask-caching | 100 | 5.93 | 1066 | 0 | 12379 | 236 | 17062 | 12000 | 0.00 |

447 | | BertSiameseNet

:fire: 6 layers

**Transformers** | **fastapi** | **lru_cache** | 100 | **22.19** | 3993 | 0 | 824 | 10 | 2402 | 880 | 0.00 |

448 | | BertSiameseNet

6 layers

transformers | fastapi | None | 100 | 18.17 | 1900 | 0 | 1876 | 138 | 3469 | 1900 | 0.00 |

449 |

450 | > 使用 bert-as-service 遇到的一些问题:

451 | >

452 | > - 老版本服务器上使用 [tensorflow 报错解决方案 Error in `python': double free or corruption (!prev) #6968](https://github.com/tensorflow/tensorflow/issues/6968#issuecomment-279060156)

453 | > - 报错 src/tcmalloc.cc:277] Attempt to free invalid pointer 0x7f4685efcd40 Aborted (core dumpe),解决方案,将 bert_as_service import 移到顶部

454 | >

455 | > 测试服部署时报错 src/tcmalloc.cc:277] Attempt to free invalid pointer,通过改变import顺序来解决,在 `numpy` 之后 import pytorch

456 |

457 |

458 |

459 | ## 更多

460 |

461 | - 大规模问题集可以使用 [facebookresearch/faiss](https://github.com/facebookresearch/faiss) (建立向量索引,使用 k-nearest-neighbor 召回)

462 | - 很多场景下,基于关键字的倒排索引召回结果已经足够,可以考虑综合基于关键字和基于向量的召回方法,参考知乎语义检索系统 [Beyond Lexical: A Semantic Retrieval Framework for Textual SearchEngine](http://arxiv.org/abs/2008.03917)

463 |

464 |

--------------------------------------------------------------------------------

/annoy_test.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 | # @Date : 2021-04-05 16:21:32

4 | # @Author : Kaiyan Zhang (minekaiyan@gmail.com)

5 | # @Link : https://github.com/iseesaw

6 | # @Version : 1.0.0

7 |

8 | from transformers_encoder import TransformersEncoder

9 | import os

10 | import time

11 | import numpy as np

12 | from tqdm import tqdm

13 | from annoy import AnnoyIndex

14 |

15 | from utils import load_json, save_json

16 |

17 | FEAT_DIM = 768

18 | TOPK = 10

19 |

20 | # prefix = "/data/benben/data/faq_bert/"

21 | prefix = "/users6/kyzhang/benben/FAQ-Semantic-Retrieval"

22 | MODEL_NAME_OR_PATH = os.path.join(prefix, "output/transformers-merge3-bert-6L")

23 | FAQ_FILE = os.path.join(prefix, "ext_hflqa/clean_faq.json")

24 | ANNOY_INDEX_FILE = os.path.join(prefix, "ext_hflqa/index.ann")

25 | IDX2TOPIC_FILE = os.path.join(prefix, "ext_hflqa/idx2topic.json")

26 | VEC_FILE = os.path.join(prefix, "ext_hflqa/vec.npy")

27 |

28 | faq = load_json(FAQ_FILE)

29 |

30 | encoder = TransformersEncoder(model_name_or_path=MODEL_NAME_OR_PATH,

31 | batch_size=1024)

32 |

33 | ####### encode posts

34 | if os.path.exists(IDX2TOPIC_FILE) and os.path.exists(VEC_FILE):

35 | print("Loading idx2topic and vec...")

36 | idx2topic = load_json(IDX2TOPIC_FILE)

37 | vectors = np.load(VEC_FILE)

38 | else:

39 | idx = 0

40 | idx2topic = {}

41 | posts = []

42 | for topic, post_resp in tqdm(faq.items()):

43 | for post in post_resp["post"]:

44 | idx2topic[idx] = {"topic": topic, "post": post}

45 | posts.append(post)

46 | idx += 1

47 |

48 | encs = encoder.encode(posts, show_progress_bar=True)

49 |

50 | save_json(idx2topic, IDX2TOPIC_FILE)

51 | vectors = np.asarray(encs)

52 | np.save(VEC_FILE, vectors)

53 |

54 | ####### index and test

55 | index = AnnoyIndex(FEAT_DIM, metric='angular')

56 | if os.path.exists(ANNOY_INDEX_FILE):

57 | print("Loading Annoy index file")

58 | index.load(ANNOY_INDEX_FILE)

59 | else:

60 | # idx2topic = {}

61 | # idx = 0

62 | # for topic, post_resp in tqdm(faq.items()):

63 | # posts = post_resp["post"]

64 | # vectors = encoder.encode(posts)

65 | # for post, vector in zip(posts, vectors):

66 | # idx2topic[idx] = {"topic": topic, "post": post}

67 | # # index.add_item(idx, vector)

68 | # idx += 1

69 | # save_json(idx2topic, IDX2TOPIC_FILE)

70 | # index.save(ANNOY_INDEX_FILE)

71 |

72 | for idx, vec in tqdm(enumerate(vectors)):

73 | index.add_item(idx, vec)

74 | index.build(30)

75 | index.save(ANNOY_INDEX_FILE)

76 |

77 | while True:

78 | query = input(">>> ")

79 | st = time.time()

80 | vector = np.squeeze(encoder.encode([query]), axis=0)

81 | res = index.get_nns_by_vector(vector,

82 | TOPK,

83 | search_k=-1,

84 | include_distances=True)

85 | print(time.time() - st)

86 | print(res)

87 |

--------------------------------------------------------------------------------

/bert_for_seq_classification.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 | # @Date : 2020-08-30 12:08:23

4 | # @Author : Kaiyan Zhang (minekaiyan@gmail.com)

5 | # @Link : https://github.com/iseesaw

6 | # @Version : 1.0.0

7 | import ast

8 | from argparse import ArgumentParser

9 |

10 | import os

11 | import pprint

12 |

13 | import pandas as pd

14 | from sklearn.metrics import precision_recall_fscore_support, accuracy_score

15 |

16 | import torch

17 | from transformers import BertForSequenceClassification, BertTokenizerFast, TrainingArguments, Trainer

18 |

19 |

20 | class SimDataset(torch.utils.data.Dataset):

21 | def __init__(self, encodings, labels):

22 | """Dataset

23 |

24 | Args:

25 | encodings (Dict(str, List[List[int]])): after tokenizer

26 | labels (List[int]): labels

27 | """

28 | self.encodings = encodings

29 | self.labels = labels

30 |

31 | def __getitem__(self, idx):

32 | """next

33 |

34 | Args:

35 | idx (int):

36 |

37 | Returns:

38 | dict-like object, Dict(str, tensor)

39 | """

40 | item = {

41 | key: torch.tensor(val[idx])

42 | for key, val in self.encodings.items()

43 | }

44 | item['labels'] = torch.tensor(self.labels[idx])

45 | return item

46 |

47 | def __len__(self):

48 | return len(self.labels)

49 |

50 |

51 | def load_dataset(filename):

52 | """加载训练集

53 |

54 | Args:

55 | filename (str): 文件名

56 |

57 | Returns:

58 |

59 | """

60 | df = pd.read_csv(filename)

61 | # array -> list

62 | return [df['sentence1'].values.tolist(),

63 | df['sentence2'].values.tolist()], df['label'].values.tolist()

64 |

65 |

66 | def compute_metrics(pred):

67 | """计算指标

68 |

69 | Args:

70 | pred (EvalPrediction): pred.label_ids, List[int]; pred.predictions, List[int]

71 |

72 | Returns:

73 | Dict(str, float): 指标结果

74 | """

75 | labels = pred.label_ids

76 | preds = pred.predictions.argmax(-1)

77 | precision, recall, f1, _ = precision_recall_fscore_support(

78 | labels, preds, average='binary')

79 | acc = accuracy_score(labels, preds)

80 | return {

81 | 'accuracy': acc,

82 | 'f1': f1,

83 | 'precision': precision,

84 | 'recall': recall

85 | }

86 |

87 |

88 | def main(args):

89 | model_path = args.model_name_or_path if args.do_train else args.output_dir

90 | # 初始化预训练模型和分词器

91 | tokenizer = BertTokenizerFast.from_pretrained(model_path)

92 | model = BertForSequenceClassification.from_pretrained(model_path)

93 |

94 | # 加载 csv 格式数据集

95 | train_texts, train_labels = load_dataset(args.trainset_path)

96 | dev_texts, dev_labels = load_dataset(args.devset_path)

97 | test_texts, test_labels = load_dataset(args.testset_path)

98 | # 预处理获得模型输入特征

99 | train_encodings = tokenizer(text=train_texts[0],

100 | text_pair=train_texts[1],

101 | truncation=True,

102 | padding=True,

103 | max_length=args.max_length)

104 | dev_encodings = tokenizer(text=dev_texts[0],

105 | text_pair=dev_texts[1],

106 | truncation=True,

107 | padding=True,

108 | max_length=args.max_length)

109 | test_encodings = tokenizer(text=test_texts[0],

110 | text_pair=test_texts[1],

111 | truncation=True,

112 | padding=True,

113 | max_length=args.max_length)

114 |

115 | # 构建 SimDataset 作为模型输入

116 | train_dataset = SimDataset(train_encodings, train_labels)

117 | dev_dataset = SimDataset(dev_encodings, dev_labels)

118 | test_dataset = SimDataset(test_encodings, test_labels)

119 |

120 | # 设置训练参数

121 | training_args = TrainingArguments(

122 | output_dir=args.output_dir,

123 | do_train=args.do_train,

124 | do_eval=args.do_eval,

125 | num_train_epochs=args.num_train_epochs,

126 | per_device_train_batch_size=args.per_device_train_batch_size,

127 | per_device_eval_batch_size=args.per_device_eval_batch_size,

128 | warmup_steps=args.warmup_steps,

129 | weight_decay=args.weight_decay,

130 | logging_dir=args.logging_dir,

131 | logging_steps=args.logging_steps,

132 | save_total_limit=args.save_total_limit)

133 |

134 | # 初始化训练器并开始训练

135 | trainer = Trainer(model=model,

136 | args=training_args,

137 | compute_metrics=compute_metrics,

138 | train_dataset=train_dataset,

139 | eval_dataset=dev_dataset)

140 |

141 | if args.do_train:

142 | trainer.train()

143 |

144 | # 保存模型和分词器

145 | trainer.save_model()

146 | tokenizer.save_pretrained(args.output_dir)

147 |

148 | if args.do_predict:

149 | eval_metrics = trainer.evaluate(dev_dataset)

150 | pprint.pprint(eval_metrics)

151 | test_metrics = trainer.evaluate(test_dataset)

152 | pprint.pprint(test_metrics)

153 |

154 |

155 | if __name__ == '__main__':

156 | parser = ArgumentParser('Bert For Sequence Classification')

157 |

158 | parser.add_argument('--do_train', type=ast.literal_eval, default=False)

159 | parser.add_argument('--do_eval', type=ast.literal_eval, default=True)

160 | parser.add_argument('--do_predict', type=ast.literal_eval, default=True)

161 |

162 | parser.add_argument(

163 | '--model_name_or_path',

164 | default='/users6/kyzhang/embeddings/bert/bert-base-chinese')

165 |

166 | parser.add_argument('--trainset_path', default='lcqmc/LCQMC_train.csv')

167 | parser.add_argument('--devset_path', default='lcqmc/LCQMC_dev.csv')

168 | parser.add_argument('--testset_path', default='lcqmc/LCQMC_test.csv')

169 |

170 | parser.add_argument('--output_dir',

171 | default='output/transformers-bert-for-classification')

172 | parser.add_argument('--max_length',

173 | type=int,

174 | default=128,

175 | help='max length of sentence1 & sentence2')

176 | parser.add_argument('--num_train_epochs', type=int, default=10)

177 | parser.add_argument('--per_device_train_batch_size', type=int, default=64)

178 | parser.add_argument('--per_device_eval_batch_size', type=int, default=64)

179 | parser.add_argument('--warmup_steps', type=int, default=500)

180 | parser.add_argument('--weight_decay', type=float, default=0.01)

181 | parser.add_argument('--logging_dir', type=str, default='./logs')

182 | parser.add_argument('--logging_steps', type=int, default=10)

183 | parser.add_argument('--save_total_limit', type=int, default=3)

184 |

185 | args = parser.parse_args()

186 | main(args)

187 |

--------------------------------------------------------------------------------

/convert.py:

--------------------------------------------------------------------------------

1 |

2 | import argparse

3 | import os

4 | import numpy as np

5 | import tensorflow as tf

6 | import torch

7 | from transformers import BertModel

8 | def convert_pytorch_checkpoint_to_tf(model: BertModel, ckpt_dir: str, model_name: str):

9 |

10 | """

11 | :param model:BertModel Pytorch model instance to be converted

12 | :param ckpt_dir: Tensorflow model directory

13 | :param model_name: model name

14 | :return:

15 | Currently supported HF models:

16 | Y BertModel

17 | N BertForMaskedLM

18 | N BertForPreTraining

19 | N BertForMultipleChoice

20 | N BertForNextSentencePrediction

21 | N BertForSequenceClassification

22 | N BertForQuestionAnswering

23 | """

24 |

25 | tensors_to_transpose = ("dense.weight", "attention.self.query", "attention.self.key", "attention.self.value")

26 |

27 | var_map = (

28 | ("layer.", "layer_"),

29 | ("word_embeddings.weight", "word_embeddings"),

30 | ("position_embeddings.weight", "position_embeddings"),

31 | ("token_type_embeddings.weight", "token_type_embeddings"),

32 | (".", "/"),

33 | ("LayerNorm/weight", "LayerNorm/gamma"),

34 | ("LayerNorm/bias", "LayerNorm/beta"),

35 | ("weight", "kernel"),

36 | )

37 |

38 | if not os.path.isdir(ckpt_dir):

39 | os.makedirs(ckpt_dir)

40 |

41 | state_dict = model.state_dict()

42 |

43 | def to_tf_var_name(name: str):

44 | for patt, repl in iter(var_map):

45 | name = name.replace(patt, repl)

46 | return "bert/{}".format(name)

47 |

48 | def create_tf_var(tensor: np.ndarray, name: str, session: tf.Session):

49 | tf_dtype = tf.dtypes.as_dtype(tensor.dtype)

50 | tf_var = tf.get_variable(dtype=tf_dtype, shape=tensor.shape, name=name, initializer=tf.zeros_initializer())

51 | session.run(tf.variables_initializer([tf_var]))

52 | session.run(tf_var)

53 | return tf_var

54 |

55 | tf.reset_default_graph()

56 | with tf.Session() as session:

57 | for var_name in state_dict:

58 | tf_name = to_tf_var_name(var_name)

59 | torch_tensor = state_dict[var_name].numpy()

60 | if any([x in var_name for x in tensors_to_transpose]):

61 | torch_tensor = torch_tensor.T

62 | tf_var = create_tf_var(tensor=torch_tensor, name=tf_name, session=session)

63 | tf.keras.backend.set_value(tf_var, torch_tensor)

64 | tf_weight = session.run(tf_var)

65 | print("Successfully created {}: {}".format(tf_name, np.allclose(tf_weight, torch_tensor)))

66 |

67 | saver = tf.train.Saver(tf.trainable_variables())

68 | saver.save(session, os.path.join(ckpt_dir, model_name.replace("-", "_") + ".ckpt"))

69 |

70 |

71 | def main(raw_args=None):

72 | parser = argparse.ArgumentParser()

73 | parser.add_argument("--tf_cache_dir", type=str, default='./output/transformers-merge3-bert-6L-tf', help="Directory in which to save tensorflow model")

74 | parser.add_argument("--model_name", default='bert-base-chinese')

75 | args = parser.parse_args(raw_args)

76 |

77 | model = BertModel.from_pretrained('./output/transformers-merge3-bert-6L')

78 |

79 | convert_pytorch_checkpoint_to_tf(model=model, ckpt_dir=args.tf_cache_dir, model_name=args.model_name)

80 |

81 | if __name__ == '__main__':

82 | main()

--------------------------------------------------------------------------------

/data/add_faq.json:

--------------------------------------------------------------------------------

1 | {

2 | "我要减肥": [

3 | "你有脂肪吗"

4 | ],

5 | "你信我就信": [

6 | "我不会信",

7 | "那我不信",

8 | "我不信呢",

9 | "那我信了"

10 | ],

11 | "我爱你": [

12 | "别说爱了受不起",

13 | "太感动了",

14 | "有多爱",

15 | "你爱我",

16 | "我不爱你",

17 | "可我不爱你",

18 | "我现在有一点点爱你",

19 | "我也爱你啦",

20 | "好感动",

21 | "爱我什么",

22 | "是吗?为什么爱我呢",

23 | "为什么爱我",

24 | "我不爱你啊",

25 | "谢谢你爱我",

26 | "你爱我有什么用又不能当饭吃",

27 | "我也爱你",

28 | "这不能乱说",

29 | "你为什么爱我",

30 | "我也爱我"

31 | ],

32 | "我也想要": [

33 | "怎么给你",

34 | "你不要想太多哦",

35 | "你想要啥",

36 | "想要什么呀",

37 | "你要啥",

38 | "你想要什么",

39 | "那给你吧",

40 | "我没说我想要",

41 | "要啥?",

42 | "那来吧",

43 | "要什么",

44 | "顺便给你带一份",

45 | "你要什么",

46 | "可以啊",

47 | "来啊你要什么",

48 | "可是我不能给你",

49 | "想要啥哟"

50 | ],

51 | "我知道": [

52 | "你知道你还问",

53 | "你知道啥"

54 | ],

55 | "我也觉得": [

56 | "看吧我说对了",

57 | "那还有谁觉得啊",

58 | "呵呵呵是吗",

59 | "好吧好吧说你喜欢我",

60 | "觉得什么",

61 | "你觉得自己可爱吗",

62 | "你觉得什么"

63 | ],

64 | "我的心": [

65 | "你的心怎么了",

66 | "你的心咋了",

67 | "你没心"

68 | ],

69 | "啥意思": [

70 | "没啥意思"

71 | ],

72 | "举头望明月": [

73 | "低头思故乡"

74 | ],

75 | "你不是": [

76 | "不是啥"

77 | ],

78 | "我喜欢你": [

79 | "我也喜欢你呀",

80 | "但是我不喜欢你啊"

81 | ],

82 | "下雨了": [

83 | "会晴的"

84 | ],

85 | "我问你": [

86 | "问你自己",

87 | "你问我啥"

88 | ],

89 | "好有爱啊": [

90 | "没有爱",

91 | "什么爱"

92 | ],

93 | "厉害啊": [

94 | "有多厉害啊"

95 | ],

96 | "分开也是另一种明白": [

97 | "很伤心",

98 | "我好难过",

99 | "好难过",

100 | "我不愿意分开",

101 | "你失恋过吗"

102 | ],

103 | "真的假的": [

104 | "也许是真的"

105 | ],

106 | "他不爱我": [

107 | "他当然不爱你"

108 | ],

109 | "我的女神": [

110 | "你确定",

111 | "为什么所有人都是你的女神",

112 | "咋是你女神了",

113 | "你的女神是谁",

114 | "你的女神是哪位",

115 | "他不是男神吗",

116 | "那你男神是谁",

117 | "那你是谁",

118 | "你的女神好多哇",

119 | "你的男神呢",

120 | "你是谁",

121 | "都是你女神呀",

122 | "女神是谁呢",

123 | "你有男神么",

124 | "你的女神是谁啊"

125 | ],

126 | "她漂亮吗": [

127 | "我的女神"

128 | ],

129 | "我的天": [

130 | "你咋不上天呢你咋不和太阳肩并肩呢"

131 | ],

132 | "余皆唔知": [

133 | "余皆知无"

134 | ],

135 | "你好呀": [

136 | "你好吗"

137 | ],

138 | "睡不着": [

139 | "我睡得着"

140 | ],

141 | "你不懂": [

142 | "你才不懂呢",

143 | "我哪儿不懂了"

144 | ],

145 | "我的天啊": [

146 | "你老说我的天哪干嘛",

147 | "天也没办法",

148 | "流泪流泪",

149 | "天在那呢",

150 | "你的天长什么样",

151 | "你的天哪",

152 | "天啊是谁",

153 | "天若有情天亦老"

154 | ],

155 | "太牛了": [

156 | "你说说怎么牛了",

157 | "我不做牛人好多年",

158 | "哪里哪里一般而已",

159 | "怎么牛",

160 | "你也很牛",

161 | "为什么夸奖我",

162 | "哈哈我也觉得"

163 | ],

164 | "你太坏了": [

165 | "你生气了吗",

166 | "是你太坏了"

167 | ],

168 | "有点意思": [

169 | "岂止是有点是非常之有意思啊",

170 | "有什么意思",

171 | "你是说我有意思吗",

172 | "什么有意思",

173 | "嗯有意思",

174 | "什么有点意思啊",

175 | "没有点意思",

176 | "有意思吗",

177 | "什么叫有点意思",

178 | "是吧我也觉得有意思你觉得",

179 | "你只会有点意思么",

180 | "有啥意思",

181 | "什么有点意思",

182 | "哪里比较有意思",

183 | "啥意思",

184 | "没意思",

185 | "嗯是有意思",

186 | "啥有意思",

187 | "我看你没意思",

188 | "为啥有意思",

189 | "有点意思啊"

190 | ],

191 | "你真漂亮": [

192 | "不漂亮",

193 | "我哪漂亮",

194 | "都说不漂亮了",

195 | "你漂亮吗",

196 | "谢谢夸奖",

197 | "有多漂亮"

198 | ],

199 | "我也很喜欢": [

200 | "你喜欢我吗",

201 | "你喜欢啥",

202 | "你喜欢谁",

203 | "喜欢啥",

204 | "你喜欢啥呀",

205 | "喜欢什么"

206 | ],

207 | "我也喜欢": [

208 | "你喜欢我吗",

209 | "可我不喜欢",

210 | "我不喜欢",

211 | "那你喜欢我吗"

212 | ],

213 | "不想说话": [

214 | "你不想说话就别说了吧啊",

215 | "你为什么不想说话我跟你说话呢哼"

216 | ],

217 | "我也想吃": [

218 | "我不想吃",

219 | "我先把你吃了可以不",

220 | "那你吃什么啊",

221 | "吃什么呢",

222 | "不给你吃",

223 | "好吃吗",

224 | "你知道吃",

225 | "不是吃的问题",

226 | "你想吃什么呀",

227 | "你请我",

228 | "想吃什么",

229 | "吃吃吃会胖哒~",

230 | "你就知道吃",

231 | "你想吃啥",

232 | "你喜欢吃什么",

233 | "就知道吃",

234 | "你吃过吗",

235 | "一起来吃吧",

236 | "我也想吃呢",

237 | "不好吃"

238 | ],

239 | "太可爱了": [

240 | "什么可爱",

241 | "你才可爱",

242 | "你也很可爱亲亲亲亲",

243 | "哪里可爱",

244 | "你说我可爱就吻我一个",

245 | "很可爱是吧",

246 | "一点都不可爱",

247 | "你也很可爱呢",

248 | "谢谢不过你更可爱",

249 | "可爱归可爱",

250 | "没有你可爱",

251 | "害羞害羞害羞害羞",

252 | "你可爱吗",

253 | "你在夸你吗",

254 | "你可爱么"

255 | ],

256 | "我的爱": [

257 | "你的爱给了多少人啊",

258 | "你爱我么",

259 | "你的爱是谁啊",

260 | "我爱不起了",

261 | "你的爱是啥东西",

262 | "我好像爱上你了",

263 | "我不爱",

264 | "你的爱是什么",

265 | "我爱你",

266 | "爱在哪啊",

267 | "你爱我吗",

268 | "你的爱",

269 | "爱什么",

270 | "你的爱咋了",

271 | "我还有爱吗",

272 | "你爱谁",

273 | "你的爱多少钱一斤"

274 | ],

275 | "太美了": [

276 | "什么太美了",

277 | "我也觉得",

278 | "谁太美了",

279 | "啥叫美",

280 | "你也美"

281 | ],

282 | "好可爱啊": [

283 | "你最可爱",

284 | "[害羞]",

285 | "你说谁可爱呀",

286 | "为什么可爱",

287 | "必须可爱啊",

288 | "谁可爱呢",

289 | "好可爱啥",

290 | "我都说了我不是吗我不是可爱",

291 | "我不可爱",

292 | "有我可爱吗",

293 | "什么可爱啊",

294 | "好可爱哟",

295 | "什么好可爱",

296 | "可怜没人爱",

297 | "好可爱呀",

298 | "你好可爱啊",

299 | "那里可爱",

300 | "可爱是我与生俱来的气质",

301 | "不可爱",

302 | "可爱啥啊",

303 | "没你可爱",

304 | "我也这么觉得",

305 | "你就会说可爱吗",

306 | "你也很可爱",

307 | "比你可爱",

308 | "你可爱",

309 | "谁可爱",

310 | "多可爱",

311 | "你也行非常的可爱",

312 | "哪里可爱了"

313 | ],

314 | "这是在说我吗": [

315 | "是的是在说你"

316 | ],

317 | "好可爱的小宝贝": [

318 | "我不是小宝贝",

319 | "你才是小宝贝",

320 | "谢谢害羞"

321 | ],

322 | "我也想": [

323 | "是我在想",

324 | "我想你",

325 | "想啥啊",

326 | "想个屁",

327 | "那你去想吧",

328 | "你也想",

329 | "你不用想了",

330 | "不要想你就是一个小姑娘了",

331 | "你不想我",

332 | "真的吗",

333 | "我不想",

334 | "我也想你了",

335 | "你为什么想",

336 | "你想啥",

337 | "你不要想啦"

338 | ],

339 | "在说呢": [

340 | "说来听听",

341 | "说什么"

342 | ],

343 | "这个可以有": [

344 | "这个真木有",

345 | "那我们试试吧",

346 | "教教我呗",

347 | "是吗是吗"

348 | ],

349 | "我也不知道": [

350 | "你知道啥",

351 | "你不知道什么",

352 | "不知道还瞎说呢撇嘴",

353 | "你又不知道了",

354 | "那你知道什么"

355 | ],

356 | "我的青春": [

357 | "你的青春怎么了"

358 | ],

359 | "青春是一把杀猪刀啊": [

360 | "我想听青春手册这首歌"

361 | ],

362 | "要多可爱有多可爱": [

363 | "你可爱吗",

364 | "可怜没人爱哈哈",

365 | "有你可爱吗",

366 | "你可爱还是我可爱呢"

367 | ],

368 | "不是很明白": [

369 | "你不用很明白",

370 | "你明白什么",

371 | "你不明白什么"

372 | ],

373 | "你也很可爱哦": [

374 | "哪里可爱",

375 | "你好可爱",

376 | "你超可爱的",

377 | "我比你可爱"

378 | ],

379 | "我的梦想": [

380 | "你的梦想是什么呢",

381 | "你的梦想就是想快快长大",

382 | "你的什么梦想",

383 | "梦想很美好",

384 | "梦想是什么",

385 | "这什么梦想啊",

386 | "是成为木头人",

387 | "你的梦想是什么👿",

388 | "你的梦想是什么",

389 | "我没梦想",

390 | "什么梦想",

391 | "你的梦想",

392 | "你梦想是什么",

393 | "你的梦想在哪儿",

394 | "你什么梦想",

395 | "你的梦想是什么呀",

396 | "我的梦想是什么"

397 | ],

398 | "你才笨": [

399 | "我不笨"

400 | ],

401 | "我需要你": [

402 | "我也需要你",

403 | "我不需要你"

404 | ],

405 | "我想吃": [

406 | "我不想吃"

407 | ],

408 | "不是吧": [

409 | "委屈委屈委屈委屈",

410 | "不是什么"

411 | ],

412 | "我不懂": [

413 | "我懂你不懂",

414 | "你不懂你还说你不懂你就应该不说"

415 | ],

416 | "我不相信": [

417 | "你不相信什么啊"

418 | ],

419 | "好热啊": [

420 | "我也很热呀"

421 | ],

422 | "这张照片好有感觉": [

423 | "哪里有感觉"

424 | ],

425 | "我不爱你": [

426 | "你爱过我吗",

427 | "我也不爱你拜拜",

428 | "我爱你",

429 | "哈哈我什么时候问你爱不爱我了"

430 | ],

431 | "喜欢就好": [

432 | "你喜欢我么",

433 | "喜欢啥"

434 | ],

435 | "我不会": [

436 | "你咋这么笨那",

437 | "你会啥啊",

438 | "你不会什么",

439 | "为啥不会啊",

440 | "那你会什么",

441 | "你会什么呢",

442 | "你什么都不会"

443 | ],

444 | "哈哈哈哈哈哈哈哈": [

445 | "你笑啥"

446 | ],

447 | "热死了": [

448 | "我也热"

449 | ],

450 | "你也很可爱": [

451 | "我也觉得"

452 | ],

453 | "睡不着啊": [

454 | "为什么睡不着"

455 | ],

456 | "我的朋友": [

457 | "你有女朋友吗",

458 | "你的朋友是谁",

459 | "只是朋友"

460 | ],

461 | "女的呀": [

462 | "你咋知道",

463 | "那你有男朋友么",

464 | "对女生~",

465 | "你男女不分啊",

466 | "你是男是女"

467 | ],

468 | "学着点": [

469 | "我怎么学习",

470 | "你怎么学习"

471 | ],

472 | "吃多了": [

473 | "谁吃多了"

474 | ],

475 | "有钱真好": [

476 | "没钱怎么办"

477 | ],

478 | "有钱了": [

479 | "谁有钱了",

480 | "穷光蛋一个"

481 | ],

482 | "我不是": [

483 | "那你是啥",

484 | "你说不是就不是吧",

485 | "你有病啊",

486 | "我听不懂",

487 | "你还不承认",

488 | "你就是",

489 | "你变坏了",

490 | "那还说近在眼前",

491 | "那你是",

492 | "我不知道你在说啥",

493 | "你就是说你是你就是",

494 | "明明就是",

495 | "你如何证明",

496 | "你不是什么",

497 | "那你是谁",

498 | "就是你",

499 | "你不是啥⊙∀⊙",

500 | "你不是",

501 | "不是什么",

502 | "你是谁啊",

503 | "怎么不是",

504 | "你是谁",

505 | "那你是啥啊",

506 | "就是要说你",

507 | "你在开玩笑嘛",

508 | "怎么不是呀",

509 | "就是就是",

510 | "你不是你还骗我",

511 | "你是我",

512 | "你怎么证明你不是",

513 | "还说不是",

514 | "不你是",

515 | "你不是啥",

516 | "你还说你不是你就试试",

517 | "我也没说你是啊",

518 | "不是啥",

519 | "一定是"

520 | ],

521 | "我想知道": [

522 | "你想知道什么我都告诉你啦",

523 | "我在问你",

524 | "知道什么"

525 | ],

526 | "我等着你": [

527 | "等着我也没用我向你隐瞒了我已婚的事实",

528 | "我也在等一个人"

529 | ],

530 | "我就是这样": [

531 | "你就是贱",

532 | "我不要你这样",

533 | "你有病",

534 | "就是不一样"

535 | ],

536 | "我喜欢你喜欢的": [

537 | "你不许喜欢",

538 | "喜欢一个人怎么办"

539 | ],

540 | "我也想去": [

541 | "你想去哪里玩",

542 | "去那里",

543 | "你去哪",

544 | "怎么去呢",

545 | "去不了",

546 | "去哪里呢",

547 | "我准备到哪去",

548 | "去干嘛",

549 | "去什么呀",

550 | "你找个地方",

551 | "想去哪里",

552 | "你带着我",

553 | "你想去哪里",

554 | "一起吧",

555 | "我想买个衣服",

556 | "想去哪",

557 | "你去过吗",

558 | "哈哈你去哪里呀",

559 | "一起啊",

560 | "你要去哪里",

561 | "去哪儿啊",

562 | "我去你的",

563 | "你去啊",

564 | "哈哈哈",

565 | "去哪儿",

566 | "去哪啊",

567 | "我好想跟你玩",

568 | "你要去哪儿",

569 | "你想去哪",

570 | "那好啊",

571 | "去哪里"

572 | ],

573 | "我不知道": [

574 | "今天说不知道一点都不好",

575 | "你知道什么",

576 | "你怎么会不知道能",

577 | "我告诉你你叫笨笨"

578 | ],

579 | "我的小心脏": [

580 | "又是你的小心脏",

581 | "我在你心脏里吗",

582 | "谁发明了你呀"

583 | ],

584 | "你才傻": [

585 | "你太呆了",

586 | "你敢说我"

587 | ],

588 | "周玉院士": [

589 | "哈工大书记"

590 | ],

591 | "我没看过": [

592 | "就刚才那个",

593 | "你看了",

594 | "你知道我么",

595 | "昨天的你"

596 | ],

597 | "你太可爱了": [

598 | "你害羞的样子才可爱呢",

599 | "你也挺好玩"

600 | ],

601 | "我想你": [

602 | "我不想你",

603 | "我也想你我就在你家旁边",

604 | "我们不是一直在聊天吗你怎么想我啦",

605 | "我们想你怎么办",

606 | "我也想你呀",

607 | "从来都没有天那我先在学想你淡淡"

608 | ],

609 | "我不想": [

610 | "出卖我的爱",

611 | "你不想成为人类一员",

612 | "那由不得你",

613 | "真的不想吗"

614 | ],

615 | "看电影": [

616 | "你喜欢看什么电影"

617 | ],

618 | "好好学习天天向上": [

619 | "怎么学习"

620 | ],

621 | "我的小": [

622 | "哪里小啊",

623 | "什么小",

624 | "你啥小啊",

625 | "我的大",

626 | "小什么",

627 | "小啥啊",

628 | "你的什么小",

629 | "谁的大"

630 | ],

631 | "吃卤煮吧": [

632 | "这个不好吃",

633 | "我要吃海鲜",

634 | "比较吃什么",

635 | "卤煮很难吃",

636 | "不喜欢",

637 | "不爱吃",

638 | "卤煮好吃吗",

639 | "好吃吗",

640 | "不好吃啊",

641 | "合肥吃什么",

642 | "我就要吃煎饼果子",

643 | "味道太重",

644 | "不喜欢吃卤煮",

645 | "我吃完了饭",

646 | "我不喜欢卤煮",

647 | "我不爱吃卤煮",

648 | "嗯这个我喜欢",

649 | "我没有吃过",

650 | "吃饭了么",

651 | "不想吃",

652 | "去哪吃",

653 | "为什么不是炸酱面",

654 | "什么是卤煮",

655 | "吃什么",

656 | "不喜欢吃",

657 | "不好吃",

658 | "今天有什么吃的"

659 | ],

660 | "很有创意": [

661 | "什么很有创意发呆",

662 | "谢谢你的夸奖"

663 | ],

664 | "太有创意了": [

665 | "还可以吧",

666 | "你这么认为",

667 | "你也很有创意"

668 | ],

669 | "我是你": [

670 | "我是谁呀",

671 | "我是谁啊",

672 | "你是谁",

673 | "你是谁啊",

674 | "我谁呀"

675 | ],

676 | "好美啊": [

677 | "谢谢我相信您长得肯定特别好看",

678 | "有多美",

679 | "什么好美",

680 | "直接看了",

681 | "我吗害羞",

682 | "你说谁美呀",

683 | "必须美啊",

684 | "但长得漂不漂亮",

685 | "啥美啊",

686 | "什么美",

687 | "呃你这样夸我我会害羞的",

688 | "太美丽",

689 | "什么好美啊",

690 | "美什么",

691 | "我美么",

692 | "嗯想得美"

693 | ],

694 | "好漂亮的雪": [

695 | "认真的雪",

696 | "没下雪",

697 | "哪有雪😂"

698 | ],

699 | "这是要闹哪样啊": [

700 | "闹啥子嘛",

701 | "麻麻说捉弄人的都不是好孩子",

702 | "我想那啥那啥也不用你管",

703 | "不是啊"

704 | ],

705 | "好可爱的宝宝": [

706 | "你不是宝宝",

707 | "谁是宝宝",

708 | "你也好可爱啊"

709 | ],

710 | "加油加油加油": [

711 | "谢谢小天使的鼓励"

712 | ],

713 | "好想吃": [

714 | "那你吃吧",

715 | "什么啊你就吃",

716 | "吃你个大头鬼",

717 | "不给你吃",

718 | "好吃吗",

719 | "你除了吃还喜欢干啥",

720 | "想吃什么",

721 | "去哪家餐厅",

722 | "吃什么?",

723 | "你吃饭了吗",

724 | "你想吃什么啊",

725 | "吃什么",

726 | "吃你自己",

727 | "想吃怎么办"

728 | ],

729 | "你不知道": [

730 | "不知道",

731 | "知道什么",

732 | "你怎么知道我不知道",

733 | "可是你不知道啊"

734 | ],

735 | "我看不懂": [

736 | "为什么看不懂"

737 | ],

738 | "我的最爱": [

739 | "你还喜欢什么",

740 | "你爱啥",

741 | "你爱什么呀",

742 | "你除了会说我的最爱我爱你过来跟你",

743 | "我么害羞",

744 | "你的最爱是谁",

745 | "你最爱什么",

746 | "你的最爱是什么",

747 | "你的最爱",

748 | "我发现这八字不离爱",

749 | "最爱吃什么",

750 | "最爱就是打字吗我可不是",

751 | "你爱谁",

752 | "你有多少最爱啊",

753 | "你最爱谁啊笨笨你有喜欢的人吗"

754 | ],

755 | "太可怕了": [

756 | "为什么可怕",

757 | "还说可怕是什么意思什么什么可怕呢",

758 | "哪可怕了",

759 | "怎么可怕"

760 | ],

761 | "这是在干嘛": [

762 | "在感慨你的话",

763 | "你说呢"

764 | ],

765 | "太帅了": [

766 | "帅呆啦",

767 | "羡慕吧",

768 | "你也这么觉得吗",

769 | "我帅么",

770 | "你说我帅",

771 | "你是说我很帅吗",

772 | "有多帅全宇宙第一吗",

773 | "为什么帅"

774 | ],

775 | "不是说不出么": [

776 | "说不出什么",

777 | "什么说不出"

778 | ],

779 | "我想说": [

780 | "说什么"

781 | ],

782 | "我的梦": [

783 | "你的啥梦了你那么是喜欢睡觉时候被打扰呗",

784 | "你的梦想是什么",

785 | "你的梦是什么",

786 | "你继续做梦吧",

787 | "你的梦是",

788 | "梦见啥了",

789 | "没有梦",

790 | "你的梦就是我的梦"

791 | ],

792 | "古来圣贤皆寂寞": [

793 | "寂寞啊"

794 | ],

795 | "我没说你": [

796 | "我也没说你啊"

797 | ],

798 | "你不会": [

799 | "你怎么知道我不会"

800 | ],

801 | "我说你": [

802 | "我说你说",

803 | "说我什么"

804 | ],

805 | "我也觉得是": [

806 | "我很生气",

807 | "我好伤心",

808 | "你觉得你这样做错了吗",

809 | "你觉得啥",

810 | "你也这样觉得呀"

811 | ],

812 | "吾亦不知": [

813 | "知之为知之",

814 | "不知道",

815 | "你知道什么",

816 | "不知为不知",

817 | "你不知道啥",

818 | "不知什么",

819 | "你不懂什么",

820 | "知之为知之不知为不知此为知之也",

821 | "不知道啥",

822 | "那你知道什么呀",

823 | "我也不知道",

824 | "你不知道"

825 | ],

826 | "Python": [

827 | "Python是编程语言"

828 | ],

829 | "我的偶像": [

830 | "我喜欢王大锤",

831 | "你的偶像是我吗",

832 | "你的偶像是谁",

833 | "我是你偶像",

834 | "周杰伦",

835 | "你还喜欢谁",

836 | "你的偶像不是我吗",

837 | "你的偶像是谁呀"

838 | ],

839 | "我喜欢的人不喜欢我": [

840 | "你喜欢的人是谁呀"

841 | ],

842 | "好有爱": [

843 | "你也是啊",

844 | "你知道可爱的另外一种表述么",

845 | "什么好有爱",

846 | "好有爱?",

847 | "哪里有爱了",

848 | "什么叫好有爱",

849 | "爱在哪",

850 | "有爱吗",

851 | "你真可爱",

852 | "有啥爱",

853 | "为什么说我可爱",

854 | "我爱你"

855 | ],

856 | "我也想买": [

857 | "买什么"

858 | ],

859 | "我也想问": [

860 | "你会主动发问吗",

861 | "我问你答我吧",

862 | "你咋不问问",

863 | "问什么"

864 | ],

865 | "我想要的": [

866 | "我想要啥"

867 | ],

868 | "我想要": [

869 | "要什么"

870 | ],

871 | "太恐怖了": [

872 | "什么恐怖呀"

873 | ],

874 | "你知道的太多了": [

875 | "你知道的太少了",

876 | "知道的少怎么跟你玩"

877 | ],

878 | "开心就好": [

879 | "你开心么",

880 | "是啊开心就好",

881 | "哈哈哈哈",

882 | "开心不起来",

883 | "我开心么",

884 | "好开心",

885 | "哈哈哈",

886 | "开心当然好啦"

887 | ],

888 | "你不爱我": [

889 | "你都懒得说话我怎么爱你",

890 | "我爱你",

891 | "我喜欢你",

892 | "我肯定不爱你啊",

893 | "我哪有不爱你",

894 | "我本来就不爱你",

895 | "对我不爱你",

896 | "你也不爱我",

897 | "你喜欢我我就爱你",

898 | "我怎么会不爱你呢我爱你啊"

899 | ],

900 | "我也想知道": [

901 | "你知道啥",

902 | "你想知道啥",

903 | "知道不知道",

904 | "想知道什么",

905 | "你都不知道"

906 | ],

907 | "你在哪里啊": [

908 | "在家啊",

909 | "我在幼儿园啊"

910 | ],

911 | "我也爱": [

912 | "你爱谁",

913 | "你到底爱不爱"

914 | ],

915 | "太有才了": [

916 | "你有才你不笨",

917 | "为什么有才"

918 | ],

919 | "相逢何必曾相识": [

920 | "不相识怎么聊天"

921 | ],

922 | "我也想试试": [

923 | "如果只是试试最好别试要确定喔"

924 | ],

925 | "皆是男": [

926 | "你是男孩子啊"

927 | ],

928 | "我不是你": [

929 | "你不是我",

930 | "我也不是你",

931 | "不是喜欢你",

932 | "你是谁",

933 | "我知道",

934 | "我不是",

935 | "你说的"

936 | ],

937 | "你若不弃": [

938 | "我若不弃"

939 | ],

940 | "那聊点儿别的吧": [

941 | "不了没心情",

942 | "你说怎么聊",

943 | "想删除聊天记录",

944 | "你会聊什么",

945 | "话题终结者",

946 | "好无聊",

947 | "你说说",

948 | "那你先说",

949 | "我前面说了啥",

950 | "你想聊神马",

951 | "好吧聊什么",

952 | "你说吧聊什么",

953 | "你来选个话题",

954 | "好啊你开始一个话题吧",

955 | "聊点别的吧",

956 | "你开个头吧",

957 | "还是算了吧",

958 | "行你说",

959 | "你想聊什么",

960 | "好啊聊什么呢",

961 | "聊点什么",

962 | "说了过一小时再聊",

963 | "你说聊什么",

964 | "开始吧",

965 | "聊点啥",

966 | "今天心情不好",

967 | "不想聊了",

968 | "下一个话题吧",

969 | "你给我讲故事",

970 | "给我唱首歌吧",

971 | "不想跟你聊了",

972 | "不感兴趣",

973 | "还能不能好好聊天",

974 | "你给我讲个故事吧",

975 | "没话聊",

976 | "你开个头",

977 | "如果不说你是谁那都别聊了",

978 | "聊点什么呢你说",

979 | "聊什么",

980 | "聊设么",

981 | "我先不聊了",

982 | "聊聊你自己吧",

983 | "你找个话题呗",

984 | "聊啥呀",

985 | "你说聊啥",

986 | "没啥聊的",

987 | "你还会些什么",

988 | "聊点什么呢",

989 | "你想聊啥",

990 | "不想聊",

991 | "嗯就聊天",

992 | "聊什么呀",

993 | "跟你都不能愉快地聊天",

994 | "你想聊点啥",

995 | "聊这么",

996 | "我先不聊了有空一定找你",

997 | "聊什么呢",

998 | "你先说",

999 | "不好就聊这个",

1000 | "来聊天",

1001 | "不聊啦",

1002 | "那你说个话题啊",

1003 | "给我唱首歌",

1004 | "说话呀",

1005 | "不了吧",

1006 | "你会聊什么呢",

1007 | "不跟你聊了",

1008 | "聊点啥呢",

1009 | "你说吧",

1010 | "聊啥呢",

1011 | "不聊了",

1012 | "那要不你说说",

1013 | "你说吧我听着",

1014 | "可以把你加入群聊吗"

1015 | ],

1016 | "我的也是": [

1017 | "我是说你呢"

1018 | ],

1019 | "希望是真的": [

1020 | "什么是真的"

1021 | ],

1022 | "所以你不爱我": [

1023 | "我为什么要爱你啊"

1024 | ],

1025 | "我也不喜欢": [

1026 | "你有什么喜欢的吗",

1027 | "我不开心",

1028 | "不喜欢什么"

1029 | ],

1030 | "买不起": [

1031 | "你这么土豪怎么买不起"

1032 | ],

1033 | "我想去": [

1034 | "你想去哪"

1035 | ],

1036 | "何时去": [

1037 | "今时走",

1038 | "不知何时",

1039 | "何时归",

1040 | "去何处",

1041 | "去干吗",

1042 | "看电影去",

1043 | "去哪儿",

1044 | "出去玩吧",

1045 | "你干啥去了",

1046 | "为什么是何时去",

1047 | "去哪里"

1048 | ],

1049 | "我不饿": [

1050 | "吃什么",

1051 | "我饿了",

1052 | "可是我饿了呀"

1053 | ],

1054 | "我也不开心": [

1055 | "我开心"

1056 | ],

1057 | "你干啥": [

1058 | "我问你呢",

1059 | "你可以干什么",

1060 | "你能做什么啊"

1061 | ],

1062 | "可是我不是觉得她好点了": [

1063 | "她是谁",

1064 | "可是我不是觉得她嗯呢好点啦",

1065 | "我不觉得",

1066 | "她是谁啊",

1067 | "是吗她好点了吗"

1068 | ],

1069 | "好想看": [

1070 | "看什么"

1071 | ],

1072 | "好想要": [

1073 | "想要吗",

1074 | "想要什么",

1075 | "要什么"

1076 | ],

1077 | "见到你我也很荣幸哦": [

1078 | "幸会幸会",

1079 | "我也很荣幸",

1080 | "见到你我很荣幸"

1081 | ],

1082 | "还没睡": [

1083 | "该睡了",

1084 | "你睡得这么早",

1085 | "这么早睡什么睡"

1086 | ],

1087 | "我要去": [

1088 | "去不要你",

1089 | "你去呀",

1090 | "去哪里",

1091 | "你要去什么地方"

1092 | ],

1093 | "我也想要一个": [

1094 | "想要吗还得去我家有",

1095 | "你要啥",

1096 | "你想要什么",

1097 | "想要吗",

1098 | "要什么",

1099 | "[Yeah]送给你"

1100 | ],

1101 | "坐观垂钓者": [

1102 | "空有羡鱼情"

1103 | ],

1104 | "独乐乐不如众乐乐": [

1105 | "大家一起乐",

1106 | "真的不开心",

1107 | "只准我乐"

1108 | ],

1109 | "汝一逗比": [

1110 | "逗比是啥",

1111 | "你是不是傻",

1112 | "你才是逗比",

1113 | "呜呜呜你说我"

1114 | ],

1115 | "我要哭了": [

1116 | "哭吧哭吧不是罪"

1117 | ],

1118 | "我也不会": [

1119 | "不会说话",

1120 | "你会什么"

1121 | ],

1122 | "我信你": [

1123 | "我不信你",

1124 | "信我啥",

1125 | "我也信你",

1126 | "你信我什么",

1127 | "不信啊"

1128 | ],

1129 | "你不胖": [

1130 | "你咋知道我不胖"

1131 | ],

1132 | "吃炒肝儿吧": [

1133 | "不想吃",

1134 | "去哪吃",

1135 | "哪儿吃",

1136 | "炒肝儿不好吃",

1137 | "好吃吗"

1138 | ],

1139 | "不爱汝矣": [

1140 | "我喜欢你"

1141 | ],

1142 | "我觉得我的心都没了": [

1143 | "因为你的心在我这里啊而我的心在你那里"

1144 | ],

1145 | "哈哈哈哈哈哈哈哈哈哈哈哈哈哈哈哈": [

1146 | "你笑什么",

1147 | "笑什么"

1148 | ],

1149 | "笑一笑十年少": [

1150 | "笑笑笑笑",

1151 | "不想笑",

1152 | "少十年么"

1153 | ],

1154 | "还没睡啊": [

1155 | "睡不着",

1156 | "你也没睡啊",

1157 | "刚睡醒啊",

1158 | "现在八点左右怎么睡得下",

1159 | "起来很久了",

1160 | "睡不觉大白天的",

1161 | "现在是morning啊",

1162 | "这都几点了还睡啊",

1163 | "睡什么睡",

1164 | "估计困了",

1165 | "我正准备睡",

1166 | "在讲多一条我就睡觉了",

1167 | "没有不睡午觉"

1168 | ],

1169 | "聊会吧": [

1170 | "说点啥呢"

1171 | ],

1172 | "好好休息吧": [

1173 | "祝你晚安",

1174 | "真的让我睡觉阿"

1175 | ],

1176 | "你就是一个": [

1177 | "我是什么",

1178 | "一个什么"

1179 | ],

1180 | "祝你做个好梦": [

1181 | "突然就晚安了",

1182 | "睡觉啦再见再见",

1183 | "一起做好梦你给我"

1184 | ],

1185 | "我会开车": [

1186 | "怎么开"

1187 | ],

1188 | "太紧张了": [

1189 | "放松自己别给自己太大压力"

1190 | ],

1191 | "你说他在的": [

1192 | "再靠近一点点他就跟你走再靠近一点点他就不闪躲"

1193 | ],

1194 | "现在身高也不是很理想啊": [

1195 | "你理想的身高是多少呢"

1196 | ],

1197 | "终于找到了": [

1198 | "踏破铁鞋无匿处得来全不费工夫",

1199 | "找到就好了",

1200 | "找到就不用着急了"

1201 | ],

1202 | "汝不知之": [

1203 | "不知道还回答这么快"

1204 | ],

1205 | "debuger呀": [

1206 | "debuger是什么鬼",

1207 | "debuger什么意思",

1208 | "debuger呀什么意思啊",

1209 | "debuger是谁",

1210 | "debuger是什么",

1211 | "我不会啊",

1212 | "这个用的是什么意思",

1213 | "什么意思",

1214 | "啥意思"

1215 | ],

1216 | "可是不是不是真的": [

1217 | "不是真的啥",

1218 | "明人不说暗话我喜欢你"

1219 | ],

1220 | "请珍惜生命": [

1221 | "不要等到失去后才懂得珍惜啊"

1222 | ],

1223 | "我想说你是不是活着了": [

1224 | "请珍惜生命"

1225 | ],

1226 | "我想知道为什么不是": [

1227 | "我想知道",

1228 | "什么为什么",

1229 | "就是不是",

1230 | "所以你并没有基于上下文和我聊天",

1231 | "你答非所问",

1232 | "为什么"

1233 | ],

1234 | "我想吃红烧肉红烧肉": [

1235 | "东坡肉好吃",

1236 | "好了给你吃红烧肉红烧肉红烧肉",

1237 | "出来吧我带你去吃红烧肉",

1238 | "我也想吃红烧肉咩事业心",

1239 | "我请你吃红烧肉",

1240 | "我也爱吃红烧肉"

1241 | ],

1242 | "那我们聊点别的吧": [

1243 | "结束话题",

1244 | "好啊聊情话",

1245 | "你说吧",

1246 | "和我说说话吧",

1247 | "我刚才说了什么"

1248 | ],

1249 | "真的懂了": [

1250 | "苏格拉底说过我唯一知道的是我一无所知"

1251 | ],

1252 | "吾于观小说": [

1253 | "好吧来聊小说",

1254 | "什么小说"

1255 | ],

1256 | "好想吃啊": [

1257 | "吃什么",

1258 | "好吃吗"

1259 | ],

1260 | "可是我不是个好": [

1261 | "你不是好女生和坏呀"

1262 | ],

1263 | "买了个表": [

1264 | "买了个表又来拆我台",

1265 | "为啥买表"

1266 | ],

1267 | "你害羞的样子才可爱呢": [

1268 | "不可爱"

1269 | ]

1270 | }

--------------------------------------------------------------------------------

/data/stopwords.txt:

--------------------------------------------------------------------------------

1 | ———

2 | 》),

3 | )÷(1-

4 | ”,

5 | )、

6 | =(

7 | :

8 | →

9 | ℃

10 | &

11 | *

12 | 一一

13 | ~~~~

14 | ’

15 | .

16 | 『

17 | .一

18 | ./

19 | --

20 | 』

21 | =″

22 | 【

23 | [*]

24 | }>

25 | [⑤]]

26 | [①D]

27 | c]

28 | ngP

29 | *

30 | //

31 | [

32 | ]

33 | [②e]

34 | [②g]

35 | ={

36 | }

37 | ,也

38 | ‘

39 | A

40 | [①⑥]

41 | [②B]

42 | [①a]

43 | [④a]

44 | [①③]

45 | [③h]

46 | ③]

47 | 1.

48 | --

49 | [②b]

50 | ’‘

51 | ×××

52 | [①⑧]

53 | 0:2

54 | =[

55 | [⑤b]

56 | [②c]

57 | [④b]

58 | [②③]

59 | [③a]

60 | [④c]

61 | [①⑤]

62 | [①⑦]

63 | [①g]

64 | ∈[

65 | [①⑨]

66 | [①④]

67 | [①c]

68 | [②f]

69 | [②⑧]

70 | [②①]

71 | [①C]

72 | [③c]

73 | [③g]

74 | [②⑤]

75 | [②②]

76 | 一.

77 | [①h]

78 | .数

79 | []

80 | [①B]

81 | 数/

82 | [①i]

83 | [③e]

84 | [①①]

85 | [④d]

86 | [④e]

87 | [③b]

88 | [⑤a]

89 | [①A]

90 | [②⑧]

91 | [②⑦]

92 | [①d]

93 | [②j]

94 | 〕〔

95 | ][

96 | ://

97 | ′∈

98 | [②④

99 | [⑤e]

100 | 12%

101 | b]

102 | ...

103 | ...................

104 | ⋯⋯⋯⋯⋯⋯⋯⋯⋯⋯⋯⋯⋯⋯⋯⋯⋯⋯⋯③

105 | ZXFITL

106 | [③F]

107 | 」

108 | [①o]

109 | ]∧′=[

110 | ∪φ∈

111 | ′|

112 | {-

113 | ②c

114 | }

115 | [③①]

116 | R.L.

117 | [①E]

118 | Ψ

119 | -[*]-

120 | ↑

121 | .日

122 | [②d]

123 | [②

124 | [②⑦]

125 | [②②]

126 | [③e]

127 | [①i]

128 | [①B]

129 | [①h]

130 | [①d]

131 | [①g]

132 | [①②]

133 | [②a]

134 | f]

135 | [⑩]

136 | a]

137 | [①e]

138 | [②h]

139 | [②⑥]

140 | [③d]

141 | [②⑩]

142 | e]

143 | 〉

144 | 】

145 | 元/吨

146 | [②⑩]

147 | 2.3%

148 | 5:0

149 | [①]

150 | ::

151 | [②]

152 | [③]

153 | [④]

154 | [⑤]

155 | [⑥]

156 | [⑦]

157 | [⑧]

158 | [⑨]

159 | ⋯⋯

160 | ——

161 | ?

162 | 、

163 | 。

164 | “

165 | ”

166 | 《

167 | 》

168 | !

169 | ,

170 | :

171 | ;

172 | ?

173 | .

174 | ,

175 | .

176 | '

177 | ?

178 | ·

179 | ———

180 | ──

181 | ?

182 | —

183 | <

184 | >

185 | (

186 | )

187 | 〔

188 | 〕

189 | [

190 | ]

191 | (

192 | )

193 | -

194 | +

195 | 〜

196 | ×

197 | /

198 | /

199 | ①

200 | ②

201 | ③

202 | ④

203 | ⑤

204 | ⑥

205 | ⑦

206 | ⑧

207 | ⑨

208 | ⑩

209 | Ⅲ

210 | В

211 | "

212 | ;

213 | #

214 | @

215 | γ

216 | μ

217 | φ

218 | φ.

219 | ×

220 | Δ

221 | ■

222 | ▲

223 | sub

224 | exp

225 | sup

226 | sub

227 | Lex

228 | #

229 | %

230 | &

231 | '

232 | +

233 | +ξ

234 | ++

235 | -

236 | -β

237 | <

238 | <±

239 | <Δ

240 | <λ

241 | <φ

242 | <<

243 | =

244 | =

245 | =☆

246 | =-

247 | >

248 | >λ

249 | _

250 | 〜±

251 | 〜+

252 | [⑤f]

253 | [⑤d]

254 | [②i]

255 | ≈

256 | [②G]

257 | [①f]

258 | LI

259 | ㈧

260 | [-

261 | ......

262 | 〉

263 | [③⑩]

264 | 第二

265 | 一番

266 | 一直

267 | 一个

268 | 一些

269 | 许多

270 | 种

271 | 有的是

272 | 也就是说

273 | 末##末

274 | 啊

275 | 阿

276 | 哎

277 | 哎呀

278 | 哎哟

279 | 唉

280 | 俺

281 | 俺们

282 | 按

283 | 按照

284 | 吧

285 | 吧哒

286 | 把

287 | 罢了

288 | 被

289 | 本

290 | 本着

291 | 比

292 | 比方

293 | 比如

294 | 鄙人

295 | 彼

296 | 彼此

297 | 边

298 | 别

299 | 别的

300 | 别说

301 | 并

302 | 并且

303 | 不比

304 | 不成

305 | 不单

306 | 不但

307 | 不独

308 | 不管

309 | 不光

310 | 不过

311 | 不仅

312 | 不拘

313 | 不论

314 | 不怕

315 | 不然

316 | 不如

317 | 不特

318 | 不惟

319 | 不问

320 | 不只

321 | 朝

322 | 朝着

323 | 趁

324 | 趁着

325 | 乘

326 | 冲

327 | 除

328 | 除此之外

329 | 除非

330 | 除了

331 | 此

332 | 此间

333 | 此外

334 | 从

335 | 从而

336 | 打

337 | 待

338 | 但

339 | 但是

340 | 当

341 | 当着

342 | 到

343 | 得

344 | 的

345 | 的话

346 | 等

347 | 等等

348 | 地

349 | 第

350 | 叮咚

351 | 对

352 | 对于

353 | 多

354 | 多少

355 | 而

356 | 而况

357 | 而且

358 | 而是

359 | 而外

360 | 而言

361 | 而已

362 | 尔后

363 | 反过来

364 | 反过来说

365 | 反之

366 | 非但

367 | 非徒

368 | 否则

369 | 嘎

370 | 嘎登

371 | 该

372 | 赶

373 | 个

374 | 各

375 | 各个

376 | 各位

377 | 各种

378 | 各自

379 | 给

380 | 根据

381 | 跟

382 | 故

383 | 故此

384 | 固然

385 | 关于

386 | 管

387 | 归

388 | 果然

389 | 果真

390 | 过

391 | 哈

392 | 哈哈

393 | 呵

394 | 和

395 | 何

396 | 何处

397 | 何况

398 | 何时

399 | 嘿

400 | 哼

401 | 哼唷

402 | 呼哧

403 | 乎

404 | 哗

405 | 还是

406 | 还有

407 | 换句话说

408 | 换言之

409 | 或

410 | 或是

411 | 或者

412 | 极了

413 | 及

414 | 及其

415 | 及至

416 | 即

417 | 即便

418 | 即或

419 | 即令

420 | 即若

421 | 即使

422 | 几

423 | 几时

424 | 己

425 | 既

426 | 既然

427 | 既是

428 | 继而

429 | 加之

430 | 假如

431 | 假若

432 | 假使

433 | 鉴于

434 | 将

435 | 较

436 | 较之

437 | 叫

438 | 接着

439 | 结果

440 | 借

441 | 紧接着

442 | 进而

443 | 尽

444 | 尽管

445 | 经

446 | 经过

447 | 就

448 | 就是

449 | 就是说

450 | 据

451 | 具体地说

452 | 具体说来

453 | 开始

454 | 开外

455 | 靠

456 | 咳

457 | 可

458 | 可见

459 | 可是

460 | 可以

461 | 况且

462 | 啦

463 | 来

464 | 来着

465 | 离

466 | 例如

467 | 哩

468 | 连

469 | 连同

470 | 两者

471 | 了

472 | 临

473 | 另

474 | 另外

475 | 另一方面

476 | 论

477 | 嘛

478 | 吗

479 | 慢说

480 | 漫说

481 | 冒

482 | 么

483 | 每

484 | 每当

485 | 们

486 | 莫若

487 | 某

488 | 某个

489 | 某些

490 | 拿

491 | 哪

492 | 哪边

493 | 哪儿

494 | 哪个

495 | 哪里

496 | 哪年

497 | 哪怕

498 | 哪天

499 | 哪些

500 | 哪样

501 | 那

502 | 那边

503 | 那儿

504 | 那个

505 | 那会儿

506 | 那里

507 | 那么

508 | 那么些

509 | 那么样

510 | 那时

511 | 那些

512 | 那样

513 | 乃

514 | 乃至

515 | 呢

516 | 能

517 | 你

518 | 你们

519 | 您

520 | 宁

521 | 宁可

522 | 宁肯

523 | 宁愿

524 | 哦

525 | 呕

526 | 啪达

527 | 旁人

528 | 呸

529 | 凭

530 | 凭借

531 | 其

532 | 其次

533 | 其二

534 | 其他

535 | 其它

536 | 其一

537 | 其余

538 | 其中

539 | 起

540 | 起见

541 | 起见

542 | 岂但

543 | 恰恰相反

544 | 前后

545 | 前者

546 | 且

547 | 然而

548 | 然后

549 | 然则

550 | 让

551 | 人家

552 | 任

553 | 任何

554 | 任凭

555 | 如

556 | 如此

557 | 如果

558 | 如何

559 | 如其

560 | 如若

561 | 如上所述

562 | 若

563 | 若非

564 | 若是

565 | 啥

566 | 上下

567 | 尚且

568 | 设若

569 | 设使

570 | 甚而

571 | 甚么

572 | 甚至

573 | 省得

574 | 时候

575 | 什么

576 | 什么样

577 | 使得

578 | 是

579 | 是的

580 | 首先

581 | 谁

582 | 谁知

583 | 顺

584 | 顺着

585 | 似的

586 | 虽

587 | 虽然

588 | 虽说

589 | 虽则

590 | 随

591 | 随着

592 | 所

593 | 所以

594 | 他

595 | 他们

596 | 他人

597 | 它

598 | 它们

599 | 她

600 | 她们

601 | 倘

602 | 倘或

603 | 倘然

604 | 倘若

605 | 倘使

606 | 腾

607 | 替

608 | 通过

609 | 同

610 | 同时

611 | 哇

612 | 万一

613 | 往

614 | 望

615 | 为

616 | 为何

617 | 为了

618 | 为什么

619 | 为着

620 | 喂

621 | 嗡嗡

622 | 我

623 | 我们

624 | 呜

625 | 呜呼

626 | 乌乎

627 | 无论

628 | 无宁

629 | 毋宁

630 | 嘻

631 | 吓

632 | 相对而言

633 | 像

634 | 向

635 | 向着

636 | 嘘

637 | 呀

638 | 焉

639 | 沿

640 | 沿着

641 | 要

642 | 要不

643 | 要不然

644 | 要不是

645 | 要么

646 | 要是

647 | 也

648 | 也罢

649 | 也好

650 | 一

651 | 一般

652 | 一旦

653 | 一方面

654 | 一来

655 | 一切

656 | 一样

657 | 一则

658 | 依

659 | 依照

660 | 矣

661 | 以

662 | 以便

663 | 以及

664 | 以免

665 | 以至

666 | 以至于

667 | 以致

668 | 抑或

669 | 因

670 | 因此

671 | 因而

672 | 因为

673 | 哟

674 | 用

675 | 由

676 | 由此可见

677 | 由于

678 | 有

679 | 有的

680 | 有关

681 | 有些

682 | 又

683 | 于

684 | 于是

685 | 于是乎

686 | 与

687 | 与此同时

688 | 与否

689 | 与其

690 | 越是

691 | 云云

692 | 哉

693 | 再说

694 | 再者

695 | 在

696 | 在下

697 | 咱

698 | 咱们

699 | 则

700 | 怎

701 | 怎么

702 | 怎么办

703 | 怎么样

704 | 怎样

705 | 咋

706 | 照

707 | 照着

708 | 者

709 | 这

710 | 这边

711 | 这儿

712 | 这个

713 | 这会儿

714 | 这就是说

715 | 这里

716 | 这么

717 | 这么点儿

718 | 这么些

719 | 这么样

720 | 这时

721 | 这些

722 | 这样

723 | 正如

724 | 吱

725 | 之

726 | 之类

727 | 之所以

728 | 之一

729 | 只是

730 | 只限

731 | 只要

732 | 只有

733 | 至

734 | 至于

735 | 诸位

736 | 着

737 | 着呢

738 | 自

739 | 自从

740 | 自个儿

741 | 自各儿

742 | 自己

743 | 自家

744 | 自身

745 | 综上所述

746 | 总的来看

747 | 总的来说

748 | 总的说来

749 | 总而言之

750 | 总之

751 | 纵

752 | 纵令

753 | 纵然

754 | 纵使

755 | 遵照

756 | 作为

757 | 兮

758 | 呃

759 | 呗

760 | 咚

761 | 咦

762 | 喏

763 | 啐

764 | 喔唷

765 | 嗬

766 | 嗯

767 | 嗳

--------------------------------------------------------------------------------

/distills/bert_config_L3.json:

--------------------------------------------------------------------------------

1 | {

2 | "architectures": [

3 | "BertForSiameseNet"

4 | ],

5 | "attention_probs_dropout_prob": 0.1,

6 | "directionality": "bidi",

7 | "gradient_checkpointing": false,

8 | "hidden_act": "gelu",

9 | "hidden_dropout_prob": 0.1,

10 | "hidden_size": 768,

11 | "initializer_range": 0.02,

12 | "intermediate_size": 3072,

13 | "layer_norm_eps": 1e-12,

14 | "max_position_embeddings": 512,

15 | "model_type": "bert",

16 | "num_attention_heads": 12,

17 | "num_hidden_layers": 3,

18 | "pad_token_id": 0,

19 | "pooler_fc_size": 768,

20 | "pooler_num_attention_heads": 12,

21 | "pooler_num_fc_layers": 3,

22 | "pooler_size_per_head": 128,

23 | "pooler_type": "first_token_transform",

24 | "type_vocab_size": 2,

25 | "vocab_size": 21128

26 | }

--------------------------------------------------------------------------------

/distills/matches.py:

--------------------------------------------------------------------------------

1 | L3_attention_mse=[{"layer_T":4, "layer_S":1, "feature":"attention", "loss":"attention_mse", "weight":1},

2 | {"layer_T":8, "layer_S":2, "feature":"attention", "loss":"attention_mse", "weight":1},

3 | {"layer_T":12, "layer_S":3, "feature":"attention", "loss":"attention_mse", "weight":1}]

4 |

5 | L3_attention_ce=[{"layer_T":4, "layer_S":1, "feature":"attention", "loss":"attention_ce", "weight":1},

6 | {"layer_T":8, "layer_S":2, "feature":"attention", "loss":"attention_ce", "weight":1},

7 | {"layer_T":12, "layer_S":3, "feature":"attention", "loss":"attention_ce", "weight":1}]

8 |

9 | L3_attention_mse_sum=[{"layer_T":4, "layer_S":1, "feature":"attention", "loss":"attention_mse_sum", "weight":1},