├── .idea

├── .name

├── encodings.xml

├── ivport-v2.iml

├── misc.xml

├── modules.xml

└── vcs.xml

├── IIC.py

├── README.md

├── init_ivport.py

├── ivport.py

├── picamera

├── IIC.py

├── VERSION

├── __init__.py

├── array.py

├── bcm_host.py

├── camera.py

├── camera_1.10_ivport.py

├── camera_1.12_backup.py

├── camera_1.12_ivport.py

├── color.py

├── encoders.py

├── exc.py

├── mmal.py

├── renderers.py

└── streams.py

├── test_ivport.py

└── test_ivport_quad.py

/.idea/.name:

--------------------------------------------------------------------------------

1 | ivport-v2

--------------------------------------------------------------------------------

/.idea/encodings.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/.idea/ivport-v2.iml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

--------------------------------------------------------------------------------

/.idea/misc.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

--------------------------------------------------------------------------------

/.idea/modules.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

--------------------------------------------------------------------------------

/.idea/vcs.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/IIC.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | #

3 | # This file is part of Ivport.

4 | # Copyright (C) 2015 Ivmech Mechatronics Ltd.

5 | #

6 | # Ivport is free software: you can redistribute it and/or modify

7 | # it under the terms of the GNU General Public License as published by

8 | # the Free Software Foundation, either version 3 of the License, or

9 | # (at your option) any later version.

10 | #

11 | # Ivport is distributed in the hope that it will be useful,

12 | # but WITHOUT ANY WARRANTY; without even the implied warranty of

13 | # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

14 | # GNU General Public License for more details.

15 | #

16 | # You should have received a copy of the GNU General Public License

17 | # along with this program. If not, see .

18 |

19 | #title :IIC.py

20 | #description :IIC module for ivport v2 camera multiplexer

21 | #author :Caner Durmusoglu

22 | #date :20160514

23 | #version :0.1

24 | #usage :

25 | #notes :

26 | #python_version :2.7

27 | #==============================================================================

28 |

29 | from datetime import datetime

30 |

31 | import smbus

32 |

33 | iic_address = (0x70)

34 | iic_register = (0x00)

35 |

36 | iic_bus0 = (0x01)

37 | iic_bus1 = (0x02)

38 | iic_bus2 = (0x04)

39 | iic_bus3 = (0x08)

40 |

41 | class IIC():

42 | def __init__(self, twi=1, addr=iic_address, bus_enable=iic_bus0):

43 | self._bus = smbus.SMBus(twi)

44 | self._addr = addr

45 | config = bus_enable

46 | self._write(iic_register, config)

47 |

48 | def _write(self, register, data):

49 | self._bus.write_byte_data(self._addr, register, data)

50 |

51 | def _read(self):

52 | return self._bus.read_byte(self._addr)

53 |

54 | def read_control_register(self):

55 | value = self._read()

56 | return value

57 |

58 | def write_control_register(self, config):

59 | self._write(iic_register, config)

60 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | IVPORT V2

2 | ---

3 |

4 | IVPORT V2 is compatible with Raspberry Pi Camera Module V2 with 8MP SONY IMX219 Sensor

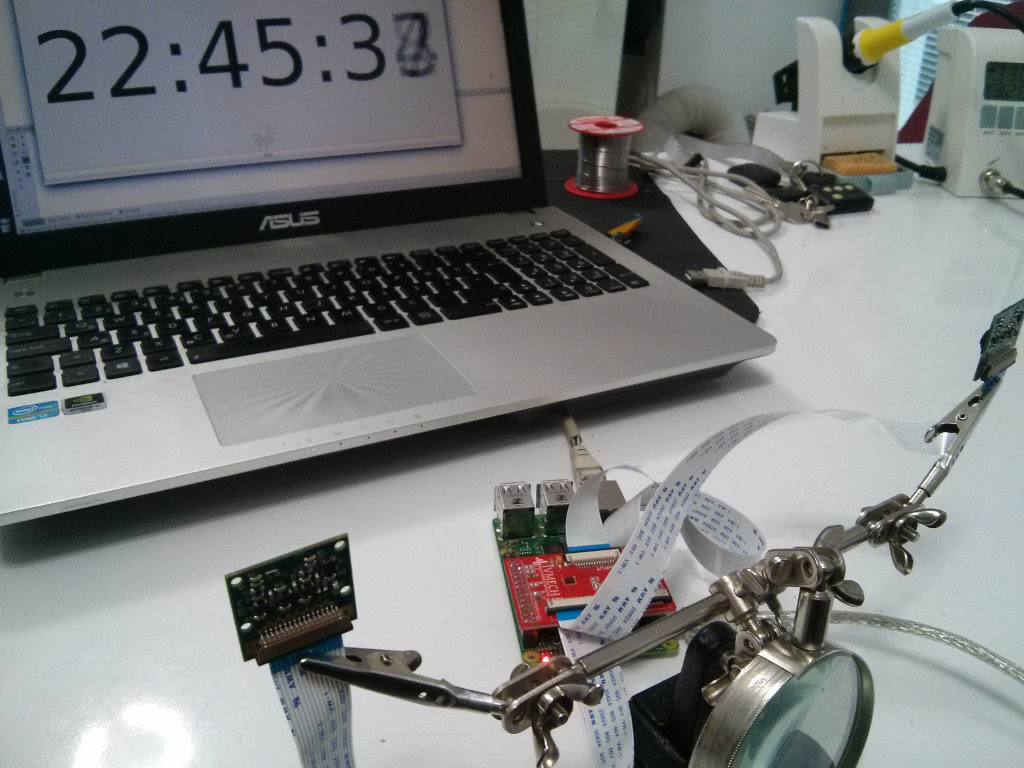

5 |

6 | IVPORT and IVPORT V2 which are the first Raspberry Pi (also Raspberry Pi A,A+,B+ and Raspberry Pi 2,3 fully compatible) Camera Module multiplexer is designed to make possible connecting more than one camera module to single CSI camera port on Raspberry Pi. Multiplexing can be controlled by 3 pins for 4 camera modules, 5 pins for 8 camera modules and 9 pins for **maximum up to 16 camera modules** with using GPIO.

7 |

8 | IVPort has already been preferred by ESA, MIT Lab, Spacetrex Lab, well known company research centers and numerous different universities.

9 |

10 | Getting Started

11 | ---

12 |

13 | ### Order

14 |

15 | IVPORT V2 is available at [HERE](http://www.ivmech.com/magaza/ivport-v2-p-107).

16 |

17 | ### Installation

18 |

19 | First of all please enable I2C from raspi-config, [guide this link](http://www.raspberrypi-spy.co.uk/2014/11/enabling-the-i2c-interface-on-the-raspberry-pi)

20 |

21 | And enable Camera Module from raspi-config on older versions of Raspberry OS.

22 |

23 | Also Enable Legacy Camera from raspi-config on Raspberry OS Debian Version 11 (bullseye) [guide this link](https://www.youtube.com/watch?v=E7KPSc_Xr24)

24 |

25 | ### Cloning a Repository

26 |

27 | ```shell

28 | git clone https://github.com/ivmech/ivport-v2.git

29 | ```

30 |

31 | ### Dependency Installation

32 |

33 | ```shell

34 | sudo apt-get install python3-smbus

35 | ```

36 | picamera module was forked from https://github.com/waveform80/picamera and small edits for camera v2 and ivport support. It may be needed to uninstall preinstalled picamera module on device.

37 |

38 | ```shell

39 | sudo apt-get remove python-picamera

40 | sudo pip uninstall picamera

41 | ```

42 |

43 | ### Usage

44 |

45 | First of all it is important that **init_ivport.py** should be run at every boot before starting to access camera.

46 |

47 | ```shell

48 | cd ivport-v2

49 | python init_ivport.py

50 | ```

51 |

52 | It is needed to reboot raspberry pi at initial run of **init_ivport.py**

53 |

54 | ```shell

55 | sudo reboot

56 | ```

57 |

58 | And check whether ivport and camera are detected by raspberry pi or no with **vcgencmd get_camera**.

59 |

60 | ```shell

61 | root@ivport:~/ivport-v2 $ i2cdetect -y 1

62 | 0 1 2 3 4 5 6 7 8 9 a b c d e f

63 | 00: -- -- -- -- -- -- -- -- -- -- -- -- --

64 | 10: 10 -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

65 | 20: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

66 | 30: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

67 | 40: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

68 | 50: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

69 | 60: -- -- -- -- 64 -- -- -- -- -- -- -- -- -- -- --

70 | 70: 70 -- -- -- -- -- -- --

71 | ```

72 | You should get both **0x70** and **0x64** as addresses of respectively **ivport v2** and **camera module v2**.

73 |

74 | If you dont get any addresses please check [IMPORTANT FIX](https://github.com/ivmech/ivport-v2/wiki/Important-Fix).

75 |

76 | ```shell

77 | root@ivport:~/ivport-v2 $ vcgencmd get_camera

78 | supported=1 detected=1

79 | ```

80 | **supported** and **detected** should be **1** before **test_ivport.py** script.

81 |

82 | There is **test_ivport.py** script for **IVPORT DUAL V2**.

83 |

84 | ```python

85 | import ivport

86 | # raspistill capture

87 | def capture(camera):

88 | "This system command for raspistill capture"

89 | cmd = "raspistill -t 10 -o still_CAM%d.jpg" % camera

90 | os.system(cmd)

91 |

92 | iv = ivport.IVPort(ivport.TYPE_DUAL2)

93 | iv.camera_change(1)

94 | capture(1)

95 | iv.camera_change(2)

96 | capture(2)

97 | iv.close()

98 | ```

99 | **TYPE** and **JUMPER** settings are configured while initialize ivport.

100 | ```python

101 | ivport.IVPort(IVPORT_TYPE, IVPORT_JUMPER)

102 | ```

103 | **RESOLUTION**, **FRAMERATE** and other settings can be configured.

104 | ```python

105 | iv = ivport.IVPort(ivport.TYPE_DUAL2)

106 | iv.camera_open(camera_v2=True, resolution=(640, 480), framerate=60)

107 | ```

108 | Also **init_ivport.py** should be run at every boot before starting to access camera.

109 |

110 | ```shell

111 | cd ivport-v2

112 | python init_ivport.py

113 | ```

114 |

115 | Tests

116 | ------

117 |

118 | There is **test_ivport.py** script which is for testing.

119 | ```shell

120 | cd ivport-v2

121 | python test_ivport.py

122 | ```

123 |

124 | Wiki

125 | ------

126 |

127 | #### See wiki pages from [here](https://github.com/ivmech/ivport/wiki).

128 |

129 | Video

130 | -------

131 |

132 | IVPort can be used for stereo vision with stereo camera.

133 |

134 | ### [Youtube video](https://www.youtube.com/watch?v=w4JZN7Y0d2o) of stereo recording with 2 camera modules

135 | [](https://www.youtube.com/watch?v=w4JZN7Y0d2o)

136 |

137 | ### IVPort was [@hackaday](http://hackaday.com/2014/12/19/multiplexing-pi-cameras/).

138 |

--------------------------------------------------------------------------------

/init_ivport.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 |

3 | import IIC

4 |

5 | if __name__ == "__main__":

6 | iviic = IIC.IIC(addr=(0x70), bus_enable =(0x01))

7 |

--------------------------------------------------------------------------------

/ivport.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | #

3 | # This file is part of Ivport.

4 | # Copyright (C) 2016 Ivmech Mechatronics Ltd.

5 | #

6 | # Ivport is free software: you can redistribute it and/or modify

7 | # it under the terms of the GNU General Public License as published by

8 | # the Free Software Foundation, either version 3 of the License, or

9 | # (at your option) any later version.

10 | #

11 | # Ivport is distributed in the hope that it will be useful,

12 | # but WITHOUT ANY WARRANTY; without even the implied warranty of

13 | # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

14 | # GNU General Public License for more details.

15 | #

16 | # You should have received a copy of the GNU General Public License

17 | # along with this program. If not, see .

18 |

19 | #title :ivport.py

20 | #description :ivport.py is module for capturing ivport camera multiplexer

21 | #author :Caner Durmusoglu

22 | #date :20160514

23 | #version :0.1

24 | #usage :import ivport

25 | #notes :

26 | #python_version :2.7

27 | #==============================================================================

28 |

29 | import sys

30 | import functools

31 |

32 | try:

33 | import IIC

34 | import RPi.GPIO as gp

35 | gp.setwarnings(False)

36 | gp.setmode(gp.BOARD)

37 | except:

38 | print("There are no IIC.py and RPi.GPIO module.")

39 | print("install RPi.GPIO: sudo apt-get install python-rpi.gpio")

40 | sys.exit(0)

41 |

42 | #try:

43 | # import picamera

44 | #except:

45 | # print("There are no picamera module or directory.")

46 | # sys.exit(0)

47 |

48 | TYPE_QUAD = 0

49 | TYPE_QUAD2 = 1

50 | TYPE_DUAL = 2

51 | TYPE_DUAL2 = 3

52 |

53 | class IVPort():

54 | IVJP = {'A': (11, 12), 'C': (21, 22), 'B': (15, 16), 'D': (23, 24)}

55 | pins = list(functools.reduce(lambda x,y: x+y, IVJP.values()))

56 | pins.sort()

57 | DIVJP = {i+1 : x for i,x in enumerate(pins)}

58 | del(pins)

59 |

60 | def __init__(self, iv_type=TYPE_DUAL2, iv_jumper=1):

61 |

62 | self.fPin = self.f1Pin = self.f2Pin = self.ePin = 0

63 | self.ivport_type = iv_type

64 | self.is_camera_v2 = self.ivport_type in (TYPE_DUAL2, TYPE_QUAD2)

65 | self.is_dual = self.ivport_type in (TYPE_DUAL2, TYPE_DUAL)

66 | self.ivport_jumper = iv_jumper

67 | if not self.is_dual: self.ivport_jumper = 'A'

68 | self.camera = 1

69 | self.is_opened = False

70 |

71 | if self.is_camera_v2:

72 | self.iviic = IIC.IIC(addr=(0x70), bus_enable =(0x01))

73 |

74 | self.link_gpio()

75 |

76 | def link_gpio(self):

77 | if self.is_dual:

78 | self.fPin = self.DIVJP[self.ivport_jumper]

79 | gp.setup(self.fPin, gp.OUT)

80 | else:

81 | self.f1Pin, self.f2Pin = self.IVJP[self.ivport_jumper]

82 | self.ePin = 7

83 | gp.setup(self.f1Pin, gp.OUT)

84 | gp.setup(self.f2Pin, gp.OUT)

85 | gp.setup(self.ePin, gp.OUT)

86 |

87 | # ivport camera change

88 | def camera_change(self, camera=1):

89 | if self.is_dual:

90 | if camera == 1:

91 | if self.is_camera_v2: self.iviic.write_control_register((0x01))

92 | gp.output(self.fPin, False)

93 | elif camera == 2:

94 | if self.is_camera_v2: self.iviic.write_control_register((0x02))

95 | gp.output(self.fPin, True)

96 | else:

97 | print("Ivport type is DUAL.")

98 | print("There isnt camera: %d" % camera)

99 | self.close()

100 | sys.exit(0)

101 | else:

102 | if camera == 1:

103 | if self.is_camera_v2: self.iviic.write_control_register((0x01))

104 | gp.output(self.ePin, False)

105 | gp.output(self.f1Pin, False)

106 | gp.output(self.f2Pin, True)

107 | elif camera == 2:

108 | if self.is_camera_v2: self.iviic.write_control_register((0x02))

109 | gp.output(self.ePin, True)

110 | gp.output(self.f1Pin, False)

111 | gp.output(self.f2Pin, True)

112 | elif camera == 3:

113 | if self.is_camera_v2: self.iviic.write_control_register((0x04))

114 | gp.output(self.ePin, False)

115 | gp.output(self.f1Pin, True)

116 | gp.output(self.f2Pin, False)

117 | elif camera == 4:

118 | if self.is_camera_v2: self.iviic.write_control_register((0x08))

119 | gp.output(self.ePin, True)

120 | gp.output(self.f1Pin, True)

121 | gp.output(self.f2Pin, False)

122 | else:

123 | print("Ivport type is QUAD.")

124 | print("Cluster feature hasnt been implemented yet.")

125 | print("There isnt camera: %d" % camera)

126 | self.close()

127 | sys.exit(0)

128 | self.camera = camera

129 |

130 | # picamera initialize

131 | # Camera V2

132 | # capture_sequence and start_recording require "camera_v2=True"

133 | # standart capture function doesnt require "camera_v2=True"

134 | def camera_open(self, camera_v2=False, resolution=None, framerate=None, grayscale=False):

135 | if self.is_opened: return

136 | self.picam = picamera.PiCamera(camera_v2=camera_v2, resolution=resolution, framerate=framerate)

137 | if grayscale: self.picam.color_effects = (128, 128)

138 | self.is_opened = True

139 |

140 | # picamera capture

141 | def camera_capture(self, filename, **options):

142 | if self.is_opened:

143 | self.picam.capture(filename + "_CAM" + str(self.camera) + '.jpg', **options)

144 | else:

145 | print("Camera is not opened.")

146 |

147 | def camera_sequence(self, **options):

148 | if self.is_opened:

149 | self.picam.capture_sequence(**options)

150 | else:

151 | print("Camera is not opened.")

152 |

153 | def close(self):

154 | self.camera_change(1)

155 | if self.is_opened: self.picam.close()

156 |

--------------------------------------------------------------------------------

/picamera/IIC.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | #

3 | # This file is part of Ivport.

4 | # Copyright (C) 2015 Ivmech Mechatronics Ltd.

5 | #

6 | # Ivport is free software: you can redistribute it and/or modify

7 | # it under the terms of the GNU General Public License as published by

8 | # the Free Software Foundation, either version 3 of the License, or

9 | # (at your option) any later version.

10 | #

11 | # Ivport is distributed in the hope that it will be useful,

12 | # but WITHOUT ANY WARRANTY; without even the implied warranty of

13 | # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

14 | # GNU General Public License for more details.

15 | #

16 | # You should have received a copy of the GNU General Public License

17 | # along with this program. If not, see .

18 |

19 | #title :IIC.py

20 | #description :IIC module for ivport v2 camera multiplexer

21 | #author :Caner Durmusoglu

22 | #date :20160514

23 | #version :0.1

24 | #usage :

25 | #notes :

26 | #python_version :2.7

27 | #==============================================================================

28 |

29 | from datetime import datetime

30 |

31 | import smbus

32 |

33 | iic_address = (0x70)

34 | iic_register = (0x00)

35 |

36 | iic_bus0 = (0x01)

37 | iic_bus1 = (0x02)

38 | iic_bus2 = (0x04)

39 | iic_bus3 = (0x08)

40 |

41 | class IIC():

42 | def __init__(self, twi=1, addr=iic_address, bus_enable=iic_bus0):

43 | self._bus = smbus.SMBus(twi)

44 | self._addr = addr

45 | config = bus_enable

46 | self._write(iic_register, config)

47 |

48 | def _write(self, register, data):

49 | self._bus.write_byte_data(self._addr, register, data)

50 |

51 | def _read(self):

52 | return self._bus.read_byte(self._addr)

53 |

54 | def read_control_register(self):

55 | value = self._read()

56 | return value

57 |

58 | def write_control_register(self, config):

59 | self._write(iic_register, config)

60 |

--------------------------------------------------------------------------------

/picamera/VERSION:

--------------------------------------------------------------------------------

1 | 1.10

2 |

--------------------------------------------------------------------------------

/picamera/__init__.py:

--------------------------------------------------------------------------------

1 | # vim: set et sw=4 sts=4 fileencoding=utf-8:

2 | #

3 | # Python camera library for the Rasperry-Pi camera module

4 | # Copyright (c) 2013-2015 Dave Jones

5 | #

6 | # Redistribution and use in source and binary forms, with or without

7 | # modification, are permitted provided that the following conditions are met:

8 | #

9 | # * Redistributions of source code must retain the above copyright

10 | # notice, this list of conditions and the following disclaimer.

11 | # * Redistributions in binary form must reproduce the above copyright

12 | # notice, this list of conditions and the following disclaimer in the

13 | # documentation and/or other materials provided with the distribution.

14 | # * Neither the name of the copyright holder nor the

15 | # names of its contributors may be used to endorse or promote products

16 | # derived from this software without specific prior written permission.

17 | #

18 | # THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

19 | # AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

20 | # IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE

21 | # ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE

22 | # LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR

23 | # CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF

24 | # SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS

25 | # INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN

26 | # CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE)

27 | # ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

28 | # POSSIBILITY OF SUCH DAMAGE.

29 |

30 | """

31 | The picamera package consists of several modules which provide a pure Python

32 | interface to the Raspberry Pi's camera module. The package is only intended to

33 | run on a Raspberry Pi, and expects to be able to load the MMAL library

34 | (libmmal.so) upon import.

35 |

36 | The classes defined by most modules in this package are available directly from

37 | the :mod:`picamera` namespace. In other words, the following code is typically

38 | all that is required to access classes in the package::

39 |

40 | import picamera

41 |

42 | The :mod:`picamera.array` module is an exception to this as it depends on the

43 | third-party `numpy`_ package (this avoids making numpy a mandatory dependency

44 | for picamera).

45 |

46 | .. _numpy: http://www.numpy.org/

47 |

48 |

49 | The following sections document the various modules available within the

50 | package:

51 |

52 | * :mod:`picamera.camera`

53 | * :mod:`picamera.encoders`

54 | * :mod:`picamera.streams`

55 | * :mod:`picamera.renderers`

56 | * :mod:`picamera.color`

57 | * :mod:`picamera.exc`

58 | * :mod:`picamera.array`

59 | """

60 |

61 | from __future__ import (

62 | unicode_literals,

63 | print_function,

64 | division,

65 | absolute_import,

66 | )

67 |

68 | # Make Py2's str equivalent to Py3's

69 | str = type('')

70 |

71 | from picamera.exc import (

72 | PiCameraWarning,

73 | PiCameraError,

74 | PiCameraRuntimeError,

75 | PiCameraClosed,

76 | PiCameraNotRecording,

77 | PiCameraAlreadyRecording,

78 | PiCameraValueError,

79 | PiCameraMMALError,

80 | mmal_check,

81 | )

82 | from picamera.camera import PiCamera

83 | from picamera.encoders import (

84 | PiVideoFrame,

85 | PiVideoFrameType,

86 | PiEncoder,

87 | PiVideoEncoder,

88 | PiImageEncoder,

89 | PiRawMixin,

90 | PiCookedVideoEncoder,

91 | PiRawVideoEncoder,

92 | PiOneImageEncoder,

93 | PiMultiImageEncoder,

94 | PiRawImageMixin,

95 | PiCookedOneImageEncoder,

96 | PiRawOneImageEncoder,

97 | PiCookedMultiImageEncoder,

98 | PiRawMultiImageEncoder,

99 | )

100 | from picamera.renderers import (

101 | PiRenderer,

102 | PiOverlayRenderer,

103 | PiPreviewRenderer,

104 | PiNullSink,

105 | )

106 | from picamera.streams import PiCameraCircularIO, CircularIO

107 | from picamera.color import Color, Red, Green, Blue, Hue, Lightness, Saturation

108 |

109 |

--------------------------------------------------------------------------------

/picamera/array.py:

--------------------------------------------------------------------------------

1 | # vim: set et sw=4 sts=4 fileencoding=utf-8:

2 | #

3 | # Python camera library for the Rasperry-Pi camera module

4 | # Copyright (c) 2013-2015 Dave Jones

5 | #

6 | # Redistribution and use in source and binary forms, with or without

7 | # modification, are permitted provided that the following conditions are met:

8 | #

9 | # * Redistributions of source code must retain the above copyright

10 | # notice, this list of conditions and the following disclaimer.

11 | # * Redistributions in binary form must reproduce the above copyright

12 | # notice, this list of conditions and the following disclaimer in the

13 | # documentation and/or other materials provided with the distribution.

14 | # * Neither the name of the copyright holder nor the

15 | # names of its contributors may be used to endorse or promote products

16 | # derived from this software without specific prior written permission.

17 | #

18 | # THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

19 | # AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

20 | # IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE

21 | # ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE

22 | # LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR

23 | # CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF

24 | # SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS

25 | # INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN

26 | # CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE)

27 | # ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

28 | # POSSIBILITY OF SUCH DAMAGE.

29 |

30 | """

31 | The :mod:`picamera.array` module provides a set of classes which aid in

32 | constructing n-dimensional `numpy`_ arrays from the camera output. In order to

33 | avoid adding a hard dependency on numpy to picamera, the module is not

34 | automatically imported by the main picamera package and must be explicitly

35 | imported.

36 |

37 | .. _numpy: http://www.numpy.org/

38 |

39 | The following classes are defined in the module:

40 |

41 |

42 | PiArrayOutput

43 | =============

44 |

45 | .. autoclass:: PiArrayOutput

46 | :members:

47 |

48 |

49 | PiRGBArray

50 | ==========

51 |

52 | .. autoclass:: PiRGBArray

53 |

54 |

55 | PiYUVArray

56 | ==========

57 |

58 | .. autoclass:: PiYUVArray

59 |

60 |

61 | PiBayerArray

62 | ============

63 |

64 | .. autoclass:: PiBayerArray

65 |

66 |

67 | PiMotionArray

68 | =============

69 |

70 | .. autoclass:: PiMotionArray

71 |

72 |

73 | PiAnalysisOutput

74 | ================

75 |

76 | .. autoclass:: PiAnalysisOutput

77 | :members:

78 |

79 |

80 | PiRGBAnalysis

81 | =============

82 |

83 | .. autoclass:: PiRGBAnalysis

84 |

85 |

86 | PiYUVAnalysis

87 | =============

88 |

89 | .. autoclass:: PiYUVAnalysis

90 |

91 |

92 | PiMotionAnalysis

93 | ================

94 |

95 | .. autoclass:: PiMotionAnalysis

96 | """

97 |

98 | from __future__ import (

99 | unicode_literals,

100 | print_function,

101 | division,

102 | absolute_import,

103 | )

104 |

105 | # Make Py2's str and range equivalent to Py3's

106 | native_str = str

107 | str = type('')

108 | try:

109 | range = xrange

110 | except NameError:

111 | pass

112 |

113 | import io

114 | import warnings

115 |

116 | import numpy as np

117 | from numpy.lib.stride_tricks import as_strided

118 |

119 | from .exc import PiCameraValueError, PiCameraDeprecated

120 |

121 |

122 | motion_dtype = np.dtype([

123 | (native_str('x'), np.int8),

124 | (native_str('y'), np.int8),

125 | (native_str('sad'), np.uint16),

126 | ])

127 |

128 |

129 | def raw_resolution(resolution):

130 | """

131 | Round a (width, height) tuple up to the nearest multiple of 32 horizontally

132 | and 16 vertically (as this is what the Pi's camera module does for

133 | unencoded output).

134 | """

135 | width, height = resolution

136 | fwidth = (width + 31) // 32 * 32

137 | fheight = (height + 15) // 16 * 16

138 | return fwidth, fheight

139 |

140 |

141 | def bytes_to_yuv(data, resolution):

142 | """

143 | Converts a bytes object containing YUV data to a `numpy`_ array.

144 | """

145 | width, height = resolution

146 | fwidth, fheight = raw_resolution(resolution)

147 | y_len = fwidth * fheight

148 | uv_len = (fwidth // 2) * (fheight // 2)

149 | if len(data) != (y_len + 2 * uv_len):

150 | raise PiCameraValueError(

151 | 'Incorrect buffer length for resolution %dx%d' % (width, height))

152 | # Separate out the Y, U, and V values from the array

153 | a = np.frombuffer(data, dtype=np.uint8)

154 | Y = a[:y_len]

155 | U = a[y_len:-uv_len]

156 | V = a[-uv_len:]

157 | # Reshape the values into two dimensions, and double the size of the

158 | # U and V values (which only have quarter resolution in YUV4:2:0)

159 | Y = Y.reshape((fheight, fwidth))

160 | U = U.reshape((fheight // 2, fwidth // 2)).repeat(2, axis=0).repeat(2, axis=1)

161 | V = V.reshape((fheight // 2, fwidth // 2)).repeat(2, axis=0).repeat(2, axis=1)

162 | # Stack the channels together and crop to the actual resolution

163 | return np.dstack((Y, U, V))[:height, :width]

164 |

165 |

166 | def bytes_to_rgb(data, resolution):

167 | """

168 | Converts a bytes objects containing RGB/BGR data to a `numpy`_ array.

169 | """

170 | width, height = resolution

171 | fwidth, fheight = raw_resolution(resolution)

172 | if len(data) != (fwidth * fheight * 3):

173 | raise PiCameraValueError(

174 | 'Incorrect buffer length for resolution %dx%d' % (width, height))

175 | # Crop to the actual resolution

176 | return np.frombuffer(data, dtype=np.uint8).\

177 | reshape((fheight, fwidth, 3))[:height, :width, :]

178 |

179 |

180 | class PiArrayOutput(io.BytesIO):

181 | """

182 | Base class for capture arrays.

183 |

184 | This class extends :class:`io.BytesIO` with a `numpy`_ array which is

185 | intended to be filled when :meth:`~io.IOBase.flush` is called (i.e. at the

186 | end of capture).

187 |

188 | .. attribute:: array

189 |

190 | After :meth:`~io.IOBase.flush` is called, this attribute contains the

191 | frame's data as a multi-dimensional `numpy`_ array. This is typically

192 | organized with the dimensions ``(rows, columns, plane)``. Hence, an

193 | RGB image with dimensions *x* and *y* would produce an array with shape

194 | ``(y, x, 3)``.

195 | """

196 |

197 | def __init__(self, camera, size=None):

198 | super(PiArrayOutput, self).__init__()

199 | self.camera = camera

200 | self.size = size

201 | self.array = None

202 |

203 | def close(self):

204 | super(PiArrayOutput, self).close()

205 | self.array = None

206 |

207 | def truncate(self, size=None):

208 | """

209 | Resize the stream to the given size in bytes (or the current position

210 | if size is not specified). This resizing can extend or reduce the

211 | current file size. The new file size is returned.

212 |

213 | In prior versions of picamera, truncation also changed the position of

214 | the stream (because prior versions of these stream classes were

215 | non-seekable). This functionality is now deprecated; scripts should

216 | use :meth:`~io.IOBase.seek` and :meth:`truncate` as one would with

217 | regular :class:`~io.BytesIO` instances.

218 | """

219 | if size is not None:

220 | warnings.warn(

221 | PiCameraDeprecated(

222 | 'This method changes the position of the stream to the '

223 | 'truncated length; this is deprecated functionality and '

224 | 'you should not rely on it (seek before or after truncate '

225 | 'to ensure position is consistent)'))

226 | super(PiArrayOutput, self).truncate(size)

227 | if size is not None:

228 | self.seek(size)

229 |

230 |

231 | class PiRGBArray(PiArrayOutput):

232 | """

233 | Produces a 3-dimensional RGB array from an RGB capture.

234 |

235 | This custom output class can be used to easily obtain a 3-dimensional numpy

236 | array, organized (rows, columns, colors), from an unencoded RGB capture.

237 | The array is accessed via the :attr:`~PiArrayOutput.array` attribute. For

238 | example::

239 |

240 | import picamera

241 | import picamera.array

242 |

243 | with picamera.PiCamera() as camera:

244 | with picamera.array.PiRGBArray(camera) as output:

245 | camera.capture(output, 'rgb')

246 | print('Captured %dx%d image' % (

247 | output.array.shape[1], output.array.shape[0]))

248 |

249 | You can re-use the output to produce multiple arrays by emptying it with

250 | ``truncate(0)`` between captures::

251 |

252 | import picamera

253 | import picamera.array

254 |

255 | with picamera.PiCamera() as camera:

256 | with picamera.array.PiRGBArray(camera) as output:

257 | camera.resolution = (1280, 720)

258 | camera.capture(output, 'rgb')

259 | print('Captured %dx%d image' % (

260 | output.array.shape[1], output.array.shape[0]))

261 | output.truncate(0)

262 | camera.resolution = (640, 480)

263 | camera.capture(output, 'rgb')

264 | print('Captured %dx%d image' % (

265 | output.array.shape[1], output.array.shape[0]))

266 |

267 | If you are using the GPU resizer when capturing (with the *resize*

268 | parameter of the various :meth:`~picamera.camera.PiCamera.capture`

269 | methods), specify the resized resolution as the optional *size* parameter

270 | when constructing the array output::

271 |

272 | import picamera

273 | import picamera.array

274 |

275 | with picamera.PiCamera() as camera:

276 | camera.resolution = (1280, 720)

277 | with picamera.array.PiRGBArray(camera, size=(640, 360)) as output:

278 | camera.capture(output, 'rgb', resize=(640, 360))

279 | print('Captured %dx%d image' % (

280 | output.array.shape[1], output.array.shape[0]))

281 | """

282 |

283 | def flush(self):

284 | super(PiRGBArray, self).flush()

285 | self.array = bytes_to_rgb(self.getvalue(), self.size or self.camera.resolution)

286 |

287 |

288 | class PiYUVArray(PiArrayOutput):

289 | """

290 | Produces 3-dimensional YUV & RGB arrays from a YUV capture.

291 |

292 | This custom output class can be used to easily obtain a 3-dimensional numpy

293 | array, organized (rows, columns, channel), from an unencoded YUV capture.

294 | The array is accessed via the :attr:`~PiArrayOutput.array` attribute. For

295 | example::

296 |

297 | import picamera

298 | import picamera.array

299 |

300 | with picamera.PiCamera() as camera:

301 | with picamera.array.PiYUVArray(camera) as output:

302 | camera.capture(output, 'yuv')

303 | print('Captured %dx%d image' % (

304 | output.array.shape[1], output.array.shape[0]))

305 |

306 | The :attr:`rgb_array` attribute can be queried for the equivalent RGB

307 | array (conversion is performed using the `ITU-R BT.601`_ matrix)::

308 |

309 | import picamera

310 | import picamera.array

311 |

312 | with picamera.PiCamera() as camera:

313 | with picamera.array.PiYUVArray(camera) as output:

314 | camera.resolution = (1280, 720)

315 | camera.capture(output, 'yuv')

316 | print(output.array.shape)

317 | print(output.rgb_array.shape)

318 |

319 | If you are using the GPU resizer when capturing (with the *resize*

320 | parameter of the various :meth:`~picamera.camera.PiCamera.capture`

321 | methods), specify the resized resolution as the optional *size* parameter

322 | when constructing the array output::

323 |

324 | import picamera

325 | import picamera.array

326 |

327 | with picamera.PiCamera() as camera:

328 | camera.resolution = (1280, 720)

329 | with picamera.array.PiYUVArray(camera, size=(640, 360)) as output:

330 | camera.capture(output, 'yuv', resize=(640, 360))

331 | print('Captured %dx%d image' % (

332 | output.array.shape[1], output.array.shape[0]))

333 |

334 | .. _ITU-R BT.601: http://en.wikipedia.org/wiki/YCbCr#ITU-R_BT.601_conversion

335 | """

336 |

337 | def __init__(self, camera, size=None):

338 | super(PiYUVArray, self).__init__(camera, size)

339 | self._rgb = None

340 |

341 | def flush(self):

342 | super(PiYUVArray, self).flush()

343 | self.array = bytes_to_yuv(self.getvalue(), self.size or self.camera.resolution)

344 |

345 | @property

346 | def rgb_array(self):

347 | if self._rgb is None:

348 | # Apply the standard biases

349 | YUV = self.array.copy()

350 | YUV[:, :, 0] = YUV[:, :, 0] - 16 # Offset Y by 16

351 | YUV[:, :, 1:] = YUV[:, :, 1:] - 128 # Offset UV by 128

352 | # YUV conversion matrix from ITU-R BT.601 version (SDTV)

353 | # Y U V

354 | M = np.array([[1.164, 0.000, 1.596], # R

355 | [1.164, -0.392, -0.813], # G

356 | [1.164, 2.017, 0.000]]) # B

357 | # Calculate the dot product with the matrix to produce RGB output,

358 | # clamp the results to byte range and convert to bytes

359 | self._rgb = YUV.dot(M.T).clip(0, 255).astype(np.uint8)

360 | return self._rgb

361 |

362 |

363 | class PiBayerArray(PiArrayOutput):

364 | """

365 | Produces a 3-dimensional RGB array from raw Bayer data.

366 |

367 | This custom output class is intended to be used with the

368 | :meth:`~picamera.camera.PiCamera.capture` method, with the *bayer*

369 | parameter set to ``True``, to include raw Bayer data in the JPEG output.

370 | The class strips out the raw data, constructing a 3-dimensional numpy array

371 | organized as (rows, columns, colors). The resulting data is accessed via

372 | the :attr:`~PiArrayOutput.array` attribute::

373 |

374 | import picamera

375 | import picamera.array

376 |

377 | with picamera.PiCamera() as camera:

378 | with picamera.array.PiBayerArray(camera) as output:

379 | camera.capture(output, 'jpeg', bayer=True)

380 | print(output.array.shape)

381 |

382 | Note that Bayer data is *always* full resolution, so the resulting array

383 | always has the shape (1944, 2592, 3); this also implies that the optional

384 | *size* parameter (for specifying a resizer resolution) is not available

385 | with this array class. As the sensor records 10-bit values, the array uses

386 | the unsigned 16-bit integer data type.

387 |

388 | By default, `de-mosaicing`_ is **not** performed; if the resulting array is

389 | viewed it will therefore appear dark and too green (due to the green bias

390 | in the `Bayer pattern`_). A trivial weighted-average demosaicing algorithm

391 | is provided in the :meth:`demosaic` method::

392 |

393 | import picamera

394 | import picamera.array

395 |

396 | with picamera.PiCamera() as camera:

397 | with picamera.array.PiBayerArray(camera) as output:

398 | camera.capture(output, 'jpeg', bayer=True)

399 | print(output.demosaic().shape)

400 |

401 | Viewing the result of the de-mosaiced data will look more normal but still

402 | considerably worse quality than the regular camera output (as none of the

403 | other usual post-processing steps like auto-exposure, white-balance,

404 | vignette compensation, and smoothing have been performed).

405 |

406 | .. _de-mosaicing: http://en.wikipedia.org/wiki/Demosaicing

407 | .. _Bayer pattern: http://en.wikipedia.org/wiki/Bayer_filter

408 | """

409 |

410 | def __init__(self, camera):

411 | super(PiBayerArray, self).__init__(camera, size=None)

412 | self._demo = None

413 |

414 | def flush(self):

415 | super(PiBayerArray, self).flush()

416 | self._demo = None

417 | data = self.getvalue()[-6404096:]

418 | if data[:4] != b'BRCM':

419 | raise PiCameraValueError('Unable to locate Bayer data at end of buffer')

420 | # Strip header

421 | data = data[32768:]

422 | # Reshape into 2D pixel values

423 | data = np.frombuffer(data, dtype=np.uint8).\

424 | reshape((1952, 3264))[:1944, :3240]

425 | # Unpack 10-bit values; every 5 bytes contains the high 8-bits of 4

426 | # values followed by the low 2-bits of 4 values packed into the fifth

427 | # byte

428 | data = data.astype(np.uint16) << 2

429 | for byte in range(4):

430 | data[:, byte::5] |= ((data[:, 4::5] >> ((4 - byte) * 2)) & 3)

431 | data = np.delete(data, np.s_[4::5], 1)

432 | # XXX Should test camera's vflip and hflip settings here and adjust

433 | self.array = np.zeros(data.shape + (3,), dtype=data.dtype)

434 | self.array[1::2, 0::2, 0] = data[1::2, 0::2] # Red

435 | self.array[0::2, 0::2, 1] = data[0::2, 0::2] # Green

436 | self.array[1::2, 1::2, 1] = data[1::2, 1::2] # Green

437 | self.array[0::2, 1::2, 2] = data[0::2, 1::2] # Blue

438 |

439 | def demosaic(self):

440 | if self._demo is None:

441 | # XXX Again, should take into account camera's vflip and hflip here

442 | # Construct representation of the bayer pattern

443 | bayer = np.zeros(self.array.shape, dtype=np.uint8)

444 | bayer[1::2, 0::2, 0] = 1 # Red

445 | bayer[0::2, 0::2, 1] = 1 # Green

446 | bayer[1::2, 1::2, 1] = 1 # Green

447 | bayer[0::2, 1::2, 2] = 1 # Blue

448 | # Allocate output array with same shape as data and set up some

449 | # constants to represent the weighted average window

450 | window = (3, 3)

451 | borders = (window[0] - 1, window[1] - 1)

452 | border = (borders[0] // 2, borders[1] // 2)

453 | # Pad out the data and the bayer pattern (np.pad is faster but

454 | # unavailable on the version of numpy shipped with Raspbian at the

455 | # time of writing)

456 | rgb = np.zeros((

457 | self.array.shape[0] + borders[0],

458 | self.array.shape[1] + borders[1],

459 | self.array.shape[2]), dtype=self.array.dtype)

460 | rgb[

461 | border[0]:rgb.shape[0] - border[0],

462 | border[1]:rgb.shape[1] - border[1],

463 | :] = self.array

464 | bayer_pad = np.zeros((

465 | self.array.shape[0] + borders[0],

466 | self.array.shape[1] + borders[1],

467 | self.array.shape[2]), dtype=bayer.dtype)

468 | bayer_pad[

469 | border[0]:bayer_pad.shape[0] - border[0],

470 | border[1]:bayer_pad.shape[1] - border[1],

471 | :] = bayer

472 | bayer = bayer_pad

473 | # For each plane in the RGB data, construct a view over the plane

474 | # of 3x3 matrices. Then do the same for the bayer array and use

475 | # Einstein summation to get the weighted average

476 | self._demo = np.empty(self.array.shape, dtype=self.array.dtype)

477 | for plane in range(3):

478 | p = rgb[..., plane]

479 | b = bayer[..., plane]

480 | pview = as_strided(p, shape=(

481 | p.shape[0] - borders[0],

482 | p.shape[1] - borders[1]) + window, strides=p.strides * 2)

483 | bview = as_strided(b, shape=(

484 | b.shape[0] - borders[0],

485 | b.shape[1] - borders[1]) + window, strides=b.strides * 2)

486 | psum = np.einsum('ijkl->ij', pview)

487 | bsum = np.einsum('ijkl->ij', bview)

488 | self._demo[..., plane] = psum // bsum

489 | return self._demo

490 |

491 |

492 | class PiMotionArray(PiArrayOutput):

493 | """

494 | Produces a 3-dimensional array of motion vectors from the H.264 encoder.

495 |

496 | This custom output class is intended to be used with the *motion_output*

497 | parameter of the :meth:`~picamera.camera.PiCamera.start_recording` method.

498 | Once recording has finished, the class generates a 3-dimensional numpy

499 | array organized as (frames, rows, columns) where ``rows`` and ``columns``

500 | are the number of rows and columns of `macro-blocks`_ (16x16 pixel blocks)

501 | in the original frames. There is always one extra column of macro-blocks

502 | present in motion vector data.

503 |

504 | The data-type of the :attr:`~PiArrayOutput.array` is an (x, y, sad)

505 | structure where ``x`` and ``y`` are signed 1-byte values, and ``sad`` is an

506 | unsigned 2-byte value representing the `sum of absolute differences`_ of

507 | the block. For example::

508 |

509 | import picamera

510 | import picamera.array

511 |

512 | with picamera.PiCamera() as camera:

513 | with picamera.array.PiMotionArray(camera) as output:

514 | camera.resolution = (640, 480)

515 | camera.start_recording(

516 | '/dev/null', format='h264', motion_output=output)

517 | camera.wait_recording(30)

518 | camera.stop_recording()

519 | print('Captured %d frames' % output.array.shape[0])

520 | print('Frames are %dx%d blocks big' % (

521 | output.array.shape[2], output.array.shape[1]))

522 |

523 | If you are using the GPU resizer with your recording, use the optional

524 | *size* parameter to specify the resizer's output resolution when

525 | constructing the array::

526 |

527 | import picamera

528 | import picamera.array

529 |

530 | with picamera.PiCamera() as camera:

531 | camera.resolution = (640, 480)

532 | with picamera.array.PiMotionArray(camera, size=(320, 240)) as output:

533 | camera.start_recording(

534 | '/dev/null', format='h264', motion_output=output,

535 | resize=(320, 240))

536 | camera.wait_recording(30)

537 | camera.stop_recording()

538 | print('Captured %d frames' % output.array.shape[0])

539 | print('Frames are %dx%d blocks big' % (

540 | output.array.shape[2], output.array.shape[1]))

541 |

542 | .. note::

543 |

544 | This class is not suitable for real-time analysis of motion vector

545 | data. See the :class:`PiMotionAnalysis` class instead.

546 |

547 | .. _macro-blocks: http://en.wikipedia.org/wiki/Macroblock

548 | .. _sum of absolute differences: http://en.wikipedia.org/wiki/Sum_of_absolute_differences

549 | """

550 |

551 | def flush(self):

552 | super(PiMotionArray, self).flush()

553 | width, height = self.size or self.camera.resolution

554 | cols = ((width + 15) // 16) + 1

555 | rows = (height + 15) // 16

556 | b = self.getvalue()

557 | frames = len(b) // (cols * rows * motion_dtype.itemsize)

558 | self.array = np.frombuffer(b, dtype=motion_dtype).reshape((frames, rows, cols))

559 |

560 |

561 | class PiAnalysisOutput(io.IOBase):

562 | """

563 | Base class for analysis outputs.

564 |

565 | This class extends :class:`io.IOBase` with a stub :meth:`analyse` method

566 | which will be called for each frame output. In this base implementation the

567 | method simply raises :exc:`NotImplementedError`.

568 | """

569 |

570 | def __init__(self, camera, size=None):

571 | super(PiAnalysisOutput, self).__init__()

572 | self.camera = camera

573 | self.size = size

574 |

575 | def writeable(self):

576 | return True

577 |

578 | def write(self, b):

579 | return len(b)

580 |

581 | def analyse(self, array):

582 | """

583 | Stub method for users to override.

584 | """

585 | raise NotImplementedError

586 |

587 |

588 | class PiRGBAnalysis(PiAnalysisOutput):

589 | """

590 | Provides a basis for per-frame RGB analysis classes.

591 |

592 | This custom output class is intended to be used with the

593 | :meth:`~picamera.camera.PiCamera.start_recording` method when it is called

594 | with *format* set to ``'rgb'`` or ``'bgr'``. While recording is in

595 | progress, the :meth:`~PiAnalysisOutput.write` method converts incoming

596 | frame data into a numpy array and calls the stub

597 | :meth:`~PiAnalysisOutput.analyse` method with the resulting array (this

598 | deliberately raises :exc:`NotImplementedError` in this class; you must

599 | override it in your descendent class).

600 |

601 | .. warning::

602 |

603 | Because the :meth:`~PiAnalysisOutput.analyse` method will be running

604 | within the encoder's callback, it must be **fast**. Specifically, it

605 | needs to return before the next frame is produced. Therefore, if the

606 | camera is running at 30fps, analyse cannot take more than 1/30s or 33ms

607 | to execute (and should take considerably less given that this doesn't

608 | take into account encoding overhead). You may wish to adjust the

609 | framerate of the camera accordingly.

610 |

611 | The array passed to :meth:`~PiAnalysisOutput.analyse` is organized as

612 | (rows, columns, channel) where the channels 0, 1, and 2 are R, G, and B

613 | respectively (or B, G, R if *format* is ``'bgr'``).

614 | """

615 |

616 | def write(self, b):

617 | result = super(PiRGBAnalysis, self).write(b)

618 | self.analyse(bytes_to_rgb(b, self.size or self.camera.resolution))

619 | return result

620 |

621 |

622 | class PiYUVAnalysis(PiAnalysisOutput):

623 | """

624 | Provides a basis for per-frame YUV analysis classes.

625 |

626 | This custom output class is intended to be used with the

627 | :meth:`~picamera.camera.PiCamera.start_recording` method when it is called

628 | with *format* set to ``'yuv'``. While recording is in progress, the

629 | :meth:`~PiAnalysisOutput.write` method converts incoming frame data into a

630 | numpy array and calls the stub :meth:`~PiAnalysisOutput.analyse` method

631 | with the resulting array (this deliberately raises

632 | :exc:`NotImplementedError` in this class; you must override it in your

633 | descendent class).

634 |

635 | .. warning::

636 |

637 | Because the :meth:`~PiAnalysisOutput.analyse` method will be running

638 | within the encoder's callback, it must be **fast**. Specifically, it

639 | needs to return before the next frame is produced. Therefore, if the

640 | camera is running at 30fps, analyse cannot take more than 1/30s or 33ms

641 | to execute (and should take considerably less given that this doesn't

642 | take into account encoding overhead). You may wish to adjust the

643 | framerate of the camera accordingly.

644 |

645 | The array passed to :meth:`~PiAnalysisOutput.analyse` is organized as

646 | (rows, columns, channel) where the channel 0 is Y (luminance), while 1 and

647 | 2 are U and V (chrominance) respectively. The chrominance values normally

648 | have quarter resolution of the luminance values but this class makes all

649 | channels equal resolution for ease of use.

650 | """

651 |

652 | def write(self, b):

653 | result = super(PiYUVAnalysis, self).write(b)

654 | self.analyse(bytes_to_yuv(b, self.size or self.camera.resolution))

655 | return result

656 |

657 |

658 | class PiMotionAnalysis(PiAnalysisOutput):

659 | """

660 | Provides a basis for real-time motion analysis classes.

661 |

662 | This custom output class is intended to be used with the *motion_output*

663 | parameter of the :meth:`~picamera.camera.PiCamera.start_recording` method.

664 | While recording is in progress, the write method converts incoming motion

665 | data into numpy arrays and calls the stub :meth:`~PiAnalysisOutput.analyse`

666 | method with the resulting array (which deliberately raises

667 | :exc:`NotImplementedError` in this class).

668 |

669 | .. warning::

670 |

671 | Because the :meth:`~PiAnalysisOutput.analyse` method will be running

672 | within the encoder's callback, it must be **fast**. Specifically, it

673 | needs to return before the next frame is produced. Therefore, if the

674 | camera is running at 30fps, analyse cannot take more than 1/30s or 33ms

675 | to execute (and should take considerably less given that this doesn't

676 | take into account encoding overhead). You may wish to adjust the

677 | framerate of the camera accordingly.

678 |

679 | The array passed to :meth:`~PiAnalysisOutput.analyse` is organized as

680 | (rows, columns) where ``rows`` and ``columns`` are the number of rows and

681 | columns of `macro-blocks`_ (16x16 pixel blocks) in the original frames.

682 | There is always one extra column of macro-blocks present in motion vector

683 | data.

684 |

685 | The data-type of the array is an (x, y, sad) structure where ``x`` and

686 | ``y`` are signed 1-byte values, and ``sad`` is an unsigned 2-byte value

687 | representing the `sum of absolute differences`_ of the block.

688 |

689 | An example of a crude motion detector is given below::

690 |

691 | import numpy as np

692 | import picamera

693 | import picamera.array

694 |

695 | class DetectMotion(picamera.array.PiMotionAnalysis):

696 | def analyse(self, a):

697 | a = np.sqrt(

698 | np.square(a['x'].astype(np.float)) +

699 | np.square(a['y'].astype(np.float))

700 | ).clip(0, 255).astype(np.uint8)

701 | # If there're more than 10 vectors with a magnitude greater

702 | # than 60, then say we've detected motion

703 | if (a > 60).sum() > 10:

704 | print('Motion detected!')

705 |

706 | with picamera.PiCamera() as camera:

707 | with DetectMotion(camera) as output:

708 | camera.resolution = (640, 480)

709 | camera.start_recording(

710 | '/dev/null', format='h264', motion_output=output)

711 | camera.wait_recording(30)

712 | camera.stop_recording()

713 |

714 | You can use the optional *size* parameter to specify the output resolution

715 | of the GPU resizer, if you are using the *resize* parameter of

716 | :meth:`~picamera.camera.PiCamera.start_recording`.

717 | """

718 |

719 | def __init__(self, camera, size=None):

720 | super(PiMotionAnalysis, self).__init__(camera, size)

721 | self.cols = None

722 | self.rows = None

723 |

724 | def write(self, b):

725 | result = super(PiMotionAnalysis, self).write(b)

726 | if self.cols is None:

727 | width, height = self.size or self.camera.resolution

728 | self.cols = ((width + 15) // 16) + 1

729 | self.rows = (height + 15) // 16

730 | self.analyse(

731 | np.frombuffer(b, dtype=motion_dtype).\

732 | reshape((self.rows, self.cols)))

733 | return result

734 |

735 |

--------------------------------------------------------------------------------

/picamera/bcm_host.py:

--------------------------------------------------------------------------------

1 | # vim: set et sw=4 sts=4 fileencoding=utf-8:

2 | #

3 | # Python header conversion

4 | # Copyright (c) 2013-2015 Dave Jones

5 | #

6 | # Original headers

7 | # Copyright (c) 2012, Broadcom Europe Ltd

8 | # All rights reserved.

9 | #

10 | # Redistribution and use in source and binary forms, with or without

11 | # modification, are permitted provided that the following conditions are met:

12 | #

13 | # * Redistributions of source code must retain the above copyright

14 | # notice, this list of conditions and the following disclaimer.

15 | # * Redistributions in binary form must reproduce the above copyright

16 | # notice, this list of conditions and the following disclaimer in the

17 | # documentation and/or other materials provided with the distribution.

18 | # * Neither the name of the copyright holder nor the

19 | # names of its contributors may be used to endorse or promote products

20 | # derived from this software without specific prior written permission.

21 | #

22 | # THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

23 | # AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

24 | # IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE

25 | # ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE

26 | # LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR

27 | # CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF

28 | # SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS

29 | # INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN

30 | # CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE)

31 | # ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

32 | # POSSIBILITY OF SUCH DAMAGE.

33 |

34 | from __future__ import (

35 | unicode_literals,

36 | print_function,

37 | division,

38 | absolute_import,

39 | )

40 |

41 | # Make Py2's str equivalent to Py3's

42 | str = type('')

43 |

44 | import ctypes as ct

45 | import warnings

46 |

47 | _lib = ct.CDLL('libbcm_host.so')

48 |

49 | # bcm_host.h #################################################################

50 |

51 | bcm_host_init = _lib.bcm_host_init

52 | bcm_host_init.argtypes = []

53 | bcm_host_init.restype = None

54 |

55 | bcm_host_deinit = _lib.bcm_host_deinit

56 | bcm_host_deinit.argtypes = []

57 | bcm_host_deinit.restype = None

58 |

59 | graphics_get_display_size = _lib.graphics_get_display_size

60 | graphics_get_display_size.argtypes = [ct.c_uint16, ct.POINTER(ct.c_uint32), ct.POINTER(ct.c_uint32)]

61 | graphics_get_display_size.restype = ct.c_int32

62 |

63 |

--------------------------------------------------------------------------------

/picamera/color.py:

--------------------------------------------------------------------------------

1 | # vim: set et sw=4 sts=4 fileencoding=utf-8:

2 | #

3 | # Python camera library for the Rasperry-Pi camera module

4 | # Copyright (c) 2013-2015 Dave Jones

5 | #

6 | # Redistribution and use in source and binary forms, with or without

7 | # modification, are permitted provided that the following conditions are met:

8 | #

9 | # * Redistributions of source code must retain the above copyright

10 | # notice, this list of conditions and the following disclaimer.

11 | # * Redistributions in binary form must reproduce the above copyright

12 | # notice, this list of conditions and the following disclaimer in the

13 | # documentation and/or other materials provided with the distribution.

14 | # * Neither the name of the copyright holder nor the

15 | # names of its contributors may be used to endorse or promote products

16 | # derived from this software without specific prior written permission.

17 | #

18 | # THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

19 | # AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

20 | # IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE

21 | # ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE

22 | # LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR

23 | # CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF

24 | # SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS

25 | # INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN

26 | # CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE)

27 | # ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

28 | # POSSIBILITY OF SUCH DAMAGE.

29 |

30 | """

31 | The color module defines a class for representing a color, along with various

32 | ancillary classes which can be used to manipulate aspects of a color.

33 |

34 | .. note::

35 |

36 | All classes in this module are available from the :mod:`picamera` namespace

37 | without having to import :mod:`picamera.color` directly.

38 |

39 | The following classes are defined in the module:

40 |

41 |

42 | Color

43 | =====

44 |

45 | .. autoclass:: Color

46 | :members:

47 |

48 |

49 | Red

50 | ===

51 |

52 | .. autoclass:: Red

53 | :members:

54 |

55 |

56 | Green

57 | =====

58 |

59 | .. autoclass:: Green

60 | :members:

61 |

62 |

63 | Blue

64 | ====

65 |

66 | .. autoclass:: Blue

67 | :members:

68 |

69 |

70 | Hue

71 | ===

72 |

73 | .. autoclass:: Hue

74 | :members:

75 |

76 |

77 | Saturation

78 | ==========

79 |

80 | .. autoclass:: Saturation

81 | :members:

82 |

83 |

84 | Lightness

85 | =========

86 |

87 | .. autoclass:: Lightness

88 | :members:

89 |

90 | """

91 |

92 | from __future__ import (

93 | unicode_literals,

94 | print_function,

95 | division,

96 | absolute_import,

97 | )

98 |

99 | # Make Py2's str and range equivalent to Py3's

100 | str = type('')

101 |

102 |

103 | import colorsys

104 | from math import pi

105 | from collections import namedtuple

106 |

107 |

108 | # From the CSS Color Module Level 3 specification, section 4.3

109 | #

110 | NAMED_COLORS = {

111 | 'aliceblue': '#f0f8ff',

112 | 'antiquewhite': '#faebd7',

113 | 'aqua': '#00ffff',

114 | 'aquamarine': '#7fffd4',

115 | 'azure': '#f0ffff',

116 | 'beige': '#f5f5dc',

117 | 'bisque': '#ffe4c4',

118 | 'black': '#000000',

119 | 'blanchedalmond': '#ffebcd',

120 | 'blue': '#0000ff',

121 | 'blueviolet': '#8a2be2',

122 | 'brown': '#a52a2a',

123 | 'burlywood': '#deb887',

124 | 'cadetblue': '#5f9ea0',

125 | 'chartreuse': '#7fff00',

126 | 'chocolate': '#d2691e',

127 | 'coral': '#ff7f50',

128 | 'cornflowerblue': '#6495ed',

129 | 'cornsilk': '#fff8dc',

130 | 'crimson': '#dc143c',

131 | 'cyan': '#00ffff',

132 | 'darkblue': '#00008b',

133 | 'darkcyan': '#008b8b',

134 | 'darkgoldenrod': '#b8860b',

135 | 'darkgray': '#a9a9a9',

136 | 'darkgreen': '#006400',

137 | 'darkgrey': '#a9a9a9',

138 | 'darkkhaki': '#bdb76b',

139 | 'darkmagenta': '#8b008b',

140 | 'darkolivegreen': '#556b2f',

141 | 'darkorange': '#ff8c00',

142 | 'darkorchid': '#9932cc',

143 | 'darkred': '#8b0000',

144 | 'darksalmon': '#e9967a',

145 | 'darkseagreen': '#8fbc8f',

146 | 'darkslateblue': '#483d8b',

147 | 'darkslategray': '#2f4f4f',

148 | 'darkslategrey': '#2f4f4f',

149 | 'darkturquoise': '#00ced1',

150 | 'darkviolet': '#9400d3',

151 | 'deeppink': '#ff1493',

152 | 'deepskyblue': '#00bfff',

153 | 'dimgray': '#696969',

154 | 'dimgrey': '#696969',

155 | 'dodgerblue': '#1e90ff',

156 | 'firebrick': '#b22222',

157 | 'floralwhite': '#fffaf0',

158 | 'forestgreen': '#228b22',

159 | 'fuchsia': '#ff00ff',

160 | 'gainsboro': '#dcdcdc',

161 | 'ghostwhite': '#f8f8ff',

162 | 'gold': '#ffd700',

163 | 'goldenrod': '#daa520',

164 | 'gray': '#808080',

165 | 'green': '#008000',

166 | 'greenyellow': '#adff2f',

167 | 'grey': '#808080',

168 | 'honeydew': '#f0fff0',

169 | 'hotpink': '#ff69b4',

170 | 'indianred': '#cd5c5c',

171 | 'indigo': '#4b0082',

172 | 'ivory': '#fffff0',

173 | 'khaki': '#f0e68c',

174 | 'lavender': '#e6e6fa',

175 | 'lavenderblush': '#fff0f5',

176 | 'lawngreen': '#7cfc00',

177 | 'lemonchiffon': '#fffacd',

178 | 'lightblue': '#add8e6',

179 | 'lightcoral': '#f08080',

180 | 'lightcyan': '#e0ffff',

181 | 'lightgoldenrodyellow': '#fafad2',

182 | 'lightgray': '#d3d3d3',

183 | 'lightgreen': '#90ee90',

184 | 'lightgrey': '#d3d3d3',

185 | 'lightpink': '#ffb6c1',

186 | 'lightsalmon': '#ffa07a',

187 | 'lightseagreen': '#20b2aa',

188 | 'lightskyblue': '#87cefa',

189 | 'lightslategray': '#778899',

190 | 'lightslategrey': '#778899',

191 | 'lightsteelblue': '#b0c4de',

192 | 'lightyellow': '#ffffe0',

193 | 'lime': '#00ff00',

194 | 'limegreen': '#32cd32',

195 | 'linen': '#faf0e6',

196 | 'magenta': '#ff00ff',

197 | 'maroon': '#800000',

198 | 'mediumaquamarine': '#66cdaa',

199 | 'mediumblue': '#0000cd',

200 | 'mediumorchid': '#ba55d3',

201 | 'mediumpurple': '#9370db',

202 | 'mediumseagreen': '#3cb371',

203 | 'mediumslateblue': '#7b68ee',

204 | 'mediumspringgreen': '#00fa9a',

205 | 'mediumturquoise': '#48d1cc',

206 | 'mediumvioletred': '#c71585',

207 | 'midnightblue': '#191970',

208 | 'mintcream': '#f5fffa',

209 | 'mistyrose': '#ffe4e1',

210 | 'moccasin': '#ffe4b5',

211 | 'navajowhite': '#ffdead',

212 | 'navy': '#000080',

213 | 'oldlace': '#fdf5e6',

214 | 'olive': '#808000',

215 | 'olivedrab': '#6b8e23',

216 | 'orange': '#ffa500',

217 | 'orangered': '#ff4500',

218 | 'orchid': '#da70d6',

219 | 'palegoldenrod': '#eee8aa',

220 | 'palegreen': '#98fb98',

221 | 'paleturquoise': '#afeeee',

222 | 'palevioletred': '#db7093',

223 | 'papayawhip': '#ffefd5',

224 | 'peachpuff': '#ffdab9',

225 | 'peru': '#cd853f',

226 | 'pink': '#ffc0cb',

227 | 'plum': '#dda0dd',

228 | 'powderblue': '#b0e0e6',

229 | 'purple': '#800080',

230 | 'red': '#ff0000',

231 | 'rosybrown': '#bc8f8f',

232 | 'royalblue': '#4169e1',

233 | 'saddlebrown': '#8b4513',

234 | 'salmon': '#fa8072',

235 | 'sandybrown': '#f4a460',

236 | 'seagreen': '#2e8b57',

237 | 'seashell': '#fff5ee',

238 | 'sienna': '#a0522d',

239 | 'silver': '#c0c0c0',

240 | 'skyblue': '#87ceeb',

241 | 'slateblue': '#6a5acd',

242 | 'slategray': '#708090',

243 | 'slategrey': '#708090',

244 | 'snow': '#fffafa',

245 | 'springgreen': '#00ff7f',

246 | 'steelblue': '#4682b4',

247 | 'tan': '#d2b48c',

248 | 'teal': '#008080',

249 | 'thistle': '#d8bfd8',

250 | 'tomato': '#ff6347',

251 | 'turquoise': '#40e0d0',

252 | 'violet': '#ee82ee',

253 | 'wheat': '#f5deb3',

254 | 'white': '#ffffff',

255 | 'whitesmoke': '#f5f5f5',

256 | 'yellow': '#ffff00',

257 | 'yellowgreen': '#9acd32',

258 | }

259 |

260 |

261 | class Red(float):

262 | """

263 | Represents the red component of a :class:`Color` for use in

264 | transformations. Instances of this class can be constructed directly with a

265 | float value, or by querying the :attr:`Color.red` attribute. Addition,

266 | subtraction, and multiplication are supported with :class:`Color`

267 | instances. For example::

268 |

269 | >>> Color.from_rgb(0, 0, 0) + Red(0.5)

270 |

271 | >>> Color('#f00') - Color('#900').red

272 |

273 | >>> (Red(0.1) * Color('red')).red

274 | Red(0.1)

275 | """

276 |

277 | def __repr__(self):

278 | return "Red(%s)" % self

279 |

280 |

281 | class Green(float):

282 | """

283 | Represents the green component of a :class:`Color` for use in

284 | transformations. Instances of this class can be constructed directly with

285 | a float value, or by querying the :attr:`Color.green` attribute. Addition,

286 | subtraction, and multiplication are supported with :class:`Color`

287 | instances. For example::

288 |

289 | >>> Color(0, 0, 0) + Green(0.1)

290 |

291 | >>> Color.from_yuv(1, -0.4, -0.6) - Green(1)

292 |

293 | >>> (Green(0.5) * Color('white')).rgb

294 | (Red(1.0), Green(0.5), Blue(1.0))

295 | """

296 |

297 | def __repr__(self):

298 | return "Green(%s)" % self

299 |

300 |

301 | class Blue(float):

302 | """

303 | Represents the blue component of a :class:`Color` for use in

304 | transformations. Instances of this class can be constructed directly with

305 | a float value, or by querying the :attr:`Color.blue` attribute. Addition,

306 | subtraction, and multiplication are supported with :class:`Color`

307 | instances. For example::

308 |

309 | >>> Color(0, 0, 0) + Blue(0.2)

310 |

311 | >>> Color.from_hls(0.5, 0.5, 1.0) - Blue(1)

312 |

313 | >>> Blue(0.9) * Color('white')

314 |

315 | """

316 |

317 | def __repr__(self):

318 | return "Blue(%s)" % self

319 |

320 |

321 | class Hue(float):

322 | """

323 | Represents the hue of a :class:`Color` for use in transformations.

324 | Instances of this class can be constructed directly with a float value in

325 | the range [0.0, 1.0) representing an angle around the `HSL hue wheel`_. As

326 | this is a circular mapping, 0.0 and 1.0 effectively mean the same thing,

327 | i.e. out of range values will be normalized into the range [0.0, 1.0).

328 |

329 | The class can also be constructed with the keyword arguments ``deg`` or

330 | ``rad`` if you wish to specify the hue value in degrees or radians instead,

331 | respectively. Instances can also be constructed by querying the

332 | :attr:`Color.hue` attribute.

333 |

334 | Addition, subtraction, and multiplication are supported with :class:`Color`

335 | instances. For example::

336 |

337 | >>> Color(1, 0, 0).hls

338 | (0.0, 0.5, 1.0)

339 | >>> (Color(1, 0, 0) + Hue(deg=180)).hls

340 | (0.5, 0.5, 1.0)

341 |

342 | Note that whilst multiplication by a :class:`Hue` doesn't make much sense,

343 | it is still supported. However, the circular nature of a hue value can lead

344 | to suprising effects. In particular, since 1.0 is equivalent to 0.0 the

345 | following may be observed::

346 |

347 | >>> (Hue(1.0) * Color.from_hls(0.5, 0.5, 1.0)).hls

348 | (0.0, 0.5, 1.0)

349 |

350 | .. _HSL hue wheel: https://en.wikipedia.org/wiki/Hue

351 | """

352 |

353 | def __new__(cls, n=None, deg=None, rad=None):

354 | if n is not None:

355 | return super(Hue, cls).__new__(cls, n % 1.0)

356 | elif deg is not None:

357 | return super(Hue, cls).__new__(cls, (deg / 360.0) % 1.0)

358 | elif rad is not None:

359 | return super(Hue, cls).__new__(cls, (rad / (2 * pi)) % 1.0)

360 | else:

361 | raise ValueError('You must specify a value, or deg or rad')

362 |

363 | def __repr__(self):

364 | return "Hue(deg=%s)" % self.deg

365 |

366 | @property

367 | def deg(self):

368 | return self * 360.0

369 |

370 | @property

371 | def rad(self):

372 | return self * 2 * pi

373 |

374 |

375 | class Lightness(float):

376 | """

377 | Represents the lightness of a :class:`Color` for use in transformations.

378 | Instances of this class can be constructed directly with a float value, or

379 | by querying the :attr:`Color.lightness` attribute. Addition, subtraction,

380 | and multiplication are supported with :class:`Color` instances. For

381 | example::

382 |

383 | >>> Color(0, 0, 0) + Lightness(0.1)

384 |

385 | >>> Color.from_rgb_bytes(0x80, 0x80, 0) - Lightness(0.2)

386 |

387 | >>> Lightness(0.9) * Color('wheat')

388 |

389 | """

390 |

391 | def __repr__(self):

392 | return "Lightness(%s)" % self

393 |

394 |

395 | class Saturation(float):

396 | """

397 | Represents the saturation of a :class:`Color` for use in transformations.

398 | Instances of this class can be constructed directly with a float value, or

399 | by querying the :attr:`Color.saturation` attribute. Addition, subtraction,

400 | and multiplication are supported with :class:`Color` instances. For

401 | example::

402 |

403 | >>> Color(0.9, 0.9, 0.6) + Saturation(0.1)

404 |

405 | >>> Color('red') - Saturation(1)

406 |

407 | >>> Saturation(0.5) * Color('wheat')

408 |

409 | """

410 |

411 | def __repr__(self):

412 | return "Lightness(%s)" % self

413 |

414 |

415 |

416 | clamp_float = lambda v: max(0.0, min(1.0, v))

417 | clamp_bytes = lambda v: max(0, min(255, v))

418 |

419 | class Color(namedtuple('Color', ('red', 'green', 'blue'))):

420 | """

421 | The Color class is a tuple which represents a color as red, green, and

422 | blue components.

423 |

424 | The class has a flexible constructor which allows you to create an instance

425 | from a variety of color systems including `RGB`_, `Y'UV`_, `Y'IQ`_, `HLS`_,

426 | and `HSV`_. There are also explicit constructors for each of these systems

427 | to allow you to force the use of a system in your code. For example, an

428 | instance of :class:`Color` can be constructed in any of the following

429 | ways::

430 |

431 | >>> Color('#f00')

432 |

433 | >>> Color('green')

434 |

435 | >>> Color(0, 0, 1)

436 |

437 | >>> Color(hue=0, saturation=1, value=0.5)

438 |

439 | >>> Color(y=0.4, u=-0.05, v=0.615)

440 |

441 |

442 | The specific forms that the default constructor will accept are enumerated

443 | below:

444 |

445 | +------------------------------+------------------------------------------+

446 | | Style | Description |

447 | +==============================+==========================================+

448 | | Single positional parameter | Equivalent to calling |

449 | | | :meth:`Color.from_string`. |

450 | +------------------------------+------------------------------------------+

451 | | Three positional parameters | Equivalent to calling |

452 | | | :meth:`Color.from_rgb` if all three |

453 | | | parameters are between 0.0 and 1.0, or |

454 | | | :meth:`Color.from_rgb_bytes` otherwise. |

455 | +------------------------------+ |

456 | | Three named parameters, | |

457 | | "r", "g", "b" | |

458 | +------------------------------+ |

459 | | Three named parameters, | |

460 | | "red", "green", "blue" | |

461 | +------------------------------+------------------------------------------+

462 | | Three named parameters, | Equivalent to calling |

463 | | "y", "u", "v" | :meth:`Color.from_yuv` if "y" is between |

464 | | | 0.0 and 1.0, "u" is between -0.436 and |

465 | | | 0.436, and "v" is between -0.615 and |

466 | | | 0.615, or :meth:`Color.from_yuv_bytes` |

467 | | | otherwise. |

468 | +------------------------------+------------------------------------------+

469 | | Three named parameters, | Equivalent to calling |

470 | | "y", "i", "q" | :meth:`Color.from_yiq`. |

471 | +------------------------------+------------------------------------------+