├── .gitignore

├── .travis.yml

├── README.md

├── akka-demo

├── README.md

├── pom.xml

└── src

│ └── main

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── akka

│ ├── HelloWorldApp.scala

│ └── PingPongApp.scala

├── analysis-demo

├── README.md

├── pom.xml

└── src

│ └── main

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── analysis

│ └── analysisApp.scala

├── breeze-demo

├── pom.xml

└── src

│ └── main

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── breeze

│ └── BreezeApp.scala

├── file-demo

├── README.md

├── pom.xml

└── src

│ └── main

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── file

│ └── FileApp.scala

├── hive-json-demo

├── README.md

├── pom.xml

└── src

│ ├── main

│ ├── java

│ │ └── cn.thinkjoy.utils4s.hive.json

│ │ │ └── JSONSerDe.java

│ └── scala

│ │ └── cn

│ │ └── thinkjoy

│ │ └── utils4s

│ │ └── hive

│ │ └── json

│ │ └── App.scala

│ └── resources

│ └── create_table.sql

├── json4s-demo

├── README.md

├── pom.xml

└── src

│ └── main

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── json4s

│ └── Json4sDemo.scala

├── lamma-demo

├── README.md

├── pom.xml

└── src

│ └── main

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── lamma

│ └── BasicOper.scala

├── log-demo

├── README.md

├── pom.xml

└── src

│ └── main

│ ├── resources

│ └── log4j.properties

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── log4s

│ ├── App.scala

│ ├── Logging.scala

│ └── LoggingTest.scala

├── manger-tools

├── python

│ └── es

│ │ ├── __init__.py

│ │ ├── check_index.py

│ │ ├── del_expired_index.py

│ │ ├── del_many_index.py

│ │ ├── delindex.py

│ │ ├── expired_index.xml

│ │ ├── index_list.xml

│ │ ├── logger.py

│ │ ├── mail.py

│ │ └── test.json

└── shell

│ ├── kafka-reassign-replica.sh

│ ├── manger.sh

│ └── start_daily.sh

├── nscala-time-demo

├── README.md

├── pom.xml

└── src

│ └── main

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── nscala_time

│ └── BasicOper.scala

├── picture

├── covAndcon.png

├── datacube.jpg

└── spark_streaming_config.png

├── pom.xml

├── resources-demo

├── README.md

├── pom.xml

└── src

│ └── main

│ ├── resources

│ ├── test.properties

│ └── test.xml

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── resources

│ └── ResourcesApp.scala

├── scala-demo

├── README.md

├── md

│ ├── 偏函数(PartialFunction)、偏应用函数(Partial Applied Function).md

│ ├── 函数参数传名调用、传值调用.md

│ └── 协变逆变上界下界.md

├── pom.xml

└── src

│ └── main

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ ├── S99

│ ├── P01.scala

│ ├── P02.scala

│ ├── P03.scala

│ ├── P04.scala

│ ├── P05.scala

│ ├── P06.scala

│ ├── P07.scala

│ ├── P08.scala

│ ├── P09.scala

│ ├── P10.scala

│ └── P11.scala

│ └── scala

│ ├── CaseClass.scala

│ ├── CovariantAndContravariant.scala

│ ├── EnumerationApp.scala

│ ├── ExtractorApp.scala

│ ├── FileSysCommandApp.scala

│ ├── FutureAndPromise.scala

│ ├── FutureApp.scala

│ ├── HighOrderFunction.scala

│ ├── MapApp.scala

│ ├── PatternMatching.scala

│ ├── TestApp.scala

│ └── TraitApp.scala

├── spark-analytics-demo

├── pom.xml

└── src

│ └── main

│ ├── resources

│ └── block_1.csv

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── spark

│ └── analytics

│ ├── DataCleaningApp.scala

│ ├── NAStatCounter.scala

│ └── StatsWithMissing.scala

├── spark-core-demo

├── pom.xml

└── src

│ └── main

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── spark

│ └── core

│ └── GroupByKeyAndReduceByKeyApp.scala

├── spark-dataframe-demo

├── README.md

├── pom.xml

└── src

│ └── main

│ ├── resources

│ ├── a.json

│ ├── b.txt

│ └── hive-site.xml

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── spark

│ └── dataframe

│ ├── RollupApp.scala

│ ├── SparkDataFrameApp.scala

│ ├── SparkDataFrameUDFApp.scala

│ ├── SparkSQLSupport.scala

│ ├── UdfTestApp.scala

│ └── udf

│ ├── AccessLogParser.scala

│ ├── AccessLogRecord.scala

│ └── LogAnalytics.scala

├── spark-knowledge

├── README.md

├── images

│ ├── MapReduce-v3.png

│ ├── Spark-Heap-Usage.png

│ ├── Spark-Memory-Management-1.6.0.png

│ ├── data-frame.png

│ ├── goupByKey.001.jpg

│ ├── groupByKey.png

│ ├── kafka

│ │ └── system_components_on_white_v2.png

│ ├── rdd-dataframe-dataset

│ │ ├── filter-down.png

│ │ └── rdd-dataframe.png

│ ├── reduceByKey.png

│ ├── spark-streaming-kafka

│ │ ├── spark-kafka-direct-api.png

│ │ ├── spark-metadata-checkpointing.png

│ │ ├── spark-reliable-source-reliable-receiver.png

│ │ ├── spark-wal.png

│ │ └── spark-wall-at-least-once-delivery.png

│ ├── spark_sort_shuffle.png

│ ├── spark_tungsten_sort_shuffle.png

│ └── zepplin

│ │ ├── helium.png

│ │ └── z-manager-zeppelin.png

├── md

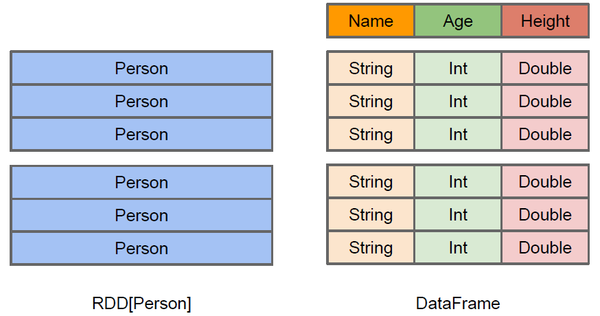

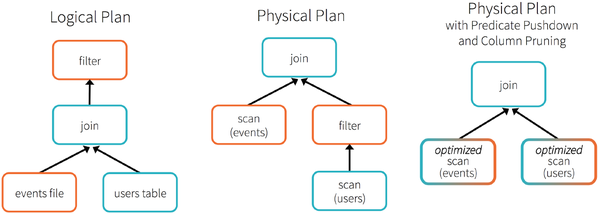

│ ├── RDD、DataFrame和DataSet的区别.md

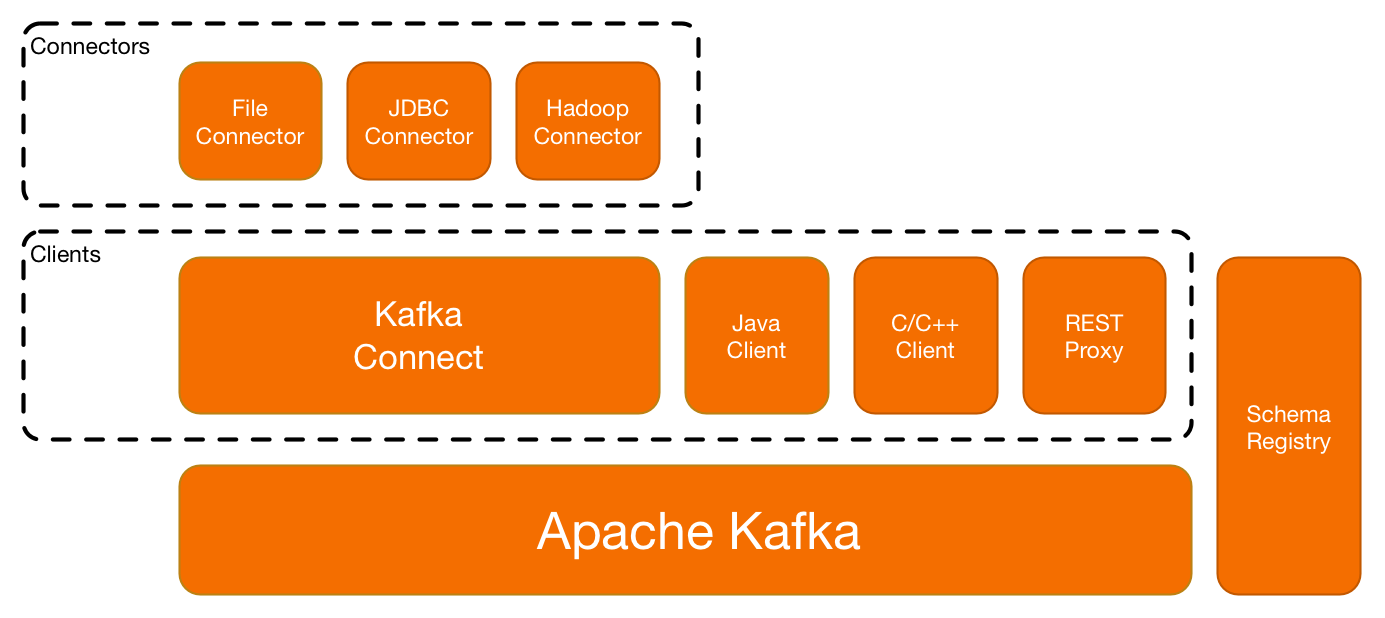

│ ├── confluent_platform2.0.md

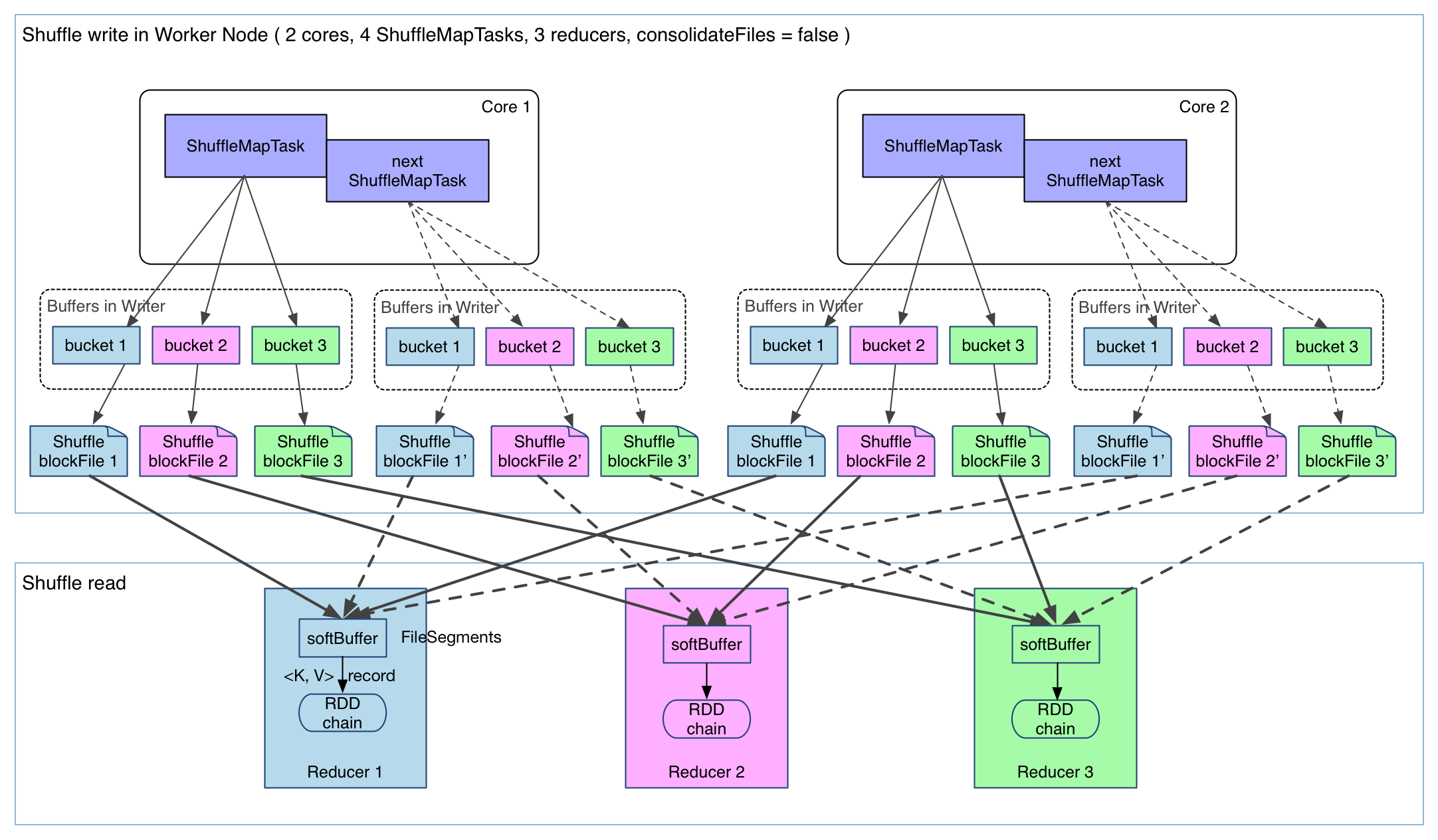

│ ├── hash-shuffle.md

│ ├── sort-shuffle.md

│ ├── spark-dataframe-parquet.md

│ ├── spark_sql选择parquet存储方式的五个原因.md

│ ├── spark_streaming使用kafka保证数据零丢失.md

│ ├── spark从关系数据库加载数据.md

│ ├── spark内存概述.md

│ ├── spark实践总结.md

│ ├── spark统一内存管理.md

│ ├── tungsten-sort-shuffle.md

│ ├── zeppelin搭建.md

│ ├── 使用spark进行数据挖掘--音乐推荐.md

│ └── 利用spark进行数据挖掘-数据清洗.md

└── resources

│ └── zeppelin

│ ├── interpreter.json

│ └── zeppelin-env.sh

├── spark-streaming-demo

├── README.md

├── md

│ ├── mapWithState.md

│ └── spark-streaming-kafka测试用例.md

├── pom.xml

└── src

│ └── main

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── sparkstreaming

│ ├── MapWithStateApp.scala

│ ├── SparkStreamingDataFrameDemo.scala

│ └── SparkStreamingDemo.scala

├── spark-timeseries-demo

├── README.md

├── data

│ └── ticker.tsv

├── pom.xml

└── src

│ └── main

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── spark

│ └── timeseries

│ └── TimeSeriesApp.scala

├── toc_gen.py

├── twitter-util-demo

├── README.md

├── pom.xml

└── src

│ └── main

│ └── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── twitter

│ └── util

│ └── core

│ └── TimeApp.scala

└── unittest-demo

├── README.md

├── pom.xml

└── src

├── main

└── scala

│ └── cn

│ └── thinkjoy

│ └── utils4s

│ └── unittest

│ └── App.scala

└── test

└── scala

└── cn

└── thinkjoy

└── utils4s

└── scala

├── StackSpec.scala

└── UnitSpec.scala

/.gitignore:

--------------------------------------------------------------------------------

1 | .idea

2 | demo.iml

3 | logs/*

4 | */*.iml

5 | */target

6 | target

7 | checkpoint

8 | derby.log

9 | metastore_db/

10 |

--------------------------------------------------------------------------------

/.travis.yml:

--------------------------------------------------------------------------------

1 | language: scala

2 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | utils4s

2 |

3 | 公众号:

4 |

5 |

6 | [](https://travis-ci.org/jacksu/utils4s)[](https://gitter.im/jacksu/utils4s?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge&utm_content=badge)

7 |

8 | * [utils4s](#id1)

9 | * [scala语法学习](#id2)

10 | * [common库](#id21)

11 | * [BigData库](#id22)

12 | * [Spark](#id221)

13 | * [Spark core](#id2211)

14 | * [Spark Streaming](#id2212)

15 | * [Spark SQL](#id2213)

16 | * [Spark 机器学习](#id2213)

17 | * [Spark Zeppelin](#id2214)

18 | * [Spark 其它](#id2215)

19 | * [ES](#id222)

20 | * [贡献代码步骤](#id23)

21 | * [贡献者](#id24)

22 |

23 | **Issues 中包含我们平时阅读的关于scala、spark好的文章,欢迎推荐**

24 |

25 | utils4s包含各种scala通用、好玩的工具库demo和使用文档,通过简单的代码演示和操作文档,各种库信手拈来。

26 |

27 | **同时欢迎大家贡献各种好玩的、经常使用的工具库。**

28 |

29 | [开源中国地址](http://git.oschina.net/jack.su/utils4s)

30 |

31 | QQ交流群 `432290475(已满),请加530066027`  或者点击上面gitter图标也可以参与讨论

32 |

33 | [作者博客专注大数据、分布式系统、机器学习,欢迎交流](http://www.jianshu.com/users/92a1227beb27/latest_articles)

34 |

35 | 微博:[**jacksu_**](http://weibo.com/jack4s)

36 |

37 |

或者点击上面gitter图标也可以参与讨论

32 |

33 | [作者博客专注大数据、分布式系统、机器学习,欢迎交流](http://www.jianshu.com/users/92a1227beb27/latest_articles)

34 |

35 | 微博:[**jacksu_**](http://weibo.com/jack4s)

36 |

37 | scala语法学习

38 |

39 | 说明:scala语法学习过程中,用例代码都放在scala-demo模块下。

40 |

41 | [利用IntelliJ IDEA与Maven开始你的Scala之旅](https://www.jianshu.com/p/ecc6eb298b8f)

42 |

43 | [快学scala电子书](http://vdisk.weibo.com/s/C7NmUN3g8gH46)(推荐入门级书)

44 |

45 | [scala理解的比较深](http://hongjiang.info/scala/)

46 |

47 | [scala99问题](http://aperiodic.net/phil/scala/s-99/)

48 |

49 | [scala初学者指南](https://windor.gitbooks.io/beginners-guide-to-scala/content/introduction.html)(这可不是初学者可以理解的欧,还是写过一些程序后再看)

50 |

51 | [scala初学者指南英文版](http://danielwestheide.com/scala/neophytes.html)

52 |

53 | [scala学习用例](scala-demo)

54 |

55 | [scala入门笔记](http://blog.djstudy.net/2016/01/24/scala-rumen-biji/)

56 |

57 | [Databricks风格](https://github.com/databricks/scala-style-guide)

58 |

59 | [scala/java 通过maven编译(Mixed Java/Scala Projects)](http://davidb.github.io/scala-maven-plugin/example_java.html)

60 |

61 | common库

62 |

63 | [日志操作](log-demo)([log4s](https://github.com/Log4s/log4s))

64 |

65 | [单元测试](unittest-demo)([scalatest](http://www.scalatest.org))

66 |

67 | [日期操作](lamma-demo)([lama](http://www.lamma.io/doc/quick_start))(注:只支持日期操作,不支持时间操作)

68 |

69 | [日期时间操作](nscala-time-demo)([nscala-time](https://github.com/nscala-time/nscala-time))(注:没有每月多少天,每月最后一天,以及每年多少天)

70 |

71 | [json解析](json4s-demo)([json4s](https://github.com/json4s/json4s))

72 |

73 | [resources下文件加载用例](resources-demo)

74 |

75 | [文件操作](file-demo)([better-files](https://github.com/pathikrit/better-files))

76 |

77 | [单位换算](analysis-demo)([squants](https://github.com/garyKeorkunian/squants))

78 |

79 | [线性代数和向量计算](breeze-demo)([breeze](https://github.com/scalanlp/breeze))

80 |

81 | [分布式并行实现库akka](akka-demo)([akka](http://akka.io))

82 |

83 | [Twitter工具库](twitter-util-demo)([twitter util](https://github.com/twitter/util))

84 |

85 | [日常脚本工具](manger-tools)

86 |

87 | BigData库

88 |

89 | Spark

90 |

91 | Spark core

92 | [spark远程调试源代码](http://hadoop1989.com/2016/02/01/Spark-Remote-Debug/)

93 |

94 | [spark介绍](http://litaotao.github.io/introduction-to-spark)

95 |

96 | [一个不错的spark学习互动课程](http://www.hubwiz.com/class/5449c691e564e50960f1b7a9)

97 |

98 | [spark 设计与实现](http://spark-internals.books.yourtion.com/index.html)

99 |

100 | [aliyun-spark-deploy-tool](https://github.com/aliyun/aliyun-spark-deploy-tool)---Spark on ECS

101 | Spark Streaming

102 |

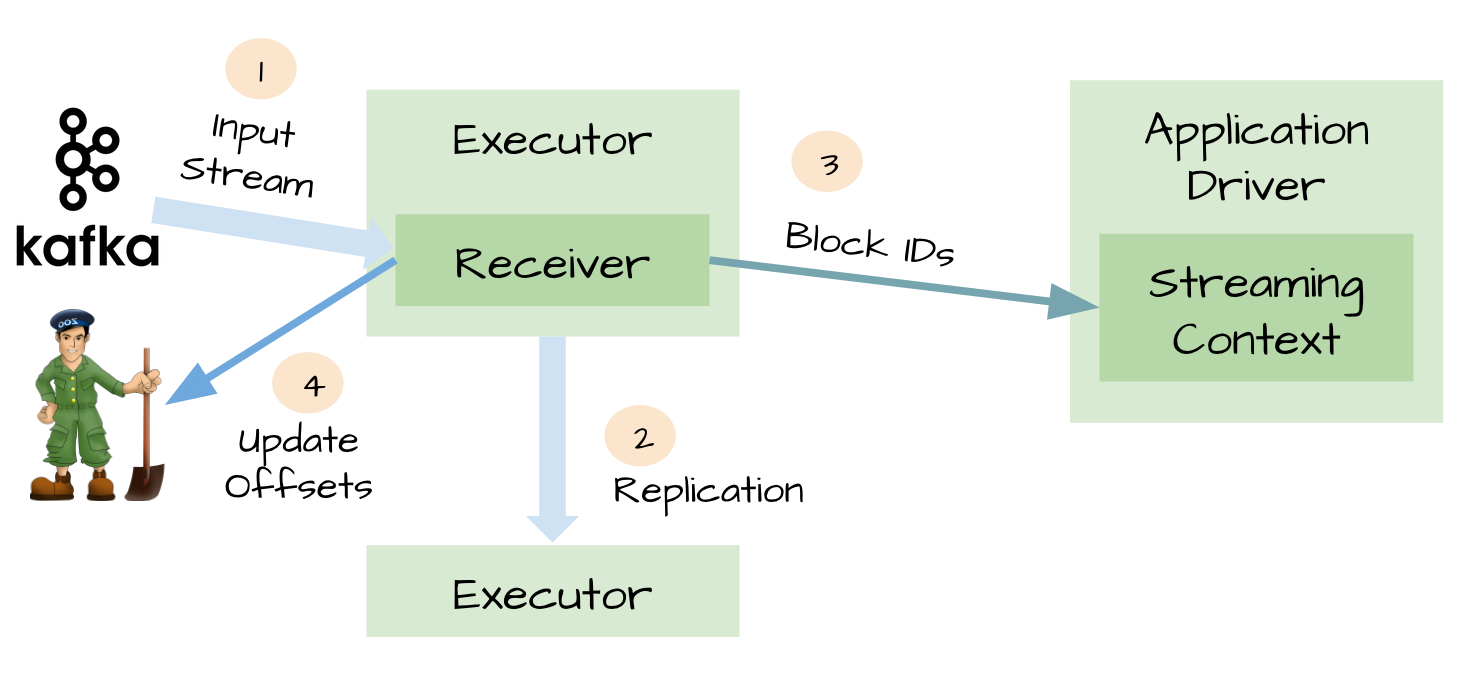

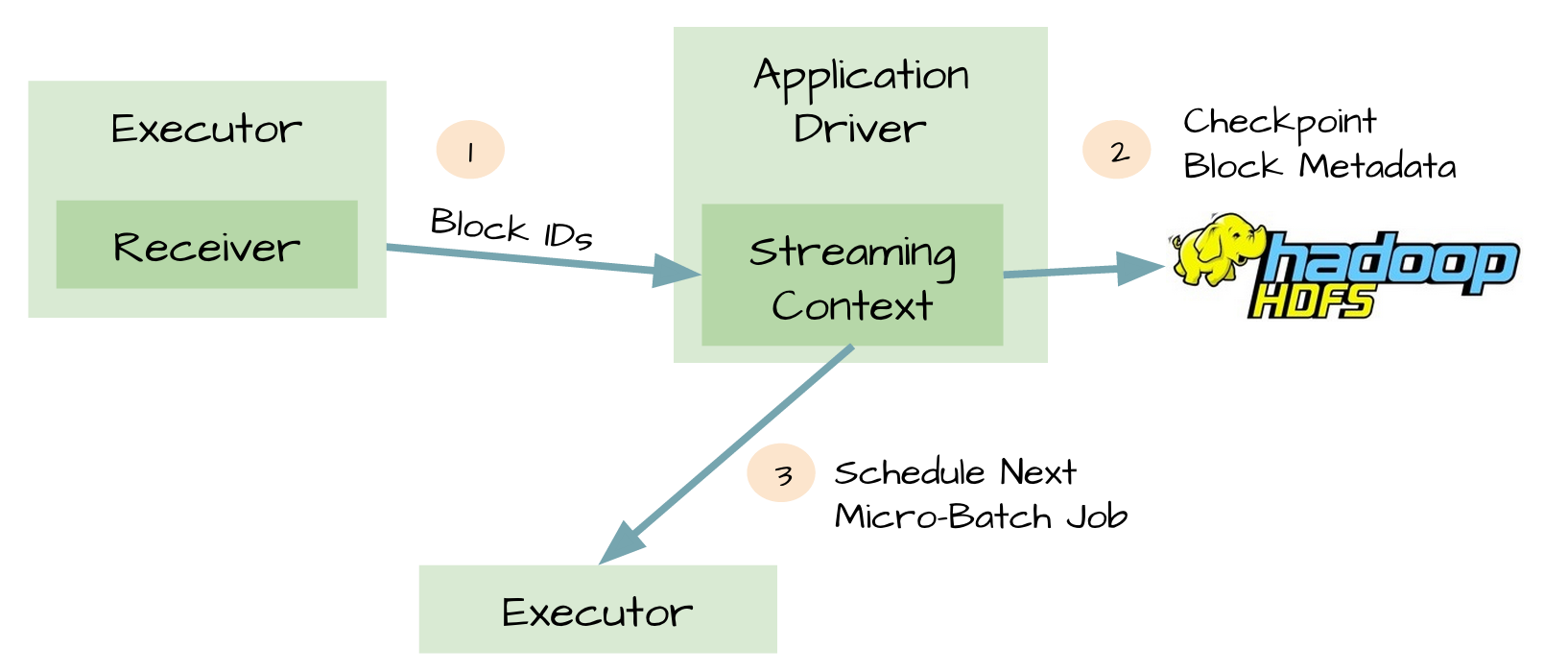

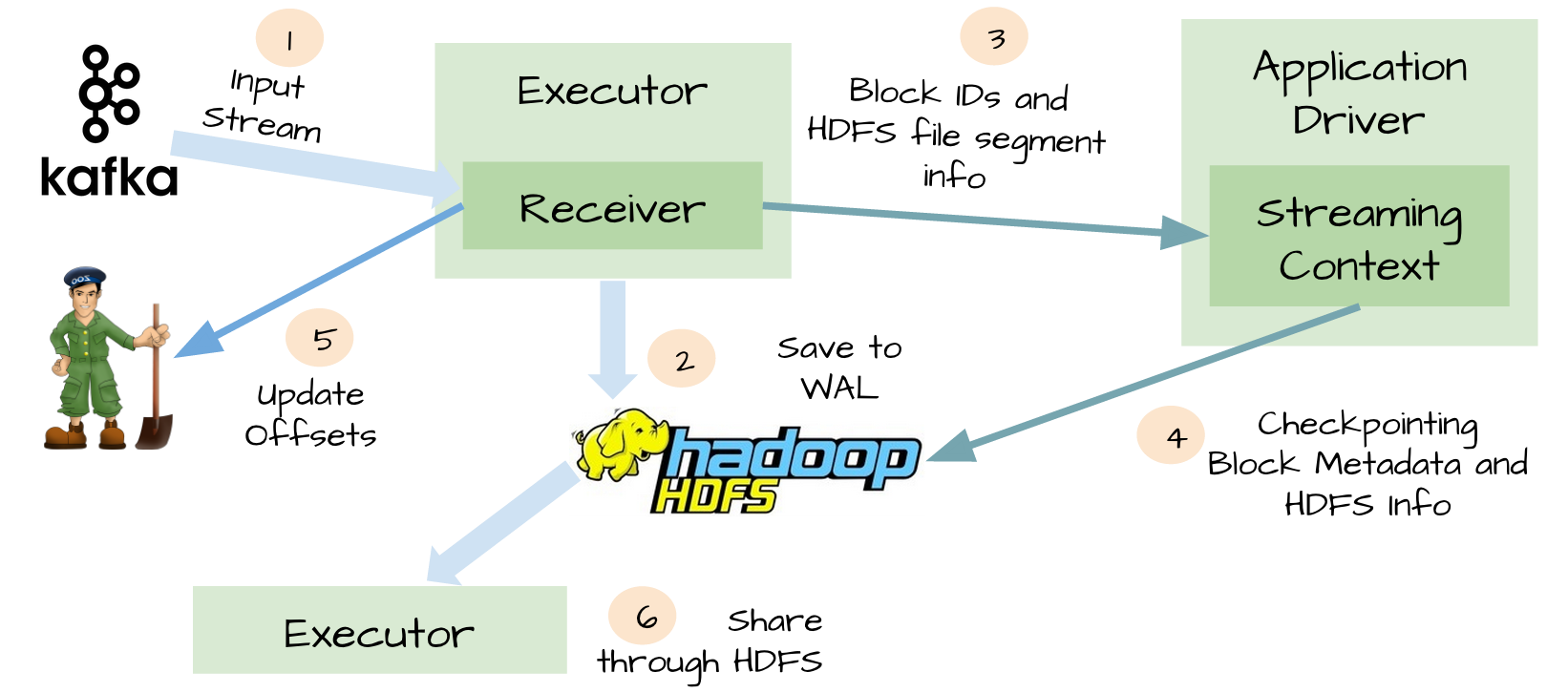

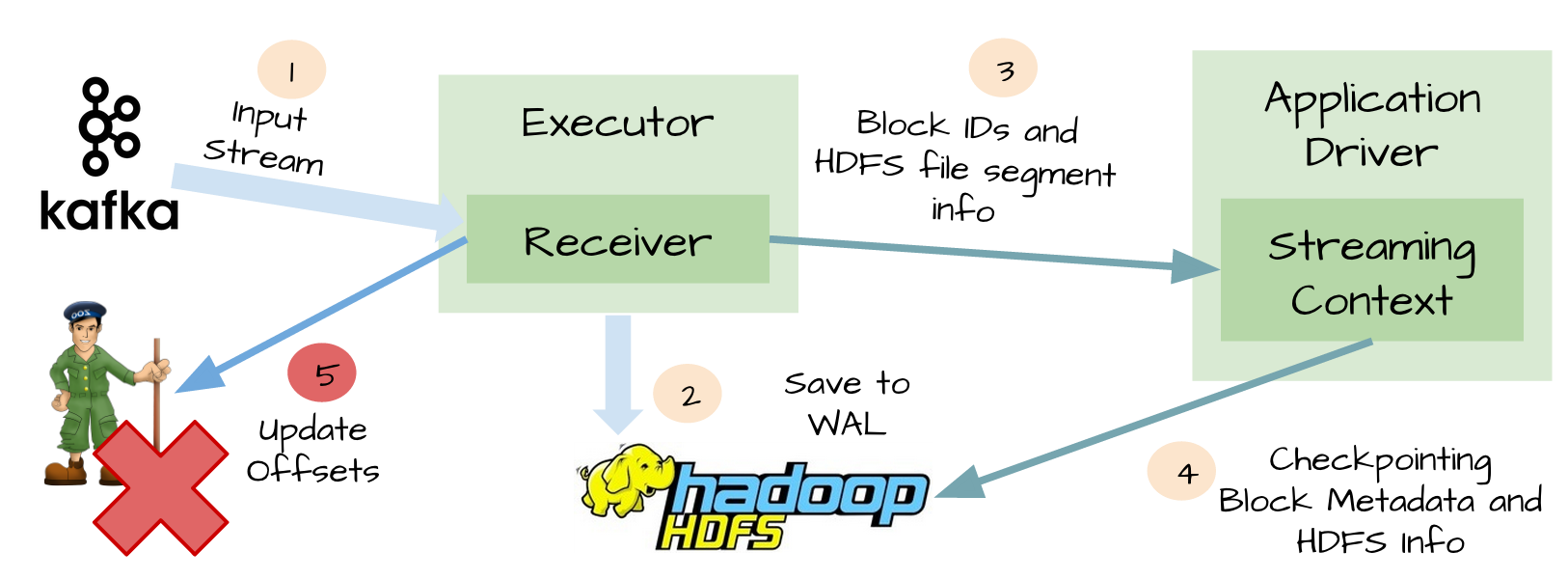

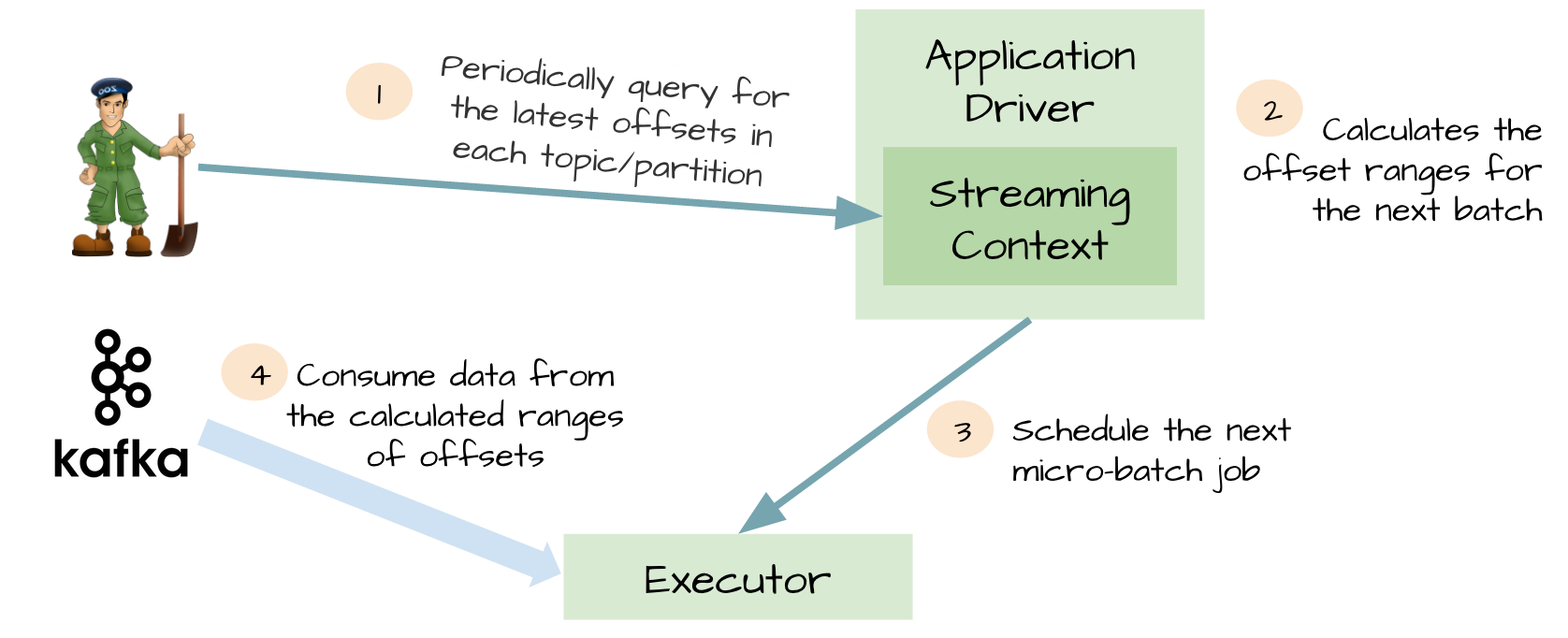

103 | [Spark Streaming使用Kafka保证数据零丢失](spark-knowledge/md/spark_streaming使用Kafka保证数据零丢失.md)

104 |

105 | [spark streaming测试用例](sparkstreaming-demo)

106 |

107 | [spark streaming源码解析](https://github.com/proflin/CoolplaySpark)

108 |

109 | [基于spark streaming的聚合分析(Sparkta)](https://github.com/Stratio/Sparkta)

110 |

111 | Spark SQL

112 |

113 | [spark DataFrame测试用例](spark-dataframe-demo)

114 |

115 | [Hive Json加载](hive-json-demo)

116 |

117 | [SparkSQL架构设计和代码分析](https://github.com/marsishandsome/SparkSQL-Internal)

118 |

119 | Spark 机器学习

120 |

121 | [spark机器学习源码解析](https://github.com/endymecy/spark-ml-source-analysis)

122 |

123 | [KeyStoneML](http://keystone-ml.org)

124 | KeystoneML is a software framework, written in Scala, from the UC Berkeley AMPLab designed to simplify the construction of large scale, end-to-end, machine learning pipelines with Apache Spark.

125 |

126 | [spark TS](spark-timeseries-demo)

127 |

128 | Spark zeppelin

129 |

130 | [**Z-Manager**](https://github.com/NFLabs/z-manager)--Simplify getting Zeppelin up and running

131 |

132 | [**zeppelin**](https://github.com/apache/incubator-zeppelin)--a web-based notebook that enables interactive data analytics. You can make beautiful data-driven, interactive and collaborative documents with SQL, Scala and more.

133 |

134 | [**helium**](http://s.apache.org/helium)--Brings Zeppelin to data analytics application platform

135 |

136 | Spark 其它

137 |

138 | [spark专题在简书](http://www.jianshu.com/collection/6157554bfdd9)

139 |

140 | [databricks spark知识库](https://aiyanbo.gitbooks.io/databricks-spark-knowledge-base-zh-cn/content/)

141 |

142 | [spark学习知识总结](spark-knowledge)

143 |

144 | [Spark library for doing exploratory data analysis in a scalable way](https://github.com/vicpara/exploratory-data-analysis/)

145 |

146 | [图处理(cassovary)](https://github.com/twitter/cassovary)

147 |

148 | [基于spark进行地理位置分析(gagellan)](https://github.com/harsha2010/magellan)

149 |

150 | [spark summit east 2016 ppt](http://vdisk.weibo.com/s/BP8uNBea_C2Af?from=page_100505_profile&wvr=6)

151 |

152 | ES

153 |

154 | [ES 非阻塞scala客户端](https://github.com/sksamuel/elastic4s)

155 |

156 | Beam

157 | [Apache Beam:下一代的数据处理标准](http://geek.csdn.net/news/detail/134167)

158 | 贡献代码步骤

159 | 1. 首先 fork 我的项目

160 | 2. 把 fork 过去的项目也就是你的项目 clone 到你的本地

161 | 3. 运行 git remote add jacksu git@github.com:jacksu/utils4s.git 把我的库添加为远端库

162 | 4. 运行 git pull jacksu master 拉取并合并到本地

163 | 5. coding

164 | 6. commit后push到自己的库( git push origin master )

165 | 7. 登陆Github在你首页可以看到一个 pull request 按钮,点击它,填写一些说明信息,然后提交即可。

166 | 1~3是初始化操作,执行一次即可。在coding前必须执行第4步同步我的库(这样避免冲突),然后执行5~7既可。

167 |

168 | 贡献者

169 | [jjcipher](https://github.com/jjcipher)

170 |

171 |

--------------------------------------------------------------------------------

/akka-demo/README.md:

--------------------------------------------------------------------------------

1 |

2 | ##参考

3 |

4 | [一个超简单的akka actor例子](http://colobu.com/2015/02/26/simple-scala-akka-actor-examples/)

5 |

6 | [akka 学习资料](https://github.com/hustnn/AkkaLearning)

--------------------------------------------------------------------------------

/akka-demo/pom.xml:

--------------------------------------------------------------------------------

1 |

3 |

4 | demo

5 | cn.thinkjoy.utils4s

6 | 1.0

7 | ../pom.xml

8 |

9 | 4.0.0

10 | cn.thinkjoy.utils4s.akka

11 | akka-demo

12 | 2008

13 |

14 |

15 | com.typesafe.akka

16 | akka-actor_${soft.scala.version}

17 | 2.3.14

18 |

19 |

20 |

21 |

22 |

--------------------------------------------------------------------------------

/akka-demo/src/main/scala/cn/thinkjoy/utils4s/akka/HelloWorldApp.scala:

--------------------------------------------------------------------------------

1 | package cn.thinkjoy.utils4s.akka

2 |

3 | import akka.actor.{Props, ActorSystem, Actor}

4 |

5 | /**

6 | * Created by jacksu on 15/12/26.

7 | */

8 |

9 | class HelloActor extends Actor{

10 | def receive = {

11 | case "hello" => println("您好!")

12 | case _ => println("您是?")

13 | }

14 | }

15 |

16 | object HelloWorldApp {

17 | def main(args: Array[String]) {

18 | val system = ActorSystem("HelloSystem")

19 | // 缺省的Actor构造函数

20 | val helloActor = system.actorOf(Props[HelloActor], name = "helloactor")

21 | helloActor ! "hello"

22 | helloActor ! "喂"

23 | }

24 | }

25 |

--------------------------------------------------------------------------------

/akka-demo/src/main/scala/cn/thinkjoy/utils4s/akka/PingPongApp.scala:

--------------------------------------------------------------------------------

1 | package cn.thinkjoy.utils4s.akka

2 |

3 | import akka.actor._

4 | /**

5 | * Created by jacksu on 15/12/26.

6 | */

7 |

8 | case object PingMessage

9 | case object PongMessage

10 | case object StartMessage

11 | case object StopMessage

12 |

13 | class Ping(pong: ActorRef) extends Actor {

14 | var count = 0

15 | def incrementAndPrint { count += 1; println("ping") }

16 | def receive = {

17 | case StartMessage =>

18 | incrementAndPrint

19 | pong ! PingMessage

20 | case PongMessage =>

21 | if (count > 9) {

22 | sender ! StopMessage

23 | println("ping stopped")

24 | context.stop(self)

25 | } else {

26 | incrementAndPrint

27 | sender ! PingMessage

28 | }

29 | }

30 | }

31 |

32 | class Pong extends Actor {

33 | def receive = {

34 | case PingMessage =>

35 | println(" pong")

36 | sender ! PongMessage

37 | case StopMessage =>

38 | println("pong stopped")

39 | context.stop(self)

40 | context.system.shutdown()

41 | }

42 | }

43 | object PingPongApp {

44 | def main(args: Array[String]) {

45 | val system = ActorSystem("PingPongSystem")

46 | val pong = system.actorOf(Props[Pong], name = "pong")

47 | val ping = system.actorOf(Props(new Ping(pong)), name = "ping")

48 | // start them going

49 | ping ! StartMessage

50 | }

51 | }

52 |

--------------------------------------------------------------------------------

/analysis-demo/README.md:

--------------------------------------------------------------------------------

1 | ##单位换算以及不同单位进行运算

2 |

3 | *已经两年没有更新*

4 |

5 | ###转换

6 |

7 | ```scala

8 | (Hours(2) + Days(1) + Seconds(1)).toSeconds //93601.0

9 | ```

10 | ###toString

11 |

12 | ```scala

13 | Days(1) toString time.Seconds //86400.0 s

14 | ```

15 |

16 | ###toTuple

17 |

18 | ```scala

19 | Days(1) toTuple time.Seconds //(86400.0,s)

20 | ```

21 |

22 | **测试不支持map**

23 |

24 | ##精度判断

25 |

26 | ```scala

27 | implicit val tolerance = Watts(.1) // implicit Power: 0.1 W

28 | val load = Kilowatts(2.0) // Power: 2.0 kW

29 | val reading = Kilowatts(1.9999) // Power: 1.9999 kW

30 |

31 | // uses implicit tolerance

32 | load =~ reading // true

33 | ```

34 |

35 | ###向量

36 | ```scala

37 | val vector: QuantityVector[Length] = QuantityVector(Kilometers(1.2), Kilometers(4.3), Kilometers(2.3))

38 | val magnitude: Length = vector.magnitude // returns the scalar value of the vector

39 | println(magnitude)

40 | val normalized = vector.normalize(Kilometers) // returns a corresponding vector scaled to 1 of the given unit

41 | println(normalized)

42 |

43 | val vector2: QuantityVector[Length] = QuantityVector(Kilometers(1.2), Kilometers(4.3), Kilometers(2.3))

44 | val vectorSum = vector + vector2 // returns the sum of two vectors

45 | println(vectorSum)

46 | val vectorDiff = vector - vector2 // return the difference of two vectors

47 | println(vectorDiff)

48 | val vectorScaled = vector * 5 // returns vector scaled 5 times

49 | println(vectorScaled)

50 | val vectorReduced = vector / 5 // returns vector reduced 5 time

51 | println(vectorReduced)

52 | val vectorDouble = vector / space.Meters(5) // returns vector reduced and converted to DoubleVector

53 | println(vectorDouble)

54 | val dotProduct = vector * vectorDouble // returns the Dot Product of vector and vectorDouble

55 | println(dotProduct)

56 |

57 | val crossProduct = vector crossProduct vectorDouble // currently only supported for 3-dimensional vectors

58 | println(crossProduct)

59 | ```

60 | result

61 |

62 | ```scala

63 | 5.021951811795888 km

64 | QuantityVector(ArrayBuffer(0.2389509188800581 km, 0.8562407926535415 km, 0.45798926118677796 km))

65 | QuantityVector(ArrayBuffer(2.4 km, 8.6 km, 4.6 km))

66 | QuantityVector(ArrayBuffer(0.0 km, 0.0 km, 0.0 km))

67 | QuantityVector(ArrayBuffer(6.0 km, 21.5 km, 11.5 km))

68 | QuantityVector(ArrayBuffer(0.24 km, 0.86 km, 0.45999999999999996 km))

69 | DoubleVector(ArrayBuffer(240.0, 860.0, 459.99999999999994))

70 | 5044.0 km

71 | QuantityVector(WrappedArray(0.0 km, 1.1368683772161603E-13 km, 0.0 km))

72 | ```

73 | ###Money and Price

74 |

75 | ###参考

76 | [squants](https://github.com/garyKeorkunian/squants)

--------------------------------------------------------------------------------

/analysis-demo/pom.xml:

--------------------------------------------------------------------------------

1 |

3 |

4 | demo

5 | cn.thinkjoy.utils4s

6 | 1.0

7 | ../pom.xml

8 |

9 | 4.0.0

10 | cn.thinkjoy.utils4s.analysis

11 | analysis-demo

12 | 2008

13 |

14 |

15 | 0.5.3

16 |

17 |

18 |

19 | com.squants

20 | squants_${soft.scala.version}

21 | ${squants.version}

22 |

23 |

24 |

25 |

26 |

--------------------------------------------------------------------------------

/analysis-demo/src/main/scala/cn/thinkjoy/utils4s/analysis/analysisApp.scala:

--------------------------------------------------------------------------------

1 | package cn.thinkjoy.utils4s.analysis

2 |

3 | import squants.energy.Power

4 | import squants.energy._

5 | import squants.space._

6 | import squants._

7 | import squants.time._

8 | import squants.market._

9 |

10 | /**

11 | * Created by jacksu on 15/11/16.

12 | */

13 |

14 | object analysisApp {

15 | def main(args: Array[String]) {

16 | val load1: Power = Kilowatts(12) // returns Power(12, Kilowatts) or 12 kW

17 | val load2: Power = Megawatts(0.023) // Power: 0.023 MW

18 | val sum = load1 + load2 // Power: 35 kW - unit on left side is preserved

19 | println("%06.2f".format(sum.toMegawatts))

20 | val ratio = Days(1) / Hours(3)

21 | println(ratio)

22 | val seconds = (Hours(2) + Days(1) + time.Seconds(1)).toSeconds

23 | println(seconds)

24 | println(Days(1).toSeconds)

25 |

26 | //toString

27 | println(Days(1) toString time.Seconds)

28 |

29 | //totuple

30 | println(Days(1) toTuple time.Seconds)

31 |

32 | //Approximations

33 | implicit val tolerance = Watts(.1) // implicit Power: 0.1 W

34 | val load = Kilowatts(2.0) // Power: 2.0 kW

35 | val reading = Kilowatts(1.9999)

36 | println(load =~ reading)

37 |

38 | //vectors

39 | val vector: QuantityVector[Length] = QuantityVector(Kilometers(1.2), Kilometers(4.3), Kilometers(2.3))

40 | val magnitude: Length = vector.magnitude // returns the scalar value of the vector

41 | println(magnitude)

42 | val normalized = vector.normalize(Kilometers) // returns a corresponding vector scaled to 1 of the given unit

43 | println(normalized)

44 |

45 | val vector2: QuantityVector[Length] = QuantityVector(Kilometers(1.2), Kilometers(4.3), Kilometers(2.3))

46 | val vectorSum = vector + vector2 // returns the sum of two vectors

47 | println(vectorSum)

48 | val vectorDiff = vector - vector2 // return the difference of two vectors

49 | println(vectorDiff)

50 | val vectorScaled = vector * 5 // returns vector scaled 5 times

51 | println(vectorScaled)

52 | val vectorReduced = vector / 5 // returns vector reduced 5 time

53 | println(vectorReduced)

54 | val vectorDouble = vector / space.Meters(5) // returns vector reduced and converted to DoubleVector

55 | println(vectorDouble)

56 | val dotProduct = vector * vectorDouble // returns the Dot Product of vector and vectorDouble

57 | println(dotProduct)

58 |

59 | val crossProduct = vector crossProduct vectorDouble // currently only supported for 3-dimensional vectors

60 | println(crossProduct)

61 |

62 | //money

63 | val tenBucks = USD(10)

64 | println(tenBucks)

65 | val tenyuan = CNY(10)

66 | println(tenyuan)

67 | val hongkong = HKD(10)

68 | println(hongkong)

69 |

70 | //price

71 | val energyPrice = USD(102.20) / MegawattHours(1)

72 | println(energyPrice)

73 | }

74 | }

75 |

--------------------------------------------------------------------------------

/breeze-demo/pom.xml:

--------------------------------------------------------------------------------

1 |

3 |

4 | demo

5 | cn.thinkjoy.utils4s

6 | 1.0

7 | ../pom.xml

8 |

9 | 4.0.0

10 | cn.thinkjoy.utils4s.breeze

11 | breeze-demo

12 | 2008

13 |

14 |

15 |

16 | org.scalanlp

17 | breeze_${soft.scala.version}

18 | 0.10

19 |

20 |

21 |

22 |

--------------------------------------------------------------------------------

/breeze-demo/src/main/scala/cn/thinkjoy/utils4s/breeze/BreezeApp.scala:

--------------------------------------------------------------------------------

1 | package cn.thinkjoy.utils4s.breeze

2 |

3 | //包含线性代数包(linear algebra)

4 | import breeze.linalg._

5 |

6 | /**

7 | * jacksu

8 | *

9 | */

10 | object BreezeApp {

11 | def main(args: Array[String]) {

12 | //=========两种矢量的区别,dense分配内存,sparse不分配=========

13 | //DenseVector(0.0, 0.0, 0.0, 0.0, 0.0)

14 | //底层是Array

15 | val x = DenseVector.zeros[Double](5)

16 | println(x)

17 |

18 | //SparseVector

19 | val y = SparseVector.zeros[Double](5)

20 | println(y)

21 |

22 | //===========操作对应的值===============

23 | //DenseVector(0.0, 2.0, 0.0, 0.0, 0.0)

24 | x(1)=2

25 | println(x)

26 |

27 | //SparseVector((1,2.0))

28 | y(1)=2

29 | println(y)

30 |

31 | //===========slice==========

32 | //DenseVector(0.5, 0.5)

33 | println(x(3 to 4):=.5)

34 | //DenseVector(0.0, 2.0, 0.0, 0.5, 0.5)

35 | println(x)

36 | println(x(1))

37 |

38 | //==========DenseMatrix===========

39 | /**

40 | * 0 0 0 0 0

41 | * 0 0 0 0 0

42 | * 0 0 0 0 0

43 | * 0 0 0 0 0

44 | * 0 0 0 0 0

45 | */

46 | val m=DenseMatrix.zeros[Int](5,5)

47 | println(m)

48 |

49 | /**

50 | * 向量是列式的

51 | * 0 0 0 0 1

52 | * 0 0 0 0 2

53 | * 0 0 0 0 3

54 | * 0 0 0 0 4

55 | * 0 0 0 0 5

56 | */

57 | m(::,4):=DenseVector(1,2,3,4,5)

58 | println(m)

59 | //5

60 | println(max(m))

61 | //15

62 | println(sum(m))

63 | //DenseVector(1.0, 1.5, 2.0)

64 | println(linspace(1,2,3))

65 |

66 | //==========对角线============

67 | /**

68 | * 1.0 0.0 0.0

69 | * 0.0 1.0 0.0

70 | * 0.0 0.0 1.0

71 | */

72 | println(DenseMatrix.eye[Double](3))

73 | /**

74 | * 1.0 0.0 0.0

75 | * 0.0 2.0 0.0

76 | * 0.0 0.0 3.0

77 | */

78 | println(diag(DenseVector(1.0,2.0,3.0)))

79 | }

80 | }

81 |

--------------------------------------------------------------------------------

/file-demo/README.md:

--------------------------------------------------------------------------------

1 | 文件基本操作

2 |

3 | **需要java8**

--------------------------------------------------------------------------------

/file-demo/pom.xml:

--------------------------------------------------------------------------------

1 |

3 |

4 | demo

5 | cn.thinkjoy.utils4s

6 | 1.0

7 | ../pom.xml

8 |

9 | 4.0.0

10 | cn.thinkjoy.utils4s.file

11 | file-demo

12 | 2008

13 |

14 | 2.13.0

15 |

16 |

17 |

18 |

19 | com.github.pathikrit

20 | better-files_${soft.scala.version}

21 | ${better.file.version}

22 | compile

23 |

24 |

25 |

26 |

--------------------------------------------------------------------------------

/file-demo/src/main/scala/cn/thinkjoy/utils4s/file/FileApp.scala:

--------------------------------------------------------------------------------

1 | package cn.thinkjoy.utils4s.file

2 |

3 | import better.files._

4 | import java.io.{File=>JFile}

5 |

6 | /**

7 | * Hello world!

8 | *

9 | */

10 | object FileApp{

11 | def main(args: Array[String]) {

12 | //TODO

13 | println("需要java8,需要继续跟")

14 | }

15 |

16 | }

17 |

--------------------------------------------------------------------------------

/hive-json-demo/README.md:

--------------------------------------------------------------------------------

1 |

2 | 实现json文件加载为hive表

3 |

4 | ##参考

5 | [Hive-JSON-Serde](https://github.com/rcongiu/Hive-JSON-Serde)

6 | [Serde](http://blog.csdn.net/xiao_jun_0820/article/details/38119123#)

7 |

--------------------------------------------------------------------------------

/hive-json-demo/pom.xml:

--------------------------------------------------------------------------------

1 |

3 |

4 | demo

5 | cn.thinkjoy.utils4s

6 | 1.0

7 | ../pom.xml

8 |

9 | 4.0.0

10 | cn.thinkjoy.utils4s.hive.json

11 | hive-json-demo

12 | 2008

13 |

14 |

15 |

16 | org.apache.hadoop

17 | hadoop-common

18 | 2.6.0

19 | compile

20 |

21 |

22 | org.apache.hive

23 | hive-serde

24 | 1.1.0

25 | compile

26 |

27 |

28 |

29 |

--------------------------------------------------------------------------------

/hive-json-demo/src/main/scala/cn/thinkjoy/utils4s/hive/json/App.scala:

--------------------------------------------------------------------------------

1 | package cn.thinkjoy.utils4s.hive.json

2 |

3 | /**

4 | * Hello world!

5 | *

6 | */

7 | object App {

8 | println( "Hello World!" )

9 | }

10 |

--------------------------------------------------------------------------------

/hive-json-demo/src/resources/create_table.sql:

--------------------------------------------------------------------------------

1 | /**

2 | create table weixiao_follower_info(

3 | requestTime BIGINT,

4 | requestParams STRUCT,

5 | requestUrl STRING)

6 | row format serde "com.besttone.hive.serde.JSONSerDe"

7 | WITH SERDEPROPERTIES(

8 | "input.invalid.ignore"="true",

9 | "requestTime"="$.requestTime",

10 | "requestParams.timestamp"="$.requestParams.timestamp",

11 | "requestParams.phone"="$.requestParams.phone",

12 | "requestParams.cardName"="$.requestParams.cardName",

13 | "requestParams.provinceCode"="$.requestParams.provinceCode",

14 | "requestParams.cityCode"="$.requestParams.cityCode",

15 | "requestUrl"="$.requestUrl");

16 | **/

17 |

18 | //暂时发现patition有问题

19 | CREATE EXTERNAL TABLE weixiao_follower_info(

20 | uid STRING,

21 | schoolCode STRING,

22 | attend STRING,

23 | app STRING,

24 | suite STRING,

25 | timestamp STRING)

26 | ROW FORMAT serde "cn.thinkjoy.utils4s.hive.json.JSONSerDe"

27 | WITH SERDEPROPERTIES(

28 | "input.invalid.ignore"="true",

29 | "uid"="$.uid",

30 | "schoolCode"="$.schoolCode",

31 | "attend."="$.attend",

32 | "app"="$.app",

33 | "suite"="$.suite",

34 | "timestamp"="$.timestamp");

35 |

36 | load data inpath '/tmp/weixiao_user_guanzhu_log/20151217/20/' INTO TABLE weixiao_follower_info partition(dt='20151217',hour='20')

37 | select * from weixiao_follower_info where cast(timestamp as bigint)>=unix_timestamp('2015121720','yyyyMMddHH')*1000;

--------------------------------------------------------------------------------

/json4s-demo/README.md:

--------------------------------------------------------------------------------

1 | #json4s

2 | json的各种形式的相互转化图如下:

3 |

4 |

5 | 其中的关键是AST,AST有如下的语法树:

6 | ```scala

7 | sealed abstract class JValue

8 | case object JNothing extends JValue // 'zero' for JValue

9 | case object JNull extends JValue

10 | case class JString(s: String) extends JValue

11 | case class JDouble(num: Double) extends JValue

12 | case class JDecimal(num: BigDecimal) extends JValue

13 | case class JInt(num: BigInt) extends JValue

14 | case class JBool(value: Boolean) extends JValue

15 | case class JObject(obj: List[JField]) extends JValue

16 | case class JArray(arr: List[JValue]) extends JValue

17 |

18 | type JField = (String, JValue)

19 | ```

20 |

21 | > * String -> AST

22 | ```scala

23 | val ast=parse(""" {"name":"test", "numbers" : [1, 2, 3, 4] } """)

24 | result: JObject(List((name,JString(test)), (numbers,JArray(List(JInt(1), JInt(2), JInt(3), JInt(4))))))

25 | ```

26 | > * Json DSL -> AST

27 | ```scala

28 | import org.json4s.JsonDSL._

29 | //DSL implicit AST

30 | val json2 = ("name" -> "joe") ~ ("age" -> Some(35))

31 | println(json2)

32 | result:JObject(List((name,JString(joe)), (age,JInt(35))))

33 | ```

34 | > * AST -> String

35 | ```scala

36 | val str=compact(render(json2))

37 | println(str)

38 | result:{"name":"joe","age":35}

39 | //pretty

40 | val pretty=pretty(render(json2))

41 | println(pretty)

42 | result:

43 | {

44 | "name" : "joe",

45 | "age" : 35

46 | }

47 | ```

48 |

49 | > * AST operation

50 | ```scala

51 | val json4 = parse( """

52 | { "name": "joe",

53 | "children": [

54 | {

55 | "name": "Mary",

56 | "age": 5

57 | },

58 | {

59 | "name": "Mazy",

60 | "age": 3

61 | }

62 | ]

63 | }

64 | """)

65 | //注意\和\\的区别

66 | //{"name":"joe","name":"Mary","name":"Mazy"}

67 | println(compact(render(json4 \\ "name")))

68 | //"joe"

69 | println(compact(render(json4 \ "name")))

70 | //[{"name":"Mary","age":5},{"name":"Mazy","age":3}]

71 | println(compact(render(json4 \\ "children")))

72 | //["Mary","Mazy"]

73 | println(compact(render(json4 \ "children" \ "name")))

74 | //{"name":"joe"}

75 | println(compact(render(json4 findField {

76 | case JField("name", _) => true

77 | case _ => false

78 | })))

79 | //{"name":"joe","name":"Mary","name":"Mazy"}

80 | println(compact(render(json4 filterField {

81 | case JField("name", _) => true

82 | case _ => false

83 | })))

84 | ```

85 |

86 | > * AST -> case class

87 | ```scala

88 | implicit val formats = DefaultFormats

89 | val json5 = parse("""{"first_name":"Mary"}""")

90 | case class Person(`firstName`: String)

91 | val json6=json5 transformField {

92 | case ("first_name", x) => ("firstName", x)

93 | }

94 | println(json6.extract[Person])

95 | println(json5.camelizeKeys.extract[Person])

96 | result:

97 | Person(Mary)

98 | Person(Mary)

99 | ```

100 |

101 | 参考:

102 | [json4s](https://github.com/json4s/json4s)

--------------------------------------------------------------------------------

/json4s-demo/pom.xml:

--------------------------------------------------------------------------------

1 |

3 |

4 | demo

5 | cn.thinkjoy.utils4s

6 | 1.0

7 | ../pom.xml

8 |

9 | 4.0.0

10 | cn.thinkjoy.utils4s.json4s

11 | json4s-demo

12 | 2008

13 |

14 |

15 |

16 | org.json4s

17 | json4s-jackson_${soft.scala.version}

18 | 3.3.0

19 |

20 |

21 |

--------------------------------------------------------------------------------

/json4s-demo/src/main/scala/cn/thinkjoy/utils4s/json4s/Json4sDemo.scala:

--------------------------------------------------------------------------------

1 | package cn.thinkjoy.utils4s.json4s

2 |

3 | import org.json4s._

4 | import org.json4s.jackson.JsonMethods._

5 |

6 |

7 | object Json4sDemo {

8 | def main(args: Array[String]) {

9 | //=========== 通过字符串解析为json AST ==============

10 | val json1 = """ {"name":"test", "numbers" : [1, 2, 3, 4] } """

11 | println(parse(json1))

12 |

13 | //============= 通过DSL解析为json AST ===========

14 | import org.json4s.JsonDSL._

15 | //DSL implicit AST

16 | val json2 = ("name" -> "joe") ~ ("age" -> Some(35))

17 | println(json2)

18 | println(render(json2))

19 |

20 | case class Winner(id: Long, numbers: List[Int])

21 | case class Lotto(id: Long, winningNumbers: List[Int], winners: List[Winner], drawDate: Option[java.util.Date])

22 | val winners = List(Winner(23, List(2, 45, 34, 23, 3, 5)), Winner(54, List(52, 3, 12, 11, 18, 22)))

23 | val lotto = Lotto(5, List(2, 45, 34, 23, 7, 5, 3), winners, None)

24 | val json3 =

25 | ("lotto" ->

26 | ("lotto-id" -> lotto.id) ~

27 | ("winning-numbers" -> lotto.winningNumbers) ~

28 | ("draw-date" -> lotto.drawDate.map(_.toString)) ~

29 | ("winners" ->

30 | lotto.winners.map { w =>

31 | (("winner-id" -> w.id) ~

32 | ("numbers" -> w.numbers))

33 | }))

34 | println(render(json3))

35 |

36 |

37 | //=================== 转化为String =============

38 | //println(compact(json1))

39 | println(compact(json2))

40 | //render用默认方式格式化空字符

41 | println(compact(render(json2)))

42 | println(compact(render(json3)))

43 |

44 | //println(pretty(json1))

45 | println(pretty(render(json2)))

46 | println(pretty(render(json3)))

47 |

48 |

49 | //=========== querying json ===============

50 | val json4 = parse( """

51 | { "name": "joe",

52 | "children": [

53 | {

54 | "name": "Mary",

55 | "age": 5

56 | },

57 | {

58 | "name": "Mazy",

59 | "age": 3

60 | }

61 | ]

62 | }

63 | """)

64 | // TODO name:"joe"

65 | val ages = for {

66 | JObject(child) <- json4

67 | JField("age", JInt(age)) <- child

68 | if age > 4

69 | } yield age

70 | val name = for{

71 | JString(name) <- json4

72 | } yield name

73 | println(ages)

74 | //List(joe, Mary, Mazy)

75 | println(name)

76 | //{"name":"joe","name":"Mary","name":"Mazy"}

77 | println(compact(render(json4 \\ "name")))

78 | //"joe"

79 | println(compact(render(json4 \ "name")))

80 | //[{"name":"Mary","age":5},{"name":"Mazy","age":3}]

81 | println(compact(render(json4 \\ "children")))

82 | //["Mary","Mazy"]

83 | println(compact(render(json4 \ "children" \ "name")))

84 | //{"name":"joe"}

85 | println(compact(render(json4 findField {

86 | case JField("name", _) => true

87 | case _ => false

88 | })))

89 | //{"name":"joe","name":"Mary","name":"Mazy"}

90 | println(compact(render(json4 filterField {

91 | case JField("name", _) => true

92 | case _ => false

93 | })))

94 |

95 | //============== extract value =================

96 | implicit val formats = DefaultFormats

97 | val json5 = parse("""{"first_name":"Mary"}""")

98 | case class Person(`firstName`: String)

99 | val json6=json5 transformField {

100 | case ("first_name", x) => ("firstName", x)

101 | }

102 | println(json6.extract[Person])

103 | println(json5.camelizeKeys.extract[Person])

104 |

105 | //================ xml 2 json ===================

106 | import org.json4s.Xml.{toJson, toXml}

107 | val xml =

108 |

109 |

110 | 1

111 | Harry

112 |

113 |

114 | 2

115 | David

116 |

117 |

118 |

119 | val json = toJson(xml)

120 | println(pretty(render(json)))

121 | println(pretty(render(json transformField {

122 | case ("id", JString(s)) => ("id", JInt(s.toInt))

123 | case ("user", x: JObject) => ("user", JArray(x :: Nil))

124 | })))

125 | //================ json 2 xml ===================

126 | println(toXml(json))

127 | }

128 | }

129 |

--------------------------------------------------------------------------------

/lamma-demo/README.md:

--------------------------------------------------------------------------------

1 | #lamma-demo

2 | 日期相关的操作全部具有,唯一的缺点就是没有时间的操作

--------------------------------------------------------------------------------

/lamma-demo/pom.xml:

--------------------------------------------------------------------------------

1 |

3 |

4 | demo

5 | cn.thinkjoy.utils4s

6 | 1.0

7 | ../pom.xml

8 |

9 | 4.0.0

10 | cn.thinkjoy.utils4s

11 | lamma-demo

12 | 2008

13 |

14 |

15 |

16 | io.lamma

17 | lamma_${soft.scala.version}

18 | 2.2.3

19 |

20 |

21 |

22 |

--------------------------------------------------------------------------------

/lamma-demo/src/main/scala/cn/thinkjoy/utils4s/lamma/BasicOper.scala:

--------------------------------------------------------------------------------

1 | package cn.thinkjoy.utils4s.lamma

2 |

3 | import io.lamma._

4 |

5 | /**

6 | * test

7 | *

8 | */

9 | object BasicOper {

10 | def main(args: Array[String]): Unit = {

11 | //============== create date ===========

12 | println(Date(2014, 7, 7).toISOString) //2014-07-07

13 | println(Date("2014-07-7").toISOInt) //20140707

14 | println(Date.today())

15 |

16 | //============== compare two date ===========

17 | println(Date(2014, 7, 7) < Date(2014, 7, 8))

18 | println((2014, 7, 7) <(2014, 7, 8))

19 | println(Date("2014-07-7") > Date("2014-7-8"))

20 | println(Date("2014-07-10") - Date("2014-7-8"))

21 |

22 | // ========== manipulate dates =============

23 | println(Date(2014, 7, 7) + 1)

24 | println((2014, 7, 7) + 30)

25 | println(Date("2014-07-7") + 1)

26 | println(Date("2014-07-7") - 1)

27 | println(Date("2014-07-7") + (2 weeks))

28 | println(Date("2014-07-7") + (2 months))

29 | println(Date("2014-07-7") + (2 years))

30 |

31 | // ========== week related ops ============

32 | println(Date("2014-07-7").dayOfWeek) //MONDAY

33 | println(Date("2014-07-7").withDayOfWeek(Monday).toISOString) //这周的星期一 2014-07-07

34 | println(Date("2014-07-7").next(Monday))

35 | println(Date(2014, 7, 8).daysOfWeek(0)) //默认星期一是一周第一天

36 |

37 | // ========== month related ops ============

38 | println(Date("2014-07-7").maxDayOfMonth)

39 | println(Date("2014-07-7").lastDayOfMonth)

40 | println(Date("2014-07-7").firstDayOfMonth)

41 | println(Date("2014-07-7").sameWeekdaysOfMonth)

42 | println(Date("2014-07-7").dayOfMonth)

43 |

44 | // ========== year related ops ============

45 | println(Date("2014-07-7").maxDayOfYear)

46 | println(Date("2014-07-7").dayOfYear)

47 | }

48 | }

49 |

--------------------------------------------------------------------------------

/log-demo/README.md:

--------------------------------------------------------------------------------

1 | #log-demo

2 | log4s可以作为日志库,使用需要log4j.prperties作为配置文件

--------------------------------------------------------------------------------

/log-demo/pom.xml:

--------------------------------------------------------------------------------

1 |

3 |

4 | demo

5 | cn.thinkjoy.utils4s

6 | 1.0

7 | ../pom.xml

8 |

9 |

10 | 4.0.0

11 | cn.thinkjoy.utils4s.log4s

12 | log-demo

13 | pom

14 | 2008

15 |

16 |

17 |

18 |

19 | org.slf4j

20 | slf4j-log4j12

21 | 1.7.2

22 |

23 |

24 | org.log4s

25 | log4s_${soft.scala.version}

26 | 1.2.0

27 | compile

28 |

29 |

30 |

31 |

32 |

--------------------------------------------------------------------------------

/log-demo/src/main/resources/log4j.properties:

--------------------------------------------------------------------------------

1 | # This is the configuring for logging displayed in the Application Server

2 | log4j.rootCategory=INFO,stdout,file

3 |

4 | #standard

5 | log4j.appender.stdout=org.apache.log4j.ConsoleAppender

6 | log4j.appender.stdout.Target = System.out

7 | log4j.appender.stdout.layout = org.apache.log4j.PatternLayout

8 | log4j.appender.stdout.layout.ConversionPattern = %d{yyyy-MM-dd HH:mm:ss,SSS} %p [%c] line:%L [%F][%M][%t] - %m%n

9 |

10 | #file configure

11 | log4j.appender.file=org.apache.log4j.DailyRollingFileAppender

12 | log4j.appender.file.encoding=UTF-8

13 | log4j.appender.file.Threshold = INFO

14 | log4j.appender.file.File=logs/log.log

15 | log4j.appender.file.layout=org.apache.log4j.PatternLayout

16 | log4j.appender.file.layout.ConversionPattern= %d{yyyy-MM-dd HH:mm:ss,SSS} %p line:%L [%F][%M] - %m%n

--------------------------------------------------------------------------------

/log-demo/src/main/scala/cn/thinkjoy/utils4s/log4s/App.scala:

--------------------------------------------------------------------------------

1 | package cn.thinkjoy.utils4s.log4s

2 |

3 | import org.log4s._

4 |

5 | /**

6 | * Hello world!

7 | *

8 | */

9 |

10 | object App {

11 |

12 | def main(args: Array[String]) {

13 | val test=new LoggingTest

14 | test.logPrint()

15 |

16 | val loggerName = this.getClass.getName

17 | val log=getLogger(loggerName)

18 | log.debug("debug log")

19 | log.info("info log")

20 | log.warn("warn log")

21 | log.error("error log")

22 |

23 | }

24 |

25 | }

26 |

--------------------------------------------------------------------------------

/log-demo/src/main/scala/cn/thinkjoy/utils4s/log4s/Logging.scala:

--------------------------------------------------------------------------------

1 | package cn.thinkjoy.utils4s.log4s

2 |

3 | import org.log4s._

4 |

5 | /**

6 | * Created by jacksu on 15/11/13.

7 | */

8 | trait Logging {

9 | private val clazz=this.getClass

10 | lazy val logger=getLogger(clazz)

11 | }

12 |

--------------------------------------------------------------------------------

/log-demo/src/main/scala/cn/thinkjoy/utils4s/log4s/LoggingTest.scala:

--------------------------------------------------------------------------------

1 | package cn.thinkjoy.utils4s.log4s

2 |

3 | import org.log4s._

4 |

5 | /**

6 | * Created by jacksu on 15/9/24.

7 | */

8 |

9 |

10 | class LoggingTest extends Logging{

11 | def logPrint(): Unit ={

12 | logger.debug("debug log")

13 | logger.info("info log")

14 | logger.warn("warn log")

15 | logger.error("error log")

16 | }

17 | }

18 |

--------------------------------------------------------------------------------

/manger-tools/python/es/__init__.py:

--------------------------------------------------------------------------------

1 | __author__ = 'jacksu'

2 |

--------------------------------------------------------------------------------

/manger-tools/python/es/check_index.py:

--------------------------------------------------------------------------------

1 | #! /usr/local/bin/python3

2 | # coding = utf-8

3 |

4 | __author__ = 'jacksu'

5 |

6 | import os

7 | import sys

8 | import xml.etree.ElementTree as ET

9 | from calendar import datetime

10 | import requests

11 | sys.path.append('.')

12 | import logger

13 | import mail

14 |

15 |

16 | if __name__ == '__main__':

17 | if len(sys.argv) != 2: # 参数判断

18 | print("example: " + sys.argv[0] + " index_list.conf")

19 | sys.exit(1)

20 | if not os.path.exists(sys.argv[1]): # 文件存在判断

21 | print("conf file does not exist")

22 | sys.exit(1)

23 |

24 | logger=logger.getLogger()

25 | logger.info("Start time: " + datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S'))

26 | tree = ET.parse(sys.argv[1])

27 | root = tree.getroot()

28 | list = []

29 | for hosts in root.findall("host"):

30 | logger.debug(hosts)

31 | auth_flag = hosts.get("auth")

32 | logger.debug(auth_flag)

33 | if "true" == auth_flag:

34 | auth = (hosts.get("user"), hosts.get("password"))

35 | logger.info(auth)

36 | top_url = hosts.get("url")

37 | logger.info(top_url)

38 | for child in hosts.findall("index"):

39 | prefix = child.find("name").text

40 | period = child.find("period").text

41 | type = child.find("period").get("type")

42 | logger.debug(type)

43 | if "day" == type:

44 | suffix = (datetime.datetime.now() - datetime.timedelta(days=int(period))).strftime('%Y.%m.%d')

45 | elif "month" == type:

46 | suffix = datetime.datetime.now().strftime('%Y%m')

47 | index = prefix + suffix

48 | logger.debug(index)

49 | url = top_url + index

50 | if "true" == auth_flag:

51 | result = requests.head(url, auth=auth)

52 | else:

53 | result = requests.head(url)

54 | if result.status_code != 200:

55 | list.append(index)

56 | if 0 != len(list):

57 | logger.debug("send mail")

58 | mail.send_mail('xbsu@thinkjoy.cn', 'xbsu@thinkjoy.cn', 'ES 索引错误', str(list))

59 | logger.info("End time: " + datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S'))

60 |

--------------------------------------------------------------------------------

/manger-tools/python/es/del_expired_index.py:

--------------------------------------------------------------------------------

1 | #! /usr/local/bin/python3

2 | # coding = utf-8

3 |

4 | __author__ = 'jacksu'

5 |

6 | import os

7 | import sys

8 | import xml.etree.ElementTree as ET

9 | from calendar import datetime

10 | import requests

11 | import logger

12 |

13 | if __name__ == '__main__':

14 | if len(sys.argv) != 2:

15 | print("example: " + sys.argv[0] + " expired_index.conf")

16 | sys.exit(1)

17 | if not os.path.exists(sys.argv[1]):

18 | print("conf file does not exist")

19 | sys.exit(1)

20 | logger = logger.getLogger()

21 | logger.info("Start time: " + datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S'))

22 | tree = ET.parse(sys.argv[1])

23 | root = tree.getroot()

24 | # conn = httplib.HTTPConnection("http://es_admin:password@10.253.2.125:9200/")

25 | for host in root.findall("host"):

26 |

27 | top_url = host.get("url")

28 | logger.info(top_url)

29 | for index in host.findall("index"):

30 | prefix = index.find("name").text

31 | period = index.find("period").text

32 | suffix = (datetime.datetime.now() - datetime.timedelta(days=int(period))).strftime('%Y.%m.%d')

33 | index = prefix + "-" + suffix

34 | logger.debug(index)

35 | url = top_url + index

36 | if "true" == host.get("auth"):

37 | auth = (host.get("user"), host.get("password"))

38 | logger.info("auth: " + str(auth))

39 | result = requests.delete(url, auth=auth)

40 | else:

41 | result = requests.delete(url)

42 | logger.debug(result.json())

43 | logger.debug(result.status_code)

44 | logger.info("End time: " + datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S'))

45 |

--------------------------------------------------------------------------------

/manger-tools/python/es/del_many_index.py:

--------------------------------------------------------------------------------

1 | #! /usr/local/bin/python3

2 | # coding = utf-8

3 |

4 | import datetime

5 | import sys

6 | sys.path.append('.')

7 | from delindex import delindex

8 |

9 | __author__ = 'jacksu'

10 |

11 |

12 | def str_2_date(str):

13 | return datetime.datetime.strptime(str, "%Y%m%d")

14 |

15 |

16 | def nextdate(str):

17 | return (datetime.datetime.strptime(str,'%Y%m%d') + datetime.timedelta(days=1)).strftime('%Y%m%d')

18 |

19 | def formatdate(str):

20 | return datetime.datetime.strptime(str, "%Y%m%d").strftime('%Y.%m.%d')

21 |

22 | if __name__ == '__main__':

23 | if len(sys.argv) != 4:

24 | print("example: " + sys.argv[0] + " index_prefix start_date end_date")

25 | sys.exit(1)

26 |

27 | prefix = sys.argv[1]

28 | begin = sys.argv[2]

29 | end = sys.argv[3]

30 |

31 | print("Start time: " + datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S'))

32 |

33 | while str_2_date(begin) <= str_2_date(end):

34 | index = prefix + "-" + formatdate(begin)

35 | print(index)

36 | if not delindex(index):

37 | print("delete index error: " + index)

38 | begin = str(nextdate(begin))

39 | print("End time: " + datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S'))

--------------------------------------------------------------------------------

/manger-tools/python/es/delindex.py:

--------------------------------------------------------------------------------

1 | #! /usr/local/bin/python3

2 | # coding = utf-8

3 |

4 | import sys

5 | import requests

6 | import datetime

7 |

8 | __author__ = 'jacksu'

9 |

10 |

11 | def delindex(index):

12 | auth = ("es_admin", "password")

13 | print(auth)

14 | top_url = "http://10.253.2.125:9200/"

15 | print(top_url)

16 | url = top_url + index

17 | result = requests.delete(url, auth=auth)

18 | if result.status_code != 200:

19 | return False

20 | return True

21 |

22 |

23 | if __name__ == '__main__':

24 | if len(sys.argv) != 2:

25 | print("example: " + sys.argv[0] + " index")

26 | sys.exit(1)

27 |

28 | index = sys.argv[1]

29 |

30 | print("Start time: " + datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S'))

31 |

32 | if not delindex(index):

33 | print("delete index error: " + index)

34 | print("End time: " + datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S'))

35 |

--------------------------------------------------------------------------------

/manger-tools/python/es/expired_index.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | logstash-qky-pro

5 | 15

6 |

7 |

8 |

9 |

10 | .marvel-

11 | 10

12 |

13 |

14 |

15 |

--------------------------------------------------------------------------------

/manger-tools/python/es/index_list.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | logstash-qky-pro-

5 | 1

6 |

7 |

8 | logstash-ucenter-oper-log-

9 | 1

10 |

11 |

12 | logstash-zhiliao_uc_access-

13 | 1

14 |

15 |

16 |

17 |

18 | yzt_errornotes_

19 | 0

20 |

21 |

22 |

23 |

--------------------------------------------------------------------------------

/manger-tools/python/es/logger.py:

--------------------------------------------------------------------------------

1 | #! /usr/local/bin/python3

2 | # coding = utf-8

3 |

4 | __author__ = 'jacksu'

5 |

6 | import logging

7 | import logging.handlers

8 |

9 |

10 | def getLogger():

11 | logging.basicConfig(level=logging.DEBUG,

12 | format='%(asctime)s %(filename)s[line:%(lineno)d] %(levelname)s %(message)s',

13 | datefmt='%a, %d %b %Y %H:%M:%S',

14 | filemode='w')

15 | return logging.getLogger()

16 |

--------------------------------------------------------------------------------

/manger-tools/python/es/mail.py:

--------------------------------------------------------------------------------

1 | #! /usr/local/bin/python3

2 | # coding = utf-8

3 |

4 | import email

5 | import smtplib

6 | import email.mime.multipart

7 | import email.mime.text

8 |

9 | __author__ = 'jacksu'

10 |

11 |

12 |

13 |

14 | def send_mail(from_list, to_list, sub, content):

15 | msg = email.mime.multipart.MIMEMultipart()

16 | msg['from'] = from_list

17 | msg['to'] = to_list

18 | msg['subject'] = sub

19 | content = content

20 | txt = email.mime.text.MIMEText(content)

21 | msg.attach(txt)

22 |

23 | smtp = smtplib.SMTP('localhost')

24 | smtp.sendmail(from_list, to_list, str(msg))

25 | smtp.quit()

--------------------------------------------------------------------------------

/manger-tools/shell/kafka-reassign-replica.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | ###################

4 | #修改Kafka中数据的replica数

5 | # Created by jacksu on 16/2/26.

6 |

7 | if [ $# -ne 2 ]

8 | then

9 | echo "exampl: $0 zookeeperURL TOPIC [replicaNum]"

10 | exit 1

11 | fi

12 |

13 | ZKURL=$1

14 | TOPIC=$2

15 |

16 | if [ $# -gt 2 ]

17 | then

18 | REPNUM=$3

19 | else

20 | REPNUM=2

21 | fi

22 |

23 | echo "replica num:$REPNUM"

24 |

25 | export PATH=$PATH

26 | KAFKAPATH="/opt/kafka"

27 |

28 | PARTITIONS=$(${KAFKAPATH}/bin/kafka-topics.sh --zookeeper $ZKURL --topic $TOPIC --describe | grep PartitionCount | awk '{print $2}' | awk -F":" '{print $2}')

29 |

30 |

31 | REPLICA=$(seq -s, 0 `expr $REPNUM - 1`)

32 | PARTITIONS=$(expr $PARTITIONS - 2)

33 | FILE=partition-to-move.json

34 |

35 | ##输出头

36 | echo "{" > $FILE

37 | echo "\"partitions\":" >> $FILE

38 | echo "[" >> $FILE

39 |

40 | if [ $PARTITIONS -gt 0 ]

41 | then

42 | for i in `seq 0 $PARTITIONS`

43 | do

44 | echo "{\"topic\": \"$TOPIC\", \"partition\": $i,\"replicas\": [$REPLICA]}," >> $FILE

45 | done

46 | elif [ $PARTITIONS -eq 0 ]

47 | then

48 | echo "{\"topic\": \"$TOPIC\", \"partition\": 0,\"replicas\": [$REPLICA]}," >> $FILE

49 | fi

50 | PARTITIONS=$(expr $PARTITIONS + 1)

51 |

52 | ##输出尾

53 | echo "{\"topic\": \"$TOPIC\", \"partition\": $PARTITIONS,\"replicas\": [$REPLICA]}" >> $FILE

54 | echo "]" >> $FILE

55 | echo "}" >> $FILE

56 |

57 |

58 | $KAFKAPATH/bin/kafka-reassign-partitions.sh --zookeeper $ZKURL -reassignment-json-file $FILE -execute

59 |

--------------------------------------------------------------------------------

/manger-tools/shell/manger.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | ###################

4 | #主要用于程序启动和停止时,只需要修改函数start中COMMAND

5 | #COMMAND赋值为你要操作的程序即可

6 | # Created by jacksu on 16/1/15.

7 |

8 | BASE_NAME=`dirname $0`

9 | NAME=`basename $0 | awk -F '.' '{print $1}'`

10 |

11 | function print_usage(){

12 | echo "manger.sh [OPTION]"

13 | echo " --help|-h"

14 | echo " --daemon|-d 默认后台运行"

15 | echo " --logdir|-l 日志目录"

16 | echo " --conf 配置文件"

17 | echo " --workdir"

18 | }

19 |

20 | # Print an error message and exit

21 | function die() {

22 | echo -e "\nError: $@\n" 1>&2

23 | print_usage

24 | exit 1

25 | }

26 |

27 | for i in "$@"

28 | do

29 | case "$1" in

30 | start|stop|restart|status)

31 | ACTION="$1"

32 | ;;

33 | --workdir)

34 | WORK_DIR="$2"

35 | shift

36 | ;;

37 | --fwdir)

38 | FWDIR="$2"

39 | shift

40 | ;;

41 | --logdir)

42 | LOG_DIR="$2"

43 | shift

44 | ;;

45 | --jars)

46 | JARS="$2"

47 | shift

48 | ;;

49 | --conf)

50 | CONFIG_DIR="$2"

51 | shift

52 | ;;

53 | --jvmflags)

54 | JVM_FLAGS="$2"

55 | shift

56 | ;;

57 | --help|-h)

58 | print_usage

59 | exit 0

60 | ;;

61 | *)

62 | ;;

63 | esac

64 | shift

65 | done

66 |

67 | PID="$BASE_NAME/.${NAME}_pid"

68 |

69 | if [ -f "$PID" ]; then

70 | PID_VALUE=`cat $PID` > /dev/null 2>&1

71 | else

72 | PID_VALUE=""

73 | fi

74 |

75 | if [ ! -d "$LOG_DIR" ]; then

76 | mkdir "$LOG_DIR"

77 | fi

78 |

79 | function start(){

80 | echo "now is starting"

81 |

82 | #TODO 添加需要执行的命令

83 | COMMAND=""

84 | COMMAND+=""

85 |

86 | echo "Running command:"

87 | echo "$COMMAND"

88 | nohup $COMMAND & echo $! > $PID

89 | }

90 |

91 | function stop() {

92 | if [ -f "$PID" ]; then

93 | if kill -0 $PID_VALUE > /dev/null 2>&1; then

94 | echo 'now is stopping'

95 | kill $PID_VALUE

96 | sleep 1

97 | if kill -0 $PID_VALUE > /dev/null 2>&1; then

98 | echo "Did not stop gracefully, killing with kill -9"

99 | kill -9 $PID_VALUE

100 | fi

101 | else

102 | echo "Process $PID_VALUE is not running"

103 | fi

104 | else

105 | echo "No pid file found"

106 | fi

107 | }

108 |

109 | # Check the status of the process

110 | function status() {

111 | if [ -f "$PID" ]; then

112 | echo "Looking into file: $PID"

113 | if kill -0 $PID_VALUE > /dev/null 2>&1; then

114 | echo "The process is running with status: "

115 | ps -ef | grep -v grep | grep $PID_VALUE

116 | else

117 | echo "The process is not running"

118 | exit 1

119 | fi

120 | else

121 | echo "No pid file found"

122 | exit 1

123 | fi

124 | }

125 |

126 |

127 | case "$ACTION" in

128 | "start")

129 | start

130 | ;;

131 | "status")

132 | status

133 | ;;

134 | "restart")

135 | stop

136 | echo "Sleeping..."

137 | sleep 1

138 | start

139 | ;;

140 | "stop")

141 | stop

142 | ;;

143 | *)

144 | print_usage

145 | exit 1

146 | ;;

147 | esac

--------------------------------------------------------------------------------

/manger-tools/shell/start_daily.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # Created by xbsu on 16/1/6.

4 |

5 | if [ $# -ne 2 ]

6 | then

7 | echo "exampl: $0 20160101 20160102"

8 | exit 1

9 | fi

10 |

11 | BEGIN_DATE=$1

12 | END_DATE=$2

13 |

14 | export PATH=$PATH

15 |

16 | DB_HOST=""

17 | DB_USER=""

18 | DB_PASS=""

19 | DB_DB=""

20 | MYSQL="mysql -u${DB_USER} -p${DB_PASS} -h${DB_HOST} -D${DB_DB} --skip-column-name -e"

21 |

22 |

23 | ##################main####################

24 | echo "======Start time `date`==========="

25 |

26 | while [ $BEGIN_DATE -le $END_DATE ]; do

27 | FORMAT_DATE=`date -d "$BEGIN_DATE" +"%Y-%m-%d"`

28 | echo "fromat date $FORMAT_DATE"

29 | ##TODO something

30 | SQL=""

31 | $MYSQL $SQL

32 | BEGIN_DATE=`date -d "$BEGIN_DATE UTC +1 day" +"%Y%m%d"`

33 | done

34 |

35 | echo "======End time `date`==========="

36 |

--------------------------------------------------------------------------------

/nscala-time-demo/README.md:

--------------------------------------------------------------------------------

1 | #nscala-time

2 |

3 | 有时间的操作,文档不全,不知道每月的最大一天是什么,暂时还不知道如何使用scala for操作日期段,如下使用。

4 |

5 | ```scala

6 | //不可以这样

7 | for(current<-DateTime.parse("2014-07-7") to DateTime.parse("2014-07-8")){

8 | println(current)

9 | }

10 | ```

11 |

12 |

13 | 谢谢jjcipher,补全demo

--------------------------------------------------------------------------------

/nscala-time-demo/pom.xml:

--------------------------------------------------------------------------------

1 |

3 |

4 | demo

5 | cn.thinkjoy.utils4s

6 | 1.0

7 | ../pom.xml

8 |

9 | 4.0.0

10 | cn.thinkjoy.utils4s.nscala-time

11 | nscala-time-demo

12 | pom

13 | 2008

14 |

15 |

16 |

17 | com.github.nscala-time

18 | nscala-time_${soft.scala.version}

19 | 2.2.0

20 |

21 |

22 |

23 |

--------------------------------------------------------------------------------

/nscala-time-demo/src/main/scala/cn/thinkjoy/utils4s/nscala_time/BasicOper.scala:

--------------------------------------------------------------------------------

1 | package cn.thinkjoy.utils4s.nscala_time

2 |

3 | import com.github.nscala_time.time._

4 | import com.github.nscala_time.time.Imports._

5 | import org.joda.time.PeriodType

6 |

7 | /**

8 | * Hello world!

9 | *

10 | */

11 | object BasicOper {

12 | def main(args: Array[String]) {

13 | //================= create date ===================

14 | println(DateTime.now())

15 | val yesterday = (DateTime.now() - 1.days).toString(StaticDateTimeFormat.forPattern("yyyy-MM-dd HH:mm:ss"))

16 | println(yesterday)

17 | println(DateTime.parse("2014-07-7"))

18 | println(DateTime.parse("20140707", DateTimeFormat.forPattern("yyyyMMdd")))

19 | println(DateTime.parse("20140707", DateTimeFormat.forPattern("yyyyMMdd")).toLocalDate)

20 | println(DateTime.parse("20140707", DateTimeFormat.forPattern("yyyyMMdd")).toLocalTime)

21 |

22 | //============== compare two date ===========

23 | println(DateTime.parse("2014-07-7") < DateTime.parse("2014-07-8"))

24 | //println((DateTime.parse("2014-07-9").toLocalDate - DateTime.parse("2014-07-8").toLocalDate))

25 |

26 |

27 | // Find the time difference between two dates

28 | val newYear2016 = new DateTime withDate(2016, 1, 1)

29 | val daysToYear2016 = (newYear2016 to DateTime.now toPeriod PeriodType.days).getDays // 到2016年一月ㄧ日還有幾天

30 |

31 | // ========== manipulate dates =============

32 | println(DateTime.parse("2014-07-7") + 1.days)

33 | println((DateTime.parse("2014-07-7") + 1.day).toLocalDate)

34 | println(DateTime.parse("2014-07-7") - 1.days)

35 | println(DateTime.parse("2014-07-7") + (2 weeks))

36 | println(DateTime.parse("2014-07-7") + (2 months))

37 | println(DateTime.parse("2014-07-7") + (2 years))

38 |

39 | // ========== manipulate times =============

40 | println(DateTime.now() + 1.hour)

41 | println(DateTime.now() + 1.hour + 1.minute + 2.seconds)

42 | println(DateTime.now().getHourOfDay)

43 | println(DateTime.now.getMinuteOfHour)

44 |

45 | // ========== week related ops =============

46 | println((DateTime.now()-1.days).getDayOfWeek)//星期一为第一天

47 | println(DateTime.now().withDayOfWeek(1).toLocalDate)//这周的星期一

48 | println((DateTime.now()+ 1.weeks).withDayOfWeek(1))//下周星期一

49 |

50 | // ========== month related ops =============

51 | println((DateTime.now()-1.days).getDayOfMonth)

52 | println(DateTime.now().getMonthOfYear)

53 | println(DateTime.now().plusMonths(1))

54 | println(DateTime.now().dayOfMonth().getMaximumValue()) // 這個月有多少天

55 |

56 | // ========== year related ops =============

57 | println((DateTime.now()-1.days).getDayOfYear)

58 | println(DateTime.now().dayOfYear().getMaximumValue()) // 今年有多少天

59 |

60 | }

61 | }

62 |

--------------------------------------------------------------------------------

/picture/covAndcon.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jacksu/utils4s/dde9292943202b70e26d5162a96998a3a863a189/picture/covAndcon.png

--------------------------------------------------------------------------------

/picture/datacube.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jacksu/utils4s/dde9292943202b70e26d5162a96998a3a863a189/picture/datacube.jpg

--------------------------------------------------------------------------------

/picture/spark_streaming_config.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jacksu/utils4s/dde9292943202b70e26d5162a96998a3a863a189/picture/spark_streaming_config.png

--------------------------------------------------------------------------------

/pom.xml:

--------------------------------------------------------------------------------

1 |

2 |

5 | 4.0.0

6 |

7 | cn.thinkjoy.utils4s

8 | demo

9 | pom

10 | 1.0

11 |

12 |

13 | 2.11.7

14 | 2.11

15 |

16 |

17 |

18 |

19 | org.scala-lang

20 | scala-compiler

21 | ${scala.version}

22 | compile

23 |

24 |

25 | org.scalatest

26 | scalatest_${soft.scala.version}

27 | 2.1.5

28 | test

29 |

30 |

31 | org.scala-lang

32 | scala-xml

33 | 2.11.0-M4

34 |

35 |

36 |

37 |

38 | log-demo

39 | unittest-demo

40 | scala-demo

41 | lamma-demo

42 | nscala-time-demo

43 | json4s-demo

44 | spark-streaming-demo

45 | resources-demo

46 | file-demo

47 | analysis-demo

48 | twitter-util-demo

49 | spark-dataframe-demo

50 |

51 | breeze-demo

52 | hive-json-demo

53 | akka-demo

54 | spark-core-demo

55 | spark-analytics-demo

56 |

57 |

58 |

59 |

60 |

61 | org.scala-tools

62 | maven-scala-plugin

63 |

64 |

65 |

66 | compile

67 | testCompile

68 |

69 |

70 |

71 |

72 | ${scala.version}

73 |

74 | -target:jvm-1.7

75 |

76 |

77 |

78 |

79 | org.apache.maven.plugins

80 | maven-surefire-plugin

81 | 2.7

82 |

83 | true

84 |

85 |

86 |

87 | org.scalatest

88 | scalatest-maven-plugin

89 | 1.0

90 |

91 | ${project.build.directory}/surefire-reports

92 | .

93 | WDF TestSuite.txt

94 |

95 |

96 |

97 | test

98 |

99 | test

100 |

101 |

102 |

103 |

104 |

105 |

106 | maven-assembly-plugin

107 |

108 |

109 | jar-with-dependencies

110 |

111 |

112 |

113 |

114 | make-assembly

115 | package

116 |

117 | single

118 |

119 |

120 |

121 |

122 |

123 |

124 |

125 |

126 |

127 | org.scala-tools

128 | maven-scala-plugin

129 |

130 | ${scala.version}

131 |

132 |

133 |

134 |

135 |

--------------------------------------------------------------------------------

/resources-demo/README.md:

--------------------------------------------------------------------------------

1 | 通过加载properties和xml两种文件进行测试。

--------------------------------------------------------------------------------

/resources-demo/pom.xml:

--------------------------------------------------------------------------------

1 |

3 |

4 | demo

5 | cn.thinkjoy.utils4s

6 | 1.0

7 | ../pom.xml

8 |

9 |

10 | 4.0.0

11 | cn.thinkjoy.utils4s

12 | resources-demo

13 | 2008

14 |

15 |

16 | src/main/scala

17 | src/test/scala

18 |

19 |

20 |

--------------------------------------------------------------------------------

/resources-demo/src/main/resources/test.properties:

--------------------------------------------------------------------------------

1 | url.jack=https://github.com/jacksu

--------------------------------------------------------------------------------

/resources-demo/src/main/resources/test.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 | test

4 | https://github.com/jacksu

5 |

6 |

7 | test1

8 | https://github.com/jacksu

9 |

10 |

11 |

--------------------------------------------------------------------------------

/resources-demo/src/main/scala/cn/thinkjoy/utils4s/resources/ResourcesApp.scala:

--------------------------------------------------------------------------------

1 | package cn.thinkjoy.utils4s.resources

2 |

3 | import java.util.Properties

4 |

5 | import scala.io.Source

6 | import scala.xml.XML

7 |

8 | /**

9 | * Hello world!

10 | *

11 | */

12 | object ResourcesApp {

13 | def main(args: Array[String]): Unit = {

14 | val stream = getClass.getResourceAsStream("/test.properties")

15 | val prop=new Properties()

16 | prop.load(stream)

17 | println(prop.getProperty("url.jack"))

18 | //获取resources下面的文件

19 | val streamXml = getClass.getResourceAsStream("/test.xml")

20 | //val lines = Source.fromInputStream(streamXml).getLines.toList

21 | val xml=XML.load(streamXml)

22 | for (child <- xml \\ "collection" \\ "property"){

23 | println((child \\ "name").text)

24 | println((child \\ "url").text)

25 | }

26 |

27 | }

28 | }

29 |

--------------------------------------------------------------------------------