├── .nvmrc

├── .gitignore

├── example

├── src

│ ├── yodel.mp3

│ ├── combineElementCreators.js

│ ├── simple.jsx

│ ├── index.html

│ ├── aggregation.jsx

│ └── withReact.jsx

├── devServer.js

└── README.md

├── .travis.yml

├── docs

├── images

│ └── complex-graph.png

├── 006-api-reference.md

├── 007-local-development.md

├── 002-audio-parameters.md

├── 005-interop-with-react.md

├── 004-updating-audio-graphs.md

├── 000-introduction.md

├── 003-aggregations.md

└── 001-getting-started.md

├── .npmignore

├── jest.config.js

├── src

├── isWaxComponent.js

├── components

│ ├── Destination.js

│ ├── AudioGraph.js

│ ├── NoOp.js

│ ├── Aggregation.jsx

│ ├── Gain.js

│ ├── StereoPanner.js

│ ├── index.js

│ ├── ChannelMerger.js

│ ├── Oscillator.js

│ ├── AudioBufferSource.js

│ ├── asSourceNode.jsx

│ └── __tests__

│ │ ├── Gain.test.js

│ │ ├── StereoPanner.test.js

│ │ ├── Oscillator.test.js

│ │ ├── AudioBufferSource.test.js

│ │ └── asSourceNode.test.jsx

├── index.js

├── paramMutations

│ ├── createParamMutator.js

│ ├── index.js

│ ├── assignAudioParam.js

│ └── __tests__

│ │ ├── createParamMutator.test.js

│ │ └── assignAudioParam.test.js

├── connectNodes.js

├── renderAudioGraph.js

├── __tests__

│ ├── helpers.js

│ ├── connectNodes.test.js

│ └── createAudioElement.test.js

└── createAudioElement.js

├── .editorconfig

├── .babelrc

├── .eslintrc.js

├── rollup.config.js

├── package.json

└── README.md

/.nvmrc:

--------------------------------------------------------------------------------

1 | v8.11.0

2 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | dist

2 | node_modules

3 | coverage

4 | .nyc_output

5 | .DS_Store

--------------------------------------------------------------------------------

/example/src/yodel.mp3:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jamesseanwright/wax/HEAD/example/src/yodel.mp3

--------------------------------------------------------------------------------

/.travis.yml:

--------------------------------------------------------------------------------

1 | language: node_js

2 | node_js:

3 | - "8"

4 | - "10"

5 |

6 | script: npm run test:ci

7 |

--------------------------------------------------------------------------------

/docs/images/complex-graph.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jamesseanwright/wax/HEAD/docs/images/complex-graph.png

--------------------------------------------------------------------------------

/docs/006-api-reference.md:

--------------------------------------------------------------------------------

1 | # API Reference

2 |

3 | Coming soon. I'm going to find a nice way of autogenerating this!

4 |

--------------------------------------------------------------------------------

/.npmignore:

--------------------------------------------------------------------------------

1 | src

2 | example

3 | .babelrc

4 | .eslintignore

5 | .eslintrc.js

6 | .nvmrc

7 | README.md

8 | rollup.config.js

9 | .travis.yml

10 |

--------------------------------------------------------------------------------

/jest.config.js:

--------------------------------------------------------------------------------

1 | module.exports = {

2 | testRegex: 'src\\/(.*\\/)*__tests__\\/.*\\.test\\.jsx?$',

3 | transform: {

4 | '.*\\.jsx?$': 'babel-jest',

5 | },

6 | };

7 |

--------------------------------------------------------------------------------

/src/isWaxComponent.js:

--------------------------------------------------------------------------------

1 | import * as components from './components';

2 |

3 | const componentsArray = Object.values(components);

4 | const isWaxComponent = Component => componentsArray.includes(Component);

5 |

6 | export default isWaxComponent;

7 |

--------------------------------------------------------------------------------

/src/components/Destination.js:

--------------------------------------------------------------------------------

1 | /* A convenience wrapper to expose

2 | * the audio context's destination

3 | * as a Web Audio X Component. */

4 |

5 | const Destination = ({ audioContext }) => audioContext.destination;

6 |

7 | export default Destination;

8 |

--------------------------------------------------------------------------------

/src/components/AudioGraph.js:

--------------------------------------------------------------------------------

1 | /* This does little right now,

2 | * but might be used in the future

3 | * to make optimisations and to

4 | * invoke other operations. */

5 |

6 | const AudioGraph = ({ children }) => children;

7 |

8 | export default AudioGraph;

9 |

--------------------------------------------------------------------------------

/src/components/NoOp.js:

--------------------------------------------------------------------------------

1 | /* An internal, workaround component

2 | * to inform the node connector that

3 | * no connection is required at this

4 | * point in the current subtree.

5 | * Used by Aggregation. */

6 |

7 | export const NO_OP = 'NO_OP';

8 |

9 | const NoOp = () => NO_OP;

10 |

11 | export default NoOp;

12 |

--------------------------------------------------------------------------------

/src/index.js:

--------------------------------------------------------------------------------

1 | export { default as createAudioElement } from './createAudioElement';

2 | export * from './renderAudioGraph';

3 | export { default as isWaxComponent } from './isWaxComponent';

4 | export * from './components';

5 | export * from './paramMutations';

6 | export { default as assignAudioParam } from './paramMutations/assignAudioParam';

7 |

--------------------------------------------------------------------------------

/src/components/Aggregation.jsx:

--------------------------------------------------------------------------------

1 | import createAudioElement from '../createAudioElement';

2 | import NoOp from './NoOp';

3 | import AudioGraph from './AudioGraph';

4 |

5 | const Aggregation = ({ children }) => (

6 |

7 | {children}

8 |

9 |

10 | );

11 |

12 | export default Aggregation;

13 |

--------------------------------------------------------------------------------

/.editorconfig:

--------------------------------------------------------------------------------

1 | root = true

2 |

3 | # Unix-style newlines with a newline ending every file

4 | [*]

5 | end_of_line = lf

6 | insert_final_newline = true

7 |

8 | # Matches multiple files with brace expansion notation

9 | # Set default charset

10 | [*.{js,jsx,html,scss}]

11 | charset = utf-8

12 | indent_style = space

13 | indent_size = 4

14 | trim_trailing_whitespace = true

15 |

--------------------------------------------------------------------------------

/.babelrc:

--------------------------------------------------------------------------------

1 | {

2 | "plugins": [

3 | "@babel/proposal-object-rest-spread",

4 | ["@babel/transform-react-jsx", {

5 | "pragma": "createAudioElement"

6 | }]

7 | ],

8 | "env": {

9 | "test": {

10 | "plugins": [

11 | "@babel/transform-modules-commonjs"

12 | ]

13 | }

14 | }

15 | }

16 |

--------------------------------------------------------------------------------

/src/components/Gain.js:

--------------------------------------------------------------------------------

1 | import assignAudioParam from '../paramMutations/assignAudioParam';

2 |

3 | export const createGain = assignParam =>

4 | ({

5 | audioContext,

6 | gain,

7 | node = audioContext.createGain(),

8 | }) => {

9 | assignParam(node.gain, gain, audioContext.currentTime);

10 | return node;

11 | };

12 |

13 | export default createGain(assignAudioParam);

14 |

--------------------------------------------------------------------------------

/src/components/StereoPanner.js:

--------------------------------------------------------------------------------

1 | import assignAudioParam from '../paramMutations/assignAudioParam';

2 |

3 | export const createStereoPanner = assignParam =>

4 | ({

5 | audioContext,

6 | pan,

7 | node = audioContext.createStereoPanner(),

8 | }) => {

9 | assignParam(node.pan, pan, audioContext.currentTime);

10 | return node;

11 | };

12 |

13 | export default createStereoPanner(assignAudioParam);

14 |

--------------------------------------------------------------------------------

/src/paramMutations/createParamMutator.js:

--------------------------------------------------------------------------------

1 | /* Since AudioParam methods follow a

2 | * consistent signature, this function

3 | * allows one to trivially create functions

4 | * that will be consumed by users and

5 | * components to schedule value changes. */

6 |

7 | const createParamMutator = name =>

8 | (value, time) =>

9 | (param, currentTime) => {

10 | param[name](value, currentTime + time);

11 | };

12 |

13 | export default createParamMutator;

14 |

--------------------------------------------------------------------------------

/src/components/index.js:

--------------------------------------------------------------------------------

1 | export { default as Aggregation } from './Aggregation';

2 | export { default as AudioGraph } from './AudioGraph';

3 | export { default as AudioBufferSource } from './AudioBufferSource';

4 | export { default as ChannelMerger } from './ChannelMerger';

5 | export { default as Gain } from './Gain';

6 | export { default as Oscillator } from './Oscillator';

7 | export { default as StereoPanner } from './StereoPanner';

8 | export { default as Destination } from './Destination';

9 |

--------------------------------------------------------------------------------

/src/paramMutations/index.js:

--------------------------------------------------------------------------------

1 | import createParamMutator from './createParamMutator';

2 |

3 | export const setValueAtTime = createParamMutator('setValueAtTime');

4 | export const linearRampToValueAtTime = createParamMutator('linearRampToValueAtTime');

5 | export const exponentialRampToValueAtTime = createParamMutator('exponentialRampToValueAtTime');

6 | export const setTargetAtTime = createParamMutator('setTargetAtTime');

7 | export const setValueCurveAtTime = createParamMutator('setValueCurveAtTime');

8 |

--------------------------------------------------------------------------------

/example/src/combineElementCreators.js:

--------------------------------------------------------------------------------

1 | const getCreator = (map, Component) =>

2 | [...map.entries()]

3 | .find(([predicate]) => predicate(Component))[1];

4 |

5 | const combineElementCreators = (...creatorBindings) => {

6 | const map = new Map(creatorBindings);

7 |

8 | return (Component, props, ...children) => {

9 | const creator = getCreator(map, Component);

10 | return creator(Component, props, ...children);

11 | };

12 | };

13 |

14 | export default combineElementCreators;

15 |

--------------------------------------------------------------------------------

/src/components/ChannelMerger.js:

--------------------------------------------------------------------------------

1 | const ChannelMerger = ({

2 | audioContext,

3 | inputs,

4 | children,

5 | node = audioContext.createChannelMerger(inputs),

6 | }) => {

7 | const [setupConnections] = children;

8 |

9 | const connectToChannel = (childNode, channel) => {

10 | // assumption here that all nodes have 1 output. Extra param?

11 | childNode.connect(node, 0, channel);

12 | };

13 |

14 | setupConnections(connectToChannel);

15 |

16 | return node;

17 | };

18 |

19 | export default ChannelMerger;

20 |

--------------------------------------------------------------------------------

/src/connectNodes.js:

--------------------------------------------------------------------------------

1 | import { NO_OP } from './components/NoOp';

2 |

3 | const connectNodes = nodes =>

4 | nodes.reduce((sourceNode, targetNode) => {

5 | const source = Array.isArray(sourceNode)

6 | ? connectNodes(sourceNode)

7 | : sourceNode;

8 |

9 | const target = Array.isArray(targetNode)

10 | ? connectNodes(targetNode)

11 | : targetNode;

12 |

13 | if (source !== NO_OP && target !== NO_OP) {

14 | source.connect(target);

15 | }

16 |

17 | return target;

18 | });

19 |

20 | export default connectNodes;

21 |

--------------------------------------------------------------------------------

/src/renderAudioGraph.js:

--------------------------------------------------------------------------------

1 | import connectNodes from './connectNodes';

2 |

3 | export const renderAudioGraph = (

4 | createGraphElement,

5 | context = new AudioContext(),

6 | ) => {

7 | const nodes = createGraphElement(context);

8 | connectNodes(nodes);

9 | return nodes;

10 | };

11 |

12 | export const renderPersistentAudioGraph = (

13 | createGraphElement,

14 | context = new AudioContext(),

15 | ) => {

16 | let nodes = renderAudioGraph(createGraphElement, context);

17 |

18 | return createNewGraphElement => {

19 | nodes = createNewGraphElement(context, nodes);

20 | };

21 | };

22 |

--------------------------------------------------------------------------------

/src/paramMutations/assignAudioParam.js:

--------------------------------------------------------------------------------

1 | const isMutation = value => typeof value === 'function';

2 |

3 | const isMutationSequence = value =>

4 | Array.isArray(value) && value.every(isMutation);

5 |

6 | const assignAudioParam = (param, value, currentTime) => {

7 | if (!value) {

8 | return;

9 | }

10 |

11 | if (isMutation(value)) {

12 | value(param, currentTime);

13 | } else if (isMutationSequence(value)) {

14 | value.forEach(paramMutation => paramMutation(param, currentTime));

15 | } else {

16 | param.value = value;

17 | }

18 | };

19 |

20 | export default assignAudioParam;

21 |

--------------------------------------------------------------------------------

/src/paramMutations/__tests__/createParamMutator.test.js:

--------------------------------------------------------------------------------

1 | import createParamMutator from '../createParamMutator';

2 |

3 | describe('createParamMutator', () => {

4 | it('should create a func for public use which returns an inner function to manipulate AudioParams', () => {

5 | const audioParam = { setValueAtTime: jest.fn() };

6 | const setValueAtTime = createParamMutator('setValueAtTime');

7 | const mutateAudioParam = setValueAtTime(300, 7);

8 |

9 | mutateAudioParam(audioParam, 3);

10 |

11 | expect(audioParam.setValueAtTime).toHaveBeenCalledTimes(1);

12 | expect(audioParam.setValueAtTime).toHaveBeenCalledWith(300, 10);

13 | });

14 | });

15 |

--------------------------------------------------------------------------------

/src/components/Oscillator.js:

--------------------------------------------------------------------------------

1 | import asSourceNode from './asSourceNode';

2 | import assignAudioParam from '../paramMutations/assignAudioParam';

3 |

4 | export const createOscillator = assignParam =>

5 | ({

6 | audioContext,

7 | detune = 0,

8 | frequency,

9 | type,

10 | onended,

11 | enqueue,

12 | node = audioContext.createOscillator(),

13 | }) => {

14 | assignParam(node.detune, detune, audioContext.currentTime);

15 | assignParam(node.frequency, frequency, audioContext.currentTime);

16 | node.type = type;

17 | node.onended = onended;

18 |

19 | enqueue(node);

20 |

21 | return node;

22 | };

23 |

24 | export default asSourceNode(

25 | createOscillator(assignAudioParam)

26 | );

27 |

--------------------------------------------------------------------------------

/example/src/simple.jsx:

--------------------------------------------------------------------------------

1 | import {

2 | createAudioElement,

3 | renderAudioGraph,

4 | AudioGraph,

5 | Oscillator,

6 | Gain,

7 | StereoPanner,

8 | Destination,

9 | setValueAtTime,

10 | exponentialRampToValueAtTime,

11 | } from 'wax-core';

12 |

13 | onAudioContextResumed(context => {

14 | renderAudioGraph(

15 |

16 |

24 |

25 |

26 |

27 | ,

28 | context,

29 | );

30 | });

31 |

--------------------------------------------------------------------------------

/src/components/AudioBufferSource.js:

--------------------------------------------------------------------------------

1 | import asSourceNode from './asSourceNode';

2 | import assignAudioParam from '../paramMutations/assignAudioParam';

3 |

4 | export const createAudioBufferSource = assignParam =>

5 | ({

6 | audioContext,

7 | buffer,

8 | detune,

9 | loop = false,

10 | loopStart = 0,

11 | loopEnd = 0,

12 | playbackRate,

13 | enqueue,

14 | node = audioContext.createBufferSource(),

15 | }) => {

16 | node.buffer = buffer;

17 | node.loop = loop;

18 | node.loopStart = loopStart;

19 | node.loopEnd = loopEnd;

20 | assignParam(node.detune, detune, audioContext.currentTime);

21 | assignParam(node.playbackRate, playbackRate, audioContext.currentTime);

22 |

23 | enqueue(node);

24 |

25 | return node;

26 | };

27 |

28 | export default asSourceNode(

29 | createAudioBufferSource(

30 | assignAudioParam

31 | )

32 | );

33 |

--------------------------------------------------------------------------------

/src/__tests__/helpers.js:

--------------------------------------------------------------------------------

1 | export const createStubAudioContext = (currentTime = 0) => ({

2 | currentTime,

3 | createGain() {

4 | return {

5 | gain: {

6 | value: 0,

7 | },

8 | };

9 | },

10 | createStereoPanner() {

11 | return {

12 | pan: {

13 | value: 0,

14 | },

15 | };

16 | },

17 | createBufferSource() {

18 | return {

19 | detune: {

20 | value: 0,

21 | },

22 | playbackRate: {

23 | value: 0,

24 | },

25 | };

26 | },

27 | createOscillator() {

28 | return {

29 | detune: {

30 | value: 0,

31 | },

32 | frequency: {

33 | value: 0,

34 | },

35 | };

36 | }

37 | });

38 |

39 | export const createArrayWith = (length, creator) =>

40 | Array(length).fill(null).map(creator);

41 |

--------------------------------------------------------------------------------

/src/components/asSourceNode.jsx:

--------------------------------------------------------------------------------

1 | /* A higher-order component that

2 | * abstracts and centralises logic

3 | * for enqueuing the starting and

4 | * stopping of source nodes */

5 |

6 | import elementCreator from '../createAudioElement';

7 |

8 | const createEnqueuer = ({ audioContext, startTime = 0, endTime }) =>

9 | node => {

10 | if (node.isScheduled) {

11 | return;

12 | }

13 |

14 | node.start(audioContext.currentTime + startTime);

15 |

16 | if (endTime) {

17 | node.stop(audioContext.currentTime + endTime);

18 | }

19 |

20 | node.isScheduled = true;

21 | };

22 |

23 | /* thunk to create HOC with cAE

24 | * as injectable dependency */

25 | export const createAsSourceNode = createAudioElement =>

26 | Component =>

27 | props =>

28 | ;

32 |

33 | export default createAsSourceNode(elementCreator);

34 |

--------------------------------------------------------------------------------

/docs/007-local-development.md:

--------------------------------------------------------------------------------

1 | # Local Development

2 |

3 | To build Wax and to run the example apps locally, you'll first need to run these commands in your terminal

4 |

5 | ```shell

6 | git clone https://github.com/jamesseanwright/wax.git # or fork and use SSH if submitting a PR

7 | cd wax

8 | npm i

9 | ```

10 |

11 | Then you can run one of the following scripts:

12 |

13 | * `npm run build` - builds the library and outputs it to the `dist` dir, ready for publishing

14 | * `npm run build-example` - builds the example app specified in the `ENTRY` environment variable, defaulting to `simple`

15 | * `npm run dev` - builds the library, then builds and runs the example app specified in the `ENTRY` environment variable, defaulting to `simple`

16 | * `npm test` - lints the source code (including `example`) and runs the unit tests. Append ` -- --watch` to enter Jest's watch mode

17 |

18 | For more information on the example apps, consult the [README in the `example` folder](https://github.com/jamesseanwright/wax/blob/master/example/README.md).

19 |

--------------------------------------------------------------------------------

/.eslintrc.js:

--------------------------------------------------------------------------------

1 | module.exports = {

2 | "globals": {

3 | "onAudioContextResumed": "readable",

4 | },

5 | "env": {

6 | "browser": true,

7 | "es6": true,

8 | "jest": true,

9 | "node": true,

10 | },

11 | "extends": "eslint:recommended",

12 | "parserOptions": {

13 | "ecmaFeatures": {

14 | "jsx": true

15 | },

16 | "ecmaVersion": 2018,

17 | "sourceType": "module"

18 | },

19 | "plugins": [

20 | "react"

21 | ],

22 | "settings": {

23 | "react": {

24 | "pragma": "createAudioElement"

25 | }

26 | },

27 | "rules": {

28 | "indent": [

29 | "error",

30 | 4

31 | ],

32 | "linebreak-style": [

33 | "error",

34 | "unix"

35 | ],

36 | "quotes": [

37 | "error",

38 | "single"

39 | ],

40 | "semi": [

41 | "error",

42 | "always"

43 | ],

44 | "react/jsx-uses-react": 1,

45 | "react/jsx-uses-vars": 1,

46 | }

47 | };

48 |

--------------------------------------------------------------------------------

/example/src/index.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | Wax Example

6 |

7 |

8 |

9 | Wax Example

10 | Turn your speakers up!

11 |

12 |

13 |

32 |

33 |

34 |

35 |

36 |

--------------------------------------------------------------------------------

/rollup.config.js:

--------------------------------------------------------------------------------

1 | /* TODO: move this and dependencies to

2 | * separate package in example directory?

3 | */

4 |

5 | import { resolve as resolvePath } from 'path';

6 | import alias from 'rollup-plugin-alias';

7 | import commonjs from 'rollup-plugin-commonjs';

8 | import resolve from 'rollup-plugin-node-resolve';

9 | import babel from 'rollup-plugin-babel';

10 |

11 | const entry = process.env.ENTRY || 'simple';

12 |

13 | export default {

14 | input: `example/src/${entry}.jsx`,

15 | output: {

16 | file: 'example/dist/index.js',

17 | format: 'iife',

18 | },

19 | plugins: [

20 | resolve(),

21 | commonjs(), // for React and ReactDOM

22 | alias({

23 | 'wax-core': resolvePath(__dirname, 'dist', 'index.js'),

24 | }),

25 | babel(

26 | entry === 'withReact'

27 | && {

28 | babelrc: false,

29 | presets: [

30 | ['@babel/react', {

31 | pragma: 'createElement',

32 | pragmaFrag: 'React.Fragment',

33 | }],

34 | ],

35 | plugins: ['transform-inline-environment-variables'],

36 | }

37 | ),

38 | ],

39 | };

40 |

--------------------------------------------------------------------------------

/example/devServer.js:

--------------------------------------------------------------------------------

1 | 'use strict';

2 |

3 | /* node-static unfortunately doesn't provide

4 | * the correct Content-Type header for non-HTML

5 | * files, breaking the decoding of yodel.mp3.

6 | * Thus, I'm overriding this via this abstraction */

7 |

8 | const PORT = 8080;

9 | const DEFAULT_MIME_TYPE = 'application/html';

10 |

11 | const http = require('http');

12 | const path = require('path');

13 | const url = require('url');

14 | const nodeStatic = require('node-static');

15 |

16 | const file = new nodeStatic.Server(

17 | path.join(__dirname, 'dist'),

18 | { cache: 0 },

19 | );

20 |

21 | const contentTypes = new Map([

22 | [/.*\.mp3$/ig, 'audio/mp3'],

23 | ]);

24 |

25 | const getContentType = req => {

26 | const { pathname } = url.parse(req.url);

27 |

28 | for (let [expression, mimeType] of contentTypes) {

29 | if (expression.test(pathname)) {

30 | return mimeType;

31 | }

32 | }

33 |

34 | return DEFAULT_MIME_TYPE;

35 | };

36 |

37 | const server = http.createServer((req, res) => {

38 | req.on('end', () => {

39 | res.setHeader('Content-Type', getContentType(req));

40 | file.serve(req, res);

41 | }).resume();

42 | });

43 |

44 | // eslint-disable-next-line no-console

45 | server.listen(PORT, () => console.log('Dev server listening on', PORT));

46 |

--------------------------------------------------------------------------------

/src/components/__tests__/Gain.test.js:

--------------------------------------------------------------------------------

1 | import { createGain } from '../Gain';

2 | import { createStubAudioContext } from '../../__tests__/helpers';

3 |

4 | describe('Gain', () => {

5 | let Gain;

6 | let assignAudioParam;

7 |

8 | beforeEach(() => {

9 | assignAudioParam = jest.fn().mockImplementation((param, value) => param.value = value);

10 | Gain = createGain(assignAudioParam);

11 | });

12 |

13 | it('should create a GainNode, assign its gain, and return said GainNode', () => {

14 | const audioContext = createStubAudioContext();

15 | const gain = 0.4;

16 | const node = Gain({ audioContext, gain });

17 |

18 | expect(assignAudioParam).toHaveBeenCalledTimes(1);

19 | expect(assignAudioParam).toHaveBeenCalledWith(node.gain, gain, audioContext.currentTime);

20 | expect(node.gain.value).toEqual(gain);

21 | });

22 |

23 | it('should mutate an existing GainNode when provided', () => {

24 | const audioContext = createStubAudioContext();

25 | const node = audioContext.createGain();

26 | const gain = 0.7;

27 | const result = Gain({ audioContext, gain, node });

28 |

29 | expect(result).toBe(node);

30 | expect(assignAudioParam).toHaveBeenCalledTimes(1);

31 | expect(assignAudioParam).toHaveBeenCalledWith(node.gain, gain, audioContext.currentTime);

32 | expect(node.gain.value).toEqual(gain);

33 | });

34 | });

35 |

--------------------------------------------------------------------------------

/src/paramMutations/__tests__/assignAudioParam.test.js:

--------------------------------------------------------------------------------

1 | import assignAudioParam from '../assignAudioParam';

2 |

3 | describe('assignAudioParam', () => {

4 | it('should do nothing if a value is not provided', () => {

5 | const param = {};

6 | assignAudioParam(param, null, 1);

7 | expect(param).toEqual({});

8 | });

9 |

10 | it('should invoke the value with the param and current time when it`s a function', () => {

11 | const param = {};

12 | const value = jest.fn();

13 | const currentTime = 6;

14 |

15 | assignAudioParam(param, value, currentTime);

16 |

17 | expect(value).toHaveBeenCalledTimes(1);

18 | expect(value).toHaveBeenCalledWith(param, currentTime);

19 | });

20 |

21 | it('should invoke each value with the param and currenttime when it`s an array of functions', () => {

22 | const param = {};

23 | const value = [jest.fn(), jest.fn(), jest.fn()];

24 | const currentTime = 9;

25 |

26 | assignAudioParam(param, value, currentTime);

27 |

28 | value.forEach(mutator => {

29 | expect(mutator).toHaveBeenCalledTimes(1);

30 | expect(mutator).toHaveBeenCalledWith(param, currentTime);

31 | });

32 | });

33 |

34 | it('should assign the value to the param`s value property if it is not a function', () => {

35 | const param = {};

36 | const value = 5;

37 |

38 | assignAudioParam(param, value);

39 |

40 | expect(param.value).toEqual(value);

41 | });

42 | });

43 |

--------------------------------------------------------------------------------

/example/src/aggregation.jsx:

--------------------------------------------------------------------------------

1 | import {

2 | createAudioElement,

3 | renderAudioGraph,

4 | AudioGraph,

5 | Aggregation,

6 | AudioBufferSource,

7 | Oscillator,

8 | Gain,

9 | StereoPanner,

10 | Destination,

11 | setValueAtTime,

12 | exponentialRampToValueAtTime,

13 | } from 'wax-core';

14 |

15 | const fetchAsAudioBuffer = async (url, audioContext) => {

16 | const response = await fetch(url);

17 | const arrayBuffer = await response.arrayBuffer();

18 | return await audioContext.decodeAudioData(arrayBuffer);

19 | };

20 |

21 | onAudioContextResumed(async context => {

22 | const yodel = await fetchAsAudioBuffer('/yodel.mp3', context);

23 | const stereoPanner = ;

24 |

25 | renderAudioGraph(

26 |

27 |

28 |

36 |

37 | {stereoPanner}

38 |

39 |

40 |

43 |

44 | {stereoPanner}

45 |

46 | {stereoPanner}

47 |

48 | ,

49 | context,

50 | );

51 | });

52 |

--------------------------------------------------------------------------------

/src/components/__tests__/StereoPanner.test.js:

--------------------------------------------------------------------------------

1 | import { createStereoPanner } from '../StereoPanner';

2 | import { createStubAudioContext } from '../../__tests__/helpers';

3 |

4 | describe('StereoPanner', () => {

5 | let assignAudioParam;

6 | let StereoPanner;

7 |

8 | beforeEach(() => {

9 | assignAudioParam = jest.fn().mockImplementationOnce((param, value) => param.value = value);

10 | StereoPanner = createStereoPanner(assignAudioParam);

11 | });

12 |

13 | afterEach(() => {

14 | assignAudioParam.mockReset();

15 | });

16 |

17 | it('should create a StereoPannerNode, assign its pan, and return said StereoPannerNode', () => {

18 | const audioContext = createStubAudioContext();

19 | const pan = 0.4;

20 | const node = StereoPanner({ audioContext, pan });

21 |

22 | expect(assignAudioParam).toHaveBeenCalledTimes(1);

23 | expect(assignAudioParam).toHaveBeenCalledWith(node.pan, pan, audioContext.currentTime);

24 | expect(node.pan.value).toEqual(pan);

25 | });

26 |

27 | it('should mutate an existing StereoPannerNode when provided', () => {

28 | const audioContext = createStubAudioContext();

29 | const node = audioContext.createStereoPanner();

30 | const pan = 0.7;

31 | const result = StereoPanner({ audioContext, pan, node });

32 |

33 | expect(result).toBe(node);

34 | expect(assignAudioParam).toHaveBeenCalledTimes(1);

35 | expect(assignAudioParam).toHaveBeenCalledWith(node.pan, pan, audioContext.currentTime);

36 | expect(node.pan.value).toEqual(pan);

37 | });

38 | });

39 |

--------------------------------------------------------------------------------

/docs/002-audio-parameters.md:

--------------------------------------------------------------------------------

1 | # Manipulating Audio Parameters

2 |

3 | The [`AudioParam`](https://developer.mozilla.org/en-US/docs/Web/API/AudioParam) interface provides a means of changing audio properties, such as `OscillatorNode.prototype.frequency` and `GainNode.prototype.gain`, via direct values or scheduled events.

4 |

5 | Looking at our app, we can observe that it a few parameter changes will occur:

6 |

7 | ```jsx

8 |

16 |

17 |

18 | ```

19 |

20 | * ``'s frequency will immediately be set to 200 Hz, then ramped to 800 Hz over a duration of 3 seconds

21 |

22 | * ``'s gain will be a constant value of `0.2`

23 |

24 | * ``'s pan will be a constant value of `-0.2`

25 |

26 | With this in mind, Wax components support param changes with various props, whose values can be:

27 |

28 | * a single, constant value

29 | * a single parameter mutation e.g. `frequency={setValueAtTime(200, 0)}`

30 | * an array of parameter mutations (as above), which will be applied in order of declaration

31 |

32 | ## What Are Parameter Mutations?

33 |

34 | Parameter mutations are functions that conform to those exposed by the `AudioParam` interface; If an audio parameter supports it, then Wax will export a mutation for it! All of them are exported for consumption, but to list them for transparency:

35 |

36 | * `setValueAtTime`

37 | * `linearRampToValueAtTime`

38 | * `exponentialRampToValueAtTime`

39 | * `setTargetAtTime`

40 | * `setValueCurveAtTime`

41 |

--------------------------------------------------------------------------------

/src/createAudioElement.js:

--------------------------------------------------------------------------------

1 | /* I chose "cache" over "memoise" here,

2 | * as we don't cache by the inner

3 | * arguments. We just want to avoid

4 | * recomputing the node for a certain

5 | * creator func reference. */

6 | const cache = func => {

7 | let result;

8 |

9 | return (...args) => {

10 | if (!result) {

11 | result = func(...args);

12 | }

13 |

14 | return result;

15 | };

16 | };

17 |

18 | /* decoration is required to differentiate

19 | * between element creators and other function

20 | * children e.g. render props */

21 | const asCachedCreator = creator => {

22 | const cachedCreator = cache(creator);

23 | cachedCreator.isElementCreator = true;

24 | return cachedCreator;

25 | };

26 |

27 | const getNodeFromTree = tree =>

28 | !Array.isArray(tree)

29 | ? tree

30 | : undefined; // facilitates with default prop in destructuring

31 |

32 | const createAudioElement = (Component, props, ...children) =>

33 | asCachedCreator((audioContext, nodeTree = []) => {

34 | const mapResult = (result, i) =>

35 | result.isElementCreator

36 | ? result(audioContext, nodeTree[i])

37 | : result;

38 |

39 | /* we want to render children first so the nodes

40 | * can be directly consumed by their parents */

41 | const createChildren = children => children.map(mapResult);

42 | const existingNode = getNodeFromTree(nodeTree);

43 |

44 | return mapResult(

45 | Component({

46 | children: createChildren(children),

47 | audioContext,

48 | node: existingNode,

49 | ...props,

50 | })

51 | );

52 | });

53 |

54 | export default createAudioElement;

55 |

--------------------------------------------------------------------------------

/example/README.md:

--------------------------------------------------------------------------------

1 | # Example Apps

2 |

3 | The `src` directory contains three example Wax applications, each bootstrapped by the same HTML document (index.html):

4 |

5 | * `simple.jsx` - declares an oscillator, with a couple of scheduled frequency changes, whose gain and pan are altered

6 |

7 | * `aggregation.jsx` - demonstrates how one can use the `` component to build more complex audio graphs

8 |

9 | * `withReact.jsx` - renders a slider element using React, which updates an `` whenever its value is changed. This calls `renderPersistentAudioGraph()`

10 |

11 | ## Running the Examples

12 |

13 | After following the setup guide in the [local development documentation](https://github.com/jamesseanwright/wax/blob/master/docs/007-local-development.md), run `npm run dev` from the root of the repository. You can specify the `ENTRY` environment varible to select which app to run; this is the name of the app **without** the `.jsx` extension e.g. `ENTRY=withReact npm run dev`. If omitted, `simple` will be built and started.

14 |

15 | The example apps are [built using rollup](https://github.com/jamesseanwright/wax/blob/master/rollup.config.js).

16 |

17 | ## Mitigating Chrome's Autoplay Policy

18 |

19 | As of December 2018, Chrome instantiates `AudioContext`s in the `'suspended'` state, [requiring an explicit user interaction before they can be resumed](https://developers.google.com/web/updates/2017/09/autoplay-policy-changes#webaudio). To mitigate this, there is some [JavaScript in the HTML bootstrapper (index.html) which will create, resume, and forward a context to the current app](https://github.com/jamesseanwright/wax/blob/master/example/src/index.html#L14) via a callback. This will potentially become a requirement for other browsers as they adopt similar policies in the future.

20 |

--------------------------------------------------------------------------------

/example/src/withReact.jsx:

--------------------------------------------------------------------------------

1 | /** @jsx createElement */

2 |

3 | import React from 'react';

4 | import ReactDOM from 'react-dom';

5 |

6 | import {

7 | isWaxComponent,

8 | createAudioElement,

9 | renderPersistentAudioGraph,

10 | AudioGraph,

11 | Oscillator,

12 | Gain,

13 | Destination,

14 | } from 'wax-core';

15 |

16 | import combineElementCreators from './combineElementCreators';

17 |

18 | const createElement = combineElementCreators(

19 | [isWaxComponent, createAudioElement],

20 | [() => true, React.createElement],

21 | );

22 |

23 | class Slider extends React.Component {

24 | constructor(props) {

25 | super(props);

26 | this.onChange = this.onChange.bind(this);

27 | }

28 |

29 | componentDidMount() {

30 | const { children, min, audioContext } = this.props;

31 |

32 | this.updateAudioGraph = renderPersistentAudioGraph(

33 | children(min),

34 | audioContext,

35 | );

36 | }

37 |

38 | onChange({ target }) {

39 | this.updateAudioGraph(

40 | this.props.children(target.value),

41 | );

42 | }

43 |

44 | render() {

45 | return (

46 |

52 | );

53 | }

54 | }

55 |

56 | onAudioContextResumed(context => {

57 | ReactDOM.render(

58 |

63 | {value =>

64 |

65 |

69 |

70 |

71 |

72 | }

73 | ,

74 | document.querySelector('#react-target'),

75 | );

76 | });

77 |

--------------------------------------------------------------------------------

/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "wax-core",

3 | "version": "0.1.1",

4 | "description": "An experimental, JSX-compatible renderer for the Web Audio API",

5 | "main": "dist/index.js",

6 | "scripts": {

7 | "build": "babel src --out-dir dist",

8 | "build-example": "mkdir -p example/dist && rm -rf example/dist/* && rollup -c && bash -c 'cp -r example/src/*.{html,mp3} example/dist'",

9 | "dev": "npm run build && npm run build-example && node example/devServer",

10 | "test": "eslint --ext js --ext jsx src example/src && jest",

11 | "test:ci": "npm test -- --coverage --coverageReporters=text-lcov | coveralls"

12 | },

13 | "repository": {

14 | "type": "git",

15 | "url": "git+https://github.com/jamesseanwright/wax.git"

16 | },

17 | "keywords": [

18 | "web",

19 | "audio",

20 | "api",

21 | "components",

22 | "jsx",

23 | "react"

24 | ],

25 | "author": "James Wright ",

26 | "license": "ISC",

27 | "bugs": {

28 | "url": "https://github.com/jamesseanwright/wax/issues"

29 | },

30 | "homepage": "https://github.com/jamesseanwright/wax#readme",

31 | "devDependencies": {

32 | "@babel/cli": "7.0.0",

33 | "@babel/core": "7.0.0",

34 | "@babel/plugin-proposal-object-rest-spread": "7.0.0",

35 | "@babel/plugin-transform-modules-commonjs": "7.1.0",

36 | "@babel/plugin-transform-react-jsx": "7.0.0",

37 | "@babel/preset-react": "7.0.0",

38 | "babel-core": "7.0.0-bridge.0",

39 | "babel-jest": "23.6.0",

40 | "babel-plugin-transform-inline-environment-variables": "0.4.3",

41 | "coveralls": "3.0.2",

42 | "eslint": "5.5.0",

43 | "eslint-plugin-react": "7.11.1",

44 | "jest": "23.6.0",

45 | "node-static": "0.7.10",

46 | "nyc": "^13.0.1",

47 | "react": "16.5.0",

48 | "react-dom": "16.5.0",

49 | "remove": "^0.1.5",

50 | "rollup": "0.65.0",

51 | "rollup-plugin-alias": "1.4.0",

52 | "rollup-plugin-babel": "4.0.2",

53 | "rollup-plugin-commonjs": "9.1.6",

54 | "rollup-plugin-node-resolve": "3.3.0"

55 | }

56 | }

57 |

--------------------------------------------------------------------------------

/src/components/__tests__/Oscillator.test.js:

--------------------------------------------------------------------------------

1 | import { createOscillator } from '../Oscillator';

2 | import { createStubAudioContext } from '../../__tests__/helpers';

3 |

4 | describe('Oscillator', () => {

5 | let assignAudioParam;

6 | let Oscillator;

7 |

8 | beforeEach(() => {

9 | assignAudioParam = jest.fn().mockImplementation((param, value) => param.value = value);

10 | Oscillator = createOscillator(assignAudioParam);

11 | });

12 |

13 | it('should create an OscillatorNode, assign its props, and return said OscillatorNode', () => {

14 | const audioContext = createStubAudioContext();

15 | const detune = 7;

16 | const frequency = 300;

17 | const type = 'square';

18 | const onended = jest.fn();

19 | const enqueue = jest.fn();

20 |

21 | const node = Oscillator({

22 | audioContext,

23 | detune,

24 | frequency,

25 | type,

26 | onended,

27 | enqueue,

28 | });

29 |

30 | expect(assignAudioParam).toHaveBeenCalledTimes(2);

31 | expect(assignAudioParam).toHaveBeenCalledWith(node.detune, detune, audioContext.currentTime);

32 | expect(assignAudioParam).toHaveBeenCalledWith(node.frequency, frequency, audioContext.currentTime);

33 | expect(enqueue).toHaveBeenCalledTimes(1);

34 | expect(enqueue).toHaveBeenCalledWith(node);

35 | expect(node.frequency.value).toEqual(frequency);

36 | expect(node.detune.value).toEqual(detune);

37 | expect(node.onended).toBe(onended);

38 | });

39 |

40 | it('should mutate an existing OscillatorNode when provided', () => {

41 | const audioContext = createStubAudioContext();

42 | const node = audioContext.createOscillator();

43 | const frequency = 20;

44 | const enqueue = jest.fn();

45 |

46 | const result = Oscillator({

47 | audioContext,

48 | frequency,

49 | enqueue,

50 | node,

51 | });

52 |

53 | expect(result).toBe(node);

54 | expect(result.frequency.value).toEqual(20);

55 | });

56 | });

57 |

--------------------------------------------------------------------------------

/docs/005-interop-with-react.md:

--------------------------------------------------------------------------------

1 | # Interop with React

2 |

3 | In the prior chapter, we learned how to update an existing audio graph whenever a `HTMLInputElement` fires a `change` event. Can we handle the visual UI with React while supporting JSX-declared audio graphs with Wax?

4 |

5 | The problem is that we need to be able to use `React.createElement` and `createAudioElement` at the same time. What if we could compose a single pragma that can select whether to use the former or the latter at runtime? The [`withReact` example app](https://github.com/jamesseanwright/wax/blob/master/example/src/withReact.jsx) has a solution:

6 |

7 | ```js

8 | /** @jsx createElement */

9 |

10 | import {

11 | isWaxComponent,

12 | ...

13 | } from 'wax-core';

14 |

15 | import combineElementCreators from './combineElementCreators';

16 |

17 | const createElement = combineElementCreators(

18 | [isWaxComponent, createAudioElement],

19 | [() => true, React.createElement],

20 | );

21 | ```

22 |

23 | `combineElementCreators` is a function that takes a mapping between predicates and pragmas, and returns a new pragma to be targeted by our transpiler. In our example, if an element belongs to Wax (determined using the exposed `isWaxComponent` binding), then the `createAudioElement` pragma will be invoked; otherwise, we'll default to `React.createElement`. `combineElementCreators` isn't provided by Wax but can be implemented with a few lines of code:

24 |

25 | ```js

26 | const getCreator = (map, Component) =>

27 | [...map.entries()]

28 | .find(([predicate]) => predicate(Component))[1];

29 |

30 | const combineElementCreators = (...creatorBindings) => {

31 | const map = new Map(creatorBindings);

32 |

33 | return (Component, props, ...children) => {

34 | const creator = getCreator(map, Component);

35 | return creator(Component, props, ...children);

36 | };

37 | };

38 | ```

39 |

40 | We can then instruct Babel to target this pragma in the usual way:

41 |

42 | ```json

43 | {

44 | "presets": [

45 | ["@babel/react", {

46 | "pragma": "createElement"

47 | }]

48 | ]

49 | }

50 | ```

51 |

52 | The aforementioned `withReact` example demonstrates how `ReactDOM.render` and `renderPersistentAudioGraph` can be used across a single app.

53 |

--------------------------------------------------------------------------------

/src/components/__tests__/AudioBufferSource.test.js:

--------------------------------------------------------------------------------

1 |

2 | import { createAudioBufferSource } from '../AudioBufferSource';

3 | import { createStubAudioContext } from '../../__tests__/helpers';

4 |

5 | describe('AudioBufferSource', () => {

6 | let AudioBufferSource;

7 | let assignAudioParam;

8 |

9 | beforeEach(() => {

10 | assignAudioParam = jest.fn().mockImplementationOnce((param, value) => param.value = value);

11 | AudioBufferSource = createAudioBufferSource(assignAudioParam);

12 | });

13 |

14 | it('should create an AudioBufferSourceNode, assign its props, enqueue, and return said GainNode', () => {

15 | const audioContext = createStubAudioContext();

16 | const buffer = {};

17 | const loop = true;

18 | const loopStart = 1;

19 | const loopEnd = 3;

20 | const detune = 1;

21 | const playbackRate = 1;

22 | const enqueue = jest.fn();

23 |

24 | const node = AudioBufferSource({

25 | audioContext,

26 | buffer,

27 | loop,

28 | loopStart,

29 | loopEnd,

30 | detune,

31 | playbackRate,

32 | enqueue,

33 | });

34 |

35 | expect(node.buffer).toBe(buffer);

36 | expect(node.loop).toEqual(true);

37 | expect(node.loopStart).toEqual(1);

38 | expect(node.loopEnd).toEqual(3);

39 |

40 | expect(assignAudioParam).toHaveBeenCalledTimes(2);

41 | expect(assignAudioParam).toHaveBeenCalledWith(node.detune, detune, audioContext.currentTime);

42 | expect(assignAudioParam).toHaveBeenCalledWith(node.playbackRate, playbackRate, audioContext.currentTime);

43 | expect(enqueue).toHaveBeenCalledTimes(1);

44 | expect(enqueue).toHaveBeenCalledWith(node);

45 | });

46 |

47 | it('should respect and mutate an existing node if provided', () => {

48 | const audioContext = createStubAudioContext();

49 | const node = audioContext.createBufferSource();

50 | const buffer = {};

51 |

52 | const result = AudioBufferSource({

53 | audioContext,

54 | buffer,

55 | node,

56 | enqueue: jest.fn(),

57 | });

58 |

59 | expect(node).toBe(result);

60 | expect(node.buffer).toBe(buffer);

61 | });

62 | });

63 |

--------------------------------------------------------------------------------

/src/__tests__/connectNodes.test.js:

--------------------------------------------------------------------------------

1 | import { NO_OP } from '../components/NoOp';

2 | import connectNodes from '../connectNodes';

3 | import { createArrayWith } from './helpers';

4 |

5 | const createStubAudioNode = () => ({

6 | connect: jest.fn(),

7 | });

8 |

9 | /* we concat item[item.length - 1] if item

10 | * is an array to match the reducing nature

11 | * of the connectNodes function. */

12 | const flatten = array => array.reduce(

13 | (arr, item) => (

14 | arr.concat(Array.isArray(item) ? item[item.length - 1] : item)

15 | ), []);

16 |

17 | describe('connectNodes', () => {

18 | it('should sequentially connect an array of audio nodes and return the last', () => {

19 | const nodes = createArrayWith(5, createStubAudioNode);

20 | const result = connectNodes(nodes);

21 |

22 | expect(result).toBe(nodes[nodes.length - 1]);

23 |

24 | nodes.reduce((previousNode, currentNode) => {

25 | expect(previousNode.connect).toHaveBeenCalledTimes(1);

26 | expect(previousNode.connect).toHaveBeenCalledWith(currentNode);

27 | return currentNode;

28 | });

29 | });

30 |

31 | it('should not connect NO_OP nodes', () => {

32 | const noOpIndex = 3;

33 |

34 | const nodes = createArrayWith(

35 | 5,

36 | (_, i) => i === noOpIndex ? NO_OP : createStubAudioNode(),

37 | );

38 |

39 | connectNodes(nodes);

40 |

41 | nodes.reduce((previousNode, currentNode) => {

42 | if (currentNode === NO_OP) {

43 | expect(previousNode.connect).not.toHaveBeenCalled();

44 | } else if (previousNode !== NO_OP) {

45 | expect(previousNode.connect).toHaveBeenCalledTimes(1);

46 | expect(previousNode.connect).toHaveBeenCalledWith(currentNode);

47 | }

48 |

49 | return currentNode;

50 | });

51 | });

52 |

53 | it('should reduce multidimensional arrays of AudioNodes', () => {

54 | const nodes = [

55 | ...createArrayWith(3, createStubAudioNode),

56 | createArrayWith(4, createStubAudioNode),

57 | ...createArrayWith(2, createStubAudioNode),

58 | ];

59 |

60 | connectNodes(nodes);

61 |

62 | flatten(nodes).reduce((previousNode, currentNode) => {

63 | expect(previousNode.connect).toHaveBeenCalledTimes(1);

64 | expect(previousNode.connect).toHaveBeenCalledWith(currentNode);

65 | return currentNode;

66 | });

67 | });

68 | });

69 |

--------------------------------------------------------------------------------

/docs/004-updating-audio-graphs.md:

--------------------------------------------------------------------------------

1 | # Updating Rendered ``s

2 |

3 | Thus far, we have been rendering static audio graphs with the `renderAudioGraph` function. Say we now want to update a graph that creates an oscillator, whose frequency is dictated by a slider (``):

4 |

5 | ```js

6 | import {

7 | renderAudioGraph,

8 | ...

9 | } from 'wax-core';

10 |

11 | const slider = document.body.querySelector('#slider');

12 |

13 | slider.addEventListener('change', ({ target }) => {

14 | renderAudioGraph(

15 |

16 |

17 |

18 | );

19 | });

20 | ```

21 |

22 | The problem with this approach is that we'll be creating a new audio node for each element whenever the slider's value changes! While `AudioNode`s are cheap to create, the above code will result in many frequencies being played at once; try at your own peril, but I can assure you that it sounds horrible.

23 |

24 | To update a tree that already exists, one can replace `renderAudioGraph` with `renderPersistentAudioGraph`:

25 |

26 | ```js

27 | import {

28 | renderPersistentAudioGraph,

29 | ...

30 | } from 'wax-core';

31 |

32 | const slider = document.body.querySelector('#slider');

33 | let value = 40;

34 |

35 | slider.value = value;

36 |

37 | const audioGraph = (

38 |

39 |

40 |

41 | );

42 |

43 | const updateAudioGraph = renderPersistentAudioGraph(audioGraph);

44 |

45 | slider.addEventListener('change', ({ target }) => {

46 | value = target.value;

47 | updateAudioGraph(audioGraph);

48 | });

49 | ```

50 |

51 | By invoking the `updateAudioGraph` function returned by calling `renderPersistentAudioGraph`, we can update our existing tree of audio elements to reflect the latest property values; this internally reinvokes component logic across the tree, but against the already-created nodes. It's analogous to React's [reconciliation](https://reactjs.org/docs/reconciliation.html) algorithm, albeit infinitely less sophisticated.

52 |

53 | ## A Note on "Reconciliation"

54 |

55 | At present, Wax will not diff element trees between renders to determine if nodes have been added or removed; it assumes that their structures are identical, and that only respective properties have changed. This is certaintly a big limitation and will be addressed properly if this project evolves from its experimental stage; for the time being, conditionally specifying elements will not work:

56 |

57 | ```js

58 |

59 | {/* This won't work... yet. */}

60 | {makeNoise && }

61 |

62 | ```

63 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Wax

2 |

3 | [](https://travis-ci.org/jamesseanwright/wax) [](https://coveralls.io/github/jamesseanwright/wax?branch=master) [](https://www.npmjs.com/package/wax-core)

4 |

5 | An experimental, JSX-compatible renderer for the Web Audio API. I wrote Wax for my [Manchester Web Meetup](https://www.meetup.com/Manchester-Web-Meetup) talk, [_Manipulating the Web Audio API with JSX and Custom Renderers_](https://www.youtube.com/watch?v=IeuuBKBb4Wg).

6 |

7 | While it has decent test coverage and is stable, I still deem this to be a work-in-progress. **Use in production at your own risk!**

8 |

9 | ```jsx

10 | /** @jsx createAudioElement */

11 |

12 | import {

13 | createAudioElement,

14 | renderAudioGraph,

15 | AudioGraph,

16 | Oscillator,

17 | Gain,

18 | StereoPanner,

19 | Destination,

20 | setValueAtTime,

21 | exponentialRampToValueAtTime,

22 | } from 'wax-core';

23 |

24 | renderAudioGraph(

25 |

26 |

34 |

35 |

36 |

37 |

38 | );

39 | ```

40 |

41 | ## Example Apps

42 |

43 | Consult the [example](https://github.com/jamesseanwright/wax/tree/master/example) directory for a few small example apps that use Wax. The included [`README`](https://github.com/jamesseanwright/wax/blob/master/example/README.md) summarises them and details how they can be built and ran.

44 |

45 | ## Documentation

46 |

47 | * [Introduction](https://github.com/jamesseanwright/wax/blob/master/docs/000-introduction.md)

48 | * [Getting Started](https://github.com/jamesseanwright/wax/blob/master/docs/001-getting-started.md)

49 | * [Manipulating Audio Parameters](https://github.com/jamesseanwright/wax/blob/master/docs/002-audio-parameters.md)

50 | * [Building Complex Graphs with ``s](https://github.com/jamesseanwright/wax/blob/master/docs/003-aggregations.md)

51 | * [Updating Rendered ``s](https://github.com/jamesseanwright/wax/blob/master/docs/004-updating-audio-graphs.md)

52 | * [Interop with React](https://github.com/jamesseanwright/wax/blob/master/docs/005-interop-with-react.md)

53 | * [API Reference](https://github.com/jamesseanwright/wax/blob/master/docs/006-api-reference.md)

54 | * [Local Development](https://github.com/jamesseanwright/wax/blob/master/docs/007-local-development.md)

55 |

--------------------------------------------------------------------------------

/docs/000-introduction.md:

--------------------------------------------------------------------------------

1 | # Introduction

2 |

3 | [Web Audio](https://developer.mozilla.org/en-US/docs/Web/API/Web_Audio_API) is a exciting capability that allows developers to generate and manipulate sound in real-time (and to [render it for later use](https://developer.mozilla.org/en-US/docs/Web/API/OfflineAudioContext)), requiring nothing beyond JavaScript and a built-in browser API. Its audio graph model is conceptually logical, but writing imperative connection code can prove tedious, especially for larger graphs:

4 |

5 | ```js

6 | oscillator.connect(gain);

7 | gain.connect(stereoPanner);

8 | bufferSource.connect(stereoPanner);

9 | stereoPanner.connect(context.destination);

10 | ```

11 |

12 | [There are ways of mitigating this "fatigue"](https://github.com/learnable-content/web-audio-api-mini-course/blob/lesson1.3/complete/index.js#L66), but what if we could declare our audio graph, and its components, as a tree of elements using [JSX](https://reactjs.org/docs/introducing-jsx.html)? Can we thus avoid directly specifying this connection code? Wax is an attempt at answering these questions.

13 |

14 | Take the example found in the main README:

15 |

16 | ```jsx

17 | renderAudioGraph(

18 |

19 |

27 |

28 |

29 |

30 |

31 | );

32 | ```

33 |

34 | This is analogous to:

35 |

36 | ```js

37 | const context = new AudioContext();

38 | const oscillator = context.createOscillator();

39 | const gain = context.createGain();

40 | const stereoPanner = context.createStereoPanner();

41 | const getTime = time => context.currentTime + time;

42 |

43 | oscillator.type = 'square';

44 | oscillator.frequency.value = 200;

45 | oscillator.frequency.exponentialRampToValueAtTime(800, getTime(3));

46 | gain.gain.value = 0.2;

47 | stereoPanner.pan.value = -0.2

48 |

49 | oscillator.connect(gain);

50 | gain.connect(stereoPanner);

51 | stereoPanner.connect(context.destination);

52 |

53 | oscillator.start();

54 | oscillator.stop(getTime(3));

55 | ```

56 |

57 | As React abstracts manual, imperative DOM operations, Wax abstracts manual, imperative Web Audio operations.

58 |

59 | But how does Wax connect these nodes? The children of the root `` element **will be connected to one another in the order in which they're declared**. In our case:

60 |

61 | 1. `` will be rendered and connected to the rendered ``

62 | 2. `` will be connected to ``

63 | 3. `` will be connected to `` (`Destination` is a convenience component to consistently handle connections to the audio context's `destination` node)

64 |

--------------------------------------------------------------------------------

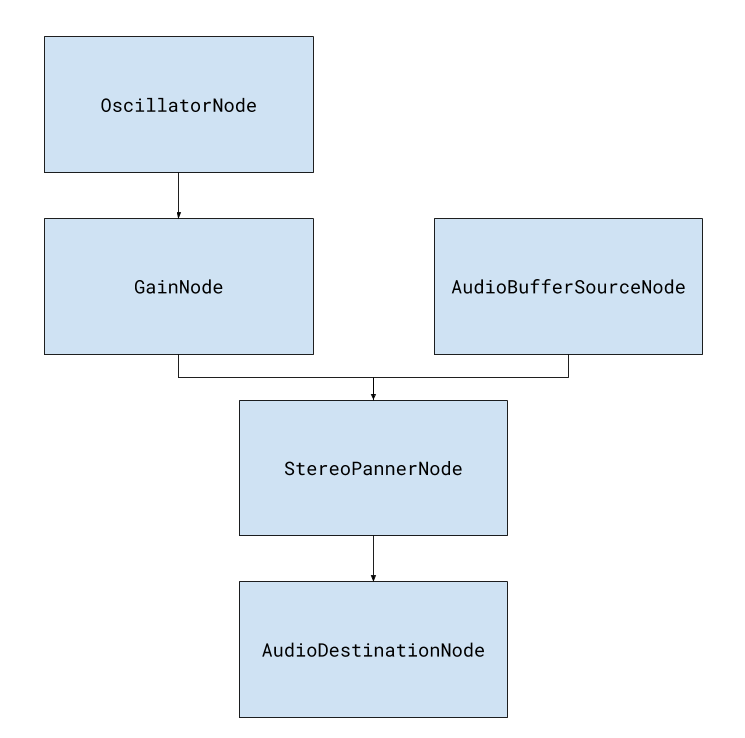

/docs/003-aggregations.md:

--------------------------------------------------------------------------------

1 | # Building Complex Graphs with ``s

2 |

3 | Thus far, we have built a simple, linear audio graph. What if we want to build more complex graphs in which multiple sources connect to common nodes? In Wax, we can achieve this with the `` component.

4 |

5 | Say we wish to build the following graph:

6 |

7 |

8 |

9 | The Web Audio API is built for this, demonstrating how the audio node model is a pragmatic fit:

10 |

11 | ```js

12 | // Node instantiation code assumed

13 | oscillator.connect(gain);

14 | gain.connect(stereoPanner);

15 | bufferSource.connect(stereoPanner);

16 | stereoPanner.connect(context.destination);

17 | ```

18 |

19 | Great! But how can we achieve this with Wax? With the `` component, that's how:

20 |

21 | ```js

22 | import {

23 | Aggregation,

24 | ...

25 | } from 'wax-core';

26 |

27 | // [...]

28 |

29 | const yodel = await fetchAsAudioBuffer('/yodel.mp3', audioContext);

30 | const stereoPanner = ;

31 |

32 | renderAudioGraph(

33 |

34 |

35 |

43 |

44 | {stereoPanner}

45 |

46 |

47 |

50 | {stereoPanner}

51 |

52 | {stereoPanner}

53 |

54 | ,

55 | audioContext,

56 | );

57 | ```

58 |

59 | You can think of an aggregation as a nestable audio graph; it will connect its children sequentially. When the root `` is rendered, any inner `` elements will be respected, avoiding double rendering and connection issues.

60 |

61 | Typically, you'll declare a shared element in a single place, to which the other children of an aggregation can connect; said shared element can then be specified again in the main audio graph to ensure it is ultimately connected, directly or indirectly, to the destination node.

62 |

63 | Let's clarify this within the above example. We declare a single `` element, which will create a single `StereoPannerNode` to which a `GainNode` and an `AudioBufferSourceNode` will respectively connect. Outside of the two `` elements, we specify the same element instance again in the root audio graph, so that it will be connected to `audioContext.destination`. As we can reuse existing elements by declaration name within a set of curly braces in React, we can achieve the same in Wax; to summarise, these sharable elements can be used within inner aggregations and the root audio graph to generate more complex sounds.

64 |

--------------------------------------------------------------------------------

/src/components/__tests__/asSourceNode.test.jsx:

--------------------------------------------------------------------------------

1 | import { createAsSourceNode } from '../asSourceNode';

2 | import { createStubAudioContext } from '../../__tests__/helpers';

3 |

4 | const createStubSourceNode = () => ({

5 | start: jest.fn(),

6 | stop: jest.fn(),

7 | });

8 |

9 | describe('asSourceNode HOC', () => {

10 | // used by both asSourceNode and JSX below

11 | const createAudioElement = jest.fn().mockImplementation(

12 | (Component, props, ...children) =>

13 | Component({

14 | children,

15 | ...props,

16 | })

17 | );

18 |

19 | let asSourceNode;

20 |

21 | beforeEach(() => {

22 | asSourceNode = createAsSourceNode(createAudioElement);

23 | });

24 |

25 | it('should create a new component that proxies incoming props and provides an enqueue prop', () => {

26 | const MyComponent = props => ({ props }); // TODO: common, reusable pattern across tests?

27 | const SourceComponent = asSourceNode(MyComponent);

28 | const sourceElement = ;

29 |

30 | expect(sourceElement.props.foo).toEqual('bar');

31 | expect(sourceElement.props.enqueue).toBeDefined();

32 | });

33 |

34 | it('should schedule playback of the source node based upon the startTime prop and context`s current time', () => {

35 | const audioContext = createStubAudioContext(3);

36 | const audioNode = createStubSourceNode();

37 | const MyComponent = ({ enqueue, node }) => enqueue(node);

38 | const SourceComponent = asSourceNode(MyComponent);

39 |

40 | ;

45 |

46 | expect(audioNode.start).toHaveBeenCalledTimes(1);

47 | expect(audioNode.start).toHaveBeenCalledWith(4);

48 | expect(audioNode.stop).not.toHaveBeenCalled();

49 | });

50 |

51 | it('should schedule the stopping of the source node when endTime is provided', () => {

52 | const audioContext = createStubAudioContext(3);

53 | const audioNode = createStubSourceNode();

54 | const MyComponent = ({ enqueue, node }) => enqueue(node);

55 | const SourceComponent = asSourceNode(MyComponent);

56 |

57 | ;

63 |

64 | expect(audioNode.start).toHaveBeenCalledTimes(1);

65 | expect(audioNode.start).toHaveBeenCalledWith(4);

66 | expect(audioNode.stop).toHaveBeenCalledTimes(1);

67 | expect(audioNode.stop).toHaveBeenCalledWith(8);

68 | });

69 |

70 | it('should not schedule node playback or stopping when it has already been scheduled', () => {

71 | const audioContext = createStubAudioContext(3);

72 | const audioNode = createStubSourceNode();

73 | const MyComponent = ({ enqueue, node }) => enqueue(node);

74 | const SourceComponent = asSourceNode(MyComponent);

75 |

76 | audioNode.isScheduled = true;

77 |

78 | ;

84 |

85 | expect(audioNode.start).not.toHaveBeenCalled();

86 | expect(audioNode.stop).not.toHaveBeenCalled();

87 | });

88 | });

89 |

--------------------------------------------------------------------------------

/docs/001-getting-started.md:

--------------------------------------------------------------------------------

1 | # Getting Started

2 |

3 | The entirety of Wax is available in a single package from npm, named `wax-core`. Install it into your project with:

4 |

5 | ```shell

6 | npm i --save wax-core

7 | ```

8 |

9 | Create a single entry point, `simple.jsx`, and replicate the following imports and audio graph.

10 |

11 | ```js

12 | import {

13 | createAudioElement,

14 | renderAudioGraph,

15 | AudioGraph,

16 | Oscillator,

17 | Gain,

18 | StereoPanner,

19 | Destination,

20 | setValueAtTime,

21 | exponentialRampToValueAtTime,

22 | } from 'wax-core';

23 |

24 | renderAudioGraph(

25 |

26 |

34 |

35 |

36 |

37 |

38 | );

39 | ```

40 |

41 | While `` does nothing special at present, it may manipulate its children in future versions of Wax. Please ensure you always specify an `AudioGraph` as the root element of the tree.

42 |

43 | But how do we actually build this? How can we instruct a transpiler that these JSX constructs should specifically target Wax? Firstly, let's look at the first binding we import from `wax-core`:

44 |

45 | ```js

46 | import {

47 | createAudioElement,

48 | ...

49 | } from 'wax-core';

50 | ```

51 |

52 | Why are we importing this is we aren't calling it anywhere? Oh, but we are; when our JSX is transpiled, it'll resolve to invocations of `createAudioElement`. It is the Wax equivalent of `React.createElement`, and follows the exact same signature!

53 |

54 | ```js

55 | renderAudioGraph(

56 | createAudioElement(

57 | AudioGraph,

58 | null,

59 | createAudioElement(

60 | Oscillator,

61 | {

62 | frequency: [

63 | setValueAtTime(200, 0),

64 | exponentialRampToValueAtTime(800, 3)

65 | ],

66 | type: 'square',

67 | endTime: 3,

68 | },

69 | ),

70 | createAudioElement(Gain, { gain: 0.2 }),

71 | createAudioElement(StereoPanner, { pan: -0.2 }),

72 | createAudioElement(Destination, null),

73 | )

74 | );

75 | ```

76 |

77 | To achieve this transformation, we can use [Babel](https://babeljs.io) and the [`transform-react-jsx`](https://babeljs.io/docs/en/babel-plugin-transform-react-jsx) plugin; the latter exposes a `pragma` option that we can configure to transform JSX to `createAudioElement` calls:

78 |

79 | ```json

80 | {

81 | "plugins": [

82 | ["@babel/transform-react-jsx", {

83 | "pragma": "createAudioElement"

84 | }]

85 | ]

86 | }

87 | ```

88 |

89 | Despite the name, this plugin performs general JSX transformations, defaulting to `React.createElement`. You do not need React to use Wax!

90 |

91 | To create a bundle containing Wax and our app's code, we'll need a build tool **that supports ES Modules**. For the example apps, we use [Rollup](https://rollupjs.org/) and [`rollup-plugin-babel`](https://github.com/rollup/rollup-plugin-babel) to respect JSX transpilation ([config](https://github.com/jamesseanwright/wax/blob/master/rollup.config.js)).

92 |

93 | Once we have our bundle, we can load it into a HTML document using a `

97 | ```

98 |

99 | ## `createAudioElement` and ESLint

100 |

101 | If you are using ESLint to analyse your code, you may receive this error:

102 |

103 | ```

104 | 'createAudioElement' is defined but never used.

105 | ```

106 |

107 | This is because `eslint-plugin-react` expects the pragma to be `React.createElement`. To suppress this error, one should explicitly configure the React plugin in the ESLint config's `settings` property:

108 |

109 | ```json

110 | {

111 | "settings": {

112 | "react": {

113 | "pragma": "createAudioElement"

114 | }

115 | }

116 | }

117 | ```

118 |

119 | Alternatively, one can specify an `@jsx` directive at the beginning of your module

120 |

121 | ```js

122 | /** @jsx createAudioElement */

123 | ```

124 |

--------------------------------------------------------------------------------

/src/__tests__/createAudioElement.test.js:

--------------------------------------------------------------------------------

1 | import { createArrayWith } from './helpers';

2 | import createAudioElement from '../createAudioElement';

3 |

4 | const createElementCreator = node => {

5 | const creator = jest.fn().mockReturnValue(node);

6 | creator.isElementCreator = true;

7 | return creator;

8 | };

9 |

10 | const createAudioGraph = nodeTree =>

11 | nodeTree.map(node =>

12 | Array.isArray(node)

13 | ? createElementCreator(createAudioGraph(node))

14 | : createElementCreator(node)

15 | );

16 |

17 |

18 | const assertNestedAudioGraph = (graph, nodeTree, audioContext) => {

19 | graph.forEach((creator, i) => {

20 | if (Array.isArray(creator)) {

21 | assertNestedAudioGraph(creator, nodeTree[i], audioContext);

22 | } else {

23 | expect(creator).toHaveBeenCalledTimes(1);

24 | expect(creator).toHaveBeenCalledWith(audioContext, nodeTree[i]);

25 | }

26 | });

27 | };

28 |

29 | describe('createAudioElement', () => {

30 | it('should conform to the JSX pragma signature and return a creator function', () => {

31 | const node = {};

32 | const audioContext = {};

33 | const Component = jest.fn().mockReturnValue(node);

34 | const props = { foo: 'bar', bar: 'baz' };

35 | const children = [{}, {}, {}];

36 | const creator = createAudioElement(Component, props, ...children);

37 | const result = creator(audioContext);

38 |

39 | expect(result).toBe(node);

40 | expect(Component).toHaveBeenCalledTimes(1);

41 | expect(Component).toHaveBeenCalledWith({

42 | children,

43 | audioContext,

44 | ...props,

45 | });

46 | });

47 |

48 | it('should render a creator when it is returned from a component', () => {

49 | const innerNode = {};

50 | const innerCreator = createElementCreator(innerNode);

51 | const audioContext = {};

52 | const Component = jest.fn().mockReturnValue(innerCreator);

53 | const creator = createAudioElement(Component, {});

54 | const result = creator(audioContext);

55 |

56 | expect(result).toBe(innerNode);

57 | expect(innerCreator).toHaveBeenCalledTimes(1);

58 | expect(innerCreator).toHaveBeenCalledWith(audioContext, undefined);

59 | });

60 |

61 | it('should invoke child creators when setting the parent`s `children` prop', () => {

62 | const children = createArrayWith(10, (_, id) => {

63 | const node = { id };

64 | const creator = createElementCreator(node);

65 | return { node, creator };

66 | });

67 |

68 | const audioContext = {};

69 | const Component = jest.fn().mockReturnValue({});

70 |

71 | const creator = createAudioElement(

72 | Component,

73 | {},

74 | ...children.map(({ creator }) => creator),

75 | );

76 |

77 | creator(audioContext);

78 |

79 | expect(Component).toHaveBeenCalledWith({

80 | audioContext,

81 | children: children.map(({ node }) => node),

82 | });

83 | });

84 |

85 | it('should reconcile existing nodes for the graph from the provided array', () => {

86 | const nodeTree = [

87 | { id: 0 },

88 | { id: 1 },

89 | [

90 | { id: 2 },

91 | { id: 3 },

92 | [

93 | { id: 4 },

94 | { id: 5 },

95 | ],

96 | { id: 6 },