├── README.md

├── config.py

├── custom

├── cuda

│ ├── nms.cu

│ └── vision.h

├── nms.h

└── vision.cpp

├── dist

├── detectron3d-0.1-py3.8-linux-x86_64.egg

└── detectron3d-0.1-py3.9-linux-x86_64.egg

├── lib

├── __init__.py

├── __pycache__

│ ├── __init__.cpython-36.pyc

│ ├── __init__.cpython-37.pyc

│ ├── __init__.cpython-38.pyc

│ ├── __init__.cpython-39.pyc

│ ├── ap_helper.cpython-37.pyc

│ ├── ap_helper.cpython-38.pyc

│ ├── dataloader.cpython-36.pyc

│ ├── dataloader.cpython-37.pyc

│ ├── dataloader.cpython-38.pyc

│ ├── dataloader.cpython-39.pyc

│ ├── dataset.cpython-36.pyc

│ ├── dataset.cpython-37.pyc

│ ├── dataset.cpython-38.pyc

│ ├── dataset.cpython-39.pyc

│ ├── detection_ap.cpython-38.pyc

│ ├── detection_ap.cpython-39.pyc

│ ├── detection_utils.cpython-37.pyc

│ ├── detection_utils.cpython-38.pyc

│ ├── detection_utils.cpython-39.pyc

│ ├── evaluation.cpython-36.pyc

│ ├── evaluation.cpython-37.pyc

│ ├── instance_ap.cpython-38.pyc

│ ├── instance_ap.cpython-39.pyc

│ ├── layers.cpython-36.pyc

│ ├── layers.cpython-37.pyc

│ ├── layers.cpython-38.pyc

│ ├── layers.cpython-39.pyc

│ ├── loss.cpython-36.pyc

│ ├── loss.cpython-37.pyc

│ ├── loss.cpython-38.pyc

│ ├── loss.cpython-39.pyc

│ ├── math_functions.cpython-36.pyc

│ ├── math_functions.cpython-37.pyc

│ ├── pc_utils.cpython-36.pyc

│ ├── pc_utils.cpython-37.pyc

│ ├── pc_utils.cpython-38.pyc

│ ├── pc_utils.cpython-39.pyc

│ ├── scannet_instance_helper.cpython-38.pyc

│ ├── solvers.cpython-36.pyc

│ ├── solvers.cpython-37.pyc

│ ├── solvers.cpython-38.pyc

│ ├── solvers.cpython-39.pyc

│ ├── test.cpython-36.pyc

│ ├── test.cpython-37.pyc

│ ├── test.cpython-38.pyc

│ ├── test.cpython-39.pyc

│ ├── train.cpython-36.pyc

│ ├── train.cpython-37.pyc

│ ├── train.cpython-38.pyc

│ ├── train.cpython-39.pyc

│ ├── transforms.cpython-36.pyc

│ ├── transforms.cpython-37.pyc

│ ├── transforms.cpython-38.pyc

│ ├── transforms.cpython-39.pyc

│ ├── utils.cpython-36.pyc

│ ├── utils.cpython-37.pyc

│ ├── utils.cpython-38.pyc

│ ├── utils.cpython-39.pyc

│ ├── voxelizer.cpython-36.pyc

│ ├── voxelizer.cpython-37.pyc

│ ├── voxelizer.cpython-38.pyc

│ └── voxelizer.cpython-39.pyc

├── dataloader.py

├── dataset.py

├── datasets

│ ├── README.md

│ ├── __init__.py

│ ├── __pycache__

│ │ ├── __init__.cpython-36.pyc

│ │ ├── __init__.cpython-37.pyc

│ │ ├── __init__.cpython-38.pyc

│ │ ├── __init__.cpython-39.pyc

│ │ ├── jrdb.cpython-38.pyc

│ │ ├── jrdb.cpython-39.pyc

│ │ ├── modelnet.cpython-36.pyc

│ │ ├── scannet.cpython-36.pyc

│ │ ├── scannet.cpython-37.pyc

│ │ ├── scannet.cpython-38.pyc

│ │ ├── scannet.cpython-39.pyc

│ │ ├── semantics3d.cpython-36.pyc

│ │ ├── shapenetseg.cpython-36.pyc

│ │ ├── stanford.cpython-36.pyc

│ │ ├── stanford3d.cpython-38.pyc

│ │ ├── stanford3d.cpython-39.pyc

│ │ ├── sunrgbd.cpython-38.pyc

│ │ ├── sunrgbd.cpython-39.pyc

│ │ ├── synthia.cpython-36.pyc

│ │ ├── synthia.cpython-37.pyc

│ │ ├── synthia.cpython-38.pyc

│ │ ├── synthia.cpython-39.pyc

│ │ └── varcity3d.cpython-36.pyc

│ ├── jrdb.py

│ ├── preprocessing

│ │ ├── __pycache__

│ │ │ ├── scannet_inst.cpython-36.pyc

│ │ │ ├── scannet_instance.cpython-36.pyc

│ │ │ ├── stanford_3d.cpython-36.pyc

│ │ │ └── synthia_instance.cpython-36.pyc

│ │ ├── jrdb.py

│ │ ├── scannet_instance.py

│ │ ├── stanford3d.py

│ │ ├── sunrgbd.py

│ │ ├── synthia_instance.py

│ │ └── votenet.py

│ ├── scannet.py

│ ├── stanford3d.py

│ ├── sunrgbd.py

│ └── synthia.py

├── detection_ap.py

├── detection_utils.py

├── evaluation.py

├── instance_ap.py

├── layers.py

├── loss.py

├── math_functions.py

├── pc_utils.py

├── pipelines

│ ├── __init__.py

│ ├── __pycache__

│ │ ├── __init__.cpython-37.pyc

│ │ ├── __init__.cpython-38.pyc

│ │ ├── __init__.cpython-39.pyc

│ │ ├── base.cpython-37.pyc

│ │ ├── base.cpython-38.pyc

│ │ ├── base.cpython-39.pyc

│ │ ├── detection.cpython-37.pyc

│ │ ├── detection.cpython-38.pyc

│ │ ├── detection.cpython-39.pyc

│ │ ├── instance.cpython-38.pyc

│ │ ├── panoptic_segmentation.cpython-37.pyc

│ │ ├── rotation.cpython-38.pyc

│ │ ├── segmentation.cpython-37.pyc

│ │ ├── segmentation.cpython-38.pyc

│ │ └── upsnet.cpython-37.pyc

│ ├── base.py

│ ├── detection.py

│ ├── instance.py

│ └── segmentation.py

├── solvers.py

├── test.py

├── train.py

├── transforms.py

├── utils.py

├── utils.py.orig

├── vis.py

├── voxelization

│ ├── __pycache__

│ │ ├── __init__.cpython-36.pyc

│ │ └── voxelizer.cpython-36.pyc

│ ├── build

│ │ └── temp.linux-x86_64-3.6

│ │ │ └── voxelizer_gpu.o

│ ├── voxelizer_cuda.o

│ ├── voxelizer_cuda_link.o

│ └── voxelizer_gpu.cpython-36m-x86_64-linux-gnu.so

└── voxelizer.py

├── main.py

├── models

├── __init__.py

├── __pycache__

│ ├── __init__.cpython-36.pyc

│ ├── __init__.cpython-37.pyc

│ ├── __init__.cpython-38.pyc

│ ├── __init__.cpython-39.pyc

│ ├── conditional_random_fields.cpython-36.pyc

│ ├── conditional_random_fields.cpython-37.pyc

│ ├── detection.cpython-37.pyc

│ ├── detection.cpython-38.pyc

│ ├── detection.cpython-39.pyc

│ ├── fcn.cpython-36.pyc

│ ├── fcn.cpython-37.pyc

│ ├── fcn.cpython-38.pyc

│ ├── fcn.cpython-39.pyc

│ ├── fpn.cpython-37.pyc

│ ├── instance.cpython-38.pyc

│ ├── model.cpython-36.pyc

│ ├── model.cpython-37.pyc

│ ├── model.cpython-38.pyc

│ ├── model.cpython-39.pyc

│ ├── pointnet.cpython-37.pyc

│ ├── pointnet.cpython-38.pyc

│ ├── res16unet.cpython-36.pyc

│ ├── res16unet.cpython-37.pyc

│ ├── res16unet.cpython-38.pyc

│ ├── res16unet.cpython-39.pyc

│ ├── resfcnet.cpython-36.pyc

│ ├── resfcnet.cpython-37.pyc

│ ├── resfcnet.cpython-38.pyc

│ ├── resfcnet.cpython-39.pyc

│ ├── resfuncunet.cpython-36.pyc

│ ├── resfuncunet.cpython-37.pyc

│ ├── resfuncunet.cpython-38.pyc

│ ├── resfuncunet.cpython-39.pyc

│ ├── resnet.cpython-36.pyc

│ ├── resnet.cpython-37.pyc

│ ├── resnet.cpython-38.pyc

│ ├── resnet.cpython-39.pyc

│ ├── resnet_dense.cpython-38.pyc

│ ├── resunet.cpython-36.pyc

│ ├── resunet.cpython-37.pyc

│ ├── resunet.cpython-38.pyc

│ ├── resunet.cpython-39.pyc

│ ├── rpn.cpython-37.pyc

│ ├── segmentation.cpython-37.pyc

│ ├── segmentation.cpython-38.pyc

│ ├── senet.cpython-36.pyc

│ ├── senet.cpython-37.pyc

│ ├── senet.cpython-38.pyc

│ ├── senet.cpython-39.pyc

│ ├── simplenet.cpython-36.pyc

│ ├── simplenet.cpython-37.pyc

│ ├── simplenet.cpython-38.pyc

│ ├── simplenet.cpython-39.pyc

│ ├── unet.cpython-36.pyc

│ ├── unet.cpython-37.pyc

│ ├── unet.cpython-38.pyc

│ ├── unet.cpython-39.pyc

│ ├── wrapper.cpython-36.pyc

│ └── wrapper.cpython-37.pyc

├── detection.py

├── fcn.py

├── instance.py

├── model.py

├── modules

│ ├── __init__.py

│ ├── __pycache__

│ │ ├── __init__.cpython-36.pyc

│ │ ├── __init__.cpython-37.pyc

│ │ ├── __init__.cpython-38.pyc

│ │ ├── __init__.cpython-39.pyc

│ │ ├── common.cpython-36.pyc

│ │ ├── common.cpython-37.pyc

│ │ ├── common.cpython-38.pyc

│ │ ├── common.cpython-39.pyc

│ │ ├── resnet_block.cpython-36.pyc

│ │ ├── resnet_block.cpython-37.pyc

│ │ ├── resnet_block.cpython-38.pyc

│ │ ├── resnet_block.cpython-39.pyc

│ │ ├── senet_block.cpython-36.pyc

│ │ ├── senet_block.cpython-37.pyc

│ │ ├── senet_block.cpython-38.pyc

│ │ └── senet_block.cpython-39.pyc

│ ├── common.py

│ ├── resnet_block.py

│ └── senet_block.py

├── pointnet.py

├── res16unet.py

├── resfcnet.py

├── resfuncunet.py

├── resnet.py

├── resunet.py

├── segmentation.py

├── senet.py

├── simplenet.py

└── unet.py

├── requirements.txt

├── resume.sh

├── run.sh

├── scripts

├── bonet_eval.py

├── draw_scannet_perclassAP.py

├── draw_stanford_perclassAP.py

├── find_optimal_anchor_params.py

├── gibson.py

├── stanford_full.py

├── test_detection_hyperparam_genscript.py

├── test_detection_hyperparam_parseresult.py

├── test_instance_hyperparam_genscript.py

├── test_instance_hyperparam_parseresult.py

├── visualize_scannet.py

└── visualize_stanford.py

└── setup.py

/README.md:

--------------------------------------------------------------------------------

1 | # Generative Sparse Detection Networks for 3D Single-shot Object Detection

2 |

3 | This is a repository for "Generative Sparse Detection Networks for 3D Single-shot Object Detection", ECCV 2020 Spotlight.

4 |

5 | ## Installation

6 |

7 | ```

8 | pip install -r requirements.txt

9 | python setup.py install

10 | ```

11 |

12 | ## Links

13 |

14 | * [Website](https://jgwak.com/publications/gsdn/)

15 | * [Full Paper (PDF, 8.4MB)](https://arxiv.org/pdf/2006.12356.pdf)

16 | * [Video (Spotlight, 10 mins)](https://www.youtube.com/watch?v=g8UqlJZVnFo)

17 | * [Video (Summary, 3 mins)](https://www.youtube.com/watch?v=9ohxok_0eTc)

18 | * [Slides (PDF, 2.4MB)](https://jgwak.com/publications/gsdn//misc/slides.pdf)

19 | * [Poster (PDF, 1.6MB)](https://jgwak.com/publications/gsdn//misc/poster.pdf)

20 | * [Bibtex](https://jgwak.com/bibtex/gwak2020generative.bib)

21 |

22 | ## Abstract

23 |

24 |

25 | 3D object detection has been widely studied due to its potential applicability to many promising areas such as robotics and augmented reality. Yet, the sparse nature of the 3D data poses unique challenges to this task. Most notably, the observable surface of the 3D point clouds is disjoint from the center of the instance to ground the bounding box prediction on. To this end, we propose Generative Sparse Detection Network (GSDN), a fully-convolutional single-shot sparse detection network that efficiently generates the support for object proposals. The key component of our model is a generative sparse tensor decoder, which uses a series of transposed convolutions and pruning layers to expand the support of sparse tensors while discarding unlikely object centers to maintain minimal runtime and memory footprint. GSDN can process unprecedentedly large-scale inputs with a single fully-convolutional feed-forward pass, thus does not require the heuristic post-processing stage that stitches results from sliding windows as other previous methods have. We validate our approach on three 3D indoor datasets including the large-scale 3D indoor reconstruction dataset where our method outperforms the state-of-the-art methods by a relative improvement of 7.14% while being 3.78 times faster than the best prior work.

26 |

27 | ## Proposed Method

28 |

29 | ### Overview

30 |

31 |

32 |

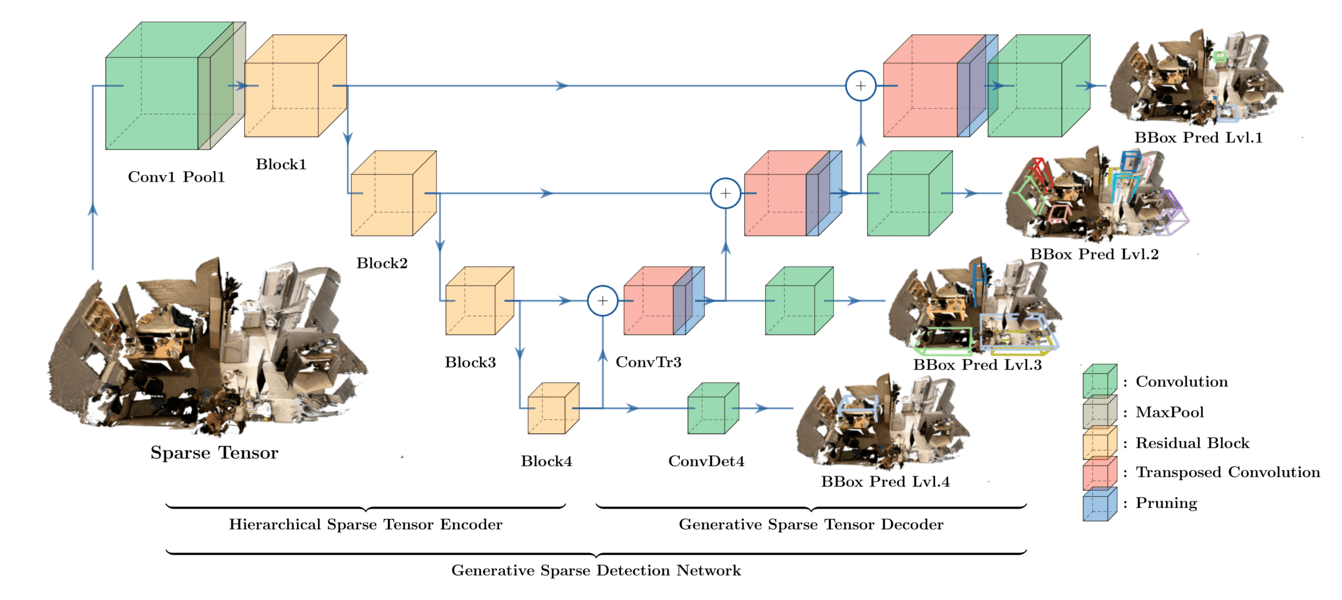

33 | We propose Generative Sparse Detection Network (GSDN), a fully-convolutional single-shot sparse detection network that efficiently generates the support for object proposals. Our model is composed of the following two components.

34 |

35 | * **Hierarchical Sparse Tensor Encoder**: Efficiently encodes large-scale 3D scene at high resolution using _Sparse Convolution_. Encode a pyramid of features at different resolution to detect objects at heavily varying scales.

36 | * **Generative Sparse Tensor Decoder**: _Generates_ and _prunes_ new coordinates to support anchor box centers. More details in the following subsection.

37 |

38 | ### Generative Sparse Tensor Decoder

39 |

40 |

41 |

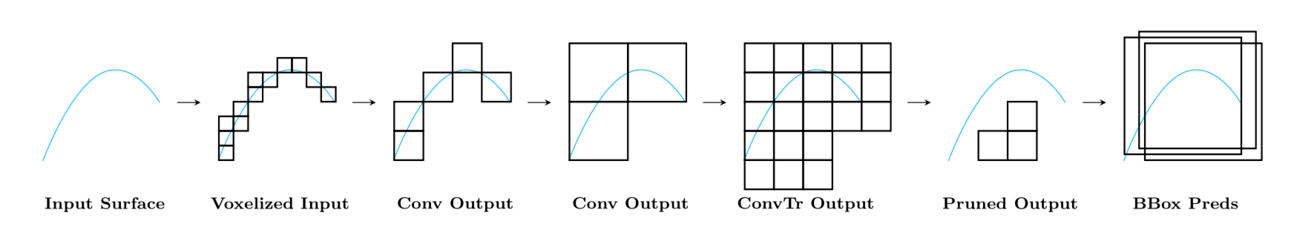

42 | One of the key challenges of 3D object detection is that the observable surface may be disjoint from the center of the instance that we want to ground the bounding box detection on. We first resolve this issue by generating new coordinates using convolution transpose. However, convolution transpose generates coordinates cubically in sparse 3D point clouds. For better efficiency, we propose to maintain sparsity by learning to prune out unnecessary generated coordinates.

43 |

44 | ### Results

45 |

46 | #### ScanNet

47 |

48 |

49 |

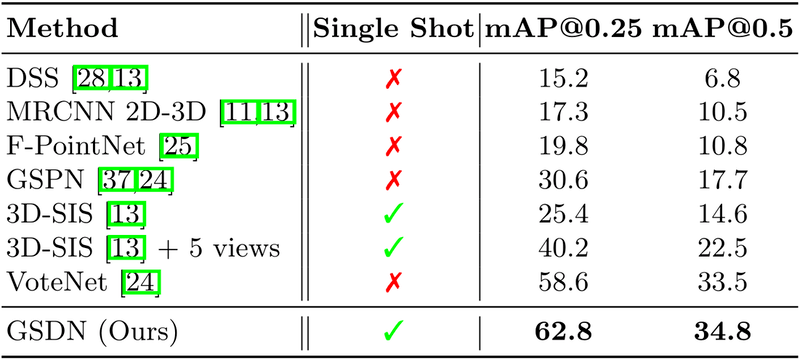

50 | To briefly summarize the results, our method

51 |

52 | * Outperforms previous state-of-the-art by **4.2 mAP@0.25**

53 | * While being **x3.7 faster** (and runtime grows **sublinear** to the volume)

54 | * With **minimal memory footprint** (**x6** efficient than dense counterpart)

55 |

56 | #### S3DIS

57 |

58 |

59 |

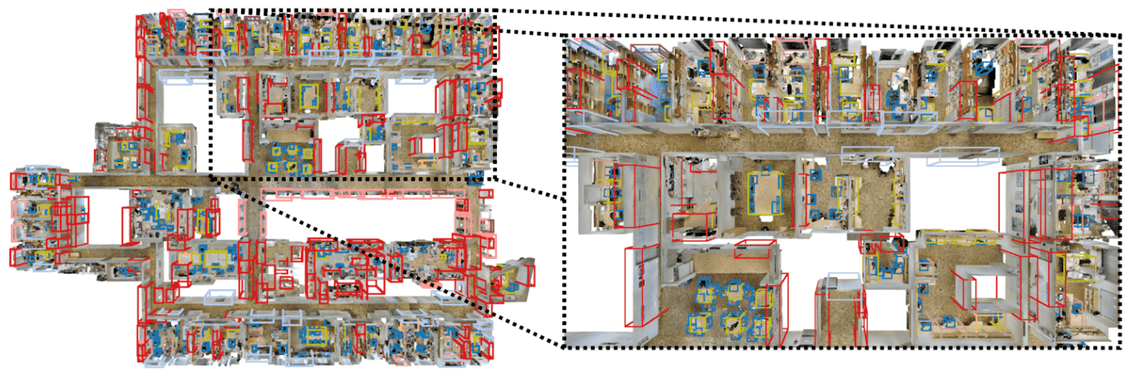

60 | Similarly, our method outperforms a baseline method on S3DIS dataset. Additionally, we evaluate GSDN on the entire building 5 of S3DIS dataset. Our proposed model can process 78M points, 13984m3, 53 room building as a whole in a _single fully convolutional feed-forward pass_, only using 5G of GPU memory to detect 573 instances of 3D objects.

61 |

62 | #### Gibson

63 |

64 |

65 |

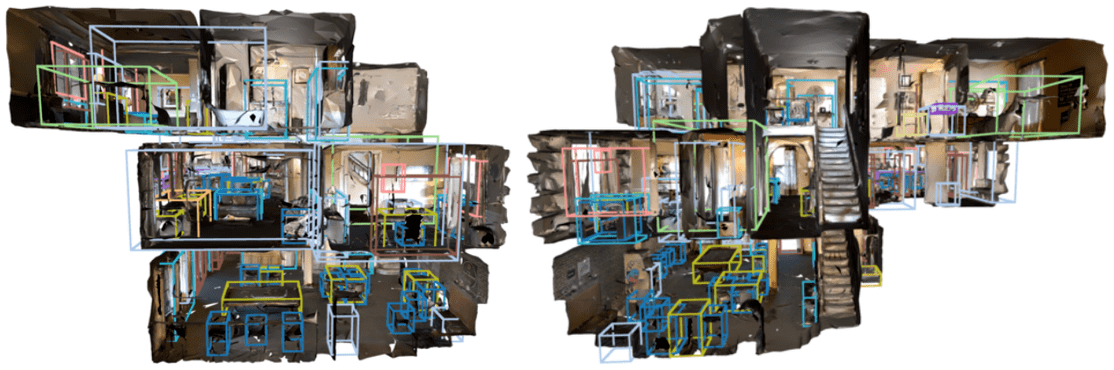

66 | We evaluate our model on Gibson dataset as well. Our model trained on single room of ScanNet dataset generanlizes to multi-story buildings without any ad-hoc pre-processing or post-processing.

67 |

68 | ## Citing this work

69 |

70 | If you find our work helpful, please cite it with the following bibtex.

71 |

72 | ```

73 | @inproceedings{gwak2020gsdn,

74 | title={Generative Sparse Detection Networks for 3D Single-shot Object Detection},

75 | author={Gwak, JunYoung and Choy, Christopher B and Savarese, Silvio},

76 | booktitle={European conference on computer vision},

77 | year={2020}

78 | }

79 | ```

80 |

--------------------------------------------------------------------------------

/custom/cuda/nms.cu:

--------------------------------------------------------------------------------

1 | // Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved.

2 | // Modified and redistributed by JunYoung Gwak

3 | #include

4 | #include

5 |

6 | #include

7 | #include

8 |

9 | #include

10 | #include

11 |

12 | int const threadsPerBlock = sizeof(unsigned long long) * 8;

13 |

14 | __device__ inline float devIoU(float const * const a, float const * const b) {

15 | float xmin = max(a[0], b[0]), xmax = min(a[3], b[3]);

16 | float ymin = max(a[1], b[1]), ymax = min(a[4], b[4]);

17 | float zmin = max(a[2], b[2]), zmax = min(a[5], b[5]);

18 | float xsize = max(xmax - xmin, 0.f), ysize = max(ymax - ymin, 0.f);

19 | float zsize = max(zmax - zmin, 0.f);

20 | float interS = xsize * ysize * zsize;

21 | float Sa = (a[3] - a[0]) * (a[4] - a[1]) * (a[5] - a[2]);

22 | float Sb = (b[3] - b[0]) * (b[4] - b[1]) * (b[5] - b[2]);

23 | return interS / (Sa + Sb - interS);

24 | }

25 |

26 | __global__ void nms_kernel(const int n_boxes, const float nms_overlap_thresh,

27 | const float *dev_boxes, unsigned long long *dev_mask) {

28 | const int row_start = blockIdx.y;

29 | const int col_start = blockIdx.x;

30 |

31 | // if (row_start > col_start) return;

32 |

33 | const int row_size =

34 | min(n_boxes - row_start * threadsPerBlock, threadsPerBlock);

35 | const int col_size =

36 | min(n_boxes - col_start * threadsPerBlock, threadsPerBlock);

37 |

38 | __shared__ float block_boxes[threadsPerBlock * 7];

39 | if (threadIdx.x < col_size) {

40 | block_boxes[threadIdx.x * 7 + 0] =

41 | dev_boxes[(threadsPerBlock * col_start + threadIdx.x) * 7 + 0];

42 | block_boxes[threadIdx.x * 7 + 1] =

43 | dev_boxes[(threadsPerBlock * col_start + threadIdx.x) * 7 + 1];

44 | block_boxes[threadIdx.x * 7 + 2] =

45 | dev_boxes[(threadsPerBlock * col_start + threadIdx.x) * 7 + 2];

46 | block_boxes[threadIdx.x * 7 + 3] =

47 | dev_boxes[(threadsPerBlock * col_start + threadIdx.x) * 7 + 3];

48 | block_boxes[threadIdx.x * 7 + 4] =

49 | dev_boxes[(threadsPerBlock * col_start + threadIdx.x) * 7 + 4];

50 | block_boxes[threadIdx.x * 7 + 5] =

51 | dev_boxes[(threadsPerBlock * col_start + threadIdx.x) * 7 + 5];

52 | block_boxes[threadIdx.x * 7 + 6] =

53 | dev_boxes[(threadsPerBlock * col_start + threadIdx.x) * 7 + 6];

54 | }

55 | __syncthreads();

56 |

57 | if (threadIdx.x < row_size) {

58 | const int cur_box_idx = threadsPerBlock * row_start + threadIdx.x;

59 | const float *cur_box = dev_boxes + cur_box_idx * 7;

60 | int i = 0;

61 | unsigned long long t = 0;

62 | int start = 0;

63 | if (row_start == col_start) {

64 | start = threadIdx.x + 1;

65 | }

66 | for (i = start; i < col_size; i++) {

67 | if (devIoU(cur_box, block_boxes + i * 7) > nms_overlap_thresh) {

68 | t |= 1ULL << i;

69 | }

70 | }

71 | const int col_blocks = THCCeilDiv(n_boxes, threadsPerBlock);

72 | dev_mask[cur_box_idx * col_blocks + col_start] = t;

73 | }

74 | }

75 |

76 | // boxes is a N x 7 tensor

77 | at::Tensor nms_cuda(const at::Tensor boxes, float nms_overlap_thresh) {

78 | using scalar_t = float;

79 | AT_ASSERTM(boxes.type().is_cuda(), "boxes must be a CUDA tensor");

80 | auto scores = boxes.select(1, 6);

81 | auto order_t = std::get<1>(scores.sort(0, /* descending=*/true));

82 | auto boxes_sorted = boxes.index_select(0, order_t);

83 |

84 | int boxes_num = boxes.size(0);

85 |

86 | const int col_blocks = THCCeilDiv(boxes_num, threadsPerBlock);

87 |

88 | scalar_t* boxes_dev = boxes_sorted.data();

89 |

90 | THCState *state = at::globalContext().lazyInitCUDA(); // TODO replace with getTHCState

91 |

92 | unsigned long long* mask_dev = NULL;

93 | //THCudaCheck(THCudaMalloc(state, (void**) &mask_dev,

94 | // boxes_num * col_blocks * sizeof(unsigned long long)));

95 |

96 | mask_dev = (unsigned long long*) THCudaMalloc(state, boxes_num * col_blocks * sizeof(unsigned long long));

97 |

98 | dim3 blocks(THCCeilDiv(boxes_num, threadsPerBlock),

99 | THCCeilDiv(boxes_num, threadsPerBlock));

100 | dim3 threads(threadsPerBlock);

101 | nms_kernel<<>>(boxes_num,

102 | nms_overlap_thresh,

103 | boxes_dev,

104 | mask_dev);

105 |

106 | std::vector mask_host(boxes_num * col_blocks);

107 | THCudaCheck(cudaMemcpy(&mask_host[0],

108 | mask_dev,

109 | sizeof(unsigned long long) * boxes_num * col_blocks,

110 | cudaMemcpyDeviceToHost));

111 |

112 | std::vector remv(col_blocks);

113 | memset(&remv[0], 0, sizeof(unsigned long long) * col_blocks);

114 | std::vector remi(boxes_num);

115 | memset(&remi[0], -1, sizeof(unsigned long long) * boxes_num);

116 |

117 | at::Tensor keep = at::empty({boxes_num}, boxes.options().dtype(at::kLong).device(at::kCPU));

118 | int64_t* keep_out = keep.data();

119 |

120 | for (int i = 0; i < boxes_num; i++) {

121 | int nblock = i / threadsPerBlock;

122 | int inblock = i % threadsPerBlock;

123 |

124 | if (!(remv[nblock] & (1ULL << inblock))) {

125 | keep_out[i] = i;

126 | unsigned long long *p = &mask_host[0] + i * col_blocks;

127 | for (int j = nblock; j < col_blocks; j++) {

128 | unsigned long long is_new_overlap = p[j] & ~remv[j];

129 | int start_thread;

130 | if (j == nblock) {

131 | start_thread = inblock + 1;

132 | } else {

133 | start_thread = 0;

134 | }

135 | for (int k = start_thread; k < threadsPerBlock; k++) {

136 | if(is_new_overlap & (1ULL << k)) {

137 | remi[j * threadsPerBlock + k] = i;

138 | }

139 | }

140 | remv[j] |= p[j];

141 | }

142 | } else {

143 | keep_out[i] = remi[i];

144 | }

145 | }

146 |

147 | THCudaFree(state, mask_dev);

148 | return order_t.index({keep.to(order_t.device(), keep.scalar_type())});

149 | }

150 |

--------------------------------------------------------------------------------

/custom/cuda/vision.h:

--------------------------------------------------------------------------------

1 | // Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved.

2 | #pragma once

3 | #include

4 |

5 |

6 | at::Tensor nms_cuda(const at::Tensor boxes, float nms_overlap_thresh);

7 |

--------------------------------------------------------------------------------

/custom/nms.h:

--------------------------------------------------------------------------------

1 | // Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved.

2 | #pragma once

3 |

4 | #ifdef WITH_CUDA

5 | #include "cuda/vision.h"

6 | #endif

7 |

8 |

9 | at::Tensor nms(const at::Tensor& dets,

10 | const at::Tensor& scores,

11 | const float threshold) {

12 |

13 | if (dets.type().is_cuda()) {

14 | #ifdef WITH_CUDA

15 | // TODO raise error if not compiled with CUDA

16 | if (dets.numel() == 0)

17 | return at::empty({0}, dets.options().dtype(at::kLong).device(at::kCPU));

18 | auto b = at::cat({dets, scores.unsqueeze(1)}, 1);

19 | return nms_cuda(b, threshold);

20 | #else

21 | AT_ERROR("Not compiled with GPU support");

22 | #endif

23 | } else {

24 | AT_ERROR("Doesn't support CPU");

25 | }

26 | }

27 |

--------------------------------------------------------------------------------

/custom/vision.cpp:

--------------------------------------------------------------------------------

1 | // Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved. MIT License.

2 | // Modified and redistributed by JunYoung Gwak

3 | #include "nms.h"

4 |

5 | PYBIND11_MODULE(TORCH_EXTENSION_NAME, m) {

6 | m.def("nms", &nms, "non-maximum suppression");

7 | }

8 |

--------------------------------------------------------------------------------

/dist/detectron3d-0.1-py3.8-linux-x86_64.egg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/dist/detectron3d-0.1-py3.8-linux-x86_64.egg

--------------------------------------------------------------------------------

/dist/detectron3d-0.1-py3.9-linux-x86_64.egg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/dist/detectron3d-0.1-py3.9-linux-x86_64.egg

--------------------------------------------------------------------------------

/lib/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__init__.py

--------------------------------------------------------------------------------

/lib/__pycache__/__init__.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/__init__.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/__init__.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/__init__.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/__init__.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/__init__.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/__init__.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/__init__.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/ap_helper.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/ap_helper.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/ap_helper.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/ap_helper.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/dataloader.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/dataloader.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/dataloader.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/dataloader.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/dataloader.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/dataloader.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/dataloader.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/dataloader.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/dataset.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/dataset.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/dataset.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/dataset.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/dataset.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/dataset.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/dataset.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/dataset.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/detection_ap.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/detection_ap.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/detection_ap.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/detection_ap.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/detection_utils.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/detection_utils.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/detection_utils.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/detection_utils.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/detection_utils.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/detection_utils.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/evaluation.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/evaluation.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/evaluation.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/evaluation.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/instance_ap.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/instance_ap.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/instance_ap.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/instance_ap.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/layers.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/layers.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/layers.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/layers.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/layers.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/layers.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/layers.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/layers.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/loss.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/loss.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/loss.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/loss.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/loss.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/loss.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/loss.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/loss.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/math_functions.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/math_functions.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/math_functions.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/math_functions.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/pc_utils.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/pc_utils.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/pc_utils.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/pc_utils.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/pc_utils.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/pc_utils.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/pc_utils.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/pc_utils.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/scannet_instance_helper.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/scannet_instance_helper.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/solvers.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/solvers.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/solvers.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/solvers.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/solvers.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/solvers.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/solvers.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/solvers.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/test.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/test.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/test.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/test.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/test.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/test.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/test.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/test.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/train.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/train.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/train.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/train.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/train.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/train.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/train.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/train.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/transforms.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/transforms.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/transforms.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/transforms.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/transforms.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/transforms.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/transforms.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/transforms.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/utils.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/utils.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/utils.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/utils.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/utils.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/utils.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/utils.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/utils.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/voxelizer.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/voxelizer.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/voxelizer.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/voxelizer.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/voxelizer.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/voxelizer.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/__pycache__/voxelizer.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/__pycache__/voxelizer.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/dataloader.py:

--------------------------------------------------------------------------------

1 | import torch

2 | from torch.utils.data.sampler import Sampler

3 |

4 |

5 | class InfSampler(Sampler):

6 | """Samples elements randomly, without replacement.

7 | Arguments:

8 | data_source (Dataset): dataset to sample from

9 | """

10 |

11 | def __init__(self, data_source, shuffle=False):

12 | self.data_source = data_source

13 | self.shuffle = shuffle

14 | self.reset_permutation()

15 |

16 | def reset_permutation(self):

17 | perm = len(self.data_source)

18 | if self.shuffle:

19 | perm = torch.randperm(perm)

20 | self._perm = perm.tolist()

21 |

22 | def __iter__(self):

23 | return self

24 |

25 | def __next__(self):

26 | if len(self._perm) == 0:

27 | self.reset_permutation()

28 | return self._perm.pop()

29 |

30 | def __len__(self):

31 | return len(self.data_source)

32 |

33 | next = __next__ # Python 2 compatibility

34 |

--------------------------------------------------------------------------------

/lib/datasets/__init__.py:

--------------------------------------------------------------------------------

1 | from .scannet import ScannetDataset, Scannet3cmDataset, ScannetVoteNetDataset, \

2 | ScannetVoteNet3cmDataset, ScannetAlignedDataset, ScannetVoteNetRGBDataset, \

3 | ScannetVoteNetRGB3cmDataset, ScannetVoteNetRGB25mmDataset

4 | from .synthia import SynthiaDataset

5 | from .sunrgbd import SUNRGBDDataset

6 | from .stanford3d import Stanford3DDataset, Stanford3DSubsampleDataset, \

7 | Stanford3DMovableObjectsDatasets, Stanford3DMovableObjects3cmDatasets

8 | from .jrdb import JRDataset, JRDataset50, JRDataset30, JRDataset15

9 |

10 | DATASETS = [

11 | ScannetDataset, Scannet3cmDataset, ScannetVoteNetDataset, ScannetVoteNet3cmDataset,

12 | ScannetVoteNetRGBDataset, ScannetAlignedDataset, SynthiaDataset, SUNRGBDDataset,

13 | Stanford3DDataset, Stanford3DSubsampleDataset, Stanford3DMovableObjectsDatasets,

14 | ScannetVoteNetRGB3cmDataset, ScannetVoteNetRGB25mmDataset, Stanford3DMovableObjects3cmDatasets,

15 | JRDataset, JRDataset50, JRDataset30, JRDataset15

16 | ]

17 |

18 |

19 | def load_dataset(name):

20 | '''Creates and returns an instance of the datasets given its name.

21 | '''

22 | # Find the model class from its name

23 | mdict = {dataset.__name__: dataset for dataset in DATASETS}

24 | if name not in mdict:

25 | print('Invalid dataset index. Options are:')

26 | # Display a list of valid dataset names

27 | for dataset in DATASETS:

28 | print('\t* {}'.format(dataset.__name__))

29 | return None

30 | DatasetClass = mdict[name]

31 |

32 | return DatasetClass

33 |

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/__init__.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/__init__.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/__init__.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/__init__.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/__init__.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/__init__.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/__init__.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/__init__.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/jrdb.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/jrdb.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/jrdb.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/jrdb.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/modelnet.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/modelnet.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/scannet.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/scannet.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/scannet.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/scannet.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/scannet.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/scannet.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/scannet.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/scannet.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/semantics3d.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/semantics3d.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/shapenetseg.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/shapenetseg.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/stanford.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/stanford.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/stanford3d.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/stanford3d.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/stanford3d.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/stanford3d.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/sunrgbd.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/sunrgbd.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/sunrgbd.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/sunrgbd.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/synthia.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/synthia.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/synthia.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/synthia.cpython-37.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/synthia.cpython-38.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/synthia.cpython-38.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/synthia.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/synthia.cpython-39.pyc

--------------------------------------------------------------------------------

/lib/datasets/__pycache__/varcity3d.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/__pycache__/varcity3d.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/datasets/jrdb.py:

--------------------------------------------------------------------------------

1 | import logging

2 | import os

3 |

4 | import numpy as np

5 |

6 | from lib.dataset import SparseVoxelizationDataset, DatasetPhase, str2datasetphase_type

7 | from lib.utils import read_txt

8 |

9 |

10 | CLASS_LABELS = ('pedestrian', )

11 |

12 |

13 | class JRDataset(SparseVoxelizationDataset):

14 |

15 | IS_ROTATION_BBOX = True

16 | HAS_GT_BBOX = True

17 |

18 | # Voxelization arguments

19 | CLIP_BOUND = None

20 | VOXEL_SIZE = 0.2

21 | NUM_IN_CHANNEL = 4

22 |

23 | # Augmentation arguments

24 | ROTATION_AUGMENTATION_BOUND = ((-np.pi / 64, np.pi / 64), (-np.pi / 64, np.pi / 64),

25 | (-np.pi / 64, np.pi / 64))

26 | TRANSLATION_AUGMENTATION_RATIO_BOUND = ((-0.2, 0.2), (-0.2, 0.2), (0, 0))

27 |

28 | ROTATION_AXIS = 'z'

29 | LOCFEAT_IDX = 2

30 | NUM_LABELS = 1

31 | INSTANCE_LABELS = list(range(1))

32 |

33 | DATA_PATH_FILE = {

34 | DatasetPhase.Train: 'train.txt',

35 | DatasetPhase.Val: 'val.txt',

36 | DatasetPhase.Test: 'test.txt'

37 | }

38 |

39 | def __init__(self,

40 | config,

41 | input_transform=None,

42 | target_transform=None,

43 | augment_data=True,

44 | cache=False,

45 | phase=DatasetPhase.Train):

46 | if isinstance(phase, str):

47 | phase = str2datasetphase_type(phase)

48 | data_root = config.jrdb_path

49 | data_paths = read_txt(os.path.join(data_root, self.DATA_PATH_FILE[phase]))

50 | logging.info('Loading {}: {}'.format(self.__class__.__name__, self.DATA_PATH_FILE[phase]))

51 | super().__init__(

52 | data_paths,

53 | data_root=data_root,

54 | input_transform=input_transform,

55 | target_transform=target_transform,

56 | ignore_label=config.ignore_label,

57 | return_transformation=config.return_transformation,

58 | augment_data=augment_data,

59 | config=config)

60 |

61 | def load_datafile(self, index):

62 | datum = np.load(self.data_root / (self.data_paths[index] + '.npz'))

63 | pointcloud, bboxes = datum['pc'], datum['bbox']

64 | return pointcloud, bboxes, None

65 |

66 | def convert_mat2cfl(self, mat):

67 | # Generally, xyz, rgb, label

68 | return mat[:, :3], mat[:, 3:], None

69 |

70 |

71 | class JRDataset50(JRDataset):

72 | VOXEL_SIZE = 0.5

73 |

74 |

75 | class JRDataset30(JRDataset):

76 | VOXEL_SIZE = 0.3

77 |

78 |

79 | class JRDataset15(JRDataset):

80 | VOXEL_SIZE = 0.15

81 |

--------------------------------------------------------------------------------

/lib/datasets/preprocessing/__pycache__/scannet_inst.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/preprocessing/__pycache__/scannet_inst.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/datasets/preprocessing/__pycache__/scannet_instance.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/preprocessing/__pycache__/scannet_instance.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/datasets/preprocessing/__pycache__/stanford_3d.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/preprocessing/__pycache__/stanford_3d.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/datasets/preprocessing/__pycache__/synthia_instance.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/jgwak/GSDN/507fb2e0db4b9bfc9c1b8f80f0db41d9095656cb/lib/datasets/preprocessing/__pycache__/synthia_instance.cpython-36.pyc

--------------------------------------------------------------------------------

/lib/datasets/preprocessing/jrdb.py:

--------------------------------------------------------------------------------

1 | import collections

2 | import os

3 | import glob

4 | import json

5 | import yaml

6 |

7 | import numpy as np

8 | import open3d as o3d

9 | import tqdm

10 | from PIL import Image

11 |

12 |

13 | DATASET_TRAIN_PATH = '/scr/jgwak/Datasets/jrdb/dataset'

14 | DATASET_TEST_PATH = '/scr/jgwak/Datasets/jrdb/dataset'

15 | IN_IMG_STITCHED_PATH = 'images/image_stitched/%s/%s.jpg'

16 | IN_PTC_LOWER_PATH = 'pointclouds/lower_velodyne/%s/%s.pcd'

17 | IN_PTC_UPPER_PATH = 'pointclouds/upper_velodyne/%s/%s.pcd'

18 | IN_LABELS_3D = 'labels/labels_3d/*.json'

19 | IN_CALIBRATION_F = 'calibration/defaults.yaml'

20 |

21 | OUT_D = '/scr/jgwak/Datasets/jrdb_d15_n15'

22 | DIST_T = 15

23 | NUM_PTS_T = 15

24 |

25 |

26 | def get_calibration(input_dir):

27 | with open(os.path.join(input_dir, IN_CALIBRATION_F)) as f:

28 | return yaml.safe_load(f)['calibrated']

29 |

30 |

31 | def get_full_file_list(input_dir):

32 | def _filepath2filelist(path):

33 | return set(tuple(os.path.splitext(f)[0].split(os.sep)[-2:])

34 | for f in glob.glob(os.path.join(input_dir, path % ('*', '*'))))

35 |

36 | def _label2filelist(path, key='labels'):

37 | seq_dicts = []

38 | for json_f in glob.glob(os.path.join(input_dir, path)):

39 | with open(json_f) as f:

40 | labels = json.load(f)

41 | seq_name = os.path.basename(os.path.splitext(json_f)[0])

42 | seq_dicts.append({(seq_name, os.path.splitext(file_name)[0]): label

43 | for file_name, label in labels[key].items()})

44 | return dict(collections.ChainMap(*seq_dicts))

45 |

46 | imgs = _filepath2filelist(IN_IMG_STITCHED_PATH)

47 | lower_ptcs = _filepath2filelist(IN_PTC_LOWER_PATH)

48 | upper_ptcs = _filepath2filelist(IN_PTC_UPPER_PATH)

49 | labels_3d = _label2filelist(IN_LABELS_3D)

50 | filelist = set.intersection(imgs, lower_ptcs, upper_ptcs, labels_3d.keys())

51 |

52 | return {f: labels_3d[f] for f in sorted(filelist)}

53 |

54 |

55 | def _load_stitched_image(input_dir, seq_name, file_name):

56 | img_path = os.path.join(input_dir, IN_IMG_STITCHED_PATH % (seq_name, file_name))

57 | return Image.open(img_path)

58 |

59 |

60 | def process_3d(input_dir, calib, seq_name, file_name, labels_3d, out_f):

61 |

62 | def _load_pointcloud(path, calib_key):

63 | ptc = np.asarray(

64 | o3d.io.read_point_cloud(os.path.join(input_dir, path % (seq_name, file_name))).points)

65 | ptc -= np.expand_dims(np.array(calib[calib_key]['translation']), 0)

66 | theta = -float(calib[calib_key]['rotation'][-1])

67 | rotation_matrix = np.array(

68 | ((np.cos(theta), np.sin(theta)), (-np.sin(theta), np.cos(theta))))

69 | ptc[:, :2] = np.squeeze(

70 | np.matmul(rotation_matrix, np.expand_dims(ptc[:, :2], 2)))

71 | return ptc

72 |

73 | lower_ptc = _load_pointcloud(IN_PTC_LOWER_PATH, 'lidar_lower_to_rgb')

74 | upper_ptc = _load_pointcloud(IN_PTC_UPPER_PATH, 'lidar_upper_to_rgb')

75 | ptc = np.vstack((upper_ptc, lower_ptc))

76 |

77 | image = _load_stitched_image(input_dir, seq_name, file_name)

78 | ptc_rect = ptc[:, [1, 2, 0]]

79 | ptc_rect[:, :2] *= -1

80 | horizontal_theta = np.arctan(ptc_rect[:, 0] / ptc_rect[:, 2])

81 | horizontal_theta += (ptc_rect[:, 2] < 0) * np.pi

82 | horizontal_percent = horizontal_theta / (2 * np.pi)

83 | x = ((horizontal_percent * image.size[0]) + 1880) % image.size[0]

84 | y = (485.78 * (ptc_rect[:, 1] / ((1 / np.cos(horizontal_theta)) *

85 | ptc_rect[:, 2]))) + (0.4375 * image.size[1])

86 | y_inrange = np.logical_and(0 <= y, y < image.size[1])

87 | rgb = np.array(image)[np.floor(y[y_inrange]).astype(int),

88 | np.floor(x[y_inrange]).astype(int)]

89 | ptc = np.vstack(

90 | (np.hstack((ptc[y_inrange], rgb)),

91 | np.hstack((ptc[~y_inrange], np.zeros(((~y_inrange).sum(), 3))))))

92 |

93 | bboxes = []

94 | for label_3d in labels_3d:

95 | if label_3d['attributes']['distance'] > DIST_T:

96 | continue

97 | if label_3d['attributes']['num_points'] < NUM_PTS_T:

98 | continue

99 | rotation_z = (-label_3d['box']['rot_z']

100 | if label_3d['box']['rot_z'] < np.pi

101 | else 2 * np.pi - label_3d['box']['rot_z'])

102 | box = np.array(

103 | (label_3d['box']['cx'], label_3d['box']['cy'],

104 | label_3d['box']['cz'], label_3d['box']['l'],

105 | label_3d['box']['w'], label_3d['box']['h'], rotation_z, 0))

106 | bboxes.append(np.concatenate((box[:3] - box[3:6], box[:3] + box[3:6], box[6:])))

107 | bboxes = np.vstack(bboxes)

108 | np.savez_compressed(out_f, pc=ptc, bbox=bboxes)

109 |

110 |

111 | def main():

112 | os.mkdir(OUT_D)

113 | os.mkdir(os.path.join(OUT_D, 'train'))

114 | file_list_train = get_full_file_list(DATASET_TRAIN_PATH)

115 | calib_train = get_calibration(DATASET_TRAIN_PATH)

116 | train_seqs = []

117 | for seq_name, file_name in tqdm.tqdm(file_list_train):

118 | train_seq_name = os.path.join('train', f'{seq_name}--{file_name}.npy')

119 | labels_3d = file_list_train[(seq_name, file_name)]

120 | train_seqs.append(train_seq_name)

121 | out_f = os.path.join(OUT_D, train_seq_name)

122 | process_3d(DATASET_TRAIN_PATH, calib_train, seq_name, file_name, labels_3d, out_f)

123 | with open(os.path.join(OUT_D, 'train.txt'), 'w') as f:

124 | f.writelines([l + '\n' for l in train_seqs])

125 |

126 | os.mkdir(os.path.join(OUT_D, 'test'))

127 | file_list_test = get_full_file_list(DATASET_TEST_PATH)

128 | calib_test = get_calibration(DATASET_TEST_PATH)

129 | test_seqs = []

130 | for seq_name, file_name in tqdm.tqdm(file_list_test):

131 | test_seq_name = os.path.join('test', f'{seq_name}--{file_name}.npy')

132 | labels_3d = file_list_test[(seq_name, file_name)]

133 | test_seqs.append(test_seq_name)

134 | out_f = os.path.join(OUT_D, test_seq_name)

135 | process_3d(DATASET_TEST_PATH, calib_test, seq_name, file_name, labels_3d, out_f)

136 | with open(os.path.join(OUT_D, 'test.txt'), 'w') as f:

137 | f.writelines([l + '\n' for l in test_seqs])

138 |

139 |

140 | if __name__ == '__main__':

141 | main()

142 |

--------------------------------------------------------------------------------

/lib/datasets/preprocessing/scannet_instance.py:

--------------------------------------------------------------------------------

1 | import json

2 | import random

3 | from pathlib import Path

4 |

5 | import numpy as np

6 | from plyfile import PlyData, PlyElement

7 |

8 | from lib.pc_utils import read_plyfile

9 |

10 | SCANNET_RAW_PATH = Path('/cvgl2/u/jgwak/Datasets/scannet_raw')

11 | SCANNET_OUT_PATH = Path('/scr/jgwak/Datasets/scannet_inst')

12 | TRAIN_DEST = 'train'

13 | TEST_DEST = 'test'

14 | SUBSETS = {TRAIN_DEST: 'scans', TEST_DEST: 'scans_test'}

15 | POINTCLOUD_FILE = '_vh_clean_2.ply'

16 | CROP_SIZE = 6.

17 | TRAIN_SPLIT = 0.8

18 | BUGS = {

19 | 'train/scene0270_00.ply': 50,

20 | 'train/scene0270_02.ply': 50,

21 | 'train/scene0384_00.ply': 149,

22 | }

23 |

24 |

25 | # TODO: Modify lib.pc_utils.save_point_cloud to take npy_types as input.

26 | def save_point_cloud(points_3d, filename):

27 | assert points_3d.ndim == 2

28 | assert points_3d.shape[1] == 8

29 | python_types = (float, float, float, int, int, int, int, int)

30 | npy_types = [('x', 'f4'), ('y', 'f4'), ('z', 'f4'), ('red', 'u1'), ('green', 'u1'),

31 | ('blue', 'u1'), ('label_class', 'u1'), ('label_instance', 'u2')]

32 | # Format into NumPy structured array

33 | vertices = []

34 | for row_idx in range(points_3d.shape[0]):

35 | cur_point = points_3d[row_idx]

36 | vertices.append(tuple(dtype(point) for dtype, point in zip(python_types, cur_point)))

37 | vertices_array = np.array(vertices, dtype=npy_types)

38 | el = PlyElement.describe(vertices_array, 'vertex')

39 |

40 | # Write

41 | PlyData([el]).write(filename)

42 |

43 |

44 | # Preprocess data.

45 | for out_path, in_path in SUBSETS.items():

46 | phase_out_path = SCANNET_OUT_PATH / out_path

47 | phase_out_path.mkdir(parents=True, exist_ok=True)

48 | for f in (SCANNET_RAW_PATH / in_path).glob('*/*' + POINTCLOUD_FILE):

49 | # Load pointcloud file.

50 | out_f = phase_out_path / (f.name[:-len(POINTCLOUD_FILE)] + f.suffix)

51 | pointcloud = read_plyfile(f)

52 | num_points = pointcloud.shape[0]

53 | # Make sure alpha value is meaningless.

54 | assert np.unique(pointcloud[:, -1]).size == 1

55 |

56 | # Load label.

57 | segment_f = f.with_suffix('.0.010000.segs.json')

58 | segment_group_f = (f.parent / f.name[:-len(POINTCLOUD_FILE)]).with_suffix('.aggregation.json')

59 | semantic_f = f.parent / (f.stem + '.labels' + f.suffix)

60 | if semantic_f.is_file():

61 | # Load semantic label.

62 | semantic_label = read_plyfile(semantic_f)

63 | # Sanity check that the pointcloud and its label has same vertices.

64 | assert num_points == semantic_label.shape[0]

65 | assert np.allclose(pointcloud[:, :3], semantic_label[:, :3])

66 | semantic_label = semantic_label[:, -1]

67 | # Load instance label.

68 | with open(segment_f) as f:

69 | segment = np.array(json.load(f)['segIndices'])

70 | with open(segment_group_f) as f:

71 | segment_groups = json.load(f)['segGroups']

72 | assert segment.size == num_points

73 | inst_idx = np.zeros(num_points)

74 | for group_idx, segment_group in enumerate(segment_groups):

75 | for segment_idx in segment_group['segments']:

76 | inst_idx[segment == segment_idx] = group_idx + 1

77 | else: # Label may not exist in test case.

78 | semantic_label = np.zeros(num_points)

79 | inst_idx = np.zeros(num_points)

80 | pointcloud_label = np.hstack((pointcloud[:, :6], semantic_label[:, None], inst_idx[:, None]))

81 | save_point_cloud(pointcloud_label, out_f)

82 |

83 |

84 | # Split trainval data to train/val according to scene.

85 | trainval_files = [f.name for f in (SCANNET_OUT_PATH / TRAIN_DEST).glob('*.ply')]

86 | trainval_scenes = list(set(f.split('_')[0] for f in trainval_files))

87 | random.shuffle(trainval_scenes)

88 | num_train = int(len(trainval_scenes) * TRAIN_SPLIT)

89 | train_scenes = trainval_scenes[:num_train]

90 | val_scenes = trainval_scenes[num_train:]

91 |

92 | # Collect file list for all phase.

93 | train_files = [f'{TRAIN_DEST}/{f}' for f in trainval_files if any(s in f for s in train_scenes)]

94 | val_files = [f'{TRAIN_DEST}/{f}' for f in trainval_files if any(s in f for s in val_scenes)]

95 | test_files = [f'{TEST_DEST}/{f.name}' for f in (SCANNET_OUT_PATH / TEST_DEST).glob('*.ply')]

96 |

97 | # Data sanity check.

98 | assert not set(train_files).intersection(val_files)

99 | assert all((SCANNET_OUT_PATH / f).is_file() for f in train_files)

100 | assert all((SCANNET_OUT_PATH / f).is_file() for f in val_files)

101 | assert all((SCANNET_OUT_PATH / f).is_file() for f in test_files)

102 |

103 | # Write file list for all phase.

104 | with open(SCANNET_OUT_PATH / 'train.txt', 'w') as f:

105 | f.writelines([f + '\n' for f in train_files])

106 | with open(SCANNET_OUT_PATH / 'val.txt', 'w') as f:

107 | f.writelines([f + '\n' for f in val_files])

108 | with open(SCANNET_OUT_PATH / 'test.txt', 'w') as f:

109 | f.writelines([f + '\n' for f in test_files])

110 |

111 | # Fix bug in the data.

112 | for ply_file, bug_index in BUGS.items():

113 | ply_path = SCANNET_OUT_PATH / ply_file

114 | pointcloud = read_plyfile(ply_path)

115 | bug_mask = pointcloud[:, -2] == bug_index

116 | print(f'Fixing {ply_file} bugged label {bug_index} x {bug_mask.sum()}')

117 | pointcloud[bug_mask, -2] = 0

118 | save_point_cloud(pointcloud, ply_path)

119 |

--------------------------------------------------------------------------------

/lib/datasets/preprocessing/stanford3d.py:

--------------------------------------------------------------------------------

1 | import os

2 | import glob

3 | import sys

4 |

5 | import tqdm

6 | import numpy as np

7 |

8 | CLASSES = [

9 | 'ceiling', 'floor', 'wall', 'beam', 'column', 'window', 'door', 'table', 'chair', 'sofa',

10 | 'bookcase', 'board', 'clutter'

11 | ]

12 | STANFORD_3D_PATH = '/scr/jgwak/Datasets/Stanford3dDataset_v1.2/Area_%d/*'

13 | OUT_DIR = 'stanford3d'

14 | CROP_SIZE = 5

15 | MIN_POINTS = 10000

16 | PREVOXELIZE_SIZE = 0.02

17 |

18 |

19 | def read_pointcloud(filename):

20 | with open(filename) as f:

21 | ptc = np.array([line.rstrip().split() for line in f.readlines()])

22 | return ptc.astype(np.float32)

23 |

24 |

25 | def subsample(ptc):

26 | voxel_coords = np.floor(ptc[:, :3] / PREVOXELIZE_SIZE).astype(int)

27 | _, unique_idxs = np.unique(voxel_coords, axis=0, return_index=True)

28 | return ptc[unique_idxs]

29 |

30 |

31 | i = int(sys.argv[-1])

32 | print(f'Processing Area {i}')

33 | for room_path in tqdm.tqdm(glob.glob(STANFORD_3D_PATH % i)):

34 | if room_path.endswith('.txt'):

35 | continue

36 | room_name = room_path.split(os.sep)[-1]

37 | num_ptc = 0

38 | sem_labels = []

39 | inst_labels = []

40 | xyzrgb = []

41 | for j, instance_path in enumerate(glob.glob(f'{room_path}/Annotations/*')):

42 | instance_ptc = read_pointcloud(instance_path)

43 | instance_name = os.path.splitext(instance_path.split(os.sep)[-1])[0]

44 | instance_class = '_'.join(instance_name.split('_')[:-1])

45 | instance_idx = j

46 | try:

47 | class_idx = CLASSES.index(instance_class)

48 | except ValueError:

49 | if instance_class != 'stairs':

50 | raise

51 | print(f'Marking unknown class {instance_class} as ignore label.')

52 | class_idx = -1

53 | instance_idx = -1

54 | sem_labels.append(np.ones((instance_ptc.shape[0]), dtype=int) * class_idx)

55 | inst_labels.append(np.ones((instance_ptc.shape[0]), dtype=int) * instance_idx)

56 | xyzrgb.append(instance_ptc)

57 | num_ptc += instance_ptc.shape[0]

58 | all_ptc = np.hstack((np.vstack(xyzrgb), np.concatenate(sem_labels)[:, None],

59 | np.concatenate(inst_labels)[:, None]))

60 | all_xyz = all_ptc[:, :3]

61 | all_xyz_min = all_xyz.min(0)

62 | room_size = all_xyz.max(0) - all_xyz_min

63 |

64 | if i != 5 and np.any(room_size > CROP_SIZE): # Save Area5 as-is.

65 | k = 0

66 | steps = (np.floor(room_size / CROP_SIZE) * 2).astype(int) + 1

67 | for dx in range(steps[0]):

68 | for dy in range(steps[1]):

69 | for dz in range(steps[2]):

70 | crop_idx = np.array([dx, dy, dz])

71 | crop_min = crop_idx * CROP_SIZE / 2 + all_xyz_min

72 | crop_max = crop_min + CROP_SIZE

73 | crop_mask = np.all(np.hstack((crop_min < all_xyz, all_xyz < crop_max)), 1)

74 | if np.sum(crop_mask) < MIN_POINTS:

75 | continue

76 | crop_xyz = all_xyz[crop_mask]

77 | size_full = (crop_xyz.max(0) - crop_xyz.min(0)) > CROP_SIZE / 2

78 | init_dim = np.array([dx, dy, dz]) == 0

79 | if not np.all(np.logical_or(size_full, init_dim)):

80 | continue

81 | np.savez_compressed(f'{OUT_DIR}/Area{i}_{room_name}_{k}', subsample(all_ptc[crop_mask]))

82 | k += 1

83 | else:

84 | np.savez_compressed(f'{OUT_DIR}/Area{i}_{room_name}_0', subsample(all_ptc))

85 |

--------------------------------------------------------------------------------

/lib/datasets/preprocessing/sunrgbd.py:

--------------------------------------------------------------------------------

1 | import glob

2 | import random

3 | import os

4 | import numpy as np

5 |

6 | import tqdm

7 |