├── .gitignore

├── .swiftpm

└── xcode

│ └── package.xcworkspace

│ └── xcshareddata

│ └── IDEWorkspaceChecks.plist

├── LICENSE

├── Package.swift

├── README.md

├── Sources

└── SemanticImage

│ ├── SemanticImage.swift

│ ├── segmentation.mlmodelc

│ ├── analytics

│ │ └── coremldata.bin

│ ├── coremldata.bin

│ ├── metadata.json

│ ├── model.espresso.net

│ ├── model.espresso.shape

│ ├── model.espresso.weights

│ ├── model

│ │ └── coremldata.bin

│ └── neural_network_optionals

│ │ └── coremldata.bin

│ └── segmentation.swift

└── Tests

└── SemanticImageTests

└── SemanticImageTests.swift

/.gitignore:

--------------------------------------------------------------------------------

1 | .DS_Store

2 | /.build

3 | /Packages

4 | /*.xcodeproj

5 | xcuserdata/

6 | DerivedData/

7 | .swiftpm/xcode/package.xcworkspace/contents.xcworkspacedata

8 |

--------------------------------------------------------------------------------

/.swiftpm/xcode/package.xcworkspace/xcshareddata/IDEWorkspaceChecks.plist:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | IDEDidComputeMac32BitWarning

6 |

7 |

8 |

9 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2021 MLBoy

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/Package.swift:

--------------------------------------------------------------------------------

1 | // swift-tools-version:5.5

2 | // The swift-tools-version declares the minimum version of Swift required to build this package.

3 |

4 | import PackageDescription

5 |

6 | let package = Package(

7 | name: "SemanticImage",

8 | platforms: [

9 | .iOS(.v14)

10 | ],

11 | products: [

12 | // Products define the executables and libraries a package produces, and make them visible to other packages.

13 | .library(

14 | name: "SemanticImage",

15 | targets: ["SemanticImage"]),

16 | ],

17 | dependencies: [

18 | // Dependencies declare other packages that this package depends on.

19 | // .package(url: /* package url */, from: "1.0.0"),

20 | ],

21 | targets: [

22 | // Targets are the basic building blocks of a package. A target can define a module or a test suite.

23 | // Targets can depend on other targets in this package, and on products in packages this package depends on.

24 | .target(

25 | name: "SemanticImage",

26 | dependencies: [],

27 | resources: [.process("segmentation.mlmodelc")]),

28 | .testTarget(

29 | name: "SemanticImageTests",

30 | dependencies: ["SemanticImage"]),

31 | ]

32 | )

33 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # SemanticImage

2 |

3 | A collection of easy-to-use image / video filters.

4 |

5 | # How to use

6 |

7 | ### Setting Up

8 |

9 | 1, Add SemanticImage to your project as Swift Package with Swift Package Manager.

10 | Or just drag SemanticImage.swift to your project.

11 |

12 | 2, Import and initialize SemanticImage

13 |

14 | ```swift

15 | import SemanticImage

16 | ```

17 |

18 | ```swift

19 | let semanticImage = SemanticImage()

20 | ```

21 |

22 | **Requires iOS 14 or above**

23 |

24 | # Filter Collection

25 |

26 | ## Image

27 |

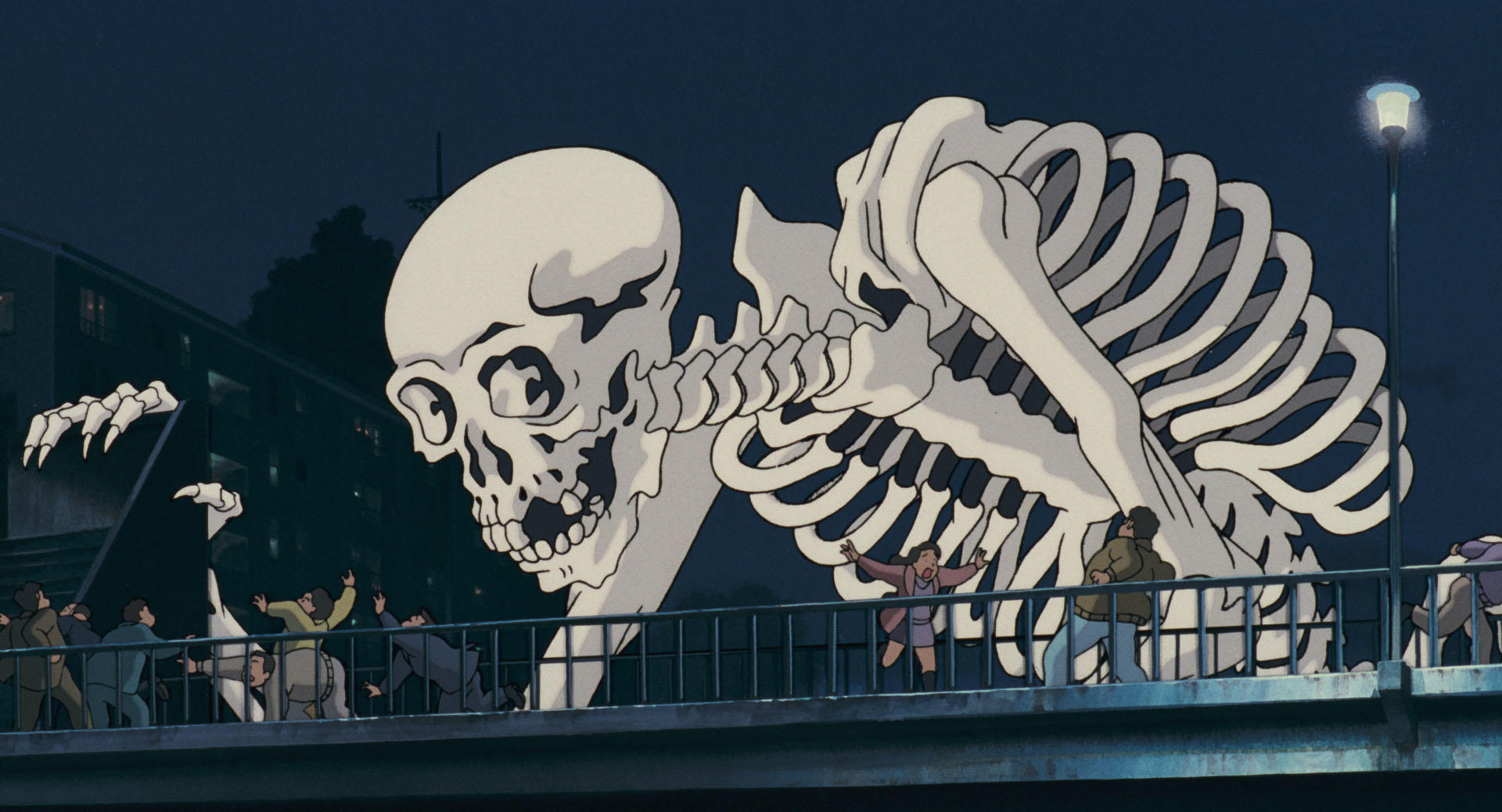

28 | ### Get Person Mask

29 |

30 |

31 |

32 |

33 | ```swift

34 | let maskImage:UIImage? = semanticImage.personMaskImage(uiImage: yourUIImage)

35 | ```

36 |

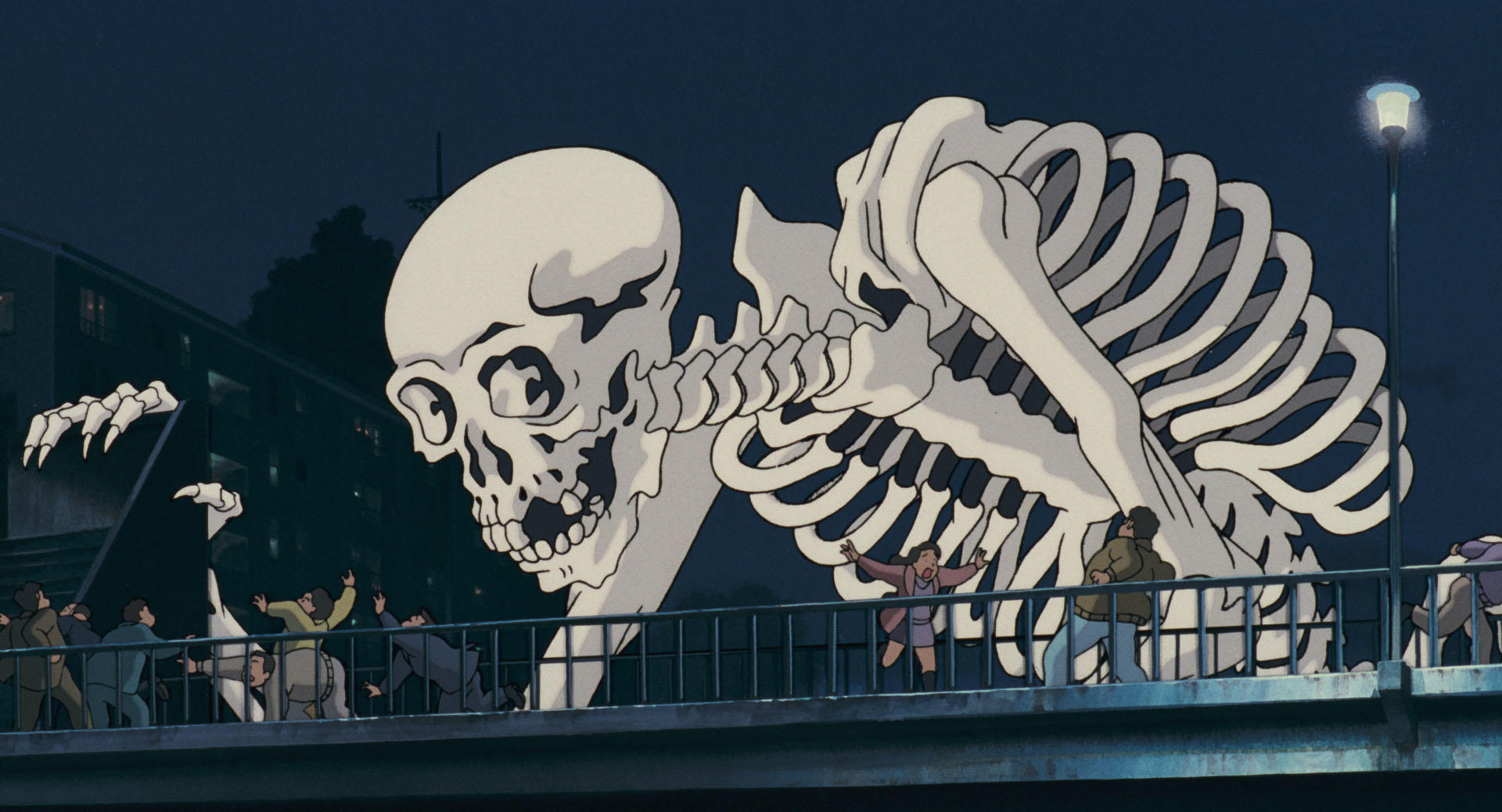

37 | ### Swap the background of a person

38 |

39 |

31 |

32 |

33 | ```swift

34 | let maskImage:UIImage? = semanticImage.personMaskImage(uiImage: yourUIImage)

35 | ```

36 |

37 | ### Swap the background of a person

38 |

39 |

40 |

41 | ```swift

42 | let swappedImage:UIImage? = semanticImage.swapBackgroundOfPerson(personUIImage: yourUIImage, backgroundUIImage: yourBackgroundUIImage)

43 | ```

44 |

45 | ### Blur the backgrond of a person

46 |

47 |

40 |

41 | ```swift

42 | let swappedImage:UIImage? = semanticImage.swapBackgroundOfPerson(personUIImage: yourUIImage, backgroundUIImage: yourBackgroundUIImage)

43 | ```

44 |

45 | ### Blur the backgrond of a person

46 |

47 |

48 |

49 | ```swift

50 | let blurredPersonImage:UIImage? = semanticImage.personBlur(uiImage:UIImage, intensity:Float)

51 | // Blur intensity: 0~100

52 | ```

53 |

54 | ### Get a prominent object mask

55 |

56 |

48 |

49 | ```swift

50 | let blurredPersonImage:UIImage? = semanticImage.personBlur(uiImage:UIImage, intensity:Float)

51 | // Blur intensity: 0~100

52 | ```

53 |

54 | ### Get a prominent object mask

55 |

56 |

57 |

58 | ```swift

59 | let prominentMaskImage:UIImage? = semanticImage.saliencyMask(uiImage:image)

60 | ```

61 |

62 | ### Swap the background of the prominent object

63 |

64 |

57 |

58 | ```swift

59 | let prominentMaskImage:UIImage? = semanticImage.saliencyMask(uiImage:image)

60 | ```

61 |

62 | ### Swap the background of the prominent object

63 |

64 |

65 |

66 | ```swift

67 | let backgroundSwapImage:UIImage? = semanticImage.saliencyBlend(objectUIImage: image, backgroundUIImage: bgImage)

68 | ```

69 |

70 | ### Crop a face rectangle

71 |

72 |

65 |

66 | ```swift

67 | let backgroundSwapImage:UIImage? = semanticImage.saliencyBlend(objectUIImage: image, backgroundUIImage: bgImage)

68 | ```

69 |

70 | ### Crop a face rectangle

71 |

72 |

73 |

74 | ```swift

75 | let faceImage:UIImage? = semanticImage.faceRectangle(uiImage: image)

76 | ```

77 |

78 | ### Crop a body rectangle

79 |

80 |

73 |

74 | ```swift

75 | let faceImage:UIImage? = semanticImage.faceRectangle(uiImage: image)

76 | ```

77 |

78 | ### Crop a body rectangle

79 |

80 |

81 |

82 | ```swift

83 | let bodyImage:UIImage? = semanticImage.humanRectangle(uiImage: image)

84 | ```

85 |

86 | ### Crop face rectangles

87 |

88 |

81 |

82 | ```swift

83 | let bodyImage:UIImage? = semanticImage.humanRectangle(uiImage: image)

84 | ```

85 |

86 | ### Crop face rectangles

87 |

88 |

89 |

90 | ```swift

91 | let faceImages:[UIImage] = semanticImage.faceRectangles(uiImage: image)

92 | ```

93 |

94 | ### Crop body rectangles

95 |

96 |

89 |

90 | ```swift

91 | let faceImages:[UIImage] = semanticImage.faceRectangles(uiImage: image)

92 | ```

93 |

94 | ### Crop body rectangles

95 |

96 |

97 |

98 | ```swift

99 | let bodyImages:[UIImage] = semanticImage.humanRectangles(uiImage: image)

100 | ```

101 |

102 | ### Crop an animal(Cat/Dog) rectangle

103 |

104 |

97 |

98 | ```swift

99 | let bodyImages:[UIImage] = semanticImage.humanRectangles(uiImage: image)

100 | ```

101 |

102 | ### Crop an animal(Cat/Dog) rectangle

103 |

104 |

105 |

106 | ```swift

107 | let animalImage:UIImage? = semanticImage.animalRectangle(uiImage: image)

108 | ```

109 |

110 | ### Crop multiple animal(Cat/Dog) rectangles

111 |

112 |

105 |

106 | ```swift

107 | let animalImage:UIImage? = semanticImage.animalRectangle(uiImage: image)

108 | ```

109 |

110 | ### Crop multiple animal(Cat/Dog) rectangles

111 |

112 |

113 |

114 | ```swift

115 | let animalImages:[UIImage] = semanticImage.animalRectangles(uiImage: image)

116 | ```

117 |

118 | ### Crop and warp document

119 |

120 |

113 |

114 | ```swift

115 | let animalImages:[UIImage] = semanticImage.animalRectangles(uiImage: image)

116 | ```

117 |

118 | ### Crop and warp document

119 |

120 |

121 |

122 | ```swift

123 | let documentImage:UIImage? = semanticImage.getDocumentImage(image: image)

124 | ```

125 |

126 | ## Video

127 |

128 | ### Apply CIFilter to Video

129 |

130 |

121 |

122 | ```swift

123 | let documentImage:UIImage? = semanticImage.getDocumentImage(image: image)

124 | ```

125 |

126 | ## Video

127 |

128 | ### Apply CIFilter to Video

129 |

130 |  131 |

132 | ```swift

133 | guard let ciFilter = CIFilter(name: "CIEdgeWork", parameters: [kCIInputRadiusKey:3.0]) else { return }

134 | semanticImage.ciFilterVideo(videoURL: url, ciFilter: ciFilter, { err, processedURL in

135 | // Handle processedURL in here.

136 | })

137 | // This process takes about the same time as the video playback time.

138 | ```

139 |

140 | ### Add virtual background of the person video

141 |

142 |

131 |

132 | ```swift

133 | guard let ciFilter = CIFilter(name: "CIEdgeWork", parameters: [kCIInputRadiusKey:3.0]) else { return }

134 | semanticImage.ciFilterVideo(videoURL: url, ciFilter: ciFilter, { err, processedURL in

135 | // Handle processedURL in here.

136 | })

137 | // This process takes about the same time as the video playback time.

138 | ```

139 |

140 | ### Add virtual background of the person video

141 |

142 |  143 |

144 | ```swift

145 | semanticImage.swapBackgroundOfPersonVideo(videoURL: url, backgroundUIImage: uiImage, { err, processedURL in

146 | // Handle processedURL in here.

147 | })

148 | // This process takes about the same time as the video playback time.

149 | ```

150 |

151 | ### Add virtual background of the salient object video

152 |

153 |

143 |

144 | ```swift

145 | semanticImage.swapBackgroundOfPersonVideo(videoURL: url, backgroundUIImage: uiImage, { err, processedURL in

146 | // Handle processedURL in here.

147 | })

148 | // This process takes about the same time as the video playback time.

149 | ```

150 |

151 | ### Add virtual background of the salient object video

152 |

153 |  154 |

155 | ```swift

156 | semanticImage.swapBGOfSalientObjectVideo(videoURL: url, backgroundUIImage: uiImage, { err, processedURL in

157 | // Handle processedURL in here.

158 | })

159 | // This process takes about the same time as the video playback time.

160 | ```

161 |

162 | ### Process video

163 |

164 |

154 |

155 | ```swift

156 | semanticImage.swapBGOfSalientObjectVideo(videoURL: url, backgroundUIImage: uiImage, { err, processedURL in

157 | // Handle processedURL in here.

158 | })

159 | // This process takes about the same time as the video playback time.

160 | ```

161 |

162 | ### Process video

163 |

164 |  165 |

166 | ```swift

167 | semanticImage.applyProcessingOnVideo(videoURL: url, { ciImage in

168 | // Write the processing of ciImage (i.e. video frame) here.

169 | return newImage

170 | }, { err, editedURL in

171 | // The processed video URL is returned

172 | })

173 | ```

174 |

175 | # Author

176 |

177 | Daisuke Majima

178 |

179 | Freelance iOS programmer from Japan.

180 |

181 | PROFILES:

182 |

183 | WORKS:

184 |

185 | BLOGS: Medium

186 |

187 | CONTACTS: rockyshikoku@gmail.com

188 |

--------------------------------------------------------------------------------

/Sources/SemanticImage/SemanticImage.swift:

--------------------------------------------------------------------------------

1 | import Foundation

2 | import Vision

3 | import UIKit

4 | import AVKit

5 |

6 | public class SemanticImage {

7 |

8 | public init() {

9 | }

10 |

11 | @available(iOS 15.0, *)

12 | lazy var personSegmentationRequest = VNGeneratePersonSegmentationRequest()

13 | lazy var faceRectangleRequest = VNDetectFaceRectanglesRequest()

14 | lazy var humanRectanglesRequest:VNDetectHumanRectanglesRequest = {

15 | let request = VNDetectHumanRectanglesRequest()

16 | if #available(iOS 15.0, *) {

17 | request.upperBodyOnly = false

18 | }

19 | return request

20 | }()

21 | lazy var animalRequest = VNRecognizeAnimalsRequest()

22 |

23 | lazy var segmentationRequest:VNCoreMLRequest? = {

24 | let url = try? Bundle.module.url(forResource: "segmentation", withExtension: "mlmodelc")

25 | let mlModel = try! MLModel(contentsOf: url!, configuration: MLModelConfiguration())

26 | guard let model = try? VNCoreMLModel(for: mlModel) else { return nil }

27 | let request = VNCoreMLRequest(model: model)

28 | request.imageCropAndScaleOption = .scaleFill

29 | return request

30 | }()

31 | lazy var rectangleRequest = VNDetectRectanglesRequest()

32 |

33 | let ciContext = CIContext()

34 |

35 | public func getDocumentImage(image:UIImage) -> UIImage? {

36 | let newImage = getCorrectOrientationUIImage(uiImage:image)

37 | let ciImage = CIImage(image: newImage)!

38 | let handler = VNImageRequestHandler(ciImage: ciImage, options: [:])

39 | try! handler.perform([rectangleRequest])

40 | guard let result = rectangleRequest.results?.first else { return nil }

41 |

42 | let topLeft = CGPoint(x: result.topLeft.x, y: 1-result.topLeft.y)

43 | let topRight = CGPoint(x: result.topRight.x, y: 1-result.topRight.y)

44 | let bottomLeft = CGPoint(x: result.bottomLeft.x, y: 1-result.bottomLeft.y)

45 | let bottomRight = CGPoint(x: result.bottomRight.x, y: 1-result.bottomRight.y)

46 |

47 |

48 | let deNormalizedTopLeft = VNImagePointForNormalizedPoint(topLeft, Int(ciImage.extent.width), Int(ciImage.extent.height))

49 | let deNormalizedTopRight = VNImagePointForNormalizedPoint(topRight, Int(ciImage.extent.width), Int(ciImage.extent.height))

50 | let deNormalizedBottomLeft = VNImagePointForNormalizedPoint(bottomLeft, Int(ciImage.extent.width), Int(ciImage.extent.height))

51 | let deNormalizedBottomRight = VNImagePointForNormalizedPoint(bottomRight, Int(ciImage.extent.width), Int(ciImage.extent.height))

52 |

53 | let croppedImage = getCroppedImage(image: ciImage, topL: deNormalizedTopLeft, topR: deNormalizedTopRight, botL: deNormalizedBottomLeft, botR: deNormalizedBottomRight)

54 | let safeCGImage = ciContext.createCGImage(croppedImage, from: croppedImage.extent)

55 | let croppedUIImage = UIImage(cgImage: safeCGImage!)

56 | return croppedUIImage

57 | }

58 |

59 | private func getCroppedImage(image: CIImage, topL: CGPoint, topR: CGPoint, botL: CGPoint, botR: CGPoint) -> CIImage {

60 | let rectCoords = NSMutableDictionary(capacity: 4)

61 |

62 | rectCoords["inputTopLeft"] = topL.toVector(image: image)

63 | rectCoords["inputTopRight"] = topR.toVector(image: image)

64 | rectCoords["inputBottomLeft"] = botL.toVector(image: image)

65 | rectCoords["inputBottomRight"] = botR.toVector(image: image)

66 |

67 | guard let coords = rectCoords as? [String : Any] else {

68 | return image

69 | }

70 | return image.applyingFilter("CIPerspectiveCorrection", parameters: coords)

71 | }

72 |

73 | // MARK: Segmentation

74 |

75 | public func personMaskImage(uiImage:UIImage) -> UIImage? {

76 | let newImage = getCorrectOrientationUIImage(uiImage:uiImage)

77 | guard let ciImage = CIImage(image: newImage) else { print("Image processing failed.Please try with another image."); return nil }

78 | let handler = VNImageRequestHandler(ciImage: ciImage, options: [:])

79 | do {

80 | if #available(iOS 15.0, *) {

81 | try handler.perform([personSegmentationRequest])

82 | guard let result = personSegmentationRequest.results?.first

83 | else { print("Image processing failed.Please try with another image.") ; return nil }

84 | let maskCIImage = CIImage(cvPixelBuffer: result.pixelBuffer)

85 | let scaledMask = maskCIImage.resize(as: CGSize(width: ciImage.extent.width, height: ciImage.extent.height))

86 |

87 | guard let safeCGImage = ciContext.createCGImage(scaledMask, from: scaledMask.extent) else { print("Image processing failed.Please try with another image.") ; return nil }

88 | let maskUIImage = UIImage(cgImage: safeCGImage)

89 | return maskUIImage

90 | } else {

91 | guard let segmentationRequest = segmentationRequest else {

92 | print("This func can't be used in this OS version."); return nil

93 | }

94 | try handler.perform([segmentationRequest])

95 | guard let result = segmentationRequest.results?.first as? VNPixelBufferObservation

96 | else { print("Image processing failed.Please try with another image.") ; return nil }

97 | let maskCIImage = CIImage(cvPixelBuffer: result.pixelBuffer)

98 | let scaledMask = maskCIImage.resize(as: CGSize(width: ciImage.extent.width, height: ciImage.extent.height))

99 |

100 | guard let safeCGImage = ciContext.createCGImage(scaledMask, from: scaledMask.extent) else { print("Image processing failed.Please try with another image.") ; return nil }

101 | let maskUIImage = UIImage(cgImage: safeCGImage)

102 | return maskUIImage

103 | }

104 | } catch let error {

105 | print("Vision error \(error)")

106 | return nil

107 | }

108 | }

109 |

110 |

111 | public func swapBackgroundOfPerson(personUIImage: UIImage, backgroundUIImage: UIImage) -> UIImage? {

112 | let newPersonUIImage = getCorrectOrientationUIImage(uiImage:personUIImage)

113 | let newBackgroundUIImage = getCorrectOrientationUIImage(uiImage:backgroundUIImage)

114 |

115 | guard let personCIImage = CIImage(image: newPersonUIImage),

116 | let backgroundCIImage = CIImage(image: newBackgroundUIImage),

117 | let maskUIImage = personMaskImage(uiImage: newPersonUIImage),

118 | let maskCIImage = CIImage(image: maskUIImage) else {

119 | return nil }

120 |

121 | let backgroundImageSize = backgroundCIImage.extent

122 | let originalSize = personCIImage.extent

123 | var scale:CGFloat = 1

124 | let widthScale = originalSize.width / backgroundImageSize.width

125 | let heightScale = originalSize.height / backgroundImageSize.height

126 | if widthScale > heightScale {

127 | scale = personCIImage.extent.width / backgroundImageSize.width

128 | } else {

129 | scale = personCIImage.extent.height / backgroundImageSize.height

130 | }

131 |

132 | let scaledBG = backgroundCIImage.resize(as: CGSize(width: backgroundCIImage.extent.width*scale, height: backgroundCIImage.extent.height*scale))

133 | let BGCenter = CGPoint(x: scaledBG.extent.width/2, y: scaledBG.extent.height/2)

134 | let originalExtent = personCIImage.extent

135 | let cropRect = CGRect(x: BGCenter.x-(originalExtent.width/2), y: BGCenter.y-(originalExtent.height/2), width: originalExtent.width, height: originalExtent.height)

136 | let croppedBG = scaledBG.cropped(to: cropRect)

137 | let translate = CGAffineTransform(translationX: -croppedBG.extent.minX, y: -croppedBG.extent.minY)

138 | let traslatedBG = croppedBG.transformed(by: translate)

139 | guard let blended = CIFilter(name: "CIBlendWithMask", parameters: [

140 | kCIInputImageKey: personCIImage,

141 | kCIInputBackgroundImageKey:traslatedBG,

142 | kCIInputMaskImageKey:maskCIImage])?.outputImage else { return nil }

143 | guard let safeCGImage = ciContext.createCGImage(blended, from: blended.extent) else { print("Image processing failed.Please try with another image.") ; return nil }

144 | let blendedUIImage = UIImage(cgImage: safeCGImage)

145 | return blendedUIImage

146 | }

147 |

148 | public func personBlur(uiImage:UIImage, intensity:Float) -> UIImage?{

149 | let newUIImage = getCorrectOrientationUIImage(uiImage:uiImage)

150 | guard let originalCIImage = CIImage(image: newUIImage),

151 | let maskUIImage = personMaskImage(uiImage: newUIImage),

152 | let maskCIImage = CIImage(image: maskUIImage) else { print("Image processing failed.Please try with another image."); return nil }

153 | let safeCropSize = CGRect(x: 0, y: 0, width: originalCIImage.extent.width * 0.999, height: originalCIImage.extent.height * 0.999)

154 | guard let blurBGCIImage = CIFilter(name: "CIGaussianBlur", parameters: [kCIInputImageKey:originalCIImage,

155 | kCIInputRadiusKey:intensity])?.outputImage?.cropped(to: safeCropSize).resize(as: originalCIImage.extent.size) else { return nil }

156 | guard let blendedCIImage = CIFilter(name: "CIBlendWithMask", parameters: [

157 | kCIInputImageKey: originalCIImage,

158 | kCIInputBackgroundImageKey:blurBGCIImage,

159 | kCIInputMaskImageKey:maskCIImage])?.outputImage,

160 | let safeCGImage = ciContext.createCGImage(blendedCIImage, from: blendedCIImage.extent)else { print("Image processing failed.Please try with another image."); return nil }

161 |

162 | let final = UIImage(cgImage: safeCGImage)

163 | return final

164 | }

165 |

166 | // MARK: Saliency

167 |

168 | public func saliencyMask(uiImage:UIImage) -> UIImage? {

169 | let newImage = getCorrectOrientationUIImage(uiImage:uiImage)

170 | guard let ciImage = CIImage(image: newImage),

171 | let request = segmentationRequest else { print("Image processing failed.Please try with another image."); return nil }

172 | let handler = VNImageRequestHandler(ciImage: ciImage, options: [:])

173 | do {

174 | try handler.perform([request])

175 | guard let result = request.results?.first as? VNPixelBufferObservation

176 | else { print("Image processing failed.Please try with another image.") ; return nil }

177 | let maskCIImage = CIImage(cvPixelBuffer: result.pixelBuffer)

178 | let scaledMask = maskCIImage.resize(as: CGSize(width: ciImage.extent.width, height: ciImage.extent.height))

179 |

180 | guard let safeCGImage = ciContext.createCGImage(scaledMask, from: scaledMask.extent) else { print("Image processing failed.Please try with another image.") ; return nil }

181 | let maskUIImage = UIImage(cgImage: safeCGImage)

182 | return maskUIImage

183 |

184 | } catch let error {

185 | print("Vision error \(error)")

186 | return nil

187 | }

188 | }

189 |

190 | public func saliencyBlend(objectUIImage:UIImage, backgroundUIImage: UIImage) -> UIImage? {

191 | let newSaliencyUIImage = getCorrectOrientationUIImage(uiImage:objectUIImage)

192 | let newBackgroundUIImage = getCorrectOrientationUIImage(uiImage:backgroundUIImage)

193 |

194 | guard let personCIImage = CIImage(image: newSaliencyUIImage),

195 | let backgroundCIImage = CIImage(image: newBackgroundUIImage),

196 | let maskUIImage = saliencyMask(uiImage: newSaliencyUIImage),

197 | let maskCIImage = CIImage(image: maskUIImage) else {

198 | return nil }

199 |

200 | let backgroundImageSize = backgroundCIImage.extent

201 | let originalSize = personCIImage.extent

202 | var scale:CGFloat = 1

203 | let widthScale = originalSize.width / backgroundImageSize.width

204 | let heightScale = originalSize.height / backgroundImageSize.height

205 | if widthScale > heightScale {

206 | scale = personCIImage.extent.width / backgroundImageSize.width

207 | } else {

208 | scale = personCIImage.extent.height / backgroundImageSize.height

209 | }

210 |

211 | let scaledBG = backgroundCIImage.resize(as: CGSize(width: backgroundCIImage.extent.width*scale, height: backgroundCIImage.extent.height*scale))

212 | let BGCenter = CGPoint(x: scaledBG.extent.width/2, y: scaledBG.extent.height/2)

213 | let originalExtent = personCIImage.extent

214 | let cropRect = CGRect(x: BGCenter.x-(originalExtent.width/2), y: BGCenter.y-(originalExtent.height/2), width: originalExtent.width, height: originalExtent.height)

215 | let croppedBG = scaledBG.cropped(to: cropRect)

216 | let translate = CGAffineTransform(translationX: -croppedBG.extent.minX, y: -croppedBG.extent.minY)

217 | let traslatedBG = croppedBG.transformed(by: translate)

218 | print(traslatedBG.extent)

219 | guard let blended = CIFilter(name: "CIBlendWithMask", parameters: [

220 | kCIInputImageKey: personCIImage,

221 | kCIInputBackgroundImageKey:traslatedBG,

222 | kCIInputMaskImageKey:maskCIImage])?.outputImage,

223 | let safeCGImage = ciContext.createCGImage(blended, from: blended.extent) else { print("Image processing failed.Please try with another image."); return nil }

224 | let blendedUIImage = UIImage(cgImage: safeCGImage)

225 | return blendedUIImage

226 | }

227 |

228 | // MARK: Rectangle

229 |

230 |

231 | public func faceRectangle(uiImage:UIImage) -> UIImage? {

232 | let newImage = getCorrectOrientationUIImage(uiImage:uiImage)

233 | guard let ciImage = CIImage(image: newImage) else { print("Image processing failed.Please try with another image."); return nil }

234 | let handler = VNImageRequestHandler(ciImage: ciImage, options: [:])

235 | do {

236 | try handler.perform([faceRectangleRequest])

237 | guard let result = faceRectangleRequest.results?.first else { print("Image processing failed.Please try with another image."); return nil }

238 | let boundingBox = result.boundingBox

239 | let faceRect = VNImageRectForNormalizedRect((boundingBox),Int(ciImage.extent.size.width), Int(ciImage.extent.size.height))

240 | var doubleScaleRect = CGRect(x: faceRect.minX - faceRect.width * 0.5, y: faceRect.minY - faceRect.height * 0.5, width: faceRect.width * 2, height: faceRect.height * 2)

241 | if doubleScaleRect.minX < 0 {

242 | doubleScaleRect.origin.x = 0

243 | }

244 |

245 | if doubleScaleRect.minY < 0 {

246 | doubleScaleRect.origin.y = 0

247 | }

248 | if doubleScaleRect.maxX > ciImage.extent.maxX {

249 | doubleScaleRect = CGRect(x: doubleScaleRect.origin.x, y: doubleScaleRect.origin.y, width: ciImage.extent.width - doubleScaleRect.origin.x, height: doubleScaleRect.height)

250 | }

251 | if doubleScaleRect.maxY > ciImage.extent.maxY {

252 | doubleScaleRect = CGRect(x: doubleScaleRect.origin.x, y: doubleScaleRect.origin.y, width: doubleScaleRect.width, height: ciImage.extent.height - doubleScaleRect.origin.y)

253 | }

254 |

255 | let faceImage = ciImage.cropped(to: doubleScaleRect)

256 | guard let final = ciContext.createCGImage(faceImage, from: faceImage.extent) else { print("Image processing failed.Please try with another image."); return nil }

257 | let finalUiimage = UIImage(cgImage: final)

258 | return finalUiimage

259 | } catch let error {

260 | print("Vision error \(error)")

261 | return nil

262 | }

263 | }

264 |

265 | public func faceRectangles(uiImage:UIImage) -> [UIImage] {

266 | var faceUIImages:[UIImage] = []

267 | let semaphore = DispatchSemaphore(value: 0)

268 | let newImage = getCorrectOrientationUIImage(uiImage:uiImage)

269 | guard let ciImage = CIImage(image: newImage) else { print("Image processing failed.Please try with another image."); return [] }

270 | let handler = VNImageRequestHandler(ciImage: ciImage, options: [:])

271 | do {

272 | try handler.perform([faceRectangleRequest])

273 | guard let results = faceRectangleRequest.results else { print("Image processing failed.Please try with another image."); return [] }

274 | guard !results.isEmpty else { print("Image processing failed.Please try with another image."); return [] }

275 | for result in results {

276 | let boundingBox = result.boundingBox

277 | let faceRect = VNImageRectForNormalizedRect((boundingBox),Int(ciImage.extent.size.width), Int(ciImage.extent.size.height))

278 | var doubleScaleRect = CGRect(x: faceRect.minX - faceRect.width * 0.5, y: faceRect.minY - faceRect.height * 0.5, width: faceRect.width * 2, height: faceRect.height * 2)

279 | if doubleScaleRect.minX < 0 {

280 | doubleScaleRect.origin.x = 0

281 | }

282 |

283 | if doubleScaleRect.minY < 0 {

284 | doubleScaleRect.origin.y = 0

285 | }

286 | if doubleScaleRect.maxX > ciImage.extent.maxX {

287 | doubleScaleRect = CGRect(x: doubleScaleRect.origin.x, y: doubleScaleRect.origin.y, width: ciImage.extent.width - doubleScaleRect.origin.x, height: doubleScaleRect.height)

288 | }

289 | if doubleScaleRect.maxY > ciImage.extent.maxY {

290 | doubleScaleRect = CGRect(x: doubleScaleRect.origin.x, y: doubleScaleRect.origin.y, width: doubleScaleRect.width, height: ciImage.extent.height - doubleScaleRect.origin.y)

291 | }

292 |

293 | let faceImage = ciImage.cropped(to: doubleScaleRect)

294 | guard let final = ciContext.createCGImage(faceImage, from: faceImage.extent) else { print("Image processing failed.Please try with another image."); return [] }

295 | let finalUiimage = UIImage(cgImage: final)

296 | faceUIImages.append(finalUiimage)

297 | if faceUIImages.count == results.count {

298 | semaphore.signal()

299 | }

300 | }

301 | semaphore.wait()

302 | return faceUIImages

303 | } catch let error {

304 | print("Vision error \(error)")

305 | return []

306 | }

307 | }

308 |

309 | public func humanRectangle(uiImage:UIImage) -> UIImage? {

310 | let newImage = getCorrectOrientationUIImage(uiImage:uiImage)

311 | guard let ciImage = CIImage(image: newImage) else { print("Image processing failed.Please try with another image."); return nil }

312 | let handler = VNImageRequestHandler(ciImage: ciImage, options: [:])

313 | do {

314 | try handler.perform([humanRectanglesRequest])

315 | guard let result = humanRectanglesRequest.results?.first else { print("Image processing failed.Please try with another image."); return nil }

316 | let boundingBox = result.boundingBox

317 | let humanRect = VNImageRectForNormalizedRect((boundingBox),Int(ciImage.extent.size.width), Int(ciImage.extent.size.height))

318 | let humanImage = ciImage.cropped(to: humanRect)

319 | guard let final = ciContext.createCGImage(humanImage, from: humanImage.extent) else { print("Image processing failed.Please try with another image."); return nil }

320 | let finalUiimage = UIImage(cgImage: final)

321 | return finalUiimage

322 | } catch let error {

323 | print("Vision error \(error)")

324 | return nil

325 | }

326 | }

327 |

328 | public func humanRectangles(uiImage:UIImage) -> [UIImage] {

329 | var bodyUIImages:[UIImage] = []

330 | let semaphore = DispatchSemaphore(value: 0)

331 | let newImage = getCorrectOrientationUIImage(uiImage:uiImage)

332 | guard let ciImage = CIImage(image: newImage) else { print("Image processing failed.Please try with another image."); return [] }

333 | let handler = VNImageRequestHandler(ciImage: ciImage, options: [:])

334 | do {

335 | try handler.perform([humanRectanglesRequest])

336 | guard let results = humanRectanglesRequest.results else { print("Image processing failed.Please try with another image."); return [] }

337 | guard !results.isEmpty else { print("Image processing failed.Please try with another image."); return [] }

338 |

339 | for result in results {

340 | let boundingBox = result.boundingBox

341 | let humanRect = VNImageRectForNormalizedRect((boundingBox),Int(ciImage.extent.size.width), Int(ciImage.extent.size.height))

342 | let humanImage = ciImage.cropped(to: humanRect)

343 | guard let final = ciContext.createCGImage(humanImage, from: humanImage.extent) else { print("Image processing failed.Please try with another image."); return [] }

344 | let finalUiimage = UIImage(cgImage: final)

345 | bodyUIImages.append(finalUiimage)

346 | if bodyUIImages.count == results.count {

347 | semaphore.signal()

348 | }

349 | }

350 | semaphore.wait()

351 | return bodyUIImages

352 | } catch let error {

353 | print("Vision error \(error)")

354 | return []

355 | }

356 | }

357 |

358 | public func animalRectangle(uiImage:UIImage) -> UIImage?{

359 |

360 | let newImage = getCorrectOrientationUIImage(uiImage:uiImage)

361 | guard let ciImage = CIImage(image: newImage) else { print("Image processing failed.Please try with another image."); return nil }

362 | let handler = VNImageRequestHandler(ciImage: ciImage, options: [:])

363 | do {

364 | try handler.perform([animalRequest])

365 | guard let result = animalRequest.results?.first else { print("Image processing failed.Please try with another image."); return nil }

366 | let boundingBox = result.boundingBox

367 | let rect = VNImageRectForNormalizedRect((boundingBox),Int(ciImage.extent.size.width), Int(ciImage.extent.size.height))

368 | let croppedImage = ciImage.cropped(to: rect)

369 | guard let final = ciContext.createCGImage(croppedImage, from: croppedImage.extent) else { print("Image processing failed.Please try with another image."); return nil }

370 | let finalUiimage = UIImage(cgImage: final)

371 | return finalUiimage

372 | } catch let error {

373 | print("Vision error \(error)")

374 | return nil

375 | }

376 | }

377 |

378 | public func animalRectangles(uiImage:UIImage) -> [UIImage] {

379 | var animalUIImages:[UIImage] = []

380 | let semaphore = DispatchSemaphore(value: 0)

381 | let newImage = getCorrectOrientationUIImage(uiImage:uiImage)

382 | guard let ciImage = CIImage(image: newImage) else { print("Image processing failed.Please try with another image."); return [] }

383 | let handler = VNImageRequestHandler(ciImage: ciImage, options: [:])

384 | do {

385 | try handler.perform([animalRequest])

386 | guard let results = animalRequest.results else { print("Image processing failed.Please try with another image."); return [] }

387 | guard !results.isEmpty else { print("Image processing failed.Please try with another image."); return [] }

388 |

389 | for result in results {

390 | let boundingBox = result.boundingBox

391 | let rect = VNImageRectForNormalizedRect((boundingBox),Int(ciImage.extent.size.width), Int(ciImage.extent.size.height))

392 | let croppedImage = ciImage.cropped(to: rect)

393 | guard let final = ciContext.createCGImage(croppedImage, from: croppedImage.extent) else { print("Image processing failed.Please try with another image."); return [] }

394 | let finalUiimage = UIImage(cgImage: final)

395 | animalUIImages.append(finalUiimage)

396 | if animalUIImages.count == results.count {

397 | semaphore.signal()

398 | }

399 | }

400 | semaphore.wait()

401 | return animalUIImages

402 | } catch let error {

403 | print("Vision error \(error)")

404 | return []

405 | }

406 | }

407 |

408 | public func swapBGOfSalientObjectVideo(videoURL:URL, backgroundUIImage: UIImage, _ completion: ((_ err: NSError?, _ filteredVideoURL: URL?) -> Void)?) {

409 | guard let bgCIImage = CIImage(image: backgroundUIImage) else { print("background image is nil") ; return}

410 | applyProcessingOnVideo(videoURL: videoURL, { ciImage in

411 | let personCIImage = ciImage

412 | let backgroundCIImage = bgCIImage

413 | var maskCIImage:CIImage

414 | let handler = VNImageRequestHandler(ciImage: personCIImage, options: [:])

415 | do {

416 | guard let segmentationRequest = self.segmentationRequest else {

417 | print("This func can't be used in this OS version."); return nil

418 | }

419 | try handler.perform([segmentationRequest])

420 | guard let result = segmentationRequest.results?.first as? VNPixelBufferObservation

421 | else { print("Image processing failed.Please try with another image.") ; return nil }

422 | let maskImage = CIImage(cvPixelBuffer: result.pixelBuffer)

423 | let scaledMask = maskImage.resize(as: CGSize(width: ciImage.extent.width, height: ciImage.extent.height))

424 |

425 | guard let safeCGImage = self.ciContext.createCGImage(scaledMask, from: scaledMask.extent) else { print("Image processing failed.Please try with another image.") ; return nil }

426 | maskCIImage = CIImage(cgImage: safeCGImage)

427 | } catch let error {

428 | print("Vision error \(error)")

429 | return ciImage

430 | }

431 |

432 | let backgroundImageSize = backgroundCIImage.extent

433 | let originalSize = personCIImage.extent

434 | var scale:CGFloat = 1

435 | let widthScale = originalSize.width / backgroundImageSize.width

436 | let heightScale = originalSize.height / backgroundImageSize.height

437 | if widthScale > heightScale {

438 | scale = personCIImage.extent.width / backgroundImageSize.width

439 | } else {

440 | scale = personCIImage.extent.height / backgroundImageSize.height

441 | }

442 |

443 | let scaledBG = backgroundCIImage.resize(as: CGSize(width: backgroundCIImage.extent.width*scale, height: backgroundCIImage.extent.height*scale))

444 | let BGCenter = CGPoint(x: scaledBG.extent.width/2, y: scaledBG.extent.height/2)

445 | let originalExtent = personCIImage.extent

446 | let cropRect = CGRect(x: BGCenter.x-(originalExtent.width/2), y: BGCenter.y-(originalExtent.height/2), width: originalExtent.width, height: originalExtent.height)

447 | let croppedBG = scaledBG.cropped(to: cropRect)

448 | let translate = CGAffineTransform(translationX: -croppedBG.extent.minX, y: -croppedBG.extent.minY)

449 | let traslatedBG = croppedBG.transformed(by: translate)

450 | guard let blended = CIFilter(name: "CIBlendWithMask", parameters: [

451 | kCIInputImageKey: personCIImage,

452 | kCIInputBackgroundImageKey:traslatedBG,

453 | kCIInputMaskImageKey:maskCIImage])?.outputImage,

454 | let safeCGImage = self.ciContext.createCGImage(blended, from: blended.extent) else {return ciImage}

455 | let outCIImage = CIImage(cgImage: safeCGImage)

456 | return outCIImage

457 | } , { err, processedVideoURL in

458 | guard err == nil else { print(err?.localizedDescription); return }

459 | completion?(err,processedVideoURL)

460 | })

461 | }

462 |

463 | public func swapBackgroundOfPersonVideo(videoURL:URL, backgroundUIImage: UIImage, _ completion: ((_ err: NSError?, _ filteredVideoURL: URL?) -> Void)?) {

464 | guard let bgCIImage = CIImage(image: backgroundUIImage) else { print("background image is nil") ; return}

465 | applyProcessingOnVideo(videoURL: videoURL, { ciImage in

466 | let personCIImage = ciImage

467 | let backgroundCIImage = bgCIImage

468 | var maskCIImage:CIImage

469 | let handler = VNImageRequestHandler(ciImage: personCIImage, options: [:])

470 | do {

471 | if #available(iOS 15.0, *) {

472 | try handler.perform([self.personSegmentationRequest])

473 | guard let result = self.personSegmentationRequest.results?.first

474 | else { print("Image processing failed.Please try with another image.") ; return nil }

475 | let maskImage = CIImage(cvPixelBuffer: result.pixelBuffer)

476 | let scaledMask = maskImage.resize(as: CGSize(width: ciImage.extent.width, height: ciImage.extent.height))

477 |

478 | guard let safeCGImage = self.ciContext.createCGImage(scaledMask, from: scaledMask.extent) else { print("Image processing failed.Please try with another image.") ; return nil }

479 | maskCIImage = CIImage(cgImage: safeCGImage)

480 | } else {

481 | guard let segmentationRequest = self.segmentationRequest else {

482 | print("This func can't be used in this OS version."); return nil

483 | }

484 | try handler.perform([segmentationRequest])

485 | guard let result = segmentationRequest.results?.first as? VNPixelBufferObservation

486 | else { print("Image processing failed.Please try with another image.") ; return nil }

487 | let maskImage = CIImage(cvPixelBuffer: result.pixelBuffer)

488 | let scaledMask = maskImage.resize(as: CGSize(width: ciImage.extent.width, height: ciImage.extent.height))

489 |

490 | guard let safeCGImage = self.ciContext.createCGImage(scaledMask, from: scaledMask.extent) else { print("Image processing failed.Please try with another image.") ; return nil }

491 | maskCIImage = CIImage(cgImage: safeCGImage)

492 | }

493 | } catch let error {

494 | print("Vision error \(error)")

495 | return ciImage

496 | }

497 |

498 | let backgroundImageSize = backgroundCIImage.extent

499 | let originalSize = personCIImage.extent

500 | var scale:CGFloat = 1

501 | let widthScale = originalSize.width / backgroundImageSize.width

502 | let heightScale = originalSize.height / backgroundImageSize.height

503 | if widthScale > heightScale {

504 | scale = personCIImage.extent.width / backgroundImageSize.width

505 | } else {

506 | scale = personCIImage.extent.height / backgroundImageSize.height

507 | }

508 |

509 | let scaledBG = backgroundCIImage.resize(as: CGSize(width: backgroundCIImage.extent.width*scale, height: backgroundCIImage.extent.height*scale))

510 | let BGCenter = CGPoint(x: scaledBG.extent.width/2, y: scaledBG.extent.height/2)

511 | let originalExtent = personCIImage.extent

512 | let cropRect = CGRect(x: BGCenter.x-(originalExtent.width/2), y: BGCenter.y-(originalExtent.height/2), width: originalExtent.width, height: originalExtent.height)

513 | let croppedBG = scaledBG.cropped(to: cropRect)

514 | let translate = CGAffineTransform(translationX: -croppedBG.extent.minX, y: -croppedBG.extent.minY)

515 | let traslatedBG = croppedBG.transformed(by: translate)

516 | guard let blended = CIFilter(name: "CIBlendWithMask", parameters: [

517 | kCIInputImageKey: personCIImage,

518 | kCIInputBackgroundImageKey:traslatedBG,

519 | kCIInputMaskImageKey:maskCIImage])?.outputImage,

520 | let safeCGImage = self.ciContext.createCGImage(blended, from: blended.extent) else {return ciImage}

521 | let outCIImage = CIImage(cgImage: safeCGImage)

522 | return outCIImage

523 | } , { err, processedVideoURL in

524 | guard err == nil else { print(err?.localizedDescription); return }

525 | completion?(err,processedVideoURL)

526 | })

527 | }

528 |

529 | public func ciFilterVideo(videoURL:URL, ciFilter: CIFilter, _ completion: ((_ err: NSError?, _ filteredVideoURL: URL?) -> Void)?) {

530 | applyProcessingOnVideo(videoURL: videoURL, { ciImage in

531 | ciFilter.setValue(ciImage, forKey: kCIInputImageKey)

532 | let outCIImage = ciFilter.outputImage

533 | return outCIImage

534 | } , { err, processedVideoURL in

535 | guard err == nil else { print(err?.localizedDescription as Any); return }

536 | completion?(err,processedVideoURL)

537 | })

538 | }

539 |

540 | public func applyProcessingOnVideo(videoURL:URL, _ processingFunction: @escaping ((CIImage) -> CIImage?), _ completion: ((_ err: NSError?, _ processedVideoURL: URL?) -> Void)?) {

541 | var frame:Int = 0

542 | var isFrameRotated = false

543 | let asset = AVURLAsset(url: videoURL)

544 | let duration = asset.duration.value

545 | let frameRate = asset.preferredRate

546 | let totalFrame = frameRate * Float(duration)

547 | let err: NSError = NSError.init(domain: "SemanticImage", code: 999, userInfo: [NSLocalizedDescriptionKey: "Video Processing Failed"])

548 | guard let writingDestinationUrl: URL = try? FileManager.default.url(for: .documentDirectory, in: .userDomainMask, appropriateFor: nil, create: true).appendingPathComponent("\(Date())" + ".mp4") else { print("nil"); return}

549 |

550 | // setup

551 |

552 | guard let reader: AVAssetReader = try? AVAssetReader.init(asset: asset) else {

553 | completion?(err, nil)

554 | return

555 | }

556 | guard let writer: AVAssetWriter = try? AVAssetWriter(outputURL: writingDestinationUrl, fileType: AVFileType.mov) else {

557 | completion?(err, nil)

558 | return

559 | }

560 |

561 | // setup finish closure

562 |

563 | var audioFinished: Bool = false

564 | var videoFinished: Bool = false

565 | let writtingFinished: (() -> Void) = {

566 | if audioFinished == true && videoFinished == true {

567 | writer.finishWriting {

568 | completion?(nil, writingDestinationUrl)

569 | }

570 | reader.cancelReading()

571 | }

572 | }

573 |

574 | // prepare video reader

575 |

576 | let readerVideoOutput: AVAssetReaderTrackOutput = AVAssetReaderTrackOutput(

577 | track: asset.tracks(withMediaType: AVMediaType.video)[0],

578 | outputSettings: [

579 | kCVPixelBufferPixelFormatTypeKey as String: Int(kCVPixelFormatType_420YpCbCr8BiPlanarFullRange),

580 | ]

581 | )

582 |

583 | reader.add(readerVideoOutput)

584 |

585 | // prepare audio reader

586 |

587 | var readerAudioOutput: AVAssetReaderTrackOutput!

588 | if asset.tracks(withMediaType: AVMediaType.audio).count <= 0 {

589 | audioFinished = true

590 | } else {

591 | readerAudioOutput = AVAssetReaderTrackOutput.init(

592 | track: asset.tracks(withMediaType: AVMediaType.audio)[0],

593 | outputSettings: [

594 | AVSampleRateKey: 44100,

595 | AVFormatIDKey: kAudioFormatLinearPCM,

596 | ]

597 | )

598 | if reader.canAdd(readerAudioOutput) {

599 | reader.add(readerAudioOutput)

600 | } else {

601 | print("Cannot add audio output reader")

602 | audioFinished = true

603 | }

604 | }

605 |

606 | // prepare video input

607 |

608 | let transform = asset.tracks(withMediaType: AVMediaType.video)[0].preferredTransform

609 | let radians = atan2(transform.b, transform.a)

610 | let degrees = (radians * 180.0) / .pi

611 |

612 | var writerVideoInput: AVAssetWriterInput

613 | switch degrees {

614 | case 90:

615 | let rotateTransform = CGAffineTransform(rotationAngle: 0)

616 | writerVideoInput = AVAssetWriterInput.init(

617 | mediaType: AVMediaType.video,

618 | outputSettings: [

619 | AVVideoCodecKey: AVVideoCodecType.h264,

620 | AVVideoWidthKey: asset.tracks(withMediaType: AVMediaType.video)[0].naturalSize.height,

621 | AVVideoHeightKey: asset.tracks(withMediaType: AVMediaType.video)[0].naturalSize.width,

622 | AVVideoCompressionPropertiesKey: [

623 | AVVideoAverageBitRateKey: asset.tracks(withMediaType: AVMediaType.video)[0].estimatedDataRate,

624 | ],

625 | ]

626 | )

627 | writerVideoInput.expectsMediaDataInRealTime = false

628 |

629 | isFrameRotated = true

630 | writerVideoInput.transform = rotateTransform

631 | default:

632 | writerVideoInput = AVAssetWriterInput.init(

633 | mediaType: AVMediaType.video,

634 | outputSettings: [

635 | AVVideoCodecKey: AVVideoCodecType.h264,

636 | AVVideoWidthKey: asset.tracks(withMediaType: AVMediaType.video)[0].naturalSize.width,

637 | AVVideoHeightKey: asset.tracks(withMediaType: AVMediaType.video)[0].naturalSize.height,

638 | AVVideoCompressionPropertiesKey: [

639 | AVVideoAverageBitRateKey: asset.tracks(withMediaType: AVMediaType.video)[0].estimatedDataRate,

640 | ],

641 | ]

642 | )

643 | writerVideoInput.expectsMediaDataInRealTime = false

644 | isFrameRotated = false

645 | writerVideoInput.transform = asset.tracks(withMediaType: AVMediaType.video)[0].preferredTransform

646 | }

647 | let pixelBufferAdaptor = AVAssetWriterInputPixelBufferAdaptor(assetWriterInput: writerVideoInput, sourcePixelBufferAttributes: [kCVPixelBufferPixelFormatTypeKey as String: Int(kCVPixelFormatType_32BGRA)])

648 |

649 | writer.add(writerVideoInput)

650 |

651 |

652 | // prepare writer input for audio

653 |

654 | var writerAudioInput: AVAssetWriterInput! = nil

655 | if asset.tracks(withMediaType: AVMediaType.audio).count > 0 {

656 | let formatDesc: [Any] = asset.tracks(withMediaType: AVMediaType.audio)[0].formatDescriptions

657 | var channels: UInt32 = 1

658 | var sampleRate: Float64 = 44100.000000

659 | for i in 0 ..< formatDesc.count {

660 | guard let bobTheDesc: UnsafePointer = CMAudioFormatDescriptionGetStreamBasicDescription(formatDesc[i] as! CMAudioFormatDescription) else {

661 | continue

662 | }

663 | channels = bobTheDesc.pointee.mChannelsPerFrame

664 | sampleRate = bobTheDesc.pointee.mSampleRate

665 | break

666 | }

667 | writerAudioInput = AVAssetWriterInput.init(

668 | mediaType: AVMediaType.audio,

669 | outputSettings: [

670 | AVFormatIDKey: kAudioFormatMPEG4AAC,

671 | AVNumberOfChannelsKey: channels,

672 | AVSampleRateKey: sampleRate,

673 | AVEncoderBitRateKey: 128000,

674 | ]

675 | )

676 | writerAudioInput.expectsMediaDataInRealTime = true

677 | writer.add(writerAudioInput)

678 | }

679 |

680 |

681 | // write

682 |

683 | let videoQueue = DispatchQueue.init(label: "videoQueue")

684 | let audioQueue = DispatchQueue.init(label: "audioQueue")

685 | writer.startWriting()

686 | reader.startReading()

687 | writer.startSession(atSourceTime: CMTime.zero)

688 |

689 | // write video

690 |

691 | writerVideoInput.requestMediaDataWhenReady(on: videoQueue) {

692 | while writerVideoInput.isReadyForMoreMediaData {

693 | autoreleasepool {

694 | if let buffer = readerVideoOutput.copyNextSampleBuffer(),let pixelBuffer = CMSampleBufferGetImageBuffer(buffer) {

695 | frame += 1

696 | var ciImage = CIImage(cvPixelBuffer: pixelBuffer)

697 | if isFrameRotated {

698 | ciImage = ciImage.oriented(CGImagePropertyOrientation.right)

699 | }

700 | guard let outCIImage = processingFunction(ciImage) else { print("Video Processing Failed") ; return }

701 |

702 | let presentationTime = CMSampleBufferGetOutputPresentationTimeStamp(buffer)

703 | var pixelBufferOut: CVPixelBuffer?

704 | CVPixelBufferPoolCreatePixelBuffer(kCFAllocatorDefault, pixelBufferAdaptor.pixelBufferPool!, &pixelBufferOut)

705 | self.ciContext.render(outCIImage, to: pixelBufferOut!)

706 | pixelBufferAdaptor.append(pixelBufferOut!, withPresentationTime: presentationTime)

707 |

708 | // if frame % 100 == 0 {

709 | // print("\(frame) / \(totalFrame) frames were processed..")

710 | // }

711 | } else {

712 | writerVideoInput.markAsFinished()

713 | DispatchQueue.main.async {

714 | videoFinished = true

715 | writtingFinished()

716 | }

717 | }

718 | }

719 | }

720 | }

721 | if writerAudioInput != nil {

722 | writerAudioInput.requestMediaDataWhenReady(on: audioQueue) {

723 | while writerAudioInput.isReadyForMoreMediaData {

724 | autoreleasepool {

725 | let buffer = readerAudioOutput.copyNextSampleBuffer()

726 | if buffer != nil {

727 | writerAudioInput.append(buffer!)

728 | } else {

729 | writerAudioInput.markAsFinished()

730 | DispatchQueue.main.async {

731 | audioFinished = true

732 | writtingFinished()

733 | }

734 | }

735 | }

736 | }

737 | }

738 | }

739 | }

740 |

741 | func scaleMaskImage(maskCIImage:CIImage, originalCIImage:CIImage) -> CIImage {

742 | let scaledMaskCIImage = maskCIImage.resize(as: originalCIImage.extent.size)

743 | return scaledMaskCIImage

744 | }

745 |

746 | public func getCorrectOrientationUIImage(uiImage:UIImage) -> UIImage {

747 | var newImage = UIImage()

748 | switch uiImage.imageOrientation.rawValue {

749 | case 1:

750 | guard let orientedCIImage = CIImage(image: uiImage)?.oriented(CGImagePropertyOrientation.down),

751 | let cgImage = ciContext.createCGImage(orientedCIImage, from: orientedCIImage.extent) else { return uiImage}

752 |

753 | newImage = UIImage(cgImage: cgImage)

754 | case 3:

755 | guard let orientedCIImage = CIImage(image: uiImage)?.oriented(CGImagePropertyOrientation.right),

756 | let cgImage = ciContext.createCGImage(orientedCIImage, from: orientedCIImage.extent) else { return uiImage}

757 | newImage = UIImage(cgImage: cgImage)

758 | default:

759 | newImage = uiImage

760 | }

761 | return newImage

762 | }

763 | }

764 |

765 | extension CIImage {

766 | func resize(as size: CGSize) -> CIImage {

767 | let selfSize = extent.size

768 | let transform = CGAffineTransform(scaleX: size.width / selfSize.width, y: size.height / selfSize.height)

769 | return transformed(by: transform)

770 | }

771 | }

772 |

773 | extension CGPoint {

774 | func toVector(image: CIImage) -> CIVector {

775 | return CIVector(x: x, y: image.extent.height-y)

776 | }

777 | }

778 |

779 |

--------------------------------------------------------------------------------

/Sources/SemanticImage/segmentation.mlmodelc/analytics/coremldata.bin:

--------------------------------------------------------------------------------

1 | ��������NeuralNetworkModelDetails����������������modelDimension��������490��������nnModelNetHash,�������/xlM/hdQw07Zd+eEBEWY2BxiK0hdwys/9K9FbBk1usI=��������nnModelShapeHash,�������W8QpQ7zUHpd/a/UmUUH3ZxSbwUItzbVf43f5qBpyxmM=��������nnModelWeightsHash,�������OybqTwX2VJdtMO9qXbIBoTDY8iQGa28/8C5qZEomiJw=��������SpecificationDetails�������� �������modelHash,�������81qVHbt2fYQ0/q+kkKKLsEycvNvKzlm1Z7JG76PKlVE= �������modelName��������segmentation

--------------------------------------------------------------------------------

/Sources/SemanticImage/segmentation.mlmodelc/coremldata.bin:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/john-rocky/SemanticImage/9343f6cee235c5c8a7e35dd3a0b8762eb9b62b42/Sources/SemanticImage/segmentation.mlmodelc/coremldata.bin

--------------------------------------------------------------------------------

/Sources/SemanticImage/segmentation.mlmodelc/metadata.json:

--------------------------------------------------------------------------------

1 | [

2 | {

3 | "metadataOutputVersion" : "3.0",

4 | "storagePrecision" : "Float32",

5 | "outputSchema" : [

6 | {

7 | "height" : "320",

8 | "colorspace" : "Grayscale",

9 | "isOptional" : "0",

10 | "width" : "320",

11 | "isColor" : "0",

12 | "formattedType" : "Image (Grayscale 320 × 320)",

13 | "hasSizeFlexibility" : "0",

14 | "type" : "Image",

15 | "shortDescription" : "",

16 | "name" : "out_p0"

17 | },

18 | {

19 | "height" : "320",

20 | "colorspace" : "Grayscale",

21 | "isOptional" : "0",

22 | "width" : "320",

23 | "isColor" : "0",

24 | "formattedType" : "Image (Grayscale 320 × 320)",

25 | "hasSizeFlexibility" : "0",

26 | "type" : "Image",

27 | "shortDescription" : "",

28 | "name" : "out_p1"

29 | },

30 | {

31 | "height" : "320",

32 | "colorspace" : "Grayscale",

33 | "isOptional" : "0",

34 | "width" : "320",

35 | "isColor" : "0",

36 | "formattedType" : "Image (Grayscale 320 × 320)",

37 | "hasSizeFlexibility" : "0",

38 | "type" : "Image",

39 | "shortDescription" : "",

40 | "name" : "out_p2"

41 | },

42 | {

43 | "height" : "320",

44 | "colorspace" : "Grayscale",

45 | "isOptional" : "0",

46 | "width" : "320",

47 | "isColor" : "0",

48 | "formattedType" : "Image (Grayscale 320 × 320)",

49 | "hasSizeFlexibility" : "0",

50 | "type" : "Image",

51 | "shortDescription" : "",

52 | "name" : "out_p3"

53 | },

54 | {

55 | "height" : "320",

56 | "colorspace" : "Grayscale",

57 | "isOptional" : "0",

58 | "width" : "320",

59 | "isColor" : "0",

60 | "formattedType" : "Image (Grayscale 320 × 320)",

61 | "hasSizeFlexibility" : "0",

62 | "type" : "Image",

63 | "shortDescription" : "",

64 | "name" : "out_p4"

65 | },

66 | {

67 | "height" : "320",

68 | "colorspace" : "Grayscale",

69 | "isOptional" : "0",

70 | "width" : "320",

71 | "isColor" : "0",

72 | "formattedType" : "Image (Grayscale 320 × 320)",

73 | "hasSizeFlexibility" : "0",

74 | "type" : "Image",

75 | "shortDescription" : "",

76 | "name" : "out_p5"

77 | },

78 | {

79 | "height" : "320",

80 | "colorspace" : "Grayscale",

81 | "isOptional" : "0",

82 | "width" : "320",

83 | "isColor" : "0",

84 | "formattedType" : "Image (Grayscale 320 × 320)",

85 | "hasSizeFlexibility" : "0",

86 | "type" : "Image",

87 | "shortDescription" : "",

88 | "name" : "out_p6"

89 | }

90 | ],

91 | "modelParameters" : [

92 |

93 | ],

94 | "specificationVersion" : 5,

95 | "computePrecision" : "Float16",

96 | "isUpdatable" : "0",

97 | "availability" : {

98 | "macOS" : "11.0",

99 | "tvOS" : "14.0",

100 | "watchOS" : "7.0",

101 | "iOS" : "14.0",

102 | "macCatalyst" : "14.0"

103 | },

104 | "neuralNetworkLayerTypeHistogram" : {

105 | "Concat" : 51,

106 | "ActivationLinear" : 7,

107 | "ActivationReLU" : 112,

108 | "UpsampleBiLinear" : 38,

109 | "Add" : 11,

110 | "BatchNorm" : 112,

111 | "Convolution" : 119,

112 | "ActivationSigmoid" : 7,

113 | "PoolingMax" : 33

114 | },

115 | "modelType" : {

116 | "name" : "MLModelType_neuralNetwork"

117 | },

118 | "userDefinedMetadata" : {

119 | "com.github.apple.coremltools.version" : "4.1",

120 | "com.github.apple.coremltools.source" : "torch==1.8.1+cu101"

121 | },

122 | "generatedClassName" : "segmentation",

123 | "inputSchema" : [

124 | {

125 | "height" : "320",

126 | "colorspace" : "RGB",

127 | "isOptional" : "0",

128 | "width" : "320",

129 | "isColor" : "1",

130 | "formattedType" : "Image (Color 320 × 320)",

131 | "hasSizeFlexibility" : "0",

132 | "type" : "Image",

133 | "shortDescription" : "",

134 | "name" : "in_0"

135 | }

136 | ],

137 | "method" : "predict"

138 | }

139 | ]

--------------------------------------------------------------------------------

/Sources/SemanticImage/segmentation.mlmodelc/model.espresso.shape:

--------------------------------------------------------------------------------

1 | {

2 | "layer_shapes" : {

3 | "input.95" : {

4 | "k" : 32,

5 | "w" : 160,

6 | "n" : 1,

7 | "_rank" : 4,

8 | "h" : 160

9 | },

10 | "input.354" : {

11 | "k" : 64,

12 | "w" : 320,

13 | "n" : 1,

14 | "_rank" : 4,

15 | "h" : 320

16 | },

17 | "input.41" : {

18 | "k" : 16,

19 | "w" : 40,

20 | "n" : 1,

21 | "_rank" : 4,

22 | "h" : 40

23 | },

24 | "input.369" : {

25 | "k" : 16,

26 | "w" : 40,

27 | "n" : 1,

28 | "_rank" : 4,

29 | "h" : 40

30 | },

31 | "input.383" : {

32 | "k" : 16,

33 | "w" : 10,

34 | "n" : 1,

35 | "_rank" : 4,

36 | "h" : 10

37 | },

38 | "input.403" : {

39 | "k" : 64,

40 | "w" : 320,

41 | "n" : 1,

42 | "_rank" : 4,

43 | "h" : 320

44 | },

45 | "tar" : {

46 | "k" : 1,

47 | "w" : 320,

48 | "n" : 1,

49 | "_rank" : 4,

50 | "h" : 320

51 | },

52 | "input.16" : {

53 | "k" : 16,

54 | "w" : 40,

55 | "n" : 1,

56 | "_rank" : 4,

57 | "h" : 40

58 | },

59 | "hx4.4" : {

60 | "k" : 16,

61 | "w" : 20,

62 | "n" : 1,

63 | "_rank" : 4,

64 | "h" : 20

65 | },

66 | "input.208" : {

67 | "k" : 32,

68 | "w" : 10,

69 | "n" : 1,

70 | "_rank" : 4,

71 | "h" : 10

72 | },

73 | "hx5dup.4" : {

74 | "k" : 16,

75 | "w" : 20,

76 | "n" : 1,

77 | "_rank" : 4,

78 | "h" : 20

79 | },

80 | "input.251" : {

81 | "k" : 16,

82 | "w" : 10,

83 | "n" : 1,

84 | "_rank" : 4,

85 | "h" : 10

86 | },

87 | "input.46" : {

88 | "k" : 32,

89 | "w" : 160,

90 | "n" : 1,

91 | "_rank" : 4,

92 | "h" : 160

93 | },

94 | "input.280" : {

95 | "k" : 16,

96 | "w" : 20,

97 | "n" : 1,

98 | "_rank" : 4,

99 | "h" : 20

100 | },

101 | "hx6dup.1" : {

102 | "k" : 16,

103 | "w" : 20,

104 | "n" : 1,

105 | "_rank" : 4,

106 | "h" : 20

107 | },

108 | "out_p6" : {

109 | "k" : 1,

110 | "w" : 320,

111 | "n" : 1,

112 | "_rank" : 4,

113 | "h" : 320

114 | },

115 | "input.76" : {

116 | "k" : 16,

117 | "w" : 10,

118 | "n" : 1,

119 | "_rank" : 4,

120 | "h" : 10

121 | },

122 | "hx6dup" : {

123 | "k" : 16,

124 | "w" : 20,

125 | "n" : 1,

126 | "_rank" : 4,

127 | "h" : 20

128 | },

129 | "input.373" : {

130 | "k" : 16,

131 | "w" : 20,

132 | "n" : 1,

133 | "_rank" : 4,

134 | "h" : 20

135 | },

136 | "input.105" : {

137 | "k" : 16,

138 | "w" : 80,

139 | "n" : 1,

140 | "_rank" : 4,

141 | "h" : 80

142 | },

143 | "input.388" : {

144 | "k" : 32,

145 | "w" : 40,

146 | "n" : 1,

147 | "_rank" : 4,

148 | "h" : 40

149 | },

150 | "input.8" : {

151 | "k" : 16,

152 | "w" : 160,

153 | "n" : 1,

154 | "_rank" : 4,

155 | "h" : 160

156 | },

157 | "input.408" : {

158 | "k" : 1,

159 | "w" : 10,

160 | "n" : 1,

161 | "_rank" : 4,

162 | "h" : 10

163 | },

164 | "hx2dup.1" : {

165 | "k" : 16,

166 | "w" : 320,

167 | "n" : 1,

168 | "_rank" : 4,

169 | "h" : 320

170 | },

171 | "input.227" : {

172 | "k" : 16,

173 | "w" : 20,

174 | "n" : 1,

175 | "_rank" : 4,

176 | "h" : 20

177 | },

178 | "hx5.1" : {

179 | "k" : 16,

180 | "w" : 10,

181 | "n" : 1,

182 | "_rank" : 4,

183 | "h" : 10

184 | },

185 | "input.27" : {

186 | "k" : 16,

187 | "w" : 10,

188 | "n" : 1,

189 | "_rank" : 4,

190 | "h" : 10

191 | },

192 | "hx1d.2" : {

193 | "k" : 64,

194 | "w" : 160,

195 | "n" : 1,

196 | "_rank" : 4,

197 | "h" : 160

198 | },

199 | "input.82" : {

200 | "k" : 16,

201 | "w" : 10,

202 | "n" : 1,

203 | "_rank" : 4,

204 | "h" : 10

205 | },

206 | "input.305" : {

207 | "k" : 64,

208 | "w" : 80,

209 | "n" : 1,

210 | "_rank" : 4,

211 | "h" : 80

212 | },

213 | "hx2dup.6" : {

214 | "k" : 16,

215 | "w" : 80,

216 | "n" : 1,

217 | "_rank" : 4,

218 | "h" : 80

219 | },

220 | "input.57" : {

221 | "k" : 64,

222 | "w" : 160,

223 | "n" : 1,

224 | "_rank" : 4,

225 | "h" : 160

226 | },

227 | "input.334" : {

228 | "k" : 16,

229 | "w" : 10,

230 | "n" : 1,

231 | "_rank" : 4,

232 | "h" : 10

233 | },

234 | "hx1d.10" : {

235 | "k" : 64,

236 | "w" : 160,

237 | "n" : 1,

238 | "_rank" : 4,

239 | "h" : 160

240 | },

241 | "input.392" : {

242 | "k" : 32,

243 | "w" : 80,

244 | "n" : 1,

245 | "_rank" : 4,

246 | "h" : 80

247 | },

248 | "input.124" : {

249 | "k" : 32,

250 | "w" : 20,

251 | "n" : 1,

252 | "_rank" : 4,

253 | "h" : 20

254 | },

255 | "input.139" : {

256 | "k" : 64,

257 | "w" : 40,

258 | "n" : 1,

259 | "_rank" : 4,

260 | "h" : 40

261 | },

262 | "input.87" : {

263 | "k" : 32,

264 | "w" : 40,

265 | "n" : 1,

266 | "_rank" : 4,

267 | "h" : 40

268 | },

269 | "hx3d" : {

270 | "k" : 16,

271 | "w" : 20,

272 | "n" : 1,

273 | "_rank" : 4,

274 | "h" : 20

275 | },

276 | "input.33" : {

277 | "k" : 16,

278 | "w" : 10,

279 | "n" : 1,

280 | "_rank" : 4,

281 | "h" : 10

282 | },

283 | "hx3dup.3" : {

284 | "k" : 16,

285 | "w" : 40,

286 | "n" : 1,

287 | "_rank" : 4,

288 | "h" : 40

289 | },

290 | "input.168" : {

291 | "k" : 64,

292 | "w" : 20,

293 | "n" : 1,

294 | "_rank" : 4,

295 | "h" : 20

296 | },

297 | "input.153" : {

298 | "k" : 32,

299 | "w" : 10,

300 | "n" : 1,

301 | "_rank" : 4,

302 | "h" : 10

303 | },

304 | "input" : {

305 | "k" : 1,

306 | "w" : 320,

307 | "n" : 1,

308 | "_rank" : 4,

309 | "h" : 320

310 | },

311 | "input.202" : {

312 | "k" : 16,

313 | "w" : 10,

314 | "n" : 1,

315 | "_rank" : 4,

316 | "h" : 10

317 | },

318 | "input.246" : {

319 | "k" : 16,

320 | "w" : 40,

321 | "n" : 1,

322 | "_rank" : 4,

323 | "h" : 40

324 | },

325 | "input.260" : {

326 | "k" : 16,

327 | "w" : 10,

328 | "n" : 1,

329 | "_rank" : 4,

330 | "h" : 10

331 | },

332 | "input.275" : {

333 | "k" : 16,

334 | "w" : 80,

335 | "n" : 1,

336 | "_rank" : 4,

337 | "h" : 80

338 | },

339 | "hx3dup.8" : {

340 | "k" : 16,

341 | "w" : 80,

342 | "n" : 1,

343 | "_rank" : 4,

344 | "h" : 80

345 | },

346 | "input.38" : {

347 | "k" : 32,

348 | "w" : 40,

349 | "n" : 1,

350 | "_rank" : 4,

351 | "h" : 40

352 | },

353 | "input.324" : {

354 | "k" : 16,

355 | "w" : 20,

356 | "n" : 1,

357 | "_rank" : 4,

358 | "h" : 20

359 | },

360 | "input.339" : {

361 | "k" : 32,

362 | "w" : 40,

363 | "n" : 1,

364 | "_rank" : 4,

365 | "h" : 40

366 | },

367 | "input.114" : {

368 | "k" : 16,

369 | "w" : 10,

370 | "n" : 1,

371 | "_rank" : 4,

372 | "h" : 10

373 | },

374 | "hx4dup.5" : {

375 | "k" : 16,

376 | "w" : 20,

377 | "n" : 1,

378 | "_rank" : 4,

379 | "h" : 20

380 | },

381 | "input.68" : {

382 | "k" : 16,

383 | "w" : 40,

384 | "n" : 1,

385 | "_rank" : 4,

386 | "h" : 40

387 | },

388 | "input.143" : {

389 | "k" : 16,

390 | "w" : 20,

391 | "n" : 1,

392 | "_rank" : 4,

393 | "h" : 20

394 | },

395 | "hx1d.3" : {

396 | "k" : 64,

397 | "w" : 80,

398 | "n" : 1,

399 | "_rank" : 4,

400 | "h" : 80

401 | },

402 | "hx2d" : {

403 | "k" : 16,

404 | "w" : 20,

405 | "n" : 1,

406 | "_rank" : 4,

407 | "h" : 20

408 | },

409 | "input.221" : {

410 | "k" : 16,

411 | "w" : 20,

412 | "n" : 1,

413 | "_rank" : 4,

414 | "h" : 20

415 | },

416 | "hx5dup.2" : {

417 | "k" : 16,

418 | "w" : 20,

419 | "n" : 1,

420 | "_rank" : 4,

421 | "h" : 20

422 | },

423 | "input.98" : {

424 | "k" : 64,

425 | "w" : 160,

426 | "n" : 1,

427 | "_rank" : 4,

428 | "h" : 160

429 | },

430 | "input.236" : {

431 | "k" : 32,

432 | "w" : 20,

433 | "n" : 1,

434 | "_rank" : 4,

435 | "h" : 20

436 | },

437 | "input.250" : {

438 | "k" : 16,

439 | "w" : 20,

440 | "n" : 1,

441 | "_rank" : 4,

442 | "h" : 20

443 | },

444 | "input.265" : {

445 | "k" : 32,

446 | "w" : 40,

447 | "n" : 1,

448 | "_rank" : 4,

449 | "h" : 40

450 | },

451 | "input.294" : {

452 | "k" : 32,

453 | "w" : 20,

454 | "n" : 1,

455 | "_rank" : 4,

456 | "h" : 20

457 | },

458 | "input.19" : {

459 | "k" : 16,

460 | "w" : 40,

461 | "n" : 1,

462 | "_rank" : 4,

463 | "h" : 40

464 | },

465 | "input.343" : {

466 | "k" : 32,

467 | "w" : 80,

468 | "n" : 1,

469 | "_rank" : 4,

470 | "h" : 80

471 | },

472 | "input.20" : {

473 | "k" : 16,

474 | "w" : 20,

475 | "n" : 1,

476 | "_rank" : 4,

477 | "h" : 20

478 | },

479 | "input.358" : {

480 | "k" : 16,

481 | "w" : 160,

482 | "n" : 1,

483 | "_rank" : 4,

484 | "h" : 160

485 | },

486 | "input.387" : {

487 | "k" : 16,

488 | "w" : 20,

489 | "n" : 1,

490 | "_rank" : 4,

491 | "h" : 20

492 | },

493 | "input.4" : {

494 | "k" : 64,

495 | "w" : 320,

496 | "n" : 1,

497 | "_rank" : 4,

498 | "h" : 320

499 | },

500 | "input.49" : {

501 | "k" : 16,

502 | "w" : 160,

503 | "n" : 1,

504 | "_rank" : 4,

505 | "h" : 160

506 | },

507 | "input.407" : {

508 | "k" : 1,

509 | "w" : 20,

510 | "n" : 1,

511 | "_rank" : 4,

512 | "h" : 20

513 | },

514 | "input.50" : {

515 | "k" : 32,

516 | "w" : 320,

517 | "n" : 1,

518 | "_rank" : 4,

519 | "h" : 320

520 | },

521 | "input.177" : {

522 | "k" : 16,

523 | "w" : 20,

524 | "n" : 1,

525 | "_rank" : 4,

526 | "h" : 20

527 | },

528 | "input.211" : {

529 | "k" : 32,

530 | "w" : 10,

531 | "n" : 1,

532 | "_rank" : 4,

533 | "h" : 10

534 | },

535 | "input.79" : {

536 | "k" : 32,

537 | "w" : 10,

538 | "n" : 1,

539 | "_rank" : 4,

540 | "h" : 10

541 | },

542 | "input.240" : {

543 | "k" : 128,

544 | "w" : 40,

545 | "n" : 1,

546 | "_rank" : 4,

547 | "h" : 40

548 | },

549 | "hx4dup" : {

550 | "k" : 16,

551 | "w" : 80,

552 | "n" : 1,

553 | "_rank" : 4,

554 | "h" : 80

555 | },

556 | "hx1d" : {

557 | "k" : 64,

558 | "w" : 320,

559 | "n" : 1,

560 | "_rank" : 4,

561 | "h" : 320

562 | },

563 | "input.284" : {

564 | "k" : 16,

565 | "w" : 10,

566 | "n" : 1,

567 | "_rank" : 4,

568 | "h" : 10

569 | },

570 | "hx2dup.4" : {

571 | "k" : 16,

572 | "w" : 40,

573 | "n" : 1,

574 | "_rank" : 4,

575 | "h" : 40

576 | },

577 | "hx1d.4" : {

578 | "k" : 64,

579 | "w" : 40,

580 | "n" : 1,

581 | "_rank" : 4,

582 | "h" : 40

583 | },

584 | "input.362" : {

585 | "k" : 16,

586 | "w" : 80,

587 | "n" : 1,

588 | "_rank" : 4,

589 | "h" : 80

590 | },

591 | "input.377" : {

592 | "k" : 16,

593 | "w" : 10,

594 | "n" : 1,

595 | "_rank" : 4,

596 | "h" : 10

597 | },

598 | "input.391" : {

599 | "k" : 16,

600 | "w" : 40,

601 | "n" : 1,

602 | "_rank" : 4,

603 | "h" : 40

604 | },

605 | "input.123" : {

606 | "k" : 16,

607 | "w" : 10,

608 | "n" : 1,

609 | "_rank" : 4,

610 | "h" : 10

611 | },

612 | "input.109" : {

613 | "k" : 16,

614 | "w" : 40,

615 | "n" : 1,

616 | "_rank" : 4,

617 | "h" : 40

618 | },

619 | "hx3dup.1" : {

620 | "k" : 16,

621 | "w" : 160,

622 | "n" : 1,

623 | "_rank" : 4,

624 | "h" : 160

625 | },

626 | "hx6.1" : {

627 | "k" : 16,

628 | "w" : 10,

629 | "n" : 1,

630 | "_rank" : 4,

631 | "h" : 10

632 | },

633 | "out_a0" : {

634 | "k" : 1,

635 | "w" : 320,

636 | "n" : 1,

637 | "_rank" : 4,

638 | "h" : 320

639 | },

640 | "input.196" : {

641 | "k" : 16,

642 | "w" : 10,

643 | "n" : 1,

644 | "_rank" : 4,

645 | "h" : 10

646 | },

647 | "input.230" : {

648 | "k" : 32,

649 | "w" : 20,

650 | "n" : 1,

651 | "_rank" : 4,

652 | "h" : 20

653 | },

654 | "input.61" : {

655 | "k" : 16,

656 | "w" : 80,

657 | "n" : 1,

658 | "_rank" : 4,

659 | "h" : 80

660 | },

661 | "hx3dup.6" : {

662 | "k" : 16,

663 | "w" : 40,

664 | "n" : 1,

665 | "_rank" : 4,

666 | "h" : 40

667 | },

668 | "input.309" : {

669 | "k" : 64,

670 | "w" : 160,

671 | "n" : 1,

672 | "_rank" : 4,

673 | "h" : 160

674 | },

675 | "input.91" : {

676 | "k" : 32,

677 | "w" : 80,

678 | "n" : 1,

679 | "_rank" : 4,

680 | "h" : 80

681 | },

682 | "input.338" : {

683 | "k" : 16,

684 | "w" : 20,

685 | "n" : 1,

686 | "_rank" : 4,

687 | "h" : 20

688 | },

689 | "input.113" : {

690 | "k" : 16,

691 | "w" : 20,

692 | "n" : 1,

693 | "_rank" : 4,

694 | "h" : 20

695 | },

696 | "hx4dup.3" : {

697 | "k" : 16,

698 | "w" : 20,

699 | "n" : 1,

700 | "_rank" : 4,

701 | "h" : 20

702 | },

703 | "hx2d.1" : {

704 | "k" : 16,

705 | "w" : 20,

706 | "n" : 1,

707 | "_rank" : 4,

708 | "h" : 20

709 | },

710 | "input.396" : {

711 | "k" : 32,

712 | "w" : 160,

713 | "n" : 1,

714 | "_rank" : 4,

715 | "h" : 160

716 | },

717 | "input.142" : {

718 | "k" : 16,

719 | "w" : 40,

720 | "n" : 1,

721 | "_rank" : 4,

722 | "h" : 40

723 | },

724 | "input.128" : {

725 | "k" : 32,

726 | "w" : 40,

727 | "n" : 1,

728 | "_rank" : 4,

729 | "h" : 40

730 | },