├── Dockerfile

├── LICENSE

├── README.md

├── examples

├── cox.pdf

└── london.pdf

└── paperify.sh

/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM debian:latest

2 |

3 | # Build with:

4 | # docker build --tag jstrieb/paperify:latest .

5 |

6 | RUN apt-get update && \

7 | apt-get install --no-install-recommends --yes \

8 | pandoc \

9 | curl ca-certificates \

10 | jq \

11 | python3 \

12 | imagemagick \

13 | texlive texlive-publishers texlive-science lmodern texlive-latex-extra

14 |

15 | COPY paperify.sh /usr/local/bin/paperify

16 | RUN chmod +x /usr/local/bin/paperify

17 |

18 | WORKDIR /root/

19 | ENTRYPOINT ["paperify"]

20 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2023 Jacob Strieb

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Paperify

2 |

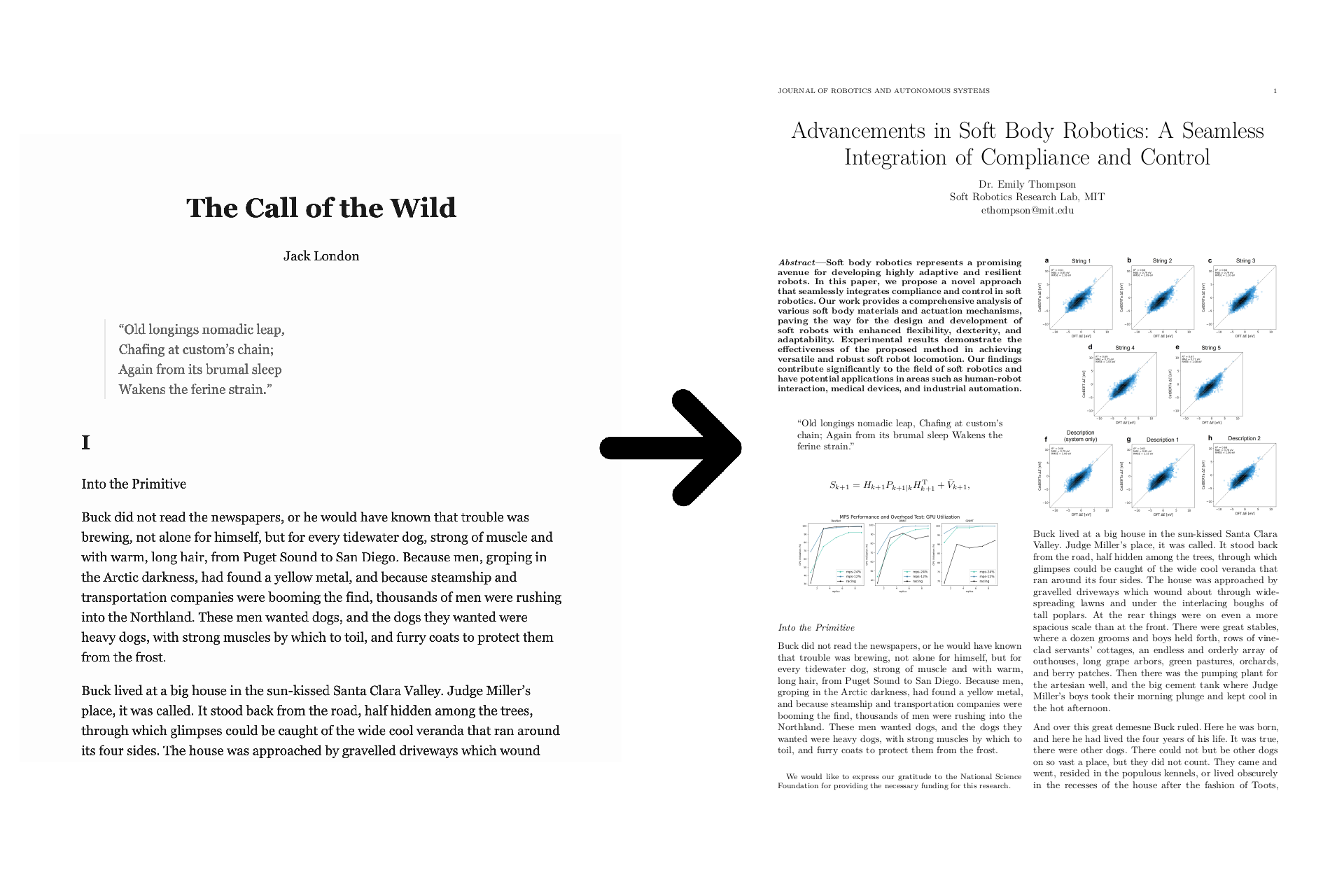

3 | Paperify transforms any document, web page, or ebook into a research paper.

4 |

5 | The text of the generated paper is the same as the text of the original

6 | document, but figures and equations from real papers are interspersed

7 | throughout.

8 |

9 | A paper title and abstract are added (optionally generated by ChatGPT, if you

10 | provide an API key), and the entire paper is compiled with the IEEE $\LaTeX$

11 | template for added realism.

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 | # Install

21 |

22 | First, install the dependencies (or [use Docker](#docker)):

23 |

24 | - curl

25 | - Python 3

26 | - Pandoc

27 | - jq

28 | - LaTeX (via TeXLive)

29 | - ImageMagick (optional)

30 |

31 | For example, on Debian-based systems (_e.g._, Debian, Ubuntu, Kali, WSL):

32 |

33 | ``` bash

34 | sudo apt update

35 | sudo apt install --no-install-recommends \

36 | pandoc \

37 | curl ca-certificates \

38 | jq \

39 | python3 \

40 | imagemagick \

41 | texlive texlive-publishers texlive-science lmodern texlive-latex-extra

42 | ```

43 |

44 | Then, clone the repo (or directly pull the script), and execute it.

45 |

46 | ``` bash

47 | curl -L https://github.com/jstrieb/paperify/raw/master/paperify.sh \

48 | | sudo tee /usr/local/bin/paperify

49 | sudo chmod +x /usr/local/bin/paperify

50 |

51 | paperify -h

52 | ```

53 |

54 |

55 | # Examples

56 |

57 | - [`examples/cox.pdf`](examples/cox.pdf)

58 |

59 | Convert [Russ Cox's transcript of Doug McIlroy's talk on the history of Bell

60 | Labs](https://research.swtch.com/bell-labs) into a paper saved to the `/tmp/`

61 | directory as `article.pdf`.

62 |

63 | ```

64 | paperify \

65 | --from-format html \

66 | "https://research.swtch.com/bell-labs" \

67 | /tmp/article.pdf

68 | ```

69 |

70 | - [`examples/london.pdf`](examples/london.pdf)

71 |

72 | Download figures and equations from the 1000 latest computer science papers

73 | on `arXiv.org`. Intersperse the figures and equations into Jack London's

74 | _Call of the Wild_ with a higher-than-default equation frequency. Use ChatGPT

75 | to generate a paper title, author, abstract, and metadata for an imaginary

76 | paper on soft body robotics. Save the file in the current directory as

77 | `london.pdf`.

78 |

79 | ```

80 | paperify \

81 | --arxiv-category cs \

82 | --num-papers 1000 \

83 | --equation-frequency 18 \

84 | --chatgpt-token "sk-[REDACTED]" \

85 | --chatgpt-topic "soft body robotics" \

86 | "https://standardebooks.org/ebooks/jack-london/the-call-of-the-wild/downloads/jack-london_the-call-of-the-wild.epub" \

87 | london.pdf

88 | ```

89 |

90 | ## Docker

91 |

92 | Alternatively, run Paperify from within a Docker container. To run the first

93 | example from within Docker and build to `./build/cox.pdf`:

94 |

95 | ``` bash

96 | docker run \

97 | --rm \

98 | -it \

99 | --volume "$(pwd)/build":/root/build \

100 | jstrieb/paperify \

101 | --from-format html \

102 | "https://research.swtch.com/bell-labs" \

103 | build/cox.pdf

104 | ```

105 |

106 |

107 | # Usage

108 |

109 | ```

110 | usage: paperify [OPTIONS]