├── .gitignore

├── LICENSE

├── README.md

├── clipboard.py

├── icon.png

├── imgurpython

├── __init__.py

├── client.py

├── helpers

│ ├── __init__.py

│ ├── error.py

│ └── format.py

└── imgur

│ ├── __init__.py

│ └── models

│ ├── __init__.py

│ ├── account.py

│ ├── account_settings.py

│ ├── album.py

│ ├── comment.py

│ ├── conversation.py

│ ├── custom_gallery.py

│ ├── gallery_album.py

│ ├── gallery_image.py

│ ├── image.py

│ ├── message.py

│ ├── notification.py

│ ├── tag.py

│ └── tag_vote.py

├── info.plist

├── oss2

├── __init__.py

├── api.py

├── auth.py

├── compat.py

├── crypto.py

├── defaults.py

├── exceptions.py

├── http.py

├── iterators.py

├── models.py

├── resumable.py

├── task_queue.py

├── utils.py

└── xml_utils.py

├── qcloud_cos

├── __init__.py

├── cos_auth.py

├── cos_client.py

├── cos_comm.py

├── cos_exception.py

├── cos_threadpool.py

├── demo.py

├── streambody.py

└── xml2dict.py

├── requirements.txt

├── util.py

├── wntc.py

└── 程序示意图.eddx

/.gitignore:

--------------------------------------------------------------------------------

1 | .idea

2 | *.pyc

3 | *.swp

4 | .DB_store

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "[]"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright [yyyy] [name of copyright owner]

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

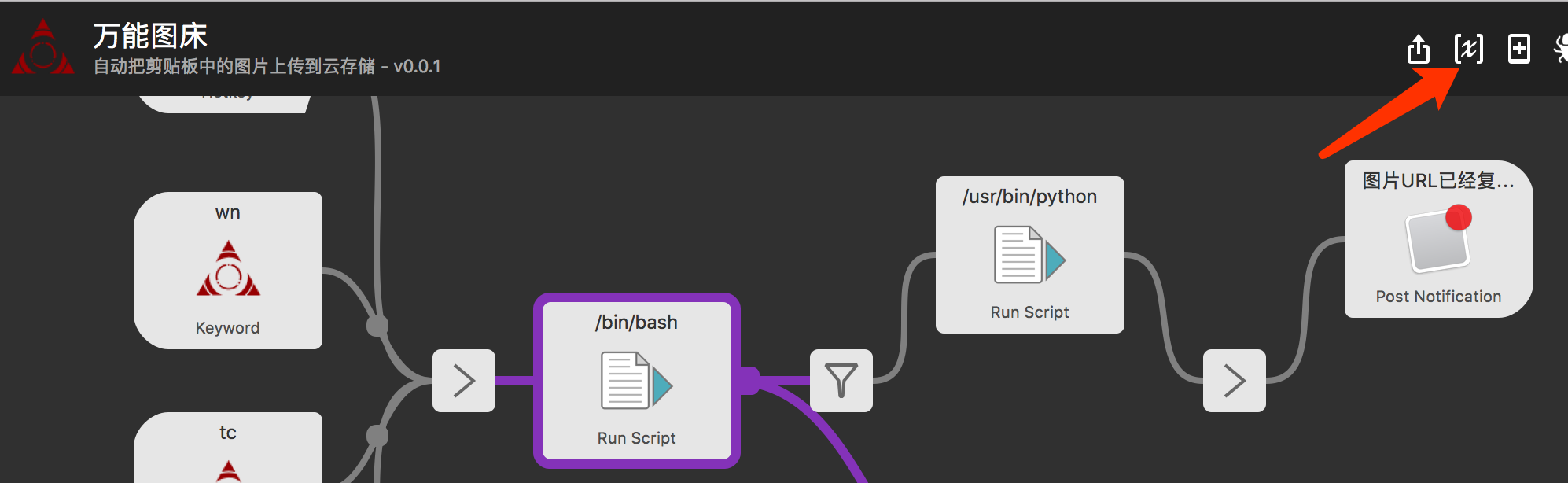

1 | # 万能图床

2 |

3 | 这是一个方便的剪贴板图片上传实用工具,且同时可上传至多个云(已实现同时上传至腾讯云和阿里云)

4 | 图片上传到图床之后,会自动把上传返回的链接放置到系统剪切版上,整个过程只需要两步:

5 |

6 | 1. 截图/复制本地图片/复制网络图片链接

7 | 2. 快捷键 `cmd + opt + p` 进行上传 (或者用调用alfred 输入关键字wn 或 tc 或你自定义的关键字 )

8 |

9 |

10 | 上传完成之后,返回的图片链接自动放入到系统剪切版中,可以直接使用`cmd + V` 使用。

11 |

12 |

13 | ----

14 | ## 支持列表

15 | - [X] 阿里云oss

16 | - [x] 腾讯云cos

17 | - [x] imgur

18 | - [ ] 七牛云

19 | - [ ] 坚果云

20 |

21 |

22 | ## 运行环境

23 |

24 | - macOs 10.13.6

25 | - alfred v3.6.2 开通PowerPack

26 | - python 2.7 mac系统默认

27 | - python依赖库

28 | - PyObjC

29 | - cos-python-sdk-v5

30 | - oss2

31 | - requests

32 |

33 |

34 | ## 配置说明

35 |

36 |

37 | |name|说明|

38 | |--|--|

39 | |debug|是否开启debug模式(会弹出多余信息)|

40 | |keyword|自定义关键字启动万能图床|

41 | |favor_yun|如果配置了多个云,配置该项会将该项的url拷贝到剪贴板里|

42 | |cos_bucket_name|腾讯云存储桶名称|

43 | |cos_is_cdn|是否使用cdn链接,前提是你开通了cdn|

44 | |cos_cdn_domain|开通了cdn的域名 如cossh.myqcloud.com|

45 | |cos_region|域名中的地域信息。枚举值参见 可用地域 文档,如:ap-beijing, ap-hongkong, eu-frankfurt 等|

46 | |cos_secret_id|开发者拥有的项目身份识别 ID,用以身份认证|

47 | |cos_secret_key|开发者拥有的项目身份密钥|

48 | |oss.AccessKeyId|开发者拥有的项目身份识别 ID,用以身份认证|

49 | |oss.AccessKeySecret|开发者拥有的项目身份密钥|

50 | |oss.bucket_name||

51 | |oss.endpoint||

52 | |imgur_use|是否使用imgur(可选)因为需要翻墙速度慢大部分人默认可关闭 true/false|

53 | |imgur_client_id||

54 | |imgur_client_secret||

55 | |imgur_access_token||

56 | |imgur_refresh_token||

57 | |imgur_album|可选|

58 | |porxyconf|如:http://127.0.0.1:58555 代理设置 imgur可能需要翻墙|

59 |

60 | #### 腾讯云

61 |

62 | https://cloud.tencent.com/document/product/436/7751

63 | #### 阿里云

64 |

65 | https://help.aliyun.com/document_detail/52834.html?spm=a2c4g.11186623.6.677.84qFxY

66 |

67 | ---

68 |

69 |

70 | ## 特性

71 | . 极速截图转图片链接

72 | 2. 极速本地图片转图片链接

73 | 3. 极速网络图片转自定义图片链接

74 | - 直接将图片粘贴为markdown支持的图片链接

75 | - 自动图片上传,失败通知栏通知

76 | - 方便的图片上传工具

77 |

78 | ## 使用

79 |

80 | 首先请确认依赖库安装成功;然后导入Alfred工作流;

81 |

82 | #### 通过截图上传

83 |

84 | 使用任意截图工具截图之后,,按下 `cmd + opt + p` ,再在任意编辑器里面你需要插入markdown格式图片的地方,按下cmd + V即可!

85 |

86 | #### 通过本地图片上传

87 |

88 | 如果你已经有一张图片了,希望上传到图床得到一个链接;

89 | 直接复制本地图片,然后按下 `cmd + opt + p`就能得到图床的链接!

90 |

91 | ## TODO

92 | - 选中任何文件即可上传到云上

93 | - 增加 七牛云、坚果云等

94 |

95 | ## 版本

96 | ###v1.1

97 | - 增加imgur支持

98 | - 增加cos的cdn域名自定义

99 | ###v1.0

100 | - 增加腾讯云cos

101 | ###v0.1

102 | - 可以使用阿里云oss

103 |

104 | ## 鸣谢

105 | - https://github.com/Imgur/imgurpython

--------------------------------------------------------------------------------

/clipboard.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import os

3 | import tempfile

4 | import imghdr

5 | import shutil

6 |

7 | from AppKit import NSPasteboard, NSPasteboardTypePNG, \

8 | NSPasteboardTypeTIFF, NSPasteboardTypeString, \

9 | NSFilenamesPboardType

10 |

11 | # image_file, need_format, need_compress

12 | NONE_IMG = (None, False, None)

13 |

14 |

15 | def _convert_to_png(from_path, to_path):

16 | # convert it to png file

17 | os.system('sips -s format png %s --out %s' % (from_path, to_path))

18 |

19 |

20 | def get_paste_img_file():

21 | ''' get a img file from clipboard;

22 | the return object is a `tempfile.NamedTemporaryFile`

23 | you can use the name field to access the file path.

24 | the tmp file will be delete as soon as possible(when gc happened or close explicitly)

25 | you can not just return a path, must hold the reference'''

26 |

27 | pb = NSPasteboard.generalPasteboard()

28 | data_type = pb.types()

29 |

30 | supported_image_format = (NSPasteboardTypePNG, NSPasteboardTypeTIFF)

31 | if NSFilenamesPboardType in data_type:

32 | # file in clipboard

33 | img_path = pb.propertyListForType_(NSFilenamesPboardType)[0]

34 | img_type = imghdr.what(img_path)

35 |

36 | if not img_type:

37 | # not image file

38 | return NONE_IMG

39 |

40 | if img_type not in ('png', 'jpeg', 'gif'):

41 | # now only support png & jpg & gif

42 | return NONE_IMG

43 |

44 | is_gif = img_type == 'gif'

45 | _file = tempfile.NamedTemporaryFile(suffix=img_type)

46 | tmp_clipboard_img_file = tempfile.NamedTemporaryFile()

47 | shutil.copy(img_path, tmp_clipboard_img_file.name)

48 | if not is_gif:

49 | _convert_to_png(tmp_clipboard_img_file.name, _file.name)

50 | else:

51 | shutil.copy(tmp_clipboard_img_file.name, _file.name)

52 | tmp_clipboard_img_file.close()

53 | return _file, False, 'gif' if is_gif else 'png'

54 |

55 | if NSPasteboardTypeString in data_type:

56 | # make this be first, because plain text may be TIFF format?

57 | # string todo, recognise url of png & jpg

58 | return NONE_IMG

59 |

60 | if any(filter(lambda f: f in data_type, supported_image_format)):

61 | # do not care which format it is, we convert it to png finally

62 | # system screen shotcut is png, QQ is tiff

63 | tmp_clipboard_img_file = tempfile.NamedTemporaryFile()

64 | print tmp_clipboard_img_file.name

65 | png_file = tempfile.NamedTemporaryFile(suffix='png')

66 | for fmt in supported_image_format:

67 | data = pb.dataForType_(fmt)

68 | if data: break

69 | ret = data.writeToFile_atomically_(tmp_clipboard_img_file.name, False)

70 | if not ret: return NONE_IMG

71 |

72 | _convert_to_png(tmp_clipboard_img_file.name, png_file.name)

73 | # close the file explicitly

74 | tmp_clipboard_img_file.close()

75 | return png_file, True, 'png'

76 |

77 |

78 | if __name__ == '__main__':

79 | get_paste_img_file()

--------------------------------------------------------------------------------

/icon.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/juforg/wntc.alfredworkflow/9f1ddbd83727a9e972fd26fd63486065021a7ae2/icon.png

--------------------------------------------------------------------------------

/imgurpython/__init__.py:

--------------------------------------------------------------------------------

1 | from .client import ImgurClient

--------------------------------------------------------------------------------

/imgurpython/helpers/__init__.py:

--------------------------------------------------------------------------------

1 | from ..imgur.models.comment import Comment

2 | from ..imgur.models.notification import Notification

3 | from ..imgur.models.gallery_album import GalleryAlbum

4 | from ..imgur.models.gallery_image import GalleryImage

--------------------------------------------------------------------------------

/imgurpython/helpers/error.py:

--------------------------------------------------------------------------------

1 | class ImgurClientError(Exception):

2 | def __init__(self, error_message, status_code=None):

3 | self.status_code = status_code

4 | self.error_message = error_message

5 |

6 | def __str__(self):

7 | if self.status_code:

8 | return "(%s) %s" % (self.status_code, self.error_message)

9 | else:

10 | return self.error_message

11 |

12 |

13 | class ImgurClientRateLimitError(Exception):

14 | def __str__(self):

15 | return 'Rate-limit exceeded!'

16 |

--------------------------------------------------------------------------------

/imgurpython/helpers/format.py:

--------------------------------------------------------------------------------

1 | from ..helpers import Comment

2 | from ..helpers import GalleryAlbum

3 | from ..helpers import GalleryImage

4 | from ..helpers import Notification

5 |

6 |

7 | def build_comment_tree(children):

8 | children_objects = []

9 | for child in children:

10 | to_insert = Comment(child)

11 | to_insert.children = build_comment_tree(to_insert.children)

12 | children_objects.append(to_insert)

13 |

14 | return children_objects

15 |

16 |

17 | def format_comment_tree(response):

18 | if isinstance(response, list):

19 | result = []

20 | for comment in response:

21 | formatted = Comment(comment)

22 | formatted.children = build_comment_tree(comment['children'])

23 | result.append(formatted)

24 | else:

25 | result = Comment(response)

26 | result.children = build_comment_tree(response['children'])

27 |

28 | return result

29 |

30 |

31 | def build_gallery_images_and_albums(response):

32 | if isinstance(response, list):

33 | result = []

34 | for item in response:

35 | if item['is_album']:

36 | result.append(GalleryAlbum(item))

37 | else:

38 | result.append(GalleryImage(item))

39 | else:

40 | if response['is_album']:

41 | result = GalleryAlbum(response)

42 | else:

43 | result = GalleryImage(response)

44 |

45 | return result

46 |

47 |

48 | def build_notifications(response):

49 | result = {

50 | 'replies': [],

51 | 'messages': [Notification(

52 | item['id'],

53 | item['account_id'],

54 | item['viewed'],

55 | item['content']

56 | ) for item in response['messages']]

57 | }

58 |

59 | for item in response['replies']:

60 | notification = Notification(

61 | item['id'],

62 | item['account_id'],

63 | item['viewed'],

64 | item['content']

65 | )

66 | notification.content = format_comment_tree(item['content'])

67 | result['replies'].append(notification)

68 |

69 | return result

70 |

71 |

72 | def build_notification(item):

73 | notification = Notification(

74 | item['id'],

75 | item['account_id'],

76 | item['viewed'],

77 | item['content']

78 | )

79 |

80 | if 'comment' in notification.content:

81 | notification.content = format_comment_tree(item['content'])

82 |

83 | return notification

84 |

--------------------------------------------------------------------------------

/imgurpython/imgur/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/juforg/wntc.alfredworkflow/9f1ddbd83727a9e972fd26fd63486065021a7ae2/imgurpython/imgur/__init__.py

--------------------------------------------------------------------------------

/imgurpython/imgur/models/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/juforg/wntc.alfredworkflow/9f1ddbd83727a9e972fd26fd63486065021a7ae2/imgurpython/imgur/models/__init__.py

--------------------------------------------------------------------------------

/imgurpython/imgur/models/account.py:

--------------------------------------------------------------------------------

1 | class Account(object):

2 |

3 | def __init__(self, account_id, url, bio, reputation, created, pro_expiration):

4 | self.id = account_id

5 | self.url = url

6 | self.bio = bio

7 | self.reputation = reputation

8 | self.created = created

9 | self.pro_expiration = pro_expiration

10 |

--------------------------------------------------------------------------------

/imgurpython/imgur/models/account_settings.py:

--------------------------------------------------------------------------------

1 | class AccountSettings(object):

2 |

3 | def __init__(self, email, high_quality, public_images, album_privacy, pro_expiration, accepted_gallery_terms,

4 | active_emails, messaging_enabled, blocked_users):

5 | self.email = email

6 | self.high_quality = high_quality

7 | self.public_images = public_images

8 | self.album_privacy = album_privacy

9 | self.pro_expiration = pro_expiration

10 | self.accepted_gallery_terms = accepted_gallery_terms

11 | self.active_emails = active_emails

12 | self.messaging_enabled = messaging_enabled

13 | self.blocked_users = blocked_users

14 |

--------------------------------------------------------------------------------

/imgurpython/imgur/models/album.py:

--------------------------------------------------------------------------------

1 | class Album(object):

2 |

3 | # See documentation at https://api.imgur.com/ for available fields

4 | def __init__(self, *initial_data, **kwargs):

5 | for dictionary in initial_data:

6 | for key in dictionary:

7 | setattr(self, key, dictionary[key])

8 | for key in kwargs:

9 | setattr(self, key, kwargs[key])

10 |

--------------------------------------------------------------------------------

/imgurpython/imgur/models/comment.py:

--------------------------------------------------------------------------------

1 | class Comment(object):

2 |

3 | # See documentation at https://api.imgur.com/ for available fields

4 | def __init__(self, *initial_data, **kwargs):

5 | for dictionary in initial_data:

6 | for key in dictionary:

7 | setattr(self, key, dictionary[key])

8 | for key in kwargs:

9 | setattr(self, key, kwargs[key])

10 |

--------------------------------------------------------------------------------

/imgurpython/imgur/models/conversation.py:

--------------------------------------------------------------------------------

1 | from .message import Message

2 |

3 | class Conversation(object):

4 |

5 | def __init__(self, conversation_id, last_message_preview, datetime, with_account_id, with_account, message_count, messages=None,

6 | done=None, page=None):

7 | self.id = conversation_id

8 | self.last_message_preview = last_message_preview

9 | self.datetime = datetime

10 | self.with_account_id = with_account_id

11 | self.with_account = with_account

12 | self.message_count = message_count

13 | self.page = page

14 | self.done = done

15 |

16 | if messages:

17 | self.messages = [Message(

18 | message['id'],

19 | message['from'],

20 | message['account_id'],

21 | message['sender_id'],

22 | message['body'],

23 | message['conversation_id'],

24 | message['datetime'],

25 | ) for message in messages]

26 | else:

27 | self.messages = None

28 |

--------------------------------------------------------------------------------

/imgurpython/imgur/models/custom_gallery.py:

--------------------------------------------------------------------------------

1 | from .gallery_album import GalleryAlbum

2 | from .gallery_image import GalleryImage

3 |

4 |

5 | class CustomGallery(object):

6 |

7 | def __init__(self, custom_gallery_id, name, datetime, account_url, link, tags, item_count=None, items=None):

8 | self.id = custom_gallery_id

9 | self.name = name

10 | self.datetime = datetime

11 | self.account_url = account_url

12 | self.link = link

13 | self.tags = tags

14 | self.item_count = item_count

15 | self.items = [GalleryAlbum(item) if item['is_album'] else GalleryImage(item) for item in items] \

16 | if items else None

17 |

--------------------------------------------------------------------------------

/imgurpython/imgur/models/gallery_album.py:

--------------------------------------------------------------------------------

1 | class GalleryAlbum(object):

2 |

3 | # See documentation at https://api.imgur.com/ for available fields

4 | def __init__(self, *initial_data, **kwargs):

5 | for dictionary in initial_data:

6 | for key in dictionary:

7 | setattr(self, key, dictionary[key])

8 | for key in kwargs:

9 | setattr(self, key, kwargs[key])

10 |

--------------------------------------------------------------------------------

/imgurpython/imgur/models/gallery_image.py:

--------------------------------------------------------------------------------

1 | class GalleryImage(object):

2 |

3 | # See documentation at https://api.imgur.com/ for available fields

4 | def __init__(self, *initial_data, **kwargs):

5 | for dictionary in initial_data:

6 | for key in dictionary:

7 | setattr(self, key, dictionary[key])

8 | for key in kwargs:

9 | setattr(self, key, kwargs[key])

10 |

--------------------------------------------------------------------------------

/imgurpython/imgur/models/image.py:

--------------------------------------------------------------------------------

1 | class Image(object):

2 |

3 | # See documentation at https://api.imgur.com/ for available fields

4 | def __init__(self, *initial_data, **kwargs):

5 | for dictionary in initial_data:

6 | for key in dictionary:

7 | setattr(self, key, dictionary[key])

8 | for key in kwargs:

9 | setattr(self, key, kwargs[key])

10 |

--------------------------------------------------------------------------------

/imgurpython/imgur/models/message.py:

--------------------------------------------------------------------------------

1 | class Message(object):

2 |

3 | def __init__(self, message_id, from_user, account_id, sender_id, body, conversation_id, datetime):

4 | self.id = message_id

5 | self.from_user = from_user

6 | self.account_id = account_id

7 | self.sender_id = sender_id

8 | self.body = body

9 | self.conversation_id = conversation_id

10 | self.datetime = datetime

11 |

--------------------------------------------------------------------------------

/imgurpython/imgur/models/notification.py:

--------------------------------------------------------------------------------

1 | class Notification(object):

2 |

3 | def __init__(self, notification_id, account_id, viewed, content):

4 | self.id = notification_id

5 | self.account_id = account_id

6 | self.viewed = viewed

7 | self.content = content

8 |

--------------------------------------------------------------------------------

/imgurpython/imgur/models/tag.py:

--------------------------------------------------------------------------------

1 | from .gallery_album import GalleryAlbum

2 | from .gallery_image import GalleryImage

3 |

4 |

5 | class Tag(object):

6 |

7 | def __init__(self, name, followers, total_items, following, items):

8 | self.name = name

9 | self.followers = followers

10 | self.total_items = total_items

11 | self.following = following

12 | self.items = [GalleryAlbum(item) if item['is_album'] else GalleryImage(item) for item in items] \

13 | if items else None

14 |

--------------------------------------------------------------------------------

/imgurpython/imgur/models/tag_vote.py:

--------------------------------------------------------------------------------

1 | class TagVote(object):

2 |

3 | def __init__(self, ups, downs, name, author):

4 | self.ups = ups

5 | self.downs = downs

6 | self.name = name

7 | self.author = author

8 |

--------------------------------------------------------------------------------

/info.plist:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | bundleid

6 | vip.appcity.workflow.wntc

7 | category

8 | Tools

9 | connections

10 |

11 | 1B8C5911-370C-4CC5-9D1A-AC88FB9BB559

12 |

13 |

14 | destinationuid

15 | 81BB8436-F1AC-4BF0-8C55-48EDB89350E1

16 | modifiers

17 | 0

18 | modifiersubtext

19 |

20 | vitoclose

21 |

22 |

23 |

24 | 2AD8D366-9A61-4E49-B504-A38B2270A457

25 |

26 |

27 | destinationuid

28 | 998E6417-75A5-4F99-AF80-4465E34811E7

29 | modifiers

30 | 0

31 | modifiersubtext

32 |

33 | vitoclose

34 |

35 |

36 |

37 | 635B6602-02AB-4983-BD28-9246A16767DE

38 |

39 |

40 | destinationuid

41 | 1B8C5911-370C-4CC5-9D1A-AC88FB9BB559

42 | modifiers

43 | 0

44 | modifiersubtext

45 |

46 | vitoclose

47 |

48 |

49 |

50 | 81BB8436-F1AC-4BF0-8C55-48EDB89350E1

51 |

52 |

53 | destinationuid

54 | 5C371D2A-F080-4EAC-A91C-B9735DAAFC91

55 | modifiers

56 | 0

57 | modifiersubtext

58 |

59 | vitoclose

60 |

61 |

62 |

63 | 998E6417-75A5-4F99-AF80-4465E34811E7

64 |

65 |

66 | destinationuid

67 | E117BAD6-E9A8-4553-B6B7-8E87A4BB8644

68 | modifiers

69 | 0

70 | modifiersubtext

71 |

72 | vitoclose

73 |

74 |

75 |

76 | A2F9CEE3-9D3C-4E97-A5ED-DF3291610B7C

77 |

78 |

79 | destinationuid

80 | 998E6417-75A5-4F99-AF80-4465E34811E7

81 | modifiers

82 | 0

83 | modifiersubtext

84 |

85 | vitoclose

86 |

87 |

88 |

89 | DE2E602F-313F-4D10-849E-78A4B46F5EAD

90 |

91 |

92 | destinationuid

93 | 998E6417-75A5-4F99-AF80-4465E34811E7

94 | modifiers

95 | 0

96 | modifiersubtext

97 |

98 | vitoclose

99 |

100 |

101 |

102 | E117BAD6-E9A8-4553-B6B7-8E87A4BB8644

103 |

104 |

105 | destinationuid

106 | 635B6602-02AB-4983-BD28-9246A16767DE

107 | modifiers

108 | 0

109 | modifiersubtext

110 |

111 | vitoclose

112 |

113 |

114 |

115 | destinationuid

116 | 8A455598-2D3E-4F17-B286-D7A58AB04A3C

117 | modifiers

118 | 0

119 | modifiersubtext

120 |

121 | vitoclose

122 |

123 |

124 |

125 | EA346044-4CD8-4752-8DE1-73CEFFDBFAAA

126 |

127 |

128 | destinationuid

129 | 998E6417-75A5-4F99-AF80-4465E34811E7

130 | modifiers

131 | 0

132 | modifiersubtext

133 |

134 | vitoclose

135 |

136 |

137 |

138 |

139 | createdby

140 | juforg

141 | description

142 | 自动把剪贴板中的图片上传到云存储

143 | disabled

144 |

145 | name

146 | 万能图床

147 | objects

148 |

149 |

150 | config

151 |

152 | action

153 | 0

154 | argument

155 | 0

156 | focusedappvariable

157 |

158 | focusedappvariablename

159 |

160 | hotkey

161 | 35

162 | hotmod

163 | 1572864

164 | hotstring

165 | P

166 | leftcursor

167 |

168 | modsmode

169 | 0

170 | relatedAppsMode

171 | 0

172 |

173 | type

174 | alfred.workflow.trigger.hotkey

175 | uid

176 | 2AD8D366-9A61-4E49-B504-A38B2270A457

177 | version

178 | 2

179 |

180 |

181 | config

182 |

183 | lastpathcomponent

184 |

185 | onlyshowifquerypopulated

186 |

187 | removeextension

188 |

189 | text

190 | {query}

191 | title

192 | 图片URL已经复制到剪贴板

193 |

194 | type

195 | alfred.workflow.output.notification

196 | uid

197 | 5C371D2A-F080-4EAC-A91C-B9735DAAFC91

198 | version

199 | 1

200 |

201 |

202 | config

203 |

204 | concurrently

205 |

206 | escaping

207 | 68

208 | script

209 | import sys

210 | import re

211 | query = """

212 | {query}

213 | """

214 | l = re.findall('(?<=\().+?(?=\))',query,re.I)

215 | if l.__len__() >0:

216 | sys.stdout.write(l[0])

217 | scriptargtype

218 | 0

219 | scriptfile

220 |

221 | type

222 | 3

223 |

224 | type

225 | alfred.workflow.action.script

226 | uid

227 | 1B8C5911-370C-4CC5-9D1A-AC88FB9BB559

228 | version

229 | 2

230 |

231 |

232 | config

233 |

234 | argumenttype

235 | 1

236 | keyword

237 | wn

238 | subtext

239 | 自动上传剪贴板图片

240 | text

241 | 万能图床

242 | withspace

243 |

244 |

245 | type

246 | alfred.workflow.input.keyword

247 | uid

248 | DE2E602F-313F-4D10-849E-78A4B46F5EAD

249 | version

250 | 1

251 |

252 |

253 | config

254 |

255 | concurrently

256 |

257 | escaping

258 | 68

259 | script

260 | python wntc.py "{query}"

261 | scriptargtype

262 | 0

263 | scriptfile

264 |

265 | type

266 | 0

267 |

268 | type

269 | alfred.workflow.action.script

270 | uid

271 | E117BAD6-E9A8-4553-B6B7-8E87A4BB8644

272 | version

273 | 2

274 |

275 |

276 | config

277 |

278 | inputstring

279 | {query}

280 | matchcasesensitive

281 |

282 | matchmode

283 | 2

284 | matchstring

285 | !\[.*\]\((.+)\)

286 |

287 | type

288 | alfred.workflow.utility.filter

289 | uid

290 | 635B6602-02AB-4983-BD28-9246A16767DE

291 | version

292 | 1

293 |

294 |

295 | config

296 |

297 | argument

298 | {query}

299 | variables

300 |

301 |

302 | type

303 | alfred.workflow.utility.argument

304 | uid

305 | 81BB8436-F1AC-4BF0-8C55-48EDB89350E1

306 | version

307 | 1

308 |

309 |

310 | config

311 |

312 | argument

313 | {query}

314 | variables

315 |

316 | vardate

317 | {date:short}

318 | vartime

319 | {time}

320 | yuncode

321 | {query}

322 |

323 |

324 | type

325 | alfred.workflow.utility.argument

326 | uid

327 | 998E6417-75A5-4F99-AF80-4465E34811E7

328 | version

329 | 1

330 |

331 |

332 | config

333 |

334 | argumenttype

335 | 1

336 | keyword

337 | tc

338 | subtext

339 | 自动上传剪贴板图片

340 | text

341 | 万能图床

342 | withspace

343 |

344 |

345 | type

346 | alfred.workflow.input.keyword

347 | uid

348 | A2F9CEE3-9D3C-4E97-A5ED-DF3291610B7C

349 | version

350 | 1

351 |

352 |

353 | config

354 |

355 | argument

356 | '{query}', {allvars}

357 | cleardebuggertext

358 |

359 | processoutputs

360 |

361 |

362 | type

363 | alfred.workflow.utility.debug

364 | uid

365 | 8A455598-2D3E-4F17-B286-D7A58AB04A3C

366 | version

367 | 1

368 |

369 |

370 | config

371 |

372 | argumenttype

373 | 0

374 | keyword

375 | {var:keyword}

376 | subtext

377 | 本workflow configuration设置的关键字

378 | text

379 | 万能图床

380 | withspace

381 |

382 |

383 | type

384 | alfred.workflow.input.keyword

385 | uid

386 | EA346044-4CD8-4752-8DE1-73CEFFDBFAAA

387 | version

388 | 1

389 |

390 |

391 | readme

392 | 无论用哪个工具截图后,在�剪贴板中都有这个图片的二进制信息,把这个二进制信息自动上传到各大图床平台上

393 | 目前支持的云有 阿里云(oss)腾讯云(cos)

394 |

395 | |debug|是否开启debug模式(会弹出多余信息)|

396 | |keyword|自定义关键字启动万能图床|

397 | |favor_yun|如果配置了多个云,配置该项会将该项的url拷贝到剪贴板里,可选配置:oss,cos,imgur|

398 | |cos_bucket_name|腾讯云存储桶名称|

399 | |cos_is_cdn|是否使用cdn链接,前提是你开通了cdn|

400 | |cos_region|域名中的地域信息。枚举值参见 可用地域 文档,如:ap-beijing, ap-hongkong, eu-frankfurt 等|

401 | |cos_secret_id|开发者拥有的项目身份识别 ID,用以身份认证|

402 | |cos_secret_key|开发者拥有的项目身份密钥|

403 | |oss.AccessKeyId|开发者拥有的项目身份识别 ID,用以身份认证|

404 | |oss.AccessKeySecret|开发者拥有的项目身份密钥|

405 | |oss.bucket_name||

406 | |oss.endpoint||

407 | |imgur_use|是否使用imgur(可选)因为需要翻墙速度慢大部分人默认可关闭 true/false|

408 | |imgur_client_id||

409 | |imgur_client_secret||

410 | |imgur_access_token||

411 | |imgur_refresh_token||

412 | |imgur_album|可选|

413 | |porxyconf|如:http://127.0.0.1:58555 代理设置 imgur可能需要翻墙|

414 | uidata

415 |

416 | 1B8C5911-370C-4CC5-9D1A-AC88FB9BB559

417 |

418 | xpos

419 | 600

420 | ypos

421 | 220

422 |

423 | 2AD8D366-9A61-4E49-B504-A38B2270A457

424 |

425 | xpos

426 | 90

427 | ypos

428 | 80

429 |

430 | 5C371D2A-F080-4EAC-A91C-B9735DAAFC91

431 |

432 | xpos

433 | 860

434 | ypos

435 | 210

436 |

437 | 635B6602-02AB-4983-BD28-9246A16767DE

438 |

439 | xpos

440 | 520

441 | ypos

442 | 330

443 |

444 | 81BB8436-F1AC-4BF0-8C55-48EDB89350E1

445 |

446 | xpos

447 | 770

448 | ypos

449 | 330

450 |

451 | 8A455598-2D3E-4F17-B286-D7A58AB04A3C

452 |

453 | xpos

454 | 660

455 | ypos

456 | 500

457 |

458 | 998E6417-75A5-4F99-AF80-4465E34811E7

459 |

460 | xpos

461 | 280

462 | ypos

463 | 330

464 |

465 | A2F9CEE3-9D3C-4E97-A5ED-DF3291610B7C

466 |

467 | xpos

468 | 90

469 | ypos

470 | 370

471 |

472 | DE2E602F-313F-4D10-849E-78A4B46F5EAD

473 |

474 | xpos

475 | 90

476 | ypos

477 | 230

478 |

479 | E117BAD6-E9A8-4553-B6B7-8E87A4BB8644

480 |

481 | xpos

482 | 350

483 | ypos

484 | 300

485 |

486 | EA346044-4CD8-4752-8DE1-73CEFFDBFAAA

487 |

488 | note

489 | 自定义关键字

490 | 自己在右上角[X] 中配置的keyword 触发本工作流

491 | xpos

492 | 90

493 | ypos

494 | 500

495 |

496 |

497 | variables

498 |

499 | cos_bucket_name

500 | wntc-1251220317

501 | cos_cdn_domain

502 | cossh.myqcloud.com

503 | cos_is_cdn

504 | true

505 | cos_region

506 | ap-shanghai

507 | cos_secret_id

508 | AKIDurgMrBPF9vgFdpcyytdExX3S0ZBc3uNt

509 | cos_secret_key

510 | gFnEUaUCicQ6as0GtcDctz1sqfcOIBxc

511 | debug

512 | false

513 | favor_yun

514 | cos

515 | imgur_access_token

516 | 9ac4a950705753af07916e0e090a6db8af6229ae

517 | imgur_client_id

518 | 4006e10bc9bfa9d

519 | imgur_client_secret

520 | 9cc6f213b55cf5678b999e4c0fcf1f9f8788de11

521 | imgur_refresh_token

522 | 8e1a904b3c6857935522266a8143a65cb87b958a

523 | imgur_use

524 | false

525 | keyword

526 | wntc

527 | oss.AccessKeyId

528 | a0yPloym0g6sXsyC

529 | oss.AccessKeySecret

530 | cVm1WlvFNueSSEsIg9qQ3ORzQw6wwa

531 | oss.bucket_name

532 | wntc

533 | oss.endpoint

534 | oss-cn-shanghai.aliyuncs.com

535 | porxyconf

536 | http://127.0.0.1:58555

537 |

538 | variablesdontexport

539 |

540 | imgur_client_id

541 | oss.AccessKeyId

542 | cos_bucket_name

543 | imgur_client_secret

544 | imgur_refresh_token

545 | oss.AccessKeySecret

546 | cos_secret_id

547 | cos_secret_key

548 | imgur_access_token

549 |

550 | version

551 | 0.0.1

552 | webaddress

553 | http://appcity.vip

554 |

555 |

556 |

--------------------------------------------------------------------------------

/oss2/__init__.py:

--------------------------------------------------------------------------------

1 | __version__ = '2.5.0'

2 |

3 | from . import models, exceptions

4 |

5 | from .api import Service, Bucket, CryptoBucket

6 | from .auth import Auth, AuthV2, AnonymousAuth, StsAuth, AUTH_VERSION_1, AUTH_VERSION_2, make_auth

7 | from .http import Session, CaseInsensitiveDict

8 |

9 |

10 | from .iterators import (BucketIterator, ObjectIterator,

11 | MultipartUploadIterator, ObjectUploadIterator,

12 | PartIterator, LiveChannelIterator)

13 |

14 |

15 | from .resumable import resumable_upload, resumable_download, ResumableStore, ResumableDownloadStore, determine_part_size

16 | from .resumable import make_upload_store, make_download_store

17 |

18 |

19 | from .compat import to_bytes, to_string, to_unicode, urlparse, urlquote, urlunquote

20 |

21 | from .utils import SizedFileAdapter, make_progress_adapter

22 | from .utils import content_type_by_name, is_valid_bucket_name

23 | from .utils import http_date, http_to_unixtime, iso8601_to_unixtime, date_to_iso8601, iso8601_to_date

24 |

25 |

26 | from .models import BUCKET_ACL_PRIVATE, BUCKET_ACL_PUBLIC_READ, BUCKET_ACL_PUBLIC_READ_WRITE

27 | from .models import OBJECT_ACL_DEFAULT, OBJECT_ACL_PRIVATE, OBJECT_ACL_PUBLIC_READ, OBJECT_ACL_PUBLIC_READ_WRITE

28 | from .models import BUCKET_STORAGE_CLASS_STANDARD, BUCKET_STORAGE_CLASS_IA, BUCKET_STORAGE_CLASS_ARCHIVE

29 |

30 | from .crypto import LocalRsaProvider, AliKMSProvider

31 |

--------------------------------------------------------------------------------

/oss2/auth.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | import hmac

4 | import hashlib

5 | import time

6 |

7 | from . import utils

8 | from .compat import urlquote, to_bytes

9 |

10 | from .defaults import get_logger

11 | import logging

12 |

13 | AUTH_VERSION_1 = 'v1'

14 | AUTH_VERSION_2 = 'v2'

15 |

16 |

17 | def make_auth(access_key_id, access_key_secret, auth_version=AUTH_VERSION_1):

18 | if auth_version == AUTH_VERSION_2:

19 | return AuthV2(access_key_id.strip(), access_key_secret.strip())

20 | else:

21 | return Auth(access_key_id.strip(), access_key_secret.strip())

22 |

23 |

24 | class AuthBase(object):

25 | """用于保存用户AccessKeyId、AccessKeySecret,以及计算签名的对象。"""

26 | def __init__(self, access_key_id, access_key_secret):

27 | self.id = access_key_id.strip()

28 | self.secret = access_key_secret.strip()

29 |

30 | def _sign_rtmp_url(self, url, bucket_name, channel_name, playlist_name, expires, params):

31 | expiration_time = int(time.time()) + expires

32 |

33 | canonicalized_resource = "/%s/%s" % (bucket_name, channel_name)

34 | canonicalized_params = []

35 |

36 | if params:

37 | items = params.items()

38 | for k, v in items:

39 | if k != "OSSAccessKeyId" and k != "Signature" and k != "Expires" and k != "SecurityToken":

40 | canonicalized_params.append((k, v))

41 |

42 | canonicalized_params.sort(key=lambda e: e[0])

43 | canon_params_str = ''

44 | for k, v in canonicalized_params:

45 | canon_params_str += '%s:%s\n' % (k, v)

46 |

47 | p = params if params else {}

48 | string_to_sign = str(expiration_time) + "\n" + canon_params_str + canonicalized_resource

49 | get_logger().debug('string_to_sign={0}'.format(string_to_sign))

50 |

51 | h = hmac.new(to_bytes(self.secret), to_bytes(string_to_sign), hashlib.sha1)

52 | signature = utils.b64encode_as_string(h.digest())

53 |

54 | p['OSSAccessKeyId'] = self.id

55 | p['Expires'] = str(expiration_time)

56 | p['Signature'] = signature

57 |

58 | return url + '?' + '&'.join(_param_to_quoted_query(k, v) for k, v in p.items())

59 |

60 |

61 | class Auth(AuthBase):

62 | """签名版本1"""

63 | _subresource_key_set = frozenset(

64 | ['response-content-type', 'response-content-language',

65 | 'response-cache-control', 'logging', 'response-content-encoding',

66 | 'acl', 'uploadId', 'uploads', 'partNumber', 'group', 'link',

67 | 'delete', 'website', 'location', 'objectInfo', 'objectMeta',

68 | 'response-expires', 'response-content-disposition', 'cors', 'lifecycle',

69 | 'restore', 'qos', 'referer', 'stat', 'bucketInfo', 'append', 'position', 'security-token',

70 | 'live', 'comp', 'status', 'vod', 'startTime', 'endTime', 'x-oss-process',

71 | 'symlink', 'callback', 'callback-var']

72 | )

73 |

74 | def _sign_request(self, req, bucket_name, key):

75 | req.headers['date'] = utils.http_date()

76 |

77 | signature = self.__make_signature(req, bucket_name, key)

78 | req.headers['authorization'] = "OSS {0}:{1}".format(self.id, signature)

79 |

80 | def _sign_url(self, req, bucket_name, key, expires):

81 | expiration_time = int(time.time()) + expires

82 |

83 | req.headers['date'] = str(expiration_time)

84 | signature = self.__make_signature(req, bucket_name, key)

85 |

86 | req.params['OSSAccessKeyId'] = self.id

87 | req.params['Expires'] = str(expiration_time)

88 | req.params['Signature'] = signature

89 |

90 | return req.url + '?' + '&'.join(_param_to_quoted_query(k, v) for k, v in req.params.items())

91 |

92 | def __make_signature(self, req, bucket_name, key):

93 | string_to_sign = self.__get_string_to_sign(req, bucket_name, key)

94 |

95 | get_logger().debug('string_to_sign={0}'.format(string_to_sign))

96 |

97 | h = hmac.new(to_bytes(self.secret), to_bytes(string_to_sign), hashlib.sha1)

98 | return utils.b64encode_as_string(h.digest())

99 |

100 | def __get_string_to_sign(self, req, bucket_name, key):

101 | resource_string = self.__get_resource_string(req, bucket_name, key)

102 | headers_string = self.__get_headers_string(req)

103 |

104 | content_md5 = req.headers.get('content-md5', '')

105 | content_type = req.headers.get('content-type', '')

106 | date = req.headers.get('date', '')

107 | return '\n'.join([req.method,

108 | content_md5,

109 | content_type,

110 | date,

111 | headers_string + resource_string])

112 |

113 | def __get_headers_string(self, req):

114 | headers = req.headers

115 | canon_headers = []

116 | for k, v in headers.items():

117 | lower_key = k.lower()

118 | if lower_key.startswith('x-oss-'):

119 | canon_headers.append((lower_key, v))

120 |

121 | canon_headers.sort(key=lambda x: x[0])

122 |

123 | if canon_headers:

124 | return '\n'.join(k + ':' + v for k, v in canon_headers) + '\n'

125 | else:

126 | return ''

127 |

128 | def __get_resource_string(self, req, bucket_name, key):

129 | if not bucket_name:

130 | return '/'

131 | else:

132 | return '/{0}/{1}{2}'.format(bucket_name, key, self.__get_subresource_string(req.params))

133 |

134 | def __get_subresource_string(self, params):

135 | if not params:

136 | return ''

137 |

138 | subresource_params = []

139 | for key, value in params.items():

140 | if key in self._subresource_key_set:

141 | subresource_params.append((key, value))

142 |

143 | subresource_params.sort(key=lambda e: e[0])

144 |

145 | if subresource_params:

146 | return '?' + '&'.join(self.__param_to_query(k, v) for k, v in subresource_params)

147 | else:

148 | return ''

149 |

150 | def __param_to_query(self, k, v):

151 | if v:

152 | return k + '=' + v

153 | else:

154 | return k

155 |

156 |

157 | class AnonymousAuth(object):

158 | """用于匿名访问。

159 |

160 | .. note::

161 | 匿名用户只能读取public-read的Bucket,或只能读取、写入public-read-write的Bucket。

162 | 不能进行Service、Bucket相关的操作,也不能罗列文件等。

163 | """

164 | def _sign_request(self, req, bucket_name, key):

165 | pass

166 |

167 | def _sign_url(self, req, bucket_name, key, expires):

168 | return req.url + '?' + '&'.join(_param_to_quoted_query(k, v) for k, v in req.params.items())

169 |

170 | def _sign_rtmp_url(self, url, bucket_name, channel_name, playlist_name, expires, params):

171 | return url + '?' + '&'.join(_param_to_quoted_query(k, v) for k, v in params.items())

172 |

173 |

174 | class StsAuth(object):

175 | """用于STS临时凭证访问。可以通过官方STS客户端获得临时密钥(AccessKeyId、AccessKeySecret)以及临时安全令牌(SecurityToken)。

176 |

177 | 注意到临时凭证会在一段时间后过期,在此之前需要重新获取临时凭证,并更新 :class:`Bucket ` 的 `auth` 成员变量为新

178 | 的 `StsAuth` 实例。

179 |

180 | :param str access_key_id: 临时AccessKeyId

181 | :param str access_key_secret: 临时AccessKeySecret

182 | :param str security_token: 临时安全令牌(SecurityToken)

183 | :param str auth_version: 需要生成auth的版本,默认为AUTH_VERSION_1(v1)

184 | """

185 | def __init__(self, access_key_id, access_key_secret, security_token, auth_version=AUTH_VERSION_1):

186 | self.__auth = make_auth(access_key_id, access_key_secret, auth_version)

187 | self.__security_token = security_token

188 |

189 | def _sign_request(self, req, bucket_name, key):

190 | req.headers['x-oss-security-token'] = self.__security_token

191 | self.__auth._sign_request(req, bucket_name, key)

192 |

193 | def _sign_url(self, req, bucket_name, key, expires):

194 | req.params['security-token'] = self.__security_token

195 | return self.__auth._sign_url(req, bucket_name, key, expires)

196 |

197 | def _sign_rtmp_url(self, url, bucket_name, channel_name, playlist_name, expires, params):

198 | params['security-token'] = self.__security_token

199 | return self.__auth._sign_rtmp_url(url, bucket_name, channel_name, playlist_name, expires, params)

200 |

201 |

202 | def _param_to_quoted_query(k, v):

203 | if v:

204 | return urlquote(k, '') + '=' + urlquote(v, '')

205 | else:

206 | return urlquote(k, '')

207 |

208 |

209 | def v2_uri_encode(raw_text):

210 | raw_text = to_bytes(raw_text)

211 |

212 | res = ''

213 | for b in raw_text:

214 | if isinstance(b, int):

215 | c = chr(b)

216 | else:

217 | c = b

218 |

219 | if (c >= 'A' and c <= 'Z') or (c >= 'a' and c <= 'z')\

220 | or (c >= '0' and c <= '9') or c in ['_', '-', '~', '.']:

221 | res += c

222 | else:

223 | res += "%{0:02X}".format(ord(c))

224 |

225 | return res

226 |

227 |

228 | _DEFAULT_ADDITIONAL_HEADERS = set(['range',

229 | 'if-modified-since'])

230 |

231 |

232 | class AuthV2(AuthBase):

233 | """签名版本2,与版本1的区别在:

234 | 1. 使用SHA256算法,具有更高的安全性

235 | 2. 参数计算包含所有的HTTP查询参数

236 | """

237 | def _sign_request(self, req, bucket_name, key, in_additional_headers=None):

238 | """把authorization放入req的header里面

239 |

240 | :param req: authorization信息将会加入到这个请求的header里面

241 | :type req: oss2.http.Request

242 |

243 | :param bucket_name: bucket名称

244 | :param key: OSS文件名

245 | :param in_additional_headers: 加入签名计算的额外header列表

246 | """

247 | if in_additional_headers is None:

248 | in_additional_headers = _DEFAULT_ADDITIONAL_HEADERS

249 |

250 | additional_headers = self.__get_additional_headers(req, in_additional_headers)

251 |

252 | req.headers['date'] = utils.http_date()

253 |

254 | signature = self.__make_signature(req, bucket_name, key, additional_headers)

255 |

256 | if additional_headers:

257 | req.headers['authorization'] = "OSS2 AccessKeyId:{0},AdditionalHeaders:{1},Signature:{2}"\

258 | .format(self.id, ';'.join(additional_headers), signature)

259 | else:

260 | req.headers['authorization'] = "OSS2 AccessKeyId:{0},Signature:{1}".format(self.id, signature)

261 |

262 | def _sign_url(self, req, bucket_name, key, expires, in_additional_headers=None):

263 | """返回一个签过名的URL

264 |

265 | :param req: 需要签名的请求

266 | :type req: oss2.http.Request

267 |

268 | :param bucket_name: bucket名称

269 | :param key: OSS文件名

270 | :param int expires: 返回的url将在`expires`秒后过期.

271 | :param in_additional_headers: 加入签名计算的额外header列表

272 |

273 | :return: a signed URL

274 | """

275 |

276 | if in_additional_headers is None:

277 | in_additional_headers = set()

278 |

279 | additional_headers = self.__get_additional_headers(req, in_additional_headers)

280 |

281 | expiration_time = int(time.time()) + expires

282 |

283 | req.headers['date'] = str(expiration_time) # re-use __make_signature by setting the 'date' header

284 |

285 | req.params['x-oss-signature-version'] = 'OSS2'

286 | req.params['x-oss-expires'] = str(expiration_time)

287 | req.params['x-oss-access-key-id'] = self.id

288 |

289 | signature = self.__make_signature(req, bucket_name, key, additional_headers)

290 |

291 | req.params['x-oss-signature'] = signature

292 |

293 | return req.url + '?' + '&'.join(_param_to_quoted_query(k, v) for k, v in req.params.items())

294 |

295 | def __make_signature(self, req, bucket_name, key, additional_headers):

296 | string_to_sign = self.__get_string_to_sign(req, bucket_name, key, additional_headers)

297 |

298 | logging.info('string_to_sign={0}'.format(string_to_sign))

299 |

300 | h = hmac.new(to_bytes(self.secret), to_bytes(string_to_sign), hashlib.sha256)

301 | return utils.b64encode_as_string(h.digest())

302 |

303 | def __get_additional_headers(self, req, in_additional_headers):

304 | # we add a header into additional_headers only if it is already in req's headers.

305 |

306 | additional_headers = set(h.lower() for h in in_additional_headers)

307 | keys_in_header = set(k.lower() for k in req.headers.keys())

308 |

309 | return additional_headers & keys_in_header

310 |

311 | def __get_string_to_sign(self, req, bucket_name, key, additional_header_list):

312 | verb = req.method

313 | content_md5 = req.headers.get('content-md5', '')

314 | content_type = req.headers.get('content-type', '')

315 | date = req.headers.get('date', '')

316 |

317 | canonicalized_oss_headers = self.__get_canonicalized_oss_headers(req, additional_header_list)

318 | additional_headers = ';'.join(sorted(additional_header_list))

319 | canonicalized_resource = self.__get_resource_string(req, bucket_name, key)

320 |

321 | return verb + '\n' +\

322 | content_md5 + '\n' +\

323 | content_type + '\n' +\

324 | date + '\n' +\

325 | canonicalized_oss_headers +\

326 | additional_headers + '\n' +\

327 | canonicalized_resource

328 |

329 | def __get_resource_string(self, req, bucket_name, key):

330 | if bucket_name:

331 | encoded_uri = v2_uri_encode('/' + bucket_name + '/' + key)

332 | else:

333 | encoded_uri = v2_uri_encode('/')

334 |

335 | logging.info('encoded_uri={0} key={1}'.format(encoded_uri, key))

336 |

337 | return encoded_uri + self.__get_canonalized_query_string(req)

338 |

339 | def __get_canonalized_query_string(self, req):

340 | encoded_params = {}

341 | for param, value in req.params.items():

342 | encoded_params[v2_uri_encode(param)] = v2_uri_encode(value)

343 |

344 | if not encoded_params:

345 | return ''

346 |

347 | sorted_params = sorted(encoded_params.items(), key=lambda e: e[0])

348 | return '?' + '&'.join(self.__param_to_query(k, v) for k, v in sorted_params)

349 |

350 | def __param_to_query(self, k, v):

351 | if v:

352 | return k + '=' + v

353 | else:

354 | return k

355 |

356 | def __get_canonicalized_oss_headers(self, req, additional_headers):

357 | """

358 | :param additional_headers: 小写的headers列表, 并且这些headers都不以'x-oss-'为前缀.

359 | """

360 | canon_headers = []

361 |

362 | for k, v in req.headers.items():

363 | lower_key = k.lower()

364 | if lower_key.startswith('x-oss-') or lower_key in additional_headers:

365 | canon_headers.append((lower_key, v))

366 |

367 | canon_headers.sort(key=lambda x: x[0])

368 |

369 | return ''.join(v[0] + ':' + v[1] + '\n' for v in canon_headers)

370 |

--------------------------------------------------------------------------------

/oss2/compat.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | """

4 | 兼容Python版本

5 | """

6 |

7 | import sys

8 |

9 | is_py2 = (sys.version_info[0] == 2)

10 | is_py3 = (sys.version_info[0] == 3)

11 | is_py33 = (sys.version_info[0] == 3 and sys.version_info[1] == 3)

12 |

13 |

14 | try:

15 | import simplejson as json

16 | except (ImportError, SyntaxError):

17 | import json

18 |

19 |

20 | if is_py2:

21 | from urllib import quote as urlquote, unquote as urlunquote

22 | from urlparse import urlparse

23 |

24 |

25 | def to_bytes(data):

26 | """若输入为unicode, 则转为utf-8编码的bytes;其他则原样返回。"""

27 | if isinstance(data, unicode):

28 | return data.encode('utf-8')

29 | else:

30 | return data

31 |

32 | def to_string(data):

33 | """把输入转换为str对象"""

34 | return to_bytes(data)

35 |

36 | def to_unicode(data):

37 | """把输入转换为unicode,要求输入是unicode或者utf-8编码的bytes。"""

38 | if isinstance(data, bytes):

39 | return data.decode('utf-8')

40 | else:

41 | return data

42 |

43 | def stringify(input):

44 | if isinstance(input, dict):

45 | return dict([(stringify(key), stringify(value)) for key,value in input.iteritems()])

46 | elif isinstance(input, list):

47 | return [stringify(element) for element in input]

48 | elif isinstance(input, unicode):

49 | return input.encode('utf-8')

50 | else:

51 | return input

52 |

53 | builtin_str = str

54 | bytes = str

55 | str = unicode

56 |

57 |

58 | elif is_py3:

59 | from urllib.parse import quote as urlquote, unquote as urlunquote

60 | from urllib.parse import urlparse

61 |

62 | def to_bytes(data):

63 | """若输入为str(即unicode),则转为utf-8编码的bytes;其他则原样返回"""

64 | if isinstance(data, str):

65 | return data.encode(encoding='utf-8')

66 | else:

67 | return data

68 |

69 | def to_string(data):

70 | """若输入为bytes,则认为是utf-8编码,并返回str"""

71 | if isinstance(data, bytes):

72 | return data.decode('utf-8')

73 | else:

74 | return data

75 |

76 | def to_unicode(data):

77 | """把输入转换为unicode,要求输入是unicode或者utf-8编码的bytes。"""

78 | return to_string(data)

79 |

80 | def stringify(input):

81 | return input

82 |

83 | builtin_str = str

84 | bytes = bytes

85 | str = str

--------------------------------------------------------------------------------

/oss2/crypto.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | """

4 | oss2.encryption

5 | ~~~~~~~~~~~~~~

6 |

7 | 该模块包含了客户端加解密相关的函数和类。

8 | """

9 | import json

10 | from functools import partial

11 |

12 | from oss2.utils import b64decode_from_string, b64encode_as_string

13 | from . import utils

14 | from .compat import to_string, to_bytes, to_unicode

15 | from .exceptions import OssError, ClientError, OpenApiFormatError, OpenApiServerError

16 |

17 | from Crypto.Cipher import PKCS1_OAEP

18 | from Crypto.PublicKey import RSA

19 | from requests.structures import CaseInsensitiveDict

20 |

21 | from aliyunsdkcore import client

22 | from aliyunsdkcore.acs_exception.exceptions import ServerException, ClientException

23 | from aliyunsdkcore.http import protocol_type, format_type, method_type

24 | from aliyunsdkkms.request.v20160120 import ListKeysRequest, GenerateDataKeyRequest, DecryptRequest, EncryptRequest

25 |

26 | import os

27 |

28 |

29 | class BaseCryptoProvider(object):

30 | """CryptoProvider 基类,提供基础的数据加密解密adapter

31 |

32 | """

33 | def __init__(self, cipher):

34 | self.plain_key = None

35 | self.plain_start = None

36 | self.cipher = cipher

37 |

38 | def make_encrypt_adapter(self, stream, key, start):

39 | return utils.make_cipher_adapter(stream, partial(self.cipher.encrypt, self.cipher(key, start)))

40 |

41 | def make_decrypt_adapter(self, stream, key, start):

42 | return utils.make_cipher_adapter(stream, partial(self.cipher.decrypt, self.cipher(key, start)))

43 |

44 |

45 | _LOCAL_RSA_TMP_DIR = '.oss-local-rsa'

46 |

47 |

48 | class LocalRsaProvider(BaseCryptoProvider):

49 | """使用本地RSA加密数据密钥。

50 |

51 | :param str dir: 本地RSA公钥私钥存储路径

52 | :param str key: 本地RSA公钥私钥名称前缀

53 | :param str passphrase: 本地RSA公钥私钥密码

54 | :param class cipher: 数据加密,默认aes256,用户可自行实现对称加密算法,需符合AESCipher注释规则

55 | """

56 |

57 | PUB_KEY_FILE = '.public_key.pem'

58 | PRIV_KEY_FILE = '.private_key.pem'

59 |

60 | def __init__(self, dir=None, key='', passphrase=None, cipher=utils.AESCipher):

61 | super(LocalRsaProvider, self).__init__(cipher=cipher)

62 | self.dir = dir or os.path.join(os.path.expanduser('~'), _LOCAL_RSA_TMP_DIR)

63 |

64 | utils.makedir_p(self.dir)

65 |

66 | priv_key_full_path = os.path.join(self.dir, key + self.PRIV_KEY_FILE)

67 | pub_key_full_path = os.path.join(self.dir, key + self.PUB_KEY_FILE)

68 | try:

69 | if os.path.exists(priv_key_full_path) and os.path.exists(pub_key_full_path):

70 | with open(priv_key_full_path, 'rb') as f:

71 | self.__decrypt_obj = PKCS1_OAEP.new(RSA.importKey(f.read(), passphrase=passphrase))

72 |

73 | with open(pub_key_full_path, 'rb') as f:

74 | self.__encrypt_obj = PKCS1_OAEP.new(RSA.importKey(f.read(), passphrase=passphrase))

75 |

76 | else:

77 | private_key = RSA.generate(2048)

78 | public_key = private_key.publickey()

79 |

80 | self.__encrypt_obj = PKCS1_OAEP.new(public_key)

81 | self.__decrypt_obj = PKCS1_OAEP.new(private_key)

82 |

83 | with open(priv_key_full_path, 'wb') as f:

84 | f.write(private_key.exportKey(passphrase=passphrase))

85 |

86 | with open(pub_key_full_path, 'wb') as f:

87 | f.write(public_key.exportKey(passphrase=passphrase))

88 | except (ValueError, TypeError, IndexError) as e:

89 | raise ClientError(str(e))

90 |

91 | def build_header(self, headers=None):

92 | if not isinstance(headers, CaseInsensitiveDict):

93 | headers = CaseInsensitiveDict(headers)

94 |

95 | if 'content-md5' in headers:

96 | headers['x-oss-meta-unencrypted-content-md5'] = headers['content-md5']

97 | del headers['content-md5']

98 |

99 | if 'content-length' in headers:

100 | headers['x-oss-meta-unencrypted-content-length'] = headers['content-length']

101 | del headers['content-length']

102 |

103 | headers['x-oss-meta-oss-crypto-key'] = b64encode_as_string(self.__encrypt_obj.encrypt(self.plain_key))

104 | headers['x-oss-meta-oss-crypto-start'] = b64encode_as_string(self.__encrypt_obj.encrypt(to_bytes(str(self.plain_start))))

105 | headers['x-oss-meta-oss-cek-alg'] = self.cipher.ALGORITHM

106 | headers['x-oss-meta-oss-wrap-alg'] = 'rsa'

107 |

108 | self.plain_key = None

109 | self.plain_start = None

110 |

111 | return headers

112 |

113 | def get_key(self):

114 | self.plain_key = self.cipher.get_key()

115 | return self.plain_key

116 |

117 | def get_start(self):

118 | self.plain_start = self.cipher.get_start()

119 | return self.plain_start

120 |

121 | def decrypt_oss_meta_data(self, headers, key, conv=lambda x:x):

122 | try:

123 | return conv(self.__decrypt_obj.decrypt(utils.b64decode_from_string(headers[key])))

124 | except:

125 | return None

126 |

127 |

128 | class AliKMSProvider(BaseCryptoProvider):

129 | """使用aliyun kms服务加密数据密钥。kms的详细说明参见

130 | https://help.aliyun.com/product/28933.html?spm=a2c4g.11186623.3.1.jlYT4v

131 | 此接口在py3.3下暂时不可用,详见

132 | https://github.com/aliyun/aliyun-openapi-python-sdk/issues/61

133 |

134 | :param str access_key_id: 可以访问kms密钥服务的access_key_id

135 | :param str access_key_secret: 可以访问kms密钥服务的access_key_secret

136 | :param str region: kms密钥服务地区

137 | :param str cmkey: 用户主密钥

138 | :param str sts_token: security token,如果使用的是临时AK需提供

139 | :param str passphrase: kms密钥服务密码

140 | :param class cipher: 数据加密,默认aes256,当前仅支持默认实现

141 | """

142 | def __init__(self, access_key_id, access_key_secret, region, cmkey, sts_token = None, passphrase=None, cipher=utils.AESCipher):

143 |

144 | if not issubclass(cipher, utils.AESCipher):

145 | raise ClientError('AliKMSProvider only support AES256 cipher')

146 |

147 | super(AliKMSProvider, self).__init__(cipher=cipher)

148 | self.cmkey = cmkey

149 | self.sts_token = sts_token

150 | self.context = '{"x-passphrase":"' + passphrase + '"}' if passphrase else ''

151 | self.clt = client.AcsClient(access_key_id, access_key_secret, region)

152 |

153 | self.encrypted_key = None

154 |

155 | def build_header(self, headers=None):

156 | if not isinstance(headers, CaseInsensitiveDict):

157 | headers = CaseInsensitiveDict(headers)

158 | if 'content-md5' in headers:

159 | headers['x-oss-meta-unencrypted-content-md5'] = headers['content-md5']

160 | del headers['content-md5']

161 |

162 | if 'content-length' in headers:

163 | headers['x-oss-meta-unencrypted-content-length'] = headers['content-length']

164 | del headers['content-length']

165 |

166 | headers['x-oss-meta-oss-crypto-key'] = self.encrypted_key

167 | headers['x-oss-meta-oss-crypto-start'] = self.__encrypt_data(to_bytes(str(self.plain_start)))

168 | headers['x-oss-meta-oss-cek-alg'] = self.cipher.ALGORITHM

169 | headers['x-oss-meta-oss-wrap-alg'] = 'kms'

170 |

171 | self.encrypted_key = None

172 | self.plain_start = None

173 |

174 | return headers

175 |

176 | def get_key(self):

177 | plain_key, self.encrypted_key = self.__generate_data_key()

178 | return plain_key

179 |

180 | def get_start(self):

181 | self.plain_start = utils.random_counter()

182 | return self.plain_start

183 |

184 | def __generate_data_key(self):

185 | req = GenerateDataKeyRequest.GenerateDataKeyRequest()

186 |

187 | req.set_accept_format(format_type.JSON)

188 | req.set_method(method_type.POST)

189 |

190 | req.set_KeyId(self.cmkey)

191 | req.set_KeySpec('AES_256')

192 | req.set_NumberOfBytes(32)

193 | req.set_EncryptionContext(self.context)

194 | if self.sts_token:

195 | req.set_STSToken(self.sts_token)

196 |

197 | resp = self.__do(req)

198 |

199 | return b64decode_from_string(resp['Plaintext']), resp['CiphertextBlob']

200 |

201 | def __encrypt_data(self, data):

202 | req = EncryptRequest.EncryptRequest()

203 |

204 | req.set_accept_format(format_type.JSON)

205 | req.set_method(method_type.POST)

206 | req.set_KeyId(self.cmkey)

207 | req.set_Plaintext(data)

208 | req.set_EncryptionContext(self.context)

209 | if self.sts_token:

210 | req.set_STSToken(self.sts_token)

211 |

212 | resp = self.__do(req)

213 |

214 | return resp['CiphertextBlob']

215 |

216 | def __decrypt_data(self, data):

217 | req = DecryptRequest.DecryptRequest()

218 |

219 | req.set_accept_format(format_type.JSON)

220 | req.set_method(method_type.POST)

221 | req.set_CiphertextBlob(data)

222 | req.set_EncryptionContext(self.context)

223 | if self.sts_token:

224 | req.set_STSToken(self.sts_token)

225 |

226 | resp = self.__do(req)

227 | return resp['Plaintext']

228 |

229 | def __do(self, req):

230 |

231 | try:

232 | body = self.clt.do_action_with_exception(req)

233 |

234 | return json.loads(to_unicode(body))

235 | except ServerException as e:

236 | raise OpenApiServerError(e.http_status, e.request_id, e.message, e.error_code)

237 | except ClientException as e:

238 | raise ClientError(e.message)

239 | except (ValueError, TypeError) as e:

240 | raise OpenApiFormatError('Json Error: ' + str(e))

241 |

242 | def decrypt_oss_meta_data(self, headers, key, conv=lambda x: x):

243 | try:

244 | if key.lower() == 'x-oss-meta-oss-crypto-key'.lower():

245 | return conv(b64decode_from_string(self.__decrypt_data(headers[key])))

246 | else:

247 | return conv(self.__decrypt_data(headers[key]))

248 | except OssError as e:

249 | raise e

250 | except:

251 | return None

--------------------------------------------------------------------------------

/oss2/defaults.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | """

4 | oss2.defaults

5 | ~~~~~~~~~~~~~

6 |

7 | 全局缺省变量。

8 |

9 | """

10 |

11 | import logging

12 |

13 |

14 | def get(value, default_value):

15 | if value is None:

16 | return default_value

17 | else:

18 | return value

19 |

20 |

21 | #: 连接超时时间

22 | connect_timeout = 60

23 |

24 | #: 缺省重试次数

25 | request_retries = 3

26 |

27 | #: 对于某些接口,上传数据长度大于或等于该值时,就采用分片上传。

28 | multipart_threshold = 10 * 1024 * 1024

29 |

30 | #: 分片上传缺省线程数

31 | multipart_num_threads = 1

32 |

33 | #: 缺省分片大小