192 |

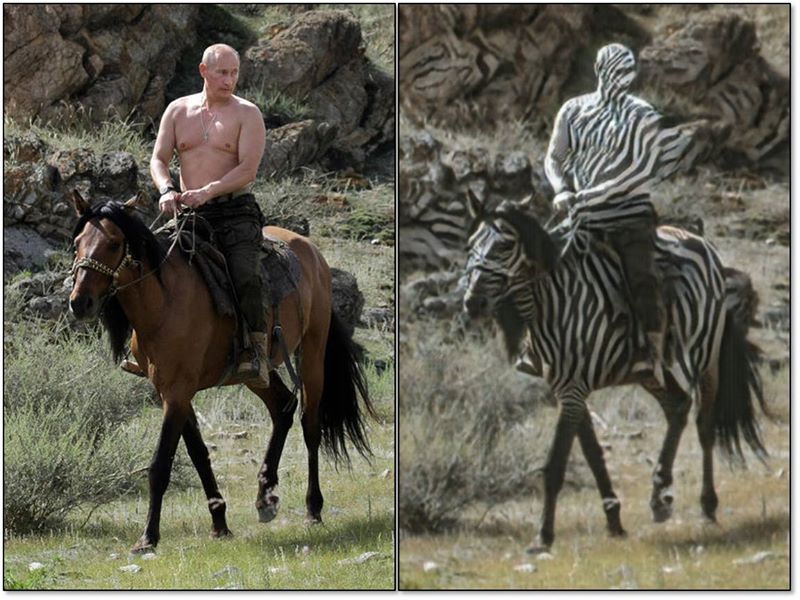

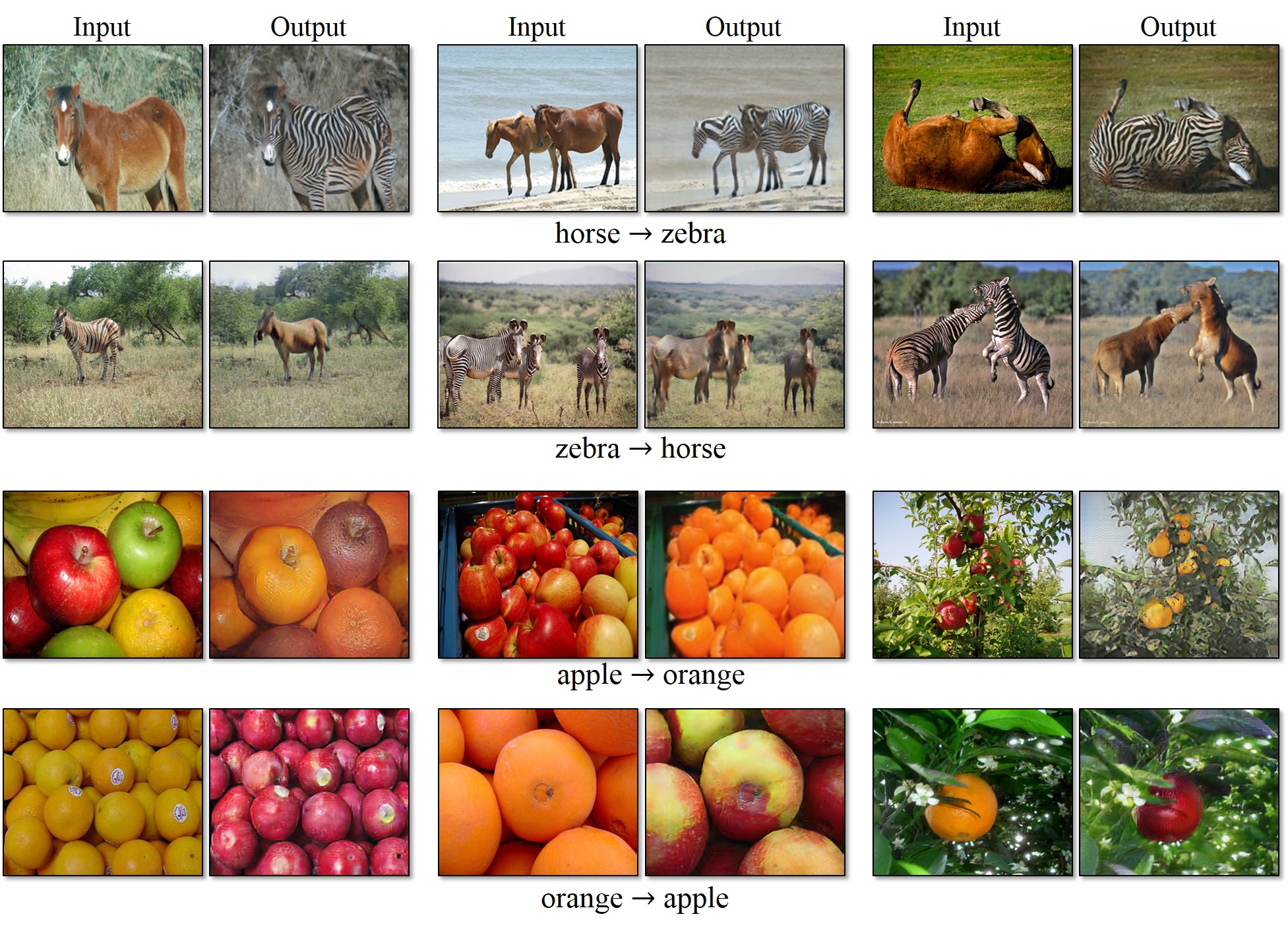

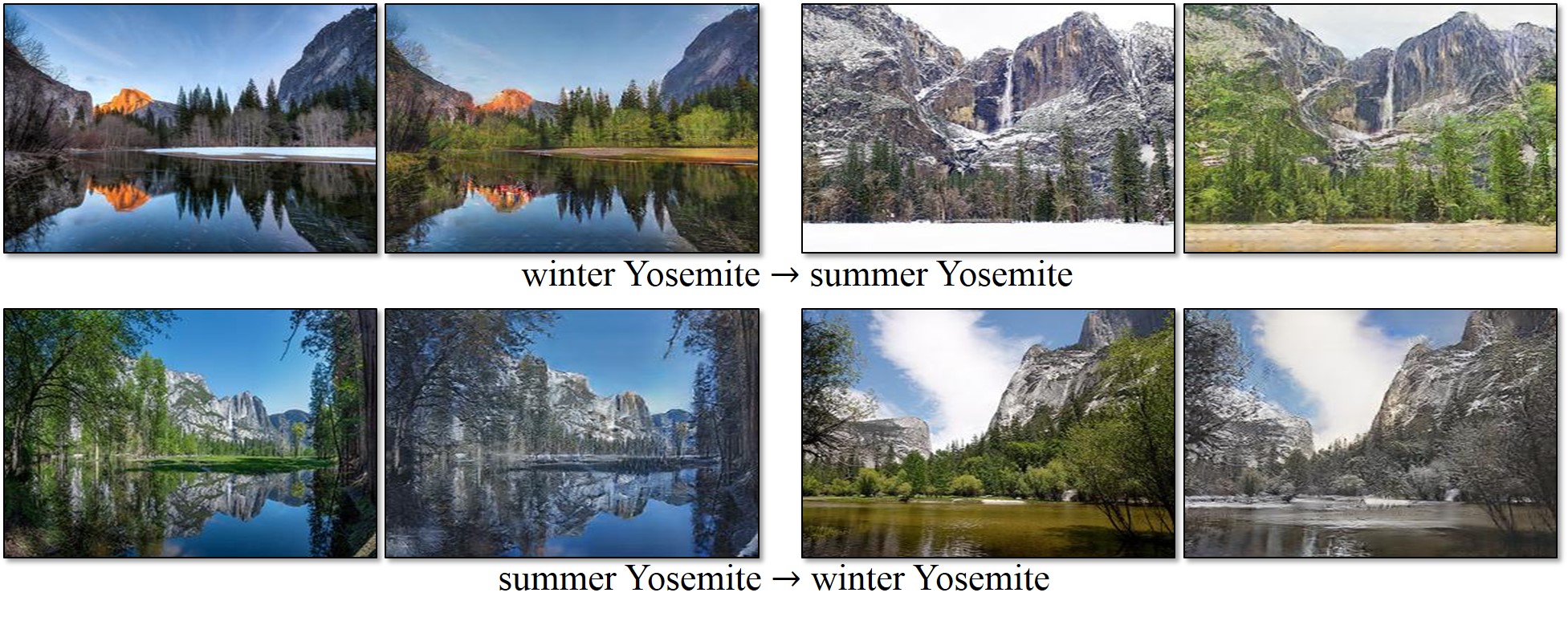

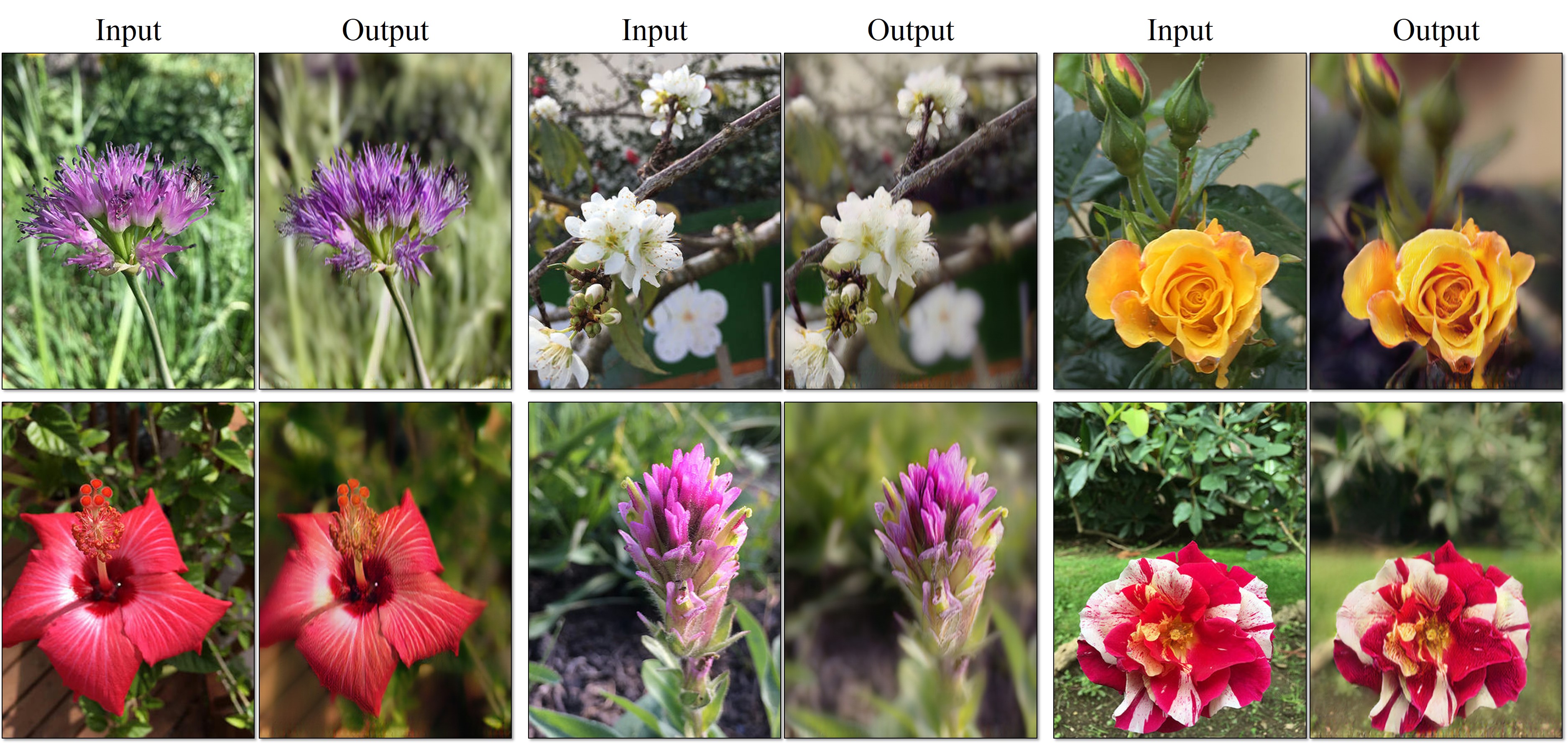

193 | Our model does not work well when the test image is rather different from the images on which the model is trained, as is the case in the figure to the left (we trained on horses and zebras without riders, but test here one a horse with a rider). See additional typical failure cases [here](https://junyanz.github.io/CycleGAN/images/failures.jpg). On translation tasks that involve color and texture changes, like many of those reported above, the method often succeeds. We have also explored tasks that require geometric changes, with little success. For example, on the task of `dog<->cat` transfiguration, the learned translation degenerates into making minimal changes to the input. We also observe a lingering gap between the results achievable with paired training data and those achieved by our unpaired method. In some cases, this gap may be very hard -- or even impossible,-- to close: for example, our method sometimes permutes the labels for tree and building in the output of the cityscapes photos->labels task.

194 |

195 |

196 |

197 | ## Citation

198 | If you use this code for your research, please cite our [paper](https://junyanz.github.io/CycleGAN/):

199 |

200 | ```

201 | @inproceedings{CycleGAN2017,

202 | title={Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networkss},

203 | author={Zhu, Jun-Yan and Park, Taesung and Isola, Phillip and Efros, Alexei A},

204 | booktitle={Computer Vision (ICCV), 2017 IEEE International Conference on},

205 | year={2017}

206 | }

207 |

208 | ```

209 |

210 |

211 | ## Related Projects:

212 | **[contrastive-unpaired-translation](https://github.com/taesungp/contrastive-unpaired-translation) (CUT)**

192 |

193 | Our model does not work well when the test image is rather different from the images on which the model is trained, as is the case in the figure to the left (we trained on horses and zebras without riders, but test here one a horse with a rider). See additional typical failure cases [here](https://junyanz.github.io/CycleGAN/images/failures.jpg). On translation tasks that involve color and texture changes, like many of those reported above, the method often succeeds. We have also explored tasks that require geometric changes, with little success. For example, on the task of `dog<->cat` transfiguration, the learned translation degenerates into making minimal changes to the input. We also observe a lingering gap between the results achievable with paired training data and those achieved by our unpaired method. In some cases, this gap may be very hard -- or even impossible,-- to close: for example, our method sometimes permutes the labels for tree and building in the output of the cityscapes photos->labels task.

194 |

195 |

196 |

197 | ## Citation

198 | If you use this code for your research, please cite our [paper](https://junyanz.github.io/CycleGAN/):

199 |

200 | ```

201 | @inproceedings{CycleGAN2017,

202 | title={Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networkss},

203 | author={Zhu, Jun-Yan and Park, Taesung and Isola, Phillip and Efros, Alexei A},

204 | booktitle={Computer Vision (ICCV), 2017 IEEE International Conference on},

205 | year={2017}

206 | }

207 |

208 | ```

209 |

210 |

211 | ## Related Projects:

212 | **[contrastive-unpaired-translation](https://github.com/taesungp/contrastive-unpaired-translation) (CUT)**213 | **[pix2pix-Torch](https://github.com/phillipi/pix2pix) | [pix2pixHD](https://github.com/NVIDIA/pix2pixHD) | 214 | [BicycleGAN](https://github.com/junyanz/BicycleGAN) | [vid2vid](https://tcwang0509.github.io/vid2vid/) | [SPADE/GauGAN](https://github.com/NVlabs/SPADE)**