├── .github

└── workflows

│ └── python-app.yml

├── .gitignore

├── 3d-plot.py

├── LICENSE

├── README.md

├── color_gray.py

├── color_sepia.py

├── color_swap.py

├── docs

├── _config.yml

├── color.md

├── face_crop.md

├── face_detection.md

├── filter.md

├── index.md

├── photo_cat.md

├── photo_exif_data_print.md

├── pillow_numpy.md

├── resize.md

├── sift.md

└── watershed.md

├── extract_color.py

├── face_crop-all.sh

├── face_crop.py

├── face_crop_raspi.py

├── face_crop_simple-all.sh

├── face_crop_simple.py

├── face_detection.py

├── face_detection_camera.py

├── filter_3by3.py

├── measure_color_average.py

├── photo_cat.py

├── photo_date_print.py

├── photo_exif_date_print.py

├── pillow_numpy_basic.py

├── resize-all.sh

├── resize.py

├── sift.py

├── tests

├── __init__.py

└── test_extract_color.py

└── watershed.py

/.github/workflows/python-app.yml:

--------------------------------------------------------------------------------

1 | # This workflow will install Python dependencies, run tests and lint with a single version of Python

2 | # For more information see: https://help.github.com/actions/language-and-framework-guides/using-python-with-github-actions

3 |

4 | name: Python application

5 |

6 | on:

7 | push:

8 | branches: [ master ]

9 | pull_request:

10 | branches: [ master ]

11 |

12 | jobs:

13 | build:

14 |

15 | runs-on: ubuntu-latest

16 |

17 | steps:

18 | - uses: actions/checkout@v2

19 | - name: Set up Python 3.9

20 | uses: actions/setup-python@v2

21 | with:

22 | python-version: 3.9

23 | - name: Install dependencies

24 | run: |

25 | python -m pip install --upgrade pip

26 | pip install flake8 pytest opencv-python

27 | if [ -f requirements.txt ]; then pip install -r requirements.txt; fi

28 | - name: Lint with flake8

29 | run: |

30 | # stop the build if there are Python syntax errors or undefined names

31 | flake8 . --count --select=E9,F63,F7,F82 --show-source --statistics

32 | # exit-zero treats all errors as warnings. The GitHub editor is 127 chars wide

33 | flake8 . --count --exit-zero --max-complexity=10 --max-line-length=127 --statistics

34 | - name: Test with pytest

35 | run: |

36 | pytest

37 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | env/

12 | build/

13 | develop-eggs/

14 | dist/

15 | downloads/

16 | eggs/

17 | .eggs/

18 | lib/

19 | lib64/

20 | parts/

21 | sdist/

22 | var/

23 | *.egg-info/

24 | .installed.cfg

25 | *.egg

26 |

27 | # PyInstaller

28 | # Usually these files are written by a python script from a template

29 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

30 | *.manifest

31 | *.spec

32 |

33 | # Installer logs

34 | pip-log.txt

35 | pip-delete-this-directory.txt

36 |

37 | # Unit test / coverage reports

38 | htmlcov/

39 | .tox/

40 | .coverage

41 | .coverage.*

42 | .cache

43 | nosetests.xml

44 | coverage.xml

45 | *,cover

46 | .hypothesis/

47 |

48 | # Translations

49 | *.mo

50 | *.pot

51 |

52 | # Django stuff:

53 | *.log

54 |

55 | # Sphinx documentation

56 | docs/_build/

57 |

58 | # PyBuilder

59 | target/

60 |

61 | #Ipython Notebook

62 | .ipynb_checkpoints

63 |

64 | # For Mac

65 | .DS_Store

66 |

--------------------------------------------------------------------------------

/3d-plot.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | from matplotlib import pyplot

3 | from mpl_toolkits.mplot3d import Axes3D

4 | from scipy import genfromtxt

5 |

6 | # read csv file

7 | d = genfromtxt("test.csv", delimiter=",")

8 |

9 | # make graph

10 | fig = pyplot.figure()

11 | ax = Axes3D(fig)

12 |

13 | # setting graph

14 | ax.set_xlabel("Green")

15 | ax.set_ylabel("Blue")

16 | ax.set_zlabel("Red")

17 |

18 | # plot

19 | ax.plot(d[:,1], d[:,0], d[:,2], "o", color="#ff0000", ms=4, mew=0.5)

20 |

21 | pyplot.show()

22 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | The MIT License (MIT)

2 |

3 | Copyright (c) 2016 karaage

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # python-image-processing

2 | Useful and simple image processing python scripts

3 |

4 |  5 |

5 |  6 |

6 |  7 |

7 |  8 |

8 |  9 |

10 |

11 | # Requirements

12 | - Mac OS X or Linux

13 | - Python3(Recommended)

14 | - Python2

15 |

16 | # Preparation

17 | ## Library

18 | - PIL

19 | - OpenCV 2

20 | - numpy

21 | - matplotlib

22 | - scipy

23 |

24 | Execute following commands for install library:

25 | ```sh

26 | $ pip install pillow

27 | $ pip install opencv

28 | $ pip install numpy

29 | $ pip install matplotlib

30 | $ pip install scipy

31 | ```

32 |

33 | ## Install(Clone) software

34 | Execute following command:

35 | ```sh

36 | $ git clone https://github.com/karaage0703/python-image-processing.git

37 | ```

38 |

39 | # Usage and Samples

40 | Go to Github pages.

41 |

42 | [github pages for image processing](https://karaage0703.github.io/python-image-processing)

43 |

44 | # License

45 | This software is released under the MIT License, see LICENSE.

46 |

47 | # Authors

48 | - karaage0703

49 | - [sugyan](https://github.com/sugyan/face-collector) (face_crop.py)

50 |

51 |

52 |

--------------------------------------------------------------------------------

/color_gray.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import sys

4 |

5 | # extract color function

6 | def color_gray(src):

7 | img_bgr = cv2.split(src)

8 | dst = cv2.merge(((img_bgr[0] + img_bgr[1] + img_bgr[2])/3, (img_bgr[0] + img_bgr[1] + img_bgr[2])/3, (img_bgr[0] + img_bgr[1] + img_bgr[2])/3))

9 |

10 | return dst

11 |

12 | if __name__ == '__main__':

13 | param = sys.argv

14 | if (len(param) != 2):

15 | print ("Usage: $ python " + param[0] + " sample.jpg")

16 | quit()

17 | # open image file

18 | try:

19 | input_img = cv2.imread(param[1])

20 | except:

21 | print ('faild to load %s' % param[1])

22 | quit()

23 |

24 | if input_img is None:

25 | print ('faild to load %s' % param[1])

26 | quit()

27 |

28 | # making mask using by extract color function

29 | output_img = color_gray(input_img)

30 |

31 | cv2.imwrite("gray_" + param[1], output_img)

32 |

--------------------------------------------------------------------------------

/color_sepia.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import sys

4 |

5 | # extract color function

6 | def color_sepia(src):

7 | img_bgr = cv2.split(src)

8 | # R=A, G=0.8xA, B=0.55xA

9 | dst = cv2.merge((img_bgr[0] * 0.55 , img_bgr[1] * 0.8, img_bgr[2] * 1.0))

10 |

11 | return dst

12 |

13 | if __name__ == '__main__':

14 | param = sys.argv

15 | if (len(param) != 2):

16 | print ("Usage: $ python " + param[0] + " sample.jpg")

17 | quit()

18 | # open image file

19 | try:

20 | input_img = cv2.imread(param[1])

21 | except:

22 | print ('faild to load %s' % param[1])

23 | quit()

24 |

25 | if input_img is None:

26 | print ('faild to load %s' % param[1])

27 | quit()

28 |

29 | # making mask using by extract color function

30 | output_img = color_sepia(input_img)

31 |

32 | cv2.imwrite("sepia_" + param[1], output_img)

33 |

--------------------------------------------------------------------------------

/color_swap.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import sys

4 |

5 | # extract color function

6 | def color_swap(src):

7 | img_bgr = cv2.split(src)

8 | dst = cv2.merge((img_bgr[2], img_bgr[1], img_bgr[0])) # from BGR to RGB

9 |

10 | return dst

11 |

12 | if __name__ == '__main__':

13 | param = sys.argv

14 | if (len(param) != 2):

15 | print ("Usage: $ python " + param[0] + " sample.jpg")

16 | quit()

17 | # open image file

18 | try:

19 | input_img = cv2.imread(param[1])

20 | except:

21 | print ('faild to load %s' % param[1])

22 | quit()

23 |

24 | if input_img is None:

25 | print ('faild to load %s' % param[1])

26 | quit()

27 |

28 | # making mask using by extract color function

29 | output_img = color_swap(input_img)

30 |

31 | cv2.imwrite("swap_" + param[1], output_img)

32 |

--------------------------------------------------------------------------------

/docs/_config.yml:

--------------------------------------------------------------------------------

1 | theme: jekyll-theme-cayman

--------------------------------------------------------------------------------

/docs/color.md:

--------------------------------------------------------------------------------

1 | [index](./index.md)

2 |

3 | ## color_swap.py

4 |

5 | ### description

6 | color swap from RGB to BGR

7 |

8 | ### preparation

9 | prepare target photo

10 |

11 | ### usage

12 | ```sh

13 | $ python color_swap.py sample.jpg

14 | ```

15 |

16 | ### original image

17 |

9 |

10 |

11 | # Requirements

12 | - Mac OS X or Linux

13 | - Python3(Recommended)

14 | - Python2

15 |

16 | # Preparation

17 | ## Library

18 | - PIL

19 | - OpenCV 2

20 | - numpy

21 | - matplotlib

22 | - scipy

23 |

24 | Execute following commands for install library:

25 | ```sh

26 | $ pip install pillow

27 | $ pip install opencv

28 | $ pip install numpy

29 | $ pip install matplotlib

30 | $ pip install scipy

31 | ```

32 |

33 | ## Install(Clone) software

34 | Execute following command:

35 | ```sh

36 | $ git clone https://github.com/karaage0703/python-image-processing.git

37 | ```

38 |

39 | # Usage and Samples

40 | Go to Github pages.

41 |

42 | [github pages for image processing](https://karaage0703.github.io/python-image-processing)

43 |

44 | # License

45 | This software is released under the MIT License, see LICENSE.

46 |

47 | # Authors

48 | - karaage0703

49 | - [sugyan](https://github.com/sugyan/face-collector) (face_crop.py)

50 |

51 |

52 |

--------------------------------------------------------------------------------

/color_gray.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import sys

4 |

5 | # extract color function

6 | def color_gray(src):

7 | img_bgr = cv2.split(src)

8 | dst = cv2.merge(((img_bgr[0] + img_bgr[1] + img_bgr[2])/3, (img_bgr[0] + img_bgr[1] + img_bgr[2])/3, (img_bgr[0] + img_bgr[1] + img_bgr[2])/3))

9 |

10 | return dst

11 |

12 | if __name__ == '__main__':

13 | param = sys.argv

14 | if (len(param) != 2):

15 | print ("Usage: $ python " + param[0] + " sample.jpg")

16 | quit()

17 | # open image file

18 | try:

19 | input_img = cv2.imread(param[1])

20 | except:

21 | print ('faild to load %s' % param[1])

22 | quit()

23 |

24 | if input_img is None:

25 | print ('faild to load %s' % param[1])

26 | quit()

27 |

28 | # making mask using by extract color function

29 | output_img = color_gray(input_img)

30 |

31 | cv2.imwrite("gray_" + param[1], output_img)

32 |

--------------------------------------------------------------------------------

/color_sepia.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import sys

4 |

5 | # extract color function

6 | def color_sepia(src):

7 | img_bgr = cv2.split(src)

8 | # R=A, G=0.8xA, B=0.55xA

9 | dst = cv2.merge((img_bgr[0] * 0.55 , img_bgr[1] * 0.8, img_bgr[2] * 1.0))

10 |

11 | return dst

12 |

13 | if __name__ == '__main__':

14 | param = sys.argv

15 | if (len(param) != 2):

16 | print ("Usage: $ python " + param[0] + " sample.jpg")

17 | quit()

18 | # open image file

19 | try:

20 | input_img = cv2.imread(param[1])

21 | except:

22 | print ('faild to load %s' % param[1])

23 | quit()

24 |

25 | if input_img is None:

26 | print ('faild to load %s' % param[1])

27 | quit()

28 |

29 | # making mask using by extract color function

30 | output_img = color_sepia(input_img)

31 |

32 | cv2.imwrite("sepia_" + param[1], output_img)

33 |

--------------------------------------------------------------------------------

/color_swap.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import sys

4 |

5 | # extract color function

6 | def color_swap(src):

7 | img_bgr = cv2.split(src)

8 | dst = cv2.merge((img_bgr[2], img_bgr[1], img_bgr[0])) # from BGR to RGB

9 |

10 | return dst

11 |

12 | if __name__ == '__main__':

13 | param = sys.argv

14 | if (len(param) != 2):

15 | print ("Usage: $ python " + param[0] + " sample.jpg")

16 | quit()

17 | # open image file

18 | try:

19 | input_img = cv2.imread(param[1])

20 | except:

21 | print ('faild to load %s' % param[1])

22 | quit()

23 |

24 | if input_img is None:

25 | print ('faild to load %s' % param[1])

26 | quit()

27 |

28 | # making mask using by extract color function

29 | output_img = color_swap(input_img)

30 |

31 | cv2.imwrite("swap_" + param[1], output_img)

32 |

--------------------------------------------------------------------------------

/docs/_config.yml:

--------------------------------------------------------------------------------

1 | theme: jekyll-theme-cayman

--------------------------------------------------------------------------------

/docs/color.md:

--------------------------------------------------------------------------------

1 | [index](./index.md)

2 |

3 | ## color_swap.py

4 |

5 | ### description

6 | color swap from RGB to BGR

7 |

8 | ### preparation

9 | prepare target photo

10 |

11 | ### usage

12 | ```sh

13 | $ python color_swap.py sample.jpg

14 | ```

15 |

16 | ### original image

17 |  18 |

19 |

20 |

21 | ### processed image

22 |

18 |

19 |

20 |

21 | ### processed image

22 |  23 |

24 |

25 | ## color_gray.py

26 |

27 | ### description

28 | gray scale converter

29 |

30 | ### preparation

31 | prepare target photo

32 |

33 | ### usage

34 | ```sh

35 | $ python color_gray.py sample.jpg

36 | ```

37 |

38 | ### original image

39 |

23 |

24 |

25 | ## color_gray.py

26 |

27 | ### description

28 | gray scale converter

29 |

30 | ### preparation

31 | prepare target photo

32 |

33 | ### usage

34 | ```sh

35 | $ python color_gray.py sample.jpg

36 | ```

37 |

38 | ### original image

39 |  40 |

41 |

42 |

43 | ### processed image

44 |

40 |

41 |

42 |

43 | ### processed image

44 |  45 |

46 | ## color_sepia.py

47 |

48 | ### description

49 | sepia color converter

50 |

51 | ### preparation

52 | prepare target photo

53 |

54 | ### usage

55 | ```sh

56 | $ python color_sepia.py sample.jpg

57 | ```

58 |

59 | ### original image

60 |

45 |

46 | ## color_sepia.py

47 |

48 | ### description

49 | sepia color converter

50 |

51 | ### preparation

52 | prepare target photo

53 |

54 | ### usage

55 | ```sh

56 | $ python color_sepia.py sample.jpg

57 | ```

58 |

59 | ### original image

60 |  61 |

62 | ### processed image

63 |

61 |

62 | ### processed image

63 |  64 |

65 | ## extract_color.py

66 |

67 | ### description

68 | extract color

69 |

70 | ### preparation

71 | prepare target photo

72 |

73 | ### usage

74 | ```sh

75 | $ python extract_color.py sample.jpg h_min, h_max, s_th, v_th

76 | ```

77 |

78 | example:

79 | ```sh

80 | $ python extract_color.py 0002.jpg 10 40 10 50

81 | ```

82 |

83 | ### original image

84 |

64 |

65 | ## extract_color.py

66 |

67 | ### description

68 | extract color

69 |

70 | ### preparation

71 | prepare target photo

72 |

73 | ### usage

74 | ```sh

75 | $ python extract_color.py sample.jpg h_min, h_max, s_th, v_th

76 | ```

77 |

78 | example:

79 | ```sh

80 | $ python extract_color.py 0002.jpg 10 40 10 50

81 | ```

82 |

83 | ### original image

84 |  85 |

86 | ### processed image

87 |

85 |

86 | ### processed image

87 |  88 |

89 | ## References

90 | - Interface 2017/05

91 | - http://venuschjp.blogspot.jp/2015/02/pythonopencv.html

92 | - https://www.blog.umentu.work/python-opencv3で画素のrgb値を入れ替える/

93 |

--------------------------------------------------------------------------------

/docs/face_crop.md:

--------------------------------------------------------------------------------

1 | ## face_crop.py

2 | [index](./index.md)

3 |

4 | ### preparation

5 |

6 | ### usage

7 | Execute following command:

8 | ```sh

9 | $ python face_crop.py sample.jpg

10 | ```

11 |

12 | If you want convert all files in same directory with this script, Execute following command:

13 | ```sh

14 | $ ./face_crop-all.sh

15 | ```

16 |

17 | ### original image

18 |

88 |

89 | ## References

90 | - Interface 2017/05

91 | - http://venuschjp.blogspot.jp/2015/02/pythonopencv.html

92 | - https://www.blog.umentu.work/python-opencv3で画素のrgb値を入れ替える/

93 |

--------------------------------------------------------------------------------

/docs/face_crop.md:

--------------------------------------------------------------------------------

1 | ## face_crop.py

2 | [index](./index.md)

3 |

4 | ### preparation

5 |

6 | ### usage

7 | Execute following command:

8 | ```sh

9 | $ python face_crop.py sample.jpg

10 | ```

11 |

12 | If you want convert all files in same directory with this script, Execute following command:

13 | ```sh

14 | $ ./face_crop-all.sh

15 | ```

16 |

17 | ### original image

18 |  19 |

20 | ### processed image

21 |

19 |

20 | ### processed image

21 |  22 |

23 |

24 | # Reference

25 | - http://memo.sugyan.com/entry/20151203/1449137219

26 |

--------------------------------------------------------------------------------

/docs/face_detection.md:

--------------------------------------------------------------------------------

1 | ## face_detection.py

2 | [index](./index.md)

3 |

4 | ### preparation

5 | Search `haarcascade_frontalface_alt.xml`:

6 | ```sh

7 | $ sudo find / -name "haarcascade_frontalface_alt.xml"

8 | ```

9 |

10 | and copy file to this repository directory

11 |

12 | If you cannot find file, please download from web

13 |

14 | ### usage

15 | ```sh

16 | $ python face_detection.py sample.jpg

17 | ```

18 |

19 | ### original image

20 |

22 |

23 |

24 | # Reference

25 | - http://memo.sugyan.com/entry/20151203/1449137219

26 |

--------------------------------------------------------------------------------

/docs/face_detection.md:

--------------------------------------------------------------------------------

1 | ## face_detection.py

2 | [index](./index.md)

3 |

4 | ### preparation

5 | Search `haarcascade_frontalface_alt.xml`:

6 | ```sh

7 | $ sudo find / -name "haarcascade_frontalface_alt.xml"

8 | ```

9 |

10 | and copy file to this repository directory

11 |

12 | If you cannot find file, please download from web

13 |

14 | ### usage

15 | ```sh

16 | $ python face_detection.py sample.jpg

17 | ```

18 |

19 | ### original image

20 |  21 |

22 | ### processed image

23 |

21 |

22 | ### processed image

23 |  24 |

25 |

26 | ## face_detection_camera.py

27 |

28 | ### caution

29 | Camera is needed

30 |

31 | ### preparation

32 | Search `haarcascade_frontalface_alt.xml`:

33 | ```sh

34 | $ sudo find / -name "haarcascade_frontalface_alt.xml"

35 | ```

36 | and copy file to this repository directory

37 |

38 | If you cannot find file, please download from web

39 |

40 | ### command

41 | ```sh

42 | $ python face_detection_camera.py

43 | ```

44 |

45 | # Reference

46 |

--------------------------------------------------------------------------------

/docs/filter.md:

--------------------------------------------------------------------------------

1 | [index](./index.md)

2 |

3 | ## filter_3by3.py

4 |

5 | ### description

6 | Image processing by using 3x3 filter

7 | This script is laplacian filter

8 |

9 | ### preparation

10 | prepare target photo

11 |

12 | ### usage

13 | ```sh

14 | $ python filter_3by3.py sample.jpg

15 | ```

16 |

17 | ### original image

18 |

24 |

25 |

26 | ## face_detection_camera.py

27 |

28 | ### caution

29 | Camera is needed

30 |

31 | ### preparation

32 | Search `haarcascade_frontalface_alt.xml`:

33 | ```sh

34 | $ sudo find / -name "haarcascade_frontalface_alt.xml"

35 | ```

36 | and copy file to this repository directory

37 |

38 | If you cannot find file, please download from web

39 |

40 | ### command

41 | ```sh

42 | $ python face_detection_camera.py

43 | ```

44 |

45 | # Reference

46 |

--------------------------------------------------------------------------------

/docs/filter.md:

--------------------------------------------------------------------------------

1 | [index](./index.md)

2 |

3 | ## filter_3by3.py

4 |

5 | ### description

6 | Image processing by using 3x3 filter

7 | This script is laplacian filter

8 |

9 | ### preparation

10 | prepare target photo

11 |

12 | ### usage

13 | ```sh

14 | $ python filter_3by3.py sample.jpg

15 | ```

16 |

17 | ### original image

18 |  19 |

20 |

21 |

22 | ### processed image

23 |

19 |

20 |

21 |

22 | ### processed image

23 |  24 |

25 | ## References

26 | - Interface 2017/05

27 |

--------------------------------------------------------------------------------

/docs/index.md:

--------------------------------------------------------------------------------

1 | [home](https://github.com/karaage0703/python-image-processing)

2 |

3 | # python-image-processing-sample

4 |

5 | - [resize](./resize.md)

6 | - [photo synthesis](./photo_cat.md)

7 | - [data print to photo by using exif data](./photo_exif_data_print.md)

8 | - [face detection](./face_detection.md)

9 | - [face crop](./face_crop.md)

10 | - [sift](./sift.md)

11 | - [pillow and numpy basic](./pillow_numpy.md)

12 | - [color](./color.md)

13 | - [filter](./filter.md)

14 | - [watershed](./watershed.md)

15 |

16 |

--------------------------------------------------------------------------------

/docs/photo_cat.md:

--------------------------------------------------------------------------------

1 | [index](./index.md)

2 |

3 | ## photo-cat.py

4 |

5 | ### description

6 | Synth one photo from four photos.

7 |

8 | ### preparation

9 | prepare 4 image files named `image-1.jpg` `image-2.jpg` `image-3.jpg` `image-4.jpg`

10 |

11 | ### command

12 | ```sh

13 | $ python photo_cat.py

14 | ```

15 |

16 | ### original image

17 |

24 |

25 | ## References

26 | - Interface 2017/05

27 |

--------------------------------------------------------------------------------

/docs/index.md:

--------------------------------------------------------------------------------

1 | [home](https://github.com/karaage0703/python-image-processing)

2 |

3 | # python-image-processing-sample

4 |

5 | - [resize](./resize.md)

6 | - [photo synthesis](./photo_cat.md)

7 | - [data print to photo by using exif data](./photo_exif_data_print.md)

8 | - [face detection](./face_detection.md)

9 | - [face crop](./face_crop.md)

10 | - [sift](./sift.md)

11 | - [pillow and numpy basic](./pillow_numpy.md)

12 | - [color](./color.md)

13 | - [filter](./filter.md)

14 | - [watershed](./watershed.md)

15 |

16 |

--------------------------------------------------------------------------------

/docs/photo_cat.md:

--------------------------------------------------------------------------------

1 | [index](./index.md)

2 |

3 | ## photo-cat.py

4 |

5 | ### description

6 | Synth one photo from four photos.

7 |

8 | ### preparation

9 | prepare 4 image files named `image-1.jpg` `image-2.jpg` `image-3.jpg` `image-4.jpg`

10 |

11 | ### command

12 | ```sh

13 | $ python photo_cat.py

14 | ```

15 |

16 | ### original image

17 |  18 |

19 |

18 |

19 |  20 |

21 |

20 |

21 |  22 |

23 |

22 |

23 |  24 |

25 | ### processed image

26 |

24 |

25 | ### processed image

26 |  27 |

28 | ## Reference

29 |

--------------------------------------------------------------------------------

/docs/photo_exif_data_print.md:

--------------------------------------------------------------------------------

1 | ## photo-exif-date-print.py

2 | [index](./index.md)

3 |

4 | ### description

5 | Print date to photo by using exif data.

6 |

7 | ### preparation

8 | Search font file place of your PC:

9 | ```sh

10 | $ sudo find / -name "Arial Black.ttf"

11 | ```

12 |

13 | Mac example

14 | ```

15 | /Library/Fonts/Arial Black.ttf

16 | ```

17 |

18 | copy font file

19 | ```sh

20 | $ cp /Library/Fonts/Arial Black.ttf ./

21 | ```

22 |

23 | ### usage

24 | ```sh

25 | $ python photo_exif_date_print.py sample.jpg

26 | ```

27 |

28 | if you want to process many files. make below script named `photo-exif-date-print.sh`

29 | ```sh

30 | #!/bin/bash

31 | for f in *.jpg

32 | do

33 | python photo-exif-date-print.py $f

34 | done

35 | ```

36 |

37 | then execute following commands:

38 | ```sh

39 | $ chmod 755 photo-exif-date-print.sh

40 | $ ./photo-exif-date-print.sh

41 | ```

42 |

43 | ### original image

44 |

27 |

28 | ## Reference

29 |

--------------------------------------------------------------------------------

/docs/photo_exif_data_print.md:

--------------------------------------------------------------------------------

1 | ## photo-exif-date-print.py

2 | [index](./index.md)

3 |

4 | ### description

5 | Print date to photo by using exif data.

6 |

7 | ### preparation

8 | Search font file place of your PC:

9 | ```sh

10 | $ sudo find / -name "Arial Black.ttf"

11 | ```

12 |

13 | Mac example

14 | ```

15 | /Library/Fonts/Arial Black.ttf

16 | ```

17 |

18 | copy font file

19 | ```sh

20 | $ cp /Library/Fonts/Arial Black.ttf ./

21 | ```

22 |

23 | ### usage

24 | ```sh

25 | $ python photo_exif_date_print.py sample.jpg

26 | ```

27 |

28 | if you want to process many files. make below script named `photo-exif-date-print.sh`

29 | ```sh

30 | #!/bin/bash

31 | for f in *.jpg

32 | do

33 | python photo-exif-date-print.py $f

34 | done

35 | ```

36 |

37 | then execute following commands:

38 | ```sh

39 | $ chmod 755 photo-exif-date-print.sh

40 | $ ./photo-exif-date-print.sh

41 | ```

42 |

43 | ### original image

44 |  45 |

46 | ### processed image

47 |

45 |

46 | ### processed image

47 |  48 |

49 | ## Reference

50 |

--------------------------------------------------------------------------------

/docs/pillow_numpy.md:

--------------------------------------------------------------------------------

1 | [index](./index.md)

2 |

3 | ## pillow_numpy_basic.py

4 |

5 | ### description

6 | image processing basic sample (this script no processing)

7 |

8 | ### preparation

9 | prepare target photo

10 |

11 | ### usage

12 | ```sh

13 | $ python pillow_numpy_basic.py sample.jpg

14 | ```

15 |

16 | ### original image

17 | any image is ok

18 |

19 | ### processed image

20 | same with original

21 |

22 | ## References

23 | - http://qiita.com/uosansatox/items/4fa34e1d8d95d8783536

24 | - http://d.hatena.ne.jp/white_wheels/20100322/p1

25 |

--------------------------------------------------------------------------------

/docs/resize.md:

--------------------------------------------------------------------------------

1 | [index](./index.md)

2 |

3 | ## resize.py

4 |

5 | ### description

6 | resize photo

7 |

8 | ### preparation

9 | prepare target photo

10 |

11 | ### usage

12 | ```sh

13 | $ python resize.py sample.jpg wide_ratio height_ratio

14 | ```

15 |

16 | example:

17 | ```sh

18 | $ python resize.py sample.jpg 10 10

19 | ```

20 |

21 | ### original image

22 |

48 |

49 | ## Reference

50 |

--------------------------------------------------------------------------------

/docs/pillow_numpy.md:

--------------------------------------------------------------------------------

1 | [index](./index.md)

2 |

3 | ## pillow_numpy_basic.py

4 |

5 | ### description

6 | image processing basic sample (this script no processing)

7 |

8 | ### preparation

9 | prepare target photo

10 |

11 | ### usage

12 | ```sh

13 | $ python pillow_numpy_basic.py sample.jpg

14 | ```

15 |

16 | ### original image

17 | any image is ok

18 |

19 | ### processed image

20 | same with original

21 |

22 | ## References

23 | - http://qiita.com/uosansatox/items/4fa34e1d8d95d8783536

24 | - http://d.hatena.ne.jp/white_wheels/20100322/p1

25 |

--------------------------------------------------------------------------------

/docs/resize.md:

--------------------------------------------------------------------------------

1 | [index](./index.md)

2 |

3 | ## resize.py

4 |

5 | ### description

6 | resize photo

7 |

8 | ### preparation

9 | prepare target photo

10 |

11 | ### usage

12 | ```sh

13 | $ python resize.py sample.jpg wide_ratio height_ratio

14 | ```

15 |

16 | example:

17 | ```sh

18 | $ python resize.py sample.jpg 10 10

19 | ```

20 |

21 | ### original image

22 |  23 |

24 | ### processed image

25 |

23 |

24 | ### processed image

25 |  26 |

27 | ## resize-all.sh

28 |

29 | ### description

30 | resize all photo in directory

31 |

32 | ### preparation

33 | prepare target photos in directory

34 |

35 | ### usage

36 | ```sh

37 | $ ./resize-all.sh

38 | ```

39 |

40 | ## References

41 | - http://tatabox.hatenablog.com/entry/2013/07/15/164015

42 |

--------------------------------------------------------------------------------

/docs/sift.md:

--------------------------------------------------------------------------------

1 | [index](./index.md)

2 |

3 | ## shift.py

4 |

5 | ### description

6 | Test SIFT

7 |

8 | ### preparation

9 | prepare 2 image files

10 |

11 | ### command

12 | ```sh

13 | $ python shift.py sample.jpg sample2.jpg

14 | ```

15 |

16 | ### original image

17 |

26 |

27 | ## resize-all.sh

28 |

29 | ### description

30 | resize all photo in directory

31 |

32 | ### preparation

33 | prepare target photos in directory

34 |

35 | ### usage

36 | ```sh

37 | $ ./resize-all.sh

38 | ```

39 |

40 | ## References

41 | - http://tatabox.hatenablog.com/entry/2013/07/15/164015

42 |

--------------------------------------------------------------------------------

/docs/sift.md:

--------------------------------------------------------------------------------

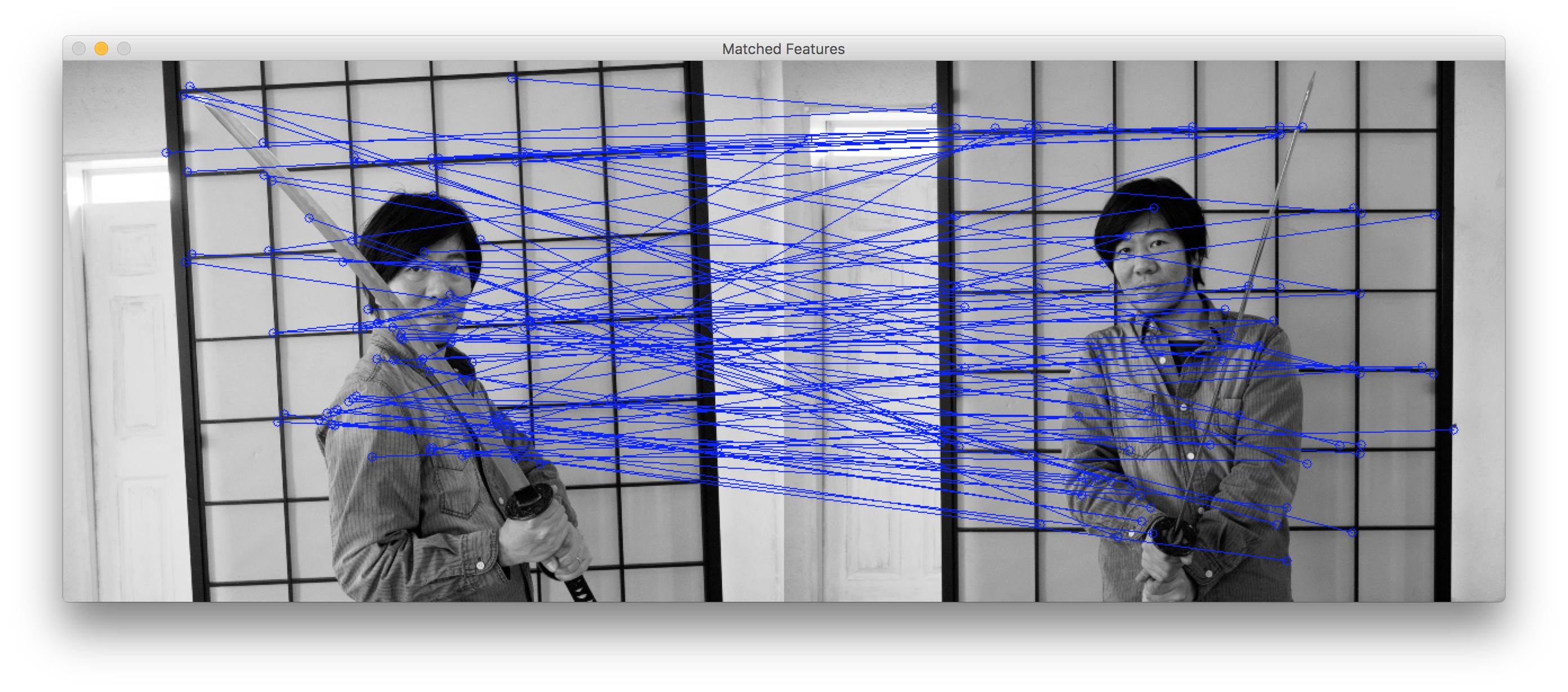

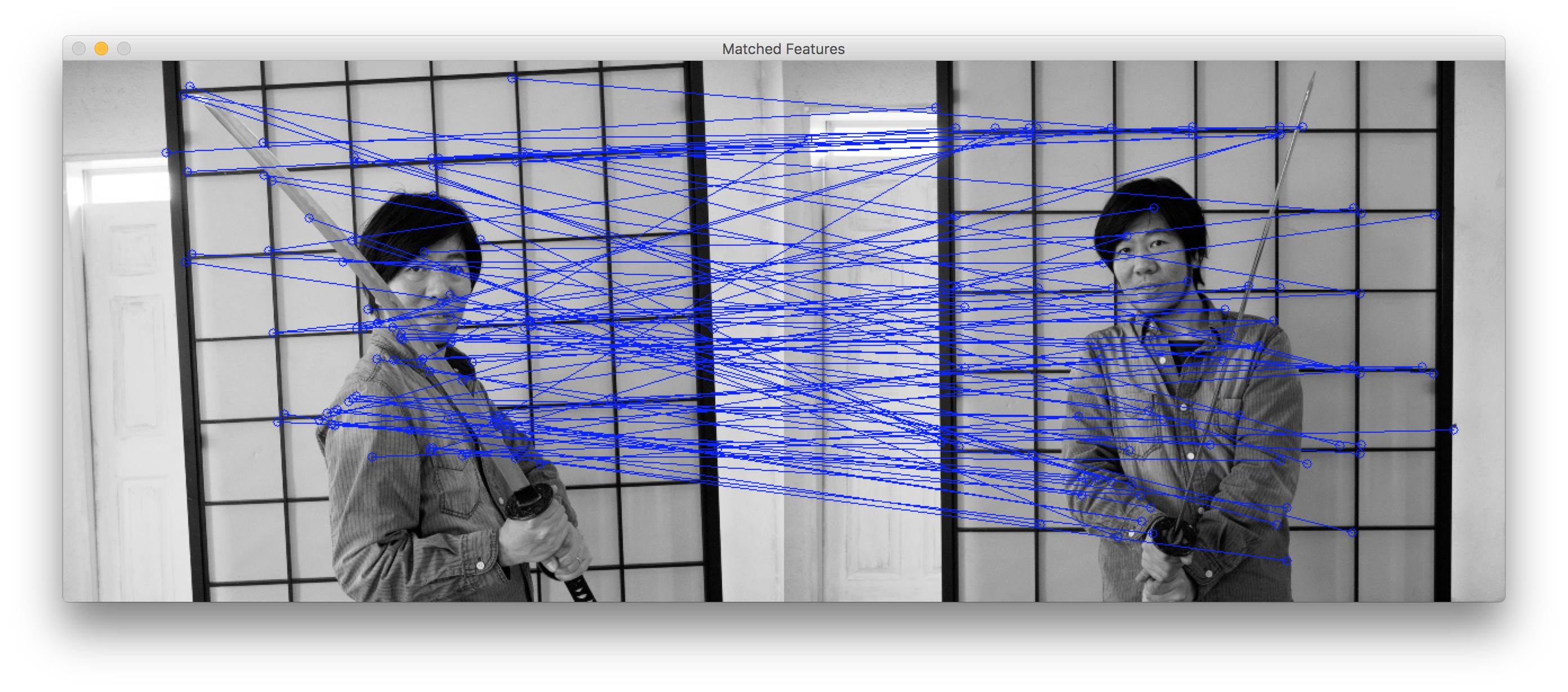

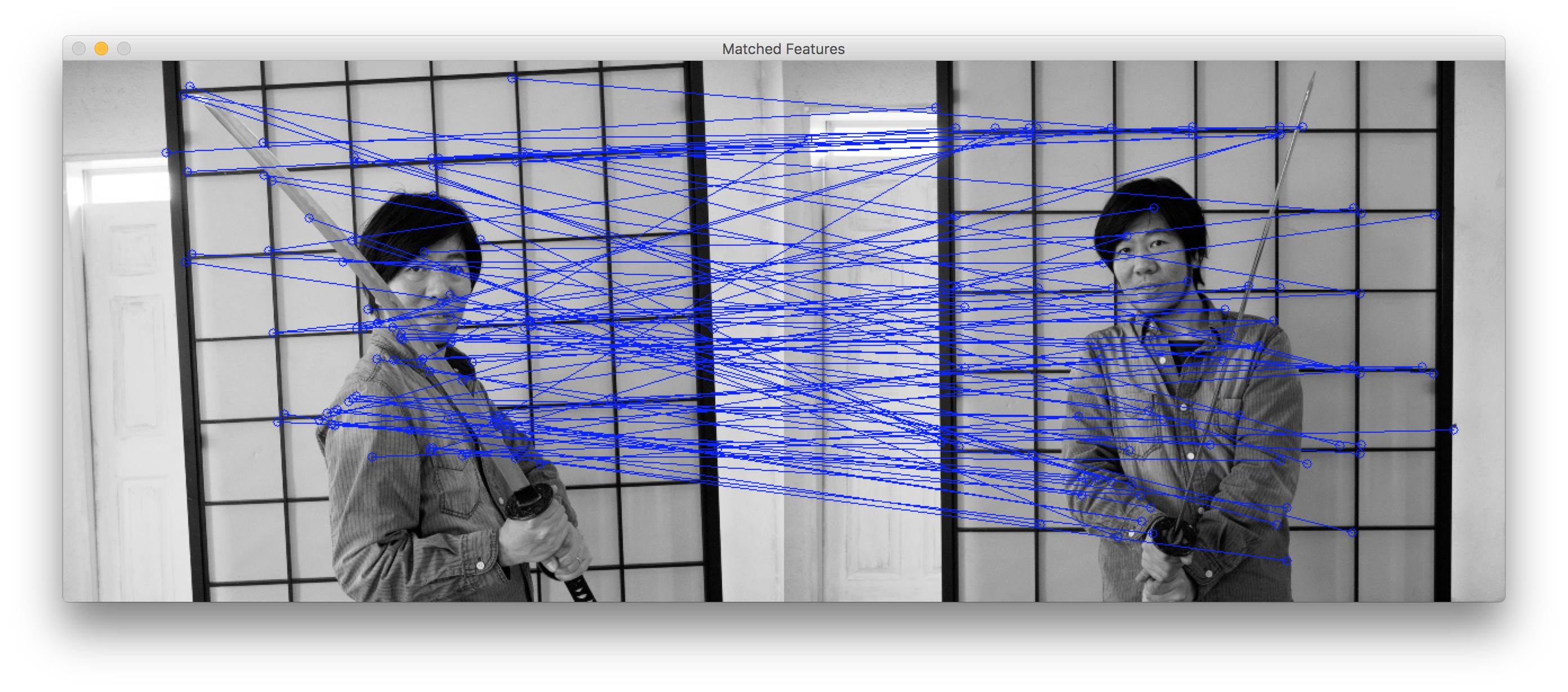

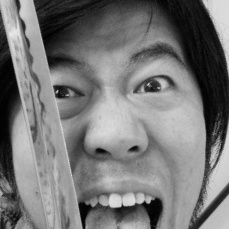

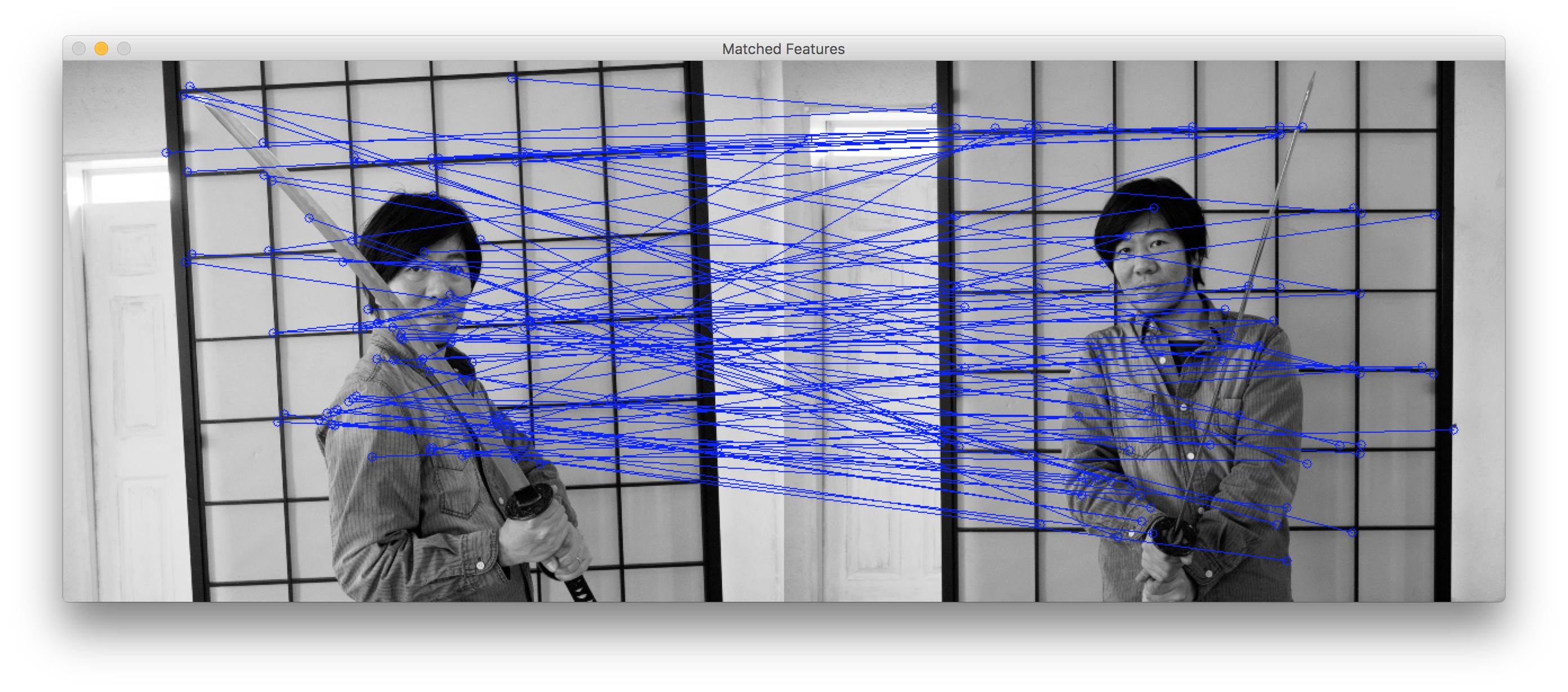

1 | [index](./index.md)

2 |

3 | ## shift.py

4 |

5 | ### description

6 | Test SIFT

7 |

8 | ### preparation

9 | prepare 2 image files

10 |

11 | ### command

12 | ```sh

13 | $ python shift.py sample.jpg sample2.jpg

14 | ```

15 |

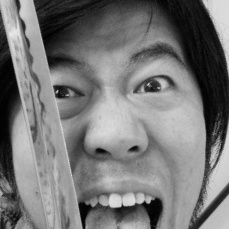

16 | ### original image

17 |  18 |

19 |

18 |

19 |  20 |

21 | ### processed image

22 |

20 |

21 | ### processed image

22 |  23 |

24 | ## References

25 | - http://toaru-gi.hatenablog.com/entry/2014/10/27/040843

26 |

--------------------------------------------------------------------------------

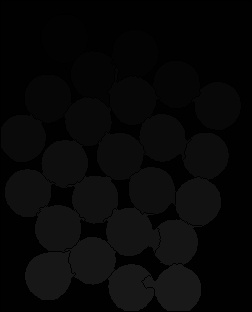

/docs/watershed.md:

--------------------------------------------------------------------------------

1 | [index](./index.md)

2 |

3 | ## watershed.py

4 |

5 | ### description

6 | Image segmented by watershed algorithm

7 |

8 | ### preparation

9 | Download sample photo.

10 | Execute following command:

11 |

12 | ```sh

13 | $ wget https://docs.opencv.org/3.1.0/water_coins.jpg

14 | ```

15 |

16 | ### usage

17 | ```sh

18 | $ python watershed.py water_coins.jpg

19 | ```

20 |

21 | ### original image

22 |

23 |

24 | ## References

25 | - http://toaru-gi.hatenablog.com/entry/2014/10/27/040843

26 |

--------------------------------------------------------------------------------

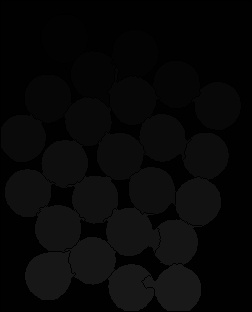

/docs/watershed.md:

--------------------------------------------------------------------------------

1 | [index](./index.md)

2 |

3 | ## watershed.py

4 |

5 | ### description

6 | Image segmented by watershed algorithm

7 |

8 | ### preparation

9 | Download sample photo.

10 | Execute following command:

11 |

12 | ```sh

13 | $ wget https://docs.opencv.org/3.1.0/water_coins.jpg

14 | ```

15 |

16 | ### usage

17 | ```sh

18 | $ python watershed.py water_coins.jpg

19 | ```

20 |

21 | ### original image

22 |  23 |

24 | ### processed image

25 | #### segmented image

26 |

23 |

24 | ### processed image

25 | #### segmented image

26 |  27 |

28 | #### mask image

29 |

27 |

28 | #### mask image

29 |  30 |

31 | ## References

32 | - https://qiita.com/ysdyt/items/5972c9520acf6a094d90

33 |

--------------------------------------------------------------------------------

/extract_color.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import sys

4 | import numpy as np

5 |

6 | # extract color function

7 | def extract_color( src, h_th_low, h_th_up, s_th, v_th ):

8 | hsv = cv2.cvtColor(src, cv2.COLOR_BGR2HSV)

9 | h, s, v = cv2.split(hsv)

10 | if h_th_low > h_th_up:

11 | ret, h_dst_1 = cv2.threshold(h, h_th_low, 255, cv2.THRESH_BINARY)

12 | ret, h_dst_2 = cv2.threshold(h, h_th_up, 255, cv2.THRESH_BINARY_INV)

13 |

14 | dst = cv2.bitwise_or(h_dst_1, h_dst_2)

15 | else:

16 | ret, dst = cv2.threshold(h, h_th_low, 255, cv2.THRESH_TOZERO)

17 | ret, dst = cv2.threshold(dst, h_th_up, 255, cv2.THRESH_TOZERO_INV)

18 | ret, dst = cv2.threshold(dst, 0, 255, cv2.THRESH_BINARY)

19 |

20 | ret, s_dst = cv2.threshold(s, s_th, 255, cv2.THRESH_BINARY)

21 | ret, v_dst = cv2.threshold(v, v_th, 255, cv2.THRESH_BINARY)

22 | dst = cv2.bitwise_and(dst, s_dst)

23 | dst = cv2.bitwise_and(dst, v_dst)

24 | return dst

25 |

26 | if __name__ == '__main__':

27 | param = sys.argv

28 | if (len(param) != 6):

29 | print ("Usage: $ python " + param[0] + " sample.jpg h_min, h_max, s_th, v_th")

30 | quit()

31 | # open image file

32 | try:

33 | input_img = cv2.imread(param[1])

34 | except:

35 | print ('faild to load %s' % param[1])

36 | quit()

37 |

38 | if input_img is None:

39 | print ('faild to load %s' % param[1])

40 | quit()

41 |

42 | # parameter setting

43 | h_min = int(param[2])

44 | h_max = int(param[3])

45 | s_th = int(param[4])

46 | v_th = int(param[5])

47 | # making mask using by extract color function

48 | msk_img = extract_color(input_img, h_min, h_max, s_th, v_th)

49 | # mask processing

50 | output_img = cv2.bitwise_and(input_img, input_img, mask = msk_img)

51 |

52 | cv2.imwrite("extract_" + param[1], output_img)

53 |

--------------------------------------------------------------------------------

/face_crop-all.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | echo face crop processing...

4 | for f in *.JPG

5 | do

6 | python face_crop.py $f

7 | done

8 |

9 | for f in *.jpg

10 | do

11 | python face_crop.py $f

12 | done

13 |

14 | for f in *.JPEG

15 | do

16 | python face_crop.py $f

17 | done

18 |

19 | for f in *.jpeg

20 | do

21 | python face_crop.py $f

22 | done

23 |

24 | echo done

25 |

--------------------------------------------------------------------------------

/face_crop.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import numpy as np

4 | import sys

5 | import math

6 | from math import sin, cos

7 | from os import path

8 |

9 | cascades_dir = path.normpath(path.join(cv2.__file__, '..', '..', '..', '..', 'share', 'OpenCV', 'haarcascades'))

10 | max_size = 720

11 |

12 | def detect(img, filename):

13 | cascade_f = cv2.CascadeClassifier(path.join(cascades_dir, 'haarcascade_frontalface_alt2.xml'))

14 | cascade_e = cv2.CascadeClassifier(path.join(cascades_dir, 'haarcascade_eye.xml'))

15 | # resize if learch image

16 | rows, cols, _ = img.shape

17 | if max(rows, cols) > max_size:

18 | l = max(rows, cols)

19 | img = cv2.resize(img, (cols * max_size / l, rows * max_size / l))

20 | rows, cols, _ = img.shape

21 | # create gray image for rotate

22 | gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

23 | hypot = int(math.ceil(math.hypot(rows, cols)))

24 | frame = np.zeros((hypot, hypot), np.uint8)

25 | frame[(hypot - rows) * 0.5:(hypot + rows) * 0.5, (hypot - cols) * 0.5:(hypot + cols) * 0.5] = gray

26 |

27 | def translate(coord, deg):

28 | x, y = coord

29 | rad = math.radians(deg)

30 | return {

31 | 'x': ( cos(rad) * x + sin(rad) * y - hypot * 0.5 * cos(rad) - hypot * 0.5 * sin(rad) + hypot * 0.5 - (hypot - cols) * 0.5) / float(cols) * 100.0,

32 | 'y': (- sin(rad) * x + cos(rad) * y + hypot * 0.5 * sin(rad) - hypot * 0.5 * cos(rad) + hypot * 0.5 - (hypot - rows) * 0.5) / float(rows) * 100.0,

33 | }

34 |

35 | # filename numbering

36 | numb = 0

37 | for deg in range(-48, 49, 6):

38 | M = cv2.getRotationMatrix2D((hypot * 0.5, hypot * 0.5), deg, 1.0)

39 | rotated = cv2.warpAffine(frame, M, (hypot, hypot))

40 | faces = cascade_f.detectMultiScale(rotated, 1.08, 2)

41 | print(deg, len(faces))

42 | for face in faces:

43 | x, y, w, h = face

44 | # eyes in face?

45 | y_offset = int(h * 0.1)

46 | roi = rotated[y + y_offset: y + h, x: x + w]

47 | eyes = cascade_e.detectMultiScale(roi, 1.05)

48 | eyes = filter(lambda e: (e[0] > w / 2 or e[0] + e[2] < w / 2) and e[1] + e[3] < h / 2, eyes)

49 | if len(eyes) == 2 and abs(eyes[0][0] - eyes[1][0]) > w / 4:

50 | score = math.atan2(abs(eyes[1][1] - eyes[0][1]), abs(eyes[1][0] - eyes[0][0]))

51 | if eyes[0][1] == eyes[1][1]:

52 | score = 0.0

53 | if score < 0.15:

54 | print("face_pos=" + str(face))

55 | print("score=" + str(score))

56 | print("numb=" + str(numb))

57 | # cv2.rectangle(rotated, (x ,y), (x+w, x+h), (255,255,255), thickness=2)

58 | # crop image

59 | output_img = rotated[y:y+h, x:x+h]

60 | # output file

61 | cv2.imwrite(str("{0:02d}".format(numb)) + "_face_" + filename, output_img)

62 | numb += 1

63 |

64 |

65 | if __name__ == '__main__':

66 | param = sys.argv

67 | if (len(param) != 2):

68 | print ("Usage: $ python " + param[0] + " sample.jpg")

69 | quit()

70 |

71 | # open image file

72 | try:

73 | input_img = cv2.imread(param[1])

74 | except:

75 | print ('faild to load %s' % param[1])

76 | quit()

77 |

78 | if input_img is None:

79 | print ('faild to load %s' % param[1])

80 | quit()

81 |

82 | output_img = detect(input_img, param[1])

83 |

--------------------------------------------------------------------------------

/face_crop_raspi.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import sys

4 | from os import path

5 | import math

6 | from math import sin, cos

7 |

8 | # cascades_dir = path.normpath(path.join(cv2.__file__, '..', '..', '..', '..', 'share', 'OpenCV', 'haarcascades'))

9 | cascades_dir ='/usr/share/opencv/haarcascades'

10 |

11 | color = (255, 255, 255) # color of rectangle for face detection

12 |

13 | def face_detect(file):

14 | image = cv2.imread(file)

15 | image_gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

16 | cascade_f = cv2.CascadeClassifier(path.join(cascades_dir, 'haarcascade_frontalface_alt2.xml'))

17 | cascade_e = cv2.CascadeClassifier(path.join(cascades_dir, 'haarcascade_eye.xml'))

18 |

19 | # facerect = cascade_f.detectMultiScale(image_gray, scaleFactor=1.08, minNeighbors=1, minSize=(50, 50))

20 | facerect = cascade_f.detectMultiScale(image_gray, scaleFactor=1.08, minNeighbors=1, minSize=(200, 200))

21 |

22 | print("face rectangle")

23 | print(facerect)

24 |

25 | # test_image = image

26 |

27 | if len(facerect) > 0:

28 | # filename numbering

29 | numb = 0

30 | for rect in facerect:

31 | x, y, w, h = rect

32 | # eyes in face?

33 | roi = image_gray[y: y + h, x: x + w]

34 | # cv2.rectangle(test_image,(x,y),(x+w,y+h),(255,255,255),2)

35 | eyes = cascade_e.detectMultiScale(roi, scaleFactor=1.05, minSize=(20,20))

36 | # eyes = filter(lambda e: (e[0] > w / 2 or e[0] + e[2] < w / 2) and e[1] + e[3] < h / 2, eyes)

37 | # for (ex,ey,ew,eh) in eyes:

38 | # print("rectangle")

39 | # cv2.rectangle(test_image,(x+ex,y+ey),(x+ex+ew,y+ey+eh),(0,255,0),2)

40 | if len(eyes) > 1:

41 | # if len(eyes) == 2 and abs(eyes[0][0] - eyes[1][0]) > w / 4:

42 | image_face = image[y:y+h, x:x+h]

43 | cv2.imwrite(str("{0:02d}".format(numb)) + "_face_" + file, image_face)

44 | numb += 1

45 |

46 | # cv2.imwrite("00debug_"+file, test_image)

47 |

48 | if __name__ == '__main__':

49 | param = sys.argv

50 | if (len(param) != 2):

51 | print ("Usage: $ python " + param[0] + " sample.jpg")

52 | quit()

53 |

54 | face_detect(param[1])

55 |

--------------------------------------------------------------------------------

/face_crop_simple-all.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | cmd=face_crop_simple.py

4 | echo face crop processing...

5 | for f in *.jpg *.jpeg *.JPG *.JPEG *.png *.PNG

6 | do

7 | if [ -f $f ]; then

8 | echo python $cmd $f

9 | python $cmd $f

10 | fi

11 | done

12 |

13 | echo done

14 |

--------------------------------------------------------------------------------

/face_crop_simple.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import sys

4 | import os

5 | from os import path

6 | import math

7 | from math import sin, cos

8 |

9 | cascades_dir = path.normpath(path.join(cv2.__file__, '..', '..', '..', '..', 'share', 'OpenCV', 'haarcascades'))

10 |

11 | color = (255, 255, 255) # color of rectangle for face detection

12 |

13 | def face_detect(file):

14 | image = cv2.imread(file)

15 | image_gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

16 | cascade_f = cv2.CascadeClassifier(path.join(cascades_dir, 'haarcascade_frontalface_alt2.xml'))

17 | cascade_e = cv2.CascadeClassifier(path.join(cascades_dir, 'haarcascade_eye.xml'))

18 |

19 | facerect = cascade_f.detectMultiScale(image_gray, scaleFactor=1.08, minNeighbors=1, minSize=(50, 50))

20 |

21 | # print("face rectangle")

22 | # print(facerect)

23 | if not os.path.exists("face_images"):

24 | os.mkdir("face_images")

25 |

26 | base = os.path.splitext(os.path.basename(sys.argv[1]))[0] + "_"

27 |

28 | if len(facerect) > 0:

29 | # filename numbering

30 | numb = 0

31 | for rect in facerect:

32 | x, y, w, h = rect

33 | # eyes in face?

34 | y_offset = int(h * 0.1)

35 | eye_area = image_gray[y + y_offset: y + h, x: x + w]

36 | eyes = cascade_e.detectMultiScale(eye_area, 1.05)

37 | eyes = filter(lambda e: (e[0] > w / 2 or e[0] + e[2] < w / 2) and e[1] + e[3] < h / 2, eyes)

38 | # print(len(eyes))

39 | if len(eyes) > 0:

40 | image_face = image[y:y+h, x:x+h]

41 | cv2.imwrite("face_images/" + base + str("{0:02d}".format(numb)) + ".jpg", image_face)

42 | numb += 1

43 |

44 | if __name__ == '__main__':

45 | param = sys.argv

46 | if (len(param) != 2):

47 | print ("Usage: $ python " + param[0] + " sample.jpg")

48 | quit()

49 |

50 | face_detect(param[1])

51 |

--------------------------------------------------------------------------------

/face_detection.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import sys

4 | cascade_path = "./haarcascade_frontalface_alt.xml"

5 |

6 | color = (255, 255, 255) # color of rectangle for face detection

7 |

8 | def face_detect(file):

9 | image = cv2.imread(file)

10 | image_gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

11 | cascade = cv2.CascadeClassifier(cascade_path)

12 | facerect = cascade.detectMultiScale(image_gray, scaleFactor=1.1, minNeighbors=1, minSize=(1, 1))

13 |

14 | print("face rectangle")

15 | print(facerect)

16 |

17 | if len(facerect) > 0:

18 | for rect in facerect:

19 | cv2.rectangle(image, tuple(rect[0:2]),tuple(rect[0:2]+rect[2:4]), color, thickness=2)

20 |

21 | return image

22 |

23 | if __name__ == '__main__':

24 | param = sys.argv

25 | if (len(param) != 2):

26 | print ("Usage: $ python " + param[0] + " sample.jpg")

27 | quit()

28 |

29 | output_img = face_detect(param[1])

30 | cv2.imwrite('facedetect_' + param[1], output_img)

31 |

--------------------------------------------------------------------------------

/face_detection_camera.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 | import cv2

4 | cascade_path = "./haarcascade_frontalface_alt.xml"

5 | color = (255, 255, 255) # color of rectangle for face detection

6 |

7 | cam = cv2.VideoCapture(0)

8 | count=0

9 |

10 | while True:

11 | ret, capture = cam.read()

12 | if not ret:

13 | print('error')

14 | break

15 | count += 1

16 | if count > 1:

17 | image = capture.copy()

18 | image_gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

19 | cascade = cv2.CascadeClassifier(cascade_path)

20 | facerect = cascade.detectMultiScale(image_gray, scaleFactor=1.1, minNeighbors=1, minSize=(1, 1))

21 |

22 | if len(facerect) > 0:

23 | for rect in facerect:

24 | cv2.rectangle(image, tuple(rect[0:2]),tuple(rect[0:2]+rect[2:4]), color, thickness=2)

25 |

26 | count=0

27 | cv2.imshow('face detector', image)

28 |

29 | cam.release()

30 | cv2.destroyAllWindows()

31 |

--------------------------------------------------------------------------------

/filter_3by3.py:

--------------------------------------------------------------------------------

1 | from PIL import Image

2 | import numpy as np

3 | import sys

4 |

5 | # filter = [0, 0, 0, 0, 1, 0, 0, 0, 0]

6 | # filter = [0, 1, 0, 1, -4, 1, 0, 1, 0]

7 | # filter = [0, 1, 0, 0, -2, 0, 0, 1, 0]

8 | # filter = [0, 0, 0, 1, -2, 1, 0, 0, 0]

9 | filter = [0, 1, 0, 1, -4, 1, 0, 1, 0]

10 | # filter = [1.0/9.0, 1.0/9.0, 1.0/9.0, 1.0/9.0, 1.0/9.0, 1.0/9.0, 1.0/9.0, 1.0/9.0, 1.0/9.0]

11 |

12 | def image_process(src):

13 | width, height = src.size

14 | dst = Image.new('RGB', (width, height))

15 |

16 | img_pixels = np.array([[src.getpixel((x,y)) for y in range(height)] for x in range(width)])

17 | color = np.zeros((len(filter), 3))

18 |

19 | for y in range(1, height-1):

20 | for x in range(1, width-1):

21 | color[0] = img_pixels[x-1][y-1]

22 | color[1] = img_pixels[x-1][y]

23 | color[2] = img_pixels[x-1][y+1]

24 | color[3] = img_pixels[x][y-1]

25 | color[4] = img_pixels[x][y]

26 | color[5] = img_pixels[x][y+1]

27 | color[6] = img_pixels[x+1][y-1]

28 | color[7] = img_pixels[x+1][y]

29 | color[8] = img_pixels[x+1][y+1]

30 |

31 | sum_color = np.zeros(3)

32 | for num in range(len(filter)):

33 | sum_color += color[num] * filter[num]

34 |

35 | r,g,b = map(int, (sum_color))

36 | r = min([r, 255])

37 | r = max([r, 0])

38 | g = min([g, 255])

39 | g = max([g, 0])

40 | b = min([b, 255])

41 | b = max([b, 0])

42 |

43 | dst.putpixel((x,y), (r,g,b))

44 |

45 | return dst

46 |

47 | if __name__ == '__main__':

48 | param = sys.argv

49 | if (len(param) != 2):

50 | print("Usage: $ python " + param[0] + " sample.jpg")

51 | quit()

52 |

53 | # open image file

54 | try:

55 | input_img = Image.open(param[1])

56 | except:

57 | print('faild to load %s' % param[1])

58 | quit()

59 |

60 | if input_img is None:

61 | print('faild to load %s' % param[1])

62 | quit()

63 |

64 | output_img = image_process(input_img)

65 | output_img.save("filtered_" + param[1])

66 | output_img.show()

67 |

--------------------------------------------------------------------------------

/measure_color_average.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import sys

4 | import numpy as np

5 |

6 | if __name__ == '__main__':

7 | param = sys.argv

8 | if (len(param) != 3):

9 | print("Usage: $ python " + param[0] + " sample.jpg" "rgb or hsv")

10 | quit()

11 |

12 | # open image file

13 | bgr_img = cv2.imread(param[1])

14 | hsv_img = cv2.cvtColor(bgr_img, cv2.COLOR_BGR2HSV)

15 |

16 | average_bgr = [0,0,0]

17 | average_hsv = [0,0,0]

18 |

19 | # measure average of RGB color

20 | for i in range(3):

21 | extract_img = bgr_img[:,:,i]

22 | extract_img = extract_img[extract_img>0]

23 | average_bgr[i] = np.average(extract_img)

24 |

25 | for i in range(3):

26 | extract_img = hsv_img[:,:,i]

27 | extract_img = extract_img[extract_img>0]

28 | average_hsv[i] = np.average(extract_img)

29 |

30 | if param[2] == "rgb":

31 | # save RGB format(from BGR)

32 | print(str(average_bgr[2])+","+str(average_bgr[1])+","+str(average_bgr[0]))

33 | elif param[2] == "hsv":

34 | # save HSV format

35 | print(str(average_hsv[0])+","+str(average_hsv[1])+","+str(average_hsv[2]))

36 | else:

37 | print("Option is wrong. please select rgb or hsv")

38 |

--------------------------------------------------------------------------------

/photo_cat.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 |

4 | img1 = cv2.imread('image-1.jpg')

5 | img2 = cv2.imread('image-2.jpg')

6 | img3 = cv2.imread('image-3.jpg')

7 | img4 = cv2.imread('image-4.jpg')

8 |

9 | img5 = cv2.vconcat([img1, img2])

10 | img6 = cv2.vconcat([img3, img4])

11 | img7 = cv2.hconcat([img5, img6])

12 | cv2.imwrite('output.jpg', img7)

13 |

--------------------------------------------------------------------------------

/photo_date_print.py:

--------------------------------------------------------------------------------

1 | import cv2

2 | import numpy as np

3 | from PIL import Image, ImageDraw, ImageFont

4 | import sys

5 |

6 | font_color = (255, 255, 255)

7 |

8 |

9 | def print_date(file, date):

10 | base_img_cv2 = cv2.imread(file)

11 |

12 | base_img = Image.open(file).convert('RGBA')

13 | txt = Image.new('RGB', base_img.size, (0, 0, 0))

14 | draw = ImageDraw.Draw(txt)

15 | fnt = ImageFont.truetype('./NotoSansJP-Regular.ttf',

16 | size=(int)((base_img.size[0]+base_img.size[1])/100))

17 |

18 | bbox = draw.textbbox((0, 0), date, font=fnt)

19 | text_width, text_height = bbox[2] - bbox[0], bbox[3] - bbox[1]

20 |

21 | draw.text(((base_img.size[0]*0.95 - text_width),

22 | (base_img.size[1]*0.95 - text_height)),

23 | date, font=fnt, fill=font_color)

24 |

25 | txt_array = np.array(txt)

26 |

27 | output_img = cv2.addWeighted(base_img_cv2, 1.0, txt_array, 1.0, 0)

28 | return output_img

29 |

30 |

31 | if __name__ == '__main__':

32 | param = sys.argv

33 | if (len(param) != 3):

34 | print("Usage: $ python " + param[0] + " ")

35 | quit()

36 |

37 | output_img = print_date(param[1], param[2])

38 | cv2.imwrite(param[2].replace(':', '_').replace(' ', '_') + "_" + param[1], output_img)

39 |

--------------------------------------------------------------------------------

/photo_exif_date_print.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import sys

4 | import numpy as np

5 | from PIL import Image, ImageDraw, ImageFont

6 | from PIL.ExifTags import TAGS, GPSTAGS

7 |

8 | font_color = (255, 255, 255)

9 |

10 | def get_exif(file,field):

11 | img = Image.open(file)

12 | exif = img._getexif()

13 |

14 | exif_data = []

15 | for id, value in exif.items():

16 | if TAGS.get(id) == field:

17 | tag = TAGS.get(id, id),value

18 | exif_data.extend(tag)

19 |

20 | return exif_data

21 |

22 |

23 | def get_exif_of_image(file):

24 | im = Image.open(file)

25 |

26 | try:

27 | exif = im._getexif()

28 | except AttributeError:

29 | return {}

30 |

31 | exif_table = {}

32 | for tag_id, value in exif.items():

33 | tag = TAGS.get(tag_id, tag_id)

34 | exif_table[tag] = value

35 |

36 | return exif_table

37 |

38 |

39 | def get_date_of_image(file):

40 | exif_table = get_exif_of_image(file)

41 | return exif_table.get("DateTimeOriginal")

42 |

43 |

44 | def put_date(file, date):

45 | base_img_cv2 = cv2.imread(file)

46 |

47 | base_img = Image.open(file).convert('RGBA')

48 | txt = Image.new('RGB', base_img.size, (0, 0, 0))

49 | draw = ImageDraw.Draw(txt)

50 | fnt = ImageFont.truetype('./Arial Black.ttf', size=(int)((base_img.size[0]+base_img.size[1])/100))

51 |

52 | textw, texth = draw.textsize(date, font=fnt)

53 |

54 | draw.text(((base_img.size[0]*0.95 - textw) , (base_img.size[1]*0.95 - texth)),

55 | date, font=fnt, fill=font_color)

56 |

57 | txt_array = np.array(txt)

58 |

59 | output_img = cv2.addWeighted(base_img_cv2, 1.0, txt_array, 1.0, 0)

60 | return output_img

61 |

62 |

63 | if __name__ == '__main__':

64 | param = sys.argv

65 | if (len(param) != 2):

66 | print ("Usage: $ python " + param[0] + " sample.jpg")

67 | quit()

68 |

69 | try:

70 | date = get_date_of_image(param[1])

71 | output_img = put_date(param[1], date)

72 | cv2.imwrite(date.replace(':','_').replace(' ','_') + "_" + param[1], output_img)

73 | except:

74 | base_img_cv2 = cv2.imread(param[1])

75 | cv2.imwrite("nodate_" + param[1], base_img_cv2)

76 |

--------------------------------------------------------------------------------

/pillow_numpy_basic.py:

--------------------------------------------------------------------------------

1 | from PIL import Image

2 | import numpy as np

3 | import sys

4 |

5 | def image_process(src):

6 | width, height = src.size

7 | dst = Image.new('RGB', (width, height))

8 |

9 | img_pixels = np.array([[src.getpixel((x,y)) for y in range(height)] for x in range(width)])

10 |

11 | for y in range(height):

12 | for x in range(width):

13 | r,g,b = img_pixels[x][y]

14 | dst.putpixel((x,y), (r,g,b))

15 |

16 | return dst

17 |

18 | if __name__ == '__main__':

19 | param = sys.argv

20 | if (len(param) != 2):

21 | print ("Usage: $ python " + param[0] + " sample.jpg")

22 | quit()

23 |

24 | # open image file

25 | try:

26 | input_img = Image.open(param[1])

27 | except:

28 | print ('faild to load %s' % param[1])

29 | quit()

30 |

31 | if input_img is None:

32 | print ('faild to load %s' % param[1])

33 | quit()

34 |

35 | output_img = image_process(input_img)

36 | output_img.save("process_" + param[1])

37 | output_img.show()

38 |

--------------------------------------------------------------------------------

/resize-all.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | WIDTH_RATIO=10

4 | HEIGHT_RATIO=10

5 |

6 | echo resize processing...

7 | for f in *.JPG

8 | do

9 | echo python resize.py $f $WIDTH_RATIO $HEIGHT_RATIO

10 | python resize.py $f $WIDTH_RATIO $HEIGHT_RATIO

11 | done

12 | echo done

13 |

--------------------------------------------------------------------------------

/resize.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import sys

4 |

5 |

6 | def resize(src, w_ratio, h_ratio):

7 | """Resize image

8 |

9 | Args:

10 | src (numpy.ndarray): Image

11 | w_ratio (int): Width ratio

12 | h_ratio (int): Height ratio

13 |

14 | Returns:

15 | numpy.ndarray: Image

16 | """

17 |

18 | height = src.shape[0]

19 | width = src.shape[1]

20 | dst = cv2.resize(src, ((int)(width/100*w_ratio), (int)(height/100*h_ratio)))

21 | return dst

22 |

23 |

24 | if __name__ == '__main__':

25 | param = sys.argv

26 | if(len(param) != 4):

27 | print("Usage: $ python " + param[0] + " sample.jpg wide_ratio height_ratio")

28 | quit()

29 |

30 | # open image file

31 | try:

32 | input_img = cv2.imread(param[1])

33 | except Exception as e:

34 | print(e)

35 | quit()

36 |

37 | if input_img is None:

38 | print('faild to load %s' % param[1])

39 | quit()

40 |

41 | w_ratio = int(param[2])

42 | h_ratio = int(param[3])

43 |

44 | output_img = resize(input_img, w_ratio, h_ratio)

45 | cv2.imwrite(param[1], output_img)

46 |

--------------------------------------------------------------------------------

/sift.py:

--------------------------------------------------------------------------------

1 | import cv2

2 | import sys

3 | import numpy as np

4 |

5 | def drawMatches(img1, kp1, img2, kp2, matches):

6 | rows1 = img1.shape[0]

7 | cols1 = img1.shape[1]

8 | rows2 = img2.shape[0]

9 | cols2 = img2.shape[1]

10 |

11 | out = np.zeros((max([rows1,rows2]),cols1+cols2,3), dtype='uint8')

12 |

13 | out[:rows1,:cols1,:] = np.dstack([img1, img1, img1])

14 |

15 | out[:rows2,cols1:cols1+cols2,:] = np.dstack([img2, img2, img2])

16 |

17 | for mat in matches:

18 |

19 | img1_idx = mat.queryIdx

20 | img2_idx = mat.trainIdx

21 |

22 | (x1,y1) = kp1[img1_idx].pt

23 | (x2,y2) = kp2[img2_idx].pt

24 |

25 | cv2.circle(out, (int(x1),int(y1)), 4, (255, 0, 0), 1)

26 | cv2.circle(out, (int(x2)+cols1,int(y2)), 4, (255, 0, 0), 1)

27 |

28 | cv2.line(out, (int(x1),int(y1)), (int(x2)+cols1,int(y2)), (255, 0, 0), 1)

29 |

30 | cv2.imshow('Matched Features', out)

31 | cv2.waitKey(0)

32 | cv2.destroyAllWindows()

33 |

34 | if __name__ == '__main__':

35 | param = sys.argv

36 | if (len(param) != 3):

37 | print ("Usage: $ python " + param[0] + " sample1.jpg sample2.jgp")

38 | quit()

39 |

40 | # open image file

41 | try:

42 | input_img1 = cv2.imread(param[1])

43 | except:

44 | print ('faild to load %s' % param[1])

45 | quit()

46 |

47 | if input_img1 is None:

48 | print ('faild to load %s' % param[1])

49 | quit()

50 |

51 | try:

52 | input_img2 = cv2.imread(param[2])

53 | except:

54 | print ('faild to load %s' % param[2])

55 | quit()

56 |

57 | if input_img2 is None:

58 | print ('faild to load %s' % param[1])

59 | quit()

60 |

61 | gray= cv2.cvtColor(input_img1,cv2.COLOR_BGR2GRAY)

62 | gray2= cv2.cvtColor(input_img2,cv2.COLOR_BGR2GRAY)

63 |

64 | sift = cv2.SIFT()

65 |

66 | kp ,des = sift.detectAndCompute(gray, None)

67 | kp2 ,des2 = sift.detectAndCompute(gray2, None)

68 |

69 | matcher = cv2.DescriptorMatcher_create("FlannBased")

70 | matches = matcher.match(des,des2)

71 |

72 | output_img = drawMatches(gray,kp,gray2,kp2,matches[:100])

73 |

74 |

--------------------------------------------------------------------------------

/tests/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/karaage0703/python-image-processing/0df536fb82883ee160217859f85340d68ff51270/tests/__init__.py

--------------------------------------------------------------------------------

/tests/test_extract_color.py:

--------------------------------------------------------------------------------

1 | import pytest

2 | from extract_color import extract_color

3 | import numpy as np

4 |

5 | import cv2

6 |

7 |

8 | # テスト用のダミー画像を生成するヘルパー関数

9 | def create_dummy_image(width, height, color):

10 | image = np.zeros((height, width, 3), dtype=np.uint8)

11 | image[:] = color

12 | return image

13 |

14 |

15 | # テストケース1: h_th_lowがh_th_upより低い場合

16 | def test_extract_color_low_high():

17 | # 画像を生成(ここでは青色の画像)

18 | src = create_dummy_image(100, 100, (255, 0, 0))

19 | h_th_low = 50

20 | h_th_up = 100

21 | s_th = 50

22 | v_th = 50

23 |

24 | # extract_colorを実行

25 | result = extract_color(src, h_th_low, h_th_up, s_th, v_th)

26 |

27 | # 想定される結果を計算

28 | expected = np.zeros((100, 100), dtype=np.uint8)

29 | # 青色はHSVでHが約120なので、結果は黒(すべてのピクセルが0)になるはずです

30 |

31 | # 結果のアサーション

32 | assert np.array_equal(result, expected), "The extract_color function did not perform as expected with low < high."

33 |

34 | # テストケース2: h_th_lowがh_th_upより高い場合

35 | def test_extract_color_high_low():

36 | # 画像を生成(ここでは緑色の画像)

37 | src = create_dummy_image(100, 100, (0, 255, 0))

38 | h_th_low = 70

39 | h_th_up = 50

40 | s_th = 50

41 | v_th = 50

42 |

43 | # extract_colorを実行

44 | result = extract_color(src, h_th_low, h_th_up, s_th, v_th)

45 |

46 | # 想定される結果を計算

47 | expected = np.zeros((100, 100), dtype=np.uint8)

48 |

49 | # 結果のアサーション

50 | assert np.array_equal(result, expected), "The extract_color function did not perform as expected with high > low."

51 |

52 |

53 | # テストケース3: s_thとv_thの閾値チェック

54 | def test_extract_color_sv_threshold():

55 | # 彩度が低く、明度が高い色(白に近い色)の画像を生成

56 | src = create_dummy_image(100, 100, (128, 128, 128))

57 | h_th_low = 20

58 | h_th_up = 30

59 | s_th = 100 # 彩度の閾値を高く設定

60 | v_th = 100 # 明度の閾値を低く設定

61 |

62 | # extract_colorを実行

63 | result = extract_color(src, h_th_low, h_th_up, s_th, v_th)

64 |

65 | # 想定される結果を計算

66 | expected = np.zeros((100, 100), dtype=np.uint8) # 閾値が高いので、全てのピクセルは除外されるべき

67 |

68 | # 結果のアサーション

69 | assert np.array_equal(result, expected), "The s_th and v_th thresholds did not filter out the pixels correctly."

70 |

71 |

72 | # テストケース5: ソース画像がNoneの場合

73 | def test_extract_color_with_none_image():

74 | with pytest.raises(cv2.error):

75 | extract_color(None, 50, 100, 50, 100)

76 |

--------------------------------------------------------------------------------

/watershed.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import sys

4 | import numpy as np

5 | # from matplotlib import pyplot as plt

6 |

7 | def watershed(src):

8 | # Change color to gray scale

9 | gray = cv2.cvtColor(src, cv2.COLOR_BGR2GRAY)

10 |

11 | # Use the Otsu's binarization

12 | thresh,bin_img = cv2.threshold(gray,0,255,cv2.THRESH_BINARY_INV+cv2.THRESH_OTSU)

13 | # print(thresh) # print threshold

14 |

15 | # Noise removal

16 | kernel = np.ones((3,3), np.uint8)

17 | opening = cv2.morphologyEx(bin_img,cv2.MORPH_OPEN,kernel,iterations = 2)

18 |

19 | # Sure background area

20 | sure_bg = cv2.dilate(opening,kernel,iterations=3)

21 |

22 | # Finding sure foreground area

23 | dist_transform = cv2.distanceTransform(opening,cv2.DIST_L2,5)

24 | ret, sure_fg = cv2.threshold(dist_transform,0.7*dist_transform.max(),255,0)

25 |

26 | # Finding unknown region

27 | sure_fg = np.uint8(sure_fg)

28 | unknown = cv2.subtract(sure_bg,sure_fg)

29 |

30 | # Marker labelling

31 | ret, markers = cv2.connectedComponents(sure_fg)

32 |

33 | # Add one to all labels so that sure background is not 0, but 1

34 | markers = markers+1

35 |

36 | # Now, mark the region of unknown with zero

37 | markers[unknown==255] = 0

38 |

39 | # Apply watershed

40 | markers = cv2.watershed(src,markers)

41 | src[markers == -1] = [255,0,0]

42 |

43 | # Check marker (If check markers, please import matplotlib)

44 | # plt.imshow(markers)

45 | # plt.show()

46 |

47 | # Check markers data

48 | # print(np.unique(markers,return_counts=True))

49 |

50 | return markers, src

51 |

52 | if __name__ == '__main__':

53 | param = sys.argv

54 | if (len(param) != 2):

55 | print ("Usage: $ python " + param[0] + " sample.jpg")

56 | quit()

57 |

58 | # open image file

59 | try:

60 | input_img = cv2.imread(param[1])

61 | except:

62 | print ('faild to load %s' % param[1])

63 | quit()

64 |

65 | if input_img is None:

66 | print ('faild to load %s' % param[1])

67 | quit()

68 |

69 | markers, img = watershed(input_img)

70 | cv2.imwrite("watershed_markers_" + param[1], markers)

71 | cv2.imwrite("watershed_image_" + param[1], img)

72 |

--------------------------------------------------------------------------------

30 |

31 | ## References

32 | - https://qiita.com/ysdyt/items/5972c9520acf6a094d90

33 |

--------------------------------------------------------------------------------

/extract_color.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import cv2

3 | import sys

4 | import numpy as np

5 |

6 | # extract color function

7 | def extract_color( src, h_th_low, h_th_up, s_th, v_th ):

8 | hsv = cv2.cvtColor(src, cv2.COLOR_BGR2HSV)

9 | h, s, v = cv2.split(hsv)

10 | if h_th_low > h_th_up:

11 | ret, h_dst_1 = cv2.threshold(h, h_th_low, 255, cv2.THRESH_BINARY)

12 | ret, h_dst_2 = cv2.threshold(h, h_th_up, 255, cv2.THRESH_BINARY_INV)

13 |

14 | dst = cv2.bitwise_or(h_dst_1, h_dst_2)

15 | else:

16 | ret, dst = cv2.threshold(h, h_th_low, 255, cv2.THRESH_TOZERO)

17 | ret, dst = cv2.threshold(dst, h_th_up, 255, cv2.THRESH_TOZERO_INV)

18 | ret, dst = cv2.threshold(dst, 0, 255, cv2.THRESH_BINARY)

19 |

20 | ret, s_dst = cv2.threshold(s, s_th, 255, cv2.THRESH_BINARY)

21 | ret, v_dst = cv2.threshold(v, v_th, 255, cv2.THRESH_BINARY)

22 | dst = cv2.bitwise_and(dst, s_dst)

23 | dst = cv2.bitwise_and(dst, v_dst)

24 | return dst

25 |

26 | if __name__ == '__main__':

27 | param = sys.argv

28 | if (len(param) != 6):

29 | print ("Usage: $ python " + param[0] + " sample.jpg h_min, h_max, s_th, v_th")

30 | quit()

31 | # open image file

32 | try:

33 | input_img = cv2.imread(param[1])

34 | except:

35 | print ('faild to load %s' % param[1])

36 | quit()

37 |

38 | if input_img is None:

39 | print ('faild to load %s' % param[1])

40 | quit()

41 |

42 | # parameter setting

43 | h_min = int(param[2])

44 | h_max = int(param[3])

45 | s_th = int(param[4])

46 | v_th = int(param[5])

47 | # making mask using by extract color function

48 | msk_img = extract_color(input_img, h_min, h_max, s_th, v_th)

49 | # mask processing

50 | output_img = cv2.bitwise_and(input_img, input_img, mask = msk_img)

51 |