├── .gitignore

├── .pre-commit-config.yaml

├── CHANGELOG.md

├── LICENSE

├── README.md

├── assests

├── AutoDeploy_architecture.png

├── developer.md

└── src.png

├── autodeploy

├── __init__.py

├── __version__.py

├── _backend

│ ├── __init__.py

│ ├── _database.py

│ ├── _heartbeat.py

│ ├── _rmq

│ │ ├── _client.py

│ │ └── _server.py

│ └── redis

│ │ └── redis_db.py

├── _schema

│ ├── __init__.py

│ ├── _schema.py

│ └── _security.py

├── api.py

├── base

│ ├── __init__.py

│ ├── base_deploy.py

│ ├── base_infere.py

│ ├── base_loader.py

│ ├── base_metric.py

│ └── base_moniter_driver.py

├── config

│ ├── __init__.py

│ ├── config.py

│ └── logging.conf

├── database

│ ├── __init__.py

│ ├── _database.py

│ └── _models.py

├── dependencies

│ ├── __init__.py

│ └── _dependency.py

├── handlers

│ ├── __init__.py

│ └── _handlers.py

├── loader

│ ├── __init__.py

│ ├── _loaders.py

│ └── _model_loader.py

├── logger

│ ├── __init__.py

│ └── logger.py

├── monitor.py

├── monitor

│ ├── __init__.py

│ ├── _drift_detection.py

│ ├── _monitor.py

│ └── _prometheus.py

├── predict.py

├── predict

│ ├── __init__.py

│ ├── _infere.py

│ └── builder.py

├── register

│ ├── __init__.py

│ └── register.py

├── routers

│ ├── __init__.py

│ ├── _api.py

│ ├── _model.py

│ ├── _predict.py

│ └── _security.py

├── security

│ └── scheme.py

├── service

│ └── _infere.py

├── testing

│ └── load_test.py

└── utils

│ ├── registry.py

│ └── utils.py

├── bin

├── autodeploy_start.sh

├── build.sh

├── load_test.sh

├── monitor_start.sh

├── predict_start.sh

└── start.sh

├── configs

├── classification

│ └── config.yaml

├── iris

│ └── config.yaml

└── prometheus.yml

├── dashboard

└── dashboard.json

├── docker

├── Dockerfile

├── MonitorDockerfile

├── PredictDockerfile

├── PrometheusDockerfile

└── docker-compose.yml

├── k8s

├── autodeploy-deployment.yaml

├── autodeploy-service.yaml

├── default-networkpolicy.yaml

├── grafana-deployment.yaml

├── grafana-service.yaml

├── horizontal-scale.yaml

├── metric-server.yaml

├── monitor-deployment.yaml

├── monitor-service.yaml

├── prediction-deployment.yaml

├── prediction-service.yaml

├── prometheus-deployment.yaml

├── prometheus-service.yaml

├── rabbitmq-deployment.yaml

├── rabbitmq-service.yaml

├── redis-deployment.yaml

└── redis-service.yaml

├── notebooks

├── autodeploy_classification_inference.ipynb

├── classification_autodeploy.ipynb

├── convert to onnx.ipynb

├── horse_1.jpg

├── test_auto_deploy.ipynb

└── zebra_1.jpg

├── pyproject.toml

└── requirements.txt

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | pip-wheel-metadata/

24 | share/python-wheels/

25 | *.egg-info/

26 | .installed.cfg

27 | *.egg

28 | MANIFEST

29 |

30 | # PyInstaller

31 | # Usually these files are written by a python script from a template

32 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

33 | *.manifest

34 | *.spec

35 |

36 | # Installer logs

37 | pip-log.txt

38 | pip-delete-this-directory.txt

39 |

40 | # Unit test / coverage reports

41 | htmlcov/

42 | .tox/

43 | .nox/

44 | .coverage

45 | .coverage.*

46 | .cache

47 | nosetests.xml

48 | coverage.xml

49 | *.cover

50 | *.py,cover

51 | .hypothesis/

52 | .pytest_cache/

53 |

54 | # Translations

55 | *.mo

56 | *.pot

57 |

58 | # Django stuff:

59 | *.log

60 | local_settings.py

61 | db.sqlite3

62 | db.sqlite3-journal

63 |

64 | # Flask stuff:

65 | instance/

66 | .webassets-cache

67 |

68 | # Scrapy stuff:

69 | .scrapy

70 |

71 | # Sphinx documentation

72 | docs/_build/

73 |

74 | # PyBuilder

75 | target/

76 |

77 | # Jupyter Notebook

78 | .ipynb_checkpoints

79 |

80 | # IPython

81 | profile_default/

82 | ipython_config.py

83 |

84 | # pyenv

85 | .python-version

86 |

87 | # pipenv

88 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

89 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

90 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

91 | # install all needed dependencies.

92 | #Pipfile.lock

93 |

94 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

95 | __pypackages__/

96 |

97 | # Celery stuff

98 | celerybeat-schedule

99 | celerybeat.pid

100 |

101 | # SageMath parsed files

102 | *.sage.py

103 |

104 | # Environments

105 | .env

106 | .venv

107 | env/

108 | venv/

109 | ENV/

110 | env.bak/

111 | venv.bak/

112 |

113 | # Spyder project settings

114 | .spyderproject

115 | .spyproject

116 |

117 | # Rope project settings

118 | .ropeproject

119 |

120 | # mkdocs documentation

121 | /site

122 |

123 | # mypy

124 | .mypy_cache/

125 | .dmypy.json

126 | dmypy.json

127 |

128 | # Pyre type checker

129 | .pyre/

130 |

131 | *.pkl

132 |

133 | *.db

134 | .vim

135 |

136 | .DS_Store

137 | *.swp

138 | *.npy

139 |

--------------------------------------------------------------------------------

/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | # See https://pre-commit.com for more information

2 | # See https://pre-commit.com/hooks.html for more hooks

3 | repos:

4 | - repo: https://github.com/pre-commit/pre-commit-hooks

5 | rev: v2.2.1

6 | hooks:

7 | - id: trailing-whitespace

8 | - id: end-of-file-fixer

9 | - id: check-yaml

10 | - id: check-added-large-files

11 | - id: check-symlinks

12 |

13 | - repo: https://github.com/pre-commit/mirrors-autopep8

14 | rev: v1.4.4

15 | hooks:

16 | - id: autopep8

17 | - repo: https://github.com/commitizen-tools/commitizen

18 | rev: master

19 | hooks:

20 | - id: commitizen

21 | stages: [commit-msg]

22 |

--------------------------------------------------------------------------------

/CHANGELOG.md:

--------------------------------------------------------------------------------

1 | ## 2.0.0 (2021-09-30)

2 |

3 | ### Feat

4 |

5 | - **kubernetes**: Added k8s configuration files

6 |

7 | ### Refactor

8 |

9 | - **load_test.sh**: removed load_test.sh from root

10 |

11 | ## 1.0.0 (2021-09-26)

12 |

13 | ### Refactor

14 |

15 | - **monitor-service**: refactored monitor service

16 | - **autodeploy**: refactored api monitor and prediction service with internal config addition

17 | - **backend-redis**: using internal config for redis configuraiton

18 |

19 | ### Feat

20 |

21 | - **_rmq-client-and-server**: use internal config for rmq configuration

22 |

23 | ## v0.1.1b (2021-09-25)

24 |

25 | ## 0.3beta (2021-09-18)

26 |

27 | ## 0.2beta (2021-09-12)

28 |

29 | ## 0.1beta (2021-09-09)

30 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2021 NeuralLink

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | AutoDeploy

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

18 |

19 |

18 |

19 |  20 | A one liner :

21 | For the DevOps nerds, AutoDeploy allows configuration based MLOps.

22 |

23 | For the rest :

24 | So you're a data scientist and have the greatest model on planet earth to classify dogs and cats! :). What next? It's a steeplearning cusrve from building your model to getting it to production. MLOps, Docker, Kubernetes, asynchronous, prometheus, logging, monitoring, versioning etc. Much more to do right before you The immediate next thoughts and tasks are

25 |

26 | - How do you get it out to your consumer to use as a service.

27 | - How do you monitor its use?

28 | - How do you test your model once deployed? And it can get trickier once you have multiple versions of your model. How do you perform

29 | A/B testing?

30 | - Can i configure custom metrics and monitor them?

31 | - What if my data distribution changes in production - how can i monitor data drift?

32 | - My models use different frameworks. Am i covered?

33 | ... and many more.

34 |

35 | # Architecture

36 |

20 | A one liner :

21 | For the DevOps nerds, AutoDeploy allows configuration based MLOps.

22 |

23 | For the rest :

24 | So you're a data scientist and have the greatest model on planet earth to classify dogs and cats! :). What next? It's a steeplearning cusrve from building your model to getting it to production. MLOps, Docker, Kubernetes, asynchronous, prometheus, logging, monitoring, versioning etc. Much more to do right before you The immediate next thoughts and tasks are

25 |

26 | - How do you get it out to your consumer to use as a service.

27 | - How do you monitor its use?

28 | - How do you test your model once deployed? And it can get trickier once you have multiple versions of your model. How do you perform

29 | A/B testing?

30 | - Can i configure custom metrics and monitor them?

31 | - What if my data distribution changes in production - how can i monitor data drift?

32 | - My models use different frameworks. Am i covered?

33 | ... and many more.

34 |

35 | # Architecture

36 |  37 |

38 |

39 | What if you could only configure a single file and get up and running with a single command. **That is what AutoDeploy is!**

40 |

41 | Read our [documentation](https://github.com/kartik4949/AutoDeploy/wiki) to know how to get setup and get to serving your models.

42 |

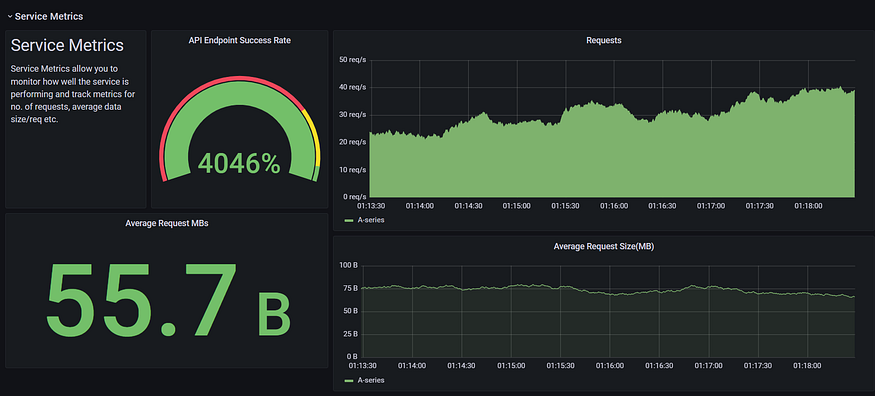

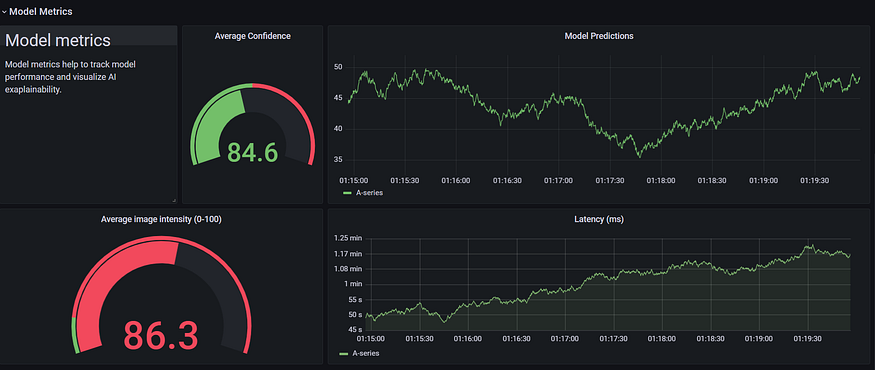

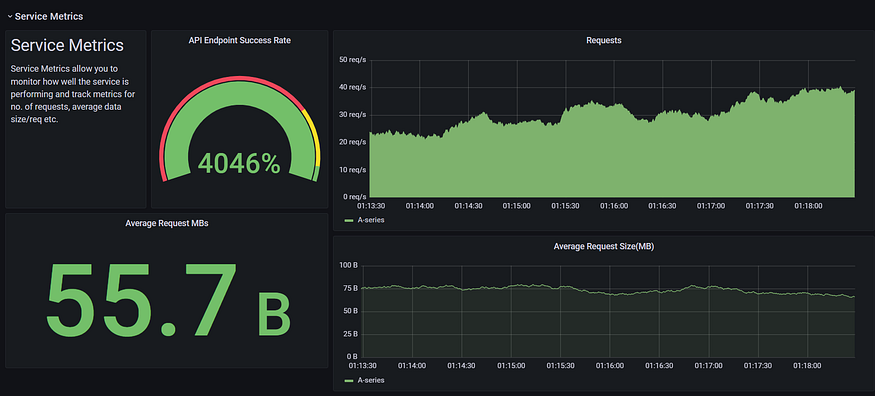

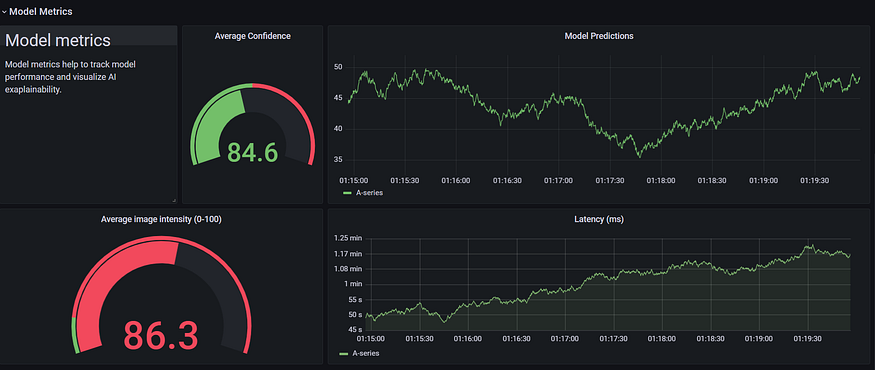

43 | # AutoDeploy monitoring dashboard

44 |

37 |

38 |

39 | What if you could only configure a single file and get up and running with a single command. **That is what AutoDeploy is!**

40 |

41 | Read our [documentation](https://github.com/kartik4949/AutoDeploy/wiki) to know how to get setup and get to serving your models.

42 |

43 | # AutoDeploy monitoring dashboard

44 |  45 |

46 |

45 |

46 |  47 |

48 | and many more...

49 |

50 | # Feature Support.

51 |

52 | - [x] Single Configuration file support.

53 | - [x] Enterprise deployment architecture.

54 | - [x] Logging.

55 | - [x] Grafana Dashboards.

56 | - [x] Dynamic Database.

57 | - [x] Data Drift Monitoring.

58 | - [x] Async Model Monitoring.

59 | - [x] Network traffic monitoring.

60 | - [x] Realtime traffic simulation.

61 | - [x] Autoscaling of services.

62 | - [x] Kubernetes.

63 | - [x] Preprocess configuration.

64 | - [x] Posprocess configuration.

65 | - [x] Custom metrics configuration.

66 |

67 | ## Prerequisites

68 | - Install docker

69 | - For Ubuntu (and Linux distros) - [Install Docker on Ubuntu](https://docs.docker.com/engine/install/ubuntu/#installation-methods)

70 | - For Windows - [Install Docker on Windows](https://docs.docker.com/desktop/windows/install/)

71 | - For Mac -

72 |

73 | - Install docker-compose

74 | - For Ubuntu (and Linux distros) - [Install docker-compose on Linux](https://docs.docker.com/compose/install/)

75 | - For Windows and Mac

76 |

77 | ## Steps

78 | - Clone the repo : https://github.com/kartik4949/AutoDeploy

79 | - Download a sample model and dependencies

80 | - Run the command in a terminal from the AutoDeploy folder ``` wget https://github.com/kartik4949/AutoDeploy/files/7134516/model_dependencies.zip ```

81 | - Extract the zip folder to get a **model_dependencies** folder

82 | - Have your model ready

83 | - Pickle file for scikitlearn

84 | - ONNX model for Tensorflow,Pytorch, MXNet etc. [How to convert to ONNX model](https://github.com/onnx/tutorials)

85 | - Create the model [dependencies](https://github.com/kartik4949/AutoDeploy/wiki/Setup-Model-Dependencies)

86 | - Copy the dependencies over to a **model_dependencies** folder

87 | - Setup [configuration](https://google.com)

88 | - Steps for Docker deployment

89 | - Build your docker image

90 | - ```bash build.sh -r path/to/model/requirements.txt -c path/to/model/config.yaml```

91 | - Start your containers

92 | - ```bash start.sh -f path/to/config/file/in/autodeploy```

93 | - Steps for Kubernetes

94 | - Build your docker image

95 | - ```bash build.sh -r path/to/model/requirements.txt -c path/to/model/config.yaml```

96 | - Apply kubeconfig files

97 | - ``` kubectl -f k8s apply ```

98 | - Print all pods

99 | - ``` kubectl get pod ```

100 | - Port forwarding of api and grafana service

101 | - ``` kubectl port-forward autodeploy-pod-name 8000:8000 ```

102 | - ``` kubectl port-forward grafana-pod-name 3000:3000 ```

103 |

104 | ## Example (Docker deployment) - Iris Model Detection (Sci-Kit Learn).

105 | - Clone repo.

106 | - Dump your iris sklearn model via pickle, lets say `custom_model.pkl`.

107 | - Make a dir model_dependencies inside AutoDeploy.

108 | - Move `custom_model.pkl` to model_dependencies.

109 | - Create or import a reference `iris_reference.npy` file for data drift monitoring.

110 | - Note: `iris_reference.npy` is numpy reference array used to find drift in incomming data.

111 | - This reference data is usually in shape `(n, *shape_of_input)` e.g for iris data : np.zeros((100, 4))

112 | - Shape (100, 4) means we are using 100 data points as reference for incomming input request.

113 |

114 | - Move `iris_reference.npy` to model_dependencies folder.

115 | - Refer below config file and make changes in configs/iris/config.yaml and save it.

116 | - Lastly make an empty reqs.txt file inside model_dependencies folder.

117 | ```

118 | model:

119 | model_type: 'sklearn'

120 | model_path: 'custom_model.pkl' # Our model pickle file.

121 | model_file_type: 'pickle'

122 | version: '1.0.0'

123 | model_name: 'sklearn iris detection model.'

124 | endpoint: 'predict'

125 | protected: 0

126 | input_type: 'structured'

127 | server:

128 | name: 'autodeploy'

129 | port: 8000

130 | dependency:

131 | path: '/app/model_dependencies'

132 | input_schema:

133 | petal_length: 'float'

134 | petal_width: 'float'

135 | sepal_length: 'float'

136 | sepal_width: 'float'

137 | out_schema:

138 | out: 'int'

139 | probablity: 'float'

140 | status: 'int'

141 | monitor:

142 | server:

143 | name: 'rabbitmq'

144 | port: 5672

145 | data_drift:

146 | name: 'KSDrift'

147 | reference_data: 'iris_reference.npy'

148 | type: 'info'

149 | metrics:

150 | average_per_day:

151 | type: 'info'

152 | ```

153 | - run ``` bash build.sh -r model_dependencies/reqs.txt -c configs/iris/config.yaml```

154 | - run ``` bash start.sh -f configs/iris/config.yaml ```

155 |

156 | Tada!! your model is deployed.

157 |

158 | ## Example (Docker deployment) - Classification Detection

159 |

160 | - Clone repo.

161 | - Convert the model to Onnx file `model.onnx`.

162 | - Make a dir model_dependencies inside AutoDeploy.

163 | - Move `model.onnx` to model_dependencies.

164 | - Create or import a reference `classification_reference.npy` file for data drift monitoring.

165 | - Move `classification_reference.npy` to model_dependencies folder.

166 | - Refer below config file and make changes in configs/iris/config.yaml and save it.

167 |

168 | ```

169 | model:

170 | model_type: 'onnx'

171 | model_path: 'horse_zebra.onnx'

172 | model_file_type: 'onnx'

173 | version: '1.0.0'

174 | model_name: 'computer vision classification model.'

175 | endpoint: 'predict'

176 | protected: 0

177 | input_type: 'serialized'

178 | input_shape: [224, 224, 3]

179 | server:

180 | name: 'autodeploy'

181 | port: 8000

182 | preprocess: 'custom_preprocess_classification'

183 | input_schema:

184 | input: 'string'

185 | out_schema:

186 | out: 'int'

187 | probablity: 'float'

188 | status: 'int'

189 | dependency:

190 | path: '/app/model_dependencies'

191 | monitor:

192 | server:

193 | name: 'rabbitmq'

194 | port: 5672

195 | data_drift:

196 | name: 'KSDrift'

197 | reference_data: 'structured_ref.npy'

198 | type: 'info'

199 | custom_metrics: 'image_brightness'

200 | metrics:

201 | average_per_day:

202 | type: 'info'

203 |

204 | ```

205 | - Make a reqs.txt file inside model_dependencies folder.

206 | - reqs.txt

207 | ```

208 | pillow

209 | ```

210 |

211 | - Make preprocess.py

212 | ```

213 | import cv2

214 | import numpy as np

215 |

216 | from register import PREPROCESS

217 |

218 | @PREPROCESS.register_module(name='custom_preprocess')

219 | def iris_pre_processing(input):

220 | return input

221 |

222 | @PREPROCESS.register_module(name='custom_preprocess_classification')

223 | def custom_preprocess_fxn(input):

224 | _channels = 3

225 | _input_shape = (224, 224)

226 | _channels_first = 1

227 | input = cv2.resize(

228 | input[0], dsize=_input_shape, interpolation=cv2.INTER_CUBIC)

229 | if _channels_first:

230 | input = np.reshape(input, (_channels, *_input_shape))

231 | else:

232 | input = np.reshape(input, (*_input_shape, _channels))

233 | return np.asarray(input, np.float32)

234 |

235 | ```

236 | - Make postproces.py

237 |

238 | ```

239 | from register import POSTPROCESS

240 |

241 | @POSTPROCESS.register_module(name='custom_postprocess')

242 | def custom_postprocess_fxn(output):

243 | out_class, out_prob = output[0], output[1]

244 | output = {'out': output[0],

245 | 'probablity': output[1],

246 | 'status': 200}

247 | return output

248 |

249 | ```

250 | - Make custom_metrics.py we will make a custom_metric to expose image_brightness

251 | ```

252 | import numpy as np

253 | from PIL import Image

254 | from register import METRICS

255 |

256 |

257 | @METRICS.register_module(name='image_brightness')

258 | def calculate_brightness(image):

259 | image = Image.fromarray(np.asarray(image[0][0], dtype='uint8'))

260 | greyscale_image = image.convert('L')

261 | histogram = greyscale_image.histogram()

262 | pixels = sum(histogram)

263 | brightness = scale = len(histogram)

264 |

265 | for index in range(0, scale):

266 | ratio = histogram[index] / pixels

267 | brightness += ratio * (-scale + index)

268 |

269 | return 1.0 if brightness == 255 else brightness / scale

270 |

271 | ```

272 | - run ``` bash build.sh -r model_dependencies/reqs.txt -c configs/classification/config.yaml ```

273 | - run ``` bash start.sh -f configs/classification/config.yaml ```

274 | - To monitor the custom metric `image_brightness`: goto grafana and add panel to the dashboard with image_brightness as metric.

275 |

276 |

277 | ## After deployment steps

278 | ### Model Endpoint

279 | - http://address:port/endpoint is your model endpoint e.g http://localhost:8000/predict

280 |

281 | ### Grafana

282 | - Open http://address:3000

283 | - Username and password both are `admin`.

284 | - Goto to add datasource.

285 | - Select first option prometheus.

286 | - Add http://prometheus:9090 in the source

287 | - Click save and test at bottom.

288 | - Goto dashboard and click import json file.

289 | - Upload dashboard/model.json avaiable in repository.

290 | - Now you have your dashboard ready!! feel free to add more panels with queries.

291 |

292 | ## Preprocess

293 | - Add preprocess.py in model_dependencies folder

294 | - from register module import PROCESS register, to register your preprocess functions.

295 | ```

296 | from register import PREPROCESS

297 | ```

298 | - decorate your preprocess function with `@PREPROCESS.register_module(name='custom_preprocess')`

299 | ```

300 | @PREPROCESS.register_module(name='custom_preprocess')

301 | def function(input):

302 | # process input

303 | input = process(input)

304 | return input

305 | ```

306 | - Remeber we will use `custom_preprocess` name in our config file, add this in your config file.

307 | ```

308 | preprocess: custom_preprocess

309 | ```

310 |

311 | ## Postprocess

312 | - Same as preprocess

313 | - Just remember schema of output from postprocess method should be same as definde in config file

314 | - i.e

315 | ```

316 | out_schema:

317 | out: 'int'

318 | probablity: 'float'

319 | status: 'int'

320 | ```

321 |

322 | ## Custom Metrics

323 | - from register import METRICS

324 | - register your function with METRIC decorator similar to preprocess

325 | - Example 1 : Simple single metric

326 | ```

327 | import numpy as np

328 | from PIL import Image

329 | from register import METRICS

330 |

331 |

332 | @METRICS.register_module(name='image_brightness')

333 | def calculate_brightness(image):

334 | image = Image.fromarray(np.asarray(image[0][0], dtype='uint8'))

335 | greyscale_image = image.convert('L')

336 | histogram = greyscale_image.histogram()

337 | pixels = sum(histogram)

338 | brightness = scale = len(histogram)

339 |

340 | for index in range(0, scale):

341 | ratio = histogram[index] / pixels

342 | brightness += ratio * (-scale + index)

343 |

344 | return 1.0 if brightness == 255 else brightness / scale

345 |

346 | ```

347 | - We will use `image_brightness` in config file to expose this metric function.

348 | ```

349 | monitor:

350 | server:

351 | name: 'rabbitmq'

352 | port: 5672

353 | data_drift:

354 | name: 'KSDrift'

355 | reference_data: 'structured_ref.npy'

356 | type: 'info'

357 | custom_metrics: ['metric1', 'metric2']

358 | metrics:

359 | average_per_day:

360 | type: 'info'

361 | ```

362 | - Example 2: Advance metric with multiple metrcis functions

363 |

364 | ```

365 | import numpy as np

366 | from PIL import Image

367 | from register import METRICS

368 |

369 |

370 | @METRICS.register_module(name='metric1')

371 | def calculate_brightness(image):

372 | return 1

373 |

374 | @METRICS.register_module(name='metric2')

375 | def metric2(image):

376 | return 2

377 |

378 | ```

379 | - config looks like

380 | ```

381 | monitor:

382 | server:

383 | name: 'rabbitmq'

384 | port: 5672

385 | data_drift:

386 | name: 'KSDrift'

387 | reference_data: 'structured_ref.npy'

388 | type: 'info'

389 | custom_metrics: ['metric1', 'metric2']

390 | metrics:

391 | average_per_day:

392 | type: 'info'

393 | ```

394 |

395 |

396 | ## License

397 |

398 | Distributed under the MIT License. See `LICENSE` for more information.

399 |

400 |

401 | ## Contact

402 |

403 | Kartik Sharma - kartik4949@gmail.com

404 | Nilav Ghosh - nilavghosh@gmail.com

405 |

--------------------------------------------------------------------------------

/assests/AutoDeploy_architecture.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kartik4949/AutoDeploy/af7b3b32954a574307849ababb05fa2f4a80f52e/assests/AutoDeploy_architecture.png

--------------------------------------------------------------------------------

/assests/developer.md:

--------------------------------------------------------------------------------

1 | # formatter information:

2 |

3 | ## autopep8 command

4 | autopep8 --global-config ~/.config/pep8 --in-place --aggressive --recursive --indent-size=2 .

5 |

6 | ## pep8 file

7 | ```

8 | [pep8]

9 | count = False

10 | ignore = E226,E302,E41

11 | max-line-length = 89

12 | statistics = True

13 | ```

14 |

--------------------------------------------------------------------------------

/assests/src.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kartik4949/AutoDeploy/af7b3b32954a574307849ababb05fa2f4a80f52e/assests/src.png

--------------------------------------------------------------------------------

/autodeploy/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kartik4949/AutoDeploy/af7b3b32954a574307849ababb05fa2f4a80f52e/autodeploy/__init__.py

--------------------------------------------------------------------------------

/autodeploy/__version__.py:

--------------------------------------------------------------------------------

1 | version = '2.0.0'

2 |

--------------------------------------------------------------------------------

/autodeploy/_backend/__init__.py:

--------------------------------------------------------------------------------

1 | from ._rmq._client import RabbitMQClient

2 | from ._rmq._server import RabbitMQConsume

3 | from ._database import Database

4 | from .redis.redis_db import RedisDB

5 |

--------------------------------------------------------------------------------

/autodeploy/_backend/_database.py:

--------------------------------------------------------------------------------

1 | ''' A simple database class utility. '''

2 | from database import _database as database, _models as models

3 | from logger import AppLogger

4 |

5 | applogger = AppLogger(__name__)

6 | logger = applogger.get_logger()

7 |

8 |

9 | class Database:

10 | '''

11 | A database class that creates and stores incoming requests

12 | in user define database with given schema.

13 | Args:

14 | config (Config): A configuration object which contains configuration

15 | for the deployment.

16 |

17 | Attributes:

18 | config (Config): internal configuration object for configurations.

19 |

20 | Example:

21 | >> with Database(config) as db:

22 | >> .... db.store_request(item)

23 |

24 | '''

25 |

26 | def __init__(self, config) -> None:

27 | self.config = config

28 | self.db = None

29 |

30 | @classmethod

31 | def bind(cls):

32 | models.Base.metadata.create_all(bind=database.engine)

33 |

34 | def setup(self):

35 | # create database engine and bind all.

36 | self.bind()

37 | self.db = database.SessionLocal()

38 |

39 | def close(self):

40 | self.db.close()

41 |

42 | def store_request(self, db_item) -> None:

43 |

44 | try:

45 | self.db.add(db_item)

46 | self.db.commit()

47 | self.db.refresh(db_item)

48 | except Exception as exc:

49 | logger.error(

50 | 'Some error occured while storing request in database.')

51 | raise Exception(

52 | 'Some error occured while storing request in database.')

53 | return db_item

54 |

--------------------------------------------------------------------------------

/autodeploy/_backend/_heartbeat.py:

--------------------------------------------------------------------------------

1 | ''' a mixin class for heatbeat to rabbitmq server. '''

2 | from time import sleep

3 | import threading

4 |

5 | # send heartbeat signal after 5 secs to rabbitmq.

6 | HEART_BEAT = 5

7 |

8 | class HeartBeatMixin:

9 | @staticmethod

10 | def _beat(connection):

11 | while True:

12 | # TODO: remove hardcode

13 | sleep(HEART_BEAT)

14 | connection.process_data_events()

15 |

16 | def beat(self):

17 | ''' process_data_events periodically.

18 | TODO: hackish way, think other way.

19 | '''

20 | heartbeat = threading.Thread(

21 | target=self._beat, args=(self.connection,), daemon=True)

22 | heartbeat.start()

23 |

--------------------------------------------------------------------------------

/autodeploy/_backend/_rmq/_client.py:

--------------------------------------------------------------------------------

1 | ''' a rabbitmq client class. '''

2 | import json

3 | import asyncio

4 | from contextlib import suppress

5 | import uuid

6 | from typing import Dict

7 |

8 | import pika

9 |

10 | from _backend._heartbeat import HeartBeatMixin

11 | from logger import AppLogger

12 |

13 | logger = AppLogger(__name__).get_logger()

14 |

15 |

16 | class RabbitMQClient(HeartBeatMixin):

17 | def __init__(self, config):

18 | self.host = config.RABBITMQ_HOST

19 | self.port = config.RABBITMQ_PORT

20 | self.retries = config.RETRIES

21 |

22 | def connect(self):

23 | ''' a simple function to get connection to rmq '''

24 | # connect to RabbitMQ Server.

25 | self.connection = pika.BlockingConnection(

26 | pika.ConnectionParameters(host=self.host, port=self.port))

27 |

28 | self.channel = self.connection.channel()

29 |

30 | result = self.channel.queue_declare(queue='monitor', exclusive=False)

31 | self.callback_queue = result.method.queue

32 | self.channel.basic_qos(prefetch_count=1)

33 |

34 | def setupRabbitMq(self, ):

35 | ''' a simple setup for rabbitmq server connection

36 | and queue connection.

37 | '''

38 | self.connect()

39 | self.beat()

40 |

41 | def _publish(self, body: Dict):

42 | self.channel.basic_publish(

43 | exchange='',

44 | routing_key='monitor',

45 | properties=pika.BasicProperties(

46 | reply_to=self.callback_queue,

47 | ),

48 | body=json.dumps(dict(body)))

49 |

50 | def publish_rbmq(self, body: Dict):

51 | try:

52 | self._publish(body)

53 | except:

54 | logger.error('Error while publish!!, restarting connection.')

55 | for i in range(self.retries):

56 | try:

57 | self._publish(body)

58 | except:

59 | continue

60 | else:

61 | logger.debug('published success after {i + 1} retries')

62 | return

63 | break

64 |

65 | logger.critical('cannot publish after retries!!')

66 |

--------------------------------------------------------------------------------

/autodeploy/_backend/_rmq/_server.py:

--------------------------------------------------------------------------------

1 | ''' a simple rabbitmq server/consumer. '''

2 | import pika

3 |

4 | from logger import AppLogger

5 | from _backend._heartbeat import HeartBeatMixin

6 |

7 | logger = AppLogger(__name__).get_logger()

8 |

9 | class RabbitMQConsume(HeartBeatMixin):

10 | def __init__(self, config) -> None:

11 | self.host = config.RABBITMQ_HOST

12 | self.port = config.RABBITMQ_PORT

13 | self.retries = config.RETRIES

14 | self.queue = config.RABBITMQ_QUEUE

15 | self.connection = None

16 |

17 | def setupRabbitMQ(self, callback):

18 | try:

19 | self.connection = pika.BlockingConnection(

20 | pika.ConnectionParameters(self.host, port=self.port))

21 | except Exception as exc:

22 | logger.critical(

23 | 'Error occured while creating connnection in rabbitmq')

24 | raise Exception(

25 | 'Error occured while creating connnection in rabbitmq', exc)

26 | channel = self.connection.channel()

27 |

28 | channel.queue_declare(queue=self.queue)

29 | self.beat()

30 |

31 | channel.basic_consume(

32 | queue=self.queue, on_message_callback=callback, auto_ack=True)

33 | self.channel = channel

34 |

--------------------------------------------------------------------------------

/autodeploy/_backend/redis/redis_db.py:

--------------------------------------------------------------------------------

1 | from uuid import uuid4

2 | import struct

3 |

4 | import redis

5 | import numpy as np

6 |

7 |

8 | class RedisDB:

9 | def __init__(self, config) -> None:

10 | self.config = config

11 | self.redis = redis.Redis(host = self.config.REDIS_SERVER, port = self.config.REDIS_PORT, db=0)

12 |

13 | def encode(self, input, shape):

14 | bytes = input.tobytes()

15 | _encoder = 'I'*len(shape)

16 | shape = struct.pack('>' + _encoder, *shape)

17 | return shape + bytes

18 |

19 | def pull(self, id, dtype, ndim):

20 | input_encoded = self.redis.get(id)

21 | shape = struct.unpack('>' + ('I'*ndim), input_encoded[:ndim*4])

22 | a = np.frombuffer(input_encoded[ndim*4:], dtype=dtype).reshape(shape)

23 | return a

24 |

25 | def push(self, input, dtype, shape):

26 | input_hash = str(uuid4())

27 | input = np.asarray(input, dtype=dtype)

28 | encoded_input = self.encode(input, shape=shape)

29 | self.redis.set(input_hash, encoded_input)

30 | return input_hash

31 |

32 | def pop():

33 | NotImplementedError('Not implemented yet!')

34 |

--------------------------------------------------------------------------------

/autodeploy/_schema/__init__.py:

--------------------------------------------------------------------------------

1 | from ._schema import UserIn, UserOut, create_model

2 |

--------------------------------------------------------------------------------

/autodeploy/_schema/_schema.py:

--------------------------------------------------------------------------------

1 | ''' user input and output schema models. '''

2 | from pydantic import create_model

3 | from utils import utils

4 |

5 |

6 | """ Simple user input schema. """

7 |

8 |

9 | class UserIn:

10 | '''

11 | `UserIn` pydantic model

12 | supports dynamic model attributes creation

13 | defined by user in configuration file.

14 |

15 | Args:

16 | UserInputSchema (pydantic.BaseModel): pydantic model.

17 |

18 | '''

19 |

20 | def __init__(self, config, *args, **kwargs):

21 | self._model_attr = utils.annotator(dict(config.input_schema))

22 | self.UserInputSchema = create_model(

23 | 'UserInputSchema', **self._model_attr)

24 |

25 |

26 | class UserOut:

27 | '''

28 |

29 | `UserOut` pydantic model

30 | supports dynamic model attributes creation

31 | defined by user in configuration file.

32 |

33 | Args:

34 | UserOutputSchema (pydantic.BaseModel): pydantic model.

35 | '''

36 |

37 | def __init__(self, config, *args, **kwargs):

38 | self._model_attr = utils.annotator(dict(config.out_schema))

39 | self.UserOutputSchema = create_model(

40 | 'UserOutputSchema', **self._model_attr)

41 |

--------------------------------------------------------------------------------

/autodeploy/_schema/_security.py:

--------------------------------------------------------------------------------

1 | """ Simple security user schema. """

2 | from typing import Optional

3 |

4 | from pydantic import BaseModel

5 |

6 |

7 | class User(BaseModel):

8 | username: str

9 | email: Optional[str] = None

10 | full_name: Optional[str] = None

11 | disabled: Optional[bool] = None

12 |

13 | class UserInDB(User):

14 | hashed_password: str

15 |

--------------------------------------------------------------------------------

/autodeploy/api.py:

--------------------------------------------------------------------------------

1 | """ A simple deploy service utility."""

2 | import traceback

3 | import argparse

4 | import requests

5 | import os

6 | import json

7 | from typing import List, Dict, Tuple, Any

8 | from datetime import datetime

9 |

10 | import uvicorn

11 | import numpy as np

12 | from prometheus_fastapi_instrumentator import Instrumentator

13 | from fastapi import Depends, FastAPI, Request, HTTPException

14 |

15 | from config.config import Config, InternalConfig

16 | from utils import utils

17 | from logger import AppLogger

18 | from routers import AutoDeployRouter

19 | from routers import api_router

20 | from routers import ModelDetailRouter

21 | from routers import model_detail_router

22 | from routers import auth_router

23 | from base import BaseDriverService

24 |

25 |

26 | # ArgumentParser to get commandline args.

27 | parser = argparse.ArgumentParser()

28 | parser.add_argument("-o", "--mode", default='debug', type=str,

29 | help="model for running deployment ,mode can be PRODUCTION or DEBUG")

30 | args = parser.parse_args()

31 |

32 | # __main__ (root) logger instance construction.

33 | applogger = AppLogger(__name__)

34 | logger = applogger.get_logger()

35 |

36 |

37 | def set_middleware(app):

38 | @app.middleware('http')

39 | async def log_incoming_requests(request: Request, call_next):

40 | '''

41 | Middleware to log incoming requests to server.

42 |

43 | Args:

44 | request (Request): incoming request payload.

45 | call_next: function to executing the request.

46 | Returns:

47 | response (Dict): reponse from the executing fxn.

48 | '''

49 | logger.info(f'incoming payload to server. {request}')

50 | response = await call_next(request)

51 | return response

52 |

53 |

54 | class APIDriver(BaseDriverService):

55 | '''

56 | APIDriver class for creating deploy driver which setups

57 | , registers routers with `app` and executes the server.

58 |

59 | This class is the main driver class responsible for creating

60 | and setupping the environment.

61 | Args:

62 | config (str): a config path.

63 |

64 | Note:

65 | `setup` method should get called before `register_routers`.

66 |

67 | '''

68 |

69 | def __init__(self, config_path) -> None:

70 | # user config for configuring model deployment.

71 | self.user_config = Config(config_path).get_config()

72 | self.internal_config = InternalConfig()

73 |

74 | def setup(self, app) -> None:

75 | '''

76 | Main setup function responsible for setting up

77 | the environment for model deployment.

78 | setups prediction and model routers.

79 |

80 | Setups Prometheus instrumentor

81 | '''

82 | # print config

83 | logger.info(self.user_config)

84 |

85 | if isinstance(self.user_config.model.model_path, list):

86 | logger.info('Multi model deployment started...')

87 |

88 | # expose prometheus data to /metrics

89 | Instrumentator().instrument(app).expose(app)

90 | _schemas = self._setup_schema()

91 |

92 | apirouter = AutoDeployRouter(self.user_config, self.internal_config)

93 | apirouter.setup(_schemas)

94 | apirouter.register_router()

95 |

96 | modeldetailrouter = ModelDetailRouter(self.user_config)

97 | modeldetailrouter.register_router()

98 | set_middleware(app)

99 |

100 | # setup exception handlers

101 | self.handler_setup(app)

102 |

103 | def register_routers(self, app):

104 | '''

105 | a helper function to register routers in the app.

106 | '''

107 | self._app_include([api_router, model_detail_router, auth_router], app)

108 |

109 | def run(self, app):

110 | '''

111 | The main executing function which runs the uvicorn server

112 | with the app instance and user configuration.

113 | '''

114 | # run uvicorn server.

115 | uvicorn.run(app, port=self.internal_config.API_PORT, host="0.0.0.0")

116 |

117 |

118 | def main():

119 | # create fastapi application

120 | app = FastAPI()

121 | deploydriver = APIDriver(os.environ['CONFIG'])

122 | deploydriver.setup(app)

123 | deploydriver.register_routers(app)

124 | deploydriver.run(app)

125 |

126 |

127 | if __name__ == "__main__":

128 | main()

129 |

--------------------------------------------------------------------------------

/autodeploy/base/__init__.py:

--------------------------------------------------------------------------------

1 | from .base_infere import BaseInfere

2 | from .base_deploy import BaseDriverService

3 | from .base_loader import BaseLoader

4 | from .base_moniter_driver import BaseMonitorService

5 | from .base_metric import BaseMetric

6 |

--------------------------------------------------------------------------------

/autodeploy/base/base_deploy.py:

--------------------------------------------------------------------------------

1 | ''' Base class for deploy driver service. '''

2 | from abc import ABC, abstractmethod

3 | from typing import List, Dict, Tuple, Any

4 |

5 | from handlers import Handler, ModelException

6 | from _schema import UserIn, UserOut

7 |

8 |

9 | class BaseDriverService(ABC):

10 | def __init__(self, *args, **kwargs):

11 | ...

12 |

13 | def _setup_schema(self) -> Tuple[Any, Any]:

14 | '''

15 | a function to setup input and output schema for

16 | server.

17 |

18 | '''

19 | # create input and output schema for model endpoint api.

20 | output_model_schema = UserOut(

21 | self.user_config)

22 | input_model_schema = UserIn(

23 | self.user_config)

24 | return (input_model_schema, output_model_schema)

25 |

26 | @staticmethod

27 | def handler_setup(app):

28 | '''

29 | a simple helper function to overide handlers

30 | '''

31 | # create exception handlers for fastapi.

32 | handler = Handler()

33 | handler.overide_handlers(app)

34 | handler.create_handlers(app)

35 |

36 | def _app_include(self, routers, app):

37 | '''

38 | a simple helper function to register routers

39 | with the fastapi `app`.

40 |

41 | '''

42 | for route in routers:

43 | app.include_router(route)

44 |

45 | @abstractmethod

46 | def setup(self, *args, **kwargs):

47 | '''

48 | abstractmethod for setup to be implemeneted in child class

49 | for setup of monitor driver.

50 |

51 | '''

52 | raise NotImplementedError('setup function is not implemeneted.')

53 |

--------------------------------------------------------------------------------

/autodeploy/base/base_infere.py:

--------------------------------------------------------------------------------

1 | ''' Base class for inference service. '''

2 | from abc import ABC, abstractmethod

3 |

4 |

5 | class BaseInfere(ABC):

6 | def __init__(self, *args, **kwargs):

7 | ...

8 |

9 | @abstractmethod

10 | def infere(self, *args, **kwargs):

11 | '''

12 | infere function which inferes the input

13 | data based on the model loaded.

14 | Needs to be overriden in child class with

15 | custom implementation.

16 |

17 | '''

18 | raise NotImplementedError('Infere function is not implemeneted.')

19 |

--------------------------------------------------------------------------------

/autodeploy/base/base_loader.py:

--------------------------------------------------------------------------------

1 | ''' Base class for loaders. '''

2 | from abc import ABC, abstractmethod

3 |

4 |

5 | class BaseLoader(ABC):

6 | def __init__(self, *args, **kwargs):

7 | ...

8 |

9 | @abstractmethod

10 | def load(self, *args, **kwargs):

11 | '''

12 | abstractmethod for loading model file.

13 |

14 | '''

15 | raise NotImplementedError('load function is not implemeneted.')

16 |

--------------------------------------------------------------------------------

/autodeploy/base/base_metric.py:

--------------------------------------------------------------------------------

1 | """ a simple model data drift detection monitering utilities. """

2 | from abc import ABC, abstractmethod

3 | from typing import Dict

4 |

5 | import numpy as np

6 |

7 | from config import Config

8 |

9 |

10 | class BaseMetric(ABC):

11 |

12 | def __init__(self, config: Config, *args, **kwargs) -> None:

13 | self.config = config

14 |

15 | @abstractmethod

16 | def get_change(self, x: np.ndarray) -> Dict:

17 | '''

18 |

19 | '''

20 | raise NotImplementedError('setup function is not implemeneted.')

21 |

--------------------------------------------------------------------------------

/autodeploy/base/base_moniter_driver.py:

--------------------------------------------------------------------------------

1 | ''' Base class for monitor driver service. '''

2 | from abc import ABC, abstractmethod

3 | from typing import List, Dict, Tuple, Any

4 |

5 | from handlers import Handler, ModelException

6 | from _schema import UserIn, UserOut

7 |

8 |

9 | class BaseMonitorService(ABC):

10 | def __init__(self, *args, **kwargs):

11 | ...

12 |

13 | @abstractmethod

14 | def setup(self, *args, **kwargs):

15 | '''

16 | abstractmethod for setup to be implemeneted in child class

17 | for setup of monitor driver.

18 |

19 | '''

20 | raise NotImplementedError('setup function is not implemeneted.')

21 |

22 | def _setup_schema(self) -> Tuple[Any, Any]:

23 | '''

24 | a function to setup input and output schema for

25 | server.

26 |

27 | '''

28 | # create input and output schema for model endpoint api.

29 | input_model_schema = UserIn(

30 | self.user_config)

31 | output_model_schema = UserOut(

32 | self.user_config)

33 | return (input_model_schema, output_model_schema)

34 |

35 | @staticmethod

36 | def handler_setup(app):

37 | '''

38 | a simple helper function to overide handlers

39 | '''

40 | # create exception handlers for fastapi.

41 | handler = Handler()

42 | handler.overide_handlers(app)

43 | handler.create_handlers(app)

44 |

45 | def _app_include(self, routers, app):

46 | '''

47 | a simple helper function to register routers

48 | with the fastapi `app`.

49 |

50 | '''

51 | for route in routers:

52 | app.include_router(route)

53 |

54 | @abstractmethod

55 | def _load_monitor_algorithm(self, *args, **kwargs):

56 | '''

57 | abstractmethod for loading monitor algorithm which needs

58 | to be overriden in child class.

59 | '''

60 |

61 | raise NotImplementedError('load monitor function is not implemeneted.')

62 |

--------------------------------------------------------------------------------

/autodeploy/config/__init__.py:

--------------------------------------------------------------------------------

1 | from .config import Config

2 | from .config import InternalConfig

3 |

--------------------------------------------------------------------------------

/autodeploy/config/config.py:

--------------------------------------------------------------------------------

1 | """ A simple configuration class. """

2 | from typing import Dict

3 | from os import path

4 | import copy

5 | import yaml

6 |

7 |

8 | class AttrDict(dict):

9 | """ Dictionary subclass whose entries can be accessed by attributes (as well

10 | as normally).

11 | """

12 |

13 | def __init__(self, *args, **kwargs):

14 | super(AttrDict, self).__init__(*args, **kwargs)

15 | self.__dict__ = self

16 |

17 | @classmethod

18 | def from_nested_dicts(cls, data):

19 | """ Construct nested AttrDicts from nested dictionaries.

20 | Args:

21 | data (dict): a dictionary data.

22 | """

23 | if not isinstance(data, dict):

24 | return data

25 | else:

26 | try:

27 | return cls({key: cls.from_nested_dicts(data[key]) for key in data})

28 | except KeyError as ke:

29 | raise KeyError('key not found in data while loading config.')

30 |

31 |

32 | class Config(AttrDict):

33 | """ A Configuration Class.

34 | Args:

35 | config_file (str): a configuration file.

36 | """

37 |

38 | def __init__(self, config_file):

39 | super().__init__()

40 | self.config_file = config_file

41 | self.config = self._parse_from_yaml()

42 |

43 | def as_dict(self):

44 | """Returns a dict representation."""

45 | config_dict = {}

46 | for k, v in self.__dict__.items():

47 | if isinstance(v, Config):

48 | config_dict[k] = v.as_dict()

49 | else:

50 | config_dict[k] = copy.deepcopy(v)

51 | return config_dict

52 |

53 | def __repr__(self):

54 | return repr(self.as_dict())

55 |

56 | def __str__(self):

57 | print("Configurations:\n")

58 | try:

59 | return yaml.dump(self.as_dict(), indent=4)

60 | except TypeError:

61 | return str(self.as_dict())

62 |

63 | def _parse_from_yaml(self) -> Dict:

64 | """Parses a yaml file and returns a dictionary."""

65 | config_path = path.join(path.dirname(path.abspath(__file__)), self.config_file)

66 | try:

67 | with open(config_path, "r") as f:

68 | config_dict = yaml.load(f, Loader=yaml.FullLoader)

69 | return config_dict

70 | except FileNotFoundError as fnfe:

71 | raise FileNotFoundError('configuration file not found.')

72 | except Exception as exc:

73 | raise Exception('Error while loading config file.')

74 |

75 | def get_config(self):

76 | return AttrDict.from_nested_dicts(self.config)

77 |

78 | class InternalConfig():

79 | """ An Internal Configuration Class.

80 | """

81 |

82 | # model backend redis

83 | REDIS_SERVER = 'redis'

84 | REDIS_PORT = 6379

85 |

86 | # api endpoint

87 | API_PORT = 8000

88 | API_NAME = 'AutoDeploy'

89 |

90 | # Monitor endpoint

91 | MONITOR_PORT = 8001

92 |

93 | # Rabbitmq

94 |

95 | RETRIES = 3

96 | RABBITMQ_PORT = 5672

97 | RABBITMQ_HOST = 'rabbitmq'

98 | RABBITMQ_QUEUE = 'monitor'

99 |

100 |

101 | # model prediction service

102 | PREDICT_PORT = 8009

103 | PREDICT_ENDPOINT = 'model_predict'

104 | PREDICT_URL = 'prediction'

105 | PREDICT_INPUT_DTYPE = 'float32'

106 |

--------------------------------------------------------------------------------

/autodeploy/config/logging.conf:

--------------------------------------------------------------------------------

1 | [loggers]

2 | keys=root, monitor

3 |

4 | [handlers]

5 | keys=StreamHandler,FileHandler

6 |

7 | [formatters]

8 | keys=normalFormatter,detailedFormatter

9 |

10 | [logger_root]

11 | level=INFO

12 | handlers=FileHandler, StreamHandler

13 |

14 | [logger_monitor]

15 | level=INFO

16 | handlers=FileHandler, StreamHandler

17 | qualname=monitor

18 | propagate=0

19 |

20 | [handler_StreamHandler]

21 | class=StreamHandler

22 | level=DEBUG

23 | formatter=normalFormatter

24 | args=(sys.stdout,)

25 |

26 | [handler_FileHandler]

27 | class=FileHandler

28 | level=INFO

29 | formatter=detailedFormatter

30 | args=("app.log",)

31 |

32 | [formatter_normalFormatter]

33 | format=%(asctime)s loglevel=%(levelname)-6s logger=%(name)s %(funcName)s() L%(lineno)-4d %(message)s

34 |

35 | [formatter_detailedFormatter]

36 | format=%(asctime)s loglevel=%(levelname)-6s logger=%(name)s %(funcName)s() L%(lineno)-4d %(message)s call_trace=%(pathname)s L%(lineno)-4d

37 |

--------------------------------------------------------------------------------

/autodeploy/database/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kartik4949/AutoDeploy/af7b3b32954a574307849ababb05fa2f4a80f52e/autodeploy/database/__init__.py

--------------------------------------------------------------------------------

/autodeploy/database/_database.py:

--------------------------------------------------------------------------------

1 | from sqlalchemy import create_engine

2 | from sqlalchemy.ext.declarative import declarative_base

3 | from sqlalchemy.orm import sessionmaker

4 |

5 | ''' SQL database url path. '''

6 | SQLALCHEMY_DATABASE_URL = "sqlite:///./data_drifts.db"

7 |

8 | engine = create_engine(

9 | SQLALCHEMY_DATABASE_URL, connect_args={"check_same_thread": False}

10 | )

11 | SessionLocal = sessionmaker(autocommit=False, autoflush=False, bind=engine)

12 |

13 | Base = declarative_base()

14 |

--------------------------------------------------------------------------------

/autodeploy/database/_models.py:

--------------------------------------------------------------------------------

1 | ''' a simple pydantic and sqlalchemy models utilities. '''

2 | from os import path, environ

3 |

4 | from sqlalchemy import Boolean, Column, Integer, String, Float, BLOB

5 |

6 | from pydantic import BaseModel

7 | from pydantic.fields import ModelField

8 | from pydantic import create_model

9 |

10 | from typing import Any, Dict, Optional

11 |

12 | from database._database import Base

13 | from utils import utils

14 | from config import Config

15 |

16 | SQLTYPE_MAPPER = {

17 | 'float': Float,

18 | 'string': String,

19 | 'int': Integer,

20 | 'bool': Boolean}

21 | config_path = path.join(path.dirname(path.abspath(__file__)),

22 | environ['CONFIG'])

23 |

24 | config = Config(config_path).get_config()

25 |

26 |

27 | def set_dynamic_inputs(cls):

28 | ''' a decorator to set dynamic model attributes. '''

29 | for k, v in dict(config.input_schema).items():

30 | if config.model.input_type == 'serialized':

31 | if v == 'string':

32 | setattr(cls, k, Column(BLOB))

33 | continue

34 |

35 | setattr(cls, k, Column(SQLTYPE_MAPPER[v]))

36 | return cls

37 |

38 |

39 | @set_dynamic_inputs

40 | class Requests(Base):

41 | '''

42 | Requests class pydantic model with input_schema.

43 | '''

44 | __tablename__ = "requests"

45 |

46 | id = Column(Integer, primary_key=True, index=True)

47 | time_stamp = Column(String)

48 | prediction = Column(Integer)

49 | is_drift = Column(Boolean, default=True)

50 |

--------------------------------------------------------------------------------

/autodeploy/dependencies/__init__.py:

--------------------------------------------------------------------------------

1 | from ._dependency import LoadDependency

2 |

--------------------------------------------------------------------------------

/autodeploy/dependencies/_dependency.py:

--------------------------------------------------------------------------------

1 | ''' a simple dependency class '''

2 | import os

3 | import json

4 | import sys

5 | import glob

6 | import importlib

7 |

8 | from config import Config

9 | from register import PREPROCESS, POSTPROCESS, METRICS

10 | from logger import AppLogger

11 |

12 | logger = AppLogger(__name__).get_logger()

13 |

14 |

15 | class LoadDependency:

16 | '''

17 | a dependency class which creates

18 | dependency on predict endpoints.

19 |

20 | Args:

21 | config (str): configuration file.

22 |

23 | '''

24 |

25 | def __init__(self, config):

26 | self.config = config

27 | self.preprocess_fxn_name = config.get('preprocess', None)

28 | self.postprocess_fxn_name = config.get('postprocess', None)

29 |

30 | @staticmethod

31 | def convert_python_path(file):

32 | # TODO: check os.

33 | file = file.split('.')[-2]

34 | file = file.split('/')[-1]

35 | return file

36 |

37 | @property

38 | def postprocess_fxn(self):

39 | _fxns = list(POSTPROCESS.module_dict.values())

40 | if self.postprocess_fxn_name:

41 | try:

42 | return POSTPROCESS.module_dict[self.postprocess_fxn_name]

43 | except KeyError as ke:

44 | raise KeyError(

45 | f'{self.postprocess_fxn_name} not found in {POSTPROCESS.keys()} keys')

46 | if _fxns:

47 | return _fxns[0]

48 | return None

49 |

50 | @property

51 | def preprocess_fxn(self):

52 | _fxns = list(PREPROCESS.module_dict.values())

53 | if self.preprocess_fxn_name:

54 | try:

55 | return PREPROCESS.module_dict[self.preprocess_fxn_name]

56 | except KeyError as ke:

57 | raise KeyError(

58 | f'{self.preprocess_fxn_name} not found in {PREPROCESS.keys()} keys')

59 | if _fxns:

60 | return _fxns[0]

61 | return None

62 |

63 | def import_dependencies(self):

64 | # import to invoke the register

65 | try:

66 | path = self.config.dependency.path

67 | sys.path.append(path)

68 | _py_files = glob.glob(os.path.join(path, '*.py'))

69 |

70 | for file in _py_files:

71 | file = self.convert_python_path(file)

72 | importlib.import_module(file)

73 | except ImportError as ie:

74 | logger.error('could not import dependency from given path.')

75 | raise ImportError('could not import dependency from given path.')

76 |

--------------------------------------------------------------------------------

/autodeploy/handlers/__init__.py:

--------------------------------------------------------------------------------

1 | from ._handlers import ModelException

2 | from ._handlers import Handler

3 |

--------------------------------------------------------------------------------

/autodeploy/handlers/_handlers.py:

--------------------------------------------------------------------------------

1 | ''' A simple Exception handlers. '''

2 | from fastapi import Request, status

3 | from fastapi.encoders import jsonable_encoder

4 | from fastapi.exceptions import RequestValidationError, StarletteHTTPException

5 | from fastapi.responses import JSONResponse, PlainTextResponse

6 |

7 |

8 | class ModelException(Exception):

9 | ''' Custom ModelException class

10 | Args:

11 | name (str): name of the exception.

12 | '''

13 |

14 | def __init__(self, name: str):

15 | self.name = name

16 |

17 |

18 | async def model_exception_handler(request: Request, exception: ModelException):

19 | return JSONResponse(status_code=500, content={"message": "model failure."})

20 |

21 |

22 | async def validation_exception_handler(request: Request, exc: RequestValidationError):

23 | return JSONResponse(

24 | status_code=status.HTTP_422_UNPROCESSABLE_ENTITY,

25 | content=jsonable_encoder({"detail": exc.errors(), "body": exc.body}),

26 | )

27 |

28 |

29 | async def http_exception_handler(request, exc):

30 | return PlainTextResponse(str(exc.detail), status_code=exc.status_code)

31 |

32 |

33 | class Handler:

34 | ''' Setup Handler class to setup

35 | and bind custom and overriden exceptions to fastapi

36 | application.

37 |

38 | '''

39 |

40 | def overide_handlers(self, app):

41 | ''' A helper function to overide default fastapi handlers.

42 | Args:

43 | app: fastapi application.

44 | '''

45 | _exc_app_request = app.exception_handler(RequestValidationError)

46 | _exc_app_starlette = app.exception_handler(StarletteHTTPException)

47 |

48 | _validation_exception_handler = _exc_app_request(

49 | validation_exception_handler)

50 | _starlette_exception_handler = _exc_app_starlette(

51 | http_exception_handler)

52 |

53 | def create_handlers(self, app):

54 | ''' A function to bind handlers to fastapi application.

55 | Args:

56 | app: fastapi application.

57 | '''

58 | _exc_app_model = app.exception_handler(ModelException)

59 | _exce_model_exception_handler = _exc_app_model(model_exception_handler)

60 |

--------------------------------------------------------------------------------

/autodeploy/loader/__init__.py:

--------------------------------------------------------------------------------

1 | from ._model_loader import ModelLoader

2 |

--------------------------------------------------------------------------------

/autodeploy/loader/_loaders.py:

--------------------------------------------------------------------------------

1 | ''' A loader class utilities. '''

2 | from os import path

3 |

4 | from logger import AppLogger

5 | from base import BaseLoader

6 |

7 | logger = AppLogger(__name__).get_logger()

8 |

9 |

10 | class PickleLoader(BaseLoader):

11 | ''' a simple PickleLoader class.

12 | class which loads pickle model file.

13 | Args:

14 | model_path (str): model file path.

15 | multi_model (bool): multi model flag.

16 | '''

17 |

18 | def __init__(self, model_path, multi_model=False):

19 | self.model_path = model_path

20 | self.multi_model = multi_model

21 |

22 | def load(self):

23 | ''' a helper function to load model_path file. '''

24 | import pickle

25 | # TODO: do handling

26 | try:

27 | if not self.multi_model:

28 | self.model_path = [self.model_path]

29 | models = []

30 | for model in self.model_path:

31 | model_path = path.join(path.dirname(path.abspath(__file__)), model)

32 | with open(model_path, 'rb') as reader:

33 | models.append(pickle.load(reader))

34 | return models

35 | except FileNotFoundError as fnfe:

36 | logger.error('model file not found...')

37 | raise FileNotFoundError('model file not found ...')

38 |

39 | class OnnxLoader(BaseLoader):

40 | ''' a simple OnnxLoader class.

41 | class which loads pickle model file.

42 | Args:

43 | model_path (str): model file path.

44 | multi_model (bool): multi model flag.

45 | '''

46 |

47 | def __init__(self, model_path, multi_model=False):

48 | self.model_path = model_path

49 | self.multi_model = multi_model

50 |

51 | def model_assert(self, model_name):

52 | ''' a helper function to assert model file name. '''

53 | if not model_name.endswith('.onnx'):

54 | logger.error(

55 | f'OnnxLoader save model extension is not .onnx but {model_name}')

56 | raise Exception(

57 | f'OnnxLoader save model extension is not .onnx but {model_name}')

58 |

59 | def load(self):

60 | ''' a function to load onnx model file. '''

61 | import onnxruntime as ort

62 |

63 | try:

64 | if not self.multi_model:

65 | self.model_path = [self.model_path]

66 | models = []

67 | for model in self.model_path:

68 | self.model_assert(model)

69 | model_path = path.join(path.dirname(path.abspath(__file__)), model)

70 | # onnx model load.

71 | sess = ort.InferenceSession(model_path)

72 | models.append(sess)

73 | return models

74 | except FileNotFoundError as fnfe:

75 | logger.error('model file not found...')

76 | raise FileNotFoundError('model file not found ...')

77 |

--------------------------------------------------------------------------------

/autodeploy/loader/_model_loader.py:

--------------------------------------------------------------------------------

1 | ''' A simple Model Loader Class. '''

2 | from loader._loaders import *

3 | from logger import AppLogger

4 |

5 | ALLOWED_MODEL_TYPES = ['pickle', 'hdf5', 'joblib', 'onnx']

6 |

7 | logger = AppLogger(__name__).get_logger()

8 |

9 |

10 | class ModelLoader:

11 | ''' a driver class ModelLoader to setup and load

12 | model file based on file type.

13 | Args:

14 | model_path (str): model file path.

15 | model_type (str): model file type.

16 | '''

17 |

18 | def __init__(self, model_path, model_type):

19 | self.model_path = model_path

20 | self.multi_model = False

21 | if isinstance(self.model_path, list):

22 | self.multi_model = True

23 | self.model_type = model_type

24 |

25 | def load(self):

26 | ''' a loading function which loads model file

27 | based on file type. '''

28 | logger.info('model loading started')

29 | if self.model_type in ALLOWED_MODEL_TYPES:

30 | if self.model_type == 'pickle':

31 | loader = PickleLoader(

32 | self.model_path, multi_model=self.multi_model)

33 | return loader.load()

34 |

35 | elif self.model_type == 'onnx':

36 | loader = OnnxLoader(

37 | self.model_path, multi_model=self.multi_model)

38 | return loader.load()

39 |

40 | else:

41 | logger.error('model type is not allowed')

42 | raise ValueError('Model type is not supported yet!!!')

43 | logger.info('model loaded successfully!')

44 |

--------------------------------------------------------------------------------

/autodeploy/logger/__init__.py:

--------------------------------------------------------------------------------

1 | from .logger import AppLogger

2 |

--------------------------------------------------------------------------------

/autodeploy/logger/logger.py:

--------------------------------------------------------------------------------

1 | """ A simple app logger utility. """

2 | import logging

3 | from os import path

4 |

5 | class AppLogger:

6 | ''' a simple logger class.

7 | logger class which takes configuration file and

8 | setups logging module.

9 |

10 | Args:

11 | file_name (str): name of file logger called from i.e `__name__`

12 |

13 | '''

14 |

15 | def __init__(self, __file_name) -> None:

16 | # Create a custom logger

17 | config_path = path.join(path.dirname(path.abspath(__file__)),

18 | '../config/logging.conf')

19 | logging.config.fileConfig(config_path, disable_existing_loggers=False)

20 | self.__file_name = __file_name

21 |

22 | def get_logger(self):

23 | '''

24 | a helper function to get logger with file name.

25 |

26 | '''

27 | # get root logger

28 | logger = logging.getLogger(self.__file_name)

29 | return logger

30 |

--------------------------------------------------------------------------------

/autodeploy/monitor.py:

--------------------------------------------------------------------------------

1 | ''' A monitoring utility micro service. '''

2 | import json

3 | import os

4 | import sys

5 | from typing import Any, List, Dict, Optional, Union

6 | import functools

7 |

8 | import pika

9 | import uvicorn

10 | import numpy as np

11 | from fastapi import FastAPI

12 | from sqlalchemy.orm import Session

13 | from prometheus_client import start_http_server

14 |

15 | from base import BaseMonitorService

16 | from config import Config, InternalConfig

17 | from monitor import Monitor

18 | from database import _models as models

19 | from logger import AppLogger

20 | from monitor import PrometheusModelMetric

21 | from monitor import drift_detection_algorithms

22 | from _backend import RabbitMQConsume, Database

23 |

24 | applogger = AppLogger(__name__)

25 | logger = applogger.get_logger()

26 |

27 | ''' A simple Monitor Driver class. '''

28 |

29 |

30 | class MonitorDriver(RabbitMQConsume, BaseMonitorService, Database):

31 | '''

32 | A simple Monitor Driver class for creating monitoring model

33 | and listening to rabbitmq queue i.e Monitor.

34 |

35 | Ref: [https://www.rabbitmq.com/tutorials/tutorial-one-python.html]

36 | RabbitMQ is a message broker: it accepts and forwards messages.

37 | You can think about it as a post office: when you put the mail

38 | that you want posting in a post box, you can be sure that Mr.

39 | or Ms. Mailperson will eventually deliver the mail to your

40 | recipient. In this analogy, RabbitMQ is a post box, a post

41 | office and a postman

42 |

43 |

44 | Protocol:

45 | AMQP - The Advanced Message Queuing Protocol (AMQP) is an open

46 | standard for passing business messages between applications or

47 | organizations. It connects systems, feeds business processes

48 | with the information they need and reliably transmits onward the

49 | instructions that achieve their goals.

50 |

51 | Ref: [https://pika.readthedocs.io/en/stable/]

52 | Pika:

53 | Pika is a pure-Python implementation of the AMQP 0-9-1 protocol

54 | that tries to stay fairly independent of the underlying network

55 | support library.

56 |

57 | Attributes:

58 | config (Config): configuration file contains configuration.

59 | host (str): name of host to connect with rabbitmq server.

60 | queue (str): name of queue to connect to for consuming message.

61 |

62 | '''

63 |

64 | def __init__(self, config) -> None:

65 | self.config = Config(config).get_config()

66 | self.internal_config = InternalConfig()

67 | super().__init__(self.internal_config)

68 | self.queue = self.internal_config.RABBITMQ_QUEUE

69 | self.drift_detection = None

70 | self.model_metric_port = self.internal_config.MONITOR_PORT

71 | self.database = Database(config)

72 |

73 | def _get_array(self, body: Dict) -> List:

74 | '''

75 |

76 | A simple internal helper function `_get_array` function

77 | to convert request body to input for model monitoring.

78 |

79 | Args:

80 | body (Dict): a body request incoming to monitor service

81 | from rabbitmq.

82 |

83 | '''

84 | input_schema = self.config.input_schema

85 | input = []

86 |

87 | # TODO: do it better

88 | try:

89 | for k in input_schema.keys():

90 | if self.config.model.input_type == 'serialized' and k == 'input':

91 | input.append(json.loads(body[k]))

92 | else:

93 | input.append(body[k])

94 | except KeyError as ke:

95 | logger.error(f'{k} key not found')

96 | raise KeyError(f'{k} key not found')

97 |

98 | return [input]

99 |

100 | def _convert_str_to_blob(self, body):

101 | _body = {}

102 | for k, v in body.items():

103 | if isinstance(v, str):

104 | _body[k] = bytes(v, 'utf-8')

105 | else:

106 | _body[k] = v

107 | return _body

108 |

109 | def _callback(self, ch: Any, method: Any,

110 | properties: Any, body: Dict) -> None:

111 | '''

112 | a simple callback function attached for post processing on

113 | incoming message body.

114 |

115 | '''

116 |

117 | try:

118 | body = json.loads(body)

119 | except JSONDecodeError as jde:

120 | logger.error('error while loading json object.')

121 | raise JSONDecodeError('error while loading json object.')

122 |

123 | input = self._get_array(body)

124 | input = np.asarray(input)

125 | output = body['prediction']

126 | if self.drift_detection:

127 | drift_status = self.drift_detection.get_change(input)

128 | self.prometheus_metric.set_drift_status(drift_status)

129 |

130 | # modify data drift status

131 | body['is_drift'] = drift_status['data']['is_drift']

132 |