107 |

108 | Do the same with `src_reviews` and `src_hosts` models.

109 |

110 | **`src_reviews.sql`**

111 |

112 | ```sql

113 | -- File path: models/src/src_reviews.sql

114 | WITH raw_reviews AS (

115 | SELECT *

116 | FROM AIRBNB.RAW.RAW_REVIEWS

117 | )

118 |

119 | SELECT

120 | listing_id,

121 | date AS review_date,

122 | reviewer_name,

123 | comments AS review_text,

124 | sentiment AS review_sentiment

125 | FROM raw_reviews

126 | ```

127 |

128 | **`src_hosts.sql`**

129 |

130 | ```sql

131 | -- File path: models/src/src_hosts.sql

132 | WITH raw_hosts AS (

133 | SELECT *

134 | FROM AIRBNB.RAW.RAW_HOSTS

135 | )

136 |

137 | SELECT

138 | id AS host_id,

139 | name AS host_name,

140 | is_superhost,

141 | created_at,

142 | updated_at

143 | FROM raw_hosts

144 | ```

145 |

146 | Then, run `dbt run` to materialise the new models in dbt and Snowflake.

147 |

107 |

108 | Do the same with `src_reviews` and `src_hosts` models.

109 |

110 | **`src_reviews.sql`**

111 |

112 | ```sql

113 | -- File path: models/src/src_reviews.sql

114 | WITH raw_reviews AS (

115 | SELECT *

116 | FROM AIRBNB.RAW.RAW_REVIEWS

117 | )

118 |

119 | SELECT

120 | listing_id,

121 | date AS review_date,

122 | reviewer_name,

123 | comments AS review_text,

124 | sentiment AS review_sentiment

125 | FROM raw_reviews

126 | ```

127 |

128 | **`src_hosts.sql`**

129 |

130 | ```sql

131 | -- File path: models/src/src_hosts.sql

132 | WITH raw_hosts AS (

133 | SELECT *

134 | FROM AIRBNB.RAW.RAW_HOSTS

135 | )

136 |

137 | SELECT

138 | id AS host_id,

139 | name AS host_name,

140 | is_superhost,

141 | created_at,

142 | updated_at

143 | FROM raw_hosts

144 | ```

145 |

146 | Then, run `dbt run` to materialise the new models in dbt and Snowflake.

147 |  148 |

149 | In Snowflake, the **DEV** folder is created to contain all the dbt materialisations. All the newly created models are contained in the **Views** folder.

150 |

148 |

149 | In Snowflake, the **DEV** folder is created to contain all the dbt materialisations. All the newly created models are contained in the **Views** folder.

150 |  151 |

152 | ## 2) Create Dim Models

153 |

154 | Create a `models/dim` folder to put in the dim models, which are the cleansed models from `src`, or staging layer. This keeps all the models well-organized.

155 |

156 | **`dim_listings_cleansed.sql`**

157 |

158 | ```sql

159 | -- File path: models/dim/dim_listings_cleansed.sql

160 | WITH src_listings AS (

161 | SELECT *

162 | FROM {{ref("src_listings")}}

163 | )

164 |

165 | SELECT

166 | listing_id,

167 | listing_name,

168 | room_type,

169 | CASE

170 | WHEN minimum_nights = 0 THEN 1

171 | ELSE minimum_nights

172 | END AS minimum_nights, -- ensure that the minimum stay is 1 night

173 | host_id,

174 | LTRIM(price_str,'$')::NUMBER(10,2) AS price_per_night, -- remove "$" and cast into number with 2 decimals

175 | created_at,

176 | updated_at

177 | FROM src_listings

178 | ```

179 |

180 | **`dim_hosts_cleansed.sql`**

181 |

182 | ```sql

183 | -- File path: models/dim/dim_hosts_cleansed.sql

184 | WITH src_hosts AS (

185 | SELECT *

186 | FROM {{ref("src_hosts")}}

187 | )

188 |

189 | SELECT

190 | host_id,

191 | NVL(host_name,'Anonymous') AS host_name,

192 | is_superhost,

193 | created_at,

194 | updated_at

195 | FROM src_hosts

196 | ```

197 |

198 | This is how the folder structure should look like now:

199 |

151 |

152 | ## 2) Create Dim Models

153 |

154 | Create a `models/dim` folder to put in the dim models, which are the cleansed models from `src`, or staging layer. This keeps all the models well-organized.

155 |

156 | **`dim_listings_cleansed.sql`**

157 |

158 | ```sql

159 | -- File path: models/dim/dim_listings_cleansed.sql

160 | WITH src_listings AS (

161 | SELECT *

162 | FROM {{ref("src_listings")}}

163 | )

164 |

165 | SELECT

166 | listing_id,

167 | listing_name,

168 | room_type,

169 | CASE

170 | WHEN minimum_nights = 0 THEN 1

171 | ELSE minimum_nights

172 | END AS minimum_nights, -- ensure that the minimum stay is 1 night

173 | host_id,

174 | LTRIM(price_str,'$')::NUMBER(10,2) AS price_per_night, -- remove "$" and cast into number with 2 decimals

175 | created_at,

176 | updated_at

177 | FROM src_listings

178 | ```

179 |

180 | **`dim_hosts_cleansed.sql`**

181 |

182 | ```sql

183 | -- File path: models/dim/dim_hosts_cleansed.sql

184 | WITH src_hosts AS (

185 | SELECT *

186 | FROM {{ref("src_hosts")}}

187 | )

188 |

189 | SELECT

190 | host_id,

191 | NVL(host_name,'Anonymous') AS host_name,

192 | is_superhost,

193 | created_at,

194 | updated_at

195 | FROM src_hosts

196 | ```

197 |

198 | This is how the folder structure should look like now:

199 |  374 |

375 | Snapshot automatically includes the columns `dbt_scd_id`, `dbt_updated_at`, `dbt_valid_from` and `dbt_valid_to`. In the first snapshot, `dbt_valid_to` is `null` because this column contains date of the next most recent changes or snapshot.

376 |

377 |

374 |

375 | Snapshot automatically includes the columns `dbt_scd_id`, `dbt_updated_at`, `dbt_valid_from` and `dbt_valid_to`. In the first snapshot, `dbt_valid_to` is `null` because this column contains date of the next most recent changes or snapshot.

376 |

377 |  378 |

379 | Let's say I make a change in id=3176 and ran `dbt snapshot` again. You'll see that `dbt_valid_to` contains the current timestamped and the next line represents id 3176 as well with a null `dbt_valid_to`.

380 |

381 |

378 |

379 | Let's say I make a change in id=3176 and ran `dbt snapshot` again. You'll see that `dbt_valid_to` contains the current timestamped and the next line represents id 3176 as well with a null `dbt_valid_to`.

380 |

381 |  382 |

383 | ***

384 |

385 | ### Tests

386 |

387 | Creating singular tests

388 |

389 | ```sql

390 | -- tests/dim_listings_minimum_nights.sql

391 | SELECT *

392 | FROM {{ref ('dim_listings_cleansed')}}

393 | WHERE minimum_nights < 1

394 | LIMIT 10

395 | ```

396 |

397 | Instead of creating query tests, I can also convert them into custom generic test like below so that I can reuse for other purposes.

398 |

399 | ```sql

400 | -- macro/positive_value.sql

401 | -- A singular test that fails when column_name is less than 1

402 |

403 | {% test positive_value(model, column_name) %}

404 |

405 | SELECT * FROM {{ model }}

406 | WHERE {{ column_name }} < 1

407 |

408 | {% endtest %}

409 | ```

410 |

411 | To apply them, I add the `positive_value` macro to the `schema.yml` referencing the `minimum_nights` field.

412 |

413 |

382 |

383 | ***

384 |

385 | ### Tests

386 |

387 | Creating singular tests

388 |

389 | ```sql

390 | -- tests/dim_listings_minimum_nights.sql

391 | SELECT *

392 | FROM {{ref ('dim_listings_cleansed')}}

393 | WHERE minimum_nights < 1

394 | LIMIT 10

395 | ```

396 |

397 | Instead of creating query tests, I can also convert them into custom generic test like below so that I can reuse for other purposes.

398 |

399 | ```sql

400 | -- macro/positive_value.sql

401 | -- A singular test that fails when column_name is less than 1

402 |

403 | {% test positive_value(model, column_name) %}

404 |

405 | SELECT * FROM {{ model }}

406 | WHERE {{ column_name }} < 1

407 |

408 | {% endtest %}

409 | ```

410 |

411 | To apply them, I add the `positive_value` macro to the `schema.yml` referencing the `minimum_nights` field.

412 |

413 |  414 |

415 | ***

416 |

417 | ### dbt Packages

418 |

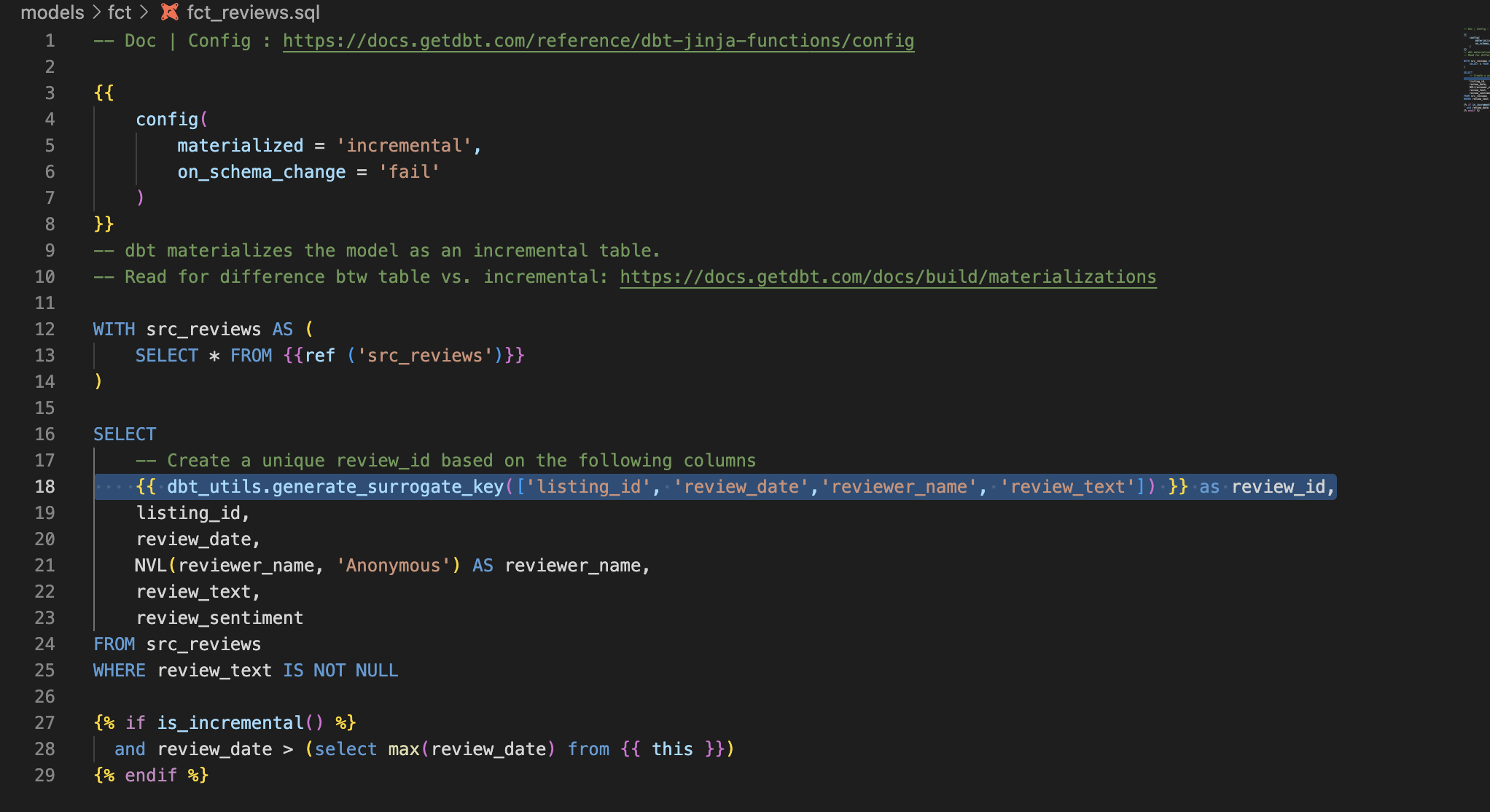

419 | I'm using a `dbt utils`[doc](https://hub.getdbt.com/dbt-labs/dbt_utils/latest/) packages specifically the `generate_source_key`[doc](https://github.com/dbt-labs/dbt-utils/tree/1.0.0/#generate_surrogate_key-source) which generates a unique ID based on the specified column IDs.

420 |

421 |

414 |

415 | ***

416 |

417 | ### dbt Packages

418 |

419 | I'm using a `dbt utils`[doc](https://hub.getdbt.com/dbt-labs/dbt_utils/latest/) packages specifically the `generate_source_key`[doc](https://github.com/dbt-labs/dbt-utils/tree/1.0.0/#generate_surrogate_key-source) which generates a unique ID based on the specified column IDs.

420 |

421 |  422 |

423 |

424 | I created a `packages.yml` file with the following package and ran `dbt deps` to install the package.

425 |

426 | ```yml

427 | -- packages.yml

428 | packages:

429 | - package: dbt-labs/dbt_utils

430 | version: 1.0.0

431 | ```

432 |

433 |

422 |

423 |

424 | I created a `packages.yml` file with the following package and ran `dbt deps` to install the package.

425 |

426 | ```yml

427 | -- packages.yml

428 | packages:

429 | - package: dbt-labs/dbt_utils

430 | version: 1.0.0

431 | ```

432 |

433 |  434 |

435 | Then, I use this function in the `fct_reviews.sql` where I created a unique ID as the `review_id` based on the `listing_id`, `review_date`, `reviewer_name`, and `review_text` fields.

436 |

437 |

434 |

435 | Then, I use this function in the `fct_reviews.sql` where I created a unique ID as the `review_id` based on the `listing_id`, `review_date`, `reviewer_name`, and `review_text` fields.

436 |

437 |  438 |

439 | As `fct_reviews.sql` is an incremental, I ran the `dbt run --full-refresh --select fct_reviews` instead of `dbt run` because

440 |

441 | ***

442 |

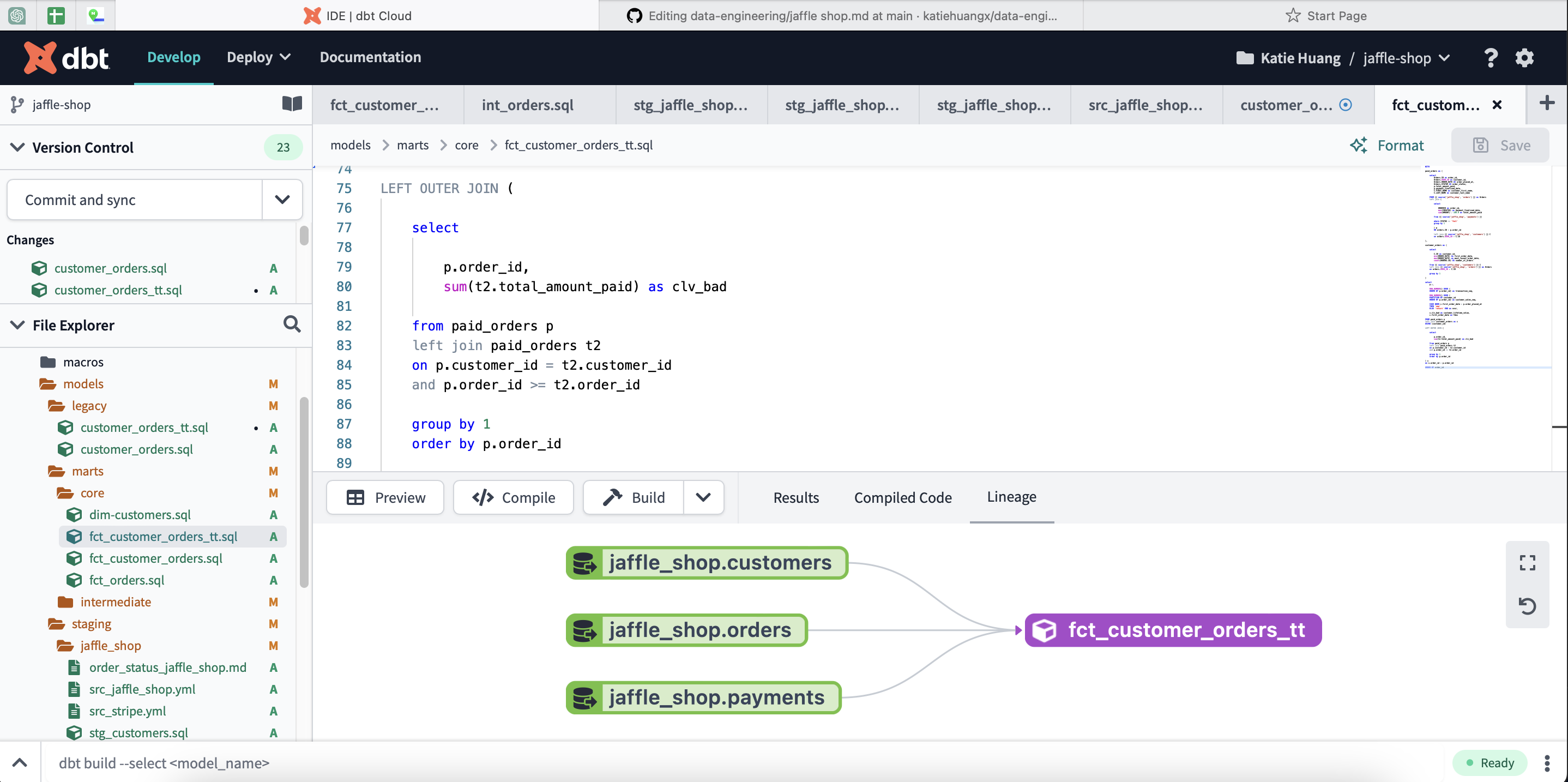

443 | ### Documentation

444 |

445 | I ran `dbt docs serve` to open into website.

446 |

447 |

438 |

439 | As `fct_reviews.sql` is an incremental, I ran the `dbt run --full-refresh --select fct_reviews` instead of `dbt run` because

440 |

441 | ***

442 |

443 | ### Documentation

444 |

445 | I ran `dbt docs serve` to open into website.

446 |

447 |  448 |

--------------------------------------------------------------------------------

/Airbnb Project/analyses/full_moon_no_sleep.sql:

--------------------------------------------------------------------------------

1 | WITH mart_fullmoon_reviews AS (

2 | SELECT * FROM {{ ref('mart_fullmoon_reviews') }}

3 | )

4 |

5 | SELECT

6 | is_full_moon,

7 | review_sentiment,

8 | COUNT(*) AS reviews

9 | FROM mart_fullmoon_reviews

10 | GROUP BY

11 | is_full_moon,

12 | review_sentiment

13 | ORDER BY

14 | is_full_moon,

15 | review_sentiment

--------------------------------------------------------------------------------

/Airbnb Project/assets/input_schema.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/katiehuangx/data-engineering/aace791c2df1ba2899624d7d51f3d5606e90d79d/Airbnb Project/assets/input_schema.png

--------------------------------------------------------------------------------

/Airbnb Project/dbt_project.yml:

--------------------------------------------------------------------------------

1 |

2 | # Name your project! Project names should contain only lowercase characters

3 | # and underscores. A good package name should reflect your organization's

4 | # name or the intended use of these models

5 | name: 'dbtlearn'

6 | version: '1.0.0'

7 | config-version: 2

8 |

9 | # This setting configures which "profile" dbt uses for this project.

10 | profile: 'dbtlearn'

11 |

12 | # These configurations specify where dbt should look for different types of files.

13 | # The `model-paths` config, for example, states that models in this project can be

14 | # found in the "models/" directory. You probably won't need to change these!

15 | model-paths: ["models"]

16 | analysis-paths: ["analyses"]

17 | test-paths: ["tests"]

18 | seed-paths: ["seeds"]

19 | macro-paths: ["macros"]

20 | snapshot-paths: ["snapshots"]

21 | asset-paths: ["assets"]

22 |

23 | target-path: "target" # directory which will store compiled SQL files

24 | clean-targets: # directories to be removed by `dbt clean`

25 | - "target"

26 | - "dbt_packages"

27 |

28 |

29 | # Configuring models

30 | # Full documentation: https://docs.getdbt.com/docs/configuring-models

31 |

32 | # In this example config, we tell dbt to build all models in the example/

33 | # directory as views. These settings can be overridden in the individual model

34 | # files using the `{{ config(...) }}` macro.

35 | models:

36 | dbtlearn:

37 | # Use a built-in function called materialized to ensure that models are materialised as view (not table)

38 | + materialized: view # Models in /dbtlearn are materialised as views

39 | + post-hook:

40 | - "GRANT SELECT ON {{ this }} TO ROLE REPORTER"

41 | dim:

42 | + materialized: table # Models in /dim are materialised as tables

43 | src:

44 | + materialized: ephemeral # Models in /src are materialised as ephemerals

--------------------------------------------------------------------------------

/Airbnb Project/environment-setup.md:

--------------------------------------------------------------------------------

1 | # Introduction and Environment Setup

2 |

3 | ## Snowflake user creation

4 |

5 | Copy these SQL statements into a Snowflake Worksheet, select all and execute them (i.e. pressing the play button).

6 |

7 | ```sql

8 | -- Use an admin role

9 | USE ROLE ACCOUNTADMIN;

10 |

11 | -- Create the `transform` role

12 | CREATE ROLE IF NOT EXISTS transform;

13 | GRANT ROLE TRANSFORM TO ROLE ACCOUNTADMIN;

14 |

15 | -- Create the default warehouse if necessary

16 | CREATE WAREHOUSE IF NOT EXISTS COMPUTE_WH;

17 | GRANT OPERATE ON WAREHOUSE COMPUTE_WH TO ROLE TRANSFORM;

18 |

19 | -- Create the `dbt` user and assign to role

20 | CREATE USER IF NOT EXISTS dbt

21 | PASSWORD='dbtPassword123'

22 | LOGIN_NAME='dbt'

23 | MUST_CHANGE_PASSWORD=FALSE

24 | DEFAULT_WAREHOUSE='COMPUTE_WH'

25 | DEFAULT_ROLE='transform'

26 | DEFAULT_NAMESPACE='AIRBNB.RAW'

27 | COMMENT='DBT user used for data transformation';

28 | GRANT ROLE transform to USER dbt;

29 |

30 | -- Create our database and schemas

31 | CREATE DATABASE IF NOT EXISTS AIRBNB;

32 | CREATE SCHEMA IF NOT EXISTS AIRBNB.RAW;

33 |

34 | -- Set up permissions to role `transform`

35 | GRANT ALL ON WAREHOUSE COMPUTE_WH TO ROLE transform;

36 | GRANT ALL ON DATABASE AIRBNB to ROLE transform;

37 | GRANT ALL ON ALL SCHEMAS IN DATABASE AIRBNB to ROLE transform;

38 | GRANT ALL ON FUTURE SCHEMAS IN DATABASE AIRBNB to ROLE transform;

39 | GRANT ALL ON ALL TABLES IN SCHEMA AIRBNB.RAW to ROLE transform;

40 | GRANT ALL ON FUTURE TABLES IN SCHEMA AIRBNB.RAW to ROLE transform;

41 | ```

42 |

43 | ***

44 |

45 | ## Snowflake data import

46 |

47 | Copy these SQL statements into a Snowflake Worksheet, select all and execute them (i.e. pressing the play button).

48 |

49 | ```sql

50 | -- Set up the defaults

51 | USE WAREHOUSE COMPUTE_WH;

52 | USE DATABASE airbnb;

53 | USE SCHEMA RAW;

54 |

55 | -- Create our three tables and import the data from S3

56 | CREATE OR REPLACE TABLE raw_listings

57 | (id integer,

58 | listing_url string,

59 | name string,

60 | room_type string,

61 | minimum_nights integer,

62 | host_id integer,

63 | price string,

64 | created_at datetime,

65 | updated_at datetime);

66 |

67 | COPY INTO raw_listings (id,

68 | listing_url,

69 | name,

70 | room_type,

71 | minimum_nights,

72 | host_id,

73 | price,

74 | created_at,

75 | updated_at)

76 | from 's3://dbtlearn/listings.csv'

77 | FILE_FORMAT = (type = 'CSV' skip_header = 1

78 | FIELD_OPTIONALLY_ENCLOSED_BY = '"');

79 |

80 |

81 | CREATE OR REPLACE TABLE raw_reviews

82 | (listing_id integer,

83 | date datetime,

84 | reviewer_name string,

85 | comments string,

86 | sentiment string);

87 |

88 | COPY INTO raw_reviews (listing_id, date, reviewer_name, comments, sentiment)

89 | from 's3://dbtlearn/reviews.csv'

90 | FILE_FORMAT = (type = 'CSV' skip_header = 1

91 | FIELD_OPTIONALLY_ENCLOSED_BY = '"');

92 |

93 |

94 | CREATE OR REPLACE TABLE raw_hosts

95 | (id integer,

96 | name string,

97 | is_superhost string,

98 | created_at datetime,

99 | updated_at datetime);

100 |

101 | COPY INTO raw_hosts (id, name, is_superhost, created_at, updated_at)

102 | from 's3://dbtlearn/hosts.csv'

103 | FILE_FORMAT = (type = 'CSV' skip_header = 1

104 | FIELD_OPTIONALLY_ENCLOSED_BY = '"');

105 | ```

106 |

107 |

--------------------------------------------------------------------------------

/Airbnb Project/macros/no_nulls_in_columns.sql:

--------------------------------------------------------------------------------

1 | -- Doc | adapter.get_columns_in_relation: https://docs.getdbt.com/reference/dbt-jinja-functions/adapter#get_columns_in_relation

2 | -- adapter.get_columns_in_relation iterates through every column in the model and checks whether column name is null.

3 |

4 | {% macro no_nulls_in_columns (model)%}

5 |

6 | SELECT *

7 | FROM {{ model }}

8 | WHERE

9 | {% for col in adapter.get_columns_in_relation(model) -%}

10 | -- Interested in columns where column name is null OR... iterates to next column

11 | {{ col.column }} IS NULL OR

12 | {% endfor %}

13 | FALSE -- To terminate the iteration

14 | {% endmacro %}

--------------------------------------------------------------------------------

/Airbnb Project/macros/positive_value.sql:

--------------------------------------------------------------------------------

1 | -- A singular test that fails when column_name is less than 1

2 |

3 | {% test positive_value(model, column_name) %}

4 |

5 | SELECT * FROM {{ model }}

6 | WHERE {{ column_name }} < 1

7 |

8 | {% endtest %}

--------------------------------------------------------------------------------

/Airbnb Project/models/dashboard.yml:

--------------------------------------------------------------------------------

1 | version: 2

2 |

3 | exposures:

4 | - name: Executive Dashboard

5 | type: dashboard

6 | maturity: low

7 | url: https://09837540.us1a.app.preset.io/superset/dashboard/p/xQrpBKxae3K/

8 | description: Executive Dashboard about Airbnb listings and hosts

9 |

10 |

11 | depends_on:

12 | - ref('dim_listings_w_hosts')

13 | - ref('mart_fullmoon_reviews')

14 |

15 | owner:

16 | name: Katie Huang

17 | email: xieminee@gmail.com

--------------------------------------------------------------------------------

/Airbnb Project/models/dim/dim_hosts_cleansed.sql:

--------------------------------------------------------------------------------

1 | {{

2 | config(

3 | materialized = 'view'

4 | )

5 | }}

6 |

7 | WITH src_hosts AS (

8 | SELECT * FROM {{ref ('src_hosts')}}

9 | )

10 |

11 | SELECT

12 | host_id,

13 | NVL(host_name, 'Anonymous') AS host_name,

14 | is_superhost,

15 | created_at,

16 | updated_at

17 | FROM src_hosts

--------------------------------------------------------------------------------

/Airbnb Project/models/dim/dim_listings_cleansed.sql:

--------------------------------------------------------------------------------

1 | {{

2 | config(

3 | materialized = 'view'

4 | )

5 | }}

6 |

7 |

8 | WITH src_listings AS (

9 | SELECT * FROM {{ ref ('src_listings')}}

10 | )

11 |

12 | SELECT

13 | listing_id,

14 | listing_name,

15 | room_type,

16 | CASE

17 | WHEN minimum_nights = 0 THEN 1 -- 0 night = 1 night, so we assign the value of 1 to indicate 1 night

18 | ELSE minimum_nights

19 | END AS minimum_nights,

20 | host_id,

21 | REPLACE( -- Parse string value into numerical form

22 | price_str, '$' -- Replace '$' with price_str value. In other words, to remove '$' from string.

23 | ) :: NUMBER (10, 2 -- Convert string type to numerical with 2 decimal places

24 | ) AS price,

25 | created_at,

26 | updated_at

27 |

28 | FROM src_listings

--------------------------------------------------------------------------------

/Airbnb Project/models/dim/dim_listings_w_hosts.sql:

--------------------------------------------------------------------------------

1 | WITH

2 | listings AS (

3 | SELECT * FROM {{ref ('dim_listings_cleansed')}}

4 | ),

5 | hosts AS (

6 | SELECT * FROM {{ref ('dim_hosts_cleansed')}}

7 | )

8 |

9 | SELECT

10 | listings.listing_id,

11 | listings.listing_name,

12 | listings.room_type,

13 | listings.minimum_nights,

14 | listings.price,

15 | listings.host_id,

16 | hosts.host_name,

17 | hosts.is_superhost AS host_is_superhost,

18 | listings.created_at,

19 | GREATEST(listings.updated_at, hosts.updated_at) AS updated_at -- Keep most recent updated_at

20 | FROM listings

21 | LEFT JOIN hosts

22 | ON listings.host_id = hosts.host_id

23 |

--------------------------------------------------------------------------------

/Airbnb Project/models/docs.md:

--------------------------------------------------------------------------------

1 | {% docs dim_listing_cleansed__minimum_nights %}

2 | Minimum number of nights required to rent this property.

3 |

4 | Keep in mind that old listings might have `minimum_nights` set to `0` in the source tables.

5 | Our cleansing algorithm updates this to `1`.

6 |

7 | {% enddocs %}

--------------------------------------------------------------------------------

/Airbnb Project/models/fct/fct_reviews.sql:

--------------------------------------------------------------------------------

1 | -- Doc | Config : https://docs.getdbt.com/reference/dbt-jinja-functions/config

2 |

3 | {{

4 | config(

5 | materialized = 'incremental',

6 | on_schema_change = 'fail'

7 | )

8 | }}

9 | -- dbt materializes the model as an incremental table.

10 | -- Read for difference btw table vs. incremental: https://docs.getdbt.com/docs/build/materializations

11 |

12 | WITH src_reviews AS (

13 | SELECT * FROM {{ref ('src_reviews')}}

14 | )

15 |

16 | SELECT

17 | -- Create a unique review_id based on the following columns

18 | {{ dbt_utils.generate_surrogate_key(['listing_id', 'review_date','reviewer_name', 'review_text']) }} as review_id,

19 | listing_id,

20 | review_date,

21 | NVL(reviewer_name, 'Anonymous') AS reviewer_name,

22 | review_text,

23 | review_sentiment

24 | FROM src_reviews

25 | WHERE review_text IS NOT NULL

26 |

27 | {% if is_incremental() %}

28 | and review_date > (select max(review_date) from {{ this }})

29 | {% endif %}

--------------------------------------------------------------------------------

/Airbnb Project/models/mart/mart_fullmoon_reviews.sql:

--------------------------------------------------------------------------------

1 | {{

2 | config(

3 | materialized = 'table'

4 | )

5 | }}

6 |

7 | WITH fct_reviews AS (

8 |

9 | SELECT * FROM {{ref ('fct_reviews')}}

10 |

11 | ),

12 | full_moon_dates AS (

13 |

14 | SELECT * FROM {{ref ('seed_full_moon_dates')}}

15 | )

16 |

17 | SELECT

18 | reviews.*,

19 | CASE

20 | WHEN fullmoon.full_moon_date IS NULL THEN 'not full moon'

21 | ELSE 'full moon' END AS is_full_moon

22 | FROM fct_reviews AS reviews

23 | LEFT JOIN full_moon_dates AS fullmoon

24 | ON (TO_DATE(reviews.review_date) = DATEADD(DAY, 1, fullmoon.full_moon_date))

25 |

--------------------------------------------------------------------------------

/Airbnb Project/models/overview.md:

--------------------------------------------------------------------------------

1 | {% docs __overview__ %}

2 |

3 | # Airbnb pipeline

4 |

5 | Hey, welcome to our Airbnb pipeline documentation!

6 |

7 | Here is the schema of our input data:

8 |

9 |

10 | {% enddocs %}

--------------------------------------------------------------------------------

/Airbnb Project/models/schema.yml:

--------------------------------------------------------------------------------

1 | version: 2

2 |

3 | models:

4 | # dim_listings_cleansed model

5 | - name: dim_listings_cleansed

6 | description: Cleansed table containing Airbnb listings

7 | columns:

8 |

9 | - name: listing_id

10 | description: Primary key for the listing

11 | tests: # Built-in generic tests

12 | - unique

13 | - not_null

14 |

15 | - name: host_id

16 | description: Foreign key references the host_id table

17 | tests:

18 | - not_null

19 | - relationships:

20 | to: ref('dim_hosts_cleansed')

21 | field: host_id

22 | # Ensure referential integrity.

23 | # Ensures that host_id in dim_listings_cleansed table exists as host_id in dim_hosts_cleansed table

24 |

25 | - name: room_type

26 | description: Type of the apartment / room

27 | tests:

28 | - accepted_values:

29 | values: ['Entire home/apt',

30 | 'Private room',

31 | 'Shared room',

32 | 'Hotel room']

33 |

34 | - name: minimum_nights

35 | description: '{{ doc("dim_listing_cleansed__minimum_nights") }}'

36 | tests:

37 | - positive_value

38 |

39 |

40 | # dim_hosts_cleansed model

41 | - name: dim_hosts_cleansed

42 | description: Cleansed table containing Airbnb hosts

43 | columns:

44 |

45 | - name: host_id

46 | description: Primary key for the hosts

47 | tests:

48 | - not_null

49 | - unique

50 |

51 | - name: host_name

52 | description: Name of the host

53 | tests:

54 | - not_null

55 |

56 | - name: is_superhost

57 | description: Defines whether the hosts is a superhost

58 | tests:

59 | - accepted_values:

60 | values: ['t', 'f']

61 | # dim_listings_w_hosts model

62 | - name: dim_listings_w_hosts

63 | description: Cleansed table containing Airbnb listings and hosts

64 | tests:

65 | - dbt_expectations.expect_table_row_count_to_equal_other_table:

66 | compare_model: source('airbnb', 'listings') # Take note of the indent

67 |

68 | columns:

69 | - name: price

70 | tests:

71 | # Doc: https://github.com/calogica/dbt-expectations/tree/0.8.2/#expect_column_values_to_be_of_type

72 |

73 | # Test ensures that price column is always number type

74 | - dbt_expectations.expect_column_values_to_be_of_type:

75 | column_type: number # Refer to datawarehouse definition of column

76 |

77 | # Test ensures that 99% of prices are between $50 and $500

78 | - dbt_expectations.expect_column_quantile_values_to_be_between:

79 | quantile: .99

80 | min_value: 50

81 | max_value: 500

82 |

83 | # Test ensures that the max price is $500

84 | - dbt_expectations.expect_column_max_to_be_between:

85 | max_value: 5000

86 | config:

87 | severity: warn

88 |

--------------------------------------------------------------------------------

/Airbnb Project/models/sources.yml:

--------------------------------------------------------------------------------

1 | version: 2

2 |

3 | sources:

4 | - name: airbnb

5 | schema: raw

6 | tables:

7 | - name: listings

8 | identifier: raw_listings

9 | columns:

10 | - name: room_type

11 | tests:

12 | # Test to ensure that the COUNT(DISTINCT room_type) is always 4

13 | - dbt_expectations.expect_column_distinct_count_to_equal:

14 | value: 4

15 | - name: price

16 | tests:

17 | - dbt_expectations.expect_column_values_to_match_regex:

18 | regex: "^\\\\$[0-9][0-9\\\\.]+$"

19 |

20 | - name: hosts

21 | identifier: raw_hosts

22 |

23 | - name: reviews

24 | identifier: raw_reviews

25 | loaded_at_field: date # Specify the original date name

26 | # Doc | Freshness: https://docs.getdbt.com/reference/resource-properties/freshness

27 | # A freshness block is used to define the acceptable amount of time between the most recent record and now, for a

28 | # table to be considered "fresh".

29 | freshness:

30 | warn_after: {count: 1, period: hour}

31 | error_after: {count: 24, period: hour}

--------------------------------------------------------------------------------

/Airbnb Project/models/src/src_hosts.sql:

--------------------------------------------------------------------------------

1 | WITH raw_hosts AS (

2 | SELECT * FROM {{source ('airbnb', 'hosts')}}

3 | )

4 |

5 | SELECT

6 | id AS host_id,

7 | name AS host_name,

8 | is_superhost,

9 | created_at,

10 | updated_at

11 | FROM raw_hosts

--------------------------------------------------------------------------------

/Airbnb Project/models/src/src_listings.sql:

--------------------------------------------------------------------------------

1 | WITH raw_listings AS (

2 | SELECT * FROM {{source ('airbnb', 'listings')}}

3 | )

4 |

5 | SELECT

6 | id AS listing_id,

7 | name AS listing_name,

8 | listing_url,

9 | room_type,

10 | minimum_nights,

11 | host_id,

12 | price AS price_str,

13 | created_at,

14 | updated_at

15 | FROM

16 | raw_listings

--------------------------------------------------------------------------------

/Airbnb Project/models/src/src_reviews.sql:

--------------------------------------------------------------------------------

1 | WITH raw_reviews AS (

2 | SELECT * FROM {{source ('airbnb', 'reviews')}}

3 | )

4 |

5 | SELECT

6 | listing_id,

7 | date AS review_date,

8 | reviewer_name,

9 | comments AS review_text,

10 | sentiment AS review_sentiment

11 | FROM raw_reviews

--------------------------------------------------------------------------------

/Airbnb Project/packages.yml:

--------------------------------------------------------------------------------

1 | # Doc | dbt Hub: https://hub.getdbt.com

2 | # dbt utils: https://hub.getdbt.com/dbt-labs/dbt_utils/latest/

3 | # great expectations: https://hub.getdbt.com/calogica/dbt_expectations/latest/ | https://github.com/calogica/dbt-expectations

4 |

5 | packages:

6 | - package: dbt-labs/dbt_utils

7 | version: 1.0.0

8 |

9 | packages:

10 | - package: calogica/dbt_expectations

11 | version: [">=0.8.0", "<0.9.0"]

--------------------------------------------------------------------------------

/Airbnb Project/seeds/seed_full_moon_dates.csv:

--------------------------------------------------------------------------------

1 | full_moon_date

2 | 2009-01-11

3 | 2009-02-09

4 | 2009-03-11

5 | 2009-04-09

6 | 2009-05-09

7 | 2009-06-07

8 | 2009-07-07

9 | 2009-08-06

10 | 2009-09-04

11 | 2009-10-04

12 | 2009-11-02

13 | 2009-12-02

14 | 2009-12-31

15 | 2010-01-30

16 | 2010-02-28

17 | 2010-03-30

18 | 2010-04-28

19 | 2010-05-28

20 | 2010-06-26

21 | 2010-07-26

22 | 2010-08-24

23 | 2010-09-23

24 | 2010-10-23

25 | 2010-11-21

26 | 2010-12-21

27 | 2011-01-19

28 | 2011-02-18

29 | 2011-03-19

30 | 2011-04-18

31 | 2011-05-17

32 | 2011-06-15

33 | 2011-07-15

34 | 2011-08-13

35 | 2011-09-12

36 | 2011-10-12

37 | 2011-11-10

38 | 2011-12-10

39 | 2012-01-09

40 | 2012-02-07

41 | 2012-03-08

42 | 2012-04-06

43 | 2012-05-06

44 | 2012-06-04

45 | 2012-07-03

46 | 2012-08-02

47 | 2012-08-31

48 | 2012-09-30

49 | 2012-10-29

50 | 2012-11-28

51 | 2012-12-28

52 | 2013-01-27

53 | 2013-02-25

54 | 2013-03-27

55 | 2013-04-25

56 | 2013-05-25

57 | 2013-06-23

58 | 2013-07-22

59 | 2013-08-21

60 | 2013-09-19

61 | 2013-10-19

62 | 2013-11-17

63 | 2013-12-17

64 | 2014-01-16

65 | 2014-02-15

66 | 2014-03-16

67 | 2014-04-15

68 | 2014-05-14

69 | 2014-06-13

70 | 2014-07-12

71 | 2014-08-10

72 | 2014-09-09

73 | 2014-10-08

74 | 2014-11-06

75 | 2014-12-06

76 | 2015-01-05

77 | 2015-02-04

78 | 2015-03-05

79 | 2015-04-04

80 | 2015-05-04

81 | 2015-06-02

82 | 2015-07-02

83 | 2015-07-31

84 | 2015-08-29

85 | 2015-09-28

86 | 2015-10-27

87 | 2015-11-25

88 | 2015-12-25

89 | 2016-01-24

90 | 2016-02-22

91 | 2016-03-23

92 | 2016-04-22

93 | 2016-05-21

94 | 2016-06-20

95 | 2016-07-20

96 | 2016-08-18

97 | 2016-09-16

98 | 2016-10-16

99 | 2016-11-14

100 | 2016-12-14

101 | 2017-01-12

102 | 2017-02-11

103 | 2017-03-12

104 | 2017-04-11

105 | 2017-05-10

106 | 2017-06-09

107 | 2017-07-09

108 | 2017-08-07

109 | 2017-09-06

110 | 2017-10-05

111 | 2017-11-04

112 | 2017-12-03

113 | 2018-01-02

114 | 2018-01-31

115 | 2018-03-02

116 | 2018-03-31

117 | 2018-04-30

118 | 2018-05-29

119 | 2018-06-28

120 | 2018-07-27

121 | 2018-08-26

122 | 2018-09-25

123 | 2018-10-24

124 | 2018-11-23

125 | 2018-12-22

126 | 2019-01-21

127 | 2019-02-19

128 | 2019-03-21

129 | 2019-04-19

130 | 2019-05-18

131 | 2019-06-17

132 | 2019-07-16

133 | 2019-08-15

134 | 2019-09-14

135 | 2019-10-13

136 | 2019-11-12

137 | 2019-12-12

138 | 2020-01-10

139 | 2020-02-09

140 | 2020-03-09

141 | 2020-04-08

142 | 2020-05-07

143 | 2020-06-05

144 | 2020-07-05

145 | 2020-08-03

146 | 2020-09-02

147 | 2020-10-01

148 | 2020-10-31

149 | 2020-11-30

150 | 2020-12-30

151 | 2021-01-28

152 | 2021-02-27

153 | 2021-03-28

154 | 2021-04-27

155 | 2021-05-26

156 | 2021-06-24

157 | 2021-07-24

158 | 2021-08-22

159 | 2021-09-21

160 | 2021-10-20

161 | 2021-11-19

162 | 2021-12-19

163 | 2022-01-18

164 | 2022-02-16

165 | 2022-03-18

166 | 2022-04-16

167 | 2022-05-16

168 | 2022-06-14

169 | 2022-07-13

170 | 2022-08-12

171 | 2022-09-10

172 | 2022-10-09

173 | 2022-11-08

174 | 2022-12-08

175 | 2023-01-07

176 | 2023-02-05

177 | 2023-03-07

178 | 2023-04-06

179 | 2023-05-05

180 | 2023-06-04

181 | 2023-07-03

182 | 2023-08-01

183 | 2023-08-31

184 | 2023-09-29

185 | 2023-10-28

186 | 2023-11-27

187 | 2023-12-27

188 | 2024-01-25

189 | 2024-02-24

190 | 2024-03-25

191 | 2024-04-24

192 | 2024-05-23

193 | 2024-06-22

194 | 2024-07-21

195 | 2024-08-19

196 | 2024-09-18

197 | 2024-10-17

198 | 2024-11-15

199 | 2024-12-15

200 | 2025-01-13

201 | 2025-02-12

202 | 2025-03-14

203 | 2025-04-13

204 | 2025-05-12

205 | 2025-06-11

206 | 2025-07-10

207 | 2025-08-09

208 | 2025-09-07

209 | 2025-10-07

210 | 2025-11-05

211 | 2025-12-05

212 | 2026-01-03

213 | 2026-02-01

214 | 2026-03-03

215 | 2026-04-02

216 | 2026-05-01

217 | 2026-05-31

218 | 2026-06-30

219 | 2026-07-29

220 | 2026-08-28

221 | 2026-09-26

222 | 2026-10-26

223 | 2026-11-24

224 | 2026-12-24

225 | 2027-01-22

226 | 2027-02-21

227 | 2027-03-22

228 | 2027-04-21

229 | 2027-05-20

230 | 2027-06-19

231 | 2027-07-18

232 | 2027-08-17

233 | 2027-09-16

234 | 2027-10-15

235 | 2027-11-14

236 | 2027-12-13

237 | 2028-01-12

238 | 2028-02-10

239 | 2028-03-11

240 | 2028-04-09

241 | 2028-05-08

242 | 2028-06-07

243 | 2028-07-06

244 | 2028-08-05

245 | 2028-09-04

246 | 2028-10-03

247 | 2028-11-02

248 | 2028-12-02

249 | 2028-12-31

250 | 2029-01-30

251 | 2029-02-28

252 | 2029-03-30

253 | 2029-04-28

254 | 2029-05-27

255 | 2029-06-26

256 | 2029-07-25

257 | 2029-08-24

258 | 2029-09-22

259 | 2029-10-22

260 | 2029-11-21

261 | 2029-12-20

262 | 2030-01-19

263 | 2030-02-18

264 | 2030-03-19

265 | 2030-04-18

266 | 2030-05-17

267 | 2030-06-15

268 | 2030-07-15

269 | 2030-08-13

270 | 2030-09-11

271 | 2030-10-11

272 | 2030-11-10

273 | 2030-12-09

274 |

--------------------------------------------------------------------------------

/Airbnb Project/snapshots/scd_raw_listings.sql:

--------------------------------------------------------------------------------

1 | {% snapshot scd_raw_listings %}

2 |

3 | {{

4 | config(

5 | target_schema="dev",

6 | unique_key="id",

7 | strategy="timestamp",

8 | updated_at="updated_at",

9 | invalidate_hard_deletes=True

10 | )

11 | }}

12 |

13 | -- Base of a snapshot is ALWAYS a SELECT * statement

14 | -- Doc | Snapshots: https://docs.getdbt.com/docs/build/snapshots

15 |

16 | SELECT * FROM {{source ('airbnb', 'listings')}}

17 |

18 | {% endsnapshot %}

--------------------------------------------------------------------------------

/Airbnb Project/target/assets/input_schema.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/katiehuangx/data-engineering/aace791c2df1ba2899624d7d51f3d5606e90d79d/Airbnb Project/target/assets/input_schema.png

--------------------------------------------------------------------------------

/Airbnb Project/target/catalog.json:

--------------------------------------------------------------------------------

1 | {"metadata": {"dbt_schema_version": "https://schemas.getdbt.com/dbt/catalog/v1.json", "dbt_version": "1.4.3", "generated_at": "2023-03-07T08:55:13.990601Z", "invocation_id": "f154e3a2-cc49-45ce-affe-fa7b412907db", "env": {}}, "nodes": {"model.dbtlearn.fct_reviews": {"metadata": {"type": "BASE TABLE", "schema": "DEV", "name": "FCT_REVIEWS", "database": "AIRBNB", "comment": null, "owner": "TRANSFORM"}, "columns": {"REVIEW_ID": {"type": "TEXT", "index": 1, "name": "REVIEW_ID", "comment": null}, "LISTING_ID": {"type": "NUMBER", "index": 2, "name": "LISTING_ID", "comment": null}, "REVIEW_DATE": {"type": "TIMESTAMP_NTZ", "index": 3, "name": "REVIEW_DATE", "comment": null}, "REVIEWER_NAME": {"type": "TEXT", "index": 4, "name": "REVIEWER_NAME", "comment": null}, "REVIEW_TEXT": {"type": "TEXT", "index": 5, "name": "REVIEW_TEXT", "comment": null}, "REVIEW_SENTIMENT": {"type": "TEXT", "index": 6, "name": "REVIEW_SENTIMENT", "comment": null}}, "stats": {"last_modified": {"id": "last_modified", "label": "Last Modified", "value": "2023-03-02 05:29UTC", "include": true, "description": "The timestamp for last update/change"}, "bytes": {"id": "bytes", "label": "Approximate Size", "value": 47417344.0, "include": true, "description": "Approximate size of the table as reported by Snowflake"}, "row_count": {"id": "row_count", "label": "Row Count", "value": 409697.0, "include": true, "description": "An approximate count of rows in this table"}, "has_stats": {"id": "has_stats", "label": "Has Stats?", "value": true, "include": false, "description": "Indicates whether there are statistics for this table"}}, "unique_id": "model.dbtlearn.fct_reviews"}, "seed.dbtlearn.seed_full_moon_dates": {"metadata": {"type": "BASE TABLE", "schema": "DEV", "name": "SEED_FULL_MOON_DATES", "database": "AIRBNB", "comment": null, "owner": "TRANSFORM"}, "columns": {"FULL_MOON_DATE": {"type": "DATE", "index": 1, "name": "FULL_MOON_DATE", "comment": null}}, "stats": {"last_modified": {"id": "last_modified", "label": "Last Modified", "value": "2023-02-27 08:20UTC", "include": true, "description": "The timestamp for last update/change"}, "bytes": {"id": "bytes", "label": "Approximate Size", "value": 1536.0, "include": true, "description": "Approximate size of the table as reported by Snowflake"}, "row_count": {"id": "row_count", "label": "Row Count", "value": 272.0, "include": true, "description": "An approximate count of rows in this table"}, "has_stats": {"id": "has_stats", "label": "Has Stats?", "value": true, "include": false, "description": "Indicates whether there are statistics for this table"}}, "unique_id": "seed.dbtlearn.seed_full_moon_dates"}, "model.dbtlearn.dim_hosts_cleansed": {"metadata": {"type": "VIEW", "schema": "DEV", "name": "DIM_HOSTS_CLEANSED", "database": "AIRBNB", "comment": null, "owner": "TRANSFORM"}, "columns": {"HOST_ID": {"type": "NUMBER", "index": 1, "name": "HOST_ID", "comment": null}, "HOST_NAME": {"type": "TEXT", "index": 2, "name": "HOST_NAME", "comment": null}, "IS_SUPERHOST": {"type": "TEXT", "index": 3, "name": "IS_SUPERHOST", "comment": null}, "CREATED_AT": {"type": "TIMESTAMP_NTZ", "index": 4, "name": "CREATED_AT", "comment": null}, "UPDATED_AT": {"type": "TIMESTAMP_NTZ", "index": 5, "name": "UPDATED_AT", "comment": null}}, "stats": {"has_stats": {"id": "has_stats", "label": "Has Stats?", "value": false, "include": false, "description": "Indicates whether there are statistics for this table"}}, "unique_id": "model.dbtlearn.dim_hosts_cleansed"}, "model.dbtlearn.dim_listings_w_hosts": {"metadata": {"type": "BASE TABLE", "schema": "DEV", "name": "DIM_LISTINGS_W_HOSTS", "database": "AIRBNB", "comment": null, "owner": "TRANSFORM"}, "columns": {"LISTING_ID": {"type": "NUMBER", "index": 1, "name": "LISTING_ID", "comment": null}, "LISTING_NAME": {"type": "TEXT", "index": 2, "name": "LISTING_NAME", "comment": null}, "ROOM_TYPE": {"type": "TEXT", "index": 3, "name": "ROOM_TYPE", "comment": null}, "MINIMUM_NIGHTS": {"type": "NUMBER", "index": 4, "name": "MINIMUM_NIGHTS", "comment": null}, "PRICE": {"type": "NUMBER", "index": 5, "name": "PRICE", "comment": null}, "HOST_ID": {"type": "NUMBER", "index": 6, "name": "HOST_ID", "comment": null}, "HOST_NAME": {"type": "TEXT", "index": 7, "name": "HOST_NAME", "comment": null}, "HOST_IS_SUPERHOST": {"type": "TEXT", "index": 8, "name": "HOST_IS_SUPERHOST", "comment": null}, "CREATED_AT": {"type": "TIMESTAMP_NTZ", "index": 9, "name": "CREATED_AT", "comment": null}, "UPDATED_AT": {"type": "TIMESTAMP_NTZ", "index": 10, "name": "UPDATED_AT", "comment": null}}, "stats": {"last_modified": {"id": "last_modified", "label": "Last Modified", "value": "2023-03-07 07:45UTC", "include": true, "description": "The timestamp for last update/change"}, "bytes": {"id": "bytes", "label": "Approximate Size", "value": 866304.0, "include": true, "description": "Approximate size of the table as reported by Snowflake"}, "row_count": {"id": "row_count", "label": "Row Count", "value": 17499.0, "include": true, "description": "An approximate count of rows in this table"}, "has_stats": {"id": "has_stats", "label": "Has Stats?", "value": true, "include": false, "description": "Indicates whether there are statistics for this table"}}, "unique_id": "model.dbtlearn.dim_listings_w_hosts"}, "model.dbtlearn.mart_fullmoon_reviews": {"metadata": {"type": "BASE TABLE", "schema": "DEV", "name": "MART_FULLMOON_REVIEWS", "database": "AIRBNB", "comment": null, "owner": "TRANSFORM"}, "columns": {"REVIEW_ID": {"type": "TEXT", "index": 1, "name": "REVIEW_ID", "comment": null}, "LISTING_ID": {"type": "NUMBER", "index": 2, "name": "LISTING_ID", "comment": null}, "REVIEW_DATE": {"type": "TIMESTAMP_NTZ", "index": 3, "name": "REVIEW_DATE", "comment": null}, "REVIEWER_NAME": {"type": "TEXT", "index": 4, "name": "REVIEWER_NAME", "comment": null}, "REVIEW_TEXT": {"type": "TEXT", "index": 5, "name": "REVIEW_TEXT", "comment": null}, "REVIEW_SENTIMENT": {"type": "TEXT", "index": 6, "name": "REVIEW_SENTIMENT", "comment": null}, "IS_FULL_MOON": {"type": "TEXT", "index": 7, "name": "IS_FULL_MOON", "comment": null}}, "stats": {"last_modified": {"id": "last_modified", "label": "Last Modified", "value": "2023-03-07 07:45UTC", "include": true, "description": "The timestamp for last update/change"}, "bytes": {"id": "bytes", "label": "Approximate Size", "value": 51119104.0, "include": true, "description": "Approximate size of the table as reported by Snowflake"}, "row_count": {"id": "row_count", "label": "Row Count", "value": 409697.0, "include": true, "description": "An approximate count of rows in this table"}, "has_stats": {"id": "has_stats", "label": "Has Stats?", "value": true, "include": false, "description": "Indicates whether there are statistics for this table"}}, "unique_id": "model.dbtlearn.mart_fullmoon_reviews"}, "snapshot.dbtlearn.scd_raw_listings": {"metadata": {"type": "BASE TABLE", "schema": "DEV", "name": "SCD_RAW_LISTINGS", "database": "AIRBNB", "comment": null, "owner": "TRANSFORM"}, "columns": {"ID": {"type": "NUMBER", "index": 1, "name": "ID", "comment": null}, "LISTING_URL": {"type": "TEXT", "index": 2, "name": "LISTING_URL", "comment": null}, "NAME": {"type": "TEXT", "index": 3, "name": "NAME", "comment": null}, "ROOM_TYPE": {"type": "TEXT", "index": 4, "name": "ROOM_TYPE", "comment": null}, "MINIMUM_NIGHTS": {"type": "NUMBER", "index": 5, "name": "MINIMUM_NIGHTS", "comment": null}, "HOST_ID": {"type": "NUMBER", "index": 6, "name": "HOST_ID", "comment": null}, "PRICE": {"type": "TEXT", "index": 7, "name": "PRICE", "comment": null}, "CREATED_AT": {"type": "TIMESTAMP_NTZ", "index": 8, "name": "CREATED_AT", "comment": null}, "UPDATED_AT": {"type": "TIMESTAMP_NTZ", "index": 9, "name": "UPDATED_AT", "comment": null}, "DBT_SCD_ID": {"type": "TEXT", "index": 10, "name": "DBT_SCD_ID", "comment": null}, "DBT_UPDATED_AT": {"type": "TIMESTAMP_NTZ", "index": 11, "name": "DBT_UPDATED_AT", "comment": null}, "DBT_VALID_FROM": {"type": "TIMESTAMP_NTZ", "index": 12, "name": "DBT_VALID_FROM", "comment": null}, "DBT_VALID_TO": {"type": "TIMESTAMP_NTZ", "index": 13, "name": "DBT_VALID_TO", "comment": null}}, "stats": {"last_modified": {"id": "last_modified", "label": "Last Modified", "value": "2023-02-28 07:17UTC", "include": true, "description": "The timestamp for last update/change"}, "bytes": {"id": "bytes", "label": "Approximate Size", "value": 1713152.0, "include": true, "description": "Approximate size of the table as reported by Snowflake"}, "row_count": {"id": "row_count", "label": "Row Count", "value": 17500.0, "include": true, "description": "An approximate count of rows in this table"}, "has_stats": {"id": "has_stats", "label": "Has Stats?", "value": true, "include": false, "description": "Indicates whether there are statistics for this table"}}, "unique_id": "snapshot.dbtlearn.scd_raw_listings"}, "model.dbtlearn.dim_listings_cleansed": {"metadata": {"type": "VIEW", "schema": "DEV", "name": "DIM_LISTINGS_CLEANSED", "database": "AIRBNB", "comment": null, "owner": "TRANSFORM"}, "columns": {"LISTING_ID": {"type": "NUMBER", "index": 1, "name": "LISTING_ID", "comment": null}, "LISTING_NAME": {"type": "TEXT", "index": 2, "name": "LISTING_NAME", "comment": null}, "ROOM_TYPE": {"type": "TEXT", "index": 3, "name": "ROOM_TYPE", "comment": null}, "MINIMUM_NIGHTS": {"type": "NUMBER", "index": 4, "name": "MINIMUM_NIGHTS", "comment": null}, "HOST_ID": {"type": "NUMBER", "index": 5, "name": "HOST_ID", "comment": null}, "PRICE": {"type": "NUMBER", "index": 6, "name": "PRICE", "comment": null}, "CREATED_AT": {"type": "TIMESTAMP_NTZ", "index": 7, "name": "CREATED_AT", "comment": null}, "UPDATED_AT": {"type": "TIMESTAMP_NTZ", "index": 8, "name": "UPDATED_AT", "comment": null}}, "stats": {"has_stats": {"id": "has_stats", "label": "Has Stats?", "value": false, "include": false, "description": "Indicates whether there are statistics for this table"}}, "unique_id": "model.dbtlearn.dim_listings_cleansed"}}, "sources": {"source.dbtlearn.airbnb.reviews": {"metadata": {"type": "BASE TABLE", "schema": "RAW", "name": "RAW_REVIEWS", "database": "AIRBNB", "comment": null, "owner": "ACCOUNTADMIN"}, "columns": {"LISTING_ID": {"type": "NUMBER", "index": 1, "name": "LISTING_ID", "comment": null}, "DATE": {"type": "TIMESTAMP_NTZ", "index": 2, "name": "DATE", "comment": null}, "REVIEWER_NAME": {"type": "TEXT", "index": 3, "name": "REVIEWER_NAME", "comment": null}, "COMMENTS": {"type": "TEXT", "index": 4, "name": "COMMENTS", "comment": null}, "SENTIMENT": {"type": "TEXT", "index": 5, "name": "SENTIMENT", "comment": null}}, "stats": {"last_modified": {"id": "last_modified", "label": "Last Modified", "value": "2023-02-27 01:28UTC", "include": true, "description": "The timestamp for last update/change"}, "bytes": {"id": "bytes", "label": "Approximate Size", "value": 38968320.0, "include": true, "description": "Approximate size of the table as reported by Snowflake"}, "row_count": {"id": "row_count", "label": "Row Count", "value": 410284.0, "include": true, "description": "An approximate count of rows in this table"}, "has_stats": {"id": "has_stats", "label": "Has Stats?", "value": true, "include": false, "description": "Indicates whether there are statistics for this table"}}, "unique_id": "source.dbtlearn.airbnb.reviews"}, "source.dbtlearn.airbnb.listings": {"metadata": {"type": "BASE TABLE", "schema": "RAW", "name": "RAW_LISTINGS", "database": "AIRBNB", "comment": null, "owner": "ACCOUNTADMIN"}, "columns": {"ID": {"type": "NUMBER", "index": 1, "name": "ID", "comment": null}, "LISTING_URL": {"type": "TEXT", "index": 2, "name": "LISTING_URL", "comment": null}, "NAME": {"type": "TEXT", "index": 3, "name": "NAME", "comment": null}, "ROOM_TYPE": {"type": "TEXT", "index": 4, "name": "ROOM_TYPE", "comment": null}, "MINIMUM_NIGHTS": {"type": "NUMBER", "index": 5, "name": "MINIMUM_NIGHTS", "comment": null}, "HOST_ID": {"type": "NUMBER", "index": 6, "name": "HOST_ID", "comment": null}, "PRICE": {"type": "TEXT", "index": 7, "name": "PRICE", "comment": null}, "CREATED_AT": {"type": "TIMESTAMP_NTZ", "index": 8, "name": "CREATED_AT", "comment": null}, "UPDATED_AT": {"type": "TIMESTAMP_NTZ", "index": 9, "name": "UPDATED_AT", "comment": null}}, "stats": {"last_modified": {"id": "last_modified", "label": "Last Modified", "value": "2023-02-28 07:15UTC", "include": true, "description": "The timestamp for last update/change"}, "bytes": {"id": "bytes", "label": "Approximate Size", "value": 927744.0, "include": true, "description": "Approximate size of the table as reported by Snowflake"}, "row_count": {"id": "row_count", "label": "Row Count", "value": 17499.0, "include": true, "description": "An approximate count of rows in this table"}, "has_stats": {"id": "has_stats", "label": "Has Stats?", "value": true, "include": false, "description": "Indicates whether there are statistics for this table"}}, "unique_id": "source.dbtlearn.airbnb.listings"}, "source.dbtlearn.airbnb.hosts": {"metadata": {"type": "BASE TABLE", "schema": "RAW", "name": "RAW_HOSTS", "database": "AIRBNB", "comment": null, "owner": "ACCOUNTADMIN"}, "columns": {"ID": {"type": "NUMBER", "index": 1, "name": "ID", "comment": null}, "NAME": {"type": "TEXT", "index": 2, "name": "NAME", "comment": null}, "IS_SUPERHOST": {"type": "TEXT", "index": 3, "name": "IS_SUPERHOST", "comment": null}, "CREATED_AT": {"type": "TIMESTAMP_NTZ", "index": 4, "name": "CREATED_AT", "comment": null}, "UPDATED_AT": {"type": "TIMESTAMP_NTZ", "index": 5, "name": "UPDATED_AT", "comment": null}}, "stats": {"last_modified": {"id": "last_modified", "label": "Last Modified", "value": "2023-02-27 01:28UTC", "include": true, "description": "The timestamp for last update/change"}, "bytes": {"id": "bytes", "label": "Approximate Size", "value": 338432.0, "include": true, "description": "Approximate size of the table as reported by Snowflake"}, "row_count": {"id": "row_count", "label": "Row Count", "value": 14111.0, "include": true, "description": "An approximate count of rows in this table"}, "has_stats": {"id": "has_stats", "label": "Has Stats?", "value": true, "include": false, "description": "Indicates whether there are statistics for this table"}}, "unique_id": "source.dbtlearn.airbnb.hosts"}}, "errors": null}

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/analyses/full_moon_no_sleep.sql:

--------------------------------------------------------------------------------

1 | WITH mart_fullmoon_reviews AS (

2 | SELECT * FROM airbnb.dev.mart_fullmoon_reviews

3 | )

4 |

5 | SELECT

6 | is_full_moon,

7 | review_sentiment,

8 | COUNT(*) AS reviews

9 | FROM mart_fullmoon_reviews

10 | GROUP BY

11 | is_full_moon,

12 | review_sentiment

13 | ORDER BY

14 | is_full_moon,

15 | review_sentiment

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/dim/dim_hosts_cleansed.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 | WITH __dbt__cte__src_hosts as (

4 | WITH raw_hosts AS (

5 | SELECT * FROM airbnb.raw.raw_hosts

6 | )

7 |

8 | SELECT

9 | id AS host_id,

10 | name AS host_name,

11 | is_superhost,

12 | created_at,

13 | updated_at

14 | FROM raw_hosts

15 | ),src_hosts AS (

16 | SELECT * FROM __dbt__cte__src_hosts

17 | )

18 |

19 | SELECT

20 | host_id,

21 | NVL(host_name, 'Anonymous') AS host_name,

22 | is_superhost,

23 | created_at,

24 | updated_at

25 | FROM src_hosts

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/dim/dim_listings_cleansed.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | WITH __dbt__cte__src_listings as (

5 | WITH raw_listings AS (

6 | SELECT * FROM airbnb.raw.raw_listings

7 | )

8 |

9 | SELECT

10 | id AS listing_id,

11 | name AS listing_name,

12 | listing_url,

13 | room_type,

14 | minimum_nights,

15 | host_id,

16 | price AS price_str,

17 | created_at,

18 | updated_at

19 | FROM

20 | raw_listings

21 | ),src_listings AS (

22 | SELECT * FROM __dbt__cte__src_listings

23 | )

24 |

25 | SELECT

26 | listing_id,

27 | listing_name,

28 | room_type,

29 | CASE

30 | WHEN minimum_nights = 0 THEN 1 -- 0 night = 1 night, so we assign the value of 1 to indicate 1 night

31 | ELSE minimum_nights

32 | END AS minimum_nights,

33 | host_id,

34 | REPLACE( -- Parse string value into numerical form

35 | price_str, '$' -- Replace '$' with price_str value. In other words, to remove '$' from string.

36 | ) :: NUMBER (10, 2 -- Convert string type to numerical with 2 decimal places

37 | ) AS price,

38 | created_at,

39 | updated_at

40 |

41 | FROM src_listings

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/dim/dim_listings_w_hosts.sql:

--------------------------------------------------------------------------------

1 | WITH

2 | listings AS (

3 | SELECT * FROM airbnb.dev.dim_listings_cleansed

4 | ),

5 | hosts AS (

6 | SELECT * FROM airbnb.dev.dim_hosts_cleansed

7 | )

8 |

9 | SELECT

10 | listings.listing_id,

11 | listings.listing_name,

12 | listings.room_type,

13 | listings.minimum_nights,

14 | listings.price,

15 | listings.host_id,

16 | hosts.host_name,

17 | hosts.is_superhost AS host_is_superhost,

18 | listings.created_at,

19 | GREATEST(listings.updated_at, hosts.updated_at) AS updated_at -- Keep most recent updated_at

20 | FROM listings

21 | LEFT JOIN hosts

22 | ON listings.host_id = hosts.host_id

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/fct/fct_reviews.sql:

--------------------------------------------------------------------------------

1 | -- Doc | Config : https://docs.getdbt.com/reference/dbt-jinja-functions/config

2 |

3 |

4 | -- dbt materializes the model as an incremental table.

5 | -- Read for difference btw table vs. incremental: https://docs.getdbt.com/docs/build/materializations

6 |

7 | WITH __dbt__cte__src_reviews as (

8 | WITH raw_reviews AS (

9 | SELECT * FROM airbnb.raw.raw_reviews

10 | )

11 |

12 | SELECT

13 | listing_id,

14 | date AS review_date,

15 | reviewer_name,

16 | comments AS review_text,

17 | sentiment AS review_sentiment

18 | FROM raw_reviews

19 | ),src_reviews AS (

20 | SELECT * FROM __dbt__cte__src_reviews

21 | )

22 |

23 | SELECT

24 | -- Create a unique review_id based on the following columns

25 |

26 |

27 | md5(cast(coalesce(cast(listing_id as TEXT), '_dbt_utils_surrogate_key_null_') || '-' || coalesce(cast(review_date as TEXT), '_dbt_utils_surrogate_key_null_') || '-' || coalesce(cast(reviewer_name as TEXT), '_dbt_utils_surrogate_key_null_') || '-' || coalesce(cast(review_text as TEXT), '_dbt_utils_surrogate_key_null_') as TEXT)) as review_id,

28 | listing_id,

29 | review_date,

30 | NVL(reviewer_name, 'Anonymous') AS reviewer_name,

31 | review_text,

32 | review_sentiment

33 | FROM src_reviews

34 | WHERE review_text IS NOT NULL

35 |

36 |

37 | and review_date > (select max(review_date) from airbnb.dev.fct_reviews)

38 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/mart/mart_fullmoon_reviews.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 | WITH fct_reviews AS (

4 |

5 | SELECT * FROM airbnb.dev.fct_reviews

6 |

7 | ),

8 | full_moon_dates AS (

9 |

10 | SELECT * FROM airbnb.dev.seed_full_moon_dates

11 | )

12 |

13 | SELECT

14 | reviews.*,

15 | CASE

16 | WHEN fullmoon.full_moon_date IS NULL THEN 'not full moon'

17 | ELSE 'full moon' END AS is_full_moon

18 | FROM fct_reviews AS reviews

19 | LEFT JOIN full_moon_dates AS fullmoon

20 | ON (TO_DATE(reviews.review_date) = DATEADD(DAY, 1, fullmoon.full_moon_date))

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/schema.yml/accepted_values_dim_hosts_cleansed_is_superhost__t__f.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | with all_values as (

6 |

7 | select

8 | is_superhost as value_field,

9 | count(*) as n_records

10 |

11 | from airbnb.dev.dim_hosts_cleansed

12 | group by is_superhost

13 |

14 | )

15 |

16 | select *

17 | from all_values

18 | where value_field not in (

19 | 't','f'

20 | )

21 |

22 |

23 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/schema.yml/accepted_values_dim_listings_c_1ca6148a08c62a5218f2a162f9d2a9a6.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | with all_values as (

6 |

7 | select

8 | room_type as value_field,

9 | count(*) as n_records

10 |

11 | from airbnb.dev.dim_listings_cleansed

12 | group by room_type

13 |

14 | )

15 |

16 | select *

17 | from all_values

18 | where value_field not in (

19 | 'Entire home/apt','Private room','Shared room','Hotel room'

20 | )

21 |

22 |

23 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/schema.yml/dbt_expectations_expect_column_07e7a515218ef6e3a17e164c642c7d18.sql:

--------------------------------------------------------------------------------

1 | with relation_columns as (

2 |

3 |

4 | select

5 | cast('LISTING_ID' as TEXT) as relation_column,

6 | cast('NUMBER' as TEXT) as relation_column_type

7 | union all

8 |

9 | select

10 | cast('LISTING_NAME' as TEXT) as relation_column,

11 | cast('VARCHAR' as TEXT) as relation_column_type

12 | union all

13 |

14 | select

15 | cast('ROOM_TYPE' as TEXT) as relation_column,

16 | cast('VARCHAR' as TEXT) as relation_column_type

17 | union all

18 |

19 | select

20 | cast('MINIMUM_NIGHTS' as TEXT) as relation_column,

21 | cast('NUMBER' as TEXT) as relation_column_type

22 | union all

23 |

24 | select

25 | cast('PRICE' as TEXT) as relation_column,

26 | cast('NUMBER' as TEXT) as relation_column_type

27 | union all

28 |

29 | select

30 | cast('HOST_ID' as TEXT) as relation_column,

31 | cast('NUMBER' as TEXT) as relation_column_type

32 | union all

33 |

34 | select

35 | cast('HOST_NAME' as TEXT) as relation_column,

36 | cast('VARCHAR' as TEXT) as relation_column_type

37 | union all

38 |

39 | select

40 | cast('HOST_IS_SUPERHOST' as TEXT) as relation_column,

41 | cast('VARCHAR' as TEXT) as relation_column_type

42 | union all

43 |

44 | select

45 | cast('CREATED_AT' as TEXT) as relation_column,

46 | cast('TIMESTAMP_NTZ' as TEXT) as relation_column_type

47 | union all

48 |

49 | select

50 | cast('UPDATED_AT' as TEXT) as relation_column,

51 | cast('TIMESTAMP_NTZ' as TEXT) as relation_column_type

52 |

53 |

54 | ),

55 | test_data as (

56 |

57 | select

58 | *

59 | from

60 | relation_columns

61 | where

62 | relation_column = 'PRICE'

63 | and

64 | relation_column_type not in ('NUMBER')

65 |

66 | )

67 | select *

68 | from test_data

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/schema.yml/dbt_expectations_expect_column_39596d790161761077ff1592b68943f6.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | with grouped_expression as (

8 | select

9 |

10 |

11 |

12 |

13 | ( 1=1 and percentile_cont(0.99) within group (order by price) >= 50 and percentile_cont(0.99) within group (order by price) <= 500

14 | )

15 | as expression

16 |

17 |

18 | from airbnb.dev.dim_listings_w_hosts

19 |

20 |

21 | ),

22 | validation_errors as (

23 |

24 | select

25 | *

26 | from

27 | grouped_expression

28 | where

29 | not(expression = true)

30 |

31 | )

32 |

33 | select *

34 | from validation_errors

35 |

36 |

37 |

38 |

39 |

40 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/schema.yml/dbt_expectations_expect_column_8e138814a11b6202811546795bffca5d.sql:

--------------------------------------------------------------------------------

1 | with relation_columns as (

2 |

3 |

4 | select

5 | cast('LISTING_ID' as TEXT) as relation_column,

6 | cast('NUMBER' as TEXT) as relation_column_type

7 | union all

8 |

9 | select

10 | cast('LISTING_NAME' as TEXT) as relation_column,

11 | cast('VARCHAR' as TEXT) as relation_column_type

12 | union all

13 |

14 | select

15 | cast('ROOM_TYPE' as TEXT) as relation_column,

16 | cast('VARCHAR' as TEXT) as relation_column_type

17 | union all

18 |

19 | select

20 | cast('MINIMUM_NIGHTS' as TEXT) as relation_column,

21 | cast('NUMBER' as TEXT) as relation_column_type

22 | union all

23 |

24 | select

25 | cast('PRICE' as TEXT) as relation_column,

26 | cast('NUMBER' as TEXT) as relation_column_type

27 | union all

28 |

29 | select

30 | cast('HOST_ID' as TEXT) as relation_column,

31 | cast('NUMBER' as TEXT) as relation_column_type

32 | union all

33 |

34 | select

35 | cast('HOST_NAME' as TEXT) as relation_column,

36 | cast('VARCHAR' as TEXT) as relation_column_type

37 | union all

38 |

39 | select

40 | cast('HOST_IS_SUPERHOST' as TEXT) as relation_column,

41 | cast('VARCHAR' as TEXT) as relation_column_type

42 | union all

43 |

44 | select

45 | cast('CREATED_AT' as TEXT) as relation_column,

46 | cast('TIMESTAMP_NTZ' as TEXT) as relation_column_type

47 | union all

48 |

49 | select

50 | cast('UPDATED_AT' as TEXT) as relation_column,

51 | cast('TIMESTAMP_NTZ' as TEXT) as relation_column_type

52 |

53 |

54 | ),

55 | test_data as (

56 |

57 | select

58 | *

59 | from

60 | relation_columns

61 | where

62 | relation_column = 'PRICE'

63 | and

64 | relation_column_type not in ('NUMBER(10,2)')

65 |

66 | )

67 | select *

68 | from test_data

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/schema.yml/dbt_expectations_expect_column_c59e300e0dddb335c4211147100ac1c6.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 | with grouped_expression as (

7 | select

8 |

9 |

10 |

11 |

12 | ( 1=1 and max(price) <= 5000

13 | )

14 | as expression

15 |

16 |

17 | from airbnb.dev.dim_listings_w_hosts

18 |

19 |

20 | ),

21 | validation_errors as (

22 |

23 | select

24 | *

25 | from

26 | grouped_expression

27 | where

28 | not(expression = true)

29 |

30 | )

31 |

32 | select *

33 | from validation_errors

34 |

35 |

36 |

37 |

38 |

39 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/schema.yml/dbt_expectations_expect_table__fbda7436ebe2ffe341acf0622c76d629.sql:

--------------------------------------------------------------------------------

1 |

2 | with a as (

3 |

4 | select

5 |

6 | count(*) as expression

7 | from

8 | airbnb.dev.dim_listings_w_hosts

9 |

10 |

11 | ),

12 | b as (

13 |

14 | select

15 |

16 | count(*) * 1 as expression

17 | from

18 | airbnb.raw.raw_listings

19 |

20 |

21 | ),

22 | final as (

23 |

24 | select

25 |

26 | a.expression,

27 | b.expression as compare_expression,

28 | abs(coalesce(a.expression, 0) - coalesce(b.expression, 0)) as expression_difference,

29 | abs(coalesce(a.expression, 0) - coalesce(b.expression, 0))/

30 | nullif(a.expression * 1.0, 0) as expression_difference_percent

31 | from

32 |

33 | a cross join b

34 |

35 | )

36 | -- DEBUG:

37 | -- select * from final

38 | select

39 | *

40 | from final

41 | where

42 |

43 | expression_difference > 0.0

44 |

45 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/schema.yml/not_null_dim_hosts_cleansed_host_id.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | select host_id

8 | from airbnb.dev.dim_hosts_cleansed

9 | where host_id is null

10 |

11 |

12 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/schema.yml/not_null_dim_hosts_cleansed_host_name.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | select host_name

8 | from airbnb.dev.dim_hosts_cleansed

9 | where host_name is null

10 |

11 |

12 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/schema.yml/not_null_dim_listings_cleansed_host_id.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | select host_id

8 | from airbnb.dev.dim_listings_cleansed

9 | where host_id is null

10 |

11 |

12 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/schema.yml/not_null_dim_listings_cleansed_listing_id.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | select listing_id

8 | from airbnb.dev.dim_listings_cleansed

9 | where listing_id is null

10 |

11 |

12 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/schema.yml/positive_value_dim_listings_cleansed_minimum_nights.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 | SELECT * FROM airbnb.dev.dim_listings_cleansed

4 | WHERE minimum_nights < 1

5 |

6 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/schema.yml/relationships_dim_listings_cle_05e2397b186a7b9306fc747b3cc4ef83.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | with child as (

6 | select host_id as from_field

7 | from airbnb.dev.dim_listings_cleansed

8 | where host_id is not null

9 | ),

10 |

11 | parent as (

12 | select host_id as to_field

13 | from airbnb.dev.dim_hosts_cleansed

14 | )

15 |

16 | select

17 | from_field

18 |

19 | from child

20 | left join parent

21 | on child.from_field = parent.to_field

22 |

23 | where parent.to_field is null

24 |

25 |

26 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/schema.yml/unique_dim_hosts_cleansed_host_id.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | select

6 | host_id as unique_field,

7 | count(*) as n_records

8 |

9 | from airbnb.dev.dim_hosts_cleansed

10 | where host_id is not null

11 | group by host_id

12 | having count(*) > 1

13 |

14 |

15 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/schema.yml/unique_dim_listings_cleansed_listing_id.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | select

6 | listing_id as unique_field,

7 | count(*) as n_records

8 |

9 | from airbnb.dev.dim_listings_cleansed

10 | where listing_id is not null

11 | group by listing_id

12 | having count(*) > 1

13 |

14 |

15 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/sources.yml/dbt_expectations_source_expect_a60b59a84fbc4577a11df360c50013bb.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 | with grouped_expression as (

7 | select

8 |

9 |

10 |

11 |

12 |

13 |

14 | regexp_instr(price, '^\\$[0-9][0-9\\.]+$', 1, 1)

15 |

16 |

17 | > 0

18 | as expression

19 |

20 |

21 | from airbnb.raw.raw_listings

22 |

23 |

24 | ),

25 | validation_errors as (

26 |

27 | select

28 | *

29 | from

30 | grouped_expression

31 | where

32 | not(expression = true)

33 |

34 | )

35 |

36 | select *

37 | from validation_errors

38 |

39 |

40 |

41 |

42 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/sources.yml/dbt_expectations_source_expect_d9770018e28873e7be74335902d9e4e5.sql:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | with grouped_expression as (

5 | select

6 |

7 |

8 |

9 |

10 | count(distinct room_type) = 4

11 | as expression

12 |

13 |

14 | from airbnb.raw.raw_listings

15 |

16 |

17 | ),

18 | validation_errors as (

19 |

20 | select

21 | *

22 | from

23 | grouped_expression

24 | where

25 | not(expression = true)

26 |

27 | )

28 |

29 | select *

30 | from validation_errors

31 |

32 |

33 |

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/src/src_hosts.sql:

--------------------------------------------------------------------------------

1 | WITH raw_hosts AS (

2 | SELECT * FROM airbnb.raw.raw_hosts

3 | )

4 |

5 | SELECT

6 | id AS host_id,

7 | name AS host_name,

8 | is_superhost,

9 | created_at,

10 | updated_at

11 | FROM raw_hosts

--------------------------------------------------------------------------------

/Airbnb Project/target/compiled/dbtlearn/models/src/src_listings.sql:

--------------------------------------------------------------------------------

1 | WITH raw_listings AS (