├── ESRNN

├── utils

│ ├── __init__.py

│ ├── config.py

│ ├── losses.py

│ ├── data.py

│ ├── ESRNN.py

│ └── DRNN.py

├── __init__.py

├── tests

│ └── test_esrnn.py

├── utils_visualization.py

├── m4_run.py

├── utils_configs.py

├── m4_data.py

├── utils_evaluation.py

├── ESRNNensemble.py

└── ESRNN.py

├── requirements.txt

├── .github

├── images

│ ├── metrics.png

│ ├── x_test.png

│ ├── x_train.png

│ ├── y_test.png

│ ├── y_train.png

│ ├── test-data-example.png

│ └── train-data-example.png

└── workflows

│ └── pythonpackage.yml

├── .gitignore

├── setup.py

├── LICENSE

└── README.md

/ESRNN/utils/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/ESRNN/__init__.py:

--------------------------------------------------------------------------------

1 | from ESRNN.ESRNN import *

2 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy>=1.16.1

2 | pandas>=0.25.2

3 | torch>=1.3.1

4 |

--------------------------------------------------------------------------------

/.github/images/metrics.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kdgutier/esrnn_torch/HEAD/.github/images/metrics.png

--------------------------------------------------------------------------------

/.github/images/x_test.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kdgutier/esrnn_torch/HEAD/.github/images/x_test.png

--------------------------------------------------------------------------------

/.github/images/x_train.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kdgutier/esrnn_torch/HEAD/.github/images/x_train.png

--------------------------------------------------------------------------------

/.github/images/y_test.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kdgutier/esrnn_torch/HEAD/.github/images/y_test.png

--------------------------------------------------------------------------------

/.github/images/y_train.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kdgutier/esrnn_torch/HEAD/.github/images/y_train.png

--------------------------------------------------------------------------------

/.github/images/test-data-example.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kdgutier/esrnn_torch/HEAD/.github/images/test-data-example.png

--------------------------------------------------------------------------------

/.github/images/train-data-example.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kdgutier/esrnn_torch/HEAD/.github/images/train-data-example.png

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | *.pyc

2 | *.DS_Store

3 | *.vscode

4 | *.ipynb_checkpoints

5 | data/

6 | !results/

7 | results/*

8 | literature/*

9 | plots/

10 | statistics/

11 | dynet/

12 | theory/

13 | *.so

14 | *.dll

15 | *.exe

16 | *.c

17 | build/

18 | ESRNN/hyperpar_tunning_m4.py

19 | logs/*

20 | configs/*

21 | setup.sh

22 | server_results.sh

23 | test.py

24 |

25 | # Setuptools distribution folder.

26 | /dist/

27 |

28 | # Python egg metadata, regenerated from source files by setuptools.

29 | /*.egg-info

30 | /*.egg

31 | ESRNN/r

32 |

--------------------------------------------------------------------------------

/.github/workflows/pythonpackage.yml:

--------------------------------------------------------------------------------

1 | # This workflow will install Python dependencies, run tests and lint with a variety of Python versions

2 | # For more information see: https://help.github.com/actions/language-and-framework-guides/using-python-with-github-actions

3 |

4 | name: Python package

5 |

6 | on:

7 | push:

8 | branches: [ master, pip ]

9 | pull_request:

10 | branches: [ master ]

11 |

12 | jobs:

13 | build:

14 |

15 | runs-on: ubuntu-latest

16 | strategy:

17 | matrix:

18 | python-version: [3.6, 3.7, 3.8]

19 |

20 | steps:

21 | - uses: actions/checkout@v2

22 | - name: Set up Python ${{ matrix.python-version }}

23 | uses: actions/setup-python@v1

24 | with:

25 | python-version: ${{ matrix.python-version }}

26 | - name: Install dependencies

27 | run: |

28 | python -m pip install --upgrade pip

29 | pip install -r requirements.txt

30 | - name: Test with pytest

31 | run: |

32 | pip install pytest

33 | pytest -s

34 |

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | import setuptools

2 |

3 | with open("README.md", "r") as fh:

4 | long_description = fh.read()

5 |

6 | setuptools.setup(

7 | name="ESRNN",

8 | version="0.1.3",

9 | author="Kin Gutierrez, Cristian Challu, Federico Garza",

10 | author_email="kdgutier@cs.cmu.edu, cchallu@andrew.cmu.edu, fede.garza.ramirez@gmail.com",

11 | description="Pytorch implementation of the ESRNN",

12 | long_description=long_description,

13 | long_description_content_type="text/markdown",

14 | url="https://github.com/kdgutier/esrnn_torch",

15 | packages=setuptools.find_packages(),

16 | classifiers=[

17 | "Programming Language :: Python :: 3",

18 | "License :: OSI Approved :: MIT License",

19 | "Operating System :: OS Independent",

20 | ],

21 | python_requires='>=3.6',

22 | install_requires =[

23 | "numpy>=1.16.1",

24 | "pandas>=0.25.2",

25 | "torch>=1.3.1"

26 | ],

27 | entry_points='''

28 | [console_scripts]

29 | m4_run=ESRNN.m4_run:cli

30 | '''

31 | )

32 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2020 Kin Gutierrez and Cristian Challu

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

--------------------------------------------------------------------------------

/ESRNN/utils/config.py:

--------------------------------------------------------------------------------

1 | class ModelConfig(object):

2 | def __init__(self, max_epochs, batch_size, batch_size_test, freq_of_test,

3 | learning_rate, lr_scheduler_step_size, lr_decay,

4 | per_series_lr_multip, gradient_eps, gradient_clipping_threshold,

5 | rnn_weight_decay,

6 | noise_std,

7 | level_variability_penalty,

8 | testing_percentile, training_percentile, ensemble,

9 | cell_type,

10 | state_hsize, dilations, add_nl_layer, seasonality, input_size, output_size,

11 | frequency, max_periods, random_seed, device, root_dir):

12 |

13 | # Train Parameters

14 | self.max_epochs = max_epochs

15 | self.batch_size = batch_size

16 | self.batch_size_test = batch_size_test

17 | self.freq_of_test = freq_of_test

18 | self.learning_rate = learning_rate

19 | self.lr_scheduler_step_size = lr_scheduler_step_size

20 | self.lr_decay = lr_decay

21 | self.per_series_lr_multip = per_series_lr_multip

22 | self.gradient_eps = gradient_eps

23 | self.gradient_clipping_threshold = gradient_clipping_threshold

24 | self.rnn_weight_decay = rnn_weight_decay

25 | self.noise_std = noise_std

26 | self.level_variability_penalty = level_variability_penalty

27 | self.testing_percentile = testing_percentile

28 | self.training_percentile = training_percentile

29 | self.ensemble = ensemble

30 | self.device = device

31 |

32 | # Model Parameters

33 | self.cell_type = cell_type

34 | self.state_hsize = state_hsize

35 | self.dilations = dilations

36 | self.add_nl_layer = add_nl_layer

37 | self.random_seed = random_seed

38 |

39 | # Data Parameters

40 | self.seasonality = seasonality

41 | if len(seasonality)>0:

42 | self.naive_seasonality = seasonality[0]

43 | else:

44 | self.naive_seasonality = 1

45 | self.input_size = input_size

46 | self.input_size_i = self.input_size

47 | self.output_size = output_size

48 | self.output_size_i = self.output_size

49 | self.frequency = frequency

50 | self.min_series_length = self.input_size_i + self.output_size_i

51 | self.max_series_length = (max_periods * self.input_size) + self.min_series_length

52 | self.max_periods = max_periods

53 | self.root_dir = root_dir

--------------------------------------------------------------------------------

/ESRNN/tests/test_esrnn.py:

--------------------------------------------------------------------------------

1 | #Testing ESRNN

2 | import runpy

3 | import os

4 |

5 | print('\n')

6 | print(10*'='+'TEST ESRNN'+10*'=')

7 | print('\n')

8 |

9 | def test_esrnn_hourly():

10 | if not os.path.exists('./data'):

11 | os.mkdir('./data')

12 |

13 | print('\n')

14 | print(10*'='+'HOURLY'+10*'=')

15 | print('\n')

16 |

17 | exec_str = 'python -m ESRNN.m4_run --dataset Hourly '

18 | exec_str += '--results_directory ./data --gpu_id 0 '

19 | exec_str += '--use_cpu 1 --num_obs 100 --test 1'

20 | results = os.system(exec_str)

21 |

22 | if results==0:

23 | print('Test completed')

24 | else:

25 | raise Exception('Something went wrong')

26 |

27 | def test_esrnn_weekly():

28 | if not os.path.exists('./data'):

29 | os.mkdir('./data')

30 |

31 | print('\n')

32 | print(10*'='+'WEEKLY'+10*'=')

33 | print('\n')

34 |

35 | exec_str = 'python -m ESRNN.m4_run --dataset Weekly '

36 | exec_str += '--results_directory ./data --gpu_id 0 '

37 | exec_str += '--use_cpu 1 --num_obs 100 --test 1'

38 | results = os.system(exec_str)

39 |

40 | if results==0:

41 | print('Test completed')

42 | else:

43 | raise Exception('Something went wrong')

44 |

45 |

46 | def test_esrnn_daily():

47 | if not os.path.exists('./data'):

48 | os.mkdir('./data')

49 |

50 | print('\n')

51 | print(10*'='+'DAILY'+10*'=')

52 | print('\n')

53 |

54 | exec_str = 'python -m ESRNN.m4_run --dataset Daily '

55 | exec_str += '--results_directory ./data --gpu_id 0 '

56 | exec_str += '--use_cpu 1 --num_obs 100 --test 1'

57 | results = os.system(exec_str)

58 |

59 | if results==0:

60 | print('Test completed')

61 | else:

62 | raise Exception('Something went wrong')

63 |

64 |

65 | def test_esrnn_monthly():

66 | if not os.path.exists('./data'):

67 | os.mkdir('./data')

68 |

69 |

70 | print('\n')

71 | print(10*'='+'MONTHLY'+10*'=')

72 | print('\n')

73 |

74 | exec_str = 'python -m ESRNN.m4_run --dataset Monthly '

75 | exec_str += '--results_directory ./data --gpu_id 0 '

76 | exec_str += '--use_cpu 1 --num_obs 100 --test 1'

77 | results = os.system(exec_str)

78 |

79 | if results==0:

80 | print('Test completed')

81 | else:

82 | raise Exception('Something went wrong')

83 |

84 |

85 | def test_esrnn_quarterly():

86 | if not os.path.exists('./data'):

87 | os.mkdir('./data')

88 |

89 | print('\n')

90 | print(10*'='+'QUARTERLY'+10*'=')

91 | print('\n')

92 |

93 | exec_str = 'python -m ESRNN.m4_run --dataset Quarterly '

94 | exec_str += '--results_directory ./data --gpu_id 0 '

95 | exec_str += '--use_cpu 1 --num_obs 100 --test 1'

96 | results = os.system(exec_str)

97 |

98 | if results==0:

99 | print('Test completed')

100 | else:

101 | raise Exception('Something went wrong')

102 |

103 |

104 | def test_esrnn_yearly():

105 | if not os.path.exists('./data'):

106 | os.mkdir('./data')

107 |

108 | print('\n')

109 | print(10*'='+'YEARLY'+10*'=')

110 | print('\n')

111 |

112 | exec_str = 'python -m ESRNN.m4_run --dataset Yearly '

113 | exec_str += '--results_directory ./data --gpu_id 0 '

114 | exec_str += '--use_cpu 1 --num_obs 100 --test 1'

115 | results = os.system(exec_str)

116 |

117 | if results==0:

118 | print('Test completed')

119 | else:

120 | raise Exception('Something went wrong')

121 |

--------------------------------------------------------------------------------

/ESRNN/utils/losses.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 |

4 | class PinballLoss(nn.Module):

5 | """ Pinball Loss

6 | Computes the pinball loss between y and y_hat.

7 |

8 | Parameters

9 | ----------

10 | y: tensor

11 | actual values in torch tensor.

12 | y_hat: tensor (same shape as y)

13 | predicted values in torch tensor.

14 | tau: float, between 0 and 1

15 | the slope of the pinball loss, in the context of

16 | quantile regression, the value of tau determines the

17 | conditional quantile level.

18 |

19 | Returns

20 | ----------

21 | pinball_loss:

22 | average accuracy for the predicted quantile

23 | """

24 | def __init__(self, tau=0.5):

25 | super(PinballLoss, self).__init__()

26 | self.tau = tau

27 |

28 | def forward(self, y, y_hat):

29 | delta_y = torch.sub(y, y_hat)

30 | pinball = torch.max(torch.mul(self.tau, delta_y), torch.mul((self.tau-1), delta_y))

31 | pinball = pinball.mean()

32 | return pinball

33 |

34 | class LevelVariabilityLoss(nn.Module):

35 | """ Level Variability Loss

36 | Computes the variability penalty for the level.

37 |

38 | Parameters

39 | ----------

40 | levels: tensor with shape (batch, n_time)

41 | levels obtained from exponential smoothing component of ESRNN

42 | level_variability_penalty: float

43 | this parameter controls the strength of the penalization

44 | to the wigglines of the level vector, induces smoothness

45 | in the output

46 |

47 | Returns

48 | ----------

49 | level_var_loss:

50 | wiggliness loss for the level vector

51 | """

52 | def __init__(self, level_variability_penalty):

53 | super(LevelVariabilityLoss, self).__init__()

54 | self.level_variability_penalty = level_variability_penalty

55 |

56 | def forward(self, levels):

57 | assert levels.shape[1] > 2

58 | level_prev = torch.log(levels[:, :-1])

59 | level_next = torch.log(levels[:, 1:])

60 | log_diff_of_levels = torch.sub(level_prev, level_next)

61 |

62 | log_diff_prev = log_diff_of_levels[:, :-1]

63 | log_diff_next = log_diff_of_levels[:, 1:]

64 | diff = torch.sub(log_diff_prev, log_diff_next)

65 | level_var_loss = diff**2

66 | level_var_loss = level_var_loss.mean() * self.level_variability_penalty

67 | return level_var_loss

68 |

69 | class StateLoss(nn.Module):

70 | pass

71 |

72 | class SmylLoss(nn.Module):

73 | """Computes the Smyl Loss that combines level variability with

74 | with Pinball loss.

75 | windows_y: tensor of actual values,

76 | shape (n_windows, batch_size, window_size).

77 | windows_y_hat: tensor of predicted values,

78 | shape (n_windows, batch_size, window_size).

79 | levels: levels obtained from exponential smoothing component of ESRNN.

80 | tensor with shape (batch, n_time).

81 | return: smyl_loss.

82 | """

83 | def __init__(self, tau, level_variability_penalty=0.0):

84 | super(SmylLoss, self).__init__()

85 | self.pinball_loss = PinballLoss(tau)

86 | self.level_variability_loss = LevelVariabilityLoss(level_variability_penalty)

87 |

88 | def forward(self, windows_y, windows_y_hat, levels):

89 | smyl_loss = self.pinball_loss(windows_y, windows_y_hat)

90 | if self.level_variability_loss.level_variability_penalty>0:

91 | log_diff_of_levels = self.level_variability_loss(levels)

92 | smyl_loss += log_diff_of_levels

93 | return smyl_loss

94 |

95 |

96 | class DisaggregatedPinballLoss(nn.Module):

97 | """ Pinball Loss

98 | Computes the pinball loss between y and y_hat.

99 |

100 | Parameters

101 | ----------

102 | y: tensor

103 | actual values in torch tensor.

104 | y_hat: tensor (same shape as y)

105 | predicted values in torch tensor.

106 | tau: float, between 0 and 1

107 | the slope of the pinball loss, in the context of

108 | quantile regression, the value of tau determines the

109 | conditional quantile level.

110 |

111 | Returns

112 | ----------

113 | pinball_loss:

114 | average accuracy for the predicted quantile

115 | """

116 | def __init__(self, tau=0.5):

117 | super(DisaggregatedPinballLoss, self).__init__()

118 | self.tau = tau

119 |

120 | def forward(self, y, y_hat):

121 | delta_y = torch.sub(y, y_hat)

122 | pinball = torch.max(torch.mul(self.tau, delta_y), torch.mul((self.tau-1), delta_y))

123 | pinball = pinball.mean(axis=0).mean(axis=1)

124 | return pinball

--------------------------------------------------------------------------------

/ESRNN/utils_visualization.py:

--------------------------------------------------------------------------------

1 | import math

2 | import numpy as np

3 | import pandas as pd

4 | import matplotlib.pyplot as plt

5 | plt.style.use('ggplot')

6 |

7 | import seaborn as sns

8 | from itertools import product

9 | import random

10 |

11 |

12 | def plot_prediction(y, y_hat):

13 | """

14 | y: pandas df

15 | panel with columns unique_id, ds, y

16 | y_hat: pandas df

17 | panel with columns unique_id, ds, y_hat

18 | """

19 | pd.plotting.register_matplotlib_converters()

20 |

21 | plt.plot(y.ds, y.y, label = 'y')

22 | plt.plot(y_hat.ds, y_hat.y_hat, label='y_hat')

23 | plt.legend(loc='upper left')

24 | plt.show()

25 |

26 | def plot_grid_prediction(y, y_hat, plot_random=True, unique_ids=None, save_file_name = None):

27 | """

28 | y: pandas df

29 | panel with columns unique_id, ds, y

30 | y_hat: pandas df

31 | panel with columns unique_id, ds, y_hat

32 | plot_random: bool

33 | if unique_ids will be sampled

34 | unique_ids: list

35 | unique_ids to plot

36 | save_file_name: str

37 | file name to save plot

38 | """

39 | pd.plotting.register_matplotlib_converters()

40 |

41 | fig, axes = plt.subplots(2, 4, figsize = (24,8))

42 |

43 | if not unique_ids:

44 | unique_ids = y['unique_id'].unique()

45 |

46 | assert len(unique_ids) >= 8, "Must provide at least 8 ts"

47 |

48 | if plot_random:

49 | unique_ids = random.sample(set(unique_ids), k=8)

50 |

51 | for i, (idx, idy) in enumerate(product(range(2), range(4))):

52 | y_uid = y[y.unique_id == unique_ids[i]]

53 | y_uid_hat = y_hat[y_hat.unique_id == unique_ids[i]]

54 |

55 | axes[idx, idy].plot(y_uid.ds, y_uid.y, label = 'y')

56 | axes[idx, idy].plot(y_uid_hat.ds, y_uid_hat.y_hat, label='y_hat')

57 | axes[idx, idy].set_title(unique_ids[i])

58 | axes[idx, idy].legend(loc='upper left')

59 |

60 | plt.show()

61 |

62 | if save_file_name:

63 | fig.savefig(save_file_name, bbox_inches='tight', pad_inches=0)

64 |

65 |

66 | def plot_distributions(distributions_dict, fig_title=None, xlabel=None):

67 | n_distributions = len(distributions_dict.keys())

68 | fig, ax = plt.subplots(1, figsize=(7, 5.5))

69 | plt.subplots_adjust(wspace=0.35)

70 |

71 | n_colors = len(distributions_dict.keys())

72 | colors = sns.color_palette("hls", n_colors)

73 |

74 | for idx, dist_name in enumerate(distributions_dict.keys()):

75 | train_dist_plot = sns.kdeplot(distributions_dict[dist_name],

76 | bw='silverman',

77 | label=dist_name,

78 | color=colors[idx])

79 | if xlabel is not None:

80 | ax.set_xlabel(xlabel, fontsize=14)

81 | ax.set_ylabel('Density', fontsize=14)

82 | ax.set_title(fig_title, fontsize=15.5)

83 | ax.grid(True)

84 | ax.legend(loc='center left', bbox_to_anchor=(1, 0.5))

85 |

86 | fig.tight_layout()

87 | if fig_title is not None:

88 | fig_title = fig_title.replace(' ', '_')

89 | plot_file = "./results/plots/{}_distributions.png".format(fig_title)

90 | plt.savefig(plot_file, bbox_inches = "tight", dpi=300)

91 | plt.show()

92 |

93 | def plot_cat_distributions(df, cat, var):

94 | unique_cats = df[cat].unique()

95 | cat_dict = {}

96 | for c in unique_cats:

97 | cat_dict[c] = df[df[cat]==c][var].values

98 |

99 | plot_distributions(cat_dict, xlabel=var)

100 |

101 | def plot_single_cat_distributions(distributions_dict, ax, fig_title=None, xlabel=None):

102 | n_distributions = len(distributions_dict.keys())

103 |

104 | n_colors = len(distributions_dict.keys())

105 | colors = sns.color_palette("hls", n_colors)

106 |

107 | for idx, dist_name in enumerate(distributions_dict.keys()):

108 | train_dist_plot = sns.distplot(distributions_dict[dist_name],

109 | #bw='silverman',

110 | #kde=False,

111 | rug=True,

112 | label=dist_name,

113 | color=colors[idx],

114 | ax=ax)

115 | if xlabel is not None:

116 | ax.set_xlabel(xlabel, fontsize=14)

117 | ax.set_ylabel('Density', fontsize=14)

118 | ax.set_title(fig_title, fontsize=15.5)

119 | ax.grid(True)

120 | ax.legend(loc='center left', bbox_to_anchor=(1, 0.5))

121 |

122 | def plot_grid_cat_distributions(df, cats, var):

123 | cols = int(np.ceil(len(cats)/2))

124 | fig, axs = plt.subplots(2, cols, figsize=(4*cols, 5.5))

125 | plt.subplots_adjust(wspace=0.95)

126 | plt.subplots_adjust(hspace=0.5)

127 |

128 | for idx, cat in enumerate(cats):

129 | unique_cats = df[cat].unique()

130 | cat_dict = {}

131 | for c in unique_cats:

132 | values = df[df[cat]==c][var].values

133 | values = values[~np.isnan(values)]

134 | if len(values)>0:

135 | cat_dict[c] = values

136 |

137 | row = int(np.round((idx/len(cats))+0.001, 0))

138 | col = idx % cols

139 | plot_single_cat_distributions(cat_dict, axs[row, col],

140 | fig_title=cat, xlabel=var)

141 |

142 | min_owa = math.floor(df.min_owa.min() * 1000) / 1000

143 | suptitle = var + ': ' + str(min_owa)

144 | fig.suptitle(suptitle, fontsize=18)

145 | plt.show()

146 |

--------------------------------------------------------------------------------

/ESRNN/utils/data.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import torch

3 |

4 |

5 | class Batch():

6 | def __init__(self, mc, y, last_ds, categories, idxs):

7 | # Parse Model config

8 | exogenous_size = mc.exogenous_size

9 | device = mc.device

10 |

11 | # y: time series values

12 | n = len(y)

13 | y = np.float32(y)

14 | self.idxs = torch.LongTensor(idxs).to(device)

15 | self.y = y

16 | if (self.y.shape[1] > mc.max_series_length):

17 | y = y[:, -mc.max_series_length:]

18 | self.y = torch.tensor(y).float()

19 |

20 | # last_ds: last time for prediction purposes

21 | self.last_ds = last_ds

22 |

23 | # categories: exogenous categoric data

24 | if exogenous_size >0:

25 | self.categories = np.zeros((len(idxs), exogenous_size))

26 | cols_idx = np.array([mc.category_to_idx[category] for category in categories])

27 | rows_idx = np.array(range(len(cols_idx)))

28 | self.categories[rows_idx, cols_idx] = 1

29 | self.categories = torch.from_numpy(self.categories).float()

30 |

31 | self.y = self.y.to(device)

32 | self.categories = self.categories.to(device)

33 |

34 |

35 | class Iterator(object):

36 | """ Time Series Iterator.

37 |

38 | Parameters

39 | ----------

40 | mc: ModelConfig object

41 | ModelConfig object with inherited hyperparameters:

42 | batch_size, and exogenous_size, from the ESRNN

43 | initialization.

44 | X: array, shape (n_unique_id, 3)

45 | Panel array with unique_id, last date stamp and

46 | exogenous variable.

47 | y: array, shape (n_unique_id, n_time)

48 | Panel array in wide format with unique_id, last

49 | date stamp and time series values.

50 | Returns

51 | ----------

52 | self : object

53 | Iterator method get_batch() returns a batch of time

54 | series objects defined by the Batch class.

55 | """

56 | def __init__(self, mc, X, y, weights=None):

57 | if weights is not None:

58 | assert len(weights)==len(X)

59 | train_ids = np.where(weights==1)[0]

60 | self.X = X[train_ids,:]

61 | self.y = y[train_ids,:]

62 | else:

63 | self.X = X

64 | self.y = y

65 | assert len(X)==len(y)

66 |

67 | # Parse Model config

68 | self.mc = mc

69 | self.batch_size = mc.batch_size

70 |

71 | self.unique_idxs = np.unique(self.X[:, 0])

72 | assert len(self.unique_idxs)==len(self.X)

73 | self.n_series = len(self.unique_idxs)

74 |

75 | #assert self.batch_size <= self.n_series

76 |

77 | # Initialize batch iterator

78 | self.b = 0

79 | self.n_batches = int(np.ceil(self.n_series / self.batch_size))

80 | shuffle = list(range(self.n_series))

81 | self.sort_key = {'unique_id': [self.unique_idxs[i] for i in shuffle],

82 | 'sort_key': shuffle}

83 |

84 | def update_batch_size(self, new_batch_size):

85 | self.batch_size = new_batch_size

86 | assert self.batch_size <= self.n_series

87 | self.n_batches = int(np.ceil(self.n_series / self.batch_size))

88 |

89 | def shuffle_dataset(self, random_seed=1):

90 | """Return the examples in the dataset in order, or shuffled."""

91 | # Random Seed

92 | np.random.seed(random_seed)

93 | self.random_seed = random_seed

94 | shuffle = np.random.choice(self.n_series, self.n_series, replace=False)

95 | self.X = self.X[shuffle]

96 | self.y = self.y[shuffle]

97 |

98 | old_sort_key = self.sort_key['sort_key']

99 | old_unique_idxs = self.sort_key['unique_id']

100 | self.sort_key = {'unique_id': [old_unique_idxs[i] for i in shuffle],

101 | 'sort_key': [old_sort_key[i] for i in shuffle]}

102 |

103 | def get_trim_batch(self, unique_id):

104 | if unique_id==None:

105 | # Compute the indexes of the minibatch.

106 | first = (self.b * self.batch_size)

107 | last = min((first + self.batch_size), self.n_series)

108 | else:

109 | # Obtain unique_id index

110 | assert unique_id in self.sort_key['unique_id'], "unique_id, not fitted"

111 | first = self.sort_key['unique_id'].index(unique_id)

112 | last = first+1

113 |

114 | # Extract values for batch

115 | unique_idxs = self.sort_key['unique_id'][first:last]

116 | batch_idxs = self.sort_key['sort_key'][first:last]

117 |

118 | batch_y = self.y[first:last]

119 | batch_categories = self.X[first:last, 1]

120 | batch_last_ds = self.X[first:last, 2]

121 |

122 | len_series = np.count_nonzero(~np.isnan(batch_y), axis=1)

123 | min_len = min(len_series)

124 | last_numeric = (~np.isnan(batch_y)).cumsum(1).argmax(1)+1

125 |

126 | # Trimming to match min_len

127 | y_b = np.zeros((batch_y.shape[0], min_len))

128 | for i in range(batch_y.shape[0]):

129 | y_b[i] = batch_y[i,(last_numeric[i]-min_len):last_numeric[i]]

130 | batch_y = y_b

131 |

132 | assert not np.isnan(batch_y).any(), \

133 | "clean np.nan's from unique_idxs: {}".format(unique_idxs)

134 | assert batch_y.shape[0]==len(batch_idxs)==len(batch_last_ds)==len(batch_categories)

135 | assert batch_y.shape[1]>=1

136 |

137 | # Feed to Batch

138 | batch = Batch(mc=self.mc, y=batch_y, last_ds=batch_last_ds,

139 | categories=batch_categories, idxs=batch_idxs)

140 | self.b = (self.b + 1) % self.n_batches

141 | return batch

142 |

143 | def get_batch(self, unique_id=None):

144 | return self.get_trim_batch(unique_id)

145 |

146 | def __len__(self):

147 | return self.n_batches

148 |

149 | def __iter__(self):

150 | pass

151 |

--------------------------------------------------------------------------------

/ESRNN/m4_run.py:

--------------------------------------------------------------------------------

1 | import os

2 | import argparse

3 | import itertools

4 | import ast

5 | import pickle

6 | import time

7 |

8 | import os

9 | import numpy as np

10 | import pandas as pd

11 |

12 | from ESRNN.m4_data import prepare_m4_data

13 | from ESRNN.utils_evaluation import evaluate_prediction_owa

14 | from ESRNN.utils_configs import get_config

15 |

16 | from ESRNN import ESRNN

17 |

18 | import torch

19 |

20 | def main(args):

21 | config = get_config(args.dataset)

22 | if config['data_parameters']['frequency'] == 'Y':

23 | config['data_parameters']['frequency'] = None

24 |

25 | #Setting needed parameters

26 | os.environ['CUDA_VISIBLE_DEVICES'] = str(args.gpu_id)

27 |

28 | if args.num_obs:

29 | num_obs = args.num_obs

30 | else:

31 | num_obs = 100000

32 |

33 | if args.use_cpu == 1:

34 | config['device'] = 'cpu'

35 | else:

36 | assert torch.cuda.is_available(), 'No cuda devices detected. You can try using CPU instead.'

37 |

38 | #Reading data

39 | print('Reading data')

40 | X_train_df, y_train_df, X_test_df, y_test_df = prepare_m4_data(dataset_name=args.dataset,

41 | directory=args.results_directory,

42 | num_obs=num_obs)

43 |

44 | # Instantiate model

45 | model = ESRNN(max_epochs=config['train_parameters']['max_epochs'],

46 | batch_size=config['train_parameters']['batch_size'],

47 | freq_of_test=config['train_parameters']['freq_of_test'],

48 | learning_rate=float(config['train_parameters']['learning_rate']),

49 | lr_scheduler_step_size=config['train_parameters']['lr_scheduler_step_size'],

50 | lr_decay=config['train_parameters']['lr_decay'],

51 | per_series_lr_multip=config['train_parameters']['per_series_lr_multip'],

52 | gradient_clipping_threshold=config['train_parameters']['gradient_clipping_threshold'],

53 | rnn_weight_decay=config['train_parameters']['rnn_weight_decay'],

54 | noise_std=config['train_parameters']['noise_std'],

55 | level_variability_penalty=config['train_parameters']['level_variability_penalty'],

56 | testing_percentile=config['train_parameters']['testing_percentile'],

57 | training_percentile=config['train_parameters']['training_percentile'],

58 | ensemble=config['train_parameters']['ensemble'],

59 | max_periods=config['data_parameters']['max_periods'],

60 | seasonality=config['data_parameters']['seasonality'],

61 | input_size=config['data_parameters']['input_size'],

62 | output_size=config['data_parameters']['output_size'],

63 | frequency=config['data_parameters']['frequency'],

64 | cell_type=config['model_parameters']['cell_type'],

65 | state_hsize=config['model_parameters']['state_hsize'],

66 | dilations=config['model_parameters']['dilations'],

67 | add_nl_layer=config['model_parameters']['add_nl_layer'],

68 | random_seed=config['model_parameters']['random_seed'],

69 | device=config['device'])

70 |

71 | if args.test == 1:

72 | model = ESRNN(max_epochs=1,

73 | batch_size=20,

74 | seasonality=config['data_parameters']['seasonality'],

75 | input_size=config['data_parameters']['input_size'],

76 | output_size=config['data_parameters']['output_size'],

77 | frequency=config['data_parameters']['frequency'],

78 | device=config['device'])

79 |

80 | # Fit model

81 | # If y_test_df is provided the model will evaluate predictions on this set every freq_test epochs

82 | model.fit(X_train_df, y_train_df, X_test_df, y_test_df)

83 |

84 | # Predict on test set

85 | print('\nForecasting')

86 | y_hat_df = model.predict(X_test_df)

87 |

88 | # Evaluate predictions

89 | print(15*'=', ' Final evaluation ', 14*'=')

90 | seasonality = config['data_parameters']['seasonality']

91 | if not seasonality:

92 | seasonality = 1

93 | else:

94 | seasonality = seasonality[0]

95 |

96 | final_owa, final_mase, final_smape = evaluate_prediction_owa(y_hat_df, y_train_df,

97 | X_test_df, y_test_df,

98 | naive2_seasonality=seasonality)

99 |

100 | if __name__ == '__main__':

101 | parser = argparse.ArgumentParser(description='Replicate M4 results for the ESRNN model')

102 | parser.add_argument("--dataset", required=True, type=str,

103 | choices=['Yearly', 'Quarterly', 'Monthly', 'Weekly', 'Hourly', 'Daily'],

104 | help="set of M4 time series to be tested")

105 | parser.add_argument("--results_directory", required=True, type=str,

106 | help="directory where M4 data will be downloaded")

107 | parser.add_argument("--gpu_id", required=False, type=int,

108 | help="an integer that specify which GPU will be used")

109 | parser.add_argument("--use_cpu", required=False, type=int,

110 | help="1 to use CPU instead of GPU (uses GPU by default)")

111 | parser.add_argument("--num_obs", required=False, type=int,

112 | help="number of M4 time series to be tested (uses all data by default)")

113 | parser.add_argument("--test", required=False, type=int,

114 | help="run fast for tests (no test by default)")

115 | args = parser.parse_args()

116 |

117 | main(args)

118 |

--------------------------------------------------------------------------------

/ESRNN/utils_configs.py:

--------------------------------------------------------------------------------

1 | def get_config(dataset_name):

2 | """

3 | Returns dict config

4 |

5 | Parameters

6 | ----------

7 | dataset_name: str

8 | """

9 | allowed_dataset_names = ('Yearly', 'Monthly', 'Weekly', 'Hourly', 'Quarterly', 'Daily')

10 | if dataset_name not in allowed_dataset_names:

11 | raise ValueError(f'kind must be one of {allowed_kinds}')

12 |

13 | if dataset_name == 'Yearly':

14 | return YEARLY

15 | elif dataset_name == 'Monthly':

16 | return MONTHLY

17 | elif dataset_name == 'Weekly':

18 | return WEEKLY

19 | elif dataset_name == 'Hourly':

20 | return HOURLY

21 | elif dataset_name == 'Quarterly':

22 | return QUARTERLY

23 | elif dataset_name == 'Daily':

24 | return DAILY

25 |

26 | YEARLY = {

27 | 'device': 'cuda',

28 | 'train_parameters': {

29 | 'max_epochs': 25,

30 | 'batch_size': 4,

31 | 'freq_of_test': 5,

32 | 'learning_rate': '1e-4',

33 | 'lr_scheduler_step_size': 10,

34 | 'lr_decay': 0.1,

35 | 'per_series_lr_multip': 0.8,

36 | 'gradient_clipping_threshold': 50,

37 | 'rnn_weight_decay': 0,

38 | 'noise_std': 0.001,

39 | 'level_variability_penalty': 100,

40 | 'testing_percentile': 50,

41 | 'training_percentile': 50,

42 | 'ensemble': False

43 | },

44 | 'data_parameters': {

45 | 'max_periods': 25,

46 | 'seasonality': [],

47 | 'input_size': 4,

48 | 'output_size': 6,

49 | 'frequency': 'Y'

50 | },

51 | 'model_parameters': {

52 | 'cell_type': 'LSTM',

53 | 'state_hsize': 40,

54 | 'dilations': [[1], [6]],

55 | 'add_nl_layer': False,

56 | 'random_seed': 117982

57 | }

58 | }

59 |

60 | MONTHLY = {

61 | 'device': 'cuda',

62 | 'train_parameters': {

63 | 'max_epochs': 15,

64 | 'batch_size': 64,

65 | 'freq_of_test': 4,

66 | 'learning_rate': '7e-4',

67 | 'lr_scheduler_step_size': 12,

68 | 'lr_decay': 0.2,

69 | 'per_series_lr_multip': 0.5,

70 | 'gradient_clipping_threshold': 20,

71 | 'rnn_weight_decay': 0,

72 | 'noise_std': 0.001,

73 | 'level_variability_penalty': 50,

74 | 'testing_percentile': 50,

75 | 'training_percentile': 45,

76 | 'ensemble': False

77 | },

78 | 'data_parameters': {

79 | 'max_periods': 36,

80 | 'seasonality': [12],

81 | 'input_size': 12,

82 | 'output_size': 18,

83 | 'frequency': 'M'

84 | },

85 | 'model_parameters': {

86 | 'cell_type': 'LSTM',

87 | 'state_hsize': 50,

88 | 'dilations': [[1, 3, 6, 12]],

89 | 'add_nl_layer': False,

90 | 'random_seed': 1

91 | }

92 | }

93 |

94 |

95 | WEEKLY = {

96 | 'device': 'cuda',

97 | 'train_parameters': {

98 | 'max_epochs': 50,

99 | 'batch_size': 32,

100 | 'freq_of_test': 10,

101 | 'learning_rate': '1e-2',

102 | 'lr_scheduler_step_size': 10,

103 | 'lr_decay': 0.5,

104 | 'per_series_lr_multip': 1.0,

105 | 'gradient_clipping_threshold': 20,

106 | 'rnn_weight_decay': 0,

107 | 'noise_std': 0.001,

108 | 'level_variability_penalty': 100,

109 | 'testing_percentile': 50,

110 | 'training_percentile': 50,

111 | 'ensemble': True

112 | },

113 | 'data_parameters': {

114 | 'max_periods': 31,

115 | 'seasonality': [],

116 | 'input_size': 10,

117 | 'output_size': 13,

118 | 'frequency': 'W'

119 | },

120 | 'model_parameters': {

121 | 'cell_type': 'ResLSTM',

122 | 'state_hsize': 40,

123 | 'dilations': [[1, 52]],

124 | 'add_nl_layer': False,

125 | 'random_seed': 2

126 | }

127 | }

128 |

129 | HOURLY = {

130 | 'device': 'cuda',

131 | 'train_parameters': {

132 | 'max_epochs': 20,

133 | 'batch_size': 32,

134 | 'freq_of_test': 5,

135 | 'learning_rate': '1e-2',

136 | 'lr_scheduler_step_size': 7,

137 | 'lr_decay': 0.5,

138 | 'per_series_lr_multip': 1.0,

139 | 'gradient_clipping_threshold': 50,

140 | 'rnn_weight_decay': 0,

141 | 'noise_std': 0.001,

142 | 'level_variability_penalty': 30,

143 | 'testing_percentile': 50,

144 | 'training_percentile': 50,

145 | 'ensemble': True

146 | },

147 | 'data_parameters': {

148 | 'max_periods': 371,

149 | 'seasonality': [24, 168],

150 | 'input_size': 24,

151 | 'output_size': 48,

152 | 'frequency': 'H'

153 | },

154 | 'model_parameters': {

155 | 'cell_type': 'LSTM',

156 | 'state_hsize': 40,

157 | 'dilations': [[1, 4, 24, 168]],

158 | 'add_nl_layer': False,

159 | 'random_seed': 1

160 | }

161 | }

162 |

163 | QUARTERLY = {

164 | 'device': 'cuda',

165 | 'train_parameters': {

166 | 'max_epochs': 30,

167 | 'batch_size': 16,

168 | 'freq_of_test': 5,

169 | 'learning_rate': '5e-4',

170 | 'lr_scheduler_step_size': 10,

171 | 'lr_decay': 0.5,

172 | 'per_series_lr_multip': 1.0,

173 | 'gradient_clipping_threshold': 20,

174 | 'rnn_weight_decay': 0,

175 | 'noise_std': 0.001,

176 | 'level_variability_penalty': 100,

177 | 'testing_percentile': 50,

178 | 'training_percentile': 50,

179 | 'ensemble': False

180 | },

181 | 'data_parameters': {

182 | 'max_periods': 20,

183 | 'seasonality': [4],

184 | 'input_size': 4,

185 | 'output_size': 8,

186 | 'frequency': 'Q'

187 | },

188 | 'model_parameters': {

189 | 'cell_type': 'LSTM',

190 | 'state_hsize': 40,

191 | 'dilations': [[1, 2, 4, 8]],

192 | 'add_nl_layer': False,

193 | 'random_seed': 3

194 | }

195 | }

196 |

197 | DAILY = {

198 | 'device': 'cuda',

199 | 'train_parameters': {

200 | 'max_epochs': 20,

201 | 'batch_size': 64,

202 | 'freq_of_test': 2,

203 | 'learning_rate': '1e-2',

204 | 'lr_scheduler_step_size': 4,

205 | 'lr_decay': 0.3333,

206 | 'per_series_lr_multip': 0.5,

207 | 'gradient_clipping_threshold': 50,

208 | 'rnn_weight_decay': 0,

209 | 'noise_std': 0.0001,

210 | 'level_variability_penalty': 100,

211 | 'testing_percentile': 50,

212 | 'training_percentile': 65,

213 | 'ensemble': False

214 | },

215 | 'data_parameters': {

216 | 'max_periods': 15,

217 | 'seasonality': [7],

218 | 'input_size': 7,

219 | 'output_size': 14,

220 | 'frequency': 'D'

221 | },

222 | 'model_parameters': {

223 | 'n_models': 5,

224 | 'n_top': 4,

225 | 'cell_type':

226 | 'LSTM',

227 | 'state_hsize': 40,

228 | 'dilations': [[1, 7, 28]],

229 | 'add_nl_layer': True,

230 | 'random_seed': 1

231 | }

232 | }

233 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | [](https://github.com/kdgutier/esrnn_torch/tree/master)

2 | [](https://pypi.python.org/pypi/ESRNN/)

3 | [](https://pepy.tech/project/esrnn)

4 | [](https://www.python.org/downloads/release/python-360+/)

5 | [](https://github.com/kdgutier/esrnn_torch/blob/master/LICENSE)

6 |

7 |

8 | # Pytorch Implementation of the ES-RNN

9 | Pytorch implementation of the ES-RNN algorithm proposed by Smyl, winning submission of the M4 Forecasting Competition. The class wraps fit and predict methods to facilitate interaction with Machine Learning pipelines along with evaluation and data wrangling utility. Developed by [Autonlab](https://www.autonlab.org/)’s members at Carnegie Mellon University.

10 |

11 | ## Installation Prerequisites

12 | * numpy>=1.16.1

13 | * pandas>=0.25.2

14 | * pytorch>=1.3.1

15 |

16 | ## Installation

17 |

18 | This code is a work in progress, any contributions or issues are welcome on

19 | GitHub at: https://github.com/kdgutier/esrnn_torch

20 |

21 | You can install the *released version* of `ESRNN` from the [Python package index](https://pypi.org) with:

22 |

23 | ```python

24 | pip install ESRNN

25 | ```

26 |

27 | ## Usage

28 |

29 | ### Input data

30 |

31 | The fit method receives `X_df`, `y_df` training pandas dataframes in long format. Optionally `X_test_df` and `y_test_df` to compute out of sample performance.

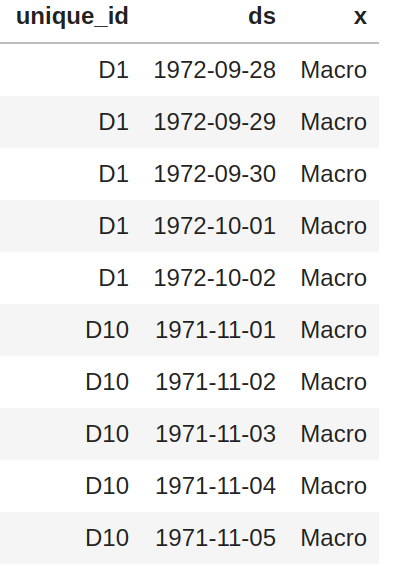

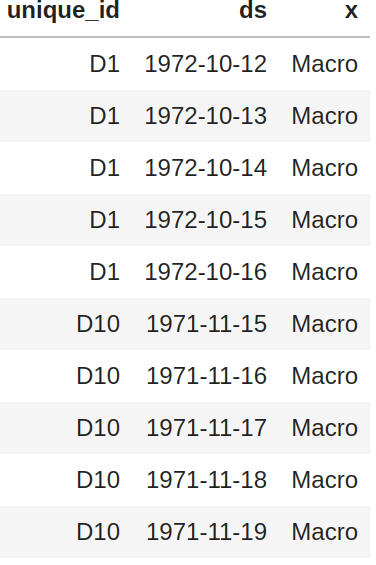

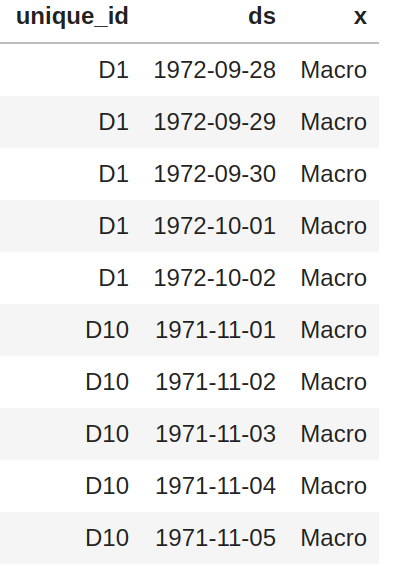

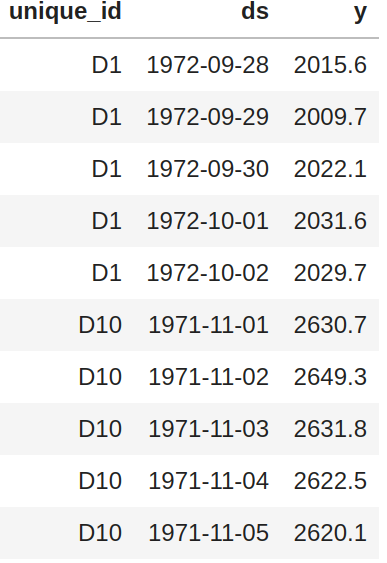

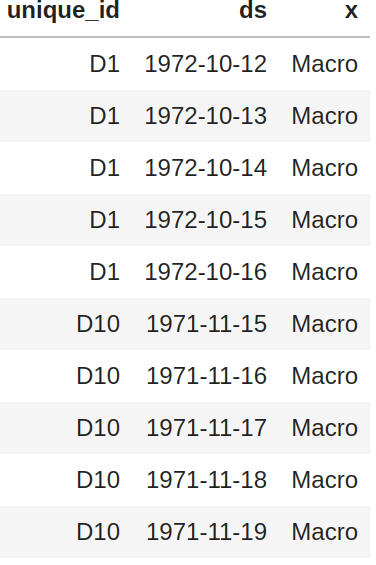

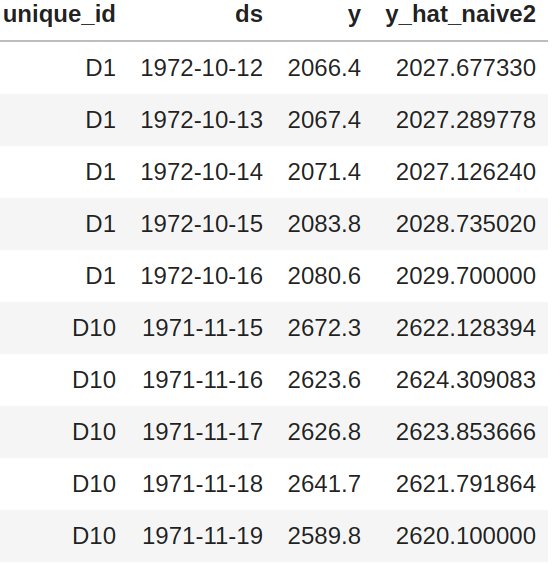

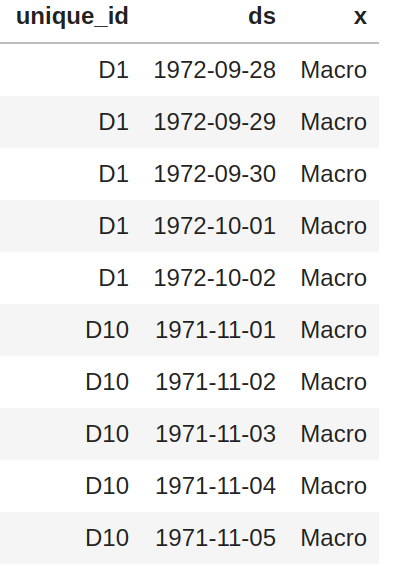

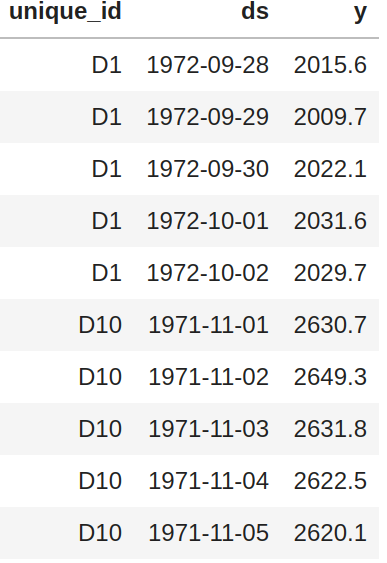

32 | - `X_df` must contain the columns `['unique_id', 'ds', 'x']`

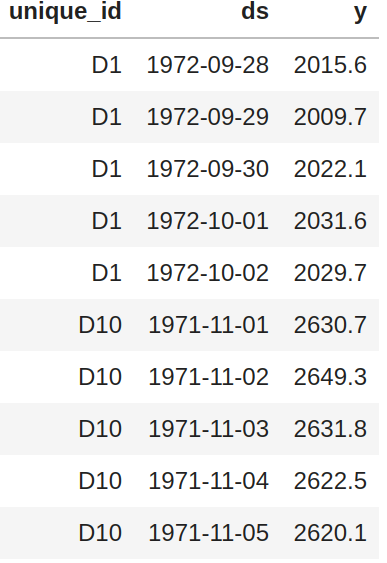

33 | - `y_df` must contain the columns `['unique_id', 'ds', 'y']`

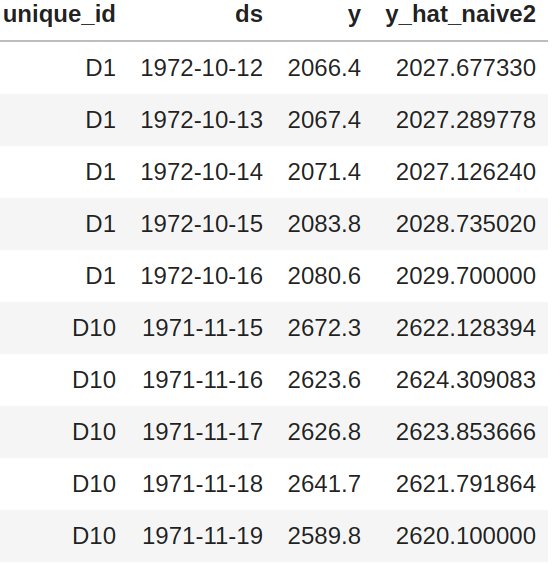

34 | - `X_test_df` must contain the columns `['unique_id', 'ds', 'x']`

35 | - `y_test_df` must contain the columns `['unique_id', 'ds', 'y']` and a benchmark model to compare against (default `'y_hat_naive2'`).

36 |

37 | For all the above:

38 | - The column `'unique_id'` is a time series identifier, the column `'ds'` stands for the datetime.

39 | - Column `'x'` is an exogenous categorical feature.

40 | - Column `'y'` is the target variable.

41 | - Column `'y'` **does not allow negative values** and the first entry for all series must be **grater than 0**.

42 |

43 | The `X` and `y` dataframes must contain the same values for `'unique_id'`, `'ds'` columns and be **balanced**, ie.no *gaps* between dates for the frequency.

44 |

45 |

46 |

47 |

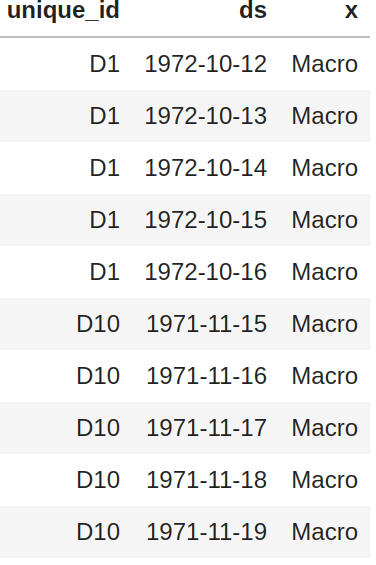

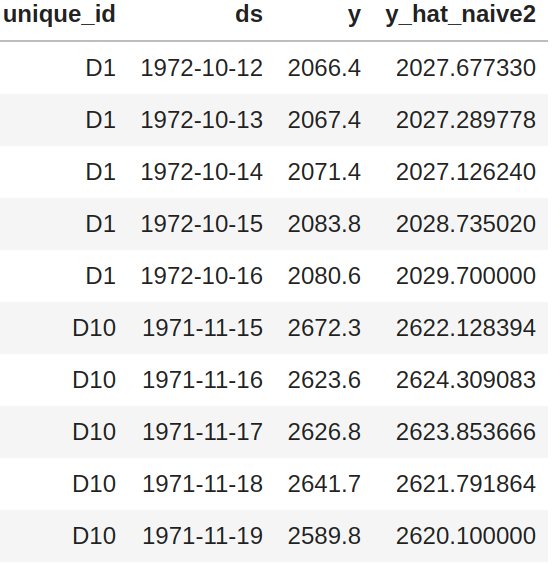

48 | |`X_df`|`y_df` |`X_test_df`| `y_test_df`|

49 | |:-----------:|:-----------:|:-----------:|:-----------:|

50 | | |

|  |

|  |

|  |

51 |

52 |

53 |

54 |

55 | ### M4 example

56 |

57 |

58 | ```python

59 | from ESRNN.m4_data import prepare_m4_data

60 | from ESRNN.utils_evaluation import evaluate_prediction_owa

61 |

62 | from ESRNN import ESRNN

63 |

64 | X_train_df, y_train_df, X_test_df, y_test_df = prepare_m4_data(dataset_name='Yearly',

65 | directory = './data',

66 | num_obs=1000)

67 |

68 | # Instantiate model

69 | model = ESRNN(max_epochs=25, freq_of_test=5, batch_size=4, learning_rate=1e-4,

70 | per_series_lr_multip=0.8, lr_scheduler_step_size=10,

71 | lr_decay=0.1, gradient_clipping_threshold=50,

72 | rnn_weight_decay=0.0, level_variability_penalty=100,

73 | testing_percentile=50, training_percentile=50,

74 | ensemble=False, max_periods=25, seasonality=[],

75 | input_size=4, output_size=6,

76 | cell_type='LSTM', state_hsize=40,

77 | dilations=[[1], [6]], add_nl_layer=False,

78 | random_seed=1, device='cpu')

79 |

80 | # Fit model

81 | # If y_test_df is provided the model

82 | # will evaluate predictions on

83 | # this set every freq_test epochs

84 | model.fit(X_train_df, y_train_df, X_test_df, y_test_df)

85 |

86 | # Predict on test set

87 | y_hat_df = model.predict(X_test_df)

88 |

89 | # Evaluate predictions

90 | final_owa, final_mase, final_smape = evaluate_prediction_owa(y_hat_df, y_train_df,

91 | X_test_df, y_test_df,

92 | naive2_seasonality=1)

93 | ```

94 | ## Overall Weighted Average

95 |

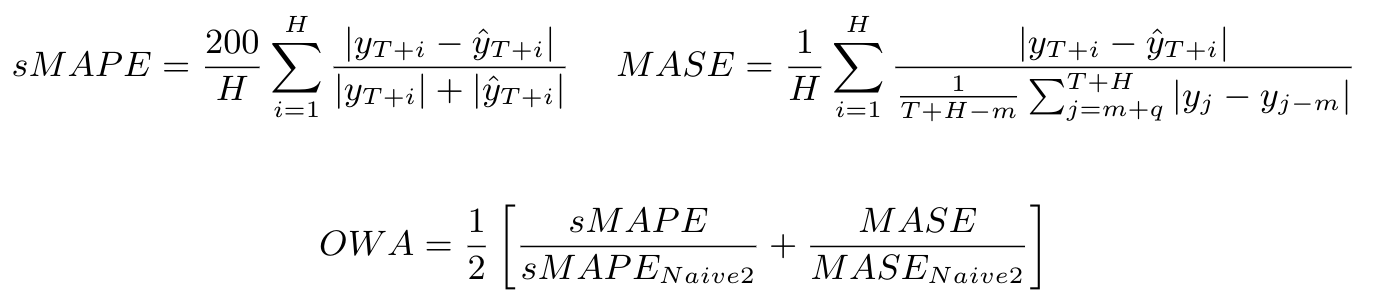

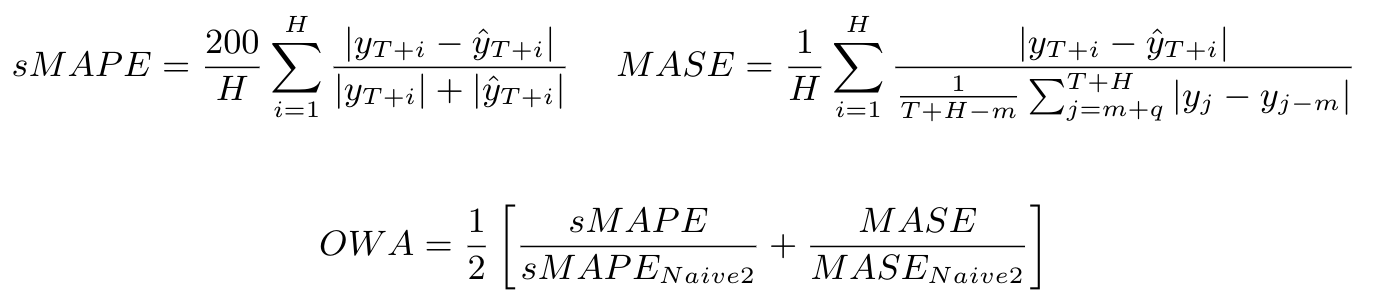

96 | A metric that is useful for quantifying the aggregate error of a specific model for various time series is the Overall Weighted Average (OWA) proposed for the M4 competition. This metric is calculated by obtaining the average of the symmetric mean absolute percentage error (sMAPE) and the mean absolute scaled error (MASE) for all the time series of the model and also calculating it for the Naive2 predictions. Both sMAPE and MASE are scale independent. These measurements are calculated as follows:

97 |

98 |

99 |

100 |

101 |

102 | ## Current Results

103 | Here we used the model directly to compare to the original implementation. It is worth noticing that these results do not include the ensemble methods mentioned in the [ESRNN paper](https://www.sciencedirect.com/science/article/pii/S0169207019301153).

|

51 |

52 |

53 |

54 |

55 | ### M4 example

56 |

57 |

58 | ```python

59 | from ESRNN.m4_data import prepare_m4_data

60 | from ESRNN.utils_evaluation import evaluate_prediction_owa

61 |

62 | from ESRNN import ESRNN

63 |

64 | X_train_df, y_train_df, X_test_df, y_test_df = prepare_m4_data(dataset_name='Yearly',

65 | directory = './data',

66 | num_obs=1000)

67 |

68 | # Instantiate model

69 | model = ESRNN(max_epochs=25, freq_of_test=5, batch_size=4, learning_rate=1e-4,

70 | per_series_lr_multip=0.8, lr_scheduler_step_size=10,

71 | lr_decay=0.1, gradient_clipping_threshold=50,

72 | rnn_weight_decay=0.0, level_variability_penalty=100,

73 | testing_percentile=50, training_percentile=50,

74 | ensemble=False, max_periods=25, seasonality=[],

75 | input_size=4, output_size=6,

76 | cell_type='LSTM', state_hsize=40,

77 | dilations=[[1], [6]], add_nl_layer=False,

78 | random_seed=1, device='cpu')

79 |

80 | # Fit model

81 | # If y_test_df is provided the model

82 | # will evaluate predictions on

83 | # this set every freq_test epochs

84 | model.fit(X_train_df, y_train_df, X_test_df, y_test_df)

85 |

86 | # Predict on test set

87 | y_hat_df = model.predict(X_test_df)

88 |

89 | # Evaluate predictions

90 | final_owa, final_mase, final_smape = evaluate_prediction_owa(y_hat_df, y_train_df,

91 | X_test_df, y_test_df,

92 | naive2_seasonality=1)

93 | ```

94 | ## Overall Weighted Average

95 |

96 | A metric that is useful for quantifying the aggregate error of a specific model for various time series is the Overall Weighted Average (OWA) proposed for the M4 competition. This metric is calculated by obtaining the average of the symmetric mean absolute percentage error (sMAPE) and the mean absolute scaled error (MASE) for all the time series of the model and also calculating it for the Naive2 predictions. Both sMAPE and MASE are scale independent. These measurements are calculated as follows:

97 |

98 |

99 |

100 |

101 |

102 | ## Current Results

103 | Here we used the model directly to compare to the original implementation. It is worth noticing that these results do not include the ensemble methods mentioned in the [ESRNN paper](https://www.sciencedirect.com/science/article/pii/S0169207019301153).

104 | [Results of the M4 competition](https://www.researchgate.net/publication/325901666_The_M4_Competition_Results_findings_conclusion_and_way_forward).

105 |

106 |

107 | | DATASET | OUR OWA | M4 OWA (Smyl) |

108 | |-----------|:---------:|:--------:|

109 | | Yearly | 0.785 | 0.778 |

110 | | Quarterly | 0.879 | 0.847 |

111 | | Monthly | 0.872 | 0.836 |

112 | | Hourly | 0.615 | 0.920 |

113 | | Weekly | 0.952 | 0.920 |

114 | | Daily | 0.968 | 0.920 |

115 |

116 |

117 | ## Replicating M4 results

118 |

119 |

120 | Replicating the M4 results is as easy as running the following line of code (for each frequency) after installing the package via pip:

121 |

122 | ```console

123 | python -m ESRNN.m4_run --dataset 'Yearly' --results_directory '/some/path' \

124 | --gpu_id 0 --use_cpu 0

125 | ```

126 |

127 | Use `--help` to get the description of each argument:

128 |

129 | ```console

130 | python -m ESRNN.m4_run --help

131 | ```

132 |

133 | ## Authors

134 | This repository was developed with joint efforts from AutonLab researchers at Carnegie Mellon University and Orax data scientists.

135 | * **Kin Gutierrez** - [kdgutier](https://github.com/kdgutier)

136 | * **Cristian Challu** - [cristianchallu](https://github.com/cristianchallu)

137 | * **Federico Garza** - [FedericoGarza](https://github.com/FedericoGarza) - [mail](fede.garza.ramirez@gmail.com)

138 | * **Max Mergenthaler** - [mergenthaler](https://github.com/mergenthaler)

139 |

140 | ## License

141 | This project is licensed under the MIT License - see the [LICENSE](https://github.com/kdgutier/esrnn_torch/blob/master/LICENSE) file for details.

142 |

143 |

144 | ## REFERENCES

145 | 1. [A hybrid method of exponential smoothing and recurrent neural networks for time series forecasting](https://www.sciencedirect.com/science/article/pii/S0169207019301153)

146 | 2. [The M4 Competition: Results, findings, conclusion and way forward](https://www.researchgate.net/publication/325901666_The_M4_Competition_Results_findings_conclusion_and_way_forward)

147 | 3. [M4 Competition Data](https://github.com/M4Competition/M4-methods/tree/master/Dataset)

148 | 4. [Dilated Recurrent Neural Networks](https://papers.nips.cc/paper/6613-dilated-recurrent-neural-networks.pdf)

149 | 5. [Residual LSTM: Design of a Deep Recurrent Architecture for Distant Speech Recognition](https://arxiv.org/abs/1701.03360)

150 | 6. [A Dual-Stage Attention-Based recurrent neural network for time series prediction](https://arxiv.org/abs/1704.02971)

151 |

--------------------------------------------------------------------------------

/ESRNN/m4_data.py:

--------------------------------------------------------------------------------

1 | import os

2 | from six.moves import urllib

3 | import subprocess

4 |

5 | import numpy as np

6 | import pandas as pd

7 |

8 | from ESRNN.utils_evaluation import Naive2

9 |

10 |

11 | seas_dict = {'Hourly': {'seasonality': 24, 'input_size': 24,

12 | 'output_size': 48, 'freq': 'H'},

13 | 'Daily': {'seasonality': 7, 'input_size': 7,

14 | 'output_size': 14, 'freq': 'D'},

15 | 'Weekly': {'seasonality': 52, 'input_size': 52,

16 | 'output_size': 13, 'freq': 'W'},

17 | 'Monthly': {'seasonality': 12, 'input_size': 12,

18 | 'output_size':18, 'freq': 'M'},

19 | 'Quarterly': {'seasonality': 4, 'input_size': 4,

20 | 'output_size': 8, 'freq': 'Q'},

21 | 'Yearly': {'seasonality': 1, 'input_size': 4,

22 | 'output_size': 6, 'freq': 'D'}}

23 |

24 | SOURCE_URL = 'https://raw.githubusercontent.com/Mcompetitions/M4-methods/master/Dataset/'

25 |

26 |

27 | def maybe_download(filename, directory):

28 | """

29 | Download the data from M4's website, unless it's already here.

30 |

31 | Parameters

32 | ----------

33 | filename: str

34 | Filename of M4 data with format /Type/Frequency.csv. Example: /Test/Daily-train.csv

35 | directory: str

36 | Custom directory where data will be downloaded.

37 | """

38 | data_directory = directory + "/m4"

39 | train_directory = data_directory + "/Train/"

40 | test_directory = data_directory + "/Test/"

41 |

42 | if not os.path.exists(data_directory):

43 | os.mkdir(data_directory)

44 | if not os.path.exists(train_directory):

45 | os.mkdir(train_directory)

46 | if not os.path.exists(test_directory):

47 | os.mkdir(test_directory)

48 |

49 | filepath = os.path.join(data_directory, filename)

50 | if not os.path.exists(filepath):

51 | filepath, _ = urllib.request.urlretrieve(SOURCE_URL + filename, filepath)

52 | size = os.path.getsize(filepath)

53 | print('Successfully downloaded', filename, size, 'bytes.')

54 | return filepath

55 |

56 | def m4_parser(dataset_name, directory, num_obs=1000000):

57 | """

58 | Transform M4 data into a panel.

59 |

60 | Parameters

61 | ----------

62 | dataset_name: str

63 | Frequency of the data. Example: 'Yearly'.

64 | directory: str

65 | Custom directory where data will be saved.

66 | num_obs: int

67 | Number of time series to return.

68 | """

69 | data_directory = directory + "/m4"

70 | train_directory = data_directory + "/Train/"

71 | test_directory = data_directory + "/Test/"

72 | freq = seas_dict[dataset_name]['freq']

73 |

74 | m4_info = pd.read_csv(data_directory+'/M4-info.csv', usecols=['M4id','category'])

75 | m4_info = m4_info[m4_info['M4id'].str.startswith(dataset_name[0])].reset_index(drop=True)

76 |

77 | # Train data

78 | train_path='{}{}-train.csv'.format(train_directory, dataset_name)

79 |

80 | train_df = pd.read_csv(train_path, nrows=num_obs)

81 | train_df = train_df.rename(columns={'V1':'unique_id'})

82 |

83 | train_df = pd.wide_to_long(train_df, stubnames=["V"], i="unique_id", j="ds").reset_index()

84 | train_df = train_df.rename(columns={'V':'y'})

85 | train_df = train_df.dropna()

86 | train_df['split'] = 'train'

87 | train_df['ds'] = train_df['ds']-1

88 | # Get len of series per unique_id

89 | len_series = train_df.groupby('unique_id').agg({'ds': 'max'}).reset_index()

90 | len_series.columns = ['unique_id', 'len_serie']

91 |

92 | # Test data

93 | test_path='{}{}-test.csv'.format(test_directory, dataset_name)

94 |

95 | test_df = pd.read_csv(test_path, nrows=num_obs)

96 | test_df = test_df.rename(columns={'V1':'unique_id'})

97 |

98 | test_df = pd.wide_to_long(test_df, stubnames=["V"], i="unique_id", j="ds").reset_index()

99 | test_df = test_df.rename(columns={'V':'y'})

100 | test_df = test_df.dropna()

101 | test_df['split'] = 'test'

102 | test_df = test_df.merge(len_series, on='unique_id')

103 | test_df['ds'] = test_df['ds'] + test_df['len_serie'] - 1

104 | test_df = test_df[['unique_id','ds','y','split']]

105 |

106 | df = pd.concat((train_df,test_df))

107 | df = df.sort_values(by=['unique_id', 'ds']).reset_index(drop=True)

108 |

109 | # Create column with dates with freq of dataset

110 | len_series = df.groupby('unique_id').agg({'ds': 'max'}).reset_index()

111 | dates = []

112 | for i in range(len(len_series)):

113 | len_serie = len_series.iloc[i,1]

114 | ranges = pd.date_range(start='1970/01/01', periods=len_serie, freq=freq)

115 | dates += list(ranges)

116 | df.loc[:,'ds'] = dates

117 |

118 | df = df.merge(m4_info, left_on=['unique_id'], right_on=['M4id'])

119 | df.drop(columns=['M4id'], inplace=True)

120 | df = df.rename(columns={'category': 'x'})

121 |

122 | X_train_df = df[df['split']=='train'].filter(items=['unique_id', 'ds', 'x'])

123 | y_train_df = df[df['split']=='train'].filter(items=['unique_id', 'ds', 'y'])

124 | X_test_df = df[df['split']=='test'].filter(items=['unique_id', 'ds', 'x'])

125 | y_test_df = df[df['split']=='test'].filter(items=['unique_id', 'ds', 'y'])

126 |

127 | X_train_df = X_train_df.reset_index(drop=True)

128 | y_train_df = y_train_df.reset_index(drop=True)

129 | X_test_df = X_test_df.reset_index(drop=True)

130 | y_test_df = y_test_df.reset_index(drop=True)

131 |

132 | return X_train_df, y_train_df, X_test_df, y_test_df

133 |

134 | def naive2_predictions(dataset_name, directory, num_obs, y_train_df = None, y_test_df = None):

135 | """

136 | Computes Naive2 predictions.

137 |

138 | Parameters

139 | ----------

140 | dataset_name: str

141 | Frequency of the data. Example: 'Yearly'.

142 | directory: str

143 | Custom directory where data will be saved.

144 | num_obs: int

145 | Number of time series to return.

146 | y_train_df: DataFrame

147 | Y train set returned by m4_parser

148 | y_test_df: DataFrame

149 | Y test set returned by m4_parser

150 | """

151 | # Read train and test data

152 | if (y_train_df is None) or (y_test_df is None):

153 | _, y_train_df, _, y_test_df = m4_parser(dataset_name, directory, num_obs)

154 |

155 | seasonality = seas_dict[dataset_name]['seasonality']

156 | input_size = seas_dict[dataset_name]['input_size']

157 | output_size = seas_dict[dataset_name]['output_size']

158 | freq = seas_dict[dataset_name]['freq']

159 |

160 | print('Preparing {} dataset'.format(dataset_name))

161 | print('Preparing Naive2 {} dataset predictions'.format(dataset_name))

162 |

163 | # Naive2

164 | y_naive2_df = pd.DataFrame(columns=['unique_id', 'ds', 'y_hat'])

165 |

166 | # Sort X by unique_id for faster loop

167 | y_train_df = y_train_df.sort_values(by=['unique_id', 'ds'])

168 | # List of uniques ids

169 | unique_ids = y_train_df['unique_id'].unique()

170 | # Panel of fitted models

171 | for unique_id in unique_ids:

172 | # Fast filter X and y by id.

173 | top_row = np.asscalar(y_train_df['unique_id'].searchsorted(unique_id, 'left'))

174 | bottom_row = np.asscalar(y_train_df['unique_id'].searchsorted(unique_id, 'right'))

175 | y_id = y_train_df[top_row:bottom_row]

176 |

177 | y_naive2 = pd.DataFrame(columns=['unique_id', 'ds', 'y_hat'])

178 | y_naive2['ds'] = pd.date_range(start=y_id.ds.max(),

179 | periods=output_size+1, freq=freq)[1:]

180 | y_naive2['unique_id'] = unique_id

181 | y_naive2['y_hat'] = Naive2(seasonality).fit(y_id.y.to_numpy()).predict(output_size)

182 | y_naive2_df = y_naive2_df.append(y_naive2)

183 |

184 | y_naive2_df = y_test_df.merge(y_naive2_df, on=['unique_id', 'ds'], how='left')

185 | y_naive2_df.rename(columns={'y_hat': 'y_hat_naive2'}, inplace=True)

186 |

187 | results_dir = directory + '/results'

188 | naive2_file = results_dir + '/{}-naive2predictions_{}.csv'.format(dataset_name, num_obs)

189 | y_naive2_df.to_csv(naive2_file, encoding='utf-8', index=None)

190 |

191 | return y_naive2_df

192 |

193 | def prepare_m4_data(dataset_name, directory, num_obs):

194 | """

195 | Pipeline that obtains M4 times series, tranforms it and gets naive2 predictions.

196 |

197 | Parameters

198 | ----------

199 | dataset_name: str

200 | Frequency of the data. Example: 'Yearly'.

201 | directory: str

202 | Custom directory where data will be saved.

203 | num_obs: int

204 | Number of time series to return.

205 | py_predictions: bool

206 | whether use python or r predictions

207 | """

208 | m4info_filename = maybe_download('M4-info.csv', directory)

209 |

210 | dailytrain_filename = maybe_download('Train/Daily-train.csv', directory)

211 | hourlytrain_filename = maybe_download('Train/Hourly-train.csv', directory)

212 | monthlytrain_filename = maybe_download('Train/Monthly-train.csv', directory)

213 | quarterlytrain_filename = maybe_download('Train/Quarterly-train.csv', directory)

214 | weeklytrain_filename = maybe_download('Train/Weekly-train.csv', directory)

215 | yearlytrain_filename = maybe_download('Train/Yearly-train.csv', directory)

216 |

217 | dailytest_filename = maybe_download('Test/Daily-test.csv', directory)

218 | hourlytest_filename = maybe_download('Test/Hourly-test.csv', directory)

219 | monthlytest_filename = maybe_download('Test/Monthly-test.csv', directory)

220 | quarterlytest_filename = maybe_download('Test/Quarterly-test.csv', directory)

221 | weeklytest_filename = maybe_download('Test/Weekly-test.csv', directory)

222 | yearlytest_filename = maybe_download('Test/Yearly-test.csv', directory)

223 | print('\n')

224 |

225 | X_train_df, y_train_df, X_test_df, y_test_df = m4_parser(dataset_name, directory, num_obs)

226 |

227 | results_dir = directory + '/results'

228 | if not os.path.exists(results_dir):

229 | os.mkdir(results_dir)

230 |

231 | naive2_file = results_dir + '/{}-naive2predictions_{}.csv'

232 | naive2_file = naive2_file.format(dataset_name, num_obs)

233 |

234 | if not os.path.exists(naive2_file):

235 | y_naive2_df = naive2_predictions(dataset_name, directory, num_obs, y_train_df, y_test_df)

236 | else:

237 | y_naive2_df = pd.read_csv(naive2_file)

238 | y_naive2_df['ds'] = pd.to_datetime(y_naive2_df['ds'])

239 |

240 | return X_train_df, y_train_df, X_test_df, y_naive2_df

241 |

--------------------------------------------------------------------------------

/ESRNN/utils/ESRNN.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 | from ESRNN.utils.DRNN import DRNN

4 | import numpy as np

5 |

6 | #import torch.jit as jit

7 |

8 |

9 | class _ES(nn.Module):

10 | def __init__(self, mc):

11 | super(_ES, self).__init__()

12 | self.mc = mc

13 | self.n_series = self.mc.n_series

14 | self.output_size = self.mc.output_size

15 | assert len(self.mc.seasonality) in [0, 1, 2]

16 |

17 | def gaussian_noise(self, input_data, std=0.2):

18 | size = input_data.size()

19 | noise = torch.autograd.Variable(input_data.data.new(size).normal_(0, std))

20 | return input_data + noise

21 |

22 | #@jit.script_method

23 | def compute_levels_seasons(self, y, idxs):

24 | pass

25 |

26 | def normalize(self, y, level, seasonalities):

27 | pass

28 |

29 | def predict(self, trend, levels, seasonalities):

30 | pass

31 |

32 | def forward(self, ts_object):

33 | # parse mc

34 | input_size = self.mc.input_size

35 | output_size = self.mc.output_size

36 | exogenous_size = self.mc.exogenous_size

37 | noise_std = self.mc.noise_std

38 | seasonality = self.mc.seasonality

39 | batch_size = len(ts_object.idxs)

40 |

41 | # Parse ts_object

42 | y = ts_object.y

43 | idxs = ts_object.idxs

44 | n_series, n_time = y.shape

45 | if self.training:

46 | windows_end = n_time-input_size-output_size+1

47 | windows_range = range(windows_end)

48 | else:

49 | windows_start = n_time-input_size-output_size+1

50 | windows_end = n_time-input_size+1

51 |

52 | windows_range = range(windows_start, windows_end)

53 | n_windows = len(windows_range)

54 | assert n_windows>0

55 |

56 | # Initialize windows, levels and seasonalities

57 | levels, seasonalities = self.compute_levels_seasons(y, idxs)

58 | windows_y_hat = torch.zeros((n_windows, batch_size, input_size+exogenous_size),

59 | device=self.mc.device)

60 | windows_y = torch.zeros((n_windows, batch_size, output_size),

61 | device=self.mc.device)

62 |

63 | for i, window in enumerate(windows_range):

64 | # Windows yhat

65 | y_hat_start = window

66 | y_hat_end = input_size + window

67 |

68 | # Y_hat deseasonalization and normalization

69 | window_y_hat = self.normalize(y=y[:, y_hat_start:y_hat_end],

70 | level=levels[:, [y_hat_end-1]],

71 | seasonalities=seasonalities,

72 | start=y_hat_start, end=y_hat_end)

73 |

74 | if self.training:

75 | window_y_hat = self.gaussian_noise(window_y_hat, std=noise_std)

76 |

77 | # Concatenate categories

78 | if exogenous_size>0:

79 | window_y_hat = torch.cat((window_y_hat, ts_object.categories), 1)

80 |

81 | windows_y_hat[i, :, :] += window_y_hat

82 |

83 | # Windows y (for loss during train)

84 | if self.training:

85 | y_start = y_hat_end

86 | y_end = y_start+output_size

87 | # Y deseasonalization and normalization

88 | window_y = self.normalize(y=y[:, y_start:y_end],

89 | level=levels[:, [y_start]],

90 | seasonalities=seasonalities,

91 | start=y_start, end=y_end)

92 | windows_y[i, :, :] += window_y

93 |

94 | return windows_y_hat, windows_y, levels, seasonalities

95 |

96 | class _ESM(_ES):

97 | def __init__(self, mc):

98 | super(_ESM, self).__init__(mc)

99 | # Level and Seasonality Smoothing parameters

100 | # 1 level, S seasonalities, S init_seas

101 | embeds_size = 1 + len(self.mc.seasonality) + sum(self.mc.seasonality)

102 | init_embeds = torch.ones((self.n_series, embeds_size)) * 0.5

103 | self.embeds = nn.Embedding(self.n_series, embeds_size)

104 | self.embeds.weight.data.copy_(init_embeds)

105 | self.register_buffer('seasonality', torch.LongTensor(self.mc.seasonality))

106 |

107 | #@jit.script_method

108 | def compute_levels_seasons(self, y, idxs):

109 | """

110 | Computes levels and seasons

111 | """

112 | # Lookup parameters per serie

113 | #seasonality = self.seasonality

114 | embeds = self.embeds(idxs)

115 | lev_sms = torch.sigmoid(embeds[:, 0])

116 |

117 | # Initialize seasonalities

118 | seas_prod = torch.ones(len(y[:,0])).to(y.device)

119 | #seasonalities1 = torch.jit.annotate(List[Tensor], [])

120 | #seasonalities2 = torch.jit.annotate(List[Tensor], [])

121 | seasonalities1 = []

122 | seasonalities2 = []

123 | seas_sms1 = torch.ones(1).to(y.device)

124 | seas_sms2 = torch.ones(1).to(y.device)

125 |

126 | if len(self.seasonality)>0:

127 | seas_sms1 = torch.sigmoid(embeds[:, 1])

128 | init_seas1 = torch.exp(embeds[:, 2:(2+self.seasonality[0])]).unbind(1)

129 | assert len(init_seas1) == self.seasonality[0]

130 |

131 | for i in range(len(init_seas1)):

132 | seasonalities1 += [init_seas1[i]]

133 | seasonalities1 += [init_seas1[0]]

134 | seas_prod = seas_prod * init_seas1[0]

135 |

136 | if len(self.seasonality)==2:

137 | seas_sms2 = torch.sigmoid(embeds[:, 2+self.seasonality[0]])

138 | init_seas2 = torch.exp(embeds[:, 3+self.seasonality[0]:]).unbind(1)

139 | assert len(init_seas2) == self.seasonality[1]

140 |

141 | for i in range(len(init_seas2)):

142 | seasonalities2 += [init_seas2[i]]

143 | seasonalities2 += [init_seas2[0]]

144 | seas_prod = seas_prod * init_seas2[0]

145 |

146 | # Initialize levels

147 | #levels = torch.jit.annotate(List[Tensor], [])

148 | levels = []

149 | levels += [y[:,0]/seas_prod]

150 |

151 | # Recursive seasonalities and levels

152 | ys = y.unbind(1)

153 | n_time = len(ys)

154 | for t in range(1, n_time):

155 |

156 | seas_prod_t = torch.ones(len(y[:,t])).to(y.device)

157 | if len(self.seasonality)>0:

158 | seas_prod_t = seas_prod_t * seasonalities1[t]

159 | if len(self.seasonality)==2:

160 | seas_prod_t = seas_prod_t * seasonalities2[t]

161 |

162 | newlev = lev_sms * (ys[t] / seas_prod_t) + (1-lev_sms) * levels[t-1]

163 | levels += [newlev]

164 |

165 | if len(self.seasonality)==1:

166 | newseason1 = seas_sms1 * (ys[t] / newlev) + (1-seas_sms1) * seasonalities1[t]

167 | seasonalities1 += [newseason1]

168 |

169 | if len(self.seasonality)==2:

170 | newseason1 = seas_sms1 * (ys[t] / (newlev * seasonalities2[t])) + \

171 | (1-seas_sms1) * seasonalities1[t]

172 | seasonalities1 += [newseason1]

173 | newseason2 = seas_sms2 * (ys[t] / (newlev * seasonalities1[t])) + \

174 | (1-seas_sms2) * seasonalities2[t]

175 | seasonalities2 += [newseason2]

176 |

177 | levels = torch.stack(levels).transpose(1,0)

178 |

179 | #seasonalities = torch.jit.annotate(List[Tensor], [])

180 | seasonalities = []

181 |

182 | if len(self.seasonality)>0:

183 | seasonalities += [torch.stack(seasonalities1).transpose(1,0)]

184 |

185 | if len(self.seasonality)==2:

186 | seasonalities += [torch.stack(seasonalities2).transpose(1,0)]

187 |

188 | return levels, seasonalities

189 |

190 | def normalize(self, y, level, seasonalities, start, end):

191 | # Deseasonalization and normalization

192 | y_n = y / level

193 | for s in range(len(self.seasonality)):

194 | y_n /= seasonalities[s][:, start:end]

195 | y_n = torch.log(y_n)

196 | return y_n

197 |

198 | def predict(self, trend, levels, seasonalities):

199 | output_size = self.mc.output_size

200 | seasonality = self.mc.seasonality

201 | n_time = levels.shape[1]

202 |

203 | # Denormalize

204 | trend = torch.exp(trend)

205 |

206 | # Completion of seasonalities if prediction horizon is larger than seasonality

207 | # Naive2 like prediction, to avoid recursive forecasting

208 | for s in range(len(seasonality)):

209 | if output_size > seasonality[s]:

210 | repetitions = int(np.ceil(output_size/seasonality[s]))-1

211 | last_season = seasonalities[s][:, -seasonality[s]:]

212 | extra_seasonality = last_season.repeat((1, repetitions))

213 | seasonalities[s] = torch.cat((seasonalities[s], extra_seasonality), 1)

214 |

215 | # Deseasonalization and normalization (inverse)

216 | y_hat = trend * levels[:,[n_time-1]]

217 | for s in range(len(seasonality)):

218 | y_hat *= seasonalities[s][:, n_time:(n_time+output_size)]

219 |

220 | return y_hat

221 |

222 | class _RNN(nn.Module):

223 | def __init__(self, mc):

224 | super(_RNN, self).__init__()

225 | self.mc = mc

226 | self.layers = len(mc.dilations)

227 |

228 | layers = []

229 | for grp_num in range(len(mc.dilations)):

230 | if grp_num == 0:

231 | input_size = mc.input_size + mc.exogenous_size

232 | else:

233 | input_size = mc.state_hsize

234 | layer = DRNN(input_size,

235 | mc.state_hsize,

236 | n_layers=len(mc.dilations[grp_num]),

237 | dilations=mc.dilations[grp_num],

238 | cell_type=mc.cell_type)

239 | layers.append(layer)

240 |

241 | self.rnn_stack = nn.Sequential(*layers)

242 |

243 | if self.mc.add_nl_layer:

244 | self.MLPW = nn.Linear(mc.state_hsize, mc.state_hsize)

245 |

246 | self.adapterW = nn.Linear(mc.state_hsize, mc.output_size)

247 |

248 | def forward(self, input_data):

249 | for layer_num in range(len(self.rnn_stack)):

250 | residual = input_data

251 | output, _ = self.rnn_stack[layer_num](input_data)

252 | if layer_num > 0:

253 | output += residual

254 | input_data = output

255 |

256 | if self.mc.add_nl_layer:

257 | input_data = self.MLPW(input_data)

258 | input_data = torch.tanh(input_data)

259 |

260 | input_data = self.adapterW(input_data)

261 | return input_data

262 |

263 |

264 | class _ESRNN(nn.Module):

265 | def __init__(self, mc):

266 | super(_ESRNN, self).__init__()

267 | self.mc = mc

268 | self.es = _ESM(mc).to(self.mc.device)

269 | self.rnn = _RNN(mc).to(self.mc.device)

270 |

271 | def forward(self, ts_object):

272 | # ES Forward

273 | windows_y_hat, windows_y, levels, seasonalities = self.es(ts_object)

274 |

275 | # RNN Forward

276 | windows_y_hat = self.rnn(windows_y_hat)

277 |

278 | return windows_y, windows_y_hat, levels

279 |

280 | def predict(self, ts_object):

281 | # ES Forward

282 | windows_y_hat, _, levels, seasonalities = self.es(ts_object)

283 |

284 | # RNN Forward

285 | windows_y_hat = self.rnn(windows_y_hat)

286 | trend = windows_y_hat[-1,:,:] # Last observation prediction

287 |

288 | y_hat = self.es.predict(trend, levels, seasonalities)

289 | return y_hat

290 |