├── .gitignore

├── go.mod

├── examples

└── sum.go

├── .github

└── workflows

│ ├── reviewdog.yml

│ ├── go.yml

│ └── codeql-analysis.yml

├── LICENSE

├── mapreduce_fuzz_test.go

├── readme-cn.md

├── go.sum

├── readme.md

├── mapreduce.go

└── mapreduce_test.go

/.gitignore:

--------------------------------------------------------------------------------

1 | # Binaries for programs and plugins

2 | *.exe

3 | *.exe~

4 | *.dll

5 | *.so

6 | *.dylib

7 |

8 | # dev files

9 | .idea

10 |

11 | # For test

12 | **/testdata

13 | *.test

14 |

15 | # Output of the go coverage tool, specifically when used with LiteIDE

16 | *.out

17 |

18 | # Dependency directories (remove the comment below to include it)

19 | # vendor/

20 |

--------------------------------------------------------------------------------

/go.mod:

--------------------------------------------------------------------------------

1 | module github.com/kevwan/mapreduce/v2

2 |

3 | go 1.18

4 |

5 | require (

6 | github.com/stretchr/testify v1.7.0

7 | go.uber.org/goleak v1.1.12

8 | )

9 |

10 | require (

11 | github.com/davecgh/go-spew v1.1.1 // indirect

12 | github.com/kr/pretty v0.2.1 // indirect

13 | github.com/pmezard/go-difflib v1.0.0 // indirect

14 | gopkg.in/yaml.v3 v3.0.0-20210107192922-496545a6307b // indirect

15 | )

16 |

--------------------------------------------------------------------------------

/examples/sum.go:

--------------------------------------------------------------------------------

1 | package main

2 |

3 | import (

4 | "fmt"

5 | "log"

6 |

7 | "github.com/kevwan/mapreduce/v2"

8 | )

9 |

10 | func main() {

11 | val, err := mapreduce.MapReduce(func(source chan<- int) {

12 | for i := 0; i < 10; i++ {

13 | source <- i

14 | }

15 | }, func(item int, writer mapreduce.Writer[int], cancel func(error)) {

16 | writer.Write(item * item)

17 | }, func(pipe <-chan int, writer mapreduce.Writer[int], cancel func(error)) {

18 | var sum int

19 | for i := range pipe {

20 | sum += i

21 | }

22 | writer.Write(sum)

23 | })

24 | if err != nil {

25 | log.Fatal(err)

26 | }

27 | fmt.Println("result:", val)

28 | }

29 |

--------------------------------------------------------------------------------

/.github/workflows/reviewdog.yml:

--------------------------------------------------------------------------------

1 | name: reviewdog

2 | on: [pull_request]

3 | jobs:

4 | staticcheck:

5 | name: runner / staticcheck

6 | runs-on: ubuntu-latest

7 | steps:

8 | - uses: actions/checkout@v2

9 | - uses: actions/setup-go@v3

10 | with:

11 | go-version: "1.18"

12 | - uses: reviewdog/action-staticcheck@v1

13 | with:

14 | github_token: ${{ secrets.github_token }}

15 | # Change reviewdog reporter if you need [github-pr-check,github-check,github-pr-review].

16 | reporter: github-pr-review

17 | # Report all results.

18 | filter_mode: nofilter

19 | # Exit with 1 when it find at least one finding.

20 | fail_on_error: true

21 | # Set staticcheck flags

22 | staticcheck_flags: -checks=inherit,-SA1019,-SA1029,-SA5008

23 |

--------------------------------------------------------------------------------

/.github/workflows/go.yml:

--------------------------------------------------------------------------------

1 | name: Go

2 |

3 | on:

4 | push:

5 | branches: [ main ]

6 | pull_request:

7 | branches: [ main ]

8 |

9 | jobs:

10 | build:

11 | name: Build

12 | runs-on: ubuntu-latest

13 | steps:

14 |

15 | - name: Set up Go 1.x

16 | uses: actions/setup-go@v2

17 | with:

18 | go-version: ^1.18

19 | id: go

20 |

21 | - name: Check out code into the Go module directory

22 | uses: actions/checkout@v2

23 |

24 | - name: Get dependencies

25 | run: |

26 | go get -v -t -d ./...

27 |

28 | - name: Lint

29 | run: |

30 | go vet -stdmethods=false $(go list ./...)

31 | go install mvdan.cc/gofumpt@latest

32 | test -z "$(gofumpt -s -l -extra .)" || echo "Please run 'gofumpt -l -w -extra .'"

33 |

34 | - name: Test

35 | run: go test -race -coverprofile=coverage.txt -covermode=atomic ./...

36 |

37 | - name: Codecov

38 | uses: codecov/codecov-action@v2

39 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2021 Kevin Wan

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/mapreduce_fuzz_test.go:

--------------------------------------------------------------------------------

1 | //go:build go1.18

2 | // +build go1.18

3 |

4 | package mapreduce

5 |

6 | import (

7 | "fmt"

8 | "math/rand"

9 | "runtime"

10 | "strings"

11 | "testing"

12 | "time"

13 |

14 | "github.com/stretchr/testify/assert"

15 | "go.uber.org/goleak"

16 | )

17 |

18 | func FuzzMapReduce(f *testing.F) {

19 | rand.Seed(time.Now().UnixNano())

20 |

21 | f.Add(int64(10), runtime.NumCPU())

22 | f.Fuzz(func(t *testing.T, n int64, workers int) {

23 | n = n%5000 + 5000

24 | genPanic := rand.Intn(100) == 0

25 | mapperPanic := rand.Intn(100) == 0

26 | reducerPanic := rand.Intn(100) == 0

27 | genIdx := rand.Int63n(n)

28 | mapperIdx := rand.Int63n(n)

29 | reducerIdx := rand.Int63n(n)

30 | squareSum := (n - 1) * n * (2*n - 1) / 6

31 |

32 | fn := func() (int64, error) {

33 | defer goleak.VerifyNone(t, goleak.IgnoreCurrent())

34 |

35 | return MapReduce(func(source chan<- int64) {

36 | for i := int64(0); i < n; i++ {

37 | source <- i

38 | if genPanic && i == genIdx {

39 | panic("foo")

40 | }

41 | }

42 | }, func(v int64, writer Writer[int64], cancel func(error)) {

43 | if mapperPanic && v == mapperIdx {

44 | panic("bar")

45 | }

46 | writer.Write(v * v)

47 | }, func(pipe <-chan int64, writer Writer[int64], cancel func(error)) {

48 | var idx int64

49 | var total int64

50 | for v := range pipe {

51 | if reducerPanic && idx == reducerIdx {

52 | panic("baz")

53 | }

54 | total += v

55 | idx++

56 | }

57 | writer.Write(total)

58 | }, WithWorkers(workers%50+runtime.NumCPU()))

59 | }

60 |

61 | if genPanic || mapperPanic || reducerPanic {

62 | var buf strings.Builder

63 | buf.WriteString(fmt.Sprintf("n: %d", n))

64 | buf.WriteString(fmt.Sprintf(", genPanic: %t", genPanic))

65 | buf.WriteString(fmt.Sprintf(", mapperPanic: %t", mapperPanic))

66 | buf.WriteString(fmt.Sprintf(", reducerPanic: %t", reducerPanic))

67 | buf.WriteString(fmt.Sprintf(", genIdx: %d", genIdx))

68 | buf.WriteString(fmt.Sprintf(", mapperIdx: %d", mapperIdx))

69 | buf.WriteString(fmt.Sprintf(", reducerIdx: %d", reducerIdx))

70 | assert.Panicsf(t, func() { fn() }, buf.String())

71 | } else {

72 | val, err := fn()

73 | assert.Nil(t, err)

74 | assert.Equal(t, squareSum, val)

75 | }

76 | })

77 | }

78 |

--------------------------------------------------------------------------------

/.github/workflows/codeql-analysis.yml:

--------------------------------------------------------------------------------

1 | # For most projects, this workflow file will not need changing; you simply need

2 | # to commit it to your repository.

3 | #

4 | # You may wish to alter this file to override the set of languages analyzed,

5 | # or to provide custom queries or build logic.

6 | #

7 | # ******** NOTE ********

8 | # We have attempted to detect the languages in your repository. Please check

9 | # the `language` matrix defined below to confirm you have the correct set of

10 | # supported CodeQL languages.

11 | #

12 | name: "CodeQL"

13 |

14 | on:

15 | push:

16 | branches: [ main ]

17 | pull_request:

18 | # The branches below must be a subset of the branches above

19 | branches: [ main ]

20 | schedule:

21 | - cron: '18 19 * * 6'

22 |

23 | jobs:

24 | analyze:

25 | name: Analyze

26 | runs-on: ubuntu-latest

27 |

28 | strategy:

29 | fail-fast: false

30 | matrix:

31 | language: [ 'go' ]

32 | # CodeQL supports [ 'cpp', 'csharp', 'go', 'java', 'javascript', 'python' ]

33 | # Learn more:

34 | # https://docs.github.com/en/free-pro-team@latest/github/finding-security-vulnerabilities-and-errors-in-your-code/configuring-code-scanning#changing-the-languages-that-are-analyzed

35 |

36 | steps:

37 | - name: Checkout repository

38 | uses: actions/checkout@v2

39 |

40 | # Initializes the CodeQL tools for scanning.

41 | - name: Initialize CodeQL

42 | uses: github/codeql-action/init@v1

43 | with:

44 | languages: ${{ matrix.language }}

45 | # If you wish to specify custom queries, you can do so here or in a config file.

46 | # By default, queries listed here will override any specified in a config file.

47 | # Prefix the list here with "+" to use these queries and those in the config file.

48 | # queries: ./path/to/local/query, your-org/your-repo/queries@main

49 |

50 | # Autobuild attempts to build any compiled languages (C/C++, C#, or Java).

51 | # If this step fails, then you should remove it and run the build manually (see below)

52 | - name: Autobuild

53 | uses: github/codeql-action/autobuild@v1

54 |

55 | # ℹ️ Command-line programs to run using the OS shell.

56 | # 📚 https://git.io/JvXDl

57 |

58 | # ✏️ If the Autobuild fails above, remove it and uncomment the following three lines

59 | # and modify them (or add more) to build your code if your project

60 | # uses a compiled language

61 |

62 | #- run: |

63 | # make bootstrap

64 | # make release

65 |

66 | - name: Perform CodeQL Analysis

67 | uses: github/codeql-action/analyze@v1

68 |

--------------------------------------------------------------------------------

/readme-cn.md:

--------------------------------------------------------------------------------

1 | # mapreduce

2 |

3 | [English](readme.md) | 简体中文

4 |

5 | [](https://github.com/kevwan/mapreduce/actions)

6 | [](https://codecov.io/gh/kevwan/mapreduce)

7 | [](https://goreportcard.com/report/github.com/kevwan/mapreduce)

8 | [](https://github.com/kevwan/mapreduce)

9 | [](https://opensource.org/licenses/MIT)

10 |

11 | ## 为什么会有这个项目

12 |

13 | `mapreduce` 其实是 [go-zero](https://github.com/zeromicro/go-zero) 的一部分,但是一些用户问我是不是可以单独使用 `mapreduce` 而不用引入 `go-zero` 的依赖,所以我考虑再三,还是单独提供一个吧。但是,我强烈推荐你使用 `go-zero`,因为 `go-zero` 真的提供了很多很好的功能。

14 |

15 | ## 为什么需要 MapReduce

16 |

17 | 在实际的业务场景中我们常常需要从不同的 rpc 服务中获取相应属性来组装成复杂对象。

18 |

19 | 比如要查询商品详情:

20 |

21 | 1. 商品服务-查询商品属性

22 | 2. 库存服务-查询库存属性

23 | 3. 价格服务-查询价格属性

24 | 4. 营销服务-查询营销属性

25 |

26 | 如果是串行调用的话响应时间会随着 rpc 调用次数呈线性增长,所以我们要优化性能一般会将串行改并行。

27 |

28 | 简单的场景下使用 waitGroup 也能够满足需求,但是如果我们需要对 rpc 调用返回的数据进行校验、数据加工转换、数据汇总呢?继续使用 waitGroup 就有点力不从心了,go 的官方库中并没有这种工具(java 中提供了 CompleteFuture),go-zero 作者依据 mapReduce 架构思想实现了进程内的数据批处理 mapReduce 并发工具类。

29 |

30 | ## 设计思路

31 |

32 | 我们尝试梳理一下并发工具可能的示例业务场景:

33 |

34 | 1. 查询商品详情:支持并发调用多个服务来组合产品属性,支持调用错误可以立即结束。

35 | 2. 商品详情页自动推荐用户卡券:支持并发校验卡券,校验失败自动剔除,返回全部卡券。

36 |

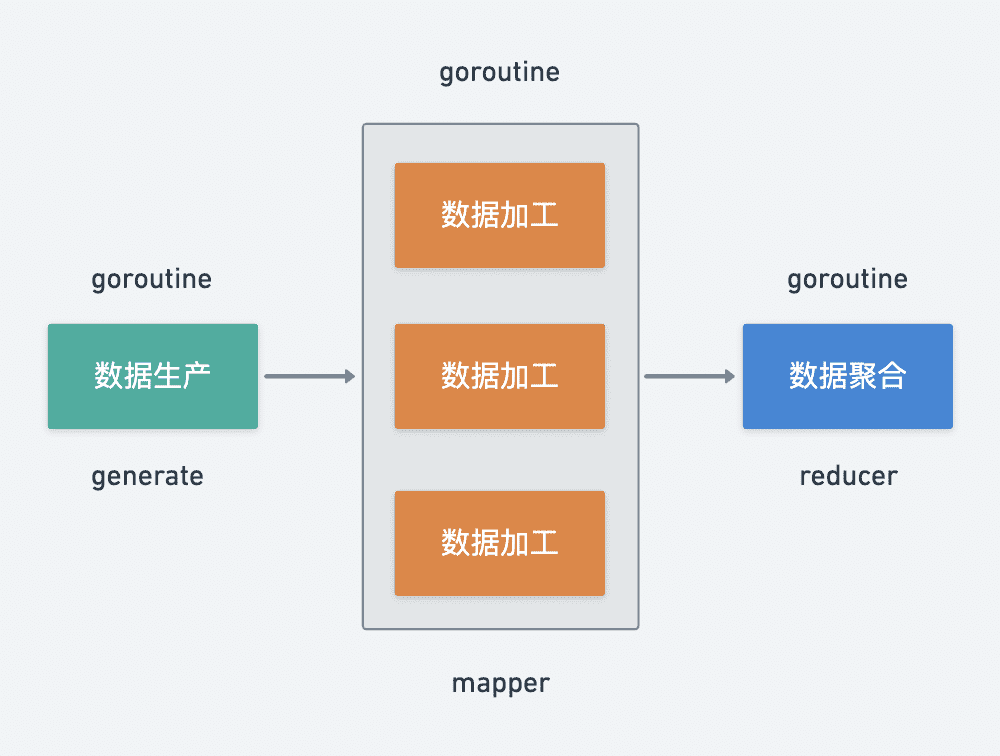

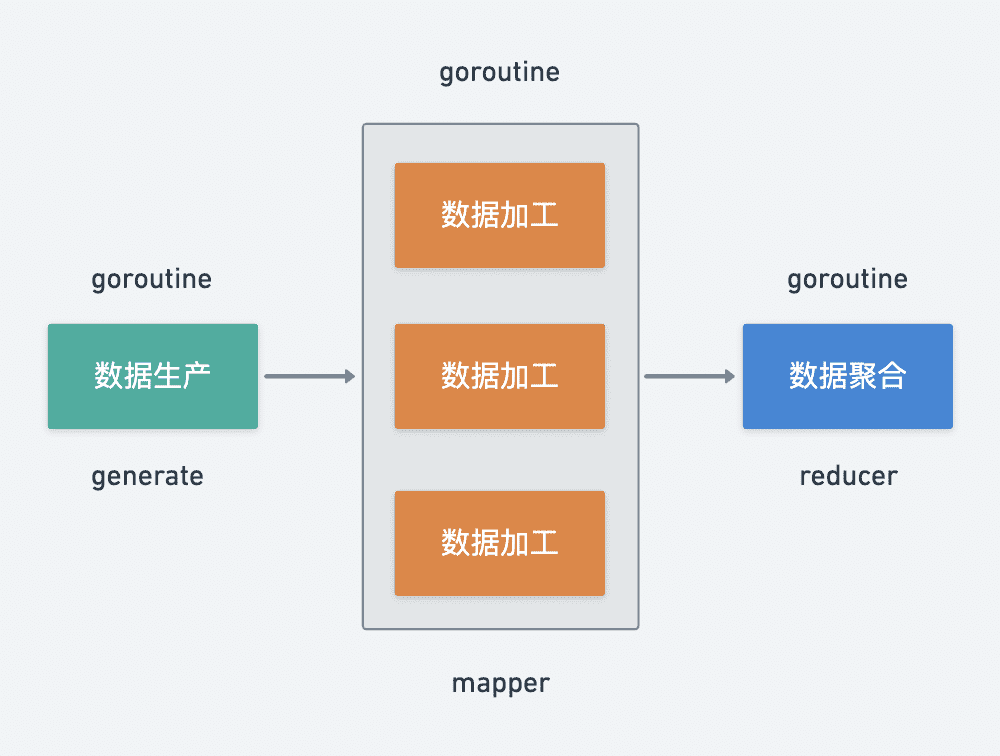

37 | 以上实际都是在进行对输入数据进行处理最后输出清洗后的数据,针对数据处理有个非常经典的异步模式:生产者消费者模式。于是我们可以抽象一下数据批处理的生命周期,大致可以分为三个阶段:

38 |

39 |  40 |

41 | 1. 数据生产 generate

42 | 2. 数据加工 mapper

43 | 3. 数据聚合 reducer

44 |

45 | 其中数据生产是不可或缺的阶段,数据加工、数据聚合是可选阶段,数据生产与加工支持并发调用,数据聚合基本属于纯内存操作单协程即可。

46 |

47 | 再来思考一下不同阶段之间数据应该如何流转,既然不同阶段的数据处理都是由不同 goroutine 执行的,那么很自然的可以考虑采用 channel 来实现 goroutine 之间的通信。

48 |

49 |

40 |

41 | 1. 数据生产 generate

42 | 2. 数据加工 mapper

43 | 3. 数据聚合 reducer

44 |

45 | 其中数据生产是不可或缺的阶段,数据加工、数据聚合是可选阶段,数据生产与加工支持并发调用,数据聚合基本属于纯内存操作单协程即可。

46 |

47 | 再来思考一下不同阶段之间数据应该如何流转,既然不同阶段的数据处理都是由不同 goroutine 执行的,那么很自然的可以考虑采用 channel 来实现 goroutine 之间的通信。

48 |

49 |  50 |

51 |

52 | 如何实现随时终止流程呢?

53 |

54 | `goroutine` 中监听一个全局的结束 `channel` 和调用方提供的 `ctx` 就行。

55 |

56 | ## 版本选择

57 |

58 | - `v1`(默认)- 非泛型版本

59 | - `v2`(泛型版)- 泛型版本,需要 Go 版本 >= 1.18

60 |

61 | ## 简单示例

62 |

63 | 并行求平方和(不要嫌弃示例简单,只是模拟并发)

64 |

65 | ```go

66 | package main

67 |

68 | import (

69 | "fmt"

70 | "log"

71 |

72 | "github.com/kevwan/mapreduce/v2"

73 | )

74 |

75 | func main() {

76 | val, err := mapreduce.MapReduce(func(source chan<- int) {

77 | // generator

78 | for i := 0; i < 10; i++ {

79 | source <- i

80 | }

81 | }, func(i int, writer mapreduce.Writer[int], cancel func(error)) {

82 | // mapper

83 | writer.Write(i * i)

84 | }, func(pipe <-chan int, writer mapreduce.Writer[int], cancel func(error)) {

85 | // reducer

86 | var sum int

87 | for i := range pipe {

88 | sum += i

89 | }

90 | writer.Write(sum)

91 | })

92 | if err != nil {

93 | log.Fatal(err)

94 | }

95 | fmt.Println("result:", val)

96 | }

97 | ```

98 |

99 | 更多示例:[https://github.com/zeromicro/zero-examples/tree/main/mapreduce](https://github.com/zeromicro/zero-examples/tree/main/mapreduce)

100 |

101 | ## 强烈推荐!

102 |

103 | go-zero: [https://github.com/zeromicro/go-zero](https://github.com/zeromicro/go-zero)

104 |

105 | ## 欢迎 star!⭐

106 |

107 | 如果你正在使用或者觉得这个项目对你有帮助,请 **star** 支持,感谢!

108 |

--------------------------------------------------------------------------------

/go.sum:

--------------------------------------------------------------------------------

1 | github.com/davecgh/go-spew v1.1.0/go.mod h1:J7Y8YcW2NihsgmVo/mv3lAwl/skON4iLHjSsI+c5H38=

2 | github.com/davecgh/go-spew v1.1.1 h1:vj9j/u1bqnvCEfJOwUhtlOARqs3+rkHYY13jYWTU97c=

3 | github.com/davecgh/go-spew v1.1.1/go.mod h1:J7Y8YcW2NihsgmVo/mv3lAwl/skON4iLHjSsI+c5H38=

4 | github.com/kevwan/mapreduce/v2 v2.0.1 h1:i+elkbdsdj1IK85dY+hVtYNdWVMx+O/q0TRr55r+mRI=

5 | github.com/kevwan/mapreduce/v2 v2.0.1/go.mod h1:CRVNCu3oR6NcBIXGxzLjhYjMtNXlEwI5jASt5JZaBLk=

6 | github.com/kr/pretty v0.1.0/go.mod h1:dAy3ld7l9f0ibDNOQOHHMYYIIbhfbHSm3C4ZsoJORNo=

7 | github.com/kr/pretty v0.2.1 h1:Fmg33tUaq4/8ym9TJN1x7sLJnHVwhP33CNkpYV/7rwI=

8 | github.com/kr/pretty v0.2.1/go.mod h1:ipq/a2n7PKx3OHsz4KJII5eveXtPO4qwEXGdVfWzfnI=

9 | github.com/kr/pty v1.1.1/go.mod h1:pFQYn66WHrOpPYNljwOMqo10TkYh1fy3cYio2l3bCsQ=

10 | github.com/kr/text v0.1.0/go.mod h1:4Jbv+DJW3UT/LiOwJeYQe1efqtUx/iVham/4vfdArNI=

11 | github.com/kr/text v0.2.0 h1:5Nx0Ya0ZqY2ygV366QzturHI13Jq95ApcVaJBhpS+AY=

12 | github.com/pmezard/go-difflib v1.0.0 h1:4DBwDE0NGyQoBHbLQYPwSUPoCMWR5BEzIk/f1lZbAQM=

13 | github.com/pmezard/go-difflib v1.0.0/go.mod h1:iKH77koFhYxTK1pcRnkKkqfTogsbg7gZNVY4sRDYZ/4=

14 | github.com/stretchr/objx v0.1.0/go.mod h1:HFkY916IF+rwdDfMAkV7OtwuqBVzrE8GR6GFx+wExME=

15 | github.com/stretchr/testify v1.7.0 h1:nwc3DEeHmmLAfoZucVR881uASk0Mfjw8xYJ99tb5CcY=

16 | github.com/stretchr/testify v1.7.0/go.mod h1:6Fq8oRcR53rry900zMqJjRRixrwX3KX962/h/Wwjteg=

17 | github.com/yuin/goldmark v1.3.5/go.mod h1:mwnBkeHKe2W/ZEtQ+71ViKU8L12m81fl3OWwC1Zlc8k=

18 | go.uber.org/goleak v1.1.12 h1:gZAh5/EyT/HQwlpkCy6wTpqfH9H8Lz8zbm3dZh+OyzA=

19 | go.uber.org/goleak v1.1.12/go.mod h1:cwTWslyiVhfpKIDGSZEM2HlOvcqm+tG4zioyIeLoqMQ=

20 | golang.org/x/crypto v0.0.0-20190308221718-c2843e01d9a2/go.mod h1:djNgcEr1/C05ACkg1iLfiJU5Ep61QUkGW8qpdssI0+w=

21 | golang.org/x/crypto v0.0.0-20191011191535-87dc89f01550/go.mod h1:yigFU9vqHzYiE8UmvKecakEJjdnWj3jj499lnFckfCI=

22 | golang.org/x/lint v0.0.0-20190930215403-16217165b5de h1:5hukYrvBGR8/eNkX5mdUezrA6JiaEZDtJb9Ei+1LlBs=

23 | golang.org/x/lint v0.0.0-20190930215403-16217165b5de/go.mod h1:6SW0HCj/g11FgYtHlgUYUwCkIfeOF89ocIRzGO/8vkc=

24 | golang.org/x/mod v0.4.2/go.mod h1:s0Qsj1ACt9ePp/hMypM3fl4fZqREWJwdYDEqhRiZZUA=

25 | golang.org/x/net v0.0.0-20190311183353-d8887717615a/go.mod h1:t9HGtf8HONx5eT2rtn7q6eTqICYqUVnKs3thJo3Qplg=

26 | golang.org/x/net v0.0.0-20190404232315-eb5bcb51f2a3/go.mod h1:t9HGtf8HONx5eT2rtn7q6eTqICYqUVnKs3thJo3Qplg=

27 | golang.org/x/net v0.0.0-20190620200207-3b0461eec859/go.mod h1:z5CRVTTTmAJ677TzLLGU+0bjPO0LkuOLi4/5GtJWs/s=

28 | golang.org/x/net v0.0.0-20210405180319-a5a99cb37ef4/go.mod h1:p54w0d4576C0XHj96bSt6lcn1PtDYWL6XObtHCRCNQM=

29 | golang.org/x/sync v0.0.0-20190423024810-112230192c58/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

30 | golang.org/x/sync v0.0.0-20210220032951-036812b2e83c/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

31 | golang.org/x/sys v0.0.0-20190215142949-d0b11bdaac8a/go.mod h1:STP8DvDyc/dI5b8T5hshtkjS+E42TnysNCUPdjciGhY=

32 | golang.org/x/sys v0.0.0-20190412213103-97732733099d/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

33 | golang.org/x/sys v0.0.0-20201119102817-f84b799fce68/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

34 | golang.org/x/sys v0.0.0-20210330210617-4fbd30eecc44/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

35 | golang.org/x/sys v0.0.0-20210510120138-977fb7262007/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

36 | golang.org/x/term v0.0.0-20201126162022-7de9c90e9dd1/go.mod h1:bj7SfCRtBDWHUb9snDiAeCFNEtKQo2Wmx5Cou7ajbmo=

37 | golang.org/x/text v0.3.0/go.mod h1:NqM8EUOU14njkJ3fqMW+pc6Ldnwhi/IjpwHt7yyuwOQ=

38 | golang.org/x/text v0.3.3/go.mod h1:5Zoc/QRtKVWzQhOtBMvqHzDpF6irO9z98xDceosuGiQ=

39 | golang.org/x/tools v0.0.0-20180917221912-90fa682c2a6e/go.mod h1:n7NCudcB/nEzxVGmLbDWY5pfWTLqBcC2KZ6jyYvM4mQ=

40 | golang.org/x/tools v0.0.0-20190311212946-11955173bddd/go.mod h1:LCzVGOaR6xXOjkQ3onu1FJEFr0SW1gC7cKk1uF8kGRs=

41 | golang.org/x/tools v0.0.0-20191119224855-298f0cb1881e/go.mod h1:b+2E5dAYhXwXZwtnZ6UAqBI28+e2cm9otk0dWdXHAEo=

42 | golang.org/x/tools v0.1.5 h1:ouewzE6p+/VEB31YYnTbEJdi8pFqKp4P4n85vwo3DHA=

43 | golang.org/x/tools v0.1.5/go.mod h1:o0xws9oXOQQZyjljx8fwUC0k7L1pTE6eaCbjGeHmOkk=

44 | golang.org/x/xerrors v0.0.0-20190717185122-a985d3407aa7/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

45 | golang.org/x/xerrors v0.0.0-20191011141410-1b5146add898/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

46 | golang.org/x/xerrors v0.0.0-20200804184101-5ec99f83aff1/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

47 | gopkg.in/check.v1 v0.0.0-20161208181325-20d25e280405/go.mod h1:Co6ibVJAznAaIkqp8huTwlJQCZ016jof/cbN4VW5Yz0=

48 | gopkg.in/check.v1 v1.0.0-20180628173108-788fd7840127/go.mod h1:Co6ibVJAznAaIkqp8huTwlJQCZ016jof/cbN4VW5Yz0=

49 | gopkg.in/check.v1 v1.0.0-20201130134442-10cb98267c6c h1:Hei/4ADfdWqJk1ZMxUNpqntNwaWcugrBjAiHlqqRiVk=

50 | gopkg.in/yaml.v3 v3.0.0-20200313102051-9f266ea9e77c/go.mod h1:K4uyk7z7BCEPqu6E+C64Yfv1cQ7kz7rIZviUmN+EgEM=

51 | gopkg.in/yaml.v3 v3.0.0-20210107192922-496545a6307b h1:h8qDotaEPuJATrMmW04NCwg7v22aHH28wwpauUhK9Oo=

52 | gopkg.in/yaml.v3 v3.0.0-20210107192922-496545a6307b/go.mod h1:K4uyk7z7BCEPqu6E+C64Yfv1cQ7kz7rIZviUmN+EgEM=

53 |

--------------------------------------------------------------------------------

/readme.md:

--------------------------------------------------------------------------------

1 |

50 |

51 |

52 | 如何实现随时终止流程呢?

53 |

54 | `goroutine` 中监听一个全局的结束 `channel` 和调用方提供的 `ctx` 就行。

55 |

56 | ## 版本选择

57 |

58 | - `v1`(默认)- 非泛型版本

59 | - `v2`(泛型版)- 泛型版本,需要 Go 版本 >= 1.18

60 |

61 | ## 简单示例

62 |

63 | 并行求平方和(不要嫌弃示例简单,只是模拟并发)

64 |

65 | ```go

66 | package main

67 |

68 | import (

69 | "fmt"

70 | "log"

71 |

72 | "github.com/kevwan/mapreduce/v2"

73 | )

74 |

75 | func main() {

76 | val, err := mapreduce.MapReduce(func(source chan<- int) {

77 | // generator

78 | for i := 0; i < 10; i++ {

79 | source <- i

80 | }

81 | }, func(i int, writer mapreduce.Writer[int], cancel func(error)) {

82 | // mapper

83 | writer.Write(i * i)

84 | }, func(pipe <-chan int, writer mapreduce.Writer[int], cancel func(error)) {

85 | // reducer

86 | var sum int

87 | for i := range pipe {

88 | sum += i

89 | }

90 | writer.Write(sum)

91 | })

92 | if err != nil {

93 | log.Fatal(err)

94 | }

95 | fmt.Println("result:", val)

96 | }

97 | ```

98 |

99 | 更多示例:[https://github.com/zeromicro/zero-examples/tree/main/mapreduce](https://github.com/zeromicro/zero-examples/tree/main/mapreduce)

100 |

101 | ## 强烈推荐!

102 |

103 | go-zero: [https://github.com/zeromicro/go-zero](https://github.com/zeromicro/go-zero)

104 |

105 | ## 欢迎 star!⭐

106 |

107 | 如果你正在使用或者觉得这个项目对你有帮助,请 **star** 支持,感谢!

108 |

--------------------------------------------------------------------------------

/go.sum:

--------------------------------------------------------------------------------

1 | github.com/davecgh/go-spew v1.1.0/go.mod h1:J7Y8YcW2NihsgmVo/mv3lAwl/skON4iLHjSsI+c5H38=

2 | github.com/davecgh/go-spew v1.1.1 h1:vj9j/u1bqnvCEfJOwUhtlOARqs3+rkHYY13jYWTU97c=

3 | github.com/davecgh/go-spew v1.1.1/go.mod h1:J7Y8YcW2NihsgmVo/mv3lAwl/skON4iLHjSsI+c5H38=

4 | github.com/kevwan/mapreduce/v2 v2.0.1 h1:i+elkbdsdj1IK85dY+hVtYNdWVMx+O/q0TRr55r+mRI=

5 | github.com/kevwan/mapreduce/v2 v2.0.1/go.mod h1:CRVNCu3oR6NcBIXGxzLjhYjMtNXlEwI5jASt5JZaBLk=

6 | github.com/kr/pretty v0.1.0/go.mod h1:dAy3ld7l9f0ibDNOQOHHMYYIIbhfbHSm3C4ZsoJORNo=

7 | github.com/kr/pretty v0.2.1 h1:Fmg33tUaq4/8ym9TJN1x7sLJnHVwhP33CNkpYV/7rwI=

8 | github.com/kr/pretty v0.2.1/go.mod h1:ipq/a2n7PKx3OHsz4KJII5eveXtPO4qwEXGdVfWzfnI=

9 | github.com/kr/pty v1.1.1/go.mod h1:pFQYn66WHrOpPYNljwOMqo10TkYh1fy3cYio2l3bCsQ=

10 | github.com/kr/text v0.1.0/go.mod h1:4Jbv+DJW3UT/LiOwJeYQe1efqtUx/iVham/4vfdArNI=

11 | github.com/kr/text v0.2.0 h1:5Nx0Ya0ZqY2ygV366QzturHI13Jq95ApcVaJBhpS+AY=

12 | github.com/pmezard/go-difflib v1.0.0 h1:4DBwDE0NGyQoBHbLQYPwSUPoCMWR5BEzIk/f1lZbAQM=

13 | github.com/pmezard/go-difflib v1.0.0/go.mod h1:iKH77koFhYxTK1pcRnkKkqfTogsbg7gZNVY4sRDYZ/4=

14 | github.com/stretchr/objx v0.1.0/go.mod h1:HFkY916IF+rwdDfMAkV7OtwuqBVzrE8GR6GFx+wExME=

15 | github.com/stretchr/testify v1.7.0 h1:nwc3DEeHmmLAfoZucVR881uASk0Mfjw8xYJ99tb5CcY=

16 | github.com/stretchr/testify v1.7.0/go.mod h1:6Fq8oRcR53rry900zMqJjRRixrwX3KX962/h/Wwjteg=

17 | github.com/yuin/goldmark v1.3.5/go.mod h1:mwnBkeHKe2W/ZEtQ+71ViKU8L12m81fl3OWwC1Zlc8k=

18 | go.uber.org/goleak v1.1.12 h1:gZAh5/EyT/HQwlpkCy6wTpqfH9H8Lz8zbm3dZh+OyzA=

19 | go.uber.org/goleak v1.1.12/go.mod h1:cwTWslyiVhfpKIDGSZEM2HlOvcqm+tG4zioyIeLoqMQ=

20 | golang.org/x/crypto v0.0.0-20190308221718-c2843e01d9a2/go.mod h1:djNgcEr1/C05ACkg1iLfiJU5Ep61QUkGW8qpdssI0+w=

21 | golang.org/x/crypto v0.0.0-20191011191535-87dc89f01550/go.mod h1:yigFU9vqHzYiE8UmvKecakEJjdnWj3jj499lnFckfCI=

22 | golang.org/x/lint v0.0.0-20190930215403-16217165b5de h1:5hukYrvBGR8/eNkX5mdUezrA6JiaEZDtJb9Ei+1LlBs=

23 | golang.org/x/lint v0.0.0-20190930215403-16217165b5de/go.mod h1:6SW0HCj/g11FgYtHlgUYUwCkIfeOF89ocIRzGO/8vkc=

24 | golang.org/x/mod v0.4.2/go.mod h1:s0Qsj1ACt9ePp/hMypM3fl4fZqREWJwdYDEqhRiZZUA=

25 | golang.org/x/net v0.0.0-20190311183353-d8887717615a/go.mod h1:t9HGtf8HONx5eT2rtn7q6eTqICYqUVnKs3thJo3Qplg=

26 | golang.org/x/net v0.0.0-20190404232315-eb5bcb51f2a3/go.mod h1:t9HGtf8HONx5eT2rtn7q6eTqICYqUVnKs3thJo3Qplg=

27 | golang.org/x/net v0.0.0-20190620200207-3b0461eec859/go.mod h1:z5CRVTTTmAJ677TzLLGU+0bjPO0LkuOLi4/5GtJWs/s=

28 | golang.org/x/net v0.0.0-20210405180319-a5a99cb37ef4/go.mod h1:p54w0d4576C0XHj96bSt6lcn1PtDYWL6XObtHCRCNQM=

29 | golang.org/x/sync v0.0.0-20190423024810-112230192c58/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

30 | golang.org/x/sync v0.0.0-20210220032951-036812b2e83c/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

31 | golang.org/x/sys v0.0.0-20190215142949-d0b11bdaac8a/go.mod h1:STP8DvDyc/dI5b8T5hshtkjS+E42TnysNCUPdjciGhY=

32 | golang.org/x/sys v0.0.0-20190412213103-97732733099d/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

33 | golang.org/x/sys v0.0.0-20201119102817-f84b799fce68/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

34 | golang.org/x/sys v0.0.0-20210330210617-4fbd30eecc44/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

35 | golang.org/x/sys v0.0.0-20210510120138-977fb7262007/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

36 | golang.org/x/term v0.0.0-20201126162022-7de9c90e9dd1/go.mod h1:bj7SfCRtBDWHUb9snDiAeCFNEtKQo2Wmx5Cou7ajbmo=

37 | golang.org/x/text v0.3.0/go.mod h1:NqM8EUOU14njkJ3fqMW+pc6Ldnwhi/IjpwHt7yyuwOQ=

38 | golang.org/x/text v0.3.3/go.mod h1:5Zoc/QRtKVWzQhOtBMvqHzDpF6irO9z98xDceosuGiQ=

39 | golang.org/x/tools v0.0.0-20180917221912-90fa682c2a6e/go.mod h1:n7NCudcB/nEzxVGmLbDWY5pfWTLqBcC2KZ6jyYvM4mQ=

40 | golang.org/x/tools v0.0.0-20190311212946-11955173bddd/go.mod h1:LCzVGOaR6xXOjkQ3onu1FJEFr0SW1gC7cKk1uF8kGRs=

41 | golang.org/x/tools v0.0.0-20191119224855-298f0cb1881e/go.mod h1:b+2E5dAYhXwXZwtnZ6UAqBI28+e2cm9otk0dWdXHAEo=

42 | golang.org/x/tools v0.1.5 h1:ouewzE6p+/VEB31YYnTbEJdi8pFqKp4P4n85vwo3DHA=

43 | golang.org/x/tools v0.1.5/go.mod h1:o0xws9oXOQQZyjljx8fwUC0k7L1pTE6eaCbjGeHmOkk=

44 | golang.org/x/xerrors v0.0.0-20190717185122-a985d3407aa7/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

45 | golang.org/x/xerrors v0.0.0-20191011141410-1b5146add898/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

46 | golang.org/x/xerrors v0.0.0-20200804184101-5ec99f83aff1/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

47 | gopkg.in/check.v1 v0.0.0-20161208181325-20d25e280405/go.mod h1:Co6ibVJAznAaIkqp8huTwlJQCZ016jof/cbN4VW5Yz0=

48 | gopkg.in/check.v1 v1.0.0-20180628173108-788fd7840127/go.mod h1:Co6ibVJAznAaIkqp8huTwlJQCZ016jof/cbN4VW5Yz0=

49 | gopkg.in/check.v1 v1.0.0-20201130134442-10cb98267c6c h1:Hei/4ADfdWqJk1ZMxUNpqntNwaWcugrBjAiHlqqRiVk=

50 | gopkg.in/yaml.v3 v3.0.0-20200313102051-9f266ea9e77c/go.mod h1:K4uyk7z7BCEPqu6E+C64Yfv1cQ7kz7rIZviUmN+EgEM=

51 | gopkg.in/yaml.v3 v3.0.0-20210107192922-496545a6307b h1:h8qDotaEPuJATrMmW04NCwg7v22aHH28wwpauUhK9Oo=

52 | gopkg.in/yaml.v3 v3.0.0-20210107192922-496545a6307b/go.mod h1:K4uyk7z7BCEPqu6E+C64Yfv1cQ7kz7rIZviUmN+EgEM=

53 |

--------------------------------------------------------------------------------

/readme.md:

--------------------------------------------------------------------------------

1 |  2 |

3 | # mapreduce

4 |

5 | English | [简体中文](readme-cn.md)

6 |

7 | [](https://github.com/kevwan/mapreduce/actions)

8 | [](https://codecov.io/gh/kevwan/mapreduce)

9 | [](https://goreportcard.com/report/github.com/kevwan/mapreduce)

10 | [](https://github.com/kevwan/mapreduce)

11 | [](https://opensource.org/licenses/MIT)

12 |

13 | ## Why we have this repo

14 |

15 | `mapreduce` is part of [go-zero](https://github.com/zeromicro/go-zero), but a few people asked if mapreduce can be used separately. But I recommend you to use `go-zero` for many more features.

16 |

17 | ## Why MapReduce is needed

18 |

19 | In practical business scenarios we often need to get the corresponding properties from different rpc services to assemble complex objects.

20 |

21 | For example, to query product details.

22 |

23 | 1. product service - query product attributes

24 | 2. inventory service - query inventory properties

25 | 3. price service - query price attributes

26 | 4. marketing service - query marketing properties

27 |

28 | If it is a serial call, the response time will increase linearly with the number of rpc calls, so we will generally change serial to parallel to optimize response time.

29 |

30 | Simple scenarios using `WaitGroup` can also meet the needs, but what if we need to check the data returned by the rpc call, data processing, data aggregation? The official go library does not have such a tool (CompleteFuture is provided in java), so we implemented an in-process data batching MapReduce concurrent tool based on the MapReduce architecture.

31 |

32 | ## Design ideas

33 |

34 | Let's sort out the possible business scenarios for the concurrency tool:

35 |

36 | 1. querying product details: supporting concurrent calls to multiple services to combine product attributes, and supporting call errors that can be ended immediately.

37 | 2. automatic recommendation of user card coupons on product details page: support concurrently verifying card coupons, automatically rejecting them if they fail, and returning all of them.

38 |

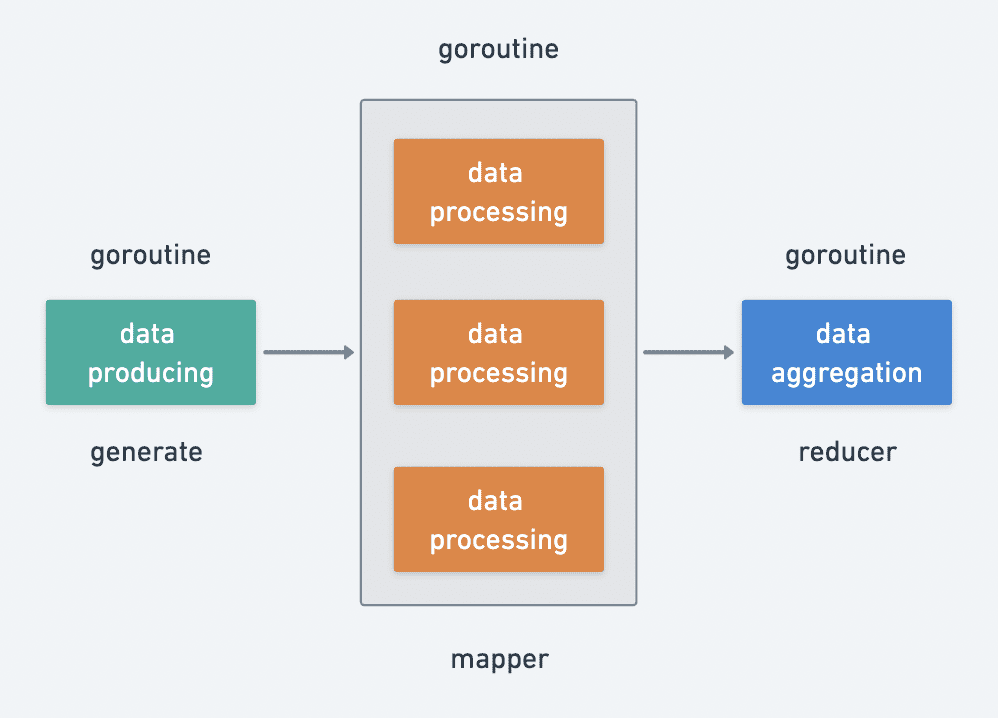

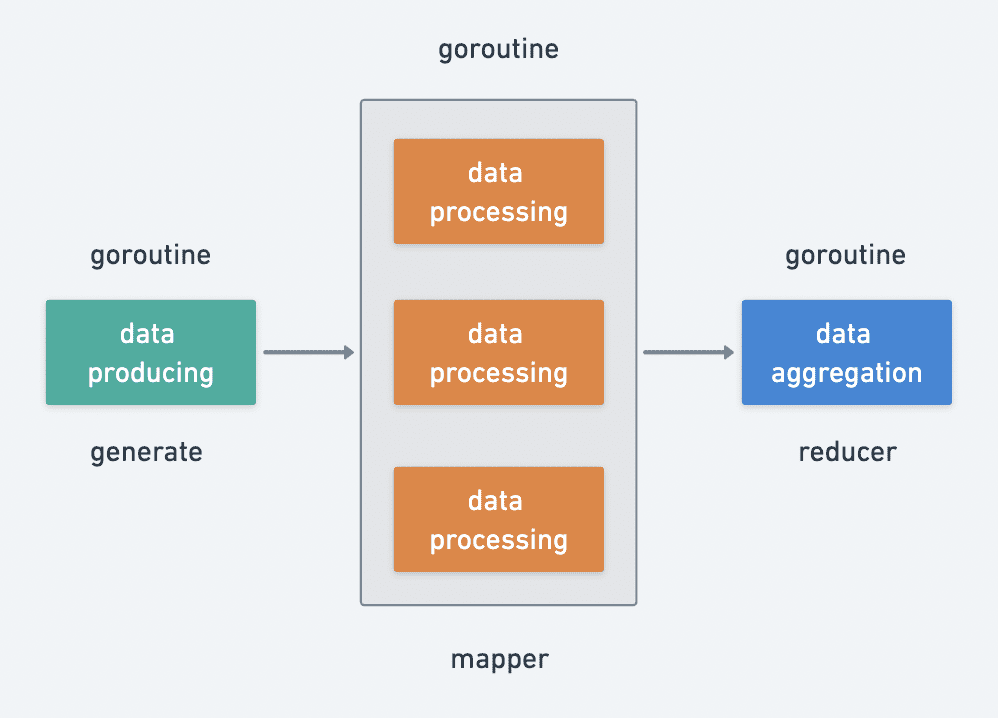

39 | The above is actually processing the input data and finally outputting the cleaned data. There is a very classic asynchronous pattern for data processing: the producer-consumer pattern. So we can abstract the life cycle of data batch processing, which can be roughly divided into three phases.

40 |

41 |

2 |

3 | # mapreduce

4 |

5 | English | [简体中文](readme-cn.md)

6 |

7 | [](https://github.com/kevwan/mapreduce/actions)

8 | [](https://codecov.io/gh/kevwan/mapreduce)

9 | [](https://goreportcard.com/report/github.com/kevwan/mapreduce)

10 | [](https://github.com/kevwan/mapreduce)

11 | [](https://opensource.org/licenses/MIT)

12 |

13 | ## Why we have this repo

14 |

15 | `mapreduce` is part of [go-zero](https://github.com/zeromicro/go-zero), but a few people asked if mapreduce can be used separately. But I recommend you to use `go-zero` for many more features.

16 |

17 | ## Why MapReduce is needed

18 |

19 | In practical business scenarios we often need to get the corresponding properties from different rpc services to assemble complex objects.

20 |

21 | For example, to query product details.

22 |

23 | 1. product service - query product attributes

24 | 2. inventory service - query inventory properties

25 | 3. price service - query price attributes

26 | 4. marketing service - query marketing properties

27 |

28 | If it is a serial call, the response time will increase linearly with the number of rpc calls, so we will generally change serial to parallel to optimize response time.

29 |

30 | Simple scenarios using `WaitGroup` can also meet the needs, but what if we need to check the data returned by the rpc call, data processing, data aggregation? The official go library does not have such a tool (CompleteFuture is provided in java), so we implemented an in-process data batching MapReduce concurrent tool based on the MapReduce architecture.

31 |

32 | ## Design ideas

33 |

34 | Let's sort out the possible business scenarios for the concurrency tool:

35 |

36 | 1. querying product details: supporting concurrent calls to multiple services to combine product attributes, and supporting call errors that can be ended immediately.

37 | 2. automatic recommendation of user card coupons on product details page: support concurrently verifying card coupons, automatically rejecting them if they fail, and returning all of them.

38 |

39 | The above is actually processing the input data and finally outputting the cleaned data. There is a very classic asynchronous pattern for data processing: the producer-consumer pattern. So we can abstract the life cycle of data batch processing, which can be roughly divided into three phases.

40 |

41 |  42 |

43 | 1. data production generate

44 | 2. data processing mapper

45 | 3. data aggregation reducer

46 |

47 | Data producing is an indispensable stage, data processing and data aggregation are optional stages, data producing and processing support concurrent calls, data aggregation is basically a pure memory operation, so a single concurrent process can do it.

48 |

49 | Since different stages of data processing are performed by different goroutines, it is natural to consider the use of channel to achieve communication between goroutines.

50 |

51 |

42 |

43 | 1. data production generate

44 | 2. data processing mapper

45 | 3. data aggregation reducer

46 |

47 | Data producing is an indispensable stage, data processing and data aggregation are optional stages, data producing and processing support concurrent calls, data aggregation is basically a pure memory operation, so a single concurrent process can do it.

48 |

49 | Since different stages of data processing are performed by different goroutines, it is natural to consider the use of channel to achieve communication between goroutines.

50 |

51 |  52 |

53 | How can I terminate the process at any time?

54 |

55 | It's simple, just receive from a channel or the given context in the goroutine.

56 |

57 | ## Choose the right version

58 |

59 | - `v1` (default) - non-generic version

60 | - `v2` (generics) - generic version, needs Go version >= 1.18

61 |

62 | ## A simple example

63 |

64 | Calculate the sum of squares, simulating the concurrency.

65 |

66 | ```go

67 | package main

68 |

69 | import (

70 | "fmt"

71 | "log"

72 |

73 | "github.com/kevwan/mapreduce/v2"

74 | )

75 |

76 | func main() {

77 | val, err := mapreduce.MapReduce(func(source chan<- int) {

78 | // generator

79 | for i := 0; i < 10; i++ {

80 | source <- i

81 | }

82 | }, func(i int, writer mapreduce.Writer[int], cancel func(error)) {

83 | // mapper

84 | writer.Write(i * i)

85 | }, func(pipe <-chan int, writer mapreduce.Writer[int], cancel func(error)) {

86 | // reducer

87 | var sum int

88 | for i := range pipe {

89 | sum += i

90 | }

91 | writer.Write(sum)

92 | })

93 | if err != nil {

94 | log.Fatal(err)

95 | }

96 | fmt.Println("result:", val)

97 | }

98 | ```

99 |

100 | More examples: [https://github.com/zeromicro/zero-examples/tree/main/mapreduce](https://github.com/zeromicro/zero-examples/tree/main/mapreduce)

101 |

102 | ## References

103 |

104 | go-zero: [https://github.com/zeromicro/go-zero](https://github.com/zeromicro/go-zero)

105 |

106 | ## Give a Star! ⭐

107 |

108 | If you like or are using this project to learn or start your solution, please give it a star. Thanks!

109 |

--------------------------------------------------------------------------------

/mapreduce.go:

--------------------------------------------------------------------------------

1 | package mapreduce

2 |

3 | import (

4 | "context"

5 | "errors"

6 | "sync"

7 | "sync/atomic"

8 | )

9 |

10 | const (

11 | defaultWorkers = 16

12 | minWorkers = 1

13 | )

14 |

15 | var (

16 | // ErrCancelWithNil is an error that mapreduce was cancelled with nil.

17 | ErrCancelWithNil = errors.New("mapreduce cancelled with nil")

18 | // ErrReduceNoOutput is an error that reduce did not output a value.

19 | ErrReduceNoOutput = errors.New("reduce not writing value")

20 | )

21 |

22 | type (

23 | // ForEachFunc is used to do element processing, but no output.

24 | ForEachFunc[T any] func(item T)

25 | // GenerateFunc is used to let callers send elements into source.

26 | GenerateFunc[T any] func(source chan<- T)

27 | // MapFunc is used to do element processing and write the output to writer.

28 | MapFunc[T, U any] func(item T, writer Writer[U])

29 | // MapperFunc is used to do element processing and write the output to writer,

30 | // use cancel func to cancel the processing.

31 | MapperFunc[T, U any] func(item T, writer Writer[U], cancel func(error))

32 | // ReducerFunc is used to reduce all the mapping output and write to writer,

33 | // use cancel func to cancel the processing.

34 | ReducerFunc[U, V any] func(pipe <-chan U, writer Writer[V], cancel func(error))

35 | // VoidReducerFunc is used to reduce all the mapping output, but no output.

36 | // Use cancel func to cancel the processing.

37 | VoidReducerFunc[U any] func(pipe <-chan U, cancel func(error))

38 | // Option defines the method to customize the mapreduce.

39 | Option func(opts *mapReduceOptions)

40 |

41 | mapperContext[T, U any] struct {

42 | ctx context.Context

43 | mapper MapFunc[T, U]

44 | source <-chan T

45 | panicChan *onceChan

46 | collector chan<- U

47 | doneChan <-chan struct{}

48 | workers int

49 | }

50 |

51 | mapReduceOptions struct {

52 | ctx context.Context

53 | workers int

54 | }

55 |

56 | // Writer interface wraps Write method.

57 | Writer[T any] interface {

58 | Write(v T)

59 | }

60 | )

61 |

62 | // Finish runs fns parallelly, cancelled on any error.

63 | func Finish(fns ...func() error) error {

64 | if len(fns) == 0 {

65 | return nil

66 | }

67 |

68 | return MapReduceVoid(func(source chan<- func() error) {

69 | for _, fn := range fns {

70 | source <- fn

71 | }

72 | }, func(fn func() error, writer Writer[any], cancel func(error)) {

73 | if err := fn(); err != nil {

74 | cancel(err)

75 | }

76 | }, func(pipe <-chan any, cancel func(error)) {

77 | }, WithWorkers(len(fns)))

78 | }

79 |

80 | // FinishVoid runs fns parallelly.

81 | func FinishVoid(fns ...func()) {

82 | if len(fns) == 0 {

83 | return

84 | }

85 |

86 | ForEach(func(source chan<- func()) {

87 | for _, fn := range fns {

88 | source <- fn

89 | }

90 | }, func(fn func()) {

91 | fn()

92 | }, WithWorkers(len(fns)))

93 | }

94 |

95 | // ForEach maps all elements from given generate but no output.

96 | func ForEach[T any](generate GenerateFunc[T], mapper ForEachFunc[T], opts ...Option) {

97 | options := buildOptions(opts...)

98 | panicChan := &onceChan{channel: make(chan any)}

99 | source := buildSource(generate, panicChan)

100 | collector := make(chan any)

101 | done := make(chan struct{})

102 |

103 | go executeMappers(mapperContext[T, any]{

104 | ctx: options.ctx,

105 | mapper: func(item T, _ Writer[any]) {

106 | mapper(item)

107 | },

108 | source: source,

109 | panicChan: panicChan,

110 | collector: collector,

111 | doneChan: done,

112 | workers: options.workers,

113 | })

114 |

115 | for {

116 | select {

117 | case v := <-panicChan.channel:

118 | panic(v)

119 | case _, ok := <-collector:

120 | if !ok {

121 | return

122 | }

123 | }

124 | }

125 | }

126 |

127 | // MapReduce maps all elements generated from given generate func,

128 | // and reduces the output elements with given reducer.

129 | func MapReduce[T, U, V any](generate GenerateFunc[T], mapper MapperFunc[T, U], reducer ReducerFunc[U, V],

130 | opts ...Option) (V, error) {

131 | panicChan := &onceChan{channel: make(chan any)}

132 | source := buildSource(generate, panicChan)

133 | return mapReduceWithPanicChan(source, panicChan, mapper, reducer, opts...)

134 | }

135 |

136 | // MapReduceChan maps all elements from source, and reduce the output elements with given reducer.

137 | func MapReduceChan[T, U, V any](source <-chan T, mapper MapperFunc[T, U], reducer ReducerFunc[U, V],

138 | opts ...Option) (V, error) {

139 | panicChan := &onceChan{channel: make(chan any)}

140 | return mapReduceWithPanicChan(source, panicChan, mapper, reducer, opts...)

141 | }

142 |

143 | // mapReduceWithPanicChan maps all elements from source, and reduce the output elements with given reducer.

144 | func mapReduceWithPanicChan[T, U, V any](source <-chan T, panicChan *onceChan, mapper MapperFunc[T, U],

145 | reducer ReducerFunc[U, V], opts ...Option) (val V, err error) {

146 | options := buildOptions(opts...)

147 | // output is used to write the final result

148 | output := make(chan V)

149 | defer func() {

150 | // reducer can only write once, if more, panic

151 | for range output {

152 | panic("more than one element written in reducer")

153 | }

154 | }()

155 |

156 | // collector is used to collect data from mapper, and consume in reducer

157 | collector := make(chan U, options.workers)

158 | // if done is closed, all mappers and reducer should stop processing

159 | done := make(chan struct{})

160 | writer := newGuardedWriter(options.ctx, output, done)

161 | var closeOnce sync.Once

162 | // use atomic.Value to avoid data race

163 | var retErr atomic.Value

164 | finish := func() {

165 | closeOnce.Do(func() {

166 | close(done)

167 | close(output)

168 | })

169 | }

170 | cancel := once(func(err error) {

171 | if err != nil {

172 | retErr.Store(err)

173 | } else {

174 | retErr.Store(ErrCancelWithNil)

175 | }

176 |

177 | drain(source)

178 | finish()

179 | })

180 |

181 | go func() {

182 | defer func() {

183 | drain(collector)

184 | if r := recover(); r != nil {

185 | panicChan.write(r)

186 | }

187 | finish()

188 | }()

189 |

190 | reducer(collector, writer, cancel)

191 | }()

192 |

193 | go executeMappers(mapperContext[T, U]{

194 | ctx: options.ctx,

195 | mapper: func(item T, w Writer[U]) {

196 | mapper(item, w, cancel)

197 | },

198 | source: source,

199 | panicChan: panicChan,

200 | collector: collector,

201 | doneChan: done,

202 | workers: options.workers,

203 | })

204 |

205 | select {

206 | case <-options.ctx.Done():

207 | cancel(context.DeadlineExceeded)

208 | err = context.DeadlineExceeded

209 | case v := <-panicChan.channel:

210 | // drain output here, otherwise for loop panic in defer

211 | drain(output)

212 | panic(v)

213 | case v, ok := <-output:

214 | if e := retErr.Load(); e != nil {

215 | err = e.(error)

216 | } else if ok {

217 | val = v

218 | } else {

219 | err = ErrReduceNoOutput

220 | }

221 | }

222 |

223 | return

224 | }

225 |

226 | // MapReduceVoid maps all elements generated from given generate,

227 | // and reduce the output elements with given reducer.

228 | func MapReduceVoid[T, U any](generate GenerateFunc[T], mapper MapperFunc[T, U],

229 | reducer VoidReducerFunc[U], opts ...Option) error {

230 | _, err := MapReduce(generate, mapper, func(input <-chan U, writer Writer[any], cancel func(error)) {

231 | reducer(input, cancel)

232 | }, opts...)

233 | if errors.Is(err, ErrReduceNoOutput) {

234 | return nil

235 | }

236 |

237 | return err

238 | }

239 |

240 | // WithContext customizes a mapreduce processing accepts a given ctx.

241 | func WithContext(ctx context.Context) Option {

242 | return func(opts *mapReduceOptions) {

243 | opts.ctx = ctx

244 | }

245 | }

246 |

247 | // WithWorkers customizes a mapreduce processing with given workers.

248 | func WithWorkers(workers int) Option {

249 | return func(opts *mapReduceOptions) {

250 | if workers < minWorkers {

251 | opts.workers = minWorkers

252 | } else {

253 | opts.workers = workers

254 | }

255 | }

256 | }

257 |

258 | func buildOptions(opts ...Option) *mapReduceOptions {

259 | options := newOptions()

260 | for _, opt := range opts {

261 | opt(options)

262 | }

263 |

264 | return options

265 | }

266 |

267 | func buildSource[T any](generate GenerateFunc[T], panicChan *onceChan) chan T {

268 | source := make(chan T)

269 | go func() {

270 | defer func() {

271 | if r := recover(); r != nil {

272 | panicChan.write(r)

273 | }

274 | close(source)

275 | }()

276 |

277 | generate(source)

278 | }()

279 |

280 | return source

281 | }

282 |

283 | // drain drains the channel.

284 | func drain[T any](channel <-chan T) {

285 | // drain the channel

286 | for range channel {

287 | }

288 | }

289 |

290 | func executeMappers[T, U any](mCtx mapperContext[T, U]) {

291 | var wg sync.WaitGroup

292 | defer func() {

293 | wg.Wait()

294 | close(mCtx.collector)

295 | drain(mCtx.source)

296 | }()

297 |

298 | var failed int32

299 | pool := make(chan struct{}, mCtx.workers)

300 | writer := newGuardedWriter(mCtx.ctx, mCtx.collector, mCtx.doneChan)

301 | for atomic.LoadInt32(&failed) == 0 {

302 | select {

303 | case <-mCtx.ctx.Done():

304 | return

305 | case <-mCtx.doneChan:

306 | return

307 | case pool <- struct{}{}:

308 | item, ok := <-mCtx.source

309 | if !ok {

310 | <-pool

311 | return

312 | }

313 |

314 | wg.Add(1)

315 | go func() {

316 | defer func() {

317 | if r := recover(); r != nil {

318 | atomic.AddInt32(&failed, 1)

319 | mCtx.panicChan.write(r)

320 | }

321 | wg.Done()

322 | <-pool

323 | }()

324 |

325 | mCtx.mapper(item, writer)

326 | }()

327 | }

328 | }

329 | }

330 |

331 | func newOptions() *mapReduceOptions {

332 | return &mapReduceOptions{

333 | ctx: context.Background(),

334 | workers: defaultWorkers,

335 | }

336 | }

337 |

338 | func once(fn func(error)) func(error) {

339 | once := new(sync.Once)

340 | return func(err error) {

341 | once.Do(func() {

342 | fn(err)

343 | })

344 | }

345 | }

346 |

347 | type guardedWriter[T any] struct {

348 | ctx context.Context

349 | channel chan<- T

350 | done <-chan struct{}

351 | }

352 |

353 | func newGuardedWriter[T any](ctx context.Context, channel chan<- T, done <-chan struct{}) guardedWriter[T] {

354 | return guardedWriter[T]{

355 | ctx: ctx,

356 | channel: channel,

357 | done: done,

358 | }

359 | }

360 |

361 | func (gw guardedWriter[T]) Write(v T) {

362 | select {

363 | case <-gw.ctx.Done():

364 | return

365 | case <-gw.done:

366 | return

367 | default:

368 | gw.channel <- v

369 | }

370 | }

371 |

372 | type onceChan struct {

373 | channel chan any

374 | wrote int32

375 | }

376 |

377 | func (oc *onceChan) write(val any) {

378 | if atomic.CompareAndSwapInt32(&oc.wrote, 0, 1) {

379 | oc.channel <- val

380 | }

381 | }

382 |

--------------------------------------------------------------------------------

/mapreduce_test.go:

--------------------------------------------------------------------------------

1 | package mapreduce

2 |

3 | import (

4 | "context"

5 | "errors"

6 | "io/ioutil"

7 | "log"

8 | "runtime"

9 | "sync/atomic"

10 | "testing"

11 | "time"

12 |

13 | "github.com/stretchr/testify/assert"

14 | "go.uber.org/goleak"

15 | )

16 |

17 | var errDummy = errors.New("dummy")

18 |

19 | func init() {

20 | log.SetOutput(ioutil.Discard)

21 | }

22 |

23 | func TestFinish(t *testing.T) {

24 | defer goleak.VerifyNone(t)

25 |

26 | var total uint32

27 | err := Finish(func() error {

28 | atomic.AddUint32(&total, 2)

29 | return nil

30 | }, func() error {

31 | atomic.AddUint32(&total, 3)

32 | return nil

33 | }, func() error {

34 | atomic.AddUint32(&total, 5)

35 | return nil

36 | })

37 |

38 | assert.Equal(t, uint32(10), atomic.LoadUint32(&total))

39 | assert.Nil(t, err)

40 | }

41 |

42 | func TestFinishNone(t *testing.T) {

43 | defer goleak.VerifyNone(t)

44 |

45 | assert.Nil(t, Finish())

46 | }

47 |

48 | func TestFinishVoidNone(t *testing.T) {

49 | defer goleak.VerifyNone(t)

50 |

51 | FinishVoid()

52 | }

53 |

54 | func TestFinishErr(t *testing.T) {

55 | defer goleak.VerifyNone(t)

56 |

57 | var total uint32

58 | err := Finish(func() error {

59 | atomic.AddUint32(&total, 2)

60 | return nil

61 | }, func() error {

62 | atomic.AddUint32(&total, 3)

63 | return errDummy

64 | }, func() error {

65 | atomic.AddUint32(&total, 5)

66 | return nil

67 | })

68 |

69 | assert.Equal(t, errDummy, err)

70 | }

71 |

72 | func TestFinishVoid(t *testing.T) {

73 | defer goleak.VerifyNone(t)

74 |

75 | var total uint32

76 | FinishVoid(func() {

77 | atomic.AddUint32(&total, 2)

78 | }, func() {

79 | atomic.AddUint32(&total, 3)

80 | }, func() {

81 | atomic.AddUint32(&total, 5)

82 | })

83 |

84 | assert.Equal(t, uint32(10), atomic.LoadUint32(&total))

85 | }

86 |

87 | func TestForEach(t *testing.T) {

88 | const tasks = 1000

89 |

90 | t.Run("all", func(t *testing.T) {

91 | defer goleak.VerifyNone(t)

92 |

93 | var count uint32

94 | ForEach(func(source chan<- int) {

95 | for i := 0; i < tasks; i++ {

96 | source <- i

97 | }

98 | }, func(item int) {

99 | atomic.AddUint32(&count, 1)

100 | }, WithWorkers(-1))

101 |

102 | assert.Equal(t, tasks, int(count))

103 | })

104 |

105 | t.Run("odd", func(t *testing.T) {

106 | defer goleak.VerifyNone(t)

107 |

108 | var count uint32

109 | ForEach(func(source chan<- int) {

110 | for i := 0; i < tasks; i++ {

111 | source <- i

112 | }

113 | }, func(item int) {

114 | if item%2 == 0 {

115 | atomic.AddUint32(&count, 1)

116 | }

117 | })

118 |

119 | assert.Equal(t, tasks/2, int(count))

120 | })

121 |

122 | t.Run("all", func(t *testing.T) {

123 | defer goleak.VerifyNone(t)

124 |

125 | assert.PanicsWithValue(t, "foo", func() {

126 | ForEach(func(source chan<- int) {

127 | for i := 0; i < tasks; i++ {

128 | source <- i

129 | }

130 | }, func(item int) {

131 | panic("foo")

132 | })

133 | })

134 | })

135 | }

136 |

137 | func TestGeneratePanic(t *testing.T) {

138 | defer goleak.VerifyNone(t)

139 |

140 | t.Run("all", func(t *testing.T) {

141 | assert.PanicsWithValue(t, "foo", func() {

142 | ForEach(func(source chan<- int) {

143 | panic("foo")

144 | }, func(item int) {

145 | })

146 | })

147 | })

148 | }

149 |

150 | func TestMapperPanic(t *testing.T) {

151 | defer goleak.VerifyNone(t)

152 |

153 | const tasks = 1000

154 | var run int32

155 | t.Run("all", func(t *testing.T) {

156 | assert.PanicsWithValue(t, "foo", func() {

157 | _, _ = MapReduce(func(source chan<- int) {

158 | for i := 0; i < tasks; i++ {

159 | source <- i

160 | }

161 | }, func(item int, writer Writer[int], cancel func(error)) {

162 | atomic.AddInt32(&run, 1)

163 | panic("foo")

164 | }, func(pipe <-chan int, writer Writer[int], cancel func(error)) {

165 | })

166 | })

167 | assert.True(t, atomic.LoadInt32(&run) < tasks/2)

168 | })

169 | }

170 |

171 | func TestMapReduce(t *testing.T) {

172 | defer goleak.VerifyNone(t)

173 |

174 | tests := []struct {

175 | name string

176 | mapper MapperFunc[int, int]

177 | reducer ReducerFunc[int, int]

178 | expectErr error

179 | expectValue int

180 | }{

181 | {

182 | name: "simple",

183 | expectErr: nil,

184 | expectValue: 30,

185 | },

186 | {

187 | name: "cancel with error",

188 | mapper: func(v int, writer Writer[int], cancel func(error)) {

189 | if v%3 == 0 {

190 | cancel(errDummy)

191 | }

192 | writer.Write(v * v)

193 | },

194 | expectErr: errDummy,

195 | },

196 | {

197 | name: "cancel with nil",

198 | mapper: func(v int, writer Writer[int], cancel func(error)) {

199 | if v%3 == 0 {

200 | cancel(nil)

201 | }

202 | writer.Write(v * v)

203 | },

204 | expectErr: ErrCancelWithNil,

205 | },

206 | {

207 | name: "cancel with more",

208 | reducer: func(pipe <-chan int, writer Writer[int], cancel func(error)) {

209 | var result int

210 | for item := range pipe {

211 | result += item

212 | if result > 10 {

213 | cancel(errDummy)

214 | }

215 | }

216 | writer.Write(result)

217 | },

218 | expectErr: errDummy,

219 | },

220 | }

221 |

222 | t.Run("MapReduce", func(t *testing.T) {

223 | for _, test := range tests {

224 | t.Run(test.name, func(t *testing.T) {

225 | if test.mapper == nil {

226 | test.mapper = func(v int, writer Writer[int], cancel func(error)) {

227 | writer.Write(v * v)

228 | }

229 | }

230 | if test.reducer == nil {

231 | test.reducer = func(pipe <-chan int, writer Writer[int], cancel func(error)) {

232 | var result int

233 | for item := range pipe {

234 | result += item

235 | }

236 | writer.Write(result)

237 | }

238 | }

239 | value, err := MapReduce(func(source chan<- int) {

240 | for i := 1; i < 5; i++ {

241 | source <- i

242 | }

243 | }, test.mapper, test.reducer, WithWorkers(runtime.NumCPU()))

244 |

245 | assert.Equal(t, test.expectErr, err)

246 | assert.Equal(t, test.expectValue, value)

247 | })

248 | }

249 | })

250 |

251 | t.Run("MapReduce", func(t *testing.T) {

252 | for _, test := range tests {

253 | t.Run(test.name, func(t *testing.T) {

254 | if test.mapper == nil {

255 | test.mapper = func(v int, writer Writer[int], cancel func(error)) {

256 | writer.Write(v * v)

257 | }

258 | }

259 | if test.reducer == nil {

260 | test.reducer = func(pipe <-chan int, writer Writer[int], cancel func(error)) {

261 | var result int

262 | for item := range pipe {

263 | result += item

264 | }

265 | writer.Write(result)

266 | }

267 | }

268 |

269 | source := make(chan int)

270 | go func() {

271 | for i := 1; i < 5; i++ {

272 | source <- i

273 | }

274 | close(source)

275 | }()

276 |

277 | value, err := MapReduceChan(source, test.mapper, test.reducer, WithWorkers(-1))

278 | assert.Equal(t, test.expectErr, err)

279 | assert.Equal(t, test.expectValue, value)

280 | })

281 | }

282 | })

283 | }

284 |

285 | func TestMapReduceWithReduerWriteMoreThanOnce(t *testing.T) {

286 | defer goleak.VerifyNone(t)

287 |

288 | assert.Panics(t, func() {

289 | MapReduce(func(source chan<- int) {

290 | for i := 0; i < 10; i++ {

291 | source <- i

292 | }

293 | }, func(item int, writer Writer[int], cancel func(error)) {

294 | writer.Write(item)

295 | }, func(pipe <-chan int, writer Writer[string], cancel func(error)) {

296 | drain(pipe)

297 | writer.Write("one")

298 | writer.Write("two")

299 | })

300 | })

301 | }

302 |

303 | func TestMapReduceVoid(t *testing.T) {

304 | defer goleak.VerifyNone(t)

305 |

306 | var value uint32

307 | tests := []struct {

308 | name string

309 | mapper MapperFunc[int, int]

310 | reducer VoidReducerFunc[int]

311 | expectValue uint32

312 | expectErr error

313 | }{

314 | {

315 | name: "simple",

316 | expectValue: 30,

317 | expectErr: nil,

318 | },

319 | {

320 | name: "cancel with error",

321 | mapper: func(v int, writer Writer[int], cancel func(error)) {

322 | if v%3 == 0 {

323 | cancel(errDummy)

324 | }

325 | writer.Write(v * v)

326 | },

327 | expectErr: errDummy,

328 | },

329 | {

330 | name: "cancel with nil",

331 | mapper: func(v int, writer Writer[int], cancel func(error)) {

332 | if v%3 == 0 {

333 | cancel(nil)

334 | }

335 | writer.Write(v * v)

336 | },

337 | expectErr: ErrCancelWithNil,

338 | },

339 | {

340 | name: "cancel with more",

341 | reducer: func(pipe <-chan int, cancel func(error)) {

342 | for item := range pipe {

343 | result := atomic.AddUint32(&value, uint32(item))

344 | if result > 10 {

345 | cancel(errDummy)

346 | }

347 | }

348 | },

349 | expectErr: errDummy,

350 | },

351 | }

352 |

353 | for _, test := range tests {

354 | t.Run(test.name, func(t *testing.T) {

355 | atomic.StoreUint32(&value, 0)

356 |

357 | if test.mapper == nil {

358 | test.mapper = func(v int, writer Writer[int], cancel func(error)) {

359 | writer.Write(v * v)

360 | }

361 | }

362 | if test.reducer == nil {

363 | test.reducer = func(pipe <-chan int, cancel func(error)) {

364 | for item := range pipe {

365 | atomic.AddUint32(&value, uint32(item))

366 | }

367 | }

368 | }

369 | err := MapReduceVoid(func(source chan<- int) {

370 | for i := 1; i < 5; i++ {

371 | source <- i

372 | }

373 | }, test.mapper, test.reducer)

374 |

375 | assert.Equal(t, test.expectErr, err)

376 | if err == nil {

377 | assert.Equal(t, test.expectValue, atomic.LoadUint32(&value))

378 | }

379 | })

380 | }

381 | }

382 |

383 | func TestMapReduceVoidWithDelay(t *testing.T) {

384 | defer goleak.VerifyNone(t)

385 |

386 | var result []int

387 | err := MapReduceVoid(func(source chan<- int) {

388 | source <- 0

389 | source <- 1

390 | }, func(i int, writer Writer[int], cancel func(error)) {

391 | if i == 0 {

392 | time.Sleep(time.Millisecond * 50)

393 | }

394 | writer.Write(i)

395 | }, func(pipe <-chan int, cancel func(error)) {

396 | for item := range pipe {

397 | i := item

398 | result = append(result, i)

399 | }

400 | })

401 | assert.Nil(t, err)

402 | assert.Equal(t, 2, len(result))

403 | assert.Equal(t, 1, result[0])

404 | assert.Equal(t, 0, result[1])

405 | }

406 |

407 | func TestMapReducePanic(t *testing.T) {

408 | defer goleak.VerifyNone(t)

409 |

410 | assert.Panics(t, func() {

411 | _, _ = MapReduce(func(source chan<- int) {

412 | source <- 0

413 | source <- 1

414 | }, func(i int, writer Writer[int], cancel func(error)) {

415 | writer.Write(i)

416 | }, func(pipe <-chan int, writer Writer[int], cancel func(error)) {

417 | for range pipe {

418 | panic("panic")

419 | }

420 | })

421 | })

422 | }

423 |

424 | func TestMapReducePanicOnce(t *testing.T) {

425 | defer goleak.VerifyNone(t)

426 |

427 | assert.Panics(t, func() {

428 | _, _ = MapReduce(func(source chan<- int) {

429 | for i := 0; i < 100; i++ {

430 | source <- i

431 | }

432 | }, func(i int, writer Writer[int], cancel func(error)) {

433 | if i == 0 {

434 | panic("foo")

435 | }

436 | writer.Write(i)

437 | }, func(pipe <-chan int, writer Writer[int], cancel func(error)) {

438 | for range pipe {

439 | panic("bar")

440 | }

441 | })

442 | })

443 | }

444 |

445 | func TestMapReducePanicBothMapperAndReducer(t *testing.T) {

446 | defer goleak.VerifyNone(t)

447 |

448 | assert.Panics(t, func() {

449 | _, _ = MapReduce(func(source chan<- int) {

450 | source <- 0

451 | source <- 1

452 | }, func(item int, writer Writer[int], cancel func(error)) {

453 | panic("foo")

454 | }, func(pipe <-chan int, writer Writer[int], cancel func(error)) {

455 | panic("bar")

456 | })

457 | })

458 | }

459 |

460 | func TestMapReduceVoidCancel(t *testing.T) {

461 | defer goleak.VerifyNone(t)

462 |

463 | var result []int

464 | err := MapReduceVoid(func(source chan<- int) {

465 | source <- 0

466 | source <- 1

467 | }, func(i int, writer Writer[int], cancel func(error)) {

468 | if i == 1 {

469 | cancel(errors.New("anything"))

470 | }

471 | writer.Write(i)

472 | }, func(pipe <-chan int, cancel func(error)) {

473 | for item := range pipe {

474 | i := item

475 | result = append(result, i)

476 | }

477 | })

478 | assert.NotNil(t, err)

479 | assert.Equal(t, "anything", err.Error())

480 | }

481 |

482 | func TestMapReduceVoidCancelWithRemains(t *testing.T) {

483 | defer goleak.VerifyNone(t)

484 |

485 | var done int32

486 | var result []int

487 | err := MapReduceVoid(func(source chan<- int) {

488 | for i := 0; i < defaultWorkers*2; i++ {

489 | source <- i

490 | }

491 | atomic.AddInt32(&done, 1)

492 | }, func(i int, writer Writer[int], cancel func(error)) {

493 | if i == defaultWorkers/2 {

494 | cancel(errors.New("anything"))

495 | }

496 | writer.Write(i)

497 | }, func(pipe <-chan int, cancel func(error)) {

498 | for item := range pipe {

499 | result = append(result, item)

500 | }

501 | })

502 | assert.NotNil(t, err)

503 | assert.Equal(t, "anything", err.Error())

504 | assert.Equal(t, int32(1), done)

505 | }

506 |

507 | func TestMapReduceWithoutReducerWrite(t *testing.T) {

508 | defer goleak.VerifyNone(t)

509 |

510 | uids := []int{1, 2, 3}

511 | res, err := MapReduce(func(source chan<- int) {

512 | for _, uid := range uids {

513 | source <- uid

514 | }

515 | }, func(item int, writer Writer[int], cancel func(error)) {

516 | writer.Write(item)

517 | }, func(pipe <-chan int, writer Writer[int], cancel func(error)) {

518 | drain(pipe)

519 | // not calling writer.Write(...), should not panic

520 | })

521 | assert.Equal(t, ErrReduceNoOutput, err)

522 | assert.Equal(t, 0, res)

523 | }

524 |

525 | func TestMapReduceVoidPanicInReducer(t *testing.T) {

526 | defer goleak.VerifyNone(t)

527 |

528 | const message = "foo"

529 | assert.Panics(t, func() {

530 | var done int32

531 | _ = MapReduceVoid(func(source chan<- int) {

532 | for i := 0; i < defaultWorkers*2; i++ {

533 | source <- i

534 | }

535 | atomic.AddInt32(&done, 1)

536 | }, func(i int, writer Writer[int], cancel func(error)) {

537 | writer.Write(i)

538 | }, func(pipe <-chan int, cancel func(error)) {

539 | panic(message)

540 | }, WithWorkers(1))

541 | })

542 | }

543 |

544 | func TestForEachWithContext(t *testing.T) {

545 | defer goleak.VerifyNone(t)

546 |

547 | var done int32

548 | ctx, cancel := context.WithCancel(context.Background())

549 | ForEach(func(source chan<- int) {

550 | for i := 0; i < defaultWorkers*2; i++ {

551 | source <- i

552 | }

553 | atomic.AddInt32(&done, 1)

554 | }, func(i int) {

555 | if i == defaultWorkers/2 {

556 | cancel()

557 | }

558 | }, WithContext(ctx))

559 | }

560 |

561 | func TestMapReduceWithContext(t *testing.T) {

562 | defer goleak.VerifyNone(t)

563 |

564 | var done int32

565 | var result []int

566 | ctx, cancel := context.WithCancel(context.Background())

567 | err := MapReduceVoid(func(source chan<- int) {

568 | for i := 0; i < defaultWorkers*2; i++ {

569 | source <- i

570 | }

571 | atomic.AddInt32(&done, 1)

572 | }, func(i int, writer Writer[int], c func(error)) {

573 | if i == defaultWorkers/2 {

574 | cancel()

575 | }

576 | writer.Write(i)

577 | }, func(pipe <-chan int, cancel func(error)) {

578 | for item := range pipe {

579 | i := item

580 | result = append(result, i)

581 | }

582 | }, WithContext(ctx))

583 | assert.NotNil(t, err)

584 | assert.Equal(t, context.DeadlineExceeded, err)

585 | }

586 |

587 | func BenchmarkMapReduce(b *testing.B) {

588 | b.ReportAllocs()

589 |

590 | mapper := func(v int64, writer Writer[int64], cancel func(error)) {

591 | writer.Write(v * v)

592 | }

593 | reducer := func(input <-chan int64, writer Writer[int64], cancel func(error)) {

594 | var result int64

595 | for v := range input {

596 | result += v

597 | }

598 | writer.Write(result)

599 | }

600 |

601 | for i := 0; i < b.N; i++ {

602 | MapReduce(func(input chan<- int64) {

603 | for j := 0; j < 2; j++ {

604 | input <- int64(j)

605 | }

606 | }, mapper, reducer)

607 | }

608 | }

609 |

--------------------------------------------------------------------------------

52 |

53 | How can I terminate the process at any time?

54 |

55 | It's simple, just receive from a channel or the given context in the goroutine.

56 |

57 | ## Choose the right version

58 |

59 | - `v1` (default) - non-generic version

60 | - `v2` (generics) - generic version, needs Go version >= 1.18

61 |

62 | ## A simple example

63 |

64 | Calculate the sum of squares, simulating the concurrency.

65 |

66 | ```go

67 | package main

68 |

69 | import (

70 | "fmt"

71 | "log"

72 |

73 | "github.com/kevwan/mapreduce/v2"

74 | )

75 |

76 | func main() {

77 | val, err := mapreduce.MapReduce(func(source chan<- int) {

78 | // generator

79 | for i := 0; i < 10; i++ {

80 | source <- i

81 | }

82 | }, func(i int, writer mapreduce.Writer[int], cancel func(error)) {

83 | // mapper

84 | writer.Write(i * i)

85 | }, func(pipe <-chan int, writer mapreduce.Writer[int], cancel func(error)) {

86 | // reducer

87 | var sum int

88 | for i := range pipe {

89 | sum += i

90 | }

91 | writer.Write(sum)

92 | })

93 | if err != nil {

94 | log.Fatal(err)

95 | }

96 | fmt.Println("result:", val)

97 | }

98 | ```

99 |

100 | More examples: [https://github.com/zeromicro/zero-examples/tree/main/mapreduce](https://github.com/zeromicro/zero-examples/tree/main/mapreduce)

101 |

102 | ## References

103 |

104 | go-zero: [https://github.com/zeromicro/go-zero](https://github.com/zeromicro/go-zero)

105 |

106 | ## Give a Star! ⭐

107 |

108 | If you like or are using this project to learn or start your solution, please give it a star. Thanks!

109 |

--------------------------------------------------------------------------------

/mapreduce.go:

--------------------------------------------------------------------------------

1 | package mapreduce

2 |

3 | import (

4 | "context"

5 | "errors"

6 | "sync"

7 | "sync/atomic"

8 | )

9 |

10 | const (

11 | defaultWorkers = 16

12 | minWorkers = 1

13 | )

14 |

15 | var (

16 | // ErrCancelWithNil is an error that mapreduce was cancelled with nil.

17 | ErrCancelWithNil = errors.New("mapreduce cancelled with nil")

18 | // ErrReduceNoOutput is an error that reduce did not output a value.

19 | ErrReduceNoOutput = errors.New("reduce not writing value")

20 | )

21 |

22 | type (

23 | // ForEachFunc is used to do element processing, but no output.

24 | ForEachFunc[T any] func(item T)

25 | // GenerateFunc is used to let callers send elements into source.

26 | GenerateFunc[T any] func(source chan<- T)

27 | // MapFunc is used to do element processing and write the output to writer.

28 | MapFunc[T, U any] func(item T, writer Writer[U])

29 | // MapperFunc is used to do element processing and write the output to writer,

30 | // use cancel func to cancel the processing.

31 | MapperFunc[T, U any] func(item T, writer Writer[U], cancel func(error))

32 | // ReducerFunc is used to reduce all the mapping output and write to writer,

33 | // use cancel func to cancel the processing.

34 | ReducerFunc[U, V any] func(pipe <-chan U, writer Writer[V], cancel func(error))

35 | // VoidReducerFunc is used to reduce all the mapping output, but no output.

36 | // Use cancel func to cancel the processing.

37 | VoidReducerFunc[U any] func(pipe <-chan U, cancel func(error))

38 | // Option defines the method to customize the mapreduce.

39 | Option func(opts *mapReduceOptions)

40 |

41 | mapperContext[T, U any] struct {

42 | ctx context.Context

43 | mapper MapFunc[T, U]

44 | source <-chan T

45 | panicChan *onceChan

46 | collector chan<- U

47 | doneChan <-chan struct{}

48 | workers int

49 | }

50 |

51 | mapReduceOptions struct {

52 | ctx context.Context

53 | workers int

54 | }

55 |

56 | // Writer interface wraps Write method.

57 | Writer[T any] interface {

58 | Write(v T)

59 | }

60 | )

61 |

62 | // Finish runs fns parallelly, cancelled on any error.

63 | func Finish(fns ...func() error) error {

64 | if len(fns) == 0 {

65 | return nil

66 | }

67 |

68 | return MapReduceVoid(func(source chan<- func() error) {

69 | for _, fn := range fns {

70 | source <- fn

71 | }

72 | }, func(fn func() error, writer Writer[any], cancel func(error)) {

73 | if err := fn(); err != nil {

74 | cancel(err)

75 | }

76 | }, func(pipe <-chan any, cancel func(error)) {

77 | }, WithWorkers(len(fns)))

78 | }

79 |

80 | // FinishVoid runs fns parallelly.

81 | func FinishVoid(fns ...func()) {

82 | if len(fns) == 0 {

83 | return

84 | }

85 |

86 | ForEach(func(source chan<- func()) {

87 | for _, fn := range fns {

88 | source <- fn

89 | }

90 | }, func(fn func()) {

91 | fn()

92 | }, WithWorkers(len(fns)))

93 | }

94 |

95 | // ForEach maps all elements from given generate but no output.

96 | func ForEach[T any](generate GenerateFunc[T], mapper ForEachFunc[T], opts ...Option) {

97 | options := buildOptions(opts...)

98 | panicChan := &onceChan{channel: make(chan any)}

99 | source := buildSource(generate, panicChan)

100 | collector := make(chan any)

101 | done := make(chan struct{})

102 |

103 | go executeMappers(mapperContext[T, any]{

104 | ctx: options.ctx,

105 | mapper: func(item T, _ Writer[any]) {

106 | mapper(item)

107 | },

108 | source: source,

109 | panicChan: panicChan,

110 | collector: collector,

111 | doneChan: done,

112 | workers: options.workers,

113 | })

114 |

115 | for {

116 | select {

117 | case v := <-panicChan.channel:

118 | panic(v)

119 | case _, ok := <-collector:

120 | if !ok {

121 | return

122 | }

123 | }

124 | }

125 | }

126 |

127 | // MapReduce maps all elements generated from given generate func,

128 | // and reduces the output elements with given reducer.

129 | func MapReduce[T, U, V any](generate GenerateFunc[T], mapper MapperFunc[T, U], reducer ReducerFunc[U, V],

130 | opts ...Option) (V, error) {

131 | panicChan := &onceChan{channel: make(chan any)}

132 | source := buildSource(generate, panicChan)

133 | return mapReduceWithPanicChan(source, panicChan, mapper, reducer, opts...)

134 | }

135 |

136 | // MapReduceChan maps all elements from source, and reduce the output elements with given reducer.

137 | func MapReduceChan[T, U, V any](source <-chan T, mapper MapperFunc[T, U], reducer ReducerFunc[U, V],

138 | opts ...Option) (V, error) {

139 | panicChan := &onceChan{channel: make(chan any)}

140 | return mapReduceWithPanicChan(source, panicChan, mapper, reducer, opts...)

141 | }

142 |

143 | // mapReduceWithPanicChan maps all elements from source, and reduce the output elements with given reducer.

144 | func mapReduceWithPanicChan[T, U, V any](source <-chan T, panicChan *onceChan, mapper MapperFunc[T, U],

145 | reducer ReducerFunc[U, V], opts ...Option) (val V, err error) {

146 | options := buildOptions(opts...)

147 | // output is used to write the final result

148 | output := make(chan V)

149 | defer func() {

150 | // reducer can only write once, if more, panic

151 | for range output {

152 | panic("more than one element written in reducer")

153 | }

154 | }()

155 |

156 | // collector is used to collect data from mapper, and consume in reducer

157 | collector := make(chan U, options.workers)

158 | // if done is closed, all mappers and reducer should stop processing

159 | done := make(chan struct{})

160 | writer := newGuardedWriter(options.ctx, output, done)

161 | var closeOnce sync.Once

162 | // use atomic.Value to avoid data race

163 | var retErr atomic.Value

164 | finish := func() {

165 | closeOnce.Do(func() {

166 | close(done)

167 | close(output)

168 | })

169 | }

170 | cancel := once(func(err error) {

171 | if err != nil {

172 | retErr.Store(err)

173 | } else {

174 | retErr.Store(ErrCancelWithNil)