├── go.mod

├── .gitignore

├── routinegroup_test.go

├── .github

└── workflows

│ ├── reviewdog.yml

│ ├── go.yml

│ └── codeql-analysis.yml

├── routinegroup.go

├── go.sum

├── ring.go

├── LICENSE

├── ring_test.go

├── stream.go

├── stream_test.go

├── readme-cn.md

└── readme.md

/go.mod:

--------------------------------------------------------------------------------

1 | module github.com/kevwan/stream

2 |

3 | go 1.17

4 |

5 | require github.com/stretchr/testify v1.7.0

6 |

7 | require (

8 | github.com/davecgh/go-spew v1.1.0 // indirect

9 | github.com/pmezard/go-difflib v1.0.0 // indirect

10 | gopkg.in/yaml.v3 v3.0.0-20200313102051-9f266ea9e77c // indirect

11 | )

12 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Binaries for programs and plugins

2 | *.exe

3 | *.exe~

4 | *.dll

5 | *.so

6 | *.dylib

7 |

8 | # Test binary, built with `go test -c`

9 | *.test

10 |

11 | # Output of the go coverage tool, specifically when used with LiteIDE

12 | *.out

13 |

14 | # dev files

15 | .idea

16 |

17 | # Dependency directories (remove the comment below to include it)

18 | # vendor/

19 |

--------------------------------------------------------------------------------

/routinegroup_test.go:

--------------------------------------------------------------------------------

1 | package stream

2 |

3 | import (

4 | "sync/atomic"

5 | "testing"

6 |

7 | "github.com/stretchr/testify/assert"

8 | )

9 |

10 | func TestRoutineGroupRun(t *testing.T) {

11 | var count int32

12 | group := NewRoutineGroup()

13 | for i := 0; i < 3; i++ {

14 | group.Run(func() {

15 | atomic.AddInt32(&count, 1)

16 | })

17 | }

18 |

19 | group.Wait()

20 |

21 | assert.Equal(t, int32(3), count)

22 | }

23 |

--------------------------------------------------------------------------------

/.github/workflows/reviewdog.yml:

--------------------------------------------------------------------------------

1 | name: reviewdog

2 | on: [pull_request]

3 | jobs:

4 | staticcheck:

5 | name: runner / staticcheck

6 | runs-on: ubuntu-latest

7 | steps:

8 | - uses: actions/checkout@v2

9 | - uses: reviewdog/action-staticcheck@v1

10 | with:

11 | github_token: ${{ secrets.github_token }}

12 | # Change reviewdog reporter if you need [github-pr-check,github-check,github-pr-review].

13 | reporter: github-pr-review

14 | # Report all results.

15 | filter_mode: nofilter

16 | # Exit with 1 when it find at least one finding.

17 | fail_on_error: true

18 | # Set staticcheck flags

19 | staticcheck_flags: -checks=inherit,-SA1019,-SA1029,-SA5008

20 |

--------------------------------------------------------------------------------

/routinegroup.go:

--------------------------------------------------------------------------------

1 | package stream

2 |

3 | import "sync"

4 |

5 | // A RoutineGroup is used to group goroutines together and all wait all goroutines to be done.

6 | type RoutineGroup struct {

7 | waitGroup sync.WaitGroup

8 | }

9 |

10 | // NewRoutineGroup returns a RoutineGroup.

11 | func NewRoutineGroup() *RoutineGroup {

12 | return new(RoutineGroup)

13 | }

14 |

15 | // Run runs the given fn in RoutineGroup.

16 | // Don't reference the variables from outside,

17 | // because outside variables can be changed by other goroutines

18 | func (g *RoutineGroup) Run(fn func()) {

19 | g.waitGroup.Add(1)

20 |

21 | go func() {

22 | defer g.waitGroup.Done()

23 | fn()

24 | }()

25 | }

26 |

27 | // Wait waits all running functions to be done.

28 | func (g *RoutineGroup) Wait() {

29 | g.waitGroup.Wait()

30 | }

31 |

--------------------------------------------------------------------------------

/.github/workflows/go.yml:

--------------------------------------------------------------------------------

1 | name: Go

2 |

3 | on:

4 | push:

5 | branches: [ main ]

6 | pull_request:

7 | branches: [ main ]

8 |

9 | jobs:

10 | build:

11 | name: Build

12 | runs-on: ubuntu-latest

13 | steps:

14 |

15 | - name: Set up Go 1.x

16 | uses: actions/setup-go@v2

17 | with:

18 | go-version: ^1.14

19 | id: go

20 |

21 | - name: Check out code into the Go module directory

22 | uses: actions/checkout@v2

23 |

24 | - name: Get dependencies

25 | run: |

26 | go get -v -t -d ./...

27 |

28 | - name: Lint

29 | run: |

30 | go vet -stdmethods=false $(go list ./...)

31 | go install mvdan.cc/gofumpt@latest

32 | test -z "$(gofumpt -s -l -extra .)" || echo "Please run 'gofumpt -l -w -extra .'"

33 |

34 | - name: Test

35 | run: go test -race -coverprofile=coverage.txt -covermode=atomic ./...

36 |

37 | - name: Codecov

38 | uses: codecov/codecov-action@v2

39 |

--------------------------------------------------------------------------------

/go.sum:

--------------------------------------------------------------------------------

1 | github.com/davecgh/go-spew v1.1.0 h1:ZDRjVQ15GmhC3fiQ8ni8+OwkZQO4DARzQgrnXU1Liz8=

2 | github.com/davecgh/go-spew v1.1.0/go.mod h1:J7Y8YcW2NihsgmVo/mv3lAwl/skON4iLHjSsI+c5H38=

3 | github.com/pmezard/go-difflib v1.0.0 h1:4DBwDE0NGyQoBHbLQYPwSUPoCMWR5BEzIk/f1lZbAQM=

4 | github.com/pmezard/go-difflib v1.0.0/go.mod h1:iKH77koFhYxTK1pcRnkKkqfTogsbg7gZNVY4sRDYZ/4=

5 | github.com/stretchr/objx v0.1.0/go.mod h1:HFkY916IF+rwdDfMAkV7OtwuqBVzrE8GR6GFx+wExME=

6 | github.com/stretchr/testify v1.7.0 h1:nwc3DEeHmmLAfoZucVR881uASk0Mfjw8xYJ99tb5CcY=

7 | github.com/stretchr/testify v1.7.0/go.mod h1:6Fq8oRcR53rry900zMqJjRRixrwX3KX962/h/Wwjteg=

8 | gopkg.in/check.v1 v0.0.0-20161208181325-20d25e280405 h1:yhCVgyC4o1eVCa2tZl7eS0r+SDo693bJlVdllGtEeKM=

9 | gopkg.in/check.v1 v0.0.0-20161208181325-20d25e280405/go.mod h1:Co6ibVJAznAaIkqp8huTwlJQCZ016jof/cbN4VW5Yz0=

10 | gopkg.in/yaml.v3 v3.0.0-20200313102051-9f266ea9e77c h1:dUUwHk2QECo/6vqA44rthZ8ie2QXMNeKRTHCNY2nXvo=

11 | gopkg.in/yaml.v3 v3.0.0-20200313102051-9f266ea9e77c/go.mod h1:K4uyk7z7BCEPqu6E+C64Yfv1cQ7kz7rIZviUmN+EgEM=

12 |

--------------------------------------------------------------------------------

/ring.go:

--------------------------------------------------------------------------------

1 | package stream

2 |

3 | import "sync"

4 |

5 | // A Ring can be used as fixed size ring.

6 | type Ring struct {

7 | elements []interface{}

8 | index int

9 | lock sync.Mutex

10 | }

11 |

12 | // NewRing returns a Ring object with the given size n.

13 | func NewRing(n int) *Ring {

14 | if n < 1 {

15 | panic("n should be greater than 0")

16 | }

17 |

18 | return &Ring{

19 | elements: make([]interface{}, n),

20 | }

21 | }

22 |

23 | // Add adds v into r.

24 | func (r *Ring) Add(v interface{}) {

25 | r.lock.Lock()

26 | defer r.lock.Unlock()

27 |

28 | r.elements[r.index%len(r.elements)] = v

29 | r.index++

30 | }

31 |

32 | // Take takes all items from r.

33 | func (r *Ring) Take() []interface{} {

34 | r.lock.Lock()

35 | defer r.lock.Unlock()

36 |

37 | var size int

38 | var start int

39 | if r.index > len(r.elements) {

40 | size = len(r.elements)

41 | start = r.index % len(r.elements)

42 | } else {

43 | size = r.index

44 | }

45 |

46 | elements := make([]interface{}, size)

47 | for i := 0; i < size; i++ {

48 | elements[i] = r.elements[(start+i)%len(r.elements)]

49 | }

50 |

51 | return elements

52 | }

53 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2022 Kevin Wan

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/ring_test.go:

--------------------------------------------------------------------------------

1 | package stream

2 |

3 | import (

4 | "sync"

5 | "testing"

6 |

7 | "github.com/stretchr/testify/assert"

8 | )

9 |

10 | func TestNewRing(t *testing.T) {

11 | assert.Panics(t, func() {

12 | NewRing(0)

13 | })

14 | }

15 |

16 | func TestRingLess(t *testing.T) {

17 | ring := NewRing(5)

18 | for i := 0; i < 3; i++ {

19 | ring.Add(i)

20 | }

21 | elements := ring.Take()

22 | assert.ElementsMatch(t, []interface{}{0, 1, 2}, elements)

23 | }

24 |

25 | func TestRingMore(t *testing.T) {

26 | ring := NewRing(5)

27 | for i := 0; i < 11; i++ {

28 | ring.Add(i)

29 | }

30 | elements := ring.Take()

31 | assert.ElementsMatch(t, []interface{}{6, 7, 8, 9, 10}, elements)

32 | }

33 |

34 | func TestRingAdd(t *testing.T) {

35 | ring := NewRing(5051)

36 | wg := sync.WaitGroup{}

37 | for i := 1; i <= 100; i++ {

38 | wg.Add(1)

39 | go func(i int) {

40 | defer wg.Done()

41 | for j := 1; j <= i; j++ {

42 | ring.Add(i)

43 | }

44 | }(i)

45 | }

46 | wg.Wait()

47 | assert.Equal(t, 5050, len(ring.Take()))

48 | }

49 |

50 | func BenchmarkRingAdd(b *testing.B) {

51 | ring := NewRing(500)

52 | b.RunParallel(func(pb *testing.PB) {

53 | for pb.Next() {

54 | for i := 0; i < b.N; i++ {

55 | ring.Add(i)

56 | }

57 | }

58 | })

59 | }

60 |

--------------------------------------------------------------------------------

/.github/workflows/codeql-analysis.yml:

--------------------------------------------------------------------------------

1 | # For most projects, this workflow file will not need changing; you simply need

2 | # to commit it to your repository.

3 | #

4 | # You may wish to alter this file to override the set of languages analyzed,

5 | # or to provide custom queries or build logic.

6 | #

7 | # ******** NOTE ********

8 | # We have attempted to detect the languages in your repository. Please check

9 | # the `language` matrix defined below to confirm you have the correct set of

10 | # supported CodeQL languages.

11 | #

12 | name: "CodeQL"

13 |

14 | on:

15 | push:

16 | branches: [ main ]

17 | pull_request:

18 | # The branches below must be a subset of the branches above

19 | branches: [ main ]

20 | schedule:

21 | - cron: '18 19 * * 6'

22 |

23 | jobs:

24 | analyze:

25 | name: Analyze

26 | runs-on: ubuntu-latest

27 |

28 | strategy:

29 | fail-fast: false

30 | matrix:

31 | language: [ 'go' ]

32 | # CodeQL supports [ 'cpp', 'csharp', 'go', 'java', 'javascript', 'python' ]

33 | # Learn more:

34 | # https://docs.github.com/en/free-pro-team@latest/github/finding-security-vulnerabilities-and-errors-in-your-code/configuring-code-scanning#changing-the-languages-that-are-analyzed

35 |

36 | steps:

37 | - name: Checkout repository

38 | uses: actions/checkout@v2

39 |

40 | # Initializes the CodeQL tools for scanning.

41 | - name: Initialize CodeQL

42 | uses: github/codeql-action/init@v1

43 | with:

44 | languages: ${{ matrix.language }}

45 | # If you wish to specify custom queries, you can do so here or in a config file.

46 | # By default, queries listed here will override any specified in a config file.

47 | # Prefix the list here with "+" to use these queries and those in the config file.

48 | # queries: ./path/to/local/query, your-org/your-repo/queries@main

49 |

50 | # Autobuild attempts to build any compiled languages (C/C++, C#, or Java).

51 | # If this step fails, then you should remove it and run the build manually (see below)

52 | - name: Autobuild

53 | uses: github/codeql-action/autobuild@v1

54 |

55 | # ℹ️ Command-line programs to run using the OS shell.

56 | # 📚 https://git.io/JvXDl

57 |

58 | # ✏️ If the Autobuild fails above, remove it and uncomment the following three lines

59 | # and modify them (or add more) to build your code if your project

60 | # uses a compiled language

61 |

62 | #- run: |

63 | # make bootstrap

64 | # make release

65 |

66 | - name: Perform CodeQL Analysis

67 | uses: github/codeql-action/analyze@v1

68 |

--------------------------------------------------------------------------------

/stream.go:

--------------------------------------------------------------------------------

1 | package stream

2 |

3 | import (

4 | "sort"

5 | "sync"

6 | )

7 |

8 | const (

9 | defaultWorkers = 16

10 | minWorkers = 1

11 | )

12 |

13 | type (

14 | rxOptions struct {

15 | unlimitedWorkers bool

16 | workers int

17 | }

18 |

19 | // FilterFunc defines the method to filter a Stream.

20 | FilterFunc func(item interface{}) bool

21 | // ForAllFunc defines the method to handle all elements in a Stream.

22 | ForAllFunc func(pipe <-chan interface{})

23 | // ForEachFunc defines the method to handle each element in a Stream.

24 | ForEachFunc func(item interface{})

25 | // GenerateFunc defines the method to send elements into a Stream.

26 | GenerateFunc func(source chan<- interface{})

27 | // KeyFunc defines the method to generate keys for the elements in a Stream.

28 | KeyFunc func(item interface{}) interface{}

29 | // LessFunc defines the method to compare the elements in a Stream.

30 | LessFunc func(a, b interface{}) bool

31 | // MapFunc defines the method to map each element to another object in a Stream.

32 | MapFunc func(item interface{}) interface{}

33 | // Option defines the method to customize a Stream.

34 | Option func(opts *rxOptions)

35 | // ParallelFunc defines the method to handle elements parallelly.

36 | ParallelFunc func(item interface{})

37 | // ReduceFunc defines the method to reduce all the elements in a Stream.

38 | ReduceFunc func(pipe <-chan interface{}) (interface{}, error)

39 | // WalkFunc defines the method to walk through all the elements in a Stream.

40 | WalkFunc func(item interface{}, pipe chan<- interface{})

41 |

42 | // A Stream is a stream that can be used to do stream processing.

43 | Stream struct {

44 | source <-chan interface{}

45 | }

46 | )

47 |

48 | // Concat returns a concatenated Stream.

49 | func Concat(s Stream, others ...Stream) Stream {

50 | return s.Concat(others...)

51 | }

52 |

53 | // From constructs a Stream from the given GenerateFunc.

54 | func From(generate GenerateFunc) Stream {

55 | source := make(chan interface{})

56 |

57 | go func() {

58 | defer close(source)

59 | generate(source)

60 | }()

61 |

62 | return Range(source)

63 | }

64 |

65 | // Just converts the given arbitrary items to a Stream.

66 | func Just(items ...interface{}) Stream {

67 | source := make(chan interface{}, len(items))

68 | for _, item := range items {

69 | source <- item

70 | }

71 | close(source)

72 |

73 | return Range(source)

74 | }

75 |

76 | // Range converts the given channel to a Stream.

77 | func Range(source <-chan interface{}) Stream {

78 | return Stream{

79 | source: source,

80 | }

81 | }

82 |

83 | // AllMach returns whether all elements of this stream match the provided predicate.

84 | // May not evaluate the predicate on all elements if not necessary for determining the result.

85 | // If the stream is empty then true is returned and the predicate is not evaluated.

86 | func (s Stream) AllMach(predicate func(item interface{}) bool) bool {

87 | for item := range s.source {

88 | if !predicate(item) {

89 | // make sure the former goroutine not block, and current func returns fast.

90 | go drain(s.source)

91 | return false

92 | }

93 | }

94 |

95 | return true

96 | }

97 |

98 | // AnyMach returns whether any elements of this stream match the provided predicate.

99 | // May not evaluate the predicate on all elements if not necessary for determining the result.

100 | // If the stream is empty then false is returned and the predicate is not evaluated.

101 | func (s Stream) AnyMach(predicate func(item interface{}) bool) bool {

102 | for item := range s.source {

103 | if predicate(item) {

104 | // make sure the former goroutine not block, and current func returns fast.

105 | go drain(s.source)

106 | return true

107 | }

108 | }

109 |

110 | return false

111 | }

112 |

113 | // Buffer buffers the items into a queue with size n.

114 | // It can balance the producer and the consumer if their processing throughput don't match.

115 | func (s Stream) Buffer(n int) Stream {

116 | if n < 0 {

117 | n = 0

118 | }

119 |

120 | source := make(chan interface{}, n)

121 | go func() {

122 | for item := range s.source {

123 | source <- item

124 | }

125 | close(source)

126 | }()

127 |

128 | return Range(source)

129 | }

130 |

131 | // Concat returns a Stream that concatenated other streams

132 | func (s Stream) Concat(others ...Stream) Stream {

133 | source := make(chan interface{})

134 |

135 | go func() {

136 | group := NewRoutineGroup()

137 | group.Run(func() {

138 | for item := range s.source {

139 | source <- item

140 | }

141 | })

142 |

143 | for _, each := range others {

144 | each := each

145 | group.Run(func() {

146 | for item := range each.source {

147 | source <- item

148 | }

149 | })

150 | }

151 |

152 | group.Wait()

153 | close(source)

154 | }()

155 |

156 | return Range(source)

157 | }

158 |

159 | // Count counts the number of elements in the result.

160 | func (s Stream) Count() (count int) {

161 | for range s.source {

162 | count++

163 | }

164 | return

165 | }

166 |

167 | // Distinct removes the duplicated items base on the given KeyFunc.

168 | func (s Stream) Distinct(fn KeyFunc) Stream {

169 | source := make(chan interface{})

170 |

171 | go func() {

172 | defer close(source)

173 |

174 | keys := make(map[interface{}]struct{})

175 | for item := range s.source {

176 | key := fn(item)

177 | if _, ok := keys[key]; !ok {

178 | source <- item

179 | keys[key] = struct{}{}

180 | }

181 | }

182 | }()

183 |

184 | return Range(source)

185 | }

186 |

187 | // Done waits all upstreaming operations to be done.

188 | func (s Stream) Done() {

189 | drain(s.source)

190 | }

191 |

192 | // Filter filters the items by the given FilterFunc.

193 | func (s Stream) Filter(fn FilterFunc, opts ...Option) Stream {

194 | return s.Walk(func(item interface{}, pipe chan<- interface{}) {

195 | if fn(item) {

196 | pipe <- item

197 | }

198 | }, opts...)

199 | }

200 |

201 | // First returns the first item, nil if no items.

202 | func (s Stream) First() interface{} {

203 | for item := range s.source {

204 | // make sure the former goroutine not block, and current func returns fast.

205 | go drain(s.source)

206 | return item

207 | }

208 |

209 | return nil

210 | }

211 |

212 | // ForAll handles the streaming elements from the source and no later streams.

213 | func (s Stream) ForAll(fn ForAllFunc) {

214 | fn(s.source)

215 | // avoid goroutine leak on fn not consuming all items.

216 | go drain(s.source)

217 | }

218 |

219 | // ForEach seals the Stream with the ForEachFunc on each item, no successive operations.

220 | func (s Stream) ForEach(fn ForEachFunc) {

221 | for item := range s.source {

222 | fn(item)

223 | }

224 | }

225 |

226 | // Group groups the elements into different groups based on their keys.

227 | func (s Stream) Group(fn KeyFunc) Stream {

228 | groups := make(map[interface{}][]interface{})

229 | for item := range s.source {

230 | key := fn(item)

231 | groups[key] = append(groups[key], item)

232 | }

233 |

234 | source := make(chan interface{})

235 | go func() {

236 | for _, group := range groups {

237 | source <- group

238 | }

239 | close(source)

240 | }()

241 |

242 | return Range(source)

243 | }

244 |

245 | // Head returns the first n elements in p.

246 | func (s Stream) Head(n int64) Stream {

247 | if n < 1 {

248 | panic("n must be greater than 0")

249 | }

250 |

251 | source := make(chan interface{})

252 |

253 | go func() {

254 | for item := range s.source {

255 | n--

256 | if n >= 0 {

257 | source <- item

258 | }

259 | if n == 0 {

260 | // let successive method go ASAP even we have more items to skip

261 | close(source)

262 | // why we don't just break the loop, and drain to consume all items.

263 | // because if breaks, this former goroutine will block forever,

264 | // which will cause goroutine leak.

265 | drain(s.source)

266 | }

267 | }

268 | // not enough items in s.source, but we need to let successive method to go ASAP.

269 | if n > 0 {

270 | close(source)

271 | }

272 | }()

273 |

274 | return Range(source)

275 | }

276 |

277 | // Last returns the last item, or nil if no items.

278 | func (s Stream) Last() (item interface{}) {

279 | for item = range s.source {

280 | }

281 | return

282 | }

283 |

284 | // Map converts each item to another corresponding item, which means it's a 1:1 model.

285 | func (s Stream) Map(fn MapFunc, opts ...Option) Stream {

286 | return s.Walk(func(item interface{}, pipe chan<- interface{}) {

287 | pipe <- fn(item)

288 | }, opts...)

289 | }

290 |

291 | // Merge merges all the items into a slice and generates a new stream.

292 | func (s Stream) Merge() Stream {

293 | var items []interface{}

294 | for item := range s.source {

295 | items = append(items, item)

296 | }

297 |

298 | source := make(chan interface{}, 1)

299 | source <- items

300 | close(source)

301 |

302 | return Range(source)

303 | }

304 |

305 | // NoneMatch returns whether all elements of this stream don't match the provided predicate.

306 | // May not evaluate the predicate on all elements if not necessary for determining the result.

307 | // If the stream is empty then true is returned and the predicate is not evaluated.

308 | func (s Stream) NoneMatch(predicate func(item interface{}) bool) bool {

309 | for item := range s.source {

310 | if predicate(item) {

311 | // make sure the former goroutine not block, and current func returns fast.

312 | go drain(s.source)

313 | return false

314 | }

315 | }

316 |

317 | return true

318 | }

319 |

320 | // Parallel applies the given ParallelFunc to each item concurrently with given number of workers.

321 | func (s Stream) Parallel(fn ParallelFunc, opts ...Option) {

322 | s.Walk(func(item interface{}, pipe chan<- interface{}) {

323 | fn(item)

324 | }, opts...).Done()

325 | }

326 |

327 | // Reduce is a utility method to let the caller deal with the underlying channel.

328 | func (s Stream) Reduce(fn ReduceFunc) (interface{}, error) {

329 | return fn(s.source)

330 | }

331 |

332 | // Reverse reverses the elements in the stream.

333 | func (s Stream) Reverse() Stream {

334 | var items []interface{}

335 | for item := range s.source {

336 | items = append(items, item)

337 | }

338 | // reverse, official method

339 | for i := len(items)/2 - 1; i >= 0; i-- {

340 | opp := len(items) - 1 - i

341 | items[i], items[opp] = items[opp], items[i]

342 | }

343 |

344 | return Just(items...)

345 | }

346 |

347 | // Skip returns a Stream that skips size elements.

348 | func (s Stream) Skip(n int64) Stream {

349 | if n < 0 {

350 | panic("n must not be negative")

351 | }

352 | if n == 0 {

353 | return s

354 | }

355 |

356 | source := make(chan interface{})

357 |

358 | go func() {

359 | for item := range s.source {

360 | n--

361 | if n >= 0 {

362 | continue

363 | } else {

364 | source <- item

365 | }

366 | }

367 | close(source)

368 | }()

369 |

370 | return Range(source)

371 | }

372 |

373 | // Sort sorts the items from the underlying source.

374 | func (s Stream) Sort(less LessFunc) Stream {

375 | var items []interface{}

376 | for item := range s.source {

377 | items = append(items, item)

378 | }

379 | sort.Slice(items, func(i, j int) bool {

380 | return less(items[i], items[j])

381 | })

382 |

383 | return Just(items...)

384 | }

385 |

386 | // Split splits the elements into chunk with size up to n,

387 | // might be less than n on tailing elements.

388 | func (s Stream) Split(n int) Stream {

389 | if n < 1 {

390 | panic("n should be greater than 0")

391 | }

392 |

393 | source := make(chan interface{})

394 | go func() {

395 | var chunk []interface{}

396 | for item := range s.source {

397 | chunk = append(chunk, item)

398 | if len(chunk) == n {

399 | source <- chunk

400 | chunk = nil

401 | }

402 | }

403 | if chunk != nil {

404 | source <- chunk

405 | }

406 | close(source)

407 | }()

408 |

409 | return Range(source)

410 | }

411 |

412 | // Tail returns the last n elements in p.

413 | func (s Stream) Tail(n int64) Stream {

414 | if n < 1 {

415 | panic("n should be greater than 0")

416 | }

417 |

418 | source := make(chan interface{})

419 |

420 | go func() {

421 | ring := NewRing(int(n))

422 | for item := range s.source {

423 | ring.Add(item)

424 | }

425 | for _, item := range ring.Take() {

426 | source <- item

427 | }

428 | close(source)

429 | }()

430 |

431 | return Range(source)

432 | }

433 |

434 | // Walk lets the callers handle each item, the caller may write zero, one or more items base on the given item.

435 | func (s Stream) Walk(fn WalkFunc, opts ...Option) Stream {

436 | option := buildOptions(opts...)

437 | if option.unlimitedWorkers {

438 | return s.walkUnlimited(fn, option)

439 | }

440 |

441 | return s.walkLimited(fn, option)

442 | }

443 |

444 | func (s Stream) walkLimited(fn WalkFunc, option *rxOptions) Stream {

445 | pipe := make(chan interface{}, option.workers)

446 |

447 | go func() {

448 | var wg sync.WaitGroup

449 | pool := make(chan struct{}, option.workers)

450 |

451 | for item := range s.source {

452 | // important, used in another goroutine

453 | val := item

454 | pool <- struct{}{}

455 | wg.Add(1)

456 |

457 | go func() {

458 | defer func() {

459 | wg.Done()

460 | <-pool

461 | }()

462 |

463 | fn(val, pipe)

464 | }()

465 | }

466 |

467 | wg.Wait()

468 | close(pipe)

469 | }()

470 |

471 | return Range(pipe)

472 | }

473 |

474 | func (s Stream) walkUnlimited(fn WalkFunc, option *rxOptions) Stream {

475 | pipe := make(chan interface{}, option.workers)

476 |

477 | go func() {

478 | var wg sync.WaitGroup

479 |

480 | for item := range s.source {

481 | // important, used in another goroutine

482 | val := item

483 | wg.Add(1)

484 | go func() {

485 | defer wg.Done()

486 | fn(val, pipe)

487 | }()

488 | }

489 |

490 | wg.Wait()

491 | close(pipe)

492 | }()

493 |

494 | return Range(pipe)

495 | }

496 |

497 | // UnlimitedWorkers lets the caller use as many workers as the tasks.

498 | func UnlimitedWorkers() Option {

499 | return func(opts *rxOptions) {

500 | opts.unlimitedWorkers = true

501 | }

502 | }

503 |

504 | // WithWorkers lets the caller customize the concurrent workers.

505 | func WithWorkers(workers int) Option {

506 | return func(opts *rxOptions) {

507 | if workers < minWorkers {

508 | opts.workers = minWorkers

509 | } else {

510 | opts.workers = workers

511 | }

512 | }

513 | }

514 |

515 | // buildOptions returns a rxOptions with given customizations.

516 | func buildOptions(opts ...Option) *rxOptions {

517 | options := newOptions()

518 | for _, opt := range opts {

519 | opt(options)

520 | }

521 |

522 | return options

523 | }

524 |

525 | // drain drains the given channel.

526 | func drain(channel <-chan interface{}) {

527 | for range channel {

528 | }

529 | }

530 |

531 | // newOptions returns a default rxOptions.

532 | func newOptions() *rxOptions {

533 | return &rxOptions{

534 | workers: defaultWorkers,

535 | }

536 | }

537 |

--------------------------------------------------------------------------------

/stream_test.go:

--------------------------------------------------------------------------------

1 | package stream

2 |

3 | import (

4 | "io/ioutil"

5 | "log"

6 | "math/rand"

7 | "reflect"

8 | "runtime"

9 | "sort"

10 | "sync"

11 | "sync/atomic"

12 | "testing"

13 | "time"

14 |

15 | "github.com/stretchr/testify/assert"

16 | )

17 |

18 | func TestBuffer(t *testing.T) {

19 | runCheckedTest(t, func(t *testing.T) {

20 | const N = 5

21 | var count int32

22 | var wait sync.WaitGroup

23 | wait.Add(1)

24 | From(func(source chan<- interface{}) {

25 | ticker := time.NewTicker(10 * time.Millisecond)

26 | defer ticker.Stop()

27 |

28 | for i := 0; i < 2*N; i++ {

29 | select {

30 | case source <- i:

31 | atomic.AddInt32(&count, 1)

32 | case <-ticker.C:

33 | wait.Done()

34 | return

35 | }

36 | }

37 | }).Buffer(N).ForAll(func(pipe <-chan interface{}) {

38 | wait.Wait()

39 | // why N+1, because take one more to wait for sending into the channel

40 | assert.Equal(t, int32(N+1), atomic.LoadInt32(&count))

41 | })

42 | })

43 | }

44 |

45 | func TestBufferNegative(t *testing.T) {

46 | runCheckedTest(t, func(t *testing.T) {

47 | var result int

48 | Just(1, 2, 3, 4).Buffer(-1).Reduce(func(pipe <-chan interface{}) (interface{}, error) {

49 | for item := range pipe {

50 | result += item.(int)

51 | }

52 | return result, nil

53 | })

54 | assert.Equal(t, 10, result)

55 | })

56 | }

57 |

58 | func TestCount(t *testing.T) {

59 | runCheckedTest(t, func(t *testing.T) {

60 | tests := []struct {

61 | name string

62 | elements []interface{}

63 | }{

64 | {

65 | name: "no elements with nil",

66 | },

67 | {

68 | name: "no elements",

69 | elements: []interface{}{},

70 | },

71 | {

72 | name: "1 element",

73 | elements: []interface{}{1},

74 | },

75 | {

76 | name: "multiple elements",

77 | elements: []interface{}{1, 2, 3},

78 | },

79 | }

80 |

81 | for _, test := range tests {

82 | t.Run(test.name, func(t *testing.T) {

83 | val := Just(test.elements...).Count()

84 | assert.Equal(t, len(test.elements), val)

85 | })

86 | }

87 | })

88 | }

89 |

90 | func TestDone(t *testing.T) {

91 | runCheckedTest(t, func(t *testing.T) {

92 | var count int32

93 | Just(1, 2, 3).Walk(func(item interface{}, pipe chan<- interface{}) {

94 | time.Sleep(time.Millisecond * 100)

95 | atomic.AddInt32(&count, int32(item.(int)))

96 | }).Done()

97 | assert.Equal(t, int32(6), count)

98 | })

99 | }

100 |

101 | func TestJust(t *testing.T) {

102 | runCheckedTest(t, func(t *testing.T) {

103 | var result int

104 | Just(1, 2, 3, 4).Reduce(func(pipe <-chan interface{}) (interface{}, error) {

105 | for item := range pipe {

106 | result += item.(int)

107 | }

108 | return result, nil

109 | })

110 | assert.Equal(t, 10, result)

111 | })

112 | }

113 |

114 | func TestDistinct(t *testing.T) {

115 | runCheckedTest(t, func(t *testing.T) {

116 | var result int

117 | Just(4, 1, 3, 2, 3, 4).Distinct(func(item interface{}) interface{} {

118 | return item

119 | }).Reduce(func(pipe <-chan interface{}) (interface{}, error) {

120 | for item := range pipe {

121 | result += item.(int)

122 | }

123 | return result, nil

124 | })

125 | assert.Equal(t, 10, result)

126 | })

127 | }

128 |

129 | func TestFilter(t *testing.T) {

130 | runCheckedTest(t, func(t *testing.T) {

131 | var result int

132 | Just(1, 2, 3, 4).Filter(func(item interface{}) bool {

133 | return item.(int)%2 == 0

134 | }).Reduce(func(pipe <-chan interface{}) (interface{}, error) {

135 | for item := range pipe {

136 | result += item.(int)

137 | }

138 | return result, nil

139 | })

140 | assert.Equal(t, 6, result)

141 | })

142 | }

143 |

144 | func TestFirst(t *testing.T) {

145 | runCheckedTest(t, func(t *testing.T) {

146 | assert.Nil(t, Just().First())

147 | assert.Equal(t, "foo", Just("foo").First())

148 | assert.Equal(t, "foo", Just("foo", "bar").First())

149 | })

150 | }

151 |

152 | func TestForAll(t *testing.T) {

153 | runCheckedTest(t, func(t *testing.T) {

154 | var result int

155 | Just(1, 2, 3, 4).Filter(func(item interface{}) bool {

156 | return item.(int)%2 == 0

157 | }).ForAll(func(pipe <-chan interface{}) {

158 | for item := range pipe {

159 | result += item.(int)

160 | }

161 | })

162 | assert.Equal(t, 6, result)

163 | })

164 | }

165 |

166 | func TestGroup(t *testing.T) {

167 | runCheckedTest(t, func(t *testing.T) {

168 | var groups [][]int

169 | Just(10, 11, 20, 21).Group(func(item interface{}) interface{} {

170 | v := item.(int)

171 | return v / 10

172 | }).ForEach(func(item interface{}) {

173 | v := item.([]interface{})

174 | var group []int

175 | for _, each := range v {

176 | group = append(group, each.(int))

177 | }

178 | groups = append(groups, group)

179 | })

180 |

181 | assert.Equal(t, 2, len(groups))

182 | for _, group := range groups {

183 | assert.Equal(t, 2, len(group))

184 | assert.True(t, group[0]/10 == group[1]/10)

185 | }

186 | })

187 | }

188 |

189 | func TestHead(t *testing.T) {

190 | runCheckedTest(t, func(t *testing.T) {

191 | var result int

192 | Just(1, 2, 3, 4).Head(2).Reduce(func(pipe <-chan interface{}) (interface{}, error) {

193 | for item := range pipe {

194 | result += item.(int)

195 | }

196 | return result, nil

197 | })

198 | assert.Equal(t, 3, result)

199 | })

200 | }

201 |

202 | func TestHeadZero(t *testing.T) {

203 | runCheckedTest(t, func(t *testing.T) {

204 | assert.Panics(t, func() {

205 | Just(1, 2, 3, 4).Head(0).Reduce(func(pipe <-chan interface{}) (interface{}, error) {

206 | return nil, nil

207 | })

208 | })

209 | })

210 | }

211 |

212 | func TestHeadMore(t *testing.T) {

213 | runCheckedTest(t, func(t *testing.T) {

214 | var result int

215 | Just(1, 2, 3, 4).Head(6).Reduce(func(pipe <-chan interface{}) (interface{}, error) {

216 | for item := range pipe {

217 | result += item.(int)

218 | }

219 | return result, nil

220 | })

221 | assert.Equal(t, 10, result)

222 | })

223 | }

224 |

225 | func TestLast(t *testing.T) {

226 | runCheckedTest(t, func(t *testing.T) {

227 | goroutines := runtime.NumGoroutine()

228 | assert.Nil(t, Just().Last())

229 | assert.Equal(t, "foo", Just("foo").Last())

230 | assert.Equal(t, "bar", Just("foo", "bar").Last())

231 | // let scheduler schedule first

232 | runtime.Gosched()

233 | assert.Equal(t, goroutines, runtime.NumGoroutine())

234 | })

235 | }

236 |

237 | func TestMap(t *testing.T) {

238 | runCheckedTest(t, func(t *testing.T) {

239 | log.SetOutput(ioutil.Discard)

240 |

241 | tests := []struct {

242 | name string

243 | mapper MapFunc

244 | expect int

245 | }{

246 | {

247 | name: "map with square",

248 | mapper: func(item interface{}) interface{} {

249 | v := item.(int)

250 | return v * v

251 | },

252 | expect: 30,

253 | },

254 | {

255 | name: "map ignore half",

256 | mapper: func(item interface{}) interface{} {

257 | v := item.(int)

258 | if v%2 == 0 {

259 | return 0

260 | }

261 | return v * v

262 | },

263 | expect: 10,

264 | },

265 | }

266 |

267 | // Map(...) works even WithWorkers(0)

268 | for i, test := range tests {

269 | t.Run(test.name, func(t *testing.T) {

270 | var result int

271 | var workers int

272 | if i%2 == 0 {

273 | workers = 0

274 | } else {

275 | workers = runtime.NumCPU()

276 | }

277 | From(func(source chan<- interface{}) {

278 | for i := 1; i < 5; i++ {

279 | source <- i

280 | }

281 | }).Map(test.mapper, WithWorkers(workers)).Reduce(

282 | func(pipe <-chan interface{}) (interface{}, error) {

283 | for item := range pipe {

284 | result += item.(int)

285 | }

286 | return result, nil

287 | })

288 |

289 | assert.Equal(t, test.expect, result)

290 | })

291 | }

292 | })

293 | }

294 |

295 | func TestMerge(t *testing.T) {

296 | runCheckedTest(t, func(t *testing.T) {

297 | Just(1, 2, 3, 4).Merge().ForEach(func(item interface{}) {

298 | assert.ElementsMatch(t, []interface{}{1, 2, 3, 4}, item.([]interface{}))

299 | })

300 | })

301 | }

302 |

303 | func TestParallelJust(t *testing.T) {

304 | runCheckedTest(t, func(t *testing.T) {

305 | var count int32

306 | Just(1, 2, 3).Parallel(func(item interface{}) {

307 | time.Sleep(time.Millisecond * 100)

308 | atomic.AddInt32(&count, int32(item.(int)))

309 | }, UnlimitedWorkers())

310 | assert.Equal(t, int32(6), count)

311 | })

312 | }

313 |

314 | func TestReverse(t *testing.T) {

315 | runCheckedTest(t, func(t *testing.T) {

316 | Just(1, 2, 3, 4).Reverse().Merge().ForEach(func(item interface{}) {

317 | assert.ElementsMatch(t, []interface{}{4, 3, 2, 1}, item.([]interface{}))

318 | })

319 | })

320 | }

321 |

322 | func TestSort(t *testing.T) {

323 | runCheckedTest(t, func(t *testing.T) {

324 | var prev int

325 | Just(5, 3, 7, 1, 9, 6, 4, 8, 2).Sort(func(a, b interface{}) bool {

326 | return a.(int) < b.(int)

327 | }).ForEach(func(item interface{}) {

328 | next := item.(int)

329 | assert.True(t, prev < next)

330 | prev = next

331 | })

332 | })

333 | }

334 |

335 | func TestSplit(t *testing.T) {

336 | runCheckedTest(t, func(t *testing.T) {

337 | assert.Panics(t, func() {

338 | Just(1, 2, 3, 4, 5, 6, 7, 8, 9, 10).Split(0).Done()

339 | })

340 | var chunks [][]interface{}

341 | Just(1, 2, 3, 4, 5, 6, 7, 8, 9, 10).Split(4).ForEach(func(item interface{}) {

342 | chunk := item.([]interface{})

343 | chunks = append(chunks, chunk)

344 | })

345 | assert.EqualValues(t, [][]interface{}{

346 | {1, 2, 3, 4},

347 | {5, 6, 7, 8},

348 | {9, 10},

349 | }, chunks)

350 | })

351 | }

352 |

353 | func TestTail(t *testing.T) {

354 | runCheckedTest(t, func(t *testing.T) {

355 | var result int

356 | Just(1, 2, 3, 4).Tail(2).Reduce(func(pipe <-chan interface{}) (interface{}, error) {

357 | for item := range pipe {

358 | result += item.(int)

359 | }

360 | return result, nil

361 | })

362 | assert.Equal(t, 7, result)

363 | })

364 | }

365 |

366 | func TestTailZero(t *testing.T) {

367 | runCheckedTest(t, func(t *testing.T) {

368 | assert.Panics(t, func() {

369 | Just(1, 2, 3, 4).Tail(0).Reduce(func(pipe <-chan interface{}) (interface{}, error) {

370 | return nil, nil

371 | })

372 | })

373 | })

374 | }

375 |

376 | func TestWalk(t *testing.T) {

377 | runCheckedTest(t, func(t *testing.T) {

378 | var result int

379 | Just(1, 2, 3, 4, 5).Walk(func(item interface{}, pipe chan<- interface{}) {

380 | if item.(int)%2 != 0 {

381 | pipe <- item

382 | }

383 | }, UnlimitedWorkers()).ForEach(func(item interface{}) {

384 | result += item.(int)

385 | })

386 | assert.Equal(t, 9, result)

387 | })

388 | }

389 |

390 | func TestStream_AnyMach(t *testing.T) {

391 | runCheckedTest(t, func(t *testing.T) {

392 | assetEqual(t, false, Just(1, 2, 3).AnyMach(func(item interface{}) bool {

393 | return item.(int) == 4

394 | }))

395 | assetEqual(t, false, Just(1, 2, 3).AnyMach(func(item interface{}) bool {

396 | return item.(int) == 0

397 | }))

398 | assetEqual(t, true, Just(1, 2, 3).AnyMach(func(item interface{}) bool {

399 | return item.(int) == 2

400 | }))

401 | assetEqual(t, true, Just(1, 2, 3).AnyMach(func(item interface{}) bool {

402 | return item.(int) == 2

403 | }))

404 | })

405 | }

406 |

407 | func TestStream_AllMach(t *testing.T) {

408 | runCheckedTest(t, func(t *testing.T) {

409 | assetEqual(

410 | t, true, Just(1, 2, 3).AllMach(func(item interface{}) bool {

411 | return true

412 | }),

413 | )

414 | assetEqual(

415 | t, false, Just(1, 2, 3).AllMach(func(item interface{}) bool {

416 | return false

417 | }),

418 | )

419 | assetEqual(

420 | t, false, Just(1, 2, 3).AllMach(func(item interface{}) bool {

421 | return item.(int) == 1

422 | }),

423 | )

424 | })

425 | }

426 |

427 | func TestStream_NoneMatch(t *testing.T) {

428 | runCheckedTest(t, func(t *testing.T) {

429 | assetEqual(

430 | t, true, Just(1, 2, 3).NoneMatch(func(item interface{}) bool {

431 | return false

432 | }),

433 | )

434 | assetEqual(

435 | t, false, Just(1, 2, 3).NoneMatch(func(item interface{}) bool {

436 | return true

437 | }),

438 | )

439 | assetEqual(

440 | t, true, Just(1, 2, 3).NoneMatch(func(item interface{}) bool {

441 | return item.(int) == 4

442 | }),

443 | )

444 | })

445 | }

446 |

447 | func TestConcat(t *testing.T) {

448 | runCheckedTest(t, func(t *testing.T) {

449 | a1 := []interface{}{1, 2, 3}

450 | a2 := []interface{}{4, 5, 6}

451 | s1 := Just(a1...)

452 | s2 := Just(a2...)

453 | stream := Concat(s1, s2)

454 | var items []interface{}

455 | for item := range stream.source {

456 | items = append(items, item)

457 | }

458 | sort.Slice(items, func(i, j int) bool {

459 | return items[i].(int) < items[j].(int)

460 | })

461 | ints := make([]interface{}, 0)

462 | ints = append(ints, a1...)

463 | ints = append(ints, a2...)

464 | assetEqual(t, ints, items)

465 | })

466 | }

467 |

468 | func TestStream_Skip(t *testing.T) {

469 | runCheckedTest(t, func(t *testing.T) {

470 | assetEqual(t, 3, Just(1, 2, 3, 4).Skip(1).Count())

471 | assetEqual(t, 1, Just(1, 2, 3, 4).Skip(3).Count())

472 | assetEqual(t, 4, Just(1, 2, 3, 4).Skip(0).Count())

473 | equal(t, Just(1, 2, 3, 4).Skip(3), []interface{}{4})

474 | assert.Panics(t, func() {

475 | Just(1, 2, 3, 4).Skip(-1)

476 | })

477 | })

478 | }

479 |

480 | func TestStream_Concat(t *testing.T) {

481 | runCheckedTest(t, func(t *testing.T) {

482 | stream := Just(1).Concat(Just(2), Just(3))

483 | var items []interface{}

484 | for item := range stream.source {

485 | items = append(items, item)

486 | }

487 | sort.Slice(items, func(i, j int) bool {

488 | return items[i].(int) < items[j].(int)

489 | })

490 | assetEqual(t, []interface{}{1, 2, 3}, items)

491 |

492 | just := Just(1)

493 | equal(t, just.Concat(just), []interface{}{1})

494 | })

495 | }

496 |

497 | func BenchmarkParallelMapReduce(b *testing.B) {

498 | b.ReportAllocs()

499 |

500 | mapper := func(v interface{}) interface{} {

501 | return v.(int64) * v.(int64)

502 | }

503 | reducer := func(input <-chan interface{}) (interface{}, error) {

504 | var result int64

505 | for v := range input {

506 | result += v.(int64)

507 | }

508 | return result, nil

509 | }

510 | b.ResetTimer()

511 | From(func(input chan<- interface{}) {

512 | b.RunParallel(func(pb *testing.PB) {

513 | for pb.Next() {

514 | input <- int64(rand.Int())

515 | }

516 | })

517 | }).Map(mapper).Reduce(reducer)

518 | }

519 |

520 | func BenchmarkMapReduce(b *testing.B) {

521 | b.ReportAllocs()

522 |

523 | mapper := func(v interface{}) interface{} {

524 | return v.(int64) * v.(int64)

525 | }

526 | reducer := func(input <-chan interface{}) (interface{}, error) {

527 | var result int64

528 | for v := range input {

529 | result += v.(int64)

530 | }

531 | return result, nil

532 | }

533 | b.ResetTimer()

534 | From(func(input chan<- interface{}) {

535 | for i := 0; i < b.N; i++ {

536 | input <- int64(rand.Int())

537 | }

538 | }).Map(mapper).Reduce(reducer)

539 | }

540 |

541 | func assetEqual(t *testing.T, except, data interface{}) {

542 | if !reflect.DeepEqual(except, data) {

543 | t.Errorf(" %v, want %v", data, except)

544 | }

545 | }

546 |

547 | func equal(t *testing.T, stream Stream, data []interface{}) {

548 | items := make([]interface{}, 0)

549 | for item := range stream.source {

550 | items = append(items, item)

551 | }

552 | if !reflect.DeepEqual(items, data) {

553 | t.Errorf(" %v, want %v", items, data)

554 | }

555 | }

556 |

557 | func runCheckedTest(t *testing.T, fn func(t *testing.T)) {

558 | goroutines := runtime.NumGoroutine()

559 | fn(t)

560 | // let scheduler schedule first

561 | time.Sleep(time.Millisecond)

562 | assert.True(t, runtime.NumGoroutine() <= goroutines)

563 | }

564 |

--------------------------------------------------------------------------------

/readme-cn.md:

--------------------------------------------------------------------------------

1 | # stream

2 |

3 | [English](readme.md) | 简体中文

4 |

5 | [](https://github.com/kevwan/stream/actions)

6 | [](https://codecov.io/gh/kevwan/stream)

7 | [](https://goreportcard.com/report/github.com/kevwan/stream)

8 | [](https://github.com/kevwan/stream)

9 | [](https://opensource.org/licenses/MIT)

10 |

11 | ## 为什么会有这个项目

12 |

13 | `stream` 其实是 [go-zero](https://github.com/zeromicro/go-zero) 的一部分,但是一些用户问我是不是可以单独使用 `fx`(go-zero里叫 fx)而不用引入 `go-zero` 的依赖,所以我考虑再三,还是单独提供一个吧。但是,我强烈推荐你使用 `go-zero`,因为 `go-zero` 真的提供了很多很好的功能。

14 |

15 |  16 |

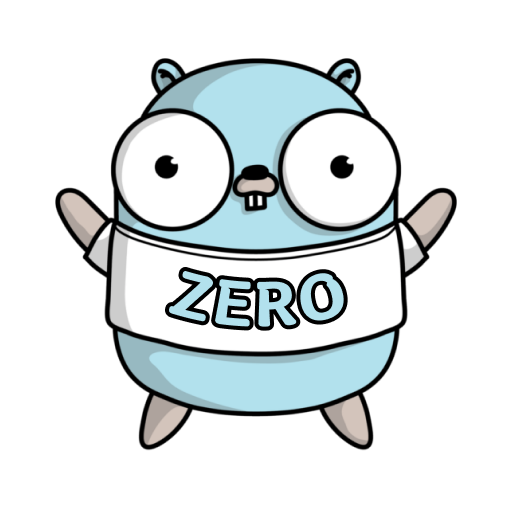

17 | ## 什么是流处理

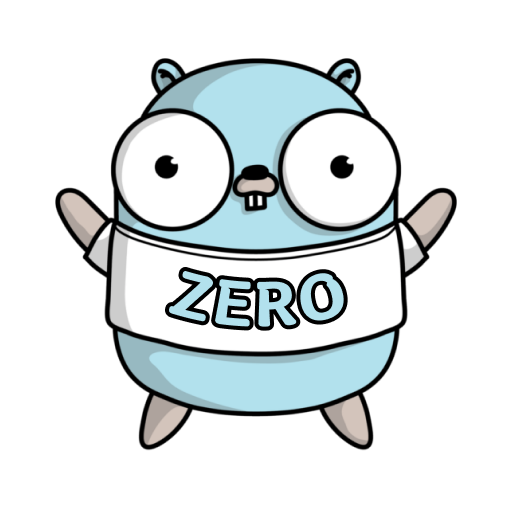

18 |

19 | 如果有 java 使用经验的同学一定会对 java8 的 Stream 赞不绝口,极大的提高了们对于集合类型数据的处理能力。

20 |

21 | ```java

22 | int sum = widgets.stream()

23 | .filter(w -> w.getColor() == RED)

24 | .mapToInt(w -> w.getWeight())

25 | .sum();

26 | ```

27 |

28 | Stream 能让我们支持链式调用和函数编程的风格来实现数据的处理,看起来数据像是在流水线一样不断的实时流转加工,最终被汇总。Stream 的实现思想就是将数据处理流程抽象成了一个数据流,每次加工后返回一个新的流供使用。

29 |

30 | ## Stream 功能定义

31 |

32 | 动手写代码之前,先想清楚,把需求理清楚是最重要的一步,我们尝试代入作者的视角来思考整个组件的实现流程。首先把底层实现的逻辑放一下 ,先尝试从零开始进行功能定义 stream 功能。

33 |

34 | Stream 的工作流程其实也属于生产消费者模型,整个流程跟工厂中的生产流程非常相似,尝试先定义一下 Stream 的生命周期:

35 |

36 | 1. 创建阶段/数据获取(原料)

37 | 2. 加工阶段/中间处理(流水线加工)

38 | 3. 汇总阶段/终结操作(最终产品)

39 |

40 | 下面围绕 stream 的三个生命周期开始定义 API:

41 |

42 | #### 创建阶段

43 |

44 | 为了创建出数据流 stream 这一抽象对象,可以理解为构造器。

45 |

46 | 我们支持三种方式构造 stream,分别是:切片转换,channel 转换,函数式转换。

47 |

48 | 注意这个阶段的方法都是普通的公开方法,并不绑定 Stream 对象。

49 |

50 | ```Go

51 | // 通过可变参数模式创建 stream

52 | func Just(items ...interface{}) Stream

53 |

54 | // 通过 channel 创建 stream

55 | func Range(source <-chan interface{}) Stream

56 |

57 | // 通过函数创建 stream

58 | func From(generate GenerateFunc) Stream

59 |

60 | // 拼接 stream

61 | func Concat(s Stream, others ...Stream) Stream

62 | ```

63 |

64 | #### 加工阶段

65 |

66 | 加工阶段需要进行的操作往往对应了我们的业务逻辑,比如:转换,过滤,去重,排序等等。

67 |

68 | 这个阶段的 API 属于 method 需要绑定到 Stream 对象上。

69 |

70 | 结合常用的业务场景进行如下定义:

71 |

72 | ```Go

73 | // 去除重复item

74 | Distinct(keyFunc KeyFunc) Stream

75 | // 按条件过滤item

76 | Filter(filterFunc FilterFunc, opts ...Option) Stream

77 | // 分组

78 | Group(fn KeyFunc) Stream

79 | // 返回前n个元素

80 | Head(n int64) Stream

81 | // 返回后n个元素

82 | Tail(n int64) Stream

83 | // 转换对象

84 | Map(fn MapFunc, opts ...Option) Stream

85 | // 合并item到slice生成新的stream

86 | Merge() Stream

87 | // 反转

88 | Reverse() Stream

89 | // 排序

90 | Sort(fn LessFunc) Stream

91 | // 作用在每个item上

92 | Walk(fn WalkFunc, opts ...Option) Stream

93 | // 聚合其他Stream

94 | Concat(streams ...Stream) Stream

95 | ```

96 |

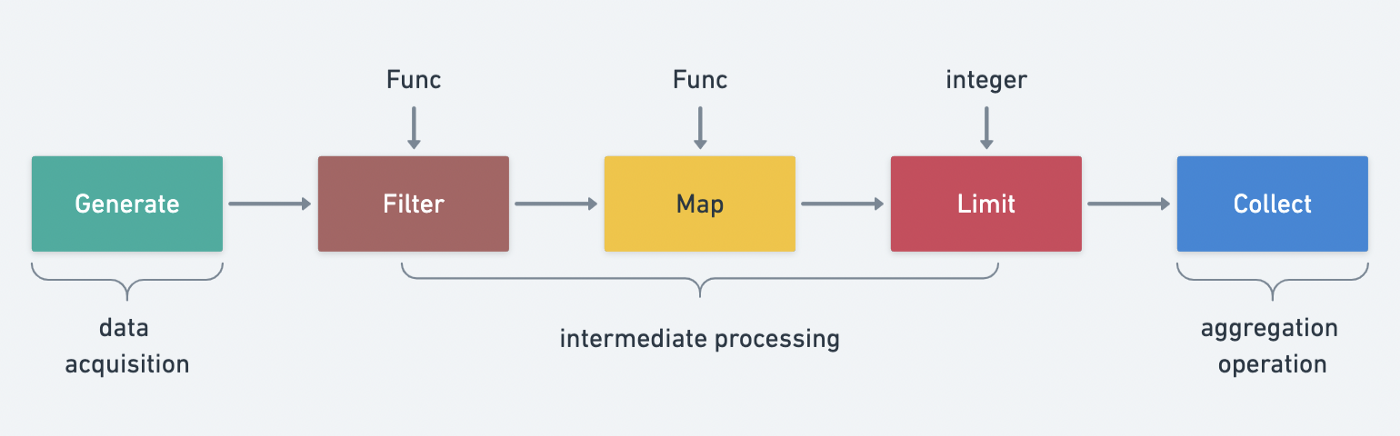

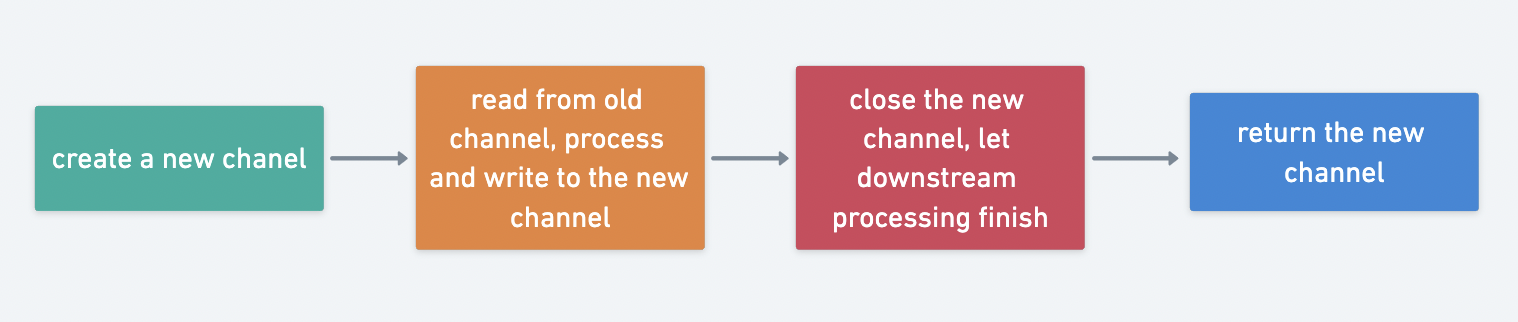

97 | 加工阶段的处理逻辑都会返回一个新的 Stream 对象,这里有个基本的实现范式

98 |

99 |

16 |

17 | ## 什么是流处理

18 |

19 | 如果有 java 使用经验的同学一定会对 java8 的 Stream 赞不绝口,极大的提高了们对于集合类型数据的处理能力。

20 |

21 | ```java

22 | int sum = widgets.stream()

23 | .filter(w -> w.getColor() == RED)

24 | .mapToInt(w -> w.getWeight())

25 | .sum();

26 | ```

27 |

28 | Stream 能让我们支持链式调用和函数编程的风格来实现数据的处理,看起来数据像是在流水线一样不断的实时流转加工,最终被汇总。Stream 的实现思想就是将数据处理流程抽象成了一个数据流,每次加工后返回一个新的流供使用。

29 |

30 | ## Stream 功能定义

31 |

32 | 动手写代码之前,先想清楚,把需求理清楚是最重要的一步,我们尝试代入作者的视角来思考整个组件的实现流程。首先把底层实现的逻辑放一下 ,先尝试从零开始进行功能定义 stream 功能。

33 |

34 | Stream 的工作流程其实也属于生产消费者模型,整个流程跟工厂中的生产流程非常相似,尝试先定义一下 Stream 的生命周期:

35 |

36 | 1. 创建阶段/数据获取(原料)

37 | 2. 加工阶段/中间处理(流水线加工)

38 | 3. 汇总阶段/终结操作(最终产品)

39 |

40 | 下面围绕 stream 的三个生命周期开始定义 API:

41 |

42 | #### 创建阶段

43 |

44 | 为了创建出数据流 stream 这一抽象对象,可以理解为构造器。

45 |

46 | 我们支持三种方式构造 stream,分别是:切片转换,channel 转换,函数式转换。

47 |

48 | 注意这个阶段的方法都是普通的公开方法,并不绑定 Stream 对象。

49 |

50 | ```Go

51 | // 通过可变参数模式创建 stream

52 | func Just(items ...interface{}) Stream

53 |

54 | // 通过 channel 创建 stream

55 | func Range(source <-chan interface{}) Stream

56 |

57 | // 通过函数创建 stream

58 | func From(generate GenerateFunc) Stream

59 |

60 | // 拼接 stream

61 | func Concat(s Stream, others ...Stream) Stream

62 | ```

63 |

64 | #### 加工阶段

65 |

66 | 加工阶段需要进行的操作往往对应了我们的业务逻辑,比如:转换,过滤,去重,排序等等。

67 |

68 | 这个阶段的 API 属于 method 需要绑定到 Stream 对象上。

69 |

70 | 结合常用的业务场景进行如下定义:

71 |

72 | ```Go

73 | // 去除重复item

74 | Distinct(keyFunc KeyFunc) Stream

75 | // 按条件过滤item

76 | Filter(filterFunc FilterFunc, opts ...Option) Stream

77 | // 分组

78 | Group(fn KeyFunc) Stream

79 | // 返回前n个元素

80 | Head(n int64) Stream

81 | // 返回后n个元素

82 | Tail(n int64) Stream

83 | // 转换对象

84 | Map(fn MapFunc, opts ...Option) Stream

85 | // 合并item到slice生成新的stream

86 | Merge() Stream

87 | // 反转

88 | Reverse() Stream

89 | // 排序

90 | Sort(fn LessFunc) Stream

91 | // 作用在每个item上

92 | Walk(fn WalkFunc, opts ...Option) Stream

93 | // 聚合其他Stream

94 | Concat(streams ...Stream) Stream

95 | ```

96 |

97 | 加工阶段的处理逻辑都会返回一个新的 Stream 对象,这里有个基本的实现范式

98 |

99 |  100 |

101 | #### 汇总阶段

102 |

103 | 汇总阶段其实就是我们想要的处理结果,比如:是否匹配,统计数量,遍历等等。

104 |

105 | ```Go

106 | // 检查是否全部匹配

107 | AllMatch(fn PredicateFunc) bool

108 | // 检查是否存在至少一项匹配

109 | AnyMatch(fn PredicateFunc) bool

110 | // 检查全部不匹配

111 | NoneMatch(fn PredicateFunc) bool

112 | // 统计数量

113 | Count() int

114 | // 清空stream

115 | Done()

116 | // 对所有元素执行操作

117 | ForAll(fn ForAllFunc)

118 | // 对每个元素执行操作

119 | ForEach(fn ForEachFunc)

120 | ```

121 |

122 | 梳理完组件的需求边界后,我们对于即将要实现的 Stream 有了更清晰的认识。在我的认知里面真正的架构师对于需求的把握以及后续演化能达到及其精准的地步,做到这一点离不开对需求的深入思考以及洞穿需求背后的本质。通过代入作者的视角来模拟复盘整个项目的构建流程,学习作者的思维方法论这正是我们学习开源项目最大的价值所在。

123 |

124 | 好了,我们尝试定义出完整的 Stream 接口全貌以及函数。

125 |

126 | > 接口的作用不仅仅是模版作用,还在于利用其抽象能力搭建项目整体的框架而不至于一开始就陷入细节,能快速的将我们的思考过程通过接口简洁的表达出来,学会养成自顶向下的思维方法从宏观的角度来观察整个系统,一开始就陷入细节则很容易拔剑四顾心茫然。。。

127 |

128 | ```Go

129 | rxOptions struct {

130 | unlimitedWorkers bool

131 | workers int

132 | }

133 | Option func(opts *rxOptions)

134 | // key生成器

135 | //item - stream中的元素

136 | KeyFunc func(item interface{}) interface{}

137 | // 过滤函数

138 | FilterFunc func(item interface{}) bool

139 | // 对象转换函数

140 | MapFunc func(intem interface{}) interface{}

141 | // 对象比较

142 | LessFunc func(a, b interface{}) bool

143 | // 遍历函数

144 | WalkFunc func(item interface{}, pip chan<- interface{})

145 | // 匹配函数

146 | PredicateFunc func(item interface{}) bool

147 | // 对所有元素执行操作

148 | ForAllFunc func(pip <-chan interface{})

149 | // 对每个item执行操作

150 | ForEachFunc func(item interface{})

151 | // 对每个元素并发执行操作

152 | ParallelFunc func(item interface{})

153 | // 对所有元素执行聚合操作

154 | ReduceFunc func(pip <-chan interface{}) (interface{}, error)

155 | // item生成函数

156 | GenerateFunc func(source <-chan interface{})

157 |

158 | Stream interface {

159 | // 去除重复item

160 | Distinct(keyFunc KeyFunc) Stream

161 | // 按条件过滤item

162 | Filter(filterFunc FilterFunc, opts ...Option) Stream

163 | // 分组

164 | Group(fn KeyFunc) Stream

165 | // 返回前n个元素

166 | Head(n int64) Stream

167 | // 返回后n个元素

168 | Tail(n int64) Stream

169 | // 获取第一个元素

170 | First() interface{}

171 | // 获取最后一个元素

172 | Last() interface{}

173 | // 转换对象

174 | Map(fn MapFunc, opts ...Option) Stream

175 | // 合并item到slice生成新的stream

176 | Merge() Stream

177 | // 反转

178 | Reverse() Stream

179 | // 排序

180 | Sort(fn LessFunc) Stream

181 | // 作用在每个item上

182 | Walk(fn WalkFunc, opts ...Option) Stream

183 | // 聚合其他Stream

184 | Concat(streams ...Stream) Stream

185 | // 检查是否全部匹配

186 | AllMatch(fn PredicateFunc) bool

187 | // 检查是否存在至少一项匹配

188 | AnyMatch(fn PredicateFunc) bool

189 | // 检查全部不匹配

190 | NoneMatch(fn PredicateFunc) bool

191 | // 统计数量

192 | Count() int

193 | // 清空stream

194 | Done()

195 | // 对所有元素执行操作

196 | ForAll(fn ForAllFunc)

197 | // 对每个元素执行操作

198 | ForEach(fn ForEachFunc)

199 | }

200 | ```

201 |

202 | channel() 方法用于获取 Stream 管道属性,因为在具体实现时我们面向的是接口对象所以暴露一个私有方法 read 出来。

203 |

204 | ```Go

205 | // 获取内部的数据容器channel,内部方法

206 | channel() chan interface{}

207 | ```

208 |

209 | ## 实现思路

210 |

211 | 功能定义梳理清楚了,接下来考虑几个工程实现的问题。

212 |

213 | ### 如何实现链式调用

214 |

215 | 链式调用,创建对象用到的 builder 模式可以达到链式调用效果。实际上 Stream 实现类似链式的效果原理也是一样的,每次调用完后都创建一个新的 Stream 返回给用户。

216 |

217 | ```Go

218 | // 去除重复item

219 | Distinct(keyFunc KeyFunc) Stream

220 | // 按条件过滤item

221 | Filter(filterFunc FilterFunc, opts ...Option) Stream

222 | ```

223 |

224 | ### 如何实现流水线的处理效果

225 |

226 | 所谓的流水线可以理解为数据在 Stream 中的存储容器,在 go 中我们可以使用 channel 作为数据的管道,达到 Stream 链式调用执行多个操作时**异步非阻塞**效果。

227 |

228 | ### 如何支持并行处理

229 |

230 | 数据加工本质上是在处理 channel 中的数据,那么要实现并行处理无非是并行消费 channel 而已,利用 goroutine 协程、WaitGroup 机制可以非常方便的实现并行处理。

231 |

232 | ## go-zero 实现

233 |

234 | `core/fx/stream.go`

235 |

236 | go-zero 中关于 Stream 的实现并没有定义接口,不过没关系底层实现时逻辑是一样的。

237 |

238 | 为了实现 Stream 接口我们定义一个内部的实现类,其中 source 为 channel 类型,模拟流水线功能。

239 |

240 | ```Go

241 | Stream struct {

242 | source <-chan interface{}

243 | }

244 | ```

245 |

246 | ### 创建 API

247 |

248 | #### channel 创建 Range

249 |

250 | 通过 channel 创建 stream

251 |

252 | ```Go

253 | func Range(source <-chan interface{}) Stream {

254 | return Stream{

255 | source: source,

256 | }

257 | }

258 | ```

259 |

260 | #### 可变参数模式创建 Just

261 |

262 | 通过可变参数模式创建 stream,channel 写完后及时 close 是个好习惯。

263 |

264 | ```Go

265 | func Just(items ...interface{}) Stream {

266 | source := make(chan interface{}, len(items))

267 | for _, item := range items {

268 | source <- item

269 | }

270 | close(source)

271 | return Range(source)

272 | }

273 | ```

274 |

275 | #### 函数创建 From

276 |

277 | 通过函数创建 Stream

278 |

279 | ```Go

280 | func From(generate GenerateFunc) Stream {

281 | source := make(chan interface{})

282 | threading.GoSafe(func() {

283 | defer close(source)

284 | generate(source)

285 | })

286 | return Range(source)

287 | }

288 | ```

289 |

290 | 因为涉及外部传入的函数参数调用,执行过程并不可用因此需要捕捉运行时异常防止 panic 错误传导到上层导致应用崩溃。

291 |

292 | ```Go

293 | func Recover(cleanups ...func()) {

294 | for _, cleanup := range cleanups {

295 | cleanup()

296 | }

297 | if r := recover(); r != nil {

298 | logx.ErrorStack(r)

299 | }

300 | }

301 |

302 | func RunSafe(fn func()) {

303 | defer rescue.Recover()

304 | fn()

305 | }

306 |

307 | func GoSafe(fn func()) {

308 | go RunSafe(fn)

309 | }

310 | ```

311 |

312 | #### 拼接 Concat

313 |

314 | 拼接其他 Stream 创建一个新的 Stream,调用内部 Concat method 方法,后文将会分析 Concat 的源码实现。

315 |

316 | ```Go

317 | func Concat(s Stream, others ...Stream) Stream {

318 | return s.Concat(others...)

319 | }

320 | ```

321 |

322 | ### 加工 API

323 |

324 | #### 去重 Distinct

325 |

326 | 因为传入的是函数参数`KeyFunc func(item interface{}) interface{}`意味着也同时支持按照业务场景自定义去重,本质上是利用 KeyFunc 返回的结果基于 map 实现去重。

327 |

328 | 函数参数非常强大,能极大的提升灵活性。

329 |

330 | ```Go

331 | func (s Stream) Distinct(keyFunc KeyFunc) Stream {

332 | source := make(chan interface{})

333 | threading.GoSafe(func() {

334 | // channel记得关闭是个好习惯

335 | defer close(source)

336 | keys := make(map[interface{}]lang.PlaceholderType)

337 | for item := range s.source {

338 | // 自定义去重逻辑

339 | key := keyFunc(item)

340 | // 如果key不存在,则将数据写入新的channel

341 | if _, ok := keys[key]; !ok {

342 | source <- item

343 | keys[key] = lang.Placeholder

344 | }

345 | }

346 | })

347 | return Range(source)

348 | }

349 | ```

350 |

351 | 使用案例:

352 |

353 | ```Go

354 | // 1 2 3 4 5

355 | Just(1, 2, 3, 3, 4, 5, 5).Distinct(func(item interface{}) interface{} {

356 | return item

357 | }).ForEach(func(item interface{}) {

358 | t.Log(item)

359 | })

360 |

361 | // 1 2 3 4

362 | Just(1, 2, 3, 3, 4, 5, 5).Distinct(func(item interface{}) interface{} {

363 | uid := item.(int)

364 | // 对大于4的item进行特殊去重逻辑,最终只保留一个>3的item

365 | if uid > 3 {

366 | return 4

367 | }

368 | return item

369 | }).ForEach(func(item interface{}) {

370 | t.Log(item)

371 | })

372 | ```

373 |

374 | #### 过滤 Filter

375 |

376 | 通过将过滤逻辑抽象成 FilterFunc,然后分别作用在 item 上根据 FilterFunc 返回的布尔值决定是否写回新的 channel 中实现过滤功能,实际的过滤逻辑委托给了 Walk method。

377 |

378 | Option 参数包含两个选项:

379 |

380 | 1. unlimitedWorkers 不限制协程数量

381 | 2. workers 限制协程数量

382 |

383 | ```Go

384 | FilterFunc func(item interface{}) bool

385 |

386 | func (s Stream) Filter(filterFunc FilterFunc, opts ...Option) Stream {

387 | return s.Walk(func(item interface{}, pip chan<- interface{}) {

388 | if filterFunc(item) {

389 | pip <- item

390 | }

391 | }, opts...)

392 | }

393 | ```

394 |

395 | 使用示例:

396 |

397 | ```Go

398 | func TestInternalStream_Filter(t *testing.T) {

399 | // 保留偶数 2,4

400 | channel := Just(1, 2, 3, 4, 5).Filter(func(item interface{}) bool {

401 | return item.(int)%2 == 0

402 | }).channel()

403 | for item := range channel {

404 | t.Log(item)

405 | }

406 | }

407 | ```

408 |

409 | #### 遍历执行 Walk

410 |

411 | walk 英文意思是步行,这里的意思是对每个 item 都执行一次 WalkFunc 操作并将结果写入到新的 Stream 中。

412 |

413 | 这里注意一下因为内部采用了协程机制异步执行读取和写入数据所以新的 Stream 中 channel 里面的数据顺序是随机的。

414 |

415 | ```Go

416 | // item-stream中的item元素

417 | // pipe-item符合条件则写入pipe

418 | WalkFunc func(item interface{}, pipe chan<- interface{})

419 |

420 | func (s Stream) Walk(fn WalkFunc, opts ...Option) Stream {

421 | option := buildOptions(opts...)

422 | if option.unlimitedWorkers {

423 | return s.walkUnLimited(fn, option)

424 | }

425 | return s.walkLimited(fn, option)

426 | }

427 |

428 | func (s Stream) walkUnLimited(fn WalkFunc, option *rxOptions) Stream {

429 | // 创建带缓冲区的channel

430 | // 默认为16,channel中元素超过16将会被阻塞

431 | pipe := make(chan interface{}, defaultWorkers)

432 | go func() {

433 | var wg sync.WaitGroup

434 |

435 | for item := range s.source {

436 | // 需要读取s.source的所有元素

437 | // 这里也说明了为什么channel最后写完记得完毕

438 | // 如果不关闭可能导致协程一直阻塞导致泄漏

439 | // 重要, 不赋值给val是个典型的并发陷阱,后面在另一个goroutine里使用了

440 | val := item

441 | wg.Add(1)

442 | // 安全模式下执行函数

443 | threading.GoSafe(func() {

444 | defer wg.Done()

445 | fn(item, pipe)

446 | })

447 | }

448 | wg.Wait()

449 | close(pipe)

450 | }()

451 |

452 | // 返回新的Stream

453 | return Range(pipe)

454 | }

455 |

456 | func (s Stream) walkLimited(fn WalkFunc, option *rxOptions) Stream {

457 | pipe := make(chan interface{}, option.workers)

458 | go func() {

459 | var wg sync.WaitGroup

460 | // 控制协程数量

461 | pool := make(chan lang.PlaceholderType, option.workers)

462 |

463 | for item := range s.source {

464 | // 重要, 不赋值给val是个典型的并发陷阱,后面在另一个goroutine里使用了

465 | val := item

466 | // 超过协程限制时将会被阻塞

467 | pool <- lang.Placeholder

468 | // 这里也说明了为什么channel最后写完记得完毕

469 | // 如果不关闭可能导致协程一直阻塞导致泄漏

470 | wg.Add(1)

471 |

472 | // 安全模式下执行函数

473 | threading.GoSafe(func() {

474 | defer func() {

475 | wg.Done()

476 | //执行完成后读取一次pool释放一个协程位置

477 | <-pool

478 | }()

479 | fn(item, pipe)

480 | })

481 | }

482 | wg.Wait()

483 | close(pipe)

484 | }()

485 | return Range(pipe)

486 | }

487 | ```

488 |

489 | 使用案例:

490 |

491 | 返回的顺序是随机的。

492 |

493 | ```Go

494 | func Test_Stream_Walk(t *testing.T) {

495 | // 返回 300,100,200

496 | Just(1, 2, 3).Walk(func(item interface{}, pip chan<- interface{}) {

497 | pip <- item.(int) * 100

498 | }, WithWorkers(3)).ForEach(func(item interface{}) {

499 | t.Log(item)

500 | })

501 | }

502 | ```

503 |

504 | #### 分组 Group

505 |

506 | 通过对 item 匹配放入 map 中。

507 |

508 | ```Go

509 | KeyFunc func(item interface{}) interface{}

510 |

511 | func (s Stream) Group(fn KeyFunc) Stream {

512 | groups := make(map[interface{}][]interface{})

513 | for item := range s.source {

514 | key := fn(item)

515 | groups[key] = append(groups[key], item)

516 | }

517 | source := make(chan interface{})

518 | go func() {

519 | for _, group := range groups {

520 | source <- group

521 | }

522 | close(source)

523 | }()

524 | return Range(source)

525 | }

526 | ```

527 |

528 | #### 获取前 n 个元素 Head

529 |

530 | n 大于实际数据集长度的话将会返回全部元素

531 |

532 | ```Go

533 | func (s Stream) Head(n int64) Stream {

534 | if n < 1 {

535 | panic("n must be greather than 1")

536 | }

537 | source := make(chan interface{})

538 | go func() {

539 | for item := range s.source {

540 | n--

541 | // n值可能大于s.source长度,需要判断是否>=0

542 | if n >= 0 {

543 | source <- item

544 | }

545 | // let successive method go ASAP even we have more items to skip

546 | // why we don't just break the loop, because if break,

547 | // this former goroutine will block forever, which will cause goroutine leak.

548 | // n==0说明source已经写满可以进行关闭了

549 | // 既然source已经满足条件了为什么不直接进行break跳出循环呢?

550 | // 作者提到了防止协程泄漏

551 | // 因为每次操作最终都会产生一个新的Stream,旧的Stream永远也不会被调用了

552 | if n == 0 {

553 | close(source)

554 | break

555 | }

556 | }

557 | // 上面的循环跳出来了说明n大于s.source实际长度

558 | // 依旧需要显示关闭新的source

559 | if n > 0 {

560 | close(source)

561 | }

562 | }()

563 | return Range(source)

564 | }

565 | ```

566 |

567 | 使用示例:

568 |

569 | ```Go

570 | // 返回1,2

571 | func TestInternalStream_Head(t *testing.T) {

572 | channel := Just(1, 2, 3, 4, 5).Head(2).channel()

573 | for item := range channel {

574 | t.Log(item)

575 | }

576 | }

577 | ```

578 |

579 | #### 获取后 n 个元素 Tail

580 |

581 | 这里很有意思,为了确保拿到最后 n 个元素使用环形切片 Ring 这个数据结构,先了解一下 Ring 的实现。

582 |

583 | ```Go

584 | // 环形切片

585 | type Ring struct {

586 | elements []interface{}

587 | index int

588 | lock sync.Mutex

589 | }

590 |

591 | func NewRing(n int) *Ring {

592 | if n < 1 {

593 | panic("n should be greather than 0")

594 | }

595 | return &Ring{

596 | elements: make([]interface{}, n),

597 | }

598 | }

599 |

600 | // 添加元素

601 | func (r *Ring) Add(v interface{}) {

602 | r.lock.Lock()

603 | defer r.lock.Unlock()

604 | // 将元素写入切片指定位置

605 | // 这里的取余实现了循环写效果

606 | r.elements[r.index%len(r.elements)] = v

607 | // 更新下次写入位置

608 | r.index++

609 | }

610 |

611 | // 获取全部元素

612 | // 读取顺序保持与写入顺序一致

613 | func (r *Ring) Take() []interface{} {

614 | r.lock.Lock()

615 | defer r.lock.Unlock()

616 |

617 | var size int

618 | var start int

619 | // 当出现循环写的情况时

620 | // 开始读取位置需要通过去余实现,因为我们希望读取出来的顺序与写入顺序一致

621 | if r.index > len(r.elements) {

622 | size = len(r.elements)

623 | // 因为出现循环写情况,当前写入位置index开始为最旧的数据

624 | start = r.index % len(r.elements)

625 | } else {

626 | size = r.index

627 | }

628 | elements := make([]interface{}, size)

629 | for i := 0; i < size; i++ {

630 | // 取余实现环形读取,读取顺序保持与写入顺序一致

631 | elements[i] = r.elements[(start+i)%len(r.elements)]

632 | }

633 |

634 | return elements

635 | }

636 | ```

637 |

638 | 总结一下环形切片的优点:

639 |

640 | - 支持自动滚动更新

641 | - 节省内存

642 |

643 | 环形切片能实现固定容量满的情况下旧数据不断被新数据覆盖,由于这个特性可以用于读取 channel 后 n 个元素。

644 |

645 | ```Go

646 | func (s Stream) Tail(n int64) Stream {

647 | if n < 1 {

648 | panic("n must be greather than 1")

649 | }

650 | source := make(chan interface{})

651 | go func() {

652 | ring := collection.NewRing(int(n))

653 | // 读取全部元素,如果数量>n环形切片能实现新数据覆盖旧数据

654 | // 保证获取到的一定最后n个元素

655 | for item := range s.source {

656 | ring.Add(item)

657 | }

658 | for _, item := range ring.Take() {

659 | source <- item

660 | }

661 | close(source)

662 | }()

663 | return Range(source)

664 | }

665 | ```

666 |

667 | 那么为什么不直接使用 len(source) 长度的切片呢?

668 |

669 | 答案是节省内存。凡是涉及到环形类型的数据结构时都具备一个优点那就省内存,能做到按需分配资源。

670 |

671 | 使用示例:

672 |

673 | ```Go

674 | func TestInternalStream_Tail(t *testing.T) {

675 | // 4,5

676 | channel := Just(1, 2, 3, 4, 5).Tail(2).channel()

677 | for item := range channel {

678 | t.Log(item)

679 | }

680 | // 1,2,3,4,5

681 | channel2 := Just(1, 2, 3, 4, 5).Tail(6).channel()

682 | for item := range channel2 {

683 | t.Log(item)

684 | }

685 | }

686 | ```

687 |

688 | #### 元素转换Map

689 |

690 | 元素转换,内部由协程完成转换操作,注意输出channel并不保证按原序输出。

691 |

692 | ```Go

693 | MapFunc func(intem interface{}) interface{}

694 | func (s Stream) Map(fn MapFunc, opts ...Option) Stream {

695 | return s.Walk(func(item interface{}, pip chan<- interface{}) {

696 | pip <- fn(item)

697 | }, opts...)

698 | }

699 | ```

700 |

701 | 使用示例:

702 |

703 | ```Go

704 | func TestInternalStream_Map(t *testing.T) {

705 | channel := Just(1, 2, 3, 4, 5, 2, 2, 2, 2, 2, 2).Map(func(item interface{}) interface{} {

706 | return item.(int) * 10

707 | }).channel()

708 | for item := range channel {

709 | t.Log(item)

710 | }

711 | }

712 | ```

713 |

714 | #### 合并 Merge

715 |

716 | 实现比较简单

717 |

718 | ```Go

719 | func (s Stream) Merge() Stream {

720 | var items []interface{}

721 | for item := range s.source {

722 | items = append(items, item)

723 | }

724 | source := make(chan interface{}, 1)

725 | source <- items

726 | close(source)

727 | return Range(source)

728 | }

729 | ```

730 |

731 | #### 反转 Reverse

732 |

733 | 反转 channel 中的元素。反转算法流程是:

734 |

735 | - 找到中间节点

736 |

737 | - 节点两边开始两两交换

738 |

739 | 注意一下为什么获取 s.source 时用切片来接收呢? 切片会自动扩容,用数组不是更好吗?

740 |

741 | 其实这里是不能用数组的,因为不知道 Stream 写入 source 的操作往往是在协程异步写入的,每个 Stream 中的 channel 都可能在动态变化,用流水线来比喻 Stream 工作流程的确非常形象。

742 |

743 | ```Go

744 | func (s Stream) Reverse() Stream {

745 | var items []interface{}

746 | for item := range s.source {

747 | items = append(items, item)

748 | }

749 | for i := len(items)/2 - 1; i >= 0; i-- {

750 | opp := len(items) - 1 - i

751 | items[i], items[opp] = items[opp], items[i]

752 | }

753 | return Just(items...)

754 | }

755 | ```

756 |

757 | 使用示例:

758 |

759 | ```Go

760 | func TestInternalStream_Reverse(t *testing.T) {

761 | channel := Just(1, 2, 3, 4, 5).Reverse().channel()

762 | for item := range channel {

763 | t.Log(item)

764 | }

765 | }

766 | ```

767 |

768 | #### 排序 Sort

769 |

770 | 内网调用 slice 官方包的排序方案,传入比较函数实现比较逻辑即可。

771 |

772 | ```Go

773 | func (s Stream) Sort(less LessFunc) Stream {

774 | var items []interface{}

775 | for item := range s.source {

776 | items = append(items, item)

777 | }

778 |

779 | sort.Slice(items, func(i, j int) bool {

780 | return less(items[i], items[j])

781 | })

782 | return Just(items...)

783 | }

784 | ```

785 |

786 | 使用示例:

787 |

788 | ```Go

789 | // 5,4,3,2,1

790 | func TestInternalStream_Sort(t *testing.T) {

791 | channel := Just(1, 2, 3, 4, 5).Sort(func(a, b interface{}) bool {

792 | return a.(int) > b.(int)

793 | }).channel()

794 | for item := range channel {

795 | t.Log(item)

796 | }

797 | }

798 | ```

799 |

800 | #### 拼接 Concat

801 |

802 | ```Go

803 | func (s Stream) Concat(steams ...Stream) Stream {

804 | // 创建新的无缓冲channel

805 | source := make(chan interface{})

806 | go func() {

807 | // 创建一个waiGroup对象

808 | group := threading.NewRoutineGroup()

809 | // 异步从原channel读取数据

810 | group.Run(func() {

811 | for item := range s.source {

812 | source <- item

813 | }

814 | })

815 | // 异步读取待拼接Stream的channel数据

816 | for _, stream := range steams {