├── .gitignore

├── config

├── slaves

├── .DS_Store

├── start-hadoop.sh

├── ssh_config

├── mapred-site.xml

├── core-site.xml

├── yarn-site.xml

├── hdfs-site.xml

├── run-wordcount.sh

└── hadoop-env.sh

├── hadoop-cluster-docker.png

├── resize-cluster.sh

├── start-container.sh

├── Dockerfile

├── README.md

└── LICENSE

/.gitignore:

--------------------------------------------------------------------------------

1 | .DS_Store

2 |

--------------------------------------------------------------------------------

/config/slaves:

--------------------------------------------------------------------------------

1 | hadoop-slave1

2 | hadoop-slave2

3 | hadoop-slave3

4 | hadoop-slave4

5 |

--------------------------------------------------------------------------------

/config/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kiwenlau/hadoop-cluster-docker/HEAD/config/.DS_Store

--------------------------------------------------------------------------------

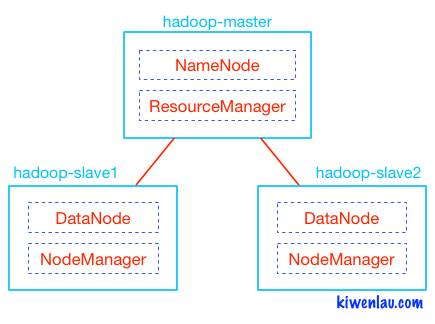

/hadoop-cluster-docker.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kiwenlau/hadoop-cluster-docker/HEAD/hadoop-cluster-docker.png

--------------------------------------------------------------------------------

/config/start-hadoop.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | echo -e "\n"

4 |

5 | $HADOOP_HOME/sbin/start-dfs.sh

6 |

7 | echo -e "\n"

8 |

9 | $HADOOP_HOME/sbin/start-yarn.sh

10 |

11 | echo -e "\n"

12 |

13 |

--------------------------------------------------------------------------------

/config/ssh_config:

--------------------------------------------------------------------------------

1 | Host localhost

2 | StrictHostKeyChecking no

3 |

4 | Host 0.0.0.0

5 | StrictHostKeyChecking no

6 |

7 | Host hadoop-*

8 | StrictHostKeyChecking no

9 | UserKnownHostsFile=/dev/null

--------------------------------------------------------------------------------

/config/mapred-site.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | mapreduce.framework.name

5 | yarn

6 |

7 |

8 |

--------------------------------------------------------------------------------

/config/core-site.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | fs.defaultFS

5 | hdfs://hadoop-master:9000/

6 |

7 |

8 |

--------------------------------------------------------------------------------

/config/yarn-site.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | yarn.nodemanager.aux-services

5 | mapreduce_shuffle

6 |

7 |

8 | yarn.nodemanager.aux-services.mapreduce_shuffle.class

9 | org.apache.hadoop.mapred.ShuffleHandler

10 |

11 |

12 | yarn.resourcemanager.hostname

13 | hadoop-master

14 |

15 |

16 |

--------------------------------------------------------------------------------

/resize-cluster.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # N is the node number of hadoop cluster

4 | N=$1

5 |

6 | if [ $# = 0 ]

7 | then

8 | echo "Please specify the node number of hadoop cluster!"

9 | exit 1

10 | fi

11 |

12 | # change slaves file

13 | i=1

14 | rm config/slaves

15 | while [ $i -lt $N ]

16 | do

17 | echo "hadoop-slave$i" >> config/slaves

18 | ((i++))

19 | done

20 |

21 | echo ""

22 |

23 | echo -e "\nbuild docker hadoop image\n"

24 |

25 | # rebuild kiwenlau/hadoop image

26 | sudo docker build -t kiwenlau/hadoop:1.0 .

27 |

28 | echo ""

29 |

--------------------------------------------------------------------------------

/config/hdfs-site.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | dfs.namenode.name.dir

5 | file:///root/hdfs/namenode

6 | NameNode directory for namespace and transaction logs storage.

7 |

8 |

9 | dfs.datanode.data.dir

10 | file:///root/hdfs/datanode

11 | DataNode directory

12 |

13 |

14 | dfs.replication

15 | 2

16 |

17 |

18 |

--------------------------------------------------------------------------------

/config/run-wordcount.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # test the hadoop cluster by running wordcount

4 |

5 | # create input files

6 | mkdir input

7 | echo "Hello Docker" >input/file2.txt

8 | echo "Hello Hadoop" >input/file1.txt

9 |

10 | # create input directory on HDFS

11 | hadoop fs -mkdir -p input

12 |

13 | # put input files to HDFS

14 | hdfs dfs -put ./input/* input

15 |

16 | # run wordcount

17 | hadoop jar $HADOOP_HOME/share/hadoop/mapreduce/sources/hadoop-mapreduce-examples-2.7.2-sources.jar org.apache.hadoop.examples.WordCount input output

18 |

19 | # print the input files

20 | echo -e "\ninput file1.txt:"

21 | hdfs dfs -cat input/file1.txt

22 |

23 | echo -e "\ninput file2.txt:"

24 | hdfs dfs -cat input/file2.txt

25 |

26 | # print the output of wordcount

27 | echo -e "\nwordcount output:"

28 | hdfs dfs -cat output/part-r-00000

29 |

30 |

--------------------------------------------------------------------------------

/start-container.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # the default node number is 3

4 | N=${1:-3}

5 |

6 |

7 | # start hadoop master container

8 | sudo docker rm -f hadoop-master &> /dev/null

9 | echo "start hadoop-master container..."

10 | sudo docker run -itd \

11 | --net=hadoop \

12 | -p 50070:50070 \

13 | -p 8088:8088 \

14 | --name hadoop-master \

15 | --hostname hadoop-master \

16 | kiwenlau/hadoop:1.0 &> /dev/null

17 |

18 |

19 | # start hadoop slave container

20 | i=1

21 | while [ $i -lt $N ]

22 | do

23 | sudo docker rm -f hadoop-slave$i &> /dev/null

24 | echo "start hadoop-slave$i container..."

25 | sudo docker run -itd \

26 | --net=hadoop \

27 | --name hadoop-slave$i \

28 | --hostname hadoop-slave$i \

29 | kiwenlau/hadoop:1.0 &> /dev/null

30 | i=$(( $i + 1 ))

31 | done

32 |

33 | # get into hadoop master container

34 | sudo docker exec -it hadoop-master bash

35 |

--------------------------------------------------------------------------------

/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM ubuntu:14.04

2 |

3 | MAINTAINER KiwenLau

4 |

5 | WORKDIR /root

6 |

7 | # install openssh-server, openjdk and wget

8 | RUN apt-get update && apt-get install -y openssh-server openjdk-7-jdk wget

9 |

10 | # install hadoop 2.7.2

11 | RUN wget https://github.com/kiwenlau/compile-hadoop/releases/download/2.7.2/hadoop-2.7.2.tar.gz && \

12 | tar -xzvf hadoop-2.7.2.tar.gz && \

13 | mv hadoop-2.7.2 /usr/local/hadoop && \

14 | rm hadoop-2.7.2.tar.gz

15 |

16 | # set environment variable

17 | ENV JAVA_HOME=/usr/lib/jvm/java-7-openjdk-amd64

18 | ENV HADOOP_HOME=/usr/local/hadoop

19 | ENV PATH=$PATH:/usr/local/hadoop/bin:/usr/local/hadoop/sbin

20 |

21 | # ssh without key

22 | RUN ssh-keygen -t rsa -f ~/.ssh/id_rsa -P '' && \

23 | cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

24 |

25 | RUN mkdir -p ~/hdfs/namenode && \

26 | mkdir -p ~/hdfs/datanode && \

27 | mkdir $HADOOP_HOME/logs

28 |

29 | COPY config/* /tmp/

30 |

31 | RUN mv /tmp/ssh_config ~/.ssh/config && \

32 | mv /tmp/hadoop-env.sh /usr/local/hadoop/etc/hadoop/hadoop-env.sh && \

33 | mv /tmp/hdfs-site.xml $HADOOP_HOME/etc/hadoop/hdfs-site.xml && \

34 | mv /tmp/core-site.xml $HADOOP_HOME/etc/hadoop/core-site.xml && \

35 | mv /tmp/mapred-site.xml $HADOOP_HOME/etc/hadoop/mapred-site.xml && \

36 | mv /tmp/yarn-site.xml $HADOOP_HOME/etc/hadoop/yarn-site.xml && \

37 | mv /tmp/slaves $HADOOP_HOME/etc/hadoop/slaves && \

38 | mv /tmp/start-hadoop.sh ~/start-hadoop.sh && \

39 | mv /tmp/run-wordcount.sh ~/run-wordcount.sh

40 |

41 | RUN chmod +x ~/start-hadoop.sh && \

42 | chmod +x ~/run-wordcount.sh && \

43 | chmod +x $HADOOP_HOME/sbin/start-dfs.sh && \

44 | chmod +x $HADOOP_HOME/sbin/start-yarn.sh

45 |

46 | # format namenode

47 | RUN /usr/local/hadoop/bin/hdfs namenode -format

48 |

49 | CMD [ "sh", "-c", "service ssh start; bash"]

50 |

51 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | ## Run Hadoop Cluster within Docker Containers

2 |

3 | - Blog: [Run Hadoop Cluster in Docker Update](http://kiwenlau.com/2016/06/26/hadoop-cluster-docker-update-english/)

4 | - 博客: [基于Docker搭建Hadoop集群之升级版](http://kiwenlau.com/2016/06/12/160612-hadoop-cluster-docker-update/)

5 |

6 |

7 |

8 |

9 |

10 | ### 3 Nodes Hadoop Cluster

11 |

12 | ##### 1. pull docker image

13 |

14 | ```

15 | sudo docker pull kiwenlau/hadoop:1.0

16 | ```

17 |

18 | ##### 2. clone github repository

19 |

20 | ```

21 | git clone https://github.com/kiwenlau/hadoop-cluster-docker

22 | ```

23 |

24 | ##### 3. create hadoop network

25 |

26 | ```

27 | sudo docker network create --driver=bridge hadoop

28 | ```

29 |

30 | ##### 4. start container

31 |

32 | ```

33 | cd hadoop-cluster-docker

34 | sudo ./start-container.sh

35 | ```

36 |

37 | **output:**

38 |

39 | ```

40 | start hadoop-master container...

41 | start hadoop-slave1 container...

42 | start hadoop-slave2 container...

43 | root@hadoop-master:~#

44 | ```

45 | - start 3 containers with 1 master and 2 slaves

46 | - you will get into the /root directory of hadoop-master container

47 |

48 | ##### 5. start hadoop

49 |

50 | ```

51 | ./start-hadoop.sh

52 | ```

53 |

54 | ##### 6. run wordcount

55 |

56 | ```

57 | ./run-wordcount.sh

58 | ```

59 |

60 | **output**

61 |

62 | ```

63 | input file1.txt:

64 | Hello Hadoop

65 |

66 | input file2.txt:

67 | Hello Docker

68 |

69 | wordcount output:

70 | Docker 1

71 | Hadoop 1

72 | Hello 2

73 | ```

74 |

75 | ### Arbitrary size Hadoop cluster

76 |

77 | ##### 1. pull docker images and clone github repository

78 |

79 | do 1~3 like section A

80 |

81 | ##### 2. rebuild docker image

82 |

83 | ```

84 | sudo ./resize-cluster.sh 5

85 | ```

86 | - specify parameter > 1: 2, 3..

87 | - this script just rebuild hadoop image with different **slaves** file, which pecifies the name of all slave nodes

88 |

89 |

90 | ##### 3. start container

91 |

92 | ```

93 | sudo ./start-container.sh 5

94 | ```

95 | - use the same parameter as the step 2

96 |

97 | ##### 4. run hadoop cluster

98 |

99 | do 5~6 like section A

100 |

101 |

--------------------------------------------------------------------------------

/config/hadoop-env.sh:

--------------------------------------------------------------------------------

1 | # Licensed to the Apache Software Foundation (ASF) under one

2 | # or more contributor license agreements. See the NOTICE file

3 | # distributed with this work for additional information

4 | # regarding copyright ownership. The ASF licenses this file

5 | # to you under the Apache License, Version 2.0 (the

6 | # "License"); you may not use this file except in compliance

7 | # with the License. You may obtain a copy of the License at

8 | #

9 | # http://www.apache.org/licenses/LICENSE-2.0

10 | #

11 | # Unless required by applicable law or agreed to in writing, software

12 | # distributed under the License is distributed on an "AS IS" BASIS,

13 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

14 | # See the License for the specific language governing permissions and

15 | # limitations under the License.

16 |

17 | # Set Hadoop-specific environment variables here.

18 |

19 | # The only required environment variable is JAVA_HOME. All others are

20 | # optional. When running a distributed configuration it is best to

21 | # set JAVA_HOME in this file, so that it is correctly defined on

22 | # remote nodes.

23 |

24 | # The java implementation to use.

25 | export JAVA_HOME=/usr/lib/jvm/java-7-openjdk-amd64

26 |

27 | # The jsvc implementation to use. Jsvc is required to run secure datanodes

28 | # that bind to privileged ports to provide authentication of data transfer

29 | # protocol. Jsvc is not required if SASL is configured for authentication of

30 | # data transfer protocol using non-privileged ports.

31 | #export JSVC_HOME=${JSVC_HOME}

32 |

33 | export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-"/etc/hadoop"}

34 |

35 | # Extra Java CLASSPATH elements. Automatically insert capacity-scheduler.

36 | for f in $HADOOP_HOME/contrib/capacity-scheduler/*.jar; do

37 | if [ "$HADOOP_CLASSPATH" ]; then

38 | export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$f

39 | else

40 | export HADOOP_CLASSPATH=$f

41 | fi

42 | done

43 |

44 | # The maximum amount of heap to use, in MB. Default is 1000.

45 | #export HADOOP_HEAPSIZE=

46 | #export HADOOP_NAMENODE_INIT_HEAPSIZE=""

47 |

48 | # Extra Java runtime options. Empty by default.

49 | export HADOOP_OPTS="$HADOOP_OPTS -Djava.net.preferIPv4Stack=true"

50 |

51 | # Command specific options appended to HADOOP_OPTS when specified

52 | export HADOOP_NAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_NAMENODE_OPTS"

53 | export HADOOP_DATANODE_OPTS="-Dhadoop.security.logger=ERROR,RFAS $HADOOP_DATANODE_OPTS"

54 |

55 | export HADOOP_SECONDARYNAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_SECONDARYNAMENODE_OPTS"

56 |

57 | export HADOOP_NFS3_OPTS="$HADOOP_NFS3_OPTS"

58 | export HADOOP_PORTMAP_OPTS="-Xmx512m $HADOOP_PORTMAP_OPTS"

59 |

60 | # The following applies to multiple commands (fs, dfs, fsck, distcp etc)

61 | export HADOOP_CLIENT_OPTS="-Xmx512m $HADOOP_CLIENT_OPTS"

62 | #HADOOP_JAVA_PLATFORM_OPTS="-XX:-UsePerfData $HADOOP_JAVA_PLATFORM_OPTS"

63 |

64 | # On secure datanodes, user to run the datanode as after dropping privileges.

65 | # This **MUST** be uncommented to enable secure HDFS if using privileged ports

66 | # to provide authentication of data transfer protocol. This **MUST NOT** be

67 | # defined if SASL is configured for authentication of data transfer protocol

68 | # using non-privileged ports.

69 | export HADOOP_SECURE_DN_USER=${HADOOP_SECURE_DN_USER}

70 |

71 | # Where log files are stored. $HADOOP_HOME/logs by default.

72 | #export HADOOP_LOG_DIR=${HADOOP_LOG_DIR}/$USER

73 |

74 | # Where log files are stored in the secure data environment.

75 | export HADOOP_SECURE_DN_LOG_DIR=${HADOOP_LOG_DIR}/${HADOOP_HDFS_USER}

76 |

77 | ###

78 | # HDFS Mover specific parameters

79 | ###

80 | # Specify the JVM options to be used when starting the HDFS Mover.

81 | # These options will be appended to the options specified as HADOOP_OPTS

82 | # and therefore may override any similar flags set in HADOOP_OPTS

83 | #

84 | # export HADOOP_MOVER_OPTS=""

85 |

86 | ###

87 | # Advanced Users Only!

88 | ###

89 |

90 | # The directory where pid files are stored. /tmp by default.

91 | # NOTE: this should be set to a directory that can only be written to by

92 | # the user that will run the hadoop daemons. Otherwise there is the

93 | # potential for a symlink attack.

94 | export HADOOP_PID_DIR=${HADOOP_PID_DIR}

95 | export HADOOP_SECURE_DN_PID_DIR=${HADOOP_PID_DIR}

96 |

97 | # A string representing this instance of hadoop. $USER by default.

98 | export HADOOP_IDENT_STRING=$USER

99 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "{}"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright {yyyy} {name of copyright owner}

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------