Index

39 | 40 |

41 | A

42 | | C

43 | | D

44 | | E

45 | | F

46 | | G

47 | | H

48 | | I

49 | | M

50 | | O

51 | | P

52 | | R

53 | | S

54 | | T

55 | | V

56 | | Z

57 |

58 |

59 | A

60 |

|

65 |

C

68 |

|

75 |

|

81 |

D

84 |

|

89 |

|

93 |

E

96 |

|

101 |

F

104 |

|

111 |

|

117 |

G

120 |

|

125 |

H

128 |

|

133 |

|

137 |

I

140 |

|

145 |

M

148 |

|

153 |

O

156 |

|

161 |

|

167 |

P

170 |

|

175 |

R

178 |

|

183 |

|

187 |

S

190 |

|

195 |

|

199 |

T

202 |

|

207 |

V

210 |

|

217 |

|

225 |

Z

228 |

|

233 |

9 |

10 | ## Overview

11 |

12 | There are tons of Python FFmpeg wrappers out there but they seem to lack complex filter support. `ffmpeg-python` works well for simple as well as complex signal graphs.

13 |

14 |

15 | ## Quickstart

16 |

17 | Flip a video horizontally:

18 | ```python

19 | import ffmpeg

20 | stream = ffmpeg.input('input.mp4')

21 | stream = ffmpeg.hflip(stream)

22 | stream = ffmpeg.output(stream, 'output.mp4')

23 | ffmpeg.run(stream)

24 | ```

25 |

26 | Or if you prefer a fluent interface:

27 | ```python

28 | import ffmpeg

29 | (

30 | ffmpeg

31 | .input('input.mp4')

32 | .hflip()

33 | .output('output.mp4')

34 | .run()

35 | )

36 | ```

37 |

38 | ## [API reference](https://kkroening.github.io/ffmpeg-python/)

39 |

40 | ## Complex filter graphs

41 | FFmpeg is extremely powerful, but its command-line interface gets really complicated rather quickly - especially when working with signal graphs and doing anything more than trivial.

42 |

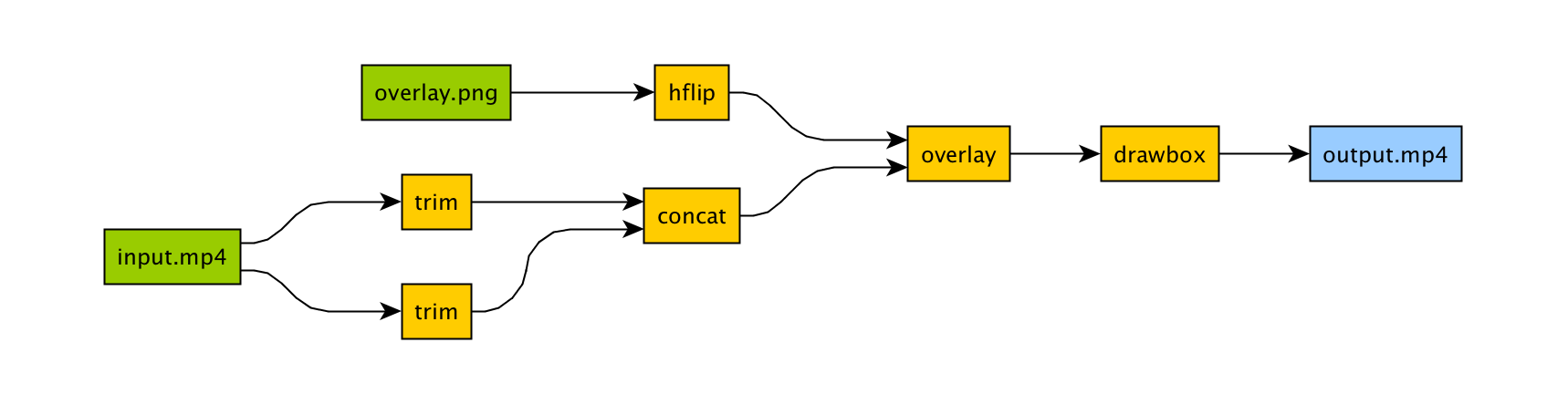

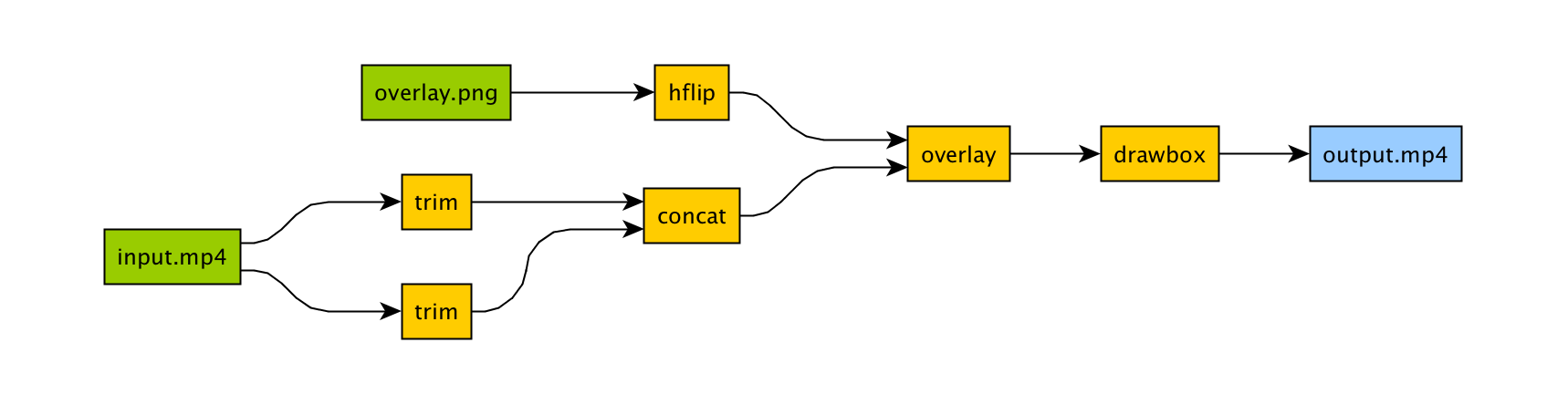

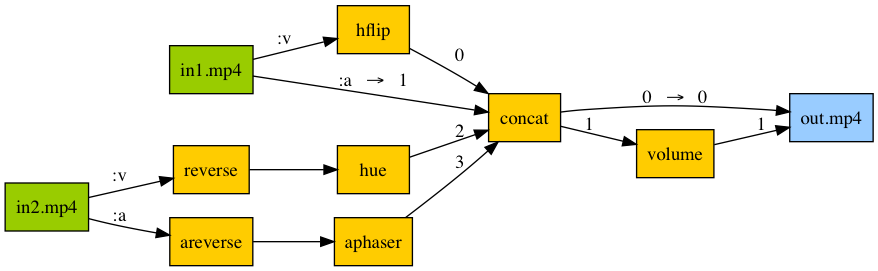

43 | Take for example a signal graph that looks like this:

44 |

45 |

46 |

47 | The corresponding command-line arguments are pretty gnarly:

48 | ```bash

49 | ffmpeg -i input.mp4 -i overlay.png -filter_complex "[0]trim=start_frame=10:end_frame=20[v0];\

50 | [0]trim=start_frame=30:end_frame=40[v1];[v0][v1]concat=n=2[v2];[1]hflip[v3];\

51 | [v2][v3]overlay=eof_action=repeat[v4];[v4]drawbox=50:50:120:120:red:t=5[v5]"\

52 | -map [v5] output.mp4

53 | ```

54 |

55 | Maybe this looks great to you, but if you're not an FFmpeg command-line expert, it probably looks alien.

56 |

57 | If you're like me and find Python to be powerful and readable, it's easier with `ffmpeg-python`:

58 | ```python

59 | import ffmpeg

60 |

61 | in_file = ffmpeg.input('input.mp4')

62 | overlay_file = ffmpeg.input('overlay.png')

63 | (

64 | ffmpeg

65 | .concat(

66 | in_file.trim(start_frame=10, end_frame=20),

67 | in_file.trim(start_frame=30, end_frame=40),

68 | )

69 | .overlay(overlay_file.hflip())

70 | .drawbox(50, 50, 120, 120, color='red', thickness=5)

71 | .output('out.mp4')

72 | .run()

73 | )

74 | ```

75 |

76 | `ffmpeg-python` takes care of running `ffmpeg` with the command-line arguments that correspond to the above filter diagram, in familiar Python terms.

77 |

78 |

9 |

10 | ## Overview

11 |

12 | There are tons of Python FFmpeg wrappers out there but they seem to lack complex filter support. `ffmpeg-python` works well for simple as well as complex signal graphs.

13 |

14 |

15 | ## Quickstart

16 |

17 | Flip a video horizontally:

18 | ```python

19 | import ffmpeg

20 | stream = ffmpeg.input('input.mp4')

21 | stream = ffmpeg.hflip(stream)

22 | stream = ffmpeg.output(stream, 'output.mp4')

23 | ffmpeg.run(stream)

24 | ```

25 |

26 | Or if you prefer a fluent interface:

27 | ```python

28 | import ffmpeg

29 | (

30 | ffmpeg

31 | .input('input.mp4')

32 | .hflip()

33 | .output('output.mp4')

34 | .run()

35 | )

36 | ```

37 |

38 | ## [API reference](https://kkroening.github.io/ffmpeg-python/)

39 |

40 | ## Complex filter graphs

41 | FFmpeg is extremely powerful, but its command-line interface gets really complicated rather quickly - especially when working with signal graphs and doing anything more than trivial.

42 |

43 | Take for example a signal graph that looks like this:

44 |

45 |

46 |

47 | The corresponding command-line arguments are pretty gnarly:

48 | ```bash

49 | ffmpeg -i input.mp4 -i overlay.png -filter_complex "[0]trim=start_frame=10:end_frame=20[v0];\

50 | [0]trim=start_frame=30:end_frame=40[v1];[v0][v1]concat=n=2[v2];[1]hflip[v3];\

51 | [v2][v3]overlay=eof_action=repeat[v4];[v4]drawbox=50:50:120:120:red:t=5[v5]"\

52 | -map [v5] output.mp4

53 | ```

54 |

55 | Maybe this looks great to you, but if you're not an FFmpeg command-line expert, it probably looks alien.

56 |

57 | If you're like me and find Python to be powerful and readable, it's easier with `ffmpeg-python`:

58 | ```python

59 | import ffmpeg

60 |

61 | in_file = ffmpeg.input('input.mp4')

62 | overlay_file = ffmpeg.input('overlay.png')

63 | (

64 | ffmpeg

65 | .concat(

66 | in_file.trim(start_frame=10, end_frame=20),

67 | in_file.trim(start_frame=30, end_frame=40),

68 | )

69 | .overlay(overlay_file.hflip())

70 | .drawbox(50, 50, 120, 120, color='red', thickness=5)

71 | .output('out.mp4')

72 | .run()

73 | )

74 | ```

75 |

76 | `ffmpeg-python` takes care of running `ffmpeg` with the command-line arguments that correspond to the above filter diagram, in familiar Python terms.

77 |

78 |  79 |

80 | Real-world signal graphs can get a heck of a lot more complex, but `ffmpeg-python` handles arbitrarily large (directed-acyclic) signal graphs.

81 |

82 | ## Installation

83 |

84 | ### Installing `ffmpeg-python`

85 |

86 | The latest version of `ffmpeg-python` can be acquired via a typical pip install:

87 |

88 | ```bash

89 | pip install ffmpeg-python

90 | ```

91 |

92 | Or the source can be cloned and installed from locally:

93 | ```bash

94 | git clone git@github.com:kkroening/ffmpeg-python.git

95 | pip install -e ./ffmpeg-python

96 | ```

97 |

98 | > **Note**: `ffmpeg-python` makes no attempt to download/install FFmpeg, as `ffmpeg-python` is merely a pure-Python wrapper - whereas FFmpeg installation is platform-dependent/environment-specific, and is thus the responsibility of the user, as described below.

99 |

100 | ### Installing FFmpeg

101 |

102 | Before using `ffmpeg-python`, FFmpeg must be installed and accessible via the `$PATH` environment variable.

103 |

104 | There are a variety of ways to install FFmpeg, such as the [official download links](https://ffmpeg.org/download.html), or using your package manager of choice (e.g. `sudo apt install ffmpeg` on Debian/Ubuntu, `brew install ffmpeg` on OS X, etc.).

105 |

106 | Regardless of how FFmpeg is installed, you can check if your environment path is set correctly by running the `ffmpeg` command from the terminal, in which case the version information should appear, as in the following example (truncated for brevity):

107 |

108 | ```

109 | $ ffmpeg

110 | ffmpeg version 4.2.4-1ubuntu0.1 Copyright (c) 2000-2020 the FFmpeg developers

111 | built with gcc 9 (Ubuntu 9.3.0-10ubuntu2)

112 | ```

113 |

114 | > **Note**: The actual version information displayed here may vary from one system to another; but if a message such as `ffmpeg: command not found` appears instead of the version information, FFmpeg is not properly installed.

115 |

116 | ## [Examples](https://github.com/kkroening/ffmpeg-python/tree/master/examples)

117 |

118 | When in doubt, take a look at the [examples](https://github.com/kkroening/ffmpeg-python/tree/master/examples) to see if there's something that's close to whatever you're trying to do.

119 |

120 | Here are a few:

121 | - [Convert video to numpy array](https://github.com/kkroening/ffmpeg-python/blob/master/examples/README.md#convert-video-to-numpy-array)

122 | - [Generate thumbnail for video](https://github.com/kkroening/ffmpeg-python/blob/master/examples/README.md#generate-thumbnail-for-video)

123 | - [Read raw PCM audio via pipe](https://github.com/kkroening/ffmpeg-python/blob/master/examples/README.md#convert-sound-to-raw-pcm-audio)

124 |

125 | - [JupyterLab/Notebook stream editor](https://github.com/kkroening/ffmpeg-python/blob/master/examples/README.md#jupyter-stream-editor)

126 |

127 |

79 |

80 | Real-world signal graphs can get a heck of a lot more complex, but `ffmpeg-python` handles arbitrarily large (directed-acyclic) signal graphs.

81 |

82 | ## Installation

83 |

84 | ### Installing `ffmpeg-python`

85 |

86 | The latest version of `ffmpeg-python` can be acquired via a typical pip install:

87 |

88 | ```bash

89 | pip install ffmpeg-python

90 | ```

91 |

92 | Or the source can be cloned and installed from locally:

93 | ```bash

94 | git clone git@github.com:kkroening/ffmpeg-python.git

95 | pip install -e ./ffmpeg-python

96 | ```

97 |

98 | > **Note**: `ffmpeg-python` makes no attempt to download/install FFmpeg, as `ffmpeg-python` is merely a pure-Python wrapper - whereas FFmpeg installation is platform-dependent/environment-specific, and is thus the responsibility of the user, as described below.

99 |

100 | ### Installing FFmpeg

101 |

102 | Before using `ffmpeg-python`, FFmpeg must be installed and accessible via the `$PATH` environment variable.

103 |

104 | There are a variety of ways to install FFmpeg, such as the [official download links](https://ffmpeg.org/download.html), or using your package manager of choice (e.g. `sudo apt install ffmpeg` on Debian/Ubuntu, `brew install ffmpeg` on OS X, etc.).

105 |

106 | Regardless of how FFmpeg is installed, you can check if your environment path is set correctly by running the `ffmpeg` command from the terminal, in which case the version information should appear, as in the following example (truncated for brevity):

107 |

108 | ```

109 | $ ffmpeg

110 | ffmpeg version 4.2.4-1ubuntu0.1 Copyright (c) 2000-2020 the FFmpeg developers

111 | built with gcc 9 (Ubuntu 9.3.0-10ubuntu2)

112 | ```

113 |

114 | > **Note**: The actual version information displayed here may vary from one system to another; but if a message such as `ffmpeg: command not found` appears instead of the version information, FFmpeg is not properly installed.

115 |

116 | ## [Examples](https://github.com/kkroening/ffmpeg-python/tree/master/examples)

117 |

118 | When in doubt, take a look at the [examples](https://github.com/kkroening/ffmpeg-python/tree/master/examples) to see if there's something that's close to whatever you're trying to do.

119 |

120 | Here are a few:

121 | - [Convert video to numpy array](https://github.com/kkroening/ffmpeg-python/blob/master/examples/README.md#convert-video-to-numpy-array)

122 | - [Generate thumbnail for video](https://github.com/kkroening/ffmpeg-python/blob/master/examples/README.md#generate-thumbnail-for-video)

123 | - [Read raw PCM audio via pipe](https://github.com/kkroening/ffmpeg-python/blob/master/examples/README.md#convert-sound-to-raw-pcm-audio)

124 |

125 | - [JupyterLab/Notebook stream editor](https://github.com/kkroening/ffmpeg-python/blob/master/examples/README.md#jupyter-stream-editor)

126 |

127 |  128 |

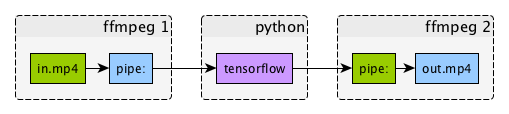

129 | - [Tensorflow/DeepDream streaming](https://github.com/kkroening/ffmpeg-python/blob/master/examples/README.md#tensorflow-streaming)

130 |

131 |

128 |

129 | - [Tensorflow/DeepDream streaming](https://github.com/kkroening/ffmpeg-python/blob/master/examples/README.md#tensorflow-streaming)

130 |

131 |  132 |

133 | See the [Examples README](https://github.com/kkroening/ffmpeg-python/tree/master/examples) for additional examples.

134 |

135 | ## Custom Filters

136 |

137 | Don't see the filter you're looking for? While `ffmpeg-python` includes shorthand notation for some of the most commonly used filters (such as `concat`), all filters can be referenced via the `.filter` operator:

138 | ```python

139 | stream = ffmpeg.input('dummy.mp4')

140 | stream = ffmpeg.filter(stream, 'fps', fps=25, round='up')

141 | stream = ffmpeg.output(stream, 'dummy2.mp4')

142 | ffmpeg.run(stream)

143 | ```

144 |

145 | Or fluently:

146 | ```python

147 | (

148 | ffmpeg

149 | .input('dummy.mp4')

150 | .filter('fps', fps=25, round='up')

151 | .output('dummy2.mp4')

152 | .run()

153 | )

154 | ```

155 |

156 | **Special option names:**

157 |

158 | Arguments with special names such as `-qscale:v` (variable bitrate), `-b:v` (constant bitrate), etc. can be specified as a keyword-args dictionary as follows:

159 | ```python

160 | (

161 | ffmpeg

162 | .input('in.mp4')

163 | .output('out.mp4', **{'qscale:v': 3})

164 | .run()

165 | )

166 | ```

167 |

168 | **Multiple inputs:**

169 |

170 | Filters that take multiple input streams can be used by passing the input streams as an array to `ffmpeg.filter`:

171 | ```python

172 | main = ffmpeg.input('main.mp4')

173 | logo = ffmpeg.input('logo.png')

174 | (

175 | ffmpeg

176 | .filter([main, logo], 'overlay', 10, 10)

177 | .output('out.mp4')

178 | .run()

179 | )

180 | ```

181 |

182 | **Multiple outputs:**

183 |

184 | Filters that produce multiple outputs can be used with `.filter_multi_output`:

185 | ```python

186 | split = (

187 | ffmpeg

188 | .input('in.mp4')

189 | .filter_multi_output('split') # or `.split()`

190 | )

191 | (

192 | ffmpeg

193 | .concat(split[0], split[1].reverse())

194 | .output('out.mp4')

195 | .run()

196 | )

197 | ```

198 | (In this particular case, `.split()` is the equivalent shorthand, but the general approach works for other multi-output filters)

199 |

200 | **String expressions:**

201 |

202 | Expressions to be interpreted by ffmpeg can be included as string parameters and reference any special ffmpeg variable names:

203 | ```python

204 | (

205 | ffmpeg

206 | .input('in.mp4')

207 | .filter('crop', 'in_w-2*10', 'in_h-2*20')

208 | .input('out.mp4')

209 | )

210 | ```

211 |

212 |

132 |

133 | See the [Examples README](https://github.com/kkroening/ffmpeg-python/tree/master/examples) for additional examples.

134 |

135 | ## Custom Filters

136 |

137 | Don't see the filter you're looking for? While `ffmpeg-python` includes shorthand notation for some of the most commonly used filters (such as `concat`), all filters can be referenced via the `.filter` operator:

138 | ```python

139 | stream = ffmpeg.input('dummy.mp4')

140 | stream = ffmpeg.filter(stream, 'fps', fps=25, round='up')

141 | stream = ffmpeg.output(stream, 'dummy2.mp4')

142 | ffmpeg.run(stream)

143 | ```

144 |

145 | Or fluently:

146 | ```python

147 | (

148 | ffmpeg

149 | .input('dummy.mp4')

150 | .filter('fps', fps=25, round='up')

151 | .output('dummy2.mp4')

152 | .run()

153 | )

154 | ```

155 |

156 | **Special option names:**

157 |

158 | Arguments with special names such as `-qscale:v` (variable bitrate), `-b:v` (constant bitrate), etc. can be specified as a keyword-args dictionary as follows:

159 | ```python

160 | (

161 | ffmpeg

162 | .input('in.mp4')

163 | .output('out.mp4', **{'qscale:v': 3})

164 | .run()

165 | )

166 | ```

167 |

168 | **Multiple inputs:**

169 |

170 | Filters that take multiple input streams can be used by passing the input streams as an array to `ffmpeg.filter`:

171 | ```python

172 | main = ffmpeg.input('main.mp4')

173 | logo = ffmpeg.input('logo.png')

174 | (

175 | ffmpeg

176 | .filter([main, logo], 'overlay', 10, 10)

177 | .output('out.mp4')

178 | .run()

179 | )

180 | ```

181 |

182 | **Multiple outputs:**

183 |

184 | Filters that produce multiple outputs can be used with `.filter_multi_output`:

185 | ```python

186 | split = (

187 | ffmpeg

188 | .input('in.mp4')

189 | .filter_multi_output('split') # or `.split()`

190 | )

191 | (

192 | ffmpeg

193 | .concat(split[0], split[1].reverse())

194 | .output('out.mp4')

195 | .run()

196 | )

197 | ```

198 | (In this particular case, `.split()` is the equivalent shorthand, but the general approach works for other multi-output filters)

199 |

200 | **String expressions:**

201 |

202 | Expressions to be interpreted by ffmpeg can be included as string parameters and reference any special ffmpeg variable names:

203 | ```python

204 | (

205 | ffmpeg

206 | .input('in.mp4')

207 | .filter('crop', 'in_w-2*10', 'in_h-2*20')

208 | .input('out.mp4')

209 | )

210 | ```

211 |

212 |  245 |

246 | One of the best things you can do to help make `ffmpeg-python` better is to answer [open questions](https://github.com/kkroening/ffmpeg-python/labels/question) in the issue tracker. The questions that are answered will be tagged and incorporated into the documentation, examples, and other learning resources.

247 |

248 | If you notice things that could be better in the documentation or overall development experience, please say so in the [issue tracker](https://github.com/kkroening/ffmpeg-python/issues). And of course, feel free to report any bugs or submit feature requests.

249 |

250 | Pull requests are welcome as well, but it wouldn't hurt to touch base in the issue tracker or hop on the [Matrix chat channel](https://riot.im/app/#/room/#ffmpeg-python:matrix.org) first.

251 |

252 | Anyone who fixes any of the [open bugs](https://github.com/kkroening/ffmpeg-python/labels/bug) or implements [requested enhancements](https://github.com/kkroening/ffmpeg-python/labels/enhancement) is a hero, but changes should include passing tests.

253 |

254 | ### Running tests

255 |

256 | ```bash

257 | git clone git@github.com:kkroening/ffmpeg-python.git

258 | cd ffmpeg-python

259 | virtualenv venv

260 | . venv/bin/activate # (OS X / Linux)

261 | venv\bin\activate # (Windows)

262 | pip install -e .[dev]

263 | pytest

264 | ```

265 |

266 |

245 |

246 | One of the best things you can do to help make `ffmpeg-python` better is to answer [open questions](https://github.com/kkroening/ffmpeg-python/labels/question) in the issue tracker. The questions that are answered will be tagged and incorporated into the documentation, examples, and other learning resources.

247 |

248 | If you notice things that could be better in the documentation or overall development experience, please say so in the [issue tracker](https://github.com/kkroening/ffmpeg-python/issues). And of course, feel free to report any bugs or submit feature requests.

249 |

250 | Pull requests are welcome as well, but it wouldn't hurt to touch base in the issue tracker or hop on the [Matrix chat channel](https://riot.im/app/#/room/#ffmpeg-python:matrix.org) first.

251 |

252 | Anyone who fixes any of the [open bugs](https://github.com/kkroening/ffmpeg-python/labels/bug) or implements [requested enhancements](https://github.com/kkroening/ffmpeg-python/labels/enhancement) is a hero, but changes should include passing tests.

253 |

254 | ### Running tests

255 |

256 | ```bash

257 | git clone git@github.com:kkroening/ffmpeg-python.git

258 | cd ffmpeg-python

259 | virtualenv venv

260 | . venv/bin/activate # (OS X / Linux)

261 | venv\bin\activate # (Windows)

262 | pip install -e .[dev]

263 | pytest

264 | ```

265 |

266 |  15 |

16 | ```python

17 | (

18 | ffmpeg

19 | .input(in_filename, ss=time)

20 | .filter('scale', width, -1)

21 | .output(out_filename, vframes=1)

22 | .run()

23 | )

24 | ```

25 |

26 | ## [Convert video to numpy array](https://github.com/kkroening/ffmpeg-python/blob/master/examples/ffmpeg-numpy.ipynb)

27 |

28 |

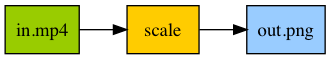

15 |

16 | ```python

17 | (

18 | ffmpeg

19 | .input(in_filename, ss=time)

20 | .filter('scale', width, -1)

21 | .output(out_filename, vframes=1)

22 | .run()

23 | )

24 | ```

25 |

26 | ## [Convert video to numpy array](https://github.com/kkroening/ffmpeg-python/blob/master/examples/ffmpeg-numpy.ipynb)

27 |

28 |  29 |

30 | ```python

31 | out, _ = (

32 | ffmpeg

33 | .input('in.mp4')

34 | .output('pipe:', format='rawvideo', pix_fmt='rgb24')

35 | .run(capture_stdout=True)

36 | )

37 | video = (

38 | np

39 | .frombuffer(out, np.uint8)

40 | .reshape([-1, height, width, 3])

41 | )

42 | ```

43 |

44 | ## [Read single video frame as jpeg through pipe](https://github.com/kkroening/ffmpeg-python/blob/master/examples/read_frame_as_jpeg.py#L16)

45 |

46 |

29 |

30 | ```python

31 | out, _ = (

32 | ffmpeg

33 | .input('in.mp4')

34 | .output('pipe:', format='rawvideo', pix_fmt='rgb24')

35 | .run(capture_stdout=True)

36 | )

37 | video = (

38 | np

39 | .frombuffer(out, np.uint8)

40 | .reshape([-1, height, width, 3])

41 | )

42 | ```

43 |

44 | ## [Read single video frame as jpeg through pipe](https://github.com/kkroening/ffmpeg-python/blob/master/examples/read_frame_as_jpeg.py#L16)

45 |

46 |  47 |

48 | ```python

49 | out, _ = (

50 | ffmpeg

51 | .input(in_filename)

52 | .filter('select', 'gte(n,{})'.format(frame_num))

53 | .output('pipe:', vframes=1, format='image2', vcodec='mjpeg')

54 | .run(capture_stdout=True)

55 | )

56 | ```

57 |

58 | ## [Convert sound to raw PCM audio](https://github.com/kkroening/ffmpeg-python/blob/master/examples/transcribe.py#L23)

59 |

60 |

47 |

48 | ```python

49 | out, _ = (

50 | ffmpeg

51 | .input(in_filename)

52 | .filter('select', 'gte(n,{})'.format(frame_num))

53 | .output('pipe:', vframes=1, format='image2', vcodec='mjpeg')

54 | .run(capture_stdout=True)

55 | )

56 | ```

57 |

58 | ## [Convert sound to raw PCM audio](https://github.com/kkroening/ffmpeg-python/blob/master/examples/transcribe.py#L23)

59 |

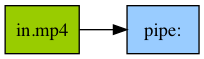

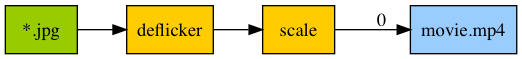

60 |  61 |

62 | ```python

63 | out, _ = (ffmpeg

64 | .input(in_filename, **input_kwargs)

65 | .output('-', format='s16le', acodec='pcm_s16le', ac=1, ar='16k')

66 | .overwrite_output()

67 | .run(capture_stdout=True)

68 | )

69 | ```

70 |

71 | ## Assemble video from sequence of frames

72 |

73 |

61 |

62 | ```python

63 | out, _ = (ffmpeg

64 | .input(in_filename, **input_kwargs)

65 | .output('-', format='s16le', acodec='pcm_s16le', ac=1, ar='16k')

66 | .overwrite_output()

67 | .run(capture_stdout=True)

68 | )

69 | ```

70 |

71 | ## Assemble video from sequence of frames

72 |

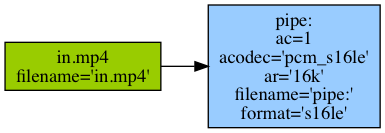

73 |  74 |

75 | ```python

76 | (

77 | ffmpeg

78 | .input('/path/to/jpegs/*.jpg', pattern_type='glob', framerate=25)

79 | .output('movie.mp4')

80 | .run()

81 | )

82 | ```

83 |

84 | With additional filtering:

85 |

86 |

74 |

75 | ```python

76 | (

77 | ffmpeg

78 | .input('/path/to/jpegs/*.jpg', pattern_type='glob', framerate=25)

79 | .output('movie.mp4')

80 | .run()

81 | )

82 | ```

83 |

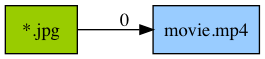

84 | With additional filtering:

85 |

86 |  87 |

88 | ```python

89 | (

90 | ffmpeg

91 | .input('/path/to/jpegs/*.jpg', pattern_type='glob', framerate=25)

92 | .filter('deflicker', mode='pm', size=10)

93 | .filter('scale', size='hd1080', force_original_aspect_ratio='increase')

94 | .output('movie.mp4', crf=20, preset='slower', movflags='faststart', pix_fmt='yuv420p')

95 | .view(filename='filter_graph')

96 | .run()

97 | )

98 | ```

99 |

100 | ## Audio/video pipeline

101 |

102 |

87 |

88 | ```python

89 | (

90 | ffmpeg

91 | .input('/path/to/jpegs/*.jpg', pattern_type='glob', framerate=25)

92 | .filter('deflicker', mode='pm', size=10)

93 | .filter('scale', size='hd1080', force_original_aspect_ratio='increase')

94 | .output('movie.mp4', crf=20, preset='slower', movflags='faststart', pix_fmt='yuv420p')

95 | .view(filename='filter_graph')

96 | .run()

97 | )

98 | ```

99 |

100 | ## Audio/video pipeline

101 |

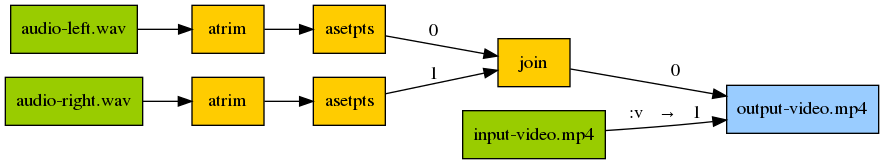

102 |  103 |

104 | ```python

105 | in1 = ffmpeg.input('in1.mp4')

106 | in2 = ffmpeg.input('in2.mp4')

107 | v1 = in1.video.hflip()

108 | a1 = in1.audio

109 | v2 = in2.video.filter('reverse').filter('hue', s=0)

110 | a2 = in2.audio.filter('areverse').filter('aphaser')

111 | joined = ffmpeg.concat(v1, a1, v2, a2, v=1, a=1).node

112 | v3 = joined[0]

113 | a3 = joined[1].filter('volume', 0.8)

114 | out = ffmpeg.output(v3, a3, 'out.mp4')

115 | out.run()

116 | ```

117 |

118 | ## Mono to stereo with offsets and video

119 |

120 |

103 |

104 | ```python

105 | in1 = ffmpeg.input('in1.mp4')

106 | in2 = ffmpeg.input('in2.mp4')

107 | v1 = in1.video.hflip()

108 | a1 = in1.audio

109 | v2 = in2.video.filter('reverse').filter('hue', s=0)

110 | a2 = in2.audio.filter('areverse').filter('aphaser')

111 | joined = ffmpeg.concat(v1, a1, v2, a2, v=1, a=1).node

112 | v3 = joined[0]

113 | a3 = joined[1].filter('volume', 0.8)

114 | out = ffmpeg.output(v3, a3, 'out.mp4')

115 | out.run()

116 | ```

117 |

118 | ## Mono to stereo with offsets and video

119 |

120 |  121 |

122 | ```python

123 | audio_left = (

124 | ffmpeg

125 | .input('audio-left.wav')

126 | .filter('atrim', start=5)

127 | .filter('asetpts', 'PTS-STARTPTS')

128 | )

129 |

130 | audio_right = (

131 | ffmpeg

132 | .input('audio-right.wav')

133 | .filter('atrim', start=10)

134 | .filter('asetpts', 'PTS-STARTPTS')

135 | )

136 |

137 | input_video = ffmpeg.input('input-video.mp4')

138 |

139 | (

140 | ffmpeg

141 | .filter((audio_left, audio_right), 'join', inputs=2, channel_layout='stereo')

142 | .output(input_video.video, 'output-video.mp4', shortest=None, vcodec='copy')

143 | .overwrite_output()

144 | .run()

145 | )

146 | ```

147 |

148 | ## [Jupyter Frame Viewer](https://github.com/kkroening/ffmpeg-python/blob/master/examples/ffmpeg-numpy.ipynb)

149 |

150 |

121 |

122 | ```python

123 | audio_left = (

124 | ffmpeg

125 | .input('audio-left.wav')

126 | .filter('atrim', start=5)

127 | .filter('asetpts', 'PTS-STARTPTS')

128 | )

129 |

130 | audio_right = (

131 | ffmpeg

132 | .input('audio-right.wav')

133 | .filter('atrim', start=10)

134 | .filter('asetpts', 'PTS-STARTPTS')

135 | )

136 |

137 | input_video = ffmpeg.input('input-video.mp4')

138 |

139 | (

140 | ffmpeg

141 | .filter((audio_left, audio_right), 'join', inputs=2, channel_layout='stereo')

142 | .output(input_video.video, 'output-video.mp4', shortest=None, vcodec='copy')

143 | .overwrite_output()

144 | .run()

145 | )

146 | ```

147 |

148 | ## [Jupyter Frame Viewer](https://github.com/kkroening/ffmpeg-python/blob/master/examples/ffmpeg-numpy.ipynb)

149 |

150 |  151 |

152 | ## [Jupyter Stream Editor](https://github.com/kkroening/ffmpeg-python/blob/master/examples/ffmpeg-numpy.ipynb)

153 |

154 |

151 |

152 | ## [Jupyter Stream Editor](https://github.com/kkroening/ffmpeg-python/blob/master/examples/ffmpeg-numpy.ipynb)

153 |

154 |  159 |

160 | - Decode input video with ffmpeg

161 | - Process video with tensorflow using "deep dream" example

162 | - Encode output video with ffmpeg

163 |

164 | ```python

165 | process1 = (

166 | ffmpeg

167 | .input(in_filename)

168 | .output('pipe:', format='rawvideo', pix_fmt='rgb24', vframes=8)

169 | .run_async(pipe_stdout=True)

170 | )

171 |

172 | process2 = (

173 | ffmpeg

174 | .input('pipe:', format='rawvideo', pix_fmt='rgb24', s='{}x{}'.format(width, height))

175 | .output(out_filename, pix_fmt='yuv420p')

176 | .overwrite_output()

177 | .run_async(pipe_stdin=True)

178 | )

179 |

180 | while True:

181 | in_bytes = process1.stdout.read(width * height * 3)

182 | if not in_bytes:

183 | break

184 | in_frame = (

185 | np

186 | .frombuffer(in_bytes, np.uint8)

187 | .reshape([height, width, 3])

188 | )

189 |

190 | # See examples/tensorflow_stream.py:

191 | out_frame = deep_dream.process_frame(in_frame)

192 |

193 | process2.stdin.write(

194 | out_frame

195 | .astype(np.uint8)

196 | .tobytes()

197 | )

198 |

199 | process2.stdin.close()

200 | process1.wait()

201 | process2.wait()

202 | ```

203 |

204 |

159 |

160 | - Decode input video with ffmpeg

161 | - Process video with tensorflow using "deep dream" example

162 | - Encode output video with ffmpeg

163 |

164 | ```python

165 | process1 = (

166 | ffmpeg

167 | .input(in_filename)

168 | .output('pipe:', format='rawvideo', pix_fmt='rgb24', vframes=8)

169 | .run_async(pipe_stdout=True)

170 | )

171 |

172 | process2 = (

173 | ffmpeg

174 | .input('pipe:', format='rawvideo', pix_fmt='rgb24', s='{}x{}'.format(width, height))

175 | .output(out_filename, pix_fmt='yuv420p')

176 | .overwrite_output()

177 | .run_async(pipe_stdin=True)

178 | )

179 |

180 | while True:

181 | in_bytes = process1.stdout.read(width * height * 3)

182 | if not in_bytes:

183 | break

184 | in_frame = (

185 | np

186 | .frombuffer(in_bytes, np.uint8)

187 | .reshape([height, width, 3])

188 | )

189 |

190 | # See examples/tensorflow_stream.py:

191 | out_frame = deep_dream.process_frame(in_frame)

192 |

193 | process2.stdin.write(

194 | out_frame

195 | .astype(np.uint8)

196 | .tobytes()

197 | )

198 |

199 | process2.stdin.close()

200 | process1.wait()

201 | process2.wait()

202 | ```

203 |

204 |