165 |

165 |  166 |

166 |  34 |

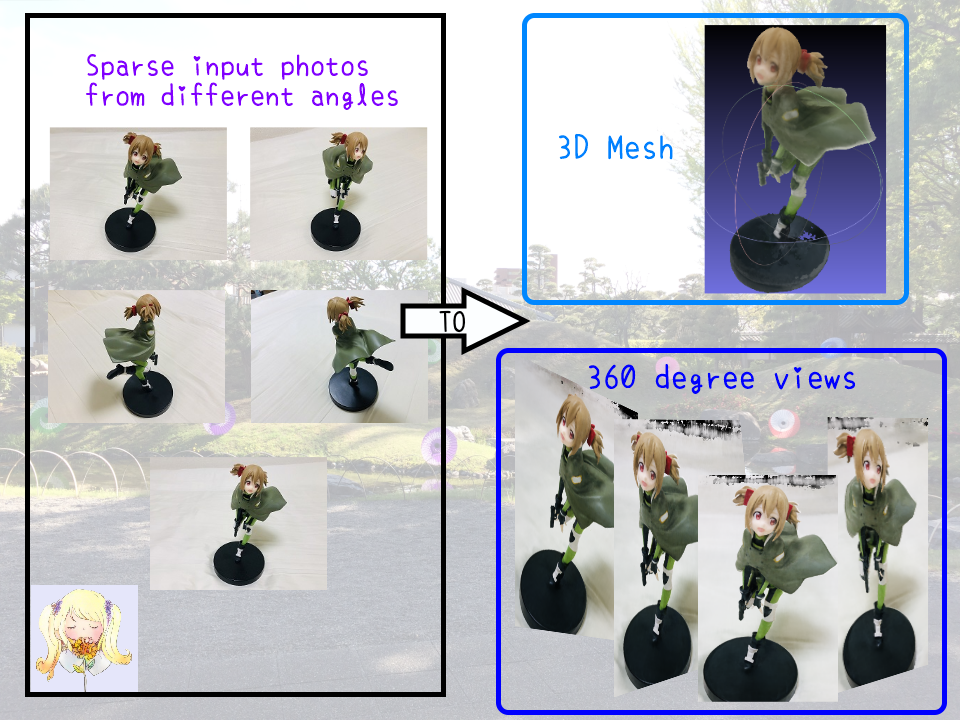

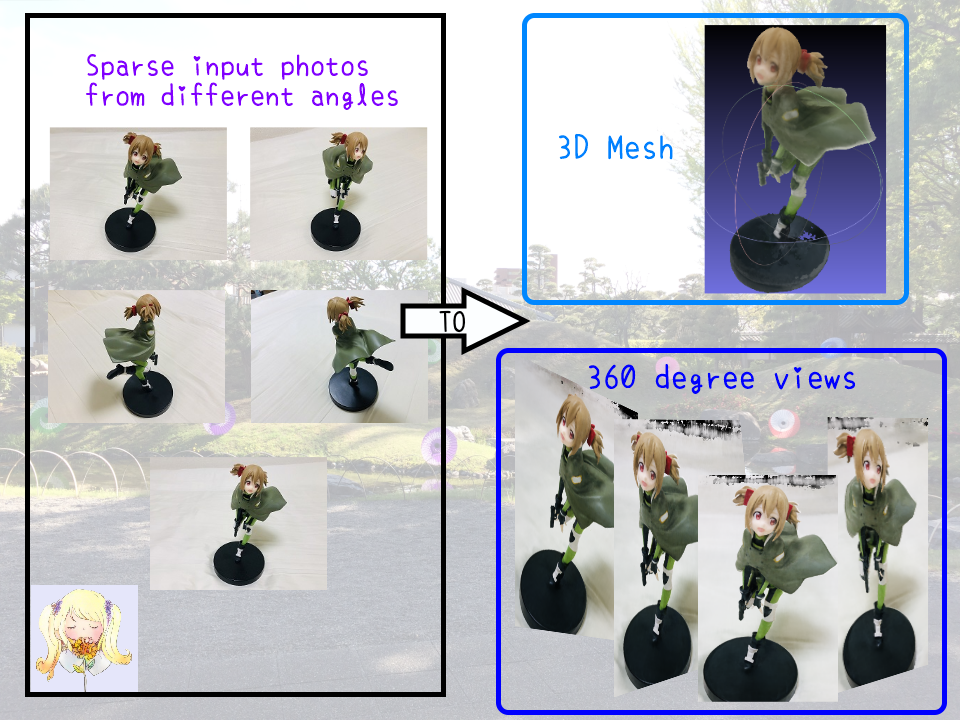

35 | ### Tutorial videos

36 |

37 |

34 |

35 | ### Tutorial videos

36 |

37 |  38 |

39 |

40 | # :computer: Installation

41 |

42 | ## Hardware

43 |

44 | * OS: Ubuntu 18.04

45 | * NVIDIA GPU with **CUDA>=10.1** (tested with 1 RTX2080Ti)

46 |

47 | ## Software

48 |

49 | * Clone this repo by `git clone --recursive https://github.com/kwea123/nerf_pl`

50 | * Python>=3.6 (installation via [anaconda](https://www.anaconda.com/distribution/) is recommended, use `conda create -n nerf_pl python=3.6` to create a conda environment and activate it by `conda activate nerf_pl`)

51 | * Python libraries

52 | * Install core requirements by `pip install -r requirements.txt`

53 | * Install `torchsearchsorted` by `cd torchsearchsorted` then `pip install .`

54 |

55 | # :key: Training

56 |

57 | Please see each subsection for training on different datasets. Available training datasets:

58 |

59 | * [Blender](#blender) (Realistic Synthetic 360)

60 | * [LLFF](#llff) (Real Forward-Facing)

61 | * [Your own data](#your-own-data) (Forward-Facing/360 inward-facing)

62 |

63 | ## Blender

64 |

38 |

39 |

40 | # :computer: Installation

41 |

42 | ## Hardware

43 |

44 | * OS: Ubuntu 18.04

45 | * NVIDIA GPU with **CUDA>=10.1** (tested with 1 RTX2080Ti)

46 |

47 | ## Software

48 |

49 | * Clone this repo by `git clone --recursive https://github.com/kwea123/nerf_pl`

50 | * Python>=3.6 (installation via [anaconda](https://www.anaconda.com/distribution/) is recommended, use `conda create -n nerf_pl python=3.6` to create a conda environment and activate it by `conda activate nerf_pl`)

51 | * Python libraries

52 | * Install core requirements by `pip install -r requirements.txt`

53 | * Install `torchsearchsorted` by `cd torchsearchsorted` then `pip install .`

54 |

55 | # :key: Training

56 |

57 | Please see each subsection for training on different datasets. Available training datasets:

58 |

59 | * [Blender](#blender) (Realistic Synthetic 360)

60 | * [LLFF](#llff) (Real Forward-Facing)

61 | * [Your own data](#your-own-data) (Forward-Facing/360 inward-facing)

62 |

63 | ## Blender

64 |

164 |  165 |

165 |  166 |

166 |

175 |

176 |  177 |

178 |

177 |

178 |  179 |

179 |

25 |

26 | by projecting the vertices onto this input image:

27 |

28 |

25 |

26 | by projecting the vertices onto this input image:

27 |

28 |  29 |

30 | You'll notice the face appears on the mantle. Why is that? It is because of **occlusion**.

31 |

32 | From the input image view, that spurious part of the mantle is actually occluded (blocked) by the face, so in reality we **shouldn't** assign color to it, but the above process assigns it the same color as the face because those vertices are projected onto the face (in pixel coordinate) as well!

33 |

34 | So the problem becomes: How do we correctly infer occlusion information, to know which vertices shouldn't be assigned colors? I tried two methods, where the first turns out to not work well:

35 |

36 | 1. Use depth information

37 |

38 | The first intuitive way is to leverage vertices' depths (which is obtained when projecting vertices onto image plane): if two (or more) vertices are projected onto the **same** pixel coordinates, then only the nearest vertex will be assigned color, the rest remains untouched. However, this method won't work since no any two pixels will be projected onto the exact same location! As we mentioned earlier, the pixel coordinates are floating numbers, so it is impossible for they to be exactly the same. If we round the numbers to integers (which I tried as well), then this method works, but with still a lot of misclassified (occluded/non occluded) vertices in my experiments.

39 |

40 | 2. Leverage NeRF model

41 |

42 | What I find a intelligent way to infer occlusion is by using NeRF model. Recall that nerf model can estimate the opacity (or density) along a ray path (the following figure c):

43 |

44 | We can leverage that information to tell if a vertex is occluded or not. More concretely, we form rays originating from the camera origin, destinating (ending) at the vertices, and compute the total opacity along these rays. If a vertex is not occluded, the opacity will be small; otherwise, the value will be large, meaning that something lies between the vertex and the camera.

45 |

46 | After applying this method, this is what we get (by projecting the vertices onto the input view as above):

47 |

29 |

30 | You'll notice the face appears on the mantle. Why is that? It is because of **occlusion**.

31 |

32 | From the input image view, that spurious part of the mantle is actually occluded (blocked) by the face, so in reality we **shouldn't** assign color to it, but the above process assigns it the same color as the face because those vertices are projected onto the face (in pixel coordinate) as well!

33 |

34 | So the problem becomes: How do we correctly infer occlusion information, to know which vertices shouldn't be assigned colors? I tried two methods, where the first turns out to not work well:

35 |

36 | 1. Use depth information

37 |

38 | The first intuitive way is to leverage vertices' depths (which is obtained when projecting vertices onto image plane): if two (or more) vertices are projected onto the **same** pixel coordinates, then only the nearest vertex will be assigned color, the rest remains untouched. However, this method won't work since no any two pixels will be projected onto the exact same location! As we mentioned earlier, the pixel coordinates are floating numbers, so it is impossible for they to be exactly the same. If we round the numbers to integers (which I tried as well), then this method works, but with still a lot of misclassified (occluded/non occluded) vertices in my experiments.

39 |

40 | 2. Leverage NeRF model

41 |

42 | What I find a intelligent way to infer occlusion is by using NeRF model. Recall that nerf model can estimate the opacity (or density) along a ray path (the following figure c):

43 |

44 | We can leverage that information to tell if a vertex is occluded or not. More concretely, we form rays originating from the camera origin, destinating (ending) at the vertices, and compute the total opacity along these rays. If a vertex is not occluded, the opacity will be small; otherwise, the value will be large, meaning that something lies between the vertex and the camera.

45 |

46 | After applying this method, this is what we get (by projecting the vertices onto the input view as above):

47 |  48 |

49 | The spurious face on the mantle disappears, and the colored pixels are almost exactly the ones we can observe from the image. By default we set the vertices to be all black, so a black vertex means it's occluded in this view, but will be assigned color when we change to other views.

50 |

51 |

52 | # Finally...

53 |

54 | This is the final result:

55 |

56 |

48 |

49 | The spurious face on the mantle disappears, and the colored pixels are almost exactly the ones we can observe from the image. By default we set the vertices to be all black, so a black vertex means it's occluded in this view, but will be assigned color when we change to other views.

50 |

51 |

52 | # Finally...

53 |

54 | This is the final result:

55 |

56 |  57 |

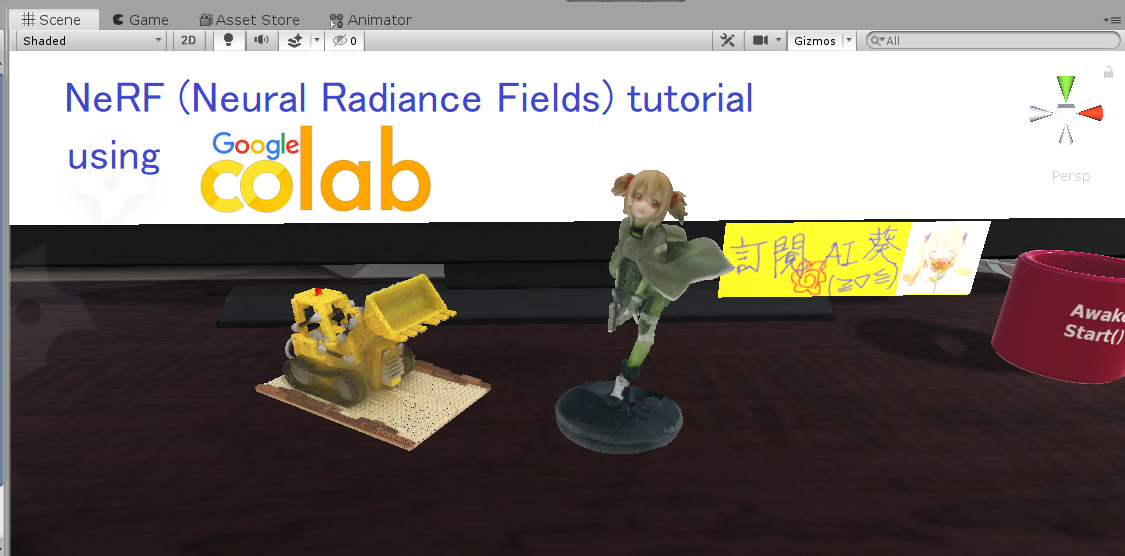

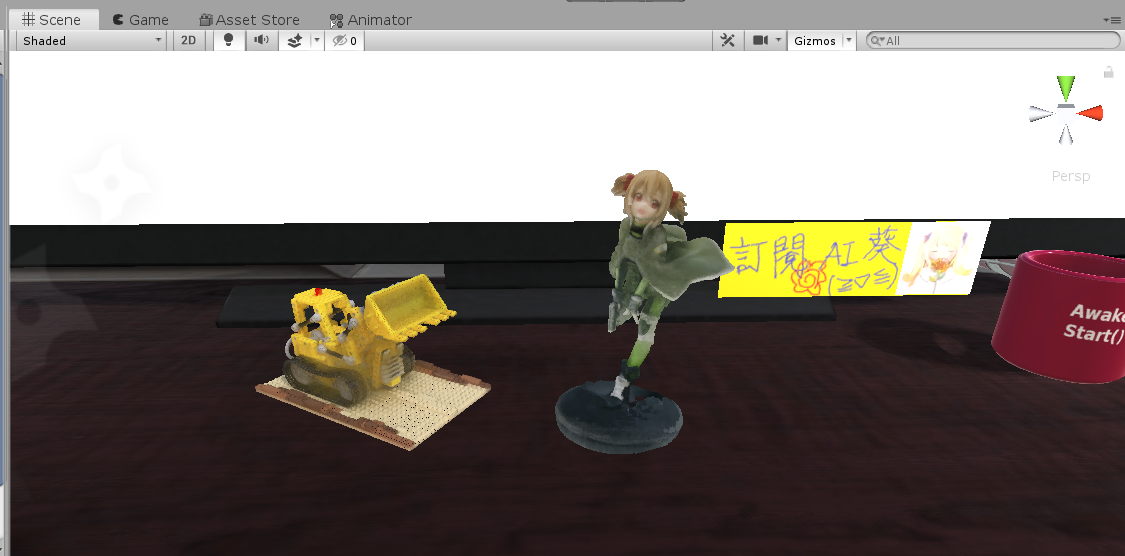

58 | We can then export this `.ply` file to any other format, and embed in programs like I did in Unity:

59 | (I use [this plugin](https://github.com/kwea123/Pcx) to import `.ply` file to unity, and made some modifications so that it can also read mesh triangles, not only the points)

60 |

61 |

62 |

63 | The meshes can be attached a meshcollider so that they can interact with other objects. You can see [this video](https://youtu.be/I2M0xhnrBos) for a demo.

64 |

65 | ## Further reading

66 | The author suggested [another way](https://github.com/bmild/nerf/issues/44#issuecomment-622961303) to extract color, in my experiments it doesn't turn out to be good, but the idea is reasonable and interesting. You can also test this by setting `--use_vertex_normal`.

67 |

--------------------------------------------------------------------------------

/datasets/__init__.py:

--------------------------------------------------------------------------------

1 | from .blender import BlenderDataset

2 | from .llff import LLFFDataset

3 |

4 | dataset_dict = {'blender': BlenderDataset,

5 | 'llff': LLFFDataset}

--------------------------------------------------------------------------------

/datasets/blender.py:

--------------------------------------------------------------------------------

1 | import torch

2 | from torch.utils.data import Dataset

3 | import json

4 | import numpy as np

5 | import os

6 | from PIL import Image

7 | from torchvision import transforms as T

8 |

9 | from .ray_utils import *

10 |

11 | class BlenderDataset(Dataset):

12 | def __init__(self, root_dir, split='train', img_wh=(800, 800)):

13 | self.root_dir = root_dir

14 | self.split = split

15 | assert img_wh[0] == img_wh[1], 'image width must equal image height!'

16 | self.img_wh = img_wh

17 | self.define_transforms()

18 |

19 | self.read_meta()

20 | self.white_back = True

21 |

22 | def read_meta(self):

23 | with open(os.path.join(self.root_dir,

24 | f"transforms_{self.split}.json"), 'r') as f:

25 | self.meta = json.load(f)

26 |

27 | w, h = self.img_wh

28 | self.focal = 0.5*800/np.tan(0.5*self.meta['camera_angle_x']) # original focal length

29 | # when W=800

30 |

31 | self.focal *= self.img_wh[0]/800 # modify focal length to match size self.img_wh

32 |

33 | # bounds, common for all scenes

34 | self.near = 2.0

35 | self.far = 6.0

36 | self.bounds = np.array([self.near, self.far])

37 |

38 | # ray directions for all pixels, same for all images (same H, W, focal)

39 | self.directions = \

40 | get_ray_directions(h, w, self.focal) # (h, w, 3)

41 |

42 | if self.split == 'train': # create buffer of all rays and rgb data

43 | self.image_paths = []

44 | self.poses = []

45 | self.all_rays = []

46 | self.all_rgbs = []

47 | for frame in self.meta['frames']:

48 | pose = np.array(frame['transform_matrix'])[:3, :4]

49 | self.poses += [pose]

50 | c2w = torch.FloatTensor(pose)

51 |

52 | image_path = os.path.join(self.root_dir, f"{frame['file_path']}.png")

53 | self.image_paths += [image_path]

54 | img = Image.open(image_path)

55 | img = img.resize(self.img_wh, Image.LANCZOS)

56 | img = self.transform(img) # (4, h, w)

57 | img = img.view(4, -1).permute(1, 0) # (h*w, 4) RGBA

58 | img = img[:, :3]*img[:, -1:] + (1-img[:, -1:]) # blend A to RGB

59 | self.all_rgbs += [img]

60 |

61 | rays_o, rays_d = get_rays(self.directions, c2w) # both (h*w, 3)

62 |

63 | self.all_rays += [torch.cat([rays_o, rays_d,

64 | self.near*torch.ones_like(rays_o[:, :1]),

65 | self.far*torch.ones_like(rays_o[:, :1])],

66 | 1)] # (h*w, 8)

67 |

68 | self.all_rays = torch.cat(self.all_rays, 0) # (len(self.meta['frames])*h*w, 3)

69 | self.all_rgbs = torch.cat(self.all_rgbs, 0) # (len(self.meta['frames])*h*w, 3)

70 |

71 | def define_transforms(self):

72 | self.transform = T.ToTensor()

73 |

74 | def __len__(self):

75 | if self.split == 'train':

76 | return len(self.all_rays)

77 | if self.split == 'val':

78 | return 8 # only validate 8 images (to support <=8 gpus)

79 | return len(self.meta['frames'])

80 |

81 | def __getitem__(self, idx):

82 | if self.split == 'train': # use data in the buffers

83 | sample = {'rays': self.all_rays[idx],

84 | 'rgbs': self.all_rgbs[idx]}

85 |

86 | else: # create data for each image separately

87 | frame = self.meta['frames'][idx]

88 | c2w = torch.FloatTensor(frame['transform_matrix'])[:3, :4]

89 |

90 | img = Image.open(os.path.join(self.root_dir, f"{frame['file_path']}.png"))

91 | img = img.resize(self.img_wh, Image.LANCZOS)

92 | img = self.transform(img) # (4, H, W)

93 | valid_mask = (img[-1]>0).flatten() # (H*W) valid color area

94 | img = img.view(4, -1).permute(1, 0) # (H*W, 4) RGBA

95 | img = img[:, :3]*img[:, -1:] + (1-img[:, -1:]) # blend A to RGB

96 |

97 | rays_o, rays_d = get_rays(self.directions, c2w)

98 |

99 | rays = torch.cat([rays_o, rays_d,

100 | self.near*torch.ones_like(rays_o[:, :1]),

101 | self.far*torch.ones_like(rays_o[:, :1])],

102 | 1) # (H*W, 8)

103 |

104 | sample = {'rays': rays,

105 | 'rgbs': img,

106 | 'c2w': c2w,

107 | 'valid_mask': valid_mask}

108 |

109 | return sample

--------------------------------------------------------------------------------

/datasets/depth_utils.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import re

3 | import sys

4 |

5 | def read_pfm(filename):

6 | file = open(filename, 'rb')

7 | color = None

8 | width = None

9 | height = None

10 | scale = None

11 | endian = None

12 |

13 | header = file.readline().decode('utf-8').rstrip()

14 | if header == 'PF':

15 | color = True

16 | elif header == 'Pf':

17 | color = False

18 | else:

19 | raise Exception('Not a PFM file.')

20 |

21 | dim_match = re.match(r'^(\d+)\s(\d+)\s$', file.readline().decode('utf-8'))

22 | if dim_match:

23 | width, height = map(int, dim_match.groups())

24 | else:

25 | raise Exception('Malformed PFM header.')

26 |

27 | scale = float(file.readline().rstrip())

28 | if scale < 0: # little-endian

29 | endian = '<'

30 | scale = -scale

31 | else:

32 | endian = '>' # big-endian

33 |

34 | data = np.fromfile(file, endian + 'f')

35 | shape = (height, width, 3) if color else (height, width)

36 |

37 | data = np.reshape(data, shape)

38 | data = np.flipud(data)

39 | file.close()

40 | return data, scale

41 |

42 |

43 | def save_pfm(filename, image, scale=1):

44 | file = open(filename, "wb")

45 | color = None

46 |

47 | image = np.flipud(image)

48 |

49 | if image.dtype.name != 'float32':

50 | raise Exception('Image dtype must be float32.')

51 |

52 | if len(image.shape) == 3 and image.shape[2] == 3: # color image

53 | color = True

54 | elif len(image.shape) == 2 or len(image.shape) == 3 and image.shape[2] == 1: # greyscale

55 | color = False

56 | else:

57 | raise Exception('Image must have H x W x 3, H x W x 1 or H x W dimensions.')

58 |

59 | file.write('PF\n'.encode('utf-8') if color else 'Pf\n'.encode('utf-8'))

60 | file.write('{} {}\n'.format(image.shape[1], image.shape[0]).encode('utf-8'))

61 |

62 | endian = image.dtype.byteorder

63 |

64 | if endian == '<' or endian == '=' and sys.byteorder == 'little':

65 | scale = -scale

66 |

67 | file.write(('%f\n' % scale).encode('utf-8'))

68 |

69 | image.tofile(file)

70 | file.close()

--------------------------------------------------------------------------------

/datasets/llff.py:

--------------------------------------------------------------------------------

1 | import torch

2 | from torch.utils.data import Dataset

3 | import glob

4 | import numpy as np

5 | import os

6 | from PIL import Image

7 | from torchvision import transforms as T

8 |

9 | from .ray_utils import *

10 |

11 |

12 | def normalize(v):

13 | """Normalize a vector."""

14 | return v/np.linalg.norm(v)

15 |

16 |

17 | def average_poses(poses):

18 | """

19 | Calculate the average pose, which is then used to center all poses

20 | using @center_poses. Its computation is as follows:

21 | 1. Compute the center: the average of pose centers.

22 | 2. Compute the z axis: the normalized average z axis.

23 | 3. Compute axis y': the average y axis.

24 | 4. Compute x' = y' cross product z, then normalize it as the x axis.

25 | 5. Compute the y axis: z cross product x.

26 |

27 | Note that at step 3, we cannot directly use y' as y axis since it's

28 | not necessarily orthogonal to z axis. We need to pass from x to y.

29 |

30 | Inputs:

31 | poses: (N_images, 3, 4)

32 |

33 | Outputs:

34 | pose_avg: (3, 4) the average pose

35 | """

36 | # 1. Compute the center

37 | center = poses[..., 3].mean(0) # (3)

38 |

39 | # 2. Compute the z axis

40 | z = normalize(poses[..., 2].mean(0)) # (3)

41 |

42 | # 3. Compute axis y' (no need to normalize as it's not the final output)

43 | y_ = poses[..., 1].mean(0) # (3)

44 |

45 | # 4. Compute the x axis

46 | x = normalize(np.cross(y_, z)) # (3)

47 |

48 | # 5. Compute the y axis (as z and x are normalized, y is already of norm 1)

49 | y = np.cross(z, x) # (3)

50 |

51 | pose_avg = np.stack([x, y, z, center], 1) # (3, 4)

52 |

53 | return pose_avg

54 |

55 |

56 | def center_poses(poses):

57 | """

58 | Center the poses so that we can use NDC.

59 | See https://github.com/bmild/nerf/issues/34

60 |

61 | Inputs:

62 | poses: (N_images, 3, 4)

63 |

64 | Outputs:

65 | poses_centered: (N_images, 3, 4) the centered poses

66 | pose_avg: (3, 4) the average pose

67 | """

68 |

69 | pose_avg = average_poses(poses) # (3, 4)

70 | pose_avg_homo = np.eye(4)

71 | pose_avg_homo[:3] = pose_avg # convert to homogeneous coordinate for faster computation

72 | # by simply adding 0, 0, 0, 1 as the last row

73 | last_row = np.tile(np.array([0, 0, 0, 1]), (len(poses), 1, 1)) # (N_images, 1, 4)

74 | poses_homo = \

75 | np.concatenate([poses, last_row], 1) # (N_images, 4, 4) homogeneous coordinate

76 |

77 | poses_centered = np.linalg.inv(pose_avg_homo) @ poses_homo # (N_images, 4, 4)

78 | poses_centered = poses_centered[:, :3] # (N_images, 3, 4)

79 |

80 | return poses_centered, np.linalg.inv(pose_avg_homo)

81 |

82 |

83 | def create_spiral_poses(radii, focus_depth, n_poses=120):

84 | """

85 | Computes poses that follow a spiral path for rendering purpose.

86 | See https://github.com/Fyusion/LLFF/issues/19

87 | In particular, the path looks like:

88 | https://tinyurl.com/ybgtfns3

89 |

90 | Inputs:

91 | radii: (3) radii of the spiral for each axis

92 | focus_depth: float, the depth that the spiral poses look at

93 | n_poses: int, number of poses to create along the path

94 |

95 | Outputs:

96 | poses_spiral: (n_poses, 3, 4) the poses in the spiral path

97 | """

98 |

99 | poses_spiral = []

100 | for t in np.linspace(0, 4*np.pi, n_poses+1)[:-1]: # rotate 4pi (2 rounds)

101 | # the parametric function of the spiral (see the interactive web)

102 | center = np.array([np.cos(t), -np.sin(t), -np.sin(0.5*t)]) * radii

103 |

104 | # the viewing z axis is the vector pointing from the @focus_depth plane

105 | # to @center

106 | z = normalize(center - np.array([0, 0, -focus_depth]))

107 |

108 | # compute other axes as in @average_poses

109 | y_ = np.array([0, 1, 0]) # (3)

110 | x = normalize(np.cross(y_, z)) # (3)

111 | y = np.cross(z, x) # (3)

112 |

113 | poses_spiral += [np.stack([x, y, z, center], 1)] # (3, 4)

114 |

115 | return np.stack(poses_spiral, 0) # (n_poses, 3, 4)

116 |

117 |

118 | def create_spheric_poses(radius, n_poses=120):

119 | """

120 | Create circular poses around z axis.

121 | Inputs:

122 | radius: the (negative) height and the radius of the circle.

123 |

124 | Outputs:

125 | spheric_poses: (n_poses, 3, 4) the poses in the circular path

126 | """

127 | def spheric_pose(theta, phi, radius):

128 | trans_t = lambda t : np.array([

129 | [1,0,0,0],

130 | [0,1,0,-0.9*t],

131 | [0,0,1,t],

132 | [0,0,0,1],

133 | ])

134 |

135 | rot_phi = lambda phi : np.array([

136 | [1,0,0,0],

137 | [0,np.cos(phi),-np.sin(phi),0],

138 | [0,np.sin(phi), np.cos(phi),0],

139 | [0,0,0,1],

140 | ])

141 |

142 | rot_theta = lambda th : np.array([

143 | [np.cos(th),0,-np.sin(th),0],

144 | [0,1,0,0],

145 | [np.sin(th),0, np.cos(th),0],

146 | [0,0,0,1],

147 | ])

148 |

149 | c2w = rot_theta(theta) @ rot_phi(phi) @ trans_t(radius)

150 | c2w = np.array([[-1,0,0,0],[0,0,1,0],[0,1,0,0],[0,0,0,1]]) @ c2w

151 | return c2w[:3]

152 |

153 | spheric_poses = []

154 | for th in np.linspace(0, 2*np.pi, n_poses+1)[:-1]:

155 | spheric_poses += [spheric_pose(th, -np.pi/5, radius)] # 36 degree view downwards

156 | return np.stack(spheric_poses, 0)

157 |

158 |

159 | class LLFFDataset(Dataset):

160 | def __init__(self, root_dir, split='train', img_wh=(504, 378), spheric_poses=False, val_num=1):

161 | """

162 | spheric_poses: whether the images are taken in a spheric inward-facing manner

163 | default: False (forward-facing)

164 | val_num: number of val images (used for multigpu training, validate same image for all gpus)

165 | """

166 | self.root_dir = root_dir

167 | self.split = split

168 | self.img_wh = img_wh

169 | self.spheric_poses = spheric_poses

170 | self.val_num = max(1, val_num) # at least 1

171 | self.define_transforms()

172 |

173 | self.read_meta()

174 | self.white_back = False

175 |

176 | def read_meta(self):

177 | poses_bounds = np.load(os.path.join(self.root_dir,

178 | 'poses_bounds.npy')) # (N_images, 17)

179 | self.image_paths = sorted(glob.glob(os.path.join(self.root_dir, 'images/*')))

180 | # load full resolution image then resize

181 | if self.split in ['train', 'val']:

182 | assert len(poses_bounds) == len(self.image_paths), \

183 | 'Mismatch between number of images and number of poses! Please rerun COLMAP!'

184 |

185 | poses = poses_bounds[:, :15].reshape(-1, 3, 5) # (N_images, 3, 5)

186 | self.bounds = poses_bounds[:, -2:] # (N_images, 2)

187 |

188 | # Step 1: rescale focal length according to training resolution

189 | H, W, self.focal = poses[0, :, -1] # original intrinsics, same for all images

190 | assert H*self.img_wh[0] == W*self.img_wh[1], \

191 | f'You must set @img_wh to have the same aspect ratio as ({W}, {H}) !'

192 |

193 | self.focal *= self.img_wh[0]/W

194 |

195 | # Step 2: correct poses

196 | # Original poses has rotation in form "down right back", change to "right up back"

197 | # See https://github.com/bmild/nerf/issues/34

198 | poses = np.concatenate([poses[..., 1:2], -poses[..., :1], poses[..., 2:4]], -1)

199 | # (N_images, 3, 4) exclude H, W, focal

200 | self.poses, self.pose_avg = center_poses(poses)

201 | distances_from_center = np.linalg.norm(self.poses[..., 3], axis=1)

202 | val_idx = np.argmin(distances_from_center) # choose val image as the closest to

203 | # center image

204 |

205 | # Step 3: correct scale so that the nearest depth is at a little more than 1.0

206 | # See https://github.com/bmild/nerf/issues/34

207 | near_original = self.bounds.min()

208 | scale_factor = near_original*0.75 # 0.75 is the default parameter

209 | # the nearest depth is at 1/0.75=1.33

210 | self.bounds /= scale_factor

211 | self.poses[..., 3] /= scale_factor

212 |

213 | # ray directions for all pixels, same for all images (same H, W, focal)

214 | self.directions = \

215 | get_ray_directions(self.img_wh[1], self.img_wh[0], self.focal) # (H, W, 3)

216 |

217 | if self.split == 'train': # create buffer of all rays and rgb data

218 | # use first N_images-1 to train, the LAST is val

219 | self.all_rays = []

220 | self.all_rgbs = []

221 | for i, image_path in enumerate(self.image_paths):

222 | if i == val_idx: # exclude the val image

223 | continue

224 | c2w = torch.FloatTensor(self.poses[i])

225 |

226 | img = Image.open(image_path).convert('RGB')

227 | assert img.size[1]*self.img_wh[0] == img.size[0]*self.img_wh[1], \

228 | f'''{image_path} has different aspect ratio than img_wh,

229 | please check your data!'''

230 | img = img.resize(self.img_wh, Image.LANCZOS)

231 | img = self.transform(img) # (3, h, w)

232 | img = img.view(3, -1).permute(1, 0) # (h*w, 3) RGB

233 | self.all_rgbs += [img]

234 |

235 | rays_o, rays_d = get_rays(self.directions, c2w) # both (h*w, 3)

236 | if not self.spheric_poses:

237 | near, far = 0, 1

238 | rays_o, rays_d = get_ndc_rays(self.img_wh[1], self.img_wh[0],

239 | self.focal, 1.0, rays_o, rays_d)

240 | # near plane is always at 1.0

241 | # near and far in NDC are always 0 and 1

242 | # See https://github.com/bmild/nerf/issues/34

243 | else:

244 | near = self.bounds.min()

245 | far = min(8 * near, self.bounds.max()) # focus on central object only

246 |

247 | self.all_rays += [torch.cat([rays_o, rays_d,

248 | near*torch.ones_like(rays_o[:, :1]),

249 | far*torch.ones_like(rays_o[:, :1])],

250 | 1)] # (h*w, 8)

251 |

252 | self.all_rays = torch.cat(self.all_rays, 0) # ((N_images-1)*h*w, 8)

253 | self.all_rgbs = torch.cat(self.all_rgbs, 0) # ((N_images-1)*h*w, 3)

254 |

255 | elif self.split == 'val':

256 | print('val image is', self.image_paths[val_idx])

257 | self.c2w_val = self.poses[val_idx]

258 | self.image_path_val = self.image_paths[val_idx]

259 |

260 | else: # for testing, create a parametric rendering path

261 | if self.split.endswith('train'): # test on training set

262 | self.poses_test = self.poses

263 | elif not self.spheric_poses:

264 | focus_depth = 3.5 # hardcoded, this is numerically close to the formula

265 | # given in the original repo. Mathematically if near=1

266 | # and far=infinity, then this number will converge to 4

267 | radii = np.percentile(np.abs(self.poses[..., 3]), 90, axis=0)

268 | self.poses_test = create_spiral_poses(radii, focus_depth)

269 | else:

270 | radius = 1.1 * self.bounds.min()

271 | self.poses_test = create_spheric_poses(radius)

272 |

273 | def define_transforms(self):

274 | self.transform = T.ToTensor()

275 |

276 | def __len__(self):

277 | if self.split == 'train':

278 | return len(self.all_rays)

279 | if self.split == 'val':

280 | return self.val_num

281 | return len(self.poses_test)

282 |

283 | def __getitem__(self, idx):

284 | if self.split == 'train': # use data in the buffers

285 | sample = {'rays': self.all_rays[idx],

286 | 'rgbs': self.all_rgbs[idx]}

287 |

288 | else:

289 | if self.split == 'val':

290 | c2w = torch.FloatTensor(self.c2w_val)

291 | else:

292 | c2w = torch.FloatTensor(self.poses_test[idx])

293 |

294 | rays_o, rays_d = get_rays(self.directions, c2w)

295 | if not self.spheric_poses:

296 | near, far = 0, 1

297 | rays_o, rays_d = get_ndc_rays(self.img_wh[1], self.img_wh[0],

298 | self.focal, 1.0, rays_o, rays_d)

299 | else:

300 | near = self.bounds.min()

301 | far = min(8 * near, self.bounds.max())

302 |

303 | rays = torch.cat([rays_o, rays_d,

304 | near*torch.ones_like(rays_o[:, :1]),

305 | far*torch.ones_like(rays_o[:, :1])],

306 | 1) # (h*w, 8)

307 |

308 | sample = {'rays': rays,

309 | 'c2w': c2w}

310 |

311 | if self.split == 'val':

312 | img = Image.open(self.image_path_val).convert('RGB')

313 | img = img.resize(self.img_wh, Image.LANCZOS)

314 | img = self.transform(img) # (3, h, w)

315 | img = img.view(3, -1).permute(1, 0) # (h*w, 3)

316 | sample['rgbs'] = img

317 |

318 | return sample

319 |

--------------------------------------------------------------------------------

/datasets/ray_utils.py:

--------------------------------------------------------------------------------

1 | import torch

2 | from kornia import create_meshgrid

3 |

4 |

5 | def get_ray_directions(H, W, focal):

6 | """

7 | Get ray directions for all pixels in camera coordinate.

8 | Reference: https://www.scratchapixel.com/lessons/3d-basic-rendering/

9 | ray-tracing-generating-camera-rays/standard-coordinate-systems

10 |

11 | Inputs:

12 | H, W, focal: image height, width and focal length

13 |

14 | Outputs:

15 | directions: (H, W, 3), the direction of the rays in camera coordinate

16 | """

17 | grid = create_meshgrid(H, W, normalized_coordinates=False)[0]

18 | i, j = grid.unbind(-1)

19 | # the direction here is without +0.5 pixel centering as calibration is not so accurate

20 | # see https://github.com/bmild/nerf/issues/24

21 | directions = \

22 | torch.stack([(i-W/2)/focal, -(j-H/2)/focal, -torch.ones_like(i)], -1) # (H, W, 3)

23 |

24 | return directions

25 |

26 |

27 | def get_rays(directions, c2w):

28 | """

29 | Get ray origin and normalized directions in world coordinate for all pixels in one image.

30 | Reference: https://www.scratchapixel.com/lessons/3d-basic-rendering/

31 | ray-tracing-generating-camera-rays/standard-coordinate-systems

32 |

33 | Inputs:

34 | directions: (H, W, 3) precomputed ray directions in camera coordinate

35 | c2w: (3, 4) transformation matrix from camera coordinate to world coordinate

36 |

37 | Outputs:

38 | rays_o: (H*W, 3), the origin of the rays in world coordinate

39 | rays_d: (H*W, 3), the normalized direction of the rays in world coordinate

40 | """

41 | # Rotate ray directions from camera coordinate to the world coordinate

42 | rays_d = directions @ c2w[:, :3].T # (H, W, 3)

43 | rays_d = rays_d / torch.norm(rays_d, dim=-1, keepdim=True)

44 | # The origin of all rays is the camera origin in world coordinate

45 | rays_o = c2w[:, 3].expand(rays_d.shape) # (H, W, 3)

46 |

47 | rays_d = rays_d.view(-1, 3)

48 | rays_o = rays_o.view(-1, 3)

49 |

50 | return rays_o, rays_d

51 |

52 |

53 | def get_ndc_rays(H, W, focal, near, rays_o, rays_d):

54 | """

55 | Transform rays from world coordinate to NDC.

56 | NDC: Space such that the canvas is a cube with sides [-1, 1] in each axis.

57 | For detailed derivation, please see:

58 | http://www.songho.ca/opengl/gl_projectionmatrix.html

59 | https://github.com/bmild/nerf/files/4451808/ndc_derivation.pdf

60 |

61 | In practice, use NDC "if and only if" the scene is unbounded (has a large depth).

62 | See https://github.com/bmild/nerf/issues/18

63 |

64 | Inputs:

65 | H, W, focal: image height, width and focal length

66 | near: (N_rays) or float, the depths of the near plane

67 | rays_o: (N_rays, 3), the origin of the rays in world coordinate

68 | rays_d: (N_rays, 3), the direction of the rays in world coordinate

69 |

70 | Outputs:

71 | rays_o: (N_rays, 3), the origin of the rays in NDC

72 | rays_d: (N_rays, 3), the direction of the rays in NDC

73 | """

74 | # Shift ray origins to near plane

75 | t = -(near + rays_o[...,2]) / rays_d[...,2]

76 | rays_o = rays_o + t[...,None] * rays_d

77 |

78 | # Store some intermediate homogeneous results

79 | ox_oz = rays_o[...,0] / rays_o[...,2]

80 | oy_oz = rays_o[...,1] / rays_o[...,2]

81 |

82 | # Projection

83 | o0 = -1./(W/(2.*focal)) * ox_oz

84 | o1 = -1./(H/(2.*focal)) * oy_oz

85 | o2 = 1. + 2. * near / rays_o[...,2]

86 |

87 | d0 = -1./(W/(2.*focal)) * (rays_d[...,0]/rays_d[...,2] - ox_oz)

88 | d1 = -1./(H/(2.*focal)) * (rays_d[...,1]/rays_d[...,2] - oy_oz)

89 | d2 = 1 - o2

90 |

91 | rays_o = torch.stack([o0, o1, o2], -1) # (B, 3)

92 | rays_d = torch.stack([d0, d1, d2], -1) # (B, 3)

93 |

94 | return rays_o, rays_d

--------------------------------------------------------------------------------

/docs/.gitignore:

--------------------------------------------------------------------------------

1 | _site

2 | .sass-cache

3 | .jekyll-cache

4 | .jekyll-metadata

5 | vendor

6 |

--------------------------------------------------------------------------------

/docs/Gemfile:

--------------------------------------------------------------------------------

1 | source 'https://rubygems.org'

2 | gem 'github-pages', group: :jekyll_plugins

3 |

--------------------------------------------------------------------------------

/docs/Gemfile.lock:

--------------------------------------------------------------------------------

1 | GEM

2 | remote: https://rubygems.org/

3 | specs:

4 | activesupport (>= 6.0.3.1)

5 | concurrent-ruby (~> 1.0, >= 1.0.2)

6 | i18n (>= 0.7, < 2)

7 | minitest (~> 5.1)

8 | tzinfo (~> 1.1)

9 | zeitwerk (~> 2.2, >= 2.2.2)

10 | addressable (2.7.0)

11 | public_suffix (>= 2.0.2, < 5.0)

12 | coffee-script (2.4.1)

13 | coffee-script-source

14 | execjs

15 | coffee-script-source (1.11.1)

16 | colorator (1.1.0)

17 | commonmarker (0.17.13)

18 | ruby-enum (~> 0.5)

19 | concurrent-ruby (1.1.6)

20 | dnsruby (1.61.3)

21 | addressable (~> 2.5)

22 | em-websocket (0.5.1)

23 | eventmachine (>= 0.12.9)

24 | http_parser.rb (~> 0.6.0)

25 | ethon (0.12.0)

26 | ffi (>= 1.3.0)

27 | eventmachine (1.2.7)

28 | execjs (2.7.0)

29 | faraday (1.0.1)

30 | multipart-post (>= 1.2, < 3)

31 | ffi (1.12.2)

32 | forwardable-extended (2.6.0)

33 | gemoji (3.0.1)

34 | github-pages (204)

35 | github-pages-health-check (= 1.16.1)

36 | jekyll (= 3.8.5)

37 | jekyll-avatar (= 0.7.0)

38 | jekyll-coffeescript (= 1.1.1)

39 | jekyll-commonmark-ghpages (= 0.1.6)

40 | jekyll-default-layout (= 0.1.4)

41 | jekyll-feed (= 0.13.0)

42 | jekyll-gist (= 1.5.0)

43 | jekyll-github-metadata (= 2.13.0)

44 | jekyll-mentions (= 1.5.1)

45 | jekyll-optional-front-matter (= 0.3.2)

46 | jekyll-paginate (= 1.1.0)

47 | jekyll-readme-index (= 0.3.0)

48 | jekyll-redirect-from (= 0.15.0)

49 | jekyll-relative-links (= 0.6.1)

50 | jekyll-remote-theme (= 0.4.1)

51 | jekyll-sass-converter (= 1.5.2)

52 | jekyll-seo-tag (= 2.6.1)

53 | jekyll-sitemap (= 1.4.0)

54 | jekyll-swiss (= 1.0.0)

55 | jekyll-theme-architect (= 0.1.1)

56 | jekyll-theme-cayman (= 0.1.1)

57 | jekyll-theme-dinky (= 0.1.1)

58 | jekyll-theme-hacker (= 0.1.1)

59 | jekyll-theme-leap-day (= 0.1.1)

60 | jekyll-theme-merlot (= 0.1.1)

61 | jekyll-theme-midnight (= 0.1.1)

62 | jekyll-theme-minimal (= 0.1.1)

63 | jekyll-theme-modernist (= 0.1.1)

64 | jekyll-theme-primer (= 0.5.4)

65 | jekyll-theme-slate (= 0.1.1)

66 | jekyll-theme-tactile (= 0.1.1)

67 | jekyll-theme-time-machine (= 0.1.1)

68 | jekyll-titles-from-headings (= 0.5.3)

69 | jemoji (= 0.11.1)

70 | kramdown (>= 2.3.0)

71 | liquid (= 4.0.3)

72 | mercenary (~> 0.3)

73 | minima (= 2.5.1)

74 | nokogiri (>= 1.11.0.rc4, < 2.0)

75 | rouge (= 3.13.0)

76 | terminal-table (~> 1.4)

77 | github-pages-health-check (1.16.1)

78 | addressable (~> 2.3)

79 | dnsruby (~> 1.60)

80 | octokit (~> 4.0)

81 | public_suffix (~> 3.0)

82 | typhoeus (~> 1.3)

83 | html-pipeline (2.12.3)

84 | activesupport (>= 2)

85 | nokogiri (>= 1.4)

86 | http_parser.rb (0.6.0)

87 | i18n (0.9.5)

88 | concurrent-ruby (~> 1.0)

89 | jekyll (3.8.5)

90 | addressable (~> 2.4)

91 | colorator (~> 1.0)

92 | em-websocket (~> 0.5)

93 | i18n (~> 0.7)

94 | jekyll-sass-converter (~> 1.0)

95 | jekyll-watch (~> 2.0)

96 | kramdown (~> 1.14)

97 | liquid (~> 4.0)

98 | mercenary (~> 0.3.3)

99 | pathutil (~> 0.9)

100 | rouge (>= 1.7, < 4)

101 | safe_yaml (~> 1.0)

102 | jekyll-avatar (0.7.0)

103 | jekyll (>= 3.0, < 5.0)

104 | jekyll-coffeescript (1.1.1)

105 | coffee-script (~> 2.2)

106 | coffee-script-source (~> 1.11.1)

107 | jekyll-commonmark (1.3.1)

108 | commonmarker (~> 0.14)

109 | jekyll (>= 3.7, < 5.0)

110 | jekyll-commonmark-ghpages (0.1.6)

111 | commonmarker (~> 0.17.6)

112 | jekyll-commonmark (~> 1.2)

113 | rouge (>= 2.0, < 4.0)

114 | jekyll-default-layout (0.1.4)

115 | jekyll (~> 3.0)

116 | jekyll-feed (0.13.0)

117 | jekyll (>= 3.7, < 5.0)

118 | jekyll-gist (1.5.0)

119 | octokit (~> 4.2)

120 | jekyll-github-metadata (2.13.0)

121 | jekyll (>= 3.4, < 5.0)

122 | octokit (~> 4.0, != 4.4.0)

123 | jekyll-mentions (1.5.1)

124 | html-pipeline (~> 2.3)

125 | jekyll (>= 3.7, < 5.0)

126 | jekyll-optional-front-matter (0.3.2)

127 | jekyll (>= 3.0, < 5.0)

128 | jekyll-paginate (1.1.0)

129 | jekyll-readme-index (0.3.0)

130 | jekyll (>= 3.0, < 5.0)

131 | jekyll-redirect-from (0.15.0)

132 | jekyll (>= 3.3, < 5.0)

133 | jekyll-relative-links (0.6.1)

134 | jekyll (>= 3.3, < 5.0)

135 | jekyll-remote-theme (0.4.1)

136 | addressable (~> 2.0)

137 | jekyll (>= 3.5, < 5.0)

138 | rubyzip (>= 1.3.0)

139 | jekyll-sass-converter (1.5.2)

140 | sass (~> 3.4)

141 | jekyll-seo-tag (2.6.1)

142 | jekyll (>= 3.3, < 5.0)

143 | jekyll-sitemap (1.4.0)

144 | jekyll (>= 3.7, < 5.0)

145 | jekyll-swiss (1.0.0)

146 | jekyll-theme-architect (0.1.1)

147 | jekyll (~> 3.5)

148 | jekyll-seo-tag (~> 2.0)

149 | jekyll-theme-cayman (0.1.1)

150 | jekyll (~> 3.5)

151 | jekyll-seo-tag (~> 2.0)

152 | jekyll-theme-dinky (0.1.1)

153 | jekyll (~> 3.5)

154 | jekyll-seo-tag (~> 2.0)

155 | jekyll-theme-hacker (0.1.1)

156 | jekyll (~> 3.5)

157 | jekyll-seo-tag (~> 2.0)

158 | jekyll-theme-leap-day (0.1.1)

159 | jekyll (~> 3.5)

160 | jekyll-seo-tag (~> 2.0)

161 | jekyll-theme-merlot (0.1.1)

162 | jekyll (~> 3.5)

163 | jekyll-seo-tag (~> 2.0)

164 | jekyll-theme-midnight (0.1.1)

165 | jekyll (~> 3.5)

166 | jekyll-seo-tag (~> 2.0)

167 | jekyll-theme-minimal (0.1.1)

168 | jekyll (~> 3.5)

169 | jekyll-seo-tag (~> 2.0)

170 | jekyll-theme-modernist (0.1.1)

171 | jekyll (~> 3.5)

172 | jekyll-seo-tag (~> 2.0)

173 | jekyll-theme-primer (0.5.4)

174 | jekyll (> 3.5, < 5.0)

175 | jekyll-github-metadata (~> 2.9)

176 | jekyll-seo-tag (~> 2.0)

177 | jekyll-theme-slate (0.1.1)

178 | jekyll (~> 3.5)

179 | jekyll-seo-tag (~> 2.0)

180 | jekyll-theme-tactile (0.1.1)

181 | jekyll (~> 3.5)

182 | jekyll-seo-tag (~> 2.0)

183 | jekyll-theme-time-machine (0.1.1)

184 | jekyll (~> 3.5)

185 | jekyll-seo-tag (~> 2.0)

186 | jekyll-titles-from-headings (0.5.3)

187 | jekyll (>= 3.3, < 5.0)

188 | jekyll-watch (2.2.1)

189 | listen (~> 3.0)

190 | jemoji (0.11.1)

191 | gemoji (~> 3.0)

192 | html-pipeline (~> 2.2)

193 | jekyll (>= 3.0, < 5.0)

194 | kramdown (>= 2.3.0)

195 | liquid (4.0.3)

196 | listen (3.2.1)

197 | rb-fsevent (~> 0.10, >= 0.10.3)

198 | rb-inotify (~> 0.9, >= 0.9.10)

199 | mercenary (0.3.6)

200 | mini_portile2 (2.4.0)

201 | minima (2.5.1)

202 | jekyll (>= 3.5, < 5.0)

203 | jekyll-feed (~> 0.9)

204 | jekyll-seo-tag (~> 2.1)

205 | minitest (5.14.1)

206 | multipart-post (2.1.1)

207 | nokogiri (1.10.9)

208 | mini_portile2 (~> 2.4.0)

209 | octokit (4.18.0)

210 | faraday (>= 0.9)

211 | sawyer (~> 0.8.0, >= 0.5.3)

212 | pathutil (0.16.2)

213 | forwardable-extended (~> 2.6)

214 | public_suffix (3.1.1)

215 | rb-fsevent (0.10.4)

216 | rb-inotify (0.10.1)

217 | ffi (~> 1.0)

218 | rouge (3.13.0)

219 | ruby-enum (0.8.0)

220 | i18n

221 | rubyzip (2.3.0)

222 | safe_yaml (1.0.5)

223 | sass (3.7.4)

224 | sass-listen (~> 4.0.0)

225 | sass-listen (4.0.0)

226 | rb-fsevent (~> 0.9, >= 0.9.4)

227 | rb-inotify (~> 0.9, >= 0.9.7)

228 | sawyer (0.8.2)

229 | addressable (>= 2.3.5)

230 | faraday (> 0.8, < 2.0)

231 | terminal-table (1.8.0)

232 | unicode-display_width (~> 1.1, >= 1.1.1)

233 | thread_safe (0.3.6)

234 | typhoeus (1.4.0)

235 | ethon (>= 0.9.0)

236 | tzinfo (1.2.7)

237 | thread_safe (~> 0.1)

238 | unicode-display_width (1.7.0)

239 | zeitwerk (2.3.0)

240 |

241 | PLATFORMS

242 | ruby

243 |

244 | DEPENDENCIES

245 | github-pages

246 |

247 | BUNDLED WITH

248 | 2.1.4

249 |

--------------------------------------------------------------------------------

/docs/_config.yml:

--------------------------------------------------------------------------------

1 | theme: jekyll-theme-slate

2 |

--------------------------------------------------------------------------------

/docs/index.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 | # Author of this implementation

11 |

57 |

58 | We can then export this `.ply` file to any other format, and embed in programs like I did in Unity:

59 | (I use [this plugin](https://github.com/kwea123/Pcx) to import `.ply` file to unity, and made some modifications so that it can also read mesh triangles, not only the points)

60 |

61 |

62 |

63 | The meshes can be attached a meshcollider so that they can interact with other objects. You can see [this video](https://youtu.be/I2M0xhnrBos) for a demo.

64 |

65 | ## Further reading

66 | The author suggested [another way](https://github.com/bmild/nerf/issues/44#issuecomment-622961303) to extract color, in my experiments it doesn't turn out to be good, but the idea is reasonable and interesting. You can also test this by setting `--use_vertex_normal`.

67 |

--------------------------------------------------------------------------------

/datasets/__init__.py:

--------------------------------------------------------------------------------

1 | from .blender import BlenderDataset

2 | from .llff import LLFFDataset

3 |

4 | dataset_dict = {'blender': BlenderDataset,

5 | 'llff': LLFFDataset}

--------------------------------------------------------------------------------

/datasets/blender.py:

--------------------------------------------------------------------------------

1 | import torch

2 | from torch.utils.data import Dataset

3 | import json

4 | import numpy as np

5 | import os

6 | from PIL import Image

7 | from torchvision import transforms as T

8 |

9 | from .ray_utils import *

10 |

11 | class BlenderDataset(Dataset):

12 | def __init__(self, root_dir, split='train', img_wh=(800, 800)):

13 | self.root_dir = root_dir

14 | self.split = split

15 | assert img_wh[0] == img_wh[1], 'image width must equal image height!'

16 | self.img_wh = img_wh

17 | self.define_transforms()

18 |

19 | self.read_meta()

20 | self.white_back = True

21 |

22 | def read_meta(self):

23 | with open(os.path.join(self.root_dir,

24 | f"transforms_{self.split}.json"), 'r') as f:

25 | self.meta = json.load(f)

26 |

27 | w, h = self.img_wh

28 | self.focal = 0.5*800/np.tan(0.5*self.meta['camera_angle_x']) # original focal length

29 | # when W=800

30 |

31 | self.focal *= self.img_wh[0]/800 # modify focal length to match size self.img_wh

32 |

33 | # bounds, common for all scenes

34 | self.near = 2.0

35 | self.far = 6.0

36 | self.bounds = np.array([self.near, self.far])

37 |

38 | # ray directions for all pixels, same for all images (same H, W, focal)

39 | self.directions = \

40 | get_ray_directions(h, w, self.focal) # (h, w, 3)

41 |

42 | if self.split == 'train': # create buffer of all rays and rgb data

43 | self.image_paths = []

44 | self.poses = []

45 | self.all_rays = []

46 | self.all_rgbs = []

47 | for frame in self.meta['frames']:

48 | pose = np.array(frame['transform_matrix'])[:3, :4]

49 | self.poses += [pose]

50 | c2w = torch.FloatTensor(pose)

51 |

52 | image_path = os.path.join(self.root_dir, f"{frame['file_path']}.png")

53 | self.image_paths += [image_path]

54 | img = Image.open(image_path)

55 | img = img.resize(self.img_wh, Image.LANCZOS)

56 | img = self.transform(img) # (4, h, w)

57 | img = img.view(4, -1).permute(1, 0) # (h*w, 4) RGBA

58 | img = img[:, :3]*img[:, -1:] + (1-img[:, -1:]) # blend A to RGB

59 | self.all_rgbs += [img]

60 |

61 | rays_o, rays_d = get_rays(self.directions, c2w) # both (h*w, 3)

62 |

63 | self.all_rays += [torch.cat([rays_o, rays_d,

64 | self.near*torch.ones_like(rays_o[:, :1]),

65 | self.far*torch.ones_like(rays_o[:, :1])],

66 | 1)] # (h*w, 8)

67 |

68 | self.all_rays = torch.cat(self.all_rays, 0) # (len(self.meta['frames])*h*w, 3)

69 | self.all_rgbs = torch.cat(self.all_rgbs, 0) # (len(self.meta['frames])*h*w, 3)

70 |

71 | def define_transforms(self):

72 | self.transform = T.ToTensor()

73 |

74 | def __len__(self):

75 | if self.split == 'train':

76 | return len(self.all_rays)

77 | if self.split == 'val':

78 | return 8 # only validate 8 images (to support <=8 gpus)

79 | return len(self.meta['frames'])

80 |

81 | def __getitem__(self, idx):

82 | if self.split == 'train': # use data in the buffers

83 | sample = {'rays': self.all_rays[idx],

84 | 'rgbs': self.all_rgbs[idx]}

85 |

86 | else: # create data for each image separately

87 | frame = self.meta['frames'][idx]

88 | c2w = torch.FloatTensor(frame['transform_matrix'])[:3, :4]

89 |

90 | img = Image.open(os.path.join(self.root_dir, f"{frame['file_path']}.png"))

91 | img = img.resize(self.img_wh, Image.LANCZOS)

92 | img = self.transform(img) # (4, H, W)

93 | valid_mask = (img[-1]>0).flatten() # (H*W) valid color area

94 | img = img.view(4, -1).permute(1, 0) # (H*W, 4) RGBA

95 | img = img[:, :3]*img[:, -1:] + (1-img[:, -1:]) # blend A to RGB

96 |

97 | rays_o, rays_d = get_rays(self.directions, c2w)

98 |

99 | rays = torch.cat([rays_o, rays_d,

100 | self.near*torch.ones_like(rays_o[:, :1]),

101 | self.far*torch.ones_like(rays_o[:, :1])],

102 | 1) # (H*W, 8)

103 |

104 | sample = {'rays': rays,

105 | 'rgbs': img,

106 | 'c2w': c2w,

107 | 'valid_mask': valid_mask}

108 |

109 | return sample

--------------------------------------------------------------------------------

/datasets/depth_utils.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import re

3 | import sys

4 |

5 | def read_pfm(filename):

6 | file = open(filename, 'rb')

7 | color = None

8 | width = None

9 | height = None

10 | scale = None

11 | endian = None

12 |

13 | header = file.readline().decode('utf-8').rstrip()

14 | if header == 'PF':

15 | color = True

16 | elif header == 'Pf':

17 | color = False

18 | else:

19 | raise Exception('Not a PFM file.')

20 |

21 | dim_match = re.match(r'^(\d+)\s(\d+)\s$', file.readline().decode('utf-8'))

22 | if dim_match:

23 | width, height = map(int, dim_match.groups())

24 | else:

25 | raise Exception('Malformed PFM header.')

26 |

27 | scale = float(file.readline().rstrip())

28 | if scale < 0: # little-endian

29 | endian = '<'

30 | scale = -scale

31 | else:

32 | endian = '>' # big-endian

33 |

34 | data = np.fromfile(file, endian + 'f')

35 | shape = (height, width, 3) if color else (height, width)

36 |

37 | data = np.reshape(data, shape)

38 | data = np.flipud(data)

39 | file.close()

40 | return data, scale

41 |

42 |

43 | def save_pfm(filename, image, scale=1):

44 | file = open(filename, "wb")

45 | color = None

46 |

47 | image = np.flipud(image)

48 |

49 | if image.dtype.name != 'float32':

50 | raise Exception('Image dtype must be float32.')

51 |

52 | if len(image.shape) == 3 and image.shape[2] == 3: # color image

53 | color = True

54 | elif len(image.shape) == 2 or len(image.shape) == 3 and image.shape[2] == 1: # greyscale

55 | color = False

56 | else:

57 | raise Exception('Image must have H x W x 3, H x W x 1 or H x W dimensions.')

58 |

59 | file.write('PF\n'.encode('utf-8') if color else 'Pf\n'.encode('utf-8'))

60 | file.write('{} {}\n'.format(image.shape[1], image.shape[0]).encode('utf-8'))

61 |

62 | endian = image.dtype.byteorder

63 |

64 | if endian == '<' or endian == '=' and sys.byteorder == 'little':

65 | scale = -scale

66 |

67 | file.write(('%f\n' % scale).encode('utf-8'))

68 |

69 | image.tofile(file)

70 | file.close()

--------------------------------------------------------------------------------

/datasets/llff.py:

--------------------------------------------------------------------------------

1 | import torch

2 | from torch.utils.data import Dataset

3 | import glob

4 | import numpy as np

5 | import os

6 | from PIL import Image

7 | from torchvision import transforms as T

8 |

9 | from .ray_utils import *

10 |

11 |

12 | def normalize(v):

13 | """Normalize a vector."""

14 | return v/np.linalg.norm(v)

15 |

16 |

17 | def average_poses(poses):

18 | """

19 | Calculate the average pose, which is then used to center all poses

20 | using @center_poses. Its computation is as follows:

21 | 1. Compute the center: the average of pose centers.

22 | 2. Compute the z axis: the normalized average z axis.

23 | 3. Compute axis y': the average y axis.

24 | 4. Compute x' = y' cross product z, then normalize it as the x axis.

25 | 5. Compute the y axis: z cross product x.

26 |

27 | Note that at step 3, we cannot directly use y' as y axis since it's

28 | not necessarily orthogonal to z axis. We need to pass from x to y.

29 |

30 | Inputs:

31 | poses: (N_images, 3, 4)

32 |

33 | Outputs:

34 | pose_avg: (3, 4) the average pose

35 | """

36 | # 1. Compute the center

37 | center = poses[..., 3].mean(0) # (3)

38 |

39 | # 2. Compute the z axis

40 | z = normalize(poses[..., 2].mean(0)) # (3)

41 |

42 | # 3. Compute axis y' (no need to normalize as it's not the final output)

43 | y_ = poses[..., 1].mean(0) # (3)

44 |

45 | # 4. Compute the x axis

46 | x = normalize(np.cross(y_, z)) # (3)

47 |

48 | # 5. Compute the y axis (as z and x are normalized, y is already of norm 1)

49 | y = np.cross(z, x) # (3)

50 |

51 | pose_avg = np.stack([x, y, z, center], 1) # (3, 4)

52 |

53 | return pose_avg

54 |

55 |

56 | def center_poses(poses):

57 | """

58 | Center the poses so that we can use NDC.

59 | See https://github.com/bmild/nerf/issues/34

60 |

61 | Inputs:

62 | poses: (N_images, 3, 4)

63 |

64 | Outputs:

65 | poses_centered: (N_images, 3, 4) the centered poses

66 | pose_avg: (3, 4) the average pose

67 | """

68 |

69 | pose_avg = average_poses(poses) # (3, 4)

70 | pose_avg_homo = np.eye(4)

71 | pose_avg_homo[:3] = pose_avg # convert to homogeneous coordinate for faster computation

72 | # by simply adding 0, 0, 0, 1 as the last row

73 | last_row = np.tile(np.array([0, 0, 0, 1]), (len(poses), 1, 1)) # (N_images, 1, 4)

74 | poses_homo = \

75 | np.concatenate([poses, last_row], 1) # (N_images, 4, 4) homogeneous coordinate

76 |

77 | poses_centered = np.linalg.inv(pose_avg_homo) @ poses_homo # (N_images, 4, 4)

78 | poses_centered = poses_centered[:, :3] # (N_images, 3, 4)

79 |

80 | return poses_centered, np.linalg.inv(pose_avg_homo)

81 |

82 |

83 | def create_spiral_poses(radii, focus_depth, n_poses=120):

84 | """

85 | Computes poses that follow a spiral path for rendering purpose.

86 | See https://github.com/Fyusion/LLFF/issues/19

87 | In particular, the path looks like:

88 | https://tinyurl.com/ybgtfns3

89 |

90 | Inputs:

91 | radii: (3) radii of the spiral for each axis

92 | focus_depth: float, the depth that the spiral poses look at

93 | n_poses: int, number of poses to create along the path

94 |

95 | Outputs:

96 | poses_spiral: (n_poses, 3, 4) the poses in the spiral path

97 | """

98 |

99 | poses_spiral = []

100 | for t in np.linspace(0, 4*np.pi, n_poses+1)[:-1]: # rotate 4pi (2 rounds)

101 | # the parametric function of the spiral (see the interactive web)

102 | center = np.array([np.cos(t), -np.sin(t), -np.sin(0.5*t)]) * radii

103 |

104 | # the viewing z axis is the vector pointing from the @focus_depth plane

105 | # to @center

106 | z = normalize(center - np.array([0, 0, -focus_depth]))

107 |

108 | # compute other axes as in @average_poses

109 | y_ = np.array([0, 1, 0]) # (3)

110 | x = normalize(np.cross(y_, z)) # (3)

111 | y = np.cross(z, x) # (3)

112 |

113 | poses_spiral += [np.stack([x, y, z, center], 1)] # (3, 4)

114 |

115 | return np.stack(poses_spiral, 0) # (n_poses, 3, 4)

116 |

117 |

118 | def create_spheric_poses(radius, n_poses=120):

119 | """

120 | Create circular poses around z axis.

121 | Inputs:

122 | radius: the (negative) height and the radius of the circle.

123 |

124 | Outputs:

125 | spheric_poses: (n_poses, 3, 4) the poses in the circular path

126 | """

127 | def spheric_pose(theta, phi, radius):

128 | trans_t = lambda t : np.array([

129 | [1,0,0,0],

130 | [0,1,0,-0.9*t],

131 | [0,0,1,t],

132 | [0,0,0,1],

133 | ])

134 |

135 | rot_phi = lambda phi : np.array([

136 | [1,0,0,0],

137 | [0,np.cos(phi),-np.sin(phi),0],

138 | [0,np.sin(phi), np.cos(phi),0],

139 | [0,0,0,1],

140 | ])

141 |

142 | rot_theta = lambda th : np.array([

143 | [np.cos(th),0,-np.sin(th),0],

144 | [0,1,0,0],

145 | [np.sin(th),0, np.cos(th),0],

146 | [0,0,0,1],

147 | ])

148 |

149 | c2w = rot_theta(theta) @ rot_phi(phi) @ trans_t(radius)

150 | c2w = np.array([[-1,0,0,0],[0,0,1,0],[0,1,0,0],[0,0,0,1]]) @ c2w

151 | return c2w[:3]

152 |

153 | spheric_poses = []

154 | for th in np.linspace(0, 2*np.pi, n_poses+1)[:-1]:

155 | spheric_poses += [spheric_pose(th, -np.pi/5, radius)] # 36 degree view downwards

156 | return np.stack(spheric_poses, 0)

157 |

158 |

159 | class LLFFDataset(Dataset):

160 | def __init__(self, root_dir, split='train', img_wh=(504, 378), spheric_poses=False, val_num=1):

161 | """

162 | spheric_poses: whether the images are taken in a spheric inward-facing manner

163 | default: False (forward-facing)

164 | val_num: number of val images (used for multigpu training, validate same image for all gpus)

165 | """

166 | self.root_dir = root_dir

167 | self.split = split

168 | self.img_wh = img_wh

169 | self.spheric_poses = spheric_poses

170 | self.val_num = max(1, val_num) # at least 1

171 | self.define_transforms()

172 |

173 | self.read_meta()

174 | self.white_back = False

175 |

176 | def read_meta(self):

177 | poses_bounds = np.load(os.path.join(self.root_dir,

178 | 'poses_bounds.npy')) # (N_images, 17)

179 | self.image_paths = sorted(glob.glob(os.path.join(self.root_dir, 'images/*')))

180 | # load full resolution image then resize

181 | if self.split in ['train', 'val']:

182 | assert len(poses_bounds) == len(self.image_paths), \

183 | 'Mismatch between number of images and number of poses! Please rerun COLMAP!'

184 |

185 | poses = poses_bounds[:, :15].reshape(-1, 3, 5) # (N_images, 3, 5)

186 | self.bounds = poses_bounds[:, -2:] # (N_images, 2)

187 |

188 | # Step 1: rescale focal length according to training resolution

189 | H, W, self.focal = poses[0, :, -1] # original intrinsics, same for all images

190 | assert H*self.img_wh[0] == W*self.img_wh[1], \

191 | f'You must set @img_wh to have the same aspect ratio as ({W}, {H}) !'

192 |

193 | self.focal *= self.img_wh[0]/W

194 |

195 | # Step 2: correct poses

196 | # Original poses has rotation in form "down right back", change to "right up back"

197 | # See https://github.com/bmild/nerf/issues/34

198 | poses = np.concatenate([poses[..., 1:2], -poses[..., :1], poses[..., 2:4]], -1)

199 | # (N_images, 3, 4) exclude H, W, focal

200 | self.poses, self.pose_avg = center_poses(poses)

201 | distances_from_center = np.linalg.norm(self.poses[..., 3], axis=1)

202 | val_idx = np.argmin(distances_from_center) # choose val image as the closest to

203 | # center image

204 |

205 | # Step 3: correct scale so that the nearest depth is at a little more than 1.0

206 | # See https://github.com/bmild/nerf/issues/34

207 | near_original = self.bounds.min()

208 | scale_factor = near_original*0.75 # 0.75 is the default parameter

209 | # the nearest depth is at 1/0.75=1.33

210 | self.bounds /= scale_factor

211 | self.poses[..., 3] /= scale_factor

212 |

213 | # ray directions for all pixels, same for all images (same H, W, focal)

214 | self.directions = \

215 | get_ray_directions(self.img_wh[1], self.img_wh[0], self.focal) # (H, W, 3)

216 |

217 | if self.split == 'train': # create buffer of all rays and rgb data

218 | # use first N_images-1 to train, the LAST is val

219 | self.all_rays = []

220 | self.all_rgbs = []

221 | for i, image_path in enumerate(self.image_paths):

222 | if i == val_idx: # exclude the val image

223 | continue

224 | c2w = torch.FloatTensor(self.poses[i])

225 |

226 | img = Image.open(image_path).convert('RGB')

227 | assert img.size[1]*self.img_wh[0] == img.size[0]*self.img_wh[1], \

228 | f'''{image_path} has different aspect ratio than img_wh,

229 | please check your data!'''

230 | img = img.resize(self.img_wh, Image.LANCZOS)

231 | img = self.transform(img) # (3, h, w)

232 | img = img.view(3, -1).permute(1, 0) # (h*w, 3) RGB

233 | self.all_rgbs += [img]

234 |

235 | rays_o, rays_d = get_rays(self.directions, c2w) # both (h*w, 3)

236 | if not self.spheric_poses:

237 | near, far = 0, 1

238 | rays_o, rays_d = get_ndc_rays(self.img_wh[1], self.img_wh[0],

239 | self.focal, 1.0, rays_o, rays_d)

240 | # near plane is always at 1.0

241 | # near and far in NDC are always 0 and 1

242 | # See https://github.com/bmild/nerf/issues/34

243 | else:

244 | near = self.bounds.min()

245 | far = min(8 * near, self.bounds.max()) # focus on central object only

246 |

247 | self.all_rays += [torch.cat([rays_o, rays_d,

248 | near*torch.ones_like(rays_o[:, :1]),

249 | far*torch.ones_like(rays_o[:, :1])],

250 | 1)] # (h*w, 8)

251 |

252 | self.all_rays = torch.cat(self.all_rays, 0) # ((N_images-1)*h*w, 8)

253 | self.all_rgbs = torch.cat(self.all_rgbs, 0) # ((N_images-1)*h*w, 3)

254 |

255 | elif self.split == 'val':

256 | print('val image is', self.image_paths[val_idx])

257 | self.c2w_val = self.poses[val_idx]

258 | self.image_path_val = self.image_paths[val_idx]

259 |

260 | else: # for testing, create a parametric rendering path

261 | if self.split.endswith('train'): # test on training set

262 | self.poses_test = self.poses

263 | elif not self.spheric_poses:

264 | focus_depth = 3.5 # hardcoded, this is numerically close to the formula

265 | # given in the original repo. Mathematically if near=1

266 | # and far=infinity, then this number will converge to 4

267 | radii = np.percentile(np.abs(self.poses[..., 3]), 90, axis=0)

268 | self.poses_test = create_spiral_poses(radii, focus_depth)

269 | else:

270 | radius = 1.1 * self.bounds.min()

271 | self.poses_test = create_spheric_poses(radius)

272 |

273 | def define_transforms(self):

274 | self.transform = T.ToTensor()

275 |

276 | def __len__(self):

277 | if self.split == 'train':

278 | return len(self.all_rays)

279 | if self.split == 'val':

280 | return self.val_num

281 | return len(self.poses_test)

282 |

283 | def __getitem__(self, idx):

284 | if self.split == 'train': # use data in the buffers

285 | sample = {'rays': self.all_rays[idx],

286 | 'rgbs': self.all_rgbs[idx]}

287 |

288 | else:

289 | if self.split == 'val':

290 | c2w = torch.FloatTensor(self.c2w_val)

291 | else:

292 | c2w = torch.FloatTensor(self.poses_test[idx])

293 |

294 | rays_o, rays_d = get_rays(self.directions, c2w)

295 | if not self.spheric_poses:

296 | near, far = 0, 1

297 | rays_o, rays_d = get_ndc_rays(self.img_wh[1], self.img_wh[0],

298 | self.focal, 1.0, rays_o, rays_d)

299 | else:

300 | near = self.bounds.min()

301 | far = min(8 * near, self.bounds.max())

302 |

303 | rays = torch.cat([rays_o, rays_d,

304 | near*torch.ones_like(rays_o[:, :1]),

305 | far*torch.ones_like(rays_o[:, :1])],

306 | 1) # (h*w, 8)

307 |

308 | sample = {'rays': rays,

309 | 'c2w': c2w}

310 |

311 | if self.split == 'val':

312 | img = Image.open(self.image_path_val).convert('RGB')

313 | img = img.resize(self.img_wh, Image.LANCZOS)

314 | img = self.transform(img) # (3, h, w)

315 | img = img.view(3, -1).permute(1, 0) # (h*w, 3)

316 | sample['rgbs'] = img

317 |

318 | return sample

319 |

--------------------------------------------------------------------------------

/datasets/ray_utils.py:

--------------------------------------------------------------------------------

1 | import torch

2 | from kornia import create_meshgrid

3 |

4 |

5 | def get_ray_directions(H, W, focal):

6 | """

7 | Get ray directions for all pixels in camera coordinate.

8 | Reference: https://www.scratchapixel.com/lessons/3d-basic-rendering/

9 | ray-tracing-generating-camera-rays/standard-coordinate-systems

10 |

11 | Inputs:

12 | H, W, focal: image height, width and focal length

13 |

14 | Outputs:

15 | directions: (H, W, 3), the direction of the rays in camera coordinate

16 | """

17 | grid = create_meshgrid(H, W, normalized_coordinates=False)[0]

18 | i, j = grid.unbind(-1)

19 | # the direction here is without +0.5 pixel centering as calibration is not so accurate

20 | # see https://github.com/bmild/nerf/issues/24

21 | directions = \

22 | torch.stack([(i-W/2)/focal, -(j-H/2)/focal, -torch.ones_like(i)], -1) # (H, W, 3)

23 |

24 | return directions

25 |

26 |

27 | def get_rays(directions, c2w):

28 | """

29 | Get ray origin and normalized directions in world coordinate for all pixels in one image.

30 | Reference: https://www.scratchapixel.com/lessons/3d-basic-rendering/

31 | ray-tracing-generating-camera-rays/standard-coordinate-systems

32 |

33 | Inputs:

34 | directions: (H, W, 3) precomputed ray directions in camera coordinate

35 | c2w: (3, 4) transformation matrix from camera coordinate to world coordinate

36 |

37 | Outputs:

38 | rays_o: (H*W, 3), the origin of the rays in world coordinate

39 | rays_d: (H*W, 3), the normalized direction of the rays in world coordinate

40 | """

41 | # Rotate ray directions from camera coordinate to the world coordinate

42 | rays_d = directions @ c2w[:, :3].T # (H, W, 3)

43 | rays_d = rays_d / torch.norm(rays_d, dim=-1, keepdim=True)

44 | # The origin of all rays is the camera origin in world coordinate

45 | rays_o = c2w[:, 3].expand(rays_d.shape) # (H, W, 3)

46 |

47 | rays_d = rays_d.view(-1, 3)

48 | rays_o = rays_o.view(-1, 3)

49 |

50 | return rays_o, rays_d

51 |

52 |

53 | def get_ndc_rays(H, W, focal, near, rays_o, rays_d):

54 | """

55 | Transform rays from world coordinate to NDC.

56 | NDC: Space such that the canvas is a cube with sides [-1, 1] in each axis.

57 | For detailed derivation, please see:

58 | http://www.songho.ca/opengl/gl_projectionmatrix.html

59 | https://github.com/bmild/nerf/files/4451808/ndc_derivation.pdf

60 |

61 | In practice, use NDC "if and only if" the scene is unbounded (has a large depth).

62 | See https://github.com/bmild/nerf/issues/18

63 |

64 | Inputs:

65 | H, W, focal: image height, width and focal length

66 | near: (N_rays) or float, the depths of the near plane

67 | rays_o: (N_rays, 3), the origin of the rays in world coordinate

68 | rays_d: (N_rays, 3), the direction of the rays in world coordinate

69 |

70 | Outputs:

71 | rays_o: (N_rays, 3), the origin of the rays in NDC

72 | rays_d: (N_rays, 3), the direction of the rays in NDC

73 | """

74 | # Shift ray origins to near plane

75 | t = -(near + rays_o[...,2]) / rays_d[...,2]

76 | rays_o = rays_o + t[...,None] * rays_d

77 |

78 | # Store some intermediate homogeneous results

79 | ox_oz = rays_o[...,0] / rays_o[...,2]

80 | oy_oz = rays_o[...,1] / rays_o[...,2]

81 |

82 | # Projection

83 | o0 = -1./(W/(2.*focal)) * ox_oz

84 | o1 = -1./(H/(2.*focal)) * oy_oz

85 | o2 = 1. + 2. * near / rays_o[...,2]

86 |

87 | d0 = -1./(W/(2.*focal)) * (rays_d[...,0]/rays_d[...,2] - ox_oz)

88 | d1 = -1./(H/(2.*focal)) * (rays_d[...,1]/rays_d[...,2] - oy_oz)

89 | d2 = 1 - o2

90 |

91 | rays_o = torch.stack([o0, o1, o2], -1) # (B, 3)

92 | rays_d = torch.stack([d0, d1, d2], -1) # (B, 3)

93 |

94 | return rays_o, rays_d

--------------------------------------------------------------------------------

/docs/.gitignore:

--------------------------------------------------------------------------------

1 | _site

2 | .sass-cache

3 | .jekyll-cache

4 | .jekyll-metadata

5 | vendor

6 |

--------------------------------------------------------------------------------

/docs/Gemfile:

--------------------------------------------------------------------------------

1 | source 'https://rubygems.org'

2 | gem 'github-pages', group: :jekyll_plugins

3 |

--------------------------------------------------------------------------------

/docs/Gemfile.lock:

--------------------------------------------------------------------------------

1 | GEM

2 | remote: https://rubygems.org/

3 | specs:

4 | activesupport (>= 6.0.3.1)

5 | concurrent-ruby (~> 1.0, >= 1.0.2)

6 | i18n (>= 0.7, < 2)

7 | minitest (~> 5.1)

8 | tzinfo (~> 1.1)

9 | zeitwerk (~> 2.2, >= 2.2.2)

10 | addressable (2.7.0)

11 | public_suffix (>= 2.0.2, < 5.0)

12 | coffee-script (2.4.1)

13 | coffee-script-source

14 | execjs

15 | coffee-script-source (1.11.1)

16 | colorator (1.1.0)

17 | commonmarker (0.17.13)

18 | ruby-enum (~> 0.5)

19 | concurrent-ruby (1.1.6)

20 | dnsruby (1.61.3)

21 | addressable (~> 2.5)

22 | em-websocket (0.5.1)

23 | eventmachine (>= 0.12.9)

24 | http_parser.rb (~> 0.6.0)

25 | ethon (0.12.0)

26 | ffi (>= 1.3.0)

27 | eventmachine (1.2.7)

28 | execjs (2.7.0)

29 | faraday (1.0.1)

30 | multipart-post (>= 1.2, < 3)

31 | ffi (1.12.2)

32 | forwardable-extended (2.6.0)

33 | gemoji (3.0.1)

34 | github-pages (204)

35 | github-pages-health-check (= 1.16.1)

36 | jekyll (= 3.8.5)

37 | jekyll-avatar (= 0.7.0)

38 | jekyll-coffeescript (= 1.1.1)

39 | jekyll-commonmark-ghpages (= 0.1.6)

40 | jekyll-default-layout (= 0.1.4)

41 | jekyll-feed (= 0.13.0)

42 | jekyll-gist (= 1.5.0)

43 | jekyll-github-metadata (= 2.13.0)

44 | jekyll-mentions (= 1.5.1)

45 | jekyll-optional-front-matter (= 0.3.2)

46 | jekyll-paginate (= 1.1.0)

47 | jekyll-readme-index (= 0.3.0)

48 | jekyll-redirect-from (= 0.15.0)

49 | jekyll-relative-links (= 0.6.1)

50 | jekyll-remote-theme (= 0.4.1)

51 | jekyll-sass-converter (= 1.5.2)

52 | jekyll-seo-tag (= 2.6.1)

53 | jekyll-sitemap (= 1.4.0)

54 | jekyll-swiss (= 1.0.0)

55 | jekyll-theme-architect (= 0.1.1)

56 | jekyll-theme-cayman (= 0.1.1)

57 | jekyll-theme-dinky (= 0.1.1)

58 | jekyll-theme-hacker (= 0.1.1)

59 | jekyll-theme-leap-day (= 0.1.1)

60 | jekyll-theme-merlot (= 0.1.1)

61 | jekyll-theme-midnight (= 0.1.1)

62 | jekyll-theme-minimal (= 0.1.1)

63 | jekyll-theme-modernist (= 0.1.1)

64 | jekyll-theme-primer (= 0.5.4)

65 | jekyll-theme-slate (= 0.1.1)

66 | jekyll-theme-tactile (= 0.1.1)

67 | jekyll-theme-time-machine (= 0.1.1)

68 | jekyll-titles-from-headings (= 0.5.3)

69 | jemoji (= 0.11.1)

70 | kramdown (>= 2.3.0)

71 | liquid (= 4.0.3)

72 | mercenary (~> 0.3)

73 | minima (= 2.5.1)

74 | nokogiri (>= 1.11.0.rc4, < 2.0)

75 | rouge (= 3.13.0)

76 | terminal-table (~> 1.4)

77 | github-pages-health-check (1.16.1)

78 | addressable (~> 2.3)

79 | dnsruby (~> 1.60)

80 | octokit (~> 4.0)

81 | public_suffix (~> 3.0)

82 | typhoeus (~> 1.3)

83 | html-pipeline (2.12.3)

84 | activesupport (>= 2)

85 | nokogiri (>= 1.4)

86 | http_parser.rb (0.6.0)

87 | i18n (0.9.5)

88 | concurrent-ruby (~> 1.0)

89 | jekyll (3.8.5)

90 | addressable (~> 2.4)

91 | colorator (~> 1.0)

92 | em-websocket (~> 0.5)

93 | i18n (~> 0.7)

94 | jekyll-sass-converter (~> 1.0)

95 | jekyll-watch (~> 2.0)

96 | kramdown (~> 1.14)

97 | liquid (~> 4.0)

98 | mercenary (~> 0.3.3)

99 | pathutil (~> 0.9)

100 | rouge (>= 1.7, < 4)

101 | safe_yaml (~> 1.0)

102 | jekyll-avatar (0.7.0)

103 | jekyll (>= 3.0, < 5.0)

104 | jekyll-coffeescript (1.1.1)

105 | coffee-script (~> 2.2)

106 | coffee-script-source (~> 1.11.1)

107 | jekyll-commonmark (1.3.1)

108 | commonmarker (~> 0.14)

109 | jekyll (>= 3.7, < 5.0)

110 | jekyll-commonmark-ghpages (0.1.6)

111 | commonmarker (~> 0.17.6)

112 | jekyll-commonmark (~> 1.2)

113 | rouge (>= 2.0, < 4.0)

114 | jekyll-default-layout (0.1.4)

115 | jekyll (~> 3.0)

116 | jekyll-feed (0.13.0)

117 | jekyll (>= 3.7, < 5.0)

118 | jekyll-gist (1.5.0)

119 | octokit (~> 4.2)

120 | jekyll-github-metadata (2.13.0)

121 | jekyll (>= 3.4, < 5.0)

122 | octokit (~> 4.0, != 4.4.0)

123 | jekyll-mentions (1.5.1)

124 | html-pipeline (~> 2.3)

125 | jekyll (>= 3.7, < 5.0)

126 | jekyll-optional-front-matter (0.3.2)

127 | jekyll (>= 3.0, < 5.0)

128 | jekyll-paginate (1.1.0)

129 | jekyll-readme-index (0.3.0)

130 | jekyll (>= 3.0, < 5.0)

131 | jekyll-redirect-from (0.15.0)

132 | jekyll (>= 3.3, < 5.0)

133 | jekyll-relative-links (0.6.1)

134 | jekyll (>= 3.3, < 5.0)

135 | jekyll-remote-theme (0.4.1)

136 | addressable (~> 2.0)

137 | jekyll (>= 3.5, < 5.0)

138 | rubyzip (>= 1.3.0)

139 | jekyll-sass-converter (1.5.2)

140 | sass (~> 3.4)

141 | jekyll-seo-tag (2.6.1)

142 | jekyll (>= 3.3, < 5.0)

143 | jekyll-sitemap (1.4.0)

144 | jekyll (>= 3.7, < 5.0)

145 | jekyll-swiss (1.0.0)

146 | jekyll-theme-architect (0.1.1)

147 | jekyll (~> 3.5)

148 | jekyll-seo-tag (~> 2.0)

149 | jekyll-theme-cayman (0.1.1)

150 | jekyll (~> 3.5)

151 | jekyll-seo-tag (~> 2.0)

152 | jekyll-theme-dinky (0.1.1)

153 | jekyll (~> 3.5)

154 | jekyll-seo-tag (~> 2.0)

155 | jekyll-theme-hacker (0.1.1)

156 | jekyll (~> 3.5)

157 | jekyll-seo-tag (~> 2.0)

158 | jekyll-theme-leap-day (0.1.1)

159 | jekyll (~> 3.5)

160 | jekyll-seo-tag (~> 2.0)

161 | jekyll-theme-merlot (0.1.1)

162 | jekyll (~> 3.5)

163 | jekyll-seo-tag (~> 2.0)

164 | jekyll-theme-midnight (0.1.1)

165 | jekyll (~> 3.5)

166 | jekyll-seo-tag (~> 2.0)

167 | jekyll-theme-minimal (0.1.1)

168 | jekyll (~> 3.5)

169 | jekyll-seo-tag (~> 2.0)

170 | jekyll-theme-modernist (0.1.1)

171 | jekyll (~> 3.5)

172 | jekyll-seo-tag (~> 2.0)

173 | jekyll-theme-primer (0.5.4)

174 | jekyll (> 3.5, < 5.0)

175 | jekyll-github-metadata (~> 2.9)

176 | jekyll-seo-tag (~> 2.0)

177 | jekyll-theme-slate (0.1.1)

178 | jekyll (~> 3.5)

179 | jekyll-seo-tag (~> 2.0)

180 | jekyll-theme-tactile (0.1.1)

181 | jekyll (~> 3.5)

182 | jekyll-seo-tag (~> 2.0)

183 | jekyll-theme-time-machine (0.1.1)

184 | jekyll (~> 3.5)

185 | jekyll-seo-tag (~> 2.0)

186 | jekyll-titles-from-headings (0.5.3)

187 | jekyll (>= 3.3, < 5.0)

188 | jekyll-watch (2.2.1)

189 | listen (~> 3.0)

190 | jemoji (0.11.1)

191 | gemoji (~> 3.0)

192 | html-pipeline (~> 2.2)

193 | jekyll (>= 3.0, < 5.0)

194 | kramdown (>= 2.3.0)

195 | liquid (4.0.3)

196 | listen (3.2.1)

197 | rb-fsevent (~> 0.10, >= 0.10.3)

198 | rb-inotify (~> 0.9, >= 0.9.10)

199 | mercenary (0.3.6)

200 | mini_portile2 (2.4.0)

201 | minima (2.5.1)

202 | jekyll (>= 3.5, < 5.0)

203 | jekyll-feed (~> 0.9)

204 | jekyll-seo-tag (~> 2.1)

205 | minitest (5.14.1)

206 | multipart-post (2.1.1)

207 | nokogiri (1.10.9)

208 | mini_portile2 (~> 2.4.0)

209 | octokit (4.18.0)

210 | faraday (>= 0.9)

211 | sawyer (~> 0.8.0, >= 0.5.3)

212 | pathutil (0.16.2)

213 | forwardable-extended (~> 2.6)

214 | public_suffix (3.1.1)

215 | rb-fsevent (0.10.4)

216 | rb-inotify (0.10.1)

217 | ffi (~> 1.0)

218 | rouge (3.13.0)

219 | ruby-enum (0.8.0)

220 | i18n

221 | rubyzip (2.3.0)

222 | safe_yaml (1.0.5)

223 | sass (3.7.4)

224 | sass-listen (~> 4.0.0)

225 | sass-listen (4.0.0)

226 | rb-fsevent (~> 0.9, >= 0.9.4)

227 | rb-inotify (~> 0.9, >= 0.9.7)

228 | sawyer (0.8.2)

229 | addressable (>= 2.3.5)

230 | faraday (> 0.8, < 2.0)