├── tests

├── __init__.py

├── conftest.py

├── test_io.py

├── test_decomposition.py

├── test_kernels.py

├── test_rbf.py

└── test_core.py

├── examples

├── lid-driven-cavity

│ ├── eq-snapshot.png

│ ├── results-re-450.png

│ ├── results-re-50.png

│ ├── generatePODdata.sim

│ ├── eq-snapshot-matrix.png

│ └── lid-driven-cavity.ipynb

├── heat-conduction

│ ├── 2d-heat.py

│ └── design-optimization.py

└── shape-optimization

│ └── optimize_shape.py

├── .github

├── codecov.yml

└── workflows

│ ├── docs.yml

│ ├── tests.yml

│ └── publish.yml

├── docs

├── api

│ └── index.md

├── examples.md

├── getting-started.md

├── user-guide

│ ├── inference.md

│ ├── io.md

│ ├── autodiff.md

│ └── training.md

└── index.md

├── pyproject.toml

├── pod_rbf

├── __init__.py

├── types.py

├── kernels.py

├── shape_optimization.py

├── decomposition.py

├── io.py

├── core.py

└── rbf.py

├── mkdocs.yml

├── .gitignore

├── CLAUDE.md

├── README.md

└── LICENSE

/tests/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/examples/lid-driven-cavity/eq-snapshot.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kylebeggs/POD-RBF/HEAD/examples/lid-driven-cavity/eq-snapshot.png

--------------------------------------------------------------------------------

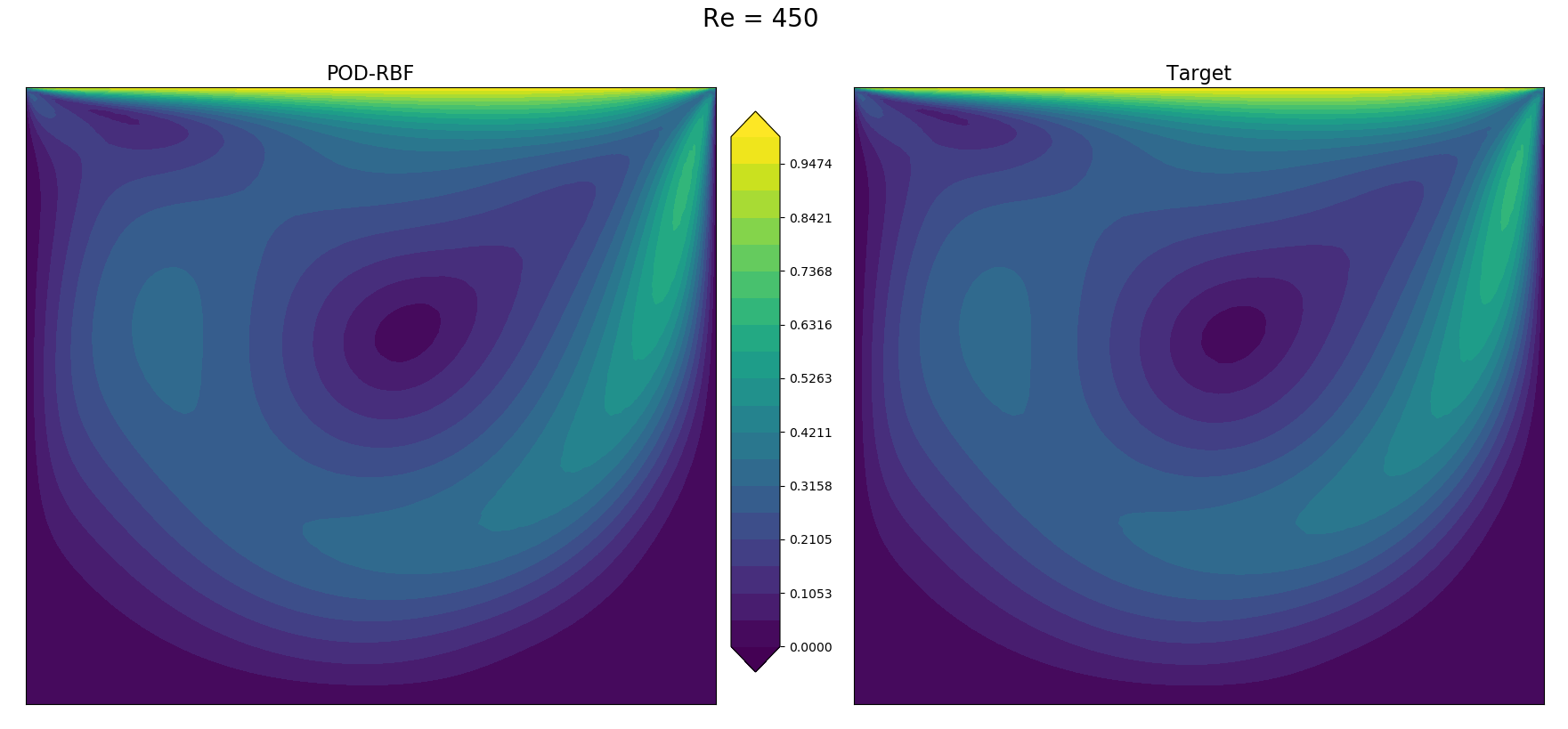

/examples/lid-driven-cavity/results-re-450.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kylebeggs/POD-RBF/HEAD/examples/lid-driven-cavity/results-re-450.png

--------------------------------------------------------------------------------

/examples/lid-driven-cavity/results-re-50.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kylebeggs/POD-RBF/HEAD/examples/lid-driven-cavity/results-re-50.png

--------------------------------------------------------------------------------

/examples/lid-driven-cavity/generatePODdata.sim:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kylebeggs/POD-RBF/HEAD/examples/lid-driven-cavity/generatePODdata.sim

--------------------------------------------------------------------------------

/examples/lid-driven-cavity/eq-snapshot-matrix.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/kylebeggs/POD-RBF/HEAD/examples/lid-driven-cavity/eq-snapshot-matrix.png

--------------------------------------------------------------------------------

/tests/conftest.py:

--------------------------------------------------------------------------------

1 | """Pytest configuration and shared fixtures."""

2 |

3 | import jax

4 |

5 | # Ensure float64 is enabled for all tests

6 | jax.config.update("jax_enable_x64", True)

7 |

--------------------------------------------------------------------------------

/.github/codecov.yml:

--------------------------------------------------------------------------------

1 | coverage:

2 | status:

3 | project:

4 | default:

5 | target: auto

6 | threshold: 1%

7 | patch:

8 | default:

9 | target: auto

10 | threshold: 1%

11 |

12 | comment:

13 | layout: "reach,diff,flags,files,footer"

14 | behavior: default

15 | require_changes: false

16 |

17 | ignore:

18 | - "tests/"

19 | - "examples/"

20 | - "setup.py"

21 |

--------------------------------------------------------------------------------

/.github/workflows/docs.yml:

--------------------------------------------------------------------------------

1 | name: docs

2 |

3 | on:

4 | push:

5 | branches: [master]

6 | workflow_dispatch:

7 |

8 | permissions:

9 | contents: write

10 |

11 | jobs:

12 | deploy:

13 | runs-on: ubuntu-latest

14 | steps:

15 | - uses: actions/checkout@v4

16 |

17 | - uses: actions/setup-python@v5

18 | with:

19 | python-version: "3.12"

20 |

21 | - name: Install dependencies

22 | run: |

23 | pip install mkdocs-material mkdocstrings[python]

24 | pip install -e .

25 |

26 | - name: Build and deploy docs

27 | run: mkdocs gh-deploy --force

28 |

--------------------------------------------------------------------------------

/.github/workflows/tests.yml:

--------------------------------------------------------------------------------

1 | name: Tests

2 |

3 | on:

4 | push:

5 | branches: [master]

6 | pull_request:

7 | branches: [master]

8 |

9 | jobs:

10 | test:

11 | runs-on: ubuntu-latest

12 | strategy:

13 | fail-fast: false

14 | matrix:

15 | python-version: ["3.10", "3.11", "3.12"]

16 |

17 | steps:

18 | - name: Checkout code

19 | uses: actions/checkout@v4

20 |

21 | - name: Set up Python ${{ matrix.python-version }}

22 | uses: actions/setup-python@v5

23 | with:

24 | python-version: ${{ matrix.python-version }}

25 | cache: 'pip'

26 | cache-dependency-path: 'pyproject.toml'

27 |

28 | - name: Install JAX (CPU)

29 | run: |

30 | pip install --upgrade pip

31 | pip install "jax[cpu]>=0.4.0"

32 |

33 | - name: Install package with dev dependencies

34 | run: pip install -e ".[dev]"

35 |

36 | - name: Run tests with coverage

37 | run: pytest tests/ -v --cov=pod_rbf --cov-report=xml --cov-report=term

38 |

39 | - name: Upload coverage to Codecov

40 | uses: codecov/codecov-action@v4

41 | with:

42 | file: ./coverage.xml

43 | flags: unittests

44 | name: codecov-umbrella

45 | fail_ci_if_error: false

46 | env:

47 | CODECOV_TOKEN: ${{ secrets.CODECOV_TOKEN }}

48 |

--------------------------------------------------------------------------------

/docs/api/index.md:

--------------------------------------------------------------------------------

1 | # API Reference

2 |

3 | ## Core Functions

4 |

5 | ### train

6 |

7 | ::: pod_rbf.train

8 | options:

9 | show_root_heading: true

10 | show_source: false

11 |

12 | ### inference

13 |

14 | ::: pod_rbf.inference

15 | options:

16 | show_root_heading: true

17 | show_source: false

18 |

19 | ### inference_single

20 |

21 | ::: pod_rbf.inference_single

22 | options:

23 | show_root_heading: true

24 | show_source: false

25 |

26 | ## I/O Functions

27 |

28 | ### build_snapshot_matrix

29 |

30 | ::: pod_rbf.build_snapshot_matrix

31 | options:

32 | show_root_heading: true

33 | show_source: false

34 |

35 | ### save_model

36 |

37 | ::: pod_rbf.save_model

38 | options:

39 | show_root_heading: true

40 | show_source: false

41 |

42 | ### load_model

43 |

44 | ::: pod_rbf.load_model

45 | options:

46 | show_root_heading: true

47 | show_source: false

48 |

49 | ## Types

50 |

51 | ### TrainConfig

52 |

53 | ::: pod_rbf.TrainConfig

54 | options:

55 | show_root_heading: true

56 | show_source: false

57 |

58 | ### ModelState

59 |

60 | ::: pod_rbf.ModelState

61 | options:

62 | show_root_heading: true

63 | show_source: false

64 |

65 | ### TrainResult

66 |

67 | ::: pod_rbf.TrainResult

68 | options:

69 | show_root_heading: true

70 | show_source: false

71 |

--------------------------------------------------------------------------------

/pyproject.toml:

--------------------------------------------------------------------------------

1 | [build-system]

2 | requires = ["setuptools>=61.0", "setuptools-scm>=8.0"]

3 | build-backend = "setuptools.build_meta"

4 |

5 | [project]

6 | name = "pod_rbf"

7 | dynamic = ["version"]

8 | authors = [{name = "Kyle Beggs", email = "beggskw@gmail.com"}]

9 | description = "JAX-based POD-RBF for autodiff-enabled reduced order modeling."

10 | readme = "README.md"

11 | license = {text = "MIT"}

12 | requires-python = ">=3.10"

13 | classifiers = [

14 | "Programming Language :: Python :: 3",

15 | "Programming Language :: Python :: 3.10",

16 | "Programming Language :: Python :: 3.11",

17 | "Programming Language :: Python :: 3.12",

18 | "License :: OSI Approved :: MIT License",

19 | "Operating System :: OS Independent",

20 | ]

21 | dependencies = [

22 | "jax>=0.4.0",

23 | "jaxlib>=0.4.0",

24 | "numpy",

25 | "tqdm",

26 | ]

27 |

28 | [project.optional-dependencies]

29 | dev = ["pytest>=7.0", "pytest-cov"]

30 | docs = [

31 | "mkdocs-material>=9.5",

32 | "mkdocstrings[python]>=0.24",

33 | ]

34 |

35 | [project.urls]

36 | Homepage = "https://github.com/kylebeggs/POD-RBF"

37 | Repository = "https://github.com/kylebeggs/POD-RBF"

38 | Documentation = "https://kylebeggs.github.io/POD-RBF"

39 |

40 | [tool.setuptools_scm]

41 |

42 | [tool.uv.sources]

43 | pod-rbf = { workspace = true }

44 |

45 | [dependency-groups]

46 | dev = [

47 | "pod-rbf",

48 | ]

49 |

--------------------------------------------------------------------------------

/pod_rbf/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | POD-RBF: Proper Orthogonal Decomposition - Radial Basis Function Network.

3 |

4 | A JAX-based implementation enabling autodifferentiation for:

5 | - Gradient optimization

6 | - Sensitivity analysis

7 | - Inverse problems

8 |

9 | Usage

10 | -----

11 | >>> import pod_rbf

12 | >>> import jax.numpy as jnp

13 | >>>

14 | >>> # Train model

15 | >>> result = pod_rbf.train(snapshot, params)

16 | >>>

17 | >>> # Inference

18 | >>> pred = pod_rbf.inference_single(result.state, jnp.array(450.0))

19 | >>>

20 | >>> # Autodiff

21 | >>> import jax

22 | >>> grad_fn = jax.grad(lambda p: jnp.sum(pod_rbf.inference_single(result.state, p)**2))

23 | >>> gradient = grad_fn(jnp.array(450.0))

24 | """

25 |

26 | import jax

27 |

28 | # Enable float64 for numerical stability (SVD, condition numbers)

29 | jax.config.update("jax_enable_x64", True)

30 |

31 | from .core import inference, inference_single, train

32 | from .io import build_snapshot_matrix, load_model, save_model

33 | from .types import ModelState, TrainConfig, TrainResult

34 |

35 | try:

36 | from importlib.metadata import version

37 |

38 | __version__ = version("pod_rbf")

39 | except Exception:

40 | __version__ = "unknown"

41 |

42 | __all__ = [

43 | # Core functions

44 | "train",

45 | "inference",

46 | "inference_single",

47 | # Types

48 | "ModelState",

49 | "TrainConfig",

50 | "TrainResult",

51 | # I/O

52 | "build_snapshot_matrix",

53 | "save_model",

54 | "load_model",

55 | ]

56 |

--------------------------------------------------------------------------------

/mkdocs.yml:

--------------------------------------------------------------------------------

1 | site_name: POD-RBF

2 | site_description: JAX-based POD-RBF for autodiff-enabled reduced order modeling

3 | site_url: https://kylebeggs.github.io/POD-RBF

4 | repo_url: https://github.com/kylebeggs/POD-RBF

5 | repo_name: kylebeggs/POD-RBF

6 |

7 | theme:

8 | name: material

9 | palette:

10 | - media: "(prefers-color-scheme: light)"

11 | scheme: default

12 | primary: indigo

13 | toggle:

14 | icon: material/brightness-7

15 | name: Switch to dark mode

16 | - media: "(prefers-color-scheme: dark)"

17 | scheme: slate

18 | primary: indigo

19 | toggle:

20 | icon: material/brightness-4

21 | name: Switch to light mode

22 | features:

23 | - navigation.instant

24 | - navigation.sections

25 | - navigation.top

26 | - search.highlight

27 | - content.code.copy

28 |

29 | plugins:

30 | - search

31 | - mkdocstrings:

32 | handlers:

33 | python:

34 | options:

35 | show_source: true

36 | show_root_heading: true

37 |

38 | nav:

39 | - Home: index.md

40 | - Getting Started: getting-started.md

41 | - User Guide:

42 | - Training Models: user-guide/training.md

43 | - Inference: user-guide/inference.md

44 | - Autodifferentiation: user-guide/autodiff.md

45 | - Saving & Loading: user-guide/io.md

46 | - API Reference: api/index.md

47 | - Examples: examples.md

48 |

49 | markdown_extensions:

50 | - pymdownx.highlight:

51 | anchor_linenums: true

52 | - pymdownx.superfences

53 | - admonition

54 | - pymdownx.details

55 |

--------------------------------------------------------------------------------

/.github/workflows/publish.yml:

--------------------------------------------------------------------------------

1 | name: Publish to PyPI

2 |

3 | on:

4 | push:

5 | tags:

6 | - 'v*'

7 |

8 | jobs:

9 | build:

10 | name: Build distribution

11 | runs-on: ubuntu-latest

12 | steps:

13 | - uses: actions/checkout@v4

14 | with:

15 | persist-credentials: false

16 | fetch-depth: 0 # Required for setuptools-scm to get version from tags

17 |

18 | - name: Set up Python

19 | uses: actions/setup-python@v5

20 | with:

21 | python-version: "3.12"

22 |

23 | - name: Install build tools

24 | run: python -m pip install build --user

25 |

26 | - name: Build wheel and sdist

27 | run: python -m build

28 |

29 | - name: Upload artifacts

30 | uses: actions/upload-artifact@v4

31 | with:

32 | name: python-package-distributions

33 | path: dist/

34 |

35 | publish-to-pypi:

36 | name: Publish to PyPI

37 | needs: build

38 | runs-on: ubuntu-latest

39 | environment:

40 | name: pypi

41 | url: https://pypi.org/p/pod_rbf

42 | permissions:

43 | id-token: write

44 | steps:

45 | - name: Download artifacts

46 | uses: actions/download-artifact@v4

47 | with:

48 | name: python-package-distributions

49 | path: dist/

50 |

51 | - name: Publish to PyPI

52 | uses: pypa/gh-action-pypi-publish@release/v1

53 |

54 | create-github-release:

55 | name: Create GitHub Release

56 | needs: publish-to-pypi

57 | runs-on: ubuntu-latest

58 | permissions:

59 | contents: write

60 | steps:

61 | - name: Checkout code

62 | uses: actions/checkout@v4

63 |

64 | - name: Create Release

65 | env:

66 | GH_TOKEN: ${{ github.token }}

67 | run: |

68 | gh release create ${{ github.ref_name }} \

69 | --title "Release ${{ github.ref_name }}" \

70 | --generate-notes

71 |

--------------------------------------------------------------------------------

/pod_rbf/types.py:

--------------------------------------------------------------------------------

1 | """

2 | Data structures for POD-RBF.

3 |

4 | All types are NamedTuples for JAX pytree compatibility.

5 | """

6 |

7 | from typing import NamedTuple

8 |

9 | from jax import Array

10 |

11 |

12 | class TrainConfig(NamedTuple):

13 | """Immutable training configuration."""

14 |

15 | energy_threshold: float = 0.99

16 | mem_limit_gb: float = 16.0

17 | cond_range: tuple[float, float] = (1e11, 1e12)

18 | max_bisection_iters: int = 50

19 | c_low_init: float = 0.011

20 | c_high_init: float = 1.0

21 | c_high_step: float = 0.01

22 | c_high_search_iters: int = 200

23 | poly_degree: int = 2 # Polynomial augmentation degree (0=none, 1=linear, 2=quadratic)

24 | kernel: str = "imq" # RBF kernel type: 'imq', 'gaussian', 'polyharmonic_spline'

25 | kernel_order: int = 3 # For polyharmonic splines only (order of r^k)

26 |

27 |

28 | class ModelState(NamedTuple):

29 | """Immutable trained model state - a valid JAX pytree."""

30 |

31 | basis: Array # Truncated POD basis (n_samples, n_modes)

32 | weights: Array # RBF network weights (n_modes, n_train_points)

33 | shape_factor: float | None # Optimized RBF shape parameter (None for PHS)

34 | train_params: Array # Training parameters (n_params, n_train_points)

35 | params_range: Array # Parameter ranges for normalization (n_params,)

36 | truncated_energy: float # Energy retained after truncation

37 | cumul_energy: Array # Cumulative energy per mode

38 | poly_coeffs: Array | None # Polynomial coefficients (n_modes, n_poly) or None

39 | poly_degree: int # Polynomial degree used (0=none)

40 | kernel: str # Kernel type used for training

41 | kernel_order: int # PHS order (ignored for other kernels)

42 |

43 |

44 | class TrainResult(NamedTuple):

45 | """Result from training, includes diagnostics."""

46 |

47 | state: ModelState

48 | n_modes: int

49 | used_eig_decomp: bool # True if eigendecomposition was used

50 |

--------------------------------------------------------------------------------

/docs/examples.md:

--------------------------------------------------------------------------------

1 | # Examples

2 |

3 | ## Jupyter Notebooks

4 |

5 | Explore these example notebooks to see POD-RBF in action:

6 |

7 | ### Lid-Driven Cavity

8 |

9 | A complete walkthrough using CFD data from a 2D lid-driven cavity simulation at various Reynolds numbers.

10 |

11 | [:octicons-mark-github-16: View on GitHub](https://github.com/kylebeggs/POD-RBF/tree/master/examples/lid-driven-cavity){ .md-button }

12 |

13 | **What you'll learn:**

14 |

15 | - Building a snapshot matrix from CSV files

16 | - Training a single-parameter model

17 | - Visualizing predictions vs. ground truth

18 |

19 | ### Multi-Parameter Example

20 |

21 | Training a model with two input parameters.

22 |

23 | [:octicons-mark-github-16: View on GitHub](https://github.com/kylebeggs/POD-RBF/blob/master/examples/2-parameters.ipynb){ .md-button }

24 |

25 | **What you'll learn:**

26 |

27 | - Setting up multi-parameter training data

28 | - Inference with multiple parameters

29 | - Parameter space exploration

30 |

31 | ### Heat Conduction

32 |

33 | A simple heat conduction problem on a unit square.

34 |

35 | [:octicons-mark-github-16: View on GitHub](https://github.com/kylebeggs/POD-RBF/tree/master/examples/heat-conduction){ .md-button }

36 |

37 | **What you'll learn:**

38 |

39 | - Basic POD-RBF workflow

40 | - Working with thermal simulation data

41 |

42 | ### Shape Parameter Optimization

43 |

44 | Exploring RBF shape parameter selection.

45 |

46 | [:octicons-mark-github-16: View on GitHub](https://github.com/kylebeggs/POD-RBF/tree/master/examples/shape-optimization){ .md-button }

47 |

48 | **What you'll learn:**

49 |

50 | - How shape parameters affect interpolation

51 | - Automatic vs. manual shape parameter selection

52 |

53 | ## Running the Examples

54 |

55 | Clone the repository and install the package:

56 |

57 | ```bash

58 | git clone https://github.com/kylebeggs/POD-RBF.git

59 | cd POD-RBF

60 | pip install -e .

61 | ```

62 |

63 | Then open the Jupyter notebooks in the `examples/` directory:

64 |

65 | ```bash

66 | jupyter notebook examples/

67 | ```

68 |

--------------------------------------------------------------------------------

/docs/getting-started.md:

--------------------------------------------------------------------------------

1 | # Getting Started

2 |

3 | ## Installation

4 |

5 | Install POD-RBF using pip:

6 |

7 | ```bash

8 | pip install pod-rbf

9 | ```

10 |

11 | Or using uv:

12 |

13 | ```bash

14 | uv add pod-rbf

15 | ```

16 |

17 | ## Basic Workflow

18 |

19 | POD-RBF follows a simple three-step workflow:

20 |

21 | 1. **Build a snapshot matrix** - Collect solution data at different parameter values

22 | 2. **Train the model** - Compute POD basis and RBF interpolation weights

23 | 3. **Inference** - Predict solutions at new parameter values

24 |

25 | ## Minimal Example

26 |

27 | ```python

28 | import pod_rbf

29 | import jax.numpy as jnp

30 | import numpy as np

31 |

32 | # 1. Define training parameters

33 | params = np.array([1, 100, 200, 300, 400, 500, 600, 700, 800, 900, 1000])

34 |

35 | # 2. Build snapshot matrix from CSV files

36 | # Each CSV file contains one solution snapshot

37 | snapshot = pod_rbf.build_snapshot_matrix("path/to/data/")

38 |

39 | # 3. Train the model

40 | result = pod_rbf.train(snapshot, params)

41 |

42 | # 4. Predict at a new parameter value

43 | prediction = pod_rbf.inference_single(result.state, jnp.array(450.0))

44 | ```

45 |

46 | ## Understanding the Snapshot Matrix

47 |

48 | The snapshot matrix `X` has shape `(n_samples, n_snapshots)`:

49 |

50 | - Each **column** is one solution snapshot at a specific parameter value

51 | - Each **row** corresponds to a spatial location or degree of freedom

52 | - `n_samples` is the number of points in your solution (e.g., mesh nodes)

53 | - `n_snapshots` is the number of parameter values you trained on

54 |

55 | For example, if you solve a problem on a 400-node mesh at 10 different parameter values, your snapshot matrix is `(400, 10)`.

56 |

57 | !!! note "Parameter Ordering"

58 | The order of columns in the snapshot matrix must match the order of your parameter array. If column 5 contains the solution at Re=500, then `params[4]` must equal 500.

59 |

60 | ## What's Next?

61 |

62 | - [Training Models](user-guide/training.md) - Learn about training configuration options

63 | - [Inference](user-guide/inference.md) - Single-point and batch predictions

64 | - [Autodifferentiation](user-guide/autodiff.md) - Use JAX for gradients and optimization

65 |

--------------------------------------------------------------------------------

/docs/user-guide/inference.md:

--------------------------------------------------------------------------------

1 | # Inference

2 |

3 | After training, use the model to predict solutions at new parameter values.

4 |

5 | ## Single-Point Inference

6 |

7 | For predicting at a single parameter value:

8 |

9 | ```python

10 | import pod_rbf

11 | import jax.numpy as jnp

12 |

13 | # Train the model

14 | result = pod_rbf.train(snapshot, params)

15 |

16 | # Predict at a new parameter

17 | prediction = pod_rbf.inference_single(result.state, jnp.array(450.0))

18 | ```

19 |

20 | The output shape is `(n_samples,)` - the same as one column of your snapshot matrix.

21 |

22 | ## Batch Inference

23 |

24 | For predicting at multiple parameter values simultaneously:

25 |

26 | ```python

27 | # Predict at multiple parameters

28 | new_params = jnp.array([350.0, 450.0, 550.0])

29 | predictions = pod_rbf.inference(result.state, new_params)

30 | ```

31 |

32 | The output shape is `(n_samples, n_points)` where `n_points` is the number of parameter values.

33 |

34 | ## Multi-Parameter Inference

35 |

36 | For models trained with multiple parameters:

37 |

38 | ```python

39 | # Single point with 2 parameters

40 | param = jnp.array([450.0, 0.15]) # [Re, Ma]

41 | prediction = pod_rbf.inference_single(result.state, param)

42 |

43 | # Batch with 2 parameters

44 | params = jnp.array([

45 | [350.0, 0.1],

46 | [450.0, 0.15],

47 | [550.0, 0.2],

48 | ])

49 | predictions = pod_rbf.inference(result.state, params)

50 | ```

51 |

52 | ## Using a Saved Model

53 |

54 | Load a previously saved model and use it for inference:

55 |

56 | ```python

57 | state = pod_rbf.load_model("model.pkl")

58 | prediction = pod_rbf.inference_single(state, jnp.array(450.0))

59 | ```

60 |

61 | ## Performance Tips

62 |

63 | 1. **Use batch inference** when predicting at multiple parameter values - it's more efficient than calling `inference_single` in a loop.

64 |

65 | 2. **JIT compilation** - The inference functions are JAX-compatible and can be JIT-compiled for faster repeated calls:

66 |

67 | ```python

68 | import jax

69 |

70 | inference_jit = jax.jit(lambda p: pod_rbf.inference_single(state, p))

71 | prediction = inference_jit(jnp.array(450.0))

72 | ```

73 |

74 | 3. **GPU acceleration** - If JAX is configured with GPU support, inference will automatically use the GPU.

75 |

--------------------------------------------------------------------------------

/docs/user-guide/io.md:

--------------------------------------------------------------------------------

1 | # Saving & Loading

2 |

3 | ## Loading Snapshot Data

4 |

5 | ### From CSV Files

6 |

7 | Load snapshots from a directory of CSV files:

8 |

9 | ```python

10 | import pod_rbf

11 |

12 | snapshot = pod_rbf.build_snapshot_matrix("path/to/data/")

13 | ```

14 |

15 | By default, this:

16 |

17 | - Loads all CSV files from the directory in alphanumeric order

18 | - Skips the first row (header)

19 | - Uses the first column

20 |

21 | Customize with optional parameters:

22 |

23 | ```python

24 | snapshot = pod_rbf.build_snapshot_matrix(

25 | "path/to/data/",

26 | skiprows=1, # Skip first N rows (default: 1 for header)

27 | usecols=0, # Column index to use (default: 0)

28 | verbose=True, # Show progress bar (default: True)

29 | )

30 | ```

31 |

32 | ### From NumPy Arrays

33 |

34 | If your data is already in memory:

35 |

36 | ```python

37 | import numpy as np

38 |

39 | # Combine individual solutions into a snapshot matrix

40 | # Each column is one snapshot

41 | snapshot = np.column_stack([sol1, sol2, sol3, sol4, sol5])

42 | ```

43 |

44 | ## Saving Models

45 |

46 | Save a trained model to disk:

47 |

48 | ```python

49 | import pod_rbf

50 |

51 | result = pod_rbf.train(snapshot, params)

52 | pod_rbf.save_model("model.pkl", result.state)

53 | ```

54 |

55 | The model is saved as a pickle file containing the `ModelState` NamedTuple.

56 |

57 | ## Loading Models

58 |

59 | Load a previously saved model:

60 |

61 | ```python

62 | state = pod_rbf.load_model("model.pkl")

63 |

64 | # Use for inference

65 | prediction = pod_rbf.inference_single(state, jnp.array(450.0))

66 | ```

67 |

68 | ## Model State Contents

69 |

70 | The saved `ModelState` contains everything needed for inference:

71 |

72 | | Field | Description |

73 | |-------|-------------|

74 | | `basis` | Truncated POD basis matrix |

75 | | `weights` | RBF interpolation weights |

76 | | `shape_factor` | Optimized RBF shape parameter |

77 | | `train_params` | Training parameter values |

78 | | `params_range` | Parameter ranges for normalization |

79 | | `truncated_energy` | Energy retained after truncation |

80 | | `cumul_energy` | Cumulative energy per mode |

81 | | `poly_coeffs` | Polynomial coefficients (if used) |

82 | | `poly_degree` | Polynomial degree used |

83 | | `kernel` | Kernel type used |

84 | | `kernel_order` | PHS order (for polyharmonic splines) |

85 |

86 | ## File Format Notes

87 |

88 | - Models are saved using Python's `pickle` module

89 | - Files are portable across machines with the same Python/JAX versions

90 | - File size depends on the number of modes and training points

91 |

--------------------------------------------------------------------------------

/docs/index.md:

--------------------------------------------------------------------------------

1 | # POD-RBF

2 |

3 | [](https://github.com/kylebeggs/POD-RBF/actions/workflows/tests.yml)

4 | [](https://codecov.io/gh/kylebeggs/POD-RBF)

5 | [](https://www.python.org/downloads/)

6 |

7 | A Python package for building Reduced Order Models (ROMs) from high-dimensional data using Proper Orthogonal Decomposition combined with Radial Basis Function interpolation.

8 |

9 |

10 |

11 | ## Features

12 |

13 | - **JAX-based** - Enables autodifferentiation for gradient optimization, sensitivity analysis, and inverse problems

14 | - **Shape parameter optimization** - Automatic tuning of RBF shape parameters

15 | - **Memory-aware algorithms** - Switches between eigenvalue decomposition and SVD based on memory requirements

16 |

17 | ## Quick Install

18 |

19 | ```bash

20 | pip install pod-rbf

21 | ```

22 |

23 | ## Quick Example

24 |

25 | ```python

26 | import pod_rbf

27 | import jax.numpy as jnp

28 | import numpy as np

29 |

30 | # Define training parameters

31 | Re = np.array([1, 100, 200, 300, 400, 500, 600, 700, 800, 900, 1000])

32 |

33 | # Build snapshot matrix from CSV files

34 | train_snapshot = pod_rbf.build_snapshot_matrix("data/train/")

35 |

36 | # Train the model

37 | result = pod_rbf.train(train_snapshot, Re)

38 |

39 | # Inference on unseen parameter

40 | sol = pod_rbf.inference_single(result.state, jnp.array(450.0))

41 | ```

42 |

43 | ## Next Steps

44 |

45 | - [Getting Started](getting-started.md) - Installation and first steps

46 | - [User Guide](user-guide/training.md) - Detailed usage instructions

47 | - [API Reference](api/index.md) - Complete API documentation

48 | - [Examples](examples.md) - Jupyter notebook examples

49 |

50 | ## References

51 |

52 | This implementation is based on the following papers:

53 |

54 | 1. [Solving inverse heat conduction problems using trained POD-RBF network inverse method](https://www.tandfonline.com/doi/full/10.1080/17415970701198290) - Ostrowski, Bialecki, Kassab (2008)

55 | 2. [RBF-trained POD-accelerated CFD analysis of wind loads on PV systems](https://www.emerald.com/insight/content/doi/10.1108/HFF-03-2016-0083/full/html) - Huayamave et al. (2017)

56 | 3. [Real-Time Thermomechanical Modeling of PV Cell Fabrication via a POD-Trained RBF Interpolation Network](https://www.techscience.com/CMES/v122n3/38374) - Das et al. (2020)

57 |

--------------------------------------------------------------------------------

/examples/lid-driven-cavity/lid-driven-cavity.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": null,

6 | "metadata": {},

7 | "outputs": [],

8 | "source": "import numpy as np\nimport matplotlib.pyplot as plt\nimport pod_rbf\n\nprint(\"using version: {}\".format(pod_rbf.__version__))\n\nRe = np.linspace(0, 1000, num=11)\nRe[0] = 1\n\ncoords_path = \"data/train/re-0001.csv\"\nx, y = np.loadtxt(\n coords_path,\n delimiter=\",\",\n skiprows=1,\n usecols=(1, 2),\n unpack=True,\n)\n\n# make snapshot matrix from csv files\ntrain_snapshot = pod_rbf.build_snapshot_matrix(\"data/train\")"

9 | },

10 | {

11 | "cell_type": "code",

12 | "execution_count": null,

13 | "metadata": {},

14 | "outputs": [],

15 | "source": "import jax.numpy as jnp\n\n# load validation\nval = np.loadtxt(\n \"data/validation/re-0450.csv\",\n # \"data/validation/re-0050.csv\",\n delimiter=\",\",\n skiprows=1,\n usecols=(0),\n unpack=True,\n)\n\n# train the model\nconfig = pod_rbf.TrainConfig(energy_threshold=0.9, poly_degree=2) # poly_degree: 0=none, 1=linear, 2=quadratic\nresult = pod_rbf.train(train_snapshot, Re, config)\nstate = result.state\nprint(\"Energy kept after truncating = {}%\".format(state.truncated_energy))\n\n# plot the energy decay\nplt.plot(state.cumul_energy)\n\n\n# inference the model on an unseen parameter\nsol = pod_rbf.inference_single(state, jnp.array(450.0))\n\n# calculate and plot the difference between inference and actual\ndiff = np.nan_to_num(np.abs(sol - val))\nprint(\"Average Percent Error = {}\".format(np.mean(diff)))\n\n# plot the inferenced solution\nfig, (ax1, ax2) = plt.subplots(1, 2, figsize=(22, 9))\nax1.set_title(\"POD-RBF\", fontsize=40)\ncntr1 = ax1.tricontourf(\n x, y, sol, levels=np.linspace(0, 1, num=20), cmap=\"viridis\", extend=\"both\"\n)\nax1.set_xticks([])\nax1.set_yticks([])\n# plot the actual solution\nax2.set_title(\"Target\", fontsize=40)\ncntr2 = ax2.tricontourf(\n x, y, val, levels=np.linspace(0, 1, num=20), cmap=\"viridis\", extend=\"both\"\n)\ncbar_ax = fig.add_axes([0.485, 0.15, 0.025, 0.7])\nfig.colorbar(cntr2, cax=cbar_ax)\nax2.set_xticks([])\nax2.set_yticks([])\n# fig.tight_layout()\n\nfig2, ax = plt.subplots(1, 1, figsize=(12, 9))\ncntr = ax.tricontourf(x, y, diff, cmap=\"viridis\", extend=\"both\")\nfig2.colorbar(cntr)\nplt.show()"

16 | }

17 | ],

18 | "metadata": {

19 | "interpreter": {

20 | "hash": "31f2aee4e71d21fbe5cf8b01ff0e069b9275f58929596ceb00d14d90e3e16cd6"

21 | },

22 | "kernelspec": {

23 | "display_name": "Python 3.8.5 64-bit",

24 | "name": "python3"

25 | },

26 | "language_info": {

27 | "name": "python",

28 | "version": ""

29 | },

30 | "metadata": {

31 | "interpreter": {

32 | "hash": "31f2aee4e71d21fbe5cf8b01ff0e069b9275f58929596ceb00d14d90e3e16cd6"

33 | }

34 | },

35 | "orig_nbformat": 2

36 | },

37 | "nbformat": 4,

38 | "nbformat_minor": 2

39 | }

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # VS Code

2 | *.code-workspace

3 | .vscode

4 |

5 | # Byte-compiled / optimized / DLL files

6 | __pycache__/

7 | *.py[cod]

8 | *$py.class

9 |

10 | # C extensions

11 | *.so

12 |

13 | # Distribution / packaging

14 | .Python

15 | build/

16 | develop-eggs/

17 | dist/

18 | downloads/

19 | eggs/

20 | .eggs/

21 | lib/

22 | lib64/

23 | parts/

24 | sdist/

25 | var/

26 | wheels/

27 | share/python-wheels/

28 | *.egg-info/

29 | .installed.cfg

30 | *.egg

31 | MANIFEST

32 |

33 | # PyInstaller

34 | # Usually these files are written by a python script from a template

35 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

36 | *.manifest

37 | *.spec

38 |

39 | # Installer logs

40 | pip-log.txt

41 | pip-delete-this-directory.txt

42 |

43 | # Unit test / coverage reports

44 | htmlcov/

45 | .tox/

46 | .nox/

47 | .coverage

48 | .coverage.*

49 | .cache

50 | nosetests.xml

51 | coverage.xml

52 | *.cover

53 | *.py,cover

54 | .hypothesis/

55 | .pytest_cache/

56 | cover/

57 |

58 | # Translations

59 | *.mo

60 | *.pot

61 |

62 | # Django stuff:

63 | *.log

64 | local_settings.py

65 | db.sqlite3

66 | db.sqlite3-journal

67 |

68 | # Flask stuff:

69 | instance/

70 | .webassets-cache

71 |

72 | # Scrapy stuff:

73 | .scrapy

74 |

75 | # Sphinx documentation

76 | docs/_build/

77 |

78 | # PyBuilder

79 | .pybuilder/

80 | target/

81 |

82 | # Jupyter Notebook

83 | .ipynb_checkpoints

84 |

85 | # IPython

86 | profile_default/

87 | ipython_config.py

88 |

89 | # pyenv

90 | # For a library or package, you might want to ignore these files since the code is

91 | # intended to run in multiple environments; otherwise, check them in:

92 | # .python-version

93 |

94 | # pipenv

95 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

96 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

97 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

98 | # install all needed dependencies.

99 | #Pipfile.lock

100 |

101 | # uv

102 | # For libraries, uv.lock should not be committed as it locks dependencies for development only.

103 | # Library users will resolve dependencies based on pyproject.toml.

104 | uv.lock

105 |

106 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

107 | __pypackages__/

108 |

109 | # Celery stuff

110 | celerybeat-schedule

111 | celerybeat.pid

112 |

113 | # SageMath parsed files

114 | *.sage.py

115 |

116 | # Environments

117 | .env

118 | .venv

119 | env/

120 | venv/

121 | ENV/

122 | env.bak/

123 | venv.bak/

124 |

125 | # Spyder project settings

126 | .spyderproject

127 | .spyproject

128 |

129 | # Rope project settings

130 | .ropeproject

131 |

132 | # mkdocs documentation

133 | /site

134 |

135 | # mypy

136 | .mypy_cache/

137 | .dmypy.json

138 | dmypy.json

139 |

140 | # Pyre type checker

141 | .pyre/

142 |

143 | # pytype static type analyzer

144 | .pytype/

145 |

146 | # Cython debug symbols

147 | cython_debug/

148 |

--------------------------------------------------------------------------------

/examples/heat-conduction/2d-heat.py:

--------------------------------------------------------------------------------

1 | import sys, os

2 | import time

3 | import numpy as np

4 | import matplotlib.pyplot as plt

5 |

6 |

7 | def buildSnapshotMatrix(params, num_points):

8 | """

9 | Assemble the snapshot matrix

10 | """

11 | print("making the snapshot matrix... ", end="")

12 | start = time.time()

13 |

14 | # evaluate the analytical solution

15 | num_terms = 50

16 | L = 1

17 | T_L = params[0, :]

18 |

19 | n = np.arange(0, num_terms)

20 | # calculate lambdas

21 | lambs = np.pi * (2 * n + 1) / (2 * L)

22 |

23 | # define points

24 | x = np.linspace(0, L, num=num_points)

25 | X, Y = np.meshgrid(x, x, indexing="xy")

26 |

27 | snapshot = np.zeros((num_points ** 2, len(T_L)))

28 | for i in range(len(T_L)):

29 | # calculate constants

30 | C = (

31 | 8

32 | * T_L[i]

33 | * (2 * (-1) ** n / (lambs * L) - 1)

34 | / ((lambs * L) ** 2 * np.cosh(lambs * L))

35 | )

36 | T = np.zeros_like(X)

37 | for j in range(0, num_terms):

38 | T = T + C[j] * np.cosh(lambs[j] * X) * np.cos(lambs[j] * Y)

39 | snapshot[:, i] = T.flatten()

40 |

41 | print("took {:3.3f} sec".format(time.time() - start))

42 |

43 | return snapshot

44 |

45 |

46 | if __name__ == "__main__":

47 |

48 | import jax

49 | import jax.numpy as jnp

50 | import pod_rbf

51 |

52 | jax.config.update('jax_default_device', jax.devices('cpu')[0]) # Change to 'gpu' or 'tpu' for accelerators

53 |

54 | T_L = np.linspace(1, 100, num=11)

55 | T_L = np.expand_dims(T_L, axis=0)

56 | T_L_test = 55.0

57 | num_points = 41

58 |

59 | # make snapshot matrix

60 | snapshot = buildSnapshotMatrix(T_L, num_points)

61 |

62 | # calculate 'test' solution

63 | # evaluate the analytical solution

64 | num_terms = 50

65 | L = 1

66 | n = np.arange(0, num_terms)

67 | # calculate lambdas

68 | lambs = np.pi * (2 * n + 1) / (2 * L)

69 | # define points

70 | x = np.linspace(0, L, num=num_points)

71 | X, Y = np.meshgrid(x, x, indexing="xy")

72 | # calculate constants

73 | C = (

74 | 8

75 | * T_L_test

76 | * (2 * (-1) ** n / (lambs * L) - 1)

77 | / ((lambs * L) ** 2 * np.cosh(lambs * L))

78 | )

79 | T_test = np.zeros_like(X)

80 | for n in range(0, num_terms):

81 | T_test = T_test + C[n] * np.cosh(lambs[n] * X) * np.cos(lambs[n] * Y)

82 |

83 | # train the POD-RBF model

84 | config = pod_rbf.TrainConfig(energy_threshold=0.5, poly_degree=2)

85 | result = pod_rbf.train(snapshot, T_L, config)

86 | state = result.state

87 |

88 | # inference the trained model

89 | sol = pod_rbf.inference_single(state, jnp.array(T_L_test))

90 |

91 | print("Energy kept after truncating = {}%".format(state.truncated_energy))

92 | print("Cumulative Energy = {}%".format(state.cumul_energy))

93 |

94 | fig = plt.figure(figsize=(12, 9))

95 | c = plt.pcolormesh(T_test, cmap="magma")

96 | fig.colorbar(c)

97 |

98 | fig = plt.figure(figsize=(12, 9))

99 | c = plt.pcolormesh(sol.reshape((num_points, num_points)), cmap="magma")

100 | fig.colorbar(c)

101 |

102 | fig = plt.figure(figsize=(12, 9))

103 | diff = np.abs(sol.reshape((num_points, num_points)) - T_test) / T_test * 100

104 | c = plt.pcolormesh(diff, cmap="magma")

105 | fig.colorbar(c)

106 |

107 | plt.show()

108 |

--------------------------------------------------------------------------------

/docs/user-guide/autodiff.md:

--------------------------------------------------------------------------------

1 | # Autodifferentiation

2 |

3 | POD-RBF is built on JAX, enabling automatic differentiation through the inference functions. This is useful for optimization, sensitivity analysis, and inverse problems.

4 |

5 | ## Computing Gradients

6 |

7 | Use `jax.grad` to compute gradients with respect to parameters:

8 |

9 | ```python

10 | import jax

11 | import jax.numpy as jnp

12 | import pod_rbf

13 |

14 | # Train model

15 | result = pod_rbf.train(snapshot, params)

16 | state = result.state

17 |

18 | # Define an objective function

19 | def objective(param):

20 | prediction = pod_rbf.inference_single(state, param)

21 | return jnp.sum(prediction ** 2)

22 |

23 | # Compute gradient

24 | grad_fn = jax.grad(objective)

25 | gradient = grad_fn(jnp.array(450.0))

26 | ```

27 |

28 | ## Optimization Example

29 |

30 | Find the parameter value that minimizes a cost function:

31 |

32 | ```python

33 | import jax

34 | import jax.numpy as jnp

35 | from jax import grad

36 |

37 | def cost_function(param, target):

38 | prediction = pod_rbf.inference_single(state, param)

39 | return jnp.mean((prediction - target) ** 2)

40 |

41 | # Gradient descent

42 | param = jnp.array(500.0) # Initial guess

43 | learning_rate = 10.0

44 |

45 | for i in range(100):

46 | grad_val = grad(cost_function)(param, target_solution)

47 | param = param - learning_rate * grad_val

48 |

49 | print(f"Optimal parameter: {param}")

50 | ```

51 |

52 | ## Inverse Problems

53 |

54 | For inverse problems where you want to find the parameter that produced an observed solution:

55 |

56 | ```python

57 | import jax

58 | import jax.numpy as jnp

59 | from jax.scipy.optimize import minimize

60 |

61 | def inverse_objective(param):

62 | prediction = pod_rbf.inference_single(state, param)

63 | return jnp.sum((prediction - observed_solution) ** 2)

64 |

65 | # Use BFGS optimization

66 | result = minimize(

67 | inverse_objective,

68 | x0=jnp.array(500.0),

69 | method="BFGS",

70 | )

71 |

72 | recovered_param = result.x

73 | ```

74 |

75 | ## Sensitivity Analysis

76 |

77 | Compute how sensitive the solution is to parameter changes:

78 |

79 | ```python

80 | import jax

81 | import jax.numpy as jnp

82 |

83 | # Jacobian: how each output point changes with the parameter

84 | jacobian_fn = jax.jacobian(

85 | lambda p: pod_rbf.inference_single(state, p)

86 | )

87 | sensitivity = jacobian_fn(jnp.array(450.0))

88 |

89 | # sensitivity shape: (n_samples,) for single parameter

90 | # Positive values indicate the solution increases with the parameter

91 | ```

92 |

93 | ## Multi-Parameter Gradients

94 |

95 | For models with multiple parameters:

96 |

97 | ```python

98 | def objective(params):

99 | # params: [Re, Ma]

100 | prediction = pod_rbf.inference_single(state, params)

101 | return jnp.sum(prediction ** 2)

102 |

103 | # Gradient with respect to all parameters

104 | grad_fn = jax.grad(objective)

105 | gradients = grad_fn(jnp.array([450.0, 0.15]))

106 | # gradients shape: (2,) - one gradient per parameter

107 | ```

108 |

109 | ## JIT Compilation

110 |

111 | For performance, JIT-compile your gradient functions:

112 |

113 | ```python

114 | @jax.jit

115 | def compute_gradient(param):

116 | return jax.grad(objective)(param)

117 |

118 | # First call compiles; subsequent calls are fast

119 | gradient = compute_gradient(jnp.array(450.0))

120 | ```

121 |

122 | ## Higher-Order Derivatives

123 |

124 | JAX supports higher-order derivatives:

125 |

126 | ```python

127 | # Second derivative (Hessian for scalar output)

128 | hessian_fn = jax.hessian(objective)

129 | hessian = hessian_fn(jnp.array(450.0))

130 | ```

131 |

--------------------------------------------------------------------------------

/CLAUDE.md:

--------------------------------------------------------------------------------

1 | # CLAUDE.md

2 |

3 | This file provides guidance to Claude Code (claude.ai/code) when working with code in this repository.

4 |

5 | ## Project Overview

6 |

7 | POD-RBF is a JAX-based Python library for building Reduced Order Models (ROMs) using Proper Orthogonal Decomposition combined with Radial Basis Function interpolation. It enables autodifferentiation for gradient optimization, sensitivity analysis, and inverse problems.

8 |

9 | ## Development Commands

10 |

11 | ```bash

12 | # Install for development

13 | pip install -e ".[dev]"

14 |

15 | # Run tests

16 | pytest tests/ -v

17 |

18 | # Run single test file

19 | pytest tests/test_core.py -v

20 |

21 | # Run specific test

22 | pytest tests/test_core.py::TestGradients::test_inverse_problem -v

23 | ```

24 |

25 | ## Architecture

26 |

27 | ### Module Structure

28 |

29 | ```

30 | pod_rbf/

31 | __init__.py # Public API, enables float64

32 | types.py # ModelState, TrainConfig, TrainResult (NamedTuples)

33 | core.py # train(), inference(), inference_single()

34 | rbf.py # build_collocation_matrix(), build_inference_matrix()

35 | decomposition.py # compute_pod_basis_svd(), compute_pod_basis_eig()

36 | shape_optimization.py # find_optimal_shape_param() (fixed-iteration bisection)

37 | io.py # build_snapshot_matrix(), save_model(), load_model()

38 | ```

39 |

40 | ### Key Types

41 |

42 | ```python

43 | class ModelState(NamedTuple):

44 | basis: Array # (n_samples, n_modes)

45 | weights: Array # (n_modes, n_train_points)

46 | shape_factor: float

47 | train_params: Array # (n_params, n_train_points)

48 | params_range: Array # (n_params,)

49 | truncated_energy: float

50 | cumul_energy: Array

51 |

52 | class TrainConfig(NamedTuple):

53 | energy_threshold: float = 0.99

54 | mem_limit_gb: float = 16.0

55 | cond_range: tuple = (1e11, 1e12)

56 | max_bisection_iters: int = 50

57 | ```

58 |

59 | ### API

60 |

61 | ```python

62 | import pod_rbf

63 | import jax

64 | import jax.numpy as jnp

65 |

66 | # Train with default config (energy_threshold=0.99)

67 | result = pod_rbf.train(snapshot, params)

68 | state = result.state

69 |

70 | # Train with custom config

71 | config = pod_rbf.TrainConfig(energy_threshold=0.9)

72 | result = pod_rbf.train(snapshot, params, config)

73 |

74 | # Inference (single point)

75 | pred = pod_rbf.inference_single(state, jnp.array(450.0))

76 |

77 | # Inference (batch)

78 | preds = pod_rbf.inference(state, jnp.array([400.0, 450.0, 500.0]))

79 |

80 | # Autodiff

81 | grad_fn = jax.grad(lambda p: jnp.sum(pod_rbf.inference_single(state, p)**2))

82 | gradient = grad_fn(jnp.array(450.0))

83 |

84 | # I/O

85 | snapshot = pod_rbf.build_snapshot_matrix("data/train/") # load CSVs from directory

86 | pod_rbf.save_model("model.pkl", state)

87 | state = pod_rbf.load_model("model.pkl")

88 | ```

89 |

90 | ### Data Shape Conventions

91 |

92 | - **Snapshot matrix**: `(n_samples, n_snapshots)` - each column is one parameter's solution

93 | - **Parameters**: 1D `(n_snapshots,)` or 2D `(n_params, n_snapshots)`

94 | - **Inference output**: `(n_samples,)` for single, `(n_samples, n_points)` for batch

95 |

96 | ### Key Algorithms

97 |

98 | 1. **POD truncation**: Keeps modes until cumulative energy exceeds `energy_threshold`

99 | 2. **RBF kernel**: Hardy Inverse Multi-Quadrics: `1/√(r²/c² + 1)`

100 | 3. **Shape optimization**: Fixed-iteration bisection (50 iters) for condition number in [10^11, 10^12]

101 |

102 | ## Dependencies

103 |

104 | - `jax>=0.4.0`, `jaxlib>=0.4.0` - Autodiff and JIT compilation

105 | - `numpy` - File I/O operations

106 | - `tqdm` - Progress bars

107 | - Python ≥ 3.10

108 |

--------------------------------------------------------------------------------

/docs/user-guide/training.md:

--------------------------------------------------------------------------------

1 | # Training Models

2 |

3 | ## Building the Snapshot Matrix

4 |

5 | The snapshot matrix contains your training data. Each column is a solution snapshot at a specific parameter value.

6 |

7 | ### From CSV Files

8 |

9 | If your snapshots are stored as individual CSV files in a directory:

10 |

11 | ```python

12 | import pod_rbf

13 |

14 | snapshot = pod_rbf.build_snapshot_matrix("path/to/data/")

15 | ```

16 |

17 | Files are loaded in alphanumeric order. The function expects one value per row in each CSV file.

18 |

19 | !!! tip "File Organization"

20 | Keep all training snapshots in a dedicated directory. Files are sorted alphanumerically, so use consistent naming like `snapshot_001.csv`, `snapshot_002.csv`, etc.

21 |

22 | ### From Arrays

23 |

24 | If you already have your data in memory:

25 |

26 | ```python

27 | import numpy as np

28 |

29 | # Shape: (n_samples, n_snapshots)

30 | snapshot = np.column_stack([solution1, solution2, solution3, ...])

31 | ```

32 |

33 | ## Training

34 |

35 | ### Basic Training

36 |

37 | ```python

38 | import pod_rbf

39 | import numpy as np

40 |

41 | params = np.array([100, 200, 300, 400, 500])

42 | result = pod_rbf.train(snapshot, params)

43 | ```

44 |

45 | The `result` object contains:

46 |

47 | - `result.state` - The trained model state (use this for inference)

48 | - `result.n_modes` - Number of POD modes retained

49 | - `result.used_eig_decomp` - Whether eigendecomposition was used (vs SVD)

50 |

51 | ### Training Configuration

52 |

53 | Customize training with `TrainConfig`:

54 |

55 | ```python

56 | from pod_rbf import TrainConfig

57 |

58 | config = TrainConfig(

59 | energy_threshold=0.99, # Keep modes until 99% energy retained

60 | kernel="imq", # RBF kernel: 'imq', 'gaussian', 'polyharmonic_spline'

61 | poly_degree=2, # Polynomial augmentation: 0=none, 1=linear, 2=quadratic

62 | )

63 |

64 | result = pod_rbf.train(snapshot, params, config)

65 | ```

66 |

67 | ### Configuration Options

68 |

69 | | Parameter | Default | Description |

70 | |-----------|---------|-------------|

71 | | `energy_threshold` | 0.99 | POD truncation threshold (0-1) |

72 | | `kernel` | `"imq"` | RBF kernel type |

73 | | `poly_degree` | 2 | Polynomial augmentation degree |

74 | | `mem_limit_gb` | 16.0 | Memory limit for algorithm selection |

75 | | `cond_range` | (1e11, 1e12) | Target condition number range |

76 | | `max_bisection_iters` | 50 | Max iterations for shape optimization |

77 |

78 | ### Kernel Options

79 |

80 | POD-RBF supports three RBF kernels:

81 |

82 | - **`imq`** (Inverse Multi-Quadrics) - Default, good general-purpose choice

83 | - **`gaussian`** - Smoother interpolation, requires careful shape parameter tuning

84 | - **`polyharmonic_spline`** - No shape parameter needed, use with `kernel_order`

85 |

86 | ```python

87 | # Using polyharmonic splines (no shape parameter optimization)

88 | config = TrainConfig(kernel="polyharmonic_spline", kernel_order=3)

89 | result = pod_rbf.train(snapshot, params, config)

90 | ```

91 |

92 | ## Multi-Parameter Training

93 |

94 | For problems with multiple parameters:

95 |

96 | ```python

97 | import numpy as np

98 |

99 | # Parameters shape: (n_params, n_snapshots)

100 | params = np.array([

101 | [100, 200, 300, 400], # Parameter 1 (e.g., Reynolds number)

102 | [0.1, 0.1, 0.2, 0.2], # Parameter 2 (e.g., Mach number)

103 | ])

104 |

105 | result = pod_rbf.train(snapshot, params)

106 | ```

107 |

108 | See the [2-parameter example](https://github.com/kylebeggs/POD-RBF/blob/master/examples/2-parameters.ipynb) for a complete walkthrough.

109 |

110 | ## Manual Shape Parameter

111 |

112 | If you want to specify the RBF shape parameter instead of using automatic optimization:

113 |

114 | ```python

115 | result = pod_rbf.train(snapshot, params, shape_factor=0.5)

116 | ```

117 |

118 | ## Understanding POD Truncation

119 |

120 | POD extracts the dominant modes from your snapshot data. The `energy_threshold` controls how many modes are kept:

121 |

122 | - `0.99` (default) - Keep modes until 99% of total energy is captured

123 | - Higher values retain more modes (more accurate, slower inference)

124 | - Lower values retain fewer modes (faster inference, may lose accuracy)

125 |

126 | After training, check how much energy was retained:

127 |

128 | ```python

129 | print(f"Modes retained: {result.n_modes}")

130 | print(f"Energy retained: {result.state.truncated_energy:.4f}")

131 | ```

132 |

--------------------------------------------------------------------------------

/pod_rbf/kernels.py:

--------------------------------------------------------------------------------

1 | """

2 | RBF kernel functions and dispatcher.

3 |

4 | Supports multiple kernel types:

5 | - Inverse Multi-Quadrics (IMQ): phi(r) = 1 / sqrt(r²/c² + 1)

6 | - Gaussian: phi(r) = exp(-r²/c²)

7 | - Polyharmonic Splines (PHS): phi(r) = r^k or r^k*log(r)

8 | """

9 |

10 | from enum import Enum

11 |

12 | import jax.numpy as jnp

13 | from jax import Array

14 |

15 |

16 | class KernelType(str, Enum):

17 | """RBF kernel types (internal use only)."""

18 |

19 | IMQ = "imq"

20 | GAUSSIAN = "gaussian"

21 | POLYHARMONIC_SPLINE = "polyharmonic_spline"

22 |

23 |

24 | def kernel_imq(r2: Array, shape_factor: float) -> Array:

25 | """

26 | Inverse Multiquadrics kernel.

27 |

28 | phi(r) = 1 / sqrt(r²/c² + 1)

29 |

30 | Parameters

31 | ----------

32 | r2 : Array

33 | Squared distances between points.

34 | shape_factor : float

35 | Shape parameter c.

36 |

37 | Returns

38 | -------

39 | Array

40 | Kernel values, same shape as r2.

41 | """

42 | return 1.0 / jnp.sqrt(r2 / (shape_factor**2) + 1.0)

43 |

44 |

45 | def kernel_gaussian(r2: Array, shape_factor: float) -> Array:

46 | """

47 | Gaussian kernel.

48 |

49 | phi(r) = exp(-r²/c²)

50 |

51 | Parameters

52 | ----------

53 | r2 : Array

54 | Squared distances between points.

55 | shape_factor : float

56 | Shape parameter c.

57 |

58 | Returns

59 | -------

60 | Array

61 | Kernel values, same shape as r2.

62 | """

63 | return jnp.exp(-r2 / (shape_factor**2))

64 |

65 |

66 | def kernel_polyharmonic_spline(r2: Array, order: int) -> Array:

67 | """

68 | Polyharmonic spline kernel.

69 |

70 | - Odd order k: phi(r) = r^k

71 | - Even order k: phi(r) = r^k * log(r)

72 |

73 | Parameters

74 | ----------

75 | r2 : Array

76 | Squared distances between points.

77 | order : int

78 | Polynomial order (typically 1-5).

79 | Odd: r, r³, r⁵

80 | Even: r²log(r), r⁴log(r)

81 |

82 | Returns

83 | -------

84 | Array

85 | Kernel values, same shape as r2.

86 |

87 | Notes

88 | -----

89 | For even orders, handles r=0 case to avoid log(0) singularity.

90 | Uses r2-based formulation to avoid gradient singularity from sqrt at r2=0.

91 | """

92 | if order % 2 == 1:

93 | # Odd order: r^k = (r2)^(k/2)

94 | # Using power of r2 directly avoids sqrt gradient singularity at r2=0

95 | return jnp.power(r2, order / 2.0)

96 | else:

97 | # Even order: r^k * log(r) = (r2)^(k/2) * log(sqrt(r2))

98 | # = (r2)^(k/2) * (1/2) * log(r2)

99 | # Handle r2=0 case: set to 0 when r2 < threshold

100 | return jnp.where(

101 | r2 > 1e-30,

102 | jnp.power(r2, order / 2.0) * 0.5 * jnp.log(r2),

103 | 0.0,

104 | )

105 |

106 |

107 | def apply_kernel(

108 | r2: Array,

109 | kernel: str,

110 | shape_factor: float | None,

111 | kernel_order: int,

112 | ) -> Array:

113 | """

114 | Apply RBF kernel to distance matrix.

115 |

116 | Dispatcher function that selects and applies the appropriate kernel

117 | based on the kernel type string.

118 |

119 | Parameters

120 | ----------

121 | r2 : Array

122 | Squared distances between points.

123 | kernel : str

124 | Kernel type: 'imq', 'gaussian', or 'polyharmonic_spline'.

125 | shape_factor : float | None

126 | Shape parameter for IMQ and Gaussian kernels.

127 | Ignored for polyharmonic splines.

128 | kernel_order : int

129 | Order for polyharmonic splines.

130 | Ignored for other kernels.

131 |

132 | Returns

133 | -------

134 | Array

135 | Kernel values applied to distance matrix.

136 |

137 | Raises

138 | ------

139 | ValueError

140 | If kernel type is not recognized.

141 | """

142 | kernel_type = KernelType(kernel)

143 |

144 | if kernel_type == KernelType.IMQ:

145 | return kernel_imq(r2, shape_factor)

146 | elif kernel_type == KernelType.GAUSSIAN:

147 | return kernel_gaussian(r2, shape_factor)

148 | elif kernel_type == KernelType.POLYHARMONIC_SPLINE:

149 | return kernel_polyharmonic_spline(r2, kernel_order)

150 | else:

151 | raise ValueError(f"Unknown kernel type: {kernel}")

152 |

153 |

154 | # Kernel-specific defaults for shape parameter optimization

155 | KERNEL_SHAPE_DEFAULTS = {

156 | "imq": {"c_low": 0.011, "c_high": 1.0, "c_step": 0.01},

157 | "gaussian": {"c_low": 0.1, "c_high": 10.0, "c_step": 0.1},

158 | }

159 |

--------------------------------------------------------------------------------

/pod_rbf/shape_optimization.py:

--------------------------------------------------------------------------------

1 | """

2 | Shape parameter optimization for RBF interpolation.

3 |

4 | Uses fixed-iteration bisection to find optimal shape parameter c such that

5 | the collocation matrix condition number falls within a target range.

6 | """

7 |

8 | import jax

9 | import jax.numpy as jnp

10 | from jax import Array

11 |

12 | from .kernels import KERNEL_SHAPE_DEFAULTS

13 | from .rbf import build_collocation_matrix

14 |

15 |

16 | def find_optimal_shape_param(

17 | train_params: Array,

18 | params_range: Array,

19 | kernel: str = "imq",

20 | kernel_order: int = 3,

21 | cond_range: tuple[float, float] = (1e11, 1e12),

22 | max_iters: int = 50,

23 | c_low_init: float | None = None,

24 | c_high_init: float | None = None,

25 | c_high_step: float | None = None,

26 | c_high_search_iters: int = 200,

27 | ) -> float | None:

28 | """

29 | Find optimal RBF shape parameter via fixed-iteration bisection.

30 |

31 | Target: condition number in [cond_range[0], cond_range[1]].

32 |

33 | Parameters

34 | ----------

35 | train_params : Array

36 | Training parameters, shape (n_params, n_train_points).

37 | params_range : Array

38 | Range of each parameter for normalization, shape (n_params,).

39 | kernel : str, optional

40 | Kernel type: 'imq', 'gaussian', or 'polyharmonic_spline'.

41 | Default is 'imq'.

42 | kernel_order : int, optional

43 | Order for polyharmonic splines (default 3).

44 | Ignored for other kernels.

45 | cond_range : tuple

46 | Target condition number range (lower, upper).

47 | max_iters : int

48 | Maximum bisection iterations.

49 | c_low_init : float | None, optional

50 | Initial lower bound for shape parameter.

51 | If None, uses kernel-specific default.

52 | c_high_init : float | None, optional

53 | Initial upper bound for shape parameter.

54 | If None, uses kernel-specific default.

55 | c_high_step : float | None, optional

56 | Step size for expanding upper bound search.

57 | If None, uses kernel-specific default.

58 | c_high_search_iters : int

59 | Maximum iterations for upper bound search.

60 |

61 | Returns

62 | -------

63 | float | None

64 | Optimal shape parameter.

65 | Returns None for kernels that don't use shape parameters (e.g., PHS).

66 | """

67 | # PHS doesn't use shape parameters

68 | if kernel == "polyharmonic_spline":

69 | return None

70 |

71 | # Use kernel-specific defaults if not provided

72 | defaults = KERNEL_SHAPE_DEFAULTS.get(kernel, KERNEL_SHAPE_DEFAULTS["imq"])

73 | c_low_init = c_low_init or defaults["c_low"]

74 | c_high_init = c_high_init or defaults["c_high"]

75 | c_high_step = c_high_step or defaults["c_step"]

76 |

77 | cond_low, cond_high = cond_range

78 |

79 | # Step 1: Find upper bound where cond >= cond_low

80 | def search_c_high_iter(i: int, carry: tuple) -> tuple:

81 | c_high, found = carry

82 | C = build_collocation_matrix(

83 | train_params, params_range, kernel, c_high, kernel_order

84 | )

85 | cond = jnp.linalg.cond(C)

86 | should_continue = (~found) & (cond < cond_low)

87 | new_c_high = jnp.where(should_continue, c_high + c_high_step, c_high)

88 | new_found = found | (cond >= cond_low)

89 | return (new_c_high, new_found)

90 |

91 | c_high, _ = jax.lax.fori_loop(

92 | 0, c_high_search_iters, search_c_high_iter, (c_high_init, False)

93 | )

94 |

95 | # Step 2: Bisection to find optimal c in range

96 | def bisection_iter(i: int, carry: tuple) -> tuple:

97 | c_low_bound, c_high_bound, optim_c, found = carry

98 |

99 | mid_c = (c_low_bound + c_high_bound) / 2.0

100 | C = build_collocation_matrix(

101 | train_params, params_range, kernel, mid_c, kernel_order

102 | )

103 | cond = jnp.linalg.cond(C)

104 |

105 | # Check if condition number is in target range

106 | in_range = (cond >= cond_low) & (cond <= cond_high)

107 | below_range = cond < cond_low

108 |

109 | # Update bounds based on condition number (only if not yet found)

110 | new_c_low = jnp.where(below_range & ~found, mid_c, c_low_bound)

111 | new_c_high = jnp.where((~below_range) & (~in_range) & ~found, mid_c, c_high_bound)

112 | new_optim_c = jnp.where(in_range & ~found, mid_c, optim_c)

113 | new_found = found | in_range

114 |

115 | return (new_c_low, new_c_high, new_optim_c, new_found)

116 |

117 | initial_guess = (c_low_init + c_high) / 2.0

118 | _, _, optim_c, _ = jax.lax.fori_loop(

119 | 0, max_iters, bisection_iter, (c_low_init, c_high, initial_guess, False)

120 | )

121 |

122 | return optim_c

123 |

--------------------------------------------------------------------------------

/pod_rbf/decomposition.py:

--------------------------------------------------------------------------------

1 | """

2 | POD basis computation via SVD or eigendecomposition.

3 | """

4 |

5 | import jax

6 | import jax.numpy as jnp

7 | from jax import Array

8 |

9 |

10 | def compute_pod_basis_svd(

11 | snapshot: Array,

12 | energy_threshold: float,

13 | ) -> tuple[Array, Array, float]:

14 | """

15 | Compute truncated POD basis via SVD.

16 |

17 | Use for smaller datasets (< mem_limit_gb).

18 |

19 | Parameters

20 | ----------

21 | snapshot : Array

22 | Snapshot matrix, shape (n_samples, n_snapshots).

23 | energy_threshold : float

24 | Minimum fraction of total energy to retain (0 < threshold <= 1).

25 |

26 | Returns

27 | -------

28 | basis : Array

29 | Truncated POD basis, shape (n_samples, n_modes).

30 | cumul_energy : Array

31 | Cumulative energy fraction per mode.

32 | truncated_energy : float

33 | Actual energy fraction retained.

34 | """

35 | U, S, _ = jnp.linalg.svd(snapshot, full_matrices=False)

36 |

37 | cumul_energy = jnp.cumsum(S) / jnp.sum(S)

38 |

39 | # Handle energy_threshold >= 1 (keep all modes)

40 | keep_all = energy_threshold >= 1.0

41 | # Find first index where cumul_energy > threshold

42 | mask = cumul_energy > energy_threshold

43 | trunc_id = jnp.where(

44 | keep_all,

45 | len(S) - 1,

46 | jnp.where(jnp.any(mask), jnp.argmax(mask), len(S) - 1),

47 | )

48 |

49 | truncated_energy = cumul_energy[trunc_id]

50 |

51 | # Dynamic slice to get truncated basis

52 | basis = jax.lax.dynamic_slice(U, (0, 0), (U.shape[0], trunc_id + 1))

53 |

54 | return basis, cumul_energy, truncated_energy

55 |

56 |

57 | def compute_pod_basis_eig(

58 | snapshot: Array,

59 | energy_threshold: float,

60 | ) -> tuple[Array, Array, float]:

61 | """

62 | Compute truncated POD basis via eigendecomposition.

63 |

64 | More memory-efficient for large datasets (>= mem_limit_gb).

65 | Computes (n_snapshots x n_snapshots) covariance instead of full SVD.

66 |

67 | Parameters

68 | ----------

69 | snapshot : Array

70 | Snapshot matrix, shape (n_samples, n_snapshots).

71 | energy_threshold : float

72 | Minimum fraction of total energy to retain (0 < threshold <= 1).

73 |

74 | Returns

75 | -------

76 | basis : Array

77 | Truncated POD basis, shape (n_samples, n_modes).

78 | cumul_energy : Array

79 | Cumulative energy fraction per mode.

80 | truncated_energy : float

81 | Actual energy fraction retained.

82 | """

83 | # Covariance matrix (n_snapshots x n_snapshots)

84 | cov = snapshot.T @ snapshot

85 | eig_vals, eig_vecs = jnp.linalg.eigh(cov)

86 |

87 | # eigh returns ascending order, reverse to descending

88 | eig_vals = jnp.abs(eig_vals[::-1])

89 | eig_vecs = eig_vecs[:, ::-1]

90 |

91 | cumul_energy = jnp.cumsum(eig_vals) / jnp.sum(eig_vals)

92 |

93 | # Handle energy_threshold >= 1 (keep all modes)

94 | keep_all = energy_threshold >= 1.0

95 | mask = cumul_energy > energy_threshold

96 | trunc_id = jnp.where(

97 | keep_all,

98 | len(eig_vals) - 1,

99 | jnp.where(jnp.any(mask), jnp.argmax(mask), len(eig_vals) - 1),

100 | )

101 |

102 | truncated_energy = cumul_energy[trunc_id]

103 |

104 | # Truncate eigenvalues and eigenvectors

105 | eig_vals_trunc = jax.lax.dynamic_slice(eig_vals, (0,), (trunc_id + 1,))

106 | eig_vecs_trunc = jax.lax.dynamic_slice(

107 | eig_vecs, (0, 0), (eig_vecs.shape[0], trunc_id + 1)

108 | )

109 |

110 | # Compute POD basis from eigenvectors

111 | basis = (snapshot @ eig_vecs_trunc) / jnp.sqrt(eig_vals_trunc)

112 |

113 | return basis, cumul_energy, truncated_energy

114 |

115 |

116 | def compute_pod_basis(

117 | snapshot: Array,

118 | energy_threshold: float,

119 | use_eig: bool = False,

120 | ) -> tuple[Array, Array, float]:

121 | """

122 | Compute truncated POD basis.

123 |

124 | Dispatches to SVD or eigendecomposition based on use_eig flag.

125 | The flag should be determined BEFORE JIT compilation based on memory.

126 |

127 | Parameters

128 | ----------

129 | snapshot : Array

130 | Snapshot matrix, shape (n_samples, n_snapshots).

131 | energy_threshold : float

132 | Minimum fraction of total energy to retain (0 < threshold <= 1).

133 | use_eig : bool

134 | If True, use eigendecomposition (memory efficient for large data).

135 | If False, use SVD (faster for smaller data).

136 |

137 | Returns

138 | -------

139 | basis : Array

140 | Truncated POD basis, shape (n_samples, n_modes).

141 | cumul_energy : Array

142 | Cumulative energy fraction per mode.

143 | truncated_energy : float

144 | Actual energy fraction retained.

145 | """

146 | if use_eig:

147 | return compute_pod_basis_eig(snapshot, energy_threshold)

148 | return compute_pod_basis_svd(snapshot, energy_threshold)

149 |

--------------------------------------------------------------------------------

/pod_rbf/io.py:

--------------------------------------------------------------------------------

1 | """

2 | File I/O utilities for POD-RBF.

3 |

4 | Uses NumPy for file operations (not differentiable).

5 | """

6 |

7 | import os

8 | import pickle

9 |

10 | import jax.numpy as jnp

11 | import numpy as np

12 | from tqdm import tqdm

13 |

14 | from .types import ModelState

15 |

16 |

17 | def build_snapshot_matrix(

18 | dirpath: str,

19 | skiprows: int = 1,

20 | usecols: int | tuple[int, ...] = 0,

21 | verbose: bool = True,

22 | ) -> np.ndarray:

23 | """

24 | Load snapshot matrix from CSV files in directory.

25 |

26 | Files are loaded in alphanumeric order. Ensure parameter array

27 | matches this ordering.

28 |

29 | Parameters

30 | ----------

31 | dirpath : str

32 | Directory containing CSV files.

33 | skiprows : int

34 | Number of header rows to skip in each file.

35 | usecols : int or tuple

36 | Column(s) to read from each file.

37 | verbose : bool

38 | Show progress bar.

39 |

40 | Returns

41 | -------

42 | np.ndarray

43 | Snapshot matrix, shape (n_samples, n_snapshots).

44 | Returns NumPy array - convert to JAX as needed.

45 | """

46 | files = sorted(

47 | [

48 | f

49 | for f in os.listdir(dirpath)

50 | if os.path.isfile(os.path.join(dirpath, f)) and f.endswith(".csv")

51 | ]

52 | )

53 |

54 | if not files:

55 | raise ValueError(f"No CSV files found in {dirpath}")

56 |

57 | # Get dimensions from first file

58 | first_data = np.loadtxt(

59 | os.path.join(dirpath, files[0]),

60 | delimiter=",",

61 | skiprows=skiprows,

62 | usecols=usecols,

63 | )

64 | n_samples = len(first_data) if first_data.ndim > 0 else 1

65 | n_snapshots = len(files)

66 |

67 | snapshot = np.zeros((n_samples, n_snapshots))

68 |

69 | iterator = tqdm(files, desc="Loading snapshots") if verbose else files

70 | for i, f in enumerate(iterator):

71 | data = np.loadtxt(

72 | os.path.join(dirpath, f),

73 | delimiter=",",

74 | skiprows=skiprows,

75 | usecols=usecols,

76 | )

77 | data_len = len(data) if data.ndim > 0 else 1

78 | assert data_len == n_samples, f"Inconsistent samples in {f}: got {data_len}, expected {n_samples}"

79 | snapshot[:, i] = data

80 |

81 | return snapshot

82 |

83 |

84 | def save_model(filename: str, state: ModelState) -> None:

85 | """

86 | Save model state to file.

87 |

88 | Parameters

89 | ----------

90 | filename : str

91 | Output filename.

92 | state : ModelState

93 | Trained model state.

94 | """

95 | # Convert JAX arrays to NumPy for pickling

96 | state_dict = {

97 | "basis": np.asarray(state.basis),

98 | "weights": np.asarray(state.weights),

99 | "shape_factor": float(state.shape_factor) if state.shape_factor is not None else None,

100 | "train_params": np.asarray(state.train_params),

101 | "params_range": np.asarray(state.params_range),

102 | "truncated_energy": float(state.truncated_energy),

103 | "cumul_energy": np.asarray(state.cumul_energy),