├── README.md

├── Readme.txt

├── code

├── EDA13.py

├── EDA16-fourWeek.py

├── EDA16-fourWeek_rightTime.py

├── EDA16-threeWeek.py

├── EDA16-threeWeek_rightTime.py

├── EDA16-twoWeek.py

├── cat_model

│ └── para.py

├── df_train_test.py

├── gen_result.py

├── gen_result2.py

├── lgb_model

│ ├── lgb_train1.py

│ ├── lgb_train2.py

│ └── lgb_train3.py

├── run.sh

├── sbb2_train1.py

├── sbb2_train2.py

├── sbb2_train3.py

├── sbb4_train1.py

├── sbb4_train2 .py

├── sbb4_train3.py

├── sbb_train1.py

├── sbb_train2.py

├── sbb_train3.py

└── xgb_model

│ ├── xgb_train1.py

│ ├── xgb_train2.py

│ └── xgb_train3.py

└── picture

├── huachuang.PNG

└── time_series.PNG

/README.md:

--------------------------------------------------------------------------------

1 | # 京东JDATA算法大赛2019-用户对品类下店铺的购买预测

2 |

3 | ## 赛题介绍

4 | 比赛网址:[JDATA2019-用户对品类下店铺的购买预测](https://jdata.jd.com/html/detail.html?id=8)

5 |

6 |

7 | ### 赛题任务

8 | 本赛题提供来自用户、商家、商品等多方面数据信息,包括商家和商品自身的内容信息、评论信息以及用户与之丰富的互动行为。参赛队伍需要通过数据挖掘技术和机器学习算法,构建用户购买商家中相关品类的预测模型,输出用户和店铺、品类的匹配结果,为精准营销提供高质量的目标群体。同时,希望参赛队伍通过本次比赛,挖掘数据背后潜在的意义,为电商生态平台的商家、用户提供多方共赢的智能解决方案。

9 | 即:对于训练集中出现的每一个用户,参赛者的模型需要预测该用户在未来7天内对`某个目标品类`下`某个店铺`的购买意向

10 |

11 | ### 赛题数据

12 | 1. 训练数据

13 | 提供`2018-02-01`到`2018-04-15`用户集合U中的用户,对商品集合S中部分商品的行为、评价、用户数据。

14 | 2. 预测数据

15 | 提供`2018-04-16`到`2018-04-22`预测用户U对哪些品类和店铺有购买,用户对品类下的店铺只会购买一次。

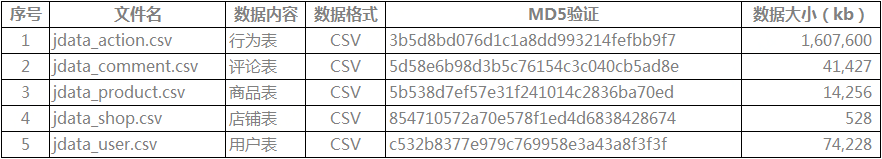

16 | 3. 数据表说明

17 |

18 | ### 评测

19 | (1)该用户`2018-04-16`到`2018-04-22`是否对品类有购买,提交的结果文件中仅包含预测为下单的用户和品类(预测为未下单的用户和品类无须在结果中出现)。评测时将对提交结果中重复的“用户-品类”做排重处理,若预测正确,则评测算法中置label=1,不正确label=0。

20 | (2)如果用户对品类有购买,还需要预测对该品类下哪个店铺有购买,若店铺预测正确,则评测算法中置pred=1,不正确pred=0。

21 | 对于参赛者提交的结果文件,按如下公式计算得分:`score=0.4F11+0.6F12`。此处的F1值定义为:

22 |

23 | 其中:Precise为准确率,Recall为召回率; F11 是label=1或0的F1值,F12 是pred=1或0的F1值。

24 |

25 | ## 代码思路

26 | 首先此次的比赛是一种典型的样本不平衡问题,用户购买和不够购买的行为大概的比例为1:100

27 | 时间滑窗法+lightgbm+xgboost+catboost

28 | 由于type==5(加入购物车行为)只存在4月8号到4月15号这一周行为,存在数据缺失问题,因此在构建测试集和训练集均将type==5的数据删除;

29 | 根据滑窗法,利用一周的时间作为预测部分,将1周/2周/3周时间的用户作为训练部分,并且将2周/4周/6周的用户和商铺、商品之间的交互行为进行特征提取。

30 | 下图所示的为3周时间的用户作为训练部分,6周的用户和商铺、商品之间的交互行为进行特征提取的表格示意图

31 |

32 |

33 | * A榜线上0.0614

Rank7

34 | * B榜线上0.0605

Rank16

35 |

36 | ## 代码环境

37 | run.sh

38 |

39 | ## 参考资料

40 | * 官方思路解答: [ 知乎:JData大数据比赛第三届非官方答疑贴](https://zhuanlan.zhihu.com/p/64503113)

41 | * 比赛参考代码: [【科普建模】JDATA3 用户对品类下店铺的购买预测](https://mp.weixin.qq.com/s?__biz=Mzg2MTEwNDQxNQ==&mid=2247483702&idx=1&sn=df621247b4790471063ddbeb15ad81c3&chksm=ce1d7146f96af85001e47999cb447d86820b082570c39de0c4ddc18dcba0b233697d5ef2e0ae&mpshare=1&scene=23&srcid=#rd)

42 | * 滑窗法介绍:[数据挖掘比赛之“滑窗法”](https://blog.csdn.net/oXiaoBuDianEr123/article/details/79309022)

43 |

--------------------------------------------------------------------------------

/Readme.txt:

--------------------------------------------------------------------------------

1 | 训练部分

2 | EDA13 统计各周行为汇总生成特征 df_train和df_test,结果在output中

3 | 以EDA16开头的文件选用第一种特征,不同的滑动窗口,与不同时间区间范围进行训练,结果在output中

4 | 以sbb开头的文件选用第二种特征,不同窗口,与相同时间区间范围进行训练,结果在feature中

5 |

6 | 预测部分

7 | 运行run.sh 获得不同特征的结果csv,通过不同特征,不同滑窗的CSV投票融合生成最后结果提交

8 |

9 | 运行方式:

10 | 原始数据放data中,一键运行run.sh,最后的结果在submit中

11 |

--------------------------------------------------------------------------------

/code/EDA13.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | from sklearn.model_selection import train_test_split

3 | import numpy as np

4 | from tqdm import tqdm

5 | import lightgbm as lgb

6 | from joblib import dump

7 |

8 |

9 | df_action=pd.read_csv("../data/jdata_action.csv")

10 | df_product=pd.read_csv("../data/jdata_product.csv")

11 |

12 | df_action=pd.merge(df_action,df_product,how='left',on='sku_id')

13 | df_action=df_action.groupby(['user_id','shop_id','cate'], as_index=False).sum()

14 |

15 | df_action=df_action[['user_id','shop_id','cate']]

16 | df_action_head=df_action.copy()

17 |

18 | df_action=pd.read_csv("../data/jdata_action.csv")

19 |

20 | def makeActionData(startDate,endDate):

21 | df=df_action[(df_action['action_time']>startDate)&(df_action['action_time']='2018-04-09') \

24 | & (jdata_data['action_time']<='2018-04-15') \

25 | & (jdata_data['type']==2)][['user_id','cate','shop_id']].drop_duplicates()

26 | train_buy['label'] = 1

27 | # 候选集 时间 : '2018-03-26'-'2018-04-08' 最近两周有行为的(用户,类目,店铺)

28 | train_set = jdata_data[(jdata_data['action_time']>='2018-03-12') \

29 | & (jdata_data['action_time']<='2018-04-08')][['user_id','cate','shop_id']].drop_duplicates()

30 | train_set = train_set.merge(train_buy,on=['user_id','cate','shop_id'],how='left').fillna(0)

31 |

32 |

33 | train_set = train_set.merge(df_train,on=['user_id','cate','shop_id'],how='left')

34 |

35 | def mapper(x):

36 | if x is not np.nan:

37 | year=int(x[:4])

38 | return 2018-year

39 |

40 |

41 | df_user['user_reg_tm']=df_user['user_reg_tm'].apply(lambda x:mapper(x))

42 | df_shop['shop_reg_tm']=df_shop['shop_reg_tm'].apply(lambda x:mapper(x))

43 | df_shop['shop_reg_tm']=df_shop['shop_reg_tm'].fillna(df_shop['shop_reg_tm'].mean())

44 | df_user['age']=df_user['age'].fillna(df_user['age'].mean())

45 | df_comment=pd.read_csv('../data/jdata_comment.csv')

46 | df_comment=df_comment.groupby(['sku_id'],as_index=False).sum()

47 | df_product=pd.read_csv('../data/jdata_product.csv')

48 | df_product_comment=pd.merge(df_product,df_comment,on='sku_id',how='left')

49 | df_product_comment=df_product_comment.fillna(0)

50 | df_product_comment=df_product_comment.groupby(['shop_id'],as_index=False).sum()

51 | df_product_comment=df_product_comment.drop(['sku_id','brand','cate'],axis=1)

52 | df_shop_product_comment=pd.merge(df_shop,df_product_comment,how='left',on='shop_id')

53 |

54 |

55 | train_set=pd.merge(train_set,df_user,how='left',on='user_id')

56 | train_set=pd.merge(train_set,df_shop_product_comment,on='shop_id',how='left')

57 |

58 | test_set = jdata_data[(jdata_data['action_time']>='2018-03-19') \

59 | & (jdata_data['action_time']<='2018-04-15')][['user_id','cate','shop_id']].drop_duplicates()

60 |

61 | test_set = test_set.merge(df_test,on=['user_id','cate','shop_id'],how='left')

62 |

63 | del df_train

64 | del df_test

65 |

66 | test_set=pd.merge(test_set,df_user,how='left',on='user_id')

67 | test_set=pd.merge(test_set,df_shop_product_comment,on='shop_id',how='left')

68 | train_set.rename(columns={'cate_x':'cate'}, inplace = True)

69 | test_set.rename(columns={'cate_x':'cate'}, inplace = True)

70 |

71 | test_head=test_set[['user_id','cate','shop_id']]

72 | train_head=train_set[['user_id','cate','shop_id']]

73 | test_set=test_set.drop(['user_id','cate','shop_id'],axis=1)

74 | train_set=train_set.drop(['user_id','cate','shop_id'],axis=1)

75 |

76 | # 数据准备

77 | X_train = train_set.drop(['label'],axis=1).values

78 | y_train = train_set['label'].values

79 | X_test = test_set.values

80 |

81 | del test_set

82 | del train_set

83 |

84 | # 模型工具

85 | class SBBTree():

86 | """Stacking,Bootstap,Bagging----SBBTree"""

87 | def __init__(self, params, stacking_num, bagging_num, bagging_test_size, num_boost_round, early_stopping_rounds):

88 | """

89 | Initializes the SBBTree.

90 | Args:

91 | params : lgb params.

92 | stacking_num : k_flod stacking.

93 | bagging_num : bootstrap num.

94 | bagging_test_size : bootstrap sample rate.

95 | num_boost_round : boost num.

96 | early_stopping_rounds : early_stopping_rounds.

97 | """

98 | self.params = params

99 | self.stacking_num = stacking_num

100 | self.bagging_num = bagging_num

101 | self.bagging_test_size = bagging_test_size

102 | self.num_boost_round = num_boost_round

103 | self.early_stopping_rounds = early_stopping_rounds

104 |

105 | self.model = lgb

106 | self.stacking_model = []

107 | self.bagging_model = []

108 |

109 | def fit(self, X, y):

110 | """ fit model. """

111 | if self.stacking_num > 1:

112 | layer_train = np.zeros((X.shape[0], 2))

113 | self.SK = StratifiedKFold(n_splits=self.stacking_num, shuffle=True, random_state=1)

114 | for k,(train_index, test_index) in enumerate(self.SK.split(X, y)):

115 | X_train = X[train_index]

116 | y_train = y[train_index]

117 | X_test = X[test_index]

118 | y_test = y[test_index]

119 |

120 | lgb_train = lgb.Dataset(X_train, y_train)

121 | lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

122 |

123 | gbm = lgb.train(self.params,

124 | lgb_train,

125 | num_boost_round=self.num_boost_round,

126 | valid_sets=lgb_eval,

127 | early_stopping_rounds=self.early_stopping_rounds,

128 | verbose_eval=300)

129 |

130 | self.stacking_model.append(gbm)

131 |

132 | pred_y = gbm.predict(X_test, num_iteration=gbm.best_iteration)

133 | layer_train[test_index, 1] = pred_y

134 |

135 | X = np.hstack((X, layer_train[:,1].reshape((-1,1))))

136 | else:

137 | pass

138 | for bn in range(self.bagging_num):

139 | X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=self.bagging_test_size, random_state=bn)

140 |

141 | lgb_train = lgb.Dataset(X_train, y_train)

142 | lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

143 |

144 | gbm = lgb.train(self.params,

145 | lgb_train,

146 | num_boost_round=10000,

147 | valid_sets=lgb_eval,

148 | early_stopping_rounds=200,

149 | verbose_eval=300)

150 |

151 | self.bagging_model.append(gbm)

152 |

153 | def predict(self, X_pred):

154 | """ predict test data. """

155 | if self.stacking_num > 1:

156 | test_pred = np.zeros((X_pred.shape[0], self.stacking_num))

157 | for sn,gbm in enumerate(self.stacking_model):

158 | pred = gbm.predict(X_pred, num_iteration=gbm.best_iteration)

159 | test_pred[:, sn] = pred

160 | X_pred = np.hstack((X_pred, test_pred.mean(axis=1).reshape((-1,1))))

161 | else:

162 | pass

163 | for bn,gbm in enumerate(self.bagging_model):

164 | pred = gbm.predict(X_pred, num_iteration=gbm.best_iteration)

165 | if bn == 0:

166 | pred_out=pred

167 | else:

168 | pred_out+=pred

169 | return pred_out/self.bagging_num

170 |

171 | # 模型参数

172 | params = {

173 | 'boosting_type': 'gbdt',

174 | 'objective': 'binary',

175 | 'metric': 'auc',

176 | 'learning_rate': 0.01,

177 | 'num_leaves': 2 ** 5 - 1,

178 | 'min_child_samples': 100,

179 | 'max_bin': 100,

180 | 'subsample': .7,

181 | 'subsample_freq': 1,

182 | 'colsample_bytree': 0.7,

183 | 'min_child_weight': 0,

184 | 'scale_pos_weight': 25,

185 | 'seed': 2018,

186 | 'nthread': 16,

187 | 'verbose': 0,

188 | }

189 |

190 | # 使用模型

191 | model = SBBTree(params=params,\

192 | stacking_num=5,\

193 | bagging_num=5,\

194 | bagging_test_size=0.33,\

195 | num_boost_round=10000,\

196 | early_stopping_rounds=200)

197 | model.fit(X_train, y_train)

198 | y_predict = model.predict(X_test)

199 | #y_train_predict = model.predict(X_train)

200 |

201 |

202 | test_head['pred_prob'] = y_predict

203 | test_head.to_csv('../output/EDA16-fourWeek.csv',index=False)

204 |

205 |

206 | fourOld = test_head[test_head['pred_prob'] >= 0.60][['user_id', 'cate', 'shop_id']]

207 | fourOld.to_csv('../output/res_fourWeekOld60.csv', index=False)

208 |

--------------------------------------------------------------------------------

/code/EDA16-fourWeek_rightTime.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | import numpy as np

3 | import datetime

4 | import lightgbm as lgb

5 | from sklearn.metrics import f1_score

6 | from sklearn.model_selection import train_test_split

7 | from sklearn.model_selection import KFold

8 | from sklearn.model_selection import StratifiedKFold

9 | pd.set_option('display.max_columns', None)

10 |

11 | df_train=pd.read_csv('../output/df_train.csv')

12 | df_test=pd.read_csv('../output/df_test.csv')

13 | df_user=pd.read_csv('../data/jdata_user.csv')

14 | df_comment=pd.read_csv('../data/jdata_comment.csv')

15 | df_shop=pd.read_csv('../data/jdata_shop.csv')

16 |

17 | # 1)行为数据(jdata_action)

18 | jdata_action = pd.read_csv('../data/jdata_action.csv')

19 | # 3)商品数据(jdata_product)

20 | jdata_product = pd.read_csv('../data/jdata_product.csv')

21 | jdata_data = jdata_action.merge(jdata_product,on=['sku_id'])

22 |

23 | train_buy = jdata_data[(jdata_data['action_time']>='2018-04-09') \

24 | & (jdata_data['action_time']<'2018-04-16') \

25 | & (jdata_data['type']==2)][['user_id','cate','shop_id']].drop_duplicates()

26 | train_buy['label'] = 1

27 | # 候选集 时间 : '2018-03-26'-'2018-04-08' 最近两周有行为的(用户,类目,店铺)

28 | train_set = jdata_data[(jdata_data['action_time']>='2018-03-12') \

29 | & (jdata_data['action_time']<'2018-04-09')][['user_id','cate','shop_id']].drop_duplicates()

30 | train_set = train_set.merge(train_buy,on=['user_id','cate','shop_id'],how='left').fillna(0)

31 |

32 |

33 | train_set = train_set.merge(df_train,on=['user_id','cate','shop_id'],how='left')

34 |

35 | def mapper(x):

36 | if x is not np.nan:

37 | year=int(x[:4])

38 | return 2018-year

39 |

40 |

41 | df_user['user_reg_tm']=df_user['user_reg_tm'].apply(lambda x:mapper(x))

42 | df_shop['shop_reg_tm']=df_shop['shop_reg_tm'].apply(lambda x:mapper(x))

43 | df_shop['shop_reg_tm']=df_shop['shop_reg_tm'].fillna(df_shop['shop_reg_tm'].mean())

44 | df_user['age']=df_user['age'].fillna(df_user['age'].mean())

45 | df_comment=pd.read_csv('../data/jdata_comment.csv')

46 | df_comment=df_comment.groupby(['sku_id'],as_index=False).sum()

47 | df_product=pd.read_csv('../data/jdata_product.csv')

48 | df_product_comment=pd.merge(df_product,df_comment,on='sku_id',how='left')

49 | df_product_comment=df_product_comment.fillna(0)

50 | df_product_comment=df_product_comment.groupby(['shop_id'],as_index=False).sum()

51 | df_product_comment=df_product_comment.drop(['sku_id','brand','cate'],axis=1)

52 | df_shop_product_comment=pd.merge(df_shop,df_product_comment,how='left',on='shop_id')

53 |

54 |

55 | train_set=pd.merge(train_set,df_user,how='left',on='user_id')

56 | train_set=pd.merge(train_set,df_shop_product_comment,on='shop_id',how='left')

57 |

58 | test_set = jdata_data[(jdata_data['action_time']>='2018-03-19') \

59 | & (jdata_data['action_time']<'2018-04-16')][['user_id','cate','shop_id']].drop_duplicates()

60 |

61 | test_set = test_set.merge(df_test,on=['user_id','cate','shop_id'],how='left')

62 |

63 | del df_train

64 | del df_test

65 |

66 | test_set=pd.merge(test_set,df_user,how='left',on='user_id')

67 | test_set=pd.merge(test_set,df_shop_product_comment,on='shop_id',how='left')

68 | train_set.rename(columns={'cate_x':'cate'}, inplace = True)

69 | test_set.rename(columns={'cate_x':'cate'}, inplace = True)

70 |

71 | test_head=test_set[['user_id','cate','shop_id']]

72 | train_head=train_set[['user_id','cate','shop_id']]

73 | test_set=test_set.drop(['user_id','cate','shop_id'],axis=1)

74 | train_set=train_set.drop(['user_id','cate','shop_id'],axis=1)

75 |

76 | # 数据准备

77 | X_train = train_set.drop(['label'],axis=1).values

78 | y_train = train_set['label'].values

79 | X_test = test_set.values

80 |

81 | del test_set

82 | del train_set

83 |

84 | # 模型工具

85 | class SBBTree():

86 | """Stacking,Bootstap,Bagging----SBBTree"""

87 | def __init__(self, params, stacking_num, bagging_num, bagging_test_size, num_boost_round, early_stopping_rounds):

88 | """

89 | Initializes the SBBTree.

90 | Args:

91 | params : lgb params.

92 | stacking_num : k_flod stacking.

93 | bagging_num : bootstrap num.

94 | bagging_test_size : bootstrap sample rate.

95 | num_boost_round : boost num.

96 | early_stopping_rounds : early_stopping_rounds.

97 | """

98 | self.params = params

99 | self.stacking_num = stacking_num

100 | self.bagging_num = bagging_num

101 | self.bagging_test_size = bagging_test_size

102 | self.num_boost_round = num_boost_round

103 | self.early_stopping_rounds = early_stopping_rounds

104 |

105 | self.model = lgb

106 | self.stacking_model = []

107 | self.bagging_model = []

108 |

109 | def fit(self, X, y):

110 | """ fit model. """

111 | if self.stacking_num > 1:

112 | layer_train = np.zeros((X.shape[0], 2))

113 | self.SK = StratifiedKFold(n_splits=self.stacking_num, shuffle=True, random_state=1)

114 | for k,(train_index, test_index) in enumerate(self.SK.split(X, y)):

115 | X_train = X[train_index]

116 | y_train = y[train_index]

117 | X_test = X[test_index]

118 | y_test = y[test_index]

119 |

120 | lgb_train = lgb.Dataset(X_train, y_train)

121 | lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

122 |

123 | gbm = lgb.train(self.params,

124 | lgb_train,

125 | num_boost_round=self.num_boost_round,

126 | valid_sets=lgb_eval,

127 | early_stopping_rounds=self.early_stopping_rounds,

128 | verbose_eval=300)

129 |

130 | self.stacking_model.append(gbm)

131 |

132 | pred_y = gbm.predict(X_test, num_iteration=gbm.best_iteration)

133 | layer_train[test_index, 1] = pred_y

134 |

135 | X = np.hstack((X, layer_train[:,1].reshape((-1,1))))

136 | else:

137 | pass

138 | for bn in range(self.bagging_num):

139 | X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=self.bagging_test_size, random_state=bn)

140 |

141 | lgb_train = lgb.Dataset(X_train, y_train)

142 | lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

143 |

144 | gbm = lgb.train(self.params,

145 | lgb_train,

146 | num_boost_round=10000,

147 | valid_sets=lgb_eval,

148 | early_stopping_rounds=200,

149 | verbose_eval=300)

150 |

151 | self.bagging_model.append(gbm)

152 |

153 | def predict(self, X_pred):

154 | """ predict test data. """

155 | if self.stacking_num > 1:

156 | test_pred = np.zeros((X_pred.shape[0], self.stacking_num))

157 | for sn,gbm in enumerate(self.stacking_model):

158 | pred = gbm.predict(X_pred, num_iteration=gbm.best_iteration)

159 | test_pred[:, sn] = pred

160 | X_pred = np.hstack((X_pred, test_pred.mean(axis=1).reshape((-1,1))))

161 | else:

162 | pass

163 | for bn,gbm in enumerate(self.bagging_model):

164 | pred = gbm.predict(X_pred, num_iteration=gbm.best_iteration)

165 | if bn == 0:

166 | pred_out=pred

167 | else:

168 | pred_out+=pred

169 | return pred_out/self.bagging_num

170 |

171 | # 模型参数

172 | params = {

173 | 'boosting_type': 'gbdt',

174 | 'objective': 'binary',

175 | 'metric': 'auc',

176 | 'learning_rate': 0.01,

177 | 'num_leaves': 2 ** 5 - 1,

178 | 'min_child_samples': 100,

179 | 'max_bin': 100,

180 | 'subsample': .7,

181 | 'subsample_freq': 1,

182 | 'colsample_bytree': 0.7,

183 | 'min_child_weight': 0,

184 | 'scale_pos_weight': 25,

185 | 'seed': 2018,

186 | 'nthread': 16,

187 | 'verbose': 0,

188 | }

189 |

190 | # 使用模型

191 | model = SBBTree(params=params,\

192 | stacking_num=5,\

193 | bagging_num=5,\

194 | bagging_test_size=0.33,\

195 | num_boost_round=10000,\

196 | early_stopping_rounds=200)

197 | model.fit(X_train, y_train)

198 | y_predict = model.predict(X_test)

199 | #y_train_predict = model.predict(X_train)

200 |

201 |

202 | test_head['pred_prob'] = y_predict

203 | test_head.to_csv('../output/EDA16-fourWeek_rightTime.csv',index=False)

204 |

205 |

206 | fourNew = test_head[test_head['pred_prob'] >= 0.675][['user_id', 'cate', 'shop_id']]

207 | fourNew.to_csv('../output/res_fourWeekNew675.csv', index=False)

208 |

--------------------------------------------------------------------------------

/code/EDA16-threeWeek.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | import numpy as np

3 | import datetime

4 | import lightgbm as lgb

5 | from sklearn.metrics import f1_score

6 | from sklearn.model_selection import train_test_split

7 | from sklearn.model_selection import KFold

8 | from sklearn.model_selection import StratifiedKFold

9 | pd.set_option('display.max_columns', None)

10 |

11 | df_train=pd.read_csv('../output/df_train.csv')

12 | df_test=pd.read_csv('../output/df_test.csv')

13 | df_user=pd.read_csv('../data/jdata_user.csv')

14 | df_comment=pd.read_csv('../data/jdata_comment.csv')

15 | df_shop=pd.read_csv('../data/jdata_shop.csv')

16 |

17 | # 1)行为数据(jdata_action)

18 | jdata_action = pd.read_csv('../data/jdata_action.csv')

19 | # 3)商品数据(jdata_product)

20 | jdata_product = pd.read_csv('../data/jdata_product.csv')

21 | jdata_data = jdata_action.merge(jdata_product,on=['sku_id'])

22 |

23 | train_buy = jdata_data[(jdata_data['action_time']>='2018-04-09') \

24 | & (jdata_data['action_time']<='2018-04-15') \

25 | & (jdata_data['type']==2)][['user_id','cate','shop_id']].drop_duplicates()

26 | train_buy['label'] = 1

27 | # 候选集 时间 : '2018-03-19'-'2018-04-08' 最近两周有行为的(用户,类目,店铺)

28 | train_set = jdata_data[(jdata_data['action_time']>='2018-03-19') \

29 | & (jdata_data['action_time']<='2018-04-08')][['user_id','cate','shop_id']].drop_duplicates()

30 | train_set = train_set.merge(train_buy,on=['user_id','cate','shop_id'],how='left').fillna(0)

31 |

32 |

33 | train_set = train_set.merge(df_train,on=['user_id','cate','shop_id'],how='left')

34 |

35 | def mapper(x):

36 | if x is not np.nan:

37 | year=int(x[:4])

38 | return 2018-year

39 |

40 |

41 | df_user['user_reg_tm']=df_user['user_reg_tm'].apply(lambda x:mapper(x))

42 | df_shop['shop_reg_tm']=df_shop['shop_reg_tm'].apply(lambda x:mapper(x))

43 | df_shop['shop_reg_tm']=df_shop['shop_reg_tm'].fillna(df_shop['shop_reg_tm'].mean())

44 | df_user['age']=df_user['age'].fillna(df_user['age'].mean())

45 | df_comment=pd.read_csv('../data/jdata_comment.csv')

46 | df_comment=df_comment.groupby(['sku_id'],as_index=False).sum()

47 | df_product=pd.read_csv('../data/jdata_product.csv')

48 | df_product_comment=pd.merge(df_product,df_comment,on='sku_id',how='left')

49 | df_product_comment=df_product_comment.fillna(0)

50 | df_product_comment=df_product_comment.groupby(['shop_id'],as_index=False).sum()

51 | df_product_comment=df_product_comment.drop(['sku_id','brand','cate'],axis=1)

52 | df_shop_product_comment=pd.merge(df_shop,df_product_comment,how='left',on='shop_id')

53 |

54 |

55 | train_set=pd.merge(train_set,df_user,how='left',on='user_id')

56 | train_set=pd.merge(train_set,df_shop_product_comment,on='shop_id',how='left')

57 |

58 | test_set = jdata_data[(jdata_data['action_time']>='2018-03-26') \

59 | & (jdata_data['action_time']<='2018-04-15')][['user_id','cate','shop_id']].drop_duplicates()

60 |

61 | test_set = test_set.merge(df_test,on=['user_id','cate','shop_id'],how='left')

62 |

63 | del df_train

64 | del df_test

65 |

66 | test_set=pd.merge(test_set,df_user,how='left',on='user_id')

67 | test_set=pd.merge(test_set,df_shop_product_comment,on='shop_id',how='left')

68 | train_set.rename(columns={'cate_x':'cate'}, inplace = True)

69 | test_set.rename(columns={'cate_x':'cate'}, inplace = True)

70 |

71 | test_head=test_set[['user_id','cate','shop_id']]

72 | train_head=train_set[['user_id','cate','shop_id']]

73 | test_set=test_set.drop(['user_id','cate','shop_id'],axis=1)

74 | train_set=train_set.drop(['user_id','cate','shop_id'],axis=1)

75 |

76 | # 数据准备

77 | X_train = train_set.drop(['label'],axis=1).values

78 | y_train = train_set['label'].values

79 | X_test = test_set.values

80 |

81 | del test_set

82 | del train_set

83 |

84 | # 模型工具

85 | class SBBTree():

86 | """Stacking,Bootstap,Bagging----SBBTree"""

87 | def __init__(self, params, stacking_num, bagging_num, bagging_test_size, num_boost_round, early_stopping_rounds):

88 | """

89 | Initializes the SBBTree.

90 | Args:

91 | params : lgb params.

92 | stacking_num : k_flod stacking.

93 | bagging_num : bootstrap num.

94 | bagging_test_size : bootstrap sample rate.

95 | num_boost_round : boost num.

96 | early_stopping_rounds : early_stopping_rounds.

97 | """

98 | self.params = params

99 | self.stacking_num = stacking_num

100 | self.bagging_num = bagging_num

101 | self.bagging_test_size = bagging_test_size

102 | self.num_boost_round = num_boost_round

103 | self.early_stopping_rounds = early_stopping_rounds

104 |

105 | self.model = lgb

106 | self.stacking_model = []

107 | self.bagging_model = []

108 |

109 | def fit(self, X, y):

110 | """ fit model. """

111 | if self.stacking_num > 1:

112 | layer_train = np.zeros((X.shape[0], 2))

113 | self.SK = StratifiedKFold(n_splits=self.stacking_num, shuffle=True, random_state=1)

114 | for k,(train_index, test_index) in enumerate(self.SK.split(X, y)):

115 | X_train = X[train_index]

116 | y_train = y[train_index]

117 | X_test = X[test_index]

118 | y_test = y[test_index]

119 |

120 | lgb_train = lgb.Dataset(X_train, y_train)

121 | lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

122 |

123 | gbm = lgb.train(self.params,

124 | lgb_train,

125 | num_boost_round=self.num_boost_round,

126 | valid_sets=lgb_eval,

127 | early_stopping_rounds=self.early_stopping_rounds,

128 | verbose_eval=300)

129 |

130 | self.stacking_model.append(gbm)

131 |

132 | pred_y = gbm.predict(X_test, num_iteration=gbm.best_iteration)

133 | layer_train[test_index, 1] = pred_y

134 |

135 | X = np.hstack((X, layer_train[:,1].reshape((-1,1))))

136 | else:

137 | pass

138 | for bn in range(self.bagging_num):

139 | X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=self.bagging_test_size, random_state=bn)

140 |

141 | lgb_train = lgb.Dataset(X_train, y_train)

142 | lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

143 |

144 | gbm = lgb.train(self.params,

145 | lgb_train,

146 | num_boost_round=10000,

147 | valid_sets=lgb_eval,

148 | early_stopping_rounds=200,

149 | verbose_eval=300)

150 |

151 | self.bagging_model.append(gbm)

152 |

153 | def predict(self, X_pred):

154 | """ predict test data. """

155 | if self.stacking_num > 1:

156 | test_pred = np.zeros((X_pred.shape[0], self.stacking_num))

157 | for sn,gbm in enumerate(self.stacking_model):

158 | pred = gbm.predict(X_pred, num_iteration=gbm.best_iteration)

159 | test_pred[:, sn] = pred

160 | X_pred = np.hstack((X_pred, test_pred.mean(axis=1).reshape((-1,1))))

161 | else:

162 | pass

163 | for bn,gbm in enumerate(self.bagging_model):

164 | pred = gbm.predict(X_pred, num_iteration=gbm.best_iteration)

165 | if bn == 0:

166 | pred_out=pred

167 | else:

168 | pred_out+=pred

169 | return pred_out/self.bagging_num

170 |

171 | # 模型参数

172 | params = {

173 | 'boosting_type': 'gbdt',

174 | 'objective': 'binary',

175 | 'metric': 'auc',

176 | 'learning_rate': 0.01,

177 | 'num_leaves': 2 ** 5 - 1,

178 | 'min_child_samples': 100,

179 | 'max_bin': 100,

180 | 'subsample': .7,

181 | 'subsample_freq': 1,

182 | 'colsample_bytree': 0.7,

183 | 'min_child_weight': 0,

184 | 'scale_pos_weight': 25,

185 | 'seed': 2018,

186 | 'nthread': 16,

187 | 'verbose': 0,

188 | }

189 |

190 | # 使用模型

191 | model = SBBTree(params=params,\

192 | stacking_num=5,\

193 | bagging_num=5,\

194 | bagging_test_size=0.33,\

195 | num_boost_round=10000,\

196 | early_stopping_rounds=200)

197 | model.fit(X_train, y_train)

198 | y_predict = model.predict(X_test)

199 | #y_train_predict = model.predict(X_train)

200 |

201 |

202 | test_head['pred_prob'] = y_predict

203 | test_head.to_csv('../output/EDA16-threeWeek.csv',index=False)

204 |

205 |

206 | threeOld = test_head[test_head['pred_prob'] >= 0.595][['user_id', 'cate', 'shop_id']]

207 | threeOld.to_csv('../output/res_threeWeekOld595.csv', index=False)

208 |

--------------------------------------------------------------------------------

/code/EDA16-threeWeek_rightTime.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | import numpy as np

3 | import datetime

4 | import lightgbm as lgb

5 | from sklearn.metrics import f1_score

6 | from sklearn.model_selection import train_test_split

7 | from sklearn.model_selection import KFold

8 | from sklearn.model_selection import StratifiedKFold

9 | pd.set_option('display.max_columns', None)

10 |

11 | df_train=pd.read_csv('../output/df_train.csv')

12 | df_test=pd.read_csv('../output/df_test.csv')

13 | df_user=pd.read_csv('../data/jdata_user.csv')

14 | df_comment=pd.read_csv('../data/jdata_comment.csv')

15 | df_shop=pd.read_csv('../data/jdata_shop.csv')

16 |

17 | # 1)行为数据(jdata_action)

18 | jdata_action = pd.read_csv('../data/jdata_action.csv')

19 | # 3)商品数据(jdata_product)

20 | jdata_product = pd.read_csv('../data/jdata_product.csv')

21 | jdata_data = jdata_action.merge(jdata_product,on=['sku_id'])

22 |

23 | train_buy = jdata_data[(jdata_data['action_time']>='2018-04-09') \

24 | & (jdata_data['action_time']<'2018-04-16') \

25 | & (jdata_data['type']==2)][['user_id','cate','shop_id']].drop_duplicates()

26 | train_buy['label'] = 1

27 | # 候选集 时间 : '2018-03-19'-'2018-04-08' 最近两周有行为的(用户,类目,店铺)

28 | train_set = jdata_data[(jdata_data['action_time']>='2018-03-19') \

29 | & (jdata_data['action_time']<'2018-04-09')][['user_id','cate','shop_id']].drop_duplicates()

30 | train_set = train_set.merge(train_buy,on=['user_id','cate','shop_id'],how='left').fillna(0)

31 |

32 |

33 | train_set = train_set.merge(df_train,on=['user_id','cate','shop_id'],how='left')

34 |

35 | def mapper(x):

36 | if x is not np.nan:

37 | year=int(x[:4])

38 | return 2018-year

39 |

40 |

41 | df_user['user_reg_tm']=df_user['user_reg_tm'].apply(lambda x:mapper(x))

42 | df_shop['shop_reg_tm']=df_shop['shop_reg_tm'].apply(lambda x:mapper(x))

43 | df_shop['shop_reg_tm']=df_shop['shop_reg_tm'].fillna(df_shop['shop_reg_tm'].mean())

44 | df_user['age']=df_user['age'].fillna(df_user['age'].mean())

45 | df_comment=pd.read_csv('../data/jdata_comment.csv')

46 | df_comment=df_comment.groupby(['sku_id'],as_index=False).sum()

47 | df_product=pd.read_csv('../data/jdata_product.csv')

48 | df_product_comment=pd.merge(df_product,df_comment,on='sku_id',how='left')

49 | df_product_comment=df_product_comment.fillna(0)

50 | df_product_comment=df_product_comment.groupby(['shop_id'],as_index=False).sum()

51 | df_product_comment=df_product_comment.drop(['sku_id','brand','cate'],axis=1)

52 | df_shop_product_comment=pd.merge(df_shop,df_product_comment,how='left',on='shop_id')

53 |

54 |

55 | train_set=pd.merge(train_set,df_user,how='left',on='user_id')

56 | train_set=pd.merge(train_set,df_shop_product_comment,on='shop_id',how='left')

57 |

58 | test_set = jdata_data[(jdata_data['action_time']>='2018-03-26') \

59 | & (jdata_data['action_time']<'2018-04-16')][['user_id','cate','shop_id']].drop_duplicates()

60 |

61 | test_set = test_set.merge(df_test,on=['user_id','cate','shop_id'],how='left')

62 |

63 | del df_train

64 | del df_test

65 |

66 | test_set=pd.merge(test_set,df_user,how='left',on='user_id')

67 | test_set=pd.merge(test_set,df_shop_product_comment,on='shop_id',how='left')

68 | train_set.rename(columns={'cate_x':'cate'}, inplace = True)

69 | test_set.rename(columns={'cate_x':'cate'}, inplace = True)

70 |

71 | test_head=test_set[['user_id','cate','shop_id']]

72 | train_head=train_set[['user_id','cate','shop_id']]

73 | test_set=test_set.drop(['user_id','cate','shop_id'],axis=1)

74 | train_set=train_set.drop(['user_id','cate','shop_id'],axis=1)

75 |

76 | # 数据准备

77 | X_train = train_set.drop(['label'],axis=1).values

78 | y_train = train_set['label'].values

79 | X_test = test_set.values

80 |

81 | del test_set

82 | del train_set

83 |

84 | # 模型工具

85 | class SBBTree():

86 | """Stacking,Bootstap,Bagging----SBBTree"""

87 | def __init__(self, params, stacking_num, bagging_num, bagging_test_size, num_boost_round, early_stopping_rounds):

88 | """

89 | Initializes the SBBTree.

90 | Args:

91 | params : lgb params.

92 | stacking_num : k_flod stacking.

93 | bagging_num : bootstrap num.

94 | bagging_test_size : bootstrap sample rate.

95 | num_boost_round : boost num.

96 | early_stopping_rounds : early_stopping_rounds.

97 | """

98 | self.params = params

99 | self.stacking_num = stacking_num

100 | self.bagging_num = bagging_num

101 | self.bagging_test_size = bagging_test_size

102 | self.num_boost_round = num_boost_round

103 | self.early_stopping_rounds = early_stopping_rounds

104 |

105 | self.model = lgb

106 | self.stacking_model = []

107 | self.bagging_model = []

108 |

109 | def fit(self, X, y):

110 | """ fit model. """

111 | if self.stacking_num > 1:

112 | layer_train = np.zeros((X.shape[0], 2))

113 | self.SK = StratifiedKFold(n_splits=self.stacking_num, shuffle=True, random_state=1)

114 | for k,(train_index, test_index) in enumerate(self.SK.split(X, y)):

115 | X_train = X[train_index]

116 | y_train = y[train_index]

117 | X_test = X[test_index]

118 | y_test = y[test_index]

119 |

120 | lgb_train = lgb.Dataset(X_train, y_train)

121 | lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

122 |

123 | gbm = lgb.train(self.params,

124 | lgb_train,

125 | num_boost_round=self.num_boost_round,

126 | valid_sets=lgb_eval,

127 | early_stopping_rounds=self.early_stopping_rounds,

128 | verbose_eval=300)

129 |

130 | self.stacking_model.append(gbm)

131 |

132 | pred_y = gbm.predict(X_test, num_iteration=gbm.best_iteration)

133 | layer_train[test_index, 1] = pred_y

134 |

135 | X = np.hstack((X, layer_train[:,1].reshape((-1,1))))

136 | else:

137 | pass

138 | for bn in range(self.bagging_num):

139 | X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=self.bagging_test_size, random_state=bn)

140 |

141 | lgb_train = lgb.Dataset(X_train, y_train)

142 | lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

143 |

144 | gbm = lgb.train(self.params,

145 | lgb_train,

146 | num_boost_round=10000,

147 | valid_sets=lgb_eval,

148 | early_stopping_rounds=200,

149 | verbose_eval=300)

150 |

151 | self.bagging_model.append(gbm)

152 |

153 | def predict(self, X_pred):

154 | """ predict test data. """

155 | if self.stacking_num > 1:

156 | test_pred = np.zeros((X_pred.shape[0], self.stacking_num))

157 | for sn,gbm in enumerate(self.stacking_model):

158 | pred = gbm.predict(X_pred, num_iteration=gbm.best_iteration)

159 | test_pred[:, sn] = pred

160 | X_pred = np.hstack((X_pred, test_pred.mean(axis=1).reshape((-1,1))))

161 | else:

162 | pass

163 | for bn,gbm in enumerate(self.bagging_model):

164 | pred = gbm.predict(X_pred, num_iteration=gbm.best_iteration)

165 | if bn == 0:

166 | pred_out=pred

167 | else:

168 | pred_out+=pred

169 | return pred_out/self.bagging_num

170 |

171 | # 模型参数

172 | params = {

173 | 'boosting_type': 'gbdt',

174 | 'objective': 'binary',

175 | 'metric': 'auc',

176 | 'learning_rate': 0.01,

177 | 'num_leaves': 2 ** 5 - 1,

178 | 'min_child_samples': 100,

179 | 'max_bin': 100,

180 | 'subsample': .7,

181 | 'subsample_freq': 1,

182 | 'colsample_bytree': 0.7,

183 | 'min_child_weight': 0,

184 | 'scale_pos_weight': 25,

185 | 'seed': 2018,

186 | 'nthread': 16,

187 | 'verbose': 0,

188 | }

189 |

190 | # 使用模型

191 | model = SBBTree(params=params,\

192 | stacking_num=5,\

193 | bagging_num=5,\

194 | bagging_test_size=0.33,\

195 | num_boost_round=10000,\

196 | early_stopping_rounds=200)

197 | model.fit(X_train, y_train)

198 | y_predict = model.predict(X_test)

199 | #y_train_predict = model.predict(X_train)

200 |

201 |

202 | test_head['pred_prob'] = y_predict

203 | test_head.to_csv('../output/EDA16-threeWeek_rightTime.csv',index=False)

204 |

205 | threeNew = test_head[test_head['pred_prob'] >= 0.65][['user_id', 'cate', 'shop_id']]

206 | threeNew.to_csv('../output/res_threeWeekNew65.csv', index=False)

207 |

--------------------------------------------------------------------------------

/code/EDA16-twoWeek.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | import numpy as np

3 | import datetime

4 | import lightgbm as lgb

5 | from sklearn.metrics import f1_score

6 | from sklearn.model_selection import train_test_split

7 | from sklearn.model_selection import KFold

8 | from sklearn.model_selection import StratifiedKFold

9 | pd.set_option('display.max_columns', None)

10 |

11 | df_train=pd.read_csv('../output/df_train.csv')

12 | df_test=pd.read_csv('../output/df_test.csv')

13 | df_user=pd.read_csv('../data/jdata_user.csv')

14 | df_comment=pd.read_csv('../data/jdata_comment.csv')

15 | df_shop=pd.read_csv('../data/jdata_shop.csv')

16 |

17 | # 1)行为数据(jdata_action)

18 | jdata_action = pd.read_csv('../data/jdata_action.csv')

19 | # 3)商品数据(jdata_product)

20 | jdata_product = pd.read_csv('../data/jdata_product.csv')

21 | jdata_data = jdata_action.merge(jdata_product,on=['sku_id'])

22 |

23 | train_buy = jdata_data[(jdata_data['action_time']>='2018-04-09') \

24 | & (jdata_data['action_time']<='2018-04-15') \

25 | & (jdata_data['type']==2)][['user_id','cate','shop_id']].drop_duplicates()

26 | train_buy['label'] = 1

27 | # 候选集 时间 : '2018-03-26'-'2018-04-08' 最近两周有行为的(用户,类目,店铺)

28 | train_set = jdata_data[(jdata_data['action_time']>='2018-03-26') \

29 | & (jdata_data['action_time']<='2018-04-08')][['user_id','cate','shop_id']].drop_duplicates()

30 | train_set = train_set.merge(train_buy,on=['user_id','cate','shop_id'],how='left').fillna(0)

31 | train_set = train_set.merge(df_train,on=['user_id','cate','shop_id'],how='left')

32 | def mapper(x):

33 | if x is not np.nan:

34 | year=int(x[:4])

35 | return 2018-year

36 |

37 | df_user['user_reg_tm']=df_user['user_reg_tm'].apply(lambda x:mapper(x))

38 | df_shop['shop_reg_tm']=df_shop['shop_reg_tm'].apply(lambda x:mapper(x))

39 | df_shop['shop_reg_tm']=df_shop['shop_reg_tm'].fillna(df_shop['shop_reg_tm'].mean())

40 | df_user['age']=df_user['age'].fillna(df_user['age'].mean())

41 | df_comment=pd.read_csv('../data/jdata_comment.csv')

42 | df_comment=df_comment.groupby(['sku_id'],as_index=False).sum()

43 | df_product=pd.read_csv('../data/jdata_product.csv')

44 | df_product_comment=pd.merge(df_product,df_comment,on='sku_id',how='left')

45 | df_product_comment=df_product_comment.fillna(0)

46 | df_product_comment=df_product_comment.groupby(['shop_id'],as_index=False).sum()

47 | df_product_comment=df_product_comment.drop(['sku_id','brand','cate'],axis=1)

48 | df_shop_product_comment=pd.merge(df_shop,df_product_comment,how='left',on='shop_id')

49 |

50 | train_set=pd.merge(train_set,df_user,how='left',on='user_id')

51 | train_set=pd.merge(train_set,df_shop_product_comment,on='shop_id',how='left')

52 | test_set = jdata_data[(jdata_data['action_time']>='2018-04-02') \

53 | & (jdata_data['action_time']<='2018-04-15')][['user_id','cate','shop_id']].drop_duplicates()

54 | test_set = test_set.merge(df_test,on=['user_id','cate','shop_id'],how='left')

55 |

56 | del df_train

57 | del df_test

58 | test_set=pd.merge(test_set,df_user,how='left',on='user_id')

59 | test_set=pd.merge(test_set,df_shop_product_comment,on='shop_id',how='left')

60 | test_set=test_set.sort_values('user_id')

61 | train_set=train_set.sort_values('user_id')

62 | train_set.rename(columns={'cate_x':'cate'}, inplace = True)

63 | test_set.rename(columns={'cate_x':'cate'}, inplace = True)

64 |

65 | test_head=test_set[['user_id','cate','shop_id']]

66 | train_head=train_set[['user_id','cate','shop_id']]

67 | test_set=test_set.drop(['user_id','cate','shop_id'],axis=1)

68 | train_set=train_set.drop(['user_id','cate','shop_id'],axis=1)

69 |

70 | # 数据准备

71 | X_train = train_set.drop(['label'],axis=1).values

72 | y_train = train_set['label'].values

73 | X_test = test_set.values

74 | del test_set

75 | del train_set

76 |

77 | # 模型工具

78 | class SBBTree():

79 | """Stacking,Bootstap,Bagging----SBBTree"""

80 | def __init__(self, params, stacking_num, bagging_num, bagging_test_size, num_boost_round, early_stopping_rounds):

81 | """

82 | Initializes the SBBTree.

83 | Args:

84 | params : lgb params.

85 | stacking_num : k_flod stacking.

86 | bagging_num : bootstrap num.

87 | bagging_test_size : bootstrap sample rate.

88 | num_boost_round : boost num.

89 | early_stopping_rounds : early_stopping_rounds.

90 | """

91 | self.params = params

92 | self.stacking_num = stacking_num

93 | self.bagging_num = bagging_num

94 | self.bagging_test_size = bagging_test_size

95 | self.num_boost_round = num_boost_round

96 | self.early_stopping_rounds = early_stopping_rounds

97 |

98 | self.model = lgb

99 | self.stacking_model = []

100 | self.bagging_model = []

101 |

102 | def fit(self, X, y):

103 | """ fit model. """

104 | if self.stacking_num > 1:

105 | layer_train = np.zeros((X.shape[0], 2))

106 | self.SK = StratifiedKFold(n_splits=self.stacking_num, shuffle=True, random_state=1)

107 | for k,(train_index, test_index) in enumerate(self.SK.split(X, y)):

108 | X_train = X[train_index]

109 | y_train = y[train_index]

110 | X_test = X[test_index]

111 | y_test = y[test_index]

112 |

113 | lgb_train = lgb.Dataset(X_train, y_train)

114 | lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

115 |

116 | gbm = lgb.train(self.params,

117 | lgb_train,

118 | num_boost_round=self.num_boost_round,

119 | valid_sets=lgb_eval,

120 | early_stopping_rounds=self.early_stopping_rounds,

121 | verbose_eval=300)

122 |

123 | self.stacking_model.append(gbm)

124 |

125 | pred_y = gbm.predict(X_test, num_iteration=gbm.best_iteration)

126 | layer_train[test_index, 1] = pred_y

127 |

128 | X = np.hstack((X, layer_train[:,1].reshape((-1,1))))

129 | else:

130 | pass

131 | for bn in range(self.bagging_num):

132 | X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=self.bagging_test_size, random_state=bn)

133 |

134 | lgb_train = lgb.Dataset(X_train, y_train)

135 | lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

136 |

137 | gbm = lgb.train(self.params,

138 | lgb_train,

139 | num_boost_round=10000,

140 | valid_sets=lgb_eval,

141 | early_stopping_rounds=200,

142 | verbose_eval=300)

143 |

144 | self.bagging_model.append(gbm)

145 |

146 | def predict(self, X_pred):

147 | """ predict test data. """

148 | if self.stacking_num > 1:

149 | test_pred = np.zeros((X_pred.shape[0], self.stacking_num))

150 | for sn,gbm in enumerate(self.stacking_model):

151 | pred = gbm.predict(X_pred, num_iteration=gbm.best_iteration)

152 | test_pred[:, sn] = pred

153 | X_pred = np.hstack((X_pred, test_pred.mean(axis=1).reshape((-1,1))))

154 | else:

155 | pass

156 | for bn,gbm in enumerate(self.bagging_model):

157 | pred = gbm.predict(X_pred, num_iteration=gbm.best_iteration)

158 | if bn == 0:

159 | pred_out=pred

160 | else:

161 | pred_out+=pred

162 | return pred_out/self.bagging_num

163 |

164 | # 模型参数

165 | params = {

166 | 'boosting_type': 'gbdt',

167 | 'objective': 'binary',

168 | 'metric': 'auc',

169 | 'learning_rate': 0.01,

170 | 'num_leaves': 2 ** 5 - 1,

171 | 'min_child_samples': 100,

172 | 'max_bin': 100,

173 | 'subsample': .7,

174 | 'subsample_freq': 1,

175 | 'colsample_bytree': 0.7,

176 | 'min_child_weight': 0,

177 | 'scale_pos_weight': 25,

178 | 'seed': 2018,

179 | 'nthread': 16,

180 | 'verbose': 0,

181 | }

182 |

183 | # 使用模型

184 | model = SBBTree(params=params,\

185 | stacking_num=5,\

186 | bagging_num=5,\

187 | bagging_test_size=0.33,\

188 | num_boost_round=10000,\

189 | early_stopping_rounds=200)

190 | model.fit(X_train, y_train)

191 | y_predict = model.predict(X_test)

192 | y_train_predict = model.predict(X_train)

193 |

194 | test_head['pred_prob'] = y_predict

195 |

196 |

197 | test_head.to_csv('../output/EDA16-twoWeek.csv', index=False)

198 |

199 | twoOld = test_head[test_head['pred_prob'] >= 0.5205][['user_id', 'cate', 'shop_id']]

200 | twoOld.to_csv('../output/res_twoWeekOld5205.csv', index=False)

201 |

202 |

203 |

204 |

205 |

--------------------------------------------------------------------------------

/code/cat_model/para.py:

--------------------------------------------------------------------------------

1 | # 感谢大佬分享的参数

2 | ctb_params = {

3 | 'n_estimators': 10000,

4 | 'learning_rate': 0.02,

5 | 'random_seed': 4590,

6 | 'reg_lambda': 0.08,

7 | 'subsample': 0.7,

8 | 'bootstrap_type': 'Bernoulli',

9 | 'boosting_type': 'Plain',

10 | 'one_hot_max_size': 10,

11 | 'rsm': 0.5,

12 | 'leaf_estimation_iterations': 5,

13 | 'use_best_model': True,

14 | 'max_depth': 6,

15 | 'verbose': -1,

16 | 'thread_count': 4

17 | }

18 | ctb_model = ctb.CatBoostRegressor(**ctb_params)

19 |

--------------------------------------------------------------------------------

/code/df_train_test.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | from sklearn.model_selection import train_test_split

3 | import numpy as np

4 | from tqdm import tqdm

5 | import lightgbm as lgb

6 | from joblib import dump

7 | import time

8 |

9 | time_0 = time.process_time()

10 | print('>> 开始读取数据')

11 | df_action=pd.read_csv("./jdata_action.csv")

12 | df_product=pd.read_csv("./jdata_product.csv")

13 |

14 | df_action=pd.merge(df_action,df_product,how='left',on='sku_id')

15 | df_action=df_action.groupby(['user_id','shop_id','cate'], as_index=False).sum()

16 | time_1 = time.process_time()

17 | print('<< 数据读取完成!用时', time_1 - time_0, 's')

18 |

19 | df_action=df_action[['user_id','shop_id','cate']]

20 | df_action_head=df_action.copy()

21 |

22 | df_action=pd.read_csv("./jdata_action.csv")

23 |

24 | def makeActionData(startDate,endDate):

25 | df=df_action[(df_action['action_time']>startDate)&(df_action['action_time']=best_u]))

15 | sbb3_3[sbb3_3['pred_prob']>=best_u][['user_id','cate','shop_id']].to_csv('../output/sbb3_3.csv',index=False)

16 |

17 | # sbb3_2['pred_prob'] = y_predict

18 | best_u = 0.602

19 | #设置阈值 计算行数

20 | # print('sbb3_2 best_len',len(sbb3_2[sbb3_2['pred_prob']>=best_u]))

21 | sbb3_2[sbb3_2['pred_prob']>=best_u][['user_id','cate','shop_id']].to_csv('../output/sbb3_2.csv',index=False)

22 | # sbb3_1['pred_prob'] = y_predict

23 | best_u = 0.521

24 | #设置阈值 计算行数

25 | # print('sbb3_1 best_len',len(sbb3_1[sbb3_1['pred_prob']>=best_u]))

26 | sbb3_1[sbb3_1['pred_prob']>=best_u][['user_id','cate','shop_id']].to_csv('../output/sbb3_1.csv',index=False)

27 |

28 |

29 | n_3_593 = pd.read_csv('../output/res_threeWeekNew65.csv')

30 | n_4_590 = pd.read_csv('../output/res_fourWeekNew675.csv')

31 | o_2_573 = pd.read_csv('../output/res_twoWeekOld5205.csv')

32 | o_3_583 = pd.read_csv('../output/res_threeWeekOld595.csv')

33 | o_4_578 = pd.read_csv('../output/res_fourWeekOld60.csv')

34 | sbb_1 = pd.read_csv('../output/sbb3_1.csv')

35 | sbb_2 = pd.read_csv('../output/sbb3_2.csv')

36 | sbb_3 = pd.read_csv('../output/sbb3_3.csv')

37 |

38 |

39 |

40 | all_item = pd.concat([n_3_593,n_4_590,o_2_573,o_3_583,o_4_578,sbb_1,sbb_2,sbb_3],axis=0)

41 | all_item = all_item.drop_duplicates()

42 |

43 |

44 | n_3_593['label1'] = 1

45 | n_4_590['label2'] = 1

46 | o_2_573['label3'] = 1

47 | o_3_583['label4'] = 1

48 | o_4_578['label5'] = 1

49 | sbb_1['label6'] = 1

50 | sbb_2['label7'] = 1

51 | sbb_3['label8'] = 1

52 |

53 |

54 |

55 | all_item = all_item.merge(n_3_593,on=['user_id','cate','shop_id'],how='left')

56 | all_item = all_item.merge(n_4_590,on=['user_id','cate','shop_id'],how='left')

57 | all_item = all_item.merge(o_2_573,on=['user_id','cate','shop_id'],how='left')

58 | all_item = all_item.merge(o_3_583,on=['user_id','cate','shop_id'],how='left')

59 | all_item = all_item.merge(o_4_578,on=['user_id','cate','shop_id'],how='left')

60 | all_item = all_item.merge(sbb_1,on=['user_id','cate','shop_id'],how='left')

61 | all_item = all_item.merge(sbb_2,on=['user_id','cate','shop_id'],how='left')

62 | all_item = all_item.merge(sbb_3,on=['user_id','cate','shop_id'],how='left')

63 |

64 |

65 | all_item = all_item.fillna(0)

66 |

67 |

68 | all_item['sum'] = all_item['label1']+all_item['label2']+all_item['label3']+all_item['label4']+all_item['label5']+all_item['label6']+all_item['label7']+all_item['label8']

69 |

70 | all_item[all_item['sum']>=2][['user_id',

71 | 'cate','shop_id']].to_csv('../submit/8_model_2.csv',index=False)

72 |

73 |

74 |

--------------------------------------------------------------------------------

/code/gen_result2.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | import numpy as np

3 | ###同特征相交 不同特征投票

4 | sbb4_3 = pd.read_csv('../feature/4_sbb_get_3_test.csv')

5 | sbb4_2 = pd.read_csv('../feature/4_sbb_get_2_test.csv')

6 | sbb4_1 = pd.read_csv('../feature/4_sbb_get_1_test.csv')

7 |

8 | from tqdm import tqdm

9 | # sbb4_1['pred_prob'] = y_predict

10 | best_u = 0.662

11 | #设置阈值 计算行数

12 | # print('sbb4_1 best_len',len(sbb4_1[sbb4_1['pred_prob']>=best_u]))

13 | sbb4_1[sbb4_1['pred_prob']>=best_u][['user_id','cate','shop_id']].to_csv('../output/sbb4_1.csv',index=False)

14 |

15 | from tqdm import tqdm

16 | # sbb4_1['pred_prob'] = y_predict

17 | best_u = 0.500

18 | #设置阈值 计算行数

19 | # print('sbb4_2 best_len',len(sbb4_2[sbb4_2['pred_prob']>=best_u]))

20 | sbb4_2[sbb4_2['pred_prob']>=best_u][['user_id','cate','shop_id']].to_csv('../output/sbb4_2.csv',index=False)

21 |

22 | from tqdm import tqdm

23 | # sbb4_3['pred_prob'] = y_predict

24 | best_u = 0.685

25 | #设置阈值 计算行数

26 | # print('sbb4_3 best_len',len(sbb4_3[sbb4_3['pred_prob']>=best_u]))

27 | sbb4_3[sbb4_3['pred_prob']>=best_u][['user_id','cate','shop_id']].to_csv('../output/sbb4_3.csv',index=False)

28 |

29 | sbb3_3 = pd.read_csv('../feature/3_sbb_get_3_test.csv')

30 | sbb3_2 = pd.read_csv('../feature/3_sbb_get_2_test.csv')

31 | sbb3_1 = pd.read_csv('../feature/3_sbb_get_1_test.csv')

32 |

33 | from tqdm import tqdm

34 | # sbb3_3['pred_prob'] = y_predict

35 | best_u = 0.686

36 | #设置阈值 计算行数

37 | # print('sbb3_3 best_len',len(sbb3_3[sbb3_3['pred_prob']>=best_u]))

38 | sbb3_3[sbb3_3['pred_prob']>=best_u][['user_id','cate','shop_id']].to_csv('../output/sbb3_3.csv',index=False)

39 |

40 | from tqdm import tqdm

41 | # sbb3_2['pred_prob'] = y_predict

42 | best_u = 0.602

43 | #设置阈值 计算行数

44 | # print('sbb3_2 best_len',len(sbb3_2[sbb3_2['pred_prob']>=best_u]))

45 | sbb3_2[sbb3_2['pred_prob']>=best_u][['user_id','cate','shop_id']].to_csv('../output/sbb3_2.csv',index=False)

46 |

47 | from tqdm import tqdm

48 | # sbb3_1['pred_prob'] = y_predict

49 | best_u = 0.521

50 | #设置阈值 计算行数

51 | # print('sbb3_1 best_len',len(sbb3_1[sbb3_1['pred_prob']>=best_u]))

52 | sbb3_1[sbb3_1['pred_prob']>=best_u][['user_id','cate','shop_id']].to_csv('../output/sbb3_1.csv',index=False)

53 |

54 | sbb2_3 = pd.read_csv('../feature/2_sbb_get_3_test.csv')

55 | sbb2_2 = pd.read_csv('../feature/2_sbb_get_2_test.csv')

56 | sbb2_1 = pd.read_csv('../feature/2_sbb_get_1_test.csv')

57 |

58 | from tqdm import tqdm

59 | # sbb2_1['pred_prob'] = y_predict

60 | best_u = 0.495

61 | #设置阈值 计算行数

62 | # print('sbb2_1 best_len',len(sbb2_1[sbb2_1['pred_prob']>=best_u]))

63 | sbb2_1[sbb2_1['pred_prob']>=best_u][['user_id','cate','shop_id']].to_csv('../output/sbb2_1.csv',index=False)

64 |

65 | from tqdm import tqdm

66 | # sbb4_2['pred_prob'] = y_predict

67 | best_u = 0.310

68 | #设置阈值 计算行数

69 | # print('sbb2_2 best_len',len(sbb2_2[sbb2_2['pred_prob']>=best_u]))

70 | sbb2_2[sbb2_2['pred_prob']>=best_u][['user_id','cate','shop_id']].to_csv('../output/sbb2_2.csv',index=False)

71 |

72 | from tqdm import tqdm

73 | # sbb4_2['pred_prob'] = y_predict

74 | best_u = 0.480

75 | #设置阈值 计算行数

76 | # print('sbb2_3 best_len',len(sbb2_3[sbb2_3['pred_prob']>=best_u]))

77 | sbb2_3[sbb2_3['pred_prob']>=best_u][['user_id','cate','shop_id']].to_csv('../output/sbb2_3.csv',index=False)

78 |

79 | ##同特征相交

80 | ##不同特征投票

81 | sbb21 = pd.read_csv('../output/sbb2_1.csv')

82 | sbb31 = pd.read_csv('../output/sbb3_1.csv')

83 | sbb41 = pd.read_csv('../output/sbb4_1.csv')

84 | all_data = pd.concat([sbb21,sbb31,sbb41],axis=0).drop_duplicates()

85 |

86 | sbb21['label2']=1

87 | sbb31['label3']=1

88 | sbb41['label4']=1

89 |

90 | all_data = all_data.merge(sbb21,on=['user_id','cate','shop_id'],how='left')

91 | all_data = all_data.merge(sbb31,on=['user_id','cate','shop_id'],how='left')

92 | all_data = all_data.merge(sbb41,on=['user_id','cate','shop_id'],how='left')

93 | all_data= all_data.fillna(0)

94 | all_data['sum'] = all_data['label2']+all_data['label3']+all_data['label4']

95 |

96 | all_data['sum'].value_counts()

97 |

98 | all_data[all_data['sum']>=3][['user_id','cate','shop_id']].to_csv('../output/sbb*1_u3.csv',index=False)

99 |

100 | sbb22 = pd.read_csv('../output/sbb2_2.csv')

101 | sbb32 = pd.read_csv('../output/sbb3_2.csv')

102 | sbb42 = pd.read_csv('../output/sbb4_2.csv')

103 | all_data = pd.concat([sbb22,sbb32,sbb42],axis=0).drop_duplicates()

104 |

105 | sbb22['label2']=1

106 | sbb32['label3']=1

107 | sbb42['label4']=1

108 |

109 | all_data = all_data.merge(sbb22,on=['user_id','cate','shop_id'],how='left')

110 | all_data = all_data.merge(sbb32,on=['user_id','cate','shop_id'],how='left')

111 | all_data = all_data.merge(sbb42,on=['user_id','cate','shop_id'],how='left')

112 | all_data= all_data.fillna(0)

113 | all_data['sum'] = all_data['label2']+all_data['label3']+all_data['label4']

114 |

115 | all_data['sum'].value_counts()

116 | all_data[all_data['sum']>=3][['user_id','cate','shop_id']].to_csv('../output/sbb*2_u3.csv',index=False)

117 |

118 | sbb23 = pd.read_csv('../output/sbb2_3.csv')

119 | sbb33 = pd.read_csv('../output/sbb3_3.csv')

120 | sbb43 = pd.read_csv('../output/sbb4_3.csv')

121 | all_data = pd.concat([sbb23,sbb33,sbb43],axis=0).drop_duplicates()

122 |

123 | sbb23['label2']=1

124 | sbb33['label3']=1

125 | sbb43['label4']=1

126 |

127 | all_data = all_data.merge(sbb23,on=['user_id','cate','shop_id'],how='left')

128 | all_data = all_data.merge(sbb33,on=['user_id','cate','shop_id'],how='left')

129 | all_data = all_data.merge(sbb43,on=['user_id','cate','shop_id'],how='left')

130 | all_data= all_data.fillna(0)

131 | all_data['sum'] = all_data['label2']+all_data['label3']+all_data['label4']

132 |

133 | all_data['sum'].value_counts()

134 | all_data[all_data['sum']>=3][['user_id','cate','shop_id']].to_csv('../output/sbb*3_u3.csv',index=False)

135 |

136 | sbb1_vote = pd.read_csv('../output/sbb*1_u3.csv')

137 | sbb2_vote = pd.read_csv('../output/sbb*2_u3.csv')

138 | sbb3_vote = pd.read_csv('../output/sbb*3_u3.csv')

139 | a_result = pd.read_csv('../submit/8_model_2.csv')

140 | all_data = pd.concat([sbb1_vote,sbb2_vote,sbb3_vote,a_result],axis=0).drop_duplicates()

141 |

142 |

143 | sbb1_vote['label2']=1

144 | sbb2_vote['label3']=1

145 | sbb3_vote['label4']=1

146 | a_result['label5'] = 1

147 |

148 | all_data = all_data.merge(sbb1_vote,on=['user_id','cate','shop_id'],how='left')

149 | all_data = all_data.merge(sbb2_vote,on=['user_id','cate','shop_id'],how='left')

150 | all_data = all_data.merge(sbb3_vote,on=['user_id','cate','shop_id'],how='left')

151 | all_data = all_data.merge(a_result,on=['user_id','cate','shop_id'],how='left')

152 | all_data= all_data.fillna(0)

153 | all_data['sum'] = all_data['label2']+all_data['label3']+all_data['label4']+all_data['label5']

154 |

155 | all_data['sum'].value_counts()

156 |

157 | all_data[all_data['sum']>=2][['user_id','cate','shop_id']].to_csv('../submit/b_final.csv',index=False)

--------------------------------------------------------------------------------

/code/lgb_model/lgb_train1.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | import numpy as np

3 | from datetime import datetime

4 | from sklearn.metrics import f1_score

5 | from sklearn.model_selection import train_test_split

6 | from sklearn.model_selection import KFold

7 | from sklearn.model_selection import StratifiedKFold

8 | import lighgbm as lgb

9 | pd.set_option('display.max_columns', None)

10 |

11 |

12 | ## 读取文件减少内存,参考鱼佬的腾讯赛

13 | def reduce_mem_usage(df, verbose=True):

14 | numerics = ['int16', 'int32', 'int64', 'float16', 'float32', 'float64']

15 | start_mem = df.memory_usage().sum() / 1024**2

16 | for col in df.columns:

17 | col_type = df[col].dtypes

18 | if col_type in numerics:

19 | c_min = df[col].min()

20 | c_max = df[col].max()

21 | if str(col_type)[:3] == 'int':

22 | if c_min > np.iinfo(np.int8).min and c_max < np.iinfo(np.int8).max:

23 | df[col] = df[col].astype(np.int8)

24 | elif c_min > np.iinfo(np.int16).min and c_max < np.iinfo(np.int16).max:

25 | df[col] = df[col].astype(np.int16)

26 | elif c_min > np.iinfo(np.int32).min and c_max < np.iinfo(np.int32).max:

27 | df[col] = df[col].astype(np.int32)

28 | elif c_min > np.iinfo(np.int64).min and c_max < np.iinfo(np.int64).max:

29 | df[col] = df[col].astype(np.int64)

30 | else:

31 | if c_min > np.finfo(np.float16).min and c_max < np.finfo(np.float16).max:

32 | df[col] = df[col].astype(np.float16)

33 | elif c_min > np.finfo(np.float32).min and c_max < np.finfo(np.float32).max:

34 | df[col] = df[col].astype(np.float32)

35 | else:

36 | df[col] = df[col].astype(np.float64)

37 | end_mem = df.memory_usage().sum() / 1024**2

38 | if verbose:

39 | print('Mem. usage decreased to {:5.2f} Mb ({:.1f}% reduction)'.format(end_mem, 100 * (start_mem - end_mem) / start_mem))

40 | return df

41 |

42 | df_train = reduce_mem_usage(pd.read_csv('./df_train.csv'))

43 | df_test = reduce_mem_usage(pd.read_csv('./df_test.csv'))

44 | ##这里可以选择 加载多个特征文件 进行merge 如果df_train变了 记得在输出文件名称加以备注 使用了什么特征文件

45 | ###设置特征标志位 如果 使用一周特征为1 加上两周特征为12 再加上三周特征 为123 只使用二周特征为2

46 |

47 | df_user=reduce_mem_usage(pd.read_csv('./jdata_user.csv'))

48 | df_comment=reduce_mem_usage(pd.read_csv('./jdata_comment.csv'))

49 | df_shop=reduce_mem_usage(pd.read_csv('./jdata_shop.csv'))

50 |

51 | # 1)行为数据(jdata_action)

52 | jdata_action = reduce_mem_usage(pd.read_csv('./jdata_action.csv'))

53 |

54 | # 3)商品数据(jdata_product)

55 | jdata_product = reduce_mem_usage(pd.read_csv('./jdata_product.csv'))

56 |

57 | jdata_data = jdata_action.merge(jdata_product,on=['sku_id'])

58 | time_1 = time.process_time()

59 | print('<< 数据读取完成!用时', time_1 - time_0, 's')

60 |

61 |

62 | label_flag = 1

63 | train_buy = jdata_data[(jdata_data['action_time']>='2018-04-09')&(jdata_data['action_time']<'2018-04-15')&(jdata_data['type']==2)][['user_id','cate','shop_id']].drop_duplicates()

64 | train_buy['label'] = 1

65 | # 候选集 时间 : '2018-03-26'-'2018-04-08' 最近两周有行为的(用户,类目,店铺)

66 | win_size = 3#如果选择两周行为则为2 三周则为3

67 | train_set = jdata_data[(jdata_data['action_time']>='2018-03-19')&(jdata_data['action_time']<'2018-04-09')][['user_id','cate','shop_id']].drop_duplicates()

68 | train_set = train_set.merge(train_buy,on=['user_id','cate','shop_id'],how='left').fillna(0)

69 |

70 | train_set = train_set.merge(df_train,on=['user_id','cate','shop_id'],how='left')

71 |

72 |

73 |

74 | def mapper_year(x):

75 | if x is not np.nan:

76 | year = int(x[:4])

77 | return 2018 - year

78 |

79 |

80 | def mapper_month(x):

81 | if x is not np.nan:

82 | year = int(x[:4])

83 | month = int(x[5:7])

84 | return (2018 - year) * 12 + month

85 |

86 |

87 | def mapper_day(x):

88 | if x is not np.nan:

89 | year = int(x[:4])

90 | month = int(x[5:7])

91 | day = int(x[8:10])

92 | return (2018 - year) * 365 + month * 30 + day

93 |

94 |

95 | df_user['user_reg_year'] = df_user['user_reg_tm'].apply(lambda x: mapper_year(x))

96 | df_user['user_reg_month'] = df_user['user_reg_tm'].apply(lambda x: mapper_month(x))

97 | df_user['user_reg_day'] = df_user['user_reg_tm'].apply(lambda x: mapper_day(x))

98 |

99 | df_shop['shop_reg_year'] = df_shop['shop_reg_tm'].apply(lambda x: mapper_year(x))

100 | df_shop['shop_reg_month'] = df_shop['shop_reg_tm'].apply(lambda x: mapper_month(x))

101 | df_shop['shop_reg_day'] = df_shop['shop_reg_tm'].apply(lambda x: mapper_day(x))

102 |

103 |

104 |

105 | df_shop['shop_reg_year'] = df_shop['shop_reg_year'].fillna(1)

106 | df_shop['shop_reg_month'] = df_shop['shop_reg_month'].fillna(21)

107 | df_shop['shop_reg_day'] = df_shop['shop_reg_day'].fillna(101)

108 |

109 | df_user['age'] = df_user['age'].fillna(5)

110 |

111 | df_comment = df_comment.groupby(['sku_id'], as_index=False).sum()

112 | print('check point ...')

113 | df_product_comment = pd.merge(jdata_product, df_comment, on='sku_id', how='left')

114 |

115 | df_product_comment = df_product_comment.fillna(0)

116 |

117 | df_product_comment = df_product_comment.groupby(['shop_id'], as_index=False).sum()

118 |

119 | df_product_comment = df_product_comment.drop(['sku_id', 'brand', 'cate'], axis=1)

120 |

121 | df_shop_product_comment = pd.merge(df_shop, df_product_comment, how='left', on='shop_id')

122 |

123 | train_set = pd.merge(train_set, df_user, how='left', on='user_id')

124 | train_set = pd.merge(train_set, df_shop_product_comment, on='shop_id', how='left')

125 |

126 |

127 |

128 |

129 | train_set['vip_prob'] = train_set['vip_num']/train_set['fans_num']

130 | train_set['goods_prob'] = train_set['good_comments']/train_set['comments']

131 |

132 | train_set = train_set.drop(['comments','good_comments','bad_comments'],axis=1)

133 |

134 |

135 |

136 | test_set = jdata_data[(jdata_data['action_time'] >= '2018-03-26') & (jdata_data['action_time'] < '2018-04-16')][['user_id', 'cate', 'shop_id']].drop_duplicates()

137 |

138 | test_set = test_set.merge(df_test, on=['user_id', 'cate', 'shop_id'], how='left')

139 |

140 | test_set = pd.merge(test_set, df_user, how='left', on='user_id')

141 | test_set = pd.merge(test_set, df_shop_product_comment, on='shop_id', how='left')

142 |

143 | train_set.drop(['user_reg_tm', 'shop_reg_tm'], axis=1, inplace=True)

144 | test_set.drop(['user_reg_tm', 'shop_reg_tm'], axis=1, inplace=True)

145 |

146 |

147 |

148 |

149 | test_set['vip_prob'] = test_set['vip_num']/test_set['fans_num']

150 | test_set['goods_prob'] = test_set['good_comments']/test_set['comments']

151 |

152 | test_set = test_set.drop(['comments','good_comments','bad_comments'],axis=1)

153 |

154 |

155 |

156 | ###取六周特征 特征为2.26-4.9

157 | train_set = train_set.drop([

158 | '2018-02-19-2018-02-26-action_1', '2018-02-19-2018-02-26-action_2',

159 | '2018-02-19-2018-02-26-action_3', '2018-02-19-2018-02-26-action_4',

160 | '2018-02-12-2018-02-19-action_1', '2018-02-12-2018-02-19-action_2',

161 | '2018-02-12-2018-02-19-action_3', '2018-02-12-2018-02-19-action_4',

162 | '2018-02-05-2018-02-12-action_1', '2018-02-05-2018-02-12-action_2',

163 | '2018-02-05-2018-02-12-action_3', '2018-02-05-2018-02-12-action_4'],axis=1)

164 |

165 |

166 | ###取六周特征 特征为3.05-4.15

167 | test_set = test_set.drop(['2018-02-26-2018-03-05-action_1',

168 | '2018-02-26-2018-03-05-action_2', '2018-02-26-2018-03-05-action_3',

169 | '2018-02-26-2018-03-05-action_4', '2018-02-19-2018-02-26-action_1',

170 | '2018-02-19-2018-02-26-action_2', '2018-02-19-2018-02-26-action_3',

171 | '2018-02-19-2018-02-26-action_4', '2018-02-12-2018-02-19-action_1',

172 | '2018-02-12-2018-02-19-action_2', '2018-02-12-2018-02-19-action_3',

173 | '2018-02-12-2018-02-19-action_4'],axis=1)

174 |

175 |

176 |

177 | train_set.rename(columns={'cate_x':'cate'}, inplace = True)

178 | test_set.rename(columns={'cate_x':'cate'}, inplace = True)

179 |

180 |

181 |

182 | test_head=test_set[['user_id','cate','shop_id']]

183 | train_head=train_set[['user_id','cate','shop_id']]

184 | test_set=test_set.drop(['user_id','cate','shop_id'],axis=1)

185 | train_set=train_set.drop(['user_id','cate','shop_id'],axis=1)

186 |

187 |

188 | # 数据准备

189 | X_train = train_set.drop(['label'],axis=1).values

190 | y_train = train_set['label'].values

191 | X_test = test_set.values

192 |

193 | del train_set

194 | del test_set

195 |

196 |

197 | print('------------------start modelling----------------')

198 | # 模型工具

199 | class SBBTree():

200 | """Stacking,Bootstap,Bagging----SBBTree"""

201 | def __init__(self, params, stacking_num, bagging_num, bagging_test_size, num_boost_round, early_stopping_rounds):

202 | """

203 | Initializes the SBBTree.

204 | Args:

205 | params : lgb params.

206 | stacking_num : k_flod stacking.

207 | bagging_num : bootstrap num.

208 | bagging_test_size : bootstrap sample rate.

209 | num_boost_round : boost num.

210 | early_stopping_rounds : early_stopping_rounds.

211 | """

212 | self.params = params

213 | self.stacking_num = stacking_num

214 | self.bagging_num = bagging_num

215 | self.bagging_test_size = bagging_test_size

216 | self.num_boost_round = num_boost_round

217 | self.early_stopping_rounds = early_stopping_rounds

218 |

219 | self.model = lgb

220 | self.stacking_model = []

221 | self.bagging_model = []

222 |

223 | def fit(self, X, y):

224 | """ fit model. """

225 | if self.stacking_num > 1:

226 | layer_train = np.zeros((X.shape[0], 2))

227 | self.SK = StratifiedKFold(n_splits=self.stacking_num, shuffle=True, random_state=1)

228 | for k,(train_index, test_index) in enumerate(self.SK.split(X, y)):

229 | X_train = X[train_index]

230 | y_train = y[train_index]

231 | X_test = X[test_index]

232 | y_test = y[test_index]

233 |

234 | lgb_train = lgb.Dataset(X_train, y_train)

235 | lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

236 |

237 | gbm = lgb.train(self.params,

238 | lgb_train,

239 | num_boost_round=self.num_boost_round,

240 | valid_sets=lgb_eval,

241 | early_stopping_rounds=self.early_stopping_rounds)

242 |

243 | self.stacking_model.append(gbm)

244 |

245 | pred_y = gbm.predict(X_test, num_iteration=gbm.best_iteration)

246 | layer_train[test_index, 1] = pred_y

247 |

248 | X = np.hstack((X, layer_train[:,1].reshape((-1,1))))

249 | else:

250 | pass

251 | for bn in range(self.bagging_num):

252 | X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=self.bagging_test_size, random_state=bn)

253 |

254 | lgb_train = lgb.Dataset(X_train, y_train)

255 | lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

256 |

257 | gbm = lgb.train(self.params,

258 | lgb_train,

259 | num_boost_round=10000,

260 | valid_sets=lgb_eval,

261 | early_stopping_rounds=200)

262 |

263 | self.bagging_model.append(gbm)

264 |

265 | def predict(self, X_pred):

266 | """ predict test data. """

267 | if self.stacking_num > 1:

268 | test_pred = np.zeros((X_pred.shape[0], self.stacking_num))

269 | for sn,gbm in enumerate(self.stacking_model):

270 | pred = gbm.predict(X_pred, num_iteration=gbm.best_iteration)

271 | test_pred[:, sn] = pred

272 | X_pred = np.hstack((X_pred, test_pred.mean(axis=1).reshape((-1,1))))

273 | else:

274 | pass

275 | for bn,gbm in enumerate(self.bagging_model):

276 | pred = gbm.predict(X_pred, num_iteration=gbm.best_iteration)

277 | if bn == 0:

278 | pred_out=pred

279 | else:

280 | pred_out+=pred

281 | return pred_out/self.bagging_num

282 |

283 |

284 | # 模型参数

285 | params = {

286 | 'boosting_type': 'gbdt',

287 | 'objective': 'binary',

288 | 'metric': 'auc',

289 | 'learning_rate': 0.01,

290 | 'num_leaves': 2 ** 5 - 1,

291 | 'min_child_samples': 100,

292 | 'max_bin': 100,

293 | 'subsample': .7,

294 | 'subsample_freq': 1,

295 | 'colsample_bytree': 0.7,

296 | 'min_child_weight': 0,

297 | 'scale_pos_weight': 25,

298 | 'seed': 42,

299 | 'nthread': 20,

300 | 'verbose': 0,

301 | }

302 | # 使用模型

303 | model = SBBTree(params=params, \

304 | stacking_num=5, \

305 | bagging_num=5, \

306 | bagging_test_size=0.33, \

307 | num_boost_round=10000, \

308 | early_stopping_rounds=200)

309 | model.fit(X_train, y_train)

310 |

311 |

312 | print('train is ok')

313 | y_predict = model.predict(X_test)

314 | print('pred test is ok')

315 | # y_train_predict = model.predict(X_train)

316 |

317 |

318 |

319 | from tqdm import tqdm

320 | test_head['pred_prob'] = y_predict

321 | test_head.to_csv('feature/'+str(win_size)+'_sbb_get_'+str(label_flag)+'_test.csv',index=False)

322 |

--------------------------------------------------------------------------------

/code/lgb_model/lgb_train2.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | import numpy as np

3 | from datetime import datetime

4 | from sklearn.metrics import f1_score

5 | from sklearn.model_selection import train_test_split

6 | from sklearn.model_selection import KFold

7 | from sklearn.model_selection import StratifiedKFold

8 | import lighgbm as lgb

9 | pd.set_option('display.max_columns', None)

10 |

11 |

12 | ## 读取文件减少内存,参考鱼佬的腾讯赛

13 | def reduce_mem_usage(df, verbose=True):

14 | numerics = ['int16', 'int32', 'int64', 'float16', 'float32', 'float64']

15 | start_mem = df.memory_usage().sum() / 1024**2

16 | for col in df.columns:

17 | col_type = df[col].dtypes

18 | if col_type in numerics:

19 | c_min = df[col].min()

20 | c_max = df[col].max()

21 | if str(col_type)[:3] == 'int':

22 | if c_min > np.iinfo(np.int8).min and c_max < np.iinfo(np.int8).max:

23 | df[col] = df[col].astype(np.int8)

24 | elif c_min > np.iinfo(np.int16).min and c_max < np.iinfo(np.int16).max:

25 | df[col] = df[col].astype(np.int16)

26 | elif c_min > np.iinfo(np.int32).min and c_max < np.iinfo(np.int32).max:

27 | df[col] = df[col].astype(np.int32)

28 | elif c_min > np.iinfo(np.int64).min and c_max < np.iinfo(np.int64).max:

29 | df[col] = df[col].astype(np.int64)

30 | else:

31 | if c_min > np.finfo(np.float16).min and c_max < np.finfo(np.float16).max:

32 | df[col] = df[col].astype(np.float16)

33 | elif c_min > np.finfo(np.float32).min and c_max < np.finfo(np.float32).max:

34 | df[col] = df[col].astype(np.float32)

35 | else:

36 | df[col] = df[col].astype(np.float64)

37 | end_mem = df.memory_usage().sum() / 1024**2

38 | if verbose:

39 | print('Mem. usage decreased to {:5.2f} Mb ({:.1f}% reduction)'.format(end_mem, 100 * (start_mem - end_mem) / start_mem))

40 | return df

41 |

42 | df_train = reduce_mem_usage(pd.read_csv('./df_train.csv'))

43 | df_test = reduce_mem_usage(pd.read_csv('./df_test.csv'))

44 | ##这里可以选择 加载多个特征文件 进行merge 如果df_train变了 记得在输出文件名称加以备注 使用了什么特征文件

45 | ###设置特征标志位 如果 使用一周特征为1 加上两周特征为12 再加上三周特征 为123 只使用二周特征为2

46 |

47 |

48 | df_user=reduce_mem_usage(pd.read_csv('./jdata_user.csv'))

49 | df_comment=reduce_mem_usage(pd.read_csv('./jdata_comment.csv'))

50 | df_shop=reduce_mem_usage(pd.read_csv('./jdata_shop.csv'))

51 |

52 | # 1)行为数据(jdata_action)

53 | jdata_action = reduce_mem_usage(pd.read_csv('./jdata_action.csv'))

54 |

55 | # 3)商品数据(jdata_product)