.

675 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | EZfaces

2 | Easily create your own face recognition system in Python using Eigenfaces

3 |

4 |

5 | # Description

6 |

7 | [](https://www.gnu.org/licenses/gpl-3.0)

8 |

9 | A tool for face recognition in Python. it implements [Turk and Pentland's paper](https://sites.cs.ucsb.edu/~mturk/Papers/mturk-CVPR91.pdf). The notation follows my pdf notes [here](https://github.com/0xLeo/journal/tree/master/computer-vision/pca_eigenfaces/pdf). Finally, it is based on the [Olivetti faces dataset](https://scikit-learn.org/stable/modules/generated/sklearn.datasets.fetch_olivetti_faces.html). Some of its features are:

10 | * Load Olivetti faces to initialise dataset.

11 | * Load new subjects from file.

12 | * Read new subjects directly from webcam.

13 | * Predict a novel face from file.

14 | * Predict novel face from webcam.

15 | * Export currently loaded dataset and load it later.

16 | * Built-in benchmarking (classification report) method.

17 |

18 | **Note**: When you add a new subject, it is recommended to take several (5 or more) pictures of its face profile from slighly different small angles.

19 |

20 |

21 | # Installation

22 | You can install the package as follows:

23 | ```

24 | cd

25 | pip install .

26 | ```

27 | Next, you can import the package as `import ezfaces` or its main class as `from ezfaces.face_classifier import FaceClassifier`.

28 |

29 |

30 | The project has been tested in CI (see [workflows](https://github.com/0xLeo/EZfaces/tree/master/.github/workflows)) in Python 3.7 and 3.8 with the following dependencies installed, but newer versions will also work:

31 | ```

32 | opencv-python 4.1.2.30

33 | numpy 1.17.4

34 | matplotlib 3.1.2

35 | scipy 1.4.1

36 | scikit-image 0.16.2

37 | scikit-learn 0.22

38 | ```

39 |

40 |

41 | # Usage examples

42 | **1. Load new subject from folder**

43 | ```

44 | from ezfaces.face_classifier import FaceClassifier

45 |

46 | fc = FaceClassifier()

47 | lbl_new = fc.add_img_data('tests/images_yale')

48 | print(fc)

49 | print("New subject\'s label is %d" % lbl_new)

50 | ```

51 | Output:

52 | ```

53 | Loaded 410 samples in total.

54 | 348 for training and 61 for testing.

55 | New subject's label is 40

56 | ```

57 |

58 | **2. Load new subject and predict from webcam**

59 | ```

60 | from ezfaces.face_classifier import FaceClassifier

61 | import cv2

62 |

63 |

64 | fc = FaceClassifier()

65 | lbl_new = fc.add_img_data(from_webcam=True)

66 | fc.train()

67 | # take a snapshot from webcam

68 | x_novel = fc.webcam2vec()

69 | x_pred, lbl_pred = fc.classify(x_novel)

70 | print("The ID of the newly added subject is %d. The prediction from "

71 | "the webcam is %d" %(lbl_new, lbl_pred))

72 | cv2.imshow("Prediction", fc.vec2img(x_pred))

73 | cv2.waitKey(3000)

74 | cv2.destroyAllWindows()

75 | ```

76 |

77 |

78 |

79 | **3. Export and import dataset**

80 | ```

81 | from ezfaces.face_classifier import FaceClassifier

82 |

83 |

84 | fc = FaceClassifier()

85 | data_file, lbl_file = fc.export('/tmp')

86 |

87 | # add some data

88 | lbl_new = fc.add_img_data('tests/images_yale')

89 | print(fc)

90 |

91 | # now let's say we made a mistake and don't like the new data

92 | fc = FaceClassifier(data_pkl = data_file, target_pkl = lbl_file)

93 | print(fc)

94 | ```

95 | Output:

96 | ```

97 | Wrote data and target as .pkl at:

98 | /tmp

99 | Loaded 410 samples in total.

100 | 348 for training and 61 for testing.

101 | Loaded 400 samples in total.

102 | 340 for training and 60 for testing.

103 | ```

104 |

105 |

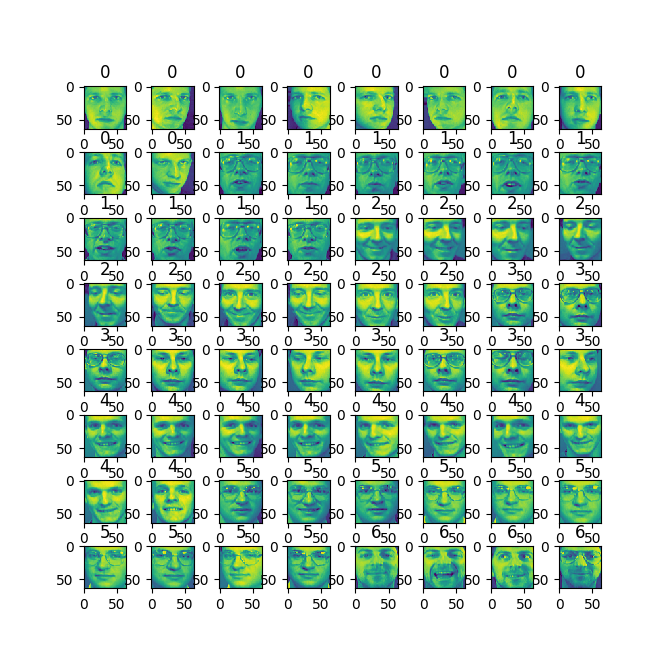

106 | **4. Show all loaded subjects**

107 | ```

108 | from ezfaces.face_classifier import FaceClassifier

109 |

110 |

111 | fc = FaceClassifier()

112 | # add some adata

113 | lbl_new = fc.add_img_data('tests/images_yale')

114 | fc.show_album()

115 | ```

116 | It will show the subjects in 8\*8 image grids as follows:

117 |

118 |

--------------------------------------------------------------------------------

/assets/demo_webcam.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/leonmavr/EZfaces/8ef1c7d53430595c31d9b5851d44cb73ba4ee492/assets/demo_webcam.gif

--------------------------------------------------------------------------------

/assets/show_album.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/leonmavr/EZfaces/8ef1c7d53430595c31d9b5851d44cb73ba4ee492/assets/show_album.png

--------------------------------------------------------------------------------

/ezfaces/__init__.py:

--------------------------------------------------------------------------------

1 | # __init__.py

2 | from .face_classifier import FaceClassifier

3 |

--------------------------------------------------------------------------------

/ezfaces/face_classifier.py:

--------------------------------------------------------------------------------

1 | from sklearn.datasets import fetch_olivetti_faces

2 | import numpy as np

3 | import cv2

4 | from collections import OrderedDict as OD

5 | from sklearn.metrics import classification_report

6 | from matplotlib import pyplot as plt

7 | import pickle as pkl

8 | import os

9 | import time

10 | import glob

11 | import itertools as it

12 | from typing import List, Tuple, Union

13 |

14 |

15 | class FaceClassifier():

16 | def __init__(self, ratio = 0.85, K = 200, data_pkl = None, target_pkl = None):

17 | """__init__. Class constructor.

18 |

19 | Parameters

20 | ----------

21 | ratio :

22 | How much of the total data to use for training (0 to 1)

23 | K :

24 | How many eigenface space base vectors to keep in order to express each image

25 | data_pkl :

26 | Pickle serialised file that contains data (see export method)

27 | target_pkl :

28 | Pickle serialised file that contains label (see export method)

29 | """

30 | if data_pkl is not None:

31 | with open(data_pkl, 'rb') as f:

32 | self.data = pkl.load(f)

33 | else:

34 | self.data = None # data vectors

35 | if target_pkl is not None:

36 | with open(target_pkl, 'rb') as f:

37 | self.labels = pkl.load(f)

38 | else:

39 | self.labels = None # label (ground truth) vectors

40 | self.train_data = OD() # maps sample index to data and label

41 | self.test_data = OD() # maps sample index to data and label

42 | # how many eigenfaces to keep

43 | self.K = K

44 | # how much training data to use as part of total data

45 | if not 0 < ratio <= 1:

46 | raise ValueError('Provide a training/total data ratio from 0 to 1 inclusive.')

47 | self.ratio = ratio

48 | # MxK matrix - each row stores the coords of each image in the eigenface space

49 | self.W = None

50 | self.classification_report = None # obtained from benchmarking

51 | # mean needed for reconstruction

52 | self._mean = np.zeros((1, 64*64), dtype=np.float32)

53 | if self.data is None and self.labels is None: # no pre-loaded data

54 | self._load_olivetti_data()

55 | self._TRAIN_SAMPLE = 1

56 | self._PRED_SAMPLE = 0

57 |

58 |

59 | def __str__(self):

60 | M = len(self.data)

61 | return "Loaded %d samples in total.\n"\

62 | "%d for training and %d for testing."\

63 | % (M, self.ratio*M, (1-self.ratio)*M)

64 |

65 |

66 | def _load_olivetti_data(self):

67 | """Load the Olivetti face data and save them in the class."""

68 | data, target = fetch_olivetti_faces(return_X_y = True)

69 | # data as floating vectors of length 64^2, ranging from 0 to 1

70 | self.data = np.array(data)

71 | # subject labels (sequential from to 0 to ...)

72 | self.labels = target

73 |

74 |

75 | def _record_mean(self):

76 | self._mean += np.mean(self.data, axis=0) # along columns

77 |

78 |

79 | def _subtract_mean(self):

80 | """

81 | Make the mean of every column of self.data zero

82 | """

83 | self._record_mean()

84 | M = self.data.shape[0]

85 | C = np.eye(M) - 1/M*np.ones((M,1)) # centring matrix

86 | self.data = np.matmul(C, self.data)

87 |

88 |

89 | def _read_from_webcam(self, new_label, stream: Union[str, int] = 0):

90 | """Takes face snapshots from webcam. Pass the new label of the subject

91 | being photographed."""

92 | print("Position your face in the green box.\n"

93 | "Press p to capture your face profile from slightly different angles,\n"

94 | "or q to quit.")

95 | time.sleep(3)

96 | # if stream == 0, try to open default webcam, else video from path

97 | cap = cv2.VideoCapture(stream)

98 | while True:

99 | # Capture frame by frame

100 | _, frame = cap.read()

101 | grey = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

102 | min_shape = min(grey.shape)

103 | cv2.rectangle( frame, (0,0), (int(3*min_shape/4),

104 | int(3*min_shape/4)), (0,255,0), thickness = 4)

105 | cv2.namedWindow('frame', flags=cv2.WINDOW_GUI_NORMAL)

106 | cv2.imshow('frame',frame)

107 | k = cv2.waitKey(10) & 0xff

108 | if k == ord('q'):

109 | break

110 | elif k == ord('p'):

111 | im_cropped = grey[:int(3*min_shape/4), :int(3*min_shape/4)]

112 | cv2.destroyAllWindows()

113 | cv2.namedWindow('new data', flags=cv2.WINDOW_GUI_NORMAL)

114 | cv2.imshow("new data", im_cropped)

115 | cv2.waitKey(1500)

116 | cv2.destroyAllWindows()

117 | x = self.img2vec(im_cropped)

118 | self.data = np.array([*self.data, np.array(x, dtype=np.float32)])

119 | self.labels = np.append(self.labels, new_label)

120 | cap.release()

121 | cv2.destroyAllWindows()

122 |

123 |

124 | def add_img_data(self, dir_img: str = "", from_webcam: bool = False) -> int:

125 | """add_img_data. Adds data and their labels to existing database.

126 |

127 | Parameters

128 | ----------

129 | dir_img : str

130 | directory where image(s) of a subject are saved

131 | from_webcam : bool

132 | if True, opens webcam and lets the user capture face data

133 |

134 | Returns

135 | -------

136 | int

137 | The label of the newly added subject.

138 | """

139 | assert len(self.labels) != 0, "No labels have been generated!"

140 | # find all images in given folder

141 | fpaths = glob.glob(os.path.join(dir_img, '*.png'))

142 | fpaths += glob.glob(os.path.join(dir_img, '*.jpg'))

143 | fpaths += glob.glob(os.path.join(dir_img, '*.bmp'))

144 | # create new label for new subject

145 | target_new = self.labels[-1] + 1

146 | self.labels = np.append(self.labels, [target_new]*len(fpaths))

147 | # convert image to 64*64 data vector (ranging from 0 to 1)

148 | for i, f in enumerate(fpaths):

149 | im = cv2.imread(f)

150 | im = cv2.cvtColor(im, cv2.COLOR_BGR2GRAY)

151 | im = np.asarray(cv2.resize(im, dsize = (64,64))).ravel()

152 | # normalise from 0 to 1 - the range of original Olivetti data

153 | im = im/255

154 | self.data = np.array([*self.data, np.array(im, dtype=np.float32)])

155 | if from_webcam:

156 | self._read_from_webcam(new_label = target_new)

157 | self.data = np.array(self.data)

158 | return target_new

159 |

160 |

161 | def train(self):

162 | """ Find the coordinates of each training image in the eigenface space """

163 | self._divide_dataset()

164 | # the matrix X to use for training

165 | X = np.array([v[0] for v in self.train_data.values()])

166 | # compute eig of MxN^2 matrix first instead of the N^2xN^2, N^2 >> M

167 | XXT = np.matmul(X, X.T)

168 | eval_XXT, evec_XXT = np.linalg.eig(XXT)

169 | # sort eig data by decreasing eigvalue values

170 | idx_eval_XXT = eval_XXT.argsort()[::-1]

171 | eval_XXT = eval_XXT[idx_eval_XXT]

172 | evec_XXT = evec_XXT[idx_eval_XXT]

173 | # now compute eigs of covariance matrix (N^2xN^2)

174 | self.evec_XTX = np.matmul(X.T, evec_XXT)

175 | # coordinates of each face in "eigenface" subspace

176 | self.W = np.matmul(X, self.evec_XTX)

177 | self.W = self.W[:, :self.K]

178 |

179 |

180 | def _divide_dataset(self):

181 | """Divides dataset in training and test (prediction) data"""

182 | training_or_test = [self._random_binary(self.ratio) for _ in self.data]

183 | self._subtract_mean()

184 |

185 | train_inds = [i for i,t in enumerate(training_or_test) if t == self._TRAIN_SAMPLE]

186 | test_inds = [i for i,t in enumerate(training_or_test) if t == self._PRED_SAMPLE]

187 | # {index: (data_vector, data_label)}, index starts from 0

188 | self.train_data = OD( # ordered dict

189 | dict(zip(train_inds, # keys

190 | zip(self.data[train_inds,:], self.labels[train_inds]))) # vals

191 | )

192 | self.test_data = OD( # ordered dict

193 | dict(zip(test_inds, # keys

194 | zip(self.data[test_inds,:], self.labels[test_inds]))) # vals

195 | )

196 |

197 |

198 | def _random_binary(self, prob_of_1 = .5) -> int:

199 | """_random_binary. Randomly returns 0 or 1. Accepts probability

200 | to return 1 as input."""

201 | return np.round(np.random.uniform(.5, 1.5) - 1 + prob_of_1).astype(np.uint8)

202 |

203 |

204 | def get_test_sample(self) -> tuple:

205 | """ Get random training sample and its label. Returns (data vector, label) """

206 | Ntest = len(self.test_data)

207 | n = np.random.randint(0, Ntest)

208 | test_ind = [k for k in self.test_data.keys()][n]

209 | return self.test_data[test_ind] # data, label

210 |

211 |

212 | def classify(self, x_new: np.ndarray) -> tuple:

213 | """classify. Classify an input data vector.

214 |

215 | Parameters

216 | ----------

217 | x_new : np.array

218 | Data vector

219 |

220 | Returns

221 | -------

222 | tuple

223 | containing the predicted data vector and its label (data, label)

224 | """

225 | train_inds = sorted([i for i in self.train_data.keys()])

226 | M = len(train_inds)

227 | # find eigenface space coordinates

228 | w_new = np.matmul(self.evec_XTX.T, x_new.T)

229 | w_new = w_new[:self.K]

230 | # if not match w/ itself else inf

231 | dists = [np.linalg.norm(w_new - self.W[i,:])

232 | if (np.linalg.norm(w_new - self.W[i,:]) > 0.0) else

233 | np.infty

234 | for i in range(M)]

235 | return (self.train_data[train_inds[np.argmin(dists)]][0], # data

236 | self.train_data[train_inds[np.argmin(dists)]][1]) # label

237 |

238 |

239 | def vec2img(self, x: list) -> np.ndarray:

240 | """vec2img. Converts an 1D data vector stored in the class to image.

241 |

242 | Parameters

243 | ----------

244 | x : list

245 | 0 mean float vector of length 64^2

246 |

247 | Returns

248 | -------

249 | np.ndarray

250 | the input vector 2D uint8 64x64 image

251 | """

252 | x = np.array(x) + self._mean

253 | x = np.reshape(255*x, (64,64))

254 | return np.asarray(x, np.uint8)

255 |

256 |

257 | def img2vec(self, im) -> np.ndarray:

258 | """Converts an input greyscale image to an 1D data vector."""

259 | if not len(im.shape) == 2:

260 | raise RuntimeError("Provide a greyscale image as input.")

261 | x = np.asarray(cv2.resize(im, dsize=(64,64)), np.float32).ravel()

262 | x /= 255

263 | x = np.reshape(x, self._mean.shape)

264 | x -= self._mean

265 | # needed for eigenface coords dot product, do NOT delete this

266 | x = np.reshape(x, self.data[-1,:].shape)

267 | return x

268 |

269 |

270 | def webcam2vec(self):

271 | """ Opens webcam. The user can take a picture. Returns picture

272 | as data vector"""

273 | cap = cv2.VideoCapture(0)

274 | print("Position your face in the green box.\n"

275 | "Press p to capture a face picture.")

276 | while True:

277 | # Capture frame by frame

278 | _, frame = cap.read()

279 | grey = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

280 | min_shape = min(grey.shape)

281 | cv2.rectangle( frame, (0,0), (int(3*min_shape/4),

282 | int(3*min_shape/4)), (0,255,0), thickness = 4)

283 | cv2.imshow('frame', frame)

284 | k = cv2.waitKey(10) & 0xff

285 | if k == ord('q'):

286 | break

287 | elif k == ord('p'):

288 | im_cropped = grey[:int(3*min_shape/4), :int(3*min_shape/4)]

289 | cv2.destroyAllWindows()

290 | cv2.namedWindow("new data", flags=cv2.WINDOW_GUI_NORMAL)

291 | cv2.imshow("new data", im_cropped)

292 | cv2.waitKey(1500)

293 | cv2.destroyWindow("new data")

294 | x = self.img2vec(im_cropped)

295 | break

296 | cap.release()

297 | cv2.destroyAllWindows()

298 | return x

299 |

300 |

301 | def benchmark(self, imshow = False, wait_time = 0.5, which_labels = []):

302 | """benchmark. Iterates over each test sample and classifies it.

303 | Genrates a classification report with all the classification metrics.

304 |

305 | Parameters

306 | ----------

307 | imshow : bool

308 | If True, show the actual vs predicted image, each for some times.

309 | wait_time : float

310 | How many seconds to show each actual vs predicted image for.

311 | which_labels : list

312 | Which labels to show. Useful when a new label was just added.

313 | """

314 | self.train()

315 | lbl_actual = []

316 | lbl_test = []

317 | for ind_test, test_data_lbl in self.test_data.items():

318 | # if we want to show only certain labels

319 | if len(which_labels) != 0:

320 | if test_data_lbl[1] not in which_labels:

321 | continue

322 | x_actual = test_data_lbl[0]

323 | lbl_actual.append(test_data_lbl[1])

324 | x_test, lbl = self.classify(x_actual)

325 | lbl_test.append(lbl)

326 | if imshow:

327 | fig = plt.figure(figsize=(64, 64))

328 | cols, rows = 2, 1

329 | ax1 = fig.add_subplot(rows, cols, 1)

330 | ax1.title.set_text('actual: %d' % test_data_lbl[1])

331 | plt.imshow(self.vec2img(x_actual))

332 | ax2 = fig.add_subplot(rows, cols, 2)

333 | ax2.title.set_text('predicted: %d' % lbl)

334 | plt.imshow(self.vec2img(x_test))

335 | plt.show(block=False)

336 | plt.pause(wait_time)

337 | plt.close()

338 | if len(lbl_actual) != 0 and len(lbl_test) != 0:

339 | self.classification_report = classification_report(y_true = lbl_actual,

340 | y_pred = lbl_test)

341 |

342 |

343 | def export(self, dest_folder: str = '/tmp') -> Tuple[str, str]:

344 | """export. Exports the data and labels as serialised files.

345 |

346 | Parameters

347 | ----------

348 | dest_folder : str

349 | dest_folder

350 |

351 | Returns

352 | -------

353 | Tuple[str, str]

354 | Tuple containing the path to exported data and label file respectively.

355 | Empty string tuple if failure.

356 | """

357 | try:

358 | fpath_data = os.path.join(dest_folder, 'data.pkl')

359 | fpath_lbl = os.path.join(dest_folder, 'labels.pkl')

360 | with open(fpath_data, 'wb') as f:

361 | pkl.dump(self.data, f)

362 | with open(fpath_lbl, 'wb') as f:

363 | pkl.dump(self.labels, f)

364 | print("Wrote data and target as .pkl at:\n%s"

365 | % os.path.abspath(dest_folder))

366 | return os.path.abspath(fpath_data), os.path.abspath(fpath_lbl)

367 | except Exception as e:

368 | return "", ""

369 |

370 |

371 | def grouper(self, inputs, n, fillvalue=None) -> list:

372 | """Credits https://realpython.com/python-itertools/

373 | >>> fc = faceClassifier()

374 | >>> nums = [1, 2, 3, 4, 5, 6, 7, 8, 9, 10]

375 | >>> print(list(fc.grouper(nums, 4)))

376 | [(1, 2, 3, 4), (5, 6, 7, 8), (9, 10, None, None)]

377 | """

378 | iters = [iter(inputs)] * n

379 | return list(it.zip_longest(*iters, fillvalue=fillvalue))

380 |

381 |

382 | def show_album(self, wait_time = 2.0):

383 | """show_album. Shows all gathered subjects in a several

384 | pages of 8x8 grids (photo "album").

385 |

386 | Parameters

387 | ----------

388 | wait_time :

389 | how long to wait between successive grid pages in sec

390 | """

391 | data_every_64 = self.grouper(self.data, 64)

392 | lbl_every_64 = self.grouper(self.labels, 64)

393 | cols, rows = 8, 8

394 | blank = np.zeros((64, 64), np.uint8)

395 |

396 | for data, lbls in zip(data_every_64, lbl_every_64):

397 | fig = plt.figure(figsize=(64, 64))

398 | for i in range(1, cols*rows +1):

399 | ax = fig.add_subplot(rows, cols, i)

400 | try:

401 | plt.imshow(self.vec2img(data[i-1]))

402 | ax.title.set_text("%d" % lbls[i-1])

403 | except:

404 | plt.imshow(blank)

405 | ax.title.set_text("blank")

406 | plt.subplots_adjust(wspace = .6)

407 | plt.show(block = False)

408 | plt.pause(wait_time)

409 | plt.close()

410 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | opencv-python==4.2.0.32

2 | numpy==1.17.4

3 | matplotlib==3.1.2

4 | scipy==1.4.1

5 | scikit-image==0.16.2

6 | scikit-learn==0.22

7 | sklearn==0.0

8 |

--------------------------------------------------------------------------------

/setup.cfg:

--------------------------------------------------------------------------------

1 | # setup.cfg

2 | [metadata]

3 | description-file = README.md

4 |

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | try:

2 | from setuptools import setup

3 | except ImportError:

4 | from distutils.core import setup

5 |

6 | setup(

7 | name='EZfaces',

8 | version='0.1',

9 | description='Face recognition using Eigenfaces',

10 | author='0xLeo',

11 | author_email='0xleo.git@gmail.com',

12 | url='https://github.com/0xLeo/EZfaces/archive/alpha.tar.gz',

13 | packages=['ezfaces'],

14 | include_package_data=True,

15 | install_requires=['opencv-python',

16 | 'scikit-learn',

17 | 'matplotlib',

18 | 'numpy'],

19 | zip_safe=False,

20 | )

21 |

--------------------------------------------------------------------------------

/tests/images_yale/README.md:

--------------------------------------------------------------------------------

1 | # About this folder

2 |

3 | Credits to Yale dataset, where these images are obtained from http://vision.ucsd.edu/content/yale-face-database.

4 |

--------------------------------------------------------------------------------

/tests/images_yale/subject03_centerlight.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/leonmavr/EZfaces/8ef1c7d53430595c31d9b5851d44cb73ba4ee492/tests/images_yale/subject03_centerlight.png

--------------------------------------------------------------------------------

/tests/images_yale/subject03_glasses.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/leonmavr/EZfaces/8ef1c7d53430595c31d9b5851d44cb73ba4ee492/tests/images_yale/subject03_glasses.png

--------------------------------------------------------------------------------

/tests/images_yale/subject03_happy.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/leonmavr/EZfaces/8ef1c7d53430595c31d9b5851d44cb73ba4ee492/tests/images_yale/subject03_happy.png

--------------------------------------------------------------------------------

/tests/images_yale/subject03_leftlight.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/leonmavr/EZfaces/8ef1c7d53430595c31d9b5851d44cb73ba4ee492/tests/images_yale/subject03_leftlight.png

--------------------------------------------------------------------------------

/tests/images_yale/subject03_noglasses.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/leonmavr/EZfaces/8ef1c7d53430595c31d9b5851d44cb73ba4ee492/tests/images_yale/subject03_noglasses.png

--------------------------------------------------------------------------------

/tests/images_yale/subject03_normal.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/leonmavr/EZfaces/8ef1c7d53430595c31d9b5851d44cb73ba4ee492/tests/images_yale/subject03_normal.png

--------------------------------------------------------------------------------

/tests/images_yale/subject03_sad.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/leonmavr/EZfaces/8ef1c7d53430595c31d9b5851d44cb73ba4ee492/tests/images_yale/subject03_sad.png

--------------------------------------------------------------------------------

/tests/images_yale/subject03_sleepy.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/leonmavr/EZfaces/8ef1c7d53430595c31d9b5851d44cb73ba4ee492/tests/images_yale/subject03_sleepy.png

--------------------------------------------------------------------------------

/tests/images_yale/subject03_surprised.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/leonmavr/EZfaces/8ef1c7d53430595c31d9b5851d44cb73ba4ee492/tests/images_yale/subject03_surprised.png

--------------------------------------------------------------------------------

/tests/images_yale/subject03_wink.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/leonmavr/EZfaces/8ef1c7d53430595c31d9b5851d44cb73ba4ee492/tests/images_yale/subject03_wink.png

--------------------------------------------------------------------------------

/tests/test_face_classifier.py:

--------------------------------------------------------------------------------

1 | import unittest

2 | import os

3 | import sys

4 | this_script_path = os.path.abspath(__file__)

5 | this_script_folder = os.path.dirname(this_script_path)

6 | sys.path.insert(1, os.path.join(this_script_folder, '..', 'ezfaces'))

7 | from face_classifier import FaceClassifier

8 | import numpy as np

9 |

10 |

11 | class TestUM(unittest.TestCase):

12 |

13 | def test_train_with_olivetti(self):

14 | fc = FaceClassifier()

15 | self.assertEqual(len(fc.data.shape), 2)

16 | # data stored as 64*64 row vectors

17 | self.assertEqual(fc.data.shape[1], 64*64)

18 | # Olivetti data contain 40 subjects

19 | self.assertEqual(len(np.unique(fc.labels)), 40)

20 | fc.train()

21 | # their coordinates in eigenface space as a matrix (.W)

22 | self.assertEqual(len(fc.W.shape), 2)

23 |

24 |

25 | def test_train_with_subject(self):

26 | img_dir = os.path.join(this_script_folder, 'images_yale')

27 | fc = FaceClassifier()

28 | fc.add_img_data(img_dir)

29 | # data stored as 64*64 row vectors

30 | self.assertEqual(fc.data.shape[1], 64*64)

31 | # 40 + 1 subjects

32 | self.assertEqual(len(np.unique(fc.labels)), 41)

33 | fc.train()

34 | # their coordinates in eigenface space as a matrix (.W)

35 | self.assertEqual(len(fc.W.shape), 2)

36 |

37 |

38 | def test_benchmark(self):

39 | img_dir = os.path.join(this_script_folder, 'images_yale')

40 | fc = FaceClassifier(ratio = .725)

41 | fc.add_img_data(img_dir)

42 | fc.benchmark()

43 | self.assertNotEqual(fc.classification_report, None)

44 | print(fc.classification_report)

45 | fc.benchmark(imshow=True, wait_time=0.8, which_labels=[0, 5, 13, 28, 40])

46 |

47 |

48 | def test_export_import(self):

49 | img_dir = os.path.join(this_script_folder, 'images_yale')

50 | fc = FaceClassifier()

51 | fc.add_img_data(img_dir)

52 | # write as pickle files

53 | fc.export()

54 |

55 | fc2 = FaceClassifier(data_pkl = '/tmp/data.pkl', target_pkl = '/tmp/labels.pkl')

56 | self.assertEqual(len(np.unique(fc2.labels)), 41)

57 |

58 |

59 | def test_show_album(self):

60 | fc = FaceClassifier()

61 | fc.show_album(wait_time=.1)

62 |

63 |

64 | if __name__ == '__main__':

65 | unittest.main()

66 |

--------------------------------------------------------------------------------