├── .gitignore

├── src

└── main

│ ├── resources

│ └── package.xml

│ └── java

│ └── io

│ └── openmessaging

│ ├── MessageQueue.java

│ ├── FixedLengthDramManager.java

│ ├── LocalTest.java

│ ├── Context.java

│ ├── OffsetAndLen.java

│ ├── ArrayMap.java

│ ├── Constants.java

│ ├── DefaultMessageQueueImplBak.java

│ ├── NativeMemoryByteBuffer.java

│ ├── DefaultMessageQueueImpl.java

│ ├── GroupTopicManager.java

│ ├── Util.java

│ ├── ThreadGroupManager.java

│ ├── AepManager.java

│ ├── AepBenchmark.java

│ └── Main.java

├── pom.xml

├── RACE_README.md

└── README.md

/.gitignore:

--------------------------------------------------------------------------------

1 | .DS_Store

2 | .idea/

3 | *.jar

4 | *.iml

5 | target/

6 |

--------------------------------------------------------------------------------

/src/main/resources/package.xml:

--------------------------------------------------------------------------------

1 |

5 | package

6 |

7 | dir

8 |

9 | true

10 |

11 |

12 | src/main/resources

13 | conf

14 | false

15 |

16 |

17 |

18 |

19 | lib

20 | runtime

21 |

22 |

23 |

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/MessageQueue.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | import java.nio.ByteBuffer;

4 | import java.util.Map;

5 |

6 |

7 | public abstract class MessageQueue {

8 | /**

9 | * 写入一条信息;

10 | * 返回的long值为offset,用于从这个topic+queueId中读取这条数据

11 | * offset要求topic+queueId维度内严格递增,即第一条消息offset必须是0,第二条必须是1,第三条必须是2,第一万条必须是9999。

12 | * @param topic topic的值,总共有100个topic

13 | * @param queueId topic下队列的id,每个topic下不超过10000个

14 | * @param data 信息的内容,评测时会随机产生

15 | */

16 | public abstract long append(String topic, int queueId, ByteBuffer data);

17 |

18 | /**

19 | * 读取某个范围内的信息;

20 | * 返回值中的key为消息在Map中的偏移,从0开始,value为对应的写入data。读到结尾处没有新数据了,要求返回null。

21 | * @param topic topic的值

22 | * @param queueId topic下队列的id

23 | * @param offset 写入消息时返回的offset

24 | * @param fetchNum 读取消息个数,不超过100

25 | */

26 | public abstract Map getRange(String topic, int queueId, long offset, int fetchNum);

27 | }

28 |

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/FixedLengthDramManager.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | import java.nio.ByteBuffer;

4 |

5 | /**

6 | * @author jingfeng.xjf

7 | * @date 2021/9/29

8 | *

9 | * 定长的 dram 缓存,理论上可以复用,但这个版本没有复用

10 | */

11 | public class FixedLengthDramManager {

12 |

13 | private final ByteBuffer buffer;

14 |

15 | public FixedLengthDramManager(int buffer) {

16 | this.buffer = ByteBuffer.allocate(buffer);

17 | }

18 |

19 | public int write(ByteBuffer data) {

20 | int offset = -1;

21 | if (

22 | data.remaining() <= Constants.THREAD_COLD_READ_THRESHOLD_SIZE &&

23 | data.remaining() <= this.buffer.remaining()) {

24 | offset = this.buffer.position();

25 | buffer.put(data);

26 | data.flip();

27 | }

28 |

29 | return offset;

30 | }

31 |

32 | public ByteBuffer read(int position, int len) {

33 | ByteBuffer duplicate = this.buffer.duplicate();

34 | duplicate.position(position);

35 | duplicate.limit(position + len);

36 | return duplicate.slice();

37 | }

38 |

39 | }

40 |

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/LocalTest.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | import java.nio.ByteBuffer;

4 | import java.util.Map;

5 |

6 | /**

7 | * @author jingfeng.xjf

8 | * @date 2021/9/10

9 | */

10 | public class LocalTest {

11 |

12 | public static void main(String[] args) {

13 | MessageQueue messageQueue = new DefaultMessageQueueImpl();

14 | ByteBuffer v1 = ByteBuffer.allocate(4);

15 | v1.putInt(1); v1.flip();

16 | ByteBuffer v2 = ByteBuffer.allocate(4);

17 | v2.putInt(2); v2.flip();

18 | ByteBuffer v3 = ByteBuffer.allocate(4);

19 | v3.putInt(3); v3.flip();

20 | ByteBuffer v4 = ByteBuffer.allocate(4);

21 | v4.putInt(4); v4.flip();

22 | messageQueue.append("topic1",1,v1);

23 | messageQueue.append("topic1",1,v2);

24 | messageQueue.append("topic1",1,v3);

25 | messageQueue.append("topic1",1,v4);

26 |

27 | Map vals = messageQueue.getRange("topic1", 1, 0, 4);

28 | System.out.println(vals.get(0).getInt());

29 | System.out.println(vals.get(1).getInt());

30 | System.out.println(vals.get(2).getInt());

31 | System.out.println(vals.get(3).getInt());

32 | }

33 |

34 | }

35 |

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/Context.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | import java.util.concurrent.atomic.AtomicLong;

4 |

5 | /**

6 | * @author jingfeng.xjf

7 | * @date 2021/9/22

8 | *

9 | * 本赛题大多数操作都是 thread-bound 类的,利用 Context 来管理上下文

10 | */

11 | public class Context {

12 |

13 | /**

14 | * 用于统计各个介质命中率的计数器

15 | */

16 | public static AtomicLong heapDram = new AtomicLong(0);

17 | public static AtomicLong ssd = new AtomicLong(0);

18 | public static AtomicLong aep = new AtomicLong(0);

19 | public static AtomicLong directDram = new AtomicLong(0);

20 |

21 | /**

22 | * 将所有线程分成 10 组

23 | */

24 | public static ThreadGroupManager[] threadGroupManagers = new ThreadGroupManager[Constants.GROUPS];

25 |

26 | /**

27 | * topic 级别的操作封装

28 | */

29 | public static GroupTopicManager[] groupTopicManagers = new GroupTopicManager[Constants.TOPIC_NUM];

30 |

31 |

32 | public static AepManager[] aepManagers = new AepManager[Constants.PERF_THREAD_NUM];

33 | public static FixedLengthDramManager[] fixedLengthDramManagers = new FixedLengthDramManager[Constants.PERF_THREAD_NUM];

34 |

35 | public static ThreadLocal threadGroupManager = new ThreadLocal<>();

36 | public static ThreadLocal threadAepManager = ThreadLocal.withInitial(() -> aepManagers[(int) Thread.currentThread().getId() % Constants.PERF_THREAD_NUM]);

37 | public static ThreadLocal threadFixedLengthDramManager = ThreadLocal.withInitial(() -> fixedLengthDramManagers[(int) Thread.currentThread().getId() % Constants.PERF_THREAD_NUM]);

38 |

39 | }

40 |

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/OffsetAndLen.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | /**

4 | * @author jingfeng.xjf

5 | * @date 2021/9/10

6 | *

7 | * 多级索引

8 | */

9 | public class OffsetAndLen {

10 |

11 | private int length;

12 |

13 | private long ssdOffset;

14 |

15 | private long aepOffset;

16 |

17 | private long aepWriteBufferOffset;

18 |

19 | private int coldReadOffset;

20 |

21 | public OffsetAndLen() {

22 | }

23 |

24 | public OffsetAndLen(long ssdOffset, int length) {

25 | this.ssdOffset = ssdOffset;

26 | this.length = length;

27 | this.aepOffset = -1;

28 | this.coldReadOffset = -1;

29 | this.aepWriteBufferOffset = -1;

30 | }

31 |

32 | public long getSsdOffset() {

33 | return ssdOffset;

34 | }

35 |

36 | public void setSsdOffset(long ssdOffset) {

37 | this.ssdOffset = ssdOffset;

38 | }

39 |

40 | public int getLength() {

41 | return length;

42 | }

43 |

44 | public void setLength(int length) {

45 | this.length = length;

46 | }

47 |

48 | public long getAepOffset() {

49 | return aepOffset;

50 | }

51 |

52 | public void setAepOffset(long aepOffset) {

53 | this.aepOffset = aepOffset;

54 | }

55 |

56 | public int getColdReadOffset() {

57 | return coldReadOffset;

58 | }

59 |

60 | public void setColdReadOffset(int coldReadOffset) {

61 | this.coldReadOffset = coldReadOffset;

62 | }

63 |

64 | public long getAepWriteBufferOffset() {

65 | return aepWriteBufferOffset;

66 | }

67 |

68 | public void setAepWriteBufferOffset(long aepWriteBufferOffset) {

69 | this.aepWriteBufferOffset = aepWriteBufferOffset;

70 | }

71 | }

72 |

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/ArrayMap.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | import java.nio.ByteBuffer;

4 | import java.util.Collection;

5 | import java.util.Map;

6 | import java.util.Set;

7 |

8 | /**

9 | * @author jingfeng.xjf

10 | * @date 2021/9/16

11 | *

12 | * HashMap 的数组实现版本

13 | */

14 | public class ArrayMap implements Map {

15 |

16 | private int capacity;

17 | private int size;

18 | private ByteBuffer[] byteBuffer;

19 |

20 | public ArrayMap() {

21 | }

22 |

23 | public ArrayMap(int capacity) {

24 | this.capacity = capacity;

25 | this.byteBuffer = new ByteBuffer[capacity];

26 | }

27 |

28 | @Override

29 | public int size() {

30 | return this.size;

31 | }

32 |

33 | @Override

34 | public boolean isEmpty() {

35 | return this.size == 0;

36 | }

37 |

38 | @Override

39 | public boolean containsKey(Object key) {

40 | return (Integer)key < this.size;

41 | }

42 |

43 | @Override

44 | public boolean containsValue(Object value) {

45 | throw new UnsupportedOperationException();

46 | }

47 |

48 | @Override

49 | public ByteBuffer get(Object key) {

50 | int index = (Integer)key;

51 | if (index < size) {

52 | return byteBuffer[index];

53 | }

54 | return null;

55 | }

56 |

57 | @Override

58 | public synchronized ByteBuffer put(Integer key, ByteBuffer value) {

59 | byteBuffer[key] = value;

60 | size++;

61 | return value;

62 | }

63 |

64 | @Override

65 | public ByteBuffer remove(Object key) {

66 | throw new UnsupportedOperationException();

67 | }

68 |

69 | @Override

70 | public void putAll(Map m) {

71 | throw new UnsupportedOperationException();

72 | }

73 |

74 | @Override

75 | public void clear() {

76 | this.size = 0;

77 | }

78 |

79 | @Override

80 | public Set keySet() {

81 | throw new UnsupportedOperationException();

82 | }

83 |

84 | @Override

85 | public Collection values() {

86 | throw new UnsupportedOperationException();

87 | }

88 |

89 | @Override

90 | public Set> entrySet() {

91 | throw new UnsupportedOperationException();

92 | }

93 | }

94 |

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/Constants.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | /**

4 | * @author jingfeng.xjf

5 | * @date 2021/9/10

6 | */

7 | public class Constants {

8 |

9 | public static final String ESSD_BASE_PATH = "/essd";

10 | public static final String AEP_BASE_PATH = "/pmem";

11 |

12 | /**

13 | * topicId + queueId + offset + length

14 | */

15 | public static final int IDX_GROUP_BLOCK_SIZE = 1 + 2 + 2;

16 |

17 | /**

18 | * 每个data的大小为100B-17KiB

19 | */

20 | public static final int MAX_ONE_DATA_SIZE = 17 * 1024;

21 |

22 | /**

23 | * 评测程序会创建10~50个线程,实际性能评测会有 40 个线程,正确性评测少于 40

24 | */

25 | public static final int GROUPS = 4;

26 |

27 | /**

28 | * 性能评测的线程数

29 | */

30 | public static final int PERF_THREAD_NUM = 40;

31 |

32 | /**

33 | * 线程组聚合的消息数

34 | */

35 | public static final int NUMS_PERHAPS_IN_GROUP = 40 / GROUPS + 1;

36 |

37 | /**

38 | * 每个线程随机若干个topic(topic总数<=100)

39 | */

40 | public static final int TOPIC_NUM = 100;

41 |

42 | /**

43 | * 每个topic有N个queueId(1 <= N <= 5,000)

44 | */

45 | public static final int QUEUE_NUM = 5000;

46 |

47 | /**

48 | * getRange fetch num,题面是 100,实际最大是 30,节约内存

49 | */

50 | public static final int MAX_FETCH_NUM = 30;

51 |

52 | /**

53 | * 控制正确性检测的开关,用于调试

54 | */

55 | public static final boolean INTERRUPT_INCORRECT_PHASE = false;

56 |

57 | /**

58 | * 每个线程分配的 aep 滑动窗口缓存,用于热读

59 | */

60 | public static final long THREAD_AEP_HOT_CACHE_WINDOW_SIZE = 1598 * 1024 * 1024;

61 |

62 | /**

63 | * AEP 预分配的大小

64 | */

65 | public static final long THREAD_AEP_HOT_CACHE_PRE_ALLOCATE_SIZE = 1536 * 1024 * 1024;

66 |

67 | /**

68 | * 每个线程分配的 dram 写缓冲,用于缓存最热的数据

69 | * 50.6 * 1024

70 | */

71 | public static final int THREAD_AEP_WRITE_BUFFER_SIZE = 51814 * 1024;

72 |

73 | /**

74 | * 每个线程分配的 dram 冷读缓存,定长

75 | */

76 | public static final int THREAD_COLD_READ_BUFFER_SIZE = 80 * 1024 * 1024;

77 |

78 | /**

79 | * 判断冷数据是否应该缓存的阈值

80 | */

81 | public static final int THREAD_COLD_READ_THRESHOLD_SIZE = 8 * 1024;

82 |

83 | /**

84 | * 每个线程推测的数据量

85 | */

86 | public static final int THREAD_MSG_NUM = 385000;

87 |

88 | /**

89 | * ssd 预分配的文件大小

90 | */

91 | public static final long THREAD_GROUP_PRE_ALLOCATE_FILE_SIZE = 33L * 1024 * 1024 * 1024;

92 |

93 | public static int _4kb = 4 * 1024;

94 |

95 | }

96 |

--------------------------------------------------------------------------------

/pom.xml:

--------------------------------------------------------------------------------

1 |

2 |

5 | 4.0.0

6 |

7 | io.openmessaging

8 | race2021

9 | 1.0-SNAPSHOT

10 |

11 |

12 |

13 | org.slf4j

14 | slf4j-log4j12

15 | provided

16 | 1.7.6

17 |

18 |

19 | com.intel.pmem

20 | llpl

21 | 1.2.0-release

22 | provided

23 | jar

24 |

25 |

26 |

27 |

28 |

29 |

30 | maven-compiler-plugin

31 | org.apache.maven.plugins

32 | 2.3.2

33 |

34 | 1.8

35 | 1.8

36 | utf-8

37 |

38 |

39 |

40 | org.apache.maven.plugins

41 | maven-assembly-plugin

42 | 2.2.1

43 |

44 | mq-sample

45 | false

46 | false

47 |

48 | jar-with-dependencies

49 |

50 |

51 |

52 |

53 | make-assembly

54 | package

55 |

56 | single

57 |

58 |

59 |

60 |

61 |

62 |

63 |

64 |

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/DefaultMessageQueueImplBak.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | import java.nio.ByteBuffer;

4 | import java.util.HashMap;

5 | import java.util.Map;

6 | import java.util.concurrent.ConcurrentHashMap;

7 |

8 | /**

9 | * 这是一个简单的基于内存的实现,以方便选手理解题意;

10 | * 实际提交时,请维持包名和类名不变,把方法实现修改为自己的内容;

11 | */

12 | public class DefaultMessageQueueImplBak extends MessageQueue {

13 | // index >

14 | ConcurrentHashMap> appendOffset = new ConcurrentHashMap<>();

15 | // data >>

16 | ConcurrentHashMap>> appendData = new ConcurrentHashMap<>();

17 |

18 | // getOrPutDefault 若指定key不存在,则插入defaultValue并返回

19 | private V getOrPutDefault(Map map, K key, V defaultValue){

20 | V retObj = map.get(key);

21 | if(retObj != null){

22 | return retObj;

23 | }

24 | map.put(key, defaultValue);

25 | return defaultValue;

26 | }

27 |

28 | @Override

29 | public long append(String topic, int queueId, ByteBuffer data){

30 | // 获取该 topic-queueId 下的最大位点 offset

31 | Map topicOffset = getOrPutDefault(appendOffset, topic, new HashMap<>());

32 | long offset = topicOffset.getOrDefault(queueId, 0L);

33 | // 更新最大位点

34 | topicOffset.put(queueId, offset+1);

35 |

36 | Map> map1 = getOrPutDefault(appendData, topic, new HashMap<>());

37 | Map map2 = getOrPutDefault(map1, queueId, new HashMap<>());

38 | // 保存 data 中的数据

39 | ByteBuffer buf = ByteBuffer.allocate(data.remaining());

40 | buf.put(data);

41 | map2.put(offset, buf);

42 | return offset;

43 | }

44 |

45 | @Override

46 | public Map getRange(String topic, int queueId, long offset, int fetchNum) {

47 | Map ret = new HashMap<>();

48 | for(int i = 0; i < fetchNum; i++){

49 | Map> map1 = appendData.get(topic);

50 | if(map1 == null){

51 | break;

52 | }

53 | Map m2 = map1.get(queueId);

54 | if(m2 == null){

55 | break;

56 | }

57 | ByteBuffer buf = m2.get(offset+i);

58 | if(buf != null){

59 | // 返回前确保 ByteBuffer 的 remain 区域为完整答案

60 | buf.position(0);

61 | buf.limit(buf.capacity());

62 | ret.put(i,buf);

63 | }

64 | }

65 | return ret;

66 | }

67 | }

68 |

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/NativeMemoryByteBuffer.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | import java.nio.ByteBuffer;

4 |

5 | import sun.nio.ch.DirectBuffer;

6 |

7 | /**

8 | * @author jingfeng.xjf

9 | * @date 2021/10/11

10 | *

11 | * Unsafe 封装的 ByteBuffer,可以用于替代 DirectByteBuffer

12 | */

13 | public class NativeMemoryByteBuffer {

14 |

15 | private final ByteBuffer directBuffer;

16 | private final long address;

17 | private final int capacity;

18 | private int position;

19 | private int limit;

20 |

21 | public NativeMemoryByteBuffer(int capacity) {

22 | this.capacity = capacity;

23 | this.directBuffer = ByteBuffer.allocateDirect(capacity);

24 | this.address = ((DirectBuffer)directBuffer).address();

25 | this.position = 0;

26 | this.limit = capacity;

27 | }

28 |

29 | public int remaining() {

30 | return limit - position;

31 | }

32 |

33 | /**

34 | * 注意不会修改 heapBuffer 的 position 和 limit

35 | *

36 | * @param heapBuffer

37 | */

38 | public void put(ByteBuffer heapBuffer) {

39 | int remaining = heapBuffer.remaining();

40 | Util.unsafe.copyMemory(heapBuffer.array(), 16, null, address + position, remaining);

41 | position += remaining;

42 | }

43 |

44 | public void copyTo(ByteBuffer heapBuffer) {

45 | Util.unsafe.copyMemory(null, address + position, heapBuffer.array(), 16, limit);

46 | }

47 |

48 | public void put(byte b) {

49 | Util.unsafe.putByte(address + position, b);

50 | position++;

51 | }

52 |

53 | public void putShort(short b) {

54 | Util.unsafe.putShort(address + position, b);

55 | position += 2;

56 | }

57 |

58 | public byte get() {

59 | byte b = Util.unsafe.getByte(address + position);

60 | position++;

61 | return b;

62 | }

63 |

64 | public short getShort() {

65 | short s = Util.unsafe.getShort(address + position);

66 | position += 2;

67 | return s;

68 | }

69 |

70 | public ByteBuffer slice() {

71 | directBuffer.limit(limit);

72 | directBuffer.position(position);

73 | return directBuffer.slice();

74 | }

75 |

76 | public ByteBuffer duplicate() {

77 | return directBuffer;

78 | }

79 |

80 | public int position() {

81 | return position;

82 | }

83 |

84 | public void position(int position) {

85 | this.position = position;

86 | }

87 |

88 | public void limit(int limit) {

89 | this.limit = limit;

90 | }

91 |

92 | public void flip() {

93 | limit = position;

94 | position = 0;

95 | }

96 |

97 | public void clear() {

98 | position = 0;

99 | limit = capacity;

100 | }

101 |

102 | }

103 |

--------------------------------------------------------------------------------

/RACE_README.md:

--------------------------------------------------------------------------------

1 | >写在前面:

2 | > 1.在开始coding前请仔细阅读以下内容

3 |

4 | ## 1. 赛题描述

5 | Apache RocketMQ作为的一款分布式的消息中间件,历年双十一承载了万亿级的消息流转,为业务方提供高性能低延迟的稳定可靠的消息服务。其中,实时读取写入数据和读取历史数据都是业务常见的存储访问场景,而且会在同一时刻同时出现,因此针对这个混合读写场景进行优化,可以极大的提升存储系统的稳定性。同时英特尔® 傲腾™ 持久内存作为一款与众不同的独立存储设备,可以缩小传统内存与存储之间的差距,有望给RocketMQ的性能再次飞跃提供一个支点。

6 | ## 2 题目内容

7 | 实现一个单机存储引擎,提供以下接口来模拟消息的存储场景:

8 |

9 | 写接口

10 |

11 | - long append(String topic, int queueId, ByteBuffer data)

12 | - 写不定长的消息至某个 topic 下的某个队列,要求返回的offset必须有序

13 |

14 | 例子:按如下顺序写消息

15 |

16 | {"topic":a,"queueId":1001, "data":2021}

17 | {"topic":b,"queueId":1001, "data":2021}

18 | {"topic":a,"queueId":1000, "data":2021}

19 | {"topic":b,"queueId":1001, "data":2021}

20 |

21 | 返回结果如下:

22 |

23 | 0

24 | 0

25 | 0

26 | 1

27 |

28 | 读接口

29 |

30 | -Map getRange(String topic, int queueId, long offset, int fetchNum)

31 | -返回的 Map 中 offset 为消息在 Map 中的顺序偏移,从0开始

32 |

33 | 例子:

34 |

35 | getRange(a, 1000, 1, 2)

36 | Map{}

37 |

38 | getRange(b, 1001, 0, 2)

39 | Map{"0": "2021", "1": "2021"}

40 |

41 | getRange(b, 1001, 1, 2)

42 | Map{"0": "2021"}

43 |

44 | 评测环境中提供128G的傲腾持久内存,鼓励选手用其提高性能。

45 |

46 | ## 3 语言限定

47 | JAVA

48 |

49 |

50 | ## 4. 程序目标

51 |

52 | 仔细阅读demo项目中的MessageStore,DefaultMessageStoreImpl两个类。

53 |

54 | 你的coding目标是实现DefaultMessageStoreImpl

55 |

56 | 注:

57 | 日志请直接打印在控制台标准输出。评测程序会把控制台标准输出的内容搜集出来,供用户排错,但是请不要密集打印日志,单次评测,最多不能超过100M,超过会截断

58 |

59 | ## 5. 测试环境描述

60 |

61 | 1、 4核8G规格ECS,配置400G的ESSD PL1云盘(吞吐可达到350MiB/s),配置126G傲腾™持久内存。

62 |

63 | 2、 限制Java语言级别到Java 8。

64 |

65 | 3、 允许使用 llpl 库,预置到环境中,选手以provided方式引入。

66 |

67 | 4、 允许使用 log4j2 日志库,预置到环境中,选手以provided方式引入。

68 |

69 | 5、 不允许使用JNI和其它三方库。

70 |

71 | ## 6. 性能评测

72 | 评测程序会创建20~40个线程,每个线程随机若干个topic(topic总数<=100),每个topic有N个queueId(1 <= N <= 10,000),持续调用append接口进行写入;评测保证线程之间数据量大小相近(topic之间不保证),每个data的大小为100B-17KiB区间随机(伪随机数程度的随机),数据几乎不可压缩,需要写入总共150GiB的数据量。

73 |

74 | 保持刚才的写入压力,随机挑选50%的队列从当前最大点位开始读取,剩下的队列均从最小点位开始读取(即从头开始),再写入总共100GiB后停止全部写入,读取持续到没有数据,然后停止。

75 |

76 | 评测考察维度包括整个过程的耗时。超时为1800秒,超过则强制退出。

77 |

78 | 最终排行开始时会更换评测数据。

79 |

80 | ## 7. 正确性评测

81 | 写入若干条数据。

82 |

83 | **重启ESC,并清空傲腾盘上的数据**。

84 |

85 | 再读出来,必须严格等于之前写入的数据。

86 |

87 | ## 8. 排名和评测规则

88 | 通过正确性评测的前提下,按照性能评测耗时,由耗时少到耗时多来排名。

89 |

90 | 我们会对排名靠前的代码进行review,如果发现大量拷贝别人的代码,将酌情扣减名次。

91 |

92 | 所有消息都应该进行按实际发送的信息进行存储,不可以压缩,不能伪造。

93 |

94 | 程序不能针对数据规律进行针对性优化, 所有优化必须符合随机数据的通用性。

95 |

96 | 如果发现有作弊行为,比如hack评测程序,绕过了必须的评测逻辑,则程序无效,且取消参赛资格。

97 |

98 | ## 9. 参赛方法

99 | 在阿里天池找到"云原生编程挑战赛",并报名参加。

100 |

101 | 在code.aliyun.com注册一个账号,新建一个仓库,将大赛官方账号cloudnative-contest 添加为项目成员,权限为Reporter 。

102 |

103 | fork或者拷贝mqrace2021仓库的代码到自己的仓库,并实现自己的逻辑;注意实现的代码必须放在指定package下,否则不会被包括在评测中。

104 |

105 | 在天池提交成绩的入口,提交自己的仓库git地址,等待评测结果。

106 |

107 | 及时保存日志文件,日志文件只保存1天。

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/DefaultMessageQueueImpl.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | import java.nio.ByteBuffer;

4 | import java.util.Date;

5 | import java.util.Map;

6 | import java.util.concurrent.Executors;

7 | import java.util.concurrent.ScheduledExecutorService;

8 | import java.util.concurrent.TimeUnit;

9 | import java.util.concurrent.atomic.AtomicInteger;

10 | import java.util.concurrent.locks.Condition;

11 | import java.util.concurrent.locks.Lock;

12 | import java.util.concurrent.locks.ReentrantLock;

13 |

14 | /**

15 | * 程序的主入口

16 | */

17 | public class DefaultMessageQueueImpl extends MessageQueue {

18 |

19 | private final Lock lock = new ReentrantLock();

20 | private final Condition condition = lock.newCondition();

21 |

22 | private final ThreadLocal firstAppend = ThreadLocal.withInitial(() -> true);

23 |

24 | private final AtomicInteger threadNum = new AtomicInteger(0);

25 |

26 | private final AtomicInteger messageNum = new AtomicInteger(0);

27 |

28 | public DefaultMessageQueueImpl() {

29 |

30 | Util.printParams();

31 |

32 | for (int i = 0; i < Constants.TOPIC_NUM; i++) {

33 | Context.groupTopicManagers[i] = new GroupTopicManager(i);

34 | }

35 |

36 | for (int i = 0; i < Constants.PERF_THREAD_NUM; i++) {

37 | Context.aepManagers[i] = new AepManager("hot_" + i,

38 | Constants.THREAD_AEP_WRITE_BUFFER_SIZE,

39 | Constants.THREAD_AEP_HOT_CACHE_WINDOW_SIZE);

40 | Context.fixedLengthDramManagers[i] = new FixedLengthDramManager(

41 | Constants.THREAD_COLD_READ_BUFFER_SIZE);

42 | }

43 |

44 | for (int i = 0; i < Constants.GROUPS; i++) {

45 | Context.threadGroupManagers[i] = new ThreadGroupManager(i);

46 | }

47 |

48 | ScheduledExecutorService scheduledExecutorService = Executors.newSingleThreadScheduledExecutor();

49 | scheduledExecutorService.scheduleAtFixedRate(Util::analysisMemory, 60, 10, TimeUnit.SECONDS);

50 |

51 | // 统计命中率

52 | Runtime.getRuntime().addShutdownHook(new Thread(this::analysisHitCache));

53 |

54 | // 防止程序卡死

55 | scheduledExecutorService.schedule(() -> {

56 | System.out.println("timeout killed");

57 | System.exit(0);

58 | }, 445, TimeUnit.SECONDS);

59 |

60 | }

61 |

62 | @Override

63 | public long append(String topic, int queueId, ByteBuffer data) {

64 | // messageNum.incrementAndGet();

65 | // 统计线程总数

66 | if (firstAppend.get()) {

67 | initThread();

68 | }

69 | int topicNo = Util.parseInt(topic);

70 |

71 | // 获取该 topic-queueId 下的最大位点 offset

72 | return Context.threadGroupManager.get().append(topicNo, queueId, data);

73 | }

74 |

75 | @Override

76 | public Map getRange(String topic, int queueId, long offset, int fetchNum) {

77 | int topicNo = Util.parseInt(topic);

78 | return Context.groupTopicManagers[topicNo].getRange(queueId, (int)offset, fetchNum);

79 | }

80 |

81 | private void initThread() {

82 | lock.lock();

83 | try {

84 | if (threadNum.incrementAndGet() != Constants.PERF_THREAD_NUM) {

85 | try {

86 | boolean await = condition.await(3, TimeUnit.SECONDS);

87 | if (!await && Constants.INTERRUPT_INCORRECT_PHASE) {

88 | throw new RuntimeException("INTERRUPT_INCORRECT_PHASE");

89 | }

90 | } catch (InterruptedException ignored) {

91 | }

92 | } else {

93 | condition.signalAll();

94 | }

95 | } finally {

96 | lock.unlock();

97 | }

98 | int groupNum;

99 | if (threadNum.get() == Constants.PERF_THREAD_NUM) {

100 | groupNum = Constants.GROUPS;

101 | } else {

102 | groupNum = threadNum.get();

103 | }

104 | firstAppend.set(false);

105 | int bucketNo = (int)(Thread.currentThread().getId() % groupNum);

106 | Context.threadGroupManager.set(Context.threadGroupManagers[bucketNo]);

107 | Context.threadGroupManagers[bucketNo].setTotal(threadNum.get() / groupNum);

108 |

109 | }

110 |

111 | private void analysisHitCache() {

112 | long totalSize = 0;

113 | System.out.println(new Date() + " totalNum: " + messageNum.get() + " " + Util.byte2M(totalSize) + "M");

114 | System.out.println("=== message num: "

115 | + Context.heapDram.get() + " "

116 | + Context.ssd.get() + " "

117 | + Context.aep.get() + " "

118 | + Context.directDram.get()

119 | );

120 | }

121 |

122 | }

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/GroupTopicManager.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | import java.nio.ByteBuffer;

4 | import java.nio.channels.FileChannel;

5 | import java.util.ArrayList;

6 | import java.util.List;

7 | import java.util.Map;

8 | import java.util.concurrent.Semaphore;

9 | import java.util.concurrent.TimeUnit;

10 |

11 | /**

12 | * @author jingfeng.xjf

13 | * @date 2021/9/15

14 | *

15 | * topic 管理器

16 | * 一个 ThreadGroupManager 逻辑管理 m 个线程

17 | * 一个线程持有 n 个 GroupTopicManager

18 | * 一个 GroupTopicManager 一定只对应一个 ThreadGroupManager

19 | */

20 | public class GroupTopicManager {

21 |

22 | static ThreadLocal mapCache = ThreadLocal.withInitial(

23 | () -> new ArrayMap(Constants.MAX_FETCH_NUM));

24 | static ThreadLocal> retBuffers = ThreadLocal.withInitial(() -> {

25 | List buffers = new ArrayList<>();

26 | for (int i = 0; i < Constants.MAX_FETCH_NUM; i++) {

27 | buffers.add(ByteBuffer.allocateDirect(Constants.MAX_ONE_DATA_SIZE));

28 | }

29 | return buffers;

30 | });

31 | /**

32 | * 1 热读队列

33 | * 0 未知

34 | * -1 冷读队列

35 | */

36 | // public int[] queueCategory;

37 |

38 | public int topicNo;

39 | private final List[] queue2index2Offset;

40 | private FileChannel dataFileChannel;

41 | private final Semaphore[] semaphores;

42 |

43 | public GroupTopicManager(int topicNo) {

44 | this.topicNo = topicNo;

45 | // this.queueCategory = new int[Constants.QUEUE_NUM];

46 | queue2index2Offset = new ArrayList[Constants.QUEUE_NUM];

47 | for (int i = 0; i < Constants.QUEUE_NUM; i++) {

48 | queue2index2Offset[i] = new ArrayList<>();

49 | }

50 | semaphores = new Semaphore[Constants.MAX_FETCH_NUM];

51 | for (int i = 0; i < Constants.MAX_FETCH_NUM; i++) {

52 | semaphores[i] = new Semaphore(0);

53 | }

54 | }

55 |

56 | public long append(int queueId, long ssdPosition, long aepPosition, long writeBufferPosition, int coldCachePosition,

57 | int len, FileChannel dataFileChannel) {

58 | try {

59 | // 保存索引

60 | List index2Offset = queue2index2Offset[queueId];

61 | long curOffset = index2Offset.size();

62 | OffsetAndLen offsetAndLen = Context.threadAepManager.get().offerOffsetAndLen();

63 | offsetAndLen.setSsdOffset(ssdPosition);

64 | offsetAndLen.setAepOffset(aepPosition);

65 | offsetAndLen.setColdReadOffset(coldCachePosition);

66 | offsetAndLen.setAepWriteBufferOffset(writeBufferPosition);

67 | offsetAndLen.setLength(len);

68 | index2Offset.add(offsetAndLen);

69 | if (this.dataFileChannel == null) {

70 | this.dataFileChannel = dataFileChannel;

71 | }

72 | return curOffset;

73 | } catch (Exception e) {

74 | throw new RuntimeException(e);

75 | }

76 | }

77 |

78 | public Map getRange(int queueId, int offset, int fetchNum) {

79 | // if (queueCategory[queueId] == 0) {

80 | // queueCategory[queueId] = offset == 0 ? -1 : 1;

81 | // }

82 | try {

83 | ArrayMap ret = mapCache.get();

84 | ret.clear();

85 | AepManager aepManager = Context.threadAepManager.get();

86 |

87 | List offsetAndLens = queue2index2Offset[queueId];

88 | if (offsetAndLens == null) {

89 | return ret;

90 | }

91 |

92 | int size = offsetAndLens.size();

93 |

94 | for (int i = 0; i < fetchNum && i + offset < size; i++) {

95 | ByteBuffer retBuffer = retBuffers.get().get(i);

96 | retBuffer.clear();

97 | OffsetAndLen offsetAndLen = offsetAndLens.get(i + offset);

98 | retBuffer.limit(offsetAndLen.getLength());

99 | aepManager.read(offsetAndLen, i, retBuffer, ret, this.dataFileChannel, semaphores[i]);

100 | }

101 | for (int i = 0; i < fetchNum && i + offset < size; i++) {

102 | semaphores[i].tryAcquire(1, 3, TimeUnit.SECONDS);

103 | }

104 | return ret;

105 | } catch (Exception e) {

106 | e.printStackTrace();

107 | throw new RuntimeException(e);

108 | }

109 | }

110 |

111 | public void addIndex(int queueId, OffsetAndLen offsetAndLen, FileChannel dataFileChannel) {

112 | if (this.dataFileChannel == null) {

113 | this.dataFileChannel = dataFileChannel;

114 | }

115 | List offsetAndLens = queue2index2Offset[queueId];

116 | offsetAndLens.add(offsetAndLen);

117 | }

118 | }

119 |

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/Util.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | import java.io.IOException;

4 | import java.lang.management.BufferPoolMXBean;

5 | import java.lang.management.ManagementFactory;

6 | import java.lang.reflect.Field;

7 | import java.nio.ByteBuffer;

8 | import java.nio.channels.FileChannel;

9 |

10 | import sun.misc.JavaNioAccess;

11 | import sun.misc.SharedSecrets;

12 | import sun.misc.Unsafe;

13 | import sun.misc.VM;

14 |

15 | /**

16 | * @author jingfeng.xjf

17 | * @date 2021/9/17

18 | */

19 | public class Util {

20 |

21 | public static int parseInt(String input) {

22 | switch (input.length()) {

23 | case 7: {

24 | return (input.charAt(5) - '0') * 10 + (input.charAt(6) - '0');

25 | }

26 | case 6: {

27 | return input.charAt(5) - '0';

28 | }

29 |

30 | }

31 | throw new RuntimeException("unknown topic " + input);

32 | }

33 |

34 | public static final Unsafe unsafe = getUnsafe();

35 |

36 | private static sun.misc.Unsafe getUnsafe() {

37 | try {

38 | Field field = Unsafe.class.getDeclaredField("theUnsafe");

39 | field.setAccessible(true);

40 | return (Unsafe)field.get(null);

41 | } catch (Exception e) {

42 | throw new RuntimeException(e);

43 | }

44 | }

45 |

46 | public static BufferPoolMXBean getDirectBufferPoolMBean() {

47 | return ManagementFactory.getPlatformMXBeans(BufferPoolMXBean.class)

48 | .stream()

49 | .filter(e -> e.getName().equals("direct"))

50 | .findFirst().get();

51 | }

52 |

53 | public static void preAllocateFile(FileChannel fileChannel, long threadGroupPerhapsFileSize) {

54 | int bufferSize = 4 * 1024;

55 | ByteBuffer byteBuffer = ByteBuffer.allocateDirect(bufferSize);

56 | for (int i = 0; i < bufferSize; i++) {

57 | byteBuffer.put((byte)-1);

58 | }

59 | byteBuffer.flip();

60 | long loopTimes = threadGroupPerhapsFileSize / bufferSize;

61 | for (long i = 0; i < loopTimes; i++) {

62 | try {

63 | fileChannel.write(byteBuffer);

64 | } catch (IOException e) {

65 | e.printStackTrace();

66 | }

67 | byteBuffer.flip();

68 | }

69 | try {

70 | fileChannel.force(true);

71 | fileChannel.position(0);

72 | } catch (IOException e) {

73 | e.printStackTrace();

74 | }

75 | }

76 |

77 | public JavaNioAccess.BufferPool getNioBufferPool() {

78 | return SharedSecrets.getJavaNioAccess().getDirectBufferPool();

79 | }

80 |

81 | /**

82 | * -XX:MaxDirectMemorySize=60M

83 | */

84 | public void testGetMaxDirectMemory() {

85 | System.out.println(Runtime.getRuntime().maxMemory() / 1024.0 / 1024.0);

86 | System.out.println(VM.maxDirectMemory() / 1024.0 / 1024.0);

87 | System.out.println(getDirectBufferPoolMBean().getTotalCapacity() / 1024.0 / 1024.0);

88 | System.out.println(getNioBufferPool().getTotalCapacity() / 1024.0 / 1024.0);

89 | }

90 |

91 | public static void analysisMemory() {

92 | System.out.printf("totalMemory: %sM freeMemory: %sM usedMemory: %sM directMemoryUsed: %sM\n",

93 | byte2M(Runtime.getRuntime().totalMemory()), byte2M(Runtime.getRuntime().freeMemory()),

94 | byte2M(Runtime.getRuntime().totalMemory() - Runtime.getRuntime().freeMemory()),

95 | byte2M(Util.getDirectBufferPoolMBean().getMemoryUsed()));

96 | }

97 |

98 | public static void analysisMemoryOnce() {

99 | System.out.printf("==specialPrint totalMemory: %sM freeMemory: %sM usedMemory: %sM directMemoryUsed: %sM\n",

100 | byte2M(Runtime.getRuntime().totalMemory()), byte2M(Runtime.getRuntime().freeMemory()),

101 | byte2M(Runtime.getRuntime().totalMemory() - Runtime.getRuntime().freeMemory()),

102 | byte2M(Util.getDirectBufferPoolMBean().getMemoryUsed()));

103 | }

104 |

105 | public static void printParams() {

106 | System.out.println("Constants.GROUPS=" + Constants.GROUPS);

107 | System.out.println(

108 | "Constants.THREAD_COLD_READ_BUFFER_SIZE=" + Util.byte2M(Constants.THREAD_COLD_READ_BUFFER_SIZE) + "M");

109 | System.out.println("Constants.THREAD_AEP_SIZE=" + Util.byte2M(Constants.THREAD_AEP_HOT_CACHE_WINDOW_SIZE) + "M");

110 | System.out.println(

111 | "Constants.THREAD_HOT_WRITE_BUFFER_SIZE=" + Util.byte2M(Constants.THREAD_AEP_WRITE_BUFFER_SIZE) + "M");

112 | System.out.println(

113 | "Constants.THREAD_COLD_READ_THRESHOLD_SIZE=" + Util.byte2KB(Constants.THREAD_COLD_READ_THRESHOLD_SIZE) + "KB");

114 | }

115 |

116 | public static long byte2M(long x) {

117 | return x / 1024 / 1024;

118 | }

119 |

120 | public static long byte2KB(long x) {

121 | return x / 1024;

122 | }

123 |

124 | }

125 |

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/ThreadGroupManager.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | import java.io.File;

4 | import java.nio.ByteBuffer;

5 | import java.nio.channels.FileChannel;

6 | import java.nio.file.StandardOpenOption;

7 | import java.util.Date;

8 | import java.util.concurrent.TimeUnit;

9 | import java.util.concurrent.locks.Condition;

10 | import java.util.concurrent.locks.Lock;

11 | import java.util.concurrent.locks.ReentrantLock;

12 |

13 | /**

14 | * @author jingfeng.xjf

15 | * @date 2021/9/15

16 | *

17 | * 聚合多个线程数据写入的管理器

18 | *

19 | * 核心思想:将 40 个线程分成 4 组,每组 10 个线程,聚合写入之后,等待最后一个线程一同 force,同时返回

20 | */

21 | public class ThreadGroupManager {

22 |

23 | private final Lock lock;

24 | private final Condition condition;

25 |

26 | private final NativeMemoryByteBuffer writeBuffer;

27 | public FileChannel fileChannel;

28 | private volatile int count = 0;

29 | private volatile int total = 0;

30 |

31 | public ThreadGroupManager(int groupNo) {

32 | this.lock = new ReentrantLock();

33 | this.condition = this.lock.newCondition();

34 | this.writeBuffer = new NativeMemoryByteBuffer(Constants.MAX_ONE_DATA_SIZE * Constants.NUMS_PERHAPS_IN_GROUP);

35 | try {

36 | String dataFilePath = Constants.ESSD_BASE_PATH + "/" + groupNo;

37 | File dataFile = new File(dataFilePath);

38 | if (!dataFile.exists()) {

39 | dataFile.createNewFile();

40 | }

41 | this.fileChannel = FileChannel.open(dataFile.toPath(), StandardOpenOption.READ,

42 | StandardOpenOption.WRITE);

43 |

44 | // recover

45 | if (this.fileChannel.size() > 0) {

46 | ByteBuffer idxWriteBuffer = ByteBuffer.allocate(Constants.IDX_GROUP_BLOCK_SIZE);

47 | long offset = 0;

48 | while (offset < this.fileChannel.size()) {

49 | idxWriteBuffer.clear();

50 | fileChannel.read(idxWriteBuffer, offset);

51 | idxWriteBuffer.flip();

52 | int topicId = idxWriteBuffer.get();

53 | if (topicId < 0 || topicId > 100) {

54 | break;

55 | }

56 | // 注意如果使用 unsafe,这里需要 reverse

57 | int queueId = Short.reverseBytes(idxWriteBuffer.getShort());

58 | if (queueId < 0 || queueId > 5000) {

59 | break;

60 | }

61 | int len = Short.reverseBytes(idxWriteBuffer.getShort());

62 | if (len <= 0 || len > Constants.MAX_ONE_DATA_SIZE) {

63 | break;

64 | }

65 | Context.groupTopicManagers[topicId].addIndex(queueId,

66 | new OffsetAndLen(offset + Constants.IDX_GROUP_BLOCK_SIZE, len),

67 | this.fileChannel);

68 | offset += Constants.IDX_GROUP_BLOCK_SIZE + len;

69 | }

70 | } else {

71 | if (groupNo < 4) {

72 | Util.preAllocateFile(this.fileChannel, Constants.THREAD_GROUP_PRE_ALLOCATE_FILE_SIZE);

73 | }

74 | }

75 | } catch (Exception e) {

76 | e.printStackTrace();

77 | }

78 | }

79 |

80 | public void setTotal(int total) {

81 | this.total = total;

82 | }

83 |

84 | public long append(int topicNo, int queueId, ByteBuffer data) {

85 | AepManager aepManager = Context.threadAepManager.get();

86 | FixedLengthDramManager fixedLengthDramManager = Context.threadFixedLengthDramManager.get();

87 |

88 | int len = data.remaining();

89 |

90 | long aepPosition = -1;

91 | long writeBufferPosition = -1;

92 |

93 | // 优先使用 DRAM 缓存

94 | int coldCachePosition = fixedLengthDramManager.write(data);

95 | if (coldCachePosition == -1) {

96 | // 使用 AEP 缓存

97 | aepPosition = aepManager.write(data);

98 | writeBufferPosition = aepManager.getWriteBufferPosition() - len;

99 | }

100 |

101 | long ssdPosition = -1;

102 |

103 | lock.lock();

104 | try {

105 | count++;

106 | ssdPosition = fileChannel.position() + writeBuffer.position() + Constants.IDX_GROUP_BLOCK_SIZE;

107 |

108 | writeBuffer.put((byte)topicNo);

109 | writeBuffer.putShort((short)queueId);

110 | writeBuffer.putShort((short)len);

111 | writeBuffer.put(data);

112 |

113 | if (count != total) {

114 | condition.await(10, TimeUnit.SECONDS);

115 | } else {

116 | // 最后一个线程触发 force 刷盘

117 | force();

118 | count = 0;

119 | // 通知其他线程放行

120 | condition.signalAll();

121 | }

122 | } catch (Exception e) {

123 | e.printStackTrace();

124 | } finally {

125 | lock.unlock();

126 | }

127 | // 需要返回 queue 的长度

128 | return Context.groupTopicManagers[topicNo].append(queueId, ssdPosition, aepPosition, writeBufferPosition,

129 | coldCachePosition, len, fileChannel);

130 | }

131 |

132 | private void force() {

133 | // 4kb 对齐

134 | if (total > 5) {

135 | int position = writeBuffer.position();

136 | int mod = position % Constants._4kb;

137 | if (mod != 0) {

138 | writeBuffer.position(position + Constants._4kb - mod);

139 | }

140 | }

141 |

142 | writeBuffer.flip();

143 | try {

144 | fileChannel.write(writeBuffer.slice());

145 | fileChannel.force(false);

146 | } catch (Exception e) {

147 | e.printStackTrace();

148 | }

149 | writeBuffer.clear();

150 | }

151 |

152 | }

153 |

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/AepManager.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | import java.io.File;

4 | import java.io.IOException;

5 | import java.nio.ByteBuffer;

6 | import java.nio.channels.FileChannel;

7 | import java.nio.file.StandardOpenOption;

8 | import java.util.concurrent.ExecutorService;

9 | import java.util.concurrent.Executors;

10 | import java.util.concurrent.Semaphore;

11 |

12 | /**

13 | * @author jingfeng.xjf

14 | * @date 2021/9/22

15 | *

16 | * AEP 管理器,

17 | * AepManager 核心思想:使用滑动窗口的思想进行缓存管理

18 | * writeBuffer 有两层作用:

19 | * 1. 作为 AEP 聚合刷盘的写入缓冲

20 | * 2. 作为热数据的 dram 缓存

21 | */

22 | public class AepManager {

23 |

24 | private final FileChannel fileChannel;

25 | /**

26 | * AEP 写入缓冲 & DRAM 热读缓存 的大小

27 | */

28 | private final int writeBufferSize;

29 | /**

30 | * AEP 写入缓冲 & DRAM 热读缓存

31 | */

32 | private final NativeMemoryByteBuffer writeBuffer;

33 | /**

34 | * AEP 滑动窗口的大小

35 | */

36 | private final long windowSize;

37 | /**

38 | * 提前将 Runtime 需要用到的索引对象分配好

39 | */

40 | private final OffsetAndLen[] offsetAndLens;

41 | private int offsetAndLenIndex = 0;

42 | /**

43 | * getRange 阶段,ssd 可以并发读

44 | */

45 | private final ExecutorService ssdReadExecutorService;

46 | /**

47 | * AEP 的逻辑长度

48 | */

49 | public long globalPosition;

50 | /**

51 | * DRAM 热读缓存的逻辑长度

52 | */

53 | public long writeBufferPosition;

54 |

55 |

56 | public AepManager(String id, int writeBufferSize, long aepWindowSize) {

57 | try {

58 | this.offsetAndLens = new OffsetAndLen[Constants.THREAD_MSG_NUM];

59 | for (int i = 0; i < Constants.THREAD_MSG_NUM; i++) {

60 | this.offsetAndLens[i] = new OffsetAndLen();

61 | }

62 | this.windowSize = aepWindowSize;

63 | this.globalPosition = 0;

64 | this.writeBufferPosition = 0;

65 | this.writeBufferSize = writeBufferSize;

66 | this.writeBuffer = new NativeMemoryByteBuffer(writeBufferSize);

67 | String path = Constants.AEP_BASE_PATH + "/" + id;

68 | File file = new File(path);

69 | if (!file.exists()) {

70 | file.createNewFile();

71 | }

72 | this.fileChannel = FileChannel.open(file.toPath(), StandardOpenOption.READ,

73 | StandardOpenOption.WRITE);

74 |

75 | // 预分配

76 | Util.preAllocateFile(this.fileChannel, Constants.THREAD_AEP_HOT_CACHE_PRE_ALLOCATE_SIZE);

77 |

78 | ssdReadExecutorService = Executors.newFixedThreadPool(8);

79 | } catch (Exception e) {

80 | throw new RuntimeException(e);

81 | }

82 | }

83 |

84 | public long write(ByteBuffer data) {

85 | try {

86 | // 待写入数据的大小

87 | int len = data.remaining();

88 | // 文件的物理写指针

89 | long windowPosition = this.globalPosition % this.windowSize;

90 | // 内存的写指针

91 | long bufferWritePosition = this.writeBuffer.position();

92 | // 写缓存

93 | if (len <= this.writeBuffer.remaining()) {

94 | // 考虑缓存块在 aep 上折行的问题

95 | if (windowPosition + bufferWritePosition + len <= this.windowSize) {

96 | // 大多数情况下,直接写入缓存,返回逻辑位移=文件逻辑写指针+内存写地址

97 | this.writeBuffer.put(data);

98 | this.writeBufferPosition += len;

99 | return globalPosition + bufferWritePosition;

100 | } else {

101 | // 少数情况下,需要考虑缓存块写入之后要换行的问题,需要先把已有的缓存落盘,换一行写。返回逻辑位移=文件逻辑写指针(因为内存块偏移肯定是0)

102 | this.writeBufferPosition += this.writeBuffer.remaining() + len;

103 |

104 | this.writeBuffer.flip();

105 | this.fileChannel.write(this.writeBuffer.slice(), windowPosition);

106 | this.globalPosition += this.windowSize - windowPosition;

107 | this.writeBuffer.clear();

108 | this.writeBuffer.put(data);

109 | return globalPosition;

110 | }

111 | } else { // 缓存不够写入了,先把缓存落盘,再写入缓存

112 | this.writeBufferPosition += this.writeBuffer.remaining() + len;

113 | // 缓存块落盘

114 | this.writeBuffer.flip();

115 | int size = this.fileChannel.write(this.writeBuffer.slice(), windowPosition);

116 | this.globalPosition += size;

117 | this.writeBuffer.clear();

118 |

119 | this.writeBuffer.put(data);

120 | windowPosition = this.globalPosition % this.windowSize;

121 | if (windowPosition + len <= this.windowSize) {

122 | // 多数情况,新的缓存块没有折行 返回逻辑位移=文件逻辑写指针(因为内存块刚落盘过,肯定是0)

123 | return this.globalPosition;

124 | } else {

125 | // 少数情况,新的缓存块刚写入第一个数据,就超过了该行的剩余大小,需要折行,修改逻辑位移即可

126 | this.globalPosition += this.windowSize - windowPosition;

127 | return this.globalPosition;

128 | }

129 | }

130 | } catch (Exception e) {

131 | throw new RuntimeException(e);

132 | }

133 | }

134 |

135 | /**

136 | * 不方便同时返回 aepPosition 和 writeBufferPosition,单独提供该方法作为一个折中

137 | */

138 | public long getWriteBufferPosition() {

139 | return writeBufferPosition;

140 | }

141 |

142 | public ByteBuffer readWriteBuffer(long position, int len) {

143 | int bufferPosition = (int) (position % this.writeBufferSize);

144 | ByteBuffer duplicate = this.writeBuffer.duplicate();

145 | duplicate.limit(bufferPosition + len);

146 | duplicate.position(bufferPosition);

147 | return duplicate.slice();

148 | }

149 |

150 | public OffsetAndLen offerOffsetAndLen() {

151 | return this.offsetAndLens[offsetAndLenIndex++];

152 | }

153 |

154 | public void read(OffsetAndLen offsetAndLen, int i, ByteBuffer reUseBuffer, ArrayMap ret,

155 | FileChannel dataFileChannel, Semaphore semaphore) throws Exception {

156 |

157 | // aep write cache

158 | long bufferStartPosition = Math.max(0, this.writeBufferPosition - this.writeBufferSize);

159 | if (offsetAndLen.getAepWriteBufferOffset() >= bufferStartPosition) {

160 | ByteBuffer duplicate = this.readWriteBuffer(offsetAndLen.getAepWriteBufferOffset(),

161 | offsetAndLen.getLength());

162 | ret.put(i, duplicate);

163 | semaphore.release(1);

164 | // Context.directDram.incrementAndGet();

165 | return;

166 | }

167 |

168 | // cold read cache

169 | if (offsetAndLen.getColdReadOffset() != -1) {

170 | FixedLengthDramManager fixedLengthDramManager = Context.threadFixedLengthDramManager.get();

171 | ByteBuffer coldCache = fixedLengthDramManager.read(offsetAndLen.getColdReadOffset(),

172 | offsetAndLen.getLength());

173 | ret.put(i, coldCache);

174 | semaphore.release(1);

175 | // Context.heapDram.incrementAndGet();

176 | return;

177 | }

178 |

179 | // aep

180 | long aepStartPosition = Math.max(0, this.globalPosition - this.windowSize);

181 | if (offsetAndLen.getAepOffset() >= aepStartPosition) {

182 | long physicalPosition = offsetAndLen.getAepOffset() % this.windowSize;

183 | ssdReadExecutorService.execute(() -> {

184 | try {

185 | this.fileChannel.read(reUseBuffer, physicalPosition);

186 | } catch (IOException e) {

187 | e.printStackTrace();

188 | }

189 | reUseBuffer.flip();

190 | ret.put(i, reUseBuffer);

191 | semaphore.release(1);

192 | });

193 | // Context.aep.incrementAndGet();

194 | return;

195 | }

196 |

197 | // 未命中,降级到 ssd 读

198 | ssdReadExecutorService.execute(() -> {

199 | try {

200 | dataFileChannel.read(reUseBuffer, offsetAndLen.getSsdOffset());

201 | } catch (IOException e) {

202 | e.printStackTrace();

203 | }

204 | reUseBuffer.flip();

205 | ret.put(i, reUseBuffer);

206 | semaphore.release(1);

207 | });

208 | // Context.ssd.incrementAndGet();

209 | }

210 |

211 | }

212 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | ## 前言

2 |

3 | 人总是这样,年少时,怨恨自己年少,年迈时,怨恨自己年迈,就连参加一场比赛,都会纠结,工作太忙怎么办,周末休息怎么办,成年人的任性往往就在那一瞬间,我只是单纯地想经历一场酣畅的性能挑战赛。所以,云原生挑战赛,我来了,Kirito 带着他的公众号来了。

4 |

5 | 读完寥寥数百多字的赛题描述,四分之一炷香之后一个灵感出现在脑海中,本以为这个灵感是开篇,没想到却是终章。临近结束,测试出了缓存命中率更高的方案,但评测已经没有了日志,在茫茫的方案之中,我错过了最大的那一颗麦穗,但在一个月不长不短的竞赛中,我挑选到了一颗不错的麦穗,从此只有眼前路,没有身后身,最终侥幸跑出了内部赛第一的成绩。

6 |

7 |

8 |

9 | 传统存储引擎类型的比赛,主要是围绕着两种存储介质:SSD 和 DRAM,不知道这俩有没有熬过七年之痒,Intel 就已经引入了第三类存储介质:AEP(PMem 的一种实现)。AEP 的出现,让原本各司其职的 SSD 和 DRAM 关系变得若即若离起来,它既可以当做 DRAM 用,也可以当做 SSD 用。蕴含在赛题中的”冷热存储“这一关键词,为后续风起云涌的赛程埋下了伏笔,同时给了 AEP 一个名分。

10 |

11 | AEP 这种存储介质不是第一次出现在我眼前,在 ADB 比赛中就遇到过它,此次比赛开始时,脑子里面对它仅存的印象便是"快"。这个快是以 SSD 为参照物,无论是读还是写,都高出传统 SSD 1~n 个数量级。但更多的认知,只能用 SSD 来类比,AEP 特性的理解和使用方法,无疑是这次的决胜点之一。

12 |

13 | 曾经的我喜欢问,现在的我喜欢试。一副键盘,一个深夜,我窥探到了 AEP 的奥秘,多线程读写必不可少,读取速度和写入速度近似 DRAM,但细究之下写比读慢,从整体吞吐来看,DRAM 的读写性能略优于 AEP,但 DRAM 和 AEP 的读写都比 SSD 快得多的多。我的麦穗也有了初步的模样:第一优先级是降低 SSD 命中率,在此基础上,提高 DRAM 命中率,AEP 起到平衡的效果,初期不用特别顾忌 AEP 和 DRAM 的命中比例。

14 |

15 | ## 参赛历程

16 |

17 | 万事开头难,中间难,后段也难。

18 |

19 | 和参加比赛的大多数人一样,我成功卡在了第一步:出分。AEP 先别用了,缓存方案也暂且搁置,不愧是 RocketMQ 团队出的题目,队列众多,那就先用 RocketMQ 的经典方案,“一阶段多线程绑定分区文件,顺序追加写,索引好办,内存和文件各存一份;二阶段随机读”,说干就干,两个小时后,果然得到了“正确性检测失败”的提示。把时间轴拉到去年,相比之下今年的参赛队伍并没有降低很多,但出分的队伍明显下降,大多数人是卡在了正确性检测这一关。咨询出题人,得知这一次的正确性检测是实实在在的”掉电“,PageCache 是指望不上了,只能派 `FileChannel#force()` 出场,成功获得了第一份成绩:1200s,一份几乎快超时的成绩。

20 |

21 | > 使用 force 解决掉电数据不丢失可以参考我赛程初段写的文章:https://www.cnkirito.moe/filechannel_force/

22 |

23 | 优化成功的喜悦片刻消散,初次出分的悸动至死不渝。那种感觉就像一觉醒来,发现今天是周六,可以睡到中午,甚至后面还有一个周日一样放肆。有了 baseline,下面便可以开始着手优化了,刚起头儿,有的是功夫,有的是希望。

24 |

25 | 掉电的限制,使得 SSD 的写入方案十分受限,每一条数据都要 force,使得 pageCache 近似无效,pageCache 从来没有受过这样的委屈,我走还不行吗?

26 |

27 | 随着赛程持续推进,也就推进了一天吧,我开始着手优化合并写入方案。传统方案中,聚合写入往往使用 DRAM 作为写入缓冲,聚合同一线程内前后几条消息,以减少写入放大问题,也有诸如 MongoDB 之流,会选择合并同一批次多个线程之间的数据,按照题意,多个线程合并写入,一起 force 已然是题目的唯一解。团结就是力量,经过测试,n 个线程聚合在一起 force,一起返回,可以显著降低 force 的总次数,缓解写入放大问题,有效地提升了分数。n 多少合适呢?理论分析,n 过大,实际 IO 线程就会变少;n 过小,force 次数多,解决调参问题,最合适的是机器学习,其次是 benchmark,经过多轮 benchmark,4 组 x 10 线程最为合适,4 个 IO 线程正好 = CPU core,这非常的合理。

28 |

29 | 合并写入后,效果显著,成绩来到了 700~800s。如果没有 AEP,这个比赛也就到头了,AEP 的加入,像草一样,一切都卷了起来。

30 |

31 | 赛程中段,有朋友在微信交流群中问我,AEP 你有用起来吗?每个人都会经历这个阶段:看见一座山,就想知道山后面是什么。我很想告诉他,可能翻过去山后面,你会发觉没有什么特别。回头看,会觉得这一边更好。但我知道他不会听,以他的性格,自己不试过,又怎么会甘心?我的 trick 无足轻重,他最终还是使用了 AEP,感受到了蒸汽火车到高铁那般速度的提升。总数据 125G,AEP 容量 60G,即使固定存储最后的 60G 数据,也可以确保热读部分的数据全部命中 AEP,SSD 会因为你的刻意保持距离而感到失落,你的分数不会。

32 |

33 | 即便是这样不经任何设计的 AEP 缓存方案,得益于 AEP 的读写速度和较大的容量加持,也可以获得 600s+ 的分数。

34 |

35 | 分数这个东西,总是比昨天高一点,比明天低一点,但要想维持这样的分数增长,需要持续付出极大的努力。600s 显然不足以支撑进入决赛,AEP 缓存固定的数据也显得有点呆,就像你意外获得了一块金刚石,不经雕琢,则无法成为耀眼夺目的钻石。必须优化 AEP 的使用方案!因为有热数据的存在,写入的一部分数据会在较短的一段时间内被消费,缓存方案也需要联动起来,写入-> 消费 -> 写入 -> 消费,大脑中飞速地模拟、推测评测程序的流程,操作系统、计算机网络的概念被一遍遍检索,最终锁定在了一个耳熟能详的概念:TCP 滑动窗口。AEP 保存最热的 60G 数据,使得热读全部命中,根据测试,发现冷读也会很快变成热读,在思路和方案连接的那一刻,代码流程也直接显现了出来,三又二分之一小时后,我提交了这份 AEP 滑动窗口的方案,没有什么比一次 Acccept 更爽的事了,一边赞叹自己的编码能力,一边自负地停止了优化,成绩停留在了 504s。

36 |

37 | 十月八号零点八分,钟楼敲响后的八分钟,我手握着一杯水,打开了排行榜,看到不少 500+ 的分数,懊恼、恐慌、焦虑一下子涌上了心头,水也越饮越寒。我本有七天时间,优化我的方案,但我没有;我在等一个奇迹,期待大家忘掉这场比赛,放弃优化,让排行榜锁定在十月一号那一天,但它没有。我将这份烦恼倾诉给妻子,换来了她的安慰,在她心目中,我永远是最棒的。我内心忐忑地依附道,那当然...在之后的晚上,世上少了一个 WOT 的玩家,多了一个埋头在 IDEA 中追求极致性能的码农。

38 |

39 | 在很长的一段时间里,我一直在追求降低 SSD 的命中率,每降低一点,我的分数总能够提升几秒。不知道从哪一天起,我看到排行榜中出现了一些 450s 的成绩,起初这并没有引起的我的警觉,因为 hack 可以很容易达到 300s,我一开始预估的极限成绩,不过也就是 470s,对,这一定是 hack,心里一遍遍地默念着。但,万一不是呢?

40 |

41 | 太想伸手摘取星星的人,常常忘记脚下的鲜花。我开始翻阅赛题描述,以寻找是否有遗漏的信息;一遍遍 review 自己的代码,调整代码结构,优化代码细节;检索自己过往的博文,以寻找可能擦肩而过的优化点。往后的几个晚上,我做的是同一个梦,梦里面重复播放着自己曾经的优化经验:4kb 对齐、文件预分配、缓存行填充...忽然间想起,自己总结的优化经验还没有完全尝试过。这次比赛是从第一次写入开始计时的,选手们可以在构造函数中恣意地预先分配一些数据,例如对象提前 new 好,内存提前 allocate,减少 runtime 时期的耗时,而这其中最有用的优化,当属 SSD 文件的预分配和 4kb 填充了。在 append 之前,事先把文件用 0 填充,得到总长度略大于 125G 的空白文件,在写入时,不足 4kb 的部分使用 4kb 填充,即使多写了一部分数据,速度还是能够提升,换算成实际的写入速度,可以达到 310M/s,而在此之前,force 的存在使得写入瓶颈始终卡在 275M/s。宁可一思进,莫在一思留,方案调通后,成绩锁定在了 440s。

42 |

43 | 内部赛结束前的两周,我又萌生了一个大胆的想法,考虑到 getRange 命中 SSD 时,系统采用的是抽样检测,那是不是意味着,用 mmap 读取就变成了一种懒加载呢?这个思路虽然在实际生产中不太通用,但在赛场上,那可以一把利器,这把利器斩下了 412s 的分数,也割伤了自己,评委不让用!我的天,我浪费了宝贵的两周,浪费在了一个无法通过的方案上。天知道评测是在抽样检测,我只是认为 mmap 读会更快呀 :)

44 |

45 | 不知道从什么时候开始,在什么东西上面都有个日期,秋刀鱼会过期,肉罐头会过期,比赛也在 10.26 号这天迎来了结束。未竟的优化,设想的思路,没能完成方案改造的遗憾都在这一刻失去了意义。我已经很久没有打过比赛了,也很久没有这样为一个方案绞尽脑汁了,这场比赛就这样任性地画上了一个句号。

46 |

47 | ## 最终方案

48 |

49 | ### SSD 写入方案

50 |

51 |

52 |

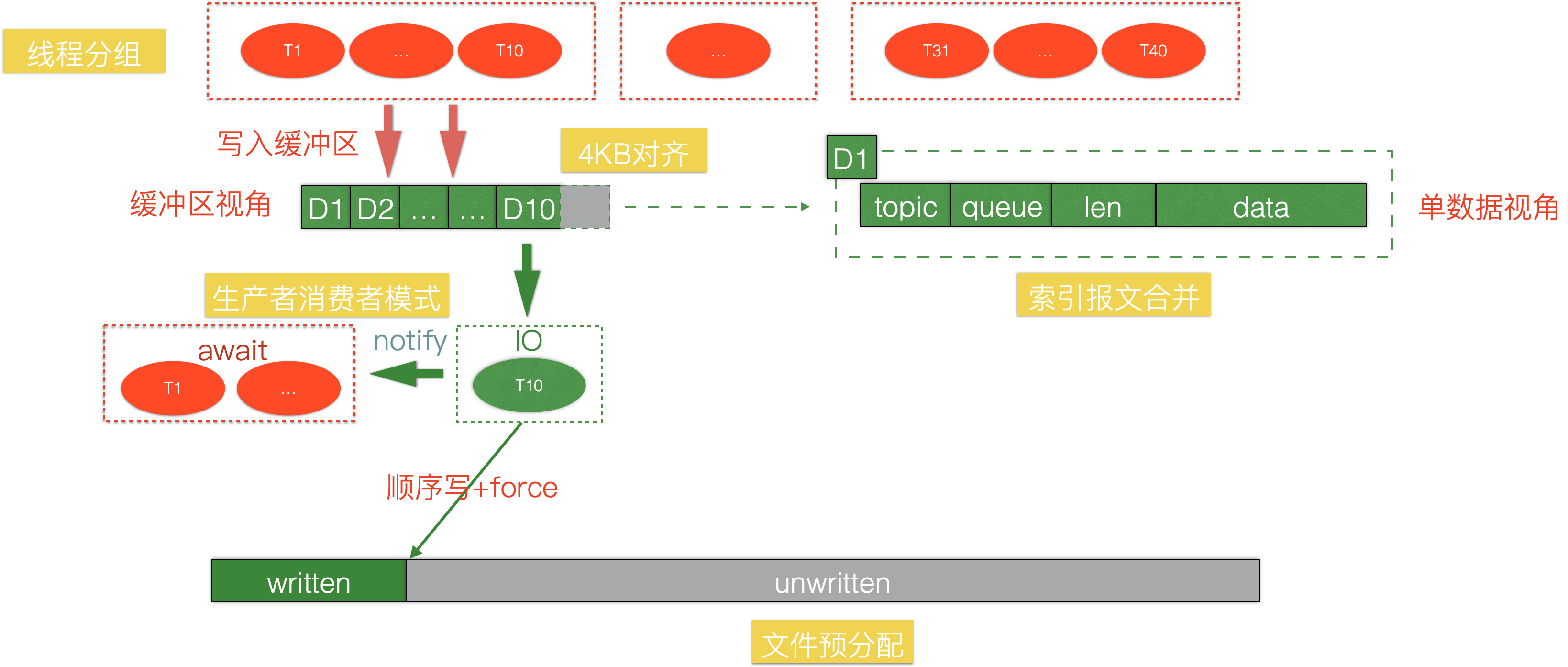

53 | 缓存架构是制胜点,SSD 的写入方案则是基本面,相信绝大多数前排的选手,都采用了上述的架构。性能评测阶段固定有 40 个线程,我将线程分为了 4 组,每组 10 个线程,进行 IO 合并。为什么是 4组在参赛历程中也介绍过,尊重 benchmark 的结果。1~9 号线程写入缓冲区完毕之后就 await 进入阻塞态,留下 10 号线程进行 IO,刷盘之后,notify 其他线程返回结果,如此往复,是一个非常经典的生产者消费者模式。

54 |

55 | 由于这次比赛,recover 阶段是不计入得分的,为了降低 force 的开销,我选择将索引的持久化和数据存在一起,这样避免了单独维护索引文件。在我的方案中,索引需要维护 topic,queue,length 三个信息,只需要定长的 5 个字节,和 100b~17Kb 的数据相比,微不足道,合并之后收益是很明显的。

56 |

57 | 选择使用 JUC 提供的 Lock + Condition 实现 wait/notify,一则是自己比较习惯这么控制并发,二则是复用其中一个 append 线程做刷盘的 IO 线程,相比其他并发方案的线程切换,要少一点。事实上,这次比赛中,CPU 是非常富余的,不会成为瓶颈,该模式的优势并没有完全发挥出来。

58 |

59 | 4kb 对齐是 SSD 经典的优化技巧,尽管并不是每一次性能挑战赛它都能排上用场,但请务必不要忘记尝试它。它对于人们的启发是使用 4kb 整数倍的写入缓冲聚合数据,整体刷盘,从而避免读写放大问题。此次比赛稍显特殊,由于赛题数据的随机分布特性,10 个线程聚合后的数据,往往不是 4KB 的整数倍,但这不妨碍我们做填充,看似多写入了一部分无意义的数据,但实际上会使得写入速度得到提升,尤其是在 force 情况下。

60 |

61 | 我曾和 @chender 交流过 4KB 填充这个话题,尝试分析出背后的原因,这里的结论不一定百分之百正确。4KB 是 SSD 的最小读写单元,这涉及硬件的操作,如果不填充,考虑以下执行流程,写入 9KB,force,写入 9 Kb,force,如果不做填充,相当于 force 了 9+3+3+9+3=27 kb,中间交叉的 3 kb,在 force 时会被重复计算,而填充过后,一定是 force 了 9+3+9+3=24 kb,整体 force 量降低。还有一个可能的依据是,没有填充的情况下,其实一定程度破坏了顺序写,写入实际写入了 12kb,但第二次写入并没有从 12kb 开始写入,而是从 9kb 写入。总之在 benchmark 下,4kb 对齐确实带来了 15s+ 的收益。

62 |

63 | 写入阶段还有一个致胜的优化,文件预分配。在 C 里面,有 fallocate,而 Java 并没有对应的 JNI 调用,不过可以取巧,利用 append 开始计分这个评测特性,在 SSD 上先使用字节 0 填充整个文件。在预分配过后,使用 force 也可以获得跟不使用 force 一样的写入速度,几乎打满了 320M/s 的 IO 速度。这个优化点,我在之前的博客中也分享过,不知道有没有其他选手看到并利用了起来,如果漏掉了这个优化,真的有点可惜,因为它足足可以让方案快 50s 左右。

64 |

65 | ### 缓存架构

66 |

67 |

68 |

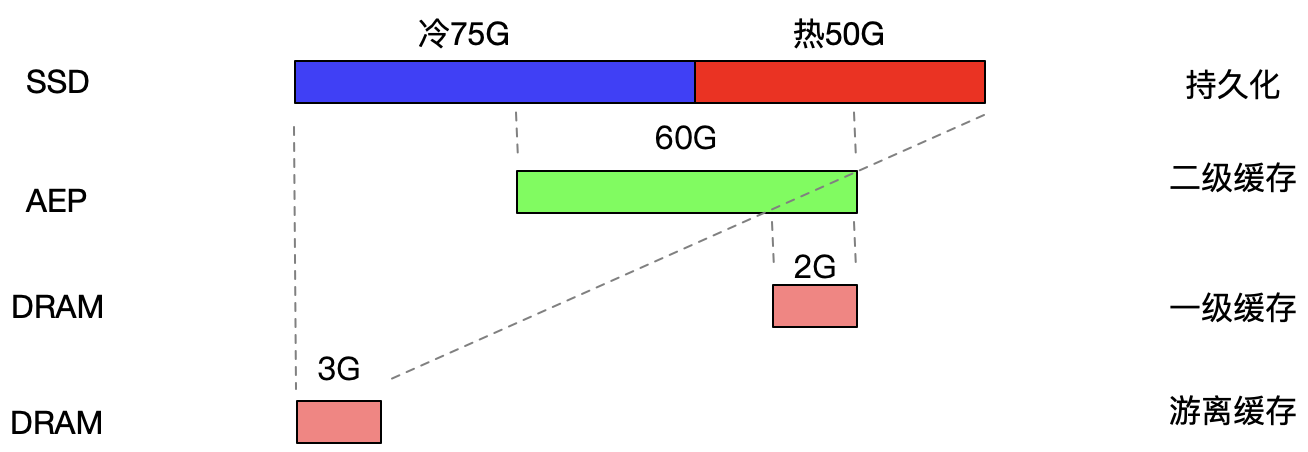

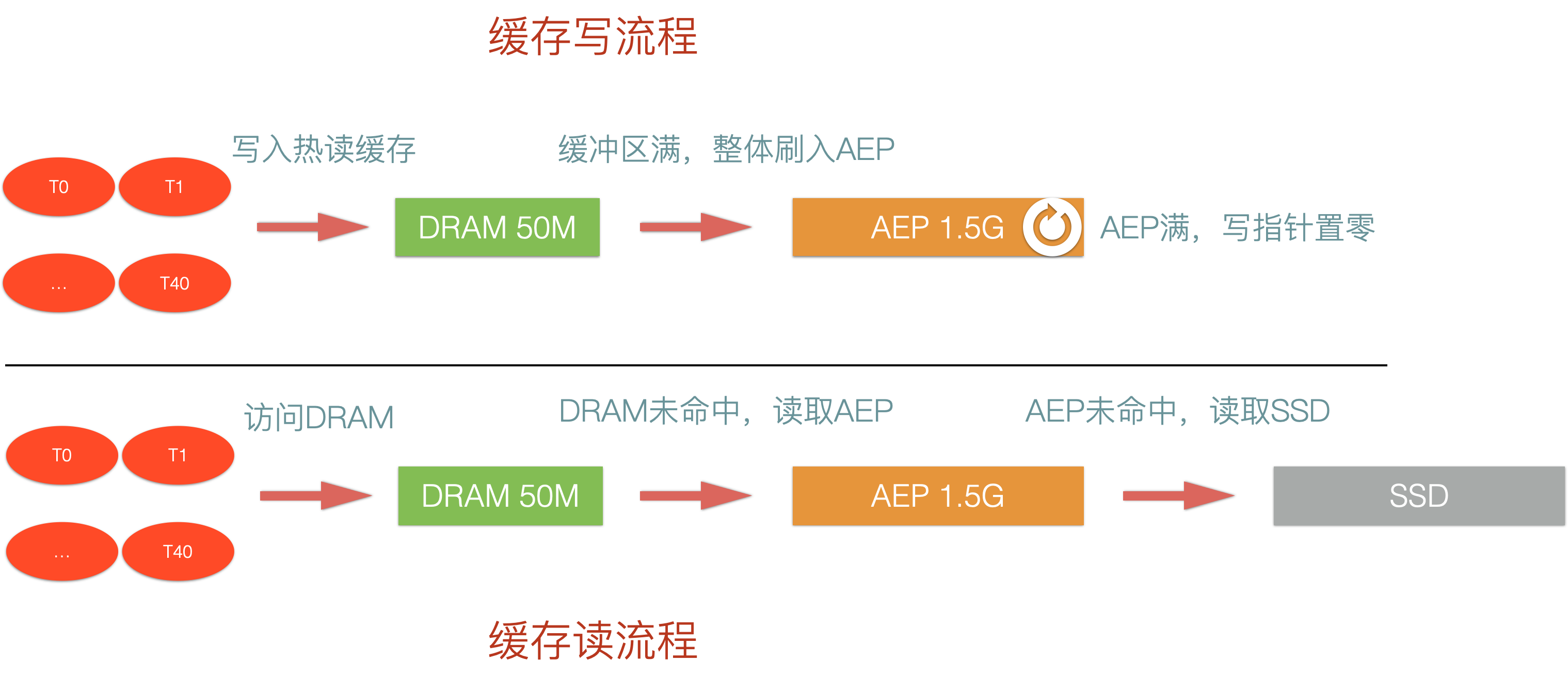

69 | 上图是全局缓存架构,整体方案的思路是多级缓存,采用滑动窗口的思想,AEP 永远缓存最新的 60G 数据,以确保热数据一定不会命中 SSD。同时,堆外的 2G DRAM 与 AEP 息息相关,这部分 DRAM 有两个作用,其一是作为 AEP 的写入缓冲,规避 AEP 写入放大的问题,其二是作为热数据的 DRAM 缓存,最热的一部分数据,可以保证直接命中 DRAM,规避 AEP 的访问。另外富余的 3G 的堆内内存,可以用于缓存由于滑动而导致被覆盖的数据,这部分 DRAM 同时配备引用计数,从而达到复用的效果。

70 |

71 |

72 |

73 | 在具体实现中,我将 60G 平均分配给 40 个线程,每个线程持有 1.5G 的 AEP 可用缓存,50M 的 DRAM 缓存。可以发现,在我的方案中,SSD 写入方案和 AEP 是不同的,SSD 由于 force 的限制,采用了线程合并写入,而 AEP 本身就是可以丢失的缓存,所以不需要进行合并,每个线程维护自身的 AEP 和 DRAM 缓存即可。

74 |

75 | 每个线程除了配备 1.5G 的AEP,还分配了 50M 的 DRAM。这部分 DRAM 永远被优先写入,同样的,也会优先被读取,前提是命中了的话。50M 显然不是一个特别大的空间,所以在其充满时,将 50M 数据整体刷入 AEP 中,使用 ByteBuffer 作为 DRAM 的 manager,还可以利用其逻辑 clear 的操作,使得 DRAM 和 AEP 一样变成了一个 RingBuffer。这部分设计算是我方案中比较巧妙的一点。

76 |

77 | 当然,你永远可以相信 SSD,它是最后一道兜底逻辑,无论缓存设计的多么糟糕,保证最后能够命中 SSD 才能出分,所有人都清楚这一点。

78 |

79 | AEP 滑动窗口的实现其实并不复杂,详见文末的代码,我就不过多介绍了。

80 |

81 | ## 程序优化

82 |

83 | ### 预分配文件

84 |

85 | ```java

86 | public static void preAllocateFile(FileChannel fileChannel, long threadGroupPerhapsFileSize) {

87 | int bufferSize = 4 * 1024;

88 | ByteBuffer byteBuffer = ByteBuffer.allocateDirect(bufferSize);

89 | for (int i = 0; i < bufferSize; i++) {

90 | byteBuffer.put((byte)0);

91 | }

92 | byteBuffer.flip();

93 | long loopTimes = threadGroupPerhapsFileSize / bufferSize;

94 | for (long i = 0; i < loopTimes; i++) {

95 | try {

96 | fileChannel.write(byteBuffer);

97 | } catch (IOException e) {

98 | e.printStackTrace();

99 | }

100 | byteBuffer.flip();

101 | }

102 | try {

103 | fileChannel.force(true);

104 | fileChannel.position(0);

105 | } catch (IOException e) {

106 | e.printStackTrace();

107 | }

108 | }

109 | ```

110 |

111 | 简单实用的一个优化技巧,属于发现就可以很快实现的一个优化,但可能不容易发现。

112 |

113 | ### 4KB 对齐

114 |

115 | ```java

116 | private void force() {

117 | // 4kb 对齐

118 | int position = writeBuffer.position();

119 | int mod = position % Constants._4kb;

120 | if (mod != 0) {

121 | writeBuffer.position(position + Constants._4kb - mod);

122 | }

123 |

124 | writeBuffer.flip();

125 | fileChannel.write(writeBuffer);

126 | fileChannel.force(false);

127 | writeBuffer.clear();

128 | }

129 | ```

130 |

131 | 同上

132 |

133 | ### Unsafe

134 |

135 | 其实感觉用了 Unsafe 也没有多少提升,因为后期抖动太大了,但最优成绩的确是用 Unsafe 跑出来的,还是罗列出来,万一下次有用呢?详见代码实现 `io.openmessaging.NativeMemoryByteBuffer`。

136 |

137 | 推荐阅读:《[聊聊Unsafe的一些使用技巧](https://www.cnkirito.moe/unsafe/)》

138 |

139 | ### ArrayMap

140 |

141 | 可以使用数组重写一遍 Map,反正此次比赛调用到的 Map 的 API 也不多,换成数组实现之后,可以降低 HashMap 的 overheap,再优秀的实现,在数组面前也会变得黯淡无光,仅限于比赛。

142 |

143 | 详见代码实现 `io.openmessaging.ArrayMap`。

144 |

145 | ### 预初始化

146 |

147 | 充分利用 append 之前的耗时不计入总分这一特性。除了将文件提前分配出来之外,Runtime 需要 new 的对象、DRAM 空间等,都提前在构造函数中完成,蚊子腿也是肉,分数总是这么一点点抠出来的。

148 |

149 | ### 并发 getRange

150 |

151 | 读取阶段 fetchNum 最大为 100,串行访问的话,如果是命中缓存还好,要是 100 次 SSD 的 IO 都是串行,那可就太糟糕了。经过测试,仅当命中 SSD 时并发访问,和不区分内存、AEP、SSD 命中,均并发访问,效果差不多,但无论如何,并发 getRange 总是比串行好的。

152 |

153 | ### ThreadLocal 复用

154 |

155 | 性能挑战赛中务必要 check 的一个环节,便是在运行时有没有动态 new 对象,有没有动态 allocate 内存,出现这些可是大忌,建议全部用 ThreadLocal 缓存。这次的赛题中有很多关键性的数字,100 个 Topic、40 个线程,稍加不留意,可能把线程级别的一些操作,错当成 Topic 级别来设计,例如分配的写入缓冲也好,getRange 阶段复用的读取缓冲也好,都应该设计成线程级别的数据。ThreadLocal 第一是方便管理线程级别的资源,第二是因为线程相对于 Topic 是要少的,需要搞清楚,哪些资源是线程级别的,哪些是 Topic 级别的,避免资源浪费。

156 |

157 | ### 合并写入

158 |

159 | 详见源码`io.openmessaging.ThreadGroupManager#append`

160 |

161 | ### AEP 滑动窗口

162 |

163 | 详见源码`io.openmessaging.AepManager`

164 |

165 | ## 总结与反思

166 |

167 | 一言以蔽之,滑动窗口缓存是我整个方案的核心,虽然这个方案经过细节的优化,让我取得了内部赛第一的成绩,但开篇我也提到过,它并不是缓存命中率最高的方案,在这个方案中,第一个明显的问题便是,堆外 DRAM 和 AEP 可能缓存了同一批数据,实际上,DRAM 和 AEP 缓存不重叠的方案肯定会有更高的缓存命中率;第二个问题,也是问题一连带的问题,在该方案中,堆内的 DRAM 无法被很高效地利用起来,所以我在本文中,只是稍带提了一下堆内的设计,没有详细介绍引用技术的逻辑。

168 |

169 | 我在赛程后半段,也尝试设计过 DRAM 和 AEP 不重叠并且动态分配回收的方案,缓存利用率的确会更高,但这意味着我要放弃滑动窗口方案中所有的细节调优!业余时间搞比赛,实在是精力时间有限,最终选择了放弃。

170 |

171 | 这像极了项目开发的技术债,如果你选择忍受,你可以得到一个尚可使用的系统,但你知道,重构之后,它可以更好;当然你也可以选择重构,死着皮,连着肉。

172 |

173 | 重赏之下,必有卷夫。内部赛还好,外部赛实在是卷,每次这种性能挑战赛,打到最后都是拼了命的抠细节,你被别人卷到了,就很累,你想到了优化点,卷到了别人,就很爽,这也太真实了。

174 |

175 | 最后说说收获,这次比赛,让我对 AEP 这个新概念有了比较深的理解,对存储设计、文件 IO 也有了更深的体会。这类比赛偶尔打打还是挺有意思的,一方面写项目代码容易疲乏,二是写出这么一个小的工程,还是挺有成就感的一件事。如果有下一场,也欢迎读者们一起来卷。

176 |

177 | ## 源码

178 |

179 | https://github.com/lexburner/aliyun-cloudnative-race-mq-2021.git

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/AepBenchmark.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | import java.io.File;

4 | import java.io.IOException;

5 | import java.io.RandomAccessFile;

6 | import java.nio.ByteBuffer;

7 | import java.nio.channels.FileChannel;

8 | import java.nio.file.StandardOpenOption;

9 | import java.util.concurrent.CountDownLatch;

10 |

11 | /**

12 | * @author jingfeng.xjf

13 | * @date 2021/9/18

14 | */

15 | public class AepBenchmark {

16 |

17 | public void testAepWriteIops()

18 | throws Exception {

19 | int threadNum = 40;

20 | String workDir = "/pmem";

21 | long totalSize = 125L * 1024 * 1024 * 1024;

22 | int bufferSize = 100 * 1024 * 1024;

23 |

24 | CountDownLatch countDownLatch = new CountDownLatch(threadNum);

25 | long start = System.currentTimeMillis();

26 | for (int i = 0; i < threadNum; i++) {

27 | final int threadNo = i;

28 | new Thread(() -> {

29 | try {

30 | File file = new File(workDir + "/daofeng_" + threadNo);

31 | RandomAccessFile rw = new RandomAccessFile(file, "rw");

32 | FileChannel fileChannel = rw.getChannel();

33 | ByteBuffer byteBuffer = ByteBuffer.allocate(bufferSize);

34 | for (int j = 0; j < bufferSize; j++) {

35 | byteBuffer.put((byte)1);

36 | }

37 | long loopTime = totalSize / bufferSize / threadNum;

38 | long maxFileLength = 60L * 1024 * 1024 * 1024 / 40 - 1024 * 1024;

39 | long position = 0;

40 | for (int t = 0; t < loopTime; t++) {

41 | byteBuffer.flip();

42 | fileChannel.write(byteBuffer, position % maxFileLength);

43 | position += bufferSize;

44 | }

45 | countDownLatch.countDown();

46 | } catch (Exception e) {

47 | e.printStackTrace();

48 | }

49 | }).start();

50 | }

51 | countDownLatch.await();

52 | System.out.println(

53 | "threadNum " + threadNum + " write " + totalSize + " bufferSize " + bufferSize + " cost " + (

54 | System.currentTimeMillis()

55 | - start) + " ms");

56 | }

57 |

58 | public void testRead2() throws Exception {

59 | int bufferSize = 200 * 1024 * 1024;

60 | File file = new File("/essd");

61 | FileChannel fileChannel = new RandomAccessFile(file, "rw").getChannel();

62 | ByteBuffer byteBuffer = ByteBuffer.allocate(bufferSize);

63 | fileChannel.read(byteBuffer);

64 | }

65 |

66 | public void testRead3() throws Exception {

67 | File file = new File("/essd");

68 | FileChannel fileChannel = new RandomAccessFile(file, "rw").getChannel();

69 | ByteBuffer byteBuffer = ByteBuffer.allocate(50 * 1024 * 1024);

70 |

71 | ByteBuffer directByteBuffer = ByteBuffer.allocateDirect(4 * 1024);

72 | for (int i = 0; i < 12800; i++) {

73 | directByteBuffer.clear();

74 | fileChannel.read(directByteBuffer, i * 4 * 1024);

75 | directByteBuffer.flip();

76 | byteBuffer.put(directByteBuffer);

77 | }

78 | }

79 |

80 | public void testAepReadIops()

81 | throws Exception {

82 | int threadNum = 40;

83 | String workDir = "/pmem";

84 | long totalSize = 125L * 1024 * 1024 * 1024;

85 | int bufferSize = 13 * 1024;

86 |

87 | CountDownLatch countDownLatch = new CountDownLatch(threadNum);

88 | long start = System.currentTimeMillis();

89 | for (int i = 0; i < threadNum; i++) {

90 | final int threadNo = i;

91 | new Thread(() -> {

92 | try {

93 | File file = new File(workDir + "/daofeng_" + threadNo);

94 | RandomAccessFile rw = new RandomAccessFile(file, "rw");

95 | FileChannel fileChannel = rw.getChannel();

96 | ByteBuffer byteBuffer = ByteBuffer.allocate(bufferSize);

97 | long loopTime = totalSize / bufferSize / threadNum;

98 | long maxFileLength = 60L * 1024 * 1024 * 1024 / 40 - 1024 * 1024 * 2;

99 | long position = 0;

100 | for (int t = 0; t < loopTime; t++) {

101 | byteBuffer.clear();

102 | fileChannel.read(byteBuffer, position % maxFileLength);

103 | position += bufferSize;

104 | }

105 | countDownLatch.countDown();

106 | } catch (Exception e) {

107 | e.printStackTrace();

108 | }

109 | }).start();

110 | }

111 | countDownLatch.await();

112 | System.out.println(

113 | "threadNum " + threadNum + " read " + totalSize + " bufferSize " + bufferSize + " cost " + (

114 | System.currentTimeMillis()

115 | - start) + " ms");

116 | }

117 |

118 | public void testWrite(String workDir, long totalSize, final int threadNum, final int bufferSize,

119 | final int testRound)

120 | throws Exception {

121 | CountDownLatch countDownLatch = new CountDownLatch(threadNum);

122 | long start = System.currentTimeMillis();

123 | for (int i = 0; i < threadNum; i++) {

124 | final int threadNo = i;

125 | new Thread(() -> {

126 | try {

127 | File file = new File(workDir + "/" + testRound + "_" + threadNo);

128 | RandomAccessFile rw = new RandomAccessFile(file, "rw");

129 | FileChannel fileChannel = rw.getChannel();

130 | ByteBuffer byteBuffer = ByteBuffer.allocate(bufferSize);

131 | for (int j = 0; j < bufferSize; j++) {

132 | byteBuffer.put((byte)1);

133 | }

134 | long loopTime = totalSize / bufferSize / threadNum;

135 | for (int t = 0; t < loopTime; t++) {

136 | byteBuffer.flip();

137 | fileChannel.write(byteBuffer);

138 | }

139 | countDownLatch.countDown();

140 | } catch (Exception e) {

141 | e.printStackTrace();

142 | }

143 | }).start();

144 | }

145 | countDownLatch.await();

146 | System.out.println(

147 | "threadNum " + threadNum + " write " + totalSize + " bufferSize " + bufferSize + " cost " + (

148 | System.currentTimeMillis()

149 | - start) + " ms");

150 |

151 | }

152 |

153 | public void testWriteEssd() throws Exception {

154 | CountDownLatch countDownLatch = new CountDownLatch(40);

155 |

156 | FileChannel[] fileChannels = new FileChannel[40];

157 | for (int i = 0; i < 40; i++) {

158 | String dataFilePath = "/essd/" + i;

159 | File dataFile = new File(dataFilePath);

160 | dataFile.createNewFile();

161 | fileChannels[i] = FileChannel.open(dataFile.toPath(), StandardOpenOption.READ,

162 | StandardOpenOption.WRITE);

163 | }

164 |

165 | long start = System.currentTimeMillis();

166 | for (int i = 0; i < 40; i++) {

167 | final int threadNo = i;

168 | new Thread(() -> {

169 | ByteBuffer buffer = ByteBuffer.allocateDirect(4 * 1024);

170 | for (int j = 0; j < 4 * 1024; j++) {

171 | buffer.put((byte)1);

172 | }

173 | buffer.flip();

174 |

175 | for (int t = 0; t < 384 * 1024; t++) {

176 | try {

177 | fileChannels[threadNo].write(buffer);

178 | buffer.flip();

179 | } catch (IOException e) {

180 | e.printStackTrace();

181 | }

182 | }

183 | countDownLatch.countDown();

184 | }).start();

185 | }

186 | countDownLatch.await();

187 | System.out.println("ssd write 40 * 384 * 4 * 1024 * 1024 cost " + (System.currentTimeMillis() - start) + " ms");

188 | }

189 |

190 | public void testWriteAep() throws Exception {

191 | CountDownLatch countDownLatch = new CountDownLatch(40);

192 |

193 | FileChannel[] fileChannels = new FileChannel[40];

194 | for (int i = 0; i < 40; i++) {

195 | String dataFilePath = "/pmem/" + i;

196 | File dataFile = new File(dataFilePath);

197 | dataFile.createNewFile();

198 | fileChannels[i] = FileChannel.open(dataFile.toPath(), StandardOpenOption.READ,

199 | StandardOpenOption.WRITE);

200 | }

201 |

202 | long start = System.currentTimeMillis();

203 | for (int i = 0; i < 40; i++) {

204 | final int threadNo = i;

205 | new Thread(() -> {

206 | ByteBuffer buffer = ByteBuffer.allocateDirect(4 * 1024);

207 | for (int j = 0; j < 4 * 1024; j++) {

208 | buffer.put((byte)1);

209 | }

210 | buffer.flip();

211 |

212 | for (int t = 0; t < 384 * 1024; t++) {

213 | try {

214 | fileChannels[threadNo].write(buffer);

215 | buffer.flip();

216 | } catch (IOException e) {

217 | e.printStackTrace();

218 | }

219 | }

220 | countDownLatch.countDown();

221 | }).start();

222 | }

223 | countDownLatch.await();

224 | System.out.println("aep write 40 * 384 * 4 * 1024 * 1024 cost " + (System.currentTimeMillis() - start) + " ms");

225 | }

226 |

227 | public void testWriteDram(ByteBuffer[] byteBuffers) throws InterruptedException {

228 | CountDownLatch countDownLatch = new CountDownLatch(40);

229 | long start = System.currentTimeMillis();

230 | for (int i = 0; i < 40; i++) {

231 | final int threadNo = i;

232 | new Thread(() -> {

233 | ByteBuffer buffer = byteBuffers[threadNo];

234 | for (int t = 0; t < 24; t++) {

235 | buffer.clear();

236 | for (int j = 0; j < 64 * 1024 * 1024; j++) {

237 | buffer.put((byte)1);

238 | }

239 | }

240 | countDownLatch.countDown();

241 | }).start();

242 | }

243 | countDownLatch.await();

244 | System.out.println("dram write 40 * 24 * 64 * 1024 * 1024 cost " + (System.currentTimeMillis() - start) + " ms");

245 | }

246 |

247 | public void test() {

248 | AepBenchmark aepBenchmark = new AepBenchmark();

249 | ByteBuffer[] byteBuffers = new ByteBuffer[40];

250 | for (int i = 0; i < 40; i++) {

251 | byteBuffers[i] = ByteBuffer.allocate(64 * 1024 * 1024);

252 | }

253 | try {

254 | long start = System.currentTimeMillis();

255 | CountDownLatch countDownLatch = new CountDownLatch(3);

256 | new Thread(() -> {

257 | try {

258 | aepBenchmark.testWriteAep();

259 | } catch (Exception e) {

260 | e.printStackTrace();

261 | }

262 | countDownLatch.countDown();

263 | }).start();

264 |

265 | new Thread(() -> {

266 | try {

267 | aepBenchmark.testWriteEssd();

268 | } catch (Exception e) {

269 | e.printStackTrace();

270 | }

271 | countDownLatch.countDown();

272 | }).start();

273 |

274 | new Thread(() -> {

275 | try {

276 | aepBenchmark.testWriteDram(byteBuffers);

277 | } catch (Exception e) {

278 | e.printStackTrace();

279 | }

280 | countDownLatch.countDown();

281 | }).start();

282 |

283 | countDownLatch.await();

284 | System.out.println("=== total cost: " + (System.currentTimeMillis() - start) + " ms");

285 |

286 | } catch (Exception e) {

287 | e.printStackTrace();

288 | }

289 | System.exit(0);

290 | }

291 |

292 | public static void main(String[] args) throws Exception {

293 |

294 | }

295 |

296 | }

297 |

--------------------------------------------------------------------------------

/src/main/java/io/openmessaging/Main.java:

--------------------------------------------------------------------------------

1 | package io.openmessaging;

2 |

3 | import java.io.File;

4 | import java.io.IOException;

5 | import java.io.RandomAccessFile;

6 | import java.lang.reflect.Field;

7 | import java.nio.ByteBuffer;

8 | import java.nio.MappedByteBuffer;

9 | import java.nio.channels.FileChannel;

10 | import java.nio.channels.FileChannel.MapMode;

11 | import java.nio.file.StandardOpenOption;

12 | import java.util.ArrayList;

13 | import java.util.List;

14 | import java.util.concurrent.ConcurrentLinkedDeque;

15 | import java.util.concurrent.CountDownLatch;

16 | import java.util.concurrent.Semaphore;

17 | import java.util.concurrent.ThreadLocalRandom;

18 | import java.util.concurrent.TimeUnit;

19 | import java.util.concurrent.locks.Condition;

20 | import java.util.concurrent.locks.Lock;

21 | import java.util.concurrent.locks.ReentrantLock;

22 |

23 | import sun.misc.VM;

24 | import sun.nio.ch.DirectBuffer;

25 |

26 | /**

27 | * @author jingfeng.xjf

28 | * @date 2021/9/15

29 | */

30 | public class Main {

31 |

32 | public static void main(String[] args) throws Exception {

33 | testMmap();