├── __init__.py

├── LLNL-CODE-802426

├── export

├── scripts_for_testing

├── demo_very-simple_fast-cam.py

├── demo_simple_fast-cam.py

├── nif_fast-cam.py

└── demo_fast-cam.py

├── images

├── snake.jpg

├── spider.png

├── IMG_1382.jpg

├── IMG_2470.jpg

├── IMG_2730.jpg

├── cat_dog.png

├── collies.JPG

├── dd_tree.jpg

├── elephant.png

├── water-bird.JPEG

├── multiple_dogs.jpg

├── 00.00230.03456.105.png

├── 00.00384.02880.105.png

├── 01.13104.08462.000.png

├── 02.08404.03657.090.png

├── 05.11065.01451.105.png

├── 07.06436.09583.135.png

├── 09.08931.05768.075.png

├── 11.02691.07335.030.png

├── 15.11213.10090.030.png

├── ILSVRC2012_val_00049169.JPEG

├── ILSVRC2012_val_00049273.JPEG

├── ILSVRC2012_val_00049702.JPEG

├── ILSVRC2012_val_00049929.JPEG

├── ILSVRC2012_val_00049931.JPEG

├── ILSVRC2012_val_00049937.JPEG

├── ILSVRC2012_val_00049965.JPEG

└── ILSVRC2012_val_00049934.224x224.png

├── mdimg

├── option.jpg

├── SMOE-v-STD.jpg

├── roar_kar.png

├── LOVI_Layers_web.jpg

├── histogram_values.jpg

├── ResNet_w_Salmaps_2.jpg

├── layer_map_examples_2.jpg

├── many_fastcam_examples.jpg

└── fast-cam.ILSVRC2012_val_00049934.jpg

├── example_outputs

├── IMG_1382_CAM_PP.jpg

├── IMG_2470_CAM_PP.jpg

├── IMG_2730_CAM_PP.jpg

├── collies_CAM_PP.jpg

├── water-bird_CAM_PP.jpg

├── multiple_dogs_CAM_PP.jpg

├── ILSVRC2012_val_00049169_CAM_PP.jpg

├── ILSVRC2012_val_00049273_CAM_PP.jpg

├── ILSVRC2012_val_00049702_CAM_PP.jpg

├── ILSVRC2012_val_00049929_CAM_PP.jpg

├── ILSVRC2012_val_00049931_CAM_PP.jpg

├── ILSVRC2012_val_00049937_CAM_PP.jpg

├── ILSVRC2012_val_00049965_CAM_PP.jpg

└── ILSVRC2012_val_00049934.224x224_CAM_PP.jpg

├── requirements.txt

├── .github

└── ISSUE_TEMPLATE

│ ├── custom.md

│ ├── feature_request.md

│ └── bug_report.md

├── LICENSE

├── .gitignore

├── CODE_OF_CONDUCT.md

├── conditional.py

├── README.md

├── scripts

└── create_many_comparison.py

├── mask.py

├── draw.py

├── bidicam.py

├── norm.py

├── resnet.py

├── misc.py

└── maps.py

/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/LLNL-CODE-802426:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/export/scripts_for_testing:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/images/snake.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/snake.jpg

--------------------------------------------------------------------------------

/images/spider.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/spider.png

--------------------------------------------------------------------------------

/mdimg/option.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/mdimg/option.jpg

--------------------------------------------------------------------------------

/images/IMG_1382.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/IMG_1382.jpg

--------------------------------------------------------------------------------

/images/IMG_2470.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/IMG_2470.jpg

--------------------------------------------------------------------------------

/images/IMG_2730.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/IMG_2730.jpg

--------------------------------------------------------------------------------

/images/cat_dog.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/cat_dog.png

--------------------------------------------------------------------------------

/images/collies.JPG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/collies.JPG

--------------------------------------------------------------------------------

/images/dd_tree.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/dd_tree.jpg

--------------------------------------------------------------------------------

/images/elephant.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/elephant.png

--------------------------------------------------------------------------------

/mdimg/SMOE-v-STD.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/mdimg/SMOE-v-STD.jpg

--------------------------------------------------------------------------------

/mdimg/roar_kar.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/mdimg/roar_kar.png

--------------------------------------------------------------------------------

/images/water-bird.JPEG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/water-bird.JPEG

--------------------------------------------------------------------------------

/images/multiple_dogs.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/multiple_dogs.jpg

--------------------------------------------------------------------------------

/mdimg/LOVI_Layers_web.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/mdimg/LOVI_Layers_web.jpg

--------------------------------------------------------------------------------

/mdimg/histogram_values.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/mdimg/histogram_values.jpg

--------------------------------------------------------------------------------

/images/00.00230.03456.105.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/00.00230.03456.105.png

--------------------------------------------------------------------------------

/images/00.00384.02880.105.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/00.00384.02880.105.png

--------------------------------------------------------------------------------

/images/01.13104.08462.000.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/01.13104.08462.000.png

--------------------------------------------------------------------------------

/images/02.08404.03657.090.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/02.08404.03657.090.png

--------------------------------------------------------------------------------

/images/05.11065.01451.105.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/05.11065.01451.105.png

--------------------------------------------------------------------------------

/images/07.06436.09583.135.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/07.06436.09583.135.png

--------------------------------------------------------------------------------

/images/09.08931.05768.075.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/09.08931.05768.075.png

--------------------------------------------------------------------------------

/images/11.02691.07335.030.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/11.02691.07335.030.png

--------------------------------------------------------------------------------

/images/15.11213.10090.030.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/15.11213.10090.030.png

--------------------------------------------------------------------------------

/mdimg/ResNet_w_Salmaps_2.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/mdimg/ResNet_w_Salmaps_2.jpg

--------------------------------------------------------------------------------

/mdimg/layer_map_examples_2.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/mdimg/layer_map_examples_2.jpg

--------------------------------------------------------------------------------

/mdimg/many_fastcam_examples.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/mdimg/many_fastcam_examples.jpg

--------------------------------------------------------------------------------

/example_outputs/IMG_1382_CAM_PP.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/example_outputs/IMG_1382_CAM_PP.jpg

--------------------------------------------------------------------------------

/example_outputs/IMG_2470_CAM_PP.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/example_outputs/IMG_2470_CAM_PP.jpg

--------------------------------------------------------------------------------

/example_outputs/IMG_2730_CAM_PP.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/example_outputs/IMG_2730_CAM_PP.jpg

--------------------------------------------------------------------------------

/example_outputs/collies_CAM_PP.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/example_outputs/collies_CAM_PP.jpg

--------------------------------------------------------------------------------

/images/ILSVRC2012_val_00049169.JPEG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/ILSVRC2012_val_00049169.JPEG

--------------------------------------------------------------------------------

/images/ILSVRC2012_val_00049273.JPEG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/ILSVRC2012_val_00049273.JPEG

--------------------------------------------------------------------------------

/images/ILSVRC2012_val_00049702.JPEG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/ILSVRC2012_val_00049702.JPEG

--------------------------------------------------------------------------------

/images/ILSVRC2012_val_00049929.JPEG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/ILSVRC2012_val_00049929.JPEG

--------------------------------------------------------------------------------

/images/ILSVRC2012_val_00049931.JPEG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/ILSVRC2012_val_00049931.JPEG

--------------------------------------------------------------------------------

/images/ILSVRC2012_val_00049937.JPEG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/ILSVRC2012_val_00049937.JPEG

--------------------------------------------------------------------------------

/images/ILSVRC2012_val_00049965.JPEG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/ILSVRC2012_val_00049965.JPEG

--------------------------------------------------------------------------------

/example_outputs/water-bird_CAM_PP.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/example_outputs/water-bird_CAM_PP.jpg

--------------------------------------------------------------------------------

/example_outputs/multiple_dogs_CAM_PP.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/example_outputs/multiple_dogs_CAM_PP.jpg

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy

2 | jupyterlab

3 | notebook

4 | torch

5 | torchvision

6 | opencv-python-nonfree

7 | pytorch_gradcam

8 |

--------------------------------------------------------------------------------

/images/ILSVRC2012_val_00049934.224x224.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/images/ILSVRC2012_val_00049934.224x224.png

--------------------------------------------------------------------------------

/mdimg/fast-cam.ILSVRC2012_val_00049934.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/mdimg/fast-cam.ILSVRC2012_val_00049934.jpg

--------------------------------------------------------------------------------

/example_outputs/ILSVRC2012_val_00049169_CAM_PP.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/example_outputs/ILSVRC2012_val_00049169_CAM_PP.jpg

--------------------------------------------------------------------------------

/example_outputs/ILSVRC2012_val_00049273_CAM_PP.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/example_outputs/ILSVRC2012_val_00049273_CAM_PP.jpg

--------------------------------------------------------------------------------

/example_outputs/ILSVRC2012_val_00049702_CAM_PP.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/example_outputs/ILSVRC2012_val_00049702_CAM_PP.jpg

--------------------------------------------------------------------------------

/example_outputs/ILSVRC2012_val_00049929_CAM_PP.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/example_outputs/ILSVRC2012_val_00049929_CAM_PP.jpg

--------------------------------------------------------------------------------

/example_outputs/ILSVRC2012_val_00049931_CAM_PP.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/example_outputs/ILSVRC2012_val_00049931_CAM_PP.jpg

--------------------------------------------------------------------------------

/example_outputs/ILSVRC2012_val_00049937_CAM_PP.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/example_outputs/ILSVRC2012_val_00049937_CAM_PP.jpg

--------------------------------------------------------------------------------

/example_outputs/ILSVRC2012_val_00049965_CAM_PP.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/example_outputs/ILSVRC2012_val_00049965_CAM_PP.jpg

--------------------------------------------------------------------------------

/example_outputs/ILSVRC2012_val_00049934.224x224_CAM_PP.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/llnl/fastcam/HEAD/example_outputs/ILSVRC2012_val_00049934.224x224_CAM_PP.jpg

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/custom.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Custom issue template

3 | about: Describe this issue template's purpose here.

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 |

11 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/feature_request.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Feature request

3 | about: Suggest an idea for this project

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Is your feature request related to a problem? Please describe.**

11 | A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

12 |

13 | **Describe the solution you'd like**

14 | A clear and concise description of what you want to happen.

15 |

16 | **Describe alternatives you've considered**

17 | A clear and concise description of any alternative solutions or features you've considered.

18 |

19 | **Additional context**

20 | Add any other context or screenshots about the feature request here.

21 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/bug_report.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Bug report

3 | about: Create a report to help us improve

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Describe the bug**

11 | A clear and concise description of what the bug is.

12 |

13 | **To Reproduce**

14 | Steps to reproduce the behavior:

15 | 1. Go to '...'

16 | 2. Click on '....'

17 | 3. Scroll down to '....'

18 | 4. See error

19 |

20 | **Expected behavior**

21 | A clear and concise description of what you expected to happen.

22 |

23 | **Screenshots**

24 | If applicable, add screenshots to help explain your problem.

25 |

26 | **Desktop (please complete the following information):**

27 | - OS: [e.g. iOS]

28 | - Browser [e.g. chrome, safari]

29 | - Version [e.g. 22]

30 |

31 | **Smartphone (please complete the following information):**

32 | - Device: [e.g. iPhone6]

33 | - OS: [e.g. iOS8.1]

34 | - Browser [e.g. stock browser, safari]

35 | - Version [e.g. 22]

36 |

37 | **Additional context**

38 | Add any other context about the problem here.

39 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | BSD 3-Clause License

2 |

3 | Copyright (c) 2020, Lawrence Livermore National Laboratory

4 | All rights reserved.

5 |

6 | Redistribution and use in source and binary forms, with or without

7 | modification, are permitted provided that the following conditions are met:

8 |

9 | 1. Redistributions of source code must retain the above copyright notice, this

10 | list of conditions and the following disclaimer.

11 |

12 | 2. Redistributions in binary form must reproduce the above copyright notice,

13 | this list of conditions and the following disclaimer in the documentation

14 | and/or other materials provided with the distribution.

15 |

16 | 3. Neither the name of the copyright holder nor the names of its

17 | contributors may be used to endorse or promote products derived from

18 | this software without specific prior written permission.

19 |

20 | THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

21 | AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

22 | IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

23 | DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

24 | FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

25 | DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

26 | SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

27 | CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

28 | OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

29 | OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

30 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | pip-wheel-metadata/

24 | share/python-wheels/

25 | *.egg-info/

26 | .installed.cfg

27 | *.egg

28 | MANIFEST

29 |

30 | # PyInstaller

31 | # Usually these files are written by a python script from a template

32 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

33 | *.manifest

34 | *.spec

35 |

36 | # Installer logs

37 | pip-log.txt

38 | pip-delete-this-directory.txt

39 |

40 | # Unit test / coverage reports

41 | htmlcov/

42 | .tox/

43 | .nox/

44 | .coverage

45 | .coverage.*

46 | .cache

47 | nosetests.xml

48 | coverage.xml

49 | *.cover

50 | *.py,cover

51 | .hypothesis/

52 | .pytest_cache/

53 |

54 | # Translations

55 | *.mo

56 | *.pot

57 |

58 | # Django stuff:

59 | *.log

60 | local_settings.py

61 | db.sqlite3

62 | db.sqlite3-journal

63 |

64 | # Flask stuff:

65 | instance/

66 | .webassets-cache

67 |

68 | # Scrapy stuff:

69 | .scrapy

70 |

71 | # Sphinx documentation

72 | docs/_build/

73 |

74 | # PyBuilder

75 | target/

76 |

77 | # Jupyter Notebook

78 | .ipynb_checkpoints

79 |

80 | # IPython

81 | profile_default/

82 | ipython_config.py

83 |

84 | # pyenv

85 | .python-version

86 |

87 | # pipenv

88 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

89 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

90 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

91 | # install all needed dependencies.

92 | #Pipfile.lock

93 |

94 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

95 | __pypackages__/

96 |

97 | # Celery stuff

98 | celerybeat-schedule

99 | celerybeat.pid

100 |

101 | # SageMath parsed files

102 | *.sage.py

103 |

104 | # Environments

105 | .env

106 | .venv

107 | env/

108 | venv/

109 | ENV/

110 | env.bak/

111 | venv.bak/

112 |

113 | # Spyder project settings

114 | .spyderproject

115 | .spyproject

116 |

117 | # Rope project settings

118 | .ropeproject

119 |

120 | # mkdocs documentation

121 | /site

122 |

123 | # mypy

124 | .mypy_cache/

125 | .dmypy.json

126 | dmypy.json

127 |

128 | # Pyre type checker

129 | .pyre/

130 |

--------------------------------------------------------------------------------

/CODE_OF_CONDUCT.md:

--------------------------------------------------------------------------------

1 | # Contributor Covenant Code of Conduct

2 |

3 | ## Our Pledge

4 |

5 | In the interest of fostering an open and welcoming environment, we as

6 | contributors and maintainers pledge to making participation in our project and

7 | our community a harassment-free experience for everyone, regardless of age, body

8 | size, disability, ethnicity, sex characteristics, gender identity and expression,

9 | level of experience, education, socio-economic status, nationality, personal

10 | appearance, race, religion, or sexual identity and orientation.

11 |

12 | ## Our Standards

13 |

14 | Examples of behavior that contributes to creating a positive environment

15 | include:

16 |

17 | * Using welcoming and inclusive language

18 | * Being respectful of differing viewpoints and experiences

19 | * Gracefully accepting constructive criticism

20 | * Focusing on what is best for the community

21 | * Showing empathy towards other community members

22 |

23 | Examples of unacceptable behavior by participants include:

24 |

25 | * The use of sexualized language or imagery and unwelcome sexual attention or

26 | advances

27 | * Trolling, insulting/derogatory comments, and personal or political attacks

28 | * Public or private harassment

29 | * Publishing others' private information, such as a physical or electronic

30 | address, without explicit permission

31 | * Other conduct which could reasonably be considered inappropriate in a

32 | professional setting

33 |

34 | ## Our Responsibilities

35 |

36 | Project maintainers are responsible for clarifying the standards of acceptable

37 | behavior and are expected to take appropriate and fair corrective action in

38 | response to any instances of unacceptable behavior.

39 |

40 | Project maintainers have the right and responsibility to remove, edit, or

41 | reject comments, commits, code, wiki edits, issues, and other contributions

42 | that are not aligned to this Code of Conduct, or to ban temporarily or

43 | permanently any contributor for other behaviors that they deem inappropriate,

44 | threatening, offensive, or harmful.

45 |

46 | ## Scope

47 |

48 | This Code of Conduct applies both within project spaces and in public spaces

49 | when an individual is representing the project or its community. Examples of

50 | representing a project or community include using an official project e-mail

51 | address, posting via an official social media account, or acting as an appointed

52 | representative at an online or offline event. Representation of a project may be

53 | further defined and clarified by project maintainers.

54 |

55 | ## Enforcement

56 |

57 | Instances of abusive, harassing, or otherwise unacceptable behavior may be

58 | reported by contacting the project team at github-admin@llnl.gov. All

59 | complaints will be reviewed and investigated and will result in a response that

60 | is deemed necessary and appropriate to the circumstances. The project team is

61 | obligated to maintain confidentiality with regard to the reporter of an incident.

62 | Further details of specific enforcement policies may be posted separately.

63 |

64 | Project maintainers who do not follow or enforce the Code of Conduct in good

65 | faith may face temporary or permanent repercussions as determined by other

66 | members of the project's leadership.

67 |

68 | ## Attribution

69 |

70 | This Code of Conduct is adapted from the [Contributor Covenant][homepage], version 1.4,

71 | available at https://www.contributor-covenant.org/version/1/4/code-of-conduct.html

72 |

73 | [homepage]: https://www.contributor-covenant.org

74 |

75 | For answers to common questions about this code of conduct, see

76 | https://www.contributor-covenant.org/faq

77 |

--------------------------------------------------------------------------------

/conditional.py:

--------------------------------------------------------------------------------

1 | '''

2 | BSD 3-Clause License

3 |

4 | Copyright (c) 2020, Lawrence Livermore National Laboratory

5 | All rights reserved.

6 |

7 | Redistribution and use in source and binary forms, with or without

8 | modification, are permitted provided that the following conditions are met:

9 |

10 | 1. Redistributions of source code must retain the above copyright notice, this

11 | list of conditions and the following disclaimer.

12 |

13 | 2. Redistributions in binary form must reproduce the above copyright notice,

14 | this list of conditions and the following disclaimer in the documentation

15 | and/or other materials provided with the distribution.

16 |

17 | 3. Neither the name of the copyright holder nor the names of its

18 | contributors may be used to endorse or promote products derived from

19 | this software without specific prior written permission.

20 |

21 | THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

22 | AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

23 | IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

24 | DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

25 | FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

26 | DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

27 | SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

28 | CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

29 | OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

30 | OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

31 | '''

32 | '''

33 | https://github.com/LLNL/fastcam

34 |

35 | A toolkit for efficent computation of saliency maps for explainable

36 | AI attribution.

37 |

38 | This work was performed under the auspices of the U.S. Department of Energy

39 | by Lawrence Livermore National Laboratory under Contract DE-AC52-07NA27344

40 | and was supported by the LLNL-LDRD Program under Project 18-ERD-021 and

41 | Project 17-SI-003.

42 |

43 | Software released as LLNL-CODE-802426.

44 |

45 | See also: https://arxiv.org/abs/1911.11293

46 | '''

47 | import torch

48 | import torch.nn as nn

49 | import torch.nn.functional as F

50 |

51 | try:

52 | from . import maps

53 | except ImportError:

54 | import maps

55 |

56 |

57 |

58 | class ConditionalSaliencyMaps(maps.CombineSaliencyMaps):

59 | r'''

60 | This will combine saliency maps into a single weighted saliency map.

61 |

62 | Input is a list of 3D tensors or various sizes.

63 | Output is a 3D tensor of size output_size

64 |

65 | num_maps specifies how many maps we will combine

66 | weights is an optional list of weights for each layer e.g. [1, 2, 3, 4, 5]

67 | '''

68 |

69 | def __init__(self, **kwargs):

70 |

71 | super(ConditionalSaliencyMaps, self).__init__(**kwargs)

72 |

73 | def forward(self, xmap, ymaps, reverse=False):

74 |

75 | r'''

76 | Input shapes are something like [64,7,7] i.e. [batch size x layer_height x layer_width]

77 | Output shape is something like [64,224,244] i.e. [batch size x image_height x image_width]

78 | '''

79 |

80 | assert(isinstance(xmap,list))

81 | assert(len(xmap) == self.map_num)

82 | assert(len(xmap[0].size()) == 3)

83 |

84 | bn = xmap[0].size()[0]

85 | cm = torch.zeros((bn, 1, self.output_size[0], self.output_size[1]), dtype=xmap[0].dtype, device=xmap[0].device)

86 | ww = []

87 |

88 | r'''

89 | Now get each saliency map and resize it. Then store it and also create a combined saliency map.

90 | '''

91 | for i in range(len(xmap)):

92 | assert(torch.is_tensor(xmap[i]))

93 | wsz = xmap[i].size()

94 | wx = xmap[i].reshape(wsz[0], 1, wsz[1], wsz[2]) + 0.0000001

95 | w = torch.zeros_like(wx)

96 |

97 | if reverse:

98 | for j in range(len(ymaps)):

99 | wy = ymaps[j][i].reshape(wsz[0], 1, wsz[1], wsz[2]) + 0.0000001

100 |

101 | w -= wx*torch.log2(wx/wy)

102 | else:

103 | for j in range(len(ymaps)):

104 | wy = ymaps[j][i].reshape(wsz[0], 1, wsz[1], wsz[2]) + 0.0000001

105 |

106 | w -= wy*torch.log2(wy/wx)

107 |

108 | w = torch.clamp(w,0.0000001,1)

109 | w = nn.functional.interpolate(w, size=self.output_size, mode=self.resize_mode, align_corners=False)

110 |

111 | ww.append(w)

112 | cm += (w * self.weights[i])

113 |

114 | cm = cm / self.weight_sum

115 | cm = cm.reshape(bn, self.output_size[0], self.output_size[1])

116 |

117 | ww = torch.stack(ww,dim=1)

118 | ww = ww.reshape(bn, self.map_num, self.output_size[0], self.output_size[1])

119 |

120 | return cm, ww

121 |

122 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Efficient Saliency and FastCAM

2 |

3 | FastCAM creates a saliency map using SMOE Scale saliency maps as described in our paper on

4 | [ArXiv:1911.11293](https://arxiv.org/abs/1911.11293). We obtain a highly significant speed-up by replacing

5 | the Guided Backprop component typically used alongside GradCAM with our SMOE Scale saliency map.

6 | Additionally, the expected accuracy of the saliency map is increased slightly. Thus, **FastCAM is three orders of magnitude faster and a little bit more accurate than GradCAM+Guided Backprop with SmoothGrad.**

7 |

8 |

9 |

10 | ## Performance

11 |

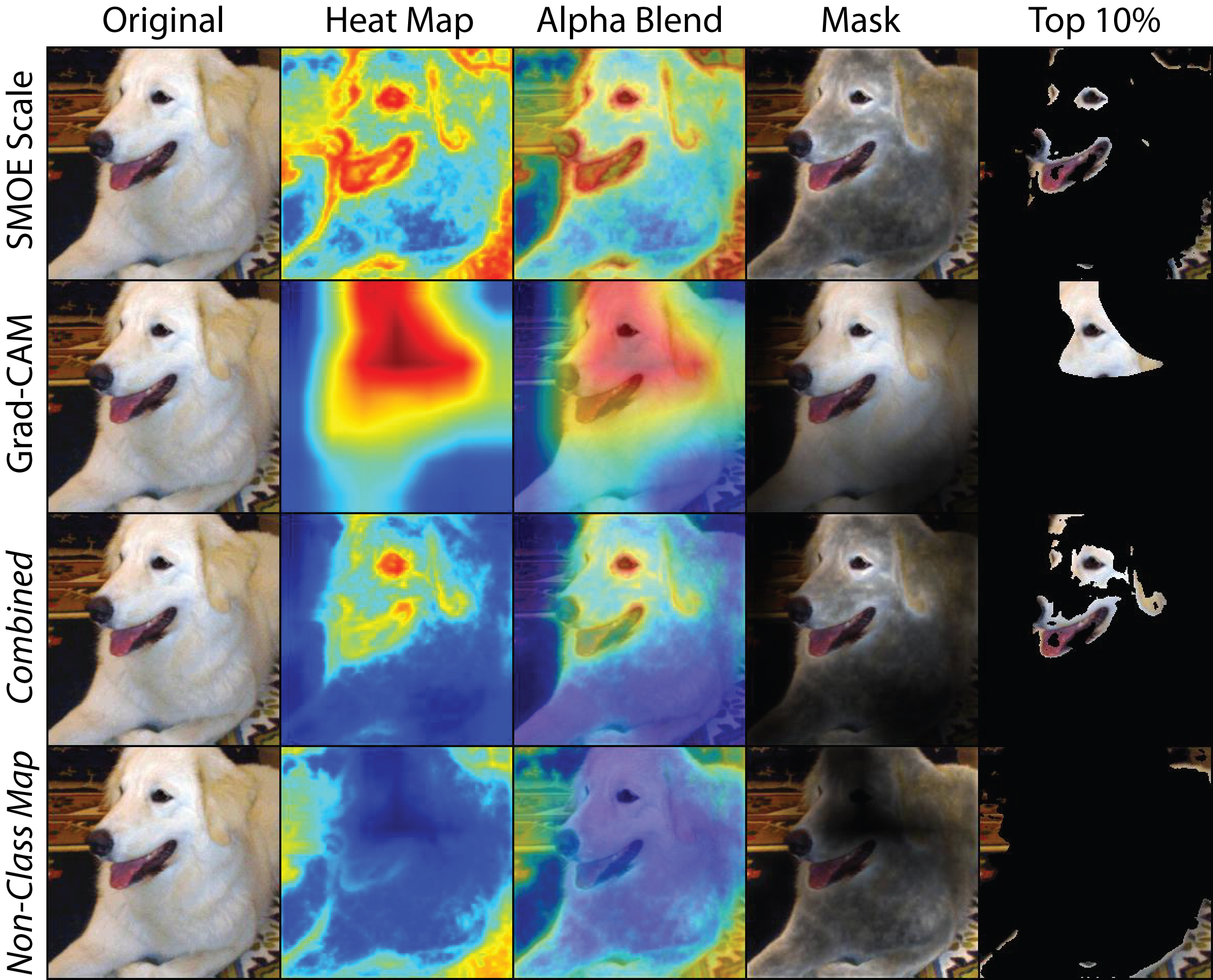

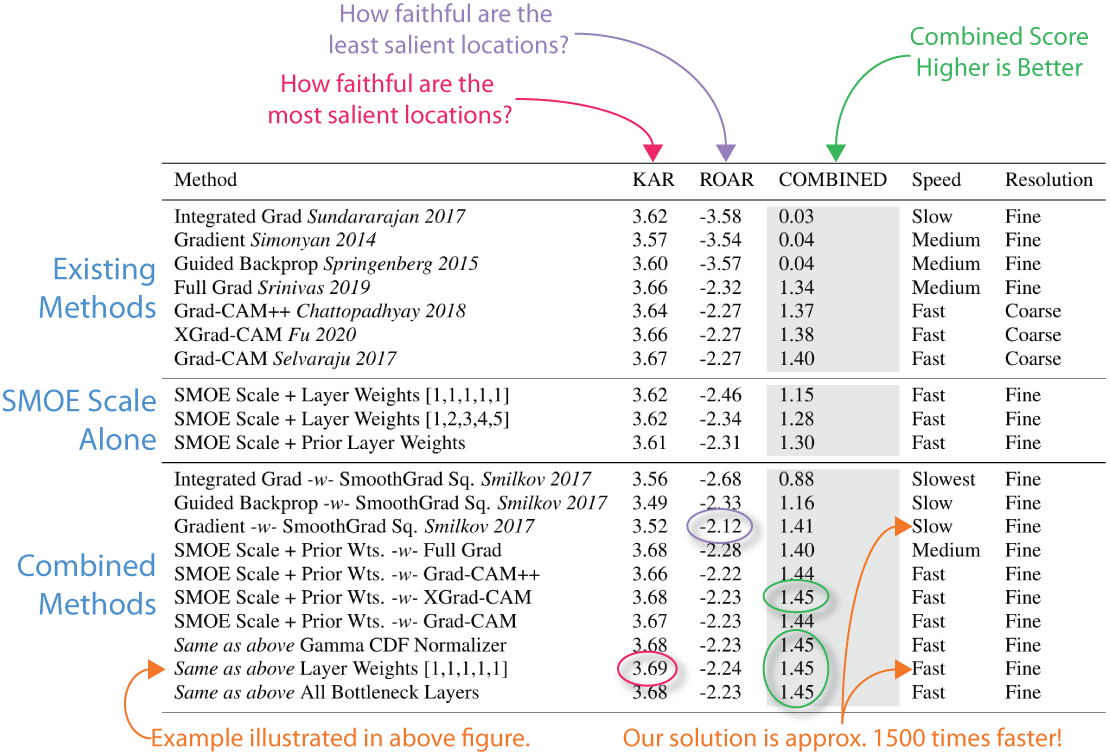

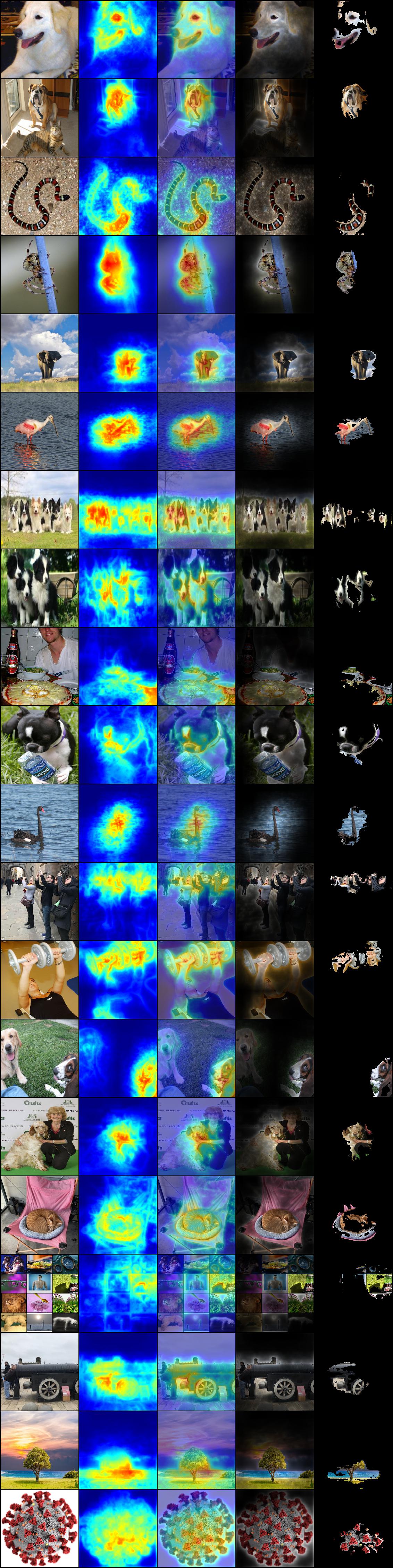

12 | FastCAM is not only fast, but it is more accurate than most methods. The following is a list of [ROAR/KAR](https://arxiv.org/abs/1806.10758) ImageNet scores for different methods along with notes about performance. In gray is the total ROAR/KAR score. Higher is better. The last line is the score for the combined map you see here.

13 |

14 |

15 |

16 | ## How Does it Work?

17 |

18 |

19 |

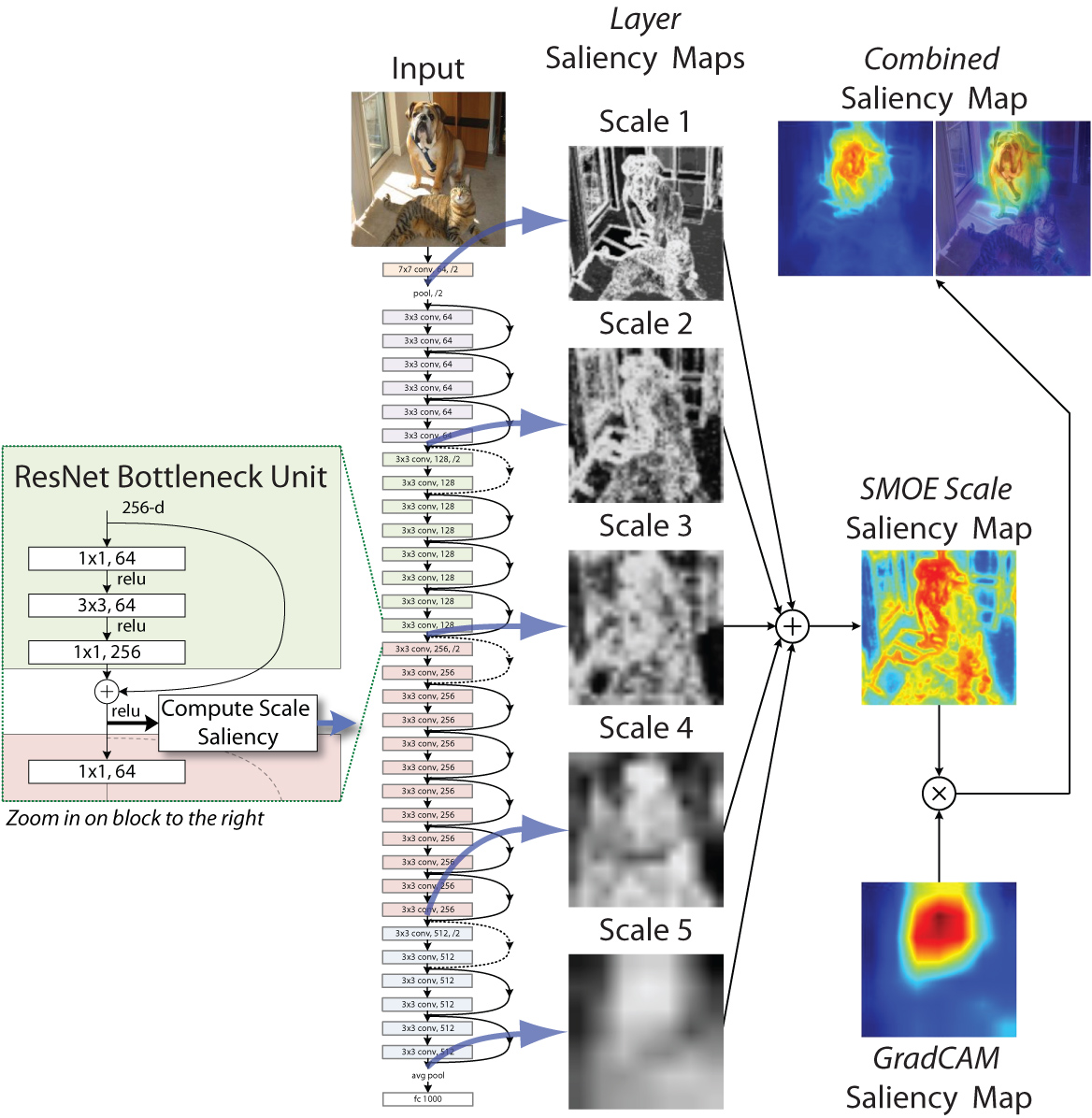

20 | We compute the saliency map by computing the *conditional entropy* between the mean activation in a layer and the individual values. This gives us the **SMOE Scale** for a given layer. We apply this to the layer at the end of each spatial scale in the network and then take a weighted average. Finally, we combine it with GradCAM by multiplying the combined SMOE Scale saliency map with the GradCAM saliency map.

21 |

22 |

23 | ## Installation

24 |

25 | The FastCAM package runs on **Python 3.x**. The package should run on **Python 2.x**. However, since

26 | the end of product life for 2.x has been announced, we will not actively support it going forward.

27 | All extra requirements are available through *pip* installation. On *IBM Power* based architecture,

28 | some packages may have to be hand installed, but it's totally doable. We have tested on Linux, MacOS and Windows.

29 | Let us know if you have any issues.

30 |

31 | The primary functionality is demonstrated using a **Jupyter notebook**. By following it, you should be

32 | able to see how to use FastCAM on your own deep network.

33 |

34 | ### Required Packages

35 |

36 | When you run the installation, these packages should automatically install for you.

37 |

38 | numpy

39 | jupyterlab

40 | notebook

41 | torch

42 | torchvision

43 | opencv-python

44 | pytorch_gradcam

45 |

46 | ### Install and Run the Demo!

47 |

48 |

49 |

50 | This will run our [Jupyter Notebook](https://github.com/LLNL/fastcam/blob/master/demo_fast-cam.ipynb) on your local computer.

51 |

52 | **Optionally** if you don't care how it runs and just want to run it, use our [simplified notebook](https://github.com/LLNL/fastcam/blob/master/demo_simple_fast-cam.ipynb).

53 |

54 | **Double Optionally** if you just want to run it and really really really don't care about how it works, use our [notebook for the exceptionally impatient](https://github.com/LLNL/fastcam/blob/master/demo_very-simple_fast-cam.ipynb).

55 |

56 | **Experimentally** we have a [PyTorch Captum framework version of FastCAM](https://github.com/LLNL/fastcam/blob/master/demo-captum.ipynb).

57 |

58 | These are our recommended installation steps:

59 |

60 | git clone git@github.com:LLNL/fastcam.git

61 |

62 | or

63 |

64 | git clone https://github.com/LLNL/fastcam.git

65 |

66 | then do:

67 |

68 | cd fastcam

69 | python3 -m venv venv3

70 | source venv3/bin/activate

71 | pip install -r requirements.txt

72 |

73 | Next you will need to start the jupyter notebook:

74 |

75 | jupyter notebook

76 |

77 | It should start the jupyter web service and create a notebook instance in your browser. You can then click on

78 |

79 | demo_fast-cam.ipynb

80 |

81 | To run the notebook, click on the double arrow (fast forward) button at the top of the web page.

82 |

83 |

84 |

85 | ### Installation Notes

86 |

87 | **1. You don't need a GPU**

88 |

89 | Because FastCAM is ... well ... fast, you can install and run the demo on a five-year-old MacBook without GPU support. You just need to make sure you have enough RAM to run a forward pass of ResNet 50.

90 |

91 | **2. Pillow Version Issue**

92 |

93 | If you get:

94 |

95 | cannot import name ‘PILLOW_VERSION’

96 |

97 | This is a known weird issue between Pillow and Torchvision, install an older version as such:

98 |

99 | pip install pillow=6.2.1

100 |

101 | **3. PyTorch GradCAM Path Issue**

102 |

103 | The library does not seem to set the python path for you. You may have to set it manually. For example in Bash,

104 | we can set it as such:

105 |

106 | export PYTHONPATH=$PYTHONPATH:/path/to/my/python/lib/python3.7/site-packages/

107 |

108 | If you want to know where that is, try:

109 |

110 | which python

111 |

112 | you will see:

113 |

114 | /path/to/my/python/bin/python

115 |

116 | ## Many More Examples

117 |

118 |

119 |

120 | ## Contact

121 |

122 | Questions, concerns and friendly banter can be addressed to:

123 |

124 | T. Nathan Mundhenk [mundhenk1@llnl.gov](mundhenk1@llnl.gov)

125 |

126 | ## License

127 |

128 | FastCAM is distributed under the [BSD 3-Clause License](https://github.com/LLNL/fastcam/blob/master/LICENSE).

129 |

130 | LLNL-CODE-802426

131 |

132 |

133 |

134 |

--------------------------------------------------------------------------------

/export/demo_very-simple_fast-cam.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # coding: utf-8

3 |

4 | # # DEMO: Running FastCAM for the Exceptionally Impatient

5 |

6 | # ### Import Libs

7 |

8 | # In[1]:

9 |

10 |

11 | import os

12 | from IPython.display import Image

13 |

14 |

15 | # Lets load the **PyTorch** Stuff.

16 |

17 | # In[2]:

18 |

19 |

20 | import torch

21 | import torch.nn.functional as F

22 | from torchvision.utils import make_grid, save_image

23 |

24 | import warnings

25 | warnings.filterwarnings('ignore')

26 |

27 |

28 | # Now we import the saliency libs for **this package**.

29 |

30 | # In[3]:

31 |

32 |

33 | import maps

34 | import misc

35 | import mask

36 | import norm

37 | import resnet

38 |

39 |

40 | # ### Set Adjustable Parameters

41 | # This is where we can set some parameters like the image name and the layer weights.

42 |

43 | # In[4]:

44 |

45 |

46 | input_image_name = "ILSVRC2012_val_00049934.224x224.png" # Our input image to process

47 | output_dir = 'outputs' # Where to save our output images

48 | input_dir = 'images' # Where to load our inputs from

49 | in_height = 224 # Size to scale input image to

50 | in_width = 224 # Size to scale input image to

51 |

52 |

53 | # Now we set up what layers we want to sample from and what weights to give each layer. We specify the layer block name within ResNet were we will pull the forward SMOE Scale results from. The results will be from the end of each layer block.

54 |

55 | # In[5]:

56 |

57 |

58 | weights = [1.0, 1.0, 1.0, 1.0, 1.0] # Equal Weights work best

59 | # when using with GradCAM

60 | layers = ['relu','layer1','layer2','layer3','layer4']

61 |

62 |

63 | # **OPTIONAL:** We can auto compute which layers to run over by setting them to *None*. **This has not yet been quantitatively tested on ROAR/KARR.**

64 |

65 | # In[6]:

66 |

67 |

68 | #weights = None

69 | #layers = None

70 |

71 |

72 | # ### Set Up Loading and Saving File Names

73 | # Lets touch up where to save output and what name to use for output files.

74 |

75 | # In[7]:

76 |

77 |

78 | save_prefix = os.path.split(os.path.splitext(input_image_name)[0])[-1] # Chop the file extension and path

79 | load_image_name = os.path.join(input_dir, input_image_name)

80 |

81 | os.makedirs(output_dir, exist_ok=True)

82 |

83 |

84 | # Take a look at the input image ...

85 | # Good Doggy!

86 |

87 | # In[8]:

88 |

89 |

90 | Image(filename=load_image_name)

91 |

92 |

93 | # ### Set Up Usual PyTorch Network Stuff

94 | # Go ahead and create a standard PyTorch device. It can use the CPU if no GPU is present. This demo works pretty well on just CPU.

95 |

96 | # In[9]:

97 |

98 |

99 | device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

100 |

101 |

102 | # Now we will create a model. Here we have a slightly customized ResNet that will only propagate backwards the last few layers. The customization is just a wrapper around the stock ResNet that comes with PyTorch. SMOE Scale computation does not need any gradients and GradCAM variants only need the last few layers. This will really speed things up, but don't try to train this network. You can train the regular ResNet if you need to do that. Since this network is just a wrapper, it will load any standard PyTorch ResNet training weights.

103 |

104 | # In[10]:

105 |

106 |

107 | model = resnet.resnet50(pretrained=True)

108 | model = model.to(device)

109 |

110 |

111 | # ### Load Images

112 | # Lets load in our image into standard torch tensors. We will do a simple resize on it.

113 |

114 | # In[11]:

115 |

116 |

117 | in_tensor = misc.LoadImageToTensor(load_image_name, device)

118 | in_tensor = F.interpolate(in_tensor, size=(in_height, in_width), mode='bilinear', align_corners=False)

119 |

120 |

121 | # For illustration purposes, Lets also load a non-normalized version of the input.

122 |

123 | # In[12]:

124 |

125 |

126 | raw_tensor = misc.LoadImageToTensor(load_image_name, device, norm=False)

127 | raw_tensor = F.interpolate(raw_tensor, size=(in_height, in_width), mode='bilinear', align_corners=False)

128 |

129 |

130 | # ### Set Up Saliency Objects

131 |

132 | # Choose our layer normalization method.

133 |

134 | # In[13]:

135 |

136 |

137 | #norm_method = norm.GaussNorm2D

138 | norm_method = norm.GammaNorm2D # A little more accurate, but much slower

139 |

140 |

141 | # We create an object to create the saliency map given the model and layers we have selected.

142 |

143 | # In[14]:

144 |

145 |

146 | getSalmap = maps.SaliencyModel(model, layers, output_size=[in_height,in_width], weights=weights,

147 | norm_method=norm_method)

148 |

149 |

150 | # Now we set up our masking object to create a nice mask image of the %10 most salient locations. You will see the results below when it is run.

151 |

152 | # In[15]:

153 |

154 |

155 | getMask = mask.SaliencyMaskDropout(keep_percent = 0.1, scale_map=False)

156 |

157 |

158 | # ### Run Saliency

159 | # Now lets run our input tensor image through the net and get the 2D saliency map back.

160 |

161 | # In[16]:

162 |

163 |

164 | cam_map,_,_ = getSalmap(in_tensor)

165 |

166 |

167 | # ### Visualize It

168 | # Take the images and create a nice tiled image to look at. This will created a tiled image of:

169 | #

170 | # (1) The input image.

171 | # (2) The saliency map.

172 | # (3) The saliency map overlaid on the input image.

173 | # (4) The raw image enhanced with the most salient locations.

174 | # (5) The top 10% most salient locations.

175 |

176 | # In[17]:

177 |

178 |

179 | images = misc.TileOutput(raw_tensor, cam_map, getMask)

180 |

181 |

182 | # We now put all the images into a nice grid for display.

183 |

184 | # In[18]:

185 |

186 |

187 | images = make_grid(torch.cat(images,0), nrow=5)

188 |

189 |

190 | # ... save and look at it.

191 |

192 | # In[19]:

193 |

194 |

195 | output_name = "{}.FASTCAM.jpg".format(save_prefix)

196 | output_path = os.path.join(output_dir, output_name)

197 |

198 | save_image(images, output_path)

199 | Image(filename=output_path)

200 |

201 |

202 | # This image should look **exactly** like the one on the README.md on Github minus the text.

203 |

204 | # In[ ]:

205 |

206 |

207 |

208 |

209 |

--------------------------------------------------------------------------------

/scripts/create_many_comparison.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # coding: utf-8

3 |

4 | import os

5 | import torch

6 | from torchvision import models

7 |

8 | # Lets load things we need for **Grad-CAM**

9 | from torchvision.utils import make_grid, save_image

10 | import torch.nn.functional as F

11 |

12 | #from gradcam.utils import visualize_cam

13 | from gradcam import GradCAM

14 |

15 | # The GradCAM kit throws a warning we don't need to see for this demo.

16 | import warnings

17 | warnings.filterwarnings('ignore')

18 |

19 | # Now we import the code for **this package**.

20 | import maps

21 | import misc

22 | import mask

23 | import norm

24 | import resnet

25 |

26 | # This is where we can set some parameters like the image name and the layer weights.

27 | files = [ "ILSVRC2012_val_00049169.JPEG",

28 | "ILSVRC2012_val_00049273.JPEG",

29 | "ILSVRC2012_val_00049702.JPEG",

30 | "ILSVRC2012_val_00049929.JPEG",

31 | "ILSVRC2012_val_00049931.JPEG",

32 | "ILSVRC2012_val_00049937.JPEG",

33 | "ILSVRC2012_val_00049965.JPEG",

34 | "ILSVRC2012_val_00049934.224x224.png",

35 | "IMG_1382.jpg",

36 | "IMG_2470.jpg",

37 | "IMG_2730.jpg",

38 | "Nate_Face.png",

39 | "brant.png",

40 | "cat_dog.png",

41 | "collies.JPG",

42 | "dd_tree.jpg",

43 | "elephant.png",

44 | "multiple_dogs.jpg",

45 | "sanity.jpg",

46 | "snake.jpg",

47 | "spider.png",

48 | "swan_image.png",

49 | "water-bird.JPEG"]

50 |

51 |

52 | # Lets set up where to save output and what name to use.

53 | output_dir = 'outputs' # Where to save our output images

54 | input_dir = 'images' # Where to load our inputs from

55 |

56 | weights = [1.0, 1.0, 1.0, 1.0, 1.0] # Equal Weights work best

57 | # when using with GradCAM

58 |

59 | #weights = [0.18, 0.15, 0.37, 0.4, 0.72] # Our saliency layer weights

60 | # From paper:

61 | # https://arxiv.org/abs/1911.11293

62 |

63 | in_height = 224 # Size to scale input image to

64 | in_width = 224 # Size to scale input image to

65 |

66 | # Choose how we want to normalize each map.

67 | #norm_method = norm.GaussNorm2D

68 | norm_method = norm.GammaNorm2D # A little more accurate, but much slower

69 |

70 | # You will need to pick out which layers to process. Here we grab the end of each group of layers by scale.

71 | layers = ['relu','layer1','layer2','layer3','layer4']

72 |

73 | # Now we create a model in PyTorch and send it to our device.

74 | device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

75 | model = models.resnet50(pretrained=True)

76 | model = model.to(device)

77 |

78 | many_image = []

79 |

80 |

81 | for f in files:

82 | model.eval()

83 |

84 | print("Image {}".format(f))

85 |

86 | input_image_name = f # Our input image to process

87 |

88 | save_prefix = os.path.split(os.path.splitext(input_image_name)[0])[-1] # Chop the file extension and path

89 | load_image_name = os.path.join(input_dir, input_image_name)

90 |

91 | os.makedirs(output_dir, exist_ok=True)

92 |

93 | # Lets load in our image. We will do a simple resize on it.

94 | in_tensor = misc.LoadImageToTensor(load_image_name, device)

95 | in_tensor = F.interpolate(in_tensor, size=(in_height, in_width), mode='bilinear', align_corners=False)

96 |

97 | # Now, lets get the Grad-CAM++ saliency map only.

98 | resnet_gradcam = GradCAM.from_config(model_type='resnet', arch=model, layer_name='layer4')

99 | cam_map, logit = resnet_gradcam(in_tensor)

100 |

101 | # Create our saliency map object. We hand it our Torch model and names for the layers we want to tap.

102 | get_salmap = maps.SaliencyModel(model, layers, output_size=[in_height,in_width], weights=weights,

103 | norm_method=norm_method)

104 |

105 |

106 | # Get Forward sal map

107 | csmap,smaps,_ = get_salmap(in_tensor)

108 |

109 |

110 | # Let's get our original input image back. We will just use this one for visualization.

111 | raw_tensor = misc.LoadImageToTensor(load_image_name, device, norm=False)

112 | raw_tensor = F.interpolate(raw_tensor, size=(in_height, in_width), mode='bilinear', align_corners=False)

113 |

114 |

115 | # We create an object to get back the mask of the saliency map

116 | getMask = mask.SaliencyMaskDropout(keep_percent = 0.1, scale_map=False)

117 |

118 |

119 | # Now we will create illustrations of the combined saliency map.

120 | images = []

121 | images = misc.TileOutput(raw_tensor,csmap,getMask,images)

122 |

123 | # Let's double check and make sure it's picking the correct class

124 | too_logit = logit.max(1)

125 | print("Network Class Output: {} : Value {} ".format(too_logit[1][0],too_logit[0][0]))

126 |

127 |

128 | # Now visualize the results

129 | images = misc.TileOutput(raw_tensor, cam_map.squeeze(0), getMask, images)

130 |

131 |

132 | # ### Combined CAM and SMOE Scale Maps

133 | # Now we combine the Grad-CAM map and the SMOE Scale saliency maps in the same way we would combine Grad-CAM with Guided Backprop.

134 | fastcam_map = csmap*cam_map

135 |

136 |

137 | # Now let's visualize the combined saliency map from SMOE Scale and GradCAM++.

138 | images = misc.TileOutput(raw_tensor, fastcam_map.squeeze(0), getMask, images)

139 |

140 |

141 | # ### Get Non-class map

142 | # Now we combine the Grad-CAM map and the SMOE Scale saliency maps but create a map of the **non-class** objects. These are salient locations that the network found interesting, but are not part of the object class.

143 | nonclass_map = csmap*(1.0 - cam_map)

144 |

145 |

146 | # Now let's visualize the combined non-class saliency map from SMOE Scale and GradCAM++.

147 | images = misc.TileOutput(raw_tensor, nonclass_map.squeeze(0), getMask, images)

148 |

149 | many_image = misc.TileMaps(raw_tensor, csmap, cam_map.squeeze(0), fastcam_map.squeeze(0), many_image)

150 |

151 |

152 | # ### Visualize this....

153 | # We now put all the images into a nice grid for display.

154 | images = make_grid(torch.cat(images,0), nrow=5)

155 |

156 | # ... save and look at it.

157 | output_name = "{}.CAM.jpg".format(save_prefix)

158 | output_path = os.path.join(output_dir, output_name)

159 |

160 | save_image(images, output_path)

161 |

162 | del in_tensor

163 | del raw_tensor

164 |

165 | many_image = make_grid(torch.cat(many_image,0), nrow=4)

166 | output_name = "many.CAM.jpg".format(save_prefix)

167 | output_path = os.path.join(output_dir, output_name)

168 |

169 | save_image(many_image, output_path)

170 |

--------------------------------------------------------------------------------

/export/demo_simple_fast-cam.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # coding: utf-8

3 |

4 | # # DEMO: Running FastCAM using SMOE Scale Saliency Maps

5 |

6 | # ## Initial code setup

7 |

8 | # In[1]:

9 |

10 |

11 | import os

12 | import torch

13 | from torchvision import models

14 | from IPython.display import Image

15 |

16 |

17 | # Lets load things we need for **Grad-CAM**

18 |

19 | # In[2]:

20 |

21 |

22 | from torchvision.utils import make_grid, save_image

23 | import torch.nn.functional as F

24 |

25 | #from gradcam.utils import visualize_cam

26 | from gradcam import GradCAMpp, GradCAM

27 |

28 | # The GradCAM kit throws a warning we don't need to see for this demo.

29 | import warnings

30 | warnings.filterwarnings('ignore')

31 |

32 |

33 | # Now we import the code for **this package**.

34 |

35 | # In[3]:

36 |

37 |

38 | import maps

39 | import misc

40 | import mask

41 | import norm

42 | import resnet

43 |

44 |

45 | # This is where we can set some parameters like the image name and the layer weights.

46 |

47 | # In[4]:

48 |

49 |

50 | input_image_name = "ILSVRC2012_val_00049934.224x224.png" # Our input image to process

51 | output_dir = 'outputs' # Where to save our output images

52 | input_dir = 'images' # Where to load our inputs from

53 |

54 | weights = [1.0, 1.0, 1.0, 1.0, 1.0] # Equal Weights work best

55 | # when using with GradCAM

56 |

57 | #weights = [0.18, 0.15, 0.37, 0.4, 0.72] # Our saliency layer weights

58 | # From paper:

59 | # https://arxiv.org/abs/1911.11293

60 |

61 | in_height = 224 # Size to scale input image to

62 | in_width = 224 # Size to scale input image to

63 |

64 |

65 | # Lets set up where to save output and what name to use.

66 |

67 | # In[5]:

68 |

69 |

70 | save_prefix = os.path.split(os.path.splitext(input_image_name)[0])[-1] # Chop the file extension and path

71 | load_image_name = os.path.join(input_dir, input_image_name)

72 |

73 | os.makedirs(output_dir, exist_ok=True)

74 |

75 |

76 | # Good Doggy!

77 |

78 | # In[6]:

79 |

80 |

81 | Image(filename=load_image_name)

82 |

83 |

84 | # Now we create a model in PyTorch and send it to our device.

85 |

86 | # In[7]:

87 |

88 |

89 | device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

90 | model = models.resnet50(pretrained=True)

91 | model = model.to(device)

92 |

93 |

94 | # You will need to pick out which layers to process. Here we grab the end of each group of layers by scale.

95 |

96 | # In[8]:

97 |

98 |

99 | layers = ['relu','layer1','layer2','layer3','layer4']

100 |

101 | # It will auto select each bottle neck layer if we instead set to None.

102 | #layers = None

103 |

104 |

105 | # Choose how we want to normalize each map.

106 |

107 | # In[9]:

108 |

109 |

110 | #norm_method = norm.GaussNorm2D

111 | norm_method = norm.GammaNorm2D # A little more accurate, but much slower

112 |

113 |

114 | # Lets load in our image. We will do a simple resize on it.

115 |

116 | # In[10]:

117 |

118 |

119 | in_tensor = misc.LoadImageToTensor(load_image_name, device)

120 | in_tensor = F.interpolate(in_tensor, size=(in_height, in_width), mode='bilinear', align_corners=False)

121 |

122 |

123 | # Create our saliency map object. We hand it our Torch model and names for the layers we want to tap.

124 |

125 | # In[11]:

126 |

127 |

128 | get_salmap = maps.SaliencyModel(model, layers, output_size=[in_height,in_width], weights=weights,

129 | norm_method=norm_method)

130 |

131 |

132 | # Lets got ahead and run the network and get back the saliency map

133 |

134 | # In[12]:

135 |

136 |

137 | csmap,smaps,_ = get_salmap(in_tensor)

138 |

139 |

140 | # ## Run With Grad-CAM or Grad-CAM++

141 |

142 | # Let's go ahead and push our network model into the Grad-CAM library.

143 | #

144 | # **NOTE** much of this code is borrowed from the Pytorch GradCAM package.

145 |

146 | # In[13]:

147 |

148 |

149 | resnet_gradcampp4 = GradCAMpp.from_config(model_type='resnet', arch=model, layer_name='layer4')

150 |

151 |

152 | # Let's get our original input image back. We will just use this one for visualization.

153 |

154 | # In[14]:

155 |

156 |

157 | raw_tensor = misc.LoadImageToTensor(load_image_name, device, norm=False)

158 | raw_tensor = F.interpolate(raw_tensor, size=(in_height, in_width), mode='bilinear', align_corners=False)

159 |

160 |

161 | # We create an object to get back the mask of the saliency map

162 |

163 | # In[15]:

164 |

165 |

166 | getMask = mask.SaliencyMaskDropout(keep_percent = 0.1, scale_map=False)

167 |

168 |

169 | # Now we will create illustrations of the combined saliency map.

170 |

171 | # In[16]:

172 |

173 |

174 | images = misc.TileOutput(raw_tensor,csmap,getMask)

175 |

176 |

177 | # Now, lets get the Grad-CAM++ saliency map only.

178 |

179 | # In[17]:

180 |

181 |

182 | cam_map, logit = resnet_gradcampp4(in_tensor)

183 |

184 |

185 | # Let's double check and make sure it's picking the correct class

186 |

187 | # In[18]:

188 |

189 |

190 | too_logit = logit.max(1)

191 | print("Network Class Output: {} : Value {} ".format(too_logit[1][0],too_logit[0][0]))

192 |

193 |

194 | # Now visualize the results

195 |

196 | # In[19]:

197 |

198 |

199 | images = misc.TileOutput(raw_tensor, cam_map.squeeze(0), getMask, images)

200 |

201 |

202 | # ### Combined CAM and SMOE Scale Maps

203 | # Now we combine the Grad-CAM map and the SMOE Scale saliency maps in the same way we would combine Grad-CAM with Guided Backprop.

204 |

205 | # In[20]:

206 |

207 |

208 | fastcam_map = csmap*cam_map

209 |

210 |

211 | # Now let's visualize the combined saliency map from SMOE Scale and GradCAM++.

212 |

213 | # In[21]:

214 |

215 |

216 | images = misc.TileOutput(raw_tensor, fastcam_map.squeeze(0), getMask, images)

217 |

218 |

219 | # ### Get Non-class map

220 | # Now we combine the Grad-CAM map and the SMOE Scale saliency maps but create a map of the **non-class** objects. These are salient locations that the network found interesting, but are not part of the object class.

221 |

222 | # In[22]:

223 |

224 |

225 | nonclass_map = csmap*(1.0 - cam_map)

226 |

227 |

228 | # Now let's visualize the combined non-class saliency map from SMOE Scale and GradCAM++.

229 |

230 | # In[23]:

231 |

232 |

233 | images = misc.TileOutput(raw_tensor, nonclass_map.squeeze(0), getMask, images)

234 |

235 |

236 | # ### Visualize this....

237 | # We now put all the images into a nice grid for display.

238 |

239 | # In[24]:

240 |

241 |

242 | images = make_grid(torch.cat(images,0), nrow=5)

243 |

244 |

245 | # ... save and look at it.

246 |

247 | # In[25]:

248 |

249 |

250 | output_name = "{}.CAM_PP.jpg".format(save_prefix)

251 | output_path = os.path.join(output_dir, output_name)

252 |

253 | save_image(images, output_path)

254 | Image(filename=output_path)

255 |

256 |

257 | # The top row is the SMOE Scale based saliency map. The second row is GradCAM++ only. Next we have the FastCAM output from combining the two. The last row is the non-class map showing salient regions that are not associated with the output class.

258 | #

259 | # This image should look **exactly** like the one on the README.md on Github minus the text.

260 |

261 | # In[ ]:

262 |

263 |

264 |

265 |

266 |

267 | # In[ ]:

268 |

269 |

270 |

271 |

272 |

--------------------------------------------------------------------------------

/mask.py:

--------------------------------------------------------------------------------

1 | '''

2 | BSD 3-Clause License

3 |

4 | Copyright (c) 2020, Lawrence Livermore National Laboratory

5 | All rights reserved.

6 |

7 | Redistribution and use in source and binary forms, with or without

8 | modification, are permitted provided that the following conditions are met:

9 |

10 | 1. Redistributions of source code must retain the above copyright notice, this

11 | list of conditions and the following disclaimer.

12 |

13 | 2. Redistributions in binary form must reproduce the above copyright notice,

14 | this list of conditions and the following disclaimer in the documentation

15 | and/or other materials provided with the distribution.

16 |

17 | 3. Neither the name of the copyright holder nor the names of its

18 | contributors may be used to endorse or promote products derived from

19 | this software without specific prior written permission.

20 |

21 | THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

22 | AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

23 | IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

24 | DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

25 | FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

26 | DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

27 | SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

28 | CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

29 | OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

30 | OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

31 | '''

32 | '''

33 | https://github.com/LLNL/fastcam

34 |

35 | A toolkit for efficent computation of saliency maps for explainable

36 | AI attribution.

37 |

38 | This work was performed under the auspices of the U.S. Department of Energy

39 | by Lawrence Livermore National Laboratory under Contract DE-AC52-07NA27344

40 | and was supported by the LLNL-LDRD Program under Project 18-ERD-021 and

41 | Project 17-SI-003.

42 |

43 | Software released as LLNL-CODE-802426.

44 |

45 | See also: https://arxiv.org/abs/1911.11293

46 | '''

47 | import torch

48 | import torch.nn as nn

49 | import torch.nn.functional as F

50 | import torch.autograd

51 |

52 | # *******************************************************************************************************************

53 | class DropMap(torch.autograd.Function):

54 | r'''

55 | When we created this, the torch.gt function did not seem to propagate gradients.

56 | It might now, but we have not checked. This autograd function provides that.

57 | '''

58 |

59 | @staticmethod

60 | def forward(ctx, p_map, k):

61 |

62 | drop_map_byte = torch.gt(p_map,k)

63 | drop_map = torch.as_tensor(drop_map_byte, dtype=p_map.dtype, device=p_map.device)

64 | ctx.save_for_backward(drop_map)

65 | return drop_map

66 |

67 | @staticmethod

68 | def backward(ctx, grad_output):

69 |

70 | drop_map = ctx.saved_tensors

71 | g_pmap = grad_output * drop_map[0]

72 |

73 | r'''

74 | Just return empty since we don't have use for this gradient.

75 | '''

76 | sz = g_pmap.size()

77 | g_k = torch.empty((sz[0],1), dtype=g_pmap.dtype, device=g_pmap.device)

78 |

79 | return g_pmap, g_k

80 |

81 | # *******************************************************************************************************************

82 | class SaliencyMaskDropout(nn.Module):

83 | r'''

84 | This will mask out an input tensor that can have arbitrary channels. It can also return the

85 | binary mask it created from the saliency map. If it is used inline in a network, scale_map

86 | should be set to True.

87 |

88 | Parameters

89 |

90 | keep_percent: A scalar from 0 to 1. This represents what percent of the image to keep.

91 | return_layer_only: Tells us to just return the masked tensor only. Useful for putting layer into an nn.sequental.

92 | scale_map: Scale the output like we would a dropout layer?

93 |

94 | Will return

95 |

96 | (1) The maksed tensor.

97 | (2) The mask by itself.

98 | '''

99 |

100 | def __init__(self, keep_percent = 0.1, return_layer_only=False, scale_map=True):

101 |

102 | super(SaliencyMaskDropout, self).__init__()

103 |

104 | assert isinstance(keep_percent,float), "keep_percent should be a floating point value from 0 to 1"

105 | assert keep_percent > 0, "keep_percent should be a floating point value from 0 to 1"

106 | assert keep_percent <= 1.0, "keep_percent should be a floating point value from 0 to 1"

107 |

108 | self.keep_percent = keep_percent

109 | if scale_map:

110 | self.scale = 1.0/keep_percent

111 | else:

112 | self.scale = 1.0

113 | self.drop_percent = 1.0-self.keep_percent

114 | self.return_layer_only = return_layer_only

115 |

116 | def forward(self, x, sal_map):

117 |

118 | assert torch.is_tensor(x), "Input x should be a Torch Tensor"

119 | assert torch.is_tensor(sal_map), "Input sal_map should be a Torch Tensor"

120 |

121 | sal_map_size = sal_map.size()

122 | x_size = x.size()

123 |

124 | assert len(x.size()) == 4, "Input x should have 4 dimensions [batch size x chans x height x width]"

125 | assert len(sal_map.size()) == 3, "Input sal_map should be 3D [batch size x height x width]"

126 |

127 | assert x_size[0] == sal_map_size[0], "x and sal_map should have same batch size"

128 | assert x_size[2] == sal_map_size[1], "x and sal_map should have same height"

129 | assert x_size[3] == sal_map_size[2], "x and sal_map should have same width"

130 |

131 | sal_map = sal_map.reshape(sal_map_size[0], sal_map_size[1]*sal_map_size[2])

132 |

133 | r'''

134 | Using basically the same method we would to find the median, we find what value is

135 | at n% in each saliency map.

136 | '''

137 | num_samples = int((sal_map_size[1]*sal_map_size[2])*self.drop_percent)

138 | s = torch.sort(sal_map, dim=1)[0]

139 |

140 | r'''

141 | Here we can check that the saliency map has valid values between 0 to 1 since we

142 | have sorted the image. It's cheap now.

143 | '''

144 | assert s[:,0] >= 0.0, "Saliency map should contain values within the range of 0 to 1"

145 | assert s[:,-1] <= 1.0, "Saliency map should contain values within the range of 0 to 1"

146 |

147 | r'''

148 | Get the kth value for each image in the batch.

149 | '''

150 | k = s[:,num_samples]

151 | k = k.reshape(sal_map_size[0], 1)

152 |

153 | r'''

154 | We will create the saliency mask but we use torch.autograd so that we can optionally

155 | propagate the gradients backwards through the mask. k is assumed to be a dead-end, so

156 | no gradients go to it.

157 | '''

158 | drop_map = DropMap.apply(sal_map, k)

159 |

160 | drop_map = drop_map.reshape(sal_map_size[0], 1, sal_map_size[1]*sal_map_size[2])

161 | x = x.reshape(x_size[0], x_size[1], x_size[2]*x_size[3])

162 |

163 | r'''

164 | Multiply the input by the mask, but optionally scale it like we would a dropout layer.

165 | '''

166 | x = x*drop_map*self.scale

167 |

168 | x = x.reshape(x_size[0], x_size[1], x_size[2], x_size[3])

169 |

170 | if self.return_layer_only:

171 | return x

172 | else:

173 | return x, drop_map.reshape(sal_map_size[0], sal_map_size[1], sal_map_size[2])

174 |

175 |

176 |

--------------------------------------------------------------------------------

/export/nif_fast-cam.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # coding: utf-8

3 |

4 | # # DEMO: Running FastCAM for the Exceptionally Impatient

5 |

6 | # ### Import Libs

7 |

8 | # In[1]:

9 |

10 |

11 | import os

12 | from IPython.display import Image

13 | import cv2

14 | import numpy as np

15 |

16 |

17 | # Lets load the **PyTorch** Stuff.

18 |

19 | # In[2]:

20 |

21 |

22 | import torch

23 | import torch.nn.functional as F

24 | from torchvision.utils import make_grid, save_image

25 |

26 | import warnings

27 | warnings.filterwarnings('ignore')

28 |

29 |

30 | # Now we import the saliency libs for **this package**.

31 |

32 | # In[3]:

33 |

34 |

35 | import maps

36 | import misc

37 | import mask

38 | import norm

39 | import draw

40 | import resnet

41 |

42 |

43 | # ### Set Adjustable Parameters

44 | # This is where we can set some parameters like the image name and the layer weights.

45 |

46 | # In[4]:

47 |

48 |

49 | input_image_names = ["OMF2_715165_45972761_POST_160_267_EXIT_RB_191025_072939.jpg",

50 | "OMF2_715165_45723294_POST_144_306_EXIT_RB_191024_174606.jpg",

51 | "OMF2_715165_47391944_POST_85_273_EXIT_RB_191024_203453.jpg",

52 | "OMF2_715165_48284471_POST_362_243_EXIT_RB_191024_204748.jpg",

53 | "OMF2_715165_48286090_POST_105_340_EXIT_RB_191024_220021.jpg",

54 | "OMF2_715165_49772491_POST_142_332_EXIT_RB_191025_075343.jpg",

55 | "OMF2_715165_48738510_POST_82_283_EXIT_RB_191024_220953.jpg",

56 | "OMF2_715165_48286273_POST_67_288_INPUT_RB_191024_194155.jpg"

57 | ]

58 |

59 |

60 | # In[5]:

61 |

62 |

63 | input_image_name = input_image_names[0] # Pick which image you want from the list

64 | output_dir = 'outputs' # Where to save our output images

65 | input_dir = 'images' # Where to load our inputs from

66 | # Assumes input image size 1392x1040

67 | in_height = 524 # Size to scale input image to

68 | in_width = 696 # Size to scale input image to

69 | in_patch = 480

70 | view_height = 1040

71 | view_width = 1392

72 | view_patch = 952

73 |

74 |

75 | # Now we set up what layers we want to sample from and what weights to give each layer. We specify the layer block name within ResNet were we will pull the forward SMOE Scale results from. The results will be from the end of each layer block.

76 |

77 | # In[6]:

78 |

79 |

80 | weights = [0.18, 0.15, 0.37, 0.4, 0.72] # Our saliency layer weights

81 | # From paper:

82 | # https://arxiv.org/abs/1911.11293

83 | layers = ['relu','layer1','layer2','layer3','layer4']

84 |

85 |

86 | # **OPTIONAL:** We can auto compute which layers to run over by setting them to *None*. **This has not yet been quantitatively tested on ROAR/KARR.**

87 |

88 | # In[7]:

89 |

90 |

91 | #weights = None

92 | #layers = None

93 |

94 |

95 | # ### Set Up Loading and Saving File Names

96 | # Lets touch up where to save output and what name to use for output files.

97 |

98 | # In[8]:

99 |