├── tests

├── __init__.py

├── test_common.py

├── test_lie_group.py

├── test_manifold.py

├── test_pca.py

├── test_backend_tensorflow.py

├── test_template.py

├── test_connection.py

├── test_visualization.py

├── helper.py

├── test_examples.py

├── test_matrices_space.py

├── test_backend_numpy.py

├── test_general_linear_group.py

├── test_stiefel.py

├── test_minkowski_space.py

└── test_spd_matrices_space.py

├── geomstats

├── backend

│ ├── common.py

│ ├── tensorflow_testing.py

│ ├── numpy_testing.py

│ ├── pytorch_testing.py

│ ├── numpy_random.py

│ ├── pytorch_random.py

│ ├── tensorflow_random.py

│ ├── __init__.py

│ ├── pytorch_linalg.py

│ ├── numpy_linalg.py

│ ├── tensorflow_linalg.py

│ ├── tensorflow.py

│ ├── numpy.py

│ └── pytorch.py

├── learning

│ ├── __init__.py

│ ├── _template.py

│ ├── mean_shift.py

│ └── pca.py

├── __init__.py

├── __about__.py

├── embedded_manifold.py

├── manifold.py

├── tests.py

├── matrices_space.py

├── euclidean_space.py

├── general_linear_group.py

├── minkowski_space.py

├── lie_group.py

├── spd_matrices_space.py

└── connection.py

├── .gitignore

├── docs

├── troubleshooting.rst

├── requirements.doc.txt

├── changelog.rst

├── install.rst

├── index.rst

├── Makefile

├── conf.py

├── tutorials.rst

└── api-reference.rst

├── examples

├── imgs

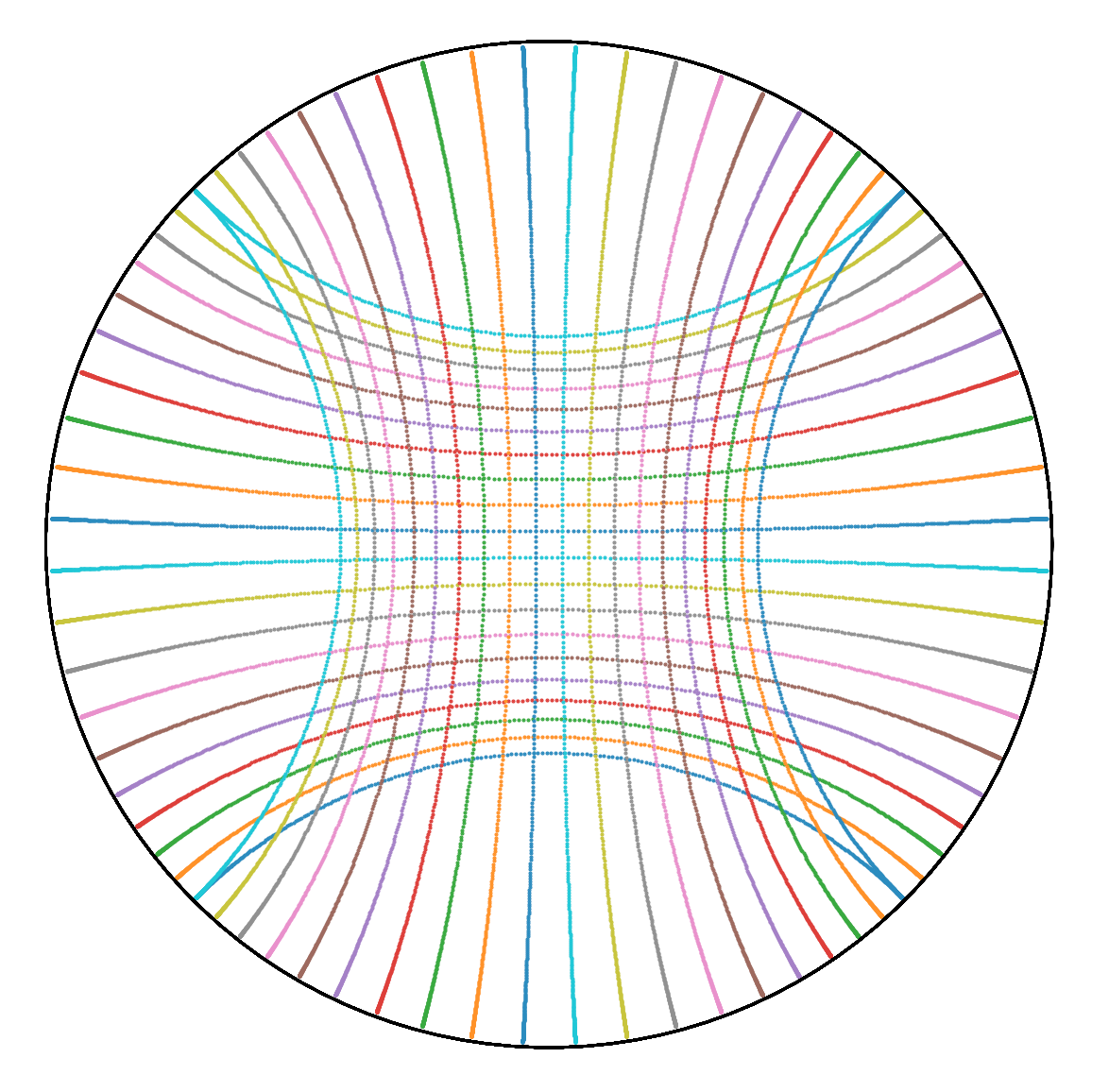

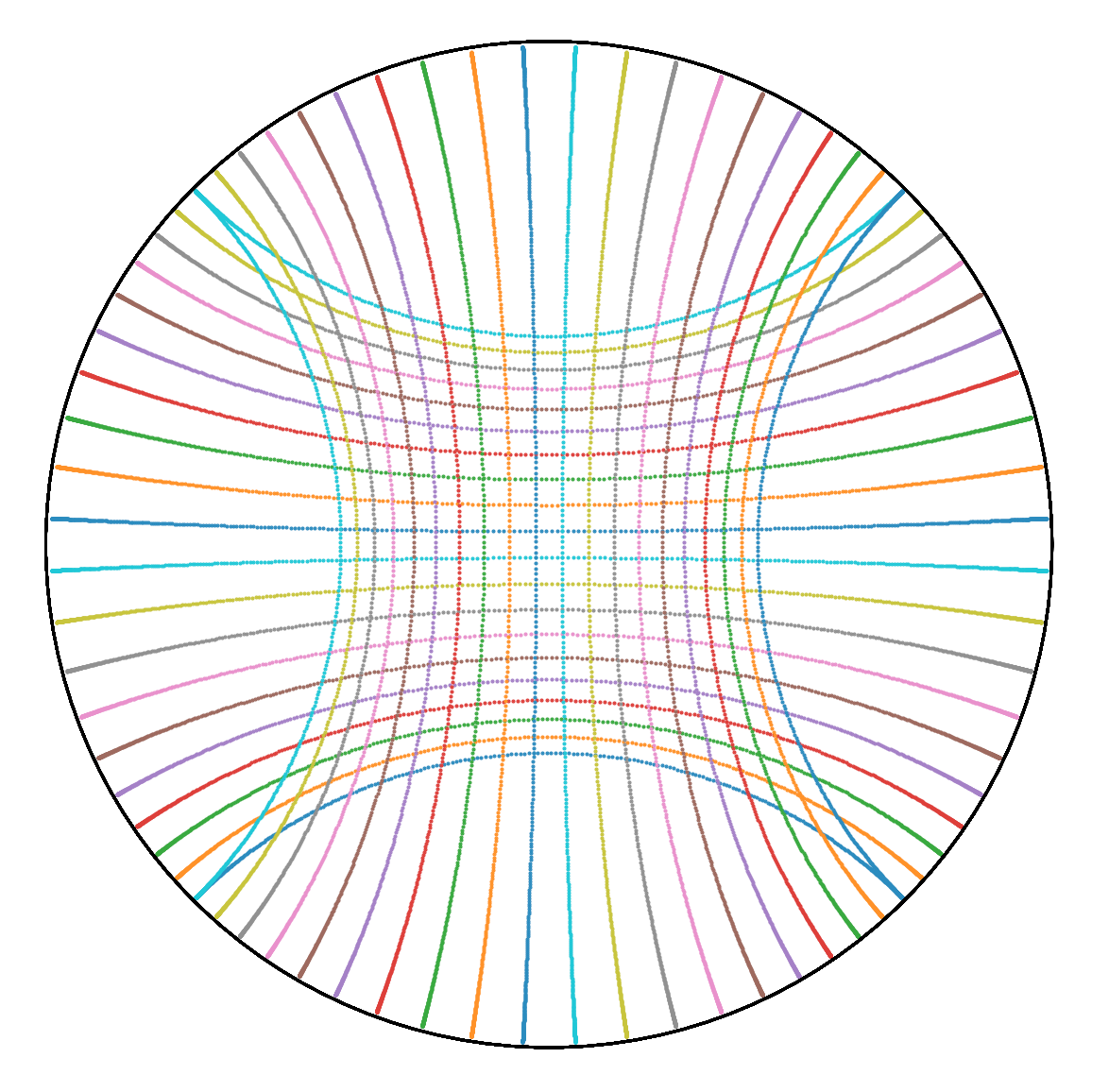

│ ├── h2_grid.png

│ ├── gradient_descent.gif

│ └── gradient_descent.png

├── plot_geodesics_so3.py

├── plot_geodesics_se3.py

├── plot_geodesics_s2.py

├── plot_quantization_s1.py

├── plot_quantization_s2.py

├── plot_square_h2_poincare_disk.py

├── plot_square_h2_poincare_half_plane.py

├── plot_grid_h2.py

├── plot_square_h2_klein_disk.py

├── tangent_pca_so3.py

├── plot_geodesics_h2.py

├── loss_and_gradient_so3.py

├── gradient_descent_s2.py

└── loss_and_gradient_se3.py

├── requirements.txt

├── .travis.yml

├── setup.py

├── LICENSE.md

├── README.md

└── CONTRIBUTING.md

/tests/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/geomstats/backend/common.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 |

3 | pi = np.pi

4 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | build

2 | dist

3 | *.mp4

4 | *egg-info

5 | *.egg

6 | *.pyc

7 | *.png

8 |

--------------------------------------------------------------------------------

/docs/troubleshooting.rst:

--------------------------------------------------------------------------------

1 | Troubleshooting

2 | ===============

3 |

4 | Trouble shooting.

5 |

--------------------------------------------------------------------------------

/examples/imgs/h2_grid.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/lmcinnes/geomstats/master/examples/imgs/h2_grid.png

--------------------------------------------------------------------------------

/examples/imgs/gradient_descent.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/lmcinnes/geomstats/master/examples/imgs/gradient_descent.gif

--------------------------------------------------------------------------------

/examples/imgs/gradient_descent.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/lmcinnes/geomstats/master/examples/imgs/gradient_descent.png

--------------------------------------------------------------------------------

/docs/requirements.doc.txt:

--------------------------------------------------------------------------------

1 | numpydoc==0.8

2 | sphinx

3 | dask_sphinx_theme>=1.1.0

4 | sphinx-click

5 | toolz

6 | cloudpickle

7 | pandas>=0.19.0

8 | distributed

9 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | autograd

2 | codecov

3 | coverage

4 | h5py==2.8.0

5 | matplotlib

6 | nose2

7 | numpy>=1.14.1

8 | scikit-learn

9 | scipy

10 | tensorflow>=1.12

11 | torch==0.4.0

12 |

--------------------------------------------------------------------------------

/geomstats/backend/tensorflow_testing.py:

--------------------------------------------------------------------------------

1 | """Testing backend."""

2 |

3 | import numpy as np

4 |

5 |

6 | def assert_allclose(*args, **kwargs):

7 | return np.testing.assert_allclose(*args, **kwargs)

8 |

--------------------------------------------------------------------------------

/geomstats/backend/numpy_testing.py:

--------------------------------------------------------------------------------

1 | """Numpy based testing backend."""

2 |

3 | import numpy as np

4 |

5 |

6 | def assert_allclose(*args, **kwargs):

7 | return np.testing.assert_allclose(*args, **kwargs)

8 |

--------------------------------------------------------------------------------

/geomstats/backend/pytorch_testing.py:

--------------------------------------------------------------------------------

1 | """Pytorch based testing backend."""

2 |

3 | import torch

4 |

5 |

6 | def assert_allclose(*args, **kwargs):

7 | return torch.testing.assert_allclose(*args, **kwargs)

8 |

--------------------------------------------------------------------------------

/docs/changelog.rst:

--------------------------------------------------------------------------------

1 | Changelog

2 | =========

3 |

4 | **0.1.7**

5 |

6 | * Bugfixes

7 | * Default arguments on the API

8 | * Better command line argument parsing

9 |

10 | **0.1.6**

11 |

12 | * Cleaner API.

13 |

--------------------------------------------------------------------------------

/geomstats/learning/__init__.py:

--------------------------------------------------------------------------------

1 | from ._template import TemplateEstimator

2 | from ._template import TemplateClassifier

3 | from ._template import TemplateTransformer

4 |

5 | __all__ = ['TemplateEstimator', 'TemplateClassifier', 'TemplateTransformer',

6 | '__version__']

7 |

--------------------------------------------------------------------------------

/docs/install.rst:

--------------------------------------------------------------------------------

1 | Install Geomstats

2 | =================

3 |

4 |

5 | You can install geomstats with ``pip3`` as follows::

6 |

7 | pip3 install geomstats

8 |

9 | You should choose your backend by setting the environment variable GEOMSTATS_BACKEND to numpy, tensorflow or pytorch::

10 |

11 | export GEOMSTATS_BACKEND=numpy

12 |

--------------------------------------------------------------------------------

/geomstats/backend/numpy_random.py:

--------------------------------------------------------------------------------

1 | """Numpy based random backend."""

2 |

3 | import numpy as np

4 |

5 |

6 | def rand(*args, **kwargs):

7 | return np.random.rand(*args, **kwargs)

8 |

9 |

10 | def randint(*args, **kwargs):

11 | return np.random.randint(*args, **kwargs)

12 |

13 |

14 | def seed(*args, **kwargs):

15 | return np.random.seed(*args, **kwargs)

16 |

17 |

18 | def normal(*args, **kwargs):

19 | return np.random.normal(*args, **kwargs)

20 |

--------------------------------------------------------------------------------

/geomstats/__init__.py:

--------------------------------------------------------------------------------

1 | from .__about__ import __version__

2 |

3 | import geomstats.manifold

4 | import geomstats.euclidean_space

5 | import geomstats.hyperbolic_space

6 | import geomstats.hypersphere

7 | import geomstats.invariant_metric

8 | import geomstats.lie_group

9 | import geomstats.minkowski_space

10 | import geomstats.spd_matrices_space

11 | import geomstats.special_euclidean_group

12 | import geomstats.special_orthogonal_group

13 | import geomstats.riemannian_metric

14 |

--------------------------------------------------------------------------------

/geomstats/backend/pytorch_random.py:

--------------------------------------------------------------------------------

1 | """Torch based random backend."""

2 |

3 | import torch

4 |

5 |

6 | def rand(*args, **kwargs):

7 | return torch.rand(*args, **kwargs)

8 |

9 |

10 | def randint(*args, **kwargs):

11 | return torch.randint(*args, **kwargs)

12 |

13 |

14 | def seed(*args, **kwargs):

15 | return torch.manual_seed(*args, **kwargs)

16 |

17 |

18 | def normal(loc=0.0, scale=1.0, size=(1, 1)):

19 | return torch.normal(torch.zeros(size), torch.ones(size))

20 |

--------------------------------------------------------------------------------

/geomstats/__about__.py:

--------------------------------------------------------------------------------

1 | # Remove -dev before releasing

2 | __version__ = '1.15'

3 |

4 | from itertools import chain

5 |

6 | install_requires = [

7 | 'autograd',

8 | 'h5py==2.8.0',

9 | 'matplotlib',

10 | 'numpy>=1.14.1',

11 | 'scipy',

12 | ]

13 |

14 | extras_require = {

15 | 'test': ['codecov', 'coverage', 'nose2'],

16 | 'tf': ['tensorflow>=1.12'],

17 | 'torch': ['torch==0.4.0'],

18 | }

19 | extras_require['all'] = list(chain(*extras_require.values()))

20 |

--------------------------------------------------------------------------------

/tests/test_common.py:

--------------------------------------------------------------------------------

1 | import pytest

2 |

3 | from sklearn.utils.estimator_checks import check_estimator

4 |

5 | from geomstats.learning._template import TemplateEstimator

6 | from geomstats.learning._template import TemplateClassifier

7 | from geomstats.learning._template import TemplateTransformer

8 |

9 |

10 | @pytest.mark.parametrize(

11 | "Estimator", [TemplateEstimator, TemplateTransformer, TemplateClassifier]

12 | )

13 | def test_all_estimators(Estimator):

14 | return check_estimator(Estimator)

15 |

--------------------------------------------------------------------------------

/docs/index.rst:

--------------------------------------------------------------------------------

1 | Geomstats

2 | =========

3 |

4 | *Geomstats provides code for computations and statistics on manifolds with geometric structures.*

5 |

6 | **Quick install**

7 |

8 | .. code-block:: bash

9 |

10 | pip3 install geomstats

11 | export GEOMSTATS_BACKEND=numpy

12 |

13 | .. toctree::

14 | :maxdepth: 1

15 | :caption: Getting Started

16 |

17 | install.rst

18 | api-reference.rst

19 | contributing.rst

20 | tutorials.rst

21 | changelog.rst

22 |

23 | .. toctree::

24 | :maxdepth: 1

25 | :caption: Support

26 |

27 | troubleshooting.rst

28 |

--------------------------------------------------------------------------------

/.travis.yml:

--------------------------------------------------------------------------------

1 | sudo: false

2 | dist: trusty

3 | cache: pip

4 | language: python

5 | python:

6 | - "3.5"

7 | - "3.5-dev"

8 | - "3.6"

9 | - "3.6-dev"

10 |

11 | install:

12 | - pip install --upgrade pip setuptools wheel

13 | - pip install -q -r requirements.txt --only-binary=numpy,scipy

14 | script:

15 | - nose2 --with-coverage --verbose

16 | env:

17 | - GEOMSTATS_BACKEND=numpy

18 | - GEOMSTATS_BACKEND=pytorch

19 | - GEOMSTATS_BACKEND=tensorflow

20 |

21 | after_success:

22 | - bash <(curl -s https://codecov.io/bash) -c -F $GEOMSTATS_BACKEND

23 |

--------------------------------------------------------------------------------

/tests/test_lie_group.py:

--------------------------------------------------------------------------------

1 | """

2 | Unit tests for Lie groups.

3 | """

4 |

5 | import geomstats.tests

6 |

7 | from geomstats.lie_group import LieGroup

8 |

9 |

10 | class TestLieGroupMethods(geomstats.tests.TestCase):

11 | _multiprocess_can_split_ = True

12 |

13 | dimension = 4

14 | group = LieGroup(dimension=dimension)

15 |

16 | def test_dimension(self):

17 | result = self.group.dimension

18 | expected = self.dimension

19 |

20 | self.assertAllClose(result, expected)

21 |

22 |

23 | if __name__ == '__main__':

24 | geomstats.tests.main()

25 |

--------------------------------------------------------------------------------

/docs/Makefile:

--------------------------------------------------------------------------------

1 | # Minimal makefile for Sphinx documentation

2 | #

3 |

4 | # You can set these variables from the command line.

5 | SPHINXOPTS =

6 | SPHINXBUILD = sphinx-build

7 | SOURCEDIR = .

8 | BUILDDIR = build

9 |

10 | # Put it first so that "make" without argument is like "make help".

11 | help:

12 | @$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

13 |

14 | .PHONY: help Makefile

15 |

16 | # Catch-all target: route all unknown targets to Sphinx using the new

17 | # "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

18 | %: Makefile

19 | @$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

20 |

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | import os

2 | import runpy

3 | from setuptools import setup, find_packages

4 |

5 | base_dir = os.path.dirname(os.path.abspath(__file__))

6 | about = runpy.run_path(os.path.join(base_dir, 'geomstats', '__about__.py'))

7 |

8 | setup(name='geomstats',

9 | version=about['__version__'],

10 | install_requires=about['install_requires'],

11 | extras_require=about['extras_require'],

12 | description='Geometric statistics on manifolds',

13 | url='http://github.com/geomstats/geomstats',

14 | author='Nina Miolane',

15 | author_email='ninamio78@gmail.com',

16 | license='MIT',

17 | packages=find_packages(),

18 | zip_safe=False)

19 |

--------------------------------------------------------------------------------

/geomstats/backend/tensorflow_random.py:

--------------------------------------------------------------------------------

1 | """Tensorflow based random backend."""

2 |

3 | import tensorflow as tf

4 |

5 |

6 | def randint(low, high=None, size=None):

7 | if size is None:

8 | size = (1,)

9 | maxval = high

10 | minval = low

11 | if high is None:

12 | maxval = low - 1

13 | minval = 0

14 | return tf.random_uniform(

15 | shape=size,

16 | minval=minval,

17 | maxval=maxval, dtype=tf.int32, seed=None, name=None)

18 |

19 |

20 | def rand(*args):

21 | return tf.random_uniform(shape=args)

22 |

23 |

24 | def seed(*args):

25 | return tf.set_random_seed(*args)

26 |

27 |

28 | def normal(loc=0.0, scale=1.0, size=(1, 1)):

29 | return tf.random_normal(mean=loc, stddev=scale, shape=size)

30 |

--------------------------------------------------------------------------------

/tests/test_manifold.py:

--------------------------------------------------------------------------------

1 | """

2 | Unit tests for manifolds.

3 | """

4 |

5 | import geomstats.backend as gs

6 | import geomstats.tests

7 |

8 | from geomstats.manifold import Manifold

9 |

10 |

11 | class TestManifoldMethods(geomstats.tests.TestCase):

12 | _multiprocess_can_split_ = True

13 |

14 | def setUp(self):

15 | self.dimension = 4

16 | self.manifold = Manifold(self.dimension)

17 |

18 | def test_dimension(self):

19 | result = self.manifold.dimension

20 | expected = self.dimension

21 | self.assertAllClose(result, expected)

22 |

23 | def test_belongs(self):

24 | point = gs.array([1., 2., 3.])

25 | self.assertRaises(NotImplementedError,

26 | lambda: self.manifold.belongs(point))

27 |

28 | def test_regularize(self):

29 | point = gs.array([1., 2., 3.])

30 | result = self.manifold.regularize(point)

31 | expected = point

32 | self.assertAllClose(result, expected)

33 |

34 |

35 | if __name__ == '__main__':

36 | geomstats.test.main()

37 |

--------------------------------------------------------------------------------

/LICENSE.md:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2018 Nina Miolane

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/geomstats/backend/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 | import sys

3 |

4 | _default_backend = 'numpy'

5 | if 'GEOMSTATS_BACKEND' in os.environ:

6 | _backend = os.environ['GEOMSTATS_BACKEND']

7 |

8 | else:

9 | _backend = _default_backend

10 |

11 | _BACKEND = _backend

12 |

13 | from .common import * # NOQA

14 |

15 | if _BACKEND == 'numpy':

16 | sys.stderr.write('Using numpy backend\n')

17 | from .numpy import * # NOQA

18 | from . import numpy_linalg as linalg

19 | from . import numpy_random as random

20 | from . import numpy_testing as testing

21 | elif _BACKEND == 'pytorch':

22 | sys.stderr.write('Using pytorch backend\n')

23 | from .pytorch import * # NOQA

24 | from . import pytorch_linalg as linalg # NOQA

25 | from . import pytorch_random as random # NOQA

26 | from . import pytorch_testing as testing # NOQA

27 | elif _BACKEND == 'tensorflow':

28 | sys.stderr.write('Using tensorflow backend\n')

29 | from .tensorflow import * # NOQA

30 | from . import tensorflow_linalg as linalg # NOQA

31 | from . import tensorflow_random as random # NOQA

32 | from . import tensorflow_testing as testing # NOQA

33 |

34 |

35 | def backend():

36 | return _BACKEND

37 |

--------------------------------------------------------------------------------

/examples/plot_geodesics_so3.py:

--------------------------------------------------------------------------------

1 | """

2 | Plot a geodesic of SO(3) equipped

3 | with its left-invariant canonical METRIC.

4 | """

5 |

6 | import matplotlib.pyplot as plt

7 | import numpy as np

8 | import os

9 |

10 | import geomstats.visualization as visualization

11 |

12 | from geomstats.special_orthogonal_group import SpecialOrthogonalGroup

13 |

14 | SO3_GROUP = SpecialOrthogonalGroup(n=3)

15 | METRIC = SO3_GROUP.bi_invariant_metric

16 |

17 |

18 | def main():

19 | initial_point = SO3_GROUP.identity

20 | initial_tangent_vec = [0.5, 0.5, 0.8]

21 | geodesic = METRIC.geodesic(initial_point=initial_point,

22 | initial_tangent_vec=initial_tangent_vec)

23 |

24 | n_steps = 10

25 | t = np.linspace(0, 1, n_steps)

26 |

27 | points = geodesic(t)

28 | visualization.plot(points, space='SO3_GROUP')

29 | plt.show()

30 |

31 |

32 | if __name__ == "__main__":

33 | if os.environ['GEOMSTATS_BACKEND'] == 'tensorflow':

34 | print('Examples with visualizations are only implemented '

35 | 'with numpy backend.\n'

36 | 'To change backend, write: '

37 | 'export GEOMSTATS_BACKEND = \'numpy\'.')

38 | else:

39 | main()

40 |

--------------------------------------------------------------------------------

/examples/plot_geodesics_se3.py:

--------------------------------------------------------------------------------

1 | """

2 | Plot a geodesic of SE(3) equipped

3 | with its left-invariant canonical METRIC.

4 | """

5 |

6 | import matplotlib.pyplot as plt

7 | import numpy as np

8 | import os

9 |

10 | import geomstats.visualization as visualization

11 |

12 | from geomstats.special_euclidean_group import SpecialEuclideanGroup

13 |

14 | SE3_GROUP = SpecialEuclideanGroup(n=3)

15 | METRIC = SE3_GROUP.left_canonical_metric

16 |

17 |

18 | def main():

19 | initial_point = SE3_GROUP.identity

20 | initial_tangent_vec = [1.8, 0.2, 0.3, 3., 3., 1.]

21 | geodesic = METRIC.geodesic(initial_point=initial_point,

22 | initial_tangent_vec=initial_tangent_vec)

23 |

24 | n_steps = 40

25 | t = np.linspace(-3, 3, n_steps)

26 |

27 | points = geodesic(t)

28 |

29 | visualization.plot(points, space='SE3_GROUP')

30 | plt.show()

31 |

32 |

33 | if __name__ == "__main__":

34 | if os.environ['GEOMSTATS_BACKEND'] == 'tensorflow':

35 | print('Examples with visualizations are only implemented '

36 | 'with numpy backend.\n'

37 | 'To change backend, write: '

38 | 'export GEOMSTATS_BACKEND = \'numpy\'.')

39 | else:

40 | main()

41 |

--------------------------------------------------------------------------------

/tests/test_pca.py:

--------------------------------------------------------------------------------

1 | import pytest

2 | import numpy as np

3 |

4 | from sklearn.utils.testing import assert_allclose

5 |

6 | from geomstats.special_orthogonal_group import SpecialOrthogonalGroup

7 |

8 | from geomstats.learning.pca import TangentPCA

9 |

10 |

11 | SO3_GROUP = SpecialOrthogonalGroup(n=3)

12 | METRIC = SO3_GROUP.bi_invariant_metric

13 | N_SAMPLES = 10

14 | N_COMPONENTS = 2

15 |

16 |

17 | @pytest.fixture

18 | def data():

19 | data = SO3_GROUP.random_uniform(n_samples=N_SAMPLES)

20 | return data

21 |

22 |

23 | def test_tangent_pca_error(data):

24 | X = data

25 | trans = TangentPCA(n_components=N_COMPONENTS)

26 | trans.fit(X)

27 | with pytest.raises(ValueError, match="Shape of input is different"):

28 | X_diff_size = np.ones((10, X.shape[1] + 1))

29 | trans.transform(X_diff_size)

30 |

31 |

32 | def test_tangent_pca(data):

33 | X = data

34 | trans = TangentPCA(n_components=N_COMPONENTS)

35 | assert trans.demo_param == 'demo'

36 |

37 | trans.fit(X)

38 | assert trans.n_features_ == X.shape[1]

39 |

40 | X_trans = trans.transform(X)

41 | assert_allclose(X_trans, np.sqrt(X))

42 |

43 | X_trans = trans.fit_transform(X)

44 | assert_allclose(X_trans, np.sqrt(X))

45 |

--------------------------------------------------------------------------------

/geomstats/embedded_manifold.py:

--------------------------------------------------------------------------------

1 | """

2 | Manifold embedded in another manifold.

3 | """

4 |

5 | import math

6 |

7 | from geomstats.manifold import Manifold

8 |

9 |

10 | class EmbeddedManifold(Manifold):

11 | """

12 | Class for manifolds embedded in another manifold.

13 | """

14 |

15 | def __init__(self, dimension, embedding_manifold):

16 | assert isinstance(dimension, int) or dimension == math.inf

17 | assert dimension > 0

18 | super(EmbeddedManifold, self).__init__(

19 | dimension=dimension)

20 | self.embedding_manifold = embedding_manifold

21 |

22 | def intrinsic_to_extrinsic_coords(self, point_intrinsic):

23 | raise NotImplementedError(

24 | 'intrinsic_to_extrinsic_coords is not implemented.')

25 |

26 | def extrinsic_to_intrinsic_coords(self, point_extrinsic):

27 | raise NotImplementedError(

28 | 'extrinsic_to_intrinsic_coords is not implemented.')

29 |

30 | def projection(self, point):

31 | raise NotImplementedError(

32 | 'projection is not implemented.')

33 |

34 | def projection_to_tangent_space(self, vector, base_point):

35 | raise NotImplementedError(

36 | 'projection_to_tangent_space is not implemented.')

37 |

--------------------------------------------------------------------------------

/examples/plot_geodesics_s2.py:

--------------------------------------------------------------------------------

1 | """

2 | Plot a geodesic on the sphere S2

3 | """

4 |

5 | import matplotlib.pyplot as plt

6 | import numpy as np

7 | import os

8 |

9 | import geomstats.visualization as visualization

10 |

11 | from geomstats.hypersphere import Hypersphere

12 |

13 | SPHERE2 = Hypersphere(dimension=2)

14 | METRIC = SPHERE2.metric

15 |

16 |

17 | def main():

18 | initial_point = [1., 0., 0.]

19 | initial_tangent_vec = SPHERE2.projection_to_tangent_space(

20 | vector=[1., 2., 0.8],

21 | base_point=initial_point)

22 | geodesic = METRIC.geodesic(initial_point=initial_point,

23 | initial_tangent_vec=initial_tangent_vec)

24 |

25 | n_steps = 10

26 | t = np.linspace(0, 1, n_steps)

27 |

28 | points = geodesic(t)

29 | visualization.plot(points, space='S2')

30 | plt.show()

31 |

32 |

33 | if __name__ == "__main__":

34 | if os.environ['GEOMSTATS_BACKEND'] == 'tensorflow':

35 | print('Examples with visualizations are only implemented '

36 | 'with numpy backend.\n'

37 | 'To change backend, write: '

38 | 'export GEOMSTATS_BACKEND = \'numpy\'.')

39 | else:

40 | main()

41 |

--------------------------------------------------------------------------------

/geomstats/backend/pytorch_linalg.py:

--------------------------------------------------------------------------------

1 | """Pytorch based linear algebra backend."""

2 |

3 | import numpy as np

4 | import scipy.linalg

5 | import torch

6 |

7 |

8 | def expm(x):

9 | np_expm = np.vectorize(

10 | scipy.linalg.expm, signature='(n,m)->(n,m)')(x)

11 | return torch.from_numpy(np_expm)

12 |

13 |

14 | def inv(*args, **kwargs):

15 | return torch.from_numpy(np.linalg.inv(*args, **kwargs))

16 |

17 |

18 | def eigvalsh(*args, **kwargs):

19 | return torch.from_numpy(np.linalg.eigvalsh(*args, **kwargs))

20 |

21 |

22 | def eigh(*args, **kwargs):

23 | eigs = np.linalg.eigh(*args, **kwargs)

24 | return torch.from_numpy(eigs[0]), torch.from_numpy(eigs[1])

25 |

26 |

27 | def svd(*args, **kwargs):

28 | svds = np.linalg.svd(*args, **kwargs)

29 | return (torch.from_numpy(svds[0]),

30 | torch.from_numpy(svds[1]),

31 | torch.from_numpy(svds[2]))

32 |

33 |

34 | def det(*args, **kwargs):

35 | return torch.from_numpy(np.linalg.det(*args, **kwargs))

36 |

37 |

38 | def norm(x, ord=2, axis=None, keepdims=False):

39 | if axis is None:

40 | return torch.norm(x, p=ord)

41 | return torch.norm(x, p=ord, dim=axis)

42 |

43 |

44 | def qr(*args, **kwargs):

45 | return torch.from_numpy(np.linalg.qr(*args, **kwargs))

46 |

--------------------------------------------------------------------------------

/docs/conf.py:

--------------------------------------------------------------------------------

1 | project = 'Geomstats'

2 | copyright = '2019, Geomstats, Inc.'

3 | author = 'Geomstats Team'

4 |

5 | version = '0.1'

6 | # The full version, including alpha/beta/rc tags

7 | release = '0.1'

8 |

9 |

10 | extensions = [

11 | 'sphinx.ext.autodoc',

12 | 'sphinx.ext.doctest',

13 | 'sphinx.ext.coverage',

14 | 'sphinx.ext.mathjax',

15 | 'sphinx.ext.viewcode',

16 | 'sphinx.ext.githubpages',

17 | ]

18 |

19 | templates_path = ['templates']

20 |

21 | source_suffix = '.rst'

22 |

23 | master_doc = 'index'

24 |

25 | language = None

26 |

27 | exclude_patterns = ['build', 'Thumbs.db', '.DS_Store']

28 |

29 | pygments_style = None

30 |

31 | html_theme = 'sphinx_rtd_theme'

32 |

33 | html_static_path = ['static']

34 |

35 | htmlhelp_basename = 'geomstatsdoc'

36 |

37 | latex_elements = {

38 | }

39 |

40 |

41 | latex_documents = [

42 | (master_doc, 'geomstats.tex', 'geomstats Documentation',

43 | 'Geomstats Team', 'manual'),

44 | ]

45 |

46 | man_pages = [

47 | (master_doc, 'geomstats', 'geomstats Documentation',

48 | [author], 1)

49 | ]

50 |

51 | texinfo_documents = [

52 | (master_doc, 'geomstats', 'geomstats Documentation',

53 | author, 'geomstats', 'One line description of project.',

54 | 'Miscellaneous'),

55 | ]

56 |

57 | epub_title = project

58 | epub_exclude_files = ['search.html']

59 |

--------------------------------------------------------------------------------

/geomstats/manifold.py:

--------------------------------------------------------------------------------

1 | """

2 | Manifold, i.e. a topological space that locally resembles

3 | Euclidean space near each point.

4 | """

5 |

6 | import math

7 |

8 |

9 | class Manifold(object):

10 | """

11 | Class for manifolds.

12 | """

13 |

14 | def __init__(self, dimension):

15 |

16 | assert isinstance(dimension, int) or dimension == math.inf

17 | assert dimension > 0

18 |

19 | self.dimension = dimension

20 |

21 | def belongs(self, point, point_type=None):

22 | """

23 | Evaluate if a point belongs to the manifold.

24 |

25 | Parameters

26 | ----------

27 | points : array-like, shape=[n_samples, dimension]

28 | Input points.

29 |

30 | Returns

31 | -------

32 | belongs : array-like, shape=[n_samples, 1]

33 | """

34 | raise NotImplementedError('belongs is not implemented.')

35 |

36 | def regularize(self, point, point_type=None):

37 | """

38 | Regularize a point to the canonical representation

39 | chosen for the manifold.

40 |

41 | Parameters

42 | ----------

43 | points : array-like, shape=[n_samples, dimension]

44 | Input points.

45 |

46 | Returns

47 | -------

48 | regularized_point : array-like, shape=[n_samples, dimension]

49 | """

50 | regularized_point = point

51 | return regularized_point

52 |

--------------------------------------------------------------------------------

/geomstats/backend/numpy_linalg.py:

--------------------------------------------------------------------------------

1 | """Numpy based linear algebra backend."""

2 |

3 | import numpy as np

4 | import scipy.linalg

5 |

6 |

7 | def expm(x):

8 | return np.vectorize(

9 | scipy.linalg.expm, signature='(n,m)->(n,m)')(x)

10 |

11 |

12 | def logm(x):

13 | return np.vectorize(

14 | scipy.linalg.logm, signature='(n,m)->(n,m)')(x)

15 |

16 |

17 | def sqrtm(x):

18 | return np.vectorize(

19 | scipy.linalg.sqrtm, signature='(n,m)->(n,m)')(x)

20 |

21 |

22 | def det(*args, **kwargs):

23 | return np.linalg.det(*args, **kwargs)

24 |

25 |

26 | def norm(*args, **kwargs):

27 | return np.linalg.norm(*args, **kwargs)

28 |

29 |

30 | def inv(*args, **kwargs):

31 | return np.linalg.inv(*args, **kwargs)

32 |

33 |

34 | def matrix_rank(*args, **kwargs):

35 | return np.linalg.matrix_rank(*args, **kwargs)

36 |

37 |

38 | def eigvalsh(*args, **kwargs):

39 | return np.linalg.eigvalsh(*args, **kwargs)

40 |

41 |

42 | def svd(*args, **kwargs):

43 | return np.linalg.svd(*args, **kwargs)

44 |

45 |

46 | def eigh(*args, **kwargs):

47 | return np.linalg.eigh(*args, **kwargs)

48 |

49 |

50 | def eig(*args, **kwargs):

51 | return np.linalg.eig(*args, **kwargs)

52 |

53 |

54 | def exp(*args, **kwargs):

55 | return np.exp(*args, **kwargs)

56 |

57 |

58 | def qr(*args, **kwargs):

59 | return np.vectorize(

60 | np.linalg.qr,

61 | signature='(n,m)->(n,k),(k,m)',

62 | excluded=['mode'])(*args, **kwargs)

63 |

--------------------------------------------------------------------------------

/examples/plot_quantization_s1.py:

--------------------------------------------------------------------------------

1 | """

2 | Plot the result of optimal quantization of the uniform distribution

3 | on the circle.

4 | """

5 |

6 | import matplotlib.pyplot as plt

7 | import os

8 |

9 | import geomstats.visualization as visualization

10 |

11 | from geomstats.hypersphere import Hypersphere

12 |

13 | CIRCLE = Hypersphere(dimension=1)

14 | METRIC = CIRCLE.metric

15 | N_POINTS = 1000

16 | N_CENTERS = 5

17 | N_REPETITIONS = 20

18 | TOLERANCE = 1e-6

19 |

20 |

21 | def main():

22 | points = CIRCLE.random_uniform(n_samples=N_POINTS, bound=None)

23 |

24 | centers, weights, clusters, n_iterations = METRIC.optimal_quantization(

25 | points=points, n_centers=N_CENTERS,

26 | n_repetitions=N_REPETITIONS, tolerance=TOLERANCE

27 | )

28 |

29 | plt.figure(0)

30 | visualization.plot(points=centers, space='S1', color='red')

31 | plt.show()

32 |

33 | plt.figure(1)

34 | ax = plt.axes()

35 | circle = visualization.Circle()

36 | circle.draw(ax=ax)

37 | for i in range(N_CENTERS):

38 | circle.draw_points(ax=ax, points=clusters[i])

39 | plt.show()

40 |

41 |

42 | if __name__ == "__main__":

43 | if os.environ['GEOMSTATS_BACKEND'] == 'tensorflow':

44 | print('Examples with visualizations are only implemented '

45 | 'with numpy backend.\n'

46 | 'To change backend, write: '

47 | 'export GEOMSTATS_BACKEND = \'numpy\'.')

48 | else:

49 | main()

50 |

--------------------------------------------------------------------------------

/tests/test_backend_tensorflow.py:

--------------------------------------------------------------------------------

1 | """

2 | Unit tests for tensorflow backend.

3 | """

4 |

5 | import importlib

6 | import os

7 | import tensorflow as tf

8 |

9 | import geomstats.backend as gs

10 |

11 |

12 | class TestBackendTensorFlow(tf.test.TestCase):

13 | _multiprocess_can_split_ = True

14 |

15 | @classmethod

16 | def setUpClass(cls):

17 | cls.initial_backend = os.environ['GEOMSTATS_BACKEND']

18 | os.environ['GEOMSTATS_BACKEND'] = 'tensorflow'

19 | os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

20 | importlib.reload(gs)

21 |

22 | @classmethod

23 | def tearDownClass(cls):

24 | os.environ['GEOMSTATS_BACKEND'] = cls.initial_backend

25 | importlib.reload(gs)

26 |

27 | def test_vstack(self):

28 | with self.test_session():

29 | tensor_1 = tf.convert_to_tensor([[1., 2., 3.], [4., 5., 6.]])

30 | tensor_2 = tf.convert_to_tensor([[7., 8., 9.]])

31 |

32 | result = gs.vstack([tensor_1, tensor_2])

33 | expected = tf.convert_to_tensor([

34 | [1., 2., 3.],

35 | [4., 5., 6.],

36 | [7., 8., 9.]])

37 | self.assertAllClose(result, expected)

38 |

39 | def test_tensor_addition(self):

40 | with self.test_session():

41 | tensor_1 = gs.ones((1, 1))

42 | tensor_2 = gs.ones((0, 1))

43 |

44 | result = tensor_1 + tensor_2

45 |

46 |

47 | if __name__ == '__main__':

48 | tf.test.main()

49 |

--------------------------------------------------------------------------------

/examples/plot_quantization_s2.py:

--------------------------------------------------------------------------------

1 | """

2 | Plot the result of optimal quantization of the von Mises Fisher distribution

3 | on the sphere

4 | """

5 |

6 | import matplotlib.pyplot as plt

7 | import os

8 |

9 | import geomstats.visualization as visualization

10 |

11 | from geomstats.hypersphere import Hypersphere

12 |

13 | SPHERE2 = Hypersphere(dimension=2)

14 | METRIC = SPHERE2.metric

15 | N_POINTS = 1000

16 | N_CENTERS = 4

17 | N_REPETITIONS = 20

18 | KAPPA = 10

19 |

20 |

21 | def main():

22 | points = SPHERE2.random_von_mises_fisher(kappa=KAPPA, n_samples=N_POINTS)

23 |

24 | centers, weights, clusters, n_steps = METRIC.optimal_quantization(

25 | points=points, n_centers=N_CENTERS,

26 | n_repetitions=N_REPETITIONS

27 | )

28 |

29 | plt.figure(0)

30 | ax = plt.subplot(111, projection="3d")

31 | visualization.plot(points=centers, ax=ax, space='S2', c='r')

32 | plt.show()

33 |

34 | plt.figure(1)

35 | ax = plt.subplot(111, projection="3d")

36 | sphere = visualization.Sphere()

37 | sphere.draw(ax=ax)

38 | for i in range(N_CENTERS):

39 | sphere.draw_points(ax=ax, points=clusters[i])

40 | plt.show()

41 |

42 |

43 | if __name__ == "__main__":

44 | if os.environ['GEOMSTATS_BACKEND'] == 'tensorflow':

45 | print('Examples with visualizations are only implemented '

46 | 'with numpy backend.\n'

47 | 'To change backend, write: '

48 | 'export GEOMSTATS_BACKEND = \'numpy\'.')

49 | else:

50 | main()

51 |

--------------------------------------------------------------------------------

/docs/tutorials.rst:

--------------------------------------------------------------------------------

1 | Tutorials

2 | =========

3 |

4 | **Choosing the backend.**

5 |

6 | You need to set the environment variable GEOMSTATS_BACKEND to numpy, tensorflow or pytorch. Only use the numpy backend for examples with visualizations.

7 |

8 | .. code-block:: bash

9 |

10 | export GEOMSTATS_BACKEND=numpy

11 |

12 | **A first python example.**

13 |

14 | This example shows how to compute a geodesic on the Lie group SE(3), which is the group of rotations and translations in 3D.

15 |

16 | .. code-block:: python

17 |

18 | """

19 | Plot a geodesic of SE(3) equipped

20 | with its left-invariant canonical metric.

21 | """

22 |

23 | import matplotlib.pyplot as plt

24 | import numpy as np

25 | import os

26 |

27 | import geomstats.visualization as visualization

28 |

29 | from geomstats.special_euclidean_group import SpecialEuclideanGroup

30 |

31 | SE3_GROUP = SpecialEuclideanGroup(n=3)

32 | METRIC = SE3_GROUP.left_canonical_metric

33 |

34 | initial_point = SE3_GROUP.identity

35 | initial_tangent_vec = [1.8, 0.2, 0.3, 3., 3., 1.]

36 | geodesic = METRIC.geodesic(initial_point=initial_point,

37 | initial_tangent_vec=initial_tangent_vec)

38 |

39 | n_steps = 40

40 | t = np.linspace(-3, 3, n_steps)

41 |

42 | points = geodesic(t)

43 |

44 | visualization.plot(points, space='SE3_GROUP')

45 | plt.show()

46 |

47 | **More examples.**

48 |

49 | You can find more examples in the repository "examples" of geomstats. You can run them from the command line as follows.

50 |

51 | .. code-block:: bash

52 |

53 | python3 examples/plot_grid_h2.py

54 |

--------------------------------------------------------------------------------

/examples/plot_square_h2_poincare_disk.py:

--------------------------------------------------------------------------------

1 | """

2 | Plot a square on H2 with Poincare Disk visualization.

3 | """

4 |

5 | import matplotlib.pyplot as plt

6 | import numpy as np

7 | import os

8 |

9 | import geomstats.visualization as visualization

10 |

11 | from geomstats.hyperbolic_space import HyperbolicSpace

12 |

13 | H2 = HyperbolicSpace(dimension=2)

14 | METRIC = H2.metric

15 |

16 | SQUARE_SIZE = 50

17 |

18 |

19 | def main():

20 | top = SQUARE_SIZE / 2.0

21 | bot = - SQUARE_SIZE / 2.0

22 | left = - SQUARE_SIZE / 2.0

23 | right = SQUARE_SIZE / 2.0

24 | corners_int = [(bot, left), (bot, right), (top, right), (top, left)]

25 | corners_ext = H2.intrinsic_to_extrinsic_coords(corners_int)

26 | n_steps = 20

27 | ax = plt.gca()

28 | for i, src in enumerate(corners_ext):

29 | dst_id = (i+1) % len(corners_ext)

30 | dst = corners_ext[dst_id]

31 | tangent_vec = METRIC.log(point=dst, base_point=src)

32 | geodesic = METRIC.geodesic(initial_point=src,

33 | initial_tangent_vec=tangent_vec)

34 | t = np.linspace(0, 1, n_steps)

35 | edge_points = geodesic(t)

36 |

37 | visualization.plot(

38 | edge_points,

39 | ax=ax,

40 | space='H2_poincare_disk',

41 | marker='.',

42 | color='black')

43 |

44 | plt.show()

45 |

46 |

47 | if __name__ == "__main__":

48 | if os.environ['GEOMSTATS_BACKEND'] == 'tensorflow':

49 | print('Examples with visualizations are only implemented '

50 | 'with numpy backend.\n'

51 | 'To change backend, write: '

52 | 'export GEOMSTATS_BACKEND = \'numpy\'.')

53 | else:

54 | main()

55 |

--------------------------------------------------------------------------------

/examples/plot_square_h2_poincare_half_plane.py:

--------------------------------------------------------------------------------

1 | """

2 | Plot a square on H2 with Poincare Disk visualization.

3 | """

4 |

5 | import matplotlib.pyplot as plt

6 | import numpy as np

7 | import os

8 |

9 | import geomstats.visualization as visualization

10 |

11 | from geomstats.hyperbolic_space import HyperbolicSpace

12 |

13 | H2 = HyperbolicSpace(dimension=2)

14 | METRIC = H2.metric

15 |

16 | SQUARE_SIZE = 50

17 |

18 |

19 | def main():

20 | top = SQUARE_SIZE / 2.0

21 | bot = - SQUARE_SIZE / 2.0

22 | left = - SQUARE_SIZE / 2.0

23 | right = SQUARE_SIZE / 2.0

24 | corners_int = [(bot, left), (bot, right), (top, right), (top, left)]

25 | corners_ext = H2.intrinsic_to_extrinsic_coords(corners_int)

26 | n_steps = 20

27 | ax = plt.gca()

28 | for i, src in enumerate(corners_ext):

29 | dst_id = (i+1) % len(corners_ext)

30 | dst = corners_ext[dst_id]

31 | tangent_vec = METRIC.log(point=dst, base_point=src)

32 | geodesic = METRIC.geodesic(initial_point=src,

33 | initial_tangent_vec=tangent_vec)

34 | t = np.linspace(0, 1, n_steps)

35 | edge_points = geodesic(t)

36 |

37 | visualization.plot(

38 | edge_points,

39 | ax=ax,

40 | space='H2_poincare_half_plane',

41 | marker='.',

42 | color='black')

43 |

44 | plt.show()

45 |

46 |

47 | if __name__ == "__main__":

48 | if os.environ['GEOMSTATS_BACKEND'] == 'tensorflow':

49 | print('Examples with visualizations are only implemented '

50 | 'with numpy backend.\n'

51 | 'To change backend, write: '

52 | 'export GEOMSTATS_BACKEND = \'numpy\'.')

53 | else:

54 | main()

55 |

--------------------------------------------------------------------------------

/examples/plot_grid_h2.py:

--------------------------------------------------------------------------------

1 | """

2 | Plot a grid on H2

3 | with Poincare Disk visualization.

4 | """

5 |

6 | import matplotlib.pyplot as plt

7 | import numpy as np

8 | import os

9 |

10 | import geomstats.visualization as visualization

11 |

12 | from geomstats.hyperbolic_space import HyperbolicSpace

13 |

14 | H2 = HyperbolicSpace(dimension=2)

15 | METRIC = H2.metric

16 |

17 |

18 | def main(left=-128,

19 | right=128,

20 | bottom=-128,

21 | top=128,

22 | grid_size=32,

23 | n_steps=512):

24 | starts = []

25 | ends = []

26 | for p in np.linspace(left, right, grid_size):

27 | starts.append(np.array([top, p]))

28 | ends.append(np.array([bottom, p]))

29 | for p in np.linspace(top, bottom, grid_size):

30 | starts.append(np.array([p, left]))

31 | ends.append(np.array([p, right]))

32 | starts = [H2.intrinsic_to_extrinsic_coords(s) for s in starts]

33 | ends = [H2.intrinsic_to_extrinsic_coords(e) for e in ends]

34 | ax = plt.gca()

35 | for start, end in zip(starts, ends):

36 | geodesic = METRIC.geodesic(initial_point=start,

37 | end_point=end)

38 |

39 | t = np.linspace(0, 1, n_steps)

40 | points_to_plot = geodesic(t)

41 | visualization.plot(

42 | points_to_plot, ax=ax, space='H2_poincare_disk', marker='.', s=1)

43 | plt.show()

44 |

45 |

46 | if __name__ == "__main__":

47 | if os.environ['GEOMSTATS_BACKEND'] == 'tensorflow':

48 | print('Examples with visualizations are only implemented '

49 | 'with numpy backend.\n'

50 | 'To change backend, write: '

51 | 'export GEOMSTATS_BACKEND = \'numpy\'.')

52 | else:

53 | main()

54 |

--------------------------------------------------------------------------------

/examples/plot_square_h2_klein_disk.py:

--------------------------------------------------------------------------------

1 | """

2 | Plot a square on H2 with Poincare Disk visualization.

3 | """

4 |

5 | import matplotlib.pyplot as plt

6 | import numpy as np

7 | import os

8 |

9 | import geomstats.visualization as visualization

10 |

11 | from geomstats.hyperbolic_space import HyperbolicSpace

12 |

13 | H2 = HyperbolicSpace(dimension=2)

14 | METRIC = H2.metric

15 |

16 | SQUARE_SIZE = 50

17 |

18 |

19 | def main():

20 | top = SQUARE_SIZE / 2.0

21 | bot = - SQUARE_SIZE / 2.0

22 | left = - SQUARE_SIZE / 2.0

23 | right = SQUARE_SIZE / 2.0

24 | corners_int = [(bot, left), (bot, right), (top, right), (top, left)]

25 | corners_ext = H2.intrinsic_to_extrinsic_coords(corners_int)

26 | n_steps = 20

27 | ax = plt.gca()

28 | for i, src in enumerate(corners_ext):

29 | dst_id = (i+1) % len(corners_ext)

30 | dst = corners_ext[dst_id]

31 | tangent_vec = METRIC.log(point=dst, base_point=src)

32 | geodesic = METRIC.geodesic(initial_point=src,

33 | initial_tangent_vec=tangent_vec)

34 | t = np.linspace(0, 1, n_steps)

35 | edge_points = geodesic(t)

36 |

37 | visualization.plot(edge_points,

38 | ax=ax,

39 | space='H2_klein_disk',

40 | marker='.',

41 | color='black')

42 | plt.show()

43 |

44 |

45 | if __name__ == "__main__":

46 | if os.environ['GEOMSTATS_BACKEND'] == 'tensorflow':

47 | print('Examples with visualizations are only implemented '

48 | 'with numpy backend.\n'

49 | 'To change backend, write: '

50 | 'export GEOMSTATS_BACKEND = \'numpy\'.')

51 | else:

52 | main()

53 |

--------------------------------------------------------------------------------

/examples/tangent_pca_so3.py:

--------------------------------------------------------------------------------

1 | """

2 | Compute the mean of a data set of 3D rotations.

3 | Performs tangent PCA at the mean.

4 | """

5 |

6 | import matplotlib.pyplot as plt

7 | import numpy as np

8 |

9 | import geomstats.visualization as visualization

10 |

11 | from geomstats.learning.pca import TangentPCA

12 | from geomstats.special_orthogonal_group import SpecialOrthogonalGroup

13 |

14 | SO3_GROUP = SpecialOrthogonalGroup(n=3)

15 | METRIC = SO3_GROUP.bi_invariant_metric

16 |

17 | N_SAMPLES = 10

18 | N_COMPONENTS = 2

19 |

20 |

21 | def main():

22 | fig = plt.figure(figsize=(15, 5))

23 |

24 | data = SO3_GROUP.random_uniform(n_samples=N_SAMPLES)

25 | mean = METRIC.mean(data)

26 |

27 | tpca = TangentPCA(metric=METRIC, n_components=N_COMPONENTS)

28 | tpca = tpca.fit(data, base_point=mean)

29 | tangent_projected_data = tpca.transform(data)

30 | print(

31 | 'Coordinates of the Log of the first 5 data points at the mean, '

32 | 'projected on the principal components:')

33 | print(tangent_projected_data[:5])

34 |

35 | ax_var = fig.add_subplot(121)

36 | xticks = np.arange(1, N_COMPONENTS+1, 1)

37 | ax_var.xaxis.set_ticks(xticks)

38 | ax_var.set_title('Explained variance')

39 | ax_var.set_xlabel('Number of Principal Components')

40 | ax_var.set_ylim((0, 1))

41 | ax_var.plot(xticks, tpca.explained_variance_ratio_)

42 |

43 | ax = fig.add_subplot(122, projection="3d")

44 | plt.setp(ax, xlabel="X", ylabel="Y", zlabel="Z")

45 |

46 | ax.set_title('Data in SO3 (black) and Frechet mean (color)')

47 | visualization.plot(data, ax, space='SO3_GROUP', color='black')

48 | visualization.plot(mean, ax, space='SO3_GROUP', linewidth=3)

49 | ax.set_xlim((-2, 2))

50 | ax.set_ylim((-2, 2))

51 | ax.set_zlim((-2, 2))

52 | plt.show()

53 |

54 |

55 | if __name__ == "__main__":

56 | main()

57 |

--------------------------------------------------------------------------------

/geomstats/backend/tensorflow_linalg.py:

--------------------------------------------------------------------------------

1 | """Tensorflow based linear algebra backend."""

2 |

3 | import tensorflow as tf

4 |

5 | from geomstats.backend.tensorflow import to_ndarray

6 |

7 |

8 | def sqrtm(sym_mat):

9 | sym_mat = to_ndarray(sym_mat, to_ndim=3)

10 |

11 | [eigenvalues, vectors] = tf.linalg.eigh(sym_mat)

12 |

13 | sqrt_eigenvalues = tf.sqrt(eigenvalues)

14 |

15 | aux = tf.einsum('ijk,ik->ijk', vectors, sqrt_eigenvalues)

16 | sqrt_mat = tf.einsum('ijk,ilk->ijl', aux, vectors)

17 |

18 | sqrt_mat = to_ndarray(sqrt_mat, to_ndim=3)

19 | return sqrt_mat

20 |

21 |

22 | def expm(x):

23 | return tf.linalg.expm(x)

24 |

25 |

26 | def logm(x):

27 | return tf.linalg.expm(x)

28 |

29 |

30 | def logm(x):

31 | x = tf.cast(x, tf.complex64)

32 | logm = tf.linalg.logm(x)

33 | logm = tf.cast(logm, tf.float32)

34 | return logm

35 |

36 |

37 | def det(x):

38 | return tf.linalg.det(x)

39 |

40 |

41 | def eigh(x):

42 | return tf.linalg.eigh(x)

43 |

44 |

45 | def eig(x):

46 | return tf.linalg.eig(x)

47 |

48 |

49 | def svd(x):

50 | s, u, v_t = tf.svd(x, full_matrices=True)

51 | return u, s, tf.transpose(v_t, perm=(0, 2, 1))

52 |

53 |

54 | def norm(x, axis=None):

55 | return tf.linalg.norm(x, axis=axis)

56 |

57 |

58 | def inv(x):

59 | return tf.linalg.inv(x)

60 |

61 |

62 | def matrix_rank(x):

63 | return tf.rank(x)

64 |

65 |

66 | def eigvalsh(x):

67 | return tf.linalg.eigvalsh(x)

68 |

69 |

70 | def qr(*args, mode='reduced'):

71 | def qr_aux(x, mode):

72 | if mode == 'complete':

73 | aux = tf.linalg.qr(x, full_matrices=True)

74 | else:

75 | aux = tf.linalg.qr(x)

76 |

77 | return (aux.q, aux.r)

78 |

79 | qr = tf.map_fn(

80 | lambda x: qr_aux(x, mode),

81 | *args,

82 | dtype=(tf.float32, tf.float32))

83 |

84 | return qr

85 |

--------------------------------------------------------------------------------

/tests/test_template.py:

--------------------------------------------------------------------------------

1 | import pytest

2 | import numpy as np

3 |

4 | from sklearn.datasets import load_iris

5 | from sklearn.utils.testing import assert_array_equal

6 | from sklearn.utils.testing import assert_allclose

7 |

8 | from geomstats.learning._template import TemplateEstimator

9 | from geomstats.learning._template import TemplateTransformer

10 | from geomstats.learning._template import TemplateClassifier

11 |

12 |

13 | @pytest.fixture

14 | def data():

15 | return load_iris(return_X_y=True)

16 |

17 |

18 | def test_template_estimator(data):

19 | est = TemplateEstimator()

20 | assert est.demo_param == 'demo_param'

21 |

22 | est.fit(*data)

23 | assert hasattr(est, 'is_fitted_')

24 |

25 | X = data[0]

26 | y_pred = est.predict(X)

27 | assert_array_equal(y_pred, np.ones(X.shape[0], dtype=np.int64))

28 |

29 |

30 | def test_template_transformer_error(data):

31 | X, y = data

32 | trans = TemplateTransformer()

33 | trans.fit(X)

34 | with pytest.raises(ValueError, match="Shape of input is different"):

35 | X_diff_size = np.ones((10, X.shape[1] + 1))

36 | trans.transform(X_diff_size)

37 |

38 |

39 | def test_template_transformer(data):

40 | X, y = data

41 | trans = TemplateTransformer()

42 | assert trans.demo_param == 'demo'

43 |

44 | trans.fit(X)

45 | assert trans.n_features_ == X.shape[1]

46 |

47 | X_trans = trans.transform(X)

48 | assert_allclose(X_trans, np.sqrt(X))

49 |

50 | X_trans = trans.fit_transform(X)

51 | assert_allclose(X_trans, np.sqrt(X))

52 |

53 |

54 | def test_template_classifier(data):

55 | X, y = data

56 | clf = TemplateClassifier()

57 | assert clf.demo_param == 'demo'

58 |

59 | clf.fit(X, y)

60 | assert hasattr(clf, 'classes_')

61 | assert hasattr(clf, 'X_')

62 | assert hasattr(clf, 'y_')

63 |

64 | y_pred = clf.predict(X)

65 | assert y_pred.shape == (X.shape[0],)

66 |

--------------------------------------------------------------------------------

/tests/test_connection.py:

--------------------------------------------------------------------------------

1 | """

2 | Unit tests for the affine connections.

3 | """

4 |

5 | import geomstats.backend as gs

6 | import geomstats.tests

7 |

8 | from geomstats.connection import LeviCivitaConnection

9 | from geomstats.euclidean_space import EuclideanMetric

10 |

11 |

12 | class TestConnectionMethods(geomstats.tests.TestCase):

13 | _multiprocess_can_split_ = True

14 |

15 | def setUp(self):

16 | self.dimension = 4

17 | self.metric = EuclideanMetric(dimension=self.dimension)

18 | self.connection = LeviCivitaConnection(self.metric)

19 |

20 | def test_metric_matrix(self):

21 | base_point = gs.array([0., 1., 0., 0.])

22 |

23 | result = self.connection.metric_matrix(base_point)

24 | expected = gs.array([gs.eye(self.dimension)])

25 |

26 | with self.session():

27 | self.assertAllClose(result, expected)

28 |

29 | def test_cometric_matrix(self):

30 | base_point = gs.array([0., 1., 0., 0.])

31 |

32 | result = self.connection.cometric_matrix(base_point)

33 | expected = gs.array([gs.eye(self.dimension)])

34 |

35 | with self.session():

36 | self.assertAllClose(result, expected)

37 |

38 | @geomstats.tests.np_only

39 | def test_metric_derivative(self):

40 | base_point = gs.array([0., 1., 0., 0.])

41 |

42 | result = self.connection.metric_derivative(base_point)

43 | expected = gs.zeros((1,) + (self.dimension, ) * 3)

44 |

45 | gs.testing.assert_allclose(result, expected)

46 |

47 | @geomstats.tests.np_only

48 | def test_christoffel_symbols(self):

49 | base_point = gs.array([0., 1., 0., 0.])

50 |

51 | result = self.connection.christoffel_symbols(base_point)

52 | expected = gs.zeros((1,) + (self.dimension, ) * 3)

53 |

54 | gs.testing.assert_allclose(result, expected)

55 |

56 |

57 | if __name__ == '__main__':

58 | geomstats.tests.main()

59 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Geomstats

2 | [](https://travis-ci.org/geomstats/geomstats)[](https://codecov.io/gh/geomstats/geomstats)[](https://codecov.io/gh/geomstats/geomstats)[](https://codecov.io/gh/geomstats/geomstats) (Coverages for: numpy, tensorflow, pytorch)

3 |

4 |

5 | Computations and statistics on manifolds with geometric structures.

6 |

7 | - To get started with ```geomstats```, see the [examples directory](https://github.com/geomstats/geomstats/examples).

8 | - For more in-depth applications of ``geomstats``, see the [applications repository](https://github.com/geomstats/applications/).

9 | - The documentation of ```geomstats``` can be found on the [documentation website](https://geomstats.github.io/).

10 | - If you use ``geomstats``, please kindly cite our [paper](https://arxiv.org/abs/1805.08308).

11 |

12 |

13 |

14 |

15 | ## Installation

16 |

17 | OS X & Linux:

18 |

19 | ```

20 | pip3 install geomstats

21 | ```

22 |

23 | ## Running tests

24 |

25 | ```

26 | pip3 install nose2

27 | nose2

28 | ```

29 |

30 | ## Getting started

31 |

32 | Define your backend by setting the environment variable ```GEOMSTATS_BACKEND``` to ```numpy```, ```tensorflow```, or ```pytorch```:

33 |

34 | ```

35 | export GEOMSTATS_BACKEND=numpy

36 | ```

37 |

38 | Then, run example scripts:

39 |

40 | ```

41 | python3 examples/plot_grid_h2.py

42 | ```

43 |

44 | ## Contributing

45 |

46 | See our [CONTRIBUTING.md][link_contributing] file!

47 |

48 | ## Authors & Contributors

49 |

50 | * Alice Le Brigant

51 | * Claire Donnat

52 | * Oleg Kachan

53 | * Benjamin Hou

54 | * Johan Mathe

55 | * Nina Miolane

56 | * Xavier Pennec

57 |

58 | ## Acknowledgements

59 |

60 | This work is partially supported by the National Science Foundation, grant NSF DMS RTG 1501767.

61 |

62 | [link_contributing]: https://github.com/geomstats/geomstats/CONTRIBUTING.md

63 |

--------------------------------------------------------------------------------

/tests/test_visualization.py:

--------------------------------------------------------------------------------

1 | """

2 | Unit tests for visualization.

3 | """

4 |

5 | import matplotlib

6 | matplotlib.use('Agg') # NOQA

7 | import matplotlib.pyplot as plt

8 |

9 | import geomstats.tests

10 | import geomstats.visualization as visualization

11 |

12 | from geomstats.hyperbolic_space import HyperbolicSpace

13 | from geomstats.hypersphere import Hypersphere

14 | from geomstats.special_euclidean_group import SpecialEuclideanGroup

15 | from geomstats.special_orthogonal_group import SpecialOrthogonalGroup

16 |

17 |

18 | class TestVisualizationMethods(geomstats.tests.TestCase):

19 | _multiprocess_can_split_ = True

20 |

21 | def setUp(self):

22 | self.n_samples = 10

23 | self.SO3_GROUP = SpecialOrthogonalGroup(n=3)

24 | self.SE3_GROUP = SpecialEuclideanGroup(n=3)

25 | self.S1 = Hypersphere(dimension=1)

26 | self.S2 = Hypersphere(dimension=2)

27 | self.H2 = HyperbolicSpace(dimension=2)

28 |

29 | plt.figure()

30 |

31 | @geomstats.tests.np_only

32 | def test_plot_points_so3(self):

33 | points = self.SO3_GROUP.random_uniform(self.n_samples)

34 | visualization.plot(points, space='SO3_GROUP')

35 |

36 | @geomstats.tests.np_only

37 | def test_plot_points_se3(self):

38 | points = self.SE3_GROUP.random_uniform(self.n_samples)

39 | visualization.plot(points, space='SE3_GROUP')

40 |

41 | @geomstats.tests.np_only

42 | def test_plot_points_s1(self):

43 | points = self.S1.random_uniform(self.n_samples)

44 | visualization.plot(points, space='S1')

45 |

46 | @geomstats.tests.np_only

47 | def test_plot_points_s2(self):

48 | points = self.S2.random_uniform(self.n_samples)

49 | visualization.plot(points, space='S2')

50 |

51 | @geomstats.tests.np_only

52 | def test_plot_points_h2_poincare_disk(self):

53 | points = self.H2.random_uniform(self.n_samples)

54 | visualization.plot(points, space='H2_poincare_disk')

55 |

56 | @geomstats.tests.np_only

57 | def test_plot_points_h2_poincare_half_plane(self):

58 | points = self.H2.random_uniform(self.n_samples)

59 | visualization.plot(points, space='H2_poincare_half_plane')

60 |

61 | @geomstats.tests.np_only

62 | def test_plot_points_h2_klein_disk(self):

63 | points = self.H2.random_uniform(self.n_samples)

64 | visualization.plot(points, space='H2_klein_disk')

65 |

66 |

67 | if __name__ == '__main__':

68 | geomstats.tests.main()

69 |

--------------------------------------------------------------------------------

/geomstats/tests.py:

--------------------------------------------------------------------------------

1 | """

2 | Testing class for geomstats.

3 |

4 | This class abstracts the backend type.

5 | """

6 |

7 | import os

8 | import tensorflow as tf

9 | import unittest

10 |

11 | import geomstats.backend as gs

12 |

13 |

14 | def pytorch_backend():

15 | return os.environ['GEOMSTATS_BACKEND'] == 'pytorch'

16 |

17 |

18 | def tf_backend():

19 | return os.environ['GEOMSTATS_BACKEND'] == 'tensorflow'

20 |

21 |

22 | def np_backend():

23 | return os.environ['GEOMSTATS_BACKEND'] == 'numpy'

24 |

25 |

26 | test_class = unittest.TestCase

27 | if tf_backend():

28 | test_class = tf.test.TestCase

29 |

30 |

31 | def np_only(test_item):

32 | """Decorator to filter tests for numpy only."""

33 | if not np_backend():

34 | test_item.__unittest_skip__ = True

35 | test_item.__unittest_skip_why__ = (

36 | 'Test for numpy backend only.')

37 | return test_item

38 |

39 |

40 | def np_and_tf_only(test_item):

41 | """Decorator to filter tests for numpy and tensorflow only."""

42 | if not (np_backend() or tf_backend()):

43 | test_item.__unittest_skip__ = True

44 | test_item.__unittest_skip_why__ = (

45 | 'Test for numpy and tensorflow backends only.')

46 | return test_item

47 |

48 |

49 | def np_and_pytorch_only(test_item):

50 | """Decorator to filter tests for numpy and pytorch only."""

51 | if not (np_backend() or pytorch_backend()):

52 | test_item.__unittest_skip__ = True

53 | test_item.__unittest_skip_why__ = (

54 | 'Test for numpy and pytorch backends only.')

55 | return test_item

56 |

57 |

58 | class DummySession():

59 | def __enter__(self):

60 | pass

61 |

62 | def __exit__(self, a, b, c):

63 | pass

64 |

65 |

66 | class TestCase(test_class):

67 |

68 | def assertAllClose(self, a, b, rtol=1e-6, atol=1e-6):

69 | if tf_backend():

70 | return super().assertAllClose(a, b, rtol=rtol, atol=atol)

71 | return self.assertTrue(gs.allclose(a, b, rtol=rtol, atol=atol))

72 |

73 | def session(self):

74 | if tf_backend():

75 | return super().test_session()

76 | return DummySession()

77 |

78 | def assertShapeEqual(self, a, b):

79 | if tf_backend():

80 | return super().assertShapeEqual(a, b)

81 | super().assertEqual(a.shape, b.shape)

82 |

83 | @classmethod

84 | def setUpClass(cls):

85 | if tf_backend():

86 | os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

87 |

--------------------------------------------------------------------------------

/examples/plot_geodesics_h2.py:

--------------------------------------------------------------------------------

1 | """

2 | Plot a geodesic on the Hyperbolic space H2,

3 | with Poincare Disk visualization.

4 | """

5 |

6 | import matplotlib.pyplot as plt

7 | import numpy as np

8 | import os

9 |

10 | import geomstats.visualization as visualization

11 |

12 | from geomstats.hyperbolic_space import HyperbolicSpace

13 |

14 | H2 = HyperbolicSpace(dimension=2)

15 | METRIC = H2.metric

16 |

17 |

18 | def plot_geodesic_between_two_points(initial_point,

19 | end_point,

20 | n_steps=10,

21 | ax=None):

22 | assert H2.belongs(initial_point)

23 | assert H2.belongs(end_point)

24 |

25 | geodesic = METRIC.geodesic(initial_point=initial_point,

26 | end_point=end_point)

27 |

28 | t = np.linspace(0, 1, n_steps)

29 | points = geodesic(t)

30 | visualization.plot(points, ax=ax, space='H2_poincare_disk')

31 |

32 |

33 | def plot_geodesic_with_initial_tangent_vector(initial_point,

34 | initial_tangent_vec,

35 | n_steps=10,

36 | ax=None):

37 | assert H2.belongs(initial_point)

38 | geodesic = METRIC.geodesic(initial_point=initial_point,

39 | initial_tangent_vec=initial_tangent_vec)

40 | n_steps = 10

41 | t = np.linspace(0, 1, n_steps)

42 |

43 | points = geodesic(t)

44 | visualization.plot(points, ax=ax, space='H2_poincare_disk')

45 |

46 |

47 | def main():

48 | initial_point = [np.sqrt(2), 1., 0.]

49 | end_point = H2.intrinsic_to_extrinsic_coords([1.5, 1.5])

50 | initial_tangent_vec = H2.projection_to_tangent_space(

51 | vector=[3.5, 0.6, 0.8],

52 | base_point=initial_point)

53 |

54 | ax = plt.gca()

55 | plot_geodesic_between_two_points(initial_point,

56 | end_point,

57 | ax=ax)

58 | plot_geodesic_with_initial_tangent_vector(initial_point,

59 | initial_tangent_vec,

60 | ax=ax)

61 | plt.show()

62 |

63 |

64 | if __name__ == "__main__":

65 | if os.environ['GEOMSTATS_BACKEND'] == 'tensorflow':

66 | print('Examples with visualizations are only implemented '

67 | 'with numpy backend.\n'

68 | 'To change backend, write: '

69 | 'export GEOMSTATS_BACKEND = \'numpy\'.')

70 | else:

71 | main()

72 |

--------------------------------------------------------------------------------

/tests/helper.py:

--------------------------------------------------------------------------------

1 | """

2 | Helper functions for unit tests.

3 | """

4 |

5 | import geomstats.backend as gs

6 |

7 |

8 | def to_scalar(expected):

9 | expected = gs.to_ndarray(expected, to_ndim=1)

10 | expected = gs.to_ndarray(expected, to_ndim=2, axis=-1)

11 | return expected

12 |

13 |

14 | def to_vector(expected):

15 | expected = gs.to_ndarray(expected, to_ndim=2)

16 | return expected

17 |

18 |

19 | def to_matrix(expected):

20 | expected = gs.to_ndarray(expected, to_ndim=3)

21 | return expected

22 |

23 |

24 | def left_log_then_exp_from_identity(metric, point):

25 | aux = metric.left_log_from_identity(point=point)

26 | result = metric.left_exp_from_identity(tangent_vec=aux)

27 | return result

28 |

29 |

30 | def left_exp_then_log_from_identity(metric, tangent_vec):

31 | aux = metric.left_exp_from_identity(tangent_vec=tangent_vec)

32 | result = metric.left_log_from_identity(point=aux)

33 | return result

34 |

35 |

36 | def log_then_exp_from_identity(metric, point):

37 | aux = metric.log_from_identity(point=point)

38 | result = metric.exp_from_identity(tangent_vec=aux)

39 | return result

40 |

41 |

42 | def exp_then_log_from_identity(metric, tangent_vec):

43 | aux = metric.exp_from_identity(tangent_vec=tangent_vec)

44 | result = metric.log_from_identity(point=aux)

45 | return result

46 |

47 |

48 | def log_then_exp(metric, point, base_point):

49 | aux = metric.log(point=point,

50 | base_point=base_point)

51 | result = metric.exp(tangent_vec=aux,

52 | base_point=base_point)

53 | return result

54 |

55 |

56 | def exp_then_log(metric, tangent_vec, base_point):

57 | aux = metric.exp(tangent_vec=tangent_vec,

58 | base_point=base_point)

59 | result = metric.log(point=aux,

60 | base_point=base_point)

61 | return result

62 |

63 |

64 | def group_log_then_exp_from_identity(group, point):

65 | aux = group.group_log_from_identity(point=point)

66 | result = group.group_exp_from_identity(tangent_vec=aux)

67 | return result

68 |

69 |

70 | def group_exp_then_log_from_identity(group, tangent_vec):

71 | aux = group.group_exp_from_identity(tangent_vec=tangent_vec)

72 | result = group.group_log_from_identity(point=aux)

73 | return result

74 |

75 |

76 | def group_log_then_exp(group, point, base_point):

77 | aux = group.group_log(point=point,

78 | base_point=base_point)

79 | result = group.group_exp(tangent_vec=aux,

80 | base_point=base_point)

81 | return result

82 |

83 |

84 | def group_exp_then_log(group, tangent_vec, base_point):

85 | aux = group.group_exp(tangent_vec=tangent_vec,

86 | base_point=base_point)

87 | result = group.group_log(point=aux,

88 | base_point=base_point)

89 | return result

90 |

--------------------------------------------------------------------------------

/docs/api-reference.rst:

--------------------------------------------------------------------------------

1 | *************

2 | API reference

3 | *************

4 |

5 | .. automodule:: geomstats

6 | :members:

7 |

8 | The "manifold" module

9 | ---------------------

10 |

11 | .. automodule:: geomstats.manifold

12 | :members:

13 |

14 | The "connection" module

15 | -----------------------

16 |

17 | .. automodule:: geomstats.connection

18 | :members:

19 |

20 | The "riemannian_metric" module

21 | ------------------------------

22 |

23 | .. automodule:: geomstats.riemannian_metric

24 | :members:

25 |

26 | Spaces of constant curvatures

27 | =============================

28 |

29 | The "embedded_manifold" module

30 | ------------------------------

31 |

32 | .. automodule:: geomstats.embedded_manifold

33 | :members:

34 |

35 | The "euclidean_space" module

36 | ----------------------------

37 |

38 | .. automodule:: geomstats.euclidean_space

39 | :members:

40 |

41 | The "minkowski_space" module

42 | ----------------------------

43 |

44 | .. automodule:: geomstats.minkowski_space

45 | :members:

46 |

47 | The "hypersphere" module

48 | ------------------------

49 |

50 | .. automodule:: geomstats.hypersphere

51 | :members:

52 |

53 | The "hyperbolic_space" module

54 | -----------------------------

55 |

56 | .. automodule:: geomstats.hyperbolic_space

57 | :members:

58 |

59 | Lie groups

60 | ==========

61 |

62 | The "lie_group" module

63 | ----------------------

64 |

65 | .. automodule:: geomstats.lie_group

66 | :members:

67 |

68 | The "matrices_space" module

69 | ---------------------------

70 |

71 | .. automodule:: geomstats.matrices_space

72 | :members:

73 |

74 | The "general_linear_group" module

75 | ---------------------------------

76 |

77 | .. automodule:: geomstats.general_linear_group

78 | :members:

79 |

80 | The "invariant_metric" module

81 | -----------------------------

82 |

83 | .. automodule:: geomstats.invariant_metric

84 | :members:

85 |

86 | The "special_euclidean_group" module

87 | ------------------------------------

88 |