├── .gitignore

├── .gitmodules

├── LICENSE

├── README.md

├── S3DIS.md

├── S3DIS_fix.diff

├── Semantic3D.md

├── learning

├── __init__.py

├── custom_dataset.py

├── ecc

│ ├── GraphConvInfo.py

│ ├── GraphConvModule.py

│ ├── GraphPoolInfo.py

│ ├── GraphPoolModule.py

│ ├── __init__.py

│ ├── cuda_kernels.py

│ ├── test_GraphConvModule.py

│ ├── test_GraphPoolModule.py

│ └── utils.py

├── evaluate.py

├── graphnet.py

├── main.py

├── metrics.py

├── modules.py

├── pointnet.py

├── s3dis_dataset.py

├── sema3d_dataset.py

├── spg.py

└── vkitti_dataset.py

├── partition

├── __init__.py

├── graphs.py

├── partition.py

├── ply_c

│ ├── CMakeLists.txt

│ ├── FindNumPy.cmake

│ ├── __init__.py

│ ├── connected_components.cpp

│ ├── ply_c.cpp

│ └── random_subgraph.cpp

├── provider.py

├── visualize.py

└── write_Semantic3d.py

├── supervized_partition

├── __init__.py

├── evaluate_partition.py

├── folderhierarchy.py

├── generate_partition.py

├── graph_processing.py

├── losses.py

└── supervized_partition.py

└── vKITTI3D.md

/.gitignore:

--------------------------------------------------------------------------------

1 | *.pyc

2 | *DS_Store*

3 | *.vscode

4 | *.so

5 | partition/ply_c/build

--------------------------------------------------------------------------------

/.gitmodules:

--------------------------------------------------------------------------------

1 | [submodule "partition/cut-pursuit"]

2 | path = partition/cut-pursuit

3 | url = ../cut-pursuit.git

4 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2018 Loic Landrieu

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 | # Large-scale Point Cloud Semantic Segmentation with Superpoint Graphs

3 |

4 | ## ⚠️ This repo is no longer maintained! Please check out our brand new [*SuperPoint Transformer*](https://github.com/drprojects/superpoint_transformer), which does everything better! ⚠️

5 |

6 |

7 |

8 | This is the official PyTorch implementation of the papers:

9 |

10 | [*Large-scale Point Cloud Semantic Segmentation with Superpoint Graphs*](http://arxiv.org/abs/1711.09869)

11 |

12 | by Loic Landrieu and Martin Simonovski (CVPR2018),

13 |

14 | and

15 |

16 | [*Point Cloud Oversegmentation with Graph-Structured Deep Metric Learning*](https://arxiv.org/pdf/1904.02113).

17 |

18 | by Loic Landrieu and Mohamed Boussaha (CVPR2019),

19 |

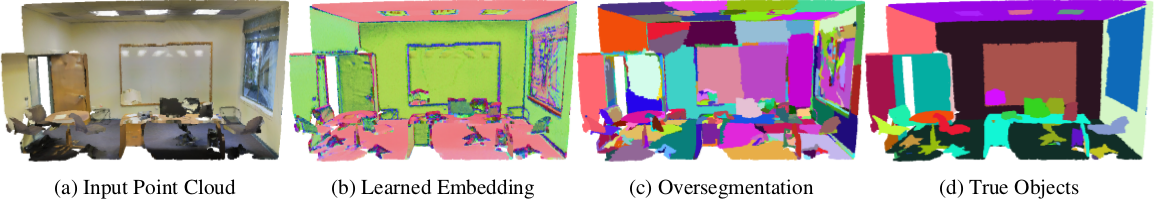

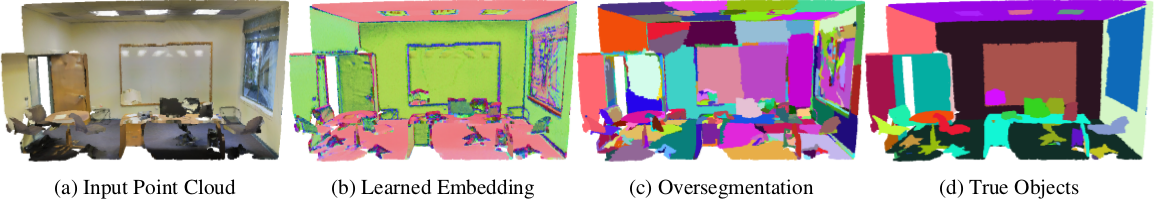

20 |  21 |

22 |

21 |

22 |  23 |

24 | ## Code structure

25 | * `./partition/*` - Partition code (geometric partitioning and superpoint graph construction using handcrafted features)

26 | * `./supervized_partition/*` - Supervized partition code (partitioning with learned features)

27 | * `./learning/*` - Learning code (superpoint embedding and contextual segmentation).

28 |

29 | To switch to the stable branch with only SPG, switch to [release](https://github.com/loicland/superpoint_graph/tree/release).

30 |

31 | ## Disclaimer

32 | Our partition method is inherently stochastic. Hence, even if we provide the trained weights, it is possible that the results that you obtain differ slightly from the ones presented in the paper.

33 |

34 | ## Requirements

35 | *0.* Download current version of the repository. We recommend using the `--recurse-submodules` option to make sure the [cut pursuit](https://github.com/loicland/cut-pursuit) module used in `/partition` is downloaded in the process. Wether you did not used the following command, please, refer to point 4:

23 |

24 | ## Code structure

25 | * `./partition/*` - Partition code (geometric partitioning and superpoint graph construction using handcrafted features)

26 | * `./supervized_partition/*` - Supervized partition code (partitioning with learned features)

27 | * `./learning/*` - Learning code (superpoint embedding and contextual segmentation).

28 |

29 | To switch to the stable branch with only SPG, switch to [release](https://github.com/loicland/superpoint_graph/tree/release).

30 |

31 | ## Disclaimer

32 | Our partition method is inherently stochastic. Hence, even if we provide the trained weights, it is possible that the results that you obtain differ slightly from the ones presented in the paper.

33 |

34 | ## Requirements

35 | *0.* Download current version of the repository. We recommend using the `--recurse-submodules` option to make sure the [cut pursuit](https://github.com/loicland/cut-pursuit) module used in `/partition` is downloaded in the process. Wether you did not used the following command, please, refer to point 4:

36 | ```

37 | git clone --recurse-submodules https://github.com/loicland/superpoint_graph

38 | ```

39 |

40 | *1.* Install [PyTorch](https://pytorch.org) and [torchnet](https://github.com/pytorch/tnt).

41 | ```

42 | pip install git+https://github.com/pytorch/tnt.git@master

43 | ```

44 |

45 | *2.* Install additional Python packages:

46 | ```

47 | pip install future igraph tqdm transforms3d pynvrtc fastrlock cupy h5py sklearn plyfile scipy pandas

48 | ```

49 |

50 | *3.* Install Boost (1.63.0 or newer) and Eigen3, in Conda:

51 | ```

52 | conda install -c anaconda boost; conda install -c omnia eigen3; conda install eigen; conda install -c r libiconv

53 | ```

54 |

55 | *4.* Make sure that cut pursuit was downloaded. Otherwise, clone [this repository](https://github.com/loicland/cut-pursuit) or add it as a submodule in `/partition`:

56 | ```

57 | cd partition

58 | git submodule init

59 | git submodule update --remote cut-pursuit

60 | ```

61 |

62 | *5.* Compile the ```libply_c``` and ```libcp``` libraries:

63 | ```

64 | CONDAENV=YOUR_CONDA_ENVIRONMENT_LOCATION

65 | cd partition/ply_c

66 | cmake . -DPYTHON_LIBRARY=$CONDAENV/lib/libpython3.6m.so -DPYTHON_INCLUDE_DIR=$CONDAENV/include/python3.6m -DBOOST_INCLUDEDIR=$CONDAENV/include -DEIGEN3_INCLUDE_DIR=$CONDAENV/include/eigen3

67 | make

68 | cd ..

69 | cd cut-pursuit

70 | mkdir build

71 | cd build

72 | cmake .. -DPYTHON_LIBRARY=$CONDAENV/lib/libpython3.6m.so -DPYTHON_INCLUDE_DIR=$CONDAENV/include/python3.6m -DBOOST_INCLUDEDIR=$CONDAENV/include -DEIGEN3_INCLUDE_DIR=$CONDAENV/include/eigen3

73 | make

74 | ```

75 | *6.* (optional) Install [Pytorch Geometric](https://github.com/rusty1s/pytorch_geometric)

76 |

77 | The code was tested on Ubuntu 14 and 16 with Python 3.5 to 3.8 and PyTorch 0.2 to 1.3.

78 |

79 | ### Troubleshooting

80 |

81 | Common sources of errors and how to fix them:

82 | - $CONDAENV is not well defined : define it or replace $CONDAENV by the absolute path of your conda environment (find it with ```locate anaconda```)

83 | - anaconda uses a different version of python than 3.6m : adapt it in the command. Find which version of python conda is using with ```locate anaconda3/lib/libpython```

84 | - you are using boost 1.62 or older: update it

85 | - cut pursuit did not download: manually clone it in the ```partition``` folder or add it as a submodule as proposed in the requirements, point 4.

86 | - error in make: `'numpy/ndarrayobject.h' file not found`: set symbolic link to python site-package with `sudo ln -s $CONDAENV/lib/python3.7/site-packages/numpy/core/include/numpy $CONDAENV/include/numpy`

87 |

88 |

89 | ## Running the code

90 |

91 | To run our code or retrain from scratch on different datasets, see the corresponding readme files.

92 | Currently supported dataset are as follow:

93 |

94 | | Dataset | handcrafted partition | learned partition |

95 | | ---------- | --------------------- | ------------------|

96 | | S3DIS | yes | yes |

97 | | Semantic3D | yes | to come soon |

98 | | vKITTI3D | no | yes |

99 | | ScanNet | to come soon | to come soon |

100 |

101 | To use pytorch-geometric graph convolutions instead of our own, use the option `--use_pyg 1` in `./learning/main.py`. Their code is more stable and just as fast. Otherwise, use `--use_pyg 0`

102 |

103 | #### Evaluation

104 |

105 | To evaluate quantitatively a trained model, use (for S3DIS and vKITTI3D only):

106 | ```

107 | python learning/evaluate.py --dataset s3dis --odir results/s3dis/best --cvfold 123456

108 | ```

109 |

110 | To visualize the results and all intermediary steps, use the visualize function in partition (for S3DIS, vKITTI3D,a nd Semantic3D). For example:

111 | ```

112 | python partition/visualize.py --dataset s3dis --ROOT_PATH $S3DIR_DIR --res_file results/s3dis/pretrained/cv1/predictions_test --file_path Area_1/conferenceRoom_1 --output_type igfpres

113 | ```

114 |

115 | ```output_type``` defined as such:

116 | - ```'i'``` = input rgb point cloud

117 | - ```'g'``` = ground truth (if available), with the predefined class to color mapping

118 | - ```'f'``` = geometric feature with color code: red = linearity, green = planarity, blue = verticality

119 | - ```'p'``` = partition, with a random color for each superpoint

120 | - ```'r'``` = result cloud, with the predefined class to color mapping

121 | - ```'e'``` = error cloud, with green/red hue for correct/faulty prediction

122 | - ```'s'``` = superedge structure of the superpoint (toggle wireframe on meshlab to view it)

123 |

124 | Add option ```--upsample 1``` if you want the prediction file to be on the original, unpruned data (long).

125 |

126 | # Other data sets

127 |

128 | You can apply SPG on your own data set with minimal changes:

129 | - adapt references to ```custom_dataset``` in ```/partition/partition.py```

130 | - you will need to create the function ```read_custom_format``` in ```/partition/provider.py``` which outputs xyz and rgb values, as well as semantic labels if available (already implemented for ply and las files)

131 | - adapt the template function ```/learning/custom_dataset.py``` to your achitecture and design choices

132 | - adapt references to ```custom_dataset``` in ```/learning/main.py```

133 | - add your data set colormap to ```get_color_from_label``` in ```/partition/provider.py```

134 | - adapt line 212 of `learning/spg.py` to reflect the missing or extra point features

135 | - change ```--model_config``` to ```gru_10,f_K``` with ```K``` as the number of classes in your dataset, or ```gru_10_0,f_K``` to use matrix edge filters instead of vectors (only use matrices when your data set is quite large, and with many different point clouds, like S3DIS).

136 |

137 | # Datasets without RGB

138 | If your data does not have RGB values you can easily use SPG. You will need to follow the instructions in ```partition/partition.ply``` regarding the pruning.

139 | You will need to adapt the ```/learning/custom_dataset.py``` file so that it does not refer ro RGB values.

140 | You should absolutely not use a model pretrained on values with RGB. instead, retrain a model from scratch using the ```--pc_attribs xyzelpsv``` option to remove RGB from the shape embedding input.

141 |

142 | # Citation

143 | If you use the semantic segmentation module (code in `/learning`), please cite:

144 | *Large-scale Point Cloud Semantic Segmentation with Superpoint Graphs*, Loic Landrieu and Martin Simonovski, CVPR, 2018.

145 |

146 | If you use the learned partition module (code in `/supervized_partition`), please cite:

147 | *Point Cloud Oversegmentation with Graph-Structured Deep Metric Learning*, Loic Landrieu and Mohamed Boussaha CVPR, 2019.

148 |

149 | To refer to the handcrafted partition (code in `/partition`) step specifically, refer to:

150 | *Weakly Supervised Segmentation-Aided Classification of Urban Scenes from 3D LiDAR Point Clouds*, Stéphane Guinard and Loic Landrieu. ISPRS Workshop, 2017.

151 |

152 | To refer to the L0-cut pursuit algorithm (code in `github.com/loicland/cut-pursuit`) specifically, refer to:

153 | *Cut Pursuit: Fast Algorithms to Learn Piecewise Constant Functions on General Weighted Graphs*, Loic Landrieu and Guillaume Obozinski, SIAM Journal on Imaging Sciences, 2017

154 |

155 | To refer to pytorch geometric implementation, see their bibtex in [their repo](https://github.com/rusty1s/pytorch_geometric).

156 |

157 |

158 |

--------------------------------------------------------------------------------

/S3DIS.md:

--------------------------------------------------------------------------------

1 | # S3DIS

2 |

3 | Download [S3DIS Dataset](http://buildingparser.stanford.edu/dataset.html) and extract `Stanford3dDataset_v1.2_Aligned_Version.zip` to `$S3DIS_DIR/data`, where `$S3DIS_DIR` is set to dataset directory.

4 |

5 | To fix some issues with the dataset as reported in issue [#29](https://github.com/loicland/superpoint_graph/issues/29), apply path `S3DIS_fix.diff` with:

6 | ```

7 | cp S3DIS_fix.diff $S3DIS_DIR/data; cd $S3DIS_DIR/data; git apply S3DIS_fix.diff; rm S3DIS_fix.diff; cd -

8 | ```

9 | Define $S3DIS_DIR as the location of the folder containing `/data`

10 |

11 | ## SPG with Handcrafted Partition

12 |

13 | To compute the partition with handcrafted features run:

14 | ```

15 | python partition/partition.py --dataset s3dis --ROOT_PATH $S3DIS_DIR --voxel_width 0.03 --reg_strength 0.03

16 | ```

17 |

18 | Then, reorganize point clouds into superpoints by:

19 | ```

20 | python learning/s3dis_dataset.py --S3DIS_PATH $S3DIS_DIR

21 | ```

22 |

23 | To train from scratch on the all 6 folds on the handcrafted partition, run:

24 | ```

25 | for FOLD in 1 2 3 4 5 6; do \

26 | CUDA_VISIBLE_DEVICES=0 python learning/main.py --dataset s3dis --S3DIS_PATH $S3DIS_DIR --cvfold $FOLD --epochs 350 \

27 | --lr_steps '[275,320]' --test_nth_epoch 50 --model_config 'gru_10_0,f_13' --ptn_nfeat_stn 14 --nworkers 2 \

28 | --pc_attribs xyzrgbelpsvXYZ --odir "results/s3dis/best/cv${FOLD}" --nworkers 4; \

29 | done

30 | ```

31 |

32 | Our trained networks can be downloaded [here](http://imagine.enpc.fr/~simonovm/largescale/models_s3dis.zip). Unzip the folder (but not the model.pth.tar themselves) and place them in the code folder `results/s3dis/pretrained/`.

33 |

34 | To test these networks on the full test set, run:

35 | ```

36 | for FOLD in 1 2 3 4 5 6; do \

37 | CUDA_VISIBLE_DEVICES=0 python learning/main.py --dataset s3dis --S3DIS_PATH $S3DIS_DIR --cvfold $FOLD --epochs -1 --lr_steps '[275,320]' \

38 | --test_nth_epoch 50 --model_config 'gru_10_0,f_13' --ptn_nfeat_stn 14 --nworkers 2 --pc_attribs xyzrgbelpsvXYZ --odir "results/s3dis/pretrained/cv${FOLD}" --resume RESUME; \

39 | done

40 | ```

41 |

42 | ## SSP+SPG: SPG with learned partition

43 |

44 | To learn the partition from scratch run:

45 | ```

46 | python supervized_partition/graph_processing.py --ROOT_PATH $S3DIS_DIR --dataset s3dis --voxel_width 0.03; \

47 |

48 | for FOLD in 1 2 3 4 5 6; do \

49 | python ./supervized_partition/supervized_partition.py --ROOT_PATH $S3DIS_DIR --cvfold $FOLD \

50 | --odir results_partition/s3dis/best --epochs 50 --reg_strength 0.1 --spatial_emb 0.2 \

51 | --global_feat eXYrgb --CP_cutoff 25; \

52 | done

53 | ```

54 | Or download our trained weights [here](http://recherche.ign.fr/llandrieu/SPG/S3DIS/pretrained.zip) in the folder `results_partition/s3dis/pretrained`, unzipped and run the following code:

55 |

56 | ```

57 | for FOLD in 1 2 3 4 5 6; do \

58 | python ./supervized_partition/supervized_partition.py --ROOT_PATH $S3DIS_DIR --cvfold $FOLD --epochs -1 \

59 | --odir results_partition/s3dis/pretrained --reg_strength 0.1 --spatial_emb 0.2 --global_feat eXYrgb \

60 | --CP_cutoff 25 --resume RESUME; \

61 | done

62 | ```

63 |

64 | To evaluate the quality of the partition, run:

65 | ```

66 | python supervized_partition/evaluate_partition.py --dataset s3dis --folder pretrained --cvfold 123456

67 | ```

68 |

69 | Then, reorganize point clouds into superpoints with:

70 | ```

71 | python learning/s3dis_dataset.py --S3DIS_PATH $S3DIS_DIR --supervized_partition 1 -plane_model_elevation 1

72 | ```

73 |

74 | Then to learn the SPG models from scratch, run:

75 | ```

76 | for FOLD in 1 2 3 4 5 6; do \

77 | CUDA_VISIBLE_DEVICES=0 python ./learning/main.py --dataset s3dis --S3DIS_PATH $S3DIS_DIR --batch_size 5 \

78 | --cvfold $FOLD --epochs 250 --lr_steps '[150,200]' --model_config "gru_10_0,f_13" --ptn_nfeat_stn 10 \

79 | --nworkers 2 --spg_augm_order 5 --pc_attribs xyzXYZrgbe --spg_augm_hardcutoff 768 --ptn_minpts 50 \

80 | --use_val_set 1 --odir results/s3dis/best/cv$FOLD; \

81 | done;

82 | ```

83 |

84 | Or use our [trained weights](http://recherche.ign.fr/llandrieu/SPG/S3DIS/pretrained_SSP.zip) with `--epochs -1` and `--resume RESUME`:

85 | ```

86 | for FOLD in 1 2 3 4 5 6; do \

87 | CUDA_VISIBLE_DEVICES=0 python ./learning/main.py --dataset s3dis --S3DIS_PATH $S3DIS_DIR --batch_size 5 \

88 | --cvfold $FOLD --epochs -1 --lr_steps '[150,200]' --model_config "gru_10_0,f_13" --ptn_nfeat_stn 10 \

89 | --nworkers 2 --spg_augm_order 5 --pc_attribs xyzXYZrgbe --spg_augm_hardcutoff 768 --ptn_minpts 50 \

90 | --use_val_set 1 --odir results/s3dis/pretrained_SSP/cv$FOLD --resume RESUME; \

91 | done;

92 | ```

93 | Note that these weights are specifically adapted to the pretrained model for the learned partition. Any change to the partition might decrease their performance.

94 |

--------------------------------------------------------------------------------

/S3DIS_fix.diff:

--------------------------------------------------------------------------------

1 | diff --git a/Area_3/hallway_2/hallway_2.txt b/Area_3/hallway_2/hallway_2.txt

2 | index 02f32b8..870566e 100644

3 | --- a/Area_3/hallway_2/hallway_2.txt

4 | +++ b/Area_3/hallway_2/hallway_2.txt

5 | @@ -926334,7 +926334,7 @@

6 | 19.237 -9.161 1.561 141 131 96

7 | 19.248 -9.160 1.768 136 129 103

8 | 19.276 -9.160 1.684 139 130 99

9 | -19.302 -9.1�0 1.785 146 137 106

10 | +19.302 -9.1 0 1.785 146 137 106

11 | 19.242 -9.160 1.790 146 134 108

12 | 19.271 -9.160 1.679 140 129 99

13 | 19.278 -9.160 1.761 133 123 98

14 | diff --git a/Area_5/hallway_6/Annotations/ceiling_1.txt b/Area_5/hallway_6/Annotations/ceiling_1.txt

15 | index 62e563d..3a9087b 100644

16 | --- a/Area_5/hallway_6/Annotations/ceiling_1.txt

17 | +++ b/Area_5/hallway_6/Annotations/ceiling_1.txt

18 | @@ -180386,7 +180386,7 @@

19 | 22.383 6.858 3.050 155 155 165

20 | 22.275 6.643 3.048 192 194 191

21 | 22.359 6.835 3.050 152 152 162

22 | -22.350 6.692 3.048 185�187 182

23 | +22.350 6.692 3.048 185 187 182

24 | 22.314 6.638 3.048 170 171 175

25 | 22.481 6.818 3.049 149 149 159

26 | 22.328 6.673 3.048 190 195 191

27 | diff --git a/Area_6/copyRoom_1/copy_Room_1.txt b/Area_6/copyRoom_1/copyRoom_1.txt

28 | similarity index 100%

29 | rename from Area_6/copyRoom_1/copy_Room_1.txt

30 | rename to Area_6/copyRoom_1/copyRoom_1.txt

31 |

--------------------------------------------------------------------------------

/Semantic3D.md:

--------------------------------------------------------------------------------

1 | # Semantic3D

2 |

3 | Download all point clouds and labels from [Semantic3D Dataset](http://www.semantic3d.net/) and place extracted training files to `$SEMA3D_DIR/data/train`, reduced test files into `$SEMA3D_DIR/data/test_reduced`, and full test files into `$SEMA3D_DIR/data/test_full`, where `$SEMA3D_DIR` is set to dataset directory. The label files of the training files must be put in the same directory than the .txt files.

4 |

5 | ## Handcrafted Partition

6 |

7 | To compute the partition with handcrafted features run:

8 | ```

9 | python partition/partition.py --dataset sema3d --ROOT_PATH $SEMA3D_DIR --voxel_width 0.05 --reg_strength 0.8 --ver_batch 5000000

10 | ```

11 | It is recommended that you have at least 24GB of RAM to run this code. Otherwise, increase the ```voxel_width``` parameter to increase pruning.

12 |

13 | Then, reorganize point clouds into superpoints by:

14 | ```

15 | python learning/sema3d_dataset.py --SEMA3D_PATH $SEMA3D_DIR

16 | ```

17 |

18 | To train on the whole publicly available data and test on the reduced test set, run:

19 | ```

20 | CUDA_VISIBLE_DEVICES=0 python learning/main.py --dataset sema3d --SEMA3D_PATH $SEMA3D_DIR --db_test_name testred --db_train_name trainval \

21 | --epochs 500 --lr_steps '[350, 400, 450]' --test_nth_epoch 100 --model_config 'gru_10,f_8' --ptn_nfeat_stn 11 \

22 | --nworkers 2 --pc_attrib xyzrgbelpsv --odir "results/sema3d/trainval_best"

23 | ```

24 | The trained network can be downloaded [here](http://imagine.enpc.fr/~simonovm/largescale/model_sema3d_trainval.pth.tar) and loaded with `--resume` argument. Rename the file ```model.pth.tar``` (do not try to unzip it!) and place it in the directory ```results/sema3d/trainval_best```.

25 |

26 | To test this network on the full test set, run:

27 | ```

28 | CUDA_VISIBLE_DEVICES=0 python learning/main.py --dataset sema3d --SEMA3D_PATH $SEMA3D_DIR --db_test_name testfull --db_train_name trainval \

29 | --epochs -1 --lr_steps '[350, 400, 450]' --test_nth_epoch 100 --model_config 'gru_10,f_8' --ptn_nfeat_stn 11 \

30 | --nworkers 2 --pc_attrib xyzrgbelpsv --odir "results/sema3d/trainval_best" --resume RESUME

31 | ```

32 | We validated our configuration on a custom split of 11 and 4 clouds. The network is trained as such:

33 | ```

34 | CUDA_VISIBLE_DEVICES=0 python learning/main.py --dataset sema3d --SEMA3D_PATH $SEMA3D_DIR --epochs 450 --lr_steps '[350, 400]' --test_nth_epoch 100 \

35 | --model_config 'gru_10,f_8' --pc_attrib xyzrgbelpsv --ptn_nfeat_stn 11 --nworkers 2 --odir "results/sema3d/best"

36 | ```

37 |

38 | #### Learned Partition

39 |

40 | Not yet available.

41 |

42 | #### Visualization

43 |

44 | To upsample the prediction to the unpruned data and write the .labels files for the reduced test set, run (quite slow):

45 | ```

46 | python partition/write_Semantic3d.py --SEMA3D_PATH $SEMA3D_DIR --odir "results/sema3d/trainval_best" --db_test_name testred

47 | ```

48 |

49 | To visualize the results and intermediary steps (on the subsampled graph), use the visualize function in partition. For example:

50 | ```

51 | python partition/visualize.py --dataset sema3d --ROOT_PATH $SEMA3D_DIR --res_file 'results/sema3d/trainval_best/prediction_testred' --file_path 'test_reduced/MarketplaceFeldkirch_Station4' --output_type ifprs

52 | ```

53 | avoid ```--upsample 1``` as it can can take a very long time on the largest clouds.

54 |

--------------------------------------------------------------------------------

/learning/__init__.py:

--------------------------------------------------------------------------------

1 | import os,sys

2 |

3 | DIR_PATH = os.path.dirname(os.path.realpath(__file__))

4 | sys.path.insert(0, DIR_PATH)

--------------------------------------------------------------------------------

/learning/custom_dataset.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | # -*- coding: utf-8 -*-

3 | """

4 | Created on Tue Mar 20 16:16:14 2018

5 |

6 | @author: landrieuloic

7 | """"""

8 | Large-scale Point Cloud Semantic Segmentation with Superpoint Graphs

9 | http://arxiv.org/abs/1711.09869

10 | 2017 Loic Landrieu, Martin Simonovsky

11 | Template file for processing custome datasets

12 | """

13 | from __future__ import division

14 | from __future__ import print_function

15 | from builtins import range

16 |

17 | import random

18 | import numpy as np

19 | import os

20 | import functools

21 | import torch

22 | import torchnet as tnt

23 | import h5py

24 | import spg

25 |

26 |

27 | def get_datasets(args, test_seed_offset=0):

28 | """build training and testing set"""

29 |

30 | #for a simple train/test organization

31 | trainset = ['train/' + f for f in os.listdir(args.CUSTOM_SET_PATH + '/superpoint_graphs/train')]

32 | testset = ['test/' + f for f in os.listdir(args.CUSTOM_SET_PATH + '/superpoint_graphs/train')]

33 |

34 | # Load superpoints graphs

35 | testlist, trainlist = [], []

36 | for n in trainset:

37 | trainlist.append(spg.spg_reader(args, args.CUSTOM_SET_PATH + '/superpoint_graphs/' + n + '.h5', True))

38 | for n in testset:

39 | testlist.append(spg.spg_reader(args, args.CUSTOM_SET_PATH + '/superpoint_graphs/' + n + '.h5', True))

40 |

41 | # Normalize edge features

42 | if args.spg_attribs01:

43 | trainlist, testlist, validlist, scaler = spg.scaler01(trainlist, testlist)

44 |

45 | return tnt.dataset.ListDataset([spg.spg_to_igraph(*tlist) for tlist in trainlist],

46 | functools.partial(spg.loader, train=True, args=args, db_path=args.CUSTOM_SET_PATH)), \

47 | tnt.dataset.ListDataset([spg.spg_to_igraph(*tlist) for tlist in testlist],

48 | functools.partial(spg.loader, train=False, args=args, db_path=args.CUSTOM_SET_PATH, test_seed_offset=test_seed_offset)) ,\

49 | scaler

50 |

51 | def get_info(args):

52 | edge_feats = 0

53 | for attrib in args.edge_attribs.split(','):

54 | a = attrib.split('/')[0]

55 | if a in ['delta_avg', 'delta_std', 'xyz']:

56 | edge_feats += 3

57 | else:

58 | edge_feats += 1

59 |

60 | return {

61 | 'node_feats': 11 if args.pc_attribs=='' else len(args.pc_attribs),

62 | 'edge_feats': edge_feats,

63 | 'classes': 10, #CHANGE TO YOUR NUMBER OF CLASS

64 | 'inv_class_map': {0:'class_A', 1:'class_B'}, #etc...

65 | }

66 |

67 | def preprocess_pointclouds(SEMA3D_PATH):

68 | """ Preprocesses data by splitting them by components and normalizing."""

69 |

70 | for n in ['train', 'test_reduced', 'test_full']:

71 | pathP = '{}/parsed/{}/'.format(SEMA3D_PATH, n)

72 | pathD = '{}/features/{}/'.format(SEMA3D_PATH, n)

73 | pathC = '{}/superpoint_graphs/{}/'.format(SEMA3D_PATH, n)

74 | if not os.path.exists(pathP):

75 | os.makedirs(pathP)

76 | random.seed(0)

77 |

78 | for file in os.listdir(pathC):

79 | print(file)

80 | if file.endswith(".h5"):

81 | f = h5py.File(pathD + file, 'r')

82 | xyz = f['xyz'][:]

83 | rgb = f['rgb'][:].astype(np.float)

84 | elpsv = np.stack([ f['xyz'][:,2][:], f['linearity'][:], f['planarity'][:], f['scattering'][:], f['verticality'][:] ], axis=1)

85 |

86 | # rescale to [-0.5,0.5]; keep xyz

87 | #warning - to use the trained model, make sure the elevation is comparable

88 | #to the set they were trained on

89 | #i.e. ~0 for roads and ~0.2-0.3 for builings for sema3d

90 | # and -0.5 for floor and 0.5 for ceiling for s3dis

91 | elpsv[:,0] /= 100 # (rough guess) #adapt

92 | elpsv[:,1:] -= 0.5

93 | rgb = rgb/255.0 - 0.5

94 |

95 | P = np.concatenate([xyz, rgb, elpsv], axis=1)

96 |

97 | f = h5py.File(pathC + file, 'r')

98 | numc = len(f['components'].keys())

99 |

100 | with h5py.File(pathP + file, 'w') as hf:

101 | for c in range(numc):

102 | idx = f['components/{:d}'.format(c)][:].flatten()

103 | if idx.size > 10000: # trim extra large segments, just for speed-up of loading time

104 | ii = random.sample(range(idx.size), k=10000)

105 | idx = idx[ii]

106 |

107 | hf.create_dataset(name='{:d}'.format(c), data=P[idx,...])

108 |

109 | if __name__ == "__main__":

110 | import argparse

111 | parser = argparse.ArgumentParser(description='Large-scale Point Cloud Semantic Segmentation with Superpoint Graphs')

112 | parser.add_argument('--CUSTOM_SET_PATH', default='datasets/custom_set')

113 | args = parser.parse_args()

114 | preprocess_pointclouds(args.CUSTOM_SET_PATH)

115 |

116 |

117 |

--------------------------------------------------------------------------------

/learning/ecc/GraphConvInfo.py:

--------------------------------------------------------------------------------

1 | """

2 | Dynamic Edge-Conditioned Filters in Convolutional Neural Networks on Graphs

3 | https://github.com/mys007/ecc

4 | https://arxiv.org/abs/1704.02901

5 | 2017 Martin Simonovsky

6 | """

7 | from __future__ import division

8 | from __future__ import print_function

9 | from builtins import range

10 |

11 | import igraph

12 | import torch

13 | from collections import defaultdict

14 | import numpy as np

15 |

16 | class GraphConvInfo(object):

17 | """ Holds information about the structure of graph(s) in a vectorized form useful to `GraphConvModule`.

18 |

19 | We assume that the node feature tensor (given to `GraphConvModule` as input) is ordered by igraph vertex id, e.g. the fifth row corresponds to vertex with id=4. Batch processing is realized by concatenating all graphs into a large graph of disconnected components (and all node feature tensors into a large tensor).

20 |

21 | The class requires problem-specific `edge_feat_func` function, which receives dict of edge attributes and returns Tensor of edge features and LongTensor of inverse indices if edge compaction was performed (less unique edge features than edges so some may be reused).

22 | """

23 |

24 | def __init__(self, *args, **kwargs):

25 | self._idxn = None #indices into input tensor of convolution (node features)

26 | self._idxe = None #indices into edge features tensor (or None if it would be linear, i.e. no compaction)

27 | self._degrees = None #in-degrees of output nodes (slices _idxn and _idxe)

28 | self._degrees_gpu = None

29 | self._edgefeats = None #edge features tensor (to be processed by feature-generating network)

30 | if len(args)>0 or len(kwargs)>0:

31 | self.set_batch(*args, **kwargs)

32 |

33 | def set_batch(self, graphs, edge_feat_func):

34 | """ Creates a representation of a given batch of graphs.

35 |

36 | Parameters:

37 | graphs: single graph or a list/tuple of graphs.

38 | edge_feat_func: see class description.

39 | """

40 |

41 | graphs = graphs if isinstance(graphs,(list,tuple)) else [graphs]

42 | p = 0

43 | idxn = []

44 | degrees = []

45 | edge_indexes = []

46 | edgeattrs = defaultdict(list)

47 |

48 | for G in graphs:

49 | E = np.array(G.get_edgelist())

50 | idx = E[:,1].argsort() # sort by target

51 |

52 | idxn.append(p + E[idx,0])

53 | edgeseq = G.es[idx.tolist()]

54 | for a in G.es.attributes():

55 | edgeattrs[a] += edgeseq.get_attribute_values(a)

56 | degrees += G.indegree(G.vs, loops=True)

57 | edge_indexes.append(np.asarray(p + E[idx]))

58 | p += G.vcount()

59 |

60 | self._edgefeats, self._idxe = edge_feat_func(edgeattrs)

61 |

62 | self._idxn = torch.LongTensor(np.concatenate(idxn))

63 | if self._idxe is not None:

64 | assert self._idxe.numel() == self._idxn.numel()

65 |

66 | self._degrees = torch.LongTensor(degrees)

67 | self._degrees_gpu = None

68 |

69 | self._edge_indexes = torch.LongTensor(np.concatenate(edge_indexes).T)

70 |

71 | def cuda(self):

72 | self._idxn = self._idxn.cuda()

73 | if self._idxe is not None: self._idxe = self._idxe.cuda()

74 | self._degrees_gpu = self._degrees.cuda()

75 | self._edgefeats = self._edgefeats.cuda()

76 | self._edge_indexes = self._edge_indexes.cuda()

77 |

78 | def get_buffers(self):

79 | """ Provides data to `GraphConvModule`.

80 | """

81 | return self._idxn, self._idxe, self._degrees, self._degrees_gpu, self._edgefeats

82 |

83 | def get_pyg_buffers(self):

84 | """ Provides data to `GraphConvModule`.

85 | """

86 | return self._edge_indexes

--------------------------------------------------------------------------------

/learning/ecc/GraphConvModule.py:

--------------------------------------------------------------------------------

1 | """

2 | Dynamic Edge-Conditioned Filters in Convolutional Neural Networks on Graphs

3 | https://github.com/mys007/ecc

4 | https://arxiv.org/abs/1704.02901

5 | 2017 Martin Simonovsky

6 | """

7 | from __future__ import division

8 | from __future__ import print_function

9 | from builtins import range

10 |

11 | import torch

12 | import torch.nn as nn

13 | from torch.autograd import Variable, Function

14 | from .GraphConvInfo import GraphConvInfo

15 | from . import cuda_kernels

16 | from . import utils

17 |

18 |

19 | class GraphConvFunction(Function):

20 | """Computes operations for each edge and averages the results over respective nodes.

21 | The operation is either matrix-vector multiplication (for 3D weight tensors) or element-wise

22 | vector-vector multiplication (for 2D weight tensors). The evaluation is computed in blocks of

23 | size `edge_mem_limit` to reduce peak memory load. See `GraphConvInfo` for info on `idxn, idxe, degs`.

24 | """

25 | def init(self, in_channels, out_channels, idxn, idxe, degs, degs_gpu, edge_mem_limit=1e20):

26 | self._in_channels = in_channels

27 | self._out_channels = out_channels

28 | self._idxn = idxn

29 | self._idxe = idxe

30 | self._degs = degs

31 | self._degs_gpu = degs_gpu

32 | self._shards = utils.get_edge_shards(degs, edge_mem_limit)

33 |

34 | def _multiply(ctx, a, b, out, f_a=None, f_b=None):

35 | """Performs operation on edge weights and node signal"""

36 | if ctx._full_weight_mat:

37 | # weights are full in_channels x out_channels matrices -> mm

38 | torch.bmm(f_a(a) if f_a else a, f_b(b) if f_b else b, out=out)

39 | else:

40 | # weights represent diagonal matrices -> mul

41 | torch.mul(a, b.expand_as(a), out=out)

42 |

43 | @staticmethod

44 | def forward(ctx, input, weights, in_channels, out_channels, idxn, idxe, degs, degs_gpu, edge_mem_limit=1e20):

45 |

46 | ctx.save_for_backward(input, weights)

47 | ctx._in_channels = in_channels

48 | ctx._out_channels = out_channels

49 | ctx._idxn = idxn

50 | ctx._idxe = idxe

51 | ctx._degs = degs

52 | ctx._degs_gpu = degs_gpu

53 | ctx._shards = utils.get_edge_shards(degs, edge_mem_limit)

54 |

55 | ctx._full_weight_mat = weights.dim() == 3

56 | assert ctx._full_weight_mat or (

57 | in_channels == out_channels and weights.size(1) == in_channels)

58 |

59 | output = input.new(degs.numel(), out_channels)

60 |

61 | # loop over blocks of output nodes

62 | startd, starte = 0, 0

63 | for numd, nume in ctx._shards:

64 |

65 | # select sequence of matching pairs of node and edge weights

66 | sel_input = torch.index_select(input, 0, idxn.narrow(0, starte, nume))

67 |

68 | if ctx._idxe is not None:

69 | sel_weights = torch.index_select(weights, 0, idxe.narrow(0, starte, nume))

70 | else:

71 | sel_weights = weights.narrow(0, starte, nume)

72 |

73 | # compute matrix-vector products

74 | products = input.new()

75 | GraphConvFunction._multiply(ctx, sel_input, sel_weights, products, lambda a: a.unsqueeze(1))

76 |

77 | # average over nodes

78 | if ctx._idxn.is_cuda:

79 | cuda_kernels.conv_aggregate_fw(output.narrow(0, startd, numd), products.view(-1, ctx._out_channels),

80 | ctx._degs_gpu.narrow(0, startd, numd))

81 | else:

82 | k = 0

83 | for i in range(startd, startd + numd):

84 | if ctx._degs[i] > 0:

85 | torch.mean(products.narrow(0, k, ctx._degs[i]), 0, out=output[i])

86 | else:

87 | output[i].fill_(0)

88 | k = k + ctx._degs[i]

89 |

90 | startd += numd

91 | starte += nume

92 | del sel_input, sel_weights, products

93 |

94 | return output

95 |

96 | @staticmethod

97 | def backward(ctx, grad_output):

98 | input, weights = ctx.saved_tensors

99 |

100 | grad_input = input.new(input.size()).fill_(0)

101 | grad_weights = weights.new(weights.size())

102 | if ctx._idxe is not None: grad_weights.fill_(0)

103 |

104 | # loop over blocks of output nodes

105 | startd, starte = 0, 0

106 | for numd, nume in ctx._shards:

107 |

108 | grad_products, tmp = input.new(nume, ctx._out_channels), input.new()

109 |

110 | if ctx._idxn.is_cuda:

111 | cuda_kernels.conv_aggregate_bw(grad_products, grad_output.narrow(0, startd, numd),

112 | ctx._degs_gpu.narrow(0, startd, numd))

113 | else:

114 | k = 0

115 | for i in range(startd, startd + numd):

116 | if ctx._degs[i] > 0:

117 | torch.div(grad_output[i], ctx._degs[i], out=grad_products[k])

118 | if ctx._degs[i] > 1:

119 | grad_products.narrow(0, k + 1, ctx._degs[i] - 1).copy_(

120 | grad_products[k].expand(ctx._degs[i] - 1, 1, ctx._out_channels).squeeze(1))

121 | k = k + ctx._degs[i]

122 |

123 | # grad wrt weights

124 | sel_input = torch.index_select(input, 0, ctx._idxn.narrow(0, starte, nume))

125 |

126 | if ctx._idxe is not None:

127 | GraphConvFunction._multiply(ctx, sel_input, grad_products, tmp,

128 | lambda a: a.unsqueeze(1).transpose_(2, 1),

129 | lambda b: b.unsqueeze(1))

130 | grad_weights.index_add_(0, ctx._idxe.narrow(0, starte, nume), tmp)

131 | else:

132 | GraphConvFunction._multiply(ctx, sel_input, grad_products, grad_weights.narrow(0, starte, nume),

133 | lambda a: a.unsqueeze(1).transpose_(2, 1), lambda b: b.unsqueeze(1))

134 |

135 | # grad wrt input

136 | if ctx._idxe is not None:

137 | torch.index_select(weights, 0, ctx._idxe.narrow(0, starte, nume), out=tmp)

138 | GraphConvFunction._multiply(ctx, grad_products, tmp, sel_input, lambda a: a.unsqueeze(1),

139 | lambda b: b.transpose_(2, 1))

140 | del tmp

141 | else:

142 | GraphConvFunction._multiply(ctx, grad_products, weights.narrow(0, starte, nume), sel_input,

143 | lambda a: a.unsqueeze(1),

144 | lambda b: b.transpose_(2, 1))

145 |

146 | grad_input.index_add_(0, ctx._idxn.narrow(0, starte, nume), sel_input)

147 |

148 | startd += numd

149 | starte += nume

150 | del grad_products, sel_input

151 |

152 | return grad_input, grad_weights, None, None, None, None, None, None, None

153 |

154 |

155 |

156 | class GraphConvModule(nn.Module):

157 | """ Computes graph convolution using filter weights obtained from a filter generating network (`filter_net`).

158 | The input should be a 2D tensor of size (# nodes, `in_channels`). Multiple graphs can be concatenated in the same tensor (minibatch).

159 |

160 | Parameters:

161 | in_channels: number of input channels

162 | out_channels: number of output channels

163 | filter_net: filter-generating network transforming a 2D tensor (# edges, # edge features) to (# edges, in_channels*out_channels) or (# edges, in_channels)

164 | gc_info: GraphConvInfo object containing graph(s) structure information, can be also set with `set_info()` method.

165 | edge_mem_limit: block size (number of evaluated edges in parallel) for convolution evaluation, a low value reduces peak memory.

166 | """

167 |

168 | def __init__(self, in_channels, out_channels, filter_net, gc_info=None, edge_mem_limit=1e20):

169 | super(GraphConvModule, self).__init__()

170 |

171 | self._in_channels = in_channels

172 | self._out_channels = out_channels

173 | self._fnet = filter_net

174 | self._edge_mem_limit = edge_mem_limit

175 |

176 | self.set_info(gc_info)

177 |

178 | def set_info(self, gc_info):

179 | self._gci = gc_info

180 |

181 | def forward(self, input):

182 | # get graph structure information tensors

183 | idxn, idxe, degs, degs_gpu, edgefeats = self._gci.get_buffers()

184 | edgefeats = Variable(edgefeats, requires_grad=False)

185 |

186 | # evalute and reshape filter weights

187 | weights = self._fnet(edgefeats)

188 | assert input.dim()==2 and weights.dim()==2 and (weights.size(1) == self._in_channels*self._out_channels or

189 | (self._in_channels == self._out_channels and weights.size(1) == self._in_channels))

190 | if weights.size(1) == self._in_channels*self._out_channels:

191 | weights = weights.view(-1, self._in_channels, self._out_channels)

192 |

193 | return GraphConvFunction(self._in_channels, self._out_channels, idxn, idxe, degs, degs_gpu, self._edge_mem_limit)(input, weights)

194 |

195 |

196 |

197 |

198 |

199 |

200 | class GraphConvModulePureAutograd(nn.Module):

201 | """

202 | Autograd-only equivalent of `GraphConvModule` + `GraphConvFunction`. Unfortunately, autograd needs to store intermediate products, which makes the module work only for very small graphs. The module is kept for didactic purposes only.

203 | """

204 |

205 | def __init__(self, in_channels, out_channels, filter_net, gc_info=None):

206 | super(GraphConvModulePureAutograd, self).__init__()

207 |

208 | self._in_channels = in_channels

209 | self._out_channels = out_channels

210 | self._fnet = filter_net

211 |

212 | self.set_info(gc_info)

213 |

214 | def set_info(self, gc_info):

215 | self._gci = gc_info

216 |

217 | def forward(self, input):

218 | # get graph structure information tensors

219 | idxn, idxe, degs, edgefeats = self._gci.get_buffers()

220 | idxn = Variable(idxn, requires_grad=False)

221 | edgefeats = Variable(edgefeats, requires_grad=False)

222 |

223 | # evalute and reshape filter weights

224 | weights = self._fnet(edgefeats)

225 | assert input.dim()==2 and weights.dim()==2 and weights.size(1) == self._in_channels*self._out_channels

226 | weights = weights.view(-1, self._in_channels, self._out_channels)

227 |

228 | # select sequence of matching pairs of node and edge weights

229 | if idxe is not None:

230 | idxe = Variable(idxe, requires_grad=False)

231 | weights = torch.index_select(weights, 0, idxe)

232 |

233 | sel_input = torch.index_select(input, 0, idxn)

234 |

235 | # compute matrix-vector products

236 | products = torch.bmm(sel_input.view(-1,1,self._in_channels), weights)

237 |

238 | output = Variable(input.data.new(len(degs), self._out_channels))

239 |

240 | # average over nodes

241 | k = 0

242 | for i in range(len(degs)):

243 | if degs[i]>0:

244 | output.index_copy_(0, Variable(torch.Tensor([i]).type_as(idxn.data)), torch.mean(products.narrow(0,k,degs[i]), 0).view(1,-1))

245 | else:

246 | output.index_fill_(0, Variable(torch.Tensor([i]).type_as(idxn.data)), 0)

247 | k = k + degs[i]

248 |

249 | return output

250 |

251 |

--------------------------------------------------------------------------------

/learning/ecc/GraphPoolInfo.py:

--------------------------------------------------------------------------------

1 | """

2 | Dynamic Edge-Conditioned Filters in Convolutional Neural Networks on Graphs

3 | https://github.com/mys007/ecc

4 | https://arxiv.org/abs/1704.02901

5 | 2017 Martin Simonovsky

6 | """

7 | from __future__ import division

8 | from __future__ import print_function

9 | from builtins import range

10 |

11 | import torch

12 |

13 |

14 | class GraphPoolInfo(object):

15 | """ Holds information about pooling in a vectorized form useful to `GraphPoolModule`.

16 |

17 | We assume that the node feature tensor (given to `GraphPoolModule` as input) is ordered by igraph vertex id, e.g. the fifth row corresponds to vertex with id=4. Batch processing is realized by concatenating all graphs into a large graph of disconnected components (and all node feature tensors into a large tensor).

18 | """

19 |

20 | def __init__(self, *args, **kwargs):

21 | self._idxn = None #indices into input tensor of convolution (node features)

22 | self._degrees = None #in-degrees of output nodes (slices _idxn)

23 | self._degrees_gpu = None

24 | if len(args)>0 or len(kwargs)>0:

25 | self.set_batch(*args, **kwargs)

26 |

27 | def set_batch(self, poolmaps, graphs_from, graphs_to):

28 | """ Creates a representation of a given batch of graph poolings.

29 |

30 | Parameters:

31 | poolmaps: dict(s) mapping vertex id in coarsened graph to a list of vertex ids in input graph (defines pooling)

32 | graphs_from: input graph(s)

33 | graphs_to: coarsened graph(s)

34 | """

35 |

36 | poolmaps = poolmaps if isinstance(poolmaps,(list,tuple)) else [poolmaps]

37 | graphs_from = graphs_from if isinstance(graphs_from,(list,tuple)) else [graphs_from]

38 | graphs_to = graphs_to if isinstance(graphs_to,(list,tuple)) else [graphs_to]

39 |

40 | idxn = []

41 | degrees = []

42 | p = 0

43 |

44 | for map, G_from, G_to in zip(poolmaps, graphs_from, graphs_to):

45 | for v in range(G_to.vcount()):

46 | nlist = map.get(v, [])

47 | idxn.extend([n+p for n in nlist])

48 | degrees.append(len(nlist))

49 | p += G_from.vcount()

50 |

51 | self._idxn = torch.LongTensor(idxn)

52 | self._degrees = torch.LongTensor(degrees)

53 | self._degrees_gpu = None

54 |

55 | def cuda(self):

56 | self._idxn = self._idxn.cuda()

57 | self._degrees_gpu = self._degrees.cuda()

58 |

59 | def get_buffers(self):

60 | """ Provides data to `GraphPoolModule`.

61 | """

62 | return self._idxn, self._degrees, self._degrees_gpu

63 |

--------------------------------------------------------------------------------

/learning/ecc/GraphPoolModule.py:

--------------------------------------------------------------------------------

1 | """

2 | Dynamic Edge-Conditioned Filters in Convolutional Neural Networks on Graphs

3 | https://github.com/mys007/ecc

4 | https://arxiv.org/abs/1704.02901

5 | 2017 Martin Simonovsky

6 | """

7 | from __future__ import division

8 | from __future__ import print_function

9 | from builtins import range

10 |

11 | import torch

12 | import torch.nn as nn

13 | from torch.autograd import Variable, Function

14 | from .GraphPoolInfo import GraphPoolInfo

15 | from . import cuda_kernels

16 | from . import utils

17 |

18 | class GraphPoolFunction(Function):

19 | """ Computes node feature aggregation for each node of the coarsened graph. The evaluation is computed in blocks of size `edge_mem_limit` to reduce peak memory load. See `GraphPoolInfo` for info on `idxn, degs`.

20 | """

21 |

22 | AGGR_MEAN = 0

23 | AGGR_MAX = 1

24 |

25 | def __init__(self, idxn, degs, degs_gpu, aggr, edge_mem_limit=1e20):

26 | super(GraphPoolFunction, self).__init__()

27 | self._idxn = idxn

28 | self._degs = degs

29 | self._degs_gpu = degs_gpu

30 | self._aggr = aggr

31 | self._shards = utils.get_edge_shards(degs, edge_mem_limit)

32 |

33 | def forward(self, input):

34 | output = input.new(self._degs.numel(), input.size(1))

35 | if self._aggr==GraphPoolFunction.AGGR_MAX:

36 | self._max_indices = self._idxn.new(self._degs.numel(), input.size(1)).fill_(-1)

37 |

38 | self._input_size = input.size()

39 |

40 | # loop over blocks of output nodes

41 | startd, starte = 0, 0

42 | for numd, nume in self._shards:

43 |

44 | sel_input = torch.index_select(input, 0, self._idxn.narrow(0,starte,nume))

45 |

46 | # aggregate over nodes

47 | if self._idxn.is_cuda:

48 | if self._aggr==GraphPoolFunction.AGGR_MEAN:

49 | cuda_kernels.avgpool_fw(output.narrow(0,startd,numd), sel_input, self._degs_gpu.narrow(0,startd,numd))

50 | elif self._aggr==GraphPoolFunction.AGGR_MAX:

51 | cuda_kernels.maxpool_fw(output.narrow(0,startd,numd), self._max_indices.narrow(0,startd,numd), sel_input, self._degs_gpu.narrow(0,startd,numd))

52 | else:

53 | k = 0

54 | for i in range(startd, startd+numd):

55 | if self._degs[i]>0:

56 | if self._aggr==GraphPoolFunction.AGGR_MEAN:

57 | torch.mean(sel_input.narrow(0,k,self._degs[i]), 0, out=output[i])

58 | elif self._aggr==GraphPoolFunction.AGGR_MAX:

59 | torch.max(sel_input.narrow(0,k,self._degs[i]), 0, out=(output[i], self._max_indices[i]))

60 | else:

61 | output[i].fill_(0)

62 | k = k + self._degs[i]

63 |

64 | startd += numd

65 | starte += nume

66 | del sel_input

67 |

68 | return output

69 |

70 |

71 | def backward(self, grad_output):

72 | grad_input = grad_output.new(self._input_size).fill_(0)

73 |

74 | # loop over blocks of output nodes

75 | startd, starte = 0, 0

76 | for numd, nume in self._shards:

77 |

78 | grad_sel_input = grad_output.new(nume, grad_output.size(1))

79 |

80 | # grad wrt input

81 | if self._idxn.is_cuda:

82 | if self._aggr==GraphPoolFunction.AGGR_MEAN:

83 | cuda_kernels.avgpool_bw(grad_input, self._idxn.narrow(0,starte,nume), grad_output.narrow(0,startd,numd), self._degs_gpu.narrow(0,startd,numd))

84 | elif self._aggr==GraphPoolFunction.AGGR_MAX:

85 | cuda_kernels.maxpool_bw(grad_input, self._idxn.narrow(0,starte,nume), self._max_indices.narrow(0,startd,numd), grad_output.narrow(0,startd,numd), self._degs_gpu.narrow(0,startd,numd))

86 | else:

87 | k = 0

88 | for i in range(startd, startd+numd):

89 | if self._degs[i]>0:

90 | if self._aggr==GraphPoolFunction.AGGR_MEAN:

91 | torch.div(grad_output[i], self._degs[i], out=grad_sel_input[k])

92 | if self._degs[i]>1:

93 | grad_sel_input.narrow(0, k+1, self._degs[i]-1).copy_( grad_sel_input[k].expand(self._degs[i]-1,1,grad_output.size(1)) )

94 | elif self._aggr==GraphPoolFunction.AGGR_MAX:

95 | grad_sel_input.narrow(0, k, self._degs[i]).fill_(0).scatter_(0, self._max_indices[i].view(1,-1), grad_output[i].view(1,-1))

96 | k = k + self._degs[i]

97 |

98 | grad_input.index_add_(0, self._idxn.narrow(0,starte,nume), grad_sel_input)

99 |

100 | startd += numd

101 | starte += nume

102 | del grad_sel_input

103 |

104 | return grad_input

105 |

106 |

107 |

108 | class GraphPoolModule(nn.Module):

109 | """ Performs graph pooling.

110 | The input should be a 2D tensor of size (# nodes, `in_channels`). Multiple graphs can be concatenated in the same tensor (minibatch).

111 |

112 | Parameters:

113 | aggr: aggregation type (GraphPoolFunction.AGGR_MEAN, GraphPoolFunction.AGGR_MAX)

114 | gp_info: GraphPoolInfo object containing node mapping information, can be also set with `set_info()` method.

115 | edge_mem_limit: block size (number of evaluated edges in parallel), a low value reduces peak memory.

116 | """

117 |

118 | def __init__(self, aggr, gp_info=None, edge_mem_limit=1e20):

119 | super(GraphPoolModule, self).__init__()

120 |

121 | self._aggr = aggr

122 | self._edge_mem_limit = edge_mem_limit

123 | self.set_info(gp_info)

124 |

125 | def set_info(self, gp_info):

126 | self._gpi = gp_info

127 |

128 | def forward(self, input):

129 | idxn, degs, degs_gpu = self._gpi.get_buffers()

130 | return GraphPoolFunction(idxn, degs, degs_gpu, self._aggr, self._edge_mem_limit)(input)

131 |

132 |

133 | class GraphAvgPoolModule(GraphPoolModule):

134 | def __init__(self, gp_info=None, edge_mem_limit=1e20):

135 | super(GraphAvgPoolModule, self).__init__(GraphPoolFunction.AGGR_MEAN, gp_info, edge_mem_limit)

136 |

137 | class GraphMaxPoolModule(GraphPoolModule):

138 | def __init__(self, gp_info=None, edge_mem_limit=1e20):

139 | super(GraphMaxPoolModule, self).__init__(GraphPoolFunction.AGGR_MAX, gp_info, edge_mem_limit)

--------------------------------------------------------------------------------

/learning/ecc/__init__.py:

--------------------------------------------------------------------------------

1 | from .GraphConvInfo import GraphConvInfo

2 | from .GraphConvModule import GraphConvModule, GraphConvFunction

3 |

4 | from .GraphPoolInfo import GraphPoolInfo

5 | from .GraphPoolModule import GraphAvgPoolModule, GraphMaxPoolModule

6 |

7 | from .utils import *

8 |

--------------------------------------------------------------------------------

/learning/ecc/cuda_kernels.py:

--------------------------------------------------------------------------------

1 | """

2 | Dynamic Edge-Conditioned Filters in Convolutional Neural Networks on Graphs

3 | https://github.com/mys007/ecc

4 | https://arxiv.org/abs/1704.02901

5 | 2017 Martin Simonovsky

6 | """

7 | from __future__ import division

8 | from __future__ import print_function

9 | from builtins import range

10 |

11 | import torch

12 | try:

13 | import cupy.cuda

14 | from pynvrtc.compiler import Program

15 | except:

16 | pass

17 | from collections import namedtuple

18 | import numpy as np

19 |

20 | CUDA_NUM_THREADS = 1024

21 |

22 | def GET_BLOCKS(N):

23 | return (N + CUDA_NUM_THREADS - 1) // CUDA_NUM_THREADS;

24 |

25 | modules = {}

26 |

27 | def get_dtype(t):

28 | if isinstance(t, torch.cuda.FloatTensor):

29 | return 'float'

30 | elif isinstance(t, torch.cuda.DoubleTensor):

31 | return 'double'

32 |

33 | def get_kernel_func(kname, ksrc, dtype):

34 | if kname+dtype not in modules:

35 | ksrc = ksrc.replace('DTYPE', dtype)

36 | #prog = Program(ksrc.encode('utf-8'), (kname+dtype+'.cu').encode('utf-8'))

37 | #uncomment the line above and comment the line below if it causes the following error: AttributeError: 'Program' object has no attribute '_program'

38 | prog = Program(ksrc, kname+dtype+'.cu')

39 | ptx = prog.compile()

40 | log = prog._interface.nvrtcGetProgramLog(prog._program)

41 | if len(log.strip()) > 0: print(log)

42 | module = cupy.cuda.function.Module()

43 | module.load(bytes(ptx.encode()))

44 | modules[kname+dtype] = module

45 | else:

46 | module = modules[kname+dtype]

47 |

48 | Stream = namedtuple('Stream', ['ptr'])

49 | s = Stream(ptr=torch.cuda.current_stream().cuda_stream)

50 |

51 | return module.get_function(kname), s

52 |

53 | ####

54 |

55 | def conv_aggregate_fw_kernel_v2(**kwargs):

56 | kernel = r'''

57 | extern "C"

58 | __global__ void conv_aggregate_fw_kernel_v2(DTYPE* dest, const DTYPE* src, const long long* lengths, const long long* cslengths, int width, int N, int dest_stridex, int src_stridex, int blockDimy) {

59 |

60 | int x = blockIdx.x * blockDim.x + threadIdx.x; //one thread per feature channel, runs over all nodes

61 | if (x >= width) return;

62 |

63 | int i = blockIdx.y * blockDimy;

64 | int imax = min(N, i + blockDimy);

65 | dest += dest_stridex * i + x;

66 | src += src_stridex * (cslengths[i] - lengths[i]) + x;

67 |

68 | for (; i 0) {

71 | DTYPE sum = 0;

72 | for (int j=0; j= width) return;

95 |

96 | int i = blockIdx.y * blockDimy;

97 | int imax = min(N, i + blockDimy);

98 | dest += dest_stridex * (cslengths[i] - lengths[i]) + x;

99 | src += src_stridex * i + x;

100 |

101 | for (; i 0) {

104 | DTYPE val = *src / len;

105 | for (int j=0; j= width) return;

150 |

151 | for (int i=0; i 0) {

153 | long long src_step = lengths[i] * src_stridex;

154 | long long bestjj = -1;

155 | DTYPE best = -1e10;

156 |

157 | for (long long j = x, jj=0; j < src_step; j += src_stridex, ++jj) {

158 | if (src[j] > best) {

159 | best = src[j];

160 | bestjj = jj;

161 | }

162 | }

163 |

164 | dest[x] = best;

165 | indices[x] = bestjj;

166 |

167 | src += src_step;

168 | }

169 | else {

170 | dest[x] = 0;

171 | indices[x] = -1;

172 | }

173 |

174 | dest += dest_stridex;

175 | indices += dest_stridex;

176 | }

177 | }

178 | '''

179 | return kernel

180 |

181 | def maxpool_bw_kernel(**kwargs):

182 | kernel = r'''

183 | //also directly scatters results by dest_indices (saves one sparse intermediate buffer)

184 | extern "C"

185 | __global__ void maxpool_bw_kernel(DTYPE* dest, const long long* dest_indices, const long long* max_indices, const DTYPE* src, const long long* lengths, int width, int N, int dest_stridex, int src_stridex) {

186 |

187 | int x = blockIdx.x * blockDim.x + threadIdx.x; //one thread per feature channel, runs over all points

188 | if (x >= width) return;

189 |

190 | for (int i=0; i 0) {

192 |

193 | long long destidx = dest_indices[max_indices[x]];

194 | dest[x + destidx * dest_stridex] += src[x]; //no need for atomicadd, only one threads cares about each feat

195 |

196 | dest_indices += lengths[i];

197 | }

198 |

199 | src += src_stridex;

200 | max_indices += src_stridex;

201 | }

202 | }

203 | '''

204 | return kernel

205 |

206 |

207 | def maxpool_fw(dest, indices, src, degs):

208 | n = degs.numel()

209 | w = src.size(1)

210 | assert n == dest.size(0) and w == dest.size(1)

211 | assert type(src)==type(dest) and isinstance(degs, torch.cuda.LongTensor) and isinstance(indices, torch.cuda.LongTensor)

212 |

213 | function, stream = get_kernel_func('maxpool_fw_kernel', maxpool_fw_kernel(), get_dtype(src))

214 | function(args=[dest.data_ptr(), indices.data_ptr(), src.data_ptr(), degs.data_ptr(), np.int32(w), np.int32(n), np.int32(dest.stride(0)), np.int32(src.stride(0))],

215 | block=(CUDA_NUM_THREADS,1,1), grid=(GET_BLOCKS(w),1,1), stream=stream)

216 |

217 | def maxpool_bw(dest, idxn, indices, src, degs):

218 | n = degs.numel()

219 | w = src.size(1)

220 | assert n == src.size(0) and w == dest.size(1)

221 | assert type(src)==type(dest) and isinstance(degs, torch.cuda.LongTensor) and isinstance(indices, torch.cuda.LongTensor) and isinstance(idxn, torch.cuda.LongTensor)

222 |

223 | function, stream = get_kernel_func('maxpool_bw_kernel', maxpool_bw_kernel(), get_dtype(src))

224 | function(args=[dest.data_ptr(), idxn.data_ptr(), indices.data_ptr(), src.data_ptr(), degs.data_ptr(), np.int32(w), np.int32(n), np.int32(dest.stride(0)), np.int32(src.stride(0))],

225 | block=(CUDA_NUM_THREADS,1,1), grid=(GET_BLOCKS(w),1,1), stream=stream)

226 |

227 |

228 |

229 | def avgpool_bw_kernel(**kwargs):

230 | kernel = r'''

231 | //also directly scatters results by dest_indices (saves one intermediate buffer)

232 | extern "C"

233 | __global__ void avgpool_bw_kernel(DTYPE* dest, const long long* dest_indices, const DTYPE* src, const long long* lengths, int width, int N, int dest_stridex, int src_stridex) {

234 |

235 | int x = blockIdx.x * blockDim.x + threadIdx.x; //one thread per feature channel, runs over all points

236 | if (x >= width) return;

237 |

238 | for (int i=0; i 0) {

240 |

241 | DTYPE val = src[x] / lengths[i];

242 |

243 | for (int j = 0; j < lengths[i]; ++j) {

244 | long long destidx = dest_indices[j];

245 | dest[x + destidx * dest_stridex] += val; //no need for atomicadd, only one threads cares about each feat

246 | }

247 |

248 | dest_indices += lengths[i];

249 | }

250 |

251 | src += src_stridex;

252 | }

253 | }

254 | '''

255 | return kernel

256 |

257 |

258 | def avgpool_fw(dest, src, degs):

259 | conv_aggregate_fw(dest, src, degs)

260 |

261 | def avgpool_bw(dest, idxn, src, degs):

262 | n = degs.numel()

263 | w = src.size(1)

264 | assert n == src.size(0) and w == dest.size(1)

265 | assert type(src)==type(dest) and isinstance(degs, torch.cuda.LongTensor) and isinstance(idxn, torch.cuda.LongTensor)

266 |

267 | function, stream = get_kernel_func('avgpool_bw_kernel', avgpool_bw_kernel(), get_dtype(src))

268 | function(args=[dest.data_ptr(), idxn.data_ptr(), src.data_ptr(), degs.data_ptr(), np.int32(w), np.int32(n), np.int32(dest.stride(0)), np.int32(src.stride(0))],

269 | block=(CUDA_NUM_THREADS,1,1), grid=(GET_BLOCKS(w),1,1), stream=stream)

270 |

--------------------------------------------------------------------------------

/learning/ecc/test_GraphConvModule.py:

--------------------------------------------------------------------------------

1 | """

2 | Dynamic Edge-Conditioned Filters in Convolutional Neural Networks on Graphs

3 | https://github.com/mys007/ecc

4 | https://arxiv.org/abs/1704.02901

5 | 2017 Martin Simonovsky

6 | """

7 | from __future__ import division

8 | from __future__ import print_function

9 | from builtins import range

10 |

11 | import unittest

12 | import numpy as np

13 | import torch

14 | import torch.nn as nn

15 | from torch.autograd import Variable, gradcheck

16 |

17 | from .GraphConvModule import *

18 | from .GraphConvInfo import GraphConvInfo

19 |

20 |

21 | class TestGraphConvModule(unittest.TestCase):

22 |

23 | def test_gradcheck(self):

24 |

25 | torch.set_default_tensor_type('torch.DoubleTensor') #necessary for proper numerical gradient

26 |

27 | for cuda in range(0,2):

28 | # without idxe

29 | n,e,in_channels, out_channels = 20,50,10, 15

30 | input = torch.randn(n,in_channels)

31 | weights = torch.randn(e,in_channels,out_channels)

32 | idxn = torch.from_numpy(np.random.randint(n,size=e))

33 | idxe = None

34 | degs = torch.LongTensor([5, 0, 15, 20, 10]) #strided conv

35 | degs_gpu = degs

36 | edge_mem_limit = 30 # some nodes will be combined, some not

37 | if cuda:

38 | input = input.cuda(); weights = weights.cuda(); idxn = idxn.cuda(); degs_gpu = degs_gpu.cuda()

39 |

40 | func = GraphConvFunction(in_channels, out_channels, idxn, idxe, degs, degs_gpu, edge_mem_limit=edge_mem_limit)

41 | data = (Variable(input, requires_grad=True), Variable(weights, requires_grad=True))

42 |

43 | ok = gradcheck(func, data)

44 | self.assertTrue(ok)

45 |

46 | # with idxe

47 | weights = torch.randn(30,in_channels,out_channels)

48 | idxe = torch.from_numpy(np.random.randint(30,size=e))

49 | if cuda:

50 | weights = weights.cuda(); idxe = idxe.cuda()

51 |

52 | func = GraphConvFunction(in_channels, out_channels, idxn, idxe, degs, degs_gpu, edge_mem_limit=edge_mem_limit)

53 |

54 | ok = gradcheck(func, data)

55 | self.assertTrue(ok)

56 |

57 | torch.set_default_tensor_type('torch.FloatTensor')

58 |

59 | def test_batch_splitting(self):

60 |

61 | n,e,in_channels, out_channels = 20,50,10, 15

62 | input = torch.randn(n,in_channels)

63 | weights = torch.randn(e,in_channels,out_channels)

64 | idxn = torch.from_numpy(np.random.randint(n,size=e))

65 | idxe = None

66 | degs = torch.LongTensor([5, 0, 15, 20, 10]) #strided conv

67 |

68 | func = GraphConvFunction(in_channels, out_channels, idxn, idxe, degs, degs, edge_mem_limit=1e10)

69 | data = (Variable(input, requires_grad=True), Variable(weights, requires_grad=True))

70 | output1 = func(*data)

71 |

72 | func = GraphConvFunction(in_channels, out_channels, idxn, idxe, degs, degs, edge_mem_limit=1)

73 | output2 = func(*data)

74 |

75 | self.assertLess((output1-output2).norm().data[0], 1e-6)

76 |

77 |

78 |

79 | if __name__ == '__main__':

80 | unittest.main()

--------------------------------------------------------------------------------

/learning/ecc/test_GraphPoolModule.py:

--------------------------------------------------------------------------------

1 | """

2 | Dynamic Edge-Conditioned Filters in Convolutional Neural Networks on Graphs

3 | https://github.com/mys007/ecc

4 | https://arxiv.org/abs/1704.02901

5 | 2017 Martin Simonovsky

6 | """

7 | from __future__ import division

8 | from __future__ import print_function

9 | from builtins import range

10 |

11 | import unittest

12 | import numpy as np

13 | import torch

14 | from torch.autograd import Variable, gradcheck

15 |

16 | from .GraphPoolModule import *

17 | from .GraphPoolInfo import GraphPoolInfo

18 |

19 |

20 | class TestGraphConvModule(unittest.TestCase):

21 |

22 | def test_gradcheck(self):

23 |

24 | torch.set_default_tensor_type('torch.DoubleTensor') #necessary for proper numerical gradient

25 |

26 | for cuda in range(0,2):

27 | for aggr in range(0,2):

28 | n,in_channels = 20,10

29 | input = torch.randn(n,in_channels)

30 | idxn = torch.from_numpy(np.random.permutation(n))

31 | degs = torch.LongTensor([2, 0, 3, 10, 5])

32 | degs_gpu = degs

33 | edge_mem_limit = 30 # some nodes will be combined, some not

34 | if cuda:

35 | input = input.cuda(); idxn = idxn.cuda(); degs_gpu = degs_gpu.cuda()

36 |

37 | func = GraphPoolFunction(idxn, degs, degs_gpu, aggr=aggr, edge_mem_limit=edge_mem_limit)

38 | data = (Variable(input, requires_grad=True),)

39 |

40 | ok = gradcheck(func, data)

41 | self.assertTrue(ok)

42 |

43 | torch.set_default_tensor_type('torch.FloatTensor')

44 |

45 | def test_batch_splitting(self):

46 | n,in_channels = 20,10

47 | input = torch.randn(n,in_channels)

48 | idxn = torch.from_numpy(np.random.permutation(n))

49 | degs = torch.LongTensor([2, 0, 3, 10, 5])

50 |

51 | func = GraphPoolFunction(idxn, degs, degs, aggr=GraphPoolFunction.AGGR_MAX, edge_mem_limit=1e10)

52 | data = (Variable(input, requires_grad=True),)

53 | output1 = func(*data)

54 |

55 | func = GraphPoolFunction(idxn, degs, degs, aggr=GraphPoolFunction.AGGR_MAX, edge_mem_limit=1)

56 | output2 = func(*data)

57 |

58 | self.assertLess((output1-output2).norm(), 1e-6)

59 |

60 | if __name__ == '__main__':

61 | unittest.main()

--------------------------------------------------------------------------------

/learning/ecc/utils.py:

--------------------------------------------------------------------------------

1 | """

2 | Dynamic Edge-Conditioned Filters in Convolutional Neural Networks on Graphs

3 | https://github.com/mys007/ecc

4 | https://arxiv.org/abs/1704.02901

5 | 2017 Martin Simonovsky

6 | """

7 | from __future__ import division

8 | from __future__ import print_function

9 | from builtins import range

10 |

11 | import random

12 | import numpy as np

13 | import torch

14 |

15 | import ecc

16 |

17 | def graph_info_collate_classification(batch, edge_func):

18 | """ Collates a list of dataset samples into a single batch. We assume that all samples have the same number of resolutions.

19 |

20 | Each sample is a tuple of following elements:

21 | features: 2D Tensor of node features

22 | classes: LongTensor of class ids

23 | graphs: list of graphs, each for one resolution

24 | pooldata: list of triplets, each for one resolution: (pooling map, finer graph, coarser graph)

25 | """

26 | features, classes, graphs, pooldata = list(zip(*batch))

27 | graphs_by_layer = list(zip(*graphs))

28 | pooldata_by_layer = list(zip(*pooldata))

29 |

30 | features = torch.cat([torch.from_numpy(f) for f in features])

31 | if features.dim()==1: features = features.view(-1,1)

32 |

33 | classes = torch.LongTensor(classes)

34 |

35 | GIs, PIs = [], []

36 | for graphs in graphs_by_layer:

37 | GIs.append( ecc.GraphConvInfo(graphs, edge_func) )

38 | for pooldata in pooldata_by_layer:

39 | PIs.append( ecc.GraphPoolInfo(*zip(*pooldata)) )

40 |

41 | return features, classes, GIs, PIs

42 |

43 |

44 | def unique_rows(data):

45 | """ Filters unique rows from a 2D np array and also returns inverse indices. Used for edge feature compaction. """

46 | # https://stackoverflow.com/questions/16970982/find-unique-rows-in-numpy-array

47 | uniq, indices = np.unique(data.view(data.dtype.descr * data.shape[1]), return_inverse=True)

48 | return uniq.view(data.dtype).reshape(-1, data.shape[1]), indices

49 |

50 | def one_hot_discretization(feat, clip_min, clip_max, upweight):

51 | indices = np.clip(np.round(feat), clip_min, clip_max).astype(int).reshape((-1,))

52 | onehot = np.zeros((feat.shape[0], clip_max - clip_min + 1))

53 | onehot[np.arange(onehot.shape[0]), indices] = onehot.shape[1] if upweight else 1

54 | return onehot

55 |

56 | def get_edge_shards(degs, edge_mem_limit):

57 | """ Splits iteration over nodes into shards, approximately limited by `edge_mem_limit` edges per shard.

58 | Returns a list of pairs indicating how many output nodes and edges to process in each shard."""

59 | d = degs if isinstance(degs, np.ndarray) else degs.numpy()

60 | cs = np.cumsum(d)

61 | cse = cs // edge_mem_limit

62 | _, cse_i, cse_c = np.unique(cse, return_index=True, return_counts=True)

63 |

64 | shards = []

65 | for b in range(len(cse_i)):

66 | numd = cse_c[b]

67 | nume = (cs[-1] if b==len(cse_i)-1 else cs[cse_i[b+1]-1]) - cs[cse_i[b]] + d[cse_i[b]]

68 | shards.append( (int(numd), int(nume)) )

69 | return shards

70 |

--------------------------------------------------------------------------------

/learning/evaluate.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | # -*- coding: utf-8 -*-

3 | """

4 | Created on Thu Jul 4 09:22:55 2019

5 |

6 | @author: landrieuloic

7 | """

8 |

9 | """

10 | Large-scale Point Cloud Semantic Segmentation with Superpoint Graphs

11 | http://arxiv.org/abs/1711.09869

12 | 2017 Loic Landrieu, Martin Simonovsky

13 | """

14 | import argparse

15 | import numpy as np

16 | import sys

17 | sys.path.append("./learning")

18 | from metrics import *

19 |

20 | parser = argparse.ArgumentParser(description='Evaluation function for S3DIS')

21 |

22 | parser.add_argument('--odir', default='./results/s3dis/best', help='Directory to store results')

23 | parser.add_argument('--dataset', default='s3dis', help='Directory to store results')

24 | parser.add_argument('--cvfold', default='123456', help='which fold to consider')

25 |

26 | args = parser.parse_args()

27 |

28 |

29 |

30 | if args.dataset == 's3dis':

31 | n_labels = 13

32 | inv_class_map = {0:'ceiling', 1:'floor', 2:'wall', 3:'column', 4:'beam', 5:'window', 6:'door', 7:'table', 8:'chair', 9:'bookcase', 10:'sofa', 11:'board', 12:'clutter'}

33 | base_name = args.odir+'/cv'

34 | elif args.dataset == 'vkitti':

35 | n_labels = 13

36 | inv_class_map = {0:'Terrain', 1:'Tree', 2:'Vegetation', 3:'Building', 4:'Road', 5:'GuardRail', 6:'TrafficSign', 7:'TrafficLight', 8:'Pole', 9:'Misc', 10:'Truck', 11:'Car', 12:'Van'}

37 | base_name = args.odir+'/cv'

38 |

39 | C = ConfusionMatrix(n_labels)

40 | C.confusion_matrix=np.zeros((n_labels, n_labels))

41 |

42 |

43 | for i_fold in range(len(args.cvfold)):

44 | fold = int(args.cvfold[i_fold])

45 | cm = ConfusionMatrix(n_labels)

46 | cm.confusion_matrix=np.load(base_name+str(fold) +'/pointwise_cm.npy')

47 | print("Fold %d : \t OA = %3.2f \t mA = %3.2f \t mIoU = %3.2f" % (fold, \

48 | 100 * ConfusionMatrix.get_overall_accuracy(cm) \

49 | , 100 * ConfusionMatrix.get_mean_class_accuracy(cm) \

50 | , 100 * ConfusionMatrix.get_average_intersection_union(cm)

51 | ))

52 | C.confusion_matrix += cm.confusion_matrix

53 |

54 | print("\nOverall accuracy : %3.2f %%" % (100 * (ConfusionMatrix.get_overall_accuracy(C))))

55 | print("Mean accuracy : %3.2f %%" % (100 * (ConfusionMatrix.get_mean_class_accuracy(C))))

56 | print("Mean IoU : %3.2f %%\n" % (100 * (ConfusionMatrix.get_average_intersection_union(C))))

57 | print(" Classe : IoU")

58 | for c in range(0,n_labels):

59 | print (" %12s : %6.2f %% \t %.1e points" %(inv_class_map[c],100*ConfusionMatrix.get_intersection_union_per_class(C)[c], ConfusionMatrix.count_gt(C,c)))

60 |

--------------------------------------------------------------------------------

/learning/graphnet.py:

--------------------------------------------------------------------------------

1 | """

2 | Large-scale Point Cloud Semantic Segmentation with Superpoint Graphs

3 | http://arxiv.org/abs/1711.09869

4 | 2017 Loic Landrieu, Martin Simonovsky

5 | """

6 | from __future__ import division

7 | from __future__ import print_function

8 | from builtins import range

9 |

10 | import torch

11 | import torch.nn as nn

12 | import torch.nn.init as init

13 | from learning import ecc

14 | from learning.modules import RNNGraphConvModule, ECC_CRFModule, GRUCellEx, LSTMCellEx

15 |

16 |

17 | def create_fnet(widths, orthoinit, llbias, bnidx=-1):

18 | """ Creates feature-generating network, a multi-layer perceptron.

19 | Parameters: