├── .gitattributes

├── .gitignore

├── .idea

├── vcs.xml

└── workspace.xml

├── README.md

├── demo.py

├── demo_simpler.py

├── experiments

├── 1.mp4

├── cfgs

│ ├── fssd_lite_mobilenetv1_train_coco.yml

│ ├── fssd_lite_mobilenetv1_train_voc.yml

│ ├── fssd_lite_mobilenetv2_train_coco.yml

│ ├── fssd_lite_mobilenetv2_train_voc.yml

│ ├── fssd_resnet50_train_coco.yml

│ ├── fssd_vgg16_train_coco.yml

│ ├── fssd_vgg16_train_voc.yml

│ ├── rfb_lite_mobilenetv1_train_coco.yml

│ ├── rfb_lite_mobilenetv1_train_voc.yml

│ ├── rfb_lite_mobilenetv2_train_coco.yml

│ ├── rfb_lite_mobilenetv2_train_voc.yml

│ ├── rfb_resnet50_train_coco.yml

│ ├── rfb_resnet50_train_voc.yml

│ ├── rfb_vgg16_train_coco.yml

│ ├── rfb_vgg16_train_voc.yml

│ ├── ssd_lite_mobilenetv1_train_coco.yml

│ ├── ssd_lite_mobilenetv1_train_voc.yml

│ ├── ssd_lite_mobilenetv2_train_coco.yml

│ ├── ssd_lite_mobilenetv2_train_voc.yml

│ ├── ssd_resnet50_train_coco.yml

│ ├── ssd_resnet50_train_voc.yml

│ ├── ssd_vgg16_train_coco.yml

│ ├── ssd_vgg16_train_voc.yml

│ ├── tests

│ │ ├── fssd_darknet19_coco.yml

│ │ ├── fssd_darknet53_coco.yml

│ │ ├── fssd_darknet53_voc.yml

│ │ ├── fssd_resnet50_train_voc.yml

│ │ ├── rfb_darknet19_coco.yml

│ │ ├── rfb_darknet53_coco.yml

│ │ ├── rfb_darknet53_voc.yml

│ │ ├── ssd_darknet19_coco.yml

│ │ ├── ssd_darknet53_coco.yml

│ │ ├── ssd_darknet53_voc.yml

│ │ ├── ssd_resnet101_train_coco.yml

│ │ ├── test.yml

│ │ ├── yolo_v2_mobilenetv1_coco.yml

│ │ ├── yolo_v2_mobilenetv1_voc.yml

│ │ ├── yolo_v2_mobilenetv2_coco.yml

│ │ ├── yolo_v2_mobilenetv2_voc.yml

│ │ ├── yolo_v3_darknet53_coco.yml

│ │ ├── yolo_v3_darknet53_voc.yml

│ │ ├── yolo_v3_mobilenetv1_coco.yml

│ │ ├── yolo_v3_mobilenetv1_voc.yml

│ │ ├── yolo_v3_mobilenetv2_coco.yml

│ │ └── yolo_v3_mobilenetv2_voc.yml

│ ├── yolo_v2_darknet19_coco.yml

│ ├── yolo_v2_darknet19_voc.yml

│ ├── yolo_v2_mobilenetv1_coco.yml

│ ├── yolo_v2_mobilenetv1_voc.yml

│ ├── yolo_v2_mobilenetv2_coco.yml

│ ├── yolo_v2_mobilenetv2_voc.yml

│ ├── yolo_v3_darknet53_coco.yml

│ ├── yolo_v3_darknet53_voc.yml

│ ├── yolo_v3_mobilenetv1_coco.yml

│ ├── yolo_v3_mobilenetv1_voc.yml

│ ├── yolo_v3_mobilenetv2_coco.yml

│ └── yolo_v3_mobilenetv2_voc.yml

└── person.jpg

├── lib

├── __init__.py

├── dataset

│ ├── __init__.py

│ ├── coco.py

│ ├── dataset_factory.py

│ ├── voc.py

│ └── voc_eval.py

├── layers

│ ├── __init__.py

│ ├── functions

│ │ ├── __init__.py

│ │ ├── detection.py

│ │ └── prior_box.py

│ └── modules

│ │ ├── __init__.py

│ │ ├── focal_loss.py

│ │ ├── l2norm.py

│ │ └── multibox_loss.py

├── modeling

│ ├── __init__.py

│ ├── model_builder.py

│ ├── nets

│ │ ├── __init__.py

│ │ ├── darknet.py

│ │ ├── mobilenet.py

│ │ ├── resnet.py

│ │ └── vgg.py

│ └── ssds

│ │ ├── __init__.py

│ │ ├── fssd.py

│ │ ├── fssd_lite.py

│ │ ├── retina.py

│ │ ├── rfb.py

│ │ ├── rfb_lite.py

│ │ ├── ssd.py

│ │ ├── ssd_lite.py

│ │ └── yolo.py

├── ssds.py

├── ssds_train.py

└── utils

│ ├── __init__.py

│ ├── box_utils.py

│ ├── build

│ └── temp.linux-x86_64-3.6

│ │ ├── nms

│ │ ├── cpu_nms.o

│ │ ├── gpu_nms.o

│ │ └── nms_kernel.o

│ │ └── pycocotools

│ │ ├── _mask.o

│ │ └── maskApi.o

│ ├── config_parse.py

│ ├── dark2pth.py

│ ├── data_augment.py

│ ├── data_augment_test.py

│ ├── eval_utils.py

│ ├── fp16_utils.py

│ ├── nms

│ ├── .gitignore

│ ├── __init__.py

│ ├── _ext

│ │ ├── __init__.py

│ │ └── nms

│ │ │ └── __init__.py

│ ├── build.py

│ ├── make.sh

│ ├── nms_gpu.py

│ ├── nms_kernel.cu

│ ├── nms_wrapper.py

│ └── src

│ │ ├── nms_cuda.c

│ │ ├── nms_cuda.h

│ │ ├── nms_cuda_kernel.cu

│ │ ├── nms_cuda_kernel.cu.o

│ │ └── nms_cuda_kernel.h

│ ├── pycocotools

│ ├── __init__.py

│ ├── _mask.c

│ ├── _mask.cpython-36m-x86_64-linux-gnu.so

│ ├── _mask.pyx

│ ├── coco.py

│ ├── cocoeval.py

│ └── mask.py

│ ├── timer.py

│ └── visualize_utils.py

├── requirements.txt

├── setup.py

├── test.py

├── time_benchmark.sh

└── train.py

/.gitattributes:

--------------------------------------------------------------------------------

1 | # Auto detect text files and perform LF normalization

2 | * text=auto

3 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | .vscode/

2 | weights/

3 | data/

4 | data

5 | experiments/models/

6 | run.sh

7 | __pycache__

8 | *.pyc

9 | log*

10 | .idea/

11 | saved_model/

12 |

13 | vendor/

14 |

--------------------------------------------------------------------------------

/.idea/vcs.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # SSDs

2 |

3 | This repo contains many object detection methods that aims at **single shot and real time**, so the **speed** is the only thing we talk about. Currently we have some base networks that support object detection task such as MobileNet V2, ResNet, VGG etc. And some SSD variants such as FSSD, RFBNet, Retina, and even Yolo are contained.

4 |

5 | If you have any faster object detection methods welcome to discuss with me to merge it into our master branches.

6 |

7 |

8 |

9 |

10 | # Note

11 |

12 | Work are just being progressing. Will update some result and pretrained model after trained on some datasets. And of course, some out-of-box inference demo.

13 |

14 | [updates]:

15 |

16 | 2018.11.06: As you know, after trained `fssd_mobilenetv2` the inference codes actually get none result, still debugging how this logic error comes out.

17 |

18 |

19 |

20 | # Train

21 |

22 | All settings about base net and ssd variants are under `./experiments/cfgs/*.yml`, just edit it to your enviroment and kick it off.

23 |

24 | ```

25 | python3 train.py --cfg=./experiments/cfgs/rfb_lite_mobilenetv2_train_vocyml

26 | ```

27 |

28 | You can try train on coco first then using your custom dataset. If you have your coco data inside /path/to/coco, the just link it to `./data/` and you can find coco inside `./data`. Same as VOC data.

29 |

30 |

31 |

32 |

33 |

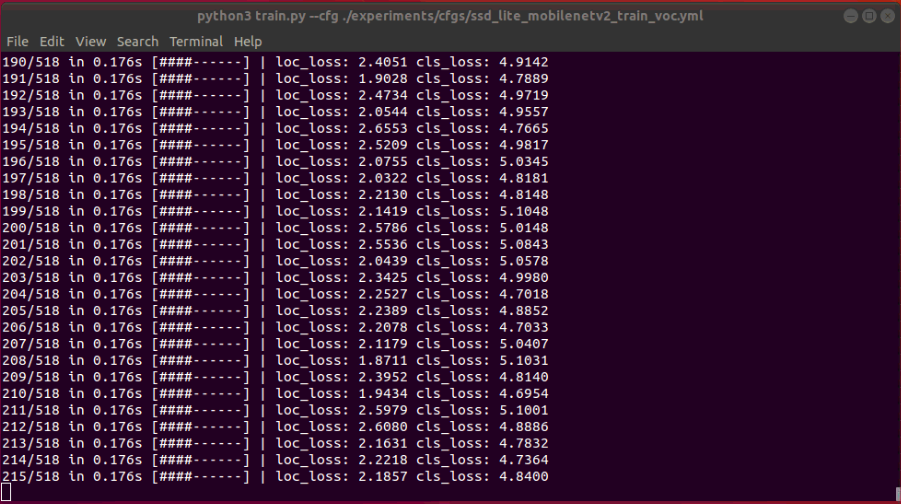

34 | That is what it trains like. After that I shall upload some trained model.

35 |

36 |

37 |

38 | ## Predict

39 |

40 | To predict on a simple image, you can find some useful codes in `demo_simpler.py`. But it still under testing. I will upload some images when I get it predicted success.

41 |

42 |

43 |

44 |

45 |

46 | ## Copyright

47 |

48 | This version contained by myself and portable to pytorch newest version. As well as some pretrained model and speed test benchmark. If you have any question or want ask *Computer Vision* questions you can contact me via **wechat**: `jintianiloveu`.

49 |

50 | Some useful links and other repo:

51 |

52 | 1. https://github.com/ShuangXieIrene/ssds.pytorch

--------------------------------------------------------------------------------

/demo.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function

2 | import sys

3 | import os

4 | import argparse

5 | import numpy as np

6 | import cv2

7 |

8 | from lib.ssds import ObjectDetector

9 | from lib.utils.config_parse import cfg_from_file

10 |

11 | VOC_CLASSES = ( 'aeroplane', 'bicycle', 'bird', 'boat',

12 | 'bottle', 'bus', 'car', 'cat', 'chair',

13 | 'cow', 'diningtable', 'dog', 'horse',

14 | 'motorbike', 'person', 'pottedplant',

15 | 'sheep', 'sofa', 'train', 'tvmonitor')

16 |

17 | def parse_args():

18 | """

19 | Parse input arguments

20 | """

21 | parser = argparse.ArgumentParser(description='Demo a ssds.pytorch network')

22 | parser.add_argument('--cfg', dest='confg_file',

23 | help='the address of optional config file', default=None, type=str, required=True)

24 | parser.add_argument('--demo', dest='demo_file',

25 | help='the address of the demo file', default=None, type=str, required=True)

26 | parser.add_argument('-t', '--type', dest='type',

27 | help='the type of the demo file, could be "image", "video", "camera" or "time", default is "image"', default='image', type=str)

28 | parser.add_argument('-d', '--display', dest='display',

29 | help='whether display the detection result, default is True', default=True, type=bool)

30 | parser.add_argument('-s', '--save', dest='save',

31 | help='whether write the detection result, default is False', default=False, type=bool)

32 |

33 | if len(sys.argv) == 1:

34 | parser.print_help()

35 | sys.exit(1)

36 |

37 | args = parser.parse_args()

38 | return args

39 |

40 |

41 | COLORS = [(255, 0, 0), (0, 255, 0), (0, 0, 255)]

42 | FONT = cv2.FONT_HERSHEY_SIMPLEX

43 |

44 | def demo(args, image_path):

45 | # 1. load the configure file

46 | cfg_from_file(args.confg_file)

47 |

48 | # 2. load detector based on the configure file

49 | object_detector = ObjectDetector()

50 |

51 | # 3. load image

52 | image = cv2.imread(image_path)

53 |

54 | # 4. detect

55 | _labels, _scores, _coords = object_detector.predict(image)

56 |

57 | # 5. draw bounding box on the image

58 | for labels, scores, coords in zip(_labels, _scores, _coords):

59 | cv2.rectangle(image, (int(coords[0]), int(coords[1])), (int(coords[2]), int(coords[3])), COLORS[labels % 3], 2)

60 | cv2.putText(image, '{label}: {score:.3f}'.format(label=VOC_CLASSES[labels], score=scores), (int(coords[0]), int(coords[1])), FONT, 0.5, COLORS[labels % 3], 2)

61 |

62 | # 6. visualize result

63 | if args.display is True:

64 | cv2.imshow('result', image)

65 | cv2.waitKey(0)

66 |

67 | # 7. write result

68 | if args.save is True:

69 | path, _ = os.path.splitext(image_path)

70 | cv2.imwrite(path + '_result.jpg', image)

71 |

72 |

73 | def demo_live(args, video_path):

74 | # 1. load the configure file

75 | cfg_from_file(args.confg_file)

76 |

77 | # 2. load detector based on the configure file

78 | object_detector = ObjectDetector()

79 |

80 | # 3. load video

81 | video = cv2.VideoCapture(video_path)

82 |

83 | index = -1

84 | while(video.isOpened()):

85 | index = index + 1

86 | sys.stdout.write('Process image: {} \r'.format(index))

87 | sys.stdout.flush()

88 |

89 | # 4. read image

90 | flag, image = video.read()

91 | if flag == False:

92 | print("Can not read image in Frame : {}".format(index))

93 | break

94 |

95 | # 5. detect

96 | _labels, _scores, _coords = object_detector.predict(image)

97 |

98 | # 6. draw bounding box on the image

99 | for labels, scores, coords in zip(_labels, _scores, _coords):

100 | cv2.rectangle(image, (int(coords[0]), int(coords[1])), (int(coords[2]), int(coords[3])), COLORS[labels % 3], 2)

101 | cv2.putText(image, '{label}: {score:.3f}'.format(label=VOC_CLASSES[labels], score=scores), (int(coords[0]), int(coords[1])), FONT, 0.5, COLORS[labels % 3], 2)

102 |

103 | # 7. visualize result

104 | if args.display is True:

105 | cv2.imshow('result', image)

106 | cv2.waitKey(33)

107 |

108 | # 8. write result

109 | if args.save is True:

110 | path, _ = os.path.splitext(video_path)

111 | path = path + '_result'

112 | if not os.path.exists(path):

113 | os.mkdir(path)

114 | cv2.imwrite(path + '/{}.jpg'.format(index), image)

115 |

116 |

117 | def time_benchmark(args, image_path):

118 | # 1. load the configure file

119 | cfg_from_file(args.confg_file)

120 |

121 | # 2. load detector based on the configure file

122 | object_detector = ObjectDetector()

123 |

124 | # 3. load image

125 | image = cv2.imread(image_path)

126 |

127 | # 4. time test

128 | warmup = 20

129 | time_iter = 100

130 | print('Warmup the detector...')

131 | _t = list()

132 | for i in range(warmup+time_iter):

133 | _, _, _, (total_time, preprocess_time, net_forward_time, detect_time, output_time) \

134 | = object_detector.predict(image, check_time=True)

135 | if i > warmup:

136 | _t.append([total_time, preprocess_time, net_forward_time, detect_time, output_time])

137 | if i % 20 == 0:

138 | print('In {}\{}, total time: {} \n preprocess: {} \n net_forward: {} \n detect: {} \n output: {}'.format(

139 | i-warmup, time_iter, total_time, preprocess_time, net_forward_time, detect_time, output_time

140 | ))

141 | total_time, preprocess_time, net_forward_time, detect_time, output_time = np.sum(_t, axis=0)/time_iter

142 | print('In average, total time: {} \n preprocess: {} \n net_forward: {} \n detect: {} \n output: {}'.format(

143 | total_time, preprocess_time, net_forward_time, detect_time, output_time

144 | ))

145 |

146 |

147 | if __name__ == '__main__':

148 | args = parse_args()

149 | if args.type == 'image':

150 | demo(args, args.demo_file)

151 | elif args.type == 'video':

152 | demo_live(args, args.demo_file)

153 | elif args.type == 'camera':

154 | demo_live(args, int(args.demo_file))

155 | elif args.type == 'time':

156 | time_benchmark(args, args.demo_file)

157 | else:

158 | AssertionError('type is not correct')

159 |

--------------------------------------------------------------------------------

/demo_simpler.py:

--------------------------------------------------------------------------------

1 | """

2 | inference on trained models

3 |

4 | with only provide a simple config file

5 |

6 | """

7 | import sys

8 | import os

9 | import numpy as np

10 | import cv2

11 |

12 | from lib.ssds import ObjectDetector

13 | from lib.utils.config_parse import cfg_from_file

14 | import argparse

15 |

16 | img_f = 'experiments/person.jpg'

17 |

18 |

19 | def parse_args():

20 | parser = argparse.ArgumentParser(description='Demo a ssds.pytorch network')

21 | parser.add_argument('--cfg', default='experiments/cfgs/fssd_lite_mobilenetv2_train_voc.yml',

22 | help='the address of optional config file')

23 | args = parser.parse_args()

24 | return args

25 |

26 |

27 | def predict():

28 | args = parse_args()

29 |

30 | cfg_from_file(args.cfg)

31 |

32 | detector = ObjectDetector()

33 |

34 | img = cv2.imread(img_f)

35 |

36 | _labels, _scores, _coords = detector.predict(img)

37 | print('labels: {}\nscores: {}\ncoords: {}'.format(_labels, _scores, _coords))

38 |

39 |

40 | if __name__ == '__main__':

41 | predict()

--------------------------------------------------------------------------------

/experiments/1.mp4:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/lucasjinreal/ssds_pytorch/00c69f1aa909d0eee3af8560a5d18e190d3718fc/experiments/1.mp4

--------------------------------------------------------------------------------

/experiments/cfgs/fssd_lite_mobilenetv1_train_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: fssd_lite

3 | NETS: mobilenet_v1

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[[5, 11, 13], [256, 512, 1024]],

7 | [['', 'S', 'S', 'S', '', ''], [512, 512, 256, 256, 256, 256]]]

8 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

9 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

10 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

11 |

12 | TRAIN:

13 | MAX_EPOCHS: 200

14 | CHECKPOINTS_EPOCHS: 1

15 | BATCH_SIZE: 32

16 | TRAINABLE_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

17 | RESUME_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

18 | OPTIMIZER:

19 | OPTIMIZER: sgd

20 | LEARNING_RATE: 0.001

21 | MOMENTUM: 0.9

22 | WEIGHT_DECAY: 0.0001

23 | LR_SCHEDULER:

24 | SCHEDULER: SGDR

25 | WARM_UP_EPOCHS: 97

26 |

27 | TEST:

28 | BATCH_SIZE: 48

29 | TEST_SCOPE: [196, 200]

30 |

31 | MATCHER:

32 | MATCHED_THRESHOLD: 0.5

33 | UNMATCHED_THRESHOLD: 0.5

34 | NEGPOS_RATIO: 3

35 |

36 | POST_PROCESS:

37 | SCORE_THRESHOLD: 0.01

38 | IOU_THRESHOLD: 0.6

39 | MAX_DETECTIONS: 100

40 |

41 | DATASET:

42 | DATASET: 'coco'

43 | DATASET_DIR: './data/COCO'

44 | TRAIN_SETS: [['2017', 'train']]

45 | TEST_SETS: [['2017', 'val']]

46 | PROB: 0.6

47 |

48 | EXP_DIR: './experiments/models/fssd_mobilenet_v1_coco'

49 | LOG_DIR: './experiments/models/fssd_mobilenet_v1_coco'

50 | RESUME_CHECKPOINT: './weights/fssd_lite/mobilenet_v1_fssd_lite_coco_24.2.pth'

51 | PHASE: ['test']

52 |

--------------------------------------------------------------------------------

/experiments/cfgs/fssd_lite_mobilenetv1_train_voc.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: fssd_lite

3 | NETS: mobilenet_v1

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 21

6 | FEATURE_LAYER: [[[5, 11, 13], [256, 512, 1024]],

7 | [['', 'S', 'S', 'S', '', ''], [512, 512, 256, 256, 256, 256]]]

8 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

9 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

10 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

11 |

12 | TRAIN:

13 | MAX_EPOCHS: 300

14 | CHECKPOINTS_EPOCHS: 1

15 | BATCH_SIZE: 32

16 | TRAINABLE_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

17 | RESUME_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

18 | OPTIMIZER:

19 | OPTIMIZER: sgd

20 | LEARNING_RATE: 0.001

21 | MOMENTUM: 0.9

22 | WEIGHT_DECAY: 0.0001

23 | LR_SCHEDULER:

24 | SCHEDULER: SGDR

25 | WARM_UP_EPOCHS: 100

26 |

27 | TEST:

28 | BATCH_SIZE: 48

29 | TEST_SCOPE: [285, 300]

30 |

31 | MATCHER:

32 | MATCHED_THRESHOLD: 0.5

33 | UNMATCHED_THRESHOLD: 0.5

34 | NEGPOS_RATIO: 3

35 |

36 | POST_PROCESS:

37 | SCORE_THRESHOLD: 0.01

38 | IOU_THRESHOLD: 0.6

39 | MAX_DETECTIONS: 100

40 |

41 | DATASET:

42 | DATASET: 'voc'

43 | DATASET_DIR: './data/VOCdevkit'

44 | TRAIN_SETS: [['2007', 'trainval'], ['2012', 'trainval']]

45 | TEST_SETS: [['2007', 'test']]

46 | PROB: 0.6

47 |

48 | EXP_DIR: './experiments/models/fssd_mobilenet_v1_voc'

49 | LOG_DIR: './experiments/models/fssd_mobilenet_v1_voc'

50 | RESUME_CHECKPOINT: './weights/fssd_lite/mobilenet_v1_fssd_lite_voc_78.4.pth'

51 | PHASE: ['test']

52 |

--------------------------------------------------------------------------------

/experiments/cfgs/fssd_lite_mobilenetv2_train_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: fssd_lite

3 | NETS: mobilenet_v2

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[[6, 13, 17], [32, 96, 320]],

7 | [['', 'S', 'S', 'S', '', ''], [256, 256, 256, 256, 128, 128]]]

8 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

9 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

10 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

11 |

12 | TRAIN:

13 | MAX_EPOCHS: 200

14 | CHECKPOINTS_EPOCHS: 1

15 | BATCH_SIZE: 4

16 | TRAINABLE_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

17 | RESUME_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

18 | OPTIMIZER:

19 | OPTIMIZER: sgd

20 | LEARNING_RATE: 0.001

21 | MOMENTUM: 0.9

22 | WEIGHT_DECAY: 0.0001

23 | LR_SCHEDULER:

24 | SCHEDULER: SGDR

25 | WARM_UP_EPOCHS: 100

26 |

27 | TEST:

28 | BATCH_SIZE: 48

29 | TEST_SCOPE: [188, 200]

30 |

31 | MATCHER:

32 | MATCHED_THRESHOLD: 0.5

33 | UNMATCHED_THRESHOLD: 0.5

34 | NEGPOS_RATIO: 3

35 |

36 | POST_PROCESS:

37 | SCORE_THRESHOLD: 0.01

38 | IOU_THRESHOLD: 0.6

39 | MAX_DETECTIONS: 100

40 |

41 | DATASET:

42 | DATASET: 'coco'

43 | DATASET_DIR: './data/COCO'

44 | TRAIN_SETS: [['2017', 'train']]

45 | TEST_SETS: [['2017', 'val']]

46 | PROB: 0.6

47 |

48 | EXP_DIR: './experiments/models/fssd_mobilenet_v2_coco'

49 | LOG_DIR: './experiments/models/fssd_mobilenet_v2_coco'

50 | RESUME_CHECKPOINT: './saved_model/fssd_mobilenet_v2_coco/fssd_lite_mobilenet_v2_voc_epoch_290.pth'

51 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/fssd_lite_mobilenetv2_train_voc.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: fssd_lite

3 | NETS: mobilenet_v2

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 21

6 | FEATURE_LAYER: [[[6, 13, 17], [32, 96, 320]],

7 | [['', 'S', 'S', 'S', '', ''], [256, 256, 256, 256, 128, 128]]]

8 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

9 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

10 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

11 |

12 | TRAIN:

13 | MAX_EPOCHS: 300

14 | CHECKPOINTS_EPOCHS: 1

15 | BATCH_SIZE: 32

16 | TRAINABLE_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

17 | RESUME_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

18 | OPTIMIZER:

19 | OPTIMIZER: sgd

20 | LEARNING_RATE: 0.001

21 | MOMENTUM: 0.9

22 | WEIGHT_DECAY: 0.0001

23 | LR_SCHEDULER:

24 | SCHEDULER: SGDR

25 | WARM_UP_EPOCHS: 100

26 |

27 | TEST:

28 | BATCH_SIZE: 48

29 | TEST_SCOPE: [288, 300]

30 |

31 | MATCHER:

32 | MATCHED_THRESHOLD: 0.5

33 | UNMATCHED_THRESHOLD: 0.5

34 | NEGPOS_RATIO: 3

35 |

36 | POST_PROCESS:

37 | SCORE_THRESHOLD: 0.01

38 | IOU_THRESHOLD: 0.6

39 | MAX_DETECTIONS: 100

40 |

41 | DATASET:

42 | DATASET: 'voc'

43 | DATASET_DIR: './data/VOCdevkit'

44 | TRAIN_SETS: [['2007', 'trainval'], ['2012', 'trainval']]

45 | TEST_SETS: [['2007', 'val']]

46 | PROB: 0.6

47 |

48 | EXP_DIR: './experiments/models/fssd_mobilenet_v2_voc'

49 | LOG_DIR: './experiments/models/fssd_mobilenet_v2_voc'

50 |

51 | RESUME_CHECKPOINT: './weights/fssd_lite/mobilenet_v2_fssd_lite_voc_76.7.pth'

52 | PHASE: ['train']

53 |

--------------------------------------------------------------------------------

/experiments/cfgs/fssd_resnet50_train_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: fssd

3 | NETS: resnet_50

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[[10, 16, 'S'], [512, 1024, 512]],

7 | [['', 'S', 'S', 'S', '', ''], [512, 512, 256, 256, 256, 256]]]

8 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

9 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

10 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

11 |

12 | TRAIN:

13 | MAX_EPOCHS: 100

14 | CHECKPOINTS_EPOCHS: 1

15 | BATCH_SIZE: 28

16 | TRAINABLE_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

17 | RESUME_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

18 | OPTIMIZER:

19 | OPTIMIZER: sgd

20 | LEARNING_RATE: 0.001

21 | MOMENTUM: 0.9

22 | WEIGHT_DECAY: 0.0001

23 | LR_SCHEDULER:

24 | SCHEDULER: SGDR

25 | WARM_UP_EPOCHS: 10

26 |

27 | TEST:

28 | BATCH_SIZE: 64

29 | TEST_SCOPE: [90, 100]

30 |

31 | MATCHER:

32 | MATCHED_THRESHOLD: 0.5

33 | UNMATCHED_THRESHOLD: 0.5

34 | NEGPOS_RATIO: 3

35 |

36 | POST_PROCESS:

37 | SCORE_THRESHOLD: 0.01

38 | IOU_THRESHOLD: 0.6

39 | MAX_DETECTIONS: 100

40 |

41 | DATASET:

42 | DATASET: 'coco'

43 | DATASET_DIR: './data/COCO'

44 | TRAIN_SETS: [['2017', 'train']]

45 | TEST_SETS: [['2017', 'val']]

46 | PROB: 0.6

47 |

48 | EXP_DIR: './experiments/models/fssd_resnet50_coco'

49 | LOG_DIR: './experiments/models/fssd_resnet50_coco'

50 | RESUME_CHECKPOINT: './weights/fssd/resnet50_fssd_coco_27.2.pth'

51 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/fssd_vgg16_train_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: fssd

3 | NETS: vgg16

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[[22, 34, 'S'], [512, 1024, 512]],

7 | [['', 'S', 'S', 'S', '', ''], [512, 512, 256, 256, 256, 256]]]

8 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

9 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

10 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

11 |

12 | TRAIN:

13 | MAX_EPOCHS: 100

14 | CHECKPOINTS_EPOCHS: 1

15 | BATCH_SIZE: 28

16 | TRAINABLE_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

17 | RESUME_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

18 | OPTIMIZER:

19 | OPTIMIZER: sgd

20 | LEARNING_RATE: 0.001

21 | MOMENTUM: 0.9

22 | WEIGHT_DECAY: 0.0001

23 | LR_SCHEDULER:

24 | SCHEDULER: SGDR

25 | WARM_UP_EPOCHS: 30

26 |

27 | TEST:

28 | BATCH_SIZE: 64

29 | TEST_SCOPE: [90, 100]

30 |

31 | MATCHER:

32 | MATCHED_THRESHOLD: 0.5

33 | UNMATCHED_THRESHOLD: 0.5

34 | NEGPOS_RATIO: 3

35 |

36 | POST_PROCESS:

37 | SCORE_THRESHOLD: 0.01

38 | IOU_THRESHOLD: 0.6

39 | MAX_DETECTIONS: 100

40 |

41 | DATASET:

42 | DATASET: 'coco'

43 | DATASET_DIR: './data/COCO'

44 | TRAIN_SETS: [['2017', 'train']]

45 | TEST_SETS: [['2017', 'val']]

46 | PROB: 0.6

47 |

48 | EXP_DIR: './experiments/models/fssd_vgg16_coco'

49 | LOG_DIR: './experiments/models/fssd_vgg16_coco'

50 | RESUME_CHECKPOINT: './weights/fssd/vgg16_fssd_coco_24.5.pth'

51 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/fssd_vgg16_train_voc.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: fssd

3 | NETS: vgg16

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 21

6 | FEATURE_LAYER: [[[22, 34, 'S'], [512, 1024, 512]],

7 | [['', 'S', 'S', 'S', '', ''], [512, 512, 256, 256, 256, 256]]]

8 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

9 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

10 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

11 |

12 | TRAIN:

13 | MAX_EPOCHS: 30

14 | CHECKPOINTS_EPOCHS: 1

15 | BATCH_SIZE: 32

16 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf,transforms,pyramids'

17 | RESUME_SCOPE: 'base,norm,extras,loc,conf,transforms,pyramids'

18 | OPTIMIZER:

19 | OPTIMIZER: sgd

20 | LEARNING_RATE: 0.004

21 | MOMENTUM: 0.9

22 | WEIGHT_DECAY: 0.0001

23 | LR_SCHEDULER:

24 | SCHEDULER: SGDR

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | TEST_SCOPE: [27, 30]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'voc'

42 | DATASET_DIR: './data/VOCdevkit'

43 | TRAIN_SETS: [['2007', 'trainval'], ['2012', 'trainval']]

44 | TEST_SETS: [['2007', 'test']]

45 |

46 | EXP_DIR: './experiments/models/fssd_vgg16_voc'

47 | LOG_DIR: './experiments/models/fssd_vgg16_voc'

48 | RESUME_CHECKPOINT: './weights/fssd/vgg16_fssd_voc_77.8.pth'

49 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/rfb_lite_mobilenetv1_train_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: rfb_lite

3 | NETS: mobilenet_v1

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[11, 13, 'RBF', 'S', 'S', 'S'], [512, 1024, 512, 256, 256, 128]]

7 | STEPS: [[16, 16], [32, 32], [64, 64], [100, 100], [150, 150], [300, 300]]

8 | SIZES: [[45, 45], [90, 90], [135, 135], [180, 180], [225, 225], [270, 270], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 51

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 32

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.004

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 25

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | # TEST_SCOPE: [45, 50]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'coco'

42 | DATASET_DIR: './data/COCO'

43 | TRAIN_SETS: [['2017', 'train']]

44 | TEST_SETS: [['2017', 'val']]

45 | PROB: 0.6

46 |

47 | EXP_DIR: './experiments/models/rfb_mobilenet_v1_coco'

48 | LOG_DIR: './experiments/models/rfb_mobilenet_v1_coco'

49 | RESUME_CHECKPOINT: './weights/rfb_lite/mobilenet_v1_rfb_lite_coco_19.1.pth'

50 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/rfb_lite_mobilenetv1_train_voc.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: rfb_lite

3 | NETS: mobilenet_v1

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 21

6 | FEATURE_LAYER: [[11, 13, 'RBF', 'S', 'S', 'S'], [512, 1024, 512, 256, 256, 128]]

7 | STEPS: [[16, 16], [32, 32], [64, 64], [100, 100], [150, 150], [300, 300]]

8 | SIZES: [[45, 45], [90, 90], [135, 135], [180, 180], [225, 225], [270, 270], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 51

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 32

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.004

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 25

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 |

29 | MATCHER:

30 | MATCHED_THRESHOLD: 0.5

31 | UNMATCHED_THRESHOLD: 0.5

32 | NEGPOS_RATIO: 3

33 |

34 | POST_PROCESS:

35 | SCORE_THRESHOLD: 0.01

36 | IOU_THRESHOLD: 0.6

37 | MAX_DETECTIONS: 100

38 |

39 | DATASET:

40 | DATASET: 'voc'

41 | DATASET_DIR: './data/VOCdevkit'

42 | TRAIN_SETS: [['2007', 'trainval'], ['2012', 'trainval']]

43 | TEST_SETS: [['2007', 'test']]

44 |

45 | EXP_DIR: './experiments/models/rfb_mobilenet_v1_voc'

46 | LOG_DIR: './experiments/models/rfb_mobilenet_v1_voc'

47 | RESUME_CHECKPOINT: './weights/rfb_lite/mobilenet_v1_rfb_lite_voc_73.7.pth'

48 | PHASE: ['test']

49 |

--------------------------------------------------------------------------------

/experiments/cfgs/rfb_lite_mobilenetv2_train_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: rfb_lite

3 | NETS: mobilenet_v2

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[13, 17, 'RBF', 'S', 'S', 'S'], [96, 320, 512, 256, 256, 128]]

7 | STEPS: [[16, 16], [32, 32], [64, 64], [100, 100], [150, 150], [300, 300]]

8 | SIZES: [[45, 45], [90, 90], [135, 135], [180, 180], [225, 225], [270, 270], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 50

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 32

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.002

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 0

25 |

26 | TEST:

27 | BATCH_SIZE: 48

28 | TEST_SCOPE: [45, 50]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'coco'

42 | DATASET_DIR: './data/COCO'

43 | TRAIN_SETS: [['2017', 'train']]

44 | TEST_SETS: [['2017', 'val']]

45 | PROB: 0.6

46 |

47 | EXP_DIR: './experiments/models/rfb_mobilenet_v2_coco'

48 | LOG_DIR: './experiments/models/rfb_mobilenet_v2_coco'

49 | RESUME_CHECKPOINT: './weights/rfb_lite/mobilenet_v2_rfb_lite_coco_18.5.pth'

50 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/rfb_lite_mobilenetv2_train_voc.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: rfb_lite

3 | NETS: mobilenet_v2

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 21

6 | FEATURE_LAYER: [[13, 17, 'RBF', 'S', 'S', 'S'], [96, 320, 512, 256, 256, 128]]

7 | STEPS: [[16, 16], [32, 32], [64, 64], [100, 100], [150, 150], [300, 300]]

8 | SIZES: [[45, 45], [90, 90], [135, 135], [180, 180], [225, 225], [270, 270], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 300

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 32

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.001

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 0

25 | TEST:

26 | BATCH_SIZE: 48

27 | TEST_SCOPE: [270, 300]

28 |

29 | MATCHER:

30 | MATCHED_THRESHOLD: 0.5

31 | UNMATCHED_THRESHOLD: 0.5

32 | NEGPOS_RATIO: 3

33 |

34 | POST_PROCESS:

35 | SCORE_THRESHOLD: 0.01

36 | IOU_THRESHOLD: 0.6

37 | MAX_DETECTIONS: 100

38 |

39 | DATASET:

40 | DATASET: 'voc'

41 | DATASET_DIR: './data/VOCdevkit'

42 | TRAIN_SETS: [['2007', 'trainval'], ['2012', 'trainval']]

43 | TEST_SETS: [['2007', 'test']]

44 |

45 | EXP_DIR: './experiments/models/rfb_mobilenet_v2_voc'

46 | LOG_DIR: './experiments/models/rfb_mobilenet_v2_voc'

47 | RESUME_CHECKPOINT: './weights/rfb_lite/mobilenet_v2_rfb_lite_voc_73.4.pth'

48 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/rfb_resnet50_train_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: rfb

3 | NETS: resnet_50

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[10, 16, 'RBF', 'RBF', '', ''], [512, 1024, 512, 256, 256, 256]]

7 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

8 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 100

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 28

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.001

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 30

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | TEST_SCOPE: [93, 100]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'coco'

42 | DATASET_DIR: './data/COCO'

43 | TRAIN_SETS: [['2017', 'train']]

44 | TEST_SETS: [['2017', 'val']]

45 | PROB: 0.6

46 |

47 | EXP_DIR: './experiments/models/rfb_resnet50_coco'

48 | LOG_DIR: './experiments/models/rfb_resnet50_coco'

49 | RESUME_CHECKPOINT: './weights/rfb/resnet50_rfb_coco_26.5.pth'

50 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/rfb_resnet50_train_voc.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: rfb

3 | NETS: resnet_50

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 21

6 | FEATURE_LAYER: [[10, 16, 'RBF', 'RBF', '', ''], [512, 1024, 512, 256, 256, 256]]

7 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

8 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 100

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 32

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.001

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 50

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | TEST_SCOPE: [90, 100]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'voc'

42 | DATASET_DIR: './data/VOCdevkit'

43 | TRAIN_SETS: [['2007', 'trainval'], ['2012', 'trainval']]

44 | TEST_SETS: [['2007', 'test']]

45 |

46 | EXP_DIR: './experiments/models/rfb_resnet50_voc'

47 | LOG_DIR: './experiments/models/rfb_resnet50_voc'

48 | RESUME_CHECKPOINT: './weights/rfb/resnet50_rfb_voc_81.2.pth'

49 | PHASE: ['test']

50 |

--------------------------------------------------------------------------------

/experiments/cfgs/rfb_vgg16_train_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: rfb

3 | NETS: vgg16

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[22, 34, 'RBF', 'RBF', '', ''], [512, 1024, 512, 256, 256, 256]]

7 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

8 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 100

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 24

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.001

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 60

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | # TEST_SCOPE: [95, 100]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'coco'

42 | DATASET_DIR: './data/COCO'

43 | TRAIN_SETS: [['2017', 'train']]

44 | TEST_SETS: [['2017', 'val']]

45 | PROB: 0.6

46 |

47 | EXP_DIR: './experiments/models/rfb_vgg16_coco'

48 | LOG_DIR: './experiments/models/rfb_vgg16_coco'

49 | RESUME_CHECKPOINT: './weights/rfb/vgg16_rfb_coco_25.5.pth'

50 | PHASE: ['test']

51 |

--------------------------------------------------------------------------------

/experiments/cfgs/rfb_vgg16_train_voc.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: rfb

3 | NETS: vgg16

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 21

6 | FEATURE_LAYER: [[22, 34, 'RBF', 'RBF', '', ''], [512, 1024, 512, 256, 256, 256]]

7 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

8 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 100

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 24

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.001

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 60

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | # TEST_SCOPE: [95, 100]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'voc'

42 | DATASET_DIR: './data/VOCdevkit'

43 | TRAIN_SETS: [['2007', 'trainval'], ['2012', 'trainval']]

44 | TEST_SETS: [['2007', 'test']]

45 | PROB: 0.6

46 |

47 | EXP_DIR: './experiments/models/rfb_vgg16_voc'

48 | LOG_DIR: './experiments/models/rfb_vgg16_voc'

49 | RESUME_CHECKPOINT: './weights/rfb/vgg16_rfb_voc_80.5.pth'

50 | PHASE: ['test']

51 |

--------------------------------------------------------------------------------

/experiments/cfgs/ssd_lite_mobilenetv1_train_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: ssd_lite

3 | NETS: mobilenet_v1

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[11, 13, 'S', 'S', 'S', 'S'], [512, 1024, 512, 256, 256, 128]]

7 | STEPS: [[16, 16], [32, 32], [64, 64], [100, 100], [150, 150], [300, 300]]

8 | SIZES: [[45, 45], [90, 90], [135, 135], [180, 180], [225, 225], [270, 270], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 100

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 32

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.001

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 0

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | TEST_SCOPE: [90, 100]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'coco'

42 | DATASET_DIR: './data/COCO'

43 | TRAIN_SETS: [['2017', 'train']]

44 | TEST_SETS: [['2017', 'val']]

45 | PROB: 0.6

46 |

47 | EXP_DIR: './experiments/models/ssd_mobilenet_v1_coco'

48 | LOG_DIR: './experiments/models/ssd_mobilenet_v1_coco'

49 | RESUME_CHECKPOINT: './weights/ssd_lite/mobilenet_v1_ssd_lite_coco_18.8.pth'

50 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/ssd_lite_mobilenetv1_train_voc.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: ssd_lite

3 | NETS: mobilenet_v1

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 21

6 | FEATURE_LAYER: [[11, 13, 'S', 'S', 'S', 'S'], [512, 1024, 512, 256, 256, 128]]

7 | STEPS: [[16, 16], [32, 32], [64, 64], [100, 100], [150, 150], [300, 300]]

8 | SIZES: [[45, 45], [90, 90], [135, 135], [180, 180], [225, 225], [270, 270], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 300

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 32

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.004

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 100

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | TEST_SCOPE: [285, 300]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'voc'

42 | DATASET_DIR: './data/VOCdevkit'

43 | TRAIN_SETS: [['2007', 'trainval'], ['2012', 'trainval']]

44 | TEST_SETS: [['2007', 'test']]

45 | PROB: 0.6

46 |

47 | EXP_DIR: './experiments/models/ssd_mobilenet_v1_voc'

48 | LOG_DIR: './experiments/models/ssd_mobilenet_v1_voc'

49 | RESUME_CHECKPOINT: './weights/ssd_lite/mobilenet_v1_ssd_lite_voc_72.7.pth'

50 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/ssd_lite_mobilenetv2_train_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: ssd_lite

3 | NETS: mobilenet_v2

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[13, 17, 'S', 'S', 'S', 'S'], [96, 320, 512, 256, 256, 128]]

7 | STEPS: [[16, 16], [32, 32], [64, 64], [100, 100], [150, 150], [300, 300]]

8 | SIZES: [[45, 45], [90, 90], [135, 135], [180, 180], [225, 225], [270, 270], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 200

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 32

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.001

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 95

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | TEST_SCOPE: [196, 200]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'coco'

42 | DATASET_DIR: './data/COCO'

43 | TRAIN_SETS: [['2017', 'train']]

44 | TEST_SETS: [['2017', 'val']]

45 | PROB: 0.6

46 |

47 | EXP_DIR: './experiments/models/ssd_mobilenet_v2_coco'

48 | LOG_DIR: './experiments/models/ssd_mobilenet_v2_coco'

49 | RESUME_CHECKPOINT: './weights/ssd_lite/mobilenet_v2_ssd_lite_coco_18.5.pth'

50 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/ssd_lite_mobilenetv2_train_voc.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: ssd_lite

3 | NETS: mobilenet_v2

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 21

6 | FEATURE_LAYER: [[13, 17, 'S', 'S', 'S', 'S'], [96, 320, 512, 256, 256, 128]]

7 | STEPS: [[16, 16], [32, 32], [64, 64], [100, 100], [150, 150], [300, 300]]

8 | SIZES: [[45, 45], [90, 90], [135, 135], [180, 180], [225, 225], [270, 270], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 300

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 32

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.001

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 100

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | TEST_SCOPE: [285, 300]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'voc'

42 | DATASET_DIR: './data/VOCdevkit'

43 | TRAIN_SETS: [['2007', 'trainval'], ['2012', 'trainval']]

44 | TEST_SETS: [['2007', 'val']]

45 | PROB: 0.6

46 |

47 | EXP_DIR: './experiments/models/ssd_mobilenet_v2_voc'

48 | LOG_DIR: './experiments/models/ssd_mobilenet_v2_voc'

49 | RESUME_CHECKPOINT: './weights/ssd_lite/mobilenet_v2_ssd_lite_voc_73.2.pth'

50 | PHASE: ['train']

--------------------------------------------------------------------------------

/experiments/cfgs/ssd_resnet50_train_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: ssd

3 | NETS: resnet_50

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[10, 16, 'S', 'S', '', ''], [512, 1024, 512, 256, 256, 256]]

7 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

8 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 200

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 32

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.004

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 10

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | TEST_SCOPE: [190, 200]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'coco'

42 | DATASET_DIR: './data/COCO'

43 | TRAIN_SETS: [['2017', 'train']]

44 | TEST_SETS: [['2017', 'val']]

45 | PROB: 0.6

46 |

47 | EXP_DIR: './experiments/models/ssd_resnet50_coco'

48 | LOG_DIR: './experiments/models/ssd_resnet50_coco'

49 | RESUME_CHECKPOINT: './weights/ssd/resnet50_ssd_coco_25.1.pth'

50 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/ssd_resnet50_train_voc.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: ssd

3 | NETS: resnet_50

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 21

6 | FEATURE_LAYER: [[10, 16, 'S', 'S', '', ''], [512, 1024, 512, 256, 256, 256]]

7 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

8 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 200

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 32

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.001

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 50

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | TEST_SCOPE: [190, 200]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'voc'

42 | DATASET_DIR: './data/VOCdevkit'

43 | TRAIN_SETS: [['2007', 'trainval'], ['2012', 'trainval']]

44 | TEST_SETS: [['2007', 'test']]

45 |

46 | EXP_DIR: './experiments/models/ssd_resnet50_voc'

47 | LOG_DIR: './experiments/models/ssd_resnet50_voc'

48 | RESUME_CHECKPOINT: './weights/ssd/resnet50_ssd_voc_79.7.pth'

49 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/ssd_vgg16_train_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: ssd

3 | NETS: vgg16

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[22, 34, 'S', 'S', '', ''], [512, 1024, 512, 256, 256, 256]]

7 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

8 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 60

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 32

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.004

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 5

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | TEST_SCOPE: [55, 60]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'coco'

42 | DATASET_DIR: './data/COCO'

43 | TRAIN_SETS: [['2017', 'train']]

44 | TEST_SETS: [['2017', 'val']]

45 | PROB: 0.6

46 |

47 | EXP_DIR: './experiments/models/ssd_vgg16_coco'

48 | LOG_DIR: './experiments/models/ssd_vgg16_coco'

49 | RESUME_CHECKPOINT: './weights/ssd/vgg16_ssd_coco_24.4.pth'

50 | PHASE: ['test']

51 |

--------------------------------------------------------------------------------

/experiments/cfgs/ssd_vgg16_train_voc.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: ssd

3 | NETS: vgg16

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 21

6 | FEATURE_LAYER: [[22, 34, 'S', 'S', '', ''], [512, 1024, 512, 256, 256, 256]]

7 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

8 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 2

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 4

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.004

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 |

25 | TEST:

26 | BATCH_SIZE: 64

27 |

28 | MATCHER:

29 | MATCHED_THRESHOLD: 0.5

30 | UNMATCHED_THRESHOLD: 0.5

31 | NEGPOS_RATIO: 3

32 |

33 | POST_PROCESS:

34 | SCORE_THRESHOLD: 0.01

35 | IOU_THRESHOLD: 0.6

36 | MAX_DETECTIONS: 100

37 |

38 | DATASET:

39 | DATASET: 'voc'

40 | DATASET_DIR: './data/VOCdevkit'

41 | # TRAIN_SETS: [['2007', 'trainval'], ['2012', 'trainval']]

42 | TRAIN_SETS: [['2007', 'trainval']]

43 | TEST_SETS: [['2007', 'test']]

44 |

45 | EXP_DIR: './experiments/models/ssd_vgg16_voc'

46 | LOG_DIR: './experiments/models/ssd_vgg16_voc'

47 | RESUME_CHECKPOINT: './weights/ssd/vgg16_reducedfc.pth'

48 | PHASE: ['train']

--------------------------------------------------------------------------------

/experiments/cfgs/tests/fssd_darknet19_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: fssd

3 | NETS: darknet_19

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[[8, 12, 16], [256, 512, 1024]],

7 | [['', 'S', 'S', 'S', '', ''], [512, 512, 256, 256, 256, 256]]]

8 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

9 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

10 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

11 |

12 | TRAIN:

13 | MAX_EPOCHS: 60

14 | CHECKPOINTS_EPOCHS: 1

15 | BATCH_SIZE: 32

16 | TRAINABLE_SCOPE: 'norm,extras,transforms,pyramids,loc,conf'

17 | RESUME_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

18 | OPTIMIZER:

19 | OPTIMIZER: sgd

20 | LEARNING_RATE: 0.001

21 | MOMENTUM: 0.9

22 | WEIGHT_DECAY: 0.0001

23 | LR_SCHEDULER:

24 | SCHEDULER: SGDR

25 | WARM_UP_EPOCHS: 0

26 |

27 | TEST:

28 | BATCH_SIZE: 64

29 | TEST_SCOPE: [91, 100]

30 |

31 | MATCHER:

32 | MATCHED_THRESHOLD: 0.5

33 | UNMATCHED_THRESHOLD: 0.5

34 | NEGPOS_RATIO: 3

35 |

36 | POST_PROCESS:

37 | SCORE_THRESHOLD: 0.01

38 | IOU_THRESHOLD: 0.6

39 | MAX_DETECTIONS: 100

40 |

41 | DATASET:

42 | DATASET: 'coco'

43 | DATASET_DIR: './data/COCO'

44 | TRAIN_SETS: [['2017', 'train']]

45 | TEST_SETS: [['2017', 'val']]

46 | PROB: 0.6

47 |

48 | EXP_DIR: './experiments/models/fssd_darknet_19_coco'

49 | LOG_DIR: './experiments/models/fssd_darknet_19_coco'

50 | RESUME_CHECKPOINT: './weights/yolo/darknet19_yolo_v2_coco_21.6.pth'

51 | PHASE: ['train']

--------------------------------------------------------------------------------

/experiments/cfgs/tests/fssd_darknet53_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: fssd

3 | NETS: darknet_53

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[[14, 23, 28], [256, 512, 1024]],

7 | [['', 'S', 'S', 'S', '', ''], [512, 512, 256, 256, 256, 256]]]

8 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

9 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

10 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

11 |

12 | TRAIN:

13 | MAX_EPOCHS: 100

14 | CHECKPOINTS_EPOCHS: 1

15 | BATCH_SIZE: 16

16 | TRAINABLE_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

17 | RESUME_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

18 | OPTIMIZER:

19 | OPTIMIZER: sgd

20 | LEARNING_RATE: 0.001

21 | MOMENTUM: 0.9

22 | WEIGHT_DECAY: 0.0001

23 | LR_SCHEDULER:

24 | SCHEDULER: SGDR

25 | WARM_UP_EPOCHS: 60

26 |

27 | TEST:

28 | BATCH_SIZE: 64

29 | TEST_SCOPE: [100, 100]

30 |

31 | MATCHER:

32 | MATCHED_THRESHOLD: 0.5

33 | UNMATCHED_THRESHOLD: 0.5

34 | NEGPOS_RATIO: 3

35 |

36 | POST_PROCESS:

37 | SCORE_THRESHOLD: 0.01

38 | IOU_THRESHOLD: 0.6

39 | MAX_DETECTIONS: 100

40 |

41 | DATASET:

42 | DATASET: 'coco'

43 | DATASET_DIR: './data/COCO'

44 | TRAIN_SETS: [['2017', 'train']]

45 | TEST_SETS: [['2017', 'val']]

46 | PROB: 0.6

47 |

48 | EXP_DIR: './experiments/models/fssd_darknet_53_coco'

49 | LOG_DIR: './experiments/models/fssd_darknet_53_coco'

50 | RESUME_CHECKPOINT: './weights/yolo/darknet53_yolo_v3_coco_27.3.pth'

51 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/tests/fssd_darknet53_voc.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: fssd

3 | NETS: darknet_53

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 21

6 | FEATURE_LAYER: [[[14, 23, 28], [256, 512, 1024]],

7 | [['', 'S', 'S', 'S', '', ''], [512, 512, 256, 256, 256, 256]]]

8 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

9 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

10 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

11 |

12 | TRAIN:

13 | MAX_EPOCHS: 100

14 | CHECKPOINTS_EPOCHS: 1

15 | BATCH_SIZE: 16

16 | TRAINABLE_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

17 | RESUME_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

18 | OPTIMIZER:

19 | OPTIMIZER: sgd

20 | LEARNING_RATE: 0.001

21 | MOMENTUM: 0.9

22 | WEIGHT_DECAY: 0.0001

23 | LR_SCHEDULER:

24 | SCHEDULER: SGDR

25 | WARM_UP_EPOCHS: 60

26 |

27 | TEST:

28 | BATCH_SIZE: 64

29 | TEST_SCOPE: [91, 100]

30 |

31 | MATCHER:

32 | MATCHED_THRESHOLD: 0.5

33 | UNMATCHED_THRESHOLD: 0.5

34 | NEGPOS_RATIO: 3

35 |

36 | POST_PROCESS:

37 | SCORE_THRESHOLD: 0.01

38 | IOU_THRESHOLD: 0.6

39 | MAX_DETECTIONS: 100

40 |

41 | DATASET:

42 | DATASET: 'voc'

43 | DATASET_DIR: './data/VOCdevkit'

44 | TRAIN_SETS: [['2007', 'trainval'], ['2012', 'trainval']]

45 | TEST_SETS: [['2007', 'test']]

46 | PROB: 0.6

47 |

48 | EXP_DIR: './experiments/models/fssd_darknet_53_voc'

49 | LOG_DIR: './experiments/models/fssd_darknet_53_voc'

50 | RESUME_CHECKPOINT: './experiments/models/fssd_darknet_53_coco/fssd_darknet_53_coco_epoch_98.pth'

51 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/tests/fssd_resnet50_train_voc.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: fssd

3 | NETS: resnet_50

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 21

6 | FEATURE_LAYER: [[[10, 16, 'S'], [512, 1024, 512]],

7 | [['', 'S', 'S', 'S', '', ''], [512, 512, 256, 256, 256, 256]]]

8 | STEPS: [[8, 8], [16, 16], [32, 32], [64, 64], [100, 100], [300, 300]]

9 | SIZES: [[30, 30], [60, 60], [111, 111], [162, 162], [213, 213], [264, 264], [315, 315]]

10 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

11 |

12 | TRAIN:

13 | MAX_EPOCHS: 50

14 | CHECKPOINTS_EPOCHS: 1

15 | BATCH_SIZE: 32

16 | TRAINABLE_SCOPE: 'norm,extras,transforms,pyramids,loc,conf'

17 | RESUME_SCOPE: 'base,norm,extras,transforms,pyramids,loc,conf'

18 | OPTIMIZER:

19 | OPTIMIZER: sgd

20 | LEARNING_RATE: 0.004

21 | MOMENTUM: 0.9

22 | WEIGHT_DECAY: 0.0001

23 | LR_SCHEDULER:

24 | SCHEDULER: SGDR

25 | WARM_UP_EPOCHS: 20

26 |

27 | TEST:

28 | BATCH_SIZE: 64

29 | TEST_SCOPE: [90, 100]

30 |

31 | MATCHER:

32 | MATCHED_THRESHOLD: 0.5

33 | UNMATCHED_THRESHOLD: 0.5

34 | NEGPOS_RATIO: 3

35 |

36 | POST_PROCESS:

37 | SCORE_THRESHOLD: 0.01

38 | IOU_THRESHOLD: 0.6

39 | MAX_DETECTIONS: 100

40 |

41 | DATASET:

42 | DATASET: 'voc'

43 | DATASET_DIR: './data/VOCdevkit'

44 | TRAIN_SETS: [['2007', 'trainval'], ['2012', 'trainval']]

45 | TEST_SETS: [['2007', 'test']]

46 |

47 | EXP_DIR: './experiments/models/fssd_resnet50_voc'

48 | LOG_DIR: './experiments/models/fssd_resnet50_voc'

49 | RESUME_CHECKPOINT: './weights/fssd/resnet50_fssd_coco_27.2.pth'

50 | PHASE: ['train']

51 |

--------------------------------------------------------------------------------

/experiments/cfgs/tests/rfb_darknet19_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: rfb

3 | NETS: darknet_19

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[12, 16, 'RBF', 'RBF', 'S', 'S'], [512, 1024, 512, 256, 256, 256]]

7 | STEPS: [[16, 16], [32, 32], [64, 64], [100, 100], [150, 150], [300, 300]]

8 | SIZES: [[45, 45], [90, 90], [135, 135], [180, 180], [225, 225], [270, 270], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 100

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 32

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.001

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 60

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | TEST_SCOPE: [91, 100]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'coco'

42 | DATASET_DIR: './data/COCO'

43 | TRAIN_SETS: [['2017', 'train']]

44 | TEST_SETS: [['2017', 'val']]

45 | PROB: 0.6

46 |

47 | EXP_DIR: './experiments/models/rfb_darknet_19_coco'

48 | LOG_DIR: './experiments/models/rfb_darknet_19_coco'

49 | RESUME_CHECKPOINT: './weights/yolo/darknet19_yolo_v2_coco_21.6.pth'

50 | PHASE: ['train']

--------------------------------------------------------------------------------

/experiments/cfgs/tests/rfb_darknet53_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: rfb

3 | NETS: darknet_53

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[23, 28, 'RBF', 'RBF', 'S', 'S'], [512, 1024, 512, 256, 256, 256]]

7 | STEPS: [[16, 16], [32, 32], [64, 64], [100, 100], [150, 150], [300, 300]]

8 | SIZES: [[45, 45], [90, 90], [135, 135], [180, 180], [225, 225], [270, 270], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 100

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 16

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.001

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 55

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | TEST_SCOPE: [96, 100]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'coco'

42 | DATASET_DIR: './data/COCO'

43 | TRAIN_SETS: [['2017', 'train']]

44 | TEST_SETS: [['2017', 'val']]

45 | PROB: 0.6

46 |

47 | EXP_DIR: './experiments/models/rfb_darknet_53_coco'

48 | LOG_DIR: './experiments/models/rfb_darknet_53_coco'

49 | RESUME_CHECKPOINT: './weights/yolo/darknet53_yolo_v3_coco_27.3.pth'

50 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/tests/rfb_darknet53_voc.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: rfb

3 | NETS: darknet_53

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 21

6 | FEATURE_LAYER: [[23, 28, 'RBF', 'RBF', 'S', 'S'], [512, 1024, 512, 256, 256, 256]]

7 | STEPS: [[16, 16], [32, 32], [64, 64], [100, 100], [150, 150], [300, 300]]

8 | SIZES: [[45, 45], [90, 90], [135, 135], [180, 180], [225, 225], [270, 270], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 100

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 32

15 | TRAINABLE_SCOPE: 'base,norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.001

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 55

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | TEST_SCOPE: [91, 100]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'voc'

42 | DATASET_DIR: './data/VOCdevkit'

43 | TRAIN_SETS: [['2007', 'trainval'], ['2012', 'trainval']]

44 | TEST_SETS: [['2007', 'test']]

45 | PROB: 0.6

46 |

47 | EXP_DIR: './experiments/models/rfb_darknet_53_voc'

48 | LOG_DIR: './experiments/models/rfb_darknet_53_voc'

49 | RESUME_CHECKPOINT: './experiments/models/rfb_darknet_53_coco/rfb_darknet_53_coco_epoch_100.pth'

50 | PHASE: ['test']

--------------------------------------------------------------------------------

/experiments/cfgs/tests/ssd_darknet19_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: ssd

3 | NETS: darknet_19

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[12, 16, 'S', 'S', 'S', 'S'], [512, 1024, 512, 256, 256, 256]]

7 | STEPS: [[16, 16], [32, 32], [64, 64], [100, 100], [150, 150], [300, 300]]

8 | SIZES: [[45, 45], [90, 90], [135, 135], [180, 180], [225, 225], [270, 270], [315, 315]]

9 | ASPECT_RATIOS: [[1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2, 3], [1, 2], [1, 2]]

10 |

11 | TRAIN:

12 | MAX_EPOCHS: 60

13 | CHECKPOINTS_EPOCHS: 1

14 | BATCH_SIZE: 32

15 | TRAINABLE_SCOPE: 'norm,extras,loc,conf'

16 | RESUME_SCOPE: 'base,norm,extras,loc,conf'

17 | OPTIMIZER:

18 | OPTIMIZER: sgd

19 | LEARNING_RATE: 0.001

20 | MOMENTUM: 0.9

21 | WEIGHT_DECAY: 0.0001

22 | LR_SCHEDULER:

23 | SCHEDULER: SGDR

24 | WARM_UP_EPOCHS: 0

25 |

26 | TEST:

27 | BATCH_SIZE: 64

28 | TEST_SCOPE: [91, 100]

29 |

30 | MATCHER:

31 | MATCHED_THRESHOLD: 0.5

32 | UNMATCHED_THRESHOLD: 0.5

33 | NEGPOS_RATIO: 3

34 |

35 | POST_PROCESS:

36 | SCORE_THRESHOLD: 0.01

37 | IOU_THRESHOLD: 0.6

38 | MAX_DETECTIONS: 100

39 |

40 | DATASET:

41 | DATASET: 'coco'

42 | DATASET_DIR: './data/COCO'

43 | TRAIN_SETS: [['2017', 'train']]

44 | TEST_SETS: [['2017', 'val']]

45 | PROB: 0.6

46 |

47 | EXP_DIR: './experiments/models/ssd_darknet_19_coco'

48 | LOG_DIR: './experiments/models/ssd_darknet_19_coco'

49 | RESUME_CHECKPOINT: './weights/yolo/darknet19_yolo_v2_coco_21.6.pth'

50 | PHASE: ['train']

--------------------------------------------------------------------------------

/experiments/cfgs/tests/ssd_darknet53_coco.yml:

--------------------------------------------------------------------------------

1 | MODEL:

2 | SSDS: ssd

3 | NETS: darknet_53

4 | IMAGE_SIZE: [300, 300]

5 | NUM_CLASSES: 81

6 | FEATURE_LAYER: [[23, 28, 'S', 'S', 'S', 'S'], [512, 1024, 512, 256, 256, 256]]

7 | STEPS: [[16, 16], [32, 32], [64, 64], [100, 100], [150, 150], [300, 300]]

8 | SIZES: [[45, 45], [90, 90], [135, 135], [180, 180], [225, 225], [270, 270], [315, 315]]