├── .github

└── workflows

│ └── main.yml

├── .gitignore

├── LICENSE

├── README.md

├── efficientnet_pytorch

├── __init__.py

├── model.py

└── utils.py

├── examples

├── imagenet

│ ├── README.md

│ ├── data

│ │ └── README.md

│ └── main.py

└── simple

│ ├── check.ipynb

│ ├── example.ipynb

│ ├── img.jpg

│ ├── img2.jpg

│ └── labels_map.txt

├── hubconf.py

├── setup.py

├── sotabench.py

├── sotabench_setup.sh

├── tests

└── test_model.py

└── tf_to_pytorch

├── README.md

├── convert_tf_to_pt

├── download.sh

├── load_tf_weights.py

├── load_tf_weights_tf1.py

├── original_tf

│ ├── __init__.py

│ ├── efficientnet_builder.py

│ ├── efficientnet_model.py

│ ├── eval_ckpt_main.py

│ ├── eval_ckpt_main_tf1.py

│ ├── preprocessing.py

│ └── utils.py

├── rename.sh

└── run.sh

└── pretrained_tensorflow

└── download.sh

/.github/workflows/main.yml:

--------------------------------------------------------------------------------

1 | name: Workflow

2 |

3 | on:

4 | push:

5 | branches:

6 | - master

7 |

8 | jobs:

9 | pypi-job:

10 | runs-on: ubuntu-latest

11 | steps:

12 | - uses: actions/checkout@v2

13 | - name: Install twine

14 | run: pip install twine

15 | - name: Build package

16 | run: python setup.py sdist

17 | - name: Publish a Python distribution to PyPI

18 | uses: pypa/gh-action-pypi-publish@release/v1

19 | with:

20 | user: __token__

21 | password: ${{ secrets.PYPI_API_TOKEN }}

22 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Custom

2 | tmp

3 | *.pkl

4 |

5 | # Byte-compiled / optimized / DLL files

6 | __pycache__/

7 | *.py[cod]

8 | *$py.class

9 |

10 | # C extensions

11 | *.so

12 |

13 | # Distribution / packaging

14 | .Python

15 | build/

16 | develop-eggs/

17 | dist/

18 | downloads/

19 | eggs/

20 | .eggs/

21 | lib/

22 | lib64/

23 | parts/

24 | sdist/

25 | var/

26 | wheels/

27 | *.egg-info/

28 | .installed.cfg

29 | *.egg

30 | MANIFEST

31 |

32 | # PyInstaller

33 | # Usually these files are written by a python script from a template

34 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

35 | *.manifest

36 | *.spec

37 |

38 | # Installer logs

39 | pip-log.txt

40 | pip-delete-this-directory.txt

41 |

42 | # Unit test / coverage reports

43 | htmlcov/

44 | .tox/

45 | .coverage

46 | .coverage.*

47 | .cache

48 | nosetests.xml

49 | coverage.xml

50 | *.cover

51 | .hypothesis/

52 | .pytest_cache/

53 |

54 | # Translations

55 | *.mo

56 | *.pot

57 |

58 | # Django stuff:

59 | *.log

60 | local_settings.py

61 | db.sqlite3

62 |

63 | # Flask stuff:

64 | instance/

65 | .webassets-cache

66 |

67 | # Scrapy stuff:

68 | .scrapy

69 |

70 | # Sphinx documentation

71 | docs/_build/

72 |

73 | # PyBuilder

74 | target/

75 |

76 | # Jupyter Notebook

77 | .ipynb_checkpoints

78 |

79 | # pyenv

80 | .python-version

81 |

82 | # celery beat schedule file

83 | celerybeat-schedule

84 |

85 | # SageMath parsed files

86 | *.sage.py

87 |

88 | # Environments

89 | .env

90 | .venv

91 | env/

92 | venv/

93 | ENV/

94 | env.bak/

95 | venv.bak/

96 |

97 | # Spyder project settings

98 | .spyderproject

99 | .spyproject

100 |

101 | # Rope project settings

102 | .ropeproject

103 |

104 | # mkdocs documentation

105 | /site

106 |

107 | # mypy

108 | .mypy_cache/

109 | .DS_STORE

110 |

111 | # PyCharm

112 | .idea*

113 | *.xml

114 |

115 | # Custom

116 | tensorflow/

117 | example/test*

118 | *.pth*

119 | examples/imagenet/data/

120 | !examples/imagenet/data/README.md

121 | tmp

122 | tf_to_pytorch/pretrained_tensorflow

123 | !tf_to_pytorch/pretrained_tensorflow/download.sh

124 | examples/imagenet/run.sh

125 |

126 |

127 |

128 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 |

2 | Apache License

3 | Version 2.0, January 2004

4 | http://www.apache.org/licenses/

5 |

6 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

7 |

8 | 1. Definitions.

9 |

10 | "License" shall mean the terms and conditions for use, reproduction,

11 | and distribution as defined by Sections 1 through 9 of this document.

12 |

13 | "Licensor" shall mean the copyright owner or entity authorized by

14 | the copyright owner that is granting the License.

15 |

16 | "Legal Entity" shall mean the union of the acting entity and all

17 | other entities that control, are controlled by, or are under common

18 | control with that entity. For the purposes of this definition,

19 | "control" means (i) the power, direct or indirect, to cause the

20 | direction or management of such entity, whether by contract or

21 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

22 | outstanding shares, or (iii) beneficial ownership of such entity.

23 |

24 | "You" (or "Your") shall mean an individual or Legal Entity

25 | exercising permissions granted by this License.

26 |

27 | "Source" form shall mean the preferred form for making modifications,

28 | including but not limited to software source code, documentation

29 | source, and configuration files.

30 |

31 | "Object" form shall mean any form resulting from mechanical

32 | transformation or translation of a Source form, including but

33 | not limited to compiled object code, generated documentation,

34 | and conversions to other media types.

35 |

36 | "Work" shall mean the work of authorship, whether in Source or

37 | Object form, made available under the License, as indicated by a

38 | copyright notice that is included in or attached to the work

39 | (an example is provided in the Appendix below).

40 |

41 | "Derivative Works" shall mean any work, whether in Source or Object

42 | form, that is based on (or derived from) the Work and for which the

43 | editorial revisions, annotations, elaborations, or other modifications

44 | represent, as a whole, an original work of authorship. For the purposes

45 | of this License, Derivative Works shall not include works that remain

46 | separable from, or merely link (or bind by name) to the interfaces of,

47 | the Work and Derivative Works thereof.

48 |

49 | "Contribution" shall mean any work of authorship, including

50 | the original version of the Work and any modifications or additions

51 | to that Work or Derivative Works thereof, that is intentionally

52 | submitted to Licensor for inclusion in the Work by the copyright owner

53 | or by an individual or Legal Entity authorized to submit on behalf of

54 | the copyright owner. For the purposes of this definition, "submitted"

55 | means any form of electronic, verbal, or written communication sent

56 | to the Licensor or its representatives, including but not limited to

57 | communication on electronic mailing lists, source code control systems,

58 | and issue tracking systems that are managed by, or on behalf of, the

59 | Licensor for the purpose of discussing and improving the Work, but

60 | excluding communication that is conspicuously marked or otherwise

61 | designated in writing by the copyright owner as "Not a Contribution."

62 |

63 | "Contributor" shall mean Licensor and any individual or Legal Entity

64 | on behalf of whom a Contribution has been received by Licensor and

65 | subsequently incorporated within the Work.

66 |

67 | 2. Grant of Copyright License. Subject to the terms and conditions of

68 | this License, each Contributor hereby grants to You a perpetual,

69 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

70 | copyright license to reproduce, prepare Derivative Works of,

71 | publicly display, publicly perform, sublicense, and distribute the

72 | Work and such Derivative Works in Source or Object form.

73 |

74 | 3. Grant of Patent License. Subject to the terms and conditions of

75 | this License, each Contributor hereby grants to You a perpetual,

76 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

77 | (except as stated in this section) patent license to make, have made,

78 | use, offer to sell, sell, import, and otherwise transfer the Work,

79 | where such license applies only to those patent claims licensable

80 | by such Contributor that are necessarily infringed by their

81 | Contribution(s) alone or by combination of their Contribution(s)

82 | with the Work to which such Contribution(s) was submitted. If You

83 | institute patent litigation against any entity (including a

84 | cross-claim or counterclaim in a lawsuit) alleging that the Work

85 | or a Contribution incorporated within the Work constitutes direct

86 | or contributory patent infringement, then any patent licenses

87 | granted to You under this License for that Work shall terminate

88 | as of the date such litigation is filed.

89 |

90 | 4. Redistribution. You may reproduce and distribute copies of the

91 | Work or Derivative Works thereof in any medium, with or without

92 | modifications, and in Source or Object form, provided that You

93 | meet the following conditions:

94 |

95 | (a) You must give any other recipients of the Work or

96 | Derivative Works a copy of this License; and

97 |

98 | (b) You must cause any modified files to carry prominent notices

99 | stating that You changed the files; and

100 |

101 | (c) You must retain, in the Source form of any Derivative Works

102 | that You distribute, all copyright, patent, trademark, and

103 | attribution notices from the Source form of the Work,

104 | excluding those notices that do not pertain to any part of

105 | the Derivative Works; and

106 |

107 | (d) If the Work includes a "NOTICE" text file as part of its

108 | distribution, then any Derivative Works that You distribute must

109 | include a readable copy of the attribution notices contained

110 | within such NOTICE file, excluding those notices that do not

111 | pertain to any part of the Derivative Works, in at least one

112 | of the following places: within a NOTICE text file distributed

113 | as part of the Derivative Works; within the Source form or

114 | documentation, if provided along with the Derivative Works; or,

115 | within a display generated by the Derivative Works, if and

116 | wherever such third-party notices normally appear. The contents

117 | of the NOTICE file are for informational purposes only and

118 | do not modify the License. You may add Your own attribution

119 | notices within Derivative Works that You distribute, alongside

120 | or as an addendum to the NOTICE text from the Work, provided

121 | that such additional attribution notices cannot be construed

122 | as modifying the License.

123 |

124 | You may add Your own copyright statement to Your modifications and

125 | may provide additional or different license terms and conditions

126 | for use, reproduction, or distribution of Your modifications, or

127 | for any such Derivative Works as a whole, provided Your use,

128 | reproduction, and distribution of the Work otherwise complies with

129 | the conditions stated in this License.

130 |

131 | 5. Submission of Contributions. Unless You explicitly state otherwise,

132 | any Contribution intentionally submitted for inclusion in the Work

133 | by You to the Licensor shall be under the terms and conditions of

134 | this License, without any additional terms or conditions.

135 | Notwithstanding the above, nothing herein shall supersede or modify

136 | the terms of any separate license agreement you may have executed

137 | with Licensor regarding such Contributions.

138 |

139 | 6. Trademarks. This License does not grant permission to use the trade

140 | names, trademarks, service marks, or product names of the Licensor,

141 | except as required for reasonable and customary use in describing the

142 | origin of the Work and reproducing the content of the NOTICE file.

143 |

144 | 7. Disclaimer of Warranty. Unless required by applicable law or

145 | agreed to in writing, Licensor provides the Work (and each

146 | Contributor provides its Contributions) on an "AS IS" BASIS,

147 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

148 | implied, including, without limitation, any warranties or conditions

149 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

150 | PARTICULAR PURPOSE. You are solely responsible for determining the

151 | appropriateness of using or redistributing the Work and assume any

152 | risks associated with Your exercise of permissions under this License.

153 |

154 | 8. Limitation of Liability. In no event and under no legal theory,

155 | whether in tort (including negligence), contract, or otherwise,

156 | unless required by applicable law (such as deliberate and grossly

157 | negligent acts) or agreed to in writing, shall any Contributor be

158 | liable to You for damages, including any direct, indirect, special,

159 | incidental, or consequential damages of any character arising as a

160 | result of this License or out of the use or inability to use the

161 | Work (including but not limited to damages for loss of goodwill,

162 | work stoppage, computer failure or malfunction, or any and all

163 | other commercial damages or losses), even if such Contributor

164 | has been advised of the possibility of such damages.

165 |

166 | 9. Accepting Warranty or Additional Liability. While redistributing

167 | the Work or Derivative Works thereof, You may choose to offer,

168 | and charge a fee for, acceptance of support, warranty, indemnity,

169 | or other liability obligations and/or rights consistent with this

170 | License. However, in accepting such obligations, You may act only

171 | on Your own behalf and on Your sole responsibility, not on behalf

172 | of any other Contributor, and only if You agree to indemnify,

173 | defend, and hold each Contributor harmless for any liability

174 | incurred by, or claims asserted against, such Contributor by reason

175 | of your accepting any such warranty or additional liability.

176 |

177 | END OF TERMS AND CONDITIONS

178 |

179 | APPENDIX: How to apply the Apache License to your work.

180 |

181 | To apply the Apache License to your work, attach the following

182 | boilerplate notice, with the fields enclosed by brackets "[]"

183 | replaced with your own identifying information. (Don't include

184 | the brackets!) The text should be enclosed in the appropriate

185 | comment syntax for the file format. We also recommend that a

186 | file or class name and description of purpose be included on the

187 | same "printed page" as the copyright notice for easier

188 | identification within third-party archives.

189 |

190 | Copyright [yyyy] [name of copyright owner]

191 |

192 | Licensed under the Apache License, Version 2.0 (the "License");

193 | you may not use this file except in compliance with the License.

194 | You may obtain a copy of the License at

195 |

196 | http://www.apache.org/licenses/LICENSE-2.0

197 |

198 | Unless required by applicable law or agreed to in writing, software

199 | distributed under the License is distributed on an "AS IS" BASIS,

200 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

201 | See the License for the specific language governing permissions and

202 | limitations under the License.

203 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # EfficientNet PyTorch

2 |

3 | ### Quickstart

4 |

5 | Install with `pip install efficientnet_pytorch` and load a pretrained EfficientNet with:

6 | ```python

7 | from efficientnet_pytorch import EfficientNet

8 | model = EfficientNet.from_pretrained('efficientnet-b0')

9 | ```

10 |

11 | ### Updates

12 |

13 | #### Update (April 2, 2021)

14 |

15 | The [EfficientNetV2 paper](https://arxiv.org/abs/2104.00298) has been released! I am working on implementing it as you read this :)

16 |

17 | About EfficientNetV2:

18 | > EfficientNetV2 is a new family of convolutional networks that have faster training speed and better parameter efficiency than previous models. To develop this family of models, we use a combination of training-aware neural architecture search and scaling, to jointly optimize training speed and parameter efficiency. The models were searched from the search space enriched with new ops such as Fused-MBConv.

19 |

20 | Here is a comparison:

21 | >  22 |

23 |

24 | #### Update (Aug 25, 2020)

25 |

26 | This update adds:

27 | * A new `include_top` (default: `True`) option ([#208](https://github.com/lukemelas/EfficientNet-PyTorch/pull/208))

28 | * Continuous testing with [sotabench](https://sotabench.com/)

29 | * Code quality improvements and fixes ([#215](https://github.com/lukemelas/EfficientNet-PyTorch/pull/215) [#223](https://github.com/lukemelas/EfficientNet-PyTorch/pull/223))

30 |

31 | #### Update (May 14, 2020)

32 |

33 | This update adds comprehensive comments and documentation (thanks to @workingcoder).

34 |

35 | #### Update (January 23, 2020)

36 |

37 | This update adds a new category of pre-trained model based on adversarial training, called _advprop_. It is important to note that the preprocessing required for the advprop pretrained models is slightly different from normal ImageNet preprocessing. As a result, by default, advprop models are not used. To load a model with advprop, use:

38 | ```python

39 | model = EfficientNet.from_pretrained("efficientnet-b0", advprop=True)

40 | ```

41 | There is also a new, large `efficientnet-b8` pretrained model that is only available in advprop form. When using these models, replace ImageNet preprocessing code as follows:

42 | ```python

43 | if advprop: # for models using advprop pretrained weights

44 | normalize = transforms.Lambda(lambda img: img * 2.0 - 1.0)

45 | else:

46 | normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406],

47 | std=[0.229, 0.224, 0.225])

48 | ```

49 | This update also addresses multiple other issues ([#115](https://github.com/lukemelas/EfficientNet-PyTorch/issues/115), [#128](https://github.com/lukemelas/EfficientNet-PyTorch/issues/128)).

50 |

51 | #### Update (October 15, 2019)

52 |

53 | This update allows you to choose whether to use a memory-efficient Swish activation. The memory-efficient version is chosen by default, but it cannot be used when exporting using PyTorch JIT. For this purpose, we have also included a standard (export-friendly) swish activation function. To switch to the export-friendly version, simply call `model.set_swish(memory_efficient=False)` after loading your desired model. This update addresses issues [#88](https://github.com/lukemelas/EfficientNet-PyTorch/pull/88) and [#89](https://github.com/lukemelas/EfficientNet-PyTorch/pull/89).

54 |

55 | #### Update (October 12, 2019)

56 |

57 | This update makes the Swish activation function more memory-efficient. It also addresses pull requests [#72](https://github.com/lukemelas/EfficientNet-PyTorch/pull/72), [#73](https://github.com/lukemelas/EfficientNet-PyTorch/pull/73), [#85](https://github.com/lukemelas/EfficientNet-PyTorch/pull/85), and [#86](https://github.com/lukemelas/EfficientNet-PyTorch/pull/86). Thanks to the authors of all the pull requests!

58 |

59 | #### Update (July 31, 2019)

60 |

61 | _Upgrade the pip package with_ `pip install --upgrade efficientnet-pytorch`

62 |

63 | The B6 and B7 models are now available. Additionally, _all_ pretrained models have been updated to use AutoAugment preprocessing, which translates to better performance across the board. Usage is the same as before:

64 | ```python

65 | from efficientnet_pytorch import EfficientNet

66 | model = EfficientNet.from_pretrained('efficientnet-b7')

67 | ```

68 |

69 | #### Update (June 29, 2019)

70 |

71 | This update adds easy model exporting ([#20](https://github.com/lukemelas/EfficientNet-PyTorch/issues/20)) and feature extraction ([#38](https://github.com/lukemelas/EfficientNet-PyTorch/issues/38)).

72 |

73 | * [Example: Export to ONNX](#example-export)

74 | * [Example: Extract features](#example-feature-extraction)

75 | * Also: fixed a CUDA/CPU bug ([#32](https://github.com/lukemelas/EfficientNet-PyTorch/issues/32))

76 |

77 | It is also now incredibly simple to load a pretrained model with a new number of classes for transfer learning:

78 | ```python

79 | model = EfficientNet.from_pretrained('efficientnet-b1', num_classes=23)

80 | ```

81 |

82 |

83 | #### Update (June 23, 2019)

84 |

85 | The B4 and B5 models are now available. Their usage is identical to the other models:

86 | ```python

87 | from efficientnet_pytorch import EfficientNet

88 | model = EfficientNet.from_pretrained('efficientnet-b4')

89 | ```

90 |

91 | ### Overview

92 | This repository contains an op-for-op PyTorch reimplementation of [EfficientNet](https://arxiv.org/abs/1905.11946), along with pre-trained models and examples.

93 |

94 | The goal of this implementation is to be simple, highly extensible, and easy to integrate into your own projects. This implementation is a work in progress -- new features are currently being implemented.

95 |

96 | At the moment, you can easily:

97 | * Load pretrained EfficientNet models

98 | * Use EfficientNet models for classification or feature extraction

99 | * Evaluate EfficientNet models on ImageNet or your own images

100 |

101 | _Upcoming features_: In the next few days, you will be able to:

102 | * Train new models from scratch on ImageNet with a simple command

103 | * Quickly finetune an EfficientNet on your own dataset

104 | * Export EfficientNet models for production

105 |

106 | ### Table of contents

107 | 1. [About EfficientNet](#about-efficientnet)

108 | 2. [About EfficientNet-PyTorch](#about-efficientnet-pytorch)

109 | 3. [Installation](#installation)

110 | 4. [Usage](#usage)

111 | * [Load pretrained models](#loading-pretrained-models)

112 | * [Example: Classify](#example-classification)

113 | * [Example: Extract features](#example-feature-extraction)

114 | * [Example: Export to ONNX](#example-export)

115 | 6. [Contributing](#contributing)

116 |

117 | ### About EfficientNet

118 |

119 | If you're new to EfficientNets, here is an explanation straight from the official TensorFlow implementation:

120 |

121 | EfficientNets are a family of image classification models, which achieve state-of-the-art accuracy, yet being an order-of-magnitude smaller and faster than previous models. We develop EfficientNets based on AutoML and Compound Scaling. In particular, we first use [AutoML Mobile framework](https://ai.googleblog.com/2018/08/mnasnet-towards-automating-design-of.html) to develop a mobile-size baseline network, named as EfficientNet-B0; Then, we use the compound scaling method to scale up this baseline to obtain EfficientNet-B1 to B7.

122 |

123 |

22 |

23 |

24 | #### Update (Aug 25, 2020)

25 |

26 | This update adds:

27 | * A new `include_top` (default: `True`) option ([#208](https://github.com/lukemelas/EfficientNet-PyTorch/pull/208))

28 | * Continuous testing with [sotabench](https://sotabench.com/)

29 | * Code quality improvements and fixes ([#215](https://github.com/lukemelas/EfficientNet-PyTorch/pull/215) [#223](https://github.com/lukemelas/EfficientNet-PyTorch/pull/223))

30 |

31 | #### Update (May 14, 2020)

32 |

33 | This update adds comprehensive comments and documentation (thanks to @workingcoder).

34 |

35 | #### Update (January 23, 2020)

36 |

37 | This update adds a new category of pre-trained model based on adversarial training, called _advprop_. It is important to note that the preprocessing required for the advprop pretrained models is slightly different from normal ImageNet preprocessing. As a result, by default, advprop models are not used. To load a model with advprop, use:

38 | ```python

39 | model = EfficientNet.from_pretrained("efficientnet-b0", advprop=True)

40 | ```

41 | There is also a new, large `efficientnet-b8` pretrained model that is only available in advprop form. When using these models, replace ImageNet preprocessing code as follows:

42 | ```python

43 | if advprop: # for models using advprop pretrained weights

44 | normalize = transforms.Lambda(lambda img: img * 2.0 - 1.0)

45 | else:

46 | normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406],

47 | std=[0.229, 0.224, 0.225])

48 | ```

49 | This update also addresses multiple other issues ([#115](https://github.com/lukemelas/EfficientNet-PyTorch/issues/115), [#128](https://github.com/lukemelas/EfficientNet-PyTorch/issues/128)).

50 |

51 | #### Update (October 15, 2019)

52 |

53 | This update allows you to choose whether to use a memory-efficient Swish activation. The memory-efficient version is chosen by default, but it cannot be used when exporting using PyTorch JIT. For this purpose, we have also included a standard (export-friendly) swish activation function. To switch to the export-friendly version, simply call `model.set_swish(memory_efficient=False)` after loading your desired model. This update addresses issues [#88](https://github.com/lukemelas/EfficientNet-PyTorch/pull/88) and [#89](https://github.com/lukemelas/EfficientNet-PyTorch/pull/89).

54 |

55 | #### Update (October 12, 2019)

56 |

57 | This update makes the Swish activation function more memory-efficient. It also addresses pull requests [#72](https://github.com/lukemelas/EfficientNet-PyTorch/pull/72), [#73](https://github.com/lukemelas/EfficientNet-PyTorch/pull/73), [#85](https://github.com/lukemelas/EfficientNet-PyTorch/pull/85), and [#86](https://github.com/lukemelas/EfficientNet-PyTorch/pull/86). Thanks to the authors of all the pull requests!

58 |

59 | #### Update (July 31, 2019)

60 |

61 | _Upgrade the pip package with_ `pip install --upgrade efficientnet-pytorch`

62 |

63 | The B6 and B7 models are now available. Additionally, _all_ pretrained models have been updated to use AutoAugment preprocessing, which translates to better performance across the board. Usage is the same as before:

64 | ```python

65 | from efficientnet_pytorch import EfficientNet

66 | model = EfficientNet.from_pretrained('efficientnet-b7')

67 | ```

68 |

69 | #### Update (June 29, 2019)

70 |

71 | This update adds easy model exporting ([#20](https://github.com/lukemelas/EfficientNet-PyTorch/issues/20)) and feature extraction ([#38](https://github.com/lukemelas/EfficientNet-PyTorch/issues/38)).

72 |

73 | * [Example: Export to ONNX](#example-export)

74 | * [Example: Extract features](#example-feature-extraction)

75 | * Also: fixed a CUDA/CPU bug ([#32](https://github.com/lukemelas/EfficientNet-PyTorch/issues/32))

76 |

77 | It is also now incredibly simple to load a pretrained model with a new number of classes for transfer learning:

78 | ```python

79 | model = EfficientNet.from_pretrained('efficientnet-b1', num_classes=23)

80 | ```

81 |

82 |

83 | #### Update (June 23, 2019)

84 |

85 | The B4 and B5 models are now available. Their usage is identical to the other models:

86 | ```python

87 | from efficientnet_pytorch import EfficientNet

88 | model = EfficientNet.from_pretrained('efficientnet-b4')

89 | ```

90 |

91 | ### Overview

92 | This repository contains an op-for-op PyTorch reimplementation of [EfficientNet](https://arxiv.org/abs/1905.11946), along with pre-trained models and examples.

93 |

94 | The goal of this implementation is to be simple, highly extensible, and easy to integrate into your own projects. This implementation is a work in progress -- new features are currently being implemented.

95 |

96 | At the moment, you can easily:

97 | * Load pretrained EfficientNet models

98 | * Use EfficientNet models for classification or feature extraction

99 | * Evaluate EfficientNet models on ImageNet or your own images

100 |

101 | _Upcoming features_: In the next few days, you will be able to:

102 | * Train new models from scratch on ImageNet with a simple command

103 | * Quickly finetune an EfficientNet on your own dataset

104 | * Export EfficientNet models for production

105 |

106 | ### Table of contents

107 | 1. [About EfficientNet](#about-efficientnet)

108 | 2. [About EfficientNet-PyTorch](#about-efficientnet-pytorch)

109 | 3. [Installation](#installation)

110 | 4. [Usage](#usage)

111 | * [Load pretrained models](#loading-pretrained-models)

112 | * [Example: Classify](#example-classification)

113 | * [Example: Extract features](#example-feature-extraction)

114 | * [Example: Export to ONNX](#example-export)

115 | 6. [Contributing](#contributing)

116 |

117 | ### About EfficientNet

118 |

119 | If you're new to EfficientNets, here is an explanation straight from the official TensorFlow implementation:

120 |

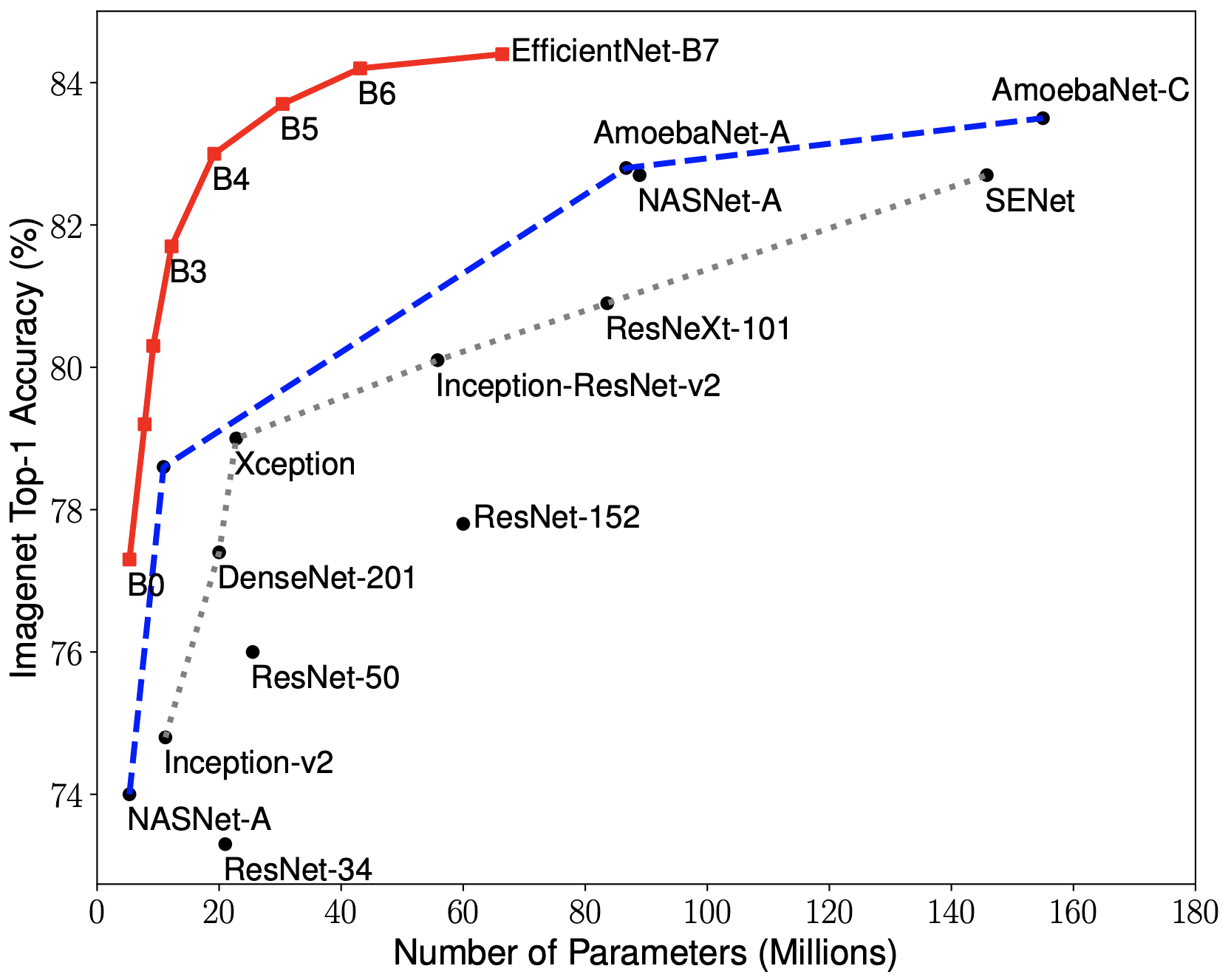

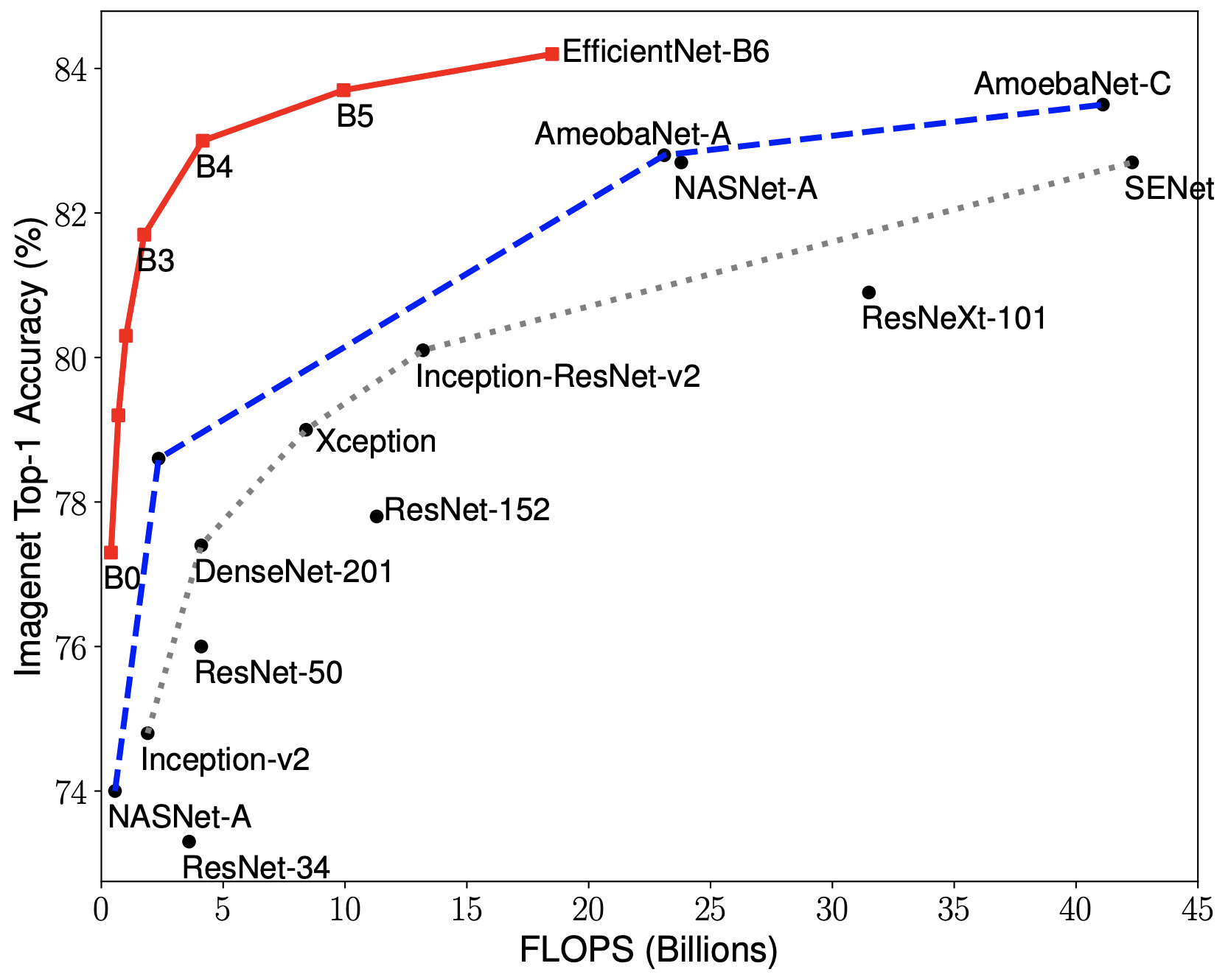

121 | EfficientNets are a family of image classification models, which achieve state-of-the-art accuracy, yet being an order-of-magnitude smaller and faster than previous models. We develop EfficientNets based on AutoML and Compound Scaling. In particular, we first use [AutoML Mobile framework](https://ai.googleblog.com/2018/08/mnasnet-towards-automating-design-of.html) to develop a mobile-size baseline network, named as EfficientNet-B0; Then, we use the compound scaling method to scale up this baseline to obtain EfficientNet-B1 to B7.

122 |

123 |

124 |

125 |

126 |  127 |

127 | |

128 |

129 |  130 |

130 | |

131 |

132 |

133 |

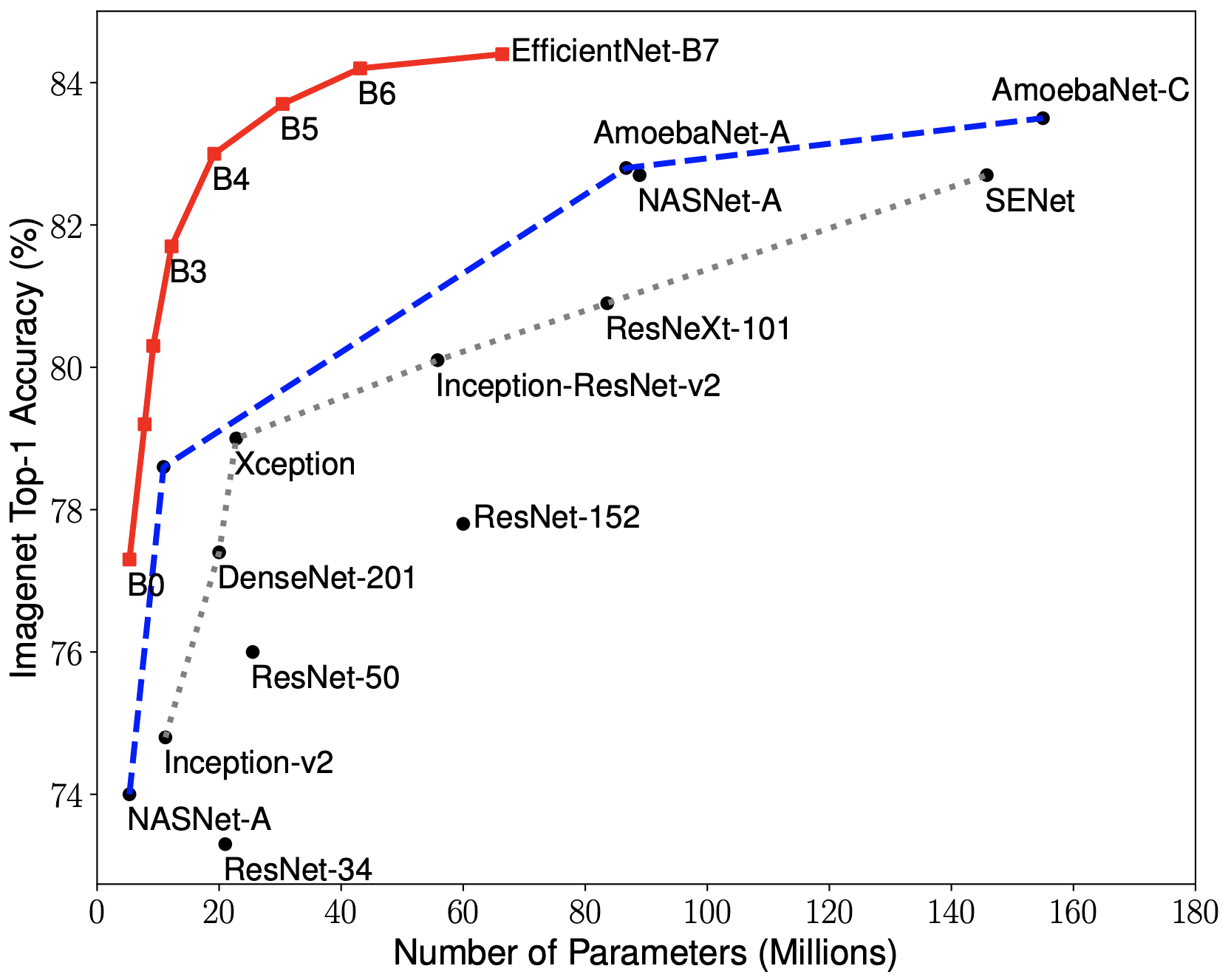

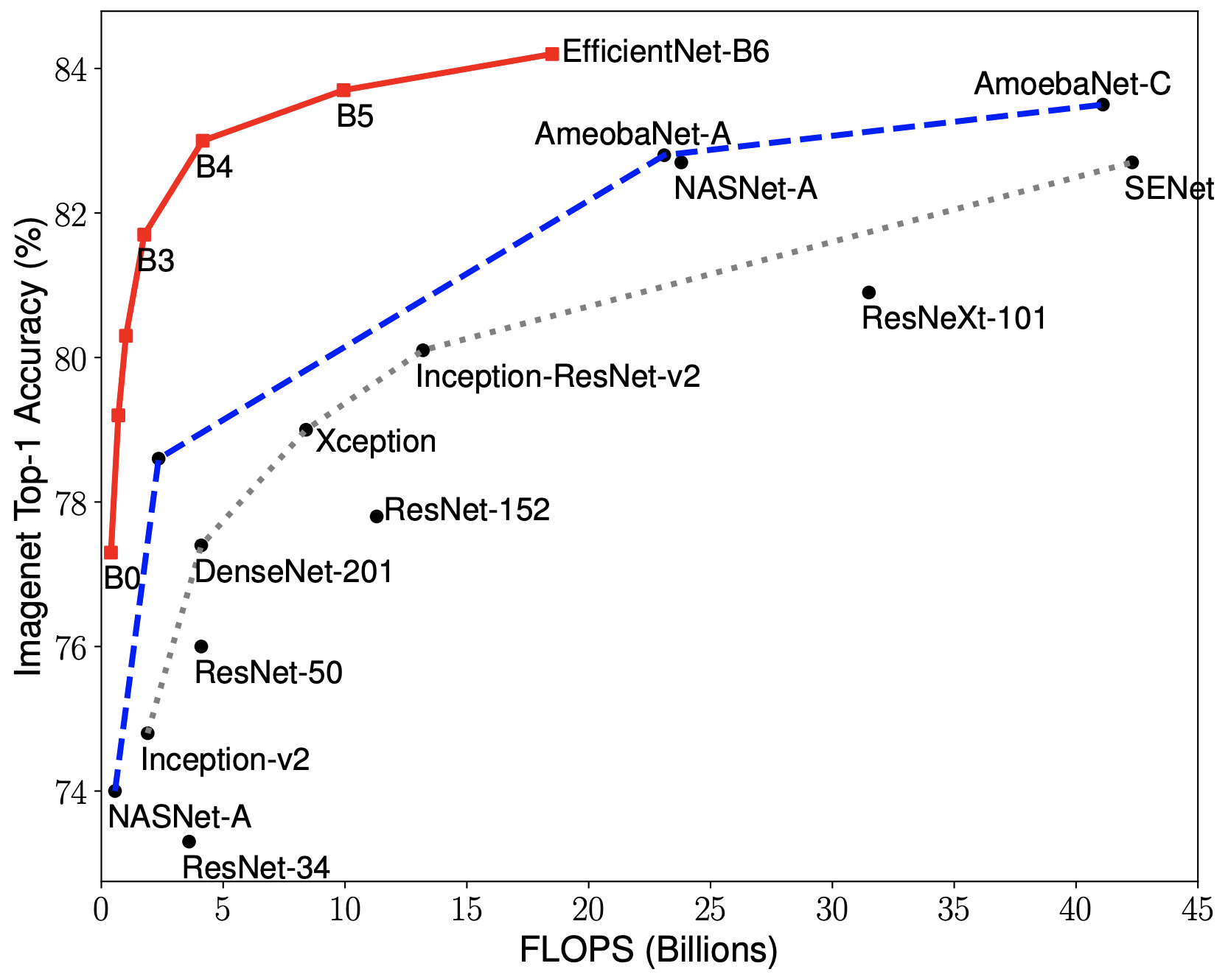

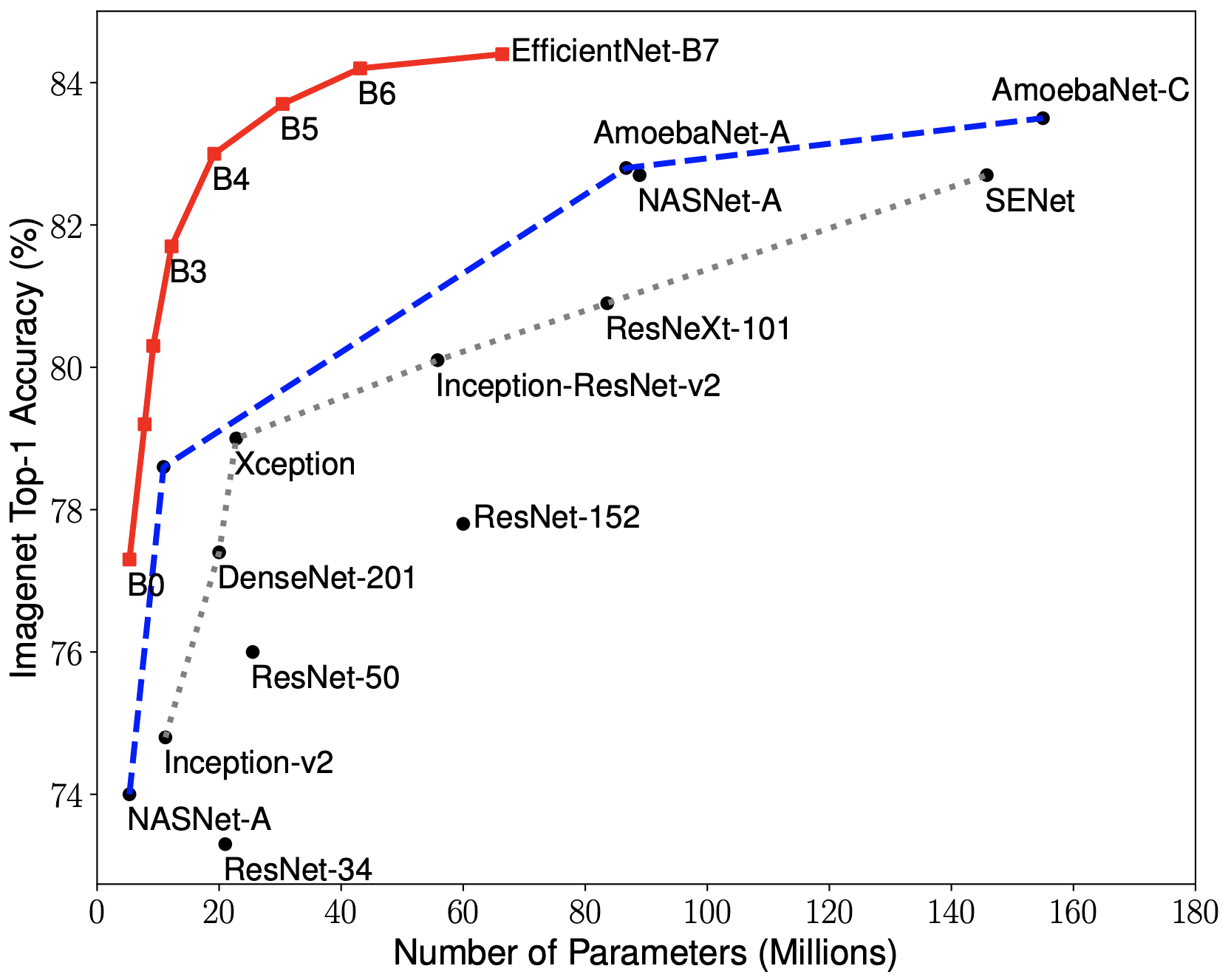

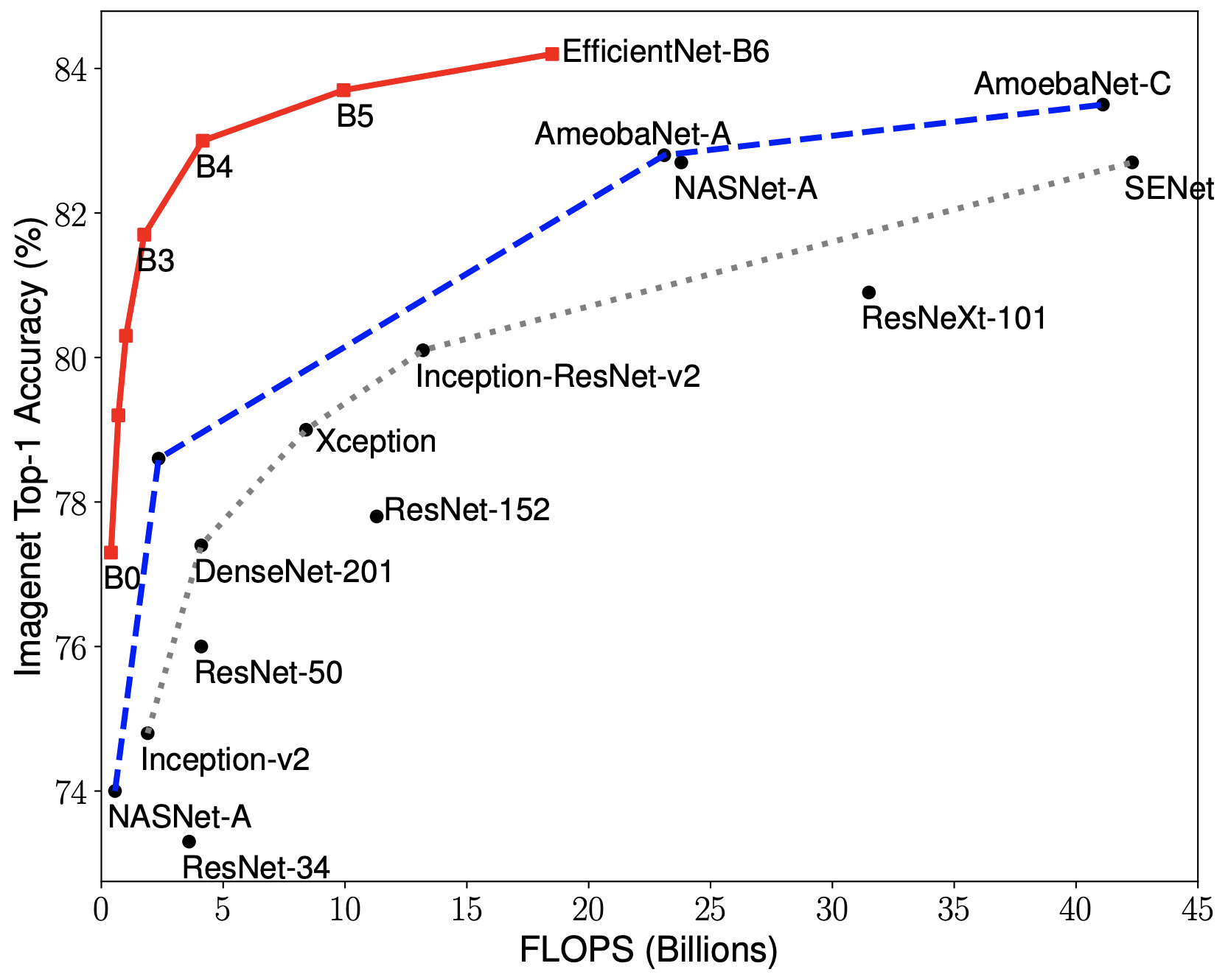

134 | EfficientNets achieve state-of-the-art accuracy on ImageNet with an order of magnitude better efficiency:

135 |

136 |

137 | * In high-accuracy regime, our EfficientNet-B7 achieves state-of-the-art 84.4% top-1 / 97.1% top-5 accuracy on ImageNet with 66M parameters and 37B FLOPS, being 8.4x smaller and 6.1x faster on CPU inference than previous best [Gpipe](https://arxiv.org/abs/1811.06965).

138 |

139 | * In middle-accuracy regime, our EfficientNet-B1 is 7.6x smaller and 5.7x faster on CPU inference than [ResNet-152](https://arxiv.org/abs/1512.03385), with similar ImageNet accuracy.

140 |

141 | * Compared with the widely used [ResNet-50](https://arxiv.org/abs/1512.03385), our EfficientNet-B4 improves the top-1 accuracy from 76.3% of ResNet-50 to 82.6% (+6.3%), under similar FLOPS constraint.

142 |

143 | ### About EfficientNet PyTorch

144 |

145 | EfficientNet PyTorch is a PyTorch re-implementation of EfficientNet. It is consistent with the [original TensorFlow implementation](https://github.com/tensorflow/tpu/tree/master/models/official/efficientnet), such that it is easy to load weights from a TensorFlow checkpoint. At the same time, we aim to make our PyTorch implementation as simple, flexible, and extensible as possible.

146 |

147 | If you have any feature requests or questions, feel free to leave them as GitHub issues!

148 |

149 | ### Installation

150 |

151 | Install via pip:

152 | ```bash

153 | pip install efficientnet_pytorch

154 | ```

155 |

156 | Or install from source:

157 | ```bash

158 | git clone https://github.com/lukemelas/EfficientNet-PyTorch

159 | cd EfficientNet-Pytorch

160 | pip install -e .

161 | ```

162 |

163 | ### Usage

164 |

165 | #### Loading pretrained models

166 |

167 | Load an EfficientNet:

168 | ```python

169 | from efficientnet_pytorch import EfficientNet

170 | model = EfficientNet.from_name('efficientnet-b0')

171 | ```

172 |

173 | Load a pretrained EfficientNet:

174 | ```python

175 | from efficientnet_pytorch import EfficientNet

176 | model = EfficientNet.from_pretrained('efficientnet-b0')

177 | ```

178 |

179 | Details about the models are below:

180 |

181 | | *Name* |*# Params*|*Top-1 Acc.*|*Pretrained?*|

182 | |:-----------------:|:--------:|:----------:|:-----------:|

183 | | `efficientnet-b0` | 5.3M | 76.3 | ✓ |

184 | | `efficientnet-b1` | 7.8M | 78.8 | ✓ |

185 | | `efficientnet-b2` | 9.2M | 79.8 | ✓ |

186 | | `efficientnet-b3` | 12M | 81.1 | ✓ |

187 | | `efficientnet-b4` | 19M | 82.6 | ✓ |

188 | | `efficientnet-b5` | 30M | 83.3 | ✓ |

189 | | `efficientnet-b6` | 43M | 84.0 | ✓ |

190 | | `efficientnet-b7` | 66M | 84.4 | ✓ |

191 |

192 |

193 | #### Example: Classification

194 |

195 | Below is a simple, complete example. It may also be found as a jupyter notebook in `examples/simple` or as a [Colab Notebook](https://colab.research.google.com/drive/1Jw28xZ1NJq4Cja4jLe6tJ6_F5lCzElb4).

196 |

197 | We assume that in your current directory, there is a `img.jpg` file and a `labels_map.txt` file (ImageNet class names). These are both included in `examples/simple`.

198 |

199 | ```python

200 | import json

201 | from PIL import Image

202 | import torch

203 | from torchvision import transforms

204 |

205 | from efficientnet_pytorch import EfficientNet

206 | model = EfficientNet.from_pretrained('efficientnet-b0')

207 |

208 | # Preprocess image

209 | tfms = transforms.Compose([transforms.Resize(224), transforms.ToTensor(),

210 | transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]),])

211 | img = tfms(Image.open('img.jpg')).unsqueeze(0)

212 | print(img.shape) # torch.Size([1, 3, 224, 224])

213 |

214 | # Load ImageNet class names

215 | labels_map = json.load(open('labels_map.txt'))

216 | labels_map = [labels_map[str(i)] for i in range(1000)]

217 |

218 | # Classify

219 | model.eval()

220 | with torch.no_grad():

221 | outputs = model(img)

222 |

223 | # Print predictions

224 | print('-----')

225 | for idx in torch.topk(outputs, k=5).indices.squeeze(0).tolist():

226 | prob = torch.softmax(outputs, dim=1)[0, idx].item()

227 | print('{label:<75} ({p:.2f}%)'.format(label=labels_map[idx], p=prob*100))

228 | ```

229 |

230 | #### Example: Feature Extraction

231 |

232 | You can easily extract features with `model.extract_features`:

233 | ```python

234 | from efficientnet_pytorch import EfficientNet

235 | model = EfficientNet.from_pretrained('efficientnet-b0')

236 |

237 | # ... image preprocessing as in the classification example ...

238 | print(img.shape) # torch.Size([1, 3, 224, 224])

239 |

240 | features = model.extract_features(img)

241 | print(features.shape) # torch.Size([1, 1280, 7, 7])

242 | ```

243 |

244 | #### Example: Export to ONNX

245 |

246 | Exporting to ONNX for deploying to production is now simple:

247 | ```python

248 | import torch

249 | from efficientnet_pytorch import EfficientNet

250 |

251 | model = EfficientNet.from_pretrained('efficientnet-b1')

252 | dummy_input = torch.randn(10, 3, 240, 240)

253 |

254 | model.set_swish(memory_efficient=False)

255 | torch.onnx.export(model, dummy_input, "test-b1.onnx", verbose=True)

256 | ```

257 |

258 | [Here](https://colab.research.google.com/drive/1rOAEXeXHaA8uo3aG2YcFDHItlRJMV0VP) is a Colab example.

259 |

260 |

261 | #### ImageNet

262 |

263 | See `examples/imagenet` for details about evaluating on ImageNet.

264 |

265 | ### Contributing

266 |

267 | If you find a bug, create a GitHub issue, or even better, submit a pull request. Similarly, if you have questions, simply post them as GitHub issues.

268 |

269 | I look forward to seeing what the community does with these models!

270 |

--------------------------------------------------------------------------------

/efficientnet_pytorch/__init__.py:

--------------------------------------------------------------------------------

1 | __version__ = "0.7.1"

2 | from .model import EfficientNet, VALID_MODELS

3 | from .utils import (

4 | GlobalParams,

5 | BlockArgs,

6 | BlockDecoder,

7 | efficientnet,

8 | get_model_params,

9 | )

10 |

--------------------------------------------------------------------------------

/efficientnet_pytorch/model.py:

--------------------------------------------------------------------------------

1 | """model.py - Model and module class for EfficientNet.

2 | They are built to mirror those in the official TensorFlow implementation.

3 | """

4 |

5 | # Author: lukemelas (github username)

6 | # Github repo: https://github.com/lukemelas/EfficientNet-PyTorch

7 | # With adjustments and added comments by workingcoder (github username).

8 |

9 | import torch

10 | from torch import nn

11 | from torch.nn import functional as F

12 | from .utils import (

13 | round_filters,

14 | round_repeats,

15 | drop_connect,

16 | get_same_padding_conv2d,

17 | get_model_params,

18 | efficientnet_params,

19 | load_pretrained_weights,

20 | Swish,

21 | MemoryEfficientSwish,

22 | calculate_output_image_size

23 | )

24 |

25 |

26 | VALID_MODELS = (

27 | 'efficientnet-b0', 'efficientnet-b1', 'efficientnet-b2', 'efficientnet-b3',

28 | 'efficientnet-b4', 'efficientnet-b5', 'efficientnet-b6', 'efficientnet-b7',

29 | 'efficientnet-b8',

30 |

31 | # Support the construction of 'efficientnet-l2' without pretrained weights

32 | 'efficientnet-l2'

33 | )

34 |

35 |

36 | class MBConvBlock(nn.Module):

37 | """Mobile Inverted Residual Bottleneck Block.

38 |

39 | Args:

40 | block_args (namedtuple): BlockArgs, defined in utils.py.

41 | global_params (namedtuple): GlobalParam, defined in utils.py.

42 | image_size (tuple or list): [image_height, image_width].

43 |

44 | References:

45 | [1] https://arxiv.org/abs/1704.04861 (MobileNet v1)

46 | [2] https://arxiv.org/abs/1801.04381 (MobileNet v2)

47 | [3] https://arxiv.org/abs/1905.02244 (MobileNet v3)

48 | """

49 |

50 | def __init__(self, block_args, global_params, image_size=None):

51 | super().__init__()

52 | self._block_args = block_args

53 | self._bn_mom = 1 - global_params.batch_norm_momentum # pytorch's difference from tensorflow

54 | self._bn_eps = global_params.batch_norm_epsilon

55 | self.has_se = (self._block_args.se_ratio is not None) and (0 < self._block_args.se_ratio <= 1)

56 | self.id_skip = block_args.id_skip # whether to use skip connection and drop connect

57 |

58 | # Expansion phase (Inverted Bottleneck)

59 | inp = self._block_args.input_filters # number of input channels

60 | oup = self._block_args.input_filters * self._block_args.expand_ratio # number of output channels

61 | if self._block_args.expand_ratio != 1:

62 | Conv2d = get_same_padding_conv2d(image_size=image_size)

63 | self._expand_conv = Conv2d(in_channels=inp, out_channels=oup, kernel_size=1, bias=False)

64 | self._bn0 = nn.BatchNorm2d(num_features=oup, momentum=self._bn_mom, eps=self._bn_eps)

65 | # image_size = calculate_output_image_size(image_size, 1) <-- this wouldn't modify image_size

66 |

67 | # Depthwise convolution phase

68 | k = self._block_args.kernel_size

69 | s = self._block_args.stride

70 | Conv2d = get_same_padding_conv2d(image_size=image_size)

71 | self._depthwise_conv = Conv2d(

72 | in_channels=oup, out_channels=oup, groups=oup, # groups makes it depthwise

73 | kernel_size=k, stride=s, bias=False)

74 | self._bn1 = nn.BatchNorm2d(num_features=oup, momentum=self._bn_mom, eps=self._bn_eps)

75 | image_size = calculate_output_image_size(image_size, s)

76 |

77 | # Squeeze and Excitation layer, if desired

78 | if self.has_se:

79 | Conv2d = get_same_padding_conv2d(image_size=(1, 1))

80 | num_squeezed_channels = max(1, int(self._block_args.input_filters * self._block_args.se_ratio))

81 | self._se_reduce = Conv2d(in_channels=oup, out_channels=num_squeezed_channels, kernel_size=1)

82 | self._se_expand = Conv2d(in_channels=num_squeezed_channels, out_channels=oup, kernel_size=1)

83 |

84 | # Pointwise convolution phase

85 | final_oup = self._block_args.output_filters

86 | Conv2d = get_same_padding_conv2d(image_size=image_size)

87 | self._project_conv = Conv2d(in_channels=oup, out_channels=final_oup, kernel_size=1, bias=False)

88 | self._bn2 = nn.BatchNorm2d(num_features=final_oup, momentum=self._bn_mom, eps=self._bn_eps)

89 | self._swish = MemoryEfficientSwish()

90 |

91 | def forward(self, inputs, drop_connect_rate=None):

92 | """MBConvBlock's forward function.

93 |

94 | Args:

95 | inputs (tensor): Input tensor.

96 | drop_connect_rate (bool): Drop connect rate (float, between 0 and 1).

97 |

98 | Returns:

99 | Output of this block after processing.

100 | """

101 |

102 | # Expansion and Depthwise Convolution

103 | x = inputs

104 | if self._block_args.expand_ratio != 1:

105 | x = self._expand_conv(inputs)

106 | x = self._bn0(x)

107 | x = self._swish(x)

108 |

109 | x = self._depthwise_conv(x)

110 | x = self._bn1(x)

111 | x = self._swish(x)

112 |

113 | # Squeeze and Excitation

114 | if self.has_se:

115 | x_squeezed = F.adaptive_avg_pool2d(x, 1)

116 | x_squeezed = self._se_reduce(x_squeezed)

117 | x_squeezed = self._swish(x_squeezed)

118 | x_squeezed = self._se_expand(x_squeezed)

119 | x = torch.sigmoid(x_squeezed) * x

120 |

121 | # Pointwise Convolution

122 | x = self._project_conv(x)

123 | x = self._bn2(x)

124 |

125 | # Skip connection and drop connect

126 | input_filters, output_filters = self._block_args.input_filters, self._block_args.output_filters

127 | if self.id_skip and self._block_args.stride == 1 and input_filters == output_filters:

128 | # The combination of skip connection and drop connect brings about stochastic depth.

129 | if drop_connect_rate:

130 | x = drop_connect(x, p=drop_connect_rate, training=self.training)

131 | x = x + inputs # skip connection

132 | return x

133 |

134 | def set_swish(self, memory_efficient=True):

135 | """Sets swish function as memory efficient (for training) or standard (for export).

136 |

137 | Args:

138 | memory_efficient (bool): Whether to use memory-efficient version of swish.

139 | """

140 | self._swish = MemoryEfficientSwish() if memory_efficient else Swish()

141 |

142 |

143 | class EfficientNet(nn.Module):

144 | """EfficientNet model.

145 | Most easily loaded with the .from_name or .from_pretrained methods.

146 |

147 | Args:

148 | blocks_args (list[namedtuple]): A list of BlockArgs to construct blocks.

149 | global_params (namedtuple): A set of GlobalParams shared between blocks.

150 |

151 | References:

152 | [1] https://arxiv.org/abs/1905.11946 (EfficientNet)

153 |

154 | Example:

155 | >>> import torch

156 | >>> from efficientnet.model import EfficientNet

157 | >>> inputs = torch.rand(1, 3, 224, 224)

158 | >>> model = EfficientNet.from_pretrained('efficientnet-b0')

159 | >>> model.eval()

160 | >>> outputs = model(inputs)

161 | """

162 |

163 | def __init__(self, blocks_args=None, global_params=None):

164 | super().__init__()

165 | assert isinstance(blocks_args, list), 'blocks_args should be a list'

166 | assert len(blocks_args) > 0, 'block args must be greater than 0'

167 | self._global_params = global_params

168 | self._blocks_args = blocks_args

169 |

170 | # Batch norm parameters

171 | bn_mom = 1 - self._global_params.batch_norm_momentum

172 | bn_eps = self._global_params.batch_norm_epsilon

173 |

174 | # Get stem static or dynamic convolution depending on image size

175 | image_size = global_params.image_size

176 | Conv2d = get_same_padding_conv2d(image_size=image_size)

177 |

178 | # Stem

179 | in_channels = 3 # rgb

180 | out_channels = round_filters(32, self._global_params) # number of output channels

181 | self._conv_stem = Conv2d(in_channels, out_channels, kernel_size=3, stride=2, bias=False)

182 | self._bn0 = nn.BatchNorm2d(num_features=out_channels, momentum=bn_mom, eps=bn_eps)

183 | image_size = calculate_output_image_size(image_size, 2)

184 |

185 | # Build blocks

186 | self._blocks = nn.ModuleList([])

187 | for block_args in self._blocks_args:

188 |

189 | # Update block input and output filters based on depth multiplier.

190 | block_args = block_args._replace(

191 | input_filters=round_filters(block_args.input_filters, self._global_params),

192 | output_filters=round_filters(block_args.output_filters, self._global_params),

193 | num_repeat=round_repeats(block_args.num_repeat, self._global_params)

194 | )

195 |

196 | # The first block needs to take care of stride and filter size increase.

197 | self._blocks.append(MBConvBlock(block_args, self._global_params, image_size=image_size))

198 | image_size = calculate_output_image_size(image_size, block_args.stride)

199 | if block_args.num_repeat > 1: # modify block_args to keep same output size

200 | block_args = block_args._replace(input_filters=block_args.output_filters, stride=1)

201 | for _ in range(block_args.num_repeat - 1):

202 | self._blocks.append(MBConvBlock(block_args, self._global_params, image_size=image_size))

203 | # image_size = calculate_output_image_size(image_size, block_args.stride) # stride = 1

204 |

205 | # Head

206 | in_channels = block_args.output_filters # output of final block

207 | out_channels = round_filters(1280, self._global_params)

208 | Conv2d = get_same_padding_conv2d(image_size=image_size)

209 | self._conv_head = Conv2d(in_channels, out_channels, kernel_size=1, bias=False)

210 | self._bn1 = nn.BatchNorm2d(num_features=out_channels, momentum=bn_mom, eps=bn_eps)

211 |

212 | # Final linear layer

213 | self._avg_pooling = nn.AdaptiveAvgPool2d(1)

214 | if self._global_params.include_top:

215 | self._dropout = nn.Dropout(self._global_params.dropout_rate)

216 | self._fc = nn.Linear(out_channels, self._global_params.num_classes)

217 |

218 | # set activation to memory efficient swish by default

219 | self._swish = MemoryEfficientSwish()

220 |

221 | def set_swish(self, memory_efficient=True):

222 | """Sets swish function as memory efficient (for training) or standard (for export).

223 |

224 | Args:

225 | memory_efficient (bool): Whether to use memory-efficient version of swish.

226 | """

227 | self._swish = MemoryEfficientSwish() if memory_efficient else Swish()

228 | for block in self._blocks:

229 | block.set_swish(memory_efficient)

230 |

231 | def extract_endpoints(self, inputs):

232 | """Use convolution layer to extract features

233 | from reduction levels i in [1, 2, 3, 4, 5].

234 |

235 | Args:

236 | inputs (tensor): Input tensor.

237 |

238 | Returns:

239 | Dictionary of last intermediate features

240 | with reduction levels i in [1, 2, 3, 4, 5].

241 | Example:

242 | >>> import torch

243 | >>> from efficientnet.model import EfficientNet

244 | >>> inputs = torch.rand(1, 3, 224, 224)

245 | >>> model = EfficientNet.from_pretrained('efficientnet-b0')

246 | >>> endpoints = model.extract_endpoints(inputs)

247 | >>> print(endpoints['reduction_1'].shape) # torch.Size([1, 16, 112, 112])

248 | >>> print(endpoints['reduction_2'].shape) # torch.Size([1, 24, 56, 56])

249 | >>> print(endpoints['reduction_3'].shape) # torch.Size([1, 40, 28, 28])

250 | >>> print(endpoints['reduction_4'].shape) # torch.Size([1, 112, 14, 14])

251 | >>> print(endpoints['reduction_5'].shape) # torch.Size([1, 320, 7, 7])

252 | >>> print(endpoints['reduction_6'].shape) # torch.Size([1, 1280, 7, 7])

253 | """

254 | endpoints = dict()

255 |

256 | # Stem

257 | x = self._swish(self._bn0(self._conv_stem(inputs)))

258 | prev_x = x

259 |

260 | # Blocks

261 | for idx, block in enumerate(self._blocks):

262 | drop_connect_rate = self._global_params.drop_connect_rate

263 | if drop_connect_rate:

264 | drop_connect_rate *= float(idx) / len(self._blocks) # scale drop connect_rate

265 | x = block(x, drop_connect_rate=drop_connect_rate)

266 | if prev_x.size(2) > x.size(2):

267 | endpoints['reduction_{}'.format(len(endpoints) + 1)] = prev_x

268 | elif idx == len(self._blocks) - 1:

269 | endpoints['reduction_{}'.format(len(endpoints) + 1)] = x

270 | prev_x = x

271 |

272 | # Head

273 | x = self._swish(self._bn1(self._conv_head(x)))

274 | endpoints['reduction_{}'.format(len(endpoints) + 1)] = x

275 |

276 | return endpoints

277 |

278 | def extract_features(self, inputs):

279 | """use convolution layer to extract feature .

280 |

281 | Args:

282 | inputs (tensor): Input tensor.

283 |

284 | Returns:

285 | Output of the final convolution

286 | layer in the efficientnet model.

287 | """

288 | # Stem

289 | x = self._swish(self._bn0(self._conv_stem(inputs)))

290 |

291 | # Blocks

292 | for idx, block in enumerate(self._blocks):

293 | drop_connect_rate = self._global_params.drop_connect_rate

294 | if drop_connect_rate:

295 | drop_connect_rate *= float(idx) / len(self._blocks) # scale drop connect_rate

296 | x = block(x, drop_connect_rate=drop_connect_rate)

297 |

298 | # Head

299 | x = self._swish(self._bn1(self._conv_head(x)))

300 |

301 | return x

302 |

303 | def forward(self, inputs):

304 | """EfficientNet's forward function.

305 | Calls extract_features to extract features, applies final linear layer, and returns logits.

306 |

307 | Args:

308 | inputs (tensor): Input tensor.

309 |

310 | Returns:

311 | Output of this model after processing.

312 | """

313 | # Convolution layers

314 | x = self.extract_features(inputs)

315 | # Pooling and final linear layer

316 | x = self._avg_pooling(x)

317 | if self._global_params.include_top:

318 | x = x.flatten(start_dim=1)

319 | x = self._dropout(x)

320 | x = self._fc(x)

321 | return x

322 |

323 | @classmethod

324 | def from_name(cls, model_name, in_channels=3, **override_params):

325 | """Create an efficientnet model according to name.

326 |

327 | Args:

328 | model_name (str): Name for efficientnet.

329 | in_channels (int): Input data's channel number.

330 | override_params (other key word params):

331 | Params to override model's global_params.

332 | Optional key:

333 | 'width_coefficient', 'depth_coefficient',

334 | 'image_size', 'dropout_rate',

335 | 'num_classes', 'batch_norm_momentum',

336 | 'batch_norm_epsilon', 'drop_connect_rate',

337 | 'depth_divisor', 'min_depth'

338 |

339 | Returns:

340 | An efficientnet model.

341 | """

342 | cls._check_model_name_is_valid(model_name)

343 | blocks_args, global_params = get_model_params(model_name, override_params)

344 | model = cls(blocks_args, global_params)

345 | model._change_in_channels(in_channels)

346 | return model

347 |

348 | @classmethod

349 | def from_pretrained(cls, model_name, weights_path=None, advprop=False,

350 | in_channels=3, num_classes=1000, **override_params):

351 | """Create an efficientnet model according to name.

352 |

353 | Args:

354 | model_name (str): Name for efficientnet.

355 | weights_path (None or str):

356 | str: path to pretrained weights file on the local disk.

357 | None: use pretrained weights downloaded from the Internet.

358 | advprop (bool):

359 | Whether to load pretrained weights

360 | trained with advprop (valid when weights_path is None).

361 | in_channels (int): Input data's channel number.

362 | num_classes (int):

363 | Number of categories for classification.

364 | It controls the output size for final linear layer.

365 | override_params (other key word params):

366 | Params to override model's global_params.

367 | Optional key:

368 | 'width_coefficient', 'depth_coefficient',

369 | 'image_size', 'dropout_rate',

370 | 'batch_norm_momentum',

371 | 'batch_norm_epsilon', 'drop_connect_rate',

372 | 'depth_divisor', 'min_depth'

373 |

374 | Returns:

375 | A pretrained efficientnet model.

376 | """

377 | model = cls.from_name(model_name, num_classes=num_classes, **override_params)

378 | load_pretrained_weights(model, model_name, weights_path=weights_path,

379 | load_fc=(num_classes == 1000), advprop=advprop)

380 | model._change_in_channels(in_channels)

381 | return model

382 |

383 | @classmethod

384 | def get_image_size(cls, model_name):

385 | """Get the input image size for a given efficientnet model.

386 |

387 | Args:

388 | model_name (str): Name for efficientnet.

389 |

390 | Returns:

391 | Input image size (resolution).

392 | """

393 | cls._check_model_name_is_valid(model_name)

394 | _, _, res, _ = efficientnet_params(model_name)

395 | return res

396 |

397 | @classmethod

398 | def _check_model_name_is_valid(cls, model_name):

399 | """Validates model name.

400 |

401 | Args:

402 | model_name (str): Name for efficientnet.

403 |

404 | Returns:

405 | bool: Is a valid name or not.

406 | """

407 | if model_name not in VALID_MODELS:

408 | raise ValueError('model_name should be one of: ' + ', '.join(VALID_MODELS))

409 |

410 | def _change_in_channels(self, in_channels):

411 | """Adjust model's first convolution layer to in_channels, if in_channels not equals 3.

412 |

413 | Args:

414 | in_channels (int): Input data's channel number.

415 | """

416 | if in_channels != 3:

417 | Conv2d = get_same_padding_conv2d(image_size=self._global_params.image_size)

418 | out_channels = round_filters(32, self._global_params)

419 | self._conv_stem = Conv2d(in_channels, out_channels, kernel_size=3, stride=2, bias=False)

420 |

--------------------------------------------------------------------------------

/efficientnet_pytorch/utils.py:

--------------------------------------------------------------------------------

1 | """utils.py - Helper functions for building the model and for loading model parameters.

2 | These helper functions are built to mirror those in the official TensorFlow implementation.

3 | """

4 |

5 | # Author: lukemelas (github username)

6 | # Github repo: https://github.com/lukemelas/EfficientNet-PyTorch

7 | # With adjustments and added comments by workingcoder (github username).

8 |

9 | import re

10 | import math

11 | import collections

12 | from functools import partial

13 | import torch

14 | from torch import nn

15 | from torch.nn import functional as F

16 | from torch.utils import model_zoo

17 |

18 |

19 | ################################################################################

20 | # Help functions for model architecture

21 | ################################################################################

22 |

23 | # GlobalParams and BlockArgs: Two namedtuples

24 | # Swish and MemoryEfficientSwish: Two implementations of the method

25 | # round_filters and round_repeats:

26 | # Functions to calculate params for scaling model width and depth ! ! !

27 | # get_width_and_height_from_size and calculate_output_image_size

28 | # drop_connect: A structural design

29 | # get_same_padding_conv2d:

30 | # Conv2dDynamicSamePadding

31 | # Conv2dStaticSamePadding

32 | # get_same_padding_maxPool2d:

33 | # MaxPool2dDynamicSamePadding

34 | # MaxPool2dStaticSamePadding

35 | # It's an additional function, not used in EfficientNet,

36 | # but can be used in other model (such as EfficientDet).

37 |

38 | # Parameters for the entire model (stem, all blocks, and head)

39 | GlobalParams = collections.namedtuple('GlobalParams', [

40 | 'width_coefficient', 'depth_coefficient', 'image_size', 'dropout_rate',

41 | 'num_classes', 'batch_norm_momentum', 'batch_norm_epsilon',

42 | 'drop_connect_rate', 'depth_divisor', 'min_depth', 'include_top'])

43 |

44 | # Parameters for an individual model block

45 | BlockArgs = collections.namedtuple('BlockArgs', [

46 | 'num_repeat', 'kernel_size', 'stride', 'expand_ratio',

47 | 'input_filters', 'output_filters', 'se_ratio', 'id_skip'])

48 |

49 | # Set GlobalParams and BlockArgs's defaults

50 | GlobalParams.__new__.__defaults__ = (None,) * len(GlobalParams._fields)

51 | BlockArgs.__new__.__defaults__ = (None,) * len(BlockArgs._fields)

52 |

53 | # Swish activation function

54 | if hasattr(nn, 'SiLU'):

55 | Swish = nn.SiLU

56 | else:

57 | # For compatibility with old PyTorch versions

58 | class Swish(nn.Module):

59 | def forward(self, x):

60 | return x * torch.sigmoid(x)

61 |

62 |

63 | # A memory-efficient implementation of Swish function

64 | class SwishImplementation(torch.autograd.Function):

65 | @staticmethod

66 | def forward(ctx, i):

67 | result = i * torch.sigmoid(i)

68 | ctx.save_for_backward(i)

69 | return result

70 |

71 | @staticmethod

72 | def backward(ctx, grad_output):

73 | i = ctx.saved_tensors[0]

74 | sigmoid_i = torch.sigmoid(i)

75 | return grad_output * (sigmoid_i * (1 + i * (1 - sigmoid_i)))

76 |

77 |

78 | class MemoryEfficientSwish(nn.Module):

79 | def forward(self, x):

80 | return SwishImplementation.apply(x)

81 |

82 |

83 | def round_filters(filters, global_params):

84 | """Calculate and round number of filters based on width multiplier.

85 | Use width_coefficient, depth_divisor and min_depth of global_params.

86 |

87 | Args:

88 | filters (int): Filters number to be calculated.

89 | global_params (namedtuple): Global params of the model.

90 |

91 | Returns:

92 | new_filters: New filters number after calculating.

93 | """

94 | multiplier = global_params.width_coefficient

95 | if not multiplier:

96 | return filters

97 | # TODO: modify the params names.

98 | # maybe the names (width_divisor,min_width)

99 | # are more suitable than (depth_divisor,min_depth).

100 | divisor = global_params.depth_divisor

101 | min_depth = global_params.min_depth

102 | filters *= multiplier

103 | min_depth = min_depth or divisor # pay attention to this line when using min_depth

104 | # follow the formula transferred from official TensorFlow implementation

105 | new_filters = max(min_depth, int(filters + divisor / 2) // divisor * divisor)

106 | if new_filters < 0.9 * filters: # prevent rounding by more than 10%

107 | new_filters += divisor

108 | return int(new_filters)

109 |

110 |

111 | def round_repeats(repeats, global_params):

112 | """Calculate module's repeat number of a block based on depth multiplier.

113 | Use depth_coefficient of global_params.

114 |

115 | Args:

116 | repeats (int): num_repeat to be calculated.

117 | global_params (namedtuple): Global params of the model.

118 |

119 | Returns:

120 | new repeat: New repeat number after calculating.

121 | """

122 | multiplier = global_params.depth_coefficient

123 | if not multiplier:

124 | return repeats

125 | # follow the formula transferred from official TensorFlow implementation

126 | return int(math.ceil(multiplier * repeats))

127 |

128 |

129 | def drop_connect(inputs, p, training):

130 | """Drop connect.

131 |

132 | Args:

133 | input (tensor: BCWH): Input of this structure.

134 | p (float: 0.0~1.0): Probability of drop connection.

135 | training (bool): The running mode.

136 |

137 | Returns:

138 | output: Output after drop connection.

139 | """

140 | assert 0 <= p <= 1, 'p must be in range of [0,1]'

141 |

142 | if not training:

143 | return inputs

144 |

145 | batch_size = inputs.shape[0]

146 | keep_prob = 1 - p

147 |

148 | # generate binary_tensor mask according to probability (p for 0, 1-p for 1)

149 | random_tensor = keep_prob

150 | random_tensor += torch.rand([batch_size, 1, 1, 1], dtype=inputs.dtype, device=inputs.device)

151 | binary_tensor = torch.floor(random_tensor)

152 |

153 | output = inputs / keep_prob * binary_tensor

154 | return output

155 |

156 |

157 | def get_width_and_height_from_size(x):

158 | """Obtain height and width from x.

159 |

160 | Args:

161 | x (int, tuple or list): Data size.

162 |

163 | Returns:

164 | size: A tuple or list (H,W).

165 | """

166 | if isinstance(x, int):

167 | return x, x

168 | if isinstance(x, list) or isinstance(x, tuple):

169 | return x

170 | else:

171 | raise TypeError()

172 |

173 |

174 | def calculate_output_image_size(input_image_size, stride):

175 | """Calculates the output image size when using Conv2dSamePadding with a stride.

176 | Necessary for static padding. Thanks to mannatsingh for pointing this out.

177 |

178 | Args:

179 | input_image_size (int, tuple or list): Size of input image.

180 | stride (int, tuple or list): Conv2d operation's stride.

181 |

182 | Returns:

183 | output_image_size: A list [H,W].

184 | """

185 | if input_image_size is None:

186 | return None

187 | image_height, image_width = get_width_and_height_from_size(input_image_size)

188 | stride = stride if isinstance(stride, int) else stride[0]

189 | image_height = int(math.ceil(image_height / stride))

190 | image_width = int(math.ceil(image_width / stride))

191 | return [image_height, image_width]

192 |

193 |

194 | # Note:

195 | # The following 'SamePadding' functions make output size equal ceil(input size/stride).

196 | # Only when stride equals 1, can the output size be the same as input size.

197 | # Don't be confused by their function names ! ! !

198 |

199 | def get_same_padding_conv2d(image_size=None):

200 | """Chooses static padding if you have specified an image size, and dynamic padding otherwise.

201 | Static padding is necessary for ONNX exporting of models.

202 |

203 | Args:

204 | image_size (int or tuple): Size of the image.

205 |

206 | Returns:

207 | Conv2dDynamicSamePadding or Conv2dStaticSamePadding.

208 | """

209 | if image_size is None:

210 | return Conv2dDynamicSamePadding

211 | else:

212 | return partial(Conv2dStaticSamePadding, image_size=image_size)

213 |

214 |

215 | class Conv2dDynamicSamePadding(nn.Conv2d):

216 | """2D Convolutions like TensorFlow, for a dynamic image size.

217 | The padding is operated in forward function by calculating dynamically.

218 | """

219 |

220 | # Tips for 'SAME' mode padding.

221 | # Given the following:

222 | # i: width or height

223 | # s: stride

224 | # k: kernel size

225 | # d: dilation

226 | # p: padding

227 | # Output after Conv2d:

228 | # o = floor((i+p-((k-1)*d+1))/s+1)

229 | # If o equals i, i = floor((i+p-((k-1)*d+1))/s+1),

230 | # => p = (i-1)*s+((k-1)*d+1)-i

231 |

232 | def __init__(self, in_channels, out_channels, kernel_size, stride=1, dilation=1, groups=1, bias=True):

233 | super().__init__(in_channels, out_channels, kernel_size, stride, 0, dilation, groups, bias)

234 | self.stride = self.stride if len(self.stride) == 2 else [self.stride[0]] * 2

235 |

236 | def forward(self, x):

237 | ih, iw = x.size()[-2:]

238 | kh, kw = self.weight.size()[-2:]

239 | sh, sw = self.stride

240 | oh, ow = math.ceil(ih / sh), math.ceil(iw / sw) # change the output size according to stride ! ! !

241 | pad_h = max((oh - 1) * self.stride[0] + (kh - 1) * self.dilation[0] + 1 - ih, 0)

242 | pad_w = max((ow - 1) * self.stride[1] + (kw - 1) * self.dilation[1] + 1 - iw, 0)

243 | if pad_h > 0 or pad_w > 0:

244 | x = F.pad(x, [pad_w // 2, pad_w - pad_w // 2, pad_h // 2, pad_h - pad_h // 2])

245 | return F.conv2d(x, self.weight, self.bias, self.stride, self.padding, self.dilation, self.groups)

246 |

247 |

248 | class Conv2dStaticSamePadding(nn.Conv2d):

249 | """2D Convolutions like TensorFlow's 'SAME' mode, with the given input image size.

250 | The padding mudule is calculated in construction function, then used in forward.

251 | """

252 |

253 | # With the same calculation as Conv2dDynamicSamePadding

254 |

255 | def __init__(self, in_channels, out_channels, kernel_size, stride=1, image_size=None, **kwargs):

256 | super().__init__(in_channels, out_channels, kernel_size, stride, **kwargs)

257 | self.stride = self.stride if len(self.stride) == 2 else [self.stride[0]] * 2

258 |

259 | # Calculate padding based on image size and save it

260 | assert image_size is not None

261 | ih, iw = (image_size, image_size) if isinstance(image_size, int) else image_size

262 | kh, kw = self.weight.size()[-2:]

263 | sh, sw = self.stride

264 | oh, ow = math.ceil(ih / sh), math.ceil(iw / sw)

265 | pad_h = max((oh - 1) * self.stride[0] + (kh - 1) * self.dilation[0] + 1 - ih, 0)

266 | pad_w = max((ow - 1) * self.stride[1] + (kw - 1) * self.dilation[1] + 1 - iw, 0)

267 | if pad_h > 0 or pad_w > 0:

268 | self.static_padding = nn.ZeroPad2d((pad_w // 2, pad_w - pad_w // 2,

269 | pad_h // 2, pad_h - pad_h // 2))

270 | else:

271 | self.static_padding = nn.Identity()

272 |

273 | def forward(self, x):

274 | x = self.static_padding(x)

275 | x = F.conv2d(x, self.weight, self.bias, self.stride, self.padding, self.dilation, self.groups)

276 | return x

277 |

278 |

279 | def get_same_padding_maxPool2d(image_size=None):

280 | """Chooses static padding if you have specified an image size, and dynamic padding otherwise.

281 | Static padding is necessary for ONNX exporting of models.

282 |

283 | Args:

284 | image_size (int or tuple): Size of the image.

285 |

286 | Returns:

287 | MaxPool2dDynamicSamePadding or MaxPool2dStaticSamePadding.

288 | """

289 | if image_size is None:

290 | return MaxPool2dDynamicSamePadding

291 | else:

292 | return partial(MaxPool2dStaticSamePadding, image_size=image_size)

293 |

294 |

295 | class MaxPool2dDynamicSamePadding(nn.MaxPool2d):

296 | """2D MaxPooling like TensorFlow's 'SAME' mode, with a dynamic image size.

297 | The padding is operated in forward function by calculating dynamically.

298 | """

299 |

300 | def __init__(self, kernel_size, stride, padding=0, dilation=1, return_indices=False, ceil_mode=False):

301 | super().__init__(kernel_size, stride, padding, dilation, return_indices, ceil_mode)

302 | self.stride = [self.stride] * 2 if isinstance(self.stride, int) else self.stride

303 | self.kernel_size = [self.kernel_size] * 2 if isinstance(self.kernel_size, int) else self.kernel_size

304 | self.dilation = [self.dilation] * 2 if isinstance(self.dilation, int) else self.dilation

305 |

306 | def forward(self, x):

307 | ih, iw = x.size()[-2:]

308 | kh, kw = self.kernel_size

309 | sh, sw = self.stride

310 | oh, ow = math.ceil(ih / sh), math.ceil(iw / sw)

311 | pad_h = max((oh - 1) * self.stride[0] + (kh - 1) * self.dilation[0] + 1 - ih, 0)

312 | pad_w = max((ow - 1) * self.stride[1] + (kw - 1) * self.dilation[1] + 1 - iw, 0)

313 | if pad_h > 0 or pad_w > 0:

314 | x = F.pad(x, [pad_w // 2, pad_w - pad_w // 2, pad_h // 2, pad_h - pad_h // 2])

315 | return F.max_pool2d(x, self.kernel_size, self.stride, self.padding,

316 | self.dilation, self.ceil_mode, self.return_indices)

317 |

318 |

319 | class MaxPool2dStaticSamePadding(nn.MaxPool2d):

320 | """2D MaxPooling like TensorFlow's 'SAME' mode, with the given input image size.

321 | The padding mudule is calculated in construction function, then used in forward.

322 | """

323 |

324 | def __init__(self, kernel_size, stride, image_size=None, **kwargs):

325 | super().__init__(kernel_size, stride, **kwargs)

326 | self.stride = [self.stride] * 2 if isinstance(self.stride, int) else self.stride

327 | self.kernel_size = [self.kernel_size] * 2 if isinstance(self.kernel_size, int) else self.kernel_size

328 | self.dilation = [self.dilation] * 2 if isinstance(self.dilation, int) else self.dilation

329 |

330 | # Calculate padding based on image size and save it

331 | assert image_size is not None

332 | ih, iw = (image_size, image_size) if isinstance(image_size, int) else image_size

333 | kh, kw = self.kernel_size

334 | sh, sw = self.stride

335 | oh, ow = math.ceil(ih / sh), math.ceil(iw / sw)

336 | pad_h = max((oh - 1) * self.stride[0] + (kh - 1) * self.dilation[0] + 1 - ih, 0)

337 | pad_w = max((ow - 1) * self.stride[1] + (kw - 1) * self.dilation[1] + 1 - iw, 0)

338 | if pad_h > 0 or pad_w > 0:

339 | self.static_padding = nn.ZeroPad2d((pad_w // 2, pad_w - pad_w // 2, pad_h // 2, pad_h - pad_h // 2))

340 | else:

341 | self.static_padding = nn.Identity()

342 |

343 | def forward(self, x):

344 | x = self.static_padding(x)

345 | x = F.max_pool2d(x, self.kernel_size, self.stride, self.padding,

346 | self.dilation, self.ceil_mode, self.return_indices)

347 | return x

348 |

349 |

350 | ################################################################################

351 | # Helper functions for loading model params

352 | ################################################################################

353 |

354 | # BlockDecoder: A Class for encoding and decoding BlockArgs

355 | # efficientnet_params: A function to query compound coefficient

356 | # get_model_params and efficientnet:

357 | # Functions to get BlockArgs and GlobalParams for efficientnet

358 | # url_map and url_map_advprop: Dicts of url_map for pretrained weights

359 | # load_pretrained_weights: A function to load pretrained weights

360 |

361 | class BlockDecoder(object):

362 | """Block Decoder for readability,

363 | straight from the official TensorFlow repository.

364 | """

365 |

366 | @staticmethod

367 | def _decode_block_string(block_string):

368 | """Get a block through a string notation of arguments.

369 |

370 | Args:

371 | block_string (str): A string notation of arguments.

372 | Examples: 'r1_k3_s11_e1_i32_o16_se0.25_noskip'.

373 |

374 | Returns:

375 | BlockArgs: The namedtuple defined at the top of this file.

376 | """

377 | assert isinstance(block_string, str)

378 |

379 | ops = block_string.split('_')

380 | options = {}

381 | for op in ops:

382 | splits = re.split(r'(\d.*)', op)

383 | if len(splits) >= 2:

384 | key, value = splits[:2]

385 | options[key] = value

386 |

387 | # Check stride

388 | assert (('s' in options and len(options['s']) == 1) or

389 | (len(options['s']) == 2 and options['s'][0] == options['s'][1]))

390 |

391 | return BlockArgs(

392 | num_repeat=int(options['r']),

393 | kernel_size=int(options['k']),

394 | stride=[int(options['s'][0])],

395 | expand_ratio=int(options['e']),

396 | input_filters=int(options['i']),

397 | output_filters=int(options['o']),

398 | se_ratio=float(options['se']) if 'se' in options else None,

399 | id_skip=('noskip' not in block_string))

400 |

401 | @staticmethod

402 | def _encode_block_string(block):

403 | """Encode a block to a string.

404 |

405 | Args:

406 | block (namedtuple): A BlockArgs type argument.

407 |

408 | Returns:

409 | block_string: A String form of BlockArgs.

410 | """

411 | args = [

412 | 'r%d' % block.num_repeat,

413 | 'k%d' % block.kernel_size,

414 | 's%d%d' % (block.strides[0], block.strides[1]),

415 | 'e%s' % block.expand_ratio,

416 | 'i%d' % block.input_filters,

417 | 'o%d' % block.output_filters

418 | ]

419 | if 0 < block.se_ratio <= 1:

420 | args.append('se%s' % block.se_ratio)

421 | if block.id_skip is False:

422 | args.append('noskip')

423 | return '_'.join(args)

424 |

425 | @staticmethod

426 | def decode(string_list):

427 | """Decode a list of string notations to specify blocks inside the network.

428 |

429 | Args:

430 | string_list (list[str]): A list of strings, each string is a notation of block.

431 |

432 | Returns:

433 | blocks_args: A list of BlockArgs namedtuples of block args.

434 | """

435 | assert isinstance(string_list, list)

436 | blocks_args = []

437 | for block_string in string_list:

438 | blocks_args.append(BlockDecoder._decode_block_string(block_string))

439 | return blocks_args

440 |

441 | @staticmethod

442 | def encode(blocks_args):

443 | """Encode a list of BlockArgs to a list of strings.

444 |

445 | Args:

446 | blocks_args (list[namedtuples]): A list of BlockArgs namedtuples of block args.

447 |

448 | Returns:

449 | block_strings: A list of strings, each string is a notation of block.

450 | """