├── KNN

├── requirements.txt

├── README.md

├── KNN.py

└── datasets

│ └── datingTestSet2.txt

├── 决策树

├── requirements.txt

├── datasets

│ └── lenses.txt

├── README.md

├── treePlotter.py

└── decision-tree.py

├── 朴素贝叶斯

├── requirements.txt

├── datasets

│ └── email

│ │ ├── ham

│ │ ├── 24.txt

│ │ ├── 16.txt

│ │ ├── 25.txt

│ │ ├── 10.txt

│ │ ├── 6.txt

│ │ ├── 5.txt

│ │ ├── 7.txt

│ │ ├── 11.txt

│ │ ├── 9.txt

│ │ ├── 1.txt

│ │ ├── 18.txt

│ │ ├── 19.txt

│ │ ├── 4.txt

│ │ ├── 13.txt

│ │ ├── 14.txt

│ │ ├── 20.txt

│ │ ├── 12.txt

│ │ ├── 2.txt

│ │ ├── 21.txt

│ │ ├── 22.txt

│ │ ├── 3.txt

│ │ ├── 17.txt

│ │ ├── 15.txt

│ │ ├── 23.txt

│ │ └── 8.txt

│ │ └── spam

│ │ ├── 17.txt

│ │ ├── 7.txt

│ │ ├── 9.txt

│ │ ├── 12.txt

│ │ ├── 14.txt

│ │ ├── 10.txt

│ │ ├── 21.txt

│ │ ├── 4.txt

│ │ ├── 1.txt

│ │ ├── 5.txt

│ │ ├── 13.txt

│ │ ├── 18.txt

│ │ ├── 6.txt

│ │ ├── 25.txt

│ │ ├── 2.txt

│ │ ├── 15.txt

│ │ ├── 16.txt

│ │ ├── 23.txt

│ │ ├── 24.txt

│ │ ├── 8.txt

│ │ ├── 20.txt

│ │ ├── 22.txt

│ │ ├── 19.txt

│ │ ├── 11.txt

│ │ └── 3.txt

├── README.md

└── 朴素贝叶斯.py

├── 基于单层决策树的AdaBoost算法

├── requirements.txt

├── README.md

├── datasets

│ └── testSet.txt

└── 基于单层决策树的AdaBoost.py

├── K-mean

├── requirements.txt

├── README.md

├── data.txt

├── K-mean.py

└── 二分K-mean.py

├── PCA

├── requirements.txt

├── README.md

├── PCA.py

└── data.txt

├── LwLineReg

├── requirements.txt

├── README.md

├── LwLineReg.py

└── datasets

│ └── ex0.txt

├── ROC曲线和AUC面积

├── requirements.txt

├── README.md

├── ROC-AUC.py

└── datasets

│ └── testSet.txt

├── SVM支持向量机

├── requirements.txt

├── .idea

│ ├── vcs.xml

│ ├── misc.xml

│ ├── modules.xml

│ ├── SVM支持向量机.iml

│ └── workspace.xml

├── README.md

├── datasets

│ └── testSet.txt

└── SVM.py

├── LineRegression

├── requirements.txt

├── README.md

├── LineRegression.py

└── datasets

│ └── ex0.txt

├── Logistic回归算法

├── requirements.txt

├── README.md

├── datasets

│ └── logisticdata.txt

└── Logistic回归.py

├── ridge-regression

├── requirements.txt

├── README.md

├── ridge-regression.py

└── datasets

│ └── ex0.txt

├── forward-regression

├── requirements.txt

├── README.md

└── forward-regression.py

├── GeneratorGIF-and-SeparateGIF

├── requirements.txt

├── SeparateGif.py

├── README.md

└── GeneratorGif.py

├── 微信读书答题辅助

├── answer.jpg

├── requirements.txt

├── README.md

└── question-answer.py

├── Building-NN-model-with-numpy

├── output_32_0.png

├── output_68_1.png

├── output_70_1.png

├── output_88_1.png

├── README.md

├── combat1.py

├── combat1-SGD.py

└── combat2-onehidden.py

├── 携程旅游评价信息爬取

├── README.md

└── traveldata.py

└── README.md

/KNN/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy==1.16.5

2 |

--------------------------------------------------------------------------------

/决策树/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy==1.16.5

2 |

--------------------------------------------------------------------------------

/朴素贝叶斯/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy==1.16.5

2 |

--------------------------------------------------------------------------------

/基于单层决策树的AdaBoost算法/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy==1.16.5

2 |

--------------------------------------------------------------------------------

/K-mean/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy==1.16.5

2 | matplotlib==3.1.1

3 |

--------------------------------------------------------------------------------

/PCA/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy==1.16.5

2 | matplotlib==3.1.1

3 |

--------------------------------------------------------------------------------

/LwLineReg/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy==1.16.5

2 | matplotlib==3.1.1

3 |

--------------------------------------------------------------------------------

/ROC曲线和AUC面积/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy==1.16.5

2 | matplotlib==3.1.1

3 |

--------------------------------------------------------------------------------

/SVM支持向量机/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy==1.16.5

2 | matplotlib==3.1.1

3 |

--------------------------------------------------------------------------------

/LineRegression/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy==1.16.5

2 | matplotlib==3.1.1

3 |

--------------------------------------------------------------------------------

/Logistic回归算法/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy==1.16.5

2 | matplotlib==3.1.1

3 |

--------------------------------------------------------------------------------

/ridge-regression/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy==1.16.5

2 | matplotlib==3.1.1

3 |

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/24.txt:

--------------------------------------------------------------------------------

1 | Ok I will be there by 10:00 at the latest.

--------------------------------------------------------------------------------

/forward-regression/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy==1.16.5

2 | matplotlib==3.1.1

3 |

--------------------------------------------------------------------------------

/GeneratorGIF-and-SeparateGIF/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy==1.16.5

2 | imageio==2.6.0

3 | PIL==6.2.0

--------------------------------------------------------------------------------

/微信读书答题辅助/answer.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/lzx1019056432/Be-Friendly-To-New-People/HEAD/微信读书答题辅助/answer.jpg

--------------------------------------------------------------------------------

/微信读书答题辅助/requirements.txt:

--------------------------------------------------------------------------------

1 | beautifulsoup4==4.8.0

2 | urllib3==1.24.2

3 | pillow==6.2.0

4 | requests==2.22.0

5 |

6 |

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/16.txt:

--------------------------------------------------------------------------------

1 | yeah I am ready. I may not be here because Jar Jar has plane tickets to Germany for me.

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/25.txt:

--------------------------------------------------------------------------------

1 | That is cold. Is there going to be a retirement party?

2 | Are the leaves changing color?

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/10.txt:

--------------------------------------------------------------------------------

1 | Ryan Whybrew commented on your status.

2 |

3 | Ryan wrote:

4 | "turd ferguson or butt horn."

5 |

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/6.txt:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/lzx1019056432/Be-Friendly-To-New-People/HEAD/朴素贝叶斯/datasets/email/ham/6.txt

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/17.txt:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/lzx1019056432/Be-Friendly-To-New-People/HEAD/朴素贝叶斯/datasets/email/spam/17.txt

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/5.txt:

--------------------------------------------------------------------------------

1 | There was a guy at the gas station who told me that if I knew Mandarin

2 | and Python I could get a job with the FBI.

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/7.txt:

--------------------------------------------------------------------------------

1 | Zach Hamm commented on your status.

2 |

3 | Zach wrote:

4 | "doggy style - enough said, thank you & good night"

5 |

6 |

7 |

--------------------------------------------------------------------------------

/Building-NN-model-with-numpy/output_32_0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/lzx1019056432/Be-Friendly-To-New-People/HEAD/Building-NN-model-with-numpy/output_32_0.png

--------------------------------------------------------------------------------

/Building-NN-model-with-numpy/output_68_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/lzx1019056432/Be-Friendly-To-New-People/HEAD/Building-NN-model-with-numpy/output_68_1.png

--------------------------------------------------------------------------------

/Building-NN-model-with-numpy/output_70_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/lzx1019056432/Be-Friendly-To-New-People/HEAD/Building-NN-model-with-numpy/output_70_1.png

--------------------------------------------------------------------------------

/Building-NN-model-with-numpy/output_88_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/lzx1019056432/Be-Friendly-To-New-People/HEAD/Building-NN-model-with-numpy/output_88_1.png

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/11.txt:

--------------------------------------------------------------------------------

1 | Arvind Thirumalai commented on your status.

2 |

3 | Arvind wrote:

4 | ""you know""

5 |

6 |

7 | Reply to this email to comment on this status.

8 |

9 |

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/9.txt:

--------------------------------------------------------------------------------

1 | Hi Peter,

2 |

3 | These are the only good scenic ones and it's too bad there was a girl's back in one of them. Just try to enjoy the blue sky : ))

4 |

5 | D

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/1.txt:

--------------------------------------------------------------------------------

1 | Hi Peter,

2 |

3 | With Jose out of town, do you want to

4 | meet once in a while to keep things

5 | going and do some interesting stuff?

6 |

7 | Let me know

8 | Eugene

--------------------------------------------------------------------------------

/SVM支持向量机/.idea/vcs.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/18.txt:

--------------------------------------------------------------------------------

1 | Hi Peter,

2 |

3 | Sure thing. Sounds good. Let me know what time would be good for you.

4 | I will come prepared with some ideas and we can go from there.

5 |

6 | Regards,

7 |

8 | -Vivek.

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/7.txt:

--------------------------------------------------------------------------------

1 | Bargains Here! Buy Phentermin 37.5 mg (K-25)

2 |

3 | Buy Genuine Phentermin at Low Cost

4 | VISA Accepted

5 | 30 - $130.50

6 | 60 - $219.00

7 | 90 - $292.50

8 | 120 - $366.00

9 | 180 - $513.00

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/9.txt:

--------------------------------------------------------------------------------

1 | Bargains Here! Buy Phentermin 37.5 mg (K-25)

2 |

3 | Buy Genuine Phentermin at Low Cost

4 | VISA Accepted

5 | 30 - $130.50

6 | 60 - $219.00

7 | 90 - $292.50

8 | 120 - $366.00

9 | 180 - $513.00

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/19.txt:

--------------------------------------------------------------------------------

1 | LinkedIn

2 |

3 | Julius O requested to add you as a connection on LinkedIn:

4 |

5 | Hi Peter.

6 |

7 | Looking forward to the book!

8 |

9 |

10 | Accept View invitation from Julius O

11 |

--------------------------------------------------------------------------------

/SVM支持向量机/.idea/misc.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/12.txt:

--------------------------------------------------------------------------------

1 | Buy Ambiem (Zolpidem) 5mg/10mg @ $2.39/- pill

2 |

3 | 30 pills x 5 mg - $129.00

4 | 60 pills x 5 mg - $199.20

5 | 180 pills x 5 mg - $430.20

6 | 30 pills x 10 mg - $ 138.00

7 | 120 pills x 10 mg - $ 322.80

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/4.txt:

--------------------------------------------------------------------------------

1 | Yo. I've been working on my running website. I'm using jquery and the jqplot plugin. I'm not too far away from having a prototype to launch.

2 |

3 | You used jqplot right? If not, I think you would like it.

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/13.txt:

--------------------------------------------------------------------------------

1 | Jay Stepp commented on your status.

2 |

3 | Jay wrote:

4 | ""to the" ???"

5 |

6 |

7 | Reply to this email to comment on this status.

8 |

9 | To see the comment thread, follow the link below:

10 |

11 |

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/14.txt:

--------------------------------------------------------------------------------

1 | LinkedIn

2 |

3 | Kerry Haloney requested to add you as a connection on LinkedIn:

4 |

5 | Peter,

6 |

7 | I'd like to add you to my professional network on LinkedIn.

8 |

9 | - Kerry Haloney

10 |

11 |

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/20.txt:

--------------------------------------------------------------------------------

1 | I've thought about this and think it's possible. We should get another

2 | lunch. I have a car now and could come pick you up this time. Does

3 | this wednesday work? 11:50?

4 |

5 | Can I have a signed copy of you book?

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/12.txt:

--------------------------------------------------------------------------------

1 | Thanks Peter.

2 |

3 | I'll definitely check in on this. How is your book

4 | going? I heard chapter 1 came in and it was in

5 | good shape. ;-)

6 |

7 | I hope you are doing well.

8 |

9 | Cheers,

10 |

11 | Troy

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/14.txt:

--------------------------------------------------------------------------------

1 | BuyVIAGRA 25mg, 50mg, 100mg,

2 | BrandViagra, FemaleViagra from $1.15 per pill

3 |

4 |

5 | ViagraNoPrescription needed - from Certified Canadian Pharmacy

6 |

7 | Buy Here... We accept VISA, AMEX, E-Check... Worldwide Delivery

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/10.txt:

--------------------------------------------------------------------------------

1 | OrderCializViagra Online & Save 75-90%

2 |

3 | 0nline Pharmacy NoPrescription required

4 | Buy Canadian Drugs at Wholesale Prices and Save 75-90%

5 | FDA-Approved drugs + Superb Quality Drugs only!

6 | Accept all major credit cards

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/2.txt:

--------------------------------------------------------------------------------

1 | Yay to you both doing fine!

2 |

3 | I'm working on an MBA in Design Strategy at CCA (top art school.) It's a new program focusing on more of a right-brained creative and strategic approach to management. I'm an 1/8 of the way done today!

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/21.txt:

--------------------------------------------------------------------------------

1 | Percocet 10/625 mg withoutPrescription 30 tabs - $225!

2 | Percocet, a narcotic analgesic, is used to treat moderate to moderately SeverePain

3 | Top Quality, EXPRESS Shipping, 100% Safe & Discreet & Private.

4 | Buy Cheap Percocet Online

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/4.txt:

--------------------------------------------------------------------------------

1 | Percocet 10/625 mg withoutPrescription 30 tabs - $225!

2 | Percocet, a narcotic analgesic, is used to treat moderate to moderately SeverePain

3 | Top Quality, EXPRESS Shipping, 100% Safe & Discreet & Private.

4 | Buy Cheap Percocet Online

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/1.txt:

--------------------------------------------------------------------------------

1 | --- Codeine 15mg -- 30 for $203.70 -- VISA Only!!! --

2 |

3 | -- Codeine (Methylmorphine) is a narcotic (opioid) pain reliever

4 | -- We have 15mg & 30mg pills -- 30/15mg for $203.70 - 60/15mg for $385.80 - 90/15mg for $562.50 -- VISA Only!!! ---

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/5.txt:

--------------------------------------------------------------------------------

1 | --- Codeine 15mg -- 30 for $203.70 -- VISA Only!!! --

2 |

3 | -- Codeine (Methylmorphine) is a narcotic (opioid) pain reliever

4 | -- We have 15mg & 30mg pills -- 30/15mg for $203.70 - 60/15mg for $385.80 - 90/15mg for $562.50 -- VISA Only!!! ---

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/21.txt:

--------------------------------------------------------------------------------

1 | we saw this on the way to the coast...thought u might like it

2 |

3 | hangzhou is huge, one day wasn't enough, but we got a glimpse...

4 |

5 | we went inside the china pavilion at expo, it is pretty interesting,

6 | each province has an exhibit...

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/13.txt:

--------------------------------------------------------------------------------

1 | OrderCializViagra Online & Save 75-90%

2 |

3 | 0nline Pharmacy NoPrescription required

4 | Buy Canadian Drugs at Wholesale Prices and Save 75-90%

5 | FDA-Approved drugs + Superb Quality Drugs only!

6 | Accept all major credit cards

7 | Order Today! From $1.38

8 |

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/18.txt:

--------------------------------------------------------------------------------

1 | Codeine (the most competitive price on NET!)

2 |

3 | Codeine (WILSON) 30mg x 30 $156.00

4 | Codeine (WILSON) 30mg x 60 $291.00 (+4 FreeViagra pills)

5 | Codeine (WILSON) 30mg x 90 $396.00 (+4 FreeViagra pills)

6 | Codeine (WILSON) 30mg x 120 $492.00 (+10 FreeViagra pills)

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/6.txt:

--------------------------------------------------------------------------------

1 | OEM Adobe & Microsoft softwares

2 | Fast order and download

3 |

4 | Microsoft Office Professional Plus 2007/2010 $129

5 | Microsoft Windows 7 Ultimate $119

6 | Adobe Photoshop CS5 Extended

7 | Adobe Acrobat 9 Pro Extended

8 | Windows XP Professional & thousand more titles

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/25.txt:

--------------------------------------------------------------------------------

1 | Experience with BiggerPenis Today! Grow 3-inches more

2 |

3 | The Safest & Most Effective Methods Of_PenisEn1argement.

4 | Save your time and money!

5 | BetterErections with effective Ma1eEnhancement products.

6 |

7 | #1 Ma1eEnhancement Supplement. Trusted by Millions. Buy Today!

--------------------------------------------------------------------------------

/SVM支持向量机/.idea/modules.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/2.txt:

--------------------------------------------------------------------------------

1 | Hydrocodone/Vicodin ES/Brand Watson

2 |

3 | Vicodin ES - 7.5/750 mg: 30 - $195 / 120 $570

4 | Brand Watson - 7.5/750 mg: 30 - $195 / 120 $570

5 | Brand Watson - 10/325 mg: 30 - $199 / 120 - $588

6 | NoPrescription Required

7 | FREE Express FedEx (3-5 days Delivery) for over $200 order

8 | Major Credit Cards + E-CHECK

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/22.txt:

--------------------------------------------------------------------------------

1 | Hi Hommies,

2 |

3 | Just got a phone call from the roofer, they will come and spaying the foaming today. it will be dusty. pls close all the doors and windows.

4 | Could you help me to close my bathroom window, cat window and the sliding door behind the TV?

5 | I don't know how can those 2 cats survive......

6 |

7 | Sorry for any inconvenience!

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/15.txt:

--------------------------------------------------------------------------------

1 | You Have Everything To Gain!

2 |

3 | Incredib1e gains in length of 3-4 inches to yourPenis, PERMANANTLY

4 |

5 | Amazing increase in thickness of yourPenis, up to 30%

6 | BetterEjacu1ation control

7 | Experience Rock-HardErecetions

8 | Explosive, intenseOrgasns

9 | Increase volume ofEjacu1ate

10 | Doctor designed and endorsed

11 | 100% herbal, 100% Natural, 100% Safe

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/16.txt:

--------------------------------------------------------------------------------

1 | You Have Everything To Gain!

2 |

3 | Incredib1e gains in length of 3-4 inches to yourPenis, PERMANANTLY

4 |

5 | Amazing increase in thickness of yourPenis, up to 30%

6 | BetterEjacu1ation control

7 | Experience Rock-HardErecetions

8 | Explosive, intenseOrgasns

9 | Increase volume ofEjacu1ate

10 | Doctor designed and endorsed

11 | 100% herbal, 100% Natural, 100% Safe

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/23.txt:

--------------------------------------------------------------------------------

1 | You Have Everything To Gain!

2 |

3 | Incredib1e gains in length of 3-4 inches to yourPenis, PERMANANTLY

4 |

5 | Amazing increase in thickness of yourPenis, up to 30%

6 | BetterEjacu1ation control

7 | Experience Rock-HardErecetions

8 | Explosive, intenseOrgasns

9 | Increase volume ofEjacu1ate

10 | Doctor designed and endorsed

11 | 100% herbal, 100% Natural, 100% Safe

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/24.txt:

--------------------------------------------------------------------------------

1 | You Have Everything To Gain!

2 |

3 | Incredib1e gains in length of 3-4 inches to yourPenis, PERMANANTLY

4 |

5 | Amazing increase in thickness of yourPenis, up to 30%

6 | BetterEjacu1ation control

7 | Experience Rock-HardErecetions

8 | Explosive, intenseOrgasns

9 | Increase volume ofEjacu1ate

10 | Doctor designed and endorsed

11 | 100% herbal, 100% Natural, 100% Safe

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/8.txt:

--------------------------------------------------------------------------------

1 | You Have Everything To Gain!

2 |

3 | Incredib1e gains in length of 3-4 inches to yourPenis, PERMANANTLY

4 |

5 | Amazing increase in thickness of yourPenis, up to 30%

6 | BetterEjacu1ation control

7 | Experience Rock-HardErecetions

8 | Explosive, intenseOrgasns

9 | Increase volume ofEjacu1ate

10 | Doctor designed and endorsed

11 | 100% herbal, 100% Natural, 100% Safe

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/3.txt:

--------------------------------------------------------------------------------

1 | WHat is going on there?

2 | I talked to John on email. We talked about some computer stuff that's it.

3 |

4 | I went bike riding in the rain, it was not that cold.

5 |

6 | We went to the museum in SF yesterday it was $3 to get in and they had

7 | free food. At the same time was a SF Giants game, when we got done we

8 | had to take the train with all the Giants fans, they are 1/2 drunk.

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/20.txt:

--------------------------------------------------------------------------------

1 | Get Up to 75% OFF at Online WatchesStore

2 |

3 | Discount Watches for All Famous Brands

4 |

5 | * Watches: aRolexBvlgari, Dior, Hermes, Oris, Cartier, AP and more brands

6 | * Louis Vuitton Bags & Wallets

7 | * Gucci Bags

8 | * Tiffany & Co Jewerly

9 |

10 | Enjoy a full 1 year WARRANTY

11 | Shipment via reputable courier: FEDEX, UPS, DHL and EMS Speedpost

12 | You will 100% recieve your order

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/22.txt:

--------------------------------------------------------------------------------

1 | Get Up to 75% OFF at Online WatchesStore

2 |

3 | Discount Watches for All Famous Brands

4 |

5 | * Watches: aRolexBvlgari, Dior, Hermes, Oris, Cartier, AP and more brands

6 | * Louis Vuitton Bags & Wallets

7 | * Gucci Bags

8 | * Tiffany & Co Jewerly

9 |

10 | Enjoy a full 1 year WARRANTY

11 | Shipment via reputable courier: FEDEX, UPS, DHL and EMS Speedpost

12 | You will 100% recieve your order

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/19.txt:

--------------------------------------------------------------------------------

1 | Get Up to 75% OFF at Online WatchesStore

2 |

3 | Discount Watches for All Famous Brands

4 |

5 | * Watches: aRolexBvlgari, Dior, Hermes, Oris, Cartier, AP and more brands

6 | * Louis Vuitton Bags & Wallets

7 | * Gucci Bags

8 | * Tiffany & Co Jewerly

9 |

10 | Enjoy a full 1 year WARRANTY

11 | Shipment via reputable courier: FEDEX, UPS, DHL and EMS Speedpost

12 | You will 100% recieve your order

13 | Save Up to 75% OFF Quality Watches

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/11.txt:

--------------------------------------------------------------------------------

1 | You Have Everything To Gain!

2 |

3 | Incredib1e gains in length of 3-4 inches to yourPenis, PERMANANTLY

4 |

5 | Amazing increase in thickness of yourPenis, up to 30%

6 | BetterEjacu1ation control

7 | Experience Rock-HardErecetions

8 | Explosive, intenseOrgasns

9 | Increase volume ofEjacu1ate

10 | Doctor designed and endorsed

11 | 100% herbal, 100% Natural, 100% Safe

12 | The proven NaturalPenisEnhancement that works!

13 | 100% MoneyBack Guaranteeed

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/spam/3.txt:

--------------------------------------------------------------------------------

1 | You Have Everything To Gain!

2 |

3 | Incredib1e gains in length of 3-4 inches to yourPenis, PERMANANTLY

4 |

5 | Amazing increase in thickness of yourPenis, up to 30%

6 | BetterEjacu1ation control

7 | Experience Rock-HardErecetions

8 | Explosive, intenseOrgasns

9 | Increase volume ofEjacu1ate

10 | Doctor designed and endorsed

11 | 100% herbal, 100% Natural, 100% Safe

12 | The proven NaturalPenisEnhancement that works!

13 | 100% MoneyBack Guaranteeed

--------------------------------------------------------------------------------

/SVM支持向量机/.idea/SVM支持向量机.iml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/17.txt:

--------------------------------------------------------------------------------

1 | Benoit Mandelbrot 1924-2010

2 |

3 | Benoit Mandelbrot 1924-2010

4 |

5 | Wilmott Team

6 |

7 | Benoit Mandelbrot, the mathematician, the father of fractal mathematics, and advocate of more sophisticated modelling in quantitative finance, died on 14th October 2010 aged 85.

8 |

9 | Wilmott magazine has often featured Mandelbrot, his ideas, and the work of others inspired by his fundamental insights.

10 |

11 | You must be logged on to view these articles from past issues of Wilmott Magazine.

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/15.txt:

--------------------------------------------------------------------------------

1 | Hi Peter,

2 |

3 | The hotels are the ones that rent out the tent. They are all lined up on the hotel grounds : )) So much for being one with nature, more like being one with a couple dozen tour groups and nature.

4 | I have about 100M of pictures from that trip. I can go through them and get you jpgs of my favorite scenic pictures.

5 |

6 | Where are you and Jocelyn now? New York? Will you come to Tokyo for Chinese New Year? Perhaps to see the two of you then. I will go to Thailand for winter holiday to see my mom : )

7 |

8 | Take care,

9 | D

10 |

--------------------------------------------------------------------------------

/KNN/README.md:

--------------------------------------------------------------------------------

1 | ## KNN(K-邻近算法)代码

2 |

3 |

4 | ### 依赖环境

5 |

6 | python3.7

7 |

8 | ### python库

9 |

10 | numpy

11 |

12 | matplotlib

13 |

14 | ### 下载安装

15 |

16 | ```

17 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

18 | ```

19 |

20 | ### 安装依赖包

21 |

22 | ```

23 | cd KNN

24 | pip install -r requirements.txt

25 | ```

26 |

27 | ### 使用方法

28 |

29 | 在当前目录下输入

30 |

31 | ```

32 | cd KNN

33 | ```

34 |

35 | 运行PCA算法

36 |

37 | ```

38 | python KNN.py

39 | ```

40 |

41 |

42 | ### 技术博客地址

43 |

44 | * [K-近邻算法(KNN)原理分析和代码实战](https://blog.csdn.net/lzx159951/article/details/106138634)

45 |

46 |

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/23.txt:

--------------------------------------------------------------------------------

1 |

2 | SciFinance now automatically generates GPU-enabled pricing & risk model source code that runs up to 50-300x faster than serial code using a new NVIDIA Fermi-class Tesla 20-Series GPU.

3 |

4 | SciFinance?is a derivatives pricing and risk model development tool that automatically generates C/C++ and GPU-enabled source code from concise, high-level model specifications. No parallel computing or CUDA programming expertise is required.

5 |

6 | SciFinance's automatic, GPU-enabled Monte Carlo pricing model source code generation capabilities have been significantly extended in the latest release. This includes:

7 |

8 |

--------------------------------------------------------------------------------

/朴素贝叶斯/datasets/email/ham/8.txt:

--------------------------------------------------------------------------------

1 | This e-mail was sent from a notification-only address that cannot accept incoming e-mail. Please do not reply to this message.

2 |

3 | Thank you for your online reservation. The store you selected has located the item you requested and has placed it on hold in your name. Please note that all items are held for 1 day. Please note store prices may differ from those online.

4 |

5 | If you have questions or need assistance with your reservation, please contact the store at the phone number listed below. You can also access store information, such as store hours and location, on the web at http://www.borders.com/online/store/StoreDetailView_98.

--------------------------------------------------------------------------------

/携程旅游评价信息爬取/README.md:

--------------------------------------------------------------------------------

1 | ## 携程旅游评价信息爬取

2 |

3 |

4 | ### 依赖环境

5 |

6 | python3.7

7 |

8 | ### python库

9 |

10 | requests

11 |

12 | json

13 |

14 | time

15 |

16 | ### 下载安装

17 |

18 | ```

19 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

20 | ```

21 |

22 | ### 使用方法

23 |

24 | 在当前目录下输入

25 |

26 | ```

27 | cd 携程旅游评价信息爬取

28 | ```

29 |

30 | 运行

31 |

32 | ```

33 | python traveldata.py

34 | ```

35 |

36 | ## 运行截图

37 |

38 |

39 |

40 | ### 技术博客地址

41 |

42 | * [携程旅行爬取评价内容博客](https://blog.csdn.net/lzx159951/article/details/106476263)

43 |

44 |

--------------------------------------------------------------------------------

/朴素贝叶斯/README.md:

--------------------------------------------------------------------------------

1 | ## 朴素贝叶斯源代码

2 |

3 |

4 | ### 依赖环境

5 |

6 | python3.7

7 |

8 | ### python库

9 |

10 | numpy

11 |

12 |

13 | ### 下载安装

14 |

15 | ```

16 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

17 | ```

18 |

19 | ### 安装依赖包

20 |

21 | ```

22 | cd 决策树

23 | pip install -r requirements.txt

24 | ```

25 | ### 文件介绍

26 | * datasets 为数据文件 里面的ham表示非垃圾邮件 spam 表示垃圾邮件

27 | * 朴素贝叶斯.py 为朴素贝叶斯主要算法

28 |

29 |

30 | ### 使用方法

31 |

32 | 在当前目录下输入

33 |

34 | ```

35 | cd 朴素贝叶斯

36 | ```

37 |

38 | 运行朴素贝叶斯算法

39 |

40 | ```

41 | python 朴素贝叶斯.py

42 | ```

43 |

44 |

45 |

46 | ### 技术博客地址

47 |

48 | * [朴素贝叶斯技术博客](https://blog.csdn.net/lzx159951/article/details/106211654)

49 |

50 |

--------------------------------------------------------------------------------

/SVM支持向量机/README.md:

--------------------------------------------------------------------------------

1 | ## SVM(支持向量机)源代码

2 |

3 |

4 | ### 依赖环境

5 |

6 | python3.7

7 |

8 | ### python库

9 |

10 | numpy

11 |

12 | matplotlib

13 |

14 | ### 下载安装

15 |

16 | ```

17 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

18 | ```

19 |

20 | ### 安装依赖包

21 |

22 | ```

23 | cd SVM支持向量机

24 | pip install -r requirements.txt

25 | ```

26 |

27 | ### 使用方法

28 |

29 | 在当前目录下输入

30 |

31 | ```

32 | cd SVM支持向量机

33 | ```

34 |

35 | 运行SVM算法

36 |

37 | ```

38 | python SVM.py

39 | ```

40 |

41 | ### 效果图

42 |

43 |

44 |

45 |

46 |

47 | ### 技术博客地址

48 |

49 | * [历经一个月,终于搞定了SVM(支持向量机)-附源代码解析](https://blog.csdn.net/lzx159951/article/details/106692871)

50 |

51 |

--------------------------------------------------------------------------------

/Logistic回归算法/README.md:

--------------------------------------------------------------------------------

1 | ## Logistic回归算法源代码

2 |

3 |

4 | ### 依赖环境

5 |

6 | python3.7

7 |

8 | ### python库

9 |

10 | numpy

11 |

12 | matplotlib

13 |

14 | ### 下载安装

15 |

16 | ```

17 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

18 | ```

19 |

20 | ### 安装依赖包

21 |

22 | ```

23 | cd Logistic回归算法

24 | pip install -r requirements.txt

25 | ```

26 |

27 | ### 使用方法

28 |

29 | 在当前目录下输入

30 |

31 | ```

32 | cd Logistic回归算法

33 | ```

34 |

35 | 运行Logistic回归算法

36 |

37 | ```

38 | python Logistic回归.py

39 | ```

40 |

41 | ### 训练过程图

42 |

43 |

44 |

45 |

46 |

47 |

48 |

49 |

50 | ### 技术博客地址

51 |

52 | * [Logistic 回归分类算法原理分析和实战](https://blog.csdn.net/lzx159951/article/details/106251640)

53 |

54 |

--------------------------------------------------------------------------------

/K-mean/README.md:

--------------------------------------------------------------------------------

1 | ## K-mean、二分K-mean聚类算法

2 | 主要是解决无标签数据分类问题

3 |

4 | ### 依赖环境

5 |

6 | python3.7

7 |

8 | ### python库

9 |

10 | numpy

11 |

12 | matplotlib

13 |

14 | ### 下载安装

15 |

16 | ```

17 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

18 | ```

19 |

20 | ### 安装依赖包

21 |

22 | ```

23 | cd K-mean

24 | pip install -r requirements.txt

25 | ```

26 |

27 | ### 使用方法

28 |

29 | 在当前目录下输入

30 |

31 | ```

32 | cd K-mean

33 | ```

34 |

35 | 运行K-mean算法

36 |

37 | ```

38 | python K-mean.py

39 | ```

40 |

41 | 运行二分K-mean算法

42 |

43 | ```

44 | python 二分K-mean.py

45 | ```

46 |

47 |

48 |

49 | ### 相关博客地址

50 |

51 | * [K-mean均值算法原理讲解和代码实战](https://blog.csdn.net/lzx159951/article/details/105763911)

52 | * [二分K-mean均值算法原理讲解和代码实战](https://blog.csdn.net/lzx159951/article/details/105764762)

--------------------------------------------------------------------------------

/forward-regression/README.md:

--------------------------------------------------------------------------------

1 | ## 前向逐步线性回归源代码

2 |

3 |

4 | ### 依赖环境

5 |

6 | python3.7

7 |

8 | ### python库

9 |

10 | numpy

11 |

12 | matplotlib

13 |

14 | ### 下载安装

15 |

16 | ```

17 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

18 | ```

19 |

20 | ### 安装依赖包

21 |

22 | ```

23 | cd ridge-regression

24 | pip install -r requirements.txt

25 | ```

26 |

27 | ### 使用方法

28 |

29 | 在当前目录下输入

30 |

31 | ```

32 | cd forward-regression

33 | ```

34 |

35 | 运行算法

36 |

37 | ```

38 | python forward-regression.py

39 | ```

40 |

41 | ### 结果分析图

42 |

43 |

44 |

45 |

46 |

47 |

48 |

49 |

50 | ### 技术博客地址

51 |

52 | * [前向逐步线性回归算法](https://blog.csdn.net/lzx159951/article/details/107206857)

53 |

54 |

--------------------------------------------------------------------------------

/ridge-regression/README.md:

--------------------------------------------------------------------------------

1 | ## 岭回归算法改进线性回归源代码

2 |

3 |

4 | ### 依赖环境

5 |

6 | python3.7

7 |

8 | ### python库

9 |

10 | numpy

11 |

12 | matplotlib

13 |

14 | ### 下载安装

15 |

16 | ```

17 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

18 | ```

19 |

20 | ### 安装依赖包

21 |

22 | ```

23 | cd ridge-regression

24 | pip install -r requirements.txt

25 | ```

26 |

27 | ### 使用方法

28 |

29 | 在当前目录下输入

30 |

31 | ```

32 | cd ridge-regression

33 | ```

34 |

35 | 运行算法

36 |

37 | ```

38 | python ridge-regression.py

39 | ```

40 |

41 | ### 结果分析图

42 |

43 |

44 |

45 |

46 |

47 |

48 |

49 |

50 | ### 技术博客地址

51 |

52 | * [岭回归算法的原理和代码实战](https://blog.csdn.net/lzx159951/article/details/107097993)

53 |

54 |

--------------------------------------------------------------------------------

/ROC曲线和AUC面积/README.md:

--------------------------------------------------------------------------------

1 | ## ROC曲线代码实战

2 |

3 |

4 | ### 依赖环境

5 |

6 | python3.7

7 |

8 | ### python库

9 |

10 | numpy

11 |

12 | matplotlib

13 |

14 | ### 下载安装

15 |

16 | ```

17 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

18 | ```

19 |

20 | ### 安装依赖包

21 |

22 | ```

23 | cd ROC-AUC

24 | pip install -r requirements.txt

25 | ```

26 |

27 | ### 使用方法

28 |

29 | 在当前目录下输入

30 |

31 | ```

32 | cd ROC曲线和AUC面积

33 | ```

34 |

35 | 运行算法

36 |

37 | ```

38 | python ROC-AUCpy

39 | ```

40 |

41 | ### 效果图

42 |

43 |

44 |

45 |

46 |

47 | ### 技术博客地址

48 |

49 | * [简单易懂的ROC曲线和AUC面积](https://blog.csdn.net/lzx159951/article/details/106877102)

50 |

51 |

--------------------------------------------------------------------------------

/LwLineReg/README.md:

--------------------------------------------------------------------------------

1 | ## 局部加权线性回归算法源代码

2 |

3 |

4 | ### 依赖环境

5 |

6 | python3.7

7 |

8 | ### python库

9 |

10 | numpy

11 |

12 | matplotlib

13 |

14 | ### 下载安装

15 |

16 | ```

17 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

18 | ```

19 |

20 | ### 安装依赖包

21 |

22 | ```

23 | cd LwLineReg

24 | pip install -r requirements.txt

25 | ```

26 |

27 | ### 使用方法

28 |

29 | 在当前目录下输入

30 |

31 | ```

32 | cd LwLineReg

33 | ```

34 |

35 | 运行线性回归算法

36 |

37 | ```

38 | python LwLineReg.py

39 | ```

40 |

41 | ### 拟合图效果图

42 |

43 |

44 |

45 |

46 |

47 |

48 |

49 |

50 | ### 技术博客地址

51 |

52 | * [局部加权线性回归算法原理分析和代码实战](https://blog.csdn.net/lzx159951/article/details/106946473)

53 |

54 |

--------------------------------------------------------------------------------

/LineRegression/README.md:

--------------------------------------------------------------------------------

1 | ## 线性回归算法源代码

2 |

3 |

4 | ### 依赖环境

5 |

6 | python3.7

7 |

8 | ### python库

9 |

10 | numpy

11 |

12 | matplotlib

13 |

14 | ### 下载安装

15 |

16 | ```

17 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

18 | ```

19 |

20 | ### 安装依赖包

21 |

22 | ```

23 | cd LineRegression

24 | pip install -r requirements.txt

25 | ```

26 |

27 | ### 使用方法

28 |

29 | 在当前目录下输入

30 |

31 | ```

32 | cd LineRegression

33 | ```

34 |

35 | 运行线性回归算法

36 |

37 | ```

38 | python LineRegression.py

39 | ```

40 |

41 | ### 拟合图

42 |

43 |

44 |

45 |

46 |

47 |

48 |

49 |

50 | ### 技术博客地址

51 |

52 | * [线性回归算法拟合数据原理分析以及源代码解析](https://blog.csdn.net/lzx159951/article/details/106941802)

53 |

54 |

--------------------------------------------------------------------------------

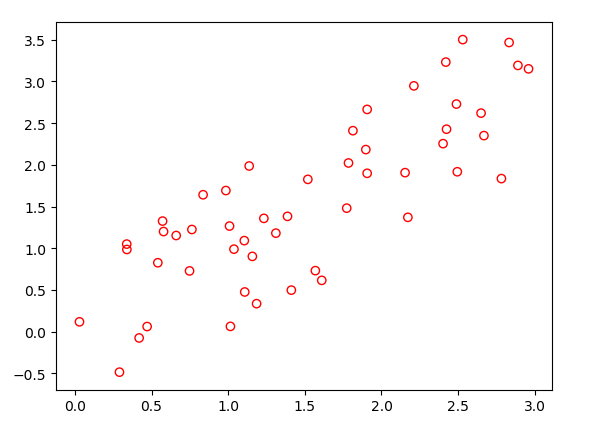

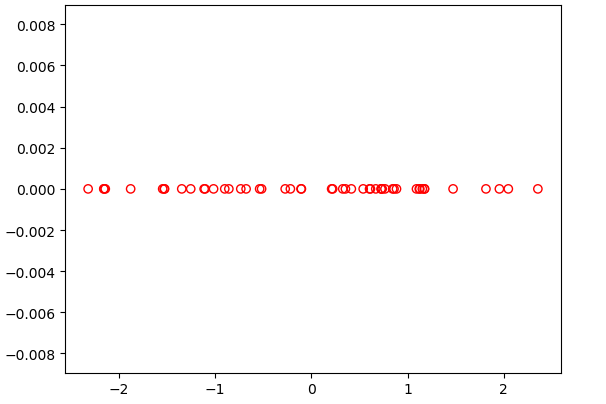

/PCA/README.md:

--------------------------------------------------------------------------------

1 | ## PCA(主成分分析算法)代码

2 |

3 |

4 | ### 依赖环境

5 |

6 | python3.7

7 |

8 | ### python库

9 |

10 | numpy

11 |

12 | matplotlib

13 |

14 | ### 下载安装

15 |

16 | ```

17 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

18 | ```

19 |

20 | ### 安装依赖包

21 |

22 | ```

23 | cd PCA

24 | pip install -r requirements.txt

25 | ```

26 |

27 | ### 使用方法

28 |

29 | 在当前目录下输入

30 |

31 | ```

32 | cd PCA

33 | ```

34 |

35 | 运行PCA算法

36 |

37 | ```

38 | python PCA.py

39 | ```

40 |

41 | ### 效果图

42 | 原数据图像:

43 |

44 |

45 |

46 | 降维后数据图像:

47 |

48 |

49 |

50 |

51 |

52 |

53 | ### 技术博客地址

54 |

55 | * [PCA(主成分分析-principal components analysis)学习笔记以及源代码实战讲解](https://blog.csdn.net/lzx159951/article/details/105912705)

56 |

57 |

--------------------------------------------------------------------------------

/决策树/datasets/lenses.txt:

--------------------------------------------------------------------------------

1 | young myope no reduced no lenses

2 | young myope no normal soft

3 | young myope yes reduced no lenses

4 | young myope yes normal hard

5 | young hyper no reduced no lenses

6 | young hyper no normal soft

7 | young hyper yes reduced no lenses

8 | young hyper yes normal hard

9 | pre myope no reduced no lenses

10 | pre myope no normal soft

11 | pre myope yes reduced no lenses

12 | pre myope yes normal hard

13 | pre hyper no reduced no lenses

14 | pre hyper no normal soft

15 | pre hyper yes reduced no lenses

16 | pre hyper yes normal no lenses

17 | presbyopic myope no reduced no lenses

18 | presbyopic myope no normal no lenses

19 | presbyopic myope yes reduced no lenses

20 | presbyopic myope yes normal hard

21 | presbyopic hyper no reduced no lenses

22 | presbyopic hyper no normal soft

23 | presbyopic hyper yes reduced no lenses

24 | presbyopic hyper yes normal no lenses

--------------------------------------------------------------------------------

/微信读书答题辅助/README.md:

--------------------------------------------------------------------------------

1 | ## 微信读书答题辅助python小程序

2 |

3 |

4 | ### 依赖环境

5 |

6 | python3.7

7 |

8 | ### python库

9 |

10 | * urllib

11 | * Pillow

12 | * BeautifulSoup4

13 | * base64

14 | * requests

15 | * time

16 | * re

17 |

18 |

19 | ### 下载安装

20 |

21 | ```

22 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

23 | ```

24 |

25 | ### 安装依赖包

26 |

27 | ```

28 | cd 微信读书答题辅助

29 | pip install -r requirements.txt

30 | ```

31 |

32 | ### 使用方法

33 |

34 | 在当前目录下输入

35 |

36 | ```

37 | cd 微信读书答题辅助

38 | ```

39 |

40 | 运行程序

41 |

42 | ```

43 | python question-answer.py

44 | ```

45 |

46 | ### 效果图

47 | 答题图:

48 |

49 |

50 |

51 |

52 | ### 注意事项:

53 |

54 | 1. 使用前请查看技术文档

55 | 2. 不想修改截图位置的话,请将mumu模拟器放在屏幕左上角

56 |

57 |

58 | ### 技术博客地址

59 |

60 | * [从0到1使用python开发一个半自动答题小程序](https://blog.csdn.net/lzx159951/article/details/106062579)

61 |

62 |

--------------------------------------------------------------------------------

/Building-NN-model-with-numpy/README.md:

--------------------------------------------------------------------------------

1 | > # 此项目百度深度学习课程中的一个例子,使用numpy搭建一个神经网络

2 |

3 |

4 |

5 | ## 项目说明

6 |

7 | 1. 此项目来源于百度深度学习课程中的一个实例。课程链接:[百度架构师手把手教深度学习](https://aistudio.baidu.com/aistudio/education/group/info/888)

8 | 2. 本节课程是以notebook文档的形式,全文大概13000字所有,并配有详细的图文和代码说明,只要你有一些python和numpy的基础,一定能看的明明白白的。

9 | 3. 项目中的reference.md文件就是课程中的文档,图片outputxx.png是文档inference.md中的图片

10 | 4. 由于github对无法很好的显示数学公式,大家可以去博客查看文档 [博客地址](https://blog.csdn.net/lzx159951/article/details/105071799)

11 |

12 |

13 |

14 | ## 项目运行

15 |

16 | 1. 安装python3.7

17 | 2. 安装numpy 1.16 及以上

18 | 3. 编辑器推荐pycharm

19 | 4. data 文件夹是数据所在文件、combat1.py 是使用梯度下降法的神经网络项目,combat1-SGD.py 是改良后的随机梯度下降法的神经网络项目

20 | 5. combat2-onehidden.py文件是手写三层神经网络模型,搭配的博客地址为:[python和numpy纯手写3层神经网络,干货满满](https://blog.csdn.net/lzx159951/article/details/105099166)

21 |

22 |

23 |

24 | ## 闲话

25 |

26 | 不得不说,这个**手动搭建神经网络教程** 是我见过讲的最好的一个教程,没有之一,相信大家完整的看完之后,一定会有很大收获。对了,文档最下面还有一些小作业,大家可以去思考一下🙂

27 |

28 |

--------------------------------------------------------------------------------

/基于单层决策树的AdaBoost算法/README.md:

--------------------------------------------------------------------------------

1 | ## 基于单层决策树的AdaBoost算法

2 |

3 |

4 | ### 依赖环境

5 |

6 | python3.7

7 |

8 | ### python库

9 |

10 | numpy

11 |

12 | ### 下载安装

13 |

14 | ```

15 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

16 | ```

17 |

18 | ### 安装依赖包

19 |

20 | ```

21 | cd 基于单层决策树的AdaBoost算法

22 | pip install -r requirements.txt

23 | ```

24 | ### 文件介绍

25 | * datasets 为数据文件

26 | * 基于单层决策树的AdaBoost.py 为决策树主函数文件

27 |

28 |

29 | ### 使用方法

30 |

31 | 在当前目录下输入

32 |

33 | ```

34 | cd 基于单层决策树的AdaBoost算法

35 | ```

36 |

37 | 运行AdaBoost算法

38 |

39 | ```

40 | python 基于单层决策树的AdaBoost.py

41 | ```

42 |

43 | ## 获取的最佳分类决策树:

44 | [{'dim': 0, 'thresh': 4.5301945, 'ineq': 'lt', 'alpha': 2.1847239262335107},

45 | {'dim': 0, 'thresh': 5.5848406, 'ineq': 'lt', 'alpha': 2.528122902674154},

46 | {'dim': 1, 'thresh': -1.8740486000000005, 'ineq': 'gt', 'alpha': 1.0220510876172635}]

47 |

48 |

49 |

50 | ### 技术博客地址

51 |

52 | * [决策树代码实战](https://blog.csdn.net/lzx159951/article/details/106809994)

53 |

54 |

--------------------------------------------------------------------------------

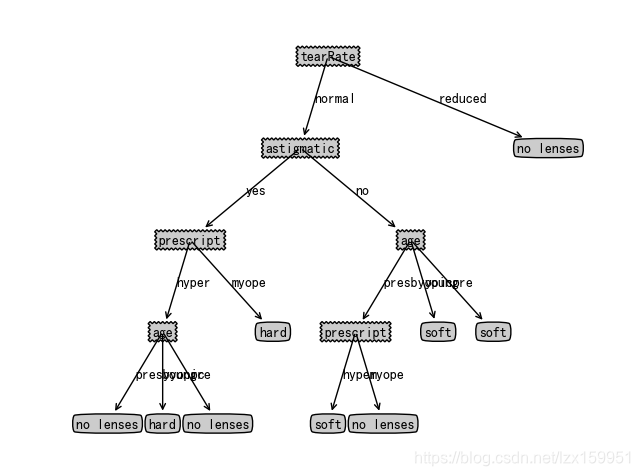

/决策树/README.md:

--------------------------------------------------------------------------------

1 | ## 决策树代码

2 |

3 |

4 | ### 依赖环境

5 |

6 | python3.7

7 |

8 | ### python库

9 |

10 | numpy

11 |

12 | matplotlib

13 |

14 | ### 下载安装

15 |

16 | ```

17 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

18 | ```

19 |

20 | ### 安装依赖包

21 |

22 | ```

23 | cd 决策树

24 | pip install -r requirements.txt

25 | ```

26 | ### 文件介绍

27 | * datasets 为数据文件

28 | * decision-tree.py 为决策树主函数文件

29 | * treePlotter.py 使用matplotlib绘画决策树

30 |

31 | ### 使用方法

32 |

33 | 在当前目录下输入

34 |

35 | ```

36 | cd 决策树

37 | ```

38 |

39 | 运行决策树算法

40 |

41 | ```

42 | python decision-tree.py

43 | ```

44 |

45 | ## 决策树格式

46 |

47 | 决策树产生字典的字典形式为:

48 |

49 | ```

50 | {'tearRate': {'reduced': 'no lenses', 'normal': {'astigmatic': {'no': {'age': {'presbyopic': {'prescript': {'hyper': 'soft', 'myope': 'no lenses'}}, 'young': 'soft', 'pre': 'soft'}}, 'yes': {'prescript': {'hyper': {'age': {'presbyopic': 'no lenses', 'young': 'hard', 'pre': 'no lenses'}}, 'myope': 'hard'}}}}}}

51 | ```

52 |

53 | 决策树图形表示:

54 |

55 |

56 |

57 | ### 技术博客地址

58 |

59 | * [决策树代码实战](https://blog.csdn.net/lzx159951/article/details/106172243)

60 |

61 |

--------------------------------------------------------------------------------

/GeneratorGIF-and-SeparateGIF/SeparateGif.py:

--------------------------------------------------------------------------------

1 | from PIL import Image

2 | import numpy as np

3 | import imageio

4 | import argparse

5 | import os

6 | '''

7 | 作者:@梁先森

8 | CSDN博客地址:https://blog.csdn.net/lzx159951

9 | github地址:

10 | 实现功能:将gif分离成一张张图片

11 | '''

12 |

13 | parser = argparse.ArgumentParser()

14 | parser.add_argument("-i",type=str,default="image",help="Please input GIF picture")

15 | parser.add_argument("-o","-output",default="outputimage",type=str,help="the name of output image")

16 | args = parser.parse_args()

17 | try:

18 | imagelist = imageio.mimread(args.i)

19 | print("GIF文件已成功读取")

20 | except:

21 | print("GIF文件读取失败")

22 | num=-1

23 | path=''

24 | try:

25 | if(not os.path.exists('output')):

26 | os.mkdir('output')

27 | path = 'output/'

28 | for i in imagelist:

29 | num+=1

30 | im = Image.fromarray(i)

31 | imagearray = np.array(i)

32 | if(imagearray.shape[2]==4):

33 | if(str(args.o).lower().endswith('.jpg') or str(args.o).lower().endswith('.jpeg') or str(args.o).lower().endswith('.png')):

34 | output = path+str(args.o).split('.')[0]+str(num)+'.png'

35 | else:

36 | output = path+str(args.o)+str(num)+'.png'

37 | imageio.imwrite(output,imagearray)

38 | print("GIF图片分离成功")

39 | except:

40 | print("GIF图片分离失败")

41 |

42 |

--------------------------------------------------------------------------------

/GeneratorGIF-and-SeparateGIF/README.md:

--------------------------------------------------------------------------------

1 | ## GeneratorGIF-and-SeparateGIF

2 | 此项目是GIF图片的生成和GIF图片的分离。

3 |

4 |

5 |

6 | 生成样例图

7 |

8 | ### 依赖环境

9 |

10 | python 3.7

11 |

12 | ### python 库

13 |

14 | numpy--图片格式操作

15 |

16 | pillow--图片操作

17 |

18 | imageio--图片相关操作

19 |

20 | os-- 文件路径操作

21 |

22 | argparse--命令行参数处理

23 |

24 | ### 下载安装

25 |

26 | ```

27 | git clone https://github.com/lzx1019056432/Be-Friendly-To-New-People.git

28 | ```

29 |

30 | ### 安装依赖包

31 |

32 | ```

33 | pip install -r requirements.txt

34 | ```

35 |

36 | ### 使用方式

37 |

38 | 在当前目录下输入:

39 |

40 | ```

41 | cd GeneratorGIF-and-SeparateGIF

42 | ```

43 |

44 | 第一次在GitHub上整理资源,有哪些做的不恰当的地方,还希望各位大佬多提提意见。做这个的初心就是想收集一些比较好的机器学习和人工智能入门案例,带有详细文档解释。这样也大大降低入门门槛,同时减少了新手各种找资料的实践。如果有愿意一起完善这个项目的,可以邮箱联系我 1019056432 @qq.com 期待与大佬一起做一件有意义的事。

45 |

46 | * 生成GIF图片

47 |

48 | ```

49 | python GeneratorGif.py -i image -o outputimage -d 0.5

50 | ```

51 |

52 | -i 后面需跟上图片所在的文件夹

53 |

54 | -o 输出的图片名称

55 |

56 | -d GIF图片播放速度,即每张图片转换延迟

57 |

58 | * 分解GIF图片

59 |

60 | ```

61 | python SeparateGif.py -i image.gif -o outputimage

62 | ```

63 |

64 | -i 后面添加需要分解的GIF图片

65 |

66 | -o 输出图片的名称,多图片后面使用递增数字区分

67 |

68 | ### 注释

69 |

70 | 1. 建议将需要合成的图片放到一个文件夹中,然后将文件放入GeneratorGIF-and-SeparateGIF目录下

71 | 2. 也可以将项目目录添加到系统环境变量中,这样就可以随时随地使用这个工具了。

72 | 3. 如果有任何疑问,欢迎大家CSDN私信我吗,我会第一时间给大家回答的。

--------------------------------------------------------------------------------

/GeneratorGIF-and-SeparateGIF/GeneratorGif.py:

--------------------------------------------------------------------------------

1 | from PIL import Image

2 | import numpy as np

3 | import imageio

4 | import argparse

5 | import os

6 | '''

7 | 作者:@梁先森

8 | CSDN博客地址:https://blog.csdn.net/lzx159951

9 | 实现功能:实现图片转换成gif、分离gif为图片

10 |

11 | '''

12 | parser = argparse.ArgumentParser()

13 | parser.add_argument("-i",type=str,default="image",help="Please input the file folder path of the picture")

14 | parser.add_argument("-o","-output",default="outputimage",type=str,help="the name of output image")

15 | parser.add_argument("-d",default="0.5",type=float,help="Picture playback interval")

16 |

17 | args = parser.parse_args()

18 | listimage=[]#存储图片

19 | listname=[]#存储图片地址

20 | try:

21 | if(not os.path.exists(args.i)):

22 | print("您输入的文件夹地址或者图片地址不存在!")

23 | else:

24 | for imagename in os.listdir('image'):

25 | if(imagename.lower().endswith('jpg') or imagename.lower().endswith('png')):

26 | listname.append(imagename)

27 | print("读取图片完成")

28 | for imagename in listname:

29 | if imagename.find('.'):

30 | im = Image.open(args.i+'/'+imagename)

31 | im = np.array(im)

32 | listimage.append(im)

33 | #创建文件夹

34 | if(not os.path.exists(args.i+'/output')):

35 | os.mkdir(args.i+'/output')

36 | if(str(args.o).lower().endswith('.gif')):

37 | filename=args.i+'/output/'+args.o

38 | else:

39 | filename = args.i+'/output/'+args.o+'.gif'

40 | print("正在生成gif......")

41 | imageio.mimsave(filename,listimage,'GIF',duration=args.d)

42 | print("gif输出完毕,感谢使用")

43 | except:

44 | print("图片转换失败")

--------------------------------------------------------------------------------

/SVM支持向量机/.idea/workspace.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

23 |

24 |

25 |

26 |

27 |

28 |

29 |

30 |

31 |

32 |

33 |

34 |

35 |

37 |

38 |

39 |

--------------------------------------------------------------------------------

/PCA/PCA.py:

--------------------------------------------------------------------------------

1 | # -*- coding:utf-8 -*-

2 | '''

3 | PCA 主成分分析

4 | 步骤:

5 | 1.将原始数据按列组成n行m列矩阵X

6 | 2. 将X的每一行进行零均值化,即减去这一行的值

7 | 3.求出协方差矩阵C=1/mXXT

8 | 4. 求出协方差矩阵的特征值及对应的特征向量

9 | 5. 将特征向量按对应特征值大小从上到下按行排成矩阵,取前K行组成矩阵P

10 | 6. Y=PX即为降维到K维后的数据

11 |

12 | 注:

13 | 这里的数据格式为 行代表数据,列代表属性。这个与之前看的资料正好相反,不过影响不大。

14 | '''

15 | import numpy as np

16 | import matplotlib.pyplot as plt

17 | #加载数据,输出为矩阵形式

18 | def loadDateSet(filename,delim='\t'):

19 | with open(filename,'r')as f:

20 | stringarr = [line.strip().split(delim) for line in f.readlines()]

21 | datarr = [list(map(float,line)) for line in stringarr]

22 | return np.mat(datarr)

23 |

24 | def pca(dataMat,topNfeat=999):

25 | meanVals = np.mean(dataMat,axis=0)#计算每一个属性的均值

26 | meanRemoved = dataMat-meanVals#将数据进行零均值化

27 | covMat = np.cov(meanRemoved,rowvar=False)#计算协方差矩阵,rowvar=False 表示属性在列,数据在行

28 | eigVals,eigVects = np.linalg.eig(np.mat(covMat))#计算矩阵的特征值和特征向量

29 | #print("特征值:",eigVals)

30 | #print("特征向量:",eigVects)

31 | eigValInd = np.argsort(eigVals)#将特征值进行排序,按照从小到大的顺序,返回索引值

32 | #print("排序后的特征值:",eigValInd)

33 | #这里就是取前K个最大值

34 | eigValInd = eigValInd[:-(topNfeat+1):-1] #这里使用了切片,只取前K个最大的索引,这里我们K=1

35 | #print("eigValInd:",eigValInd)

36 | redEigVects = eigVects[:,eigValInd] #取特征值对应的特征向量

37 | #print("redEigVects",redEigVects)

38 | #Y=XP

39 | lowDDataMat = meanRemoved*redEigVects #零均值的数据与 特征向量做相乘 结果为Y,降维后的数据

40 | #redEigVects.T 为对redEigVects 进行转置

41 | #这里为何能还原呢,是因为P是特征向量,特征向量P*其转置=E so XP*PT=X

42 | reconMat = (lowDDataMat*redEigVects.T)+meanVals #数据还原

43 | return lowDDataMat,reconMat

44 |

45 | if __name__ == '__main__':

46 | datamat = loadDateSet('data.txt')

47 | lowDDataMat,reconmat = pca(datamat,1)

48 | print("原数据:\n",reconmat)

49 | print("降维后的数据:\n",lowDDataMat)

--------------------------------------------------------------------------------

/LineRegression/LineRegression.py:

--------------------------------------------------------------------------------

1 | # -*- coding:utf-8 -*-

2 | '''

3 | 参考《机器学习实战》

4 | 使用最小二乘法算法进行求解问题

5 |

6 | '''

7 |

8 | import numpy as np

9 | import matplotlib.pyplot as plt

10 | def LoadData(filename):

11 | dataMat = []

12 | labelMat = []

13 | with open(filename) as f:

14 | numFeat = len(f.readline().split('\t'))-1#这里会导致忽略第一个数据

15 | for line in f.readlines():

16 | lineArr = []

17 | curLine = line.strip().split('\t')

18 | for i in range(numFeat):

19 | lineArr.append(float(curLine[i]))

20 | dataMat.append(lineArr)

21 | labelMat.append(float(curLine[-1]))

22 | return dataMat,labelMat

23 | def standRegres(xArr,yArr):

24 | xMat = np.mat(xArr);yMat = np.mat(yArr).T#由于原来形状是(1,199),所以这里需要转置

25 | print("yMat",yMat.shape)#yMat (199, 1)

26 | xTx = xMat.T*xMat

27 | if np.linalg.det(xTx)==0.0:

28 | print("false")

29 | return

30 | print("xtx.shape",xTx.shape)#形状:(2,2)

31 | print("xTx.I",xTx.I)#矩阵的逆

32 | print("xMat.T",(xMat.T).shape)

33 | ws = xTx.I*(xMat.T*yMat)#(xMat.T*yMat)形状为(2,1)

34 | return ws

35 |

36 | def showdata(xArr,yMat,ws):

37 | fig = plt.figure()

38 | ax = fig.add_subplot(111)

39 | xCopy = xArr.copy()

40 | xCopy.sort(0)

41 | yHat = xCopy*ws

42 | ax.scatter(xArr[:,1].flatten().tolist(),yMat.T[:,0].flatten().tolist(),s=20,alpha=0.5)

43 | ax.plot(xCopy[:,1],yHat)

44 | plt.show()

45 | def pearsoncor(yHat,yMat):

46 | result = np.corrcoef(yHat.T,yMat)#相关系数分析。越接近1,表示相似度越高

47 | print("pearson-result:",result)

48 |

49 |

50 | if __name__ == '__main__':

51 | xArr,yArr = LoadData('datasets/ex0.txt')

52 | print(xArr)

53 | ws = standRegres(xArr,yArr)

54 | print("ws:",ws)#输出w

55 | xMat = np.mat(xArr)

56 | yMat = np.mat(yArr)

57 | yHat = xMat*ws#获得预测值

58 | showdata(xMat,yMat,ws)#图像显示

59 | pearsoncor(yHat,yMat)#进行相关度分析

60 | print("yArr[0]",yArr[0])

61 |

62 |

--------------------------------------------------------------------------------

/PCA/data.txt:

--------------------------------------------------------------------------------

1 | 1.0089380119044606 1.2656678128352157

2 | 1.3112528199132147 1.1807446761302725

3 | 2.424508865890327 2.42623813185137

4 | 1.0147525857585666 0.06368262131662794

5 | 1.1578567759128777 0.9024219166888485

6 | 0.5722916129482798 1.3253794224645534

7 | 0.2908871361168006 -0.48463308082989065

8 | 1.1053724904864857 1.0911672155623187

9 | 2.782643175630093 1.8344774809236917

10 | 1.1371327811239178 1.9855014728748523

11 | 2.9595202573961683 3.1493568304265667

12 | 0.03001377099248237 0.1184138168692801

13 | 1.6105626276842764 0.6151813024295321

14 | 2.40192019049226 2.2532532683638085

15 | 1.0377638875699935 0.9891452647051595

16 | 1.9068506322033905 2.6633954432208444

17 | 2.8902965567858003 3.190505116006065

18 | 1.387127300556284 1.3822077284377672

19 | 2.1723405537093683 1.3703297004736115

20 | 1.1082474794834063 0.476093034988174

21 | 2.832791781368075 3.465027202868262

22 | 1.2329543292136673 1.3580114948781103

23 | 0.3383435782654256 1.0496994597605154

24 | 2.6686732678308283 2.3496745526652143

25 | 2.154185375024965 1.905677081834225

26 | 1.4121985566741515 0.4981343973255359

27 | 0.9851509398902834 1.6913204917122577

28 | 1.568840906349229 0.7314958832099767

29 | 2.2116472577644415 2.9455247453019044

30 | 2.494869052708789 1.9164818029038488

31 | 1.8139006367300752 2.408621604326571

32 | 1.773479929898689 1.4804511173353605

33 | 0.7635093683671226 1.2246723892862486

34 | 0.41923787374006094 -0.07487849240816102

35 | 0.5411773173213034 0.8263056748482267

36 | 0.8364437773383608 1.6408482378736724

37 | 2.4892980267068867 2.727527588160629

38 | 0.7481168620570725 0.72830031282616

39 | 0.3392311809900582 0.9855980245973939

40 | 0.5786230011762762 1.2000957680538957

41 | 1.9067969026591851 1.897918445903974

42 | 0.6608553944889943 1.1524725820678174

43 | 1.7850248331657892 2.0217406020350204

44 | 2.6498704423269475 2.6190156209335624

45 | 1.1854548209066529 0.3359742053709607

46 | 0.4713755383568643 0.06201732243202529

47 | 1.8982073515313658 2.1822442745683635

48 | 2.419962189930445 3.230103406395937

49 | 1.5197960108303117 1.8251570655193818

50 | 2.5304157468853683 3.499657694315162

51 |

--------------------------------------------------------------------------------

/K-mean/data.txt:

--------------------------------------------------------------------------------

1 | 1.0089380119044606 1.2656678128352157

2 | 1.3112528199132147 1.1807446761302725

3 | 2.424508865890327 2.42623813185137

4 | 1.0147525857585666 0.06368262131662794

5 | 1.1578567759128777 0.9024219166888485

6 | 0.5722916129482798 1.3253794224645534

7 | 0.2908871361168006 -0.48463308082989065

8 | 1.1053724904864857 1.0911672155623187

9 | 2.782643175630093 1.8344774809236917

10 | 1.1371327811239178 1.9855014728748523

11 | 2.9595202573961683 3.1493568304265667

12 | 0.03001377099248237 0.1184138168692801

13 | 1.6105626276842764 0.6151813024295321

14 | 2.40192019049226 2.2532532683638085

15 | 1.0377638875699935 0.9891452647051595

16 | 1.9068506322033905 2.6633954432208444

17 | 2.8902965567858003 3.190505116006065

18 | 1.387127300556284 1.3822077284377672

19 | 2.1723405537093683 1.3703297004736115

20 | 1.1082474794834063 0.476093034988174

21 | 2.832791781368075 3.465027202868262

22 | 1.2329543292136673 1.3580114948781103

23 | 0.3383435782654256 1.0496994597605154

24 | 2.6686732678308283 2.3496745526652143

25 | 2.154185375024965 1.905677081834225

26 | 1.4121985566741515 0.4981343973255359

27 | 0.9851509398902834 1.6913204917122577

28 | 1.568840906349229 0.7314958832099767

29 | 2.2116472577644415 2.9455247453019044

30 | 2.494869052708789 1.9164818029038488

31 | 1.8139006367300752 2.408621604326571

32 | 1.773479929898689 1.4804511173353605

33 | 0.7635093683671226 1.2246723892862486

34 | 0.41923787374006094 -0.07487849240816102

35 | 0.5411773173213034 0.8263056748482267

36 | 0.8364437773383608 1.6408482378736724

37 | 2.4892980267068867 2.727527588160629

38 | 0.7481168620570725 0.72830031282616

39 | 0.3392311809900582 0.9855980245973939

40 | 0.5786230011762762 1.2000957680538957

41 | 1.9067969026591851 1.897918445903974

42 | 0.6608553944889943 1.1524725820678174

43 | 1.7850248331657892 2.0217406020350204

44 | 2.6498704423269475 2.6190156209335624

45 | 1.1854548209066529 0.3359742053709607

46 | 0.4713755383568643 0.06201732243202529

47 | 1.8982073515313658 2.1822442745683635

48 | 2.419962189930445 3.230103406395937

49 | 1.5197960108303117 1.8251570655193818

50 | 2.5304157468853683 3.499657694315162

51 |

--------------------------------------------------------------------------------

/ROC曲线和AUC面积/ROC-AUC.py:

--------------------------------------------------------------------------------

1 | # -*- coding:utf-8 -*-

2 | import numpy as np

3 | import matplotlib.pyplot as plt

4 | def loadSimpData():

5 | dataMat = []

6 | labelMat = []

7 | data = open('datasets/testSet.txt')

8 | for dataline in data.readlines():

9 | linedata = dataline.split('\t')

10 | dataMat.append([float(linedata[0]),float(linedata[1])])

11 | labelMat.append(float(linedata[2].replace('\n','')))

12 | return dataMat,labelMat

13 |

14 | def plotROC(predStrengths,classLabels):

15 | #predStrengths = np.fabs(predStrengths)

16 | # print("predStrengths:",predStrengths[0])

17 | # print("sorted(predStrengths):",sorted(list(predStrengths[0])))

18 | cur = (0.0,0.0)

19 | ySum = 0.0

20 | numPosClas = np.sum(np.array(classLabels)==1.0)

21 | yStep = 1/float(numPosClas)

22 | xStep = 1/float(len(classLabels)-numPosClas)

23 | sortedIndicies = list(predStrengths.argsort())

24 | sortedIndicies.sort(reverse=True)

25 | fig = plt.figure()

26 | fig.clf()

27 | ax = fig.add_subplot(111)

28 | for index in sortedIndicies:

29 | if classLabels[index]==1:

30 | addX = 0

31 | addY = yStep

32 | else:

33 | addX = xStep

34 | addY=0

35 | ySum+=cur[1]

36 | # print("[cur[0],cur[0]-delX]:",[cur[0],cur[0]-delX])

37 | # print("[cur[1],cur[1]-delY]:",[cur[1],cur[1]-delY])

38 | ax.plot([cur[0],cur[0]+addX],[cur[1],cur[1]+addY],c='r')#[1,1],[1,]

39 | print("ystep,xstep",yStep,xStep)

40 | cur = (cur[0]+addX,cur[1]+addY)

41 | ax.plot([0,1],[0,1],'b--')

42 | plt.xlabel('False Positive Rate')

43 | plt.ylabel('True Positive Rate')

44 | plt.title('Roc curve for AdaBoost Horse Colic Detection System')

45 | #ax.axis([0,1,0,1])

46 | plt.show()

47 | print('the Area Under the Curve is :',ySum*xStep)

48 |

49 | if __name__ == '__main__':

50 | dataMat,classLabels = loadSimpData()

51 | traindata = np.mat(dataMat[:80]);trainclasslabels = np.array(classLabels[:80])

52 | predStrengths = np.array([i/10 for i in range(1,80)])

53 | plotROC(predStrengths,trainclasslabels)

54 |

55 |

--------------------------------------------------------------------------------

/LwLineReg/LwLineReg.py:

--------------------------------------------------------------------------------

1 | # -*- coding:utf-8 -*-

2 |

3 | '''

4 | 局部加权线性回归算法

5 |

6 | '''

7 | import numpy as np

8 | import matplotlib.pyplot as plt

9 | def LoadData(filename):

10 | dataMat = []

11 | labelMat = []

12 | with open(filename) as f:

13 | numFeat = len(f.readline().split('\t'))-1#这里会导致忽略第一个数据

14 | f.seek(0)

15 | for line in f.readlines():

16 | lineArr = []

17 | curLine = line.strip().split('\t')

18 | for i in range(numFeat):

19 | lineArr.append(float(curLine[i]))

20 | dataMat.append(lineArr)

21 | labelMat.append(float(curLine[-1]))

22 | return dataMat,labelMat

23 |

24 | #局部加权线性回归

25 | def lwlr(testPoint,xArr,yArr,k=0.1):

26 | xMat = np.mat(xArr);yMat = np.mat(yArr).T#

27 | m = np.shape(xMat)[0]#数据个数

28 | #为什么创建方阵,是为了实现给每个数据增添不同的权值

29 | weights = np.mat(np.eye(m))#初始化一个阶数等于m的方阵,其对角线的值为1,其余值均为0

30 | for j in range(m):

31 | diffMat = testPoint-xMat[j,:]

32 | weights[j,j] = np.exp(diffMat*diffMat.T/(-2.0*k**2)) #e的指数形式

33 | xTx = xMat.T*(weights*xMat)

34 | #print("weights",weights)

35 | if np.linalg.det(xTx)==0.0:#通过计算行列式的值来判是否可逆

36 | print("this matrix is singular,cannot do inverse")

37 | return

38 | ws = xTx.I*(xMat.T*(weights*yMat))

39 | return testPoint*ws

40 | def lwlrTest(testArr,xArr,yArr,k=1.0):

41 | m = np.shape(testArr)[0]

42 | yHat = np.zeros(m)

43 | for i in range(m):

44 | yHat[i] = lwlr(testArr[i],xArr,yArr,k)

45 | return yHat

46 | #图像显示

47 | def lwlshow(xArr,yMat,yHat,k):

48 | fig = plt.figure()

49 | ax = fig.add_subplot(111)

50 | xCopy = np.array(xArr.copy())

51 | srtInd = xCopy[:,1].argsort(0)

52 | print("xSort",xCopy[srtInd])

53 | ax.plot(xCopy[srtInd][:,1],yHat[srtInd])

54 | yMat = np.array(yMat)

55 | ax.scatter(xCopy[srtInd][:,1].tolist(),yMat[srtInd],s=10,alpha=0.7)