├── Mini project 3

├── usersdb.db

├── kindpng_1372491.png

├── kindpng_170301.png

├── __pycache__

│ ├── mydatabase.cpython-39.pyc

│ └── ui_main_window.cpython-39.pyc

├── mydatabase.py

├── Readme.md

├── webcam.ui

├── log in.ui

├── new user.ui

├── main.ui

├── main.py

└── user edit.ui

├── assignment-23

├── img

│ ├── lips.png

│ ├── emoji1.png

│ ├── emoji2.png

│ └── sunglasses.png

├── Readme.md

└── Main.py

├── assignment-25

├── input

│ ├── me.jpg

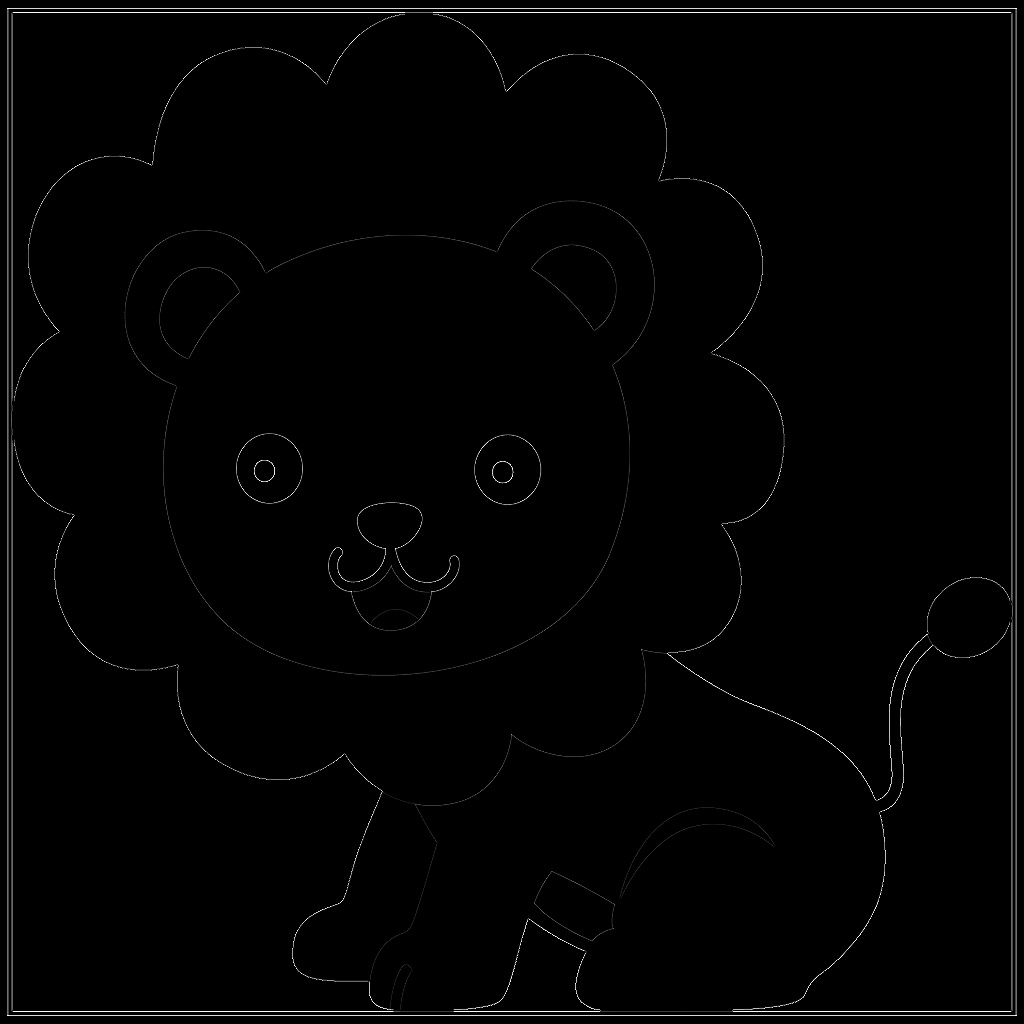

│ ├── lion.png

│ ├── building.tif

│ ├── flower_input.jpg

│ ├── 3ShadesOfGray.mp4

│ └── flower_output.jpg

├── output

│ ├── result_me.jpg

│ ├── result_lion.jpg

│ ├── result_lion2.jpg

│ ├── Portrate_Flower.png

│ └── result_building.jpg

├── lion.py

├── lion2.py

├── building.py

├── ConvolutionFunc.py

├── Readme.md

├── Portrateflower.py

└── ColorSensing.py

├── assignment-27

├── Input

│ ├── 0.jpg

│ ├── 1.jpg

│ ├── 10.jpg

│ ├── 11.png

│ ├── 12.png

│ ├── 2.jpg

│ ├── 3.jpg

│ ├── puma.jpg

│ └── jLw8Rbf.jpg

├── Object Detection Ex

│ ├── Input

│ │ ├── coins.jpg

│ │ ├── puma.jpg

│ │ ├── wolf.jpg

│ │ ├── pinguine2.jpg

│ │ └── pinguine3.jpg

│ └── Readme.md

├── Readme.md

└── Find Contour Method.ipynb

├── assignment-24

├── input

│ ├── image.jpg

│ └── img.jpg

├── output

│ └── result2.jpg

├── weights

│ ├── RFB-320.tflite

│ ├── coor_2d106.tflite

│ ├── iris_localization.tflite

│ └── head_pose_object_points.npy

├── requirements.txt

├── __pycache__

│ └── TFLiteFaceDetector.cpython-39.pyc

├── SolvePnPHeadPoseEstimation.py

├── vtuber_link_start.py

├── TFLiteFaceDetector.py

├── TFLiteIrisLocalization.py

├── WebcamTFLiteFaceAlignment.py

└── MyTFLiteFaceAlignment.py

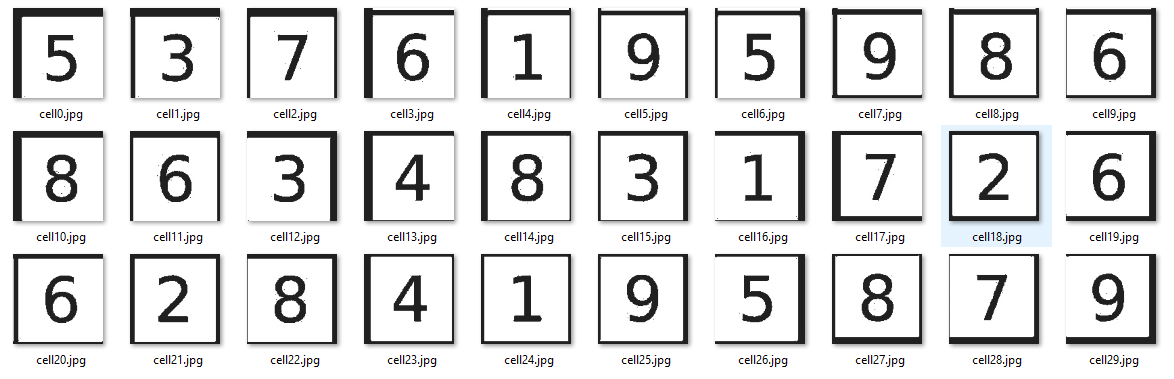

├── assignment-26-1

├── Input

│ ├── 1.png

│ ├── 2.png

│ ├── 3.tif

│ └── sudoku.tif

├── Output

│ ├── cell0.jpg

│ ├── cell1.jpg

│ ├── cell10.jpg

│ ├── cell11.jpg

│ ├── cell12.jpg

│ ├── cell13.jpg

│ ├── cell14.jpg

│ ├── cell15.jpg

│ ├── cell16.jpg

│ ├── cell17.jpg

│ ├── cell18.jpg

│ ├── cell19.jpg

│ ├── cell2.jpg

│ ├── cell20.jpg

│ ├── cell21.jpg

│ ├── cell22.jpg

│ ├── cell23.jpg

│ ├── cell24.jpg

│ ├── cell25.jpg

│ ├── cell26.jpg

│ ├── cell27.jpg

│ ├── cell28.jpg

│ ├── cell29.jpg

│ ├── cell3.jpg

│ ├── cell4.jpg

│ ├── cell5.jpg

│ ├── cell6.jpg

│ ├── cell7.jpg

│ ├── cell8.jpg

│ ├── cell9.jpg

│ └── final.png

├── Readme.md

├── ColorSensing.py

├── Increase Contrast.ipynb

└── test.ipynb

├── assignment-29

├── Input

│ ├── rubix.png

│ ├── Carrot.jpg

│ └── color picker.ui

├── Output

│ ├── Rubix.png

│ ├── Green Carrot.jpg

│ └── Microsoft Logo.png

├── ColorSensing.py

├── Readme.md

├── Color Picker.py

└── Microsoft Logo.ipynb

├── assignment-30

├── input

│ ├── sky.jpg

│ └── SuperMan.jpg

├── Skin detector .py

└── Readme.md

├── assignment-28

├── Input

│ ├── sudoku1.jpg

│ ├── sudoku2.jpg

│ └── sudoku3.png

├── Output

│ ├── sudoku4.jpg

│ ├── sudoku5.jpg

│ └── sudoku6.jpg

├── time warp scan

│ ├── Readme.md

│ └── time warp scan.py

├── Readme.md

├── Sudoku.py

└── Webcam Sudoku Detector.py

└── assignment-26-2

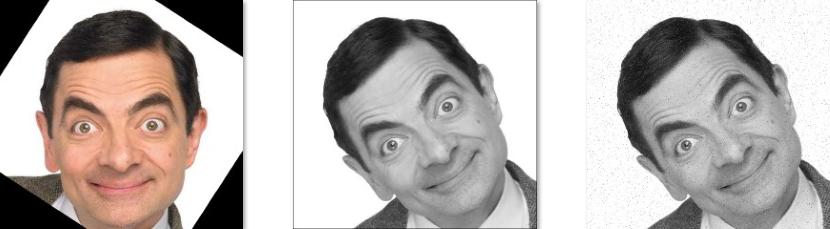

├── Mr Bin

├── input

│ └── 1.jpg

└── output

│ ├── rotated_img.jpg

│ ├── salt_pepper_img.jpg

│ └── img_without_noise.jpg

├── snowy gif

├── output

│ └── img116.jpg

└── input

│ └── village-scaled.jpg

├── numbers sepration

├── input

│ └── mnist.png

├── output

│ └── cell0.jpg

└── numbers sepration.ipynb

└── Readme.md

/Mini project 3/usersdb.db:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/Mini project 3/usersdb.db

--------------------------------------------------------------------------------

/assignment-23/img/lips.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-23/img/lips.png

--------------------------------------------------------------------------------

/assignment-25/input/me.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-25/input/me.jpg

--------------------------------------------------------------------------------

/assignment-27/Input/0.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-27/Input/0.jpg

--------------------------------------------------------------------------------

/assignment-27/Input/1.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-27/Input/1.jpg

--------------------------------------------------------------------------------

/assignment-27/Input/10.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-27/Input/10.jpg

--------------------------------------------------------------------------------

/assignment-27/Input/11.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-27/Input/11.png

--------------------------------------------------------------------------------

/assignment-27/Input/12.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-27/Input/12.png

--------------------------------------------------------------------------------

/assignment-27/Input/2.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-27/Input/2.jpg

--------------------------------------------------------------------------------

/assignment-27/Input/3.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-27/Input/3.jpg

--------------------------------------------------------------------------------

/assignment-23/img/emoji1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-23/img/emoji1.png

--------------------------------------------------------------------------------

/assignment-23/img/emoji2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-23/img/emoji2.png

--------------------------------------------------------------------------------

/assignment-24/input/image.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-24/input/image.jpg

--------------------------------------------------------------------------------

/assignment-24/input/img.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-24/input/img.jpg

--------------------------------------------------------------------------------

/assignment-25/input/lion.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-25/input/lion.png

--------------------------------------------------------------------------------

/assignment-26-1/Input/1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Input/1.png

--------------------------------------------------------------------------------

/assignment-26-1/Input/2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Input/2.png

--------------------------------------------------------------------------------

/assignment-26-1/Input/3.tif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Input/3.tif

--------------------------------------------------------------------------------

/assignment-27/Input/puma.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-27/Input/puma.jpg

--------------------------------------------------------------------------------

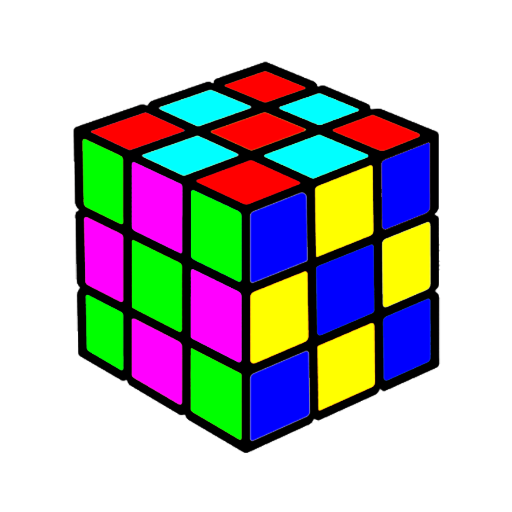

/assignment-29/Input/rubix.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-29/Input/rubix.png

--------------------------------------------------------------------------------

/assignment-30/input/sky.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-30/input/sky.jpg

--------------------------------------------------------------------------------

/assignment-27/Input/jLw8Rbf.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-27/Input/jLw8Rbf.jpg

--------------------------------------------------------------------------------

/assignment-28/Input/sudoku1.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-28/Input/sudoku1.jpg

--------------------------------------------------------------------------------

/assignment-28/Input/sudoku2.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-28/Input/sudoku2.jpg

--------------------------------------------------------------------------------

/assignment-28/Input/sudoku3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-28/Input/sudoku3.png

--------------------------------------------------------------------------------

/assignment-29/Input/Carrot.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-29/Input/Carrot.jpg

--------------------------------------------------------------------------------

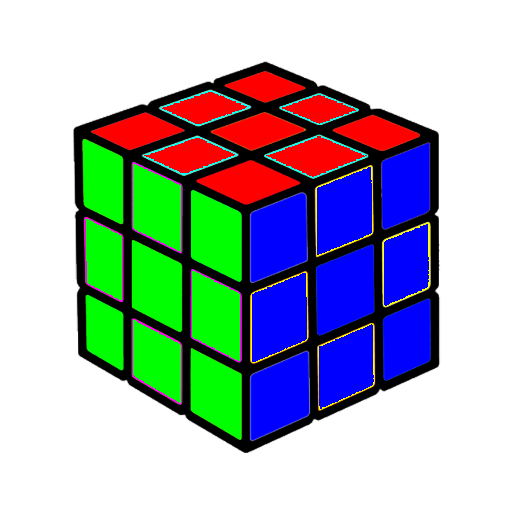

/assignment-29/Output/Rubix.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-29/Output/Rubix.png

--------------------------------------------------------------------------------

/Mini project 3/kindpng_1372491.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/Mini project 3/kindpng_1372491.png

--------------------------------------------------------------------------------

/Mini project 3/kindpng_170301.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/Mini project 3/kindpng_170301.png

--------------------------------------------------------------------------------

/assignment-23/img/sunglasses.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-23/img/sunglasses.png

--------------------------------------------------------------------------------

/assignment-24/output/result2.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-24/output/result2.jpg

--------------------------------------------------------------------------------

/assignment-25/input/building.tif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-25/input/building.tif

--------------------------------------------------------------------------------

/assignment-25/output/result_me.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-25/output/result_me.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Input/sudoku.tif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Input/sudoku.tif

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell0.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell0.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell1.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell1.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell10.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell10.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell11.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell11.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell12.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell12.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell13.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell13.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell14.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell14.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell15.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell15.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell16.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell16.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell17.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell17.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell18.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell18.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell19.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell19.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell2.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell2.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell20.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell20.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell21.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell21.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell22.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell22.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell23.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell23.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell24.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell24.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell25.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell25.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell26.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell26.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell27.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell27.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell28.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell28.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell29.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell29.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell3.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell3.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell4.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell4.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell5.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell5.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell6.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell6.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell7.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell7.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell8.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell8.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/cell9.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/cell9.jpg

--------------------------------------------------------------------------------

/assignment-26-1/Output/final.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-1/Output/final.png

--------------------------------------------------------------------------------

/assignment-26-2/Mr Bin/input/1.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-2/Mr Bin/input/1.jpg

--------------------------------------------------------------------------------

/assignment-28/Output/sudoku4.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-28/Output/sudoku4.jpg

--------------------------------------------------------------------------------

/assignment-28/Output/sudoku5.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-28/Output/sudoku5.jpg

--------------------------------------------------------------------------------

/assignment-28/Output/sudoku6.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-28/Output/sudoku6.jpg

--------------------------------------------------------------------------------

/assignment-30/input/SuperMan.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-30/input/SuperMan.jpg

--------------------------------------------------------------------------------

/assignment-24/weights/RFB-320.tflite:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-24/weights/RFB-320.tflite

--------------------------------------------------------------------------------

/assignment-25/input/flower_input.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-25/input/flower_input.jpg

--------------------------------------------------------------------------------

/assignment-25/output/result_lion.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-25/output/result_lion.jpg

--------------------------------------------------------------------------------

/assignment-24/weights/coor_2d106.tflite:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-24/weights/coor_2d106.tflite

--------------------------------------------------------------------------------

/assignment-25/input/3ShadesOfGray.mp4:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-25/input/3ShadesOfGray.mp4

--------------------------------------------------------------------------------

/assignment-25/input/flower_output.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-25/input/flower_output.jpg

--------------------------------------------------------------------------------

/assignment-25/output/result_lion2.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-25/output/result_lion2.jpg

--------------------------------------------------------------------------------

/assignment-29/Output/Green Carrot.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-29/Output/Green Carrot.jpg

--------------------------------------------------------------------------------

/assignment-29/Output/Microsoft Logo.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-29/Output/Microsoft Logo.png

--------------------------------------------------------------------------------

/assignment-25/output/Portrate_Flower.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-25/output/Portrate_Flower.png

--------------------------------------------------------------------------------

/assignment-25/output/result_building.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-25/output/result_building.jpg

--------------------------------------------------------------------------------

/assignment-26-2/snowy gif/output/img116.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-2/snowy gif/output/img116.jpg

--------------------------------------------------------------------------------

/assignment-24/weights/iris_localization.tflite:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-24/weights/iris_localization.tflite

--------------------------------------------------------------------------------

/assignment-26-2/Mr Bin/output/rotated_img.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-2/Mr Bin/output/rotated_img.jpg

--------------------------------------------------------------------------------

/assignment-24/weights/head_pose_object_points.npy:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-24/weights/head_pose_object_points.npy

--------------------------------------------------------------------------------

/assignment-26-2/Mr Bin/output/salt_pepper_img.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-2/Mr Bin/output/salt_pepper_img.jpg

--------------------------------------------------------------------------------

/assignment-26-2/numbers sepration/input/mnist.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-2/numbers sepration/input/mnist.png

--------------------------------------------------------------------------------

/assignment-27/Object Detection Ex/Input/coins.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-27/Object Detection Ex/Input/coins.jpg

--------------------------------------------------------------------------------

/assignment-27/Object Detection Ex/Input/puma.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-27/Object Detection Ex/Input/puma.jpg

--------------------------------------------------------------------------------

/assignment-27/Object Detection Ex/Input/wolf.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-27/Object Detection Ex/Input/wolf.jpg

--------------------------------------------------------------------------------

/assignment-24/requirements.txt:

--------------------------------------------------------------------------------

1 | tensorflow >= 2.3

2 | opencv-python

3 | bidict == 0.21.2

4 | websocket-client==0.57.0

5 | python-socketio == 5.0.4

6 | python-engineio == 4.0.0

--------------------------------------------------------------------------------

/assignment-26-2/Mr Bin/output/img_without_noise.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-2/Mr Bin/output/img_without_noise.jpg

--------------------------------------------------------------------------------

/assignment-26-2/numbers sepration/output/cell0.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-2/numbers sepration/output/cell0.jpg

--------------------------------------------------------------------------------

/assignment-26-2/snowy gif/input/village-scaled.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-26-2/snowy gif/input/village-scaled.jpg

--------------------------------------------------------------------------------

/Mini project 3/__pycache__/mydatabase.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/Mini project 3/__pycache__/mydatabase.cpython-39.pyc

--------------------------------------------------------------------------------

/assignment-27/Object Detection Ex/Input/pinguine2.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-27/Object Detection Ex/Input/pinguine2.jpg

--------------------------------------------------------------------------------

/assignment-27/Object Detection Ex/Input/pinguine3.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-27/Object Detection Ex/Input/pinguine3.jpg

--------------------------------------------------------------------------------

/Mini project 3/__pycache__/ui_main_window.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/Mini project 3/__pycache__/ui_main_window.cpython-39.pyc

--------------------------------------------------------------------------------

/assignment-24/__pycache__/TFLiteFaceDetector.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mahdisesmaeelian/Python-ImageProcessing/HEAD/assignment-24/__pycache__/TFLiteFaceDetector.cpython-39.pyc

--------------------------------------------------------------------------------

/assignment-26-1/Readme.md:

--------------------------------------------------------------------------------

1 | ##

2 | ##

3 |

--------------------------------------------------------------------------------

/assignment-27/Object Detection Ex/Readme.md:

--------------------------------------------------------------------------------

1 | ## Exercises of detecting objects in images by opencv

2 | ##

3 | ##

4 | ##

5 |

--------------------------------------------------------------------------------

/assignment-28/time warp scan/Readme.md:

--------------------------------------------------------------------------------

1 | ## Time warp scan filter

2 |

3 | What this project do is grabbing a column of pixels where the height of the column is the height of the video,

4 | and width is some small value(1 pixel). We store these pixels as an image so that we can draw it to the screen overtop of our capture display.

5 |

6 |

7 |

--------------------------------------------------------------------------------

/assignment-25/lion.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import cv2

3 |

4 | img = cv2.imread("input/lion.png")

5 | img = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

6 |

7 | result = np.zeros(img.shape)

8 |

9 | mask = np.array([[0 , -1 , 0],

10 | [-1 , 4 , -1],

11 | [0 , -1 , 0]])

12 |

13 | rows , cols = img.shape

14 |

15 | for i in range (1,rows-1):

16 | for j in range(1,cols-1):

17 | small_img = img[i-1:i+2, j-1:j+2]

18 | result[i,j] =np.sum(small_img * mask)

19 |

20 | cv2.imwrite("result_lion.jpg",result)

--------------------------------------------------------------------------------

/assignment-25/lion2.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import cv2

3 |

4 | img = cv2.imread("input/lion.png")

5 | img = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

6 |

7 | result = np.zeros(img.shape)

8 |

9 | mask = np.array([[-1 , -1 , -1],

10 | [0 , 0 , 0],

11 | [1 , 1 , 1]])

12 |

13 | rows , cols = img.shape

14 |

15 | for i in range (1,rows-1):

16 | for j in range(1,cols-1):

17 | small_img = img[i-1:i+2, j-1:j+2]

18 | result[i,j] =np.sum(small_img * mask)

19 |

20 | cv2.imwrite("result_lion2.jpg",result)

--------------------------------------------------------------------------------

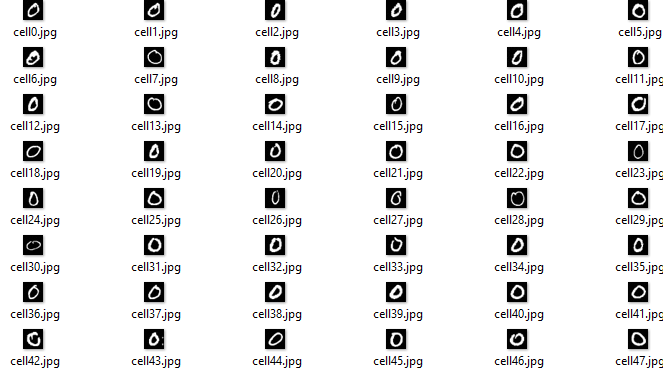

/assignment-26-2/Readme.md:

--------------------------------------------------------------------------------

1 | ## Seprate numbers of an image

2 | ##

3 | ## Prosses on an image by adding noise and remove it, and changing face alignment

4 | ##

5 | ## Creating snowfall gif

6 | ##

7 |

8 | https://user-images.githubusercontent.com/88204357/145231592-41682bef-de86-4629-9c2f-c153c317e16f.mp4

9 |

10 |

11 |

12 |

--------------------------------------------------------------------------------

/assignment-25/building.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import cv2

3 |

4 | img = cv2.imread("input/building.tif")

5 | img = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

6 |

7 | result = np.zeros(img.shape)

8 |

9 | mask = np.array([[-1 , 0 , 1],

10 | [-1 , 0 , 1],

11 | [-1 , 0 , 1]])

12 |

13 | rows , cols = img.shape

14 |

15 | for i in range (1,rows-1):

16 | for j in range(1,cols-1):

17 | small_img = img[i-1:i+2, j-1:j+2]

18 | result[i,j] =np.sum(small_img * mask)

19 |

20 | cv2.imwrite("result_building.jpg",result)

--------------------------------------------------------------------------------

/assignment-25/ConvolutionFunc.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import cv2

3 |

4 | img = cv2.imread("input/me.jpg")

5 | img = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

6 |

7 | result = np.zeros(img.shape)

8 |

9 | def Canvolution(a):

10 | b = a//2

11 | mask = np.ones((a,a)) / (a*a)

12 |

13 | rows , cols = img.shape

14 |

15 | for i in range (b,rows-b):

16 | for j in range(b,cols-b):

17 | small_img = img[i-b:i+(b+1), j-b:j+(b+1)]

18 | result[i,j] =np.sum(small_img * mask)

19 |

20 | Canvolution(15)

21 |

22 | cv2.imwrite("result_me.jpg",result)

23 |

--------------------------------------------------------------------------------

/assignment-25/Readme.md:

--------------------------------------------------------------------------------

1 | ##

2 | ##

3 | ##

4 | ##

5 | ##

6 |

7 | https://user-images.githubusercontent.com/88204357/143685303-ab48b87a-c6db-4bdc-86c4-e542da768a9f.mp4

8 |

--------------------------------------------------------------------------------

/assignment-30/Skin detector .py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import cv2

3 |

4 | min_HSV = np.array([0, 48, 80], dtype = "uint8")

5 | max_HSV = np.array([20, 255, 255], dtype = "uint8")

6 |

7 | video_cap = cv2.VideoCapture(0)

8 |

9 | while True:

10 |

11 | ret,frame = video_cap.read()

12 |

13 | frame_HSV = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV)

14 | Mask = cv2.inRange(frame_HSV, min_HSV, max_HSV)

15 |

16 | skinMask = cv2.GaussianBlur(Mask, (3, 3), 0)

17 | output = cv2.bitwise_and(frame, frame, mask = skinMask)

18 |

19 | cv2.imshow("Camera", output)

20 |

21 | if cv2.waitKey(1) & 0xFF == ord("q"):

22 | break

--------------------------------------------------------------------------------

/assignment-30/Readme.md:

--------------------------------------------------------------------------------

1 | ## change superman's background using blue screen technique :

2 |

3 |

4 |

5 |

6 | ## Camera Skin detector using open cv and HSV color range:

7 |

8 |

--------------------------------------------------------------------------------

/assignment-25/Portrateflower.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import cv2

3 |

4 | img = cv2.imread('input/flower_input.jpg', 0)

5 |

6 | threshold = 180

7 | result = np.zeros(img.shape)

8 | mask = np.ones((15,15)) / 225

9 | rows , cols = img.shape

10 |

11 | for i in range (7,rows-7):

12 | for j in range(7,cols-7):

13 | small_img = img[i-7:i+8,j-7:j+8]

14 | result[i,j] = np.sum(small_img * mask)

15 |

16 | for i in range(rows):

17 | for j in range(cols):

18 | if img[i,j] <= threshold:

19 | img[i,j] = result[i,j]

20 |

21 | cv2.imwrite("Portrate_Flower.png", img)

22 | cv2.waitKey()

--------------------------------------------------------------------------------

/assignment-28/time warp scan/time warp scan.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import cv2

3 |

4 | list_frames = []

5 | y = 1

6 |

7 | cam = cv2.VideoCapture(0)

8 |

9 | while True:

10 |

11 | ret,frame = cam.read()

12 |

13 | if not ret:

14 | break

15 |

16 | rows , cols , _= frame.shape

17 |

18 | list_frames.append(frame[y-1])

19 | frame[:y, :] = np.array(list_frames)

20 |

21 | cv2.line(frame, (0, y), (cols, y), (238,0,0), 1)

22 | y += 1

23 |

24 | if cv2.waitKey(1) == ord('q'):

25 | break

26 |

27 | if y == rows :

28 | break

29 |

30 | cv2.imshow("Camera", frame)

31 | cv2.waitKey(1)

32 |

33 | cv2.imwrite("Time warp scan.jpg",frame)

--------------------------------------------------------------------------------

/Mini project 3/mydatabase.py:

--------------------------------------------------------------------------------

1 | import sqlite3

2 |

3 | mydb = sqlite3.connect("usersdb.db")

4 | myCursor = mydb.cursor()

5 |

6 | # with open("face_images/user.jpg","rb") as f:

7 | # data = f.read()

8 |

9 | # def InsertImg():

10 | # myCursor.execute(f'INSERT INTO Users(photo) VALUES {data}')

11 |

12 | def Add(name,lastname,nationalcode,birthdate):

13 | myCursor.execute(f'INSERT INTO Users(Name, LastName, nationalCode, birthdate) VALUES("{name}","{lastname}","{nationalcode}","{birthdate}")')

14 | mydb.commit()

15 |

16 | def Edit(name,lastname,nationalcode1,birthdate,id):

17 | myCursor.execute(f'UPDATE Users SET Name = "{name}" ,LastName = "{lastname}", nationalCode = "{nationalcode1}" ,birthdate = "{birthdate}" WHERE Id = "{id}"')

18 | mydb.commit()

19 |

20 | def GetAll():

21 | myCursor.execute("SELECT * FROM Users")

22 | result = myCursor.fetchall()

23 | return result

--------------------------------------------------------------------------------

/assignment-29/ColorSensing.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import cv2

3 |

4 | video_cap = cv2.VideoCapture(0)

5 |

6 | while True:

7 | ret,frame = video_cap.read()

8 |

9 | myrec = frame[180:300,270:390]

10 |

11 | if ret == False:

12 | break

13 |

14 | kernel = np.ones((35,35),np.float32)/1225

15 | dst = cv2.filter2D(frame,-1,kernel)

16 |

17 | cv2.rectangle(frame,(270,180), (390,300), (0, 0, 0),2)

18 | dst[180:300,270:390] = myrec

19 |

20 | if 0 < np.average(myrec) <= 60:

21 | cv2.putText(dst, "Black", (25, 50), cv2.FONT_HERSHEY_PLAIN,3, (0, 0, 0),3)

22 | elif 60 < np.average(myrec) <= 120:

23 | cv2.putText(dst, "Gray", (25, 50), cv2.FONT_HERSHEY_PLAIN,3, (0, 0, 0),3)

24 | else:

25 | cv2.putText(dst, "White", (25, 50), cv2.FONT_HERSHEY_PLAIN,3, (0, 0, 0),3)

26 |

27 | cv2.imshow('Camera',dst)

28 | cv2.waitKey(1)

--------------------------------------------------------------------------------

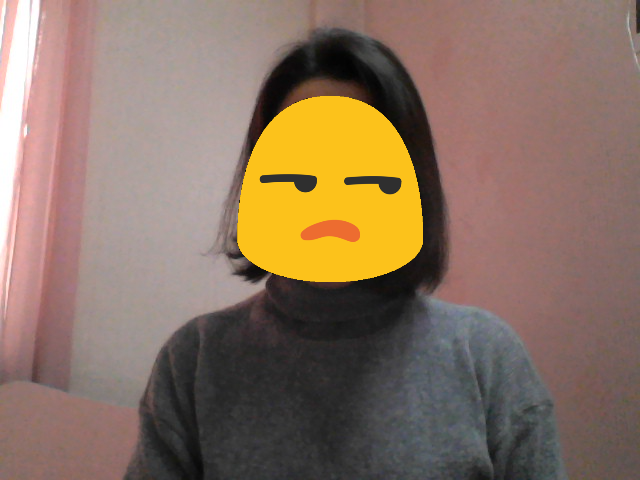

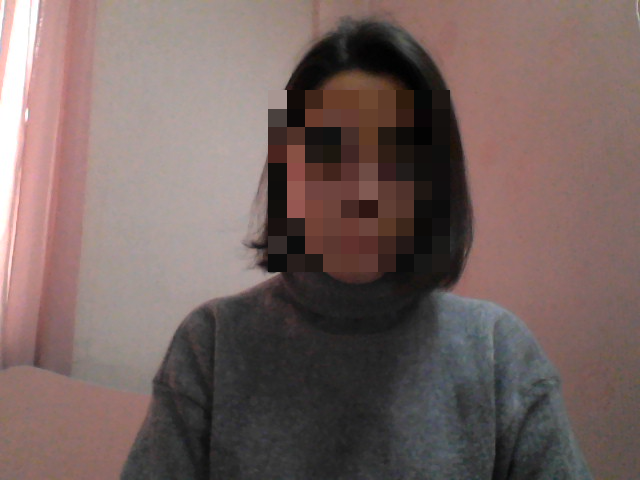

/assignment-23/Readme.md:

--------------------------------------------------------------------------------

1 | ## Image Processing

2 |

3 |

4 | Opening the camera and put effect on faces:

5 |

6 |

7 | You will see this emojie on your face by press number 1 on keyboard(face detection):

8 | ##

9 |

10 | By pressing number 2 this effect shown on your eyes and mouth(created by eyes and mouth detection xml file):

11 | ##

12 |

13 | Key number 3 will bluring your face:

14 | ##

15 |

16 | And key number 4 is flip your image vertically:

17 | ##

18 |

--------------------------------------------------------------------------------

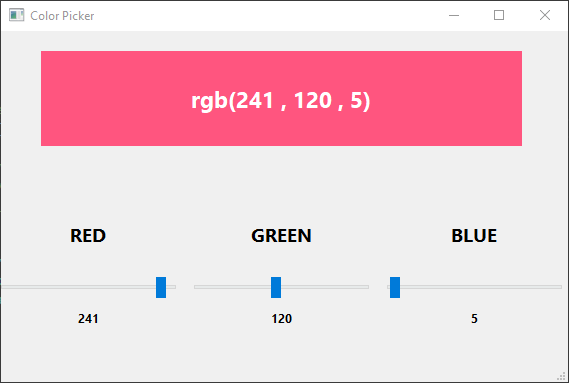

/assignment-29/Readme.md:

--------------------------------------------------------------------------------

1 | ## Recolor the carrot by change r value :

2 |

3 |

4 |

5 | ## Design the microsoft LOGO and put special font on it :

6 |

7 |

8 | ## Solving the rubix cube by np.where fuction :

9 |

10 |

11 |

12 | ## Creat a Color Picker Box using opencv + qt

13 |

14 |

--------------------------------------------------------------------------------

/assignment-25/ColorSensing.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import cv2

3 |

4 | video_cap = cv2.VideoCapture(0)

5 |

6 | while True:

7 | ret,frame = video_cap.read()

8 | frame = cv2.cvtColor(frame,cv2.COLOR_BGR2GRAY)

9 |

10 | myrec = frame[180:300,270:390]

11 |

12 | if ret == False:

13 | break

14 |

15 | kernel = np.ones((35,35),np.float32)/1225

16 | dst = cv2.filter2D(frame,-1,kernel)

17 |

18 | cv2.rectangle(frame,(270,180), (390,300), (0, 0, 0),2)

19 | dst[180:300,270:390] = myrec

20 | color_detect_area = dst[180:300,270:390]

21 |

22 | if 0 < np.average(color_detect_area) <= 70:

23 | cv2.putText(dst, "Black", (25, 50), cv2.FONT_HERSHEY_PLAIN,3, (0, 0, 0),3)

24 | elif 70 < np.average(color_detect_area) <= 120:

25 | cv2.putText(dst, "Gray", (25, 50), cv2.FONT_HERSHEY_PLAIN,3, (0, 0, 0),3)

26 | else:

27 | cv2.putText(dst, "White", (25, 50), cv2.FONT_HERSHEY_PLAIN,3, (0, 0, 0),3)

28 |

29 | cv2.imshow('Camera',dst)

30 | cv2.waitKey(1)

31 |

--------------------------------------------------------------------------------

/assignment-26-1/ColorSensing.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import cv2

3 |

4 | video_cap = cv2.VideoCapture(0)

5 |

6 | while True:

7 | ret,frame = video_cap.read()

8 | frame = cv2.cvtColor(frame,cv2.COLOR_BGR2GRAY)

9 |

10 | myrec = frame[180:300,270:390]

11 |

12 | alpha = 1.5

13 | beta = 0

14 |

15 | if ret == False:

16 | break

17 |

18 | kernel = np.ones((35,35),np.float32)/1225

19 | dst = cv2.filter2D(frame,-1,kernel)

20 |

21 | cv2.rectangle(frame,(270,180), (390,300), (0, 0, 0),2)

22 | dst[180:300,270:390] = myrec

23 | color_detect_area = cv2.convertScaleAbs(dst[180:300,270:390], alpha=alpha, beta=beta)

24 | dst[180:300,270:390] = color_detect_area

25 |

26 | if 0 < np.average(color_detect_area) <= 60:

27 | cv2.putText(dst, "Black", (25, 50), cv2.FONT_HERSHEY_PLAIN,3, (0, 0, 0),3)

28 | elif 60 < np.average(color_detect_area) <= 120:

29 | cv2.putText(dst, "Gray", (25, 50), cv2.FONT_HERSHEY_PLAIN,3, (0, 0, 0),3)

30 | else:

31 | cv2.putText(dst, "White", (25, 50), cv2.FONT_HERSHEY_PLAIN,3, (0, 0, 0),3)

32 |

33 | cv2.imshow('Camera',dst)

34 | cv2.waitKey(1)

--------------------------------------------------------------------------------

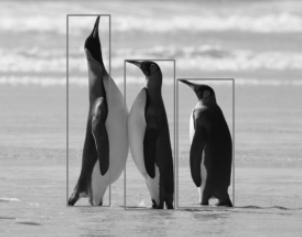

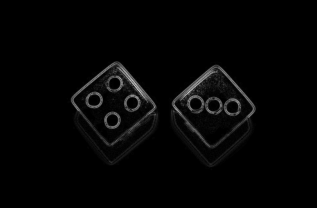

/assignment-27/Readme.md:

--------------------------------------------------------------------------------

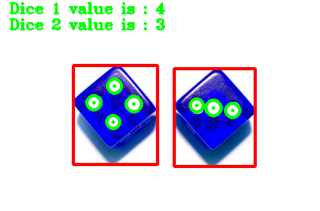

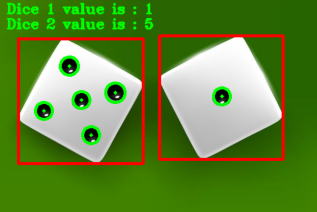

1 | ## Find contour method from scratch for detect an object :

2 |

3 |

4 | ## Dice dots detection using opencv library ,Find Contours ,Laplacian and HoughCircles methods :

5 |

6 |

7 |

8 | ##

9 |

10 |

11 |

12 |

--------------------------------------------------------------------------------

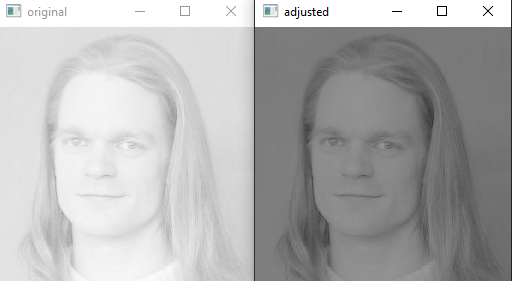

/assignment-26-1/Increase Contrast.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": 1,

6 | "id": "e60ef6e3-97d8-4270-8dd9-abb84d1dfd73",

7 | "metadata": {},

8 | "outputs": [],

9 | "source": [

10 | "import cv2\n",

11 | "\n",

12 | "image = cv2.imread('input/1.png')\n",

13 | "image = cv2.cvtColor(image,cv2.COLOR_BGR2GRAY)\n",

14 | "\n",

15 | "alpha = 1\n",

16 | "beta = -100\n",

17 | "\n",

18 | "adjusted = cv2.convertScaleAbs(image, alpha=alpha, beta=beta)\n",

19 | "\n",

20 | "cv2.imshow('original', image)\n",

21 | "cv2.imshow('adjusted', adjusted)\n",

22 | "cv2.waitKey()"

23 | ]

24 | }

25 | ],

26 | "metadata": {

27 | "kernelspec": {

28 | "display_name": "Python 3 (ipykernel)",

29 | "language": "python",

30 | "name": "python3"

31 | },

32 | "language_info": {

33 | "codemirror_mode": {

34 | "name": "ipython",

35 | "version": 3

36 | },

37 | "file_extension": ".py",

38 | "mimetype": "text/x-python",

39 | "name": "python",

40 | "nbconvert_exporter": "python",

41 | "pygments_lexer": "ipython3",

42 | "version": "3.9.0"

43 | }

44 | },

45 | "nbformat": 4,

46 | "nbformat_minor": 5

47 | }

48 |

--------------------------------------------------------------------------------

/Mini project 3/Readme.md:

--------------------------------------------------------------------------------

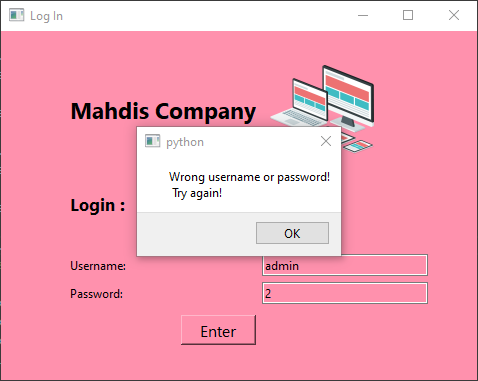

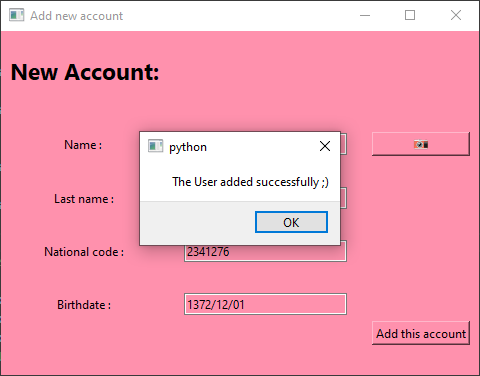

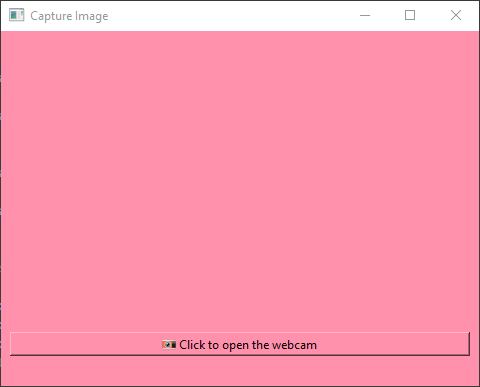

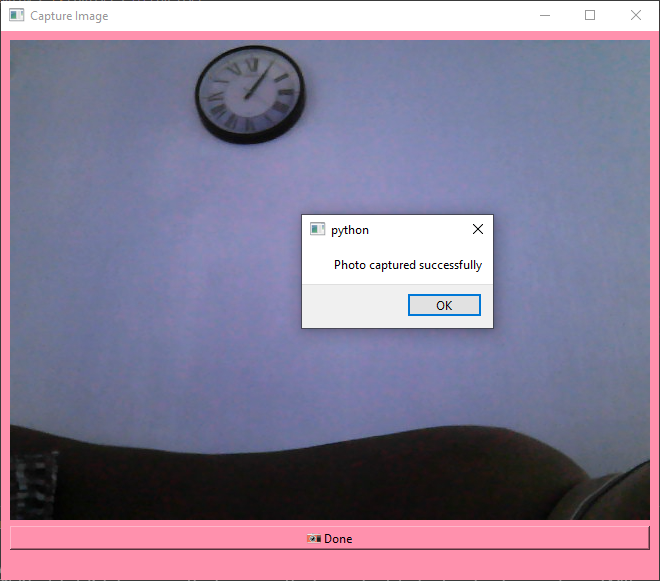

1 | ## Company users management

2 | ♦Login with the right username and password:

3 |

4 |

5 |

6 |

7 |

8 |

9 |

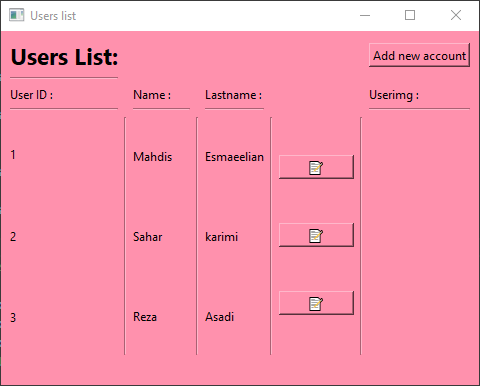

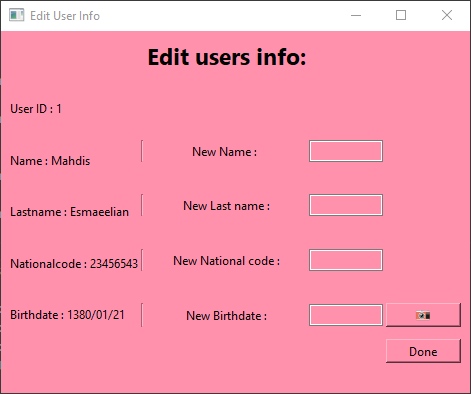

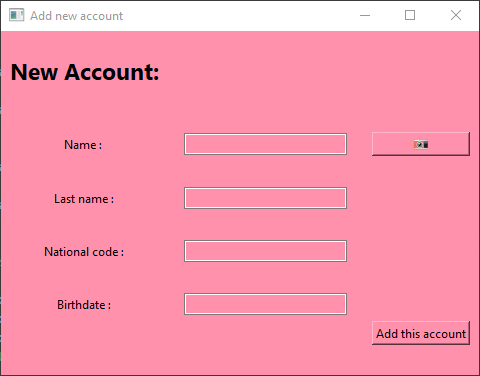

10 | ♦Showing users list , which has modify and add new user option :

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

23 |

24 |

25 |

26 |

27 |

28 |

29 |

--------------------------------------------------------------------------------

/assignment-28/Readme.md:

--------------------------------------------------------------------------------

1 | ## Sudoku detector using opencv

2 |

3 | The following code is a Python program that can be run at the command line ,It takes input image and output name from user :

4 |

5 | ◾Convert bgr color to gray

6 |

7 | ◾Using GaussianBlur for decrease noises

8 |

9 |

10 |

11 | ◾Adaptive threshold to obtain binary image

12 |

13 |

14 |

15 |

16 | ◾Find contours and filter for largest contour

17 |

18 | ◾Find shape with 4 points by approxPolyDP

19 |

20 | ◾Draw contours around the sudoku table

21 |

22 |

23 |

24 |

25 | ◾Crop the table and save it as a jpg image

26 |

27 |

28 |

29 |

30 | ## Webcam Sudoku Detector.py

31 |

32 |

33 | This python project has an ability to recognize Sudoku table in live webcam.

34 |

35 | By pressing the s button, the sudoku image is cropped and saved.

36 |

37 |

38 |

39 |

40 |

--------------------------------------------------------------------------------

/assignment-28/Sudoku.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import cv2

3 | import matplotlib.pyplot as plt

4 | import imutils

5 | from imutils import contours

6 | from imutils.perspective import four_point_transform

7 |

8 | parser = argparse.ArgumentParser(description="Sudoku Detector version 1.0")

9 | parser.add_argument("--input" , type=str , help="path of your input image")

10 | parser.add_argument("--filter_size" , type=int , help="size of GaussianBlur mask", default= 7)

11 | parser.add_argument("--output" , type=str , help="path of your output image")

12 | args = parser.parse_args()

13 |

14 | img = cv2.imread(args.input)

15 | gray_img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

16 |

17 | blurred_img = cv2.GaussianBlur(gray_img , (args.filter_size,args.filter_size), 3)

18 |

19 | thresh = cv2.adaptiveThreshold(blurred_img , 255 , cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY_INV , 11 ,2)

20 |

21 | contours = cv2.findContours(thresh, cv2.RETR_EXTERNAL , cv2.CHAIN_APPROX_SIMPLE)

22 |

23 | contours = imutils.grab_contours(contours)

24 | contours = sorted(contours , key = cv2.contourArea , reverse = True)

25 |

26 | sudoku_contour = None

27 |

28 | for contour in contours:

29 | epsilon = 0.02 * cv2.arcLength(contour , True)

30 | approx = cv2.approxPolyDP(contour , epsilon , True)

31 |

32 | if len(approx) == 4:

33 | sudoku_contour = approx

34 | break

35 |

36 | if sudoku_contour is None :

37 | print("Not found")

38 | else:

39 | result = cv2.drawContours(img , [sudoku_contour] , -1 ,(0,255,0) , 8)

40 | puzzle = four_point_transform(img, sudoku_contour.reshape(4,2))

41 | warped = four_point_transform(gray_img, sudoku_contour.reshape(4,2))

42 | cv2.imshow("Puzzle" ,puzzle)

43 | cv2.imwrite(args.output, puzzle)

--------------------------------------------------------------------------------

/assignment-28/Webcam Sudoku Detector.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import cv2

3 | import matplotlib.pyplot as plt

4 | import imutils

5 | from imutils import contours

6 | from imutils.perspective import four_point_transform

7 |

8 | cam = cv2.VideoCapture(0)

9 |

10 | while True:

11 |

12 | ret,frame = cam.read()

13 |

14 | if not ret:

15 | break

16 |

17 | gray_frame = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

18 |

19 | blurred_img = cv2.GaussianBlur(gray_frame , (3,3), 3)

20 |

21 | thresh = cv2.adaptiveThreshold(blurred_img , 255 , cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY_INV , 11 ,2)

22 |

23 | contours = cv2.findContours(thresh, cv2.RETR_EXTERNAL , cv2.CHAIN_APPROX_SIMPLE)

24 |

25 | contours = imutils.grab_contours(contours)

26 | contours = sorted(contours , key = cv2.contourArea , reverse = True)

27 |

28 | sudoku_contour = None

29 |

30 | for contour in contours:

31 | epsilon = 0.12 * cv2.arcLength(contour , True)

32 | approx = cv2.approxPolyDP(contour , epsilon , True)

33 |

34 | if len(approx) == 4:

35 | sudoku_contour = approx

36 | break

37 |

38 | if sudoku_contour is None :

39 | print("Not found")

40 | else:

41 | result = cv2.drawContours(frame , [sudoku_contour] , -1 ,(0,255,0) , 8)

42 |

43 | cv2.imshow("Camera", frame)

44 |

45 |

46 | if cv2.waitKey(1) == ord('s'):

47 | if sudoku_contour is None :

48 | print("Not found")

49 | else:

50 | puzzle = four_point_transform(frame, sudoku_contour.reshape(4,2))

51 | warped = four_point_transform(gray_frame, sudoku_contour.reshape(4,2))

52 | cv2.imwrite("Detected_Sudoku.jpg" , puzzle)

53 |

54 | if cv2.waitKey(1) == ord('q'):

55 | break

--------------------------------------------------------------------------------

/assignment-29/Color Picker.py:

--------------------------------------------------------------------------------

1 | import sys

2 | from functools import partial

3 | from PySide6.QtWidgets import *

4 | from PySide6.QtUiTools import *

5 | from PySide6.QtCore import *

6 |

7 | loader = QUiLoader()

8 | app = QApplication(sys.argv)

9 | ui = loader.load("Input\color picker.ui", None)

10 | ui.setWindowTitle("Color Picker")

11 |

12 | rcode = ui.redSlider.value()

13 | gcode = ui.greenSlider.value()

14 | bcode = ui.blueSlider.value()

15 |

16 | def red_slider_changed(_,rcode):

17 | ui.lableR.setText(f"{rcode}")

18 | rcode = ui.redSlider.value()

19 | gcode = ui.greenSlider.value()

20 | bcode = ui.blueSlider.value()

21 | ui.labelColor.setText(f"rgb({rcode} , {gcode} , {bcode})")

22 | ui.labelColor.setStyleSheet(f"background-color: rgb({rcode},{gcode},{bcode});")

23 |

24 | def green_slider_changed(_,gcode):

25 | ui.lableG.setText(f"{gcode}")

26 | rcode = ui.redSlider.value()

27 | gcode = ui.greenSlider.value()

28 | bcode = ui.blueSlider.value()

29 | ui.labelColor.setText(f"rgb({rcode} , {gcode} , {bcode})")

30 | ui.labelColor.setStyleSheet(f"background-color: rgb({rcode},{gcode},{bcode});")

31 |

32 | def blue_slider_changed(_,bcode):

33 | ui.lableB.setText(f"{bcode}")

34 | rcode = ui.redSlider.value()

35 | gcode = ui.greenSlider.value()

36 | bcode = ui.blueSlider.value()

37 | ui.labelColor.setText(f"rgb({rcode} , {gcode} , {bcode})")

38 | ui.labelColor.setStyleSheet(f"background-color: rgb({rcode},{gcode},{bcode});")

39 |

40 |

41 | ui.redSlider.valueChanged.connect(partial(red_slider_changed,rcode))

42 | ui.greenSlider.valueChanged.connect(partial(green_slider_changed,gcode))

43 | ui.blueSlider.valueChanged.connect(partial(blue_slider_changed,bcode))

44 |

45 | ui.show()

46 | app.exec_()

47 |

--------------------------------------------------------------------------------

/Mini project 3/webcam.ui:

--------------------------------------------------------------------------------

1 |

2 |

3 | MainWindow

4 |

5 |

6 |

7 | 0

8 | 0

9 | 478

10 | 355

11 |

12 |

13 |

14 | MainWindow

15 |

16 |

17 |

18 | background-color: rgb(255, 145, 173);

19 |

20 |

21 |

22 |

23 | 6

24 | 10

25 |

26 |

27 |

28 | -

29 |

30 |

31 | QLayout::SetNoConstraint

32 |

33 |

-

34 |

35 |

36 | Click to open the webcam

37 |

38 |

39 |

40 | C:/Users/User/Downloads/—Pngtree—photo camera_4732850.pngC:/Users/User/Downloads/—Pngtree—photo camera_4732850.png

41 |

42 |

43 |

44 | -

45 |

46 |

47 |

48 |

49 |

50 |

51 |

52 |

53 |

54 |

55 |

56 |

57 |

58 |

59 |

60 |

61 |

62 |

--------------------------------------------------------------------------------

/assignment-26-2/numbers sepration/numbers sepration.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": 14,

6 | "id": "2360fbea-e355-4299-87d3-9f2e5d5ada42",

7 | "metadata": {},

8 | "outputs": [],

9 | "source": [

10 | "import numpy as np\n",

11 | "import cv2\n",

12 | "import matplotlib.pyplot as plt"

13 | ]

14 | },

15 | {

16 | "cell_type": "code",

17 | "execution_count": 15,

18 | "id": "e8a5738d-6ca1-4ecf-ba59-0c3cc8dc09d0",

19 | "metadata": {},

20 | "outputs": [],

21 | "source": [

22 | "image = cv2.imread('input/mnist.png')\n",

23 | "image = cv2.cvtColor(image,cv2.COLOR_BGR2GRAY)"

24 | ]

25 | },

26 | {

27 | "cell_type": "code",

28 | "execution_count": 17,

29 | "id": "c9caa432-21ef-4512-b3ae-74a1764feafe",

30 | "metadata": {},

31 | "outputs": [],

32 | "source": [

33 | "width, height = image.shape\n",

34 | "width_cell = width // 50\n",

35 | "height_cell = height // 100"

36 | ]

37 | },

38 | {

39 | "cell_type": "code",

40 | "execution_count": 18,

41 | "id": "407d5143-08b6-412c-8a73-a528efa19cf4",

42 | "metadata": {},

43 | "outputs": [],

44 | "source": [

45 | "counter = 0\n",

46 | "\n",

47 | "for i in range(0, width, width_cell):\n",

48 | " for j in range(0, height, height_cell):\n",

49 | " single_cell = image[i:i+width_cell, j:j+height_cell]\n",

50 | " if single_cell.shape == (width_cell, height_cell):\n",

51 | " cv2.imwrite(f\"output/cell{counter}.jpg\",single_cell)\n",

52 | " counter += 1"

53 | ]

54 | }

55 | ],

56 | "metadata": {

57 | "kernelspec": {

58 | "display_name": "Python 3 (ipykernel)",

59 | "language": "python",

60 | "name": "python3"

61 | },

62 | "language_info": {

63 | "codemirror_mode": {

64 | "name": "ipython",

65 | "version": 3

66 | },

67 | "file_extension": ".py",

68 | "mimetype": "text/x-python",

69 | "name": "python",

70 | "nbconvert_exporter": "python",

71 | "pygments_lexer": "ipython3",

72 | "version": "3.9.0"

73 | }

74 | },

75 | "nbformat": 4,

76 | "nbformat_minor": 5

77 | }

78 |

--------------------------------------------------------------------------------

/assignment-24/SolvePnPHeadPoseEstimation.py:

--------------------------------------------------------------------------------

1 | import cv2

2 | import numpy as np

3 | import sys

4 |

5 |

6 | class HeadPoseEstimator:

7 |

8 | def __init__(self, filepath, W, H) -> None:

9 | # camera matrix

10 | matrix = np.array([[W, 0, W/2.0],

11 | [0, W, H/2.0],

12 | [0, 0, 1]])

13 |

14 | # load pre-defined 3d object points and mapping indexes

15 | obj, index = np.load(filepath, allow_pickle=True)

16 | obj = obj.T

17 |

18 | def solve_pnp_wrapper(obj, index, matrix):

19 | def solve_pnp(shape):

20 | return cv2.solvePnP(obj, shape[index], matrix, None)

21 | return solve_pnp

22 |

23 | self._solve_pnp = solve_pnp_wrapper(obj, index, matrix)

24 |

25 | def get_head_pose(self, shape):

26 | if len(shape) != 106:

27 | raise RuntimeError('Unsupported shape format')

28 |

29 | _, rotation_vec, translation_vec = self._solve_pnp(shape)

30 |

31 | rotation_mat = cv2.Rodrigues(rotation_vec)[0]

32 | pose_mat = cv2.hconcat((rotation_mat, translation_vec))

33 | euler_angle = cv2.decomposeProjectionMatrix(pose_mat)[-1]

34 |

35 | return euler_angle

36 |

37 | @staticmethod

38 | def draw_axis(img, euler_angle, center, size=80, thickness=3,

39 | angle_const=np.pi/180, copy=False):

40 | if copy:

41 | img = img.copy()

42 |

43 | euler_angle *= angle_const

44 | sin_pitch, sin_yaw, sin_roll = np.sin(euler_angle)

45 | cos_pitch, cos_yaw, cos_roll = np.cos(euler_angle)

46 |

47 | axis = np.array([

48 | [cos_yaw * cos_roll,

49 | cos_pitch * sin_roll + cos_roll * sin_pitch * sin_yaw],

50 | [-cos_yaw * sin_roll,

51 | cos_pitch * cos_roll - sin_pitch * sin_yaw * sin_roll],

52 | [sin_yaw,

53 | -cos_yaw * sin_pitch]

54 | ])

55 |

56 | axis *= size

57 | axis += center

58 |

59 | axis = axis.astype(np.int)

60 | tp_center = tuple(center.astype(np.int))

61 |

62 | cv2.line(img, tp_center, tuple(axis[0]), (0, 0, 255), thickness)

63 | cv2.line(img, tp_center, tuple(axis[1]), (0, 255, 0), thickness)

64 | cv2.line(img, tp_center, tuple(axis[2]), (255, 0, 0), thickness)

65 |

66 | return img

67 |

68 |

69 | def main(filename):

70 |

71 | from TFLiteFaceDetector import UltraLightFaceDetecion

72 | from TFLiteFaceAlignment import CoordinateAlignmentModel

73 |

74 | cap = cv2.VideoCapture(filename)

75 |

76 | fd = UltraLightFaceDetecion("weights/RFB-320.tflite",

77 | conf_threshold=0.95)

78 | fa = CoordinateAlignmentModel("weights/coor_2d106.tflite")

79 | hp = HeadPoseEstimator("weights/head_pose_object_points.npy",

80 | cap.get(3), cap.get(4))

81 |

82 | color = (125, 255, 125)

83 |

84 | while True:

85 | ret, frame = cap.read()

86 |

87 | if not ret:

88 | break

89 |

90 | bboxes, _ = fd.inference(frame)

91 |

92 | for pred in fa.get_landmarks(frame, bboxes):

93 | for p in np.round(pred).astype(np.int):

94 | cv2.circle(frame, tuple(p), 1, color, 1, cv2.LINE_AA)

95 | face_center = np.mean(pred, axis=0)

96 | euler_angle = hp.get_head_pose(pred).flatten()

97 | print(*euler_angle)

98 | hp.draw_axis(frame, euler_angle, face_center)

99 |

100 | cv2.imshow("result", frame)

101 | if cv2.waitKey(0) == ord('q'):

102 | break

103 |

104 |

105 | if __name__ == '__main__':

106 | main(sys.argv[1])

107 |

--------------------------------------------------------------------------------

/assignment-23/Main.py:

--------------------------------------------------------------------------------

1 | import cv2

2 |

3 | blurred = False

4 | rotated = False

5 | pixelate = False

6 | EmojiOnFace = False

7 | SunglassesMustache = False

8 |

9 | face_detector = cv2.CascadeClassifier("src/haarcascade_frontalface_default.xml")

10 | eyes_detector = cv2.CascadeClassifier('src/frontalEyes35x16.xml')

11 | mouth_detector = cv2.CascadeClassifier('src/mouth.xml')

12 |

13 | sticker1 = cv2.imread("img/emoji1.png")

14 | lip = cv2.imread('img/lips.png')

15 | sunglasses = cv2.imread('img/sunglasses.png')

16 |

17 | video_cap = cv2.VideoCapture(0)

18 |

19 | while True:

20 |

21 | ret,frame = video_cap.read()

22 | if ret == False:

23 | break

24 |

25 | faces = face_detector.detectMultiScale(frame, scaleFactor=1.3, minNeighbors=5)

26 |

27 | for (x, y, w, h) in faces:

28 |

29 | face_pos = frame[y:y+h, x:x+w]

30 | eyes = eyes_detector.detectMultiScale(face_pos, scaleFactor=1.2, minNeighbors=5)

31 | mouth = mouth_detector.detectMultiScale(face_pos, scaleFactor=1.3, minNeighbors=50)

32 |

33 | if EmojiOnFace:

34 |

35 | sticker = cv2.resize(sticker1, (w,h))

36 | img2gray = cv2.cvtColor(sticker,cv2.COLOR_BGR2GRAY)

37 |

38 | _,mask= cv2.threshold(img2gray,10,255,cv2.THRESH_BINARY)

39 | mask_inv = cv2.bitwise_not(mask)

40 |

41 | background1 = cv2.bitwise_and(face_pos,face_pos,mask = mask_inv)

42 | mask_sticker = cv2.bitwise_and(sticker, sticker, mask=mask)

43 |

44 | finalsticker =cv2.add(mask_sticker ,background1)

45 | frame[y:y+h, x:x+h] = finalsticker

46 |

47 | if SunglassesMustache:

48 | for (ex, ey, ew, eh) in eyes:

49 |

50 | sunglasses_resize = cv2.resize(sunglasses, (ew,eh))

51 | sunglasses2gray = cv2.cvtColor(sunglasses_resize,cv2.COLOR_BGR2GRAY)

52 |

53 | _,mask= cv2.threshold(sunglasses2gray,10,255,cv2.THRESH_BINARY)

54 | mask_inv = cv2.bitwise_not(mask)

55 |

56 | eyes_pos = cv2.bitwise_and(face_pos[ey:ey+eh, ex:ex+ew],face_pos[ey:ey+eh, ex:ex+ew],mask = mask)

57 | mask_glasses = cv2.bitwise_and(sunglasses_resize, sunglasses_resize, mask=mask_inv)

58 |

59 | finalsticker =cv2.add(mask_glasses ,eyes_pos)

60 | face_pos[ey:ey+eh, ex:ex+ew] = finalsticker

61 |

62 | for (mx, my, mw, mh) in mouth:

63 |

64 | mouth_resize = cv2.resize(lip, (mw,mh))

65 | mouth2gray = cv2.cvtColor(mouth_resize,cv2.COLOR_BGR2GRAY)

66 |

67 | _,mask = cv2.threshold(mouth2gray,10,255,cv2.THRESH_BINARY)

68 | mask_inv = cv2.bitwise_not(mask)

69 |

70 | nose_pos = cv2.bitwise_and(face_pos[my:my+mh, mx:mx+mw],face_pos[my:my+mh, mx:mx+mw],mask = mask_inv)

71 | mask_mouth = cv2.bitwise_and(mouth_resize, mouth_resize, mask=mask)

72 |

73 | finalmouth =cv2.add(mask_mouth ,nose_pos)

74 | face_pos[my:my+mh, mx:mx+mw] = finalmouth

75 |

76 | if blurred:

77 | frame[y:y+h, x:x+h] = cv2.blur(frame[y:y+h, x:x+h],(30,30))

78 |

79 | if pixelate:

80 | for (x, y, w, h) in faces:

81 | square = cv2.resize(frame[y:y+h,x:x+w], (10,10))

82 | output = cv2.resize(square, (w, h), interpolation=cv2.INTER_NEAREST)

83 | frame[y:y+h, x:x+h] = output

84 |

85 | if rotated:

86 | frame[y:y+h, x:x+h] = cv2.rotate(frame[y:y+h, x:x+h], cv2.ROTATE_180)

87 |

88 |

89 | key = cv2.waitKey(1)

90 |

91 | if key == ord('1'):

92 | EmojiOnFace = not EmojiOnFace

93 |

94 | if key == ord('2'):

95 | SunglassesMustache = not SunglassesMustache

96 |

97 | if key == ord('3'):

98 | blurred = not blurred

99 |

100 | if key == ord('4'):

101 | pixelate = not pixelate

102 |

103 | if key == ord('5'):

104 | rotated = not rotated

105 |

106 | cv2.imshow('Camera',(frame))

107 | cv2.waitKey(1)

108 |

--------------------------------------------------------------------------------

/assignment-24/vtuber_link_start.py:

--------------------------------------------------------------------------------

1 | # coding: utf-8

2 |

3 | import numpy as np

4 | import service

5 | import cv2

6 | import sys

7 | import socketio

8 |

9 | from threading import Thread

10 | from queue import Queue

11 |

12 |

13 | cap = cv2.VideoCapture(sys.argv[1])

14 |

15 | fd = service.UltraLightFaceDetecion("weights/RFB-320.tflite",

16 | conf_threshold=0.98)

17 | fa = service.CoordinateAlignmentModel("weights/coor_2d106.tflite")

18 | hp = service.HeadPoseEstimator("weights/head_pose_object_points.npy",

19 | cap.get(3), cap.get(4))

20 | gs = service.IrisLocalizationModel("weights/iris_localization.tflite")

21 |

22 | QUEUE_BUFFER_SIZE = 18

23 |

24 | box_queue = Queue(maxsize=QUEUE_BUFFER_SIZE)

25 | landmark_queue = Queue(maxsize=QUEUE_BUFFER_SIZE)

26 | iris_queue = Queue(maxsize=QUEUE_BUFFER_SIZE)

27 | upstream_queue = Queue(maxsize=QUEUE_BUFFER_SIZE)

28 |

29 | # ======================================================

30 |

31 | def face_detection():

32 | while True:

33 | ret, frame = cap.read()

34 |

35 | if not ret:

36 | break

37 |

38 | face_boxes, _ = fd.inference(frame)

39 | box_queue.put((frame, face_boxes))

40 |

41 |

42 | def face_alignment():

43 | while True:

44 | frame, boxes = box_queue.get()

45 | landmarks = fa.get_landmarks(frame, boxes)

46 | landmark_queue.put((frame, landmarks))

47 |

48 |

49 | def iris_localization(YAW_THD=45):

50 | sio = socketio.Client()

51 |

52 | sio.connect("http://127.0.0.1:6789", namespaces='/kizuna')

53 |

54 | while True:

55 | frame, preds = landmark_queue.get()

56 |

57 | for landmarks in preds:

58 | # calculate head pose

59 | euler_angle = hp.get_head_pose(landmarks).flatten()

60 | pitch, yaw, roll = euler_angle

61 |

62 | eye_starts = landmarks[[35, 89]]

63 | eye_ends = landmarks[[39, 93]]

64 | eye_centers = landmarks[[34, 88]]

65 | eye_lengths = (eye_ends - eye_starts)[:, 0]

66 |

67 | pupils = eye_centers.copy()

68 |

69 | if yaw > -YAW_THD:

70 | iris_left = gs.get_mesh(frame, eye_lengths[0], eye_centers[0])

71 | pupils[0] = iris_left[0]

72 |

73 | if yaw < YAW_THD:

74 | iris_right = gs.get_mesh(frame, eye_lengths[1], eye_centers[1])

75 | pupils[1] = iris_right[0]

76 |

77 | poi = eye_starts, eye_ends, pupils, eye_centers

78 |

79 | theta, pha, _ = gs.calculate_3d_gaze(poi)

80 | mouth_open_percent = (

81 | landmarks[60, 1] - landmarks[62, 1]) / (landmarks[53, 1] - landmarks[71, 1])

82 | left_eye_status = (

83 | landmarks[33, 1] - landmarks[40, 1]) / eye_lengths[0]

84 | right_eye_status = (

85 | landmarks[87, 1] - landmarks[94, 1]) / eye_lengths[1]

86 | result_string = {'euler': (pitch, -yaw, -roll),

87 | 'eye': (theta.mean(), pha.mean()),

88 | 'mouth': mouth_open_percent,

89 | 'blink': (left_eye_status, right_eye_status)}

90 | sio.emit('result_data', result_string, namespace='/kizuna')

91 | upstream_queue.put((frame, landmarks, euler_angle))

92 | break

93 |

94 |

95 | def draw(color=(125, 255, 0), thickness=2):

96 | while True:

97 | frame, landmarks, euler_angle = upstream_queue.get()

98 |

99 | for p in np.round(landmarks).astype(np.int):

100 | cv2.circle(frame, tuple(p), 1, color, thickness, cv2.LINE_AA)

101 |

102 | face_center = np.mean(landmarks, axis=0)

103 | hp.draw_axis(frame, euler_angle, face_center)

104 |

105 | frame = cv2.resize(frame, (960, 720))

106 |