├── .gitignore

├── .travis.yml

├── CONTRIBUTING.md

├── LICENSE

├── MANIFEST.in

├── README.md

├── benchmark

├── __init__.py

├── table_perf.py

└── text_perf.py

├── binder

└── environment.yml

├── citation.bib

├── doc

├── blog_post.md

├── conf.py

├── images

│ ├── image_from_paper.png

│ ├── images.png

│ ├── lime.png

│ ├── multiclass.png

│ ├── tabular.png

│ ├── twoclass.png

│ └── video_screenshot.png

├── index.rst

├── lime.rst

└── notebooks

│ ├── Latin Hypercube Sampling.ipynb

│ ├── Lime - basic usage, two class case.ipynb

│ ├── Lime - multiclass.ipynb

│ ├── Lime with Recurrent Neural Networks.ipynb

│ ├── Submodular Pick examples.ipynb

│ ├── Tutorial - Faces and GradBoost.ipynb

│ ├── Tutorial - Image Classification Keras.ipynb

│ ├── Tutorial - MNIST and RF.ipynb

│ ├── Tutorial - continuous and categorical features.ipynb

│ ├── Tutorial - images - Pytorch.ipynb

│ ├── Tutorial - images.ipynb

│ ├── Tutorial_H2O_continuous_and_cat.ipynb

│ ├── Using lime for regression.ipynb

│ └── data

│ ├── adult.csv

│ ├── cat_mouse.jpg

│ ├── co2_data.csv

│ ├── dogs.png

│ ├── imagenet_class_index.json

│ └── mushroom_data.csv

├── lime

├── __init__.py

├── bundle.js

├── bundle.js.map

├── discretize.py

├── exceptions.py

├── explanation.py

├── js

│ ├── bar_chart.js

│ ├── explanation.js

│ ├── main.js

│ ├── predict_proba.js

│ └── predicted_value.js

├── lime_base.py

├── lime_image.py

├── lime_tabular.py

├── lime_text.py

├── package.json

├── style.css

├── submodular_pick.py

├── test_table.html

├── tests

│ ├── __init__.py

│ ├── test_discretize.py

│ ├── test_generic_utils.py

│ ├── test_lime_tabular.py

│ ├── test_lime_text.py

│ └── test_scikit_image.py

├── utils

│ ├── __init__.py

│ └── generic_utils.py

├── webpack.config.js

└── wrappers

│ ├── __init__.py

│ └── scikit_image.py

├── setup.cfg

└── setup.py

/.gitignore:

--------------------------------------------------------------------------------

1 | # Compiled python modules.

2 | *.pyc

3 |

4 | # Setuptools distribution folder.

5 | /dist/

6 |

7 | /lime/node_modules

8 |

9 | # Python egg metadata, regenerated from source files by setuptools.

10 | /*.egg-info

11 |

12 | # Unit test / coverage reports

13 | .cache

14 |

15 | # Created by https://www.gitignore.io/api/pycharm

16 |

17 | ### PyCharm ###

18 | # Covers JetBrains IDEs: IntelliJ, RubyMine, PhpStorm, AppCode, PyCharm, CLion, Android Studio and Webstorm

19 | # Reference: https://intellij-support.jetbrains.com/hc/en-us/articles/206544839

20 |

21 | # User-specific stuff:

22 | .idea/workspace.xml

23 | .idea/tasks.xml

24 | .idea/dictionaries

25 | .idea/vcs.xml

26 | .idea/jsLibraryMappings.xml

27 |

28 | # Sensitive or high-churn files:

29 | .idea/dataSources.ids

30 | .idea/dataSources.xml

31 | .idea/dataSources.local.xml

32 | .idea/sqlDataSources.xml

33 | .idea/dynamic.xml

34 | .idea/uiDesigner.xml

35 |

36 | # Gradle:

37 | .idea/gradle.xml

38 | .idea/libraries

39 |

40 | # Mongo Explorer plugin:

41 | .idea/mongoSettings.xml

42 |

43 | ## File-based project format:

44 | *.iws

45 |

46 | ## Plugin-specific files:

47 |

48 | # IntelliJ

49 | /out/

50 |

51 | # mpeltonen/sbt-idea plugin

52 | .idea_modules/

53 |

54 | # JIRA plugin

55 | atlassian-ide-plugin.xml

56 |

57 | # Crashlytics plugin (for Android Studio and IntelliJ)

58 | com_crashlytics_export_strings.xml

59 | crashlytics.properties

60 | crashlytics-build.properties

61 | fabric.properties

62 |

63 | ### PyCharm Patch ###

64 | # Comment Reason: https://github.com/joeblau/gitignore.io/issues/186#issuecomment-215987721

65 |

66 | # *.iml

67 | # modules.xml

68 | # .idea/misc.xml

69 | # *.ipr

70 |

71 | # Pycharm

72 | .idea

73 |

--------------------------------------------------------------------------------

/.travis.yml:

--------------------------------------------------------------------------------

1 | dist: xenial

2 | sudo: false

3 | language: python

4 | cache: pip

5 | python:

6 | - "3.6"

7 | - "3.7"

8 | # command to install dependencies

9 | install:

10 | - python -m pip install -U pip

11 | - python -m pip install -e .[dev]

12 | # command to run tests

13 | script:

14 | - pytest lime

15 | - flake8 lime

16 |

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | ## Contributing

2 | I am delighted when people want to contribute to LIME. Here are a few things to keep in mind before sending in a pull request:

3 | * We are now using flake8 as a style guide enforcer (I plan on adding eslint for javascript soon). Make sure your code passes the default flake8 execution.

4 | * There must be a really good reason to change the external interfaces - I want to avoid breaking previous code as much as possible.

5 | * If you are adding a new feature, please let me know the use case and the rationale behind how you did it (unless it's obvious)

6 |

7 | If you want to contribute but don't know where to start, take a look at the [issues page](https://github.com/marcotcr/lime/issues), or at the list below.

8 |

9 | # Roadmap

10 | Here are a few high level features I want to incorporate in LIME. If you want to work incrementally in any of these, feel free to start a branch.

11 |

12 | 1. Creating meaningful tests that we can run before merging things. Right now I run the example notebooks and the few tests we have.

13 | 2. Creating a wrapper that computes explanations for a particular dataset, and suggests instances for the user to look at (similar to what we did in [the paper](http://arxiv.org/abs/1602.04938))

14 | 3. Making LIME work with images in a reasonable time. The explanations we used in the paper took a few minutes, which is too slow.

15 | 4. Thinking through what is needed to use LIME in regression problems. An obvious problem is that features with different scales make it really hard to interpret.

16 | 5. Figuring out better alternatives to discretizing the data for tabular data. Discretizing is definitely more interpretable, but we may just want to treat features as continuous.

17 | 6. Figuring out better ways to sample around a data point for tabular data. One example is sampling columns from the training set assuming independence, or some form of conditional sampling.

18 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Copyright (c) 2016, Marco Tulio Correia Ribeiro

2 | All rights reserved.

3 |

4 | Redistribution and use in source and binary forms, with or without

5 | modification, are permitted provided that the following conditions are met:

6 |

7 | * Redistributions of source code must retain the above copyright notice, this

8 | list of conditions and the following disclaimer.

9 |

10 | * Redistributions in binary form must reproduce the above copyright notice,

11 | this list of conditions and the following disclaimer in the documentation

12 | and/or other materials provided with the distribution.

13 |

14 | THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

15 | AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

16 | IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

17 | DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

18 | FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

19 | DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

20 | SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

21 | CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

22 | OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

23 | OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

24 |

--------------------------------------------------------------------------------

/MANIFEST.in:

--------------------------------------------------------------------------------

1 | include lime/*.js

2 | include LICENSE

3 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # lime

2 |

3 | [](https://travis-ci.org/marcotcr/lime)

4 | [](https://mybinder.org/v2/gh/marcotcr/lime/master)

5 |

6 | This project is about explaining what machine learning classifiers (or models) are doing.

7 | At the moment, we support explaining individual predictions for text classifiers or classifiers that act on tables (numpy arrays of numerical or categorical data) or images, with a package called lime (short for local interpretable model-agnostic explanations).

8 | Lime is based on the work presented in [this paper](https://arxiv.org/abs/1602.04938) ([bibtex here for citation](https://github.com/marcotcr/lime/blob/master/citation.bib)). Here is a link to the promo video:

9 |

10 |  11 |

12 | Our plan is to add more packages that help users understand and interact meaningfully with machine learning.

13 |

14 | Lime is able to explain any black box classifier, with two or more classes. All we require is that the classifier implements a function that takes in raw text or a numpy array and outputs a probability for each class. Support for scikit-learn classifiers is built-in.

15 |

16 | ## Installation

17 |

18 | The lime package is on [PyPI](https://pypi.python.org/pypi/lime). Simply run:

19 |

20 | ```sh

21 | pip install lime

22 | ```

23 |

24 | Or clone the repository and run:

25 |

26 | ```sh

27 | pip install .

28 | ```

29 |

30 | We dropped python2 support in `0.2.0`, `0.1.1.37` was the last version before that.

31 |

32 | ## Screenshots

33 |

34 | Below are some screenshots of lime explanations. These are generated in html, and can be easily produced and embedded in ipython notebooks. We also support visualizations using matplotlib, although they don't look as nice as these ones.

35 |

36 | #### Two class case, text

37 |

38 | Negative (blue) words indicate atheism, while positive (orange) words indicate christian. The way to interpret the weights by applying them to the prediction probabilities. For example, if we remove the words Host and NNTP from the document, we expect the classifier to predict atheism with probability 0.58 - 0.14 - 0.11 = 0.31.

39 |

40 |

41 |

42 | #### Multiclass case

43 |

44 |

45 |

46 | #### Tabular data

47 |

48 |

49 |

50 | #### Images (explaining prediction of 'Cat' in pros and cons)

51 |

52 |

11 |

12 | Our plan is to add more packages that help users understand and interact meaningfully with machine learning.

13 |

14 | Lime is able to explain any black box classifier, with two or more classes. All we require is that the classifier implements a function that takes in raw text or a numpy array and outputs a probability for each class. Support for scikit-learn classifiers is built-in.

15 |

16 | ## Installation

17 |

18 | The lime package is on [PyPI](https://pypi.python.org/pypi/lime). Simply run:

19 |

20 | ```sh

21 | pip install lime

22 | ```

23 |

24 | Or clone the repository and run:

25 |

26 | ```sh

27 | pip install .

28 | ```

29 |

30 | We dropped python2 support in `0.2.0`, `0.1.1.37` was the last version before that.

31 |

32 | ## Screenshots

33 |

34 | Below are some screenshots of lime explanations. These are generated in html, and can be easily produced and embedded in ipython notebooks. We also support visualizations using matplotlib, although they don't look as nice as these ones.

35 |

36 | #### Two class case, text

37 |

38 | Negative (blue) words indicate atheism, while positive (orange) words indicate christian. The way to interpret the weights by applying them to the prediction probabilities. For example, if we remove the words Host and NNTP from the document, we expect the classifier to predict atheism with probability 0.58 - 0.14 - 0.11 = 0.31.

39 |

40 |

41 |

42 | #### Multiclass case

43 |

44 |

45 |

46 | #### Tabular data

47 |

48 |

49 |

50 | #### Images (explaining prediction of 'Cat' in pros and cons)

51 |

52 |  53 |

54 | ## Tutorials and API

55 |

56 | For example usage for text classifiers, take a look at the following two tutorials (generated from ipython notebooks):

57 |

58 | - [Basic usage, two class. We explain random forest classifiers.](https://marcotcr.github.io/lime/tutorials/Lime%20-%20basic%20usage%2C%20two%20class%20case.html)

59 | - [Multiclass case](https://marcotcr.github.io/lime/tutorials/Lime%20-%20multiclass.html)

60 |

61 | For classifiers that use numerical or categorical data, take a look at the following tutorial (this is newer, so please let me know if you find something wrong):

62 |

63 | - [Tabular data](https://marcotcr.github.io/lime/tutorials/Tutorial%20-%20continuous%20and%20categorical%20features.html)

64 | - [Tabular data with H2O models](https://marcotcr.github.io/lime/tutorials/Tutorial_H2O_continuous_and_cat.html)

65 | - [Latin Hypercube Sampling](doc/notebooks/Latin%20Hypercube%20Sampling.ipynb)

66 |

67 | For image classifiers:

68 |

69 | - [Images - basic](https://marcotcr.github.io/lime/tutorials/Tutorial%20-%20images.html)

70 | - [Images - Faces](https://github.com/marcotcr/lime/blob/master/doc/notebooks/Tutorial%20-%20Faces%20and%20GradBoost.ipynb)

71 | - [Images with Keras](https://github.com/marcotcr/lime/blob/master/doc/notebooks/Tutorial%20-%20Image%20Classification%20Keras.ipynb)

72 | - [MNIST with random forests](https://github.com/marcotcr/lime/blob/master/doc/notebooks/Tutorial%20-%20MNIST%20and%20RF.ipynb)

73 | - [Images with PyTorch](https://github.com/marcotcr/lime/blob/master/doc/notebooks/Tutorial%20-%20images%20-%20Pytorch.ipynb)

74 |

75 | For regression:

76 |

77 | - [Simple regression](https://marcotcr.github.io/lime/tutorials/Using%2Blime%2Bfor%2Bregression.html)

78 |

79 | Submodular Pick:

80 |

81 | - [Submodular Pick](https://github.com/marcotcr/lime/tree/master/doc/notebooks/Submodular%20Pick%20examples.ipynb)

82 |

83 | The raw (non-html) notebooks for these tutorials are available [here](https://github.com/marcotcr/lime/tree/master/doc/notebooks).

84 |

85 | The API reference is available [here](https://lime-ml.readthedocs.io/en/latest/).

86 |

87 | ## What are explanations?

88 |

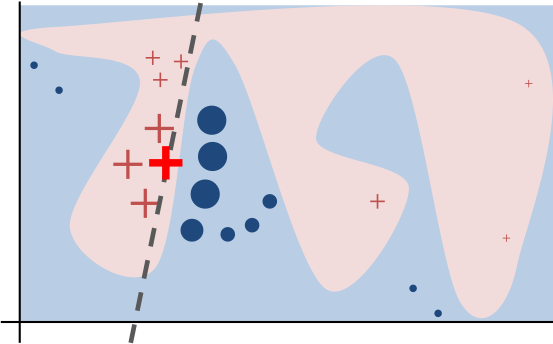

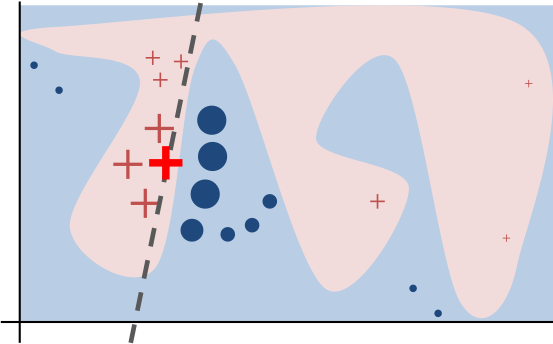

89 | Intuitively, an explanation is a local linear approximation of the model's behaviour.

90 | While the model may be very complex globally, it is easier to approximate it around the vicinity of a particular instance.

91 | While treating the model as a black box, we perturb the instance we want to explain and learn a sparse linear model around it, as an explanation.

92 | The figure below illustrates the intuition for this procedure. The model's decision function is represented by the blue/pink background, and is clearly nonlinear.

93 | The bright red cross is the instance being explained (let's call it X).

94 | We sample instances around X, and weight them according to their proximity to X (weight here is indicated by size).

95 | We then learn a linear model (dashed line) that approximates the model well in the vicinity of X, but not necessarily globally. For more information, [read our paper](https://arxiv.org/abs/1602.04938), or take a look at [this blog post](https://www.oreilly.com/learning/introduction-to-local-interpretable-model-agnostic-explanations-lime).

96 |

97 |

53 |

54 | ## Tutorials and API

55 |

56 | For example usage for text classifiers, take a look at the following two tutorials (generated from ipython notebooks):

57 |

58 | - [Basic usage, two class. We explain random forest classifiers.](https://marcotcr.github.io/lime/tutorials/Lime%20-%20basic%20usage%2C%20two%20class%20case.html)

59 | - [Multiclass case](https://marcotcr.github.io/lime/tutorials/Lime%20-%20multiclass.html)

60 |

61 | For classifiers that use numerical or categorical data, take a look at the following tutorial (this is newer, so please let me know if you find something wrong):

62 |

63 | - [Tabular data](https://marcotcr.github.io/lime/tutorials/Tutorial%20-%20continuous%20and%20categorical%20features.html)

64 | - [Tabular data with H2O models](https://marcotcr.github.io/lime/tutorials/Tutorial_H2O_continuous_and_cat.html)

65 | - [Latin Hypercube Sampling](doc/notebooks/Latin%20Hypercube%20Sampling.ipynb)

66 |

67 | For image classifiers:

68 |

69 | - [Images - basic](https://marcotcr.github.io/lime/tutorials/Tutorial%20-%20images.html)

70 | - [Images - Faces](https://github.com/marcotcr/lime/blob/master/doc/notebooks/Tutorial%20-%20Faces%20and%20GradBoost.ipynb)

71 | - [Images with Keras](https://github.com/marcotcr/lime/blob/master/doc/notebooks/Tutorial%20-%20Image%20Classification%20Keras.ipynb)

72 | - [MNIST with random forests](https://github.com/marcotcr/lime/blob/master/doc/notebooks/Tutorial%20-%20MNIST%20and%20RF.ipynb)

73 | - [Images with PyTorch](https://github.com/marcotcr/lime/blob/master/doc/notebooks/Tutorial%20-%20images%20-%20Pytorch.ipynb)

74 |

75 | For regression:

76 |

77 | - [Simple regression](https://marcotcr.github.io/lime/tutorials/Using%2Blime%2Bfor%2Bregression.html)

78 |

79 | Submodular Pick:

80 |

81 | - [Submodular Pick](https://github.com/marcotcr/lime/tree/master/doc/notebooks/Submodular%20Pick%20examples.ipynb)

82 |

83 | The raw (non-html) notebooks for these tutorials are available [here](https://github.com/marcotcr/lime/tree/master/doc/notebooks).

84 |

85 | The API reference is available [here](https://lime-ml.readthedocs.io/en/latest/).

86 |

87 | ## What are explanations?

88 |

89 | Intuitively, an explanation is a local linear approximation of the model's behaviour.

90 | While the model may be very complex globally, it is easier to approximate it around the vicinity of a particular instance.

91 | While treating the model as a black box, we perturb the instance we want to explain and learn a sparse linear model around it, as an explanation.

92 | The figure below illustrates the intuition for this procedure. The model's decision function is represented by the blue/pink background, and is clearly nonlinear.

93 | The bright red cross is the instance being explained (let's call it X).

94 | We sample instances around X, and weight them according to their proximity to X (weight here is indicated by size).

95 | We then learn a linear model (dashed line) that approximates the model well in the vicinity of X, but not necessarily globally. For more information, [read our paper](https://arxiv.org/abs/1602.04938), or take a look at [this blog post](https://www.oreilly.com/learning/introduction-to-local-interpretable-model-agnostic-explanations-lime).

96 |

97 |  98 |

99 | ## Contributing

100 |

101 | Please read [this](CONTRIBUTING.md).

102 |

--------------------------------------------------------------------------------

/benchmark/__init__.py:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/benchmark/table_perf.py:

--------------------------------------------------------------------------------

1 | """

2 | A helper script for evaluating performance of changes to the tabular explainer, in this case different

3 | implementations and methods for distance calculation.

4 | """

5 |

6 | import time

7 | from sklearn.ensemble import RandomForestClassifier

8 | from sklearn.datasets import make_classification

9 | from lime.lime_tabular import LimeTabularExplainer

10 |

11 |

12 | def interpret_data(X, y, func):

13 | explainer = LimeTabularExplainer(X, discretize_continuous=False, kernel_width=3)

14 | times, scores = [], []

15 | for r_idx in range(100):

16 | start_time = time.time()

17 | explanation = explainer.explain_instance(X[r_idx, :], func)

18 | times.append(time.time() - start_time)

19 | scores.append(explanation.score)

20 | print('...')

21 |

22 | return times, scores

23 |

24 |

25 | if __name__ == '__main__':

26 | X_raw, y_raw = make_classification(n_classes=2, n_features=1000, n_samples=1000)

27 | clf = RandomForestClassifier()

28 | clf.fit(X_raw, y_raw)

29 | y_hat = clf.predict_proba(X_raw)

30 |

31 | times, scores = interpret_data(X_raw, y_hat, clf.predict_proba)

32 | print('%9.4fs %9.4fs %9.4fs' % (min(times), sum(times) / len(times), max(times)))

33 | print('%9.4f %9.4f% 9.4f' % (min(scores), sum(scores) / len(scores), max(scores)))

--------------------------------------------------------------------------------

/benchmark/text_perf.py:

--------------------------------------------------------------------------------

1 | import time

2 | import sklearn

3 | import sklearn.ensemble

4 | import sklearn.metrics

5 | from sklearn.datasets import fetch_20newsgroups

6 | from sklearn.pipeline import make_pipeline

7 | from lime.lime_text import LimeTextExplainer

8 |

9 |

10 | def interpret_data(X, y, func, class_names):

11 | explainer = LimeTextExplainer(class_names=class_names)

12 | times, scores = [], []

13 | for r_idx in range(10):

14 | start_time = time.time()

15 | exp = explainer.explain_instance(newsgroups_test.data[r_idx], func, num_features=6)

16 | times.append(time.time() - start_time)

17 | scores.append(exp.score)

18 | print('...')

19 |

20 | return times, scores

21 |

22 | if __name__ == '__main__':

23 | categories = ['alt.atheism', 'soc.religion.christian']

24 | newsgroups_train = fetch_20newsgroups(subset='train', categories=categories)

25 | newsgroups_test = fetch_20newsgroups(subset='test', categories=categories)

26 | class_names = ['atheism', 'christian']

27 |

28 | vectorizer = sklearn.feature_extraction.text.TfidfVectorizer(lowercase=False)

29 | train_vectors = vectorizer.fit_transform(newsgroups_train.data)

30 | test_vectors = vectorizer.transform(newsgroups_test.data)

31 | rf = sklearn.ensemble.RandomForestClassifier(n_estimators=500)

32 | rf.fit(train_vectors, newsgroups_train.target)

33 | pred = rf.predict(test_vectors)

34 | sklearn.metrics.f1_score(newsgroups_test.target, pred, average='binary')

35 | c = make_pipeline(vectorizer, rf)

36 |

37 | interpret_data(train_vectors, newsgroups_train.target, c.predict_proba, class_names)

--------------------------------------------------------------------------------

/binder/environment.yml:

--------------------------------------------------------------------------------

1 |

2 | name: lime-dev

3 | channels:

4 | - conda-forge

5 | dependencies:

6 | - python=3.7.*

7 | # lime install dependencies

8 | - matplotlib

9 | - numpy

10 | - scipy

11 | - scikit-learn

12 | - scikit-image

13 | - pyDOE2

14 | # for testing

15 | - flake8

16 | - pytest

17 | # for examples

18 | - jupyter

19 | - pandas

20 | - keras

21 | - pytorch::pytorch-cpu

22 | - tensorflow

23 | - h2oai::h2o

24 | - py-xgboost

25 | - pip:

26 | # lime source code

27 | - -e ..

28 |

--------------------------------------------------------------------------------

/citation.bib:

--------------------------------------------------------------------------------

1 | @inproceedings{lime,

2 | author = {Marco Tulio Ribeiro and

3 | Sameer Singh and

4 | Carlos Guestrin},

5 | title = {"Why Should {I} Trust You?": Explaining the Predictions of Any Classifier},

6 | booktitle = {Proceedings of the 22nd {ACM} {SIGKDD} International Conference on

7 | Knowledge Discovery and Data Mining, San Francisco, CA, USA, August

8 | 13-17, 2016},

9 | pages = {1135--1144},

10 | year = {2016},

11 | }

12 |

--------------------------------------------------------------------------------

/doc/blog_post.md:

--------------------------------------------------------------------------------

1 | # LIME - Local Interpretable Model-Agnostic Explanations

2 | In this post, we'll talk about the method for explaining the predictions of any classifier described in [this paper](http://arxiv.org/pdf/1602.04938v1.pdf), and implemented in [this open source package](https://github.com/marcotcr/lime).

3 | # Motivation: why do we want to understand predictions?

4 | Machine learning is a buzzword these days. With computers beating professionals in games like [Go](https://deepmind.com/alpha-go.html), many people have started asking if machines would also make for better [drivers](https://www.google.com/selfdrivingcar/), or even doctors.

5 |

6 | Many of the state of the art machine learning models are functionally black boxes, as it is nearly impossible to get a feeling for its inner workings. This brings us to a question of trust: do I trust that a certain prediction from the model is correct? Or do I even trust that the model is making reasonable predictions in general?

7 | While the stakes are low in a Go game, they are much higher if a computer is replacing my doctor, or deciding if I am a suspect of terrorism ([Person of Interest](http://www.imdb.com/title/tt1839578/), anyone?). Perhaps more commonly, if a company is replacing some system with one based on machine learning, it has to trust that the machine learning model will behave reasonably well.

8 |

9 | It seems intuitive that explaining the rationale behind individual predictions would make us better positioned to trust or mistrust the prediction, or the classifier as a whole. Even if we can't necesseraly understand how the model behaves on all cases, it may be possible (and indeed it is in most cases) to understand how it behaves in particular cases.

10 |

11 | Finally, a word on accuracy. If you have had experience with machine learning, I bet you are thinking something along the lines of: "of course I know my model is going to perform well in the real world, I have really high cross validation accuracy! Why do I need to understand it's predictions when I know it gets it right 99% of the time?". As anyone who has used machine learning in the real world (not only in a static dataset) can attest, accuracy on cross validation can be very misleading. Sometimes data that shouldn't be available leaks into the training data accidentaly. Sometimes the way you gather data introduces correlations that will not exist in the real world, which the model exploits. Many other tricky problems can give us a false understanding of performance, even in [doing A/B tests](http://www.exp-platform.com/documents/puzzlingoutcomesincontrolledexperiments.pdf). I am not saying you shouldn't measure accuracy, but simply that it should not be your only metric for assessing trust.

12 |

13 | # Lime: A couple of examples.

14 | First, we give an example from text classification. The famous [20 newsgroups dataset](http://qwone.com/~jason/20Newsgroups/) is a benchmark in the field, and has been used to compare different models in several papers. We take two classes that are suposedly harder to distinguish, due to the fact that they share many words: Christianity and Atheism. Training a random forest with 500 trees, we get a test set accuracy of 92.4%, which is surprisingly high. If accuracy was our only measure of trust, we would definitely trust this algorithm.

15 |

16 | Below is an explanation for an arbitrary instance in the test set, generated using [the lime package](https://github.com/marcotcr/lime).

17 |

18 | This is a case where the classifier predicts the instance correctly, but for the wrong reasons. A little further exploration shows us that the word "Posting" (part of the email header) appears in 21.6% of the examples in the training set, only two times in the class 'Christianity'. This is repeated on the test set, where it appears in almost 20% of the examples, only twice in 'Christianity'. This kind of quirk in the dataset makes the problem much easier than it is in the real world, where this classifier would **not** be able to distinguish between christianity and atheism documents. This is hard to see just by looking at accuracy or raw data, but easy once explanations are provided. Such insights become common once you understand what models are actually doing, leading to models that generalize much better.

19 |

20 | Note further how interpretable the explanations are: they correspond to a very sparse linear model (with only 6 features). Even though the underlying classifier is a complicated random forest, in the neighborhood of this example it behaves roughly as a linear model. Sure nenough, if we remove the words "Host" and "NNTP" from the example, the "atheism" prediction probability becomes close to 0.57 - 0.14 - 0.12 = 0.31.

21 |

22 | Below is an image from our paper, where we explain Google's [Inception neural network](https://github.com/google/inception) on some arbitary images. In this case, we keep as explanations the parts of the image that are most positive towards a certain class. In this case, the classifier predicts Electric Guitar even though the image contains an acoustic guitar. The explanation reveals why it would confuse the two: the fretboard is very similar. Getting explanations for image classifiers is something that is not yet available in the lime package, but we are working on it.

23 |

24 |

25 | # Lime: how we get explanations

26 | Lime is short for Local Interpretable Model-Agnostic Explanations. Each part of the name reflects something that we desire in explanations. **Local** refers to local fidelity - i.e., we want the explanation to really reflect the behaviour of the classifier "around" the instance being predicted. This explanation is useless unless it is **interpretable** - that is, unless a human can make sense of it. Lime is able to explain any model without needing to 'peak' into it, so it is **model-agnostic**. We now give a high level overview of how lime works. For more details, check out our [pre-print](http://arxiv.org/pdf/1602.04938v1.pdf).

27 |

28 | First, a word about **interpretability**. Some classifiers use representations that are not intuitive to users at all (e.g. word embeddings). Lime explains those classifiers in terms of interpretable representations (words), even if that is not the representation actually used by the classifier. Further, lime takes human limitations into account: i.e. the explanations are not too long. Right now, our package supports explanations that are sparse linear models (as presented before), although we are working on other representations.

29 |

30 | In order to be **model-agnostic**, lime can't peak into the model. In order to figure out what parts of the interpretable input are contributing to the prediction, we perturb the input around its neighborhood and see how the model's predictions behave. We then weight these perturbed data points by their proximity to the original example, and learn an interpretable model on those and the associated predictions. For example, if we are trying to explain the prediction for the sentence "I hate this movie", we will perturb the sentence and get predictions on sentences such as "I hate movie", "I this movie", "I movie", "I hate", etc. Even if the original classifier takes many more words into account globally, it is reasonable to expect that around this example only the word "hate" will be relevant. Note that if the classifier uses some uninterpretable representation such as word embeddings, this still works: we just represent the perturbed sentences with word embeddings, and the explanation will still be in terms of words such as "hate" or "movie".

31 |

32 | An illustration of this process is given below. The original model's decision function is represented by the blue/pink background, and is clearly nonlinear.

33 | The bright red cross is the instance being explained (let's call it X).

34 | We sample perturbed instances around X, and weight them according to their proximity to X (weight here is represented by size). We get original model's prediction on these perturbed instances, and then learn a linear model (dashed line) that approximates the model well in the vicinity of X. Note that the explanation in this case is not faithful globally, but it is faithful locally around X.

35 |

36 |

37 | # Conclusion

38 | I hope I've convinced you that understanding individual predictions from classifiers is an important problem. Having explanations lets you make an informed decision about how much you trust the prediction or the model as a whole, and provides insights that can be used to improve the model.

39 |

40 | If you're interested in going more in-depth into how lime works, and the kinds of experiments we did to validate the usefulness of such explanations, [here is a link to our pre-print paper](http://arxiv.org/pdf/1602.04938v1.pdf).

41 |

42 | If you are interested in trying lime for text classifiers, make sure you check out our [python package](https://github.com/marcotcr/lime/). Installation is as simple as typing:

43 | ```pip install lime```

44 | The package is very easy to use. It is particulary easy to explain scikit-learn classifiers. In the github page we also link to a few tutorials, such as [this one](http://marcotcr.github.io/lime/tutorials/Lime%20-%20basic%20usage%2C%20two%20class%20case.html), with examples from scikit-learn.

45 |

46 |

47 |

--------------------------------------------------------------------------------

/doc/conf.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | #

3 | # lime documentation build configuration file, created by

4 | # sphinx-quickstart on Fri Mar 18 16:20:40 2016.

5 | #

6 | # This file is execfile()d with the current directory set to its

7 | # containing dir.

8 | #

9 | # Note that not all possible configuration values are present in this

10 | # autogenerated file.

11 | #

12 | # All configuration values have a default; values that are commented out

13 | # serve to show the default.

14 |

15 | import sys

16 | import os

17 |

18 | # If extensions (or modules to document with autodoc) are in another directory,

19 | # add these directories to sys.path here. If the directory is relative to the

20 | # documentation root, use os.path.abspath to make it absolute, like shown here.

21 | #sys.path.insert(0, os.path.abspath('.'))

22 | curr_path = os.path.dirname(os.path.abspath(os.path.expanduser(__file__)))

23 | libpath = os.path.join(curr_path, '../')

24 | sys.path.insert(0, libpath)

25 | sys.path.insert(0, curr_path)

26 |

27 | import mock

28 | MOCK_MODULES = ['numpy', 'scipy', 'scipy.sparse', 'scipy.special',

29 | 'scipy.stats', 'scipy.stats.distributions', 'sklearn', 'sklearn.preprocessing',

30 | 'sklearn.linear_model', 'matplotlib',

31 | 'sklearn.datasets', 'sklearn.ensemble', 'sklearn.cross_validation',

32 | 'sklearn.feature_extraction', 'sklearn.feature_extraction.text',

33 | 'sklearn.metrics', 'sklearn.naive_bayes', 'sklearn.pipeline',

34 | 'sklearn.utils', 'pyDOE2',]

35 | # for mod_name in MOCK_MODULES:

36 | # sys.modules[mod_name] = mock.Mock()

37 |

38 | import scipy

39 | import scipy.stats

40 | import scipy.stats.distributions

41 | import lime

42 | import lime.lime_text

43 |

44 | import lime.lime_tabular

45 | import lime.explanation

46 | import lime.lime_base

47 | import lime.submodular_pick

48 |

49 | # -- General configuration ------------------------------------------------

50 |

51 | # If your documentation needs a minimal Sphinx version, state it here.

52 | #needs_sphinx = '1.0'

53 |

54 | # Add any Sphinx extension module names here, as strings. They can be

55 | # extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

56 | # ones.

57 | extensions = [

58 | 'sphinx.ext.autodoc',

59 | 'sphinx.ext.mathjax',

60 | 'sphinx.ext.napoleon',

61 | ]

62 |

63 | # Add any paths that contain templates here, relative to this directory.

64 | templates_path = ['_templates']

65 |

66 | # The suffix(es) of source filenames.

67 | # You can specify multiple suffix as a list of string:

68 | # source_suffix = ['.rst', '.md']

69 | source_suffix = '.rst'

70 |

71 | # The encoding of source files.

72 | #source_encoding = 'utf-8-sig'

73 |

74 | # The master toctree document.

75 | master_doc = 'index'

76 |

77 | # General information about the project.

78 | project = u'lime'

79 | copyright = u'2016, Marco Tulio Ribeiro'

80 | author = u'Marco Tulio Ribeiro'

81 |

82 | # The version info for the project you're documenting, acts as replacement for

83 | # |version| and |release|, also used in various other places throughout the

84 | # built documents.

85 | #

86 | # The short X.Y version.

87 | version = u'0.1'

88 | # The full version, including alpha/beta/rc tags.

89 | release = u'0.1'

90 |

91 | # The language for content autogenerated by Sphinx. Refer to documentation

92 | # for a list of supported languages.

93 | #

94 | # This is also used if you do content translation via gettext catalogs.

95 | # Usually you set "language" from the command line for these cases.

96 | language = None

97 |

98 | # There are two options for replacing |today|: either, you set today to some

99 | # non-false value, then it is used:

100 | #today = ''

101 | # Else, today_fmt is used as the format for a strftime call.

102 | #today_fmt = '%B %d, %Y'

103 |

104 | # List of patterns, relative to source directory, that match files and

105 | # directories to ignore when looking for source files.

106 | exclude_patterns = ['_build']

107 |

108 | # The reST default role (used for this markup: `text`) to use for all

109 | # documents.

110 | #default_role = None

111 |

112 | # If true, '()' will be appended to :func: etc. cross-reference text.

113 | #add_function_parentheses = True

114 |

115 | # If true, the current module name will be prepended to all description

116 | # unit titles (such as .. function::).

117 | #add_module_names = True

118 |

119 | # If true, sectionauthor and moduleauthor directives will be shown in the

120 | # output. They are ignored by default.

121 | #show_authors = False

122 |

123 | # The name of the Pygments (syntax highlighting) style to use.

124 | pygments_style = 'sphinx'

125 |

126 | # A list of ignored prefixes for module index sorting.

127 | #modindex_common_prefix = []

128 |

129 | # If true, keep warnings as "system message" paragraphs in the built documents.

130 | #keep_warnings = False

131 |

132 | # If true, `todo` and `todoList` produce output, else they produce nothing.

133 | todo_include_todos = False

134 |

135 |

136 | # -- Options for HTML output ----------------------------------------------

137 |

138 | # The theme to use for HTML and HTML Help pages. See the documentation for

139 | # a list of builtin themes.

140 | html_theme = 'default'

141 |

142 | # Theme options are theme-specific and customize the look and feel of a theme

143 | # further. For a list of options available for each theme, see the

144 | # documentation.

145 | #html_theme_options = {}

146 |

147 | # Add any paths that contain custom themes here, relative to this directory.

148 | #html_theme_path = []

149 |

150 | # The name for this set of Sphinx documents. If None, it defaults to

151 | # " v documentation".

152 | #html_title = None

153 |

154 | # A shorter title for the navigation bar. Default is the same as html_title.

155 | #html_short_title = None

156 |

157 | # The name of an image file (relative to this directory) to place at the top

158 | # of the sidebar.

159 | #html_logo = None

160 |

161 | # The name of an image file (relative to this directory) to use as a favicon of

162 | # the docs. This file should be a Windows icon file (.ico) being 16x16 or 32x32

163 | # pixels large.

164 | #html_favicon = None

165 |

166 | # Add any paths that contain custom static files (such as style sheets) here,

167 | # relative to this directory. They are copied after the builtin static files,

168 | # so a file named "default.css" will overwrite the builtin "default.css".

169 | html_static_path = ['_static']

170 |

171 | # Add any extra paths that contain custom files (such as robots.txt or

172 | # .htaccess) here, relative to this directory. These files are copied

173 | # directly to the root of the documentation.

174 | #html_extra_path = []

175 |

176 | # If not '', a 'Last updated on:' timestamp is inserted at every page bottom,

177 | # using the given strftime format.

178 | #html_last_updated_fmt = '%b %d, %Y'

179 |

180 | # If true, SmartyPants will be used to convert quotes and dashes to

181 | # typographically correct entities.

182 | #html_use_smartypants = True

183 |

184 | # Custom sidebar templates, maps document names to template names.

185 | #html_sidebars = {}

186 |

187 | # Additional templates that should be rendered to pages, maps page names to

188 | # template names.

189 | #html_additional_pages = {}

190 |

191 | # If false, no module index is generated.

192 | #html_domain_indices = True

193 |

194 | # If false, no index is generated.

195 | #html_use_index = True

196 |

197 | # If true, the index is split into individual pages for each letter.

198 | #html_split_index = False

199 |

200 | # If true, links to the reST sources are added to the pages.

201 | #html_show_sourcelink = True

202 |

203 | # If true, "Created using Sphinx" is shown in the HTML footer. Default is True.

204 | #html_show_sphinx = True

205 |

206 | # If true, "(C) Copyright ..." is shown in the HTML footer. Default is True.

207 | #html_show_copyright = True

208 |

209 | # If true, an OpenSearch description file will be output, and all pages will

210 | # contain a tag referring to it. The value of this option must be the

211 | # base URL from which the finished HTML is served.

212 | #html_use_opensearch = ''

213 |

214 | # This is the file name suffix for HTML files (e.g. ".xhtml").

215 | #html_file_suffix = None

216 |

217 | # Language to be used for generating the HTML full-text search index.

218 | # Sphinx supports the following languages:

219 | # 'da', 'de', 'en', 'es', 'fi', 'fr', 'hu', 'it', 'ja'

220 | # 'nl', 'no', 'pt', 'ro', 'ru', 'sv', 'tr'

221 | #html_search_language = 'en'

222 |

223 | # A dictionary with options for the search language support, empty by default.

224 | # Now only 'ja' uses this config value

225 | #html_search_options = {'type': 'default'}

226 |

227 | # The name of a javascript file (relative to the configuration directory) that

228 | # implements a search results scorer. If empty, the default will be used.

229 | #html_search_scorer = 'scorer.js'

230 |

231 | # Output file base name for HTML help builder.

232 | htmlhelp_basename = 'limedoc'

233 |

234 | # -- Options for LaTeX output ---------------------------------------------

235 |

236 | latex_elements = {

237 | # The paper size ('letterpaper' or 'a4paper').

238 | #'papersize': 'letterpaper',

239 |

240 | # The font size ('10pt', '11pt' or '12pt').

241 | #'pointsize': '10pt',

242 |

243 | # Additional stuff for the LaTeX preamble.

244 | #'preamble': '',

245 |

246 | # Latex figure (float) alignment

247 | #'figure_align': 'htbp',

248 | }

249 |

250 | # Grouping the document tree into LaTeX files. List of tuples

251 | # (source start file, target name, title,

252 | # author, documentclass [howto, manual, or own class]).

253 | latex_documents = [

254 | (master_doc, 'lime.tex', u'lime Documentation',

255 | u'Marco Tulio Ribeiro', 'manual'),

256 | ]

257 |

258 | # The name of an image file (relative to this directory) to place at the top of

259 | # the title page.

260 | #latex_logo = None

261 |

262 | # For "manual" documents, if this is true, then toplevel headings are parts,

263 | # not chapters.

264 | #latex_use_parts = False

265 |

266 | # If true, show page references after internal links.

267 | #latex_show_pagerefs = False

268 |

269 | # If true, show URL addresses after external links.

270 | #latex_show_urls = False

271 |

272 | # Documents to append as an appendix to all manuals.

273 | #latex_appendices = []

274 |

275 | # If false, no module index is generated.

276 | #latex_domain_indices = True

277 |

278 |

279 | # -- Options for manual page output ---------------------------------------

280 |

281 | # One entry per manual page. List of tuples

282 | # (source start file, name, description, authors, manual section).

283 | man_pages = [

284 | (master_doc, 'lime', u'lime Documentation',

285 | [author], 1)

286 | ]

287 |

288 | # If true, show URL addresses after external links.

289 | #man_show_urls = False

290 |

291 |

292 | # -- Options for Texinfo output -------------------------------------------

293 |

294 | # Grouping the document tree into Texinfo files. List of tuples

295 | # (source start file, target name, title, author,

296 | # dir menu entry, description, category)

297 | texinfo_documents = [

298 | (master_doc, 'lime', u'lime Documentation',

299 | author, 'lime', 'One line description of project.',

300 | 'Miscellaneous'),

301 | ]

302 |

303 | autoclass_content = 'both'

304 | # Documents to append as an appendix to all manuals.

305 | #texinfo_appendices = []

306 |

307 | # If false, no module index is generated.

308 | #texinfo_domain_indices = True

309 |

310 | # How to display URL addresses: 'footnote', 'no', or 'inline'.

311 | #texinfo_show_urls = 'footnote'

312 |

313 | # If true, do not generate a @detailmenu in the "Top" node's menu.

314 | #texinfo_no_detailmenu = False

315 |

--------------------------------------------------------------------------------

/doc/images/image_from_paper.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/marcotcr/lime/fd7eb2e6f760619c29fca0187c07b82157601b32/doc/images/image_from_paper.png

--------------------------------------------------------------------------------

/doc/images/images.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/marcotcr/lime/fd7eb2e6f760619c29fca0187c07b82157601b32/doc/images/images.png

--------------------------------------------------------------------------------

/doc/images/lime.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/marcotcr/lime/fd7eb2e6f760619c29fca0187c07b82157601b32/doc/images/lime.png

--------------------------------------------------------------------------------

/doc/images/multiclass.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/marcotcr/lime/fd7eb2e6f760619c29fca0187c07b82157601b32/doc/images/multiclass.png

--------------------------------------------------------------------------------

/doc/images/tabular.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/marcotcr/lime/fd7eb2e6f760619c29fca0187c07b82157601b32/doc/images/tabular.png

--------------------------------------------------------------------------------

/doc/images/twoclass.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/marcotcr/lime/fd7eb2e6f760619c29fca0187c07b82157601b32/doc/images/twoclass.png

--------------------------------------------------------------------------------

/doc/images/video_screenshot.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/marcotcr/lime/fd7eb2e6f760619c29fca0187c07b82157601b32/doc/images/video_screenshot.png

--------------------------------------------------------------------------------

/doc/index.rst:

--------------------------------------------------------------------------------

1 | .. lime documentation master file, created by

2 | sphinx-quickstart on Fri Mar 18 16:20:40 2016.

3 | You can adapt this file completely to your liking, but it should at least

4 | contain the root `toctree` directive.

5 |

6 | Local Interpretable Model-Agnostic Explanations (lime)

7 | ================================

8 | In this page, you can find the Python API reference for the lime package (local interpretable model-agnostic explanations).

9 | For tutorials and more information, visit `the github page `_.

10 |

11 |

12 | .. toctree::

13 | :maxdepth: 2

14 |

15 | lime

16 |

17 |

18 |

19 | Indices and tables

20 | ==================

21 |

22 | * :ref:`genindex`

23 | * :ref:`modindex`

24 | * :ref:`search`

25 |

26 |

--------------------------------------------------------------------------------

/doc/lime.rst:

--------------------------------------------------------------------------------

1 | lime package

2 | ============

3 |

4 | Subpackages

5 | -----------

6 |

7 | .. toctree::

8 |

9 | lime.tests

10 |

11 | Submodules

12 | ----------

13 |

14 | lime\.discretize module

15 | -----------------------

16 |

17 | .. automodule:: lime.discretize

18 | :members:

19 | :undoc-members:

20 | :show-inheritance:

21 |

22 | lime\.exceptions module

23 | -----------------------

24 |

25 | .. automodule:: lime.exceptions

26 | :members:

27 | :undoc-members:

28 | :show-inheritance:

29 |

30 | lime\.explanation module

31 | ------------------------

32 |

33 | .. automodule:: lime.explanation

34 | :members:

35 | :undoc-members:

36 | :show-inheritance:

37 |

38 | lime\.lime\_base module

39 | -----------------------

40 |

41 | .. automodule:: lime.lime_base

42 | :members:

43 | :undoc-members:

44 | :show-inheritance:

45 |

46 | lime\.lime\_image module

47 | ------------------------

48 |

49 | .. automodule:: lime.lime_image

50 | :members:

51 | :undoc-members:

52 | :show-inheritance:

53 |

54 | lime\.lime\_tabular module

55 | --------------------------

56 |

57 | .. automodule:: lime.lime_tabular

58 | :members:

59 | :undoc-members:

60 | :show-inheritance:

61 |

62 | lime\.lime\_text module

63 | -----------------------

64 |

65 | .. automodule:: lime.lime_text

66 | :members:

67 | :undoc-members:

68 | :show-inheritance:

69 |

70 |

71 | lime\.submodular\_pick module

72 | -----------------------

73 |

74 | .. automodule:: lime.submodular_pick

75 | :members:

76 | :undoc-members:

77 | :show-inheritance:

78 |

79 |

80 | Module contents

81 | ---------------

82 |

83 | .. automodule:: lime

84 | :members:

85 | :undoc-members:

86 | :show-inheritance:

87 |

--------------------------------------------------------------------------------

/doc/notebooks/data/cat_mouse.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/marcotcr/lime/fd7eb2e6f760619c29fca0187c07b82157601b32/doc/notebooks/data/cat_mouse.jpg

--------------------------------------------------------------------------------

/doc/notebooks/data/dogs.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/marcotcr/lime/fd7eb2e6f760619c29fca0187c07b82157601b32/doc/notebooks/data/dogs.png

--------------------------------------------------------------------------------

/doc/notebooks/data/imagenet_class_index.json:

--------------------------------------------------------------------------------