├── .github

└── workflows

│ ├── docs.yml

│ └── qc.yml

├── .gitignore

├── .travis.yml

├── CONTRIBUTING.md

├── LICENSE.txt

├── README.md

├── deprecated-class-historical-taxonomy.owl

├── docs

├── cite.md

├── contributing.md

├── history.md

├── index.md

├── modeling

│ ├── Home.md

│ ├── Householder.md

│ ├── Housing-unit-and-Household.md

│ ├── How-are-household-and-housing-unit-related?.md

│ ├── Insurance.md

│ ├── Modeling-Discharge-and-Discharge-Status.md

│ ├── OMRSE-Language-and-Language-Individuals.md

│ ├── OMRSE-Overview.md

│ ├── PCORNet-Smoking-Status.md

│ ├── Race-And-Ethnicity.md

│ ├── Representing-Languages-as-codified-by--ISO-639.md

│ ├── Typology-of-Health-Care-Facilities.md

│ └── What-is-the-relation-between-a-role-and-the-organization-that-creates-sanctions-confers-it.md

├── odk-workflows

│ ├── ContinuousIntegration.md

│ ├── EditorsWorkflow.md

│ ├── ManageDocumentation.md

│ ├── ReleaseWorkflow.md

│ ├── RepoManagement.md

│ ├── RepositoryFileStructure.md

│ ├── SettingUpDockerForODK.md

│ ├── UpdateImports.md

│ ├── components.md

│ └── index.md

├── omrse-doc-process

│ └── EditingOMRSEDocumentation.md

└── templates

│ └── dosdp.md

├── issue_template.md

├── mkdocs.yaml

├── omrse-base.json

├── omrse-base.obo

├── omrse-base.owl

├── omrse-full.json

├── omrse-full.obo

├── omrse-full.owl

├── omrse.json

├── omrse.obo

├── omrse.owl

└── src

├── ontology

├── Makefile

├── README-editors.md

├── archive

│ ├── README.md

│ ├── omrse-edit-external.ofn

│ ├── release-diff-build.txt

│ ├── release-diff-merged.md

│ └── release-diff-merged.txt

├── catalog-v001.xml

├── components

│ └── language

│ │ └── language-individuals.owl

├── imports

│ ├── apollo_sv_import.owl

│ ├── apollo_sv_terms.txt

│ ├── bfo_import.owl

│ ├── bfo_terms.txt

│ ├── d-acts_import.owl

│ ├── d-acts_terms.txt

│ ├── external_import.owl

│ ├── geo_import.owl

│ ├── geo_terms.txt

│ ├── go_import.owl

│ ├── go_terms.txt

│ ├── iao_import.owl

│ ├── iao_terms.txt

│ ├── mf_import.owl

│ ├── mf_terms.txt

│ ├── ncbitaxon_import.owl

│ ├── ncbitaxon_terms.txt

│ ├── oae_import.owl

│ ├── oae_terms.txt

│ ├── obi_import.owl

│ ├── obi_terms.txt

│ ├── obib_import.owl

│ ├── obib_terms.txt

│ ├── ogms_import.owl

│ ├── ogms_terms.txt

│ ├── omo_import.owl

│ ├── omo_terms.txt

│ ├── oostt_import.owl

│ ├── oostt_terms.txt

│ ├── pco_import.owl

│ ├── pco_terms.txt

│ ├── pno_import.owl

│ ├── pno_terms.txt

│ ├── ro_import.owl

│ └── ro_terms.txt

├── omrse-edit.owl

├── omrse-idranges.owl

├── omrse-odk.yaml

├── omrse.Makefile

├── profile.txt

├── run.bat

└── run.sh

├── scripts

├── find-highest-obo-id.sh

├── summarize-table.py

├── update_repo.sh

└── validate_id_ranges.sc

├── sparql

├── README.md

├── basic-report.sparql

├── class-count-by-prefix.sparql

├── def-lacks-xref-violation.sparql

├── edges.sparql

├── equivalent-classes-violation.sparql

├── inject-subset-declaration.ru

├── inject-synonymtype-declaration.ru

├── iri-range-violation.sparql

├── label-with-iri-violation.sparql

├── labels.sparql

├── multiple-replaced_by-violation.sparql

├── nolabels-violation.sparql

├── obi_terms.sparql

├── obsolete-violation.sparql

├── obsoletes.sparql

├── omrse_terms.sparql

├── owldef-self-reference-violation.sparql

├── owldef-violation.sparql

├── postprocess-module.ru

├── preprocess-module.ru

├── redundant-subClassOf-violation.sparql

├── simple-seed.sparql

├── subsets-labeled.sparql

├── synonyms.sparql

├── terms.sparql

├── triple-table.sparql

└── xrefs.sparql

└── templates

└── external_import.tsv

/.github/workflows/docs.yml:

--------------------------------------------------------------------------------

1 | # Basic ODK workflow

2 | name: Docs

3 |

4 | # Controls when the action will run.

5 | on:

6 | # Allows you to run this workflow manually from the Actions tab

7 | workflow_dispatch:

8 | push:

9 | branches:

10 | - main

11 |

12 | # A workflow run is made up of one or more jobs that can run sequentially or in parallel

13 | jobs:

14 | build:

15 | name: Deploy docs

16 | runs-on: ubuntu-latest

17 | steps:

18 | - name: Checkout main

19 | uses: actions/checkout@v3

20 |

21 | - name: Deploy docs

22 | uses: mhausenblas/mkdocs-deploy-gh-pages@master

23 | # Or use mhausenblas/mkdocs-deploy-gh-pages@nomaterial to build without the mkdocs-material theme

24 | env:

25 | GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

26 | CONFIG_FILE: mkdocs.yaml

27 |

28 |

--------------------------------------------------------------------------------

/.github/workflows/qc.yml:

--------------------------------------------------------------------------------

1 | # Basic ODK workflow

2 |

3 | name: CI

4 |

5 | # Controls when the action will run.

6 | on:

7 | # Triggers the workflow on push or pull request events but only for the main branch

8 | push:

9 | branches: [ main ]

10 | pull_request:

11 | branches: [ main ]

12 |

13 | # Allows you to run this workflow manually from the Actions tab

14 | workflow_dispatch:

15 |

16 | # A workflow run is made up of one or more jobs that can run sequentially or in parallel

17 | jobs:

18 | # This workflow contains a single job called "ontology_qc"

19 | ontology_qc:

20 | # The type of runner that the job will run on

21 | runs-on: ubuntu-latest

22 | container: obolibrary/odkfull:v1.5.4

23 |

24 | # Steps represent a sequence of tasks that will be executed as part of the job

25 | steps:

26 | # Checks-out your repository under $GITHUB_WORKSPACE, so your job can access it

27 | - uses: actions/checkout@v3

28 |

29 | - name: Run ontology QC checks

30 | env:

31 | DEFAULT_BRANCH: main

32 | run: cd src/ontology && make ROBOT_ENV='ROBOT_JAVA_ARGS=-Xmx6G' test IMP=false PAT=false MIR=false

33 |

34 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | .DS_Store

2 | semantic.cache

3 | bin/

4 |

5 | *.tmp

6 | *.tmp.obo

7 | *.tmp.owl

8 | *.tmp.json

9 |

10 | src/ontology/mirror

11 | src/ontology/mirror/*

12 | src/ontology/omrse.owl

13 | src/ontology/omrse.obo

14 | src/ontology/omrse.json

15 | src/ontology/omrse-base.*

16 | src/ontology/omrse-basic.*

17 | src/ontology/omrse-full.*

18 | src/ontology/omrse-simple.*

19 | src/ontology/omrse-simple-non-classified.*

20 |

21 | src/ontology/seed.txt

22 | src/ontology/dosdp-tools.log

23 | src/ontology/ed_definitions_merged.owl

24 | src/ontology/ontologyterms.txt

25 | src/ontology/simple_seed.txt

26 | src/ontology/patterns

27 | src/ontology/merged-omrse-edit.owl

28 | src/ontology/reports

29 | src/ontology/omrse-edit.properties

30 | reports/

31 |

32 | src/ontology/target/

33 | src/ontology/tmp/*

34 | !src/ontology/tmp/README.md

35 |

36 | src/ontology/imports/*_terms_combined.txt

37 |

38 | src/patterns/data/**/*.ofn

39 | src/patterns/data/**/*.txt

40 | src/patterns/pattern_owl_seed.txt

41 | src/patterns/all_pattern_terms.txt

42 | src/scripts/pull_changes_from_master.sh

43 | src/_patterns

44 | src/_sparql

45 | .cogs

46 | site

47 | pull-request.md

48 | omrse/catalog-v001.xml

49 | omrse/language/catalog-v001.xml

50 |

51 | .idea/

52 | *.py

53 |

--------------------------------------------------------------------------------

/.travis.yml:

--------------------------------------------------------------------------------

1 | ## REMEMBER TO SET UP YOUR GIT REPO FOR TRAVIS

2 | ## Go to: https://travis-ci.org/matentzn for details

3 | sudo: required

4 |

5 | services:

6 | - docker

7 |

8 | before_install:

9 | - docker pull obolibrary/odkfull

10 |

11 | # command to run tests

12 | script: cd src/ontology && sh run.sh make test

13 |

14 | #after_success:

15 | # coveralls

16 |

17 | # whitelist

18 | branches:

19 | only:

20 | - master

21 | - test-travis

22 |

23 | ### Add your own lists here

24 | ### See https://github.com/INCATools/ontology-development-kit/issues/35

25 | notifications:

26 | email:

27 | - obo-ci-reports-all@groups.io

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | ## Before you write a new request, please consider the following:

2 |

3 | - **Does the term already exist?** Before submitting suggestions for new ontology terms, check whether the term exist, either as a primary term or a synonym term. You can search using [OLS](http://www.ebi.ac.uk/ols/ontologies/omrse)

4 |

5 | ## Guidelines for creating GitHub tickets with contributions to the ontology:

6 |

7 | 1. **Write a detailed request:** Please be specific and include as many details as necessary, providing background information, and if possible, suggesting a solution. GOC editors will be better equipped to address your suggestions if you offer details regarding *'what is wrong'*, *'why'*, and *'how to fix it'*.

8 |

9 | 2. **Provide examples and references:** Please include PMIDs for new term requests, and include also screenshots, or URLs illustrating the current ontology structure for other types of requests.

10 |

11 | 3. **For new term request:** Be sure to provide suggestions for label (name), definition, references, position in hierarchy, etc.

12 |

13 | 4. **For updates to relationships:** Provide details of the current axioms, why you think they are wrong or not sufficient, and what exactly should be added or removed.

14 |

15 | On behalf of the The Ontology of Medically Related Social Entities editorial team, Thanks!

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | [](http://robot.obolibrary.org/)

2 |  3 |

3 |  4 |

5 | # OMRSE -- The Ontology for Modeling and Representation of Social Entities

6 |

7 | Shield: [![CC BY 4.0][cc-by-shield]][cc-by]

8 |

9 | This work is licensed under a

10 | [Creative Commons Attribution 4.0 International License][cc-by].

11 |

12 | [![CC BY 4.0][cc-by-image]][cc-by]

13 |

14 | [cc-by]: http://creativecommons.org/licenses/by/4.0/

15 | [cc-by-image]: https://i.creativecommons.org/l/by/4.0/88x31.png

16 | [cc-by-shield]: https://img.shields.io/badge/License-CC%20BY%204.0-lightgrey.svg

17 |

18 | The Ontology for Modeling and Representation of Social Entities (OMRSE) is an OBO Foundry ontology that represents the various entities that arise from human social interactions, such as social acts, social roles, social groups, and organizations. For more information on the social entities represented in OMRSE, please visit our [wiki page](https://mcwdsi.github.io/OMRSE/modeling/Home/) or list of publications. OMRSE is designed to be a mid-level ontology that bridges the gap between BFO, which it reuses for its top-level hierarchy, and more specific domain or application ontologies. For this reason, we are always open to working with ontology developers who want to build interoperability between their projects and OMRSE. A list of projects we currently collaborate with or that reuse OMRSE classes is listed below.

19 |

20 | OMRSE Google Group: http://groups.google.com/group/omrse-discuss Click the "Apply for Membership" link to join.

21 |

22 | Monthly meetings occur on the first Wednesday of each month at 11:00 ET / 10:00 CT / 8:00 PT and last one hour

23 |

24 | Agenda: https://docs.google.com/document/d/1UUD-53SaioJO7btrs8ie3yjFYJIF6HV3RUU3A7A_w2Y/edit#

25 |

26 | ## Previously known as the Ontology of Medically Related Social Entities

27 |

28 | ## Projects and ontologies that use OMRSE

29 |

30 | [Apollo Structured Vocabulary](https://github.com/ApolloDev)

31 |

32 | [OBIB](https://github.com/biobanking/biobanking): Ontology for Biobanking

33 |

34 | [OccO](https://github.com/Occupation-Ontology/OccO): Occupation Ontology

35 |

36 | [OntoNeo](https://ontoneo.com/): Obstetric and Neonatal Ontology

37 |

38 | [Oral Health and Disease Ontology](https://github.com/wdduncan/ohd-ontology)

39 |

40 | [OOSTT](https://github.com/OOSTT/): Ontology of Organizational Structures of Trauma centers and Trauma systems

41 |

42 | ## Preparing a new OMRSE release

43 |

44 | See [documentation](docs/ReleaseWorkflow.md).

45 |

46 | ## List of Publications

47 |

48 | Dowland SC, Smith B, Diller MA, Landgrebe J, Hogan WR. Ontology of language, with applications to demographic data. Applied Ontology. 2023;18:239-62. http://doi.org/10.3233/AO-230049

49 |

50 | Diller M, Hogan WR. An Ontological Representation of Money with a View Toward Economic Determinants of Health. Proceedings of the International Conference on Biological and Biomedical Ontology (ICBO) 2022;1613:0073. Link: https://icbo-conference.github.io/icbo2022/papers/ICBO-2022_paper_8682.pdf

51 |

52 | Dowland SC, Hogan WR. Using Ontologies to Enhance Data on Intimate Partner Violence. Proceedings of the International Conference on Biological and Biomedical Ontology (ICBO). Link: https://icbo-conference.github.io/icbo2022/papers/ICBO-2022_paper_6874.pdf

53 |

54 | Hicks A, Hanna J, Welch D, Brochhausen M, Hogan WR. The ontology of medically related social entities: recent developments. Journal of Biomedical Semantics. 2016 Dec;7(1):1-4. Link: https://jbiomedsem.biomedcentral.com/articles/10.1186/s13326-016-0087-8

55 |

56 | Ceusters W, Hogan WR. An ontological analysis of diagnostic assertions in electronic healthcare records. Homo. 2015;1:2. Link: http://icbo2015.fc.ul.pt/regular2.pdf

57 |

58 | Hogan WR, Garimalla S, Tariq SA. Representing the Reality Underlying Demographic Data. InICBO 2011 Jul 26. Link: http://ceur-ws.org/Vol-833/paper20.pdf

59 |

60 |

--------------------------------------------------------------------------------

/deprecated-class-historical-taxonomy.owl:

--------------------------------------------------------------------------------

1 |

2 |

10 |

11 |

12 |

13 |

14 |

21 |

22 |

23 |

24 |

25 |

26 |

27 |

28 | has participant

29 |

30 |

31 |

32 |

33 |

34 |

35 |

36 | has active participant

37 |

38 |

39 |

40 |

41 |

42 |

49 |

50 |

51 |

52 |

53 |

54 |

55 |

56 | material entity

57 |

58 |

59 |

60 |

61 |

62 |

63 |

64 | social act

65 |

66 |

67 |

68 |

69 |

70 |

71 |

72 | human social role

73 |

74 |

75 |

76 |

77 |

78 |

79 |

80 |

81 | obsolete OMB ethnic identity datum

82 | true

83 |

84 |

85 |

86 |

87 |

88 |

89 |

90 |

91 | obsolete socio-legal human social role

92 | true

93 |

94 |

95 |

96 |

97 |

98 |

99 |

100 |

101 | obsolete racial identity datum

102 | true

103 |

104 |

105 |

106 |

107 |

108 |

109 |

110 |

111 | obsolete ethnic identity datum

112 | true

113 |

114 |

115 |

116 |

117 |

118 |

119 |

120 |

121 | obsolete hispanic or latino identity datum

122 | true

123 |

124 |

125 |

126 |

127 |

128 |

129 |

130 |

131 | obsolete not hispanic or latino identity datum

132 | true

133 |

134 |

135 |

136 |

137 |

138 |

139 |

140 |

141 | obsolete identity datum

142 | true

143 |

144 |

145 |

146 |

147 |

148 |

149 |

150 |

151 | obsolete gender identity datum

152 | true

153 |

154 |

155 |

156 |

157 |

158 |

159 |

160 |

161 | obsolete female gender identity datum

162 | true

163 |

164 |

165 |

166 |

167 |

168 |

169 |

170 |

171 | obsolete male gender identity datum

172 | true

173 |

174 |

175 |

176 |

177 |

178 |

179 |

180 |

181 | obsolete self-identity data item

182 | true

183 |

184 |

185 |

186 |

187 |

188 |

189 |

190 |

191 | obsolete medical advice

192 | true

193 |

194 |

195 |

196 |

197 |

198 |

199 |

200 | obsolete questions asking process

201 | true

202 |

203 |

204 |

205 |

206 |

207 |

208 |

209 |

210 | obsolete material information bearer of question text plus answer set

211 | true

212 |

213 |

214 |

215 |

216 |

217 |

218 |

219 |

220 | obsolete identity question asking process

221 | true

222 |

223 |

224 |

225 |

226 |

227 |

228 |

229 |

230 | obsolete ethnic identity question asking process

231 | true

232 |

233 |

234 |

235 |

236 |

237 |

238 |

239 |

240 | obsolete race identity question asking process

241 | true

242 |

243 |

244 |

245 |

246 |

247 |

248 |

249 | answer to identity question

250 |

251 |

252 |

253 |

254 |

255 |

256 |

257 |

258 | obsolete American Indian or Alaska Native identity datum

259 | true

260 |

261 |

262 |

263 |

264 |

265 |

266 |

267 |

268 | obsolete Asian identity datum

269 | true

270 |

271 |

272 |

273 |

274 |

275 |

276 |

277 |

278 | obsolete black or African American identity datum

279 | true

280 |

281 |

282 |

283 |

284 |

285 |

286 |

287 |

288 | obsolete Native Hawaiian or other Pacific Islander identity datum

289 | true

290 |

291 |

292 |

293 |

294 |

295 |

296 |

297 |

298 | obsolete white identity datum

299 | true

300 |

301 |

302 |

303 |

304 |

305 |

306 |

307 |

308 | obsolete OMB racial identity datum

309 | true

310 |

311 |

312 |

313 |

314 |

315 |

316 |

317 | obsolete communication

318 | true

319 |

320 |

321 |

322 |

323 |

324 |

325 |

326 |

327 | obsolete expression of preferred language

328 | true

329 |

330 |

331 |

332 |

333 |

334 |

335 |

336 |

337 | obsolete non-binary gender identity datum

338 | true

339 |

340 |

341 |

342 |

343 |

350 |

351 |

352 | is-aggregate-of

353 |

354 |

355 |

356 |

357 |

358 |

359 |

360 |

--------------------------------------------------------------------------------

/docs/cite.md:

--------------------------------------------------------------------------------

1 | # How to cite OMRSE

2 |

--------------------------------------------------------------------------------

/docs/contributing.md:

--------------------------------------------------------------------------------

1 | # How to contribute to OMRSE

2 |

--------------------------------------------------------------------------------

/docs/history.md:

--------------------------------------------------------------------------------

1 | # A brief history of OMRSE

2 |

3 | The following page gives an overview of the history of OMRSE.

4 |

--------------------------------------------------------------------------------

/docs/index.md:

--------------------------------------------------------------------------------

1 | # OMRSE Ontology Documentation

2 |

3 | [//]: # "This file is meant to be edited by the ontology maintainer."

4 |

5 | Welcome to the OMRSE documentation!

6 |

7 | You can find descriptions of the standard ontology engineering workflows [here](odk-workflows/index.md).

8 |

--------------------------------------------------------------------------------

/docs/modeling/Home.md:

--------------------------------------------------------------------------------

1 | # Welcome to the OMRSE wiki!

2 |

3 | Read an [overview](OMRSE-Overview.md) of the OMRSE ontology

4 |

5 | ## Specific modeling decisions:

6 | - [Healthcare facilities](Typology-of-Health-Care-Facilities.md)

7 | - [Hospital discharge & discharge status](Modeling-Discharge-and-Discharge-Status.md)

8 | - [Householder](Householder.md)

9 | - [Housing unit & household](Housing-unit-and-Household.md)

10 | - [Insurance, especially health insurance](Insurance.md)

11 | - [Language](OMRSE-Language-and-Language-Individuals.md)

12 | - [Language as codified by ISO-639](Representing-Languages-as-codified-by--ISO-639.md)

13 | - [Race and ethnicity](Race-And-Ethnicity.md)

14 | - [Relation between a role and the organization that creates it](What-is-the-relation-between-a-role-and-the-organization-that-creates-sanctions-confers-it.md)

15 | - [Smoking status](PCORNet-Smoking-Status.md)

16 |

--------------------------------------------------------------------------------

/docs/modeling/Householder.md:

--------------------------------------------------------------------------------

1 | # US Census Householder

2 |

3 | US Census

4 | "The householder refers to the person (or one of the people) in whose name the housing unit is owned or rented (maintained) or, if there is no such person, any adult member, excluding roomers, boarders, or paid employees. If the house is owned or rented jointly by a married couple, the householder may be either the husband or the wife. The person designated as the householder is the "reference person" to whom the relationship of all other household members, if any, is recorded.

5 |

6 | "The number of householders is equal to the number of households. Also, the number of family householders is equal to the number of families.

7 |

8 | "Head versus householder. Beginning with the 1980 CPS, the Bureau of the Census discontinued the use of the terms "head of household" and "head of family." Instead, the terms "householder" and "family householder" are used. Recent social changes have resulted in greater sharing of household responsibilities among the adult members and, therefore, have made the term "head" increasingly inappropriate in the analysis of household and family data. Specifically, beginning in 1980, the Census Bureau discontinued its longtime practice of always classifying the husband as the reference person (head) when he and his wife are living together."

9 | http://www.census.gov/cps/about/cpsdef.html

10 |

11 | * Sufficient condition - being the only person who maintains (owns or rents) the housing unit in their name.

12 |

13 | * Necessary conditions: adult, member of the household, not paying another member of the household to live there; not a paid employee of the household.

14 |

15 | Notes: There is one householder to household. The householder role is individuated by households. Can one person by the householder of many households? (Look at Utah census data.) The householder also plays the role of US Census reference person.

16 |

17 | Proposed definition:

18 | A US Census householder role is a human social role that inheres in a Homo sapiens and is realized by that person being a member of a household and either owning or renting the housing unit in which that household resides and being designated as the householder. If there is only one member of the household who owns or rents the housing unit, that person is designated the householder by default.

19 |

20 | # US Census Reference Person

21 |

22 | "The reference person is the person to whom the relationship of other people in the household is recorded. The household reference person is the person listed as the householder (see definition of "Householder"). The subfamily reference person is either the single parent or the husband/wife in a married-couple situation."

23 |

24 | Do we need to model "US Census reference person"?

--------------------------------------------------------------------------------

/docs/modeling/Housing-unit-and-Household.md:

--------------------------------------------------------------------------------

1 | ## Use Cases

2 |

3 | ### Representing US Census Housing Units for Exposome Research

4 |

5 | We currently have a need to integrate American Community Survey (ACS) data for Florida with other environmental datasets in a graph database to support exposome research. These data capture measures of aggregates of households within a particular region in the US (e.g., Census tracts, counties, etc.).

6 |

7 | ### Representing Synthetic Ecosystem for the Modeling Infectious Disease Agent Study

8 |

9 | We previously had a use case to represent households and housing units in the Modeling Infectious Disease Agent Study (MIDAS) Informatics Services Group (ISG). Specifically, we represented synthetic ecosystem datasets, which are datasets that are derived from so-called "micro-samples" of actual census data. To build agent-based epidemic simulators, researchers will often take samples of census data and expand them back up to the size of the entire population according to statistical algorithms that ensure the re-created overall population dataset matches the actual population in terms of race, gender, ethnicity, geographical distribution, and so on.

10 |

11 | For modelers, finding a synthetic ecosystem that meets their needs in terms of geography, other entities represented (such as households, schools, and workplaces) is a key issue. Therefore, we built the Ontology-based Catalog for Infectious Disease Epidemiology (OBC.ide) to help modelers and analysts find synthetic ecosystems and other information resources.

12 |

13 | We wish to transform some existing synthetic ecosystem data into RDF and need key ontology classes for it. Two of these classes are housing unit and household.

14 |

15 | ## Background Information

16 | Because the data for our use cases are based on US Census data, we propose initially to use definitions from the US Census:

17 |

18 | **household**: The Census defines it as ...all the people who occupy a housing unit. Source: http://www.census.gov/cps/about/cpsdef.html.

19 |

20 | **housing unit**: The Census says A house, an apartment or other group of rooms, or a single room, is regarded as a housing unit when it is occupied or intended for occupancy as separate living quarters; that is, when the occupants do not live with any other persons in the structure and there is direct access from the outside or through a common hall.

21 |

22 | Per OMRSE, the entire apartment building would be an instance of **architectural structure**. So each apartment unit in it is not an architectural structure. It is a part of it. However, in the case of a detached, single-family home, the housing unit is indeed an architectural structure.

23 |

24 | So at minimum, a housing unit is a part of an architectural structure (the reflexivity of part of handles the single-family home case).

25 |

26 | The Census criterion of direct access from outside or through a hall common to other housing units is a good one. Also we note that mobile homes are sufficiently attached to the ground when serving as a housing unit to meet the definition of 'architectural structure'.

27 |

28 | One key issue to decide is what about house boats and recreational vehicles? They clearly are not architectural structures (lack connection to ground). But they serve a particular housing function.

29 |

30 | Here is how the US Census addresses the issue: Both occupied and vacant housing units are included in the housing unit inventory, except that recreational vehicles, boats, vans, tents, railroad cars, and the like are included only if they are occupied as someone's usual place of residence. Vacant mobile homes are included provided they are intended for occupancy on the site where they stand. Vacant mobile homes on dealer's sales lots, at the factory, or in storage yards are excluded from the housing unit inventory. Source: https://www.census.gov/popest/about/terms/housing.html.

31 |

32 | So a house boat or a recreational vehicle is only a housing unit if it is someone's "usual place of residence", else it is not. A mobile home is only a housing unit if it is an architectural structure.

33 |

34 | ## Definitions

35 | - **housing unit**: A material entity that has as parts one or more sites large enough to contain humans, has as part one or more material entities that separates it from other sites, and bears a residence function.

36 |

37 | - **household**: A human or collection of humans that occupies a housing unit by storing their possessions there and habitually sleeping there thereby participating in the realization of that housing unit's residence function.

38 |

39 | This definition is intended to cover vehicular residences, architectural residences, natural geological formation residences, and other types of material entities that can serve as housing for someone.

40 |

41 | - **residence function**: A function that inheres in a material entity and, if realized, is realized by protecting persons and their possessions from weather and by some person or group of persons habitually sleeping in at least one site that is contained by that material entity.

42 |

43 | For the U.S. Census, architectural structures that bear a residence function and vehicles that are realizing a residence function, are housing units. Also, the U.S. Census says a mobile home does not bear a residence function until it becomes an architectural structure (it is suitably sited and anchored to the ground).

44 |

45 | Similarly, tents are not housing units unless they are realizing a residence function. A tent does not have as "firm [a] connection between its foundation and the ground" so we would exclude them as architectural structures. They don't fit category of vehicle or natural formation either, but may still be important to include to cover folks who are unhoused and live on the streets.

46 |

47 |

48 | To make it easier to relate a person or household to the housing unit they live in, we created the object property **lives in** (OMRSE:00000260). This object property relates a household to another material entity.

49 |

50 | - **lives in**: A relation between a household and a material entity that the household stores their possessions in and sleeps in habitually.

51 |

--------------------------------------------------------------------------------

/docs/modeling/How-are-household-and-housing-unit-related?.md:

--------------------------------------------------------------------------------

1 | The members of the household are participants of a process that realizes the residence function. Whether they or the housing unit itself is the agent of the process is an interesting question.

2 |

3 | So if we had particulars as follows:

4 |

5 | hh1 - household #1

6 | hu1 - housing unit #1

7 | rf1 - residence function #1

8 | p1 - process #1

9 |

10 | hh1 'is participant of' p1

11 | p1 'realizes' rf1

12 | rf1 'inheres in' hu1

13 |

14 | One issue is that it means that there is no connection between a household and the housing unit until at least one member of the household sleeps there. Based on my (Bill Hogan) own experience with moving to Gainesville last year, I'm down with it.

--------------------------------------------------------------------------------

/docs/modeling/Insurance.md:

--------------------------------------------------------------------------------

1 | This page discusses questions relevant to modeling insurance and insurance policies in OMRSE

2 |

3 | In Clarke v Clarke, (1993) 84 BCLR 2d 98, the BC Supreme Court accepted this definition (of life or disability insurance):

4 | "A contract by which one party undertakes, in consideration for a payment (called a premium), to secure the other against pecuniary loss, by payment of a sum of money in the event of the death or disablement of a person."(http://www.duhaime.org/LegalDictionary/I/Insurance.aspx, accessed July 20, 2015)

5 |

6 | And

7 |

8 | "A contract whereby, for specified consideration, one party undertakes to compensate the other for a loss relating to a particular subject as a result of the occurrence of designated hazards. … A contract is considered to be insurance if it distributes risk among a large number of persons through an enterprise that is engaged primarily in the business of insurance. Warranties or service contracts for merchandise, for example, do not constitute insurance. They are not issued by insurance companies, and the risk distribution in the transaction is incidental to the purchase of the merchandise. Warranties and service contracts are thus exempt from strict insurance laws and regulations.” (http://legal-dictionary.thefreedictionary.com/insurance, accessed July 20, 2015)

9 |

10 | "A contract (an insurance contract) whereby one person, the insurer, promises and undertakes, in exchange for consideration of a set or assessed amount of money (called a "premium"), to make a payment to either the insured or a third-party if a specified event occurs, also known as "occurrences"." (http://www.duhaime.org/LegalDictionary/I/Insurance.aspx, accessed July 20, 2015)

11 |

12 | From Couch on Insurance, 3rd Edition: "while a policy of insurance, other than life or accident insurance, is basically a contract of indemnity, not all contracts of indemnity are insurance contracts; rather, an insurance contract is one type of indemnity contract.”

13 |

14 | 1. An **insurance policy** is a **contract**. More specifically, it's a type of **indemnity contract**.

15 | * What's the relationship between a contract and a document act? An insurance policy is a contract that is a document that has as parts action, conditional, plan, and objective specifications. It is the specified output of a document act.

16 | 2. It is distinguished from other indemnity contracts by distributing the risk among a group of persons through an organization.

17 | 3. Insurance policies are the specified output of a document act. (Is this right?)

18 | 4. That document act has as participants (1) a group of persons (the **insured parties**) and (2) the organization that issues the plan. (From 2) The organization and the **primary insured persons on the policy** are parties to a legal agreement (an insurance policy).

19 | 5. An insurance company is an organization and bearer_of some **payor_role** that is realized by making a payment to the insured or a third party once (in the case of health insurance) health services are provided to the insured. The payor role (in this case, not generally) is the concretization of a socio-legal generically dependent_continuant that is the specified output of some document act and inheres in an organization that is party to a insurance policy. The payor role is the subject of the action specification that is a part of the insurance policy as is the payment.

20 | 6. The enrollment date is the day that the payor and insured roles came into existence. Or perhaps the SLGDCs that the roles concretize. (Note that the insured role is not generically dependent since one cannot transfers one's insurance benefits to another person.)

21 | 7. An insured party role is the subject of a conditional specification that is a part of some insurance policy and is the specified output of the document act that also has the insurance policy as specified output.

22 | 8. An enrollment start date is a date that contains the left boundary of the existence of the insured party role.

23 | 9. A date is a temporal interval that has a scalar measurement datum whose value is equivalent to one day.

24 | 10. An enrollment in an insurance policy period is a temporal interval during which an organism is the bearer of an insured party role. The enrollment in an insurance policy period is also a part of the temporal interval occupied by the life of that organism.

25 |

26 | ## New Terms

27 | * **contract** - Superclass: Document and (is_specified_output_of some 'document act')

28 | * **indemnity contract** - Superclass: contract and ('has part' some 'action specification') and ('has part' some 'objective specification') and ('has part' some 'plan specification') and ('has part' some 'conditional specification')

29 | * **insurance policy** - Superclass: 'indemnity contract' and is_specified_output_of some ('document act' and (has_agent some 'insurance company') and (has_agent some ('collection of humans' and 'has member' only (bearer_of some 'policy holder role'))))

30 | ['and has_specified_output some 'insured party role'' should modify 'document act', but this is not possible while declaration is defined as having a SLGDC as specified output since roles are not generically dependent.]

31 | * **insured party role** - Superclass: 'role in human social processes' and (inverse 'is about' some ('conditional specification' and 'part of' some 'insurance policy')) and (is_specified_output_of some ('document act' and (has_specified_output some 'insurance policy')))

32 | * **insurance company** - Superclass: organization and

33 | ('bearer of' some ('payer role' and (concretizes some ('socio-legal generically dependent continuant' and is_specified_output_of some 'document act')))) and ('bearer of' some 'party to an insurance policy')

34 | and (inverse 'is about' some ('action specification' and 'part of' some 'insurance policy'))

35 | * **policy holder role** - Superclass: 'insured party role' and ('inheres in' some ('bearer of' some 'party to an insurance policy'))

36 | * **payer role** - Superclass: 'role in human social processes'

37 | * **party to an insurance policy** - Superclass: 'party to a legal agreement'

38 | * **enrollment in an insurance policy period** - Subclass: 'temporal interval' and (inverse(exists at) ('insured part role' and 'inheres in' (some organism))) and ('part of' some ('temporal interval' and 'is temporal location of' [life of an organism])

39 | * **enrollment start date** - date and 'is occupied by' some ('history part' and 'has left process boundary' some ('process boundary' and ('part of' some 'enrollment in an insurance policy period'))

40 | * **enrollment end date** - date and 'is occupied by' some ('history part' and 'has right process boundary' some ('process boundary' and ('part of' some 'enrollment in an insurance policy period'))

41 | * **date** - 'temporal interval' and inverse 'is duration of' some ('measurement datum' and 'has value specification' some ('scalar value specification' and 'has value' '1' and 'has measurement unit label' 'day')

42 |

43 | ##PCORNet Enrollment

44 | "ENROLLMENT Domain Description: Enrollment is a concept that defines a period of time during which all medically-attended events are expected to be observed. This concept is often insurance-based, but other methods of defining enrollment are possible."

45 |

46 | "The ENROLLMENT table contains one record per unique combination of PATID, ENR_START_DATE, and BASIS.

47 | * What are "medically-attended events"?

48 | * The enrollment dates specify a period of complete data capture. This is a different notion from enrollment in an insurance plan although enrollment in an insurance plan can be the "basis" of the complete data capture. Also, notice that the purpose of enrollment dates is to support a closed-world assumption. ("The ENROLLMENT table provides an important analytic basis for identifying periods during which medical care should be observed, for calculating person-time, and for inferring the meaning of unobserved care (ie, if care is not observed, it likely did not happen).")

49 |

50 |

--------------------------------------------------------------------------------

/docs/modeling/Modeling-Discharge-and-Discharge-Status.md:

--------------------------------------------------------------------------------

1 | # Discharge as Document Act

2 | A discharge is a document act that involves a document that concretizes a directive information entity. That directive information entity has as parts a plan specification, an action specification, and an objective specification. Usually the objective specification is about where the patient will go upon discharge, e.g., home, a rehab facility, etc.

3 |

4 | Discharges have as agents humans who are the bearer of a health care provider role.

--------------------------------------------------------------------------------

/docs/modeling/OMRSE-Language-and-Language-Individuals.md:

--------------------------------------------------------------------------------

1 | # OMRSE Language Modules

2 |

3 | We created omrse-language.owl to represent 'language' and 'preferred language content entity', among other language-related classes.

4 |

5 | # OMRSE Language Individuals

6 |

7 | We represent individual languages as OWL individuals in language-individuals.owl. This file was programmatically generated using the Python 3 rdflib (v4.2.2) library. The information contained in the annotations for each language individual was pulled directly from the table in the ["List of ISO 639-2 codes" Wikipedia page](https://en.wikipedia.org/wiki/List_of_ISO_639-2_codes), although not exhaustively. In particular, we excluded any languages from that table that were categorized in the "type" column as "Collective," "Special," or "Local."

8 |

9 | The rdfs:label of each language individual comes from the ISO 639-2 language name (i.e., the English name(s) of the language). In some instances, there are multiple names listed, in which case we used the first name listed as the rdfs:label and created alternative term annotations for each additional name.

10 |

11 | ## ISO 639-1 annotation

12 |

13 | The first part of the ISO 639 standard--ISO 639-1--consists of two-letter codes that are derived from the native name of each language covered. In total, there are 184 ISO 639-1 codes, which amounts to less than half of the languages that are represented in OMRSE Language. Therefore, we only include ISO 639-1 annotations for those languages that have been assigned one.

14 |

15 | ## ISO 639-2(T/B) annotations

16 |

17 | The second part of the ISO 639 standard--ISO 639-2--contains of three-letter language codes for the names of each language. This standard consists of two sets of codes--the bibliographic set (ISO 639-2/B) and the terminological set (ISO 639-2/T). The difference between the two is that the three-letter codes in the bibliographic set were derived from the English name of the language, while the three-letter codes in the terminological set were derived from the native name. In total, however, there are only ~20 languages in ISO 639-2 whose three-letter ISO 639-2/T codes differ from the ISO 639-2/B. Each language individual contains both an ISO 639-2/T and an ISO 639-2/B annotation.

18 |

19 | ## Native Name annotation

20 |

21 | Where available, we created separate alternative term annotations for the native name(s) of a language using the native names listed in the aforementioned Wikipedia table. If the language was assigned a two-digit ISO 639-1 code, we assigned it to the language tag of each such annotation. If no ISO 639-1 code was available, we left the language tag blank.

--------------------------------------------------------------------------------

/docs/modeling/OMRSE-Overview.md:

--------------------------------------------------------------------------------

1 | Welcome to the OMRSE wiki!

2 |

3 | The Ontology for Modeling and Representation of Social Entities or OMRSE (previously known as the Ontology of Medically Related Social Entities) is an ontology that represents various roles, processes, and dependent entities that are the product of interactions among humans (although we haven't ruled out social interactions of other species). It began by representing various roles required for demographics, including those required to represent gender and marital status.

4 |

5 | We welcome all contributions to OMRSE!

6 |

7 | The permanent URL to the latest, release version of OMRSE is http://purl.obolibrary.org/obo/omrse.owl

8 |

9 |

--------------------------------------------------------------------------------

/docs/modeling/PCORNet-Smoking-Status.md:

--------------------------------------------------------------------------------

1 | #PCORNet VITAL Table Specification

2 |

3 | * 01 = Current every day smoker

4 | * 02 = Current some day smoker

5 | * 03 = Former smoker

6 | * 04 = Never smoker

7 | * 05 = Smoker, current status unknown

8 | * 06 = Unknown if ever smoked

9 | * 07 = Heavy tobacco smoker

10 | * 08 = Light tobacco smoker

11 | * NI = No information

12 | * UN = Unknown

13 | * OT = Other

14 |

15 |

16 | "This field is new to v3.0. Indicator for any form of tobacco that is smoked. Per Meaningful Use guidance, “…smoking status includes any form of tobacco that is smoked, but not all tobacco use.” “’Light smoker’ is interpreted to mean less than 10 cigarettes per day, or an equivalent (but less concretely defined) quantity of cigar or pipe smoke. ‘Heavy smoker’ is interpreted to mean greater than 10 cigarettes per day or an equivalent (but less concretely defined) quantity of cigar or pipe smoke.” “…we understand that a “current every day smoker” or “current some day smoker” is an individual who has smoked at least 100 cigarettes during his/her lifetime and still regularly smokes every day or periodically, yet consistently; a “former smoker” would be an individual who has smoked at least 100 cigarettes during his/her lifetime but does not currently smoke; and a “never smoker” would be an individual who has not smoked 100 or more cigarettes during his/her lifetime.” "

17 |

18 | ##Questions

19 | 1. Is a former smoker a smoker?

--------------------------------------------------------------------------------

/docs/modeling/Race-And-Ethnicity.md:

--------------------------------------------------------------------------------

1 | # Race And Ethnicity in the US Census

2 |

3 | Resources

4 |

5 | **OMB**

6 | https://www.whitehouse.gov/omb/fedreg_race-ethnicity

7 | https://www.whitehouse.gov/sites/default/files/omb/assets/information_and_regulatory_affairs/re_app-a-update.pdf

8 |

9 | **History of the Census and Other Race and Ethnicity Classification Schemes**

10 | http://www.pewsocialtrends.org/2010/01/21/race-and-the-census-the-%E2%80%9Cnegro%E2%80%9D-controversy/

11 |

12 | # Option #1 for modeling race data gathered by the US Census

13 |

14 | ## OBM Categories to Date

15 |

16 | * American Indian or Alaska Native. A person having origins in any of the original peoples

17 | of North and South America (including Central America), and who maintains tribal

18 | affiliation or community attachment.

19 | * Asian. A person having origins in any of the original peoples of the Far East, Southeast

20 | Asia, or the Indian subcontinent including, for example, Cambodia, China, India, Japan,

21 | Korea, Malaysia, Pakistan, the Philippine Islands, Thailand, and Vietnam.

22 | * Black or African American. A person having origins in any of the black racial groups of

23 | Africa. Terms such as “Haitian” or “Negro” can be used in addition to “Black or African

24 | American.”

25 | * Native Hawaiian or Other Pacific Islander. A person having origins in any of the original

26 | peoples of Hawaii, Guam, Samoa, or other Pacific Islands.

27 | * White. A person having origins in any of the original peoples of Europe, the Middle East, or

28 | North Africa.

29 |

30 | ## How to model self-identity claims about race and ethnicity

31 |

32 | * Self-identity claims about race and ethnicity as they appear in the US Census are Information Content Entities that are intended to be a truthful statement. They are part of a 'documented identity'. A documented identity is "the aggregate of all data items about an entity. Notice that a documented identity is not itself a document since a document is intended to be understood as a whole and data items about an individual are usually scattered across different documents."

33 |

34 | * **Racial identities** are part of document identities and are about a person. They have parts some specified output of a **racial identification process**. **Racial identification processes** are social acts and planned processes. A special case of a **racial identification process** is a US Census Racial identity process, which is also part of a US Census Survey and has specified output (at present) some OMB racial identification.

35 |

36 | * Racial identities are self-identified when they are about the same person who is the agent of the racial identification process. This can not be represented in OWL/DL but can be captured by a SPARQL query.

37 |

38 | ### New classes for OMRSE

39 | * **racial identity** - Superclass: 'information content entity' and ('is about' some 'Homo sapiens') and ('part of' some 'documented identity') and ('has part' some (is_specified_output_of some 'racial identification process')) and ('part of' some 'documented identity')

40 | * **racial identification process** - Superclass: 'social act' and 'planned process' and ('has specified output' some ('part of' some 'racial identity'))

41 | * **ethnic identity** - Superclass: 'information content entity' and ('is about' some 'Homo sapiens') and ('part of' some 'documented identity') and ('has part' some (is_specified_output_of some 'ethnic identification process')) and ('part of' some 'documented identity')

42 | * **ethnic identification process** - Superclass: 'social act' and 'planned process' and ('has specified output' some ('part of' some 'ethnic identity'))

--------------------------------------------------------------------------------

/docs/modeling/Representing-Languages-as-codified-by--ISO-639.md:

--------------------------------------------------------------------------------

1 | # Languages and ISO-639

2 | ## Background

3 | The need for languages as a class arises from our work representing demographic information in Continuity of Care Documents (CCDs). Preferred language is an element in the CCD, and the header has meta-data indicating the language of the CCD. More details are in the following slide deck (slides 10-17): http://ncor.buffalo.edu/2015/CTSO/Hogan.pptx

4 |

5 | In these slides we propose introducing directive information entities (DIEs) for languages, e.g., 'English Directive Information Entity'. These DIEs might be concretized by languages considered as dispositions. Note that the slides refer to MF_00000022, but this has been removed from the Mental Functioning Ontology. https://github.com/jannahastings/mental-functioning-ontology/commit/6a1231b8d973c59cb6189a6d6750632c70fdcf95

6 |

7 | ## Spoken versus written language and language as a directive information entity

8 |

9 | The Mental Health Functioning Ontology has a class with the label 'language' that are subclasses of dispositions, but this refers to human spoken language. ISO-639 was initially developed to describe literature, so way may consider creating a new class to allign ISO language codes. If we do not represent ISO codes for language in terms of MF's class for language, language DIEs could still be concretized by MFs.

10 |

11 | ## Ontological considerations for modeling language

12 | (1) ‘Language’ is ambiguous between

13 | (a) the capacity for communicating with language (disposition)

14 | (b) a specific language such as English (which we propose modeling as a directive information entity)

15 | 'Person A speaks English' entails that Person A has a disposition to communicate with language (any language) and also that they have a disposition to communicate with speech, written language, or other signs that concretize an English DIE.

16 |

17 | We could then model the language of a document in terms of the language DIE, but the details here still need to be worked out. Are written documents and spoken pieces of language concretization of ICEs that are the outputs of processes that realize the language disposition?

18 |

19 | (2) Is ‘English DIE’ an individual or a class?

20 | (a) How should we model dialects?

21 |

22 | ## Some proposed necessary conditions for preferred languages

23 | ### A Patient Whose Preferred Language is English

24 | Subclass Of: member of the population that is a bearer of a language disposition that concretizes an/the English DIE.

25 |

26 | ### Person 2 speaks Englis.h

27 | ‘English DIE’ ‘is concretized by’ some (language and ‘inheres in at all times’ some population and (‘has continuant part at all times’ (‘Person 2’ ‘is a’ (human being))))

28 |

29 |

--------------------------------------------------------------------------------

/docs/modeling/Typology-of-Health-Care-Facilities.md:

--------------------------------------------------------------------------------

1 | # General Strategy for Modeling Health Care Facilities

2 |

3 | A facility is defined as "an architectural structure that is the bearer of some function."

4 |

5 | 'hospital facility' has the following DL restriction: facility and (('is owned by' some 'hospital organization') or ('is administrated by' some 'hospital organization')) and (bearer_of some 'hospital function')

6 |

7 | This definition ties the facility to an organization in addition to a function.

8 | Question: Do we need to tie an organization to each of the types of health care facilities? At a minimum we could specify that are owned or administered by health care provider organizations.

9 |

10 |

11 | ## Types of Health Care Facilities From PCORNet CDM DISCHARGE_STATUS

12 | * Urgent care

13 | * Ambulatory surgery

14 | * Hospice

15 | * Emergency department

16 | * Physician office

17 | * Outpatient clinic

18 | * Overnight dialysis

19 | * Rehabilitation

20 | * Skilled nursing

21 | * Residential

22 | * Nursing Home

23 |

24 | ## Differentiation of Facilities

25 | Facilities are material entities that are differentiated according to the functions they bear and the organizations that own or administer them.

26 |

27 | ## Proposed Natural Language Definitions for Functions

28 | **Urgent care function** - "A function inhering in a material entity that is realized by the material entity being the site at which outpatient healthcare is provided for illness or injury that requires immediate care but does not require a visit to an emergency department."

29 |

30 | **Ambulatory surgery function** - "A function inhering in a material entity that is realized by the material entity being the site at which outpatient surgical care is provided to a patient population."

31 |

32 | **Hospice function** - "A function inhering in a material entity that is realized by the material entity being the site at which inpatient palliative healthcare is provided to a patient population with a terminal prognosis."

33 |

34 | **Emergency department function** - "A function inhering in a material entity that is realized by the material entity being the site at which emergency medicine and treatment of acute illness and injury is provided to a patient population."

35 |

36 | **Physician office function** - in progress

37 |

38 | **Outpatient clinic function** - "A function inhering in a material entity that is realized by the material entity being the site at which medical care is provided to a patient population and in which the patients receiving the medical care each stay for less than 24 hours."

39 |

40 | **Overnight dialysis clinic function** - "A function inhering in a material entity that is realized by the material entity being the site at which hemodialysis is administered to a patient population at night or when the patient habitually sleeps."

41 |

42 | **Rehabilitation facility function** - in progress

43 |

44 | **Skilled nursing facility function** - in progress

45 |

46 | **Residential facility function** - in progress

47 |

48 | **Nursing home function** - in progress

49 |

50 | ## Criteria of Differentiation of Functions

51 |

52 | **Outpatient, Inpatient, ER** - in progress

53 |

54 | **Type of Care** - in progress

55 |

56 | **Temporary or Permanent Living Arrangement** - in progress

57 |

58 | ## Other relevant terms

59 |

60 | **Skilled Nursing** - in progress

61 |

62 | **Custodial Care** - in progress

63 |

--------------------------------------------------------------------------------

/docs/modeling/What-is-the-relation-between-a-role-and-the-organization-that-creates-sanctions-confers-it.md:

--------------------------------------------------------------------------------

1 | Per BFO, roles inhere in continuants and are realized by processes.

2 |

3 | However, we often need to connect a continuant that is the bearer of a role to the organization, government, etc. that confers the role.

4 |

5 | For example, how does one relate an employee to her employer organization?

6 |

7 | First, we note that BFO2 currently posits that no relational roles exist, so employer/employee are not connected by jointly bearing a single role:

8 |

9 | "Hypothesis: There are no relational roles. In other words, each role is the role of exactly one bearer."

10 |

11 | ### Option #1: The connection is through participation in a role-conferring process

12 |

13 | One possibility is that both the bestower of the role, and the recipient of the role participate in a process whereby the role is conferred to the recipient, and after which the recipient is the bearer of the role:

14 |

15 | Consider the following particulars:

16 |

17 | o1: organization #1

18 |

19 | p1: person #1

20 |

21 | r1: p1's role as employee of o1

22 |

23 | h1: hiring process of p1 by o1

24 |

25 | Then, we say that:

26 |

27 | o1 'is participant of' h1 (note, it's really certain other employees of o1 that participate)

28 |

29 | p1 'is participant of' h1

30 |

31 | r1 'begins to exist during' h1

32 |

33 | The key issue is that not all roles are conferred by such processes.

34 |

35 | ### Option #2: The connection is through Socio-legal dependent continuants and the declarations for which the SLGDC is a specified output.

36 |

37 | **Definition of SLGDC** - Socio-legal generically dependent continuants are generically dependent continuants that come into existence through declarations and are concretized as roles.

38 |

39 | **Definition of 'declaration'** - A social act that brings about, transfers or revokes a socio-legal generically dependent continuant. Declarations do not depend on words spoken or written, but sometimes are merely actions, for instance the signing of a document.

40 |

41 | **Class restriction of 'declaration'** - (('legally revokes' some 'socio-legal generically dependent continuant') or ('legally transfers' some 'socio-legal generically dependent continuant') or (has_specified_output some 'socio-legal generically dependent continuant')) and (realizes some 'declaration performer role') and (has_agent some

42 | (('Homo sapiens' or organization or 'collection of humans' or 'aggregate of organizations') and (bearer_of some 'declaration performer role')))

43 |

44 | **The gist** - Some roles are concretizations of SLGDCs. An SLGDC is created by a declaration that involves some agent (the bearer of a declaration performer role) and a declaration target (the bearer of the role that concretizes the SLGDC).

45 |

46 | An employee role is a concretization of a SLGDC. An employee is the bearer of a role that concretizes an SLGDC that is the specified output of a declaration that had the employer as agent.

47 |

48 | SLGDCs can be distinguished according according to a couple criteria: the type of entity that is the agent (e.g., Human or organization) and the type of entity that is the declaration target (e.g., human or organization). Roles that concretize SLGDCs can be distinguished in the same way.

49 |

50 | In the case of employee/employer scenarios, the declaration target (or the bearer of the employee role that concretizes an SLGCD) is a human and the agent is an organization.

51 |

52 | With this in mind I suggest that we have the following hierarchy in OMRSE:

53 |

54 | * 'role in human social processes'

55 | * * 'organism social role'

56 | * * * 'human social role'

57 | * * * * 'socio-legal human social role' (is_concretization_of some ('socio-legal generically dependent continuant'))

58 | * * * * * 'human role within an organization' (is_concretization_of some ('socio-legal generically dependent continuant' and is_specified_output_of some (declaration and has_agent some (organization or 'aggregate of organizations')))

59 | * * * * * * 'student role'

60 | * * * * * * 'employee role'

61 |

--------------------------------------------------------------------------------

/docs/odk-workflows/ContinuousIntegration.md:

--------------------------------------------------------------------------------

1 | # Introduction to Continuous Integration Workflows with ODK

2 |

3 | Historically, most repos have been using Travis CI for continuous integration testing and building, but due to

4 | runtime restrictions, we recently switched a lot of our repos to GitHub actions. You can set up your repo with CI by adding

5 | this to your configuration file (src/ontology/omrse-odk.yaml):

6 |

7 | ```

8 | ci:

9 | - github_actions

10 | ```

11 |

12 | When [updateing your repo](RepoManagement.md), you will notice a new file being added: `.github/workflows/qc.yml`.

13 |

14 | This file contains your CI logic, so if you need to change, or add anything, this is the place!

15 |

16 | Alternatively, if your repo is in GitLab instead of GitHub, you can set up your repo with GitLab CI by adding

17 | this to your configuration file (src/ontology/omrse-odk.yaml):

18 |

19 | ```

20 | ci:

21 | - gitlab-ci

22 | ```

23 |

24 | This will add a file called `.gitlab-ci.yml` in the root of your repo.

25 |

26 |

--------------------------------------------------------------------------------

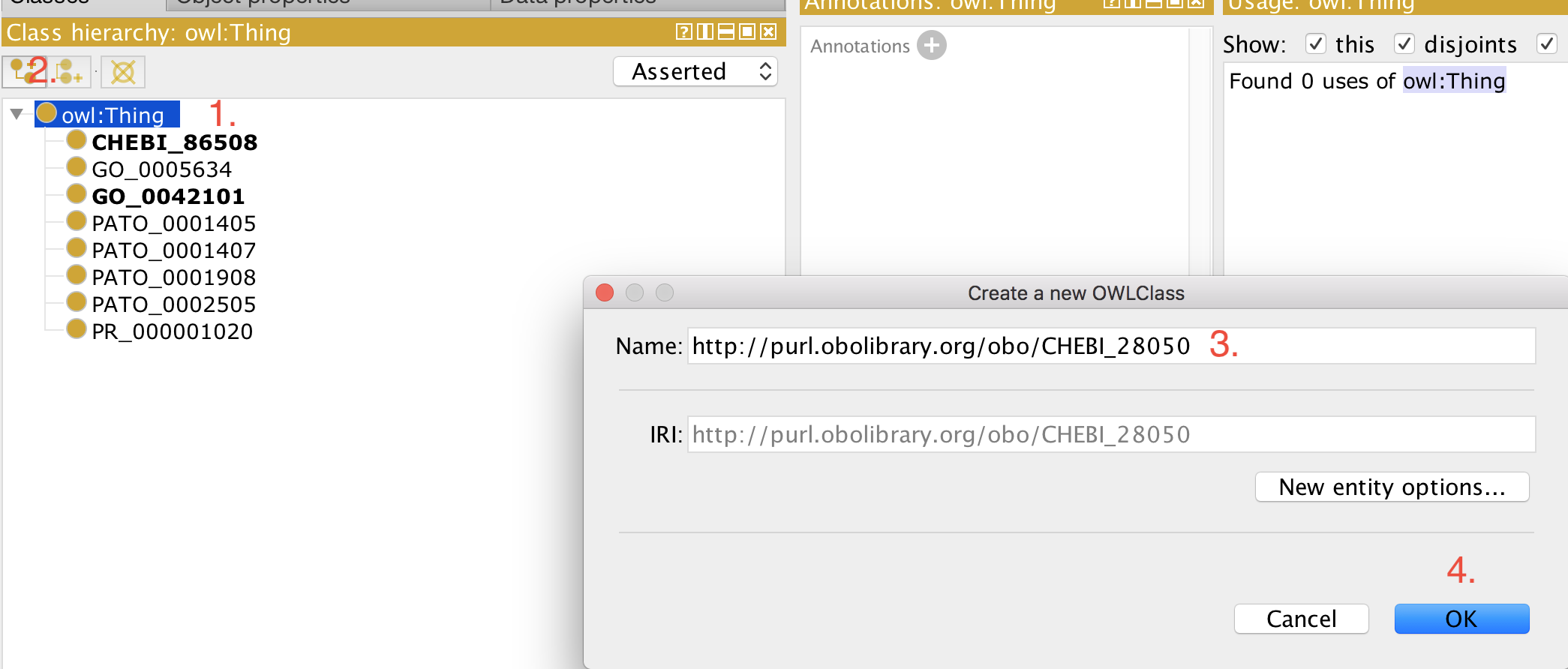

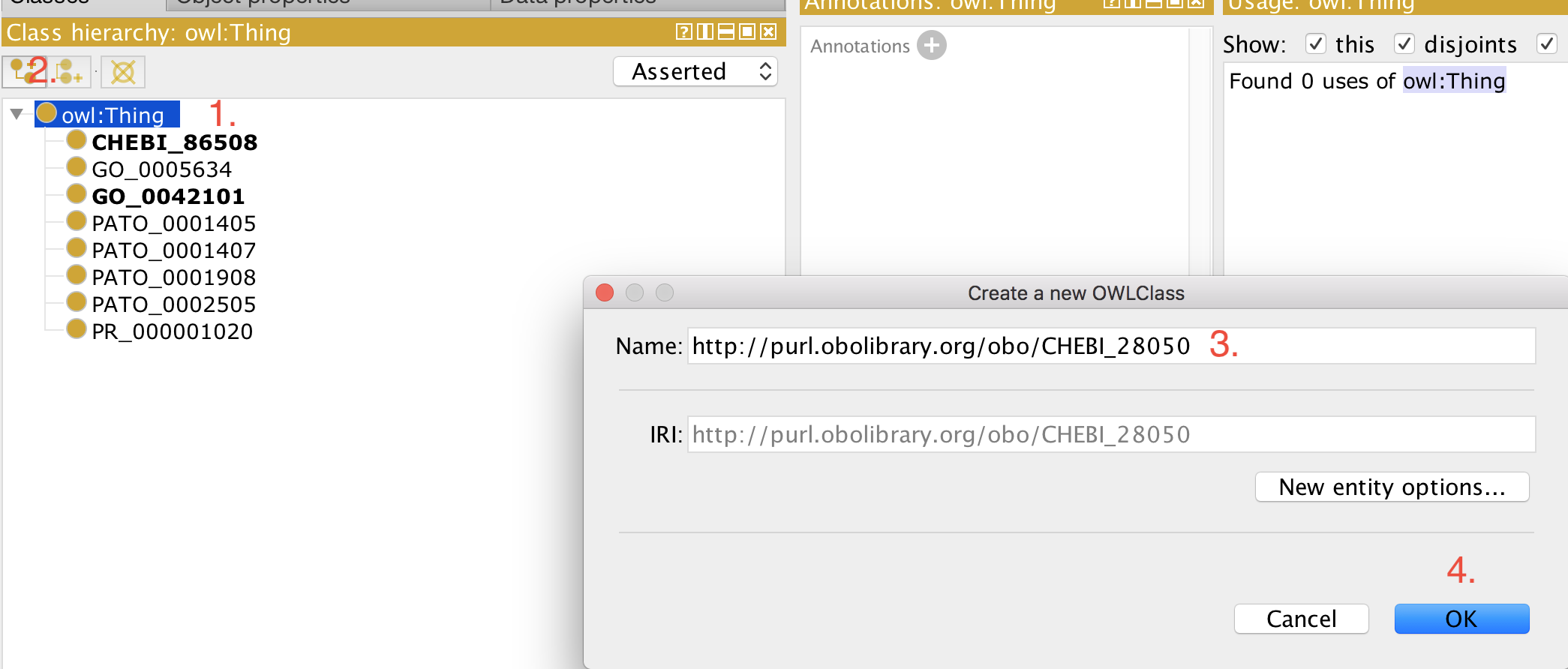

/docs/odk-workflows/EditorsWorkflow.md:

--------------------------------------------------------------------------------

1 | # Editors Workflow

2 |

3 | The editors workflow is one of the formal [workflows](index.md) to ensure that the ontology is developed correctly according to ontology engineering principles. There are a few different editors workflows:

4 |

5 | 1. Local editing workflow: Editing the ontology in your local environment by hand, using tools such as Protégé, ROBOT templates or DOSDP patterns.

6 | 2. Completely automated data pipeline (GitHub Actions)

7 | 3. DROID workflow

8 |

9 | This document only covers the first editing workflow, but more will be added in the future

10 |

11 | ### Local editing workflow

12 |

13 | Workflow requirements:

14 |

15 | - git

16 | - github

17 | - docker

18 | - editing tool of choice, e.g. Protégé, your favourite text editor, etc

19 |

20 | #### 1. _Create issue_

21 | Ensure that there is a ticket on your issue tracker that describes the change you are about to make. While this seems optional, this is a very important part of the social contract of building an ontology - no change to the ontology should be performed without a good ticket, describing the motivation and nature of the intended change.

22 |

23 | #### 2. _Update main branch_

24 | In your local environment (e.g. your laptop), make sure you are on the `main` (prev. `master`) branch and ensure that you have all the upstream changes, for example:

25 |

26 | ```

27 | git checkout main

28 | git pull

29 | ```

30 |

31 | #### 3. _Create feature branch_

32 | Create a new branch. Per convention, we try to use meaningful branch names such as:

33 | - issue23removeprocess (where issue 23 is the related issue on GitHub)

34 | - issue26addcontributor

35 | - release20210101 (for releases)

36 |

37 | On your command line, this looks like this:

38 |

39 | ```

40 | git checkout -b issue23removeprocess

41 | ```

42 |

43 | #### 4. _Perform edit_

44 | Using your editor of choice, perform the intended edit. For example:

45 |

46 | _Protégé_

47 |

48 | 1. Open `src/ontology/omrse-edit.owl` in Protégé

49 | 2. Make the change

50 | 3. Save the file

51 |

52 | _TextEdit_

53 |

54 | 1. Open `src/ontology/omrse-edit.owl` in TextEdit (or Sublime, Atom, Vim, Nano)

55 | 2. Make the change

56 | 3. Save the file

57 |

58 | Consider the following when making the edit.

59 |

60 | 1. According to our development philosophy, the only places that should be manually edited are:

61 | - `src/ontology/omrse-edit.owl`

62 | - Any ROBOT templates you chose to use (the TSV files only)

63 | - Any DOSDP data tables you chose to use (the TSV files, and potentially the associated patterns)

64 | - components (anything in `src/ontology/components`), see [here](RepositoryFileStructure.md).

65 | 2. Imports should not be edited (any edits will be flushed out with the next update). However, refreshing imports is a potentially breaking change - and is discussed [elsewhere](UpdateImports.md).

66 | 3. Changes should usually be small. Adding or changing 1 term is great. Adding or changing 10 related terms is ok. Adding or changing 100 or more terms at once should be considered very carefully.

67 |

68 | #### 4. _Check the Git diff_

69 | This step is very important. Rather than simply trusting your change had the intended effect, we should always use a git diff as a first pass for sanity checking.

70 |

71 | In our experience, having a visual git client like [GitHub Desktop](https://desktop.github.com/) or [sourcetree](https://www.sourcetreeapp.com/) is really helpful for this part. In case you prefer the command line:

72 |

73 | ```

74 | git status

75 | git diff

76 | ```

77 | #### 5. Quality control

78 | Now it's time to run your quality control checks. This can either happen locally ([5a](#5a-local-testing)) or through your continuous integration system ([7/5b](#75b-continuous-integration-testing)).

79 |

80 | #### 5a. Local testing

81 | If you chose to run your test locally:

82 |

83 | ```

84 | sh run.sh make IMP=false test

85 | ```

86 | This will run the whole set of configured ODK tests on including your change. If you have a complex DOSDP pattern pipeline you may want to add `PAT=false` to skip the potentially lengthy process of rebuilding the patterns.

87 |

88 | ```

89 | sh run.sh make IMP=false PAT=false test

90 | ```

91 |

92 | #### 6. Pull request

93 |

94 | When you are happy with the changes, you commit your changes to your feature branch, push them upstream (to GitHub) and create a pull request. For example:

95 |

96 | ```

97 | git add NAMEOFCHANGEDFILES

98 | git commit -m "Added biological process term #12"

99 | git push -u origin issue23removeprocess

100 | ```

101 |

102 | Then you go to your project on GitHub, and create a new pull request from the branch, for example: https://github.com/INCATools/ontology-development-kit/pulls

103 |

104 | There is a lot of great advise on how to write pull requests, but at the very least you should:

105 | - mention the tickets affected: `see #23` to link to a related ticket, or `fixes #23` if, by merging this pull request, the ticket is fixed. Tickets in the latter case will be closed automatically by GitHub when the pull request is merged.

106 | - summarise the changes in a few sentences. Consider the reviewer: what would they want to know right away.

107 | - If the diff is large, provide instructions on how to review the pull request best (sometimes, there are many changed files, but only one important change).

108 |

109 | #### 7/5b. Continuous Integration Testing

110 | If you didn't run and local quality control checks (see [5a](#5a-local-testing)), you should have Continuous Integration (CI) set up, for example:

111 | - Travis

112 | - GitHub Actions

113 |

114 | More on how to set this up [here](ContinuousIntegration.md). Once the pull request is created, the CI will automatically trigger. If all is fine, it will show up green, otherwise red.

115 |

116 | #### 8. Community review

117 | Once all the automatic tests have passed, it is important to put a second set of eyes on the pull request. Ontologies are inherently social - as in that they represent some kind of community consensus on how a domain is organised conceptually. This seems high brow talk, but it is very important that as an ontology editor, you have your work validated by the community you are trying to serve (e.g. your colleagues, other contributors etc.). In our experience, it is hard to get more than one review on a pull request - two is great. You can set up GitHub branch protection to actually require a review before a pull request can be merged! We recommend this.

118 |

119 | This step seems daunting to some hopefully under-resourced ontologies, but we recommend to put this high up on your list of priorities - train a colleague, reach out!

120 |

121 | #### 9. Merge and cleanup

122 | When the QC is green and the reviews are in (approvals), it is time to merge the pull request. After the pull request is merged, remember to delete the branch as well (this option will show up as a big button right after you have merged the pull request). If you have not done so, close all the associated tickets fixed by the pull request.

123 |

124 | #### 10. Changelog (Optional)

125 | It is sometimes difficult to keep track of changes made to an ontology. Some ontology teams opt to document changes in a changelog (simply a text file in your repository) so that when release day comes, you know everything you have changed. This is advisable at least for major changes (such as a new release system, a new pattern or template etc.).

126 |

--------------------------------------------------------------------------------

/docs/odk-workflows/ManageDocumentation.md:

--------------------------------------------------------------------------------

1 | # Updating the Documentation

2 |

3 | The documentation for OMRSE is managed in two places (relative to the repository root):

4 |

5 | 1. The `docs` directory contains all the files that pertain to the content of the documentation (more below)

6 | 2. the `mkdocs.yaml` file contains the documentation config, in particular its navigation bar and theme.

7 |

8 | The documentation is hosted using GitHub pages, on a special branch of the repository (called `gh-pages`). It is important that this branch is never deleted - it contains all the files GitHub pages needs to render and deploy the site. It is also important to note that _the gh-pages branch should never be edited manually_. All changes to the docs happen inside the `docs` directory on the `main` branch.

9 |

10 | ## Editing the docs

11 |

12 | ### Changing content

13 | All the documentation is contained in the `docs` directory, and is managed in _Markdown_. Markdown is a very simple and convenient way to produce text documents with formatting instructions, and is very easy to learn - it is also used, for example, in GitHub issues. This is a normal editing workflow:

14 |

15 | 1. Open the `.md` file you want to change in an editor of choice (a simple text editor is often best). _IMPORTANT_: Do not edit any files in the `docs/odk-workflows/` directory. These files are managed by the ODK system and will be overwritten when the repository is upgraded! If you wish to change these files, make an issue on the [ODK issue tracker](https://github.com/INCATools/ontology-development-kit/issues).

16 | 2. Perform the edit and save the file

17 | 3. Commit the file to a branch, and create a pull request as usual.

18 | 4. If your development team likes your changes, merge the docs into main branch.

19 | 5. Deploy the documentation (see below)

20 |

21 | ## Deploy the documentation

22 |

23 | The documentation is _not_ automatically updated from the Markdown, and needs to be deployed deliberately. To do this, perform the following steps:

24 |

25 | 1. In your terminal, navigate to the edit directory of your ontology, e.g.:

26 | ```

27 | cd omrse/src/ontology

28 | ```

29 | 2. Now you are ready to build the docs as follows:

30 | ```

31 | sh run.sh make update_docs

32 | ```

33 | [Mkdocs](https://www.mkdocs.org/) now sets off to build the site from the markdown pages. You will be asked to

34 | - Enter your username

35 | - Enter your password (see [here](https://docs.github.com/en/github/authenticating-to-github/creating-a-personal-access-token) for using GitHub access tokens instead)

36 | _IMPORTANT_: Using password based authentication will be deprecated this year (2021). Make sure you read up on [personal access tokens](https://docs.github.com/en/github/authenticating-to-github/creating-a-personal-access-token) if that happens!

37 |

38 | If everything was successful, you will see a message similar to this one:

39 |

40 | ```

41 | INFO - Your documentation should shortly be available at: https://mcwdsi.github.io/OMRSE/

42 | ```

43 | 3. Just to double check, you can now navigate to your documentation pages (usually https://mcwdsi.github.io/OMRSE/).

44 | Just make sure you give GitHub 2-5 minutes to build the pages!

45 |

46 |

47 |

48 |

--------------------------------------------------------------------------------

/docs/odk-workflows/ReleaseWorkflow.md:

--------------------------------------------------------------------------------

1 | # The release workflow

2 | The release workflow recommended by the ODK is based on GitHub releases and works as follows:

3 |

4 | 1. Run a release with the ODK

5 | 2. Review the release

6 | 3. Merge to main branch

7 | 4. Create a GitHub release

8 |