├── securityhub-collector

├── src

│ ├── __init__.py

│ └── securityhub_collector.py

├── sam

│ ├── requirements.txt

│ ├── sam_package.sh

│ ├── create_layer.sh

│ ├── packaged.yaml

│ ├── template.yaml

│ └── event.json

└── README.md

├── securityhub-forwarder

├── src

│ ├── __init__.py

│ ├── utils.py

│ └── securityhub_forwarder.py

├── sam

│ ├── requirements.txt

│ ├── sam_package.sh

│ ├── template.yaml

│ └── packaged.yaml

├── test

│ ├── fixtures.json

│ └── test_securityhub_connector.py

└── README.md

├── sumologic-app-utils

├── src

│ ├── __init__.py

│ ├── main.py

│ └── sumologic.py

├── README.md

├── sumo_app_utils.yaml

├── packaged_sumo_app_utils.yaml

└── deploy.sh

├── loggroup-lambda-connector

├── test

│ ├── __init__.py

│ ├── requirements.txt

│ └── loggroup-lambda-cft.json

├── package.json

├── sam

│ ├── sam_package.sh

│ ├── packaged.yaml

│ └── template.yaml

├── Readme.md

└── src

│ └── loggroup-lambda-connector.js

├── cloudwatchlogs-with-dlq

├── requirements.txt

├── sumo-dlq-function-utils

│ ├── lib

│ │ ├── mainindex.js

│ │ ├── dlqutils.js

│ │ ├── utils.js

│ │ └── sumologsclient.js

│ └── package.json

├── package.json

├── DLQProcessor.js

├── Readme.md

├── vpcutils.js

└── cloudwatchlogs_lambda.js

├── LICENSE.txt

├── kinesisfirehose-processor

├── package.json

├── kinesisfirehose-processor.js

├── Readme.md

├── kinesisfirehose-lambda-cft.json

└── test-kinesisfirehose-lambda-cft.json

├── cloudwatchevents

├── guardduty

│ ├── packaged.yaml

│ ├── template.yaml

│ ├── event.json

│ ├── README.md

│ └── cloudwatchevents.json

├── package.json

├── guarddutybenchmark

│ ├── README.md

│ ├── template_v2.yaml

│ └── packaged_v2.yaml

├── README.md

└── src

│ └── cloudwatchevents.js

├── CHANGELOG.md

├── .travis.yml

├── s3

├── README.md

└── node.js

│ └── s3.js

├── inspector

├── Readme.md

└── python

│ └── inspector.py

├── cloudwatchlogs

├── README.md

└── cloudwatchlogs_lambda.js

├── cloudtrail_s3

├── cloudtrail_s3_to_sumo.js

└── README.md

├── deploy_function.py

├── kinesis

└── README.md

└── README.md

/securityhub-collector/src/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/securityhub-forwarder/src/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/sumologic-app-utils/src/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/loggroup-lambda-connector/test/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/securityhub-collector/sam/requirements.txt:

--------------------------------------------------------------------------------

1 | boto3==1.9.60

2 |

--------------------------------------------------------------------------------

/securityhub-forwarder/sam/requirements.txt:

--------------------------------------------------------------------------------

1 | boto3==1.9.66

2 |

--------------------------------------------------------------------------------

/cloudwatchlogs-with-dlq/requirements.txt:

--------------------------------------------------------------------------------

1 | requests==2.20.0

2 | boto3==1.5.1

3 |

--------------------------------------------------------------------------------

/loggroup-lambda-connector/test/requirements.txt:

--------------------------------------------------------------------------------

1 | requests==2.20.0

2 | boto3==1.5.1

3 |

--------------------------------------------------------------------------------

/cloudwatchlogs-with-dlq/sumo-dlq-function-utils/lib/mainindex.js:

--------------------------------------------------------------------------------

1 | var SumoLogsClient = require('./sumologsclient.js').SumoLogsClient;

2 | var DLQUtils = require('./dlqutils.js');

3 | var Utils = require('./utils.js');

4 |

5 | module.exports = {

6 | SumoLogsClient: SumoLogsClient,

7 | DLQUtils: DLQUtils,

8 | Utils: Utils

9 | };

10 |

--------------------------------------------------------------------------------

/cloudwatchlogs-with-dlq/sumo-dlq-function-utils/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "sumo-dlq-function-utils",

3 | "version": "0.0.1",

4 | "description": "This is an utility package for Sumo Logic Inc.",

5 | "license": "Apache",

6 | "files": ["./lib"],

7 | "main": "./lib/mainindex",

8 | "dependencies": {

9 | "aws-sdk": "^2.160.0"

10 | },

11 | "devDependencies": {},

12 | "author": "Himanshu Pal"

13 | }

14 |

--------------------------------------------------------------------------------

/LICENSE.txt:

--------------------------------------------------------------------------------

1 | Copyright 2015, Sumo Logic Inc. All Rights Reserved.

2 |

3 | Licensed under the Apache License, Version 2.0 (the "License");

4 | you may not use this file except in compliance with the License.

5 | You may obtain a copy of the License at

6 |

7 | http://www.apache.org/licenses/LICENSE-2.0

8 |

9 | Unless required by applicable law or agreed to in writing, software

10 | distributed under the License is distributed on an "AS IS" BASIS,

11 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | See the License for the specific language governing permissions and

13 | limitations under the License.

14 |

--------------------------------------------------------------------------------

/kinesisfirehose-processor/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "kinesisfirehose-processor",

3 | "version": "1.0.0",

4 | "description": "Lambda Function for transforming incoming data from kinesis firehose",

5 | "main": "kinesisfirehose-processor.js",

6 | "dependencies": {},

7 | "devDependencies": {},

8 | "scripts": {

9 | "test": "echo \"Error: no test specified\" && exit 1",

10 | "build": "rm -f kinesisfirehose-processor.zip && zip -r kinesisfirehose-processor.zip kinesisfirehose-processor.js package.json"

11 | },

12 | "keywords": [

13 | "AWS",

14 | "Kinesis Firehose"

15 | ],

16 | "author": "Himanshu Pal",

17 | "license": "Apache-2.0"

18 | }

19 |

--------------------------------------------------------------------------------

/cloudwatchlogs-with-dlq/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "dlq_processor",

3 | "version": "1.0.0",

4 | "description": "Lambda function for processing messages from CloudWatch with Dead Letter Queue Support",

5 | "main": "DLQProcessor.js",

6 | "dependencies": {

7 | "jmespath": "^0.15.0",

8 | "lodash.find": "^4.6.0"

9 | },

10 | "devDependencies": {},

11 | "scripts": {

12 | "test": "node -e 'require('./test').test()'",

13 | "build": "rm -f cloudwatchlogs-with-dlq.zip && npm install && zip -r cloudwatchlogs-with-dlq.zip DLQProcessor.js cloudwatchlogs_lambda.js vpcutils.js package.json sumo-dlq-function-utils/ node_modules/",

14 | "prod_deploy": "python -c 'from test_cwl_lambda import prod_deploy;prod_deploy()'"

15 | },

16 | "author": "Himanshu Pal",

17 | "license": "Apache-2.0"

18 | }

19 |

--------------------------------------------------------------------------------

/loggroup-lambda-connector/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "loggroup-lambda-connector",

3 | "version": "1.0.0",

4 | "description": "Lambda Function for automatic subscription of any Sumo Logic lambda function with loggroups matching an input pattern.",

5 | "main": "loggroup-lambda-connector.js",

6 | "dependencies": {

7 | "aws-sdk": "^2.160.0"

8 | },

9 | "devDependencies": {},

10 | "scripts": {

11 | "test": "echo \"Error: no test specified\" && exit 1",

12 | "build": "echo `pwd` && rm -f test/loggroup-lambda-connector.zip && zip -r test/loggroup-lambda-connector.zip src/loggroup-lambda-connector.js package.json",

13 | "prod_deploy": "python -c 'from test.test_loggroup_lambda_connector import prod_deploy;prod_deploy()'"

14 | },

15 | "keywords": [

16 | "AWS"

17 | ],

18 | "author": "Himanshu Pal",

19 | "license": "Apache-2.0"

20 | }

21 |

--------------------------------------------------------------------------------

/sumologic-app-utils/README.md:

--------------------------------------------------------------------------------

1 | # sumologic-app-utils

2 |

3 | This lambda function is used for creating custom resources.This application is used in conjunction with other sam applications like sumologic-guardduty-benchmark to automate creation/deletion of sumo logic resources.

4 |

5 |

6 | Made with ❤️ by Sumo Logic. Available on the [AWS Serverless Application Repository](https://aws.amazon.com/serverless)

7 |

8 |

9 |

10 | ## Setup

11 |

12 | 1. Deploying the SAM Application

13 | 1. Go to https://serverlessrepo.aws.amazon.com/applications.

14 | 2. Search for sumologic-app-utils.

15 | 3. Click on the sumologic-app-utils application, and then click Deploy.

16 | 4. Click Deploy.

17 |

18 |

19 | ## License

20 |

21 | Apache License 2.0 (Apache-2.0)

22 |

23 |

24 | ## Support

25 | Requests & issues should be filed on GitHub: https://github.com/SumoLogic/sumologic-aws-lambda/issues

26 |

27 |

--------------------------------------------------------------------------------

/sumologic-app-utils/sumo_app_utils.yaml:

--------------------------------------------------------------------------------

1 | AWSTemplateFormatVersion: '2010-09-09'

2 | Transform: 'AWS::Serverless-2016-10-31'

3 | Description: >

4 | This solution consists of a lambda function which which gets triggered when CF stack is deployed. This is used for creating sumologic resources like collector, source and app folders.

5 |

6 | Globals:

7 | Function:

8 | Timeout: 300

9 |

10 | Resources:

11 | SumoAppUtilsFunction:

12 | Type: 'AWS::Serverless::Function'

13 | Properties:

14 | Handler: main.handler

15 | Runtime: python3.7

16 | CodeUri: s3://appdevstore/sumo_app_utils.zip

17 | MemorySize: 128

18 | Timeout: 300

19 |

20 | Outputs:

21 | SumoAppUtilsFunction:

22 | Description: "SumoAppUtils Function ARN"

23 | Value: !GetAtt SumoAppUtilsFunction.Arn

24 | Export:

25 | Name : !Sub "${AWS::StackName}-SumoAppUtilsFunction"

26 |

--------------------------------------------------------------------------------

/sumologic-app-utils/packaged_sumo_app_utils.yaml:

--------------------------------------------------------------------------------

1 | AWSTemplateFormatVersion: '2010-09-09'

2 | Description: 'This solution consists of a lambda function which which gets triggered

3 | when CF stack is deployed. This is used for creating sumologic resources like collector,

4 | source and app folders.

5 |

6 | '

7 | Globals:

8 | Function:

9 | Timeout: 300

10 | Outputs:

11 | SumoAppUtilsFunction:

12 | Description: SumoAppUtils Function ARN

13 | Export:

14 | Name:

15 | Fn::Sub: ${AWS::StackName}-SumoAppUtilsFunction

16 | Value:

17 | Fn::GetAtt:

18 | - SumoAppUtilsFunction

19 | - Arn

20 | Resources:

21 | SumoAppUtilsFunction:

22 | Properties:

23 | CodeUri: s3://appdevstore/sumo_app_utils.zip

24 | Handler: main.handler

25 | MemorySize: 128

26 | Runtime: python3.7

27 | Timeout: 300

28 | Type: AWS::Serverless::Function

29 | Transform: AWS::Serverless-2016-10-31

30 |

--------------------------------------------------------------------------------

/securityhub-forwarder/sam/sam_package.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | if [ "$AWS_PROFILE" == "prod" ]

4 | then

5 | SAM_S3_BUCKET="appdevstore"

6 | AWS_REGION="us-east-1"

7 | else

8 | SAM_S3_BUCKET="cf-templates-5d0x5unchag-us-east-2"

9 | AWS_REGION="us-east-2"

10 | fi

11 |

12 | sam package --template-file template.yaml --s3-bucket $SAM_S3_BUCKET --output-template-file packaged.yaml

13 |

14 | sam deploy --template-file packaged.yaml --stack-name testingsecurityhubforwarder --capabilities CAPABILITY_IAM --region $AWS_REGION

15 | #aws cloudformation describe-stack-events --stack-name testingsecurityhublambda --region $AWS_REGION

16 | #aws cloudformation get-template --stack-name testingsecurityhublambda --region $AWS_REGION

17 | # aws serverlessrepo create-application-version --region us-east-1 --application-id arn:aws:serverlessrepo:us-east-1:$AWS_ACCOUNT_ID:applications/sumologic-securityhub-forwarder --semantic-version 1.0.1 --template-body file://packaged.yaml

18 |

--------------------------------------------------------------------------------

/securityhub-collector/sam/sam_package.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | if [ "$AWS_PROFILE" == "prod" ]

4 | then

5 | SAM_S3_BUCKET="appdevstore"

6 | AWS_REGION="us-east-1"

7 | else

8 | SAM_S3_BUCKET="cf-templates-5d0x5unchag-us-east-2"

9 | AWS_REGION="us-east-2"

10 | fi

11 | sam package --template-file template.yaml --s3-bucket $SAM_S3_BUCKET --output-template-file packaged.yaml

12 |

13 | sam deploy --template-file packaged.yaml --stack-name testingsecurityhubcollector --capabilities CAPABILITY_IAM --region $AWS_REGION --parameter-overrides S3SourceBucketName=securityhubfindings

14 | #aws cloudformation describe-stack-events --stack-name testingsecurityhublambda --region $AWS_REGION

15 | #aws cloudformation get-template --stack-name testingsecurityhublambda --region $AWS_REGION

16 | # aws serverlessrepo create-application-version --region us-east-1 --application-id arn:aws:serverlessrepo:us-east-1:$AWS_ACCOUNT_ID:applications/sumologic-securityhub-connector --semantic-version 1.0.1 --template-body file://packaged.yaml

17 |

--------------------------------------------------------------------------------

/loggroup-lambda-connector/sam/sam_package.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | if [ "$AWS_PROFILE" == "prod" ]

4 | then

5 | SAM_S3_BUCKET="appdevstore"

6 | AWS_REGION="us-east-1"

7 | else

8 | SAM_S3_BUCKET="cf-templates-5d0x5unchag-us-east-2"

9 | AWS_REGION="us-east-2"

10 | fi

11 | sam package --template-file template.yaml --s3-bucket $SAM_S3_BUCKET --output-template-file packaged.yaml

12 |

13 | sam deploy --template-file packaged.yaml --stack-name testingloggrpconnector --capabilities CAPABILITY_IAM --region $AWS_REGION --parameter-overrides LambdaARN="arn:aws:lambda:us-east-1:956882708938:function:AccessVPCResourcesLambda"

14 | #aws cloudformation describe-stack-events --stack-name testingloggrpconnector --region $AWS_REGION

15 | #aws cloudformation get-template --stack-name testingloggrpconnector --region $AWS_REGION

16 | # aws serverlessrepo create-application-version --region us-east-1 --application-id arn:aws:serverlessrepo:us-east-1:$AWS_ACCOUNT_ID:applications/sumologic-securityhub-connector --semantic-version 1.0.1 --template-body file://packaged.yaml

17 |

--------------------------------------------------------------------------------

/cloudwatchevents/guardduty/packaged.yaml:

--------------------------------------------------------------------------------

1 | AWSTemplateFormatVersion: '2010-09-09'

2 | Description: 'This function is invoked by AWS CloudWatch events in response to state

3 | change in your AWS resources which matches a event target definition. The event

4 | payload received is then forwarded to Sumo Logic HTTP source endpoint.

5 |

6 | '

7 | Globals:

8 | Function:

9 | Timeout: 300

10 | Outputs:

11 | CloudWatchEventFunction:

12 | Description: CloudWatchEvent Processor Function ARN

13 | Value:

14 | Fn::GetAtt:

15 | - CloudWatchEventFunction

16 | - Arn

17 | Parameters:

18 | SumoEndpointUrl:

19 | Type: String

20 | Resources:

21 | CloudWatchEventFunction:

22 | Properties:

23 | CodeUri: s3://appdevstore/e62e525a25bb080e521d8bf64909ea41

24 | Environment:

25 | Variables:

26 | SUMO_ENDPOINT:

27 | Ref: SumoEndpointUrl

28 | Events:

29 | CloudWatchEventTrigger:

30 | Properties:

31 | Pattern:

32 | source:

33 | - aws.guardduty

34 | Type: CloudWatchEvent

35 | Handler: cloudwatchevents.handler

36 | Runtime: nodejs8.10

37 | Type: AWS::Serverless::Function

38 | Transform: AWS::Serverless-2016-10-31

39 |

--------------------------------------------------------------------------------

/cloudwatchevents/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "cloudwatchevents-processor",

3 | "version": "1.0.0",

4 | "description": "AWS Lambda function to collect CloudWatch events and post them to SumoLogic.",

5 | "main": "src/cloudwatchevents.js",

6 | "repository": "https://github.com/SumoLogic/sumologic-aws-lambda/tree/master/cloudwatchevents",

7 | "author": "Himanshu Pal",

8 | "license": "Apache-2.0",

9 | "dependencies": {},

10 | "scripts": {

11 | "test": "cd guardduty && sam local invoke CloudWatchEventFunction -e event.json",

12 | "build_guardduty": "cd guardduty && sam package --template-file template.yaml --s3-bucket $SAM_S3_BUCKET --output-template-file packaged.yaml",

13 | "deploy_guardduty": "cd guardduty && sam deploy --template-file packaged.yaml --stack-name testingguarddutylambda --capabilities CAPABILITY_IAM --parameter-overrides SumoEndpointUrl=$SUMO_ENDPOINT",

14 | "view_deploy_logs": "aws cloudformation describe-stack-events --stack-name testingguarddutylambda",

15 | "build_zip": "rm -f guardduty.zip && cd src && zip ../guardduty.zip cloudwatchevents.js && cd ..",

16 | "build_temp": "aws cloudformation get-template --stack-name testingguarddutylambda --region $AWS_REGION"

17 | },

18 | "keywords": [

19 | "lambda",

20 | "cloudwatch-events"

21 | ]

22 | }

23 |

24 |

25 |

--------------------------------------------------------------------------------

/securityhub-forwarder/test/fixtures.json:

--------------------------------------------------------------------------------

1 | {

2 | "Types": "Software and Configuration Checks/Industry and Regulatory Standards/HIPAA Controls",

3 | "Description": "This search gives top 10 resources which are accessed in last 15 minutes",

4 | "GeneratorID": "InsertFindingsScheduledSearch",

5 | "Severity": 30,

6 | "SourceUrl":"https://service.sumologic.com/ui/#/search/RmC8kAUGZbXrkj2rOFmUxmHtzINUgfJnFplh3QWY",

7 | "ComplianceStatus": "FAILED",

8 | "Rows": "[{\"Timeslice\":1545042427000,\"finding_time\":\"1545042427000\",\"item_name\":\"A nice dashboard.png\",\"title\":\"Vulnerability: Apple iTunes m3u Playlist File Title Parsing Buffer Overflow Vulnerability(34886) found on 207.235.176.3\",\"resource_id\":\"10.178.11.43\",\"resource_type\":\"Other\"},{\"Timeslice\":\"1545042427000\",\"finding_time\":\"1545042427000\",\"item_name\":\"Screen Shot 2014-07-30 at 11.39.29 PM.png\",\"title\":\"PCI Req 01: Traffic to Cardholder Environment: Direct external traffic to secure port on 10.178.11.43\",\"resource_id\":\"10.178.11.42\",\"resource_type\":\"AwsEc2Instance\"},{\"Timeslice\":\"1545042427000\",\"finding_time\":\"1545042427000\",\"item_name\":\"10388049_589057504526630_2031213996_n.jpg\",\"title\":\"Test Check Success for 207.235.176.5\",\"resource_id\":\"10.178.11.41\",\"resource_type\":\"Other\"}]"

9 | }

10 |

--------------------------------------------------------------------------------

/CHANGELOG.md:

--------------------------------------------------------------------------------

1 | # CHANGELOG for sumologic-aws-lambda functions

2 |

3 | This file lists changes made in each version of function repo

4 |

5 | ## 1.2.2:

6 | July 11, 2016:

7 | * Merged [PR#12](https://github.com/SumoLogic/sumologic-aws-lambda/pull/12).

8 |

9 | ## 1.2.1:

10 | Apr 05, 2016

11 | * Merged [PR#7](https://github.com/SumoLogic/sumologic-aws-lambda/pull/7).

12 |

13 | ## 1.2.0:

14 | Feb 29, 2016

15 | * Merged [PR#4](https://github.com/SumoLogic/sumologic-aws-lambda/pull/4), add LICENSE file, and a function for reading from S3.

16 |

17 | ## 1.1.1:

18 | Feb 22, 2016

19 | * Merged [PR#3](https://github.com/SumoLogic/sumologic-aws-lambda/pull/3), add strict equality comparison operator in the Lambda function for Lambda logs

20 |

21 | ## 1.1.0:

22 | Feb 3, 2016

23 | * Merged [PR#2](https://github.com/SumoLogic/sumologic-aws-lambda/pull/2), add a function for reading from AWS Kinesis

24 |

25 | ## 1.0.0:

26 | Jan 25, 2016

27 | * Initial release with 2 functions for reading from AWS CloudWatch Logs (VPC and Lambda function logs)

28 |

29 |

30 | - - -

31 | Check the [Markdown Syntax Guide](http://daringfireball.net/projects/markdown/syntax) for help with Markdown.

32 |

33 | The [Github Flavored Markdown page](http://github.github.com/github-flavored-markdown/) describes the differences between markdown on github and standard markdown.

34 |

--------------------------------------------------------------------------------

/securityhub-collector/sam/create_layer.sh:

--------------------------------------------------------------------------------

1 | #!bash/bin

2 |

3 | if [ ! -f securityhub_deps.zip ]; then

4 | echo "creating zip file"

5 | mkdir python

6 | cd python

7 | pip install -r ../requirements.txt -t ./

8 | zip -r ../securityhub_deps.zip .

9 | cd ..

10 | fi

11 |

12 | declare -a regions=("us-east-2" "us-east-1" "us-west-1" "us-west-2" "ap-south-1" "ap-northeast-2" "ap-southeast-1" "ap-southeast-2" "ap-northeast-1" "ca-central-1" "eu-central-1" "eu-west-1" "eu-west-2" "eu-west-3" "sa-east-1")

13 |

14 | for i in "${regions[@]}"

15 | do

16 | echo "Deploying layer in $i"

17 | bucket_name="appdevzipfiles-$i"

18 | aws s3 cp securityhub_deps.zip s3://$bucket_name/ --region $i

19 |

20 | aws lambda publish-layer-version --layer-name securityhub_deps --description "contains securityhub solution dependencies" --license-info "MIT" --content S3Bucket=$bucket_name,S3Key=securityhub_deps.zip --compatible-runtimes python3.7 python3.6 --region $i

21 |

22 | aws lambda add-layer-version-permission --layer-name securityhub_deps --statement-id securityhub-deps --version-number 1 --principal '*' --action lambda:GetLayerVersion --region $i

23 | done

24 |

25 | # aws lambda remove-layer-version-permission --layer-name securityhub_deps --version-number 1 --statement-id securityhub-deps --region us-east-1

26 | # aws lambda get-layer-version-policy --layer-name securityhub_deps --region us-east-1

27 |

--------------------------------------------------------------------------------

/cloudwatchlogs-with-dlq/sumo-dlq-function-utils/lib/dlqutils.js:

--------------------------------------------------------------------------------

1 | var AWS = require("aws-sdk");

2 |

3 | function Messages(env) {

4 | this.sqs = new AWS.SQS({region: env.AWS_REGION});

5 | this.env = env;

6 | }

7 |

8 | Messages.prototype.receiveMessages = function (messageCount, callback) {

9 | var params = {

10 | QueueUrl: this.env.TASK_QUEUE_URL,

11 | MaxNumberOfMessages: messageCount

12 | };

13 | this.sqs.receiveMessage(params, callback);

14 | };

15 |

16 | Messages.prototype.deleteMessage = function (receiptHandle, callback) {

17 | this.sqs.deleteMessage({

18 | ReceiptHandle: receiptHandle,

19 | QueueUrl: this.env.TASK_QUEUE_URL

20 | }, callback);

21 | };

22 |

23 | function invokeLambdas(awsRegion, numOfWorkers, functionName, payload, context) {

24 |

25 | for (var i = 0; i < numOfWorkers; i++) {

26 | var lambda = new AWS.Lambda({

27 | region: awsRegion

28 | });

29 | lambda.invoke({

30 | InvocationType: 'Event',

31 | FunctionName: functionName,

32 | Payload: payload

33 | }, function(err, data) {

34 | if (err) {

35 | context.fail(err);

36 | } else {

37 | context.succeed('success');

38 | }

39 | });

40 | }

41 | }

42 |

43 | module.exports = {

44 | Messages: Messages,

45 | invokeLambdas: invokeLambdas

46 | };

47 |

--------------------------------------------------------------------------------

/cloudwatchevents/guardduty/template.yaml:

--------------------------------------------------------------------------------

1 | AWSTemplateFormatVersion: '2010-09-09'

2 | Transform: AWS::Serverless-2016-10-31

3 | Description: >

4 | This function is invoked by AWS CloudWatch events in response to state change in your AWS resources which matches a event target definition. The event payload received is then forwarded to Sumo Logic HTTP source endpoint.

5 |

6 | # More info about Globals: https://github.com/awslabs/serverless-application-model/blob/master/docs/globals.rst

7 | Globals:

8 | Function:

9 | Timeout: 300

10 |

11 | Parameters:

12 | SumoEndpointUrl:

13 | Type: String

14 |

15 | Resources:

16 |

17 | CloudWatchEventFunction:

18 | Type: AWS::Serverless::Function # More info about Function Resource: https://github.com/awslabs/serverless-application-model/blob/master/versions/2016-10-31.md#awsserverlessfunction

19 | Properties:

20 | CodeUri: ../src/

21 | Handler: cloudwatchevents.handler

22 | Runtime: nodejs8.10

23 | Environment:

24 | Variables:

25 | SUMO_ENDPOINT: !Ref SumoEndpointUrl

26 | Events:

27 | CloudWatchEventTrigger:

28 | Type: CloudWatchEvent

29 | Properties:

30 | Pattern:

31 | source:

32 | - aws.guardduty

33 | Outputs:

34 |

35 | CloudWatchEventFunction:

36 | Description: "CloudWatchEvent Processor Function ARN"

37 | Value: !GetAtt CloudWatchEventFunction.Arn

38 |

--------------------------------------------------------------------------------

/securityhub-collector/sam/packaged.yaml:

--------------------------------------------------------------------------------

1 | AWSTemplateFormatVersion: '2010-09-09'

2 | Transform: AWS::Serverless-2016-10-31

3 | Description: 'This solution consists of a lambda function which which gets triggered

4 | by CloudWatch events with findings as payload which are then ingested to Sumo Logic

5 | via S3 source

6 |

7 | '

8 | Globals:

9 | Function:

10 | Timeout: 300

11 | Parameters:

12 | S3SourceBucketName:

13 | Type: String

14 | Resources:

15 | SecurityHubCollectorFunction:

16 | Type: AWS::Serverless::Function

17 | Properties:

18 | Handler: securityhub_collector.lambda_handler

19 | Runtime: python3.7

20 | CodeUri: s3://appdevstore/3821fd9c5288ebaca71e4ea0b26629ab

21 | MemorySize: 128

22 | Timeout: 300

23 | Policies:

24 | - Statement:

25 | - Sid: SecurityHubS3PutObjectPolicy

26 | Effect: Allow

27 | Action:

28 | - s3:PutObject

29 | Resource:

30 | - Fn::Sub: arn:aws:s3:::${S3SourceBucketName}

31 | - Fn::Sub: arn:aws:s3:::${S3SourceBucketName}/*

32 | Environment:

33 | Variables:

34 | S3_LOG_BUCKET:

35 | Ref: S3SourceBucketName

36 | Events:

37 | CloudWatchEventTrigger:

38 | Type: CloudWatchEvent

39 | Properties:

40 | Pattern:

41 | source:

42 | - aws.securityhub

43 | Outputs:

44 | SecurityHubCollectorFunction:

45 | Description: SecurityHubCollector Function ARN

46 | Value:

47 | Fn::GetAtt:

48 | - SecurityHubCollectorFunction

49 | - Arn

50 |

--------------------------------------------------------------------------------

/securityhub-collector/sam/template.yaml:

--------------------------------------------------------------------------------

1 | AWSTemplateFormatVersion: '2010-09-09'

2 | Transform: 'AWS::Serverless-2016-10-31'

3 | Description: >

4 | This solution consists of a lambda function which which gets triggered by CloudWatch events with findings as payload which are then ingested to Sumo Logic via S3 source

5 |

6 | Globals:

7 | Function:

8 | Timeout: 300

9 |

10 | Parameters:

11 | S3SourceBucketName:

12 | Type: String

13 |

14 | Resources:

15 |

16 | SecurityHubCollectorFunction:

17 | Type: 'AWS::Serverless::Function'

18 | Properties:

19 | Handler: securityhub_collector.lambda_handler

20 | Runtime: python3.7

21 | CodeUri: ../src/

22 | MemorySize: 128

23 | Timeout: 300

24 | Policies:

25 | - Statement:

26 | - Sid: SecurityHubS3PutObjectPolicy

27 | Effect: Allow

28 | Action:

29 | - "s3:PutObject"

30 | Resource:

31 | - !Sub 'arn:aws:s3:::${S3SourceBucketName}'

32 | - !Sub 'arn:aws:s3:::${S3SourceBucketName}/*'

33 |

34 | Environment:

35 | Variables:

36 | S3_LOG_BUCKET: !Ref S3SourceBucketName

37 |

38 | Events:

39 | CloudWatchEventTrigger:

40 | Type: CloudWatchEvent

41 | Properties:

42 | Pattern:

43 | source:

44 | - aws.securityhub

45 |

46 | Outputs:

47 | SecurityHubCollectorFunction:

48 | Description: "SecurityHubCollector Function ARN"

49 | Value: !GetAtt SecurityHubCollectorFunction.Arn

50 |

--------------------------------------------------------------------------------

/cloudwatchevents/guardduty/event.json:

--------------------------------------------------------------------------------

1 | {

2 | "version": "0",

3 | "id": "f81b1e52-fff2-e312-7b6f-66e0a353fee4",

4 | "detail-type": "AWS API Call via CloudTrail",

5 | "source": "aws.guardduty",

6 | "account": "456227676011",

7 | "time": "2018-10-03T00:53:52Z",

8 | "region": "us-east-2",

9 | "resources": [],

10 | "detail": {

11 | "eventVersion": "1.05",

12 | "userIdentity": {

13 | "type": "IAMUser",

14 | "principalId": "AIDAIFSYASDF6O2ZUR4M",

15 | "arn": "arn:aws:iam::45234274376011:user/HELLOWORLD",

16 | "accountId": "4562123123011",

17 | "accessKeyId": "ASIA123123123MU7V",

18 | "userName": "hello",

19 | "sessionContext": {

20 | "attributes": {

21 | "mfaAuthenticated": "true",

22 | "creationDate": "2018-10-02T23:20:50Z"

23 | }

24 | },

25 | "invokedBy": "signin.amazonaws.com"

26 | },

27 | "eventTime": "2018-10-03T00:53:52Z",

28 | "eventSource": "guardduty.amazonaws.com",

29 | "eventName": "CreateSampleFindings",

30 | "awsRegion": "us-east-2",

31 | "sourceIPAddress": "122.177.239.147",

32 | "userAgent": "signin.amazonaws.com",

33 | "requestParameters": {

34 | "detectorId": "d0b31b9d4905e74c121212b12e79f"

35 | },

36 | "responseElements": null,

37 | "requestID": "cbd191aa-1234-11e8-a342-399aed033d0d",

38 | "eventID": "1329e405-1234-4289-adb7-612b503622a5",

39 | "readOnly": false,

40 | "eventType": "AwsApiCall"

41 | }

42 | }

43 |

--------------------------------------------------------------------------------

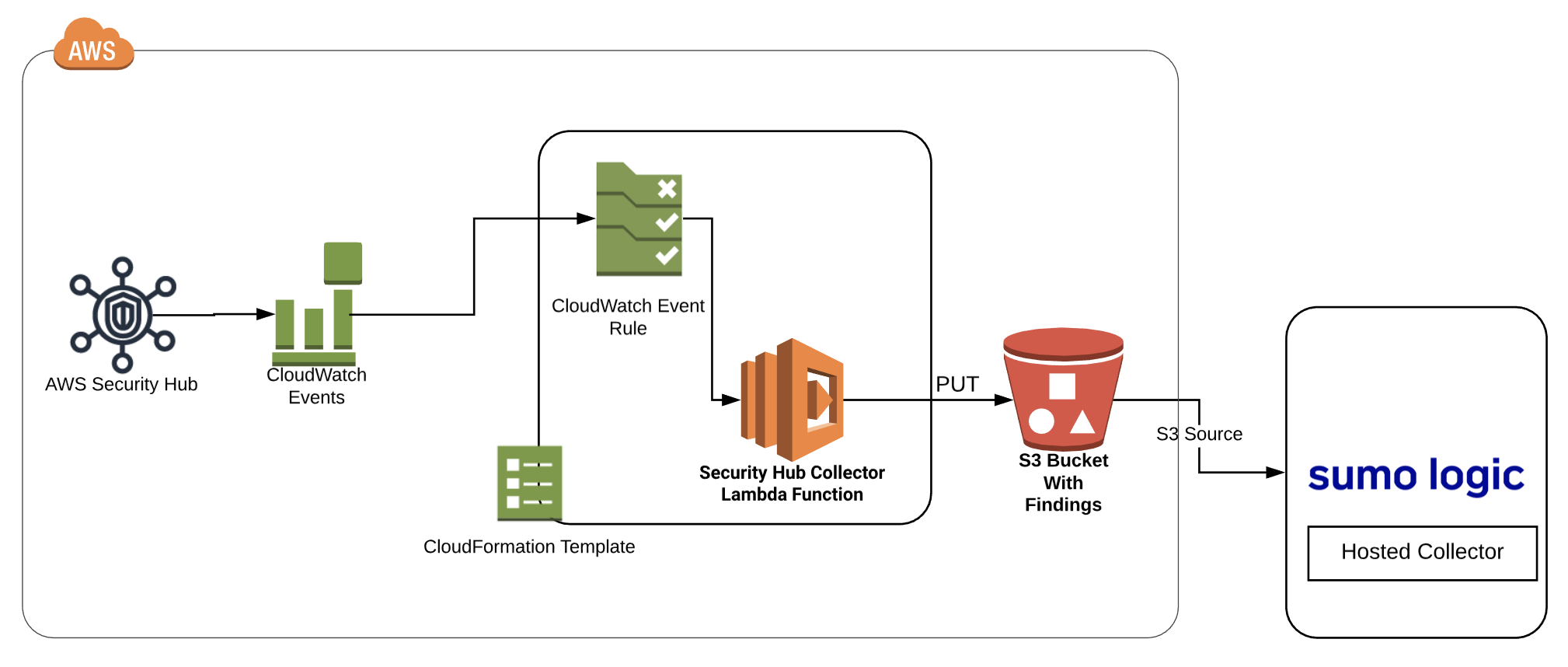

/securityhub-collector/README.md:

--------------------------------------------------------------------------------

1 | # sumologic-securityhub-collector

2 |

3 | This solution consists of a lambda function which which gets triggered by CloudWatch events with findings as payload which are then ingested to Sumo Logic via S3 source

4 |

5 |

6 | Made with ❤️ by Sumo Logic. Available on the [AWS Serverless Application Repository](https://aws.amazon.com/serverless)

7 |

8 |

9 |

10 | ## Setup

11 |

12 |

13 | 1. Configure a [Hosted Collector](https://help.sumologic.com/03Send-Data/Hosted-Collectors/Configure-a-Hosted-Collector) and an [AWS S3 Source](https://help.sumologic.com/03Send-Data/Sources/02Sources-for-Hosted-Collectors/Amazon-Web-Services/AWS-S3-Source#AWS_Sources) to Sumo Logic, and in Advanced Options for Logs, under Timestamp Format, click Specify a format and enter the following:

14 | Specify Format as yyyy-MM-dd'T'HH:mm:ss.SSS'Z'

15 | Specify Timestamp locator as .*"UpdatedAt":"(.*)".*

16 |

17 | 2. Deploying the SAM Application

18 | 1. Open a browser window and enter the following URL: https://serverlessrepo.aws.amazon.com/applications

19 | 2. In the Serverless Application Repository, search for sumologic.

20 | 3. Select Show apps that create custom IAM roles or resource policies check box.

21 | 4. Click the sumologic-securityhub-collector,link, and then click Deploy.

22 | 5. In the Configure application parameters panel, enter the name of the S3 bucket configured while creating AWS S3 source.

23 | Click Deploy.

24 |

25 |

26 | ## License

27 |

28 | Apache License 2.0 (Apache-2.0)

29 |

30 |

31 | ## Support

32 | Requests & issues should be filed on GitHub: https://github.com/SumoLogic/sumologic-aws-lambda/issues

33 |

34 |

--------------------------------------------------------------------------------

/securityhub-forwarder/src/utils.py:

--------------------------------------------------------------------------------

1 | import time

2 | from functools import wraps

3 |

4 |

5 | def fixed_sleep(fixed_wait_time):

6 | def handler():

7 | return fixed_wait_time

8 | return handler

9 |

10 |

11 | def incrementing_sleep(wait_time_inc, start_wait_time=3):

12 | attempt = 1

13 |

14 | def handler():

15 | nonlocal attempt

16 | print("generating time", attempt)

17 | result = start_wait_time + (attempt-1)*wait_time_inc

18 | attempt += 1

19 | return result

20 | return handler

21 |

22 |

23 | def exponential_sleep(multiplier):

24 | attempt = 1

25 |

26 | def handler():

27 | nonlocal attempt

28 | exp = 2 ** attempt

29 | result = multiplier * exp

30 | attempt += 1

31 | return result

32 | return handler

33 |

34 |

35 | def retry_if_exception_of_type(retryable_types):

36 | def _retry_if_exception_these_types(exception):

37 | return isinstance(exception, retryable_types)

38 | return _retry_if_exception_these_types

39 |

40 |

41 | def retry(ExceptionToCheck=(Exception,), max_retries=4,

42 | logger=None, handler_type=exponential_sleep, *hdlrargs, **hdlrkwargs):

43 |

44 | def deco_retry(f):

45 |

46 | @wraps(f)

47 | def f_retry(*args, **kwargs):

48 | delay_handler = handler_type(*hdlrargs, **hdlrkwargs)

49 | retries_left, wait_time = max_retries, delay_handler()

50 | while retries_left > 1:

51 | try:

52 | return f(*args, **kwargs)

53 | except ExceptionToCheck as e:

54 | msg = "%s, Retrying in %d seconds..." % (str(e), wait_time)

55 | if logger:

56 | logger.warning(msg)

57 | else:

58 | print(msg)

59 | time.sleep(wait_time)

60 | retries_left -= 1

61 | wait_time = delay_handler()

62 | return f(*args, **kwargs)

63 |

64 | return f_retry

65 |

66 | return deco_retry

67 |

--------------------------------------------------------------------------------

/.travis.yml:

--------------------------------------------------------------------------------

1 | sudo: required

2 | dist: trusty

3 | language: node_js

4 | python:

5 | - '2.7'

6 | jobs:

7 | include:

8 | - stage: Node 8

9 | node_js: '8.10'

10 | env: FUNCTION_DIR=cloudwatchlogs-with-dlq TEST_DIR=cloudwatchlogs-with-dlq TEST_FILE=test_cwl_lambda.py NODE_VERSION="8.10"

11 | - stage: Node 8

12 | node_js: '8.10'

13 | env: FUNCTION_DIR=loggroup-lambda-connector TEST_DIR=loggroup-lambda-connector/test TEST_FILE=test_loggroup_lambda_connector.py NODE_VERSION="8.10"

14 | before_install:

15 | - sudo apt-get install python-pip

16 | - cd $TEST_DIR && sudo pip install -r requirements.txt && cd -

17 | script:

18 | - cd $FUNCTION_DIR && npm run build && cd -

19 | - cd $TEST_DIR && python $TEST_FILE && cd -

20 | env:

21 | global:

22 | - secure: iGATK/X1PfH8FCJlUpBbJ//mQ57QBQT0jETyDDH2r+GxZxXPnFS46ugPGsWTX0IDDEGjXE+/P6wnm0ORo7aa3yp7DZnfWFDyrgFchgUo1p3unt5sQcHg/9mitkQF7lHFlnAqM1D69AEGP5WU63m/9xQoj0BVYCVb2eUEqatV1AU8lpvRAkFc2juumy1ba4skoDFLOtrsaO2k+SCCBfMKq2SOJkcPnfMZGTOT7niaNnNAZSDYDovAlMYaLDOR10EMUAyklnmmAADyDxNRwSSAG8JKJMmfxSqIe4+s7xjqztjtkApWmEAjevDLpc62v1TWe0O2zCxnb4E4EBN6A31R1XJha4i/IKSeVL99J9X8aV1wIb9feV85qmZmlxVL2EU7/CcFGTdKeAak3qQWHZ1C6X32TBB6x5C0qASSC/x5pwDDJIOyeZr0Z93+dDhnBLNmzz8sA3h7AyzQZfhTqG/f4/SOgxTf7aF13X8BKuoM8BaGfXjy0keaVb0xbTjtDvi9F21EymWPdwNlQHsKca+EcTT2KE3mwFNrHAsTeNhGzMbrzmbZzvNHlmIwjB1C5l9h4GpUkxNb/mqi9SBTx9YfDIgz0bDOds1T92tGIAcBaHfJLTjc2JIgxwgdL13X3cL8GJBiFFwiJqiKJCSz4SxhWqsrrbsGSQBUqN5UoSsyKco=

23 | - secure: pmgNH6sLnwPadB/m4e/DtV/NbblVCa84N56Q45vpDkdP7fSIt8YShkvElrxFNJBWXaPyG/uE2gnIrXREtN/7xox+xv2ej+Gsv+cLYwBIIs1oGgHVlm/JG4OBLhuSn23w/DK/RuuHWWjDJ2DsDaXlXgbPTU01EJC2kpM9YnsmeifDnq/HSNPVx8k6bBKhzED7atf8v8yy8XYAkpL3viNwm3B98xU/AvEcgrNwG0XYQexCBTm9nJTQ2q3sBFQfuvQXFNuQoQWuN0wmSlhFuAnGsm0nugk7YJ8HZTsw1X1OUW61J3c9p0BHKL69nWHoYvSkyzl/9kls3QxYhLumF2DepBSbw/+iKMkxNzd4s7DDKGMqM7Y/9omxj3djrGxn8qGpn7GKNyZJR5EqLS+KY9E7xQ6ql1COdUA1W6aTEzLeEelti4abHEoA7a5sEhRSC/rmR0v+PP3sKc2FJjDOB9/eBVG/8V05EgN3Ji7KEu5vsrvIzu1Ng4a7BUyM06gw1vF92H/uOOBGGh25H8LLIZTpB9z//brZ9RtrzSA585KyJPFFW8JdMl34CE+nz8DhGwXSCDBQz/HMh0h1RJ0+8nJkIuxi96yOPH73c1tngUTnhm7OZh7yyNCr1RLT7yS552stnR0WqSv8gSxWK1+Apmzi6P5s5oqraDhEW9CeQe/qkzI=

24 |

--------------------------------------------------------------------------------

/kinesisfirehose-processor/kinesisfirehose-processor.js:

--------------------------------------------------------------------------------

1 | function encodebase64(data) {

2 | return (new Buffer(data, 'utf8')).toString('base64');

3 | }

4 |

5 | function decodebase64(data) {

6 | return (new Buffer(data, 'base64')).toString('utf8');

7 | }

8 |

9 | function addDelimitertoJSON(data, delimiter) {

10 | delimiter = typeof delimiter === 'undefined' ? '\n' : delimiter;

11 | let resultdata = decodebase64(data);

12 | resultdata = resultdata + delimiter;

13 | resultdata = encodebase64(resultdata);

14 | return resultdata;

15 | }

16 |

17 | function convertToLine(data) {

18 | // converts json object to a single line ({k1:v1,k2:v2} to k1=v1 k2=v2)

19 | const entryObj = JSON.parse(decodebase64(data));

20 | var resultdata = "";

21 | for (var key in entryObj) {

22 | if (entryObj.hasOwnProperty(key)) {

23 | resultdata += key + "=" + entryObj[key] + " ";

24 | }

25 | }

26 | resultdata = resultdata.trim() + "\n";

27 | resultdata = encodebase64(resultdata);

28 | return resultdata;

29 | }

30 | exports.handler = (event, context, callback) => {

31 | console.log("invoking transformation lambda");

32 | let success = 0;

33 | let failure = 0;

34 |

35 | const output = event.records.map( function (record) {

36 | try {

37 | // let resultdata = convertToLine(record.data);

38 | let resultdata = addDelimitertoJSON(record.data);

39 | success++;

40 | return {

41 | recordId: record.recordId,

42 | result: 'Ok',

43 | data: resultdata

44 | };

45 | } catch(error) {

46 | console.log("Error in record transformation", error);

47 | failure++;

48 | return {

49 | recordId: record.recordId,

50 | result: 'ProcessingFailed',

51 | data: record.data,

52 | };

53 | }

54 | });

55 | console.log(`Processing completed.Total records ${output.length}. Success ${success} Failed ${failure}`);

56 | callback(null, { records: output });

57 | };

58 |

--------------------------------------------------------------------------------

/securityhub-collector/src/securityhub_collector.py:

--------------------------------------------------------------------------------

1 | import json

2 | import os

3 | import logging

4 | import sys

5 | sys.path.insert(0, '/opt') # layer packages are in opt directory

6 | import boto3

7 | from collections import defaultdict

8 |

9 |

10 | BUCKET_NAME = os.getenv("S3_LOG_BUCKET")

11 | BUCKET_REGION = os.getenv("AWS_REGION")

12 | s3cli = boto3.client('s3', region_name=BUCKET_REGION)

13 |

14 |

15 | logger = logging.getLogger()

16 | logger.setLevel(logging.INFO)

17 |

18 |

19 | def post_to_s3(findings, filename, silent=False):

20 |

21 | findings_data = "\n\n".join([json.dumps(data) for data in findings])

22 | is_success = False

23 | try:

24 | response = s3cli.put_object(Body=findings_data, Bucket=BUCKET_NAME, Key=filename)

25 | is_success = True

26 | logger.info("Saved %d findings to s3 %s status_code: %s" % (len(findings), filename, response["ResponseMetadata"].get("HTTPStatusCode")))

27 | except Exception as e:

28 | logger.error("Failed to post findings to S3: %s" % str(e))

29 | if not silent:

30 | raise e

31 |

32 | return is_success

33 |

34 |

35 | def send_findings(findings, context):

36 |

37 | count = 0

38 | if len(findings) > 0:

39 | finding_buckets = defaultdict(list)

40 | for f in findings:

41 | finding_buckets[f['ProductArn']].append(f)

42 | count += 1

43 |

44 | for product_arn, finding_list in finding_buckets.items():

45 | filename = "%s-%s" % (product_arn, context.aws_request_id)

46 | post_to_s3(finding_list, filename)

47 |

48 | logger.info("Finished Sending NumFindings: %d" % (count))

49 |

50 |

51 | def lambda_handler(event, context):

52 | logger.info("Invoking SecurityHubCollector source %s region %s" % (event['source'], event['region']))

53 | findings = event['detail'].get('findings', [])

54 | send_findings(findings, context)

55 |

56 |

57 | if __name__ == '__main__':

58 |

59 | event = json.load(open('../sam/event.json'))

60 | BUCKET_NAME = "securityhubfindings"

61 |

62 | class context:

63 | aws_request_id = "testid12323"

64 |

65 | lambda_handler(event, context)

66 |

--------------------------------------------------------------------------------

/sumologic-app-utils/deploy.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | if [ "$AWS_PROFILE" == "prod" ]

4 | then

5 | SAM_S3_BUCKET="appdevstore"

6 | AWS_REGION="us-east-1"

7 | else

8 | SAM_S3_BUCKET="cf-templates-5d0x5unchag-us-east-2"

9 | AWS_REGION="us-east-2"

10 | fi

11 |

12 | rm src/external/*.pyc

13 | rm src/*.pyc

14 | rm sumo_app_utils.zip

15 |

16 | if [ ! -f sumo_app_utils.zip ]; then

17 | echo "creating zip file"

18 | mkdir python

19 | cd python

20 | pip install crhelper -t .

21 | pip install requests -t .

22 | cp -v ../src/*.py .

23 | zip -r ../sumo_app_utils.zip .

24 | cd ..

25 | rm -r python

26 | fi

27 |

28 | aws s3 cp sumo_app_utils.zip s3://$SAM_S3_BUCKET/ --region $AWS_REGION

29 |

30 | sam package --template-file sumo_app_utils.yaml --s3-bucket $SAM_S3_BUCKET --output-template-file packaged_sumo_app_utils.yaml

31 |

32 | sam deploy --template-file packaged_sumo_app_utils.yaml --stack-name testingsumoapputils --capabilities CAPABILITY_IAM --region $AWS_REGION

33 |

34 | # Before testing below command one needs to publish new version of sumo_app_utils and change version in template

35 | sam package --template-file /Users/hpal/git/sumologic-aws-lambda/cloudwatchevents/guarddutybenchmark/template_v2.yaml --s3-bucket $SAM_S3_BUCKET --output-template-file /Users/hpal/git/sumologic-aws-lambda/cloudwatchevents/guarddutybenchmark/packaged_v2.yaml

36 |

37 | sam deploy --template-file /Users/hpal/git/sumologic-aws-lambda/cloudwatchevents/guarddutybenchmark/packaged_v2.yaml --stack-name guarddutysamdemo --capabilities CAPABILITY_IAM CAPABILITY_AUTO_EXPAND --region $AWS_REGION --parameter-overrides SumoAccessID=$SUMO_ACCESS_ID SumoAccessKey=$SUMO_ACCESS_KEY SumoDeployment=$SUMO_DEPLOYMENT RemoveSumoResourcesOnDeleteStack="true"

38 |

39 | #aws cloudformation describe-stack-events --stack-name testingsecurityhublambda --region $AWS_REGION

40 | #aws cloudformation get-template --stack-name testingsecurityhublambda --region $AWS_REGION

41 | # aws serverlessrepo create-application-version --region us-east-1 --application-id arn:aws:serverlessrepo:us-east-1:$AWS_ACCOUNT_ID:applications/sumologic-securityhub-connector --semantic-version 1.0.1 --template-body file://packaged.yaml

42 |

--------------------------------------------------------------------------------

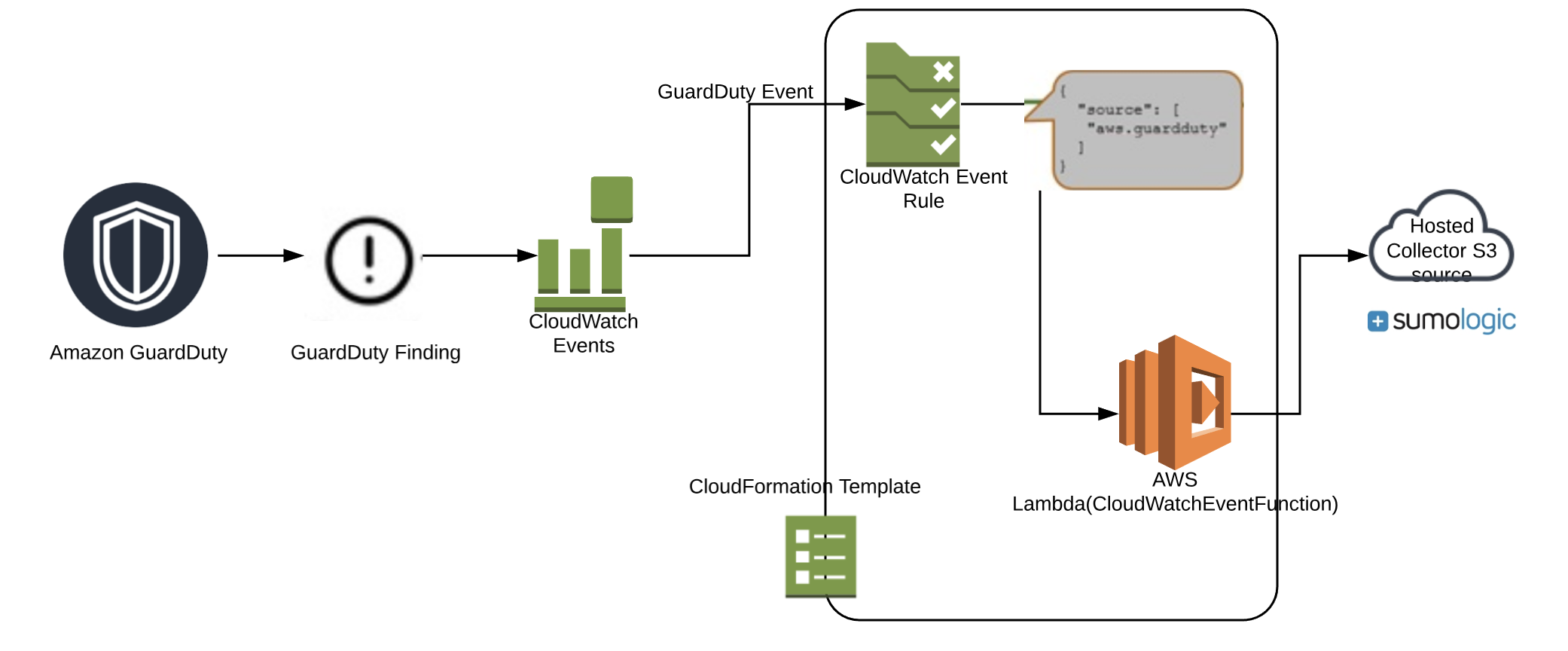

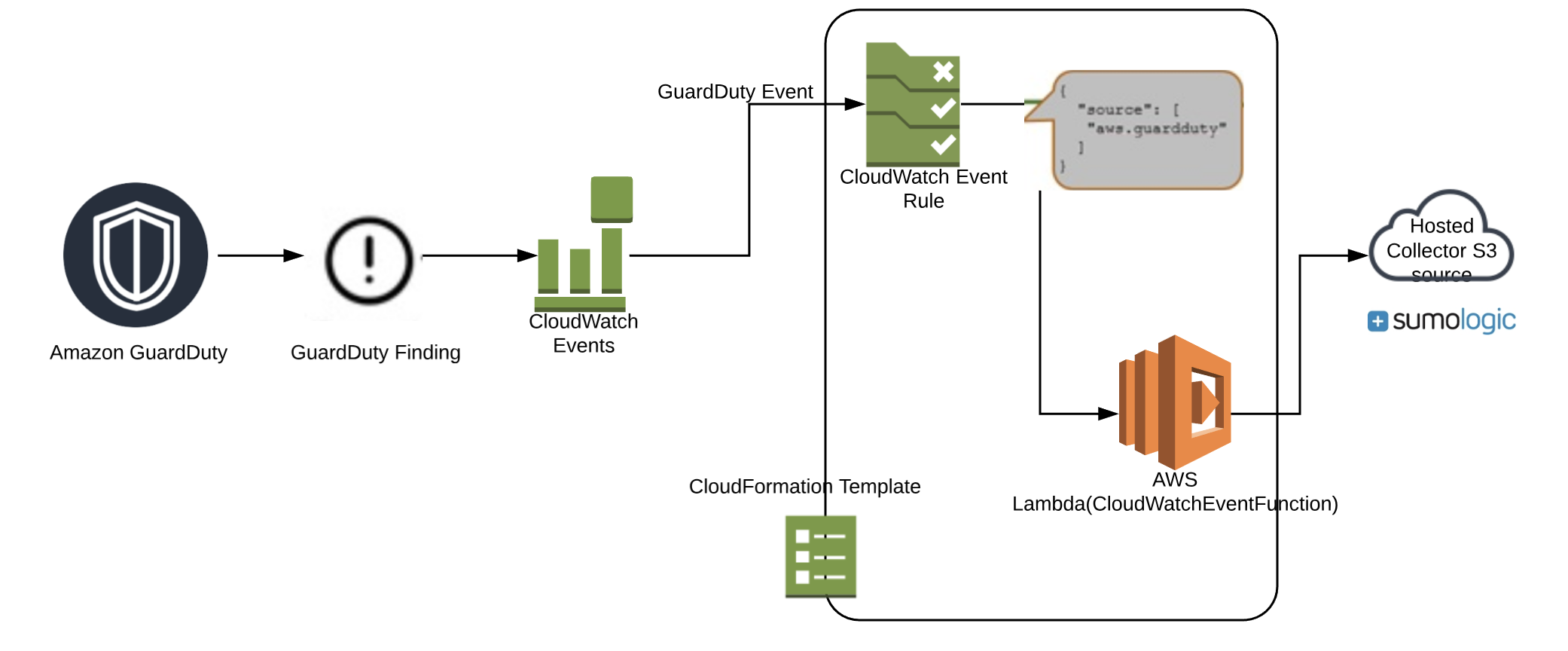

/cloudwatchevents/guardduty/README.md:

--------------------------------------------------------------------------------

1 | # sumologic-guardduty-events-processor

2 |

3 | This solution creates resources for processing and sending Amazon GuardDuty Events to Sumo logic.

4 |

5 |

6 | Made with ❤️ by Sumo Logic AppDev Team. Available on the [AWS Serverless Application Repository](https://aws.amazon.com/serverless)

7 |

8 |

9 |

10 | ## Setup

11 | 1. First create an HTTP collector endpoint within SumoLogic. You will need the endpoint URL for the lambda function later.

12 | 2. Go to https://serverlessrepo.aws.amazon.com/applications.

13 | 3. Search for sumologic-guardduty-events-processor and click on deploy.

14 | 4. In Configure application parameters panel paste the HTTP collector endpoint previously configured.

15 | 5. Click on Deploy

16 |

17 | ## Lambda Environment Variables

18 | The following AWS Lambda environment variables are supported

19 |

20 | SUMO_ENDPOINT (REQUIRED) - SumoLogic HTTP Collector endpoint URL.

21 | SOURCE_CATEGORY_OVERRIDE (OPTIONAL) - Override _sourceCategory metadata field within SumoLogic. If none will not be overridden

22 | SOURCE_HOST_OVERRIDE (OPTIONAL) - Override _sourceHost metadata field within SumoLogic. If none will not be overridden

23 | SOURCE_NAME_OVERRIDE (OPTIONAL) - Override _sourceName metadata field within SumoLogic. If none will not be overridden

24 |

25 | ## Excluding Outer Event Fields

26 |

27 | By default, a CloudWatch Event has a format similar to this:

28 | ```

29 | {

30 | "version":"0",

31 | "id":"0123456d-7e46-ecb4-f5a2-e59cec50b100",

32 | "detail-type":"AWS API Call via CloudTrail",

33 | "source":"aws.logs",

34 | "account":"012345678908",

35 | "time":"2017-11-06T23:36:59Z",

36 | "region":"us-east-1",

37 | "resources":[ ],

38 | "detail":▶{ … }

39 | }

40 | ```

41 | This event will be sent as-is to Sumo Logic. If you just want to send the detail key instead, set the removeOuterFields variable to true.

42 |

43 |

44 | ## License

45 |

46 | Apache License 2.0 (Apache-2.0)

47 |

48 |

49 | ## Support

50 | Requests & issues should be filed on GitHub: https://github.com/SumoLogic/sumologic-aws-lambda/issues

51 |

52 |

--------------------------------------------------------------------------------

/cloudwatchevents/guarddutybenchmark/README.md:

--------------------------------------------------------------------------------

1 | # sumologic-guardduty-benchmark

2 |

3 | This solution installs the Guardduty Benchmark App, creates collectors/sources in Sumo Logic platform and deploys the lambda function in your AWS account using configuration provided at the time of sam application deployment.

4 |

5 |

6 | Made with ❤️ by Sumo Logic AppDev Team. Available on the [AWS Serverless Application Repository](https://aws.amazon.com/serverless)

7 |

8 |

9 |

10 | ## Setup

11 | 1. Generate Access key from sumologic console as per [docs](https://help.sumologic.com/Manage/Security/Access-Keys#Create_an_access_key).

12 |

13 | 2. Go to https://serverlessrepo.aws.amazon.com/applications.

14 | 3. Search for sumologic-guardduty-benchmark and click on deploy.

15 | 4. In the Configure application parameters panel, enter the following parameters

16 | * Access ID(Required): Sumo Logic Access ID generated from Step 1

17 | * Access Key(Required): Sumo Logic Access Key generated from Step 1

18 | * Deployment Name(Required): Deployment name (environment name in lower case as per [docs](https://help.sumologic.com/APIs/General-API-Information/Sumo-Logic-Endpoints-and-Firewall-Security))

19 | * Collector Name: Enter the name of the Hosted Collector which will be created in Sumo Logic.

20 | * Source Name: Enter the name of the HTTP Source which will be created within the collector.

21 | * Source Category Name: Enter the name of the Source Category which will be used for writing search queries.

22 | 5. Click on Deploy

23 |

24 |

25 | ## Excluding Outer Event Fields

26 |

27 | By default, a CloudWatch Event has a format similar to this:

28 | ```

29 | {

30 | "version":"0",

31 | "id":"0123456d-7e46-ecb4-f5a2-e59cec50b100",

32 | "detail-type":"AWS API Call via CloudTrail",

33 | "source":"aws.logs",

34 | "account":"012345678908",

35 | "time":"2017-11-06T23:36:59Z",

36 | "region":"us-east-1",

37 | "resources":[ ],

38 | "detail":▶{ … }

39 | }

40 | ```

41 | This event will be sent as-is to Sumo Logic. If you just want to send the detail key instead, set the removeOuterFields variable to true.

42 |

43 |

44 | ## License

45 |

46 | Apache License 2.0 (Apache-2.0)

47 |

48 |

49 | ## Support

50 | Requests & issues should be filed on GitHub: https://github.com/SumoLogic/sumologic-aws-lambda/issues

51 |

52 |

--------------------------------------------------------------------------------

/s3/README.md:

--------------------------------------------------------------------------------

1 | # Warning: This Lambda Function has been deprecated

2 | We recommend using [S3 Event Notifications Integration](https://help.sumologic.com/Send-Data/Sources/02Sources-for-Hosted-Collectors/Amazon_Web_Services/AWS_S3_Source#S3_Event_Notifications_Integration),

3 |

4 | S3 to Sumo Logic

5 | ===========================================

6 |

7 | Files

8 | -----

9 | * *node.js/s3.js*: node.js function to read files from an S3 bucket to a Sumo Logic hosted HTTP collector. Files in the source bucket can be gzipped, or in cleartext, but should contain only texts. The function receives S3 notifications on new files uploaded to the source S3 bucket, then reads these files, or unzips them if the file names end with `gz`, and finally sends the data to the target Sumo endpoint.

10 |

11 | ## Lambda Setup

12 | For the Sumo collector configuration, do not enable multiline processing or

13 | one message per request -- the idea is to send as many messages in one request

14 | as possible to Sumo and let Sumo break them apart as needed.

15 |

16 | In the AWS console, use a code entry type of 'Edit code inline' and paste in the

17 | code (doublecheck the hostname and path as per your collector setup).

18 |

19 | In configuration specify index.handler as the Handler. Specify a Role that has

20 | sufficient privileges to read from the *source* bucket, and invoke a lambda

21 | function. One can use the AWSLambdaBasicExecution and the AWSS3ReadOnlyAccess role, although it is *strongly* recommended to customize them to restrict to relevant resources in production:

22 |

23 |

24 | {

25 | "Version": "2012-10-17",

26 | "Statement": [

27 | {

28 | "Effect": "Allow",

29 | "Action": [

30 | "logs:CreateLogGroup",

31 | "logs:CreateLogStream",

32 | "logs:PutLogEvents"

33 | ],

34 | "Resource": "arn:aws:logs:*:*:*"

35 | }

36 | ]

37 | }

38 |

39 |

40 | AND

41 |

42 |

43 | {

44 | "Version": "2012-10-17",

45 | "Statement": [

46 | {

47 | "Effect": "Allow",

48 | "Action": [

49 | "s3:Get*",

50 | "s3:List*"

51 | ],

52 | "Resource": "*"

53 | }

54 | ]

55 | }

56 |

57 |

58 | Once the function is created, you can tie it to the source S3 bucket. From the S3 Management console, select the bucket, goto its Properties, select Events and add a Notification. From there, provide a name for the notification, select *ObjectCreated (All)* as the Events, and select *Lambda* as the *Send To* option. Finally, select the Lambda function created above and Save.

59 |

60 |

61 |

--------------------------------------------------------------------------------

/sumologic-app-utils/src/main.py:

--------------------------------------------------------------------------------

1 | from crhelper import CfnResource

2 | from api import ResourceFactory

3 |

4 | helper = CfnResource(json_logging=False, log_level='DEBUG')

5 |

6 |

7 | def get_resource(event):

8 | resource_type = event.get("ResourceType").split("::")[-1]

9 | resource_class = ResourceFactory.get_resource(resource_type)

10 | props = event.get("ResourceProperties")

11 | resource = resource_class(props["SumoAccessID"], props["SumoAccessKey"], props["SumoDeployment"])

12 | params = resource.extract_params(event)

13 | params["remove_on_delete_stack"] = props.get("RemoveOnDeleteStack") == 'true'

14 | print(params)

15 | return resource, resource_type, params

16 |

17 |

18 | @helper.create

19 | def create(event, context):

20 | # Test with failure cases should not get stuck in progress

21 | # Optionally return an ID that will be used for the resource PhysicalResourceId,

22 | # if None is returned an ID will be generated. If a poll_create function is defined

23 | # return value is placed into the poll event as event['CrHelperData']['PhysicalResourceId']

24 | resource, resource_type, params = get_resource(event)

25 | data, resource_id = resource.create(**params)

26 | print(data)

27 | print(resource_id)

28 | helper.Data.update(data)

29 | helper.Status = "SUCCESS"

30 | print("Created %s" % resource_type)

31 | return "%s/%s" % (event.get('LogicalResourceId', ''), resource_id)

32 |

33 |

34 | @helper.update

35 | def update(event, context):

36 | resource, resource_type, params = get_resource(event)

37 | data, resource_id = resource.create(**params)

38 | print(data)

39 | print(resource_id)

40 | helper.Data.update(data)

41 | helper.Status = "SUCCESS"

42 | print("Updated %s" % resource_type)

43 | return "%s/%s" % (event.get('LogicalResourceId', ''), resource_id)

44 | # If the update resulted in a new resource being created, return an id for the new resource.

45 | # CloudFormation will send a delete event with the old id when stack update completes

46 |

47 |

48 | @helper.delete

49 | def delete(event, context):

50 | if "/" not in event.get('PhysicalResourceId', ""):

51 | print("%s resource_id not found" % event.get('PhysicalResourceId'))

52 | return

53 | resource, resource_type, params = get_resource(event)

54 | resource.delete(**params)

55 | helper.Status = "SUCCESS"

56 | print("Deleted %s" % resource_type)

57 | # Delete never returns anything. Should not fail if the underlying resources are already deleted. Desired state.

58 |

59 |

60 | def handler(event, context):

61 | helper(event, context)

62 |

--------------------------------------------------------------------------------

/inspector/Readme.md:

--------------------------------------------------------------------------------

1 | # SumoLogic Lambda Function for Amazon Inspector

2 |

3 | This function receives the records published to a SNS Topic by Amazon Inspector.It looks up an Inspector object based on its arn and type and then adds extra context to the final messages which are compressed and send to Sumo Logic HTTP source endpoint.

4 |

5 | ## Lambda Setup((docs)[https://help.sumologic.com/Send-Data/Applications-and-Other-Data-Sources/Amazon-Inspector-App/01-Collect-Data-for-Amazon-Inspector])

6 |

7 | ### Create an Amazon SNS Topic

8 | 1. Login to the Amazon Console.

9 | 2. Go to Application Integration > Simple Notification Service (SNS).

10 | 3. On the SNS Dashboard, select Create topic.

11 | 4. Enter a Topic name and a Display name, and click Create topic.

12 | 5. To assign the following policy to this topic, select the topic, then under Advanced view, click Actions/Edit topic policy.

13 | 6. Replace the existing text with the following:

14 | ```

15 | {

16 | "Version": "2008-10-17",

17 | "Id": "inspector-sns-publish-policy",

18 | "Statement": [

19 | {

20 | "Sid": "inspector-sns-publish-statement",

21 | "Effect": "Allow",

22 | "Principal": {

23 | "Service": "inspector.amazonaws.com"

24 | },

25 | "Action": "SNS:Publish",

26 | "Resource": "arn:aws:sns:*"

27 | }

28 | ]

29 | }

30 | ```

31 | 7. Click Update policy.

32 |

33 | ### Configure Amazon Inspector

34 | 1. In the Amazon Console, go to Security, Identity & Compliance > Inspector.

35 | 2. Select each assessment template you want to monitor.

36 | 3. Expand each row and find the section called SNS topics.

37 | 4. Click the Edit icon and select the SNS topic you created in the previous section.

38 | 5. Click Save.

39 |

40 | ### Create a Role

41 | In the Amazon Console, go to Security, Identity & Compliance > IAM.

42 | Create a new role called Lambda-Inspector.

43 |

44 | ### Create a Lambda Function

45 | 1. In the Amazon Console, go to Compute > Lambda.

46 | 2. Create a new function.

47 | 3. On the Select blueprint page, select a Blank function.

48 | 4. Select the SNS topic you created in Create an Amazon SNS Topic as trigger.

49 | 5. Click Next.

50 | 6. On the Configure function page, enter a name for the function.

51 | 7. Go to https://github.com/SumoLogic/sumologic-aws-lambda/blob/master/inspector/python/inspector.py and copy and paste the sumologic-aws-lambda code into the field.

52 | 8. Edit the code to enter the URL of the Sumo Logic endpoint that will receive data from the HTTP Source.

53 | 9. Scroll down and configure the rest of the settings as follows:

54 | Memory (MB). 128.

55 | Timeout. 5 min.

56 | VPC. No VCP.

57 | 10. Click Next.

58 | 11. Click Create function.

59 |

--------------------------------------------------------------------------------

/loggroup-lambda-connector/sam/packaged.yaml:

--------------------------------------------------------------------------------

1 | AWSTemplateFormatVersion: '2010-09-09'

2 | Description: '"Lambda Function for automatic subscription of any Sumo Logic lambda

3 | function with loggroups matching an input pattern."

4 |

5 | '

6 | Globals:

7 | Function:

8 | MemorySize: 128

9 | Timeout: 300

10 | Outputs:

11 | SumoLogGroupLambdaConnector:

12 | Description: SumoLogGroupLambdaConnector Function ARN

13 | Value:

14 | Fn::GetAtt:

15 | - SumoLogGroupLambdaConnector

16 | - Arn

17 | Parameters:

18 | LambdaARN:

19 | Default: arn:aws:lambda:us-east-1:123456789000:function:TestLambda

20 | Description: Enter ARN for target lambda function

21 | Type: String

22 | LogGroupPattern:

23 | Default: Test

24 | Description: Enter regex for matching logGroups

25 | Type: String

26 | UseExistingLogs:

27 | AllowedValues:

28 | - 'true'

29 | - 'false'

30 | Default: 'false'

31 | Description: Select true for subscribing existing logs

32 | Type: String

33 | Resources:

34 | SumoCWLambdaInvokePermission:

35 | Properties:

36 | Action: lambda:InvokeFunction

37 | FunctionName:

38 | Ref: LambdaARN

39 | Principal:

40 | Fn::Sub: logs.${AWS::Region}.amazonaws.com

41 | SourceAccount:

42 | Ref: AWS::AccountId

43 | SourceArn:

44 | Fn::Sub: arn:aws:logs:${AWS::Region}:${AWS::AccountId}:log-group:*:*

45 | Type: AWS::Lambda::Permission

46 | SumoLogGroupLambdaConnector:

47 | Properties:

48 | CodeUri: s3://appdevstore/6bef113d950a9923b446dd438116f2a1

49 | Environment:

50 | Variables:

51 | LAMBDA_ARN:

52 | Ref: LambdaARN

53 | LOG_GROUP_PATTERN:

54 | Ref: LogGroupPattern

55 | USE_EXISTING_LOG_GROUPS:

56 | Ref: UseExistingLogs

57 | Events:

58 | LambdaTrigger:

59 | Properties:

60 | Pattern:

61 | detail:

62 | eventName:

63 | - CreateLogGroup

64 | eventSource:

65 | - logs.amazonaws.com

66 | source:

67 | - aws.logs

68 | Type: CloudWatchEvent

69 | Handler: loggroup-lambda-connector.handler

70 | Policies:

71 | - Statement:

72 | - Action:

73 | - logs:DescribeLogGroups

74 | - logs:DescribeLogStreams

75 | - logs:PutSubscriptionFilter

76 | Effect: Allow

77 | Resource:

78 | - Fn::Sub: arn:aws:logs:${AWS::Region}:${AWS::AccountId}:log-group:*

79 | Sid: ReadWriteFilterPolicy

80 | Runtime: nodejs8.10

81 | Type: AWS::Serverless::Function

82 | Transform: AWS::Serverless-2016-10-31

83 |

--------------------------------------------------------------------------------

/securityhub-collector/sam/event.json:

--------------------------------------------------------------------------------

1 | {

2 | "account": "956882702234",

3 | "detail": {

4 | "findings": [

5 | {

6 | "AwsAccountId": "956882702234",

7 | "Compliance": {

8 | "Status": "FAILED"

9 | },

10 | "CreatedAt": "2019-04-18T14:51:55.000000Z",

11 | "Description": "This search gives top 10 resources which are accessed in last 15 minutes",

12 | "FirstObservedAt": "2019-04-18T14:51:55.000000Z",

13 | "GeneratorId": "InsertFindingsScheduledSearch",

14 | "Id": "sumologic:us-east-2:956882702234:InsertFindingsScheduledSearch/finding/eb083fb9-03aa-4840-af0e-eb3ae4adebe9",

15 | "ProductArn": "arn:aws:securityhub:us-east-2:956882702234:product/sumologicinc/sumologic-mda",

16 | "ProductFields": {

17 | "aws/securityhub/CompanyName": "Sumo Logic",

18 | "aws/securityhub/FindingId": "arn:aws:securityhub:us-east-2:956882702234:product/sumologicinc/sumologic-mda/sumologic:us-east-2:956882702234:InsertFindingsScheduledSearch/finding/eb083fb9-03aa-4840-af0e-eb3ae4adebe9",

19 | "aws/securityhub/ProductName": "Machine Data Analytics",

20 | "aws/securityhub/SeverityLabel": "LOW"

21 | },

22 | "RecordState": "ACTIVE",

23 | "Resources": [

24 | {

25 | "Id": "10.178.11.43",

26 | "Type": "Other"

27 | }

28 | ],

29 | "SchemaVersion": "2018-10-08",

30 | "Severity": {

31 | "Normalized": 30

32 | },

33 | "SourceUrl": "https://service.sumologic.com/ui/#/search/RmC8kAUGZbXrkj2rOFmUxmHtzINUgfJnFplh3QWY",

34 | "Title": "Vulnerability: Apple iTunes m3u Playlist File Title Parsing Buffer Overflow Vulnerability(34886) found on 207.235.176.3",

35 | "Types": [

36 | "Software and Configuration Checks/Industry and Regulatory Standards/HIPAA Controls"

37 | ],

38 | "UpdatedAt": "2019-04-18T14:51:55.000000Z",

39 | "WorkflowState": "NEW",

40 | "approximateArrivalTimestamp": 1555599782.881,

41 | "updatedAt": "2019-04-18T14:51:55.000000Z"

42 | }

43 | ]

44 | },

45 | "detail-type": "Security Hub Findings",

46 | "id": "f06f61e9-b099-8321-e446-5a20583bd791",

47 | "region": "us-east-2",

48 | "resources": [

49 | "arn:aws:securityhub:us-east-2:956882702234:product/sumologicinc/sumologic-mda/sumologic:us-east-2:956882702234:InsertFindingsScheduledSearch/finding/eb083fb9-03aa-4840-af0e-eb3ae4adebe9"

50 | ],

51 | "source": "aws.securityhub",

52 | "time": "2019-04-18T15:03:04Z",

53 | "version": "0"

54 | }

55 |

--------------------------------------------------------------------------------

/securityhub-forwarder/sam/template.yaml:

--------------------------------------------------------------------------------

1 | AWSTemplateFormatVersion: '2010-09-09'

2 | Transform: 'AWS::Serverless-2016-10-31'

3 | Description: >

4 | This function is invoked by Sumo Logic(via Scheduled Search) through API Gateway. The event payload received is then forwarded to AWS Security Hub.

5 |

6 | Resources:

7 | SecurityHubForwarderApiGateway:

8 | Type: AWS::Serverless::Api

9 | Properties:

10 | StageName: prod

11 | EndpointConfiguration: EDGE

12 | DefinitionBody:

13 | swagger: "2.0"

14 | info:

15 | title:

16 | Ref: AWS::StackName

17 | description: API endpoint for invoking SecurityHubForwarderFunction

18 | version: 1.0.0

19 | securityDefinitions:

20 | sigv4:

21 | type: "apiKey"

22 | name: "Authorization"

23 | in: "header"

24 | x-amazon-apigateway-authtype: "awsSigv4"

25 | paths:

26 | /findings:

27 | post:

28 | consumes:

29 | - "application/json"

30 | produces:

31 | - "application/json"

32 | responses: {}

33 | security:

34 | - sigv4: []

35 | x-amazon-apigateway-integration:

36 | type: "aws_proxy"

37 | uri:

38 | Fn::Sub: arn:aws:apigateway:${AWS::Region}:lambda:path/2015-03-31/functions/${SecurityHubForwarderFunction.Arn}/invocations

39 | passthroughBehavior: "when_no_match"

40 | httpMethod: "POST"

41 | requestParameters:

42 | integration.request.header.X-Amz-Invocation-Type: "'RequestResponse'"

43 |

44 | SecurityHubForwarderFunction:

45 | Type: 'AWS::Serverless::Function'

46 | Properties:

47 | Handler: securityhub_forwarder.lambda_handler

48 | Runtime: python3.7

49 | Layers:

50 | - !Sub 'arn:aws:lambda:${AWS::Region}:956882708938:layer:securityhub_deps:1'

51 | CodeUri: ../src/

52 | MemorySize: 128

53 | Timeout: 300

54 | Policies:

55 | - Statement:

56 | - Sid: SecurityHubImportFindingsPolicy

57 | Effect: Allow

58 | Action:

59 | - securityhub:BatchImportFindings

60 | Resource: 'arn:aws:securityhub:*:*:*'

61 | Events:

62 | Api1:

63 | Type: Api

64 | Properties:

65 | Path: '/findings'

66 | Method: POST

67 | RestApiId:

68 | Ref: SecurityHubForwarderApiGateway

69 |

70 | Outputs:

71 |

72 | SecurityHubForwarderFunction:

73 | Description: "SecurityHubForwarder Function ARN"

74 | Value: !GetAtt SecurityHubForwarderFunction.Arn

75 | SecurityHubForwarderApiUrl:

76 | Description: URL of your API endpoint

77 | Value: !Sub "https://${SecurityHubForwarderApiGateway}.execute-api.${AWS::Region}.amazonaws.com/prod/findings"

78 |

--------------------------------------------------------------------------------

/securityhub-forwarder/sam/packaged.yaml:

--------------------------------------------------------------------------------

1 | AWSTemplateFormatVersion: '2010-09-09'

2 | Description: 'This function is invoked by Sumo Logic(via Scheduled Search) through

3 | API Gateway. The event payload received is then forwarded to AWS Security Hub.

4 |

5 | '

6 | Outputs:

7 | SecurityHubForwarderApiUrl:

8 | Description: URL of your API endpoint

9 | Value:

10 | Fn::Sub: https://${SecurityHubForwarderApiGateway}.execute-api.${AWS::Region}.amazonaws.com/prod/findings

11 | SecurityHubForwarderFunction:

12 | Description: SecurityHubForwarder Function ARN

13 | Value:

14 | Fn::GetAtt:

15 | - SecurityHubForwarderFunction

16 | - Arn

17 | Resources:

18 | SecurityHubForwarderApiGateway:

19 | Properties:

20 | DefinitionBody:

21 | info:

22 | description: API endpoint for invoking SecurityHubForwarderFunction

23 | title:

24 | Ref: AWS::StackName

25 | version: 1.0.0

26 | paths:

27 | /findings:

28 | post:

29 | consumes:

30 | - application/json

31 | produces:

32 | - application/json

33 | responses: {}

34 | security:

35 | - sigv4: []

36 | x-amazon-apigateway-integration:

37 | httpMethod: POST

38 | passthroughBehavior: when_no_match

39 | requestParameters:

40 | integration.request.header.X-Amz-Invocation-Type: '''RequestResponse'''

41 | type: aws_proxy

42 | uri:

43 | Fn::Sub: arn:aws:apigateway:${AWS::Region}:lambda:path/2015-03-31/functions/${SecurityHubForwarderFunction.Arn}/invocations

44 | securityDefinitions:

45 | sigv4:

46 | in: header

47 | name: Authorization

48 | type: apiKey

49 | x-amazon-apigateway-authtype: awsSigv4

50 | swagger: '2.0'

51 | EndpointConfiguration: EDGE

52 | StageName: prod

53 | Type: AWS::Serverless::Api

54 | SecurityHubForwarderFunction:

55 | Properties:

56 | CodeUri: s3://appdevstore/98ee274ed4543bd1e1344fec701211df

57 | Events:

58 | Api1:

59 | Properties:

60 | Method: POST

61 | Path: /findings

62 | RestApiId:

63 | Ref: SecurityHubForwarderApiGateway

64 | Type: Api

65 | Handler: securityhub_forwarder.lambda_handler

66 | Layers:

67 | - Fn::Sub: arn:aws:lambda:${AWS::Region}:956882708938:layer:securityhub_deps:1

68 | MemorySize: 128

69 | Policies:

70 | - Statement:

71 | - Action:

72 | - securityhub:BatchImportFindings

73 | Effect: Allow

74 | Resource: arn:aws:securityhub:*:*:*

75 | Sid: SecurityHubImportFindingsPolicy

76 | Runtime: python3.7

77 | Timeout: 300

78 | Type: AWS::Serverless::Function

79 | Transform: AWS::Serverless-2016-10-31

80 |

--------------------------------------------------------------------------------

/loggroup-lambda-connector/sam/template.yaml:

--------------------------------------------------------------------------------

1 | AWSTemplateFormatVersion: '2010-09-09'

2 | Transform: AWS::Serverless-2016-10-31

3 | Description: >

4 | "Lambda Function for automatic subscription of any Sumo Logic lambda function with loggroups matching an input pattern."

5 |

6 | # More info about Globals: https://github.com/awslabs/serverless-application-model/blob/master/docs/globals.rst

7 | Globals:

8 | Function:

9 | Timeout: 300

10 | MemorySize: 128

11 |

12 | Parameters:

13 | LambdaARN:

14 | Type : String

15 | Default: "arn:aws:lambda:us-east-1:123456789000:function:TestLambda"

16 | Description: "Enter ARN for target lambda function"

17 |

18 | LogGroupPattern:

19 | Type : String

20 | Default: "Test"

21 | Description: "Enter regex for matching logGroups"

22 |

23 | UseExistingLogs:

24 | Type : String

25 | Default: "false"

26 | AllowedValues : ["true", "false"]

27 | Description: "Select true for subscribing existing logs"

28 |

29 | Resources:

30 |

31 | SumoLogGroupLambdaConnector:

32 | Type: AWS::Serverless::Function # More info about Function Resource: https://github.com/awslabs/serverless-application-model/blob/master/versions/2016-10-31.md#awsserverlessfunction

33 | Properties:

34 | CodeUri: ../src/

35 | Handler: "loggroup-lambda-connector.handler"

36 | Runtime: nodejs8.10

37 | Environment:

38 | Variables:

39 | LAMBDA_ARN: !Ref "LambdaARN"

40 | LOG_GROUP_PATTERN: !Ref "LogGroupPattern"

41 | USE_EXISTING_LOG_GROUPS: !Ref "UseExistingLogs"

42 | Policies:

43 | - Statement:

44 | - Sid: ReadWriteFilterPolicy

45 | Effect: Allow

46 | Action:

47 | - logs:DescribeLogGroups

48 | - logs:DescribeLogStreams

49 | - logs:PutSubscriptionFilter

50 | Resource:

51 | - !Sub 'arn:aws:logs:${AWS::Region}:${AWS::AccountId}:log-group:*'

52 | Events:

53 | LambdaTrigger:

54 | Type: CloudWatchEvent

55 | Properties:

56 | Pattern:

57 | source:

58 | - aws.logs

59 | detail:

60 | eventSource:

61 | - logs.amazonaws.com

62 | eventName:

63 | - CreateLogGroup

64 | SumoCWLambdaInvokePermission:

65 | Type: AWS::Lambda::Permission

66 | Properties:

67 | Action: lambda:InvokeFunction

68 | FunctionName: !Ref "LambdaARN"

69 | Principal: !Sub 'logs.${AWS::Region}.amazonaws.com'

70 | SourceAccount: !Ref AWS::AccountId

71 | SourceArn: !Sub 'arn:aws:logs:${AWS::Region}:${AWS::AccountId}:log-group:*:*'

72 |

73 | Outputs:

74 |

75 | SumoLogGroupLambdaConnector:

76 | Description: "SumoLogGroupLambdaConnector Function ARN"

77 | Value: !GetAtt SumoLogGroupLambdaConnector.Arn

78 |

--------------------------------------------------------------------------------

/loggroup-lambda-connector/Readme.md:

--------------------------------------------------------------------------------

1 | # SumoLogic LogGroup Connector

2 | This is used to automatically subscribe newly created and existing Cloudwatch LogGroups to a Lambda function.

3 |

4 | Made with ❤️ by Sumo Logic. Available on the [AWS Serverless Application Repository](https://aws.amazon.com/serverless)

5 |

6 | ### Deploying the SAM Application