├── .git-blame-ignore-revs

├── .github

├── ISSUE_TEMPLATE

│ ├── bug_report.md

│ ├── documentation.md

│ └── feature_request.md

└── workflows

│ ├── pytests.yml

│ └── test_and_deploy.yml

├── .gitignore

├── .pre-commit-config.yaml

├── LICENSE

├── README.md

├── docs

├── README.md

├── __init__.py

├── buyers-guide.md

├── calibration-guide.md

├── data-schema.md

├── development-guide.md

├── images

│ ├── HSV_legend.png

│ ├── JCh_Color_legend.png

│ ├── JCh_legend.png

│ ├── acq_finished.png

│ ├── acquire_buttons.png

│ ├── acquisition_settings.png

│ ├── advanced.png

│ ├── cap_bg.png

│ ├── cli_structure.png

│ ├── comms_video_screenshot.png

│ ├── connect_to_mm.png

│ ├── create_group.png

│ ├── create_group_voltage.png

│ ├── create_preset.png

│ ├── create_preset_voltage.png

│ ├── general_reconstruction_settings.png

│ ├── ideal_plot.png

│ ├── modulation.png

│ ├── no-overlay.png

│ ├── overlay-demo.png

│ ├── overlay.png

│ ├── phase_reconstruction_settings.png

│ ├── poincare_swing.svg

│ ├── recOrder_Fig1_Overview.png

│ ├── recOrder_plugin_logo.png

│ ├── reconstruction_birefriengence.png

│ ├── reconstruction_data.png

│ ├── reconstruction_data_info.png

│ ├── reconstruction_models.png

│ ├── reconstruction_queue.png

│ ├── run_calib.png

│ └── run_port.png

├── microscope-installation-guide.md

├── napari-plugin-guide.md

├── reconstruction-guide.md

└── software-installation-guide.md

├── examples

├── README.md

├── birefringence-and-phase.yml

├── birefringence.yml

├── fluorescence.yml

└── phase.yml

├── pyproject.toml

├── recOrder

├── __init__.py

├── acq

│ ├── __init__.py

│ ├── acq_functions.py

│ └── acquisition_workers.py

├── calib

│ ├── Calibration.py

│ ├── Optimization.py

│ ├── __init__.py

│ └── calibration_workers.py

├── cli

│ ├── apply_inverse_models.py

│ ├── apply_inverse_transfer_function.py

│ ├── compute_transfer_function.py

│ ├── gui_widget.py

│ ├── jobs_mgmt.py

│ ├── main.py

│ ├── monitor.py

│ ├── option_eat_all.py

│ ├── parsing.py

│ ├── printing.py

│ ├── reconstruct.py

│ ├── settings.py

│ └── utils.py

├── io

│ ├── __init__.py

│ ├── _reader.py

│ ├── core_functions.py

│ ├── metadata_reader.py

│ ├── utils.py

│ └── visualization.py

├── napari.yaml

├── plugin

│ ├── __init__.py

│ ├── gui.py

│ ├── gui.ui

│ ├── main_widget.py

│ └── tab_recon.py

├── scripts

│ ├── __init__.py

│ ├── launch_napari.py

│ ├── repeat-cal-acq-rec.py

│ ├── repeat-calibration.py

│ ├── samples.py

│ └── simulate_zarr_acq.py

└── tests

│ ├── acq_tests

│ └── test_acq.py

│ ├── calibration_tests

│ └── test_calibration.py

│ ├── cli_tests

│ ├── test_cli.py

│ ├── test_compute_tf.py

│ ├── test_reconstruct.py

│ └── test_settings.py

│ ├── conftest.py

│ ├── mmcore_tests

│ └── test_core_func.py

│ ├── util_tests

│ ├── test_create_empty.py

│ ├── test_io.py

│ └── test_overlays.py

│ └── widget_tests

│ ├── test_dock_widget.py

│ └── test_sample_contributions.py

├── setup.cfg

├── setup.py

└── tox.ini

/.git-blame-ignore-revs:

--------------------------------------------------------------------------------

1 | # .git-blame-ignore-revs

2 | # created as described in: https://docs.github.com/en/repositories/working-with-files/using-files/viewing-a-file#ignore-commits-in-the-blame-view

3 | # black-format all `.py` files except recorder_ui.py

4 | 82f6df5ed34460374ce7c0fdca089d8caa570b9f

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/bug_report.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Bug report

3 | about: Create a report to help us improve

4 | title: "[BUG]"

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Summary and expected behavior**

11 |

12 | **Code for reproduction (using python script or command line interface)**

13 | ```

14 | # paste your code here

15 |

16 | ```

17 |

18 | **Screenshots or steps for reproduction (using napari GUI)**

19 |

20 | **Include relevant logs which are created next to the output dir, name of the dataset, yaml file(s) if encountering reconstruction errors.**

21 |

22 | **Expected behavior**

23 | A clear and concise description of what you expected to happen.

24 |

25 | **Environment:**

26 |

27 | Operating system:

28 | Python version:

29 | Python environment (command line, IDE, Jupyter notebook, etc):

30 | Micro-Manager/pycromanager version:

31 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/documentation.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Documentation

3 | about: Help us improve documentation

4 | title: "[DOC]"

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Suggested improvement**

11 |

12 | **Optional: Pull request with better documentation**

13 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/feature_request.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Feature request

3 | about: Suggest an idea for this project

4 | title: "[FEATURE]"

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Problem**

11 |

12 | **Proposed solution**

13 |

14 |

15 | **Alternatives you have considered, if any**

16 |

17 | **Additional context**

18 | Note relevant experimental conditions or datasets

19 |

--------------------------------------------------------------------------------

/.github/workflows/pytests.yml:

--------------------------------------------------------------------------------

1 | # This workflow will install Python dependencies, run tests and lint with a single version of Python

2 | # For more information see: https://help.github.com/actions/language-and-framework-guides/using-python-with-github-actions

3 |

4 | name: pytests

5 |

6 | on: [push]

7 |

8 | jobs:

9 | build:

10 | runs-on: ubuntu-latest

11 | strategy:

12 | matrix:

13 | python-version: ["3.10", "3.11"]

14 |

15 | steps:

16 | - name: Checkout repo

17 | uses: actions/checkout@v2

18 |

19 | - name: Set up Python ${{ matrix.python-version }}

20 | uses: actions/setup-python@v1

21 | with:

22 | python-version: ${{ matrix.python-version }}

23 |

24 | - name: Install dependencies

25 | run: |

26 | python -m pip install --upgrade pip

27 | pip install ".[all,dev]"

28 |

29 | # - name: Lint with flake8

30 | # run: |

31 | # pip install flake8

32 | # # stop the build if there are Python syntax errors or undefined names

33 | # flake8 . --count --select=E9,F63,F7,F82 --show-source --statistics

34 | # # exit-zero treats all errors as warnings. The GitHub editor is 127 chars wide

35 | # flake8 . --count --exit-zero --max-complexity=10 --max-line-length=127 --statistics

36 |

37 | - name: Test with pytest

38 | run: |

39 | pytest -v

40 | pytest --cov=./ --cov-report=xml

41 |

42 | # - name: Upload coverage to Codecov

43 | # uses: codecov/codecov-action@v1

44 | # with:

45 | # flags: unittest

46 | # name: codecov-umbrella

47 | # fail_ci_if_error: true

48 |

--------------------------------------------------------------------------------

/.github/workflows/test_and_deploy.yml:

--------------------------------------------------------------------------------

1 | # Modified from cookiecutter-napari-plugin

2 | # This workflows will upload a Python Package using Twine when a release is created

3 | # For more information see: https://help.github.com/en/actions/language-and-framework-guides/using-python-with-github-actions#publishing-to-package-registries

4 | name: test-and-deploy

5 |

6 | on:

7 | push:

8 | branches:

9 | - main

10 | tags:

11 | - "*"

12 | pull_request:

13 | branches:

14 | - "*"

15 | workflow_dispatch:

16 |

17 | jobs:

18 | test:

19 | name: ${{ matrix.platform }} py${{ matrix.python-version }}

20 | runs-on: ${{ matrix.platform }}

21 | strategy:

22 | matrix:

23 | platform: [ubuntu-latest, windows-latest, macos-latest]

24 | python-version: ["3.10", "3.11"]

25 |

26 | steps:

27 | - uses: actions/checkout@v3

28 |

29 | - name: Set up Python ${{ matrix.python-version }}

30 | uses: actions/setup-python@v4

31 | with:

32 | python-version: ${{ matrix.python-version }}

33 |

34 | # these libraries enable testing on Qt on linux

35 | - uses: tlambert03/setup-qt-libs@v1

36 |

37 | # strategy borrowed from vispy for installing opengl libs on windows

38 | - name: Install Windows OpenGL

39 | if: runner.os == 'Windows'

40 | run: |

41 | git clone --depth 1 https://github.com/pyvista/gl-ci-helpers.git

42 | powershell gl-ci-helpers/appveyor/install_opengl.ps1

43 |

44 | # note: if you need dependencies from conda, considering using

45 | # setup-miniconda: https://github.com/conda-incubator/setup-miniconda

46 | # and

47 | # tox-conda: https://github.com/tox-dev/tox-conda

48 | - name: Install dependencies

49 | run: |

50 | python -m pip install --upgrade pip

51 | python -m pip install setuptools tox tox-gh-actions

52 |

53 | # https://github.com/napari/cookiecutter-napari-plugin/commit/cb9a8c152b68473e8beabf44e7ab11fc46483b5d

54 | - name: Test

55 | uses: aganders3/headless-gui@v1

56 | with:

57 | run: python -m tox

58 |

59 | - name: Coverage

60 | uses: codecov/codecov-action@v3

61 |

62 | deploy:

63 | # this will run when you have tagged a commit with a version number

64 | # and requires that you have put your twine API key in your

65 | # github secrets (see readme for details)

66 | needs: [test]

67 | runs-on: ubuntu-latest

68 | if: contains(github.ref, 'tags')

69 | steps:

70 | - uses: actions/checkout@v2

71 | - name: Set up Python

72 | uses: actions/setup-python@v2

73 | with:

74 | python-version: "3.x"

75 | - name: Install dependencies

76 | run: |

77 | python -m pip install --upgrade pip

78 | pip install -U setuptools setuptools_scm wheel twine build

79 |

80 | # skip build and publish for now

81 | # - name: Build and publish

82 | # env:

83 | # TWINE_USERNAME: __token__

84 | # TWINE_PASSWORD: ${{ secrets.TWINE_API_KEY }}

85 | # run: |

86 | # git tag

87 | # python -m build .

88 | # twine upload --repository testpypi dist/* # Commented until API key is on github

89 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Binary image files.

2 | *.tif[f]

3 | *.jp[e]g

4 | *.zar[r]

5 | *.json

6 |

7 | # pycharm IDE

8 | .idea

9 | .DS_Store

10 |

11 | # Byte-compiled / optimized / DLL files

12 | __pycache__/

13 | *.py[cod]

14 | *$py.class

15 |

16 | # C extensions

17 | *.so

18 |

19 | # Distribution / packaging

20 | .Python

21 | build/

22 | develop-eggs/

23 | dist/

24 | downloads/

25 | eggs/

26 | .eggs/

27 | lib/

28 | lib64/

29 | parts/

30 | sdist/

31 | var/

32 | wheels/

33 | pip-wheel-metadata/

34 | share/python-wheels/

35 | *.egg-info/

36 | .installed.cfg

37 | *.egg

38 | MANIFEST

39 |

40 | # PyInstaller

41 | # Usually these files are written by a python script from a template

42 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

43 | *.manifest

44 | *.spec

45 |

46 | # Installer logs

47 | pip-log.txt

48 | pip-delete-this-directory.txt

49 |

50 | # Unit test / coverage reports

51 | htmlcov/

52 | .tox/

53 | .nox/

54 | .coverage

55 | .coverage.*

56 | .cache

57 | nosetests.xml

58 | coverage.xml

59 | *.cover

60 | *.py,cover

61 | .hypothesis/

62 | .pytest_cache/

63 | pytest_temp/

64 |

65 | # Translations

66 | *.mo

67 | *.pot

68 |

69 | # Django stuff:

70 | *.log

71 | local_settings.py

72 | db.sqlite3

73 | db.sqlite3-journal

74 |

75 | # Flask stuff:

76 | instance/

77 | .webassets-cache

78 |

79 | # Scrapy stuff:

80 | .scrapy

81 |

82 | # Sphinx documentation

83 | docs/_build/

84 |

85 | # PyBuilder

86 | target/

87 |

88 | # Jupyter Notebook

89 | .ipynb_checkpoints

90 |

91 | # IPython

92 | profile_default/

93 | ipython_config.py

94 |

95 | # pyenv

96 | .python-version

97 |

98 | # pipenv

99 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

100 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

101 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

102 | # install all needed dependencies.

103 | #Pipfile.lock

104 |

105 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

106 | __pypackages__/

107 |

108 | # Celery stuff

109 | celerybeat-schedule

110 | celerybeat.pid

111 |

112 | # SageMath parsed files

113 | *.sage.py

114 |

115 | # Environments

116 | .env

117 | .venv

118 | env/

119 | venv/

120 | ENV/

121 | env.bak/

122 | venv.bak/

123 |

124 | # Spyder project settings

125 | .spyderproject

126 | .spyproject

127 |

128 | # Rope project settings

129 | .ropeproject

130 |

131 | # mkdocs documentation

132 | /site

133 |

134 | # mypy

135 | .mypy_cache/

136 | .dmypy.json

137 | dmypy.json

138 |

139 | # Pyre type checker

140 | .pyre/

141 |

142 | .DS_Store

143 |

144 | # written by setuptools_scm

145 | */_version.py

146 | recOrder/_version.py

147 | *.autosave

148 |

149 | # example data

150 | /examples/data_temp/*

151 | /logs/*

152 |

--------------------------------------------------------------------------------

/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | repos:

2 | - repo: https://github.com/python/black

3 | rev: 21.6b0

4 | hooks:

5 | - id: black

6 | language_version: python3

7 | pass_filenames: false

8 | args: [.]

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | BSD 3-Clause License

2 |

3 | Copyright (c) 2020, Chan Zuckerberg Biohub

4 |

5 | Redistribution and use in source and binary forms, with or without

6 | modification, are permitted provided that the following conditions are met:

7 |

8 | 1. Redistributions of source code must retain the above copyright notice, this

9 | list of conditions and the following disclaimer.

10 |

11 | 2. Redistributions in binary form must reproduce the above copyright notice,

12 | this list of conditions and the following disclaimer in the documentation

13 | and/or other materials provided with the distribution.

14 |

15 | 3. Neither the name of the copyright holder nor the names of its

16 | contributors may be used to endorse or promote products derived from

17 | this software without specific prior written permission.

18 |

19 | THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

20 | AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

21 | IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

22 | DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

23 | FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

24 | DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

25 | SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

26 | CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

27 | OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

28 | OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

29 |

30 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # recOrder

2 | [](https://pypi.org/project/recOrder-napari)

3 | [](https://pypistats.org/packages/recOrder-napari)

4 | [](https://pepy.tech/project/recOrder-napari)

5 | [](https://github.com/mehta-lab/recOrder/graphs/contributors)

6 |

7 |

8 |

9 |

10 |

11 |

12 | `recOrder` is a collection of computational imaging methods. It currently provides QLIPP (quantitative label-free imaging with phase and polarization), phase from defocus, and fluorescence deconvolution.

13 |

14 | [](https://www.youtube.com/watch?v=JEZAaPeZhck)

15 |

16 | Acquisition, calibration, background correction, reconstruction, and applications of QLIPP are described in the following [E-Life Paper](https://elifesciences.org/articles/55502):

17 |

18 | ```bibtex

19 | Syuan-Ming Guo, Li-Hao Yeh, Jenny Folkesson, Ivan E Ivanov, Anitha P Krishnan, Matthew G Keefe, Ezzat Hashemi, David Shin, Bryant B Chhun, Nathan H Cho, Manuel D Leonetti, May H Han, Tomasz J Nowakowski, Shalin B Mehta, "Revealing architectural order with quantitative label-free imaging and deep learning," eLife 2020;9:e55502 DOI: 10.7554/eLife.55502 (2020).

20 | ```

21 |

22 | These are the kinds of data you can acquire with `recOrder` and QLIPP:

23 |

24 | https://user-images.githubusercontent.com/9554101/271128301-cc71da57-df6f-401b-a955-796750a96d88.mov

25 |

26 | https://user-images.githubusercontent.com/9554101/271128510-aa2180af-607f-4c0c-912c-c18dc4f29432.mp4

27 |

28 | ## What do I need to use `recOrder`

29 | `recOrder` is to be used alongside a conventional widefield microscope. For QLIPP, the microscope must be fitted with an analyzer and a universal polarizer:

30 |

31 | https://user-images.githubusercontent.com/9554101/273073475-70afb05a-1eb7-4019-9c42-af3e07bef723.mp4

32 |

33 | For phase-from-defocus or fluorescence deconvolution methods, the universal polarizer is optional.

34 |

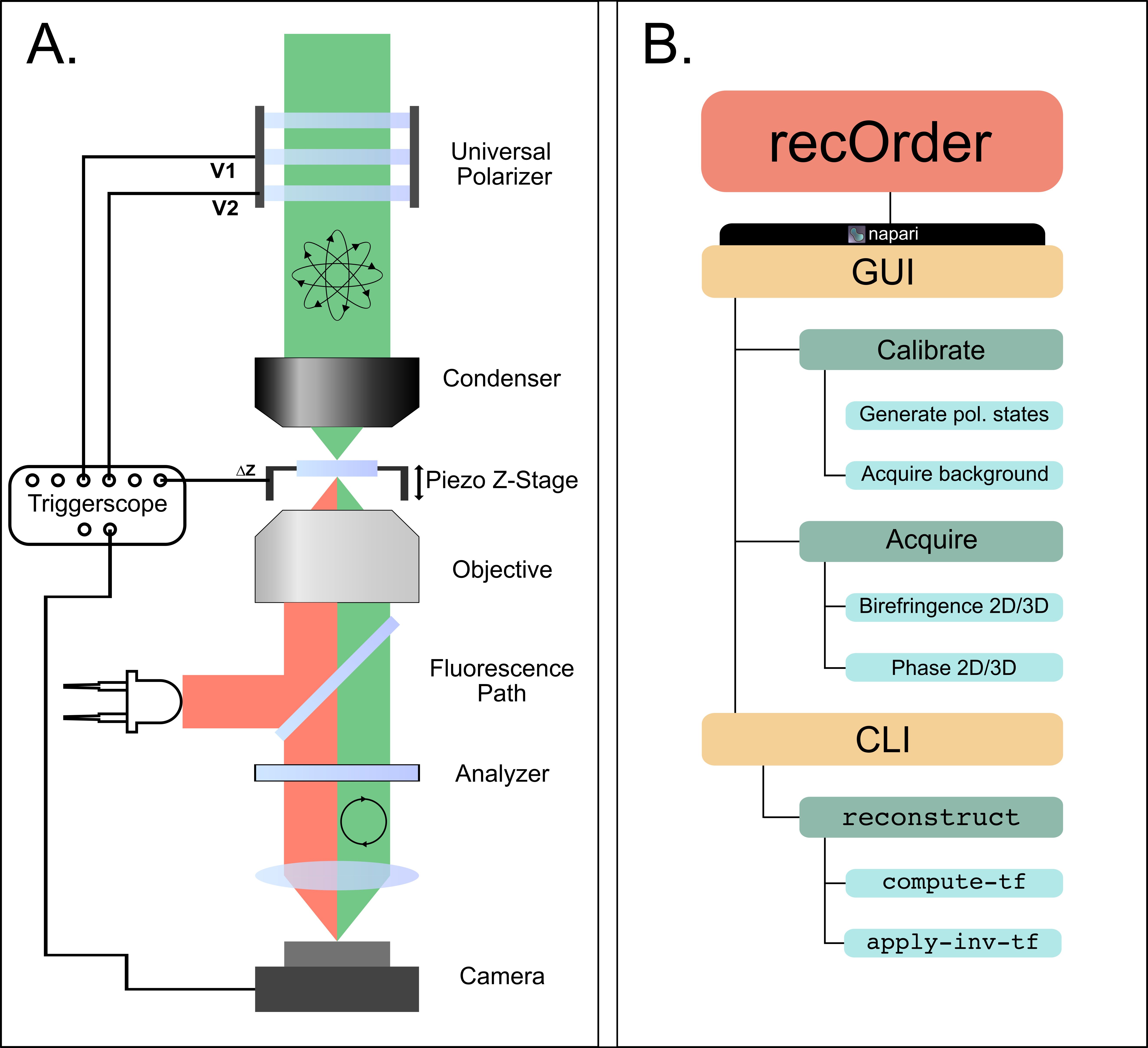

35 | The overall structure of `recOrder` is shown in Panel B, highlighting the structure of the graphical user interface (GUI) through a napari plugin and the command-line interface (CLI) that allows users to perform reconstructions.

36 |

37 |

38 |

39 |

40 |

41 | ## Software Quick Start

42 |

43 | (Optional but recommended) install [anaconda](https://www.anaconda.com/products/distribution) and create a virtual environment:

44 |

45 | ```sh

46 | conda create -y -n recOrder python=3.10

47 | conda activate recOrder

48 | ```

49 |

50 | Install `recOrder-napari` with acquisition dependencies

51 | (napari with PyQt6 and pycro-manager):

52 |

53 | ```sh

54 | pip install recOrder-napari[all]

55 | ```

56 |

57 | Install `recOrder-napari` without napari, QtBindings (PyQt/PySide) and pycro-manager dependencies:

58 |

59 | ```sh

60 | pip install recOrder-napari

61 | ```

62 |

63 | Open `napari` with `recOrder-napari`:

64 |

65 | ```sh

66 | napari -w recOrder-napari

67 | ```

68 |

69 | For more help, see [`recOrder`'s documentation](https://github.com/mehta-lab/recOrder/tree/main/docs). To install `recOrder`

70 | on a microscope, see the [microscope installation guide](https://github.com/mehta-lab/recOrder/blob/main/docs/microscope-installation-guide.md).

71 |

72 | ## Dataset

73 |

74 | [Slides](https://doi.org/10.5281/zenodo.5135889) and a [dataset](https://doi.org/10.5281/zenodo.5178487) shared during a workshop on QLIPP and recOrder can be found on Zenodo, and the napari plugin's sample contributions (`File > Open Sample > recOrder-napari` in napari).

75 |

76 | [](https://doi.org/10.5281/zenodo.5178487)

77 | [](https://doi.org/10.5281/zenodo.5135889)

--------------------------------------------------------------------------------

/docs/README.md:

--------------------------------------------------------------------------------

1 | # Welcome to `recOrder`'s documentation

2 |

3 | **I want to buy hardware for a polarized-light installation:** start with the [buyers guide](./buyers-guide.md).

4 |

5 | **I would like to install `recOrder` on my microscope:** start with the [microscope installation guide](./microscope-installation-guide.md).

6 |

7 | **I would like to use the `napari plugin`:** start with the [plugin guide](./napari-plugin-guide.md).

8 |

9 | **I would like to reconstruct existing data:** start with the [reconstruction guide](./reconstruction-guide.md) and consult the [data schema](./data-schema.md) for `recOrder`'s format.

10 |

11 | **I would like to set up a development environment and test `recOrder`**: start with the [development guide](./development-guide.md).

12 |

13 | **I would like understand `recOrder`'s calibration routine**: read the [calibration guide](./calibration-guide.md).

14 |

15 | **I noticed an error in the documentation or code:** [open an issue](https://github.com/mehta-lab/recOrder/issues/new/choose) or [send us an email](mailto:shalin.mehta@czbiohub.org,talon.chandler@czbiohub.org). We appreciate your help!

--------------------------------------------------------------------------------

/docs/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/__init__.py

--------------------------------------------------------------------------------

/docs/buyers-guide.md:

--------------------------------------------------------------------------------

1 | # Buyer's Guide

2 |

3 | ## Quantitative phase imaging:

4 |

5 | You can use a transmitted light source (LED or a lamp) and a condenser commonly available on almost all microscopes. In addition to the transmitted light imaging path, you will need a motorized stage for acquiring through-focus image stacks.

6 |

7 | ## Quantitative polarization imaging (PolScope):

8 |

9 | The following list of components assumes that you already have a transmitted light source (LED or a lamp) and a condenser.

10 |

11 | Buyers have two options:

12 | 1. buy a complete hardware kit from the OpenPolScope project, or

13 | 2. assemble your own kit piece by piece.

14 |

15 | ### Buy a kit from the OpenPolScope project

16 |

17 | - Read about the [OpenPolScope Hardware Kit](https://openpolscope.org/pages/OPS_Hardware.htm).

18 | - Complete the [OpenPolScope information request form](https://openpolscope.org/pages/Info_Request_Form.htm).

19 |

20 | ### Buy individual components

21 |

22 | The components are listed in the order in which they process light. See the build video here to see how to assemble these components on your microscope.

23 |

24 | https://github.com/user-attachments/assets/a0a8bffb-bf81-4401-9ace-3b4955436b57

25 |

26 | | Part | Approximate Price | Notes |

27 | |--------------------------|-------------------|-----------------------------|

28 | | Illumination filter | $200 | We suggest [a Thorlabs CWL = 530 nm, FWHM = 10 nm notch filter](https://www.thorlabs.com/thorproduct.cfm?partnumber=FBH530-10).|

29 | | Circular polarizer | $350 | We suggest [a Thorlabs 532 nm, left-hand circular polarizer](https://www.thorlabs.com/thorproduct.cfm?partnumber=CP1L532).|

30 | | Liquid crystal compensator | $6,000 | Meadowlark optics LVR-42x52mm-VIS-ASSY or LVR-50x60mm-VIS-POL-ASSY. Although near-variants are listed in the [Meadowlowlark catalog](https://www.meadowlark.com/product/liquid-crystal-variable-retarder/), this is a custom part with two liquid crystals in a custom housing. [Contact Meadowlark](https://www.meadowlark.com/contact-us/) for a quote.|

31 | | Liquid crystal control electronics | $2,000 | [Meadowlark optics D5020-20V](https://www.meadowlark.com/product/liquid-crystal-digital-interface-controller/). Choose the high-voltage 20V version.

32 | | Liquid crystal adapter | $25-$500 | A 3D printed part that aligns the liquid crystal compensator in a microscope stand's illumination path. Check for your stand among the [OpenPolScope `.stl` files](https://github.com/amitabhverma/Microscope-LC-adapters/tree/main/stl_files) or [contact us](compmicro@czbiohub.org) for more options.|

33 | | Circular analyzer (opposite handedness) | $350 | We suggest [a Thorlabs 532 nm, right-hand circular polarizer](https://www.thorlabs.com/thorproduct.cfm?partnumber=CP1R532).|

34 |

35 | If you need help selecting or assembling the components, please start an issue on this GitHub repository or contact us at compmicro@czbiohub.org.

36 |

37 | ## Quantitative phase and polarization imaging (QLIPP):

38 |

39 | Combining the Z-stage and the PolScope components listed above enables joint phase and polarization imaging with `recOrder`.

--------------------------------------------------------------------------------

/docs/calibration-guide.md:

--------------------------------------------------------------------------------

1 | # Calibration guide

2 | This guide describes `recOrder`'s calibration routine with details about its goals, parameters, and evaluation metrics.

3 |

4 | ## Why calibrate?

5 |

6 | `recOrder` sends commands via Micro-Manager (or a TriggerScope) to apply voltages to the liquid crystals which modify the polarization of the light that illuminates the sample. `recOrder` could apply a fixed set of voltages so the user would never have to worry about these details, but this approach leads to extremely poor performance because

7 |

8 | - the sample, the sample holder, lenses, dichroics, and other optical elements introduce small changes in polarization, and

9 | - the liquid crystals' voltage response drifts over time.

10 |

11 | Therefore, recalibrating the liquid crystals regularly (definitely between imaging sessions, often between different samples) is essential for acquiring optimal images.

12 |

13 | ## Finding the extinction state

14 |

15 | Every calibration starts with a routine that finds the **extinction state**: the polarization state (and corresponding voltages) that minimizes the intensity that reaches the camera. If the analyzer is a right-hand-circular polarizer, then the extinction state is the set of voltages that correspond to left-hand-circular light in the sample.

16 |

17 | ## Setting a goal for the remaining states: swing

18 |

19 | After finding the circular extinction state, the calibration routine finds the remaining states. The **swing** parameter sets the target ellipticity of the remaining states and is best understood using [the Poincare sphere](https://en.wikipedia.org/wiki/Unpolarized_light#Poincar%C3%A9_sphere), a diagram that organizes all pure polarization states onto the surface of a sphere.

20 |

21 |  22 |

23 | On the Poincare sphere, the extinction state corresponds to the north pole, and the swing value corresponds to the targeted line of [colatitude](https://en.m.wikipedia.org/wiki/File:Spherical_Coordinates_%28Colatitude,_Longitude%29.svg) for the remaining states. For example, a swing value of 0.25 (above left) sets the targeted polarization states to the states on the equator: a set of linear polarization states. Similarly, a swing value of 0.125 (above right) sets the targeted polarization states to the states on the line of colatitude 45 degrees ( $\pi$/4 radians) away from the north pole: a set of elliptical polarization states.

24 |

25 | The Poincare sphere is also useful for calculating the ratio of intensities measured before and after an analyzer illuminated with a polarized beam. First, find the point on the Poincare sphere that corresponds to the analyzer; in our case we have a right-circular analyzer corresponding to the south pole. Next, find the point that corresponds to the polarization state of the light incident on the analyzer; this could be any arbitrary point on the Poincare sphere. To find the ratio of intensities before and after the analyzer $I/I_0$, find the great-circle angle between the two points on the Poincare sphere, $\alpha$, and calculate $I/I_0 = \cos^2(\alpha/2)$. As expected, points that are close together transmit perfectly ( $\alpha = 0$ implies $I/I_0 = 1$), while antipodal points lead to extinction ( $\alpha = \pi$ implies $I/I_0 = 0$).

26 |

27 | This geometric construction illustrates that all non-extinction polarization states have the same intensity after the analyzer because they live on the same line of colatitude and have the same great-circle angle to the south pole (the analyzer). We use this fact to help us find our non-extinction states.

28 |

29 | Practically, we find our first non-extinction state immediately using the liquid crystal manufacturer's measurements from the factory. In other words, we apply a fixed voltage offset to the extinction-state voltages to find the first non-extinction state, and this requires no iteration or optimization. To find the remaining non-extinction states, we keep the polarization orientation fixed and search through neighboring states with different ellipticity to find states that transmit the same intensity as the first non-extinction state.

30 |

31 | ## Evaluating a calibration: extinction ratio

32 |

33 | At the end of a calibration we report the **extinction ratio**, the ratio of the largest and smallest intensities that the imaging system can transmit above background. This metric measures the quality of the entire optical path including the liquid crystals and their calibrated states, and all depolarization, scattering, or absorption caused by optical elements in the light path will reduce the extinction ratio.

34 |

35 | ## Calculating extinction ratio from measured intensities (advanced topic)

36 |

37 | To calculate the extinction ratio, we could optimize the liquid crystal voltages to maximize measured intensity then calculate the ratio of that result with the earlier extinction intensity, but this approach requires a time-consuming optimization and it does not characterize the quality of the calibrated states of the liquid crystals.

38 |

39 | Instead, we estimate the extinction ratio from the intensities we measure during the calibration process. Specifically, we measure the black-level intensity $I_{\text{bl}}$, the extinction intensity $I_{\text{ext}}$, and the intensity under the first elliptical state $I_{\text{ellip}}(S)$ where $S$ is the swing. We proceed to algebraically express the extinction ratio in terms of these three quantities.

40 |

41 | We can decompose $I_{\text{ellip}}(S)$ into a constant term $I_{\text{ellip}}(0) = I_{\text{ext}}$, and a modulation term given by

42 |

43 | $$I_{\text{ellip}}(S) = I_{\text{mod}}\sin^2(\pi S) + I_{\text{ext}},\qquad\qquad (1)$$

44 | where $I_{\text{mod}}$ is the modulation depth, and the $\sin^2(\pi S)$ term can be understood using the Poincare sphere (the intensity behind the circular analyzer is proportional to $\cos^2(\alpha/2)$ and for a given swing we have $\alpha = \pi - 2\pi S$ so $\cos^2(\frac{\pi - 2\pi S}{2}) = \sin^2(\pi S)$ ).

45 |

46 | Next, we decompose $I_{\text{ext}}$ into the sum of two terms, the black level intensity and a leakage intensity $I_{\text{leak}}$

47 | $$I_{\text{ext}} = I_{\text{bl}} + I_{\text{leak}}.\qquad\qquad (2)$$

48 |

49 | The following diagram clarifies our definitions and shows how the measured $I_{\text{ellip}}(S)$ depends on the swing (green line).

50 |

51 |

22 |

23 | On the Poincare sphere, the extinction state corresponds to the north pole, and the swing value corresponds to the targeted line of [colatitude](https://en.m.wikipedia.org/wiki/File:Spherical_Coordinates_%28Colatitude,_Longitude%29.svg) for the remaining states. For example, a swing value of 0.25 (above left) sets the targeted polarization states to the states on the equator: a set of linear polarization states. Similarly, a swing value of 0.125 (above right) sets the targeted polarization states to the states on the line of colatitude 45 degrees ( $\pi$/4 radians) away from the north pole: a set of elliptical polarization states.

24 |

25 | The Poincare sphere is also useful for calculating the ratio of intensities measured before and after an analyzer illuminated with a polarized beam. First, find the point on the Poincare sphere that corresponds to the analyzer; in our case we have a right-circular analyzer corresponding to the south pole. Next, find the point that corresponds to the polarization state of the light incident on the analyzer; this could be any arbitrary point on the Poincare sphere. To find the ratio of intensities before and after the analyzer $I/I_0$, find the great-circle angle between the two points on the Poincare sphere, $\alpha$, and calculate $I/I_0 = \cos^2(\alpha/2)$. As expected, points that are close together transmit perfectly ( $\alpha = 0$ implies $I/I_0 = 1$), while antipodal points lead to extinction ( $\alpha = \pi$ implies $I/I_0 = 0$).

26 |

27 | This geometric construction illustrates that all non-extinction polarization states have the same intensity after the analyzer because they live on the same line of colatitude and have the same great-circle angle to the south pole (the analyzer). We use this fact to help us find our non-extinction states.

28 |

29 | Practically, we find our first non-extinction state immediately using the liquid crystal manufacturer's measurements from the factory. In other words, we apply a fixed voltage offset to the extinction-state voltages to find the first non-extinction state, and this requires no iteration or optimization. To find the remaining non-extinction states, we keep the polarization orientation fixed and search through neighboring states with different ellipticity to find states that transmit the same intensity as the first non-extinction state.

30 |

31 | ## Evaluating a calibration: extinction ratio

32 |

33 | At the end of a calibration we report the **extinction ratio**, the ratio of the largest and smallest intensities that the imaging system can transmit above background. This metric measures the quality of the entire optical path including the liquid crystals and their calibrated states, and all depolarization, scattering, or absorption caused by optical elements in the light path will reduce the extinction ratio.

34 |

35 | ## Calculating extinction ratio from measured intensities (advanced topic)

36 |

37 | To calculate the extinction ratio, we could optimize the liquid crystal voltages to maximize measured intensity then calculate the ratio of that result with the earlier extinction intensity, but this approach requires a time-consuming optimization and it does not characterize the quality of the calibrated states of the liquid crystals.

38 |

39 | Instead, we estimate the extinction ratio from the intensities we measure during the calibration process. Specifically, we measure the black-level intensity $I_{\text{bl}}$, the extinction intensity $I_{\text{ext}}$, and the intensity under the first elliptical state $I_{\text{ellip}}(S)$ where $S$ is the swing. We proceed to algebraically express the extinction ratio in terms of these three quantities.

40 |

41 | We can decompose $I_{\text{ellip}}(S)$ into a constant term $I_{\text{ellip}}(0) = I_{\text{ext}}$, and a modulation term given by

42 |

43 | $$I_{\text{ellip}}(S) = I_{\text{mod}}\sin^2(\pi S) + I_{\text{ext}},\qquad\qquad (1)$$

44 | where $I_{\text{mod}}$ is the modulation depth, and the $\sin^2(\pi S)$ term can be understood using the Poincare sphere (the intensity behind the circular analyzer is proportional to $\cos^2(\alpha/2)$ and for a given swing we have $\alpha = \pi - 2\pi S$ so $\cos^2(\frac{\pi - 2\pi S}{2}) = \sin^2(\pi S)$ ).

45 |

46 | Next, we decompose $I_{\text{ext}}$ into the sum of two terms, the black level intensity and a leakage intensity $I_{\text{leak}}$

47 | $$I_{\text{ext}} = I_{\text{bl}} + I_{\text{leak}}.\qquad\qquad (2)$$

48 |

49 | The following diagram clarifies our definitions and shows how the measured $I_{\text{ellip}}(S)$ depends on the swing (green line).

50 |

51 |  52 |

53 | The extinction ratio is the ratio of the largest and smallest intensities that the imaging system can transmit above background, which is most easily expressed in terms of $I_{\text{mod}}$ and $I_{\text{leak}}$

54 | $$\text{Extinction Ratio} = \frac{I_{\text{mod}} + I_{\text{leak}}}{I_{\text{leak}}}.\qquad\qquad (3)$$

55 |

56 | Substituting Eqs. (1) and (2) into Eq. (3) gives the extinction ratio in terms of the measured intensities

57 | $$\text{Extinction Ratio} = \frac{1}{\sin^2(\pi S)}\frac{I_{\text{ellip}}(S) - I_{\text{ext}}}{I_{\text{ext}} - I_{\text{bl}}} + 1.$$

58 |

59 | ## Summary: `recOrder`'s step-by-step calibration procedure

60 | 1. Close the shutter, measure the black level, then reopen the shutter.

61 | 2. Find the extinction state by finding voltages that minimize the intensity that reaches the camera.

62 | 3. Use the swing value to immediately find the first elliptical state, and record the intensity on the camera.

63 | 4. For each remaining elliptical state, keep the polarization orientation fixed and optimize the voltages to match the intensity of the first elliptical state.

64 | 5. Store the voltages and calculate the extinction ratio.

--------------------------------------------------------------------------------

/docs/data-schema.md:

--------------------------------------------------------------------------------

1 | # Data schema

2 |

3 | This document defines the standard for data acquired with `recOrder`.

4 |

5 | ## Raw directory organization

6 |

7 | Currently, we structure raw data in the following hierarchy:

8 |

9 | ```text

10 | working_directory/ # commonly YYYY_MM_DD_exp_name, but not enforced

11 | ├── polarization_calibration_0.txt

12 | │ ...

13 | ├── polarization_calibration_.txt # i calibration repeats

14 | │

15 | ├── bg_0

16 | │ ...

17 | ├── bg_ # j background repeats

18 | │ ├── background.zarr

19 | │ ├── polarization_calibration.txt # copied into each bg folder

20 | │ ├── reconstruction.zarr

21 | │ ├── reconstruction_settings.yml # for use with `recorder reconstruct`

22 | │ └── transfer_function.zarr # for use with `recorder apply-inv-tf`

23 | │

24 | ├── _snap_0

25 | ├── _snap_1

26 | │ ├── raw_data.zarr

27 | │ ├── reconstruction.zarr

28 | │ ├── reconstruction_settings.yml

29 | │ └── transfer_function.zarr

30 | │ ...

31 | ├── _snap_ # k repeats with the first acquisition name

32 | │ ├── raw_data.zarr

33 | │ ├── reconstruction.zarr

34 | │ ├── reconstruction_settings.yml

35 | │ └── transfer_function.zarr

36 | │ ...

37 | │

38 | ├── _snap_0 # l different acquisition names

39 | │ ...

40 | ├── _snap_ # m repeats for this acquisition name

41 | ├── raw_data.zarr

42 | ├── reconstruction.zarr

43 | ├── reconstruction_settings.yml

44 | └── transfer_function.zarr

45 | ```

46 |

47 | Each `.zarr` contains an [OME-NGFF v0.4](https://ngff.openmicroscopy.org/0.4/) in HCS format with a single field of view.

--------------------------------------------------------------------------------

/docs/development-guide.md:

--------------------------------------------------------------------------------

1 | # `recOrder` development guide

2 |

3 | ## Install `recOrder` for development

4 |

5 | 1. Install [conda](https://github.com/conda-forge/miniforge) and create a virtual environment:

6 |

7 | ```sh

8 | conda create -y -n recOrder python=3.10

9 | conda activate recOrder

10 | ```

11 |

12 | 2. Clone the `recOrder` directory:

13 |

14 | ```sh

15 | git clone https://github.com/mehta-lab/recOrder.git

16 | ```

17 |

18 | 3. Install `recOrder` in editable mode with development dependencies

19 |

20 | ```sh

21 | cd recOrder

22 | pip install -e ".[all,dev]"

23 | ```

24 |

25 | 4. Optionally, for the co-development of [`waveorder`](https://github.com/mehta-lab/waveorder) and `recOrder`:

26 |

27 | > Note that `pip` will raise an 'error' complaining that the dependency of `recOrder-napari` has been broken if you do the following.

28 | > This does not affect the installation, but can be suppressed by removing [this line](https://github.com/mehta-lab/recOrder/blob/5bc9314a9bacf6f4e235eaffb06c297cf20e4b65/setup.cfg#L40) before installing `waveorder`.

29 | > (Just remember to revert the change afterwards!)

30 | > We expect a nicer behavior to be possible once we release a stable version of `waveorder`.

31 |

32 | ```sh

33 | cd # where you want to clone the repo

34 | git clone https://github.com/mehta-lab/waveorder.git

35 | pip install ./waveorder -e ".[dev]"

36 | ```

37 |

38 | > Importing from a local and/or editable package can cause issues with static code analyzers such due to the absence of the source code from the paths they expect.

39 | > VS Code users can refer to [this guide](https://github.com/microsoft/pylance-release/blob/main/TROUBLESHOOTING.md#common-questions-and-issues) to resolve typing warnings.

40 |

41 | ## Set up a development environment

42 |

43 | ### Code linting

44 |

45 | We are not currently specifying a code linter as most modern Python code editors already have their own. If not, add a plugin to your editor to help catch bugs pre-commit!

46 |

47 | ### Code formatting

48 |

49 | We use `black` to format Python code, and a specific version is installed as a development dependency. Use the `black` in the `recOrder` virtual environment, either from commandline or the editor of your choice.

50 |

51 | > *VS Code users*: Install the [Black Formatter](https://marketplace.visualstudio.com/items?itemName=ms-python.black-formatter) plugin. Press `^/⌘ ⇧ P` and type 'format document with...', choose the Black Formatter and start formatting!

52 |

53 | ### Docstring style

54 |

55 | The [NumPy style](https://numpydoc.readthedocs.io/en/latest/format.html) docstrings are used in `recOrder`.

56 |

57 | > *VS Code users*: [this popular plugin](https://marketplace.visualstudio.com/items?itemName=njpwerner.autodocstring) helps auto-generate most popular docstring styles (including `numpydoc`).

58 |

59 | ## Run automated tests

60 |

61 | From within the `recOrder` directory run:

62 |

63 | ```sh

64 | pytest

65 | ```

66 |

67 | Running `pytest` for the first time will download ~50 MB of test data from Zenodo, and subsequent runs will reuse the downloaded data.

68 |

69 | ## Run manual tests

70 |

71 | Although many of `recOrder`'s tests are automated, many features require manual testing. The following is a summary of features that need to be tested manually before release:

72 |

73 | * Install a compatible version of Micro-Manager and check that `recOrder` can connect.

74 | * Perform calibrations with and without an ROI; with and without a shutter configured in Micro-Manager, in 4- and 5-state modes; and in MM-Voltage, MM-Retardance, and DAC modes (if the TriggerScope is available).

75 | * Test "Load Calibration" and "Calculate Extinction" buttons.

76 | * Test "Capture Background" button.

77 | * Test the "Acquire Birefringence" button on a background FOV. Does a background-corrected background acquisition give random orientations?

78 | * Test the four "Acquire" buttons with varied combinations of 2D/3D, background correction settings, "Phase from BF" checkbox, and regularization parameters.

79 | * Use the data you collected to test "Offline" mode reconstructions with varied combinations of parameters.

80 |

81 | ## GUI development

82 |

83 | We use `QT Creator` for large parts of `recOrder`'s GUI. To modify the GUI, install `QT Creator` from [its website](https://www.qt.io/product/development-tools) or with `brew install --cask qt-creator`

84 |

85 | Open `./recOrder/plugin/gui.ui` in `QT Creator` and make your changes.

86 |

87 | Next, convert the `.ui` to a `.py` file with:

88 |

89 | ```sh

90 | pyuic5 -x gui.ui -o gui.py

91 | ```

92 |

93 | Note: `pyuic5` is installed alongside `PyQt5`, so you can expect to find it installed in your `recOrder` conda environement.

94 |

95 | Finally, change the `gui.py` file's to import `qtpy` instead of `PyQt5` to adhere to [napari plugin best practices](https://napari.org/stable/plugins/best_practices.html#don-t-include-pyside2-or-pyqt5-in-your-plugin-s-dependencies).

96 | On macOS, you can modify the file in place with:

97 |

98 | ```sh

99 | sed -i '' 's/from PyQt5/from qtpy/g' gui.py

100 | ```

101 |

102 | > This is specific for BSD `sed`, omit `''` with GNU.

103 |

104 | Note: although much of the GUI is specified in the generated `recOrder_ui.py` file, the `main_widget.py` file makes extensive modifications to the GUI.

105 |

106 | ## Make `git blame` ignore formatting commits

107 |

108 | **Note:** `git --version` must be `>=2.23` to use this feature.

109 |

110 | If you would like `git blame` to ignore formatting commits, run this line:

111 |

112 | ```sh

113 | git config --global blame.ignoreRevsFile .git-blame-ignore-revs

114 | ```

115 |

116 | The `\.git-blame-ignore-revs` file contains a list of commit hashes corresponding to formatting commits.

117 | If you make a formatting commit, please add the commit's hash to this file.

118 |

119 | ## Pre-release checklist

120 | - merge `README.md` figures to `main`, then update the links to point to these uploaded figures. We do not upload figures to PyPI, so without this step the README figure will not appear on PyPI or napari-hub.

121 | - update version numbers and links in [the microscope dependency guide](./microscope-installation-guide.md).

122 |

--------------------------------------------------------------------------------

/docs/images/HSV_legend.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/HSV_legend.png

--------------------------------------------------------------------------------

/docs/images/JCh_Color_legend.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/JCh_Color_legend.png

--------------------------------------------------------------------------------

/docs/images/JCh_legend.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/JCh_legend.png

--------------------------------------------------------------------------------

/docs/images/acq_finished.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/acq_finished.png

--------------------------------------------------------------------------------

/docs/images/acquire_buttons.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/acquire_buttons.png

--------------------------------------------------------------------------------

/docs/images/acquisition_settings.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/acquisition_settings.png

--------------------------------------------------------------------------------

/docs/images/advanced.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/advanced.png

--------------------------------------------------------------------------------

/docs/images/cap_bg.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/cap_bg.png

--------------------------------------------------------------------------------

/docs/images/cli_structure.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/cli_structure.png

--------------------------------------------------------------------------------

/docs/images/comms_video_screenshot.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/comms_video_screenshot.png

--------------------------------------------------------------------------------

/docs/images/connect_to_mm.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/connect_to_mm.png

--------------------------------------------------------------------------------

/docs/images/create_group.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/create_group.png

--------------------------------------------------------------------------------

/docs/images/create_group_voltage.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/create_group_voltage.png

--------------------------------------------------------------------------------

/docs/images/create_preset.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/create_preset.png

--------------------------------------------------------------------------------

/docs/images/create_preset_voltage.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/create_preset_voltage.png

--------------------------------------------------------------------------------

/docs/images/general_reconstruction_settings.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/general_reconstruction_settings.png

--------------------------------------------------------------------------------

/docs/images/ideal_plot.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/ideal_plot.png

--------------------------------------------------------------------------------

/docs/images/modulation.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/modulation.png

--------------------------------------------------------------------------------

/docs/images/no-overlay.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/no-overlay.png

--------------------------------------------------------------------------------

/docs/images/overlay-demo.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/overlay-demo.png

--------------------------------------------------------------------------------

/docs/images/overlay.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/overlay.png

--------------------------------------------------------------------------------

/docs/images/phase_reconstruction_settings.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/phase_reconstruction_settings.png

--------------------------------------------------------------------------------

/docs/images/recOrder_Fig1_Overview.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/recOrder_Fig1_Overview.png

--------------------------------------------------------------------------------

/docs/images/recOrder_plugin_logo.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/recOrder_plugin_logo.png

--------------------------------------------------------------------------------

/docs/images/reconstruction_birefriengence.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/reconstruction_birefriengence.png

--------------------------------------------------------------------------------

/docs/images/reconstruction_data.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/reconstruction_data.png

--------------------------------------------------------------------------------

/docs/images/reconstruction_data_info.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/reconstruction_data_info.png

--------------------------------------------------------------------------------

/docs/images/reconstruction_models.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/reconstruction_models.png

--------------------------------------------------------------------------------

/docs/images/reconstruction_queue.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/reconstruction_queue.png

--------------------------------------------------------------------------------

/docs/images/run_calib.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/run_calib.png

--------------------------------------------------------------------------------

/docs/images/run_port.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mehta-lab/recOrder/c4fed09c0ce0d0fa259951c5940d4ca4f5ef4f5d/docs/images/run_port.png

--------------------------------------------------------------------------------

/docs/microscope-installation-guide.md:

--------------------------------------------------------------------------------

1 | # Microscope Installation Guide

2 |

3 | This guide will walk through a complete recOrder installation consisting of:

4 | 1. Checking pre-requisites for compatibility.

5 | 2. Installing Meadowlark DS5020 and liquid crystals.

6 | 3. Installing and launching the latest stable version of `recOrder` via `pip`.

7 | 4. Installing a compatible version of Micro-Manager and LC device drivers.

8 | 5. Connecting `recOrder` to Micro-Manager via a `pycromanager` connection.

9 |

10 | ## Compatibility Summary

11 | Before you start you will need to confirm that your system is compatible with the following software:

12 |

13 | | Software | Version |

14 | | :--- | :--- |

15 | | `recOrder` | 0.4.0 |

16 | | OS | Windows 10 |

17 | | Micro-Manager version | [2023-04-26 (160 MB)](https://download.micro-manager.org/nightly/2.0/Windows/MMSetup_64bit_2.0.1_20230426.exe) |

18 | | Meadowlark drivers | [USB driver (70 kB)](https://github.com/mehta-lab/recOrder/releases/download/0.4.0/usbdrvd.dll) |

19 | | Meadowlark PC software version | 1.08 |

20 | | Meadowlark controller firmware version | >=1.04 |

21 |

22 | ## Install Meadowlark DS5020 and liquid crystals

23 |

24 | Start by installing the Meadowlark DS5020 and liquid crystals using the software on the USB stick provided by Meadowlark. You will need to install the USB drivers and CellDrive5000.

25 |

26 | **Check your installation versions** by opening CellDrive5000 and double clicking the Meadowlark Optics logo. Confirm that **"PC software version = 1.08" and "Controller firmware version >= 1.04".**

27 |

28 | If you need to change your PC software version, follow these steps:

29 | - From "Add and remove programs", remove CellDrive5000 and "National Instruments Software".

30 | - From "Device manager", open the "Meadowlark Optics" group, right click `mlousb`, click "Uninstall device", check "Delete the driver software for this device", and click "Uninstall". Uninstall `Meadowlark Optics D5020 LC Driver` following the same steps.

31 | - Using the USB stick provided by Meadowlark, reinstall the USB drivers and CellDrive5000.

32 |

33 | ## Install recOrder software

34 |

35 | (Optional but recommended) install [anaconda](https://www.anaconda.com/products/distribution) and create a virtual environment

36 | ```

37 | conda create -y -n recOrder python=3.10

38 | conda activate recOrder

39 | ```

40 |

41 | Install `recOrder` with acquisition dependencies (napari and pycro-manager):

42 | ```

43 | pip install recOrder-napari[all]

44 | ```

45 | Check your installation:

46 | ```

47 | napari -w recOrder-napari

48 | ```

49 | should launch napari with the recOrder plugin (may take 15 seconds on a fresh installation).

50 |

51 | ## Install and configure Micro-Manager

52 |

53 | Download and install [`Micro-Manager 2.0` nightly build `20230426` (~150 MB link).](https://download.micro-manager.org/nightly/2.0/Windows/MMSetup_64bit_2.0.1_20230426.exe)

54 |

55 | **Note:** We have tested recOrder with `20230426`, but most features will work with newer builds. We recommend testing a minimal installation with `20230426` before testing with a different nightly build or additional device drivers.

56 |

57 | Before launching Micro-Manager, download the [USB driver](https://github.com/mehta-lab/recOrder/releases/download/0.4.0rc0/usbdrvd.dll) and place this file into your Micro-Manager folder (likely `C:\Program Files\Micro-Manager` or similar).

58 |

59 | Launch Micro-Manager, open `Devices > Hardware Configuration Wizard...`, and add the `MeadowlarkLC` device to your configuration. Confirm your installation by opening `Devices > Device Property Browser...` and confirming that `MeadowlarkLC` properties appear.

60 |

61 | **Upgrading users:** you will need to reinstall the Meadowlark device to your Micro-Manager configuration file, because the device driver's name has changed to from `MeadowlarkLcOpenSource` to `MeadowlarkLC`.

62 |

63 | ### Option 1 (recommended): Voltage-mode calibration installation

64 | Create a new channel group and add the `MeadowlarkLC-Voltage (V) LC-A` and `MeadowlarkLC-Voltage (V) LC-B` properties.

65 |

66 |

67 |

68 | Add 5 presets to this group named `State0`, `State1`, `State2`, `State3`, and `State4`. You can set random voltages to add these presets, and `recOrder` will calibrate and set these voltages later.

69 |

70 |

71 |

72 | ### Option 2 (soon deprecated): retardance mode calibration installation

73 |

74 | Create a new channel group and add the property `MeadowlarkLC-String send to -`.

75 |

76 |

77 |

78 | Add 5 presets to this group named `State0`, `State1`, `State2`, `State3`, and `State4` and set the corresponding preset values to `state0`, `state1`, `state2`, `state3`, `state4` in the `MeadowlarkLC-String send to –`* property.

79 |

80 |

81 |

82 | ### (Optional) Enable "Phase From BF" acquisition

83 |

84 | If you would like to reconstruct phase from brightfield, add a Micro-Manager preset with brightfield properties (e.g. moving the polarization analyzer out the light path) and give the preset a name that contains one of the following case-insensitive keywords:

85 |

86 | `["bf", "brightfield", "bright", "labelfree", "label-free", "lf", "label", "phase, "ph"]`

87 |

88 | In `recOrder` you can select this preset using the `Acquisition Settings > BF Channel` dropdown menu.

89 |

90 | ### Enable port access

91 |

92 | Finally, enable port access so that Micro-Manager can communicate with recOrder through the `pycromanager` bridge. To do so open Micro-Manager and navigate to `Tools > Options` and check the box that says `Run server on port 4827`

93 |

94 |

95 |

96 | ## Connect `recOrder` to Micro-Manager

97 |

98 | From the `recOrder` window, click `Switch to Online`. If you see `Success`, your installation is complete and you can [proceed to the napari plugin guide](./napari-plugin-guide.md).

99 |

100 | If you you see `Failed`, check that Micro-Manager is open, check that you've enabled `Run server on port 4827`. If the connection continues to fail, report an issue with your stack trace for support.

101 |

--------------------------------------------------------------------------------

/docs/napari-plugin-guide.md:

--------------------------------------------------------------------------------

1 | # Napari Plugin Guide

2 | This guide summarizes a complete `recOrder` workflow.

3 |

4 | ## Launch `recOrder`

5 | Activate the `recOrder` environment

6 | ```

7 | conda activate recOrder

8 | ```

9 |

10 | Launch `napari` with `recOrder`

11 | ```

12 | napari -w recOrder-napari

13 | ```

14 | ## Connect to Micro-Manager

15 | Click “Connect to MM”. If the connection succeeds, proceed to calibration. If not, revisit the [microscope installation guide](./microscope-installation-guide.md).

16 |

17 |

18 |

19 | For polarization imaging, start with the **Calibration** tab. For phase-from-brightfield imaging, you can skip the calibration and go to the **Aquisition / Reconstruction** tab.

20 |

21 | ## Calibration tab

22 | The first step in the acquisition process is to calibrate the liquid crystals and measure a background. In the `recOrder` plugin you will see the following options for controlling the calibration:

23 |

24 |

25 |

26 |

27 | ### Prepare for a calibration

28 | Place your sample on the stage, focus on the surface of the coverslip/well, navigate to **an empty FOV**, then align the light source into **Kohler illumination** [following these steps](https://www.microscopyu.com/tutorials/kohler).

29 |

30 | ### Choose calibration parameters

31 | Browse for and choose a **Directory** where you calibration and background images will be saved.

32 |

33 | Choose a **Swing** based on the anisotropy of your sample. We recommend

34 |

35 | * Tissue Imaging: `swing = 0.1 - 0.05`

36 | * Live or fixed Cells: `swing = 0.05 – 0.03`

37 |

38 | We recommend starting with a swing of **0.1** for tissue samples and **0.05** for cells then reducing the swing to measure smaller structures. See the [calibration guide](./calibration-guide.md) for more information about this parameter and the calibration process.

39 |

40 | Choose an **Illumination Scheme** to decides how many polarization states you will calibrate and use. We recommend starting with the *4-State (Ext, 0, 60, 120)* scheme as it requires one less illumination state than the *5-State* scheme.

41 |

42 | **Calibration Mode** is set automatically, so the default value is a good place to start. Different modes allow calibrations with voltages, retardances, or hardware sequencing.

43 |

44 | The **Config Group** is set automatically to the Micro-Manager configuration group that contains the `State*` presets. You can modify this option if you have multple configuration groups with these presets.

45 |

46 | ### Run the calibration

47 | Start a calibration with **Run Calibration**.

48 |

49 | The progress bar will show the progress of calibration, and it should take less than 2 minutes on most systems.

50 |

51 | The plot shows the intensities over time during calibration. One way to diagnose an in-progress calibration is to watch the intensity plot. An ideal plot will look similar to the following:

52 |

53 |

54 |

55 | Once finished, you will get a calibration assessment and an extinction value. The extinction value gives you a metric for calibration quality: the higher the extinction, the cleaner the light path and the greater the sensitivity of QLIPP.

56 |

57 | * **Extinction 0 – 50**: Very poor. The alignment of the universal compensator may be off or the sample chamber may be highly birefringent.

58 |

59 | * **Extinction 50 - 100**: Okay extinction, could be okay for tissue imaging and strong anisotropic structures. Most likely not suitable for cell imaging

60 |

61 | * **Extinction 100 - 200**: Good Extinction. These are the typical values we get on our microscopes.

62 |

63 | * **Extinction 200+**: Excellent. Indicates a very well-aligned and clean light path and high sensitivity of the system.

64 |

65 | For a deeper discussion of the calibration procedure, swing, and the extinction ratio, see the [calibration guide](./calibration-guide.md).

66 |

67 | ### Optional: Load Calibration

68 | The **Load Calibration** button allows earlier calibrations to be reused. Select a *polarization_calibration.txt* file and Micro-Manager's presets will be updated with these settings. `recOrder` will also collect a few images to update the extinction ratio to reflect the current condition of the light path. Once this short acquisition has finished, the user can acquire data as normal.

69 |

70 | This feature is useful if Micro-Manager and/or `recOrder` crashes. If the sample and imaging setup haven't changed, it is safe to reuse a calibration. Otherwise, if the sample or the microscope changes, we recommend performing a new calibration.

71 |

72 | ### Optional: Calculate Extinction

73 | The **Calculate Extinction** button acquires a few images and recalculates the extinction value.

74 |

75 | This feature is useful for checking if a new region of your sample requires a recalibration. If the sample or background varies as you move around the sample, the extinction will drop and you should recalibrate and acquire background images as close to the area you will be imaging as possible.

76 |

77 | ### Capture Background

78 |

79 | The **Capture Background** button will acquire several images under each of the calibrated polarization states, average them (we recommend 5), save them to specified **Background Folder Name** within the main **Directory**, then display the result in napari layers.

80 |

81 |

82 |

83 | It is normal to see background retardance and orientation. We will use these background images to correct the data we collect our acquisitions of the sample.

84 |

85 | ### Advanced Tab

86 | The advanced tab gives the user a log output which can be useful for debugging purposes. There is a log level “debugging” which serves as a verbose output. Look here for any hints as to what may have gone wrong during calibration or acquisition.

87 |

88 | ## Acquisition / Reconstruction Tab

89 | This acquisition tab is designed to acquire and reconstruct single volumes of both phase and birefringence measurements to allow the user to test their calibration and background. We recommend this tab for quick testing and the Micro-Manager MDA acquisition for high-throughput data collection.

90 |

91 | ### Acquire Buttons

92 |

93 |

94 | The **Retardance + Orientation**, **Phase From BF**, and **Retardance + Orientation + Phase** buttons set off Micro-Manager acquisitions that use the upcoming acquisition settings. After the acquisition is complete, these routines will set off `recOrder` reconstructions that estimate the named parameters.

95 |

96 | The **STOP** button will end the acquisition as soon as possible, though Micro-Manager acquisitions cannot always be interrupted.

97 |

98 | ### Acquisition Settings

99 |

100 |

101 | The **Acquisition Mode** sets the target dimensions for the reconstruction. Perhaps surprisingly, all 2D reconstructions require 3D data except for **Retardance + Orientation** in **2D Acquisition Mode**. The following table summarizes the data that will be acquired when an acquisition button is pressed in **2D** and **3D** acquisition modes:

102 |

103 | | **Acquisition** \ Acquisition Mode | 2D mode | 3D mode |

104 | | :--- | :--- | :--- |

105 | | **Retardance + Orientation** | CYX data | CZYX data |

106 | | **Phase From BF** | ZYX data | ZYX data |

107 | | **Retardance + Orientation + Phase** | CZYX data | CZYX data |

108 |

109 | Unless a **Retardance + Orientation** reconstruction in **2D Acquisition Mode** is requested, `recOrder` uses Micro-Manager's z-stage to acquire 3D data. **Z Start**, **Z End**, and **Z Step** are stage settings for acquiring an image volume, relative to the current position of the stage. Values are in the stage's default units, typically in micrometers.

110 |

111 | For example, to image a 20 um thick cell the user would focus in the middle of the cell then choose

112 |

113 | * **Z Start** = -12

114 | * **Z End** = 12

115 | * **Z Step** = 0.25

116 |

117 | For phase reconstruction, the stack should have about two depths-of-focus above and below the edges of the sample because the reconstruction algorithm uses defocus information to more accurately reconstruct phase.

118 |

119 | ### General Reconstruction Settings

120 |

121 |

122 | The **Save Directory** and **Save Name** are where the acquired data (`/_snap_/raw_data.zarr`) and reconstructions (`/_snap_/reconstruction.zarr`) will be saved.

123 |

124 | The **Background Correction** menu has several options (each with mouseover explanations):

125 | * **None**: No background correction is performed.

126 | * **Measured**: Corrects sample images with a background image acquired at an empty field of view, loaded from **Background Path**, by default the most recent background acquisition.

127 | * **Estimated**: Estimates the sample background by fitting a 2D surface to the sample images. Works well when structures are spatially distributed across the field of view and a clear background is unavailable.

128 | * **Measured + Estimated**: Applies a **Measured** background correction then an **Estimated** background correction. Use to remove residual background after the sample retardance is corrected with measured background.

129 |

130 | The remaining parameters are used by the reconstructions:

131 |

132 | * **GPU ID**: Not implemented

133 | * **Wavelength (nm)**: illumination wavelength

134 | * **Objective NA**: numerical aperture of the objective, typically found next to magnification

135 | * **Condenser NA**: numerical aperture of the condenser

136 | * **Camera Pixel Size (um)**: pixel size of the camera in micrometers (e.g. 6.5 μm)

137 | * **RI of Obj. Media**: refractive index of the objective media, typical values are 1.0 (air), 1.3 (water), 1.473 (glycerol), or 1.512 (oil)

138 | * **Magnification**: magnification of the objective

139 | * **Rotate Orientation (90 deg)**: rotates "Orientation" reconstructions by +90 degrees clockwise and saves the result, most useful when a known-orientation sample is available

140 | * **Flip Orientation**: flips "Orientation" reconstructions about napari's horizontal axis before saving the result

141 | * **Invert Phase Contrast**: inverts the phase reconstruction's contrast by flipping the positive and negative directions of the stage during the reconstruction, and saves the result

142 |

143 | ### Phase Reconstruction Settings

144 |

145 |

146 | These parameters are used only by phase reconstructions

147 |

148 | * **Z Padding**: The number of slices to pad on either end of the stack, necessary if the sample is not fully out of focus on either end of the stack

149 | * **Regularizer**: Choose "Tikhonov", the "TV" regularizer is not implemented

150 | * **Strength**: The Tikhonov regularization strength, too small/large will result in reconstructions that are too noisy/smooth

151 |

152 | The acquired data will then be displayed in napari layers. Note that phase reconstruction is more computationally expensive and may take several minutes depending on your system.

153 |