├── awesome

├── image-cbr.md

├── entity-linking.md

├── health-data.md

├── opendata-gbif.md

├── chinese-word-segmentation.md

├── fenci.md

├── sparse-representation-cv.md

├── dataset.md

├── query-intent.md

├── manifold-learning.md

├── reverse-proxy-load-balancer.md

├── stanford-cs224w.md

├── rdb-rdf.md

├── recurrent-neural-networks.md

├── crawler.md

├── machine-learning-reading.md

├── phonetic_algorithm.md

├── ocr-tools.md

├── learn-big-data.md

├── multiclass-boosting.md

├── piecewise-linear-regression.md

├── bayesian-network-python.md

├── outlier-text-mining.md

├── computer-vision-dataset.md

├── question-answer.md

├── mlss.md

├── semanticweb-dl

├── imbalanced-data-classification.md

├── influential-user-social-network.md

├── deep-learning-introduction.md

├── chinese-word-similarity.md

├── multitask-learning.md

├── speech-recognition.md

├── test-recent.md

├── machine-learning-guide.md

└── nlp.md

└── README.md

/awesome/image-cbr.md:

--------------------------------------------------------------------------------

1 | http://www.openimaj.org/

2 |

3 | http://www.openimaj.org/tutorial-pdf.pdf

4 |

5 | https://code.google.com/p/lire/

6 |

7 | http://demo-itec.uni-klu.ac.at/liredemo/

8 |

9 | http://www.phash.org/

10 |

11 | http://www.phash.org/docs/pubs/thesis_zauner.pdf

12 |

--------------------------------------------------------------------------------

/awesome/entity-linking.md:

--------------------------------------------------------------------------------

1 | # reading lists

2 | http://nlp.cs.rpi.edu/kbp/2014/elreading.html Entity linking paper reading list, by Heng Ji.

3 |

4 | # tutorial

5 | http://nlp.cs.rpi.edu/paper/wikificationtutorial.pdf ACL 2014 wikification tutorial by Dan Roth (UIUC), Heng Ji (RPI), Ming-Wei Chang (MSR), and Taylor Cassidy (ARL, IBM)

6 |

--------------------------------------------------------------------------------

/awesome/health-data.md:

--------------------------------------------------------------------------------

1 | 国际组织相关卫生统计数据

2 |

3 | http://t.cn/8FDT5pG

4 |

5 | http://t.cn/RPSIhDv

6 |

7 | http://t.cn/RPSIhDZ

8 |

9 | http://t.cn/RPSIhDP

10 |

11 | 美国卫生统计数据是分散在各个部门

12 |

13 | http://t.cn/RPSIhDh

14 |

15 | http://t.cn/RPSIhDz

16 |

17 | http://t.cn/RPSIhD7

18 |

19 | 中国的卫生统计数据

20 |

21 | http://t.cn/zYK9zeF

22 |

23 | 芝加哥大学有个主页搜集了一些卫生统计数据

24 |

25 | http://t.cn/RPSIhDw

26 |

--------------------------------------------------------------------------------

/awesome/opendata-gbif.md:

--------------------------------------------------------------------------------

1 | http://www.gbif.org/mendeley/usecases research papers

2 |

3 | http://www.gbif.org/newsroom/uses showcases using aggregated data

4 |

5 | http://imsgbif.gbif.org/CMS_ORC/?doc_id=2613&download=1 2014 overview

6 |

7 | http://www.plosone.org/article/info%3Adoi%2F10.1371%2Fjournal.pone.0066559 research paper on grey squirrel

8 |

9 | http://www.gbif.org/ homepage

10 |

11 |

--------------------------------------------------------------------------------

/awesome/chinese-word-segmentation.md:

--------------------------------------------------------------------------------

1 |

2 | ## 综述

3 | http://www.zhihu.com/question/19929473

4 |

5 | ## 专题

6 |

7 | http://www.52nlp.cn/%E4%B8%AD%E6%96%87%E5%88%86%E8%AF%8D%E5%85%A5%E9%97%A8%E4%B9%8B%E8%B5%84%E6%BA%90 中文分词

8 |

9 | http://www.google.com/patents/US20100306139 google专利,中文姓名识别

10 |

11 | https://github.com/fxsjy/jieba 中文分词

12 |

13 | http://nlp.stanford.edu/software/CRF-NER.shtml stanford named entity recognition

14 |

--------------------------------------------------------------------------------

/awesome/fenci.md:

--------------------------------------------------------------------------------

1 | Ansj中文分词 java

2 |

3 | http://t.cn/zWDqIRw

4 |

5 | python 结巴分词

6 |

7 | http://t.cn/zlfOaMU

8 |

9 | 结巴"中文分词的C++版本

10 |

11 | http://t.cn/RPICG0o

12 |

13 | 技术文章:

14 |

15 | 基础类(这两个选一个就可以):

16 |

17 | http://t.cn/RPICqae

18 |

19 | http://t.cn/zHm2KHK

20 |

21 | 常用的算法

22 | CRF

23 |

24 | http://t.cn/RPIC5fy

25 |

26 | HMM

27 |

28 | http://t.cn/zOec8CW

29 |

30 | 数据结构

31 |

32 | tire树

33 |

34 | http://t.cn/RPIC5mA

35 |

36 | 双数组

37 |

38 | http://t.cn/ar6lK9

39 |

--------------------------------------------------------------------------------

/awesome/sparse-representation-cv.md:

--------------------------------------------------------------------------------

1 | http://www.eecs.berkeley.edu/~yang/software/l1benchmark/index.html sparse Optimization

2 |

3 | http://perception.csl.illinois.edu/matrix-rank/ Low-Rank Representation

4 |

5 | http://www.eecs.berkeley.edu/%7Eyang/courses/ECCV2012/ECCV12-lecture1.pdf Introduction to Sparse Representation and Low-Rank Representation

6 |

7 | http://www.eecs.berkeley.edu/%7Eyang/courses/ECCV2012/ECCV12-lecture2.pdf Variations of Sparse Optimization and Their Numerical Implementation

8 |

9 | http://www.eecs.berkeley.edu/%7Eyang/courses/ECCV2012/ECCV12-lecture3.pdf Finding and Harnessing Low-Dimensional Structure of High-Dimensional Data

10 |

11 | http://www.eecs.berkeley.edu/~yang/ Allen Y. Yang

12 |

13 | http://www.columbia.edu/~jw2966/ John Wright

14 |

15 | http://yima.csl.illinois.edu/ Yi Ma

16 |

--------------------------------------------------------------------------------

/awesome/dataset.md:

--------------------------------------------------------------------------------

1 | ## dataset catalogs

2 |

3 | https://snap.stanford.edu/data/ Stanford Large Network Dataset Collection

4 |

5 | http://www.rdatamining.com/resources/data Free Datasets for R

6 |

7 | http://aws.amazon.com/publicdatasets/

8 |

9 | http://catalog.data.gov/dataset

10 |

11 | http://data.worldbank.org/

12 |

13 | http://www.infochimps.com/datasets/

14 |

15 | http://ckan.org/instances/#

16 |

17 | http://archive.ics.uci.edu/ml/datasets.html

18 |

19 | http://www.kdnuggets.com/datasets/index.html

20 |

21 |

22 | ## individual datasets

23 | https://developers.google.com/freebase/data freebase

24 |

25 | https://archive.org/details/stackexchange stack overflow

26 |

27 | http://commoncrawl.org/data/accessing-the-data/ common crawl

28 |

29 | http://km.aifb.kit.edu/projects/btc-2012/ billion triple challenge (including dbpedia, dblp, tumbler ...)

30 |

--------------------------------------------------------------------------------

/awesome/query-intent.md:

--------------------------------------------------------------------------------

1 | http://www.cnblogs.com/yangxudong/p/3750358.html Query意图分析:记一次完整的机器学习过程(scikit learn library学习笔记)

2 |

3 | http://www.tao-sou.com/740.html 淘宝搜索Query的15个类型(500个query样本)以及与百度谷歌的比较

4 |

5 | http://searchnewscentral.com/20110531166/Technical/query-classification-understanding-user-intent.html Query classification; understanding user intent

6 |

7 | http://dl.acm.org/citation.cfm?id=1351372 Determining the informational, navigational, and transactional intent of Web queries

8 |

9 | http://dl.acm.org/citation.cfm?id=1507510 Survey and evaluation of query intent detection methods

10 |

11 | http://www.slideshare.net/daniel.gayo/survey-and-evaluation-of-query-intent-detection-methods

12 |

13 | http://www.ijarce.com/downloads/may-2014/IJARCE-13201416.pdf Survey and Analysis for User Intention Refined Internet Image Search

14 |

15 | http://gesterling.wordpress.com/2010/03/03/local-queries-vs-local-intent/ Local Queries vs. ‘Local Intent’

16 |

--------------------------------------------------------------------------------

/awesome/manifold-learning.md:

--------------------------------------------------------------------------------

1 | 讨论与进展 issue 26 https://github.com/memect/hao/issues/26

2 |

3 | ## Introduction

4 |

5 | http://blog.sina.com.cn/s/blog_eccca60e0101h1d6.html @cmdyz 流形学习 (Manifold Learning)

6 |

7 | http://blog.pluskid.org/?p=533 浅谈流形学习

8 |

9 | http://blog.csdn.net/chl033/article/details/6107042 流形学习(manifold learning)综述

10 |

11 | http://colah.github.io/posts/2014-03-NN-Manifolds-Topology/ Neural Networks, Manifolds, and Topology

12 |

13 | # Tutorial

14 |

15 | http://www.cad.zju.edu.cn/reports/%C1%F7%D0%CE%D1%A7%CF%B0.pdf 何晓飞 流形学习

16 |

17 | https://www.cs.cmu.edu/~efros/courses/AP06/presentations/ThompsonDimensionalityReduction.pdf

18 |

19 | http://mlsp2012.conwiz.dk/fileadmin/lectures/mlsp2012_raich.pdf MLSP2012 Tutorial: Manifold Learning: Modeling and. Algorithms

20 |

21 | # Additional Tutorials

22 |

23 | http://www2.imm.dtu.dk/projects/manifold/Syllabus.html Summer School on Manifold Learning in Image and Signal Analysis

24 |

25 | ## Implementation

26 |

27 | http://scikit-learn.org/stable/modules/manifold.html

28 |

29 | 谁还关注这个话题: @王斌_ICTIR @丕子

30 |

31 |

--------------------------------------------------------------------------------

/awesome/reverse-proxy-load-balancer.md:

--------------------------------------------------------------------------------

1 | # 提高网站页面响应速度的解决方案: DNS A-Record, 反向代理及负载均衡

2 |

3 | contributors @mahak, BUPTGuo , 情非得已小屋, 新世界_玉兔 , 52cs

4 |

5 | discussion: https://github.com/memect/hao/issues/48

6 |

7 | keywords:

8 | DNS A-Record,

9 | 负载均衡(load balancer),

10 | 反向映射 (reverse proxy),

11 |

12 |

13 | ## 解决方案

14 | http://webmasters.stackexchange.com/questions/10927/using-multiple-a-records-for-my-domain-do-web-browsers-ever-try-more-than-one 最简单的方案, DNS设置, 在一个域名下设置多个 "A" record, 即一个域名映射多个IP地址, 然后由域名服务器与浏览器共同选择其中的一个IP访问

15 |

16 | http://yijiu.blog.51cto.com/433846/1408443 基于Nginx反向代理及负载均衡

17 |

18 | http://fournines.wordpress.com/2011/12/02/improving-page-speed-cdn-vs-squid-varnish-nginx/ Improving page speed: CDN vs Squid/Varnish/nginx/mod_proxy

19 |

20 | http://en.wikipedia.org/wiki/Reverse_proxy

21 |

22 |

23 | http://en.wikipedia.org/wiki/Load_balancing_%28computing%29#Load_balancer_features

24 |

25 |

26 | ## 讨论

27 | @52cs 一个域名貌似只能绑定一个IP,这么多服务器怎么都可以被域名找到呢?

28 |

29 | mahak: 域名服务的A记录可以是多个ip做循环(round roubin),请求到了ip之后,可以是负载均衡设备,具体均衡策略可根据应用调整,比如是否会话保持等。

30 |

31 | BUPTGuo:负载均衡? (8月3日 17:17)

32 |

33 | 好东西传送门:[求助] 欢迎大家到这里去解答 http://t.cn/RPi5Prc 小声说一句:应该是通过load balancer或reverse proxy //@龙星计划: 求科普 (8月3日 17:51)

34 |

35 | 情非得已小屋:负载均衡+反向映射 (8月3日 19:24)

36 |

37 | 新世界_玉兔:DNS提供负载均衡 (8月4日 16:05)

38 |

39 |

40 |

--------------------------------------------------------------------------------

/awesome/stanford-cs224w.md:

--------------------------------------------------------------------------------

1 | http://web.stanford.edu/class/cs224w/

2 |

3 |

4 | #class notes

5 | http://web.stanford.edu/class/cs224w/slides/01-intro.pdf

6 |

7 | http://web.stanford.edu/class/cs224w/slides/02-gnp.pdf

8 |

9 | http://web.stanford.edu/class/cs224w/slides/03-smallworld.pdf

10 |

11 | http://web.stanford.edu/class/cs224w/slides/04-navigation.pdf

12 |

13 | http://web.stanford.edu/class/cs224w/slides/05-evals.pdf

14 |

15 | http://web.stanford.edu/class/cs224w/slides/06-signed.pdf

16 |

17 | http://web.stanford.edu/class/cs224w/slides/07-cascading.pdf

18 |

19 | http://web.stanford.edu/class/cs224w/slides/08-cascades.pdf

20 |

21 | http://web.stanford.edu/class/cs224w/slides/09-influence.pdf

22 |

23 | http://web.stanford.edu/class/cs224w/slides/10-outbreak.pdf

24 |

25 | http://web.stanford.edu/class/cs224w/slides/11-powerlaws.pdf

26 |

27 | http://web.stanford.edu/class/cs224w/slides/12-evolution.pdf

28 |

29 | http://web.stanford.edu/class/cs224w/slides/13-pagerank.pdf

30 |

31 | http://web.stanford.edu/class/cs224w/slides/14-kronecker.pdf

32 |

33 | http://web.stanford.edu/class/cs224w/slides/15-weakties.pdf

34 |

35 | http://web.stanford.edu/class/cs224w/slides/16-spectral.pdf

36 |

37 | http://web.stanford.edu/class/cs224w/slides/17-overlapping.pdf

38 |

39 | http://web.stanford.edu/class/cs224w/slides/19-memes.pdf

40 |

41 | http://web.stanford.edu/class/cs224w/slides/20-review.pdf

42 |

--------------------------------------------------------------------------------

/awesome/rdb-rdf.md:

--------------------------------------------------------------------------------

1 | # Relational Databases to RDF (RDB2RDF)

2 | 摘要:[经典收藏]如何将关系数据库数据映射到语义万维网RDF表达方式并支持SPARQL查询语言。

3 |

4 | editor(s): [吴伟](https://github.com/wwumit), [好东西传送门](https://github.com/haoawesome)

5 |

6 |

7 | # Overview

8 | http://www.csee.umbc.edu/courses/graduate/691/spring14/01/notes/20_rdbs/20r2r.pdf Short story - RDB and RDF 1, Tim Finin's class notes - [CMSC 491/691 Special Topics: A Web of Data]( http://www.csee.umbc.edu/courses/graduate/691/spring14/01/)

9 |

10 | http://www.slideshare.net/juansequeda/rdb2-rdf-tutorial-iswc2013 Long story - the Relational Databases to RDF (RDB2RDF) Tutorial at the 2013 International Semantic Web Conference (ISWC2013)

11 |

12 | http://www.w3.org/2001/sw/wiki/RDB2RDF

13 |

14 |

15 | # W3C Recommendations

16 |

17 | http://www.w3.org/TR/r2rml/ R2RDF, W3C Recommendation 2012

18 |

19 | http://www.w3.org/TR/rdb-direct-mapping/ Direct Mapping, W3C Recommendation 2012

20 |

21 | # Tools

22 |

23 | ## Academic Research

24 |

25 | https://github.com/nkons/r2rml-parser

26 |

27 | https://github.com/antidot/db2triples

28 |

29 | http://d2rq.org/

30 |

31 | http://www.capsenta.com/

32 |

33 | http://www.dblab.ntua.gr/~bikakis/SPARQL-RW.html

34 |

35 | http://www.dblab.ntua.gr/~bikakis/SPARQL2XQuery.html

36 |

37 | ## Commerial Tools

38 | http://virtuoso.openlinksw.com/dataspace/doc/dav/wiki/Main/VirtR2RML OpenLink Virtuoso

39 |

40 | http://docs.oracle.com/database/121/RDFRM/sem_relational_views.htm Oracle database

41 |

42 |

--------------------------------------------------------------------------------

/awesome/recurrent-neural-networks.md:

--------------------------------------------------------------------------------

1 | contributors: @ICT_朱亚东 @维尔茨

2 |

3 | card list: http://bigdata.memect.com/?tag=rnn

4 |

5 | https://github.com/memect/hao/blob/master/awesome/recurrent-neural-networks.md

6 |

7 | ## 学习资源

8 | http://en.wikipedia.org/wiki/Recurrent_neural_network 背景知识

9 |

10 | http://minds.jacobs-university.de/sites/default/files/uploads/papers/ESNTutorialRev.pdf ( @ICT_朱亚东 推荐, 短教程) H. Jaeger (2002): Tutorial on training recurrent neural networks, covering BPPT,RTRL, EKF and the "echo state network" approach. GMD Report 159, German National Research Center for Information Technology, 2002 (48 pp.)

11 |

12 | http://www.cs.toronto.edu/~graves/preprint.pdf (@维尔茨 认证, 教科书) Supervised Sequence Labelling with Recurrent Neural Networks. Textbook, Studies in Computational Intelligence, Springer, 2012.

13 |

14 | http://www.idsia.ch/~juergen/rnn.html (资源列表) over 60 RNN papers by Jürgen Schmidhuber's group at IDSIA

15 |

16 | ## 专家

17 |

18 | http://www.cs.toronto.edu/~graves/ Alex Graves

19 |

20 | http://www.idsia.ch/~juergen/ Jürgen Schmidhuber

21 |

22 | http://research.microsoft.com/en-us/projects/rnn/ Microsoft RNN group

23 |

24 |

25 | ## 相关讨论

26 | ### @维尔茨 RNN label sequence: https://github.com/memect/hao/issues/41

27 | 问: @维尔茨 有木有关于循环神经网络在segmented sequence labeling方面的papers么?我希望用RNN label sequence本身而非sequence members

28 |

29 | 答: 多伦多大学的 Alex Graves 有专著研究此问题. 基于recurrent neural networks(RNN)研究: @ICT_朱亚东 推荐Herbert Jaeger的短教程(40多页). Jürgen Schmidhuber教授收集了60多相关论文, 微软研究院利用RNN做自然语言处理

30 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | #  好东西传送门

2 | [http://www.weibo.com/haoawesome](http://www.weibo.com/haoawesome)

3 | * [简介](README.md#简介) : [问答服务](README.md#问答服务), [订阅服务](README.md#订阅服务), [使用许可](README.md#使用许可)

4 | * [问答与传送档案](README.md#问答与传送档案)

5 | * [通知与声明](README.md#通知与声明)

6 |

7 |

8 | ## 简介

9 | *好东西传送门* 支持微博上的知识传播,集成微博好人好东西,帮您快速解决问题,为您精选专业知识

10 | * [欢迎提供建议](https://github.com/memect/hao/issues/new)

11 |

12 | ### 问答服务

13 | 1. 微博用户 [访问微博](http://www.weibo.com/haoawesome/)

14 | * 发一条微博提问,里面加上 @好东西传送门

15 | * 发私信给 好东西传送门

16 |

17 | 2. github用户:

18 | * [提问](https://github.com/memect/hao/issues/new)

19 | * [跟踪问答进展](https://github.com/memect/hao/issues) 欢迎认领还没有回答的问题

20 |

21 | ### 订阅服务

22 | 1. 订阅微信公众号: 好东西传送门 (发送好东西传送门的一些推荐和<机器学习日报>)

23 |

24 |

好东西传送门

2 | [http://www.weibo.com/haoawesome](http://www.weibo.com/haoawesome)

3 | * [简介](README.md#简介) : [问答服务](README.md#问答服务), [订阅服务](README.md#订阅服务), [使用许可](README.md#使用许可)

4 | * [问答与传送档案](README.md#问答与传送档案)

5 | * [通知与声明](README.md#通知与声明)

6 |

7 |

8 | ## 简介

9 | *好东西传送门* 支持微博上的知识传播,集成微博好人好东西,帮您快速解决问题,为您精选专业知识

10 | * [欢迎提供建议](https://github.com/memect/hao/issues/new)

11 |

12 | ### 问答服务

13 | 1. 微博用户 [访问微博](http://www.weibo.com/haoawesome/)

14 | * 发一条微博提问,里面加上 @好东西传送门

15 | * 发私信给 好东西传送门

16 |

17 | 2. github用户:

18 | * [提问](https://github.com/memect/hao/issues/new)

19 | * [跟踪问答进展](https://github.com/memect/hao/issues) 欢迎认领还没有回答的问题

20 |

21 | ### 订阅服务

22 | 1. 订阅微信公众号: 好东西传送门 (发送好东西传送门的一些推荐和<机器学习日报>)

23 |

24 |  25 |

26 | 2. [订阅好东西周报](http://haoweekly.memect.com/) (邮件列表,每周的问答与资源推荐合集,大约每周五发)

27 |

28 | ### 使用许可

29 |

30 | 本站内容许可证:[Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License](http://creativecommons.org/licenses/by-nc-sa/4.0/)

31 |

25 |

26 | 2. [订阅好东西周报](http://haoweekly.memect.com/) (邮件列表,每周的问答与资源推荐合集,大约每周五发)

27 |

28 | ### 使用许可

29 |

30 | 本站内容许可证:[Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License](http://creativecommons.org/licenses/by-nc-sa/4.0/)

31 |  32 |

33 |

34 |

35 | ## 问答与传送档案

36 |

37 | 最新的内容请看好东西周报 [http://haoweekly.memect.com/](http://haoweekly.memect.com/) , 每周更新

38 |

39 | 2014-11以前的内容看 [存档](https://github.com/memect/hao/blob/master/archive-2014.md)

40 |

--------------------------------------------------------------------------------

/awesome/crawler.md:

--------------------------------------------------------------------------------

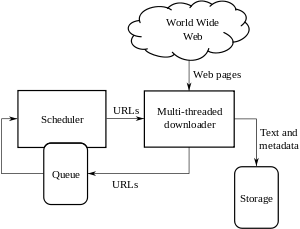

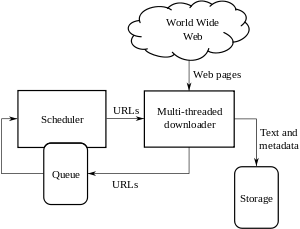

1 | # 网络爬虫(Web crawler)资料

2 |

3 | ## 概念

4 | http://en.wikipedia.org/wiki/Web_crawler A Web crawler is an Internet bot that systematically browses the World Wide Web, typically for the purpose of Web indexing. A Web crawler may also be called a Web spider,[1] an ant, an automatic indexer,[2] or (in the FOAF software context) a Web scutter.

5 |

6 | http://zh.wikipedia.org/zh-cn/%E7%B6%B2%E8%B7%AF%E8%9C%98%E8%9B%9B 网络蜘蛛(Web spider)也叫网络爬虫(Web crawler)[1],蚂蚁(ant),自动检索工具(automatic indexer),或者(在FOAF软件概念中)网络疾走(WEB scutter),是一种“自动化浏览网络”的程序,或者说是一种网络机器人。它们被广泛用于互联网搜索引擎或其他类似网站,以获取或更新这些网站的内容和检索方式。它们可以自动采集所有其能够访问到的页面内容,以供搜索引擎做进一步处理(分检整理下载的页面),而使得用户能更快的检索到他们需要的信息。

7 |

8 | ## 基本爬虫框架和最简单的例子

9 |

10 |

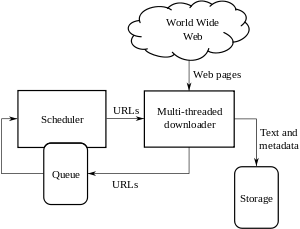

11 | * URL列表(queue): 一个数据表包含一组URL。需要初始化,每次循环后加入未访问过的URL。要有去重机制。 高级一些还要避免爬虫陷阱。

12 | * 调度器(scheduler):选择queue里的URL,以设定的频率,顺序或并发地调用下载模块。最简单实现就是for循环

13 | * 注意遵循[爬虫机器人须知 Robots.txt](http://en.wikipedia.org/wiki/Robots_exclusion_standard)。

14 | * 下载器(downloader):给定一个URL,下载URL的网页内容(content) 以及相关元数据(http header),写到下载数据storage中。一般都有HTTP客户端开源实现

15 | * 链接提取器(link extractors): 解析网页文本内容,提取URL,最后写到queue里。 可以任选字符串匹配,正则表达式,网页解析器(html/xml parser)等工具实现。

16 | * 下载数据存储(storage):,同时保存网页内容(文本、图片...)和下载时的相关元数据(URL,下载时间, 文件大小, 服务器端最后更新时间...)

17 |

18 | 下面是两个非常简单的可执行代码样例

19 | * https://cs.nyu.edu/courses/fall02/G22.3033-008/WebCrawler.java - java

20 | * https://github.com/kezakez/python-web-crawler - python

21 |

22 |

23 | ## 进阶讲义

24 | * http://www.slideshare.net/denshe/icwe13-tutorial-webcrawling

25 |

26 | ## 开源工具

27 | * http://java-source.net/open-source/crawlers Open Source Crawlers in Java

28 | * http://en.wikipedia.org/wiki/Web_crawler#Open-source_crawlers Open-source crawlers

29 |

--------------------------------------------------------------------------------

/awesome/machine-learning-reading.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ## readings recommend by michael jordan

4 |

5 | source: http://www.reddit.com/r/MachineLearning/comments/2fxi6v/ama_michael_i_jordan/ckdqzph

6 |

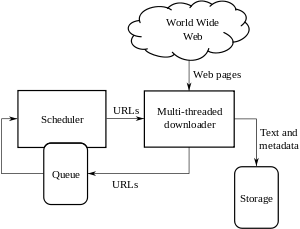

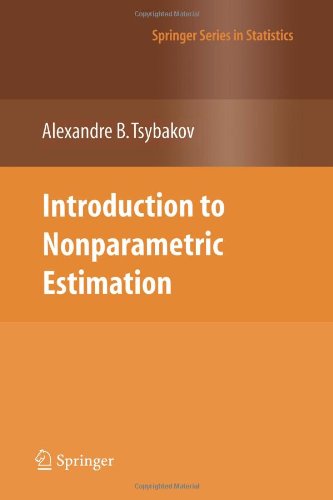

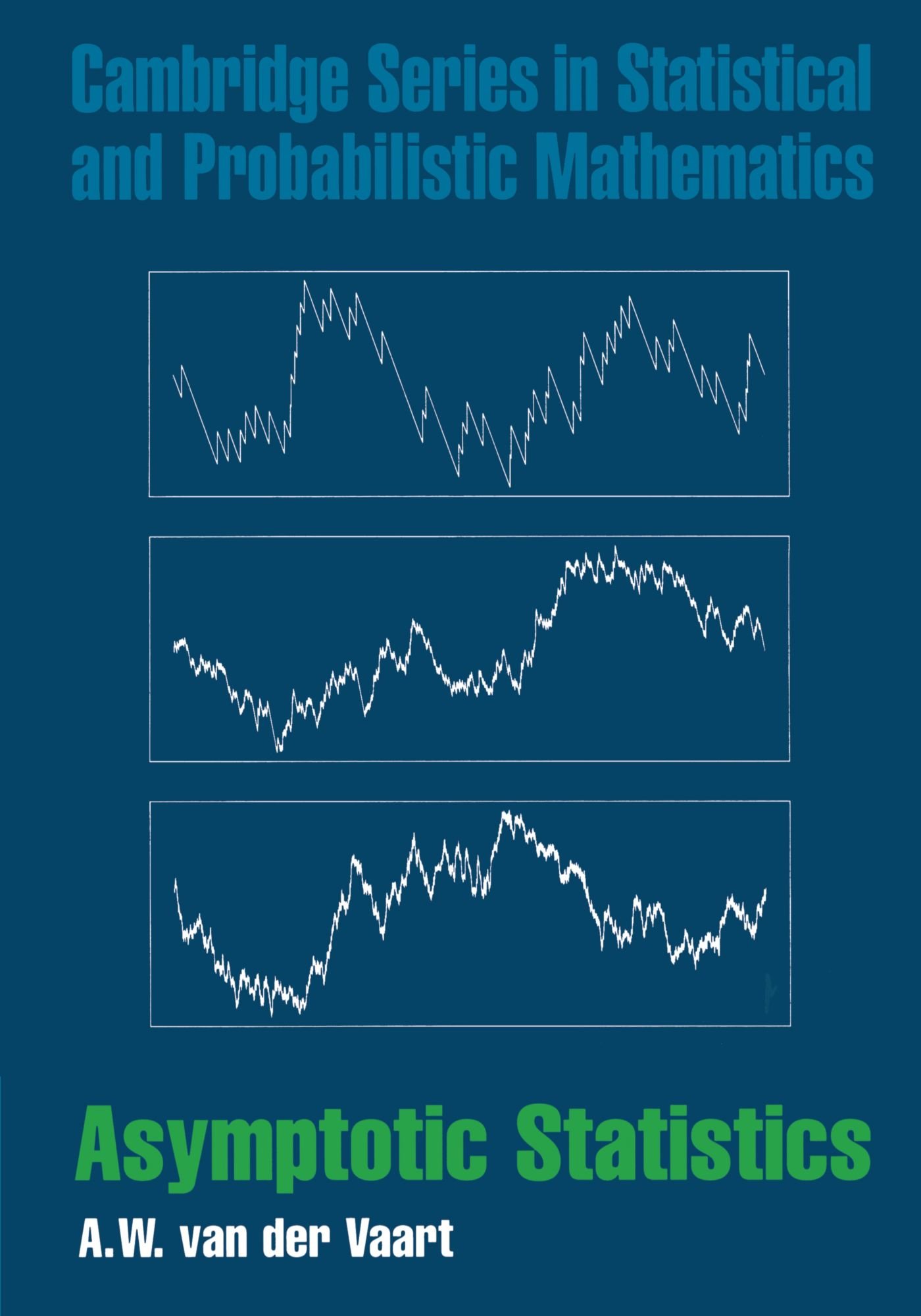

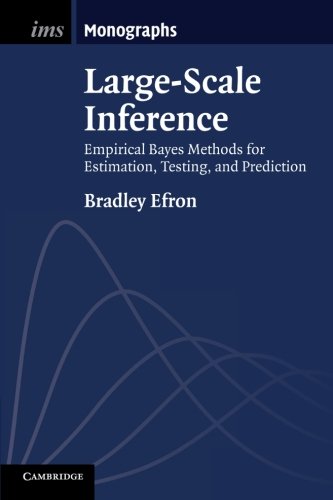

7 | "I now tend to add some books that dig still further into foundational topics. In particular, I recommend A. Tsybakov's book "Introduction to Nonparametric Estimation" as a very readable source for the tools for obtaining lower bounds on estimators, and Y. Nesterov's very readable "Introductory Lectures on Convex Optimization" as a way to start to understand lower bounds in optimization. I also recommend A. van der Vaart's "Asymptotic Statistics", a book that we often teach from at Berkeley, as a book that shows how many ideas in inference (M estimation---which includes maximum likelihood and empirical risk minimization---the bootstrap, semiparametrics, etc) repose on top of empirical process theory. I'd also include B. Efron's "Large-Scale Inference: Empirical Bayes Methods for Estimation, Testing, and Prediction", as a thought-provoking book"

8 |

9 |

10 | http://www.amazon.com/Introduction-Nonparametric-Estimation-Springer-Statistics/dp/1441927093

11 | Introduction to Nonparametric Estimation

12 |

13 |

32 |

33 |

34 |

35 | ## 问答与传送档案

36 |

37 | 最新的内容请看好东西周报 [http://haoweekly.memect.com/](http://haoweekly.memect.com/) , 每周更新

38 |

39 | 2014-11以前的内容看 [存档](https://github.com/memect/hao/blob/master/archive-2014.md)

40 |

--------------------------------------------------------------------------------

/awesome/crawler.md:

--------------------------------------------------------------------------------

1 | # 网络爬虫(Web crawler)资料

2 |

3 | ## 概念

4 | http://en.wikipedia.org/wiki/Web_crawler A Web crawler is an Internet bot that systematically browses the World Wide Web, typically for the purpose of Web indexing. A Web crawler may also be called a Web spider,[1] an ant, an automatic indexer,[2] or (in the FOAF software context) a Web scutter.

5 |

6 | http://zh.wikipedia.org/zh-cn/%E7%B6%B2%E8%B7%AF%E8%9C%98%E8%9B%9B 网络蜘蛛(Web spider)也叫网络爬虫(Web crawler)[1],蚂蚁(ant),自动检索工具(automatic indexer),或者(在FOAF软件概念中)网络疾走(WEB scutter),是一种“自动化浏览网络”的程序,或者说是一种网络机器人。它们被广泛用于互联网搜索引擎或其他类似网站,以获取或更新这些网站的内容和检索方式。它们可以自动采集所有其能够访问到的页面内容,以供搜索引擎做进一步处理(分检整理下载的页面),而使得用户能更快的检索到他们需要的信息。

7 |

8 | ## 基本爬虫框架和最简单的例子

9 |

10 |

11 | * URL列表(queue): 一个数据表包含一组URL。需要初始化,每次循环后加入未访问过的URL。要有去重机制。 高级一些还要避免爬虫陷阱。

12 | * 调度器(scheduler):选择queue里的URL,以设定的频率,顺序或并发地调用下载模块。最简单实现就是for循环

13 | * 注意遵循[爬虫机器人须知 Robots.txt](http://en.wikipedia.org/wiki/Robots_exclusion_standard)。

14 | * 下载器(downloader):给定一个URL,下载URL的网页内容(content) 以及相关元数据(http header),写到下载数据storage中。一般都有HTTP客户端开源实现

15 | * 链接提取器(link extractors): 解析网页文本内容,提取URL,最后写到queue里。 可以任选字符串匹配,正则表达式,网页解析器(html/xml parser)等工具实现。

16 | * 下载数据存储(storage):,同时保存网页内容(文本、图片...)和下载时的相关元数据(URL,下载时间, 文件大小, 服务器端最后更新时间...)

17 |

18 | 下面是两个非常简单的可执行代码样例

19 | * https://cs.nyu.edu/courses/fall02/G22.3033-008/WebCrawler.java - java

20 | * https://github.com/kezakez/python-web-crawler - python

21 |

22 |

23 | ## 进阶讲义

24 | * http://www.slideshare.net/denshe/icwe13-tutorial-webcrawling

25 |

26 | ## 开源工具

27 | * http://java-source.net/open-source/crawlers Open Source Crawlers in Java

28 | * http://en.wikipedia.org/wiki/Web_crawler#Open-source_crawlers Open-source crawlers

29 |

--------------------------------------------------------------------------------

/awesome/machine-learning-reading.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ## readings recommend by michael jordan

4 |

5 | source: http://www.reddit.com/r/MachineLearning/comments/2fxi6v/ama_michael_i_jordan/ckdqzph

6 |

7 | "I now tend to add some books that dig still further into foundational topics. In particular, I recommend A. Tsybakov's book "Introduction to Nonparametric Estimation" as a very readable source for the tools for obtaining lower bounds on estimators, and Y. Nesterov's very readable "Introductory Lectures on Convex Optimization" as a way to start to understand lower bounds in optimization. I also recommend A. van der Vaart's "Asymptotic Statistics", a book that we often teach from at Berkeley, as a book that shows how many ideas in inference (M estimation---which includes maximum likelihood and empirical risk minimization---the bootstrap, semiparametrics, etc) repose on top of empirical process theory. I'd also include B. Efron's "Large-Scale Inference: Empirical Bayes Methods for Estimation, Testing, and Prediction", as a thought-provoking book"

8 |

9 |

10 | http://www.amazon.com/Introduction-Nonparametric-Estimation-Springer-Statistics/dp/1441927093

11 | Introduction to Nonparametric Estimation

12 |

13 |  14 |

15 | http://www.amazon.com/Introductory-Lectures-Convex-Optimization-Applied/dp/1402075537

16 | Introductory Lectures on Convex Optimization

17 |

18 |

14 |

15 | http://www.amazon.com/Introductory-Lectures-Convex-Optimization-Applied/dp/1402075537

16 | Introductory Lectures on Convex Optimization

17 |

18 |  19 |

20 |

21 | http://www.amazon.com/Asymptotic-Statistics-Statistical-Probabilistic-Mathematics/dp/0521784506

22 | Asymptotic Statistics

23 |

24 |

19 |

20 |

21 | http://www.amazon.com/Asymptotic-Statistics-Statistical-Probabilistic-Mathematics/dp/0521784506

22 | Asymptotic Statistics

23 |

24 |  25 |

26 | http://www.amazon.com/Large-Scale-Inference-Estimation-Prediction-Mathematical/dp/110761967X

27 | Large-Scale Inference: Empirical Bayes Methods for Estimation, Testing, and Prediction

28 |

29 |

25 |

26 | http://www.amazon.com/Large-Scale-Inference-Estimation-Prediction-Mathematical/dp/110761967X

27 | Large-Scale Inference: Empirical Bayes Methods for Estimation, Testing, and Prediction

28 |

29 |  30 |

31 |

--------------------------------------------------------------------------------

/awesome/phonetic_algorithm.md:

--------------------------------------------------------------------------------

1 | # 语音相似度算法与代码

2 | 讨论: https://github.com/memect/hao/issues/164

3 |

4 |

5 | ## 概念

6 | [语音算法](http://en.wikipedia.org/wiki/Phonetic_algorithm) A phonetic algorithm is an algorithm for indexing of words by their pronunciation.

7 |

8 | 相关关键字:

9 | 语音相似度 phonetic similarity

10 | 声音相似度 Acoustic similarity/Confusability

11 |

12 |

13 | ## 算法与开源代码

14 |

15 |

16 |

17 | algorithms

18 | * Soundex

19 | * Daitch–Mokotoff Soundex

20 | * Kölner Phonetik

21 | * Metaphone

22 | * Double Metaphone

23 | * New York State Identification and Intelligence System

24 | * Match Rating Approach (MRA)

25 | * Caverphone

26 |

27 | open source code

28 | * https://github.com/elasticsearch/elasticsearch-analysis-phonetic/ -- java

29 | * https://github.com/maros/Text-Phonetic -- perl

30 | * https://github.com/dotcypress/phonetics -- go

31 | * https://github.com/lukelex/soundcord -- ruby

32 | * https://github.com/Simmetrics/simmetrics -- java

33 | * https://github.com/oubiwann/metaphone - https://pypi.python.org/pypi/Metaphone/0.4 --python

34 | * https://bitbucket.org/yougov/fuzzy - https://pypi.python.org/pypi/Fuzzy/1.0 --python

35 | * https://github.com/sunlightlabs/jellyfish - https://pypi.python.org/pypi/jellyfish/0.3.2 -- python

36 |

37 | source: wikipedia, github

38 |

39 | ## 相关论文

40 |

41 | http://saffron.insight-centre.org/acl/topic/phonetic_similarity/ 相关论文列表

42 |

43 | https://homes.cs.washington.edu/~bhixon/papers/phonemic_similarity_metrics_Interspeech_2011.pdf Phonemic Similarity Metrics to Compare Pronunciation Methods (2011)

44 |

45 | http://webdocs.cs.ualberta.ca/~kondrak/papers/lingdist.pdf Evaluation of Several Phonetic Similarity Algorithms on the Task of Cognate Identification (2006)

46 |

47 | http://webdocs.cs.ualberta.ca/~kondrak/papers/chum.pdf Phonetic alignment and similarity (2003)

48 |

49 | http://www.aclweb.org/anthology/C69-5701 THE Measurement OF PHONETIC SIMILARITY (1967)

50 |

51 | http://www.aclweb.org/anthology/P/P06/P06-1125.pdf A Phonetic-Based Approach to Chinese Chat Text Normalization 中文方法

52 | 语音相似度 phonetic similarity 算法与开源代码

53 |

54 |

--------------------------------------------------------------------------------

/awesome/ocr-tools.md:

--------------------------------------------------------------------------------

1 | 极客杨的OCR工具箱:Tesseract 是目前应用最广泛的免费开源OCR工具(背后有Google的支持)。商业产品有ABBYY的finereader,还有Adobe;国产的有文通和汉王。当前热点是将OCR移植到智能手机上拓展新的输入渠道、IOS有基于Tesseract的实现,Android有高通vuforia API。

2 |

3 | 识别效率高低的关键还是调参数,主要两点:不同的语言有不同的初始设置; 有颜色或渐进的背景会极大降低识别准确率,需要先转换成黑白/灰度模式(可以试试OpenCV)。 推荐看两篇文章,一篇是Tesseract简介(2007),另一篇报告了Tesseract在处理彩色图片中遇到的问题。

4 |

5 | 资料卡片流: http://hao.memect.com/?tag=ocr-tools

6 |

7 |

8 | [](http://hao.memect.com/?tag=ocr-tools)

9 |

10 | # Top Reading - Market Survey

11 | https://tesseract-ocr.googlecode.com/files/TesseractOSCON.pdf Tesseract features and key issues (2007)

12 |

13 | http://www.assistivetechnology.vcu.edu/files/2013/09/pxc3882784.pdf Optical Character Recognition by Open Source OCR

14 | Tool Tesseract: A Case Study (2012)

15 |

16 | http://lifehacker.com/5624781/five-best-text-recognition-tools

17 |

18 | http://www.zhihu.com/question/19593313

19 |

20 | http://www.perfectgeeks.com/list/top-best-free-ocr-software/13

21 |

22 | http://lib.psnc.pl/Content/358/PSNC_Tesseract-FineReader-report.pdf Report on the comparison of Tesseract and

23 | ABBYY FineReader OCR engines (2012)

24 |

25 |

26 | # best OCR tools

27 | https://code.google.com/p/tesseract-ocr/ mostly used open source ocr software. apache 2.0. It has been improved extensively by Google

28 |

29 | http://finereader.abbyy.com/ one of the best commercial product

30 |

31 | http://www.wintone.com.cn/en/ one of the best commercial product for Chinese

32 |

33 | # Tesseract in action and Q/A

34 | http://benschmidt.org/dighist13/?page_id=129

35 |

36 | http://stackoverflow.com/questions/13511102/ios-tesseract-ocr-image-preperation

37 |

38 | http://stackoverflow.com/questions/9480013/image-processing-to-improve-tesseract-ocr-accuracy?rq=1

39 |

40 | http://www.sk-spell.sk.cx/tesseract-ocr-parameters-in-302-version

41 |

42 |

43 |

44 | # Tesseract related applications

45 | https://github.com/gali8/Tesseract-OCR-iOS

46 |

47 | https://github.com/rmtheis/android-ocr

48 |

49 | https://github.com/rmtheis/tess-two

50 |

51 |

52 | # misc

53 |

54 | https://developer.vuforia.com/resources/sample-apps/text-recognition

55 | https://www.youtube.com/watch?v=KLqFQ2u52iU

56 |

57 | http://blog.ayoungprogrammer.com/2013/01/equation-ocr-part-1-using-contours-to.html

58 |

--------------------------------------------------------------------------------

/awesome/learn-big-data.md:

--------------------------------------------------------------------------------

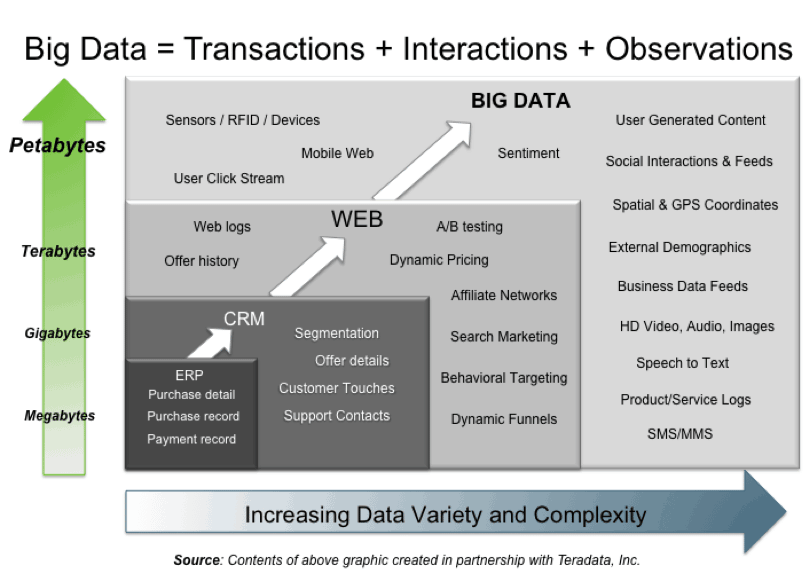

1 | # 大数据应用与技术 - 入门资源汇编

2 |

3 | 大数据是一个内涵非常广泛的概念,涵盖了统计,数据科学,机器学习,数据挖掘,分布式数据库,分布式计算,云端存储,信息可视化等等诸多领域.

4 | 更详细的领域列表可以见Github上的 [Awesome Big Data](https://github.com/onurakpolat/awesome-bigdata)

5 |

6 | 一般个人和中小企业学习大数据可以先了解一些大数据应用的案例,再基于自身拥有的数据与业务(不论大小)进行实践.

7 | 注意, 盲目上大数据技术很容易浪费学习时间,也能带来大量不必要的运营成本.

8 |

9 |

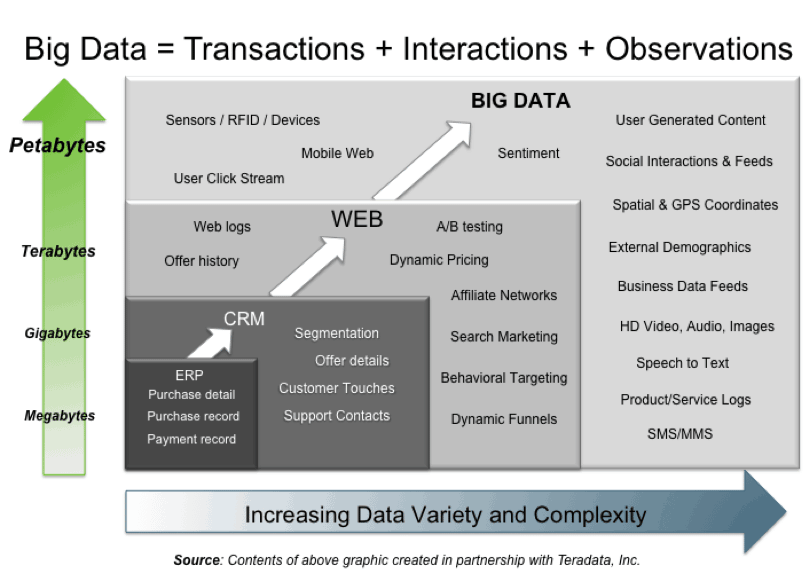

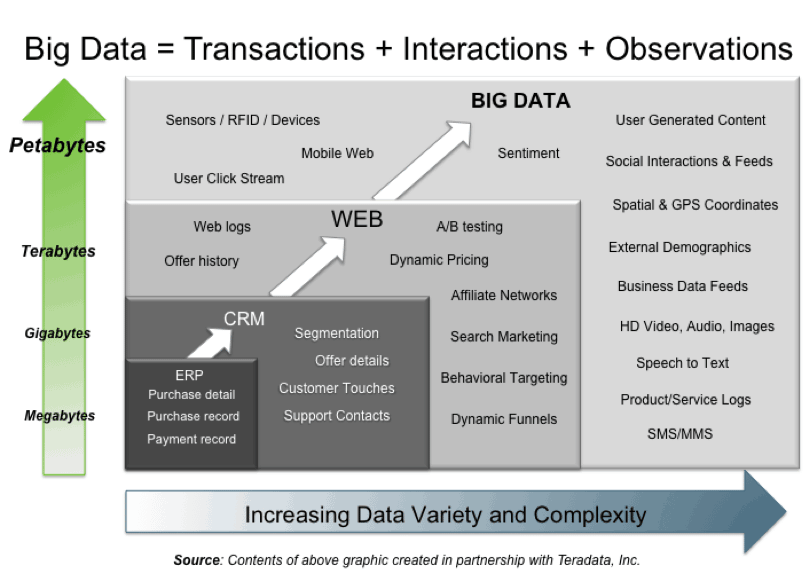

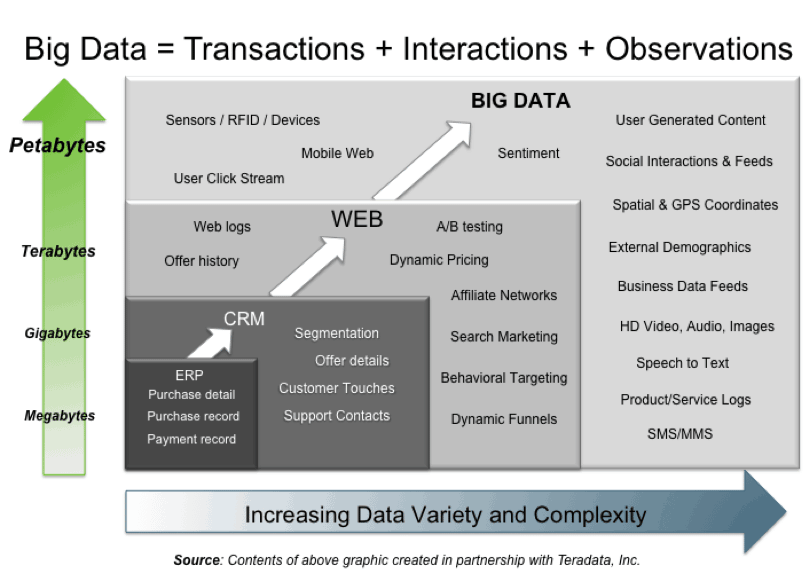

10 | ## 大数据应用 - 什么算大数据

11 |

12 | 作为产品经理, 要了解大数据的基本概念和特点,进而找到与自身业务流程相关的地方. 也要多看看大数据应用案例,鉴于这些应用的规模很有可能只能在500强企业中才会出现,中小企业应要灵活学习而不必照搬技术框架.

13 |

14 | http://www.planet-data.eu/sites/default/files/presentations/Big_Data_Tutorial_part4.pdf 这个大数据讲义(2012, 41页)综合了很多关于大数据的分析图表,也列举了不少关键技术用例.

15 |

16 | http://hortonworks.com/blog/7-key-drivers-for-the-big-data-market/ 该文整理了在高盛云计算大会学到的核心概念.

17 |

18 |

19 |

20 | ## 大数据技术 - 简版进阶方案

21 |

22 | 要想成为数据科学家, 通常可以选修网上相关课程,如coursera和小象学院.

23 | 这里我们面向Excel为基础的中小企业初学者设计一个简版进阶方案.

24 |

25 | 第0级:电子表格Excel -- 实现简单的数据分析与图表

26 |

27 | 第1级:关系数据库和SQL语言,例如Access和MySQL -- 利用数据库查询聚合大量业务数据纪录

28 |

29 | 第2级:基础的编程语言,例如Python/R,Java -- 通过程序将数据处理流程自动化

30 |

31 | 第3级:在程序中访问数据库,例如ORM, ODBC, JDBC -- 进一步提高数据处理自动化程度

32 |

33 | 第4级:了解一个NoSQL数据库,例如redis,mongodb,neo4j,elasticsearch -- 根据业务需要选择一个合用的就行,传统关系数据库的性能未必不够用.

34 |

35 | 第5级:了解一点数据分析(含机器学习/数据挖掘)常识,如线性回归,多项式拟合,逻辑回归,KNN聚类,决策树,Naive贝叶斯等.Python/R/Java都有现成实现

36 |

37 | 第6级:如果需要使用变态多的计算/存储资源,学习云计算平台,如亚马逊的EC2, S3, Google Compute Engine, Microsoft Azure

38 |

39 | 第7级:如果要处理变态多的数据,学习分布式计算Hadoop和MapReduce的原理,然后使用一个现成的实现,如Amazon Elastic MapReduce (Amazon EMR)

40 |

41 | 第8级:如果要在变态多的数据上做数据分析,学习spark, mahout 或任何一个SQL on Hadoop.

42 |

43 | 到此恭喜你,在任何一个"大数据群"都可以指点江山了.

44 |

45 |

46 | ## 傻瓜入门参考书

47 |

48 | (英文) Big Data Glossary 大数据入门指导图书,主要讲解大数据处理技术及工具,内容涵盖了NoSql Database,各种MapReduce,Storage,Servers,数据清理阶段工具,NLP库与工具包,Machine learning机器学习工具包,数据可视化工具包,公共数据清洗,序列化指南等等。有点老(2011),不过重点推荐。有免费pdf

49 | http://download.bigbata.com/ebook/oreilly/books/Big_Data_Glossary.pdf

50 |

51 | (英文) Big Data For Dummies 有免费pdf http://it-ebooks.info/book/2082/

52 |

53 | "大数据时代从入门到全面理解" http://book.douban.com/review/6131027/ 适合了解大数据的一些基本概念.不过作者看法有些片面, 有很多吸引眼球的段子, 但与技术流结合地不够紧密.

54 |

55 | ## 数据科学家学习资源

56 |

57 | http://www.douban.com/note/247983915/ 数据科学家的各种资源

58 |

59 | http://www.aboutyun.com/thread-7569-1-1.html 大数据入门:各种大数据技术介绍

60 |

61 | https://class.coursera.org/datasci-001 coursera上的公开课 大数据科学入门 Introduction to Data Science

62 |

63 |

64 | ## 应用案例资源

65 |

66 | http://www.ibm.com/big-data/us/en/big-data-and-analytics/case-studies.html IBM的一些大数据分析案例

67 |

68 | http://www.sas.com/resources/asset/Big-Data-in-Big-Companies.pdf SAS的大数据案例

69 |

70 | http://www.teradata.com/big-data/use-cases/ Teradata的大数据案例

71 |

72 |

73 |

74 |

--------------------------------------------------------------------------------

/awesome/multiclass-boosting.md:

--------------------------------------------------------------------------------

1 | #Awesome Multi-class Boosting Resources

2 |

3 | abstract: classic papers, slides and overviews, plus Github code.

4 |

5 |

6 |

7 | (image source http://www.svcl.ucsd.edu/projects/)

8 |

9 | chinese abstract: 问:@图像视觉研究 有没有经典的Multi-Class boosting的相关资料推荐推荐? 答:找到几篇经典论文,几个幻灯片、录像以及工具包。相关学校有MIT,UCSD,Stanford,umich等。软件有C++, Pythton (scikit-learn) 实现,也有几个GITHUB开源软件。 [资料卡片](http://bigdata.memect.com/?tag=MultiClassBoosting)

10 |

11 | https://github.com/memect/hao/blob/master/awesome/multiclass-boosting.md

12 |

13 | # overview

14 | http://www.svcl.ucsd.edu/projects/mcboost/

15 |

16 | http://classes.soe.ucsc.edu/cmps242/Fall09/proj/Mario_Rodriguez_Multiclass_Boosting_talk.pdf Multi-class boosting (slides), Mario Rodriguez, 2009

17 |

18 | http://cmp.felk.cvut.cz/~sochmj1/adaboost_talk.pdf presentation summarizing AdaBoost

19 |

20 |

21 | # people

22 |

23 | http://dept.stat.lsa.umich.edu/~jizhu/ check his contribution on SAMME

24 |

25 |

26 | #video lectures

27 |

28 | https://www.youtube.com/watch?v=L6BlpGnCYVg "A Theory of Multiclass Boosting", Rob Schapire, Partha Niyogi Memorial Conference: Computer Science

29 |

30 | http://techtalks.tv/talks/multiclass-boosting-with-hinge-loss-based-on-output-coding/54338/ Multiclass Boosting with Hinge Loss based on Output Coding, Tianshi Gao; Daphne Koller, ICML 2011

31 |

32 | # classical paper

33 | http://web.mit.edu/torralba/www/cvpr2004.pdf Sharing features: efficient boosting procedures for multiclass object detection, Antonio Torralba Kevin P. Murphy William T. Freeman, CVPR 2004

34 |

35 |

36 | http://dept.stat.lsa.umich.edu/~jizhu/pubs/Zhu-SII09.pdf Multi-class AdaBoost, Ji Zhu, Hui Zou, Saharon Rosset and Trevor Hastie, Statistics and Its Interface, 2009

37 |

38 | http://www.cs.princeton.edu/~imukherj/nips10.pdf A Theory of Multiclass Boosting, Indraneel Mukherjee, Robert E. Schapire, NIPS 2010

39 |

40 | http://papers.nips.cc/paper/4450-multiclass-boosting-theory-and-algorithms.pdf Multiclass Boosting: Theory and Algorithms, Mohammad J. Saberian, Nuno Vasconcelos, NIPS, 2011

41 |

42 |

43 | # tools

44 | http://www.multiboost.org/ a fast C++ implementation of multi-class/multi-label/multi-task boosting algorithms. It is based on AdaBoost.MH but also implements popular cascade classifiers and FilterBoost along with a batch of common multi-class base learners (stumps, trees, products, Haar filters).

45 |

46 | http://scikit-learn.org/stable/auto_examples/ensemble/plot_adaboost_multiclass.html

47 |

48 | https://github.com/cshen/fast-multiboost-cw

49 |

50 | https://github.com/pengsun/AOSOLogitBoost

51 |

52 | https://github.com/circlingthesun/omclboost

53 |

--------------------------------------------------------------------------------

/awesome/piecewise-linear-regression.md:

--------------------------------------------------------------------------------

1 | # 分段线性模型资料与软件-入门篇

2 |

3 | * contributors: @视觉动物晴木明川 @heavenfireray @禅系一之花

4 | * keywords: 分段线性模型, Piecewise linear regression, Segmented linear regression,

5 | * license: Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License

6 | * cardbox: http://bigdata.memect.com/?tag=piecewiselinearregression

7 | * discussion: https://github.com/memect/hao/issues/70

8 |

9 | https://github.com/memect/hao/blob/master/awesome/piecewise-linear-regression.md

10 |

11 |

12 | ## 教程

13 |

14 | https://onlinecourses.science.psu.edu/stat501/node/77 Piecewise linear regression models

15 |

16 | http://www.fs.fed.us/rm/pubs/rmrs_gtr189.pdf A Tutorial on the Piecewise Regression Approach Applied to Bedload Transport Data, Sandra E. Ryan, Laurie S. Porth

17 | * @禅系一之花 我喜欢这个指南

18 |

19 | http://www.ee.ucla.edu/ee236a/lectures/pwl.pdf UCLA, (2013) Lecture 2 Piecewise-linear optimization

20 | * 补充了一个UCLA 的偏理论的教程幻灯片

21 |

22 | ## 统计软件, 都支持这个功能

23 |

24 | http://people.ucalgary.ca/~aniknafs/index_files/TR%2094%202011.pdf RapidMiner (这个有免费版,用户挺多)

25 |

26 | http://mathematica.stackexchange.com/questions/45745/fitting-piecewise-functions Mathematica

27 |

28 | * http://forums.wolfram.com/student-support/topics/22308 "piecewise linear fit"

29 | * "Mathematica Navigator: Mathematics, Statistics and Graphics" page 516

30 | * http://dsp.stackexchange.com/questions/1227/fit-piecewise-linear-data

31 | * http://coen.boisestate.edu/bknowlton/files/2011/12/Mathematica-Tutorial-Megan-Frary.pdf Mathematica Tutorial

32 |

33 | http://mobiusfunction.wordpress.com/2012/06/26/piece-wise-linear-regression-from-two-dimensional-data-multiple-break-points/ matlab

34 |

35 | http://stats.stackexchange.com/questions/18468/how-to-do-piecewise-linear-regression-with-multiple-unknown-knots

36 | matlab

37 |

38 | http://www.ats.ucla.edu/stat/sas/faq/nlin_optimal_knots.htm SAS

39 |

40 | http://climateecology.wordpress.com/2012/08/19/r-for-ecologists-putting-together-a-piecewise-regression/ R

41 | * "Piecewise or segmented regression for when your data has two different linear patterns. Again, comments here are good" source: https://twitter.com/statsforbios/status/378163948740026368

42 |

43 | https://github.com/scikit-learn/scikit-learn/blob/master/doc/modules/linear_model.rst python

44 |

45 |

46 | ## 网友评论

47 | @视觉动物晴木明川 :分段线性是众多非线性处理方法的本质!//@机器学习那些事儿:你上次说的MLR的分片思想本以为是基于LR-based adaboost 看来要好好学习你的论文了 //@heavenfireray:给个好玩的,我之前演示分段线性的菱形数据,LR-based adaboost的准确率超不过55%(分片线性模型能到99%以上)

48 | http://weibo.com/1718403260/ADrUnChqt

49 |

50 | http://arxiv.org/abs/1401.6413 Online Piecewise Linear Regression via Infinite Depth Context Trees N. Denizcan Vanli, Muhammed O. Sayin, Suleyman S. Kozat

51 |

52 |

53 | ## 相关的阅读

54 | http://www.eccf.ukim.edu.mk/ArticleContents/JCEBI/03%20Miodrag%20Lovric,%20Marina%20Milanovic%20and%20Milan%20Stamenkovic.pdf 时间序列分析

55 |

56 |

57 |

--------------------------------------------------------------------------------

/awesome/bayesian-network-python.md:

--------------------------------------------------------------------------------

1 | # Bayesian network 与python概率编程实战入门

2 | contributors: @西瓜大丸子汤 @王威廉 @不确定的世界2012 @Rebecca1020

3 |

4 |

5 | ## [1. Bayesian network 入门讲义幻灯片](http://bigdata.memect.com/?tag=hao71)

6 |

7 | http://www.cs.cmu.edu/~epxing/Class/10708/lectures/lecture2-BNrepresentation.pdf Directed Graphical Models: Bayesian Networks

8 | * 王威廉 推荐

9 |

10 | http://www.ee.columbia.edu/~vittorio/Lecture12.pdf Inference and Learning in Bayesian Networks

11 |

12 | http://courses.cs.washington.edu/courses/cse515/09sp/slides/bnets.pdf Bayesian networks

13 |

14 |

15 | ## [2. 基于python的实战入门](http://python.memect.com/?tag=hao71)

16 |

17 | [Bayesian Methods for Hackers](http://python.memect.com/?p=6737) 6000+ star book on github

18 | * 西瓜大丸子汤 推荐

19 | * 小猴机器人 推荐中文介绍 张天雷 写的《概率编程语言与贝叶斯方法实践》 http://www.infoq.com/cn/news/2014/07/programming-language-bayes

20 |

21 |

22 | [Frequentists and Bayesians series](http://python.memect.com/?tag=fb-series) four blogs

23 |

24 | [PyMC tutorial](http://python.memect.com/?p=8536) pretty short

25 |

26 |

27 | ----

28 |

29 | ## 补充相关材料

30 | ### 基于R的实战入门

31 |

32 | http://site.douban.com/182577/widget/notes/12817482/note/273585095/ 贝叶斯网的R实现( Bayesian network in R)

33 |

34 |

35 | ### 相关进阶

36 | http://bayes.cs.ucla.edu/BOOK-2K/index.html Causality: Models, Reasoning, and Inference

37 | * Judea Pearl的书 http://en.wikipedia.org/wiki/Judea_Pearl

38 |

39 | http://www.biostat.jhsph.edu/~cfrangak/papers/preffects.pdf Principal Stratification in Causal Inference - Biostatistics (2002)

40 | * Don Rubin

41 | * Rebecca1020 推荐

42 |

43 | http://www.cs.cmu.edu/~epxing/Class/10708/lecture.html Probabilistic Graphical Models by Eric Xing(CMU)

44 | * 王威廉 推荐

45 |

46 |

47 | http://web4.cs.ucl.ac.uk/staff/D.Barber/pmwiki/pmwiki.php?n=Brml.HomePage Bayesian Reasoning and Machine Learning by David Barber

48 | * @诸神善待民科组 推荐 (比 Koller 的 PGM 好读,好处是图多)

49 |

50 | ### 相关微博

51 |

52 | @王威廉 :CMU机器学习系Eric Xing老师的Probabilistic Graphic Model 已经开了10个年头了, 这学期貌似是第一次把视频放在网上:http://t.cn/zTh9OqO 目前这学期的课程刚开始。

53 | 1月23日15:21

54 | http://weibo.com/1657470871/AtrlldqAU

55 |

56 | @西瓜大丸子汤 :在推荐一本我最近正在看的书Probabilistic Programming and Bayesian Methods for Hackers 贝叶斯方法实战,用Python来解释各种概率推理方法,有代码有真相。基于PyMC 包,解剖了MCMC ,大数定律,金融分析等概念与应用。Github上已经有5000颗星。

57 | 7月8日20:06

58 | http://weibo.com/1932835417/BcKj0k0Wx

59 |

60 |

61 |

62 | @不确定的世界2012 :【贝叶斯网的R实现( Bayesian network in R)(一)gRain(1)】#本文主要介绍运用贝叶斯网的一些R语言工具。 贝叶斯网,又称信念网络或概率有向无环图模型(Bayesian network,belief network,probabilistic directed acyclic graphical m... http://t.cn/zToro0U

63 | 2013-7-2 19:52

64 | http://weibo.com/1768506843/zEfzDsln9

65 |

66 |

67 |

68 |

69 | @Rebecca1020 :因果推断在USA分两大学派:因果推断本质统计做不了的,但为了能得到inference,必须要加入假设。不同假设就产生了两大不同的学派。西边以berkeley为主,Jordan他们搞的是bayesian network,用有向图来代表之间因果关系。东边Rubin在03年提出Principal stratification,以此为主要假设来进行统计推断。

70 | 2013-4-11 02:44

71 | http://weibo.com/1669820502/zrFNJv8DI

72 |

73 | 张天雷 提供中文介绍《概率编程语言与贝叶斯方法实践》 //@小猴机器人: 来,给个中文介绍哈, http://t.cn/RPwbEPz

74 | http://www.weibo.com/5220650532/BmkyPihT4

75 |

--------------------------------------------------------------------------------

/awesome/outlier-text-mining.md:

--------------------------------------------------------------------------------

1 | # Outlier Detection in Text Mining

2 |

3 | contributor: 郭惠礼 , 许扬逸Dijkstra , phunter_lau , ai_东沂

4 |

5 | card list: http://bigdata.memect.com/?tag=outlierdetectionandtextmining

6 |

7 | https://github.com/memect/hao/blob/master/awesome/outlier-text-mining.md

8 |

9 | keywords:

10 | outlier detection,

11 | anomaly detection,

12 | text mining

13 |

14 | ## Outlier detection survey

15 | http://info.mapr.com/resources_anewlook_anomalydetection_ty.html.html?aliId=7992403

16 | @ 郭惠礼 :刚看完一本书. Practical Machine Learning: A New Look At Anomaly Detection. " http://t.cn/RPJX4YT 一本免费的机器学习实践书。此书主要以Anomaly Detection与T-digest算法为主轴展开论述, 不涉及太深的知识。 比较简单,适合刚接触ML的初学者.

17 |

18 | http://arxiv.org/abs/1009.6119 A Comprehensive Survey of Data Mining-based Fraud Detection Research, Clifton Phua, Vincent Lee, Kate Smith, Ross Gayler 2010

19 |

20 | http://www.kdnuggets.com/2014/05/book-outlier-detection-temporal-data.html Outlier Detection for Temporal Data (Book)

21 |

22 | http://en.wikipedia.org/wiki/Anomaly_detection

23 |

24 | http://www.siam.org/meetings/sdm10/tutorial3.pdf Outlier Detection Techniques - SIAM

25 |

26 | http://www.slideshare.net/HouwLiong/chapter-12-outlier

27 |

28 |

29 | ## Outlier/anomaly detection in Text mining

30 |

31 | http://nlp.shef.ac.uk/Completed_PhD_Projects/guthrie.pdf David Guthrie, Unsupervised Detection of Anomalous Text

32 | 来自UK Shef大学的博士论文

33 |

34 | http://link.springer.com/chapter/10.1007%2F978-1-4614-6396-2_7 Aggarwal的outlier analysis一书的chapter 7 Outlier Detection in Categorical, Text and Mixed Attribute Data

35 |

36 |

37 | http://www.amazon.com/Survey-Text-Mining-Clustering-Classification/dp/1848000456 Survey of Text Mining: Clustering, Classification, and Retrieval, Second Edition , Michael W. Berry and Malu Castellanos, Editors 2007 (check part IV Part IV Anomaly Detection) https://perso.uclouvain.be/vincent.blondel/publications/08-textmining.pdf

38 |

39 | http://www.mdpi.com/1999-4893/5/4/469 Contextual Anomaly Detection in Text Data 2012

40 |

41 |

42 | ## Text mining (focus on topic models)

43 | http://www.itee.uq.edu.au/dke/filething/get/855/text-mining-ChengXiangZhai.pdf Statistical Methods for Mining Big Text Data, ChengXiang Zhai 2014

44 |

45 | http://cs.gmu.edu/~carlotta/publications/AlsumaitL_onlineLDA.pdf On-Line LDA: Adaptive Topic Models for Mining Text Streams with Applications to Topic Detection and Tracking, Loulwah AlSumait, Daniel Barbar´a, Carlotta Domeniconi

46 |

47 |

48 | ## 相关点评

49 |

50 |

51 | @phunter_lau:里面用到的技能也就是outlier detection然后根据outlier所在的几个表进行join,暴力搜索,这是常见手段

52 | http://weibo.com/1770891687/B5Gs7xdqQ

53 |

54 | phunter_lau:注意,下面这段话不是常规办法也没多少理论依据,不能误导大家:

55 | "phunter_lau:也可以,并且对于非连通的情况可以随机加入连通,比如“你就是偷看那个妹子了”并继续分析有意想不到的结果"

56 |

57 |

58 |

59 | 许扬逸Dijkstra: 在antispam,multidimension outlier detection上也可以试试它

60 | @计兮 【金融数据挖掘之朴素贝叶斯】by@数说工作室网站:本文介绍了金融数据挖掘过程中的朴素贝叶斯模型,供大家参考。原文链接→http://t.cn/RPzhx7S

61 | http://www.weibo.com/1642083541/Be9vDxvyw

62 |

63 |

64 | ai_东沂: 我补充一下之前搜到的资料,来自UK Shef大学的博士论文http://nlp.shef.ac.uk/Completed_PhD_Projects/guthrie.pdf

65 | Aggarwal的outlier analysis一书的chapter 7 Outlier Detection in Categorical, Text and Mixed Attribute Data,http://link.springer.com/chapter/10.1007%2F978-1-4614-6396-2_7

66 |

--------------------------------------------------------------------------------

/awesome/computer-vision-dataset.md:

--------------------------------------------------------------------------------

1 | # 计算机视觉数据集不完全汇总

2 | contributors: @丕子 @邹宇华 @李岩ICT人脸识别 @网路冷眼 @王威廉 @金连文 @数据堂 zhubenfulovepoem@cnblog

3 |

4 | created: 2014-09-24

5 |

6 | keywords: computer vision, dataset

7 |

8 | discussion: https://github.com/memect/hao/issues/222

9 |

10 |

11 | ## 经典/热点计算机视觉数据集

12 | * http://yann.lecun.com/exdb/mnist/ The MNIST database of handwritten digits, available from this page, has a training set of 60,000 examples, and a test set of 10,000 examples. Collected by Yann LeCun, Corinna Cortes, Christopher J.C. Burges

13 | * http://www.cs.toronto.edu/~kriz/cifar.html cifar10 The CIFAR-10 and CIFAR-100 are labeled subsets of the 80 million tiny images dataset. They were collected by Alex Krizhevsky, Vinod Nair, and Geoffrey Hinton.

14 | * http://en.wikipedia.org/wiki/Caltech_101 Caltech 101 is a data set of digital images created in September, 2003, compiled by Fei-Fei Li, Marco Andreetto, Marc 'Aurelio Ranzato and Pietro Perona at the California Institute of Technology. It is intended to facilitate Computer Vision research and techniques. It is most applicable to techniques involving recognition, classification, and categorization.

15 | * http://www.image-net.org/ ImageNet is an image database organized according to the WordNet hierarchy (currently only the nouns), in which each node of the hierarchy is depicted by hundreds and thousands of images. CVPR 这几年的竞赛用这个数据集测试

16 | * http://yahoolabs.tumblr.com/post/89783581601/one-hundred-million-creative-commons-flickr-images-for @网路冷眼 推荐【Yahoo实验室公开1亿Flickr图像和视频供研究之用】 One Hundred Million Creative Commons Flickr Images for Research

17 | * http://sourceforge.net/projects/oirds/ Overhead Imagery Research Data Set (OIRDS) - an annotated data library & tools to aid in the development of computer vision algorithms

18 |

19 | ## 计算机视觉数据集:目录

20 | * http://riemenschneider.hayko.at/vision/dataset/ @邹宇华 推荐 比较新的一个计算机视觉数据库网站 Yet Another Computer Vision Index To Datasets (YACVID) 200多数据集

21 | * http://www.computervisiononline.com/datasets @丕子 Richard Szeliski 推荐 上百数据集

22 | * http://www.cvpapers.com/datasets.html 上百数据集

23 | * http://datasets.visionbib.com/ Richard Szeliski 推荐 有分类

24 | * http://homepages.inf.ed.ac.uk/rbf/CVonline/ @李岩ICT人脸识别 Richard Szeliski 推荐 有分类

25 | * http://blog.csdn.net/zhubenfulovepoem/article/details/7191794 由 [zhubenfulovepoem](http://my.csdn.net/zhubenfulovepoem) (cnblog) 整理自ComputerVision: Algorithms and Applications by Richard Szeliski

26 |

27 | * http://vision.ucsd.edu/datasetsAll UCSD 数据集

28 | * http://www-cvr.ai.uiuc.edu/ponce_grp/data/ UIUC Datasets

29 | * http://www.vcipl.okstate.edu/otcbvs/bench/ OTCBVS Datasets

30 | * http://www.nicta.com.au/research/projects/AutoMap/computer_vision_datasets @数据堂 推荐NICTA Pedestrian Dataset(澳大利亚信息与通讯技术研究中心行人数据库) 论文 http://www.nicta.com.au/pub?doc=1245

31 | * http://clickdamage.com/sourcecode/cv_datasets.php 几十个数据集,有分类

32 | * http://www.iapr-tc11.org/mediawiki/index.php/Datasets_List @金连文 推荐 IAPR TC11的官网上有许多文档处理相关的数据集,例如联机及脱机手写数据、Text、自然场景的文档图像

33 | * http://en.wikipedia.org/wiki/Category:Datasets_in_computer_vision 维基百科的列表 列了几个经典数据集

34 | * http://webscope.sandbox.yahoo.com/catalog.php?datatype=i @王威廉 推荐

35 |

36 |

37 | ## 计算机视觉数据集:人脸识别:目录

38 | * http://www.face-rec.org/databases/ 几十个数据集

39 | * http://en.wikipedia.org/wiki/Comparison_of_facial_image_datasets 11个数据集列表对比

40 |

41 | ## 基本策略

42 | 通常可以查阅相关论文或竞赛,再顺藤摸瓜找数据集,有时还需要联系原作者, ICCV, CVPR 应该都有一些线索

43 |

--------------------------------------------------------------------------------

/awesome/question-answer.md:

--------------------------------------------------------------------------------

1 | # 问答系统资料整理

2 |

3 |

4 | ## 智能个人助理(Intelligent personal assistant)

5 | * [Amazon Evi](http://www.evi.com/) (launched in 2012) "best selling mobile app that can answer questions about local knowledge"

6 | ** formerly [True Knowledge](http://en.wikipedia.org/wiki/Evi_(software)) (launched in 2007), "a natural answering question answering system", acquired by Amazon in 2012

7 | * [Google Now](http://www.google.com/landing/now/) (launched in 2012) "an intelligent personal assistant developed by Google"

8 | * [Apple Siri](https://www.apple.com/ios/siri/) (launched in 2011) "an intelligent personal assistant and knowledge navigator which works as an application for Apple Inc.'s iOS."

9 | ** Siri IOS app (by Siri Inc.) (launched in 2009), founed in 2007, acquired by Apple in 2010

10 | * [Microsoft Cortana](http://www.windowsphone.com/en-us/how-to/wp8/cortana/meet-cortana) "an intelligent personal assistant on Windows Phone 8.1"

11 | * [Sumsung S Voice](http://www.samsung.com/global/galaxys3/svoice.html) (launched in 2012) "an intelligent personal assistant and knowledge navigator which is only available as a built-in application for the Samsung Galaxy”

12 |

13 |

14 | * [Jelly](http://en.wikipedia.org/wiki/Jelly_%28app%29) "an app (currently available on iOS and Android) that serves as a Q&A platform, created by a company of the same name led by Biz Stone, one of Twitter's co-founders. " ," it encourages people to use photos to ask questions"

15 | * [Viv](http://viv.ai/) (launching in 2014) "a global platform that enables developers to plug into and create an intelligent, conversational interface to anything."

16 | * [出门问问](http://chumenwenwen.com/)

17 |

18 | * [Project CALO](http://en.wikipedia.org/wiki/CALO) (2003-2008) funded by the Defense Advanced Research Projects Agency (DARPA) under its Personalized Assistant that Learns (PAL) program

19 | * [Vlingo](http://en.wikipedia.org/wiki/Vlingo) acquired by Nuance in December 2011

20 | * [Voice Mate](http://en.wikipedia.org/wiki/Voice_Mate) LG

21 |

22 |

23 | ## 智能自动问答系统:

24 | * [IBM Watson](http://www.ibm.com/smarterplanet/us/en/ibmwatson/) (launched in 2013)

25 | ** [IBM DeepQA (watson)](https://www.research.ibm.com/deepqa/deepqa.shtml) (launched in 2011) "A first stop along the way is the Jeopardy! Challenge..."

26 | * [Wolfram alpha](http://www.wolframalpha.com/) "which was released on May 15, 2009"

27 | * [Project Aristo](http://www.allenai.org/TemplateGeneric.aspx?contentId=8) current project at Allen Institute for Artificial Intelligence (AI2)

28 | ** [Porject Halo](http://www.allenai.org/TemplateGeneric.aspx?contentId=9) past project

29 |

30 |

31 | ## 聊天机器人(Chatbot)与图灵测试:

32 | * [小Q(腾讯聊天机器人)](http://qrobot.qq.com/) "QQ机器人是腾讯公司陆续推出的人工智能聊天机器人的总称" (2013)

33 | * [微软小冰](http://www.msxiaoice.com/v2/DesktopLanding) "微软小冰是领先的跨平台人工智能机器人" (2014)

34 | * [Eugene Goostman](http://en.wikipedia.org/wiki/Eugene_Goostman) "portrayed as a 13-year-old Ukrainian boy" (2001-)

35 | * [Cleverbot](http://en.wikipedia.org/wiki/Cleverbot) "a web application that uses an artificial intelligence algorithm to have conversations with humans"

36 | * [ELIZA](http://en.wikipedia.org/wiki/ELIZA) ELIZA is a computer program and an early example of primitive natural language processing (1976)

37 |

38 | ## 人工问答系统:

39 | * ask.com

40 | * https://answers.yahoo.com/

41 | * http://answers.com

42 | ** http://wiki.answers.com/

43 | * stackoverflow

44 | * reddit

45 | * quora

46 | * Formspring qa based social network

47 | * 知乎

48 | * 百度知道

49 | * 百度微问答

50 | * http://segmentfault.com/

51 | * 天涯 http://wenda.tianya.cn/

52 | 更多见维基百科 http://en.wikipedia.org/wiki/List_of_question-and-answer_websites

53 |

54 |

--------------------------------------------------------------------------------

/awesome/mlss.md:

--------------------------------------------------------------------------------

1 | # MLSS Machine Learning Summer Schools

2 | (forked from http://www.mlss.cc/) adding more links to the list

3 |

4 | ## highlights

5 | * 特别推荐09年UK的MLSS 所有还幻灯片 [打包下载ZIP 51M](http://mlg.eng.cam.ac.uk/mlss09/mlss_slides.zip) @bigiceberg 推荐 "其中09年UK的mlss最经典"

6 |

7 | ## Future (8)

8 | * MLSS Spain (Fernando Perez-Cruz), late spring 2016 (tentative)

9 | * MLSS London (tentative)

10 | * MLSS Tübingen, summer 2017 (tentative)

11 | * MLSS Africa (very tentative)

12 | * MLSS Kyoto (Marco Cuturi, Masashi Sugiyama, Akihiro Yamamoto), August 31 - September 11 (tentative), 2015

13 | * MLSS Tübingen (Michael Hirsch, Philipp Hennig, Bernhard Schölkopf), July 13-24, 2015

14 | * MLSS Sydney (Edwin Bonilla, Yang Wang, Bob Williamson), 16 - 25 February, 2015 http://www.nicta.com.au/research/machine_learning/mlss2015

15 | * MLSS Austin (Peter Stone, Pradeep Ravikumar), January 7-16, 2015 http://www.cs.utexas.edu/mlss/

16 |

17 | ## Past (25)

18 | * MLSS China, Beijing (Stephen Gould, Hang Li, Zhi-Hua Zhou), June 15-21, 2014, colocated with ICML http://lamda.nju.edu.cn/conf/mlss2014/

19 | * MLSS Pittsburgh (Alex Smola & Zico Kolter), July 6-18, 2014 http://mlss2014.com/

20 | * MLSS Iceland (Sami Kaski), April 26 - May 4, 2014 (colocated with AISTATS) http://mlss2014.hiit.fi/

21 | * MLSS Tübingen, Germany, 26 August - 07 September 2013 http://mlss.tuebingen.mpg.de

22 | * MLSS Kyoto, August 27 - September 7, 2012 http://www.i.kyoto-u.ac.jp/mlss12/

23 | * MLSS Santa Cruz, July 9-20, 2012 http://mlss.soe.ucsc.edu/home

24 | * MLSS La Palma, Canary Islands, April 11-19, 2012 (followed by AISTATS) http://mlss2012.tsc.uc3m.es/

25 | * MLSS France, September 4 - 17, 2011 http://mlss11.bordeaux.inria.fr/

26 | * MLSS @Purdue, June 13 and June 24, 2011 http://learning.stat.purdue.edu/wiki/mlss/start

27 | * MLSS Singapore, June 13 - 17, 2011 http://bigbird.comp.nus.edu.sg/pmwiki/farm/mlss/

28 | * MLSS Canberra, Australia, September 27 - October 6, 2010 http://canberra10.mlss.cc

29 | * MLSS Sardinia, May 6 - May 12, 2010 http://www.sardegnaricerche.it/index.php?xsl=370&s=139254&v=2&c=3841 [video lecture](http://videolectures.net/mlss2010_sardinia/)

30 | * MLSS Cambridge, UK, August 29 - September 10, 2009 http://mlg.eng.cam.ac.uk/mlss09

31 | * MLSS Canberra, Australia, January 26 - February 6, 2009 http://ssll.cecs.anu.edu.au/

32 | * MLSS Isle de Re, France, September 1-15, 2008 [archive](https://web.archive.org/web/20080329172541/http://mlss08.futurs.inria.fr/) [announcement](http://eventseer.net/e/7178/)

33 | * MLSS Kioloa, Australia, March 3 - 14, 2008 http://kioloa08.mlss.cc

34 | * MLSS Tübingen, Germany, August 20 - August 31, 2007 http://videolectures.net/mlss07_tuebingen/

35 | * MLSS Taipei, Taiwan, July 24 - August 2, 2006 http://www.iis.sinica.edu.tw/MLSS2006/

36 | * MLSS Canberra, Australia, February 6-17, 2006 http://canberra06.mlss.cc/

37 | * MLSS Chicago, USA, May 16-27, 2005 [archive](https://web.archive.org/web/20080314055344/http://chicago05.mlss.cc/) [announcement](http://linguistlist.org/LL/fyi/fyi-details.cfm?submissionid=49210)

38 | * MLSS Canberra, Australia, January 23 - February 5, 2005 [archive](https://web.archive.org/web/20060105025204/http://canberra05.mlss.cc/)

39 | * MLSS Berder, France, September 12-25, 2004 [archive](https://web.archive.org/web/20080406175615/http://www.kyb.tuebingen.mpg.de/mlss04/)

40 | * MLSS Tübingen, Germany, August 4-16, 2003 [archive](https://web.archive.org/web/20080409113424/http://www.kyb.tuebingen.mpg.de/mlss04/mlss03/)

41 | * MLSS Canberra, Australia, February 2-14, 2003 [archive](https://web.archive.org/web/20030607005801/http://mlg.anu.edu.au/summer2003/)

42 | * MLSS Canberra, Australia, February 11-22, 2002 [archive](https://web.archive.org/web/20030607063738/http://mlg.anu.edu.au/summer2002/)

43 |

--------------------------------------------------------------------------------

/awesome/semanticweb-dl:

--------------------------------------------------------------------------------

1 | 徐涵W3China 2014-11-21 08:00

2 | 《黄智生博士谈语义网与Web 3.0》时隔多年,这篇5年前的访谈至今很大程度上仍然受用。@好东西传送门http://t.cn/RzA6G69

3 | 好东西传送门 转发于2014-11-21 10:10

4 | 在知识图谱已广为人知的今天,回顾这篇访谈很有必要。

5 | Gary南京 转发于2014-11-22 06:57

6 | 谈语义Web最好不要把OWL和描述逻辑的作用过分夸大,因为本体不等于描述逻辑,语义Web的实现不一定要描述逻辑,描述逻辑很多东西在Web上是无用的

7 | 任远AI 转发于2014-11-22 07:04

8 | OWL作为一个逻辑上的探索还是非常有价值的,提供了可计算性,完备性,正确性都有保障的情况下的一个表达能力的(近似)上界。

9 | 徐涵W3China 转发于2014-11-22 08:05

10 | 逻辑专家谈描述逻辑!

11 | 昊奋 转发于2014-11-22 08:48

12 | 任何时候都有符合当前潮流需要大力推广的技术,至少在这几年,基于描述逻辑和owl知识表示的任何技术还只能停留在科研范围,不过说不定过了几年又会得到重视,deep semantic或许是一个好的名字,也应该会走从shallow learning到deep learning发展的路。

13 | Gary南京 转发于2014-11-22 10:22

14 | OWL和描述逻辑只是众多推理和表示方法中的一种,其实没那么重要,之所以最近几年红火,只是学术界吹捧的,发了很多没什么用的论文,真正实用性是很差的,一旦没有实用性,就会被抛弃,这就是近两年来描述逻辑冷下去的原因,脱离实际的推理是不会有什么影响力的

15 | 昊奋 转发于2014-11-22 10:37

16 | 由漆教授作这样的逻辑推理专家做出如此反思和结论,更值得称赞,同时也比我等不做逻辑的人大谈特谈来得更能让人信服

17 | Gary南京 转发于2014-11-22 10:48

18 | 呵呵,@昊奋 对推理的理解也是比较深的,我之所以说这些,是因为我不认为我自己的搞描述逻辑的,我不会把自己限制在某个门派,只要是有意思的东西都可以做,其实目前真正有用的还是早期的那些产生式规则、语义网络的东西

19 | 昊奋 转发于2014-11-22 11:05

20 | 回复@Gary南京:这种开放的精神值得赞

21 | 任远AI 转发于2014-11-22 12:04

22 | 赞同不应有门派之间。不过科研成果的实用性还是很难预计的。像语义网络在早期发展的时候也没特别大的影响力,这两年才在知识图谱之类的工业界应用上开始发挥作用。所以以后逻辑方法会有怎么样的前景还很不好说。

23 | 任远AI 转发于2014-11-22 12:09

24 | 如果漆教授可以再详细阐述一下描述逻辑之所以实用性差的问题核心,以及结合实际的推理技术应具备的特征,会是一个很有价值的课题!

25 | 昊奋 转发于2014-11-22 12:37

26 | 这个提议很好,不过挺难回答好,如果说清楚会对业界和学术界影响很大

27 | Gary南京 转发于2014-11-22 13:21

28 | 这个要全面分析是很难的,我也是在思考当中,不过今年年初在Huddersfield的一个聚集了OWL推理的一些精英的研讨会上,大家对OWL的在大公司中是否有用的讨论中,发现其实很少有公司在用,其实OWL比较有用的也就DL-Lite, EL,就算这两个影响力其实是有限的。我说OWL实用性差就是基于此次讨论做的

29 | Gary南京 转发于2014-11-22 13:26

30 | 另外,要注意的是,搞逻辑的总觉得自己的东西很有用,其实现在逻辑是基于知识库才有威力的,而真正有多少知识库是描述逻辑可以用的?知识获取的瓶颈突破不了,逻辑只是纸上谈兵而以,这就是KR不如ML和NLP的主要原因,而KR届真正意识到这点的人很少

31 | 任远AI 转发于2014-11-22 16:23

32 | 回复@Gary南京:我个人觉得描述逻辑的研究的出发点是相当有野心的,试图找出各种概念模型的一个可判定的最大“并集”,以此来解决异构知识的整合问题。可是工程和认知上实现并集的代价太大了,目前能做的其实只是各种模型的“交集”,这也是为什么越轻的DL相对越常用的原因。复杂DL只在极特定的领域可用

33 | Gary南京 转发于2014-11-22 16:46

34 | 回复@任远AI:描述逻辑是否有用这个问题其实不需要去争论,因为肯定是有用的。不过在Web上,知识的表示是多样性的,描述逻辑只是其中一种而以,不需要过分的夸大,这就是我的观点,08年以前就是过分夸大了,照成泡沫,现在也差不多爆掉了

35 | Gary南京 转发于2014-11-22 16:52

36 | 如果你去看看现在搞描述逻辑的人都在做什么你就会发现,所谓的OWL 2其实没多大影响力,大部分人都在搞DL-Lite, EL, OWL 2 RL,这其实就是对的,很多时候,越是简单的越实用。我其实对OWL 2一直就觉得没多大用,都是搞研究的人在空想的,应用中不一定是这样,只有根植于生活中的东西才有生命力

37 | 任远AI 转发于2014-11-22 16:55

38 | KR和知识获取本来应该是相互依存的关系。但现在知识获取有瓶颈,KR的人等不下去,于是只能想象出一些情境来做研究。以后Linked Data和WikiData可能会给KR提供一个更扎实的基础。

39 | Gary南京 转发于2014-11-22 16:59

40 | 表面上看是KR的人等不下去了,其实本质上是做KR的人没有应用驱动的去思考问题,只会去从理论方向去想问题,容易脱离实际,我觉得要真正做好KR,就需要去了解应用,而不是纸上谈兵。现在KR届的人思想太僵化,抱着自己的一某三分地不放,没有创新,最终很多组都会消亡

41 | 任远AI 转发于2014-11-22 17:02

42 | 这点我赞同,其实Ian和Franz早期搞DL的时候还是基于Galen和SNOMED之类的本体的,还是贴近实践的。只是搞逻辑的天生喜欢精巧复杂的东西,喜欢探讨理论上的可能性。这个算是KR领域的一个基因了。。。

43 | 任远AI 转发于2014-11-22 17:15

44 | 我觉得主要是逻辑这个圈子和工程师思维八字不合。像做ML或者NLP的可以说针对某个特定的应用对某个经典的模型进行改进提升了n%的精度。这种文章在KR里面是很难发的,你必须说你这个改进不是ad hoc的,有可推广性,是某种意义上的最优解。这就逼到理论的路子上去了。

45 | 任远AI 转发于2014-11-22 17:29

46 | 回复@Gary南京:哈哈哈深有同感。其实搞理论,搞证明,搞复杂的东西没啥错。为理论而理论,而证明而证明,而复杂而复杂就没必要的。有时候看到很多文章,框架定理一套套,证明了一堆很玄的东西,看得你热血沸腾,最后实质可以用的就那么一丁点。我就不说是谁了[doge][doge][doge]

47 | 昊奋 转发于2014-11-22 17:54

48 | KR只是解决知识表示和知识模型的问题,但终究还有知识获取等问题。所以要成功,一定是开放,拥抱其他领域,针对具体的问题,踏踏实实的做出一些东西。ML和NLP的深入人心也是靠做出来的

49 | 昊奋 转发于2014-11-22 17:55

50 | 已经很明显地说明是谁了,[嘻嘻]

51 | 昊奋 转发于2014-11-22 18:01

52 | 一般要确定你做的是本体编辑还是ontology population还是ontology learning,对于编辑,可以用protégé或各种基于wiki的本体编辑,如果是population,如NELL等基于本体的学习算法可用,这时是生成实例,如果是最后一种情况,MPI的PATTY等可以参考,这种可以学习新的本体模式

53 | Gary南京 转发于2014-11-22 18:03

54 | 回复@昊奋:是的,ML和NLP也很多灌水的论文,基本上没多大用,只是因为有应用支撑才红火起来的

55 | 任远AI 转发于2014-11-22 18:14

56 | 手工本体编辑很难规模化,大的本体都是十多年的努力才做成的。也许以后要用自动翻译之类的方法来生成本体

57 | 昊奋 转发于2014-11-22 18:17

58 | 所以在本体编辑的时候需要借助搜索或其他途径来获取现有相关本体并达到复用的目的。

59 | 昊奋 转发于2014-11-22 18:32

60 | 回复@anklebreaker11: 领域本体的构建请先查阅是否有相关的本体或者是否可以从通用的本体或知识库中抽取一个子集来获得。接着,再是类似NELL的方法来进一步扩充实例知识。

61 | 昊奋 转发于2014-11-22 18:48

62 | 回复@anklebreaker11: 医学领域比较复杂,不过你可以先了解一下LODD (linked open drug data) 以及 linked life science中涉及到的如snomed-ct等本体。另外,很多本体是包含中文标签的。当然如果涉及中医,可能需要更多依赖中文的资料,特别是医古文书籍等进行开放式抽取等。

63 |

64 |

65 |

--------------------------------------------------------------------------------

/awesome/imbalanced-data-classification.md:

--------------------------------------------------------------------------------

1 | # 不平衡数据分类(Imbalanced data classification)

2 |

3 | contributors: AixinSG, 刘知远THU , xierqi , eacl_newsmth

4 |

5 | https://github.com/memect/hao/blob/master/awesome/imbalanced-data-classification.md

6 |

7 | card list: http://bigdata.memect.com/?tag=imbalanceddataclassification

8 |

9 | discussion: https://github.com/memect/hao/issues/47

10 |

11 | keywords:

12 | Positive only,

13 | Imbalanced data,

14 | classification,

15 |

16 |

17 | ## readings

18 |

19 | ### survey

20 | http://www.cs.cmu.edu/~qyj/IR-Lab/ImbalancedSummary.html Yanjun Qi, A Brief Literature Review of Class Imbalanced Problem

21 | (2004)

22 |

23 | ### classic

24 | http://homes.cs.washington.edu/~pedrod/papers/kdd99.pdf (@xierqi 推荐) Domingo, MetaCost: A General Method for Making Classifiers Cost, KDD 1999

25 |

26 | https://www.jair.org/media/953/live-953-2037-jair.pdf SMOTE: Synthetic Minority Over-sampling Technique (2002) JAIR

27 |

28 |

29 | http://cseweb.ucsd.edu/~elkan/posonly.pdf Learning Classifiers from Only Positive and Unlabeled Data (2008)

30 |

31 | http://www.ele.uri.edu/faculty/he/PDFfiles/ImbalancedLearning.pdf Haibo He, Edwardo A. Garcia . (2009). Learning from Imbalanced Data. IEEE Transactions on Knowledge and Data Engineering, 21(9), 1263-1284.

32 |

33 | http://www.computer.org/csdl/proceedings/icnc/2008/3304/04/3304d192-abs.html Guo, X., Yin, Y., Dong, C., Yang, G., & Zhou, G. (2008). On the Class Imbalance Problem. 2008 Fourth International Conference on Natural Computation (pp. 192-201).

34 |

35 |

36 |

37 | ### current

38 | http://www.aclweb.org/anthology/P/P13/P13-2141.pdf (@eacl_newsmth 推荐) Towards Accurate Distant Supervision for Relational Facts Extraction, acl 2013

39 |

40 | http://link.springer.com/article/10.1007/s10618-012-0295-5 Training and assessing classification rules with imbalanced data (2014) Data Mining and Knowledge Discovery

41 |

42 | http://www.aaai.org/ocs/index.php/AAAI/AAAI13/paper/viewFile/6353/6827 An Effective Approach for Imbalanced Classification: Unevenly Balanced Bagging (2013) AAAI

43 |

44 |

45 |

46 |

47 | ### further readings

48 | http://stackoverflow.com/questions/12877153/tools-for-multiclass-imbalanced-classification-in-statistical-packages

49 |

50 |

51 | ## tools

52 |

53 | http://www.nltk.org/_modules/nltk/classify/positivenaivebayes.html nltk

54 |

55 | http://weka.wikispaces.com/MetaCost Weka

56 |

57 | http://tokestermw.github.io/posts/imbalanced-datasets-random-forests/ smote

58 |

59 | https://github.com/fmfn/UnbalancedDataset based on SMOTE

60 |

61 | ## datasets

62 |

63 | http://pages.cs.wisc.edu/~dpage/kddcup2001/ Prediction of Molecular Bioactivity for Drug Design -- Binding to Thrombin

64 |

65 | http://code.google.com/p/imbalanced-data-sampling/ Imbalanced Data Sampling Using Sample Subset Optimization

66 |

67 | #### dataset list

68 | https://archive.ics.uci.edu/ml/datasets.html?format=&task=cla&att=&area=&numAtt=&numIns=&type=&sort=nameUp&view=table UCI dataset repo, classification category

69 |

70 | http://www.inf.ed.ac.uk/teaching/courses/dme/html/datasets0405.html dataset list

71 |

72 |

73 | ## discussion

74 | ### @eastone01 不平衡数据分类数据集 https://github.com/memect/hao/issues/47

75 |

76 | 请问目前有木有关于不平衡数据分类(imbalance dataset classification)任务的人工二维toy dataset?

77 |

78 | AixinSG:Undersampling 总体上效果有限,个人理解

79 |

80 | 刘知远THU: 不平衡数据分类,尤其是标注正例特别多,几乎没有标注负例,但有大量未标注数据的话,应当怎么处理呢?这个问题在relation extraction中很普遍。现在只能在大量未标注数据中随机抽样作为负例。

81 |

82 | xierqi: 有段调研过这方面,90%都是采样,最大问题是评估方法不适合真实场景。个人推荐domingos的meta-cost,非常实用,经验设下cost就好。http://t.cn/RPiexE9

83 |

84 | eacl_newsmth: 在关系抽取中,是正例特别多? 没有负例么?我怎么觉得很多情况下是正例有限,但负例很多(当然你也可以argue说负例其实很难界定)。。。。

85 |

86 | 刘知远THU:回复@eacl_newsmth: 就像knowledge graph中可以提供很多正例,但负例需要通过随机替换正例中的entity来产生,这样容易把也是正确的样例当成负例来看。

87 |

88 | eacl_newsmth:回复@刘知远THU:恩,我估计你就要说这个例子,所以我在后面说,看你怎么界定负例,哈哈,我也纠结过好久,后来觉得其实还是正例少,而且很多时候你能保证正例是对的么?

89 |

90 | 刘知远THU:回复@eacl_newsmth: 正例基本是正确的,例如来自Freebase的,但负例对效果影响很大。:)今年AAAI有篇MSRA做的TransH的模型中,就提出一个负例选取的trick,效果拔群。

91 |

92 | eacl_newsmth:回复@刘知远THU:恩,KB中的实例确实是正确的,但是依据这些实例去海量文档中寻找的那些样本未必是正确的啊。 就目前的工作来看,确实很多在负例上做文章的工作都能把效率提升一些,去年语言所的一个学生利用“关系”特性,优选训练样本,也确实能提升性能。但单就这个问题而言,不能回避正例的可靠性

93 |

94 | 刘知远THU:回复@eacl_newsmth: 你说的这篇文章能告诉一下题目么?我现在关注的还不是从文本中抽关系,而是做knowledge graph completion,有点类似于graph上的link prediction,但要预测的link是有不同类型的relation。

95 |

96 | eacl_newsmth:回复@刘知远THU:http://t.cn/RPX75A3 恩,看了你们那里一个小伙的talk,感觉和sebastian之前的工作很相关啊,也许是他表述的问题?啥时候回北京?可以好好讨论一下。

97 |

98 |

--------------------------------------------------------------------------------

/awesome/influential-user-social-network.md:

--------------------------------------------------------------------------------

1 | # Influential User Identification in Online Social Networks

2 |

3 | contributors: @唐小sin @善良的右行

4 |

5 | discussion: https://github.com/memect/hao/issues/89

6 |

7 | keywords:

8 | 意见领袖 ( opinion leader),

9 | user influence,

10 | influential spreaders ,

11 | influential user ,

12 | twitter ,

13 |

14 | # 微博讨论精华

15 |

16 | 善良的右行:@好东西传送门 这几篇论文略旧……当然引用率是不用说的……貌似问题本质是重要节点挖掘……菜鸟冒泡一下……不知说的对不对…… (今天 14:45)

17 |

18 | 好东西传送门:发现重要节点一直是社交网络研究的重要问题, 研究热点大约在2007~2010社交媒体蓬勃发展的时候, 2014年已经有influential user identification的综述了.鉴于这类研究的算法并不困难,但数据量较大且较难获得,研究前沿已经逐渐从学术界转移到工业界/创业应用。http://t.cn/RPQfWRW (52分钟前)

19 |

20 | 唐小sin:的确是这样,现在social influence这块需要一个很好的问题去解,感觉就是做得太多很难入手。

21 |

22 |

23 |

24 | 唐小sin:任何influence的文章都可以哪来读读,而至于意见领袖不妨看看twitterrank (今天 15:13)

25 |

26 | 好东西传送门:回复@唐小sin: 这篇文章很不错哦, 还对比了TunkRank, Topic-sensitive PageRank (TSPR) (44分钟前)

27 |

28 |

29 | 善良的右行:@好东西传送门 惭愧,我也是菜鸟,当然很乐意共享:Identification of influentialspreaders in complex networks;Leaders in Social Networks, the Delicious Case; Absence of influential spreaders in rumor dynamics,都是牛人牛文……

30 |

31 |

32 | @好东西传送门: 回复@善良的右行: 这几个推荐文章都很好呀,第一篇引用率都快400了. 要不是了解领域,谁能想到这个关键词呢, influential spreaders . 意共享:Identification of influentialspreaders in complex networks;Leaders in Social Networks, the De

33 |

34 |

35 |

36 |

37 | # readings

38 |

39 | ## industry

40 | http://mashable.com/2014/02/25/socialrank-brands/ SocialRank Tool Helps Brands Find Most Valuable Followers (2014)

41 |

42 | http://www.smallbusinesssem.com/find-interesting-influential-twitter-users/3974/ Quick Way to Find Interesting & Influential Twitter Users (2011)

43 |

44 | ## readings

45 |

46 | ### influential user/spreader identification/ranking

47 | http://link.springer.com/chapter/10.1007/978-3-319-01778-5_37 Survey of Influential User Identification Techniques in Online Social Networks (2014) Advances in Intelligent Systems and Computing

48 |

49 | http://dl.acm.org/citation.cfm?id=1835935 Yu Wang, Gao Cong, Guojie Song, and Kunqing Xie. 2010. Community-based greedy algorithm for mining top-K influential nodes in mobile social networks. In Proceedings of the 16th ACM SIGKDD international conference on Knowledge discovery and data mining (KDD '10)

50 |

51 | http://ink.library.smu.edu.sg/cgi/viewcontent.cgi?article=1503&context=sis_research Twitterrank: Finding Topic-Sensitive Influential Twitterers 2010

52 | @唐小sin 推荐

53 |

54 | http://www.anderson.ucla.edu/faculty/anand.bodapati/Determining-Influential-Users.pdf Determining Influential Users in Internet Social Networks

55 |

56 | http://polymer.bu.edu/hes/articles/kghlmsm10.pdf Identification of influential spreaders in complex networks

57 | @善良的右行 推荐

58 |

59 | http://www.plosone.org/article/info%3Adoi%2F10.1371%2Fjournal.pone.0021202 Lü L, Zhang Y-C, Yeung CH, Zhou T (2011) Leaders in Social Networks, the Delicious Case. PLoS ONE 6(6)

60 | @善良的右行 推荐

61 |

62 | http://arxiv.org/pdf/1112.2239.pdf Absence of influential spreaders in rumor dynamics

63 | @善良的右行 推荐

64 |

65 | ### measure influence

66 |

67 | http://blog.datalicious.com/awesome-new-research-measuring-twitter-user-influence-from-meeyoung-cha-max-planck-institute/ Awesome new research: Measuring twitter user influence from Meeyoung Cha, Max Planck Institute (2010) read the original paper below

68 |

69 | http://www.aaai.org/ocs/index.php/ICWSM/ICWSM10/paper/viewFile/1538%20Amit%20Goyal%2C%20Francesco%20Bonchi%2C%20Laks%20V.%20S.%20Lakshmanan%3A%20Approximation%20Analysis%20of%20Influence%20Spread%20in%20Social%20Networks%20CoRR%20abs/1826 Measuring User Influence in Twitter: The Million Follower Fallacy

70 |

71 | http://dl.acm.org/citation.cfm?id=2480726

72 | Mario Cataldi, Nupur Mittal, and Marie-Aude Aufaure. 2013. Estimating domain-based user influence in social networks. In Proceedings of the 28th Annual ACM Symposium on Applied Computing (SAC '13).

73 |

74 | http://www.cse.ust.hk/~qnature/pdf/globecom13.pdf Analyzing the Influential People in Sina Weibo

75 | Dataset (2013)

76 |

77 | http://dl.acm.org/citation.cfm?id=1935845

78 | Eytan Bakshy, Jake M. Hofman, Winter A. Mason, and Duncan J. Watts. 2011. Everyone's an influencer: quantifying influence on twitter. In Proceedings of the fourth ACM international conference on Web search and data mining (WSDM '11)

79 |

80 |

81 |

82 | ## related

83 | http://en.wikipedia.org/wiki/Opinion_leadership

84 |

85 | http://dl.acm.org/citation.cfm?id=2503797

86 | Adrien Guille, Hakim Hacid, Cecile Favre, and Djamel A. Zighed. 2013. Information diffusion in online social networks: a survey. SIGMOD Rec. 42, 2 (July 2013), 17-28.

87 |

88 | http://dl.acm.org/citation.cfm?id=2601412 Charu Aggarwal and Karthik Subbian. 2014. Evolutionary Network Analysis: A Survey. ACM Comput. Surv. 47, 1, Article 10 (May 2014), 36 pages.

89 |

90 |

91 |

--------------------------------------------------------------------------------

/awesome/deep-learning-introduction.md:

--------------------------------------------------------------------------------

1 | ## 深度学习入门与综述资料

2 |

3 | contributors: @自觉自愿来看老婆微博 @邓侃 @星空下的巫师

4 |

5 | created: 2014-09-16

6 |

7 |

8 | ## 初学入门