├── .github

└── ISSUE_TEMPLATE

│ ├── bug_report.md

│ └── share-streaming-insights.md

├── LICENSE

├── README.md

├── backend

├── cs

│ ├── .gitignore

│ ├── README.md

│ └── memgraph-streaming

│ │ ├── Program.cs

│ │ └── memgraph-streaming.csproj

├── go

│ ├── .gitignore

│ ├── README.md

│ └── app.go

├── java

│ ├── .gitignore

│ ├── README.md

│ └── memgraph-streaming

│ │ ├── pom.xml

│ │ └── src

│ │ ├── main

│ │ └── java

│ │ │ └── memgraph

│ │ │ └── App.java

│ │ └── test

│ │ └── java

│ │ └── memgraph

│ │ └── AppTest.java

├── node

│ ├── .eslintrc.js

│ ├── .gitignore

│ ├── README.md

│ ├── package-lock.json

│ ├── package.json

│ └── src

│ │ └── index.js

├── python

│ ├── .gitignore

│ ├── README.md

│ ├── app.py

│ └── requirements.txt

└── rust

│ ├── .gitignore

│ ├── Cargo.lock

│ ├── Cargo.toml

│ ├── README.md

│ └── src

│ └── main.rs

├── kafka

├── .gitignore

├── README.md

├── producer

│ ├── input.csv

│ ├── requirements.txt

│ ├── static_producer.py

│ └── stream_producer.py

└── run.sh

└── memgraph

├── README.md

├── queries

├── create_constraint.cypher

├── create_index.cypher

├── create_node_trigger.cypher

├── create_stream.cypher

├── create_update_neighbors_trigger.cypher

├── drop_constraint.cypher

├── drop_data.cypher

├── drop_index.cypher

├── drop_node_trigger.cypher

├── drop_stream.cypher

├── drop_update_neighbors_trigger.cypher

├── show_constraints.cypher

├── show_indexes.cypher

├── show_streams.cypher

├── show_triggers.cypher

└── start_stream.cypher

├── query_modules

└── kafka.py

└── run.sh

/.github/ISSUE_TEMPLATE/bug_report.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Bug report

3 | about: Create a report to help us improve

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Describe the bug**

11 | A clear and concise description of what the bug is.

12 |

13 | **To Reproduce**

14 | Steps to reproduce the behavior:

15 | 1. Go to '...'

16 | 2. Click on '....'

17 | 3. Scroll down to '....'

18 | 4. See error

19 |

20 | **Expected behavior**

21 | A clear and concise description of what you expected to happen.

22 |

23 | **Screenshots**

24 | If applicable, add screenshots to help explain your problem.

25 |

26 | **Desktop (please complete the following information):**

27 | - OS: [e.g. iOS]

28 | - Browser [e.g. chrome, safari]

29 | - Version [e.g. 22]

30 |

31 | **Smartphone (please complete the following information):**

32 | - Device: [e.g. iPhone6]

33 | - OS: [e.g. iOS8.1]

34 | - Browser [e.g. stock browser, safari]

35 | - Version [e.g. 22]

36 |

37 | **Additional context**

38 | Add any other context about the problem here.

39 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/share-streaming-insights.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Share streaming insights

3 | about: Tell us what are the requirements of your graph streaming app

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **What's your backend programming language?**

11 | E.g. Python

12 |

13 | **What is the throughput of messages coming into the graph? In other words, how many messages per second, hour, or day are coming into the system?**

14 | E.g. 100/s

15 |

16 | **What messaging queue are you using?**

17 | NATS

18 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 |

2 | Apache License

3 | Version 2.0, January 2004

4 | http://www.apache.org/licenses/

5 |

6 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

7 |

8 | 1. Definitions.

9 |

10 | "License" shall mean the terms and conditions for use, reproduction,

11 | and distribution as defined by Sections 1 through 9 of this document.

12 |

13 | "Licensor" shall mean the copyright owner or entity authorized by

14 | the copyright owner that is granting the License.

15 |

16 | "Legal Entity" shall mean the union of the acting entity and all

17 | other entities that control, are controlled by, or are under common

18 | control with that entity. For the purposes of this definition,

19 | "control" means (i) the power, direct or indirect, to cause the

20 | direction or management of such entity, whether by contract or

21 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

22 | outstanding shares, or (iii) beneficial ownership of such entity.

23 |

24 | "You" (or "Your") shall mean an individual or Legal Entity

25 | exercising permissions granted by this License.

26 |

27 | "Source" form shall mean the preferred form for making modifications,

28 | including but not limited to software source code, documentation

29 | source, and configuration files.

30 |

31 | "Object" form shall mean any form resulting from mechanical

32 | transformation or translation of a Source form, including but

33 | not limited to compiled object code, generated documentation,

34 | and conversions to other media types.

35 |

36 | "Work" shall mean the work of authorship, whether in Source or

37 | Object form, made available under the License, as indicated by a

38 | copyright notice that is included in or attached to the work

39 | (an example is provided in the Appendix below).

40 |

41 | "Derivative Works" shall mean any work, whether in Source or Object

42 | form, that is based on (or derived from) the Work and for which the

43 | editorial revisions, annotations, elaborations, or other modifications

44 | represent, as a whole, an original work of authorship. For the purposes

45 | of this License, Derivative Works shall not include works that remain

46 | separable from, or merely link (or bind by name) to the interfaces of,

47 | the Work and Derivative Works thereof.

48 |

49 | "Contribution" shall mean any work of authorship, including

50 | the original version of the Work and any modifications or additions

51 | to that Work or Derivative Works thereof, that is intentionally

52 | submitted to Licensor for inclusion in the Work by the copyright owner

53 | or by an individual or Legal Entity authorized to submit on behalf of

54 | the copyright owner. For the purposes of this definition, "submitted"

55 | means any form of electronic, verbal, or written communication sent

56 | to the Licensor or its representatives, including but not limited to

57 | communication on electronic mailing lists, source code control systems,

58 | and issue tracking systems that are managed by, or on behalf of, the

59 | Licensor for the purpose of discussing and improving the Work, but

60 | excluding communication that is conspicuously marked or otherwise

61 | designated in writing by the copyright owner as "Not a Contribution."

62 |

63 | "Contributor" shall mean Licensor and any individual or Legal Entity

64 | on behalf of whom a Contribution has been received by Licensor and

65 | subsequently incorporated within the Work.

66 |

67 | 2. Grant of Copyright License. Subject to the terms and conditions of

68 | this License, each Contributor hereby grants to You a perpetual,

69 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

70 | copyright license to reproduce, prepare Derivative Works of,

71 | publicly display, publicly perform, sublicense, and distribute the

72 | Work and such Derivative Works in Source or Object form.

73 |

74 | 3. Grant of Patent License. Subject to the terms and conditions of

75 | this License, each Contributor hereby grants to You a perpetual,

76 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

77 | (except as stated in this section) patent license to make, have made,

78 | use, offer to sell, sell, import, and otherwise transfer the Work,

79 | where such license applies only to those patent claims licensable

80 | by such Contributor that are necessarily infringed by their

81 | Contribution(s) alone or by combination of their Contribution(s)

82 | with the Work to which such Contribution(s) was submitted. If You

83 | institute patent litigation against any entity (including a

84 | cross-claim or counterclaim in a lawsuit) alleging that the Work

85 | or a Contribution incorporated within the Work constitutes direct

86 | or contributory patent infringement, then any patent licenses

87 | granted to You under this License for that Work shall terminate

88 | as of the date such litigation is filed.

89 |

90 | 4. Redistribution. You may reproduce and distribute copies of the

91 | Work or Derivative Works thereof in any medium, with or without

92 | modifications, and in Source or Object form, provided that You

93 | meet the following conditions:

94 |

95 | (a) You must give any other recipients of the Work or

96 | Derivative Works a copy of this License; and

97 |

98 | (b) You must cause any modified files to carry prominent notices

99 | stating that You changed the files; and

100 |

101 | (c) You must retain, in the Source form of any Derivative Works

102 | that You distribute, all copyright, patent, trademark, and

103 | attribution notices from the Source form of the Work,

104 | excluding those notices that do not pertain to any part of

105 | the Derivative Works; and

106 |

107 | (d) If the Work includes a "NOTICE" text file as part of its

108 | distribution, then any Derivative Works that You distribute must

109 | include a readable copy of the attribution notices contained

110 | within such NOTICE file, excluding those notices that do not

111 | pertain to any part of the Derivative Works, in at least one

112 | of the following places: within a NOTICE text file distributed

113 | as part of the Derivative Works; within the Source form or

114 | documentation, if provided along with the Derivative Works; or,

115 | within a display generated by the Derivative Works, if and

116 | wherever such third-party notices normally appear. The contents

117 | of the NOTICE file are for informational purposes only and

118 | do not modify the License. You may add Your own attribution

119 | notices within Derivative Works that You distribute, alongside

120 | or as an addendum to the NOTICE text from the Work, provided

121 | that such additional attribution notices cannot be construed

122 | as modifying the License.

123 |

124 | You may add Your own copyright statement to Your modifications and

125 | may provide additional or different license terms and conditions

126 | for use, reproduction, or distribution of Your modifications, or

127 | for any such Derivative Works as a whole, provided Your use,

128 | reproduction, and distribution of the Work otherwise complies with

129 | the conditions stated in this License.

130 |

131 | 5. Submission of Contributions. Unless You explicitly state otherwise,

132 | any Contribution intentionally submitted for inclusion in the Work

133 | by You to the Licensor shall be under the terms and conditions of

134 | this License, without any additional terms or conditions.

135 | Notwithstanding the above, nothing herein shall supersede or modify

136 | the terms of any separate license agreement you may have executed

137 | with Licensor regarding such Contributions.

138 |

139 | 6. Trademarks. This License does not grant permission to use the trade

140 | names, trademarks, service marks, or product names of the Licensor,

141 | except as required for reasonable and customary use in describing the

142 | origin of the Work and reproducing the content of the NOTICE file.

143 |

144 | 7. Disclaimer of Warranty. Unless required by applicable law or

145 | agreed to in writing, Licensor provides the Work (and each

146 | Contributor provides its Contributions) on an "AS IS" BASIS,

147 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

148 | implied, including, without limitation, any warranties or conditions

149 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

150 | PARTICULAR PURPOSE. You are solely responsible for determining the

151 | appropriateness of using or redistributing the Work and assume any

152 | risks associated with Your exercise of permissions under this License.

153 |

154 | 8. Limitation of Liability. In no event and under no legal theory,

155 | whether in tort (including negligence), contract, or otherwise,

156 | unless required by applicable law (such as deliberate and grossly

157 | negligent acts) or agreed to in writing, shall any Contributor be

158 | liable to You for damages, including any direct, indirect, special,

159 | incidental, or consequential damages of any character arising as a

160 | result of this License or out of the use or inability to use the

161 | Work (including but not limited to damages for loss of goodwill,

162 | work stoppage, computer failure or malfunction, or any and all

163 | other commercial damages or losses), even if such Contributor

164 | has been advised of the possibility of such damages.

165 |

166 | 9. Accepting Warranty or Additional Liability. While redistributing

167 | the Work or Derivative Works thereof, You may choose to offer,

168 | and charge a fee for, acceptance of support, warranty, indemnity,

169 | or other liability obligations and/or rights consistent with this

170 | License. However, in accepting such obligations, You may act only

171 | on Your own behalf and on Your sole responsibility, not on behalf

172 | of any other Contributor, and only if You agree to indemnify,

173 | defend, and hold each Contributor harmless for any liability

174 | incurred by, or claims asserted against, such Contributor by reason

175 | of your accepting any such warranty or additional liability.

176 |

177 | END OF TERMS AND CONDITIONS

178 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Example Streaming App 🚀🚀

2 |

3 |

4 |

5 |  6 |

7 |

8 |

6 |

7 |

8 |  9 |

10 |

11 |

9 |

10 |

11 |  12 |

13 |

12 |

13 |

14 |

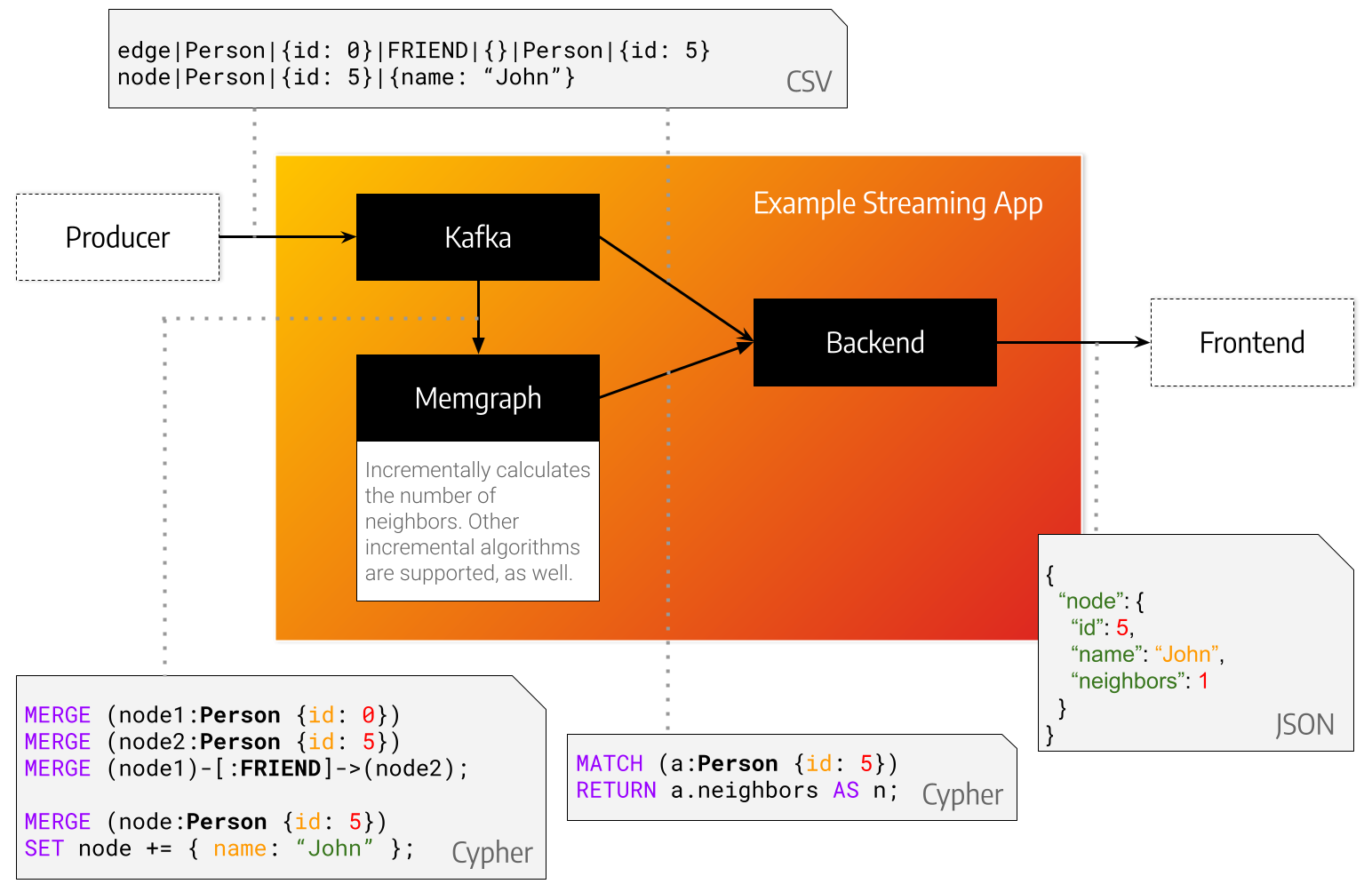

15 | This repository serves as a point of reference when developing a streaming application with [Memgraph](https://memgraph.com) and a message broker such as [Kafka](https://kafka.apache.org).

16 |

17 |

18 |

19 | *KafkaProducer* represents the source of your data.

20 | That can be transactions, queries, metadata or something different entirely.

21 | In this minimal example we propose using a [special string format](./kafka) that is easy to parse.

22 | The data is sent from the *KafkaProducer* to *Kafka* under a topic aptly named *topic*.

23 | The *Backend* implements a *KafkaConsumer*.

24 | It takes data from *Kafka*, consumes it, but also queries *Memgraph* for graph analysis, feature extraction or storage.

25 |

26 | ## Installation

27 | Install [Kafka](./kafka) and [Memgraph](./memgraph) using the instructions in the homonymous directories.

28 | Then choose a programming language from the list of supported languages and follow the instructions given there.

29 |

30 | ### List of supported programming languages

31 | - [c#](./backend/cs)

32 | - [go](./backend/go)

33 | - [java](./backend/java)

34 | - [node](./backend/node)

35 | - [python](./backend/python)

36 | - [rust](./backend/rust)

37 |

38 | ## How does it work *exactly*

39 | ### KafkaProducer

40 | The *KafkaProducer* in [./kafka/producer](./kafka/producer) creates nodes with a label *Person* that are connected with edges of type *CONNECTED_WITH*.

41 | In this repository we provide a static producer that reads entries from a file and a stream producer that produces entries every *X* seconds.

42 |

43 | ### Backend

44 | The *backend* takes a message at a time from kafka, parses it with a csv parser as a line, converts it into a `openCypher` query and sends it to Memgraph.

45 | After storing a node in Memgraph the backend asks Memgraph how many adjacent nodes does it have and prints it to the terminal.

46 |

47 | ### Memgraph

48 | You can think of Memgraph as two separate components: a storage engine and an algorithm execution engine.

49 | First we create a [trigger](./memgraph/queries/create_trigger.cypher): an algorithm that will be run every time a node is inserted.

50 | This algorithm calculates and updates the number of neighbors of each affected node after every query is executed.

51 |

--------------------------------------------------------------------------------

/backend/cs/.gitignore:

--------------------------------------------------------------------------------

1 | bin/

2 | obj/

3 |

--------------------------------------------------------------------------------

/backend/cs/README.md:

--------------------------------------------------------------------------------

1 | ## How it works

2 | 1. A [kafka](https://kafka.apache.org) consumer is started and messages are accepted in a [special format](../../kafka).

3 | 2. A memgraph client connects to [Memgraph](https://memgraph.com/) on port 7687.

4 | 3. The consumer script parses the messages and inserts data from them to Memgraph using [Cypher](https://opencypher.org/) via the [bolt protocol](https://en.wikipedia.org/wiki/Bolt_\(network_protocol\)).

5 |

6 | ## How to run

7 |

8 | 1. Install neo4j from nuget `dotnet add package Neo4j.Driver.Simple`

9 | 2. Install kafka from nuget `dotnet add package Confluent.Kafka`

10 | 3. Run kafka on port 9092, [instructions](../../kafka)

11 | 4. Run memgraph on port 7687, [instructions](../../memgraph)

12 | 5. Run the app with `dotnet run`

13 | 6. Run a producer, [instructions](../../kafka/producer)

14 |

--------------------------------------------------------------------------------

/backend/cs/memgraph-streaming/Program.cs:

--------------------------------------------------------------------------------

1 | using System;

2 | using Neo4j.Driver;

3 | using Confluent.Kafka;

4 |

5 | namespace memgraph_streaming

6 | {

7 | class Program

8 | {

9 | static void Main(string[] args)

10 | {

11 | using var driver = GraphDatabase.Driver("bolt://localhost:7687", AuthTokens.None);

12 | using var session = driver.Session();

13 |

14 | var config = new ConsumerConfig

15 | {

16 | BootstrapServers = "localhost:9092",

17 | GroupId = "consumers",

18 | };

19 | using var consumer = new ConsumerBuilder(config).Build();

20 | consumer.Subscribe("topic");

21 | try {

22 | while (true)

23 | {

24 | var message = consumer.Consume().Message.Value;

25 | System.Console.WriteLine("received message: " + message);

26 | var arr = message.Split("|");

27 | if (arr[0] == "node") {

28 | var neighbors = session.WriteTransaction(tx =>

29 | {

30 | return tx.Run(string.Format("MATCH (node:{0} {1}) RETURN node.neighbors AS neighbors", arr[1], arr[2])).Peek();

31 | });

32 | if (neighbors != null) {

33 | Console.WriteLine(string.Format("Node (node:{0} {1}) has {2} neighbors.", arr[1], arr[2], neighbors.Values["neighbors"]));

34 | } else {

35 | Console.WriteLine("Neighbors number is null. Triggers are not defined or not yet executed.");

36 | }

37 | }

38 | }

39 | }

40 | finally

41 | {

42 | // this has to be called despite the using statement, supposedly

43 | consumer.Close();

44 | };

45 | }

46 | }

47 | }

48 |

--------------------------------------------------------------------------------

/backend/cs/memgraph-streaming/memgraph-streaming.csproj:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | Exe

5 | net5.0

6 | memgraph_streaming

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

--------------------------------------------------------------------------------

/backend/go/.gitignore:

--------------------------------------------------------------------------------

1 | go.mod

2 | go.sum

3 |

--------------------------------------------------------------------------------

/backend/go/README.md:

--------------------------------------------------------------------------------

1 | ## How it works

2 | 1. A [kafka](https://kafka.apache.org) consumer is started and messages are accepted in a [special format](../../kafka).

3 | 2. A memgraph client connects to [Memgraph](https://memgraph.com/) on port 7687.

4 | 3. The consumer script parses the messages and inserts data from them to Memgraph using [Cypher](https://opencypher.org/) via the [bolt protocol](https://en.wikipedia.org/wiki/Bolt_\(network_protocol\)).

5 |

6 | ## How to run

7 |

8 | 1. Create go repository `go mod init memgraph.com/streaming-app`

9 | 2. Install dependencies `go mod tidy`

10 | 3. Run kafka on port 9092, [instructions](../../kafka)

11 | 4. Run memgraph on port 7687, [instructions](../../memgraph)

12 | 5. Run the app with `go run app.go`

13 | 6. Run a producer, [instructions](../../kafka/producer)

14 |

--------------------------------------------------------------------------------

/backend/go/app.go:

--------------------------------------------------------------------------------

1 | package main

2 |

3 | import (

4 | "context"

5 | "fmt"

6 | "strings"

7 |

8 | "github.com/neo4j/neo4j-go-driver/v4/neo4j"

9 | "github.com/segmentio/kafka-go"

10 | )

11 |

12 | func main() {

13 | db_auth := neo4j.BasicAuth("", "", "")

14 | driver, err := neo4j.NewDriver("bolt://localhost:7687", db_auth)

15 | if err != nil {

16 | panic(err)

17 | }

18 | defer driver.Close()

19 |

20 | kafkaReader := kafka.NewReader(kafka.ReaderConfig{

21 | Brokers: []string{"localhost:9092"},

22 | Topic: "topic",

23 | MinBytes: 0,

24 | MaxBytes: 10e6,

25 | })

26 | defer kafkaReader.Close()

27 | for {

28 | kafkaMessage, err := kafkaReader.ReadMessage(context.Background())

29 | if err != nil {

30 | fmt.Println("nothing to read...")

31 | break

32 | }

33 | message := string(kafkaMessage.Value)

34 | arr := strings.Split(message, "|")

35 |

36 | if arr[0] == "node" {

37 | result, err := runCypherCommand(

38 | driver,

39 | fmt.Sprintf("MATCH (node:%s %s) RETURN node.neighbors", arr[1], arr[2]),

40 | )

41 | if err != nil {

42 | panic(err)

43 | }

44 | fmt.Printf("Node (node:%s %s) has %d neighbors.\n", arr[1], arr[2], result)

45 | }

46 | }

47 | }

48 |

49 | func runCypherCommand(driver neo4j.Driver, cypherCommand string) (interface{}, error) {

50 | session := driver.NewSession(neo4j.SessionConfig{})

51 | defer session.Close()

52 | result, err := session.WriteTransaction(func(tx neo4j.Transaction) (interface{}, error) {

53 | result, err := tx.Run(cypherCommand, map[string]interface{}{})

54 | if err != nil {

55 | return nil, err

56 | }

57 | if result.Next() {

58 | return result.Record().Values[0], nil

59 | }

60 |

61 | return nil, result.Err()

62 | })

63 | return result, err

64 | }

65 |

--------------------------------------------------------------------------------

/backend/java/.gitignore:

--------------------------------------------------------------------------------

1 | target/

2 | pom.xml.tag

3 | pom.xml.releaseBackup

4 | pom.xml.versionsBackup

5 | pom.xml.next

6 | release.properties

7 | dependency-reduced-pom.xml

8 | buildNumber.properties

9 | .mvn/timing.properties

10 | # https://github.com/takari/maven-wrapper#usage-without-binary-jar

11 | .mvn/wrapper/maven-wrapper.jar

12 | .settings/

13 | .classpath

14 | .project

15 |

--------------------------------------------------------------------------------

/backend/java/README.md:

--------------------------------------------------------------------------------

1 | ## How it works

2 | 1. A [kafka](https://kafka.apache.org) consumer is started and messages are accepted in a [special format](../../kafka).

3 | 2. A memgraph client connects to [Memgraph](https://memgraph.com/) on port 7687.

4 | 3. The consumer script parses the messages and inserts data from them to Memgraph using [Cypher](https://opencypher.org/) via the [bolt protocol](https://en.wikipedia.org/wiki/Bolt_\(network_protocol\)).

5 |

6 | ## How to run

7 |

8 | 1. Install java and maven

9 | 2. Position yourself within the project or open with a java IDE

10 | 3. Compile the project within an IDE or with `mvn compile`

11 | 4. Run kafka on port 9092, [instructions](../../kafka)

12 | 5. Run memgraph on port 7687, [instructions](../../memgraph)

13 | 6. Start the application within an IDE or with `mvn exec:java -Dexec.mainClass=memgraph.App`

14 | 7. Run a producer, [instructions](../../kafka/producer)

15 |

--------------------------------------------------------------------------------

/backend/java/memgraph-streaming/pom.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

5 | 4.0.0

6 |

7 | memgraph

8 | memgraph-streaming

9 | 1.0-SNAPSHOT

10 |

11 | memgraph-streaming

12 | https://memgraph.com

13 |

14 |

15 | UTF-8

16 | 11

17 | 11

18 |

19 |

20 |

21 |

22 | junit

23 | junit

24 | 4.11

25 | test

26 |

27 |

28 | org.neo4j.driver

29 | neo4j-java-driver

30 | 4.1.1

31 |

32 |

33 | org.apache.kafka

34 | kafka-clients

35 | 2.8.0

36 |

37 |

38 |

39 |

40 |

41 |

42 |

43 | maven-clean-plugin

44 | 3.1.0

45 |

46 |

47 | maven-resources-plugin

48 | 3.0.2

49 |

50 |

51 | maven-compiler-plugin

52 | 3.8.0

53 |

54 |

55 | maven-surefire-plugin

56 | 2.22.1

57 |

58 |

59 | maven-jar-plugin

60 | 3.0.2

61 |

62 |

63 | maven-install-plugin

64 | 2.5.2

65 |

66 |

67 | maven-deploy-plugin

68 | 2.8.2

69 |

70 |

71 | maven-site-plugin

72 | 3.7.1

73 |

74 |

75 | maven-project-info-reports-plugin

76 | 3.0.0

77 |

78 |

79 |

80 |

81 |

82 |

--------------------------------------------------------------------------------

/backend/java/memgraph-streaming/src/main/java/memgraph/App.java:

--------------------------------------------------------------------------------

1 | package memgraph;

2 |

3 | import java.time.Duration;

4 | import java.util.Arrays;

5 | import java.util.Properties;

6 | import org.apache.kafka.clients.consumer.ConsumerRecords;

7 | import org.apache.kafka.clients.consumer.KafkaConsumer;

8 | import org.apache.kafka.common.serialization.LongDeserializer;

9 | import org.apache.kafka.common.serialization.StringDeserializer;

10 | import org.neo4j.driver.Driver;

11 | import org.neo4j.driver.GraphDatabase;

12 | import org.neo4j.driver.Result;

13 | import org.neo4j.driver.Session;

14 | import org.neo4j.driver.Transaction;

15 | import org.neo4j.driver.TransactionWork;

16 |

17 | public class App {

18 | public static void main(String[] args) throws Exception {

19 |

20 | try (Driver driver = GraphDatabase.driver("bolt://localhost:7687");

21 | Session session = driver.session();

22 | KafkaConsumer consumer = getKafkaConsumer()) {

23 | consumer.subscribe(Arrays.asList("topic"));

24 | while (true) {

25 | ConsumerRecords records =

26 | consumer.poll(Duration.ofMillis(100));

27 |

28 | if (records.count() > 0) {

29 | records.forEach(record -> {

30 | String[] command = record.value().split("\\|");

31 | session.writeTransaction(new TransactionWork() {

32 | @Override

33 | public String execute(Transaction tx) {

34 | switch (command[0]) {

35 | case "node":

36 | Result result = tx.run(String.format(

37 | "MATCH (node:%s %s) RETURN node.neighbors AS neighbors",

38 | command[1], command[2]));

39 | System.out.printf("Node (node:%s %s) has %d neighbors.\n",

40 | command[1], command[2],

41 | result.single().get(0).asInt());

42 | break;

43 | }

44 | System.out.printf("%s\n", record.value());

45 | return null;

46 | }

47 | });

48 | });

49 | }

50 | }

51 | }

52 | }

53 |

54 | public static KafkaConsumer getKafkaConsumer() {

55 | Properties props = new Properties();

56 | props.put("bootstrap.servers", "localhost:9092");

57 | props.put("key.deserializer", LongDeserializer.class.getName());

58 | props.put("value.deserializer", StringDeserializer.class.getName());

59 | props.put("group.id", "MemgraphStreaming");

60 | return new KafkaConsumer(props);

61 | }

62 | }

63 |

--------------------------------------------------------------------------------

/backend/java/memgraph-streaming/src/test/java/memgraph/AppTest.java:

--------------------------------------------------------------------------------

1 | package memgraph;

2 |

3 | import static org.junit.Assert.assertTrue;

4 |

5 | import org.junit.Test;

6 |

7 | /**

8 | * Unit test for simple App.

9 | */

10 | public class AppTest {

11 | /**

12 | * Rigorous Test :-)

13 | */

14 | @Test

15 | public void shouldAnswerWithTrue() {

16 | assertTrue(true);

17 | }

18 | }

19 |

--------------------------------------------------------------------------------

/backend/node/.eslintrc.js:

--------------------------------------------------------------------------------

1 | module.exports = {

2 | 'root': true,

3 | 'env': {

4 | 'es6': true,

5 | 'node': true,

6 | },

7 | 'extends': [

8 | 'eslint:recommended',

9 | 'google',

10 | 'plugin:prettier/recommended',

11 | 'plugin:jest/recommended',

12 | ],

13 | 'globals': {

14 | 'Atomics': 'readonly',

15 | 'SharedArrayBuffer': 'readonly',

16 | },

17 | 'plugins': [

18 | 'jest',

19 | 'prettier',

20 | ],

21 | 'parserOptions': {

22 | 'ecmaVersion': 8

23 | },

24 | 'rules': {

25 | 'prettier/prettier': [

26 | 'error',

27 | {

28 | 'singleQuote': true,

29 | 'trailingComma': 'all',

30 | 'printWidth': 120,

31 | },

32 | ],

33 | 'no-console': ['warn', { allow: ['warn'] }],

34 | 'max-len': ['error', {'code': 120, 'ignoreUrls': true, 'ignoreStrings': true}],

35 | 'eqeqeq': 'warn',

36 | 'new-cap': 'off',

37 | 'require-jsdoc': 'off',

38 | 'valid-jsdoc': 'off',

39 | 'lines-between-class-members': ['error', 'always', { exceptAfterSingleLine: true }],

40 | 'padding-line-between-statements': [

41 | 'error',

42 | { 'blankLine': 'always', 'prev': ['function'], 'next':['function'] },

43 | { 'blankLine': 'always', 'prev': 'multiline-block-like', 'next':'multiline-block-like' },

44 | { 'blankLine': 'always', 'prev': '*', 'next':'export' },

45 | { 'blankLine': 'always', 'prev': 'import', 'next':['const', 'let', 'var','class','block-like'] },

46 | ],

47 | 'brace-style': ['error', '1tbs'],

48 | 'curly': ['error', 'all'],

49 | 'jest/no-standalone-expect': 'off',

50 | 'jest/expect-expect': 'off',

51 | 'jest/no-commented-out-tests': 'off',

52 | 'jest/no-done-callback': 'off',

53 | },

54 | };

55 |

--------------------------------------------------------------------------------

/backend/node/.gitignore:

--------------------------------------------------------------------------------

1 | node_modules/

2 |

--------------------------------------------------------------------------------

/backend/node/README.md:

--------------------------------------------------------------------------------

1 | # Node.js Minimal Streaming App

2 |

3 | ## How it works

4 |

5 | 1. A [kafka](https://kafka.apache.org) consumer is started and messages are

6 | accepted in a [special format](../../kafka).

7 | 2. A memgraph client connects to [Memgraph](https://memgraph.com/) on port

8 | 7687.

9 | 3. The consumer script parses the messages and inserts data from them to

10 | Memgraph using [Cypher](https://opencypher.org/) via the [bolt

11 | protocol](https://en.wikipedia.org/wiki/Bolt_\(network_protocol\)).

12 |

13 | ## How to run

14 |

15 | 1. Install dependencies `npm install`

16 | 2. Run kafka on port 9092, [instructions](../../kafka)

17 | 3. Run memgraph on port 7687, [instructions](../../memgraph)

18 | 4. Run the app with `npm run backend`

19 | 5. Run a producer, [instructions](../../kafka/producer)

20 |

--------------------------------------------------------------------------------

/backend/node/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "memgraph-streaming-app",

3 | "version": "0.1.0",

4 | "description": "",

5 | "main": "index.js",

6 | "scripts": {

7 | "backend": "node src/index.js",

8 | "eslintjs": "npx eslint -c .eslintrc.js './{src,test}/**/*.js'",

9 | "eslintjs:fix": "npx eslint -c .eslintrc.js --fix './{src,test}/**/*.js'",

10 | "test": "npx jest"

11 | },

12 | "author": "",

13 | "license": "Apache-2.0",

14 | "devDependencies": {

15 | "eslint": "^7.29.0",

16 | "eslint-config-google": "^0.14.0",

17 | "eslint-config-prettier": "^8.3.0",

18 | "eslint-plugin-jest": "^24.3.6",

19 | "eslint-plugin-prettier": "^3.4.0",

20 | "jest": "^27.0.5",

21 | "prettier": "^2.3.1"

22 | },

23 | "dependencies": {

24 | "express": "^4.17.1",

25 | "kafka-node": "^5.0.0",

26 | "neo4j-driver": "^4.3.1"

27 | }

28 | }

29 |

--------------------------------------------------------------------------------

/backend/node/src/index.js:

--------------------------------------------------------------------------------

1 | /**

2 | * This is a generic Kafka Consumer + an example express app.

3 | */

4 | const express = require('express');

5 | const app = express();

6 | const port = 3000;

7 |

8 | const kafka = require('kafka-node');

9 | let kafkaCounter = 0;

10 |

11 | const memgraph = require('neo4j-driver');

12 | const driver = memgraph.driver('bolt://localhost:7687', memgraph.auth.basic('', ''));

13 | process.on('SIGINT', async () => {

14 | await driver.close();

15 | process.exit(0);

16 | });

17 |

18 | function createConsumer(onData) {

19 | return new Promise((resolve, reject) => {

20 | const client = new kafka.KafkaClient({ kafkaHost: 'localhost:9092' });

21 | const consumer = new kafka.Consumer(client, [{ topic: 'topic' }]);

22 | consumer.on('message', onData);

23 | resolve(consumer);

24 | });

25 | }

26 |

27 | /**

28 | * Adds catch to the wrapped function.

29 | * Convenient to use when the error just has to be console logged.

30 | */

31 | const consoleErrorWrap =

32 | (fn) =>

33 | (...args) =>

34 | fn(...args).catch((err) => {

35 | console.error(`${err}`);

36 | });

37 |

38 | async function runConsumer() {

39 | await createConsumer(

40 | consoleErrorWrap(async ({ key, value, partition, offset }) => {

41 | console.log(`Consumed record with: \

42 | \n - key ${key} \

43 | \n - value ${value} \

44 | \n - partition ${partition} \

45 | \n - offset ${offset}. \

46 | \nUpdated total count to ${++kafkaCounter}`);

47 | }),

48 | );

49 | }

50 |

51 | runConsumer().catch((err) => {

52 | console.error(`Something went wrong: ${err}`);

53 | process.exit(1);

54 | });

55 |

56 | /**

57 | * Adds catch to the wrapped function.

58 | * Convenient to use with Express callbacks.

59 | */

60 | const expressErrorWrap =

61 | (fn) =>

62 | (...args) =>

63 | fn(...args).catch(args[2]);

64 |

65 | app.get(

66 | '/',

67 | expressErrorWrap(async (req, res) => {

68 | const session = driver.session();

69 | const allNodes = await session.run(`MATCH (n) RETURN n;`);

70 | const allNodesStr = allNodes.records

71 | .map((r) => {

72 | const node = r.get('n');

73 | return node.properties['id'] + ': ' + node.properties['neighbors'];

74 | })

75 | .join(', ');

76 | res.send(`Hello streaming data sources!

\

77 | I've received ${kafkaCounter} Kafka messages so far :D

\

78 | All neighbors count: ${allNodesStr}

`);

79 | }),

80 | );

81 |

82 | app.listen(port, () => {

83 | console.log(`Minimal streaming app listening at http://localhost:${port}`);

84 | console.log('Ready to roll!');

85 | });

86 |

--------------------------------------------------------------------------------

/backend/python/.gitignore:

--------------------------------------------------------------------------------

1 | info.log

2 |

--------------------------------------------------------------------------------

/backend/python/README.md:

--------------------------------------------------------------------------------

1 | ## How it works

2 | 1. A [kafka](https://kafka.apache.org) consumer is started and messages are accepted in a [special format](../../kafka).

3 | 2. A memgraph client connects to [Memgraph](https://memgraph.com/) on port 7687.

4 | 3. The consumer script parses the messages and inserts data from them to Memgraph using [Cypher](https://opencypher.org/) via the [bolt protocol](https://en.wikipedia.org/wiki/Bolt_\(network_protocol\)).

5 |

6 | ## How to run

7 |

8 | 1. Install requirements `pip install -r requirements.txt`

9 | 2. Run kafka on port 9092, [instructions](../../kafka)

10 | 3. Run memgraph on port 7687, [instructions](../../memgraph)

11 | 4. Run the app with `python app.py`

12 | 5. Run a producer, [instructions](../../kafka/producer)

13 |

--------------------------------------------------------------------------------

/backend/python/app.py:

--------------------------------------------------------------------------------

1 | """This is a generic Kafka Consumer and an example Python code on how to query

2 | Memgraph.

3 | """

4 | import logging

5 | import csv

6 |

7 | from gqlalchemy import Memgraph

8 | from kafka import KafkaConsumer

9 |

10 |

11 | def process(message: str, db: Memgraph):

12 | """Prints the number of neighbors."""

13 | logging.info(f"Received `{message}`")

14 | payload = next(csv.reader([message], delimiter="|"))

15 | command, *payload = payload

16 |

17 | if command == "node":

18 | label, unique_fields, fields = payload

19 | neighbors = next(

20 | db.execute_and_fetch(

21 | f"match (a:{label} {unique_fields}) return a.neighbors as n"

22 | )

23 | )["n"]

24 | if neighbors is None:

25 | print(

26 | "The neighbors variable isn't set. "

27 | "Memgraph triggers are probably not set up properly."

28 | )

29 | else:

30 | print(f"(node:{label} {unique_fields}) has {neighbors} neighbors.")

31 | elif command == "edge":

32 | pass

33 | else:

34 | raise ValueError(f"Command `{command}` not recognized.")

35 |

36 |

37 | if __name__ == "__main__":

38 | logging.basicConfig(

39 | level=logging.INFO,

40 | format="%(levelname)s: %(asctime)s %(message)s",

41 | )

42 |

43 | db = Memgraph(host="localhost", port=7687)

44 | db.drop_database()

45 |

46 | consumer = KafkaConsumer("topic", bootstrap_servers=["localhost:9092"])

47 | try:

48 | for message in consumer:

49 | message = message.value.decode("utf-8")

50 | try:

51 | process(message, db)

52 | except Exception as error:

53 | logging.error(f"`{message}`, {repr(error)}")

54 | continue

55 | except KeyboardInterrupt:

56 | pass

57 |

--------------------------------------------------------------------------------

/backend/python/requirements.txt:

--------------------------------------------------------------------------------

1 | kafka-python

2 | gqlalchemy

3 |

--------------------------------------------------------------------------------

/backend/rust/.gitignore:

--------------------------------------------------------------------------------

1 | target/

2 | tarpaulin-report.html

3 |

--------------------------------------------------------------------------------

/backend/rust/Cargo.lock:

--------------------------------------------------------------------------------

1 | # This file is automatically @generated by Cargo.

2 | # It is not intended for manual editing.

3 | version = 3

4 |

5 | [[package]]

6 | name = "aho-corasick"

7 | version = "0.7.18"

8 | source = "registry+https://github.com/rust-lang/crates.io-index"

9 | checksum = "1e37cfd5e7657ada45f742d6e99ca5788580b5c529dc78faf11ece6dc702656f"

10 | dependencies = [

11 | "memchr",

12 | ]

13 |

14 | [[package]]

15 | name = "ansi_term"

16 | version = "0.11.0"

17 | source = "registry+https://github.com/rust-lang/crates.io-index"

18 | checksum = "ee49baf6cb617b853aa8d93bf420db2383fab46d314482ca2803b40d5fde979b"

19 | dependencies = [

20 | "winapi",

21 | ]

22 |

23 | [[package]]

24 | name = "atty"

25 | version = "0.2.14"

26 | source = "registry+https://github.com/rust-lang/crates.io-index"

27 | checksum = "d9b39be18770d11421cdb1b9947a45dd3f37e93092cbf377614828a319d5fee8"

28 | dependencies = [

29 | "hermit-abi",

30 | "libc",

31 | "winapi",

32 | ]

33 |

34 | [[package]]

35 | name = "autocfg"

36 | version = "1.0.1"

37 | source = "registry+https://github.com/rust-lang/crates.io-index"

38 | checksum = "cdb031dd78e28731d87d56cc8ffef4a8f36ca26c38fe2de700543e627f8a464a"

39 |

40 | [[package]]

41 | name = "bindgen"

42 | version = "0.58.1"

43 | source = "registry+https://github.com/rust-lang/crates.io-index"

44 | checksum = "0f8523b410d7187a43085e7e064416ea32ded16bd0a4e6fc025e21616d01258f"

45 | dependencies = [

46 | "bitflags",

47 | "cexpr",

48 | "clang-sys",

49 | "clap",

50 | "env_logger",

51 | "lazy_static",

52 | "lazycell",

53 | "log",

54 | "peeking_take_while",

55 | "proc-macro2",

56 | "quote",

57 | "regex",

58 | "rustc-hash",

59 | "shlex",

60 | "which",

61 | ]

62 |

63 | [[package]]

64 | name = "bitflags"

65 | version = "1.2.1"

66 | source = "registry+https://github.com/rust-lang/crates.io-index"

67 | checksum = "cf1de2fe8c75bc145a2f577add951f8134889b4795d47466a54a5c846d691693"

68 |

69 | [[package]]

70 | name = "bstr"

71 | version = "0.2.16"

72 | source = "registry+https://github.com/rust-lang/crates.io-index"

73 | checksum = "90682c8d613ad3373e66de8c6411e0ae2ab2571e879d2efbf73558cc66f21279"

74 | dependencies = [

75 | "lazy_static",

76 | "memchr",

77 | "regex-automata",

78 | "serde",

79 | ]

80 |

81 | [[package]]

82 | name = "bytes"

83 | version = "1.0.1"

84 | source = "registry+https://github.com/rust-lang/crates.io-index"

85 | checksum = "b700ce4376041dcd0a327fd0097c41095743c4c8af8887265942faf1100bd040"

86 |

87 | [[package]]

88 | name = "cc"

89 | version = "1.0.68"

90 | source = "registry+https://github.com/rust-lang/crates.io-index"

91 | checksum = "4a72c244c1ff497a746a7e1fb3d14bd08420ecda70c8f25c7112f2781652d787"

92 |

93 | [[package]]

94 | name = "cexpr"

95 | version = "0.4.0"

96 | source = "registry+https://github.com/rust-lang/crates.io-index"

97 | checksum = "f4aedb84272dbe89af497cf81375129abda4fc0a9e7c5d317498c15cc30c0d27"

98 | dependencies = [

99 | "nom",

100 | ]

101 |

102 | [[package]]

103 | name = "cfg-if"

104 | version = "1.0.0"

105 | source = "registry+https://github.com/rust-lang/crates.io-index"

106 | checksum = "baf1de4339761588bc0619e3cbc0120ee582ebb74b53b4efbf79117bd2da40fd"

107 |

108 | [[package]]

109 | name = "chrono"

110 | version = "0.4.19"

111 | source = "registry+https://github.com/rust-lang/crates.io-index"

112 | checksum = "670ad68c9088c2a963aaa298cb369688cf3f9465ce5e2d4ca10e6e0098a1ce73"

113 | dependencies = [

114 | "libc",

115 | "num-integer",

116 | "num-traits",

117 | "time",

118 | "winapi",

119 | ]

120 |

121 | [[package]]

122 | name = "clang-sys"

123 | version = "1.2.2"

124 | source = "registry+https://github.com/rust-lang/crates.io-index"

125 | checksum = "10612c0ec0e0a1ff0e97980647cb058a6e7aedb913d01d009c406b8b7d0b26ee"

126 | dependencies = [

127 | "glob",

128 | "libc",

129 | "libloading",

130 | ]

131 |

132 | [[package]]

133 | name = "clap"

134 | version = "2.33.3"

135 | source = "registry+https://github.com/rust-lang/crates.io-index"

136 | checksum = "37e58ac78573c40708d45522f0d80fa2f01cc4f9b4e2bf749807255454312002"

137 | dependencies = [

138 | "ansi_term",

139 | "atty",

140 | "bitflags",

141 | "strsim",

142 | "textwrap",

143 | "unicode-width",

144 | "vec_map",

145 | ]

146 |

147 | [[package]]

148 | name = "cmake"

149 | version = "0.1.46"

150 | source = "registry+https://github.com/rust-lang/crates.io-index"

151 | checksum = "b7b858541263efe664aead4a5209a4ae5c5d2811167d4ed4ee0944503f8d2089"

152 | dependencies = [

153 | "cc",

154 | ]

155 |

156 | [[package]]

157 | name = "csv"

158 | version = "1.1.6"

159 | source = "registry+https://github.com/rust-lang/crates.io-index"

160 | checksum = "22813a6dc45b335f9bade10bf7271dc477e81113e89eb251a0bc2a8a81c536e1"

161 | dependencies = [

162 | "bstr",

163 | "csv-core",

164 | "itoa",

165 | "ryu",

166 | "serde",

167 | ]

168 |

169 | [[package]]

170 | name = "csv-core"

171 | version = "0.1.10"

172 | source = "registry+https://github.com/rust-lang/crates.io-index"

173 | checksum = "2b2466559f260f48ad25fe6317b3c8dac77b5bdb5763ac7d9d6103530663bc90"

174 | dependencies = [

175 | "memchr",

176 | ]

177 |

178 | [[package]]

179 | name = "derivative"

180 | version = "2.2.0"

181 | source = "registry+https://github.com/rust-lang/crates.io-index"

182 | checksum = "fcc3dd5e9e9c0b295d6e1e4d811fb6f157d5ffd784b8d202fc62eac8035a770b"

183 | dependencies = [

184 | "proc-macro2",

185 | "quote",

186 | "syn",

187 | ]

188 |

189 | [[package]]

190 | name = "env_logger"

191 | version = "0.8.4"

192 | source = "registry+https://github.com/rust-lang/crates.io-index"

193 | checksum = "a19187fea3ac7e84da7dacf48de0c45d63c6a76f9490dae389aead16c243fce3"

194 | dependencies = [

195 | "atty",

196 | "humantime",

197 | "log",

198 | "regex",

199 | "termcolor",

200 | ]

201 |

202 | [[package]]

203 | name = "futures"

204 | version = "0.3.15"

205 | source = "registry+https://github.com/rust-lang/crates.io-index"

206 | checksum = "0e7e43a803dae2fa37c1f6a8fe121e1f7bf9548b4dfc0522a42f34145dadfc27"

207 | dependencies = [

208 | "futures-channel",

209 | "futures-core",

210 | "futures-executor",

211 | "futures-io",

212 | "futures-sink",

213 | "futures-task",

214 | "futures-util",

215 | ]

216 |

217 | [[package]]

218 | name = "futures-channel"

219 | version = "0.3.15"

220 | source = "registry+https://github.com/rust-lang/crates.io-index"

221 | checksum = "e682a68b29a882df0545c143dc3646daefe80ba479bcdede94d5a703de2871e2"

222 | dependencies = [

223 | "futures-core",

224 | "futures-sink",

225 | ]

226 |

227 | [[package]]

228 | name = "futures-core"

229 | version = "0.3.15"

230 | source = "registry+https://github.com/rust-lang/crates.io-index"

231 | checksum = "0402f765d8a89a26043b889b26ce3c4679d268fa6bb22cd7c6aad98340e179d1"

232 |

233 | [[package]]

234 | name = "futures-executor"

235 | version = "0.3.15"

236 | source = "registry+https://github.com/rust-lang/crates.io-index"

237 | checksum = "badaa6a909fac9e7236d0620a2f57f7664640c56575b71a7552fbd68deafab79"

238 | dependencies = [

239 | "futures-core",

240 | "futures-task",

241 | "futures-util",

242 | ]

243 |

244 | [[package]]

245 | name = "futures-io"

246 | version = "0.3.15"

247 | source = "registry+https://github.com/rust-lang/crates.io-index"

248 | checksum = "acc499defb3b348f8d8f3f66415835a9131856ff7714bf10dadfc4ec4bdb29a1"

249 |

250 | [[package]]

251 | name = "futures-macro"

252 | version = "0.3.15"

253 | source = "registry+https://github.com/rust-lang/crates.io-index"

254 | checksum = "a4c40298486cdf52cc00cd6d6987892ba502c7656a16a4192a9992b1ccedd121"

255 | dependencies = [

256 | "autocfg",

257 | "proc-macro-hack",

258 | "proc-macro2",

259 | "quote",

260 | "syn",

261 | ]

262 |

263 | [[package]]

264 | name = "futures-sink"

265 | version = "0.3.15"

266 | source = "registry+https://github.com/rust-lang/crates.io-index"

267 | checksum = "a57bead0ceff0d6dde8f465ecd96c9338121bb7717d3e7b108059531870c4282"

268 |

269 | [[package]]

270 | name = "futures-task"

271 | version = "0.3.15"

272 | source = "registry+https://github.com/rust-lang/crates.io-index"

273 | checksum = "8a16bef9fc1a4dddb5bee51c989e3fbba26569cbb0e31f5b303c184e3dd33dae"

274 |

275 | [[package]]

276 | name = "futures-util"

277 | version = "0.3.15"

278 | source = "registry+https://github.com/rust-lang/crates.io-index"

279 | checksum = "feb5c238d27e2bf94ffdfd27b2c29e3df4a68c4193bb6427384259e2bf191967"

280 | dependencies = [

281 | "autocfg",

282 | "futures-channel",

283 | "futures-core",

284 | "futures-io",

285 | "futures-macro",

286 | "futures-sink",

287 | "futures-task",

288 | "memchr",

289 | "pin-project-lite",

290 | "pin-utils",

291 | "proc-macro-hack",

292 | "proc-macro-nested",

293 | "slab",

294 | ]

295 |

296 | [[package]]

297 | name = "glob"

298 | version = "0.3.0"

299 | source = "registry+https://github.com/rust-lang/crates.io-index"

300 | checksum = "9b919933a397b79c37e33b77bb2aa3dc8eb6e165ad809e58ff75bc7db2e34574"

301 |

302 | [[package]]

303 | name = "hermit-abi"

304 | version = "0.1.19"

305 | source = "registry+https://github.com/rust-lang/crates.io-index"

306 | checksum = "62b467343b94ba476dcb2500d242dadbb39557df889310ac77c5d99100aaac33"

307 | dependencies = [

308 | "libc",

309 | ]

310 |

311 | [[package]]

312 | name = "humantime"

313 | version = "2.1.0"

314 | source = "registry+https://github.com/rust-lang/crates.io-index"

315 | checksum = "9a3a5bfb195931eeb336b2a7b4d761daec841b97f947d34394601737a7bba5e4"

316 |

317 | [[package]]

318 | name = "instant"

319 | version = "0.1.9"

320 | source = "registry+https://github.com/rust-lang/crates.io-index"

321 | checksum = "61124eeebbd69b8190558df225adf7e4caafce0d743919e5d6b19652314ec5ec"

322 | dependencies = [

323 | "cfg-if",

324 | ]

325 |

326 | [[package]]

327 | name = "itoa"

328 | version = "0.4.7"

329 | source = "registry+https://github.com/rust-lang/crates.io-index"

330 | checksum = "dd25036021b0de88a0aff6b850051563c6516d0bf53f8638938edbb9de732736"

331 |

332 | [[package]]

333 | name = "lazy_static"

334 | version = "1.4.0"

335 | source = "registry+https://github.com/rust-lang/crates.io-index"

336 | checksum = "e2abad23fbc42b3700f2f279844dc832adb2b2eb069b2df918f455c4e18cc646"

337 |

338 | [[package]]

339 | name = "lazycell"

340 | version = "1.3.0"

341 | source = "registry+https://github.com/rust-lang/crates.io-index"

342 | checksum = "830d08ce1d1d941e6b30645f1a0eb5643013d835ce3779a5fc208261dbe10f55"

343 |

344 | [[package]]

345 | name = "libc"

346 | version = "0.2.97"

347 | source = "registry+https://github.com/rust-lang/crates.io-index"

348 | checksum = "12b8adadd720df158f4d70dfe7ccc6adb0472d7c55ca83445f6a5ab3e36f8fb6"

349 |

350 | [[package]]

351 | name = "libloading"

352 | version = "0.7.1"

353 | source = "registry+https://github.com/rust-lang/crates.io-index"

354 | checksum = "c0cf036d15402bea3c5d4de17b3fce76b3e4a56ebc1f577be0e7a72f7c607cf0"

355 | dependencies = [

356 | "cfg-if",

357 | "winapi",

358 | ]

359 |

360 | [[package]]

361 | name = "libz-sys"

362 | version = "1.1.3"

363 | source = "registry+https://github.com/rust-lang/crates.io-index"

364 | checksum = "de5435b8549c16d423ed0c03dbaafe57cf6c3344744f1242520d59c9d8ecec66"

365 | dependencies = [

366 | "cc",

367 | "libc",

368 | "pkg-config",

369 | "vcpkg",

370 | ]

371 |

372 | [[package]]

373 | name = "lock_api"

374 | version = "0.4.4"

375 | source = "registry+https://github.com/rust-lang/crates.io-index"

376 | checksum = "0382880606dff6d15c9476c416d18690b72742aa7b605bb6dd6ec9030fbf07eb"

377 | dependencies = [

378 | "scopeguard",

379 | ]

380 |

381 | [[package]]

382 | name = "log"

383 | version = "0.4.14"

384 | source = "registry+https://github.com/rust-lang/crates.io-index"

385 | checksum = "51b9bbe6c47d51fc3e1a9b945965946b4c44142ab8792c50835a980d362c2710"

386 | dependencies = [

387 | "cfg-if",

388 | ]

389 |

390 | [[package]]

391 | name = "maplit"

392 | version = "1.0.2"

393 | source = "registry+https://github.com/rust-lang/crates.io-index"

394 | checksum = "3e2e65a1a2e43cfcb47a895c4c8b10d1f4a61097f9f254f183aee60cad9c651d"

395 |

396 | [[package]]

397 | name = "memchr"

398 | version = "2.4.0"

399 | source = "registry+https://github.com/rust-lang/crates.io-index"

400 | checksum = "b16bd47d9e329435e309c58469fe0791c2d0d1ba96ec0954152a5ae2b04387dc"

401 |

402 | [[package]]

403 | name = "mio"

404 | version = "0.7.13"

405 | source = "registry+https://github.com/rust-lang/crates.io-index"

406 | checksum = "8c2bdb6314ec10835cd3293dd268473a835c02b7b352e788be788b3c6ca6bb16"

407 | dependencies = [

408 | "libc",

409 | "log",

410 | "miow",

411 | "ntapi",

412 | "winapi",

413 | ]

414 |

415 | [[package]]

416 | name = "miow"

417 | version = "0.3.7"

418 | source = "registry+https://github.com/rust-lang/crates.io-index"

419 | checksum = "b9f1c5b025cda876f66ef43a113f91ebc9f4ccef34843000e0adf6ebbab84e21"

420 | dependencies = [

421 | "winapi",

422 | ]

423 |

424 | [[package]]

425 | name = "nom"

426 | version = "5.1.2"

427 | source = "registry+https://github.com/rust-lang/crates.io-index"

428 | checksum = "ffb4262d26ed83a1c0a33a38fe2bb15797329c85770da05e6b828ddb782627af"

429 | dependencies = [

430 | "memchr",

431 | "version_check",

432 | ]

433 |

434 | [[package]]

435 | name = "ntapi"

436 | version = "0.3.6"

437 | source = "registry+https://github.com/rust-lang/crates.io-index"

438 | checksum = "3f6bb902e437b6d86e03cce10a7e2af662292c5dfef23b65899ea3ac9354ad44"

439 | dependencies = [

440 | "winapi",

441 | ]

442 |

443 | [[package]]

444 | name = "num-integer"

445 | version = "0.1.44"

446 | source = "registry+https://github.com/rust-lang/crates.io-index"

447 | checksum = "d2cc698a63b549a70bc047073d2949cce27cd1c7b0a4a862d08a8031bc2801db"

448 | dependencies = [

449 | "autocfg",

450 | "num-traits",

451 | ]

452 |

453 | [[package]]

454 | name = "num-traits"

455 | version = "0.2.14"

456 | source = "registry+https://github.com/rust-lang/crates.io-index"

457 | checksum = "9a64b1ec5cda2586e284722486d802acf1f7dbdc623e2bfc57e65ca1cd099290"

458 | dependencies = [

459 | "autocfg",

460 | ]

461 |

462 | [[package]]

463 | name = "num_cpus"

464 | version = "1.13.0"

465 | source = "registry+https://github.com/rust-lang/crates.io-index"

466 | checksum = "05499f3756671c15885fee9034446956fff3f243d6077b91e5767df161f766b3"

467 | dependencies = [

468 | "hermit-abi",

469 | "libc",

470 | ]

471 |

472 | [[package]]

473 | name = "num_enum"

474 | version = "0.5.1"

475 | source = "registry+https://github.com/rust-lang/crates.io-index"

476 | checksum = "226b45a5c2ac4dd696ed30fa6b94b057ad909c7b7fc2e0d0808192bced894066"

477 | dependencies = [

478 | "derivative",

479 | "num_enum_derive",

480 | ]

481 |

482 | [[package]]

483 | name = "num_enum_derive"

484 | version = "0.5.1"

485 | source = "registry+https://github.com/rust-lang/crates.io-index"

486 | checksum = "1c0fd9eba1d5db0994a239e09c1be402d35622277e35468ba891aa5e3188ce7e"

487 | dependencies = [

488 | "proc-macro-crate",

489 | "proc-macro2",

490 | "quote",

491 | "syn",

492 | ]

493 |

494 | [[package]]

495 | name = "once_cell"

496 | version = "1.8.0"

497 | source = "registry+https://github.com/rust-lang/crates.io-index"

498 | checksum = "692fcb63b64b1758029e0a96ee63e049ce8c5948587f2f7208df04625e5f6b56"

499 |

500 | [[package]]

501 | name = "parking_lot"

502 | version = "0.11.1"

503 | source = "registry+https://github.com/rust-lang/crates.io-index"

504 | checksum = "6d7744ac029df22dca6284efe4e898991d28e3085c706c972bcd7da4a27a15eb"

505 | dependencies = [

506 | "instant",

507 | "lock_api",

508 | "parking_lot_core",

509 | ]

510 |

511 | [[package]]

512 | name = "parking_lot_core"

513 | version = "0.8.3"

514 | source = "registry+https://github.com/rust-lang/crates.io-index"

515 | checksum = "fa7a782938e745763fe6907fc6ba86946d72f49fe7e21de074e08128a99fb018"

516 | dependencies = [

517 | "cfg-if",

518 | "instant",

519 | "libc",

520 | "redox_syscall",

521 | "smallvec",

522 | "winapi",

523 | ]

524 |

525 | [[package]]

526 | name = "peeking_take_while"

527 | version = "0.1.2"

528 | source = "registry+https://github.com/rust-lang/crates.io-index"

529 | checksum = "19b17cddbe7ec3f8bc800887bab5e717348c95ea2ca0b1bf0837fb964dc67099"

530 |

531 | [[package]]

532 | name = "pin-project-lite"

533 | version = "0.2.7"

534 | source = "registry+https://github.com/rust-lang/crates.io-index"

535 | checksum = "8d31d11c69a6b52a174b42bdc0c30e5e11670f90788b2c471c31c1d17d449443"

536 |

537 | [[package]]

538 | name = "pin-utils"

539 | version = "0.1.0"

540 | source = "registry+https://github.com/rust-lang/crates.io-index"

541 | checksum = "8b870d8c151b6f2fb93e84a13146138f05d02ed11c7e7c54f8826aaaf7c9f184"

542 |

543 | [[package]]

544 | name = "pkg-config"

545 | version = "0.3.19"

546 | source = "registry+https://github.com/rust-lang/crates.io-index"

547 | checksum = "3831453b3449ceb48b6d9c7ad7c96d5ea673e9b470a1dc578c2ce6521230884c"

548 |

549 | [[package]]

550 | name = "proc-macro-crate"

551 | version = "0.1.5"

552 | source = "registry+https://github.com/rust-lang/crates.io-index"

553 | checksum = "1d6ea3c4595b96363c13943497db34af4460fb474a95c43f4446ad341b8c9785"

554 | dependencies = [

555 | "toml",

556 | ]

557 |

558 | [[package]]

559 | name = "proc-macro-hack"

560 | version = "0.5.19"

561 | source = "registry+https://github.com/rust-lang/crates.io-index"

562 | checksum = "dbf0c48bc1d91375ae5c3cd81e3722dff1abcf81a30960240640d223f59fe0e5"

563 |

564 | [[package]]

565 | name = "proc-macro-nested"

566 | version = "0.1.7"

567 | source = "registry+https://github.com/rust-lang/crates.io-index"

568 | checksum = "bc881b2c22681370c6a780e47af9840ef841837bc98118431d4e1868bd0c1086"

569 |

570 | [[package]]

571 | name = "proc-macro2"

572 | version = "1.0.27"

573 | source = "registry+https://github.com/rust-lang/crates.io-index"

574 | checksum = "f0d8caf72986c1a598726adc988bb5984792ef84f5ee5aa50209145ee8077038"

575 | dependencies = [

576 | "unicode-xid",

577 | ]

578 |

579 | [[package]]

580 | name = "quote"

581 | version = "1.0.9"

582 | source = "registry+https://github.com/rust-lang/crates.io-index"

583 | checksum = "c3d0b9745dc2debf507c8422de05d7226cc1f0644216dfdfead988f9b1ab32a7"

584 | dependencies = [

585 | "proc-macro2",

586 | ]

587 |

588 | [[package]]

589 | name = "rdkafka"

590 | version = "0.26.0"

591 | source = "registry+https://github.com/rust-lang/crates.io-index"

592 | checksum = "af78bc431a82ef178c4ad6db537eb9cc25715a8591d27acc30455ee7227a76f4"

593 | dependencies = [

594 | "futures",

595 | "libc",

596 | "log",

597 | "rdkafka-sys",

598 | "serde",

599 | "serde_derive",

600 | "serde_json",

601 | "slab",

602 | "tokio",

603 | ]

604 |

605 | [[package]]

606 | name = "rdkafka-sys"

607 | version = "4.0.0+1.6.1"

608 | source = "registry+https://github.com/rust-lang/crates.io-index"

609 | checksum = "54f24572851adfeb525fdc4a1d51185898e54fed4e8d8dba4fadb90c6b4f0422"

610 | dependencies = [

611 | "libc",

612 | "libz-sys",

613 | "num_enum",

614 | "pkg-config",

615 | ]

616 |

617 | [[package]]

618 | name = "redox_syscall"

619 | version = "0.2.9"

620 | source = "registry+https://github.com/rust-lang/crates.io-index"

621 | checksum = "5ab49abadf3f9e1c4bc499e8845e152ad87d2ad2d30371841171169e9d75feee"

622 | dependencies = [

623 | "bitflags",

624 | ]

625 |

626 | [[package]]

627 | name = "regex"

628 | version = "1.5.4"

629 | source = "registry+https://github.com/rust-lang/crates.io-index"

630 | checksum = "d07a8629359eb56f1e2fb1652bb04212c072a87ba68546a04065d525673ac461"

631 | dependencies = [

632 | "aho-corasick",

633 | "memchr",

634 | "regex-syntax",

635 | ]

636 |

637 | [[package]]

638 | name = "regex-automata"

639 | version = "0.1.10"

640 | source = "registry+https://github.com/rust-lang/crates.io-index"

641 | checksum = "6c230d73fb8d8c1b9c0b3135c5142a8acee3a0558fb8db5cf1cb65f8d7862132"

642 |

643 | [[package]]

644 | name = "regex-syntax"

645 | version = "0.6.25"

646 | source = "registry+https://github.com/rust-lang/crates.io-index"

647 | checksum = "f497285884f3fcff424ffc933e56d7cbca511def0c9831a7f9b5f6153e3cc89b"

648 |

649 | [[package]]

650 | name = "rsmgclient"

651 | version = "1.0.0"

652 | source = "registry+https://github.com/rust-lang/crates.io-index"

653 | checksum = "868b721984daa0a330ac19d8bae68fc76a3d470c32802810bad6b93732b9a826"

654 | dependencies = [

655 | "bindgen",

656 | "chrono",

657 | "cmake",

658 | "maplit",

659 | ]

660 |

661 | [[package]]

662 | name = "rust"

663 | version = "0.1.0"

664 | dependencies = [

665 | "chrono",

666 | "clap",

667 | "csv",

668 | "env_logger",

669 | "log",

670 | "rdkafka",

671 | "rsmgclient",

672 | "tokio",

673 | ]

674 |

675 | [[package]]

676 | name = "rustc-hash"

677 | version = "1.1.0"

678 | source = "registry+https://github.com/rust-lang/crates.io-index"

679 | checksum = "08d43f7aa6b08d49f382cde6a7982047c3426db949b1424bc4b7ec9ae12c6ce2"

680 |

681 | [[package]]

682 | name = "ryu"

683 | version = "1.0.5"

684 | source = "registry+https://github.com/rust-lang/crates.io-index"

685 | checksum = "71d301d4193d031abdd79ff7e3dd721168a9572ef3fe51a1517aba235bd8f86e"

686 |

687 | [[package]]

688 | name = "scopeguard"

689 | version = "1.1.0"

690 | source = "registry+https://github.com/rust-lang/crates.io-index"

691 | checksum = "d29ab0c6d3fc0ee92fe66e2d99f700eab17a8d57d1c1d3b748380fb20baa78cd"

692 |

693 | [[package]]

694 | name = "serde"

695 | version = "1.0.126"

696 | source = "registry+https://github.com/rust-lang/crates.io-index"

697 | checksum = "ec7505abeacaec74ae4778d9d9328fe5a5d04253220a85c4ee022239fc996d03"

698 | dependencies = [

699 | "serde_derive",

700 | ]

701 |

702 | [[package]]

703 | name = "serde_derive"

704 | version = "1.0.126"

705 | source = "registry+https://github.com/rust-lang/crates.io-index"

706 | checksum = "963a7dbc9895aeac7ac90e74f34a5d5261828f79df35cbed41e10189d3804d43"

707 | dependencies = [

708 | "proc-macro2",

709 | "quote",

710 | "syn",

711 | ]

712 |

713 | [[package]]

714 | name = "serde_json"

715 | version = "1.0.64"

716 | source = "registry+https://github.com/rust-lang/crates.io-index"

717 | checksum = "799e97dc9fdae36a5c8b8f2cae9ce2ee9fdce2058c57a93e6099d919fd982f79"

718 | dependencies = [

719 | "itoa",

720 | "ryu",

721 | "serde",

722 | ]

723 |

724 | [[package]]

725 | name = "shlex"

726 | version = "1.1.0"

727 | source = "registry+https://github.com/rust-lang/crates.io-index"

728 | checksum = "43b2853a4d09f215c24cc5489c992ce46052d359b5109343cbafbf26bc62f8a3"

729 |

730 | [[package]]

731 | name = "signal-hook-registry"

732 | version = "1.4.0"

733 | source = "registry+https://github.com/rust-lang/crates.io-index"

734 | checksum = "e51e73328dc4ac0c7ccbda3a494dfa03df1de2f46018127f60c693f2648455b0"

735 | dependencies = [

736 | "libc",

737 | ]

738 |

739 | [[package]]

740 | name = "slab"

741 | version = "0.4.3"

742 | source = "registry+https://github.com/rust-lang/crates.io-index"

743 | checksum = "f173ac3d1a7e3b28003f40de0b5ce7fe2710f9b9dc3fc38664cebee46b3b6527"

744 |

745 | [[package]]

746 | name = "smallvec"

747 | version = "1.6.1"

748 | source = "registry+https://github.com/rust-lang/crates.io-index"

749 | checksum = "fe0f37c9e8f3c5a4a66ad655a93c74daac4ad00c441533bf5c6e7990bb42604e"

750 |

751 | [[package]]

752 | name = "strsim"

753 | version = "0.8.0"

754 | source = "registry+https://github.com/rust-lang/crates.io-index"

755 | checksum = "8ea5119cdb4c55b55d432abb513a0429384878c15dde60cc77b1c99de1a95a6a"

756 |

757 | [[package]]

758 | name = "syn"

759 | version = "1.0.73"

760 | source = "registry+https://github.com/rust-lang/crates.io-index"

761 | checksum = "f71489ff30030d2ae598524f61326b902466f72a0fb1a8564c001cc63425bcc7"

762 | dependencies = [

763 | "proc-macro2",

764 | "quote",

765 | "unicode-xid",

766 | ]

767 |

768 | [[package]]

769 | name = "termcolor"

770 | version = "1.1.2"

771 | source = "registry+https://github.com/rust-lang/crates.io-index"

772 | checksum = "2dfed899f0eb03f32ee8c6a0aabdb8a7949659e3466561fc0adf54e26d88c5f4"

773 | dependencies = [

774 | "winapi-util",

775 | ]

776 |

777 | [[package]]

778 | name = "textwrap"

779 | version = "0.11.0"

780 | source = "registry+https://github.com/rust-lang/crates.io-index"

781 | checksum = "d326610f408c7a4eb6f51c37c330e496b08506c9457c9d34287ecc38809fb060"

782 | dependencies = [

783 | "unicode-width",

784 | ]

785 |

786 | [[package]]

787 | name = "time"

788 | version = "0.1.44"

789 | source = "registry+https://github.com/rust-lang/crates.io-index"

790 | checksum = "6db9e6914ab8b1ae1c260a4ae7a49b6c5611b40328a735b21862567685e73255"

791 | dependencies = [

792 | "libc",

793 | "wasi",

794 | "winapi",

795 | ]

796 |

797 | [[package]]

798 | name = "tokio"

799 | version = "1.8.0"

800 | source = "registry+https://github.com/rust-lang/crates.io-index"

801 | checksum = "570c2eb13b3ab38208130eccd41be92520388791207fde783bda7c1e8ace28d4"

802 | dependencies = [

803 | "autocfg",

804 | "bytes",

805 | "libc",

806 | "memchr",

807 | "mio",

808 | "num_cpus",

809 | "once_cell",

810 | "parking_lot",

811 | "pin-project-lite",

812 | "signal-hook-registry",

813 | "tokio-macros",

814 | "winapi",

815 | ]

816 |

817 | [[package]]

818 | name = "tokio-macros"

819 | version = "1.2.0"

820 | source = "registry+https://github.com/rust-lang/crates.io-index"

821 | checksum = "c49e3df43841dafb86046472506755d8501c5615673955f6aa17181125d13c37"

822 | dependencies = [

823 | "proc-macro2",

824 | "quote",

825 | "syn",

826 | ]

827 |

828 | [[package]]

829 | name = "toml"

830 | version = "0.5.8"

831 | source = "registry+https://github.com/rust-lang/crates.io-index"

832 | checksum = "a31142970826733df8241ef35dc040ef98c679ab14d7c3e54d827099b3acecaa"

833 | dependencies = [

834 | "serde",

835 | ]

836 |

837 | [[package]]

838 | name = "unicode-width"

839 | version = "0.1.8"

840 | source = "registry+https://github.com/rust-lang/crates.io-index"

841 | checksum = "9337591893a19b88d8d87f2cec1e73fad5cdfd10e5a6f349f498ad6ea2ffb1e3"

842 |

843 | [[package]]

844 | name = "unicode-xid"

845 | version = "0.2.2"

846 | source = "registry+https://github.com/rust-lang/crates.io-index"

847 | checksum = "8ccb82d61f80a663efe1f787a51b16b5a51e3314d6ac365b08639f52387b33f3"

848 |

849 | [[package]]

850 | name = "vcpkg"

851 | version = "0.2.15"

852 | source = "registry+https://github.com/rust-lang/crates.io-index"

853 | checksum = "accd4ea62f7bb7a82fe23066fb0957d48ef677f6eeb8215f372f52e48bb32426"

854 |

855 | [[package]]

856 | name = "vec_map"

857 | version = "0.8.2"

858 | source = "registry+https://github.com/rust-lang/crates.io-index"

859 | checksum = "f1bddf1187be692e79c5ffeab891132dfb0f236ed36a43c7ed39f1165ee20191"

860 |

861 | [[package]]

862 | name = "version_check"

863 | version = "0.9.3"

864 | source = "registry+https://github.com/rust-lang/crates.io-index"

865 | checksum = "5fecdca9a5291cc2b8dcf7dc02453fee791a280f3743cb0905f8822ae463b3fe"

866 |

867 | [[package]]

868 | name = "wasi"

869 | version = "0.10.0+wasi-snapshot-preview1"

870 | source = "registry+https://github.com/rust-lang/crates.io-index"

871 | checksum = "1a143597ca7c7793eff794def352d41792a93c481eb1042423ff7ff72ba2c31f"

872 |

873 | [[package]]

874 | name = "which"

875 | version = "3.1.1"

876 | source = "registry+https://github.com/rust-lang/crates.io-index"

877 | checksum = "d011071ae14a2f6671d0b74080ae0cd8ebf3a6f8c9589a2cd45f23126fe29724"

878 | dependencies = [

879 | "libc",

880 | ]

881 |

882 | [[package]]

883 | name = "winapi"

884 | version = "0.3.9"

885 | source = "registry+https://github.com/rust-lang/crates.io-index"

886 | checksum = "5c839a674fcd7a98952e593242ea400abe93992746761e38641405d28b00f419"

887 | dependencies = [

888 | "winapi-i686-pc-windows-gnu",

889 | "winapi-x86_64-pc-windows-gnu",

890 | ]

891 |

892 | [[package]]

893 | name = "winapi-i686-pc-windows-gnu"

894 | version = "0.4.0"

895 | source = "registry+https://github.com/rust-lang/crates.io-index"

896 | checksum = "ac3b87c63620426dd9b991e5ce0329eff545bccbbb34f3be09ff6fb6ab51b7b6"

897 |

898 | [[package]]

899 | name = "winapi-util"

900 | version = "0.1.5"

901 | source = "registry+https://github.com/rust-lang/crates.io-index"

902 | checksum = "70ec6ce85bb158151cae5e5c87f95a8e97d2c0c4b001223f33a334e3ce5de178"

903 | dependencies = [

904 | "winapi",

905 | ]

906 |

907 | [[package]]

908 | name = "winapi-x86_64-pc-windows-gnu"

909 | version = "0.4.0"

910 | source = "registry+https://github.com/rust-lang/crates.io-index"

911 | checksum = "712e227841d057c1ee1cd2fb22fa7e5a5461ae8e48fa2ca79ec42cfc1931183f"

912 |

--------------------------------------------------------------------------------

/backend/rust/Cargo.toml:

--------------------------------------------------------------------------------

1 | [package]

2 | name = "rust"

3 | version = "0.1.0"

4 | authors = ["Marko Budiselic "]

5 | edition = "2018"

6 |

7 | # See more keys and their definitions at https://doc.rust-lang.org/cargo/reference/manifest.html

8 |

9 | [dependencies]

10 | chrono = "0.4.19"

11 | clap = "2.33.3"

12 | env_logger = "0.8.4"

13 | log = "0.4.14"

14 | rdkafka = "0.26.0"

15 | tokio = { version = "1.8.0" , features = ["full"] }

16 | rsmgclient = "1.0.0"

17 | csv = "1.1.6"

18 |

--------------------------------------------------------------------------------

/backend/rust/README.md: