├── docs

├── index.md

├── images

│ ├── debezium-iceberg.png

│ ├── rdbms-debezium-iceberg.png

│ ├── rdbms-debezium-iceberg_white.png

│ ├── debezium-iceberg-architecture.drawio.png

│ └── debezium-iceberg.drawio

├── contributing.md

├── icebergevents.md

├── python-runner.md

├── faq.md

└── migration.md

├── .dockerignore

├── examples

├── lakekeeper

│ ├── notebooks

│ │ └── .gitignore

│ ├── config

│ │ └── application.properties

│ └── produce_data.py

└── nessie

│ ├── config

│ └── application.properties

│ ├── produce_data.py

│ └── docker-compose.yaml

├── debezium-server-iceberg-dist

├── src

│ └── main

│ │ └── resources

│ │ ├── distro

│ │ ├── jmx

│ │ │ ├── jmxremote.access

│ │ │ ├── jmxremote.password

│ │ │ └── enable_jmx.sh

│ │ ├── lib_metrics

│ │ │ └── enable_exporter.sh

│ │ ├── run.sh

│ │ ├── config

│ │ │ └── metrics.yml

│ │ └── debezium.py

│ │ └── assemblies

│ │ └── server-distribution.xml

└── README.md

├── .github

├── dependabot.yml

└── workflows

│ ├── deploy-documentation.yml

│ ├── build.yml

│ ├── stale.yml

│ ├── codeql-analysis.yml

│ └── release.yml

├── debezium-server-iceberg-sink

└── src

│ ├── main

│ ├── resources

│ │ └── META-INF

│ │ │ └── beans.xml

│ └── java

│ │ └── io

│ │ └── debezium

│ │ └── server

│ │ └── iceberg

│ │ ├── mapper

│ │ ├── IcebergTableMapper.java

│ │ └── DefaultIcebergTableMapper.java

│ │ ├── converter

│ │ ├── SchemaConverter.java

│ │ ├── AbstractVariantObject.java

│ │ ├── IcebergSchemaInfo.java

│ │ ├── EventConverter.java

│ │ └── DateTimeUtils.java

│ │ ├── batchsizewait

│ │ ├── NoBatchSizeWait.java

│ │ ├── BatchSizeWait.java

│ │ └── MaxBatchSizeWait.java

│ │ ├── GlobalConfig.java

│ │ ├── history

│ │ └── IcebergSchemaHistoryConfig.java

│ │ ├── offset

│ │ └── IcebergOffsetBackingStoreConfig.java

│ │ ├── tableoperator

│ │ ├── Operation.java

│ │ ├── PartitionedAppendWriter.java

│ │ ├── UnpartitionedDeltaWriter.java

│ │ ├── PartitionedDeltaWriter.java

│ │ ├── RecordWrapper.java

│ │ ├── BaseDeltaTaskWriter.java

│ │ └── IcebergTableWriterFactory.java

│ │ ├── BatchConfig.java

│ │ ├── storage

│ │ └── BaseIcebergStorageConfig.java

│ │ └── IcebergConfig.java

│ └── test

│ ├── resources

│ ├── json

│ │ ├── serde-unnested-delete-key-withschema.json

│ │ ├── serde-unnested-order-key-withschema.json

│ │ ├── serde-update.json

│ │ ├── unwrap-with-schema.json

│ │ ├── serde-with-array.json

│ │ └── serde-with-schema_geom.json

│ ├── mongodb

│ │ └── Dockerfile

│ └── META-INF

│ │ └── services

│ │ └── org.eclipse.microprofile.config.spi.ConfigSource

│ └── java

│ └── io

│ └── debezium

│ └── server

│ └── iceberg

│ ├── GlobalConfigProducer.java

│ ├── IcebergConfigProducer.java

│ ├── DebeziumConfigProducer.java

│ ├── mapper

│ ├── CustomMapper.java

│ └── CustomMapperTest.java

│ ├── converter

│ ├── JsonEventConverterSchemaDataTest.java

│ └── JsonEventConverterBuilderTest.java

│ ├── testresources

│ ├── CatalogJdbc.java

│ ├── TestUtil.java

│ ├── CatalogRest.java

│ ├── SourceMongoDB.java

│ ├── CatalogNessie.java

│ ├── SourceMysqlDB.java

│ ├── SourcePostgresqlDB.java

│ └── S3Minio.java

│ ├── tableoperator

│ ├── UnpartitionedDeltaWriterTest.java

│ └── BaseWriterTest.java

│ ├── IcebergChangeConsumerJdbcCatalogTest.java

│ ├── GlobalConfigTest.java

│ ├── IcebergChangeConsumerRestCatalogTest.java

│ ├── IcebergChangeConsumerConnectTest.java

│ ├── IcebergChangeConsumerNessieCatalogTest.java

│ ├── IcebergChangeConsumerDecimalTest.java

│ ├── IcebergEventsChangeConsumerTest.java

│ ├── IcebergChangeConsumerMongodbTest.java

│ ├── IcebergChangeConsumerExcludedColumnsTest.java

│ ├── batchsizewait

│ └── MaxBatchSizeWaitTest.java

│ ├── history

│ └── IcebergSchemaHistoryTest.java

│ ├── IcebergChangeConsumerMysqlTest.java

│ ├── IcebergChangeConsumerTestUnwraapped.java

│ └── IcebergChangeConsumerTemporalIsoStringTest.java

├── python

├── debezium

│ ├── __main__.py

│ └── __init__.py

└── pyproject.toml

├── .run

├── IcebergChangeConsumerTest.run.xml

├── IcebergChangeConsumerTest.testSimpleUpload.run.xml

├── All in debezium-server-iceberg-sink.run.xml

├── package.run.xml

├── dependency_tree.run.xml

└── clean,install.run.xml

├── mkdocs.yml

├── Dockerfile

├── README.md

└── .gitignore

/docs/index.md:

--------------------------------------------------------------------------------

1 | --8<-- "README.md"

--------------------------------------------------------------------------------

/.dockerignore:

--------------------------------------------------------------------------------

1 | Dockerfile

2 | **/target/

3 |

4 | .idea/

5 | .github/

6 | .run/

--------------------------------------------------------------------------------

/examples/lakekeeper/notebooks/.gitignore:

--------------------------------------------------------------------------------

1 | spark-warehouse

2 | .ipynb_checkpoints

--------------------------------------------------------------------------------

/debezium-server-iceberg-dist/src/main/resources/distro/jmx/jmxremote.access:

--------------------------------------------------------------------------------

1 | monitor readonly

2 | admin readwrite

--------------------------------------------------------------------------------

/debezium-server-iceberg-dist/src/main/resources/distro/jmx/jmxremote.password:

--------------------------------------------------------------------------------

1 | admin admin123

2 | monitor monitor123

--------------------------------------------------------------------------------

/docs/images/debezium-iceberg.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/memiiso/debezium-server-iceberg/HEAD/docs/images/debezium-iceberg.png

--------------------------------------------------------------------------------

/docs/images/rdbms-debezium-iceberg.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/memiiso/debezium-server-iceberg/HEAD/docs/images/rdbms-debezium-iceberg.png

--------------------------------------------------------------------------------

/docs/images/rdbms-debezium-iceberg_white.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/memiiso/debezium-server-iceberg/HEAD/docs/images/rdbms-debezium-iceberg_white.png

--------------------------------------------------------------------------------

/docs/images/debezium-iceberg-architecture.drawio.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/memiiso/debezium-server-iceberg/HEAD/docs/images/debezium-iceberg-architecture.drawio.png

--------------------------------------------------------------------------------

/debezium-server-iceberg-dist/README.md:

--------------------------------------------------------------------------------

1 | Copy of

2 | Debezium [debezium-server-dist](https://github.com/debezium/debezium/tree/master/debezium-server/debezium-server-dist)

3 | project

4 |

5 | Authors : Debezium Authors

--------------------------------------------------------------------------------

/.github/dependabot.yml:

--------------------------------------------------------------------------------

1 | version: 2

2 | updates:

3 | - package-ecosystem: "github-actions"

4 | directory: "/"

5 | schedule:

6 | interval: "weekly"

7 | - package-ecosystem: "maven"

8 | directory: "/"

9 | schedule:

10 | interval: "weekly"

11 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/main/resources/META-INF/beans.xml:

--------------------------------------------------------------------------------

1 |

8 |

9 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/main/java/io/debezium/server/iceberg/mapper/IcebergTableMapper.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg.mapper;

2 |

3 | import org.apache.iceberg.catalog.TableIdentifier;

4 |

5 | public interface IcebergTableMapper {

6 | TableIdentifier mapDestination(String destination);

7 | }

8 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/resources/json/serde-unnested-delete-key-withschema.json:

--------------------------------------------------------------------------------

1 | {

2 | "schema": {

3 | "type": "struct",

4 | "fields": [

5 | {

6 | "type": "int32",

7 | "optional": false,

8 | "field": "id"

9 | }

10 | ],

11 | "optional": false,

12 | "name": "testc.inventory.customers.Key"

13 | },

14 | "payload": {

15 | "id": 1004

16 | }

17 | }

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/resources/json/serde-unnested-order-key-withschema.json:

--------------------------------------------------------------------------------

1 | {

2 | "schema": {

3 | "type": "struct",

4 | "fields": [

5 | {

6 | "type": "int32",

7 | "optional": false,

8 | "field": "order_number"

9 | }

10 | ],

11 | "optional": false,

12 | "name": "testc.inventory.orders.Key"

13 | },

14 | "payload": {

15 | "order_number": 10004

16 | }

17 | }

--------------------------------------------------------------------------------

/docs/contributing.md:

--------------------------------------------------------------------------------

1 | # Contributing

2 |

3 | Debezium Iceberg consumer is a very young project and looking for new maintainers. There are definitively many small/big

4 | improvements to do, including documentation, adding new features to submitting bug reports.

5 |

6 | Please feel free to send pull request, report bugs or open feature request.

7 |

8 | ## License

9 |

10 | By contributing, you agree that your contributions will be licensed under Apache 2.0 License.

11 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-dist/src/main/resources/distro/lib_metrics/enable_exporter.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | # To enable Prometheus JMX exporter, set JMX_EXPORTER_PORT environment variable

3 |

4 | if [ -n "${JMX_EXPORTER_PORT}" ]; then

5 | JMX_EXPORTER_CONFIG=${JMX_EXPORTER_CONFIG:-"config/metrics.yml"}

6 | JMX_EXPORTER_AGENT_JAR=$(find lib_metrics -name "jmx_prometheus_javaagent-*.jar")

7 | export JAVA_OPTS="-javaagent:${JMX_EXPORTER_AGENT_JAR}=0.0.0.0:${JMX_EXPORTER_PORT}:${JMX_EXPORTER_CONFIG} ${JAVA_OPTS}"

8 | fi

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/main/java/io/debezium/server/iceberg/converter/SchemaConverter.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg.converter;

2 |

3 | import org.apache.iceberg.Schema;

4 | import org.apache.iceberg.SortOrder;

5 |

6 | public interface SchemaConverter {

7 | @Override

8 | int hashCode();

9 |

10 | @Override

11 | boolean equals(Object o);

12 |

13 | Schema icebergSchema(boolean withIdentifierFields);

14 |

15 | default Schema icebergSchema() {

16 | return icebergSchema(true);

17 | }

18 |

19 | SortOrder sortOrder(Schema schema);

20 | }

21 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/resources/mongodb/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM mongo:6.0

2 |

3 | LABEL maintainer="Debezium Community"

4 |

5 | ENV REPLICA_SET_HOSTS="localhost"

6 |

7 | # Starting with MongoDB 4.4 the authentication enabled MongoDB requires a key

8 | # for intra-replica set communication

9 | RUN openssl rand -base64 756 > /etc/mongodb.keyfile &&\

10 | chown mongodb:mongodb /etc/mongodb.keyfile &&\

11 | chmod 400 /etc/mongodb.keyfile

12 |

13 | COPY start-mongodb.sh /usr/local/bin/

14 | RUN chmod +x /usr/local/bin/start-mongodb.sh

15 |

16 | ENTRYPOINT ["start-mongodb.sh"]

17 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/main/java/io/debezium/server/iceberg/batchsizewait/NoBatchSizeWait.java:

--------------------------------------------------------------------------------

1 | /*

2 | *

3 | * * Copyright memiiso Authors.

4 | * *

5 | * * Licensed under the Apache Software License version 2.0, available at http://www.apache.org/licenses/LICENSE-2.0

6 | *

7 | */

8 |

9 | package io.debezium.server.iceberg.batchsizewait;

10 |

11 | import jakarta.enterprise.context.Dependent;

12 | import jakarta.inject.Named;

13 |

14 | /**

15 | * Optimizes batch size around 85%-90% of max,batch.size using dynamically calculated sleep(ms)

16 | *

17 | * @author Ismail Simsek

18 | */

19 | @Dependent

20 | @Named("NoBatchSizeWait")

21 | public class NoBatchSizeWait implements BatchSizeWait {

22 | }

23 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/main/java/io/debezium/server/iceberg/batchsizewait/BatchSizeWait.java:

--------------------------------------------------------------------------------

1 | /*

2 | *

3 | * * Copyright memiiso Authors.

4 | * *

5 | * * Licensed under the Apache Software License version 2.0, available at http://www.apache.org/licenses/LICENSE-2.0

6 | *

7 | */

8 |

9 | package io.debezium.server.iceberg.batchsizewait;

10 |

11 | /**

12 | * When enabled dds waiting to the consumer to control batch size. I will turn the processing to batch processing.

13 | *

14 | * @author Ismail Simsek

15 | */

16 | public interface BatchSizeWait {

17 |

18 | default void initizalize() {

19 | }

20 |

21 | default void waitMs(Integer numRecordsProcessed, Integer processingTimeMs) throws InterruptedException {

22 | }

23 |

24 | }

25 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/main/java/io/debezium/server/iceberg/GlobalConfig.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg;

2 |

3 | import io.quarkus.runtime.annotations.ConfigRoot;

4 | import io.smallrye.config.ConfigMapping;

5 | import io.smallrye.config.WithDefault;

6 | import io.smallrye.config.WithName;

7 | import io.smallrye.config.WithParentName;

8 | import org.jboss.logging.Logger;

9 |

10 | @ConfigRoot

11 | @ConfigMapping

12 | public interface GlobalConfig {

13 |

14 | @WithParentName

15 | IcebergConfig iceberg();

16 |

17 | @WithParentName

18 | DebeziumConfig debezium();

19 |

20 | @WithParentName

21 | BatchConfig batch();

22 |

23 | @WithName("quarkus.log.level")

24 | @WithDefault("INFO")

25 | Logger.Level quarkusLogLevel();

26 |

27 | }

--------------------------------------------------------------------------------

/.github/workflows/deploy-documentation.yml:

--------------------------------------------------------------------------------

1 | name: deploy-mkdocs-documentation

2 | on:

3 | push:

4 | branches:

5 | - master

6 | - main

7 | - docs

8 | permissions:

9 | contents: write

10 | jobs:

11 | deploy:

12 | runs-on: ubuntu-latest

13 | steps:

14 | - uses: actions/checkout@v4

15 | - name: Configure Git Credentials

16 | run: |

17 | git config user.name github-actions[bot]

18 | git config user.email 41898282+github-actions[bot]@users.noreply.github.com

19 | - uses: actions/setup-python@v6

20 | with:

21 | python-version: 3.x

22 | - run: echo "cache_id=$(date --utc '+%V')" >> $GITHUB_ENV

23 | - run: pip install mkdocs-material

24 | - run: mkdocs gh-deploy --force

--------------------------------------------------------------------------------

/python/debezium/__main__.py:

--------------------------------------------------------------------------------

1 | import argparse

2 |

3 | from debezium import Debezium

4 |

5 |

6 | def main():

7 | parser = argparse.ArgumentParser()

8 | parser.add_argument('--debezium_dir', type=str, default=None,

9 | help='Directory of debezium server application')

10 | parser.add_argument('--conf_dir', type=str, default=None,

11 | help='Directory of application.properties')

12 | parser.add_argument('--java_home', type=str, default=None,

13 | help='JAVA_HOME directory')

14 | _args, args = parser.parse_known_args()

15 | ds = Debezium(debezium_dir=_args.debezium_dir, conf_dir=_args.conf_dir, java_home=_args.java_home)

16 | ds.run(*args)

17 |

18 |

19 | if __name__ == '__main__':

20 | main()

21 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/main/java/io/debezium/server/iceberg/history/IcebergSchemaHistoryConfig.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg.history;

2 |

3 | import io.debezium.config.Configuration;

4 | import io.debezium.server.iceberg.storage.BaseIcebergStorageConfig;

5 |

6 |

7 | public class IcebergSchemaHistoryConfig extends BaseIcebergStorageConfig {

8 | public IcebergSchemaHistoryConfig(Configuration config, String configuration_field_prefix) {

9 | super(config, configuration_field_prefix);

10 | }

11 |

12 | @Override

13 | public String tableName() {

14 | return this.config.getProperty("table-name", "debezium_database_history_storage");

15 | }

16 | public String getMigrateHistoryFile() {

17 | return config.getProperty("migrate-history-file", "");

18 | }

19 | }

20 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/main/java/io/debezium/server/iceberg/offset/IcebergOffsetBackingStoreConfig.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg.offset;

2 |

3 | import io.debezium.config.Configuration;

4 | import io.debezium.server.iceberg.storage.BaseIcebergStorageConfig;

5 |

6 |

7 | public class IcebergOffsetBackingStoreConfig extends BaseIcebergStorageConfig {

8 | public IcebergOffsetBackingStoreConfig(Configuration config, String configuration_field_prefix) {

9 | super(config, configuration_field_prefix);

10 | }

11 |

12 | @Override

13 | public String tableName() {

14 | return this.config.getProperty("table-name", "debezium_offset_storage");

15 | }

16 |

17 | public String getMigrateOffsetFile() {

18 | return this.config.getProperty("migrate-offset-file","");

19 | }

20 |

21 | }

22 |

--------------------------------------------------------------------------------

/.run/IcebergChangeConsumerTest.run.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/resources/json/serde-update.json:

--------------------------------------------------------------------------------

1 | {

2 | "op": "u",

3 | "ts_ms": 1465491411815,

4 | "before": {

5 | "id": 1004,

6 | "first_name": "Anne-Marie",

7 | "last_name": "Kretchmar",

8 | "email": "annek@noanswer.org"

9 | },

10 | "after": {

11 | "id": 1004,

12 | "first_name": "Anne",

13 | "last_name": "Kretchmar",

14 | "email": "annek@noanswer.org"

15 | },

16 | "source": {

17 | "version": "0.10.0.Final",

18 | "connector": "mysql",

19 | "name": "mysql-server-1",

20 | "ts_ms": 0,

21 | "snapshot": false,

22 | "db": "inventory",

23 | "table": "customers",

24 | "server_id": 0,

25 | "gtid": null,

26 | "file": "mysql-bin.000003",

27 | "pos": 154,

28 | "row": 0,

29 | "thread": 7,

30 | "query": "INSERT INTO customers (first_name, last_name, email) VALUES ('Anne', 'Kretchmar', 'annek@noanswer.org')"

31 | }

32 | }

--------------------------------------------------------------------------------

/.run/IcebergChangeConsumerTest.testSimpleUpload.run.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

--------------------------------------------------------------------------------

/.run/All in debezium-server-iceberg-sink.run.xml:

--------------------------------------------------------------------------------

1 |

8 |

9 |

10 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

--------------------------------------------------------------------------------

/python/pyproject.toml:

--------------------------------------------------------------------------------

1 | [build-system]

2 | requires = ["setuptools", "setuptools-scm"]

3 | build-backend = "setuptools.build_meta"

4 |

5 | [project]

6 | name = "debezium"

7 | version = "0.1.0"

8 | authors = [

9 | { name = "Memiiso Organization" },

10 | ]

11 | description = "Debezium Server Python runner"

12 | # readme = "README.md"

13 | requires-python = ">=3.8"

14 | keywords = ["Debezium", "Replication", "Apache", "Iceberg"]

15 | license = { text = "Apache License 2.0" }

16 | classifiers = [

17 | "Development Status :: 5 - Production/Stable",

18 | "Programming Language :: Python :: 3",

19 | ]

20 | dependencies = [

21 | "pyjnius==1.6.1"

22 | ]

23 | [project.scripts]

24 | debezium = "debezium.__main__:main"

25 |

26 | [project.urls]

27 | Homepage = "https://github.com/memiiso/debezium-server-iceberg "

28 | Documentation = "https://github.com/memiiso/debezium-server-iceberg "

29 | Repository = "https://github.com/memiiso/debezium-server-iceberg "

30 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/resources/META-INF/services/org.eclipse.microprofile.config.spi.ConfigSource:

--------------------------------------------------------------------------------

1 | #

2 | # /*

3 | # * Copyright memiiso Authors.

4 | # *

5 | # * Licensed under the Apache Software License version 2.0, available at http://www.apache.org/licenses/LICENSE-2.0

6 | # */

7 | #

8 |

9 | #

10 | # /*

11 | # * Copyright memiiso Authors.

12 | # *

13 | # * Licensed under the Apache Software License version 2.0, available at http://www.apache.org/licenses/LICENSE-2.0

14 | # */

15 | #

16 |

17 | #

18 | # /*

19 | # * Copyright memiiso Authors.

20 | # *

21 | # * Licensed under the Apache Software License version 2.0, available at http://www.apache.org/licenses/LICENSE-2.0

22 | # */

23 | #

24 |

25 | #

26 | # /*

27 | # * Copyright memiiso Authors.

28 | # *

29 | # * Licensed under the Apache Software License version 2.0, available at http://www.apache.org/licenses/LICENSE-2.0

30 | # */

31 | #

32 |

33 | io.debezium.server.iceberg.TestConfigSource

--------------------------------------------------------------------------------

/debezium-server-iceberg-dist/src/main/resources/distro/run.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | #

3 | # /*

4 | # * Copyright memiiso Authors.

5 | # *

6 | # * Licensed under the Apache Software License version 2.0, available at http://www.apache.org/licenses/LICENSE-2.0

7 | # */

8 | #

9 |

10 | LIB_PATH="lib/*"

11 |

12 | if [ "$OSTYPE" = "msys" ] || [ "$OSTYPE" = "cygwin" ]; then

13 | PATH_SEP=";"

14 | else

15 | PATH_SEP=":"

16 | fi

17 |

18 | if [ -z "$JAVA_HOME" ]; then

19 | JAVA_BINARY="java"

20 | else

21 | JAVA_BINARY="$JAVA_HOME/bin/java"

22 | fi

23 |

24 | RUNNER=$(ls debezium-server-*runner.jar)

25 |

26 | ENABLE_DEBEZIUM_SCRIPTING=${ENABLE_DEBEZIUM_SCRIPTING:-false}

27 | if [[ "${ENABLE_DEBEZIUM_SCRIPTING}" == "true" ]]; then

28 | LIB_PATH=$LIB_PATH$PATH_SEP"lib_opt/*"

29 | fi

30 |

31 | source ./jmx/enable_jmx.sh

32 | source ./lib_metrics/enable_exporter.sh

33 |

34 | exec "$JAVA_BINARY" $DEBEZIUM_OPTS $JAVA_OPTS -cp \

35 | $RUNNER$PATH_SEP$LIB_PATH io.debezium.server.Main

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/java/io/debezium/server/iceberg/GlobalConfigProducer.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg;

2 |

3 | import io.smallrye.config.SmallRyeConfig;

4 | import jakarta.enterprise.context.ApplicationScoped;

5 | import jakarta.enterprise.inject.Produces;

6 | import jakarta.inject.Inject;

7 | import org.eclipse.microprofile.config.Config;

8 | import org.mockito.Mockito;

9 |

10 | /**

11 | * This class provides a mocked instance of GlobalConfig for testing purposes,

12 | * allowing selective overriding of configuration values while preserving the original

13 | * configuration.

14 | */

15 | public class GlobalConfigProducer {

16 | @Inject

17 | Config config;

18 |

19 | @Produces

20 | @ApplicationScoped

21 | @io.quarkus.test.Mock

22 | GlobalConfig appConfig() {

23 | GlobalConfig appConfig = config.unwrap(SmallRyeConfig.class).getConfigMapping(GlobalConfig.class);

24 | GlobalConfig appConfigSpy = Mockito.spy(appConfig);

25 | return appConfigSpy;

26 | }

27 |

28 | }

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/java/io/debezium/server/iceberg/IcebergConfigProducer.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg;

2 |

3 | import io.smallrye.config.SmallRyeConfig;

4 | import jakarta.enterprise.context.ApplicationScoped;

5 | import jakarta.enterprise.inject.Produces;

6 | import jakarta.inject.Inject;

7 | import org.eclipse.microprofile.config.Config;

8 | import org.mockito.Mockito;

9 |

10 | /**

11 | * This class provides a mocked instance of IcebergConfig for testing purposes,

12 | * allowing selective overriding of configuration values while preserving the original

13 | * configuration.

14 | */

15 | public class IcebergConfigProducer {

16 | @Inject

17 | Config config;

18 |

19 | @Produces

20 | @ApplicationScoped

21 | @io.quarkus.test.Mock

22 | IcebergConfig appConfig() {

23 | IcebergConfig appConfig = config.unwrap(SmallRyeConfig.class).getConfigMapping(IcebergConfig.class);

24 | IcebergConfig appConfigSpy = Mockito.spy(appConfig);

25 | return appConfigSpy;

26 | }

27 |

28 | }

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/java/io/debezium/server/iceberg/DebeziumConfigProducer.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg;

2 |

3 | import io.smallrye.config.SmallRyeConfig;

4 | import jakarta.enterprise.context.ApplicationScoped;

5 | import jakarta.enterprise.inject.Produces;

6 | import jakarta.inject.Inject;

7 | import org.eclipse.microprofile.config.Config;

8 | import org.mockito.Mockito;

9 |

10 | /**

11 | * This class provides a mocked instance of DebeziumConfig for testing purposes,

12 | * allowing selective overriding of configuration values while preserving the original

13 | * configuration.

14 | */

15 | public class DebeziumConfigProducer {

16 | @Inject

17 | Config config;

18 |

19 | @Produces

20 | @ApplicationScoped

21 | @io.quarkus.test.Mock

22 | DebeziumConfig appConfig() {

23 | DebeziumConfig appConfig = config.unwrap(SmallRyeConfig.class).getConfigMapping(DebeziumConfig.class);

24 | DebeziumConfig appConfigSpy = Mockito.spy(appConfig);

25 | return appConfigSpy;

26 | }

27 |

28 | }

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/java/io/debezium/server/iceberg/mapper/CustomMapper.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg.mapper;

2 |

3 | import io.debezium.server.iceberg.GlobalConfig;

4 | import jakarta.enterprise.context.Dependent;

5 | import jakarta.inject.Inject;

6 | import jakarta.inject.Named;

7 | import org.apache.iceberg.catalog.Namespace;

8 | import org.apache.iceberg.catalog.TableIdentifier;

9 |

10 | @Named("custom-mapper")

11 | @Dependent

12 | public class CustomMapper implements IcebergTableMapper {

13 | @Inject

14 | GlobalConfig config;

15 |

16 | @Override

17 | public TableIdentifier mapDestination(String destination) {

18 | try {

19 | String[] parts = destination.split("\\.");

20 | String tableName = parts[parts.length - 1];

21 | return TableIdentifier.of(Namespace.of(config.iceberg().namespace()), "custom_mapper_" + tableName);

22 | } catch (Exception e) {

23 | System.out.println("Failed to map:" + destination);

24 | throw new RuntimeException(e);

25 | }

26 | }

27 | }

28 |

--------------------------------------------------------------------------------

/mkdocs.yml:

--------------------------------------------------------------------------------

1 | site_name: Debezium Server Iceberg Consumer

2 | site_url: http://memiiso.github.io/debezium-server-iceberg

3 | repo_url: https://github.com/memiiso/debezium-server-iceberg

4 | theme:

5 | name: material

6 | features:

7 | # - navigation.instant

8 | - navigation.indexes

9 | - navigation.tabs

10 | # - navigation.expand

11 | - toc.integrate

12 | - content.code.copy

13 | - content.tabs.link

14 | nav:

15 | - Home: index.md

16 | - iceberg Consumer: iceberg.md

17 | - icebergevents Consumer: icebergevents.md

18 | - Python Runner: python-runner.md

19 | - Migration Guideline: migration.md

20 | - FAQ: faq.md

21 | - Contributing: contributing.md

22 |

23 | markdown_extensions:

24 | - pymdownx.highlight:

25 | anchor_linenums: true

26 | line_spans: __span

27 | pygments_lang_class: true

28 | - pymdownx.inlinehilite

29 | - pymdownx.snippets

30 | - pymdownx.superfences

31 | - admonition

32 | - pymdownx.details

33 | - abbr

34 | - pymdownx.snippets:

35 | base_path: [ !relative $config_dir ]

36 | check_paths: true

--------------------------------------------------------------------------------

/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM maven:3.9.9-eclipse-temurin-21 as builder

2 | ARG RELEASE_VERSION

3 | RUN apt-get -qq update && apt-get -qq install unzip

4 | COPY . /app

5 | WORKDIR /app

6 | RUN mvn clean package -Passembly -Dmaven.test.skip --quiet -Drevision=${RELEASE_VERSION}

7 | RUN unzip /app/debezium-server-iceberg-dist/target/debezium-server-iceberg-dist*.zip -d appdist

8 | RUN mkdir /app/appdist/debezium-server-iceberg/data && \

9 | chown -R 185 /app/appdist/debezium-server-iceberg && \

10 | chmod -R g+w,o+w /app/appdist/debezium-server-iceberg

11 |

12 | # Stage 2: Final image

13 | FROM registry.access.redhat.com/ubi8/openjdk-21

14 |

15 | ENV SERVER_HOME=/debezium

16 |

17 | USER root

18 | RUN microdnf clean all

19 |

20 | USER jboss

21 |

22 | COPY --from=builder /app/appdist/debezium-server-iceberg $SERVER_HOME

23 |

24 | # Set the working directory to the Debezium Server home directory

25 | WORKDIR $SERVER_HOME

26 |

27 | #

28 | # Expose the ports and set up volumes for the data, transaction log, and configuration

29 | #

30 | EXPOSE 8080

31 | VOLUME ["/debezium/config","/debezium/data"]

32 |

33 | CMD ["/debezium/run.sh"]

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/main/java/io/debezium/server/iceberg/tableoperator/Operation.java:

--------------------------------------------------------------------------------

1 | /*

2 | * Licensed to the Apache Software Foundation (ASF) under one

3 | * or more contributor license agreements. See the NOTICE file

4 | * distributed with this work for additional information

5 | * regarding copyright ownership. The ASF licenses this file

6 | * to you under the Apache License, Version 2.0 (the

7 | * "License"); you may not use this file except in compliance

8 | * with the License. You may obtain a copy of the License at

9 | *

10 | * http://www.apache.org/licenses/LICENSE-2.0

11 | *

12 | * Unless required by applicable law or agreed to in writing,

13 | * software distributed under the License is distributed on an

14 | * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

15 | * KIND, either express or implied. See the License for the

16 | * specific language governing permissions and limitations

17 | * under the License.

18 | */

19 | package io.debezium.server.iceberg.tableoperator;

20 |

21 | public enum Operation {

22 | INSERT,

23 | UPDATE,

24 | DELETE,

25 | READ

26 | }

27 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-dist/src/main/resources/distro/jmx/enable_jmx.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | # To enable JMX functionality, export the JMX_HOST and JMX_PORT environment variables.

3 | # Modify the jmxremote.access and jmxremote.password files accordingly.

4 | if [ -n "${JMX_HOST}" -a -n "${JMX_PORT}" ]; then

5 | export JAVA_OPTS="-Dcom.sun.management.jmxremote.ssl=false \

6 | -Dcom.sun.management.jmxremote.port=${JMX_PORT} \

7 | -Dcom.sun.management.jmxremote.rmi.port=${JMX_PORT} \

8 | -Dcom.sun.management.jmxremote.local.only=false \

9 | -Djava.rmi.server.hostname=${JMX_HOST} \

10 | -Dcom.sun.management.jmxremote.verbose=true"

11 |

12 | if [ -f "jmx/jmxremote.access" -a -f "jmx/jmxremote.password" ]; then

13 | chmod 600 jmx/jmxremote.password

14 | export JAVA_OPTS="${JAVA_OPTS} -Dcom.sun.management.jmxremote.authenticate=true \

15 | -Dcom.sun.management.jmxremote.access.file=jmx/jmxremote.access \

16 | -Dcom.sun.management.jmxremote.password.file=jmx/jmxremote.password"

17 | else

18 | export JAVA_OPTS="${JAVA_OPTS} -Dcom.sun.management.jmxremote.authenticate=false"

19 | fi

20 | fi

21 |

--------------------------------------------------------------------------------

/.run/package.run.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

27 |

28 |

29 |

30 |

--------------------------------------------------------------------------------

/.run/dependency_tree.run.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

23 |

24 |

25 |

26 |

27 |

28 |

29 |

30 |

31 |

32 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/java/io/debezium/server/iceberg/converter/JsonEventConverterSchemaDataTest.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg.converter;

2 |

3 | import org.junit.jupiter.api.Test;

4 | import org.junit.jupiter.api.condition.DisabledIfEnvironmentVariable;

5 |

6 | import java.util.Set;

7 |

8 | import static org.junit.jupiter.api.Assertions.assertEquals;

9 |

10 | @DisabledIfEnvironmentVariable(named = "DEBEZIUM_FORMAT_VALUE", matches = "connect")

11 | class JsonEventConverterSchemaDataTest {

12 |

13 | @Test

14 | void testIcebergSchemaConverterDataBehaviourAndCloning() {

15 |

16 | IcebergSchemaInfo test = new IcebergSchemaInfo(5);

17 | test.identifierFieldIds().add(3);

18 | assertEquals(6, test.nextFieldId().incrementAndGet());

19 | assertEquals(Set.of(3), test.identifierFieldIds());

20 |

21 | // test cloning and then changing nextFieldId is persisting

22 | IcebergSchemaInfo copy = test.copyPreservingMetadata();

23 | assertEquals(6, test.nextFieldId().get());

24 | copy.nextFieldId().incrementAndGet();

25 | assertEquals(7, test.nextFieldId().get());

26 |

27 | // test cloning and then changing identifier fields is persisting

28 | assertEquals(Set.of(3), copy.identifierFieldIds());

29 | copy.identifierFieldIds().add(7);

30 | assertEquals(Set.of(3, 7), test.identifierFieldIds());

31 |

32 | }

33 |

34 | }

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/main/java/io/debezium/server/iceberg/BatchConfig.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg;

2 |

3 | import io.debezium.config.CommonConnectorConfig;

4 | import io.quarkus.runtime.annotations.ConfigRoot;

5 | import io.smallrye.config.ConfigMapping;

6 | import io.smallrye.config.WithDefault;

7 | import io.smallrye.config.WithName;

8 |

9 | @ConfigRoot

10 | @ConfigMapping

11 | public interface BatchConfig {

12 | @WithName("debezium.source.max.queue.size")

13 | @WithDefault(CommonConnectorConfig.DEFAULT_MAX_QUEUE_SIZE + "")

14 | int sourceMaxQueueSize();

15 |

16 | @WithName("debezium.source.max.batch.size")

17 | @WithDefault(CommonConnectorConfig.DEFAULT_MAX_BATCH_SIZE + "")

18 | int sourceMaxBatchSize();

19 |

20 | @WithName("debezium.sink.batch.batch-size-wait.max-wait-ms")

21 | @WithDefault("300000")

22 | int batchSizeWaitMaxWaitMs();

23 |

24 | @WithName("debezium.sink.batch.batch-size-wait.wait-interval-ms")

25 | @WithDefault("10000")

26 | int batchSizeWaitWaitIntervalMs();

27 |

28 | @WithName("debezium.sink.batch.batch-size-wait")

29 | @WithDefault("NoBatchSizeWait")

30 | String batchSizeWaitName();

31 |

32 | @WithName("debezium.sink.batch.concurrent-uploads")

33 | @WithDefault("1")

34 | int concurrentUploads();

35 |

36 | @WithName("debezium.sink.batch.concurrent-uploads.timeout-minutes")

37 | @WithDefault("60")

38 | int concurrentUploadsTimeoutMinutes();

39 |

40 |

41 | }

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/java/io/debezium/server/iceberg/testresources/CatalogJdbc.java:

--------------------------------------------------------------------------------

1 | /*

2 | *

3 | * * Copyright memiiso Authors.

4 | * *

5 | * * Licensed under the Apache Software License version 2.0, available at http://www.apache.org/licenses/LICENSE-2.0

6 | *

7 | */

8 |

9 | package io.debezium.server.iceberg.testresources;

10 |

11 | import io.quarkus.test.common.QuarkusTestResourceLifecycleManager;

12 |

13 | import java.util.Map;

14 | import java.util.concurrent.ConcurrentHashMap;

15 |

16 | import org.testcontainers.containers.MySQLContainer;

17 |

18 | public class CatalogJdbc implements QuarkusTestResourceLifecycleManager {

19 | public static final MySQLContainer container = new MySQLContainer<>("mysql:8");

20 |

21 | @Override

22 | public Map start() {

23 | container.start();

24 | System.out.println("Jdbc Catalog started: " + container.getJdbcUrl());

25 |

26 | Map config = new ConcurrentHashMap<>();

27 |

28 | config.put("debezium.sink.iceberg.type", "jdbc");

29 | config.put("debezium.sink.iceberg.uri", container.getJdbcUrl());

30 | config.put("debezium.sink.iceberg.jdbc.user", container.getUsername());

31 | config.put("debezium.sink.iceberg.jdbc.password", container.getPassword());

32 | config.put("debezium.sink.iceberg.jdbc.schema-version", "V1");

33 |

34 | return config;

35 | }

36 |

37 | @Override

38 | public void stop() {

39 | container.stop();

40 | }

41 |

42 | }

43 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/main/java/io/debezium/server/iceberg/tableoperator/PartitionedAppendWriter.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg.tableoperator;

2 |

3 | import org.apache.iceberg.FileFormat;

4 | import org.apache.iceberg.PartitionKey;

5 | import org.apache.iceberg.PartitionSpec;

6 | import org.apache.iceberg.Schema;

7 | import org.apache.iceberg.data.InternalRecordWrapper;

8 | import org.apache.iceberg.data.Record;

9 | import org.apache.iceberg.io.FileAppenderFactory;

10 | import org.apache.iceberg.io.FileIO;

11 | import org.apache.iceberg.io.OutputFileFactory;

12 | import org.apache.iceberg.io.PartitionedWriter;

13 |

14 | public class PartitionedAppendWriter extends PartitionedWriter {

15 | private final PartitionKey partitionKey;

16 | final InternalRecordWrapper wrapper;

17 |

18 | public PartitionedAppendWriter(PartitionSpec spec, FileFormat format,

19 | FileAppenderFactory appenderFactory,

20 | OutputFileFactory fileFactory, FileIO io, long targetFileSize,

21 | Schema schema) {

22 | super(spec, format, appenderFactory, fileFactory, io, targetFileSize);

23 | this.partitionKey = new PartitionKey(spec, schema);

24 | this.wrapper = new InternalRecordWrapper(schema.asStruct());

25 | }

26 |

27 | @Override

28 | protected PartitionKey partition(Record row) {

29 | partitionKey.partition(wrapper.wrap(row));

30 | return partitionKey;

31 | }

32 | }

33 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/java/io/debezium/server/iceberg/tableoperator/UnpartitionedDeltaWriterTest.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg.tableoperator;

2 |

3 | import org.apache.iceberg.data.GenericRecord;

4 | import org.apache.iceberg.data.Record;

5 | import org.apache.iceberg.io.WriteResult;

6 | import org.junit.jupiter.api.Assertions;

7 | import org.junit.jupiter.api.Test;

8 |

9 | import java.io.IOException;

10 |

11 | class UnpartitionedDeltaWriterTest extends BaseWriterTest {

12 |

13 | @Test

14 | public void testUnpartitionedDeltaWriter() throws IOException {

15 | UnpartitionedDeltaWriter writer = new UnpartitionedDeltaWriter(table.spec(), format, appenderFactory, fileFactory,

16 | table.io(),

17 | Long.MAX_VALUE, table.schema(), identifierFieldIds, true);

18 |

19 | Record row = GenericRecord.create(SCHEMA);

20 | row.setField("id", "123");

21 | row.setField("data", "hello world!");

22 | row.setField("id2", "123");

23 | row.setField("__op", "u");

24 |

25 | writer.write(new RecordWrapper(row, Operation.UPDATE));

26 | WriteResult result = writer.complete();

27 |

28 | // in upsert mode, each write is a delete + append, so we'll have 1 data file and 1 delete file

29 | Assertions.assertEquals(result.dataFiles().length, 1);

30 | Assertions.assertEquals(result.dataFiles()[0].format(), format);

31 | Assertions.assertEquals(result.deleteFiles().length, 1);

32 | Assertions.assertEquals(result.deleteFiles()[0].format(), format);

33 | }

34 | }

35 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/main/java/io/debezium/server/iceberg/tableoperator/UnpartitionedDeltaWriter.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg.tableoperator;

2 |

3 | import java.io.IOException;

4 | import java.util.Set;

5 |

6 | import org.apache.iceberg.FileFormat;

7 | import org.apache.iceberg.PartitionSpec;

8 | import org.apache.iceberg.Schema;

9 | import org.apache.iceberg.data.Record;

10 | import org.apache.iceberg.io.FileAppenderFactory;

11 | import org.apache.iceberg.io.FileIO;

12 | import org.apache.iceberg.io.OutputFileFactory;

13 |

14 | class UnpartitionedDeltaWriter extends BaseDeltaTaskWriter {

15 | private final RowDataDeltaWriter writer;

16 |

17 | UnpartitionedDeltaWriter(PartitionSpec spec,

18 | FileFormat format,

19 | FileAppenderFactory appenderFactory,

20 | OutputFileFactory fileFactory,

21 | FileIO io,

22 | long targetFileSize,

23 | Schema schema,

24 | Set identifierFieldIds,

25 | boolean keepDeletes) {

26 | super(spec, format, appenderFactory, fileFactory, io, targetFileSize, schema, identifierFieldIds, keepDeletes);

27 | this.writer = new RowDataDeltaWriter(null);

28 | }

29 |

30 | @Override

31 | RowDataDeltaWriter route(Record row) {

32 | return writer;

33 | }

34 |

35 | @Override

36 | public void close() throws IOException {

37 | writer.close();

38 | }

39 | }

40 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/main/java/io/debezium/server/iceberg/mapper/DefaultIcebergTableMapper.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg.mapper;

2 |

3 | import io.debezium.server.iceberg.GlobalConfig;

4 | import jakarta.enterprise.context.Dependent;

5 | import jakarta.inject.Inject;

6 | import jakarta.inject.Named;

7 | import org.apache.iceberg.catalog.Namespace;

8 | import org.apache.iceberg.catalog.TableIdentifier;

9 |

10 | @Named("default-mapper")

11 | @Dependent

12 | public class DefaultIcebergTableMapper implements IcebergTableMapper {

13 | @Inject

14 | GlobalConfig config;

15 |

16 | @Override

17 | public TableIdentifier mapDestination(String destination) {

18 | final String tableName = destination

19 | .replaceAll(config.iceberg().destinationRegexp().orElse(""), config.iceberg().destinationRegexpReplace().orElse(""))

20 | .replace(".", "_");

21 |

22 | if (config.iceberg().destinationUppercaseTableNames()) {

23 | return TableIdentifier.of(Namespace.of(config.iceberg().namespace()), (config.iceberg().tablePrefix().orElse("") + tableName).toUpperCase());

24 | } else if (config.iceberg().destinationLowercaseTableNames()) {

25 | return TableIdentifier.of(Namespace.of(config.iceberg().namespace()), (config.iceberg().tablePrefix().orElse("") + tableName).toLowerCase());

26 | } else {

27 | return TableIdentifier.of(Namespace.of(config.iceberg().namespace()), config.iceberg().tablePrefix().orElse("") + tableName);

28 | }

29 | }

30 | }

31 |

--------------------------------------------------------------------------------

/.run/clean,install.run.xml:

--------------------------------------------------------------------------------

1 |

8 |

9 |

10 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

23 |

24 |

25 |

26 |

27 |

28 |

29 |

30 |

31 |

32 |

33 |

34 |

35 |

36 |

37 |

38 |

--------------------------------------------------------------------------------

/docs/icebergevents.md:

--------------------------------------------------------------------------------

1 | # DEPRECATED

2 |

3 | Using the `iceberg` consumer with the following settings is recommended to achieve the same results:

4 |

5 | ```properties

6 | # Store nested data in variant fields

7 | debezium.sink.iceberg.nested-as-variant=true

8 | # Ensure event flattening is disabled (flattening is the default behavior)

9 | debezium.transforms=,

10 | ```

11 |

12 | # `icebergevents` Consumer

13 |

14 | This consumer appends all Change Data Capture (CDC) events as JSON strings to a single Iceberg table. The table is

15 | partitioned by `event_destination` and `event_sink_timestamptz` for efficient data organization and query performance.

16 |

17 | ````properties

18 | debezium.sink.type=icebergevents

19 | debezium.sink.iceberg.catalog-name=default

20 | ````

21 |

22 | Iceberg table definition:

23 |

24 | ```java

25 | static final String TABLE_NAME = "debezium_events";

26 | static final Schema TABLE_SCHEMA = new Schema(

27 | required(1, "event_destination", Types.StringType.get()),

28 | optional(2, "event_key", Types.StringType.get()),

29 | optional(3, "event_value", Types.StringType.get()),

30 | optional(4, "event_sink_epoch_ms", Types.LongType.get()),

31 | optional(5, "event_sink_timestamptz", Types.TimestampType.withZone())

32 | );

33 | static final PartitionSpec TABLE_PARTITION = PartitionSpec.builderFor(TABLE_SCHEMA)

34 | .identity("event_destination")

35 | .hour("event_sink_timestamptz")

36 | .build();

37 | static final SortOrder TABLE_SORT_ORDER = SortOrder.builderFor(TABLE_SCHEMA)

38 | .asc("event_sink_epoch_ms", NullOrder.NULLS_LAST)

39 | .build();

40 | ```

41 |

--------------------------------------------------------------------------------

/docs/python-runner.md:

--------------------------------------------------------------------------------

1 | # Python Runner for Debezium Server

2 |

3 | It's possible to use python to run,operate debezium server

4 |

5 | For convenience this project additionally provides Python scripts to automate the startup, shutdown, and configuration

6 | of Debezium Server.

7 | Using Python, you can do various Debezium Server operation and take programmatic, dynamic, debezium configuration.

8 | example:

9 |

10 | ```commandline

11 | pip install git+https://github.com/memiiso/debezium-server-iceberg.git@master#subdirectory=python

12 | debezium

13 | # running with custom arguments

14 | debezium --debezium_dir=/my/debezium_server/dir/ --java_home=/my/java/homedir/

15 | ```

16 |

17 | ```python

18 | from debezium import Debezium

19 |

20 | d = Debezium(debezium_dir="/dbz/server/dir", java_home='/java/home/dir')

21 | java_args = []

22 | java_args.append("-Dquarkus.log.file.enable=true")

23 | java_args.append("-Dquarkus.log.file.path=/logs/dbz_logfile.log")

24 | d.run(*java_args)

25 | ```

26 |

27 | ```python

28 | import os

29 | from debezium import DebeziumRunAsyn

30 |

31 | java_args = []

32 | # using python we can dynamically influence debezium

33 | # by chaning its config within python

34 | if my_custom_condition_check is True:

35 | # Option 1: set config using java arg

36 | java_args.append("-Dsnapshot.mode=always")

37 | # Option 2: set config using ENV variable

38 | os.environ["SNAPSHOT_MODE"] = "always"

39 |

40 | java_args.append("-Dquarkus.log.file.enable=true")

41 | java_args.append("-Dquarkus.log.file.path=/logs/dbz_logfile.log")

42 | d = DebeziumRunAsyn(debezium_dir="/dbz/server/dir", java_home='/java/home/dir', java_args=java_args)

43 | d.run()

44 | d.join()

45 | ```

--------------------------------------------------------------------------------

/.github/workflows/build.yml:

--------------------------------------------------------------------------------

1 | name: Build Java Project

2 |

3 | on:

4 | push:

5 | branches: [ master, '*.*' ]

6 | paths-ignore:

7 | - '.github/**'

8 | - '.idea/**'

9 | - '.run/**'

10 | pull_request:

11 | branches: [ master, '*.*' ]

12 | paths-ignore:

13 | - '.github/**'

14 | - '.idea/**'

15 | - '.run/**'

16 |

17 | env:

18 | SPARK_LOCAL_IP: 127.0.0.1

19 |

20 | jobs:

21 | build-java-project-json-format:

22 | name: Build-Test (Json Format)

23 | runs-on: ubuntu-latest

24 | env:

25 | DEBEZIUM_FORMAT_VALUE: json

26 | DEBEZIUM_FORMAT_KEY: json

27 | steps:

28 | - name: Checkout Repository

29 | uses: actions/checkout@v4

30 | - name: Set up Java

31 | uses: actions/setup-java@v5

32 | with:

33 | distribution: 'temurin'

34 | java-version: 21

35 | cache: 'maven'

36 | - name: Build with Maven

37 | run: mvn -B --no-transfer-progress package --file pom.xml -Dsurefire.skipAfterFailureCount=1

38 |

39 |

40 | build-java-project-connect-format:

41 | name: Build-Test (Connect Format)

42 | runs-on: ubuntu-latest

43 | needs: build-java-project-json-format

44 | env:

45 | DEBEZIUM_FORMAT_VALUE: connect

46 | DEBEZIUM_FORMAT_KEY: connect

47 | steps:

48 | - name: Checkout Repository

49 | uses: actions/checkout@v4

50 |

51 | - name: Set up Java

52 | uses: actions/setup-java@v5

53 | with:

54 | distribution: 'temurin'

55 | java-version: 21

56 | cache: 'maven'

57 | - name: Build with Maven (Connect Format)

58 | run: mvn -B --no-transfer-progress package --file pom.xml -Dsurefire.skipAfterFailureCount=1

59 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/java/io/debezium/server/iceberg/testresources/TestUtil.java:

--------------------------------------------------------------------------------

1 | /*

2 | *

3 | * * Copyright memiiso Authors.

4 | * *

5 | * * Licensed under the Apache Software License version 2.0, available at http://www.apache.org/licenses/LICENSE-2.0

6 | *

7 | */

8 |

9 | package io.debezium.server.iceberg.testresources;

10 |

11 | import io.debezium.embedded.EmbeddedEngineChangeEvent;

12 | import io.debezium.engine.DebeziumEngine;

13 |

14 | import java.security.SecureRandom;

15 |

16 | import org.apache.kafka.connect.source.SourceRecord;

17 |

18 | public class TestUtil {

19 | static final String AB = "0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz";

20 | static final SecureRandom rnd = new SecureRandom();

21 |

22 |

23 | public static int randomInt(int low, int high) {

24 | return rnd.nextInt(high - low) + low;

25 | }

26 |

27 | public static String randomString(int len) {

28 | StringBuilder sb = new StringBuilder(len);

29 | for (int i = 0; i < len; i++)

30 | sb.append(AB.charAt(rnd.nextInt(AB.length())));

31 | return sb.toString();

32 | }

33 |

34 | public static DebeziumEngine.RecordCommitter getCommitter() {

35 | return new DebeziumEngine.RecordCommitter() {

36 | public synchronized void markProcessed(SourceRecord record) {

37 | }

38 |

39 | @Override

40 | public void markProcessed(Object record) {

41 | }

42 |

43 | public synchronized void markBatchFinished() {

44 | }

45 |

46 | @Override

47 | public void markProcessed(Object record, DebeziumEngine.Offsets sourceOffsets) {

48 | }

49 |

50 | @Override

51 | public DebeziumEngine.Offsets buildOffsets() {

52 | return null;

53 | }

54 | };

55 | }

56 |

57 | }

58 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/resources/json/unwrap-with-schema.json:

--------------------------------------------------------------------------------

1 | {

2 | "schema": {

3 | "type": "struct",

4 | "fields": [

5 | {

6 | "type": "int32",

7 | "optional": false,

8 | "field": "id"

9 | },

10 | {

11 | "type": "int32",

12 | "optional": false,

13 | "name": "io.debezium.time.Date",

14 | "version": 1,

15 | "field": "order_date"

16 | },

17 | {

18 | "type": "int32",

19 | "optional": false,

20 | "field": "purchaser"

21 | },

22 | {

23 | "type": "int32",

24 | "optional": false,

25 | "field": "quantity"

26 | },

27 | {

28 | "type": "int32",

29 | "optional": false,

30 | "field": "product_id"

31 | },

32 | {

33 | "type": "string",

34 | "optional": true,

35 | "field": "__op"

36 | },

37 | {

38 | "type": "string",

39 | "optional": true,

40 | "field": "__table"

41 | },

42 | {

43 | "type": "int64",

44 | "optional": true,

45 | "field": "__lsn"

46 | },

47 | {

48 | "type": "int64",

49 | "optional": true,

50 | "field": "__source_ts_ms"

51 | },

52 | {

53 | "type": "string",

54 | "optional": true,

55 | "field": "__deleted"

56 | }

57 | ],

58 | "optional": false,

59 | "name": "testc.inventory.orders.Value"

60 | },

61 | "payload": {

62 | "id": 10003,

63 | "order_date": 16850,

64 | "purchaser": 1002,

65 | "quantity": 2,

66 | "product_id": 106,

67 | "__op": "r",

68 | "__table": "orders",

69 | "__lsn": 33832960,

70 | "__source_ts_ms": 1596309876678,

71 | "__deleted": "false"

72 | }

73 | }

74 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/java/io/debezium/server/iceberg/testresources/CatalogRest.java:

--------------------------------------------------------------------------------

1 | /*

2 | *

3 | * * Copyright memiiso Authors.

4 | * *

5 | * * Licensed under the Apache Software License version 2.0, available at http://www.apache.org/licenses/LICENSE-2.0

6 | *

7 | */

8 |

9 | package io.debezium.server.iceberg.testresources;

10 |

11 | import io.quarkus.test.common.QuarkusTestResourceLifecycleManager;

12 | import org.testcontainers.containers.GenericContainer;

13 | import org.testcontainers.containers.wait.strategy.Wait;

14 | import org.testcontainers.utility.DockerImageName;

15 |

16 | import java.util.Map;

17 | import java.util.concurrent.ConcurrentHashMap;

18 |

19 | public class CatalogRest implements QuarkusTestResourceLifecycleManager {

20 | public static final int REST_CATALOG_PORT = 8181;

21 | public static final String REST_CATALOG_IMAGE = "apache/iceberg-rest-fixture";

22 |

23 | public static final GenericContainer container = new GenericContainer<>(DockerImageName.parse(REST_CATALOG_IMAGE))

24 | .withExposedPorts(REST_CATALOG_PORT)

25 | .waitingFor(Wait.forLogMessage(".*Started Server.*", 1));

26 |

27 | public static String getHostUrl() {

28 | return String.format("http://%s:%s", container.getHost(), container.getMappedPort(REST_CATALOG_PORT));

29 | }

30 |

31 | @Override

32 | public Map start() {

33 | container.start();

34 | System.out.println("Rest Catalog started: " + getHostUrl());

35 |

36 | Map config = new ConcurrentHashMap<>();

37 |

38 | config.put("debezium.sink.iceberg.type", "rest");

39 | config.put("debezium.sink.iceberg.uri", CatalogRest.getHostUrl());

40 |

41 | return config;

42 | }

43 |

44 | @Override

45 | public void stop() {

46 | container.stop();

47 | }

48 |

49 | }

50 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/java/io/debezium/server/iceberg/IcebergChangeConsumerJdbcCatalogTest.java:

--------------------------------------------------------------------------------

1 | /*

2 | *

3 | * * Copyright memiiso Authors.

4 | * *

5 | * * Licensed under the Apache Software License version 2.0, available at http://www.apache.org/licenses/LICENSE-2.0

6 | *

7 | */

8 |

9 | package io.debezium.server.iceberg;

10 |

11 | import com.google.common.collect.Lists;

12 | import io.debezium.server.iceberg.testresources.CatalogJdbc;

13 | import io.debezium.server.iceberg.testresources.S3Minio;

14 | import io.debezium.server.iceberg.testresources.SourcePostgresqlDB;

15 | import io.quarkus.test.common.QuarkusTestResource;

16 | import io.quarkus.test.junit.QuarkusTest;

17 | import org.apache.iceberg.data.Record;

18 | import org.apache.iceberg.io.CloseableIterable;

19 | import org.awaitility.Awaitility;

20 | import org.junit.jupiter.api.Test;

21 |

22 | import java.time.Duration;

23 |

24 | /**

25 | * Integration test that verifies basic reading from PostgreSQL database and writing to iceberg destination.

26 | *

27 | * @author Ismail Simsek

28 | */

29 | @QuarkusTest

30 | @QuarkusTestResource(value = S3Minio.class, restrictToAnnotatedClass = true)

31 | @QuarkusTestResource(value = SourcePostgresqlDB.class, restrictToAnnotatedClass = true)

32 | @QuarkusTestResource(value = CatalogJdbc.class, restrictToAnnotatedClass = true)

33 | public class IcebergChangeConsumerJdbcCatalogTest extends BaseTest {

34 |

35 | @Test

36 | public void testSimpleUpload() {

37 | Awaitility.await().atMost(Duration.ofSeconds(120)).until(() -> {

38 | try {

39 | CloseableIterable result = getTableDataV2("testc.inventory.customers");

40 | return Lists.newArrayList(result).size() >= 3;

41 | } catch (Exception e) {

42 | return false;

43 | }

44 | });

45 | }

46 |

47 | }

48 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/resources/json/serde-with-array.json:

--------------------------------------------------------------------------------

1 | {

2 | "schema": {

3 | "type": "struct",

4 | "fields": [

5 | {

6 | "type": "string",

7 | "optional": true,

8 | "field": "name"

9 | },

10 | {

11 | "type": "array",

12 | "items": {

13 | "type": "int32",

14 | "optional": true

15 | },

16 | "optional": true,

17 | "field": "pay_by_quarter"

18 | },

19 | {

20 | "type": "array",

21 | "items": {

22 | "type": "string",

23 | "optional": true

24 | },

25 | "optional": true,

26 | "field": "schedule"

27 | },

28 | {

29 | "type": "string",

30 | "optional": true,

31 | "field": "__op"

32 | },

33 | {

34 | "type": "string",

35 | "optional": true,

36 | "field": "__table"

37 | },

38 | {

39 | "type": "int64",

40 | "optional": true,

41 | "field": "__source_ts_ms"

42 | },

43 | {

44 | "type": "string",

45 | "optional": true,

46 | "field": "__db"

47 | },

48 | {

49 | "type": "string",

50 | "optional": true,

51 | "field": "__deleted"

52 | }

53 | ],

54 | "optional": false,

55 | "name": "testc.inventory.array_data.Value"

56 | },

57 | "payload": {

58 | "name": "Bill",

59 | "pay_by_quarter": [

60 | 10000,

61 | 10001,

62 | 10002,

63 | 10003

64 | ],

65 | "schedule": [

66 | "[Ljava.lang.String;@508917a0",

67 | "[Ljava.lang.String;@7412bd2"

68 | ],

69 | "__op": "c",

70 | "__table": "array_data",

71 | "__source_ts_ms": 1638128893618,

72 | "__db": "postgres",

73 | "__deleted": "false"

74 | }

75 | }

76 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-sink/src/test/java/io/debezium/server/iceberg/GlobalConfigTest.java:

--------------------------------------------------------------------------------

1 | package io.debezium.server.iceberg;

2 |

3 | import io.quarkus.test.junit.QuarkusTest;

4 | import io.quarkus.test.junit.QuarkusTestProfile;

5 | import io.quarkus.test.junit.TestProfile;

6 | import org.jboss.logging.Logger;

7 | import org.junit.jupiter.api.Assertions;

8 | import org.junit.jupiter.api.Test;

9 |

10 | import java.util.HashMap;

11 | import java.util.Map;

12 |

13 | import static io.debezium.server.iceberg.TestConfigSource.ICEBERG_CATALOG_NAME;

14 | import static io.debezium.server.iceberg.TestConfigSource.ICEBERG_WAREHOUSE_S3A;

15 |

16 | @QuarkusTest

17 | @TestProfile(GlobalConfigTest.TestProfile.class)

18 | public class GlobalConfigTest extends BaseTest {

19 |

20 | @Test

21 | void configLoadsCorrectly() {

22 | Assertions.assertEquals(ICEBERG_CATALOG_NAME, config.iceberg().catalogName());

23 | // tests are running with false

24 | Assertions.assertEquals(false, config.iceberg().upsert());

25 | Assertions.assertEquals(ICEBERG_WAREHOUSE_S3A, config.iceberg().warehouseLocation());

26 |

27 | Assertions.assertTrue(config.iceberg().icebergConfigs().containsKey("warehouse"));

28 | Assertions.assertTrue(config.iceberg().icebergConfigs().containsValue(ICEBERG_WAREHOUSE_S3A));

29 | Assertions.assertTrue(config.iceberg().icebergConfigs().containsKey("table-namespace"));

30 | Assertions.assertTrue(config.iceberg().icebergConfigs().containsKey("catalog-name"));

31 | Assertions.assertTrue(config.iceberg().icebergConfigs().containsValue(ICEBERG_CATALOG_NAME));

32 | Assertions.assertEquals(Logger.Level.ERROR, config.quarkusLogLevel());

33 | }

34 |

35 | public static class TestProfile implements QuarkusTestProfile {

36 | @Override

37 | public Map getConfigOverrides() {

38 | Map config = new HashMap<>();

39 | config.put("quarkus.log.level", "ERROR");

40 | return config;

41 | }

42 | }

43 |

44 | }

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | [](http://www.apache.org/licenses/LICENSE-2.0.html)

2 |

3 |

4 |

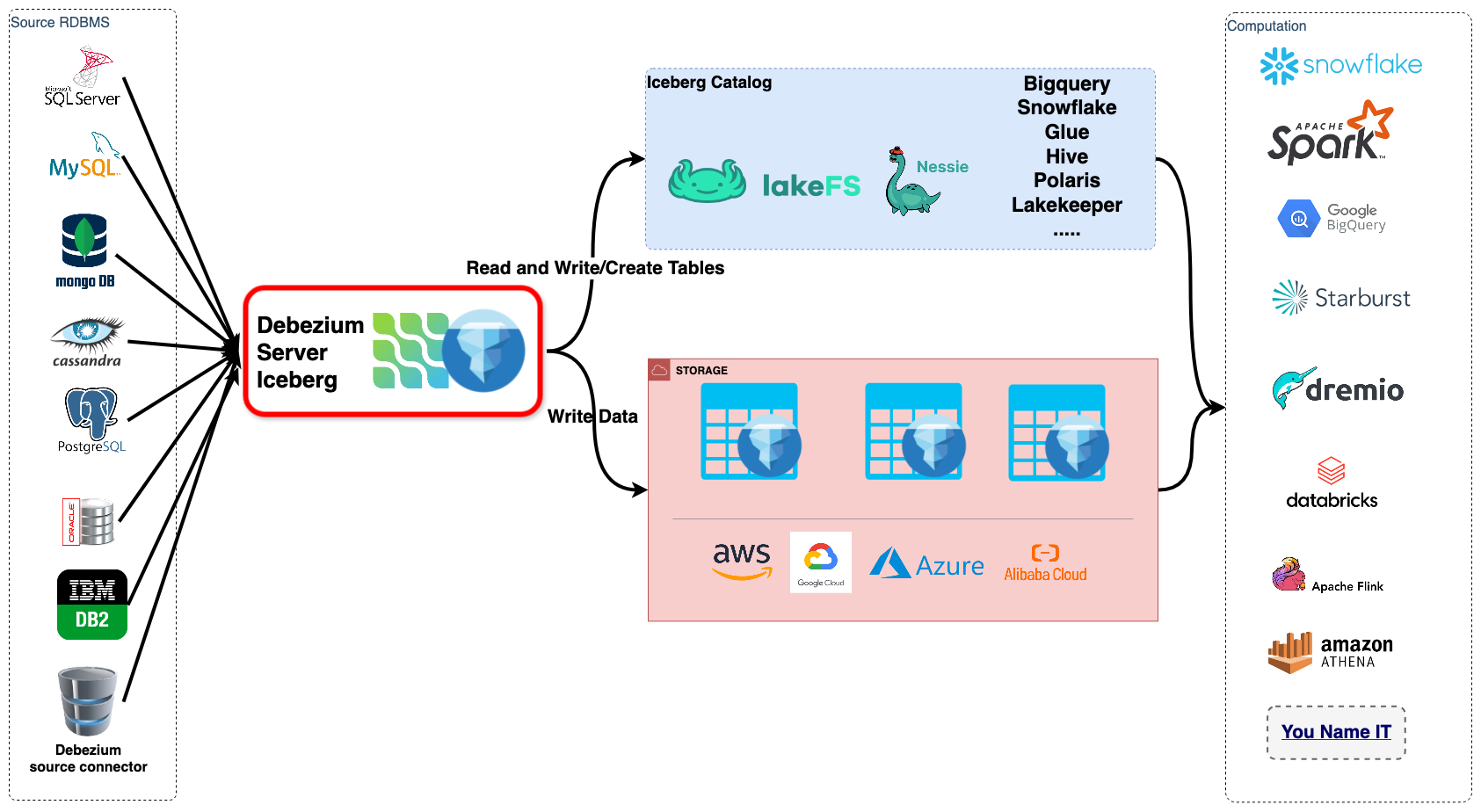

5 | # Debezium Iceberg Consumer

6 |

7 | This project implements Debezium Server Iceberg consumer

8 | see [Debezium Server](https://debezium.io/documentation/reference/operations/debezium-server.html). It enables real-time

9 | replication of Change Data Capture (CDC) events from any database to Iceberg tables. Without requiring Spark, Kafka or

10 | Streaming platform in between.

11 |

12 | See the [Documentation Page](https://memiiso.github.io/debezium-server-iceberg/) for more details.

13 |

14 |

15 |

16 | ## Installation

17 | - Requirements:

18 | - JDK 21

19 | - Maven

20 | ### Building from source code

21 |

22 | ```bash

23 | git clone https://github.com/memiiso/debezium-server-iceberg.git

24 | cd debezium-server-iceberg

25 | mvn -Passembly -Dmaven.test.skip package

26 | # unzip and run the application

27 | unzip debezium-server-iceberg-dist/target/debezium-server-iceberg-dist*.zip -d appdist

28 | cd appdist/debezium-server-iceberg

29 | mv config/application.properties.example config/application.properties

30 | bash run.sh

31 | ```

32 |

33 | ## Contributing

34 |

35 | The Memiiso community welcomes anyone that wants to help out in any way, whether that includes reporting problems,

36 | helping with documentation, or contributing code changes to fix bugs, add tests, or implement new features.

37 | See [contributing document](docs/contributing.md) for details.

38 |

39 | ### Contributors

40 |

41 |

42 |  43 |

44 |

--------------------------------------------------------------------------------

/.github/workflows/stale.yml:

--------------------------------------------------------------------------------

1 | name: "Close Stale Issues and PRs"

2 | on:

3 | schedule:

4 | - cron: '0 0 * * *'

5 |

6 | permissions:

7 | # All other permissions are set to none

8 | issues: write

9 | pull-requests: write

10 |

11 | jobs:

12 | stale:

13 | if: github.repository_owner == 'memiiso'

14 | runs-on: ubuntu-22.04

15 | steps:

16 | - uses: actions/stale@v10.1.0

17 | with:

18 | # stale issues

19 | stale-issue-label: 'stale'

20 | exempt-issue-labels: 'not-stale'

21 | days-before-issue-stale: 180

22 | days-before-issue-close: 14

23 | stale-issue-message: >

24 | This issue has been automatically marked as stale because it has been open for 180 days

25 | with no activity. It will be closed in next 14 days if no further activity occurs. To

26 | permanently prevent this issue from being considered stale, add the label 'not-stale',

27 | but commenting on the issue is preferred when possible.

28 | close-issue-message: >

29 | This issue has been closed because it has not received any activity in the last 14 days

30 | since being marked as 'stale'

31 | # stale PRs

32 | stale-pr-label: 'stale'

33 | exempt-pr-labels: 'not-stale,security'

34 | stale-pr-message: 'This pull request has been marked as stale due to 30 days of inactivity. It will be closed in 1 week if no further activity occurs. If you think that’s incorrect or this pull request requires a review, please simply write any comment. If closed, you can revive the PR at any time. Thank you for your contributions.'

35 | close-pr-message: 'This pull request has been closed due to lack of activity. This is not a judgement on the merit of the PR in any way. It is just a way of keeping the PR queue manageable. If you think that is incorrect, or the pull request requires review, you can revive the PR at any time.'

36 | days-before-pr-stale: 30

37 | days-before-pr-close: 7

38 | ascending: true

39 | operations-per-run: 200

40 |

--------------------------------------------------------------------------------

/debezium-server-iceberg-dist/src/main/resources/distro/config/metrics.yml:

--------------------------------------------------------------------------------

1 | startDelaySeconds: 0

2 | ssl: false

3 | lowercaseOutputName: false

4 | lowercaseOutputLabelNames: false

5 | rules:

6 | - pattern: "kafka.producer]+)><>([^:]+)"

7 | name: "kafka_producer_metrics_$2"

8 | type: GAUGE

9 | labels:

10 | client: "$1"

11 | - pattern: "kafka.producer]+), node-id=([^>]+)><>([^:]+)"

12 | name: "kafka_producer_node_metrics_$3"

13 | type: GAUGE

14 | labels:

15 | client: "$1"

16 | node: "$2"

17 | - pattern: "kafka.producer]+), topic=([^>]+)><>([^:]+)"

18 | name: "kafka_producer_topic_metrics_$3"

19 | type: GAUGE

20 | labels:

21 | client: "$1"

22 | topic: "$2"

23 | - pattern: "kafka.connect([^:]+):"

24 | name: "kafka_connect_worker_metrics_$1"

25 | type: GAUGE

26 | - pattern: "kafka.connect<>([^:]+)"

27 | name: "kafka_connect_metrics_$2"

28 | type: GAUGE

29 | labels:

30 | client: "$1"

31 | - pattern: "debezium.([^:]+)]+)><>RowsScanned"

32 | name: "debezium_metrics_RowsScanned"

33 | type: GAUGE

34 | labels:

35 | plugin: "$1"

36 | name: "$3"

37 | context: "$2"

38 | table: "$4"

39 | - pattern: "debezium.([^:]+)]+)>([^:]+)"

40 | name: "debezium_metrics_$6"

41 | type: GAUGE

42 | labels:

43 | plugin: "$1"

44 | name: "$2"

45 | task: "$3"

46 | context: "$4"

47 | database: "$5"

48 | - pattern: "debezium.([^:]+)]+)>([^:]+)"

49 | name: "debezium_metrics_$5"

50 | type: GAUGE

51 | labels:

52 | plugin: "$1"

53 | name: "$2"

54 | task: "$3"

55 | context: "$4"

56 | - pattern: "debezium.([^:]+)]+)>([^:]+)"

57 | name: "debezium_metrics_$4"