├── .github

├── dependabot.yml

└── workflows

│ ├── deploy-documentation.yml

│ ├── release-test.yml

│ ├── release.yml

│ ├── tests-dbt-version.yml

│ └── tests.yml

├── .gitignore

├── .idea

├── .gitignore

├── git_toolbox_blame.xml

├── git_toolbox_prj.xml

├── misc.xml

├── modules.xml

├── opendbt.iml

├── runConfigurations

│ ├── Python_tests_in_tests.xml

│ ├── pip_install.xml

│ └── pylint.xml

└── vcs.xml

├── .pylintrc

├── LICENSE

├── README.md

├── docs

├── assets

│ ├── airflow-dbt-docs-link.png

│ ├── airflow-dbt-docs-page.png

│ ├── airflow-dbt-flow.png

│ ├── dbt-custom-adapter-python.png

│ ├── dbt-local-python.png

│ ├── docs-columns-transformation.png

│ ├── docs-dependencies.png

│ ├── docs-lineage.png

│ ├── docs-run-info-error.png

│ ├── docs-run-info.png

│ └── opendbt-airflow-ui.png

├── catalog.md

├── examples.md

├── index.md

└── opendbtdocs

│ ├── catalog.json

│ ├── catalogl.json

│ ├── index.html

│ ├── manifest.json

│ ├── run_info.json

│ └── run_results.json

├── mkdocs.yml

├── opendbt

├── __init__.py

├── __main__.py

├── airflow

│ ├── __init__.py

│ └── plugin.py

├── catalog

│ └── __init__.py

├── dbt

│ ├── __init__.py

│ ├── docs

│ │ ├── .gitignore

│ │ └── index.html

│ ├── shared

│ │ ├── __init__.py

│ │ ├── adapters

│ │ │ ├── __init__.py

│ │ │ └── impl.py

│ │ ├── cli

│ │ │ ├── __init__.py

│ │ │ └── main.py

│ │ └── task

│ │ │ ├── __init__.py

│ │ │ └── sqlfluff.py

│ ├── v17

│ │ ├── __init__.py

│ │ ├── adapters

│ │ │ ├── __init__.py

│ │ │ └── factory.py

│ │ ├── config

│ │ │ ├── __init__.py

│ │ │ └── runtime.py

│ │ └── task

│ │ │ ├── __init__.py

│ │ │ ├── docs

│ │ │ ├── __init__.py

│ │ │ └── generate.py

│ │ │ └── run.py

│ └── v18

│ │ ├── __init__.py

│ │ ├── adapters

│ │ ├── __init__.py

│ │ └── factory.py

│ │ ├── artifacts

│ │ ├── __init__.py

│ │ └── schemas

│ │ │ ├── __init__.py

│ │ │ └── run.py

│ │ ├── config

│ │ ├── __init__.py

│ │ └── runtime.py

│ │ └── task

│ │ ├── __init__.py

│ │ ├── docs

│ │ ├── __init__.py

│ │ └── generate.py

│ │ └── run.py

├── examples.py

├── logger.py

├── macros

│ ├── executedlt.sql

│ ├── executepython.sql

│ └── executesql.sql

├── runtime_patcher.py

└── utils.py

├── pyproject.toml

└── tests

├── base_dbt_test.py

├── resources

├── airflow

│ ├── Dockerfile

│ ├── airflow

│ │ ├── airflow.cfg

│ │ └── webserver_config.py

│ ├── dags

│ │ ├── dbt_mesh_workflow.py

│ │ ├── dbt_tests_workflow.py

│ │ └── dbt_workflow.py

│ ├── docker-compose.yaml

│ └── plugins

│ │ └── airflow_dbtdocs_page.py

├── dbtcore

│ ├── .gitignore

│ ├── .sqlfluff

│ ├── dbt_project.yml

│ ├── models

│ │ ├── my_core_table1.sql

│ │ ├── my_executedlt_model.py

│ │ ├── my_executepython_dlt_model.py

│ │ ├── my_executepython_model.py

│ │ ├── my_executesql_dbt_model.sql

│ │ ├── my_failing_dbt_model.sql

│ │ ├── my_first_dbt_model.sql

│ │ ├── my_second_dbt_model.sql

│ │ └── schema.yml

│ └── profiles.yml

└── dbtfinance

│ ├── .gitignore

│ ├── dbt_project.yml

│ ├── dependencies.yml

│ ├── macros

│ └── generate_schema_name.sql

│ ├── models

│ ├── my_cross_project_ref_model.sql

│ └── sources.yml

│ └── profiles.yml

├── test_airflow.py

├── test_catalog.py

├── test_custom_adapter.py

├── test_dbt_docs.py

├── test_dbt_sqlfluff.py

├── test_executedlt_materialization.py

├── test_executepython_materialization.py

├── test_executesql_materialization.py

├── test_main.py

├── test_opendbt_airflow.py

├── test_opendbt_cli.py

├── test_opendbt_mesh.py

└── test_opendbt_project.py

/.github/dependabot.yml:

--------------------------------------------------------------------------------

1 | version: 2

2 | updates:

3 | - package-ecosystem: "github-actions"

4 | directory: "/"

5 | schedule:

6 | interval: "weekly"

7 | - package-ecosystem: "pip"

8 | directory: "/"

9 | schedule:

10 | interval: "weekly"

11 |

--------------------------------------------------------------------------------

/.github/workflows/deploy-documentation.yml:

--------------------------------------------------------------------------------

1 | name: deploy-mkdocs-documentation

2 | on:

3 | push:

4 | branches:

5 | - master

6 | - main

7 | - docs

8 | permissions:

9 | contents: write

10 | jobs:

11 | deploy:

12 | runs-on: ubuntu-latest

13 | steps:

14 | - uses: actions/checkout@v4

15 | - name: Configure Git Credentials

16 | run: |

17 | git config user.name github-actions[bot]

18 | git config user.email 41898282+github-actions[bot]@users.noreply.github.com

19 | - uses: actions/setup-python@v5

20 | with:

21 | python-version: 3.x

22 | - run: echo "cache_id=$(date --utc '+%V')" >> $GITHUB_ENV

23 | - run: pip install mkdocs-material

24 | - run: mkdocs gh-deploy --force

--------------------------------------------------------------------------------

/.github/workflows/release-test.yml:

--------------------------------------------------------------------------------

1 | name: Create Test Pypi Release

2 |

3 | on:

4 | push:

5 | branches: [ main ]

6 |

7 | jobs:

8 | build:

9 | if: github.repository_owner == 'memiiso'

10 | runs-on: ubuntu-latest

11 | strategy:

12 | matrix:

13 | python-version: [ 3.8 ]

14 |

15 | steps:

16 | - uses: actions/checkout@v4

17 | - name: Set up Python ${{ matrix.python-version }}

18 | uses: actions/setup-python@v5

19 | with:

20 | python-version: ${{ matrix.python-version }}

21 | - name: Install pypa/build

22 | run: |

23 | python -m pip install build --user

24 | - name: Build a binary wheel and a source tarball

25 | run: |

26 | python -m build --sdist --wheel --outdir dist/ .

27 |

28 | - name: Publish main to Test Pypi

29 | if: github.event_name == 'push' && startsWith(github.ref, 'refs/heads/main')

30 | uses: pypa/gh-action-pypi-publish@release/v1

31 | with:

32 | user: __token__

33 | password: ${{ secrets.TEST_PYPI_API_TOKEN }}

34 | repository_url: https://test.pypi.org/legacy/

35 | skip_existing: true

--------------------------------------------------------------------------------

/.github/workflows/release.yml:

--------------------------------------------------------------------------------

1 | name: Create Pypi Release

2 |

3 | on:

4 | push:

5 | tags:

6 | - '*.*.*'

7 |

8 | jobs:

9 | build:

10 | if: github.repository_owner == 'memiiso'

11 | runs-on: ubuntu-latest

12 | strategy:

13 | matrix:

14 | python-version: [ 3.8 ]

15 |

16 | steps:

17 | - uses: actions/checkout@v4

18 | - name: Set up Python ${{ matrix.python-version }}

19 | uses: actions/setup-python@v5

20 | with:

21 | python-version: ${{ matrix.python-version }}

22 | - name: Install pypa/build

23 | run: |

24 | python -m pip install build --user

25 | - name: Build a binary wheel and a source tarball

26 | run: |

27 | python -m build --sdist --wheel --outdir dist/ .

28 |

29 | - name: Publish to Pypi

30 | if: github.event_name == 'push' && startsWith(github.ref, 'refs/tags')

31 | uses: pypa/gh-action-pypi-publish@release/v1

32 | with:

33 | user: __token__

34 | password: ${{ secrets.PYPI_API_TOKEN }}

--------------------------------------------------------------------------------

/.github/workflows/tests-dbt-version.yml:

--------------------------------------------------------------------------------

1 | name: Build and Test DBT Version

2 |

3 | on:

4 | workflow_call:

5 | inputs:

6 | dbt-version:

7 | required: true

8 | type: string

9 |

10 | jobs:

11 | test-dbt-version:

12 | runs-on: macos-latest

13 | strategy:

14 | fail-fast: false

15 | matrix:

16 | python-version: [ "3.9", "3.10", "3.11", "3.12" ]

17 | steps:

18 | - uses: actions/checkout@v4

19 | - name: Set up Python ${{ matrix.python-version }}

20 | uses: actions/setup-python@v5

21 | with:

22 | python-version: ${{ matrix.python-version }}

23 | cache: 'pip' # caching pip dependencies

24 | - name: Build & Install DBT ${{ inputs.dbt-version }}

25 | run: |

26 | pip install -q coverage pylint

27 | pip install -q dbt-core==${{ inputs.dbt-version }}.* dbt-duckdb==${{ inputs.dbt-version }}.* --force-reinstall --upgrade

28 | # FIX for protobuf issue: https://github.com/dbt-labs/dbt-core/issues/9759

29 | pip install -q "apache-airflow<3.0.0" "protobuf>=4.25.3,<5.0.0" "opentelemetry-proto<1.28.0" --prefer-binary

30 | pip install -q .[test] --prefer-binary

31 | pip install -q dbt-core==${{ inputs.dbt-version }}.* dbt-duckdb==${{ inputs.dbt-version }}.* --force-reinstall --upgrade

32 | python --version

33 | python -c "from dbt.version import get_installed_version as get_dbt_version;print(f'dbt version={get_dbt_version()}')"

34 | python -m compileall -f opendbt

35 | python -m pylint opendbt

36 | - name: Run Tests

37 | run: |

38 | python -c "from dbt.version import get_installed_version as get_dbt_version;print(f'dbt version={get_dbt_version()}')"

39 | python -m coverage run --source=./tests/ -m unittest discover -s tests/

40 | python -m coverage report -m ./opendbt/*.py

41 |

--------------------------------------------------------------------------------

/.github/workflows/tests.yml:

--------------------------------------------------------------------------------

1 | name: Build and Test

2 |

3 | on:

4 | workflow_dispatch:

5 | push:

6 | branches: [ main ]

7 | paths-ignore:

8 | - '.idea/**'

9 | - '.run/**'

10 | pull_request:

11 | branches: [ main ]

12 | paths-ignore:

13 | - '.idea/**'

14 | - '.run/**'

15 |

16 | jobs:

17 | test-dbt-1-7:

18 | uses: ./.github/workflows/tests-dbt-version.yml

19 | with:

20 | dbt-version: "1.7"

21 | needs: test-dbt-1-8

22 | test-dbt-1-8:

23 | uses: ./.github/workflows/tests-dbt-version.yml

24 | with:

25 | dbt-version: "1.8"

26 | needs: test-dbt-1-9

27 | test-dbt-1-9:

28 | uses: ./.github/workflows/tests-dbt-version.yml

29 | with:

30 | dbt-version: "1.9"

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | logs

2 | **.duckdb

3 | **.user.yml

4 | reset.sh

5 |

6 | ###### JetBrains ######

7 | # Covers JetBrains IDEs: IntelliJ, RubyMine, PhpStorm, AppCode, PyCharm, CLion, Android Studio, WebStorm and Rider

8 | # Reference: https://intellij-support.jetbrains.com/hc/en-us/articles/206544839

9 |

10 | # User-specific stuff

11 | .idea/**/workspace.xml

12 | .idea/**/tasks.xml

13 | .idea/**/usage.statistics.xml

14 | .idea/**/dictionaries

15 | .idea/**/shelf

16 |

17 | # AWS User-specific

18 | .idea/**/aws.xml

19 |

20 | # Generated files

21 | .idea/**/contentModel.xml

22 |

23 | # Sensitive or high-churn files

24 | .idea/**/dataSources/

25 | .idea/**/dataSources.ids

26 | .idea/**/dataSources.local.xml

27 | .idea/**/sqlDataSources.xml

28 | .idea/**/dynamic.xml

29 | .idea/**/uiDesigner.xml

30 | .idea/**/dbnavigator.xml

31 |

32 | # Gradle

33 | .idea/**/gradle.xml

34 | .idea/**/libraries

35 |

36 | # Gradle and Maven with auto-import

37 | # When using Gradle or Maven with auto-import, you should exclude module files,

38 | # since they will be recreated, and may cause churn. Uncomment if using

39 | # auto-import.

40 | # .idea/artifacts

41 | # .idea/compiler.xml

42 | # .idea/jarRepositories.xml

43 | # .idea/modules.xml

44 | # .idea/*.iml

45 | # .idea/modules

46 | # *.iml

47 | # *.ipr

48 |

49 | # CMake

50 | cmake-build-*/

51 |

52 | # Mongo Explorer plugin

53 | .idea/**/mongoSettings.xml

54 |

55 | # File-based project format

56 | *.iws

57 |

58 | # IntelliJ

59 | out/

60 |

61 | # mpeltonen/sbt-idea plugin

62 | .idea_modules/

63 |

64 | # JIRA plugin

65 | atlassian-ide-plugin.xml

66 |

67 | # Cursive Clojure plugin

68 | .idea/replstate.xml

69 |

70 | # SonarLint plugin

71 | .idea/sonarlint/

72 |

73 | # Crashlytics plugin (for Android Studio and IntelliJ)

74 | com_crashlytics_export_strings.xml

75 | crashlytics.properties

76 | crashlytics-build.properties

77 | fabric.properties

78 |

79 | # Editor-based Rest Client

80 | .idea/httpRequests

81 |

82 | # Android studio 3.1+ serialized cache file

83 | .idea/caches/build_file_checksums.ser

84 |

85 |

86 | ###### Python ######

87 | # Byte-compiled / optimized / DLL files

88 | __pycache__/

89 | *.py[cod]

90 | *$py.class

91 |

92 | # C extensions

93 | *.so

94 |

95 | # Distribution / packaging

96 | .Python

97 | build/

98 | develop-eggs/

99 | dist/

100 | downloads/

101 | eggs/

102 | .eggs/

103 | lib/

104 | lib64/

105 | parts/

106 | sdist/

107 | var/

108 | wheels/

109 | share/python-wheels/

110 | *.egg-info/

111 | .installed.cfg

112 | *.egg

113 | MANIFEST

114 |

115 | # PyInstaller

116 | # Usually these files are written by a python script from a template

117 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

118 | *.manifest

119 | *.spec

120 |

121 | # Installer logs

122 | pip-log.txt

123 | pip-delete-this-directory.txt

124 |

125 | # Unit test / coverage reports

126 | htmlcov/

127 | .tox/

128 | .nox/

129 | .coverage

130 | .coverage.*

131 | .cache

132 | nosetests.xml

133 | coverage.xml

134 | *.cover

135 | *.py,cover

136 | .hypothesis/

137 | .pytest_cache/

138 | cover/

139 |

140 | # Translations

141 | *.mo

142 | *.pot

143 |

144 | # Django stuff:

145 | *.log

146 | local_settings.py

147 | db.sqlite3

148 | db.sqlite3-journal

149 |

150 | # Flask stuff:

151 | instance/

152 | .webassets-cache

153 |

154 | # Scrapy stuff:

155 | .scrapy

156 |

157 | # Sphinx documentation

158 | docs/_build/

159 |

160 | # PyBuilder

161 | .pybuilder/

162 | target/

163 |

164 | # Jupyter Notebook

165 | .ipynb_checkpoints

166 |

167 | # IPython

168 | profile_default/

169 | ipython_config.py

170 |

171 | # pyenv

172 | # For a library or package, you might want to ignore these files since the code is

173 | # intended to run in multiple environments; otherwise, check them in:

174 | # .python-version

175 |

176 | # pipenv

177 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

178 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

179 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

180 | # install all needed dependencies.

181 | #Pipfile.lock

182 |

183 | # poetry

184 | # Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

185 | # This is especially recommended for binary packages to ensure reproducibility, and is more

186 | # commonly ignored for libraries.

187 | # https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

188 | #poetry.lock

189 |

190 | # pdm

191 | # Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

192 | #pdm.lock

193 | # pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

194 | # in version control.

195 | # https://pdm.fming.dev/latest/usage/project/#working-with-version-control

196 | .pdm.toml

197 | .pdm-python

198 | .pdm-build/

199 |

200 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

201 | __pypackages__/

202 |

203 | # Celery stuff

204 | celerybeat-schedule

205 | celerybeat.pid

206 |

207 | # SageMath parsed files

208 | *.sage.py

209 |

210 | # Environments

211 | .env

212 | .venv

213 | env/

214 | venv/

215 | ENV/

216 | env.bak/

217 | venv.bak/

218 |

219 | # Spyder project settings

220 | .spyderproject

221 | .spyproject

222 |

223 | # Rope project settings

224 | .ropeproject

225 |

226 | # mkdocs documentation

227 | /site

228 |

229 | # mypy

230 | .mypy_cache/

231 | .dmypy.json

232 | dmypy.json

233 |

234 | # Pyre type checker

235 | .pyre/

236 |

237 | # pytype static type analyzer

238 | .pytype/

239 |

240 | # Cython debug symbols

241 | cython_debug/

242 |

243 | # PyCharm

244 | # JetBrains specific template is maintained in a separate JetBrains.gitignore that can

245 | # be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

246 | # and can be added to the global gitignore or merged into this file. For a more nuclear

247 | # option (not recommended) you can uncomment the following to ignore the entire idea folder.

248 | #.idea/

249 |

--------------------------------------------------------------------------------

/.idea/.gitignore:

--------------------------------------------------------------------------------

1 | # Default ignored files

2 | /shelf/

3 | /workspace.xml

4 |

--------------------------------------------------------------------------------

/.idea/git_toolbox_blame.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/.idea/git_toolbox_prj.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

--------------------------------------------------------------------------------

/.idea/misc.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 | EmbeddedPerformanceJava

13 |

14 |

15 | Error handlingJava

16 |

17 |

18 | Groovy

19 |

20 |

21 | InitializationJava

22 |

23 |

24 | JVM languages

25 |

26 |

27 | Java

28 |

29 |

30 | Java 21Java language level migration aidsJava

31 |

32 |

33 | Java language level migration aidsJava

34 |

35 |

36 | Kotlin

37 |

38 |

39 | LoggingJVM languages

40 |

41 |

42 | MemoryJava

43 |

44 |

45 | PerformanceJava

46 |

47 |

48 | Probable bugsJava

49 |

50 |

51 | Python

52 |

53 |

54 | Redundant constructsKotlin

55 |

56 |

57 | RegExp

58 |

59 |

60 | Style issuesKotlin

61 |

62 |

63 | Threading issuesGroovy

64 |

65 |

66 | Threading issuesJava

67 |

68 |

69 | Verbose or redundant code constructsJava

70 |

71 |

72 |

73 |

74 |

75 |

76 |

77 |

78 |

--------------------------------------------------------------------------------

/.idea/modules.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

--------------------------------------------------------------------------------

/.idea/opendbt.iml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

--------------------------------------------------------------------------------

/.idea/runConfigurations/Python_tests_in_tests.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

--------------------------------------------------------------------------------

/.idea/runConfigurations/pip_install.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

--------------------------------------------------------------------------------

/.idea/runConfigurations/pylint.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

23 |

24 |

25 |

--------------------------------------------------------------------------------

/.idea/vcs.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/.pylintrc:

--------------------------------------------------------------------------------

1 | [MASTER]

2 | disable=

3 | C, # convention

4 | W, # warnings

5 | import-error,

6 | no-name-in-module,

7 | too-many-arguments,

8 | too-many-positional-arguments,

9 | too-few-public-methods,

10 | no-member,

11 | unexpected-keyword-arg,

12 | R0801 # Similar lines in 2 files

13 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "[]"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright [yyyy] [name of copyright owner]

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 | [](http://www.apache.org/licenses/LICENSE-2.0.html)

3 |

4 |

5 | # opendbt

6 |

7 | This project adds new capabilities to dbt-core by dynamically extending dbt's source code.

8 |

9 | dbt is a popular solution for batch data processing in data analytics. While it operates on

10 | an [open-core model](https://opencoreventures.com/blog/2023-07-open-core-is-misunderstood-business-model/), which can

11 | sometimes limit the inclusion of community features in the open-source version. no worries opendbt is here to solve it.

12 | opendbt offers a fully open-source package to address these concerns. **OpenDBT builds upon dbt-core, adding valuable

13 | features without changing dbt-core code.**

14 |

15 | With `opendbt` you can go beyond the core functionalities of dbt. For example seamlessly integrating your customized

16 | adapter and providing jinja context with further adapter/python methods.

17 |

18 | ## Features

19 |

20 | - :white_check_mark: Includes superior [dbt catalog UI](https://memiiso.github.io/opendbt/opendbtdocs/), user-friendly

21 | data catalog,

22 | including row level

23 | lineage, [see it here](https://memiiso.github.io/opendbt/opendbtdocs/)

24 | - :white_check_mark: Integrates Python and DLT Jobs to dbt. Enables Extract&Load (EL) with dbt.

25 | - :white_check_mark: Supports DBT Mesh setups. Supports running multiple projects which are using cross project ref

26 | models.

27 | - :white_check_mark: And many more features, customization options.

28 | - Customize Existing Adapters: add your custom logic to current adapters

29 | - By extending current adapter provide more functions to jinja

30 | - Execute Local Python

31 | Code: [run local Python code](https://medium.com/@ismail-simsek/make-dbt-great-again-ec34f3b661f5). For example, you

32 | could import data from web APIs directly within your dbt model.

33 | - [Integrate DLT](https://github.com/memiiso/opendbt/issues/40). Run end to end ETL pipeline with dbt and DLT.

34 | - [Use multi project dbt-mesh setup cross-project references](https://docs.getdbt.com/docs/collaborate/govern/project-dependencies#how-to-write-cross-project-ref).

35 | - This feature was only available in "dbt Cloud Enterprise" so far.

36 | - Granular Model-Level Orchestration with Airflow: Integrate Airflow for fine-grained control over model execution.

37 | - Serve dbt Docs in Airflow UI: Create a custom page on the Airflow server that displays dbt documentation as an

38 | Airflow

39 | UI page.

40 | - Register [dbt callbacks](https://docs.getdbt.com/reference/programmatic-invocations#registering-callbacks) within a

41 | dbt project to trigger custom actions or alerting based on selected dbt events.

42 |

43 | [See documentation for further details and detailed examples](https://memiiso.github.io/opendbt/).

44 |

45 |

46 |

47 | ## Installation

48 |

49 | install from github or pypi:

50 |

51 | ```shell

52 | pip install opendbt==0.13.0

53 | # Or

54 | pip install https://github.com/memiiso/opendbt/archive/refs/tags/0.4.0.zip --upgrade --user

55 | ```

56 |

57 | ## **Your Contributions Matter**

58 |

59 | The project completely open-source, using the Apache 2.0 license.

60 | opendbt still is a young project and there are things to improve.

61 | Please feel free to test it, give feedback, open feature requests or send pull requests.

62 |

63 | ### Contributors

64 |

65 |

66 |  67 |

68 |

--------------------------------------------------------------------------------

/docs/assets/airflow-dbt-docs-link.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/memiiso/opendbt/812f2143c73f974d2f6e1932d9e29481071679ea/docs/assets/airflow-dbt-docs-link.png

--------------------------------------------------------------------------------

/docs/assets/airflow-dbt-docs-page.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/memiiso/opendbt/812f2143c73f974d2f6e1932d9e29481071679ea/docs/assets/airflow-dbt-docs-page.png

--------------------------------------------------------------------------------

/docs/assets/airflow-dbt-flow.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/memiiso/opendbt/812f2143c73f974d2f6e1932d9e29481071679ea/docs/assets/airflow-dbt-flow.png

--------------------------------------------------------------------------------

/docs/assets/dbt-custom-adapter-python.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/memiiso/opendbt/812f2143c73f974d2f6e1932d9e29481071679ea/docs/assets/dbt-custom-adapter-python.png

--------------------------------------------------------------------------------

/docs/assets/dbt-local-python.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/memiiso/opendbt/812f2143c73f974d2f6e1932d9e29481071679ea/docs/assets/dbt-local-python.png

--------------------------------------------------------------------------------

/docs/assets/docs-columns-transformation.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/memiiso/opendbt/812f2143c73f974d2f6e1932d9e29481071679ea/docs/assets/docs-columns-transformation.png

--------------------------------------------------------------------------------

/docs/assets/docs-dependencies.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/memiiso/opendbt/812f2143c73f974d2f6e1932d9e29481071679ea/docs/assets/docs-dependencies.png

--------------------------------------------------------------------------------

/docs/assets/docs-lineage.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/memiiso/opendbt/812f2143c73f974d2f6e1932d9e29481071679ea/docs/assets/docs-lineage.png

--------------------------------------------------------------------------------

/docs/assets/docs-run-info-error.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/memiiso/opendbt/812f2143c73f974d2f6e1932d9e29481071679ea/docs/assets/docs-run-info-error.png

--------------------------------------------------------------------------------

/docs/assets/docs-run-info.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/memiiso/opendbt/812f2143c73f974d2f6e1932d9e29481071679ea/docs/assets/docs-run-info.png

--------------------------------------------------------------------------------

/docs/assets/opendbt-airflow-ui.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/memiiso/opendbt/812f2143c73f974d2f6e1932d9e29481071679ea/docs/assets/opendbt-airflow-ui.png

--------------------------------------------------------------------------------

/docs/catalog.md:

--------------------------------------------------------------------------------

1 | # Opendbt Catalog

2 |

3 | [See it in action](https://memiiso.github.io/opendbt/opendbtdocs/)

4 |

5 | Summary of the catalog files:

6 |

7 | - [catalog.json](catalog.json): Generated by dbt

8 | - [catalogl.json](catalogl.json): Generated by opendbt contains extended catalog information with column level lineage

9 | - [manifest.json](manifest.json): Generated by dbt

10 | - [run_info.json](run_info.json): Generated by opendbt, contains latest run information per object/model

11 |

12 | ## Key Features

13 |

14 | ### Up to date Run information

15 |

16 |

17 |

18 | ### Run information with error messages

19 |

20 |

21 |

22 | ### Model dependencies including tests

23 |

24 |

25 |

26 | ### Column level dependency lineage, transformation

27 |

28 |

29 |

30 | ### Dependency lineage

31 |

32 |

--------------------------------------------------------------------------------

/docs/examples.md:

--------------------------------------------------------------------------------

1 | # Examples

2 |

3 | ## Using dbt with User-Defined Adapters and Jinja Methods

4 |

5 | To add custom methods to an existing adapter and expose them to Jinja templates, follow these steps:

6 |

7 | **Step-1:** Extend the Adapter

8 | Create a new adapter class that inherits from the desired base adapter. Add the necessary methods to this class.

9 | https://github.com/memiiso/opendbt/blob/a5a7a598a3e4f04e184b38257578279473d78cfc/opendbt/examples.py#L10-L26

10 |

11 | **Step-2:** In your `dbt_project.yml` file, set the `dbt_custom_adapter` variable to the fully qualified name of your

12 | custom adapter class. This will enable opendbt to recognize activate your adapter.

13 | ```yml

14 | vars:

15 | dbt_custom_adapter: opendbt.examples.DuckDBAdapterV2Custom

16 | ```

17 |

18 | **Step-3:** Execute dbt commands as usual. dbt will now load and utilize your custom adapter class, allowing you to

19 | access the newly defined methods within your Jinja macros.

20 | ```python

21 | from opendbt import OpenDbtProject

22 |

23 | dp = OpenDbtProject(project_dir="/dbt/project_dir", profiles_dir="/dbt/profiles_dir")

24 | dp.run(command="run")

25 | ```

26 |

27 | ## Executing Python Models Locally with dbt

28 |

29 | By leveraging a customized adapter and a custom materialization, dbt can be extended to execute Python code locally.

30 | This powerful capability is particularly useful for scenarios involving data ingestion from external APIs, enabling

31 | seamless integration within the dbt framework.

32 |

33 | **Step-1:** We'll extend an existing adapter (like `DuckDBAdapter`) to add a new method, `submit_local_python_job`. This

34 | method will execute the provided Python code as a subprocess.

35 | https://github.com/memiiso/opendbt/blob/a5a7a598a3e4f04e184b38257578279473d78cfc/opendbt/examples.py#L10-L26

36 |

37 | **Step-2:** Create a new materialization named `executepython`. This materialization will call the newly added

38 | `submit_local_python_job` method from the custom adapter to execute the compiled Python code.

39 |

40 | https://github.com/memiiso/opendbt/blob/a5a7a598a3e4f04e184b38257578279473d78cfc/opendbt/macros/executepython.sql#L1-L26

41 |

42 | **Step-3:** Let's create a sample Python model that will be executed locally by dbt using the executepython

43 | materialization.

44 | https://github.com/memiiso/opendbt/blob/a5a7a598a3e4f04e184b38257578279473d78cfc/tests/resources/dbttest/models/my_executepython_dbt_model.py#L1-L22

45 |

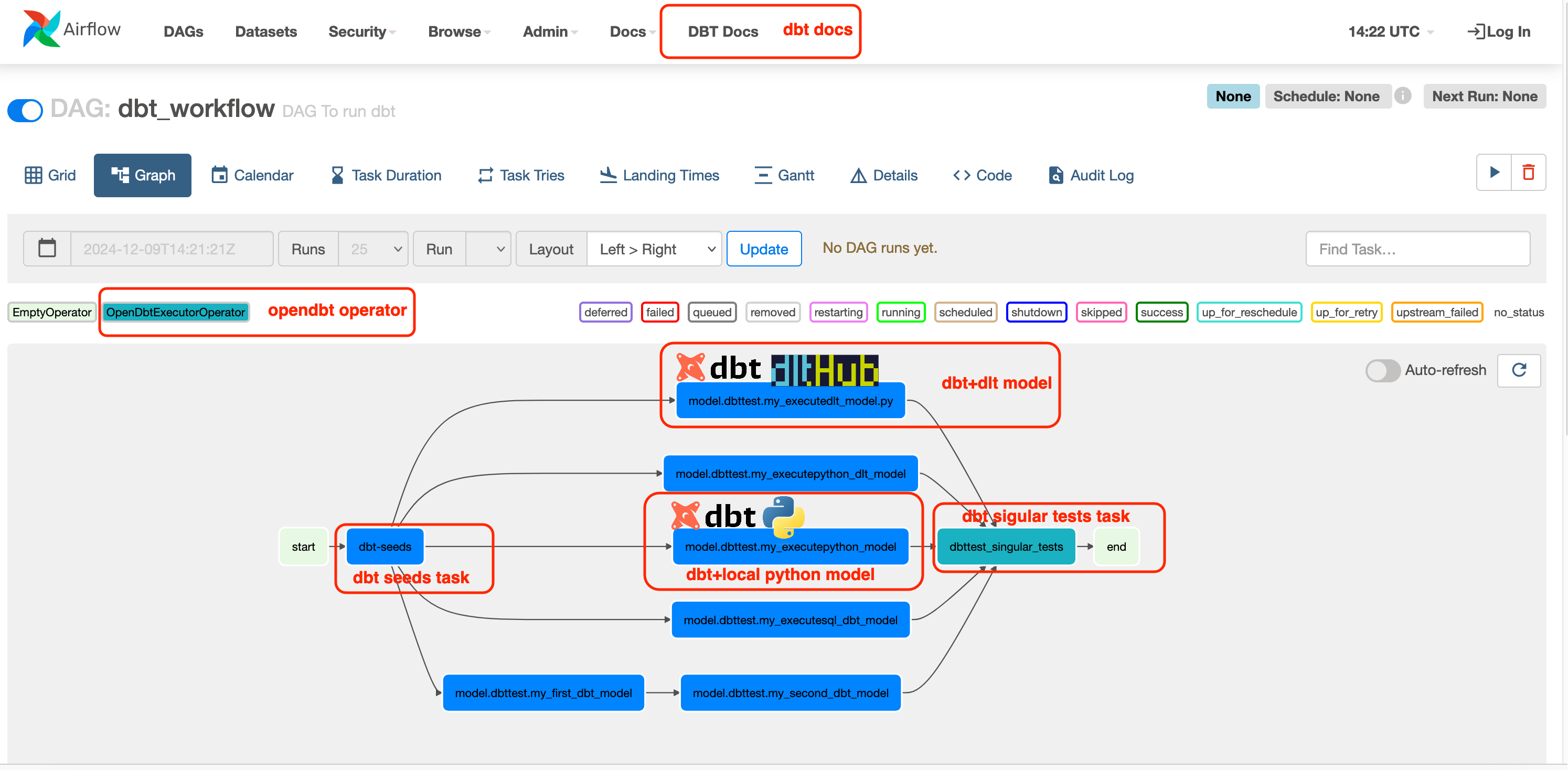

46 | ## Orchestrating dbt Models with Airflow

47 |

48 | **Step-1:** Let's create an Airflow DAG to orchestrate the execution of your dbt project.

49 | https://github.com/memiiso/opendbt/blob/a5a7a598a3e4f04e184b38257578279473d78cfc/tests/resources/airflow/dags/dbt_workflow.py#L17-L32

50 |

51 |

52 |

53 | #### Creating Airflow DAG that selectively executes a specific subset of models from your dbt project.

54 |

55 | ```python

56 | from opendbt.airflow import OpenDbtAirflowProject

57 |

58 | # create dbt build tasks for models with given tag

59 | p = OpenDbtAirflowProject(resource_type='model', project_dir="/dbt/project_dir", profiles_dir="/dbt/profiles_dir",

60 | target='dev', tag="MY_TAG")

61 | p.load_dbt_tasks(dag=dag, start_node=start, end_node=end)

62 | ```

63 |

64 | #### Creating dag to run dbt tests

65 |

66 | ```python

67 | from opendbt.airflow import OpenDbtAirflowProject

68 |

69 | # create dbt test tasks with given model tag

70 | p = OpenDbtAirflowProject(resource_type='test', project_dir="/dbt/project_dir", profiles_dir="/dbt/profiles_dir",

71 | target='dev', tag="MY_TAG")

72 | p.load_dbt_tasks(dag=dag, start_node=start, end_node=end)

73 | ```

74 |

75 | ## Integrating dbt Documentation into Airflow

76 |

77 | Airflow, a powerful workflow orchestration tool, can be leveraged to streamline not only dbt execution but also dbt

78 | documentation access. By integrating dbt documentation into your Airflow interface, you can centralize your data

79 | engineering resources and improve team collaboration.

80 |

81 | here is how:

82 | **Step-1:** Create python file. Navigate to your Airflow's `{airflow}/plugins` directory.

83 | Create a new Python file and name it appropriately, such as `dbt_docs_plugin.py`. Add following code to

84 | `dbt_docs_plugin.py` file.

85 | Ensure that the specified path accurately points to the folder where your dbt project generates its documentation.

86 | https://github.com/memiiso/opendbt/blob/a5a7a598a3e4f04e184b38257578279473d78cfc/tests/resources/airflow/plugins/airflow_dbtdocs_page.py#L1-L6

87 |

88 | **Step-2:** Restart Airflow to activate the plugin. Once the restart is complete, you should see a new link labeled

89 | `DBT Docs` within your Airflow web interface. This link will provide access to your dbt documentation.

90 |

91 |

92 | **Step-3:** Click on the `DBT Docs` link to open your dbt documentation.

93 |

--------------------------------------------------------------------------------

/docs/index.md:

--------------------------------------------------------------------------------

1 | --8<-- "README.md"

--------------------------------------------------------------------------------

/docs/opendbtdocs/catalog.json:

--------------------------------------------------------------------------------

1 | {

2 | "metadata": {

3 | "dbt_schema_version": "https://schemas.getdbt.com/dbt/catalog/v1.json",

4 | "dbt_version": "1.9.4",

5 | "generated_at": "2025-05-15T18:01:15.469569Z",

6 | "invocation_id": "4a81f777-5b15-45b3-8c88-b08d0f7bb9ea",

7 | "env": {}

8 | },

9 | "nodes": {

10 | "model.dbtcore.my_core_table1": {

11 | "metadata": {

12 | "type": "BASE TABLE",

13 | "schema": "core",

14 | "name": "my_core_table1",

15 | "database": "dev",

16 | "comment": null,

17 | "owner": null

18 | },

19 | "columns": {

20 | "id": {

21 | "type": "INTEGER",

22 | "index": 1,

23 | "name": "id",

24 | "comment": null

25 | },

26 | "row_data": {

27 | "type": "VARCHAR",

28 | "index": 2,

29 | "name": "row_data",

30 | "comment": null

31 | }

32 | },

33 | "stats": {

34 | "has_stats": {

35 | "id": "has_stats",

36 | "label": "Has Stats?",

37 | "value": false,

38 | "include": false,

39 | "description": "Indicates whether there are statistics for this table"

40 | }

41 | },

42 | "unique_id": "model.dbtcore.my_core_table1"

43 | },

44 | "model.dbtcore.my_executedlt_model": {

45 | "metadata": {

46 | "type": "BASE TABLE",

47 | "schema": "core",

48 | "name": "my_executedlt_model",

49 | "database": "dev",

50 | "comment": null,

51 | "owner": null

52 | },

53 | "columns": {

54 | "event_tstamp": {

55 | "type": "TIMESTAMP WITH TIME ZONE",

56 | "index": 1,

57 | "name": "event_tstamp",

58 | "comment": null

59 | },

60 | "event_id": {

61 | "type": "BIGINT",

62 | "index": 2,

63 | "name": "event_id",

64 | "comment": null

65 | },

66 | "_dlt_load_id": {

67 | "type": "VARCHAR",

68 | "index": 3,

69 | "name": "_dlt_load_id",

70 | "comment": null

71 | },

72 | "_dlt_id": {

73 | "type": "VARCHAR",

74 | "index": 4,

75 | "name": "_dlt_id",

76 | "comment": null

77 | },

78 | "event_tstamp__v_text": {

79 | "type": "VARCHAR",

80 | "index": 5,

81 | "name": "event_tstamp__v_text",

82 | "comment": null

83 | }

84 | },

85 | "stats": {

86 | "has_stats": {

87 | "id": "has_stats",

88 | "label": "Has Stats?",

89 | "value": false,

90 | "include": false,

91 | "description": "Indicates whether there are statistics for this table"

92 | }

93 | },

94 | "unique_id": "model.dbtcore.my_executedlt_model"

95 | },

96 | "model.dbtcore.my_first_dbt_model": {

97 | "metadata": {

98 | "type": "BASE TABLE",

99 | "schema": "core",

100 | "name": "my_first_dbt_model",

101 | "database": "dev",

102 | "comment": null,

103 | "owner": null

104 | },

105 | "columns": {

106 | "id": {

107 | "type": "INTEGER",

108 | "index": 1,

109 | "name": "id",

110 | "comment": null

111 | },

112 | "data_value": {

113 | "type": "VARCHAR",

114 | "index": 2,

115 | "name": "data_value",

116 | "comment": null

117 | },

118 | "column_3": {

119 | "type": "VARCHAR",

120 | "index": 3,

121 | "name": "column_3",

122 | "comment": null

123 | }

124 | },

125 | "stats": {

126 | "has_stats": {

127 | "id": "has_stats",

128 | "label": "Has Stats?",

129 | "value": false,

130 | "include": false,

131 | "description": "Indicates whether there are statistics for this table"

132 | }

133 | },

134 | "unique_id": "model.dbtcore.my_first_dbt_model"

135 | },

136 | "model.dbtcore.my_second_dbt_model": {

137 | "metadata": {

138 | "type": "BASE TABLE",

139 | "schema": "core",

140 | "name": "my_second_dbt_model",

141 | "database": "dev",

142 | "comment": null,

143 | "owner": null

144 | },

145 | "columns": {

146 | "pk_id": {

147 | "type": "INTEGER",

148 | "index": 1,

149 | "name": "pk_id",

150 | "comment": null

151 | },

152 | "data_value1": {

153 | "type": "VARCHAR",

154 | "index": 2,

155 | "name": "data_value1",

156 | "comment": null

157 | },

158 | "data_value2": {

159 | "type": "VARCHAR",

160 | "index": 3,

161 | "name": "data_value2",

162 | "comment": null

163 | },

164 | "event_tstamp": {

165 | "type": "TIMESTAMP WITH TIME ZONE",

166 | "index": 4,

167 | "name": "event_tstamp",

168 | "comment": null

169 | }

170 | },

171 | "stats": {

172 | "has_stats": {

173 | "id": "has_stats",

174 | "label": "Has Stats?",

175 | "value": false,

176 | "include": false,

177 | "description": "Indicates whether there are statistics for this table"

178 | }

179 | },

180 | "unique_id": "model.dbtcore.my_second_dbt_model"

181 | }

182 | },

183 | "sources": {},

184 | "errors": null

185 | }

--------------------------------------------------------------------------------

/docs/opendbtdocs/catalogl.json:

--------------------------------------------------------------------------------

1 | {

2 | "metadata": {

3 | "dbt_schema_version": "https://schemas.getdbt.com/dbt/catalog/v1.json",

4 | "dbt_version": "1.9.4",

5 | "generated_at": "2025-05-15T18:01:15.469569Z",

6 | "invocation_id": "4a81f777-5b15-45b3-8c88-b08d0f7bb9ea",

7 | "env": {}

8 | },

9 | "nodes": {

10 | "model.dbtfinance.my_cross_project_ref_model": {

11 | "stats": {},

12 | "columns": {

13 | "id": {

14 | "name": "id",

15 | "type": "unknown",

16 | "column_fqn": "dev.finance.my_cross_project_ref_model.id",

17 | "table_fqn": "dev.finance.my_cross_project_ref_model",

18 | "table_relative_fqn": "finance.my_cross_project_ref_model",

19 | "transformations": [],

20 | "depends_on": []

21 | },

22 | "row_data": {

23 | "name": "row_data",

24 | "type": "unknown",

25 | "column_fqn": "dev.finance.my_cross_project_ref_model.row_data",

26 | "table_fqn": "dev.finance.my_cross_project_ref_model",

27 | "table_relative_fqn": "finance.my_cross_project_ref_model",

28 | "transformations": [],

29 | "depends_on": []

30 | },

31 | "num_rows": {

32 | "name": "num_rows",

33 | "type": "unknown",

34 | "column_fqn": "dev.finance.my_cross_project_ref_model.num_rows",

35 | "table_fqn": "dev.finance.my_cross_project_ref_model",

36 | "table_relative_fqn": "finance.my_cross_project_ref_model",

37 | "transformations": [],

38 | "depends_on": []

39 | }

40 | },

41 | "metadata": {}

42 | },

43 | "model.dbtcore.my_first_dbt_model": {

44 | "stats": {},

45 | "columns": {

46 | "data_value": {

47 | "name": "data_value",

48 | "description": "",

49 | "meta": {},

50 | "data_type": null,

51 | "constraints": [],

52 | "quote": null,

53 | "tags": [],

54 | "granularity": null,

55 | "type": "unknown",

56 | "column_fqn": "dev.core.my_first_dbt_model.data_value",

57 | "table_fqn": "dev.core.my_first_dbt_model",

58 | "table_relative_fqn": "core.my_first_dbt_model",

59 | "transformations": [

60 | "'test-value' AS data_value",

61 | "'test-value' AS data_value",

62 | "'test-value' AS data_value",

63 | "'test-value' AS data_value",

64 | "source_data.data_value AS data_value"

65 | ],

66 | "depends_on": []

67 | },

68 | "column_3": {

69 | "name": "column_3",

70 | "description": "",

71 | "meta": {},

72 | "data_type": null,

73 | "constraints": [],

74 | "quote": null,

75 | "tags": [],

76 | "granularity": null,

77 | "type": "unknown",

78 | "column_fqn": "dev.core.my_first_dbt_model.column_3",

79 | "table_fqn": "dev.core.my_first_dbt_model",

80 | "table_relative_fqn": "core.my_first_dbt_model",

81 | "transformations": [

82 | "'test-value' AS column_3",

83 | "'test-value' AS column_3",

84 | "'test-value' AS column_3",

85 | "'test-value' AS column_3",

86 | "source_data.column_3 AS column_3"

87 | ],

88 | "depends_on": []

89 | },

90 | "id": {

91 | "name": "id",

92 | "description": "The **primary key** for this table",

93 | "meta": {},

94 | "data_type": null,

95 | "constraints": [],

96 | "quote": null,

97 | "tags": [],

98 | "granularity": null,

99 | "type": "unknown",

100 | "column_fqn": "dev.core.my_first_dbt_model.id",

101 | "table_fqn": "dev.core.my_first_dbt_model",

102 | "table_relative_fqn": "core.my_first_dbt_model",

103 | "transformations": [

104 | "NULL AS id",

105 | "2 AS id",

106 | "1 AS id",

107 | "1 AS id",

108 | "source_data.id AS id"

109 | ],

110 | "depends_on": []

111 | }

112 | },

113 | "metadata": {}

114 | },

115 | "model.dbtcore.my_executesql_dbt_model": {

116 | "stats": {},

117 | "columns": {},

118 | "metadata": {}

119 | },

120 | "model.dbtcore.my_failing_dbt_model": {

121 | "stats": {},

122 | "columns": {

123 | "my_failing_column": {

124 | "name": "my_failing_column",

125 | "type": "unknown",

126 | "column_fqn": "dev.core.my_failing_dbt_model.my_failing_column",

127 | "table_fqn": "dev.core.my_failing_dbt_model",

128 | "table_relative_fqn": "core.my_failing_dbt_model",

129 | "transformations": [],

130 | "depends_on": []

131 | }

132 | },

133 | "metadata": {}

134 | },

135 | "model.dbtcore.my_core_table1": {

136 | "stats": {},

137 | "columns": {

138 | "id": {

139 | "name": "id",

140 | "description": "",

141 | "meta": {},

142 | "data_type": null,

143 | "constraints": [],

144 | "quote": null,

145 | "tags": [],

146 | "granularity": null,

147 | "type": "unknown",

148 | "column_fqn": "dev.core.my_core_table1.id",

149 | "table_fqn": "dev.core.my_core_table1",

150 | "table_relative_fqn": "core.my_core_table1",

151 | "transformations": [

152 | "2 AS id",

153 | "1 AS id",

154 | "source_data.id AS id"

155 | ],

156 | "depends_on": []

157 | },

158 | "row_data": {

159 | "name": "row_data",

160 | "description": "",

161 | "meta": {},

162 | "data_type": null,

163 | "constraints": [],

164 | "quote": null,

165 | "tags": [],

166 | "granularity": null,

167 | "type": "unknown",

168 | "column_fqn": "dev.core.my_core_table1.row_data",

169 | "table_fqn": "dev.core.my_core_table1",

170 | "table_relative_fqn": "core.my_core_table1",

171 | "transformations": [

172 | "'row1' AS row_data",

173 | "'row1' AS row_data",

174 | "source_data.row_data AS row_data"

175 | ],

176 | "depends_on": []

177 | }

178 | },

179 | "metadata": {}

180 | },

181 | "model.dbtcore.my_second_dbt_model": {

182 | "stats": {},

183 | "columns": {

184 | "pk_id": {

185 | "name": "pk_id",

186 | "description": "The primary key for this table",

187 | "meta": {},

188 | "data_type": null,

189 | "constraints": [],

190 | "quote": null,

191 | "tags": [],

192 | "granularity": null,

193 | "type": "unknown",

194 | "column_fqn": "dev.core.my_second_dbt_model.pk_id",

195 | "table_fqn": "dev.core.my_second_dbt_model",

196 | "table_relative_fqn": "core.my_second_dbt_model",

197 | "transformations": [

198 | "dev.core.my_first_dbt_model AS t1",

199 | "t1.id AS pk_id"

200 | ],

201 | "depends_on": [

202 | {

203 | "name": "id",

204 | "type": "unknown",

205 | "column_fqn": "dev.core.my_first_dbt_model.id",

206 | "table_fqn": "dev.core.my_first_dbt_model",

207 | "table_relative_fqn": "core.my_first_dbt_model",

208 | "transformations": [],

209 | "depends_on": [],

210 | "model_id": "model.dbtcore.my_first_dbt_model"

211 | }

212 | ]

213 | },

214 | "data_value1": {

215 | "name": "data_value1",

216 | "description": "",

217 | "meta": {},

218 | "data_type": null,

219 | "constraints": [],

220 | "quote": null,

221 | "tags": [],

222 | "granularity": null,

223 | "type": "unknown",

224 | "column_fqn": "dev.core.my_second_dbt_model.data_value1",

225 | "table_fqn": "dev.core.my_second_dbt_model",

226 | "table_relative_fqn": "core.my_second_dbt_model",

227 | "transformations": [

228 | "dev.core.my_first_dbt_model AS t1",

229 | "t1.data_value AS data_value1"

230 | ],

231 | "depends_on": [

232 | {

233 | "name": "data_value",

234 | "type": "unknown",

235 | "column_fqn": "dev.core.my_first_dbt_model.data_value",

236 | "table_fqn": "dev.core.my_first_dbt_model",

237 | "table_relative_fqn": "core.my_first_dbt_model",

238 | "transformations": [],

239 | "depends_on": [],

240 | "model_id": "model.dbtcore.my_first_dbt_model"

241 | }

242 | ]

243 | },

244 | "data_value2": {

245 | "name": "data_value2",

246 | "description": "",

247 | "meta": {},

248 | "data_type": null,

249 | "constraints": [],

250 | "quote": null,

251 | "tags": [],

252 | "granularity": null,

253 | "type": "unknown",

254 | "column_fqn": "dev.core.my_second_dbt_model.data_value2",

255 | "table_fqn": "dev.core.my_second_dbt_model",

256 | "table_relative_fqn": "core.my_second_dbt_model",

257 | "transformations": [

258 | "dev.core.my_core_table1 AS t2",

259 | "dev.core.my_first_dbt_model AS t1",

260 | "dev.core.my_first_dbt_model AS t1",

261 | "CONCAT(t1.column_3, '-concat-1', t1.data_value, t2.row_data) AS data_value2"

262 | ],

263 | "depends_on": [

264 | {

265 | "name": "data_value",

266 | "type": "unknown",

267 | "column_fqn": "dev.core.my_first_dbt_model.data_value",

268 | "table_fqn": "dev.core.my_first_dbt_model",

269 | "table_relative_fqn": "core.my_first_dbt_model",

270 | "transformations": [],

271 | "depends_on": [],

272 | "model_id": "model.dbtcore.my_first_dbt_model"

273 | },

274 | {

275 | "name": "column_3",

276 | "type": "unknown",

277 | "column_fqn": "dev.core.my_first_dbt_model.column_3",

278 | "table_fqn": "dev.core.my_first_dbt_model",

279 | "table_relative_fqn": "core.my_first_dbt_model",

280 | "transformations": [],

281 | "depends_on": [],

282 | "model_id": "model.dbtcore.my_first_dbt_model"

283 | },

284 | {

285 | "name": "row_data",

286 | "type": "unknown",

287 | "column_fqn": "dev.core.my_core_table1.row_data",

288 | "table_fqn": "dev.core.my_core_table1",

289 | "table_relative_fqn": "core.my_core_table1",

290 | "transformations": [],

291 | "depends_on": [],

292 | "model_id": "model.dbtcore.my_core_table1"

293 | }

294 | ]

295 | },

296 | "event_tstamp": {

297 | "name": "event_tstamp",

298 | "description": "",

299 | "meta": {},

300 | "data_type": null,

301 | "constraints": [],

302 | "quote": null,

303 | "tags": [],

304 | "granularity": null,

305 | "type": "unknown",

306 | "column_fqn": "dev.core.my_second_dbt_model.event_tstamp",

307 | "table_fqn": "dev.core.my_second_dbt_model",

308 | "table_relative_fqn": "core.my_second_dbt_model",

309 | "transformations": [

310 | "dev.core.my_executedlt_model AS t3",

311 | "t3.event_tstamp AS event_tstamp"

312 | ],

313 | "depends_on": [

314 | {

315 | "name": "event_tstamp",

316 | "type": "unknown",

317 | "column_fqn": "dev.core.my_executedlt_model.event_tstamp",

318 | "table_fqn": "dev.core.my_executedlt_model",

319 | "table_relative_fqn": "core.my_executedlt_model",

320 | "transformations": [],

321 | "depends_on": [],

322 | "model_id": "model.dbtcore.my_executedlt_model"

323 | }

324 | ]

325 | }

326 | },

327 | "metadata": {}

328 | },

329 | "model.dbtcore.my_executepython_dlt_model": {

330 | "stats": {},

331 | "columns": {},

332 | "metadata": {}

333 | },

334 | "model.dbtcore.my_executedlt_model": {

335 | "stats": {},

336 | "columns": {

337 | "event_id": {

338 | "name": "event_id",

339 | "description": "",

340 | "meta": {},

341 | "data_type": null,

342 | "constraints": [],

343 | "quote": null,

344 | "tags": [],

345 | "granularity": null,

346 | "type": "unknown",

347 | "column_fqn": "dev.core.my_executedlt_model.event_id",

348 | "table_fqn": "dev.core.my_executedlt_model",

349 | "table_relative_fqn": "core.my_executedlt_model",

350 | "transformations": [],

351 | "depends_on": []

352 | },

353 | "event_tstamp": {

354 | "name": "event_tstamp",

355 | "description": "",

356 | "meta": {},

357 | "data_type": null,

358 | "constraints": [],

359 | "quote": null,

360 | "tags": [],

361 | "granularity": null,

362 | "type": "unknown",

363 | "column_fqn": "dev.core.my_executedlt_model.event_tstamp",

364 | "table_fqn": "dev.core.my_executedlt_model",

365 | "table_relative_fqn": "core.my_executedlt_model",

366 | "transformations": [],

367 | "depends_on": []

368 | }

369 | },

370 | "metadata": {}

371 | },

372 | "model.dbtcore.my_executepython_model": {

373 | "stats": {},

374 | "columns": {

375 | "event_id": {

376 | "name": "event_id",

377 | "description": "",

378 | "meta": {},

379 | "data_type": null,

380 | "constraints": [],

381 | "quote": null,

382 | "tags": [],

383 | "granularity": null,

384 | "type": "unknown",

385 | "column_fqn": "dev.core.my_executepython_model.event_id",

386 | "table_fqn": "dev.core.my_executepython_model",

387 | "table_relative_fqn": "core.my_executepython_model",

388 | "transformations": [],

389 | "depends_on": []

390 | },

391 | "event_tstamp": {

392 | "name": "event_tstamp",

393 | "description": "",

394 | "meta": {},

395 | "data_type": null,

396 | "constraints": [],

397 | "quote": null,

398 | "tags": [],

399 | "granularity": null,

400 | "type": "unknown",

401 | "column_fqn": "dev.core.my_executepython_model.event_tstamp",

402 | "table_fqn": "dev.core.my_executepython_model",

403 | "table_relative_fqn": "core.my_executepython_model",

404 | "transformations": [],

405 | "depends_on": []

406 | }

407 | },

408 | "metadata": {}

409 | },

410 | "source.dbtfinance.core.my_executepython_model": {

411 | "stats": {},

412 | "columns": {},

413 | "metadata": {}

414 | },

415 | "source.dbtfinance.core.my_executepython_dlt_model": {

416 | "stats": {},

417 | "columns": {},

418 | "metadata": {}

419 | }

420 | },

421 | "sources": {},

422 | "errors": null

423 | }

--------------------------------------------------------------------------------

/docs/opendbtdocs/run_info.json:

--------------------------------------------------------------------------------

1 | {

2 | "metadata": {

3 | "dbt_schema_version": "https://schemas.getdbt.com/dbt/run-results/v6.json",

4 | "dbt_version": "1.9.4",

5 | "generated_at": "2025-05-15T18:01:12.604966Z",

6 | "invocation_id": "03a957b9-3b54-4612-b157-2c69961dbcf9",

7 | "env": {}

8 | },

9 | "elapsed_time": 11.362421989440918,

10 | "args": {

11 | "warn_error_options": {

12 | "include": [],

13 | "exclude": []

14 | },

15 | "show": false,

16 | "which": "build",

17 | "state_modified_compare_vars": false,

18 | "export_saved_queries": false,

19 | "include_saved_query": false,

20 | "use_colors": true,

21 | "version_check": true,

22 | "log_format_file": "debug",

23 | "require_explicit_package_overrides_for_builtin_materializations": true,

24 | "log_level": "info",

25 | "source_freshness_run_project_hooks": false,

26 | "populate_cache": true,

27 | "defer": false,

28 | "select": [],

29 | "require_batched_execution_for_custom_microbatch_strategy": false,

30 | "print": true,

31 | "state_modified_compare_more_unrendered_values": false,

32 | "strict_mode": false,

33 | "require_yaml_configuration_for_mf_time_spines": false,

34 | "send_anonymous_usage_stats": true,

35 | "log_file_max_bytes": 10485760,

36 | "exclude": [],

37 | "resource_types": [],

38 | "log_format": "default",

39 | "partial_parse_file_diff": true,

40 | "write_json": true,

41 | "invocation_command": "dbt test_dbt_docs.py::TestDbtDocs::test_run_docs_generate",

42 | "quiet": false,

43 | "target": "dev",

44 | "vars": {},

45 | "favor_state": false,

46 | "log_path": "/Users/simseki/IdeaProjects/opendbt/tests/resources/dbtcore/logs",

47 | "macro_debugging": false,

48 | "exclude_resource_types": [],

49 | "require_nested_cumulative_type_params": false,

50 | "require_resource_names_without_spaces": false,

51 | "static_parser": true,

52 | "show_resource_report": false,

53 | "printer_width": 80,

54 | "introspect": true,

55 | "cache_selected_only": false,

56 | "log_level_file": "debug",

57 | "skip_nodes_if_on_run_start_fails": false,

58 | "profiles_dir": "/Users/simseki/IdeaProjects/opendbt/tests/resources/dbtcore",

59 | "project_dir": "/Users/simseki/IdeaProjects/opendbt/tests/resources/dbtcore",

60 | "use_colors_file": true,

61 | "empty": false,

62 | "partial_parse": true,

63 | "indirect_selection": "eager"

64 | },

65 | "nodes": {

66 | "model.dbtcore.my_core_table1": {

67 | "run_status": "success",

68 | "run_completed_at": "2025-05-15 18:01:01",

69 | "run_message": "OK",

70 | "run_failures": null,

71 | "run_adapter_response": {

72 | "_message": "OK"

73 | }

74 | },

75 | "model.dbtcore.my_executedlt_model": {

76 | "run_status": "success",

77 | "run_completed_at": "2025-05-15 18:01:09",

78 | "run_message": "Executed DLT pipeline",

79 | "run_failures": null,

80 | "run_adapter_response": {

81 | "_message": "Executed DLT pipeline",

82 | "code": "import dlt\nfrom dlt.pipeline import TPipeline\n\n\n@dlt.resource(\n columns={\"event_tstamp\": {\"data_type\": \"timestamp\", \"precision\": 3}},\n primary_key=\"event_id\",\n)\ndef events():\n yield [{\"event_id\": 1, \"event_tstamp\": \"2024-07-30T10:00:00.123\"},\n {\"event_id\": 2, \"event_tstamp\": \"2025-02-30T10:00:00.321\"}]\n\n\ndef model(dbt, pipeline: TPipeline):\n \"\"\"\n\n :param dbt:\n :param pipeline: Pre-configured dlt pipeline. dlt target connection and dataset is pre-set using the model config!\n :return:\n \"\"\"\n dbt.config(materialized=\"executedlt\")\n print(\"========================================================\")\n print(f\"INFO: DLT Pipeline pipeline_name:{pipeline.pipeline_name}\")\n print(f\"INFO: DLT Pipeline dataset_name:{pipeline.dataset_name}\")\n print(f\"INFO: DLT Pipeline dataset_name:{pipeline}\")\n print(f\"INFO: DLT Pipeline staging:{pipeline.staging}\")\n print(f\"INFO: DLT Pipeline destination:{pipeline.destination}\")\n print(f\"INFO: DLT Pipeline _pipeline_storage:{pipeline._pipeline_storage}\")\n print(f\"INFO: DLT Pipeline _schema_storage:{pipeline._schema_storage}\")\n print(f\"INFO: DLT Pipeline state:{pipeline.state}\")\n print(f\"INFO: DBT this:{dbt.this}\")\n print(\"========================================================\")\n load_info = pipeline.run(events(), table_name=str(str(dbt.this).split('.')[-1]).strip('\"'))\n print(load_info)\n row_counts = pipeline.last_trace.last_normalize_info\n print(row_counts)\n print(\"========================================================\")\n return None\n\n\n# This part is user provided model code\n# you will need to copy the next section to run the code\n# COMMAND ----------\n# this part is dbt logic for get ref work, do not modify\n\ndef ref(*args, **kwargs):\n refs = {}\n key = '.'.join(args)\n version = kwargs.get(\"v\") or kwargs.get(\"version\")\n if version:\n key += f\".v{version}\"\n dbt_load_df_function = kwargs.get(\"dbt_load_df_function\")\n return dbt_load_df_function(refs[key])\n\n\ndef source(*args, dbt_load_df_function):\n sources = {}\n key = '.'.join(args)\n return dbt_load_df_function(sources[key])\n\n\nconfig_dict = {}\n\n\nclass config:\n def __init__(self, *args, **kwargs):\n pass\n\n @staticmethod\n def get(key, default=None):\n return config_dict.get(key, default)\n\nclass this:\n \"\"\"dbt.this() or dbt.this.identifier\"\"\"\n database = \"dev\"\n schema = \"core\"\n identifier = \"my_executedlt_model\"\n \n def __repr__(self):\n return '\"dev\".\"core\".\"my_executedlt_model\"'\n\n\nclass dbtObj:\n def __init__(self, load_df_function) -> None:\n self.source = lambda *args: source(*args, dbt_load_df_function=load_df_function)\n self.ref = lambda *args, **kwargs: ref(*args, **kwargs, dbt_load_df_function=load_df_function)\n self.config = config\n self.this = this()\n self.is_incremental = False\n\n# COMMAND ----------\n\n\n",

83 | "rows_affected": -1

84 | }

85 | },

86 | "model.dbtcore.my_executepython_dlt_model": {

87 | "run_status": "success",

88 | "run_completed_at": "2025-05-15 18:01:12",